Visual guiding-based robot workpiece grabbing method

A visual guidance and robot technology, applied in the field of robot visual recognition and positioning, can solve the problems of increasing calculation, reducing efficiency, missing capture, etc., and achieve the effect of reducing data volume, low error rate, and eliminating interference

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] Below in conjunction with accompanying drawing, the present invention is described in further detail:

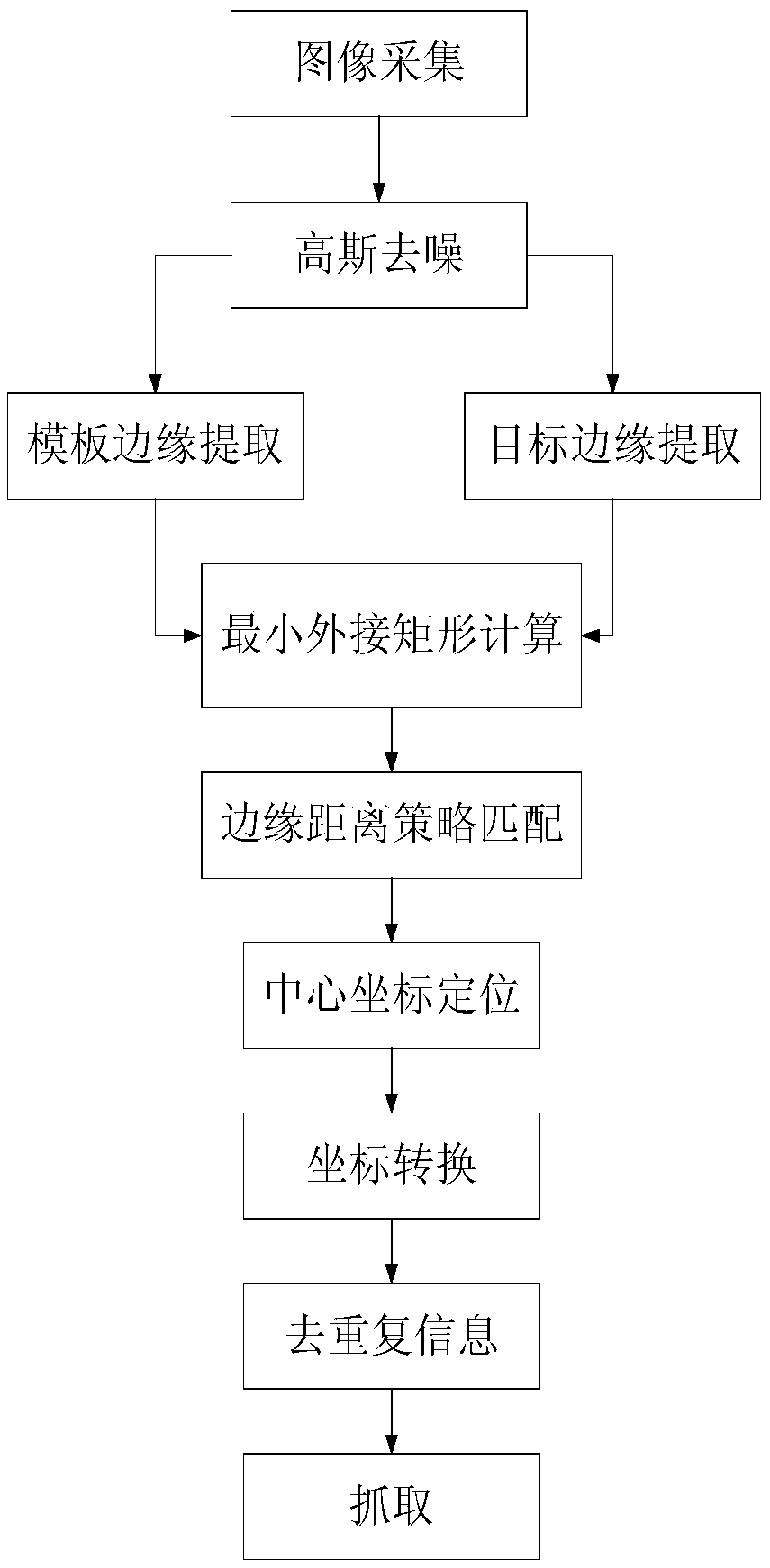

[0057] The illumination change and rotation of the workpiece itself will change its gray level distribution in the global image. The present invention first uses the edge extraction operator to obtain the continuous edge features of the target workpiece. In order to eliminate the interference of noise and illumination changes, the edge feature image is extracted , propose a search strategy based on edge point distance (such as Figure 5 shown). According to the rotation change, the minimum circumscribed rectangle of the workpiece is established, the possible deflection angle of the target is obtained, a template set is generated, and the target recognition is completed through a search strategy. Establish the conversion relationship between the vision system and the robot system, remove repetitive information, and complete the positioning and grasping of the target w...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com