Patents

Literature

1887 results about "Frame sequence" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Method and Apparatus for Multi-Dimensional Content Search and Video Identification

ActiveUS20080313140A1Improve accuracyConfidenceImage enhancementVideo data indexingFrame sequenceVideo sequence

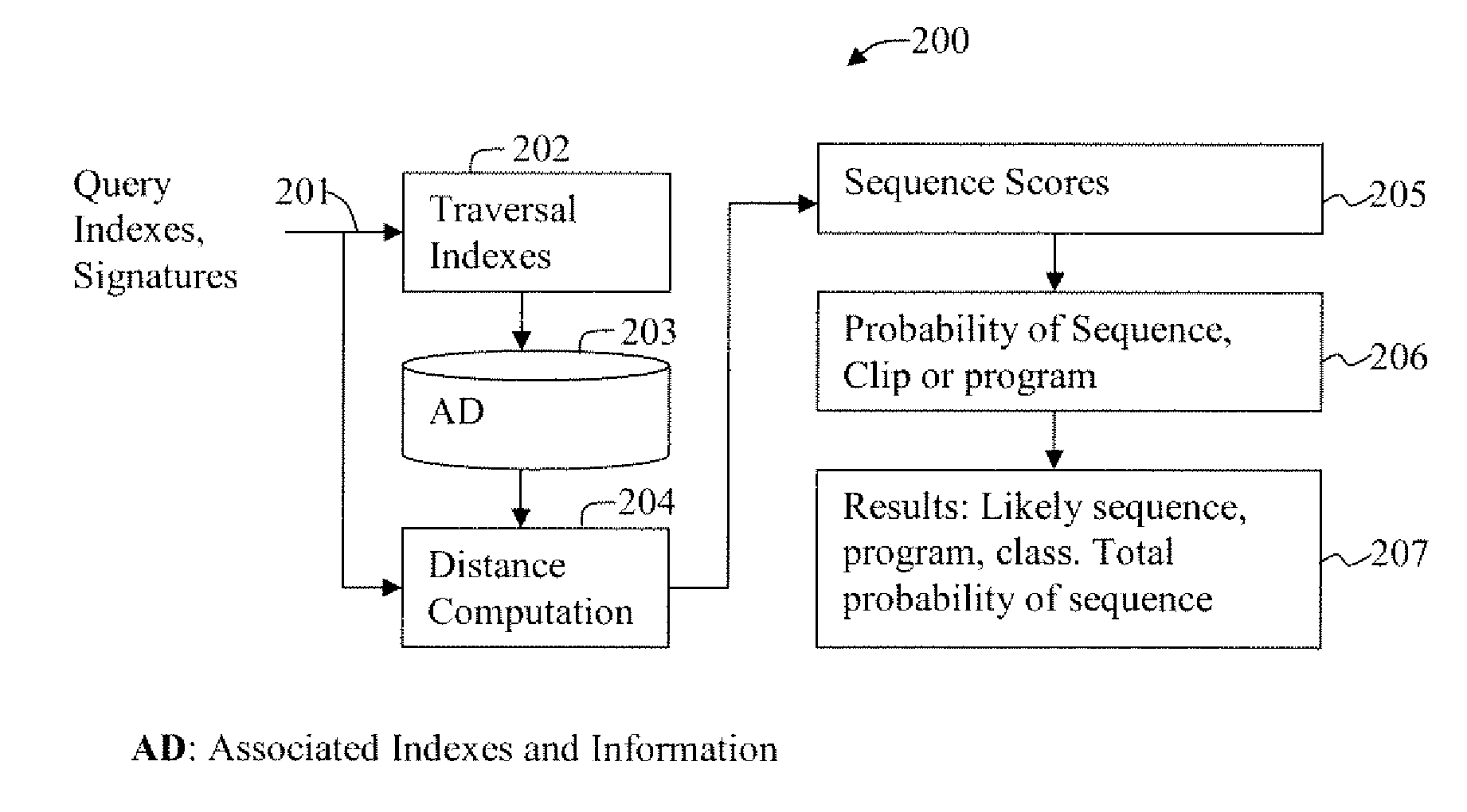

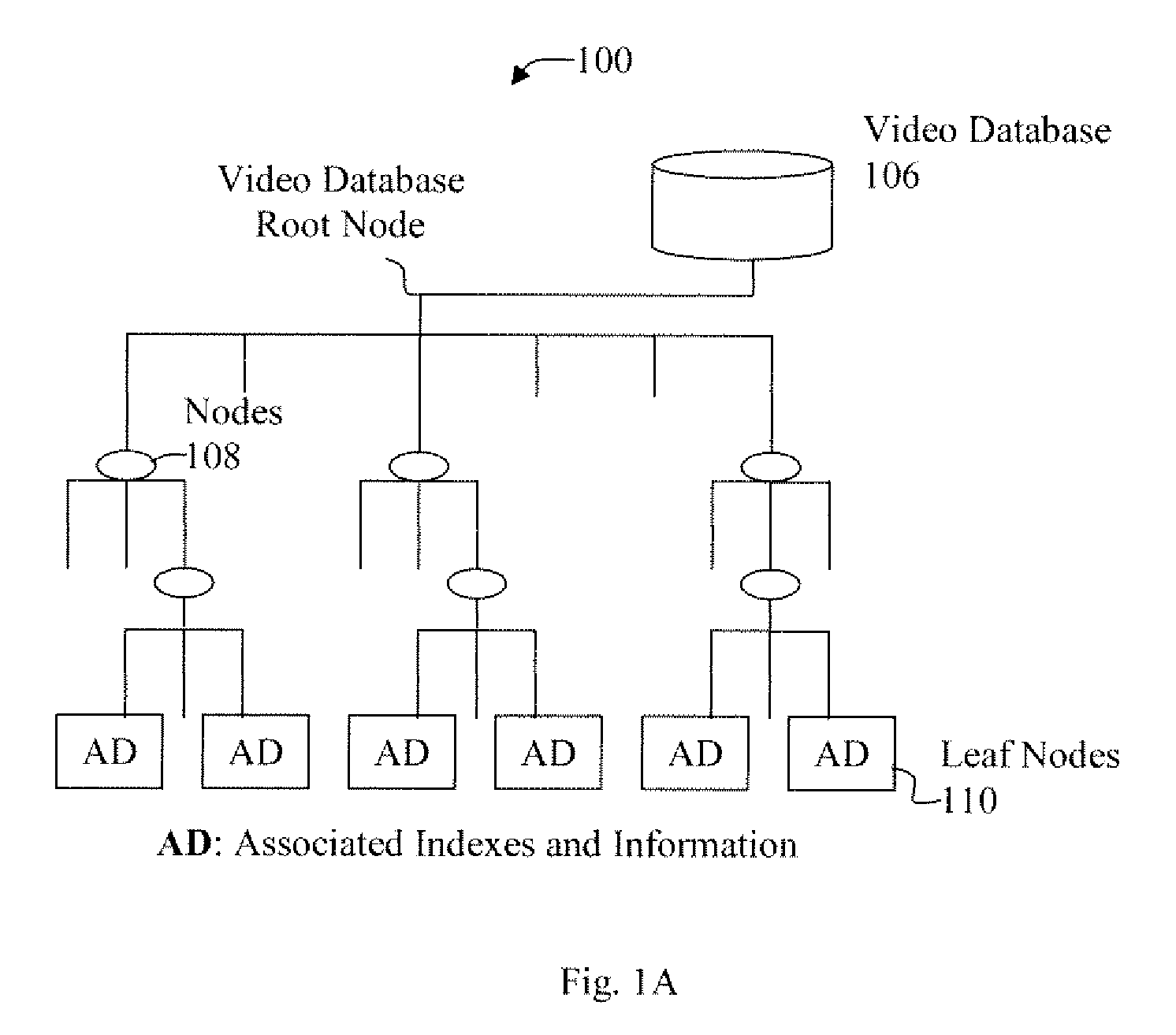

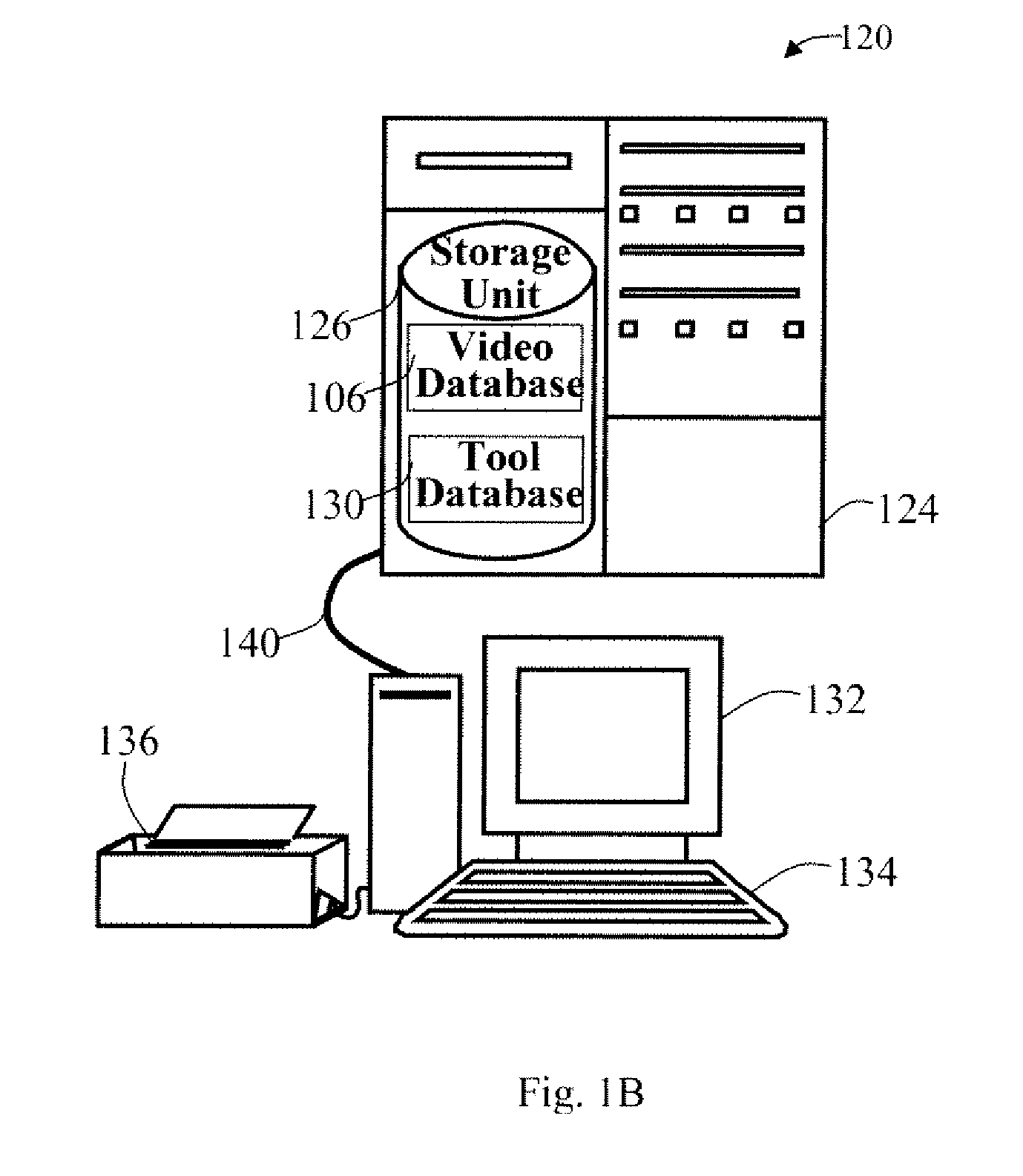

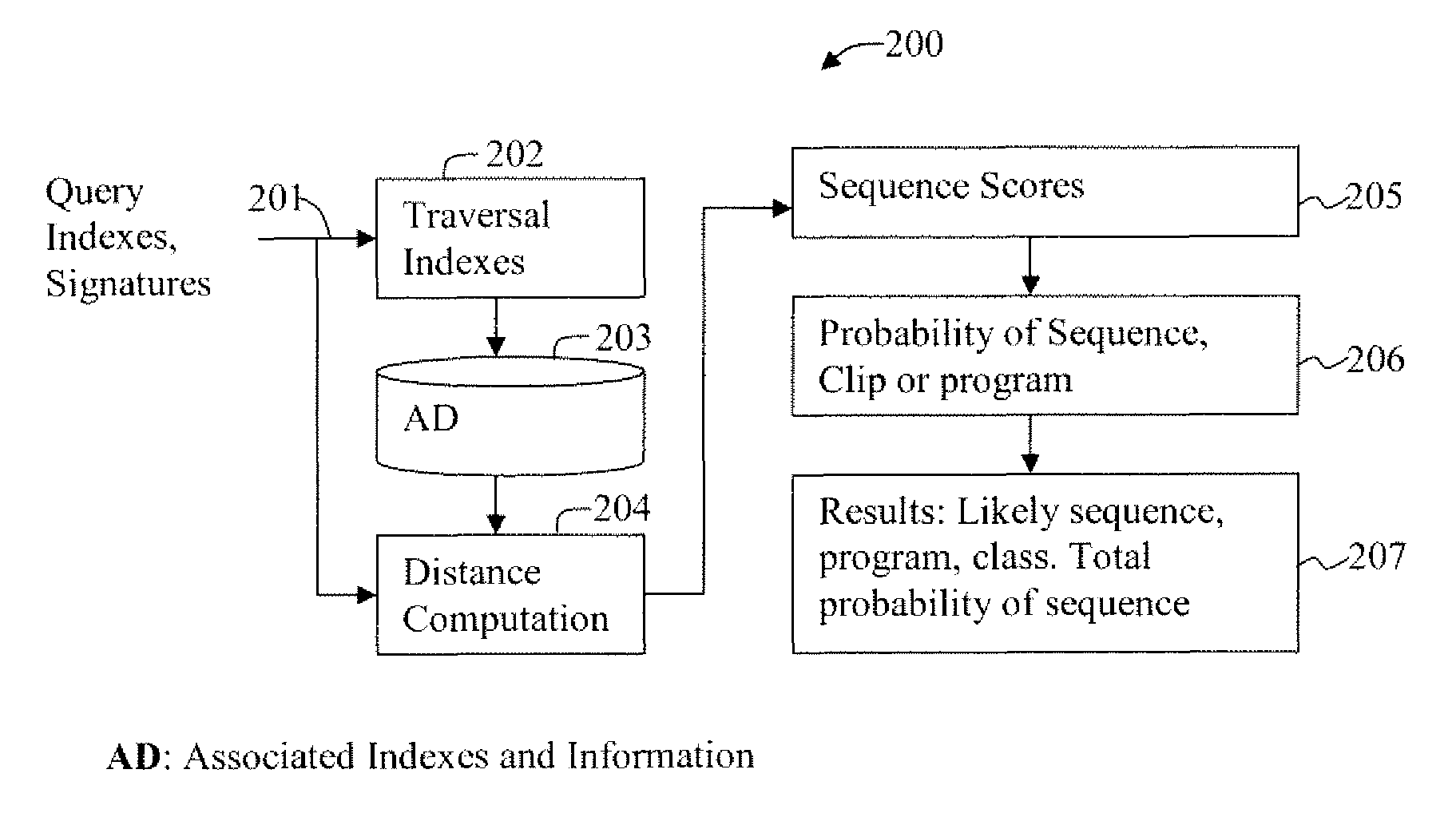

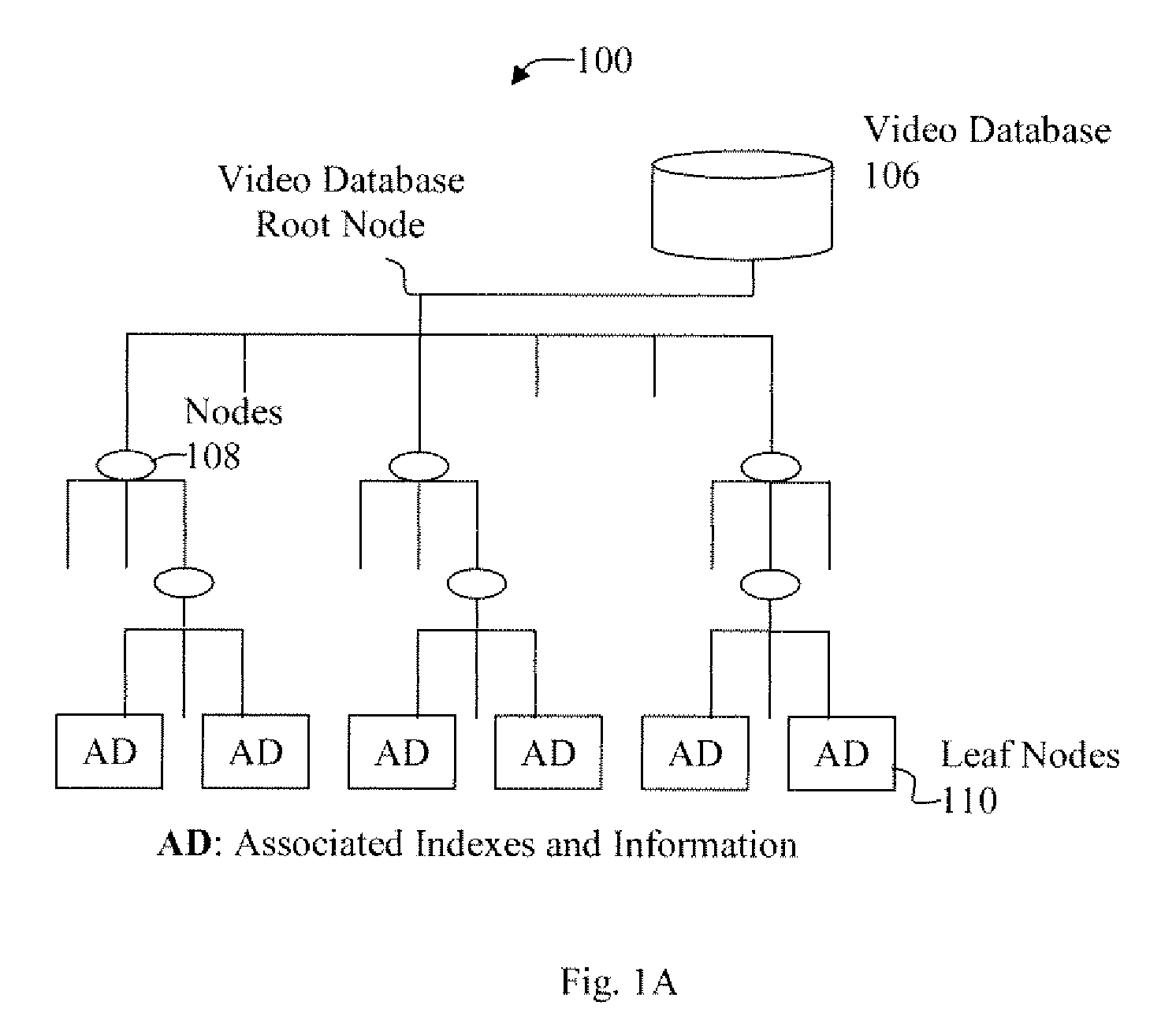

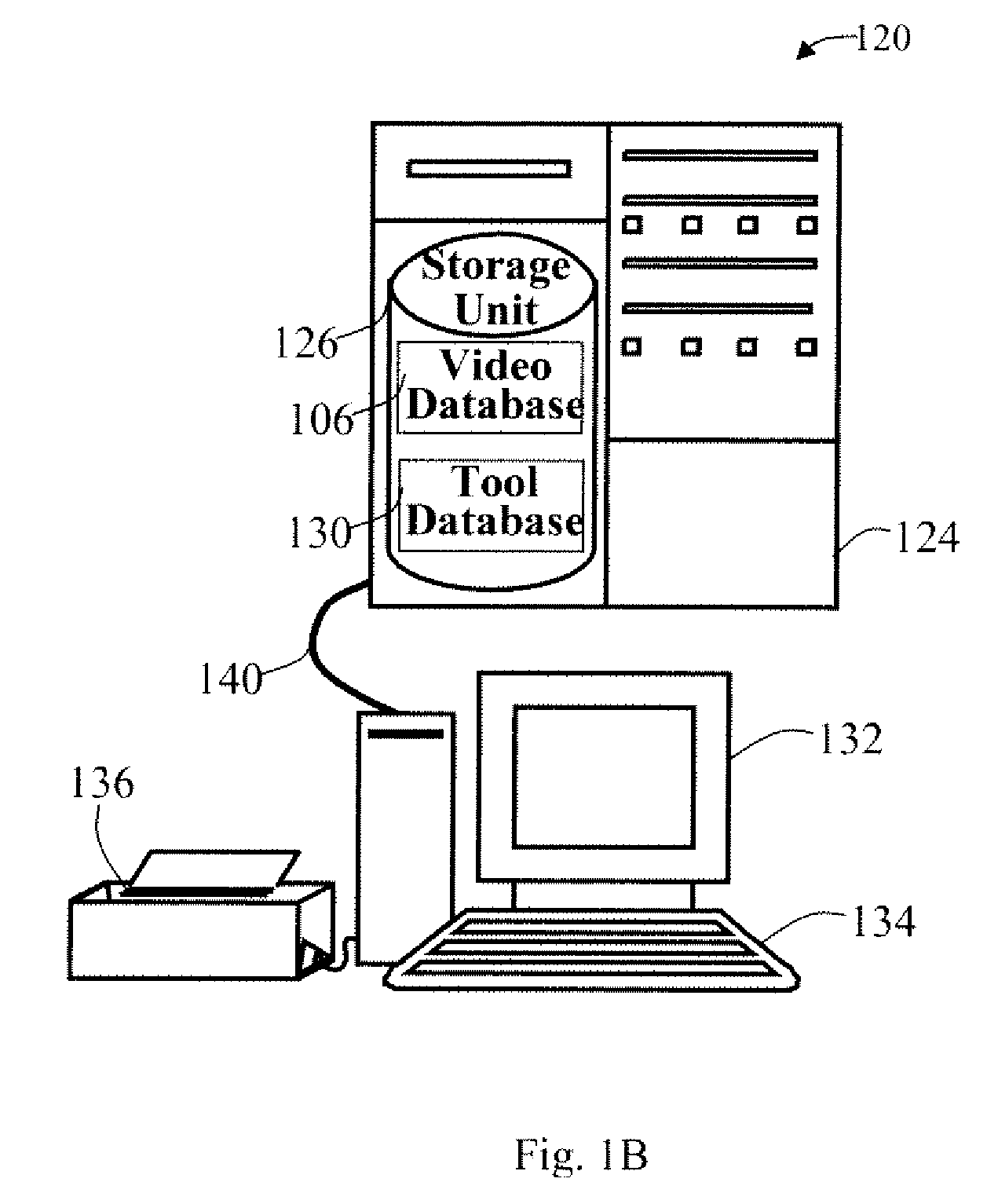

A multi-dimensional database and indexes and operations on the multi-dimensional database are described which include video search applications or other similar sequence or structure searches. Traversal indexes utilize highly discriminative information about images and video sequences or about object shapes. Global and local signatures around keypoints are used for compact and robust retrieval and discriminative information content of images or video sequences of interest. For other objects or structures relevant signature of pattern or structure are used for traversal indexes. Traversal indexes are stored in leaf nodes along with distance measures and occurrence of similar images in the database. During a sequence query, correlation scores are calculated for single frame, for frame sequence, and video clips, or for other objects or structures.

Owner:ROKU INCORPORATED

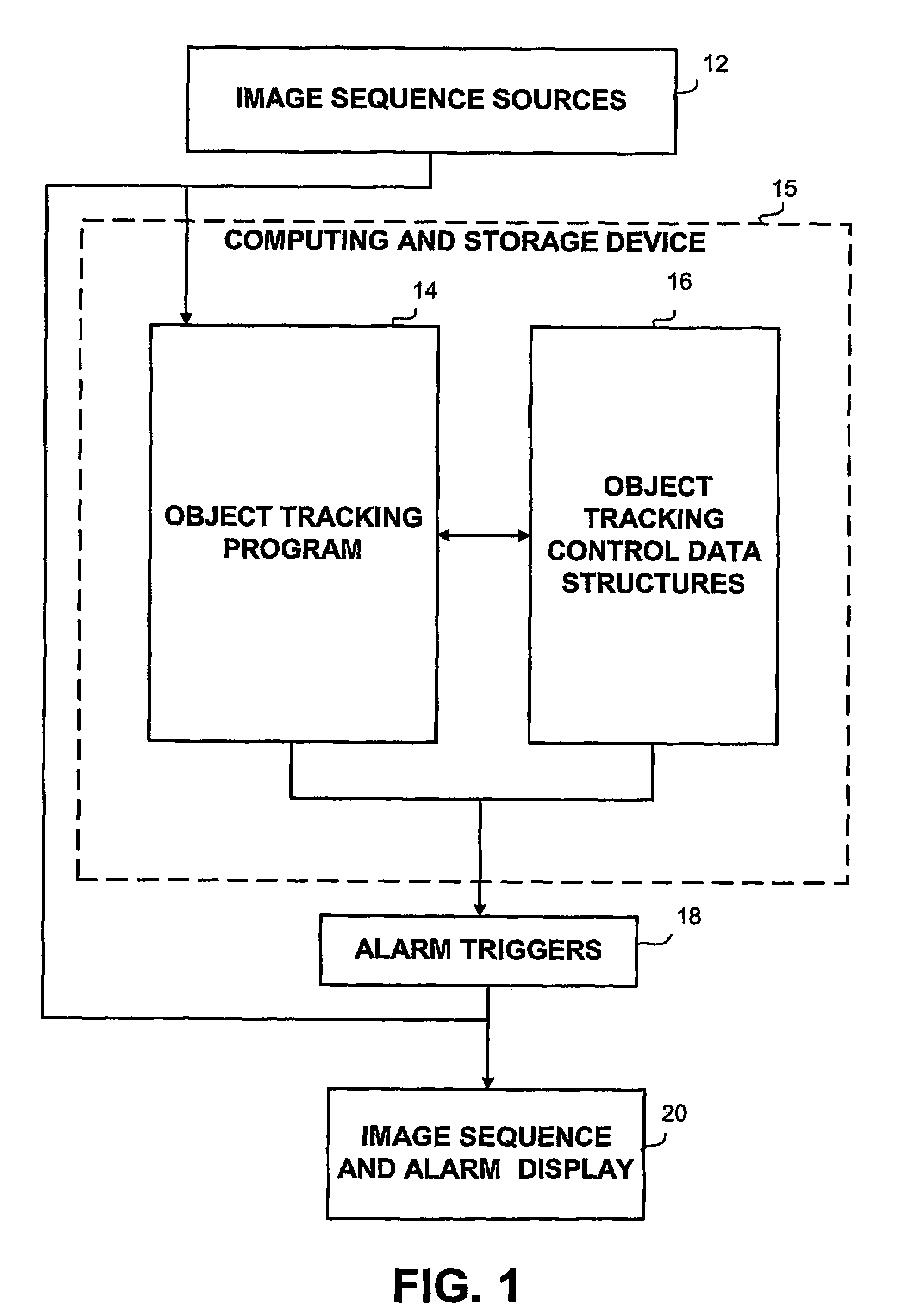

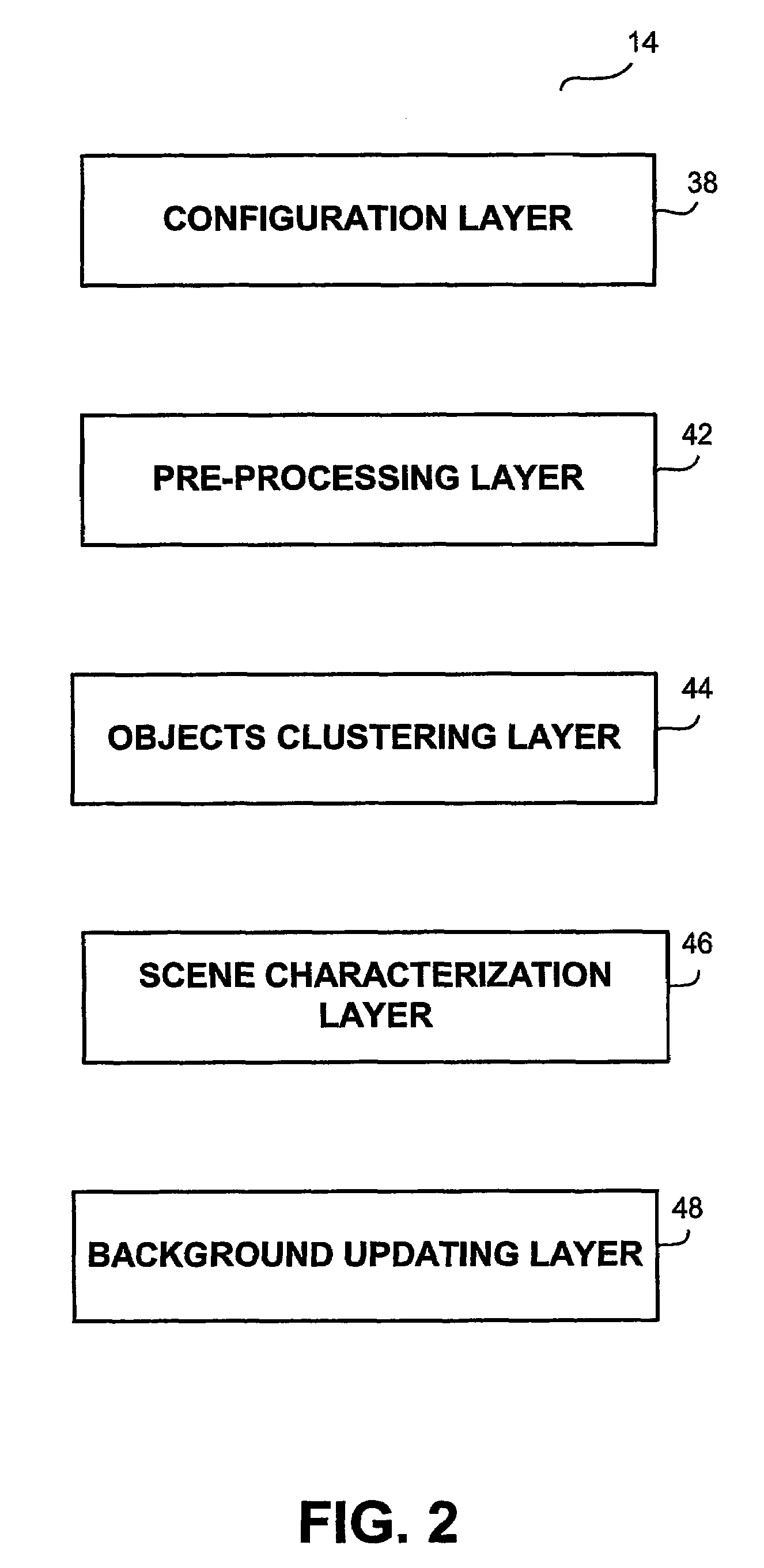

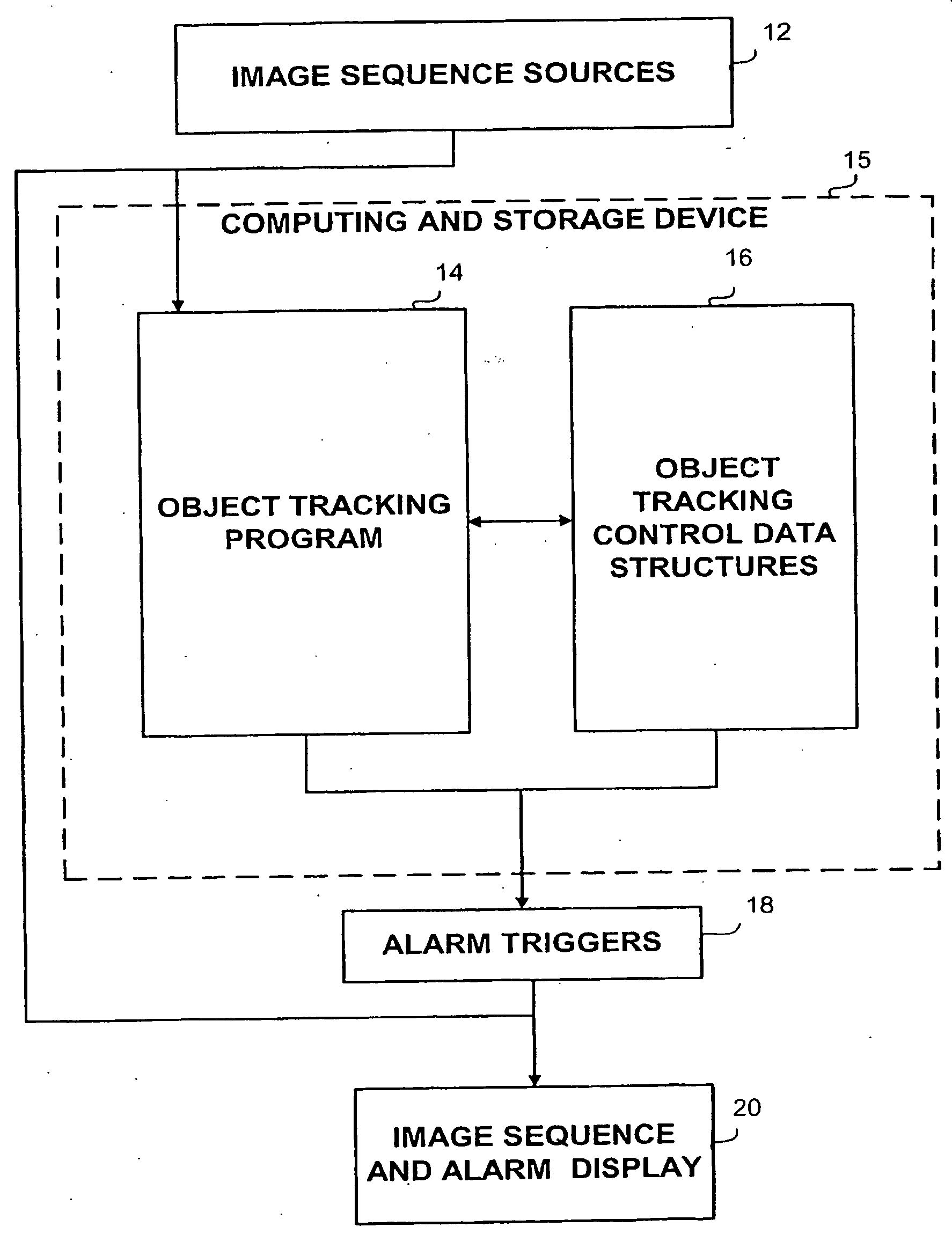

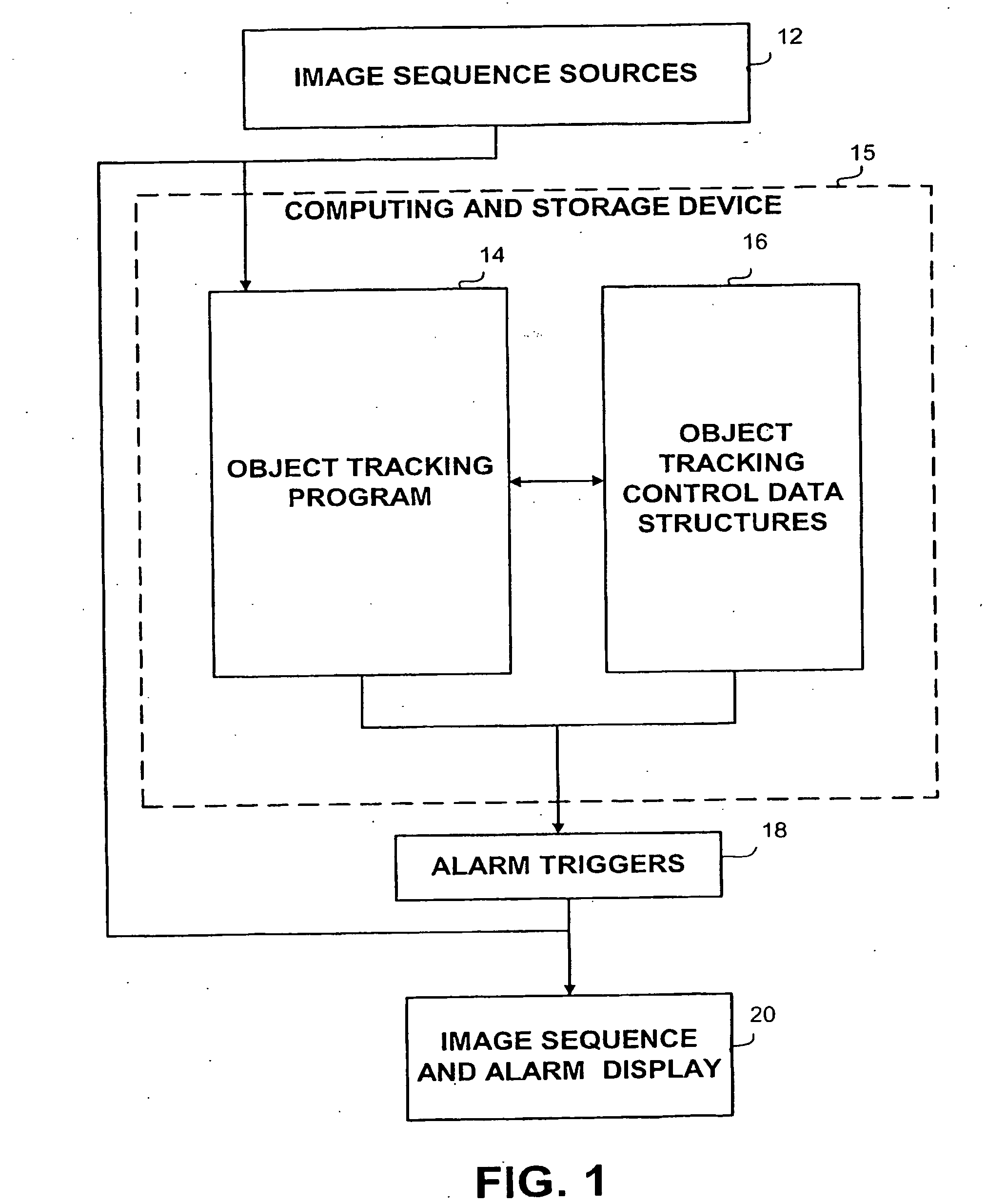

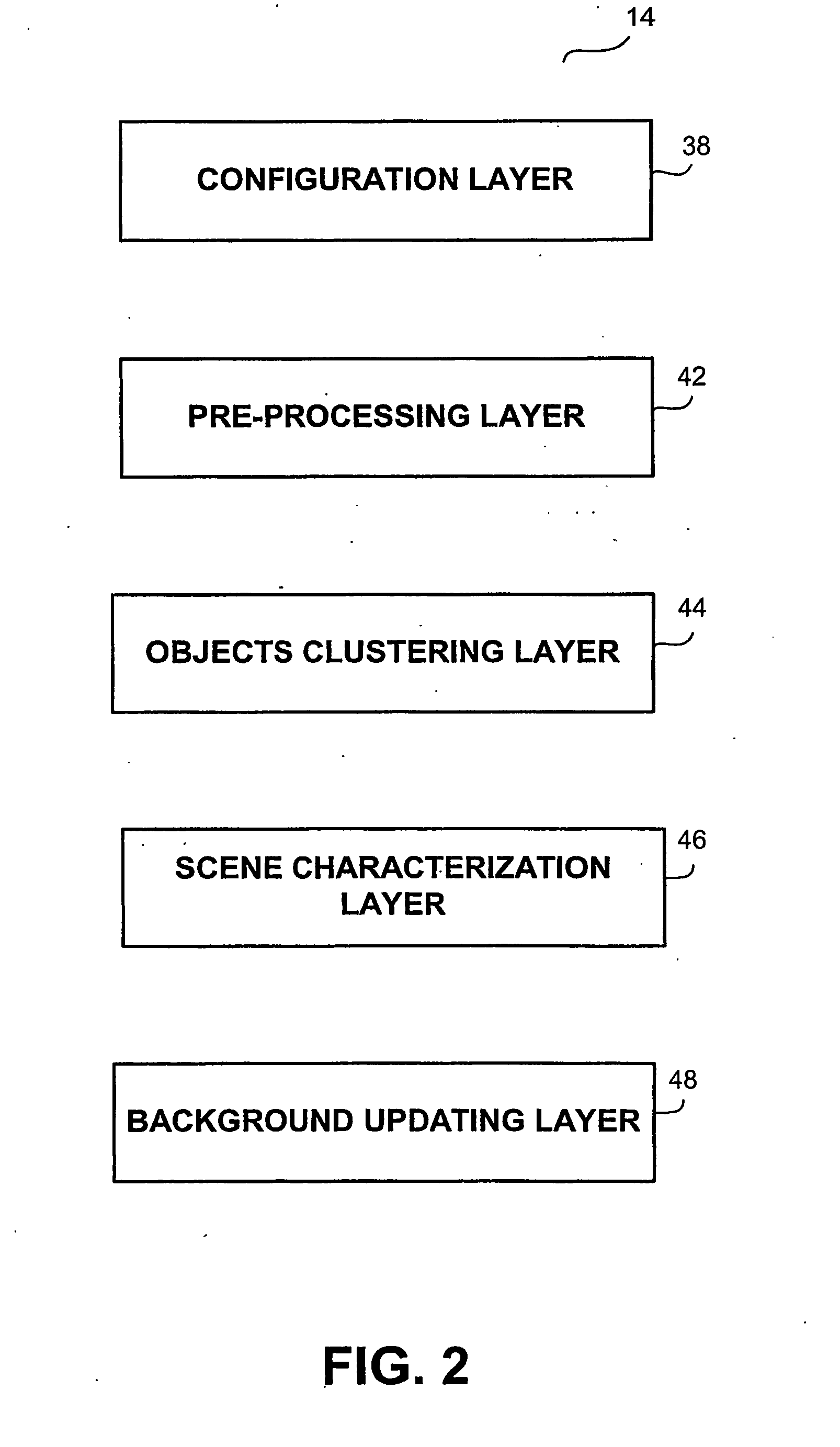

Method and apparatus for video frame sequence-based object tracking

ActiveUS7436887B2Television system detailsPicture reproducers using cathode ray tubesFrame sequencePattern matching

Owner:QOGNIFY

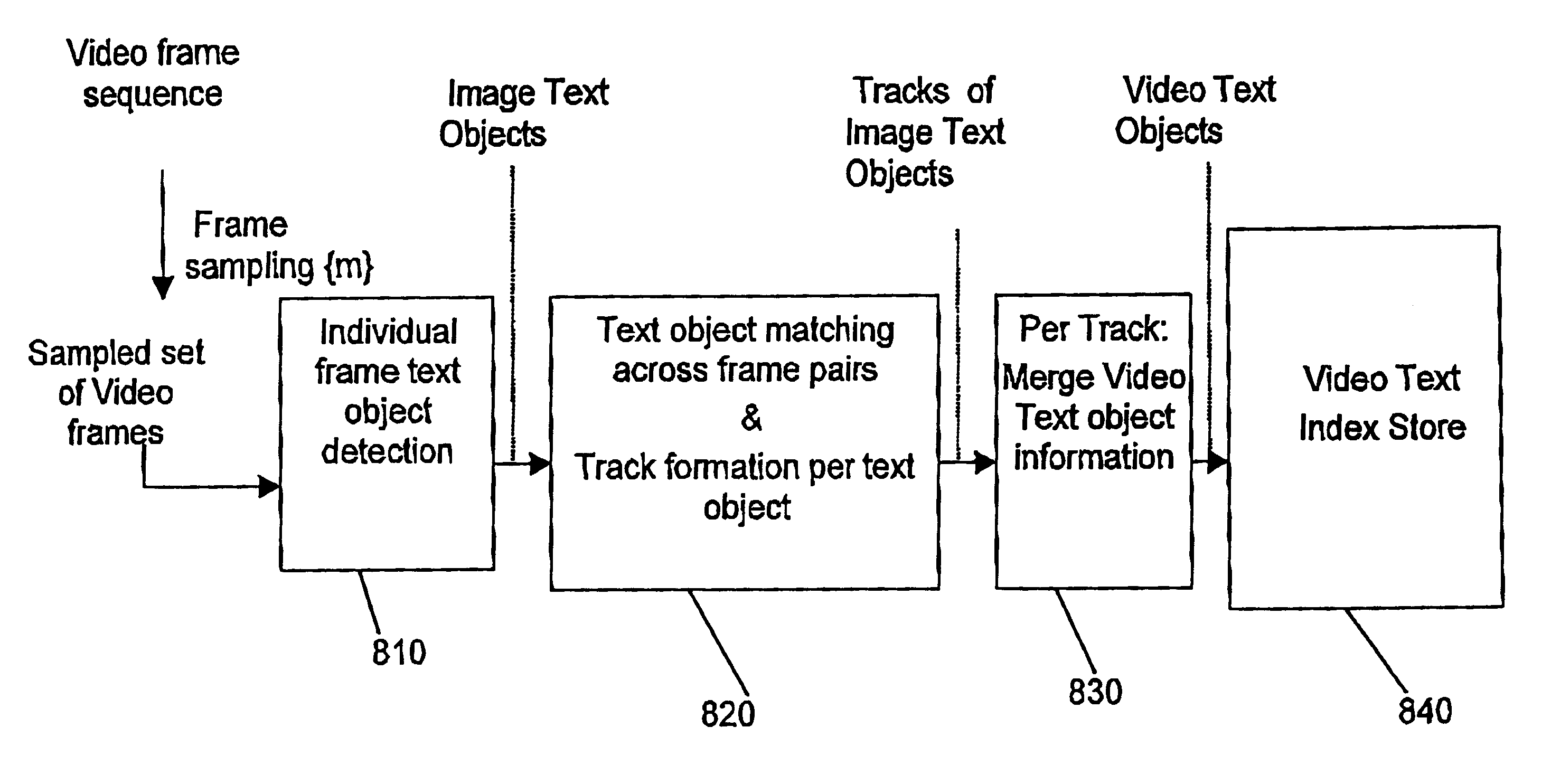

Method of indexing and searching images of text in video

InactiveUS6937766B1Reducing online storage requirementReduce search timePicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningFrame sequenceComputer graphics (images)

A method for generating an index of the text of a video image sequence is provided. The method includes the steps of determining the image text objects in each of a plurality of frames of the video image sequence; comparing the image text objects in each of the plurality of frames of the video image sequence to obtain a record of frame sequences having matching image text objects; extracting the content for each of the similar image text objects in text string format; and storing the text string for each image text object as a video text object in a retrievable medium.

Owner:ANXIN MATE HLDG

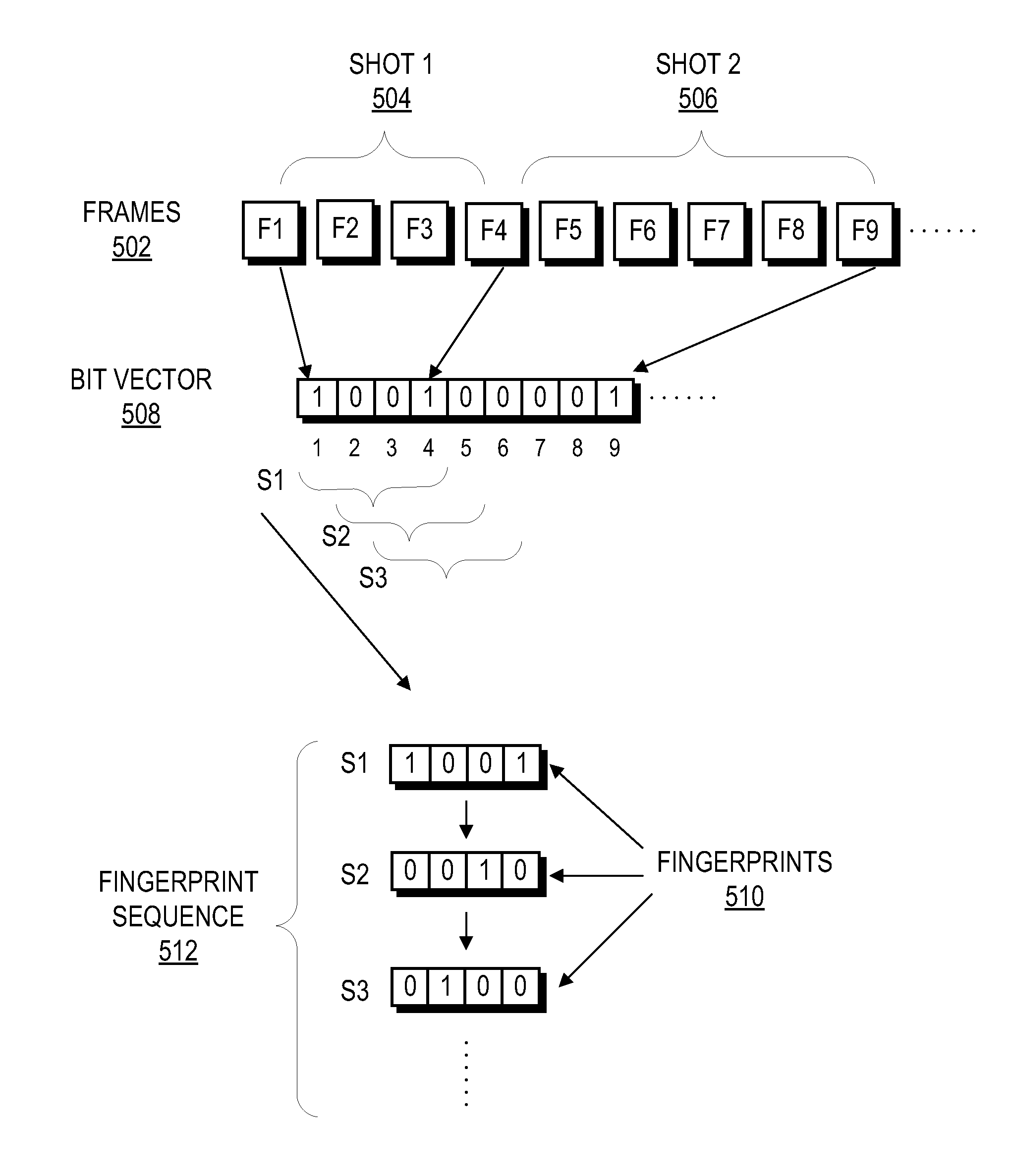

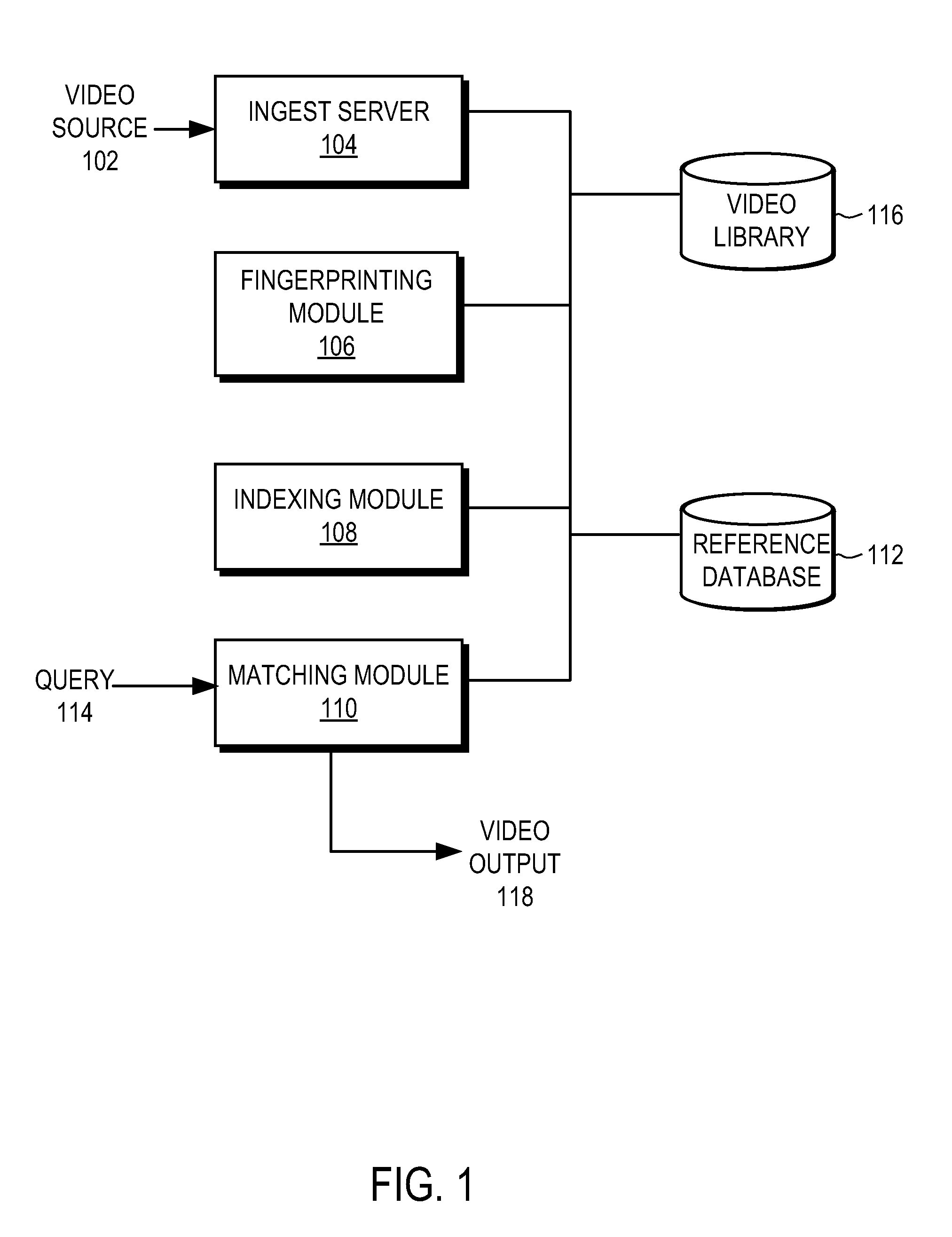

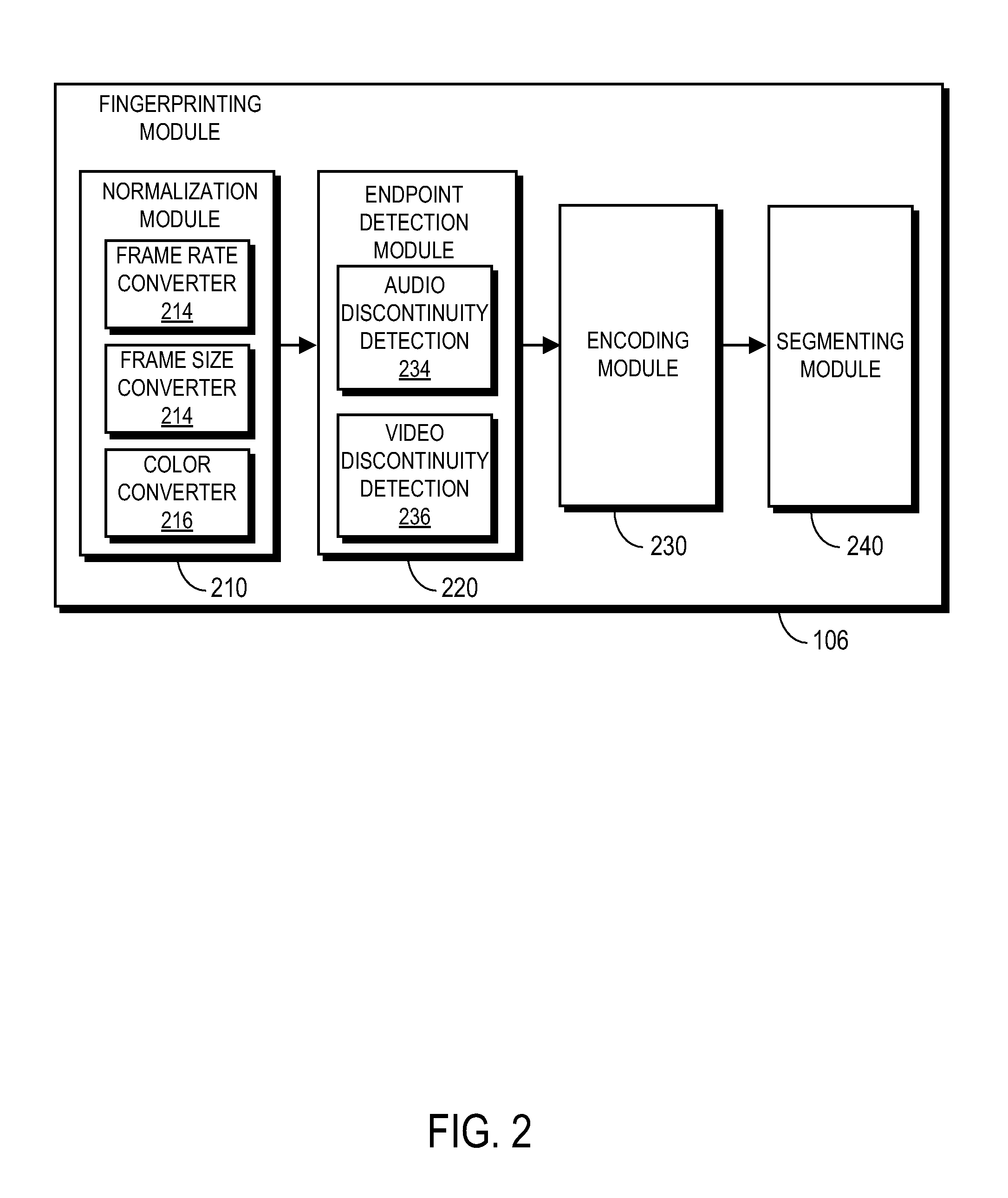

Endpoint based video fingerprinting

ActiveUS8611422B1Quickly and efficiently identifyImprove the display effectPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningFrame sequenceComputer graphics (images)

A method and system generates and compares fingerprints for videos in a video library. The video fingerprints provide a compact representation of the temporal locations of discontinuities in the video that can be used to quickly and efficiently identify video content. Discontinuities can be, for example, shot boundaries in the video frame sequence or silent points in the audio stream. Because the fingerprints are based on structural discontinuity characteristics rather than exact bit sequences, visual content of videos can be effectively compared even when there are small differences between the videos in compression factors, source resolutions, start and stop times, frame rates, and so on. Comparison of video fingerprints can be used, for example, to search for and remove copyright protected videos from a video library. Furthermore, duplicate videos can be detected and discarded in order to preserve storage space.

Owner:GOOGLE LLC

Method and apparatus for multi-dimensional content search and video identification

A multi-dimensional database and indexes and operations on the multi-dimensional database are described which include video search applications or other similar sequence or structure searches. Traversal indexes utilize highly discriminative information about images and video sequences or about object shapes. Global and local signatures around keypoints are used for compact and robust retrieval and discriminative information content of images or video sequences of interest. For other objects or structures relevant signature of pattern or structure are used for traversal indexes. Traversal indexes are stored in leaf nodes along with distance measures and occurrence of similar images in the database. During a sequence query, correlation scores are calculated for single frame, for frame sequence, and video clips, or for other objects or structures.

Owner:ROKU INCORPORATED

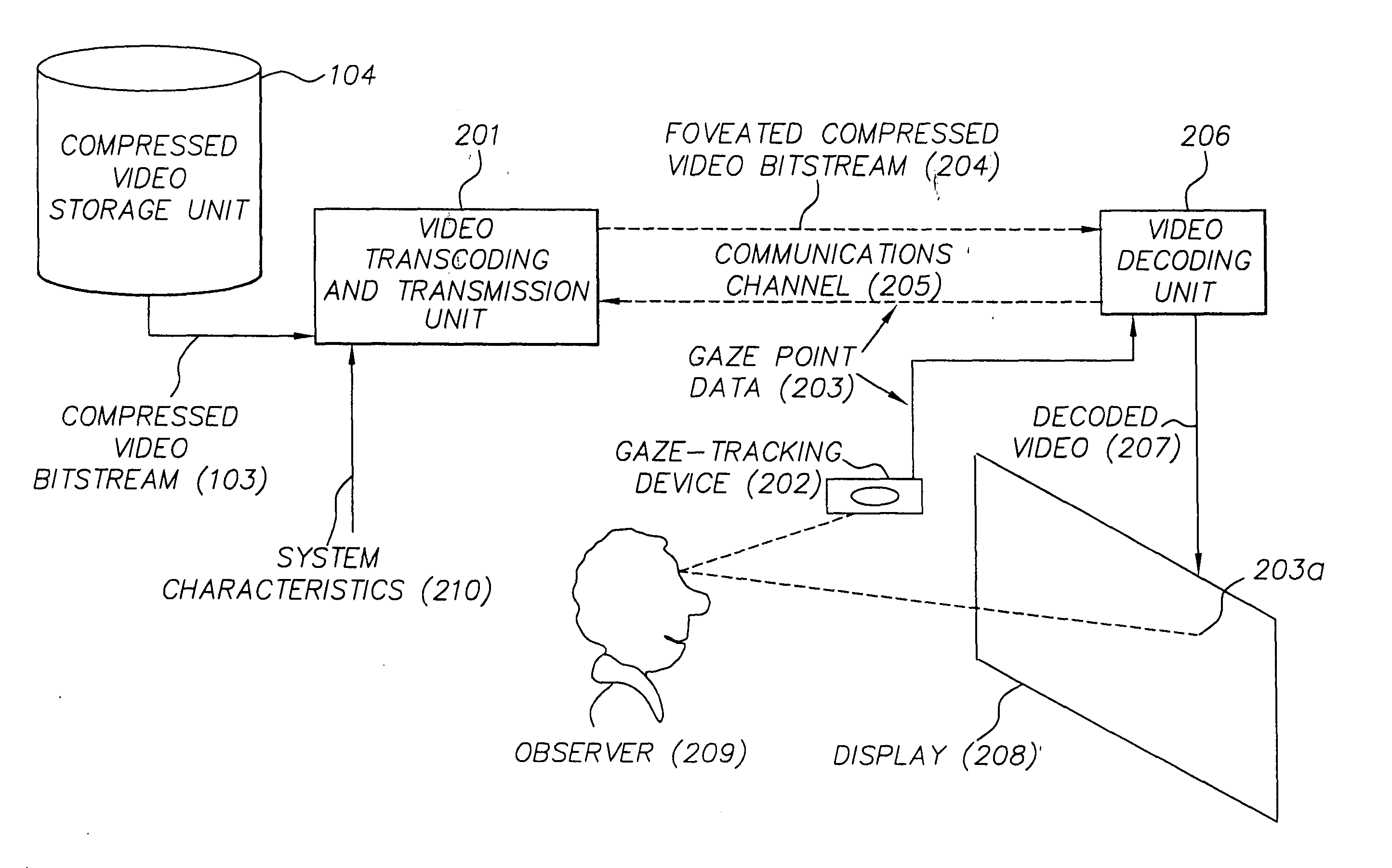

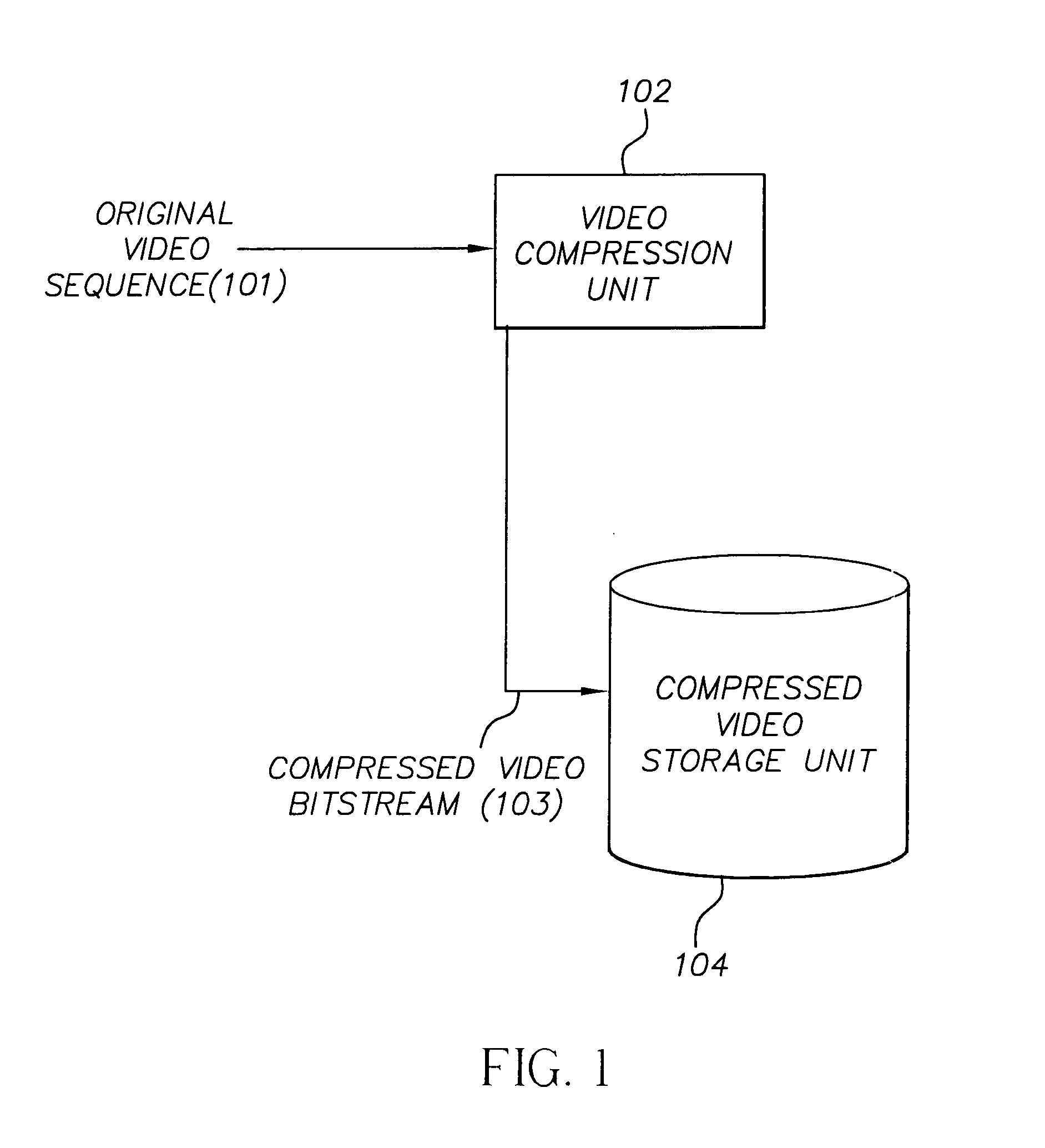

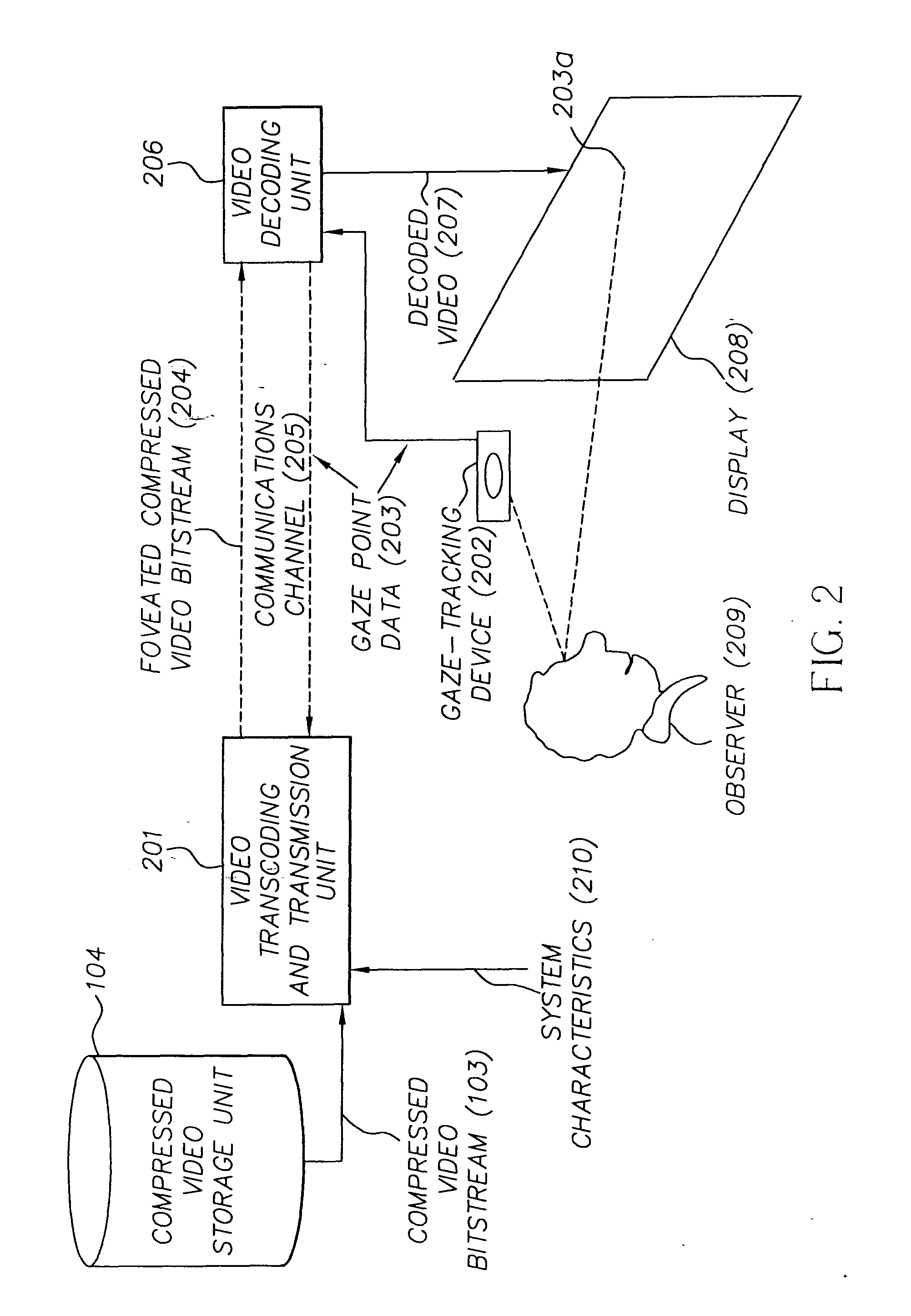

Foveated video coding system and method

InactiveUS20050018911A1Reduce bandwidth requirementsAttenuation bandwidthCharacter and pattern recognitionTelevision systemsDigital videoDisplay device

In transcoding a frequency transform-encoded digital video signal representing a sequence of video frames, a foveated, compressed digital video signal is produced and transmitted over a limited bandwidth communication channel to a display according to the following method: First, a frequency transform-encoded digital video signal having encoded frequency coefficients representing a sequence of video frames is provided, wherein the encoding removes temporal redundancies from the video signal and encodes the frequency coefficients as base layer frequency coefficients in a base layer and as residual frequency coefficients in an enhancement layer. Then, an observer's gaze point is identified on the display. The encoded digital video signal is partially decoded to recover the frequency coefficients, and the residual frequency coefficients are adjusted to reduce the high frequency content of the video signal in regions away from the gaze point. The frequency coefficients, including the adjusted residual frequency coefficients, are then recoded to produce a foveated, transcoded digital video signal, and the foveated, transcoded digital video signal is displayed to the observer.

Owner:EASTMAN KODAK CO

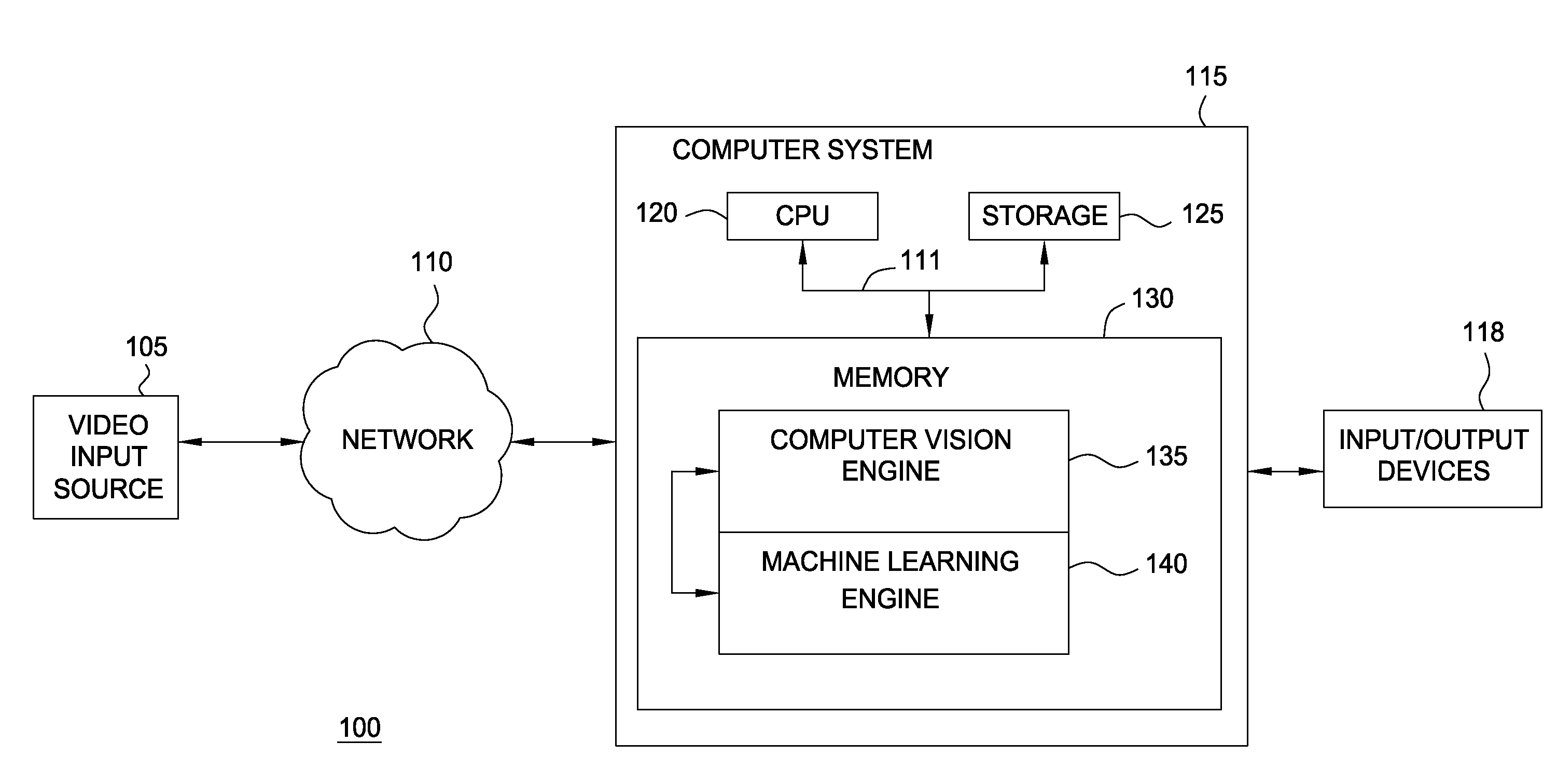

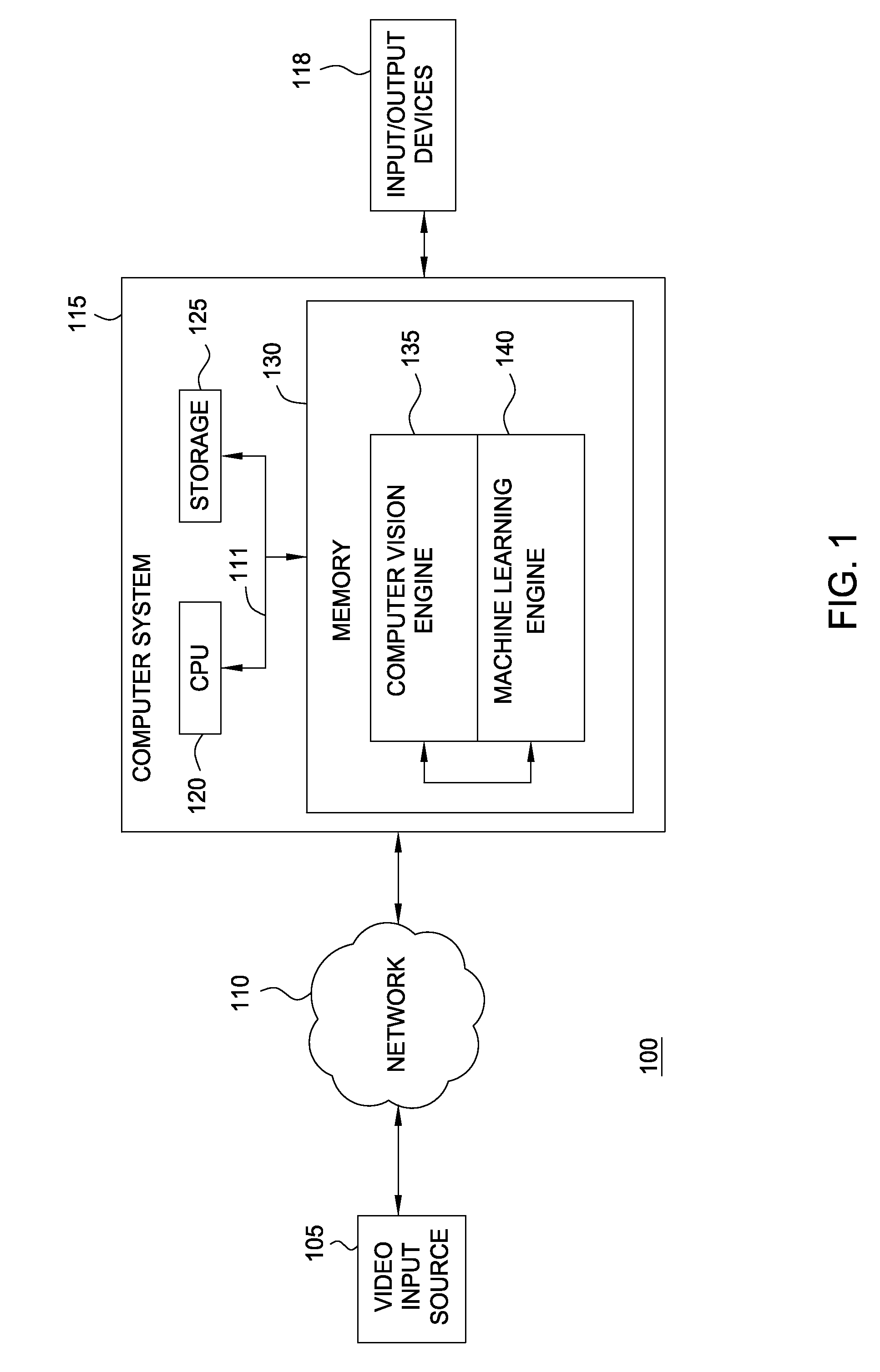

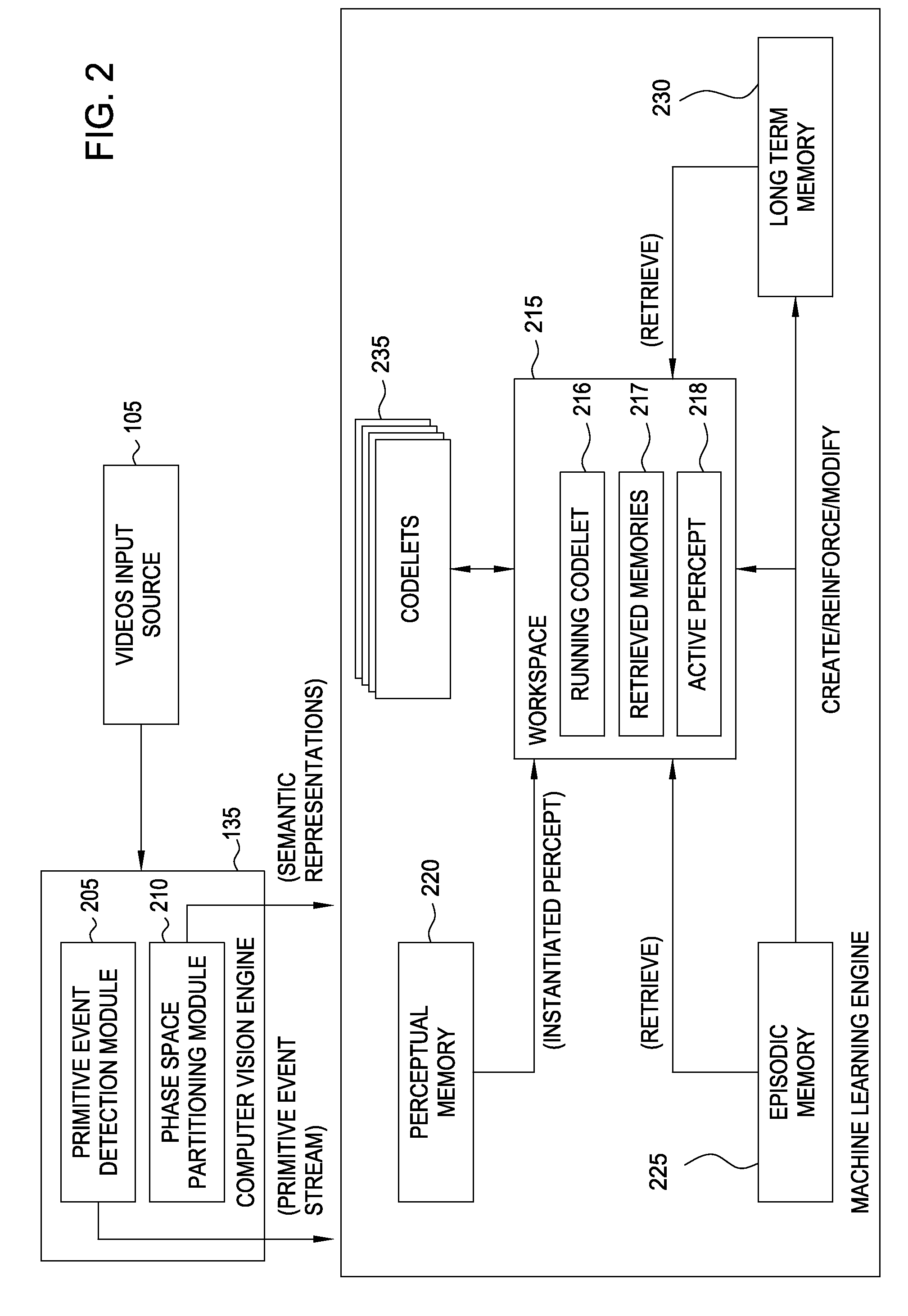

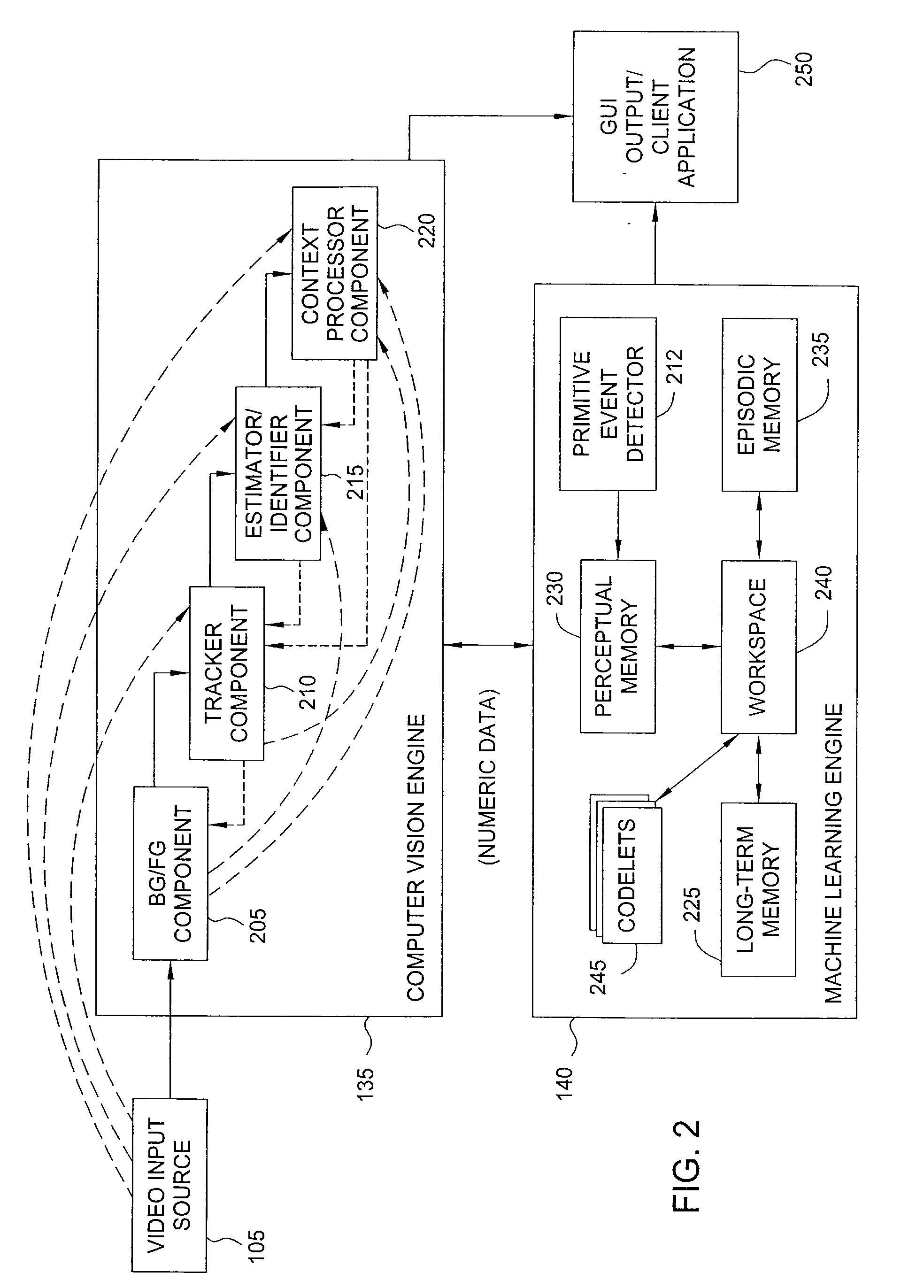

Long-term memory in a video analysis system

ActiveUS20100063949A1Digital computer detailsCharacter and pattern recognitionLong-term memoryNerve network

A long-term memory used to store and retrieve information learned while a video analysis system observes a stream of video frames is disclosed. The long-term memory provides a memory with a capacity that grows in size gracefully, as events are observed over time. Additionally, the long-term memory may encode events, represented by sub-graphs of a neural network. Further, rather than predefining a number of patterns recognized and manipulated by the long-term memory, embodiments of the invention provide a long-term memory where the size of a feature dimension (used to determine the similarity between different observed events) may grow dynamically as necessary, depending on the actual events observed in a sequence of video frames.

Owner:MOTOROLA SOLUTIONS INC

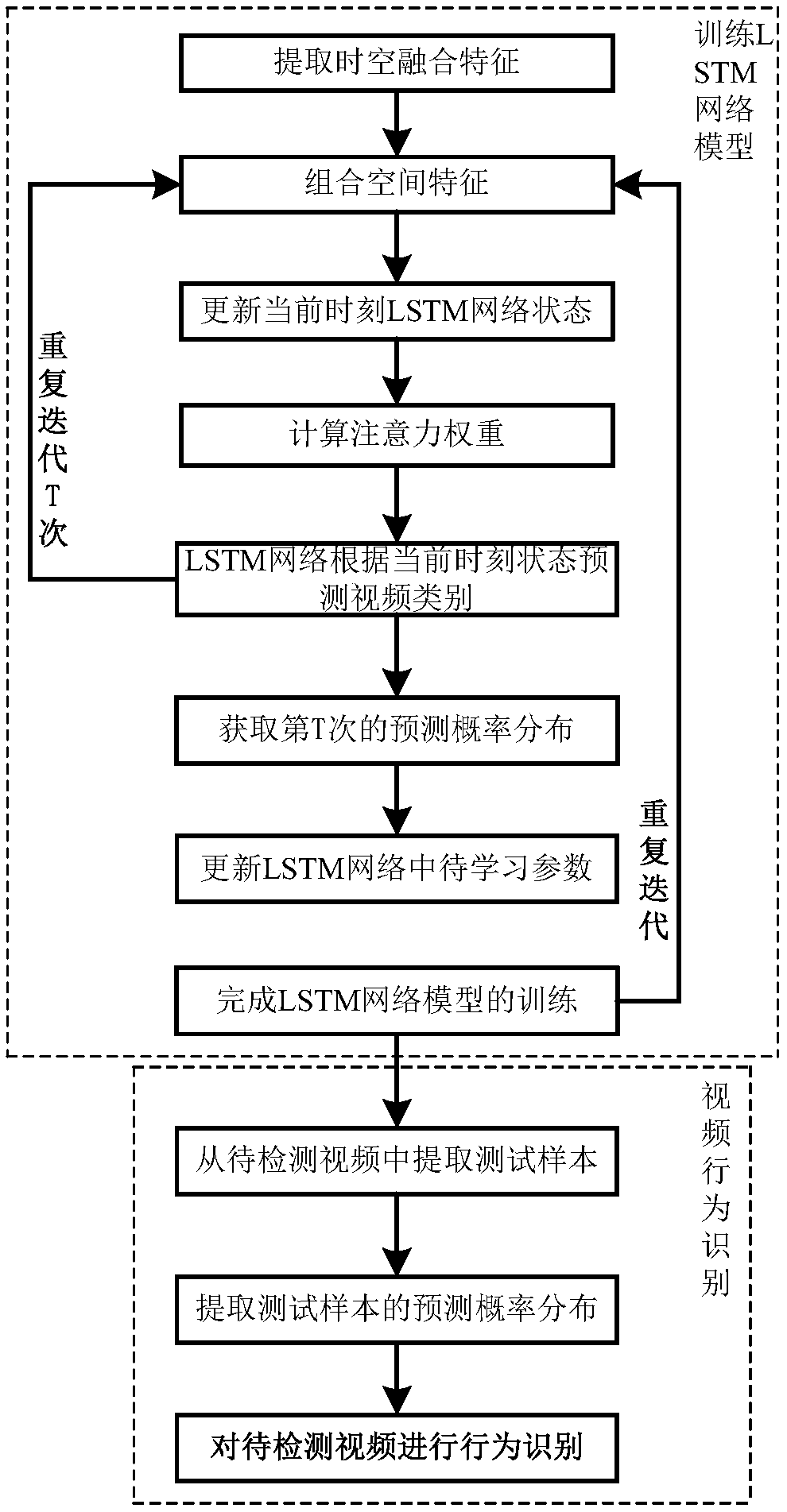

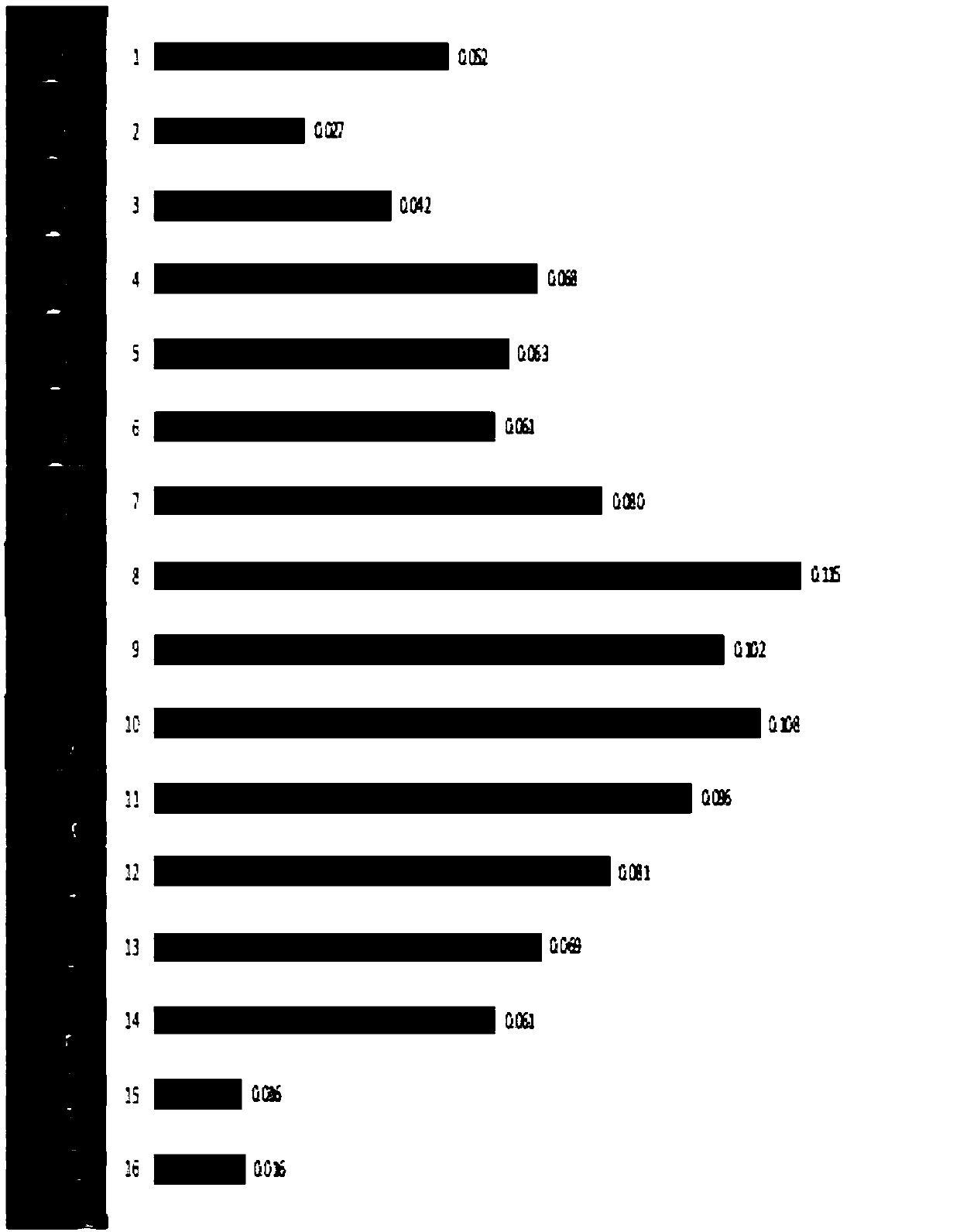

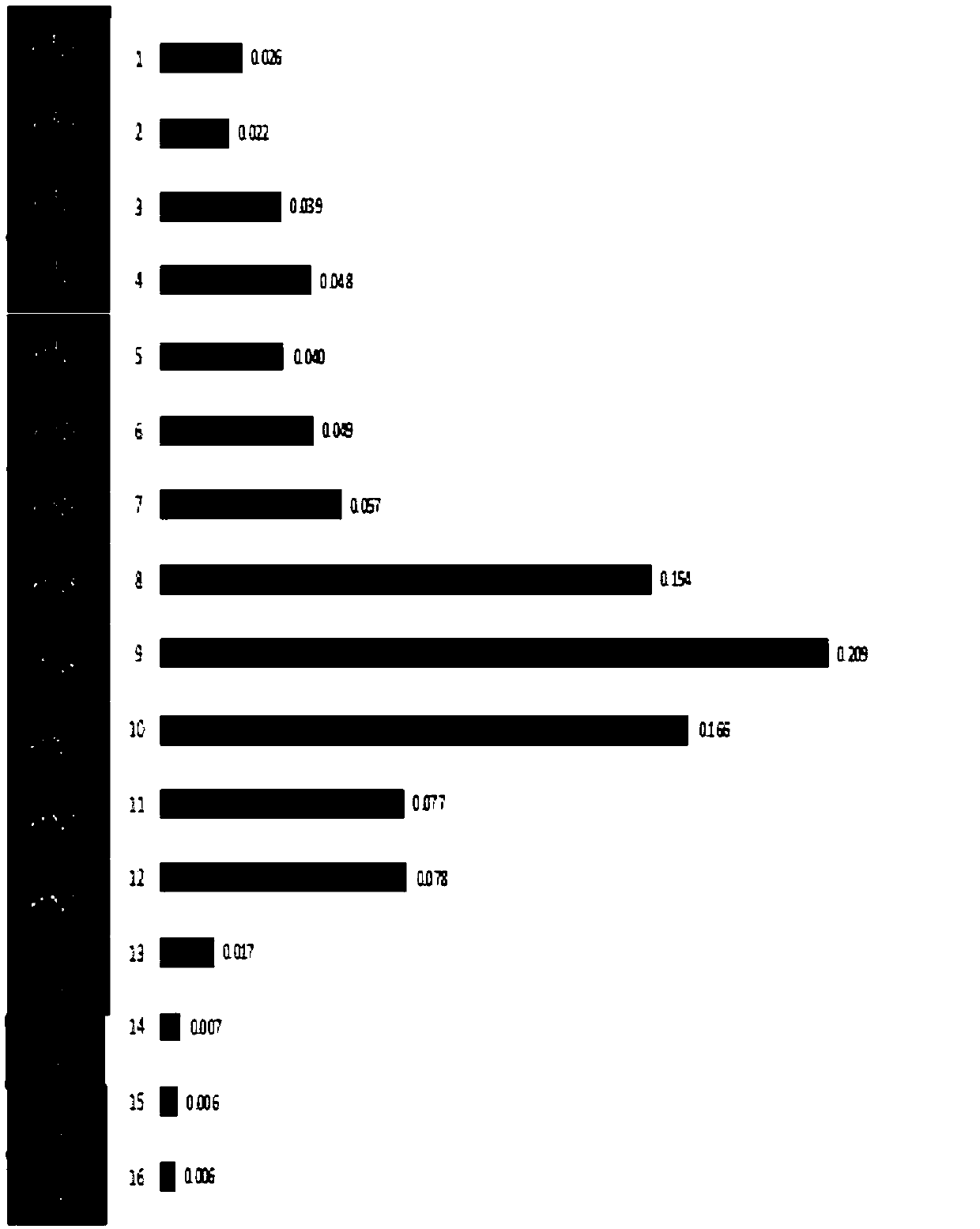

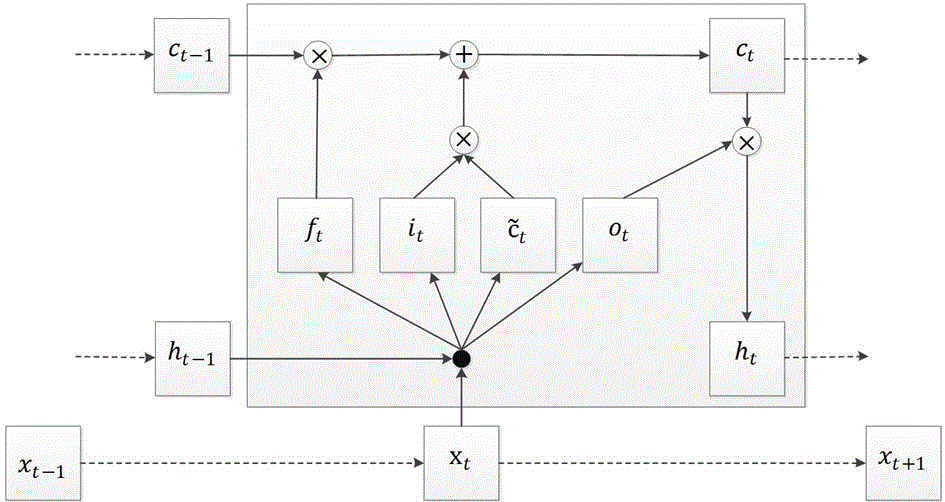

A video behavior recognition method based on spatio-temporal fusion features and attention mechanism

ActiveCN109101896AImprove accuracyEfficient use ofCharacter and pattern recognitionNeural architecturesFrame sequenceKey frame

The invention discloses a video behavior identification method based on spatio-temporal fusion characteristics and attention mechanism, The spatio-temporal fusion feature of input video is extracted by convolution neural network Inception V3, and then combining with the attention mechanism of human visual system on the basis of spatio-temporal fusion characteristics, the network can automaticallyallocate weights according to the video content, extract the key frames in the video frame sequence, and identify the behavior from the video as a whole. Thus, the interference of redundant information on the identification is eliminated, and the accuracy of the video behavior identification is improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

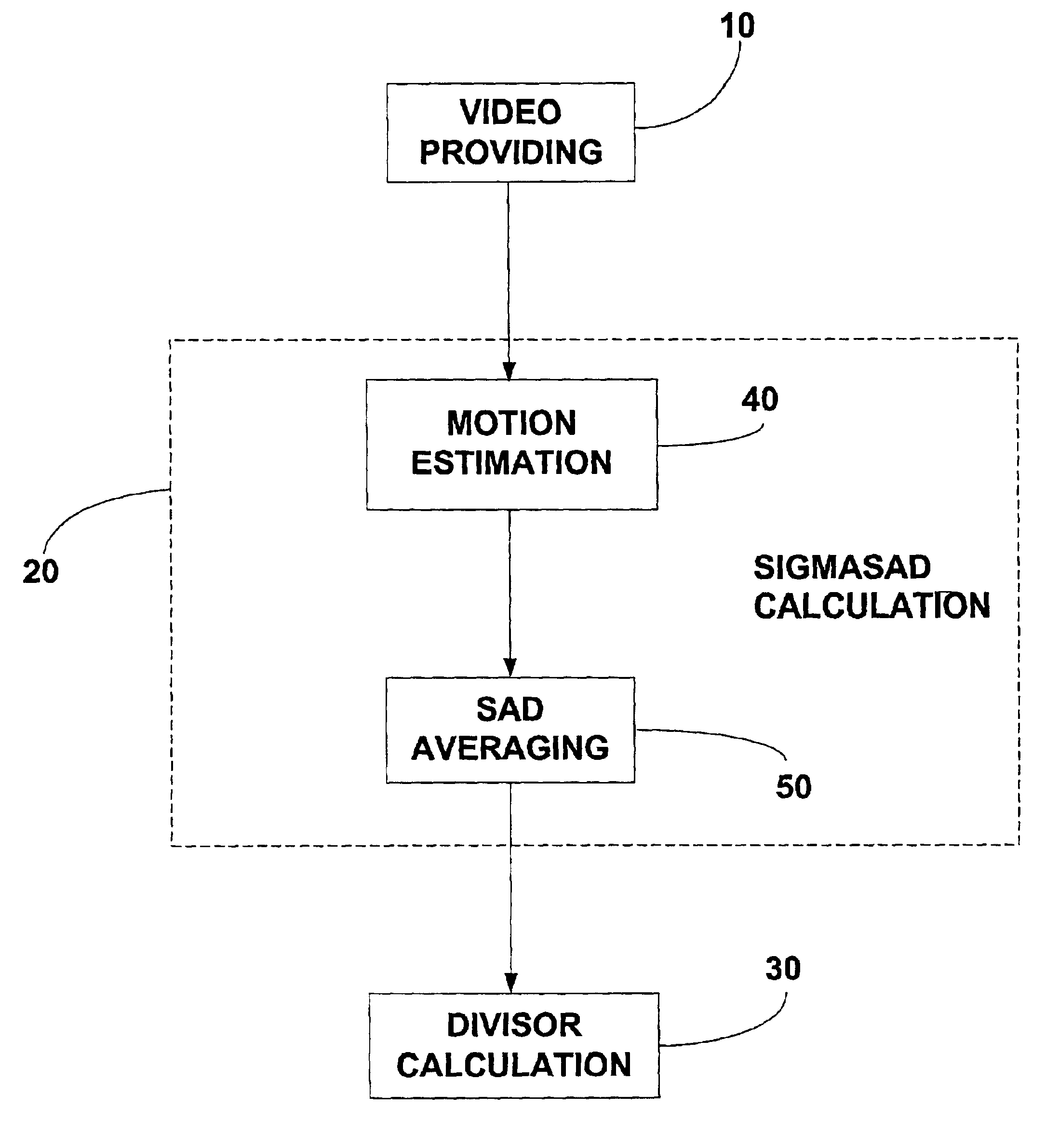

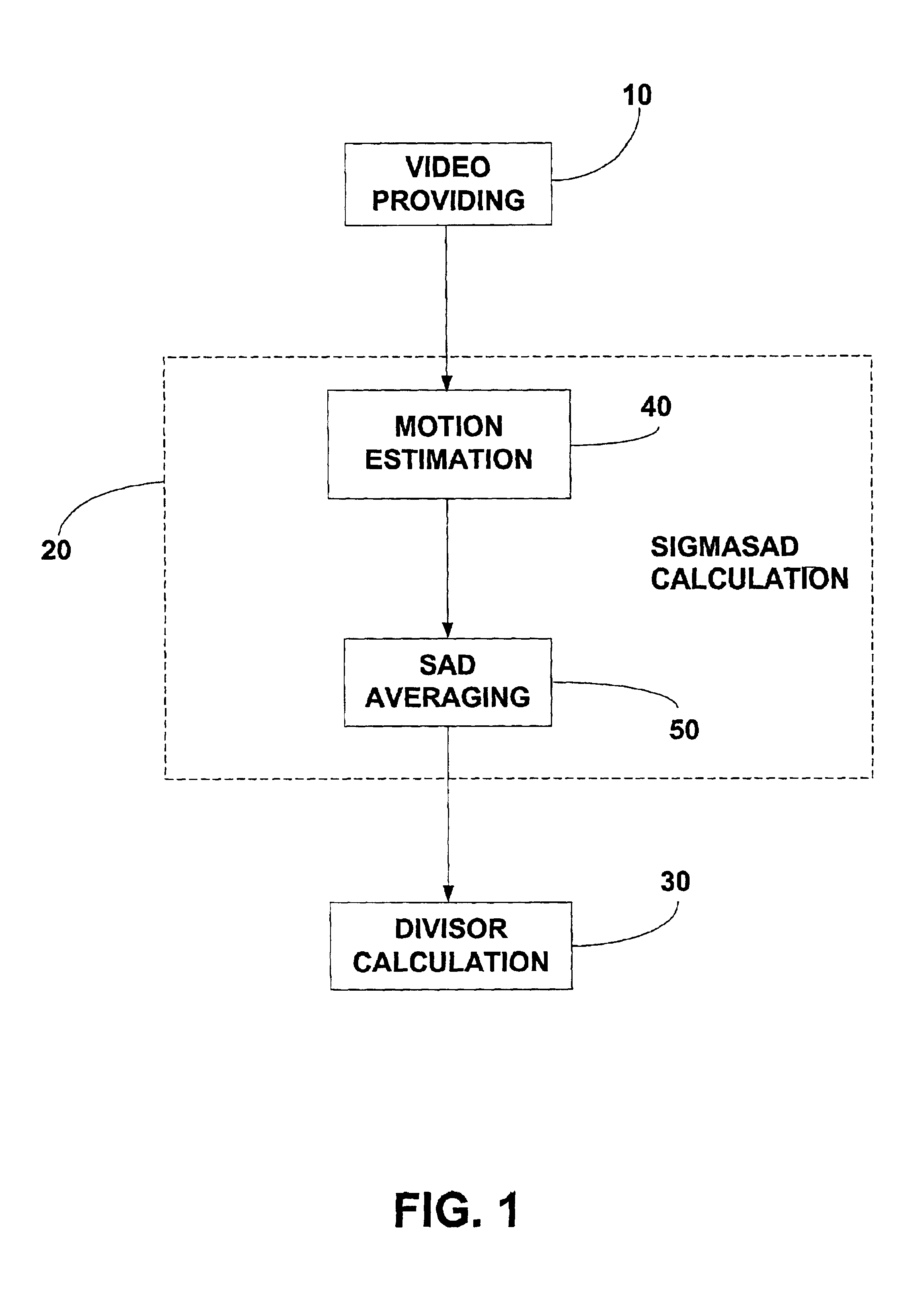

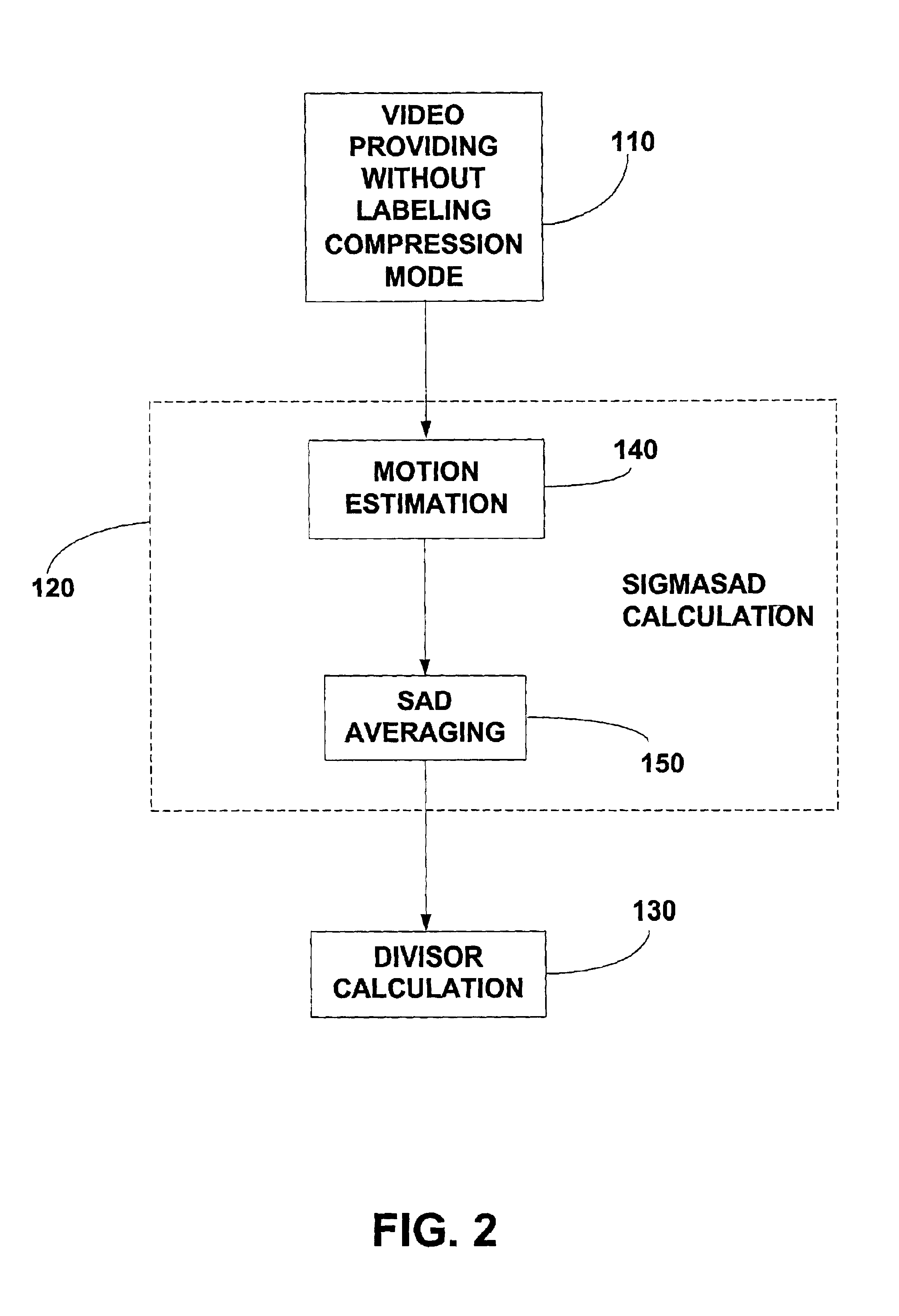

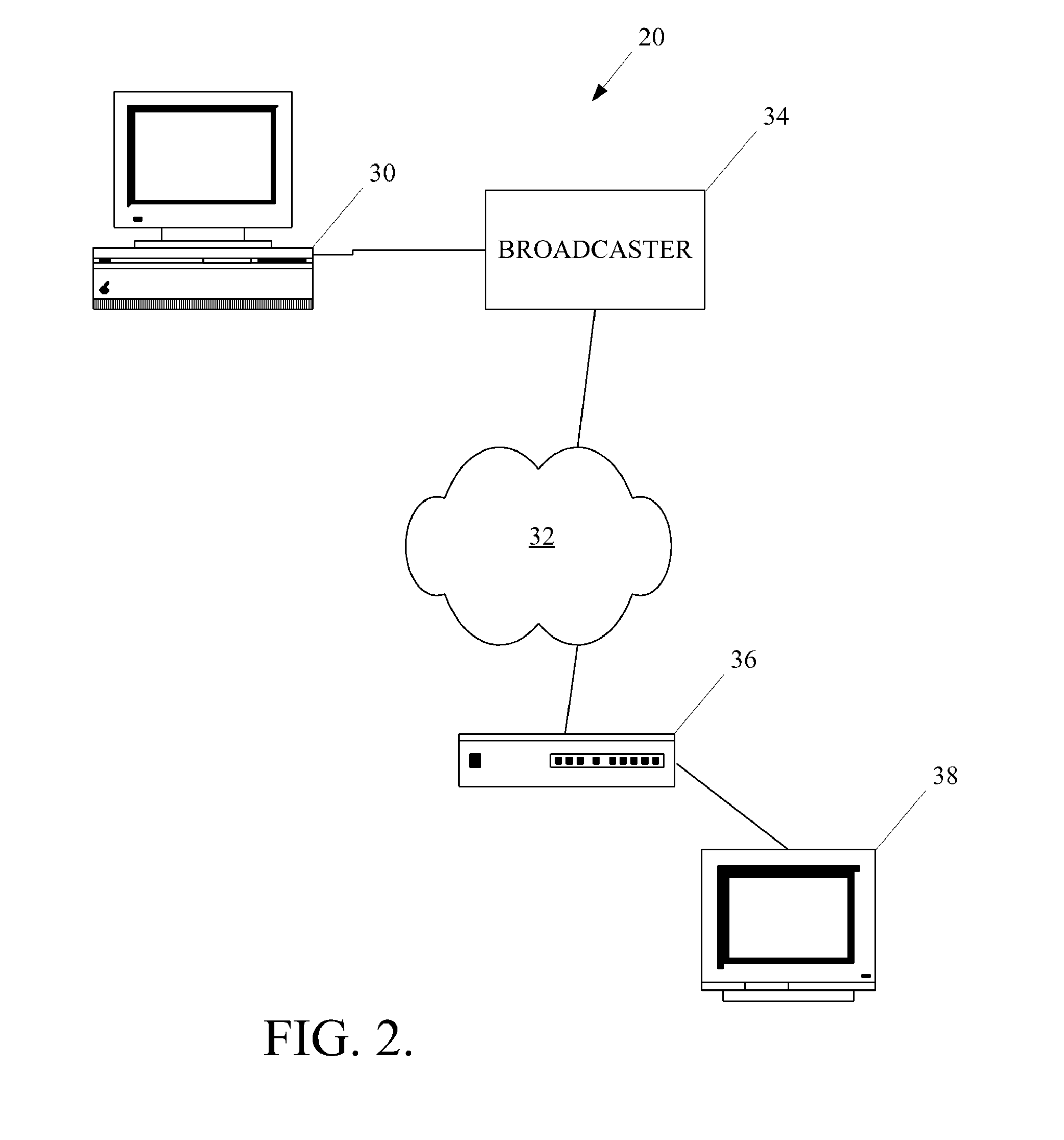

Method and system for predictive control for live streaming video/audio media

InactiveUS6873654B1Color television with pulse code modulationColor television with bandwidth reductionAudio MediaDigital video

A technique for digital video processing in a predictive manner is provided. In one embodiment, a method for processing digital video signals for live video applications comprises providing video data that comprise a plurality of frames, identifying a first frame and a second frame in the frame sequences, and processing the information of the first frame and the information of the second frame to determine a quantization step for the second frame. The step of processing the information of the first frame and the information of the second frame further comprises calculating a sigmaSAD value for the second frame, calculating a divisor value for the second frame, and calculating the quantization step for the second frame. In another embodiment, a system for processing digital video signals for live video applications comprises a memory unit within which a computer program is stored. The computer program instructs the system to process digital video in a predicative manner. In yet another embodiment, a system for processing digital video signals for live video applications comprises various subsystems that together process digital video in a predictive manner.

Owner:LUXXON CORP

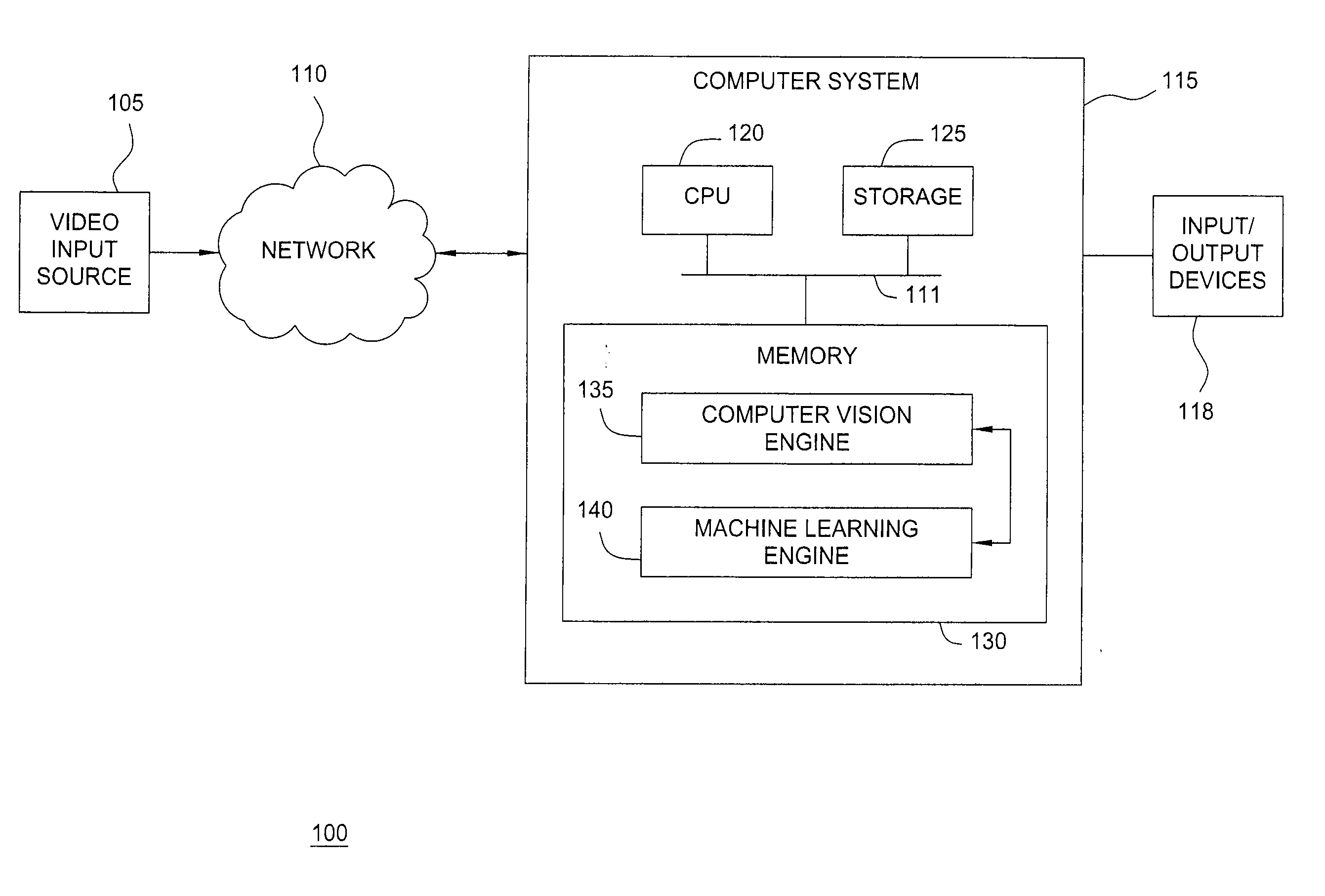

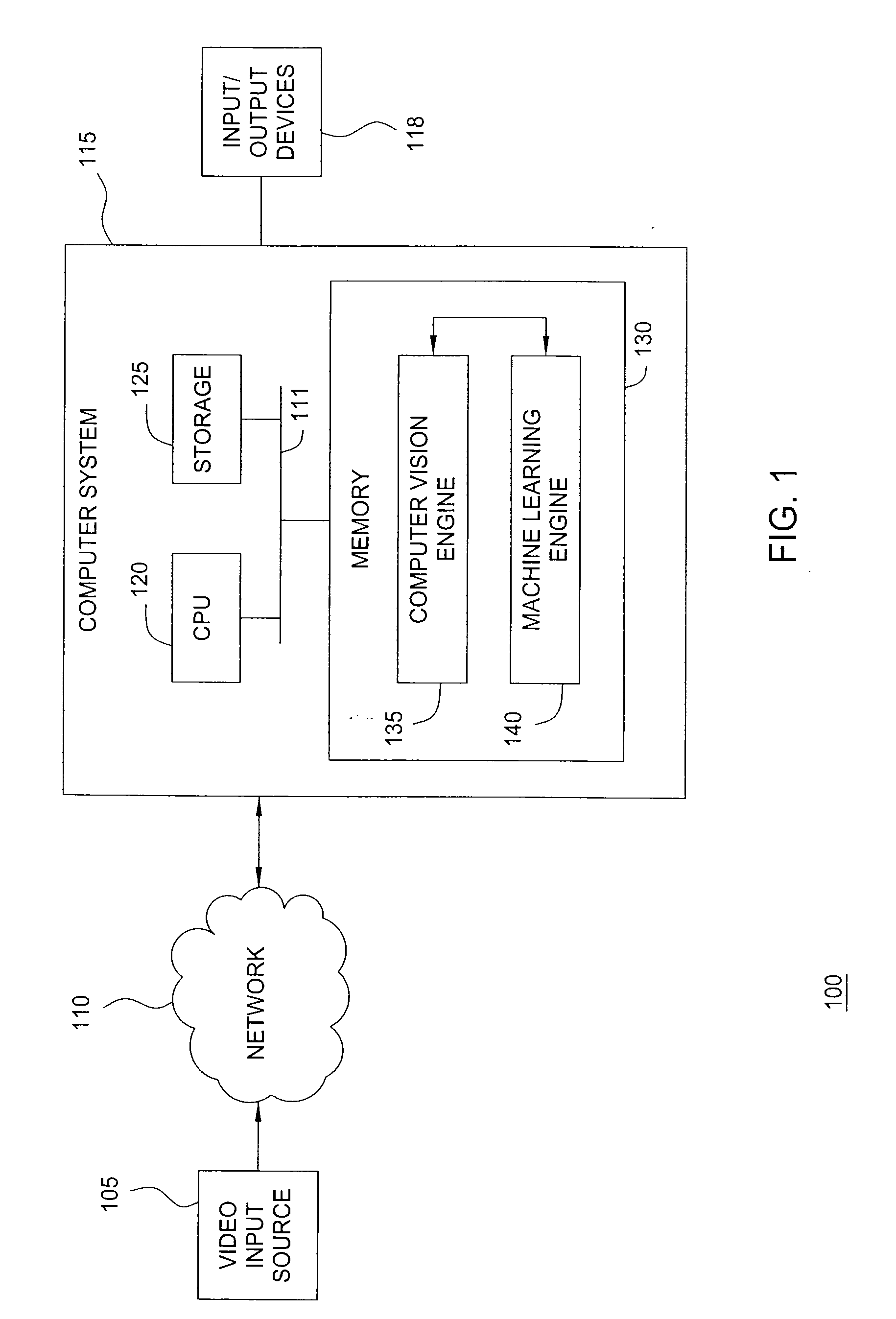

Adaptive update of background pixel thresholds using sudden illumination change detection

Techniques are disclosed for a computer vision engine to update both a background model and thresholds used to classify pixels as depicting scene foreground or background in response to detecting that a sudden illumination changes has occurred in a sequence of video frames. The threshold values may be used to specify how much pixel a given pixel may differ from corresponding values in the background model before being classified as depicting foreground. When a sudden illumination change is detected, the values for pixels affected by sudden illumination change may be used to update the value in the background image to reflect the value for that pixel following the sudden illumination change as well as update the threshold for classifying that pixel as depicting foreground / background in subsequent frames of video.

Owner:PEPPERL FUCHS GMBH +1

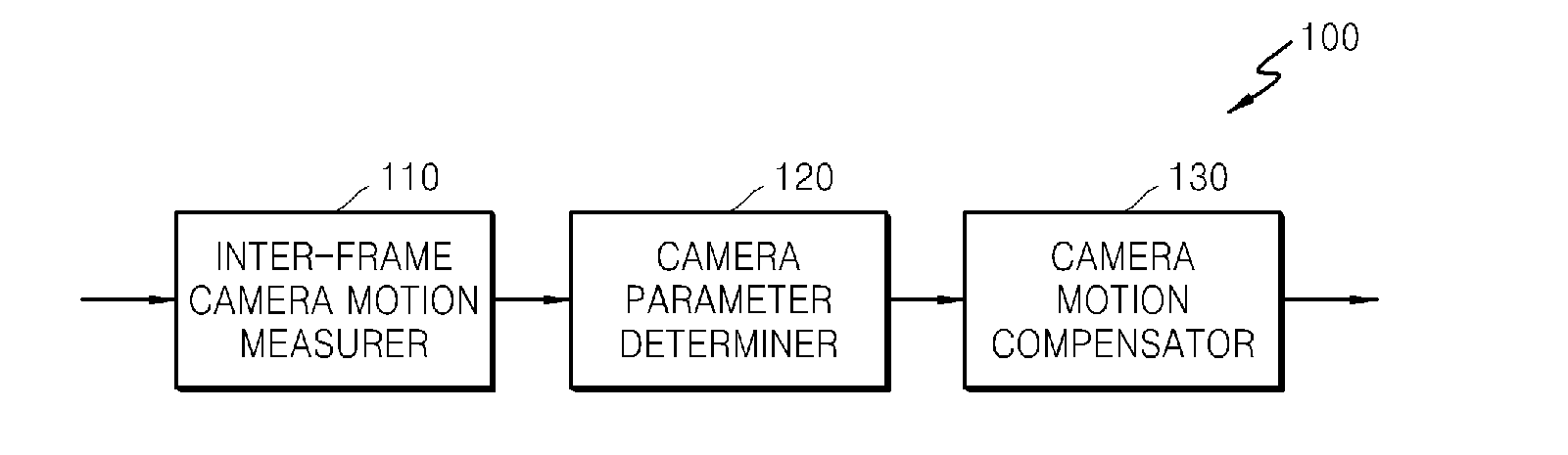

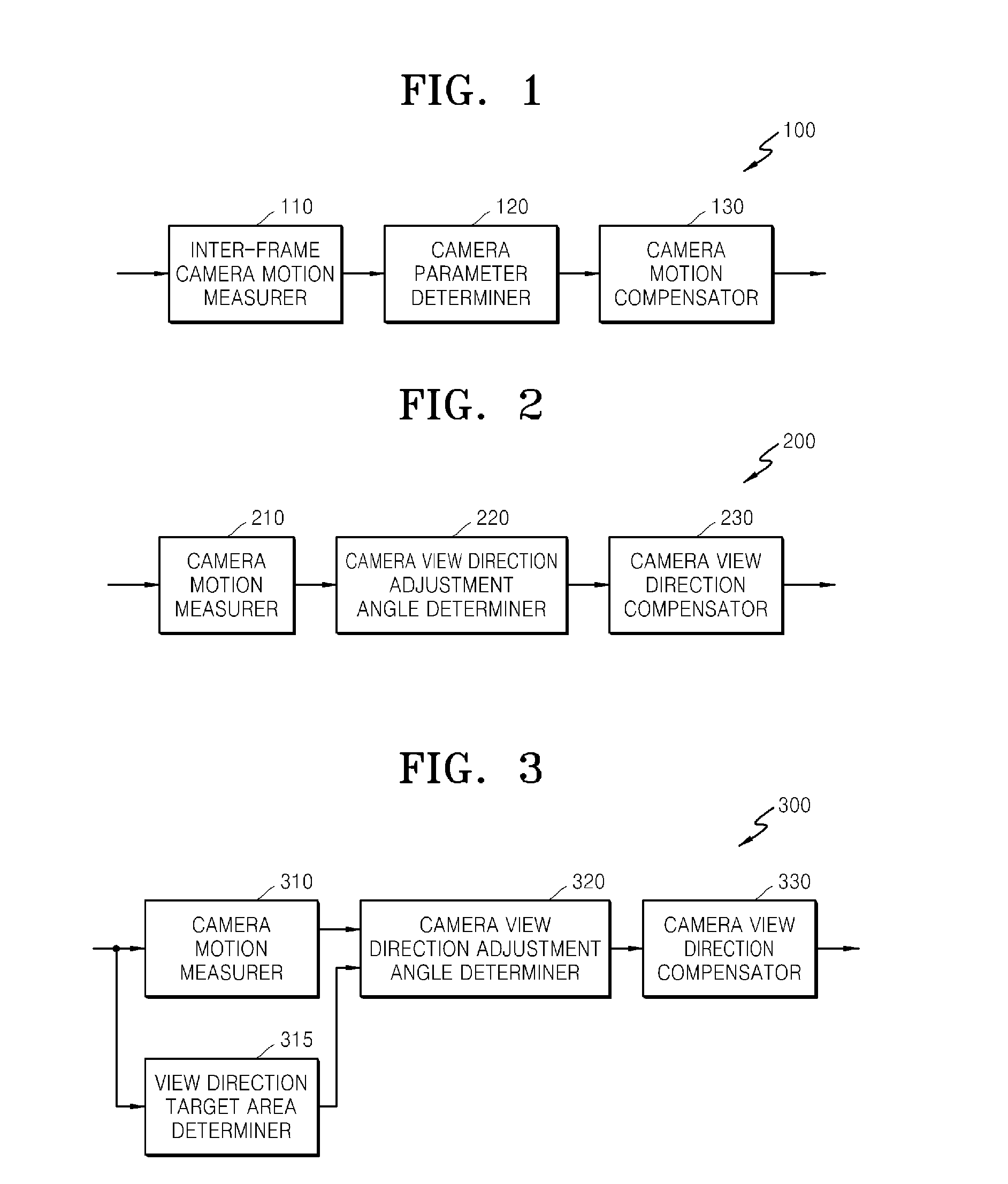

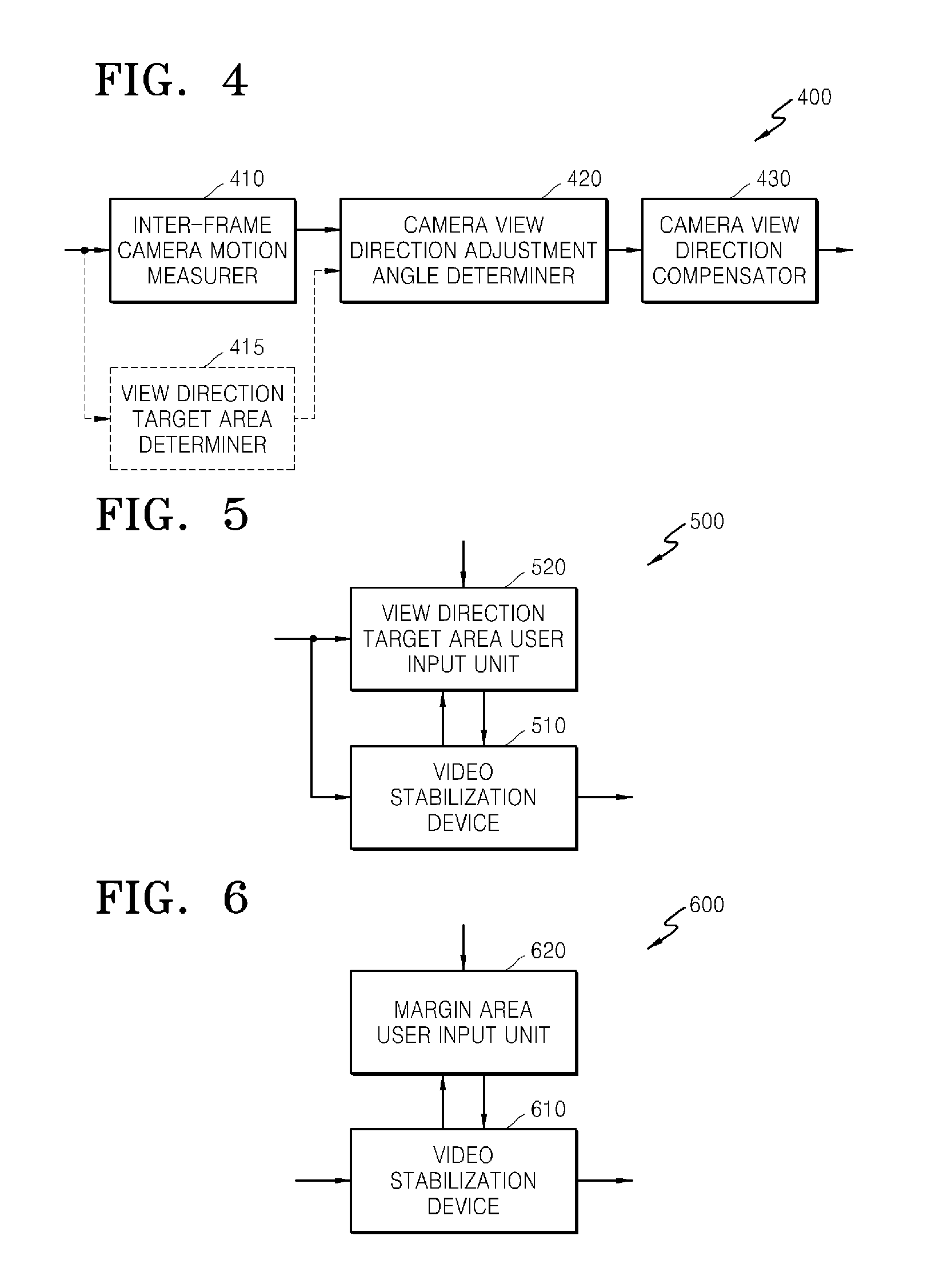

Method and apparatus for video stabilization by compensating for view direction of camera

InactiveUS20120120264A1Television system detailsImage analysisFrame sequenceComputer graphics (images)

A video stabilization method includes: measuring an inter-frame camera motion based on a difference angle of a relative camera view direction in comparison with a reference camera view direction in each frame of a frame sequence of a video; generating a camera motion path of the frame sequence by using the inter-frame camera motion and determining a camera view direction adjustment angle based on a user's view direction by using the camera motion path; and compensating for the camera view direction by using the camera view direction adjustment angle in each frame.

Owner:SAMSUNG ELECTRONICS CO LTD

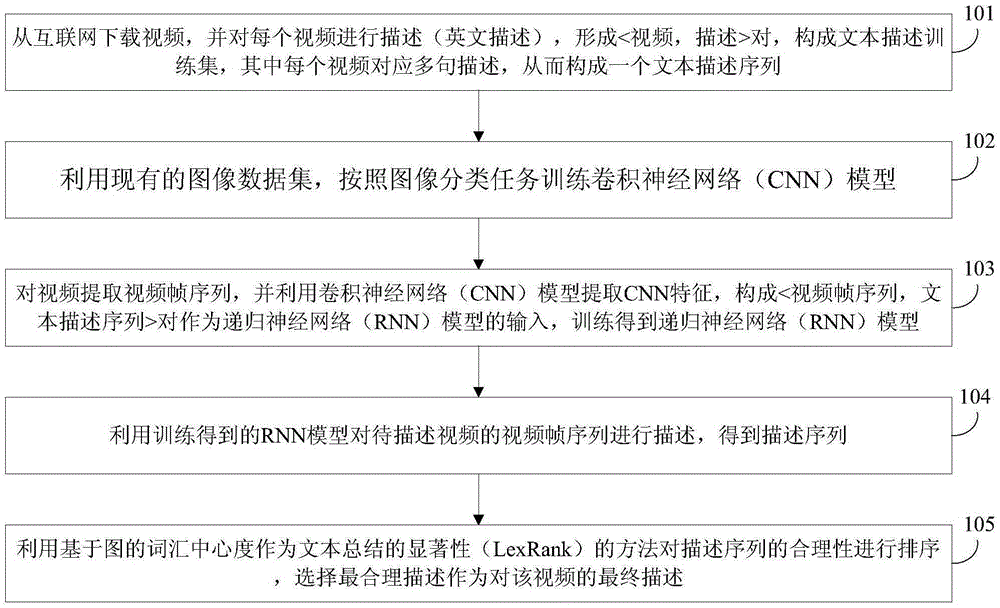

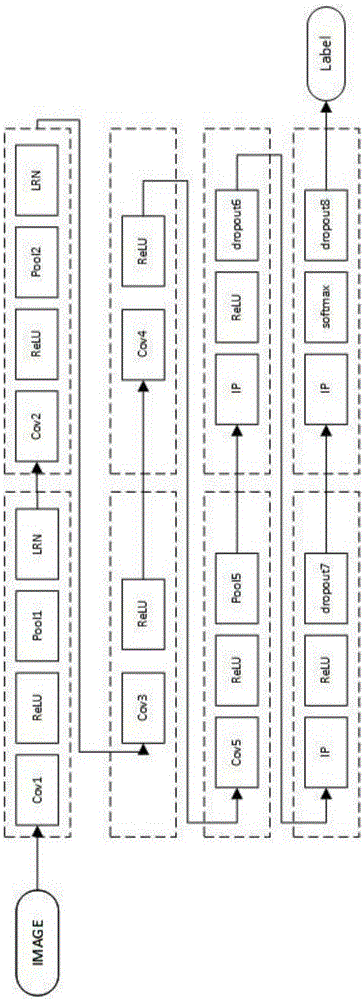

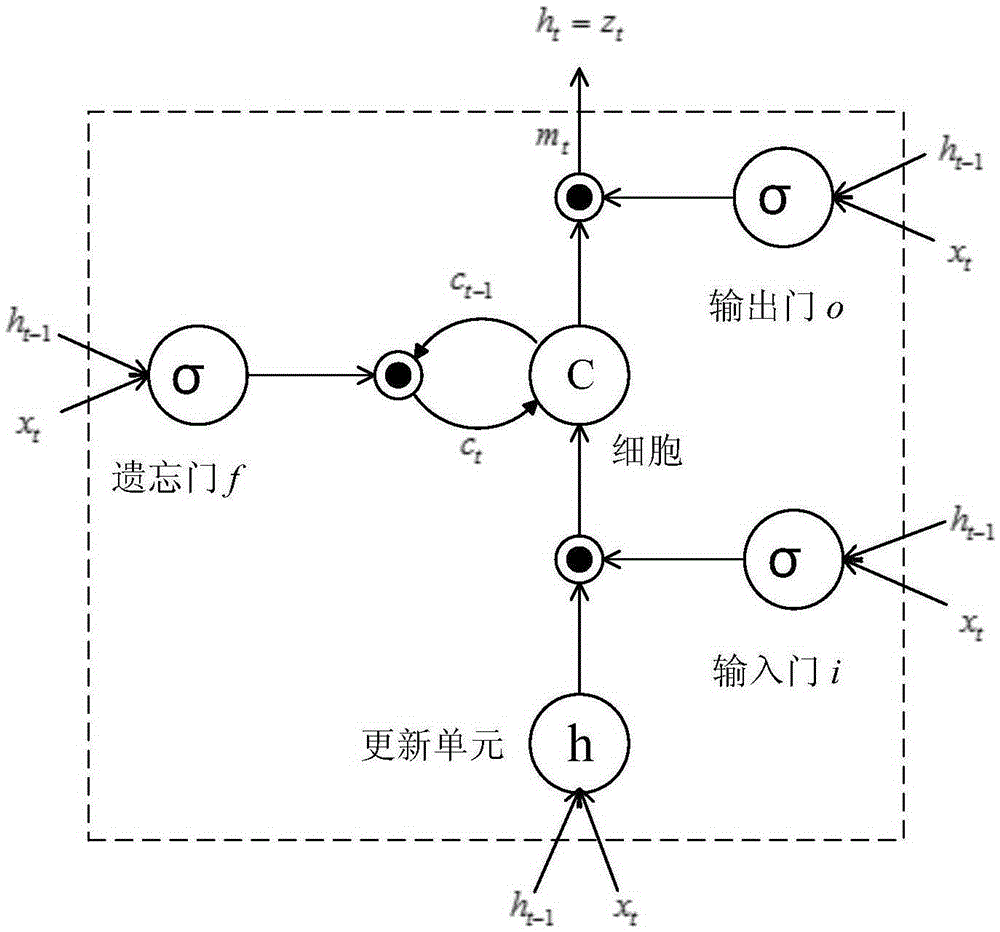

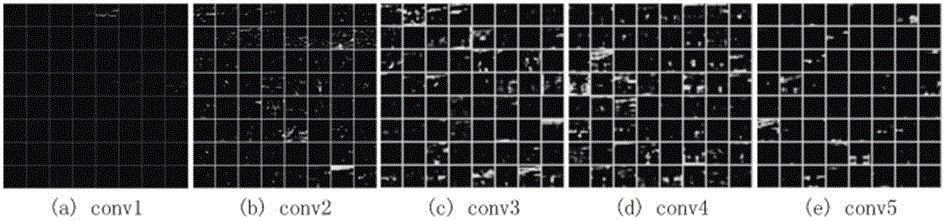

Video description method based on deep learning and text summarization

ActiveCN105279495AImprove accuracyGood varietyCharacter and pattern recognitionNeural learning methodsFrame sequenceData set

The invention discloses a video description method based on deep learning and text summarization. The video description method comprises the following steps: through a traditional image data set, training a convolutional neural network model according to an image classification task; extracting a video frame sequence of a video, utilizing the convolutional neural network model to extract convolutional neural network characteristics to form a <video frame sequence, text description sequence> pair which is used as the input of a recurrent neural network model, and training to obtain the recurrent neural network model; describing the video frame sequence of the video to be described through the recurrent neural network model obtained by training to obtain description sequences; and through a method that graph-based vocabulary centrality is used as the significance of the text summarization, sorting the description sequences, and outputting a final description result of the video. An event which happens in one video and object attributes associated with the event are described through a natural language so as to achieve a purpose that video contents are described and summarized.

Owner:广州葳尔思克自动化科技有限公司

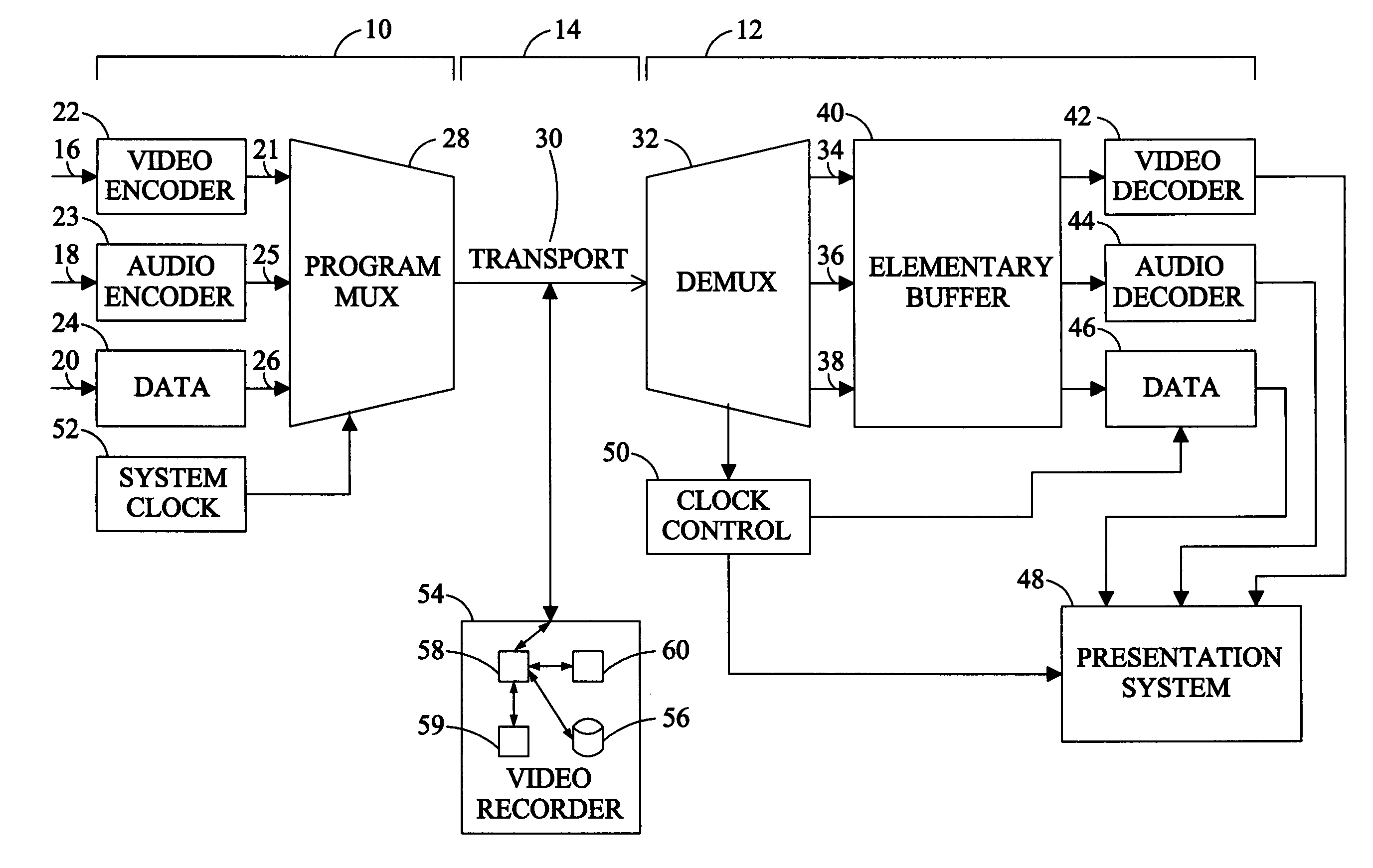

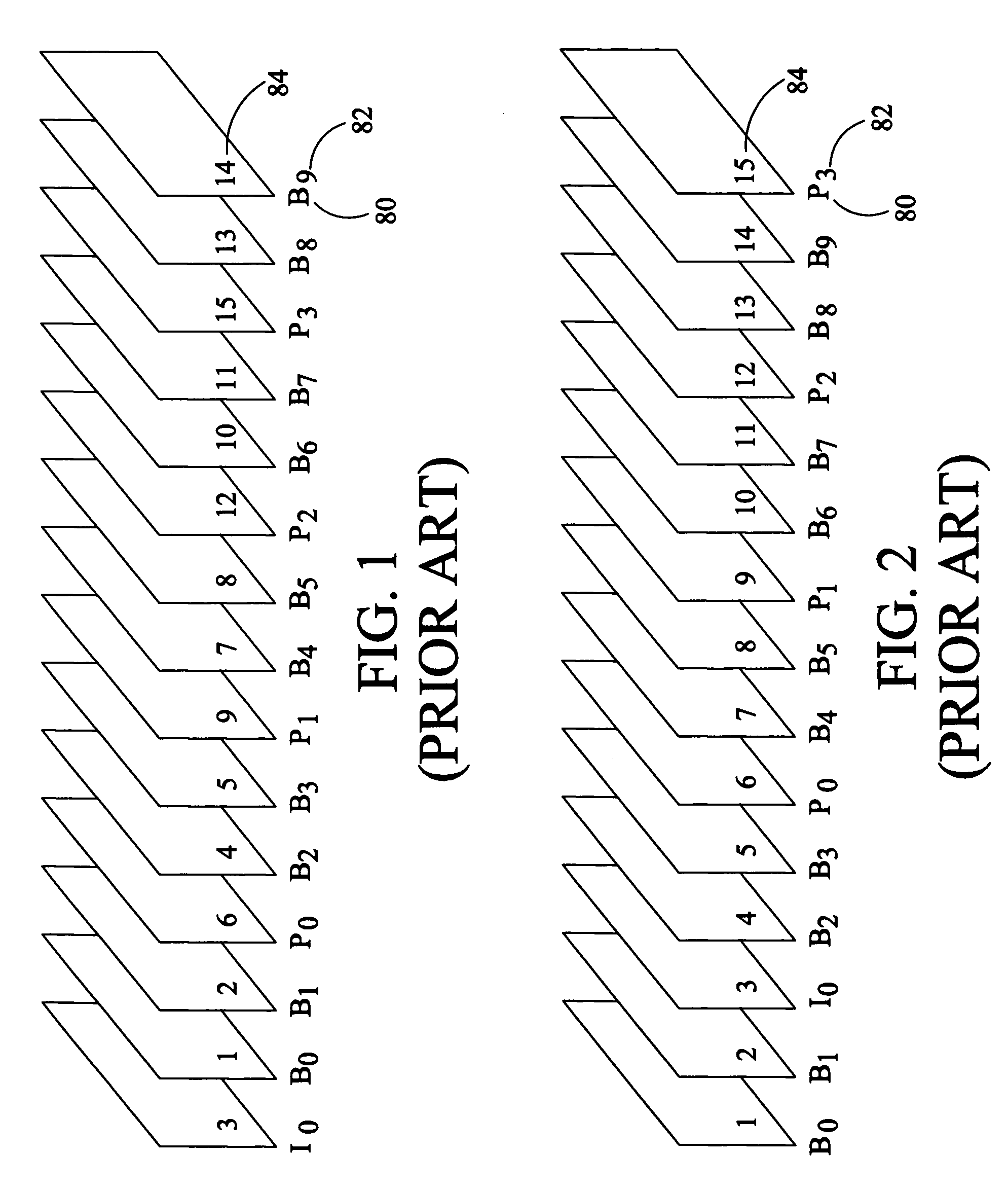

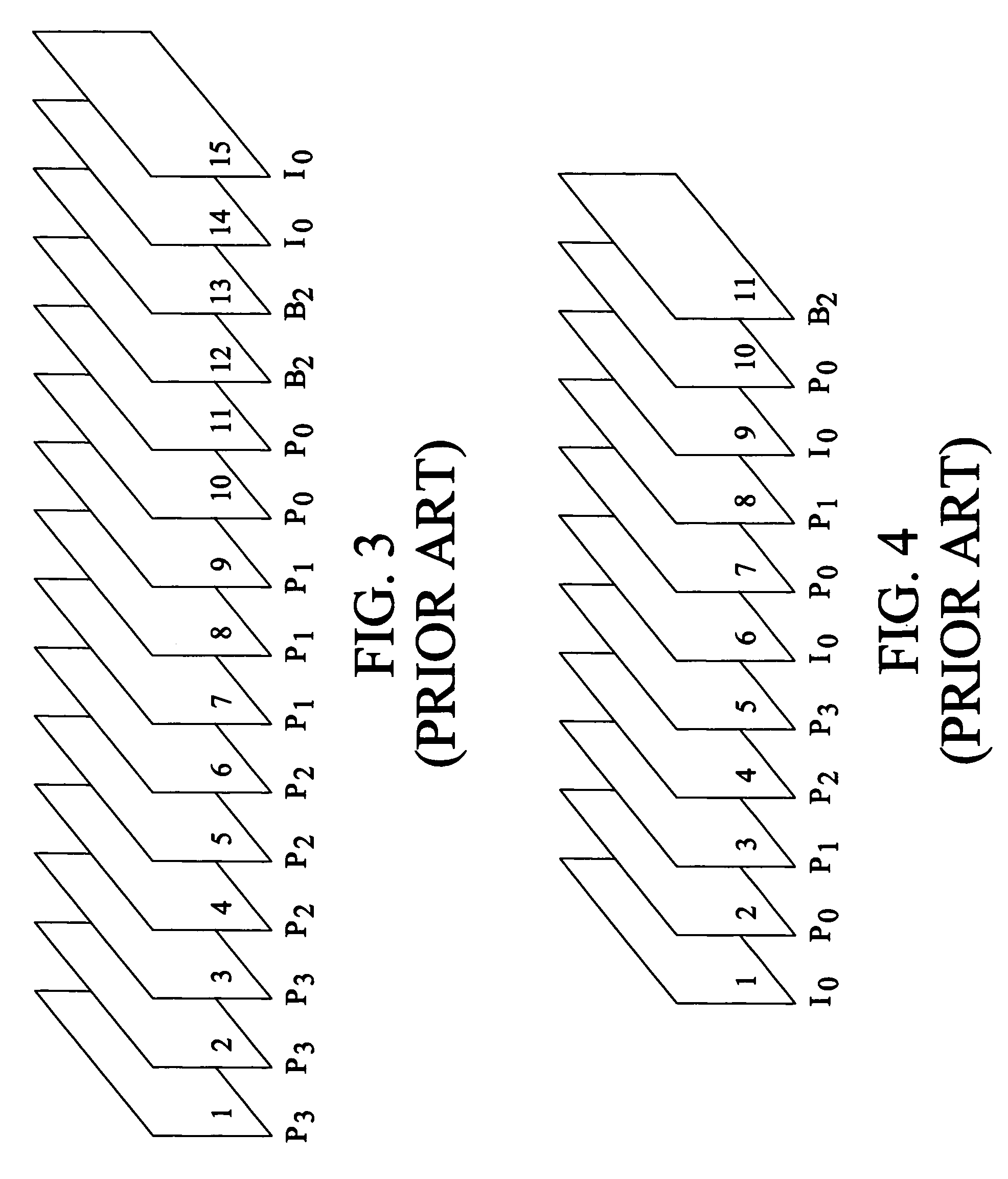

Method for efficient MPEG-2 transport stream frame re-sequencing

InactiveUS7027713B1Television system detailsColor television detailsDigital videoProgram clock reference

A method and apparatus for creating a trick play video display from an MPEG-2 digital video transport stream are described. A trick play transport stream frame sequence template for each transport stream frame sequence and trick play video display mode supported by a video recorder is stored in the recorder. When the recorder receives an input transport stream frame sequence, the sequence is identified and template corresponding to that sequence and the selected trick play display mode is selected. The template is used to identify frames of the input transport stream to be appended to the trick play display transport stream frame sequence. When the trick play transport stream frame sequence is constructed the program clock reference, presentation time stamps and decoding time stamps associated with the frames are updated from information in the template.

Owner:SHARP KK

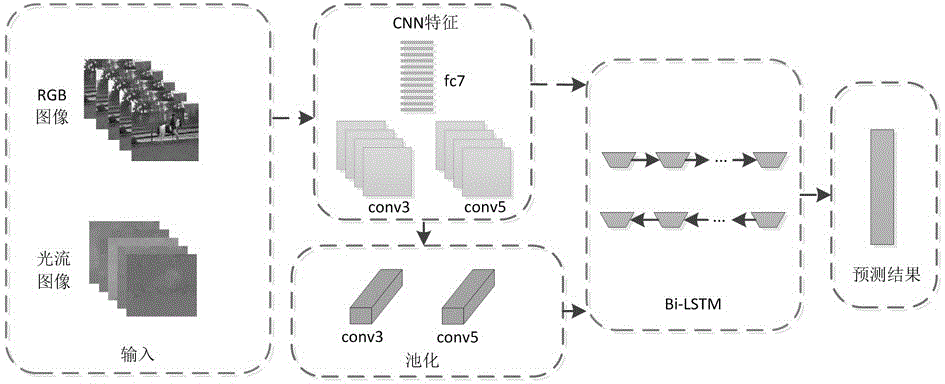

Bidirectional long short-term memory unit-based behavior identification method for video

InactiveCN106845351AImprove accuracyGuaranteed accuracyCharacter and pattern recognitionNeural architecturesTime domainTemporal information

Owner:SUZHOU UNIV

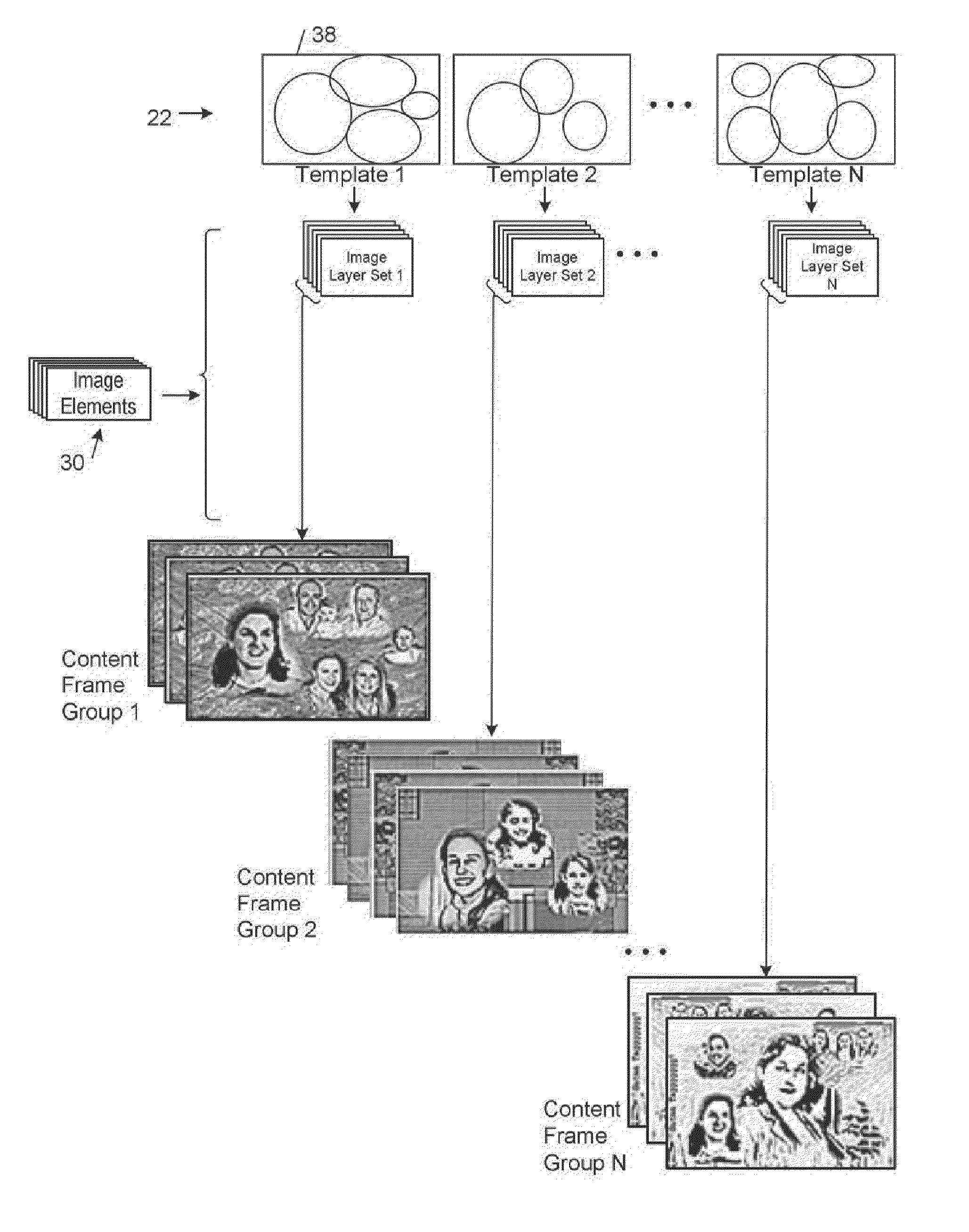

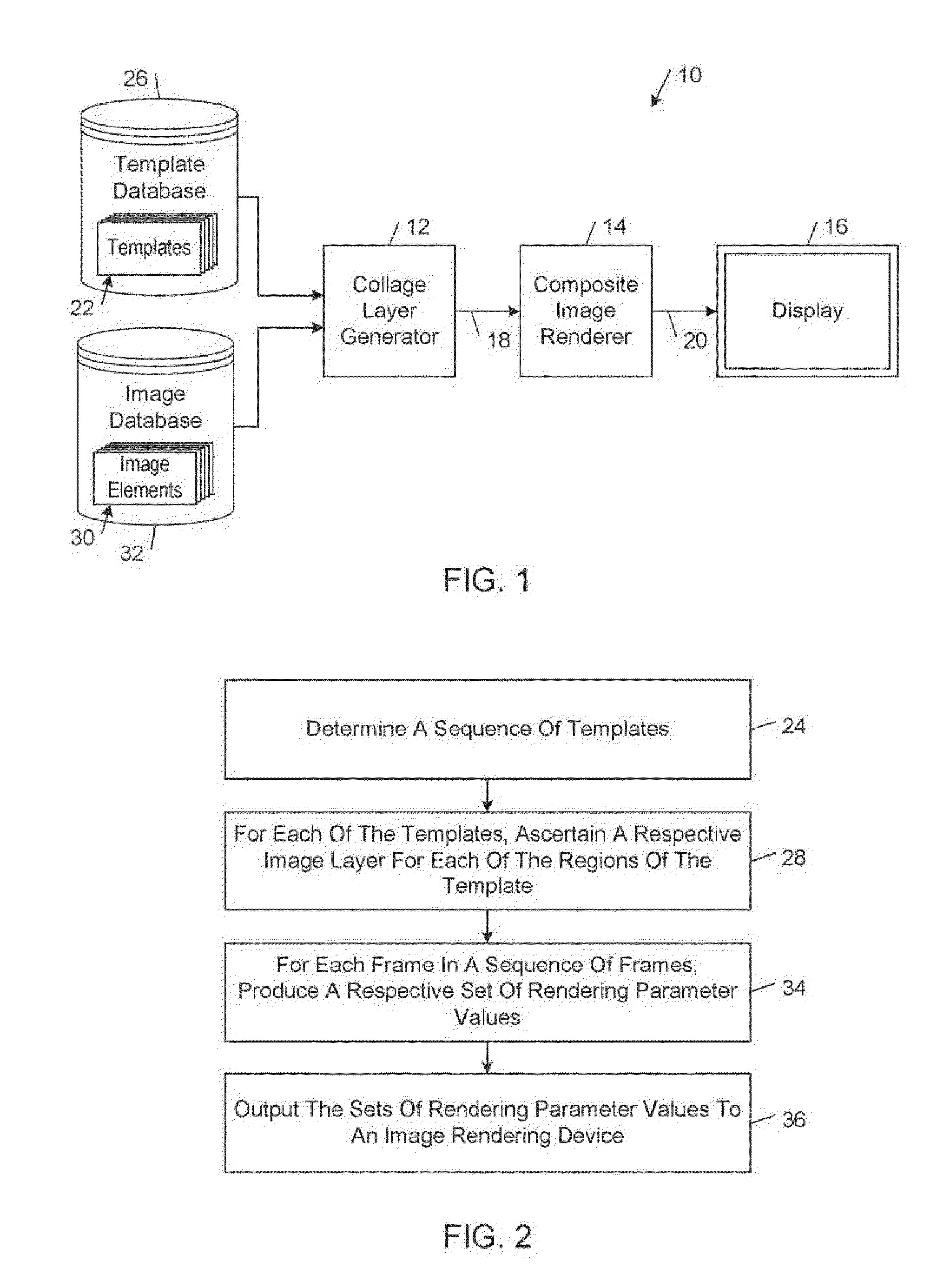

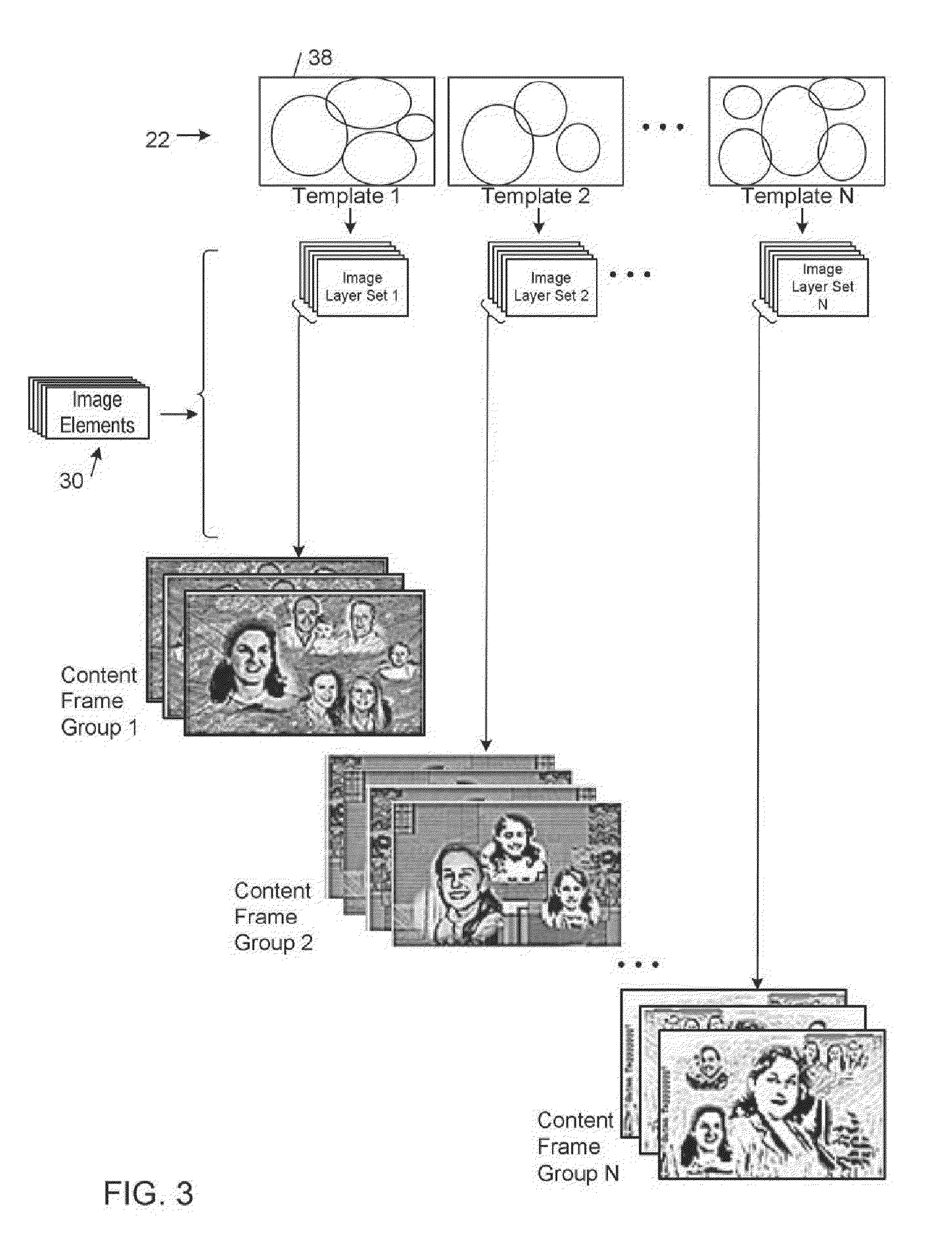

Dynamic Image Collage

InactiveUS20110285748A1Cathode-ray tube indicatorsEditing/combining figures or textPattern recognitionFrame sequence

A sequence of templates (22) is determined. Each of the templates (22) defines a spatiotemporal layout of regions in a display area (38). Each of the regions is associated with a set of one or more image selection criteria and a set of one or more temporal behavior attribute values. For each of the templates (22), an image layer for each of the regions of the template is ascertained. For each of the layers (18), an image element (30) is assigned to the region in accordance with the respective set of image selection criteria. For each frame (20) in a frame sequence, a set of rendering parameter values is produced, where each set specifies a composition of the respective frame in the display area (38) based on a respective set of the image layers (18) determined for the templates (22) and the respective sets of temporal behavior attribute values.

Owner:HEWLETT PACKARD DEV CO LP

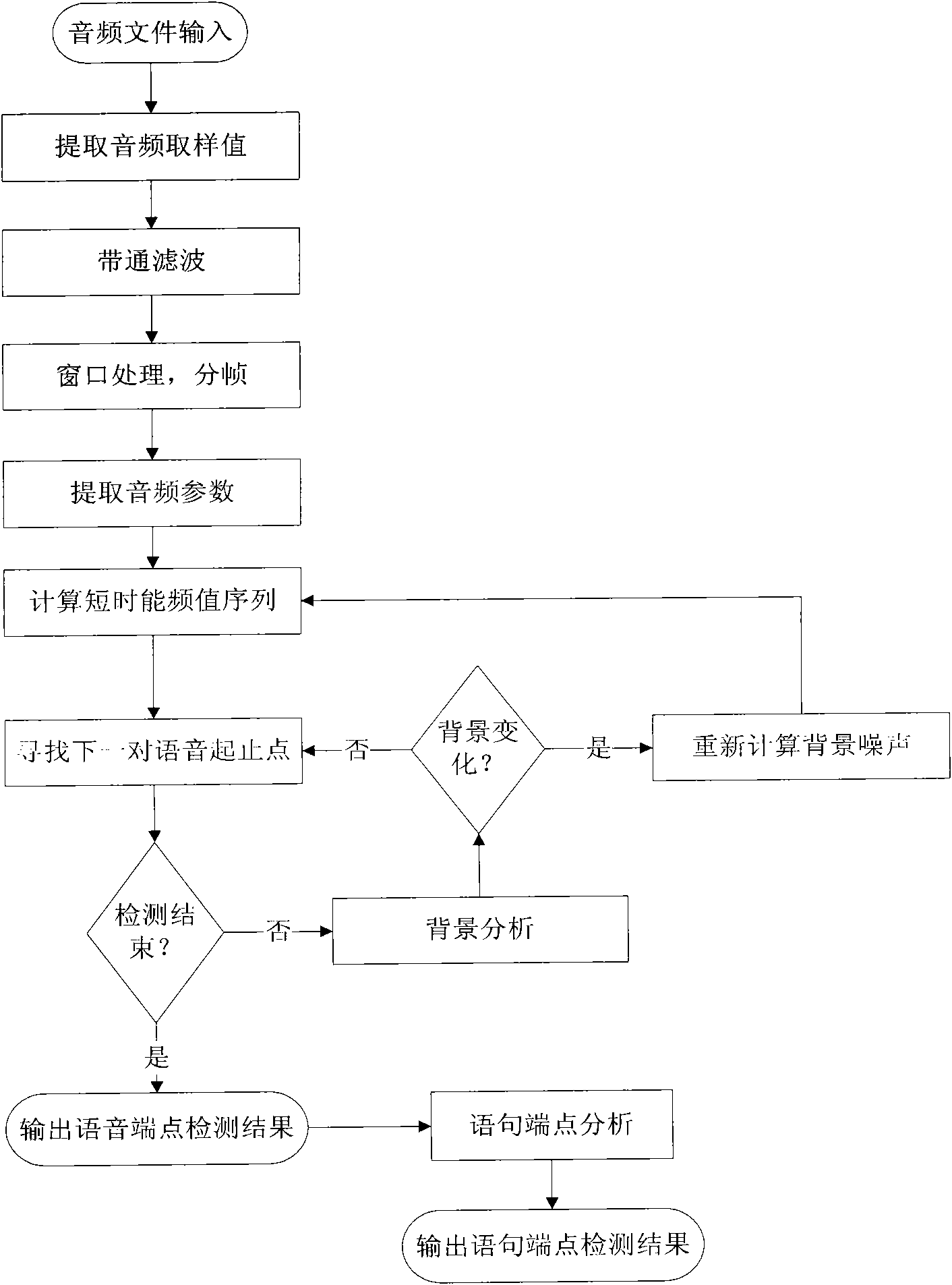

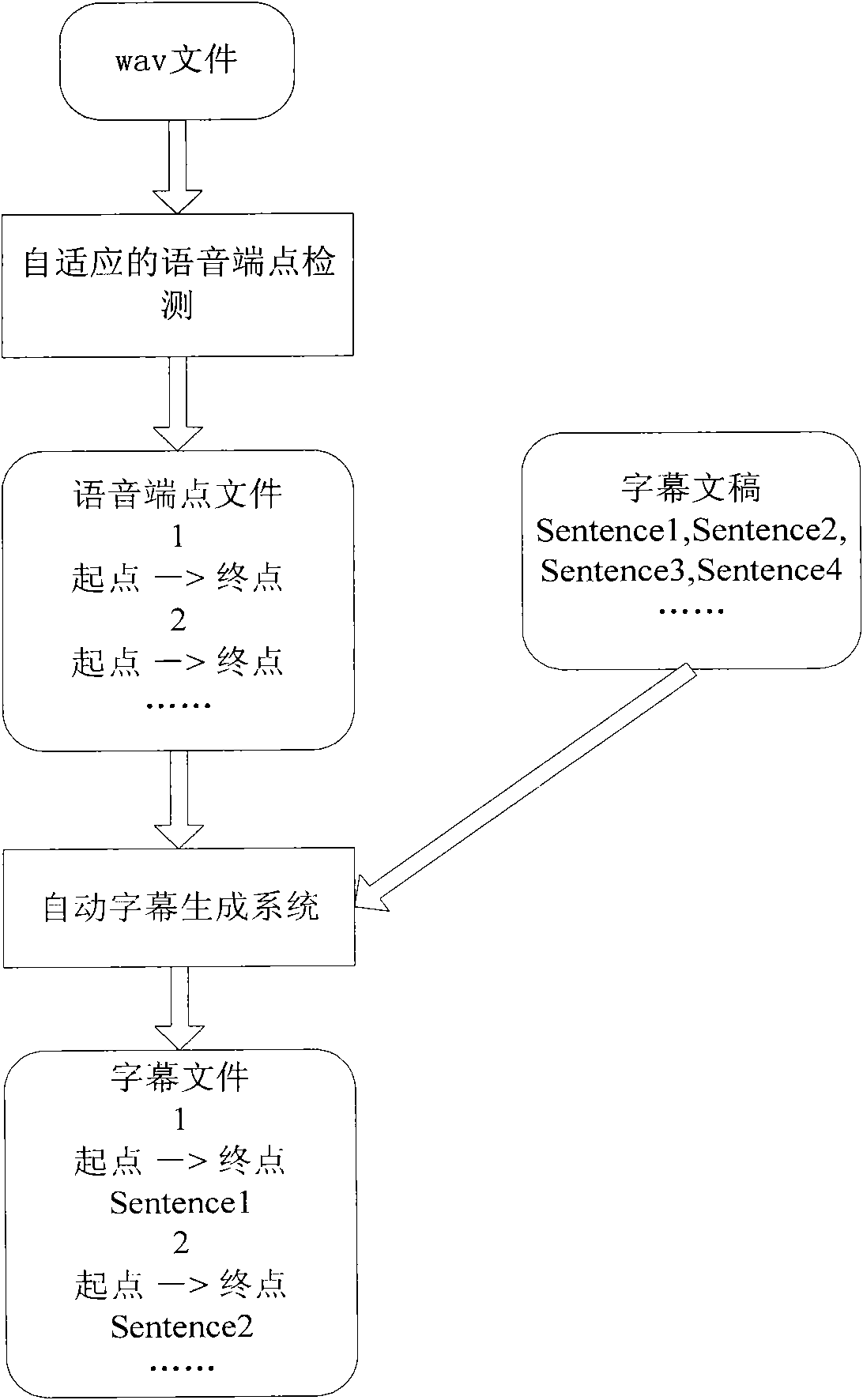

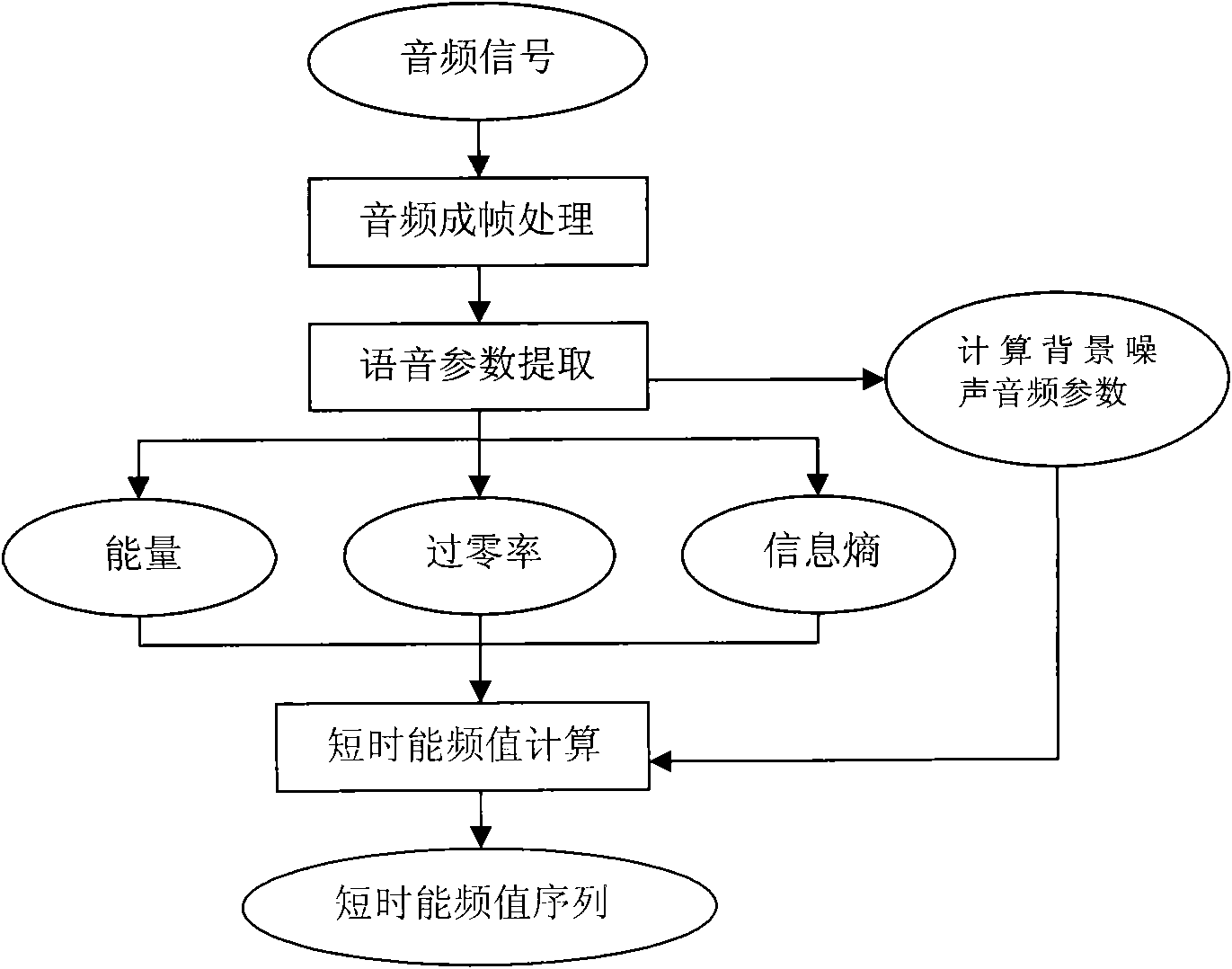

Self-adaptive voice endpoint detection method

InactiveCN101625857AImprove detection efficiencyQuality improvementSpeech recognitionTime informationFrame sequence

The invention relates to voice detection technology in an automatic caption generating system, in particular to a self-adaptive voice endpoint detection method. The method comprises the following steps: dividing an audio sampling sequence into frames with fixed lengths, and forming a frame sequence; extracting three audio characteristic parameters comprising short-time energy, short-time zero-crossing rate and short-time information entropy aiming at data of each frame; calculating short-time energy frequency values of the data of each frame according to the audio characteristic parameters, and forming a short-time energy frequency value sequence; analyzing the short-time energy frequency value sequence from the data of the first frame, and seeking for a pair of voice starting point and ending point; analyzing background noise, and if the background noise is changed, recalculating the audio characteristic parameters of the background noise, and updating the short-time energy frequency value sequence; and repeating the processes till the detection is finished. The method can carry out voice endpoint detection for the continuous voice under the condition that the background noise is changed frequently so as to improve the voice endpoint detection efficiency under a complex noise background.

Owner:CHINA DIGITAL VIDEO BEIJING

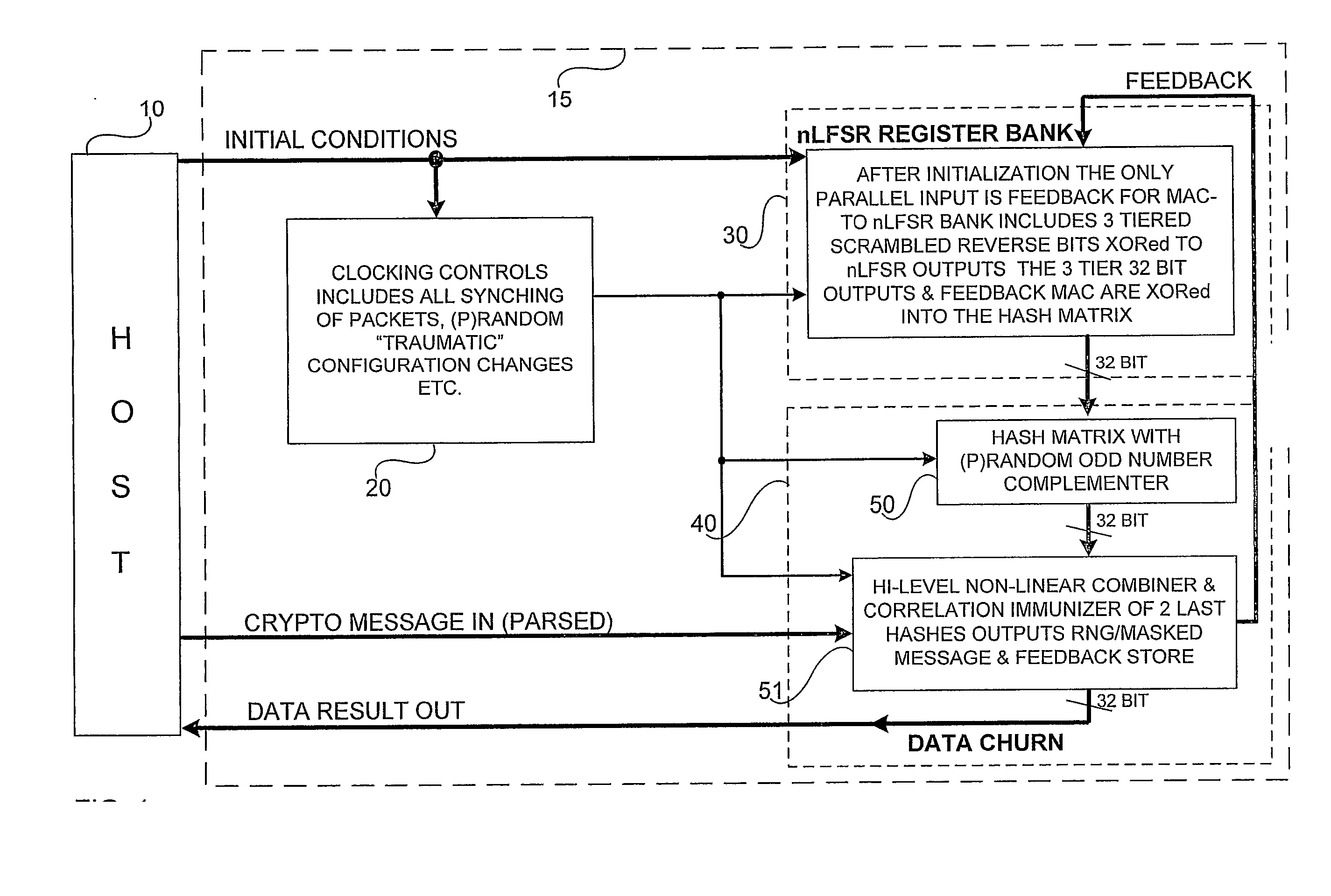

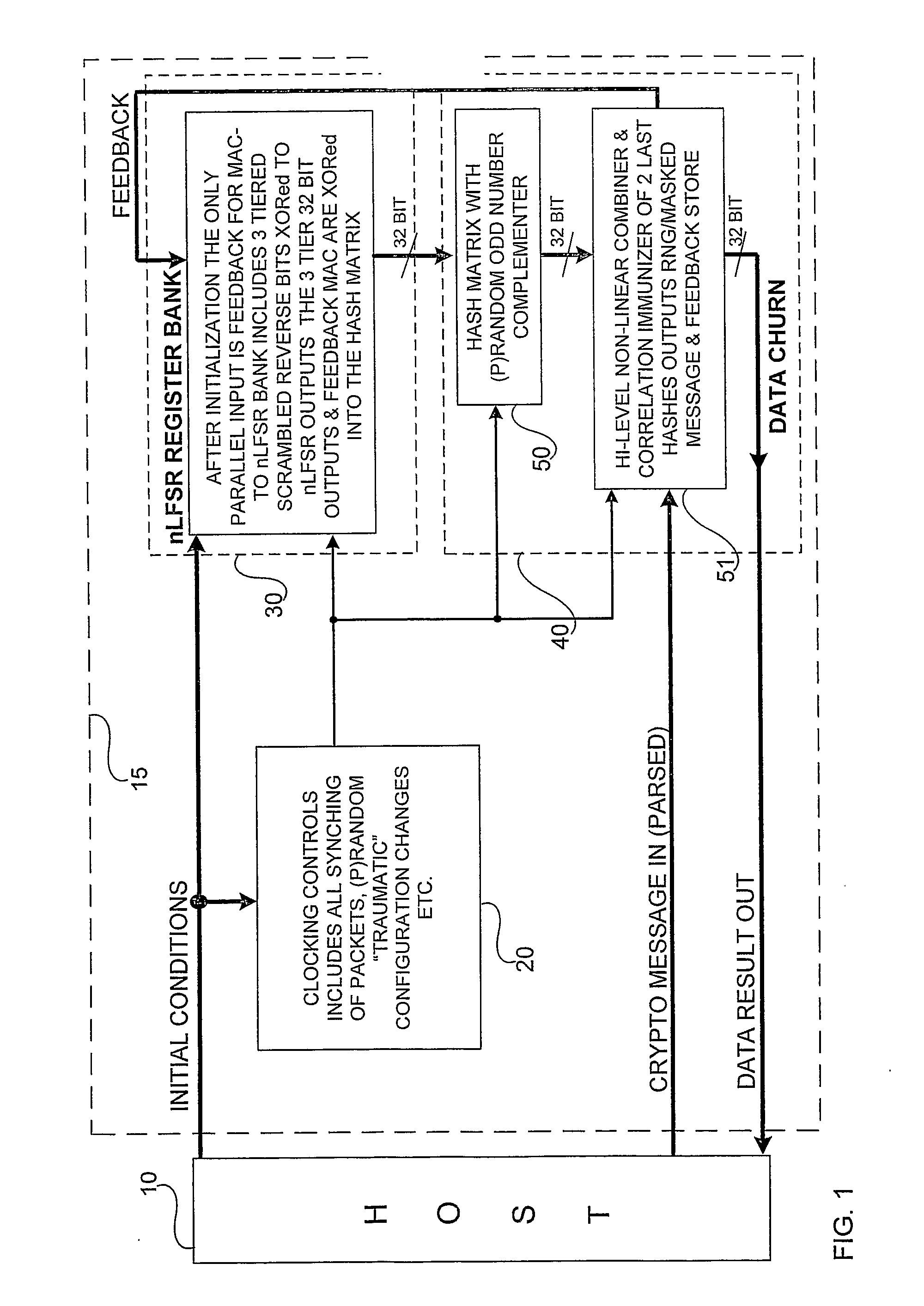

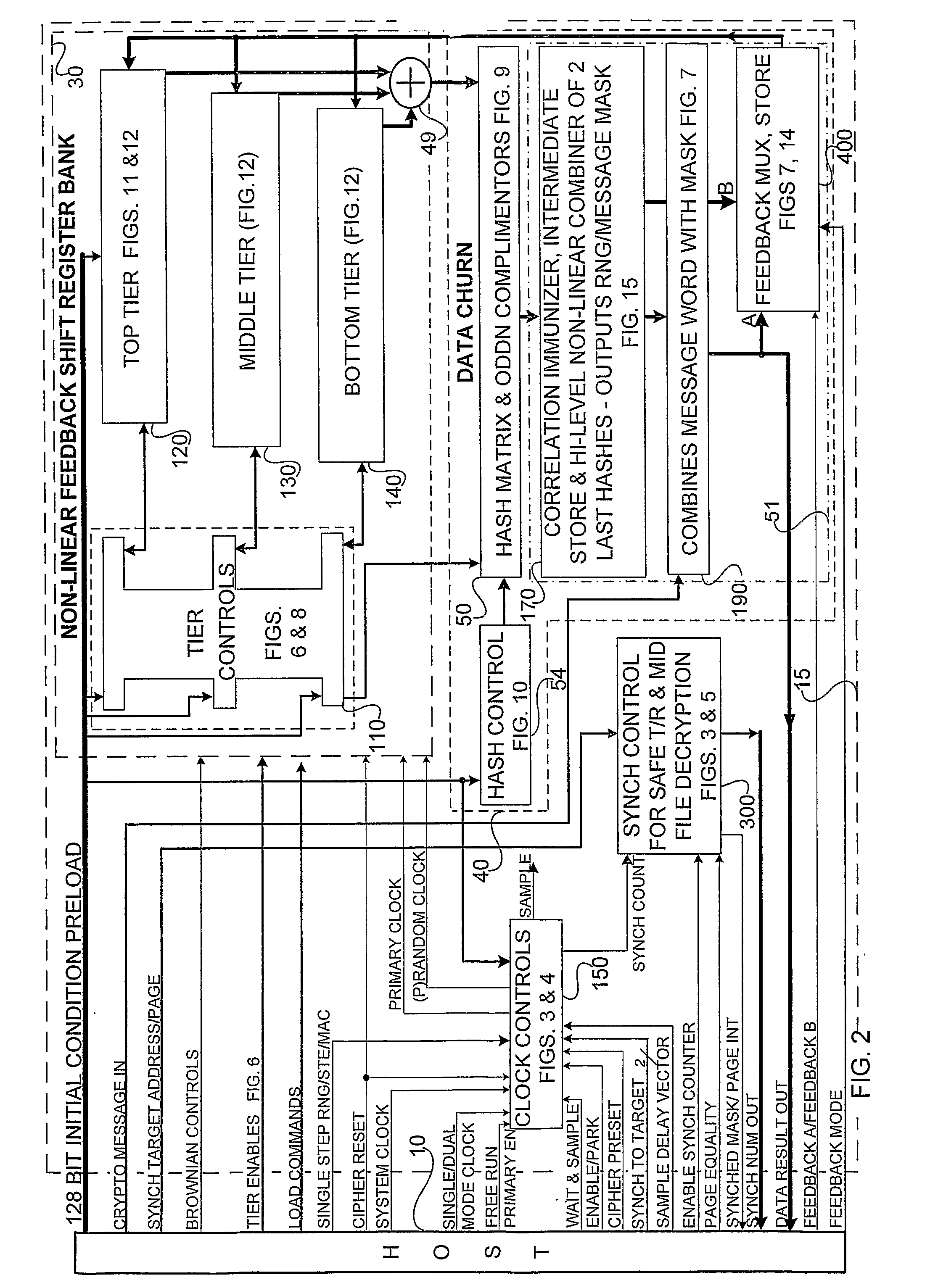

Accelerated Throughtput Synchronized Word Stream Cipher, Message Authenticator and Zero-Knowledge Output Random Number Generator

ActiveUS20070244951A1Small loss of entropyNecessary numberSynchronising transmission/receiving encryption devicesRandom number generatorsComputer hardwareFrame sequence

Systems and methods are disclosed, especially designed for very compact hardware implementations, to generate random number strings with a high level of entropy at maximum speed. For immediate deployment of software implementations, certain permutations have been introduced to maintain the same level of unpredictability which is more amenable to hi-level software programming, with a small time loss on hardware execution; typically when hardware devices communicate with software implementations. Particular attention has been paid to maintain maximum correlation immunity, and to maximize non-linearity of the output sequence. Good stream ciphers are based on random generators which have a large number of secured internal binary variables, which lead to the page synchronized stream ciphering. The method for parsed page synchronization which is presented is especially valuable for Internet applications, where occasionally frame sequences are often mixed. The large number of internal variables with fast diffusion of individual bits wherein the masked message is fed back into the machine variables is potentially ideal for message authentication procedures.

Owner:FORTRESS GB

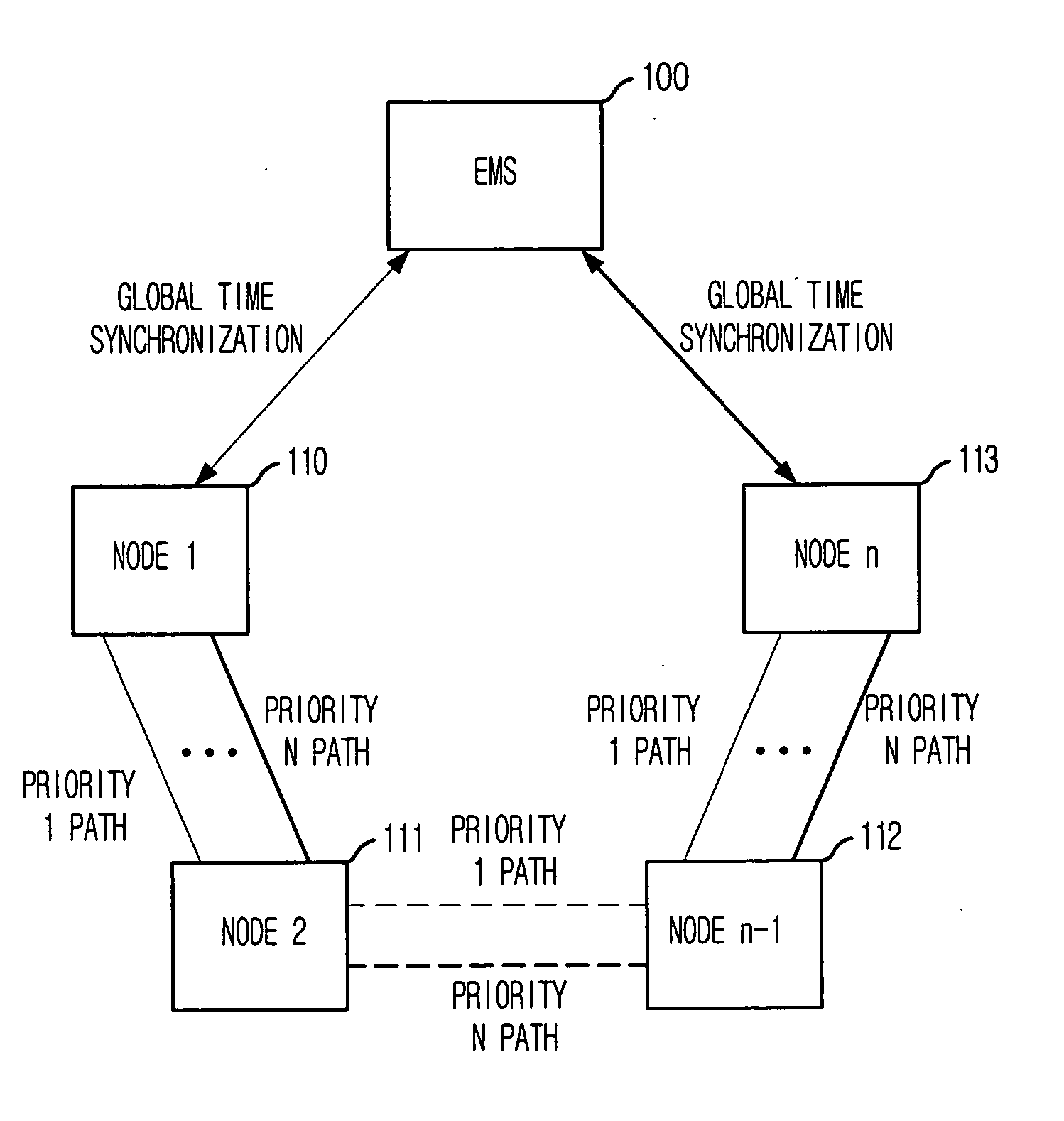

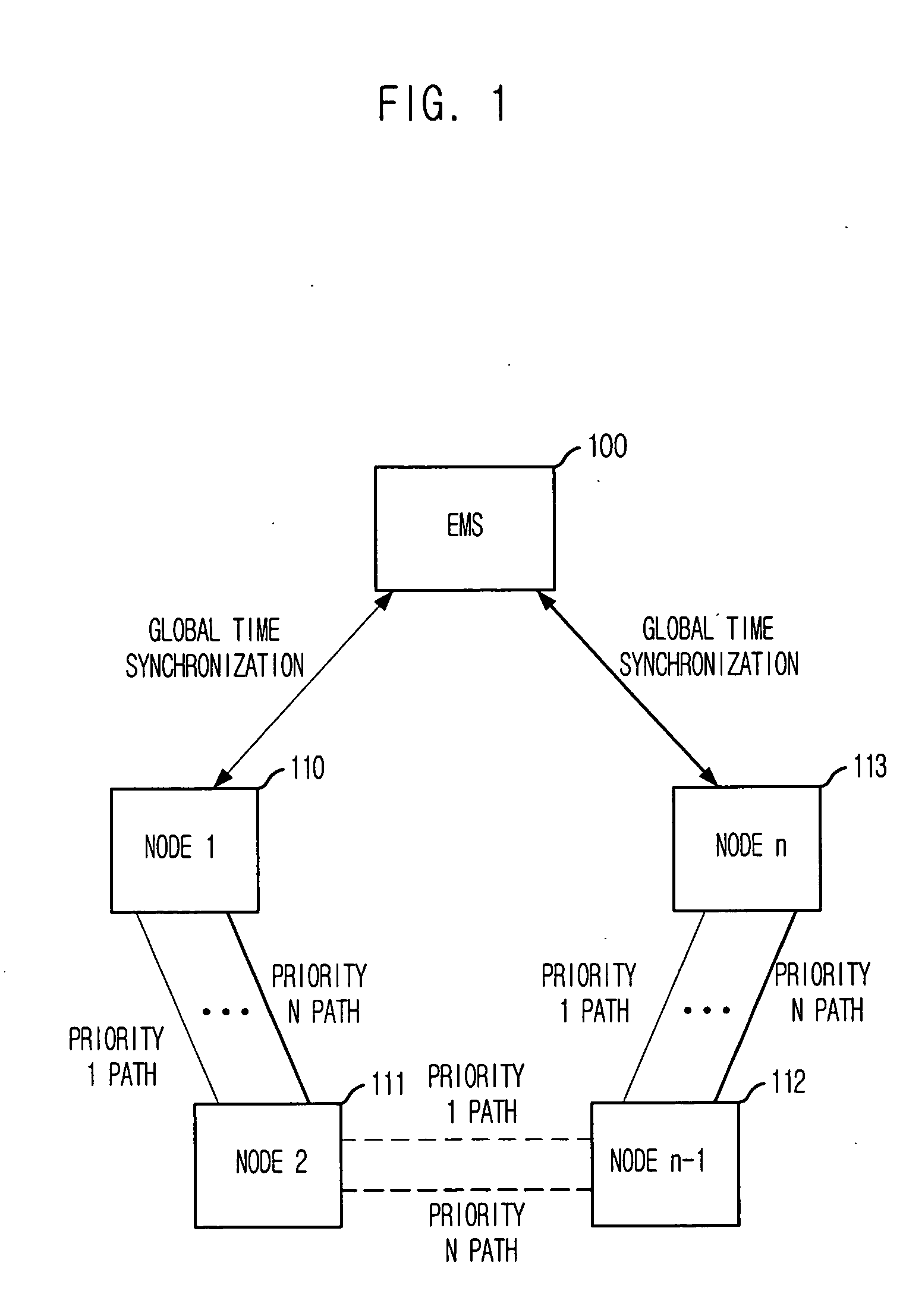

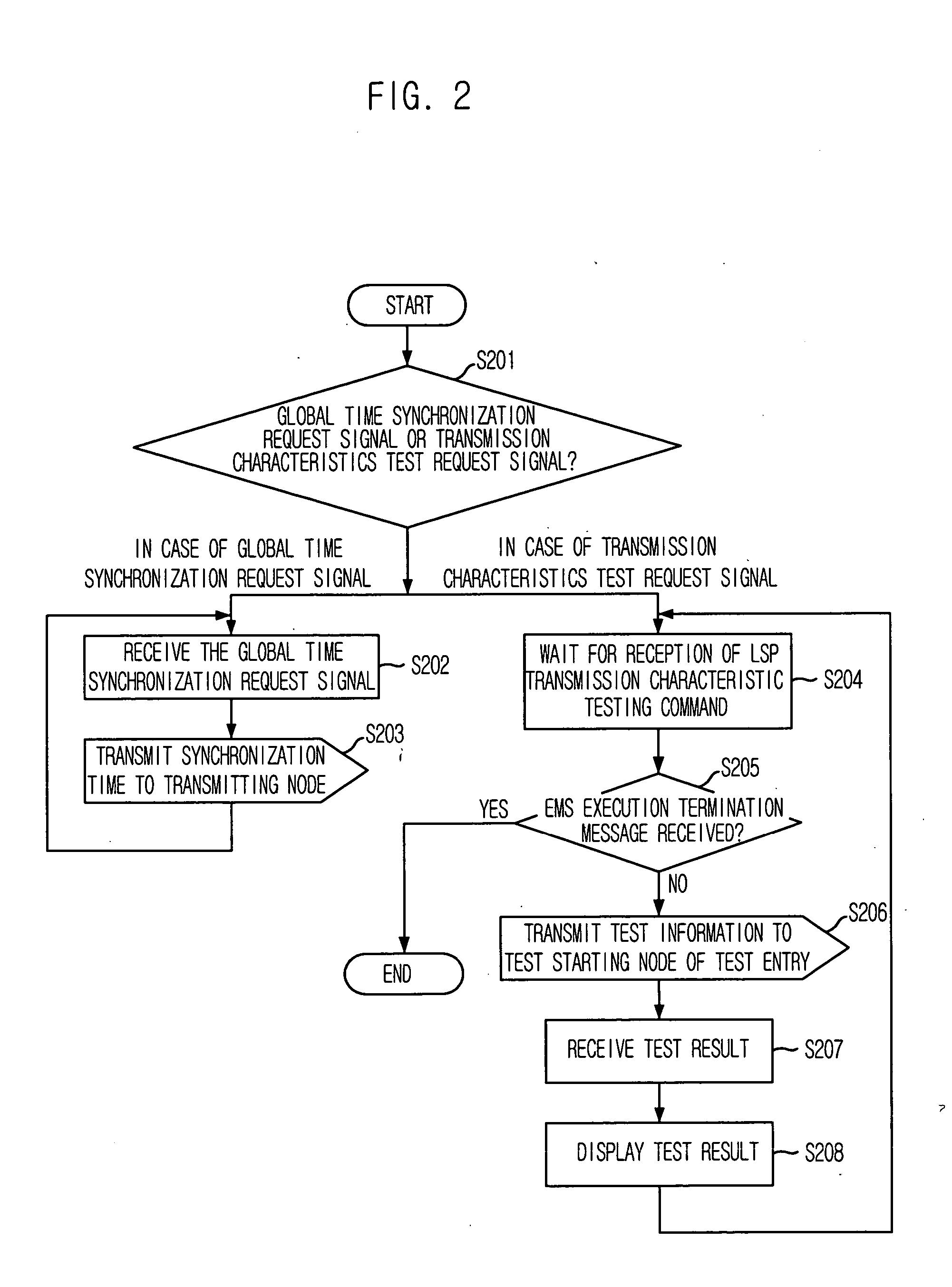

Method for measuring characteristics of path between nodes by using active testing packets based on priority

Provided are a method for measuring characteristics of a path between nodes by using active testing packets based on priority, i.e., an inter-node path characteristic measuring method, which can measure and provide characteristics of a generated node, when an inter-node data transmission path is generated based on Multi-Protocol Label Switching (MPLS) to provide a path with satisfactory transmission delay, jitter and packet loss that are required by a user, and to provide a computer-readable recording medium for recording a program that implement the method. The method includes the steps of: a) synchronizing system time of the nodes with a global standard time; b) forming each testing packet; c) registering frame sequence and the global standard time during transmission; and d) calculating transmission delay time, jitter and packet loss by using time stamp and packet sequence information of a frame received by the destination node and transmitting the result to the management system.

Owner:ELECTRONICS & TELECOMM RES INST

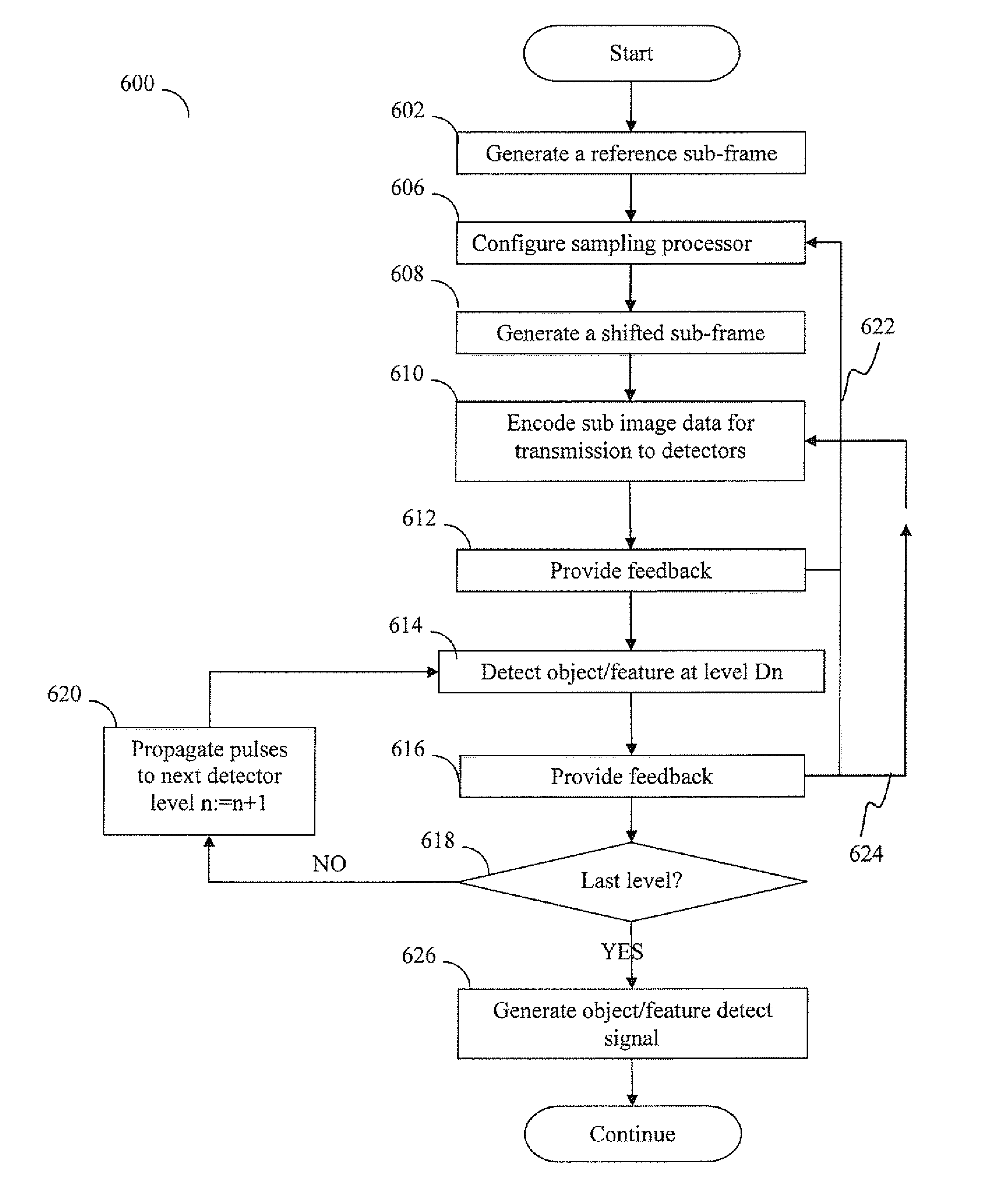

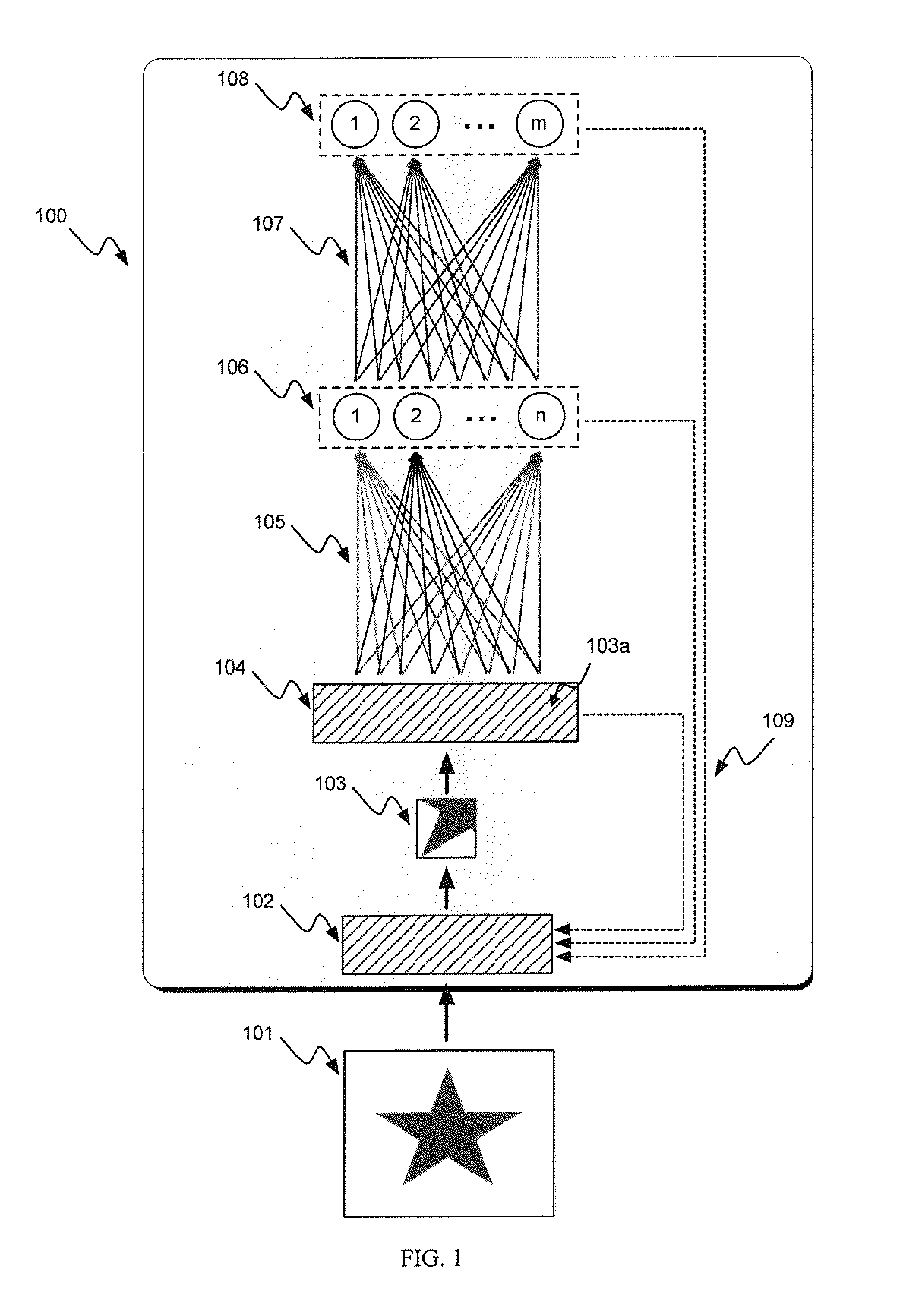

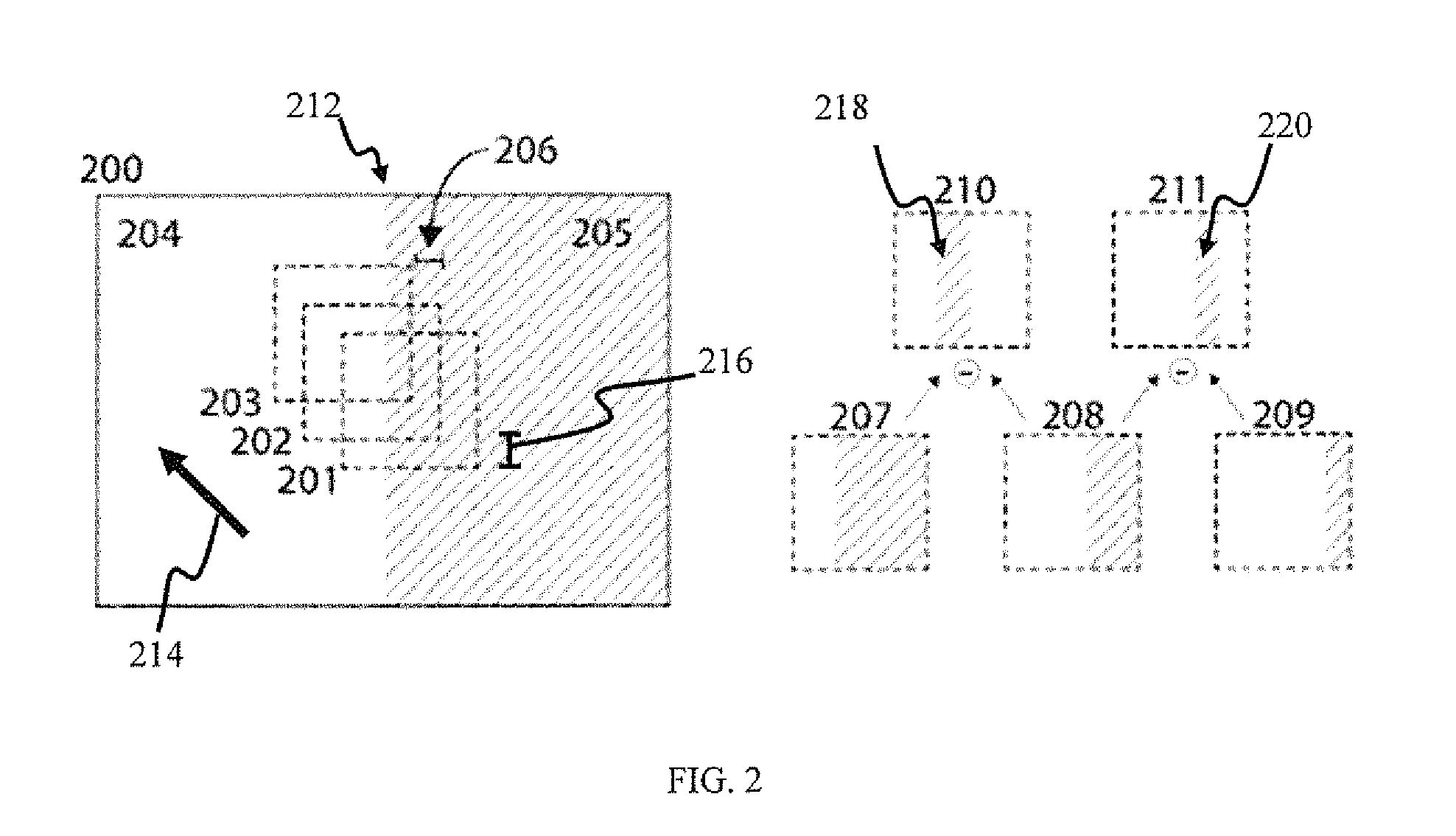

Sensory input processing apparatus and methods

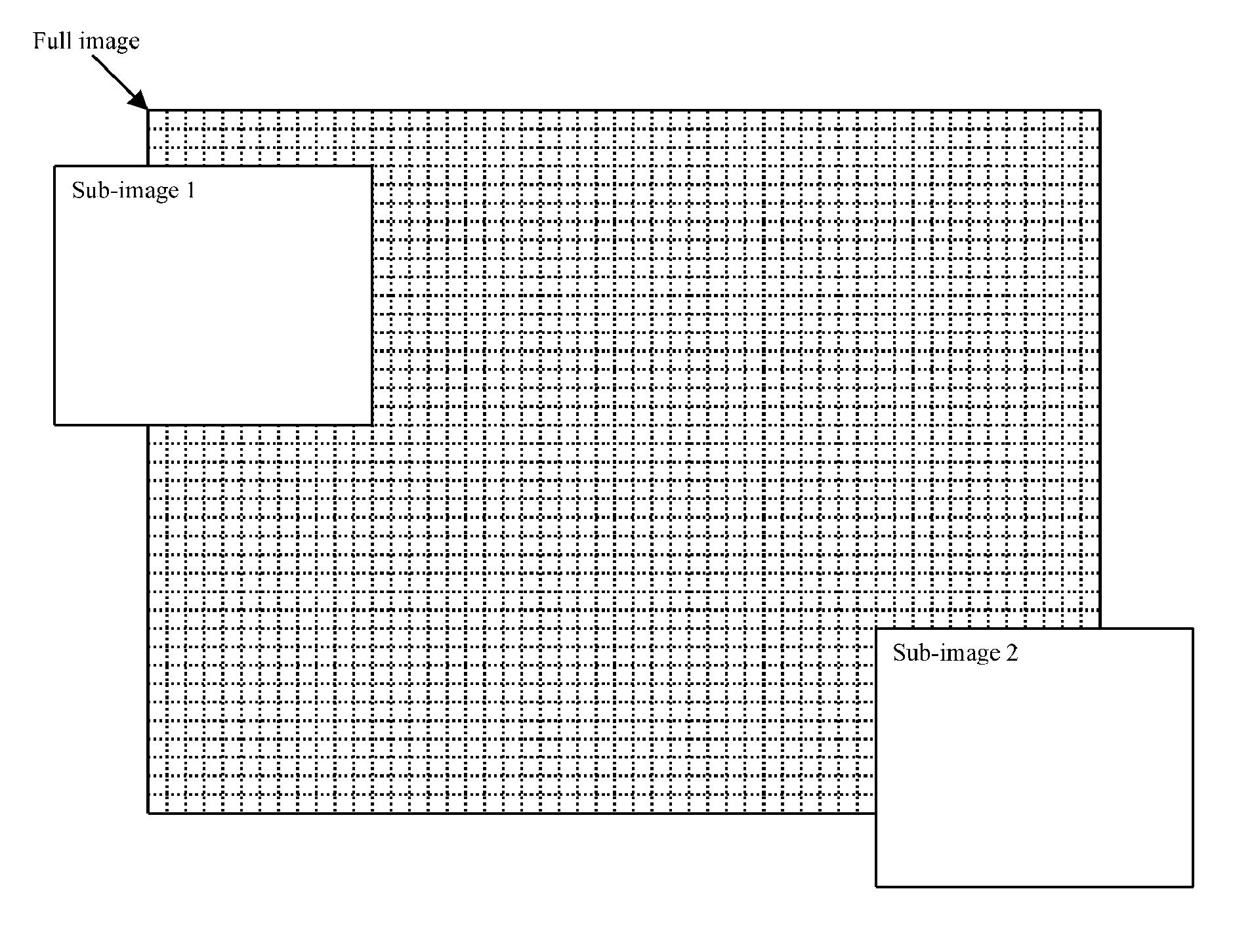

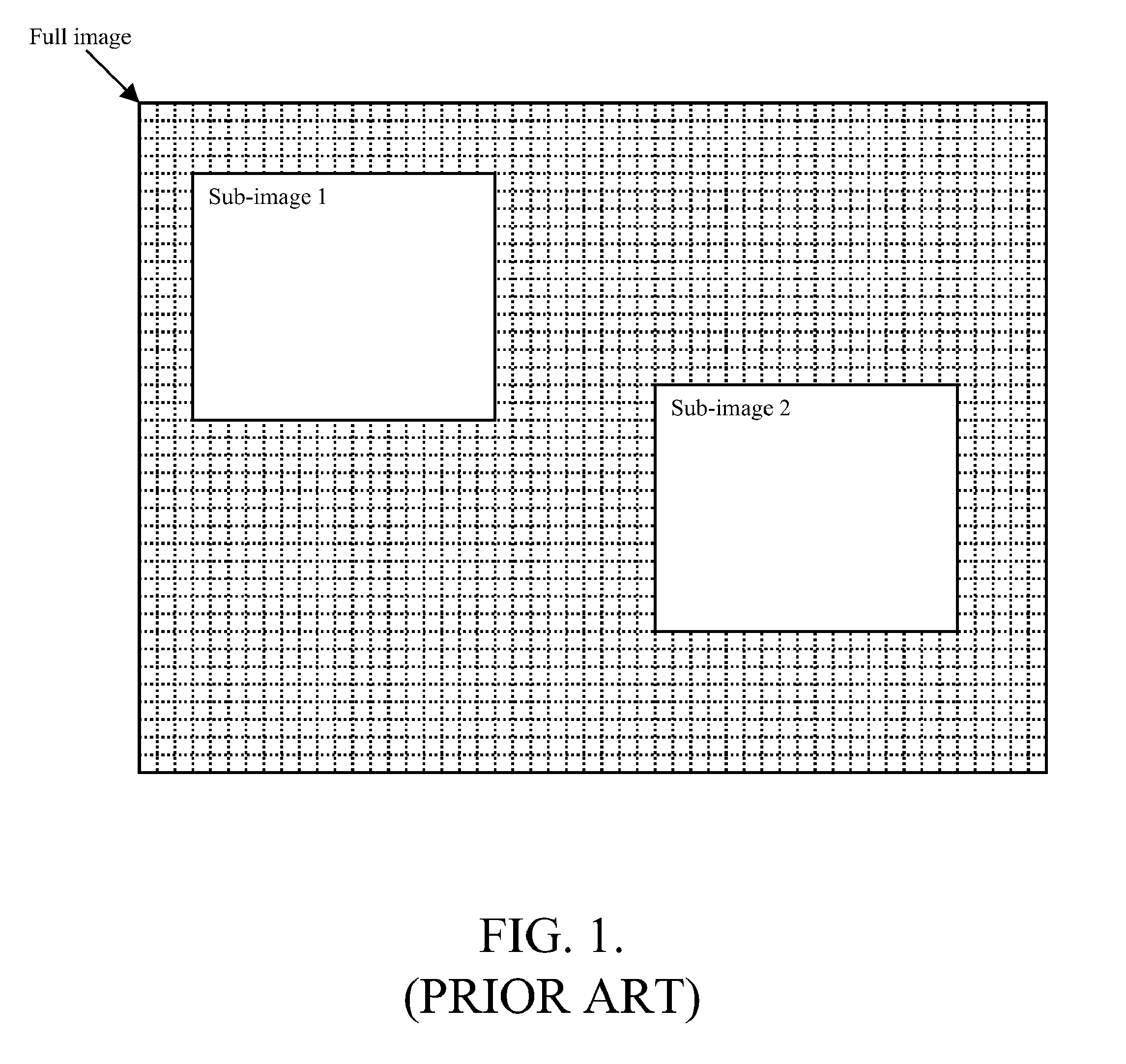

ActiveUS20140064609A1Easy to detectCharacter and pattern recognitionNeural architecturesFrame sequenceAdaptive encoding

Sensory input processing apparatus and methods useful for adaptive encoding and decoding of features. In one embodiment, the apparatus receives an input frame having a representation of the object feature, generates a sequence of sub-frames that are displaced from one another (and correspond to different areas within the frame), and encodes the sub-frame sequence into groups of pulses. The patterns of pulses are directed via transmission channels to detection apparatus configured to generate an output pulse upon detecting a predetermined pattern within received groups of pulses that is associated with the feature. Upon detecting a particular pattern, the detection apparatus provides feedback to the displacement module in order to optimize sub-frame displacement for detecting the feature of interest. In another embodiment, the detections apparatus elevates its sensitivity (and / or channel characteristics) to that particular pulse pattern when processing subsequent pulse group inputs, thereby increasing the likelihood of feature detection.

Owner:BRAIN CORP

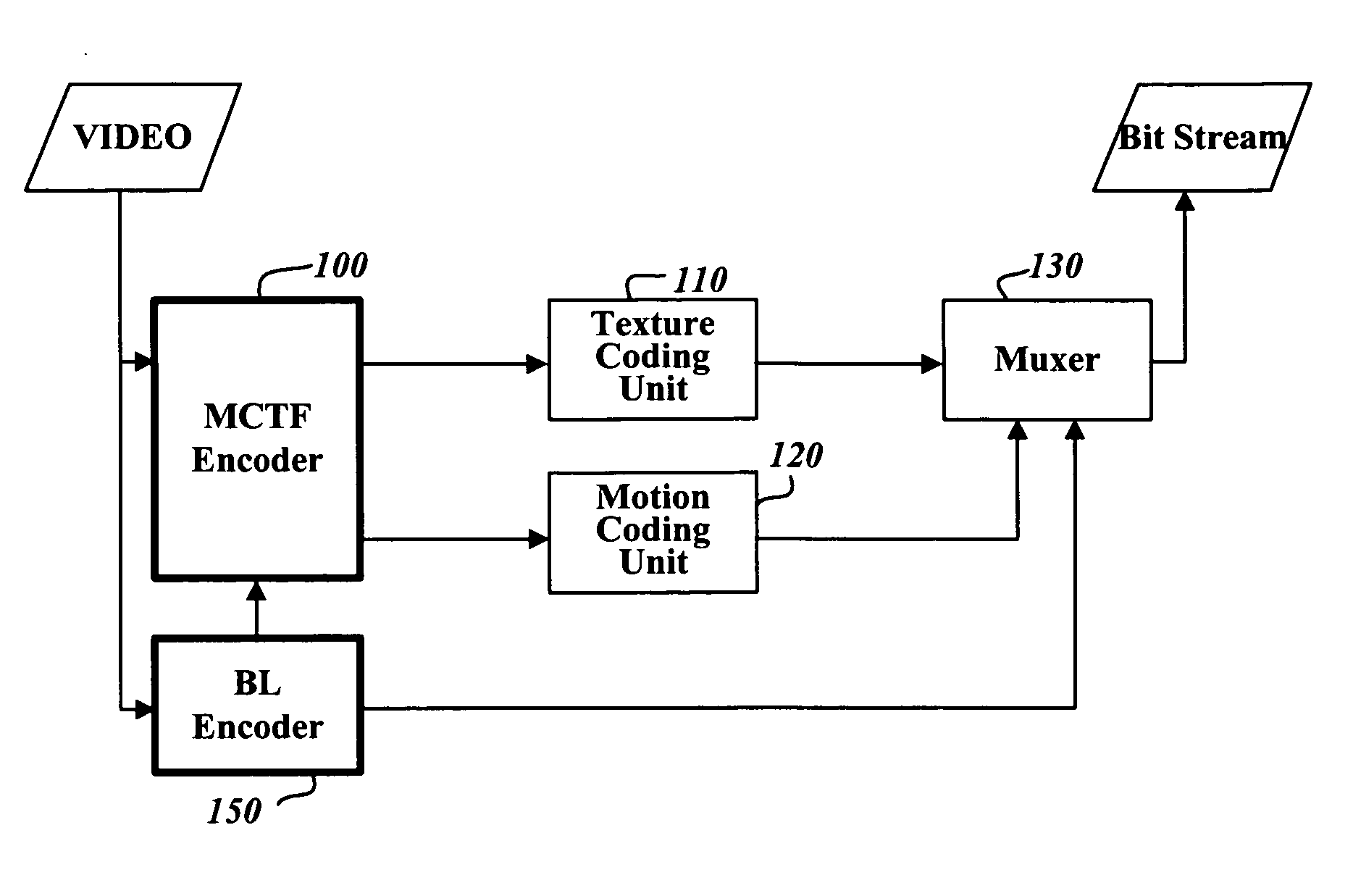

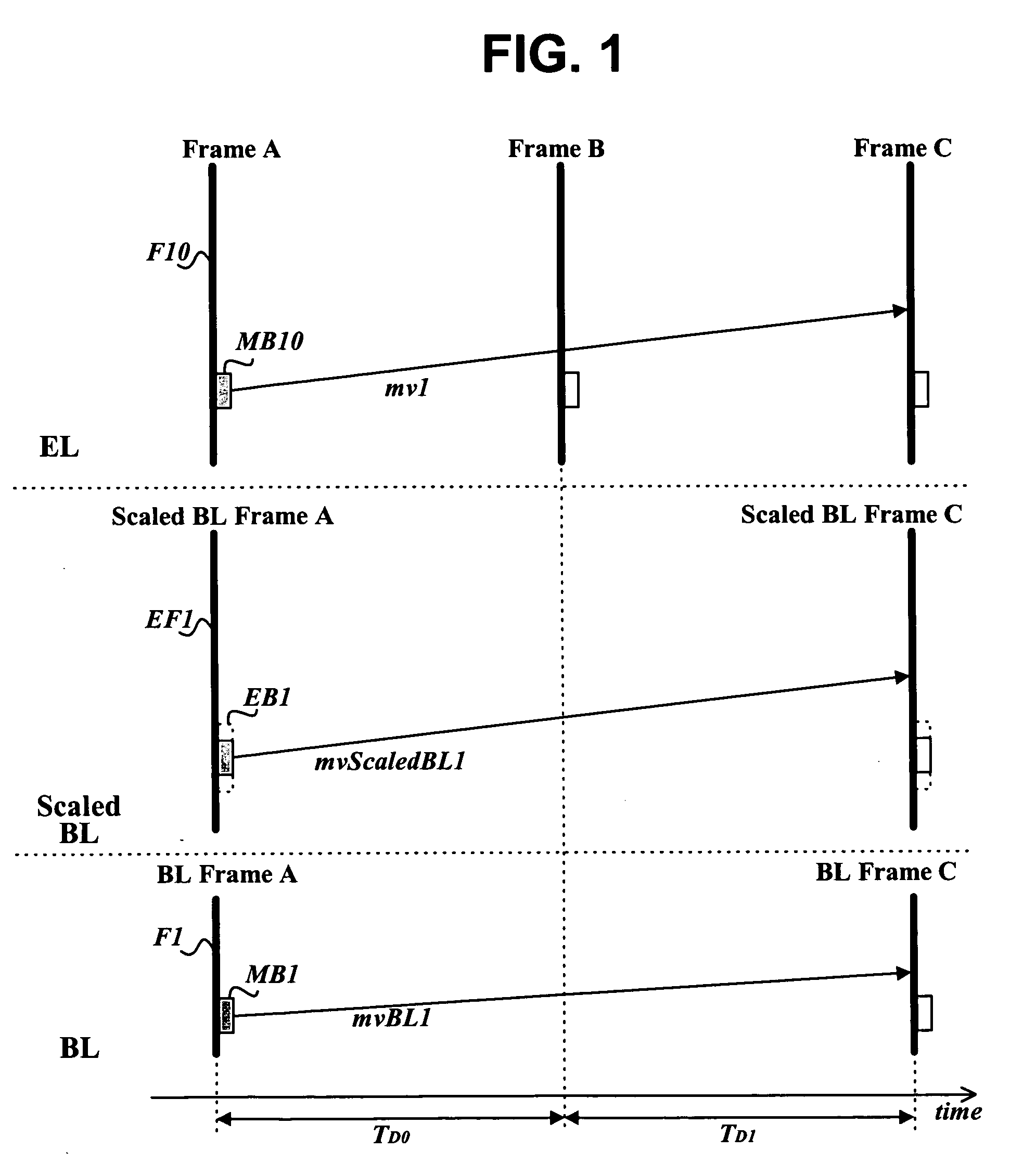

Method and apparatus for encoding/decoding video signal using motion vectors of pictures in base layer

InactiveUS20060120454A1Color television with pulse code modulationColor television with bandwidth reductionFrame sequenceComputer graphics (images)

A method and apparatus for encoding video signals of a main layer using motion vectors of predictive image frames of an auxiliary layer and decoding such encoded video data is provided. In the encoding method, a video signal is encoded in a scalable MCTF scheme to output an enhanced layer (EL) bitstream and encoded in another specified scheme to output a base layer (BL) bitstream. During MCTF encoding, information regarding a motion vector of an image block in an arbitrary frame in a frame sequence of the video signal is recorded using a motion vector of a block, which is present, at a position corresponding to the image block, in an auxiliary frame present in the BL bitstream and temporally separated from the arbitrary frame. Using the correlation between motion vectors of temporally adjacent frames in different layers reduces the amount of coded motion vector data.

Owner:LG ELECTRONICS INC

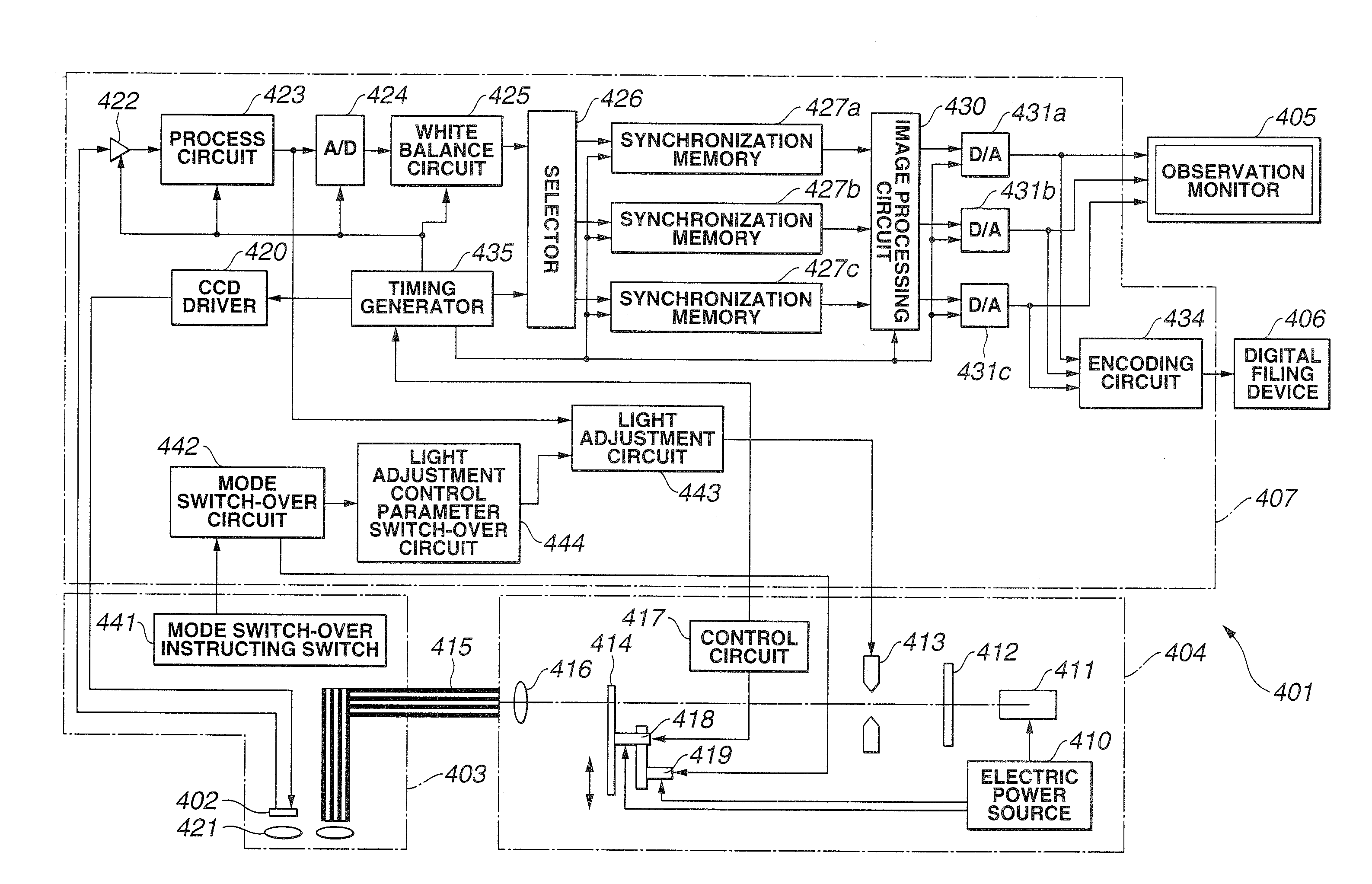

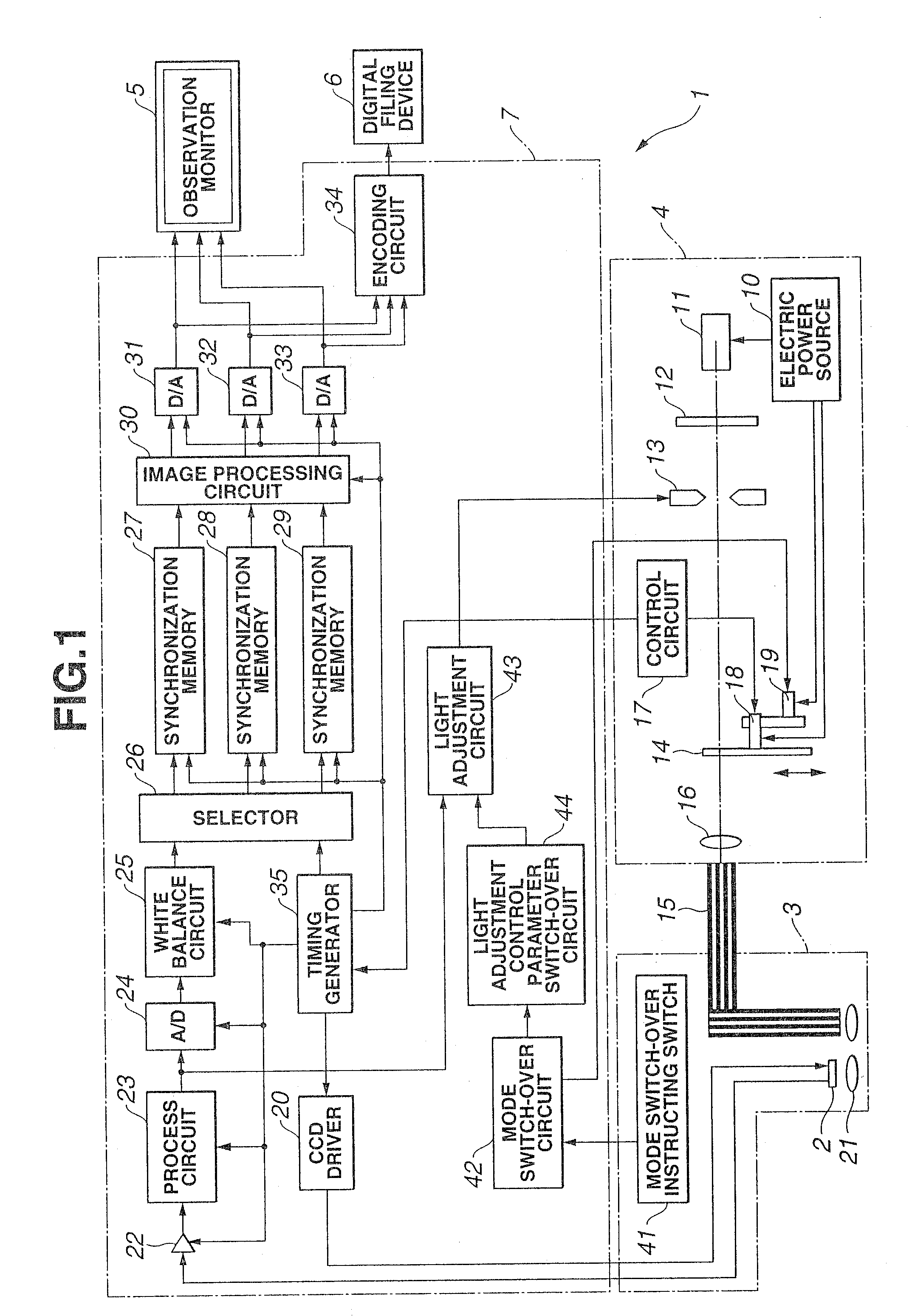

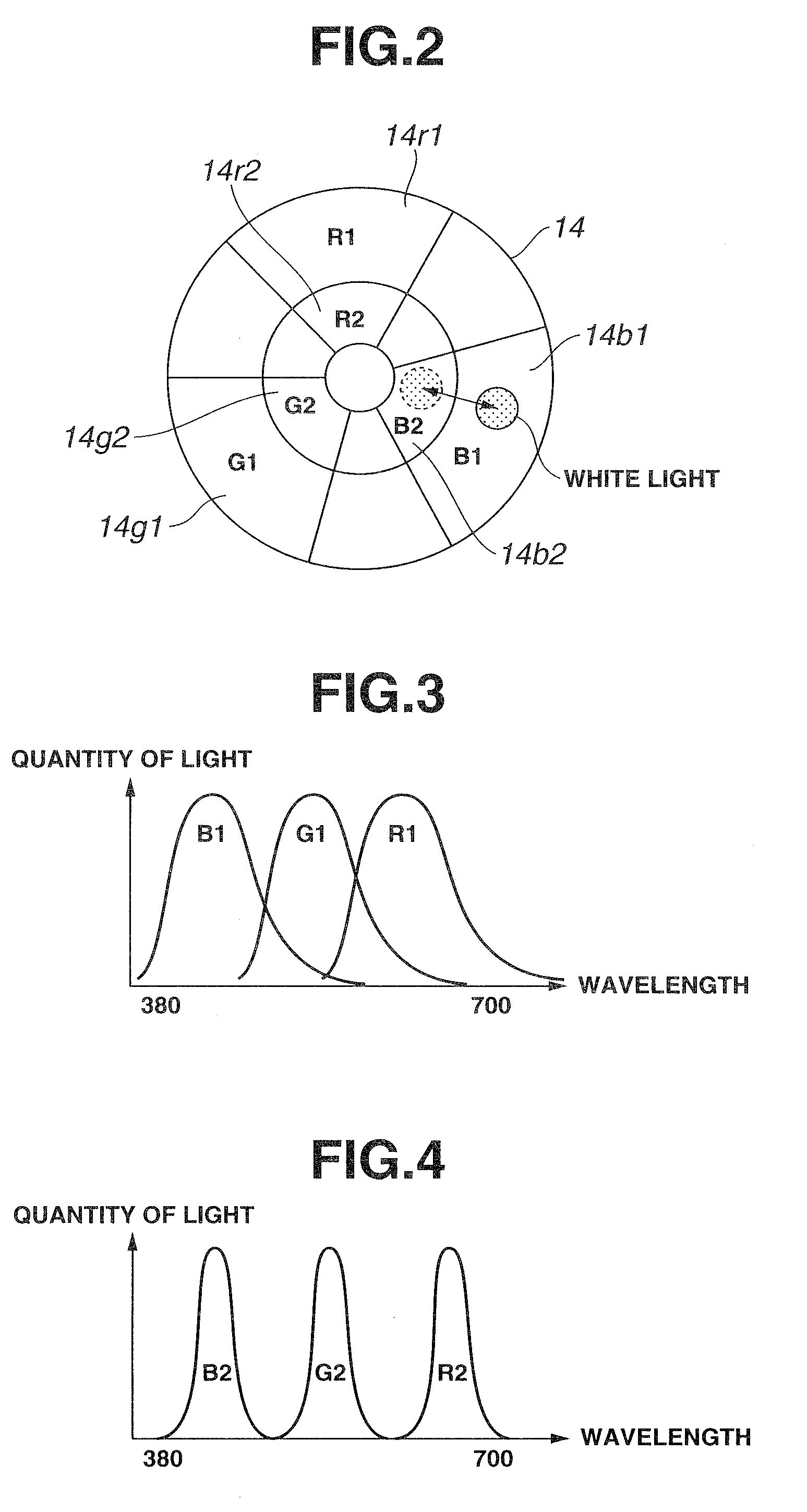

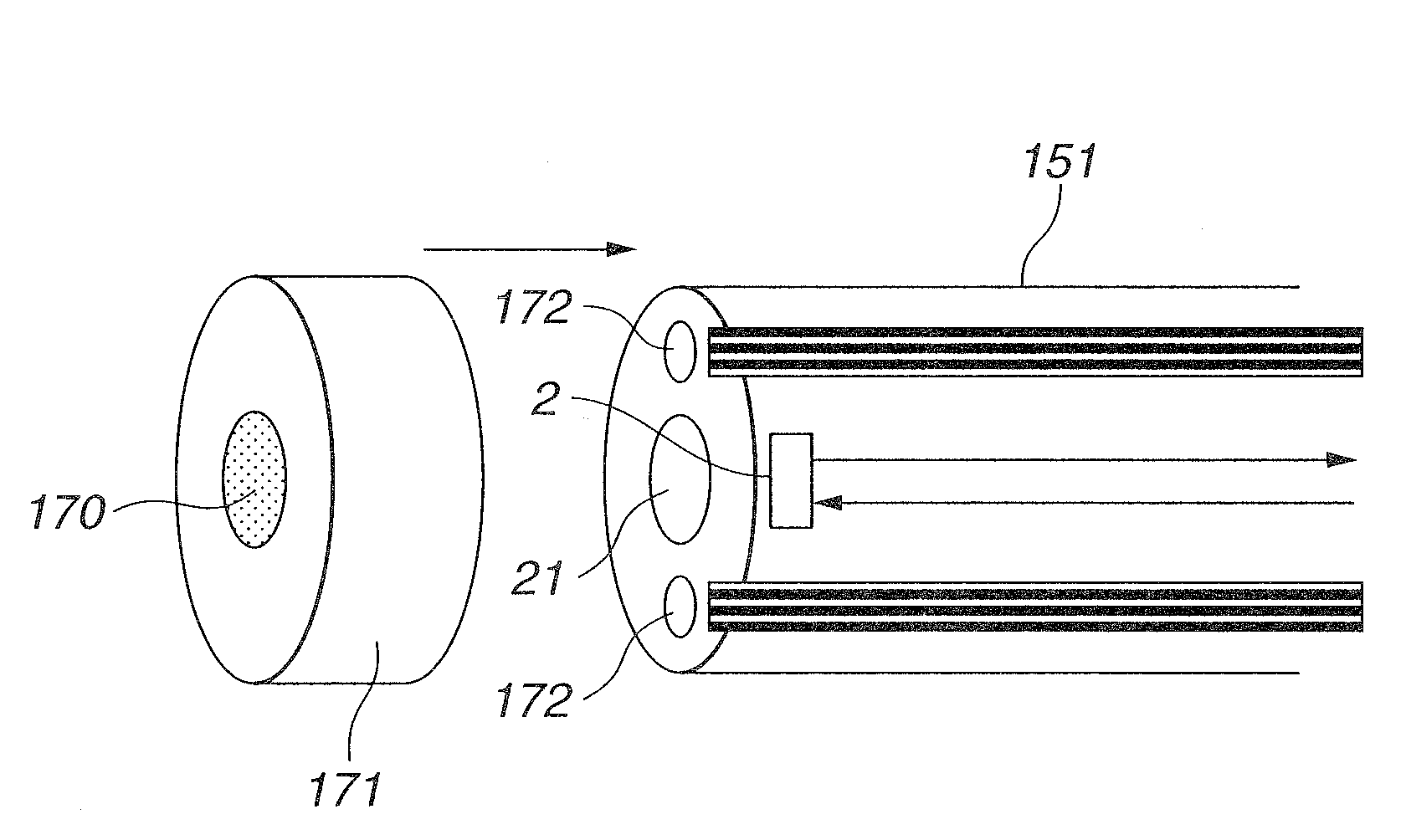

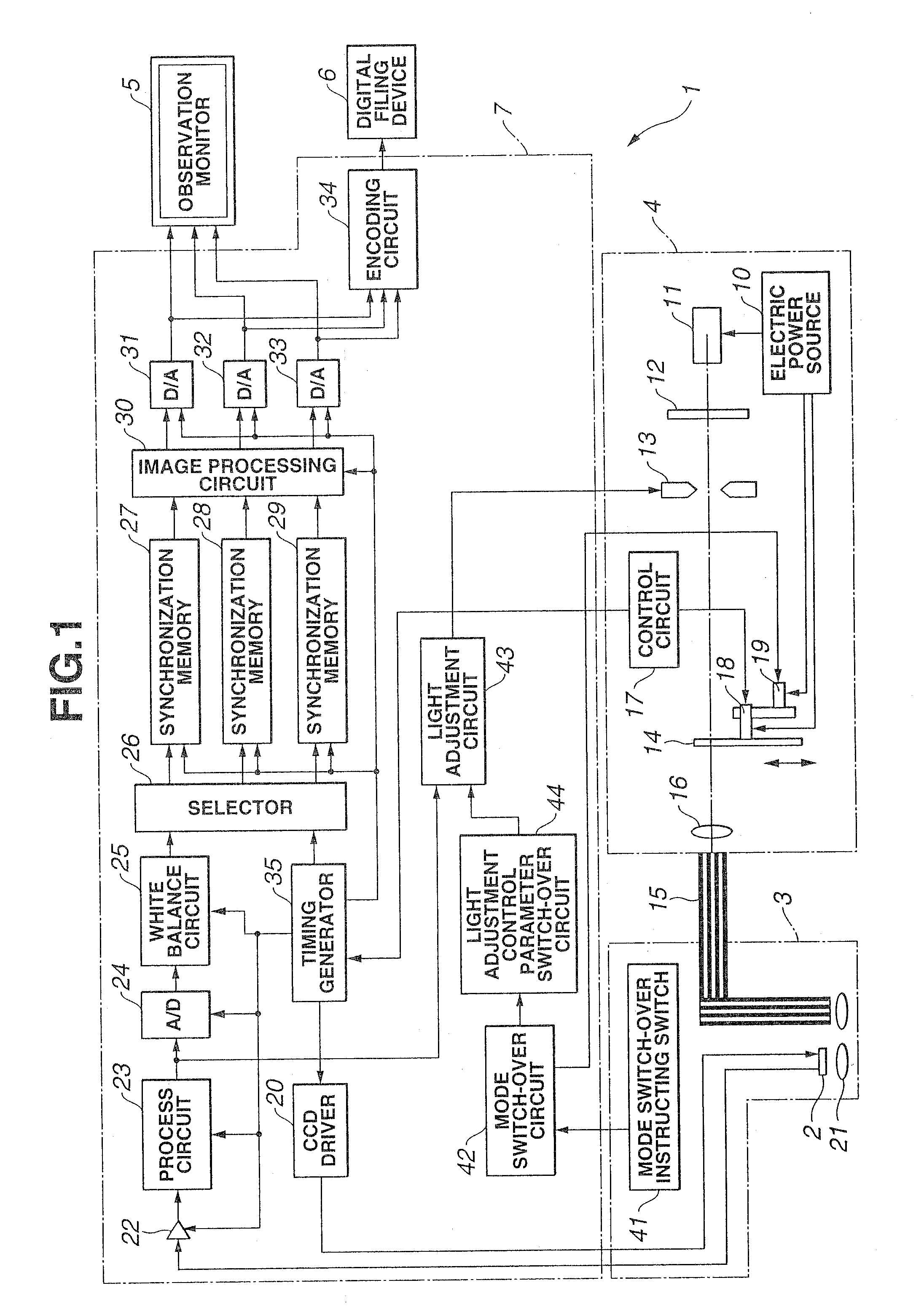

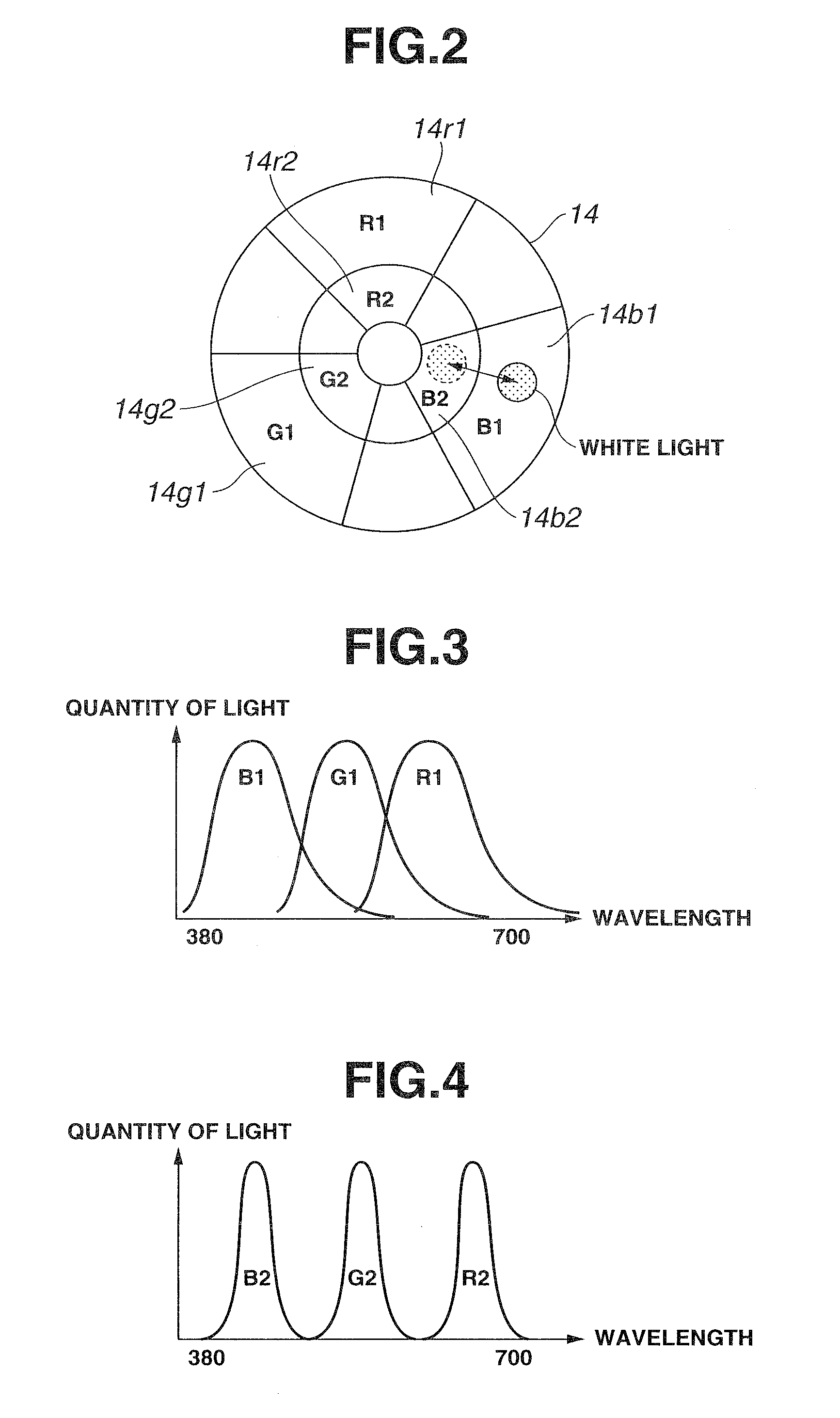

Endoscope device

An endoscope device obtains tissue information of a desired depth near the tissue surface. A xenon lamp (11) in a light source (4) emits illumination light. A diaphragm (13) controls a quantity of the light that reaches a rotating filter. The rotating filter has an outer sector with a first filter set, and an inner sector with a second filter set. The first filter set outputs frame sequence light having overlapping spectral properties suitable for color reproduction, while the second filter set outputs narrow-band frame sequence light having discrete spectral properties enabling extraction of desired deep tissue information. A condenser lens (16) collects the frame sequence light coming through the rotating filter onto the incident face of a light guide (15). The diaphragm controls the amount of the light reaching the filter depending on which filter set is selected.

Owner:OLYMPUS CORP

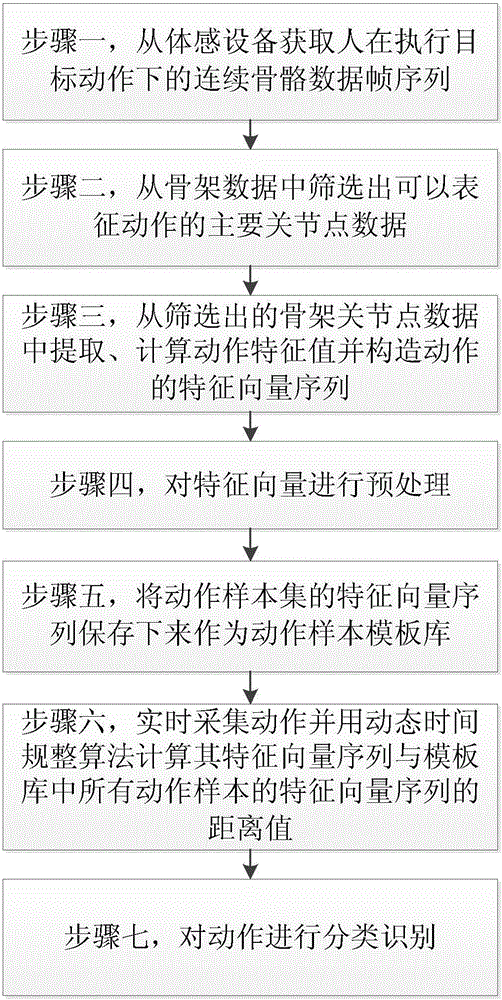

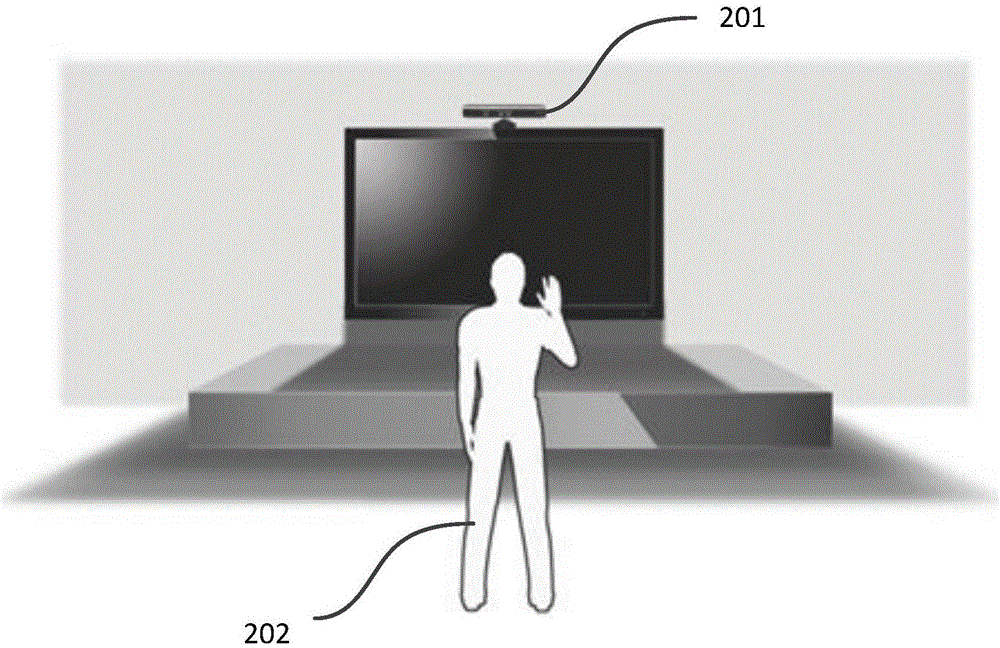

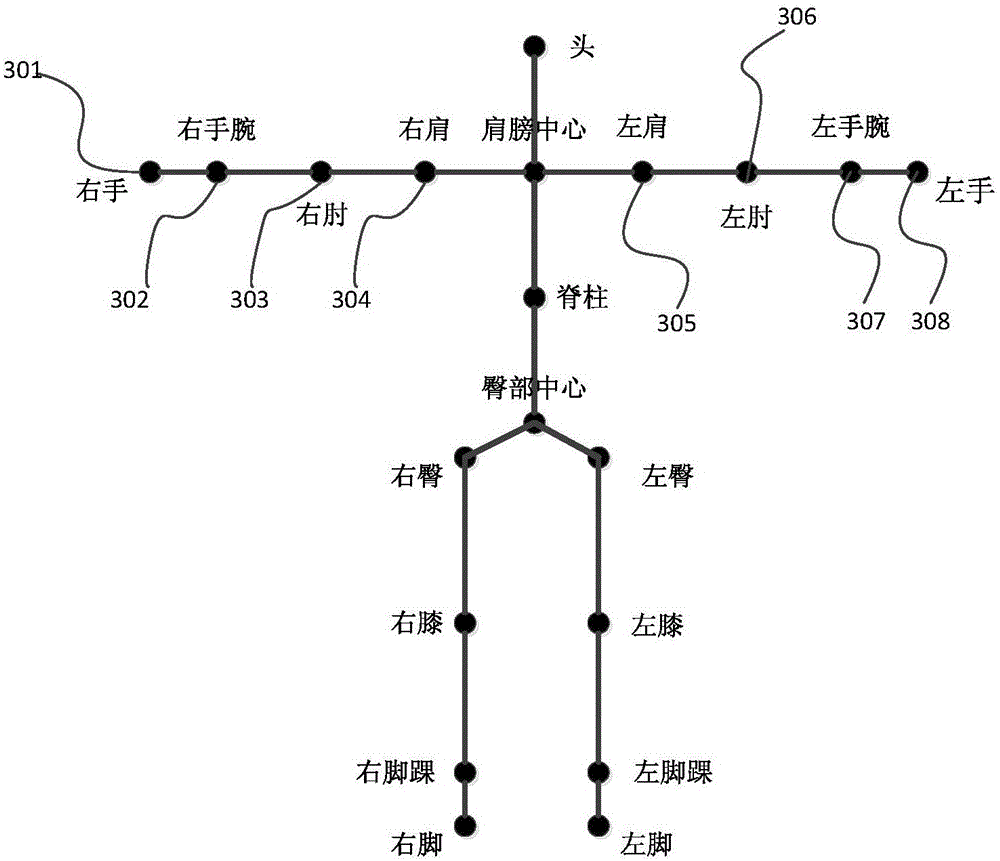

Human body skeleton-based action recognition method

ActiveCN105930767AImprove versatilityEliminate the effects of different relative positionsCharacter and pattern recognitionHuman bodyFeature vector

The invention relates to a human body skeleton-based action recognition method. The method is characterized by comprising the following basic steps that: step 1, a continuous skeleton data frame sequence of a person who is executing target actions is obtained from a somatosensory device; step 2, main joint point data which can characterize the actions are screened out from the skeleton data; step 3, action feature values are extracted from the main joint point data and are calculated, and a feature vector sequence of the actions is constructed; step 4, the feature vectors are preprocessed; step 4, the feature vector sequence of an action sample set is saved as an action sample template library; step 6, actions are acquired in real time, the distance value of the feature vector sequence of the actions and the feature vector sequence of all action samples in the template library is calculated by using a dynamic time warping algorithm; and step 7, the actions are classified and recognized. The method of the invention has the advantages of high real-time performance, high robustness, high accuracy and simple and reliable implementation, and is suitable for a real-time action recognition system.

Owner:NANJING HUAJIE IMI TECH CO LTD

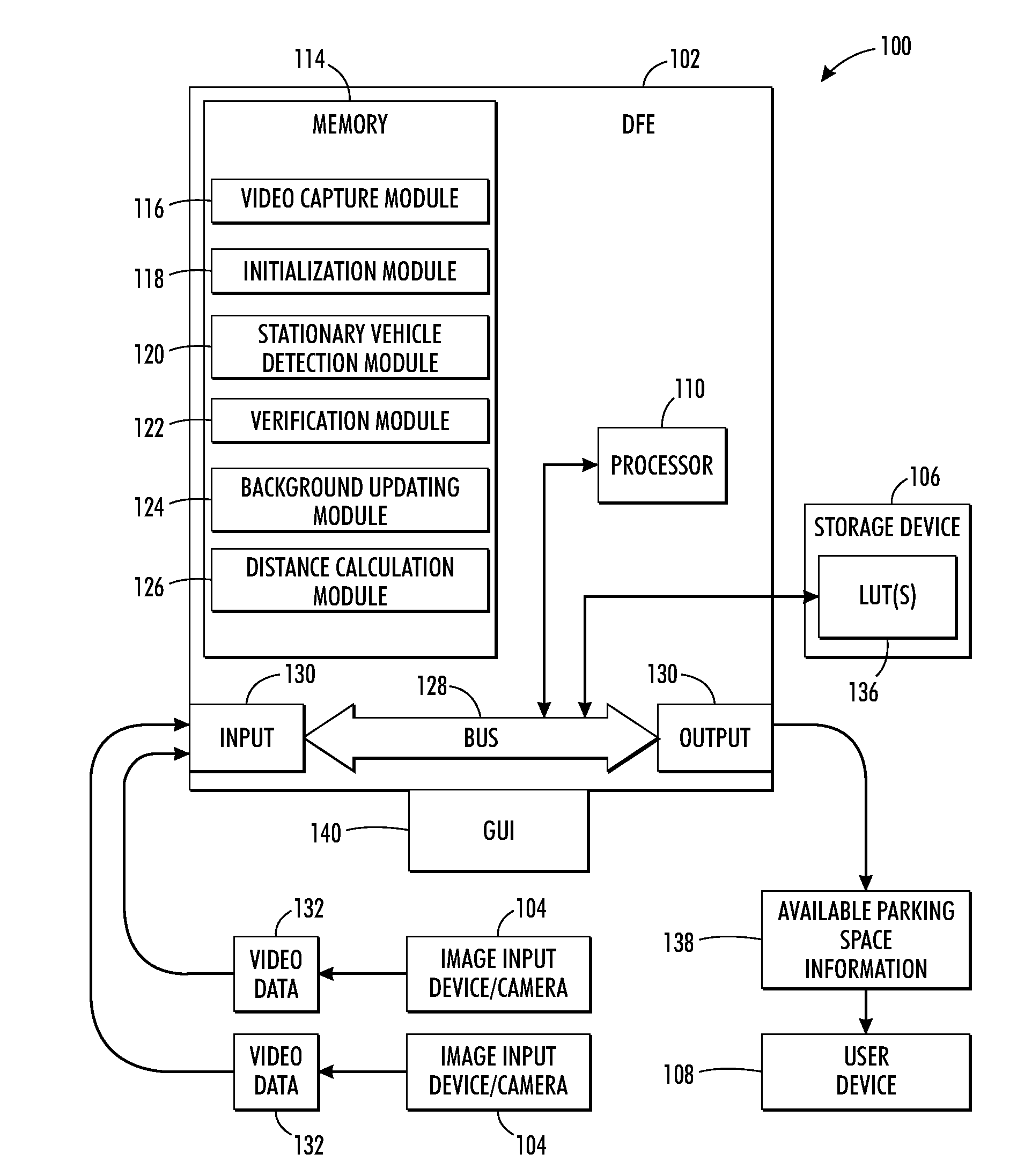

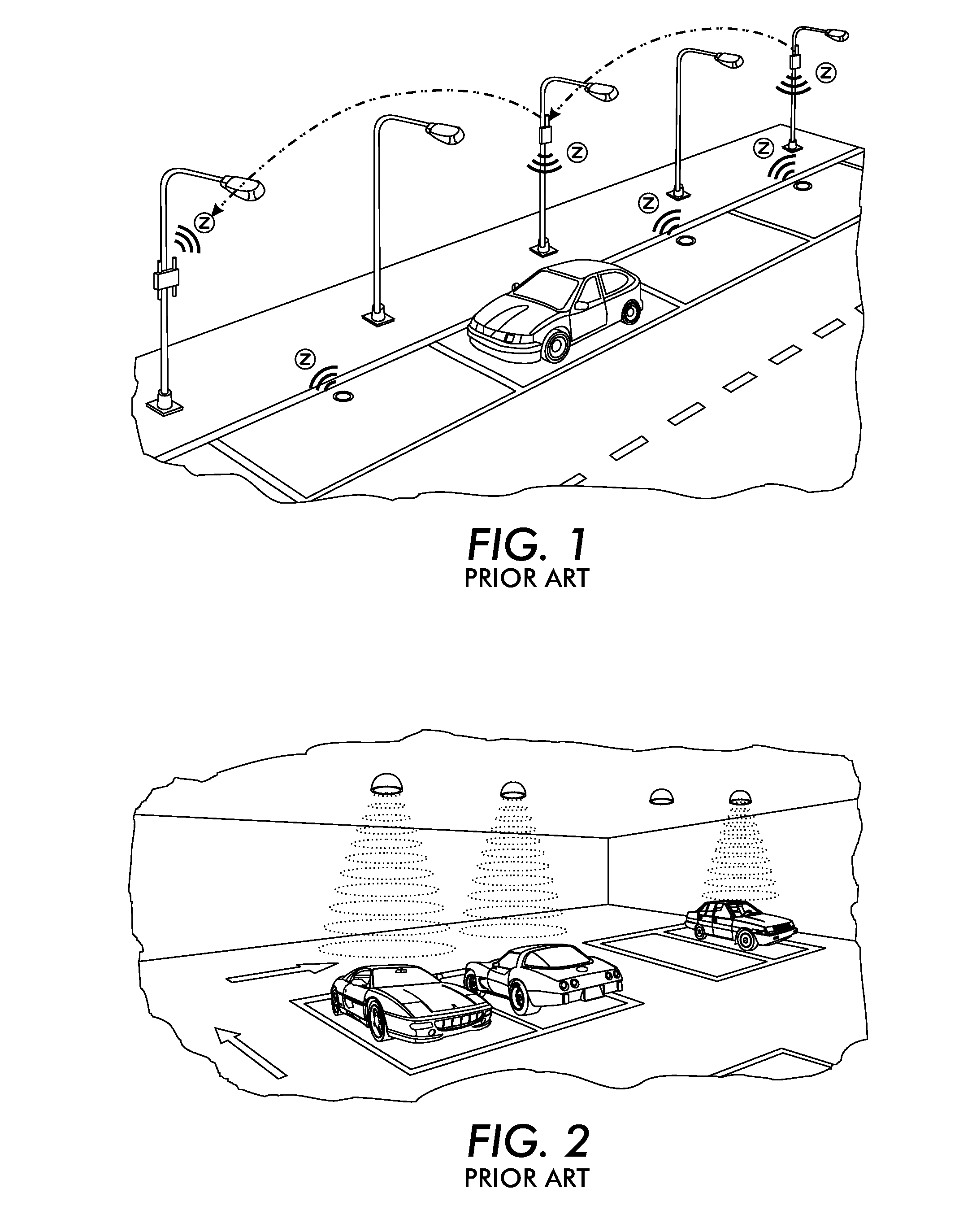

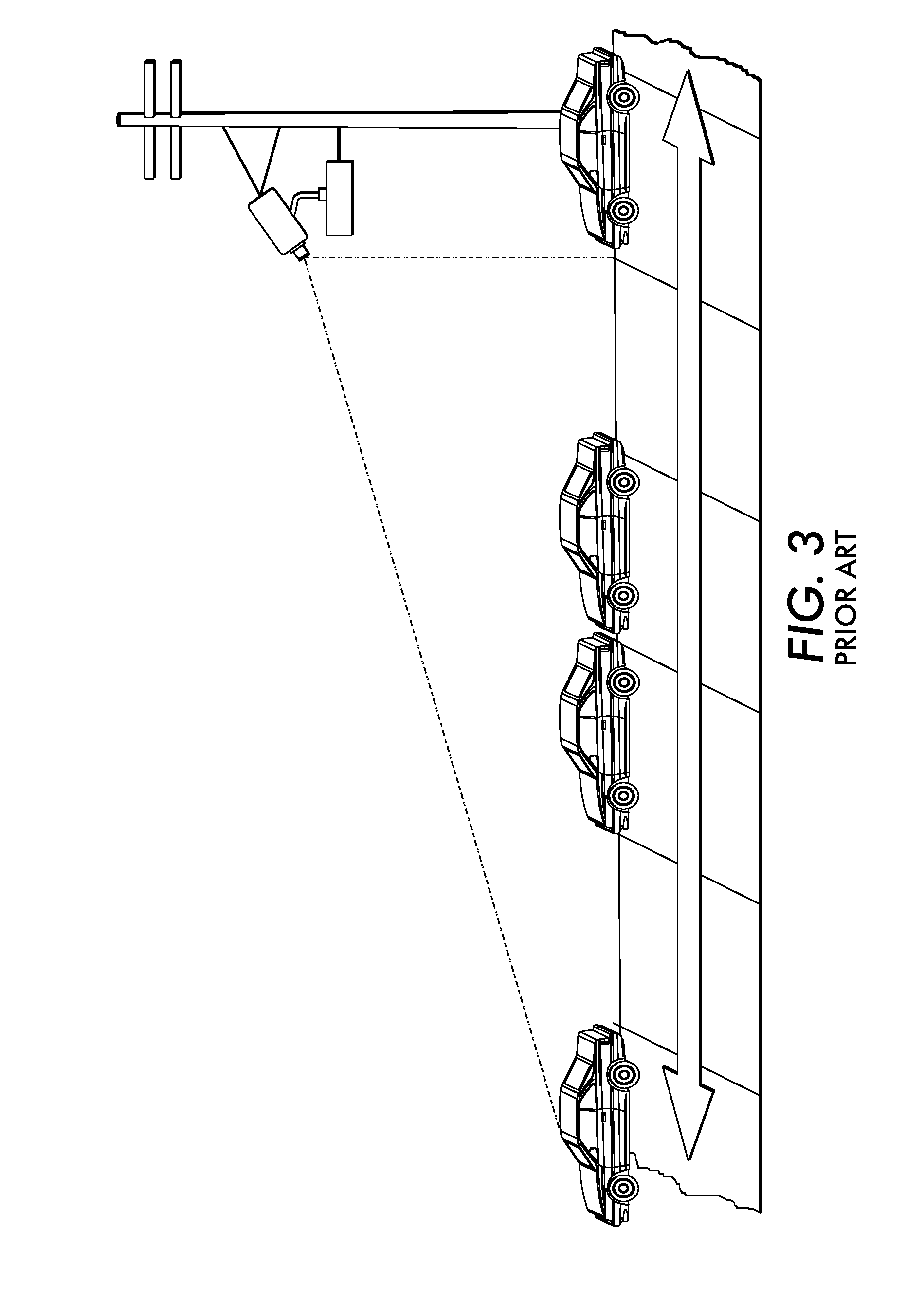

System and method for available parking space estimation for multispace on-street parking

InactiveUS20130265419A1Road vehicles traffic controlColor television detailsParking areaParking space

A method for determining parking availability includes receiving video data from a sequence of frames taken from an image capture device that is monitoring a parking area. The method includes determining background and foreground images in an initial frame of the sequence of frames. The method further includes updating the background and foreground images in each of the sequence of frames following the initial frame. The method also includes determining a length of a parking space using the determined background and foreground images. The determining includes computing a pixel distance between a foreground image and one of an adjacent foreground image and an end of the parking area. The determining further includes mapping the pixel distance to an actual distance for estimating the length of the parking space.

Owner:CONDUENT BUSINESS SERVICES LLC

Endoscope device

An endoscope device obtains tissue information of a desired depth near the tissue surface. A xenon lamp (11) in a light source (4) emits illumination light. A diaphragm (13) controls a quantity of the light that reaches a rotating filter. The rotating filter has an outer sector with a first filter set, and an inner sector with a second filter set. The first filter set outputs frame sequence light having overlapping spectral properties suitable for color reproduction, while the second filter set outputs narrow-band frame sequence light having discrete spectral properties enabling extraction of desired deep tissue information. A condenser lens (16) collects the frame sequence light coming through the rotating filter onto the incident face of a light guide (15). The diaphragm controls the amount of the light reaching the filter depending on which filter set is selected.

Owner:OLYMPUS CORP

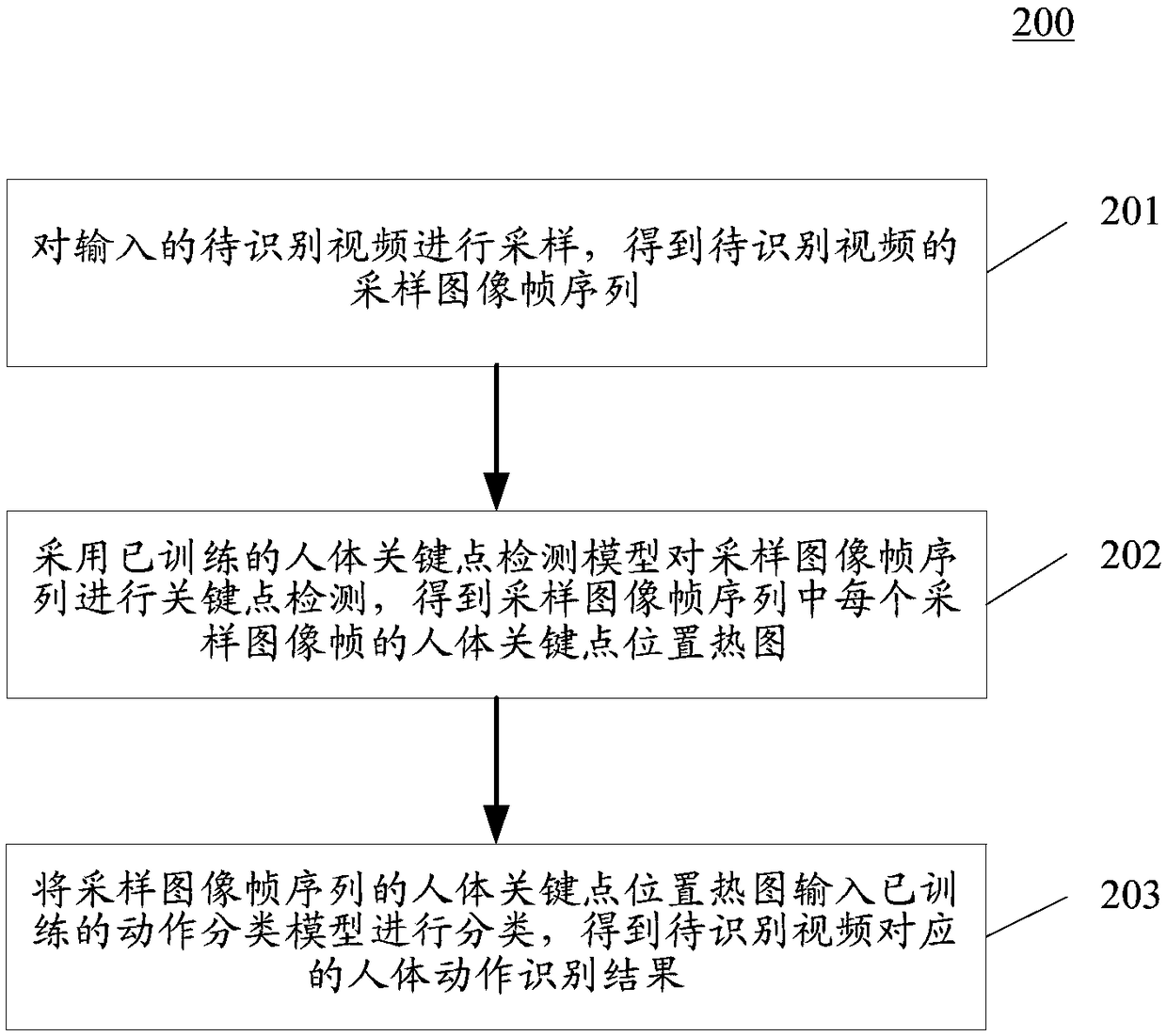

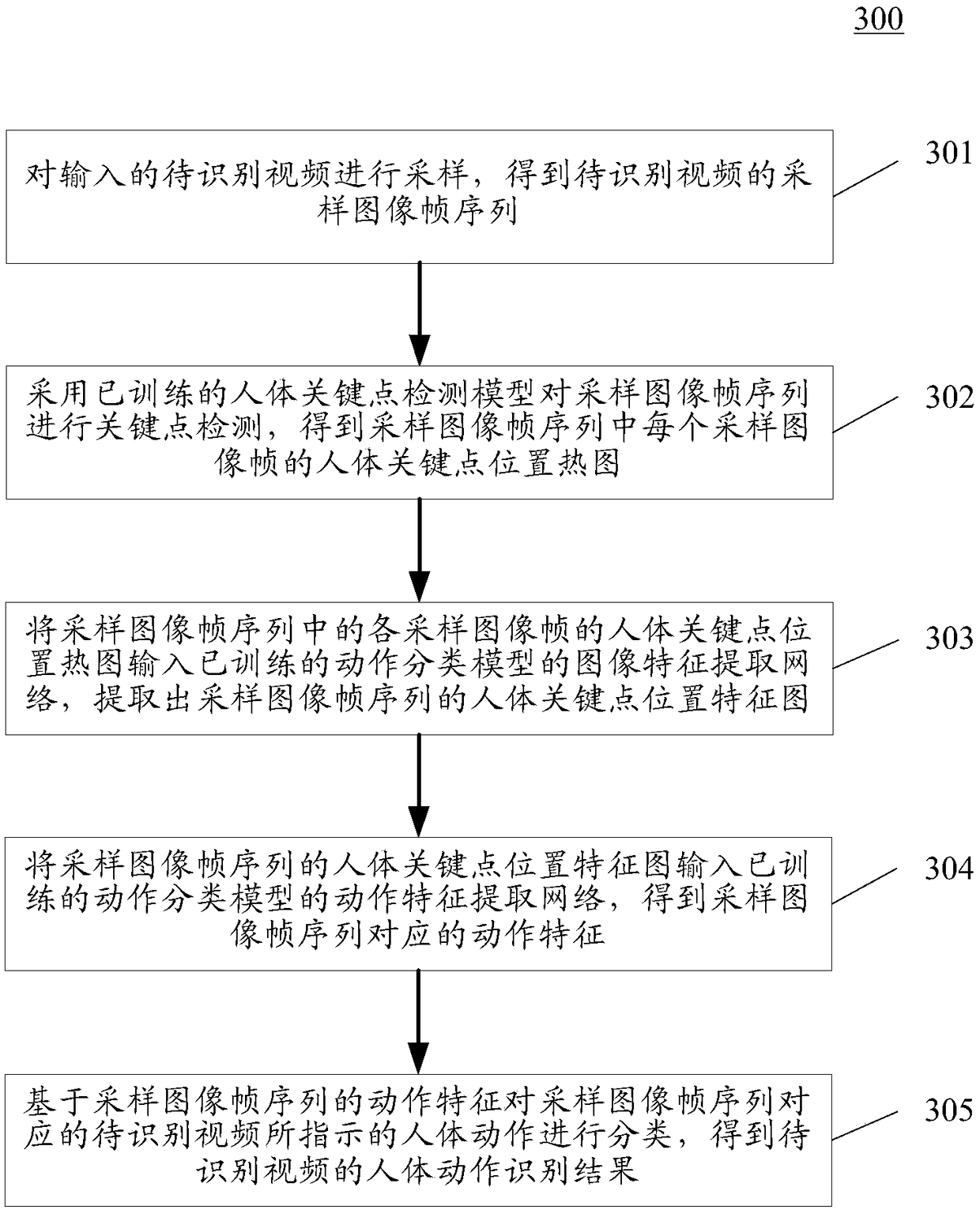

Human motion recognition method and device

ActiveCN108985259AImprove recognition accuracyCharacter and pattern recognitionFrame sequenceHuman motion

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

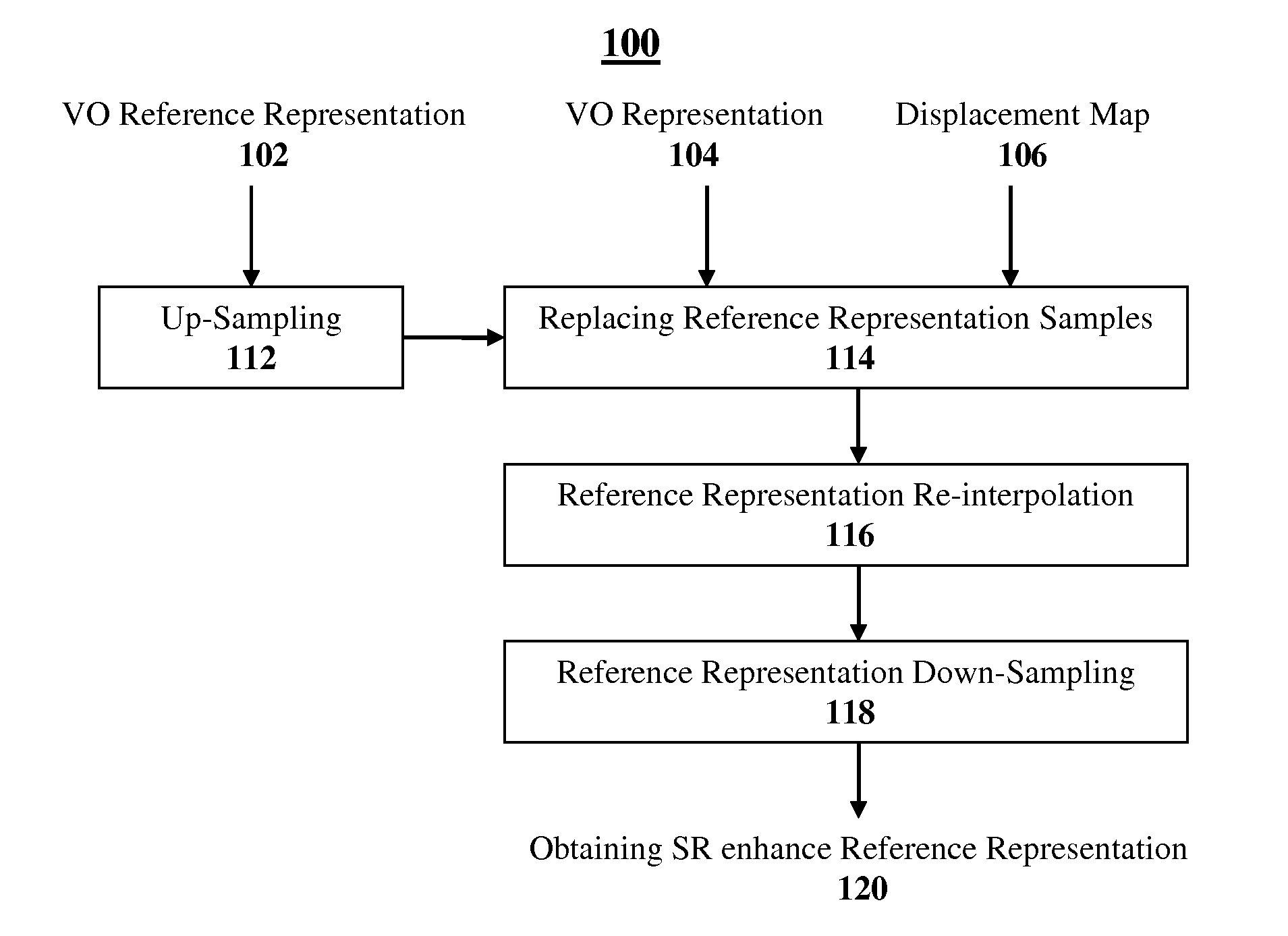

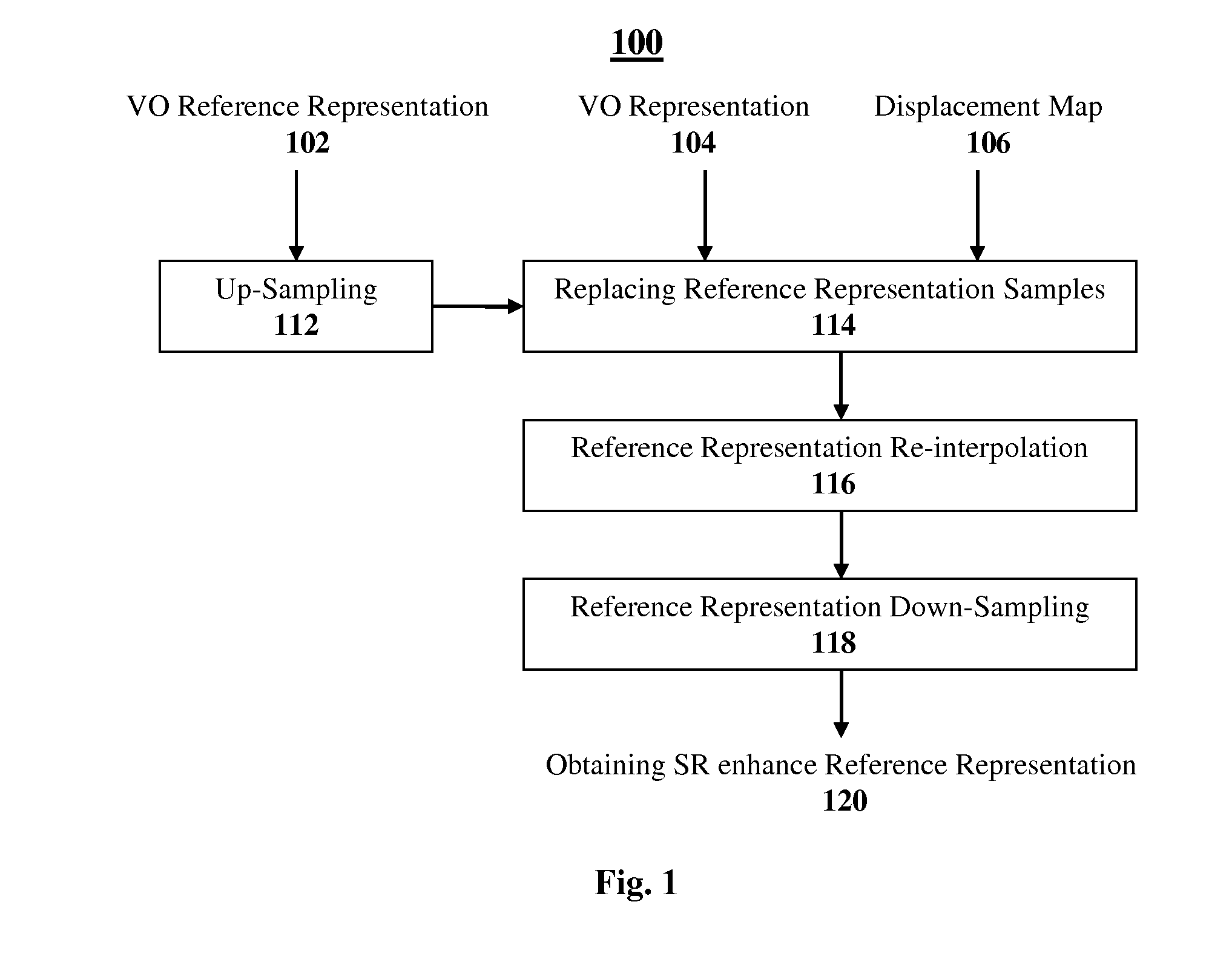

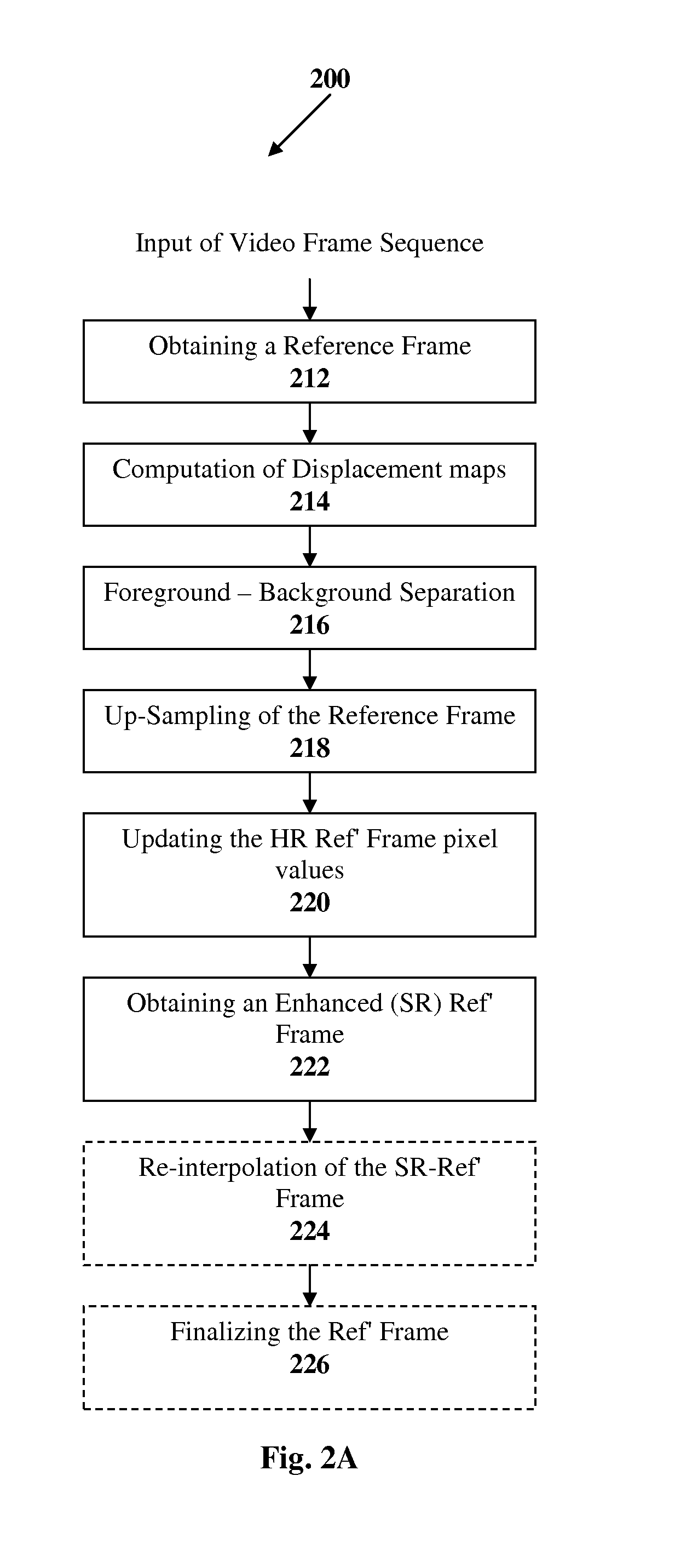

System and Method for Real-Time Super-Resolution

InactiveUS20100272184A1Efficient and economical power requirementReduce image dataColor television with pulse code modulationColor television with bandwidth reductionFrame sequenceMotion vector

A method and system are presented for real time Super-Resolution image reconstruction. According to this technique, data indicative of a video frame sequence compressed by motion compensated compression technique is processed, and representations of one or more video objects (VOs) appearing in one or more frames of said video frame sequence are obtained. At least one of these representations is utilized as a reference representation and motion vectors, associating said representations with said at least one reference representation, are obtained from said data indicative of the video frame sequence. The representations and the motion vectors are processed, and pixel displacement maps are generated, each associating at least some pixels of one of the representations with locations on said at least one reference representation. The reference representation is re-sampled according to the sub-pixel accuracy of the displacement maps, and a re-sampled reference representation is obtained. Pixels of said representations are registered against the re-sampled reference representation according to the displacement maps, thereby providing super-resolved image of the reference representation of said one or more VOs.

Owner:RAMOT AT TEL AVIV UNIV LTD

Methods and systems for repositioning MPEG image content without recoding

InactiveUS20060256868A1Color television with pulse code modulationColor television with bandwidth reductionFrame sequenceComputer graphics (images)

Methods, systems and computer-program products for encoding an image so that a portion of the image can be extracted without requiring modification of any of the encoded data. A valid MPEG I-frame sequence is generated from the portion of the image, then fed directly to an MPEG decoder for decompression and display.

Owner:ENSEQUENCE

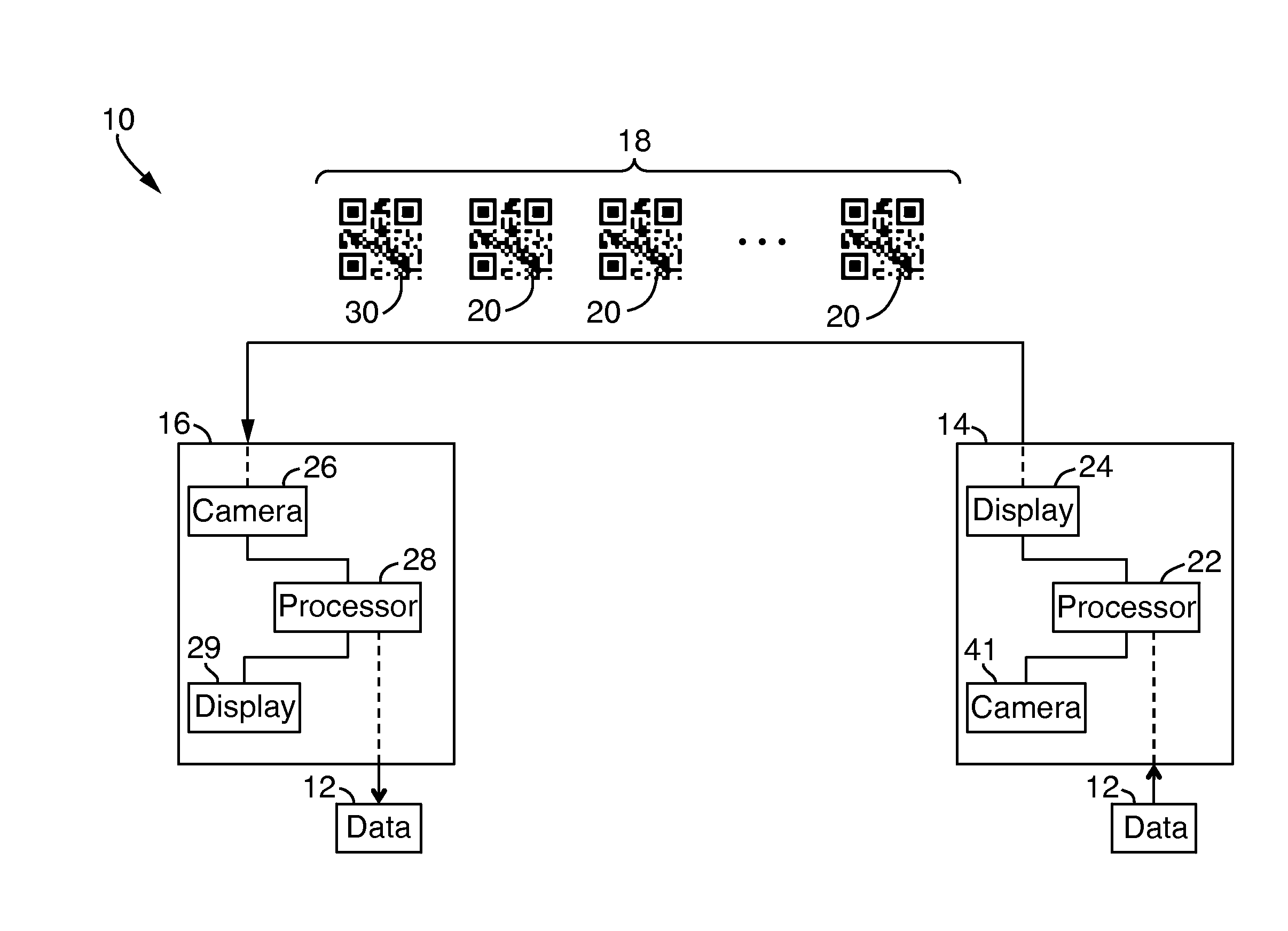

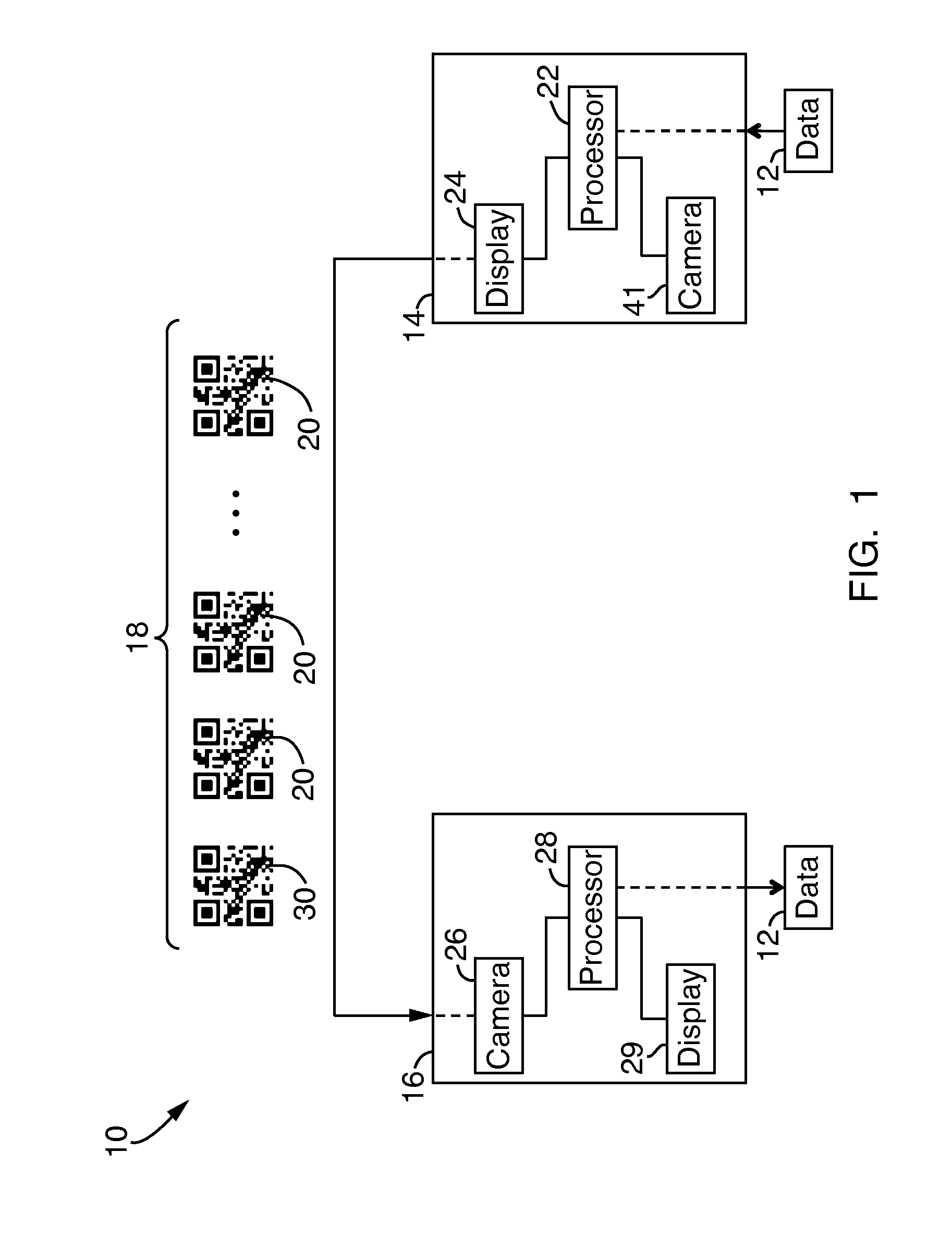

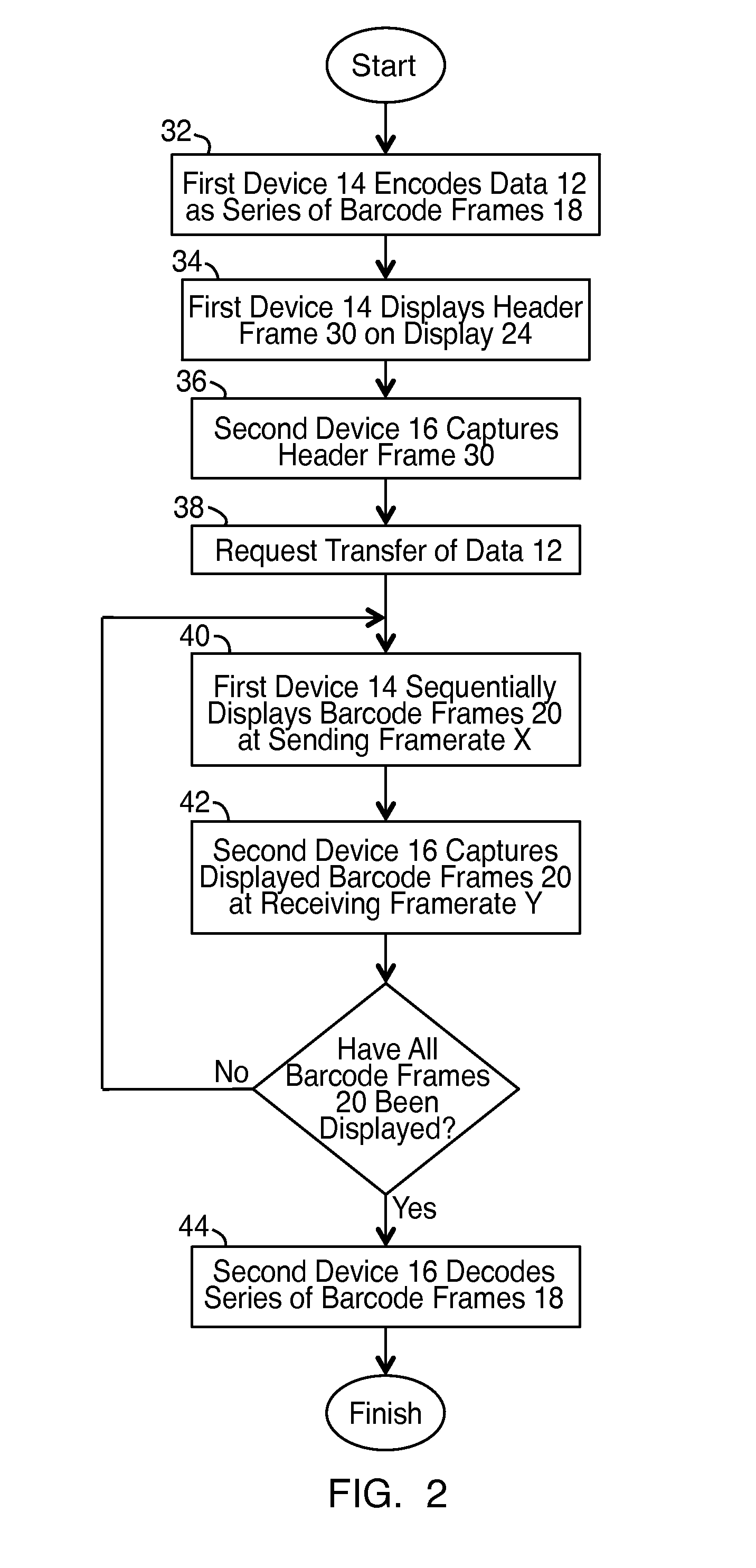

Data exchange using streamed barcodes

InactiveUS20140084067A1Provides redundancyClose-range type systemsRecord carriers used with machinesAlgorithmComputer graphics (images)

A system and method for data exchange between a first device and a second device includes encoding data as a series of barcode frames on the first device and then successively displaying each barcode frame of the series of barcode frames on the first device. The second device captures the barcode frames being displayed by the first device to obtain the series of barcode frames and then decodes the captured series of barcode frames to complete the data exchange.

Owner:PIECE FUTURE PTE LTD

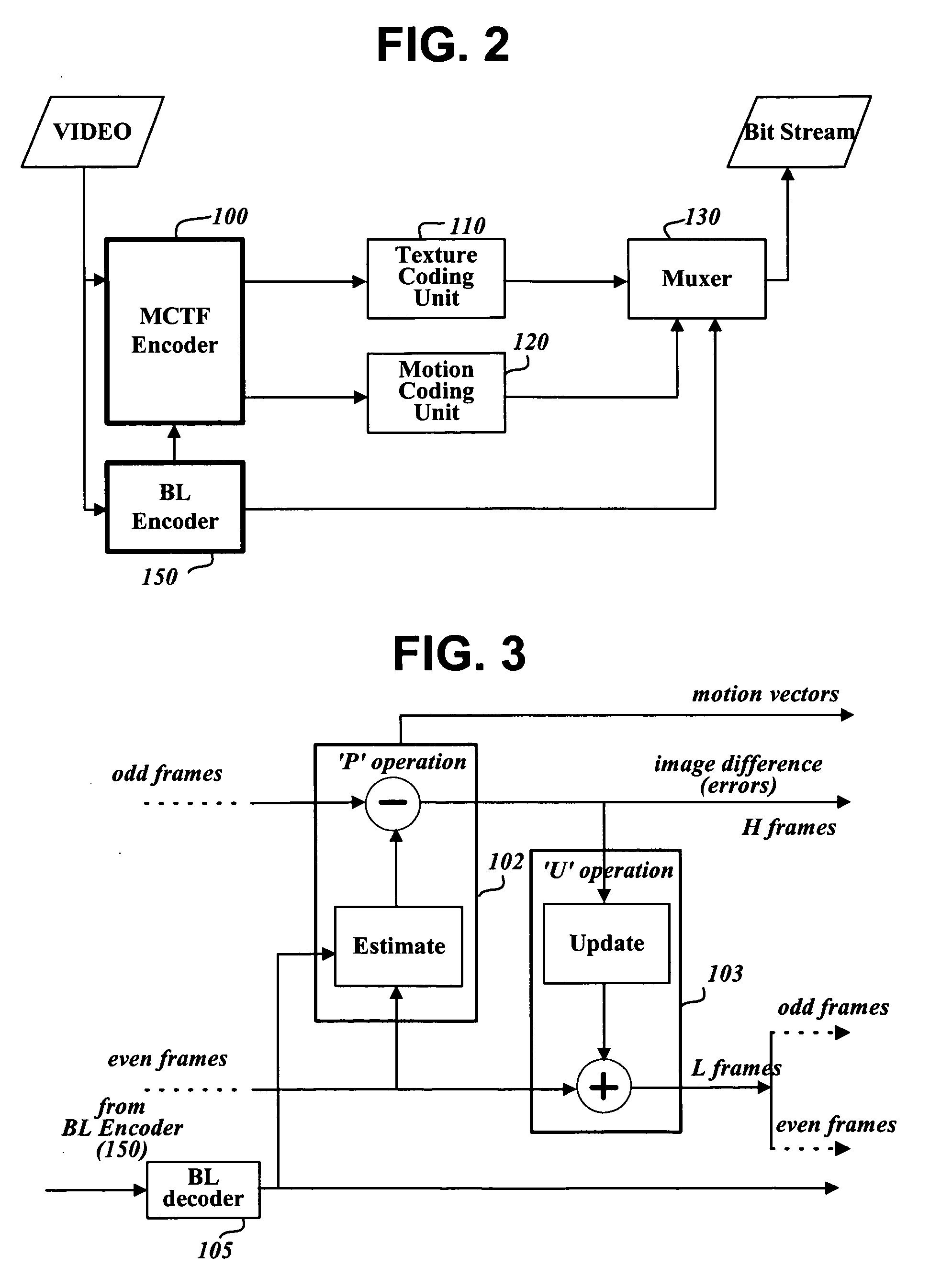

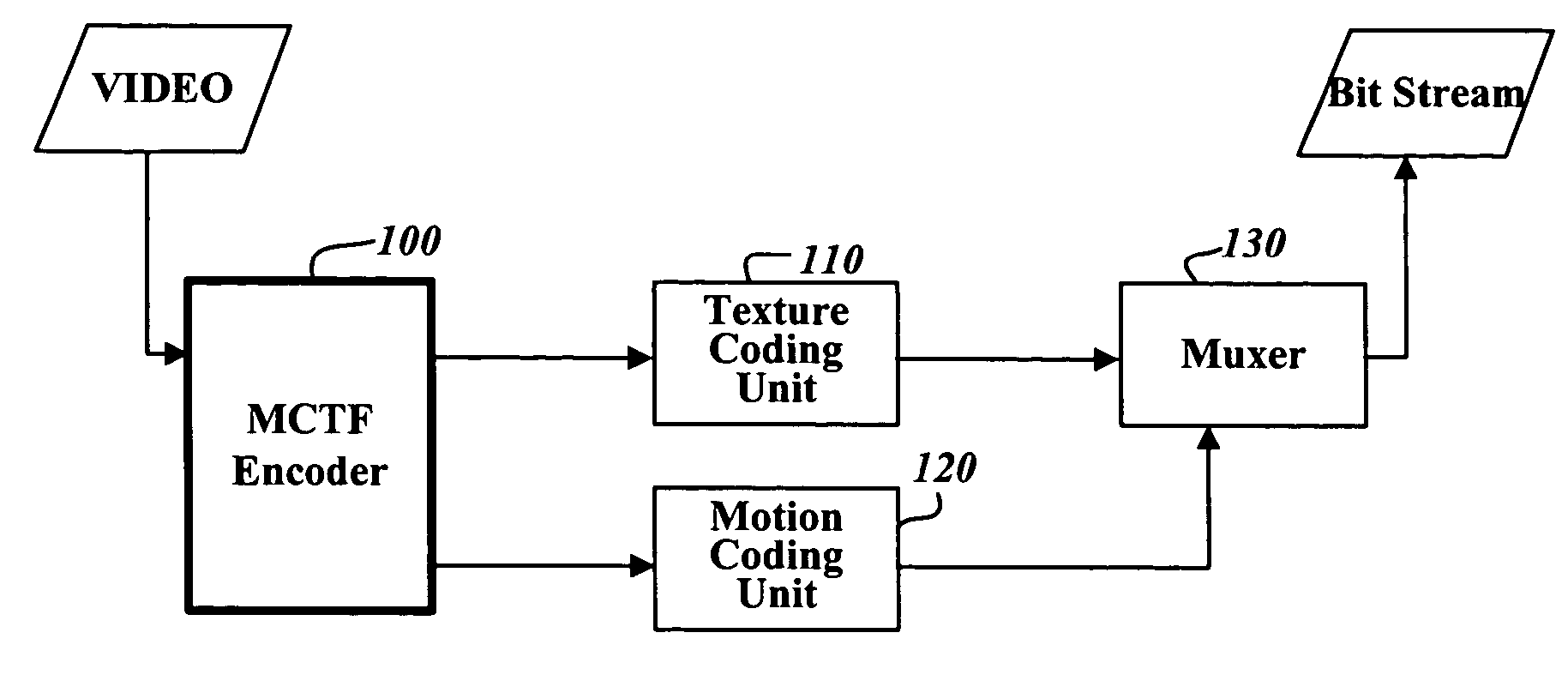

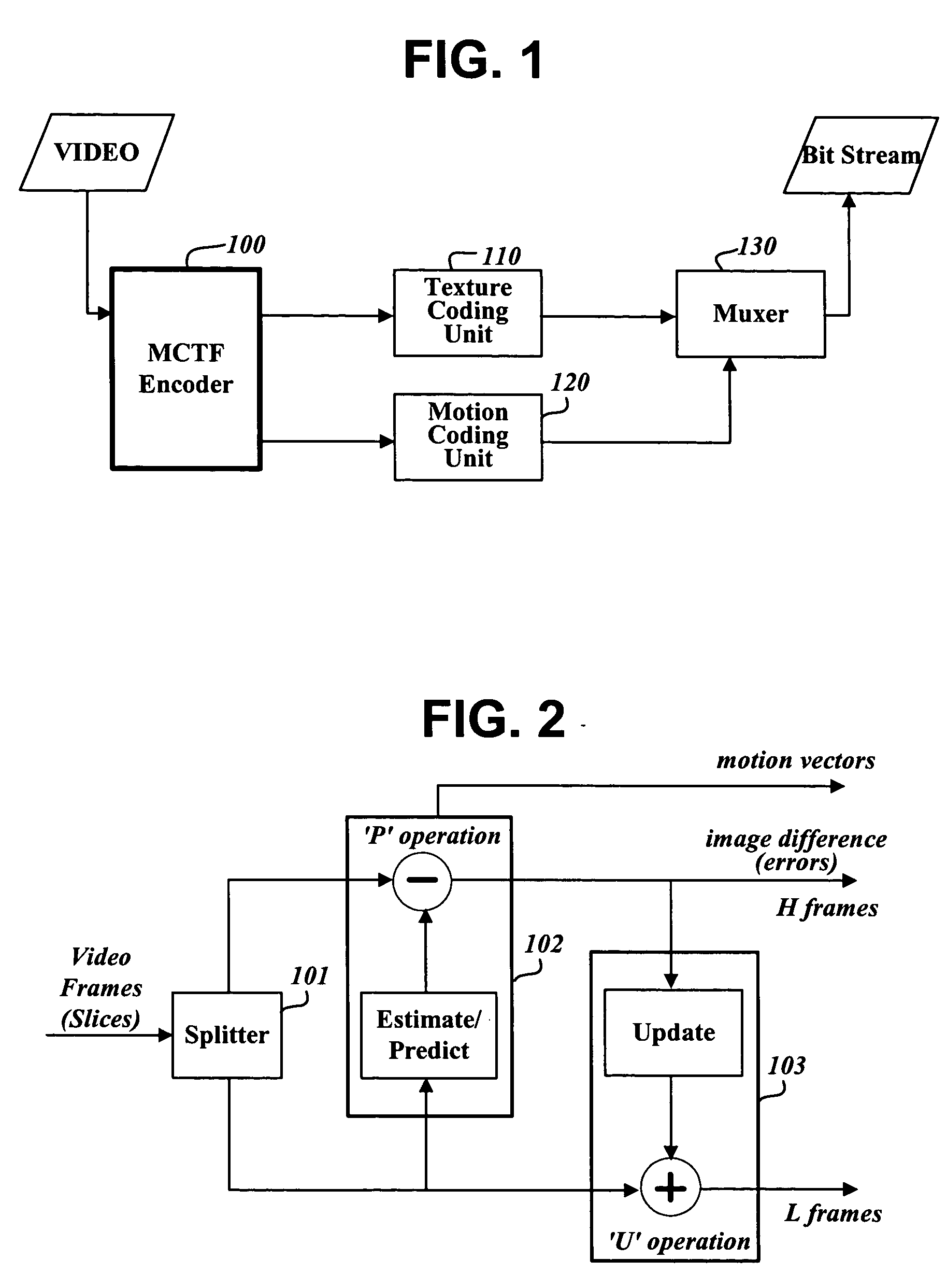

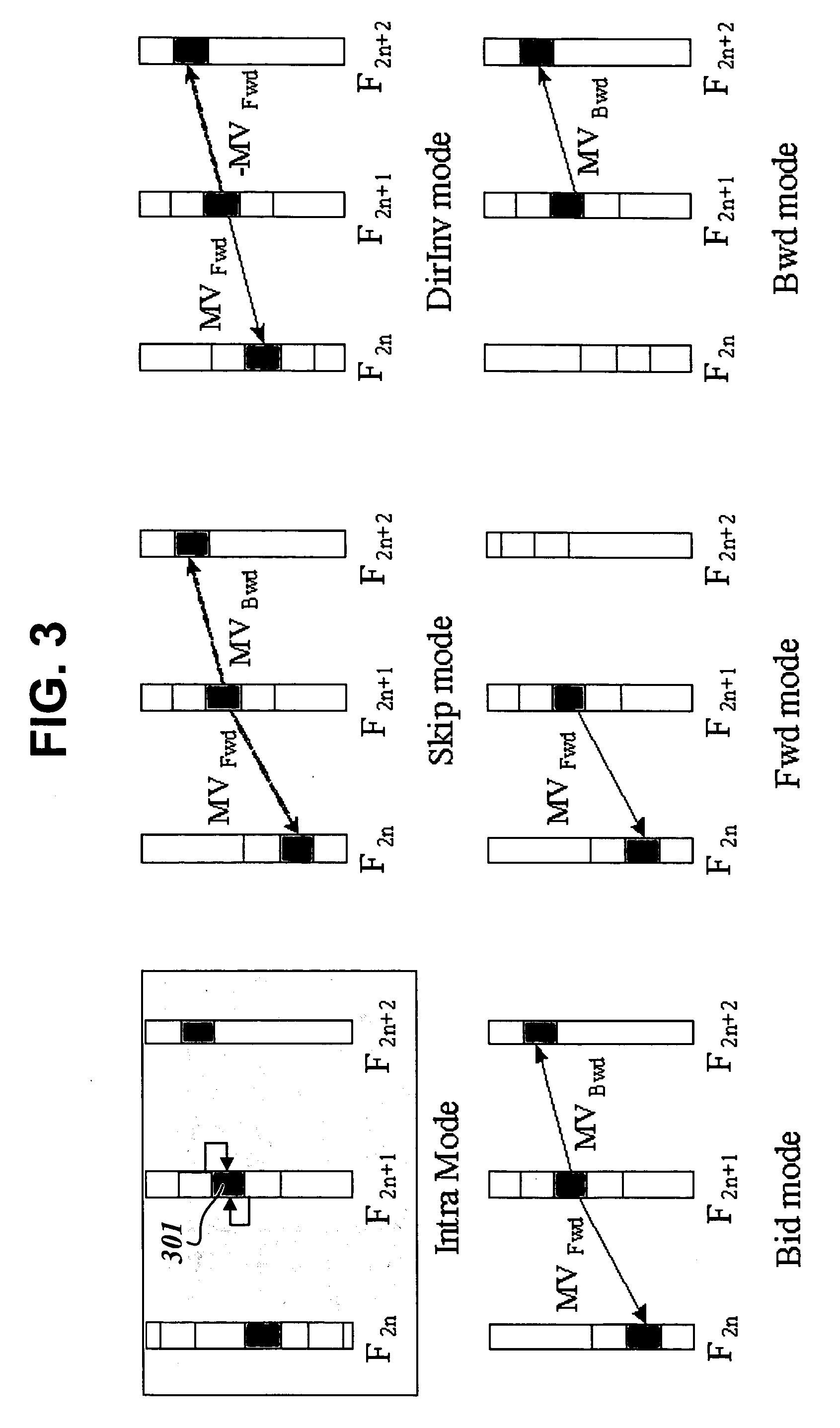

Method and device for encoding/decoding video signals using temporal and spatial correlations between macroblocks

InactiveUS20060062299A1Reduce the amount requiredImprove coding efficiencyColor television with pulse code modulationColor television with bandwidth reductionPattern recognitionSpatial correlation

A method and a device for encoding / decoding video signals by motion compensated temporal filtering. Blocks of a video frame are encoded / decoded using temporal and spatial correlations according to a scalable Motion Compensated Temporal Filtering (MCTF) scheme. When a video signal is encoded using a scalable MCTF scheme, a reference block of an image block in a frame in a video frame sequence constituting the video signal is searched for in temporally adjacent frames. If a reference block is found, an image difference (pixel-to-pixel difference) of the image block from the reference block is obtained, and the obtained image difference is added to the reference block. If no reference block is found, pixel difference values of the image block are obtained based on at least one pixel adjacent to the image block in the same frame. Thus, the encoding procedure uses the spatial correlation between image blocks, improving the coding efficiency.

Owner:LG ELECTRONICS INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com