Human body skeleton-based action recognition method

A human skeleton, action recognition technology, applied in the field of pattern recognition and human-computer interaction, can solve the problem of inability to measure the similarity of actions and actions, matching information (unreasonable eigenvalues, increasing complexity and difficulty, etc.)

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0053] The specific implementation manners of the present invention will be further described in detail below in conjunction with the drawings and examples.

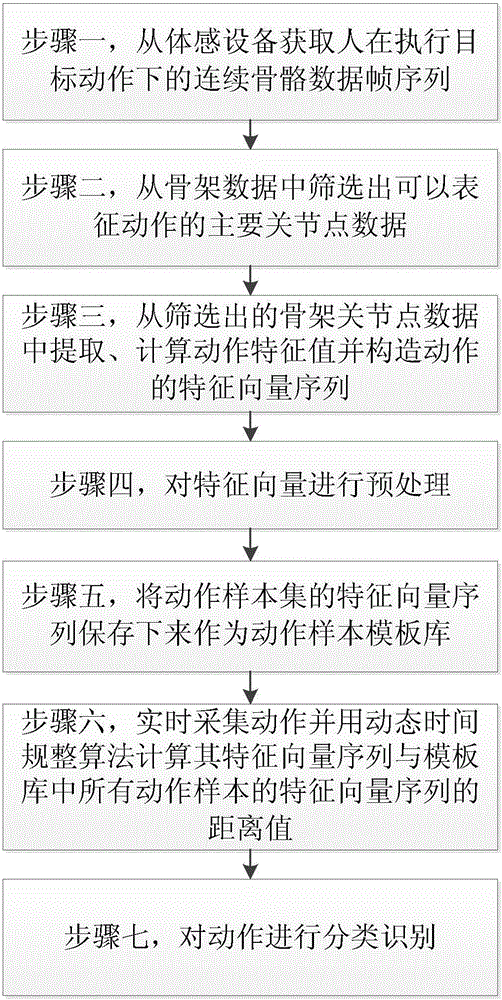

[0054] combine figure 1 , a kind of motion recognition method based on human skeleton that the present invention proposes, comprises following concrete steps:

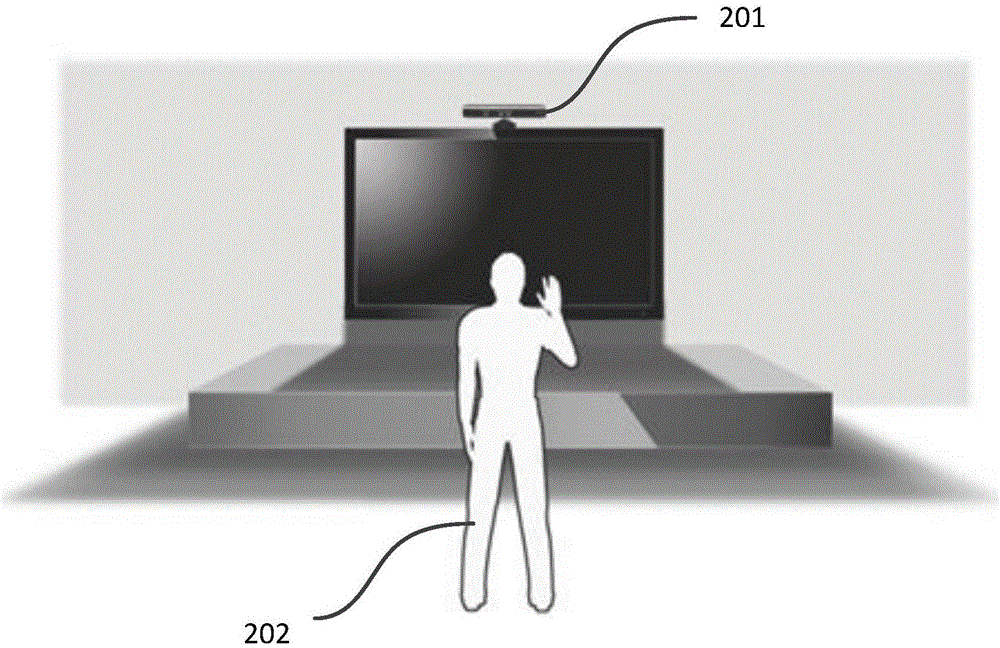

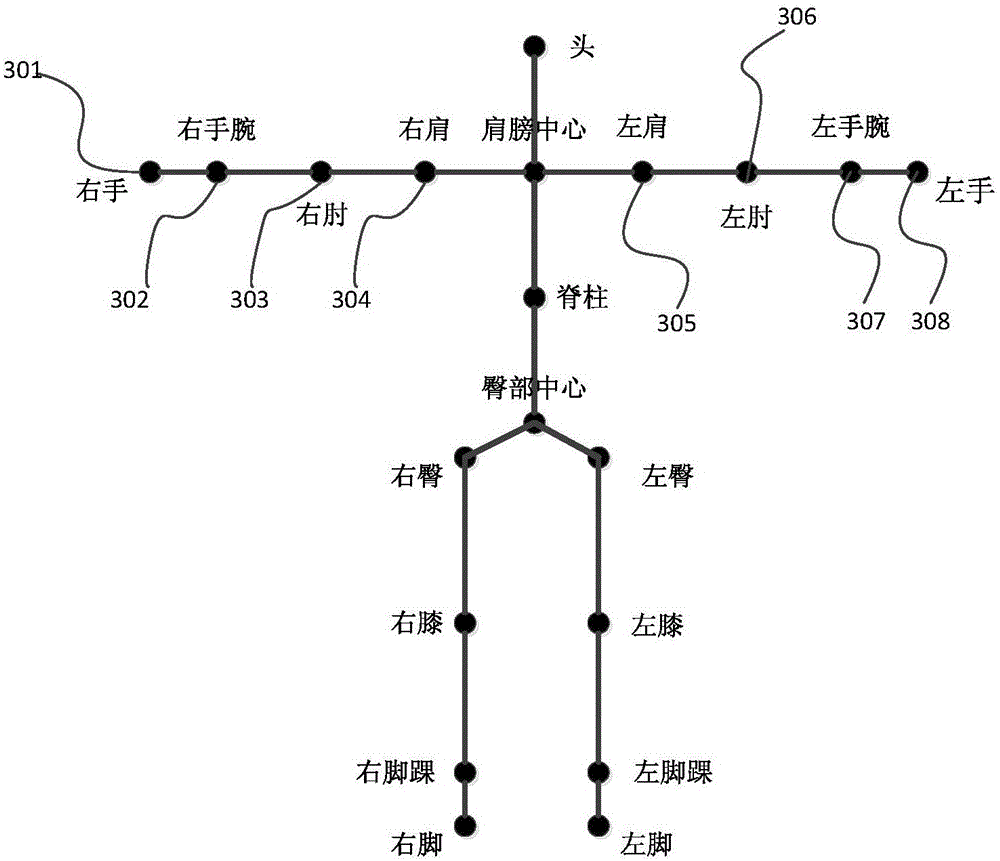

[0055] Step 1, acquiring the continuous skeleton data frame sequence of the person performing the target action from the somatosensory device: the somatosensory device refers to a collection device that can at least obtain 3D space position information and angle information of each joint point including the human skeleton; The human skeleton data includes the data of human joint points provided by the acquisition device;

[0056] Step 2, selecting the main joint point data that can represent the action from the skeleton data: the main joint point data that characterizes the action is the joint point data that plays a key role in action recognition;

[0057] Ste...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com