Patents

Literature

793 results about "Joint point" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

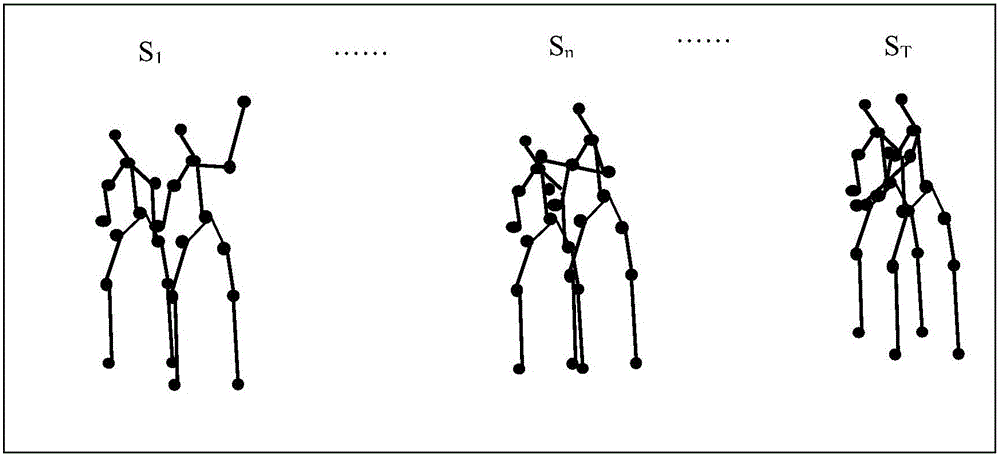

Human body skeleton-based action recognition method

ActiveCN105930767AImprove versatilityEliminate the effects of different relative positionsCharacter and pattern recognitionHuman bodyFeature vector

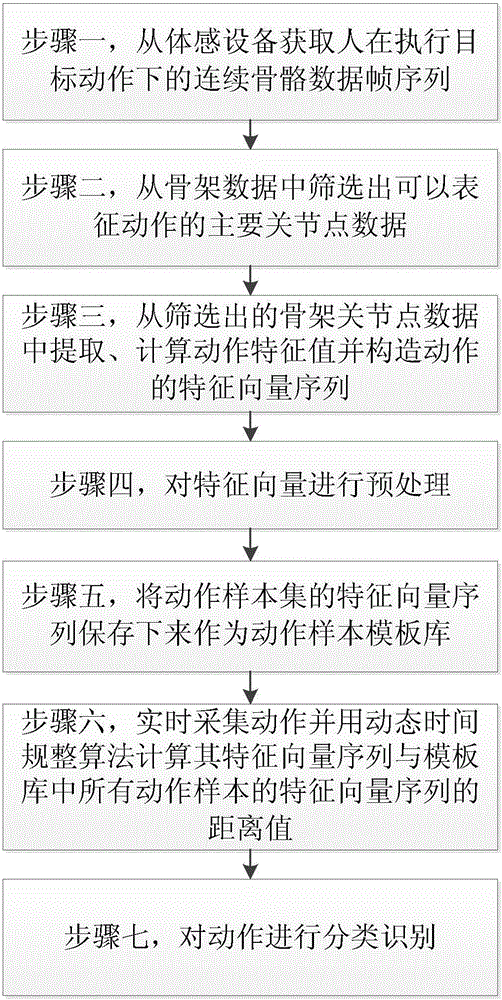

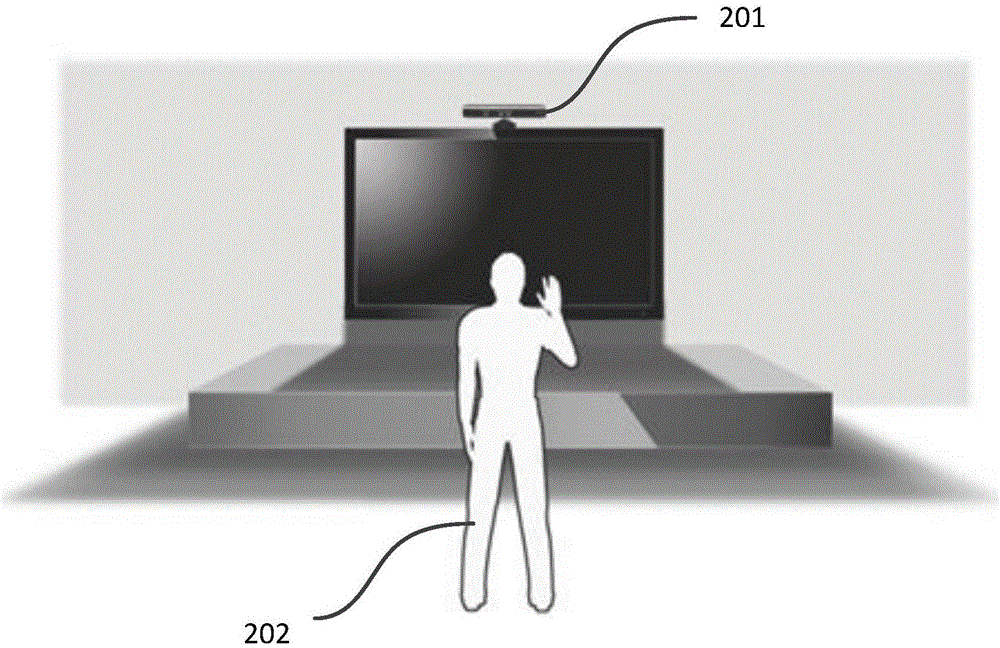

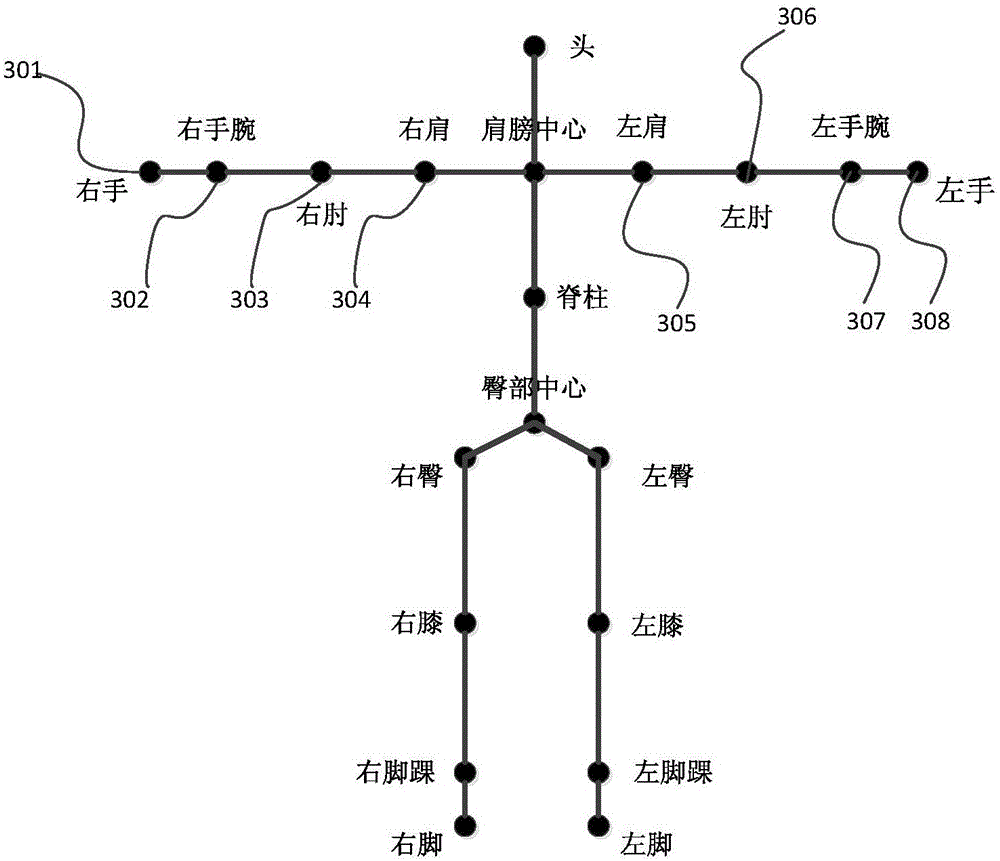

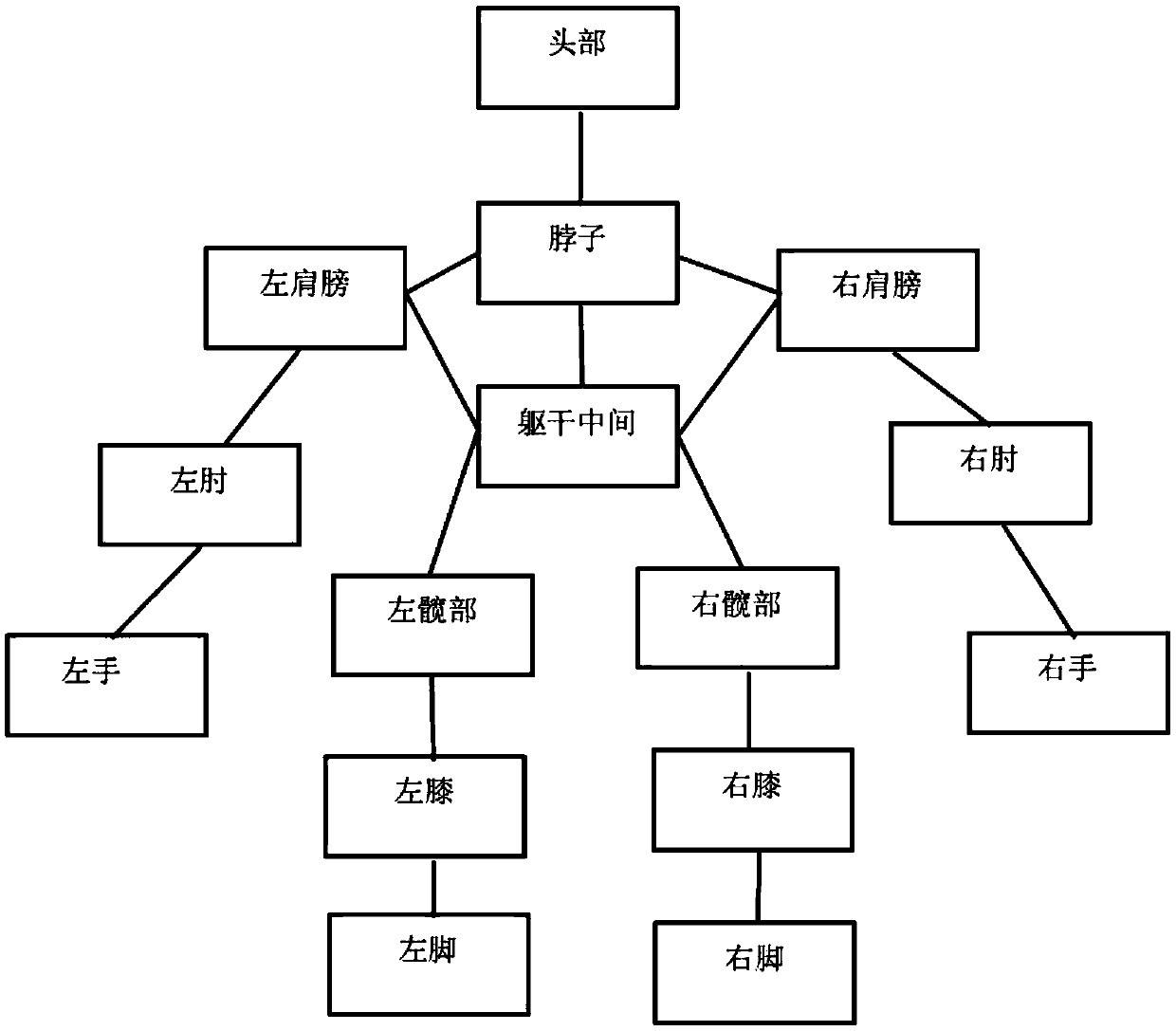

The invention relates to a human body skeleton-based action recognition method. The method is characterized by comprising the following basic steps that: step 1, a continuous skeleton data frame sequence of a person who is executing target actions is obtained from a somatosensory device; step 2, main joint point data which can characterize the actions are screened out from the skeleton data; step 3, action feature values are extracted from the main joint point data and are calculated, and a feature vector sequence of the actions is constructed; step 4, the feature vectors are preprocessed; step 4, the feature vector sequence of an action sample set is saved as an action sample template library; step 6, actions are acquired in real time, the distance value of the feature vector sequence of the actions and the feature vector sequence of all action samples in the template library is calculated by using a dynamic time warping algorithm; and step 7, the actions are classified and recognized. The method of the invention has the advantages of high real-time performance, high robustness, high accuracy and simple and reliable implementation, and is suitable for a real-time action recognition system.

Owner:NANJING HUAJIE IMI TECH CO LTD

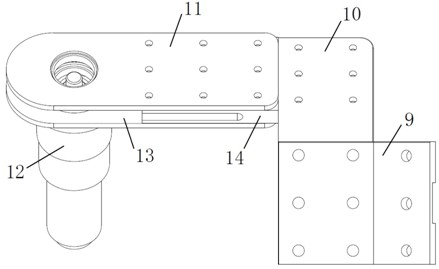

Wearable 7-degree-of-freedom upper limb movement rehabilitation training exoskeleton

InactiveCN102119902AEasy to analyzeEasy to formulateChiropractic devicesMedial rotationAngular degrees

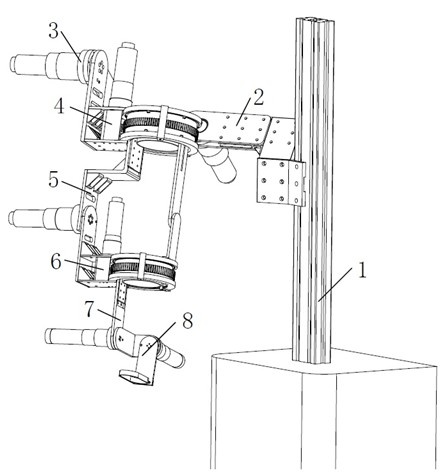

The invention provides a wearable 7-degree-of-freedom upper limb movement rehabilitation training exoskeleton which comprises a supporting rod and an exoskeleton training device which are fixed on a base, wherein the exoskeleton training device is formed by connecting a shoulder adduction / abduction joint, a shoulder flexion / extension joint, a shoulder medial rotation / lateral rotation joint, an elbow flexion / extension joint, an elbow medial rotation / lateral rotation joint, a wrist adduction / abduction joint and a wrist flexion / extension joint in series. The joints are directly driven by a motor, wherein the shoulder and elbow rotating joints are additionally provided with a spur-gear set, the structure is simple, and the response speed is high. Compared with the prior art, the exoskeleton provided by the invention has more degrees of freedom of movement and can adapt to standing and sitting training. The length of an exoskeleton rod can be adjusted according to the height of a patient, thereby ensuring the wearing comfort. A spacing structure is adopted by the joints, thereby improving the safety. Angle and moment sensors at the joint points can acquire kinematic and dynamic data of each joint in real time, thereby being convenient for physical therapists to subsequently analyze and establish a training scheme to achieve an optimal rehabilitation effect.

Owner:ZHEJIANG UNIV

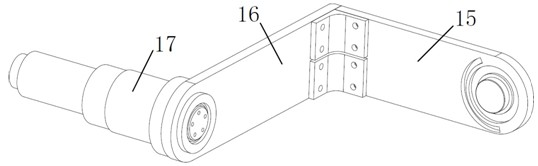

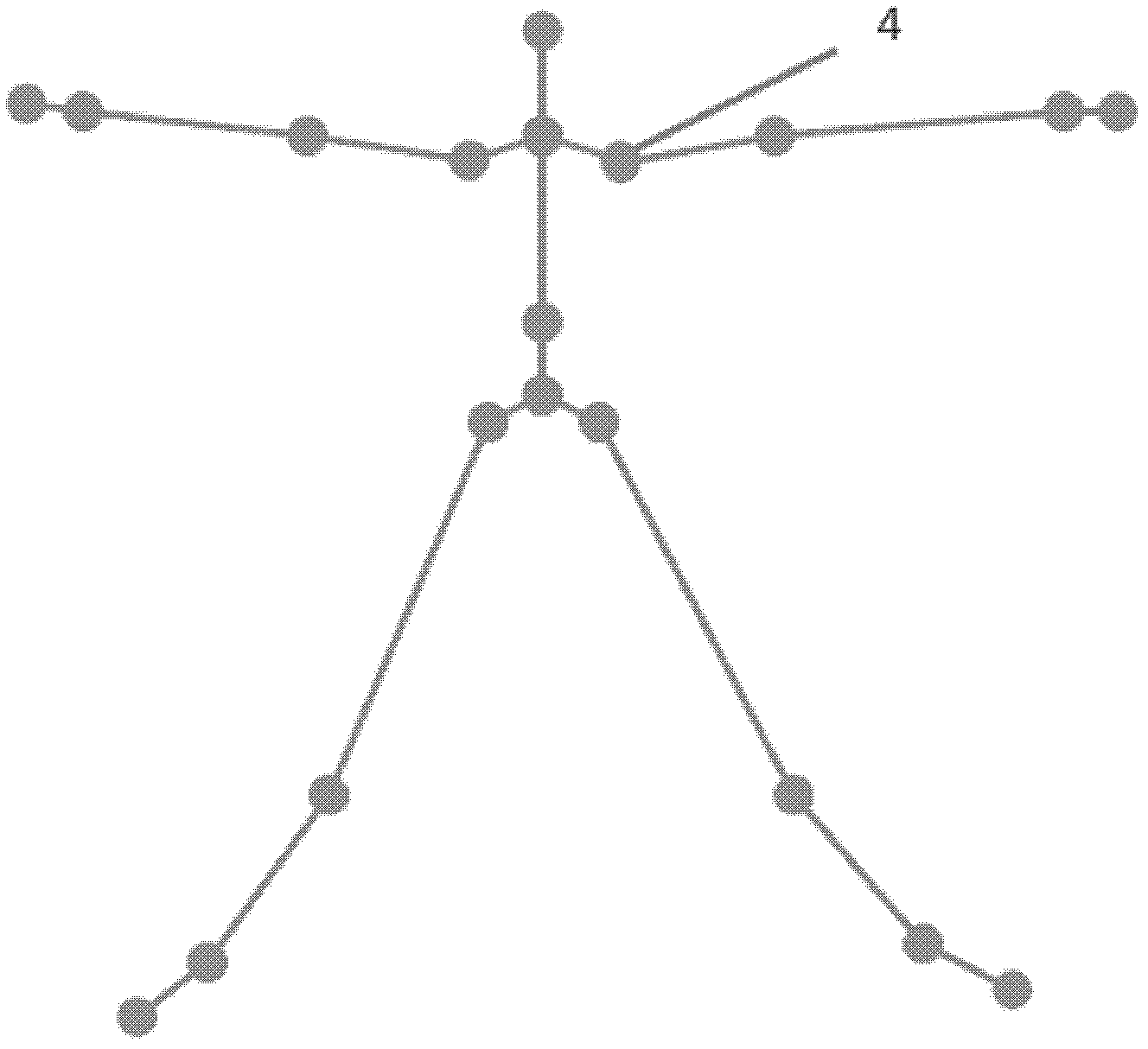

3D (three-dimensional) human posture capturing and simulating system

InactiveCN102622591AReal-time motion captureStatic indicating devicesCharacter and pattern recognitionHuman bodyComputer module

The invention relates to a 3D (three-dimensional) human posture capturing and simulating system, which comprises a human action capturing module, a human joint point recognizing and human posture processing module and a human posture display module. The human action capturing module comprises an RGB (red, green, blue) camera, an infrared transmitter and an infrared receiver; the RGB camera is used for acquiring environmental information of the camera, generated data frames are transmitted to the human joint point recognizing and human posture processing module via a communication module to beprocessed, and accordingly a visible light image is generated; the infrared transmitter is used for emitting laser speckles in a fixed mode; and the infrared receiver is used for receiving infrared light, generated infrared image data frames are transmitted to the human joint point recognizing and human posture processing module via the communication module to be processed, and accordingly an infrared light image is generated. The 3D human posture capturing and simulating system can realize real-time human action capturing, displays human actions in a three-dimensional display, and simulates human postures.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

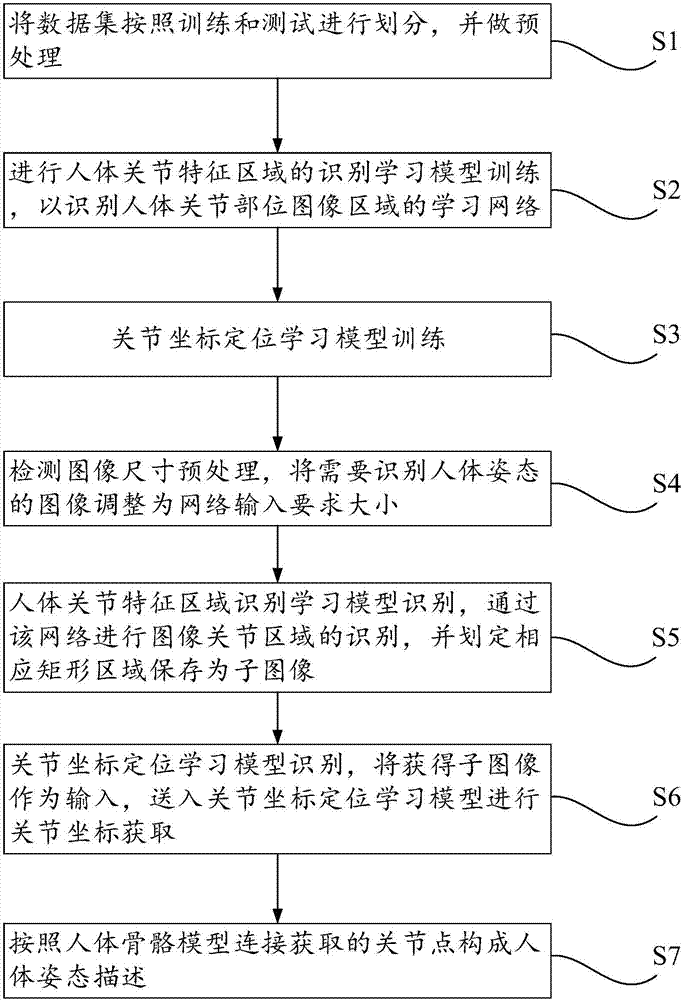

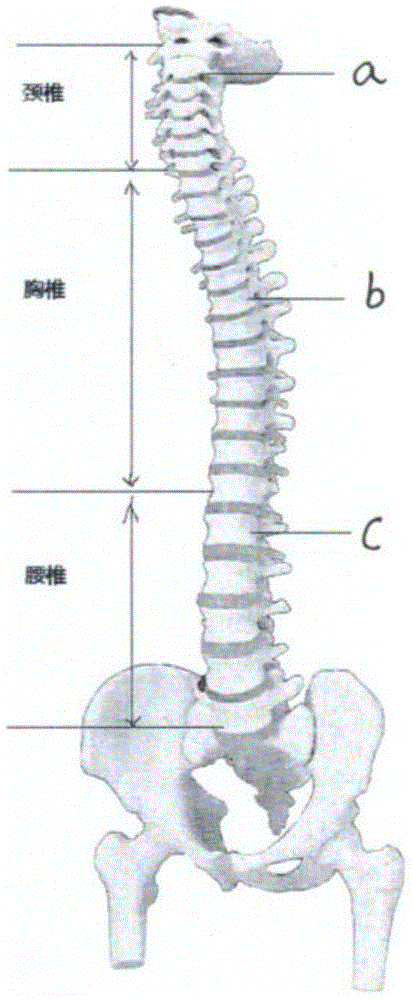

Attitude detection method using deep convolutional neural network and equipment

The invention discloses an attitude detection method using a deep convolutional neural network, and is suitable for being performed in computing equipment. The method comprises the steps that a data set is divided into training and testing and preprocessed; identification learning model training of human body joint feature areas is performed so as to identify the learning network of human body joint image areas; joint coordinate positioning learning model training is performed; detection image size preprocessing is performed, and the images requiring human body attitude identification are adjusted into the size of the network input requirements; identification of the image joint areas is performed through the network and corresponding rectangular areas are defined to be saved as sub-images; the acquired sub-images act as the input to be transmitted to the joint coordinate positioning learning model to perform joint coordinate acquisition; and the acquired joint points are connected according to the human skeleton model so as to form human body attitude description. The invention also provides storage equipment and a mobile terminal.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

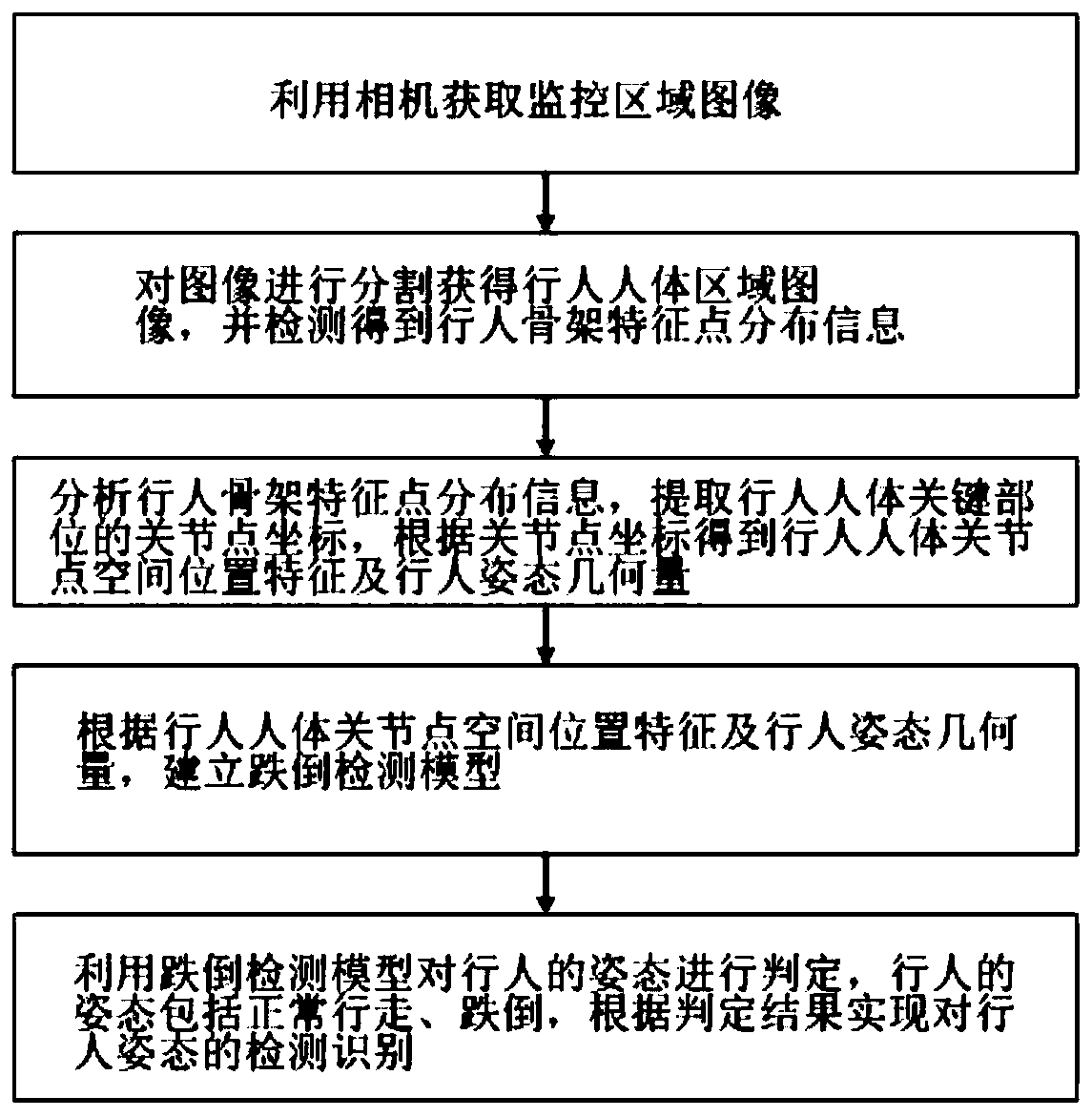

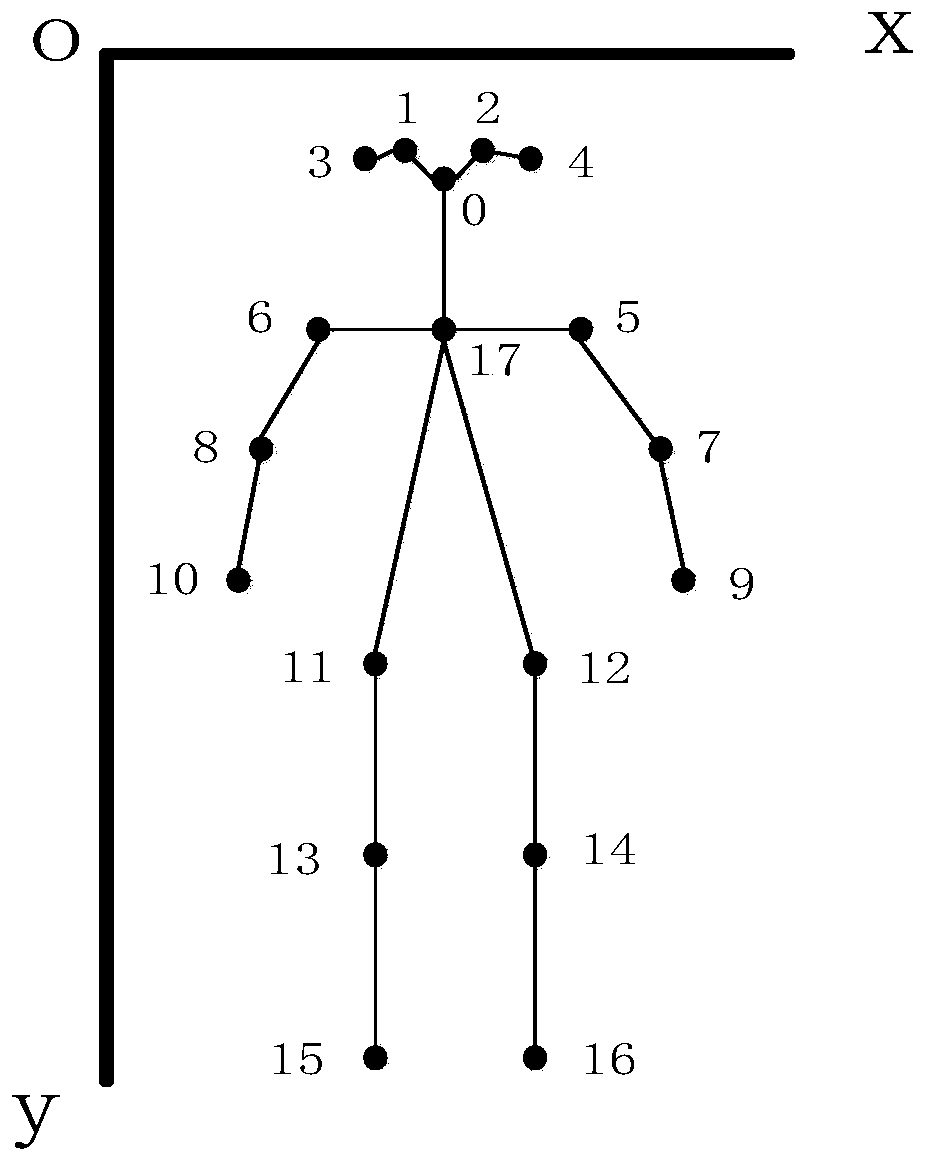

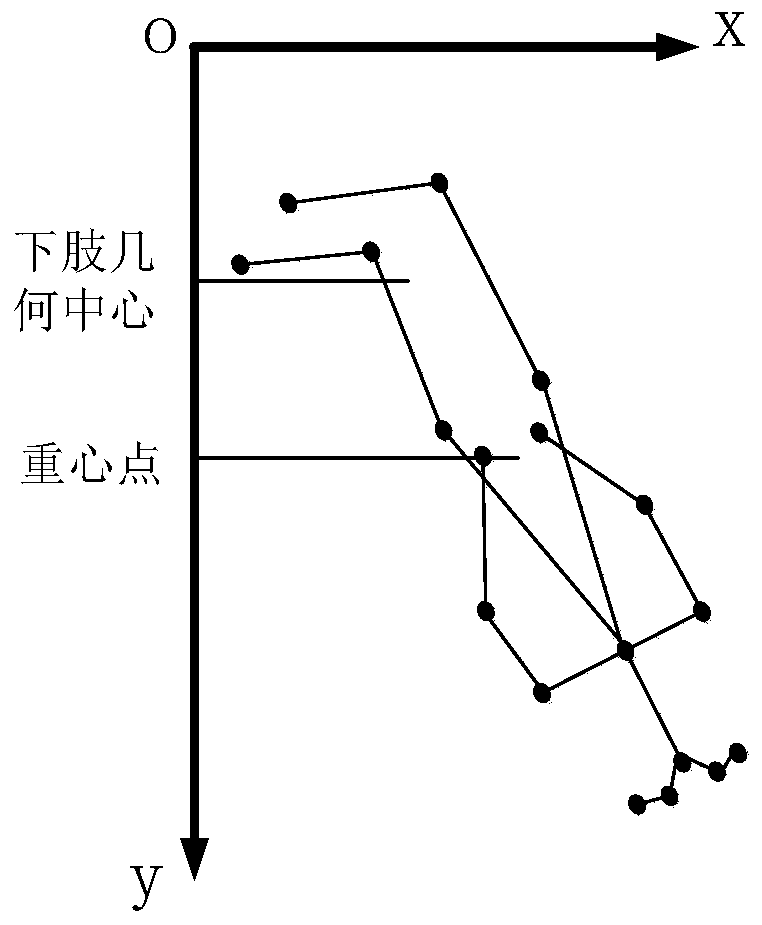

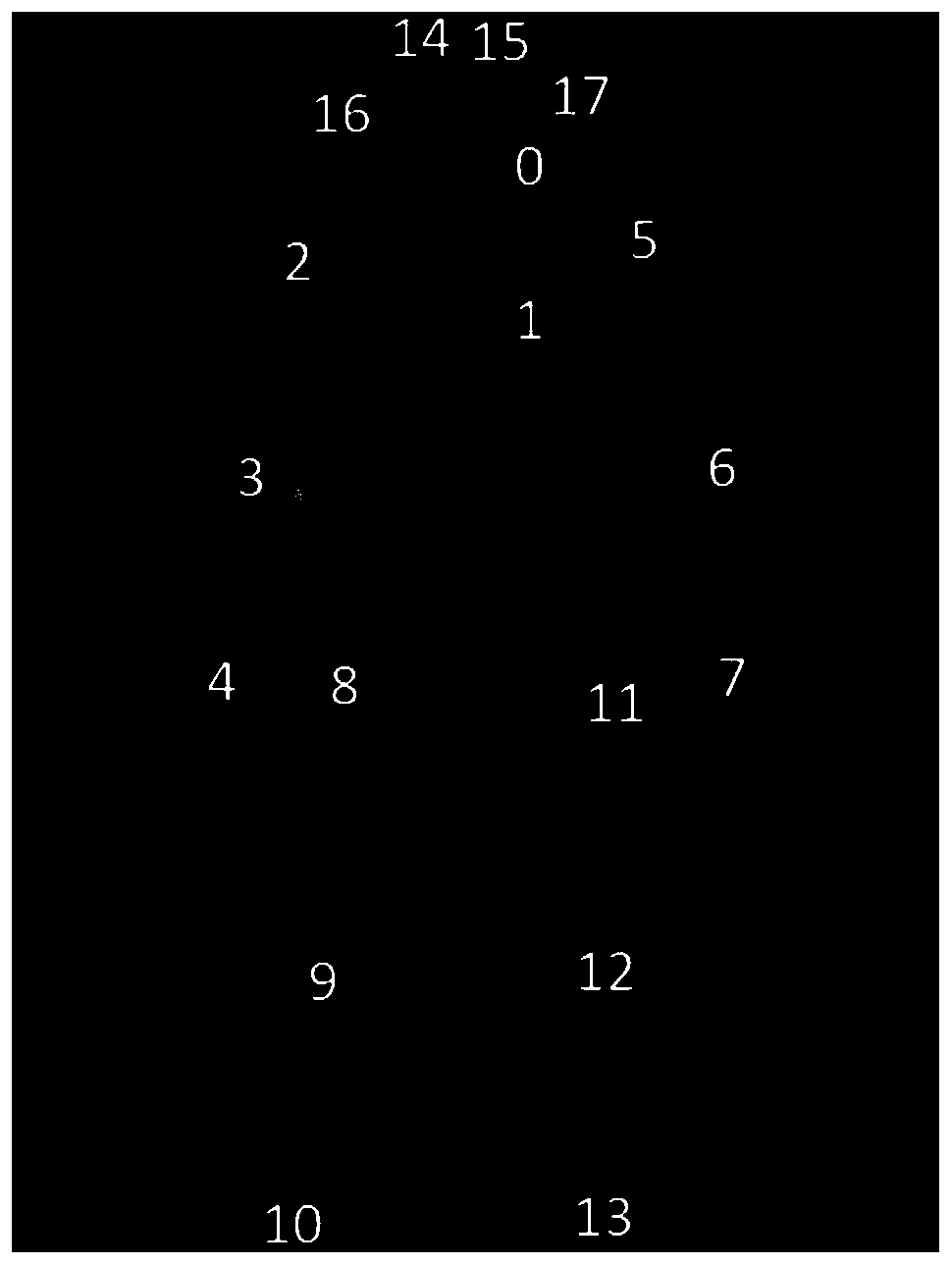

Pedestrian falling recognition method based on skeleton detection

ActiveCN109919132AAccurate fall detectionGuarantee the safety of lifeCharacter and pattern recognitionEarly warning systemHuman body

The invention discloses a pedestrian falling recognition method based on skeleton detection. The method comprises the following steps: S1, acquiring a monitoring area image by using a camera; S2, segmenting the image to obtain a pedestrian body area image, and detecting to obtain pedestrian skeleton feature point distribution information; S3, analyzing pedestrian skeleton feature point distribution information, extracting articulation point coordinates of key parts of the human body of the pedestrian, acquiring pedestrian human body articulation point space position features and pedestrian posture geometrical quantities according to the articulation point coordinates, and the key parts are preset parts on the human body; S4, establishing a tumble detection model according to the spatial position characteristics of the joint points of the human body of the pedestrian and the pedestrian posture geometric quantity; and S5, judging the posture of the pedestrian by using the fall detectionmodel wherein the posture of the pedestrian comprises normal walking and fall, and the detection and recognition of the posture of the pedestrian are realized according to the judgment result. The method can actively detect abnormal conditions such as pedestrian falling in a monitoring video, and can improve the monitoring capability of pedestrian safety events by combining with an early warning system.

Owner:INST OF INTELLIGENT MFG GUANGDONG ACAD OF SCI

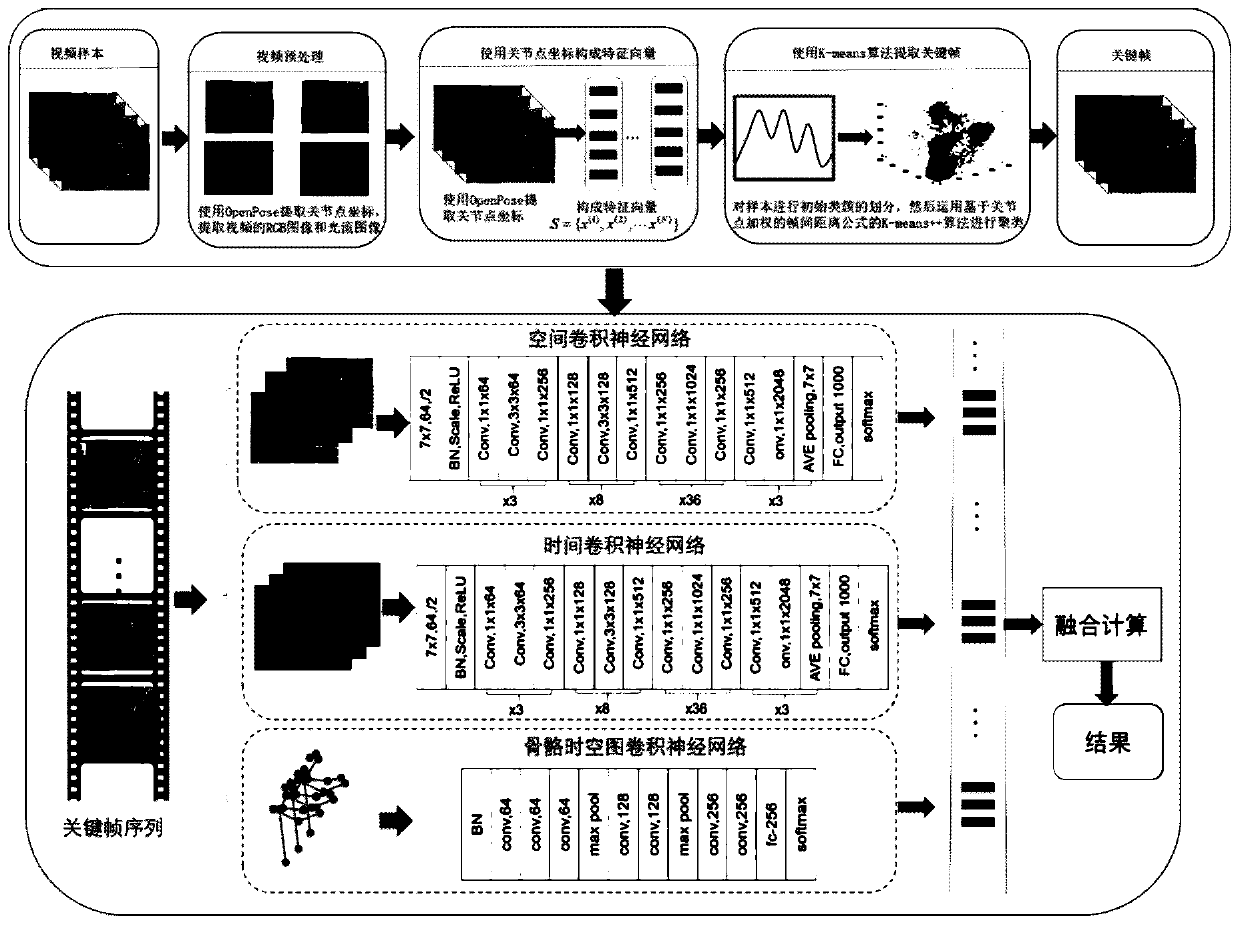

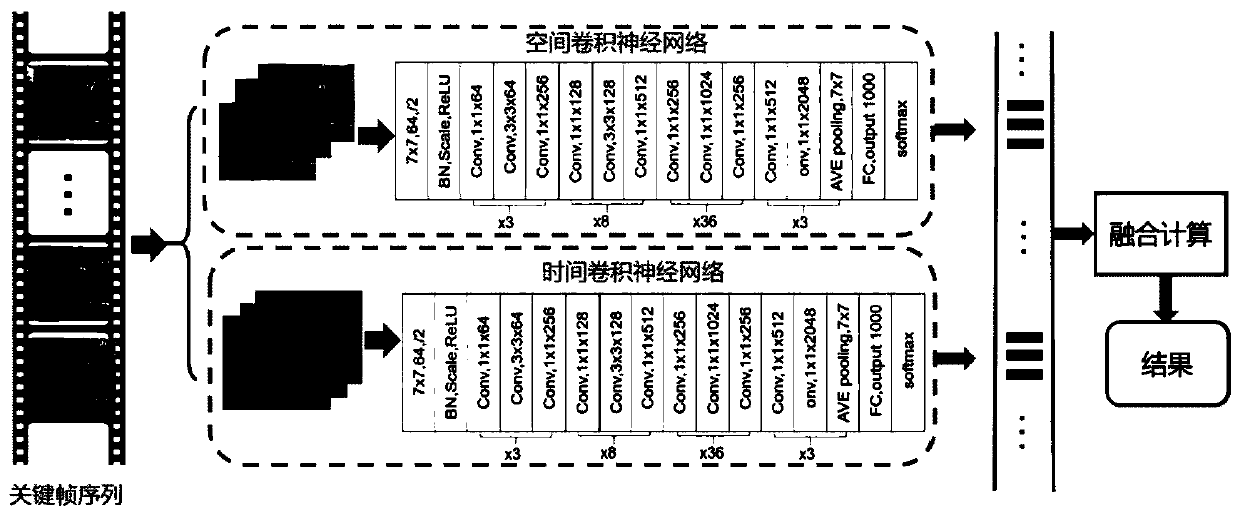

Multi-feature fusion behavior identification method based on key frame

ActiveCN110096950ARefine nuancesImprove accuracyCharacter and pattern recognitionFeature vectorOptical flow

A multi-feature fusion behavior identification method based on a key frame comprises the following steps of firstly, extracting a joint point feature vector x (i) of a human body in a video through anopenpose human body posture extraction library to form a sequence S = {x (1), x (2),..., x (N)}; secondly, using a K-means algorithm to obtain K final clustering centers c '= {c' | i = 1, 2,..., K},extracting a frame closest to each clustering center as a key frame of the video, and obtaining a key frame sequence F = {Fii | i = 1, 2,..., K}; and then obtaining the RGB information, optical flow information and skeleton information of the key frame, processing the information, and then inputting the processed information into a double-flow convolutional network model to obtain the higher-levelfeature expression of the RGB information and the optical flow information, and inputting the skeleton information into a space-time diagram convolutional network model to construct the space-time diagram expression features of the skeleton; and then fusing the softmax output results of the network to obtain a final identification result. According to the process, the influences, such as the timeconsumption, accuracy reduction, etc., caused by redundant frames can be well avoided, and then the information in the video can be better utilized to express the behaviors, so that the recognition accuracy is further improved.

Owner:NORTHWEST UNIV(CN)

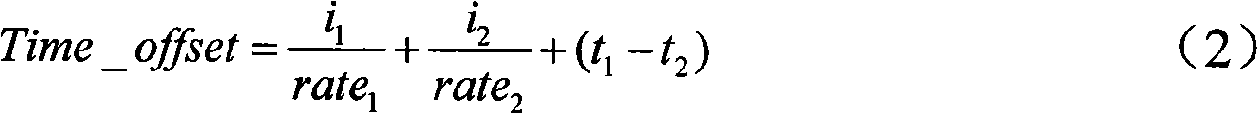

Method for processing multimedia video object

ActiveCN101409831ASimple and seamless splicing schemeAvoid false detectionImage enhancementImage analysisTime domainComputer graphics (images)

The invention discloses a multimedia video object processing method which comprises the steps as follows: (1) carrying out scene dividing on an MPEG video based on macro block information; (2) pre-reading a video needed to be jointed, obtaining various information and searching a proper joint scene; (3) searching the inlet point and the outlet point of the joint and carrying out regulation on various information of the accessed video; (4) selecting a proper audio joint point to realize audio-video seamless joint; (5) setting a video buffer area and unifying the code rate of the video to be jointed; (6) carrying out coarse extraction on a moving object in the video in a time domain; (7) carrying out watershed processing on the coarse extraction result, carrying out space region merging and leading to a accurate segmentation object. The .invention is characterized by simple and high-efficiency algorithm, low system resource consumption and fast speed and high accuracy of processing.

Owner:ZHEJIANG BOXSAM ELECTRONICS

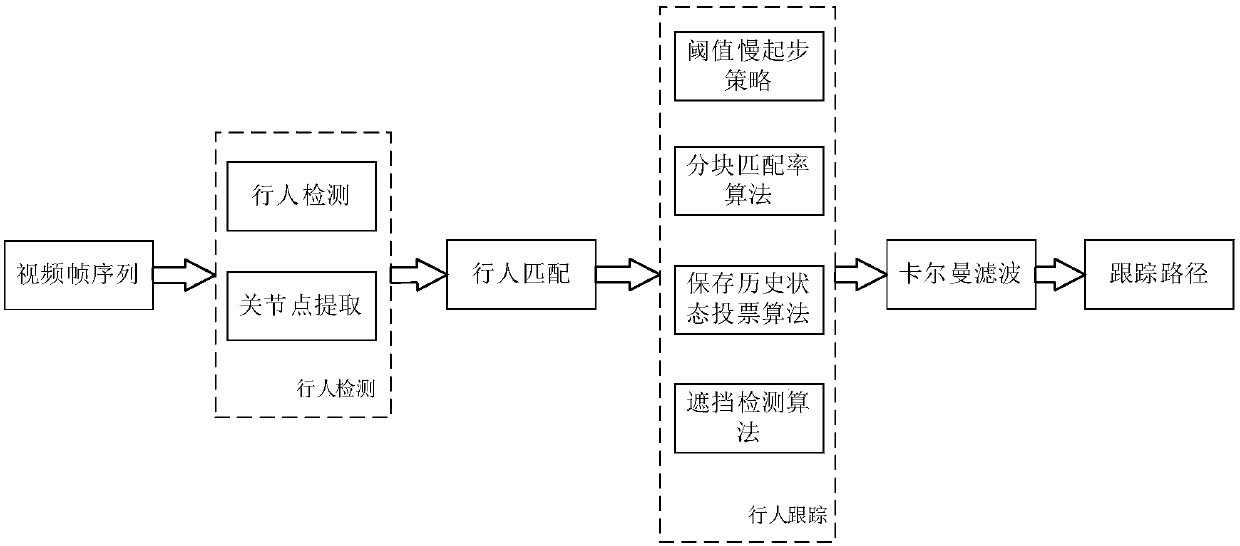

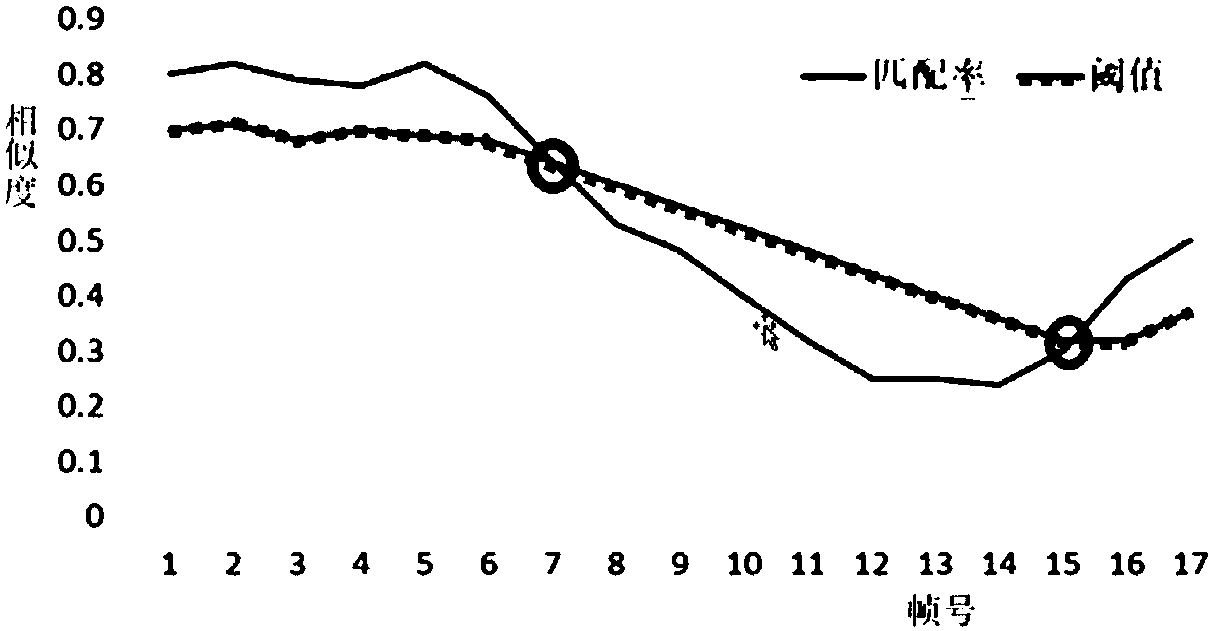

Deep-learning-based multi-target pedestrian detection and tracking method

ActiveCN107563313AGood compatibilityThe matching effect is stableCharacter and pattern recognitionNeural architecturesFeature extractionTracking model

The invention discloses a deep-learning-based multi-target pedestrian detection and tracking method. The method comprises the following steps: step one, carrying out multi-target pedestrian detectionand joint point extraction on an inputted video and storing obtained position information and joint point information as inputs of a next stage; step two, selecting a key frame at an interval with thecertain number of frames and carrying out apparent characteristic extraction in a pedestrian in the key frame; to be specific, according to the obtained position information and joint point information, extracting upper body part attitude characteristics and color histogram characteristics respectively for pedestrian association between key frames; and step three, carrying out continuous trackingon the pedestrians in the key frames with a threshold slow starting strategy, a block matching rate model detection algorithm, a historical state keeping voting algorithm and a shielding detection method for tracking effect improvement, returning to the step one after tracking ending, and detecting the key frames again to ensure stability of the method.

Owner:BEIHANG UNIV

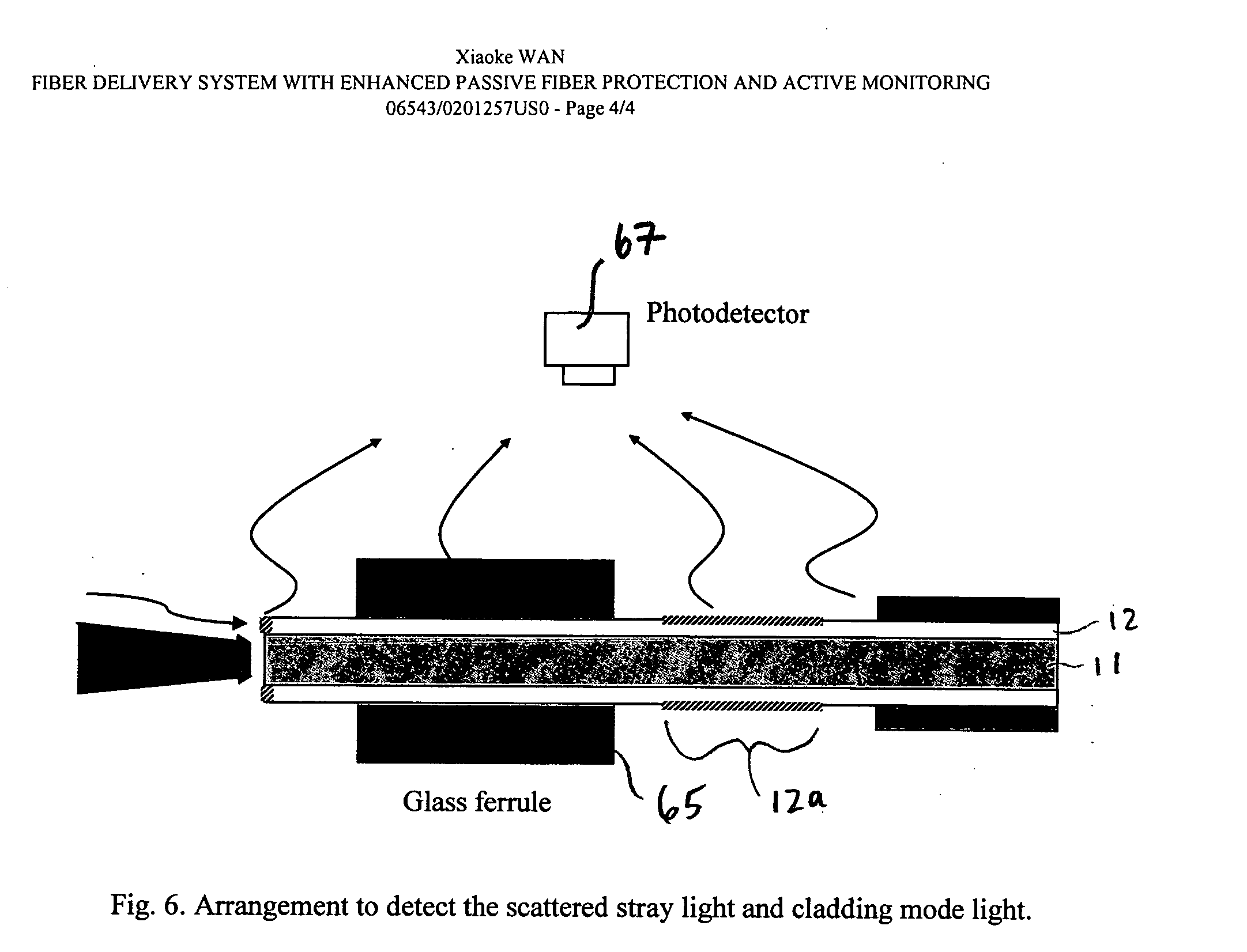

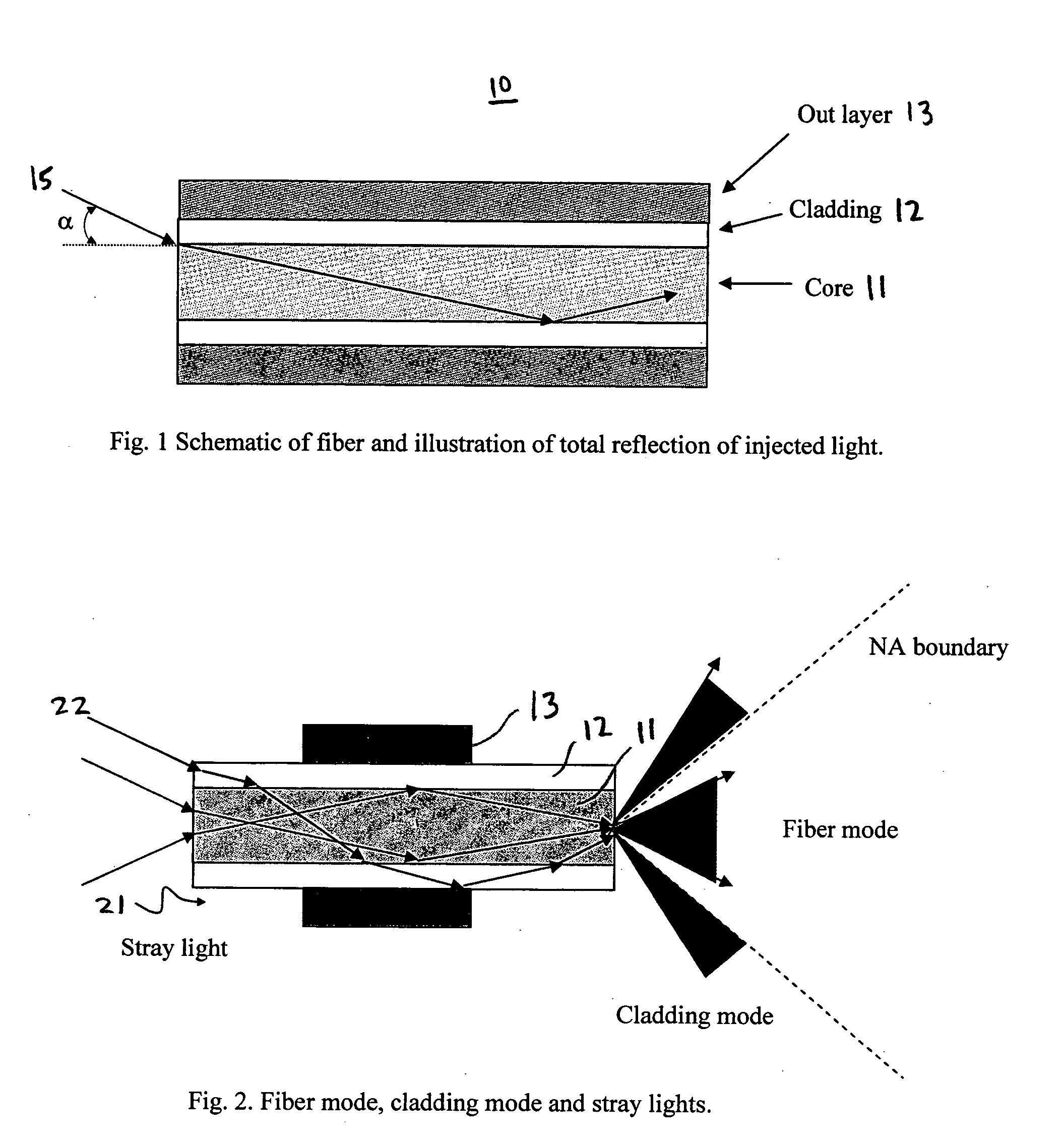

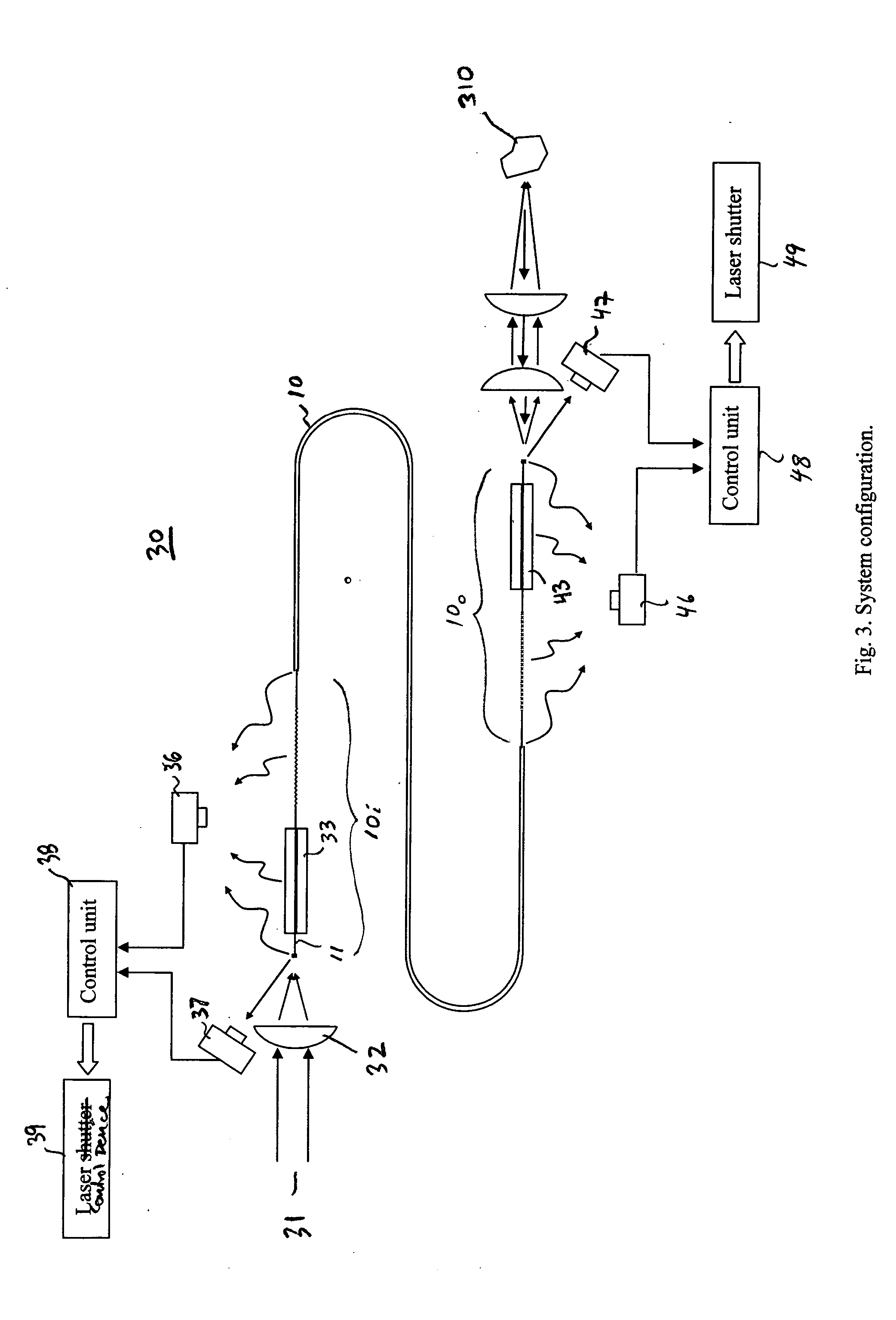

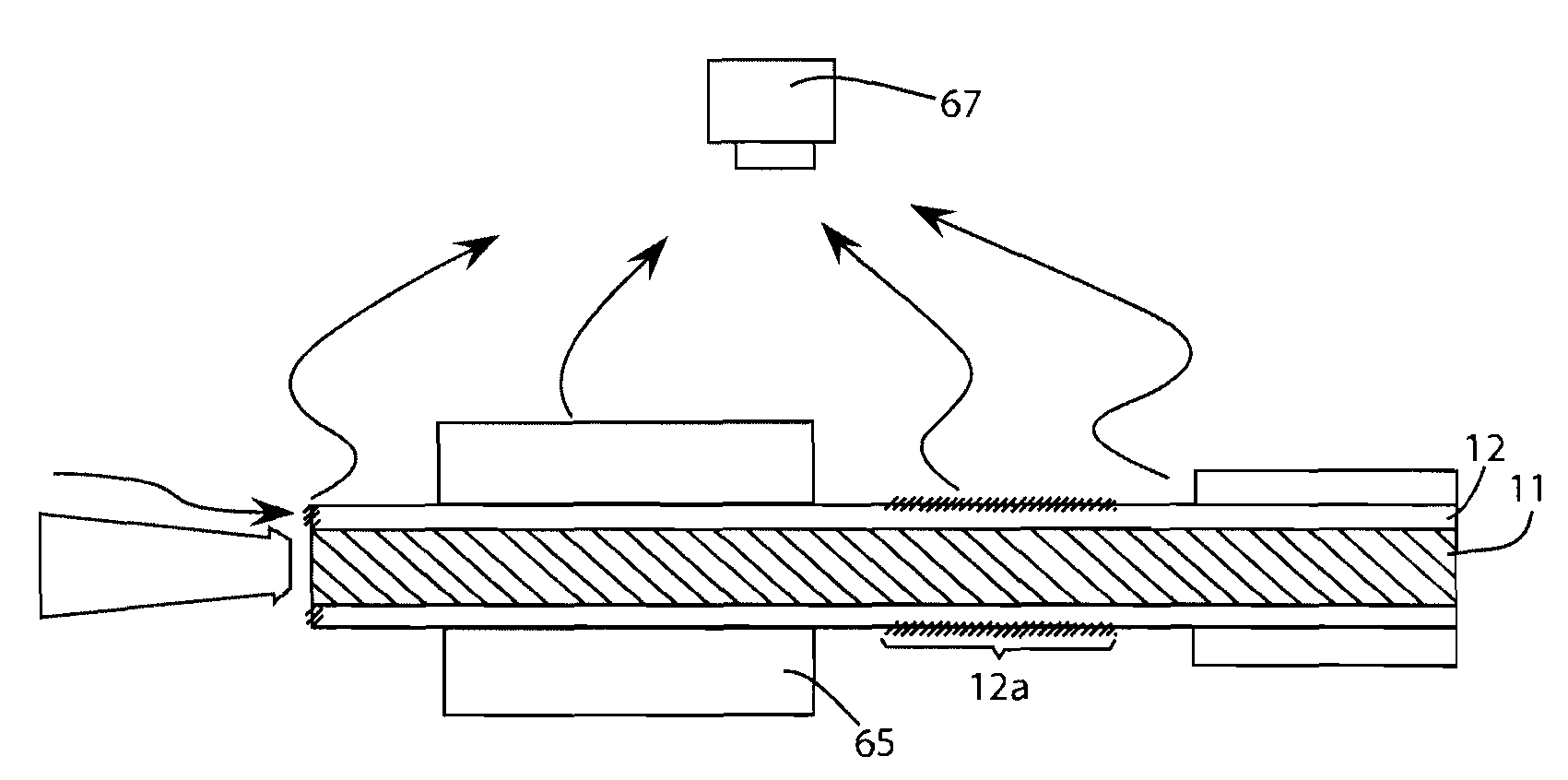

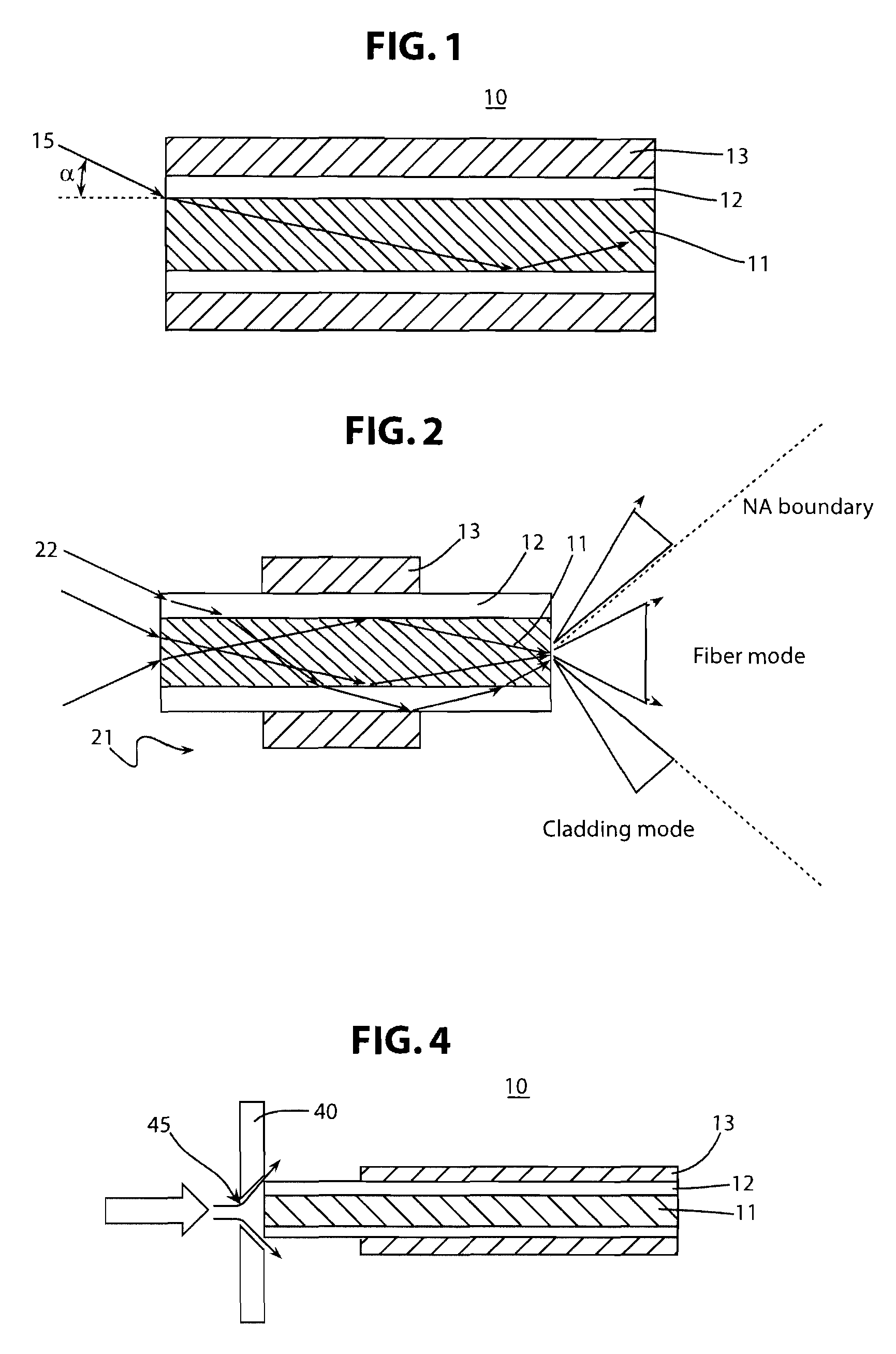

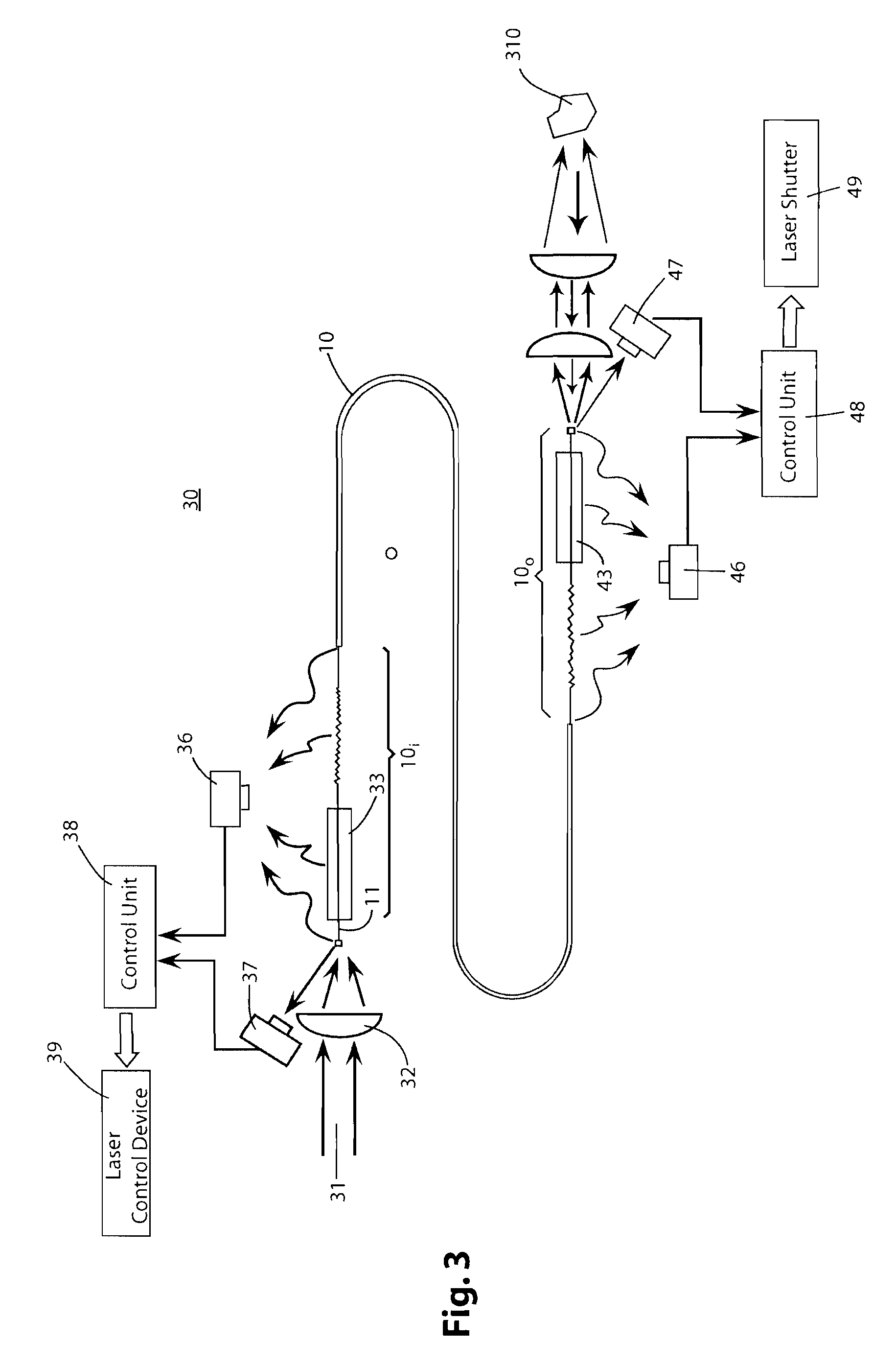

Fiber delivery system with enhanced passive fiber protection and active monitoring

In an optical fiber system for delivering laser, a laser beam is focused onto an optical fiber at an injection port of the system. The end portions of the fiber have cladding treatments to attenuate stray light and cladding mode light, so as to enhance the protection of the outer layer joint points. Photodetector sensors monitor scattered stray light, cladding mode light, and / or transmitted cladding mode light. Sensor signals are provided to a control unit for analyzing the fiber coupling performance. If need be, the control unit can control a laser shutter or the like to minimize or prevent damage. In materials processing applications, the photodetector signals can be analyzed to determine the processing status of a work piece.

Owner:CONTINUUM ELECTRO OPTICS

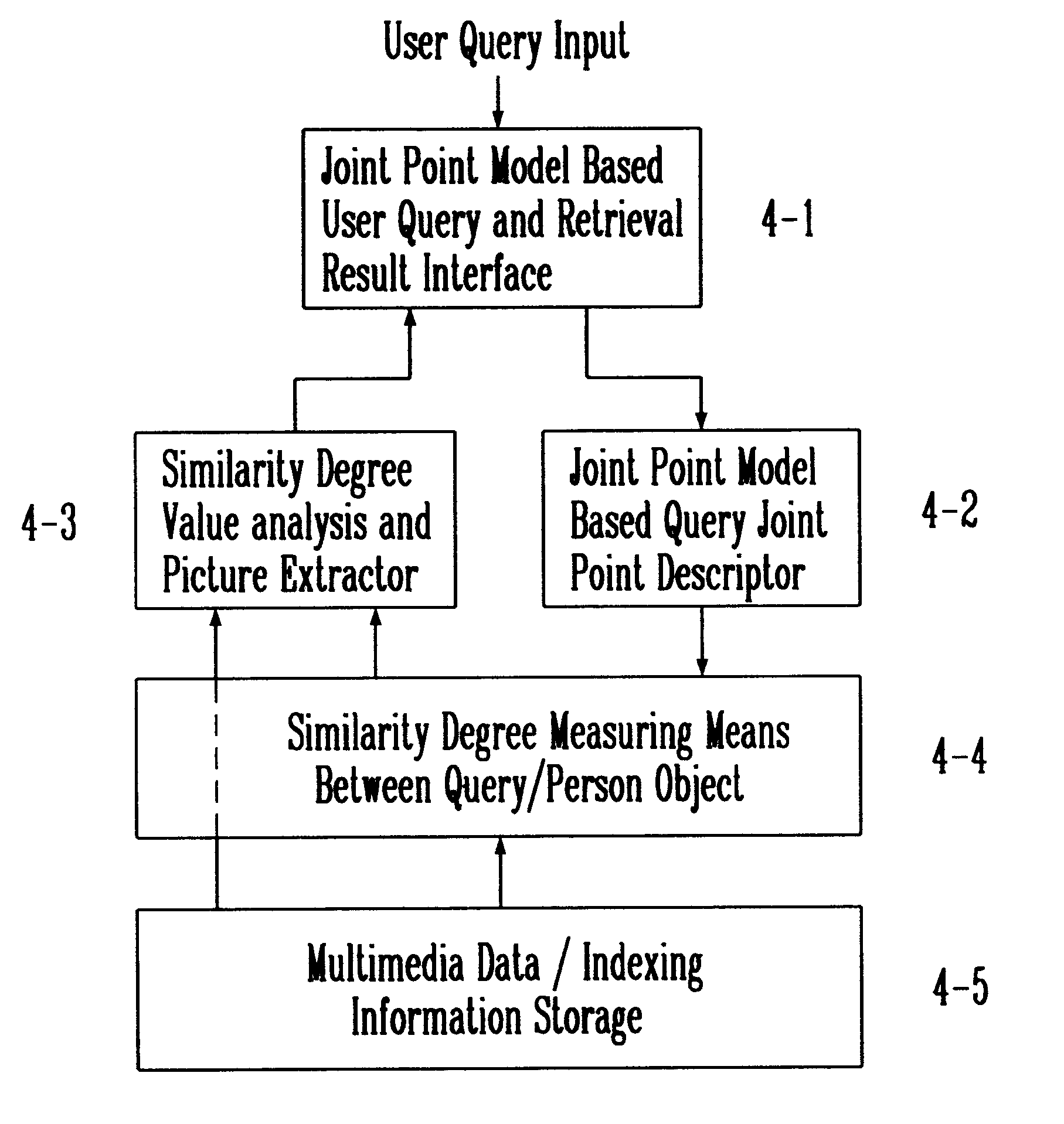

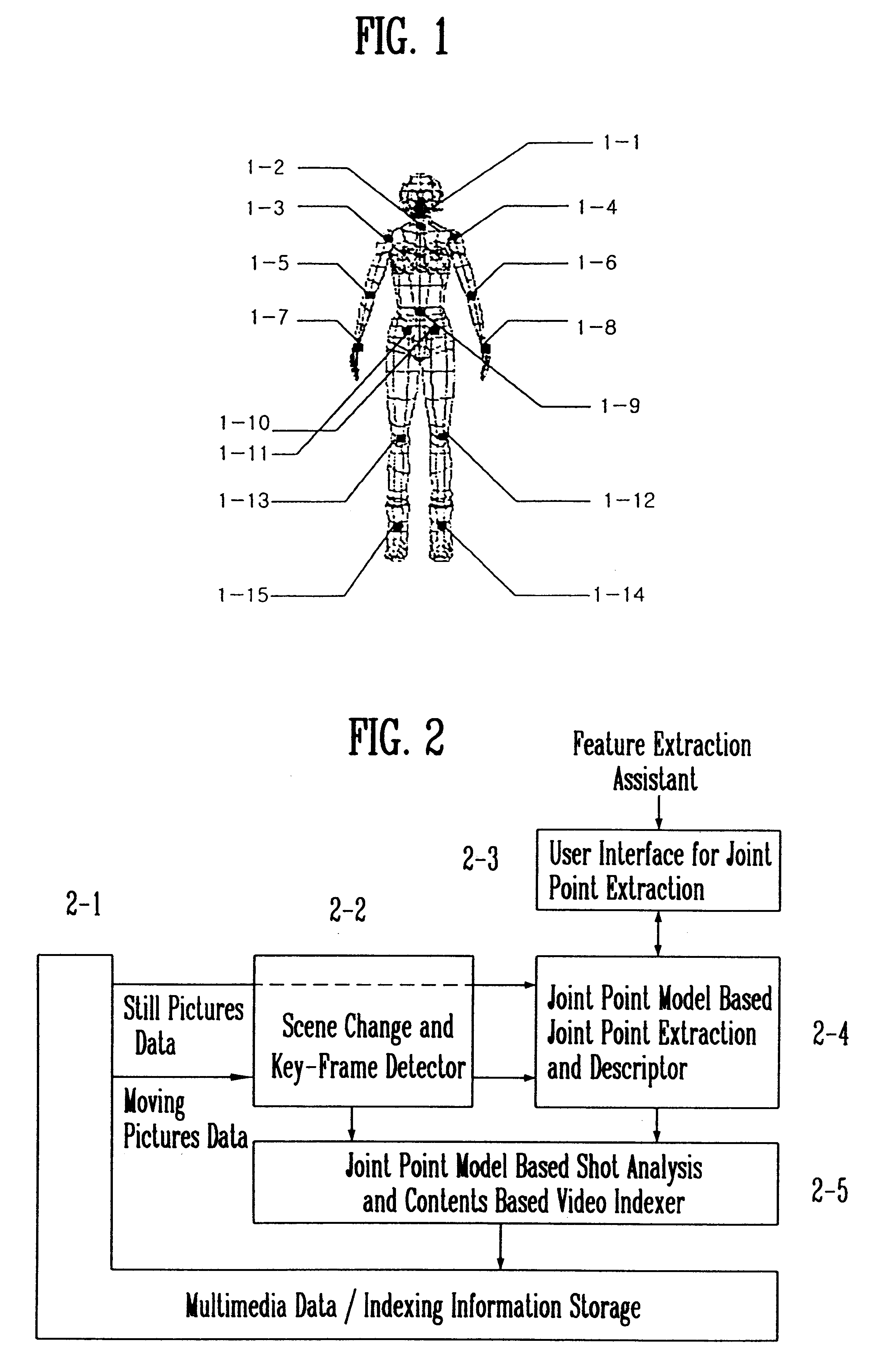

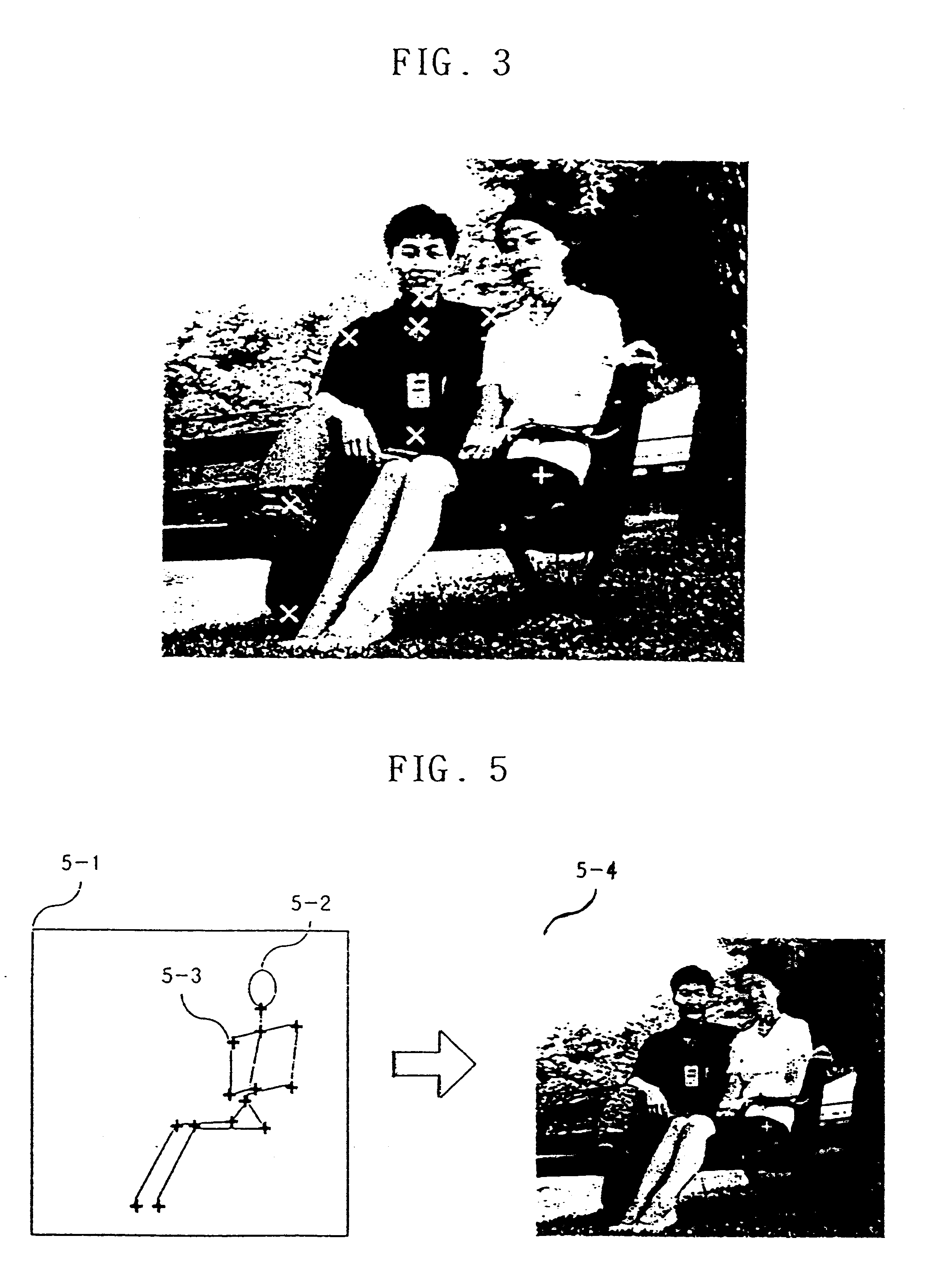

Method of retrieving moving pictures using joint points based on pose information

There is disclosed a method of retrieving moving pictures using joint point based moving information for allowing an user to retrieve pictures including a desired person object or the pose of the person object for still picture / moving picture data of multimedia data. The method of retrieving moving pictures using joint points based on pose information according to the present invention comprises the steps of extracting and expressing joint points for the joint point model against pictures where a major person exists from moving pictures or still pictures which are the object of retrival by means of joint points model based indexing apparatus, and retriving the person object according to the joint points extracted by said joint point model based indexing apparatus.

Owner:ELECTRONICS & TELECOMM RES INST

Fiber delivery system with enhanced passive fiber protection and active monitoring

Owner:CONTINUUM ELECTRO OPTICS

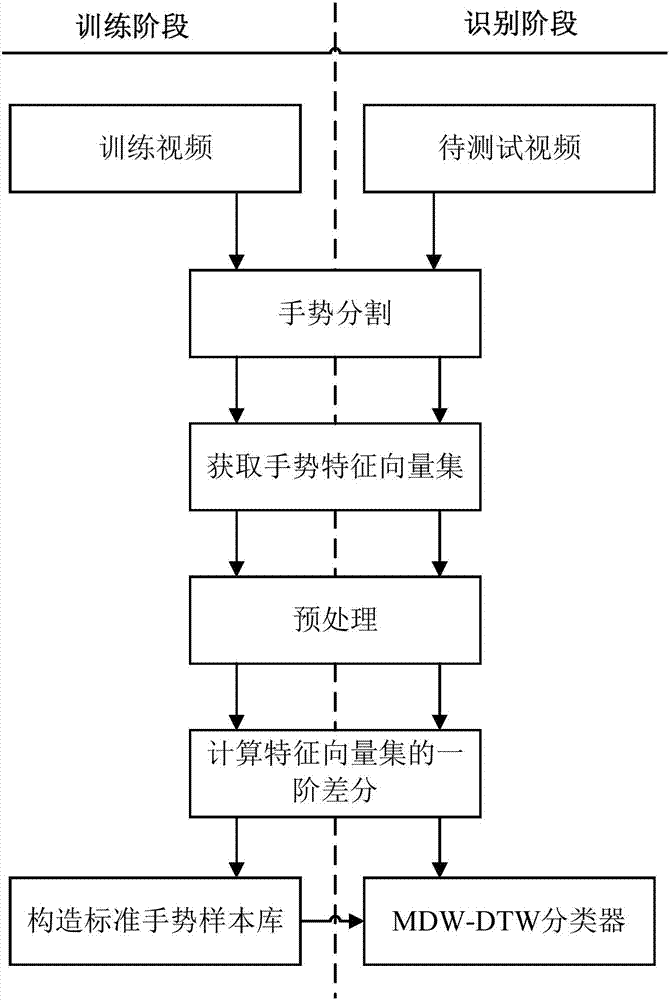

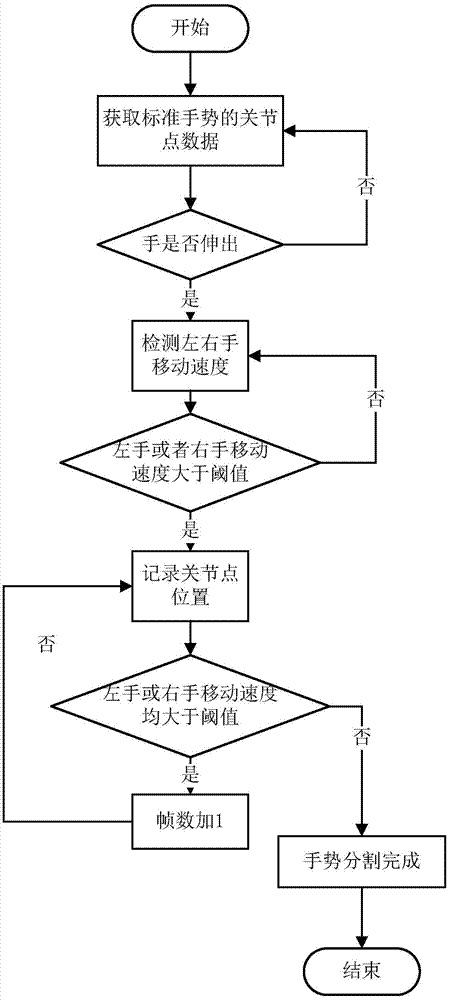

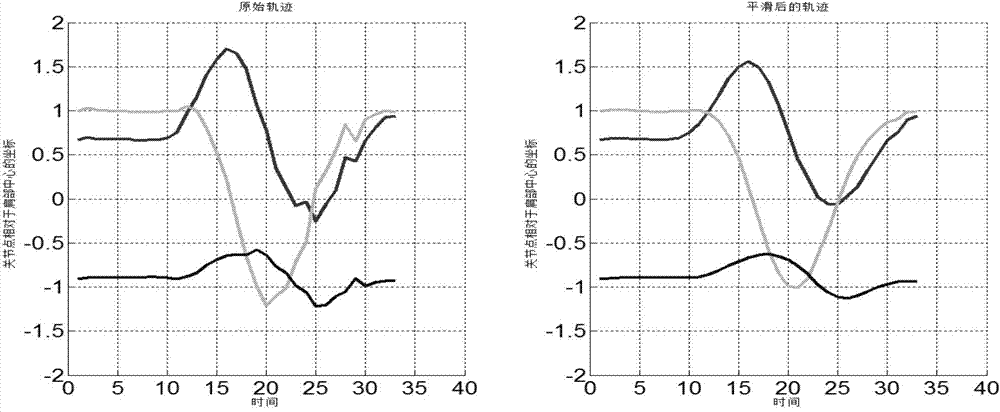

Multidimensional weighted 3D recognition method for dynamic gestures

InactiveCN104123007AIncrease flexibilityImprove generalization abilityInput/output for user-computer interactionGraph readingFeature vectorSystem transformation

The invention discloses a multidimensional weighted 3D recognition method for dynamic gestures. At the training stage, firstly, standard gestures are segmented to obtain a feature vector of the standard gestures; secondly, coordinate system transformation, normalization processing, smoothing processing, downsampling and differential processing are performed to obtain a feature vector set of the standard gestures, weight values of all joint points and weight values of all dimensions of elements in the feature vector set, and in this way, a standard gesture sample library is constructed. At the recognition stage, by the adoption of a multidimensional weighted dynamic time warping algorithm, the dynamic warping distances between the feature vector set Ftest of the gestures to be recognized and feature vector sets Fc =1,2,...,C of all standard gestures in the standard gesture sample library are calculated; when the (m, n)th element S(m, n) of a cost matrix C is calculated, consideration is given to the weight values of all the joint points and the weight values of all the dimensions of the elements, the joint points and coordinate dimensions making no contribution to gesture recognition are removed, in this way, the interference on the gesture recognition by joint jittering and false operation of the human body is effectively removed, the anti-interference capacity of the algorithm is enhanced, and finally the accuracy and real-time performance of the gesture recognition are improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

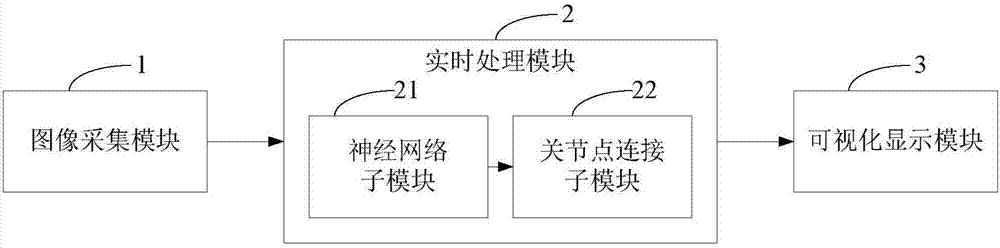

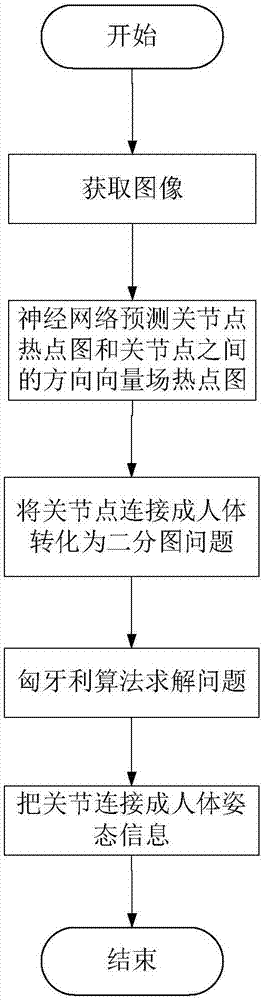

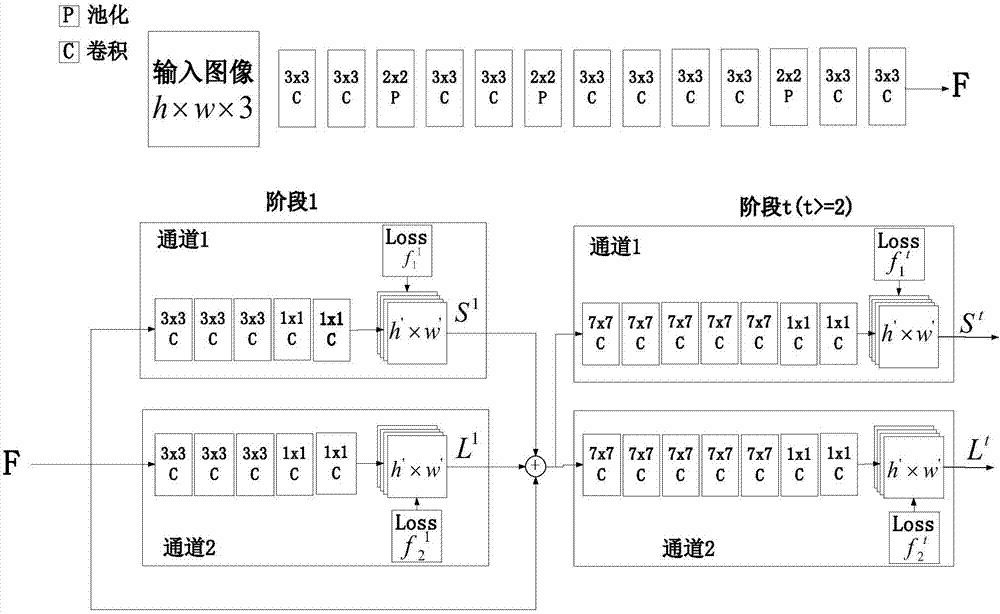

Real-time multi-target human body 2D attitude detection system and method

InactiveCN107886069AAccurate estimateEasy to handleCharacter and pattern recognitionNeural architecturesHuman bodyNerve network

The invention relates to a real-time multi-target human body 2D attitude detection system and method. The system comprises an image acquisition module used for acquiring image data, a real-time processing module used for inputting the image data to the neural network for learning and prediction and generating the human body attitude information according to a hot spot map of the acquired joint point position and a hot spot map of the direction vector field among joint points, and a visual display module used for presenting the predicted human body attitude information to users in a line connection mode. The system is advantaged in that the depth learning method is utilized to encode the joint position and the position and the direction of bones formed by joints through interconnection, accurate human body 2D attitude estimation of a single image is realized, for complex people gathering conditions, multiple human body attitudes of the scene can be accurately estimated, the users are facilitated to carry out further analysis processing and mining of the human body attitudes, and next behaviors are predicted.

Owner:NORTHEASTERN UNIV

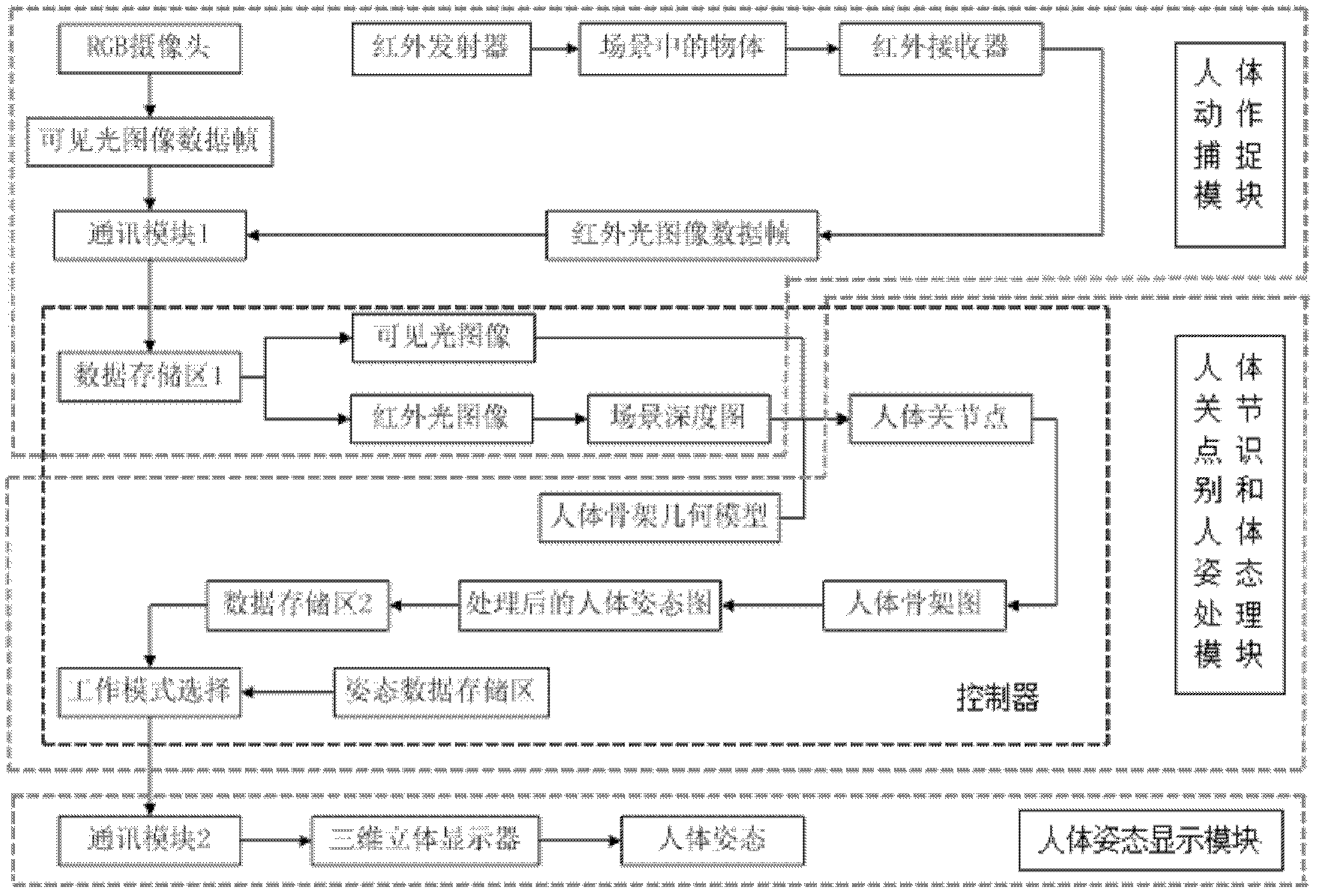

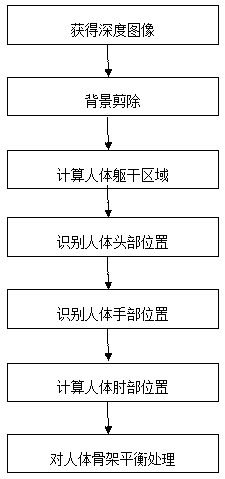

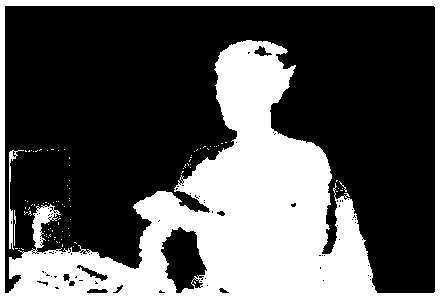

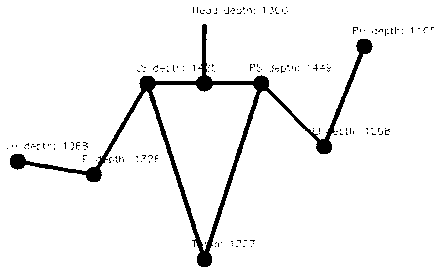

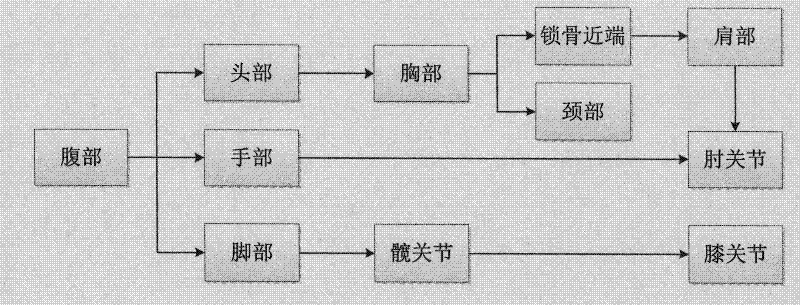

Method for recovering real-time three-dimensional body posture based on multimodal fusion

InactiveCN102800126AThe motion capture process is easyImprove stability3D-image rendering3D modellingColor imageTime domain

The invention relates to a method for recovering a real-time three-dimensional body posture based on multimodal fusion. The method can be used for recovering three-dimensional framework information of a human body by utilizing multiple technologies of depth map analysis, color identification, face detection and the like to obtain coordinates of main joint points of the human body in a real world. According to the method, on the basis of scene depth images and scene color images synchronously acquired at different moments, position information of the head of the human body can be acquired by a face detection method; position information of the four-limb end points with color marks of the human body are acquired by a color identification method; position information of the elbows and the knees of the human body is figured out by virtue of the position information of the four-limb end points and a mapping relation between the color maps and the depth maps; and an acquired framework is subjected to smooth processing by time domain information to reconstruct movement information of the human body in real time. Compared with the conventional technology for recovering the three-dimensional body posture by near-infrared equipment, the method provided by the invention can improve the recovery stability, and allows a human body movement capture process to be more convenient.

Owner:ZHEJIANG UNIV

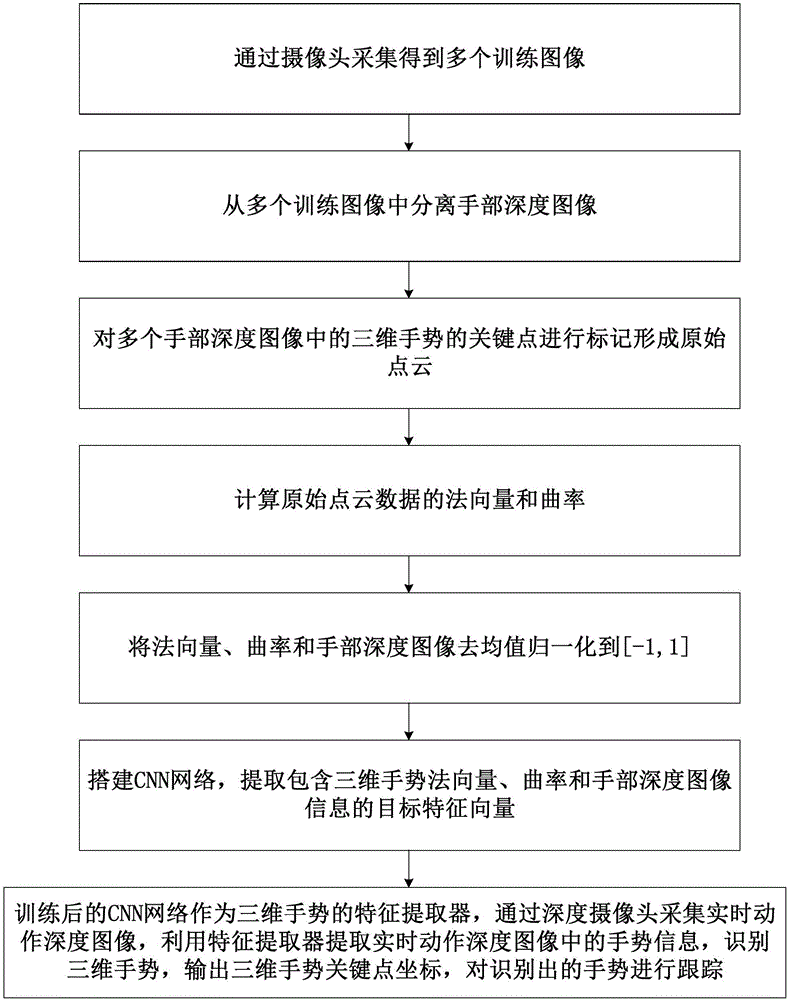

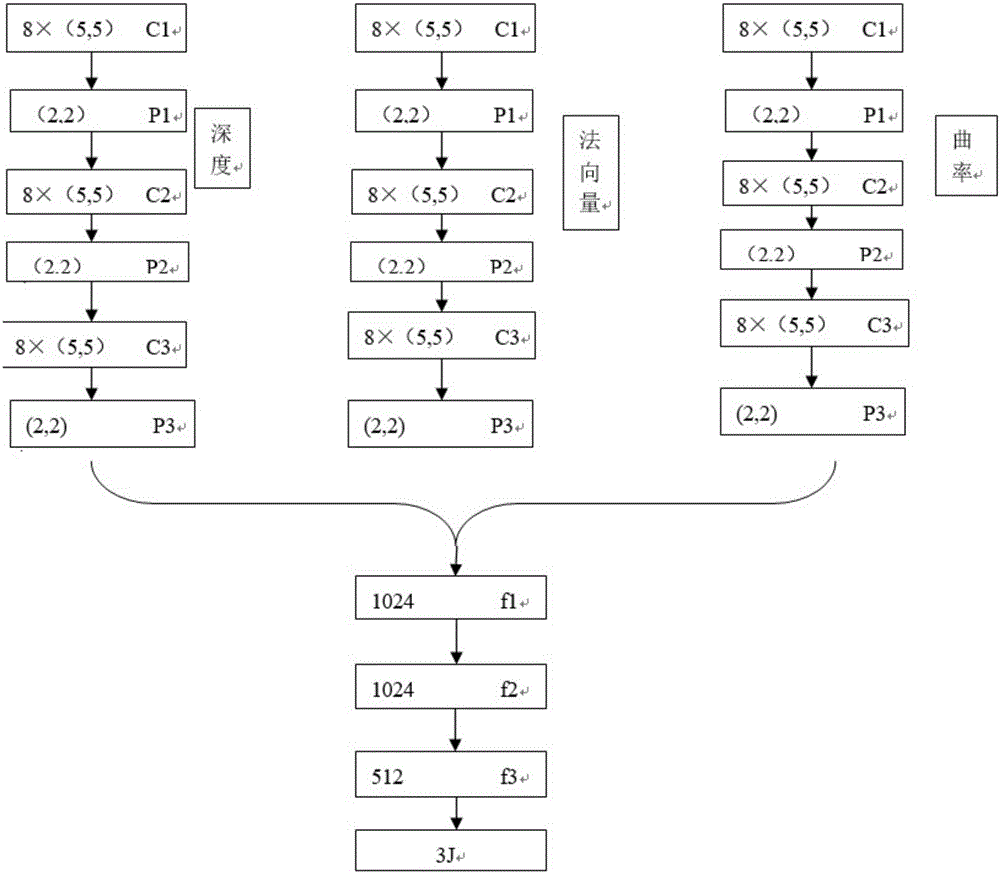

Gesture tracking method for VR headset device and VR headset device

ActiveCN106648103AHigh precisionEliminate errorsInput/output for user-computer interactionCharacter and pattern recognitionPoint cloudFeature extraction

The invention provides a gesture tracking method for a VR headset device, which comprises the following steps of: acquiring a plurality of training images; separating hand depth images; marking a three-dimensional gesture, and forming original point cloud; calculating a normal vector and a curvature, and carrying out mean removing normalization; setting up a CNN network, wherein an input end of the CNN network is used for respectively inputting multiple normal vectors, curvatures and hand depth images, and an output end of the CNN network is used for outputting three-dimensional coordinates of a plurality of joint points including a palm center; using the trained CNN network as a feature extractor of the three-dimensional gesture, acquiring real-time action depth images by a depth camera, extracting and processing normal vector, curvature and hand depth image information of the three-dimensional gesture which the real-time action depth images comprise by the feature extractor, outputting the three-dimensional coordinates of a plurality of joint points including the palm center, and carrying out tracking on the identified three-dimensional gesture. The invention further discloses a VR headset device. The gesture tracking method and the VR headset device which are provided by the invention fuse three-dimensional feature information, and have the advantage of high model identification rate.

Owner:GEER TECH CO LTD

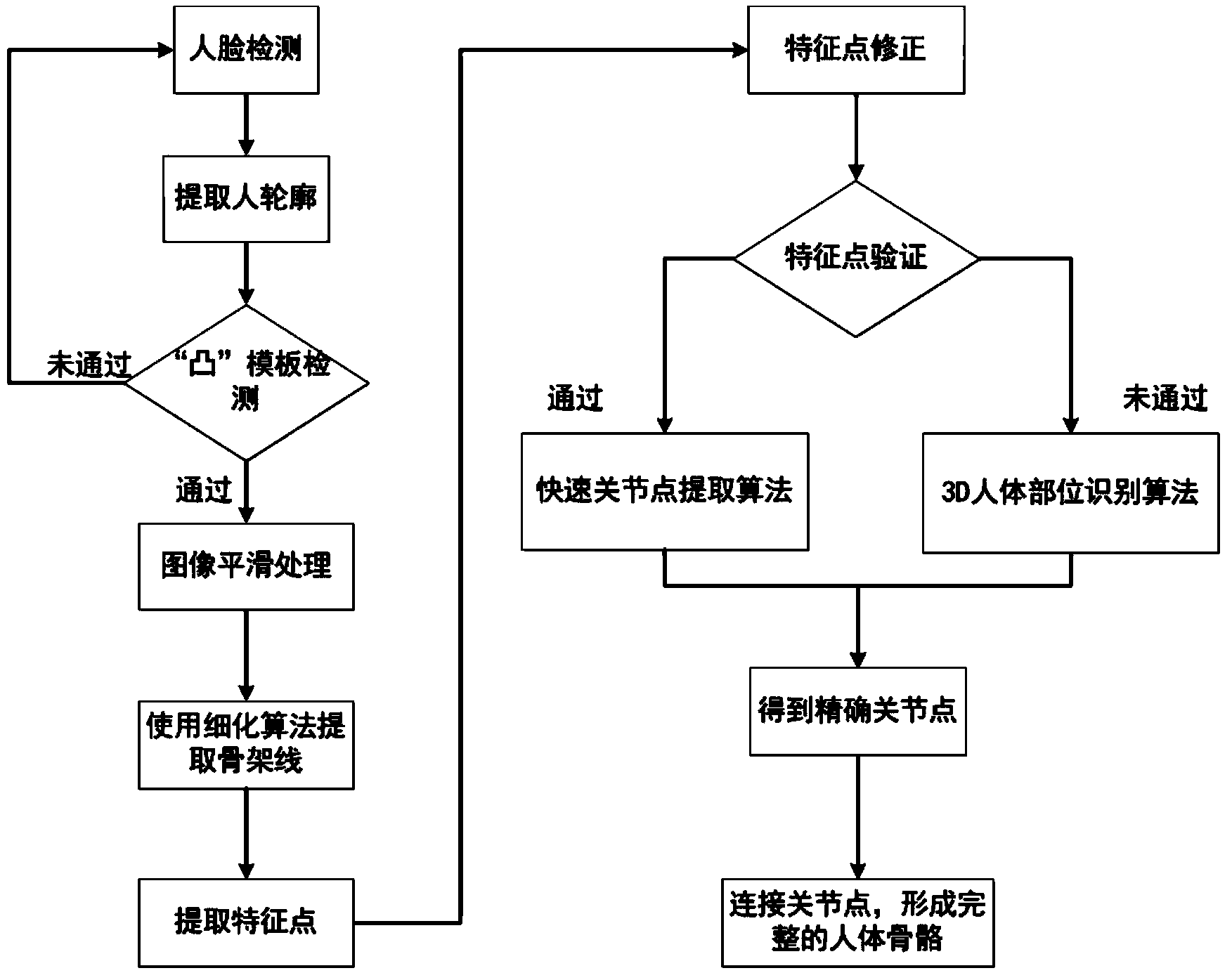

Fast 3D skeleton model detecting method based on depth camera

ActiveCN103679175AQuick checkPrecise positioningCharacter and pattern recognitionFace detectionHuman body

The invention relates to the field of the computer visual technology, in particular to a fast 3D skeleton model detecting method based on a depth camera. The fast 3D skeleton model detecting method based on the depth camera comprises the steps that a whole human body is shot by using the depth camera, human face detection is carried out in the image by using an Adaboost algorithm, and thus depth information of the human face is obtained; the body silhouette is extracted based on the depth information of the human face; detection verification is carried out on the detected body silhouette through a 'convex template' verification algorithm; after the verification succeeds, image smoothness processing is carried out on the body silhouette, and the skeleton line of the body silhouette is obtained through a detailing algorithm; characteristic points on the skeleton line of the body silhouette are extracted, the number and the positions of the characteristic points are corrected, and interference points are removed; the corrected characteristic points are verified, and accurate joint points and other characteristics are obtained by adopting a fast joint point extracting algorithm if the verification succeeds. The fast 3D skeleton model detecting method based on the depth camera is high in operating speed, low in computing complexity and adaptive to various complex backgrounds, and each frame of image only needs 5ms.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

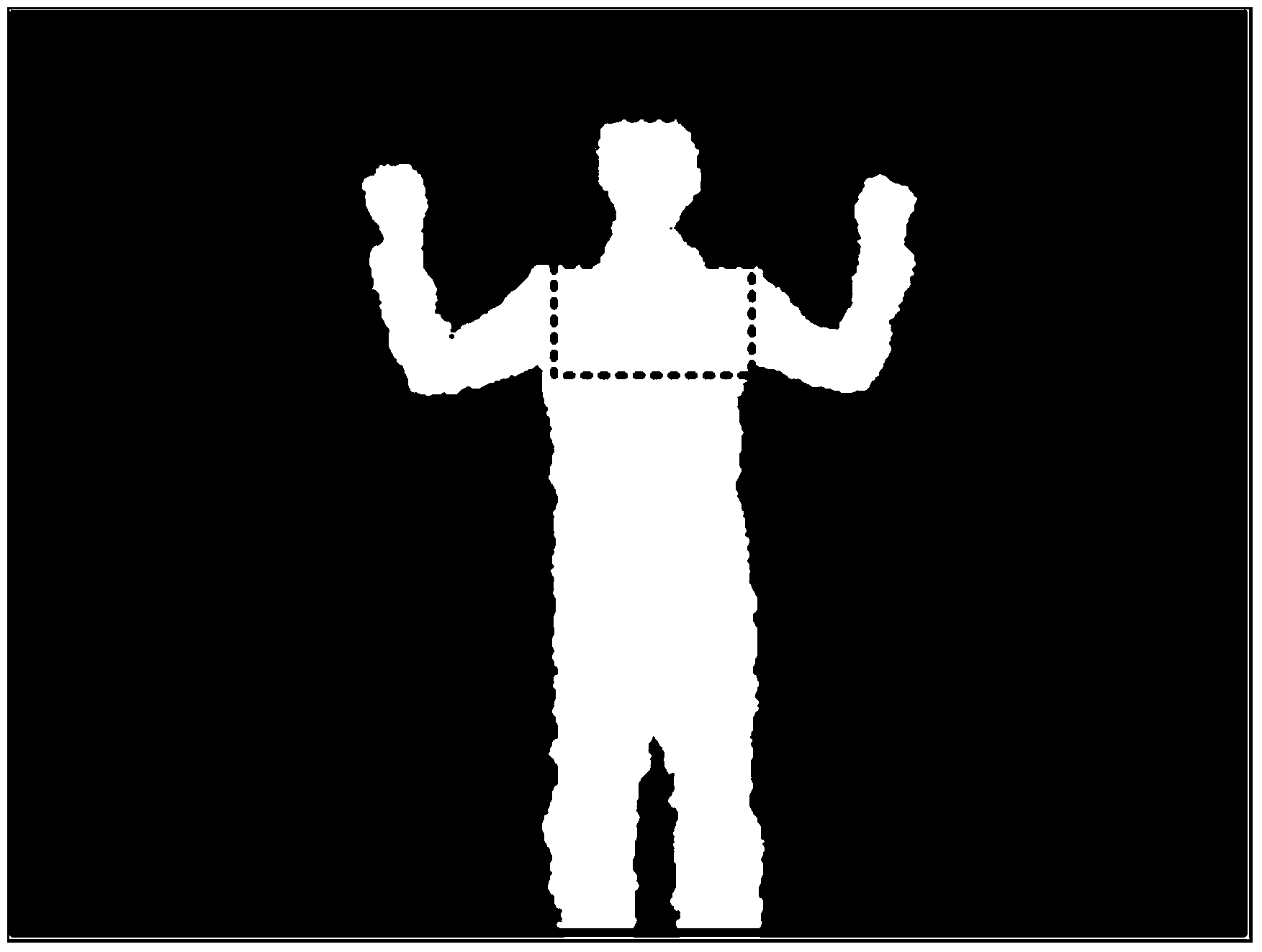

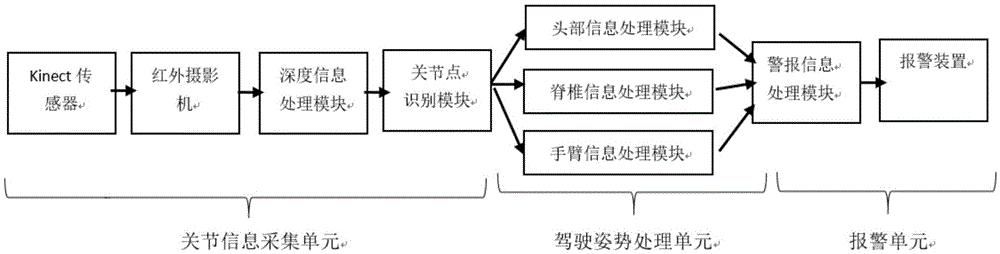

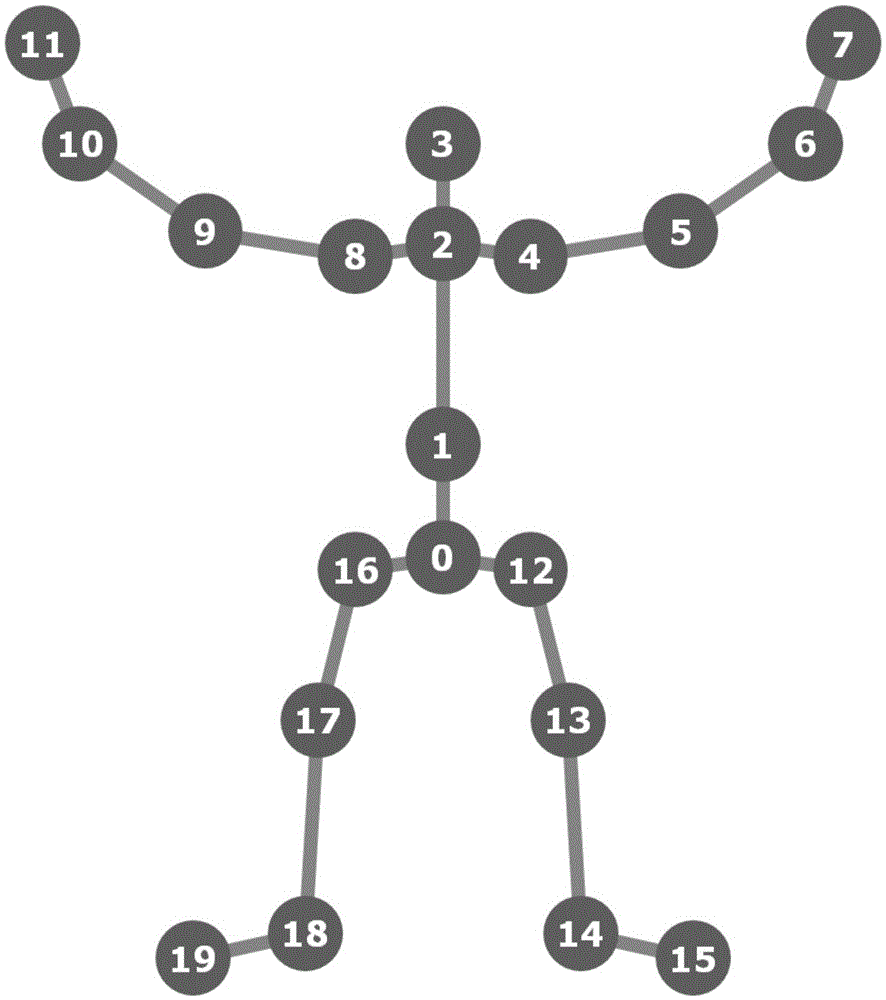

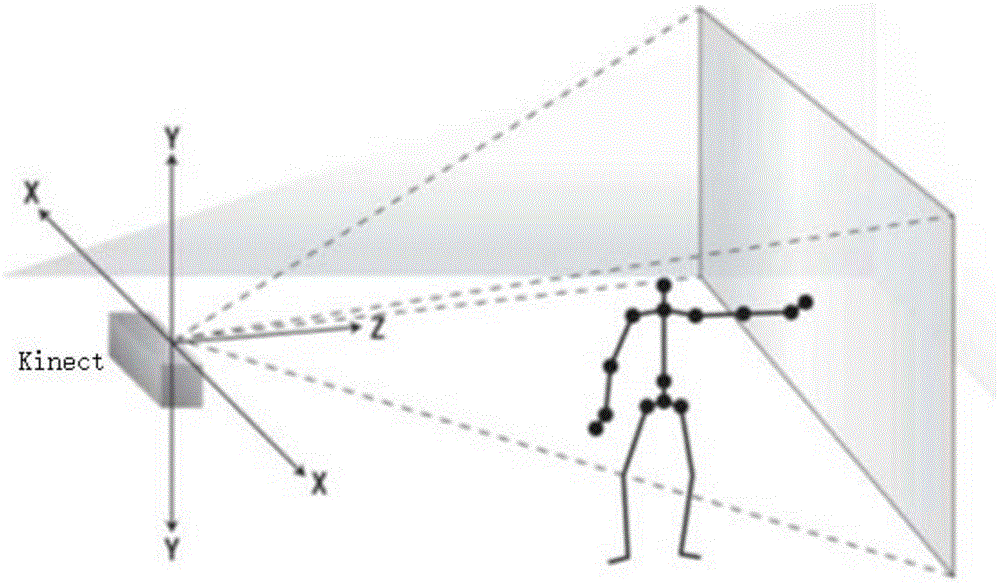

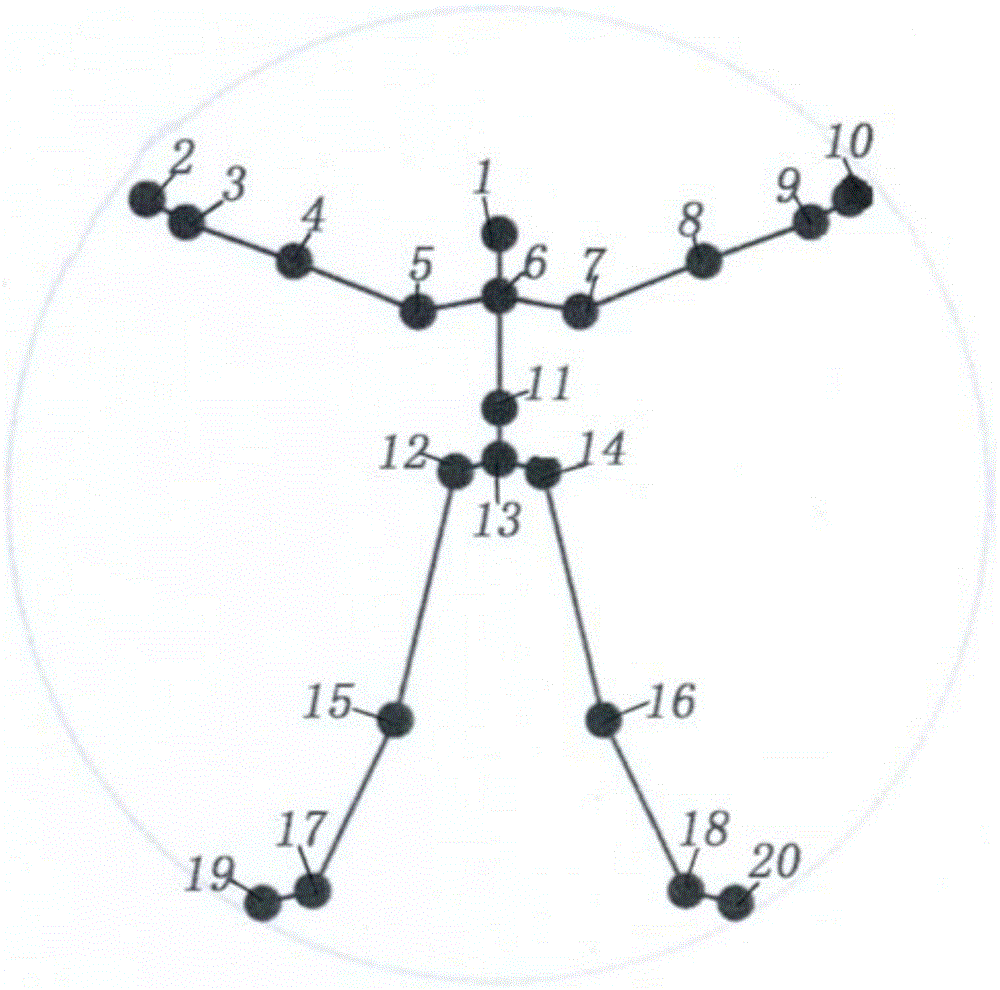

Driving state monitoring system based on Kinect human body posture recognition

The invention relates to a driving state monitoring and alarming system based on Kinect human body posture recognition. The system comprises a joint information acquisition unit, a driving posture processing unit and an alarming unit. The joint information acquisition unit is used for acquiring the body section image of a driver, obtaining the basic joint point positions of the human body according to an image recognition algorithm, binding joint point position data to a virtual skeleton model, establishing a space coordinate system and completing joint recognition. The driving posture processing unit is used for analyzing the virtual skeleton model of which joint recognition is completed, monitoring position information of all the joint points to obtain real-time movement information of the driver and comparing the position information of the joints with preset dangerous movement characteristic information, and transmitting a corresponding state abnormality signal to the alarming unit. The alarming unit is used for alarming after receiving the abnormality signal transmitted by the driving posture processing unit.

Owner:JILIN UNIV

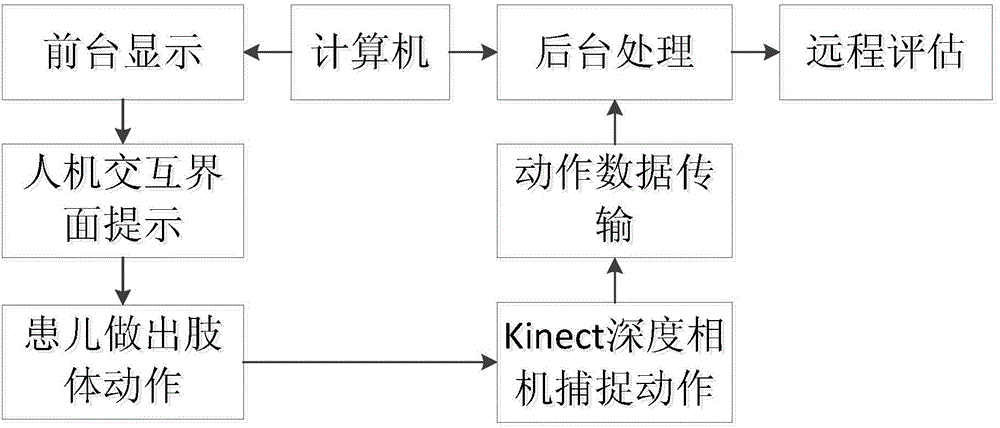

Cerebral palsy child rehabilitation training method based on Kinect sensor

InactiveCN104524742AAccurate detection of coordinated actions in real timeImprove coordinationDiagnostic recording/measuringSensorsCerebral paralysisMuscular force

The invention discloses a cerebral palsy child rehabilitation training method based on a Kinect sensor. The method comprises the following steps: S1, acquiring skeleton point data of a child; S2, carrying out limb movement training and judging the tilting angle and uplifting angle of skeleton points of the child, wherein after the child conducts a movement, the movement of the child is captured, and the tilting angle and uplifting angle of the joint points of the head, the upper limbs and the lower limbs are judged; S3, carrying out robust interactive processing on the movement of the child; S4, connecting a game engine and sending child skeleton data obtained after robust interactive processing in the step S3 to the game engine; S5, feeding back the movement of the child in a voice mode to remind the child of non-standard movements and encourage the child to conduct standard movements; S6, estimating the progress of rehabilitation training of the child. According to the method, the movement behavior characteristics of the child in training are acquired based on microsoft Kinect, so that the cardiovascular endurance, muscular endurance, muscular force, balance and flexibility of the child are comprehensively developed.

Owner:HOHAI UNIV CHANGZHOU

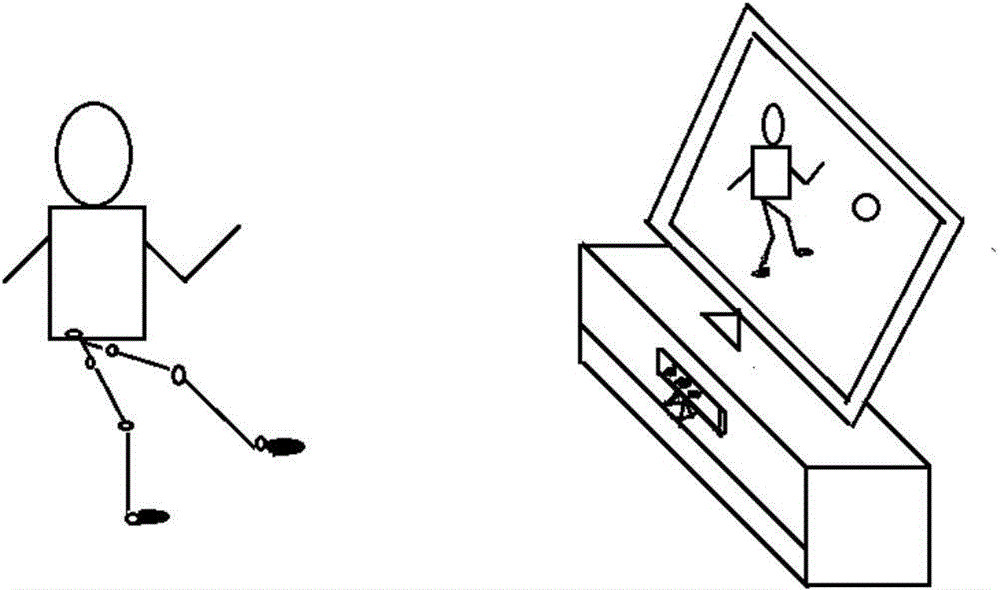

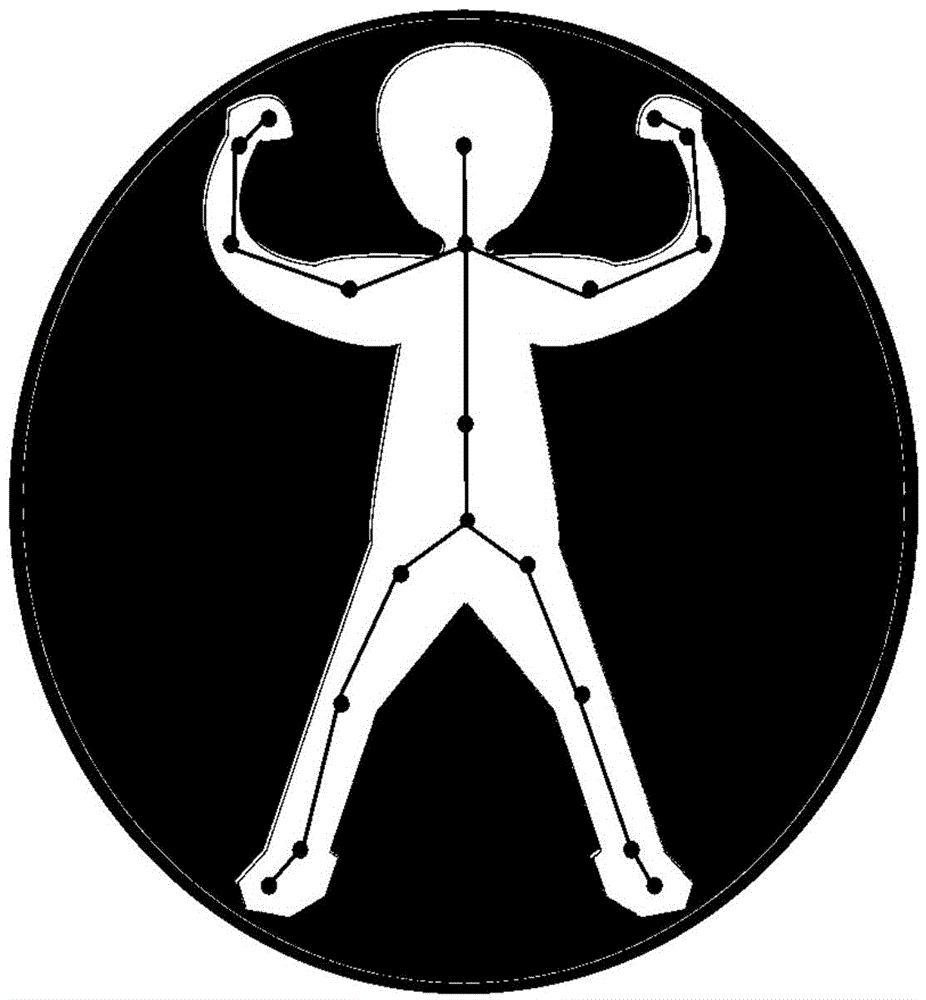

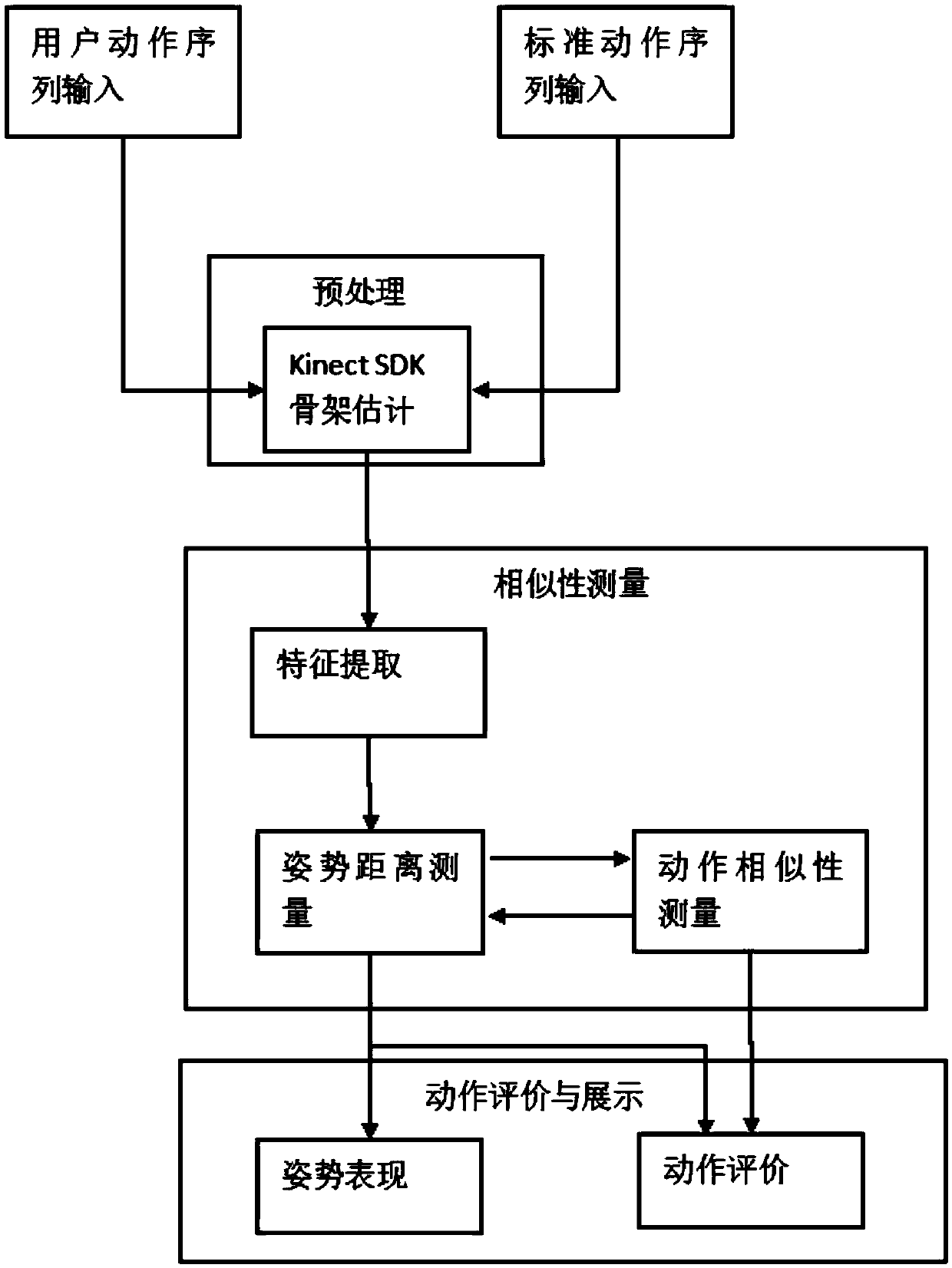

Kinect-based badminton motion guidance system

InactiveCN105512621AAvoid discomfortImprove accuracyCharacter and pattern recognitionHuman bodyGuidance system

The invention discloses a Kinect-based badminton motion guidance system, which comprises a pre-processing module, a similarity measurement module, and a motion evaluation and display module. The pre-processing module adopts Microsoft's kinect motion sensing equipment, tracks user motions through a kinectSDK, and obtains skeleton features of a human body; the similarity measurement module matches the obtained skeleton features of the human body with skeleton features of standard badminton actions and carries out similarity measurement; the motion evaluation and display module carries out distance comparison on a motion time period (during which user motion poses are matched with standard motion poses) obtained by the similarity measurement module, so as to obtain distance differences between joint points at corresponding positions of user motions and a standard notion sequence, and displays results on a screen. According to the Kinect-based badminton motion guidance system, the user does not need to wear clothes provided with sensors, and can learn badminton standard motions by following videos in a self-aid manner under the condition without a coach, thereby improving accuracy of badminton racket swinging motions of the user.

Owner:SOUTH CHINA UNIV OF TECH

Motion sensing technique based bank ATM intelligent monitoring method

ActiveCN105913559AScalableMeet the needs of different situationsCharacter and pattern recognitionCoin/paper handlersFeature vectorHuman body

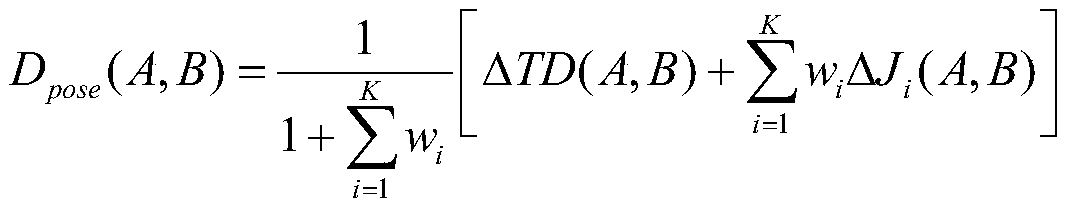

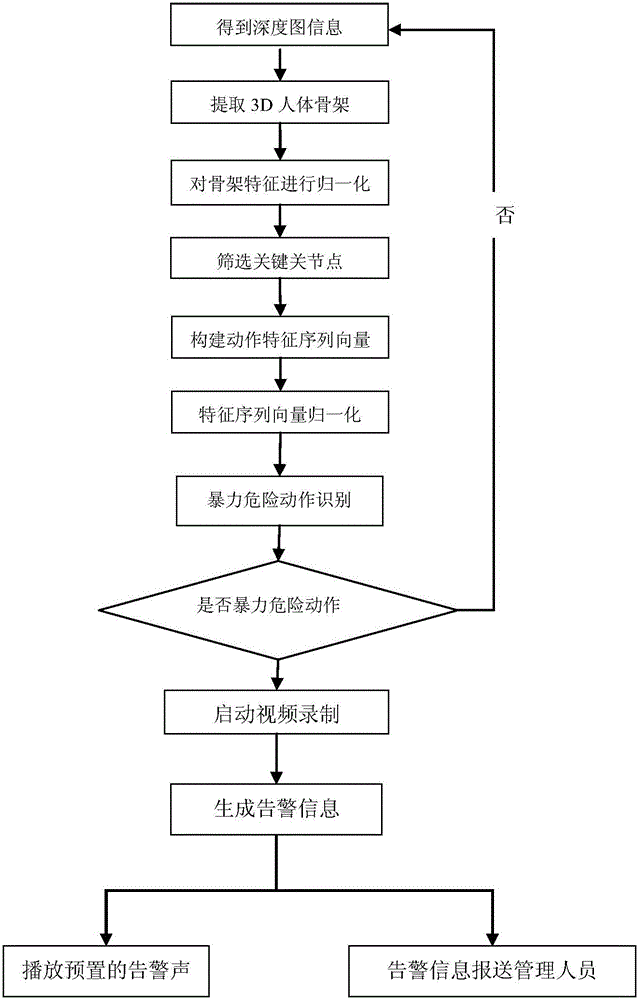

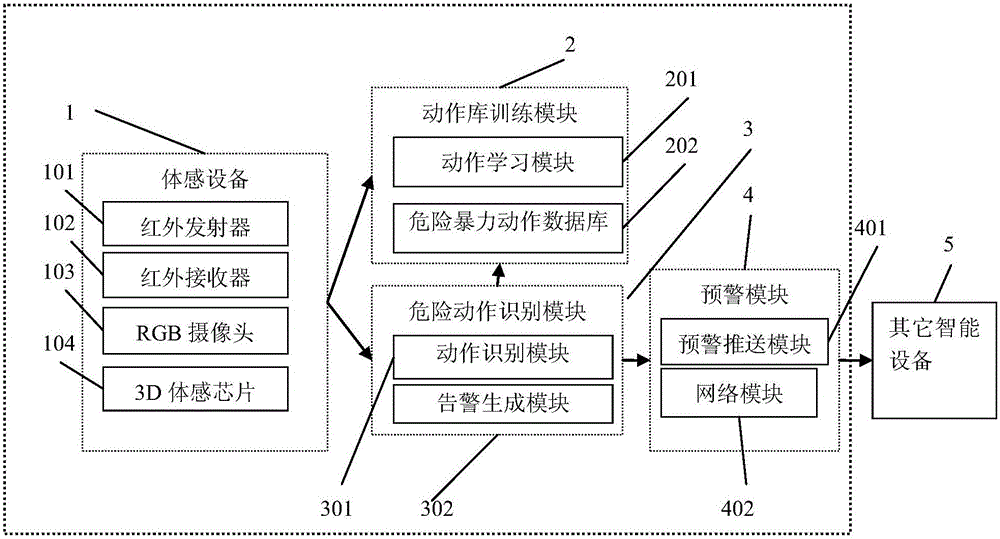

The invention relates to a motion sensing technique based bank ATM intelligent monitoring method. The method includes: arranging a 3D motion sensing device in an operation zone of an ATM; capturing a human body 3D skeleton image of an operator in real time; extracting key joint point character vectors; inputting the character vectors in to a motion classifier to be classified; determining if a motion is a dangerous violence motion needing to be monitored; and training the motion classifier through a learning method in advance. Through the 3D visual perception technique, the method can detect, analyze, and track a human body motion posture and a figure motion trail, can dynamically capture a motion of an ATM operator in real time, and then predict dangerous violence motions of falling on a ground, grappling and destroying the ATM, and sends an alarm in advance, and in this way, abnormal behaviors can be prevented in the operation zone of the ATM.

Owner:NANJING HUAJIE IMI TECH CO LTD

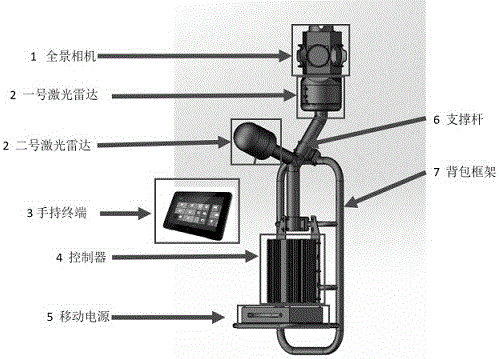

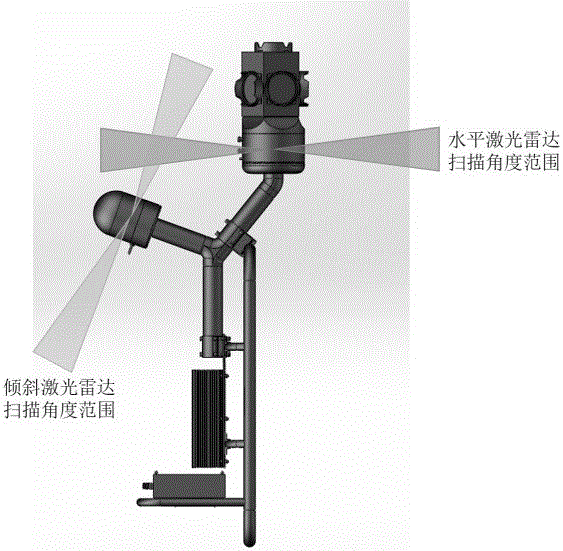

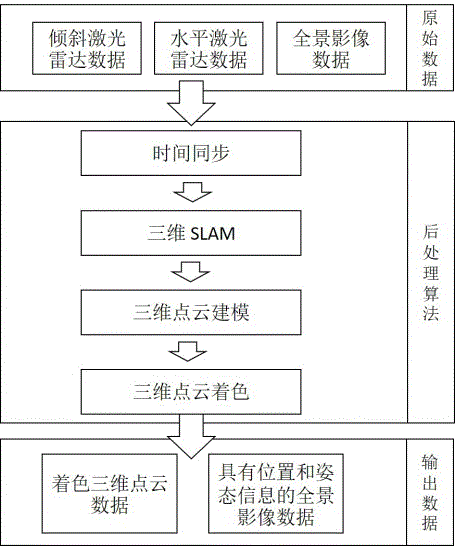

Piggyback mobile surveying and mapping system based on laser radar and panorama camera

InactiveCN106443687AImprove understandingRealize mobile measurementPhotogrammetry/videogrammetryNavigation instrumentsRadarData acquisition

The present invention discloses a piggyback mobile surveying and mapping system based on a laser radar and a panorama camera. The system mainly comprises a collection device module and a post-processing module. The collection device module is a piggyback mode and is mainly composed of a panorama camera, a laser radar, a controller, a hand-held terminal, a portable power source, a bracing piece and a piggyback frame, and each part is connected and supported by the piggyback frame. The system and method can continuously move the obtained surrounding scene information, and are good in passing ability, high in adaptability and high in data acquisition efficiency. The problems which the system overcomes comprise: (1) the problem of the failure of a current ground mobile measurement system in the environment without GNSS; (2) the problem of low efficiency because the traditional fixed laser scanning needs lots of station changing joint point cloud; and (3) the problem that a cart based on the two-dimensional SLAM technology is only suitable for the ground horizontal environment.

Owner:欧思徕(北京)智能科技有限公司

Human limb three-dimensional model building method based on image cutline

InactiveCN1617174AReduce computational complexitySimple method3D modellingCircular coneCurve fitting

The 3D human limb model building method based on image outline adopts rotating conic curved surface, and has joint points and line segments connecting the joint points to constitute skeleton layer to represent the human limb skeleton structure and rotating conic curved surface to represent the skin layer, with human limb skin deformation being reflected in two deforming parameters. Human limb edge contour information is first extracted from human limb image sequence obtained with binocular stereo vision system, the limb contours of the right and left images in different times are fitted with 2D conic curve, the deformation parameters of 2D conic curve equation are found out, corresponding 3D conic curve equation is found out and made to rotate around the skeleton line to find out the 3D rotating conic curved surface equation, and three spherical bodies and two rotating conic curved surfaces are adopted to draw human limb model. The method is simple and easy to realize.

Owner:SHANGHAI JIAO TONG UNIV

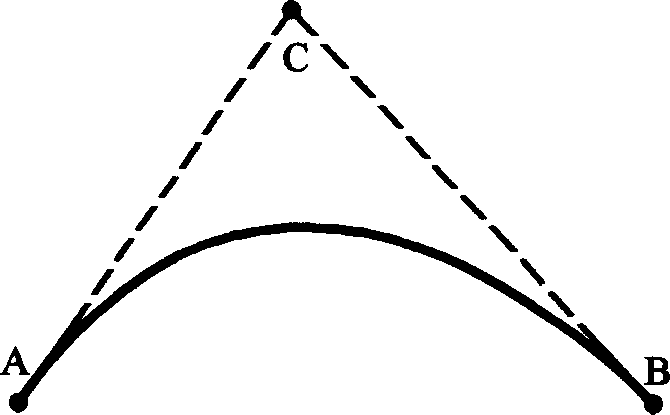

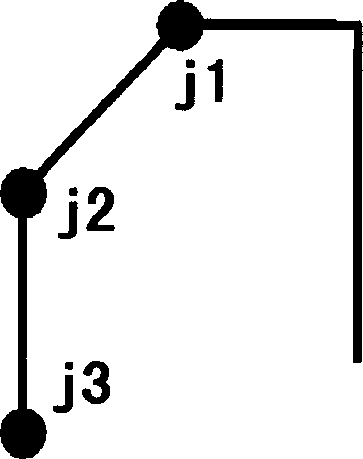

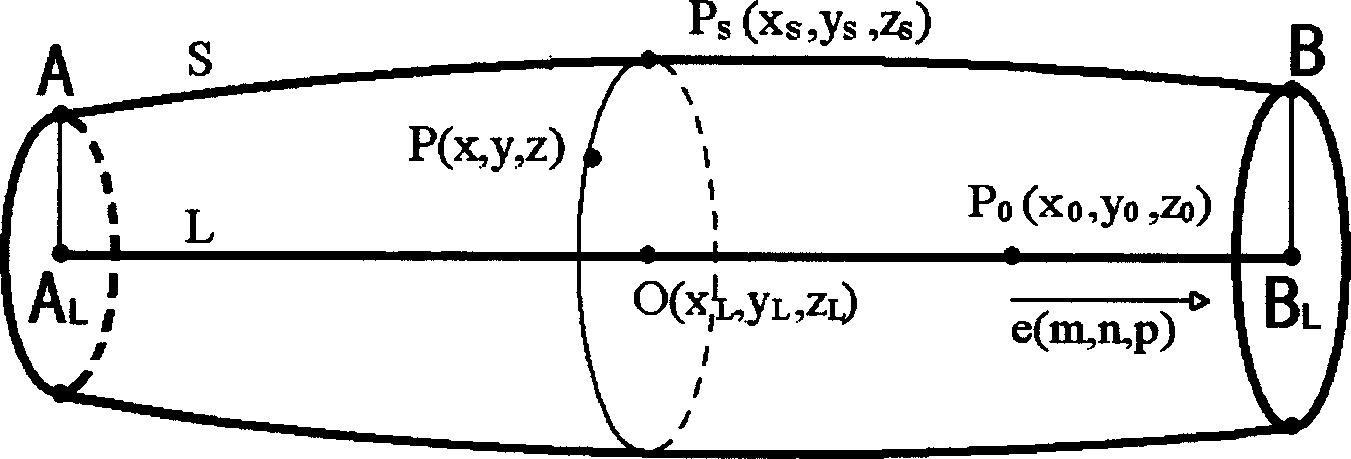

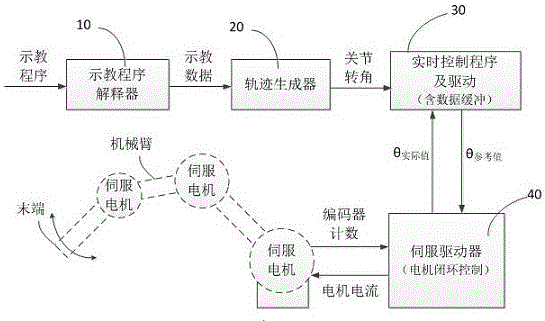

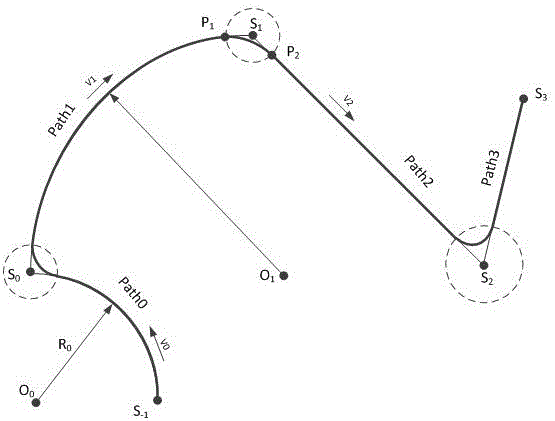

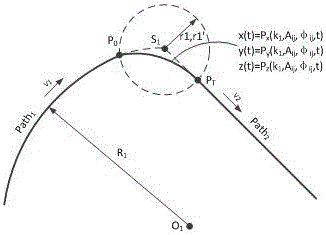

Continuous trajectory planning transition method for robot tail end

ActiveCN105710881AReduce complexityThe algorithm flow is clearProgramme-controlled manipulatorControl systemEngineering

The invention discloses a continuous trajectory planning transition method for a robot tail end. The method comprises following steps that firstly, a first line segment and a second line segment which need to be subjected to continuous trajectory planning transition are determined, demonstration points of a connecting line segment are determined, and the transition distance between the demonstration points and the first line segment and the transition distance between the demonstration points and the second line segment are determined; secondly, according to the transition distance between the demonstration points and the first line segment, first transition joint points on the first line segment are determined, and according to the transition distance between the demonstration points and the second line segment, second transition joint points on the second line segment are determined; and thirdly, an amplitude coefficient, a phase coefficient and a speed zooming coefficient are calculated in a coordinate axis, and the amplitude coefficient, the phase coefficient and the speed zooming coefficient are brought into a limited term sine position planning function to determine a transition curve expression between the first transition joint points and the second transition joint points. The algorithm flow is clear, the calculating time is greatly shortened, and the complex degree of a robot control system is reduced.

Owner:HANGZHOU WAHAHA PRECISION MACHINERY

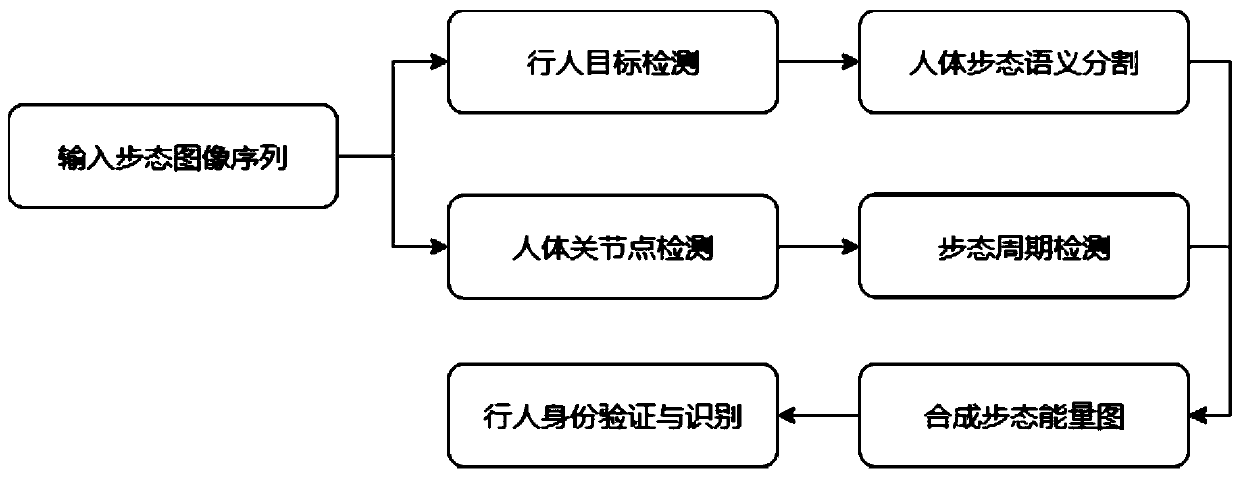

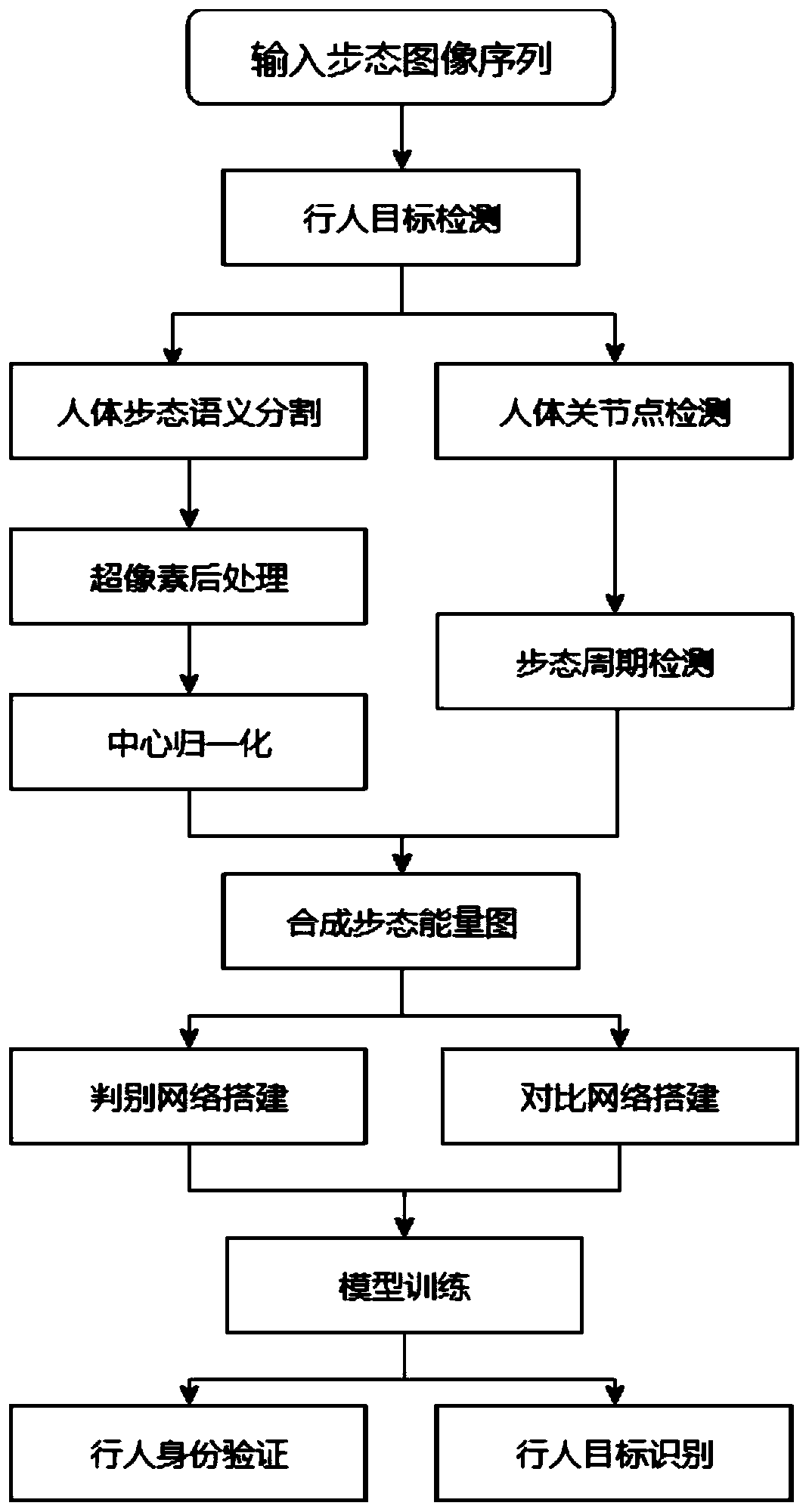

Gait feature extraction method and pedestrian identity recognition method based on gait features

ActiveCN110084156AImprove real-time performanceHigh utility valueCharacter and pattern recognitionSmall sampleFeature extraction

The invention discloses a gait feature extraction method and a pedestrian identity recognition method based on gait features, and the method comprises the following steps: A, obtaining a region wherea pedestrian is located as a region of interest for each frame of gait image in a gait image sequence; B, segmenting a pedestrian target in the region of interest; C, acquiring joint point position information of the pedestrian target in each frame of gait image; D, based on joint point position information of the pedestrian target in each frame of gait image of the gait image sequence, carrying out gait period detection; and E, synthesizing a gait energy image corresponding to the gait image sequence according to the detected gait period, and taking the gait energy image as gait characteristics; based on the extracted gait characteristics, judging or identifying the pedestrian target by using a judgment network and a comparison network. According to the method, the problem of insufficientsample size in small sample classification in the field of gait recognition is well solved, and the algorithm is good in real-time performance.

Owner:CENT SOUTH UNIV

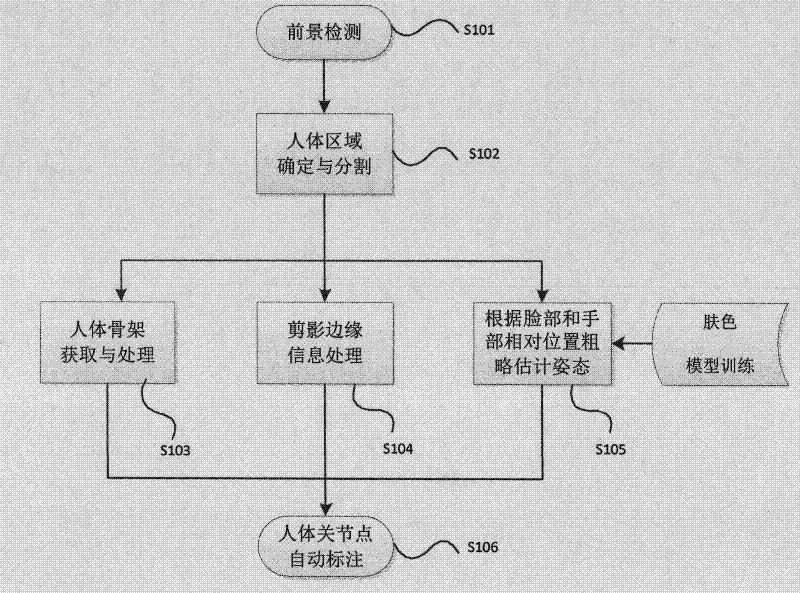

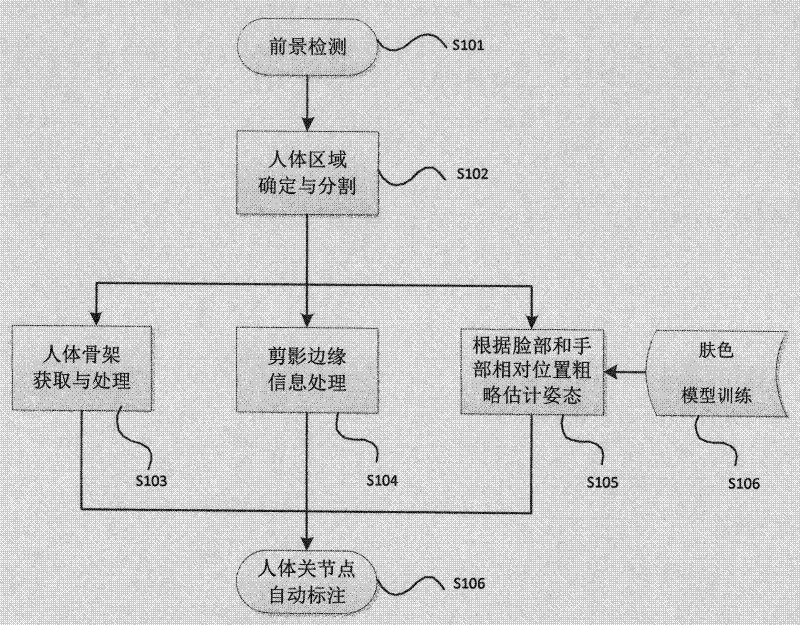

Automatic labeling method for human joint based on monocular video

ActiveCN102609683AEasy to getReduce complexityCharacter and pattern recognitionFeature vectorSkin color

The invention provides an automatic labeling method for a human joint based on a monocular video. The automatic labeling method comprises the following steps: detecting a foreground and storing the foreground as an area of interest; confirming an area of a human body, cutting a part and obtaining an outline of sketch; obtaining a skeleton of the human body and obtaining a key point of the skeleton; utilizing a relative position of face and hands to roughly estimate the gesture of human body; and automatically labeling the point of human joint. During an automatic labeling process, the sketch outline information, skin color information and skeleton information from sketch of human body are comprehensively utilized, so that the accuracy for extracting the joint point is ensured. According to the automatic labeling method provided by the invention, the accurate and efficient cutting for the part of human body is performed, the gesture information of each limb part is obtained and a beneficial condition is supplied to the next operation for obtaining and treating feature vectors of human body.

Owner:BEIJING UNIV OF POSTS & TELECOMM

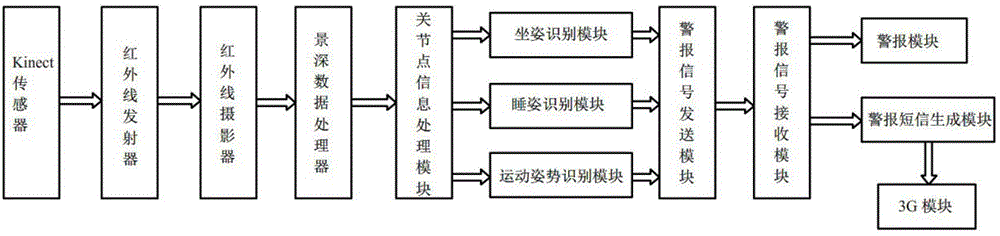

Human body posture correction device based on Kinect sensor

The invention discloses a human body posture correction device based on a Kinect sensor. The correction device comprises a skeleton information acquisition unit, a control unit and a warning unit, wherein the skeleton information acquisition unit comprises the Kinect sensor, a depth of field data acquisition module and a joint point information processing module; the control unit comprises a posture judging module and a warning signal transmitting module; the warning unit comprises a warning signal receiving module, a warning module, a warning message generating module and a 3G module. The human body posture correction device has the advantages of excellent expansibility, zero radiation, accurate information acquisition and no need of personnel wear.

Owner:YANSHAN UNIV

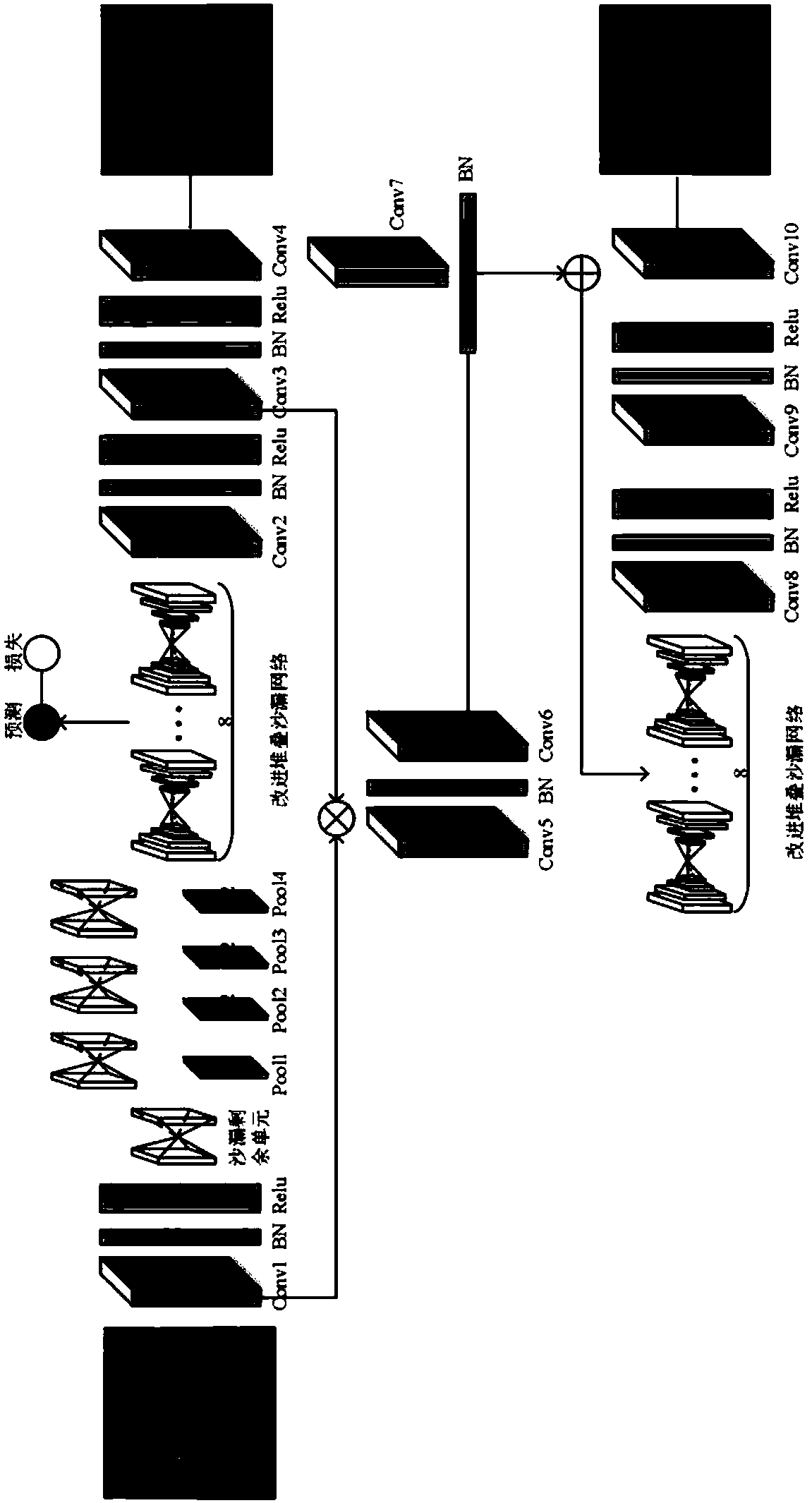

Multi-person attitude estimation method based on multi-layer fractal network and joint relative pattern

ActiveCN108549844AImprove intermediate forecastsImprove matchCharacter and pattern recognitionNODALReasoning algorithm

The invention relates to a multi-person attitude estimation method based on a multi-layer fractal network and a joint relative pattern. The multi-person attitude estimation method adopts a three-layerfractal network model to predict key points of a human body, proposes a hierarchical bidirectional reasoning algorithm to match joint points of a plurality of people, realizes the optimal matching ofa plurality of human body joint points according to a relative degree and external space constraint relationship between each pair of joint points, effectively removing disorder matching among a large number of joint points, and can greatly improve the average precision of multi-person attitude estimation.

Owner:HUAQIAO UNIVERSITY +1

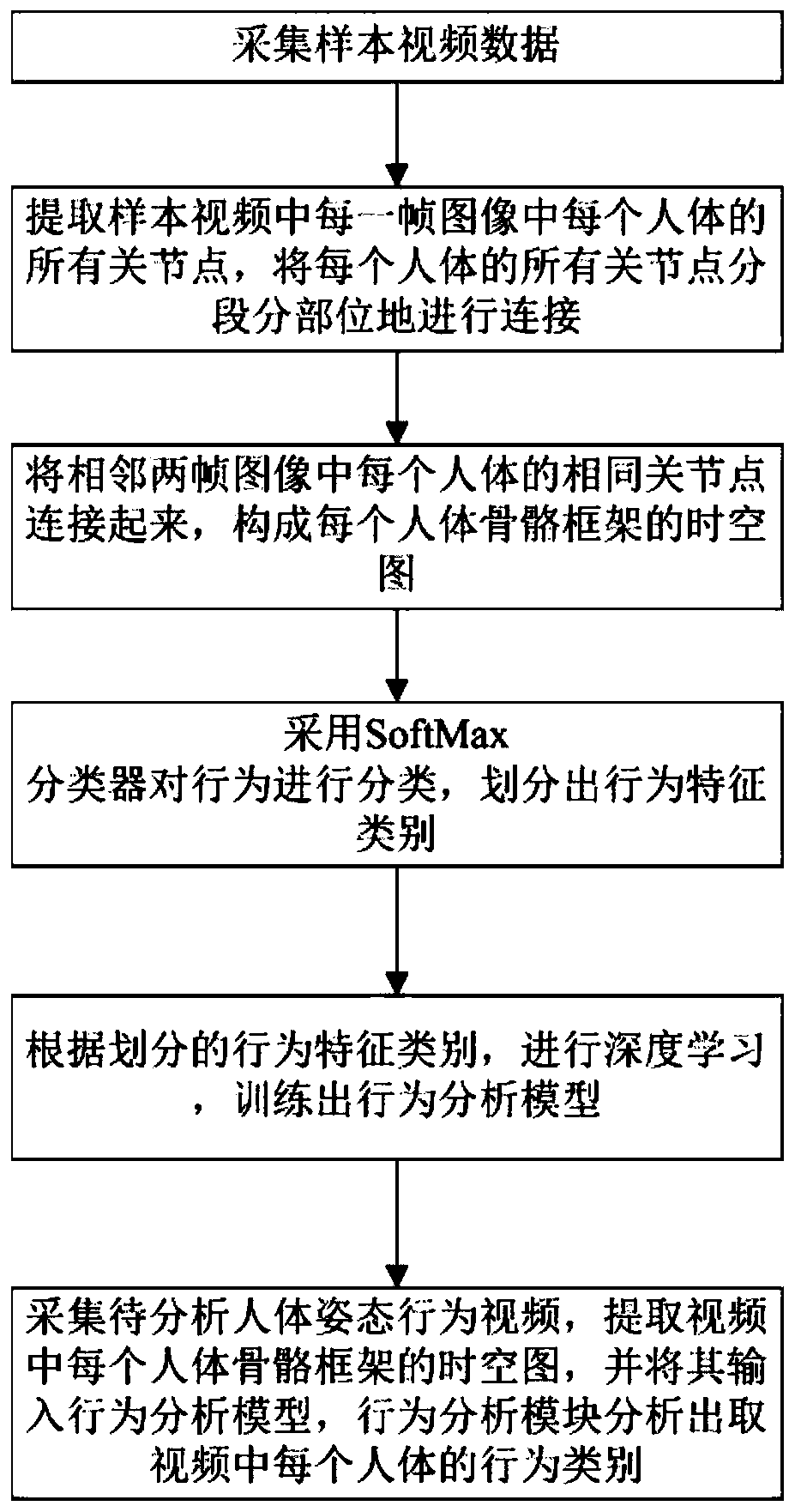

Human body posture behavior intelligent analysis and recognition method

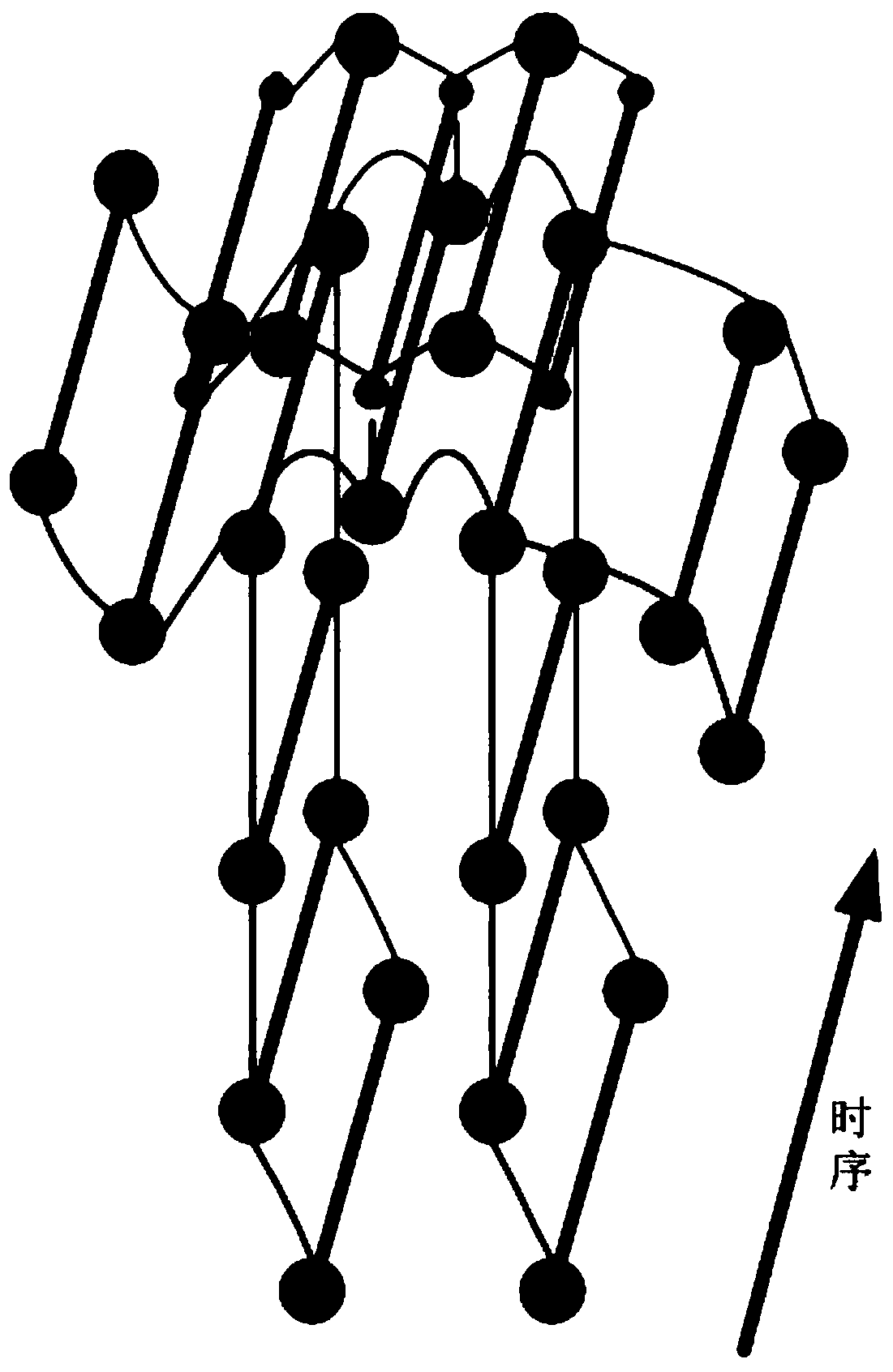

The invention discloses a human body posture behavior intelligent analysis and recognition method. The method comprises the following steps: collecting sample video data; extracting all joint points of each human body in each frame of image in the sample video, and connecting all joint points of each human body in a segmented and partial manner; connecting the same joint point of each human body in two adjacent frames of images to form a space-time diagram of each human body skeleton framework; classifying the behaviors by adopting a SoftMax classifier, and dividing behavior feature categories; performing deep learning according to the divided behavior feature categories, and training a behavior analysis model; collecting a human body posture behavior video to be analyzed, extracting a space-time diagram of each human body skeleton framework in the video, inputting the space-time diagrams into the behavior analysis model, and analyzing the behavior category of each human body in the video by the behavior analysis module. Human body posture behaviors are analyzed and recognized through feature extraction, behavior modeling and behavior classification, and the accuracy is high.

Owner:哈工大机器人义乌人工智能研究院

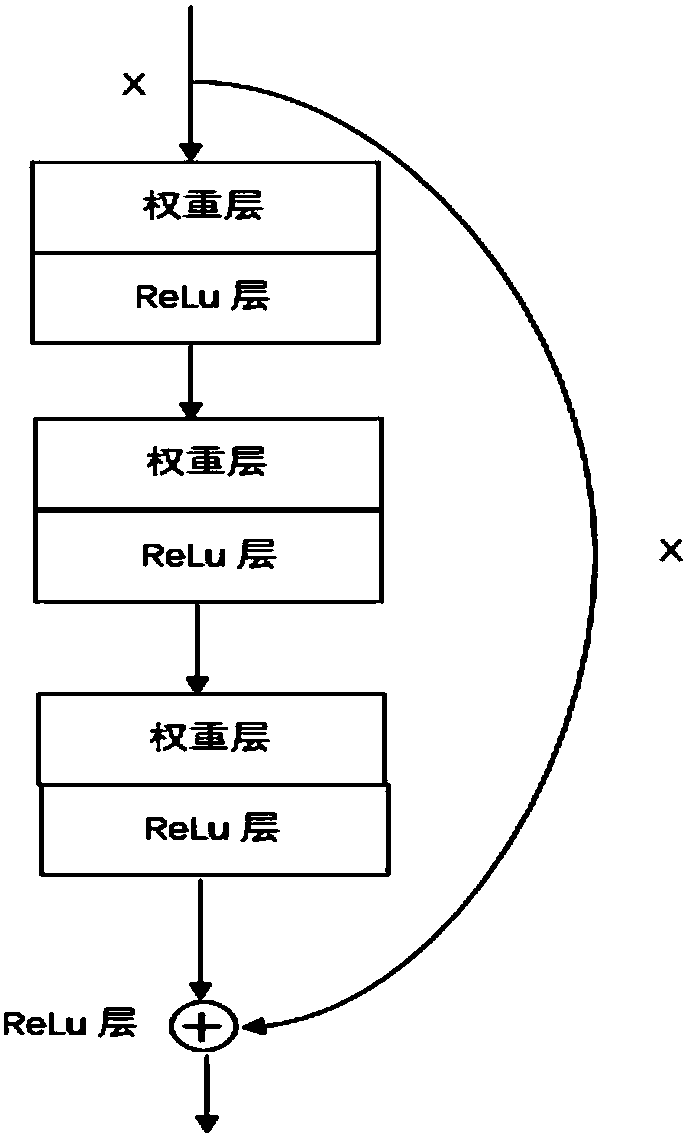

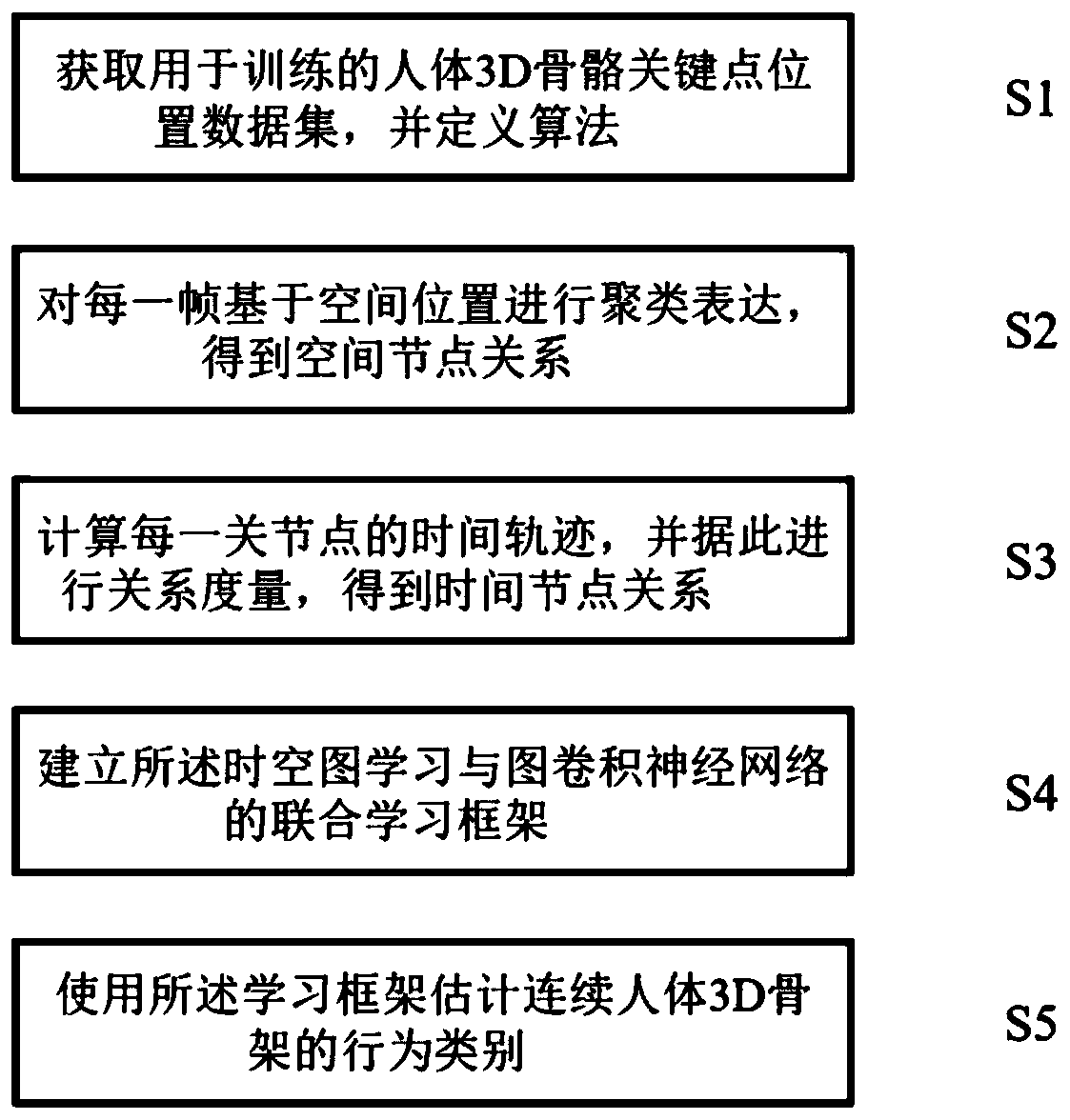

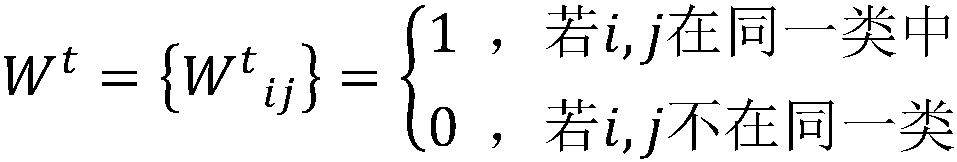

Behavior recognition method for learning human skeleton of neural network based on end-to-end space-time diagram

ActiveCN109858390ASolve behavior recognition problemsImprove forecast resultsCharacter and pattern recognitionNeural architecturesNODALHuman body

The invention discloses a behavior recognition method for learning a human skeleton of a neural network based on an end-to-end space-time diagram. The method is used for behavior recognition of a human 3D skeleton. The method specifically comprises the following steps: obtaining a human body 3D skeleton key point position data set for training, and defining an algorithm target; performing clustering expression on each frame based on the spatial position to obtain a spatial node relation; calculating a time track of each joint point, and performing relation measurement according to the time track to obtain a time node relation; establishing a joint learning framework of the space-time diagram learning and the diagram convolutional neural network; and estimating the behavior category of thecontinuous human body 3D skeleton by using the learning framework. The method is suitable for human body action analysis in a real video, and has a good effect and robustness for various complex conditions.

Owner:ZHEJIANG UNIV

Connecting structure for middle and outside wings of unmanned aerial vehicle

InactiveCN101214853AReduce complexityReduce manufacturing errorsWingsToy aircraftsButt jointHigh intensity

The present invention discloses a mid-wing and external-wing connection structure of an unmanned aerial vehicle. A mid-wing end rib and an external-wing root rib are the aluminium alloy reinforced ribs with comb-shaped joints. At the butt joint of the two reinforced ribs, the mid-wing and the external-wing are jointed by butt joint bolts made from high-intensified aluminium alloy material. After the mid-wing and the external-wing are butted, a joint seam is covered by a cowling made from composite material that is two rows of bolts are used fix the cowling at the reinforced ribs along the edge of the cowling. The mid-wing end rib and the external-wing root rib are considered as the butt joint of the mid-wing and the external-wing to integrate the joint and the structure as a whole, which reduces the complexity of the coordination relation and the connection relation and the manufacture error and assembly error by single joint, and the joint point and the grinder and the supporting structure of the external-wing are in one axial line, which ensures that the transmission force is continuous. The present invention has simple connection manner, which is convenient for disassembling and assembling and for checking the damage condition of a connection piece.

Owner:BEIHANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com