Patents

Literature

562 results about "Unbalanced data" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Unbalanced data. In this context, unbalanced data refers to classification problems where we have unequal instances for different classes. Having unbalanced data is actually very common in general, but it is especially prevalent when working with disease data where we usually have more healthy control samples than disease cases.

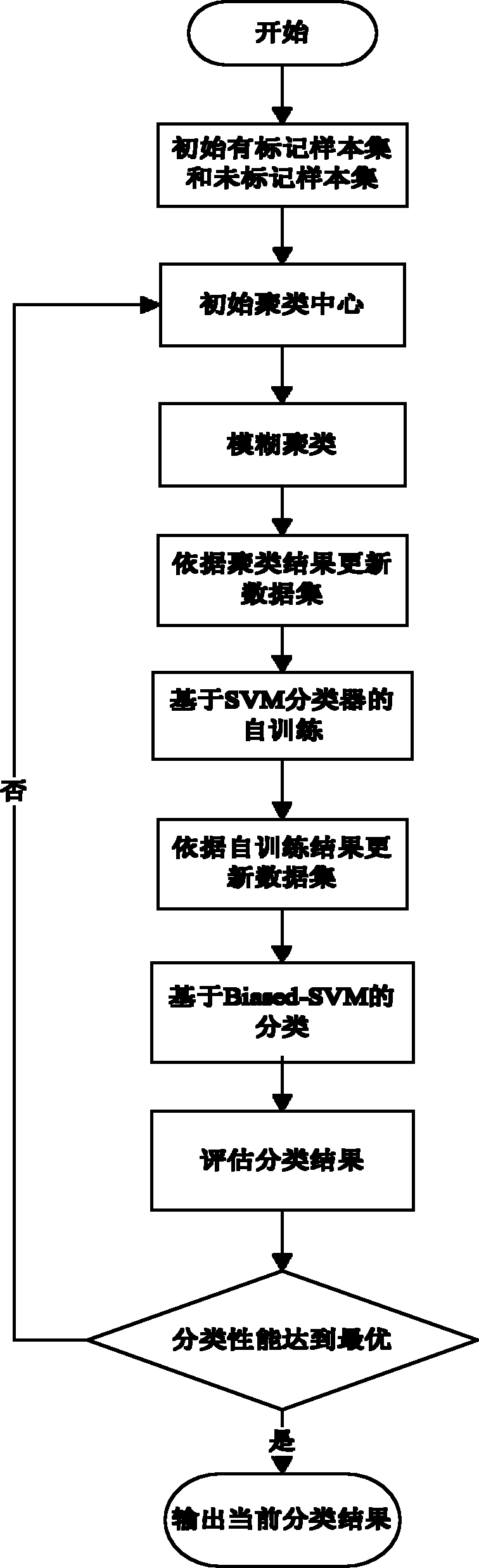

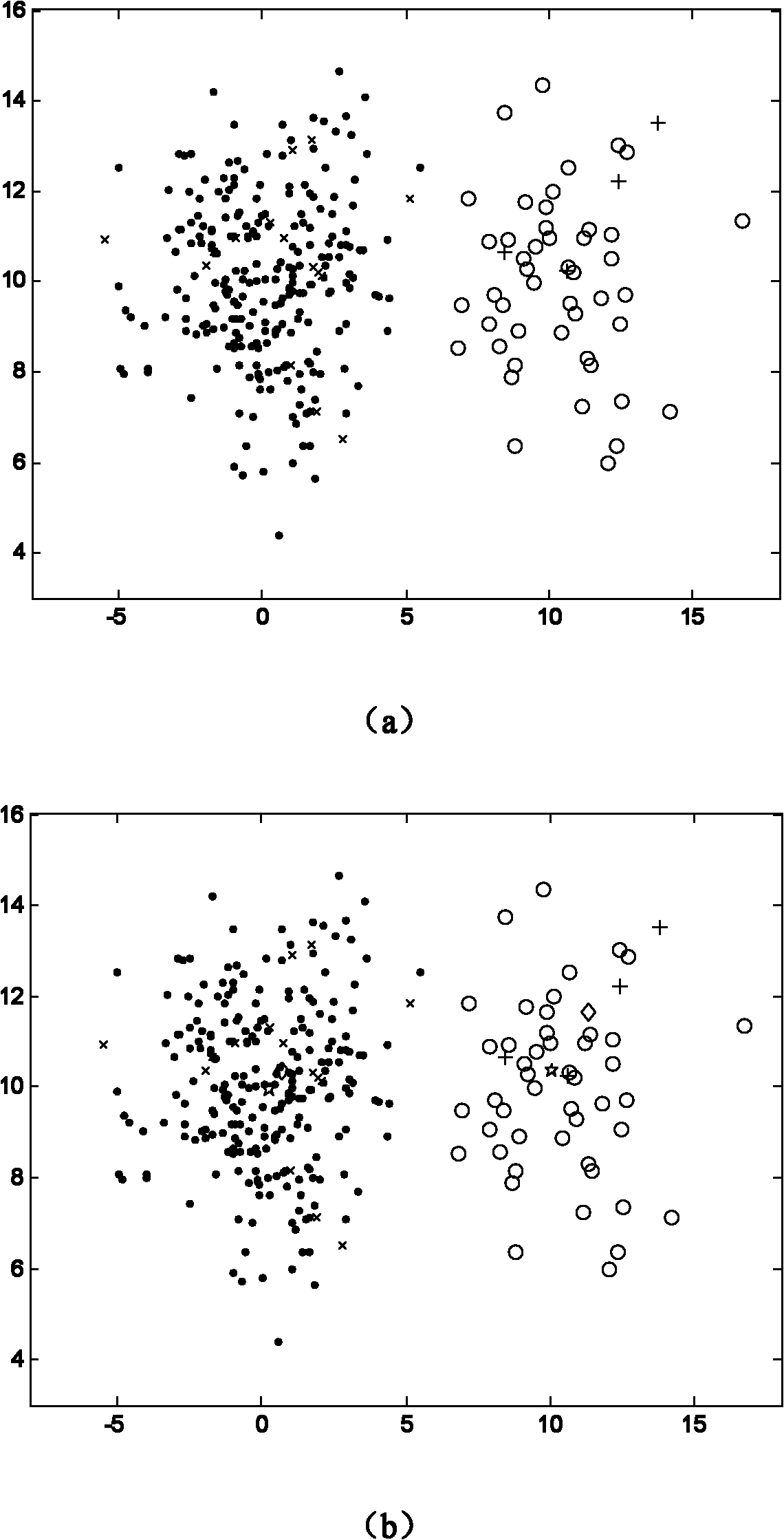

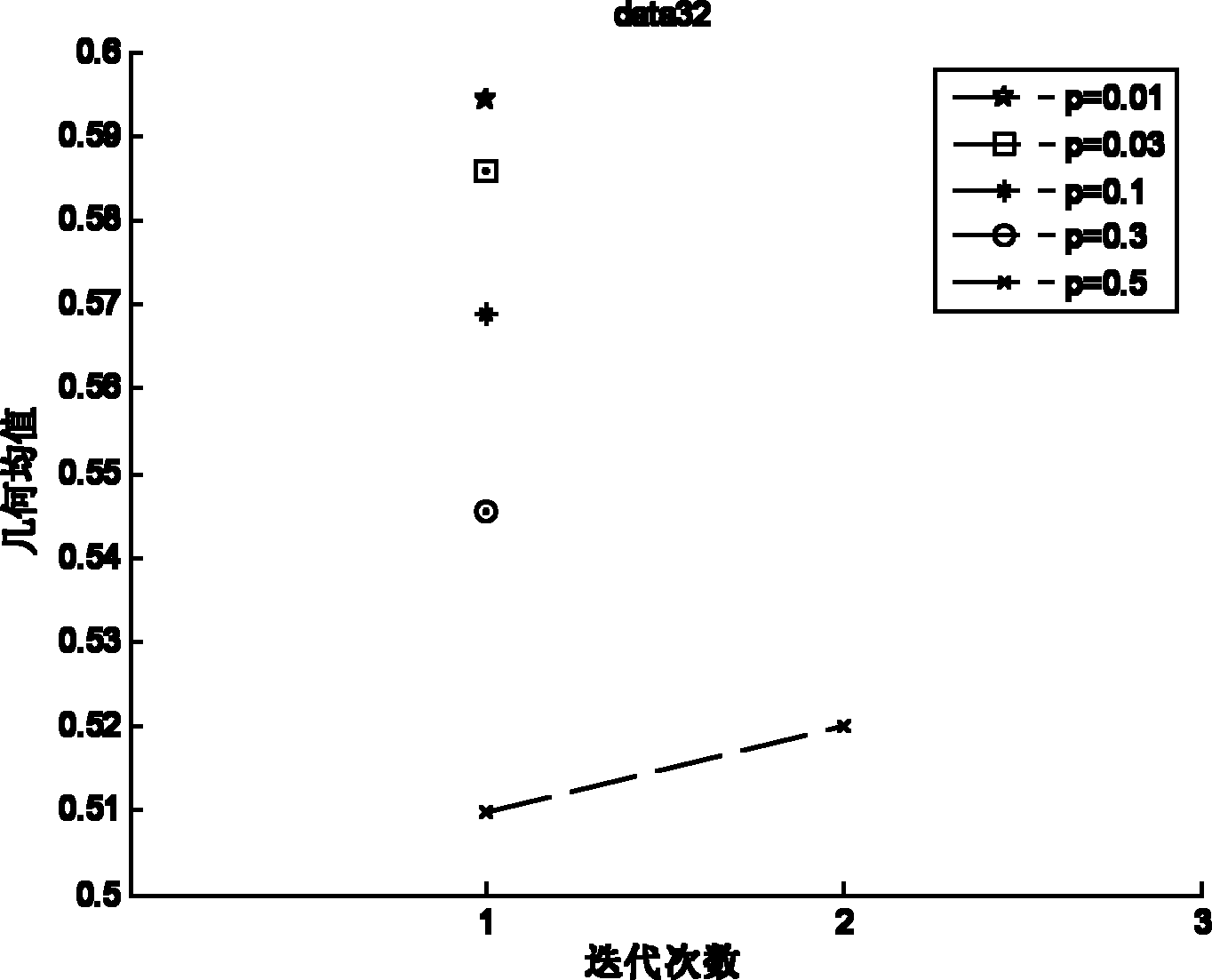

Semi-supervised classification method of unbalance data

InactiveCN101980202AImprove generalization abilityTedious and time-consuming labeling workSpecial data processing applicationsSelf trainingAlgorithm

The invention discloses a semi-supervised classification method of unbalance data, which is mainly used for solving the problem of low classification precision of a minority of data which have fewer marked samples and high degree of unbalance in the prior art. The method is implemented by the following steps: (1) initializing a marked sample set and an unmarked sample set; (2) initializing a cluster center; (3) implementing fuzzy clustering; (4) updating the marked sample set and unmarked sample set according to the result of the clustering; (5) performing the self-training based on a support vector machine (SVM) classifier; (6) updating the marked sample set and unmarked sample set according to the result of the self-training; (7) performing the classification of support vector machines Biased-SVM based on penalty parameters; and (8) estimating a classification result and outputting the result. For unbalance data which have fewer marked samples, the method improves the classification precision of a minority of data. And the method can be used for classifying and identifying unbalance data having few training samples.

Owner:XIDIAN UNIV

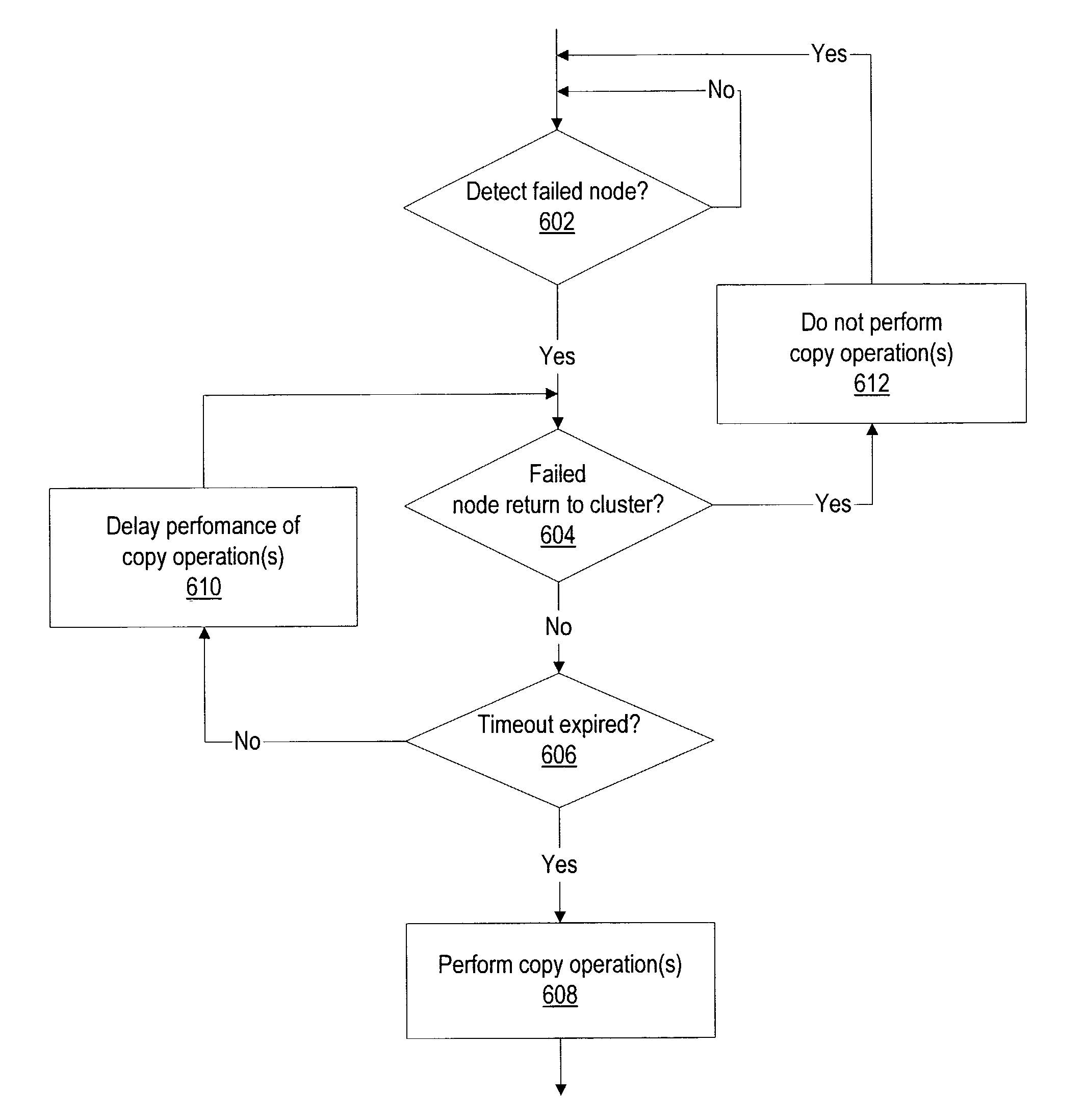

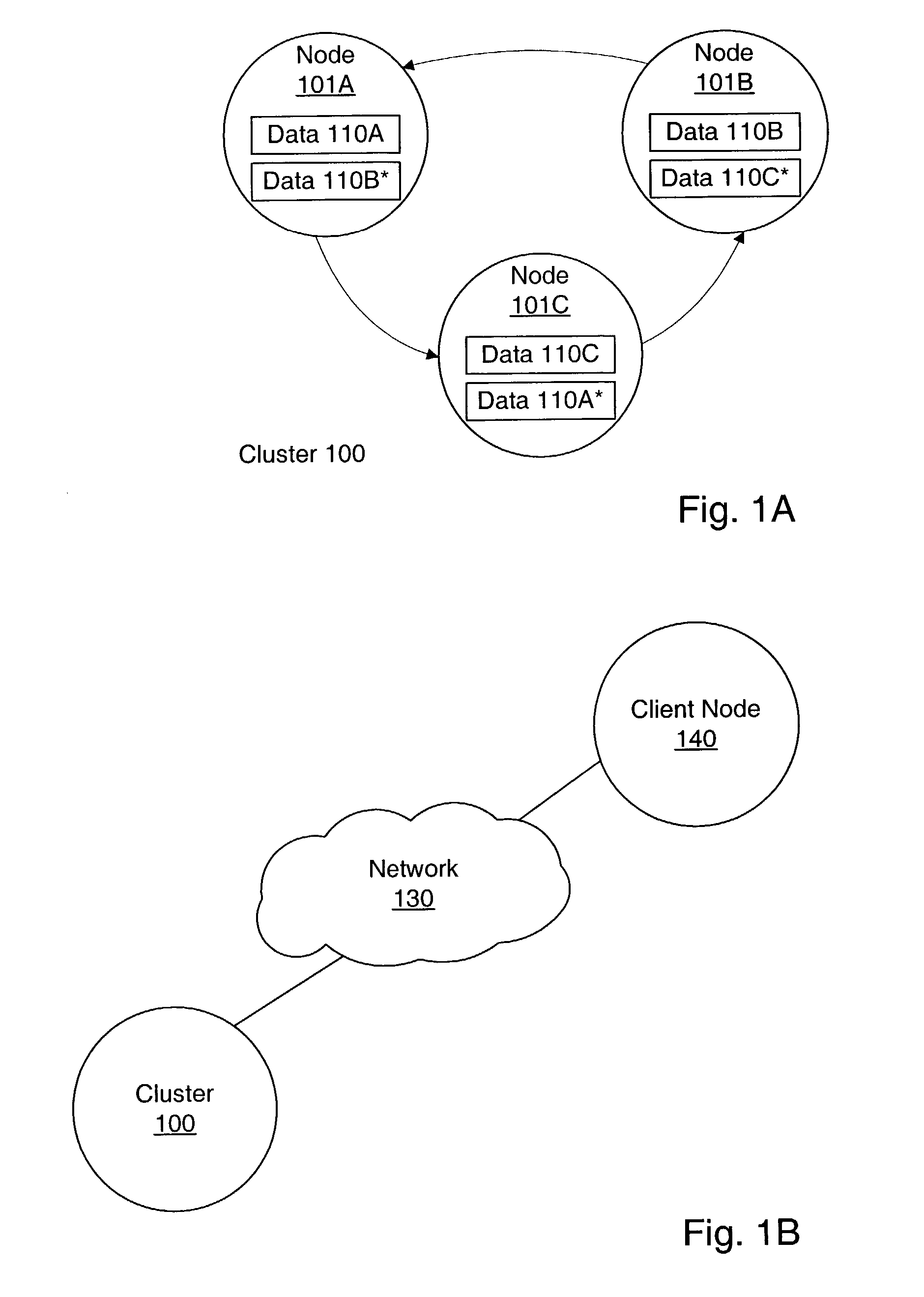

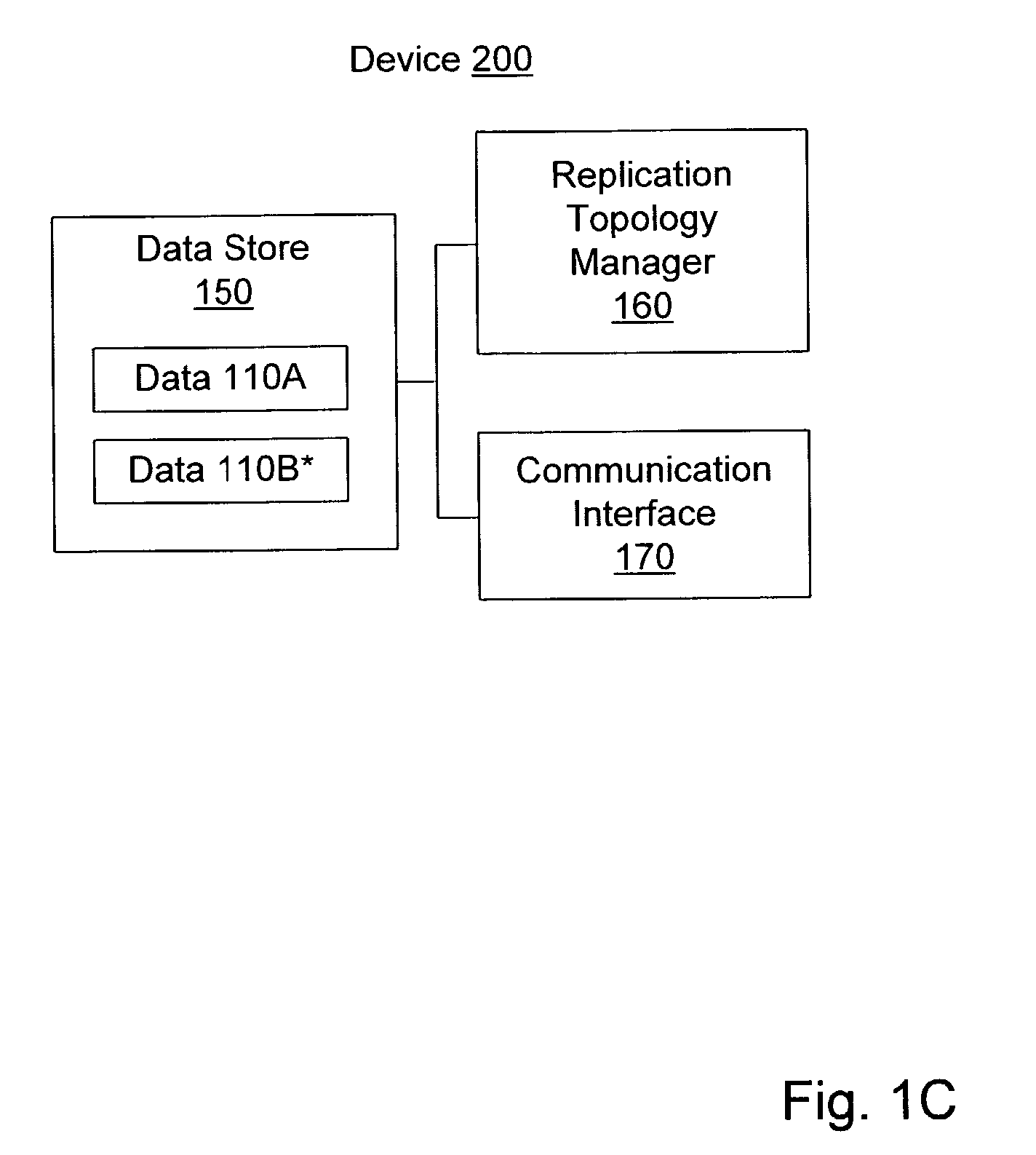

System and method for reforming a distributed data system cluster after temporary node failures or restarts

ActiveUS7206836B2Error detection/correctionMultiple digital computer combinationsSelf-healingData system

Owner:ORACLE INT CORP

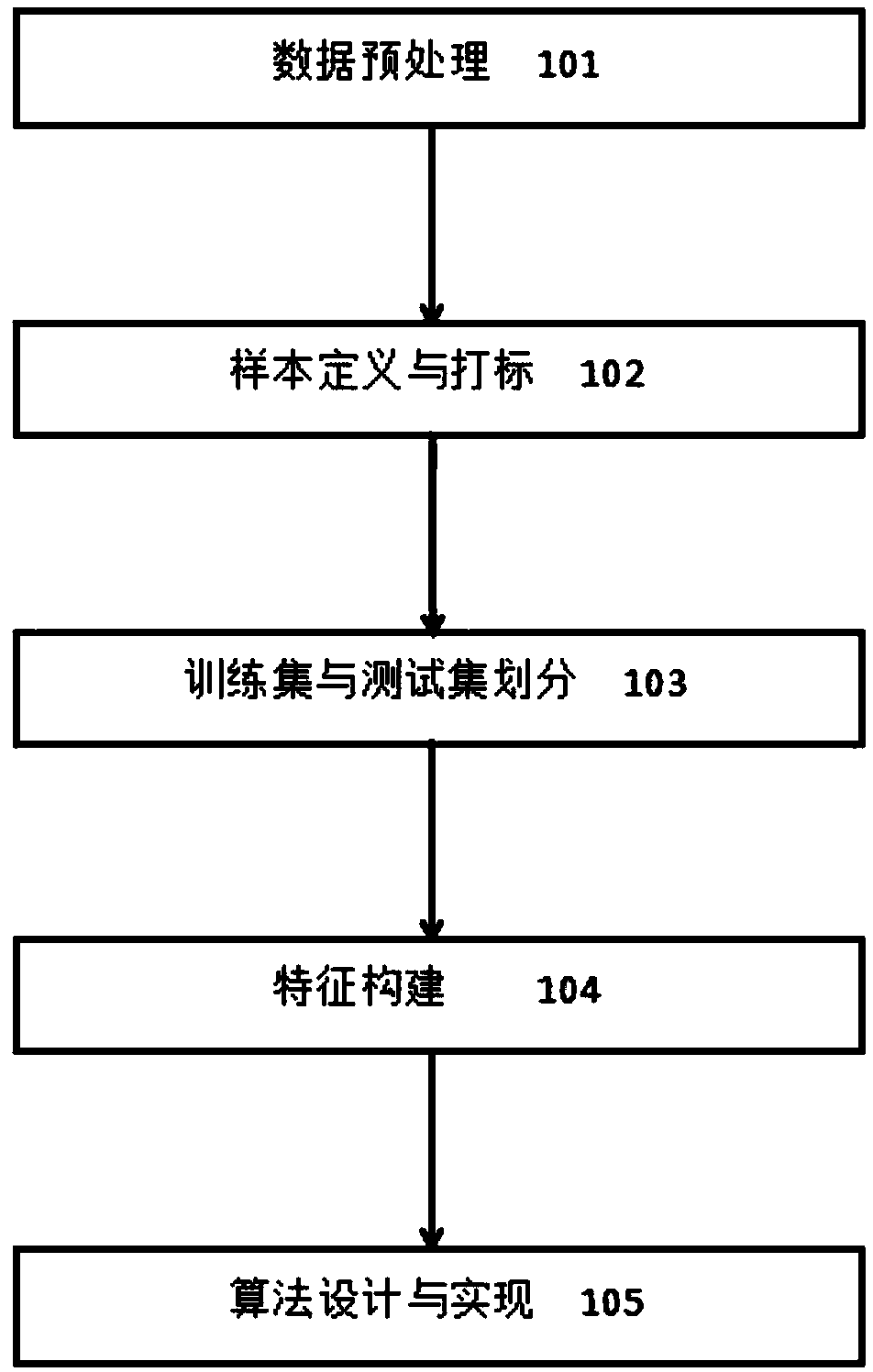

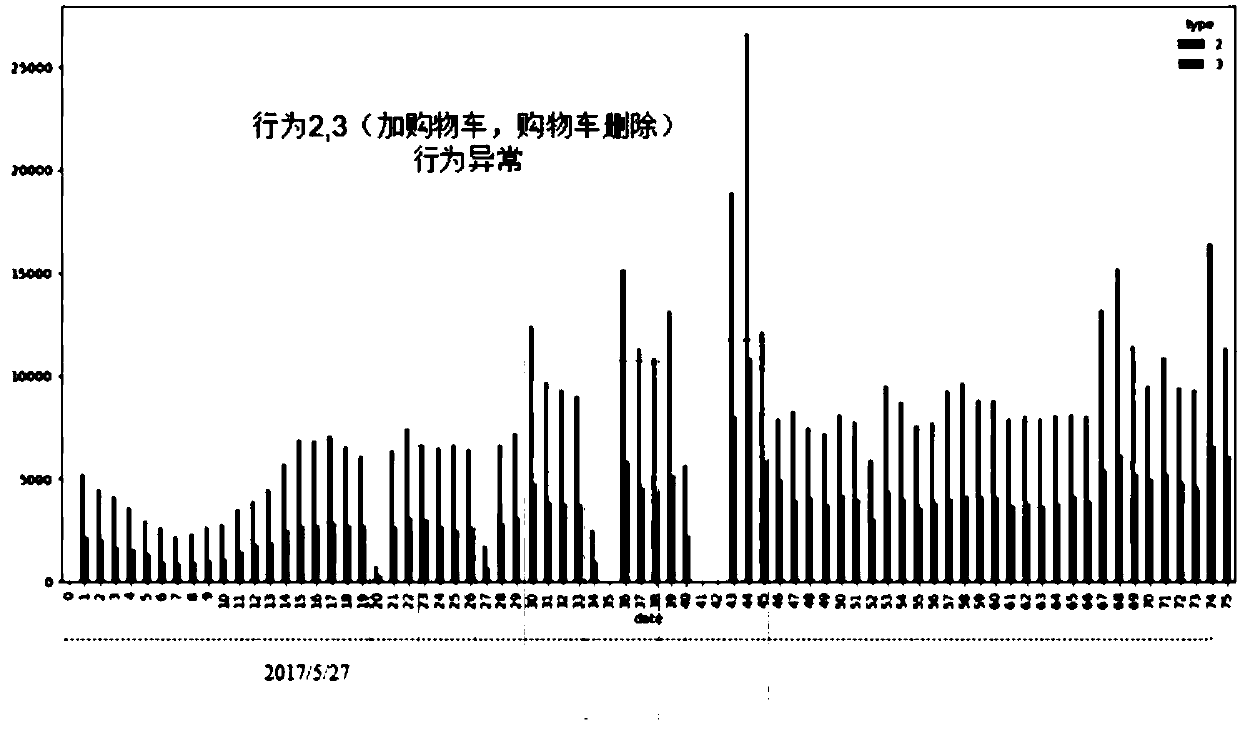

High potential user buying intention prediction method based on big data user behavior analysis

ActiveCN107944913AReduce data redundancyPrecision marketingCharacter and pattern recognitionMarket data gatheringIterative learning algorithmData set

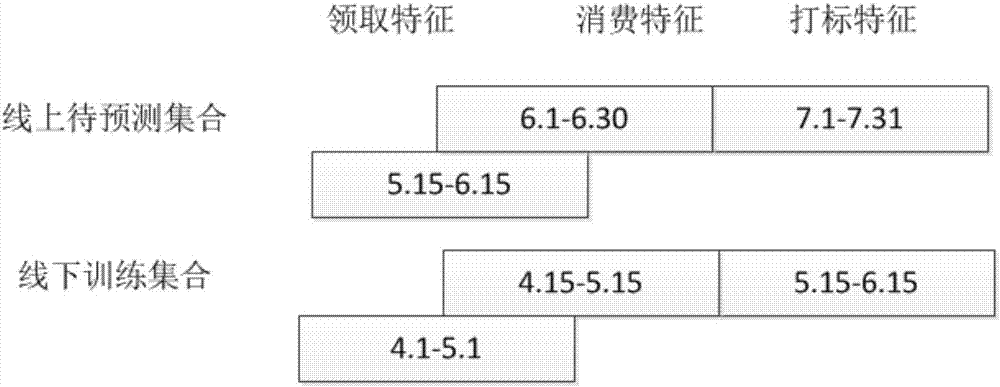

The invention provides a high potential user buying intention prediction method based on big data user behavior analysis. The high potential user buying intention prediction method comprises the following steps: 101 data preprocessing: the historical behavior data set of the e-commerce user is preprocessed; 102 sample defining and marking: samples are constructed with the interacted user product pairs to act as the keywords according to the historical consumption behavior of the user; 103 division of a training set and a test set: the historical data are divided into the training set and the test set by using a time window division method; 104 feature construction: feature engineering construction of the historical behavior data of the user is performed; and 105 algorithm design and implementation: feature selection of the feature group and unbalanced data processing of the data set are performed and then the final result of two-layer model iterative learning algorithm prediction is put forward. The prediction model is established on the basis of the historical behavior data of the e-commerce user of the time span of 45 days so that whether the user places an order of the commodityin the candidate commodity set P in the following 5 days can be predicted.

Owner:上海普瑾特信息技术服务股份有限公司

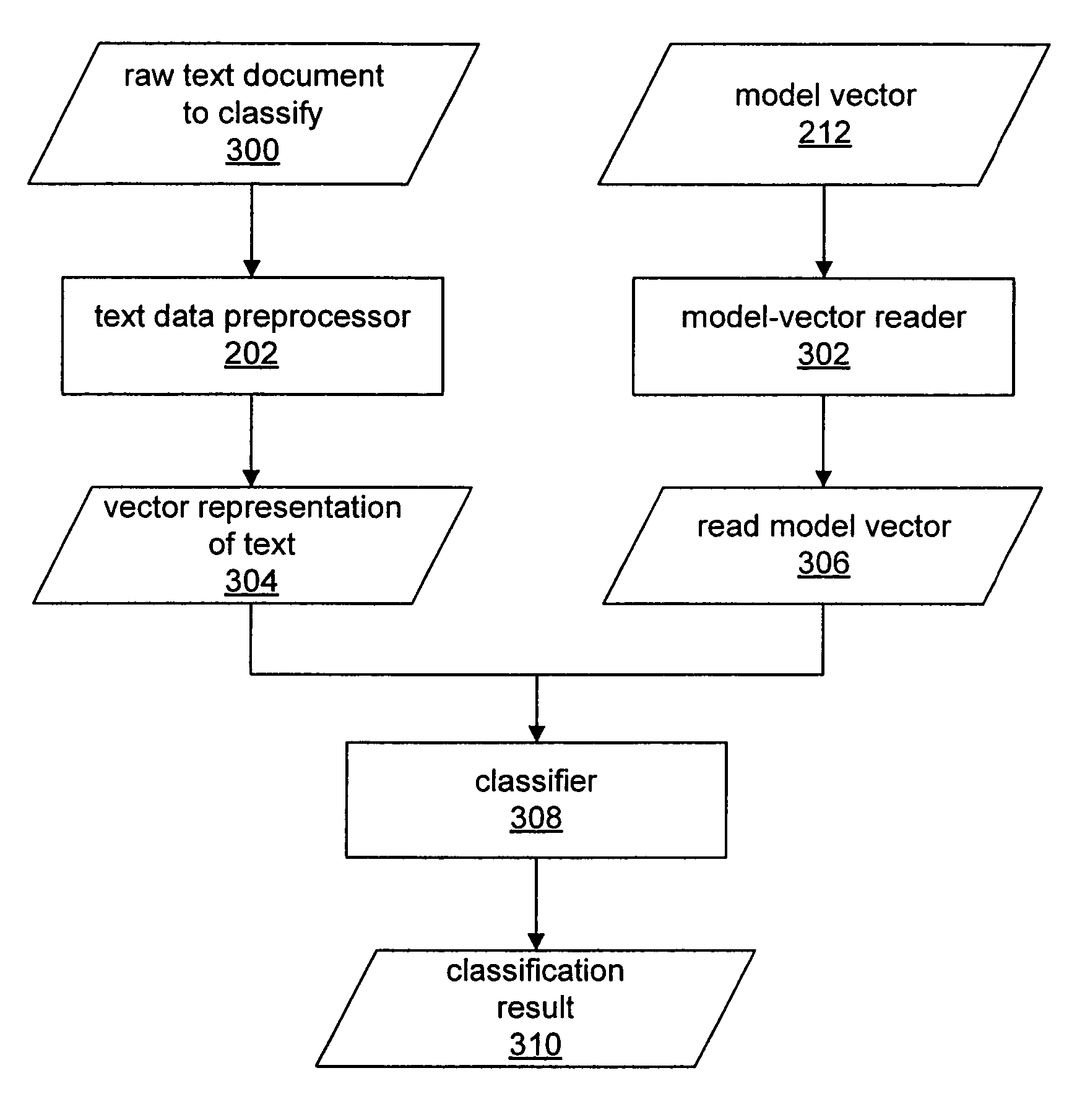

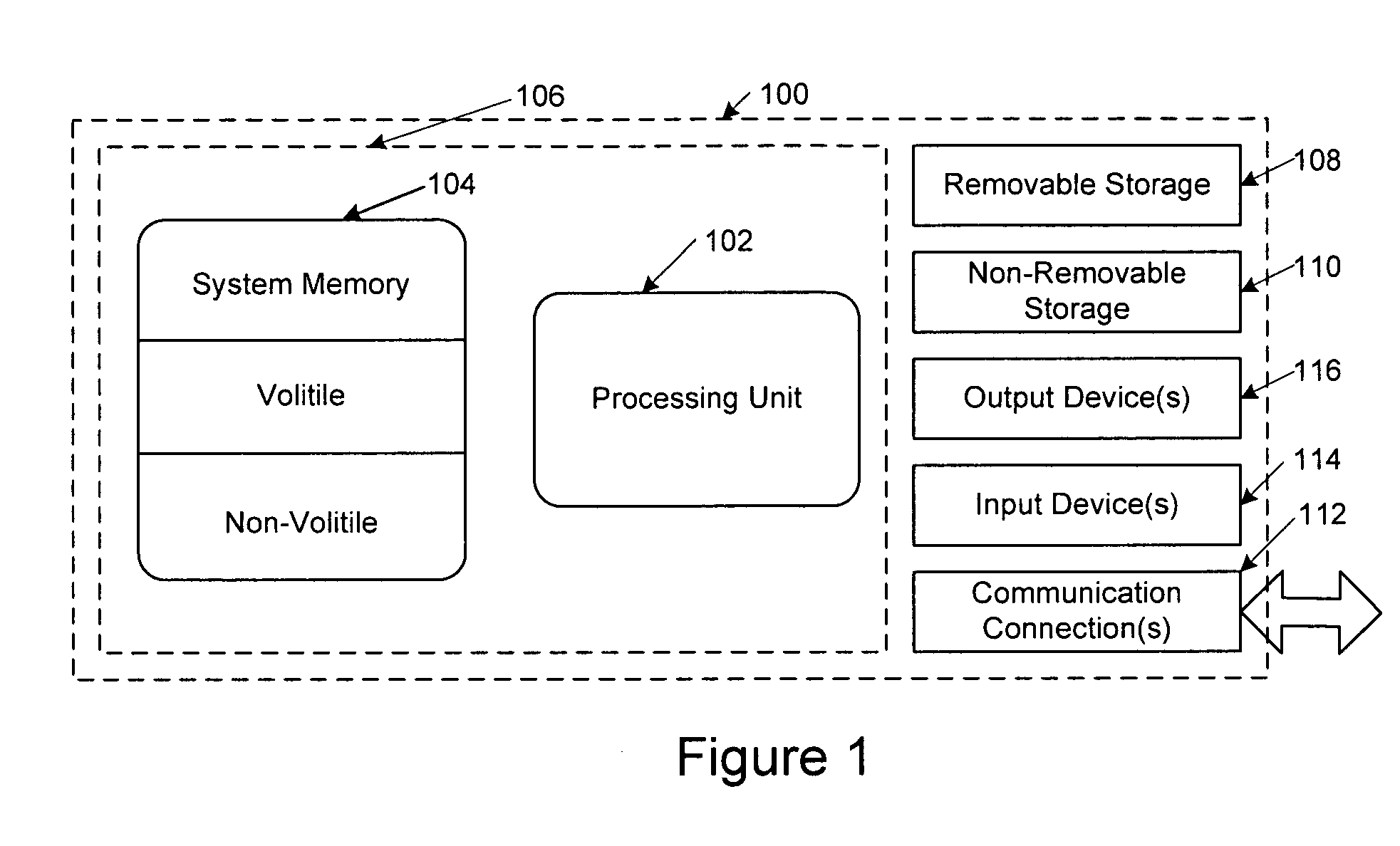

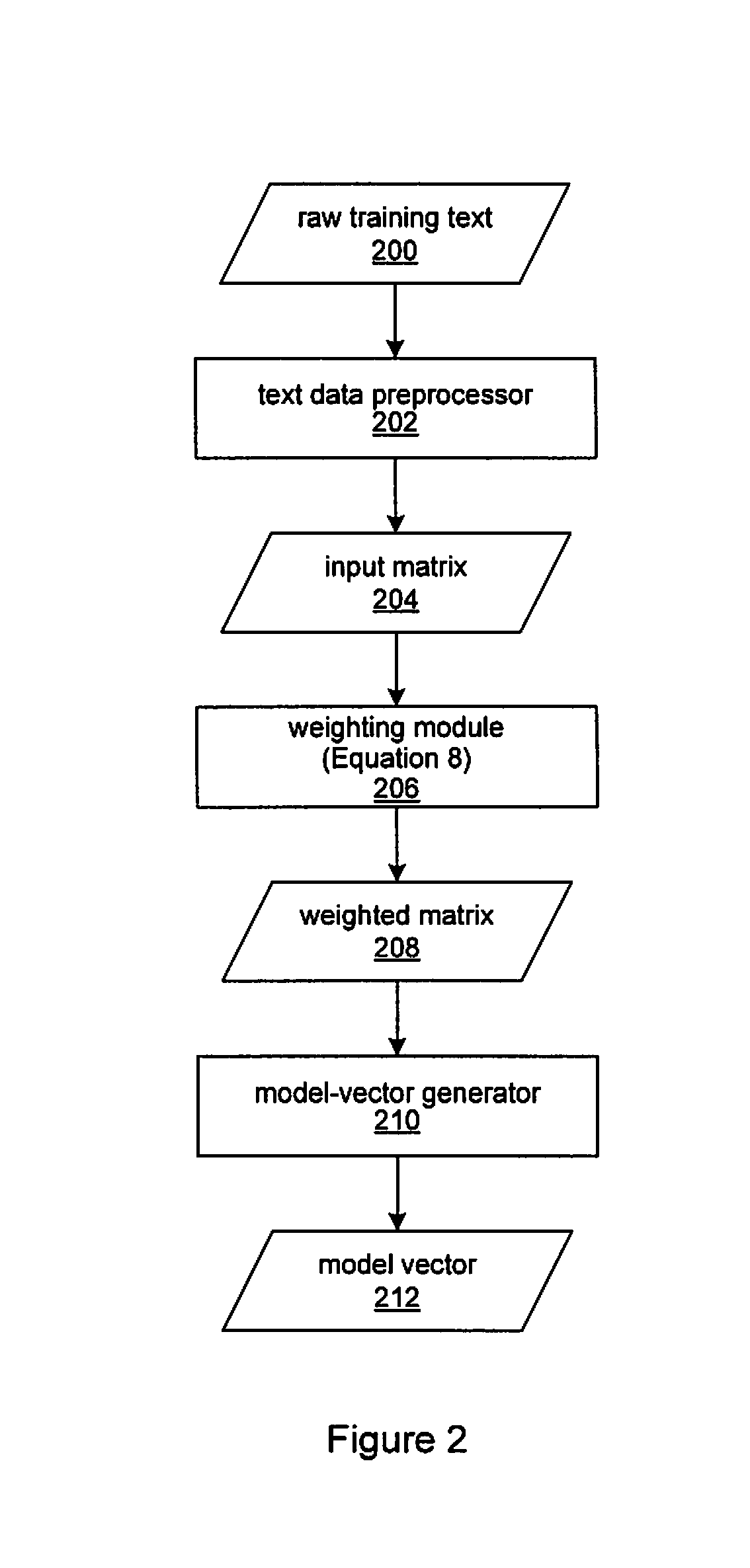

Text classification by weighted proximal support vector machine

InactiveUS20070239638A1Improve classification qualityFast trainingDigital data information retrievalDigital computer detailsText categorizationCategorical models

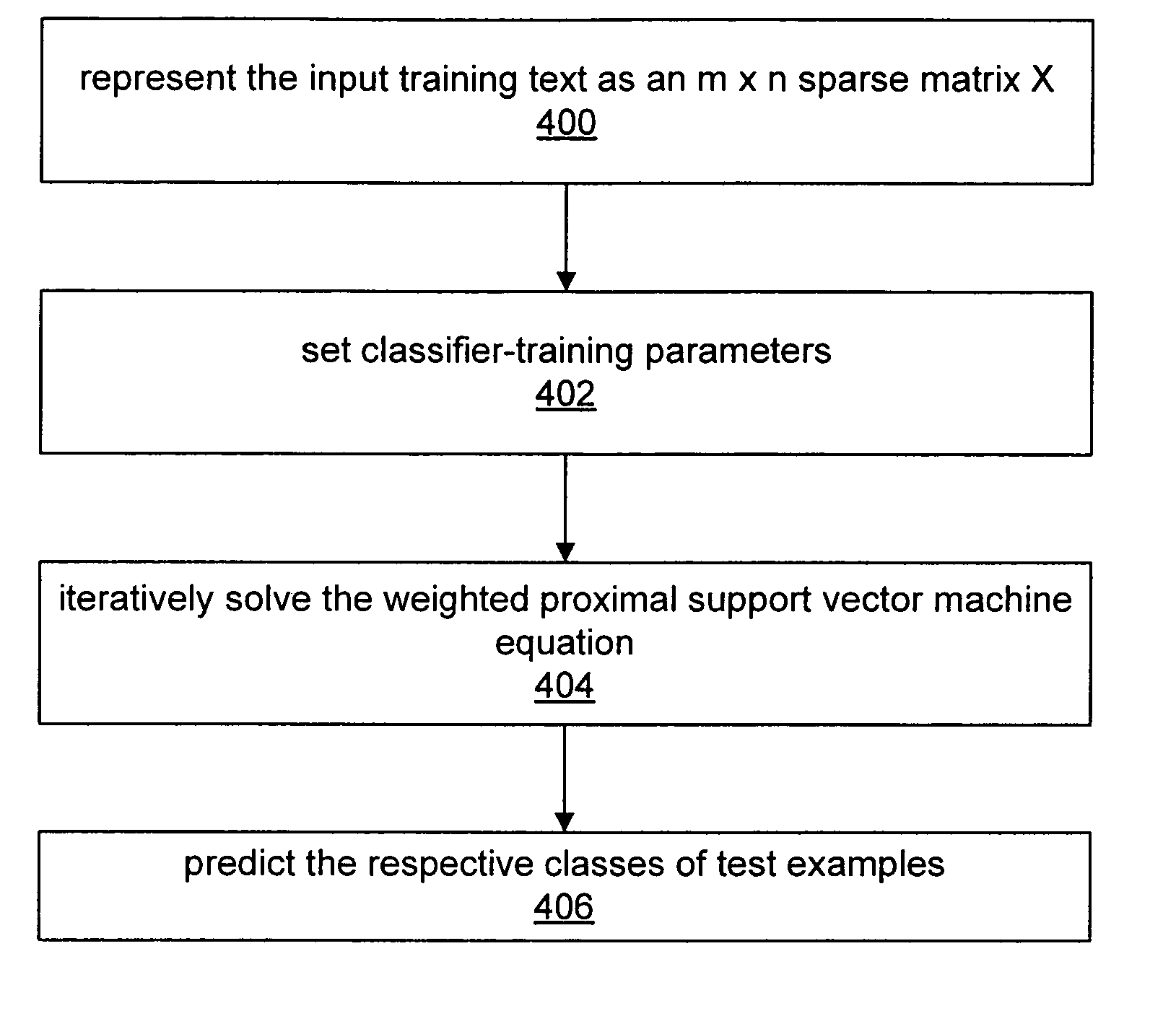

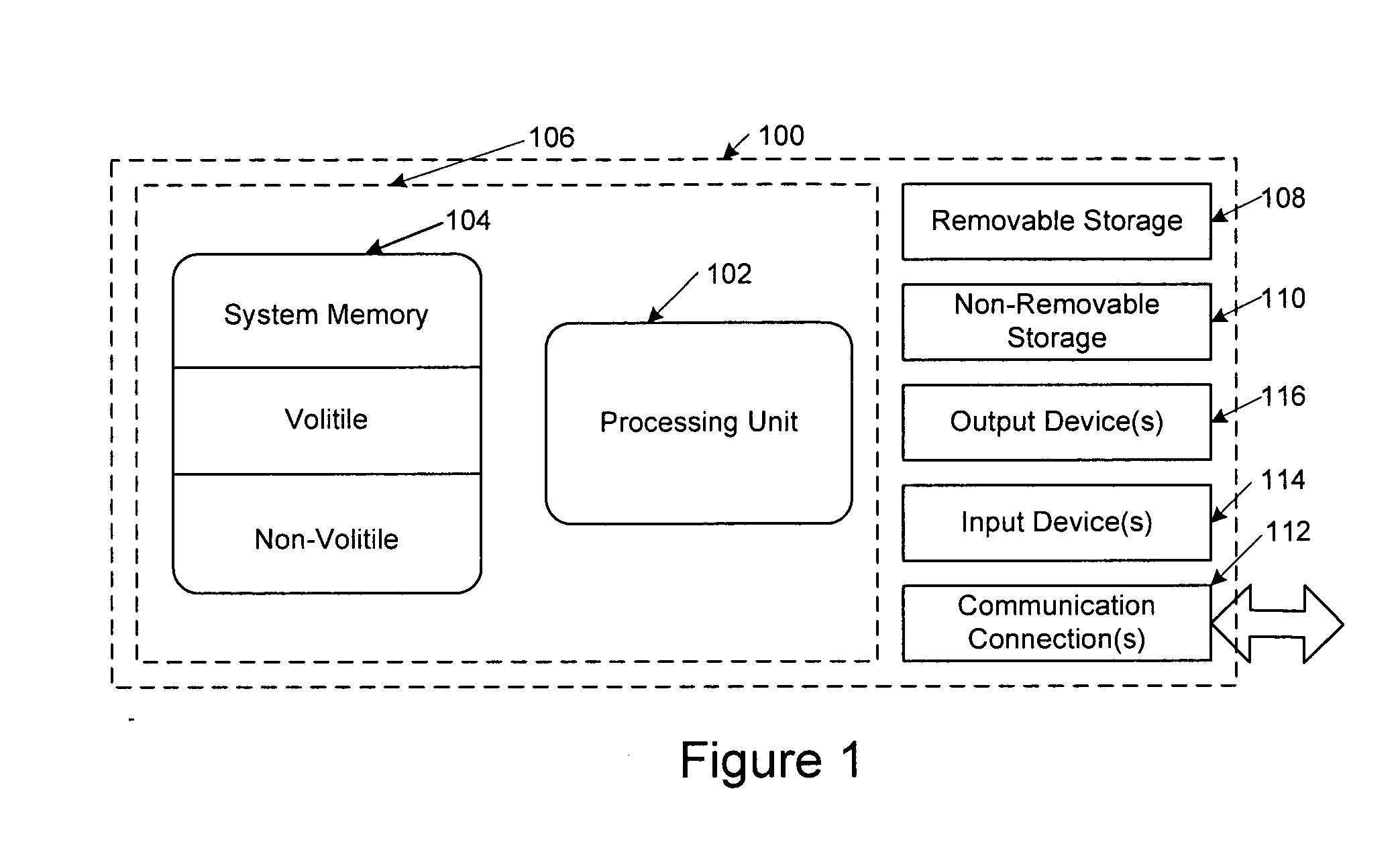

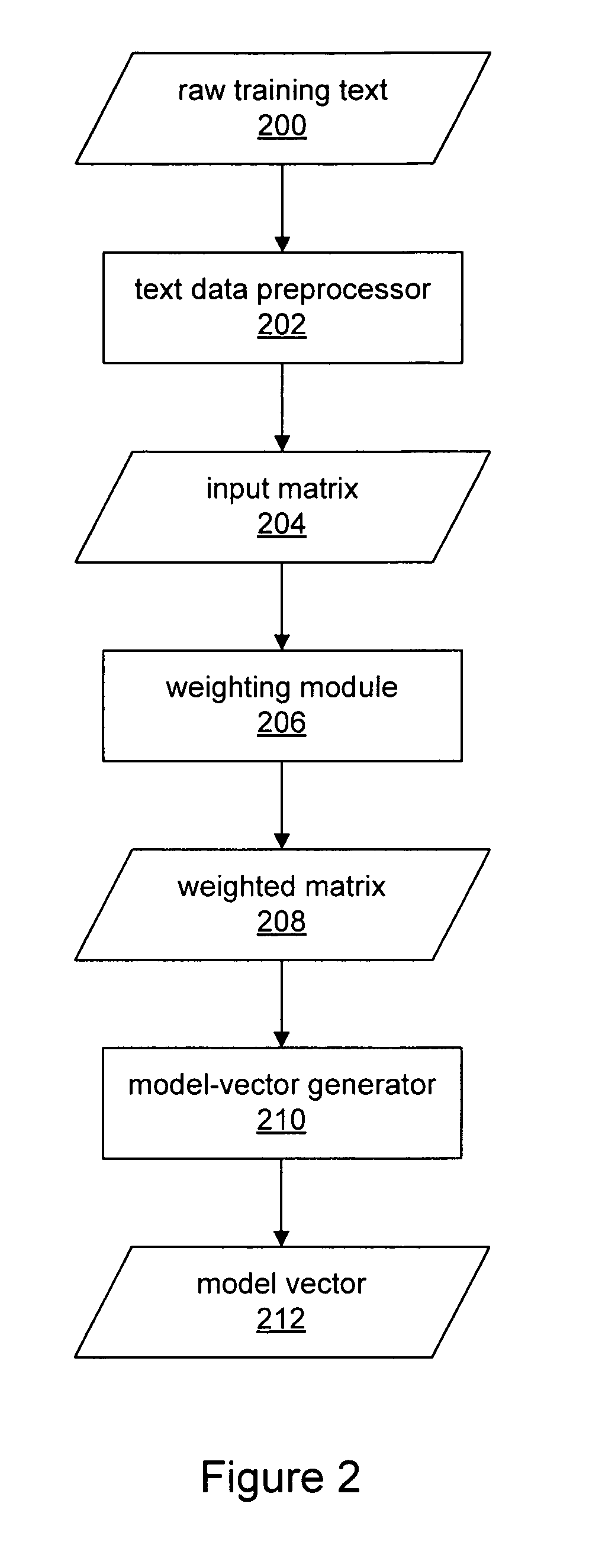

Embodiments of the invention relate to improvements to the support vector machine (SVM) classification model. When text data is significantly unbalanced (i.e., positive and negative labeled data are in disproportion), the classification quality of standard SVM deteriorates. Embodiments of the invention are directed to a weighted proximal SVM (WPSVM) model that achieves substantially the same accuracy as the traditional SVM model while requiring significantly less computational time. A weighted proximal SVM (WPSVM) model in accordance with embodiments of the invention may include a weight for each training error and a method for estimating the weights, which automatically solves the unbalanced data problem. And, instead of solving the optimization problem via the KKT (Karush-Kuhn-Tucker) conditions and the Sherman-Morrison-Woodbury formula, embodiments of the invention use an iterative algorithm to solve an unconstrained optimization problem, which makes WPSVM suitable for classifying relatively high dimensional data.

Owner:MICROSOFT TECH LICENSING LLC

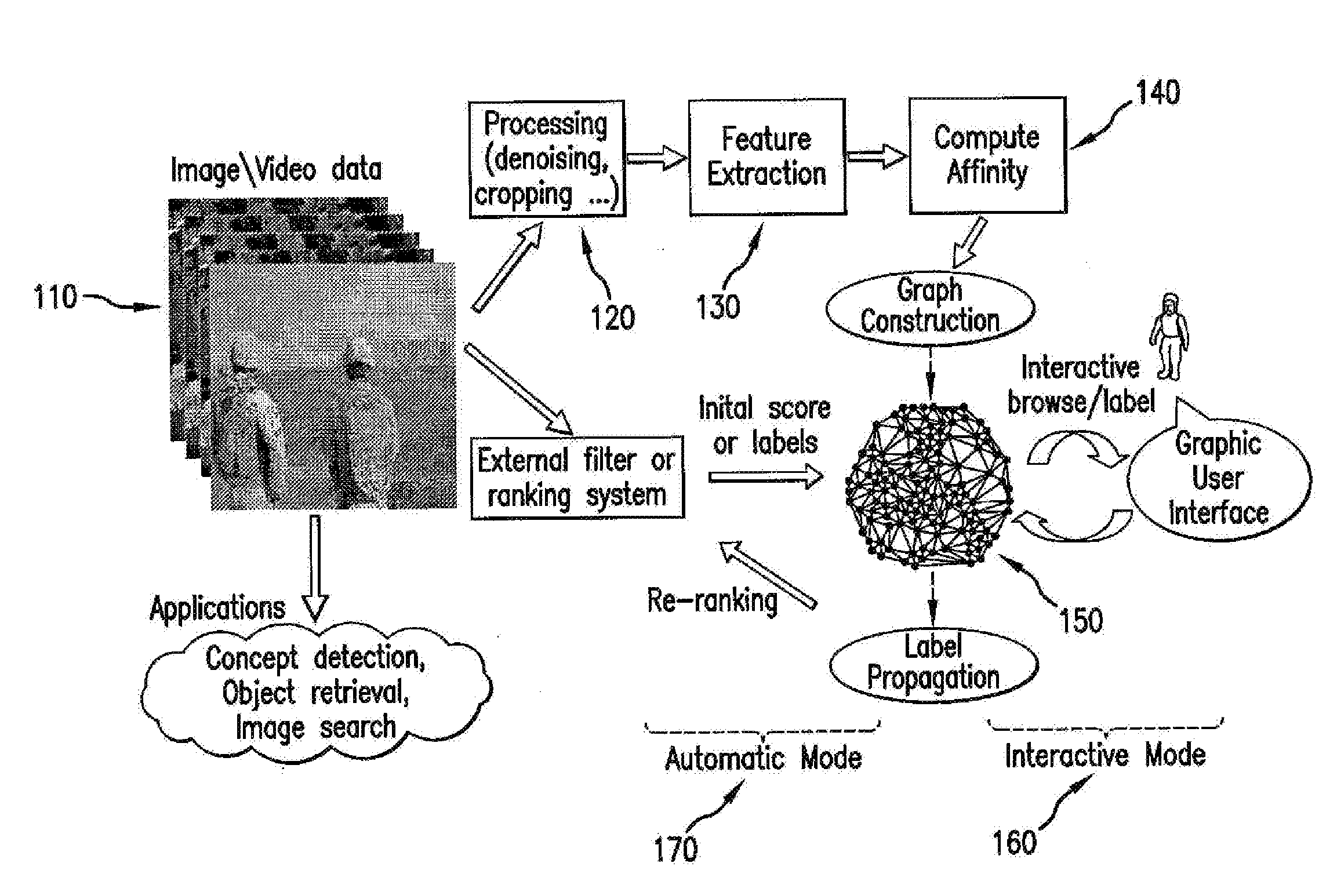

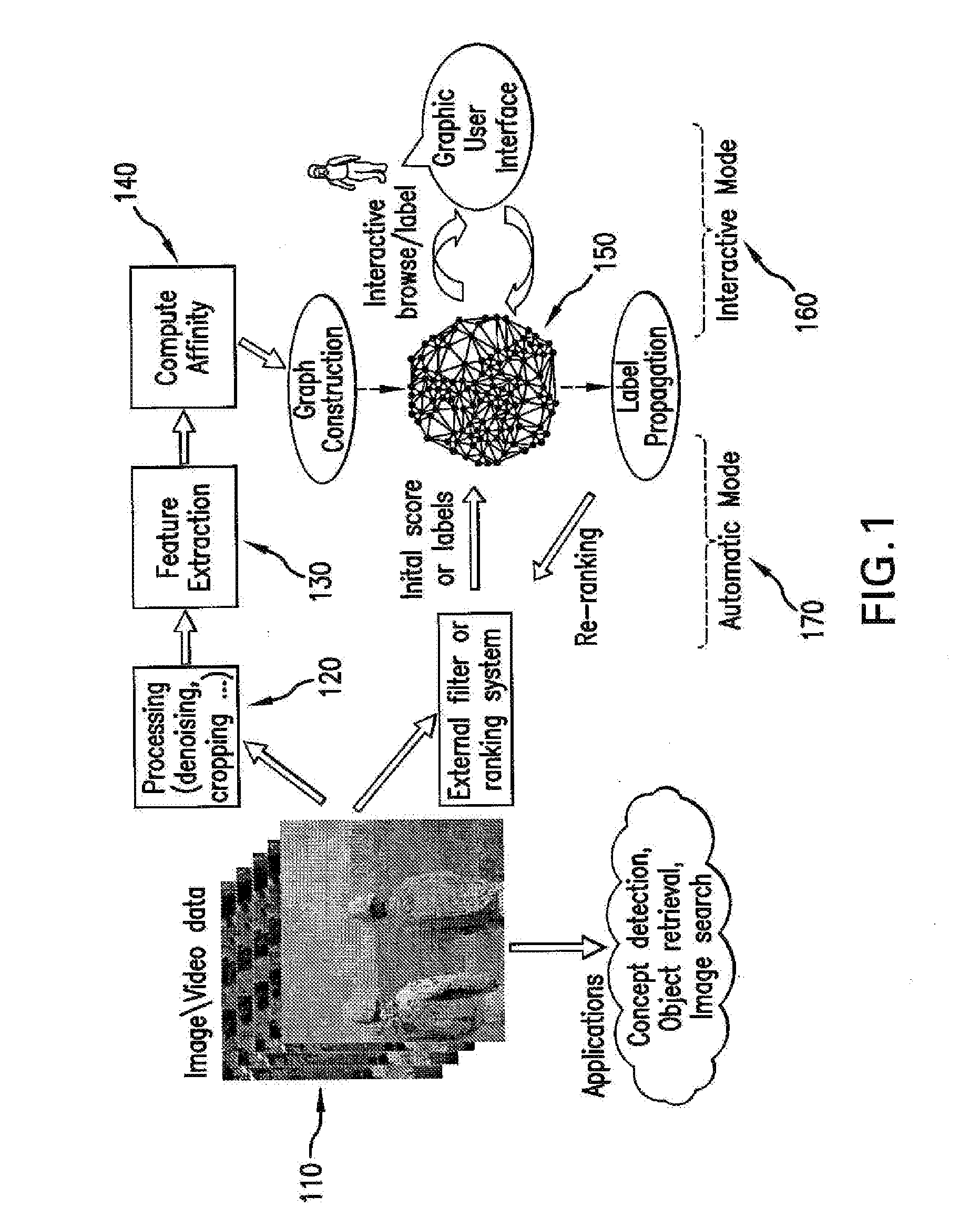

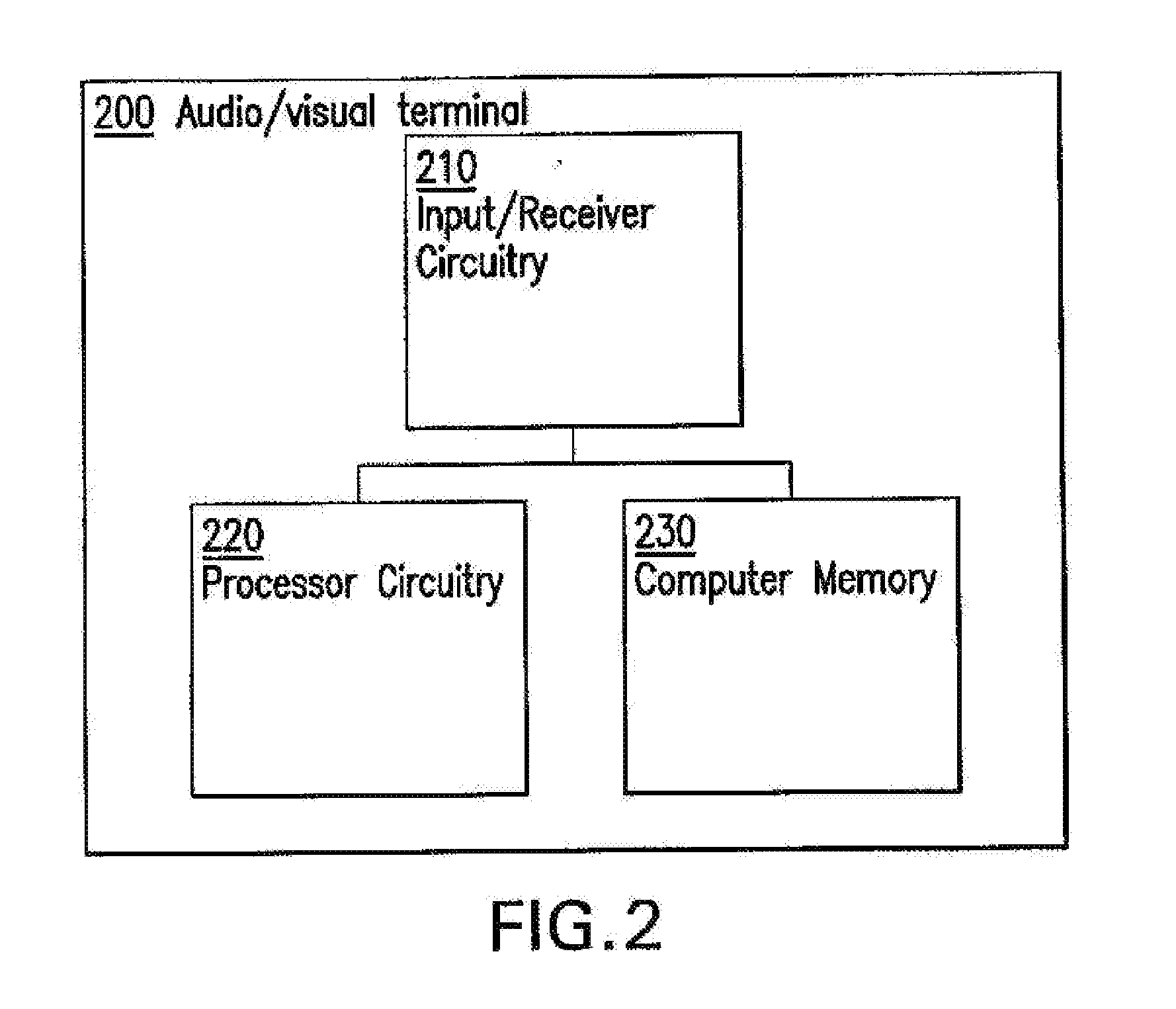

System And Method For Annotating And Searching Media

InactiveUS20110314367A1Facilitate rapid retrieval explorationPromote resultsDigital data information retrievalNatural language data processingImbalanced dataData mining

A system and method for labeling and classifying multimedia data is provided that includes novel label propagation techniques and classification function characteristics. The system and method corrects and propagates a small number of potentially erroneous labels to a large amount of multimedia data and generate optimal ways of ranking, classification, and presentation of the data sets. The disclosed systems and methods improve upon prior systems and methods and provide an improved approach to the problems of imbalanced data sets and incorrect label data.

Owner:THE TRUSTEES OF COLUMBIA UNIV IN THE CITY OF NEW YORK

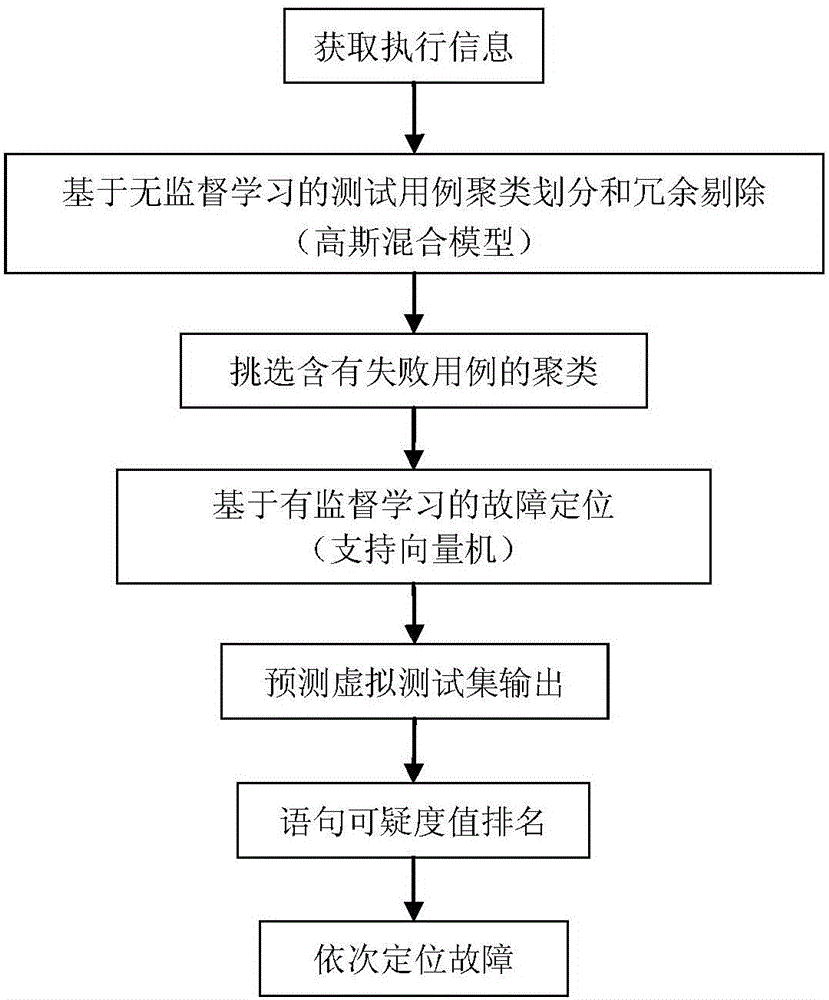

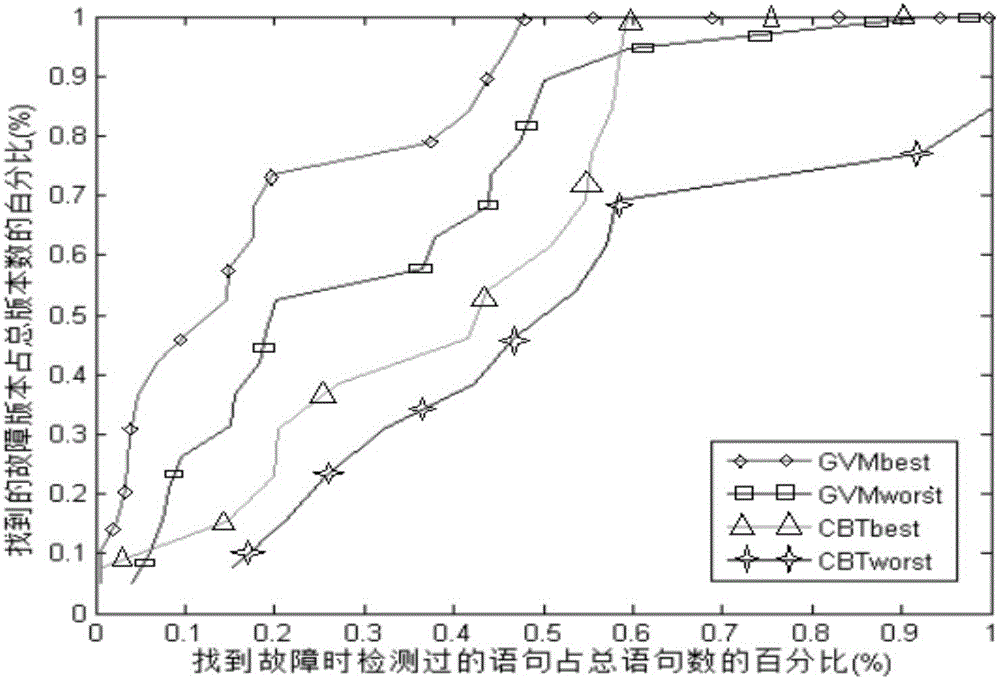

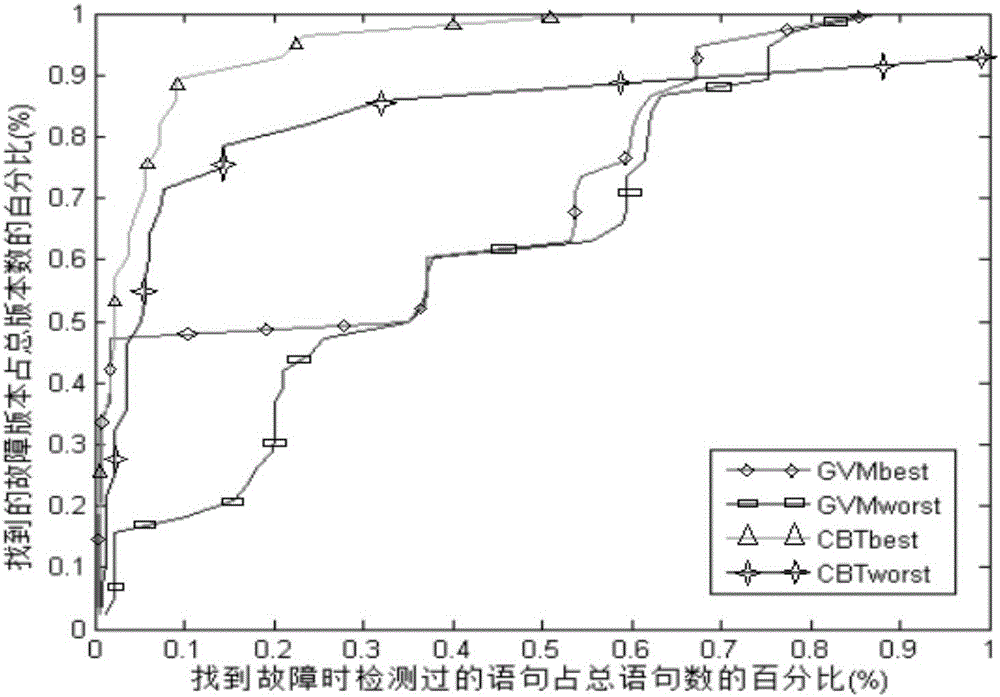

Software failure positioning method based on machine learning algorithm

InactiveCN105893256AReduce adverse effectsImprove fault location efficiencySoftware testing/debuggingSoftware failureGaussian mixture distribution

The invention discloses a software failure positioning method based on machine learning algorithm to solve the technical problem of low positioning efficiency of existing software failure positioning methods. According to the technical scheme, the method comprises the steps of describing failure distribution possibly existing in an actual program based on Gaussian mixture distribution to enable failure distribution in the program to be more definite; removing redundant test samples with a cluster analysis method based on a Gaussian mixture model, and finding a special test set for a specific failure, so that the adverse effect of redundant use cases on positioning precision is reduced; remodifying a support vector machine model to be adapted to an unbalanced data sample, and finding the nonlinear mapping relation between use case coverage information and an execution result by means of the parallel debugging theory, so that machine learning algorithm is free from the local optimal solution problem caused by uneven samples; finally, designing a virtual test suite, placing the virtual test suite in a well trained model for prediction, obtaining a statement equivocation value ranking result, and conducting failure positioning. In this way, software failure positioning efficiency is improved.

Owner:北京京航计算通讯研究所

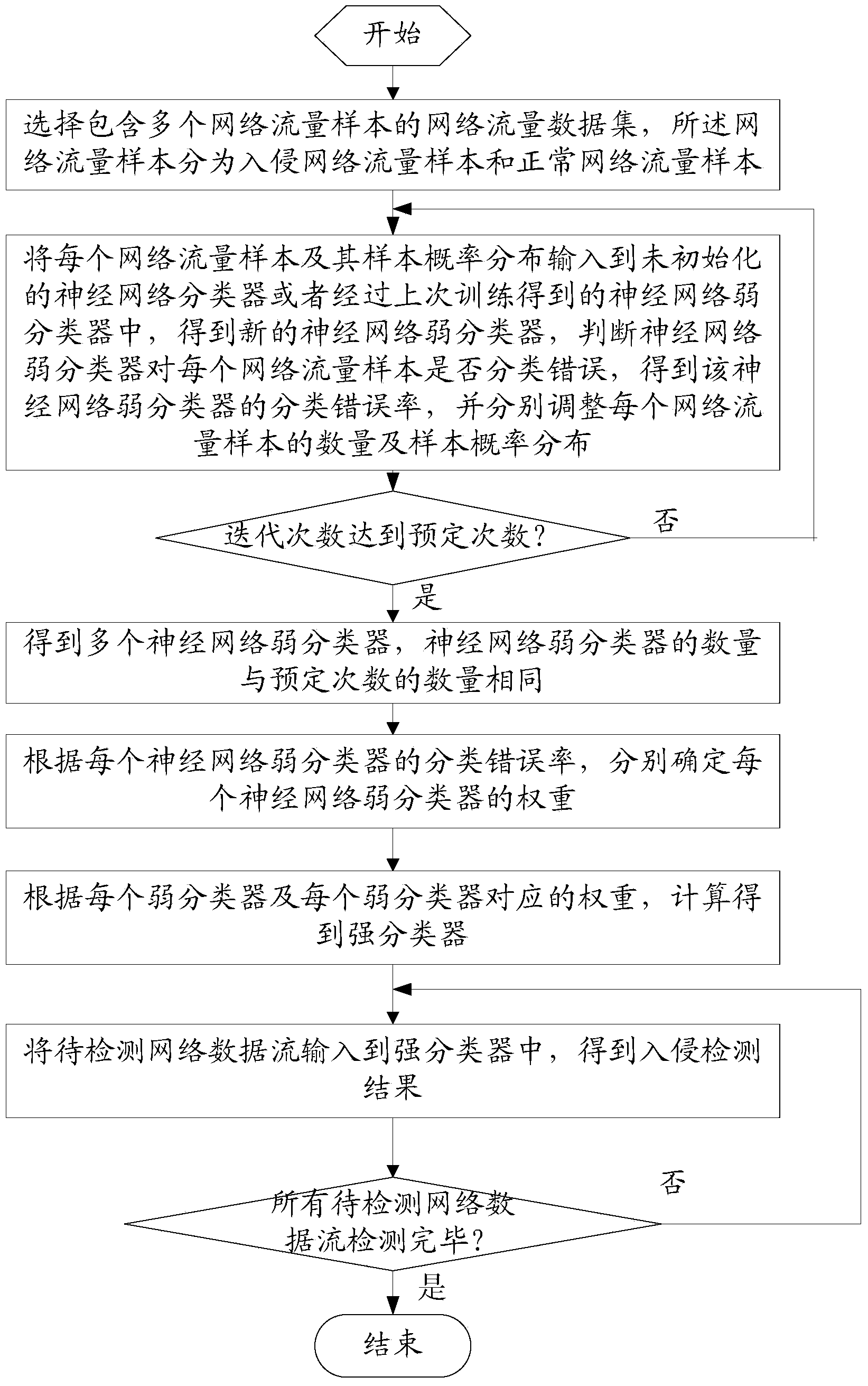

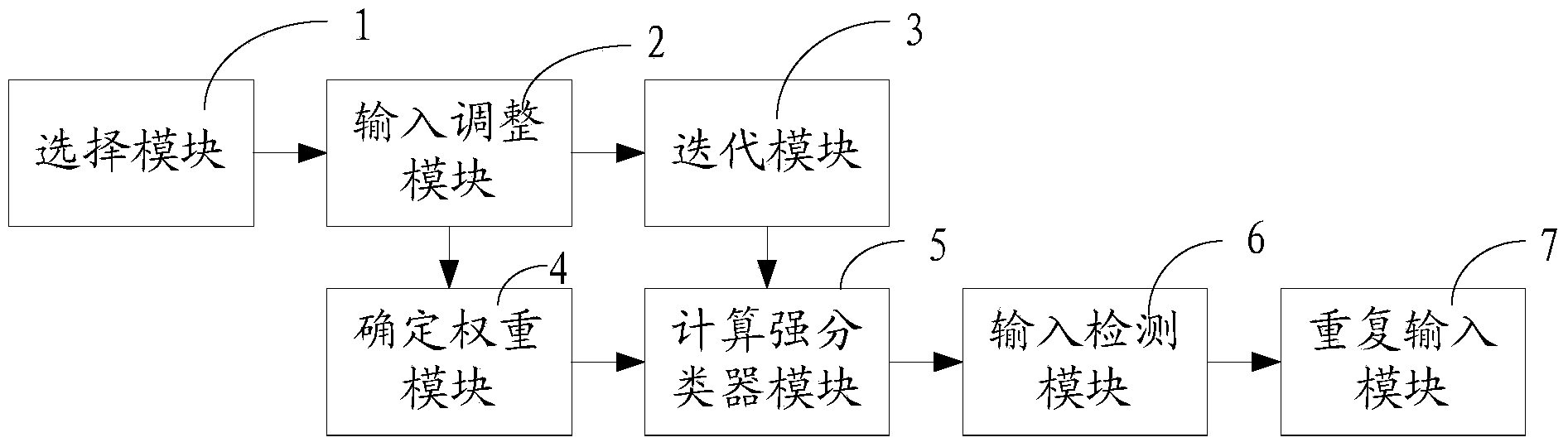

Abnormal intrusion detection ensemble learning method and apparatus based on Wiener process

ActiveCN103716204AExpand the borderEasy to detectBiological neural network modelsData switching networksData streamData set

The invention relates to an abnormal intrusion detection ensemble learning method based on the Wiener process. The method comprises the following steps: selecting a network traffic data set; inputting each network traffic sample and sample probability distribution thereof to an uninitialized neural network classifier or a neural network weak classifier obtained through the previous training, judging whether the neural network weak classifier wrongly classifies each network traffic sample, and adjusting quantity and sample probability distribution of each network traffic sample; repeating the step 2 to obtain a plurality of neural network weak classifiers; determining the weight of each neural network weak classifier respectively; obtaining strong classifiers based on each weak classifier and the corresponding weight of each neural network weak classifier; inputting network data flow to be detected to the strong classifiers to obtain intrusion detection results; and repeating the step 6 until all the network data flow to be detected is detected. According to the method and apparatus in the invention, the problem of classification of the unbalanced data set can be solved, and an unbiased classifier with high classification correct rate can be obtained.

Owner:INST OF INFORMATION ENG CAS

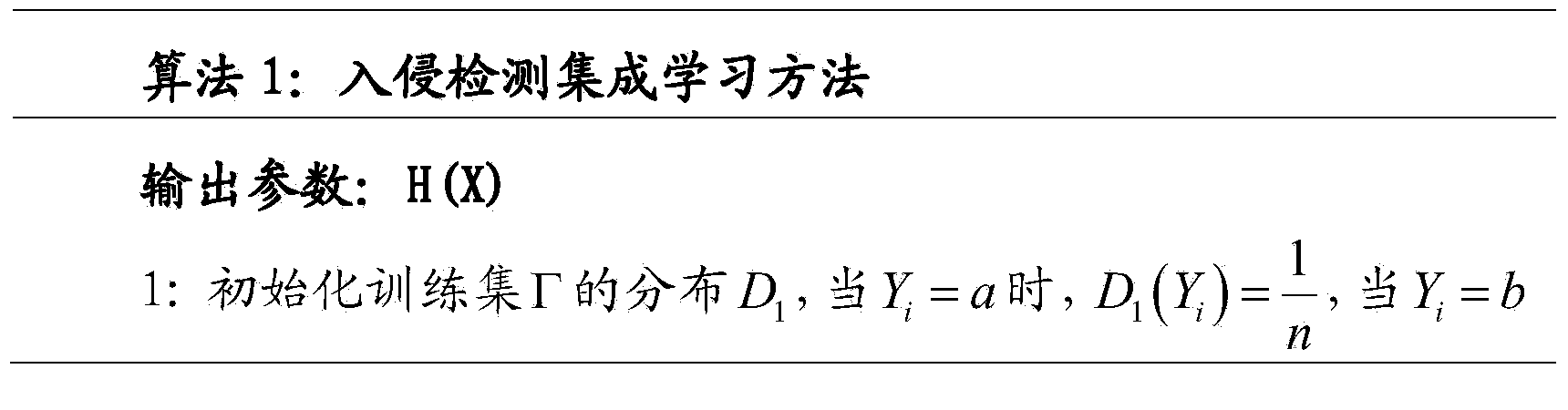

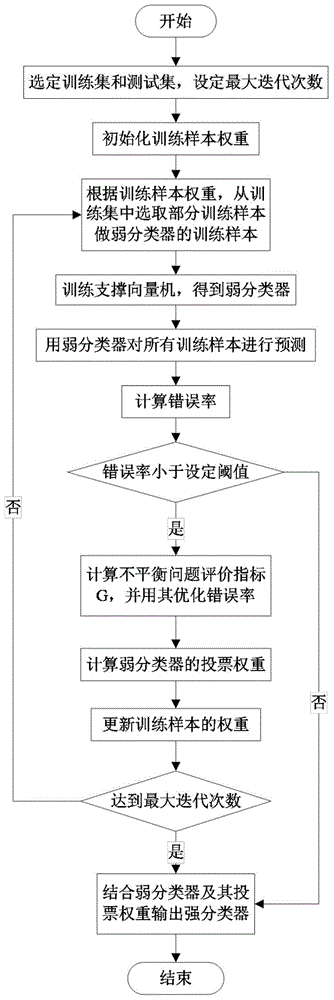

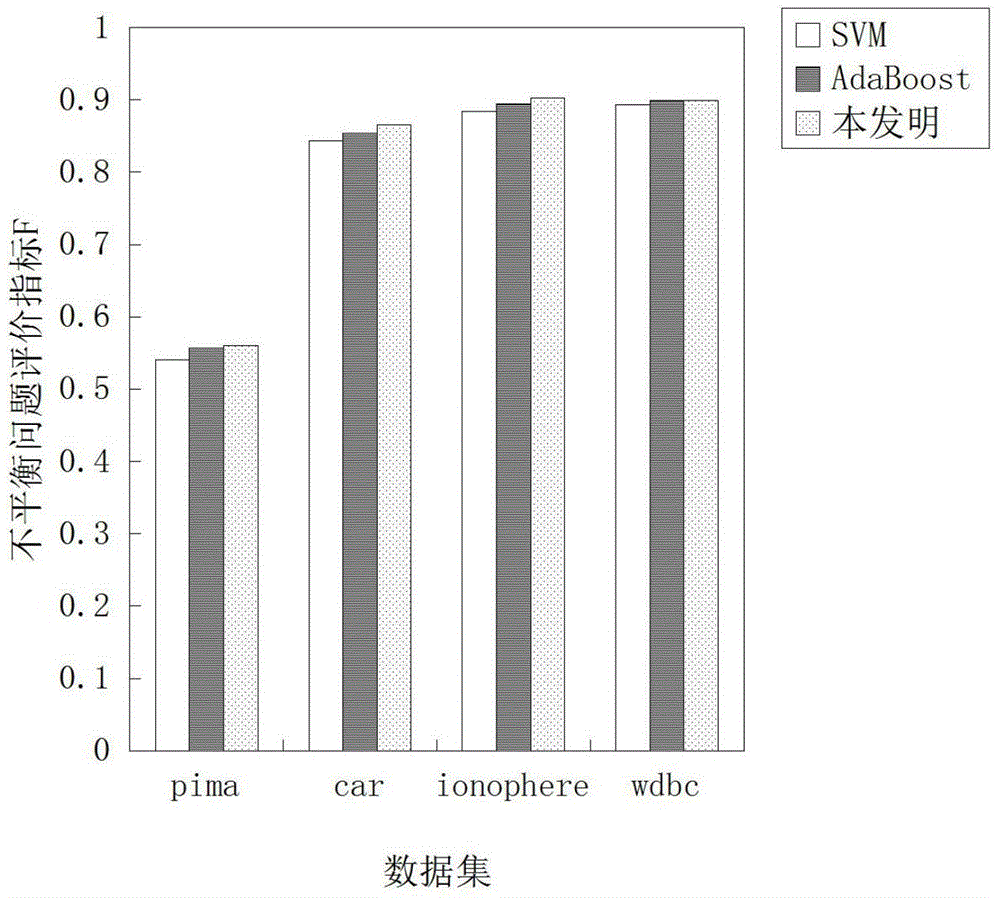

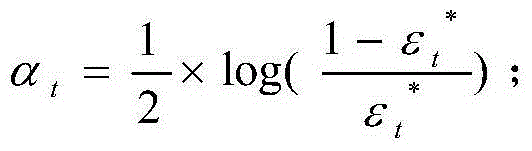

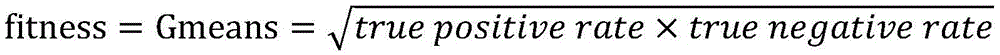

Unbalanced data classification method based on unbalanced classification indexes and integrated learning

InactiveCN104951809AImprove classification accuracySolving Imbalanced Classification ProblemsCharacter and pattern recognitionMinority classClassification methods

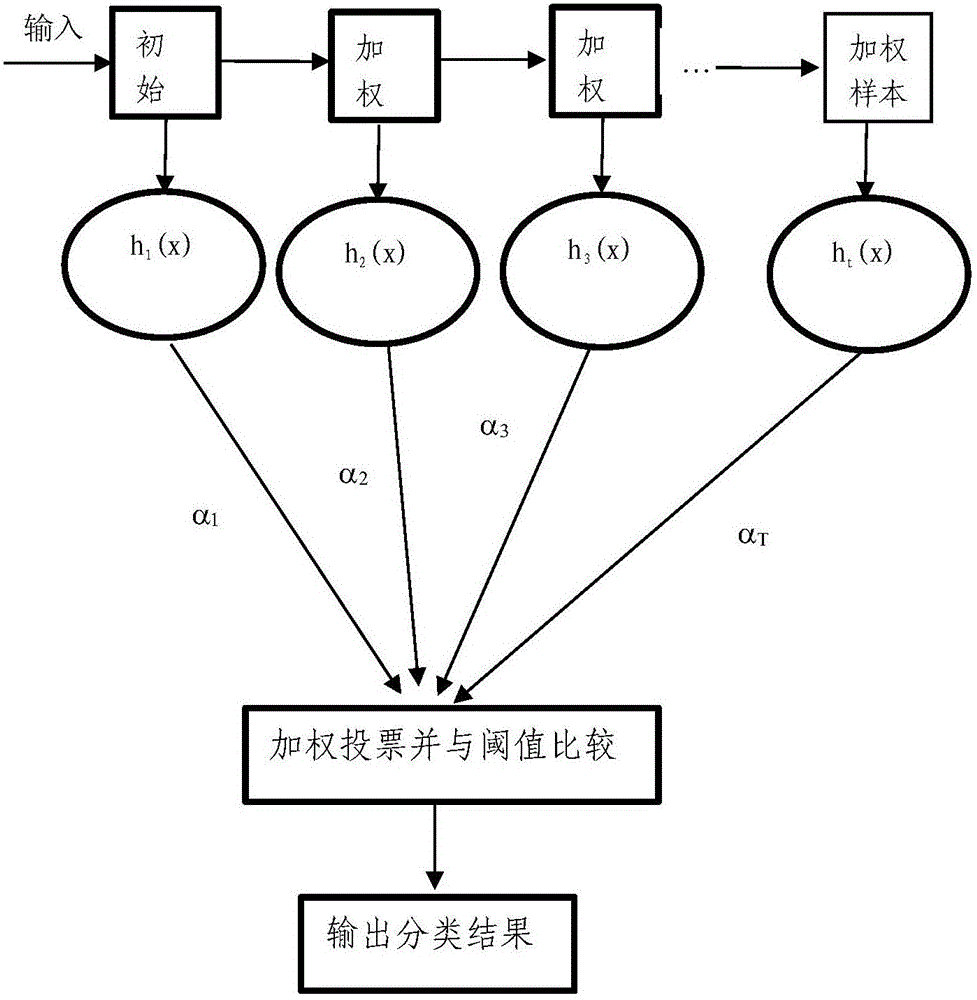

The invention discloses an unbalanced data classification method based on unbalanced classification indexes and integrated learning, and mainly solves the problem of low classification accuracy of the minority class of the unbalanced data in the prior art. The method comprises steps as follows: (1), a training set and a testing set are selected; (2), training sample weight is initialized; (3), part of training samples is selected according to the training sample weight for training a weak classifier, and the well trained weak classifier is used for classifying all training samples; (4), the classification error rate of the weak classifier on the training set is calculated, is compared with a set threshold value and is optimized; (5), voting weight of the weak classifier is calculated according to the error rate, and the training sample weight is updated; (6), whether the training of the weak classifier reaches the maximum number of iterations is judged, if the training of the weak classifier reaches the maximum number of iterations, a strong classifier is calculated according to the weak classifier and the voting weight of the weak classifier, and otherwise, the operation returns to the step (3). The classification accuracy of the minority class is improved, and the method can be applied to classification of the unbalanced data.

Owner:XIDIAN UNIV

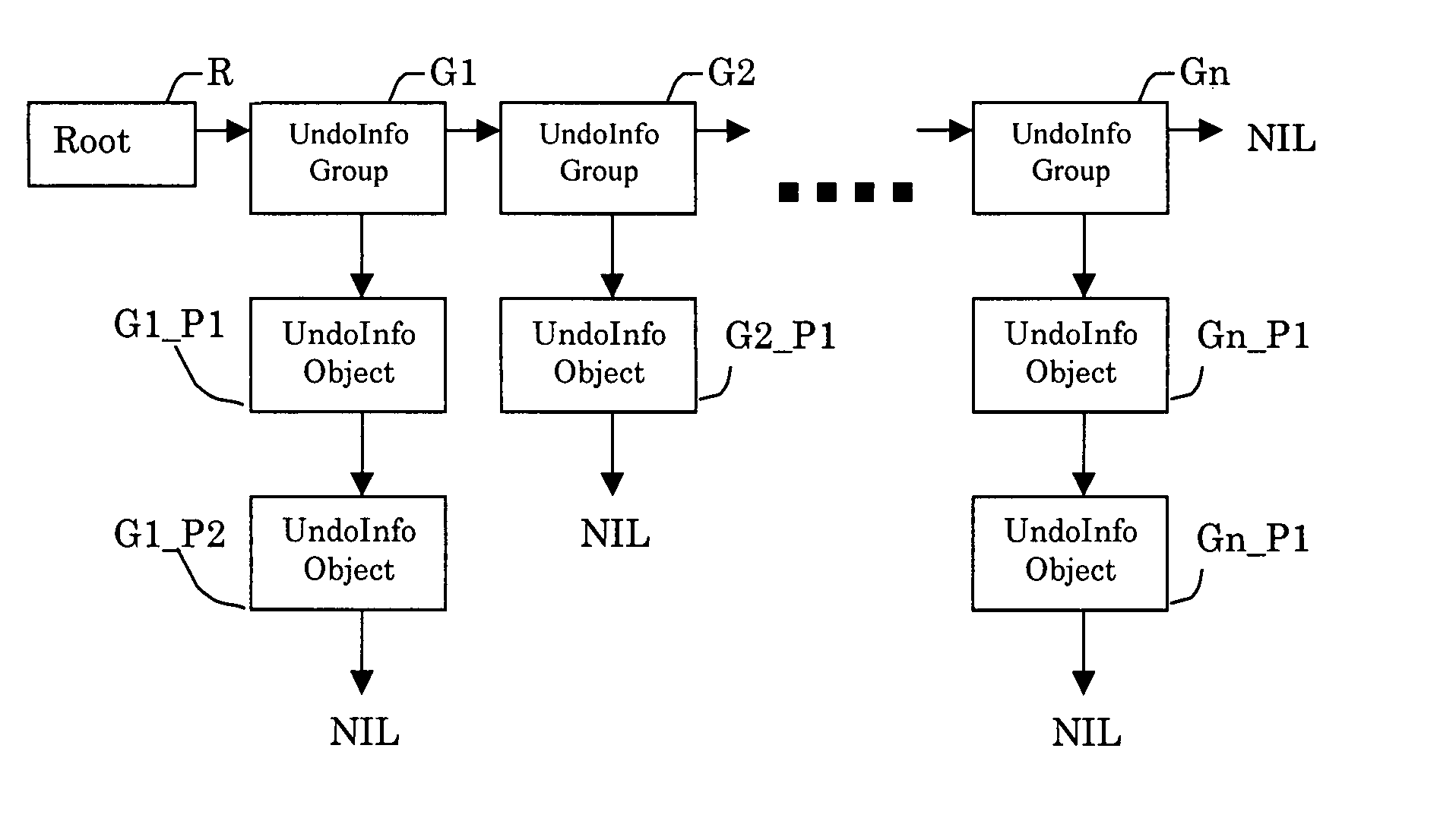

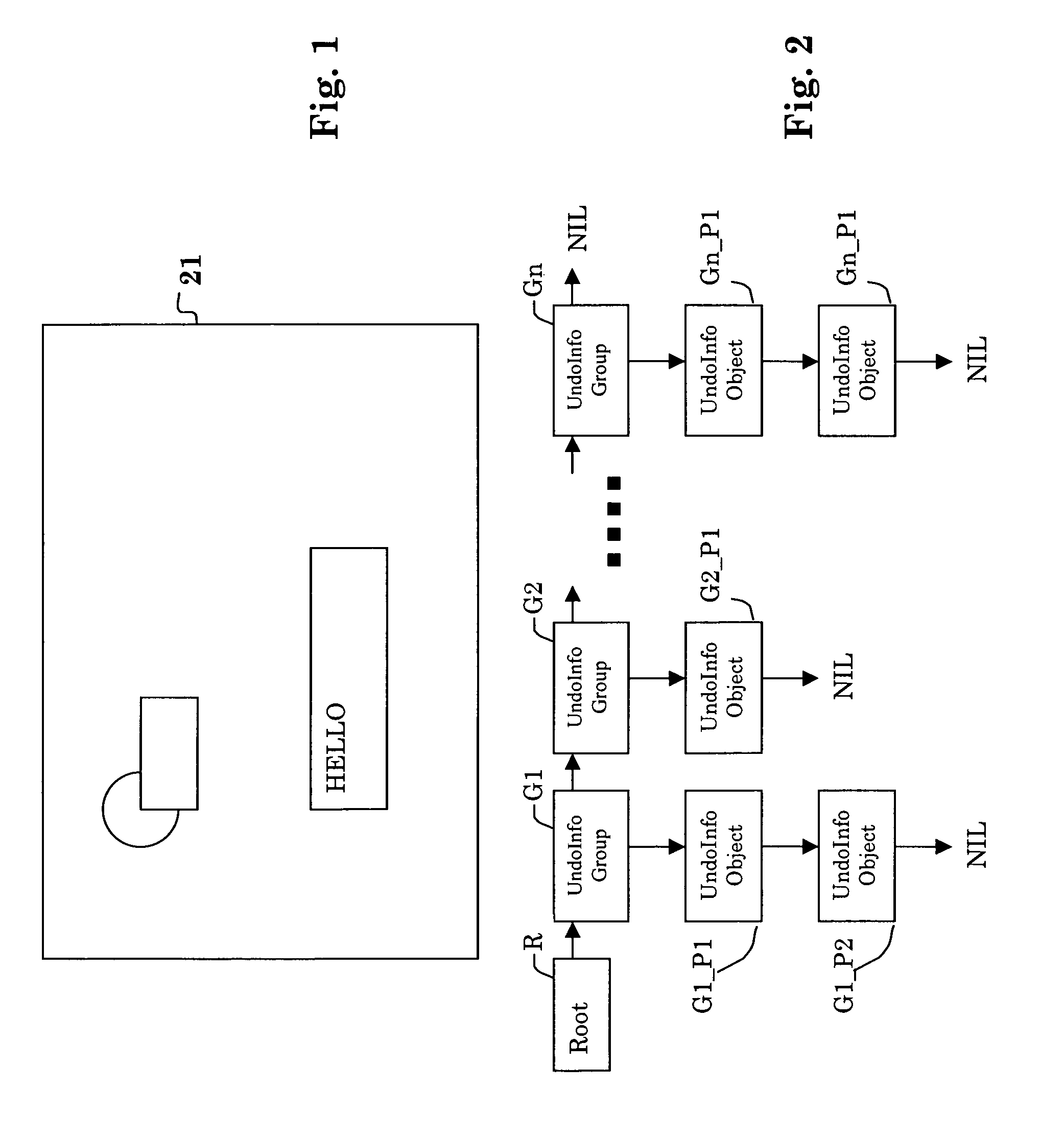

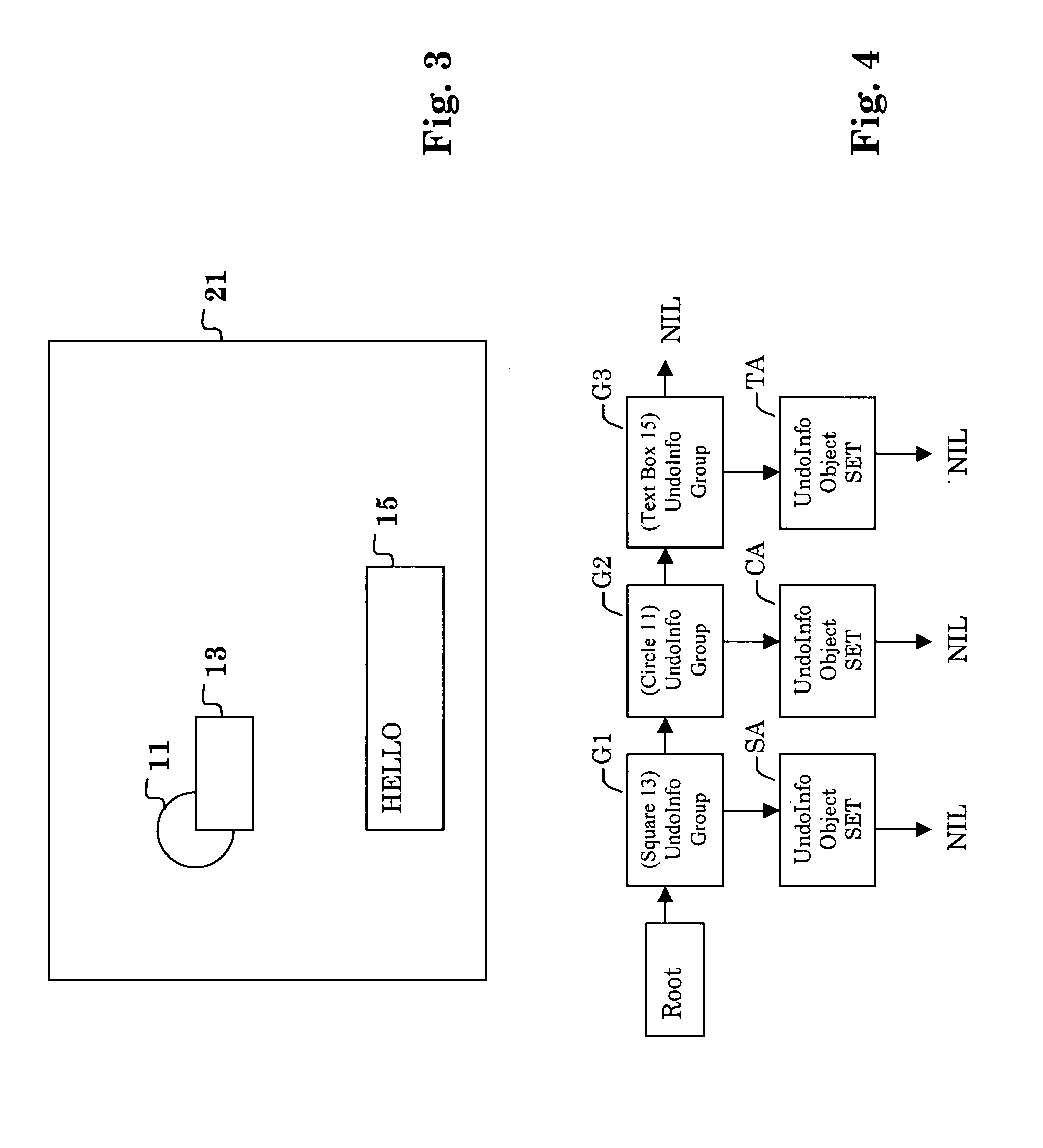

Undo/redo algorithm for a computer program

InactiveUS7003695B2Minimal modificationSoftware testing/debuggingTransmissionPresent methodUnbalanced data

A method of tracking modifications of specific program objects during the runtime of a computer program, facilitates the creation of general UnDo and ReDo operations, as well as the support of an object-specific UnDo operation. When an object is modified, the object is interrogated to collect information about it and how the modification may be undone. The collected information is stored in a highly unbalanced data-tree structure. Since the interrogation of an object is a characteristic of the programming language, and not necessarily a modification of the program being executed, the present method may be easily applied to different existing programs with minimal, if any, modification to the existing programs.

Owner:SEIKO EPSON CORP

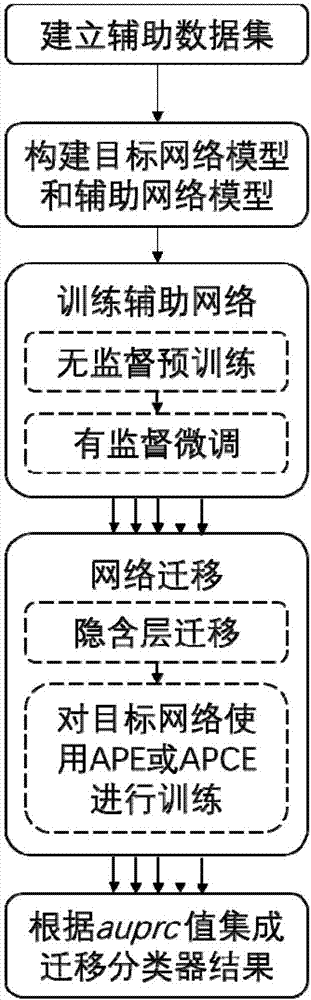

Deep transfer learning-based unbalanced classification ensemble method

ActiveCN107316061AFor classification problems applyImprove classification performanceCharacter and pattern recognitionNeural learning methodsLearning basedData set

The invention discloses a deep transfer learning-based unbalanced classification ensemble method. The method comprises the following steps that: an auxiliary data set is established; an auxiliary deep network model and a target deep network model are constructed; the auxiliary deep network is trained; the structure and parameters of the auxiliary deep network are transferred to the target deep network; and the products of auprc values are calculated and are adopted as the weights of classifiers, and weighted ensemble is performed on the classification results of each transfer classifier, so that an ensemble classification result is obtained and is adopted as the output of an ensemble classifier. According to the method of the present invention, an improved average precision variance loss function (APE) and an average precision cross-entropy loss function (APCE) are adopted; when the loss cost of samples is calculated, the weights of the samples are dynamically adjusted; and few weights are assigned to majority classification samples, more weights are assigned to minority classification samples, and therefore, the trained deep network attaches more importance to the minority classification samples, and the method is more suitable for the classification of unbalanced data.

Owner:SOUTH CHINA UNIV OF TECH

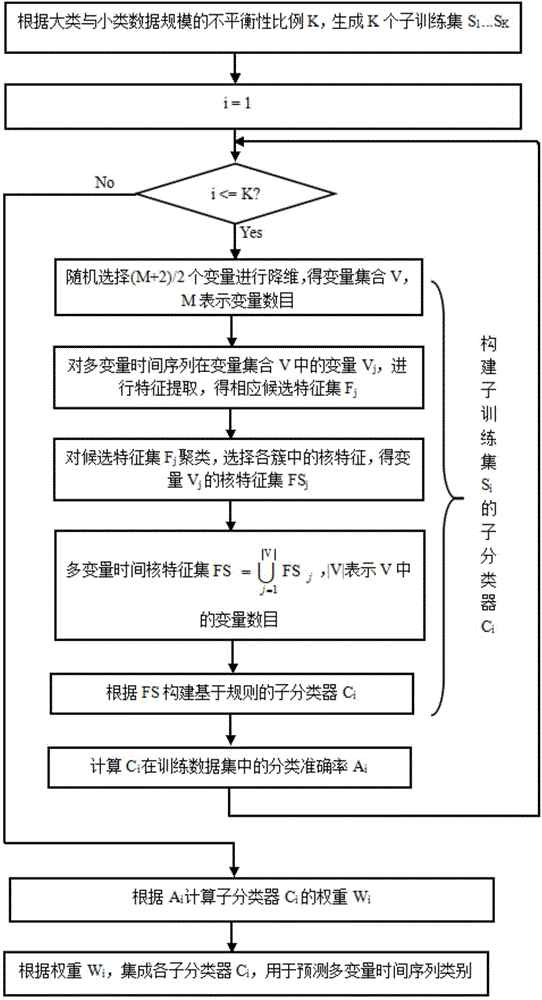

Method for early classifying imbalance multi-variable time sequence data

InactiveCN104809226ASolve classification problemsImprove accuracySpecial data processing applicationsData setAlgorithm

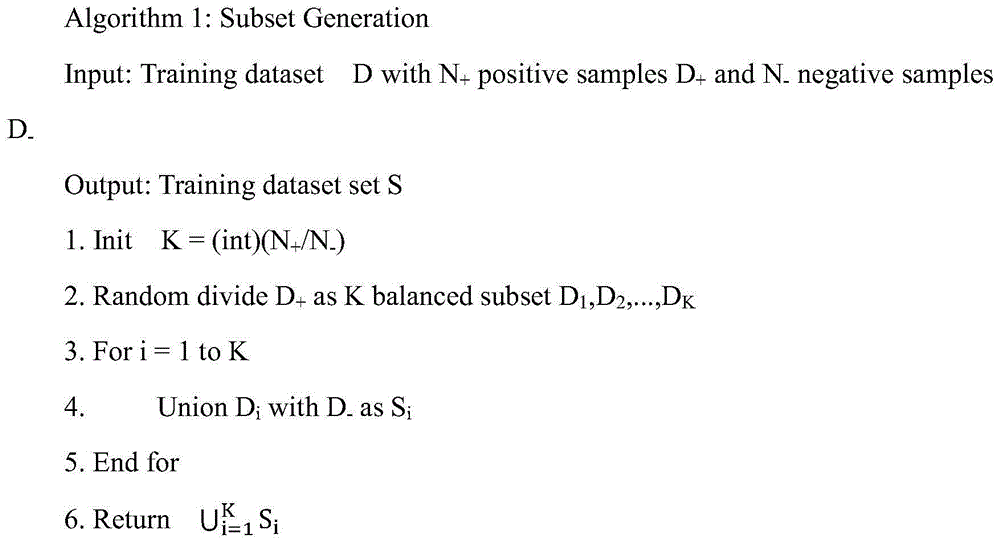

The invention discloses a method for early classifying imbalance multi-variable time sequence data. The method comprises the following steps: carrying out the under-sampling for a large category of category data sets according to an imbalance ratio for the inter-category data scale imbalance problem into a plurality of subsets, and combining the subsets with a small category of category data to form a plurality of training subsets; extracting and selecting core features of each training subset, and establishing a sub-classifier based on a rule according to the core features, wherein the feature selection process is realized in a clustering way in order to solve the imbalance problem of the data scale of the intra-category sub-concepts so as to guarantee the diversity of the core features; and solving the weight of a classifying effect of data in the training set by utilizing the sub-classifier on the basis of each sub-classifier, and establishing an integrated classifier. The classifier can solve the multi-variable time sequence classifying problem of the imbalance data set, the accuracy is relatively high, and the earliness degree is good.

Owner:WUHAN UNIV

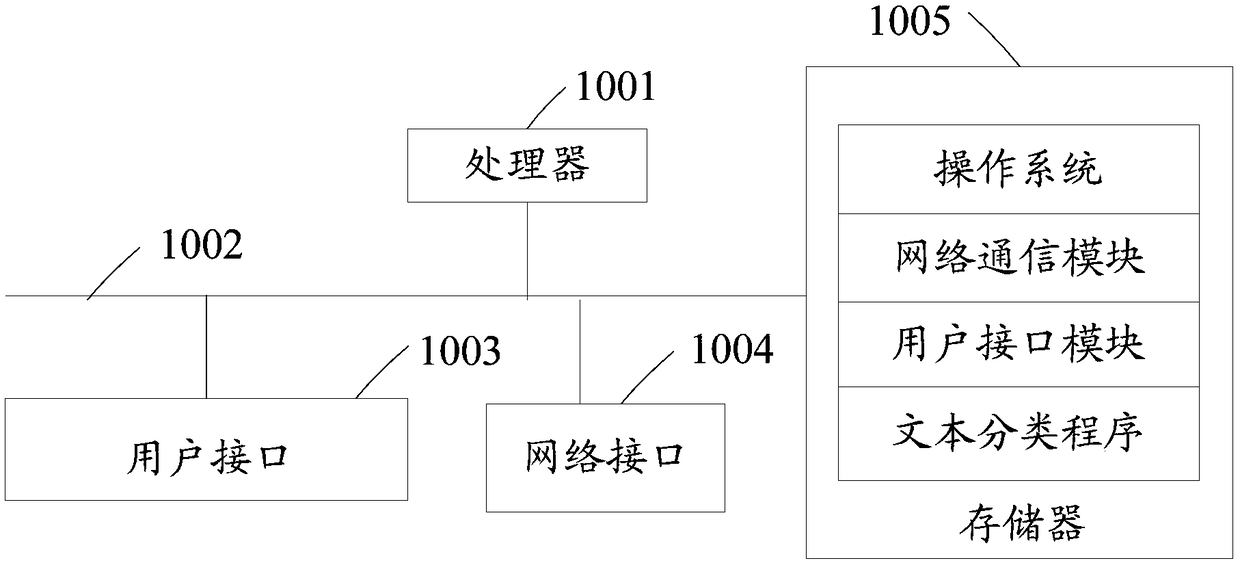

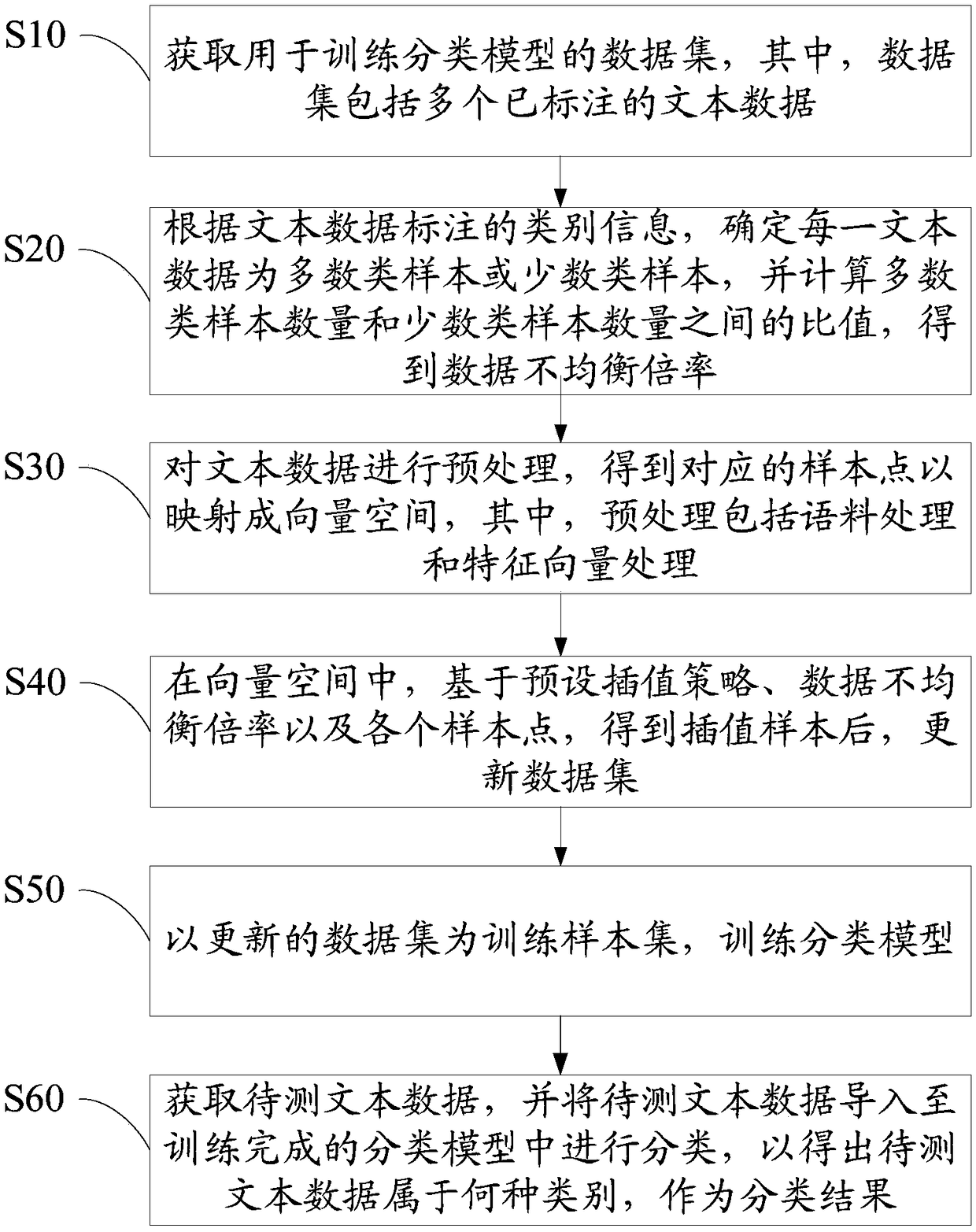

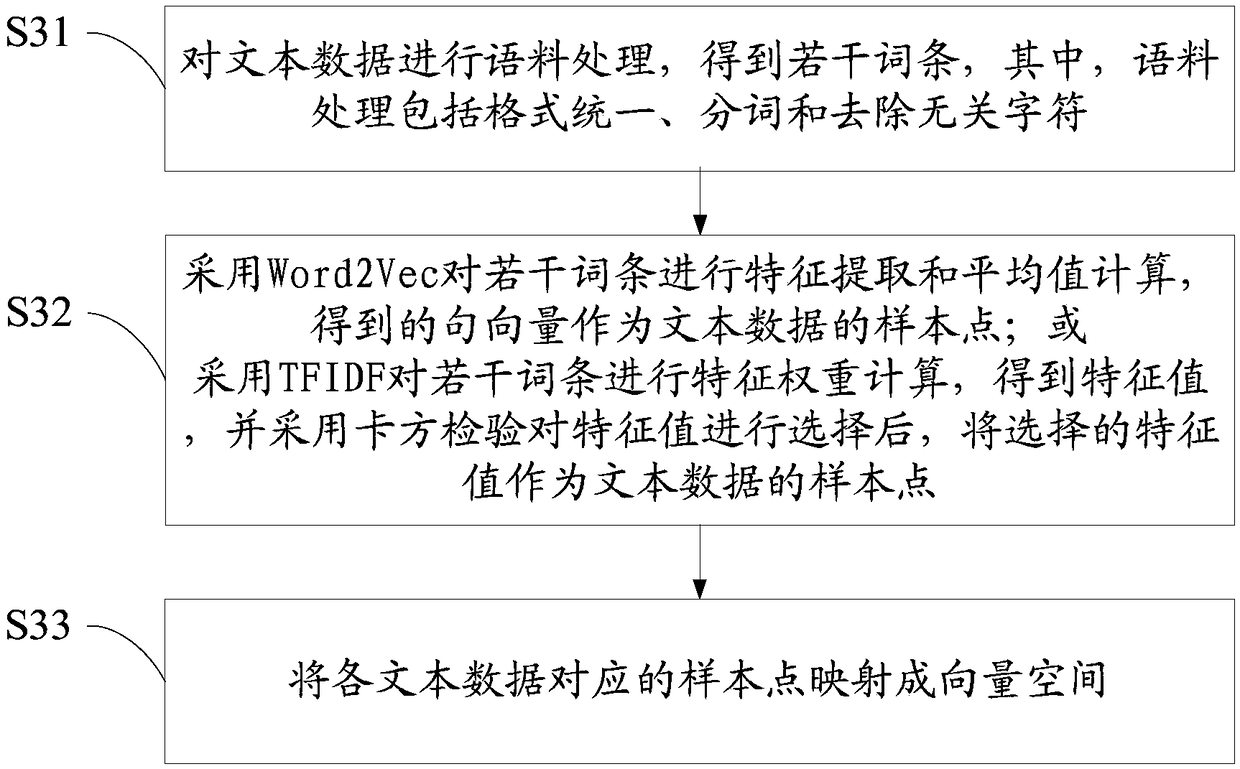

Text classification method, text classifier and storage medium for unbalanced data set

ActiveCN108628971AImprove classification performanceExpand border areaCharacter and pattern recognitionSpecial data processing applicationsData setText categorization

The invention discloses a text classification method, a text classifier and storage medium for an unbalanced data set, wherein the method comprises the following steps of: acquiring the data set usedfor training a classification model; determining whether each text data is a plurality of samples or a few samples according to the category information marked by the text data; calculating the ratiobetween the number of the plurality of samples and the number of the few samples to obtain an unbalance ratio; carrying out pre-processing on the text data to obtain a corresponding sample point to map into a vector space; updating the data set after the interpolation sample is obtained based on the preset interpolation strategy, the unbalance rate and each sample point; training the classification model using the updated data set as a training sample set; acquiring the text data to be tested, and introducing the text data to be tested into the classification model after finishing the trainingto classify so as to obtain the category of the text data to be tested as a classification result. According to the invention, the few samples and a boundary region thereof can be enlarged, and the classification effect of the model can be effectively improved.

Owner:WEBANK (CHINA)

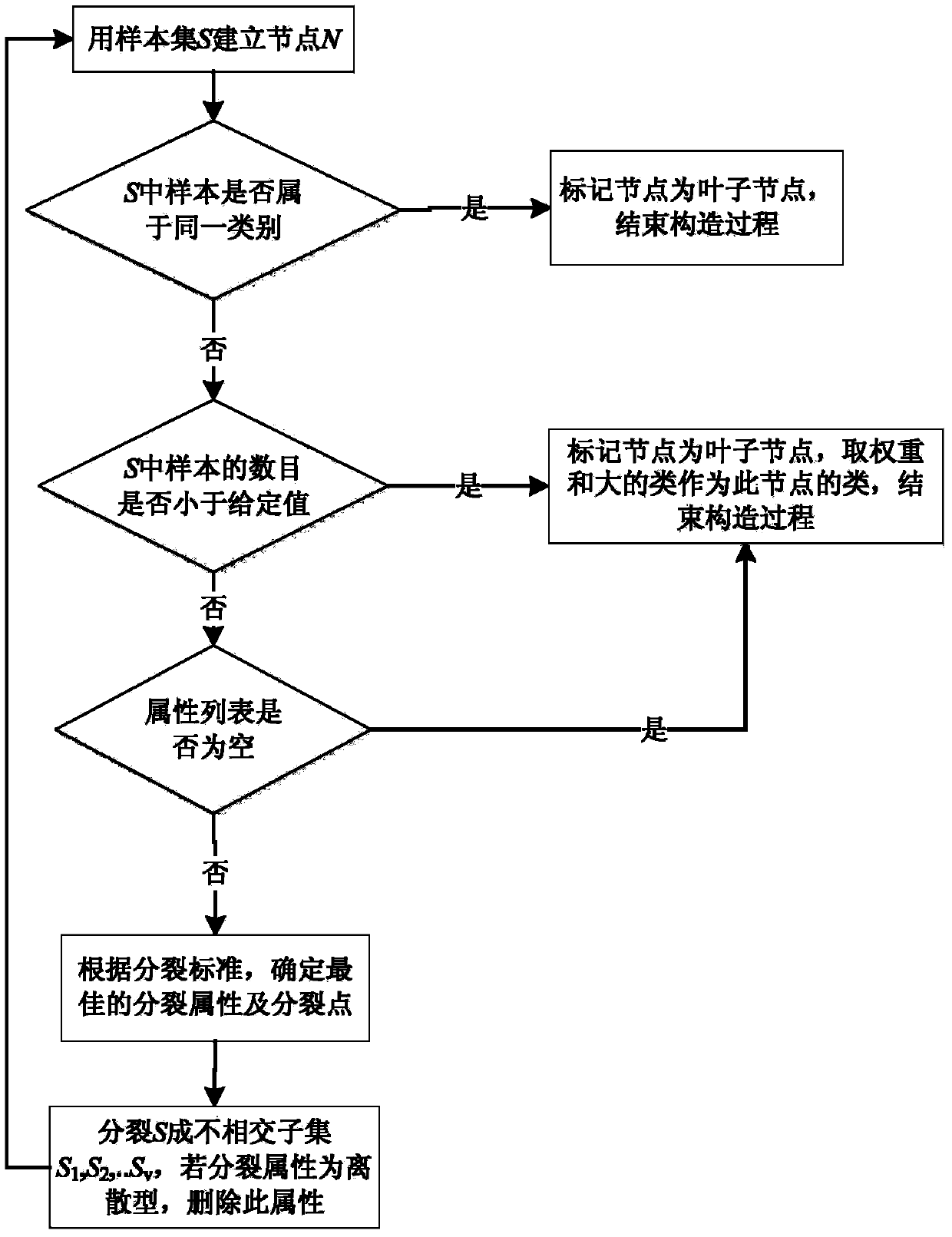

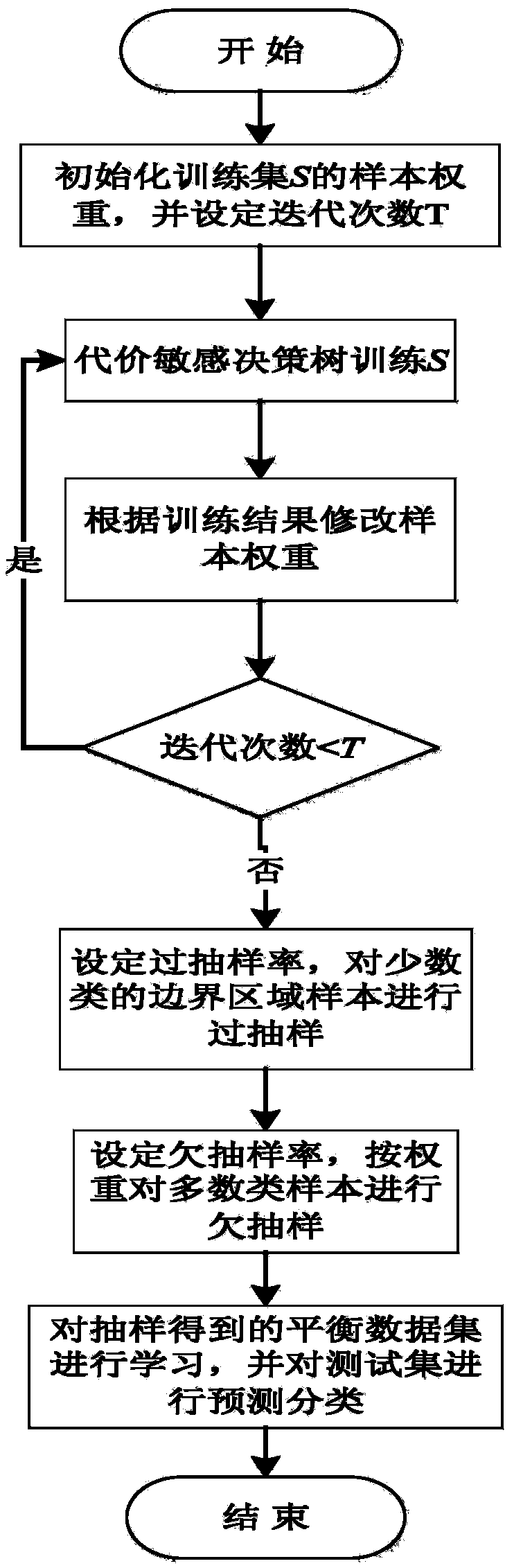

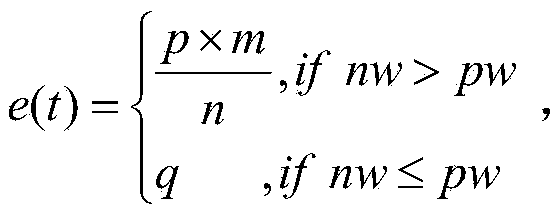

Unbalanced data sampling method in improved C4.5 decision tree algorithm

InactiveCN105373606AReflect the distributionImprove classification performanceRelational databasesSpecial data processing applicationsMajority classData set

The invention relates to an unbalanced data sampling method in an improved C4.5 decision tree algorithm. The method comprises the steps as follows: firstly, initial weights of various samples are determined according to the number of various samples; the weights of the samples are modified through the training result of the improved C4.5 decision tree algorithm in each round; the information gain ratio and misclassified sample weights are taken into account by a division standard of the improved C4.5 algorithm; the final weights of the samples are obtained after T iterations; the samples in minority class boundary regions and majority class center regions are found out according to the sample weights; over-sampling is carried out on the samples in the minority class boundary regions by an SMOTE algorithm; and under-sampling is carried out on majority class samples by a weight sampling method, so that the samples in the center regions are relatively easily selected to improve the balance degree of different classes of data, and the recognition rates of the minority class and the overall data set are improved. According to the unbalanced data sampling method in the improved C4.5 decision tree algorithm, weight modification is carried out through the improved C4.5 decision tree algorithm; and over-sampling and under-sampling are specifically carried out according to the sample weights, so that the phenomena of classifier over-fitting, loss of useful information of the majority class and the like are effectively avoided.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

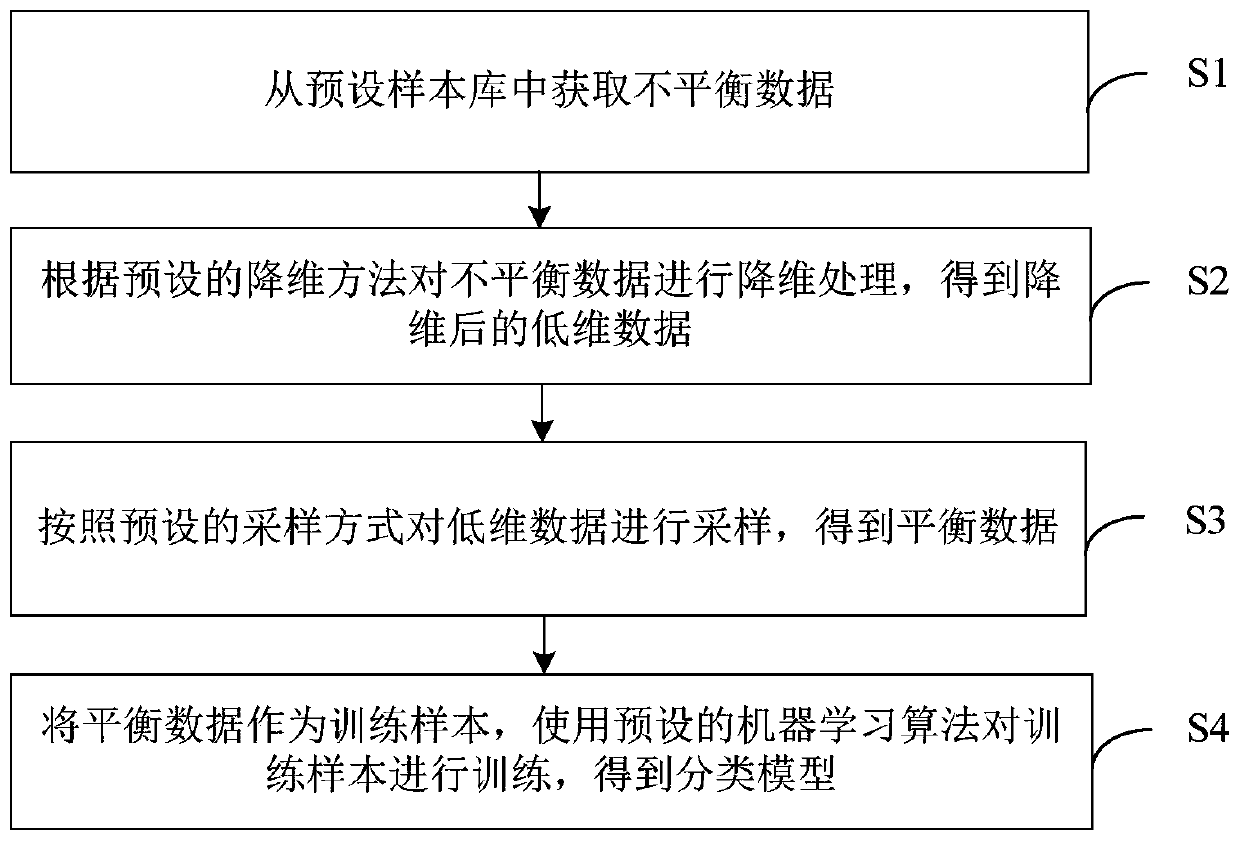

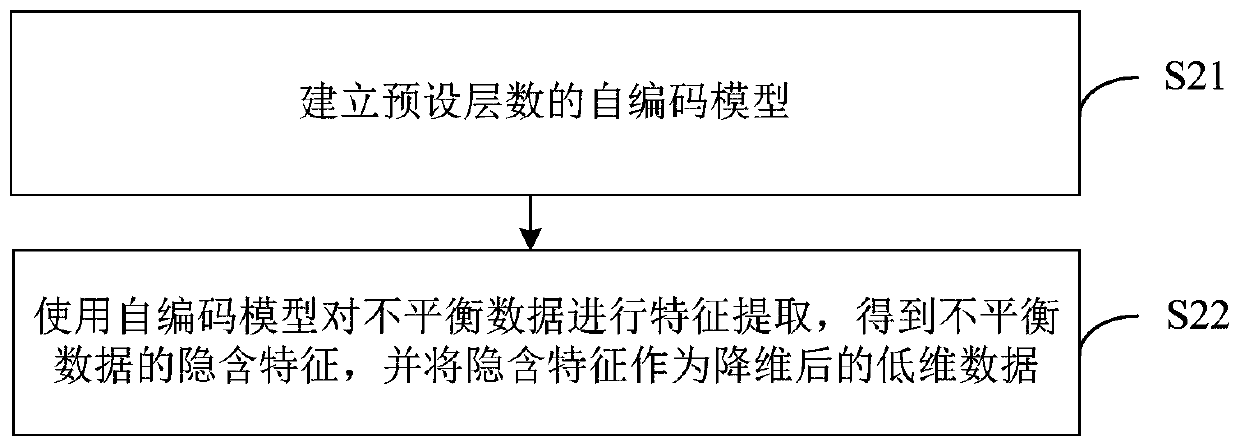

Unbalanced data classification model training method, device and equipment and storage medium

PendingCN110163261AIncrease weightImprove impactCharacter and pattern recognitionDimensionality reductionUnbalanced data

The invention discloses an unbalanced data classification model training method and device, computer equipment and a storage medium. The method comprises the steps of obtaining unbalanced data from apreset sample library; performing dimension reduction processing on the unbalanced data according to a preset dimension reduction method to obtain low-dimensional data after dimension reduction; sampling the low-dimensional data according to a preset sampling mode to obtain balance data; and taking the balance data as a training sample, and training the training sample by using a preset machine learning algorithm to obtain a classification model. According to the technical scheme, the classification model obtained through training is used for classification, the misjudgment rate of few types of data in the unbalanced data can be reduced, and therefore the classification accuracy is improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

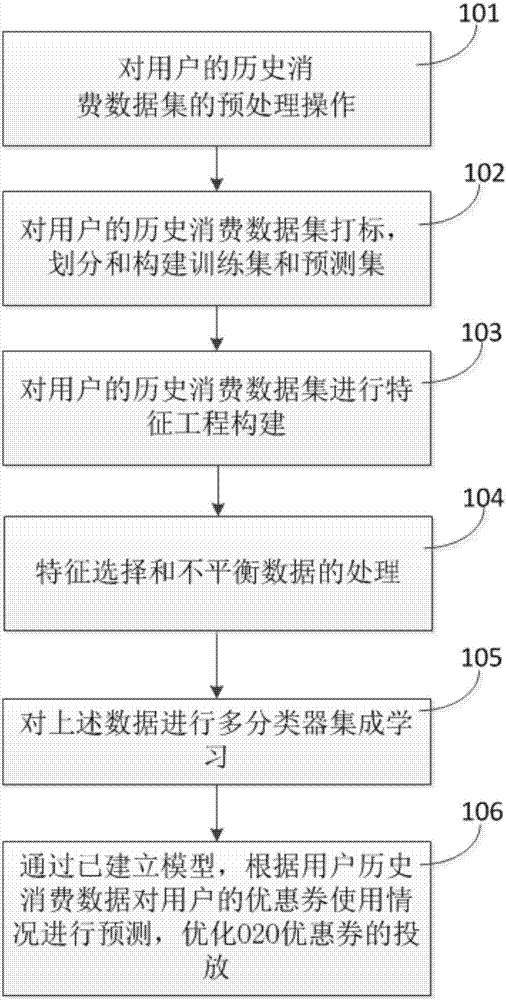

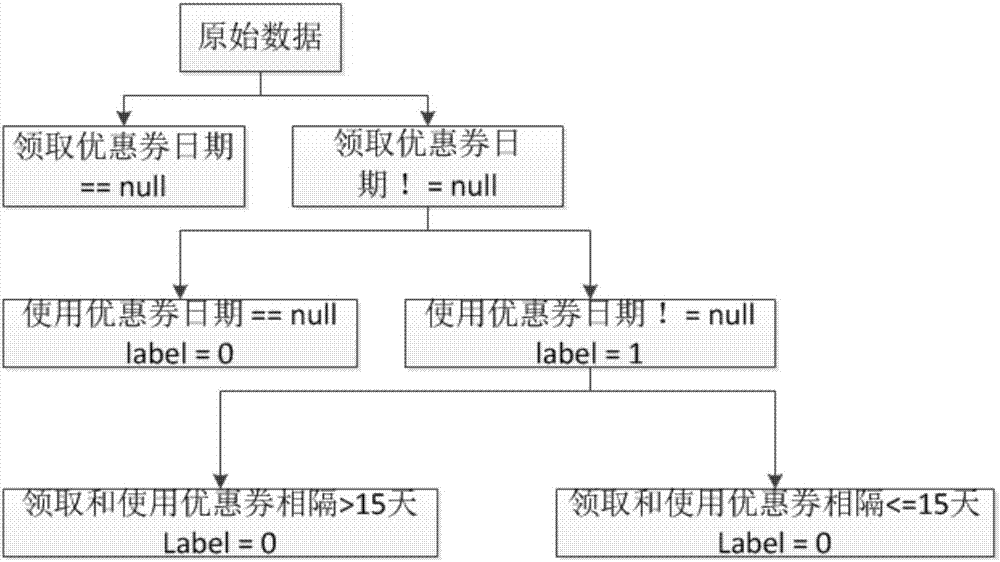

O2O coupon usage big data prediction method

The invention protects an O2O coupon usage big data prediction method. The method comprises the steps that 101, a historical consumption dataset of a user is subjected to preprocessing operation; 102, the historical consumption dataset of the user is marked, and a training set and a prediction set are divided and constructed; 103, the historical consumption dataset of the user is subjected to feature engineering construction; 104, feature selection and processing of unbalanced data are performed; 105, the data is subjected to multi-classifier integrated learning; and 106, coupon usage of the user is predicted through an established model according to historical consumption data of the user, and serving of an O2O coupon is optimized. According to the method, a prediction model is established mainly by processing the user consumption data and performing multi-classifier integrated learning on the data, therefore, the coupon usage of the user in the future is predicted, and serving of the O2O coupon is optimized.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

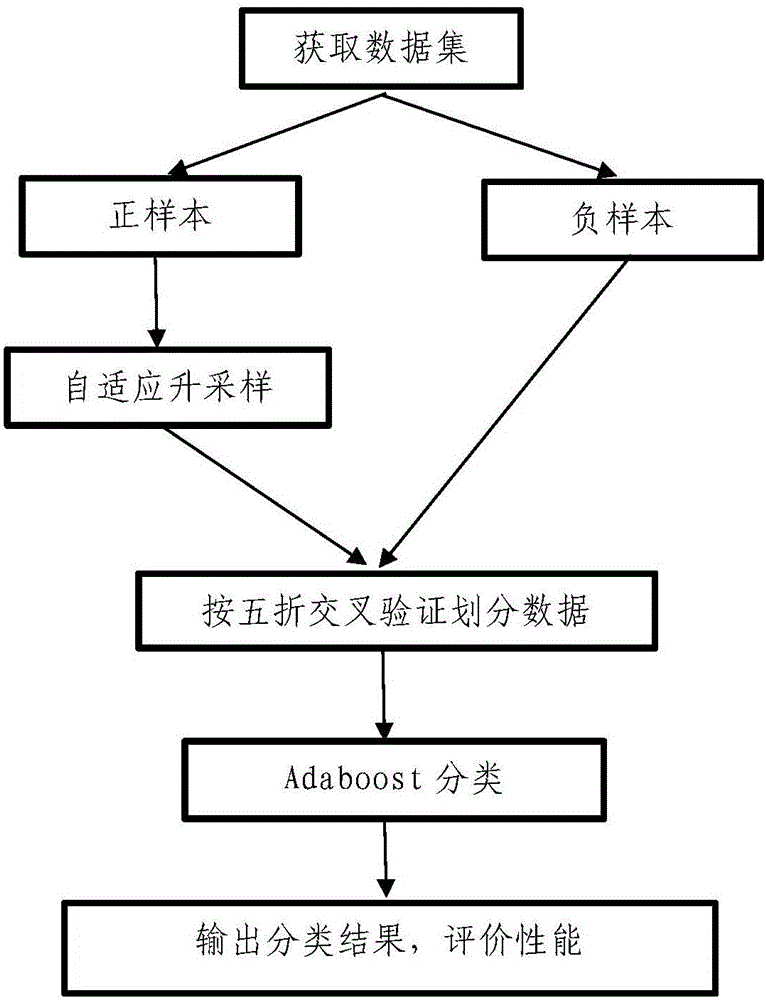

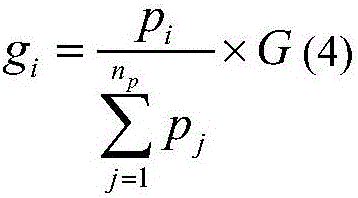

Unbalanced data classification method based on adaptive upsampling

InactiveCN105975992AImprove performanceImprove classification effectCharacter and pattern recognitionPositive sampleData set

The invention relates to an unbalanced data classification method based on adaptive upsampling. The method includes the following steps of calculating the total of positive samples to be newly generated; calculating the probability density distribution for each positive sample by taking the Euclidean distance as the metric; determining the number of the new samples to be generated of the positive sample; generating a new positive sample and adding the newly generated positive sample points to an original unbalanced training set to make the positive and negative samples be same in number, namely, obtaining a new balance training set including n<n> positive samples and n<n> negative samples; and training the newly generated balance training set by means of an Adaboost algorithm and obtaining a final classification model after the iteration for T times. According to the invention, the classification performance of the unbalanced dataset is improved.

Owner:TIANJIN UNIV

Unbalanced data classification method

InactiveCN104239516AImprove classification effectRelational databasesSpecial data processing applicationsSupport vector machineData set

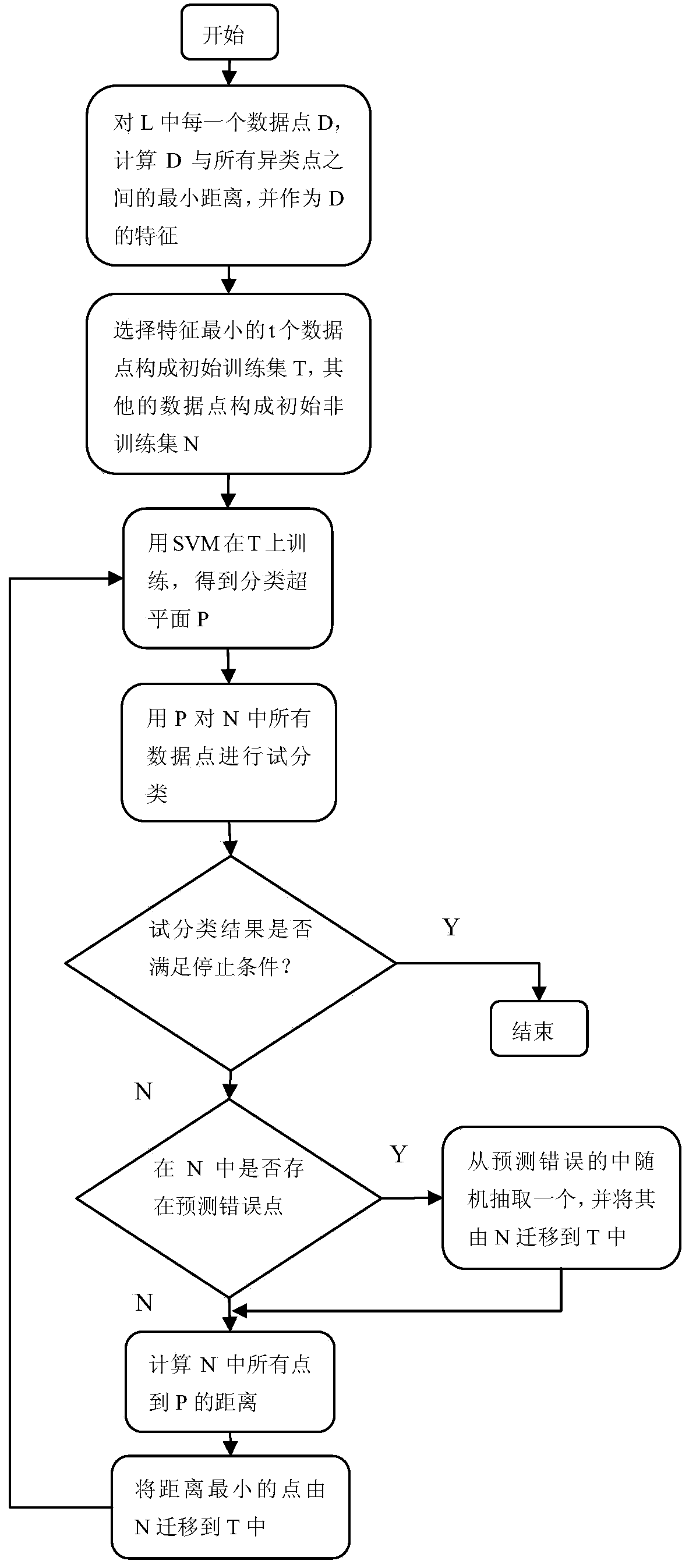

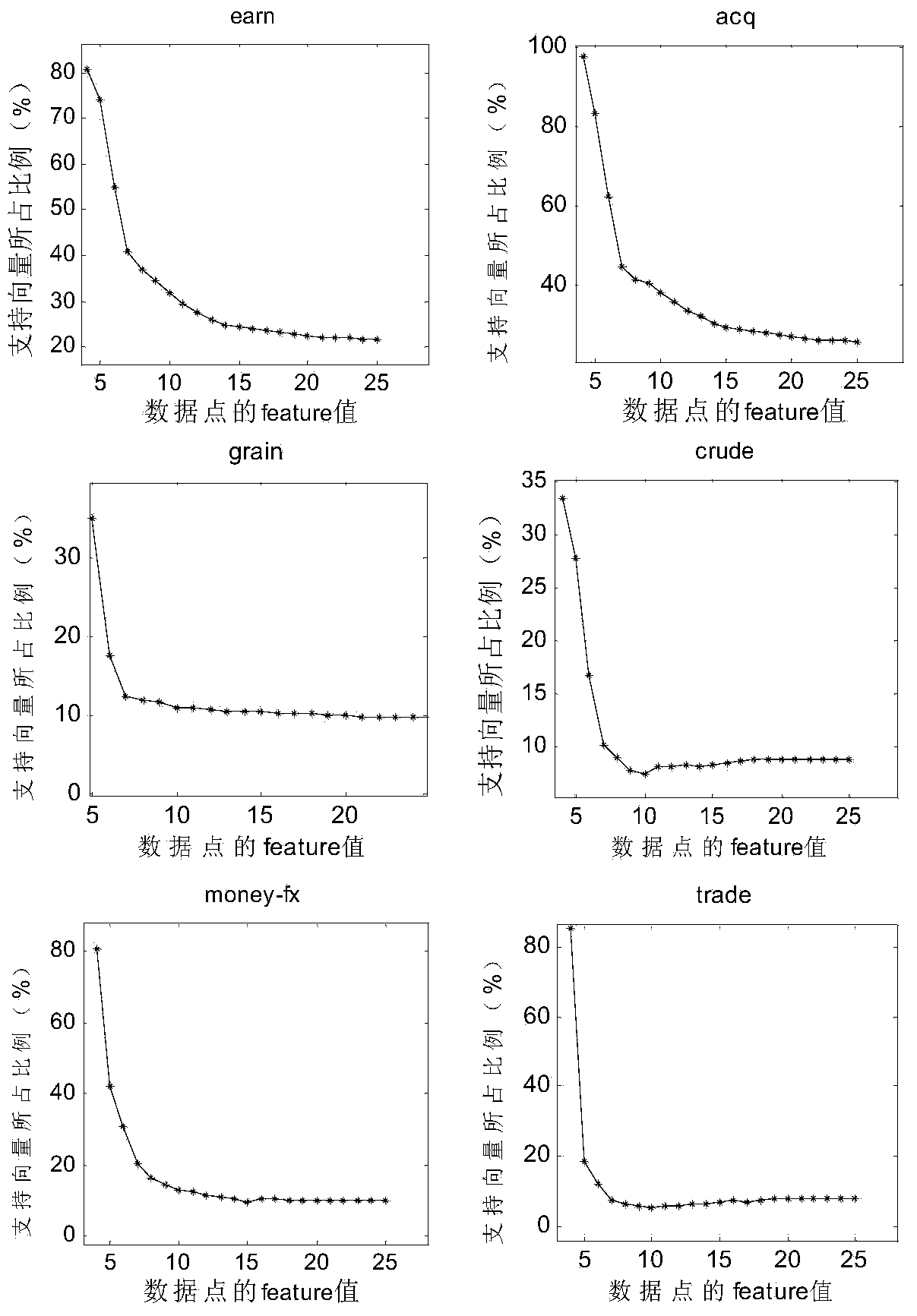

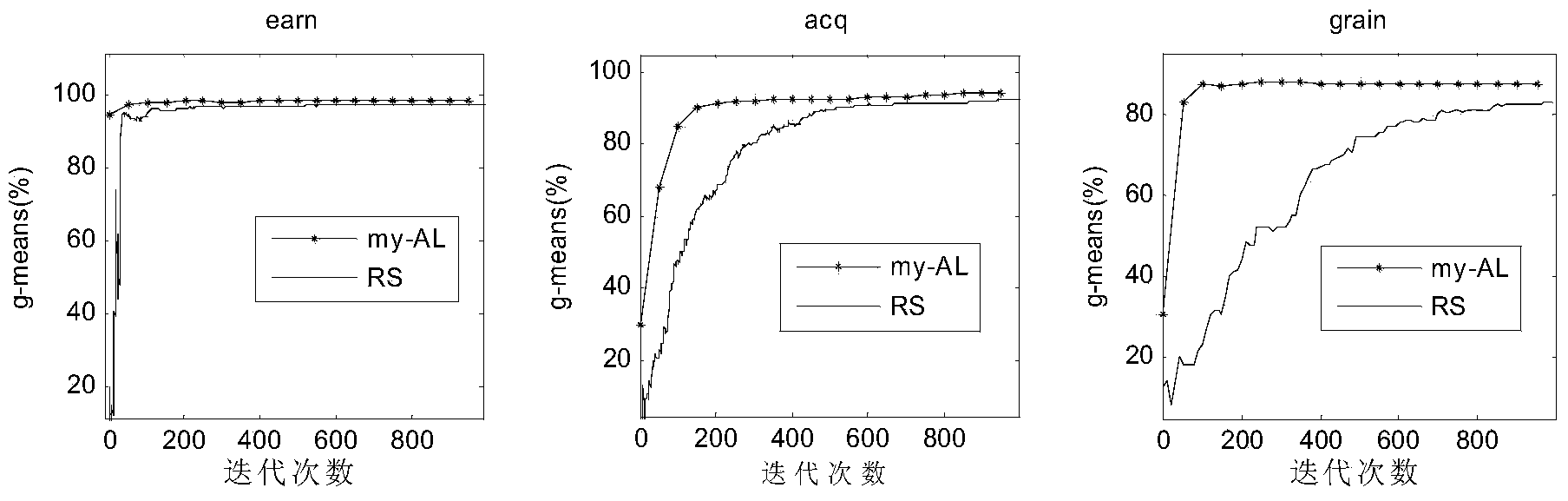

The invention relates to an unbalanced data classification method. The unbalanced data classification method comprises the following steps: in a labeled data set L, firstly processing each data point, calculating the distances between the data points and all non-similar data points, and reserving the shortest distance as the characteristic of the data points; arranging all the data points according to the characteristic from small to large, taking the first t data points with the minimum characteristic to form an initial training set T, and enabling the remaining data points to form an initial non-training set N; using a support vector machine, and utilizing an active learning strategy to carry out iterative learning on the training set T; after training begins, a temporary classification hyperplane P is generated in each step of iteration, using the Ps to carry out trial classification on all the data points in the N, if mispredicted data points exist, drawing an item at random from the mispredicted data points to be added to the training set T, and meanwhile selecting the data point closest to the P in the N to be added to the T; if no mispredicted data points exist in the N, selecting the data point closest to the P from the data points to be added to the training train T. carrying out subsequent training.

Owner:NANJING UNIV

Data classification method based on intuitive fuzzy integration and system

ActiveCN102402690AImprove unbalanced conditionsQuantitative uncertaintyCharacter and pattern recognitionOriginal dataClassification methods

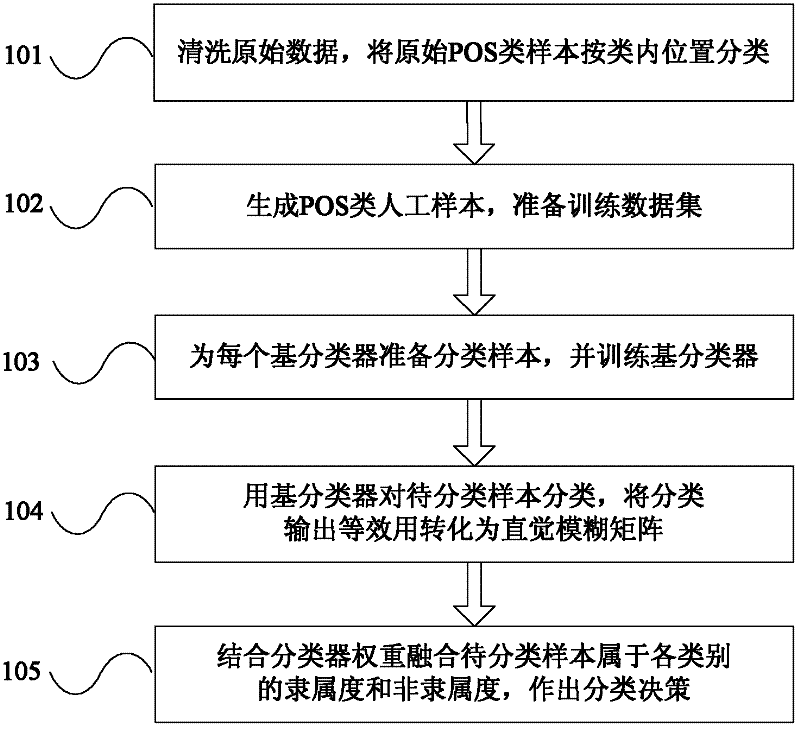

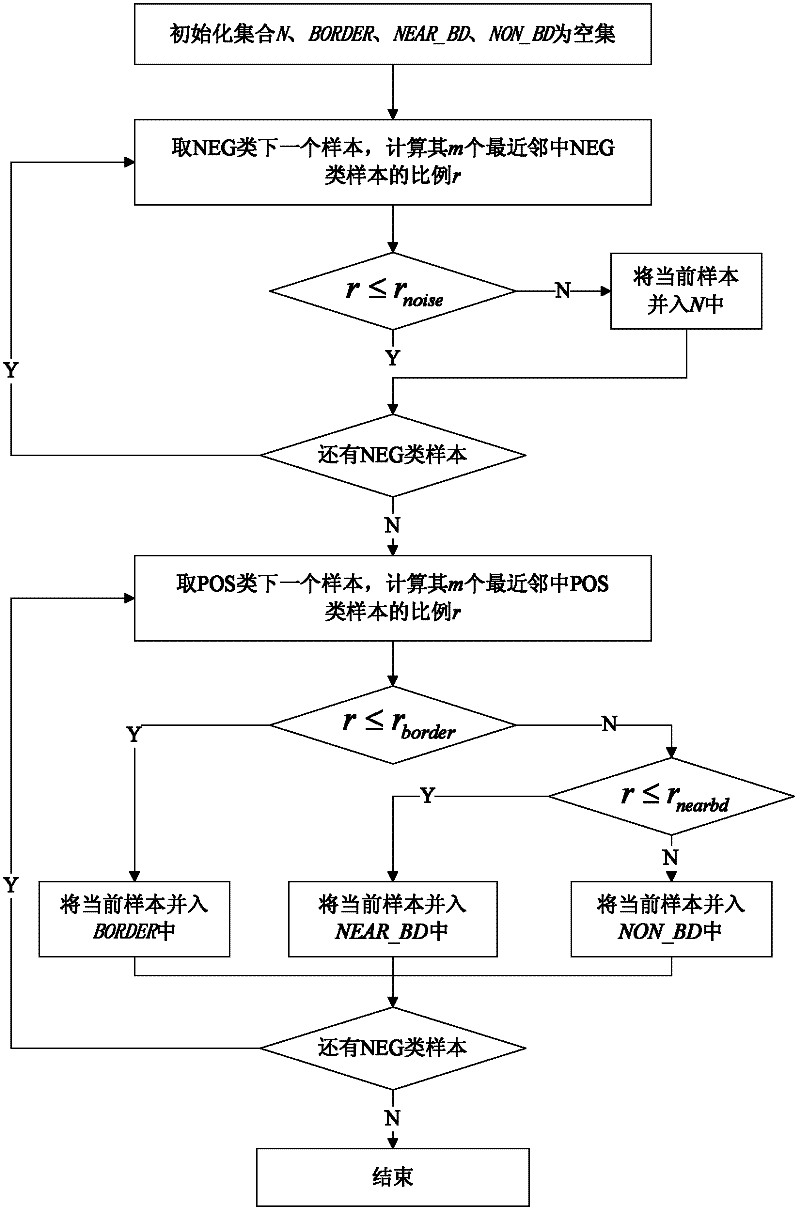

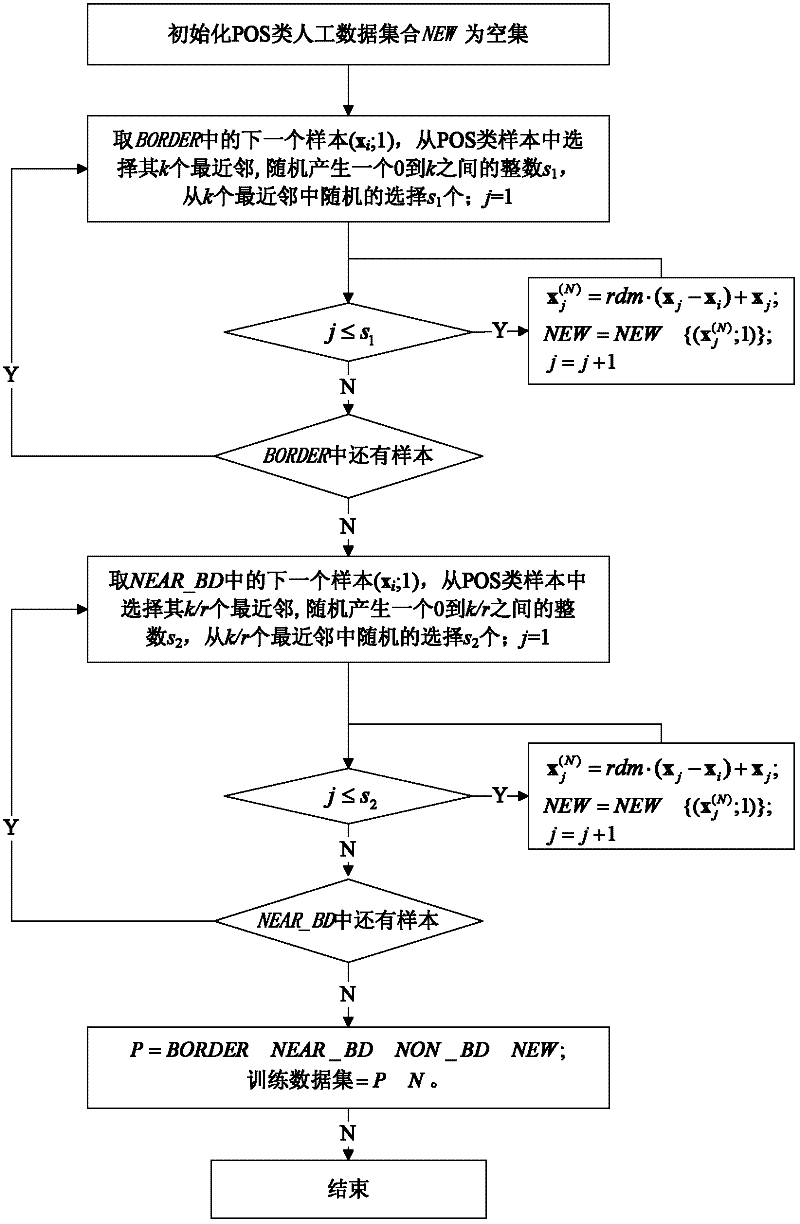

The invention relates to the field of pattern recognition, and discloses an unbalanced data classification method based on intuitive fuzzy integration and a system based on the method. The method comprises the following steps of: a) cleaning original data, and classifying original point-of-sale (POS) class samples according to intra-class positions to generate POS class artificial samples; b) training a base classifier by using different sample sets of inter-class approximate balance; c) converting the classification output equal utility of the base classifier into an intuitive fuzzy matrix; and d) integrating samples to be classified into the membership and the non-membership of the POS class and the negative (NEG) class by combining the weight of the base classifier, and making a classification decision. The invention has the advantages that: over learning is avoided by integrating over sampling and under sampling; the training samples of the base classifier are different, so that the difference of the base classifier is ensured; the base classifier is not specifically limited, so the method has good expandability; the intuitive fuzzy reasoning method quantitatively describes the uncertainty in classification so as to improve the performance of integrated learning; therefore, the system based on the method can better support the medical diagnosis decision and the like.

Owner:NANJING NORMAL UNIVERSITY

Image anomaly detection method in combination with CNN migration learning and SVDD

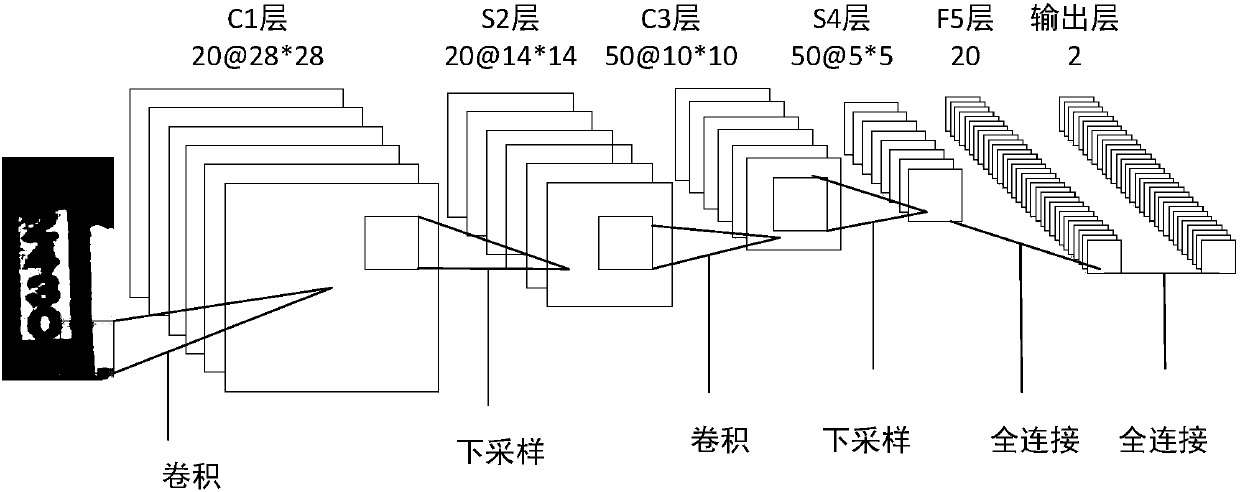

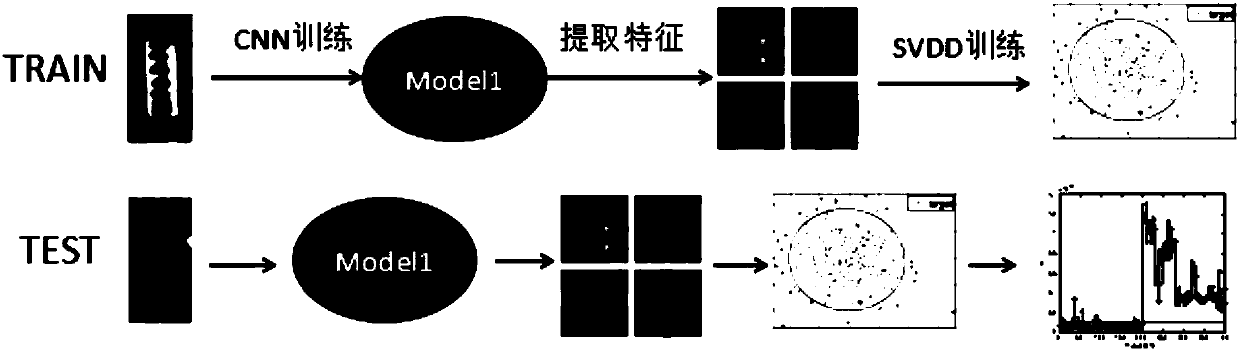

InactiveCN107563431AEfficient use ofEasy to handleImage analysisCharacter and pattern recognitionPositive sampleData set

The invention discloses an image anomaly detection method in combination with CNN migration learning and SVDD. The method comprises the steps of manually capturing images around a to-be-detected imageobject according to video data, making a to-be-detected pillar number data set, expressing image data depth features by utilizing a CNN, fully extracting features of pillar number samples through pre-trained weight and parameter network models, and solving the problem of minority class data in unbalanced data; and constructing a positive sample feature set which needs to participate in training in a classifier, finally performing parameter optimization by utilizing an SVDD algorithm, grid search and the like, forming a normal domain of positive sample feature training, and realizing identification of a number state of a contact network through a boundary. The automated processing level is relatively high, so that the workload of operators can be greatly reduced; and the problem of pillarnumber anomaly of the contact network is discovered early, so that the inspection efficiency is improved.

Owner:SOUTHWEST JIAOTONG UNIV

Text classification by weighted proximal support vector machine based on positive and negative sample sizes and weights

InactiveUS7707129B2Quality improvementFast trainingDigital data information retrievalDigital computer detailsText categorizationHigh dimensional

Embodiments of the invention relate to improvements to the support vector machine (SVM) classification model. When text data is significantly unbalanced (i.e., positive and negative labeled data are in disproportion), the classification quality of standard SVM deteriorates. Embodiments of the invention are directed to a weighted proximal SVM (WPSVM) model that achieves substantially the same accuracy as the traditional SVM model while requiring significantly less computational time. A weighted proximal SVM (WPSVM) model in accordance with embodiments of the invention may include a weight for each training error and a method for estimating the weights, which automatically solves the unbalanced data problem. And, instead of solving the optimization problem via the KKT (Karush-Kuhn-Tucker) conditions and the Sherman-Morrison-Woodbury formula, embodiments of the invention use an iterative algorithm to solve an unconstrained optimization problem, which makes WPSVM suitable for classifying relatively high dimensional data.

Owner:MICROSOFT TECH LICENSING LLC

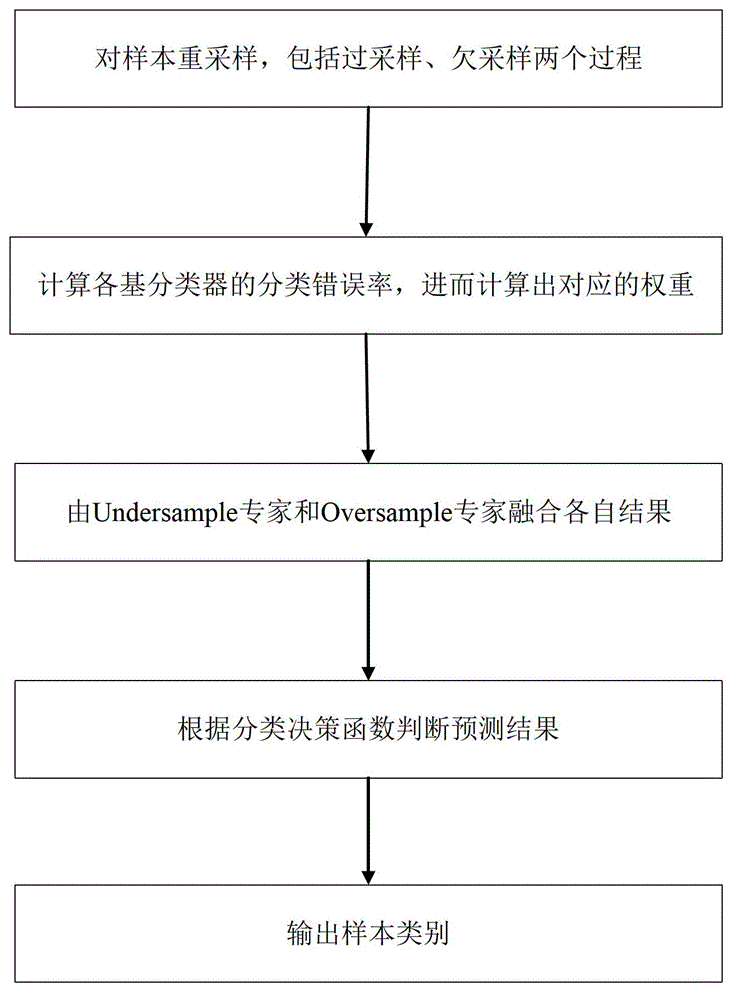

Unbalanced data distribution-based multi-heterogeneous base classifier fusion classification method

InactiveCN102945280ADiversity guaranteedPerformance pros and consSpecial data processing applicationsData setClassification methods

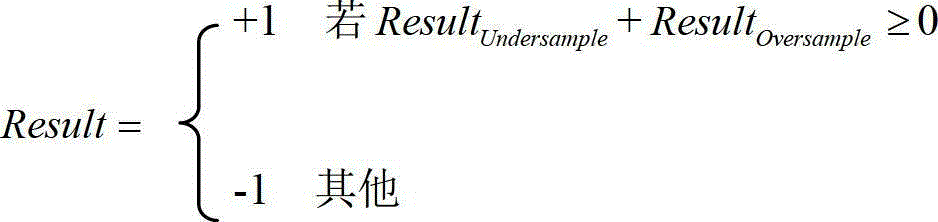

The invention discloses an unbalanced data distribution-based multi-heterogeneous base classifier fusion classification method, and relates to an unbalanced data classification technology in the field of data mining. The method comprises the following steps of: preprocessing a sample by using a difference sampling rate-based resampling algorithm, including an oversampling and an under-sampling process, thereby distributing different samples to be classified for different base classifiers; calculating a classification error rate of each base classifier and further calculating the corresponding weight; counting respective results by an oversampling expert and an under-sampling expert; and fusing the final prediction result according to a classification strategy function to obtain the category of the sample. By using the multi-heterogeneous base classifier fusion classification method, important characteristics of a few types of samples are found in mass data, and the accuracy of the few types of samples can be effectively improved, so that the aim of improving the integral classification accuracy of a data set is fulfilled.

Owner:翟云 +1

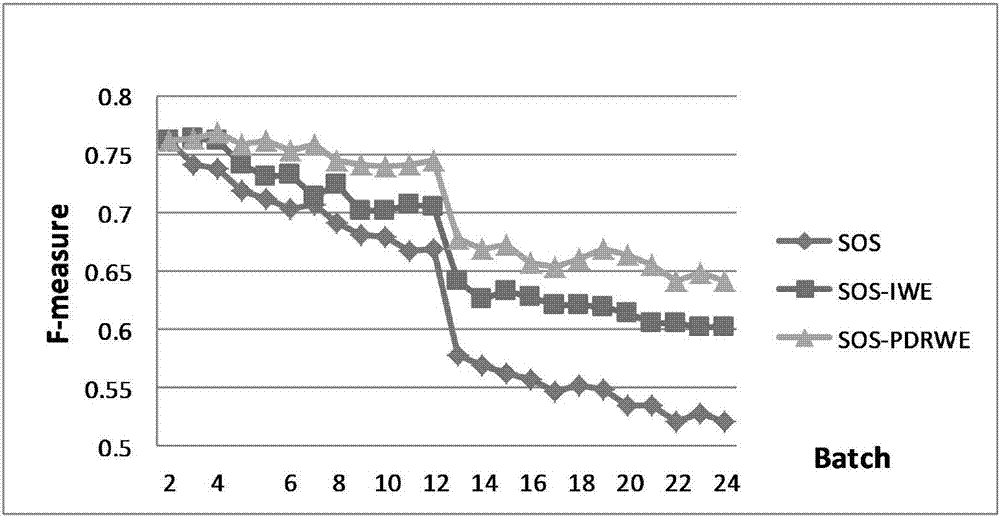

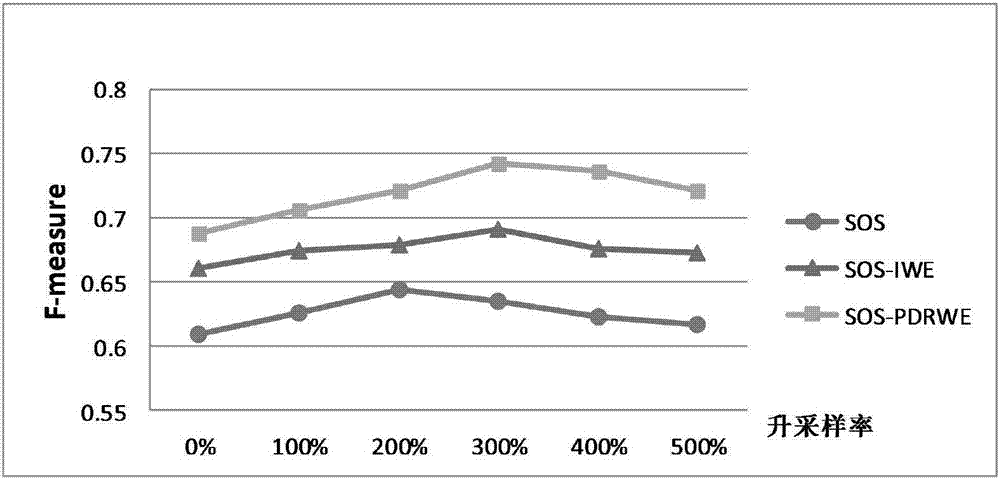

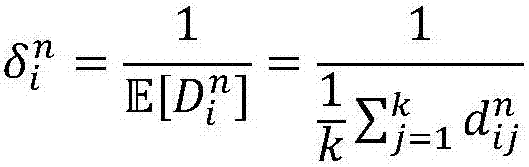

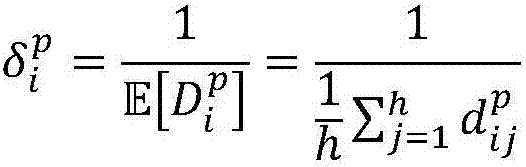

Selective up-sampling combined method for weighted ensemble classification prediction of unbalanced data flows

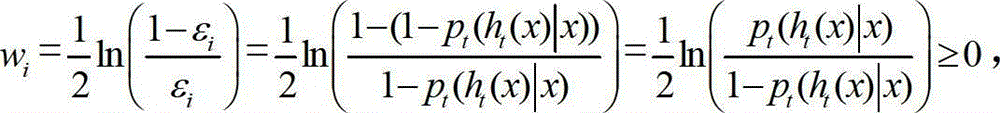

InactiveCN107341497AImprove recognition rateImprove classification performanceCharacter and pattern recognitionAlgorithmMinority class

The invention relates to the technical field of data mining, and discloses a selective up-sampling combined method for weighted ensemble classification prediction of unbalanced data flows. The method comprises the following steps of: screening minority class samples of history data blocks according to a similarity, and selecting samples closest to the current training data block in the aspect of concept; synthesizing the selected samples into new samples in a decision boundary area so as to selectively implement up-sampling; and carrying out weighted ensemble classification on the new sample by adoption of a probability distribution relevancy-based weight distribution strategy. According to the method, the minority class sample information is effectively increased through selecting history data with high similarities and synthesizing new data at the boundary area, so that the decision domain of the minority class is enlarged; and meanwhile, in order to adapt the dynamic data with concept drift and use an ensemble classification thought, the probability distribution relevancy-based weight distribution strategy is designed, so that the overall classification precision is enhanced. Experiment results show that the method is capable of effectively improving the minority class identification rate and the overall classification performance, and has the advantage of better processing the unbalanced data flows.

Owner:NORTHEASTERN UNIV

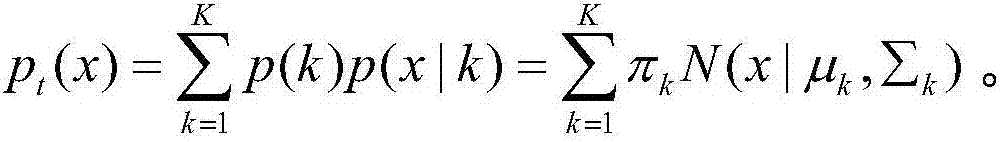

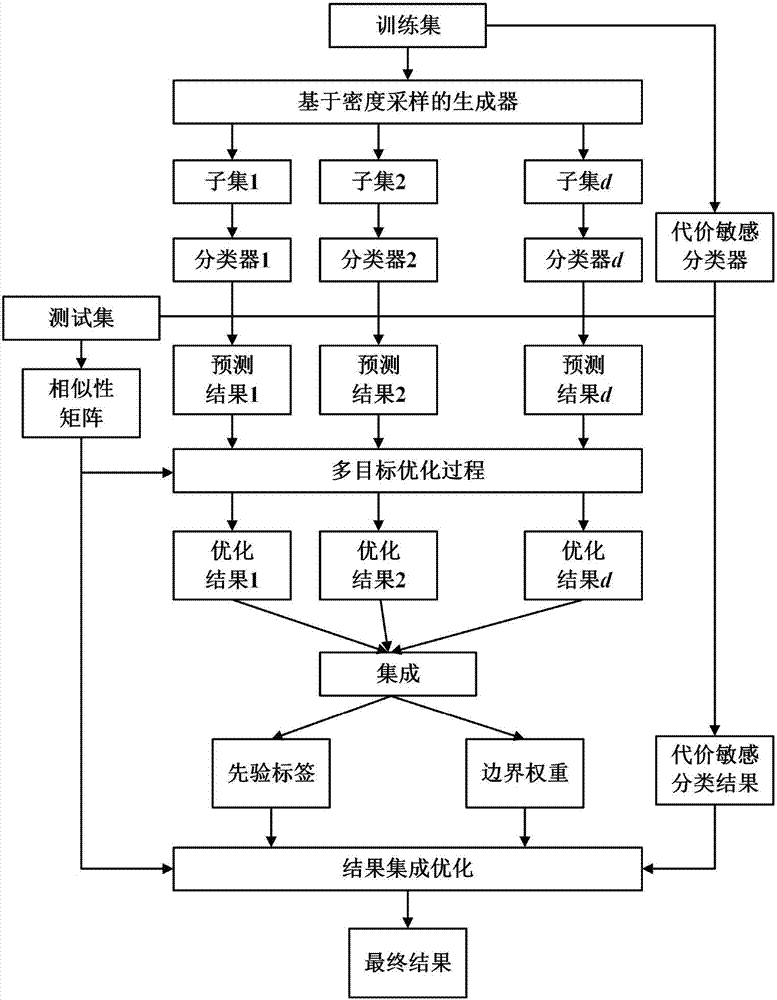

Re-sampling and cost-sensitive learning integrated unbalanced data integration and classification method

InactiveCN107545275AImprove accuracyImprove stabilityCharacter and pattern recognitionData setAlgorithm

The invention discloses an unbalanced data integration classification method that combines resampling technology and cost-sensitive learning, relates to the field of artificial intelligence integrated learning, and mainly solves the problem of unbalanced data classification using complete data information in the prior art. The method The steps are: (1) input the training data set; (2) calculate the relative density of the sample space distribution; (3) resample to generate multiple subsets and train the basic classifier; (4) calculate the similarity matrix of the test sample; (5) ) using multi-objective optimization and integration to obtain prior results; (6) performing cost-sensitive learning prediction on the test set; (7) using KL divergence to optimize and fuse the results. The method designs a new sampling method to solve the problem of unbalanced data distribution; uses a method combining resampling technology and cost-sensitive learning to solve the problem of incomplete information; and makes full use of the data information of the test set itself to improve integration performance of the classifier.

Owner:SOUTH CHINA UNIV OF TECH

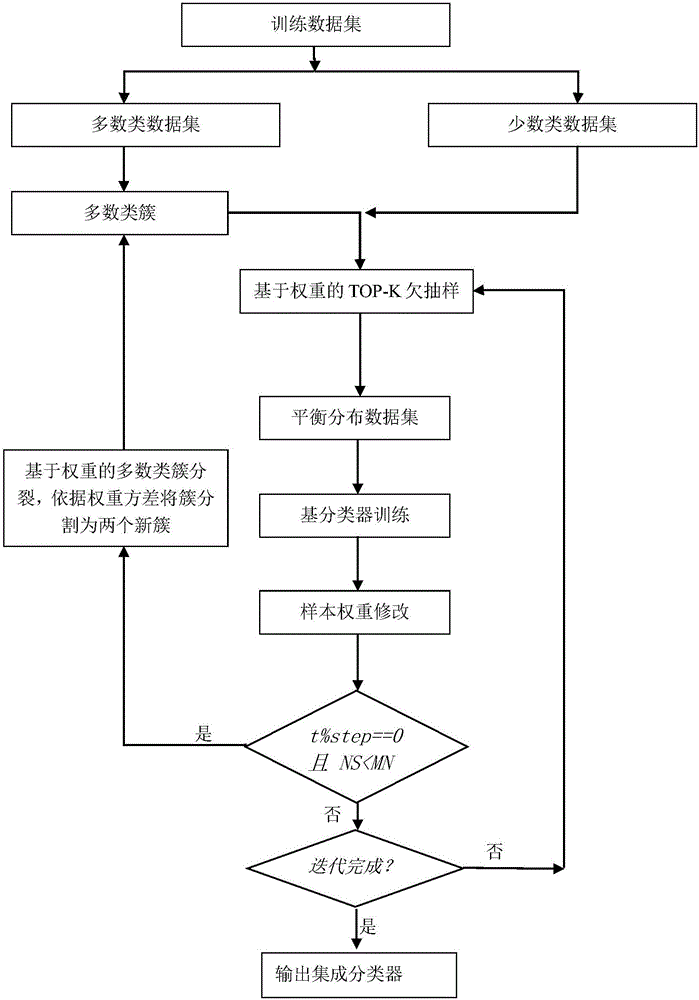

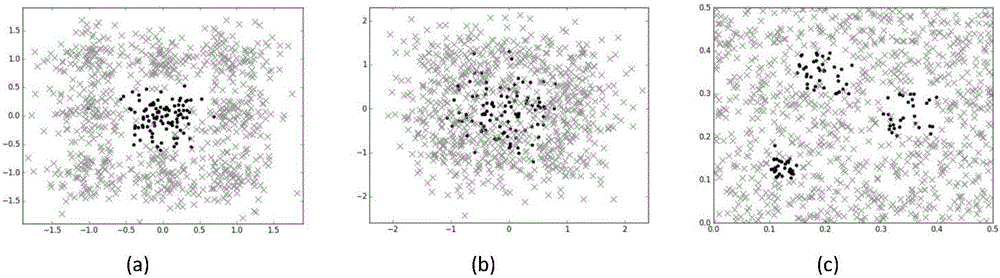

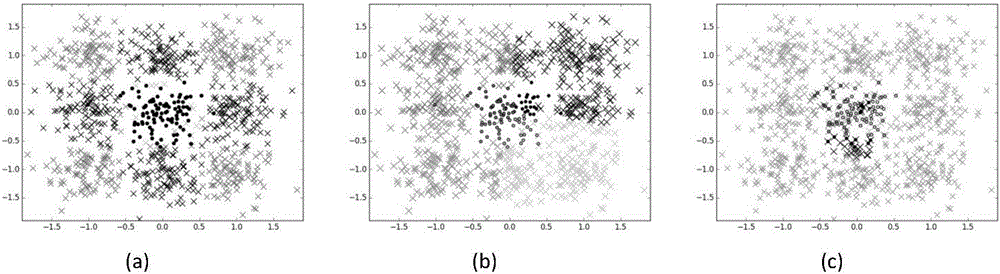

Weight clustering and under-sampling-based unbalanced data classification method

InactiveCN106778853ATo achieve the effect of automatic clusteringImprove classification accuracyCharacter and pattern recognitionData dredgingMajority class

The classification of unbalanced data sets already becomes one of most challenging problems in data mining. A quantity of minority class samples is far smaller than a quantity of majority class samples, so that the minority classes have the defects of low accuracy, poor generalization performance and the like in a classification learning process of a conventional algorithm. The algorithm integration already becomes an important method for dealing with the problem, wherein random under-sampling-based and clustering-based integrated algorithms can effectively improve classification performance. But, the former easily causes information loss, and the latter is complex in calculation and difficult to popularize. The invention provides a weight clustering-based improved integrated classification algorithm fusing under-sampling, which is specifically a weight clustering and under-sampling-based unbalanced data classification method. According to the algorithm, a cluster is divided according to weights of the samples, a certain proportion of majority classes and all minority classes are extracted from each cluster according to weight values of the samples to form a balanced data set, and classifiers are integrated by utilizing an Adaboost algorithm framework, so that the classification effect is improved. An experimental result shows that the algorithm has the characteristics of accuracy, simplicity and high stability.

Owner:CENT SOUTH UNIV

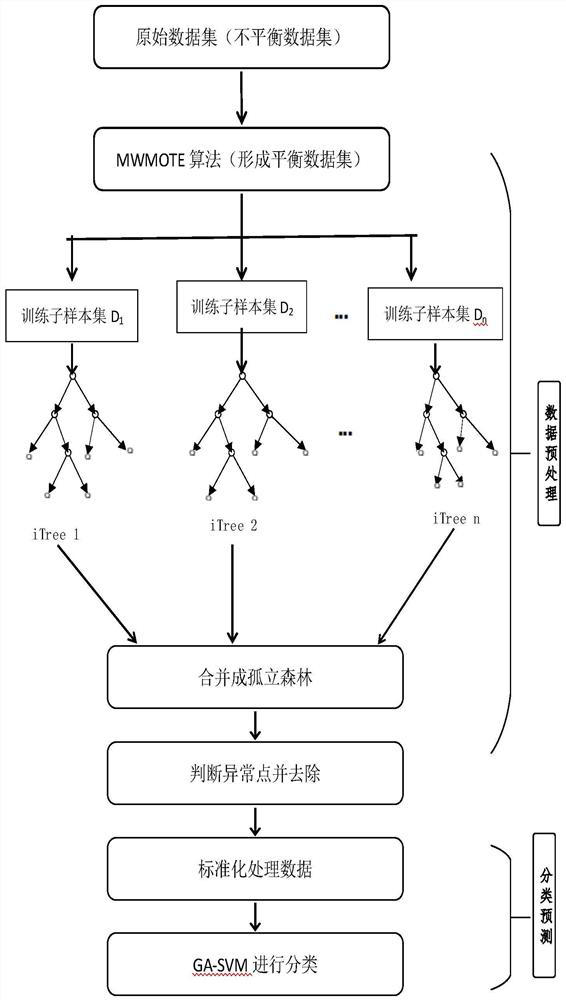

Prediction method for unbalanced data set based on isolated forest learning

PendingCN112070125AImprove forecast accuracyPrediction results are stableFinanceCharacter and pattern recognitionData setSupport vector machine classifier

The invention discloses a prediction method for an unbalanced data set based on isolated forest learning. The prediction method comprises the following steps: receiving a prediction request; collecting data, and defining features and labels in the data set and the number of minority class samples and majority class samples; converting a non-numerical feature column and a label column in the data set into classification numerical values; synthesizing minority class samples by using a majority class weighted minority class oversampling technology to form a balance data set; performing abnormal point identification and removal on the balance data set by using an isolated forest algorithm; then performing data standardization, and dividing a training set and a test set; constructing and training a support vector machine classifier model by using the training set; adjusting hyper-parameters of the support vector machine classifier model through a genetic algorithm, and obtaining a prediction model after training is completed; and inputting the test set into the prediction model to obtain a prediction result. The prediction method for the unbalanced data set based on isolated forest learning has the characteristics of stable prediction result and high prediction precision.

Owner:XIAN UNIV OF TECH

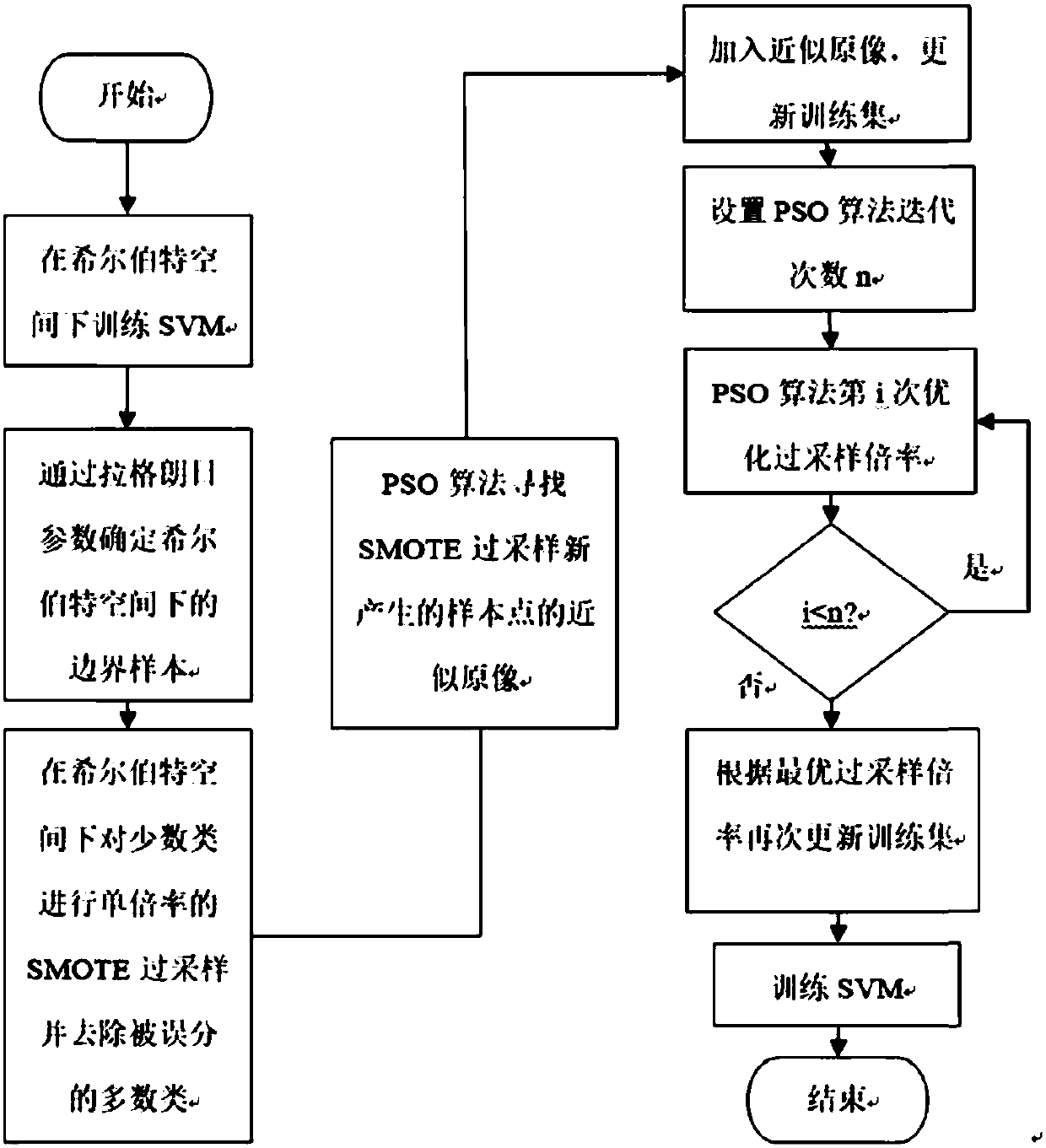

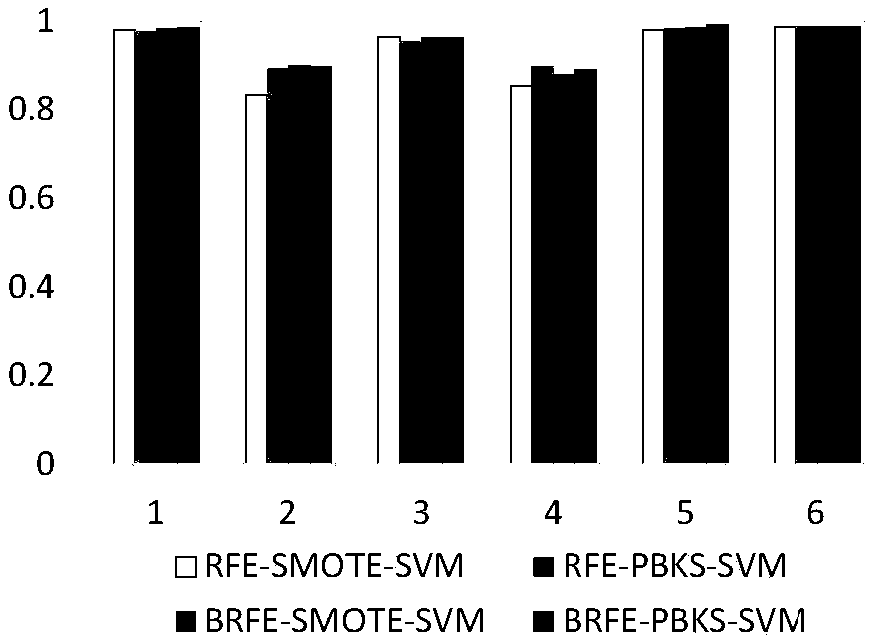

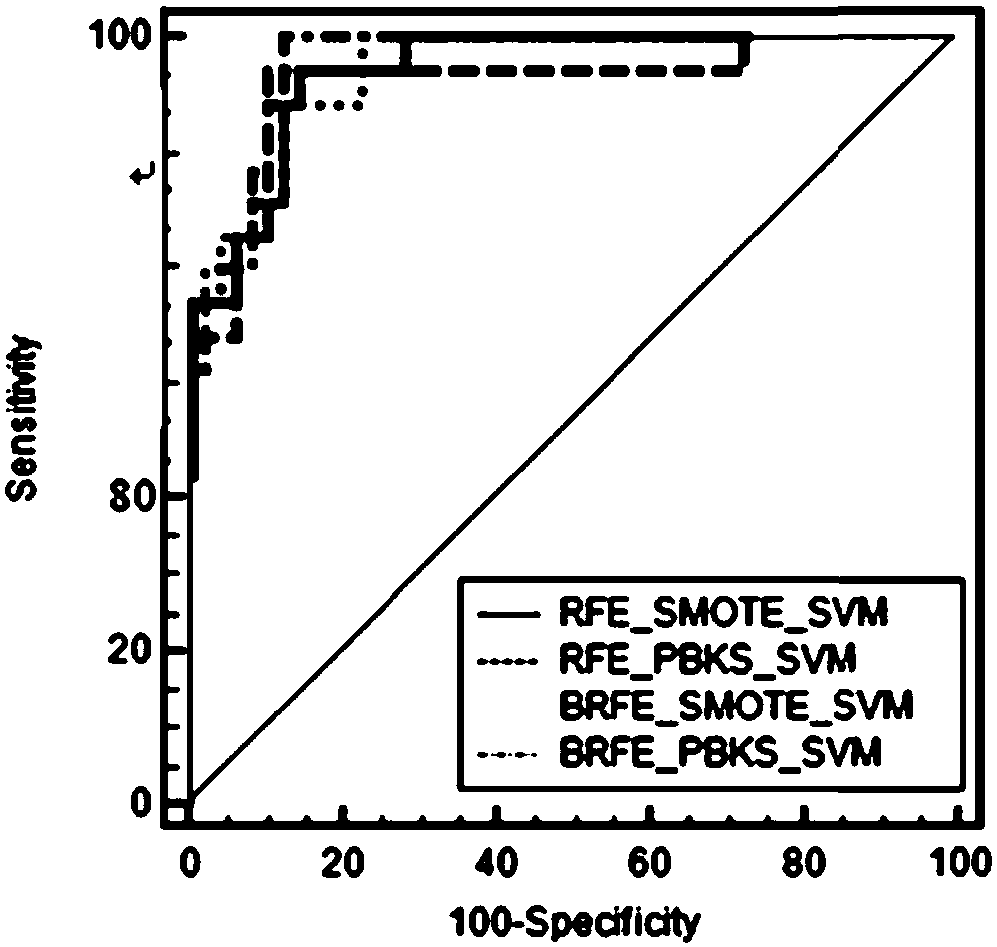

High-dimensional imbalanced data classification method based on SVM

InactiveCN107563435ACharacter and pattern recognitionSpecial data processing applicationsAlgorithmClassification methods

The invention proposes a high-dimensional imbalanced data classification method based on SVM. The method includes two parts. The first part is feature selection. An SVM-BRFE algorithm is used to carryout boundary resampling to find the optimal feature weight to carry out feature importance measuring, feature selecting and training set updating, and the process is repeated. Finally, a feature mostconductive to enhancing the F1 value is retained, and other features are removed. A subsequent training process is carried out under the condition with feature redundancy and irrelevant feature combination as less as possible and dimension as low as possible. The influence of a high-dimensional problem on an imbalance problem and the constraint of an SMOTE oversampling algorithm are reduced. Thesecond part is data sampling. An improved SMOTE algorithm, namely PBKS algorithm, is used. Few classes in boundaries automatically partitioned by SVM are used as distance constraints in the Hilbert space Dxij<H>, and original constraints are replaced. A grid method is used to find the approximate preimage. The method provided by the invention can finish the classification task of high-dimensionalunbalanced data stably and effectively, and can obtain a considerable effect.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

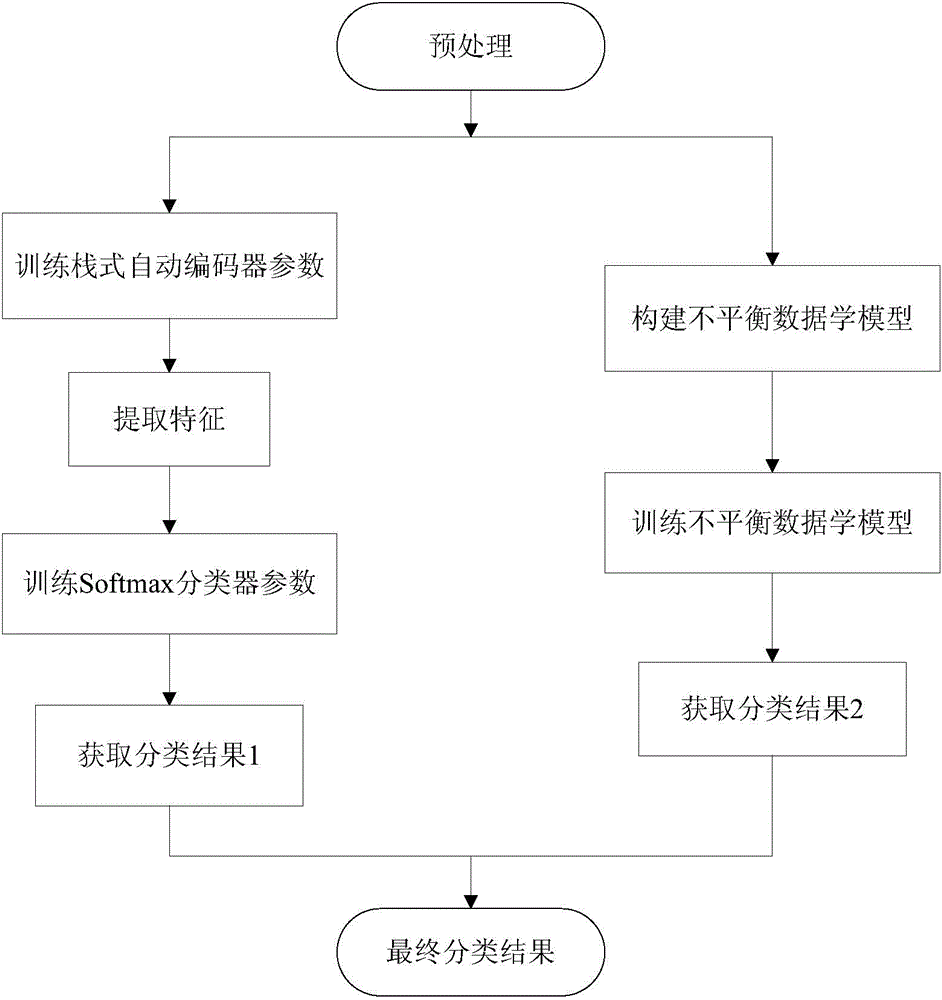

Polarized SAR image classification method on basis of SAE and IDL

ActiveCN104156736ADescribe the essenceOvercome the shortcomings of large gaps in classification accuracyCharacter and pattern recognitionSynthetic aperture radarClassification methods

The invention discloses a polarized SAR (Synthetic Aperture Radar) image classification method on the basis of an SAE (Stake Auto-Encoder) and IDL (Inbalanced Data Learning). The polarized SAR image classification method comprises the following implementing steps: (1) preprocessing; (2) training parameters of the SAE; (3) extracting characteristics; (4) training parameters of a Softmax classifier; (5) acquiring a classification result I; (6) establishing an IDL model; (7) training the IDL model; (8) acquiring a classification result II; (9) outputting a final classification result. The polarized SAR image classification method adopts the SAE to extract the characteristics capable of more substantially describing an original input; moreover, the polarized SAR image classification method solves the problem of large classification accuracy difference between classes, which is caused by class unbalance of a training sample set, and has the advantage of improving classification accuracy and region consistency; and the polarized SAR image classification method can be applied to the fields of terrain classification, target detection, identification and the like of a remote sensing image.

Owner:XIDIAN UNIV

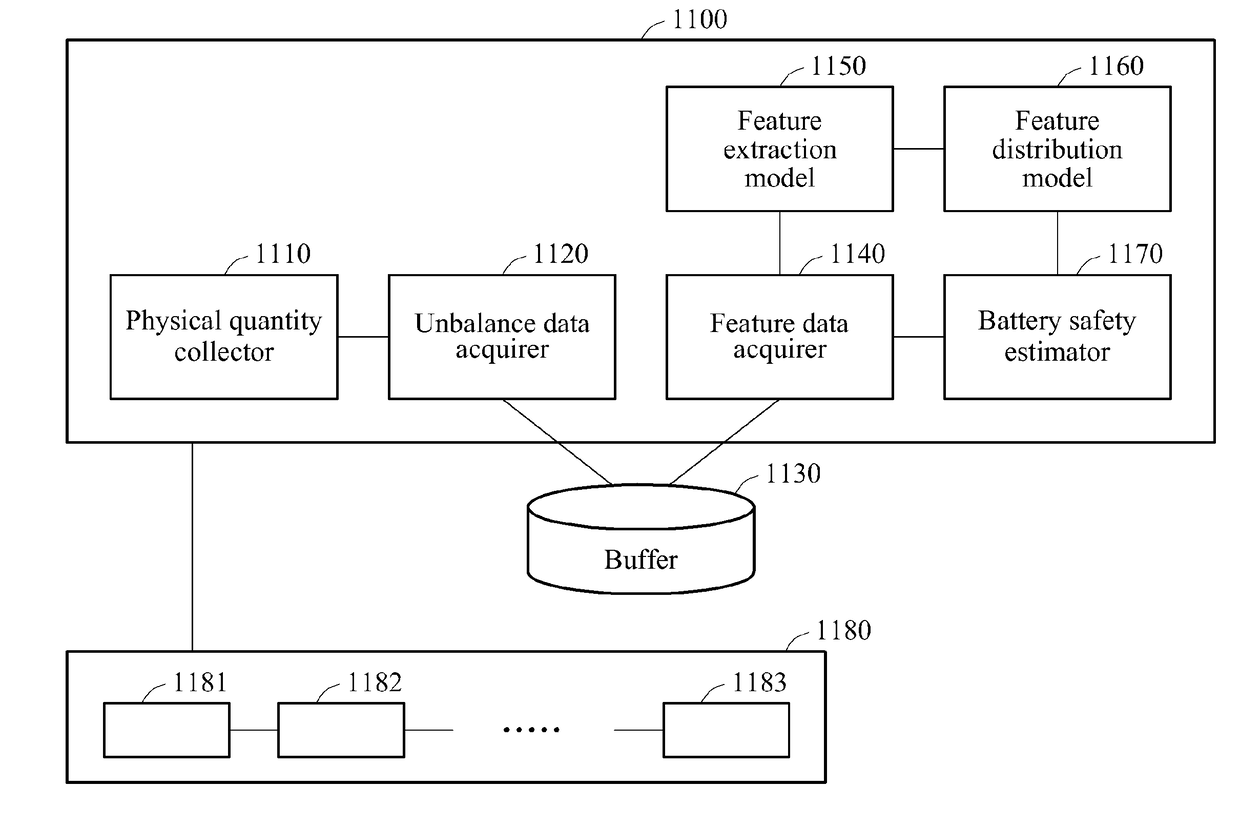

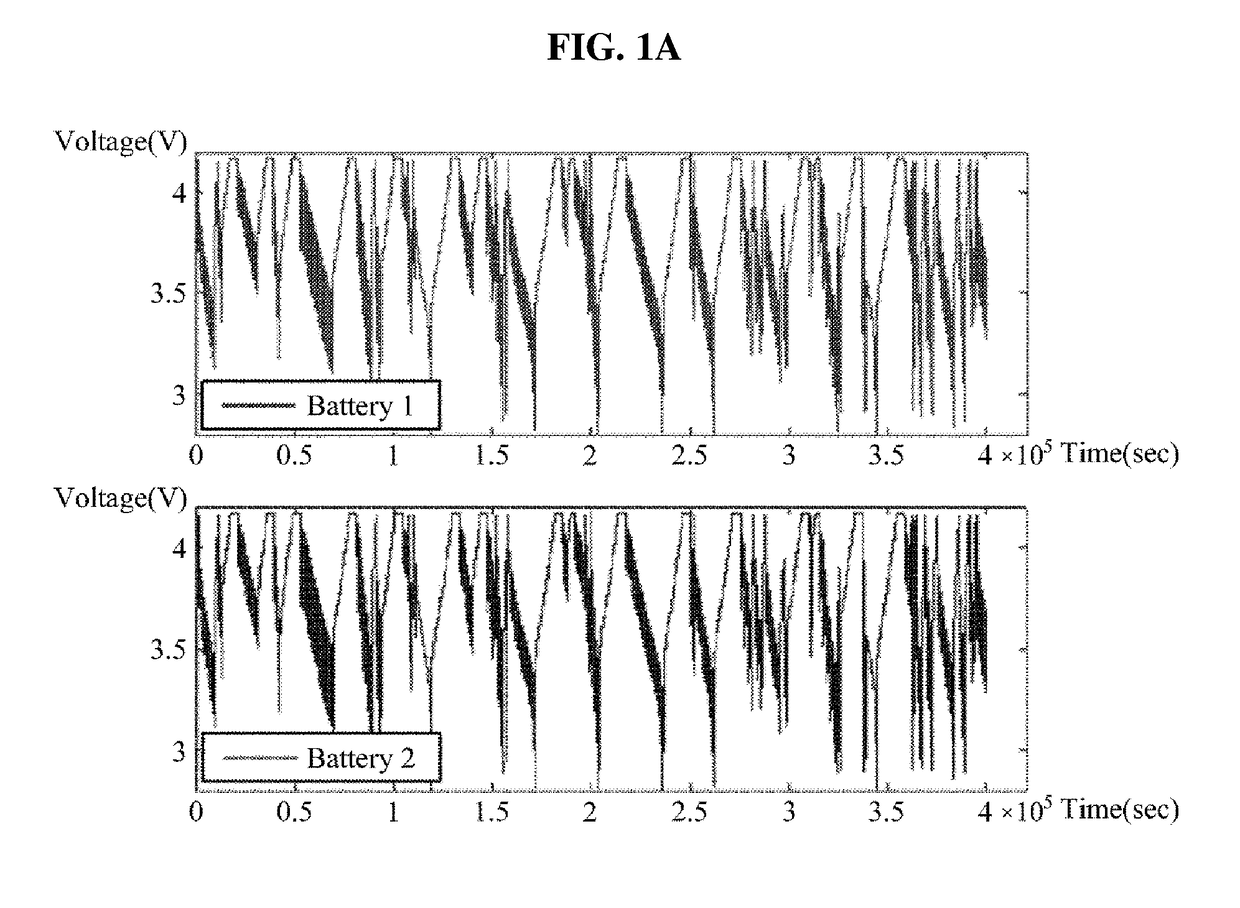

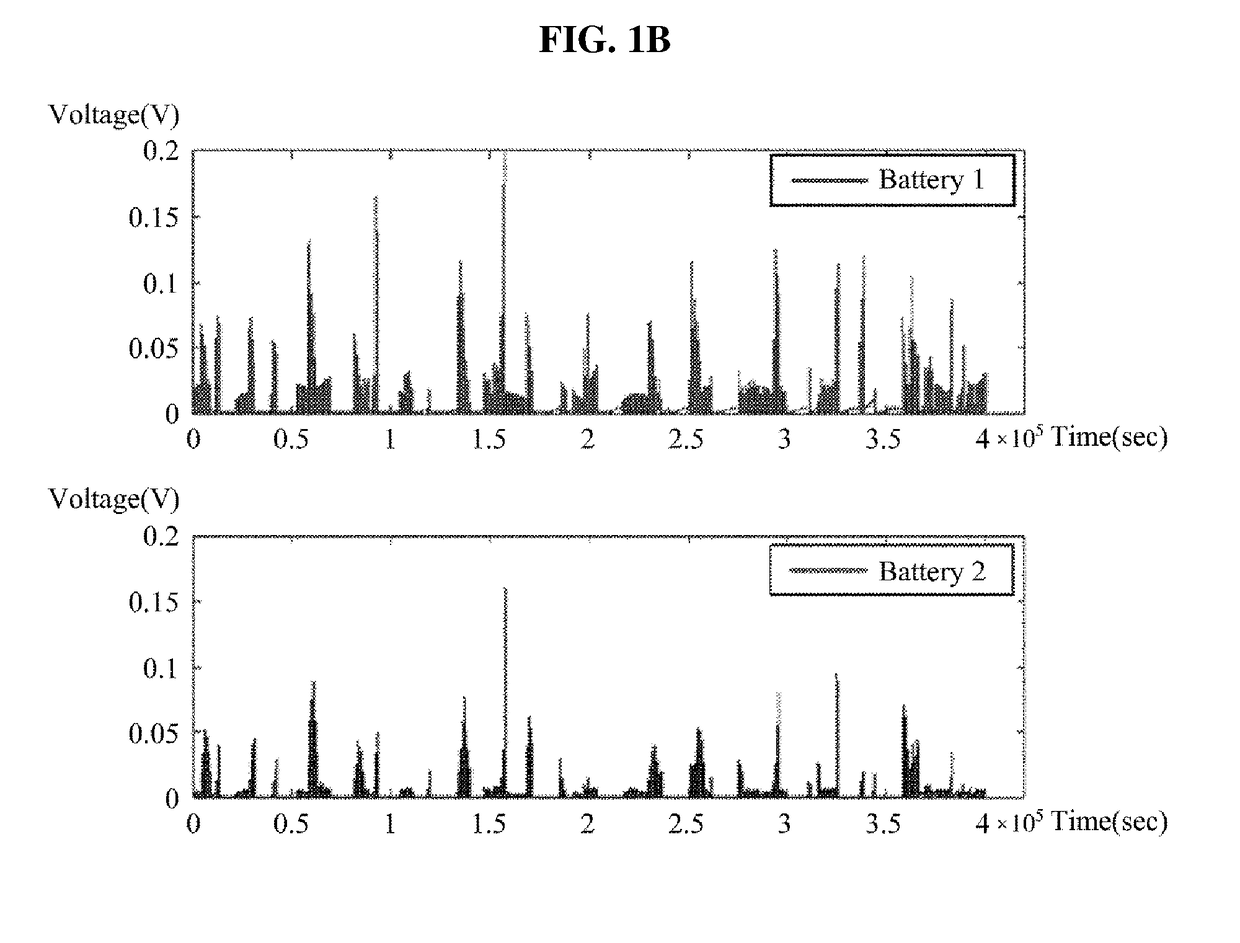

Battery management method and apparatus

ActiveUS20170126027A1Charge equalisation circuitElectric devicesApproaches of managementFeature data

Provided is a battery management method and apparatus. The battery management method includes acquiring physical quantity data, for each of a plurality of batteries, of when corresponding physical quantities of the plurality of batteries, making up the physical quantity data, dynamically vary, calculating unbalance data based on physical quantity difference information derived from the physical quantity data, calculating feature data for the physical quantity data by projecting the unbalance data to a feature space, and determining a battery safety for one or more of the plurality of batteries based on determined distribution information of the feature data.

Owner:SAMSUNG ELECTRONICS CO LTD

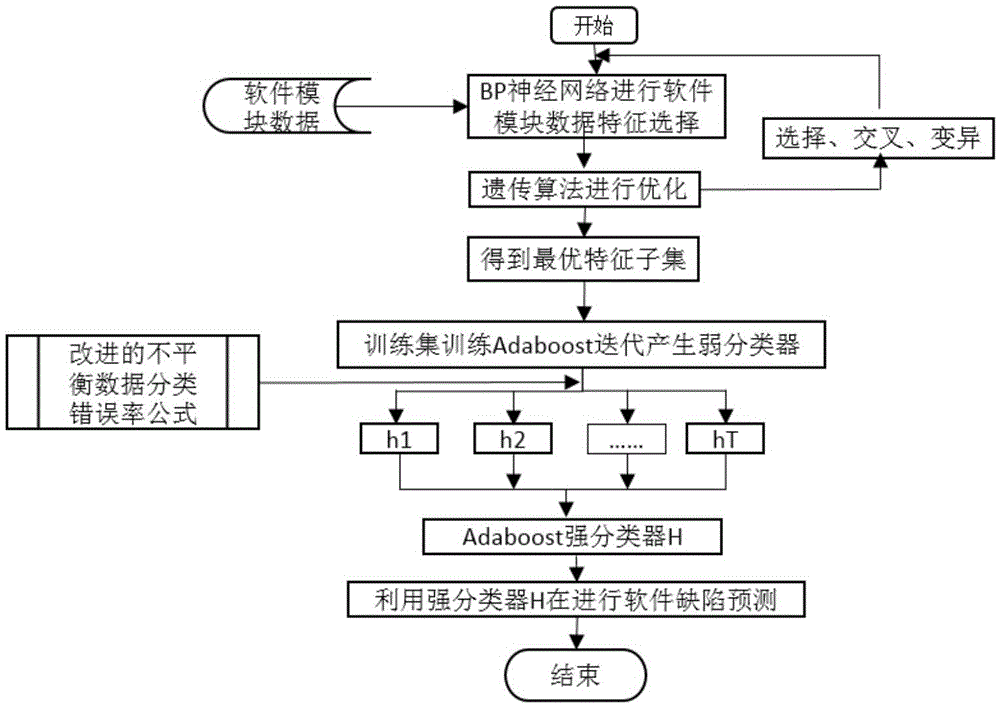

Adaboost software defect unbalanced data classification method based on improvement

InactiveCN105677564ACharacter and pattern recognitionSoftware testing/debuggingData setClassification methods

The invention discloses an Adaboost software defect unbalanced data classification method based on improvement, and mainly solves the problem that an existing software defect data classification method is poor in classification effect on minority classes. The method comprises the following steps that 1, software data is acquired from a software data set and then preprocessed, software module data is divided into a training set and a testing set for training and testing, and cross validation is performed for ten times; 2, feature selection of the software data is performed by combining a genetic algorithm based on improvement with a BP neural network to obtain an optimal feature subset, and then dimension reduction processing is performed on the software features; 3, the unbalancedness of the software defect data is fully considered, and an Adaboost classifier based on improvement is trained to classify software modules. According to the Adaboost software defect unbalanced data classification method based on improvement, the classification precision of the minority classes can be improved, and the software defect modules can be better detected.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

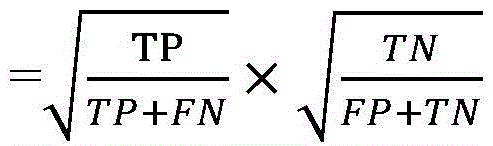

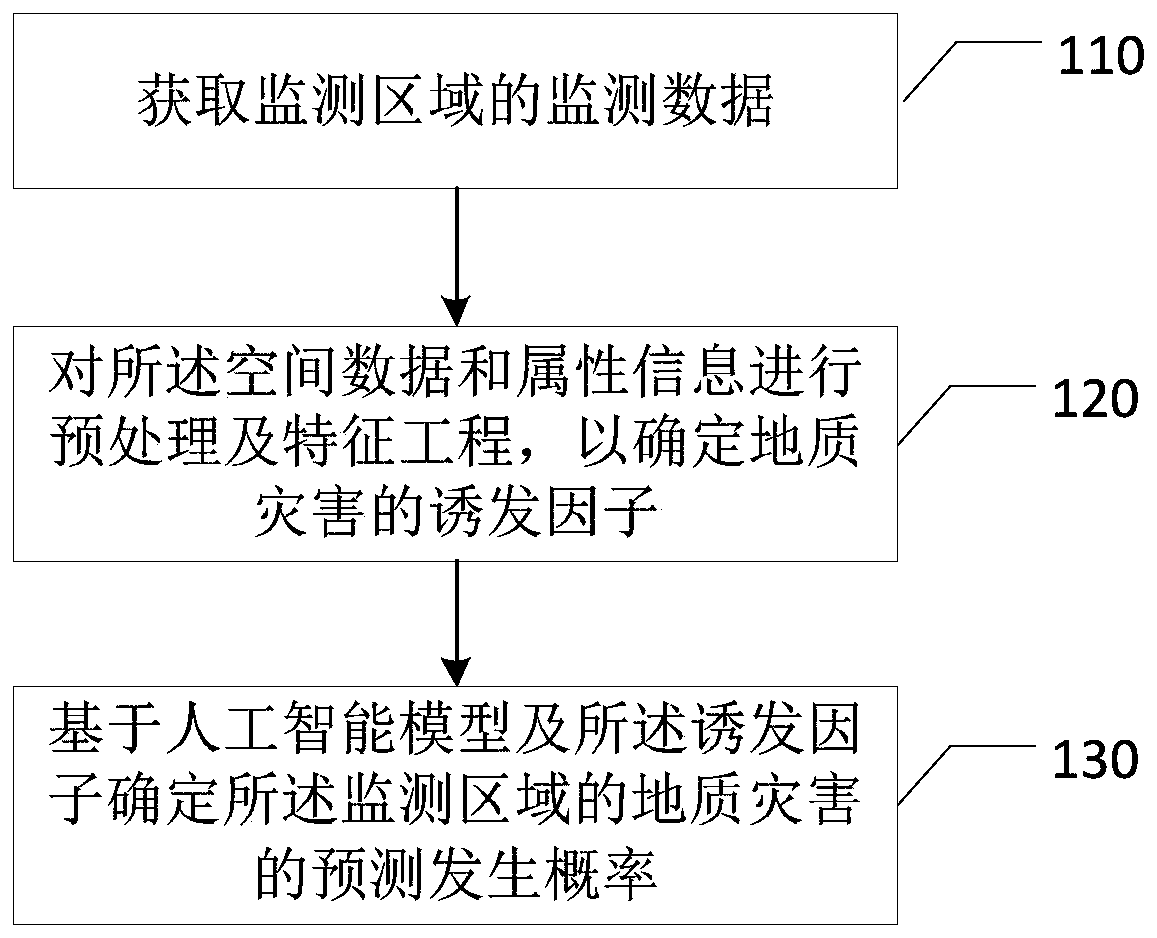

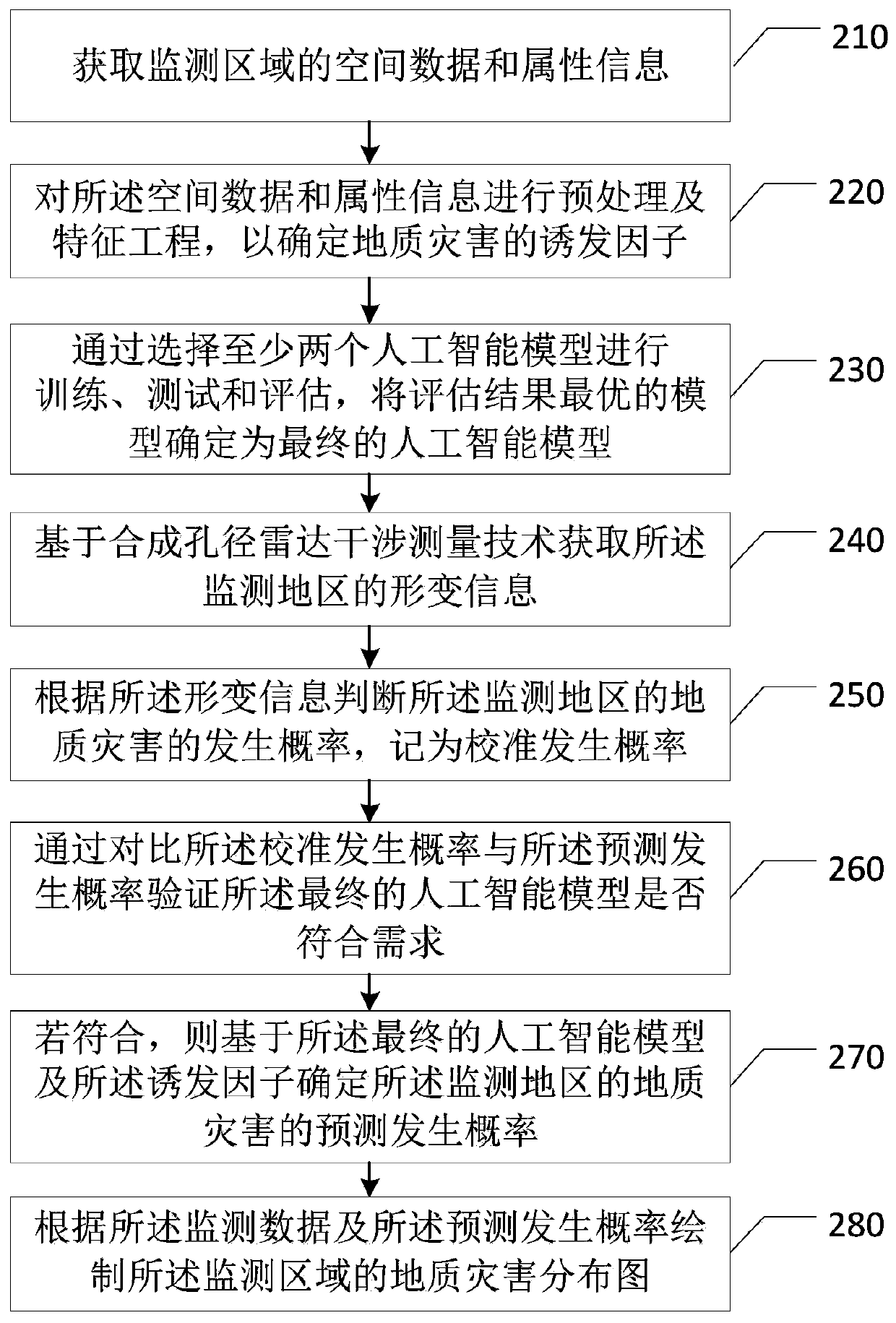

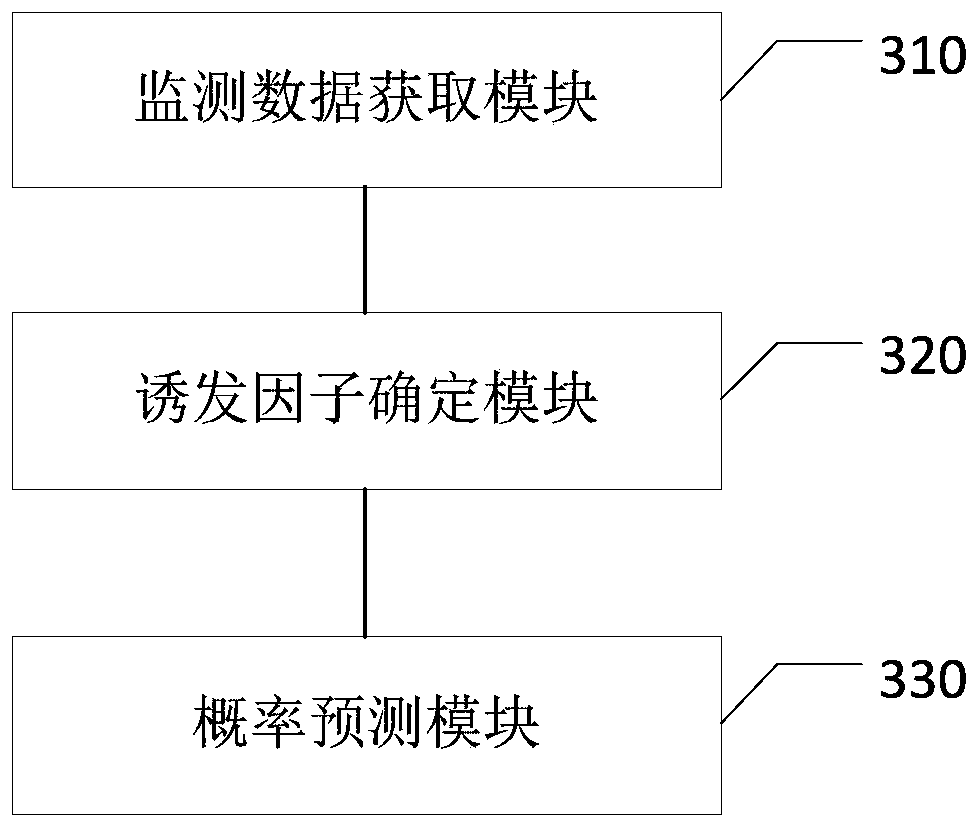

Geological disaster prediction method, device and equipment

The invention discloses a geological disaster prediction method, device and equipment, and the method comprises the steps of obtaining the monitoring data of a monitoring region, wherein the monitoring data comprises the spatial data and attribute information for describing geological disasters; performing preprocessing and feature engineering on the spatial data and the attribute information to determine a feature subset of the geological disaster; and establishing an artificial intelligence model based on the feature subset to determine the predicted occurrence probability of the geologicaldisaster of the monitoring area. The embodiment of the invention discloses the technical scheme which includes obtaining monitoring data, processing the data and subjecting to feature engineering, extracting the feature subsets related to the geological disasters, up / down sampling the unbalanced data, determining the occurrence probability of the geological disasters based on the artificial intelligence model, realizing real-time monitoring and automatic prediction of the occurrence probability of the disasters, and improving the prediction comprehensiveness and accuracy.

Owner:杭州鲁尔物联科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com