Patents

Literature

656 results about "Support vector machine classifier" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

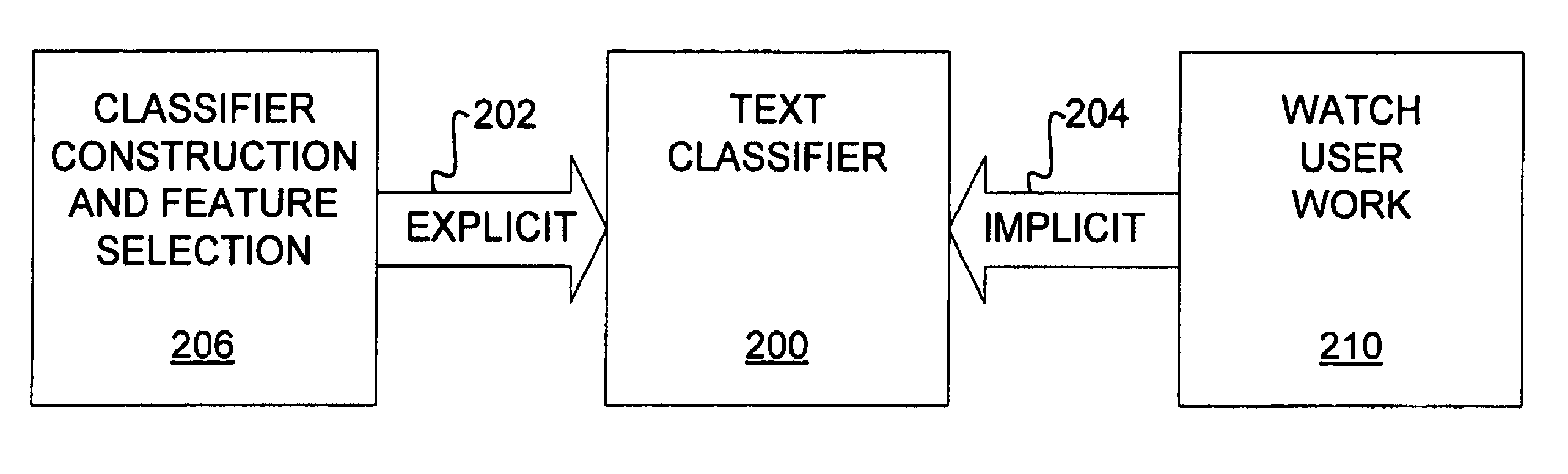

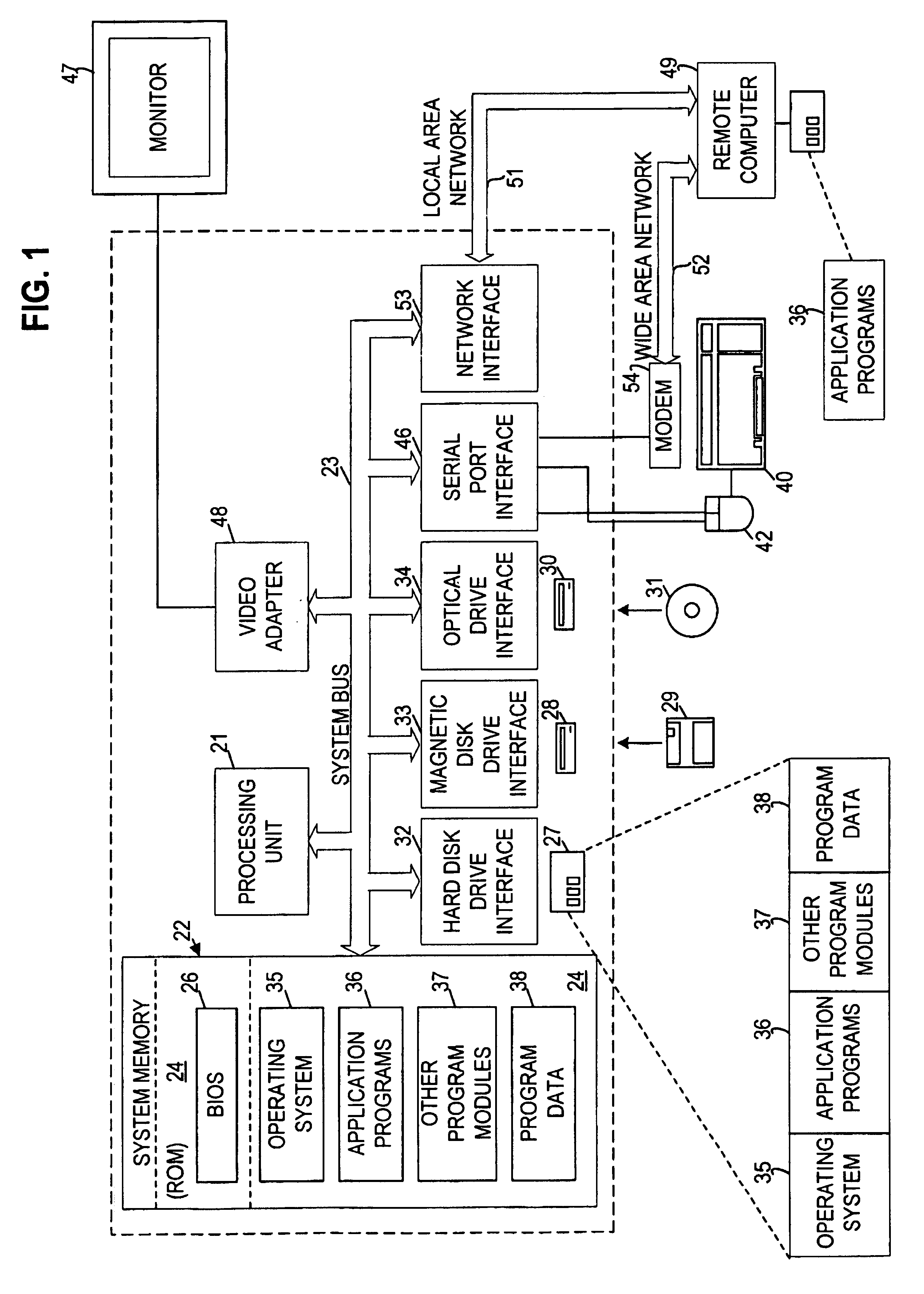

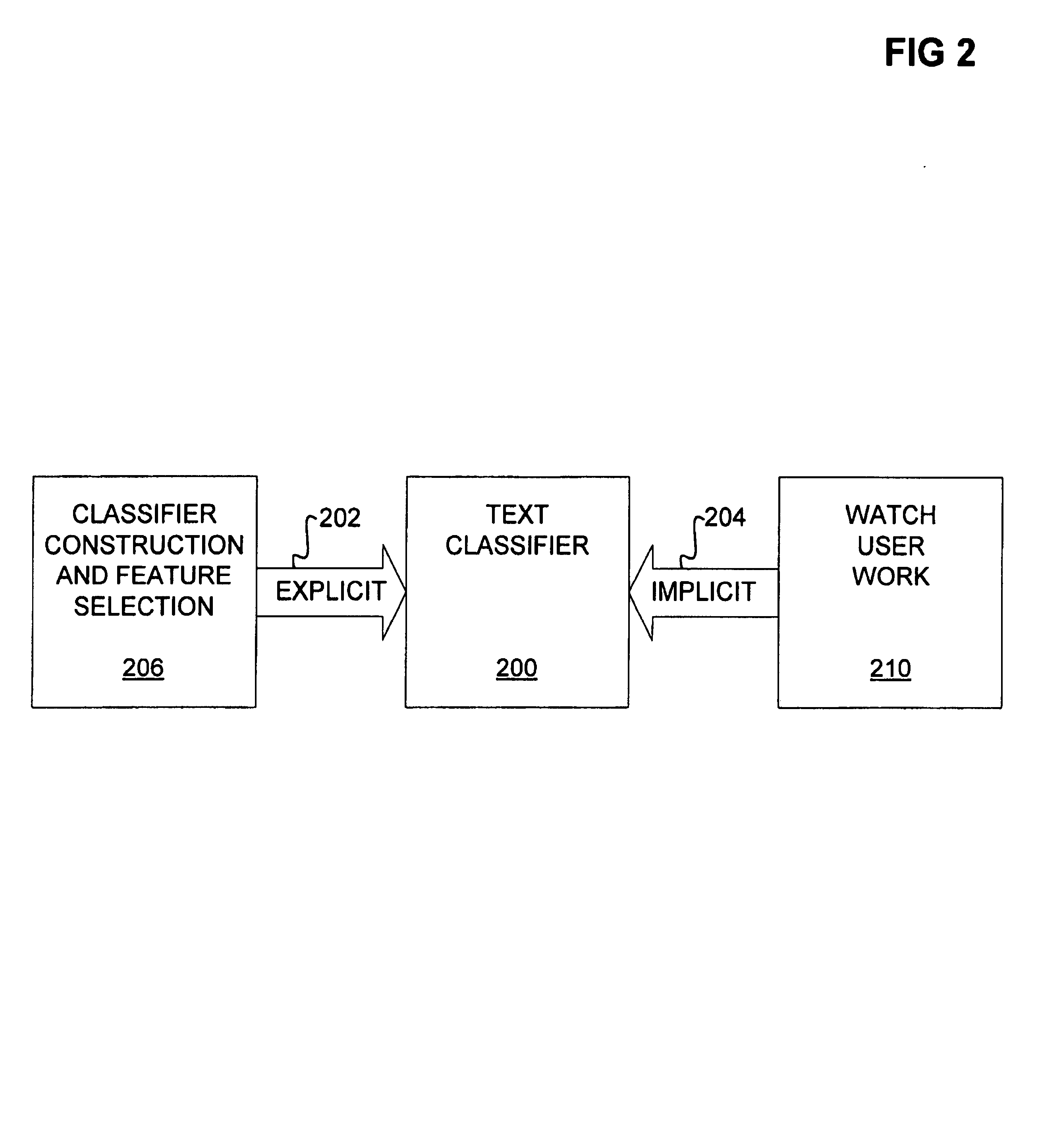

Methods for display, notification, and interaction with prioritized messages

InactiveUS7120865B1Digital computer detailsTransmissionLevel of detailSupport vector machine classifier

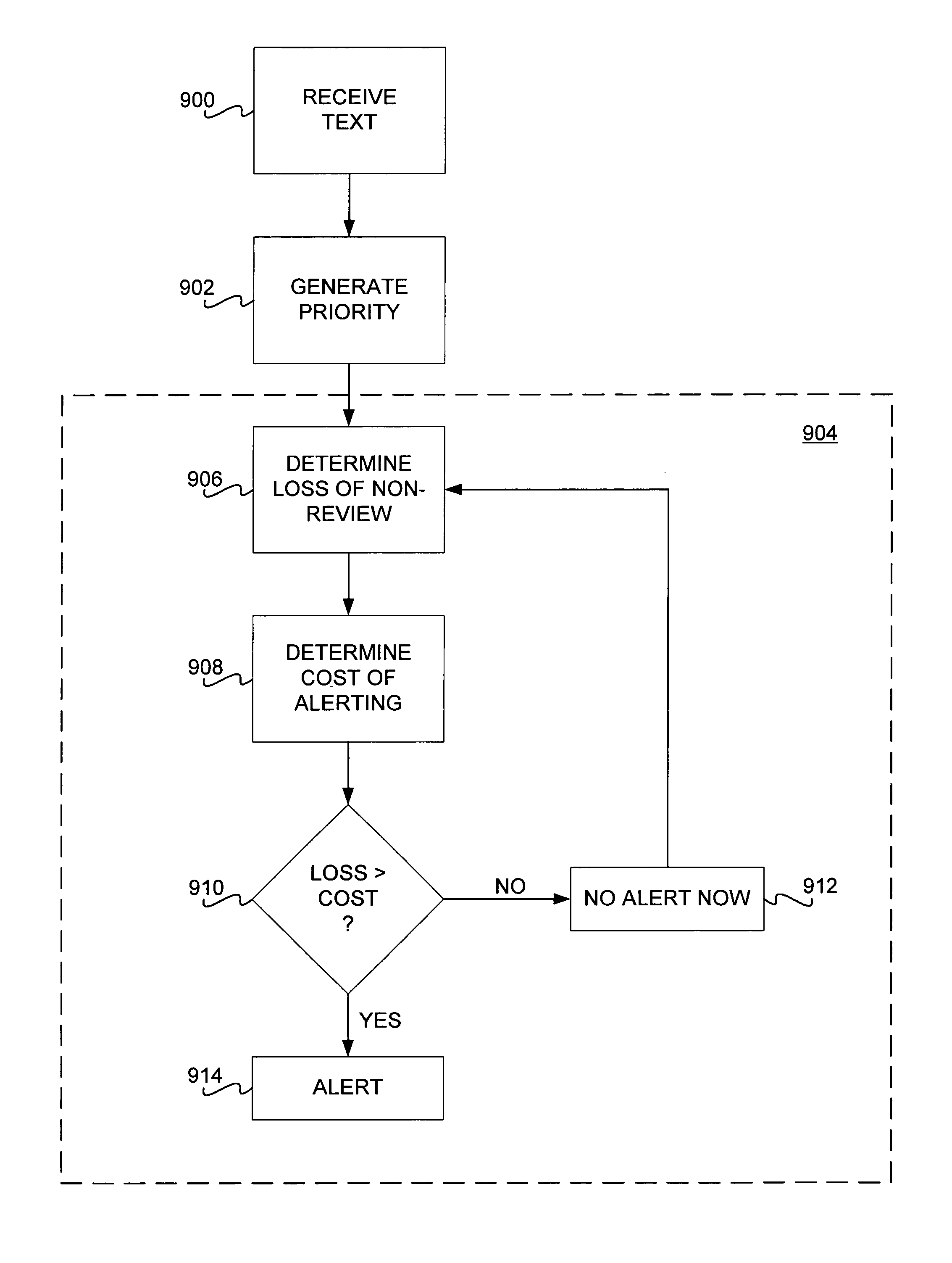

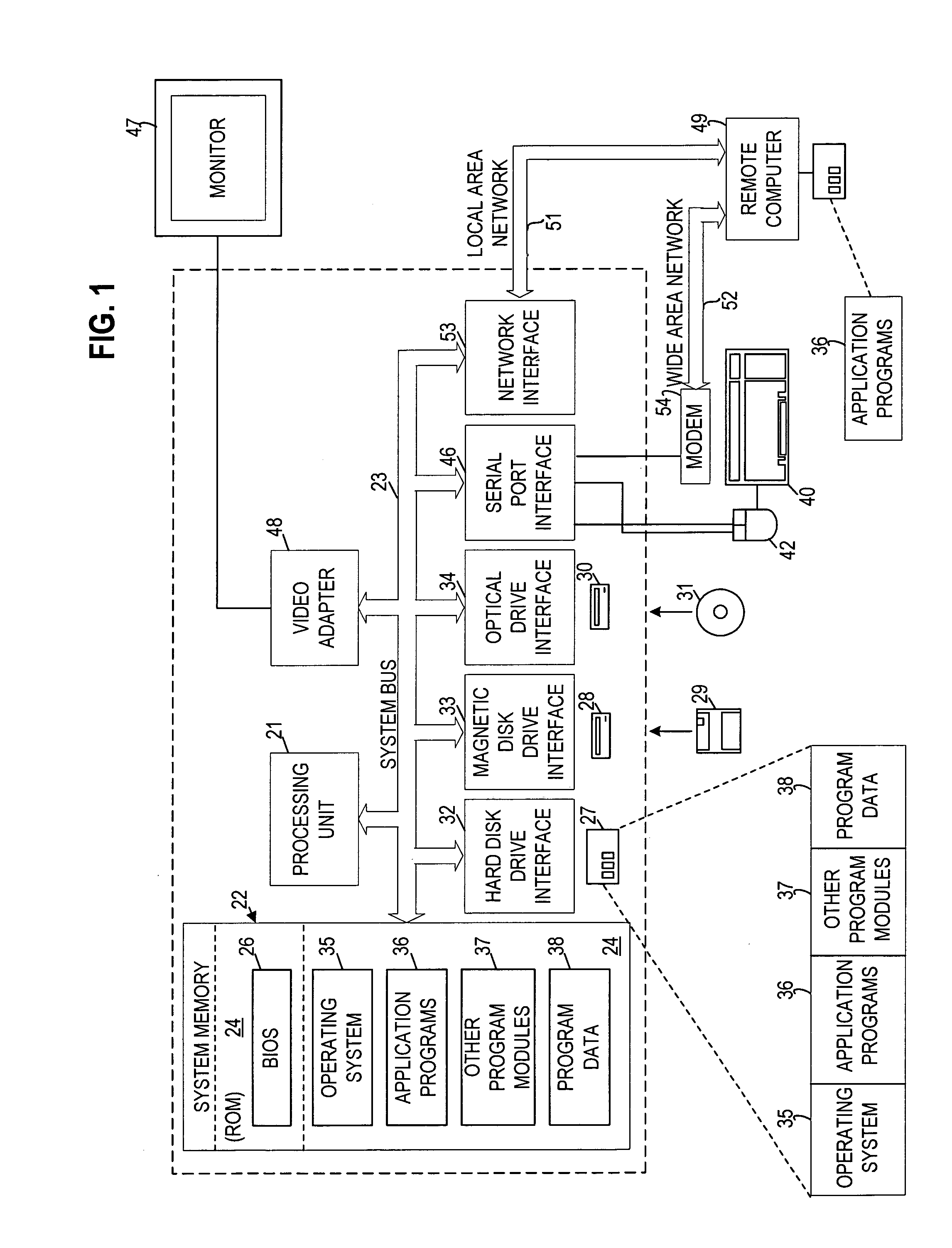

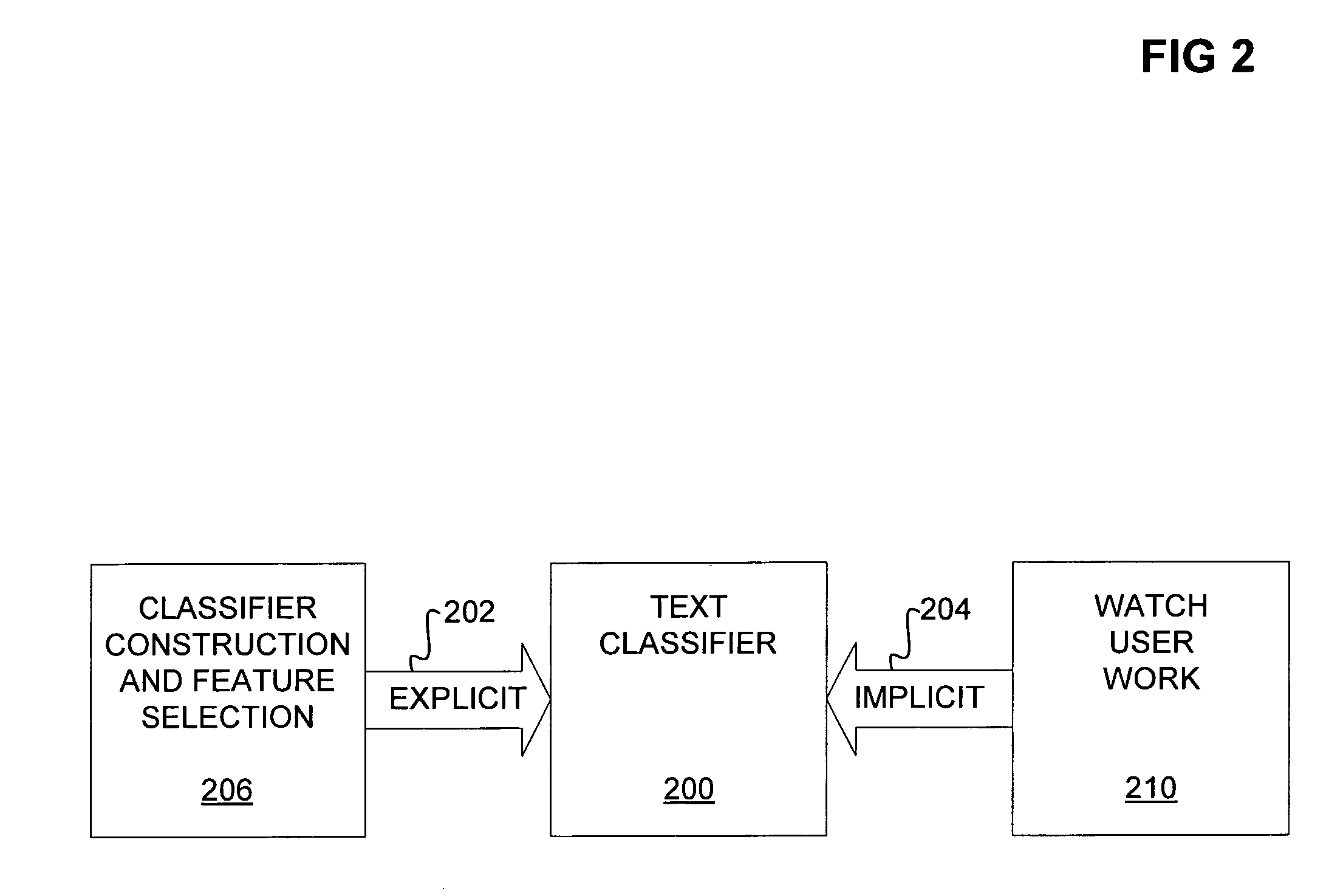

Prioritization of document, such as email messages, is disclosed. In one embodiment, a computer-implemented method first receives a document. The method generates a priority of the document, based on a document classifier such as a Bayesian classifier or a support-vector machine classifier. The method then outputs the priority. In one embodiment, the method includes alerting the user based on an expected loss of now-review of the document as compared to an expected cost of alerting the user of the document, at a current time. Several methods are reviewed for display and interaction that leverage the assignment of priorities to documents, including a means for guiding visual and auditory actions by priority of incoming messages. Other aspects of the machinery include a special viewer that allows users to scope a list of email sorted by priority so that it can include varying histories of time, to annotate a list of messages with color or icons based on the automatically assigned priority, to harness the priority to control the level of detail provided in a summarization of a document, and to use a priority threshold to invoke an interaction context that lasts for some period of time that can be dictated by the priority of the incoming message.

Owner:MICROSOFT TECH LICENSING LLC

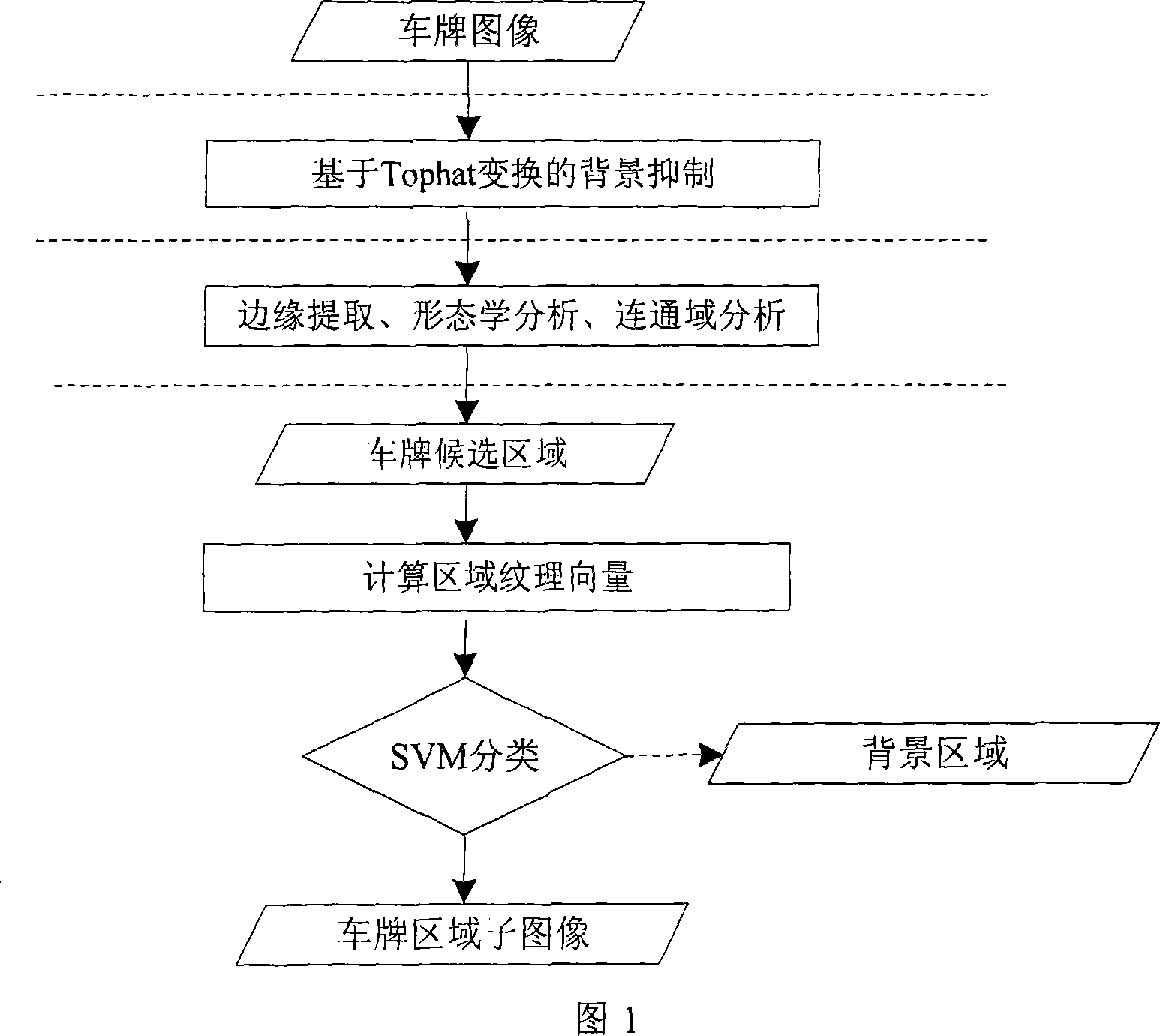

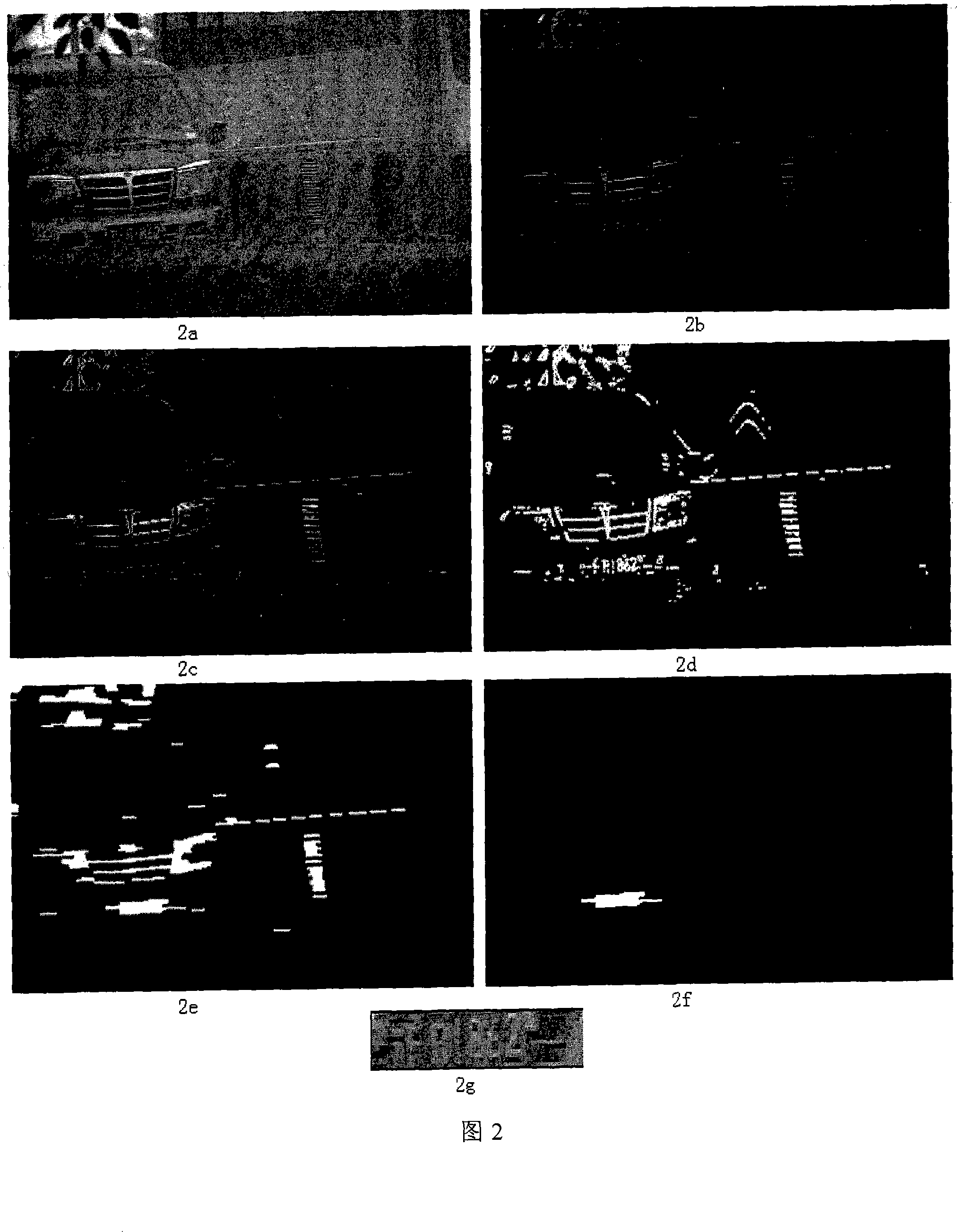

Fast license plate locating method

InactiveCN101246551AReduce distractionsImprove adaptabilityCharacter and pattern recognitionPattern recognitionComputation complexity

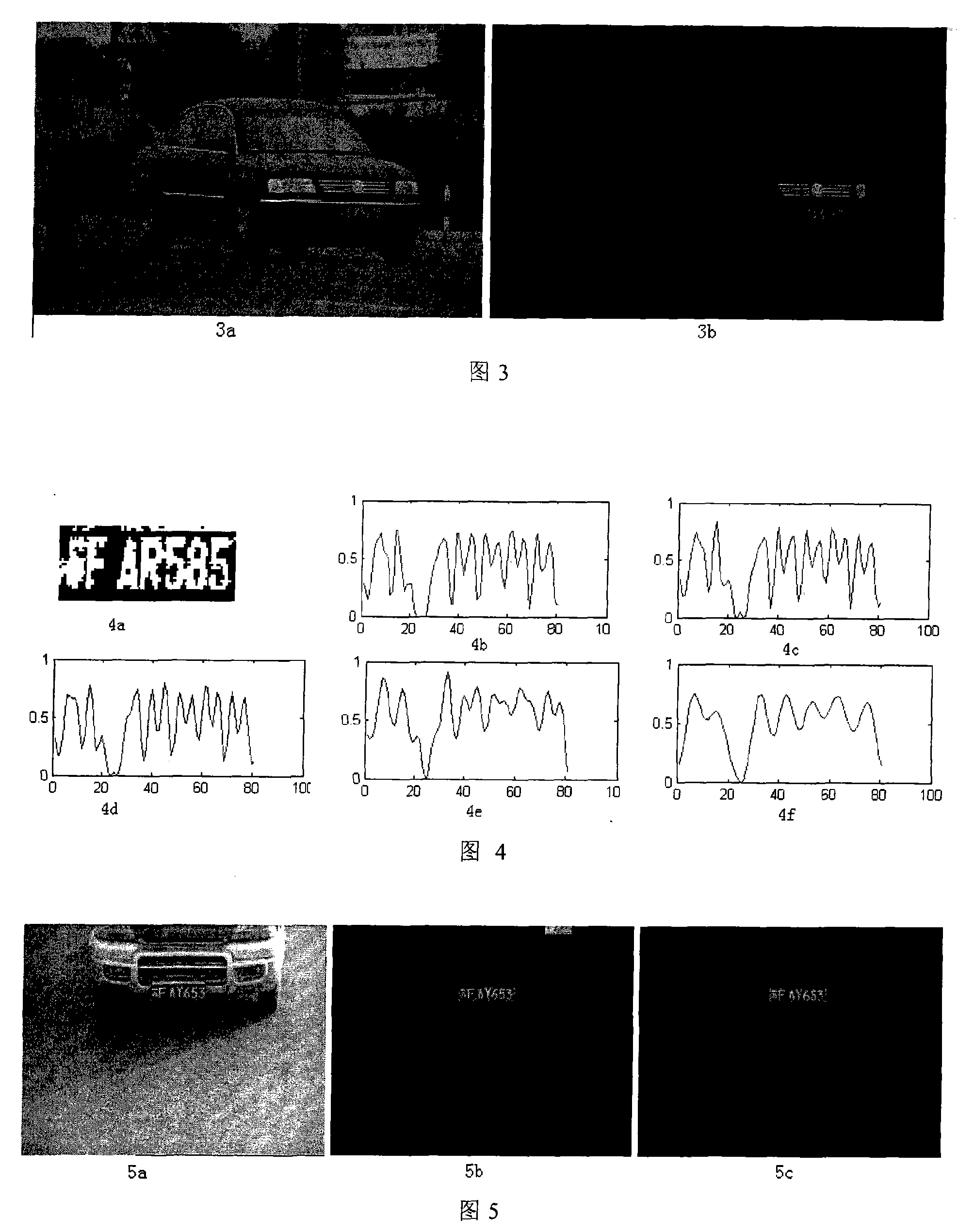

A rapid vehicle license plate locating method is provided, which uses top hat transformation and character texture characteristic, and is divided into background subtraction, vehicle license plate region crude location and precision location three steps, specifically comprises: (1) background subtraction, converting obtained color vehicle license plate image into gray image, using top hat transformation to inhibit large-size background object, highlighting vehicle license plate region; (2) crude location phase, calculating image edge map and proceeding binaryzation, morphological dilation and connected components analysis and other operation, obtaining reasonable size vehicle license plate candidate region set; (3) precision location phase, extracting candidate region texture characteristic, using support vector machine classifier to classify candidate vehicle license plate region, thus accurately locating vehicle license plate region. The invention uses the top hat transformation to filter vehicle license plate image, reduces large-size background interference, so that greatly enhances environment adaptation; meanwhile, from crude to fine location method is more efficient, and reduces greatly computing complexity.

Owner:BEIHANG UNIV

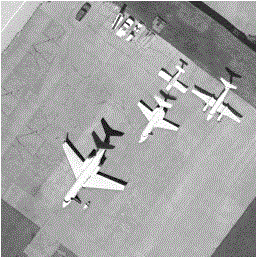

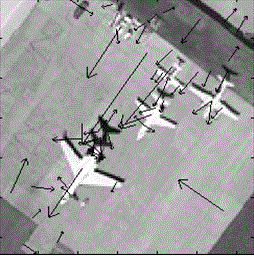

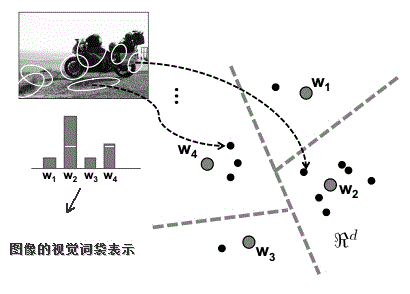

Remote sensing image classification method based on multi-feature fusion

ActiveCN102622607AImprove classification accuracyEnhanced Feature RepresentationCharacter and pattern recognitionSynthesis methodsClassification methods

The invention discloses a remote sensing image classification method based on multi-feature fusion, which includes the following steps: A, respectively extracting visual word bag features, color histogram features and textural features of training set remote sensing images; B, respectively using the visual word bag features, the color histogram features and the textural features of the training remote sensing images to perform support vector machine training to obtain three different support vector machine classifiers; and C, respectively extracting visual word bag features, color histogram features and textural features of unknown test samples, using corresponding support vector machine classifiers obtained in the step B to perform category forecasting to obtain three groups of category forecasting results, and synthesizing the three groups of category forecasting results in a weighting synthesis method to obtain the final classification result. The remote sensing image classification method based on multi-feature fusion further adopts an improved word bag model to perform visual word bag feature extracting. Compared with the prior art, the remote sensing image classification method based on multi-feature fusion can obtain more accurate classification result.

Owner:HOHAI UNIV

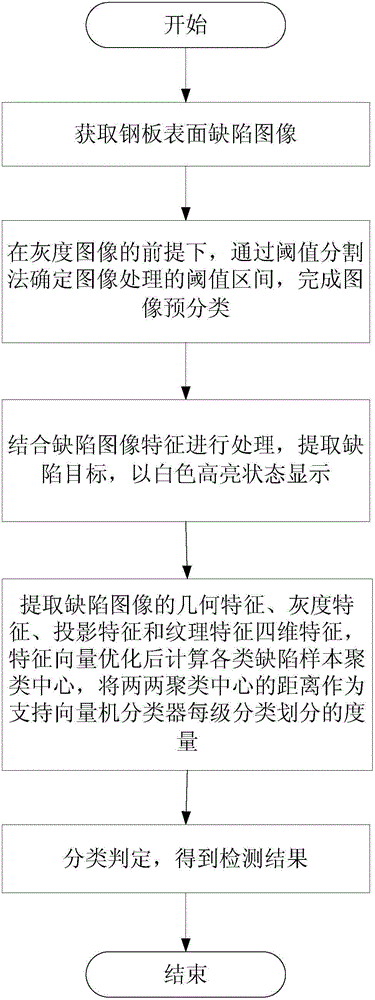

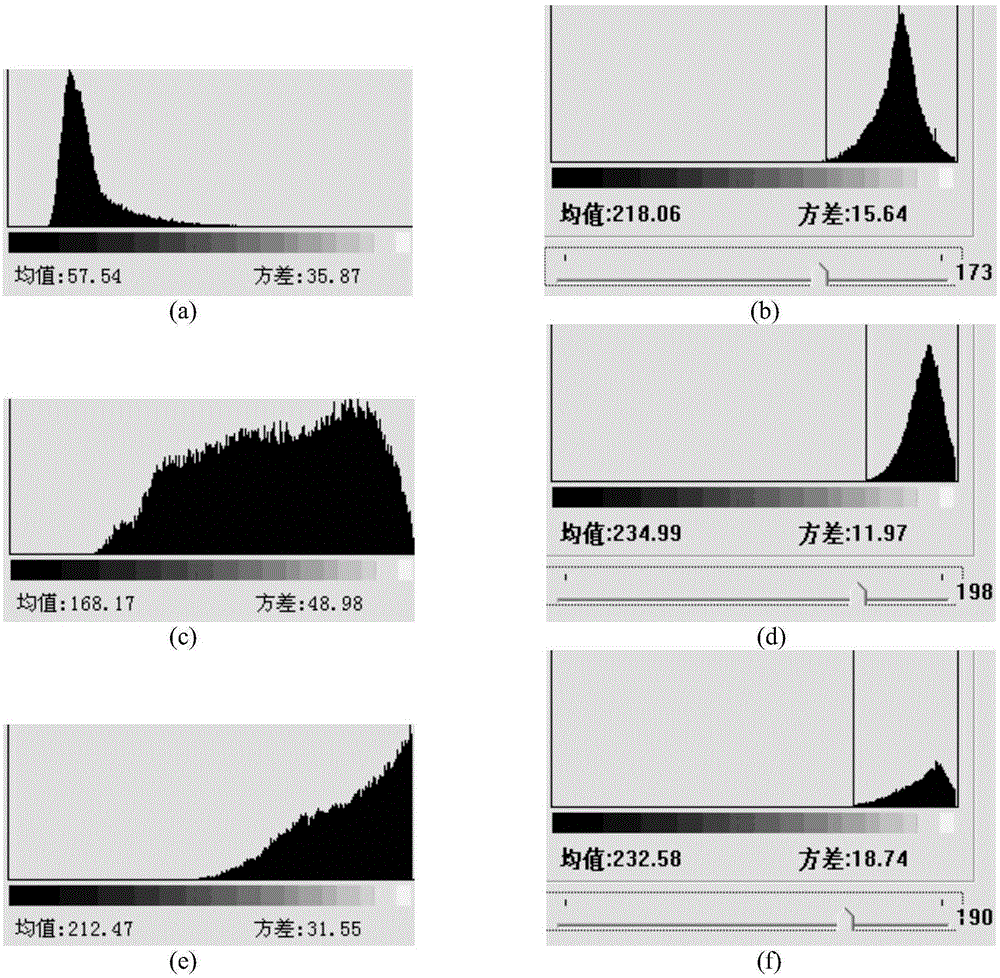

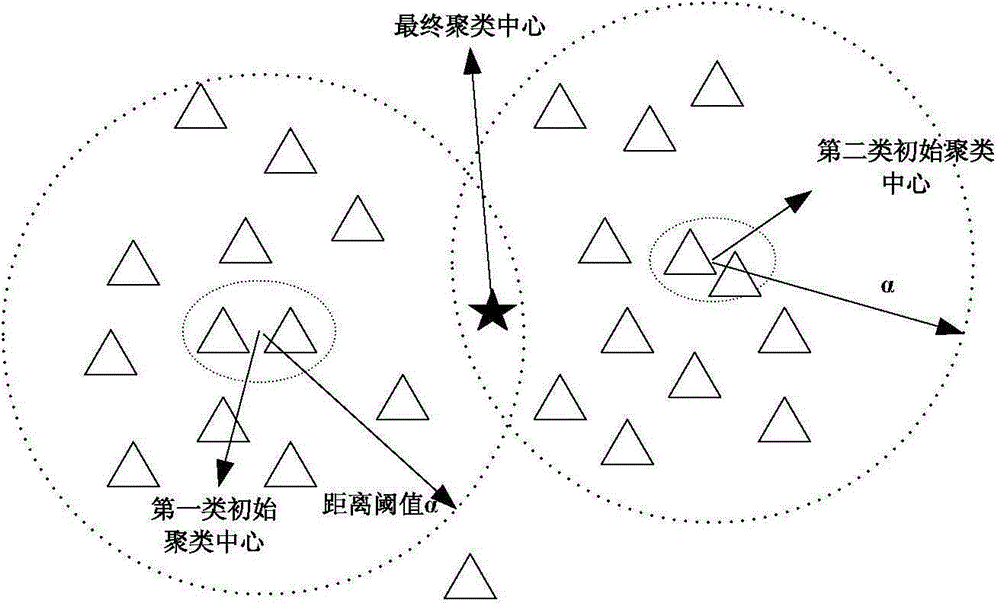

Fuzzy clustering steel plate surface defect detection method based on pre classification

InactiveCN104794491AFast classificationImprove accuracyImage analysisCharacter and pattern recognitionGray levelDimensionality reduction

The invention relates to the technical field of digital image processing and pattern recognition, discloses a fuzzy clustering steel plate surface defect detection method based on pre classification and aims to overcome defects of judgment missing and mistaken judgment by the existing steel plate surface detection method and improve the accuracy of steel plate surface defect online real-time detection effectively during steel plate surface defect detection. The method includes the steps of 1, acquiring steel plate surface defect images; 2 performing pre classification on the images acquired through step 1, and determining the threshold intervals of image classification; 3, classifying images of the threshold intervals of the step 2, and generating white highlighted defect targets; 4, extracting geometry, gray level, projection and texture characteristics of defect images, determining input vectors supporting a vector machine classifier through characteristic dimensionality reduction, calculating the clustering centers of various samples by the fuzzy clustering algorithm, and adopting the distances of two cluster centers as scales supporting the vector machine classifier to classify; 5, determining classification, and acquiring the defect detection results.

Owner:CHONGQING UNIV

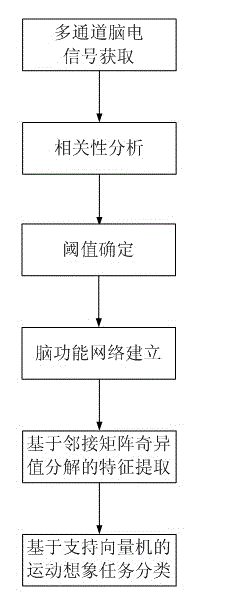

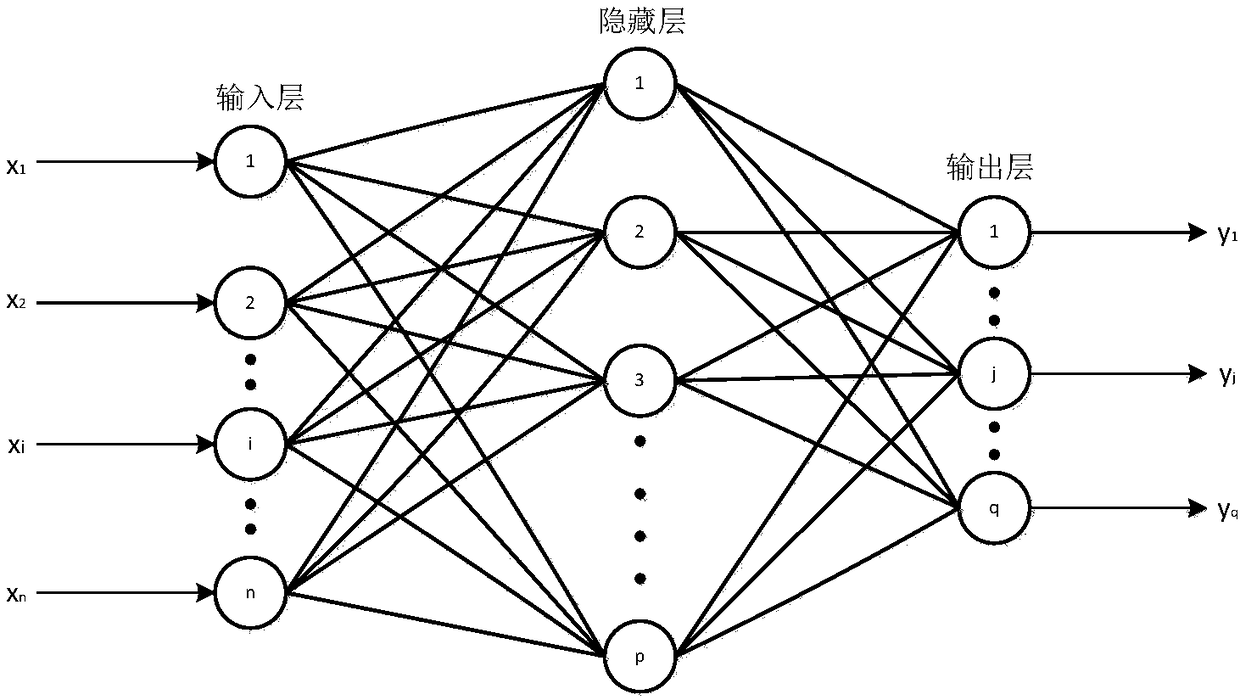

Electroencephalogram feature extracting method based on brain function network adjacent matrix decomposition

InactiveCN102722727AIgnore the relationshipIgnore coordinationCharacter and pattern recognitionMatrix decompositionSingular value decomposition

The invention relates to an electroencephalogram feature extracting method based on brain function network adjacent matrix decomposition. The current motion image electroencephalogram signal feature extraction algorithm mostly focuses on partially activating the qualitative and quantitative analysis of brain areas, and ignores the interrelation of the bran areas and the overall coordination. In light of a brain function network, and on the basis of complex brain network theory based on atlas analysis, the method comprises the steps of: firstly, establishing the brain function network through a multi-channel motion image electroencephalogram signal, secondly, carrying out singular value decomposition on the network adjacent matrix, thirdly, identifying a group of feature parameters based on the singular value obtained by the decomposition for showing the feature vector of the electroencephalogram signal, and fourthly, inputting the feature vector into a classifier of a supporting vector machine to complete the classification and identification of various motion image tasks. The method has a wide application prospect in the identification of a motion image task in the field of brain-machine interfaces.

Owner:启东晟涵医疗科技有限公司

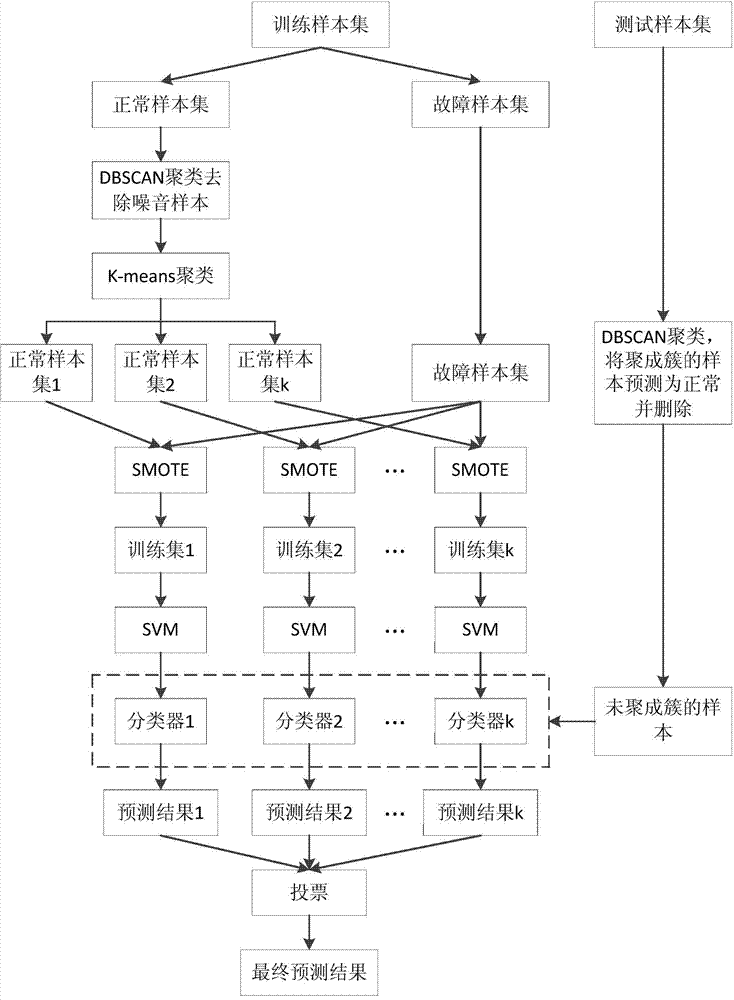

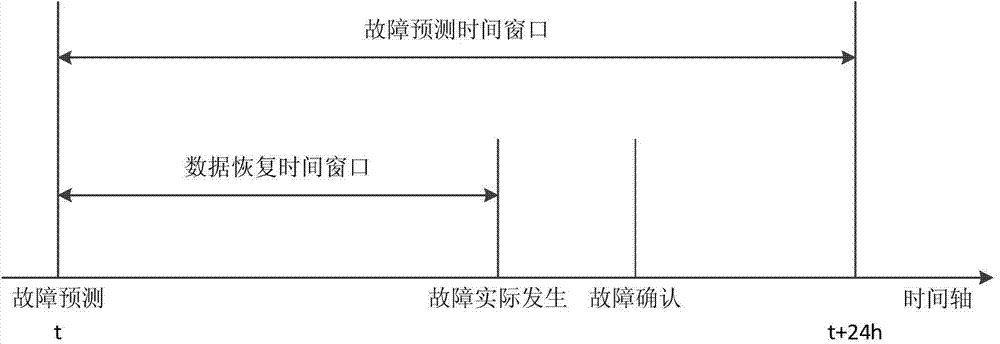

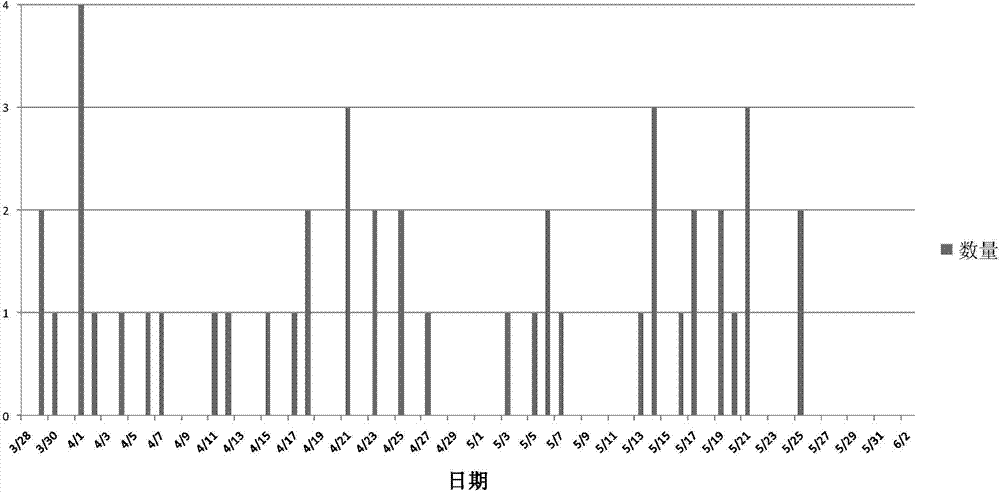

Hard disk failure prediction method for cloud computing platform

InactiveCN104503874AHigh fault recall rateImprove performanceDetecting faulty computer hardwareHardware monitoringDensity basedSupport vector machine classifier

The invention discloses a hard disk failure prediction method for a cloud computing platform. The hard disk failure predication method comprises the following steps: marking SMART log data of a hard disk as a normal hard disk sample and a faulted hard disk sample according to a hard disk maintenance record in a prediction time window; then, dividing the denoised normal hard disk sample into k non-intersected subsets by adopting a K-means clustering algorithm; combining the k non-intersected subsets with the faulted hard disk sample respectively; generating k groups of balance training sets according to an SMOTE (Synthetic Minority Oversampling Technique) so as to obtain k support vector machine classifiers for predicting the faulted hard disk. In the prediction stage, test sets can be clustered by using a DBSCAN (Density-based Spatial Clustering Of Applications With Noise), a sample in a clustered cluster is predicted as the normal hard disk sample, a noise sample is predicted by each classifier obtained by training, and further a final prediction result is obtained by voting. According to the method disclosed by the invention, hard disk fault prediction is carried out by using the SMART data of the hard disk, and relatively high fault recall ratio and overall performance can be obtained.

Owner:NANJING UNIV

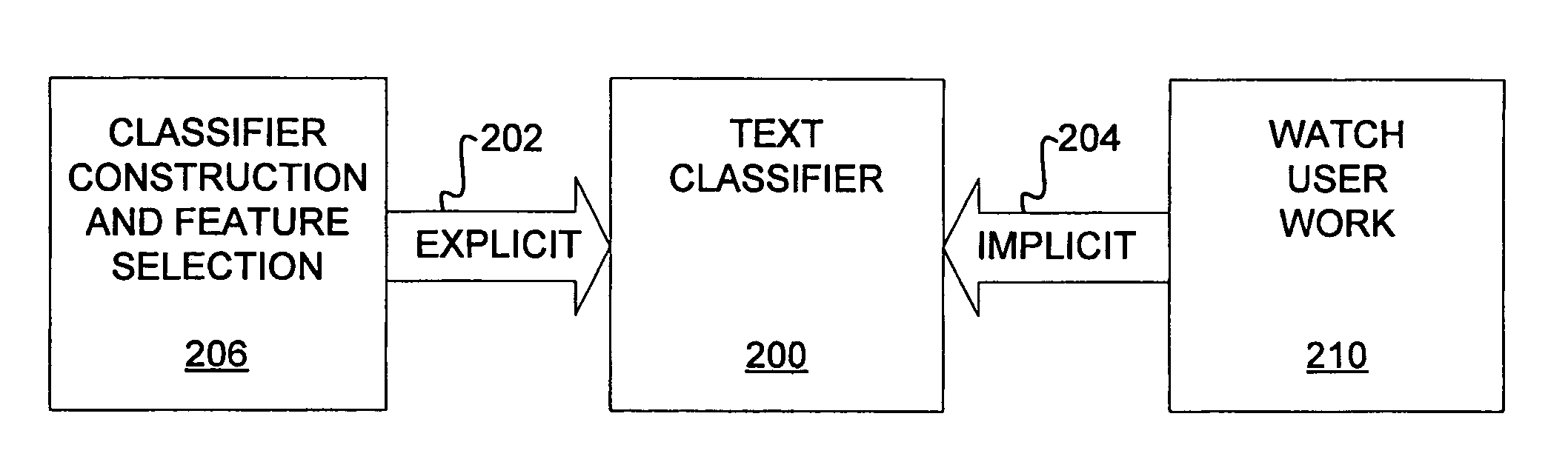

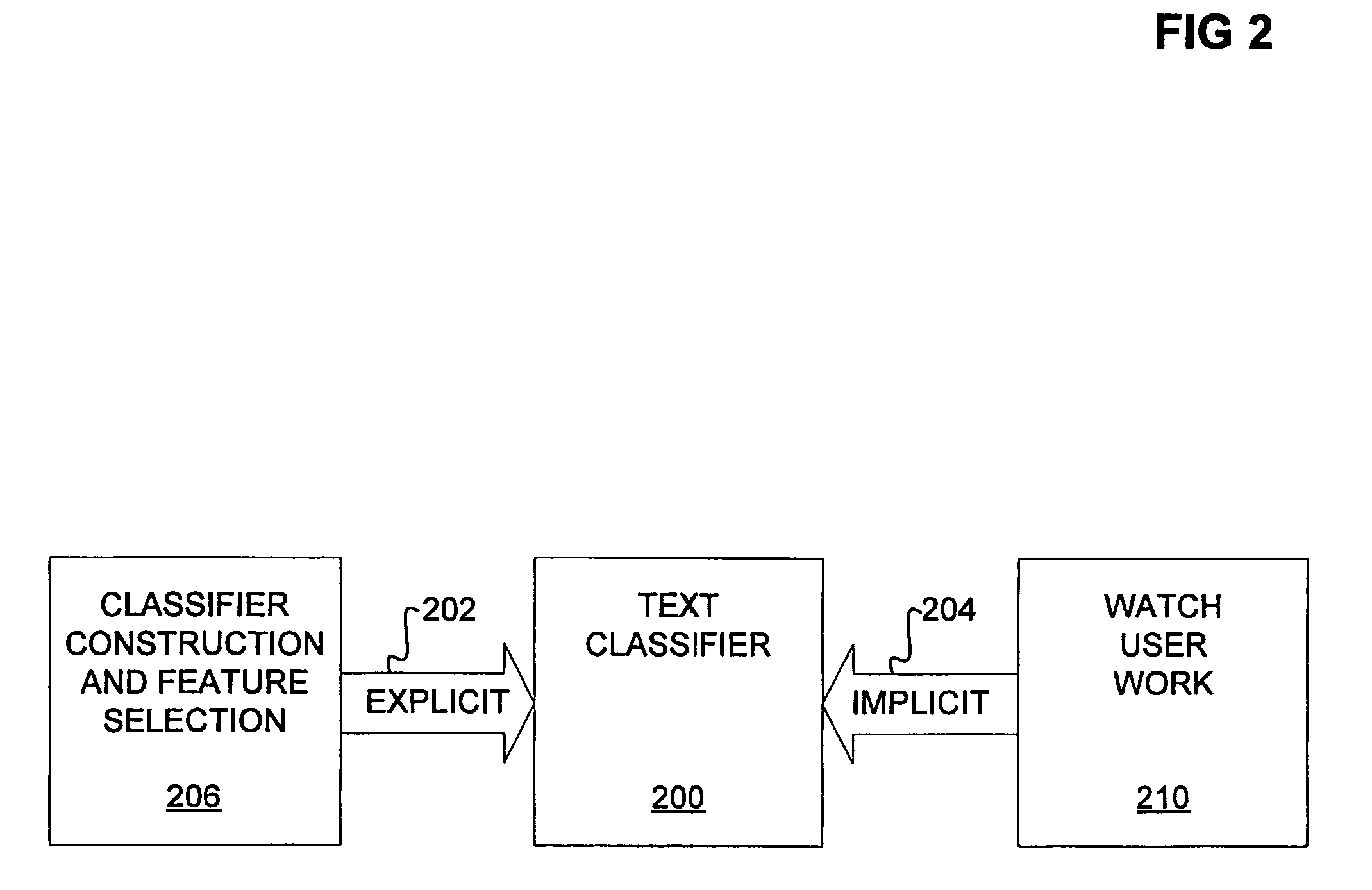

Method for automatically assigning priorities to documents and messages

InactiveUS7194681B1Data processing applicationsDigital computer detailsDistractionText categorization

Methods for prioritizing documents, such as email messages, is disclosed. In one embodiment, a computer-implemented method first receives a document. The method assigns a measure of priority to the document, by employing a text classifier such as a Bayesian classifier or a support-vector machine classifier. The method then outputs the priority. In one embodiment, the method includes alerting the user about a document, such as an email message, based on the expected loss associated with delays expected in reviewing the document as compared to the expected cost of distraction and transmission incurred with alerting the user about the document.

Owner:MICROSOFT TECH LICENSING LLC

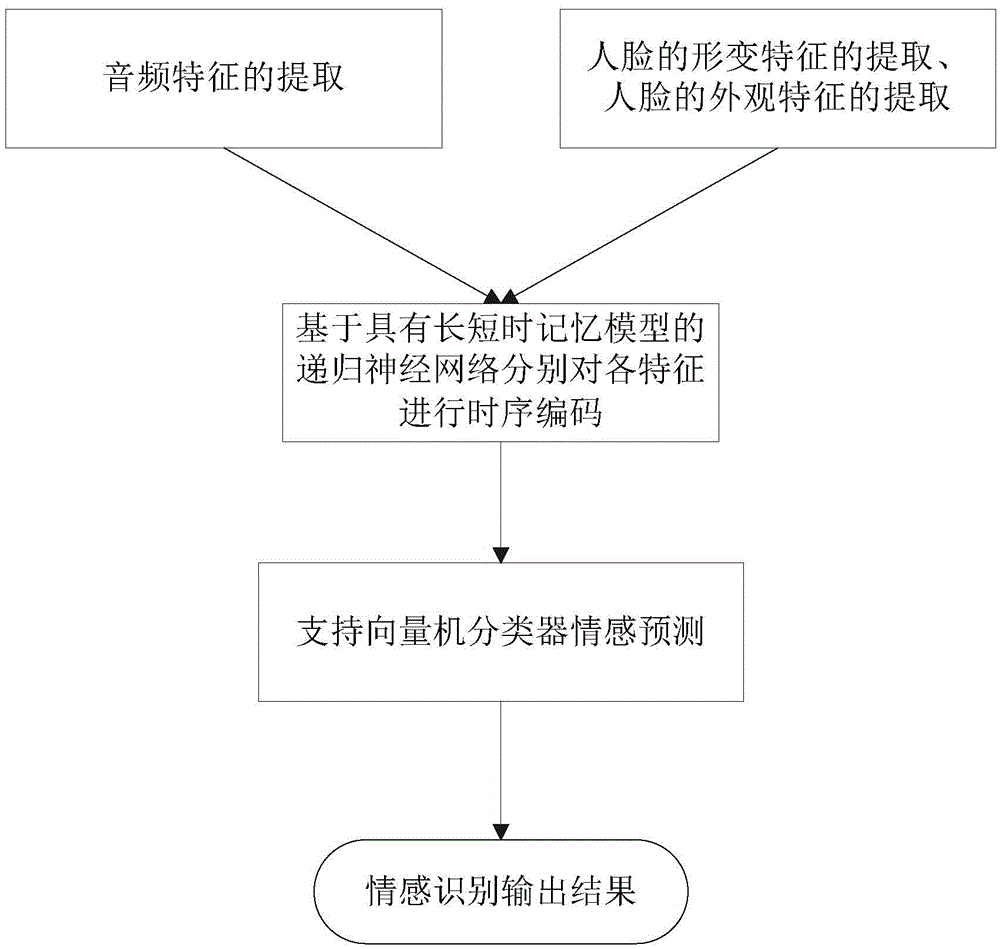

Recurrent neural network-based discrete emotion recognition method

ActiveCN105469065AModeling implementationRealize precise identificationCharacter and pattern recognitionDiscrete emotionsSupport vector machine classifier

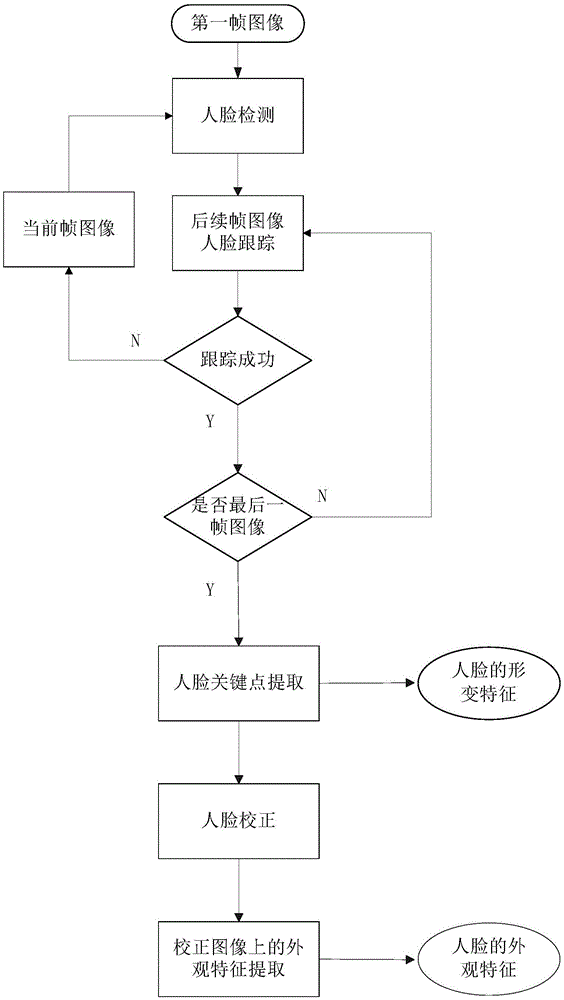

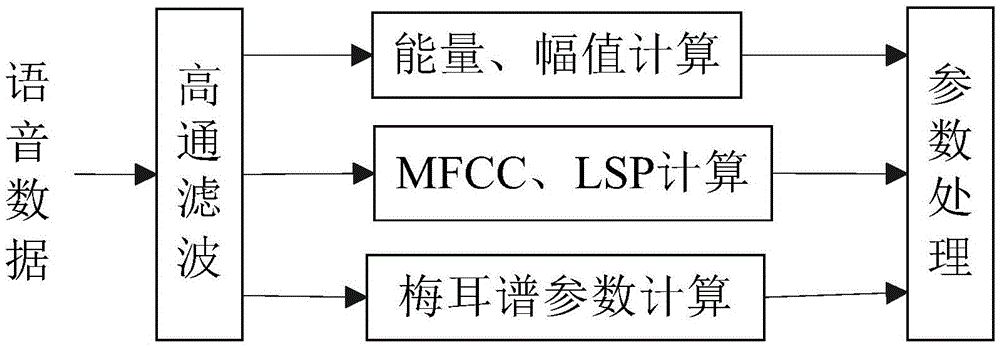

The invention provides a recurrent neural network-based discrete emotion recognition method. The method comprises the following steps: 1, carrying out face detecting and tracking on image signals in a video, extracting key points of faces to serve as deformation features of the faces after obtaining the face regions, clipping the face regions and normalizing to a uniform size and extracting the appearance features of the faces; 2, windowing audio signals in the video, segmenting audio sequence units out and extracting audio features; 3, respectively carrying out sequential coding on the three features obtained by utilizing a recurrent neural network with long short-term memory models to obtain emotion representation vectors with fixed lengths, connecting the vectors in series and obtaining final emotion expression features; and 4, carrying out emotion category prediction by utilizing the final emotion expression features obtained in the step 3 on the basis of a support vector machine classifier. According to the method, dynamic information in the emotion expressing process can be fully utilized, so that the precise recognition of emotions of participators in the video is realized.

Owner:北京中科欧科科技有限公司

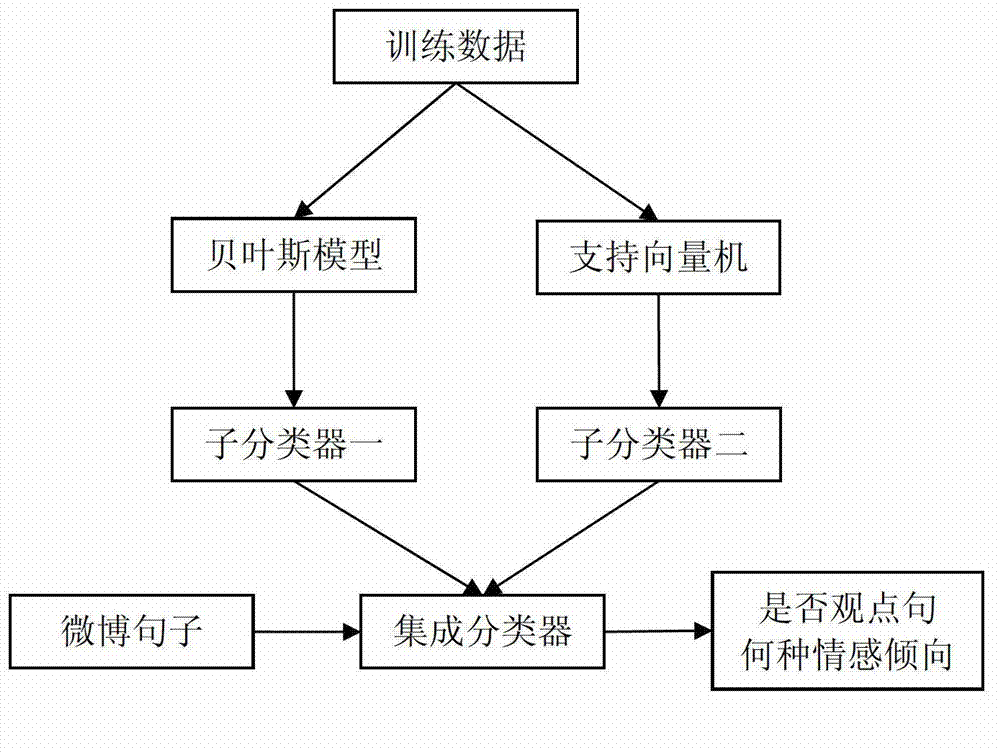

Emotion analyzing system and method

InactiveCN103034626AEasy to judgeImproving the performance of sentiment orientation classificationSpecial data processing applicationsViewpointsSupport vector machine classifier

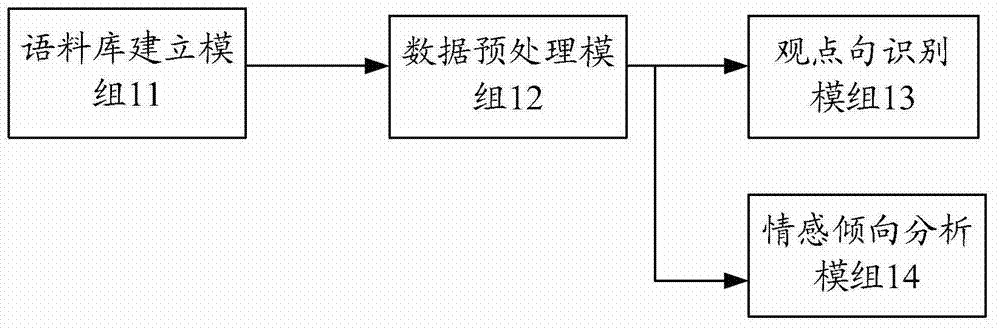

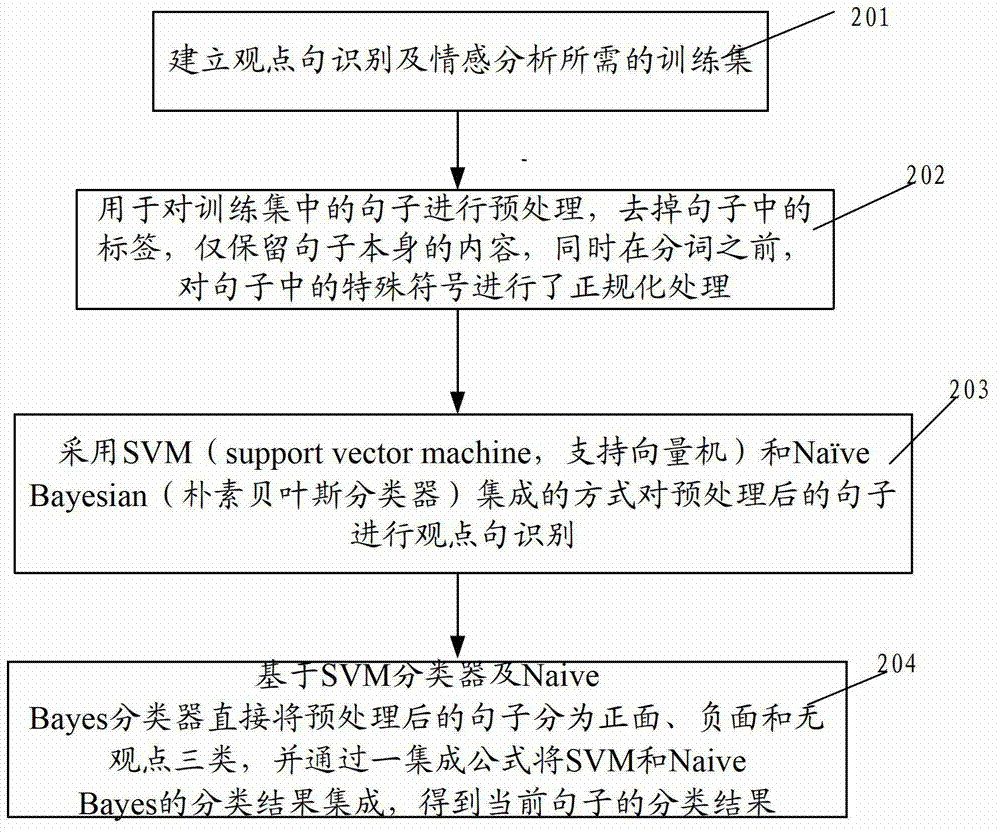

The invention discloses an emotion analyzing system and an emotion analyzing method. The system comprises a language database establishing module, a data preprocessing module, a perspective sentence identifying module and an emotion tendency analyzing module, wherein the language database establishing module is used for establishing a training set needed by perspective sentence identification and emotion tendency analysis; the data preprocessing module is used for preprocessing sentences in the training set; the perspective sentence identifying module is used for performing perspective sentence identification on the preprocessed sentences by adopting a support vector machine classifier and a Bayes classifier respectively, and integrally processing results of the classifiers to obtain a final classifying result; and the emotion tendency analyzing module is used for directly classifying the preprocessed sentences into positive, negative and non-viewpoint sentences respectively on the basis of the support vector machine classifier and the Bayers classifier, and integrating the classifying results of the vector machine classifier and the Bayers classifier through an integration formula to obtain a classifying result of a current sentence. Due to the adoption of the emotion analyzing system and the emotion analyzing method, the viewpoint sentence judging and emotion tendency classifying properties of Chinese microblogs can be improved.

Owner:SHANGHAI JIAO TONG UNIV

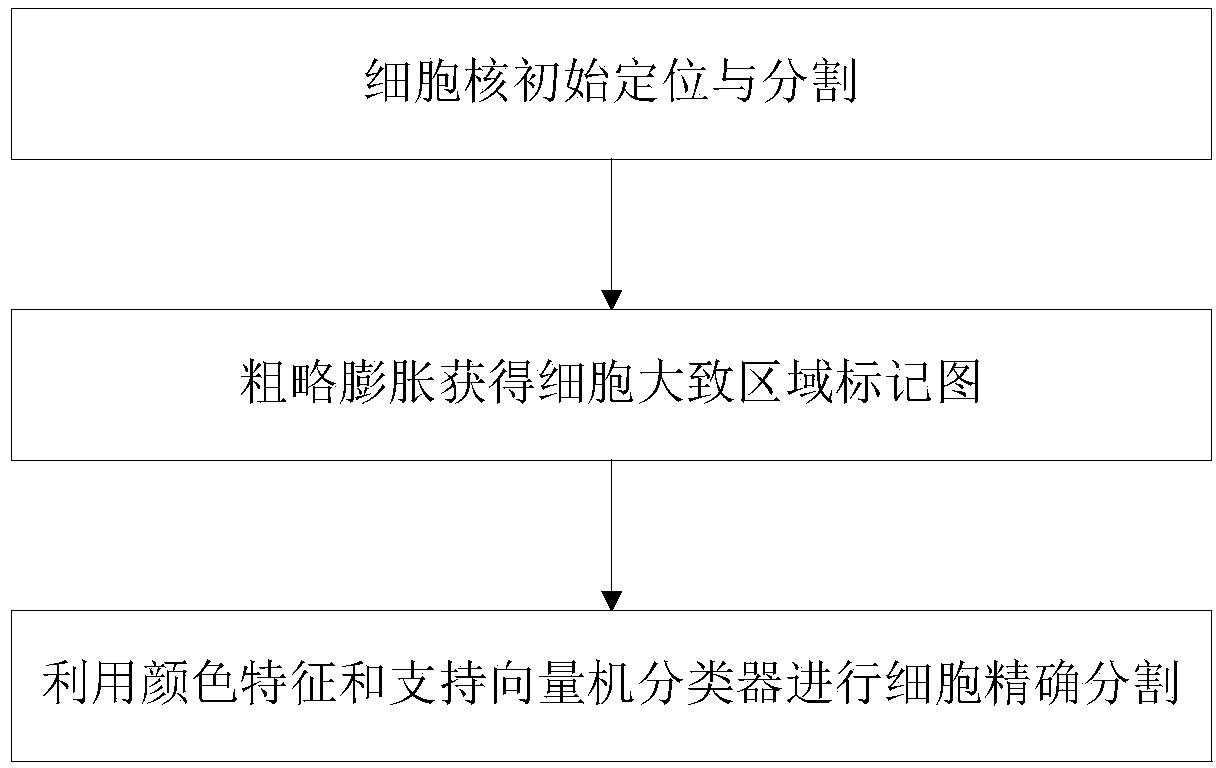

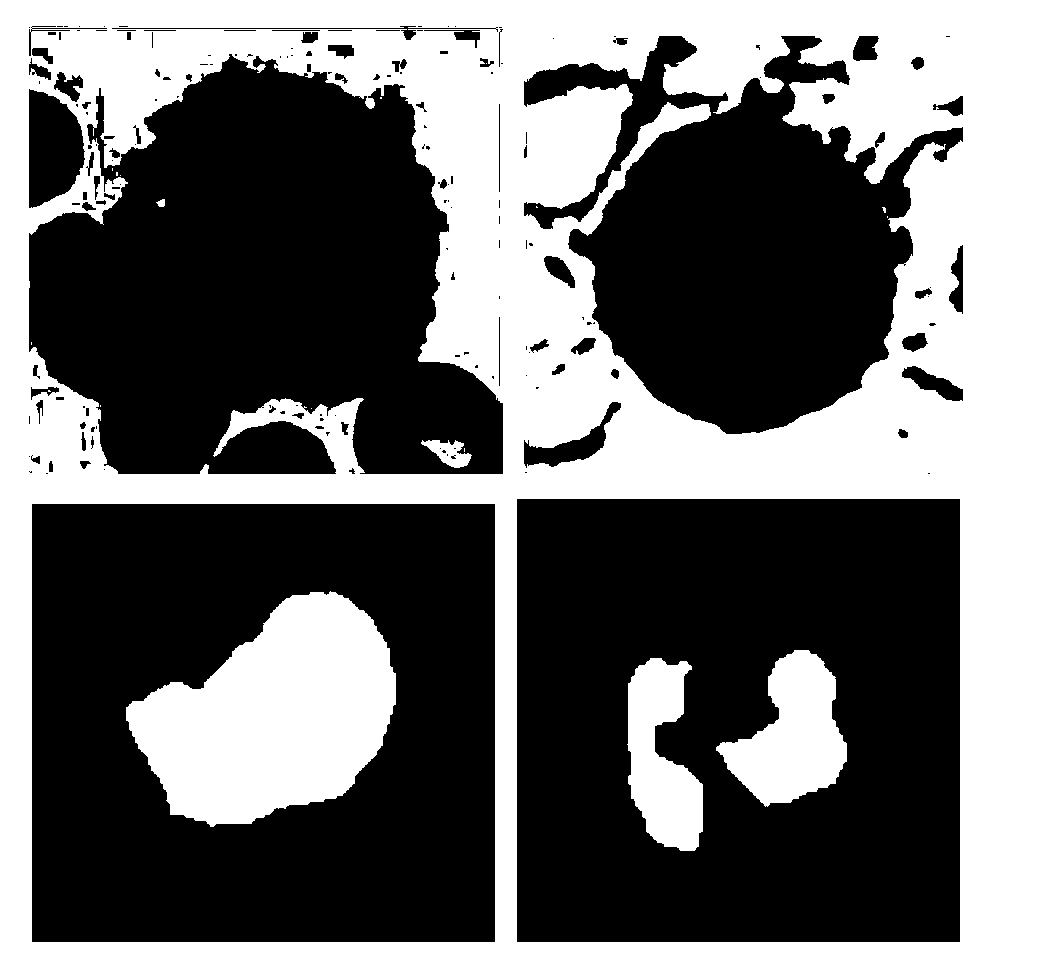

White blood cell image accurate segmentation method and system based on support vector machine

InactiveCN103473739AAccurate segmentationImprove stabilityImage enhancementImage analysisWhite blood cellVisual saliency

The invention discloses a white blood cell image accurate segmentation method and system based on a support vector machine. The method comprises performing nucleus initial positioning and segmenting, performing rough expansion so as to obtain a substantial area labeled graph of cells, and accurately segmenting the cells by using color characteristics and the classifier of the support vector machine. According to the method provided by the invention, on one hand, according to a mankind visual saliency attention mechanism, the sensitivity of human eyes to the change of image edges are simulated, and a nucleus area can be accurately and rapidly segmented by using the clustering of edge-color pairs; and on the other hand, the adopted classifier of the support vector machine has excellent stability and anti-interference performance, and at the same time the space relationship of color information and pixel points are fully utilized so that the training sample sampling mode of the classifier of the support vector machine is improved, thus the accurate segmentation of white blood cells in a cell small image can be realized.

Owner:HUAZHONG UNIV OF SCI & TECH

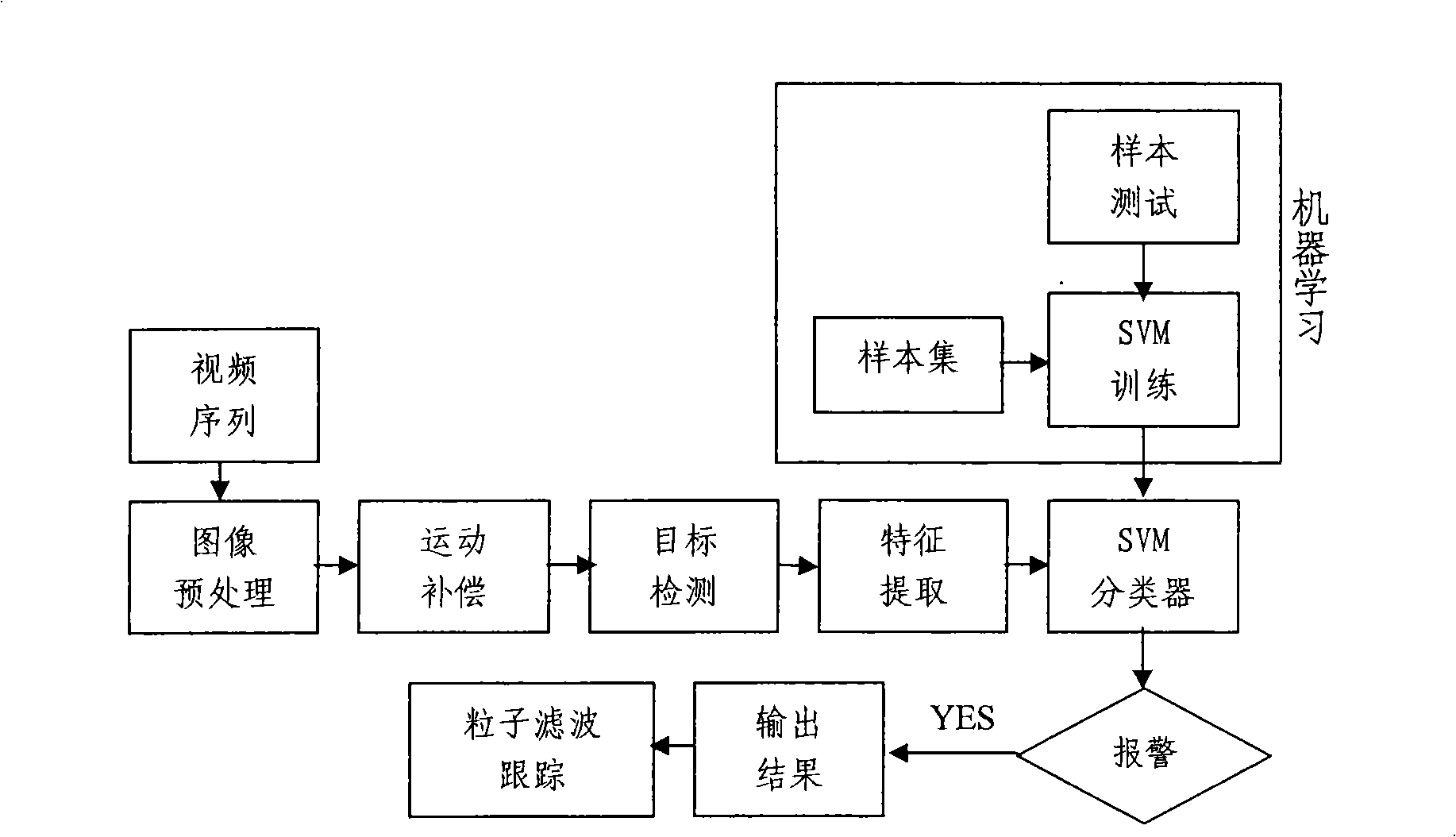

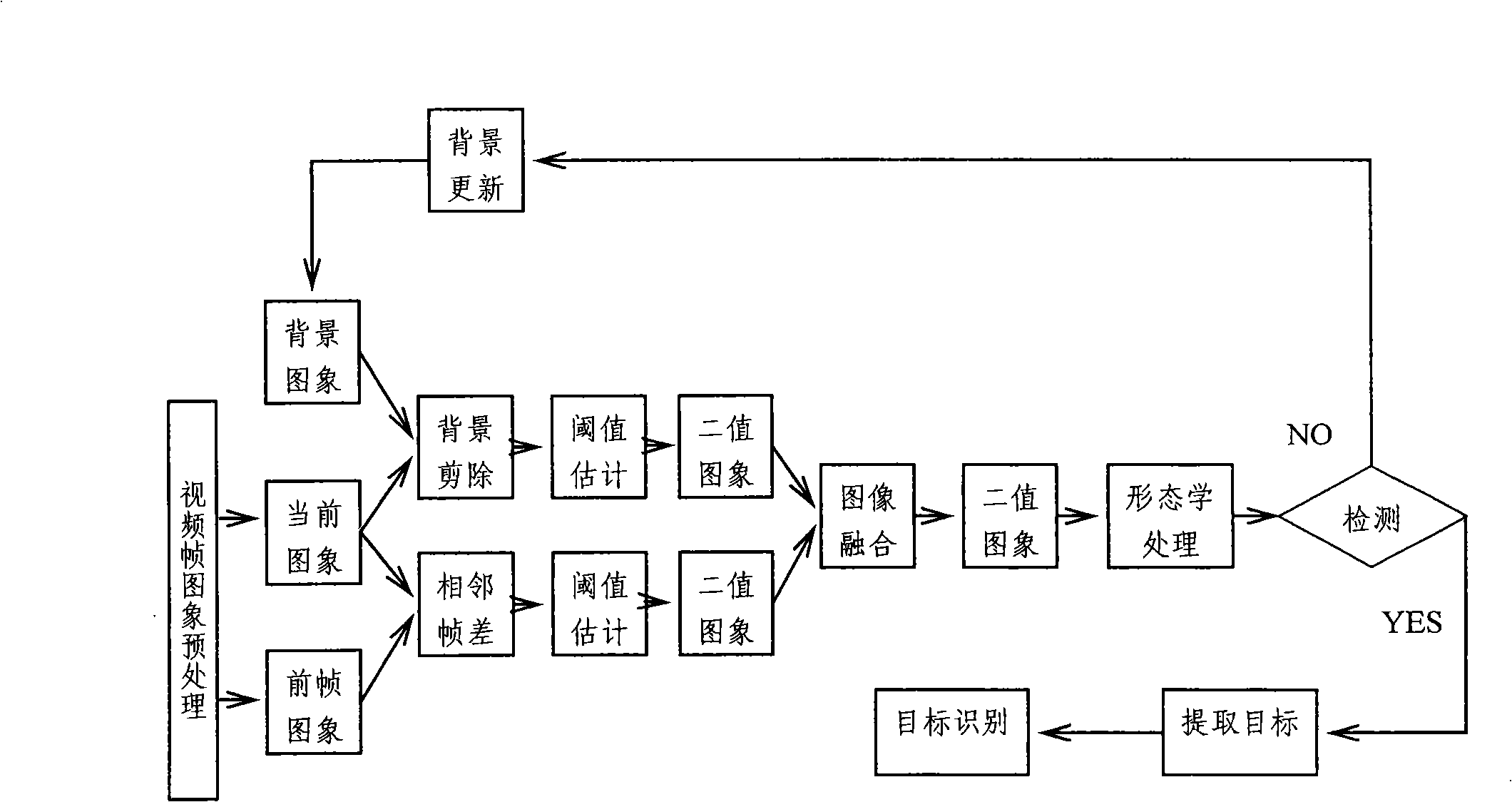

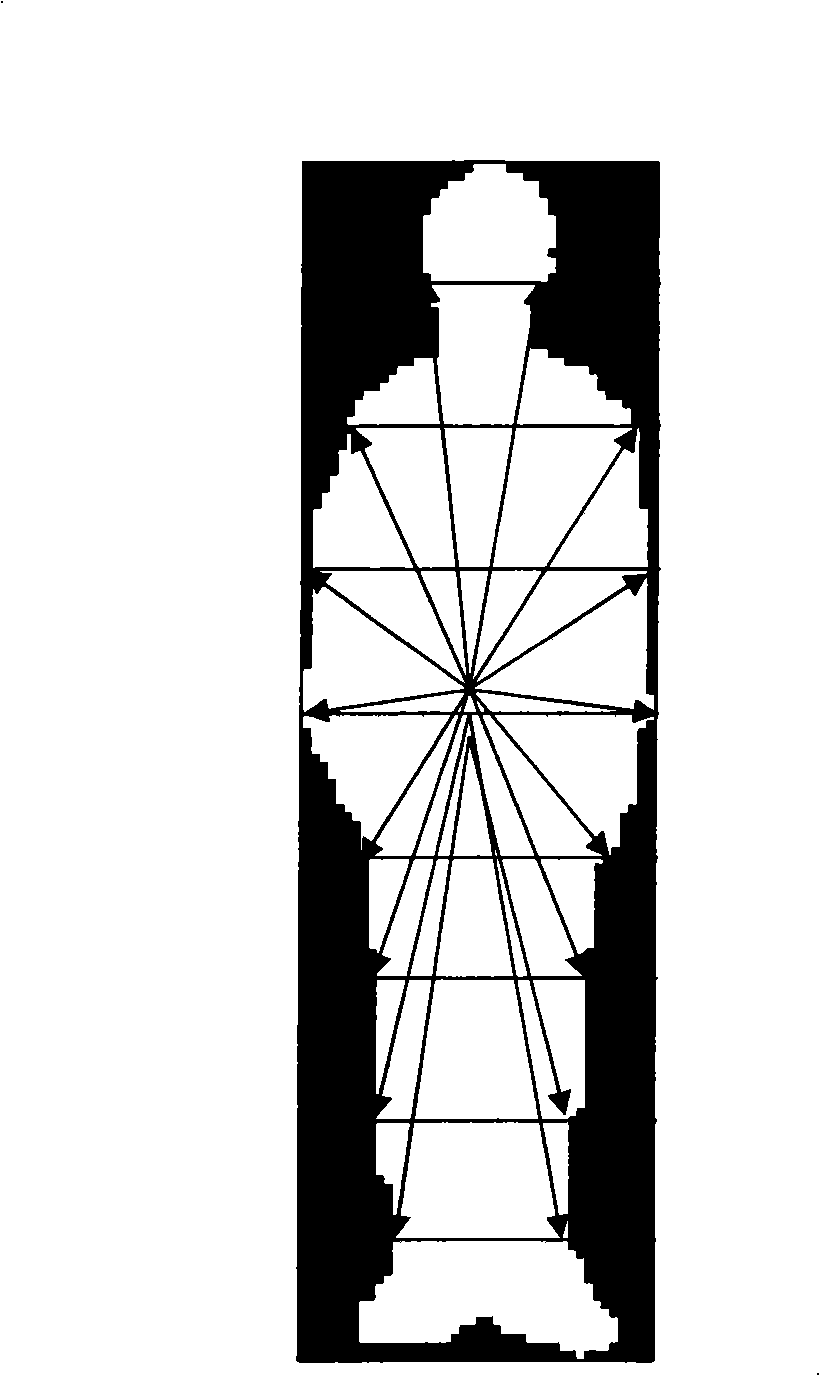

Portrait and vehicle recognition alarming and tracing method

InactiveCN101295405AImprove visibilitySolving recognition problemsImage analysisCharacter and pattern recognitionFeature vectorHuman body

The invention relates to an alarming and tracking method for the identification of the portraits and vehicles; the technical characteristics lie in: first, conducting a target detection, extracting the altered target area from a video image, and extracting an eigenvector of the target area through a method of central beam; then determining whether the target is an image of human body or vehicle through a well-trained support vector machine classifier according to the extracted eigenvector. If the abnormal conditions occur, alarm signals are given. Meanwhile, according to the track continuity of the target during the movement, a technique of particle filter is adopted to carry out a track processing only for regions where the targets have possibility to exist. The alarming and tracking method for the identification of the portraits and vehicles has the advantages that: when the target is seriously interfered or influenced by the noise, which causes lower matching reliability, a forecast can be applied to reasonably estimate the position of the target, in order to keep a normal tracking for the target. The alarming and tracking method for the identification of the portraits and vehicles features small computational amount and excellent real-time performance, and can be widely applied in the field of national defense and civil field.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

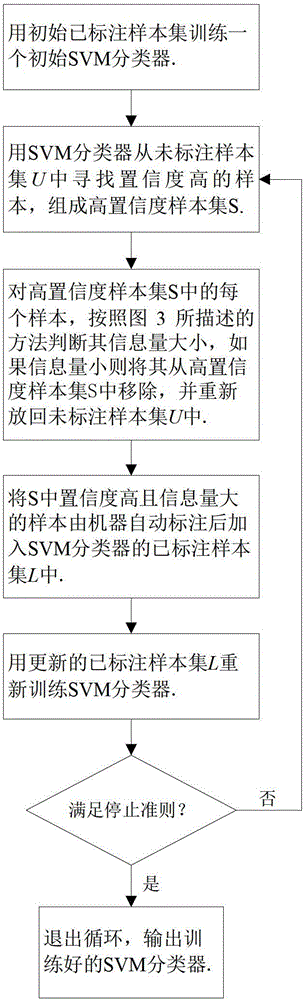

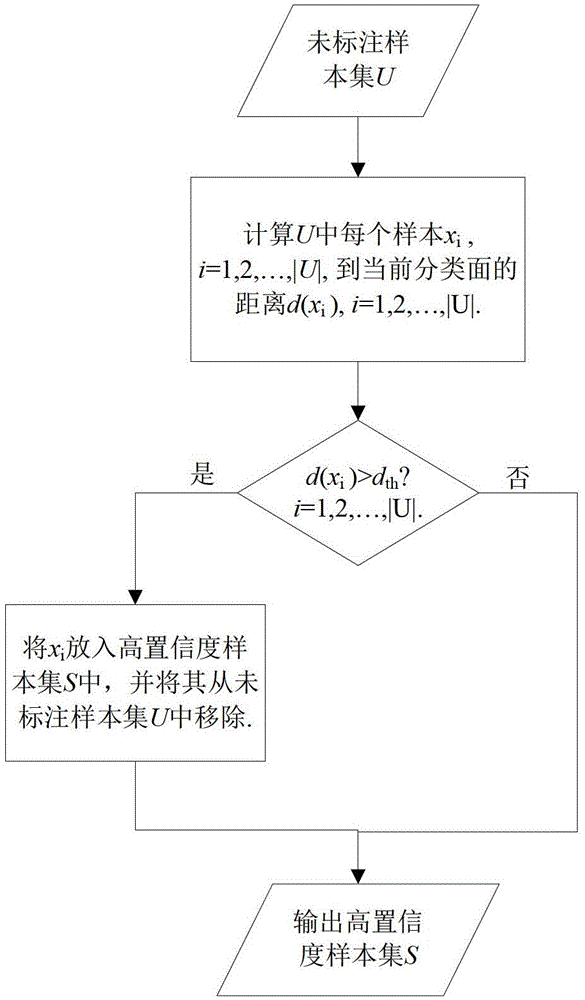

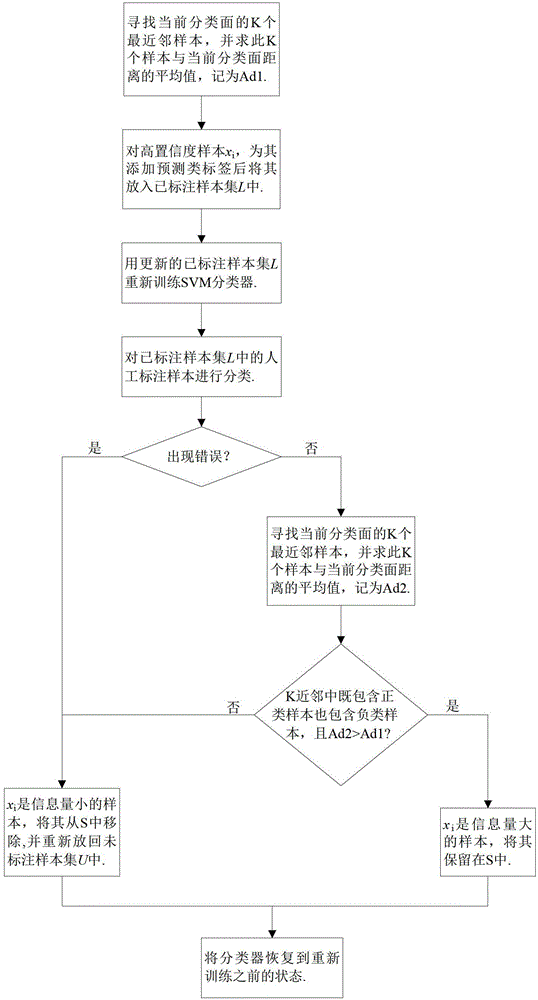

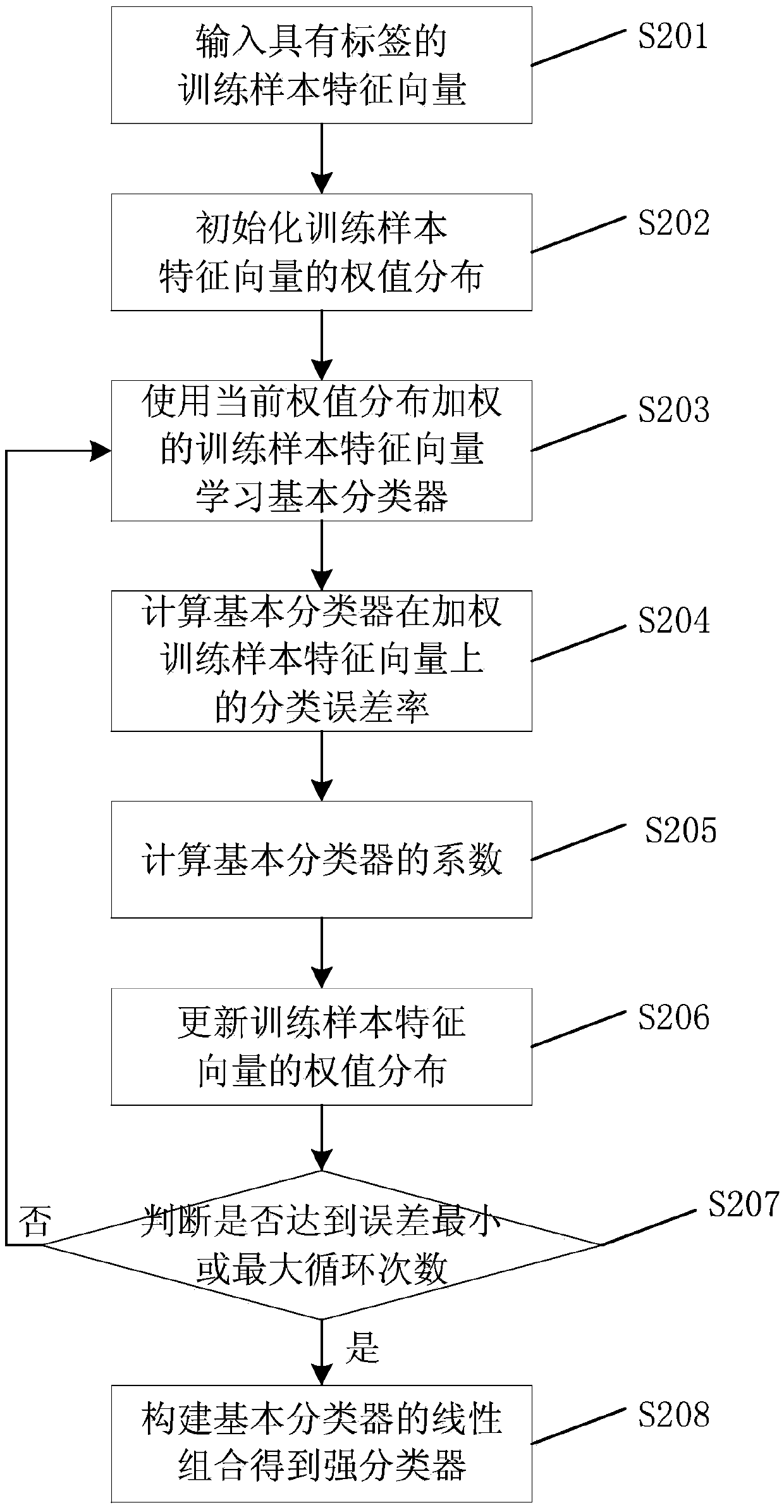

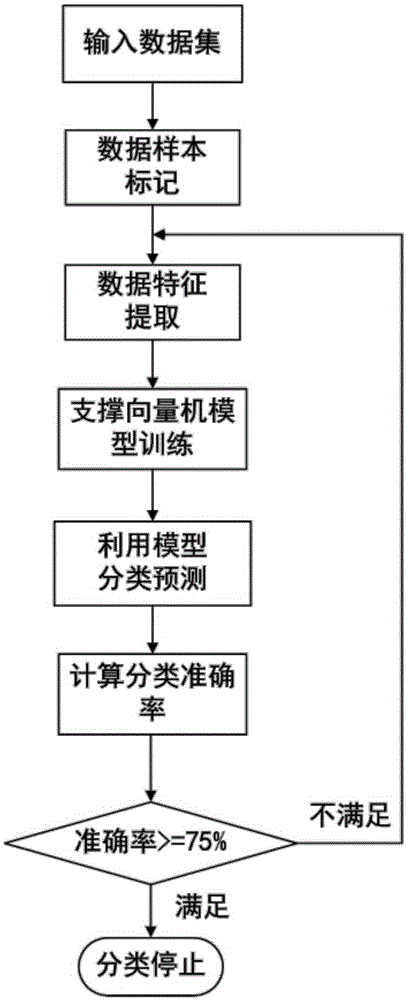

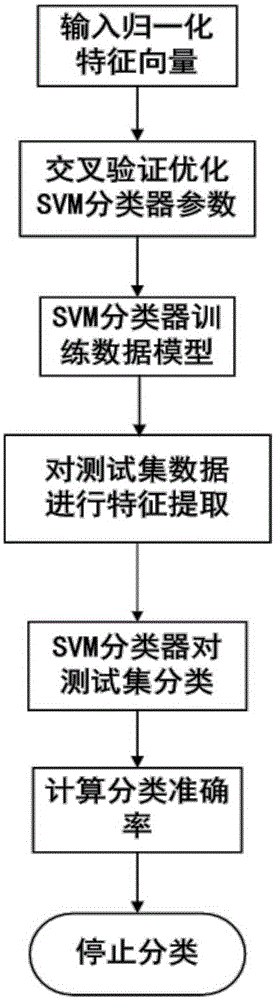

Training method of SVM (Support Vector Machine) classifier based on semi-supervised learning

InactiveCN103150578AReduce workloadFast convergenceDigital computer detailsCharacter and pattern recognitionSvm classifierSupport vector machine classifier

The invention especially discloses a training method of an SVM (Support Vector Machine) classifier based on semi-supervised learning. The training method comprises the following steps of: step 1, training an initial SVM classifier through an initial labelled sample set; step 2, looking for samples with high classifying confidence degrees from an unlabelled sample set U to constitute a sample set S with high confidence degree; step 3, judging an amount of information of each sample in the sample set S with high confidence degrees according to a method described in the graph 3, removing the samples from the sample set S with high confidence degrees if the amount of information is large , and placing the samples back into the unlabelled sample set U; step 4, adding the samples with high confidence degrees and large amount of information after the samples are automatically labeled by a machine in the sample set S into a labeled sample set L of the SVM classifier; step 5, using the renewed labeled sample set L to retrain the SVM classifier; and step 6, judging whether the SVM classifier either exists a loop or continuously iterates according to a stopping criterion.

Owner:SHANDONG NORMAL UNIV

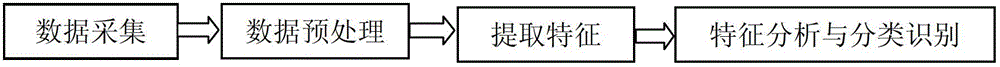

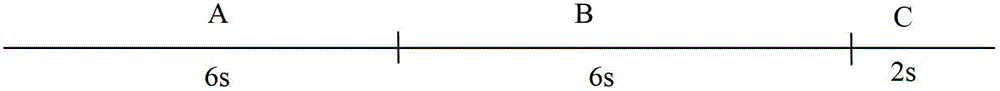

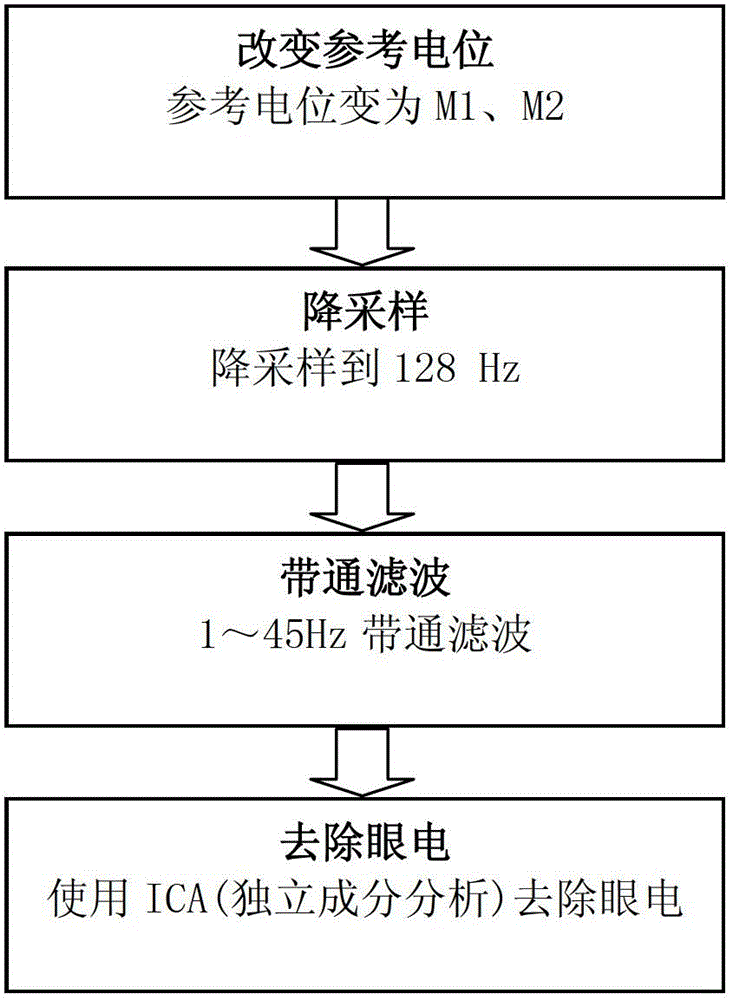

Brain electric features based emotional state recognition method

The invention discloses a brain electric features based emotional state recognition method. The method comprises the following steps of: data acquisition stage: under the condition of international emotional picture induction, extracting 64 brain electric data which is tested under the induction of different-happiness-level pictures; data pretreatment stage: carrying out four stages of reference electric potential variation, down sampling, band-pass filtering, electro-oculogram removal on the collected 64 brain electric data; feature extraction stage: extracting time domain features after signals after pretreatment are filtered by a common space model algorithm; and feature recognition: recognizing the features by using a support vector machine classifier, and differentiating different emotional states. According to the method, an OVR (one versus rest) common space model algorithm is used for removing the interference of background signals, and is used for the signal intensification of multiple types of emotion induced brain electricity; after the background signals are removed, the differences among different types of emotional brain electricity are intensified, the recognition accurate ratio of subjects is relatively ideal when the recognition is carried out by the time domain variance features, and the emotions of different happiness can be differentiated accurately.

Owner:TIANJIN UNIV

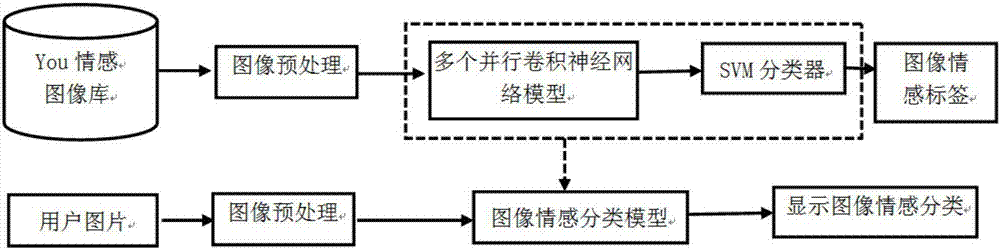

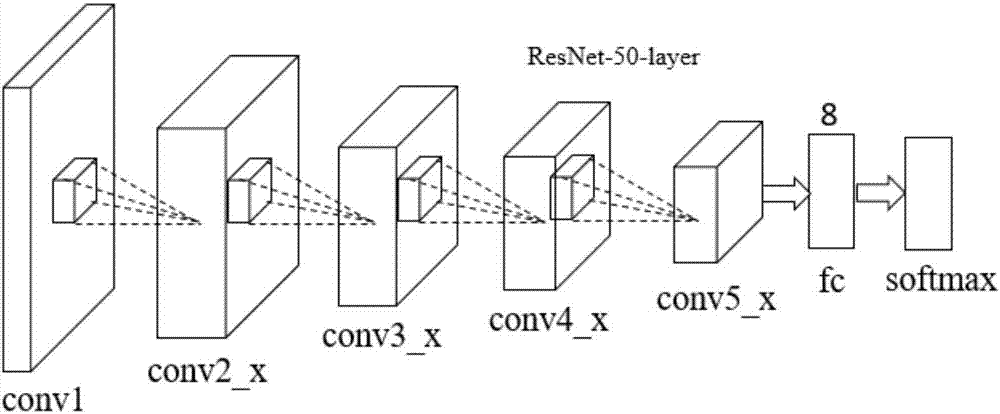

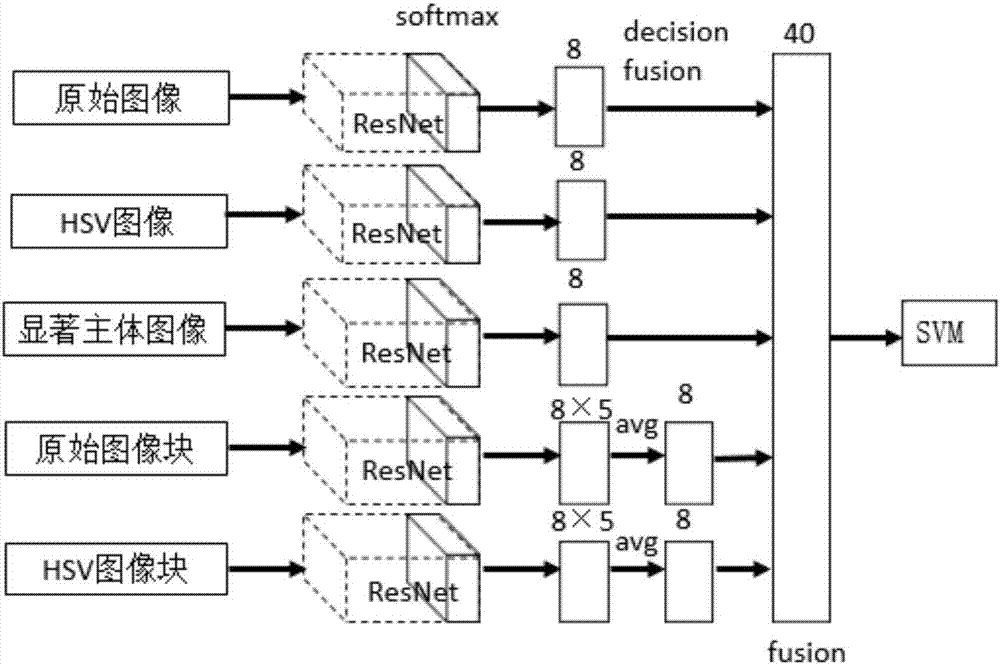

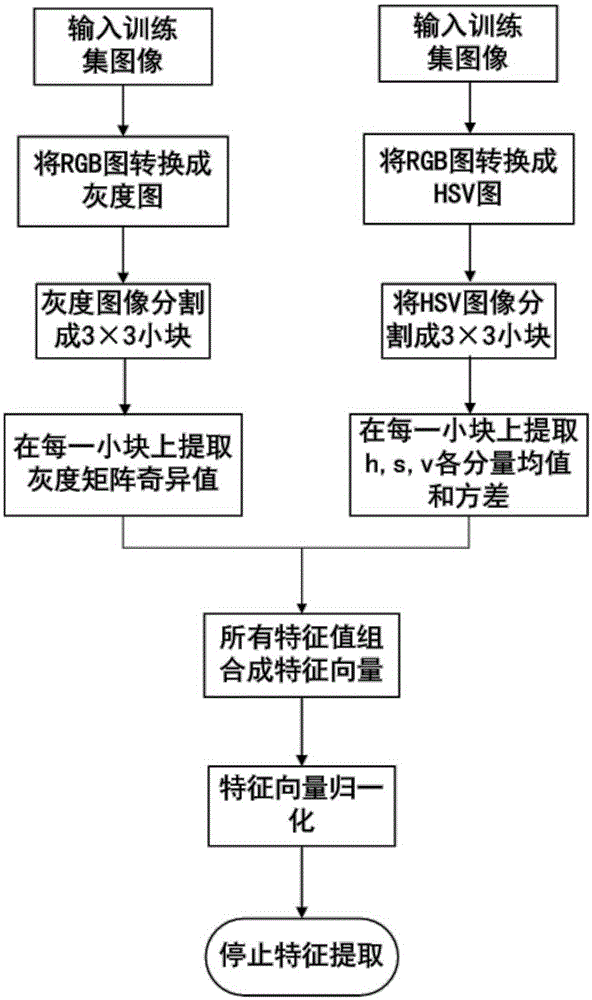

Multi-aspect deep learning expression-based image emotion classification method

InactiveCN107341506AImprove robustnessShorten the timeCharacter and pattern recognitionSvm classifierSupport vector machine classifier

The invention discloses a multi-aspect deep learning expression-based image emotion classification method. The method comprises the following steps of: (1) designing an image emotion classification model: the image emotion classification model comprises a parallel convolutional neural network model and a support vector machine classifier which is used for carrying out decision fusion on network features; (2) designing a parallel convolutional neural network structure: the parallel convolutional neural network structure comprises 5 networks with same a structure, and each network comprises 5 convolutional layer groups, a full connection layer and a softmax layer; (3) carrying out significant main body extraction and HSV format conversion on an original image; (4) training the convolutional neural network model; (5) fusing image emotion features learnt and expressed by the plurality of convolutional neural networks, and training the SVM classifier to carry out decision fusion on the image emotion features; and (6) classifying user images by using the trained image emotion classification model so as to realize image emotion classification. According to the method disclosed by the invention, the obtained image emotion classification result accords with the human emotion standard, and the judgement process is free of artificial participation, so that machine-based full-automatic image emotion classification is realized.

Owner:SOUTH CHINA UNIV OF TECH

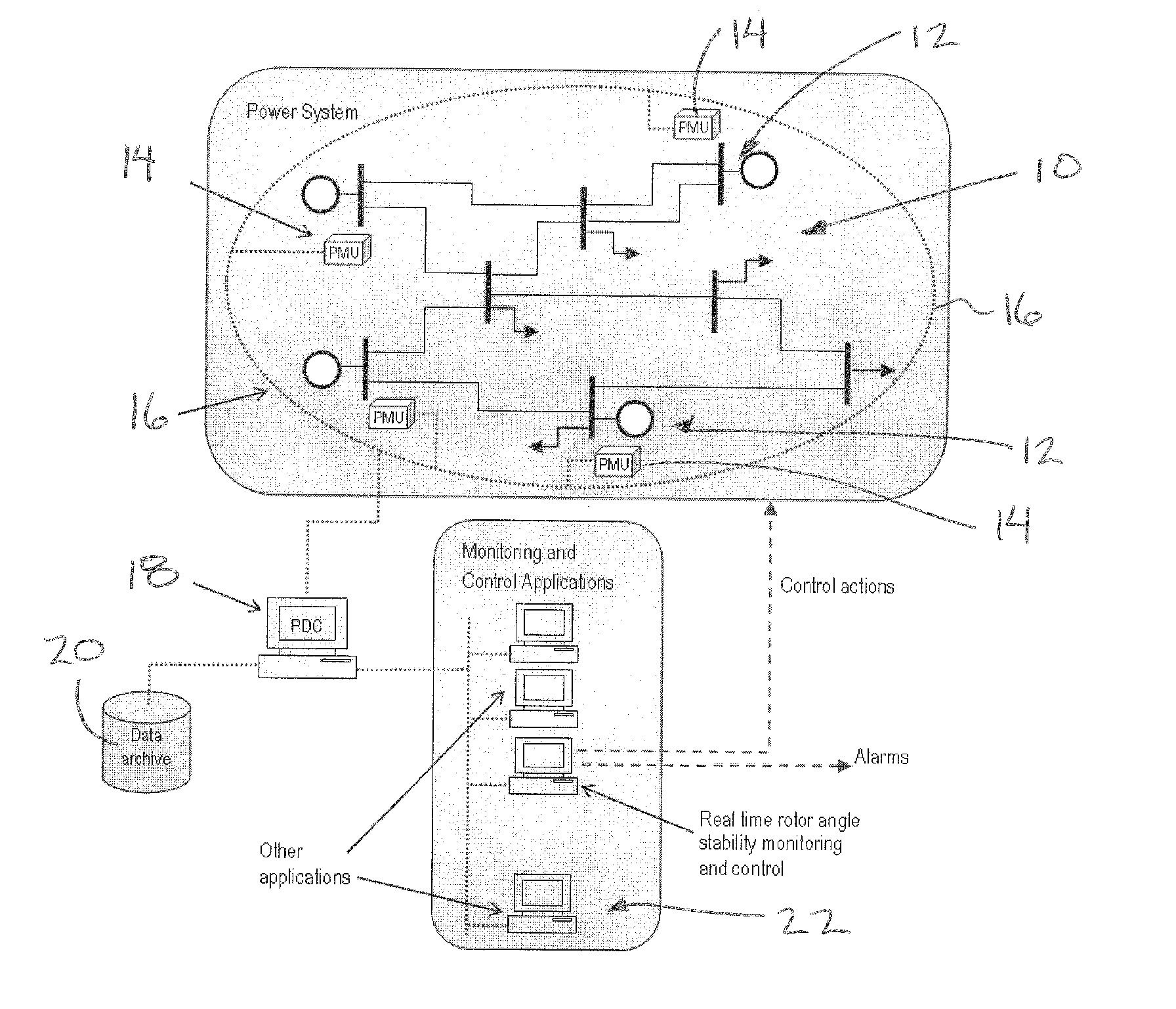

Rotor Angle Stability Prediction Using Post Disturbance Voltage Trajectories

ActiveUS20110022240A1Level controlEmergency protective circuit arrangementsDisturbance voltageSupport vector machine classifier

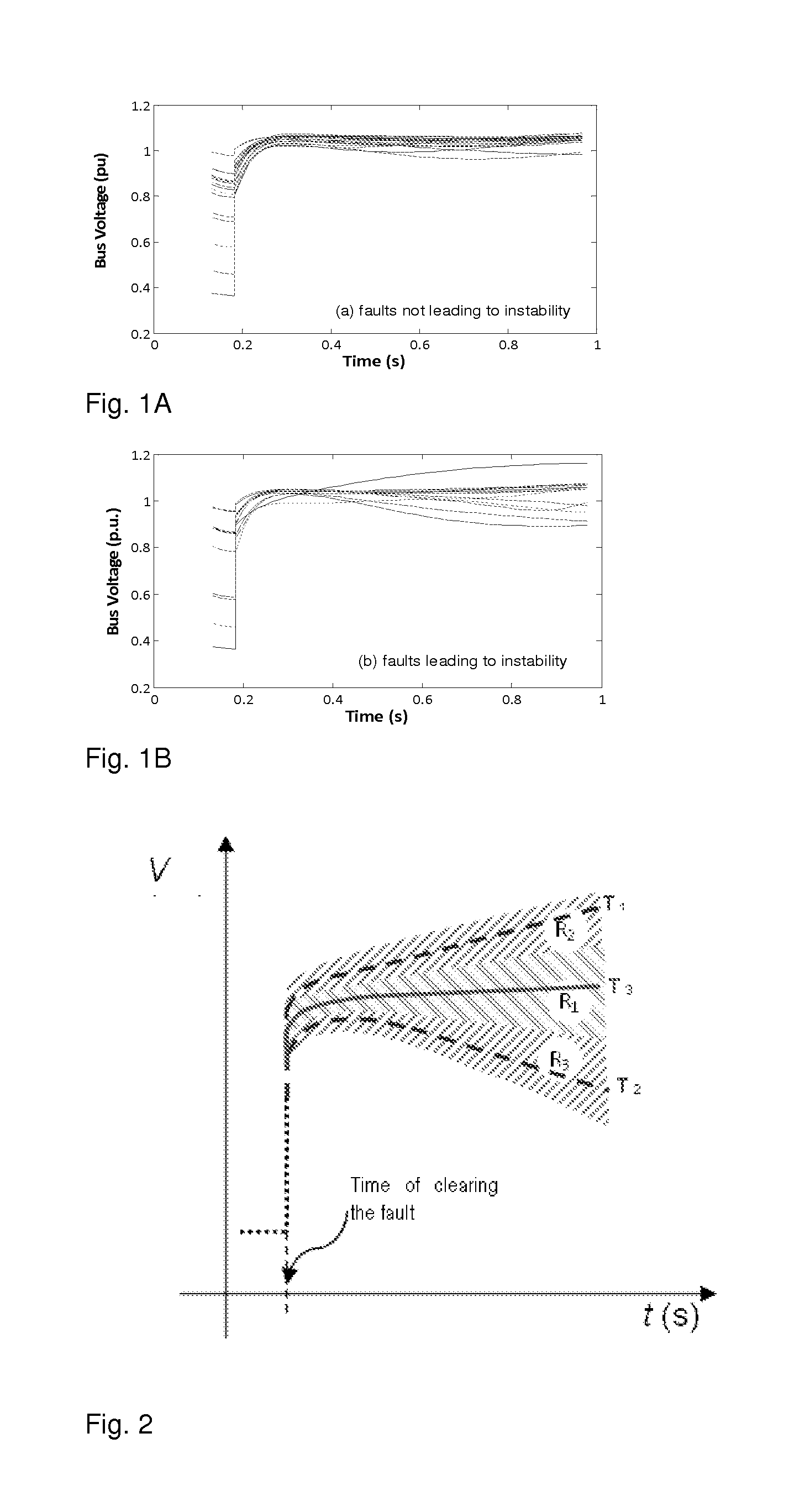

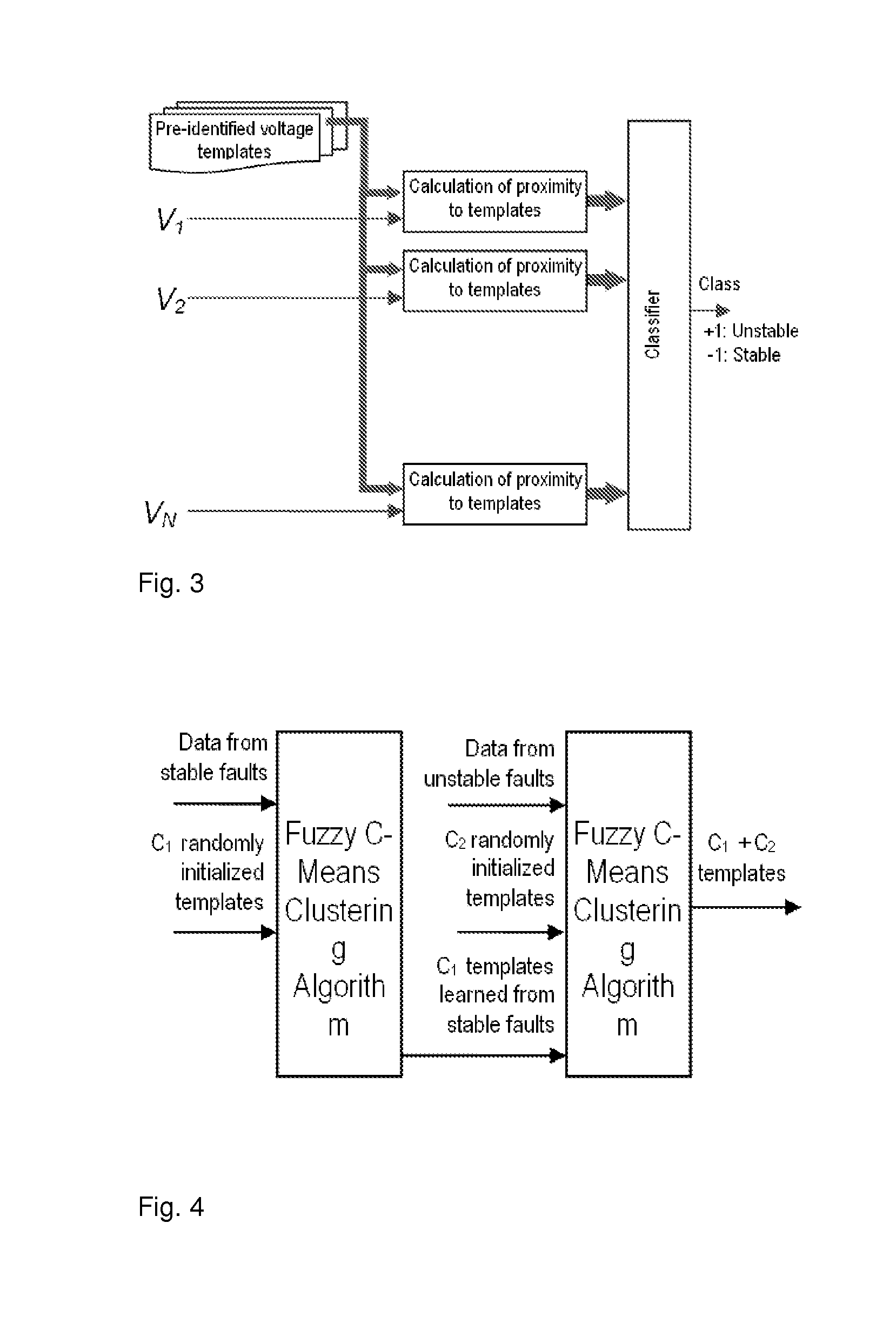

A new method for predicting the rotor angle stability status of a power system immediately after a large disturbance is presented. The proposed two stage method involves estimation of the similarity of post-fault voltage trajectories of the generator buses after the disturbance to some pre-identified templates and then prediction of the stability status using a classifier which takes the similarity values calculated at the different generator buses as inputs. The typical bus voltage variation patterns after a disturbance for both stable and unstable situations are identified from a database of simulations using fuzzy C-means clustering algorithm. The same database is used to train a support vector machine classifier which takes proximity of the actual voltage variations to the identified templates as features.

Owner:RAJAPAKSE ATHULA

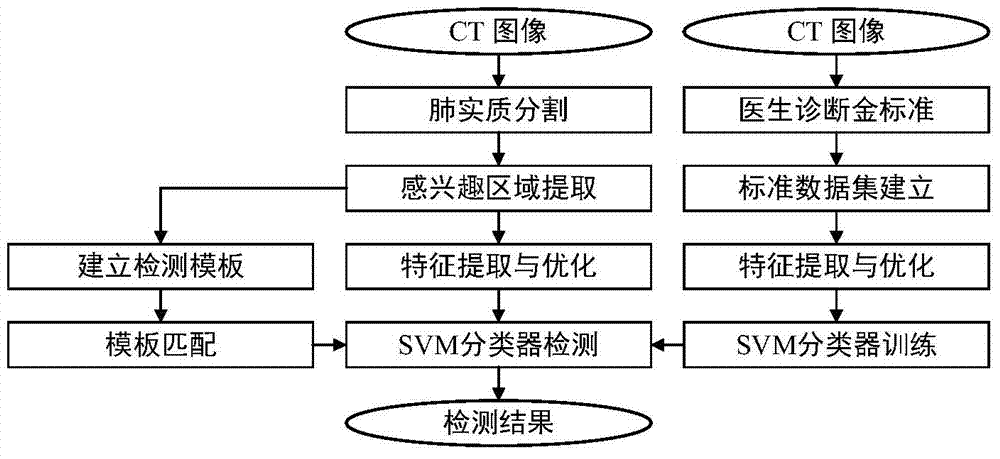

Pulmonary nodule detection device and method based on shape template matching and combining classifier

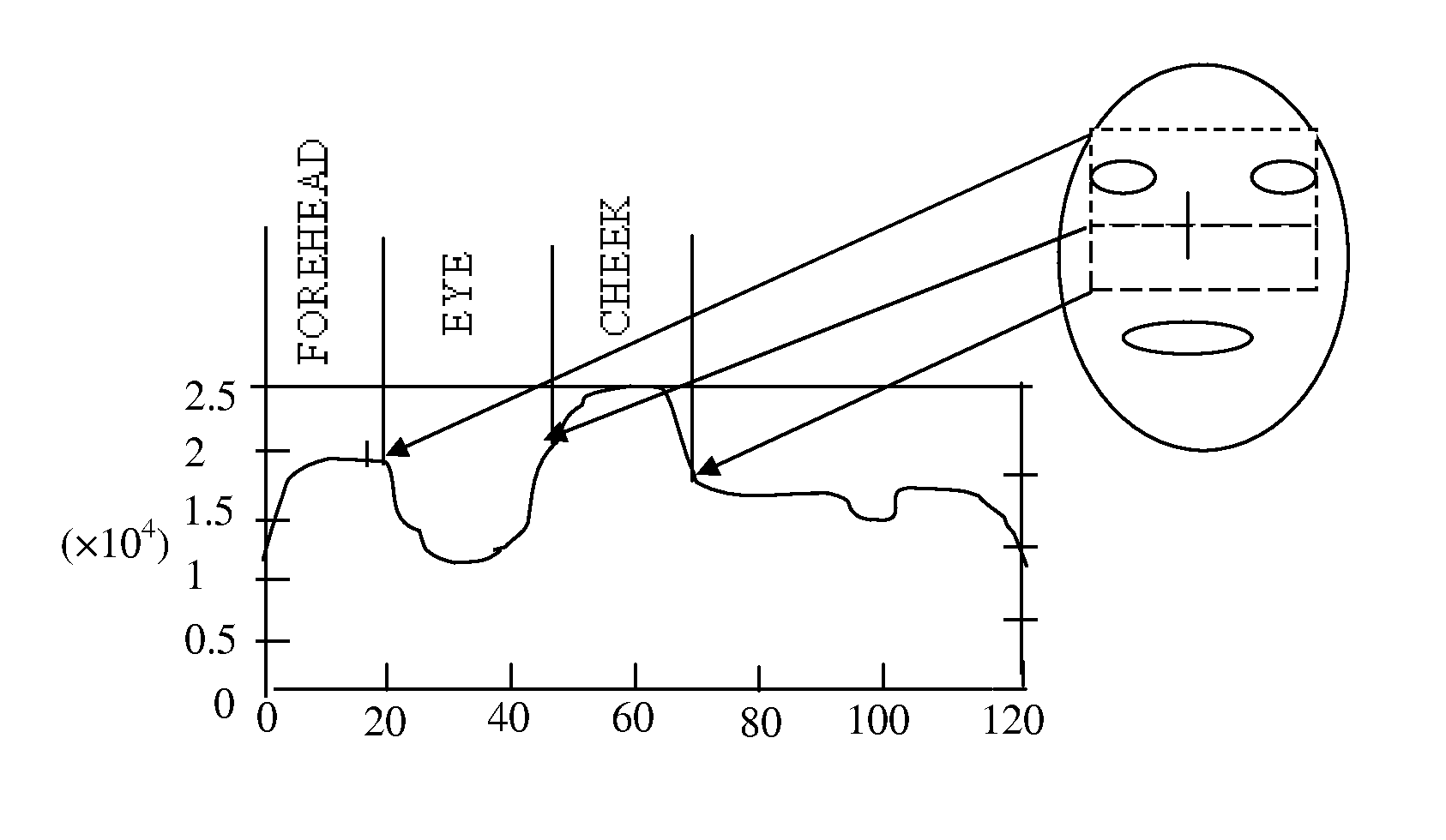

ActiveCN104751178AIncreased sensitivityEasy to detectImage analysisCharacter and pattern recognitionPulmonary parenchymaPulmonary nodule

A pulmonary nodule detection device and method based on a shape template matching and combining classifier comprises an input unit, a pulmonary parenchyma region processing unit, a ROI (region of interest) extraction unit, a coarse screening unit, a feature extraction unit and a secondary detection unit. The input unit is used for inputting pulmonary CT sectional sequence images in format DICOM; the pulmonary parenchyma region processing unit is used for segmenting pulmonary parenchyma regions from the CT sectional sequence images, repairing the segmented pulmonary parenchyma regions by the boundary encoding algorithm and reconstructing the pulmonary parenchyma regions by the surface rendering algorithm after the three-dimensional observation and repairing; the ROI extraction unit is used for setting a gray level threshold and extracting the ROI according to the repaired pulmonary parenchyma regions; the coarse screening unit is used for performing coarse screening on the ROI by the pulmonary nodule morphological feature design template matching algorithm and acquiring selective pulmonary nodule regions; the feature extraction unit is used for extracting various feature parameters as sample sets for the post detection according to selective nodule gray levels and morphological features; the secondary detection unit is used for performing secondary detection on the selective nodule regions through a vector machine classifier and acquiring the final detection result.

Owner:KANGDA INTERCONTINENTAL MEDICAL EQUIP CO LTD

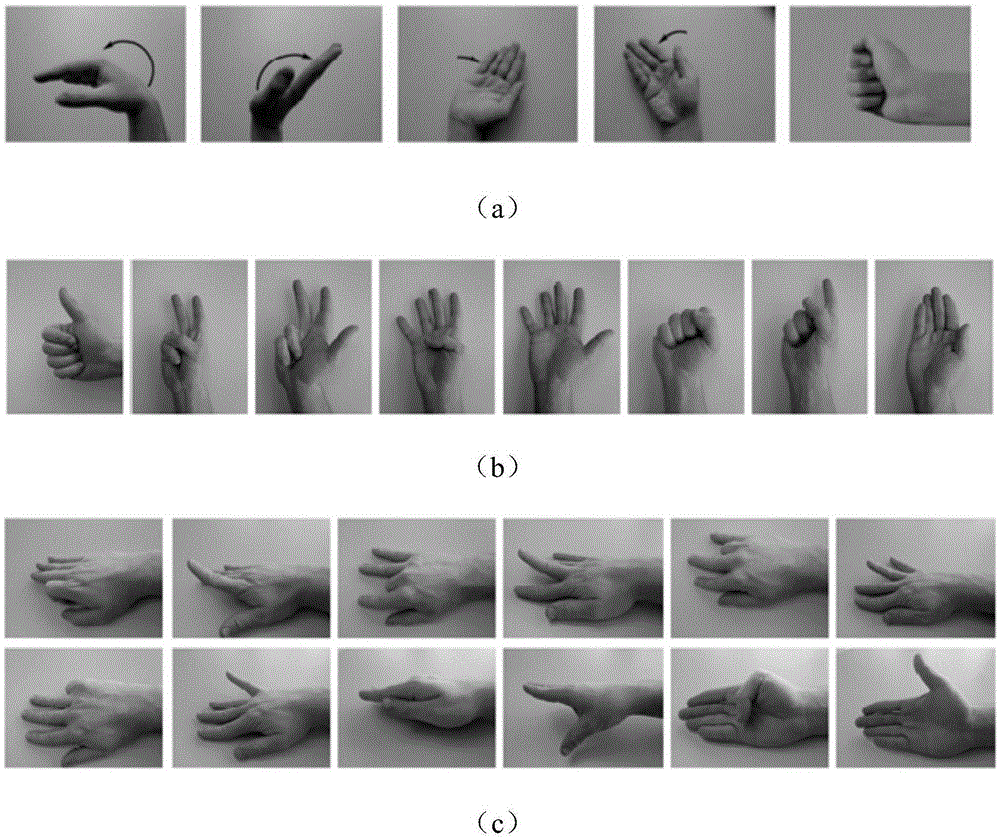

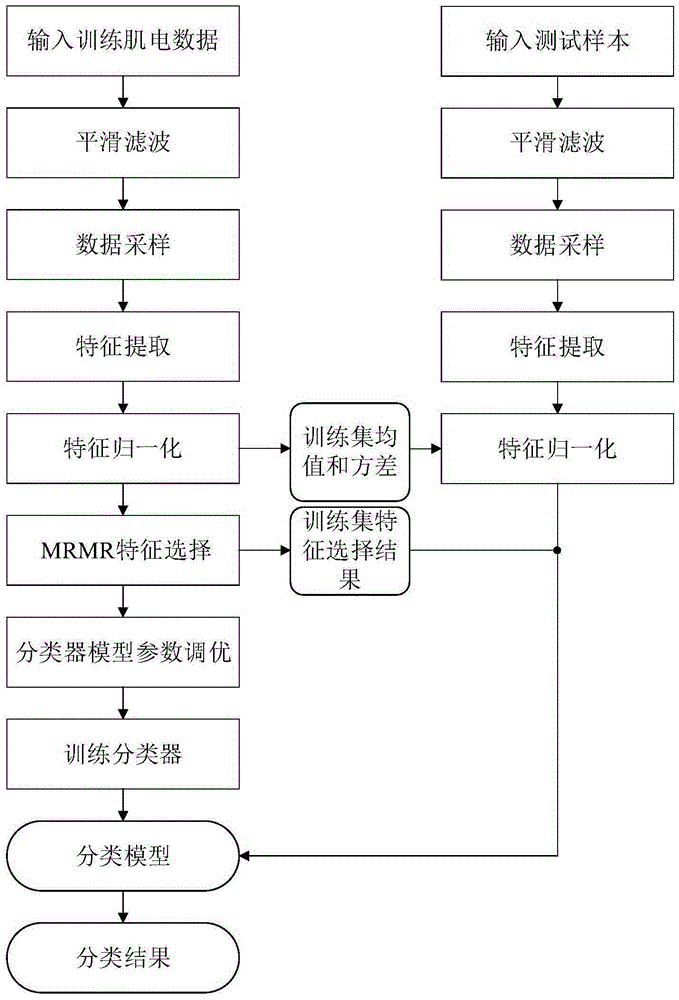

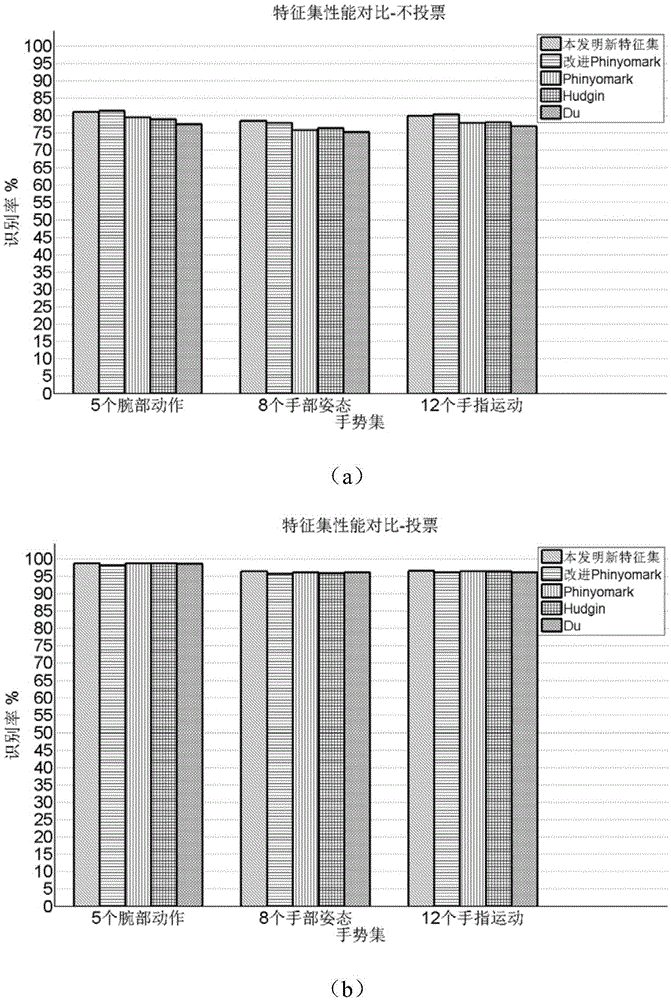

Support vector machine based surface electromyogram signal multi-hand action identification method

InactiveCN105426842AImprove classification performanceCharacter and pattern recognitionTime domainFeature vector

The invention discloses a support vector machine based surface electromyogram signal multi-hand action identification method. The method comprises the following main steps: 1) obtaining electromyogram data, performing smooth filtering on a signal, and generating data samples through sampling windows of different scales; 2) by taking the data samples as units, extracting a novel multi-feature set containing 19 time domain, frequency domain and time-frequency domain features from each data sample, and performing normalization and minimum redundancy maximum correlation criterion based feature selection on eigenvectors; 3) designing a Pearson VII generalized kernel based support vector machine classifier and optimizing parameters of a support vector machine by using a cross validation based coarse grid search optimization algorithm; and 4) training a classification model by using data samples in a training set and optical classifier parameters obtained in the parameter optimization process of the step 3) and inputting data samples in a test set into the classification model to perform classification testing.

Owner:ZHEJIANG UNIV

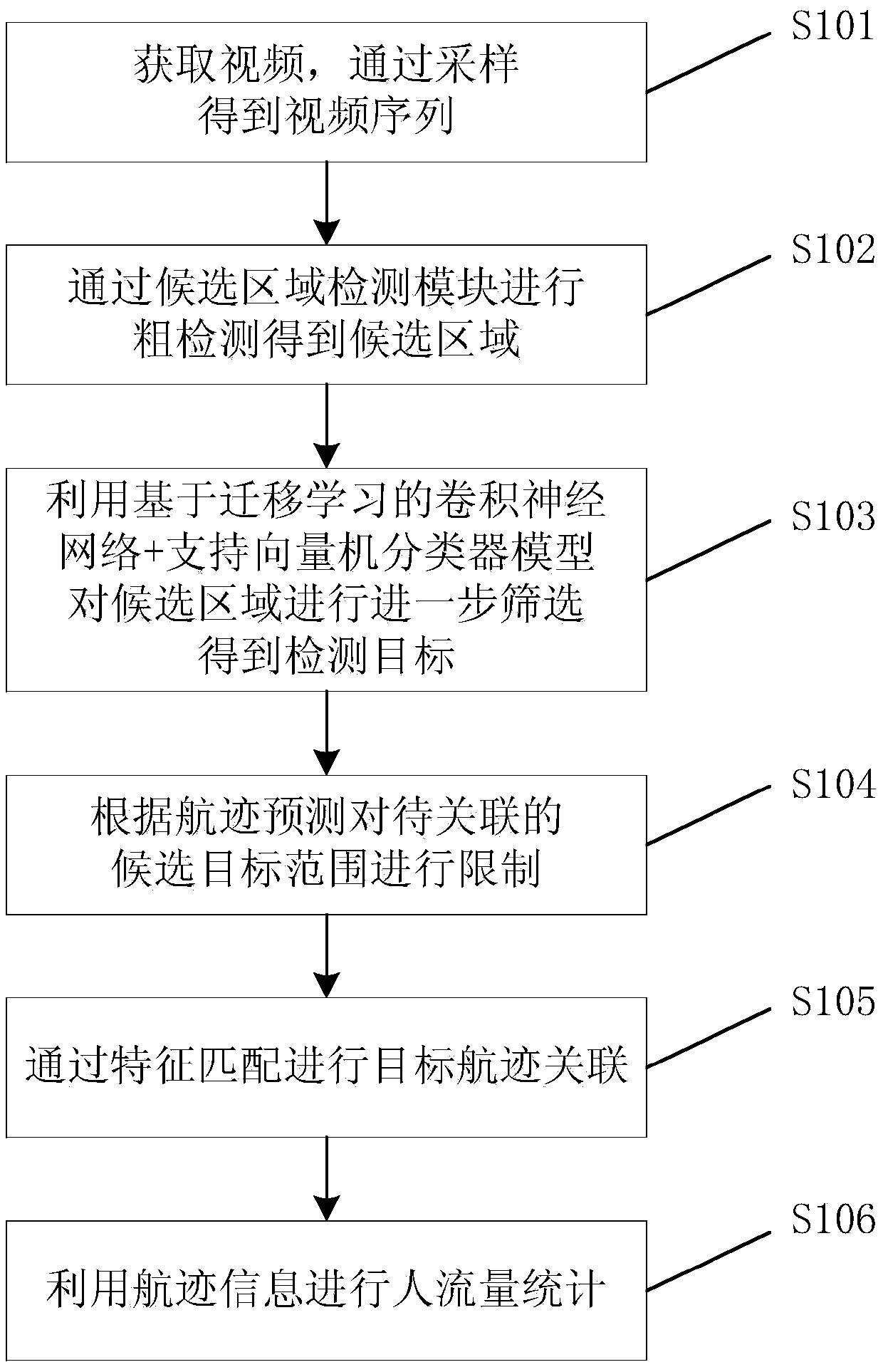

Method for acquiring people flow on the basis of video sequence

ActiveCN105512640AOvercome Counting DisadvantageSave human resourcesImage enhancementImage analysisVideo monitoringSupport vector machine classifier

The invention relates to a method for acquiring a people flow on the basis of a video sequence and belongs to the technical field of image processing and video monitoring. The method comprises the following steps of: 1), acquiring a video and obtaining the video sequence by means of sampling; 2) performing coarse detection by using a candidate area detection module so as to obtain candidate target areas; 3) further screening the candidate target areas by using a convolutional neural network based on transfer learning and a support vector machine classifier model so as to obtain a detection target; 4) restricting a candidate target range to be associated according to track prediction; 5) associating target tracks by means of feature matching; and 6) acquiring the people flow by means of track information. The method saves a large number of human resources, prevents error statistics duo to man-made factors, well overcomes the disadvantages of manual counting in some cases, and may accurately locate a single pedestrian in order to provide great significance for subsequent analysis.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Integration of a computer-based message priority system with mobile electronic devices

InactiveUS7444384B2Multiplex system selection arrangementsSpecial service provision for substationPagerText categorization

Methods for integrating mobile electronic devices with computational methods for assigning priorities to documents are disclosed. In one embodiment, a computer-implemented method first receives a new document such as an electronic mail message. The method assigns a priority to the document, based on a text classifier such as a Bayesian classifier or a support-vector machine classifier. The method then alerts a user on an electronic device, such as a pager or a cellular phone, based on an alert criteria that can be made sensitive to information about the location, inferred task, and focus of attention of the user. Such information can be inferred under uncertainty or can be accessed directly from online information sources. One embodiment makes use of information from an online calendar to control the criteria used to make decisions about relaying information to a mobile device.

Owner:MICROSOFT TECH LICENSING LLC

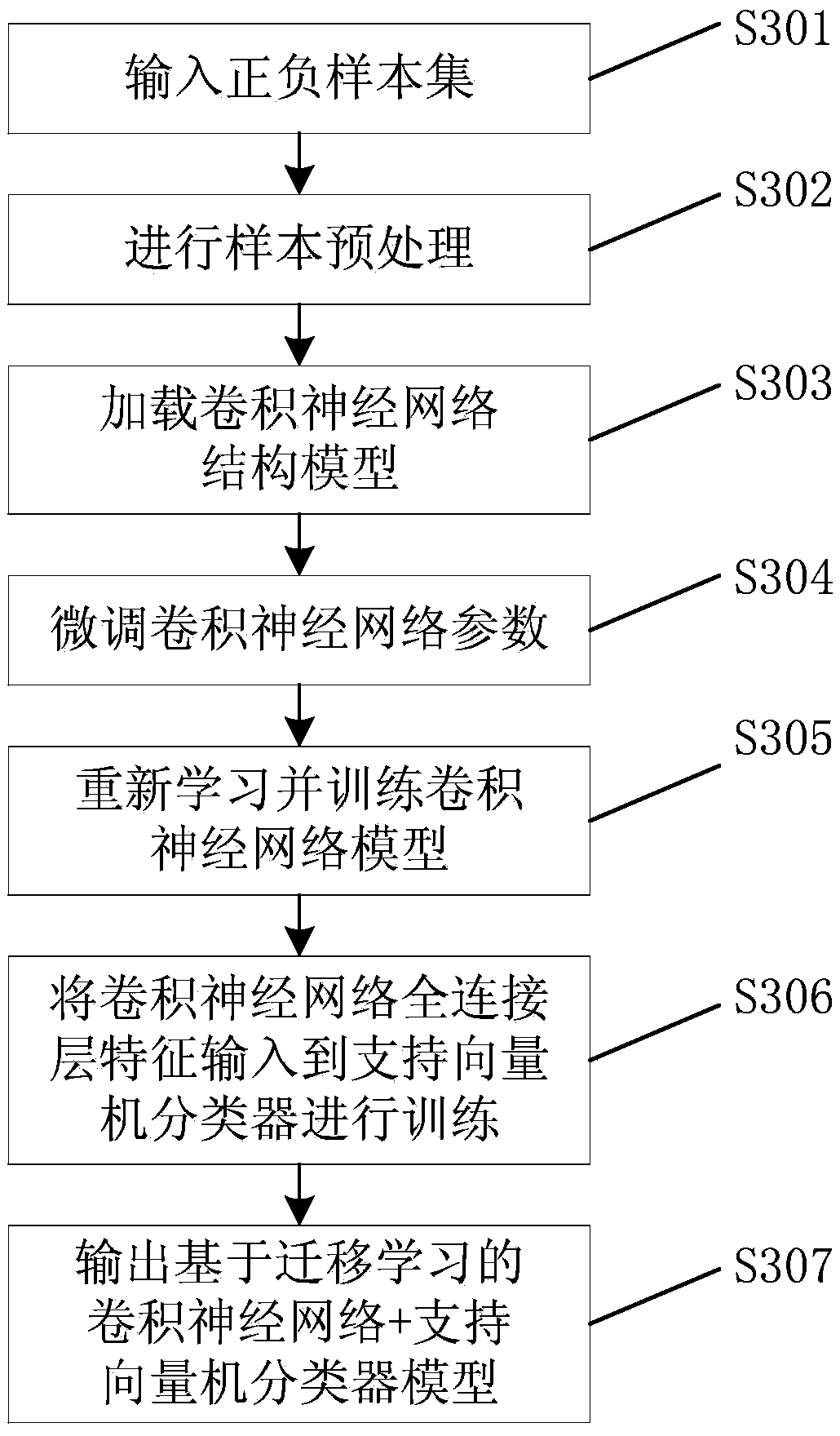

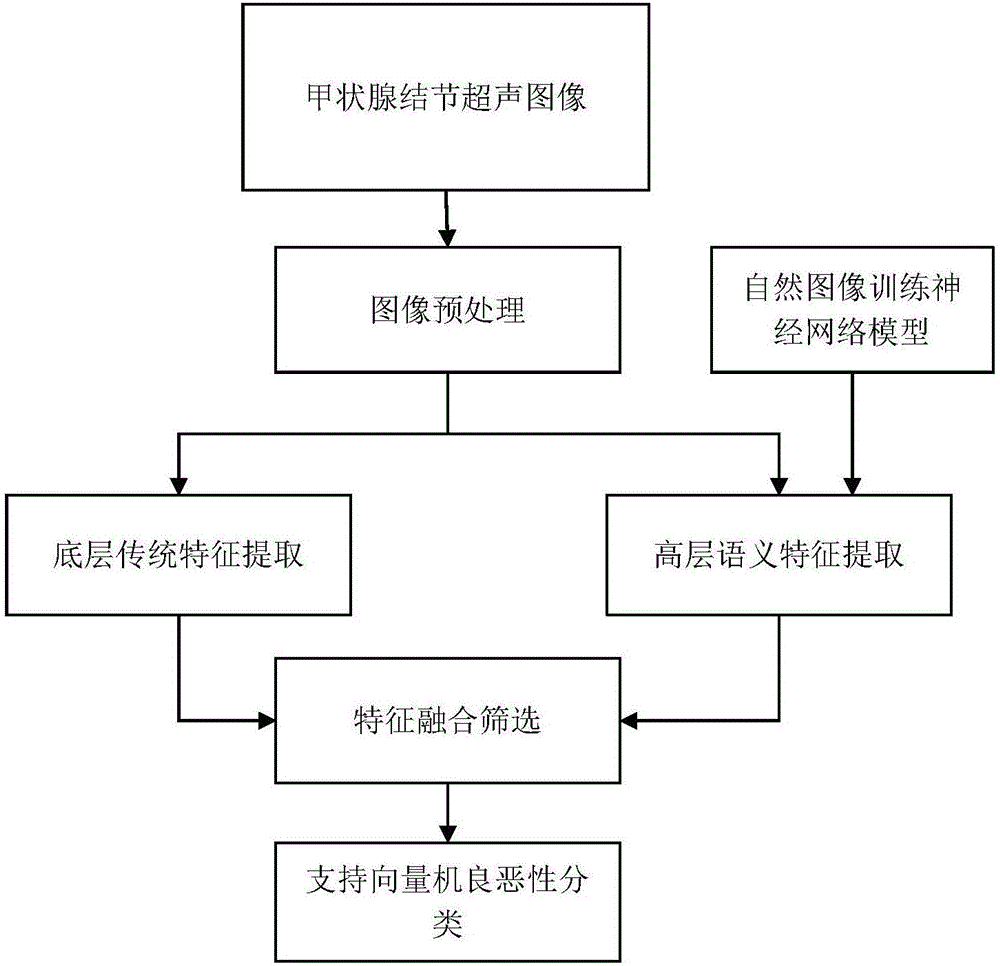

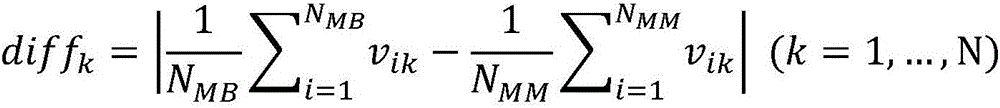

Transfer learning and feature fusion-based ultrasonic thyroid nodule benign and malignant classification method

ActiveCN106780448ADescribe the characteristics of the caseAvoiding Obstacles That Cannot Train Convolutional Neural NetworksImage enhancementImage analysisSonificationSupport vector machine classifier

The invention discloses a transfer learning and feature fusion-based ultrasonic thyroid nodule benign and malignant classification method. The method comprises the following steps of firstly preprocessing an ultrasonic image and zooming the ultrasonic image to a uniform size; extracting traditional low-level features of the ultrasonic image; extracting high-level semantic features of the ultrasonic image by using a model obtained in a natural image through deep neural network training through a transfer learning method; fusing the low-level features with the high-level features; carrying out feature screening by utilizing distinction degree of benign and malignant thyroid nodules so as to obtain a final feature vector which is used for training a support vector machine classifier; and carrying out final thyroid nodule benign and malignant classification. According to the method disclosed by the invention, the low-level features and the high-level features are fused, and salient feature screening is carried out, so that the problem that the ability of single features for describing thyroid nodule features on the level of semantic meaning is insufficient is solved, and the classification precision is effectively improved; and through importing the transfer learning, the problems that the medical sample images are few and the deep features can not be obtained by direct training are solved.

Owner:TSINGHUA UNIV +1

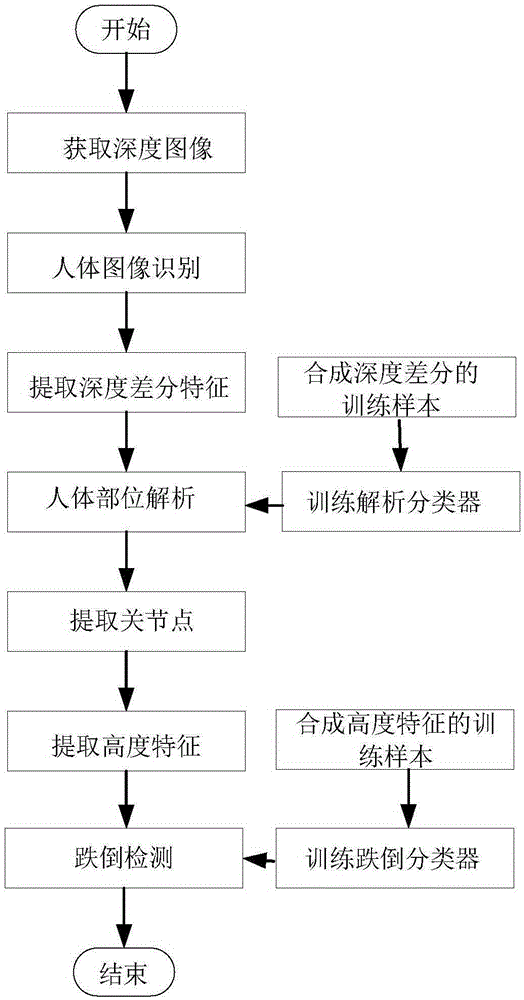

Fall-down behavior real-time detection method based on depth image

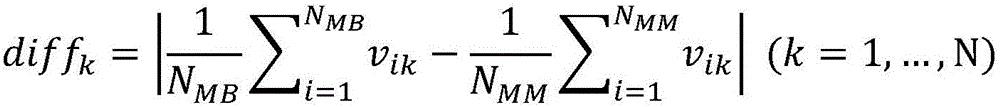

ActiveCN105279483AIllumination invariancePrivacy protectionCharacter and pattern recognitionFeature vectorNODAL

The invention discloses a fall-down behavior real-time detection method based on a depth image. The method comprises steps of depth image obtainment, human body image identification, depth difference feature extraction, human body part analysis, articulation point extraction, height feature extraction and fall-down behavior detection. Based on a depth image, a specific depth difference feature is selected on an identified human body image; a random forest classifier is used for analyzing human body parts; a human body is divided into a head part and a trunk part; articulation points are detected, and then a height feature vector is extracted; and a support vector machine classifier is used for detecting whether a detected object is in fall-down state. The invention provides the fall-down behavior detection method, improves the operation speed, and achieves timeliness of fall-down behavior detection. The depth image is utilized for fall-down behavior detection. On one hand, the method is free of influences of illumination and can be operated in an all-weather manner, and on the other hand, personal privacy can be protected compared with a colorful image. Only one depth sensor is required in terms of hardware support, and the advantage of low cost is achieved.

Owner:HUAZHONG UNIV OF SCI & TECH

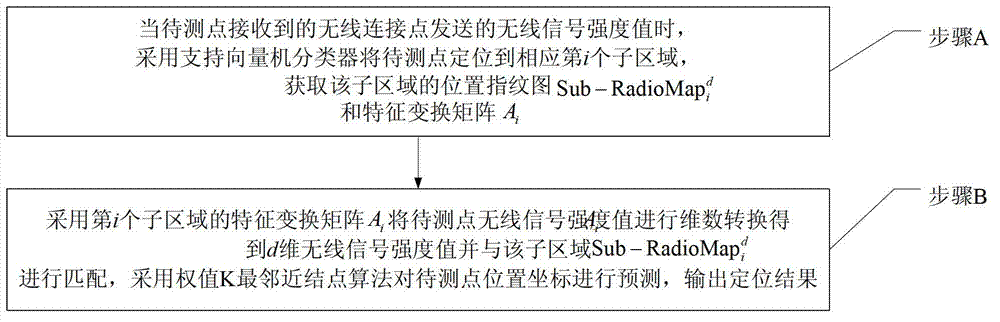

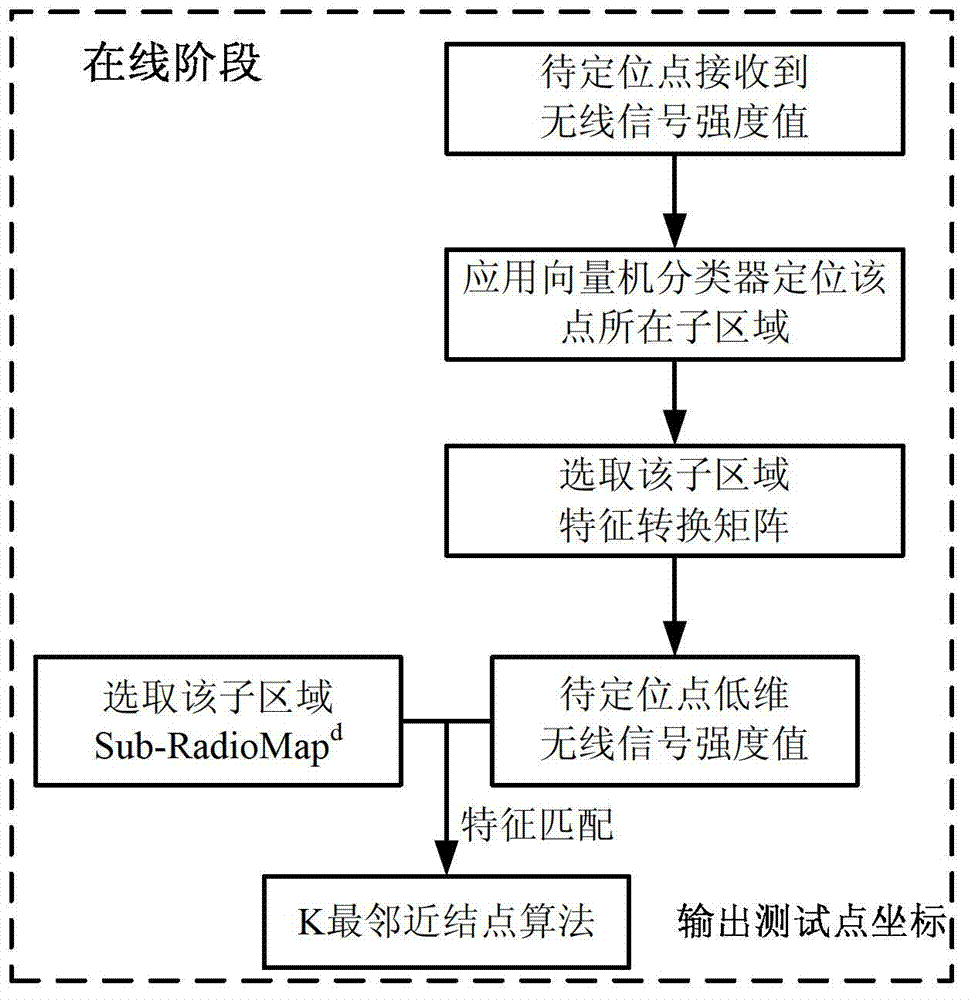

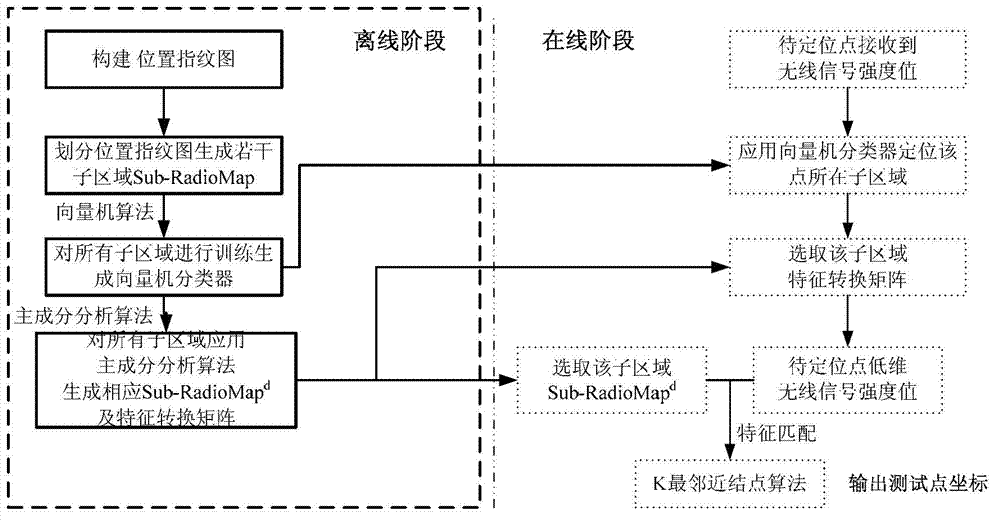

Wireless fidelity (Wi-Fi) indoor positioning method

InactiveCN103096466AReduce operational complexityImprove real-time performanceWireless communicationWi-FiComputation complexity

The invention particularly relates to a wireless fidelity (Wi-Fi) indoor positioning method, and aims at resolving the problems that in a traditional Wi-Fi indoor positioning method, a feature information position fingerprint map data base is too large, computation complexity in an on-line positioning phase matching process is high, instantaneity is poor, and the like. The method includes that when a point to be detected receives a wireless signal strength value sent by a wireless connection point, a support vector machine classifier is adopted to position the point to be detected to a corresponding ith subregion, and a position fingerprint map and a feature transformational matrix Ai of the subregion are obtained; and the feature transformational matrix Ai of the ith subregion is adopted to enable the wireless signal strength value of the point to be detected to achieve shiftdim, a d-dimension wireless signal strength value is obtained and matched with the subregion, the weight K-nearest neighbor node algorithm is adopted to forecast position coordinates of the point to be detected, and positioning results are output. The Wi-Fi indoor positioning method is applied to the communication field.

Owner:HARBIN INST OF TECH

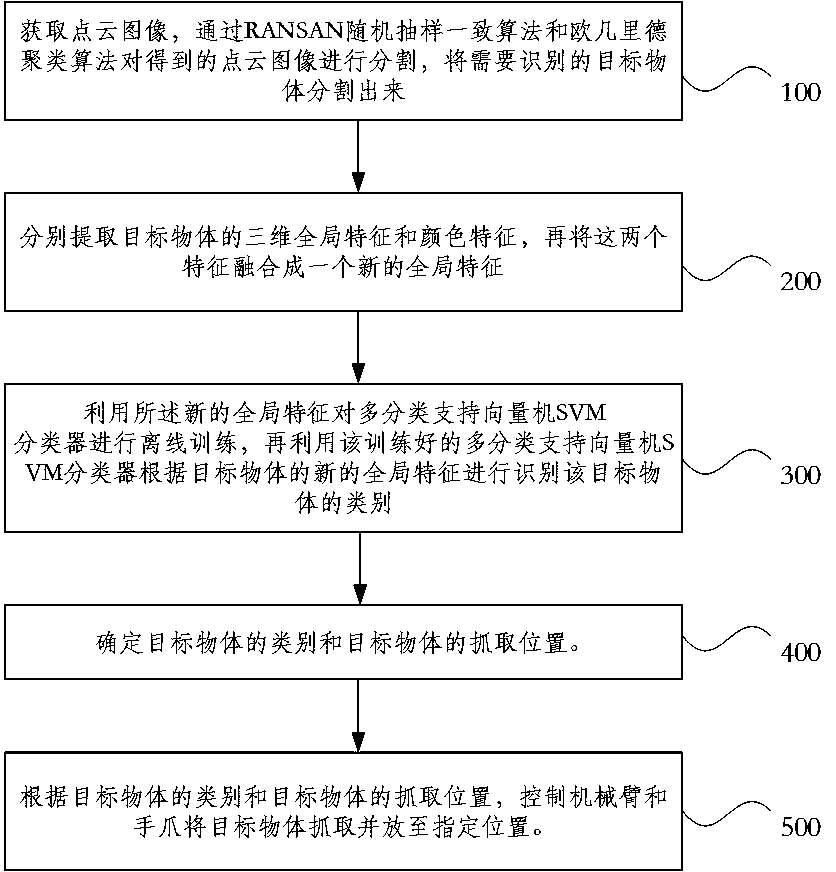

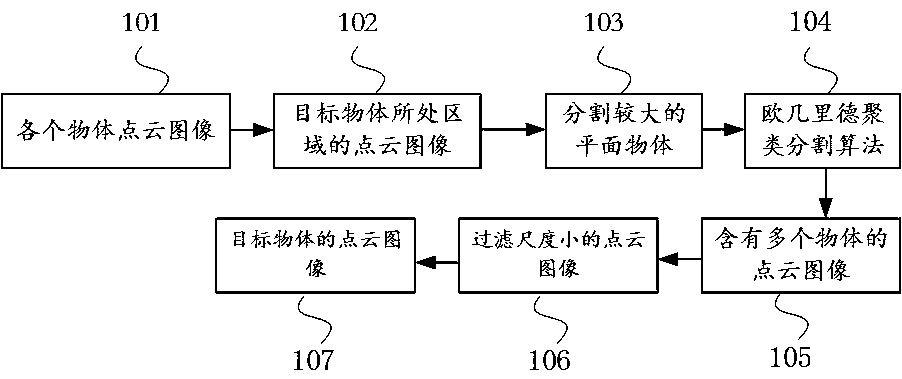

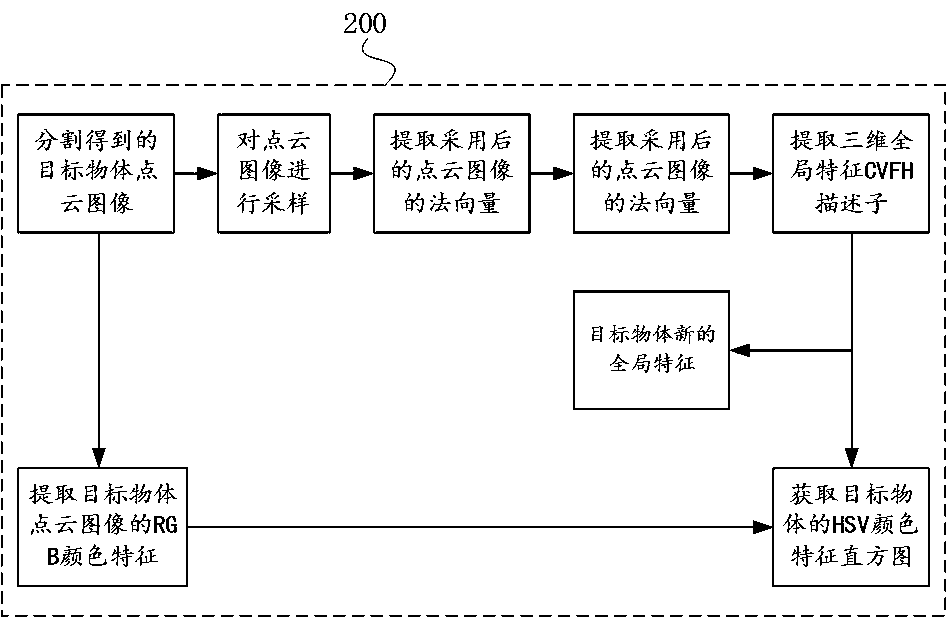

Visual capture method and device based on depth image and readable storage medium

InactiveCN107748890AImprove robustnessTexture features, so that the system can not only recognize lessCharacter and pattern recognitionCluster algorithmPattern recognition

The invention discloses a visual capture method and device based on a depth image and a readable storage medium. The method comprises steps that a point cloud image is acquired through a depth cameraKinect, the acquired point cloud image is segmented through an RANSAN random sampling consensus algorithm and an Euclidean clustering algorithm, and an identification-needing target object is acquiredthrough segmentation; 3D global characteristics and color characteristics of the object are respectively extracted and are fused to form a new global characteristic; off-line training of a multi-class support vector machine classifier SVM is carried out through utilizing the new global characteristic of the object, category of the target object is identified through utilizing the trained multi-class support vector machine classifier SVM according to the new global characteristic; then the category and the grasping position of the target object are determined; and lastly, according to the category of the target object and the grasping position of the target object, a manipulator and a gripper are controlled for grasping the target object to the specified position. The method is advantagedin that the target object can be accurately identified and grasped.

Owner:SHANTOU UNIV

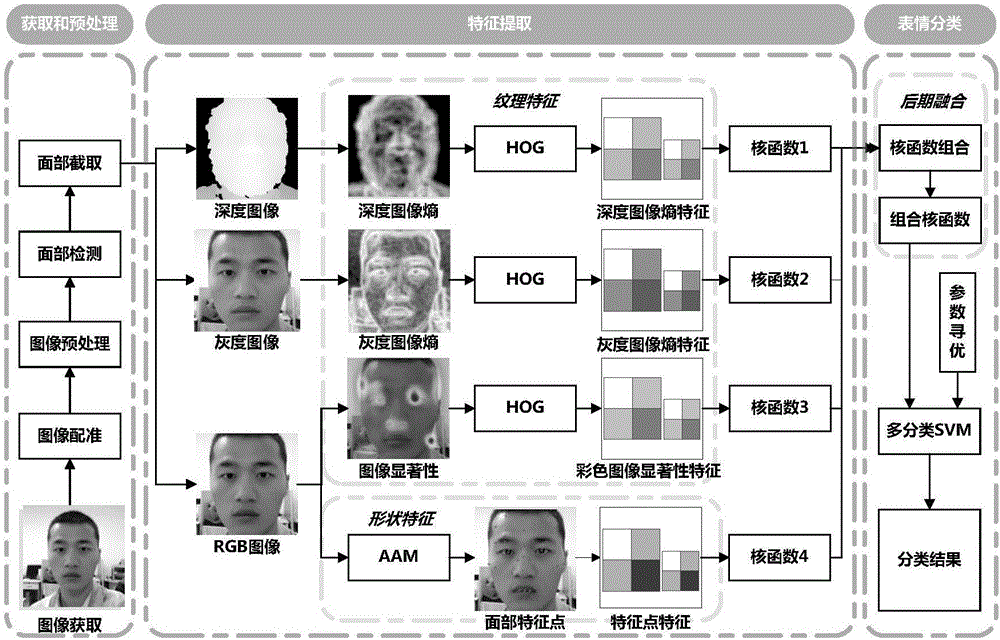

Expression identification method fusing depth image and multi-channel features

InactiveCN106778506AGuaranteed recognition efficiencyImprove robustnessCharacter and pattern recognitionColor imageSupport vector machine classifier

The invention discloses an expression identification method fusing a depth image and multi-channel features. The method comprises the steps of performing human face region identification on an input human face expression image and performing preprocessing operation; selecting the multi-channel features of the image, extracting a depth image entropy, a grayscale image entropy and a color image salient feature as human face expression texture information in the texture feature aspect, extracting texture features of the texture information by adopting a grayscale histogram method, and extracting facial expression feature points as geometric features from a color information image by utilizing an active appearance model in the geometric feature aspect; and fusing the texture features and the geometric features, selecting different kernel functions for different features to perform kernel function fusion, and transmitting a fusion result to a multi-class support vector machine classifier for performing expression classification. Compared with the prior art, the method has the advantages that the influence of factors such as different illumination, different head poses, complex backgrounds and the like in expression identification can be effectively overcome, the expression identification rate is increased, and the method has good real-time property and robustness.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

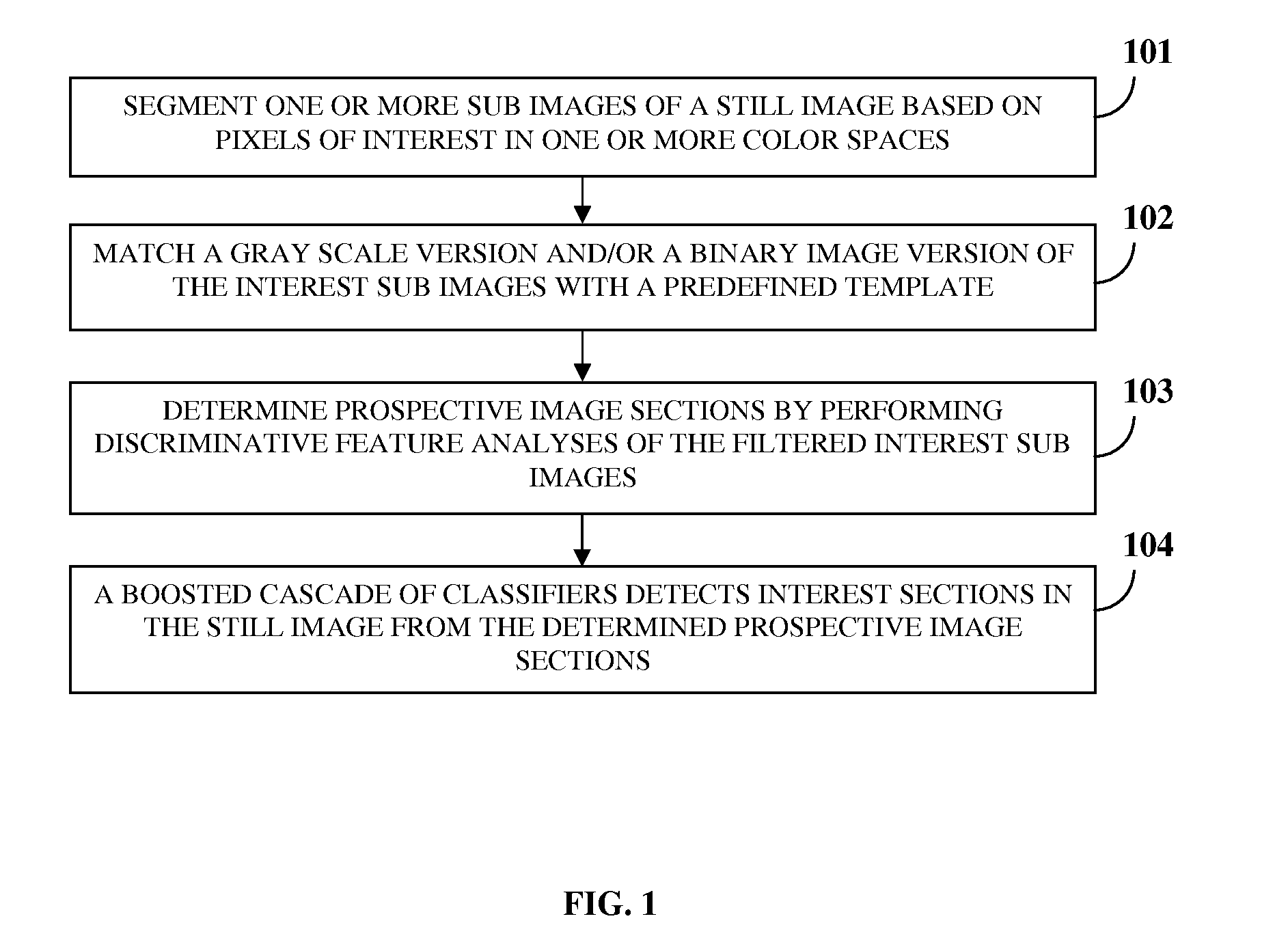

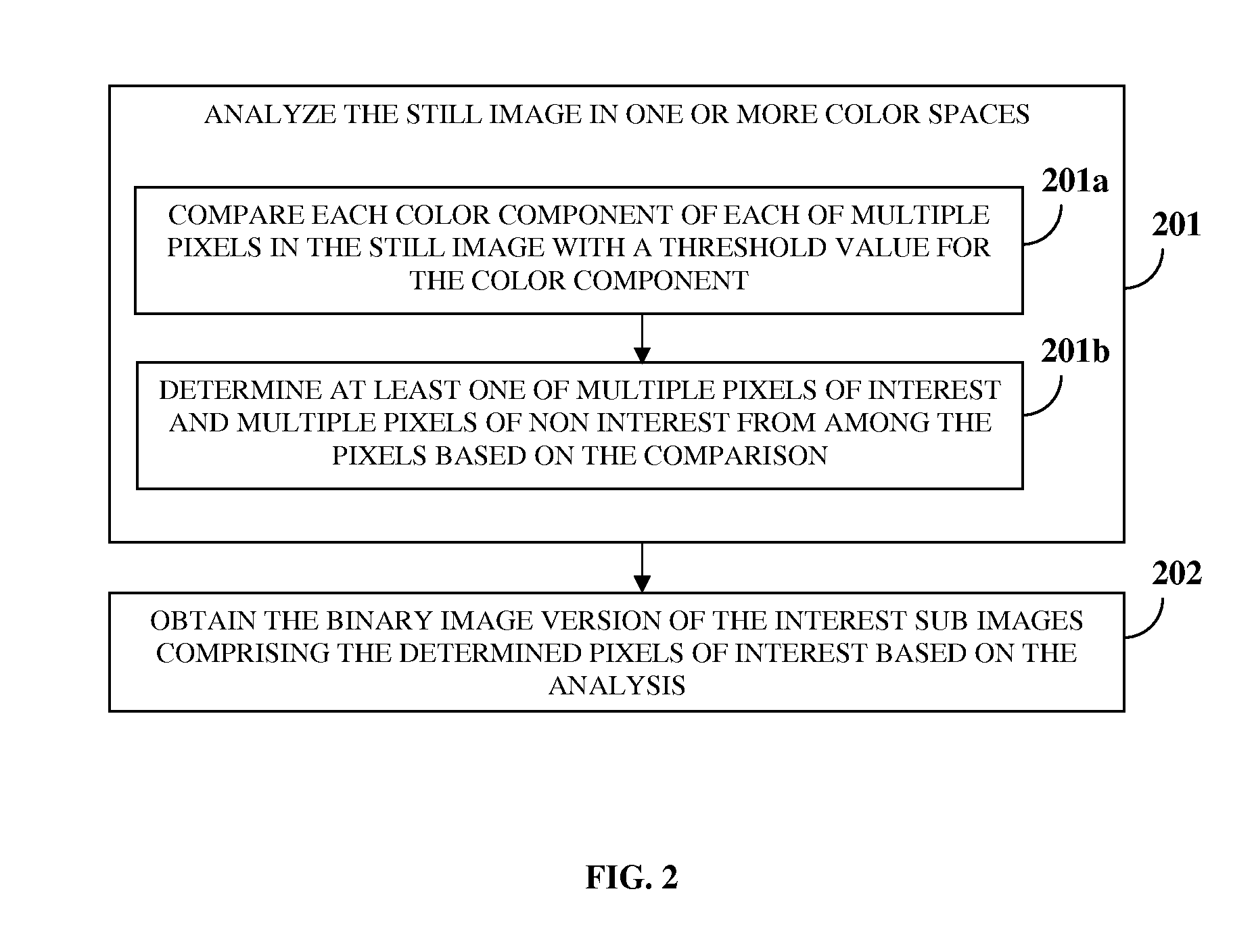

Detecting Objects Of Interest In Still Images

ActiveUS20110243431A1Good precisionSimple calculationCharacter and pattern recognitionTemplate matchingSupport vector machine classifier

A computer implemented method and system for detecting interest sections in a still image are provided. One or more sub images of the still image is subjected to segmentation. A gray scale version of interest sub images and / or a binary image version of the interest sub images are matched with a predefined template for filtering the interest sub images. Multiple prospective image sections comprising one or more of prospective interest sections and prospective near interest sections are determined by performing discriminative feature analyses of the filtered interest sub images using a gabor feature filter. The discriminative feature analyses are processed by a boosted cascade of classifiers. The boosted cascade of classifiers detects the interest sections in the still image from the prospective interest sections and the prospective near interest sections. The detected interest sections are subjected to a support vector machine classifier for further detecting interest sections.

Owner:LTIMINDTREE LTD

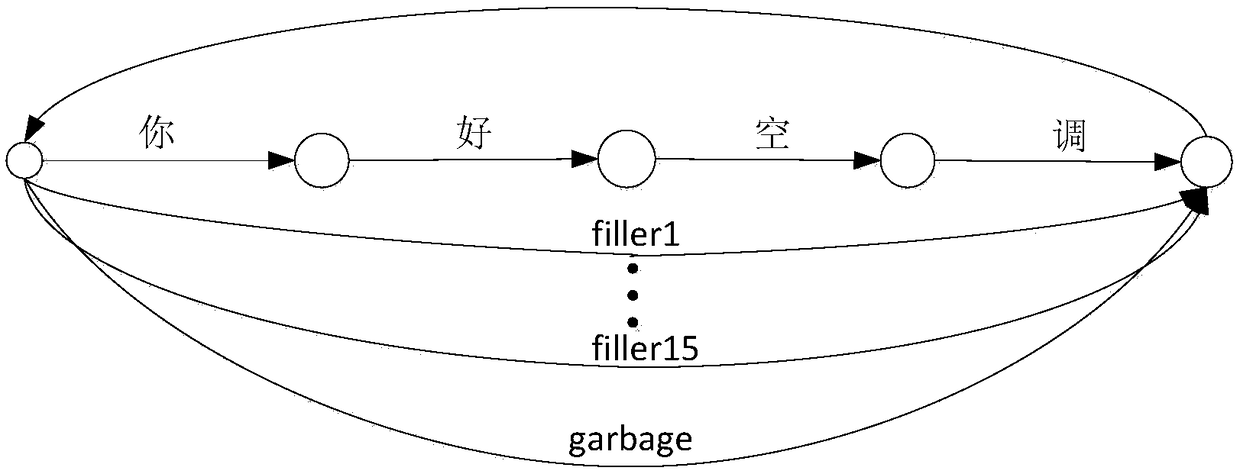

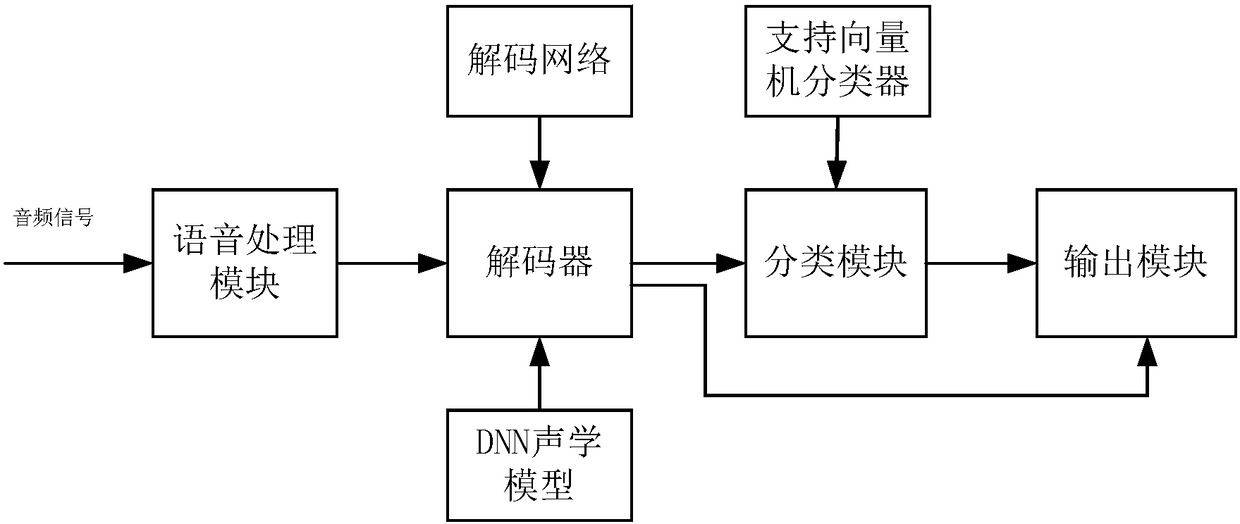

Universal voice awakening identification method and system under full-phoneme frame

InactiveCN108281137AImprove wake-up effectQuick changeSpeech recognitionSupport vector machine classifierAcoustic model

The invention discloses a universal voice awakening identification method and a system under a full-phoneme frame. The method comprises the following steps: firstly, training a deep neural network acoustic model, modifying a dictionary according to awakening words, constructing a decoding network based on the filler, and training a support vector machine classifier according to training samples; preprocessing the input voice, inputting processed voice characteristics into a decoding network for decoding the processed voice characteristics, calculating an acoustic score according to the deep neural network acoustic model, and obtaining decoding results; inputting the statistical magnitude of successfully recognized decoding results into the support vector machine classifier for classification, and obtaining a final recognition result. According to the method disclosed by the invention, triphonon states obtained through the extension of all atonal phonemes are subjected to modeling, andthen a universal acoustic model is obtained. During the decoding process, a decoding path is limited. Therefore, the awakening performance can be improved. Meanwhile, the later-stage processing part is combined to analyze the multi-dimensional statistics of the phoneme posterior probability and the like on each path. As a result, the hidden danger that the false alarm rate is increased is eliminated.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

Color and singular value feature-based face in-vivo detection method

InactiveCN105354554APrevent extractionReduce computational complexitySpoof detectionBatch extractionSupport vector machine classifier

The invention discloses a color and singular value feature-based face in-vivo detection method, and mainly aims at solving the problem, that the existing face authenticity identification technology is complicated in calculation and low in identification rate. The method is realized through the following steps: 1) marking positive and negative samples of a face database, and dividing the samples into a training set and a testing set; 2) segmenting face images in the training set into blocks and extracting the color features and singular value features of the small blocks in the training set in batches; 3) normalizing vectors of the extracted features and sending the normalized vectors into a support vector machine classifier to train so as to obtain a training model; and 4) carrying out feature extraction on data in the testing set and predicting the features by utilizing the training model so as to obtain a classification result. The color and singular value feature-based face in-vivo detection method is capable of improving the classification efficiency and obtaining higher classification result, and can be used for the face authenticity detection in social networks or real life.

Owner:XIDIAN UNIV

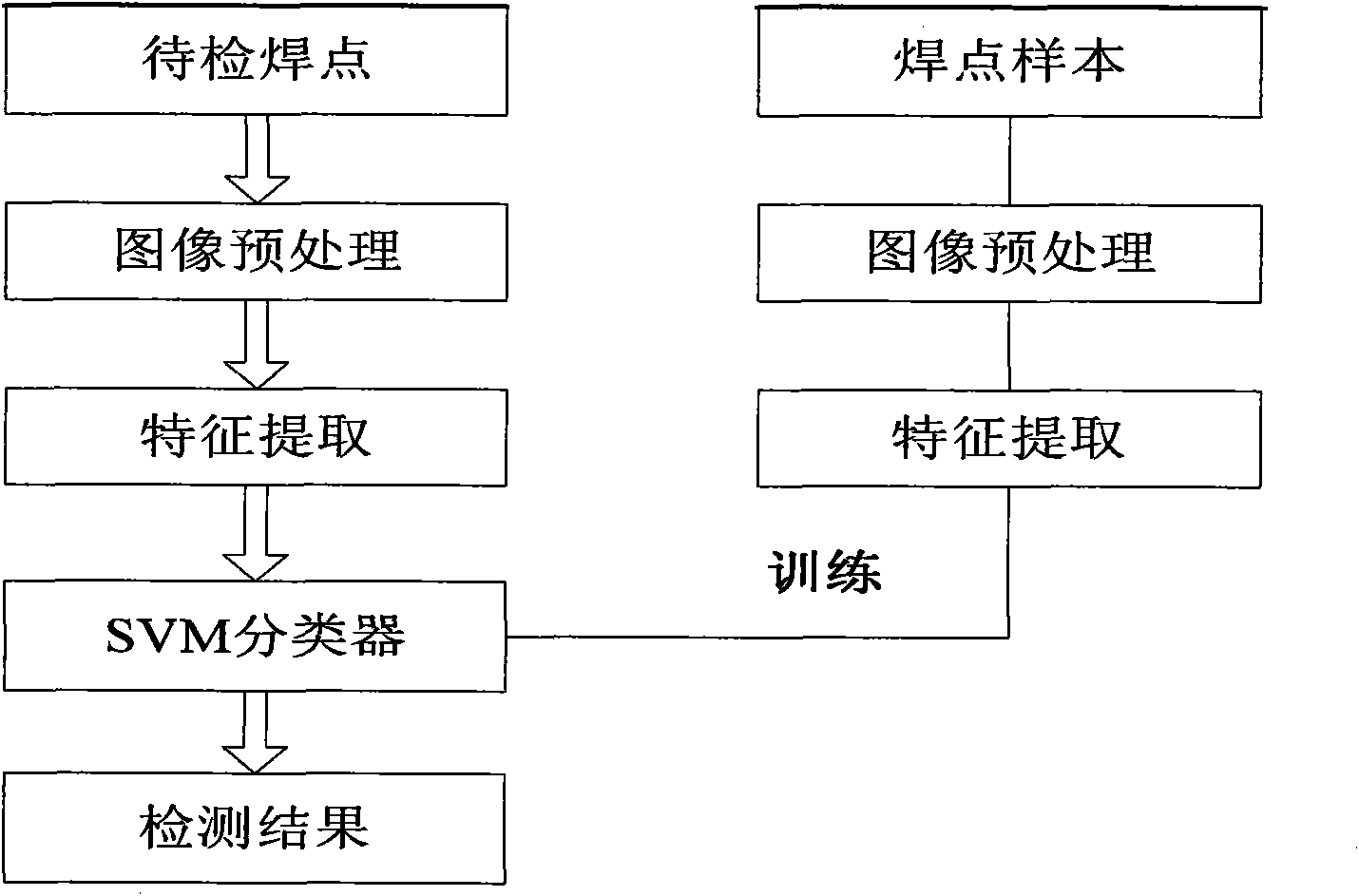

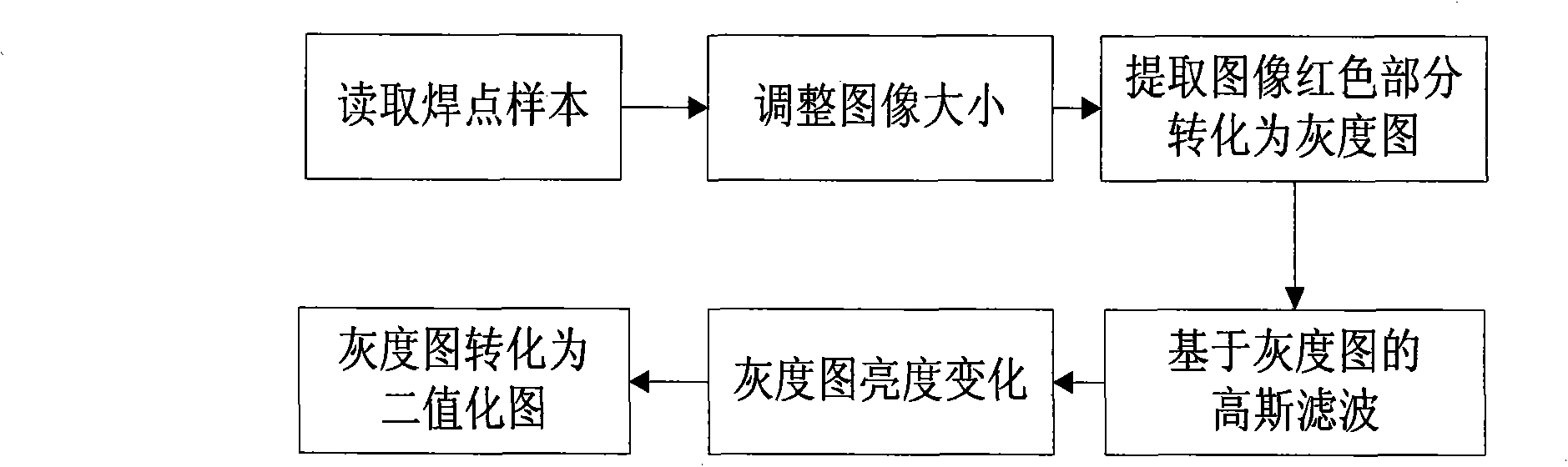

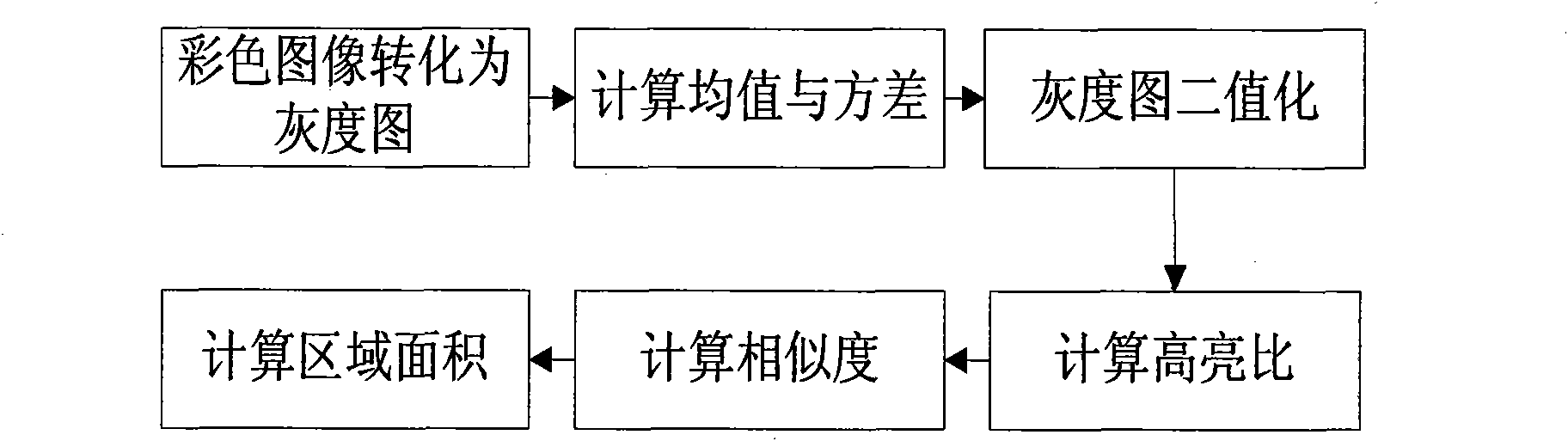

Automatic optical inspection method for printed circuit board comprising resistance element

InactiveCN101915769AActual production significanceEasy to distinguishImage analysisMaterial analysis by optical meansElectrical resistance and conductanceSupport vector machine classifier

The invention relates to an automatic optical inspection method for a printed circuit board comprising a resistance element. After the characteristics of welding spots are extracted, the welding spots are correctly classified into three types of normality, starved solder and missing parts through a classifier of a support vector machine. The automatic optical inspection method is suitable for classification and detection of the special welding spots during production. The automatic optical inspection method comprises the following steps of: converting red areas in welding spot images into a grayscale image and a binary image; calculating grayscale image-based mean values and standard deviations, and binary image-based height-lightness ratio, cross correlation and area of area color; and classifying the welding spots according to the quality by using the mean values, the variance, the height-lightness ratio and the similarity level of the welding spot images in the classifier of the support vector machine. Wrong welding spot types are classified through the mean values, the variance, the height-lightness ratio and the area characteristics of the welding spots. After the quality of the welding spots is distinguished, the wrong welding spots can be further classified into two types of starved solder and missing parts by the method.

Owner:SOUTH CHINA UNIV OF TECH

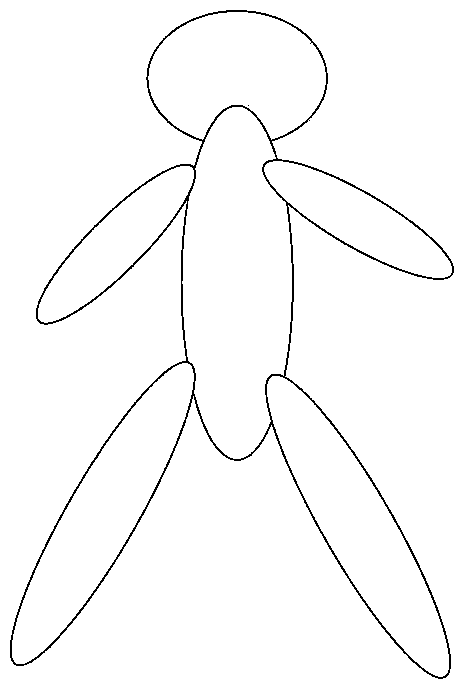

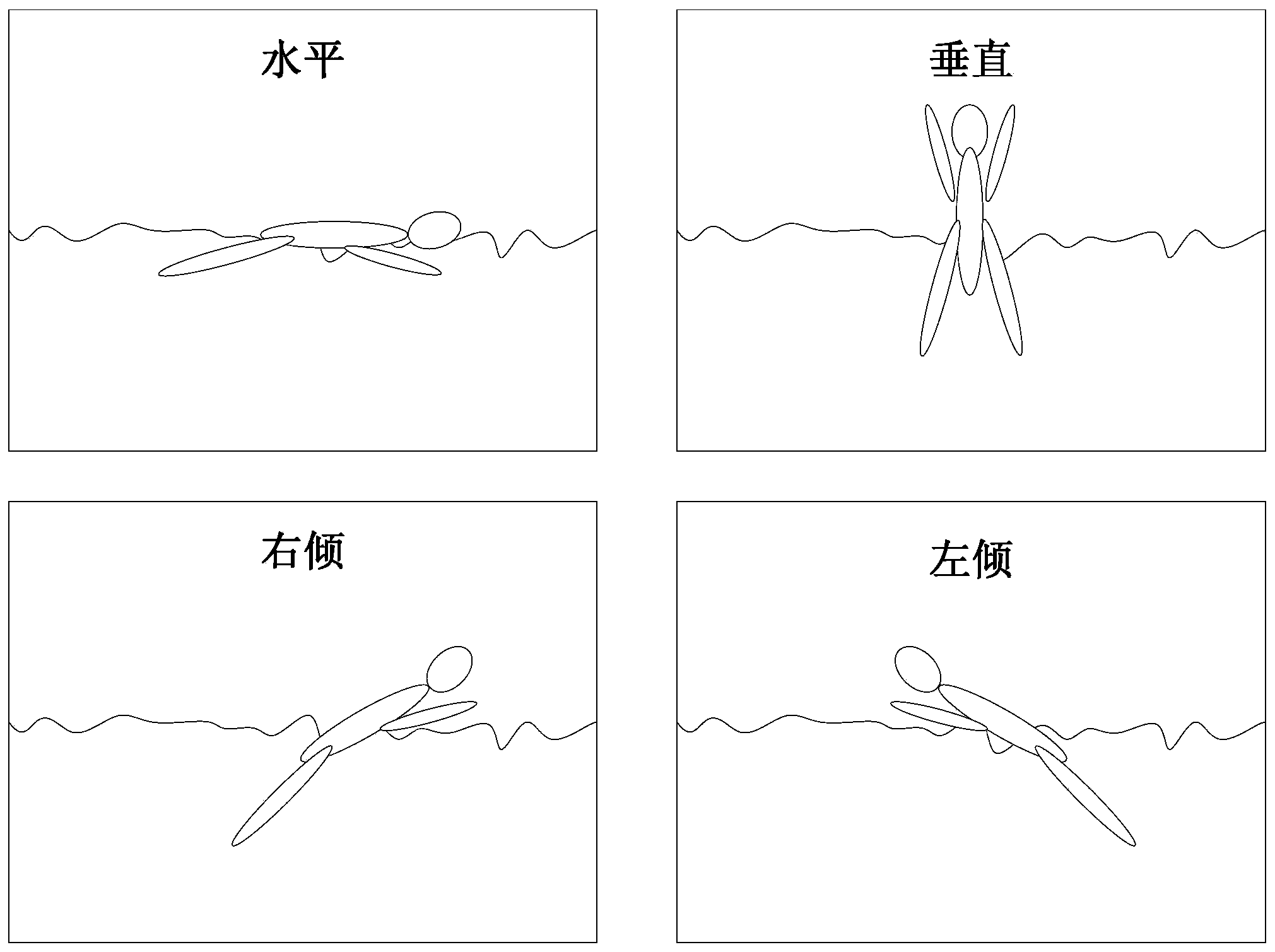

Near-drowning behavior detection method based on support vector machine

InactiveCN103413114ARescue in timeImprove environmental adaptabilityCharacter and pattern recognitionBehavioral stateSupport vector machine classifier

The invention discloses a near-drowning behavior detection method based on a support vector machine. According to the invention, the support vector machine is used as a classifier for the learning of a machine; the support vector machine classifier is trained through an obtained video sequence sample of near-drowning behavior and normal swimming behavior by pre-simulation; then a video image sequence of a pool is acquired in real time through a camera arranged above the water; the monitored video image sequence is inputted into the trained support vector machine classifier to determine a behavioral state of a swimmer. Therefore, a near-drowner can be automatically detected through the camera in an actual public swimming place and lives can be timely saved at the maximum. The near-drowning behavior detection method based on the support vector machine has the advantages of accurate and reliable detection, good robustness, high noise immunity and good adaption to transformation of light. Besides, through monitoring by the camera arranged above the water, the near-drowning behavior detection method based on the support vector machine lowers costs for system implementation and has great value in engineering application.

Owner:ZHEJIANG UNIV

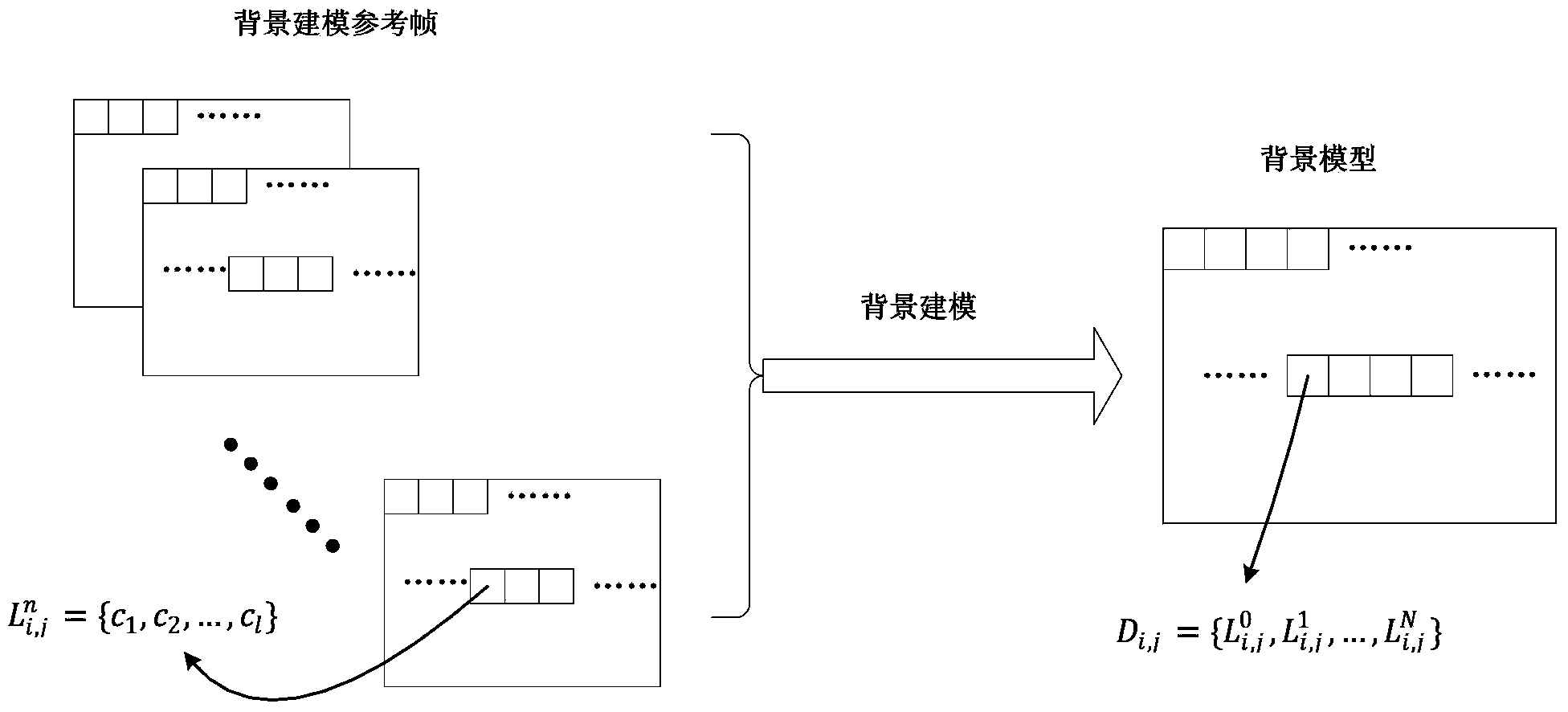

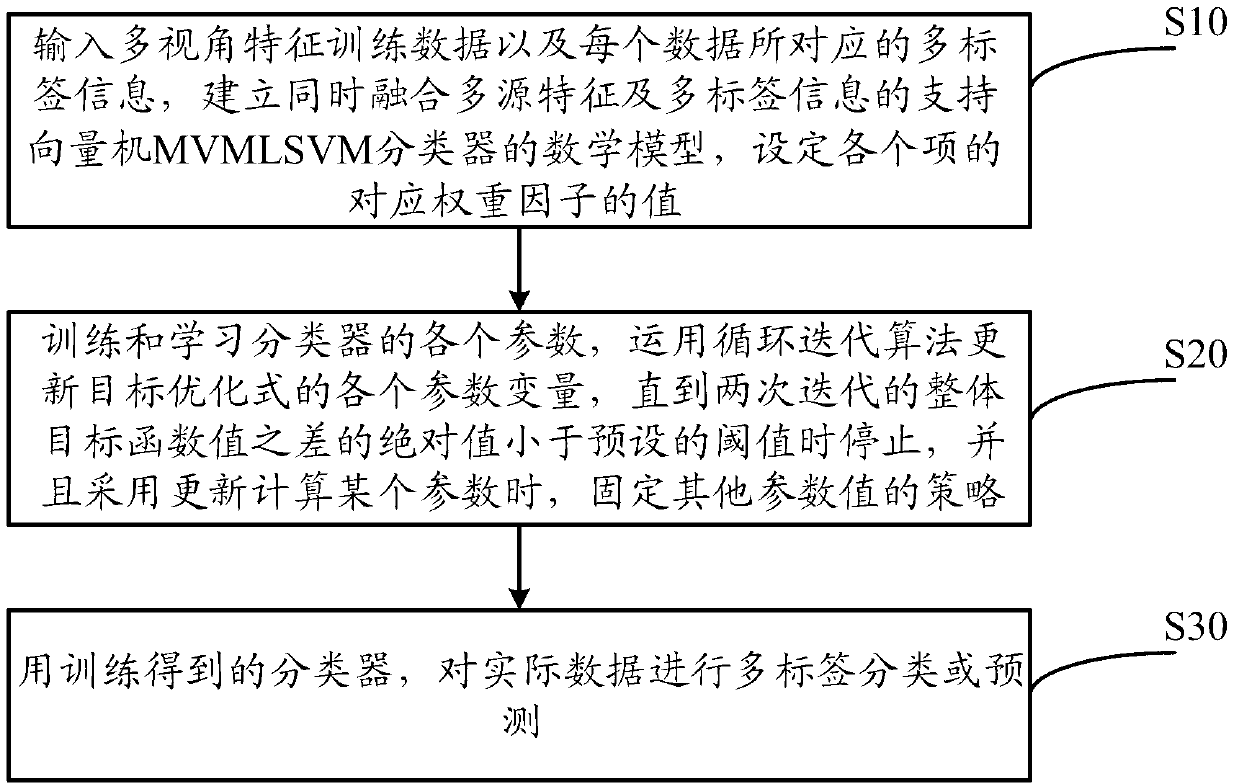

Support vector machine sorting method based on simultaneously blending multi-view features and multi-label information

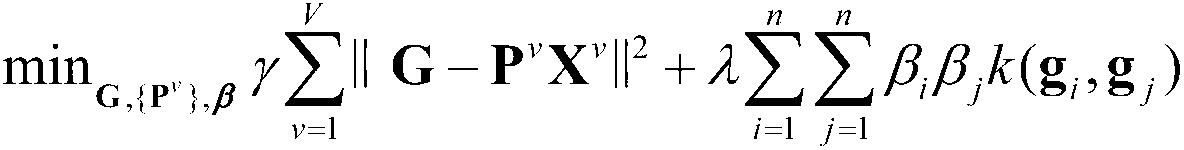

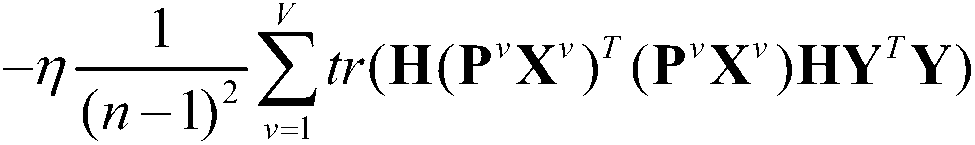

InactiveCN102982344AReduce noiseReduce redundancyCharacter and pattern recognitionSpecial data processing applicationsMathematical modelMulti-label classification

The invention discloses a support vector machine sorting method based on simultaneously blending multi-view features and multi-label information. The support vector machine sorting method based on simultaneously blending the multi-view features and the multi-label information comprises the following steps, inputting multi-view feature training data and the multi-label information corresponding to each data, establishing a mathematical model which simultaneously blends the multi-view features and the multi-label information and supports a vector machine classifier, and setting value of a corresponding weight factor of each item. Training and learning each parameter of a classifier, using loop iteration interactive algorithm to update all parameter variables of target optimization formula until absolute value of the difference of whole objective function values of two iterative is less than preset threshold valve, stopping. Meanwhile, when a parameter is adopted, updated and calculated, strategy fixing other parameter values is adopted. The classifier which is obtained by training conducts multi-label classification or precasting on actual data. When technology supports classification of a vector machine, a unified data expression form in a novel data space is learned, and accuracy rate of the classifier is improved.

Owner:ZHEJIANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com