Visual capture method and device based on depth image and readable storage medium

A deep image and vision technology, applied in the direction of instruments, character and pattern recognition, computer components, etc., can solve problems such as misjudgment and affect the accuracy of object recognition, save storage space, reduce program running time, and improve robustness sexual effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings.

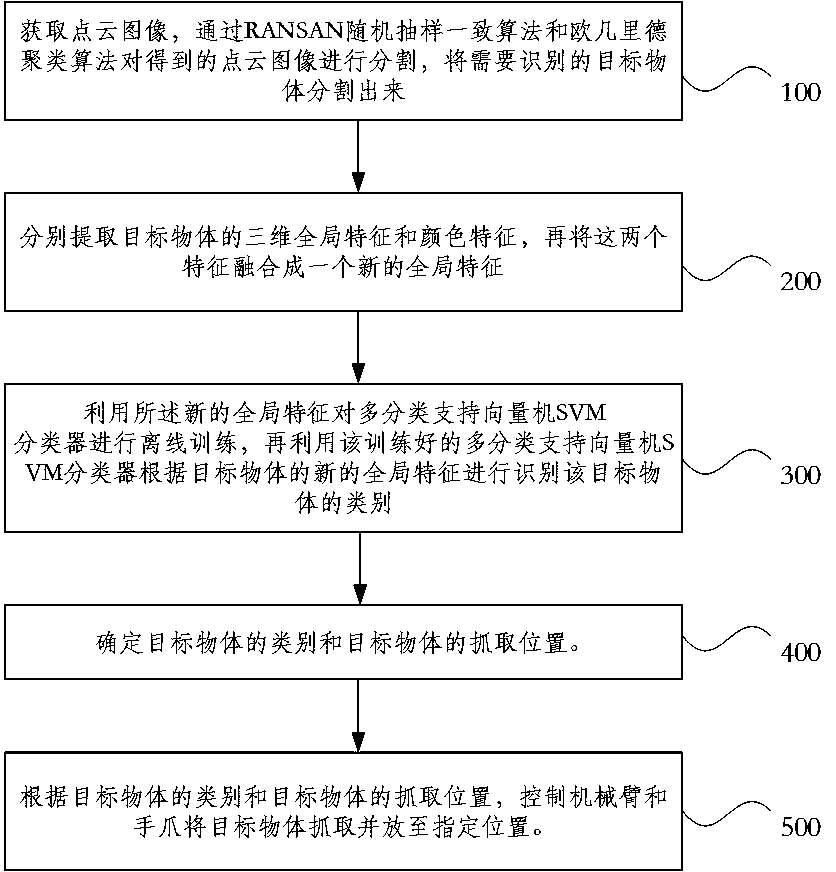

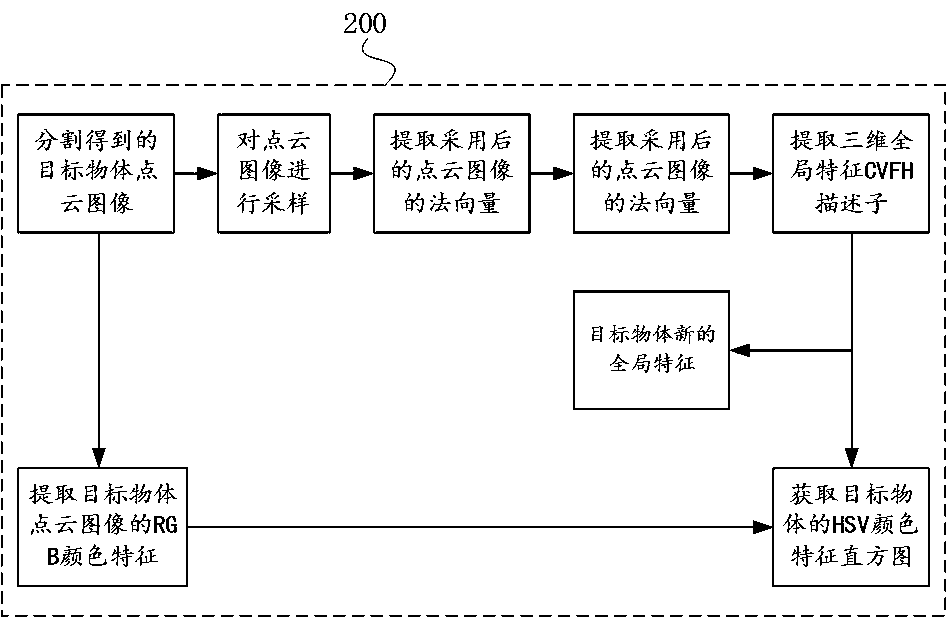

[0038] see figure 1 , an embodiment of the present invention provides a visual capture method based on a depth image, comprising the following steps:

[0039] Step 100: Obtain the point cloud image, and segment the obtained point cloud image through the RANSAN random sampling consensus algorithm and the Euclidean clustering algorithm, and segment the target object to be identified.

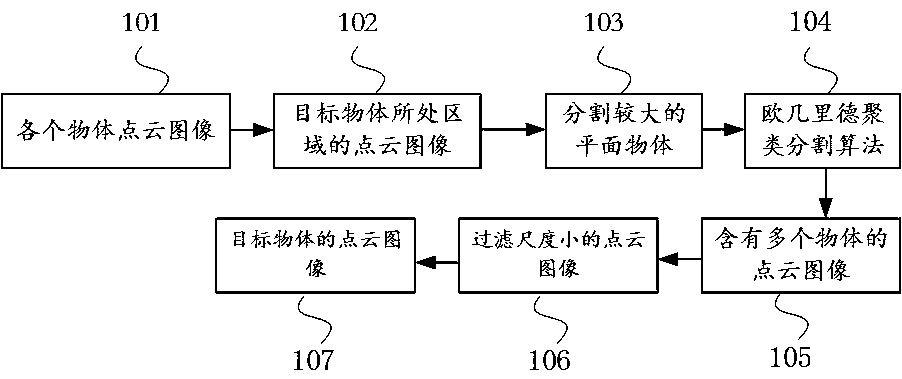

[0040] see figure 2 First, the point cloud image 101 of each object is acquired through the depth camera Kinect, and the point cloud image includes position information (x, y, z) and color information (R, G, B) of the object. Before segmenting the target object from the point cloud image acquired by the depth camera Kinect, filter the point cloud image information far from the targ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com