Patents

Literature

73 results about "Visual capture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In psychology, visual capture is the dominance of vision over other sense modalities in creating a percept. In this process, the visual senses influence the other parts of the somatosensory system, to result in a perceived environment that is not congruent with the actual stimuli. Through this phenomenon, the visual system is able to disregard what other information a different sensory system is conveying, and provide a logical explanation for whatever output the environment provides. Visual capture allows one to interpret the location of sound as well as the sensation of touch without actually relying on those stimuli but rather creating an output that allows the individual to perceive a coherent environment.

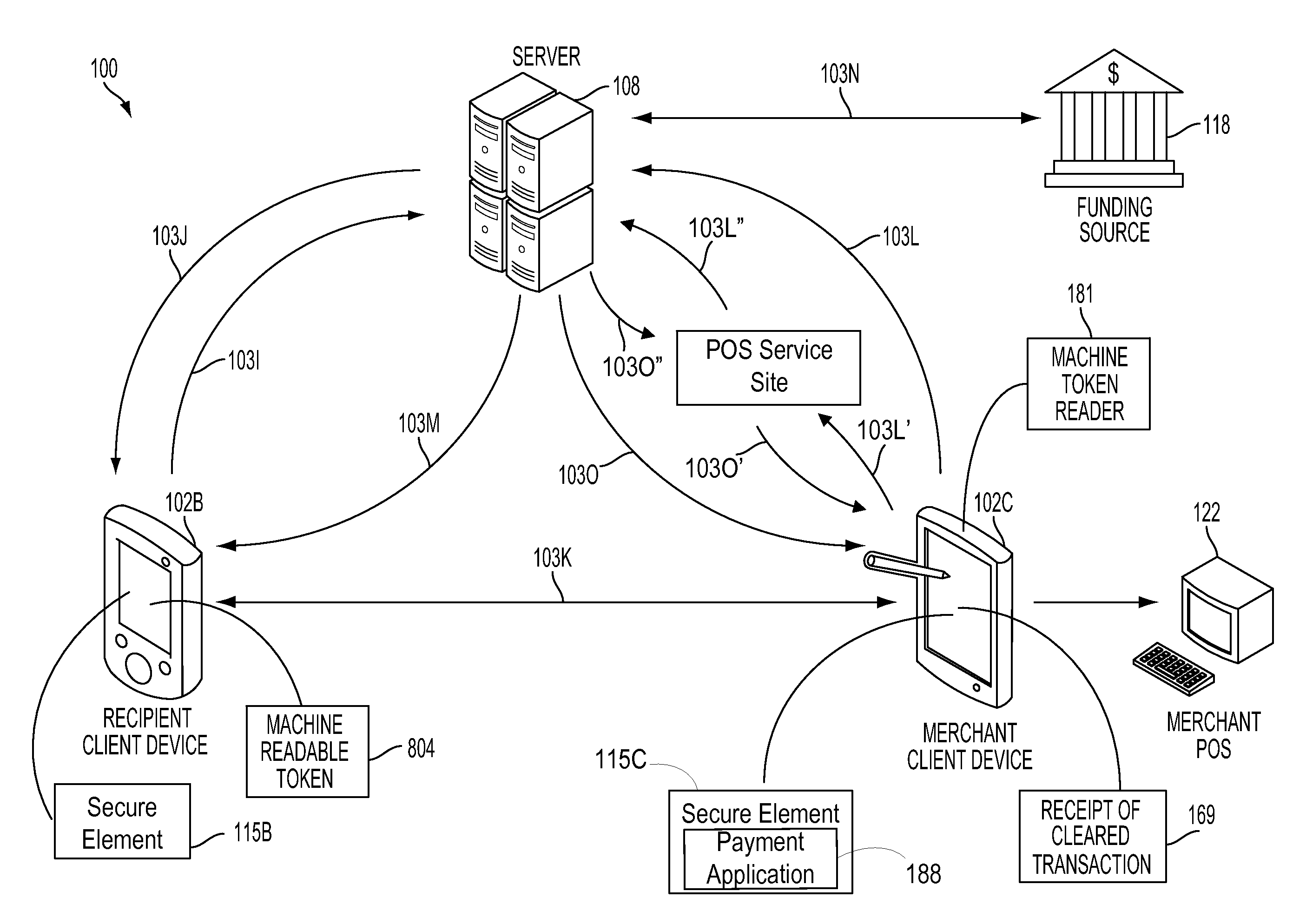

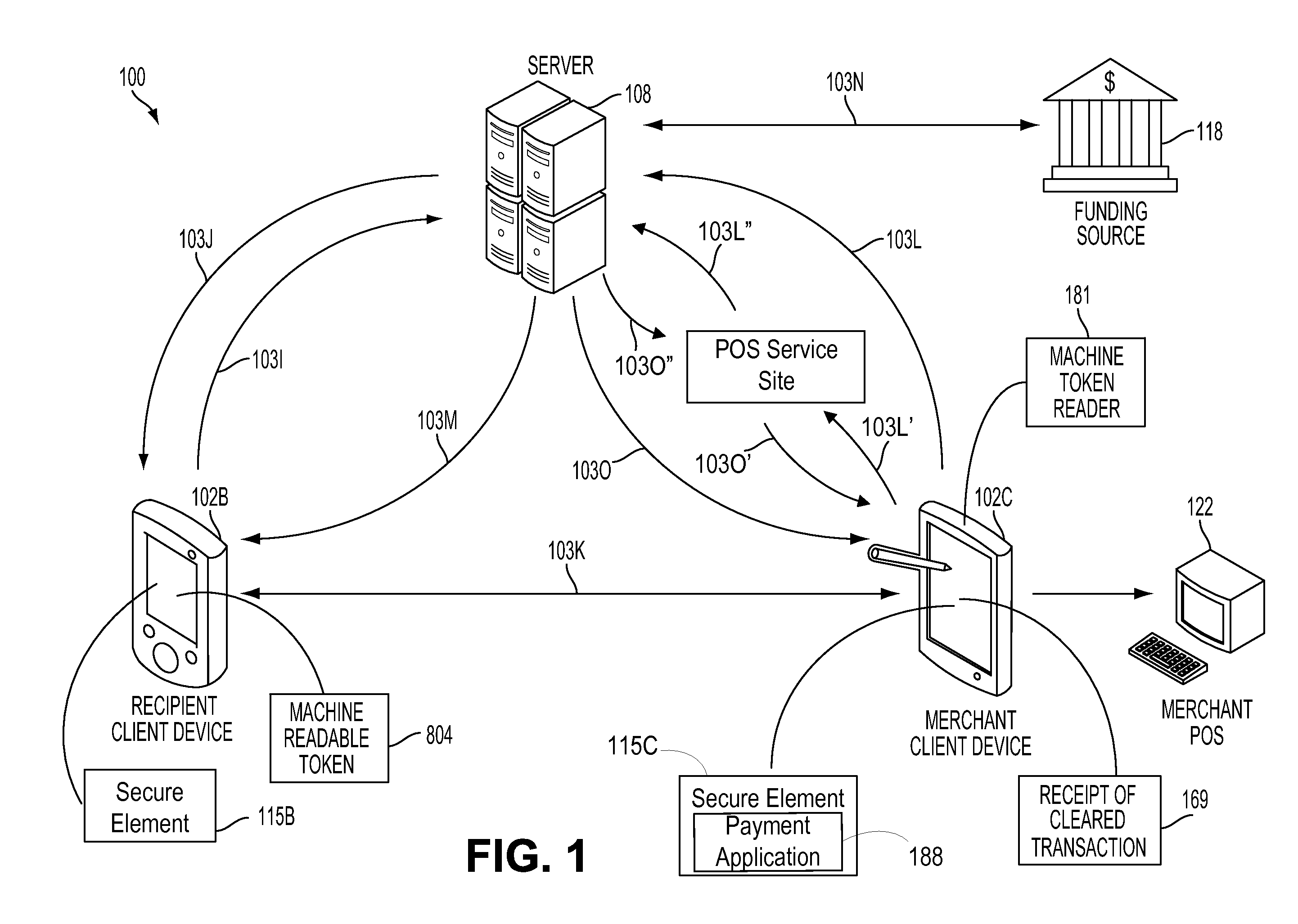

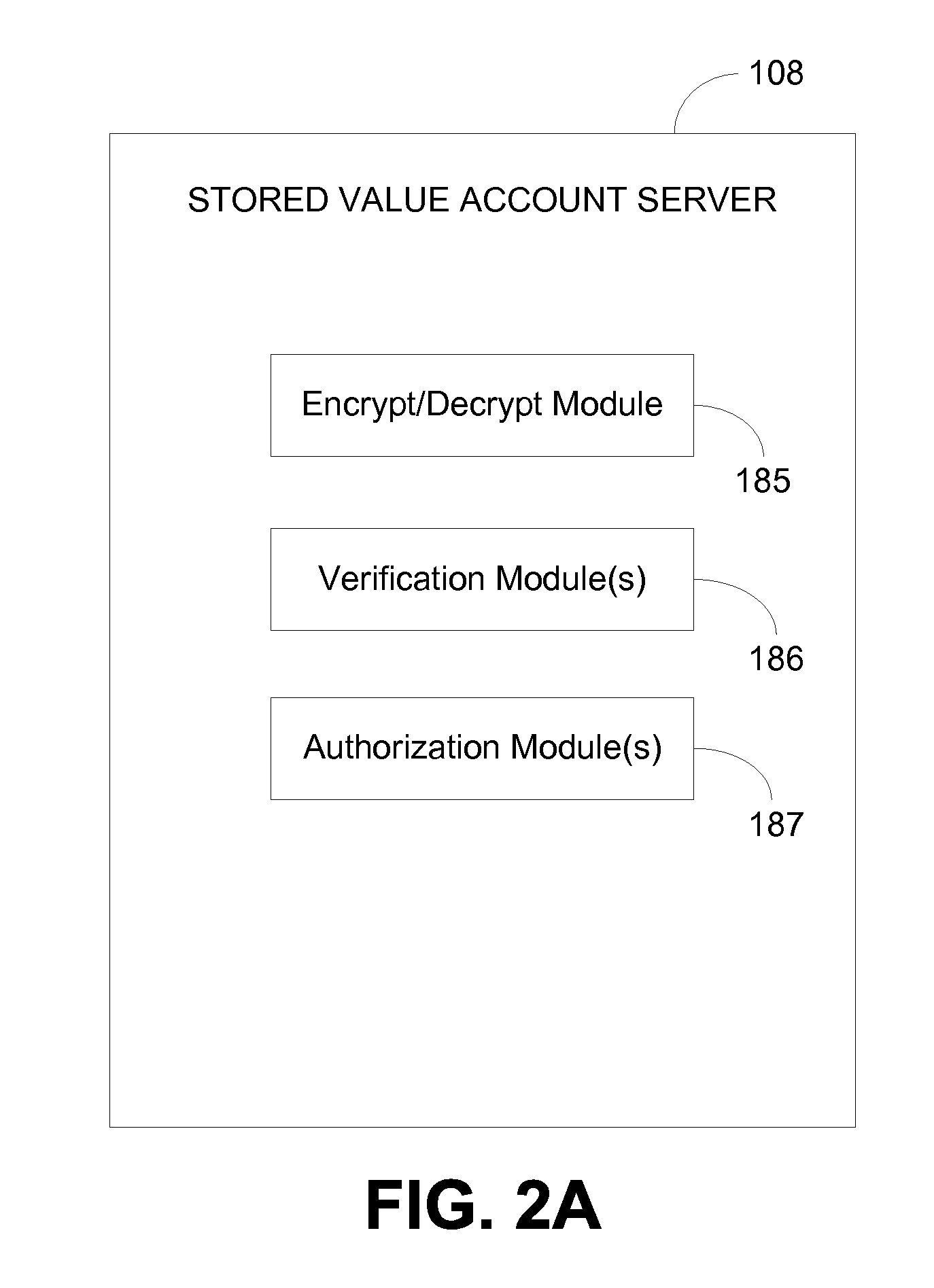

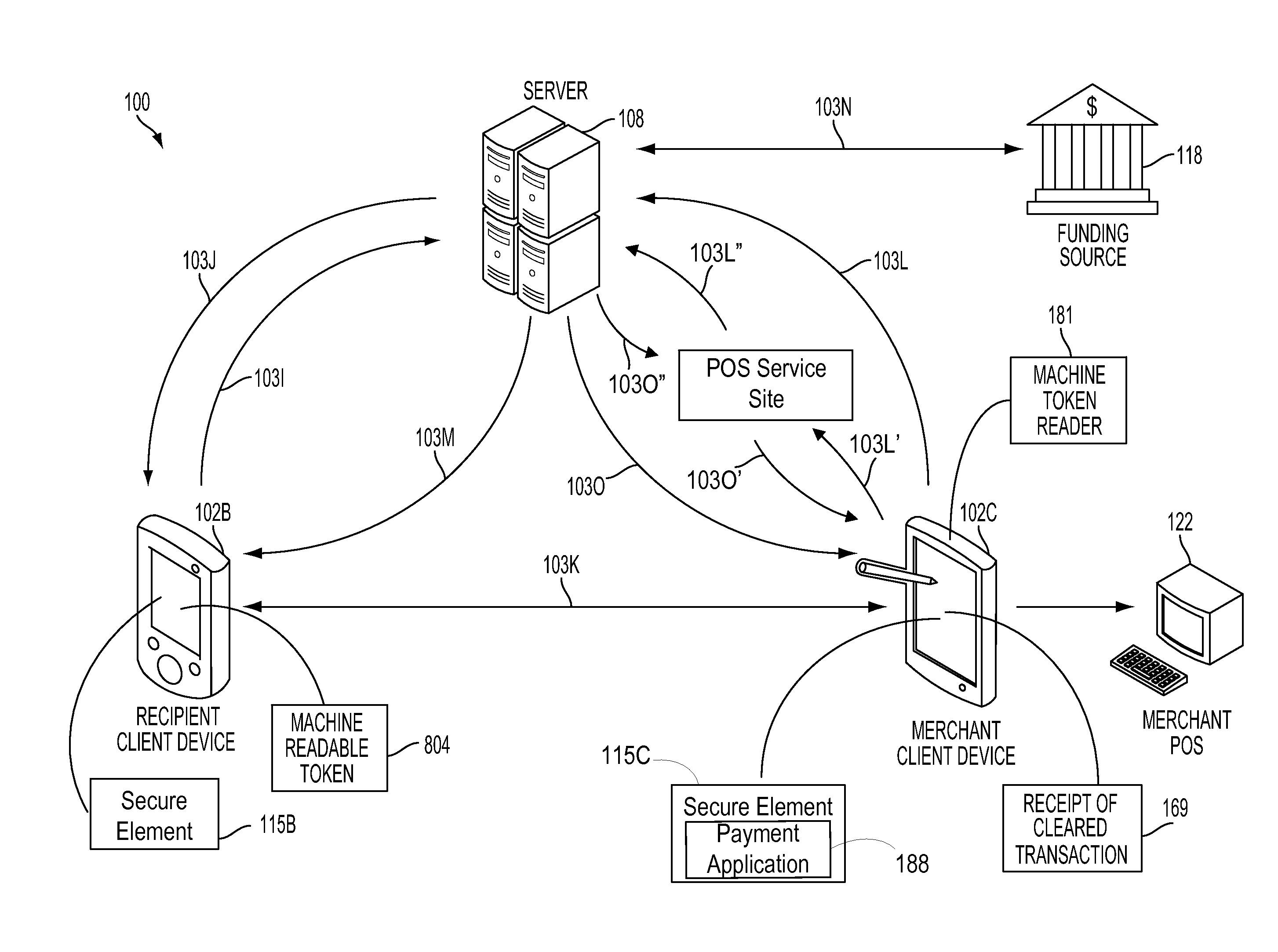

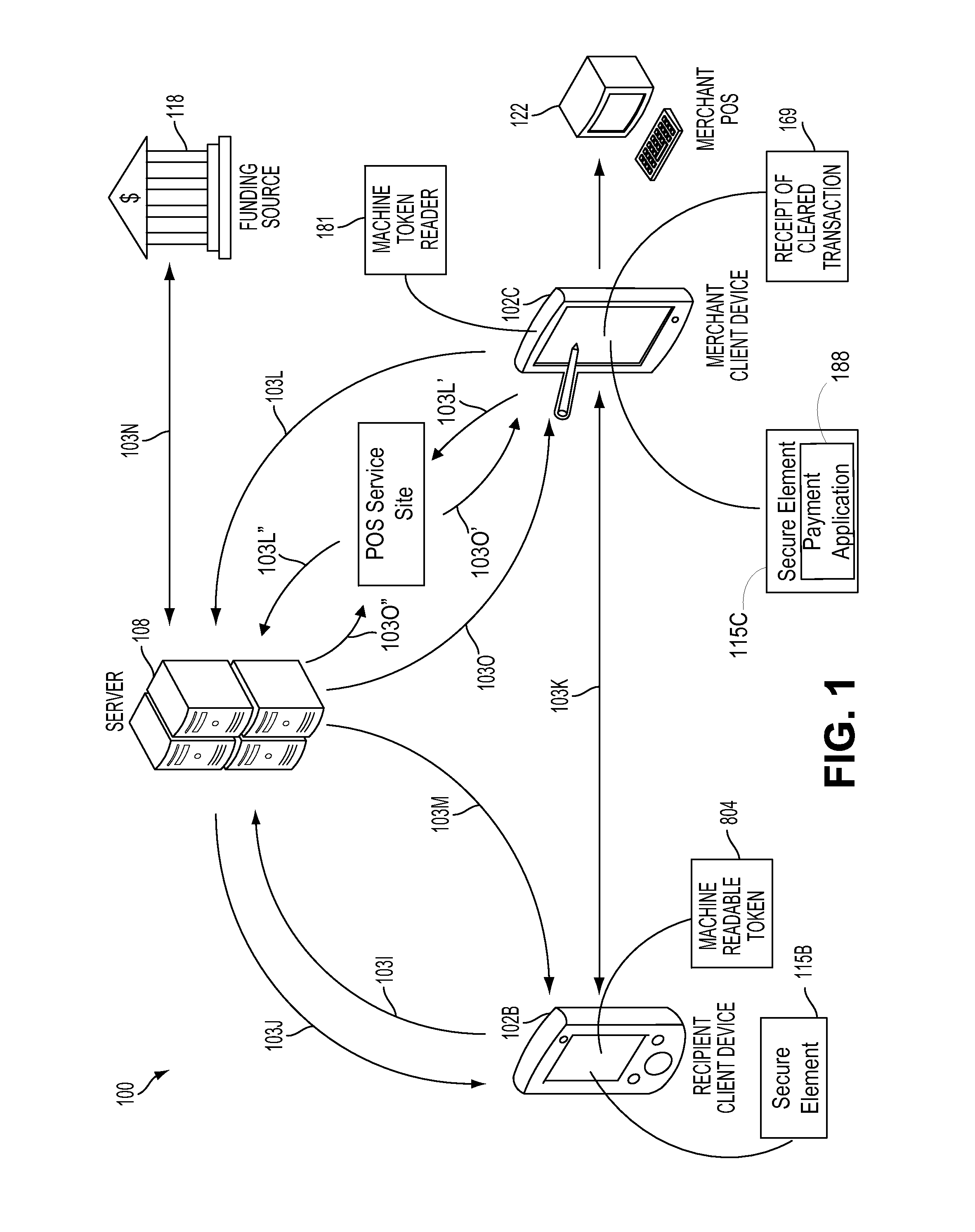

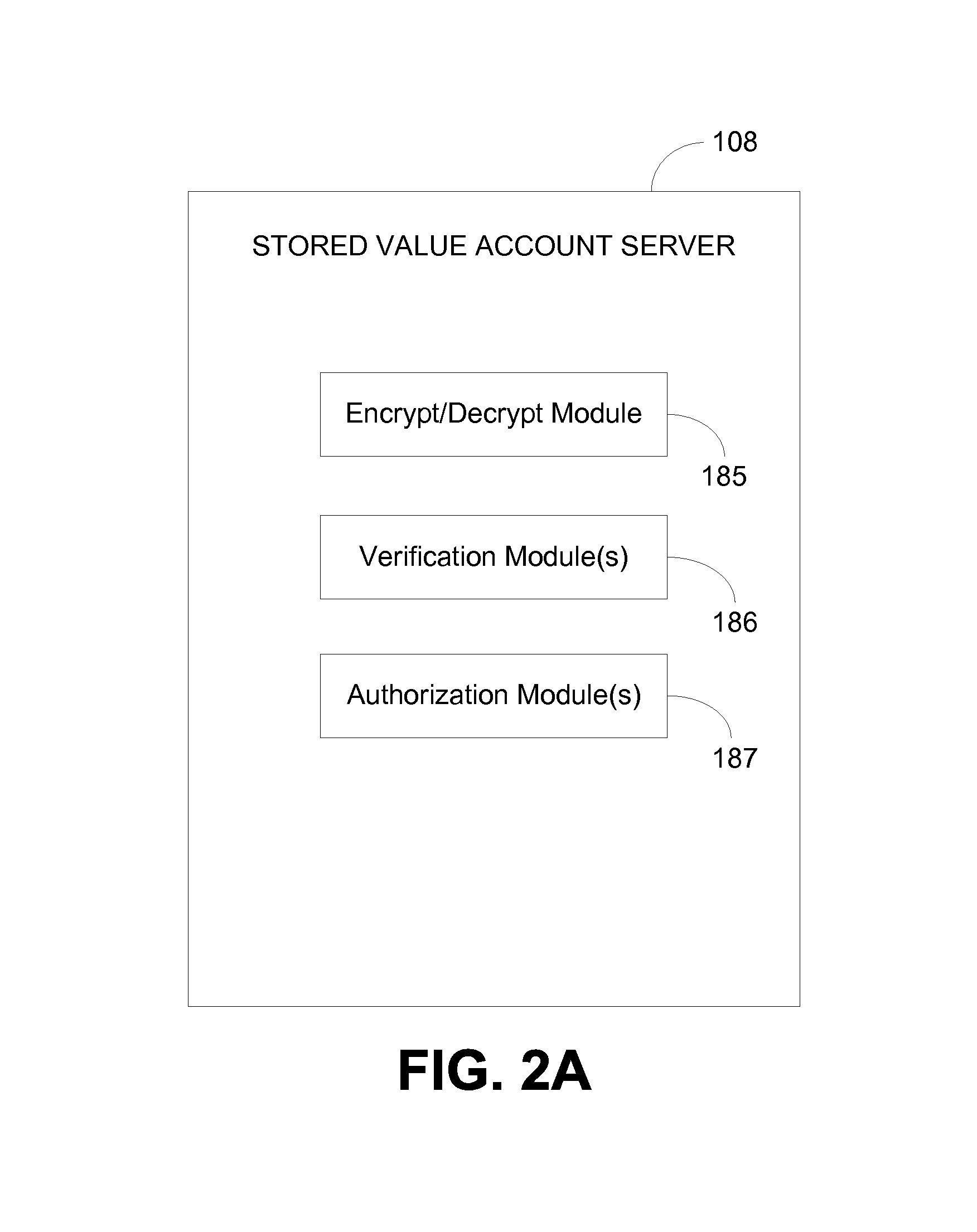

System and method for point of service payment acceptance via wireless communication

A system and method for remitting payment from a consumer's credit or stored value account by digital presentations of account information are described. A merchant may create one or more merchant account at a point-of-sale service site (“POS service site”) and associate one or more merchant portable computing devices (“MPCDs”) with one or more of the merchant accounts. Each MPCD may be configured to receive customer data from a customer via one of a visual capture and a wireless communication, such as a near field communication (“NFC”) or a machine-readable optical code. The customer data may be received from an NFC-capable physical token such as an EMV card, or a virtual token presented by a customer portable computing device (“CPCD”) using a NFC or a machine-readable optical code. Each MPCD may contain a point-of-sale payment application supplied by the POS service site that may capture data representative of a consumer account from the received customer data.

Owner:QUALCOMM INC

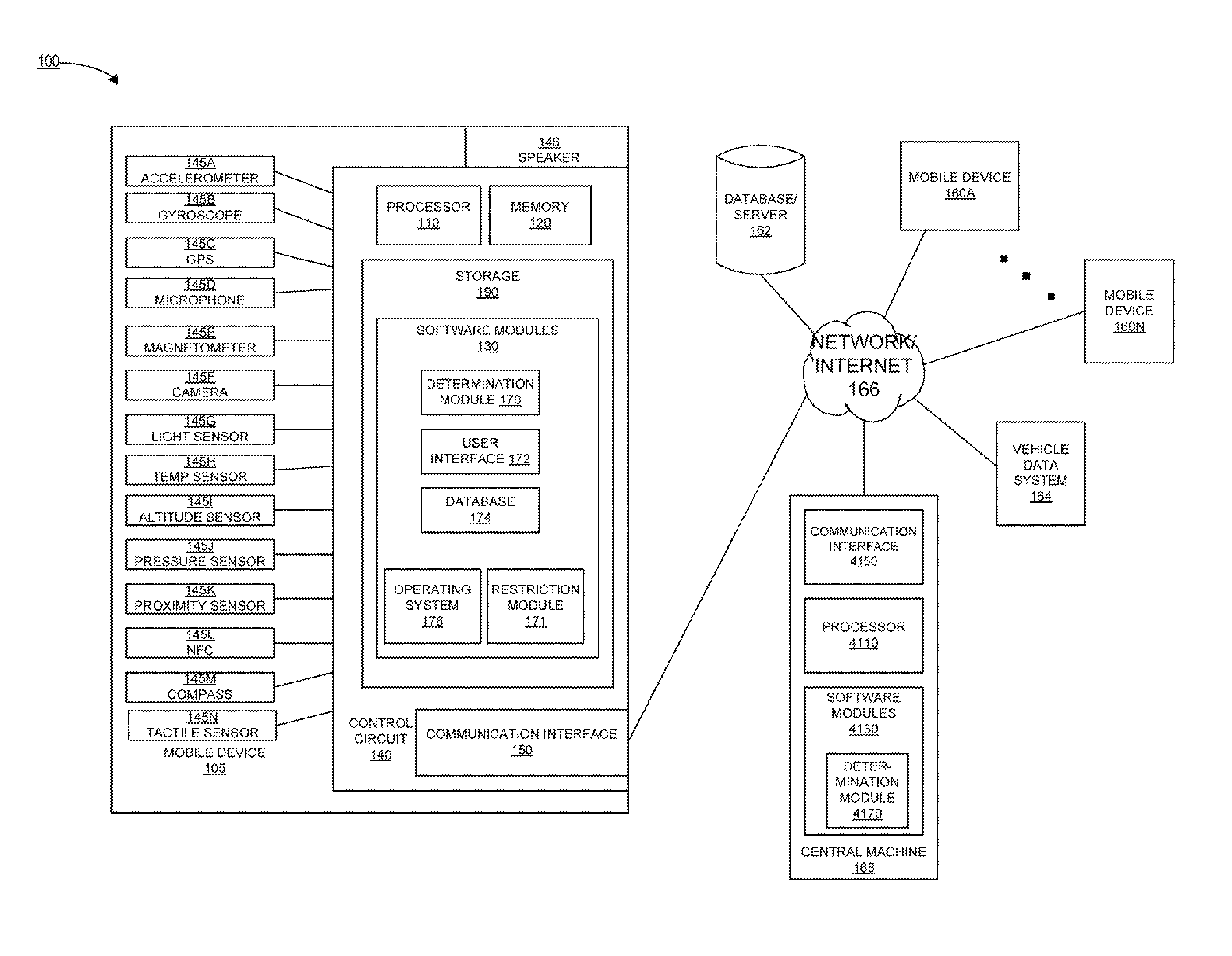

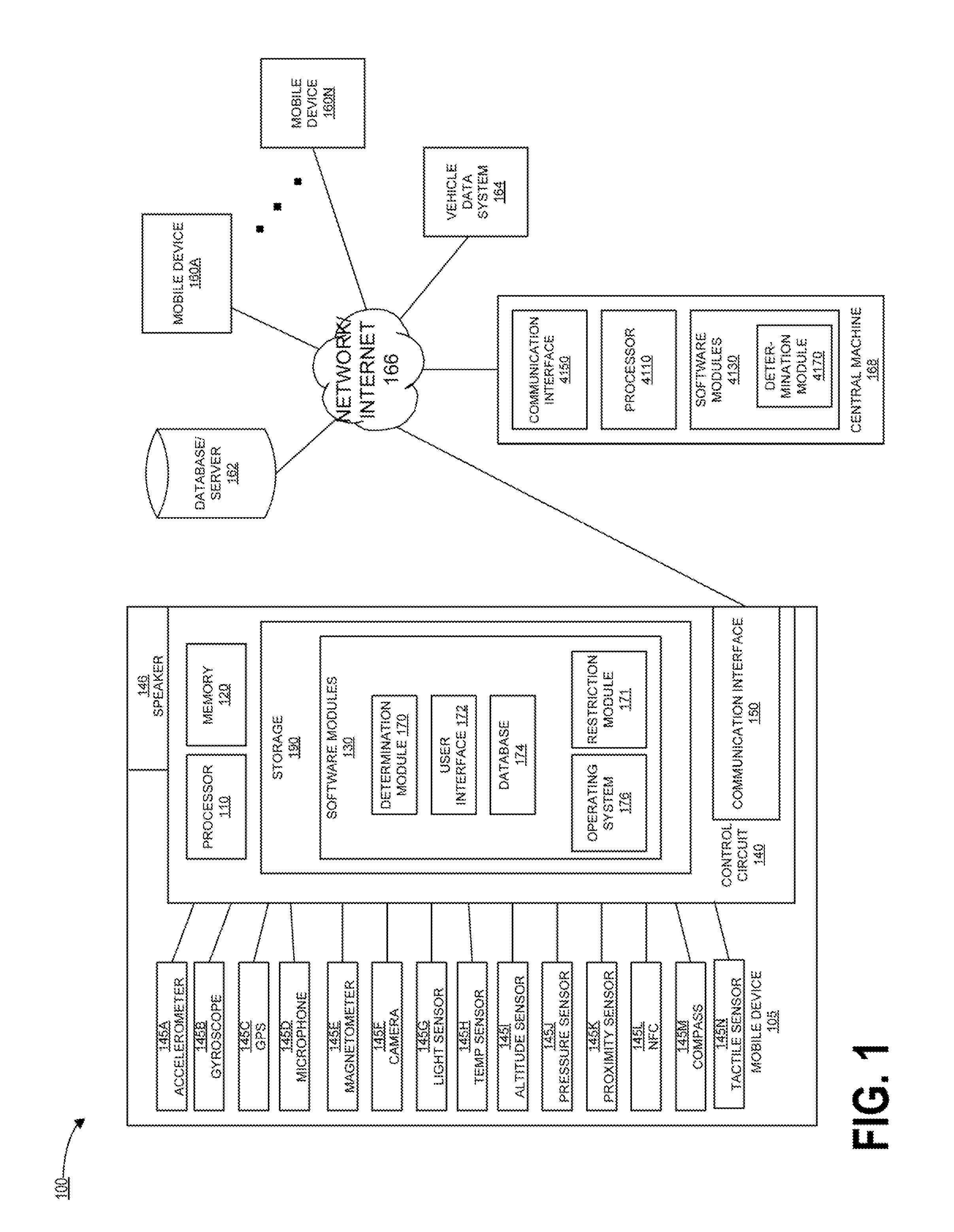

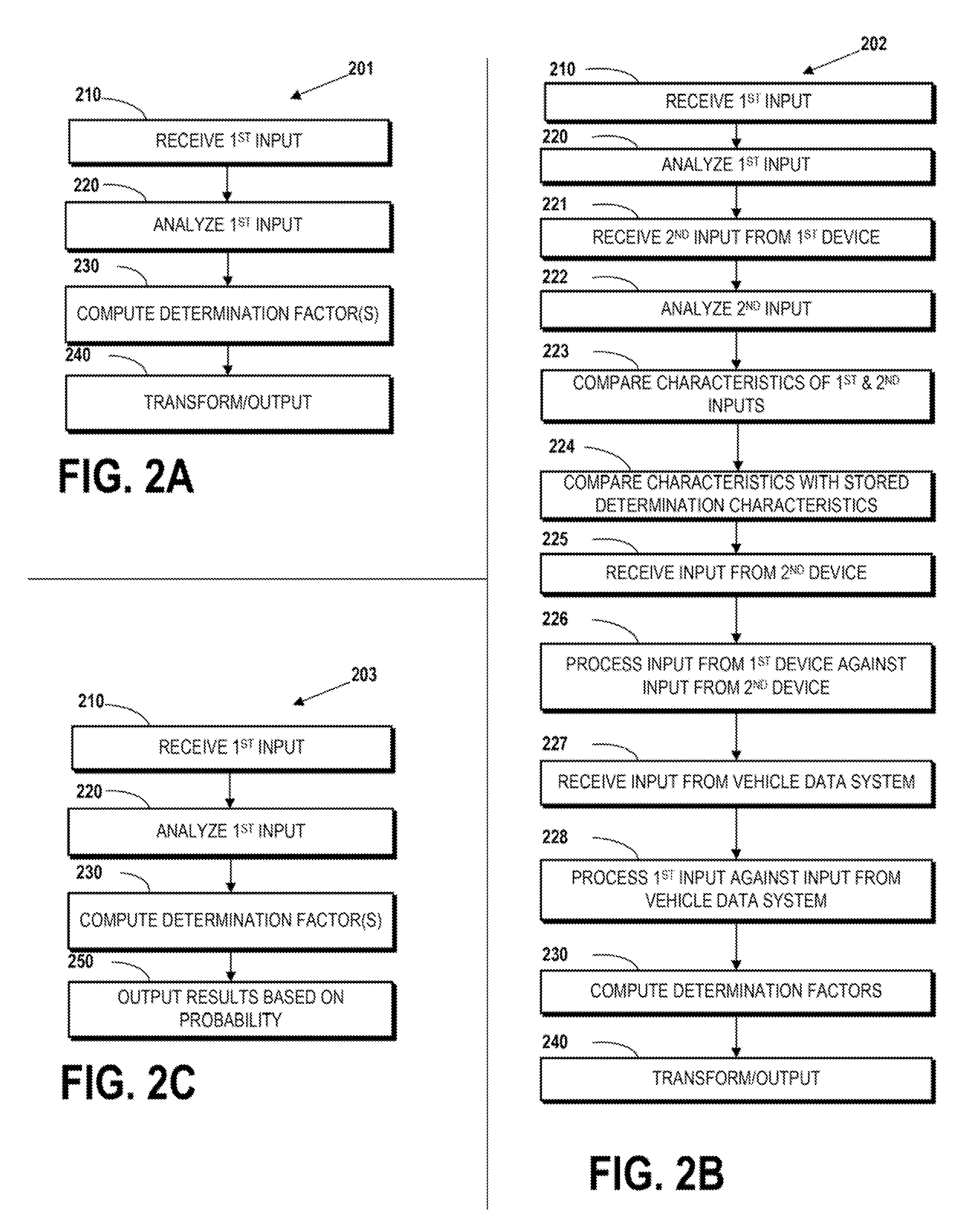

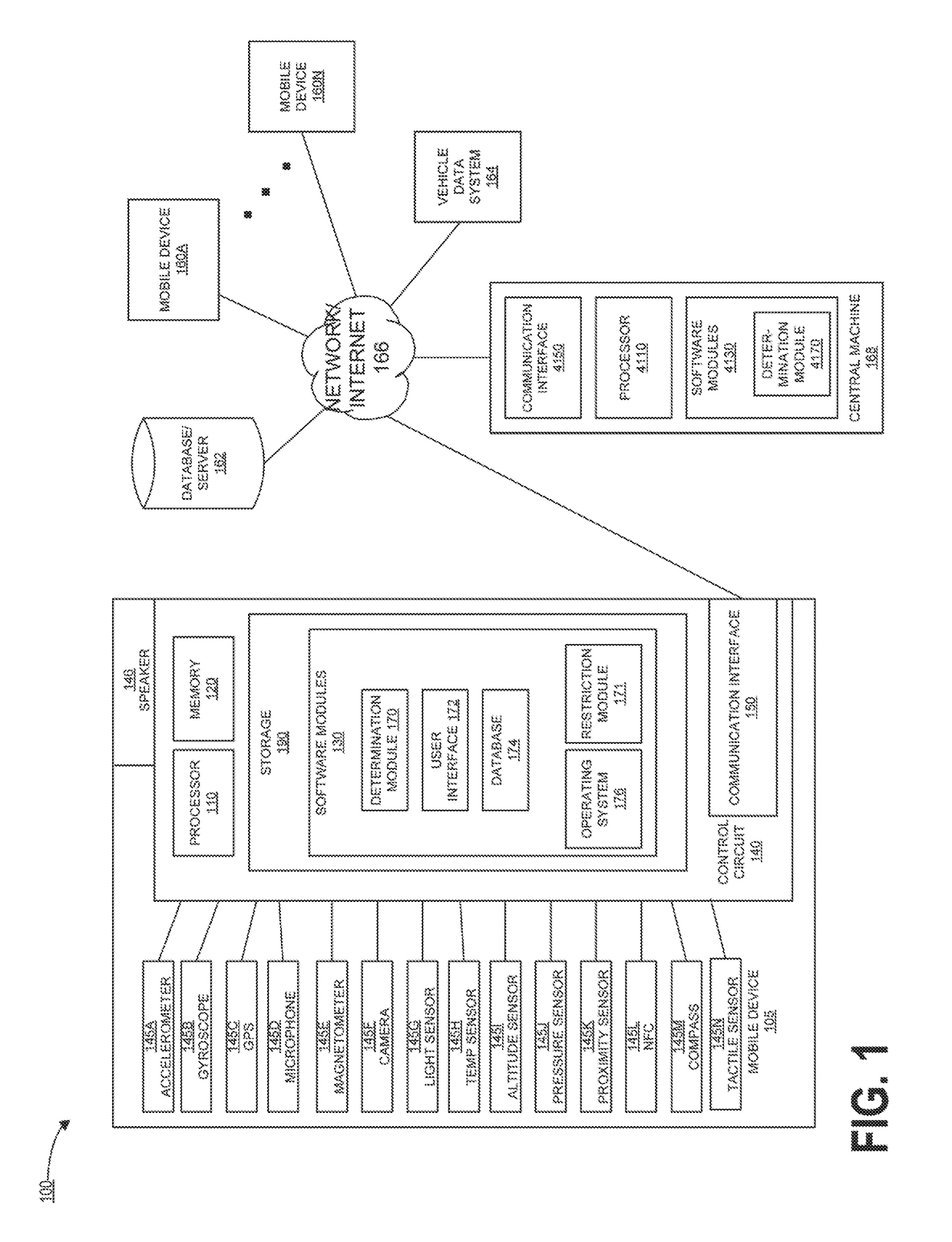

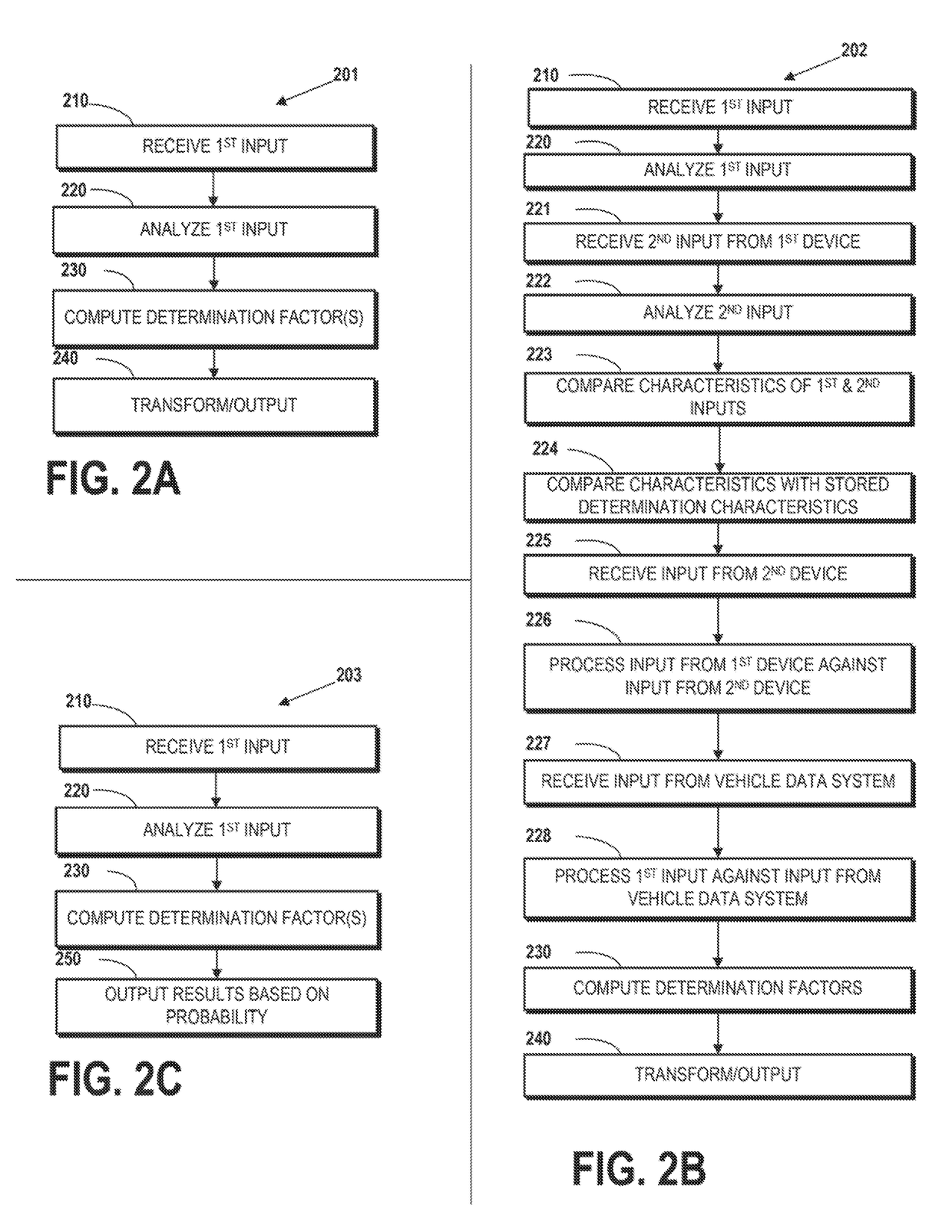

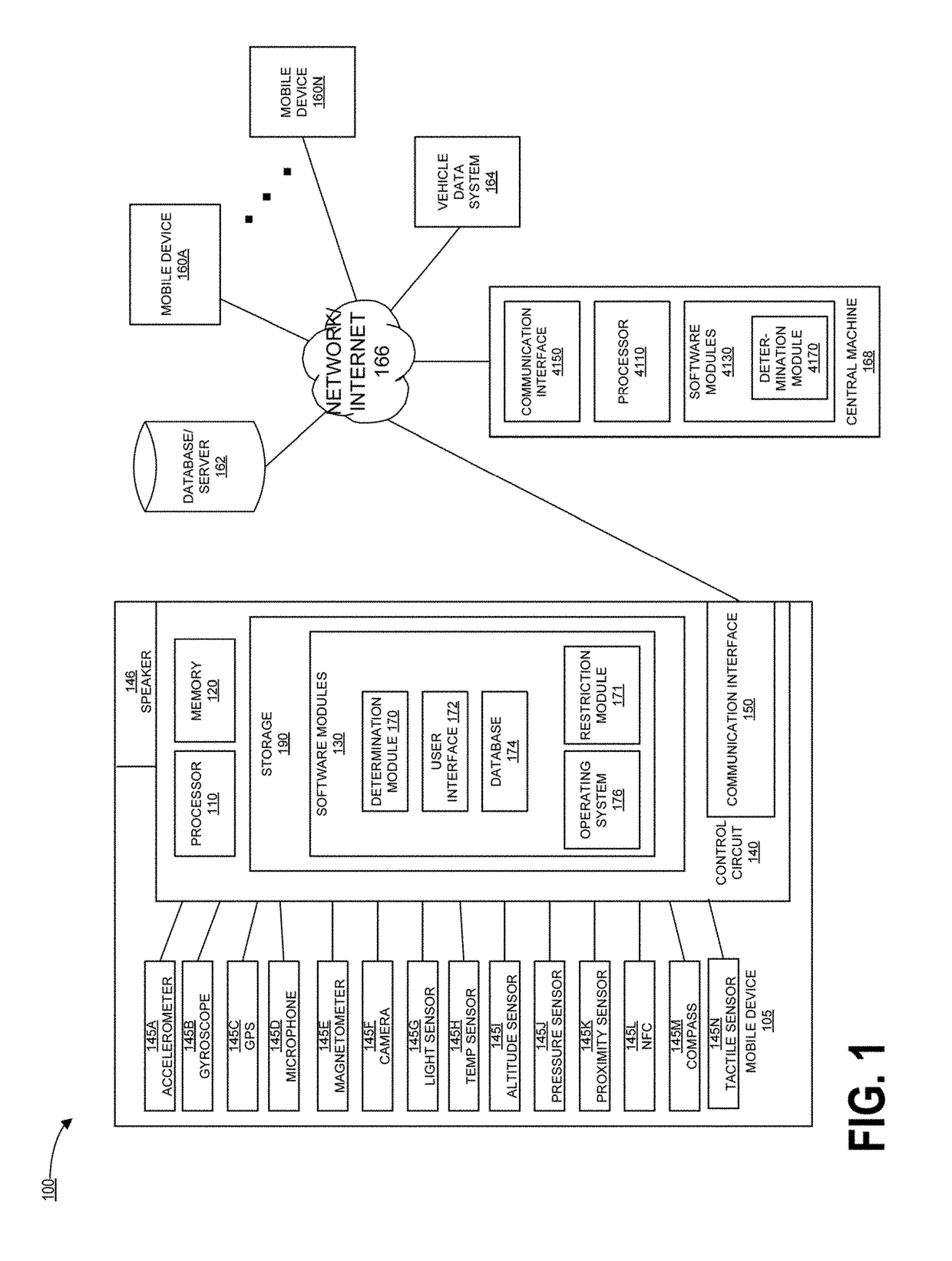

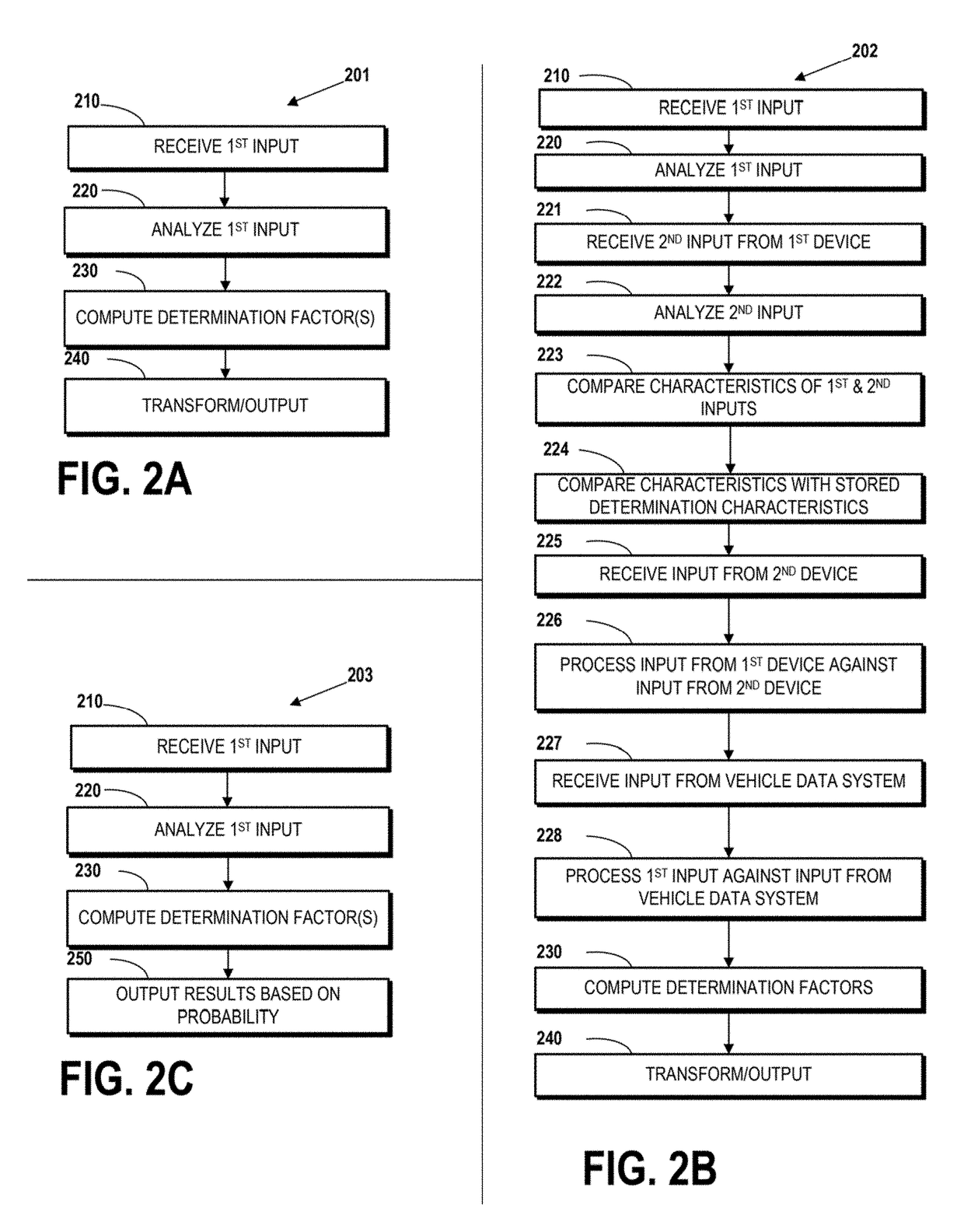

Restricting mobile device usage

InactiveUS20160021238A1Input/output for user-computer interactionDevices with GPS signal receiverIn vehicleMobile device

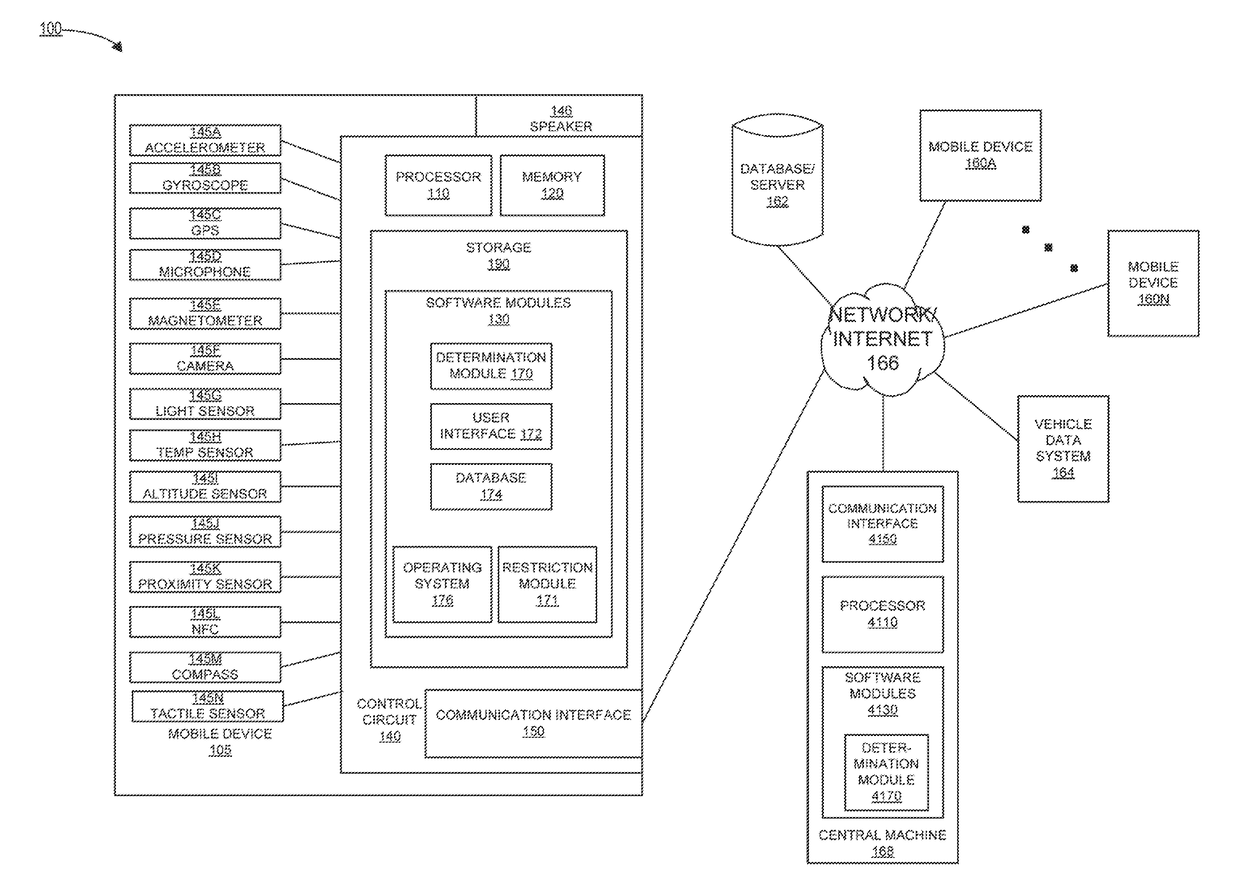

Systems and methods are provided for selectively restricting a mobile device. In one implementation, a visual capture can be to identify one or more indicators within the visual capture. Based on the indicators, an implementation of a restriction at the mobile device can be adjusted. In another implementation inputs can be processed to compute a determination, determination reflecting at least one of the in-vehicle role of the user as a driver and the in-vehicle role of the user as a passenger; and, an operation state of the mobile device can be modified based on such a determination. According to another implementation, one or more outputs can be projected from a mobile device, inputs can be received, and such inputs and outputs can be processed to determine a correlation between them. A restriction can then be modified based on the correlation.

Owner:CELLEPATHY

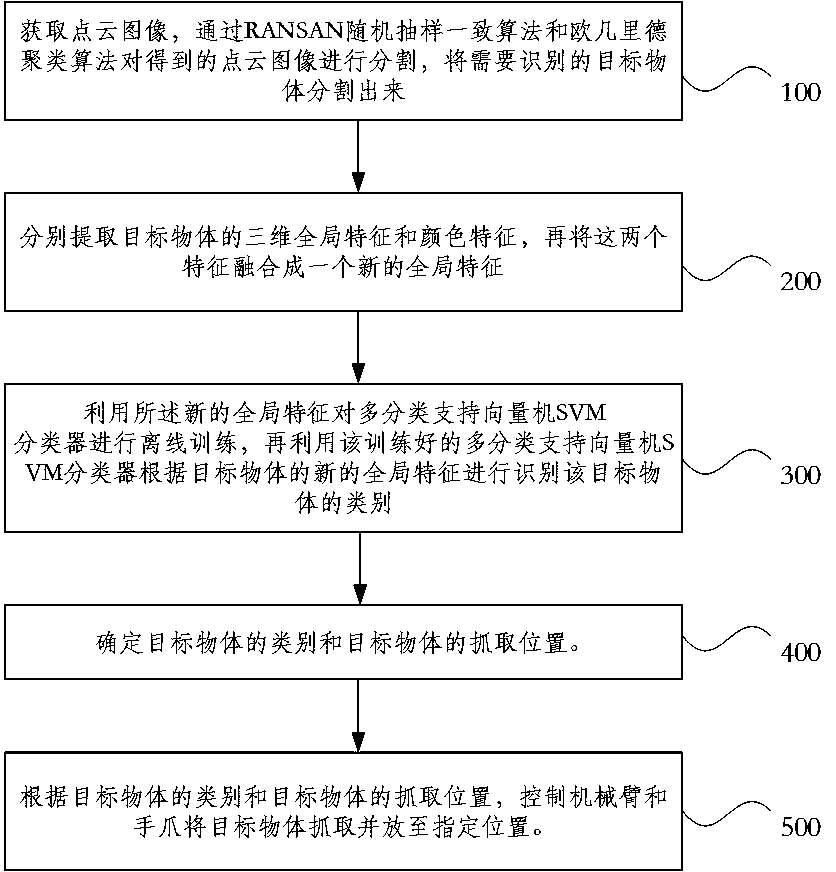

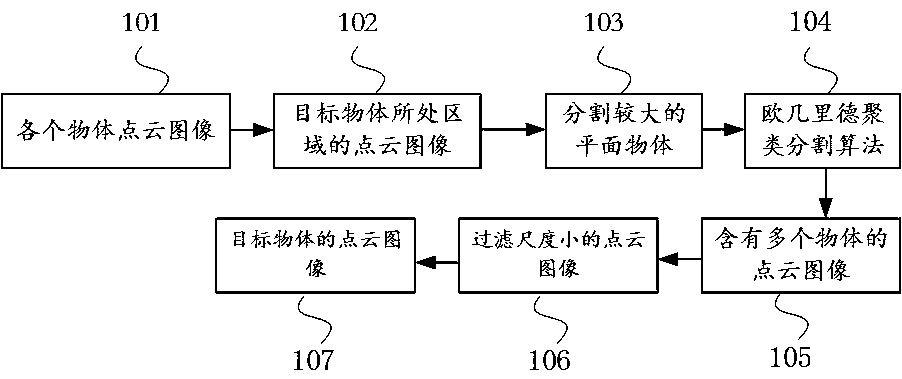

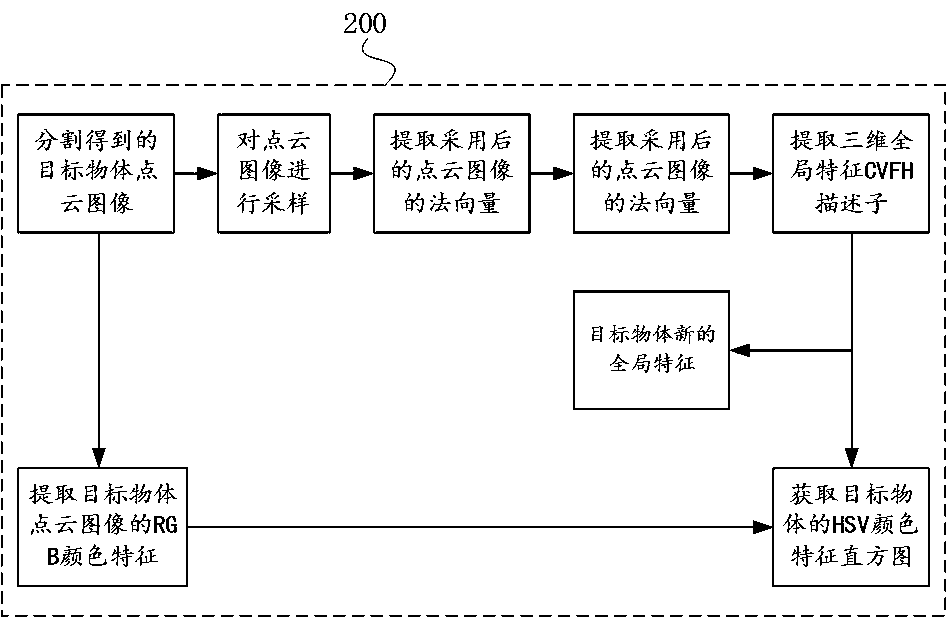

Visual capture method and device based on depth image and readable storage medium

InactiveCN107748890AImprove robustnessTexture features, so that the system can not only recognize lessCharacter and pattern recognitionCluster algorithmPattern recognition

The invention discloses a visual capture method and device based on a depth image and a readable storage medium. The method comprises steps that a point cloud image is acquired through a depth cameraKinect, the acquired point cloud image is segmented through an RANSAN random sampling consensus algorithm and an Euclidean clustering algorithm, and an identification-needing target object is acquiredthrough segmentation; 3D global characteristics and color characteristics of the object are respectively extracted and are fused to form a new global characteristic; off-line training of a multi-class support vector machine classifier SVM is carried out through utilizing the new global characteristic of the object, category of the target object is identified through utilizing the trained multi-class support vector machine classifier SVM according to the new global characteristic; then the category and the grasping position of the target object are determined; and lastly, according to the category of the target object and the grasping position of the target object, a manipulator and a gripper are controlled for grasping the target object to the specified position. The method is advantagedin that the target object can be accurately identified and grasped.

Owner:SHANTOU UNIV

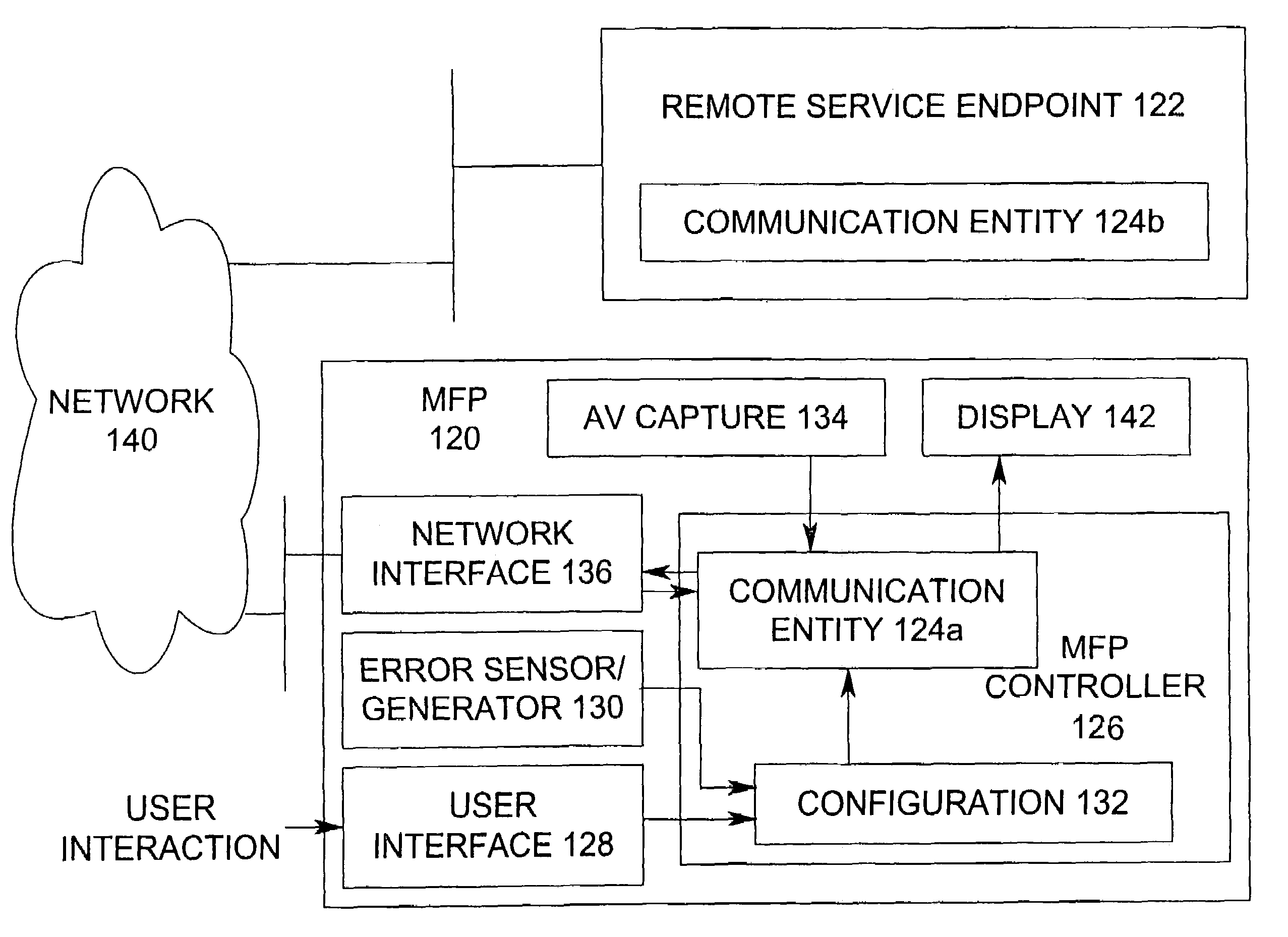

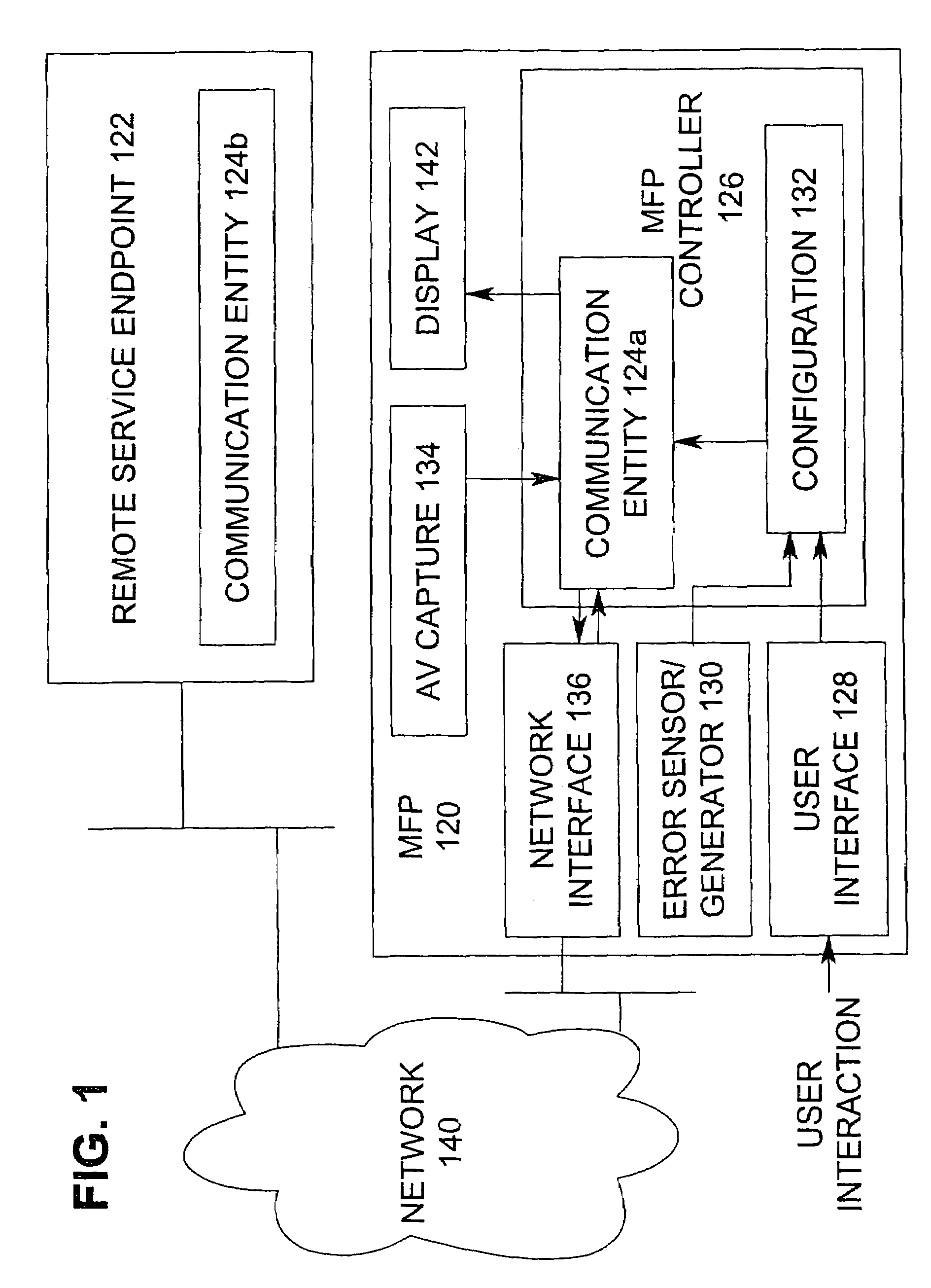

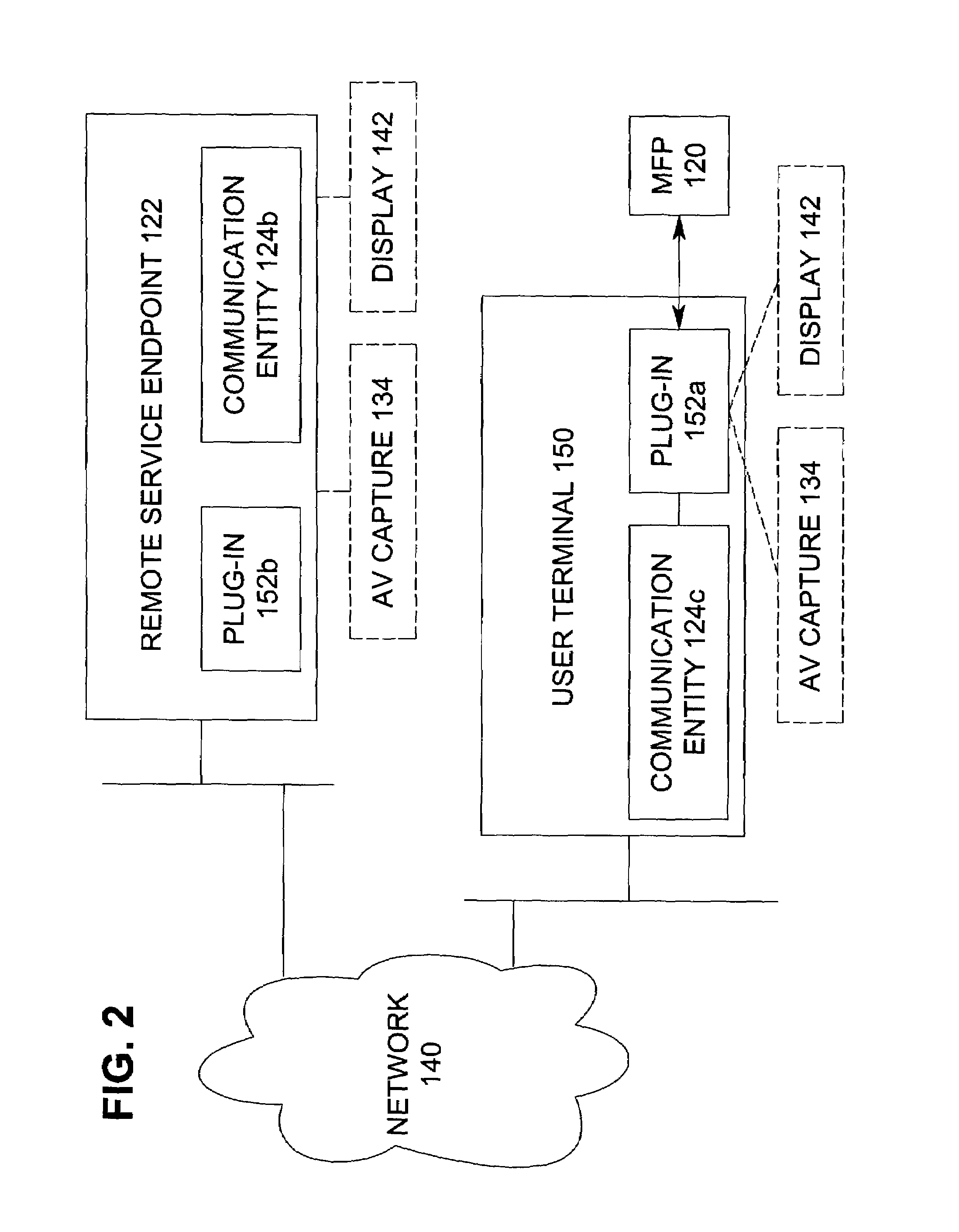

Interactive multimedia for remote diagnostics and maintenance of a multifunctional peripheral

ActiveUS7149936B2Detecting faulty hardware by remote testFunctional testingDisplay deviceNetworked system

An interactive multimedia system for remote diagnostics of, maintenance of, and assistance pertaining to a multifunction peripheral preferably includes a multifunction peripheral, a remote service endpoint, and a network system over which the multifunction peripheral communicates with the remote service endpoint. An audio / visual capture device and a display are preferably communicatively associated with the multifunction peripheral communication entity. The remote service endpoint provides assistance based on the audio / video data over the display in such exemplary forms as text, images, multimedia presentation, audio presentations, video presentations, or live interactive communications. The present invention is also directed to a method performed by a multifunction peripheral that provides interactive multimedia for remote diagnostics of, maintenance of, and assistance regarding a multifunction peripheral.

Owner:SHARP KK

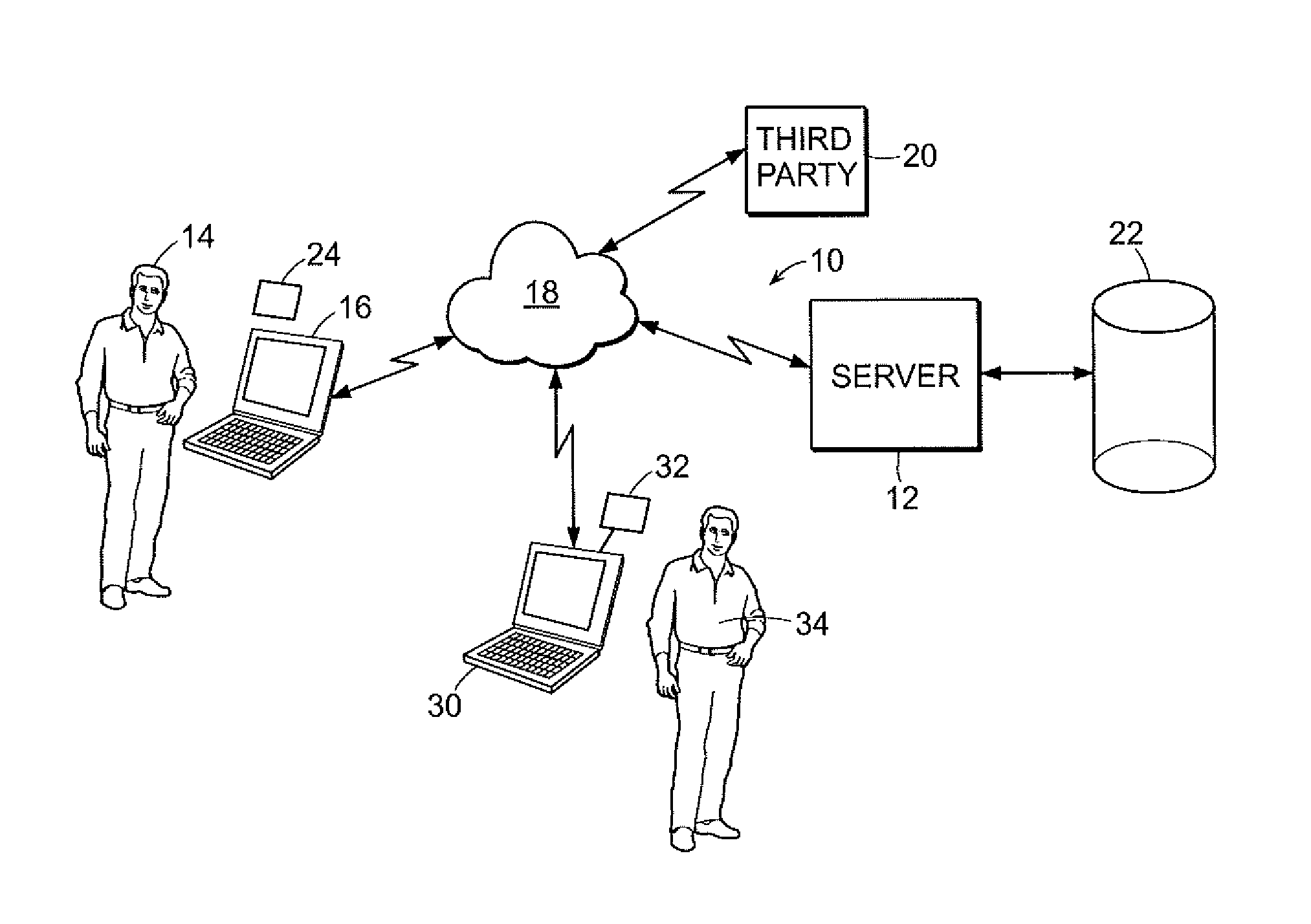

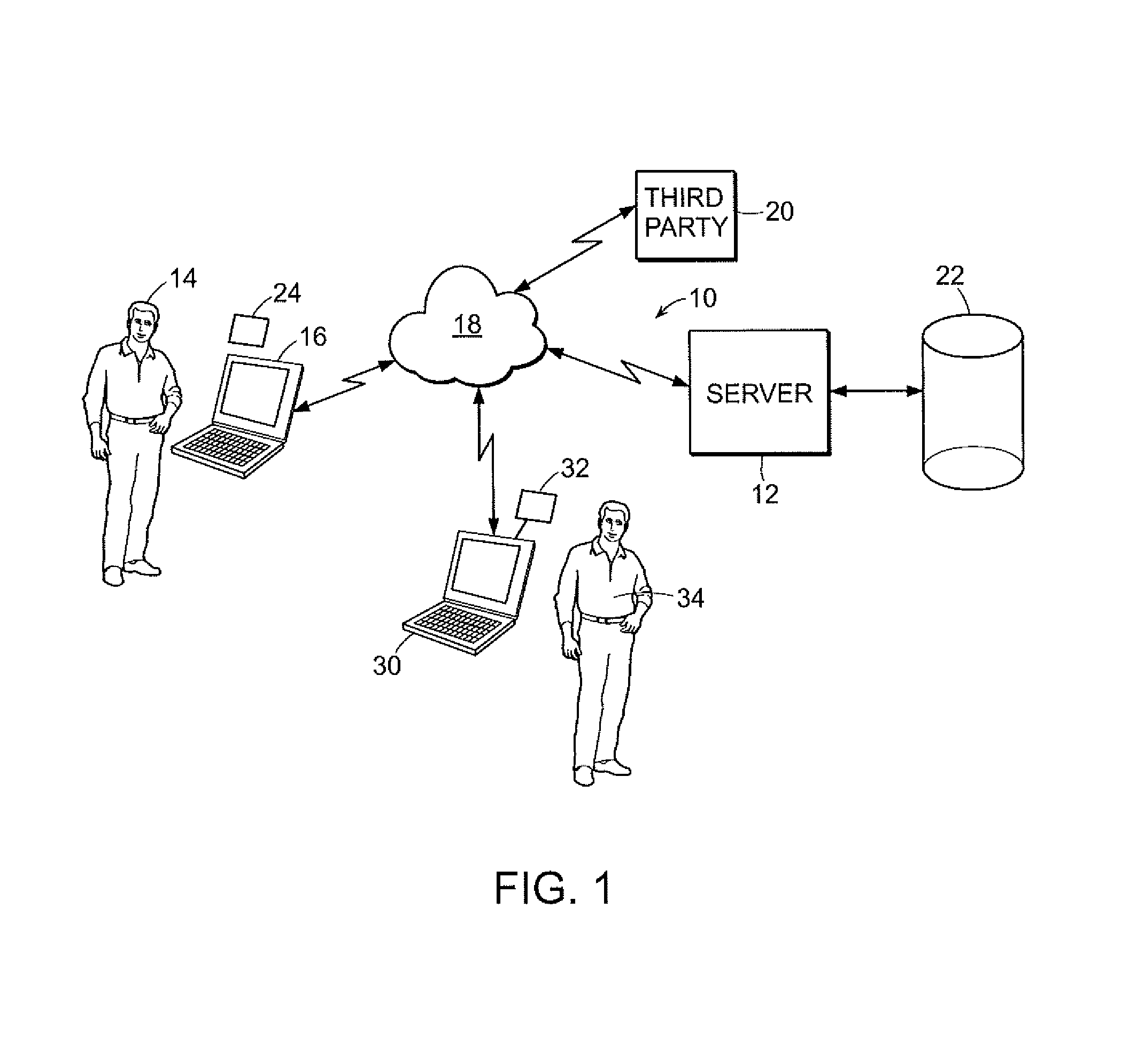

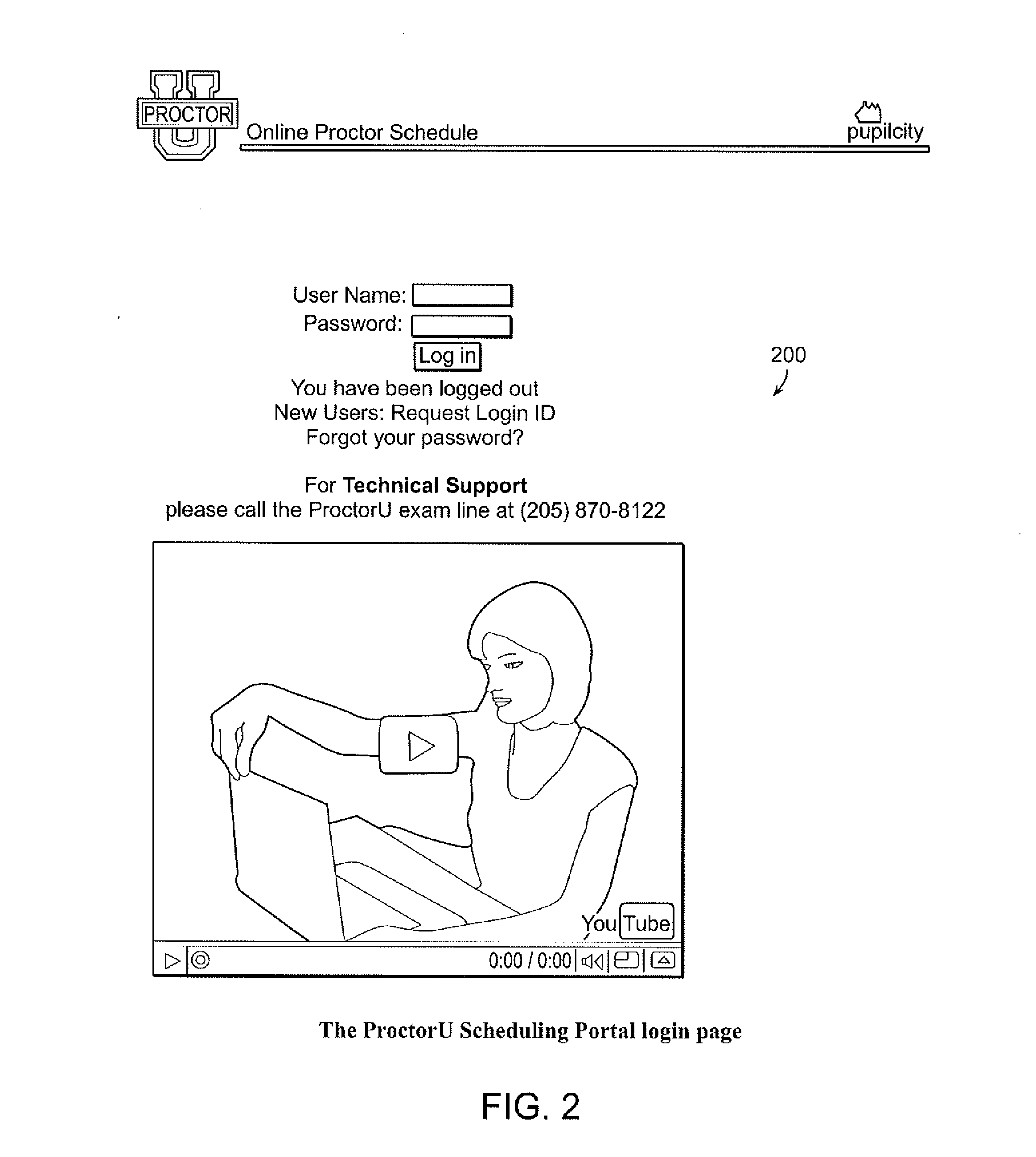

Online proctoring process for distance-based testing

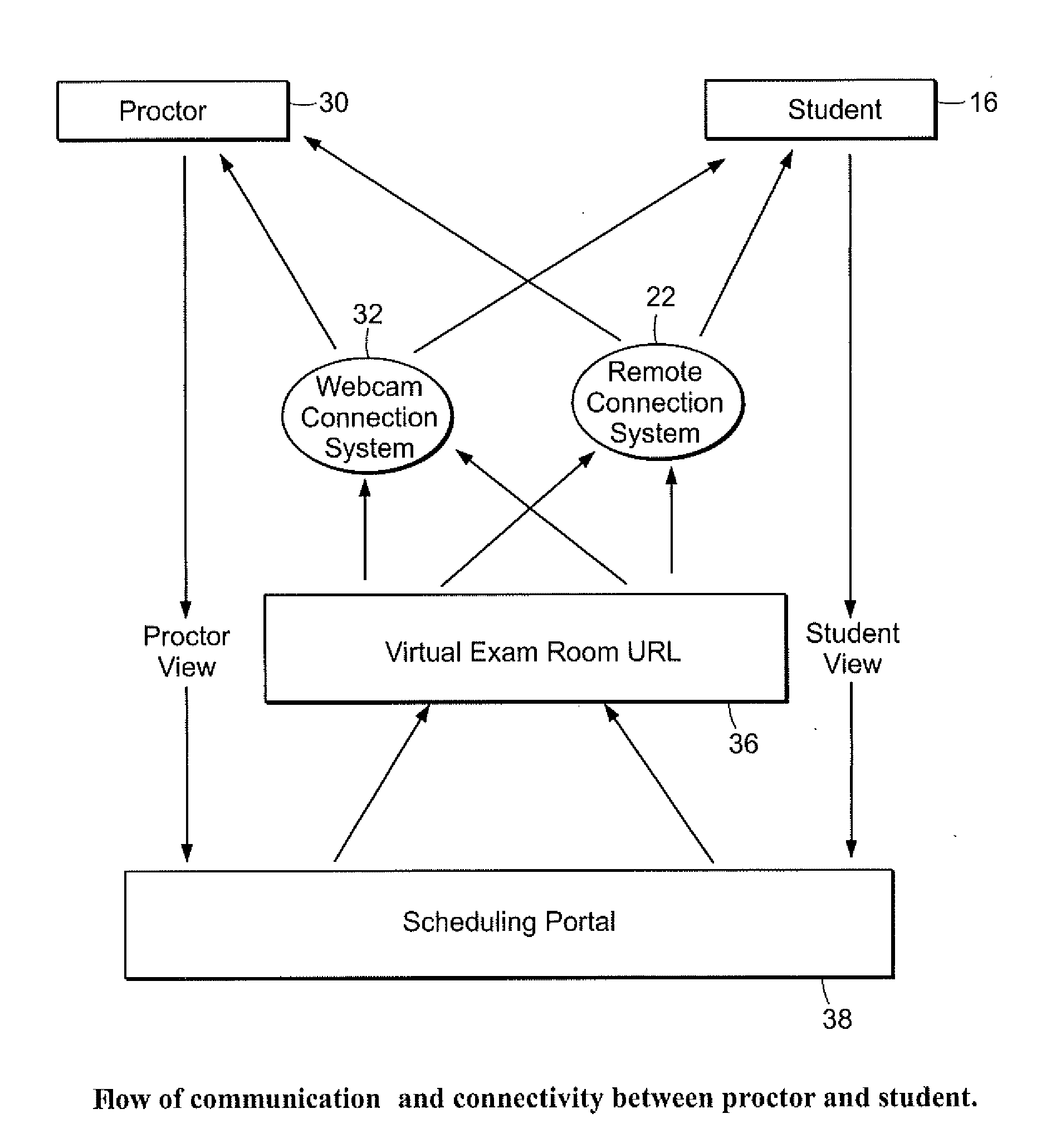

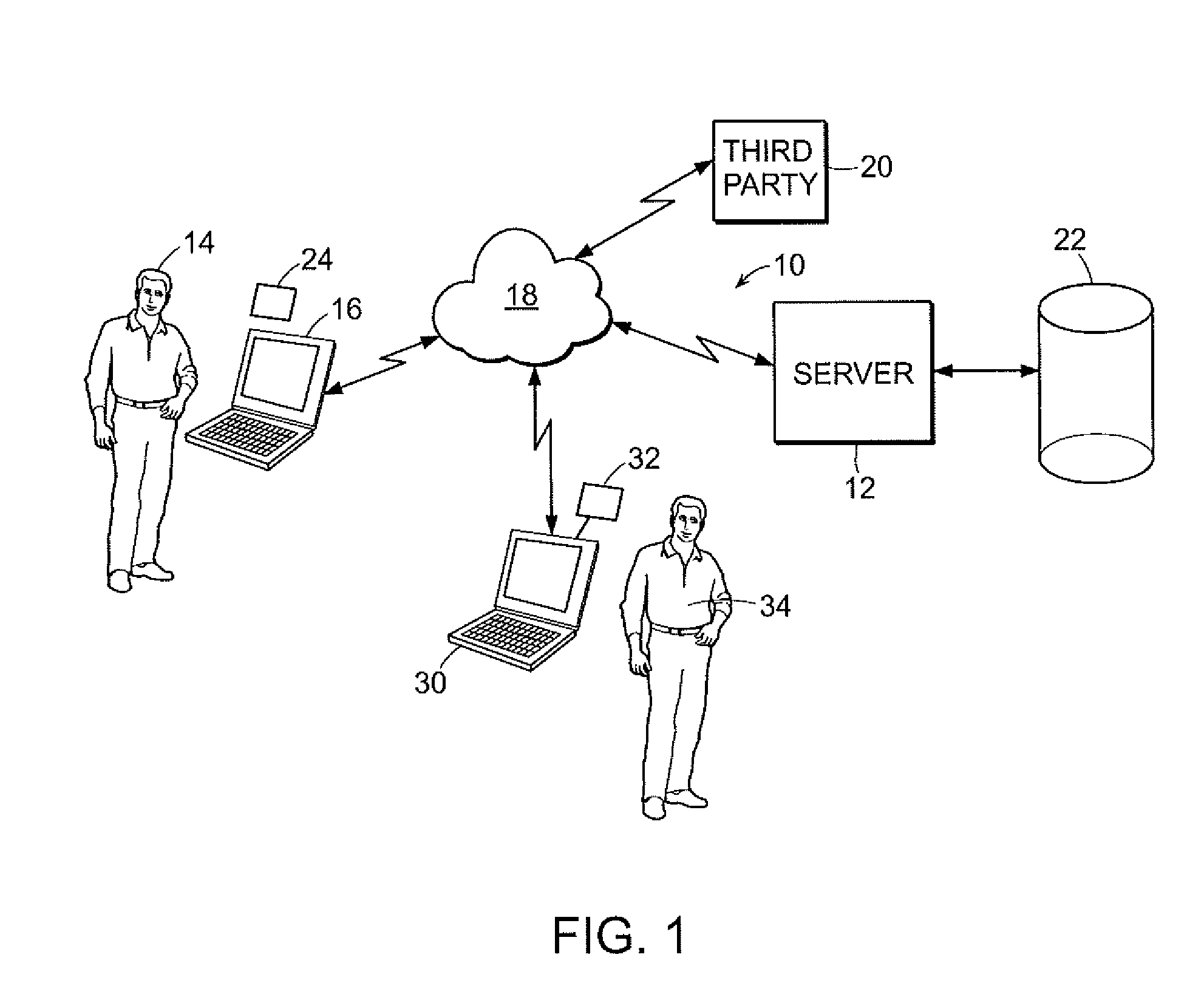

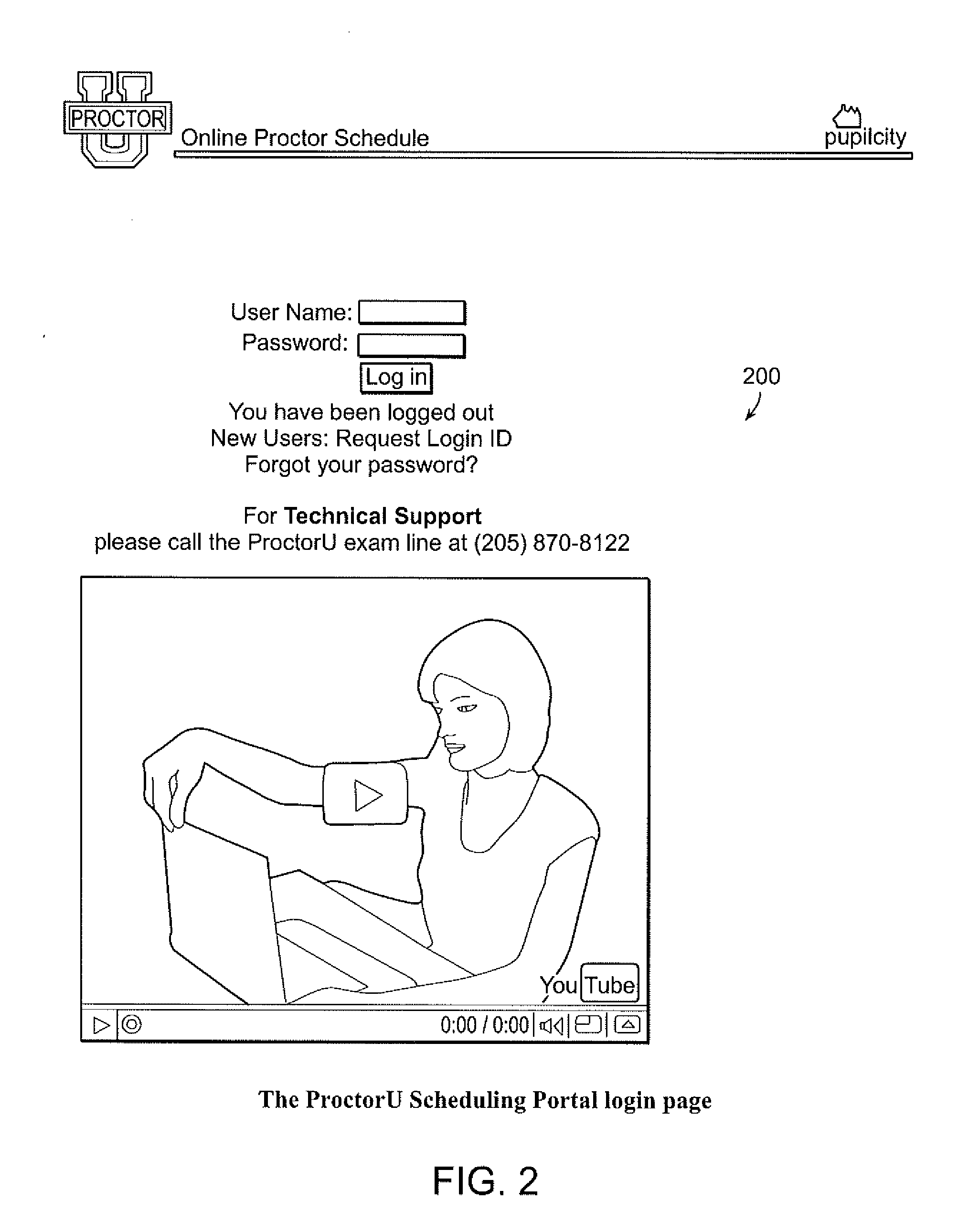

A system for enabling real time live proctoring of an exam across a distributed network includes a first remote computer. The first remote computer is capable of real time audio visual capture and display of an image of a user of the first remote computer. A second remote computer is capable of real time audio visual capture and display of an image of the user of the second remote computer. A server is in communication with the first remote computer and the second remote computer, and provides an interactive web based scheduling portal accessible from the first remote computer and the second remote computer. A database is associated with the server for storing data regarding the rules for proctoring of an exam including the rate at which an exam may be proctored at a given date and time. The server enables access to a virtual exam room by the first remote computer and the second remote computer in response to a request from the first remote computer through the scheduling portal for a date and time to take an exam administered at the first computer when the requested date and time fulfils the rules stored in the database.

Owner:PROCTORU

Restricting mobile device usage

ActiveUS20180124233A1Input/output for user-computer interactionUnauthorised/fraudulent call preventionIn vehicleMobile device

Systems and methods are provided for selectively restricting a mobile device. In one implementation, a visual capture can be to identify one or more indicators within the visual capture. Based on the indicators, an implementation of a restriction at the mobile device can be adjusted. In another implementation inputs can be processed to compute a determination, determination reflecting at least one of the in-vehicle role of the user as a driver and the in-vehicle role of the user as a passenger; and, an operation state of the mobile device can be modified based on such a determination. According to another implementation, one or more outputs can be projected from a mobile device, inputs can be received, and such inputs and outputs can be processed to determine a correlation between them. A restriction can then be modified based on the correlation.

Owner:CELLEPATHY

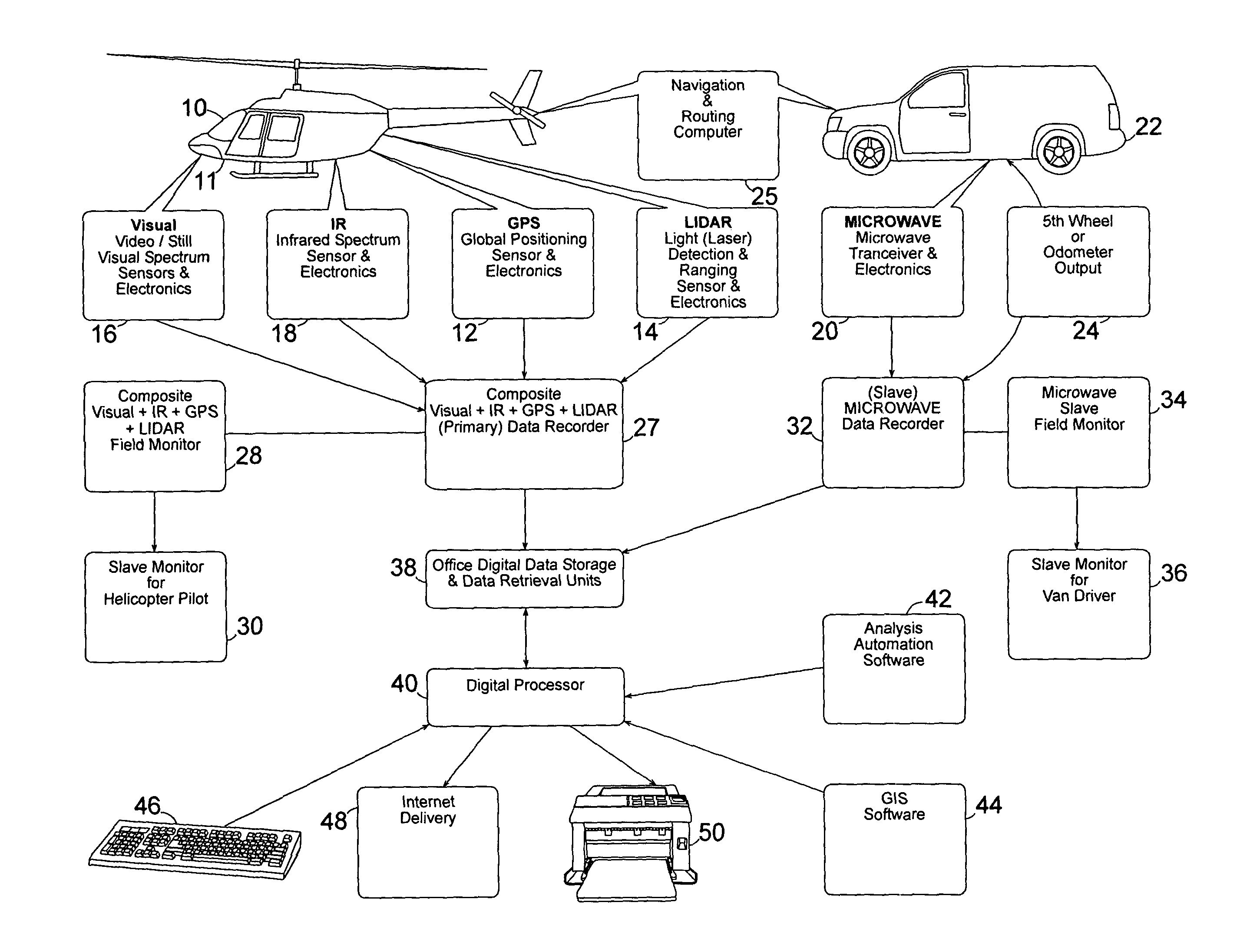

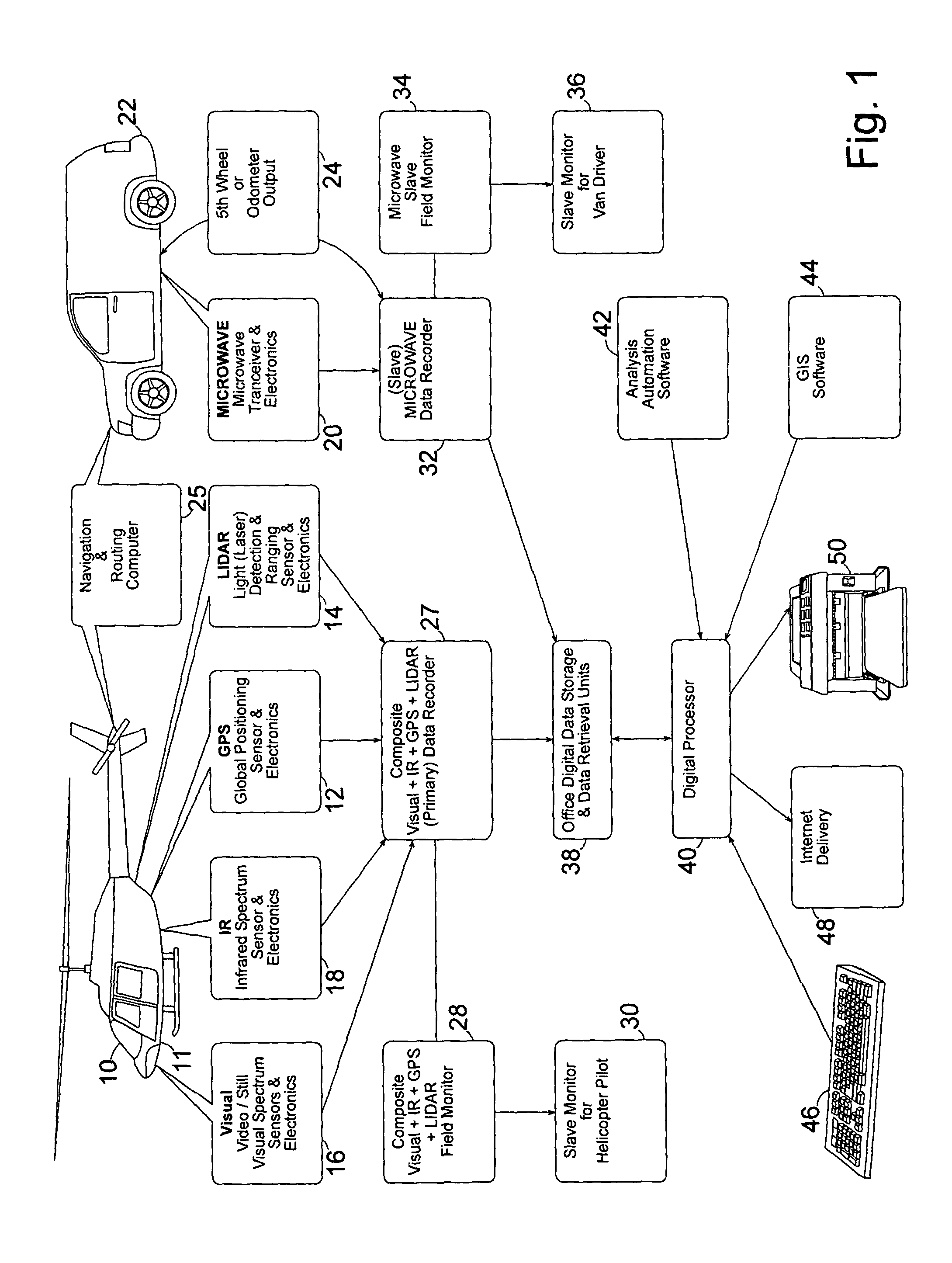

Complete remote sensing bridge investigation system

InactiveUS20130176424A1Less moneyEfficiently determinedColor television detailsClosed circuit television systemsMultiple sensorGlobal Positioning System

Selectively mobile system and method detect and locate information to determine repair or replacement needs for bridge and roadway infrastructure by detection of subsurface defects. Multiple sensors collect data to be fused include 1) Visual capture device or devices (16) aboard a mobile carrier (10) capture visual spectrum in either HD or regular video and / or as still frame camera images; 2) Infrared spectrum sensors (18) aboard the mobile carrier capture video or still frame images; 3) Global positioning sensors (GPS) (12) aboard the mobile carrier determine position; 4) Light detection and ranging system (LIDAR) aboard the mobile carrier gives precise locations. Further, 5) ground penetrating microwave radar (GPR) sensors carried by a vehicle (22) and related electronics determine depth of subsurface defects. The sensor systems supply data to a digital processor (40) having analysis and graphical information system (GIS) software (44) that fuses the data and presents it for delivery and use.

Owner:ENTECH ENG

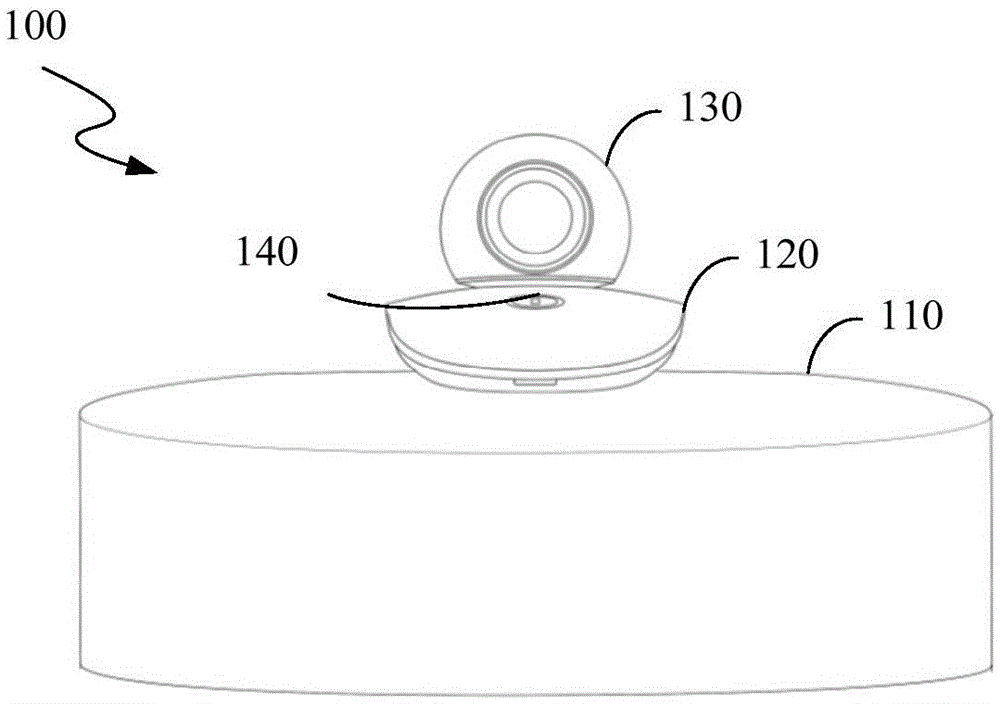

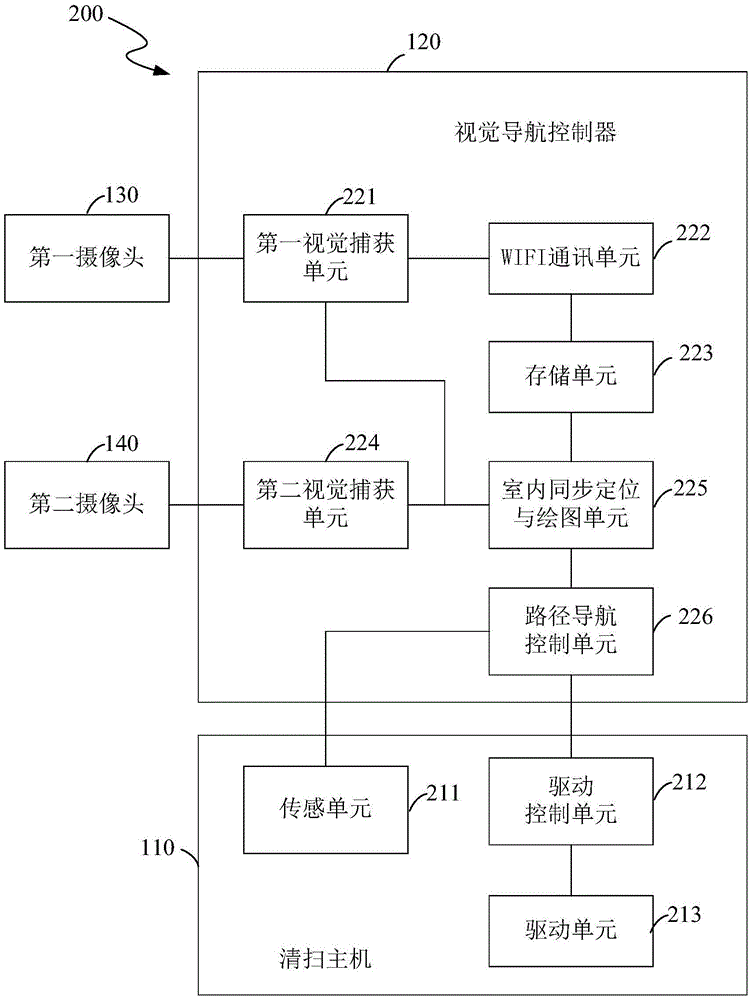

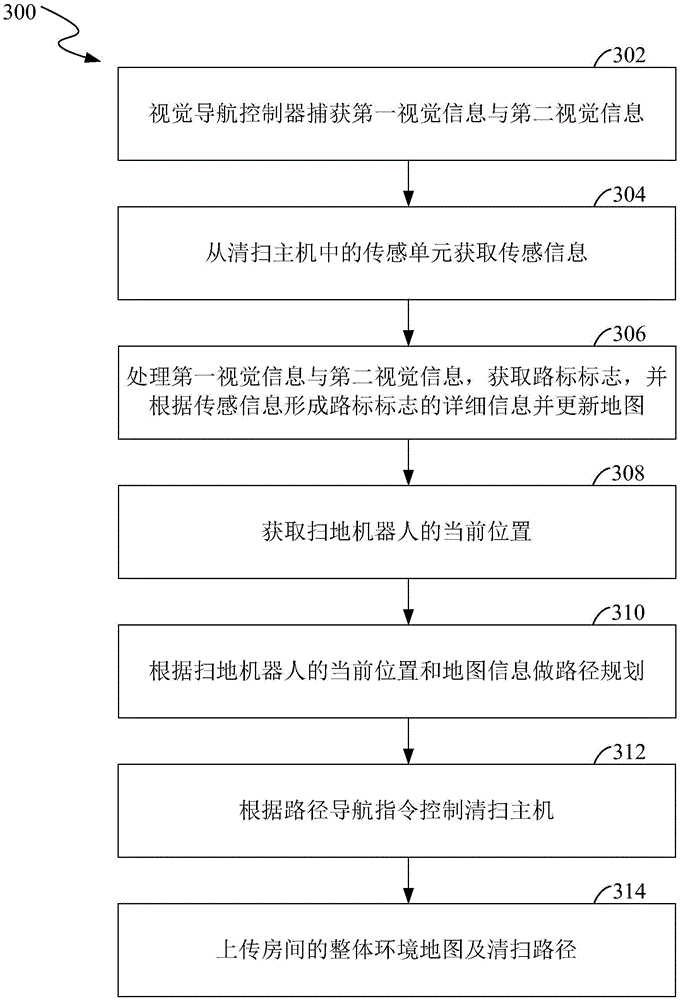

Floor sweeping robot having visual navigation function and navigation method thereof

InactiveCN106569489AAchieve visual effectsRealize functionPosition/course control in two dimensionsVehiclesCommunication unitSimulation

The invention provides a floor sweeping robot having the visual navigation function and a navigation method thereof. The floor sweeping robot comprises a sweeping host and a visual navigation controller. The sweeping host comprises a sensing unit, a driving unit, and a driving control unit. The visual navigation controller includes a first visual capture unit, a second visual capture unit, a WIFI communication unit, a storage unit, an indoor simultaneous positioning and mapping unit and a path navigation and control unit. The first visual capture unit and the second visual capture unit are configured to capture the related visual information. The related visual information includes the map information of the local map and the global map of a room wherein the floor sweeping robot is located. The path navigation and control unit is used for sending out a path navigation instruction according to the visual information, the map information and the sensing information. The driving control unit is used for controlling the driving unit according to the path navigation instruction. Advantageously, based on the floor sweeping robot having the visual navigation function and the navigation method thereof, the visual navigation and path planning function can be realized based on the simple modification of an ordinary floor sweeping robot (without a camera).

Owner:LOOQ SYST

Restricting mobile device usage

InactiveUS9800716B2Input/output for user-computer interactionSubstation speech amplifiersIn vehicleMobile device

Owner:CELLEPATHY

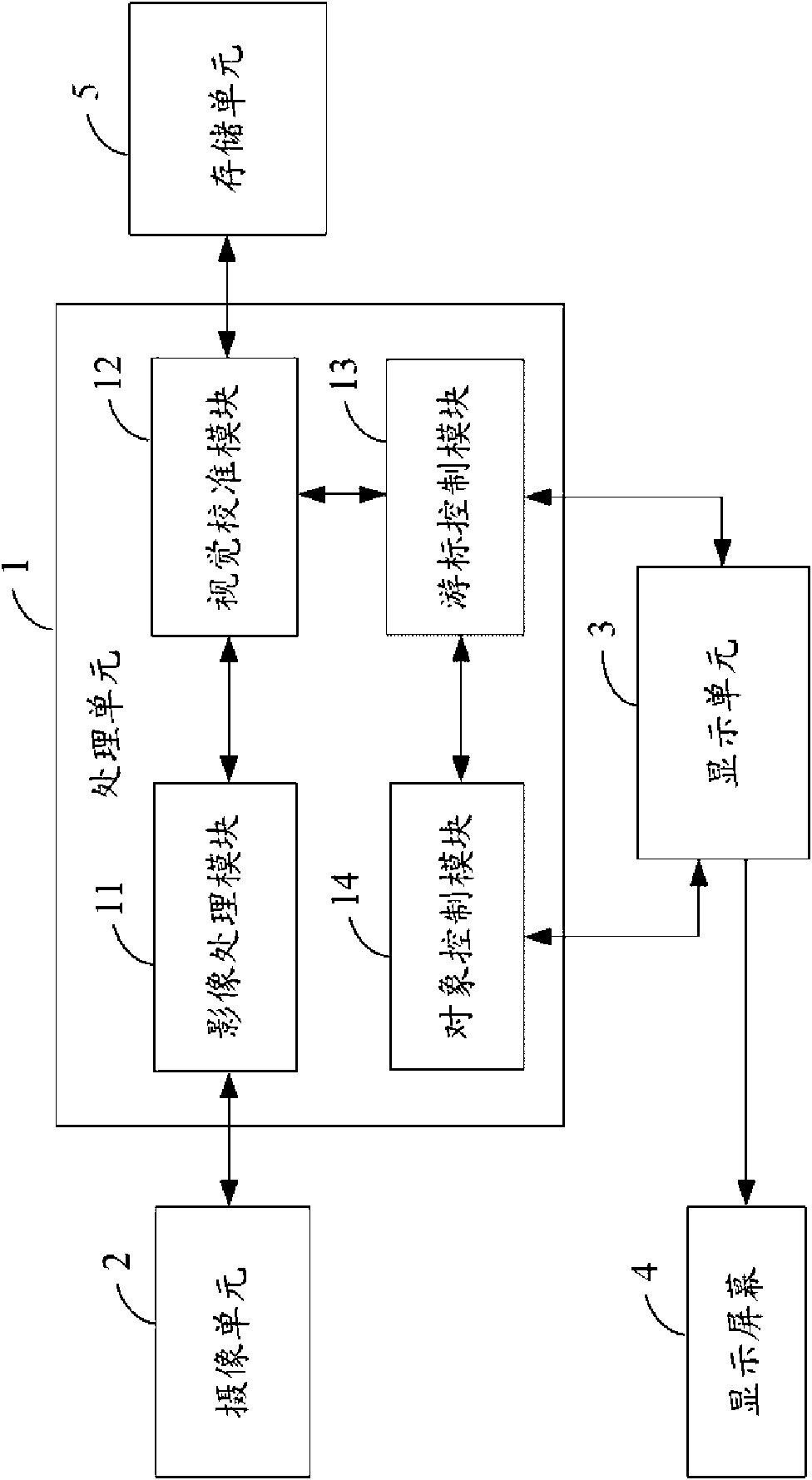

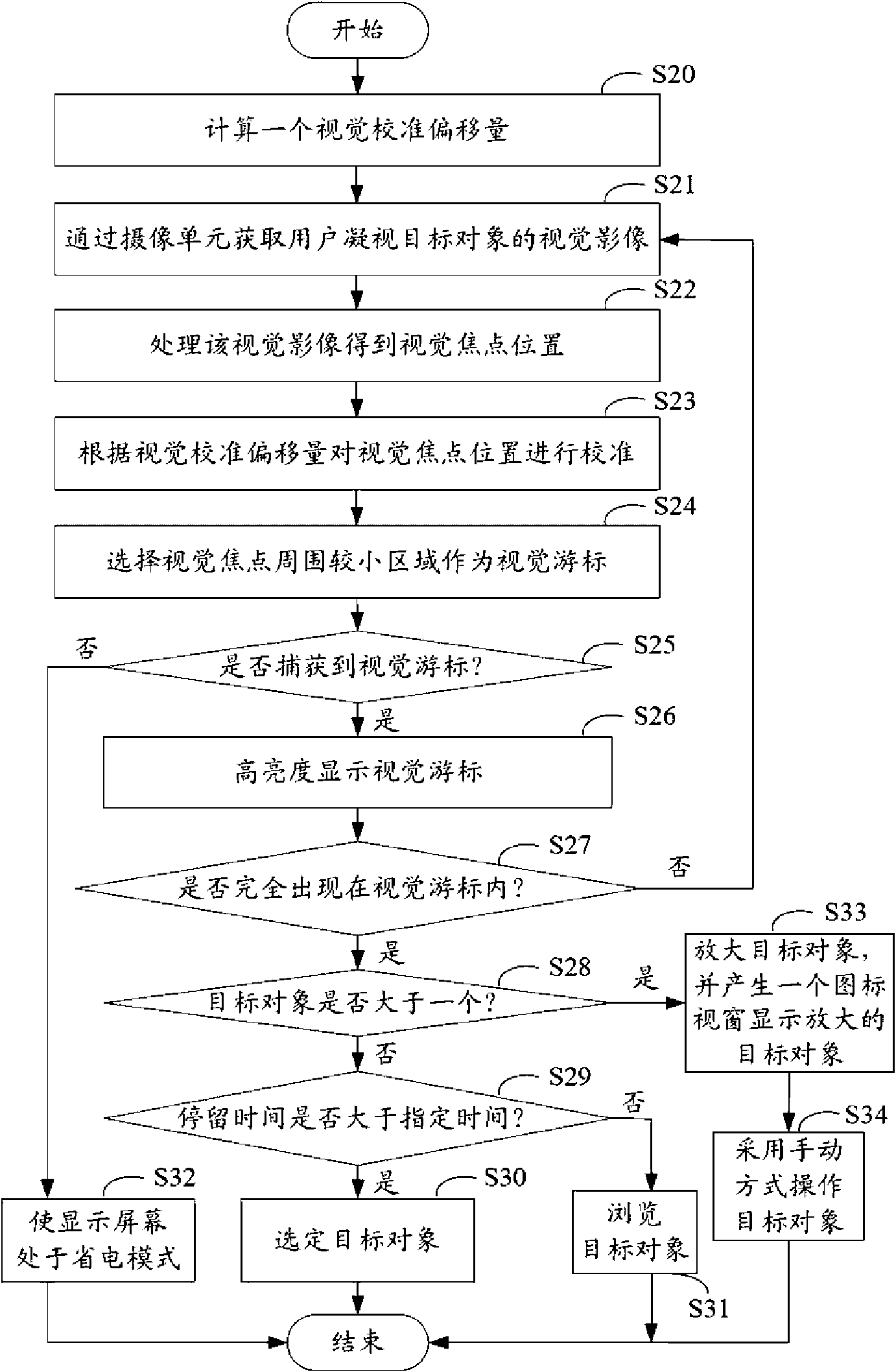

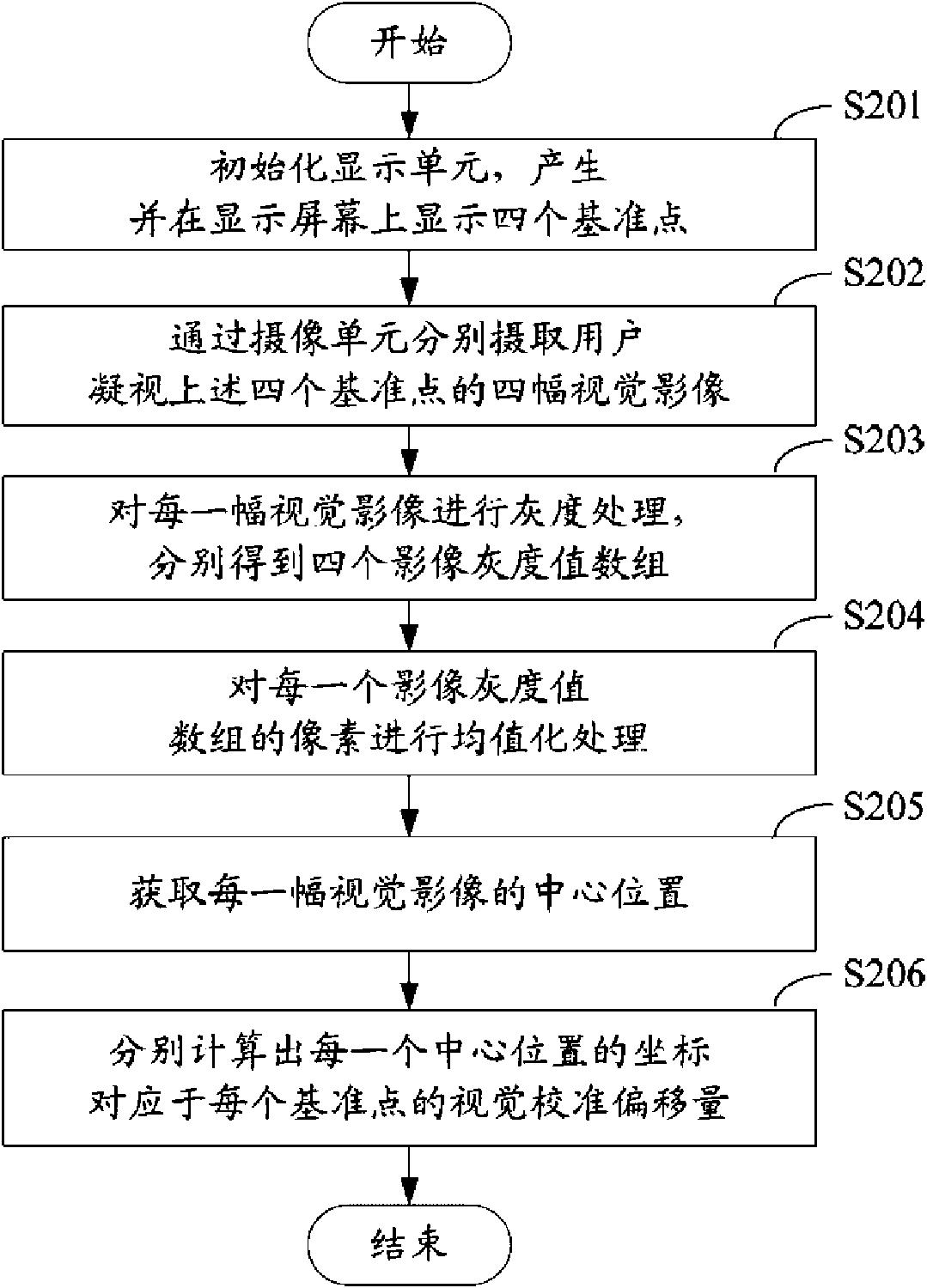

Visual perception device and control method thereof

ActiveCN101840265AReduce uncertaintyLow reliabilityInput/output for user-computer interactionGraph readingElectricityImaging processing

The invention relates to a visual perception device which comprises a processing unit, a camera unit, a display unit, a display screen and a storage unit, wherein the processing unit comprises an image processing module, a visual calibration module, a cursor control module and an object control module; the image processing module is used for controlling the camera unit to intake the visual images of the eyes of a user when the user stares at a target object in the display screen, processing the visual images to obtain a visual focal position, and computing visual calibration offset; the visual calibration module is used for carrying out coordinate calibration on the visual focal position according to the visual calibration offset; the cursor control module is used for selecting the peripheral area of the visual focal point as a visual cursor to judge whether the dwell time of the visual cursor is longer than a set time; and the object control module is used for controlling the visual cursor to select the target object when the dwell time is longer than the set time, and controlling the next target object to enter a visual cursor area when the dwell time is shorter than or equal the set time. The invention can reduce the unreliability of visual capture and the number of times of manual interaction, and realizes the effect of electricity saving and energy saving at the same time.

Owner:HENGQIN INT INTPROP EXCHANGE CENT CO LTD

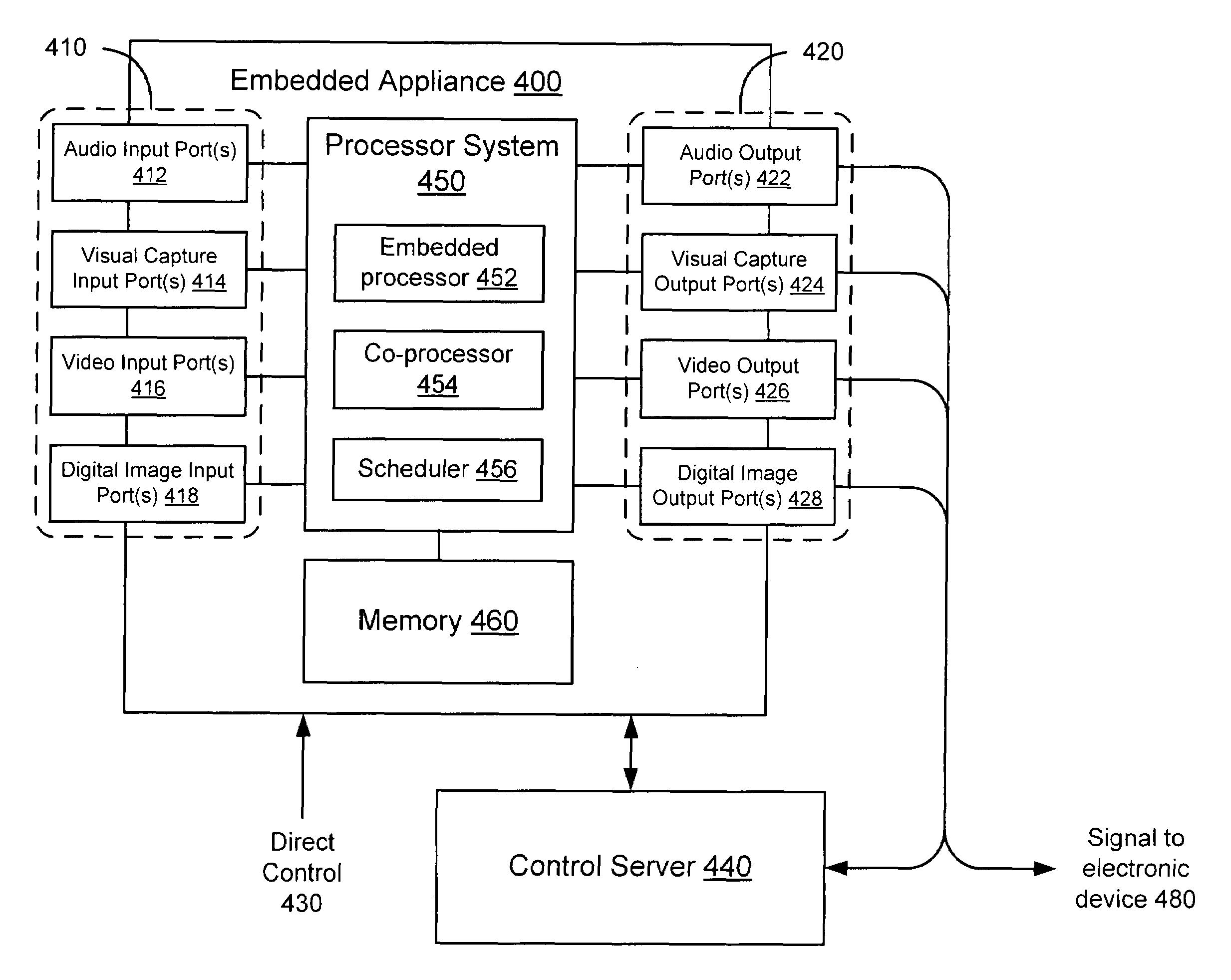

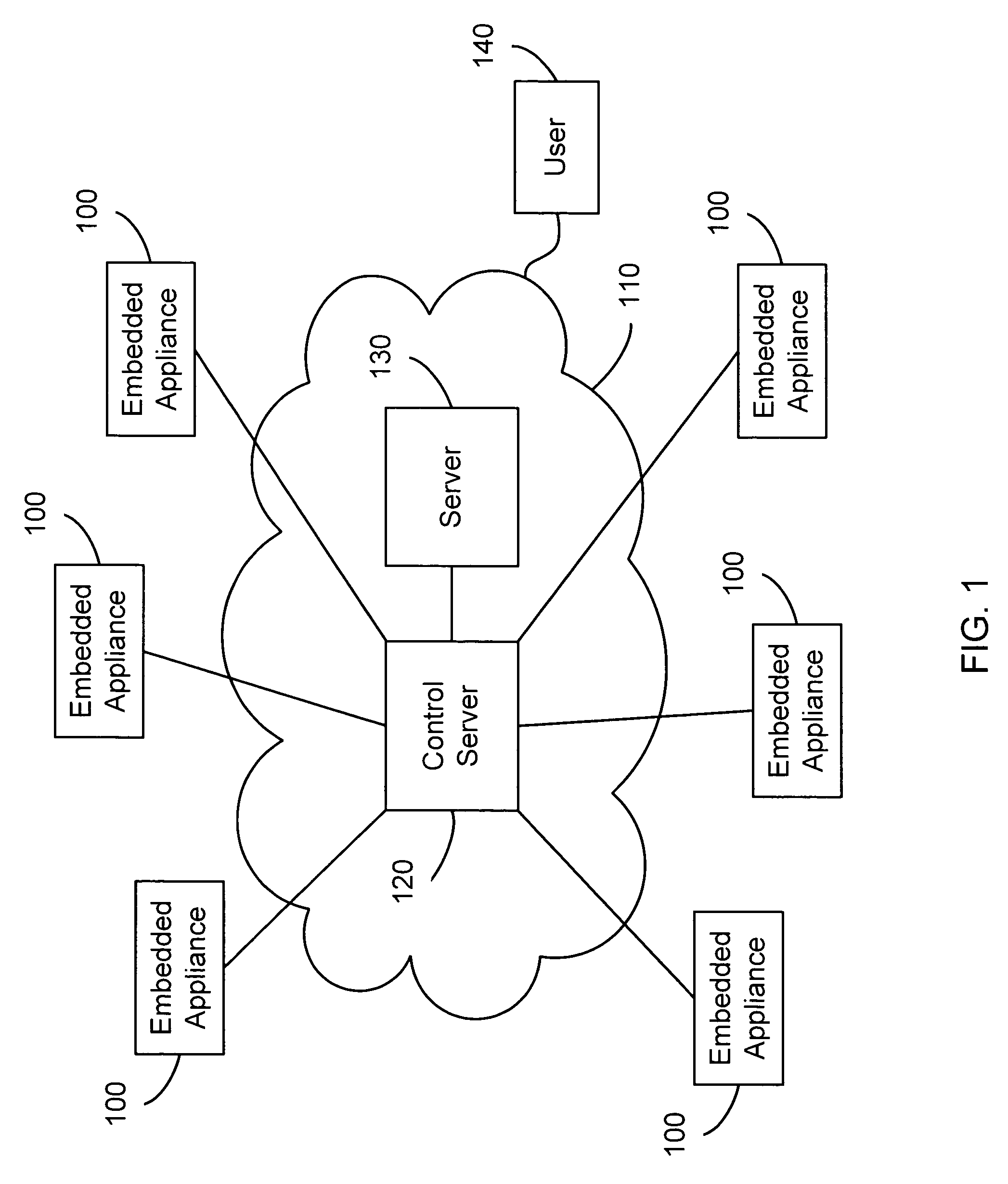

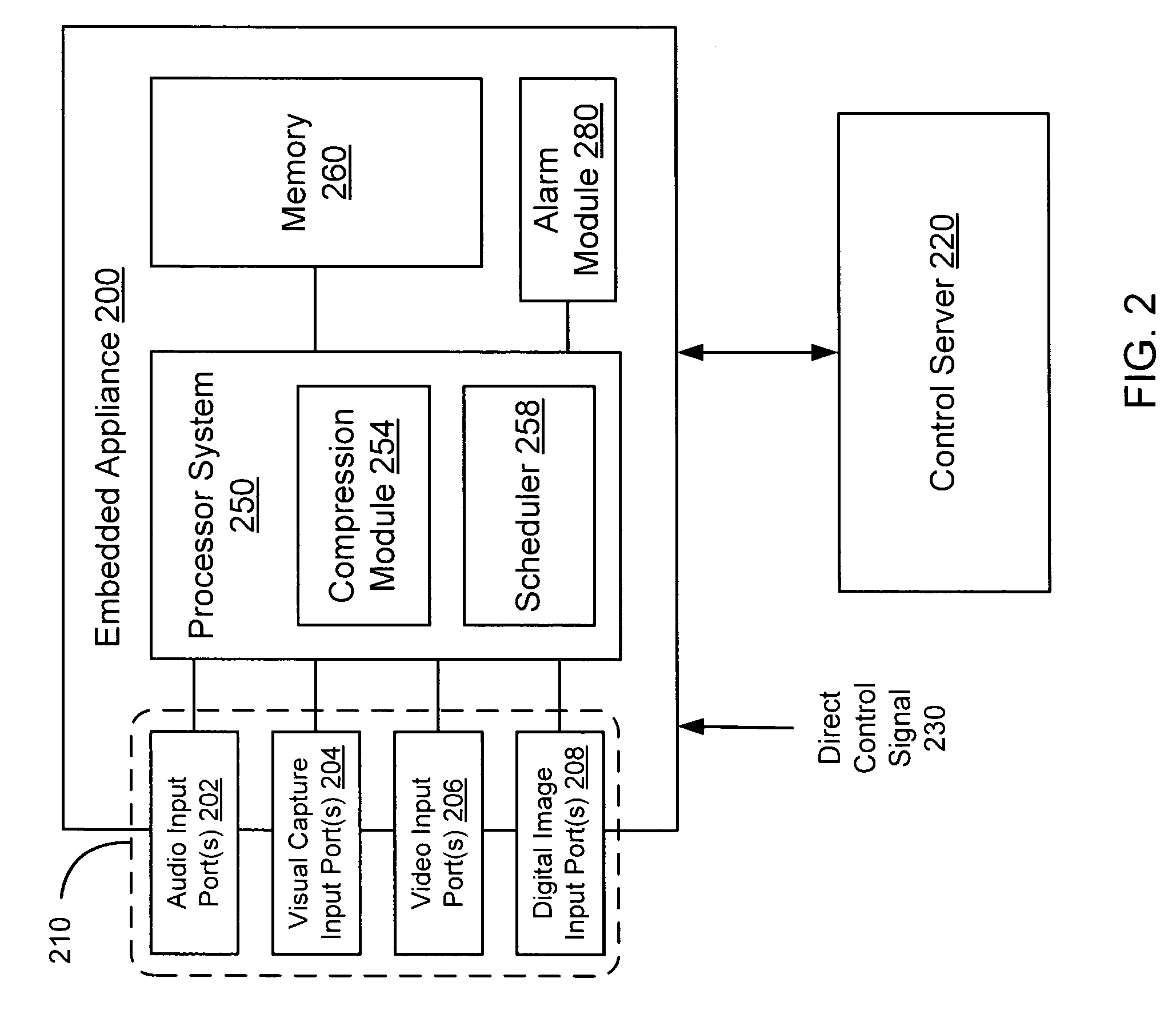

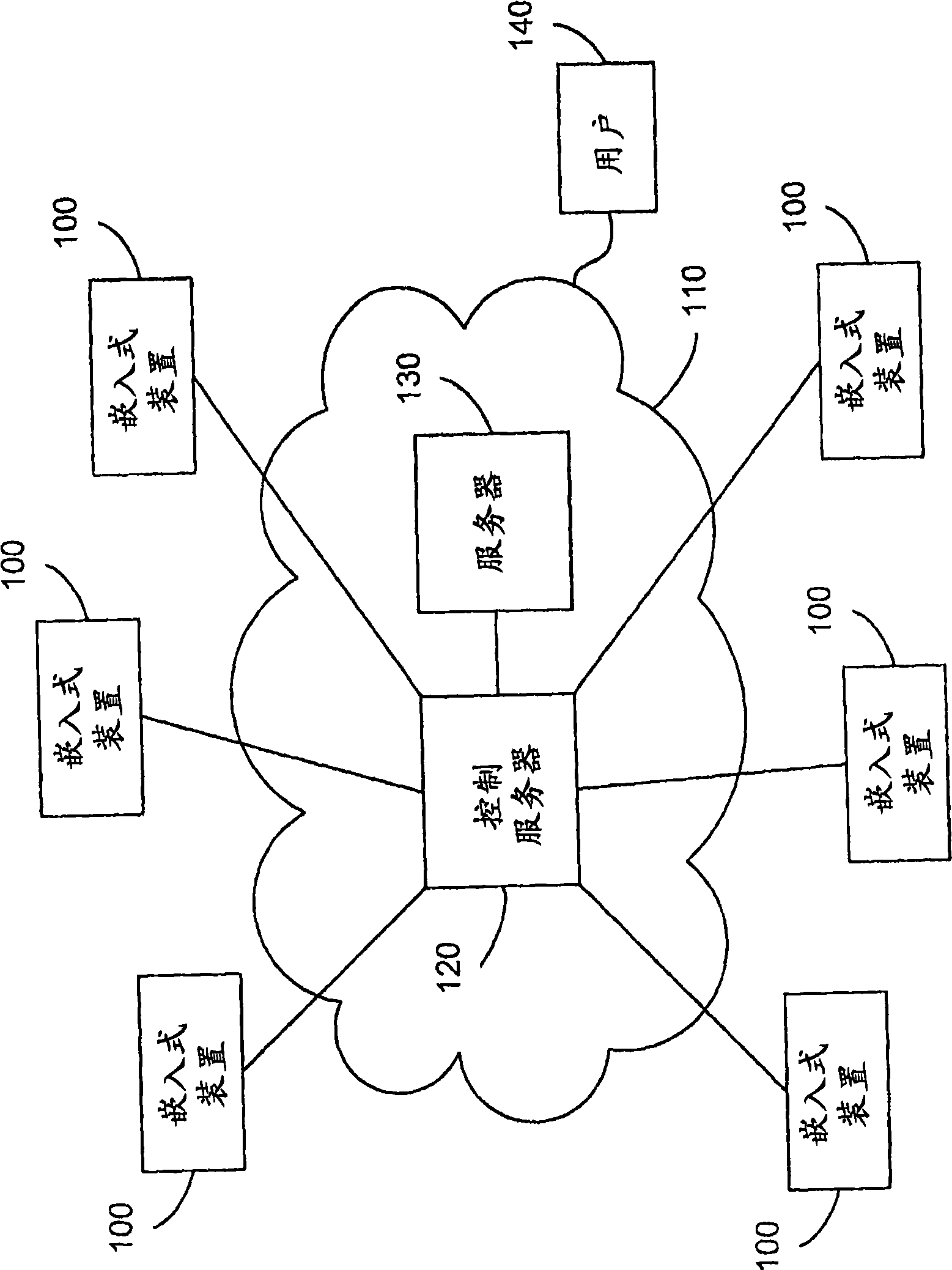

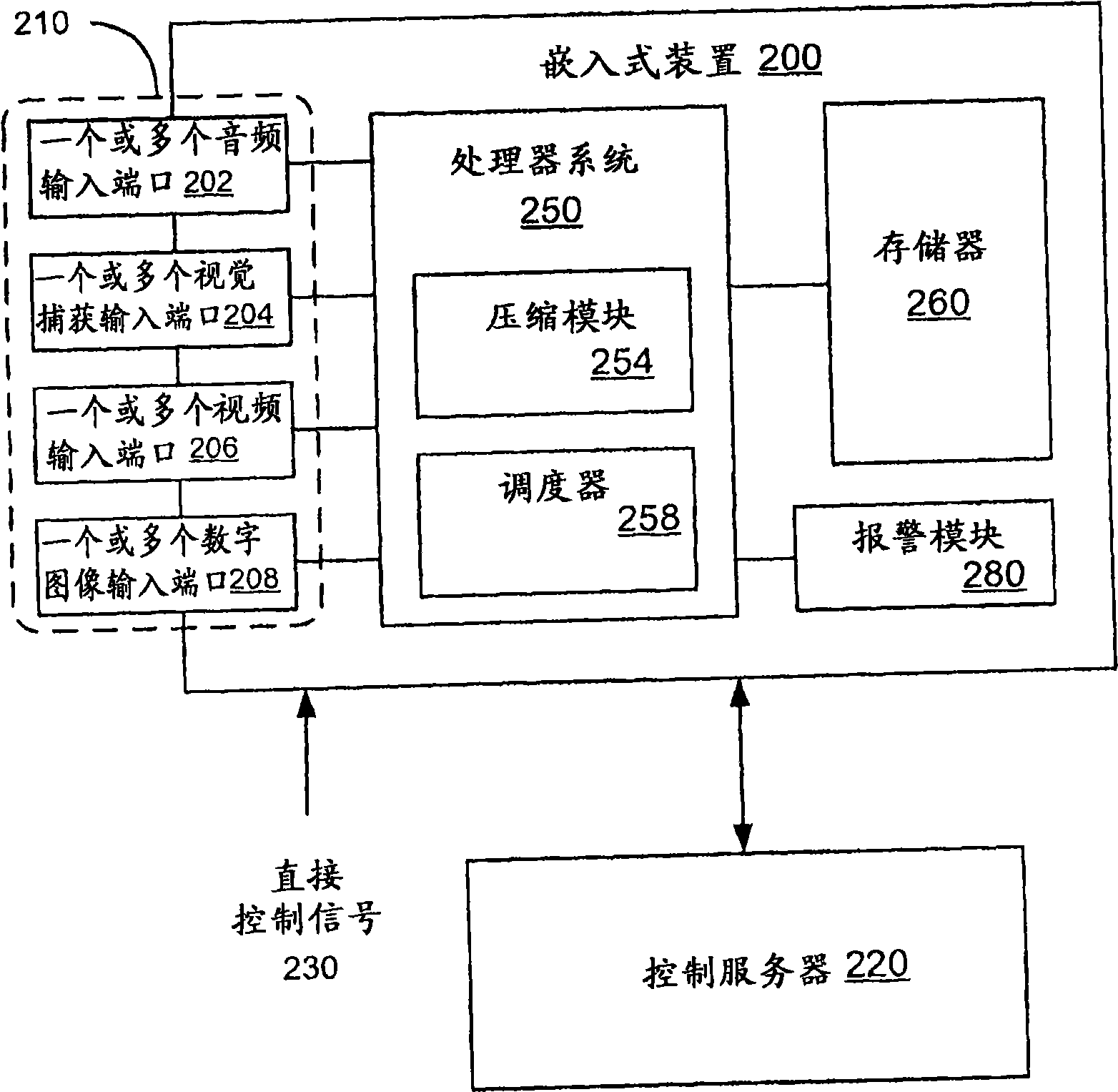

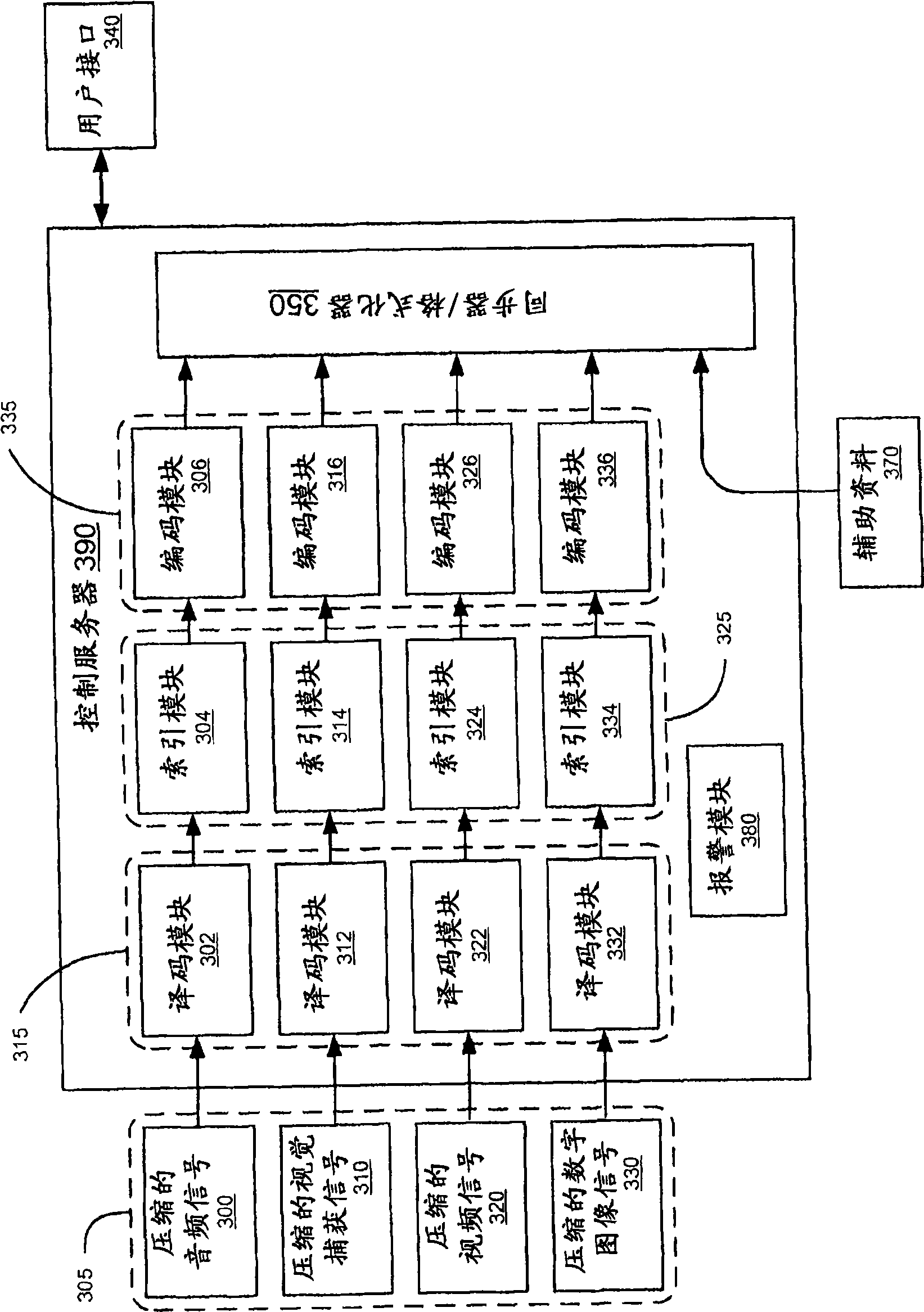

Embedded appliance for multimedia capture

ActiveUS7720251B2Television system detailsCharacter and pattern recognitionDigital imageVisual capture

Owner:ECHO 360 INC

Online proctoring process for distance-based testing

A system for enabling real time live proctoring of an exam across a distributed network includes a first remote computer. The first remote computer is capable of real time audio visual capture and display of an image of a user of the first remote computer. A second remote computer is capable of real time audio visual capture and display of an image of the user of the second remote computer. A server is in communication with the first remote computer and the second remote computer, and provides an interactive web based scheduling portal accessible from the first remote computer and the second remote computer. A database is associated with the server for storing data regarding the rules for proctoring of an exam including the rate at which an exam may be proctored at a given date and time. The server enables access to a virtual exam room by the first remote computer and the second remote computer in response to a request from the first remote computer through the scheduling portal for a date and time to take an exam administered at the first computer when the requested date and time fulfils the rules stored in the database.

Owner:PROCTORU

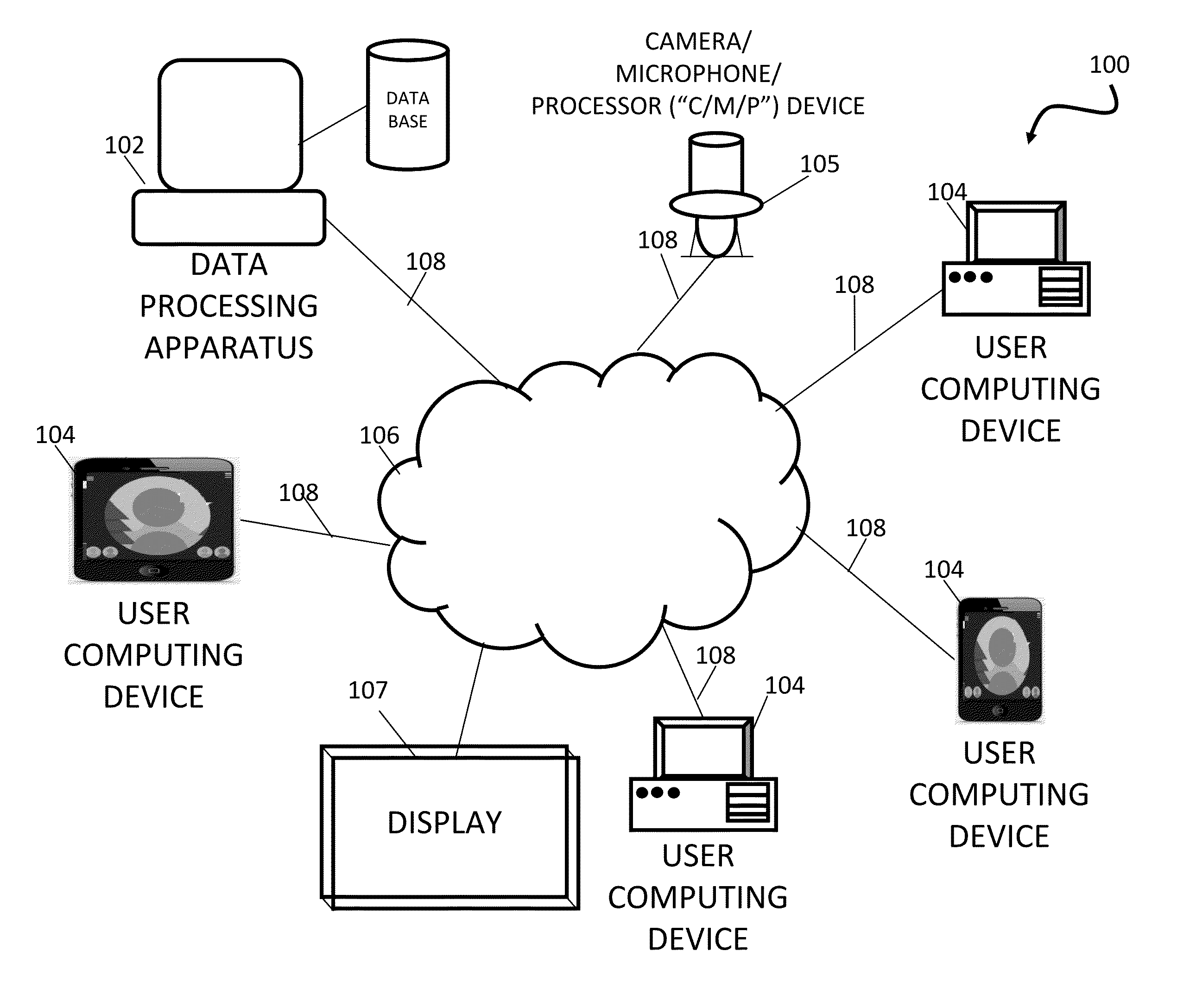

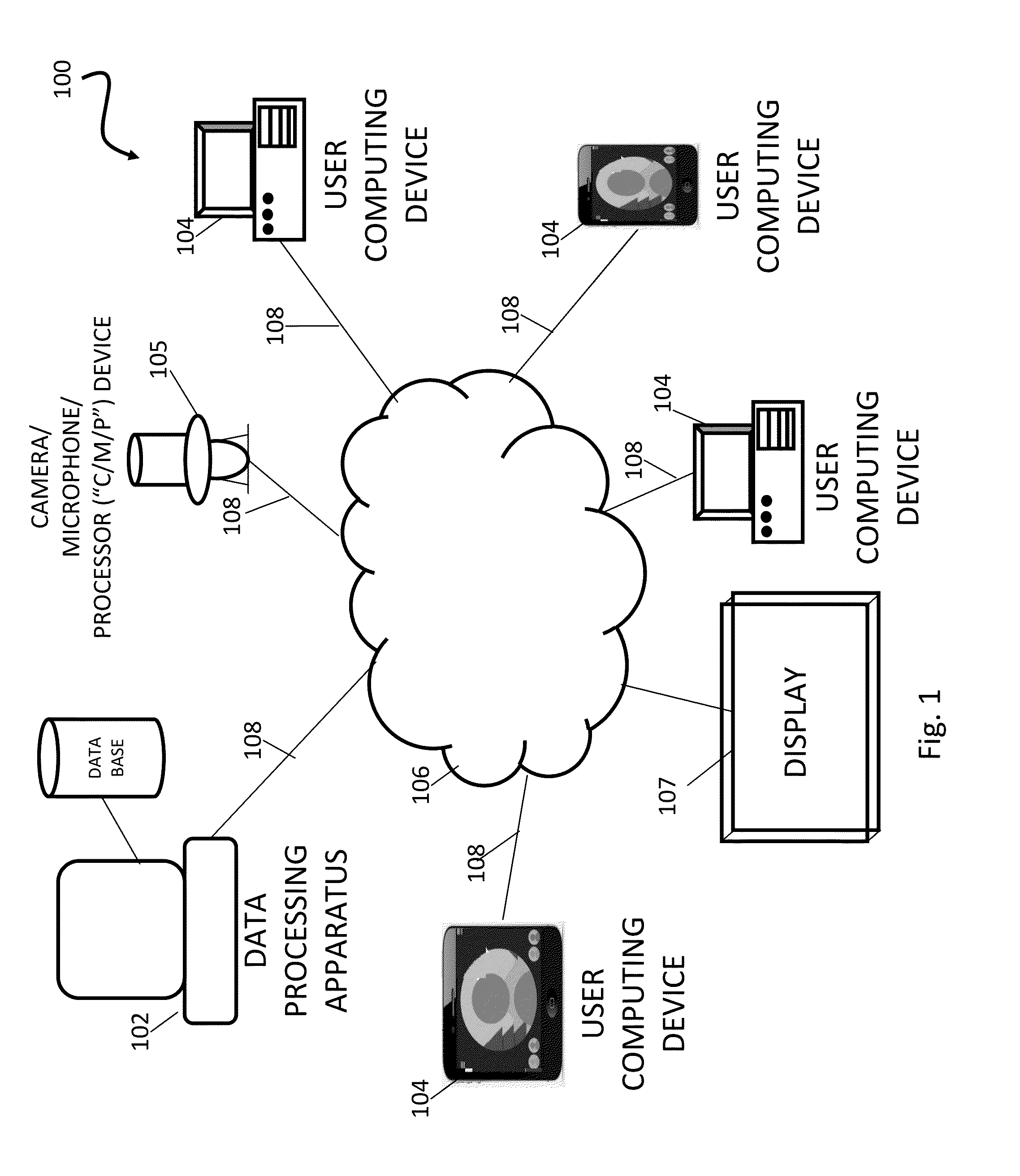

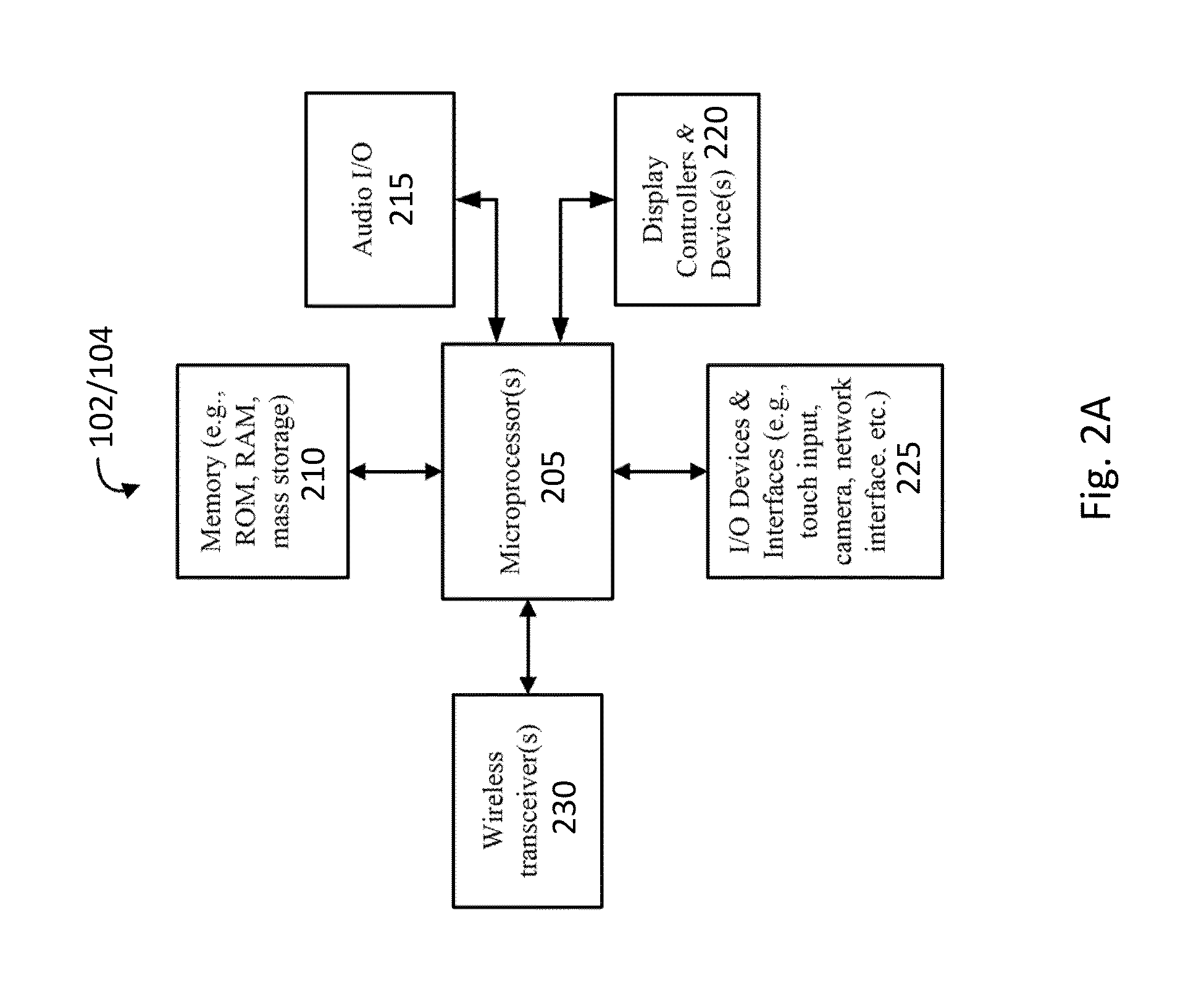

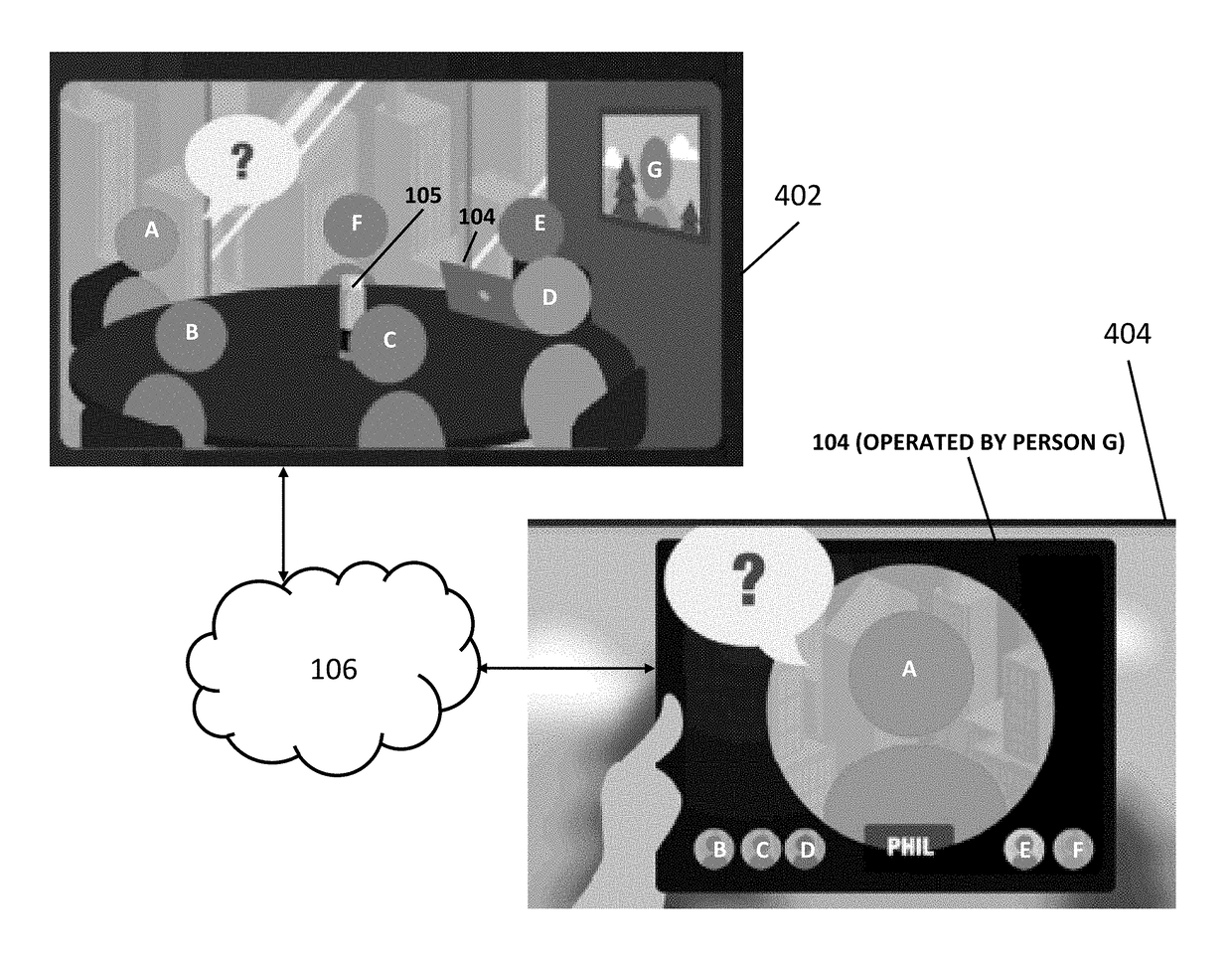

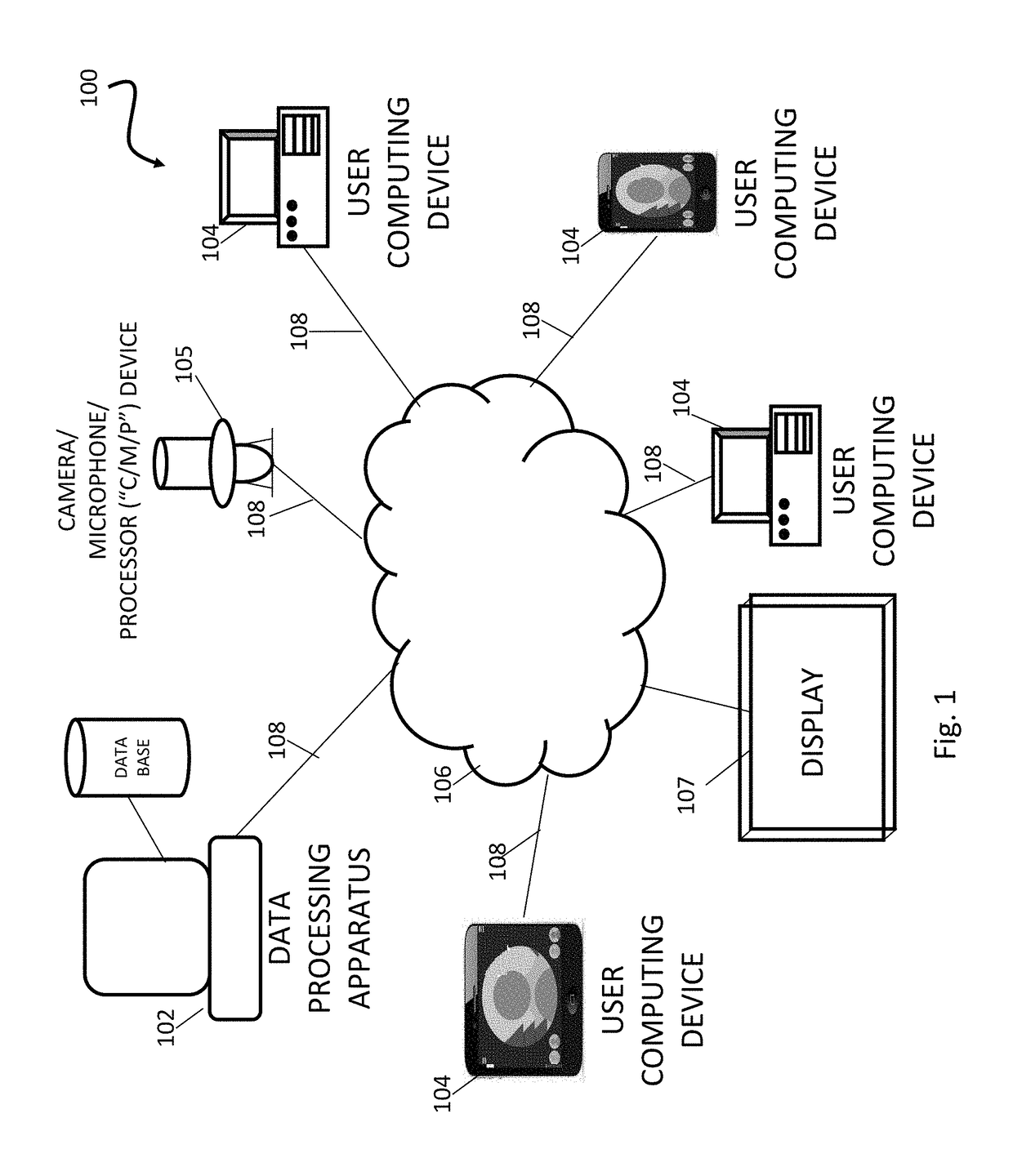

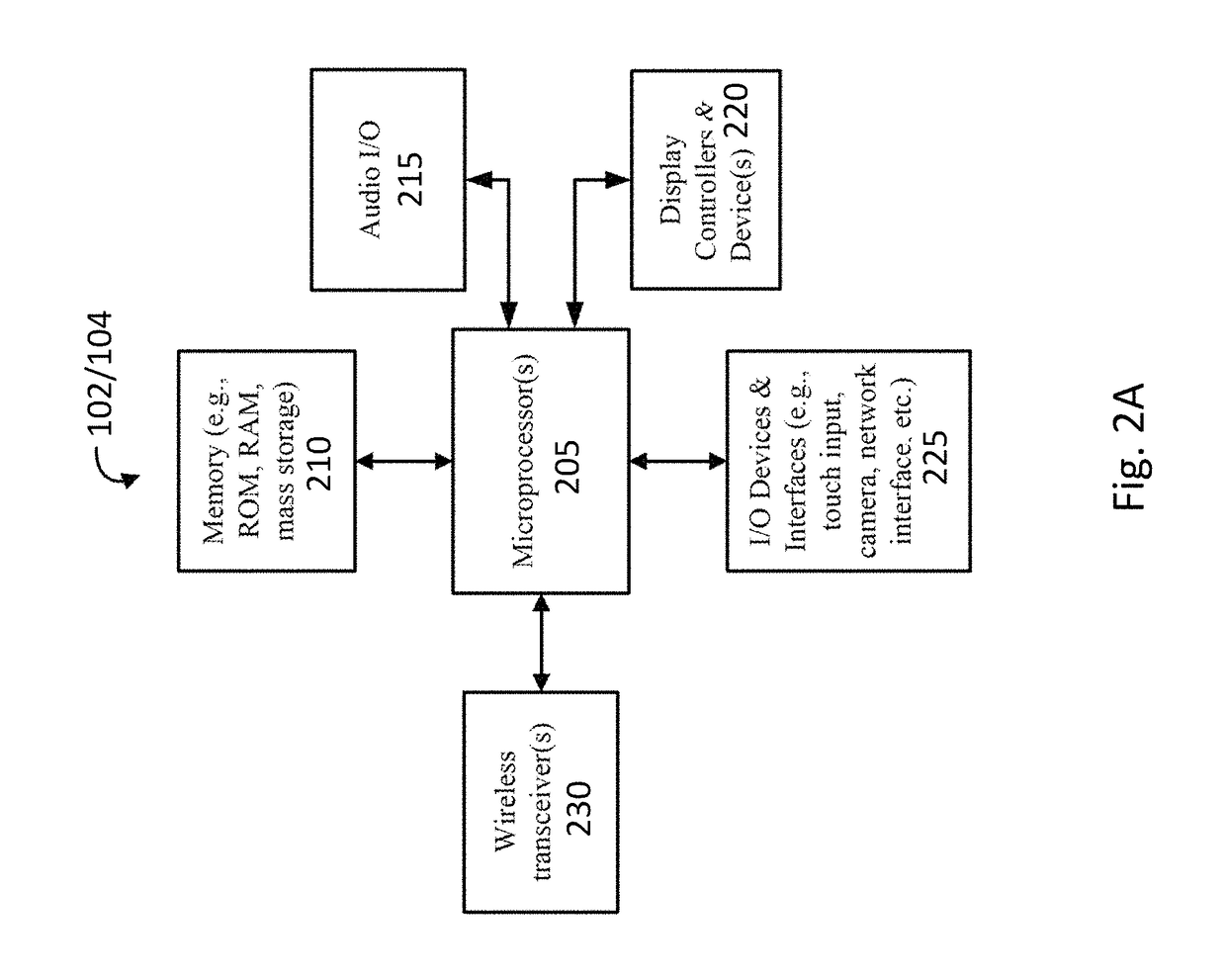

System for immersive telepresence

ActiveUS20160050394A1Television conference systemsTwo-way working systemsComputer moduleHuman–computer interaction

Disclosed is system and method for interactive telepresence that includes at least one data processing apparatus, at least one database, an audio / visual capture device that is configured with at least one microphone and camera. A detection module is provided to detect one of the plurality of participants who is speaking during the meeting, and a display module that is configured to display video that is generated.

Owner:THERE0

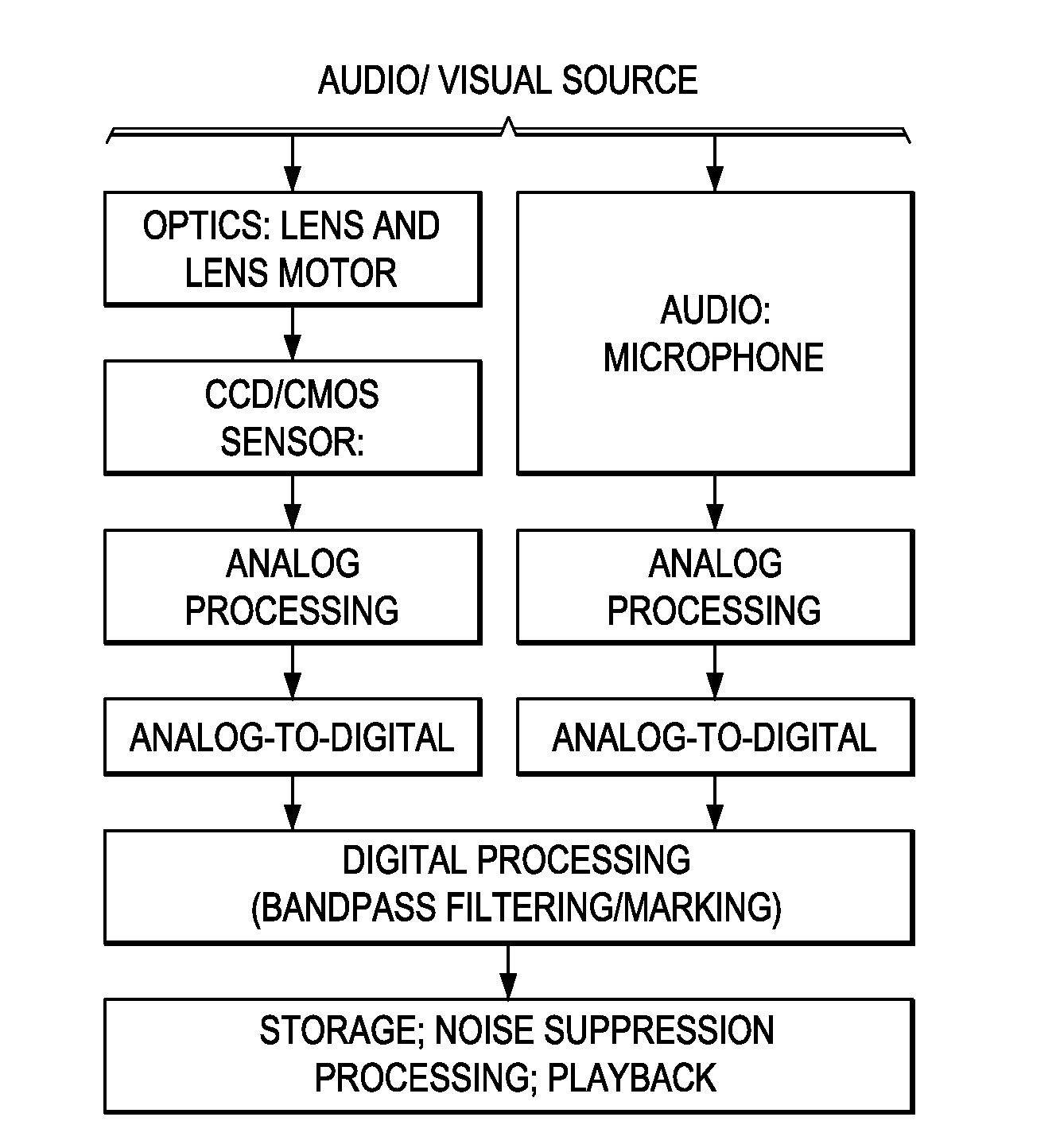

Method and apparatus for image processing

InactiveUS20080309786A1Improve speech intelligibilityIncrease usageTelevision system detailsColor television detailsCamera lensImaging processing

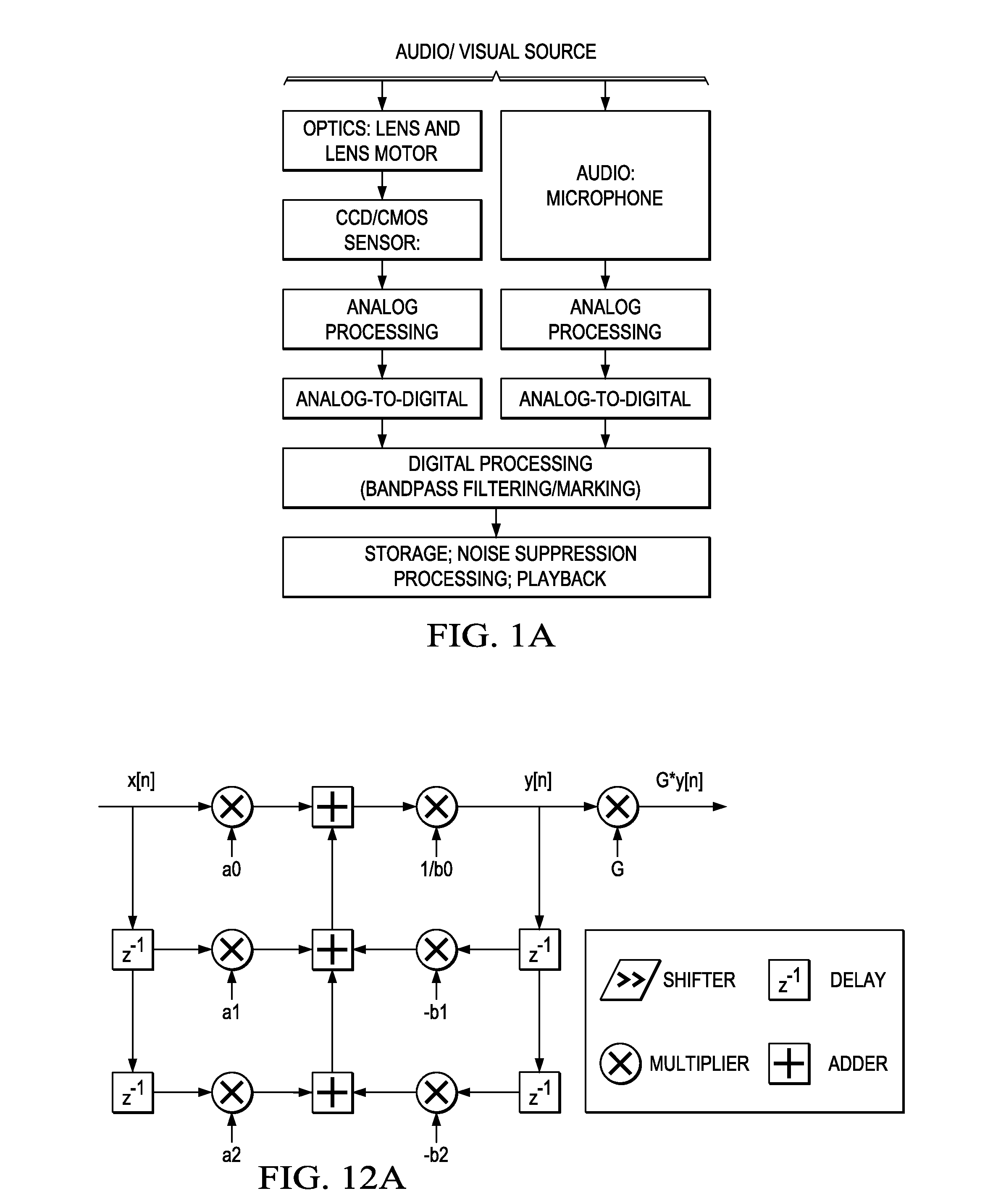

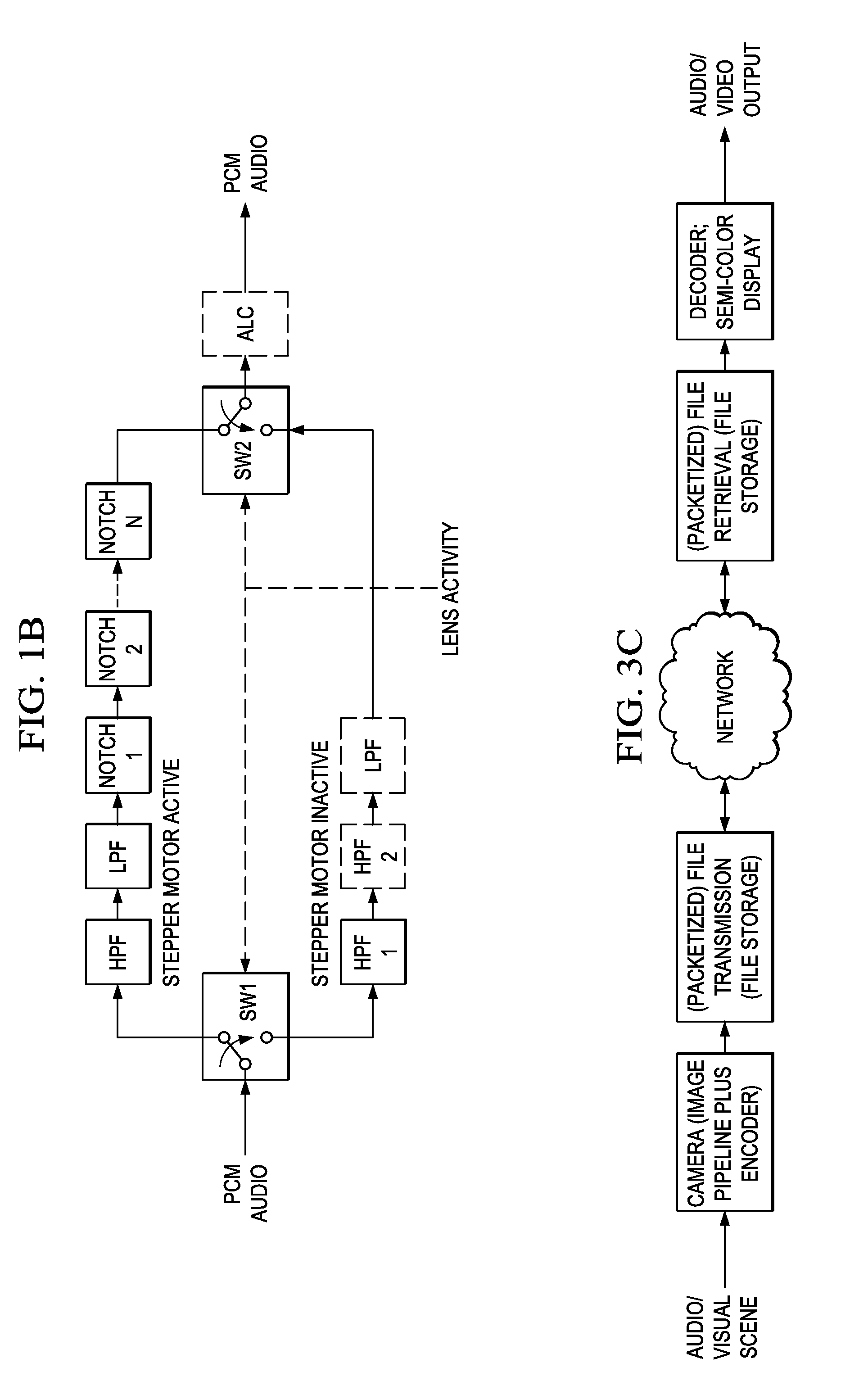

Digital camera audio / visual capture includes bandpass and notch filtering for the audio input during camera lens motor operation; the filtering may be active during capture or the audio segments may be marked for later noise suppression processing.

Owner:TEXAS INSTR INC

System and method for point of service payment acceptance via wireless communication

Owner:QUALCOMM INC

Embedded appliance for multimedia capture

ActiveCN101536014ATelevision system detailsCharacter and pattern recognitionDigital imageVisual capture

A multimedia device includes input ports (210) dedicated to receiving a real-time media signal and a processor system (250) dedicated to capturing the real-time media signal. The processor system defines an embedded environment. The input ports (210) and the processor system (250) are integrated into the multimedia capture device. The input ports include an audio input port (202) and at least oneof a visual-capture input port (204) or a digital-image input port (208).

Owner:ECHO 360 INC

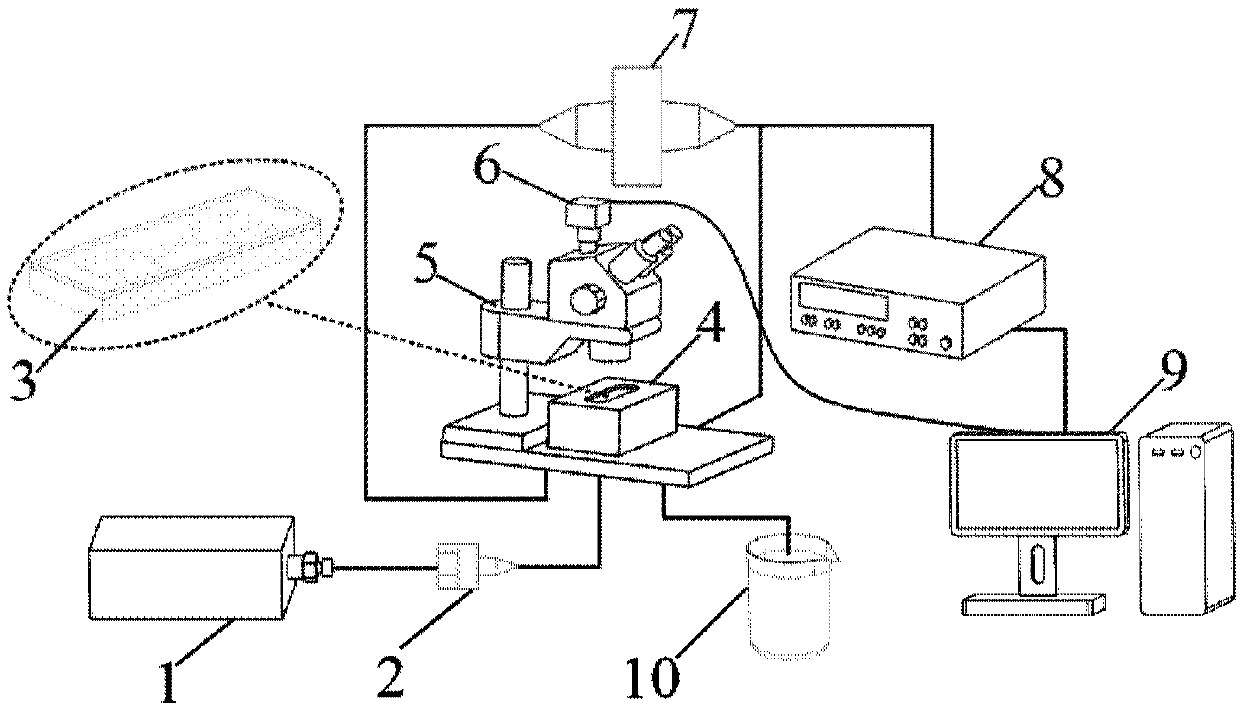

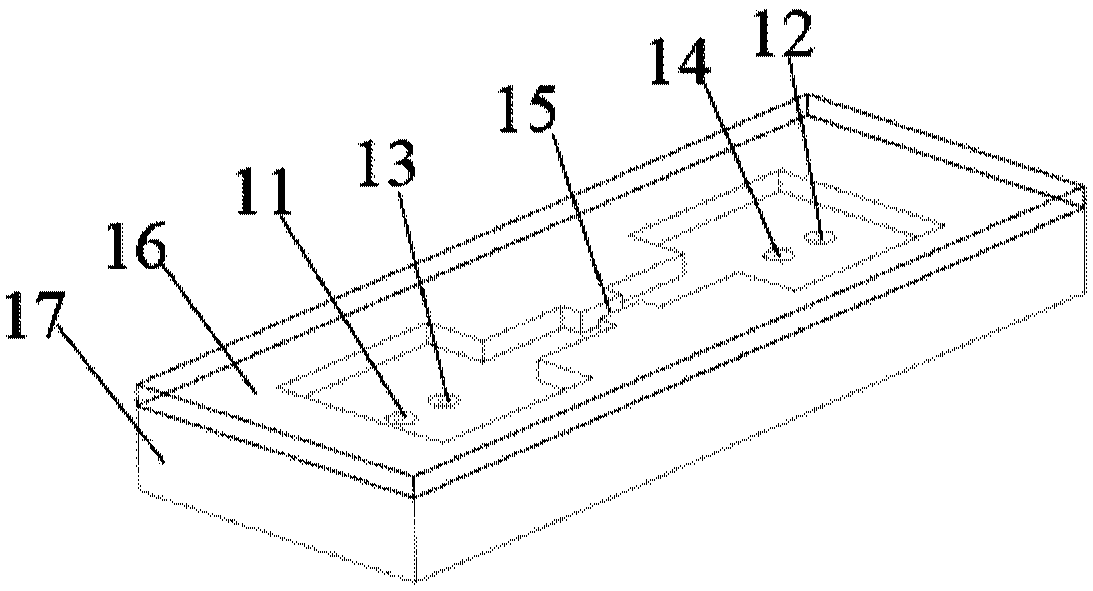

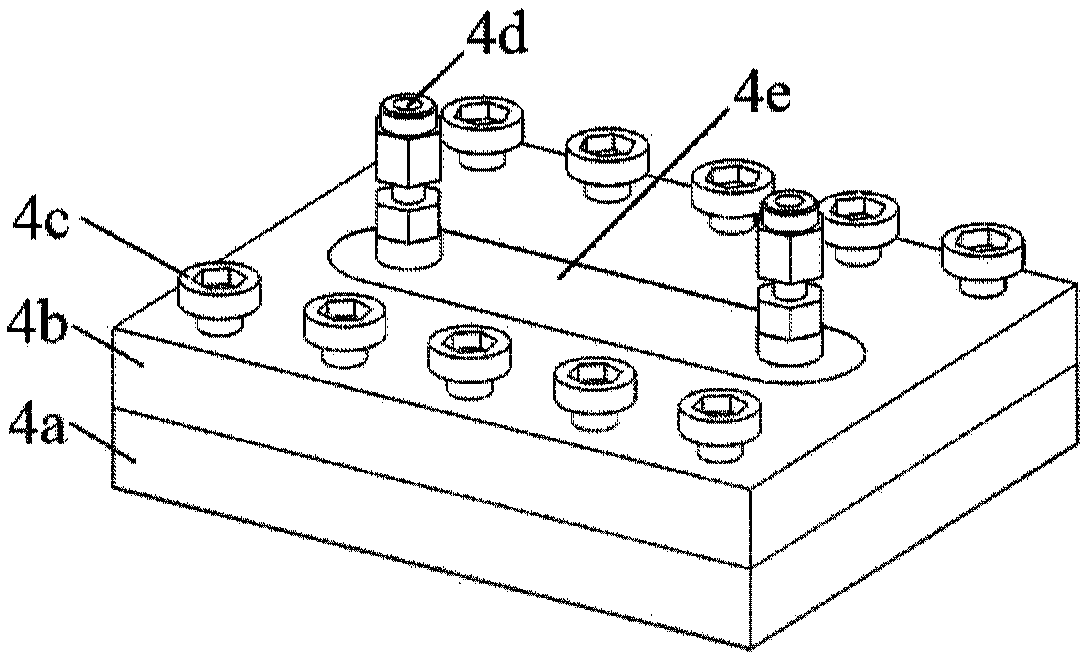

Measurement method for migration rule of pre-crosslinked gel particles in rock microscopic pore throat

The invention provides a measurement method for the migration rule of pre-crosslinked gel particles in a rock microscopic pore throat. The method includes: preparing a pre-crosslinked gel particle solution; building a visual measurement platform, and installing a rock microscopic pore throat model; utilizing a visual image acquisition and analysis system and a real-time pressure measurement and acquisition system respectively for real-time and visual capture and measurement of the morphologic change and pressure change of pre-crosslinked gel particles when passing the rock microscopic pore throat model; analyzing the changes to acquire microscopic seepage parameters of the pre-crosslinked gel particles when passing the rock microscopic pore throat model, and performing autoregression of amathematical model corresponding to the morphologic change and pressure change of the particles; and analyzing the migration rule of the pre-crosslinked gel particles in the rock microscopic pore throat, thus realizing integrated measurement of deformation, blockage, breakage and other morphologic change characteristics and real-time pressure change situation involved when the pre-crosslinked gelparticles pass through the rock microscopic pore throat.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

System for immersive telepresence

ActiveUS10057542B2Special service provision for substationTelevision conference systemsVisual captureHuman–computer interaction

Disclosed is system and method for interactive telepresence that includes at least one data processing apparatus, at least one database, an audio / visual capture device that is configured with at least one microphone and camera. A detection module is provided to detect one of the plurality of participants who is speaking during the meeting, and a display module that is configured to display video that is generated.

Owner:THERE0

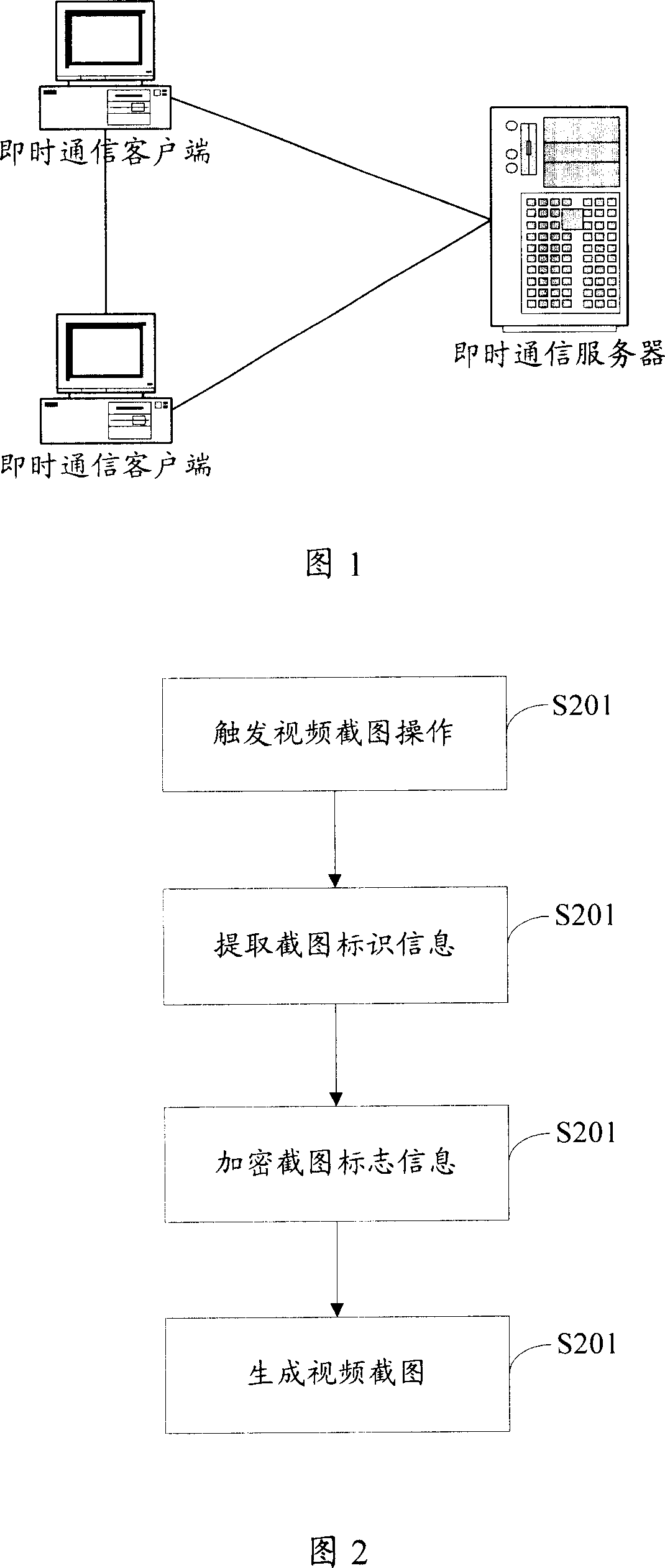

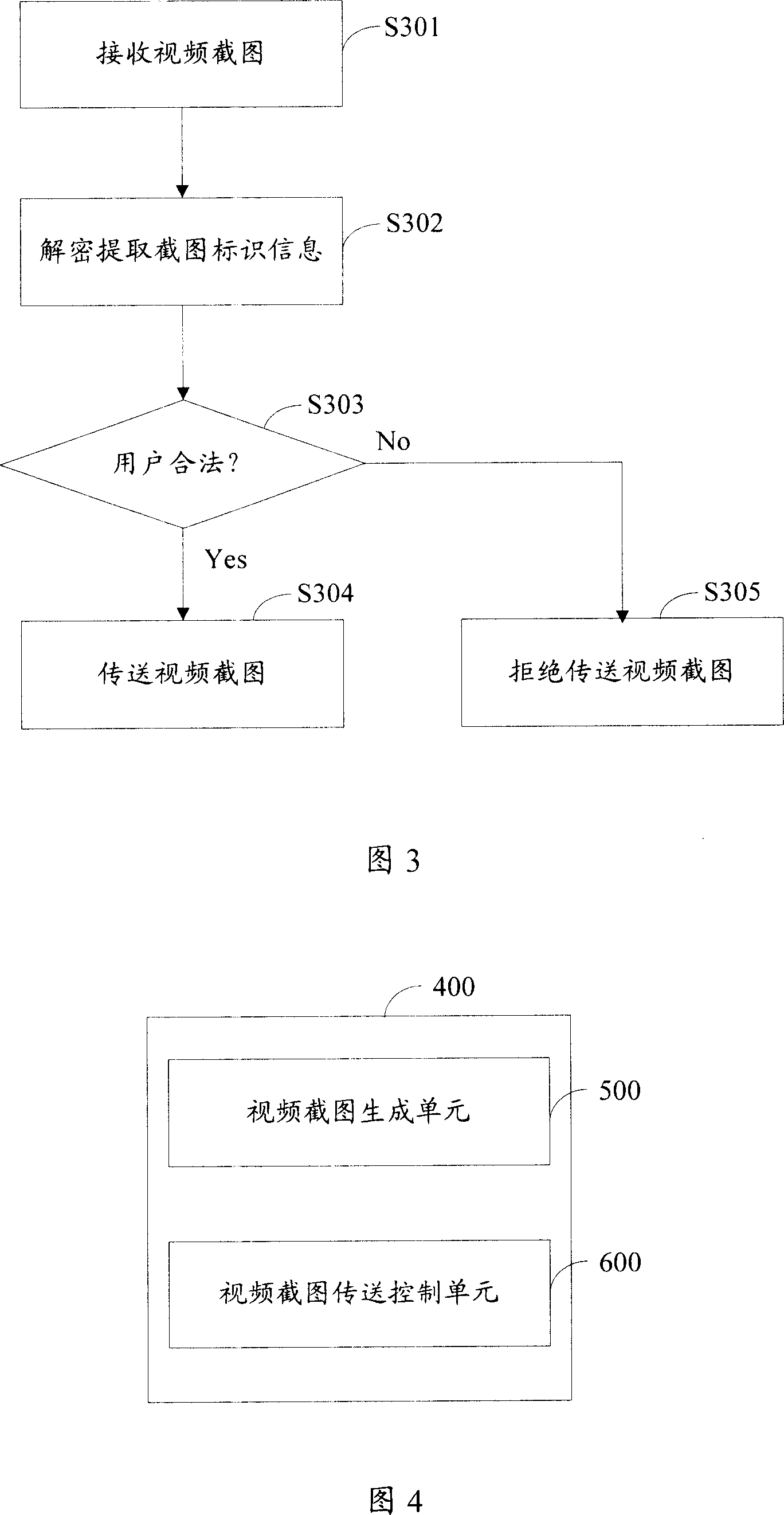

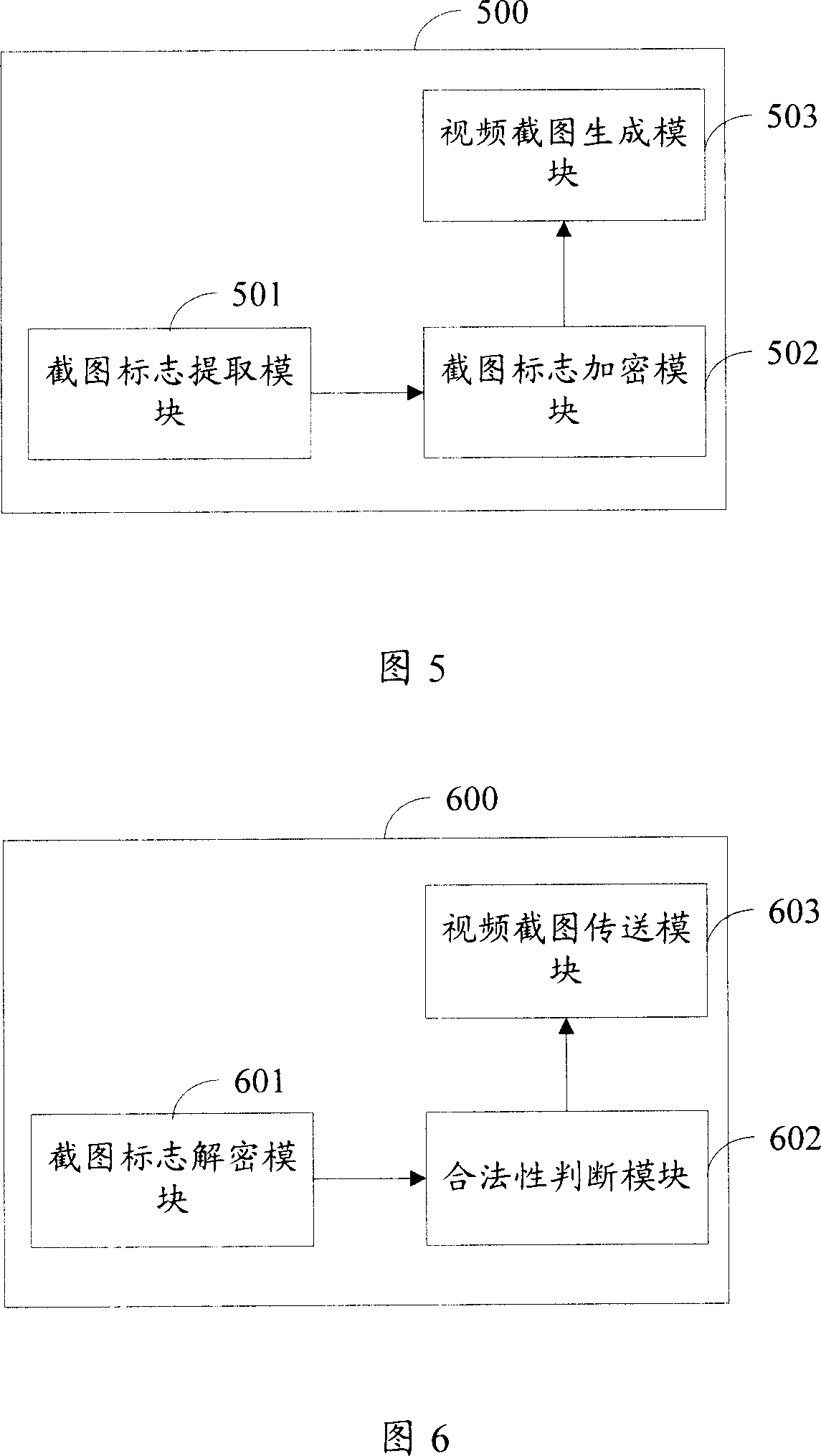

A method and system for control of the video captured figure in the instant communication

ActiveCN1997141APrevent illegal transmissionAvoid disputesAnalogue secracy/subscription systemsTwo-way working systemsPattern recognitionInformation control

This invention provides one method and system to send visual captured image in instant communication, which comprises the following steps: a, adding capture label information into the visual section to generate visual section; b, through the section label information controlling the deliver of the visual section. This invention adds section label information into the section to control the deliver through section information.

Owner:TENCENT TECH (SHENZHEN) CO LTD

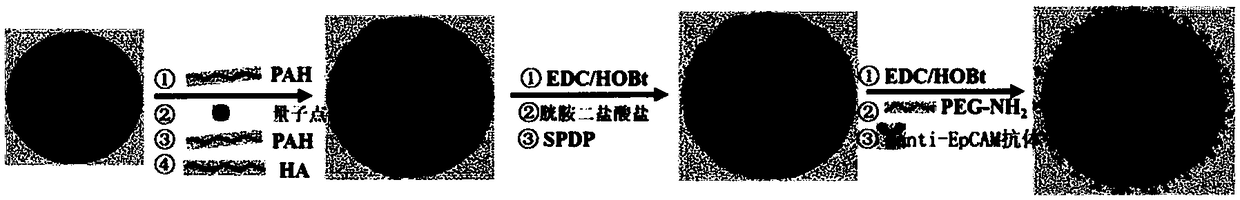

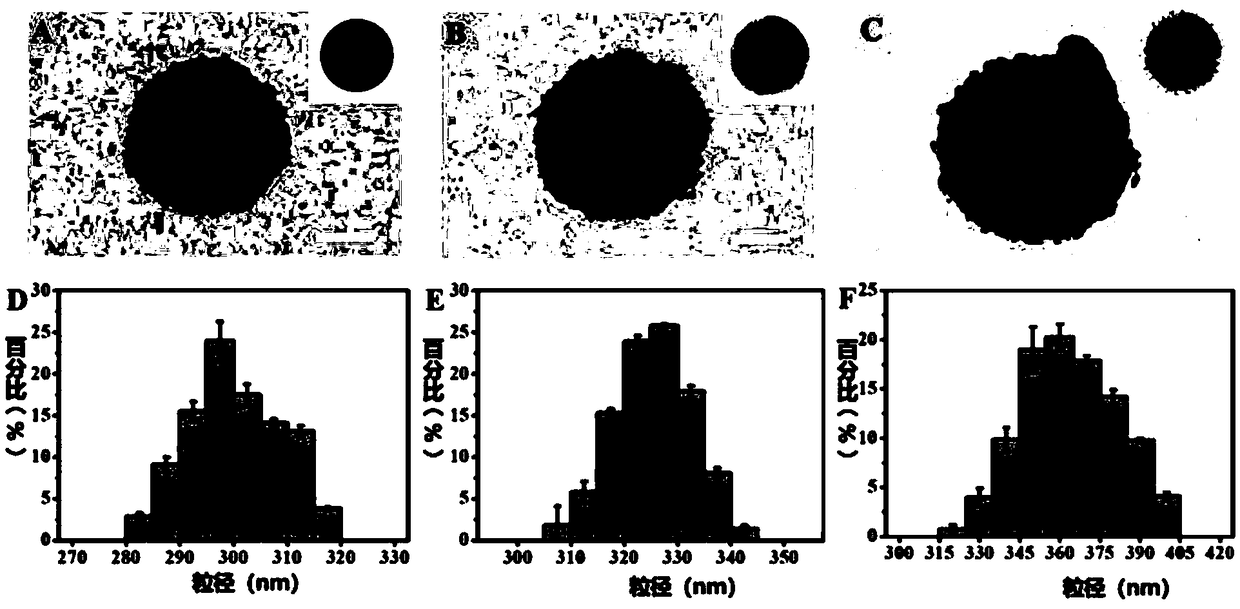

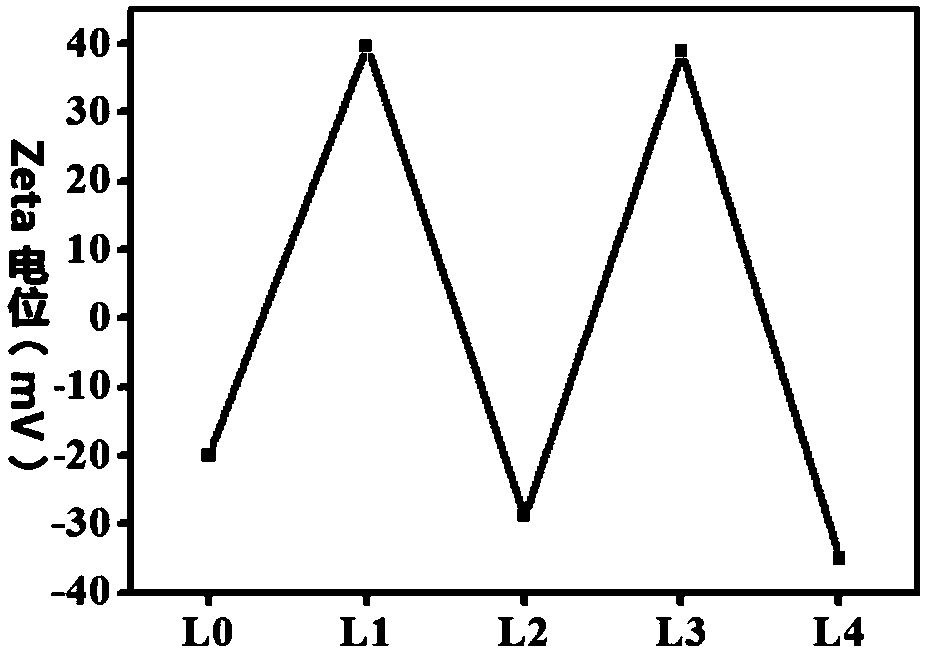

Immunomagnetic nano-particle for visual capture and release of circulating tumor cells, and preparation method thereof

ActiveCN109100511AHigh enrichment efficiencyImplement featuresInorganic material magnetismBiological testingFluorescencePolyethylene glycol

The invention discloses an immunomagnetic nano-particle with fluorescent properties and a preparation method and application thereof. The method comprises the following steps: using magnetic nano-particle Fe3O4 as a core, constructing a magnetic nano-particle with fluorescence properties by depositing a quantum dot layer on the surface of the magnetic nano-particle Fe3O4, and further introducing the anti-epithelial cell adhesion molecule antibody and the aminated polyethylene glycol molecule into the fluorescent magnetic nano-particle to obtain an immunomagnetic nano-particle. The immunomagnetic nano-particle enables rapid specific capture and release of circulating tumor cells. In addition, the quantum dot-labeled magnetic nano-particle enables visualization of capture and release processes of circulating tumor cells.

Owner:SICHUAN UNIV

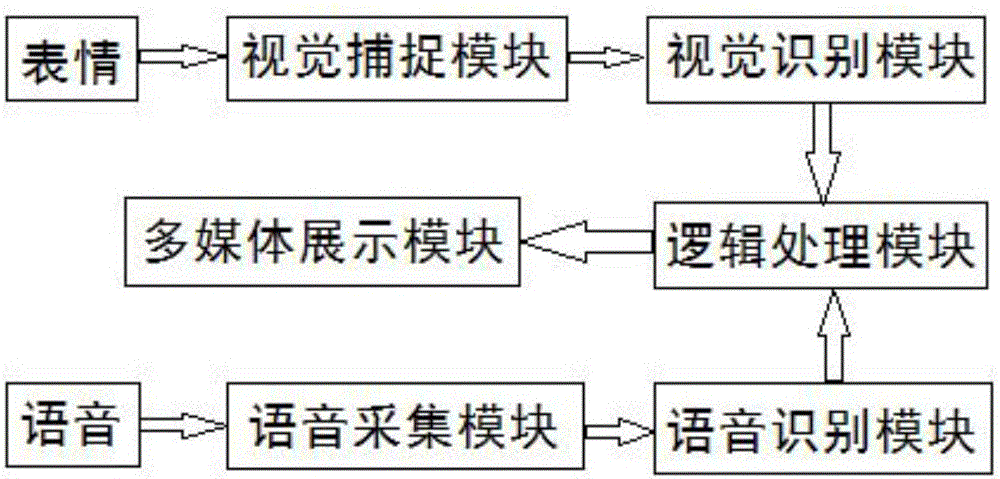

Game control system and method based on vision and speech recognition technology

The invention discloses a game control system based on the vision and speech recognition technology. The system comprises a vision capture module, a vision recognition module, a voice acquisition module, a voice recognition module, a logical processing module and a multimedia display module. The invention further provides a game control method based on the vision and speech recognition technology. The method includes the following steps of game running, vision and speech recognition, text processing and multimedia display. According to the system and the method, the user can control a game by combining facial expression, movement and voice commands and can play a mobile game and enjoy the happiness brought by the game at any time and any place; the user can also operate or control the game through facial expression, the interestingness of the game is improved, and illiterate old people or children can enjoy the entertainment of games; the method and the system can also be applied to the fields of education, interactive entertainment and the like, damage to the eyesight is reduced, and operation is convenient.

Owner:合肥泰壤信息科技有限公司

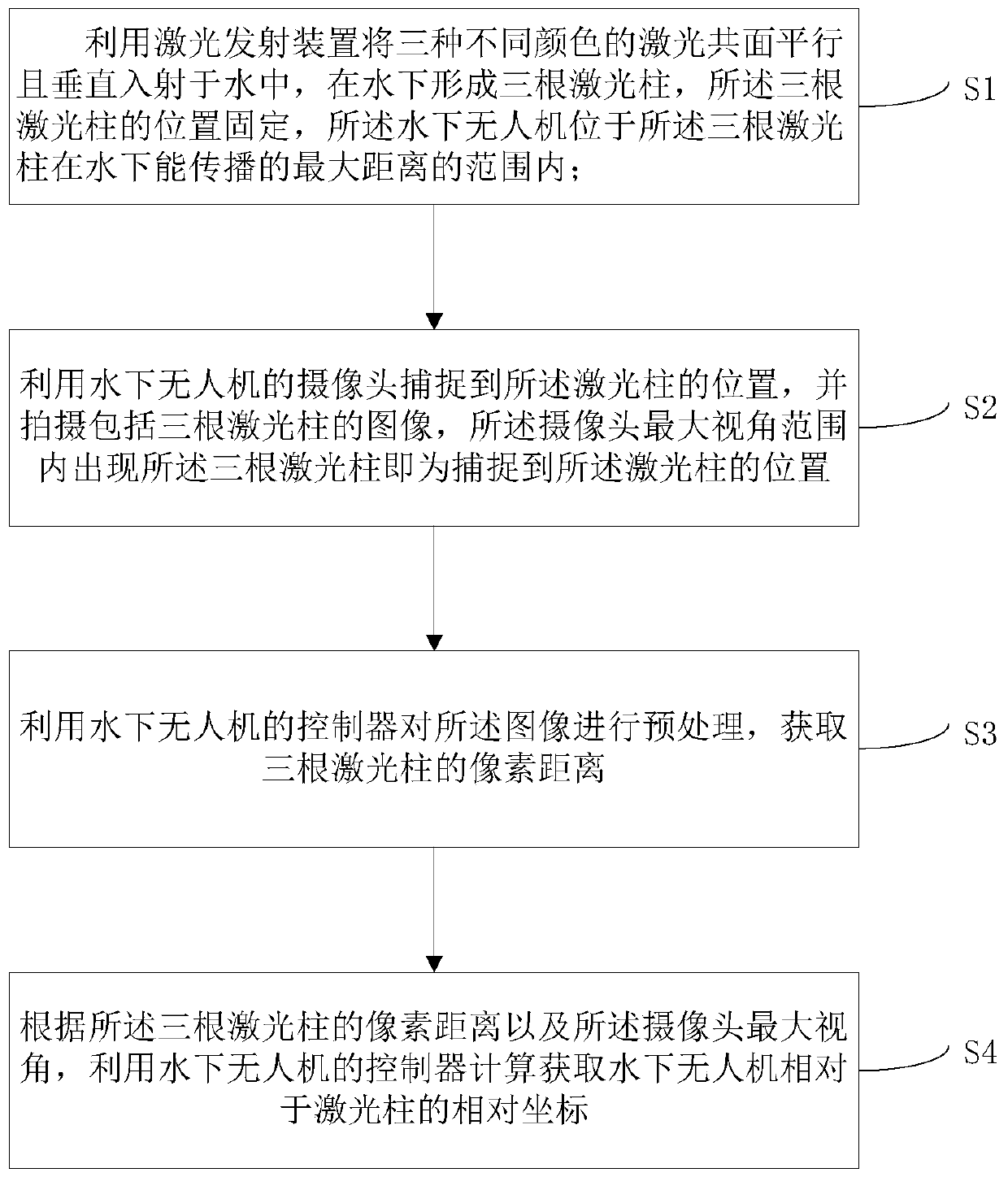

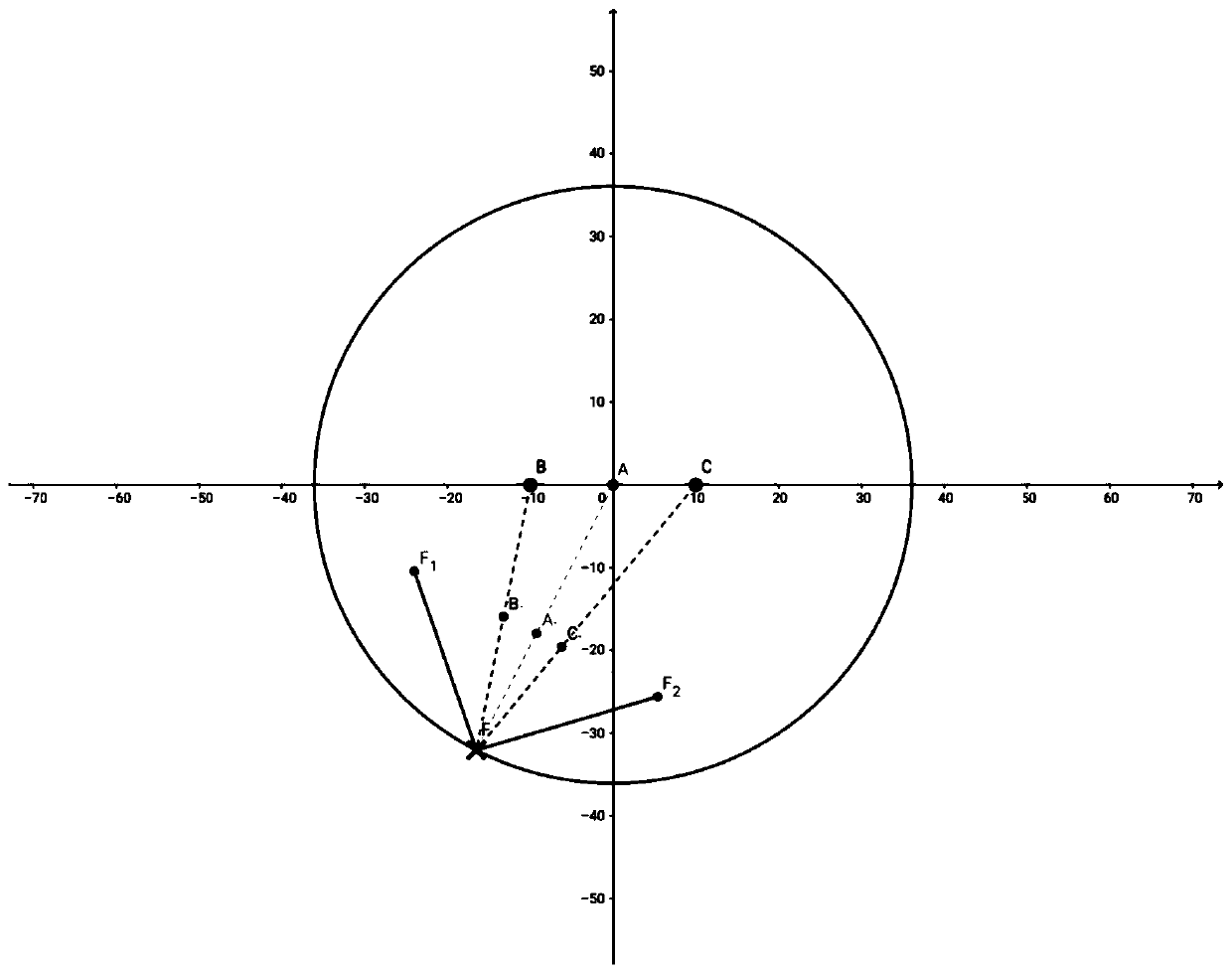

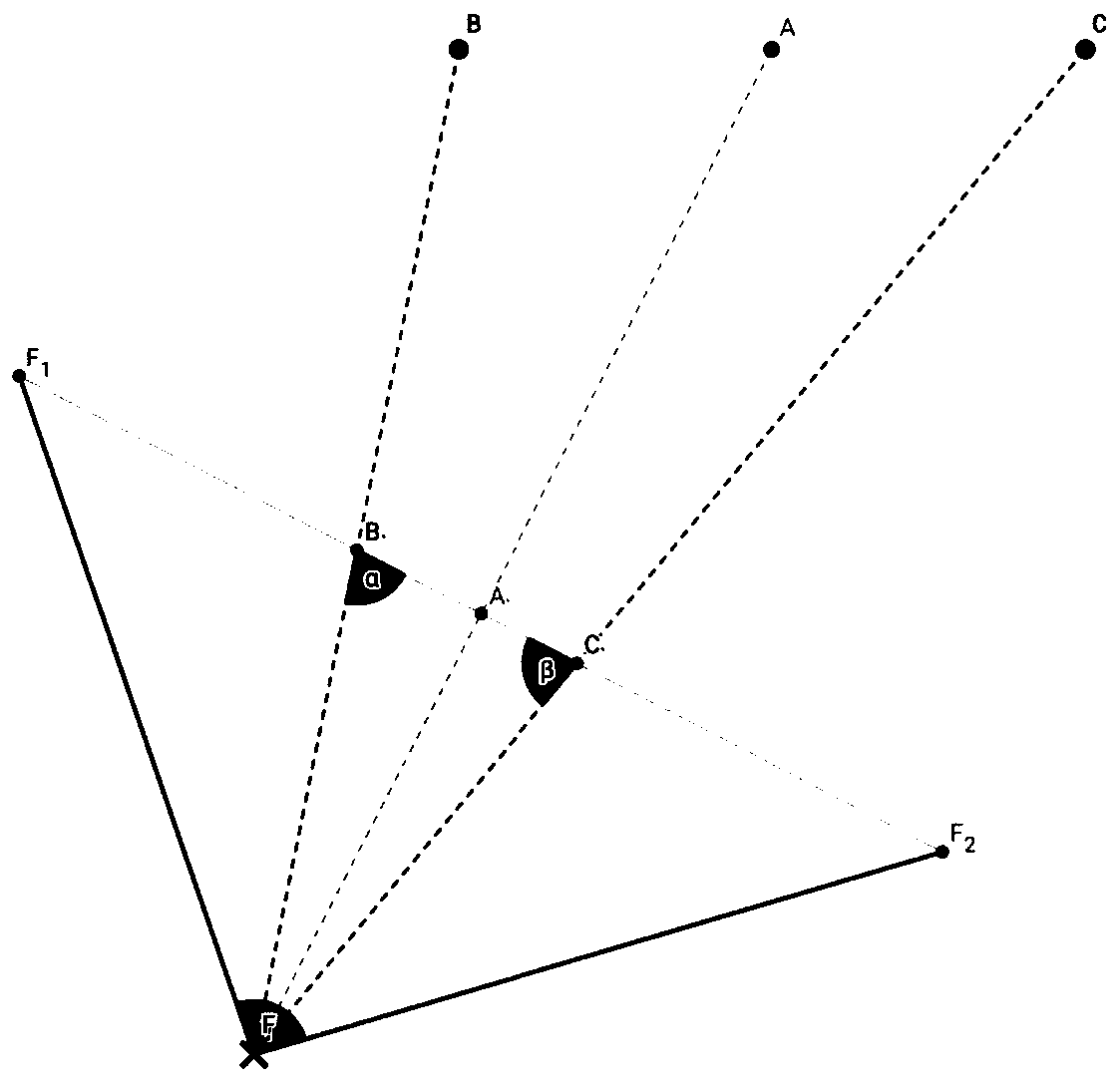

Underwater UAV positioning method and device based on three-color laser

ActiveCN109975759ALow costPrecise positioningPosition fixationWater resource assessmentUnderwaterTurbid water

The invention discloses an underwater UAV positioning method and an underwater UAV positioning device based on three-color laser. The underwater UAV positioning method comprises the steps that: S1, lasers of three different colors are incident into water in a coplanar, parallel and vertical manner, so as to form three laser beams underwater; S2, an underwater UAV captures positions of the laser beams and acquires an image containing the three laser beams; S3, the image is preprocessed to obtain pixel distances of the three laser beams; S4, and relative coordinates of the underwater UAV relative to the laser beams are obtained according to the pixel distances of the three laser beams and a photographic viewing angle of the image. The underwater UAV positioning method and the underwater UAVpositioning device utilize the Tyndall Effect to perform visual capture in turbid water areas through emitting three lasers of different colors underwater from above the surface of the water, and calculates the relative positions of the UAV relative to the lasers according to the photographic viewing angle and the transverse pixel length, thereby realizing precise positioning of the UAV at low cost.

Owner:GUANGDONG UNIV OF TECH

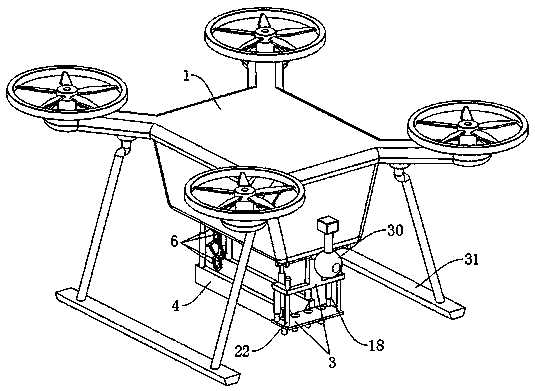

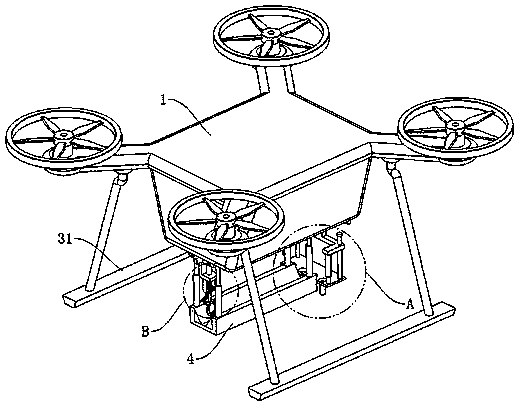

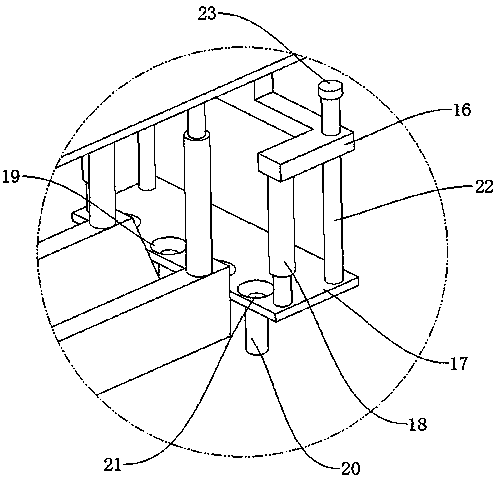

Visual capture based unmanned aerial vehicle transplanting system

InactiveCN110431965AReduce destructive powerImprove work efficiencyTransplantingAircraftsUncrewed vehicleVisual capture

The invention relates to the technical field of application of unmanned aerial vehicles, in particular to a visual capture based unmanned aerial vehicle transplanting system. The visual capture basedunmanned aerial vehicle transplanting system comprises an engine body and a transplanting mechanism, the transplanting mechanism is slidably arranged at one end of the bottom of the engine body to beconnected with seedlings for transplanting, the transplanting mechanism includes a first sliding table and a transplanting assembly, and further includes a material channel and a clamping mechanism, the material channel is horizontally arranged at the other end of the base of the engine body to accommodate to-be-transplanted seedlings, the clamping mechanism is arranged above the material channeland is located at the other end of the base of the engine body to take the seedlings from the material channel, and the clamping mechanism includes a second sliding table and a clamping assembly. By the arrangement that a current mainstream manual transplanting mode is replaced with an automatic mode, the working efficiency is improved, time is saved, and the labor intensity is low; compared withan vehicle transplanter in the prior art, the unmanned aerial vehicle transplanting system has the advantages of simple structure, small size, less destructive power to farmland and lower cost at thesame time.

Owner:倪晋挺

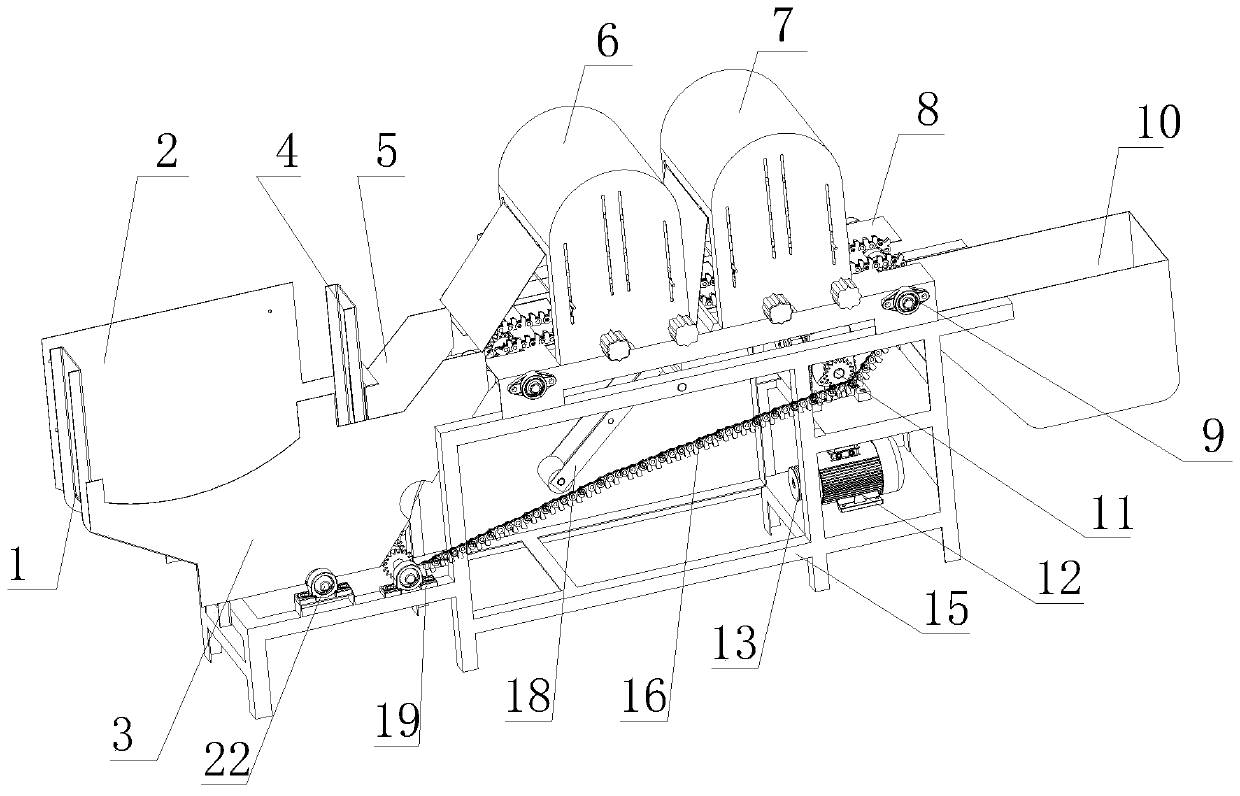

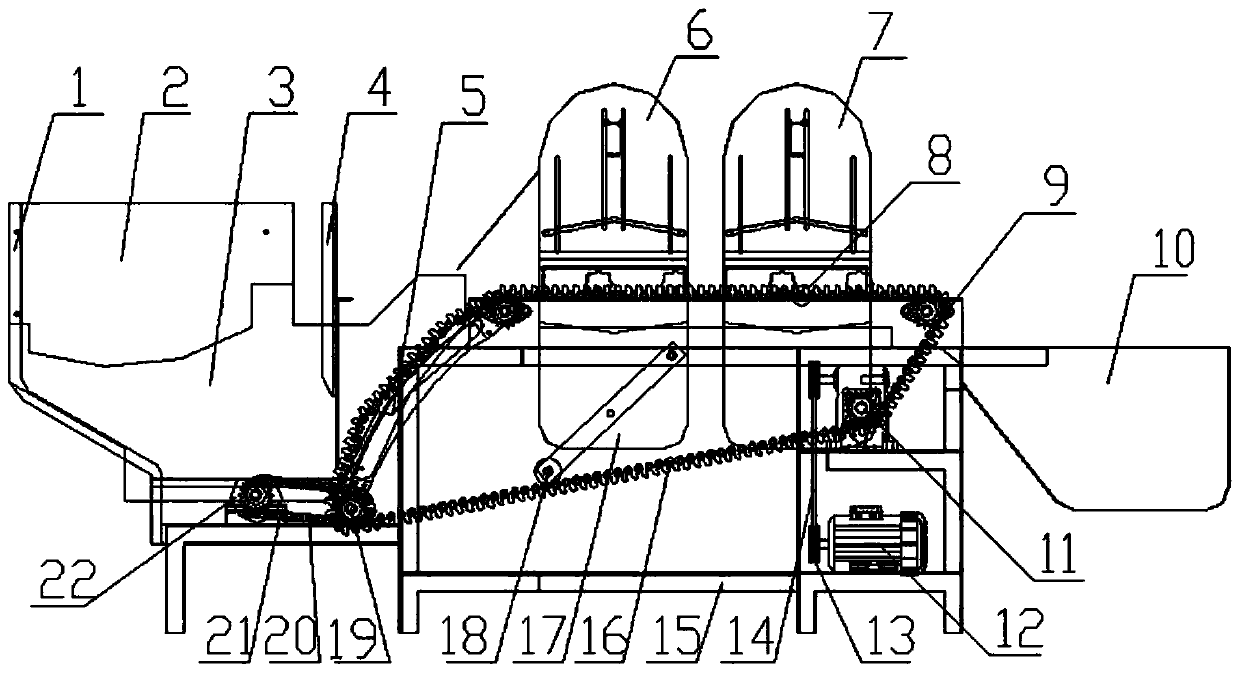

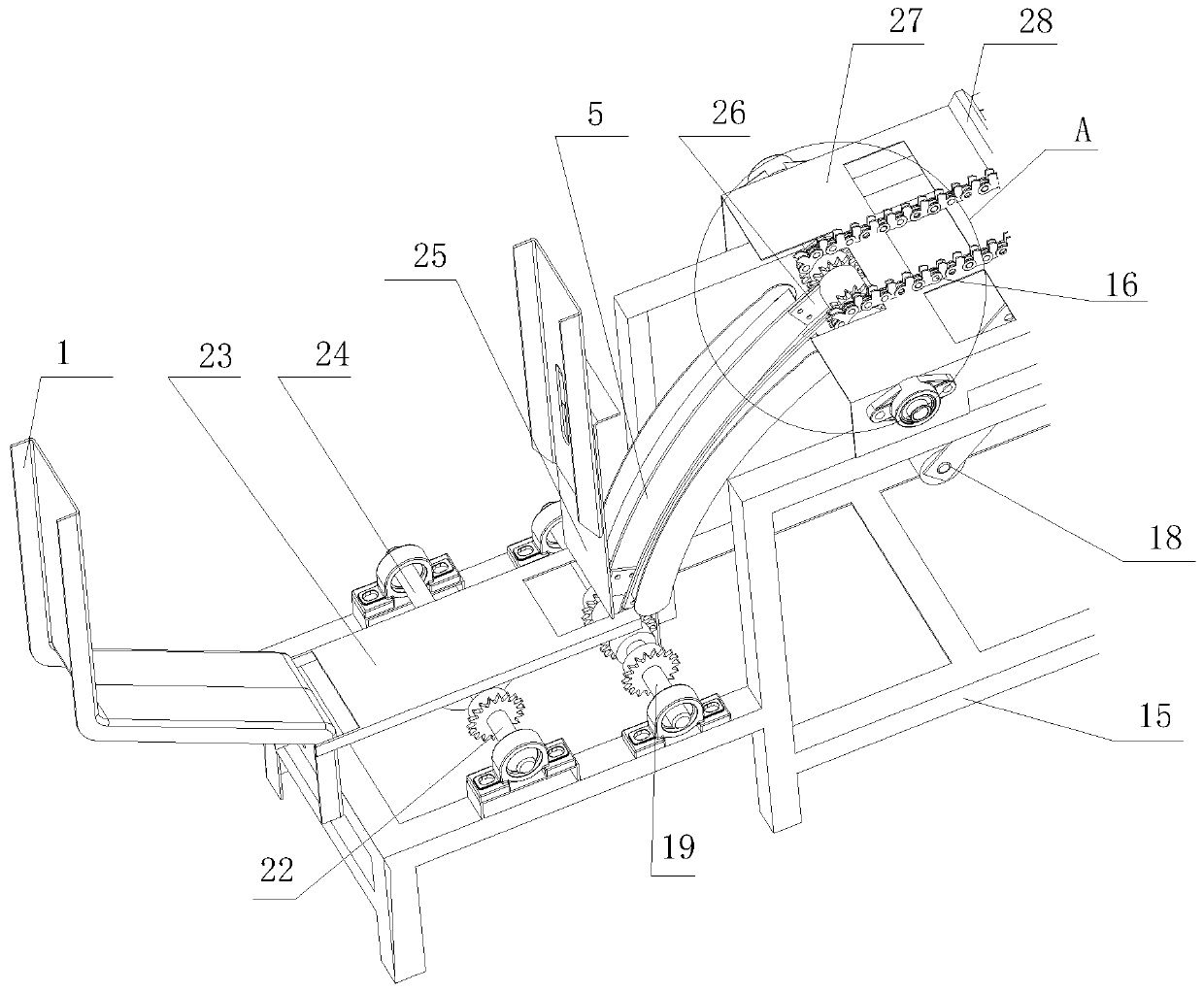

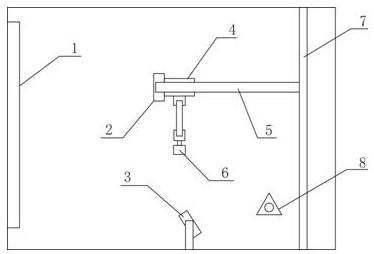

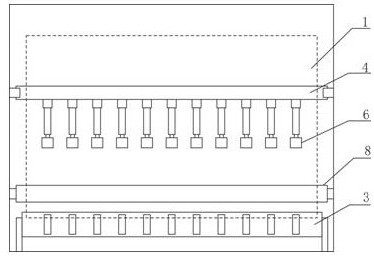

Chopstick sorting detecting device and algorithm

ActiveCN111545483AImprove sorting efficiencyImprove sorting qualityCharacter and pattern recognitionSortingMotor driveWaste collection

The invention discloses a chopstick sorting device and algorithm. The device comprises a rack. A left side baffle, a side face baffle, a bottom baffle and a right side baffle are fixedly installed atthe top of the rack. A lifting frame is fixedly installed at one side of the right side baffle. According to the chopstick sorting device, a camera is used for replacing human eyes to complete the functions of recognition, measurement, positioning and the like, manual work is replaced to remove chopsticks with stains or mildew stains and chipped edges, a visual detection system is used for effectively improving the detection speed and precision of a production assembly line, the yield and the quality are greatly improved, meanwhile, misjudgment caused by human eye fatigue is avoided, a camerais used for photographing, computer vision is adopted for catching defects of the chopsticks, an instruction is sent, a motor drives a transmission chain to lower the transmission speed, chopsticks are blown out to fall into a waste collection box by turning on an air injection pump, and therefore the chopstick sorting accuracy is greatly increased, and the chopstick sorting efficiency and qualityare accelerated.

Owner:JIANGXI AGRICULTURAL UNIVERSITY

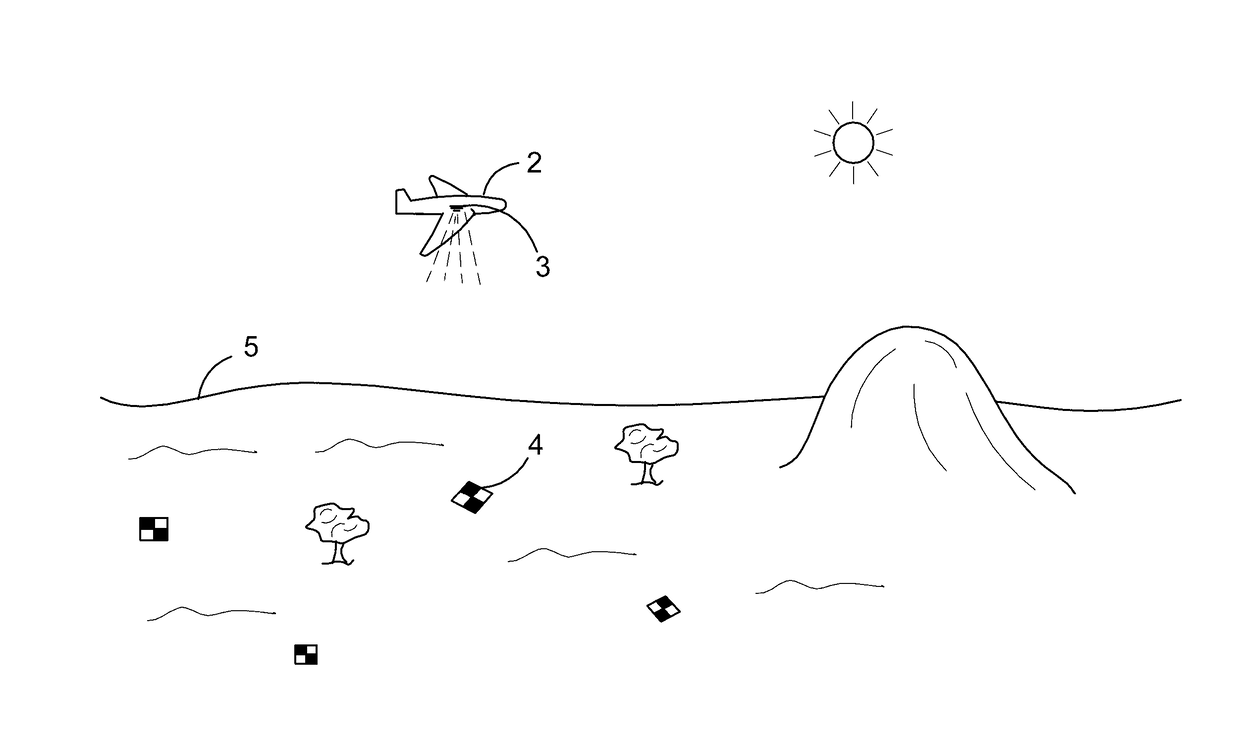

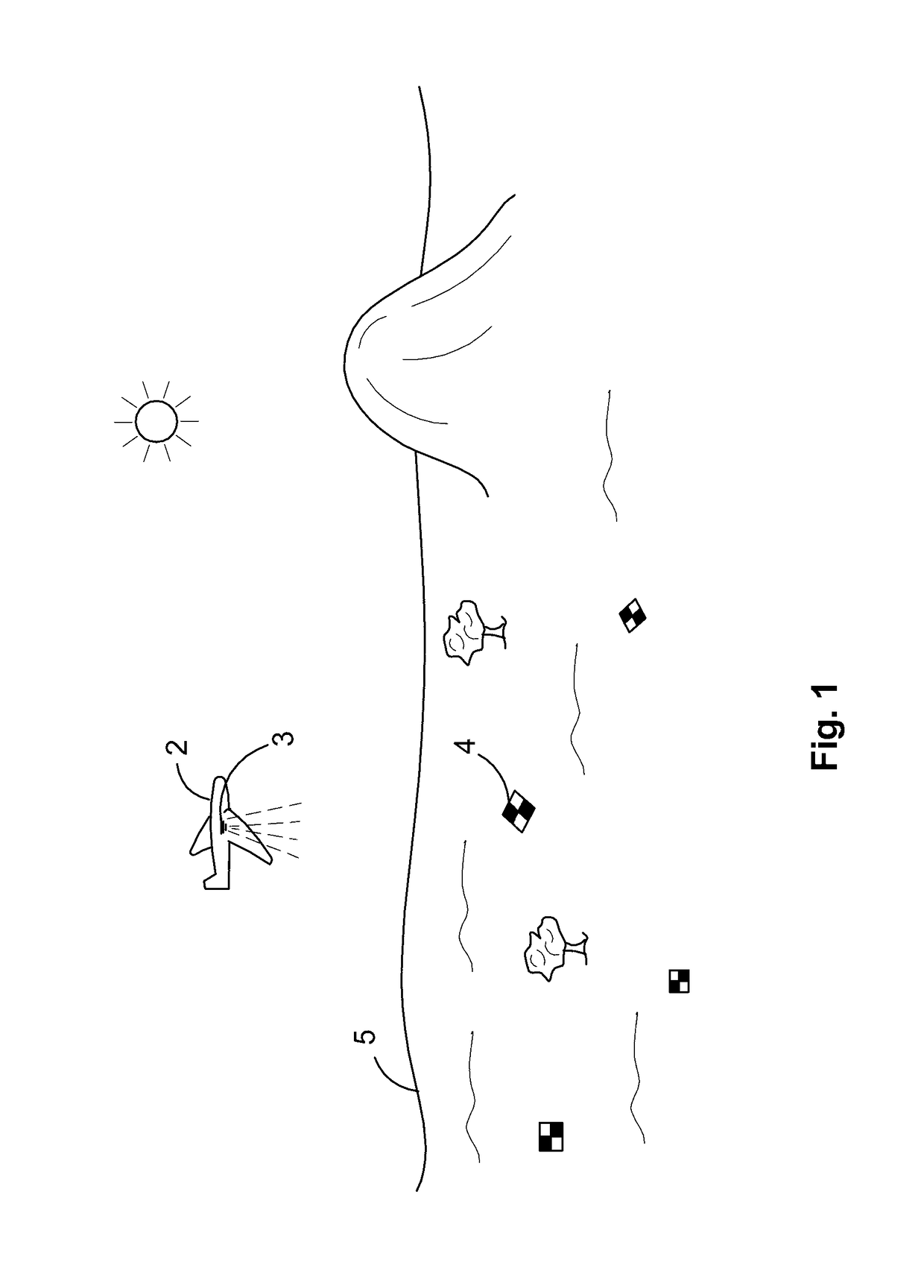

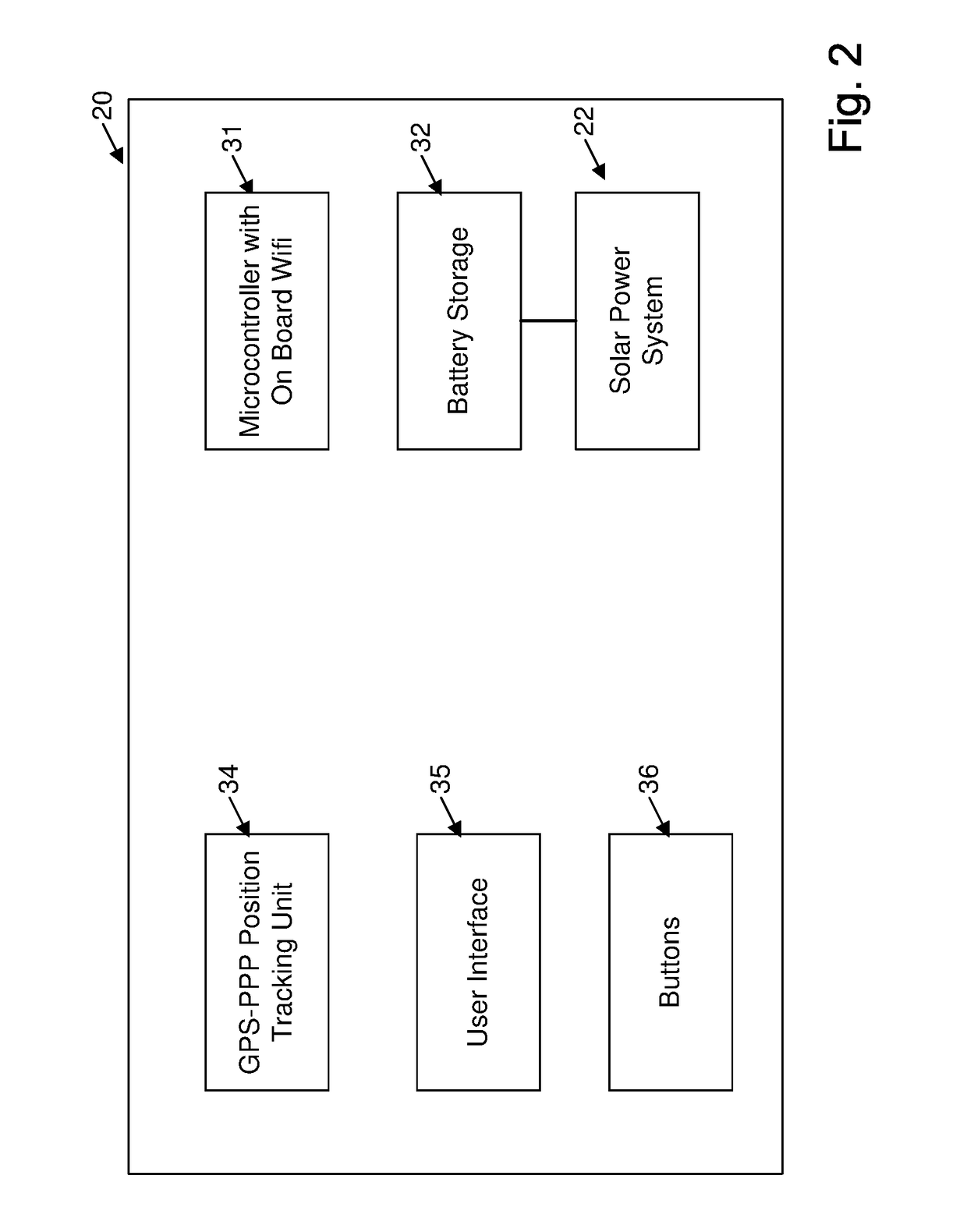

Integrated visual geo-referencing target unit and method of operation

ActiveUS20180239031A1Photogrammetry/videogrammetryNavigation by terrestrial meansMicrocontrollerTime range

Integrated Visual Geo-referencing Target Unit ABSTRACT A georeferencing target unit including: a generally planar top surface including a visual marker structure on the top surface, dimensioned to be observable at a distance by a remote visual capture device; an internal gps tracking unit tracking the current position of the target unit; a microcontroller and storage means for storing gps tracking data; and wireless network interconnection unit for interconnecting wirelessly with an external network for the downloading of stored gps tracking data; a power supply for driving the gps tracking unit, microcontroller, storage and wireless network interconnection unit, a user interface including an activation mechanism for activating the internal gps tracking unit to track the current position of the target unit over an extended time frame and store the tracked gps tracking data in the storage means.

Owner:PROPELLER AEROBOTICS PTY LTD

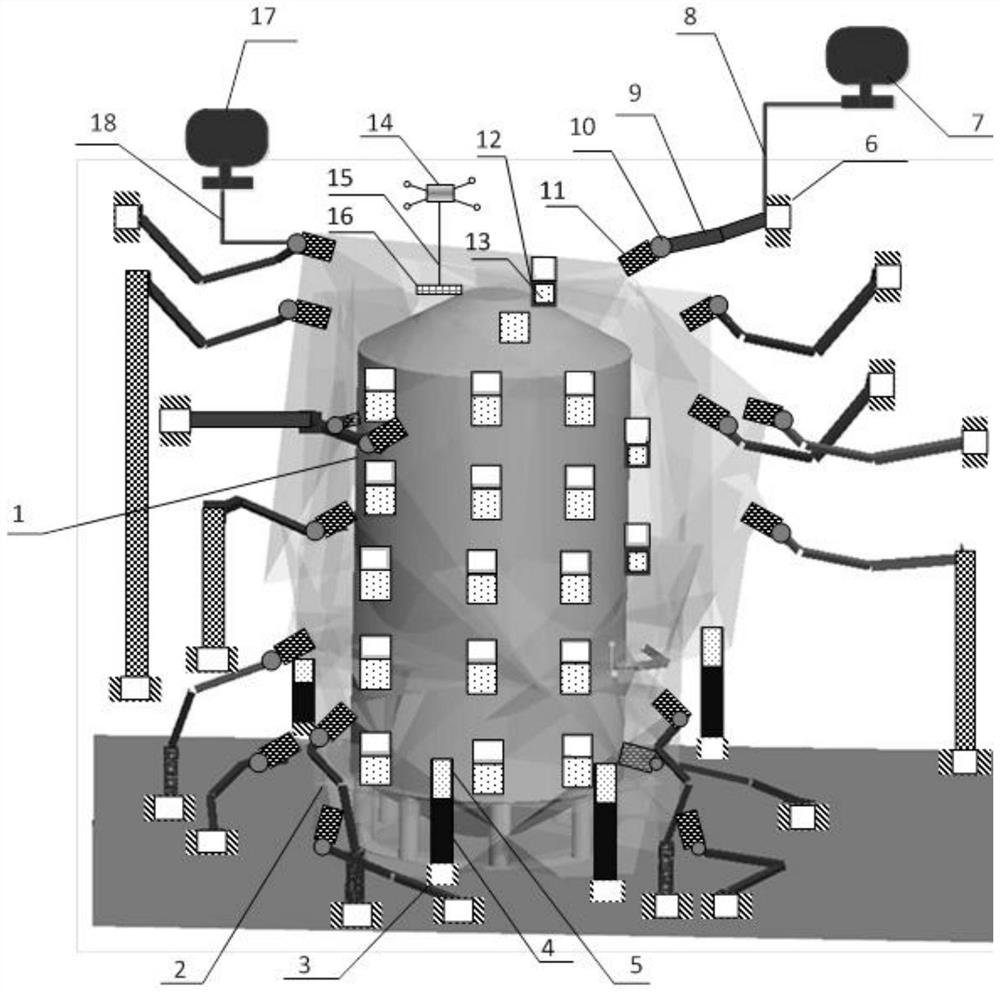

Large-view-field real-time deformation measurement system and method for spacecraft structure static test

PendingCN113513999AWith dynamic real-time measurementWith real-time measurementUsing optical meansStrength propertiesClassical mechanicsUncrewed vehicle

The invention relates to a large-view-field real-time deformation measurement system and method for a spacecraft structure static test, and belongs to the field of structure deformation measurement in a large spacecraft structure static load test. The system comprises a test piece module, a calibration module, a multi-camera networking vision measurement module and a self-adaptive view field adjustment module; a test supporting tool is used for fixing a test piece and providing a loading boundary for the test piece, and the self-adaptive view field adjustment module is used for adjusting the measurement view field and the measurement position of the multi-camera networking system to achieve global visual capture of all measured points of measured pieces of various sizes and shapes. According to the measurement method, a fixed calibration target calibration system and a mobile unmanned aerial vehicle suspended calibration ruler calibration system are combined to calibrate a multi-camera measurement system. And high-precision measurement of the multi-camera networking measurement system is realized by adopting a method of carrying out real-time synchronous calibration and correction on the camera group before the test and before each measurement in the test.

Owner:BEIJING SATELLITE MFG FACTORY

Visual capture system for cable film aging test and test method thereof

InactiveCN113267444AGuaranteed to be normalAvoid changeWeather/light/corrosion resistanceMaterial strength using tensile/compressive forcesLaser transmitterEngineering

The invention relates to a visual capture system for cable film aging test and a test method thereof. The visual capture system comprises a test box body, and a sample rack and an optical measuring device arranged in the test box body. One side of the test box body is opened. The optical measuring device comprises prisms rotationally connected with the two sides of the test box body, a laser transmitter arranged opposite to the prisms and a photoelectric conversion screen arranged on the side, opposite to the opening, of the inner wall of the test box body, and the sample frame is arranged between the prisms and the photoelectric conversion screen. According to the invention, the length of the to-be-measured film is obtained in real time through the photoelectric conversion screen, the mechanical property of the to-be-tested film is analyzed and judged according to the length change of the to-be-tested film, the measurement precision is high by adopting an optical mode, the length of the film in the test box can be observed without frequently opening a box door of the test box, the change of the temperature in the box body is avoided, and the normal proceeding of an experiment is ensured.

Owner:NANYANG CABLE TIANJIN

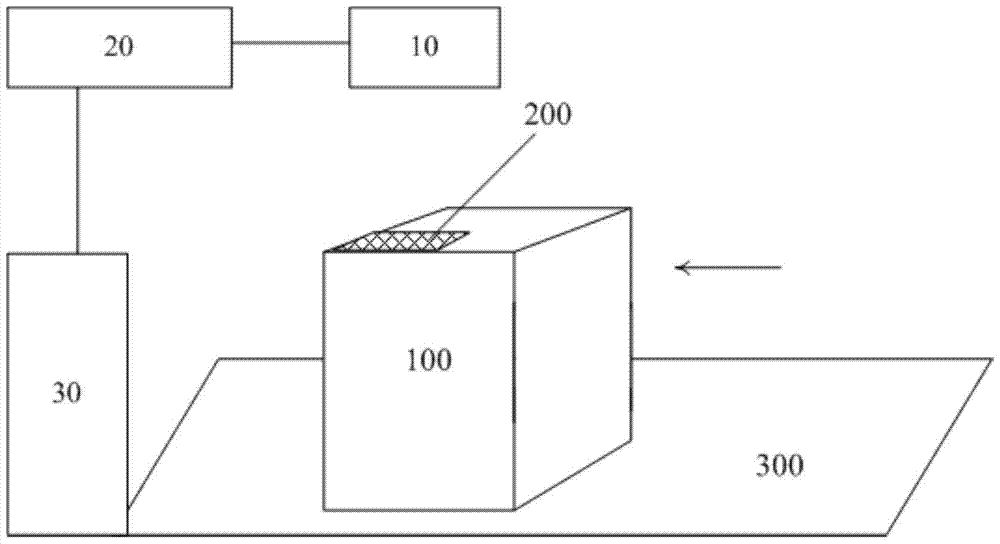

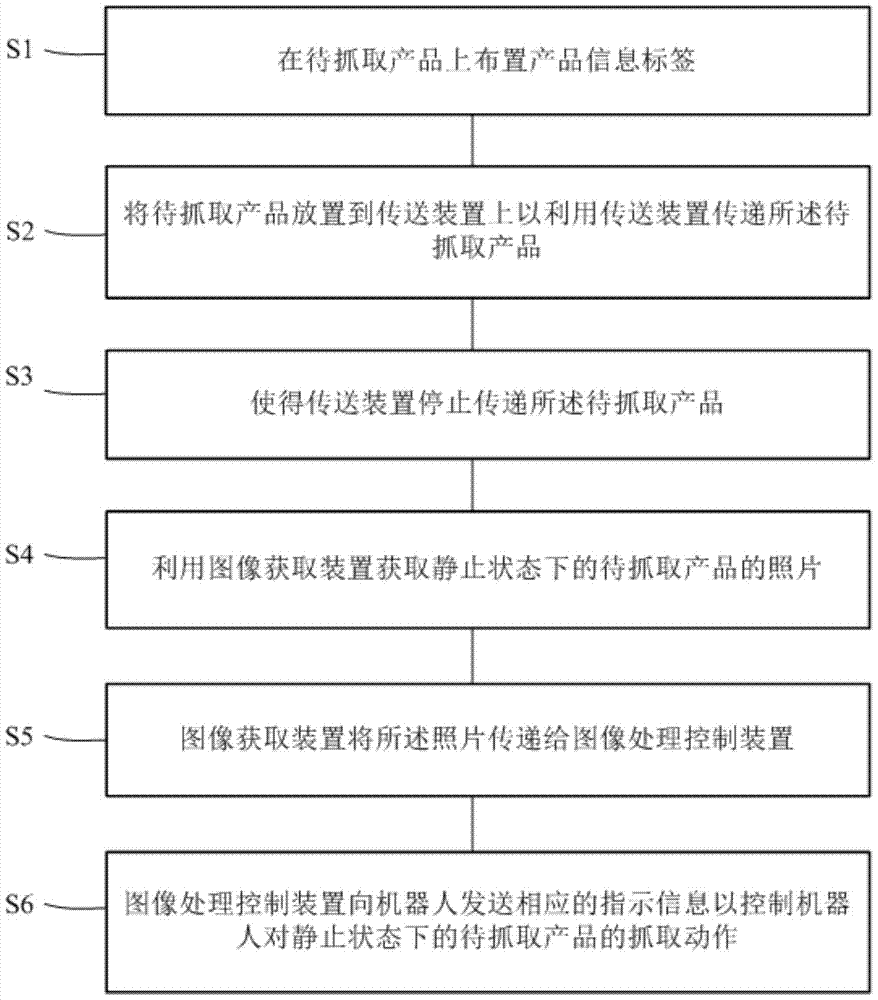

Robot Vision Grasping Method

ActiveCN105459136BSmall amount of calculationRapid responseManipulatorImaging processingComputer graphics (images)

Owner:FREESENSE IMAGE TECH

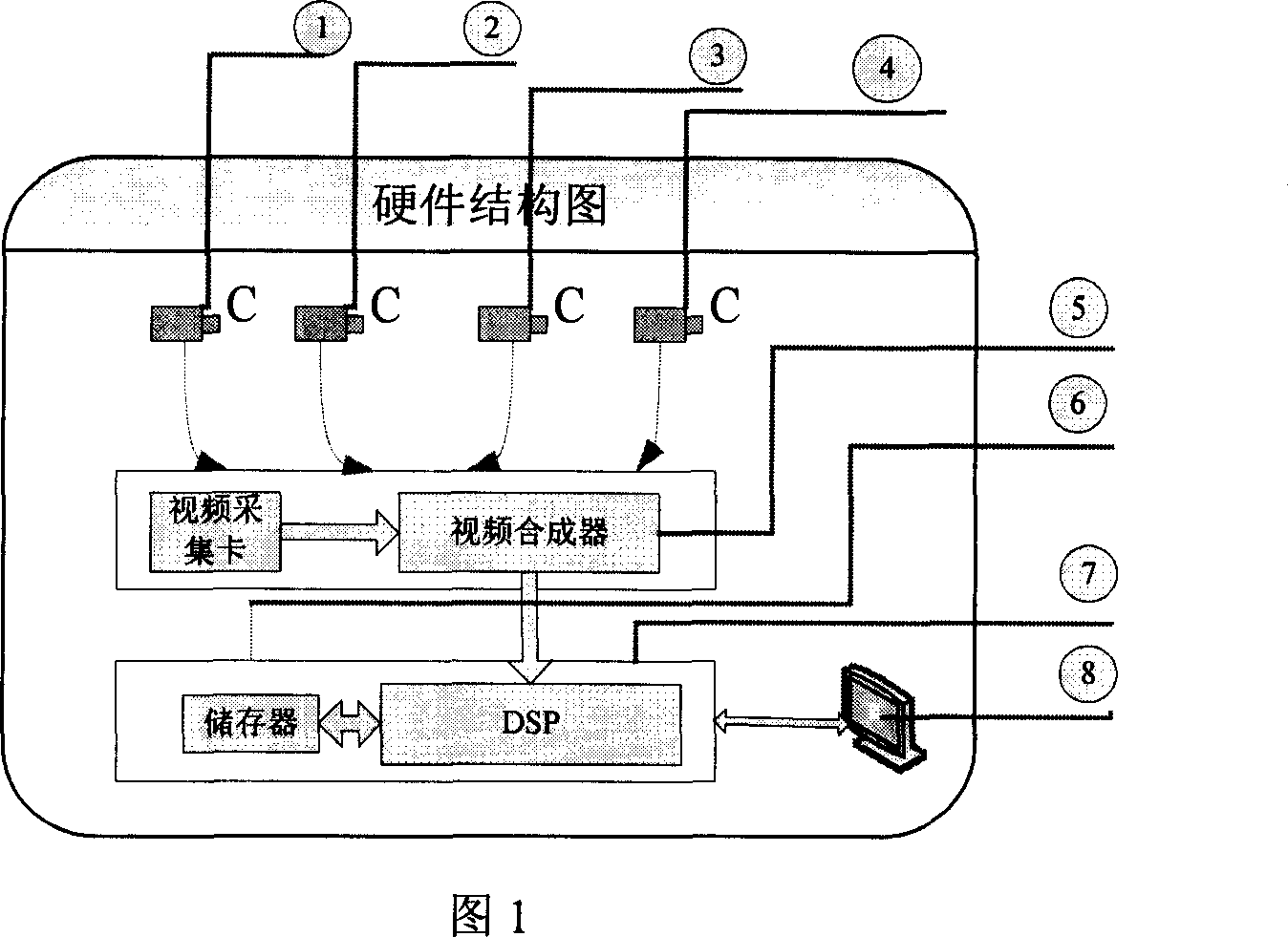

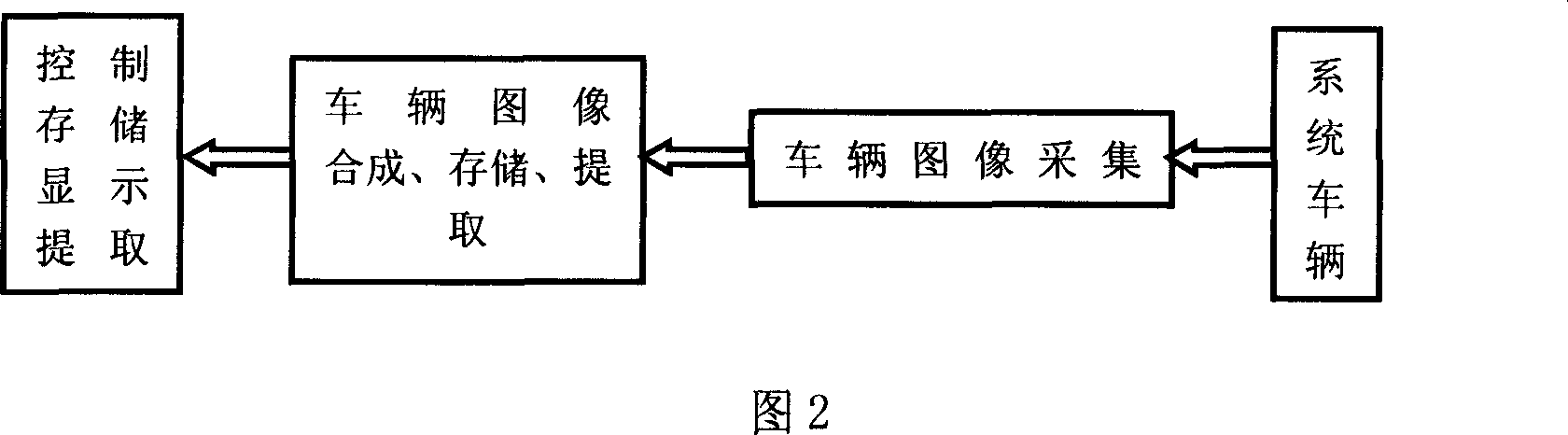

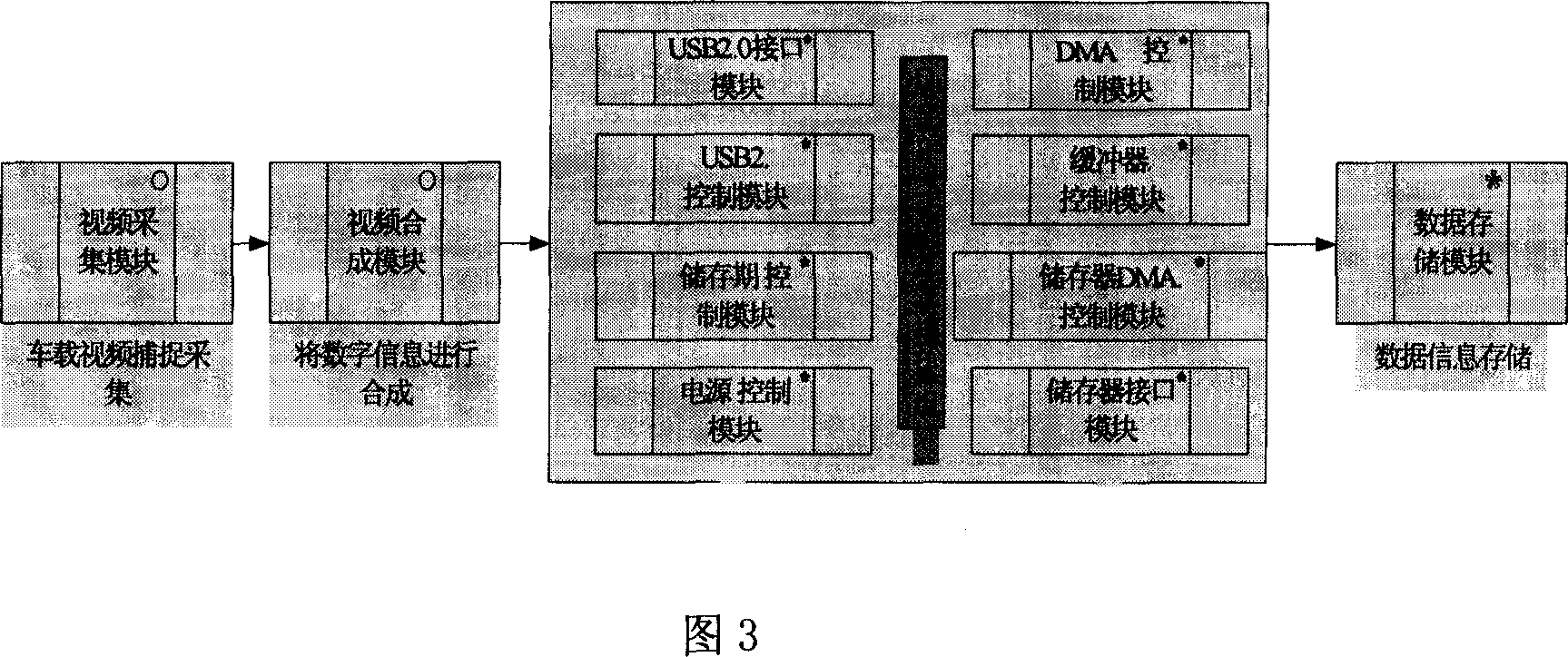

Vehicle mounted system for monitoring traffic accident

InactiveCN101064042AReal-time accident evidenceIntuitively providedRegistering/indicating working of vehiclesClosed circuit television systemsVisual integrationIntegrator

This invention relates to one mobile traffic accident monitor system in machine visual technique, wherein the system comprises system hardware and software parts; the hardware comprises cameral head one to four, visual integrator, digital memory, DSP and selective display and data restore device through wires and plug connection; the system software comprises visual capture, image collection, visual integration, data memory and inquire, display and restore and extraction module. This invention can process collection, process, memory, restore and extraction to realize mobile traffic accident real time monitor on visual data information.

Owner:KUNMING TREASURE TECH

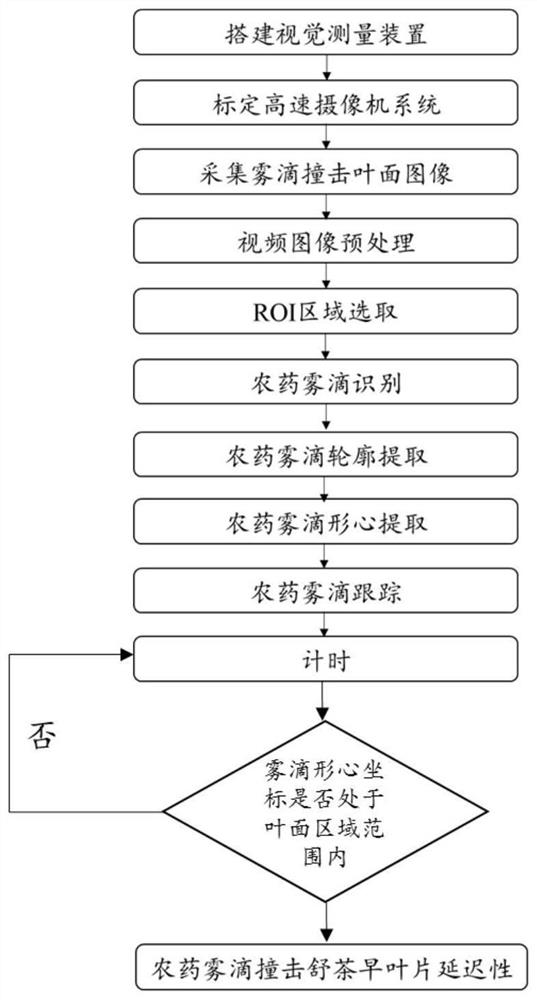

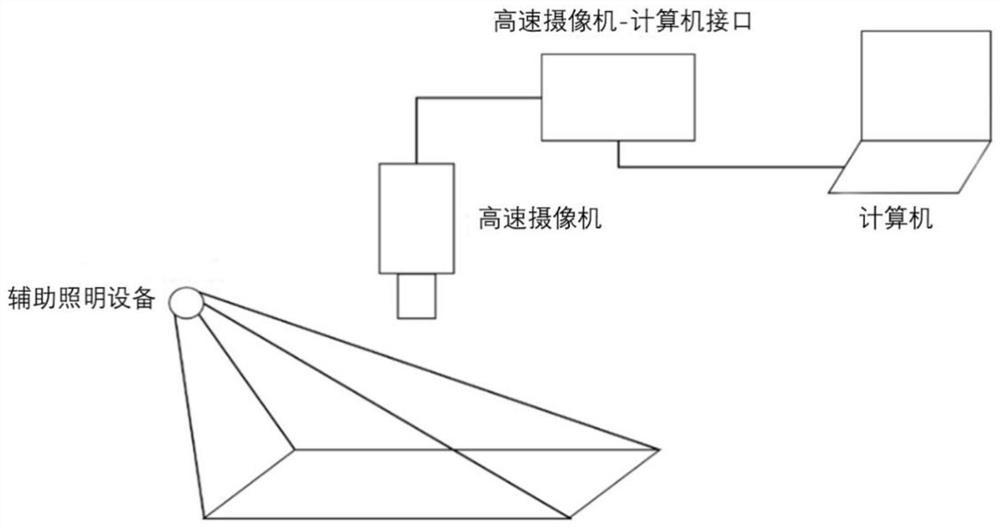

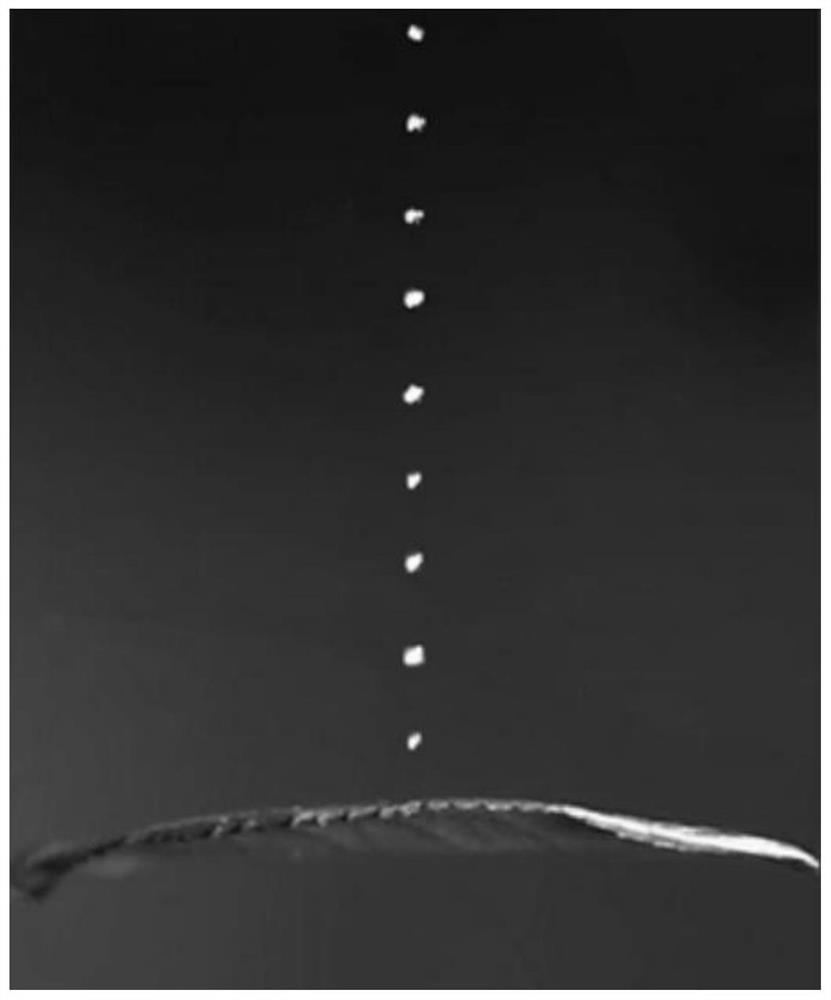

Method for measuring latency of multiple pesticide fog drops impacting leaf surfaces based on high-speed visual coupling contour feature extraction

PendingCN114332629AOptimizing Particle Spectrum DistributionImprove deposition efficiencyCharacter and pattern recognitionMachine learningSoil scienceFeature extraction

The invention discloses a method for measuring the latency of multiple pesticide fog drops impacting a leaf surface based on high-speed visual coupling contour feature extraction. Comprising the following steps: building a high-speed visual capture system, calibrating a high-speed camera system, collecting fog drop impact leaf surface images, preprocessing video images, selecting an ROI (Region of Interest) region, identifying pesticide fog drops, extracting pesticide fog drop contours, extracting pesticide fog drop centroids, and tracking and timing pesticide fog drops, so as to obtain the delay of multiple pesticide fog drops to impact the leaf surfaces. According to the invention, a quantitative analysis means is provided for optimizing pesticide spraying parameters, especially for controlling the pesticide application amount, optimizing the droplet spectrum distribution and blending the pesticide liquid content; the obtained measurement result has theoretical significance and application value for developing a precise pesticide application technology and improving the deposition efficiency of tea tree pesticide spray.

Owner:ANHUI AGRICULTURAL UNIVERSITY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com