Patents

Literature

109 results about "Behavioral state" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Near-drowning behavior detection method based on support vector machine

InactiveCN103413114ARescue in timeImprove environmental adaptabilityCharacter and pattern recognitionBehavioral stateSupport vector machine classifier

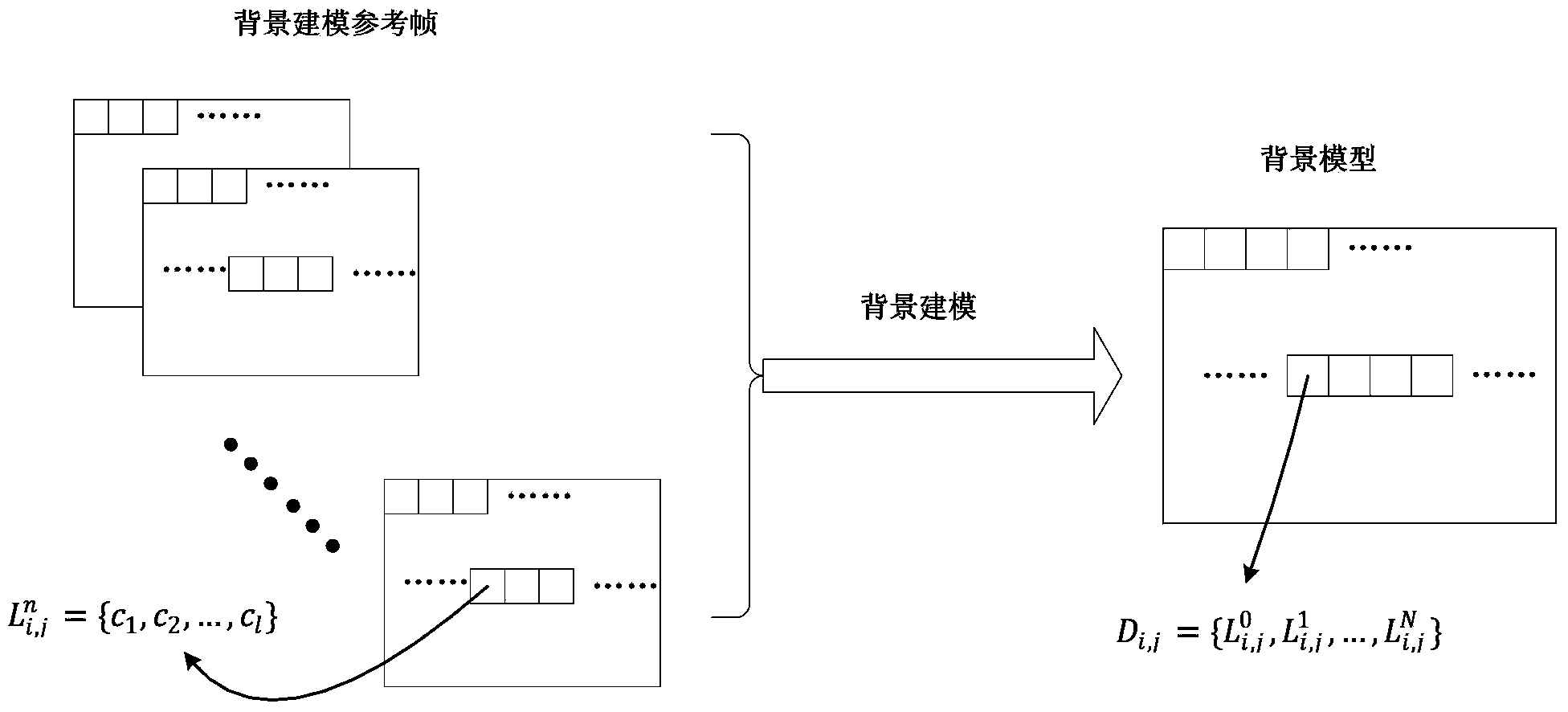

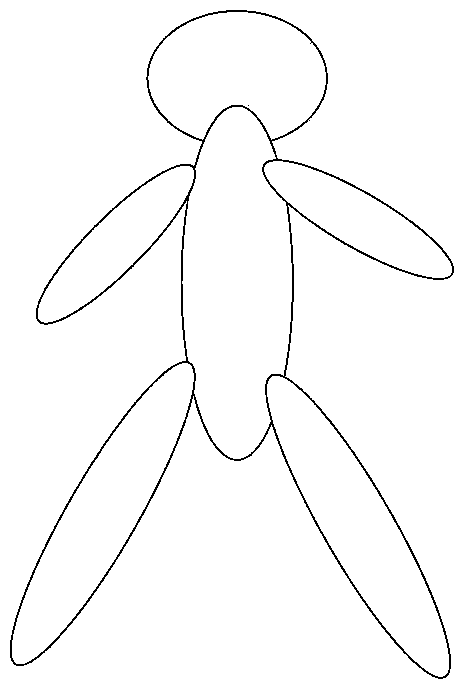

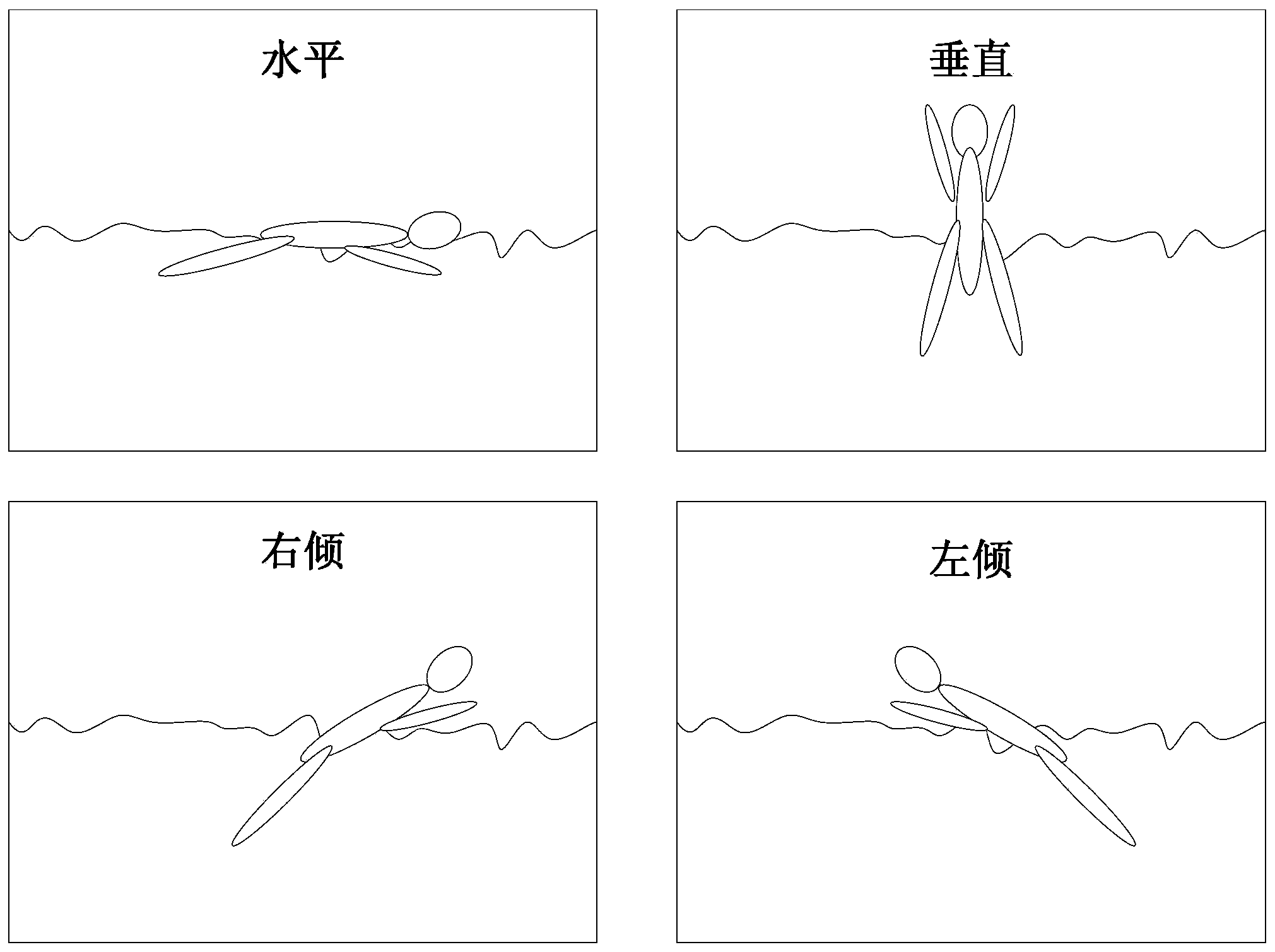

The invention discloses a near-drowning behavior detection method based on a support vector machine. According to the invention, the support vector machine is used as a classifier for the learning of a machine; the support vector machine classifier is trained through an obtained video sequence sample of near-drowning behavior and normal swimming behavior by pre-simulation; then a video image sequence of a pool is acquired in real time through a camera arranged above the water; the monitored video image sequence is inputted into the trained support vector machine classifier to determine a behavioral state of a swimmer. Therefore, a near-drowner can be automatically detected through the camera in an actual public swimming place and lives can be timely saved at the maximum. The near-drowning behavior detection method based on the support vector machine has the advantages of accurate and reliable detection, good robustness, high noise immunity and good adaption to transformation of light. Besides, through monitoring by the camera arranged above the water, the near-drowning behavior detection method based on the support vector machine lowers costs for system implementation and has great value in engineering application.

Owner:ZHEJIANG UNIV

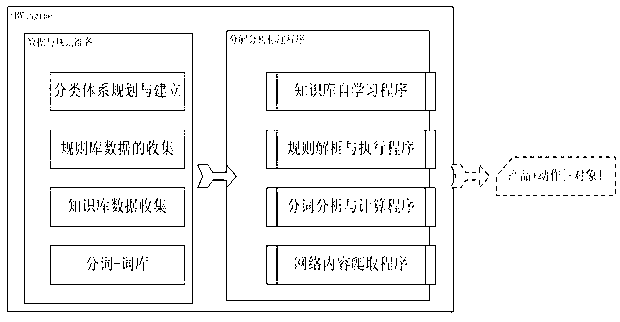

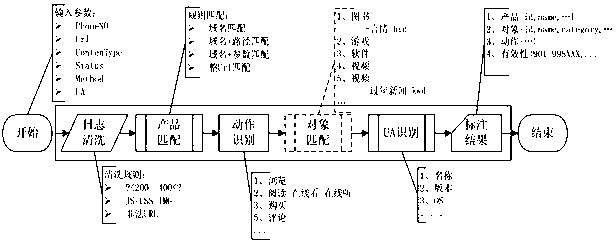

Internet behavior markup engine and behavior markup method corresponding to same

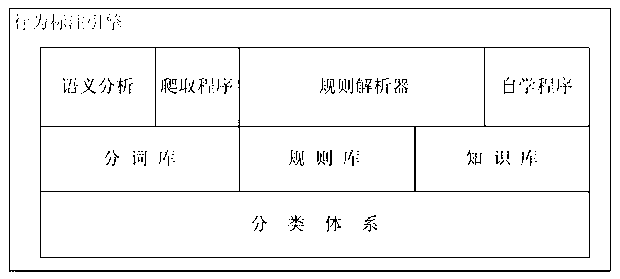

The invention discloses an internet behavior markup engine and a behavior markup method corresponding to the same, and belongs to the technical field of user internet behavior data collection and analysis. The markup engine comprises a classification system module, a word segmentation base module, a semantic analysis module, a crawling program module, a rule base module, a knowledge base module, a rule parser module and a self-learning program module. The internet behavior markup method provides a basic logical structure that user behavior=behavior agent + behavior identification + behavior state. By the engine and the method, classification efficiency and accuracy are improved, description particle size of internet user behavior data is thinned, action, object and environmental conditions of one-time user behaviors are integrally recognized, and internet user behaviors are restored integrally. User behavior data outputted according to IUBML (internet universal behavior markup language) rules directly provide accurate advertising services based on user behaviors and demand understanding, and marketing requirements of corporate clients are met.

Owner:北京宽连十方数字技术有限公司

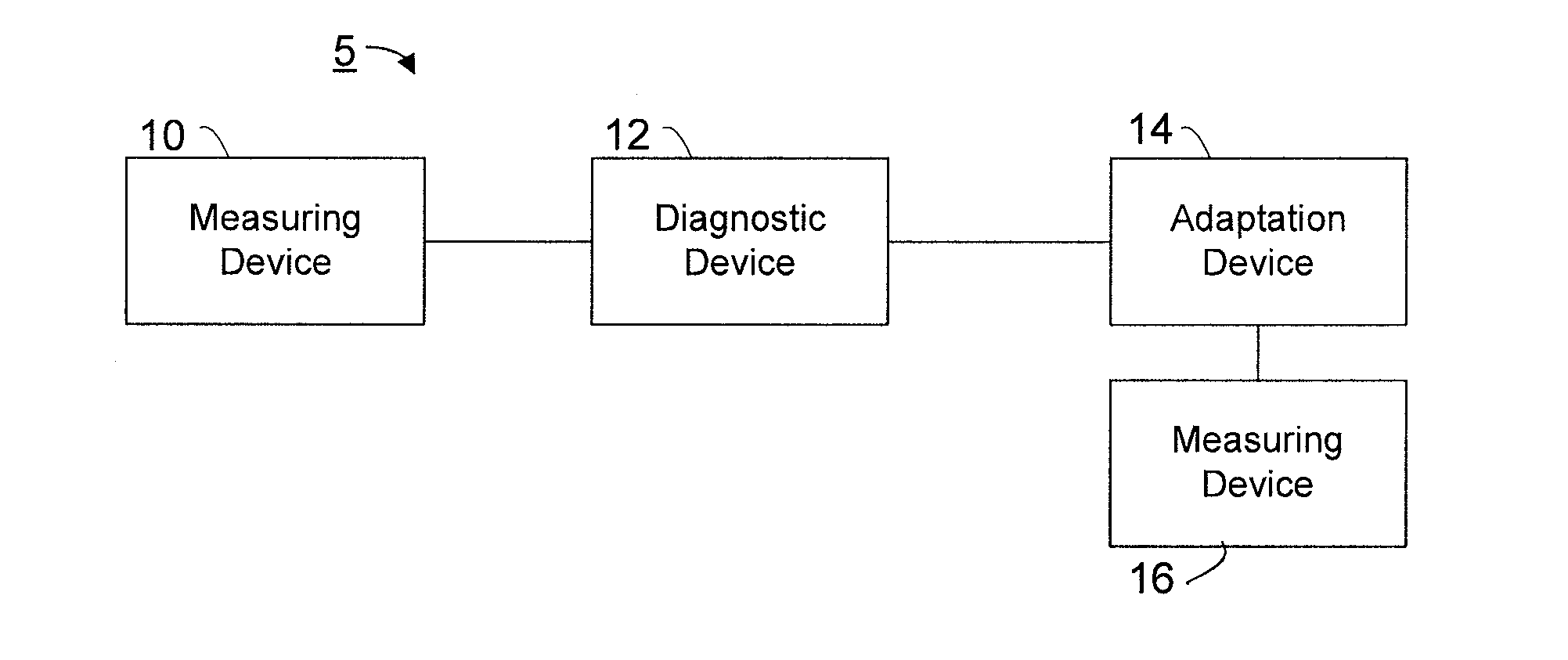

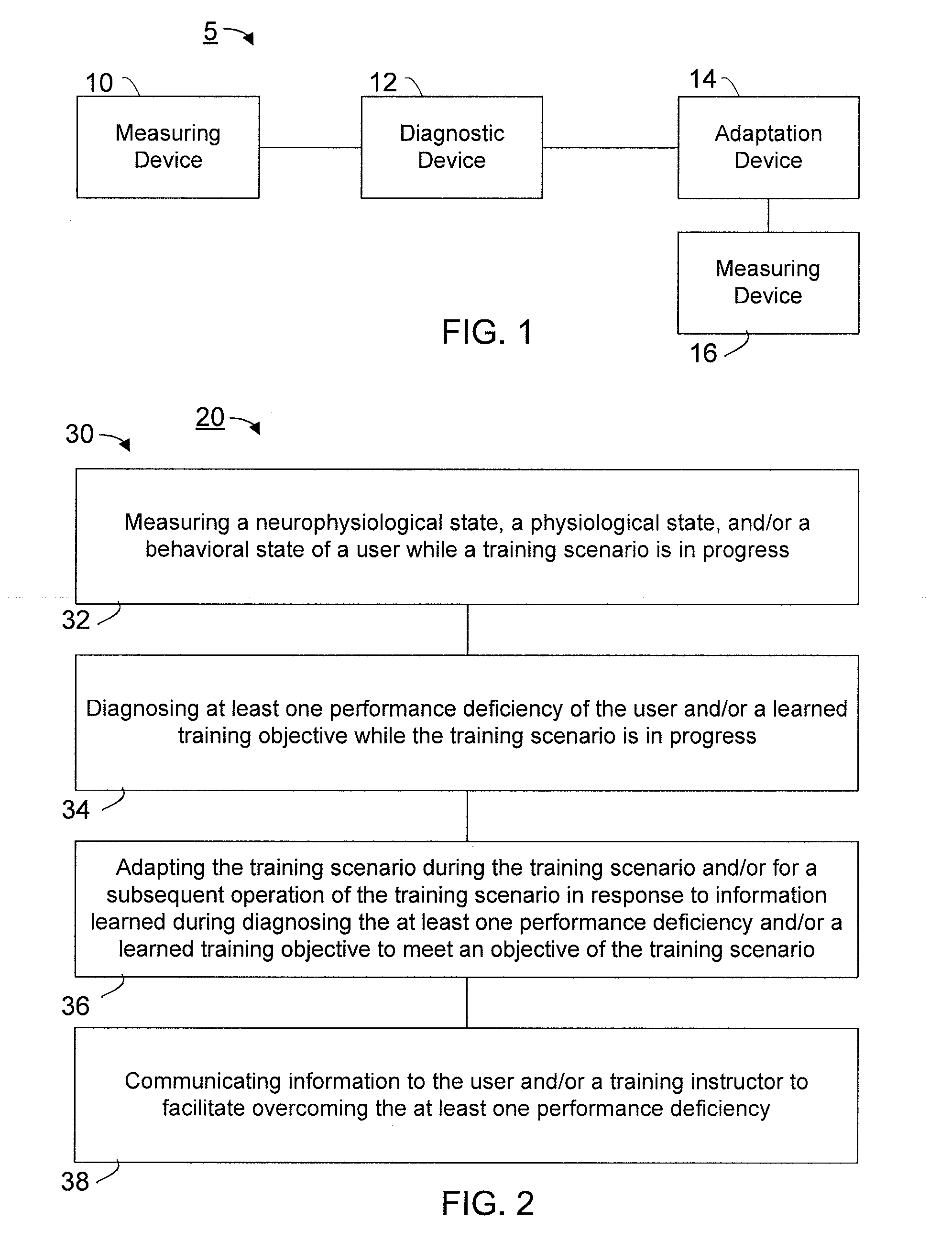

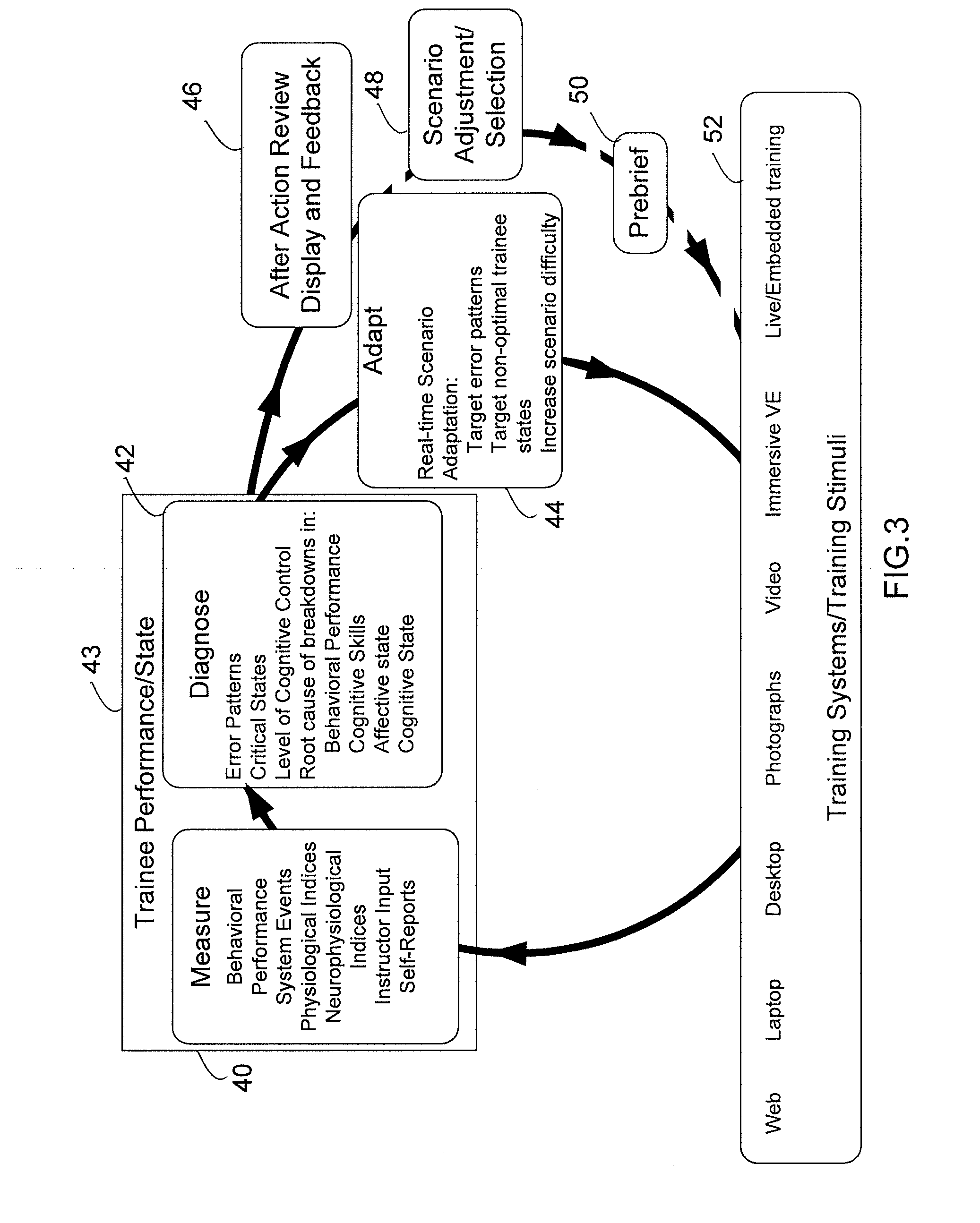

Method, system, and computer software code for the adaptation of training via performance diagnosis based on (NEURO)physiological metrics

InactiveUS20110091847A1Minimizing trainingPerformance deficiencyElectrical appliancesTeaching apparatusBehavioral stateHabilitation training

A method for adapting a training system based on information obtained from a user using a training system during a training scenario, the method including measuring a neurophysiological state, a physiological state, and / or a behavioral state of a user while a training scenario is in progress, diagnosing at least one performance deficiency of the user and / or a learned training objective while the training scenario is in progress, and adapting the training scenario during the training scenario and / or for a subsequent operation of the training scenario in response to information learned during diagnosing the at least one performance deficiency and / or a learned training objective to meet an objective of the training scenario. A system and computer software code for adapting the training system based on information obtained from the user using the training system during a training scenario is also disclosed.

Owner:DESIGN INTERACTIVE

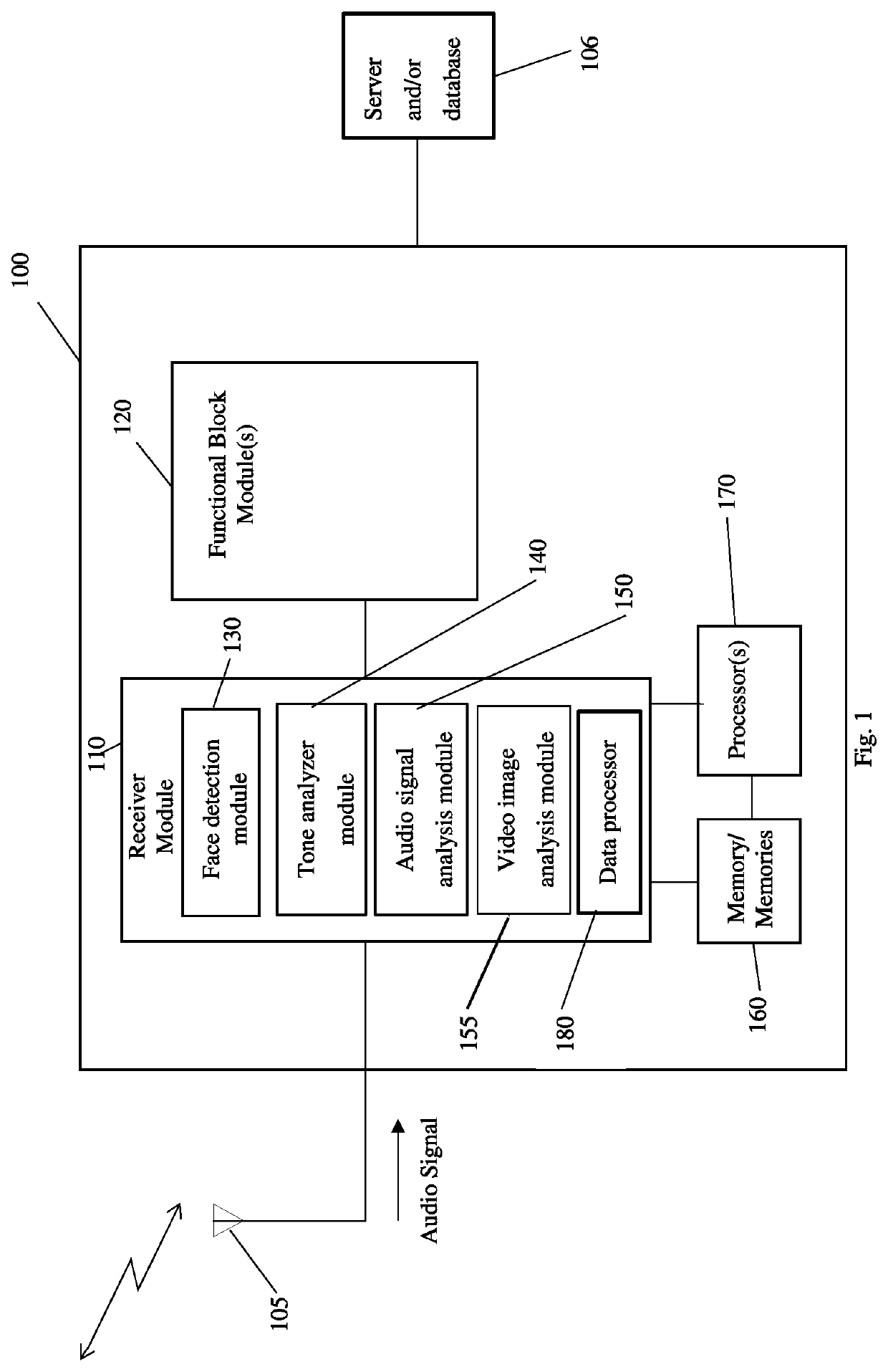

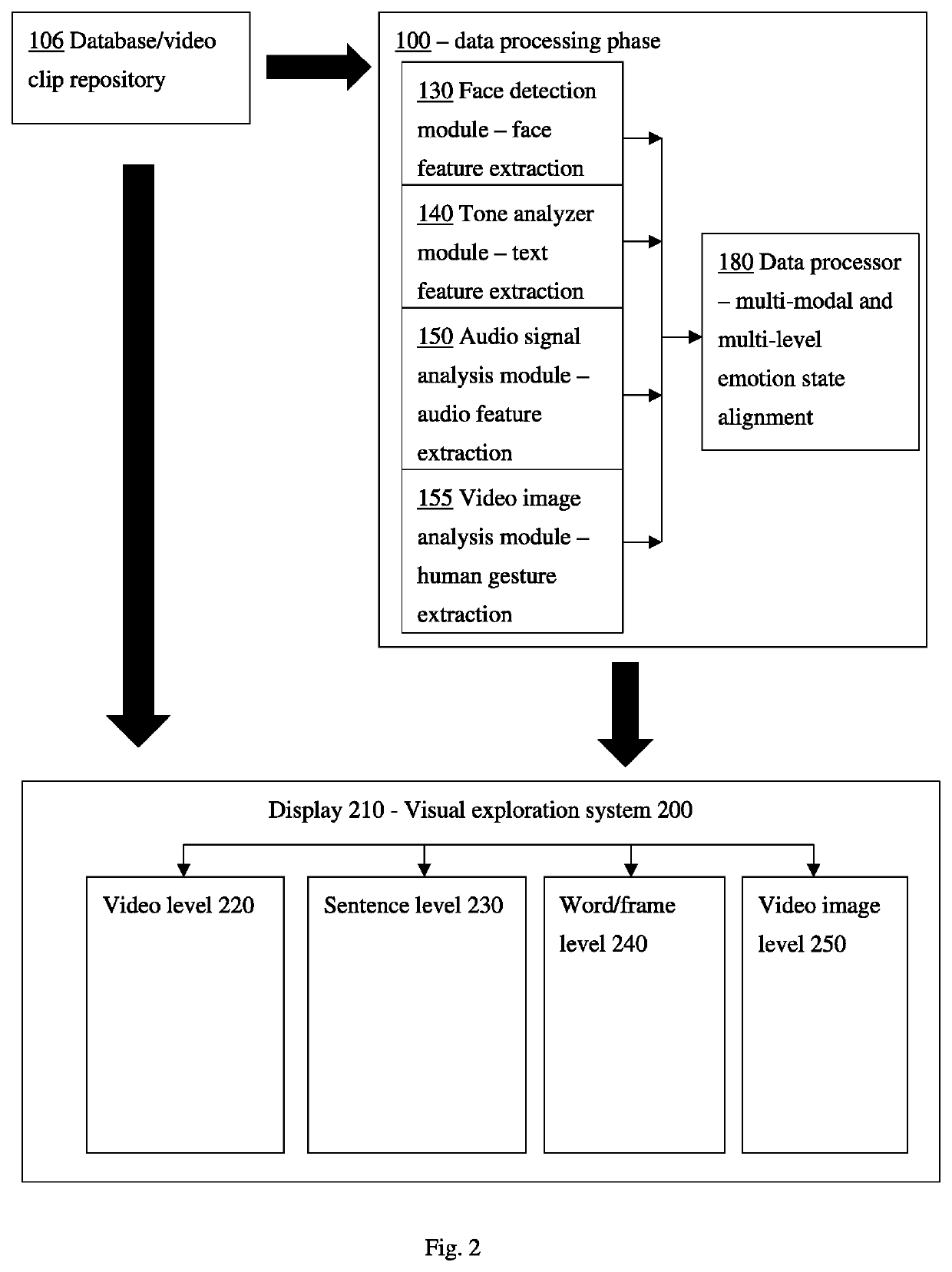

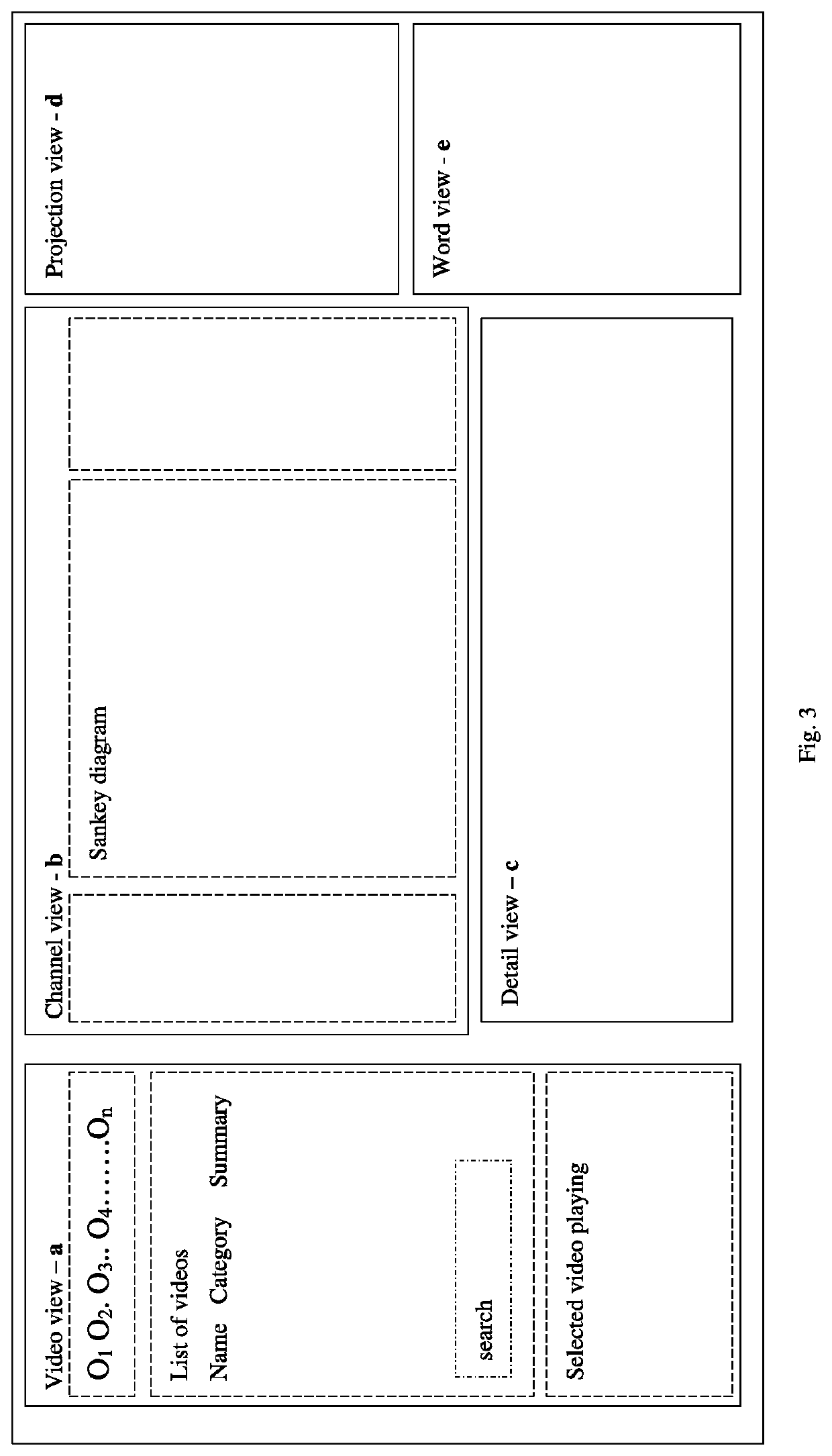

System and Method for Visual Analysis of Emotional Coherence in Videos

ActiveUS20210073526A1Mitigate and/or obviate problemsNatural language analysisSpeech analysisInformation processingComputer graphics (images)

A computer implemented method and system processing a video signal. The method comprises comprising the steps of: detecting a human face displayed in the video signal and extracting physiological, biological, or behavior state information from the displayed face at a first level of granularity of the video signal; processing any two or more of: (i) a script derived from or associated with the video signal to extract language tone information from said script at a first level of granularity of the script; (ii) an audio signal derived from or associated with the video signal to derive behavior state information from said audio signal at a first level of granularity of the audio signal; (iii) a video image derived from the video signal to detect one or more human gestures of the person whose face is displayed in the video signal; and merging said physiological, biological, or behavior state information extracted from the displayed face in the video signal with any two or more of: (i) the language tone information extracted from the script; (ii) the behavior state information derived from the audio signal; and (iii) and the one or more human gestures derived from the video image, wherein the merging step is based on behavior state categories and / or levels of granularity.

Owner:BLUE PLANET TRAINING INC

A pedestrian behavior identification and trajectory tracking method

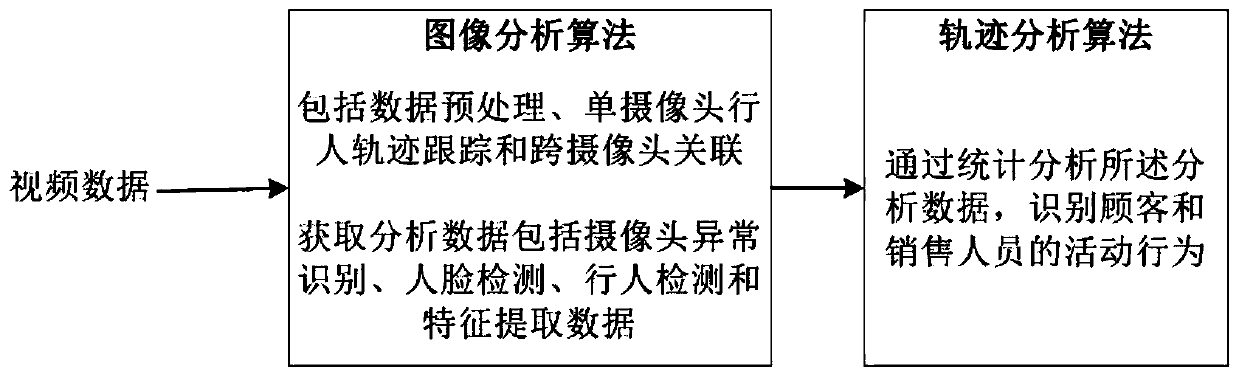

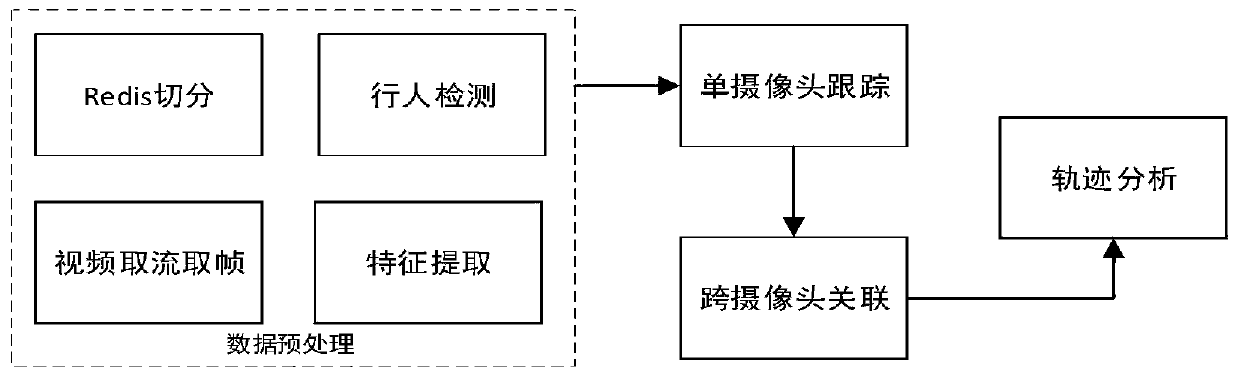

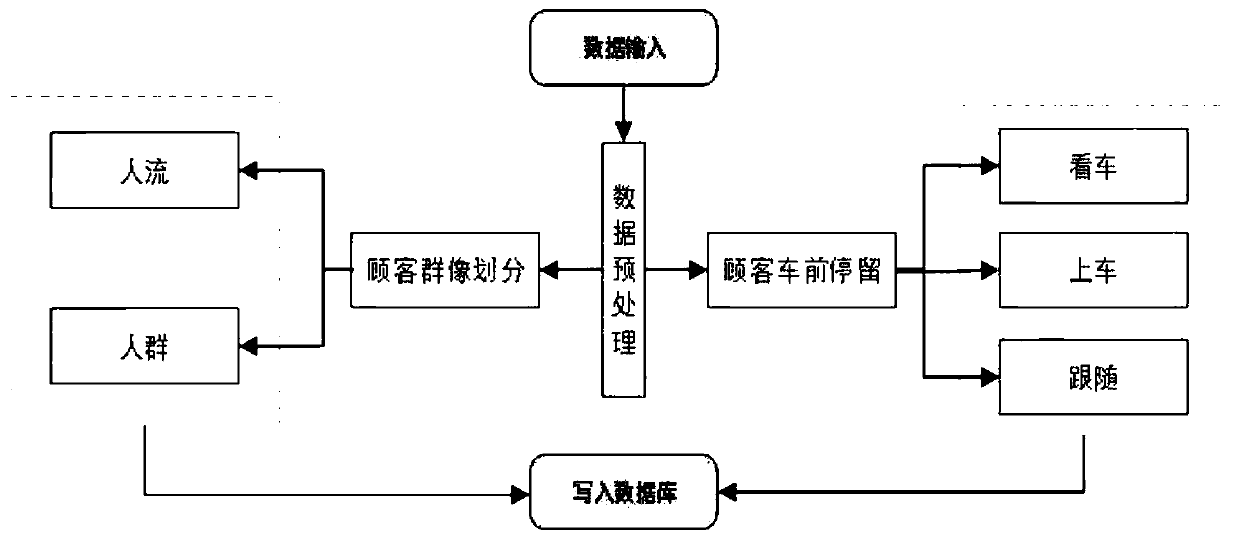

ActiveCN109784162AImplement trackingIntelligent judgment of behavior statusCharacter and pattern recognitionMarketingFace detectionFeature extraction

The invention discloses a pedestrian behavior recognition and trajectory tracking method, which comprises an image analysis algorithm and a trajectory analysis algorithm. collected video data sequentially passes through an image analysis algorithm module and a trajectory analysis algorithm module; The image analysis algorithm comprises the steps of data preprocessing, single-camera pedestrian trajectory tracking and cross-camera association, and camera abnormity recognition, and can achieve face detection, pedestrian detection and feature extraction; And a trajectory analysis algorithm includes calculating and analyzing trajectory data of the pedestrian from the acquired image, and identifying activity behaviors of the customer and the sales. The pedestrian behavior track can be monitoredand recognized in real time, the behavior state of pedestrians is intelligently judged, centralized management of the behavior track of customers and sales is facilitated, and more optimized and moreintelligent services are provided for the customers.

Owner:CHENGDU UNION BIG DATA TECH CO LTD

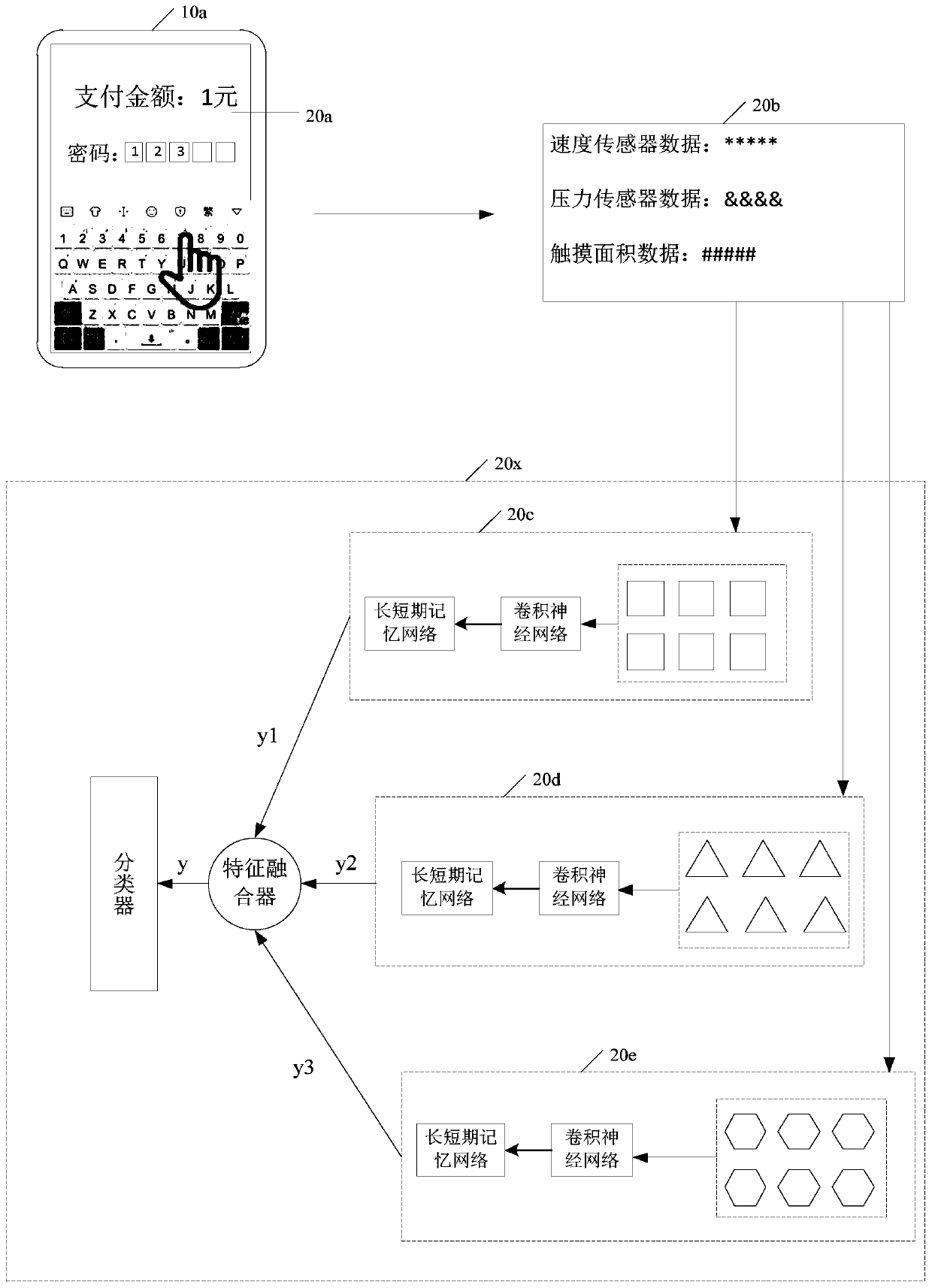

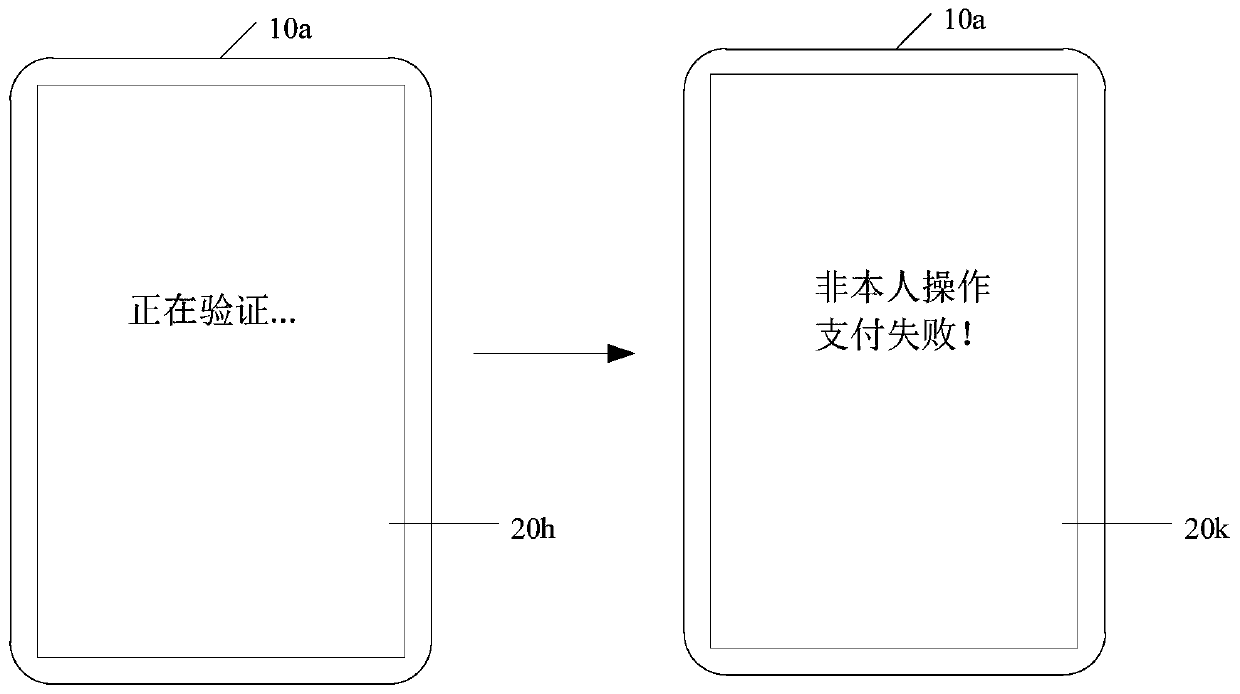

Identity recognition method and device and related equipment

ActiveCN110163611AHigh-precision identificationImprove reliabilityProtocol authorisationInternet privacyIdentity recognition

The embodiment of the invention discloses an identity recognition method and device and related equipment, and the method comprises the steps: obtaining target behavior state information when receiving input identity verification information based on a target user, wherein the target behavior state information is behavior state information generated according to an operation behavior of inputtingthe identity verification information by a target user; obtaining an identity authentication model corresponding to the registered user, wherein the identity authentication model is obtained by training according to behavior state information of an input operation behavior of a registered user; identifying an identity matching relationship between the target user and the registered user in the identity authentication model according to the target behavior state information; and identifying the security type of the target user according to the identity matching relationship and the input identity verification information. According to the invention, the reliability of system security authentication can be improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

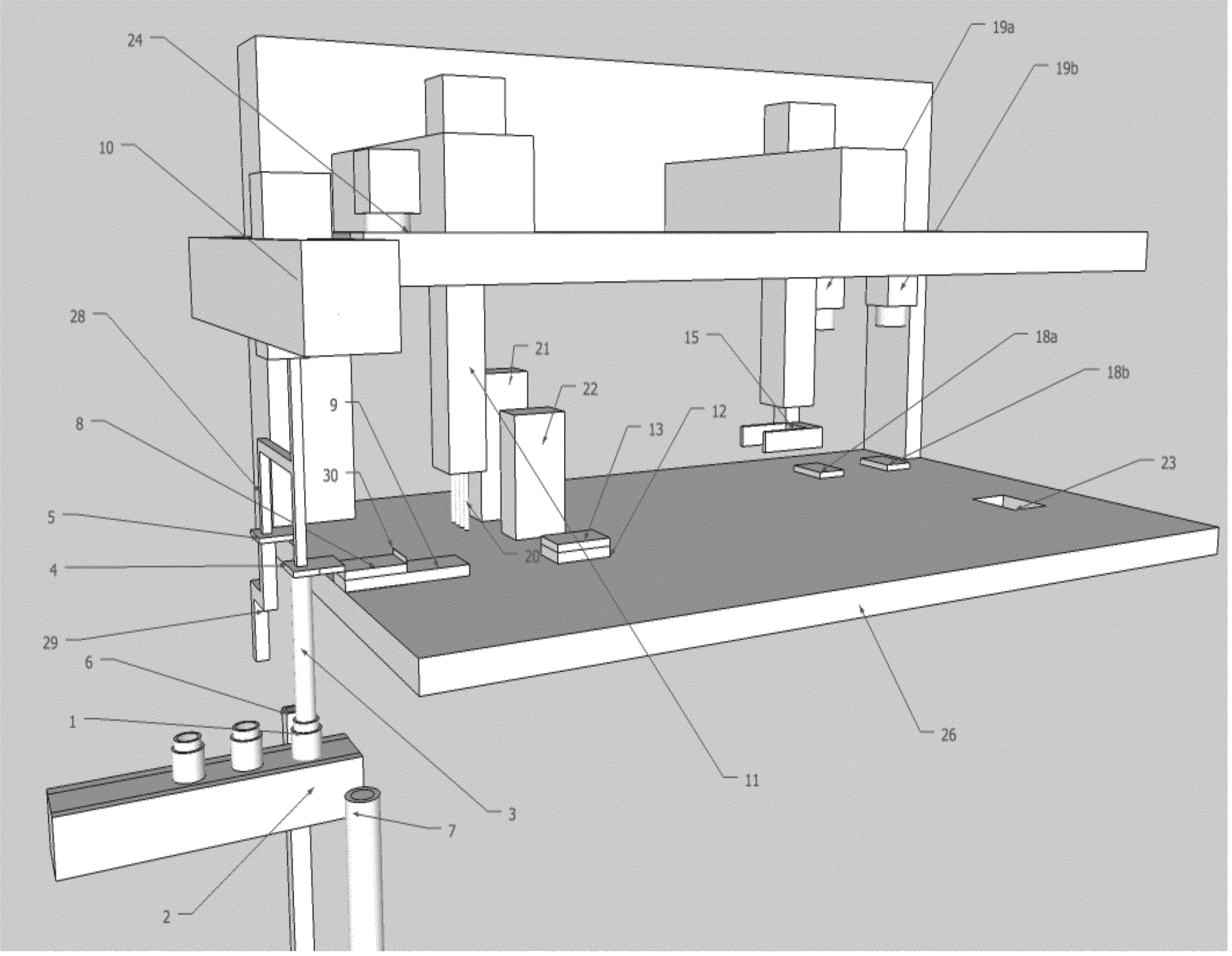

Device for automating behavioral experiments on animals

The present invention enables testing the effect of one or more test agent(s) on one or more animal(s) of a group of animals, preferably insects, to identify agents that affect behavioral properties of the animals. The invention is comprised of the steps of providing animals suitable for testing, bringing those animals into contact with the test agent(s), moving the animals from a growth container to isolate them, prepare and separate the animals for singulation, relocated the animals to a behavior arena, subject the animals to behavioral tracking to assess their behavioral state, and removing the animals from the behavioral tracking to facilitate iterative analysis of further groups. The invention enables a method for preparing a therapeutic compound for the treatment of an animal disease.

Owner:CLARIDGE CHANG ADAM

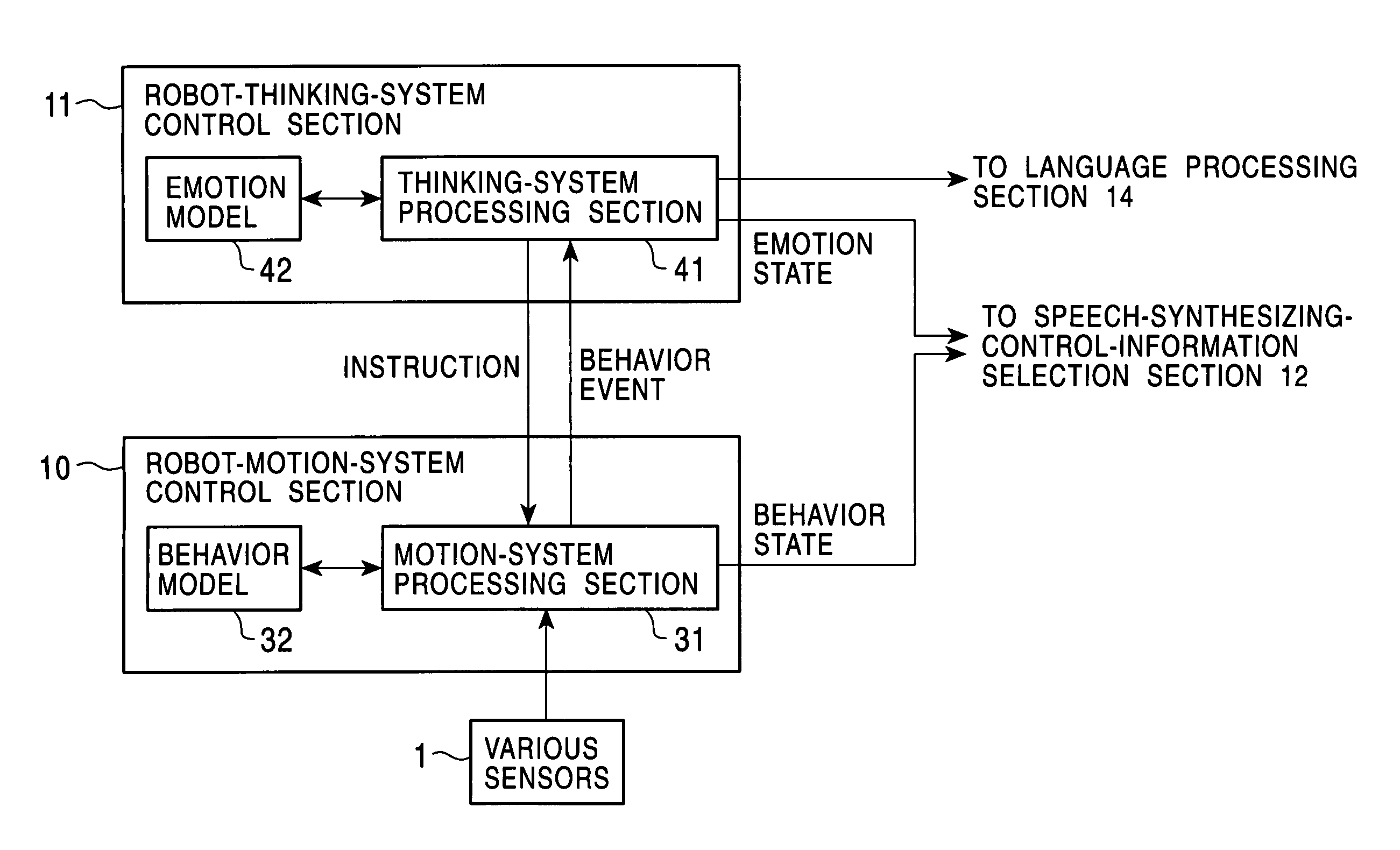

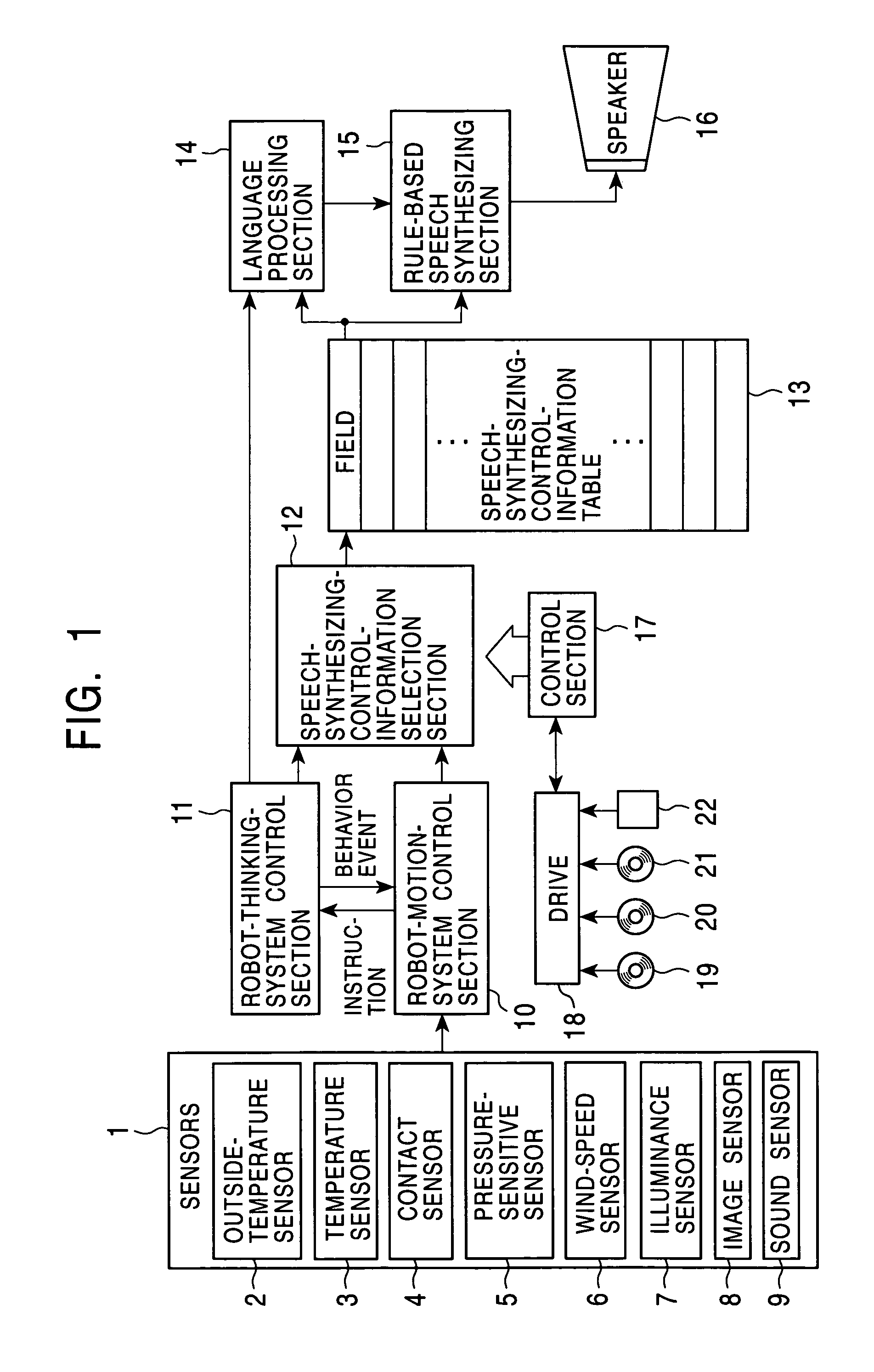

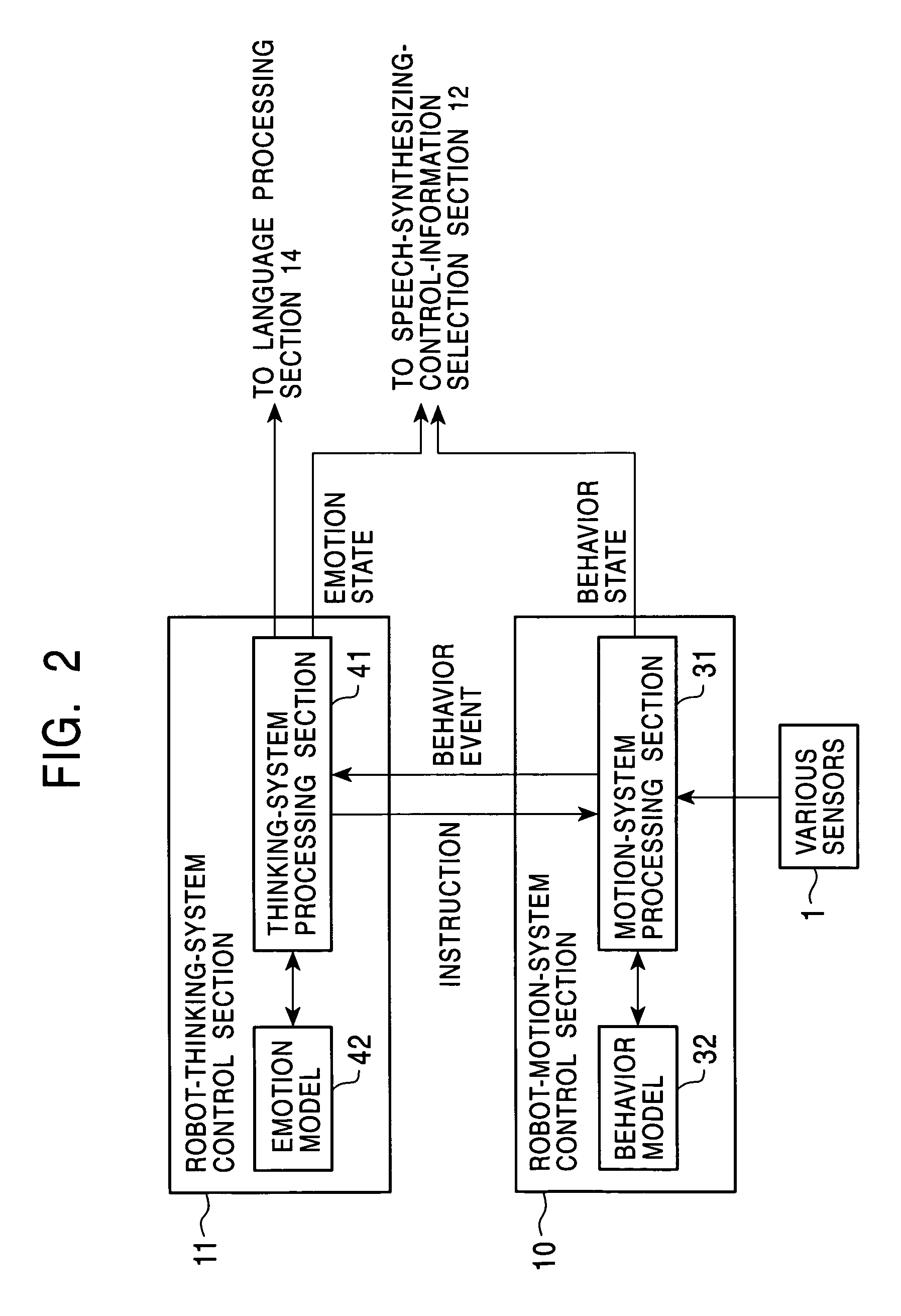

Speech synthesizing apparatus, speech synthesizing method, and recording medium using a plurality of substitute dictionaries corresponding to pre-programmed personality information

Various sensors detect conditions outside a robot and an operation applied to the robot, and output the results of detection to a robot-motion-system control section. The robot-motion-system control section determines a behavior state according to a behavior model. A robot-thinking-system control section determines an emotion state according to an emotion model. A speech-synthesizing-control-information selection section determines a field on a speech-synthesizing-control-information table according to the behavior state and the emotion state. A language processing section analyzes in grammar a text for speech synthesizing sent from the robot-thinking-system control section, converts a predetermined portion according to a speech-synthesizing control information, and outputs to a rule-based speech synthesizing section. The rule-based speech synthesizing section synthesizes a speech signal corresponding to the text for speech synthesizing.

Owner:SONY CORP

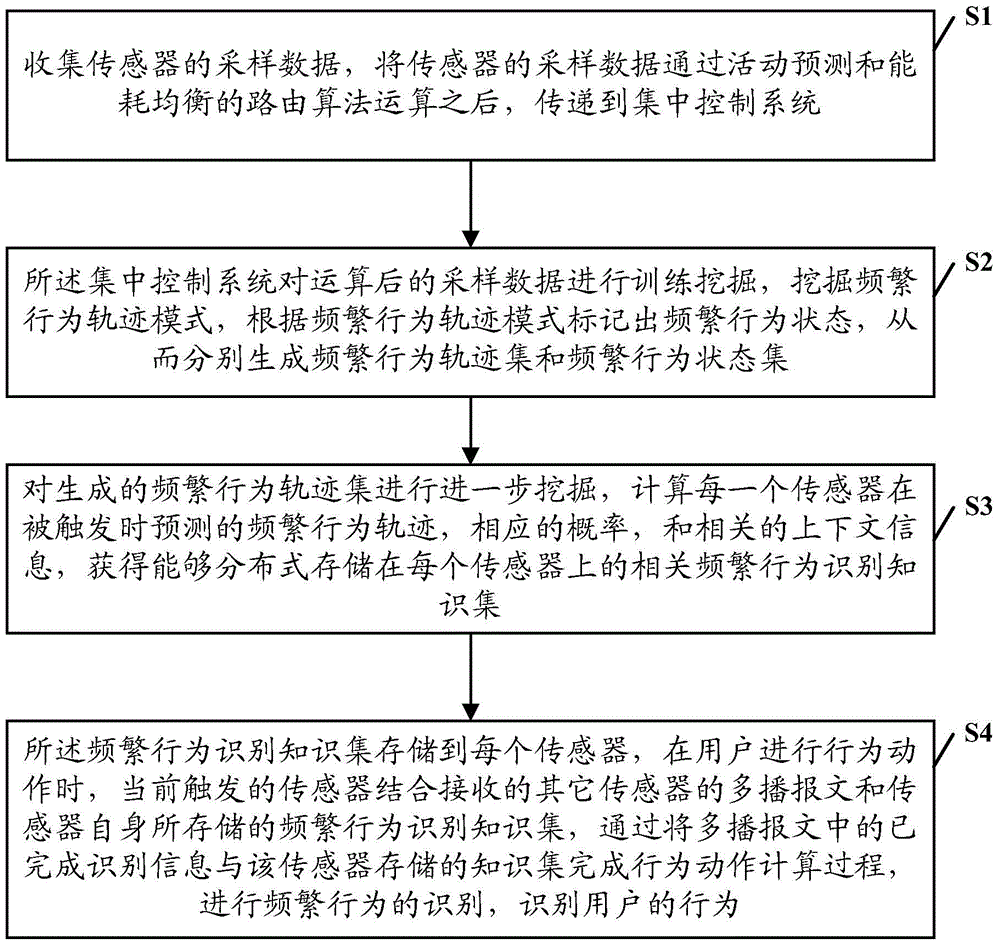

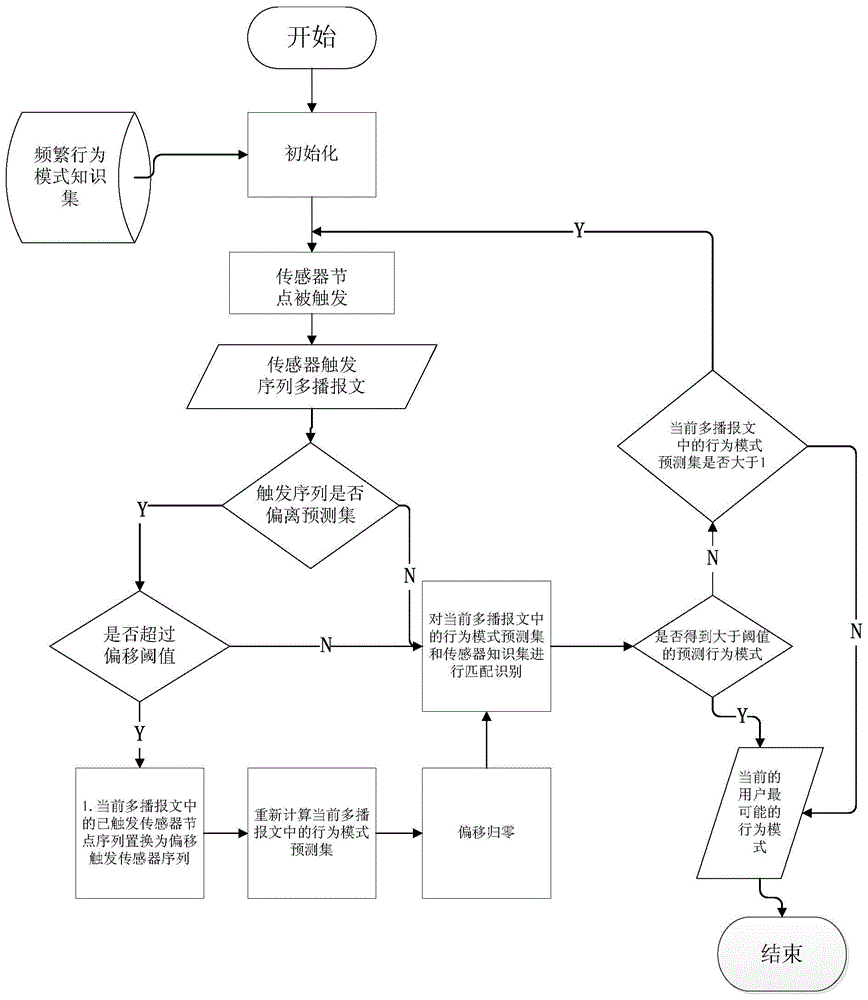

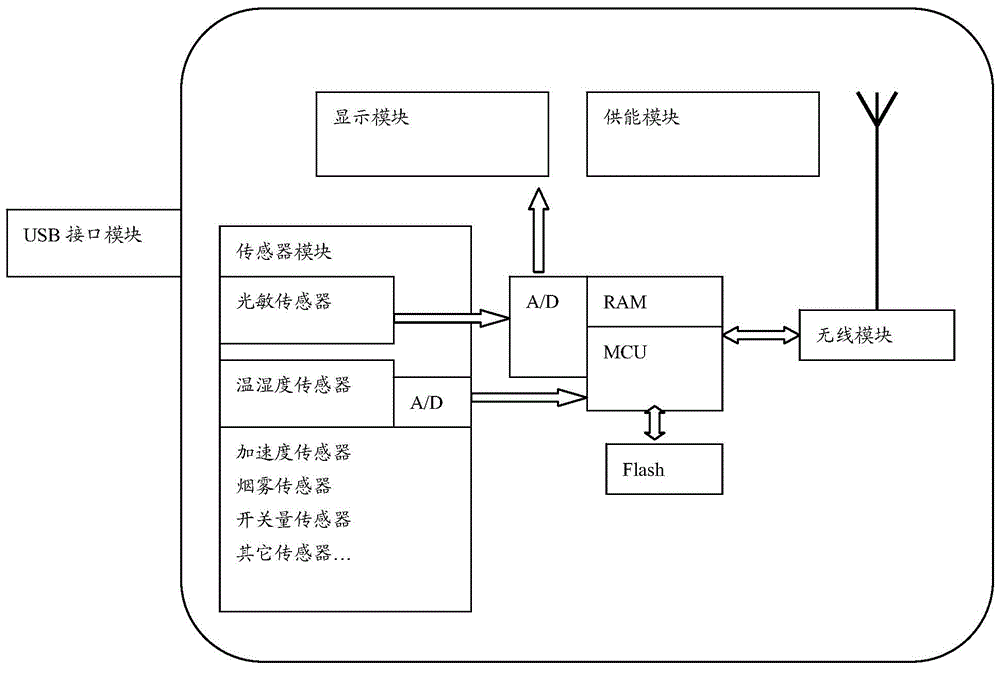

Distributed behavior identification method based on wireless sensor network

InactiveCN104035396ALife safetyEnergy saving and comfort for livingNetwork topologiesProgramme total factory controlWireless mesh networkControl system

The invention discloses a distributed behavior identification method based on a wireless sensor network. The method comprises the following steps of firstly, collecting sampling data of sensors, computing the sampling data of the sensors through an active prediction and energy consumption balancing routing algorithm and transmitting the computed sampling data to a centralized control system; secondly performing train excavation on the computed sampling data through the centralized control system to excavate frequent behavior track modes and further generating a frequent behavior track set and a frequent behavior state set; thirdly, further excavating the generated frequent behavior set to obtain associated frequent behavior identification knowledge sets which can be stored in every sensor in a distributed mode; fourthly, storing the frequent behavior identification knowledge sets, and when a user has behaviors, completing a behavior computing process through completed identification information in a multicast message and the knowledge set stored by the corresponding sensor to perform frequent behavior identification and further to identify the behaviors of the user.

Owner:CHONGQING UNIV

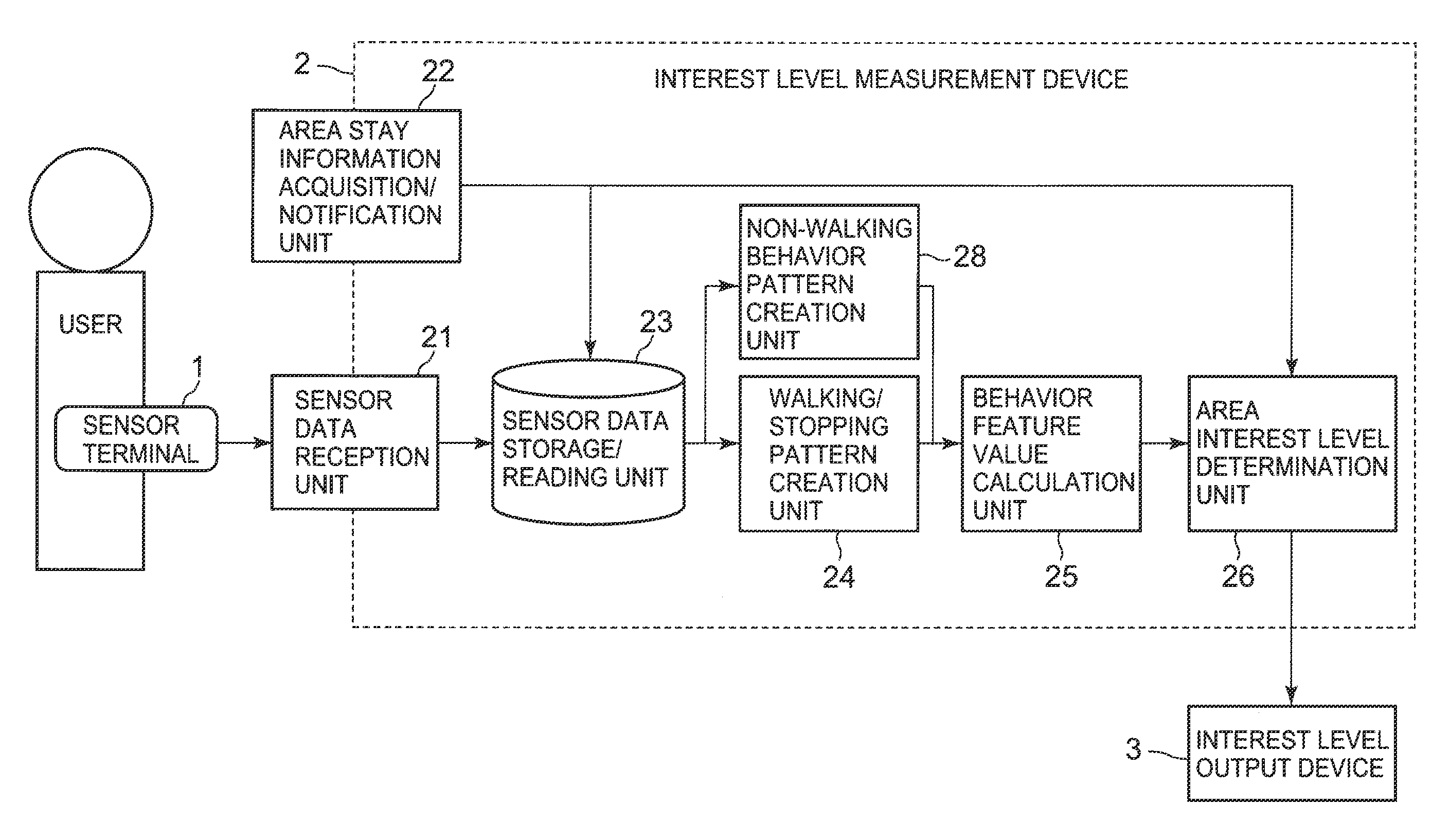

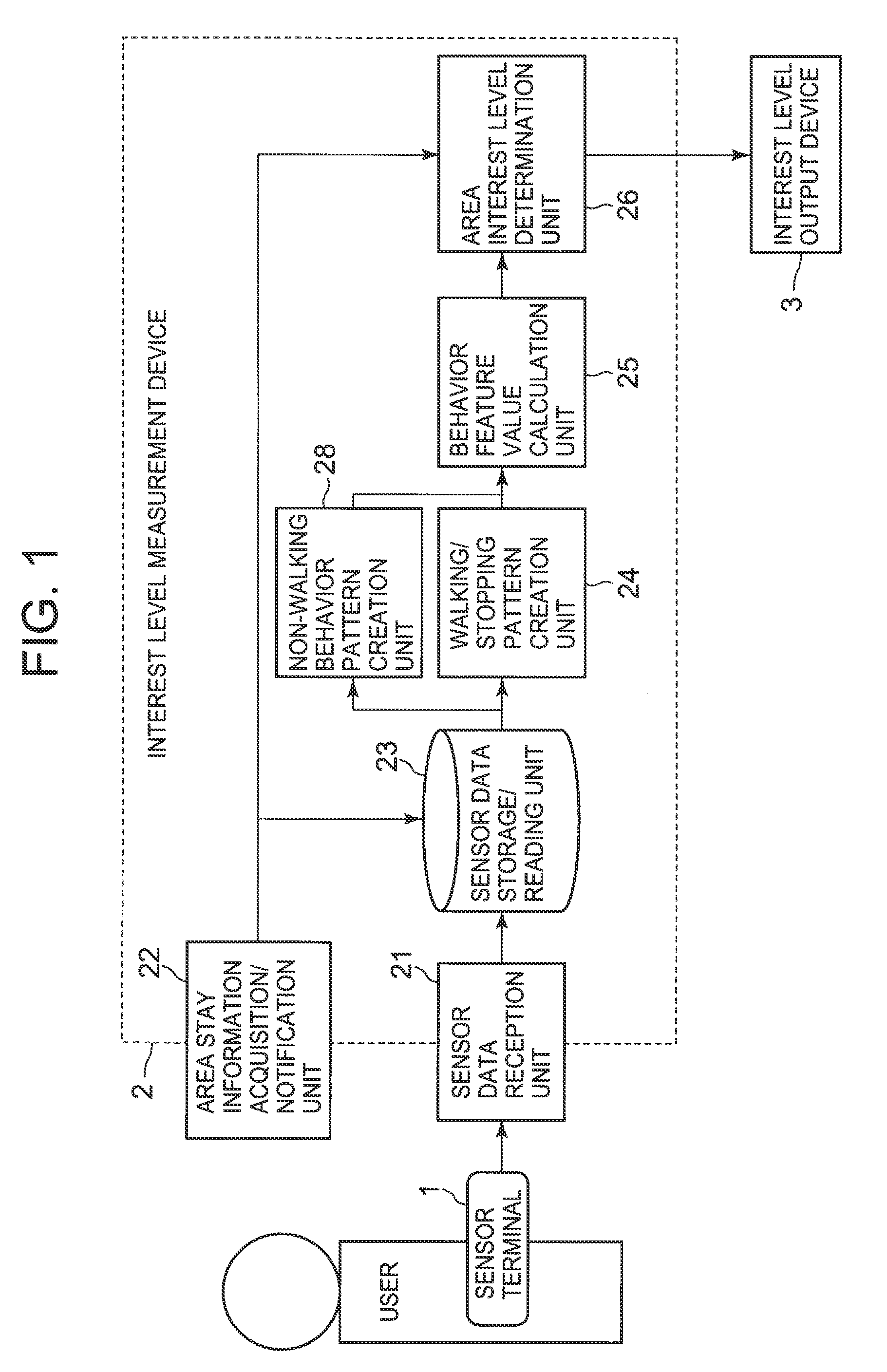

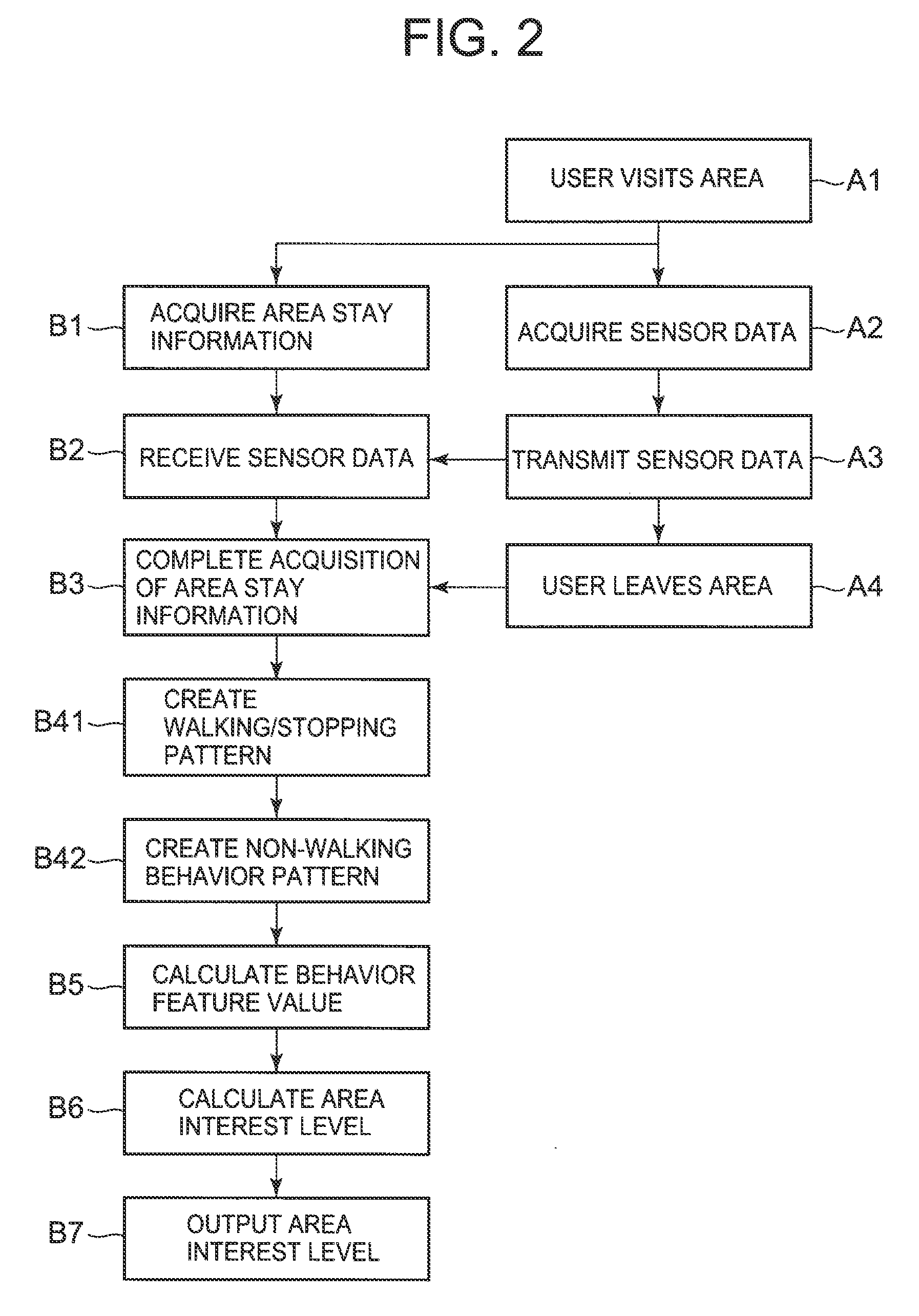

Interest level measurement system, interest level measurement device, interest level measurement method, and interest level measurement program

InactiveUS20120317066A1Data processing applicationsKnowledge representationTemporal informationMeasurement device

Data that indicates an action state of a user is acquired by a user terminal. Area stay information that includes position information of an area in which the user stays and stay time information of a time during which the user stays in the area is acquired. The data acquired by the user terminal is stored, and the stored data is read according to the acquired area stay information. A behavior status of the user is determined based on the read data, and a behavior status time-series pattern that indicates the behavior status of the user is created. A behavior feature value that indicates a feature of a behavior of the user is calculated based on the created behavior status time-series pattern. An area interest level that indicates a degree and tendency of the user's interest in the area is determined using the calculated behavior feature value.

Owner:NEC CORP

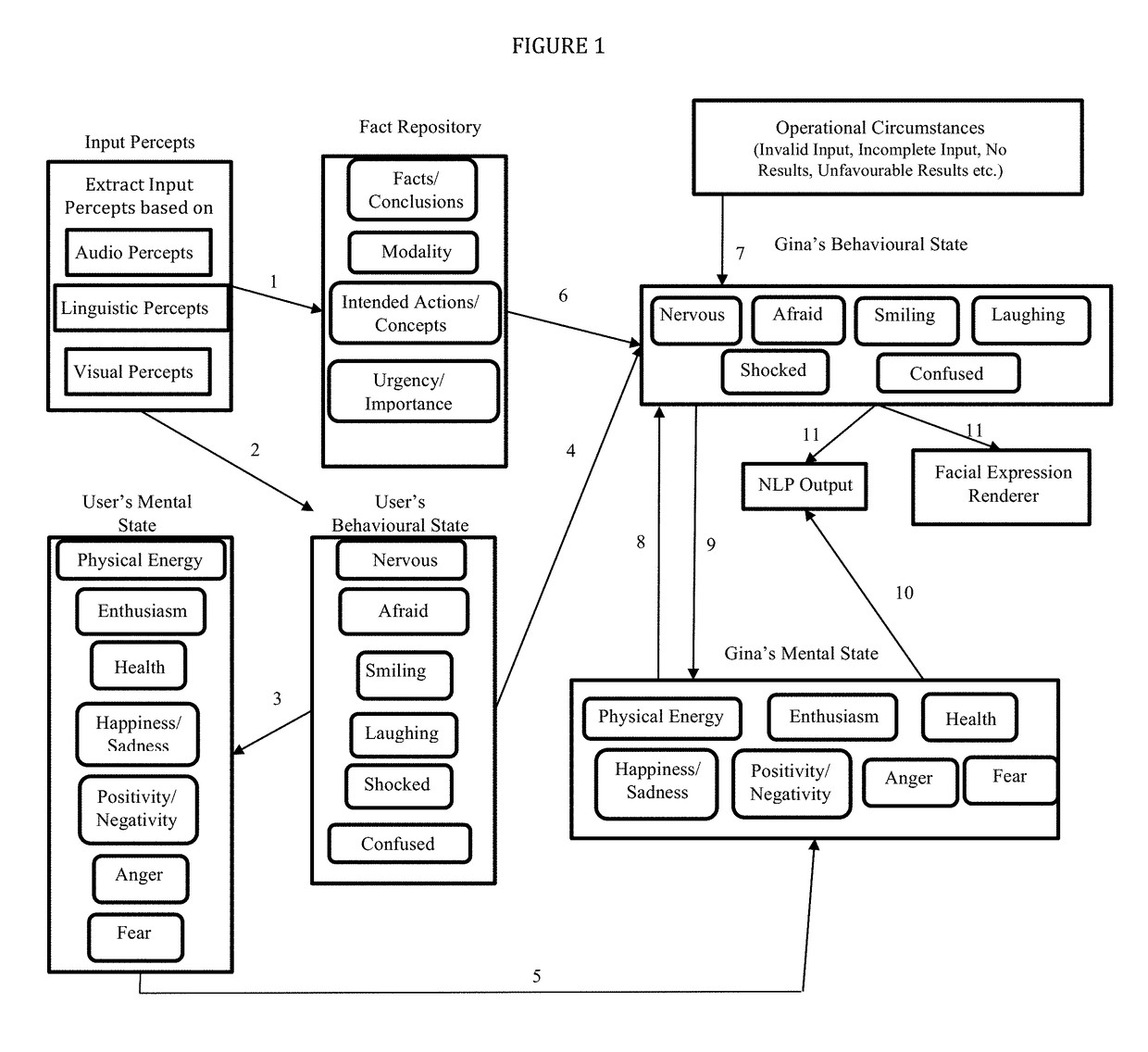

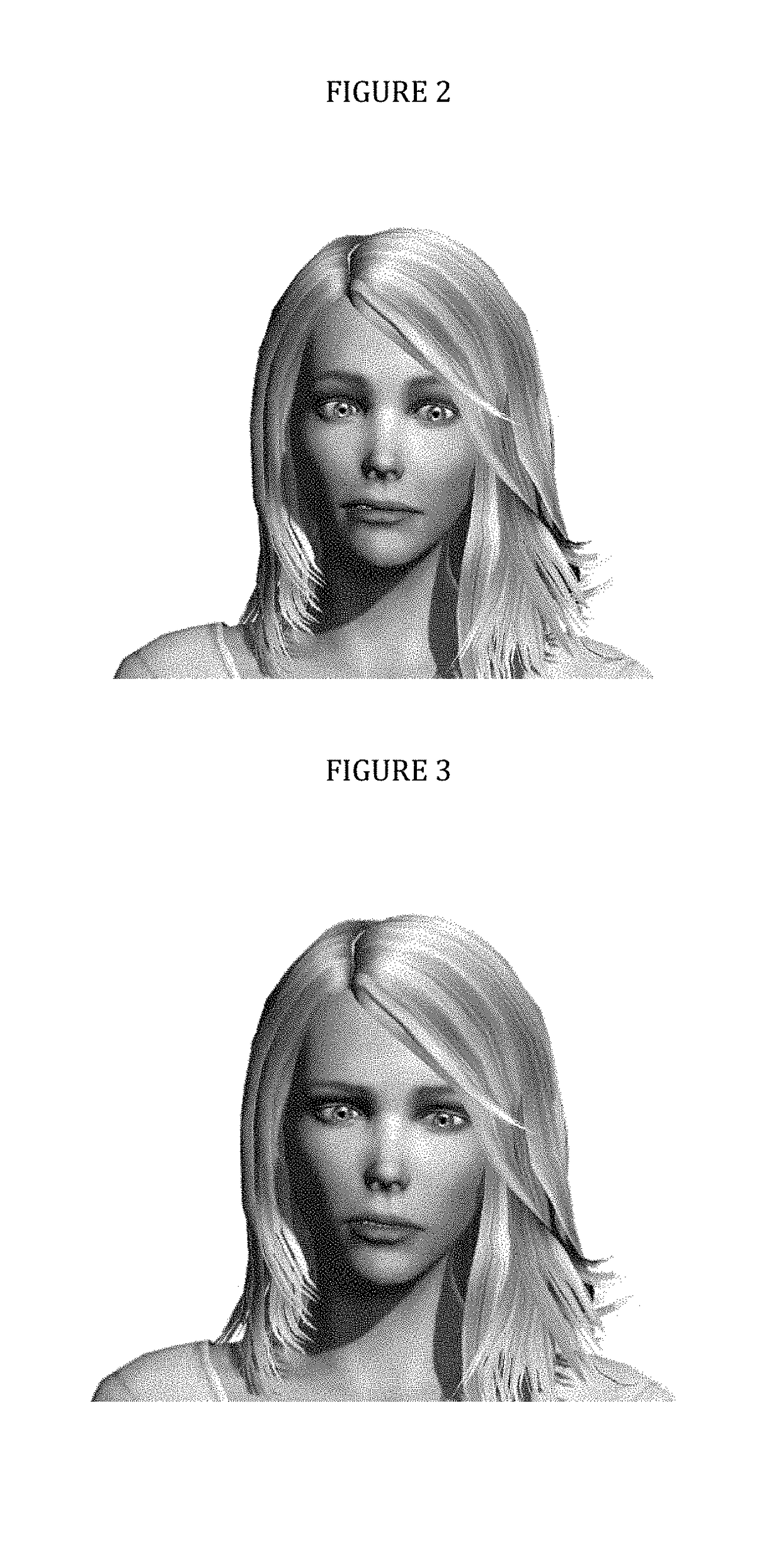

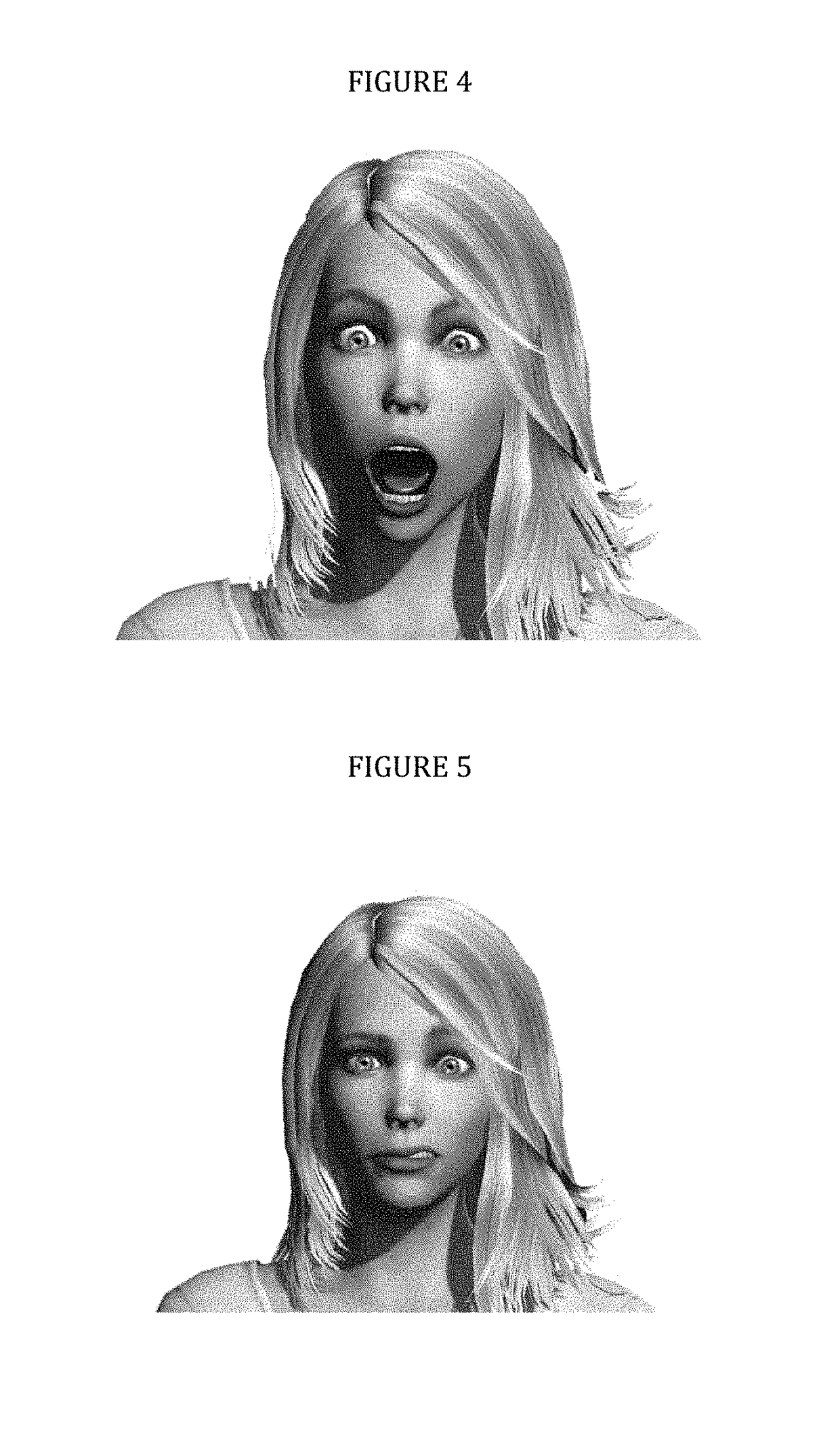

Intelligent agent / personal virtual assistant with animated 3D persona, facial expressions, human gestures, body movements and mental states

A computer implemented system, method, and media for providing an Intelligent Interactive Agent that comprises an animated graphical representation of a plurality of behavioral states of the Intelligent Interactive Agent, said plurality of behavioral states comprising one or more states selected from the following: a Nervous state, an Afraid state, a Smiling state, a laughing state, a shocked state, or a Confused state, said behavioral state further comprising an index values for each state indicating the intensity of such states.

Owner:VADODARIA VISHAL

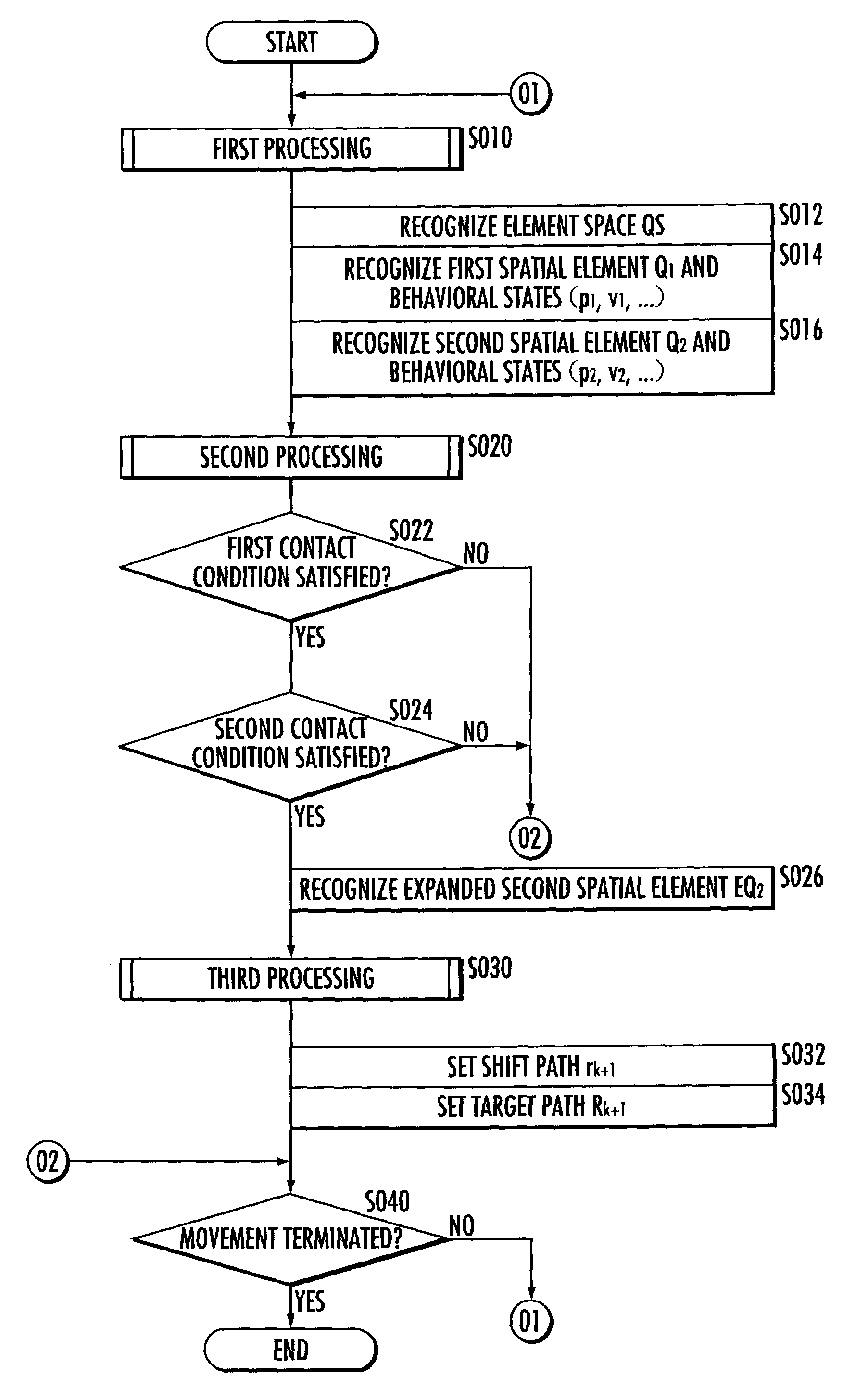

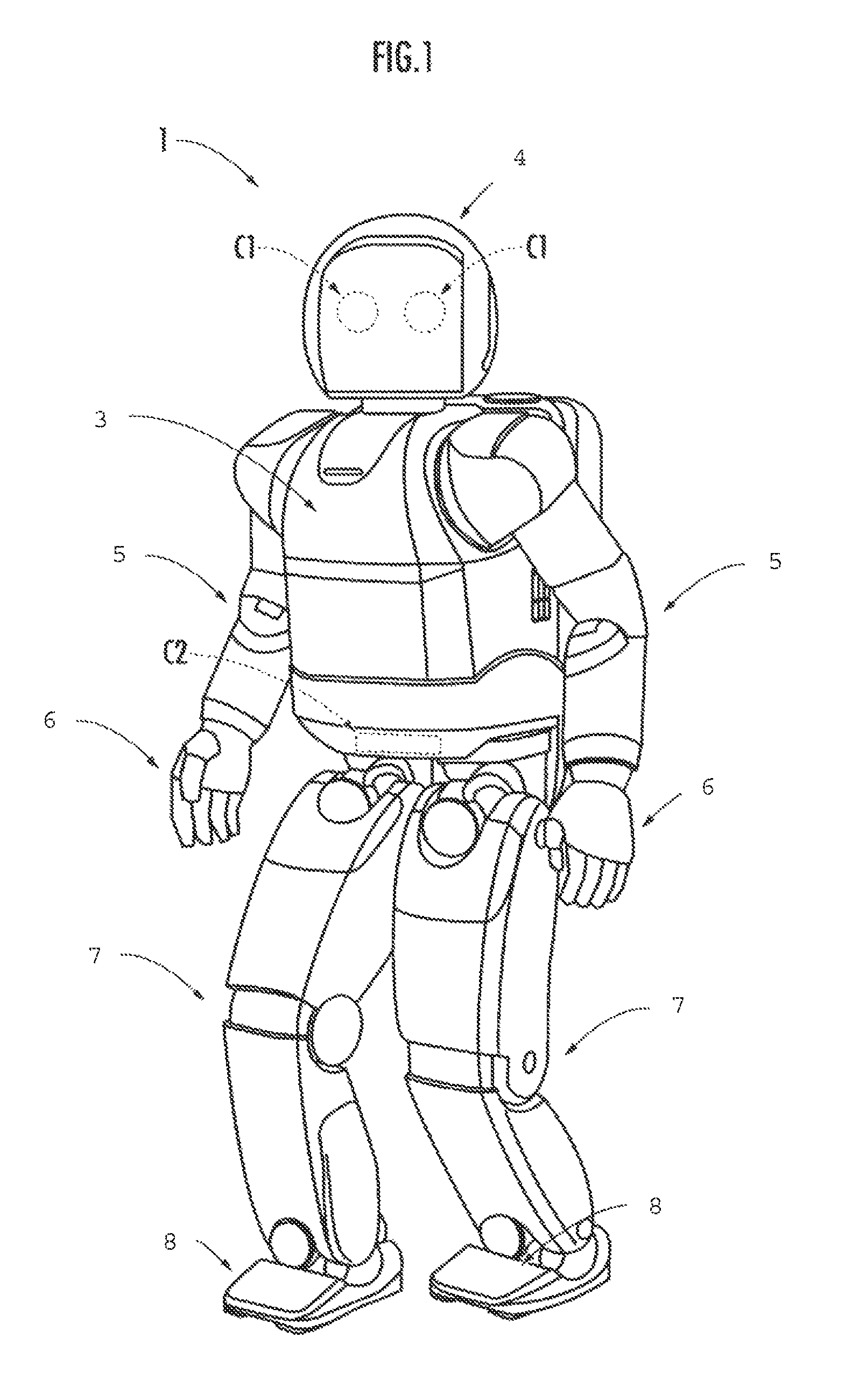

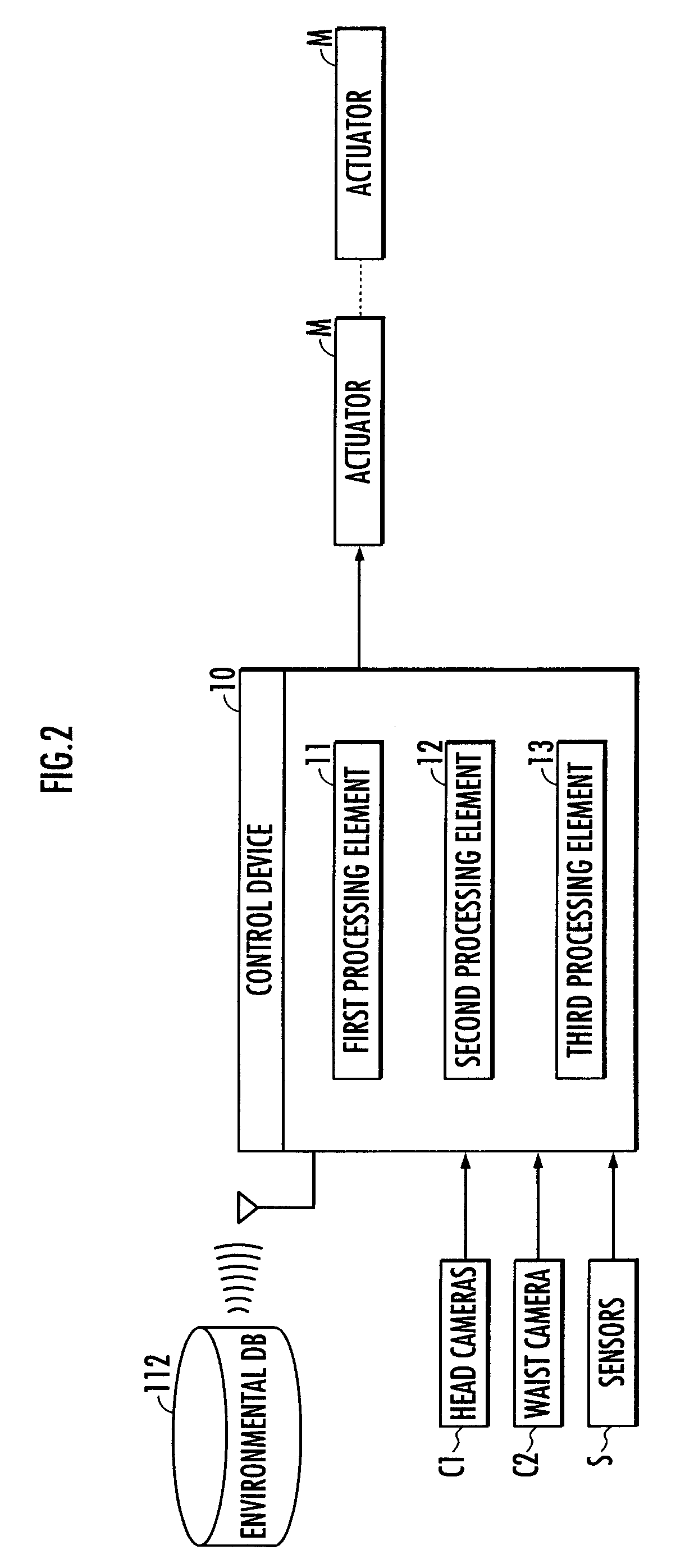

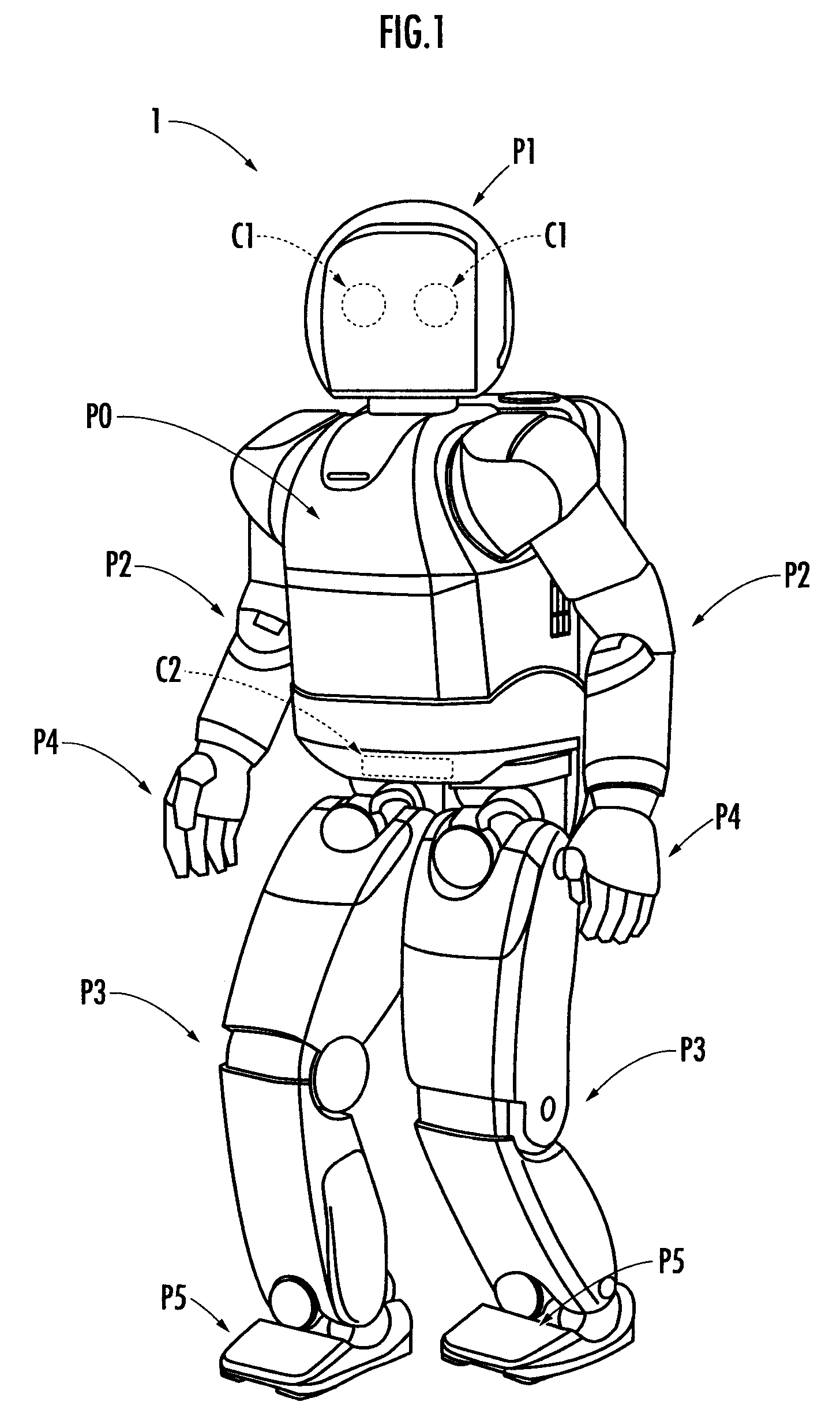

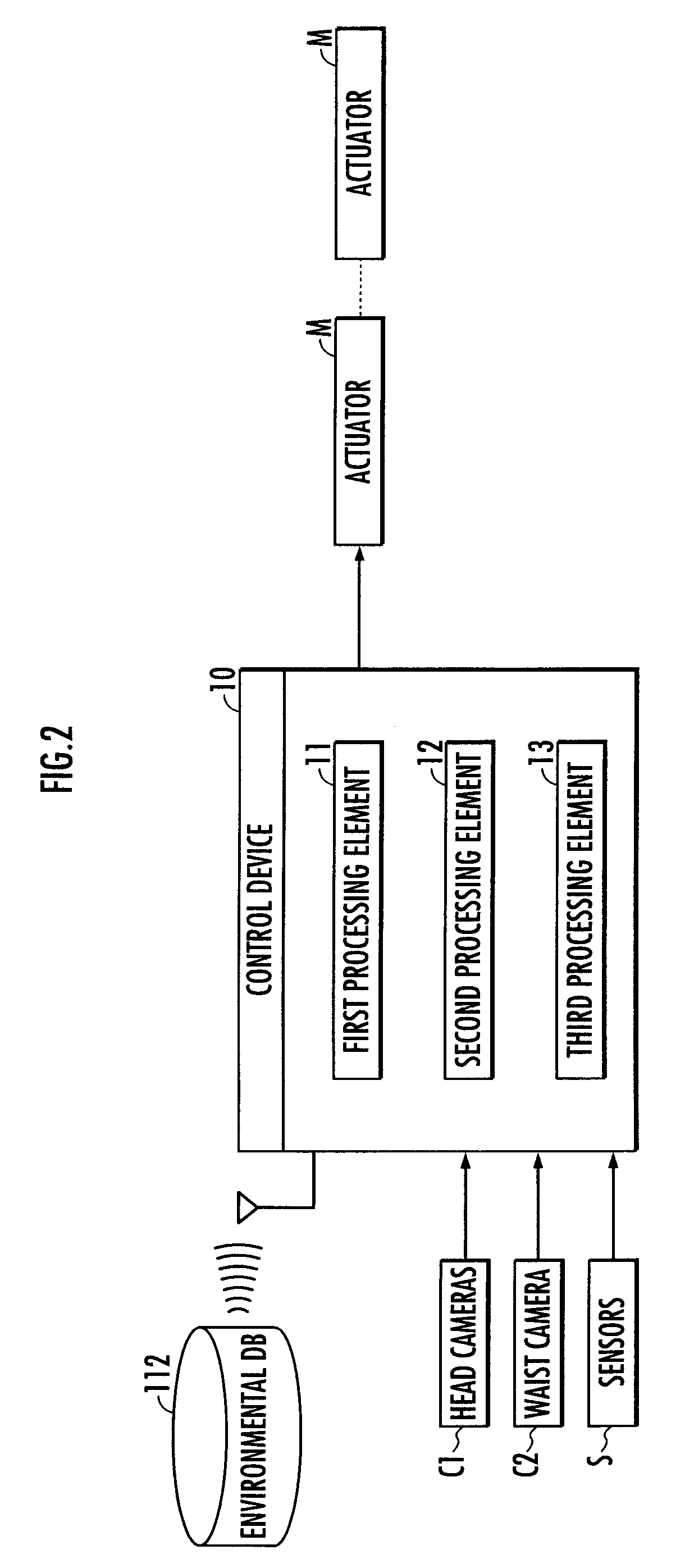

Mobile apparatus, control device and control program

A mobile apparatus capable of moving while avoiding contact with an object by allowing the object to recognize the behavioral change of the mobile apparatus is provided. The robot and its behavioral state and the object and its behavioral state are recognized as a first spatial element and its behavioral state and a second spatial element and its behavioral state, respectively, in an element space. Based on the recognition result, if the first spatial element may contact the second spatial element in the element space, a shift path is set which is tilted from the previous target path by an angle responsive to the distance between the first spatial element and the second spatial element. With the end point of the shift path as a start point, a path allowing the first spatial element to avoid contact with an expanded second spatial element is set as a new target path.

Owner:HONDA MOTOR CO LTD

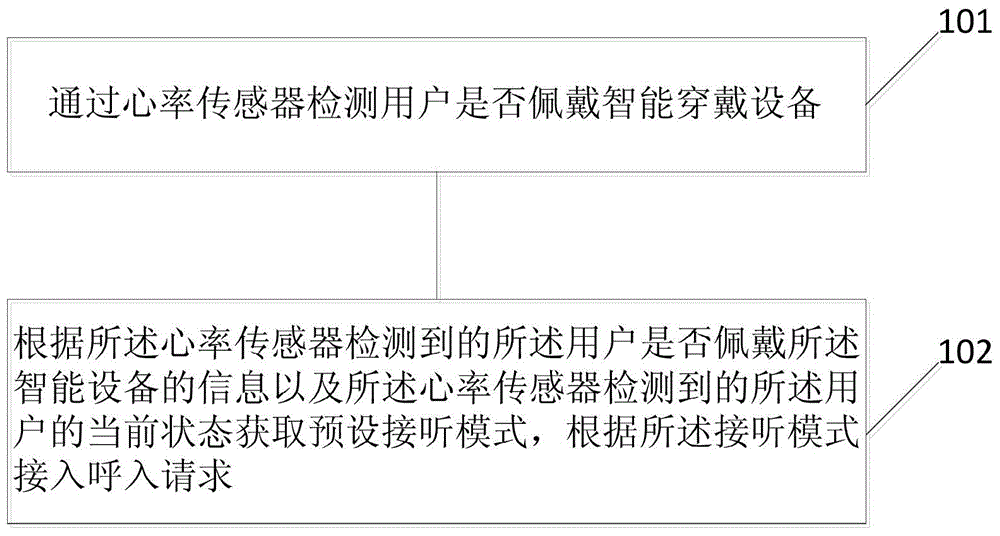

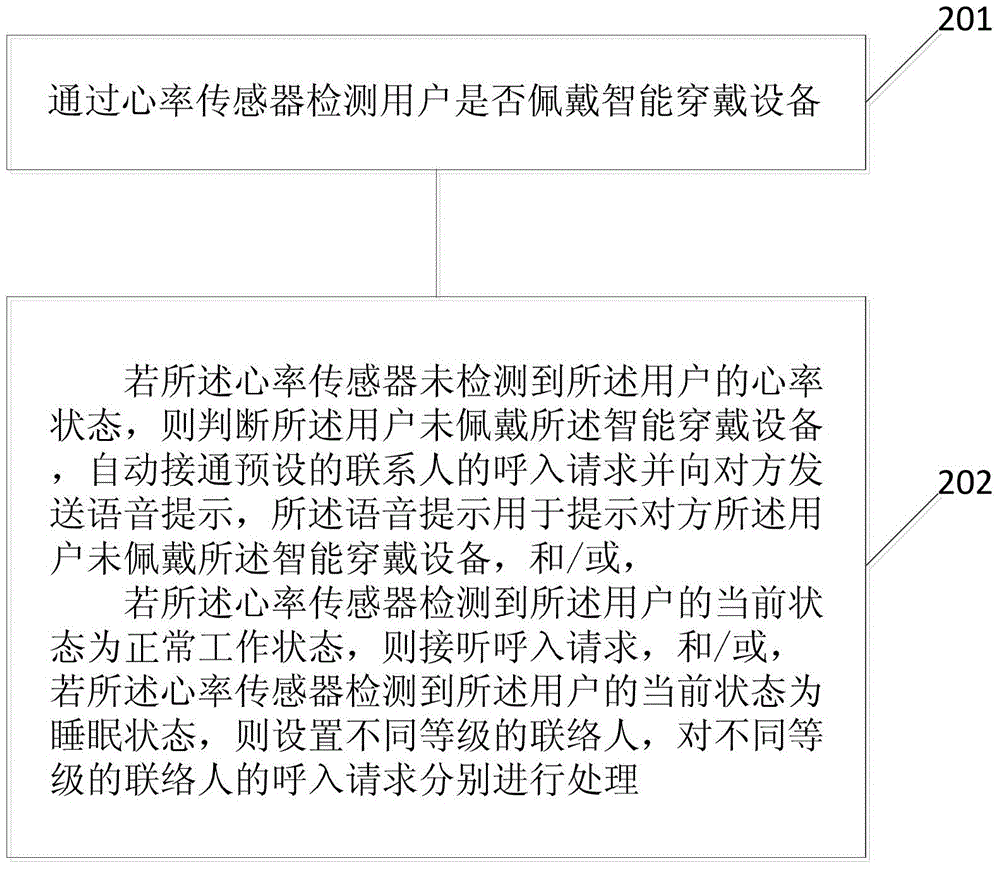

Telephone answering method and device of intelligent wearable equipment

ActiveCN104935727AImprove experienceDiagnostic recording/measuringSensorsBehavioral stateIntelligent equipment

Owner:GUANGDONG XIAOTIANCAI TECH CO LTD

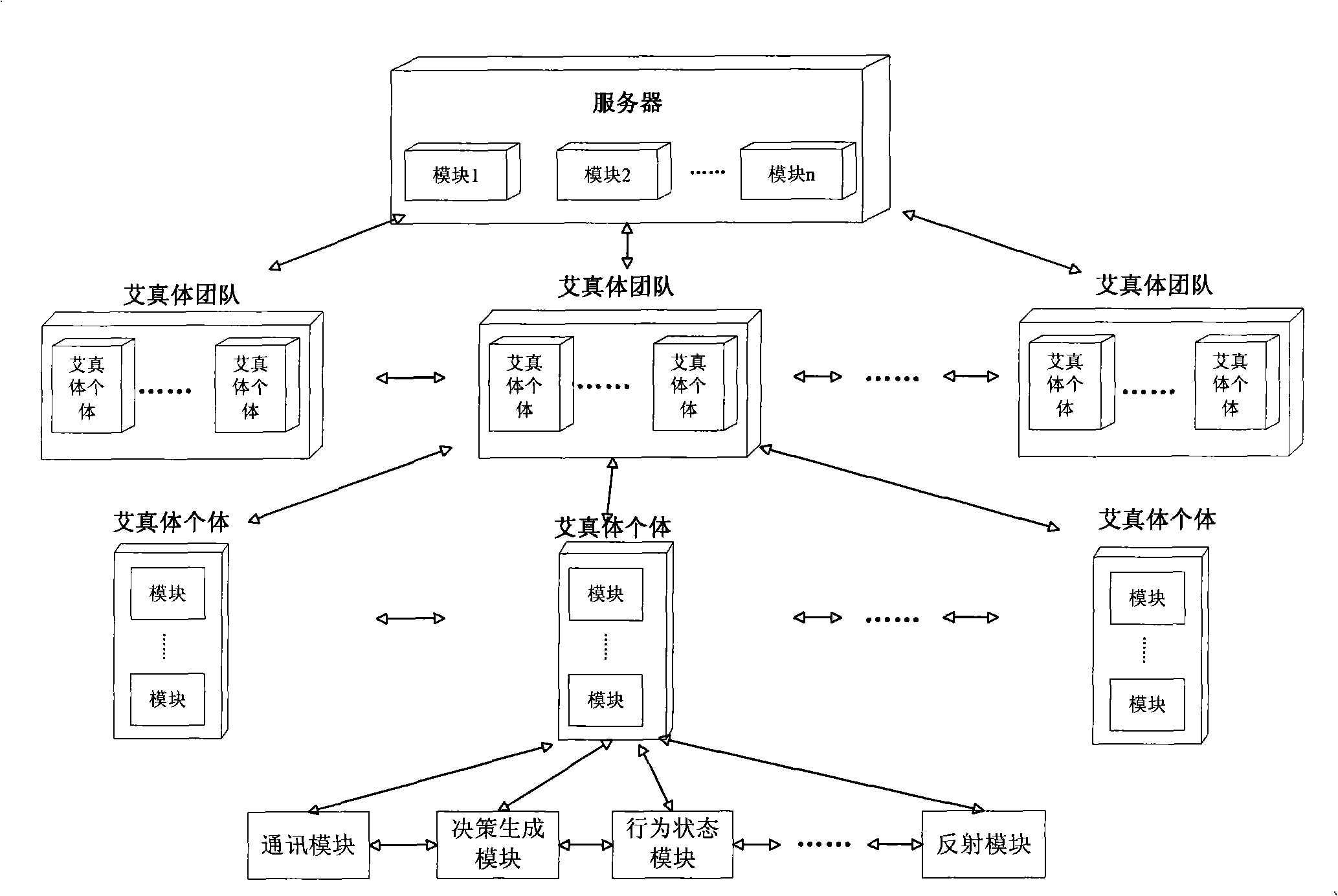

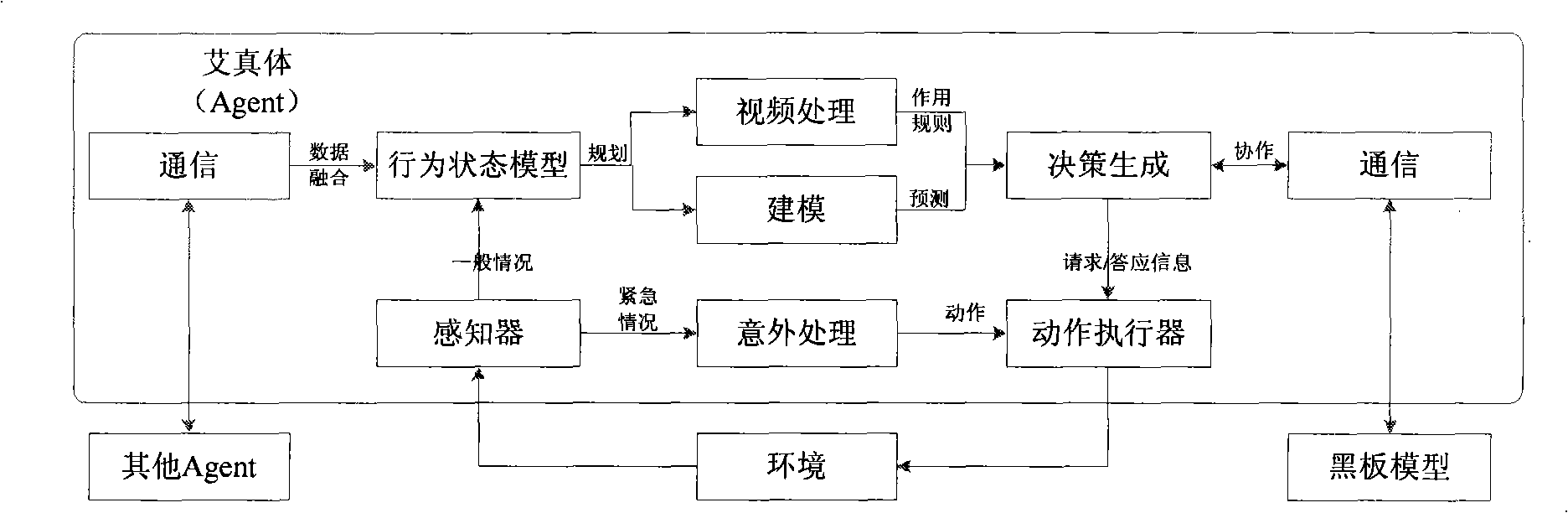

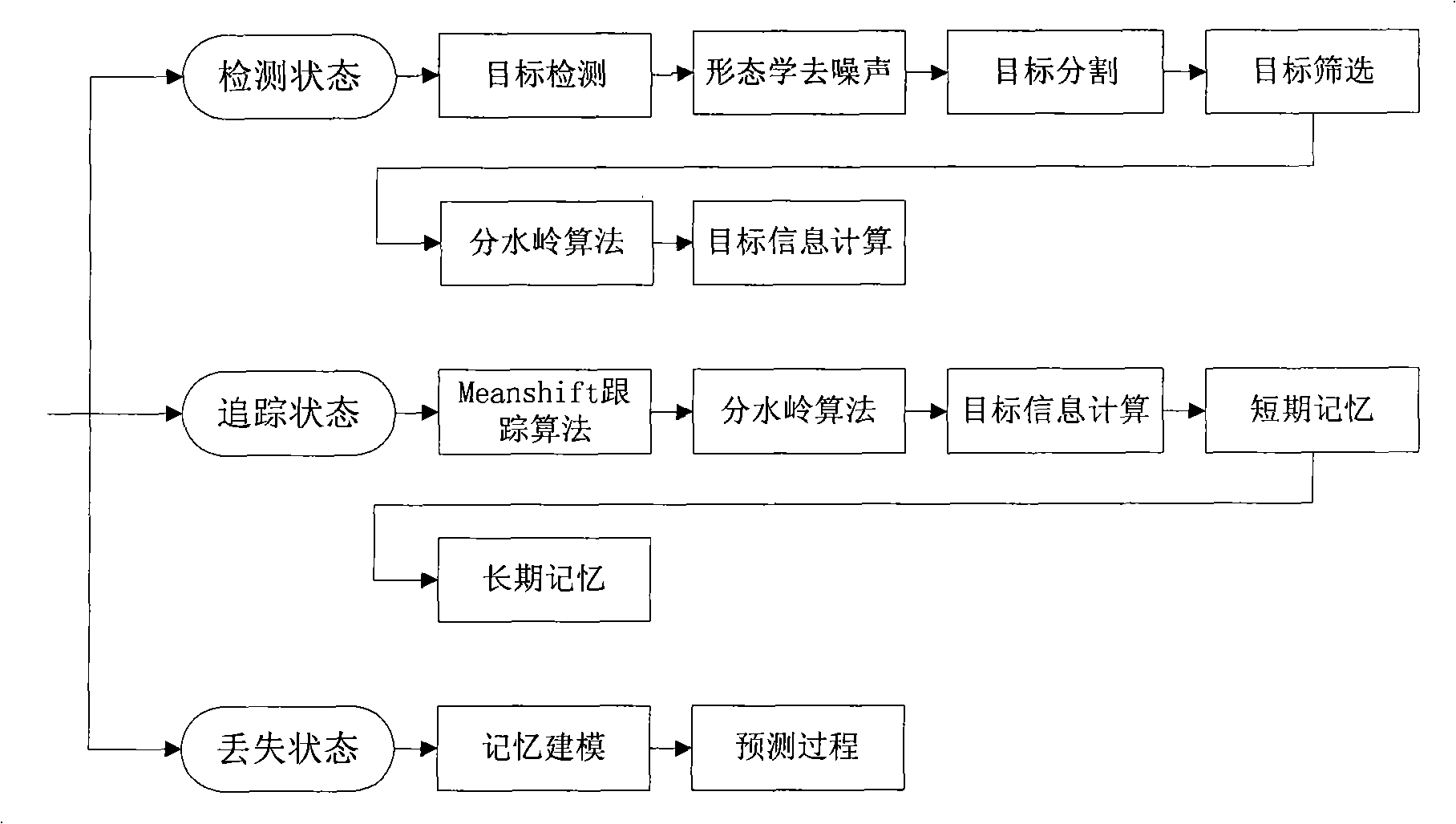

Multi-agent dynamic multi-target collaboration tracking method based on finite-state automata

InactiveCN101303589AImprove work efficiencyNavigation instrumentsTotal factory controlHybrid typeInteraction control

The invention discloses a multi-agent dynamic multi-objective cooperative tracking method which is based on a finite state automaton, which is characterized in that a combined agent selects one finite state automaton from a plurality of finite state automatons as the finite state automaton to maintain the behavior state model of the combined agent according to self-detected environmental information I, task information M which is sent by a server, other agents or a manager of agent groups and needs to be fulfilled, and / or artificially designated information H which is sent by the server. The agent is driven by behavior state, emotion information and other factors and can carry out centralized control or agent individual information interaction control by information interaction or by combining the server for team coordination. The method is suitable for different architectures such as centralized type, distributed type and hybrid type.

Owner:CENT SOUTH UNIV

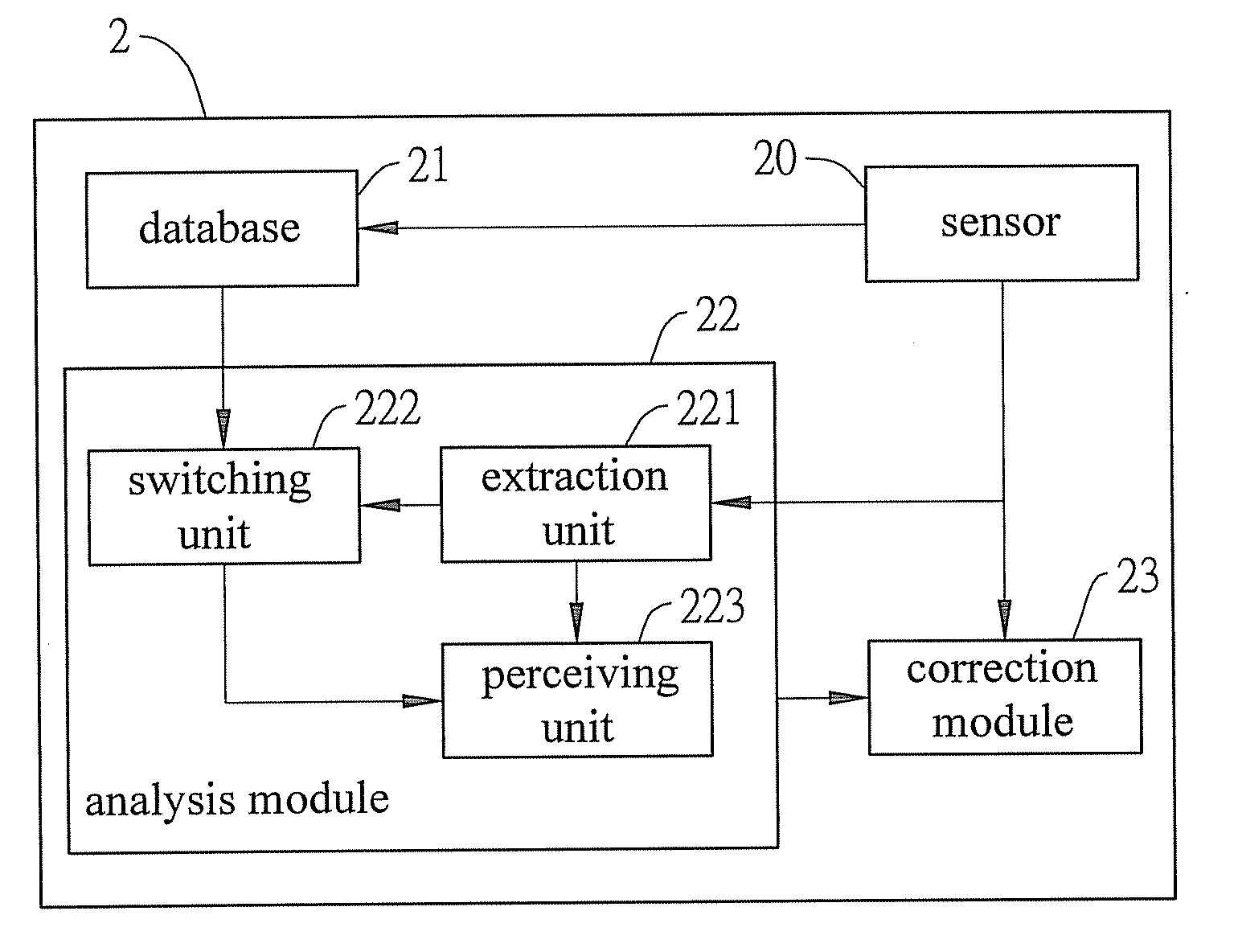

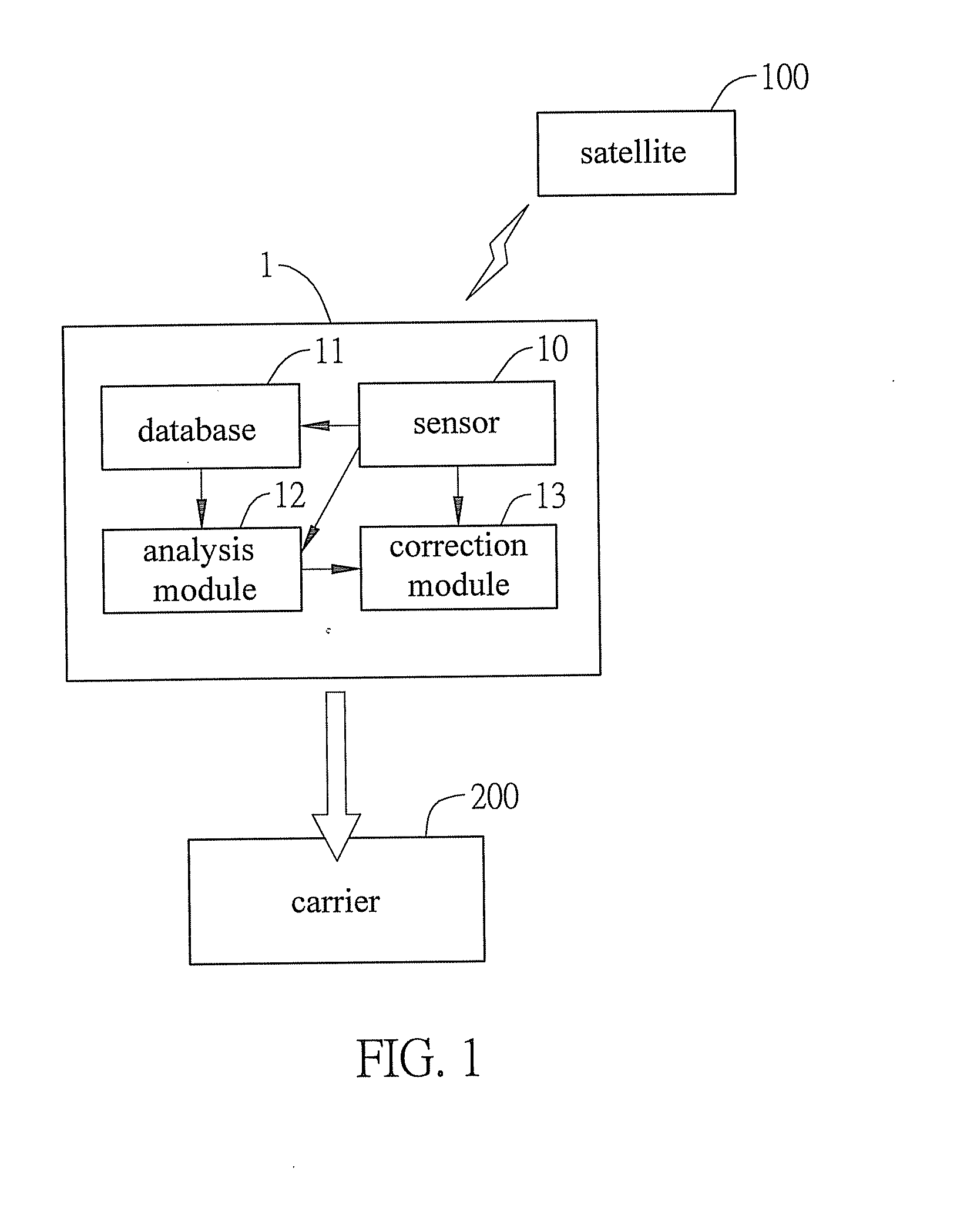

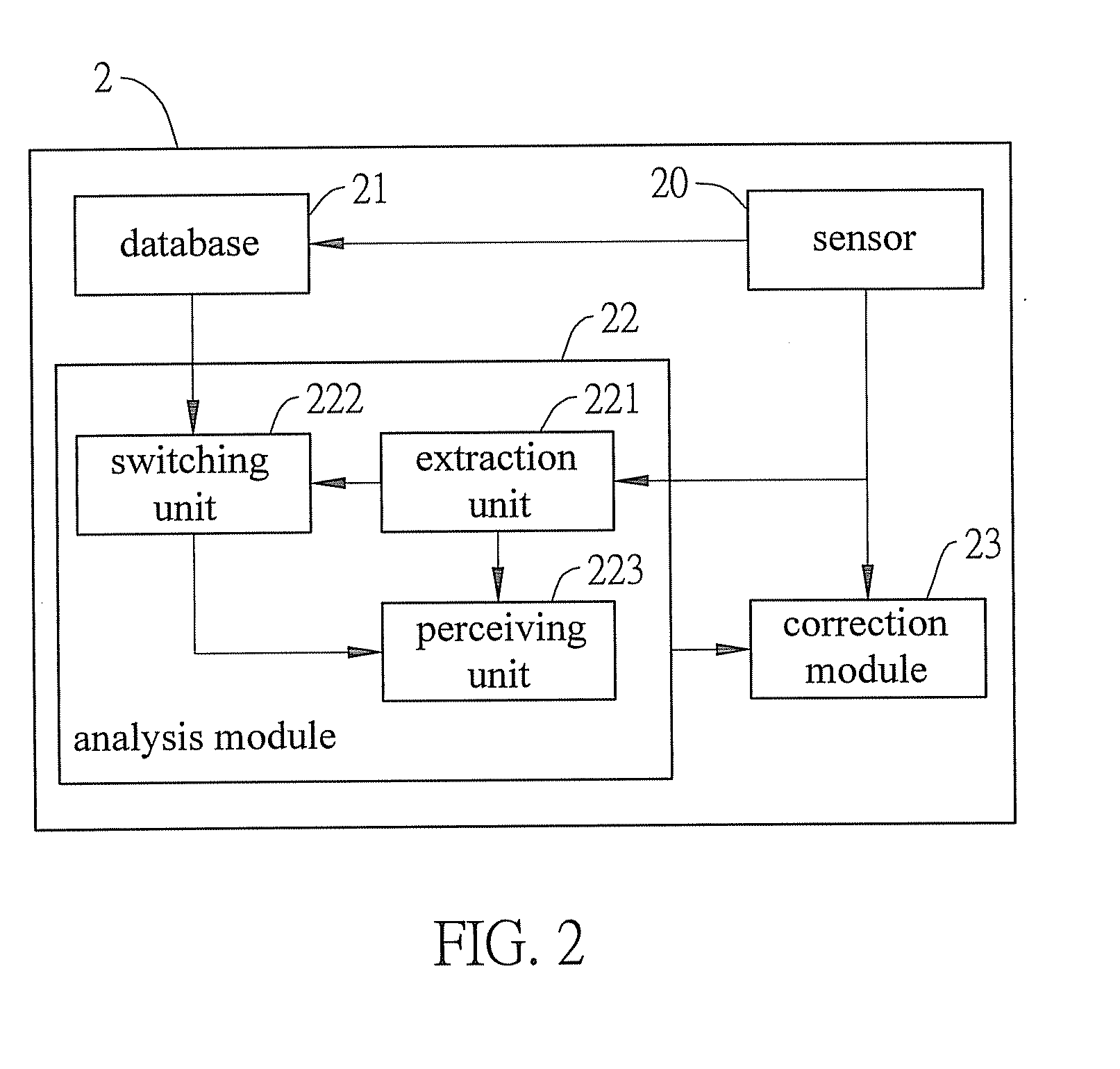

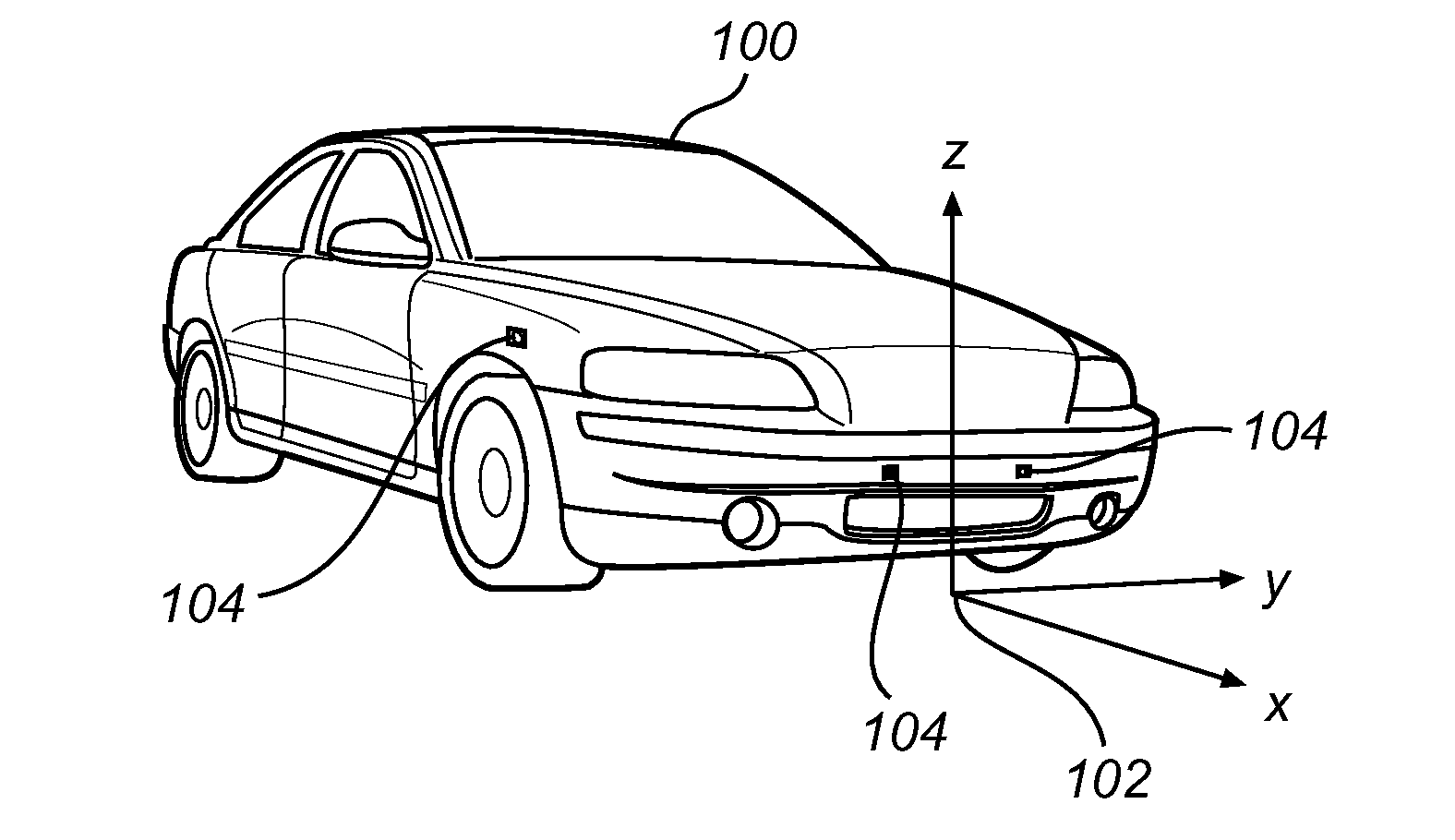

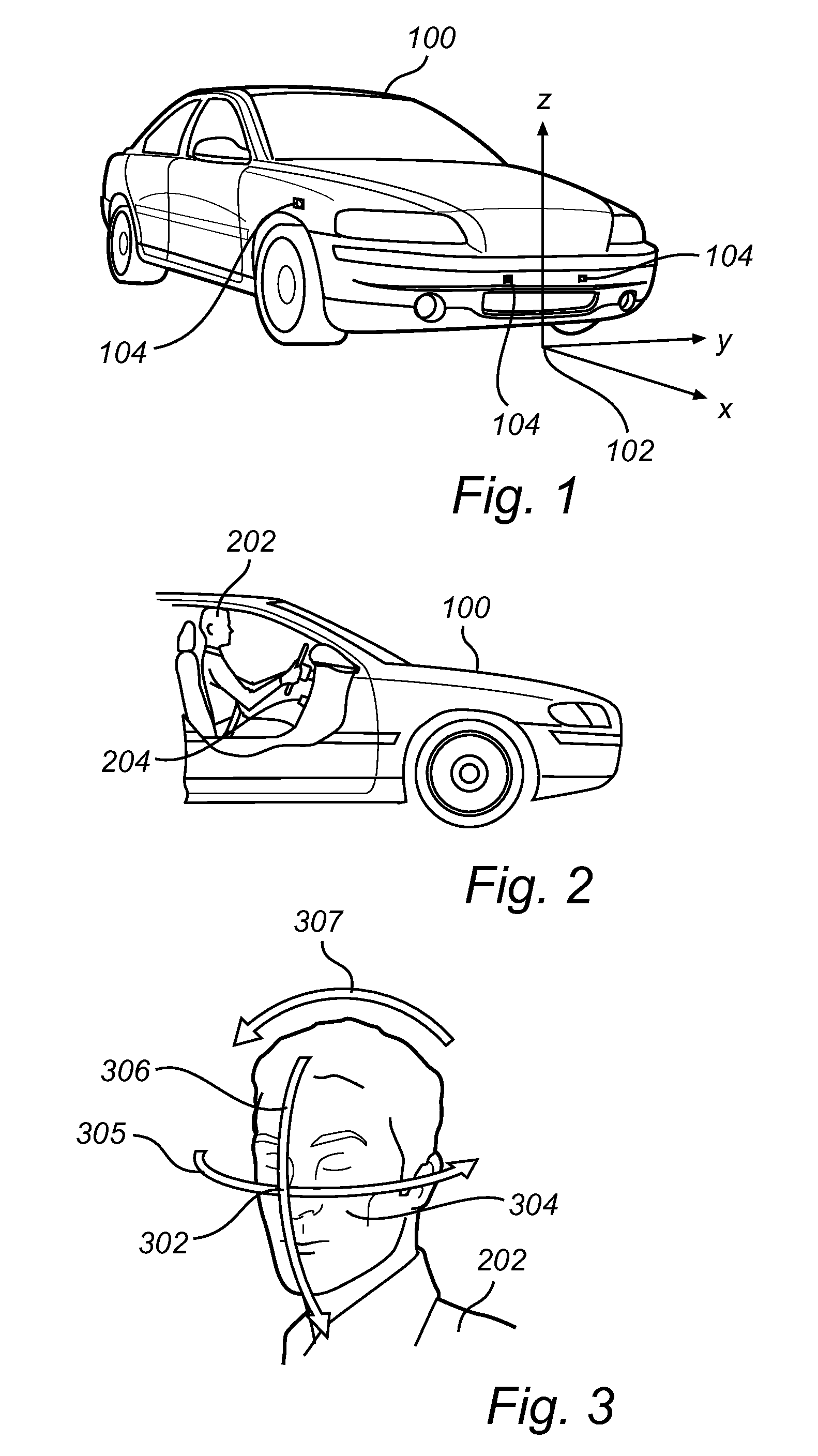

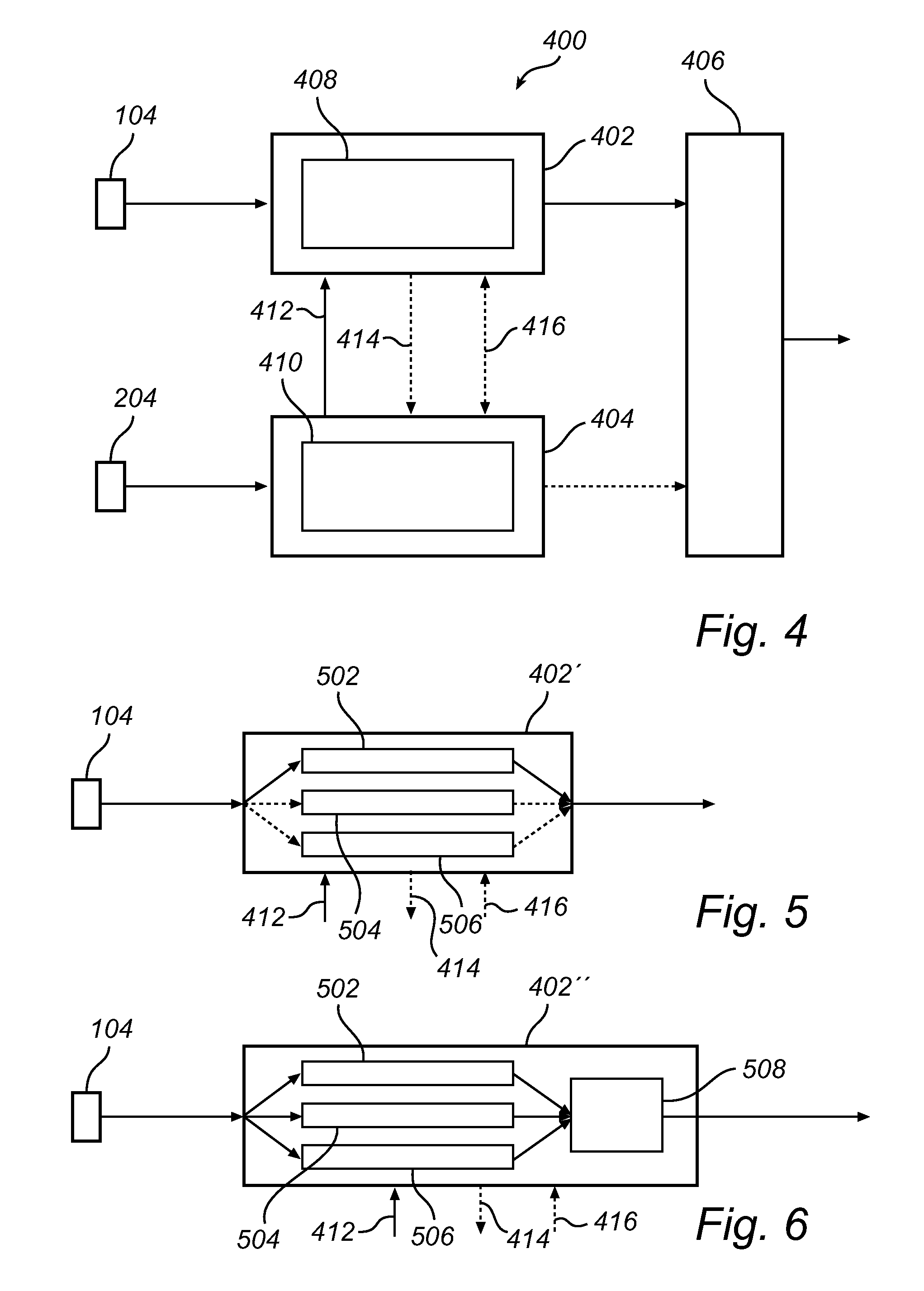

Perceptive global positioning device and method thereof

InactiveUS20110285583A1Low accuracyAccuracy of positioning effectSatellite radio beaconingBehavioral stateCarrier signal

A perceptive global positioning device is disposed on or carried by a carrier (vehicle, pedestrian, etc.) for receiving positioning signals transmitted from satellites, to detect various behavioral states related to movement and correct errors in positioning data for positioning the carrier. The positioning device includes a sensor for receiving positioning data related to the global position of the carrier transmitted from satellites, a database for storing the positioning data received by the sensor and a preset behavioral transition matrix of the carrier, an analysis module for analyzing the positioning data and behavioral transition matrix to obtain predictable behavior data, and a correction module for comparing the predictable behavior data with the positioning data so as to correct errors of the positioning data of the carrier, thereby overcoming the defect of inaccurate data as encountered in the prior art.

Owner:JIUNG YAO HUANG +1

User behavior learning method based on PST in wireless network

InactiveCN103338467AImprove quality of experienceImprove network performanceMathematical modelsData switching networksQuality of serviceWireless mesh network

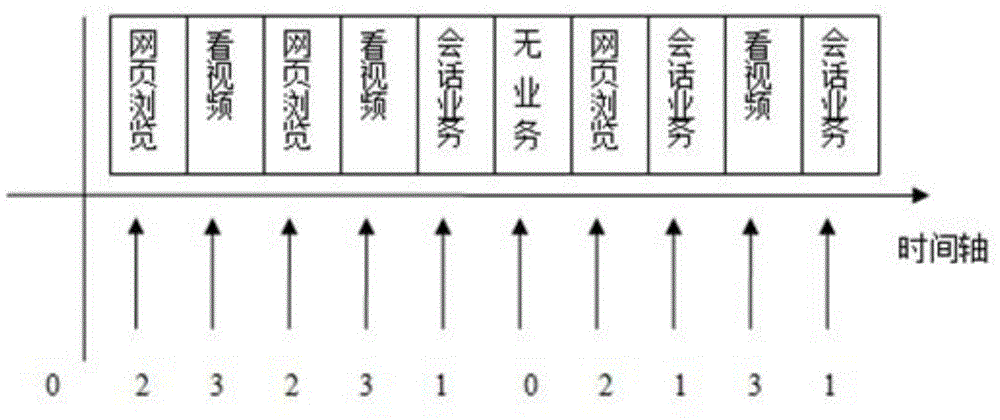

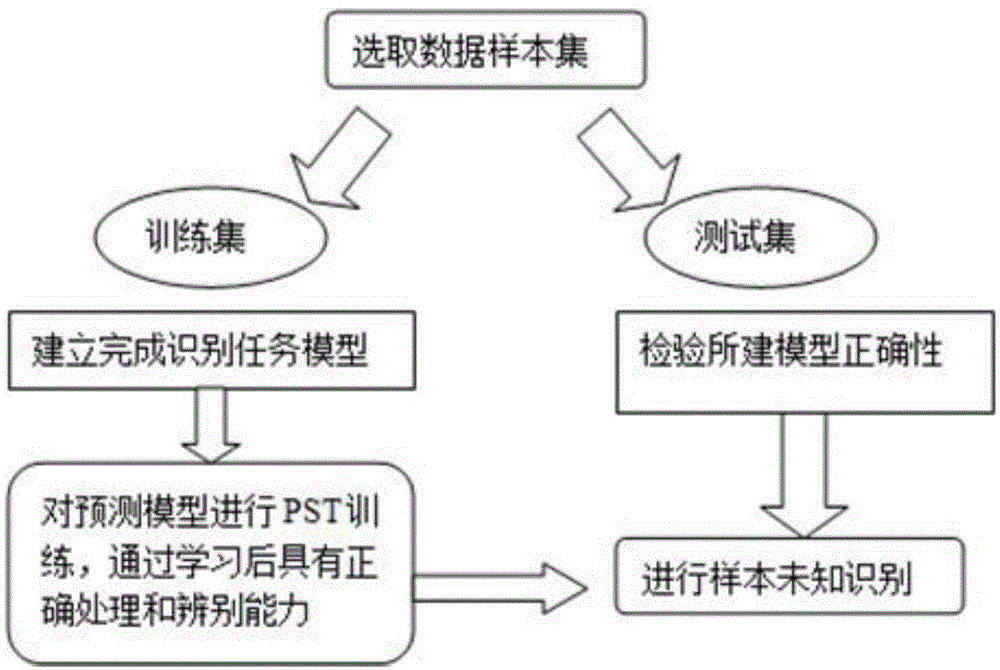

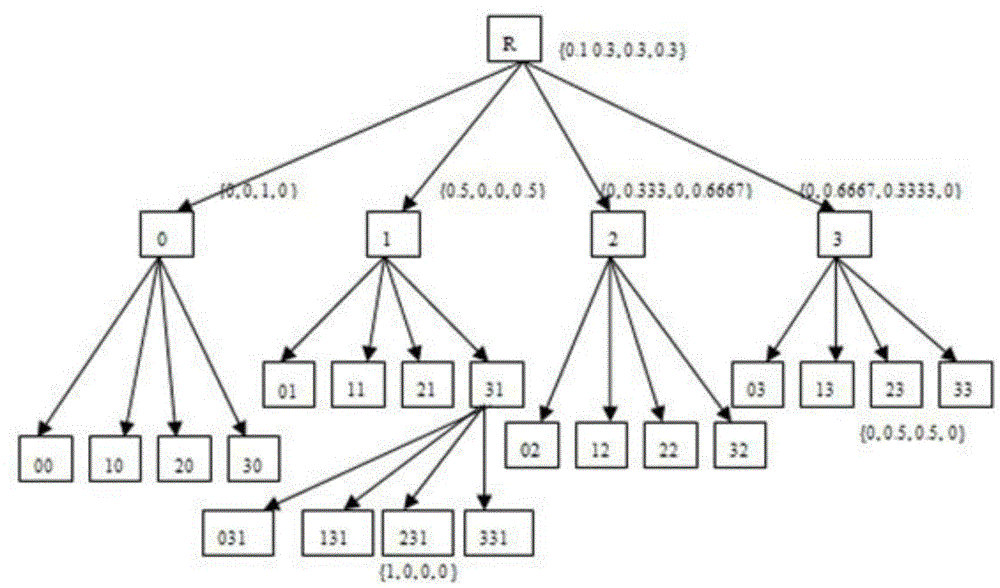

The invention provides a user behavior learning method based on a PST (Probabilistic Suffix Tree) in a wireless network. The method comprises the steps that a user behavior based on a business is divided into no business, a session business, an interaction business and a streaming media business according to different network QoS (Quality of Service) requirements of the business in the wireless network; a quaternary user behavior state sequence is generated; and an appropriate network resource can be selected for providing a high-quality service for a user according to a predicted business behavior by learning to construct the PST to train the user behavior sequence, and adopting the possible user behavior during a time period of a variable-length Markov model prediction. The method can improve the accuracy of user behavior prediction, is simple, and convenient to realize, and has a very good application prospect.

Owner:NANJING UNIV OF POSTS & TELECOMM

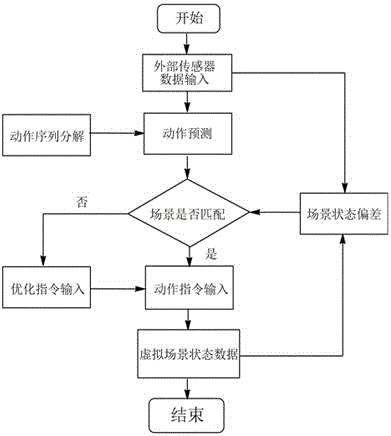

Matching method of real-time three-dimensional visual virtual monitoring for intelligent manufacturing

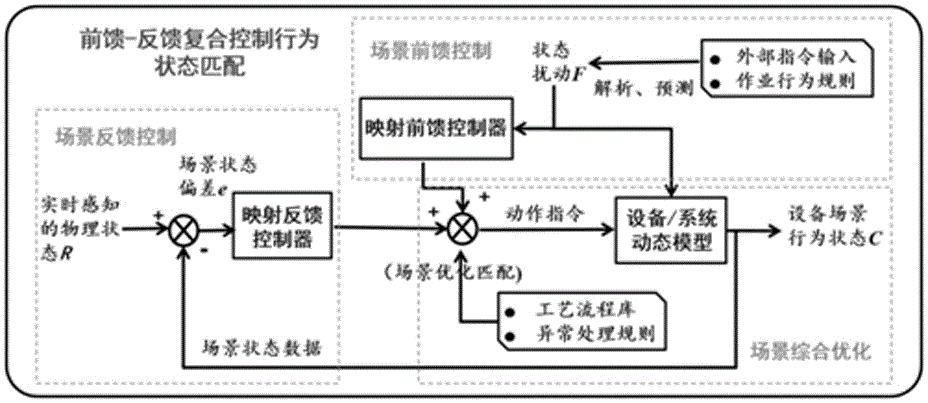

ActiveCN106157377AImprove the real-time performance of actionsIncrease or decrease the speed of movementImage data processingReal-time dataBehavioral state

The invention discloses a matching method of real-time three-dimensional visual virtual monitoring for intelligent manufacturing. According to the method, three-dimensional virtual monitoring for an intelligentization manufacturing workshop is realized by virtue of the behavior state matching of feed-forward feed compound control and by virtue of real-time data. The method comprises the following steps: first decomposing action sequences of various monitored intelligent devices of a workshop, ranking the action sequences according to an operational logic, predicting a next action of a virtual model by virtue of a feed-forward control method, and carrying out optimization matching according to the state data fed back by the intelligent device in real time and in conjunction with a prediction action, thus obtaining a current movement instruction and driving a three-dimensional model. By adopting the method, the real-time performance and accuracy of the model action in the three-dimensional visual virtual monitoring can be effectively improved, the optimization of a virtual scene is realized, and the real-time performance and real-scene feeling of the three-dimensional virtual monitoring for the manufacturing workshop can be improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

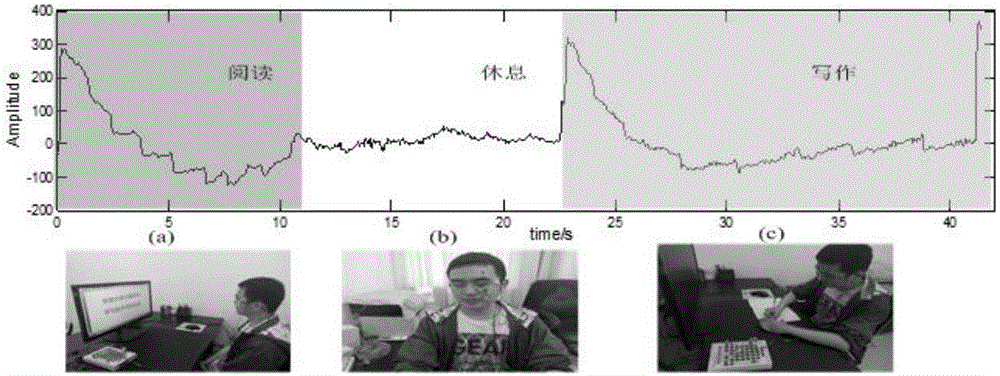

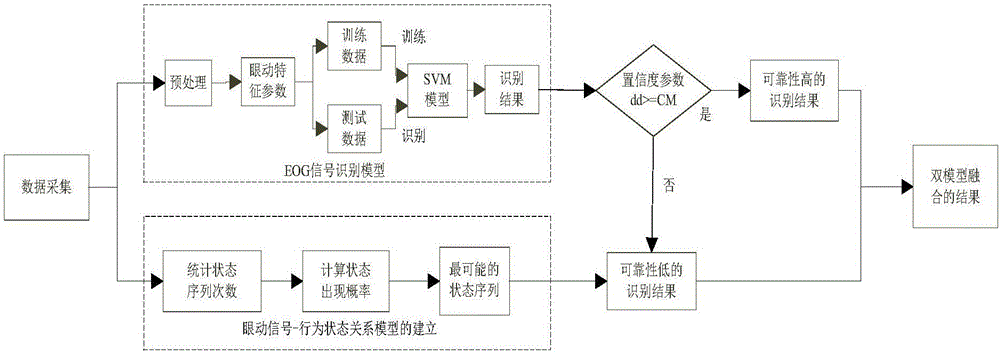

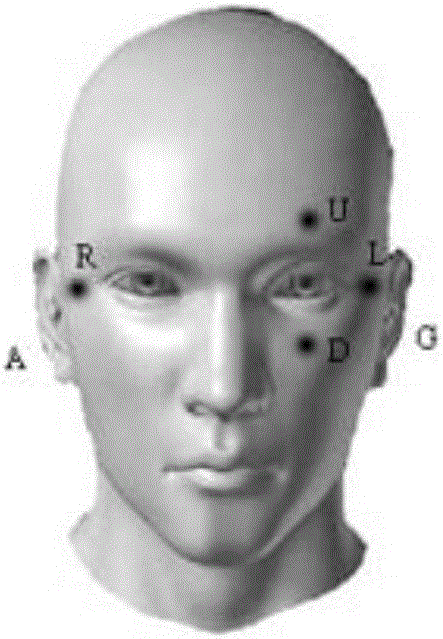

EOG-based human body behavior identification system and method

ActiveCN106491129AImprove recognition accuracyImprove scalabilityInput/output for user-computer interactionCharacter and pattern recognitionExtensibilityPattern recognition

The invention discloses a EOG-based human body behavior identification system and method. Firstly, an EOG signal recognition model based on Hjorth parameters is established and is used for achieving identification of original unit EOG signals; meanwhile, statistics is conducted on the context relations of different behavior states under a background task by using an N-gram method, and an eye movement signal-behavioral state relations model is established; finally, a most probable behavior state of a tested person is obtained by conducting comprehensive analysis and judgment on output results of the two models based on confidence parameters. The EOG-based human body behavior identification method has the advantages of being high in identification correction rate, strong in extensibility, good in application prospect and the like.

Owner:ANHUI UNIVERSITY

Mobile apparatus, control device and control program

A mobile apparatus capable of moving while avoiding contact with an object by allowing the object to recognize the behavioral change of the mobile apparatus is provided. The robot and its behavioral state and the object and its behavioral state are recognized as a first spatial element and its behavioral state and a second spatial element and its behavioral state, respectively, in an element space. Based on the recognition result, if the first spatial element may contact the second spatial element in the element space, a shift path is set which is tilted from the previous target path by an angle responsive to the distance between the first spatial element and the second spatial element. With the end point of the shift path as a start point, a path allowing the first spatial element to avoid contact with an expanded second spatial element is set as a new target path.

Owner:HONDA MOTOR CO LTD

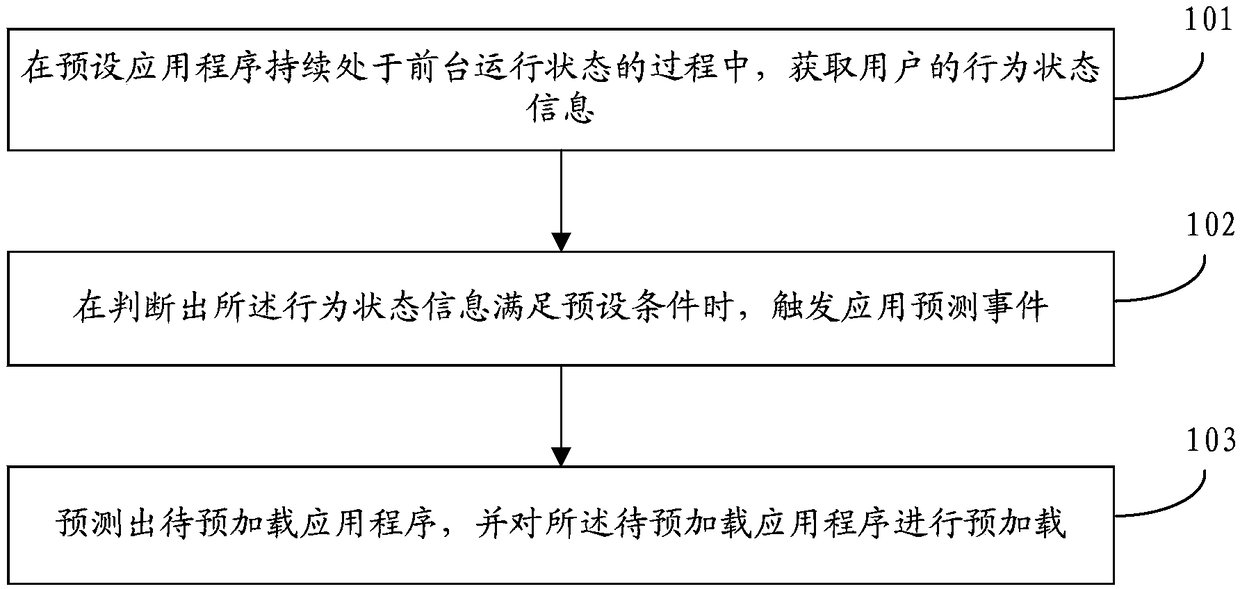

Application program pre-loading method and device, storage medium and terminal

ActiveCN108628645AAchieve forecastImplement preloadingProgram loading/initiatingBehavioral stateApplication software

The embodiment of the invention discloses an application program pre-loading method and device, a storage medium and a terminal. The method comprises the steps that behavior state information of a user is obtained in the process that a preset application program is continuously in a foreground running state; when it is judged that the behavior state information meets preset conditions, an application predicting event is triggered; an application program to be preloaded is predicted and is preloaded. By adopting the above technical scheme, the moment of triggering the application predicting event can be reasonably determined according to the behavior state information of the user, thus the predicted application program to be preloaded is preloaded, and prediction and preloading of application programs are achieved under the situation that system resources are saved.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

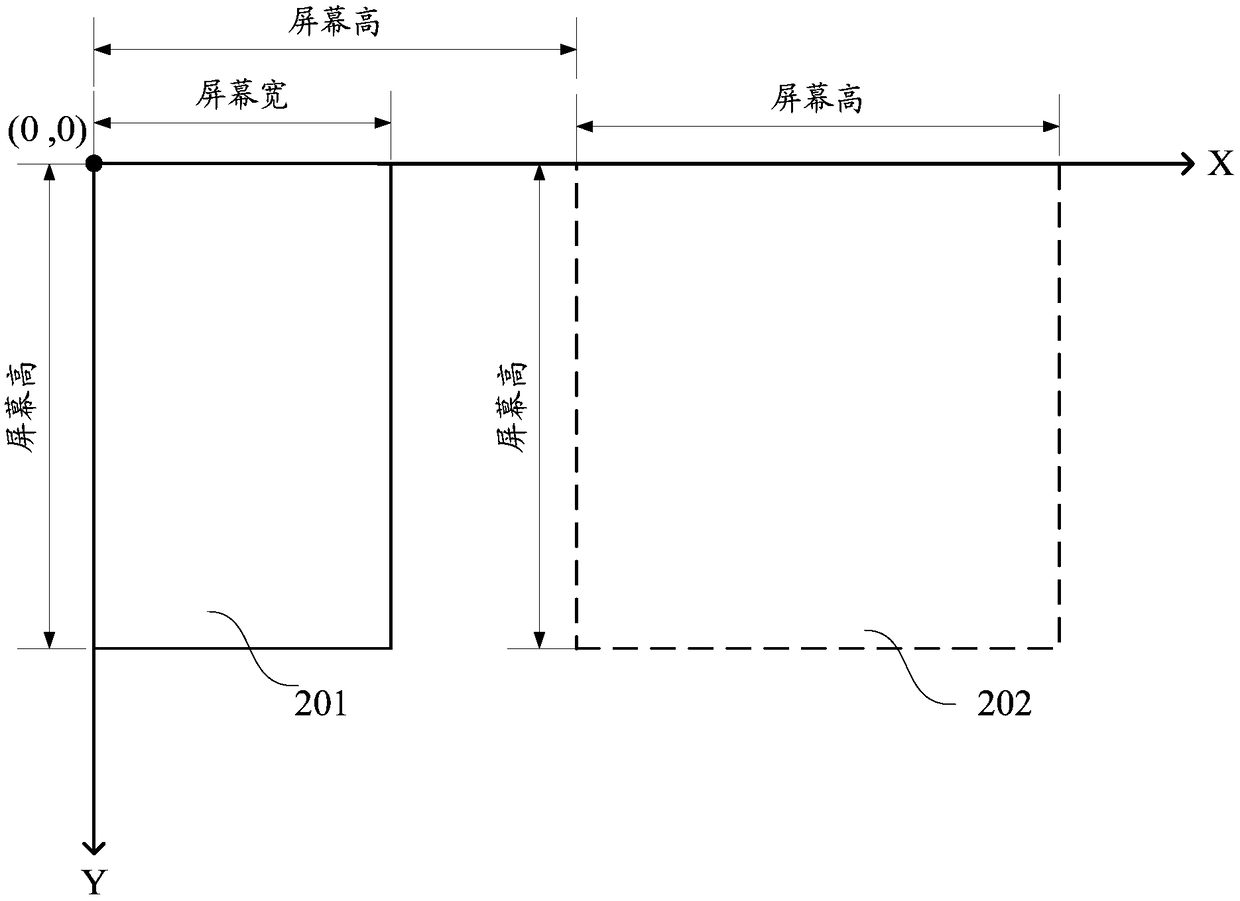

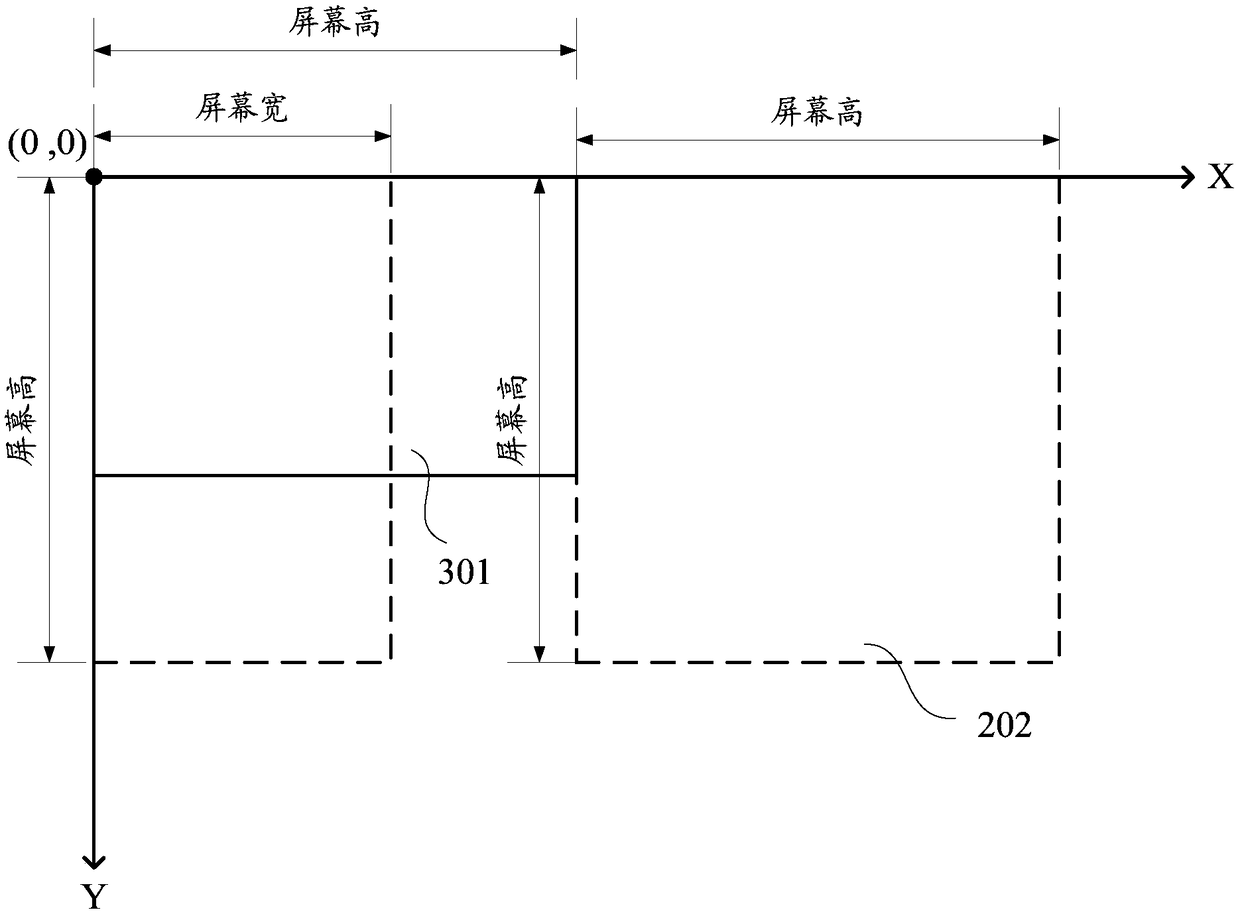

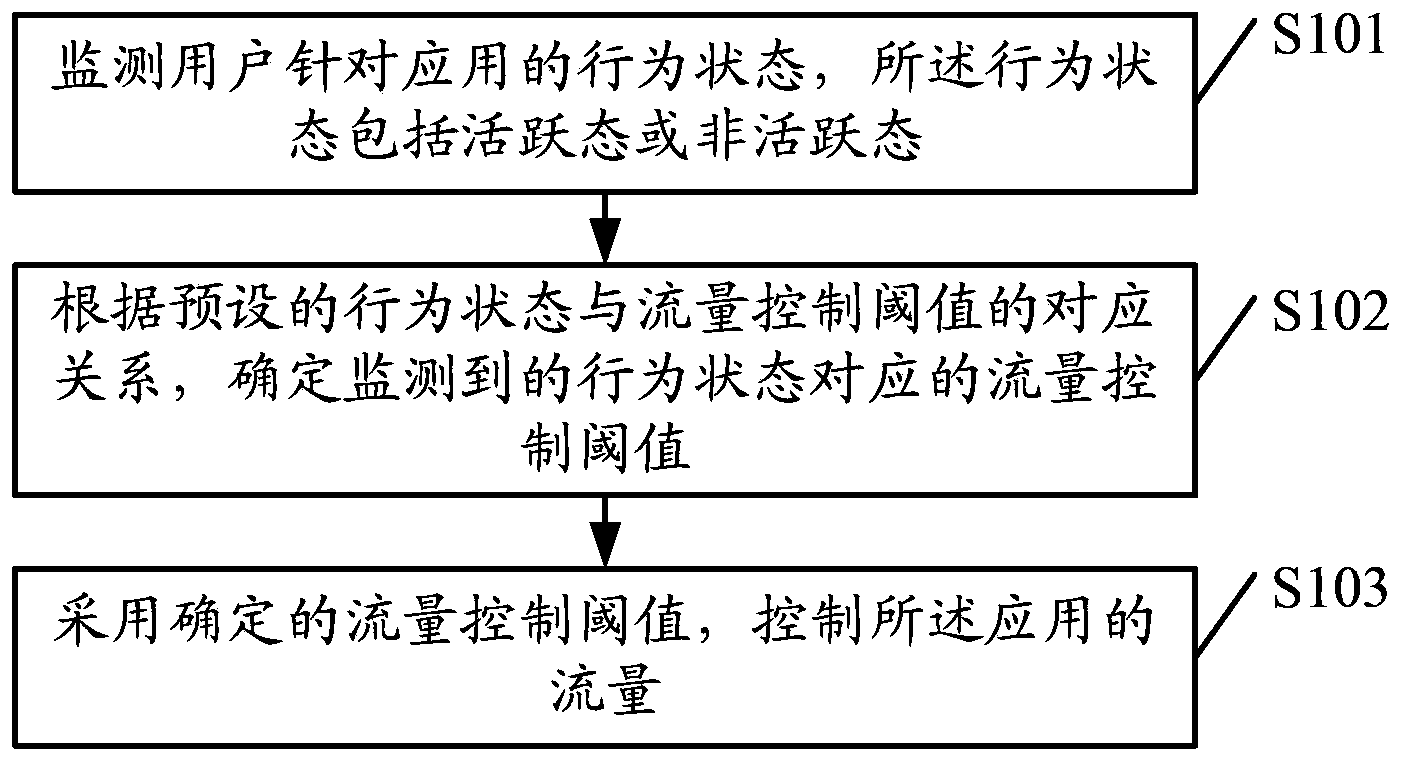

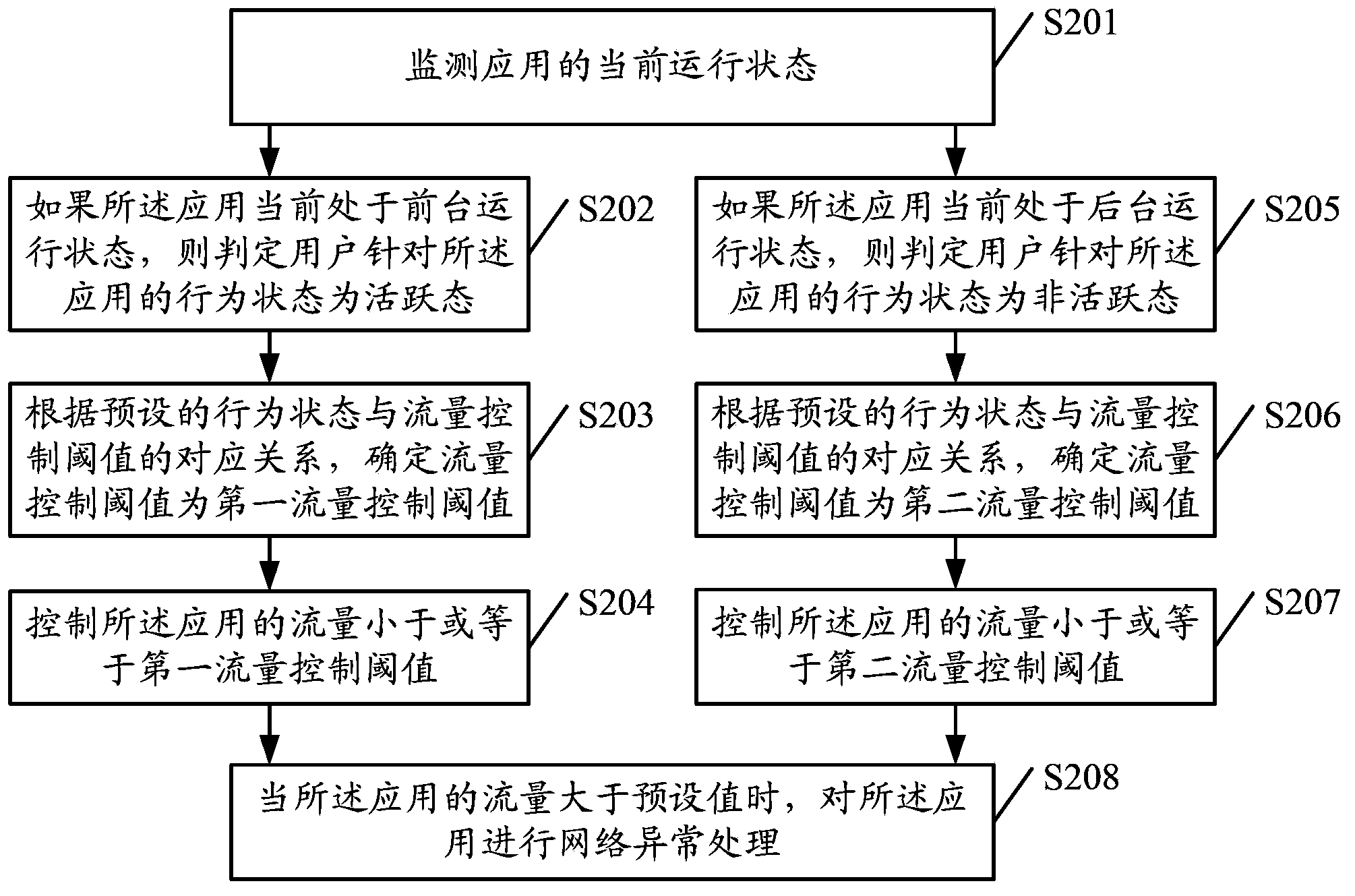

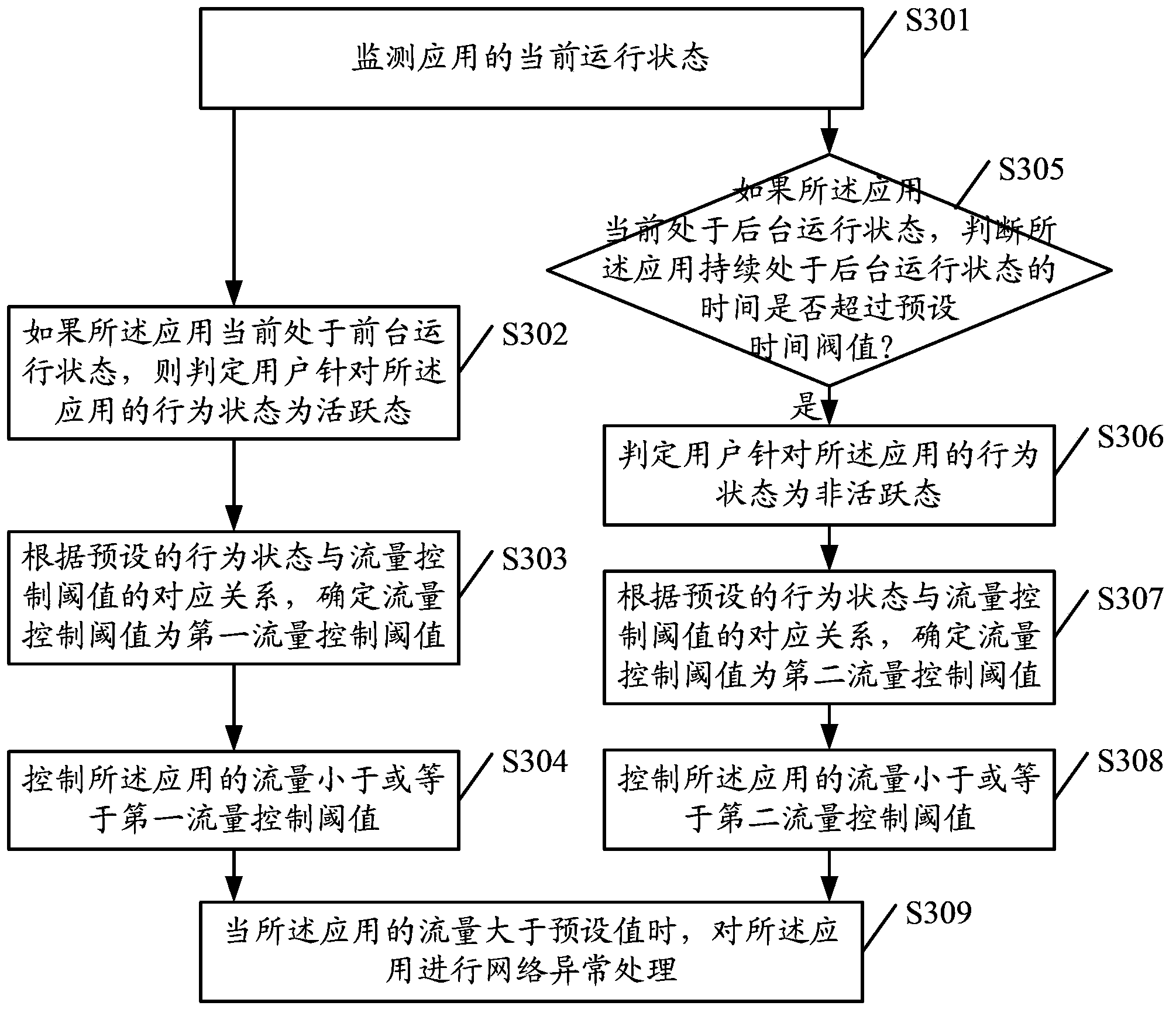

Flow control method and device

ActiveCN103780447AImprove intelligenceEasy to useNetwork traffic/resource managementData switching networksBehavioral stateActive state

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

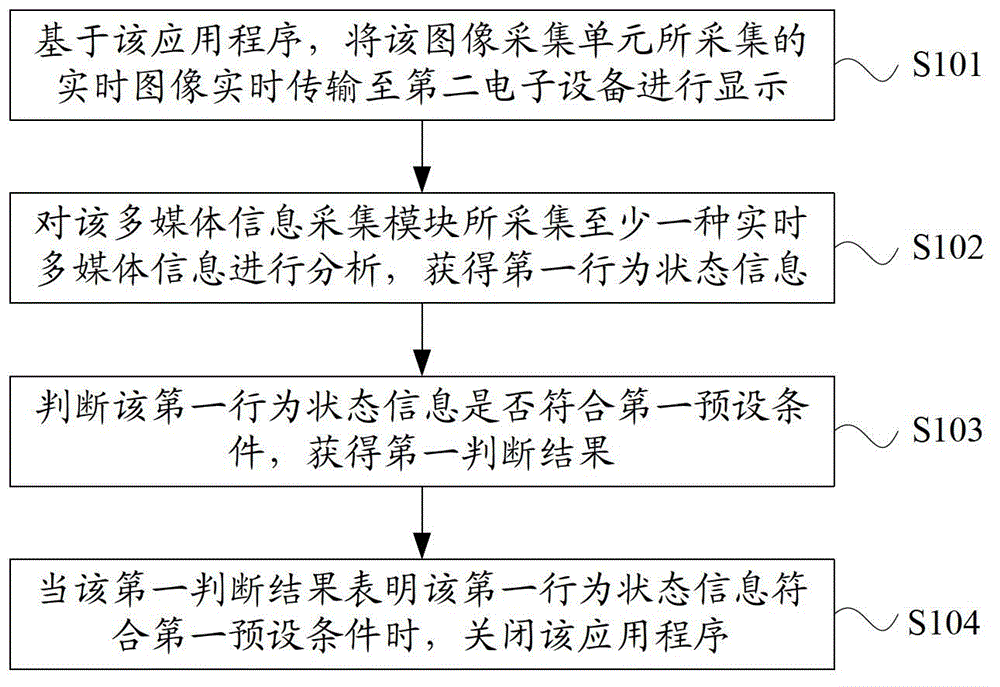

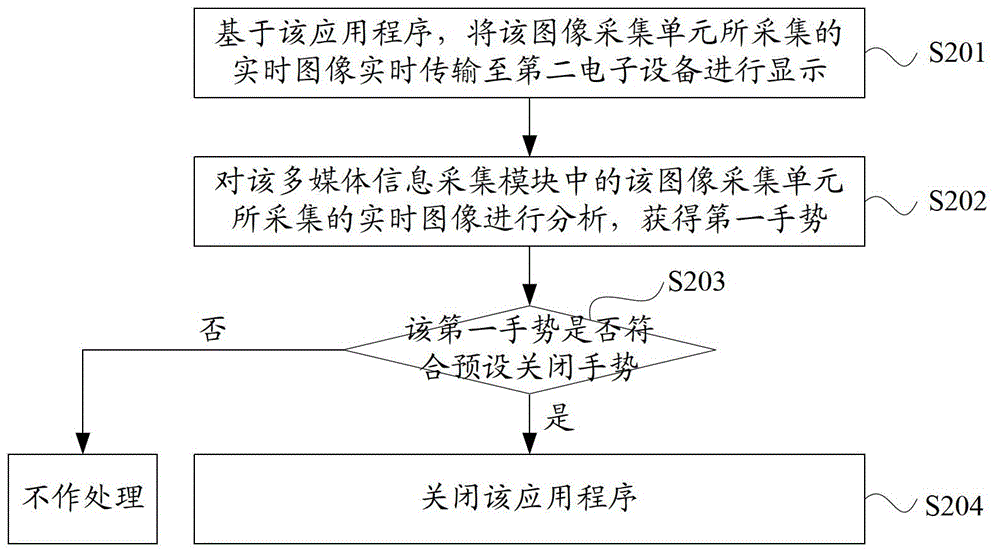

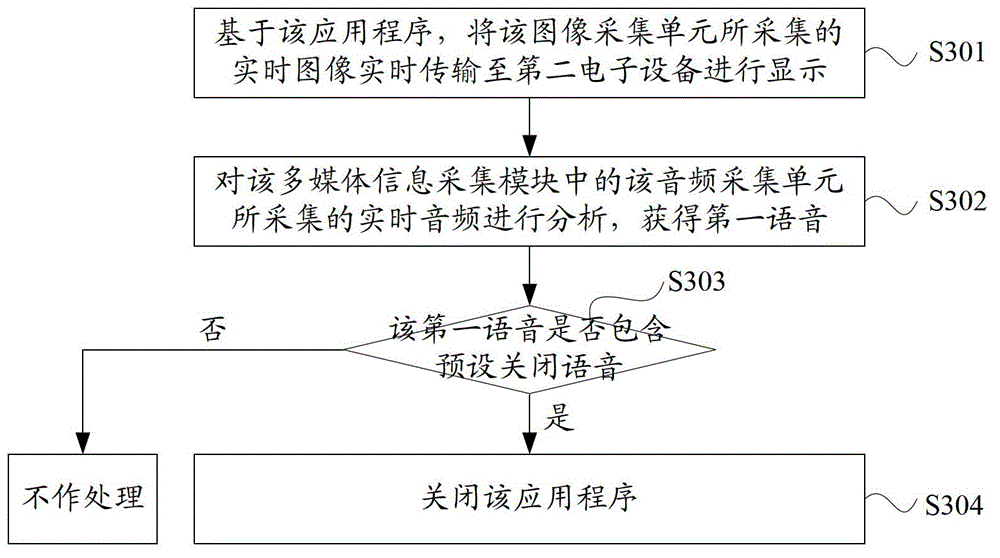

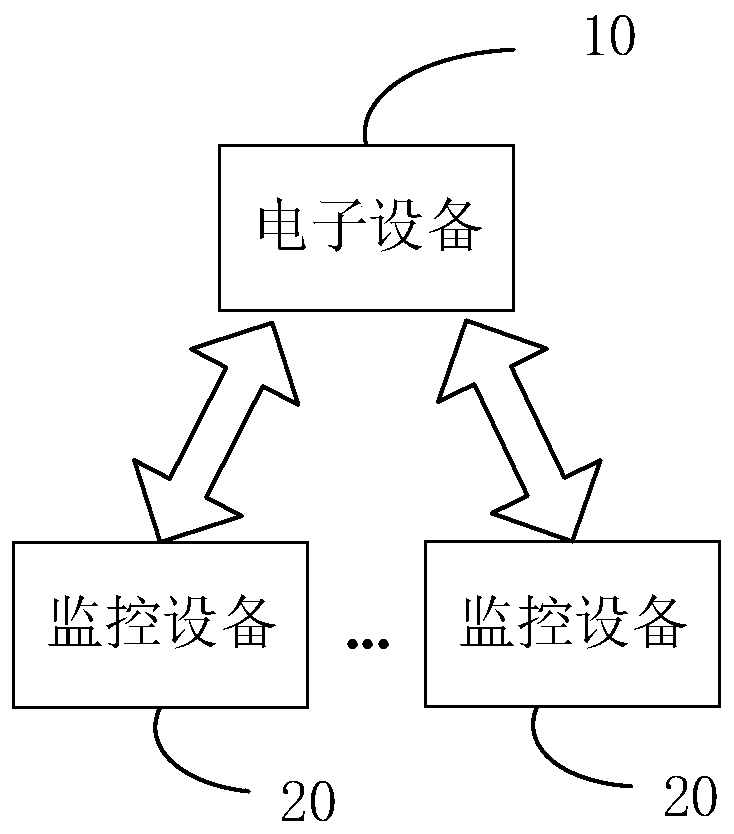

Information processing method and electronic equipment

The invention discloses an information processing method and a piece of electronic equipment. The method is applied to first electronic equipment. The first electronic equipment comprises a multimedia information acquisition module at least consisting of an image acquisition unit, and an application program based on the image acquisition unit. The method comprises the following steps: a real-time image acquired by the image acquisition unit is transmitted to second electronic equipment in real time for display based on the application program; at least one type of real-time multimedia information acquired by the multimedia information acquisition module is analyzed to obtain first behavioral state information; whether the first behavioral state information meets a first preset condition is judged to obtain a first judgment result; and the application program is closed when the first judgment result indicates that the first behavioral state information meets the first preset condition. By adopting the scheme, users do not need to manually click a corresponding closing button to end video call, and the user experience in the process of video call is improved.

Owner:LENOVO (BEIJING) LTD

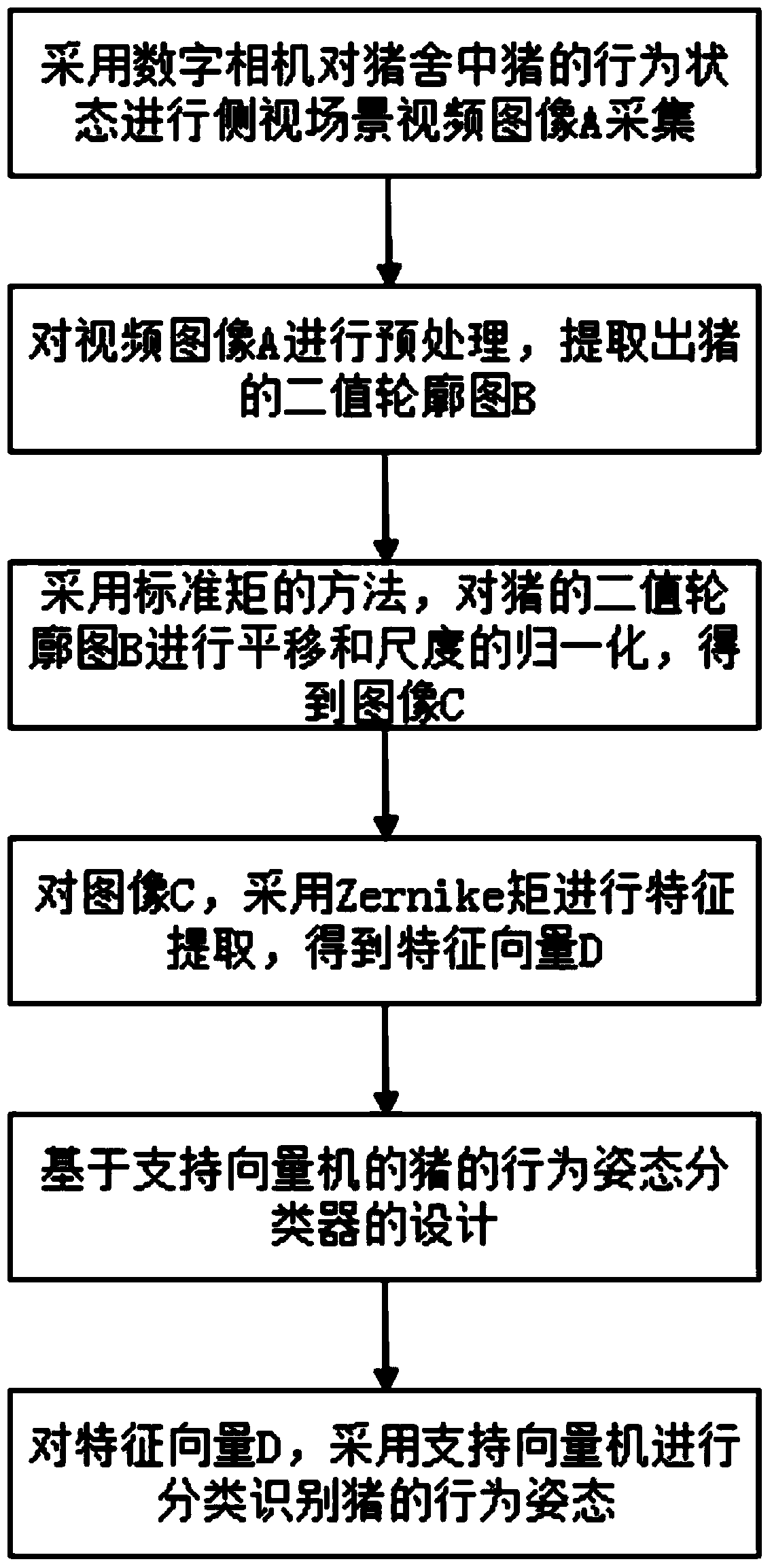

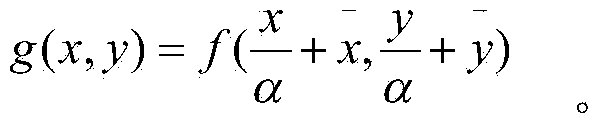

Pig posture recognition method based on Zernike moment and support vector machine

The invention provides a pig posture recognition method based on a Zernike moment and a support vector machine. The method mainly includes the steps of conducting side view scene video image collection on behavior states of a pig in a pig house through a digital camera, preprocessing video images to extract a two-value skeleton map of the pig, conducting transverse moving normalization and dimension normalization on the two-value skeleton map of the pig with the method of regular moments, conducting feature extraction on the normalized images through the Zernike moment to obtain feature vectors, conducting design of a classifier for the behavior states of the pig based on the support vector machine, and conducting classification identification on the behavior states of the pig through the support vector machine for the feature vectors. Identification of the normal walking posture, the head-lowered walking posture, the head-raised walking posture and the lie-down posture of the pig can be identified through the machine vision technology and the support vector machine technology.

Owner:JIANGSU UNIV

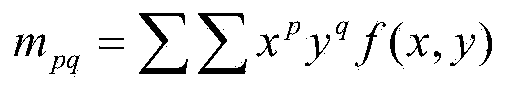

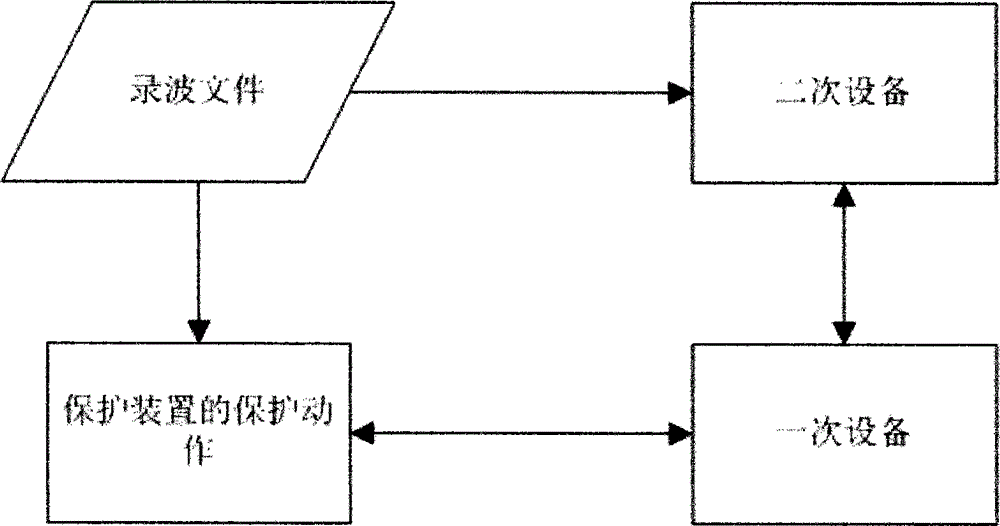

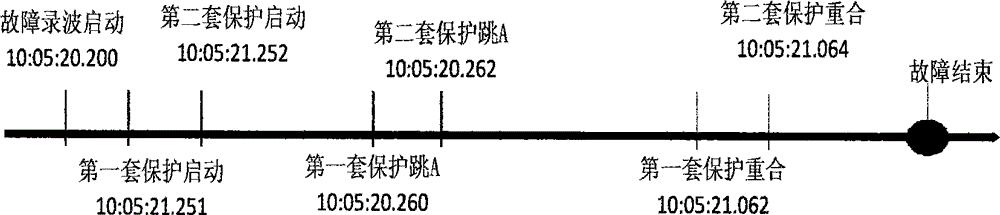

Fault-recording-based protection action information analyzing method

The invention relates to a fault-recording-based protection action information analyzing method. The fault-recording-based protection action information analyzing method comprises the steps that a configuration model is built; primary equipment serves as the basis, and a configuration model of association among the primary equipment, secondary equipment related to the primary equipment and a protection device is built; the protection action behavior state and sequential relationship of the protection device of the primary equipment and the secondary equipment related to the primary equipment are determined, and the protection action behavior state of the protection device is described with time as the axis; on the basis of the protection action behavior state and sequential relationship of the protection device of the primary equipment and the secondary equipment related to the primary equipment, in combination with protection action behavior logic and related constant-value information in the actual process, the protection action behavior of the protection device is judged, and inverting is performed on the fault process; the result is comprehensively analyzed, and a protection action report is formed. The method obtains data from fault recording, a new means and a new method are provided for protection action analysis in a power grid, and a reference base is provided for protection action behavior judgment after an accident.

Owner:STATE GRID ANHUI ELECTRIC POWER +2

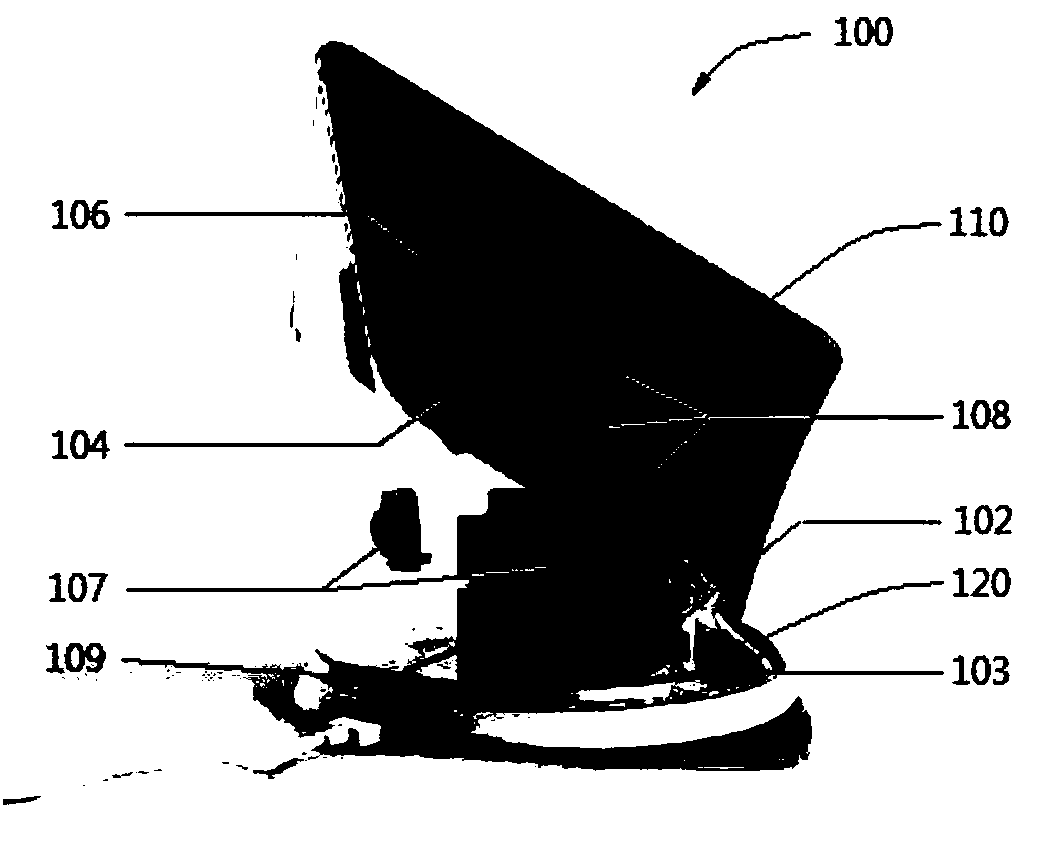

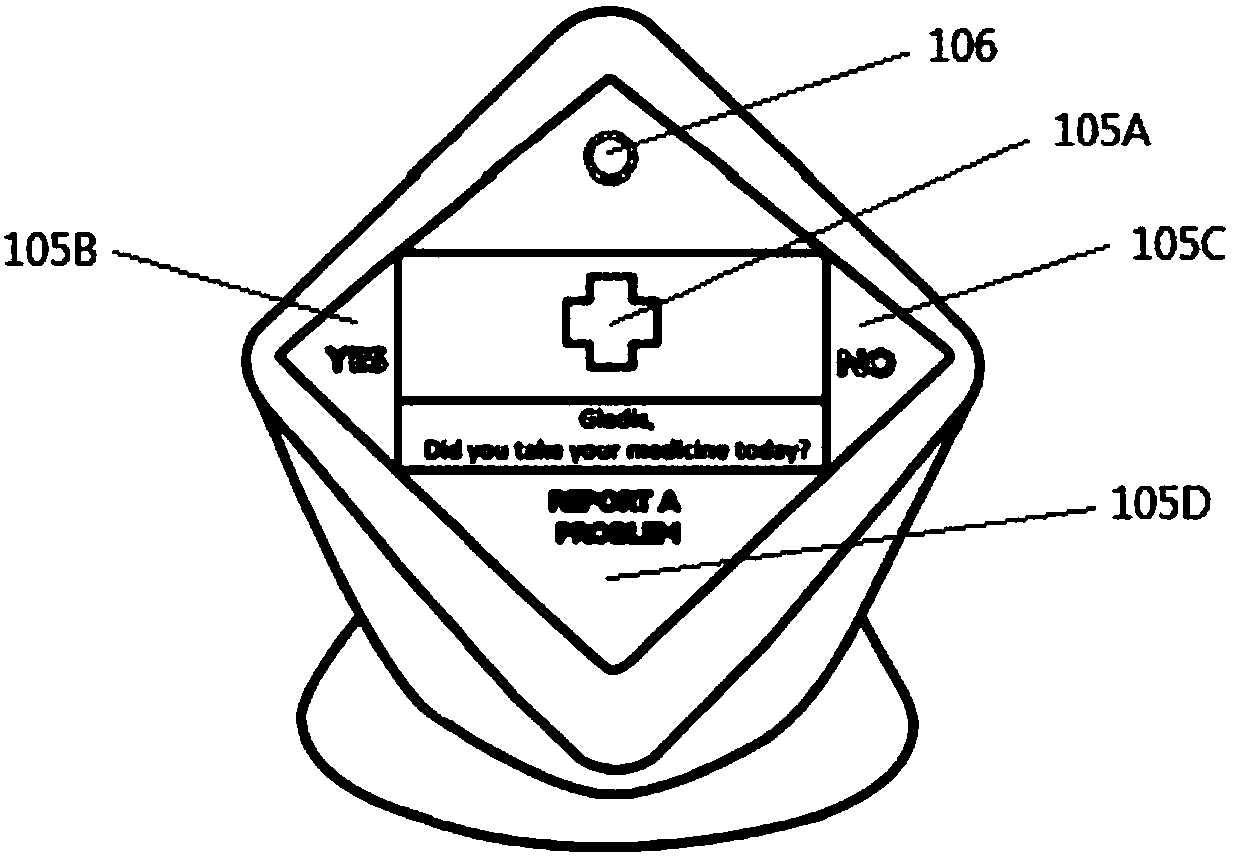

Nounou intelligent monitoring system for health care and company of old people

The invention provides a Nounou intelligent monitoring system for the health care and company of old people. The system comprises an intelligent monitoring device, a sensing device, an information processing device, an information receiving device, an information transmission device and an information storage device. The intelligent monitoring device includes a main body and a base capable of rotating relative to the main body. The main body has a main processing board, a display device, a touch screen, a touch key and an audio and image sensing element and can be equipped with a wearable detection or sensing element. The intelligent monitoring device can carry out information fusion and information splitting processing through the information processing device and carry out intelligent and deep learning of a behavior status of a watched person through a neural network. The intelligent monitoring system can effectively monitor the physical and mental status of the old people and can further provide real-time communication and company with the old people, the communication with relevant personnel or facilities and timely advices and assistance to the watched person.

Owner:朴一植

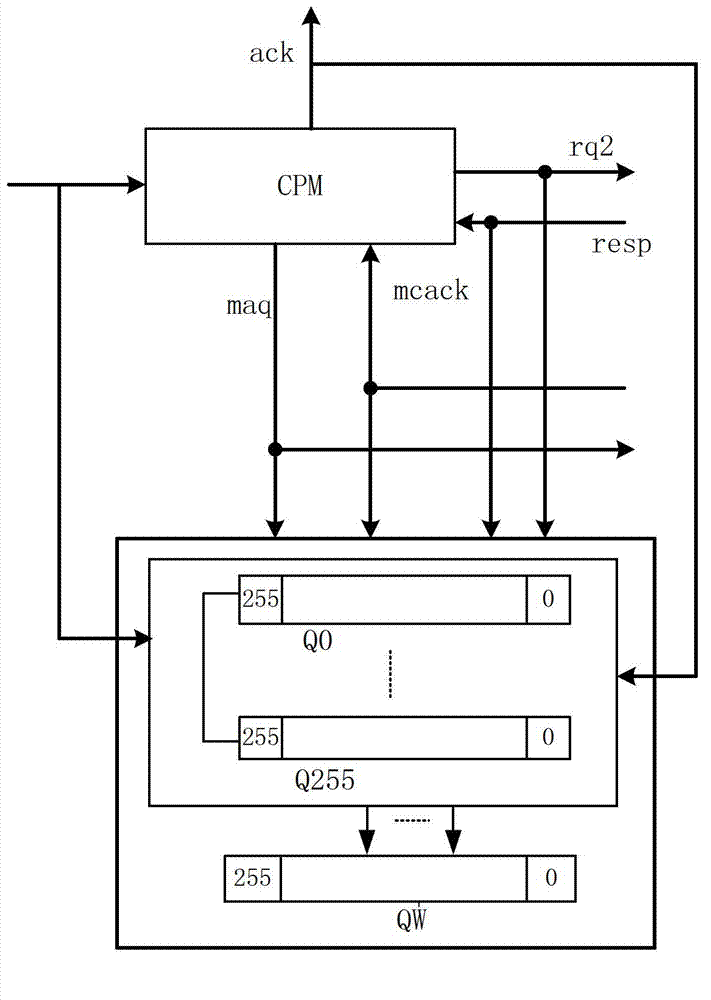

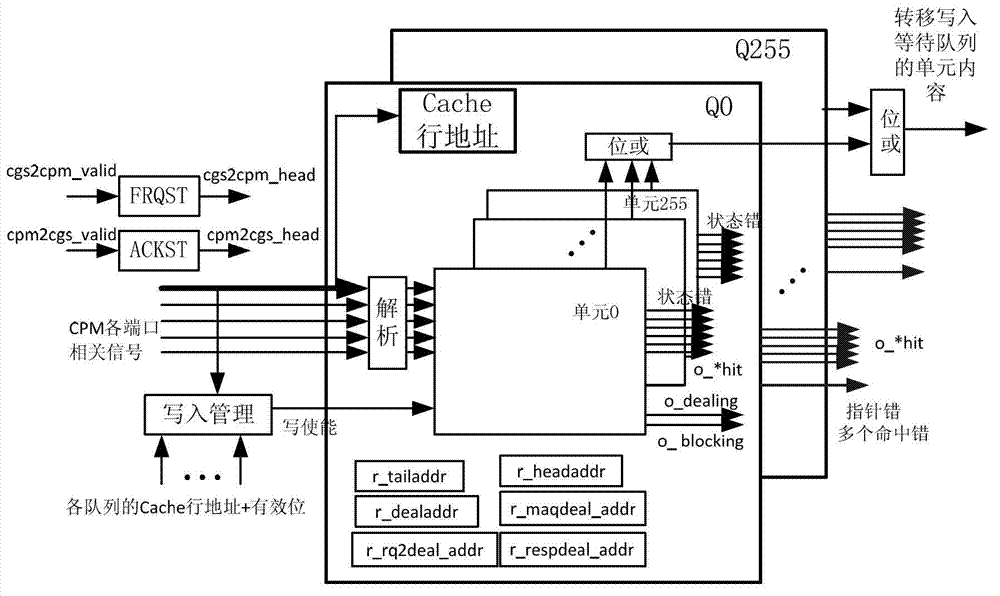

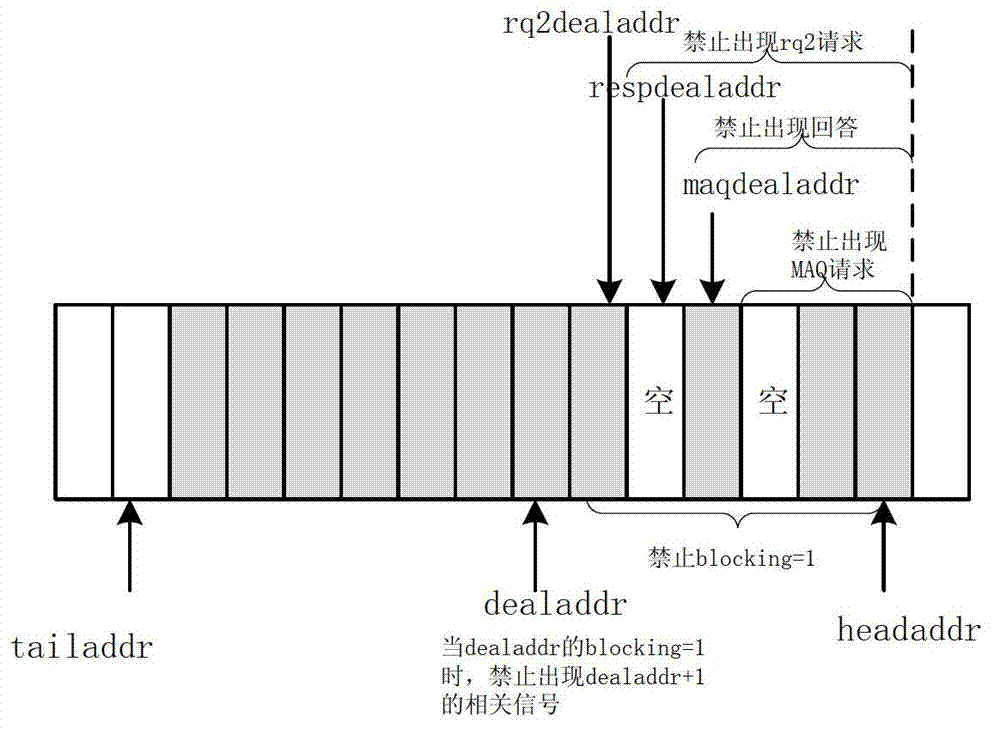

Method for verifying Cache coherence protocol and multi-core processor system

ActiveCN102880467ABehavior Accurate MonitoringDigital computer detailsSpecific program execution arrangementsBehavioral stateProcessing element

The invention provides a method for verifying a Cache coherence protocol and a multi-core processor system. The method for verifying the Cache coherence protocol comprises the following steps that: a plurality of queues are arranged in a monitor, every queue comprises a plurality of units, the units are used for recording all primary requests which are not processed completely, all the requests which are relevant to addresses are orderly stored in the units of the same queue according to the sequence in which the requests enter a coherence processing element, and every unit is used for tracking the performance status of recorded requests independently. According to the characteristic that the method for verifying the Cache coherence protocol based on the monitor in the invention can process the requests relevant to the memorized and accessed addresses in sequence according to the Cache coherence protocol, the monitor is used for monitoring the protocol-level behavior of the Cache coherence processing element accurately, and the behavior of every request package can be monitored accurately. Through adjusting the content in the monitor, the method for verifying the Cache coherence protocol is suitable for verifying various coherence protocols.

Owner:JIANGNAN INST OF COMPUTING TECH

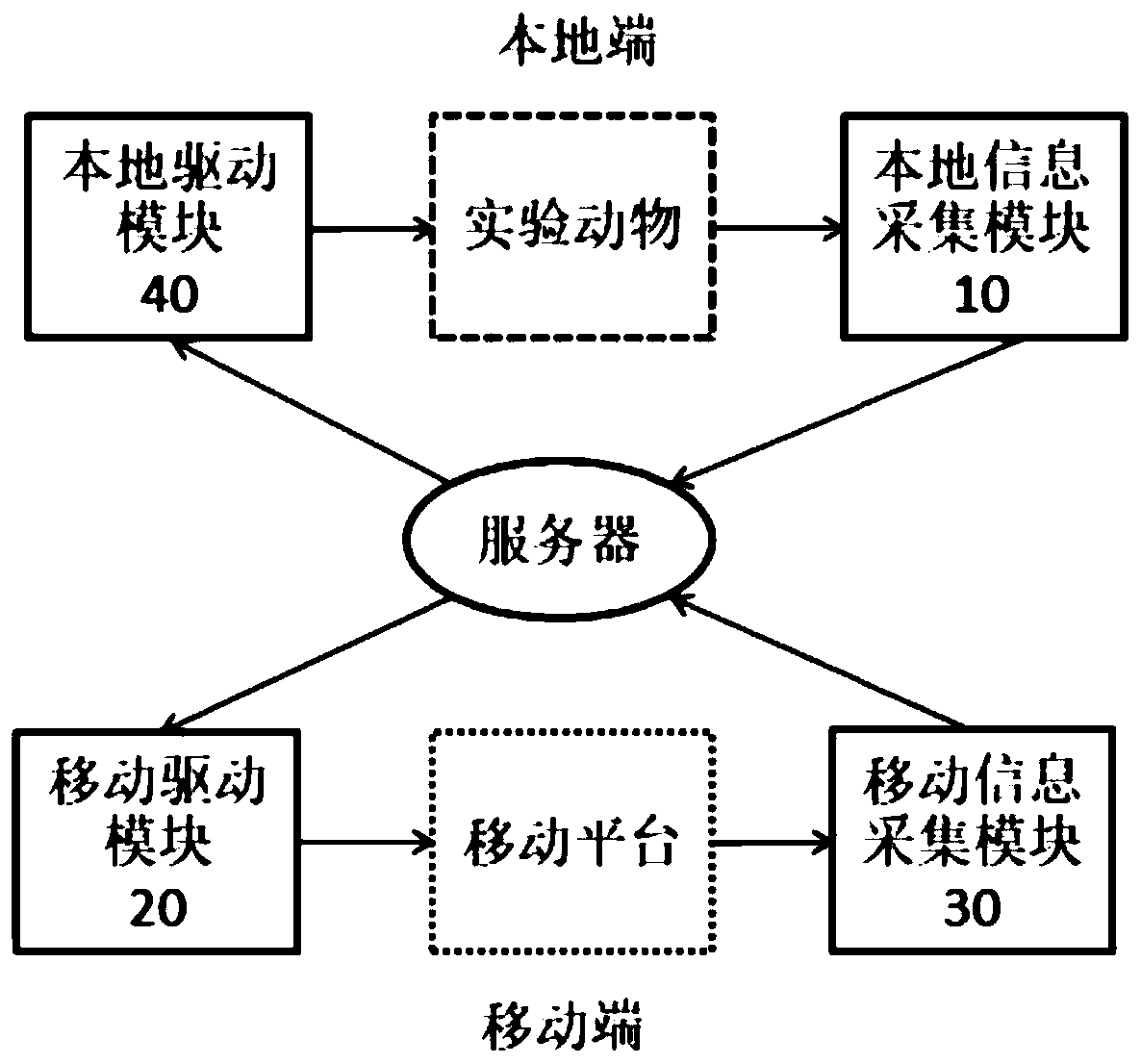

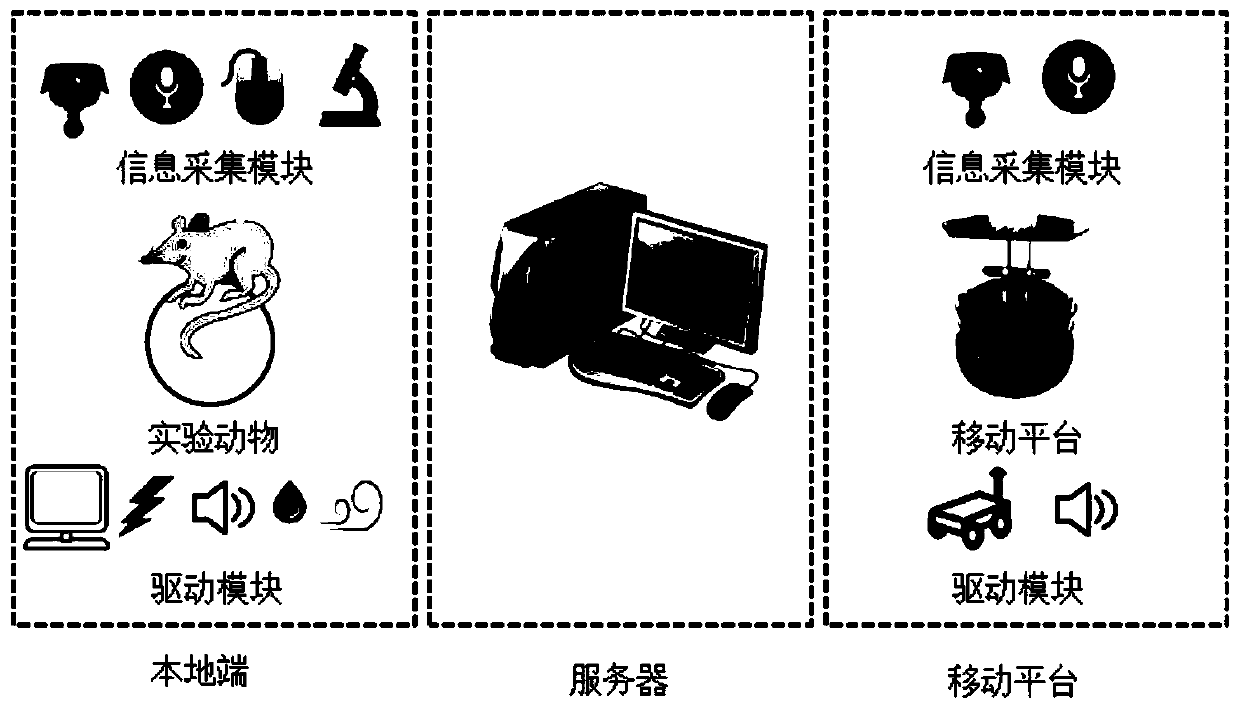

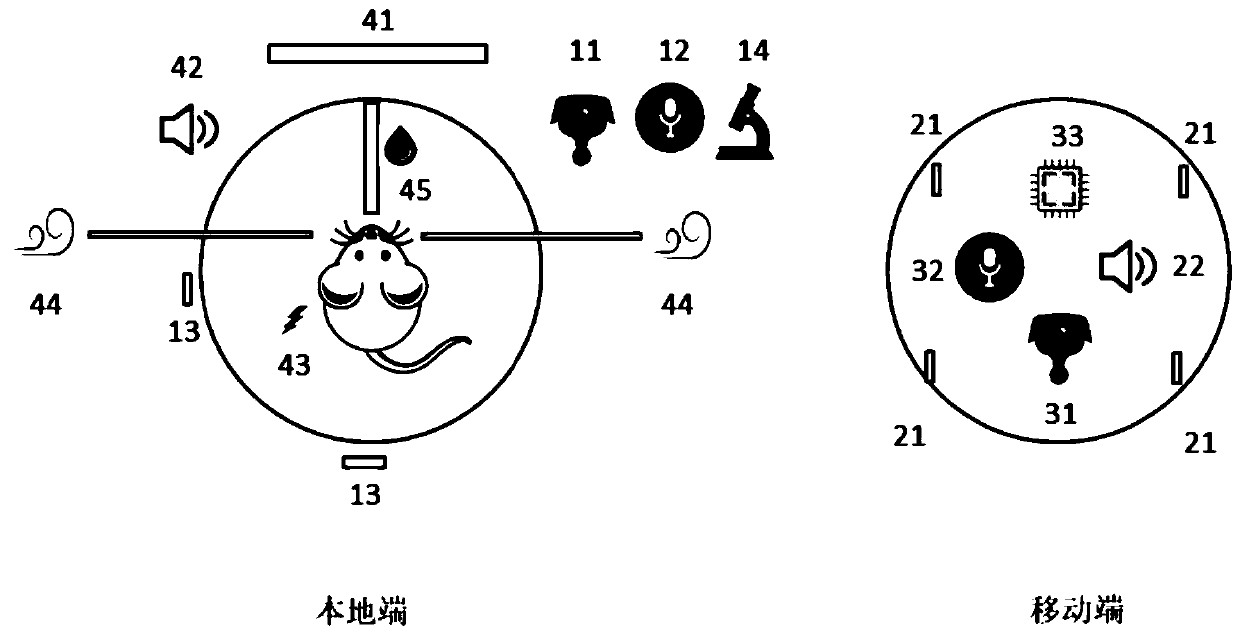

Virtual reality system and method for animal experiment

The invention discloses a virtual reality system and method for an animal experiment. The system comprises a local driving module, a local information acquisition module, a mobile driving module, a mobile information acquisition module and a server module, wherein the local driving module is used for acting a stimulus on an experimental animal; the local information acquisition module is used foracquiring physiological and behavior states of the experimental animal; the mobile driving module is used for driving a mobile platform to move; the mobile information acquisition module is used for acquiring a surrounding signal; and the server module is used for generating a control instruction of the mobile driving module according to the physiological and behavior states of the experimental animal so as to drive the mobile platform to move and generating a control instruction of the local driving module according to the surrounding signal so as to act the stimulus on the experimental animal. According to the system disclosed by the embodiment of the invention, a behavioral experimental paradigm of effectively and rapidly fixing an animal is created; functions of an existing measurementinstrument can be economically and efficiently expanded; and the virtual reality system and method for the animal experiment have the ability of measuring physiological parameters of a moving mouse under the high accuracy.

Owner:TSINGHUA UNIV

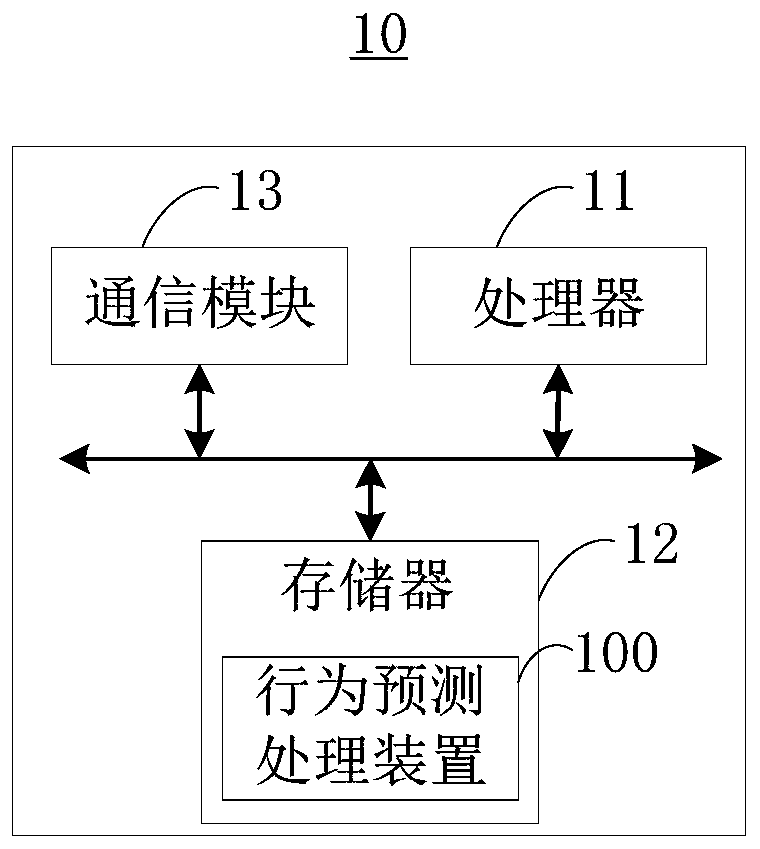

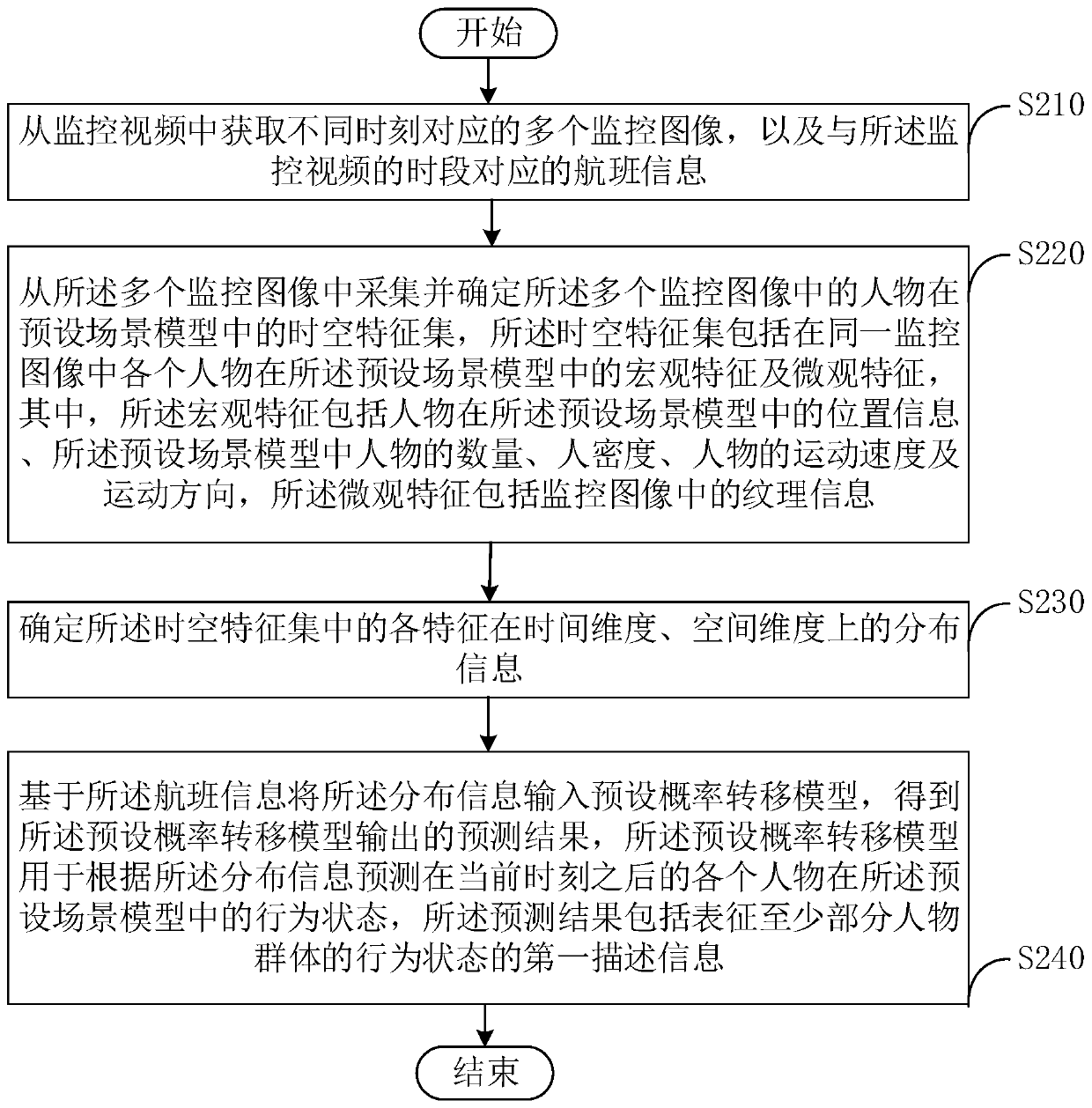

Behavior prediction processing method and device and electronic equipment

The invention provides a behavior prediction processing method and device and electronic equipment, and relates to the technical field of computer data processing. The method comprises the following steps: acquiring a plurality of monitoring images corresponding to different moments from a monitoring video, and flight information corresponding to time periods of the monitoring video; acquiring anddetermining macroscopic features and microscopic features of characters in the plurality of monitoring images in a preset scene model from the plurality of monitoring images, wherein the macroscopicfeatures comprise position information of the characters in a preset scene model, the number of the characters in the preset scene model, the human density, the movement speed and the movement direction of the characters, and the microcosmic features comprise texture information in the monitoring image; inputting the distribution information into the preset probability transfer model based on theflight information, and obtaining the prediction result output by the preset probability transfer model. Prediction of the passenger behavior state is achieved, and the technical problem that abnormalconditions cannot be prevented and processed in time through a monitoring video in the prior art can be solved.

Owner:THE SECOND RES INST OF CIVIL AVIATION ADMINISTRATION OF CHINA

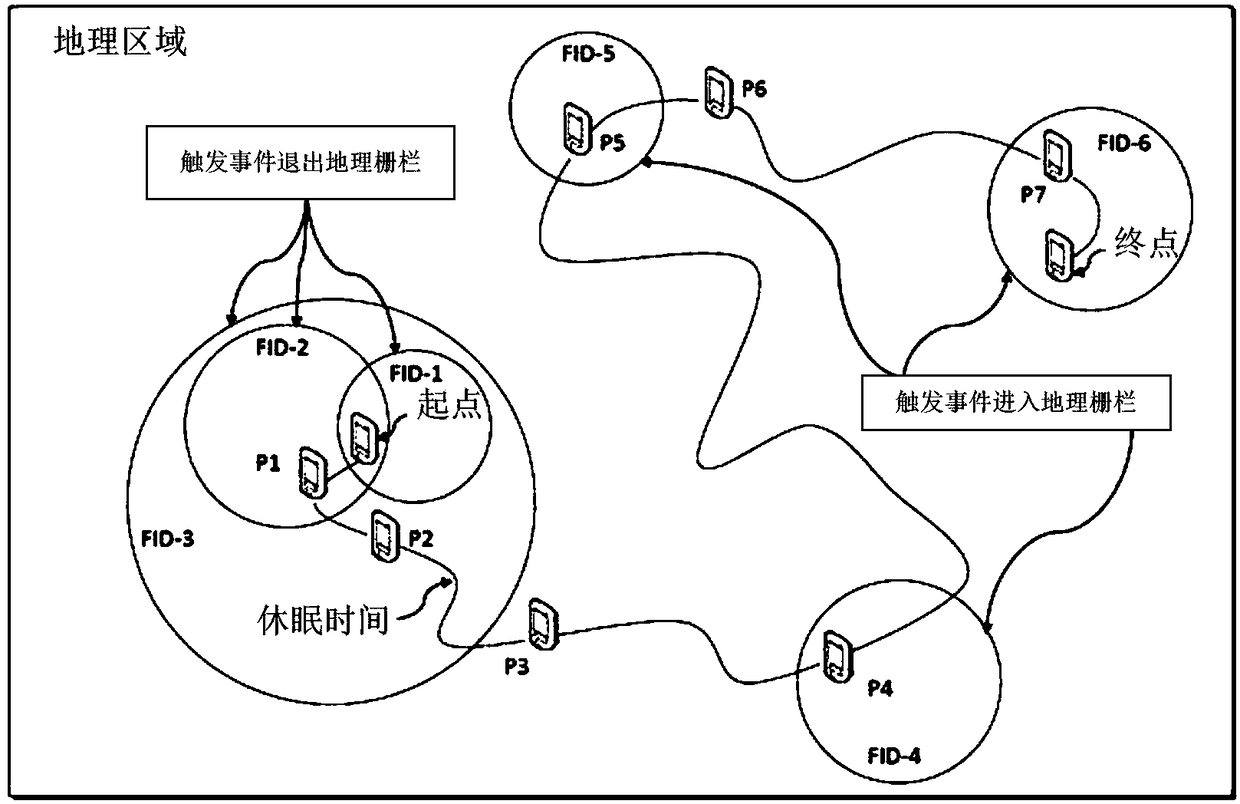

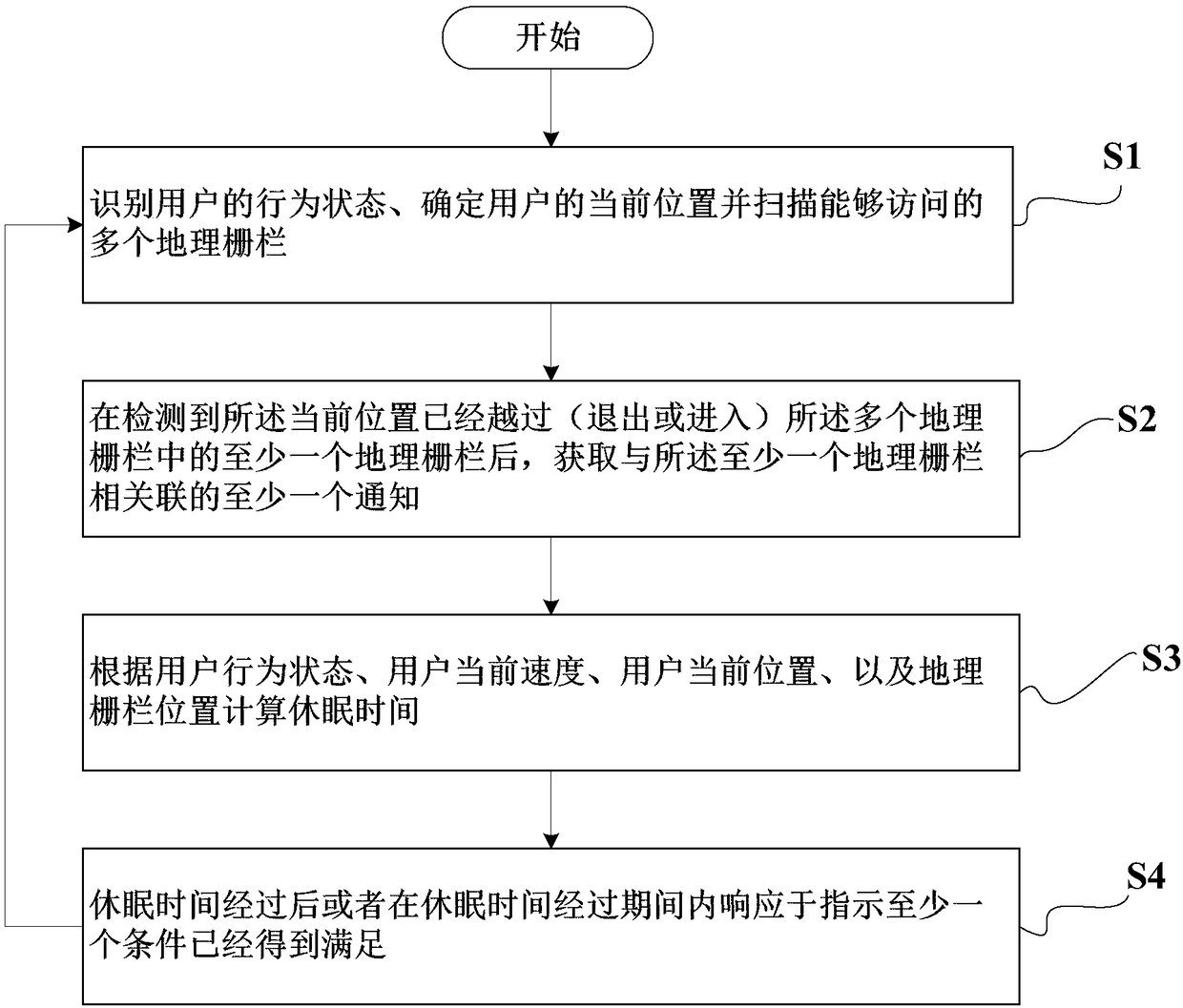

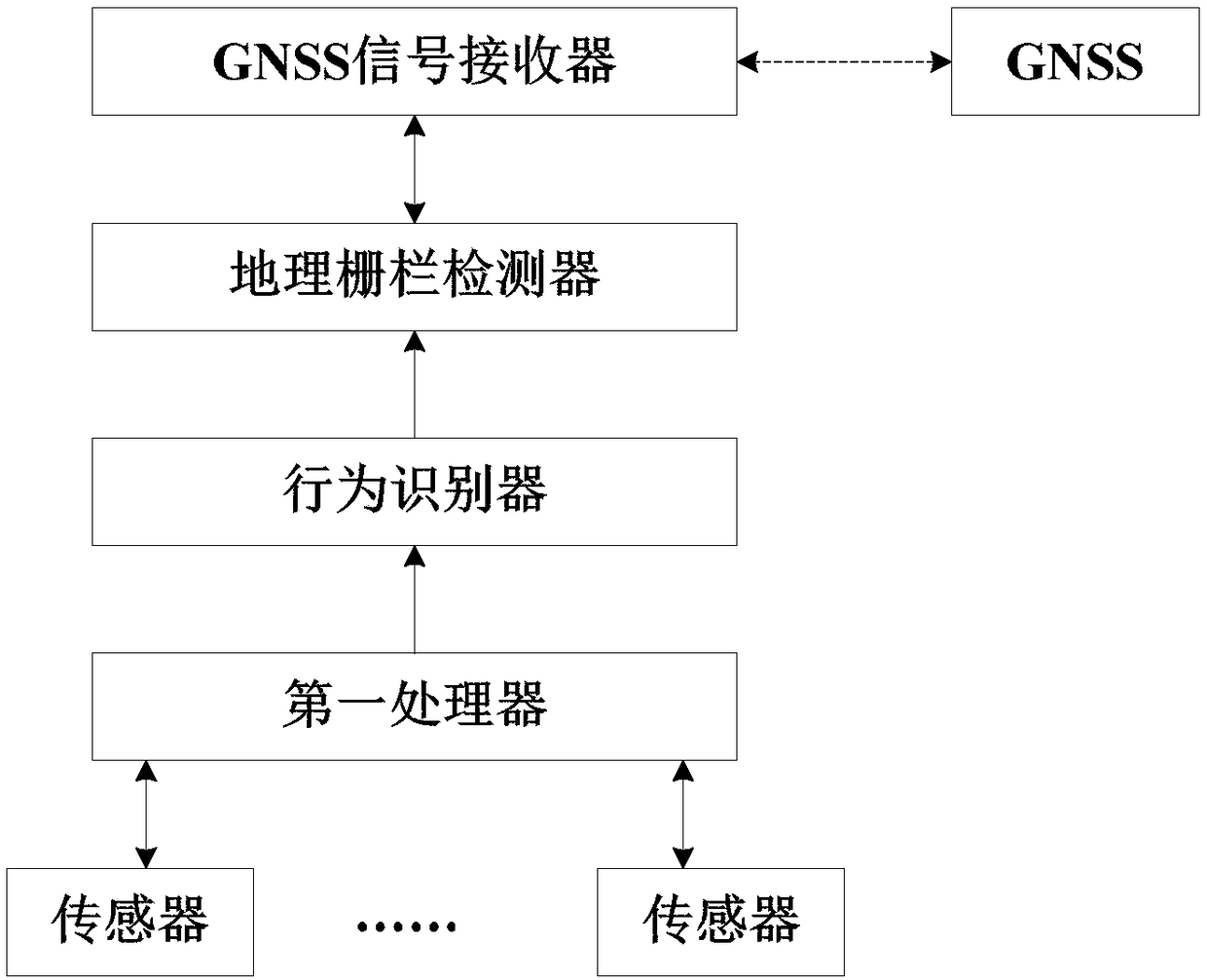

Adaptive geo-fence detection method, apparatus, electronic device and management method

ActiveCN108318902AReduce power consumptionAccurate detectionSatellite radio beaconingLocation information based serviceSleep timeBehavioral state

The present invention belongs to the geo-fence detection technological field and relates to an adaptive geo-fence detection method, an adaptive geo-fence detection apparatus, an electronic device withthe adaptive geo-fence detection apparatus and a method for managing a geo-fence detection process on the electronic device. According to the adaptive geo-fence detection method and the adaptive geo-fence detection apparatus, sleep time is calculated according to the behavior state of a user, the current speed of the user, the current location of the user, and the positions of geo-fences, operation including triggering the re-identification of the behavioral state of the user, determining the current location of the user, and scanning a plurality of accessible geo-fences is carried out afterthe sleep time passes or in response to an indication that at least one condition has been satisfied during the sleep time, and therefore, the geo-fences can be tracked, such as entering, exiting ora combination of entering and exiting, a plurality of geo-fences can be detected, and power consumption can be saved, and accurate detection can be maintained.

Owner:芯与物(上海)技术有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com