Patents

Literature

73 results about "Active appearance model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

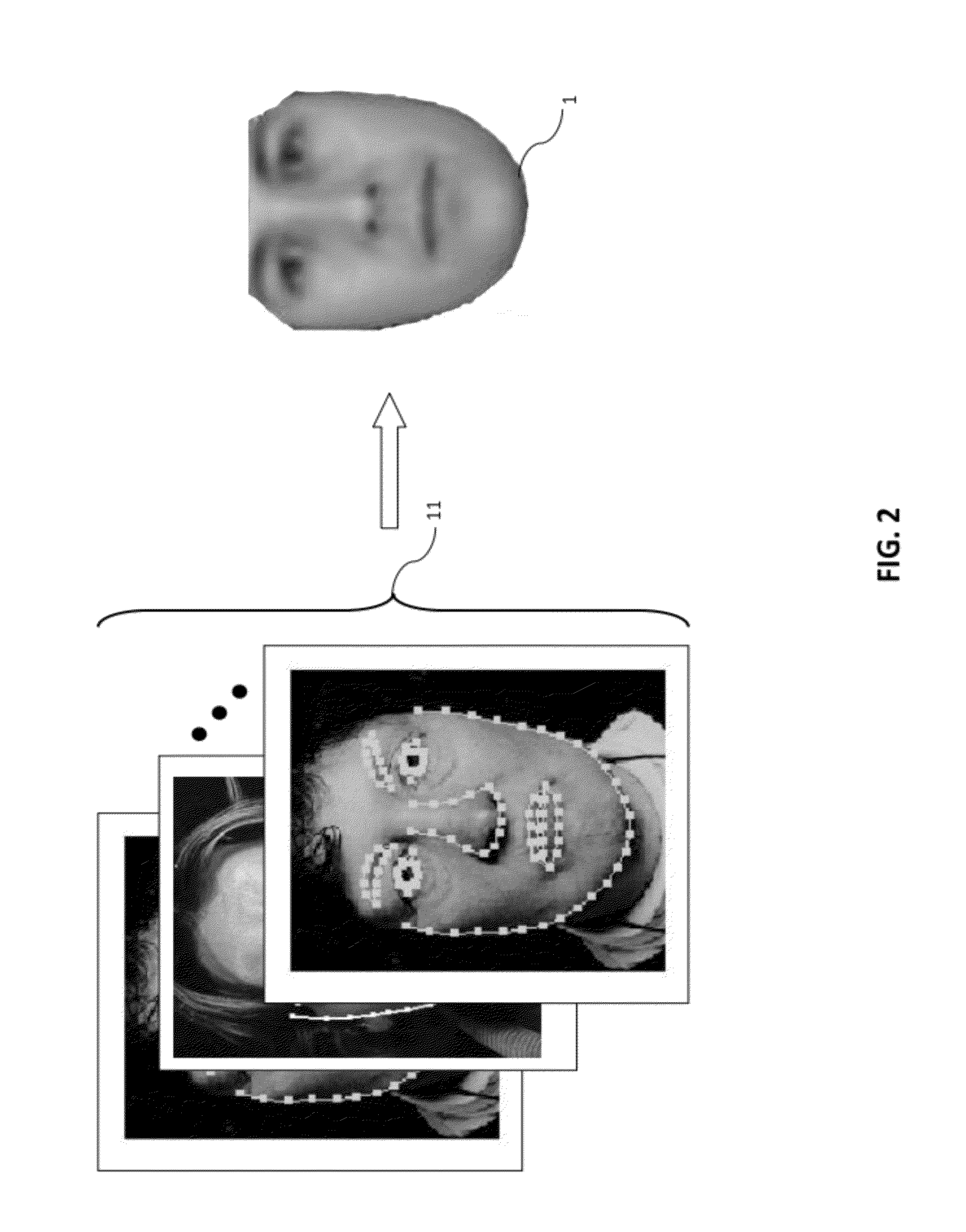

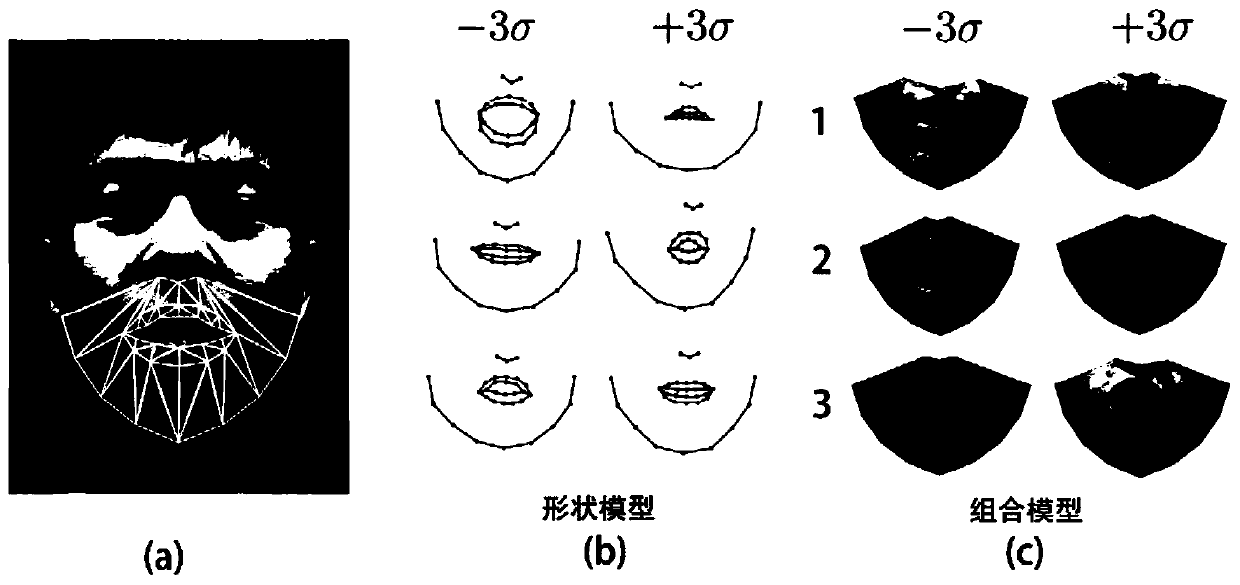

An active appearance model (AAM) is a computer vision algorithm for matching a statistical model of object shape and appearance to a new image. They are built during a training phase. A set of images, together with coordinates of landmarks that appear in all of the images, is provided to the training supervisor.

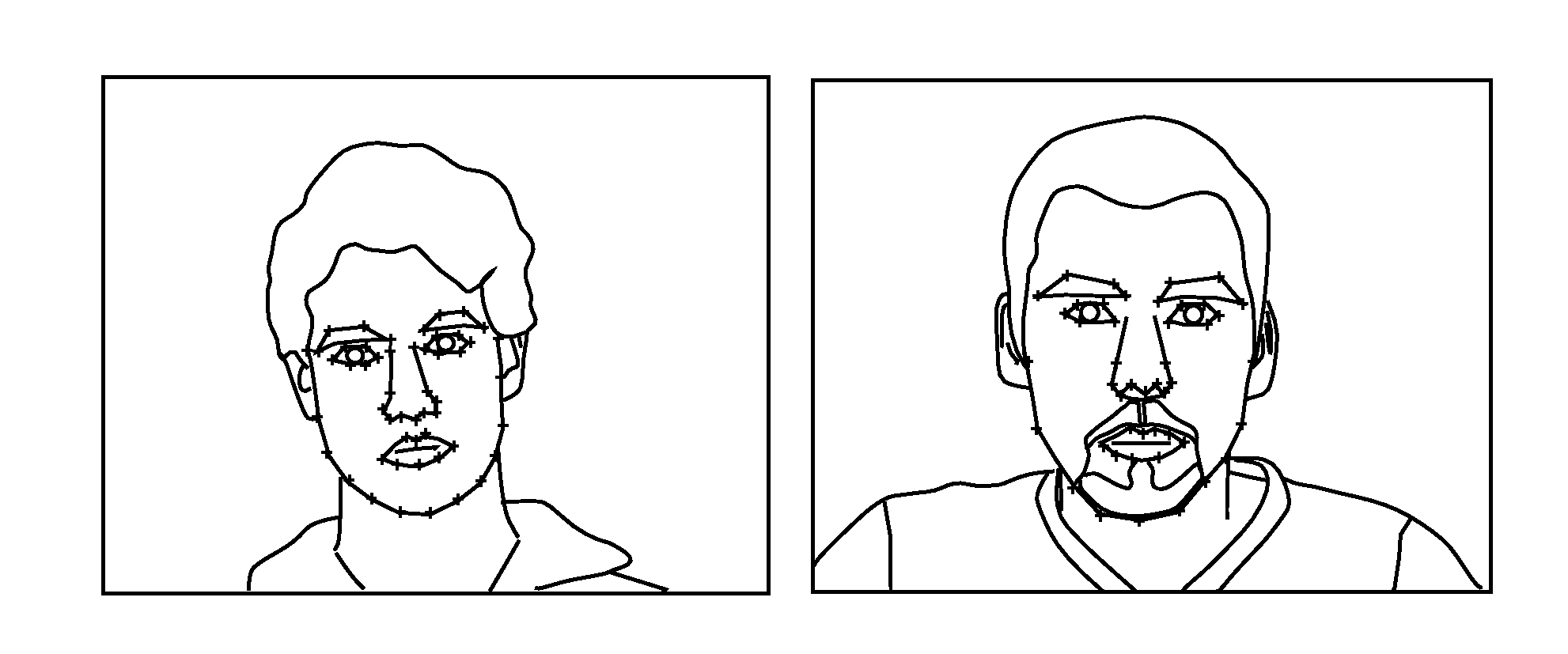

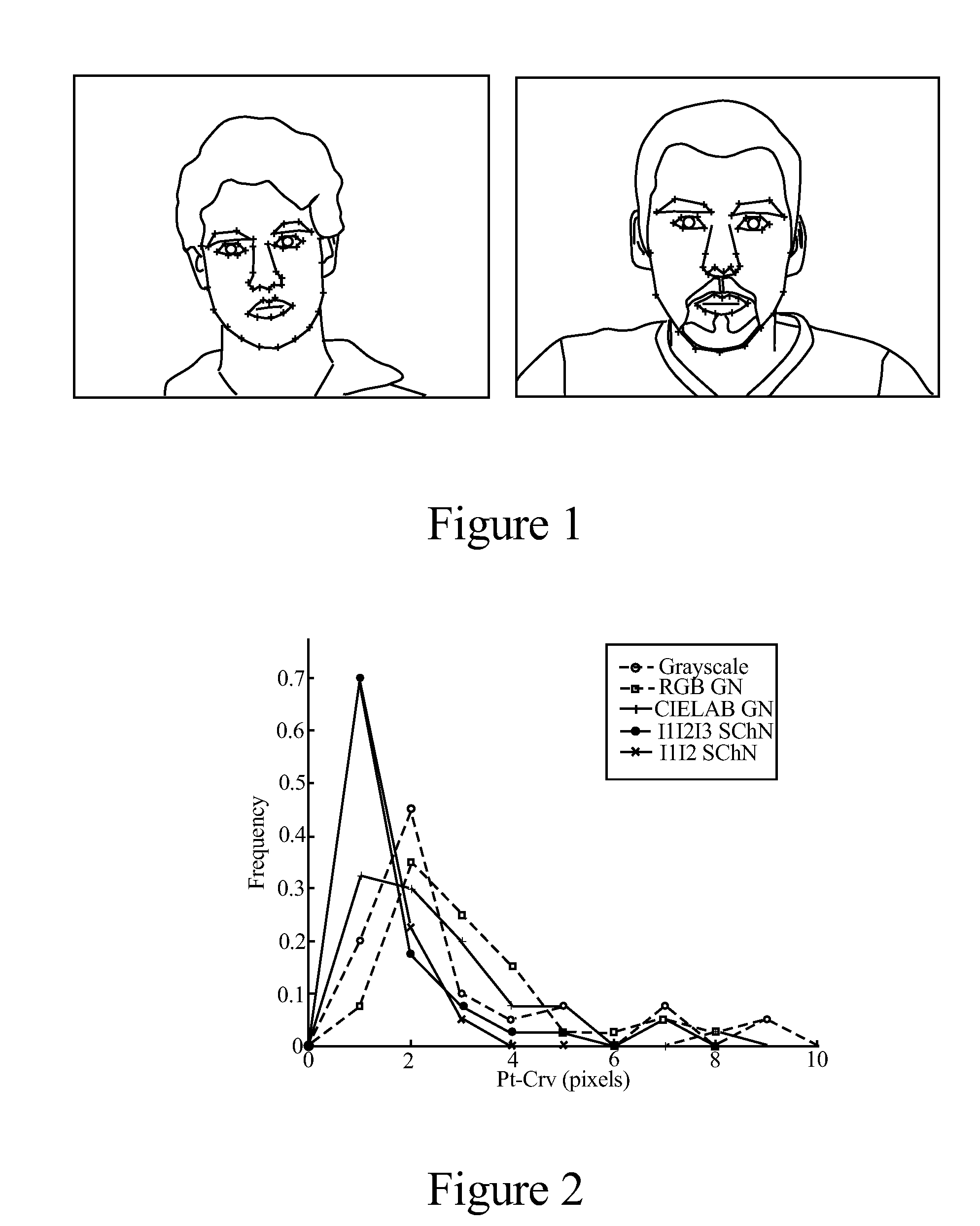

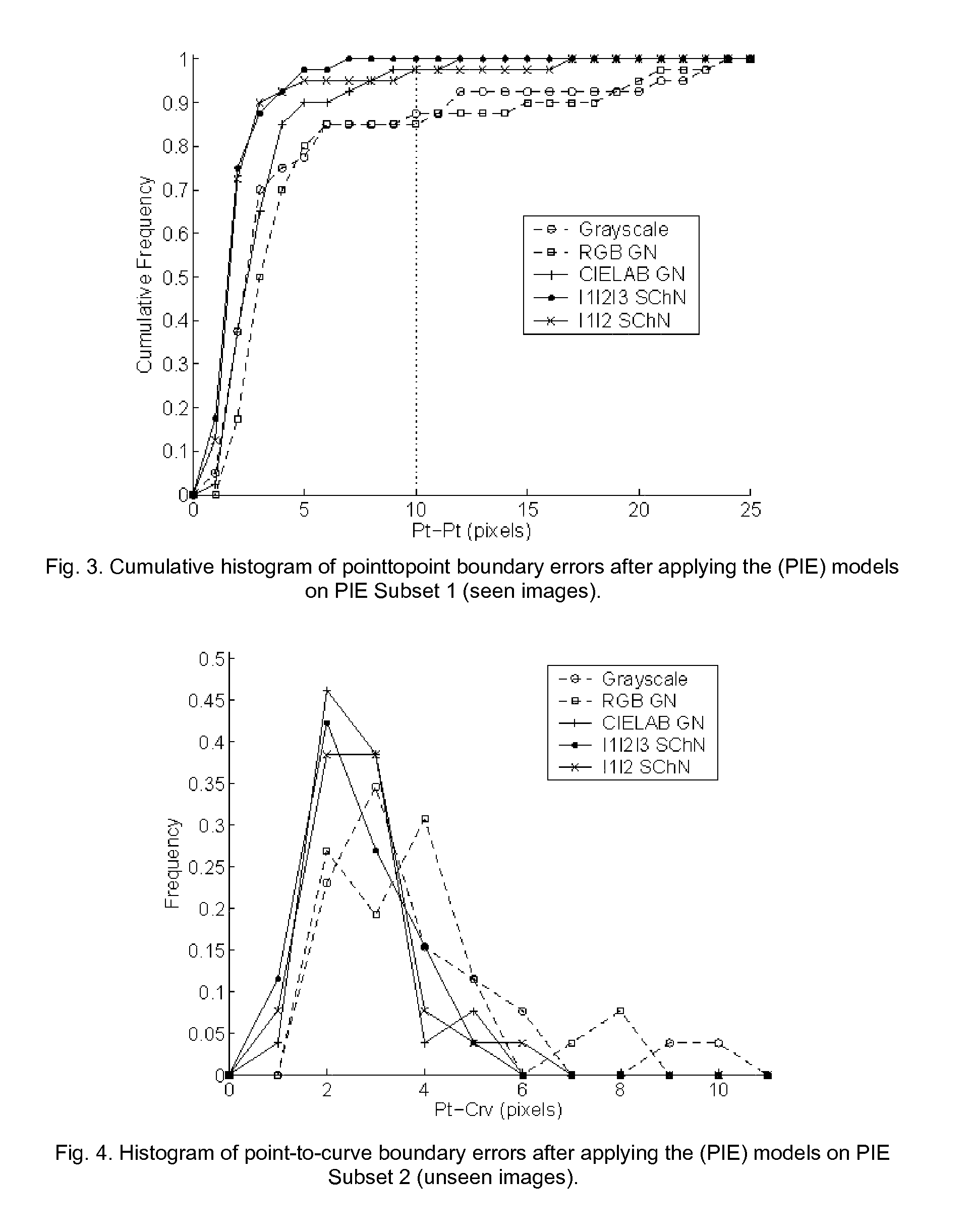

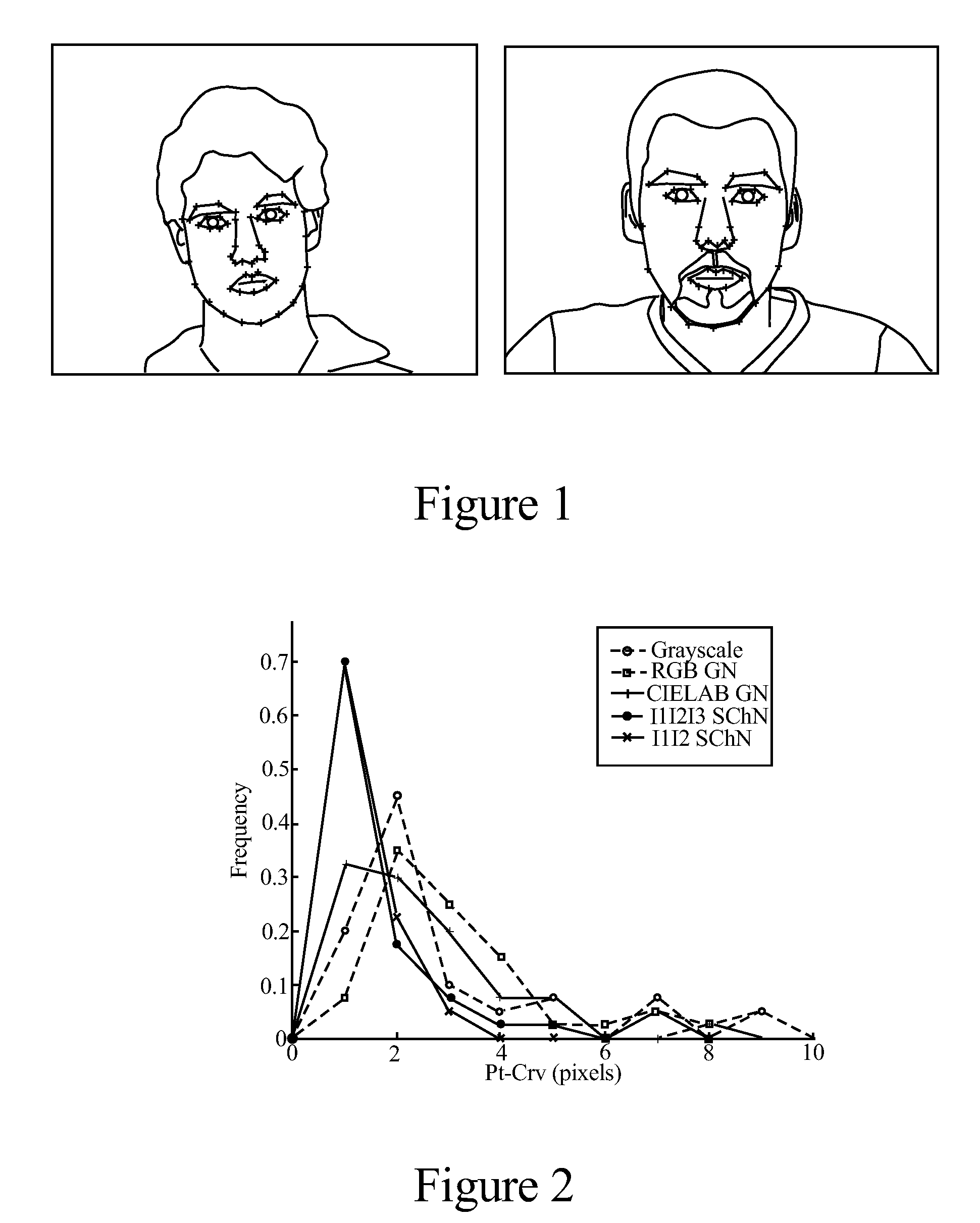

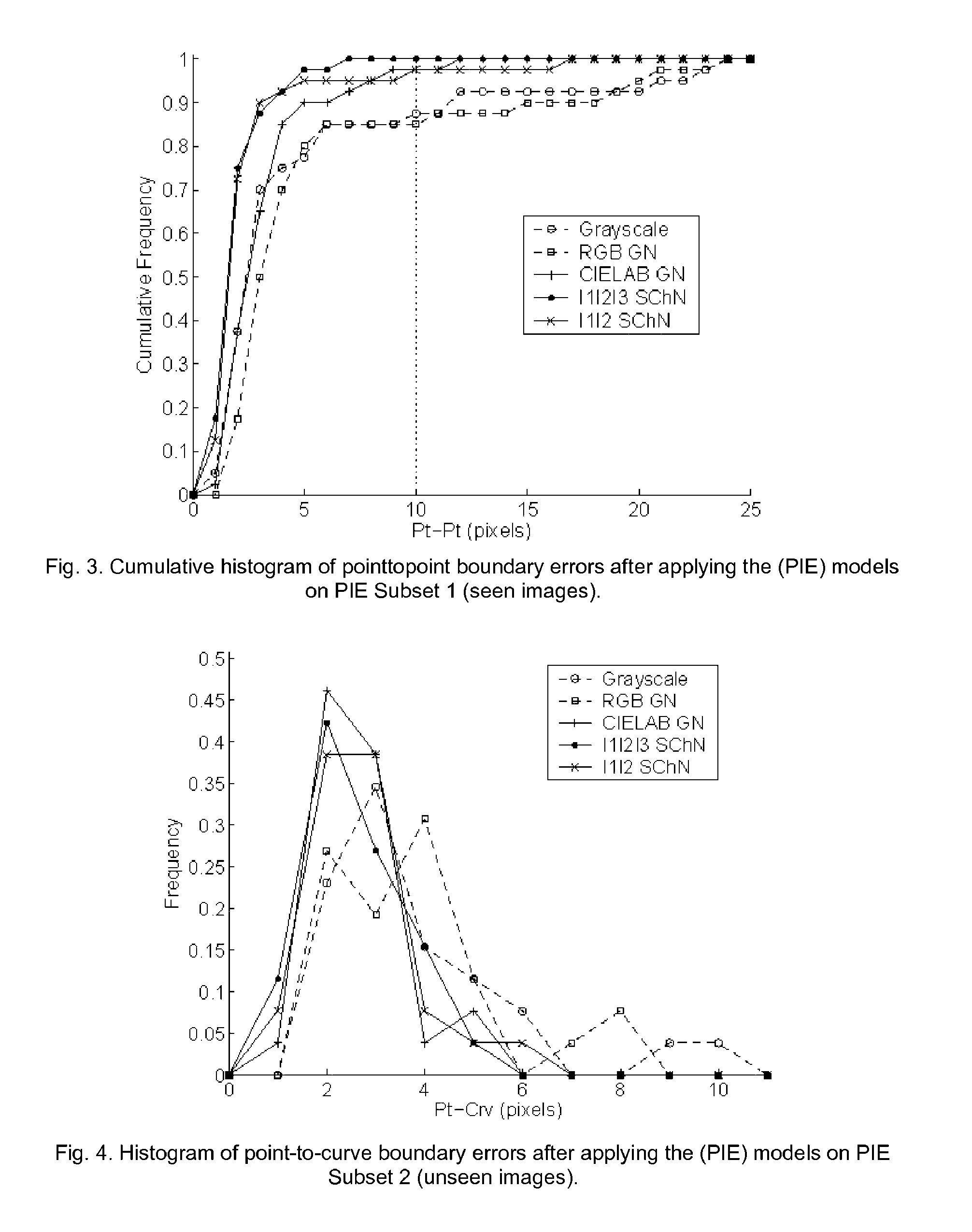

Advances in extending the aam techniques from grayscale to color images

A face detection and / or detection method includes acquiring a digital color image. An active appearance model (AAM) is applied including an interchannel-decorrelated color space. One or more parameters of the model are matched to the image. Face detection results based on the matching and / or different results incorporating the face detection result are communicated.

Owner:TESSERA TECH IRELAND LTD +1

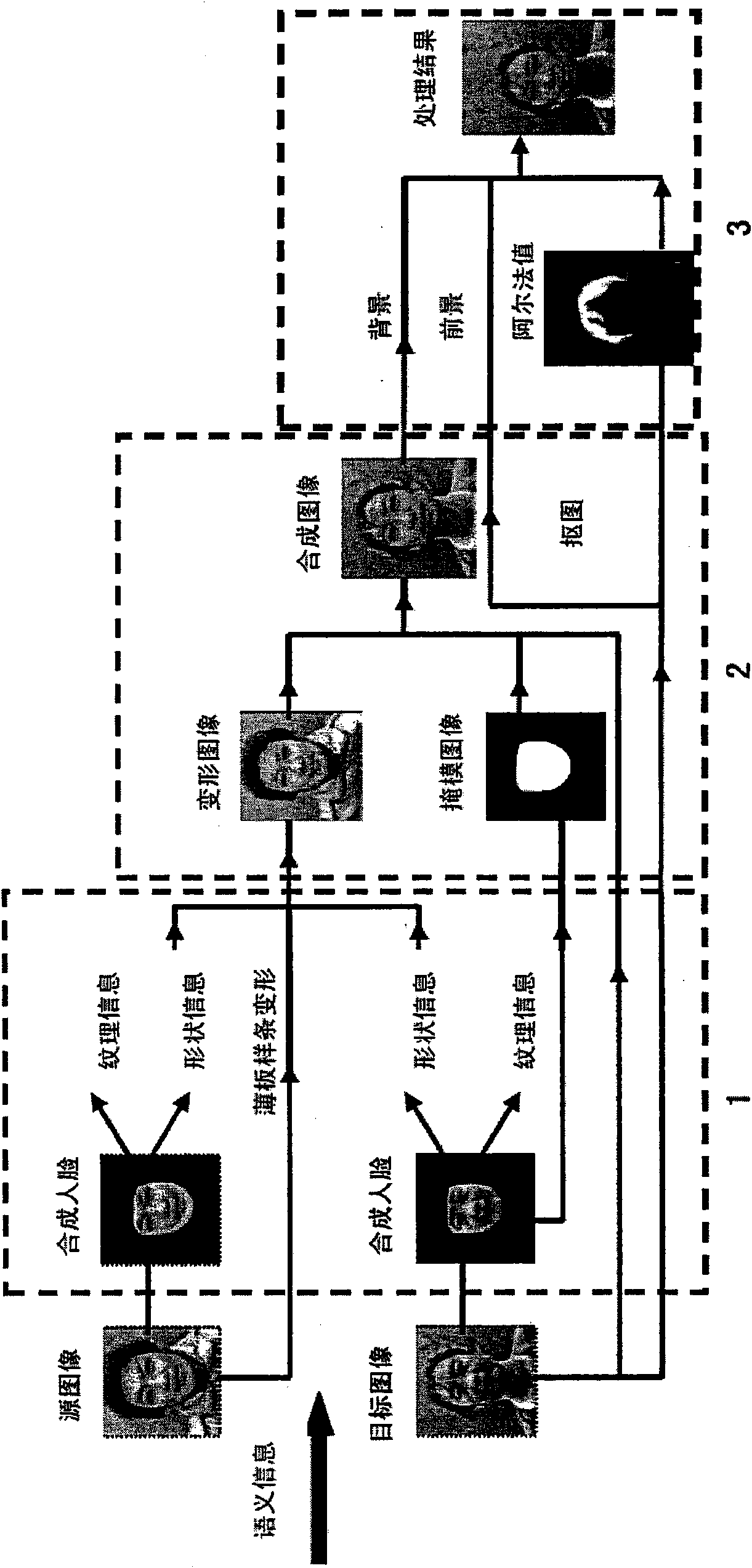

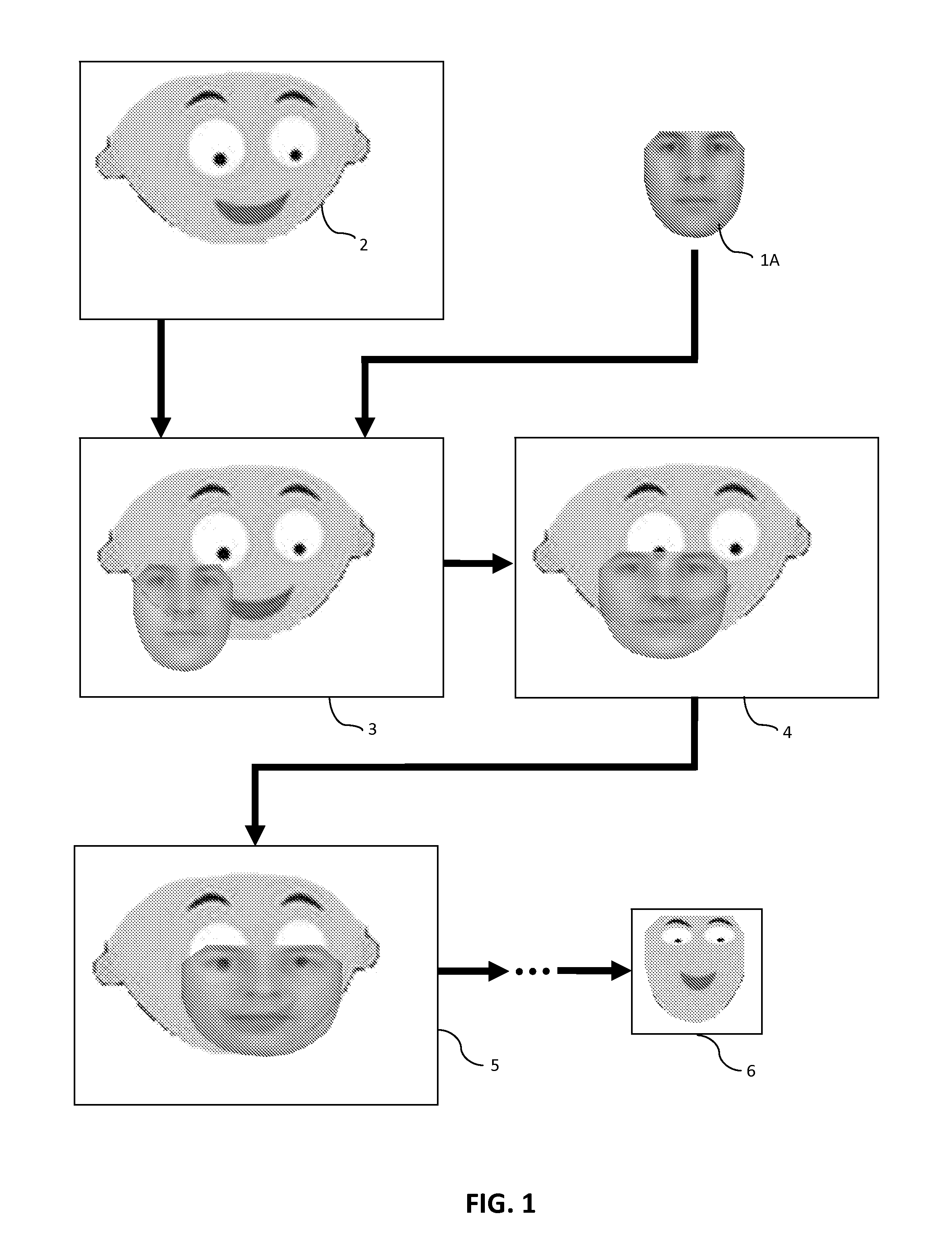

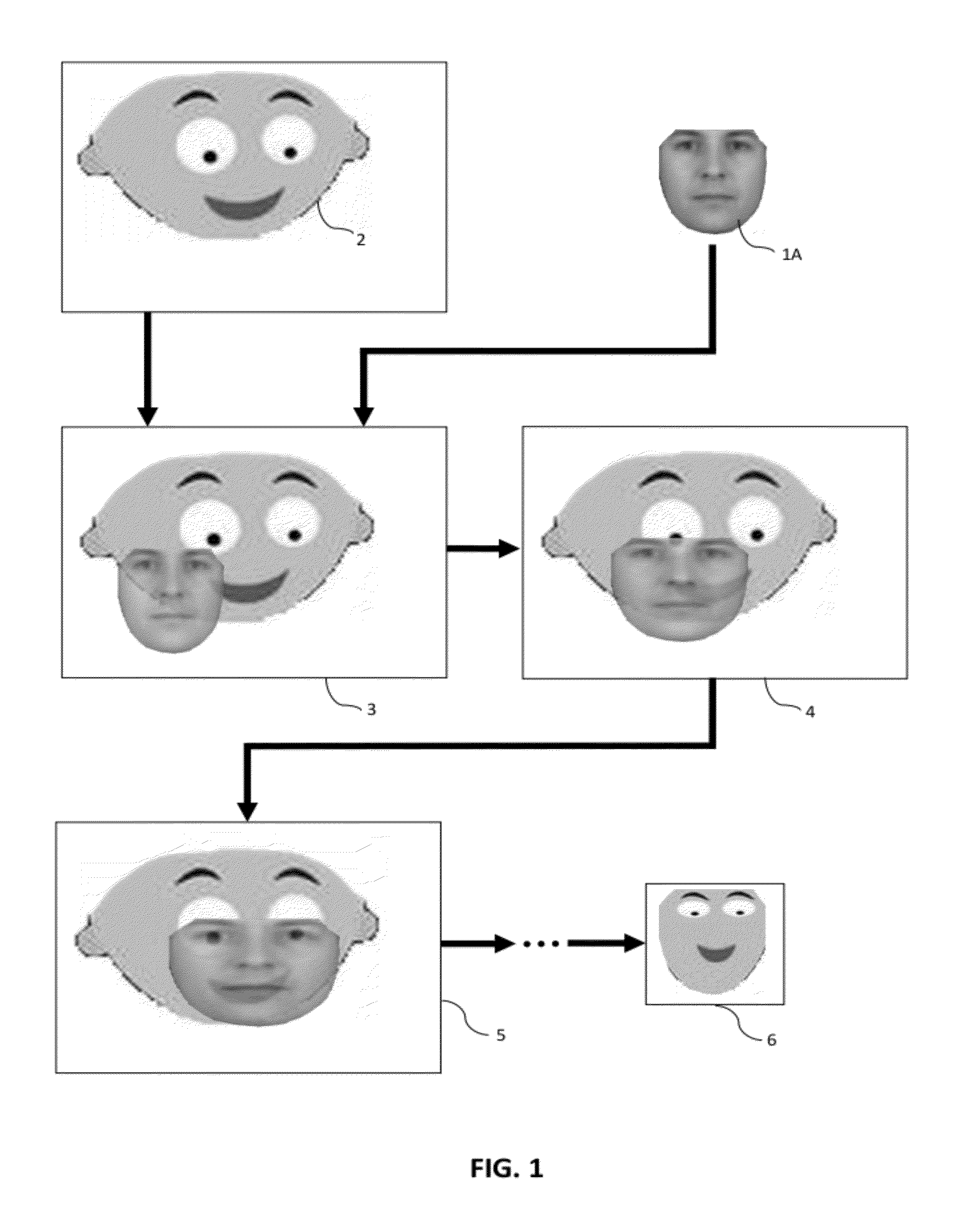

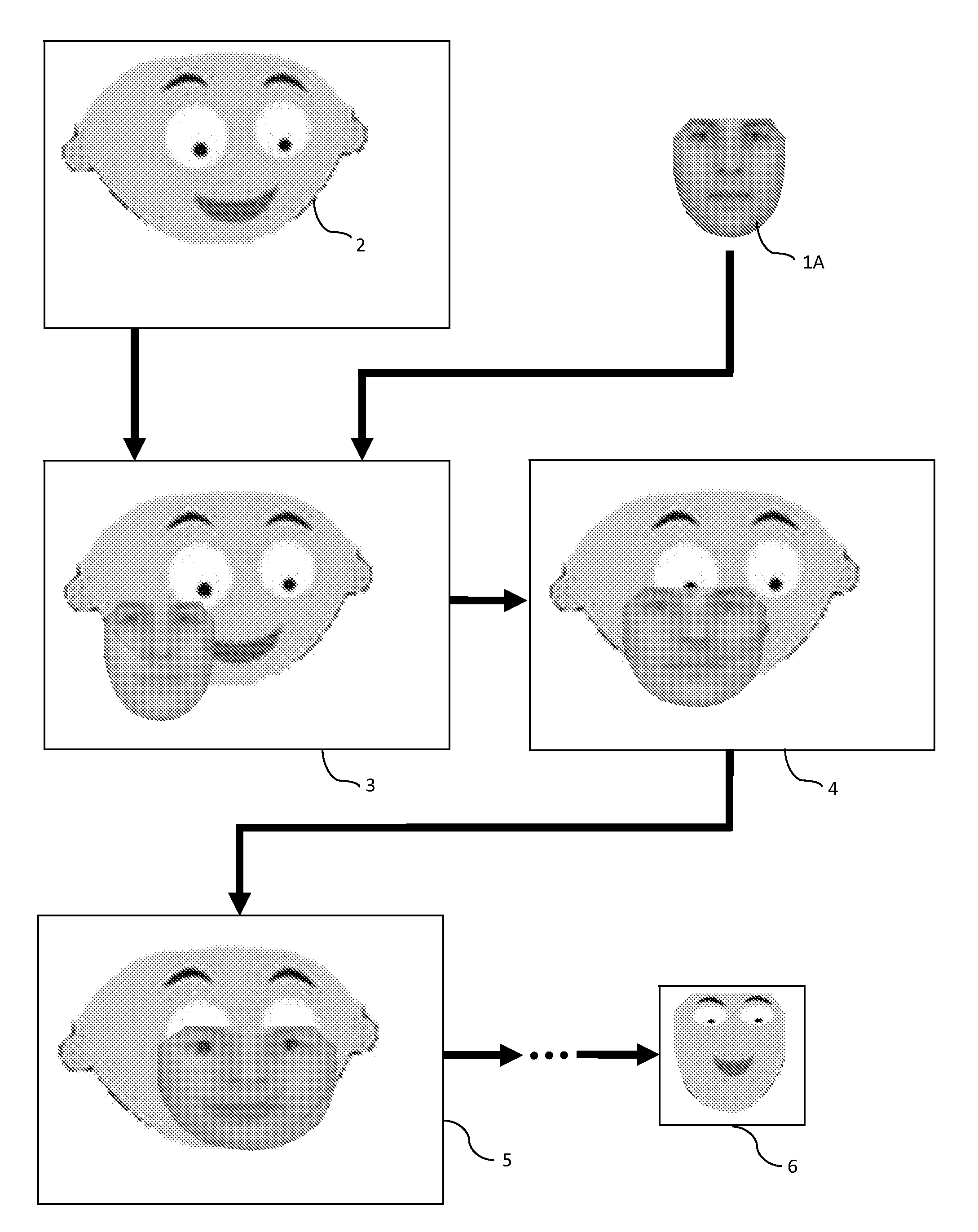

Automatic synthesis method for facial image

The invention provides an automatic synthesis method for a facial image. A user inputs two facial source images, a target image and semantic information of an area to be edited; a model matching module utilizes an active appearance model to automatically search an image and provide facial shape information and facial texture information of the facial image; then, the shapes of the two facial images are aligned by using the characteristics of the model, and the shapes of the source images in the edited area are aligned to a corresponding area in the target image by virtue of the deformation of a sheet sample band; a characteristic synthesis module is used for characteristic synthesis for the shape information and the texture information of the aligned source images and the target image, so as to generate a synthetic image automatically; an occlusion processing module is used for occlusion processing the synthetic image, and the occlusion areas of the source images are divided, matched and seamlessly integrated into the target image. Compared with the traditional facial synthesis, the invention integrates the characteristic areas of the two images and solves the problem of image distortion caused by partial face occlusion in the target image.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

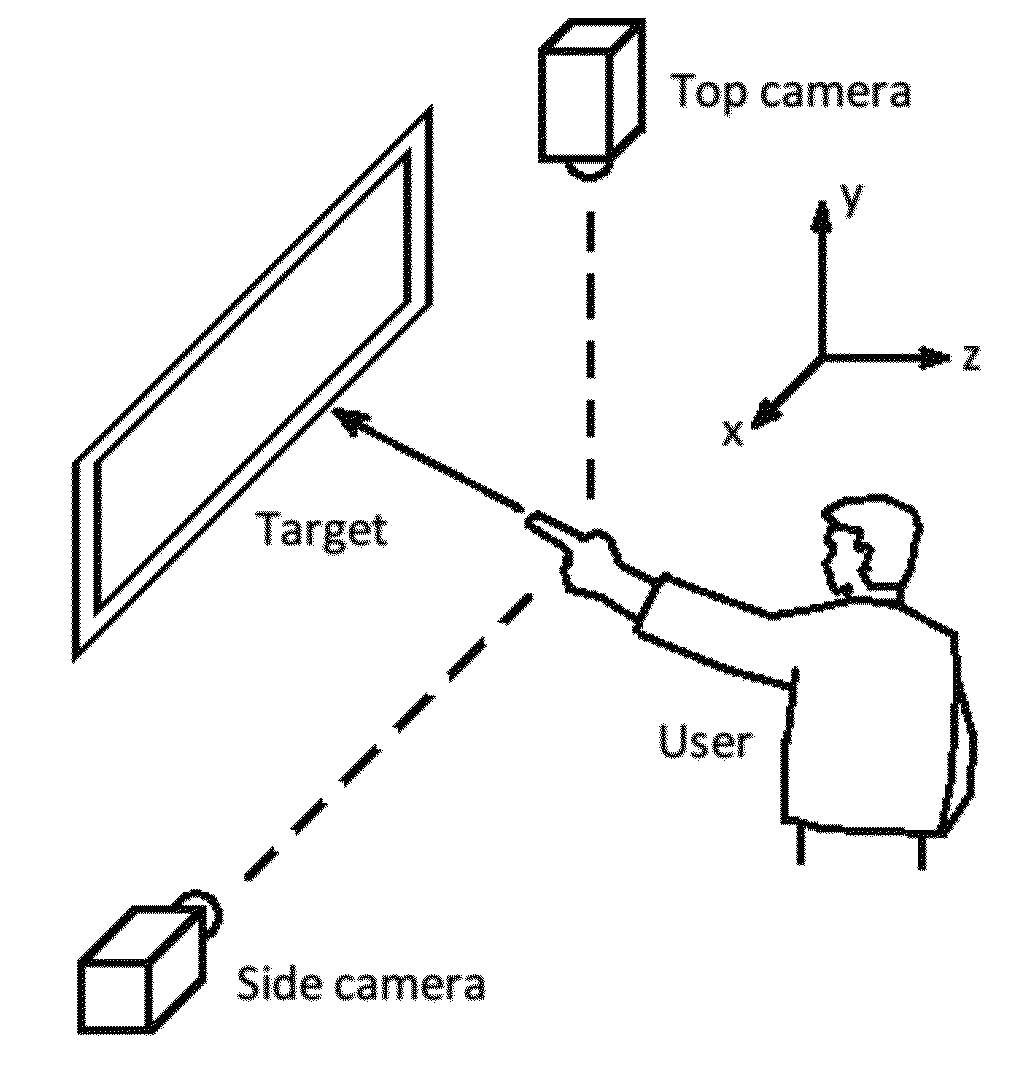

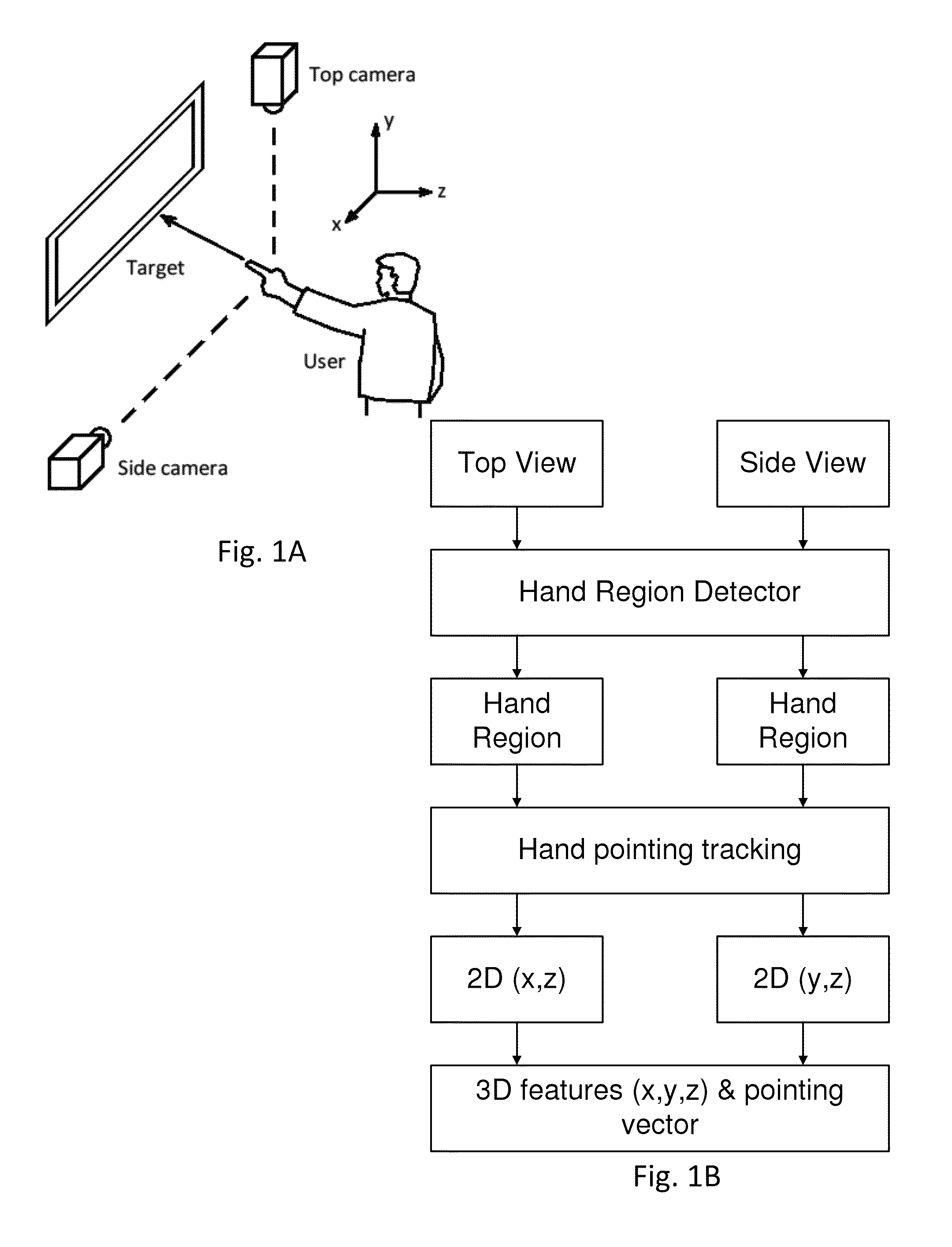

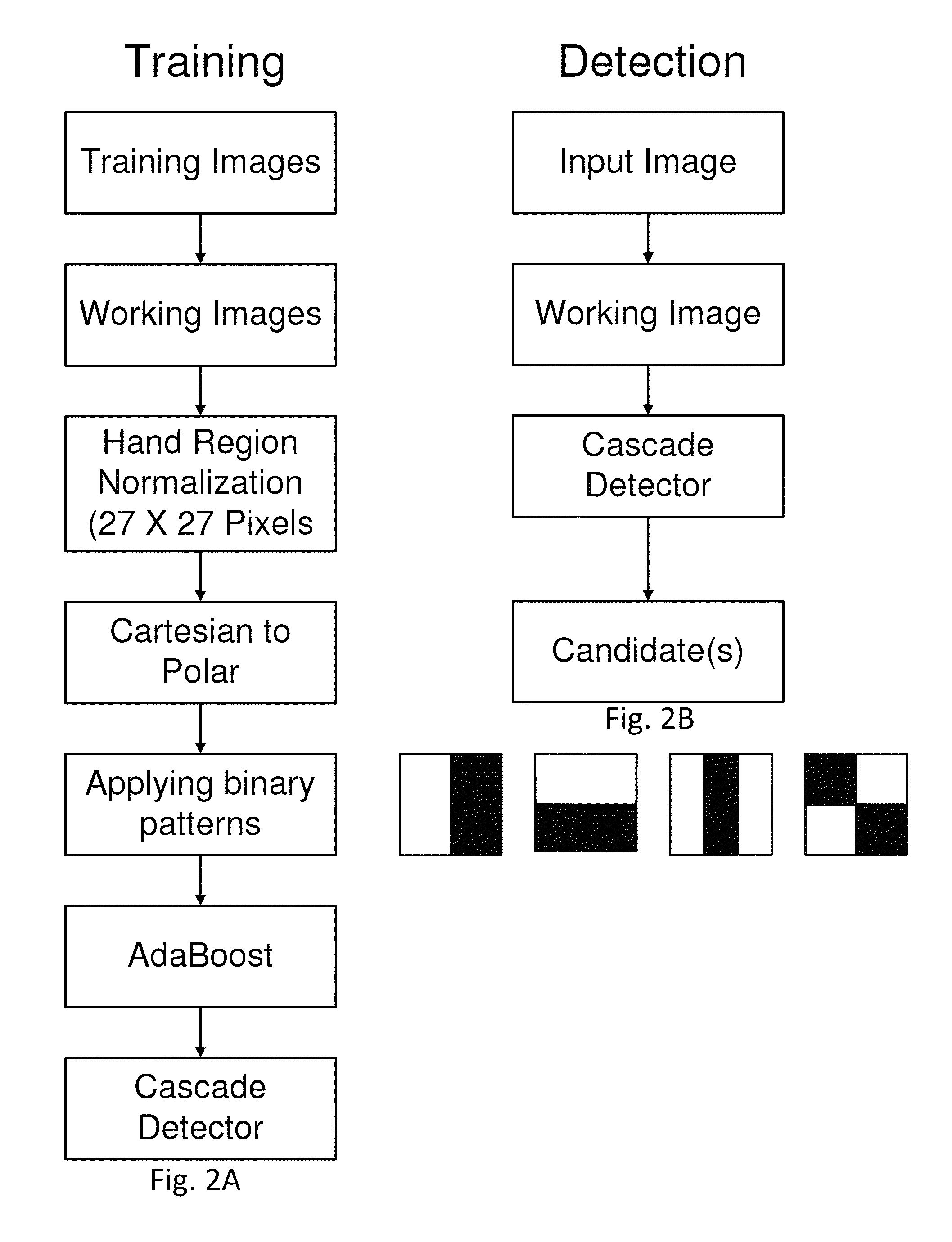

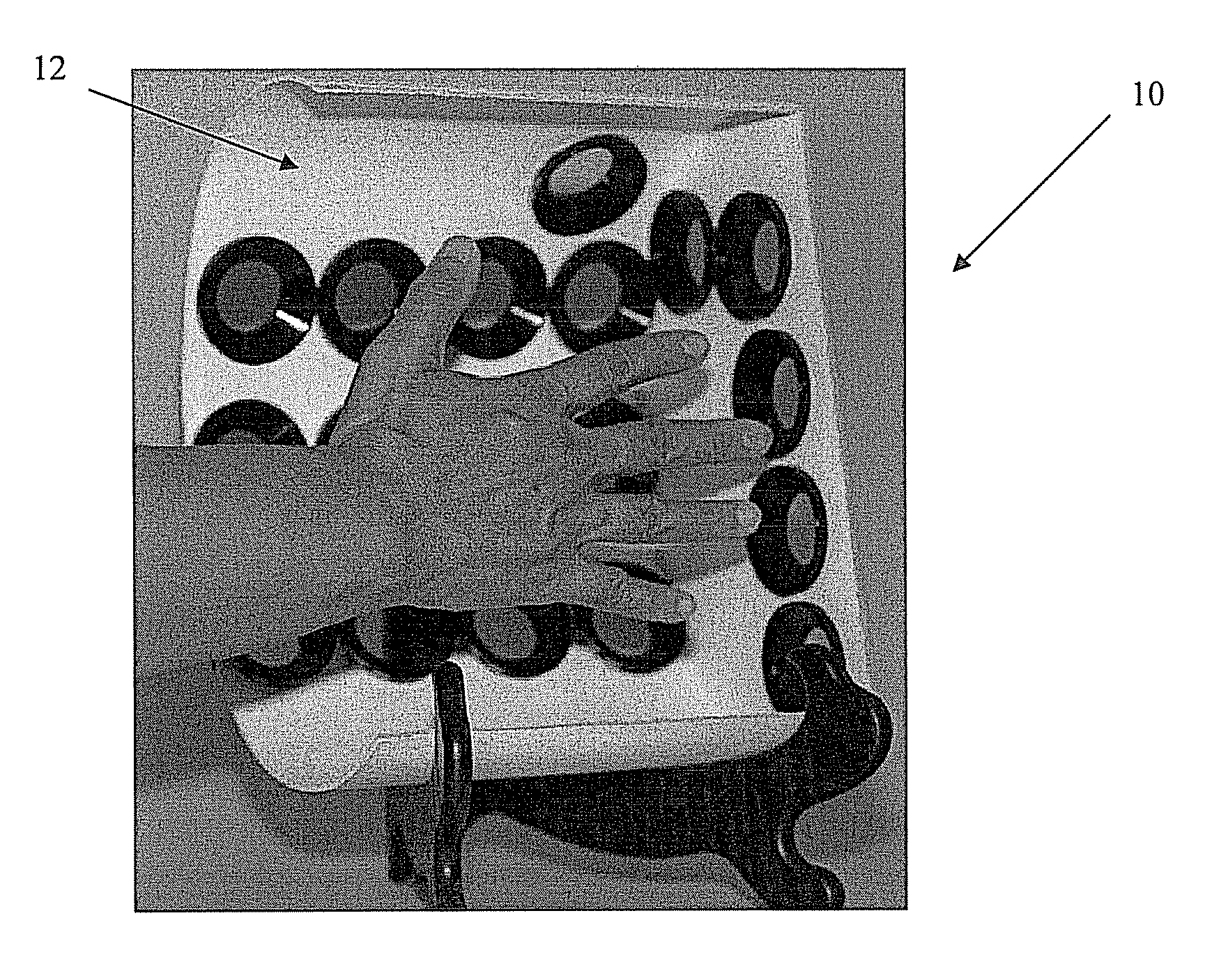

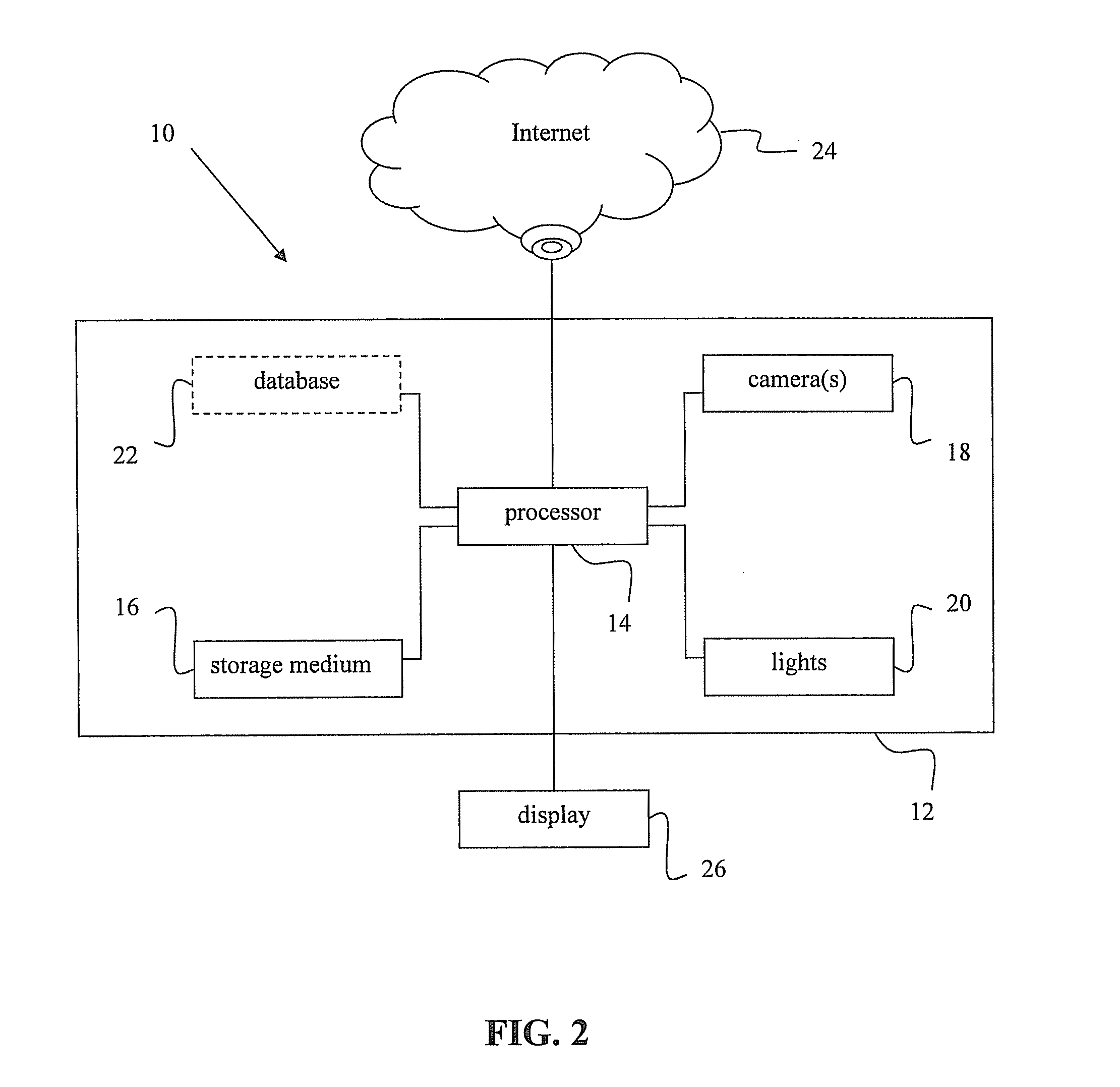

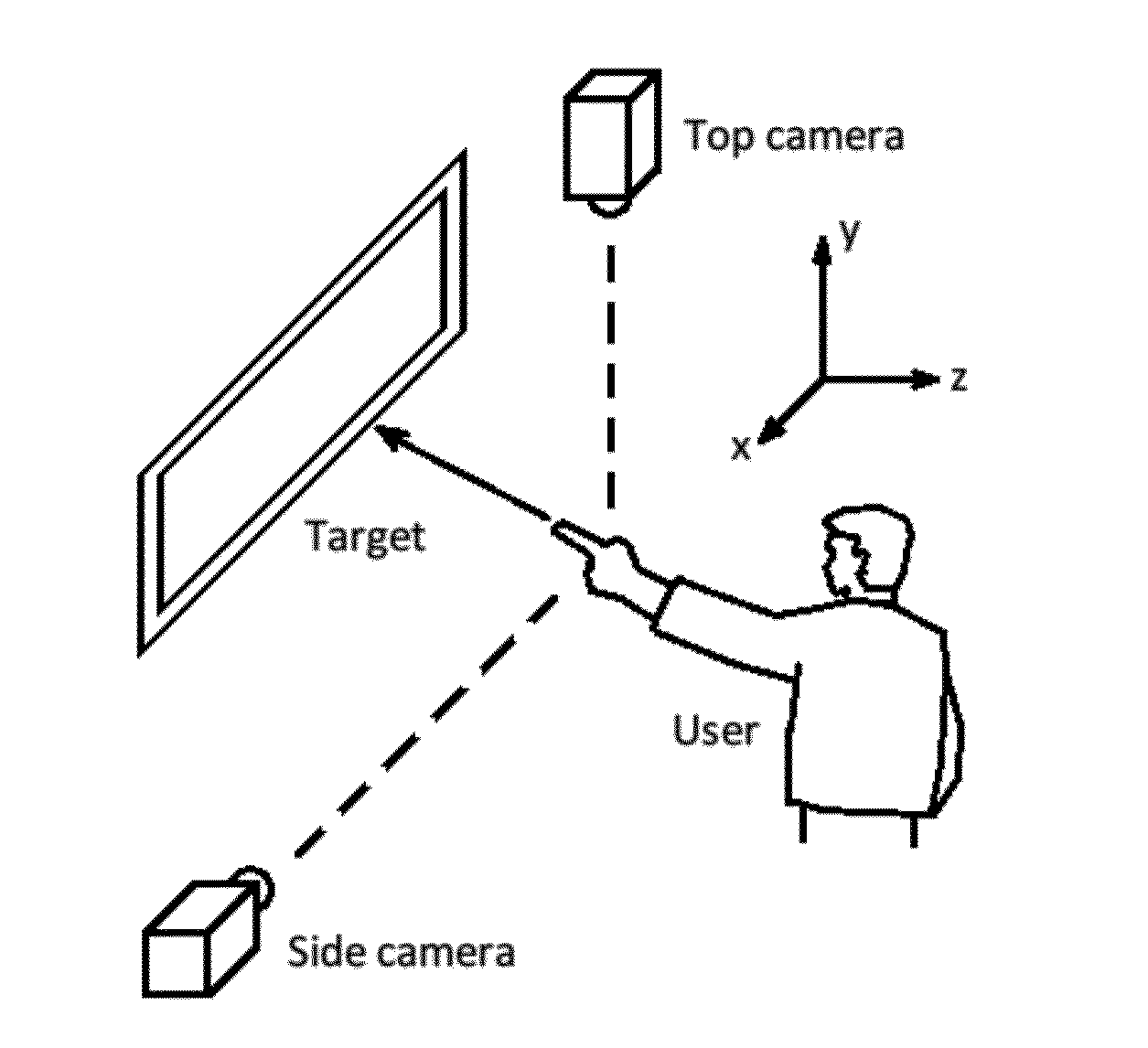

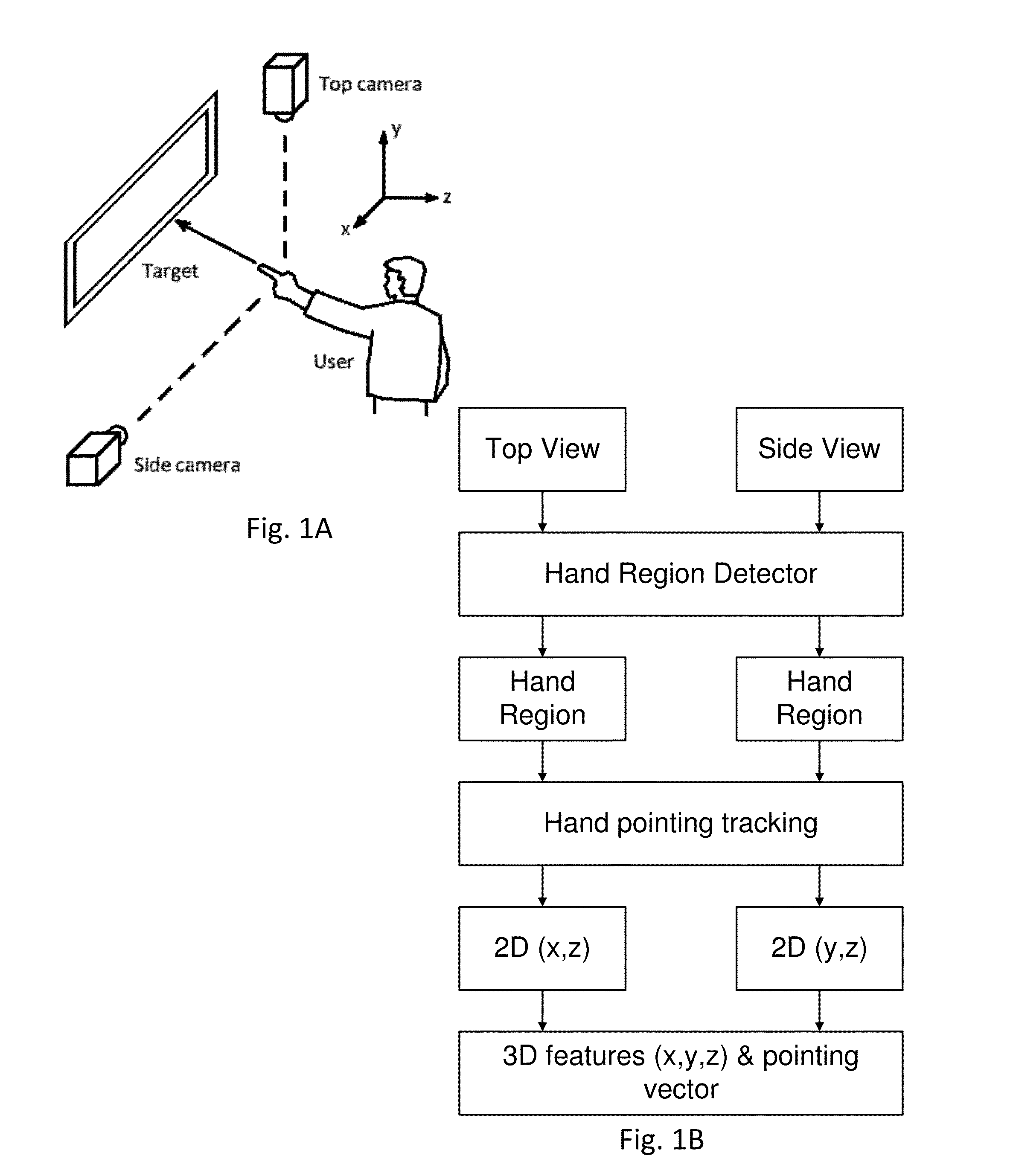

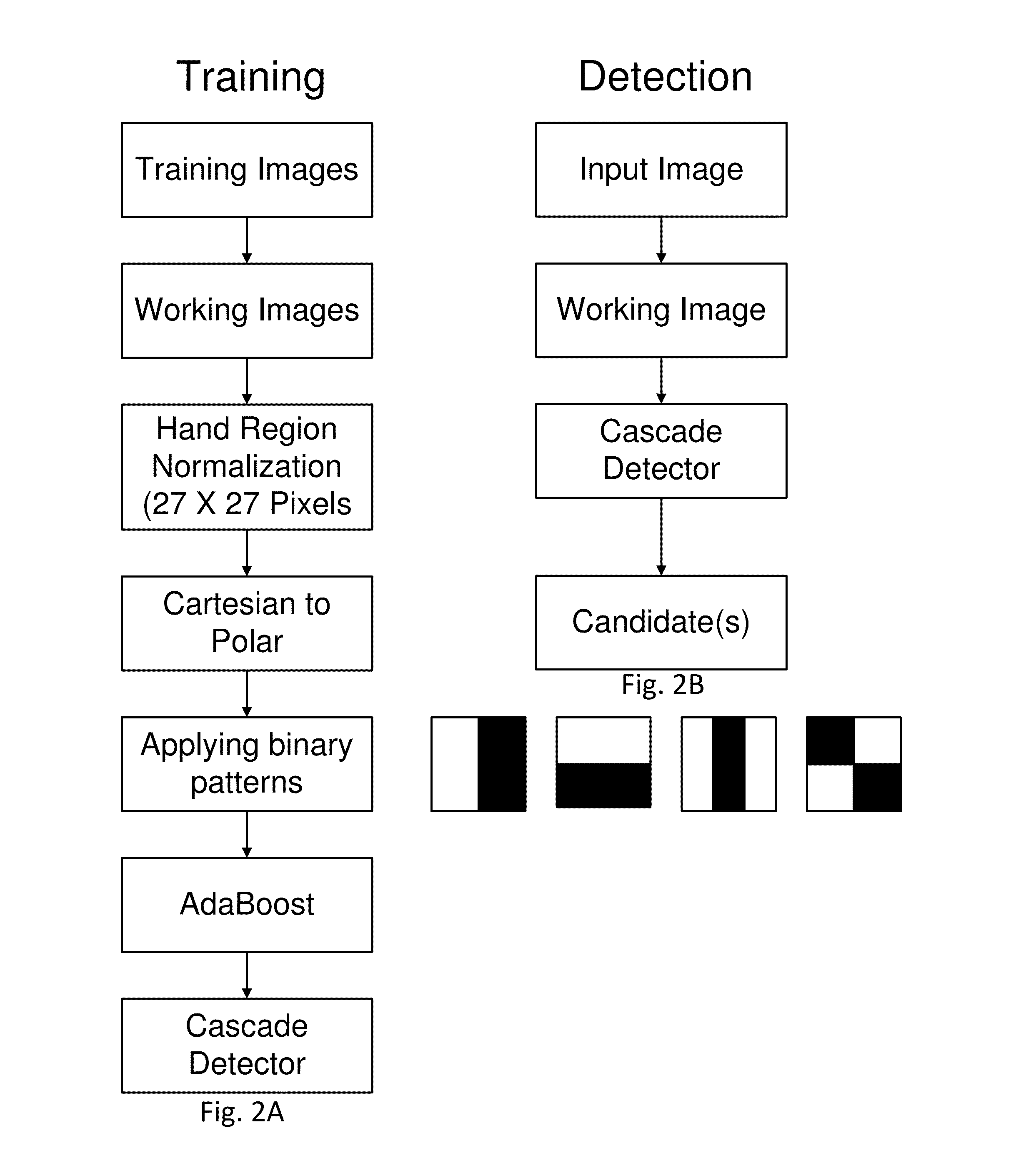

Hand pointing estimation for human computer interaction

ActiveUS8971572B1Simple classificationHigh degree of robustnessImage analysisCharacter and pattern recognitionHuman interactionHuman–robot interaction

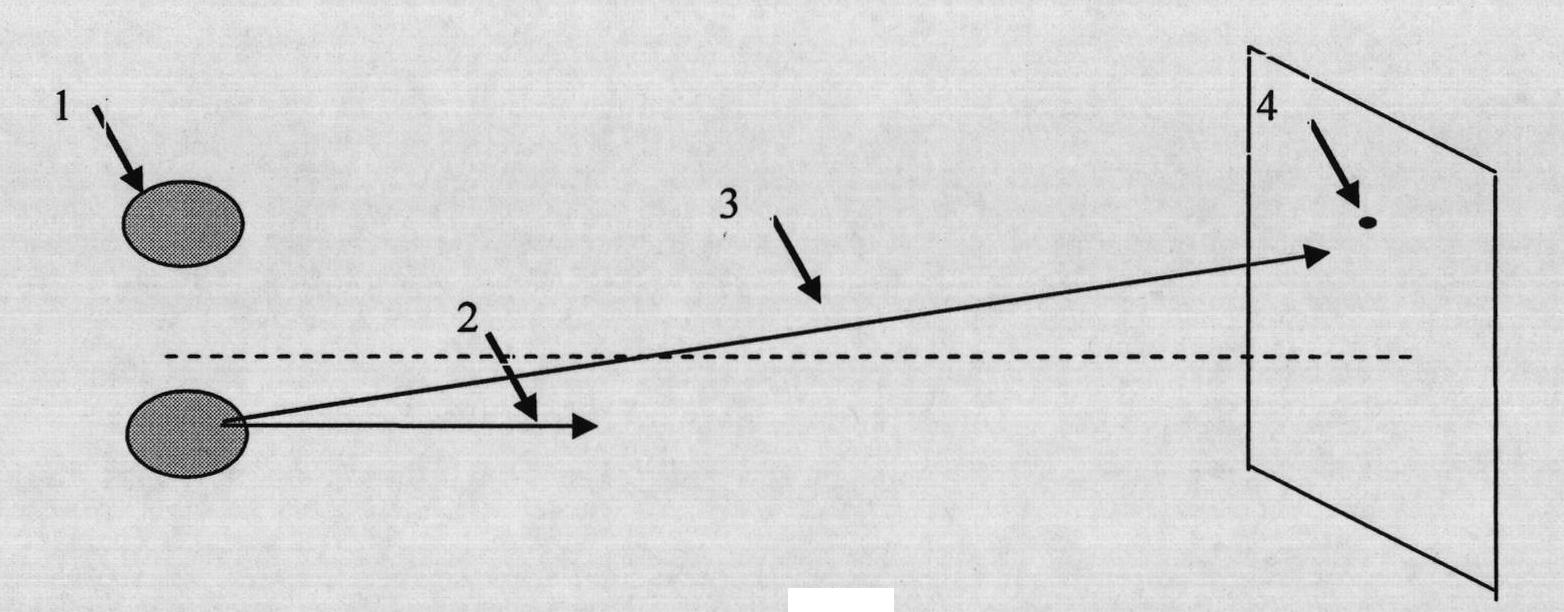

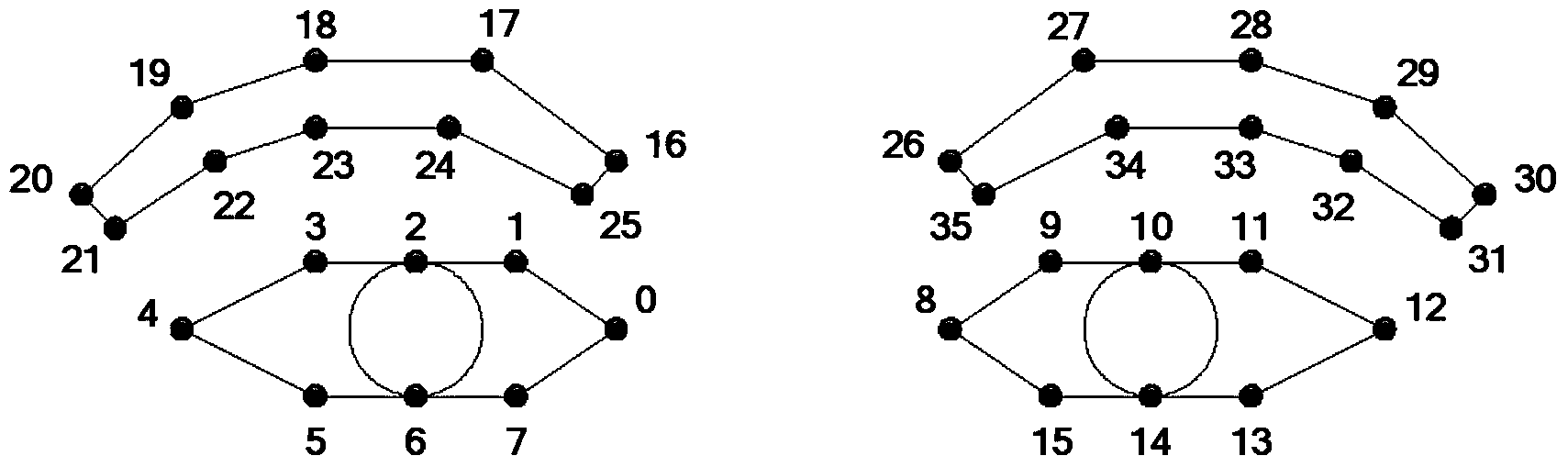

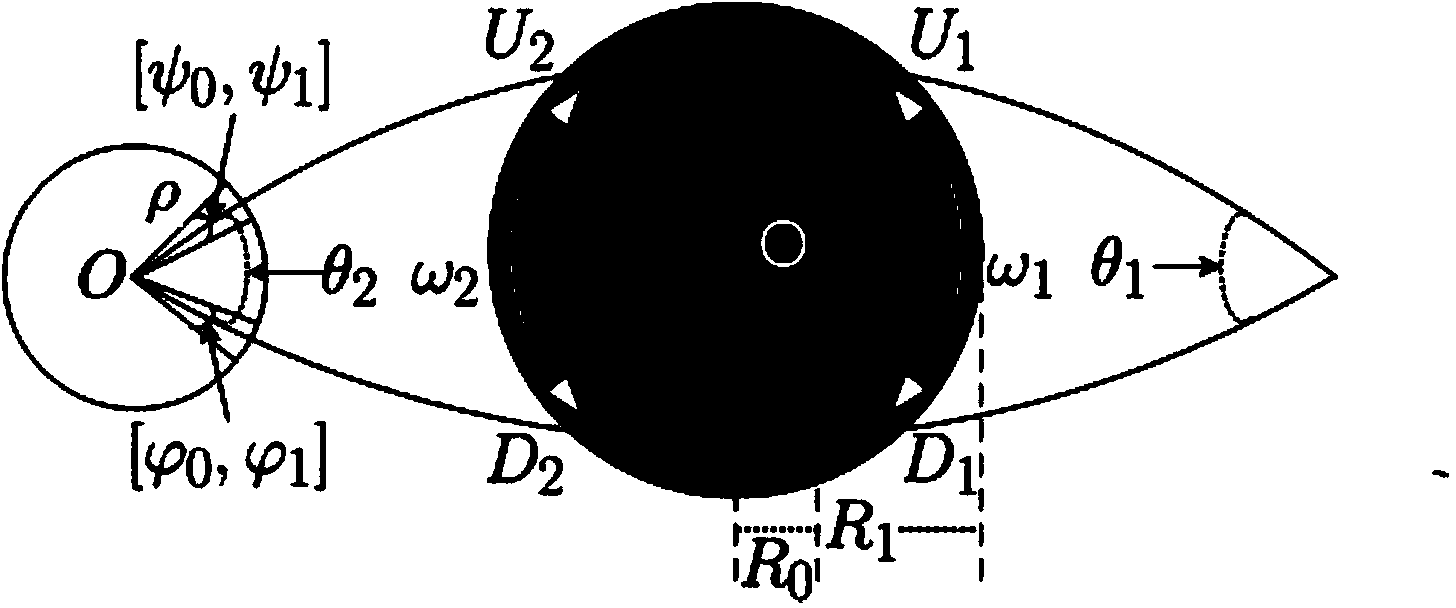

Hand pointing has been an intuitive gesture for human interaction with computers. A hand pointing estimation system is provided, based on two regular cameras, which includes hand region detection, hand finger estimation, two views' feature detection, and 3D pointing direction estimation. The technique may employ a polar coordinate system to represent the hand region, and tests show a good result in terms of the robustness to hand orientation variation. To estimate the pointing direction, Active Appearance Models are employed to detect and track, e.g., 14 feature points along the hand contour from a top view and a side view. Combining two views of the hand features, the 3D pointing direction is estimated.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

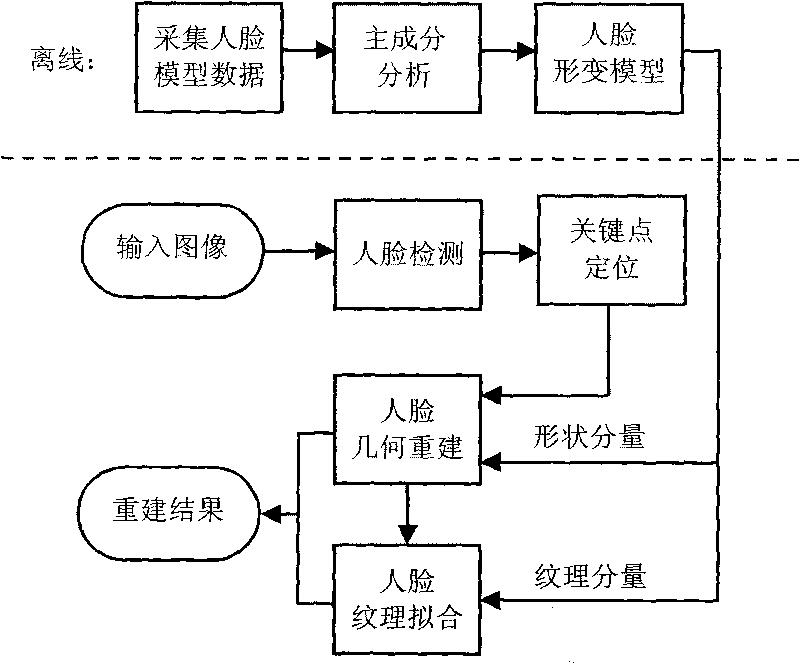

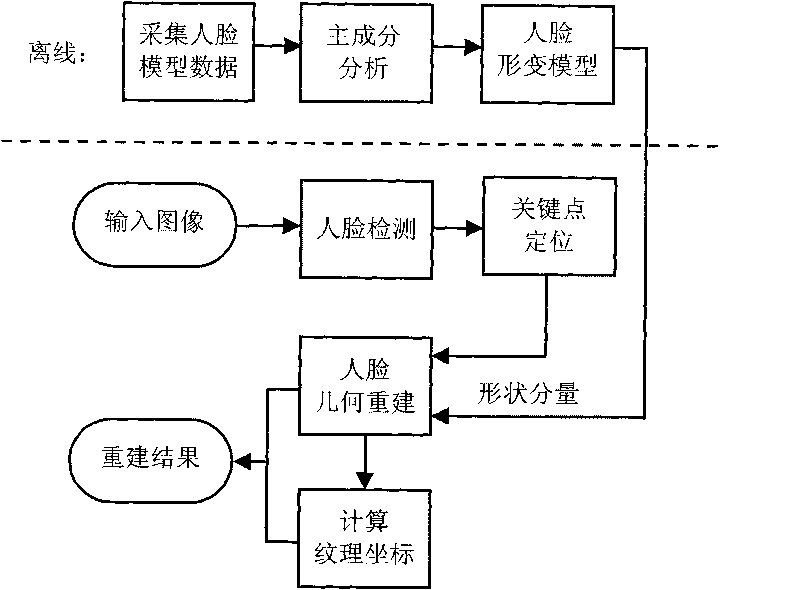

Three-dimensional facial reconstruction method

InactiveCN101751689AGeometry reconstruction speed reducedImplement automatic rebuild3D-image rendering3D modellingAdaBoostFace model

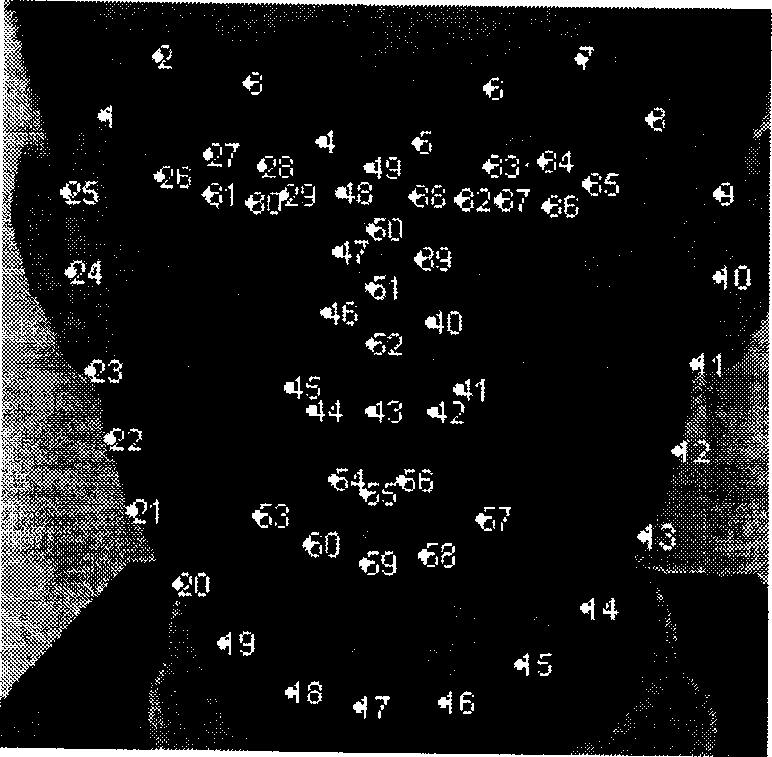

The invention relates to a three-dimensional facial reconstruction method, which can automatically reconstruct a three-dimensional facial model from a single front face image and puts forward two schemes. The first scheme is as follows: a deformable face model is generated off line; Adaboost is utilized to automatically detect face positions in the inputted image; an active appearance model is utilized to automatically locate key points on the face in the inputted image; based on the shape components of the deformable face model and the key points of the face on the image, the geometry of a three-dimensional face is reconstructed; with a shape-free texture as a target image, the texture components of the deformable face model are utilized to fit face textures, so that a whole face texture is obtained; and after texture mapping, a reconstructed result is obtained. The second scheme has the following differences from the first scheme: after the geometry of the three-dimensional face is reconstructed, face texture fitting is not carried out, but the inputted image is directly used as a texture image as a reconstructed result. The first scheme is applicable to fields such as film and television making and three-dimensional face recognition, and the reconstruction speed of the second scheme is high.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

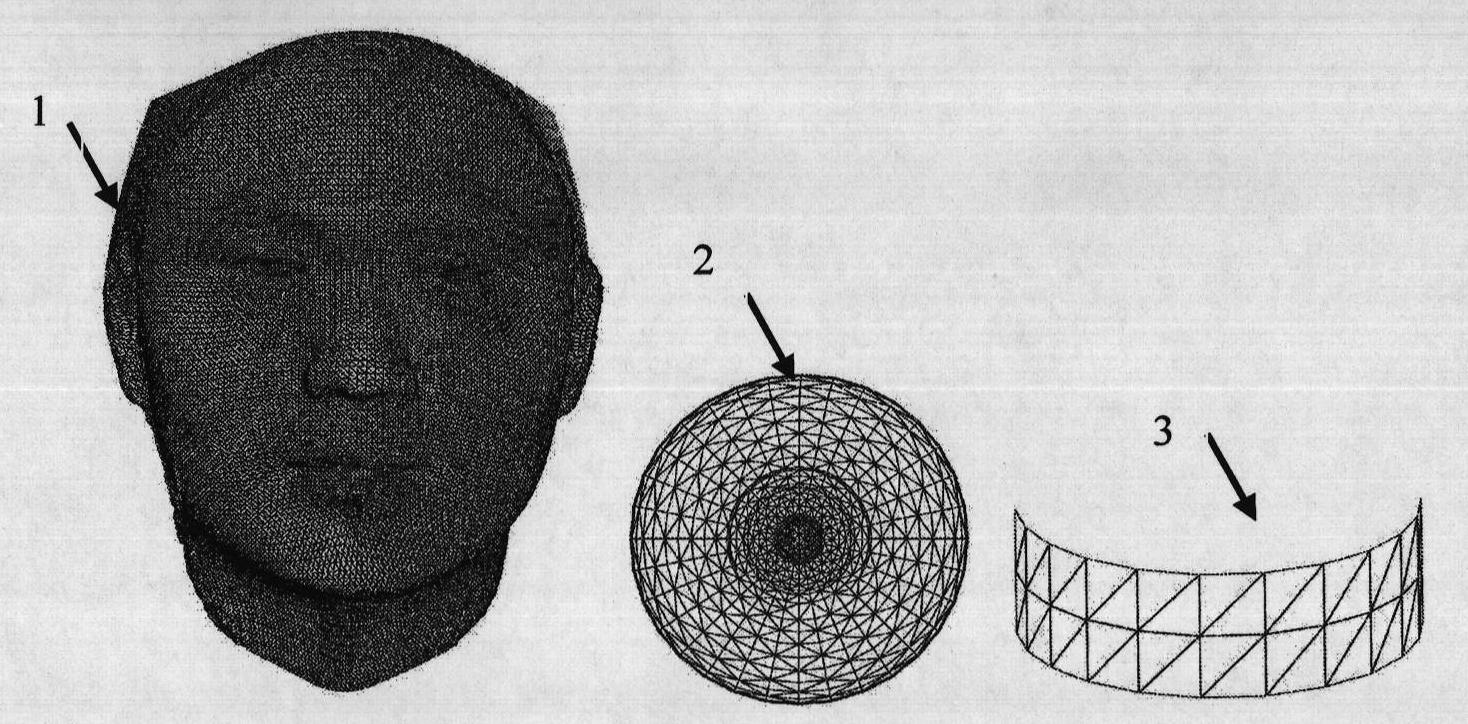

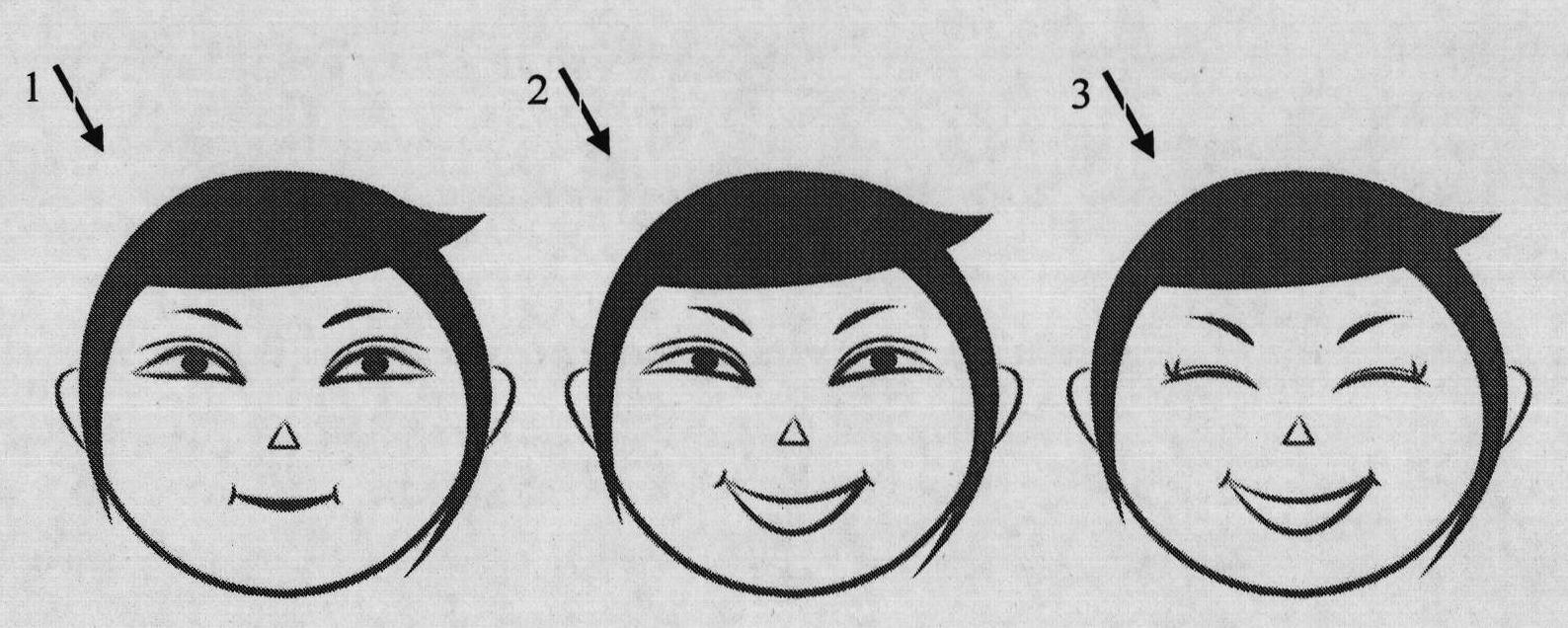

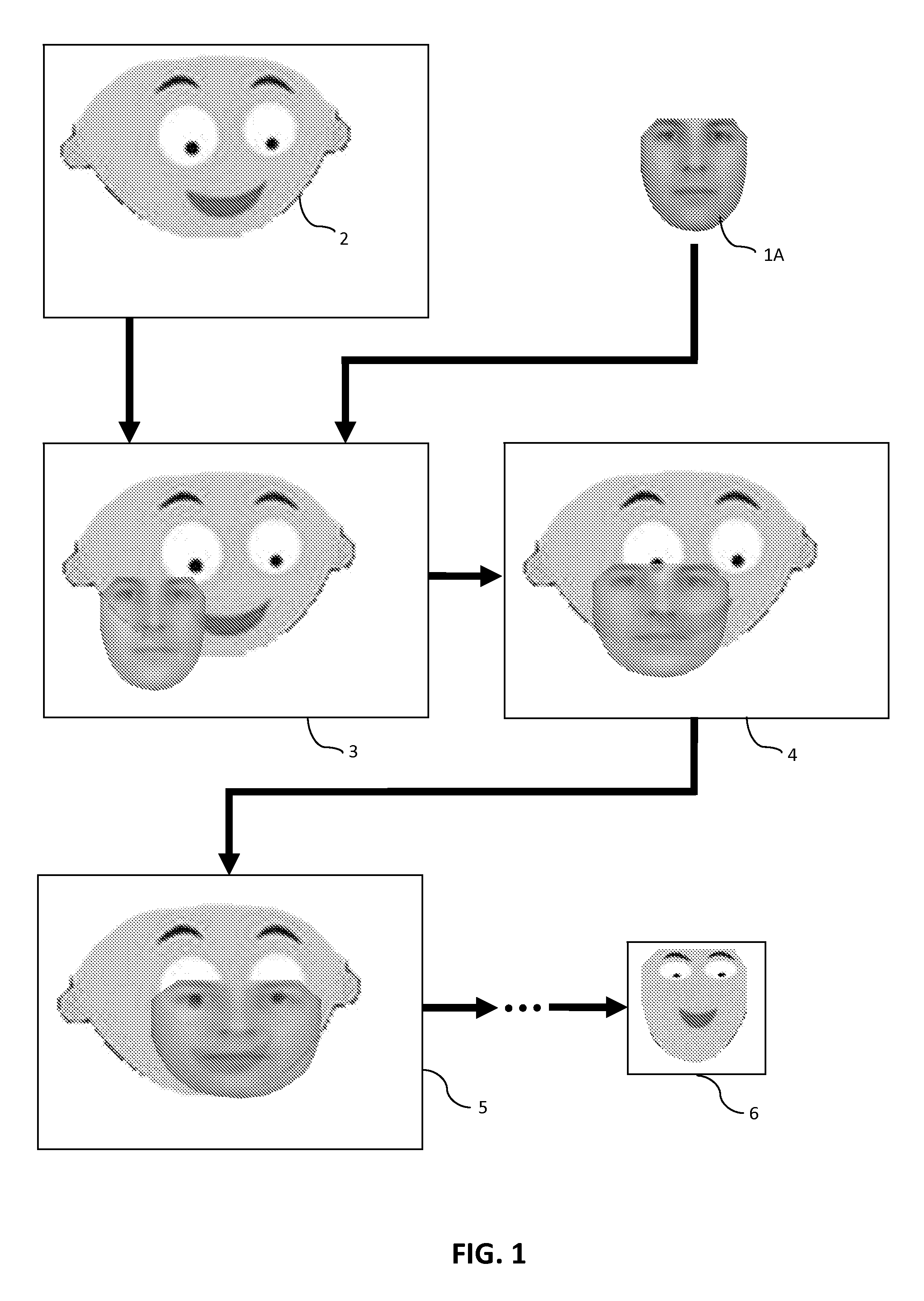

Single-photo-based human face animating method

The invention discloses a single-photo-based human face animating method, which belongs to the field of graph and image processing and computer vision. The method is to automatically reconstruct a three-dimensional model of a human face according to a single human front face photo and then to drive the reconstructed three-dimensional model to form personal human face animation. The method uses a human three-dimensional reconstruction unit and a human face animation unit, wherein the human face three-dimensional reconstruction unit carries out the following steps: generating a shape-change model off-line; automatically positioning the key points on the human faces by utilizing an active appearance model; adding eye and tooth grids to form a complete human face model; and obtaining the reconstruction result by texture mapping. The human face animation unit carries out the following steps: making animation data of far spaced key points; mapping the animation data onto a target human face model by using a radical primary function; realizing motion data interpolation by using spherical parametrization; and generating the motion of eyes. The method has the characteristics of high automation, robustness and sense of reality and is suitable to be used in field of film and television making, three-dimensional games and the like.

Owner:北京盛开智联科技有限公司

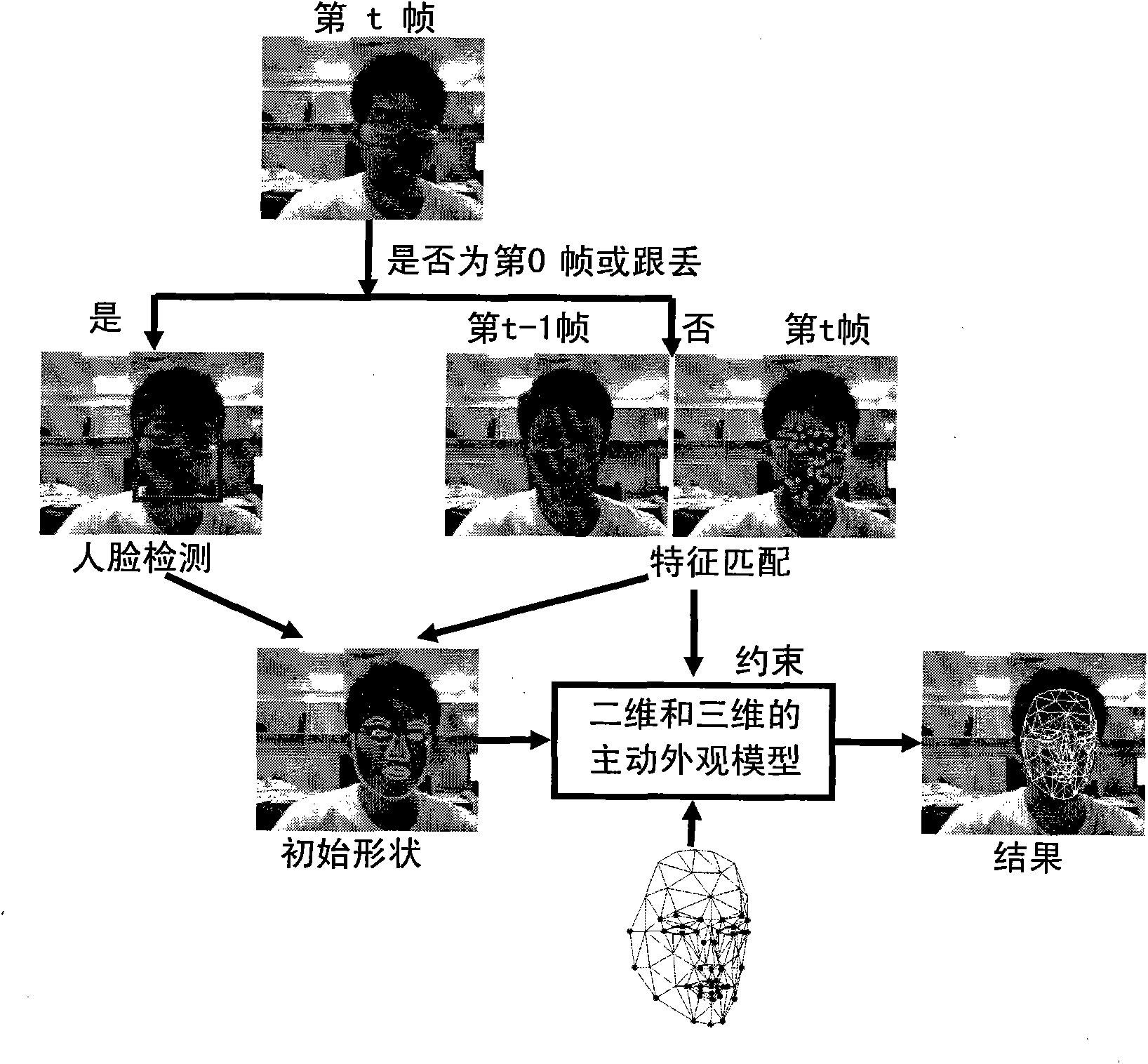

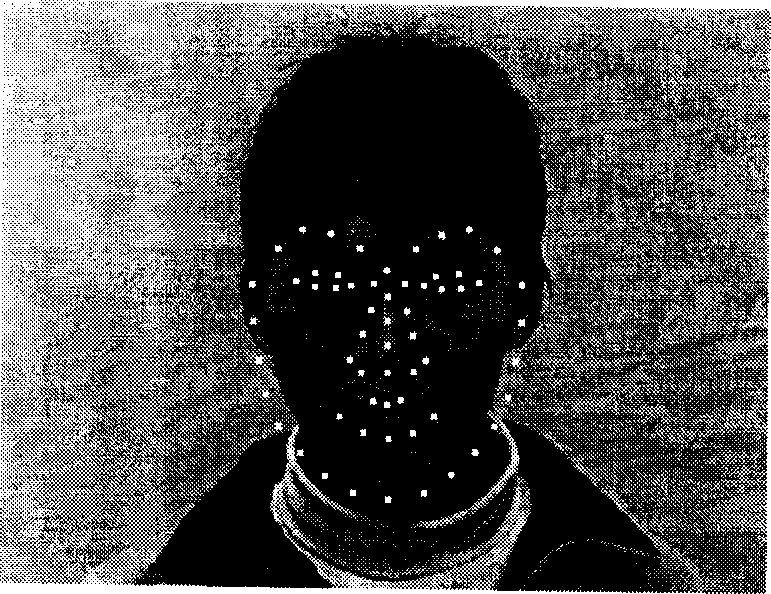

Method for tracking gestures and actions of human face

InactiveCN102402691ANo human intervention requiredFast trackingImage analysisCharacter and pattern recognitionFace detectionFeature point matching

The invention discloses a method for tracking gestures and actions of a human face, which comprises steps as follows: a step S1 includes that frame-by-frame images are extracted from a video streaming, human face detection is carried out for a first frame of image of an input video or when tracking is failed, and a human face surrounding frame is obtained, a step S2 includes that after convergent iteration of a previous frame of image, more remarkable feature points of textural features of a human face area of the previous frame of image match with corresponding feather points found in a current frame of image during normal tracking, and matching results of the feather points are obtained, a step S3 includes that the shape of an active appearance model is initialized according to the human face surrounding frame or the feature point matching results, and an initial value of the shape of a human face in the current frame of image is obtained, and a step S4 includes that the active appearance model is fit by a reversal synthesis algorithm, so that human face three-dimensional gestures and face action parameters are obtained. By the aid of the method, online tracking can be completed full-automatically in real time under the condition of common illumination.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

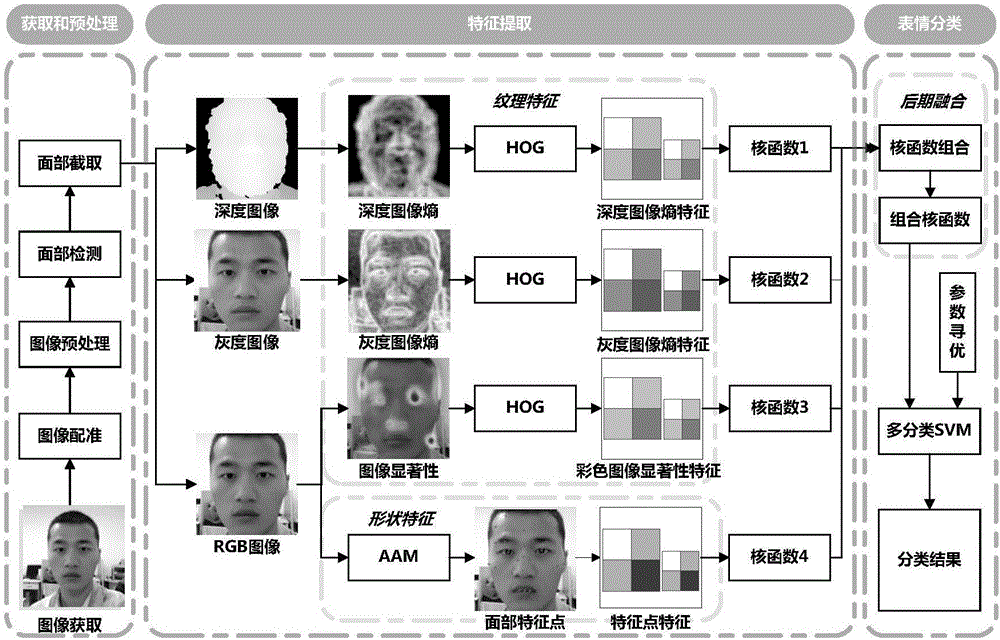

Expression identification method fusing depth image and multi-channel features

InactiveCN106778506AGuaranteed recognition efficiencyImprove robustnessCharacter and pattern recognitionColor imageSupport vector machine classifier

The invention discloses an expression identification method fusing a depth image and multi-channel features. The method comprises the steps of performing human face region identification on an input human face expression image and performing preprocessing operation; selecting the multi-channel features of the image, extracting a depth image entropy, a grayscale image entropy and a color image salient feature as human face expression texture information in the texture feature aspect, extracting texture features of the texture information by adopting a grayscale histogram method, and extracting facial expression feature points as geometric features from a color information image by utilizing an active appearance model in the geometric feature aspect; and fusing the texture features and the geometric features, selecting different kernel functions for different features to perform kernel function fusion, and transmitting a fusion result to a multi-class support vector machine classifier for performing expression classification. Compared with the prior art, the method has the advantages that the influence of factors such as different illumination, different head poses, complex backgrounds and the like in expression identification can be effectively overcome, the expression identification rate is increased, and the method has good real-time property and robustness.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

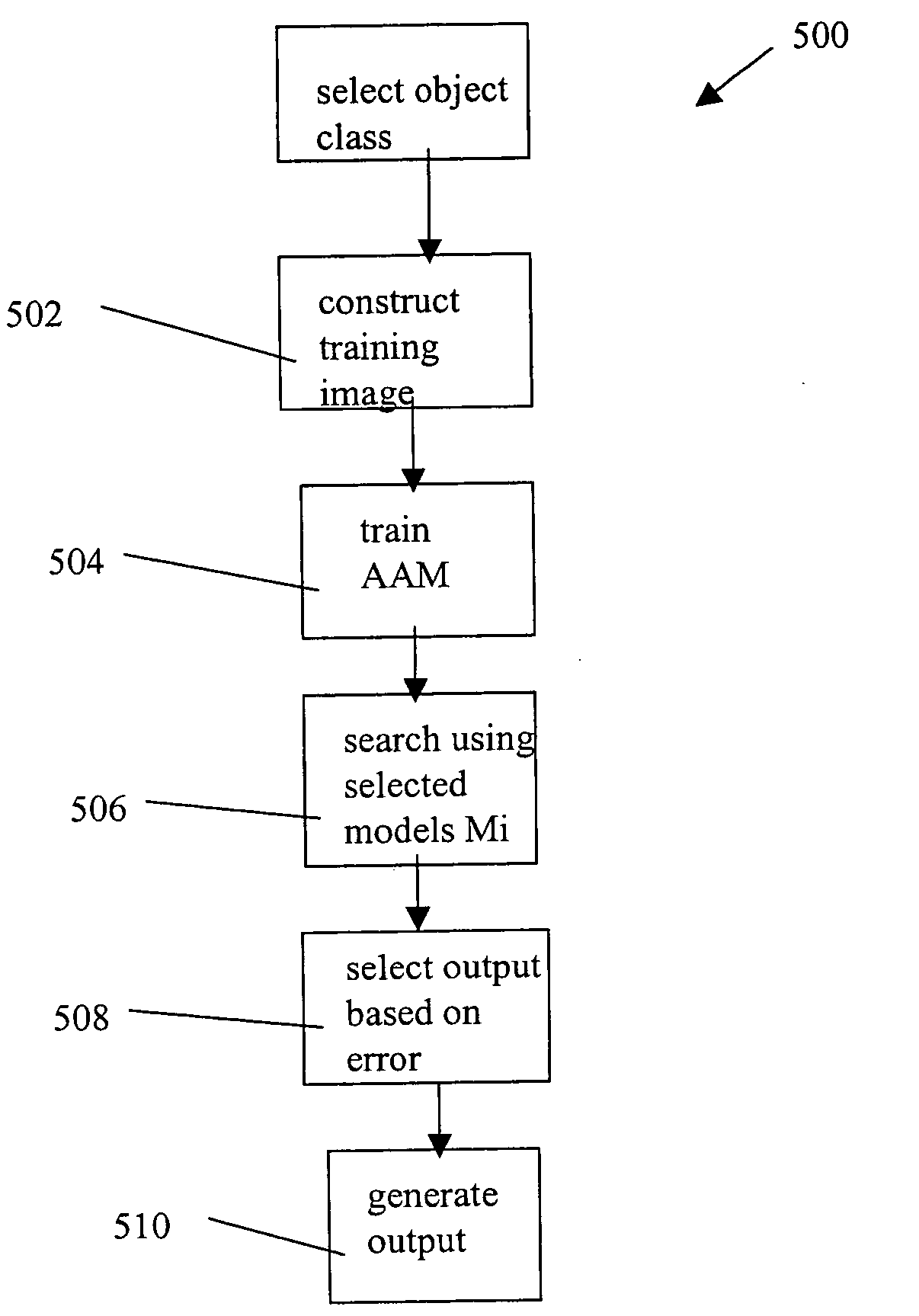

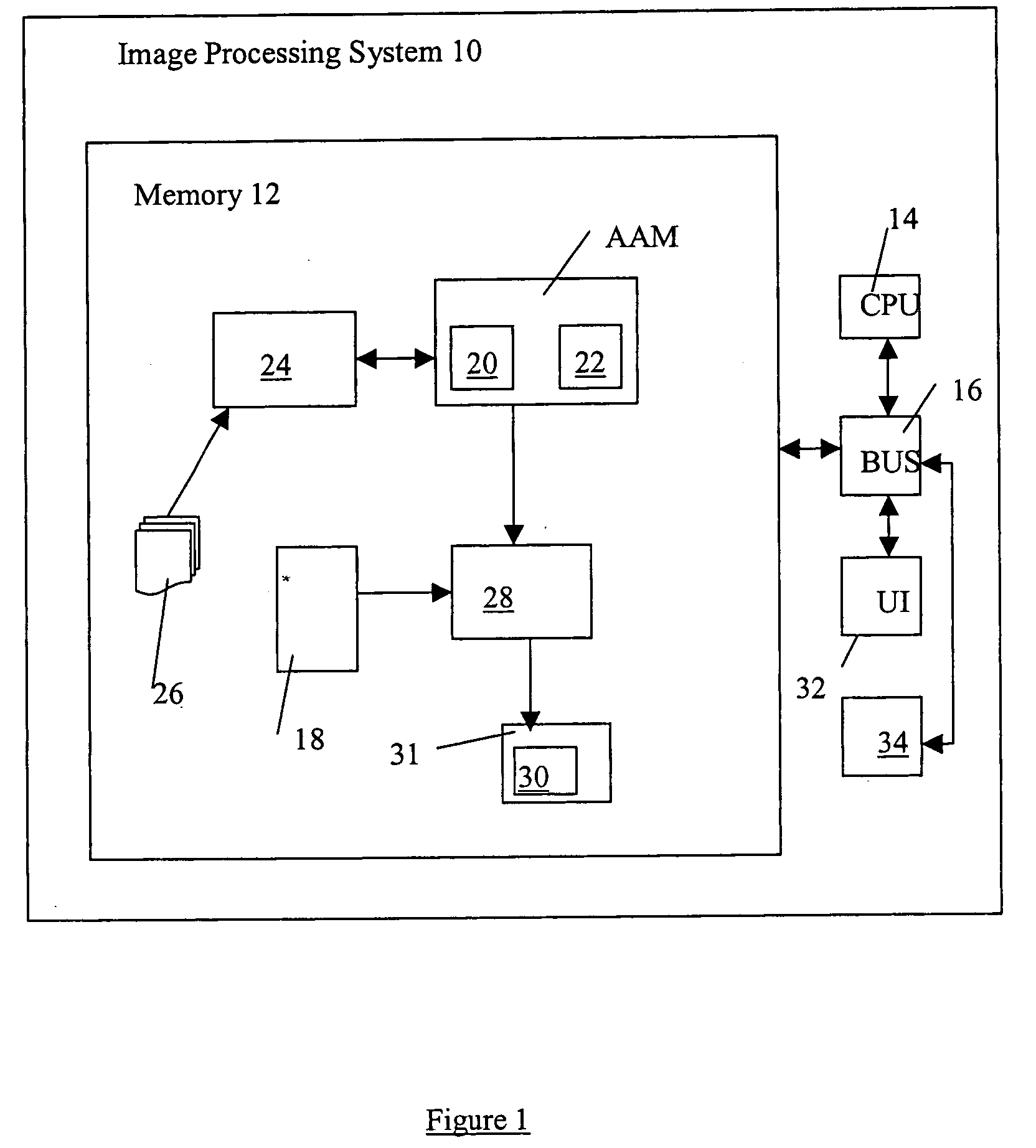

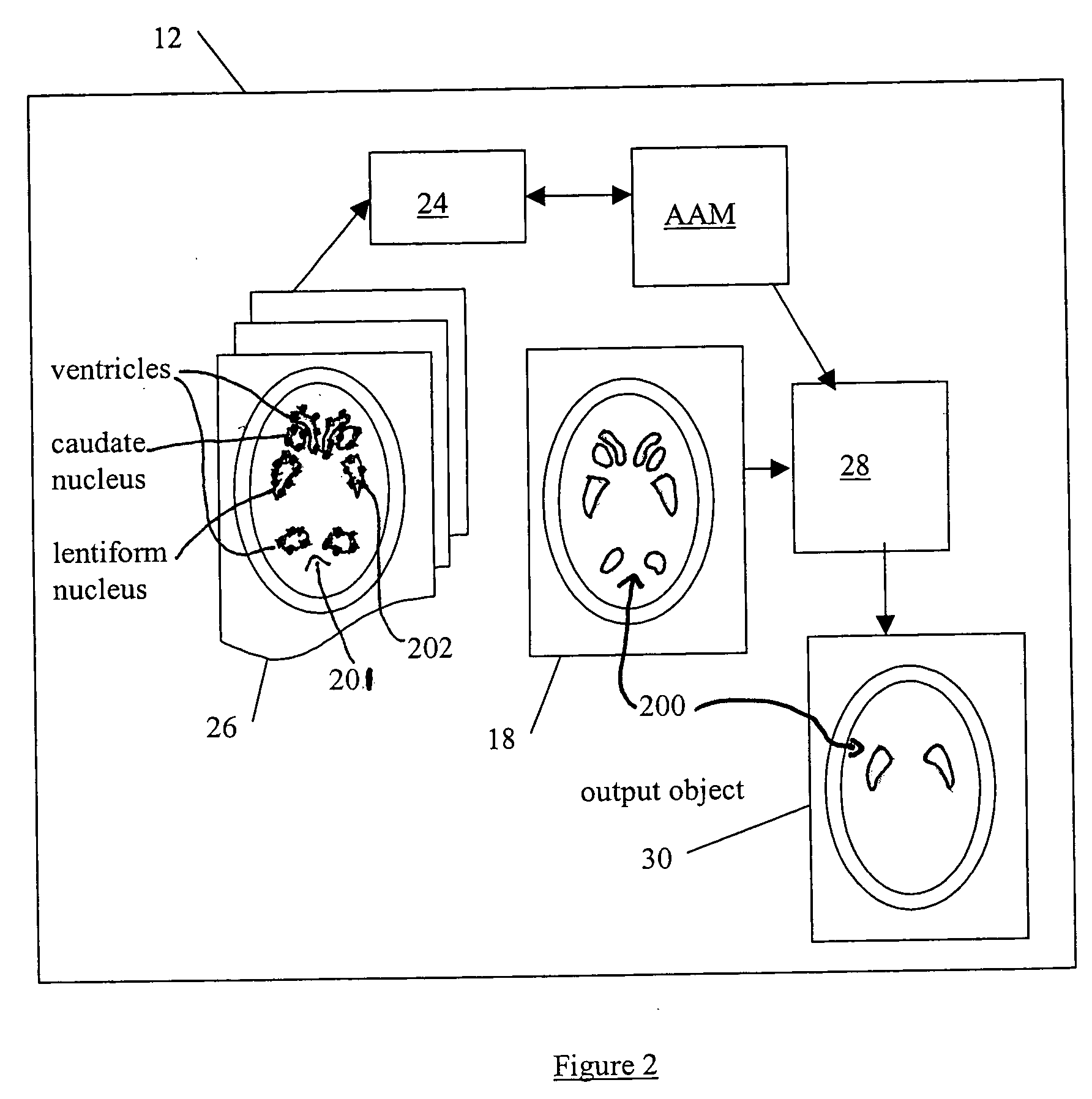

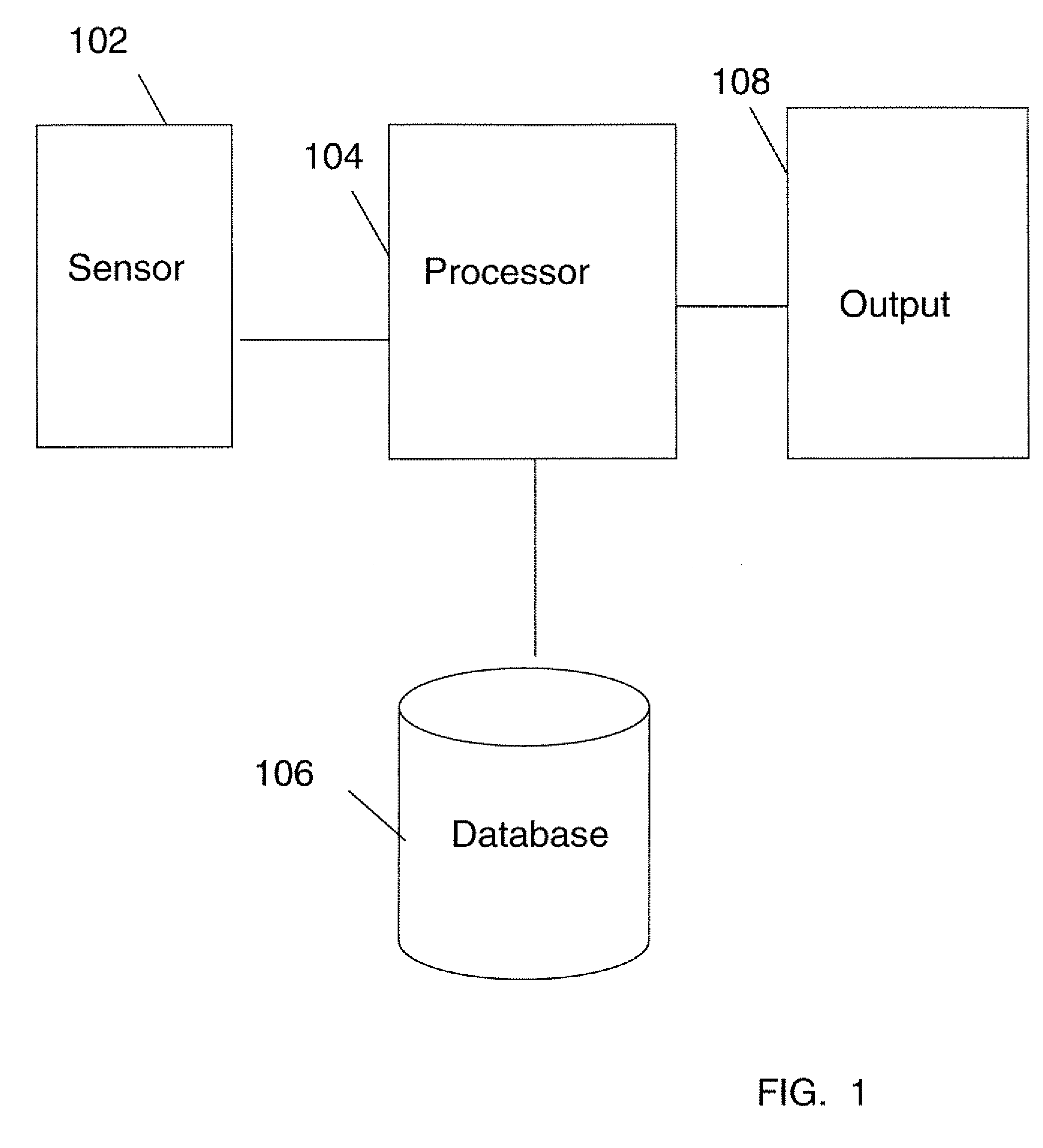

System and method for applying active appearance models to image analysis

An image processing system and method having a statistical appearance model for interpreting a digital image. The appearance model has at least one model parameter. The system and method comprises a two dimensional first model object including an associated first statistical relationship, the first model object configured for deforming to approximate a shape and texture of a two dimensional first target object in the digital image. Also included is a search module for selecting and applying the first model object to the image for generating a two dimensional first output object approximating the shape and texture of the first target object, the search module calculating a first error between the first output object and the first target object. Also included is an output module for providing data representing the first output object to an output. The processing system uses interpolation for improving image segmentation, as well as multiple models optimised for various target object configurations. Also included is a model labelling that is associated with model parameters, such that the labelling is attributed to solution images to aid in patient diagnosis.

Owner:IBM CORP

Method for extracting face features

ActiveCN102880866AAccurate trackingAccurate facial feature calibrationCharacter and pattern recognitionAnalysis dataFeature extraction

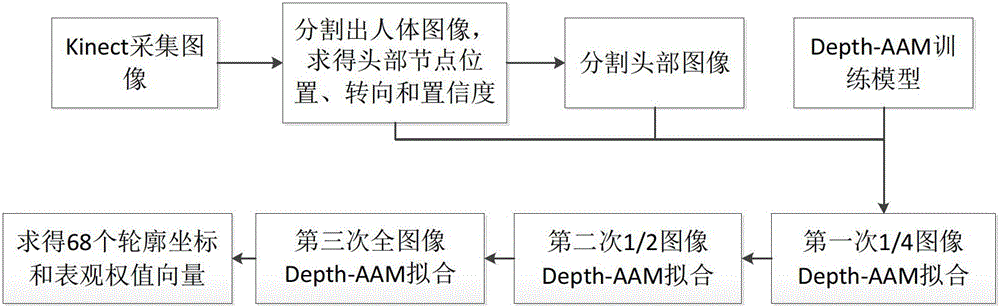

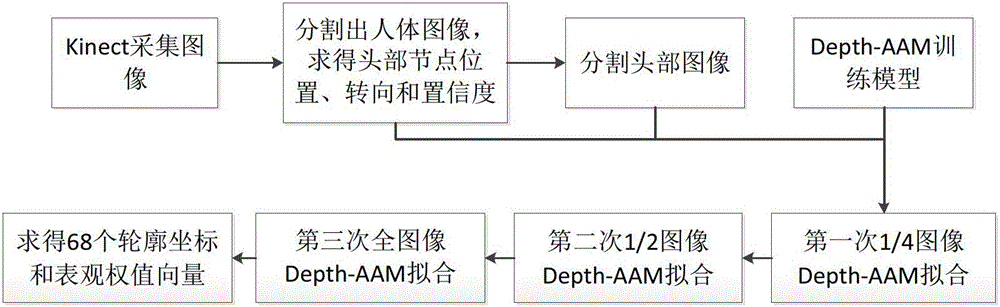

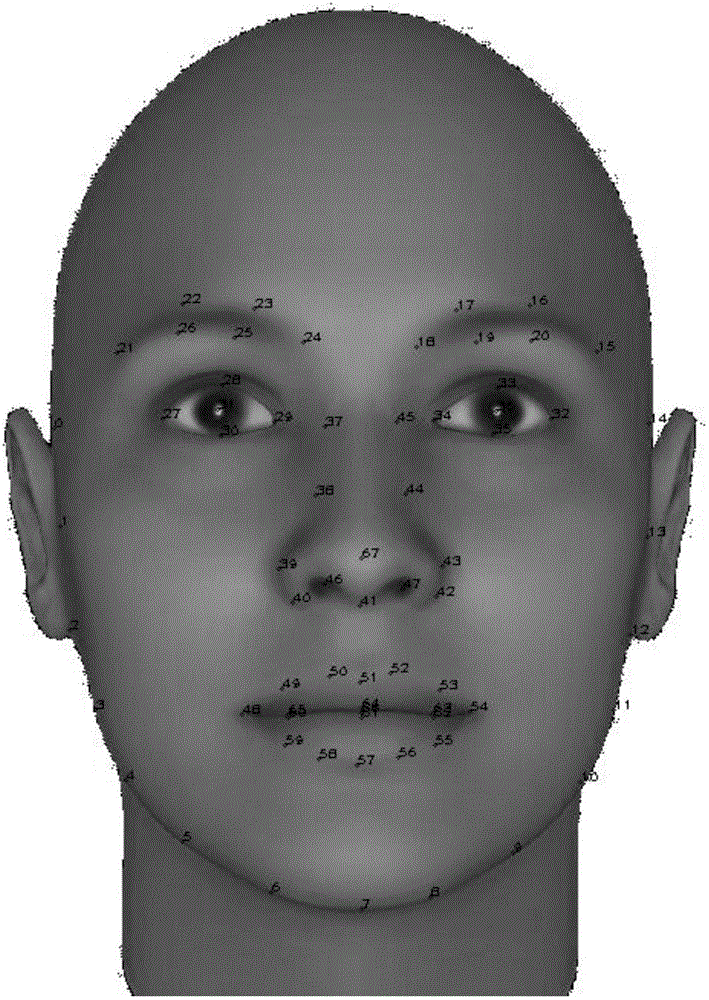

The invention discloses a method for extracting face feature. Accordingly, body posture analytical data and depth data provided by a Kinect camera are combined with a Depth-Active Appearance Model (AMM) algorithm, and the method based on 2.5 dimensional images is formed. The method comprises steps of training the AMM of the Depth-AMM algorithm by using a principal component analysis method and extracting face features based on the AMM of the Depth-AMM algorithm after completed training.

Owner:NINGBO UNIV

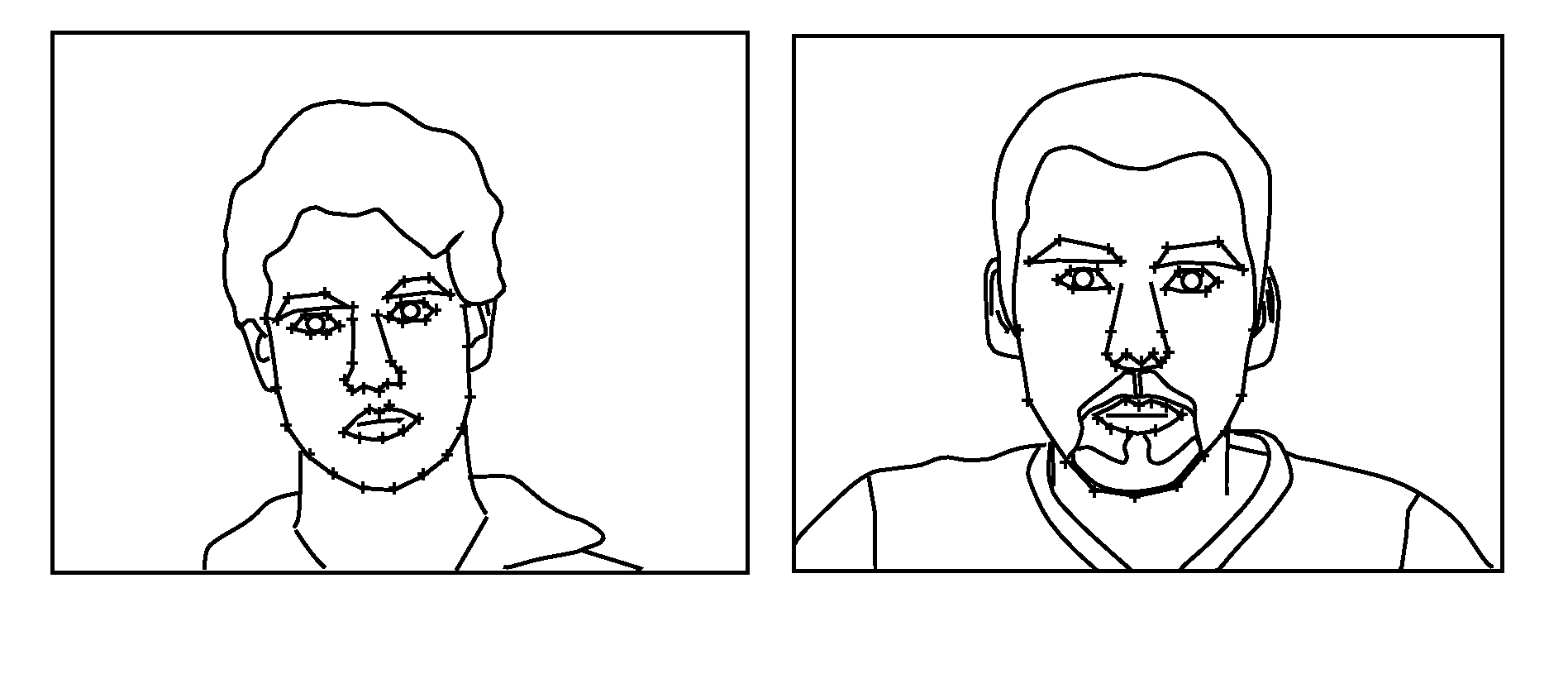

Advances in extending the AAM techniques from grayscale to color images

A face detection and / or detection method includes acquiring a digital color image. An active appearance model (AAM) is applied including an interchannel-decorrelated color space. One or more parameters of the model are matched to the image. Face detection results based on the matching and / or different results incorporating the face detection result are communicated.

Owner:TESSERA TECH IRELAND LTD +1

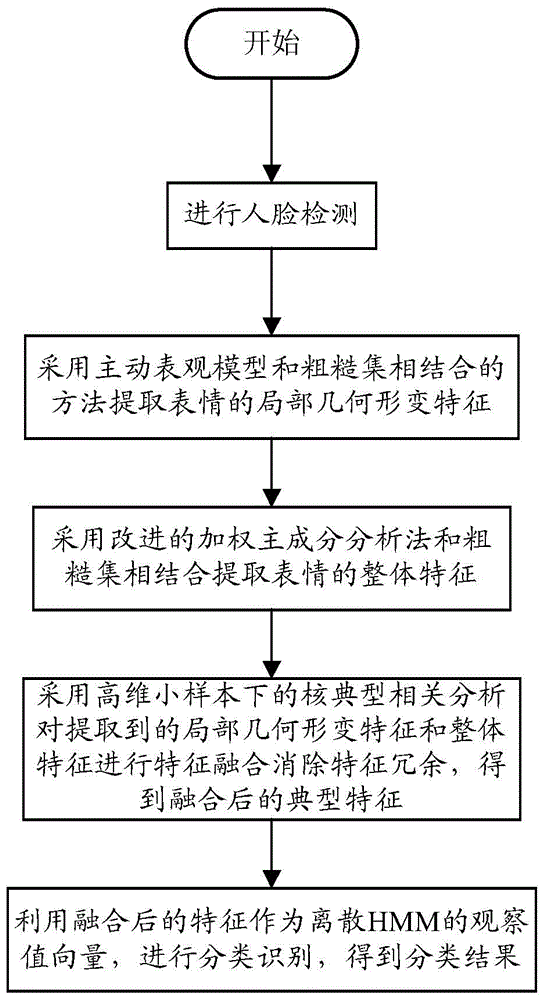

Facial expression recognition method based on rough set and mixed features

InactiveCN103984919AImprove recognition rateRecognition time is shortCharacter and pattern recognitionSmall sampleHigh dimensional

The invention discloses a facial expression recognition method based on a rough set and mixed features. The method includes the following steps that (1) facial detection is carried out; (2) local geometric distortion features are extracted by means of a method combining an active appearance model and the rough set; (3) overall features of expressions are extracted with the combination of an improved weighting primary component analysis method and the rough set; (4) feature fusion is carried out on the extracted local geometric distortion features and the overall features by means of kernel canonical correlation analysis under a high-dimensional small sample to eliminate feature redundancy, and fused typical features are obtained; (5) the fused typical features serve as observation vectors of a discrete HMM for classification and recognition, and a classification result is obtained. As is presented by experiments, the improved method can shorten facial expression recognition time and improve the facial expression recognition rate.

Owner:上海优思通信科技有限公司

Frontal Hand Capture of Fingerprints, Palm Prints and Hand Geometry Using Contactless Photography

InactiveUS20100165090A1Easy to identifyMinimize the numberColor television detailsClosed circuit television systemsHand partsPalm print

The present invention is a system and method that captures hand geometry, full-length fingerprints, and / or palm prints from a frontal view of a freely posed hand using contactless photography. A system and method for capturing biometric data of a hand includes the steps of and means for (a) digitally photographing a designated contactless capture area not having any predefined hand-positioning structures or platen for receiving a hand, to obtain a captured image of the capture area that includes a naturally-posed hand; (b) extracting a frontal hand image of the naturally-posed hand by applying an Active Appearance Model (AAM) to the captured image; (c) computing a plurality of hand geometry measurements of the frontal hand image; and (d) comparing the plurality of hand geometry measurements from the frontal hand image to corresponding existing hand geometry measurements, wherein the comparison results in a best match.

Owner:HANDSHOT

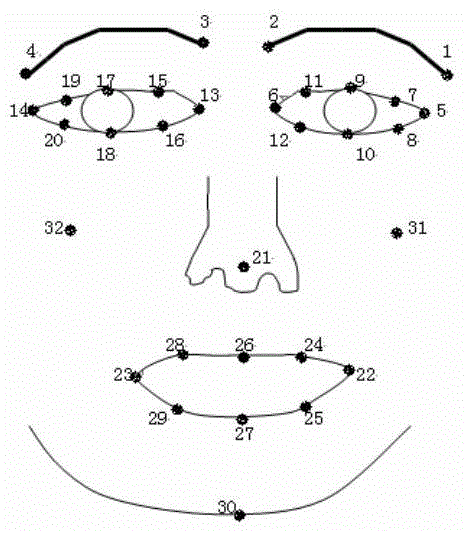

Man face characteristic point positioning method of combining local searching and movable appearance model

InactiveCN1687957AImprove accuracyHigh speedCharacter and pattern recognitionActive appearance modelCharacteristic point

Owner:SHANGHAI JIAO TONG UNIV

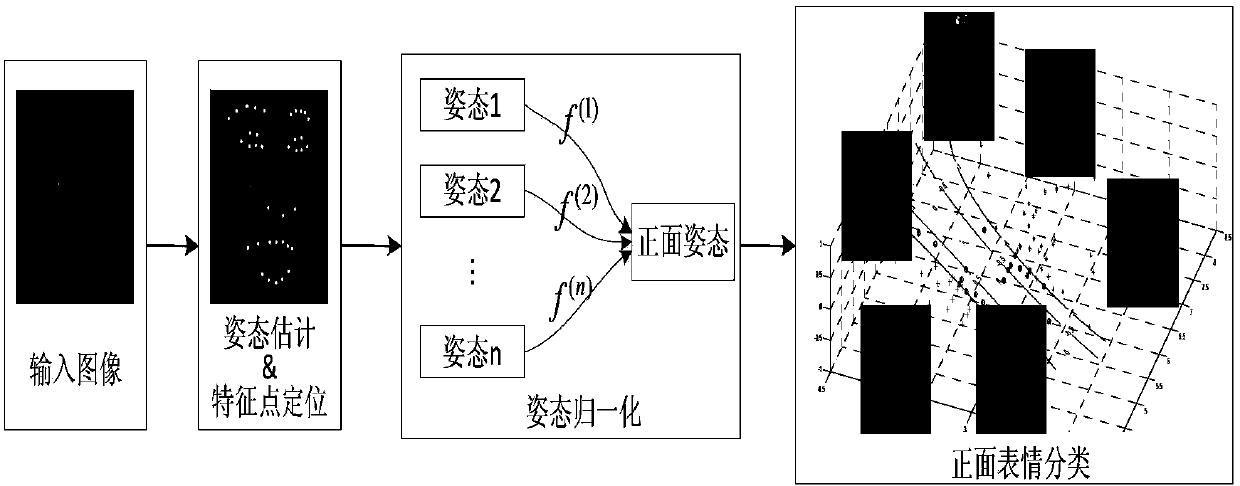

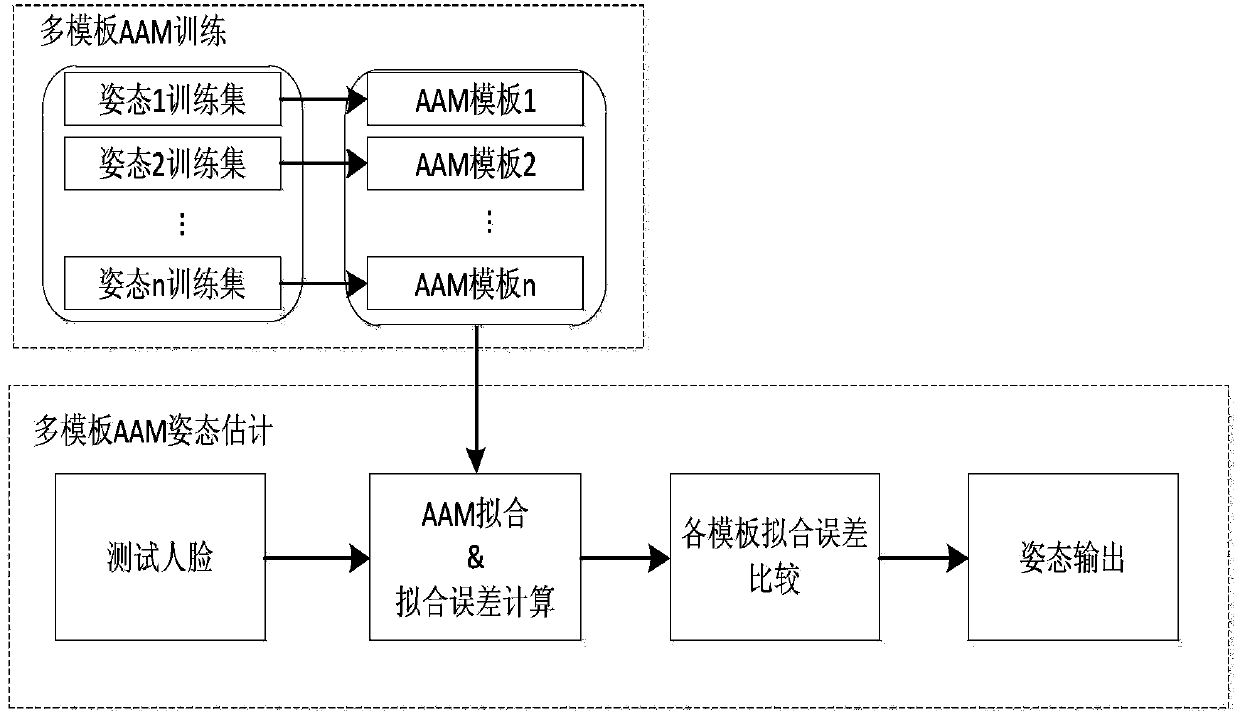

Method identifying non-front-side facial expression based on attitude normalization

ActiveCN103400105AReduce in quantityPositioning Noise RobustCharacter and pattern recognitionFacial expressionNonlinear regression

The invention discloses a method identifying a non-front-side facial expression based on attitude normalization. The method comprises that facial expressions in a training sample set are learned via a nonlinear regression model to obtain a mapping function from non-front-side facial characteristic points to front-side facial characteristic points; attitude estimation and characteristic point positioning are carried out on to-be-tested non-front-side facial images via a multi-template active appearance model, and the characteristic points of a non-front-side face are normalized to a front-side attitude via the corresponding attitude mapping function; and geometric positions of the characteristic points of a front-side face are classified into expressions via a support vector machine. The method identifying the non-front-side facial expression is simple and effectively, which solves the problem that different facial attitudes cause different expressions, and satisfies the requirement of identifying non-front-side facial expressions in real time.

Owner:SOUTHEAST UNIV

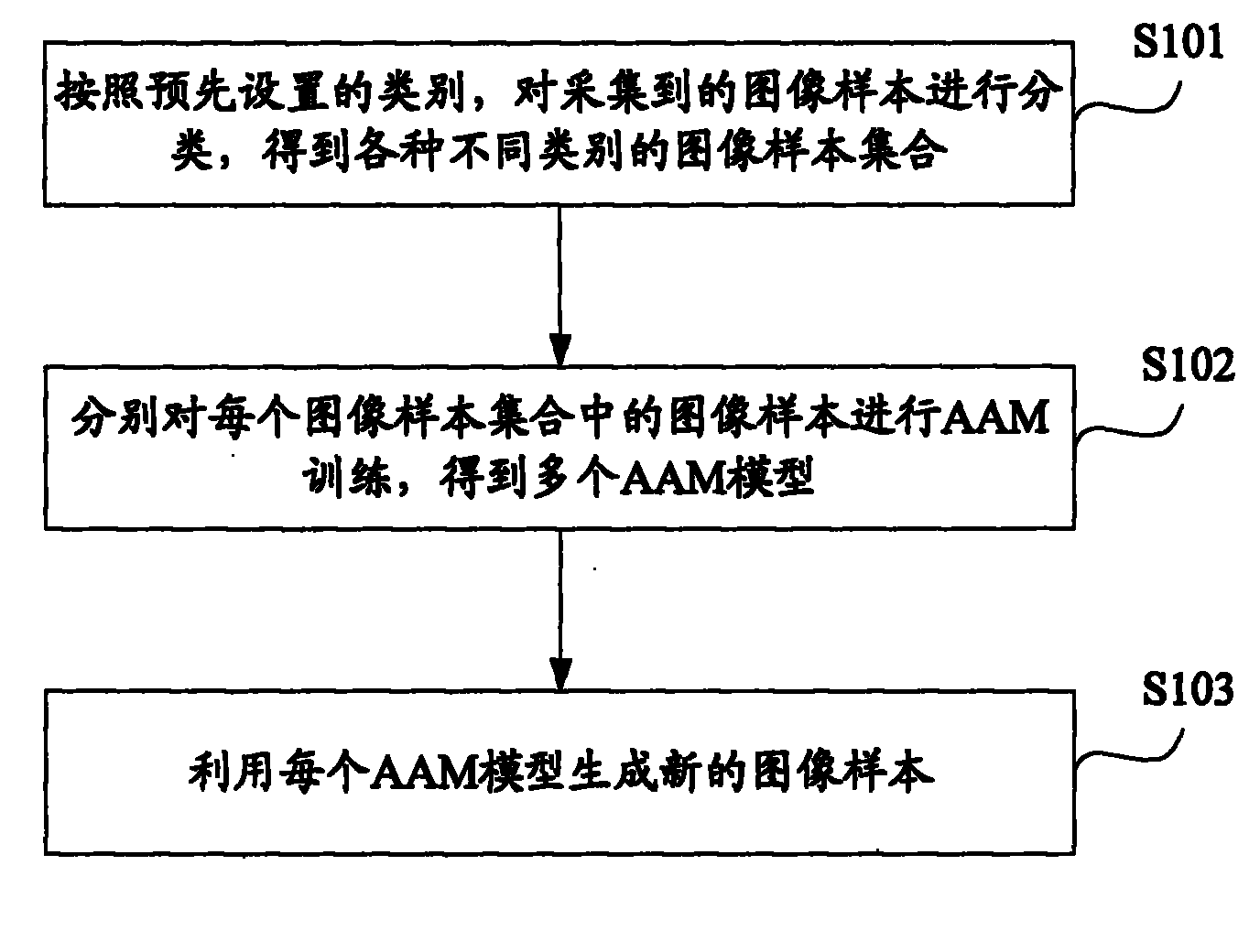

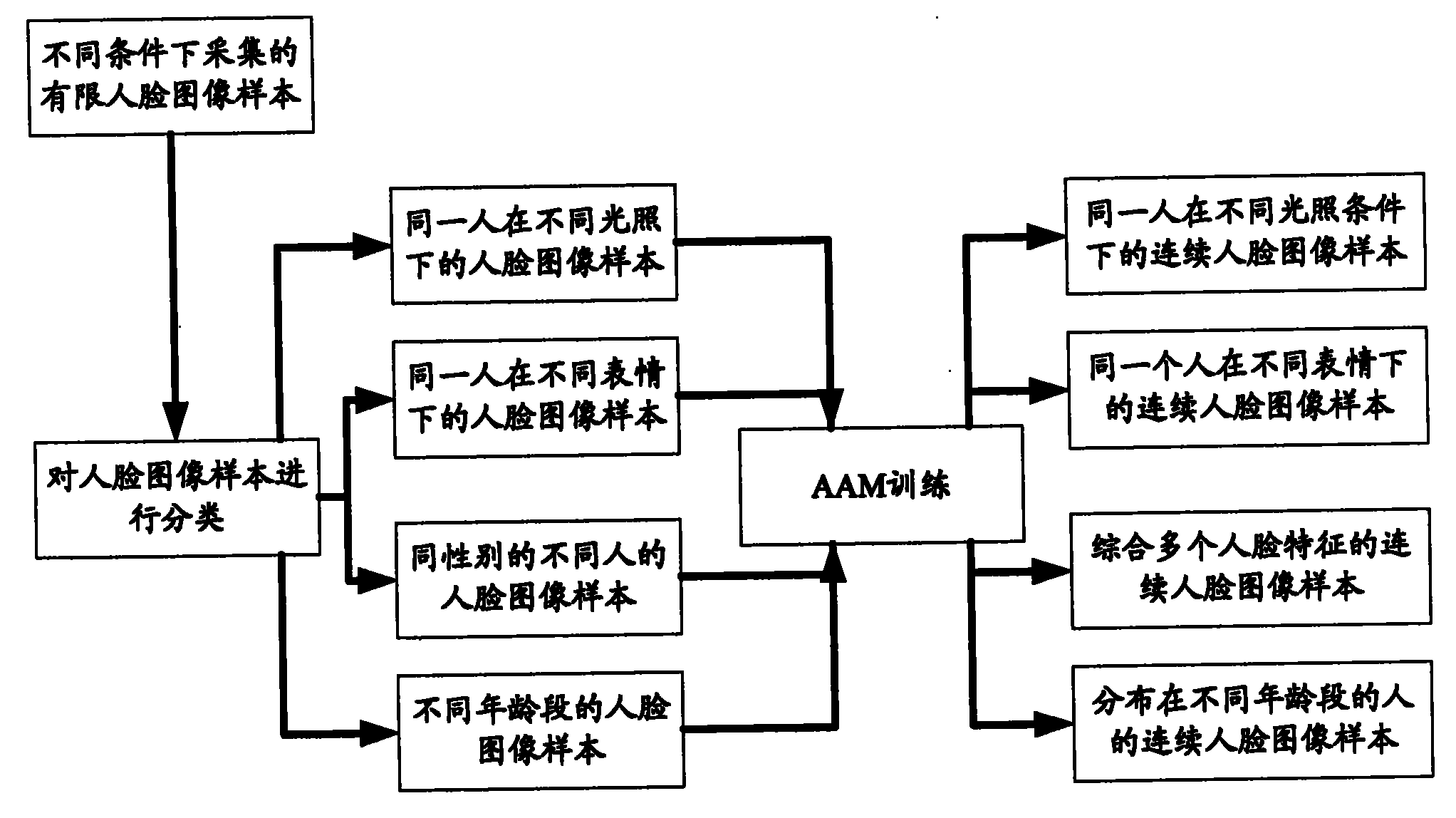

Method and device for generating image sample

InactiveCN102103695AQuality improvementImprove training accuracyCharacter and pattern recognitionComputer scienceActive appearance model

The invention discloses a method and device for generating image sample for obtaining the image sample featured with better quality and excellent representativeness for expanding an image sample base, improving the training precision of a classifier of the image sample and satisfying the application demand. The invention provides a method for generating image sample, comprising the following steps of: classifying the collected image samples according to the predetermined type to obtain different types of image sample sets; performing AAM (Active Appearance Model) training to the image sample of each image sample set to obtain a plurality of AAM models; and generating new image sample by each AAM model.

Owner:VIMICRO CORP

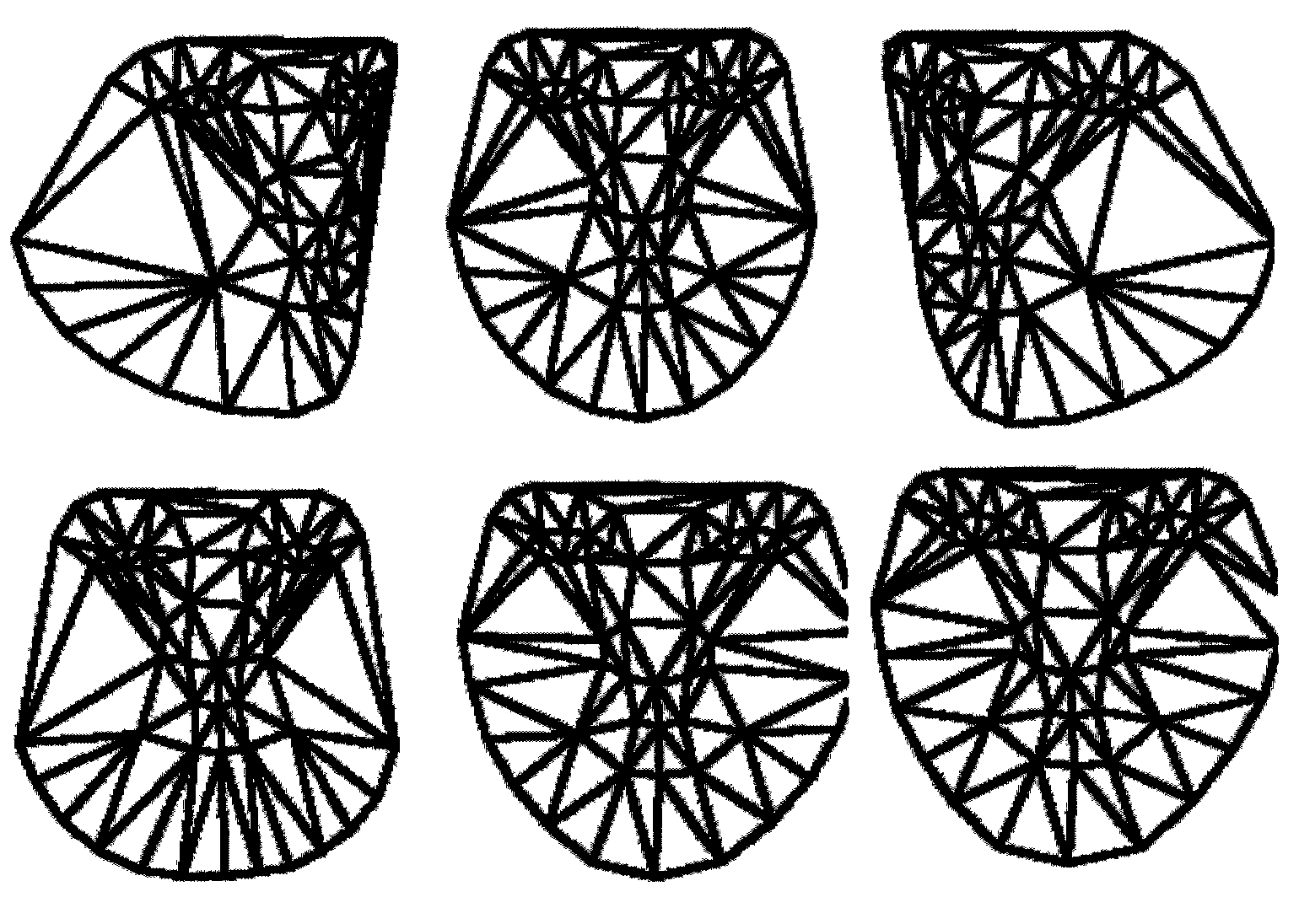

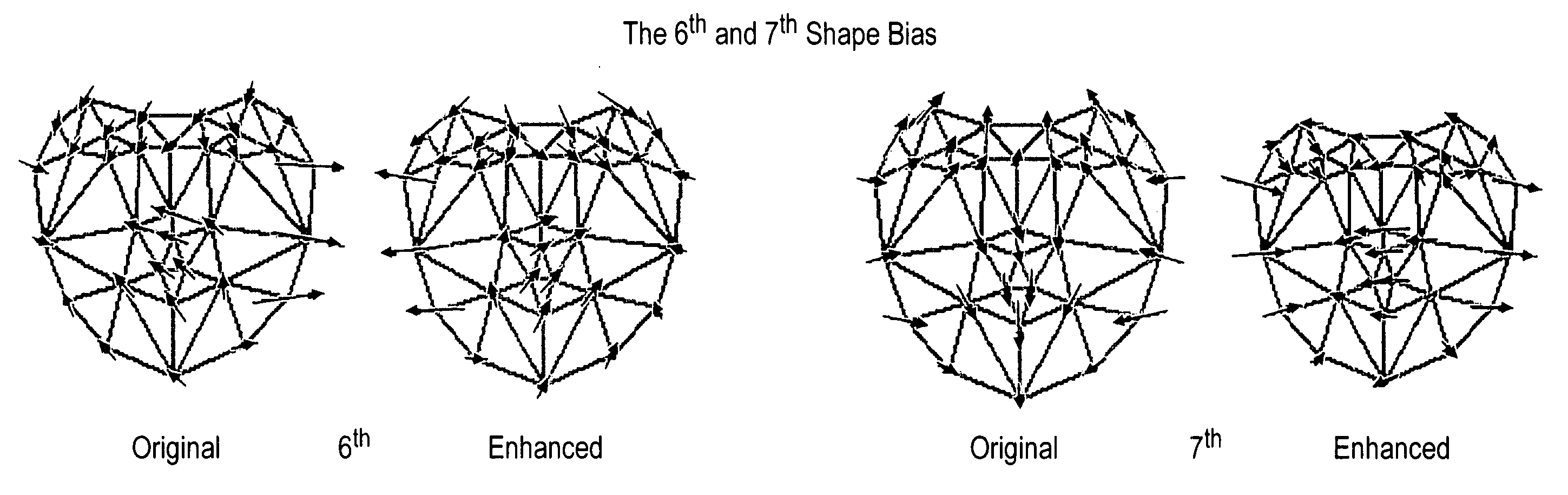

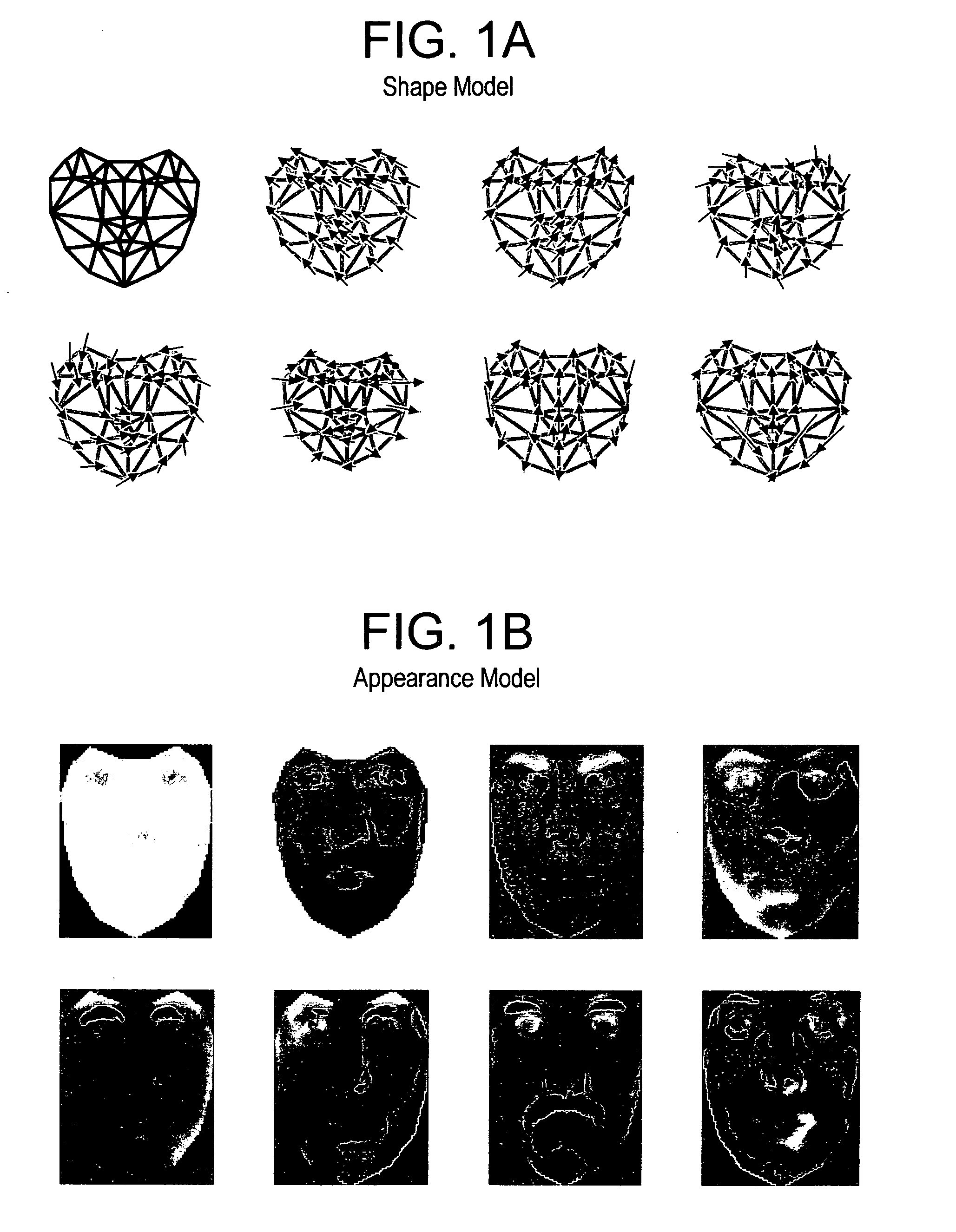

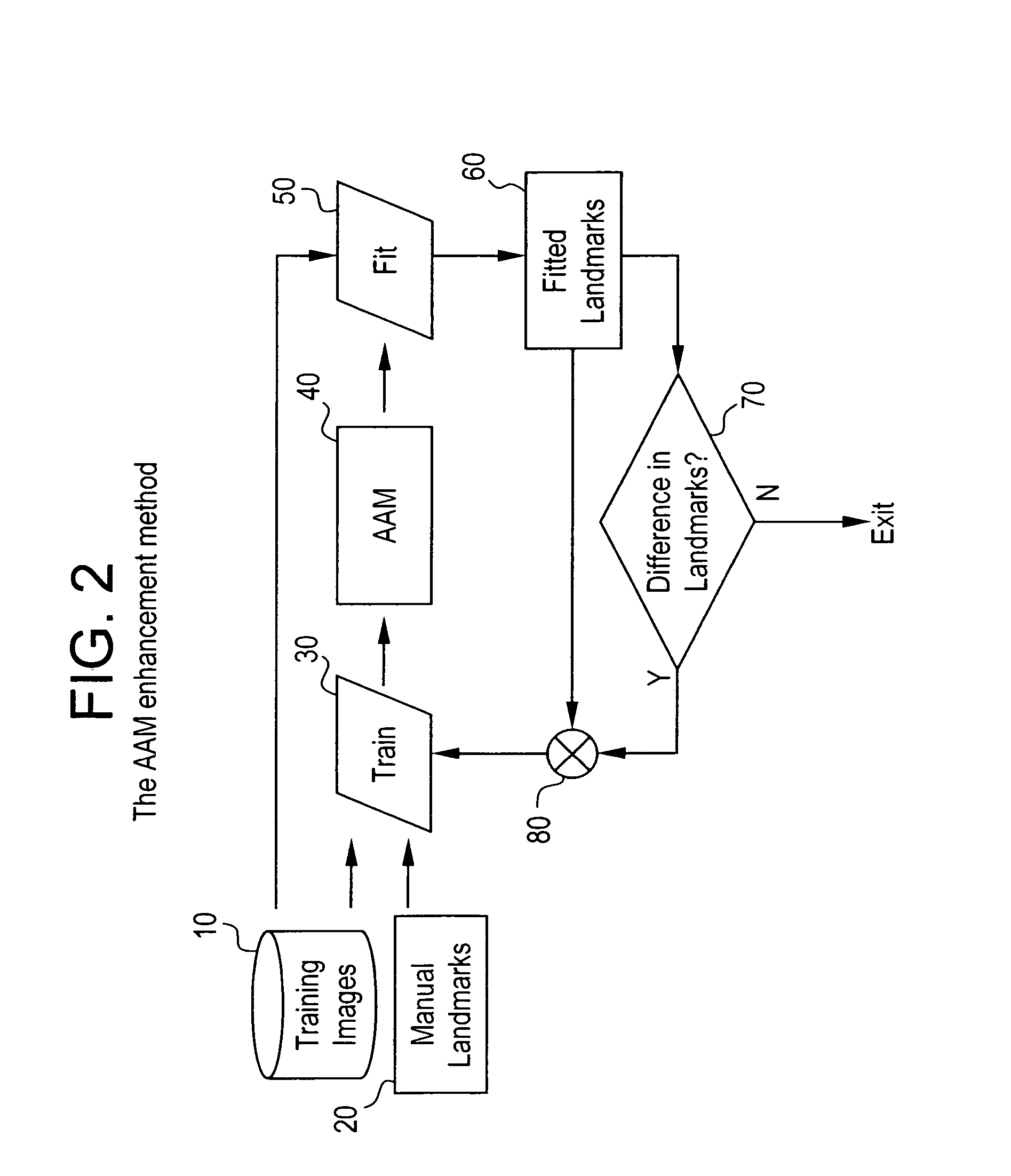

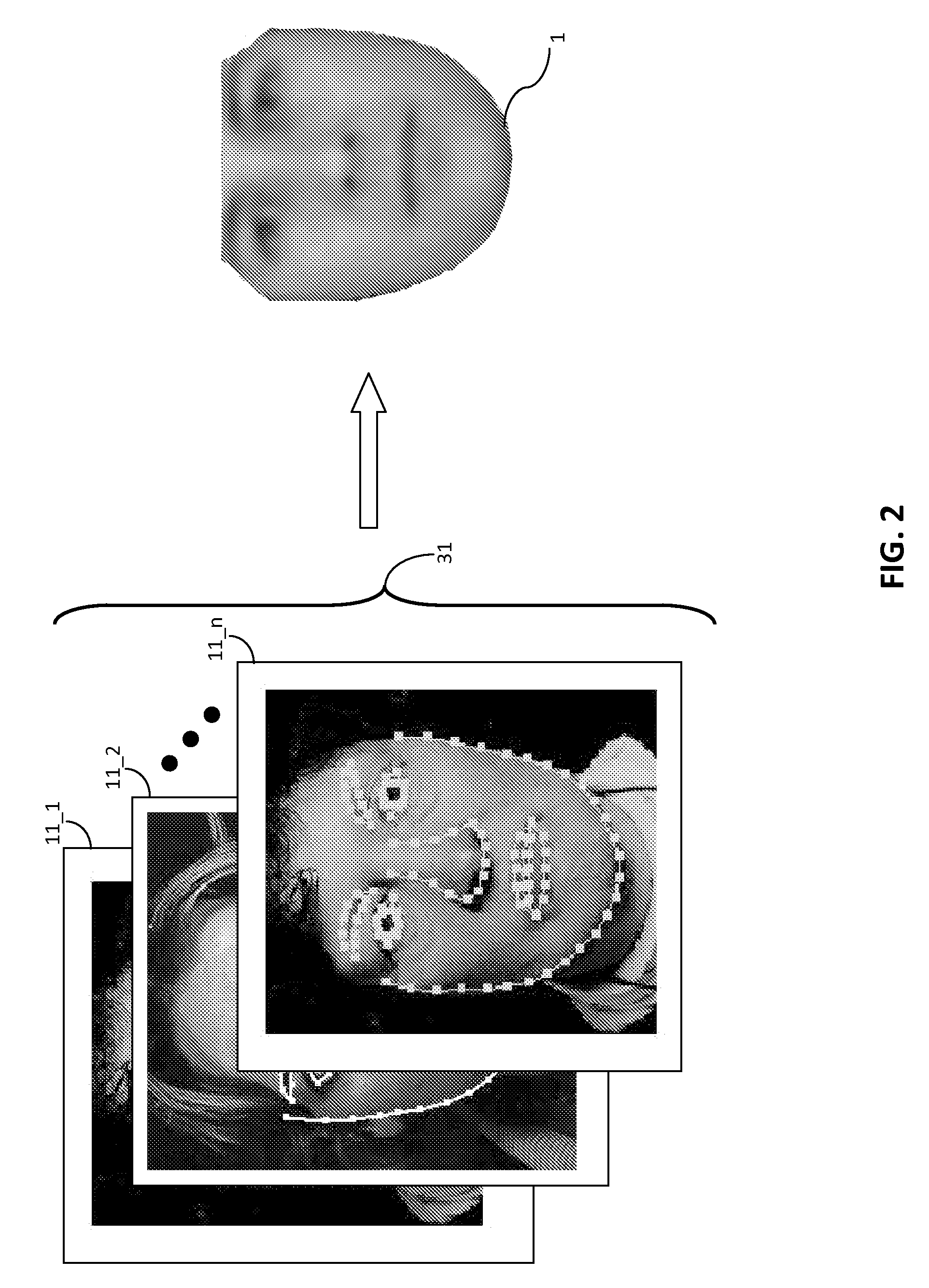

Method of combining images of multiple resolutions to produce an enhanced active appearance model

InactiveUS20070292049A1Easy alignmentIncreases fitting speedCharacter and pattern recognitionImage resolutionMulti resolution

A method of producing an enhanced Active Appearance Model (AAM) by combining images of multiple resolutions is described herein. The method generally includes processing a plurality of images each having image landmarks and each image having an original resolution level. The images are down-sampled into multiple scales of reduced resolution levels. The AAM is trained for each image at each reduced resolution level, thereby creating a multi-resolution AAM. An enhancement technique is then used to refine the image landmarks for training the AAM at the original resolution level. The landmarks for training the AAM at each level of reduced resolution is obtained by scaling the landmarks used at the original resolution level by a ratio in accordance with the multiple scales.

Owner:UTC FIRE & SECURITY AMERICAS CORPORATION INC

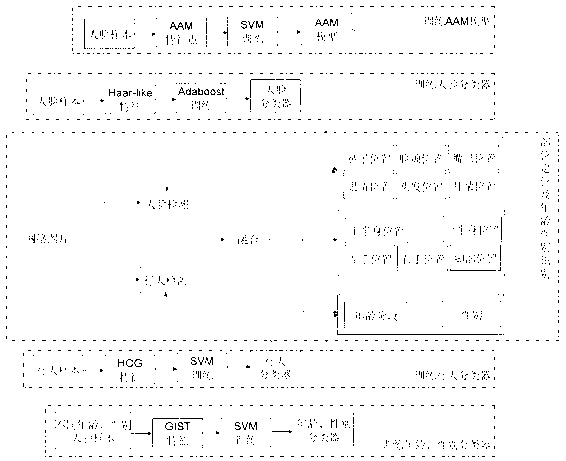

Method and system for figure detection, body part positioning, age estimation and gender identification in picture of network

The invention provides a method and system for figure detection, body part positioning, age estimation and gender identification in a picture of a network. The method includes the steps that by means of a face detection technique based on Haar-Like characteristics and Adaboost trainings and a pedestrian detection technique based on HOG characteristics and SVM trainings, a face area and an overall body area are primarily detected, and then a further fusion is carried out so that a face can correspond to a body if the body exists; active appearance models (AAM) are used in the face part so as to position the positions of the five sense organs of the face, and the position of the upper half body, the position of the lower half body, the positions of the left hand and the right hand, and the position of feet are confirmed on a body part according to a human body geometric model; eventually, identification of gender and age is carried out on the face part according to GIST characteristics and an SVM. Therefore, whether a figure exists in the picture of the network and various biological characteristics of the figure can be obtained through calculation.

Owner:北京明日时尚信息技术有限公司

Hand pointing estimation for human computer interaction

ActiveUS20150177846A1High degree of robustnessMore robustInput/output for user-computer interactionImage analysisHuman interactionHand parts

Hand pointing has been an intuitive gesture for human interaction with computers. A hand pointing estimation system is provided, based on two regular cameras, which includes hand region detection, hand finger estimation, two views' feature detection, and 3D pointing direction estimation. The technique may employ a polar coordinate system to represent the hand region, and tests show a good result in terms of the robustness to hand orientation variation. To estimate the pointing direction, Active Appearance Models are employed to detect and track, e.g., 14 feature points along the hand contour from a top view and a side view. Combining two views of the hand features, the 3D pointing direction is estimated.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

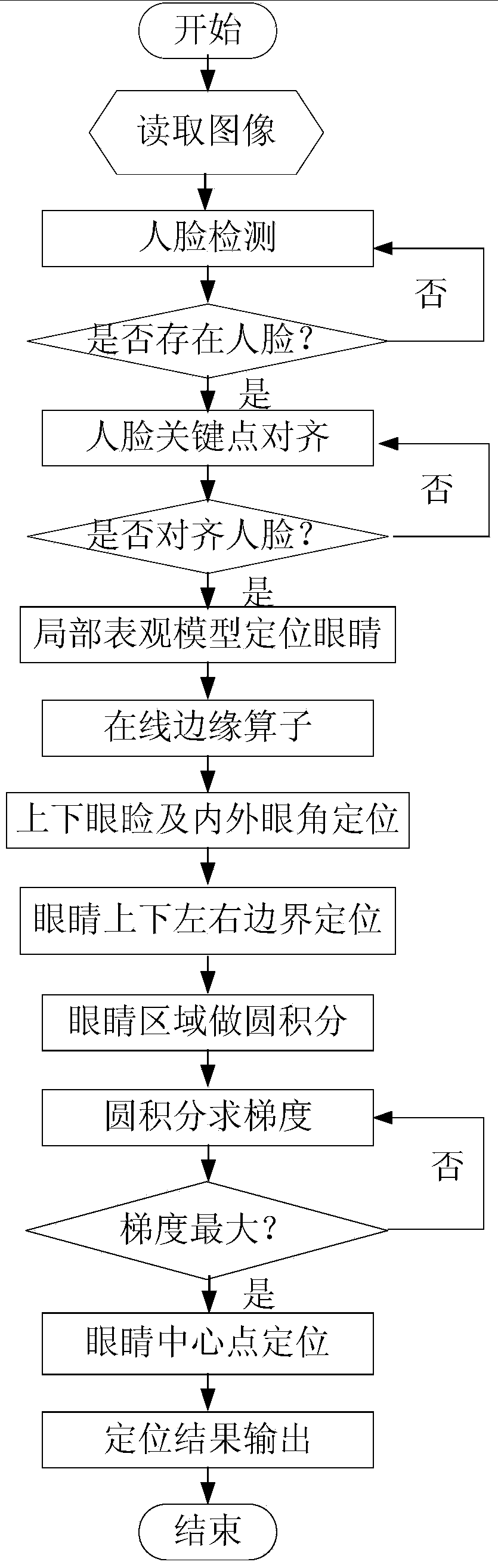

Method for locating central points of eyes in natural lighting front face image

InactiveCN104063700AEliminate uneven lightingAchieve precise positioningCharacter and pattern recognitionEyelidFace detection

The invention discloses a method for locating central points of eyes in a natural lighting front face image. The method comprises the steps that an automatic face detecting algorithm is used for detecting a face area of the input front face image; an active appearance model is used for automatically locating key points on the face in a rectangular frame of the face area to define primary areas of the eye areas; on the basis that the eye areas are primarily located, an eye local appearance model is applied to further defining eye locating areas; light treatment is performed on the eye locating areas which are further defined, so that lighting influence on the local eye areas is eliminated, a boundary operator is applied to detecting the boundary characters, and inner and outer eye corner points are accurately located by using the boundary characters; the connection line of the inner and outer eye corner points of each eye area serves as a calculation starting point, the largest corresponding point is calculated by adopting the method that the circle integral is used for solving the gradient, and the point is the central point of the corresponding eye. The method can be used for accurately locating the eyes, thereby having definite robustness on lighting and eyelid shielding.

Owner:WUHAN INSTITUTE OF TECHNOLOGY

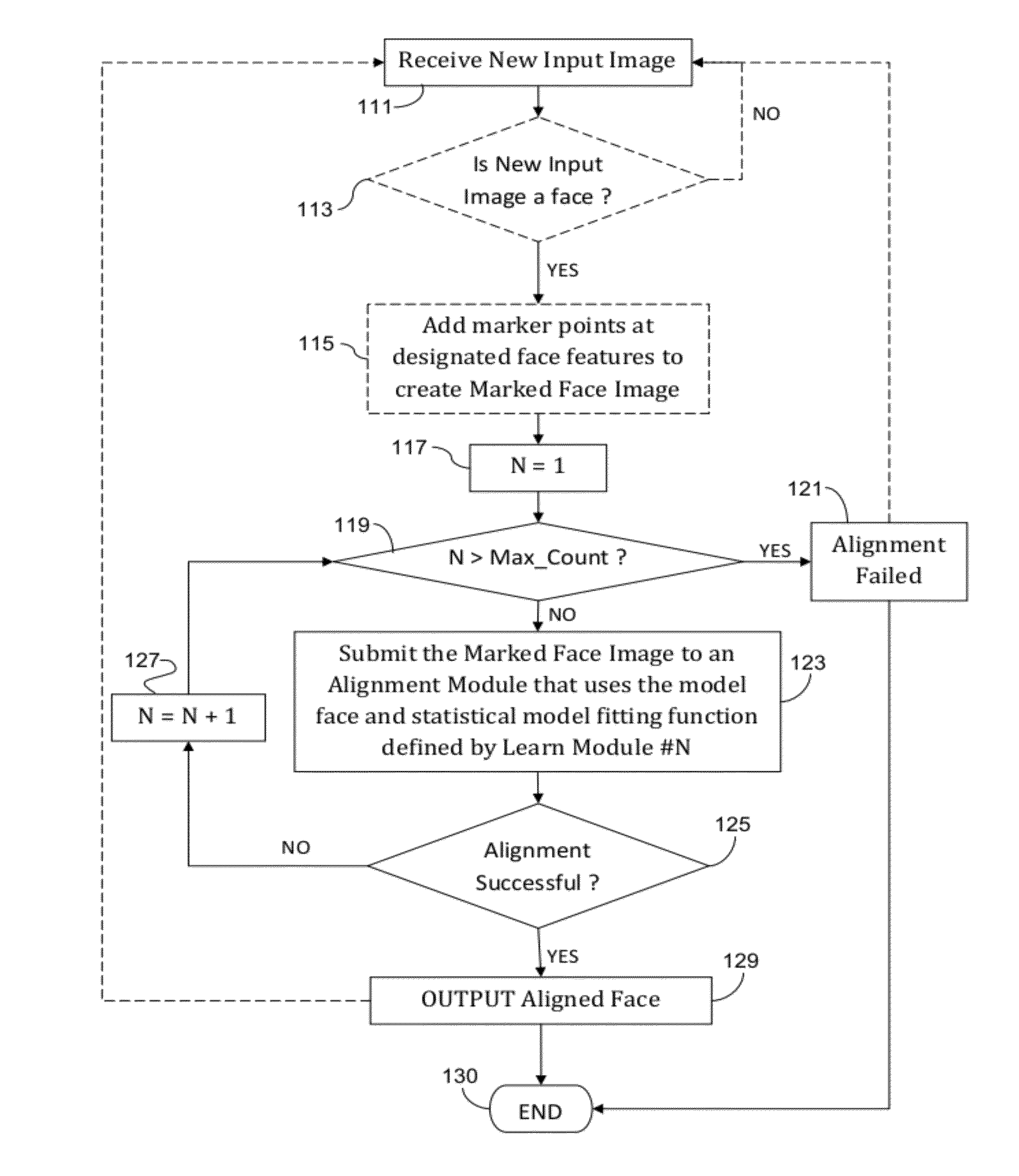

Hierarchical Tree AAM

ActiveUS20120195495A1Faster alignment processFast processingTelevision system detailsCharacter and pattern recognitionPhysical therapyActive appearance model

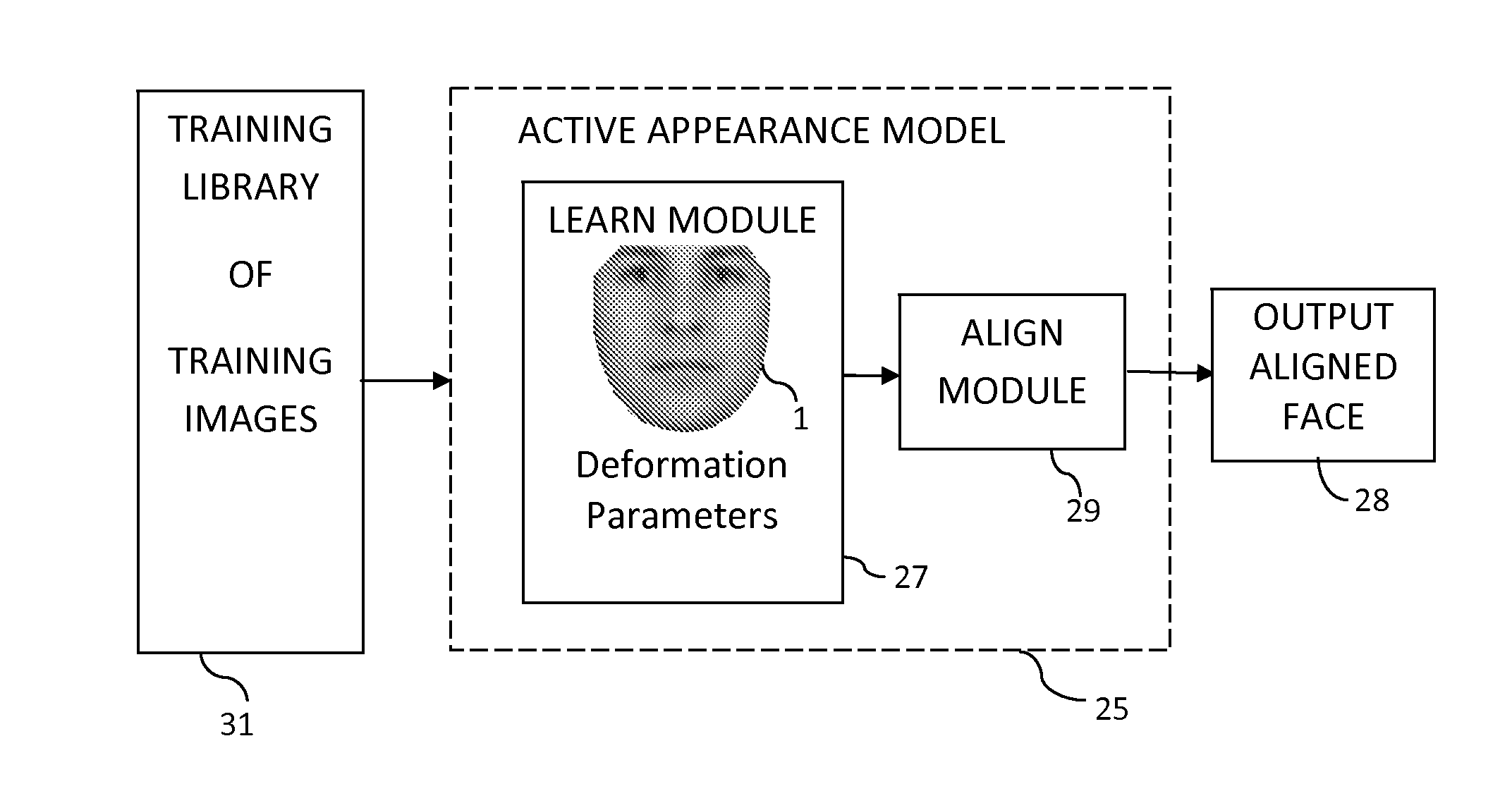

An active appearance model is built by arranging the training images in its training library into a hierarchical tree with the training images at each parent node being divided into two child nodes according to similarities in characteristic features. The number of node levels is such that the number of training images associated with each leaf node is smaller than a predefined maximum. A separate AAM, one per leaf node, is constructed using each leaf node's corresponding training images. In operation, starting at the root node, a test image is compared with each parent node's two child nodes and follows a node-path of model images that most closely matches the test image. The test image is submitted to an AAM selected for being associated with the leaf node at which the test image rests. The selected AAM's output aligned image may be resubmitted to the hierarchical tree if sufficient alignment is not achieved.

Owner:138 EAST LCD ADVANCEMENTS LTD

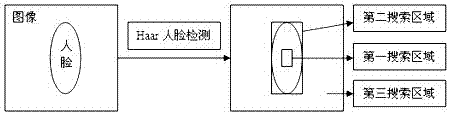

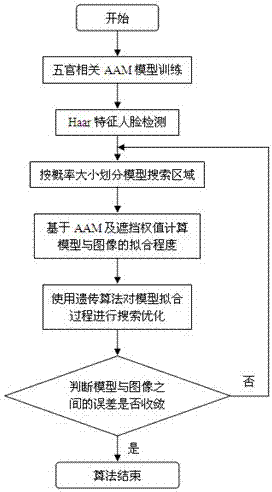

A method of facial feature localization based on facial features-related AAM model

InactiveCN102270308AImprove robustnessImprove search efficiencyCharacter and pattern recognitionFace detectionFacial region

The invention relates to a facial feature location method aiming at partially obscured images in complex scene. The method comprises the following steps of: based on a sample image set, respectively modeling for each facial organ, and training to obtain an AAM (Active Appearance Model) related to the five sense organs; determining search areas of the AAM through a Haar feature face detection technology while initially locating a facial area, and classifying the search areas according to the probability of being searched; at an AAM fitting calculation part, respectively performing error calculation for each facial organ based on an obscuring weight of the five sense organs, and then, comprehensively evaluating a fitting degree of the model and the image through an energy function; and performing search optimization on a fitting process of the AAM by a genetic algorithm. In comparison with the prior related algorithms, the method can locate the facial features of the partially obscured images more accurately, and can enhance the robustness of the algorithms and improve the efficiency of the algorithms while ensuring higher accuracy.

Owner:WUHAN UNIV

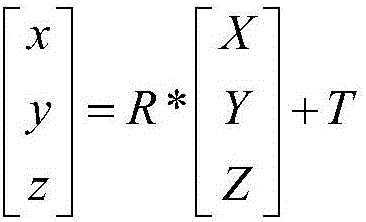

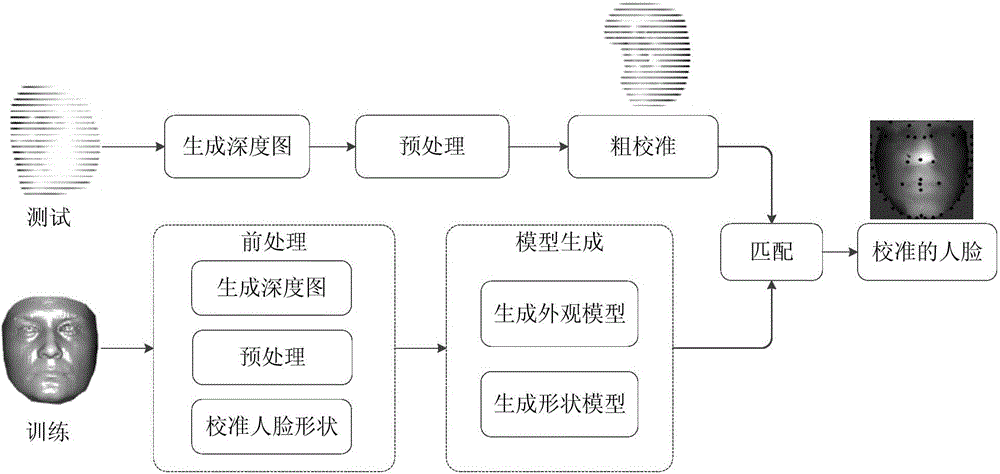

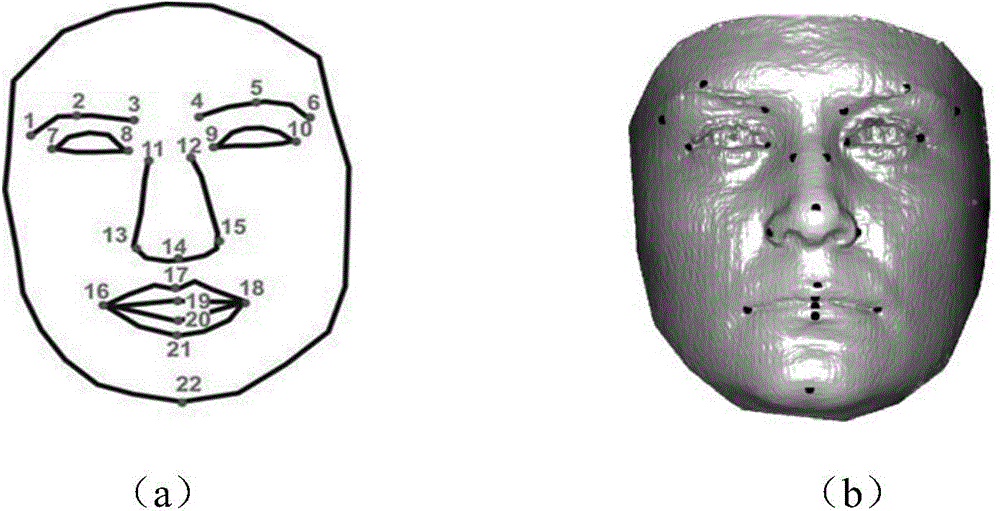

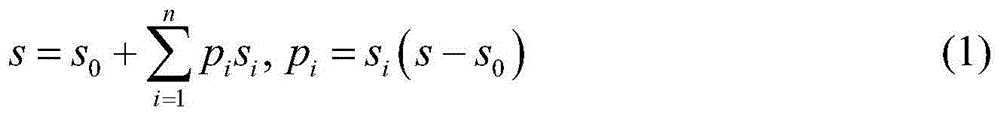

Three-dimensional face calibrating method capable of resisting posture and facial expression changes

ActiveCN104657713AImprove accuracyFast convergenceCharacter and pattern recognition3D-image renderingActive appearance model

The invention discloses a three-dimensional face calibrating method capable of resisting posture and facial expression changes. The three-dimensional face calibrating method comprises an active appearance model establishing stage and a face calibrating stage. In the active appearance model establishing stage, a three-dimensional face is acquired through three-dimensional image acquisition equipment, important markers of the face are manually marked, and through the grid shape and appearance information of the face, an active appearance model based on a depth image is established; in the face calibrating stage, the face is roughly calibrated through an average nose model first and then a test face is matched with the active appearance model based on the depth image to finely calibrate the face. By the method from rough calibration to fine calibration, the posture and facial expression changes of the face can be resisted, so that the face can be accurately calibrated under a natural condition; through conversion of the three-dimensional face onto the depth image, the calibrating efficiency is improved; therefore, the three-dimensional face calibrating method has an important significance in promoting practical application of the three-dimensional face to identity authentication.

Owner:ZHEJIANG UNIV

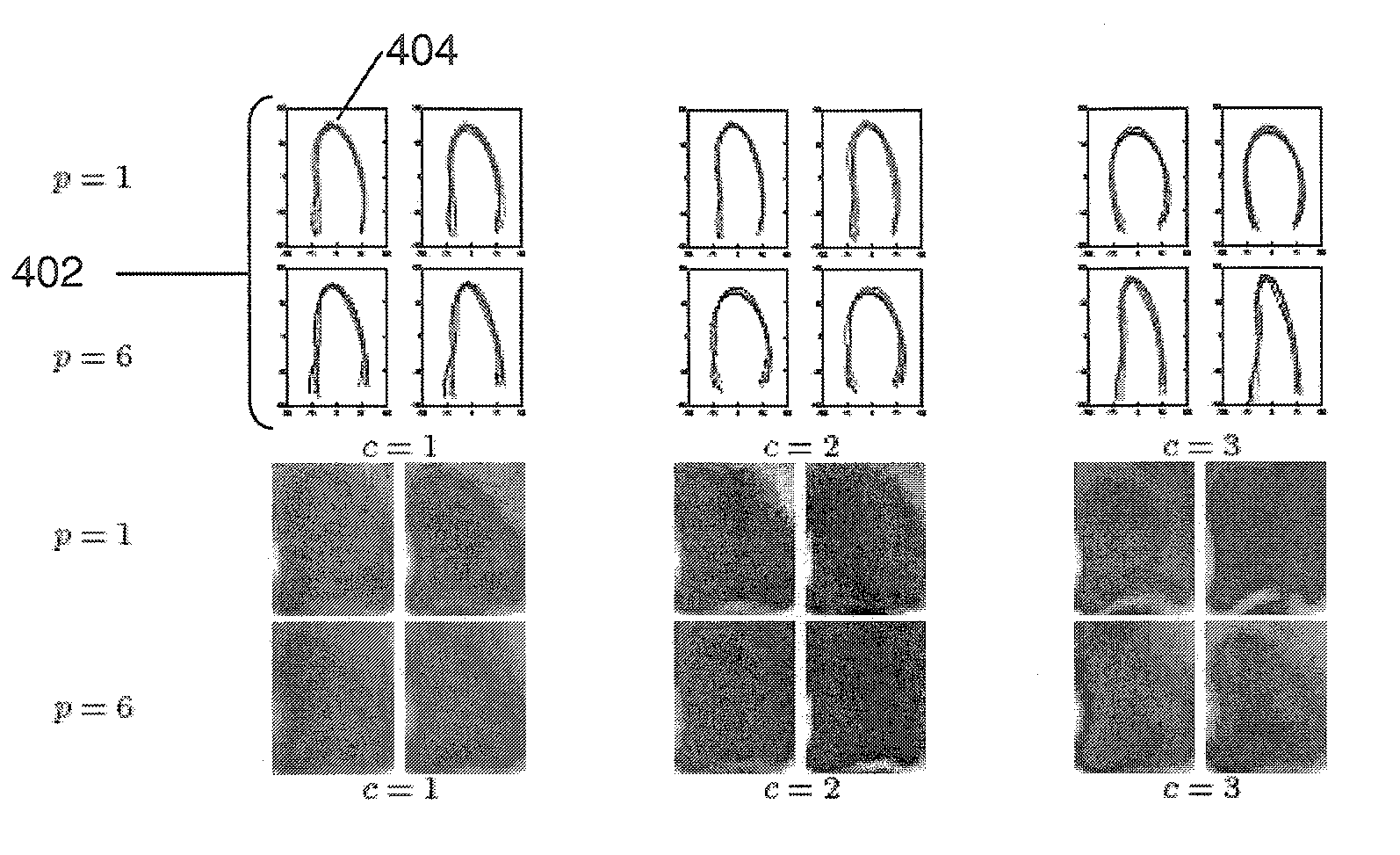

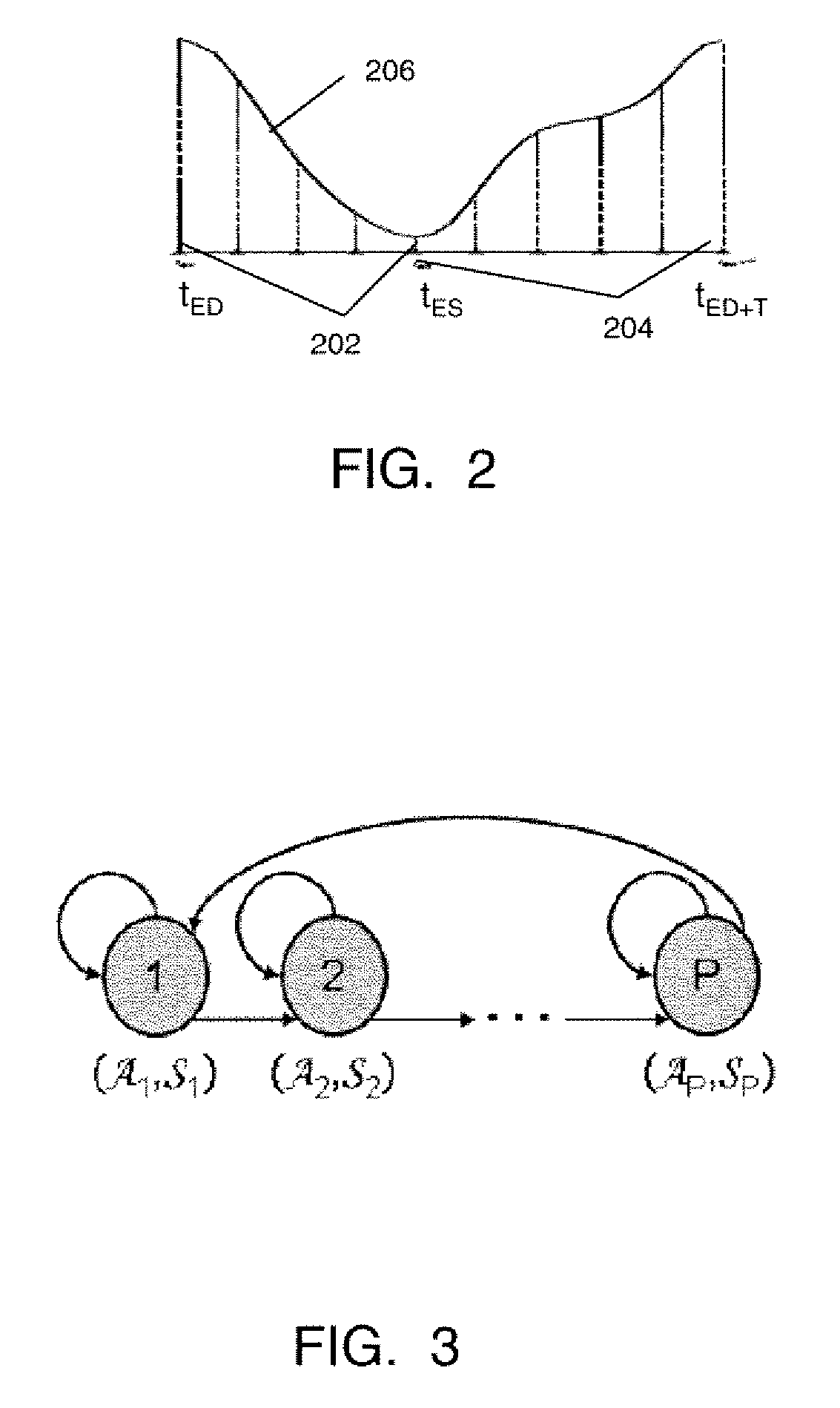

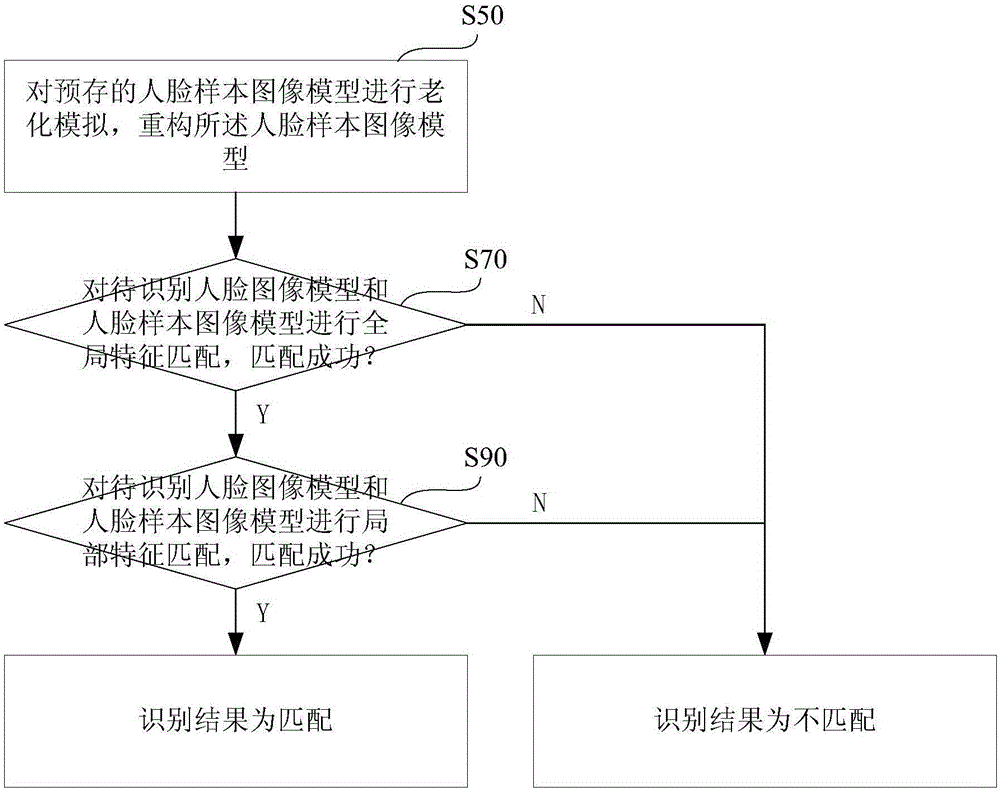

Method for characterizing shape, appearance and motion of an object that is being tracked

A method for generating Pairwise Active Appearance Models (PAAMs) that characterize shape, appearance and motion of an object and using the PAAM to track the motion of an object is disclosed. A plurality of video streams is received. Each video stream includes a series of image frames that depict an object in motion. Each video stream includes an index of identified motion phases that are associated with a motion cycle of the object. For each video stream, a shape of the object is represented by a shape vector. An appearance of an object is represented by an appearance vector. The shape and appearance vectors associated at two consecutive motion phases are concatenated. Paired data for the concatenated shape and appearance vectors is computed. Paired data is computed for each two consecutive motion phases in the motion cycle. A shape subspace is constructed based on the computed paired data. An appearance subspace is constructed based on the computed paired data. A joint subspace is constructed using a combination of the shape subspace and appearance subspace. A PAAM is generated using the joint subspace and the PAAM is stored in a database.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

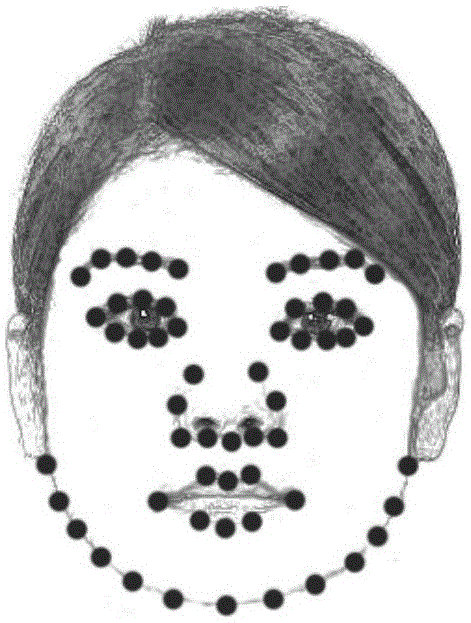

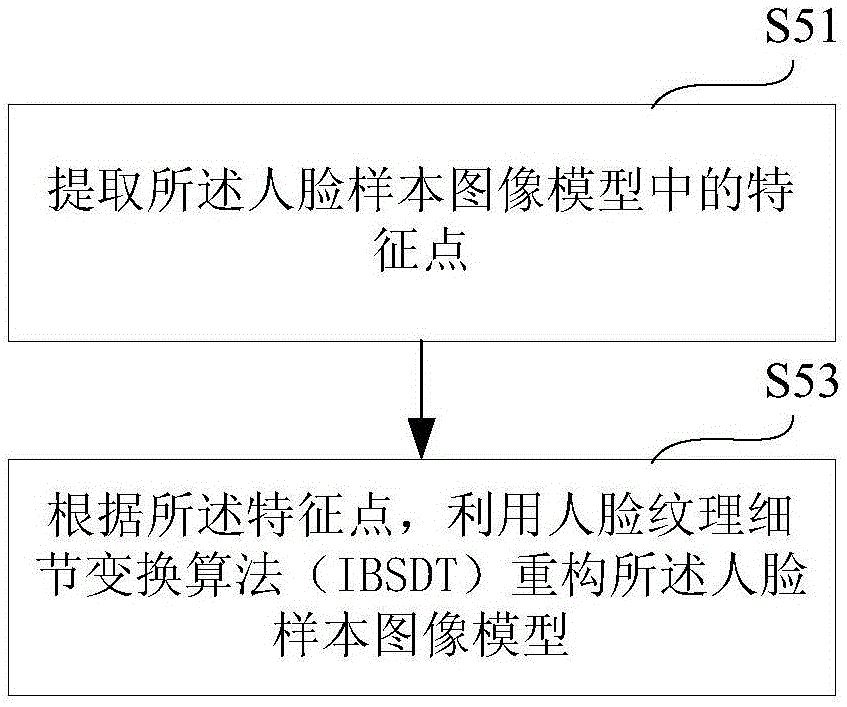

Single-sample human face recognition method compatible for human face aging recognition

InactiveCN105117712AImprove accuracyImprove reliabilityCharacter and pattern recognitionSingle sampleTriangulation

The invention provides a single-sample human face recognition method compatible for human face aging recognition, which comprises the steps of conducting the aging simulation on the pre-stored image model of a human face sample to re-construct the image model of the human face sample; conducting the global feature matching for a to-be-recognized human face image model with the image model of the human face sample, wherein if the matching fails, regarding the recognition result as mismatching; and conducting the local feature matching for the to-be-recognized human face image model with the image model of the human face sample, wherein if the matching fails, regarding the recognition result as mismatching. The above to-be-recognized human face image model is an active appearance model of a to-be-recognized human face image. The image model of the human face sample is an active appearance model of a reserved human face sample image. According to the technical scheme of the invention, the recognition effect compatible for human face aging influence is realized and improved based on the combination of the AAM technique with the IBSDT technique. Meanwhile, based on the combination of the AAM technique with the triangulation matching technique, the matching reliability of global features is greatly improved. Based on the combination of the LBP technique with the SURF technique, the matching reliability of local features and the illumination robustness are improved. Finally, the high recognition rate for the reserved human face image as a single sample is realized.

Owner:BEIJING TCHZT INFO TECH CO LTD

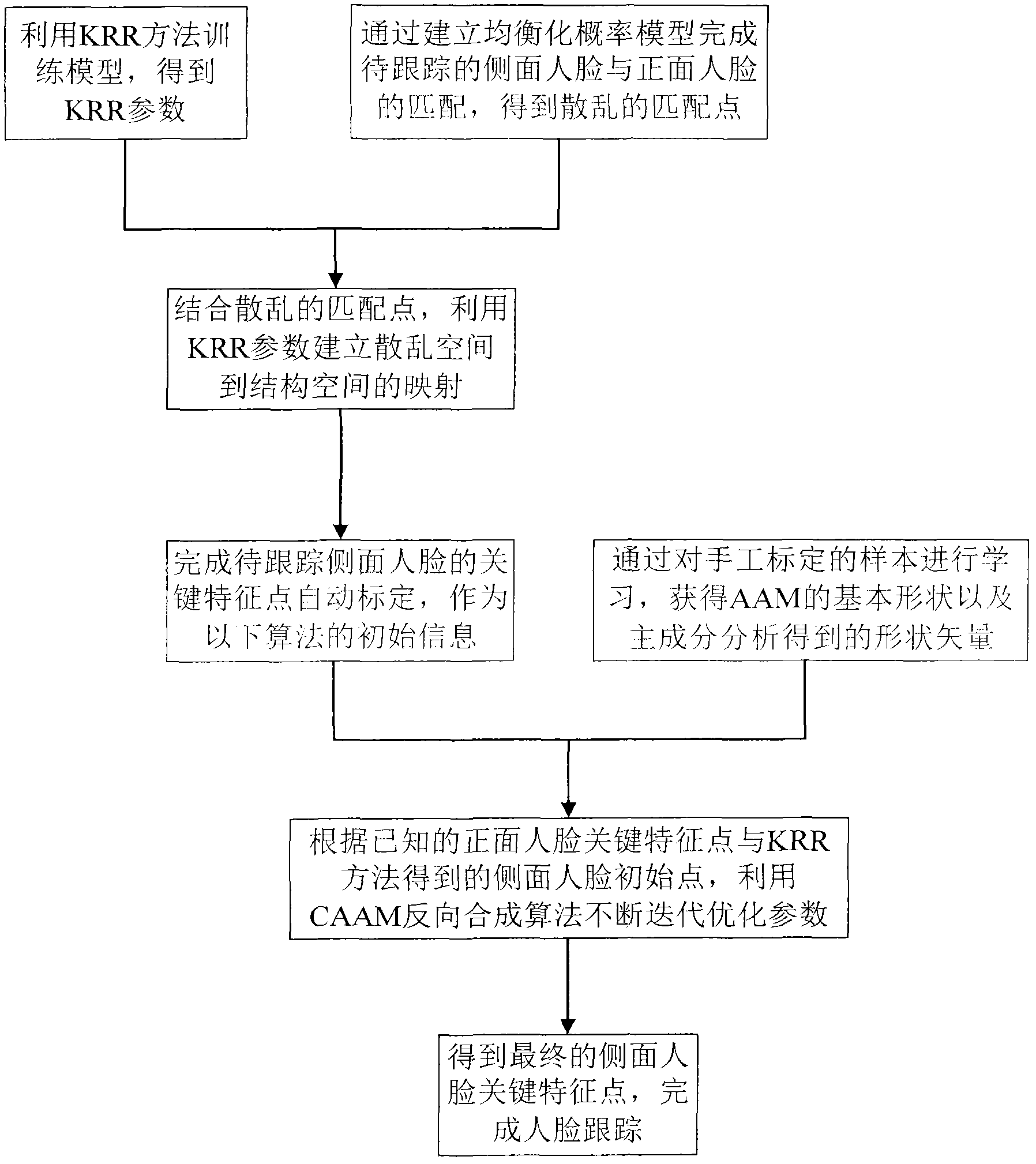

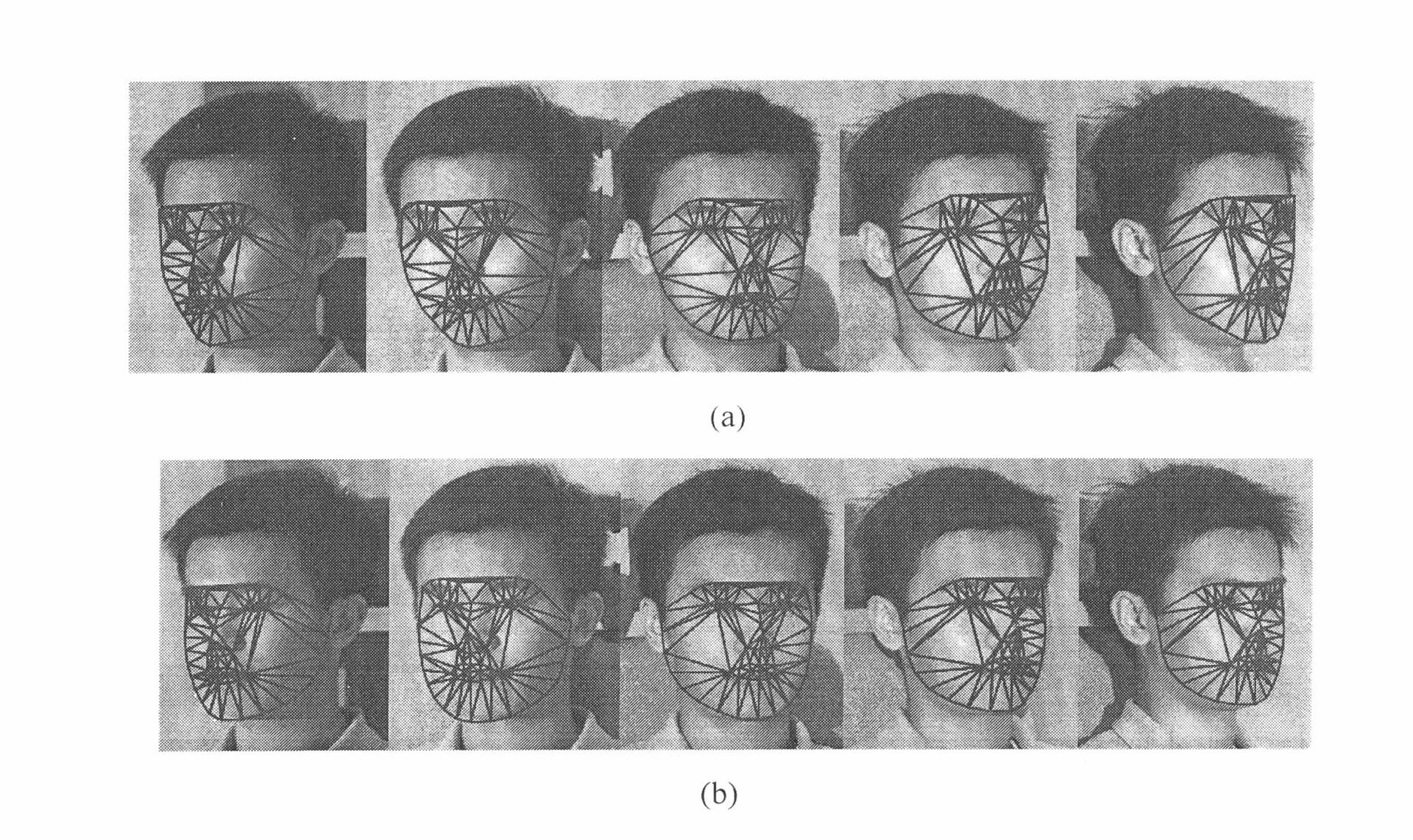

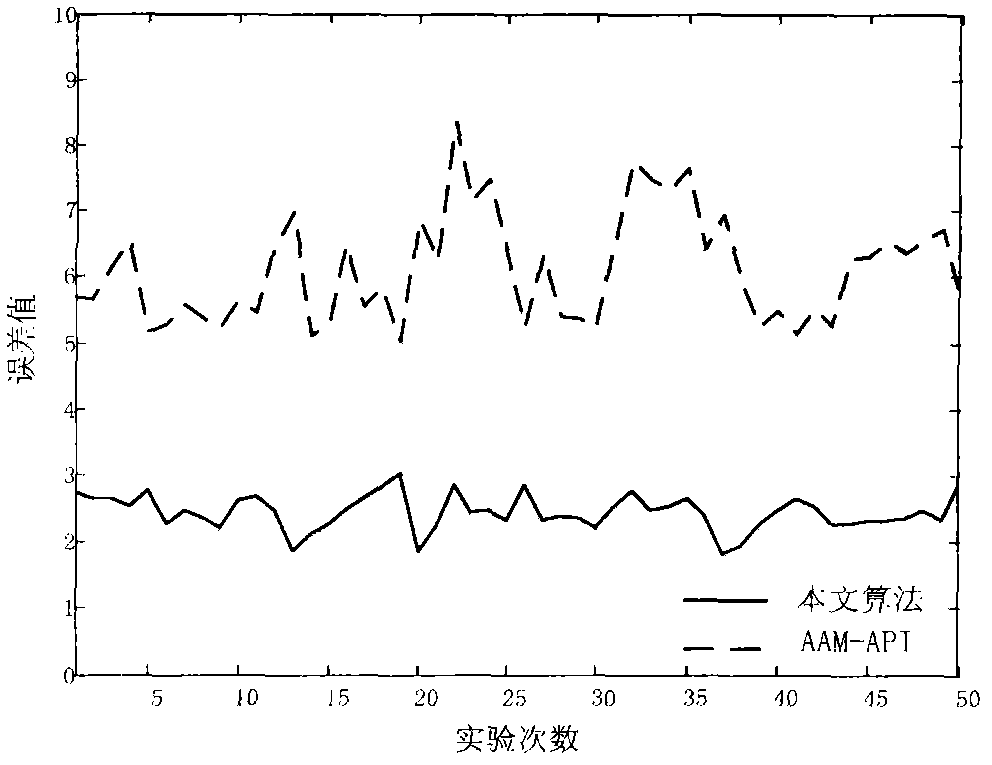

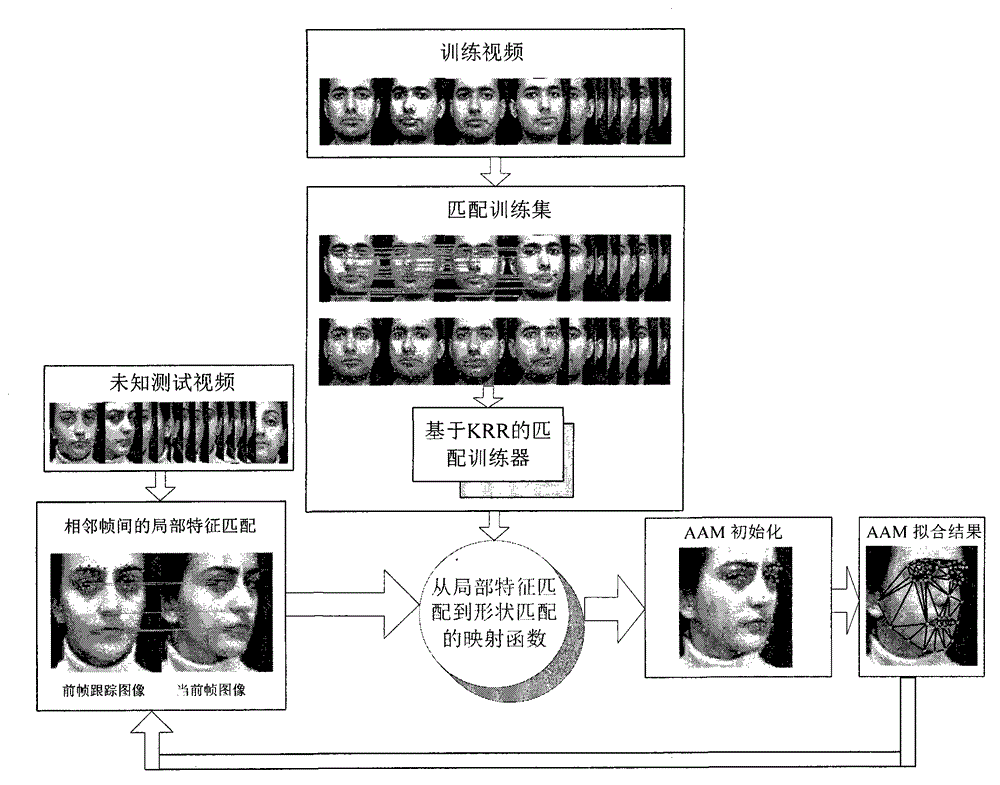

Face characteristic point automation calibration method based on conditional appearance model

InactiveCN102663351ATo achieve the purpose of precise calibrationImprove balanceCharacter and pattern recognitionKernel ridge regressionModel parameters

The invention, which belongs to the computer vision field, discloses a face characteristic point automation calibration method based on a conditional appearance model. The method comprises the following steps: assuming that front face calibration is known; firstly, establishing that a discrete characteristic point of the front face corresponds to the discrete characteristic point of a side face; through a mapping relation between discrete characteristic points and a structural calibration point, acquiring an initialization calibration result of the side face, wherein the mapping relation is acquired by a regression algorithm; then, establishing the conditional model between the side face calibration point and the front face calibration point, continuously carrying out iteration optimization on a model parameter according to a reverse synthesis algorithm so as to obtain a final calibration result. According to the invention, the space mapping of the discrete characteristic points and the structural calibration point is established through kernel ridge regression (KRR) so as to obtain the initial calibration of the face characteristic. A subsequent iteration frequency is reduced and calibration precision is improved. The conditional appearance model and the reverse synthesis iteration algorithm are designed. Appearance deformation searching can be avoided and a searching efficiency can be improved. Compared to a traditional active appearance model (AAM), by using the calibration method of the invention, the calibration result is more accurate.

Owner:JIANGNAN UNIV

Cascaded face model

InactiveUS8144976B1Cooperate effectivelyCharacter and pattern recognitionSpecial data processing applicationsFace modelStatistical model

An Active Appearance Model AAM is trained using expanded library having examples of true outlier images. The AAM creates a first statistical fitting pair (a model image of the class of object and corresponding statistical model fitting) using characteristic features drawn only from the expanded library. All images within the expanded library that the first statistical fitting pair cannot align are collected into a smaller, second library of true outlier cases. A second statistical fitting pair is created using characteristic features drawn only from the second library, and samples not aligned by the second statistical fitting pair are collected into a still smaller, third library. This process is repeated until a desired percentage of all the images within the initial, expanded library have been aligned. In operation, the AAM applies each of its created statistical fitting pairs, in turn, until it has successfully aligned a submitted test image, or until a stop criterion has been reached.

Owner:SEIKO EPSON CORP

Regression-based active appearance model initialization method

InactiveCN104036229AImprove balanceHigh speedCharacter and pattern recognitionCalibration resultThresholding

The invention discloses a regression-based active appearance model initialization method, and belongs to the computer vision field. The realization process of the method is as follows: when human face characteristic point automatic tracking is carried out by use of an active appearance model, assuming object position information of a first frame in known video tracking, during a subsequent tracking process, by use of a double-threshold characteristic corresponding algorithm, obtaining corresponding discrete characteristics between neighboring frame images, and by use of a space mapping relation between the discrete characteristic points and structured calibration points, established through a nucleus ridge regression algorithm, obtaining initial calibration of human face characteristics, such that the subsequent iteration frequency can be substantially reduced, and at the same time, the calibration precision is improved. Compared to an initialization method of a conventional active appearance model, more accurate human face characteristic point calibration results can be obtained by use of the auxiliary active appearance model.

Owner:JIANGNAN UNIV

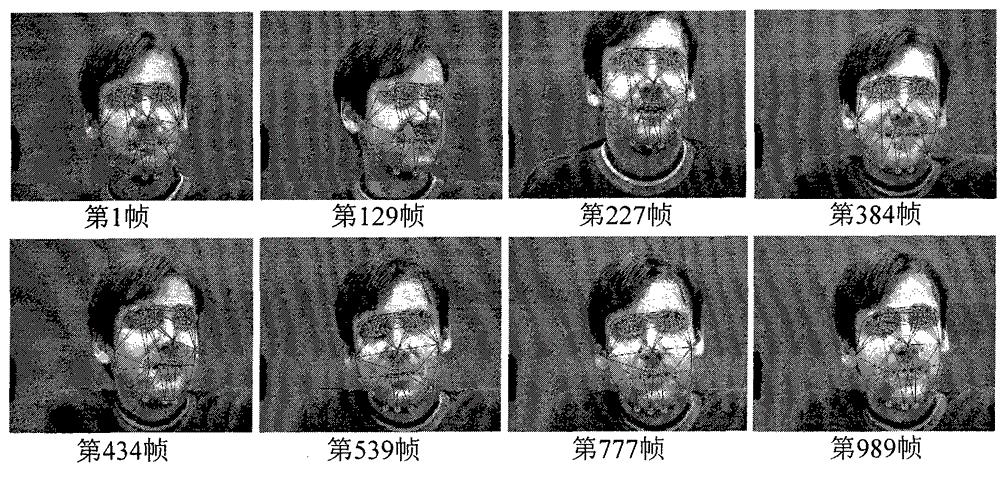

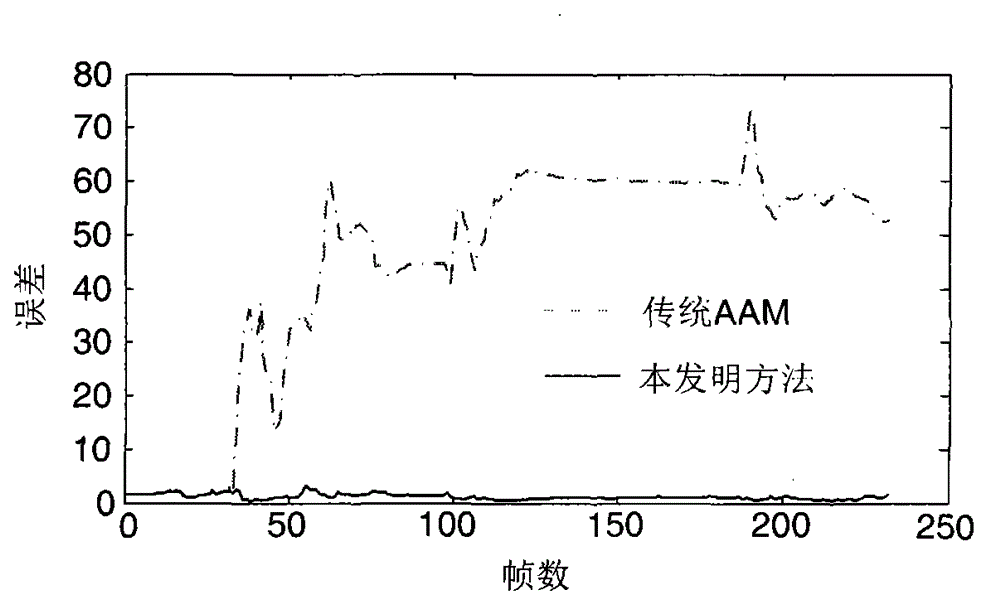

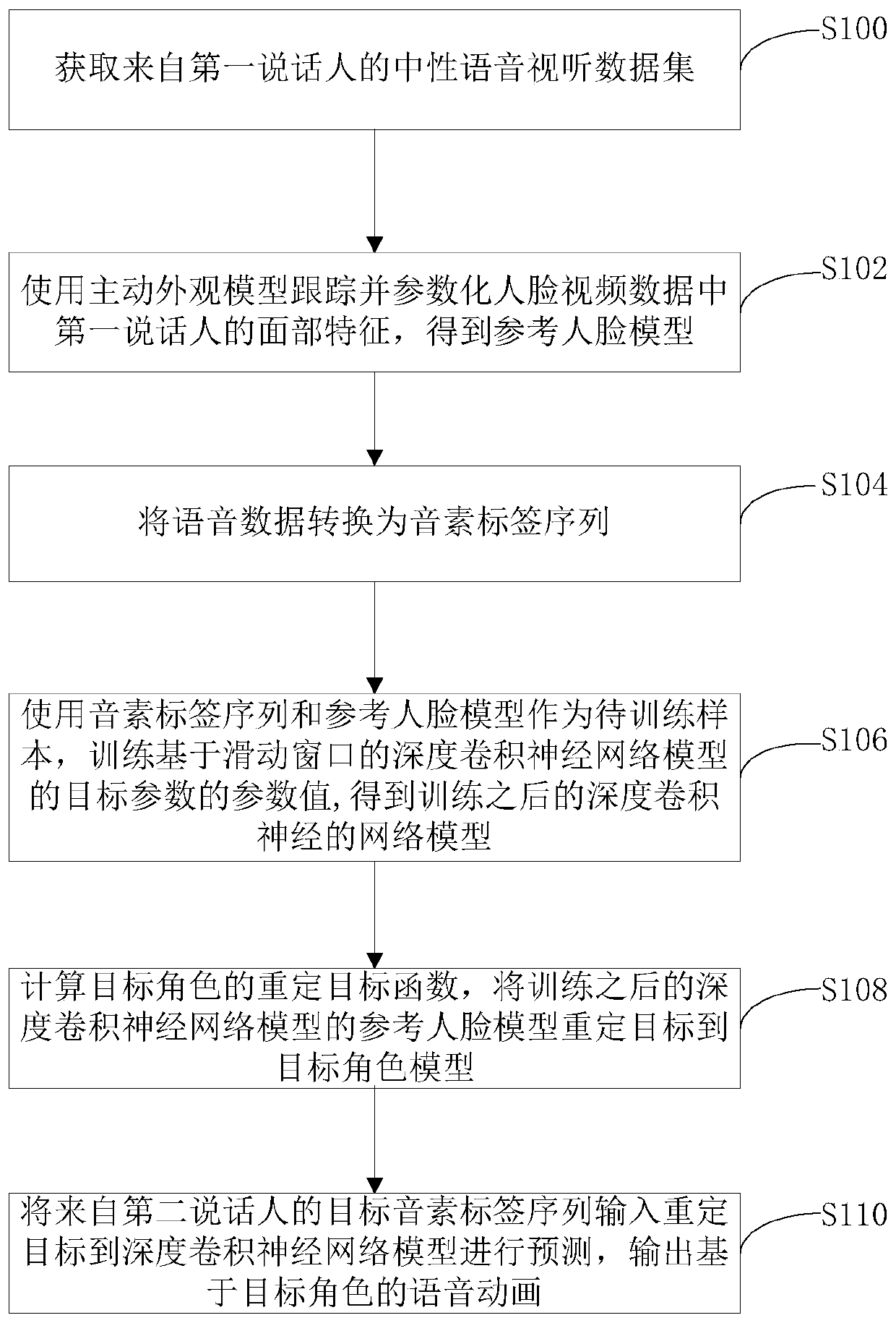

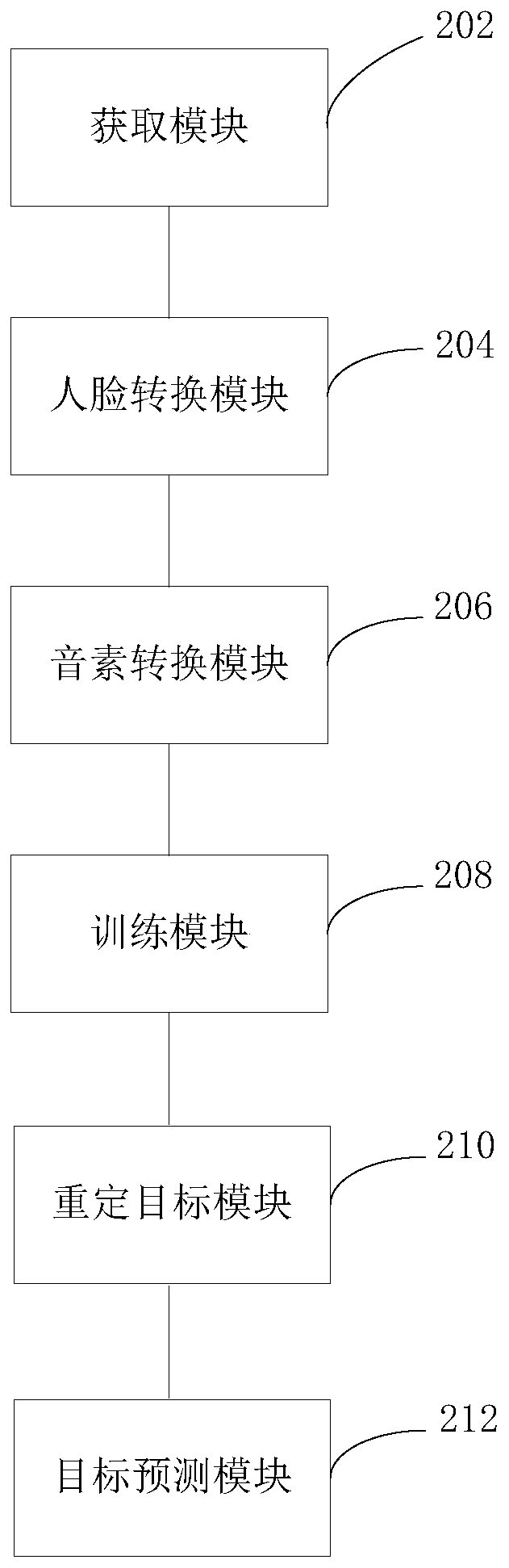

Method and system for driving human face animation through real-time voice

The invention discloses a method and a system for driving human face animation by real-time voice. The method comprises the following steps: acquiring a neutral voice audio-visual data set from a first speaker; tracking and parameterizing face video data by using an active appearance model; converting the voice data into a phoneme label sequence; training a deep convolutional neural network modelbased on a sliding window; relocating the target of the reference face model to the target role model; and inputting the target phoneme label sequence from the second speaker into the deep convolutional neural network model of the target role model for prediction. The system provided by the invention comprises an acquisition module, a face conversion module, a phoneme conversion module, a trainingmodule, a target redetermination module and a target prediction module. According to the method and the system provided by the invention, the problems that the existing voice animation method dependson a specific speaker and a specific speaking style and cannot retarget the generated animation to any facial equipment are solved.

Owner:北京中科深智科技有限公司

Feature point positioning method combined with active shape model and quick active appearance model

InactiveCN1866272AHigh precisionImprove search accuracyCharacter and pattern recognitionComputer scienceImage processing

The disclosed characteristic point locating method comprises: (1) building Lucas AAM model, calculating initial parameters, and obtaining initial position; (2) building ASM model; (3) searching human face characteristic point by the initial position from (1) and Lucas AAM method; and (4) searching new position by the initial position from (3) and ASM method. This invention makes good use of Lucas AAM and ASM methods, and increases search speed.

Owner:SHANGHAI RUISHI MACHINE VISION TECH

Hierarchical tree AAM

ActiveUS8306257B2Fast processingIncrease the number ofTelevision system detailsCharacter and pattern recognitionPhysical therapyActive appearance model

Owner:138 EAST LCD ADVANCEMENTS LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com