Single-photo-based human face animating method

A face and animation technology, applied in the field of image-based 3D face modeling and animation, can solve the problems of inability to real-time processing, large amount of calculation, unrealistic expressions, etc., to achieve realistic eye animation effect, strong sense of realism, and universal strong effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] The present invention will be described in detail below in conjunction with the accompanying drawings. It should be noted that the described embodiments are only intended to facilitate the understanding of the present invention, rather than limiting it in any way. The present invention is illustrated by the following examples:

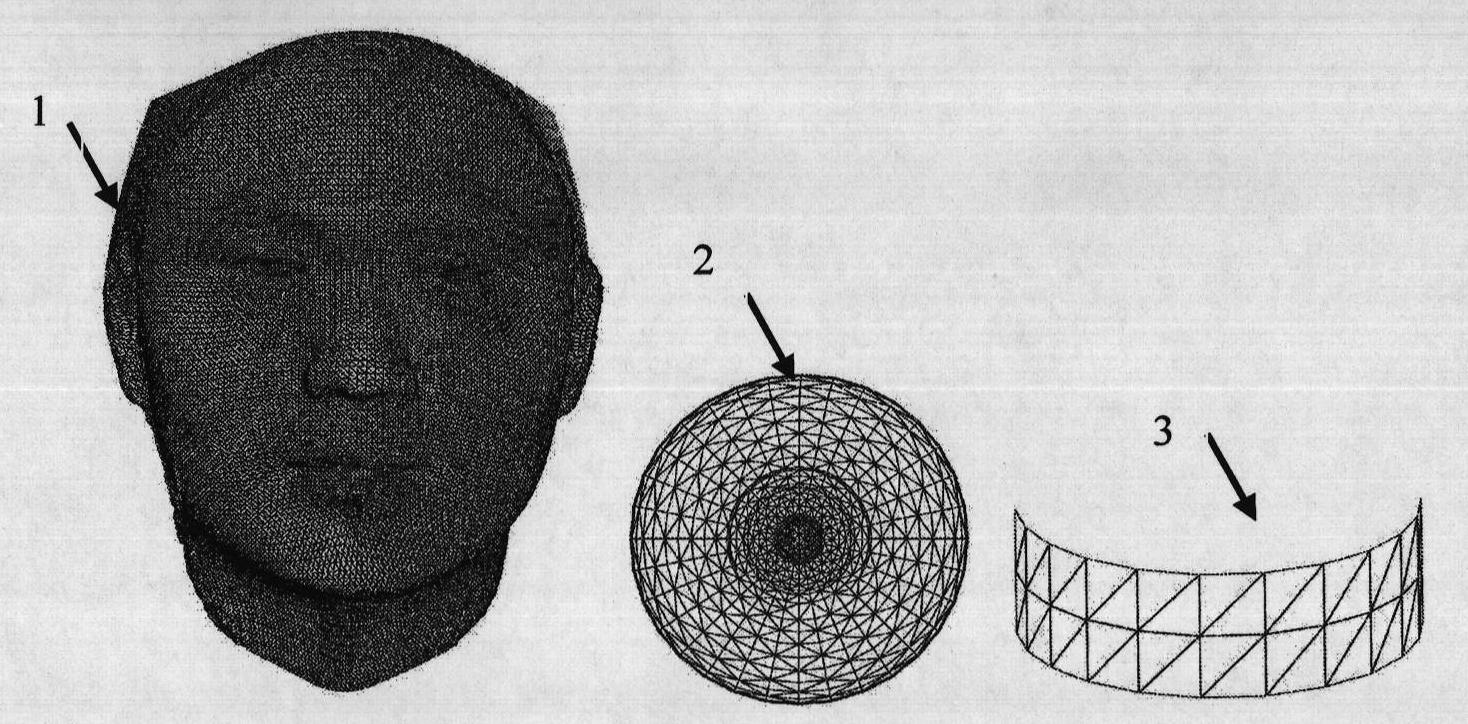

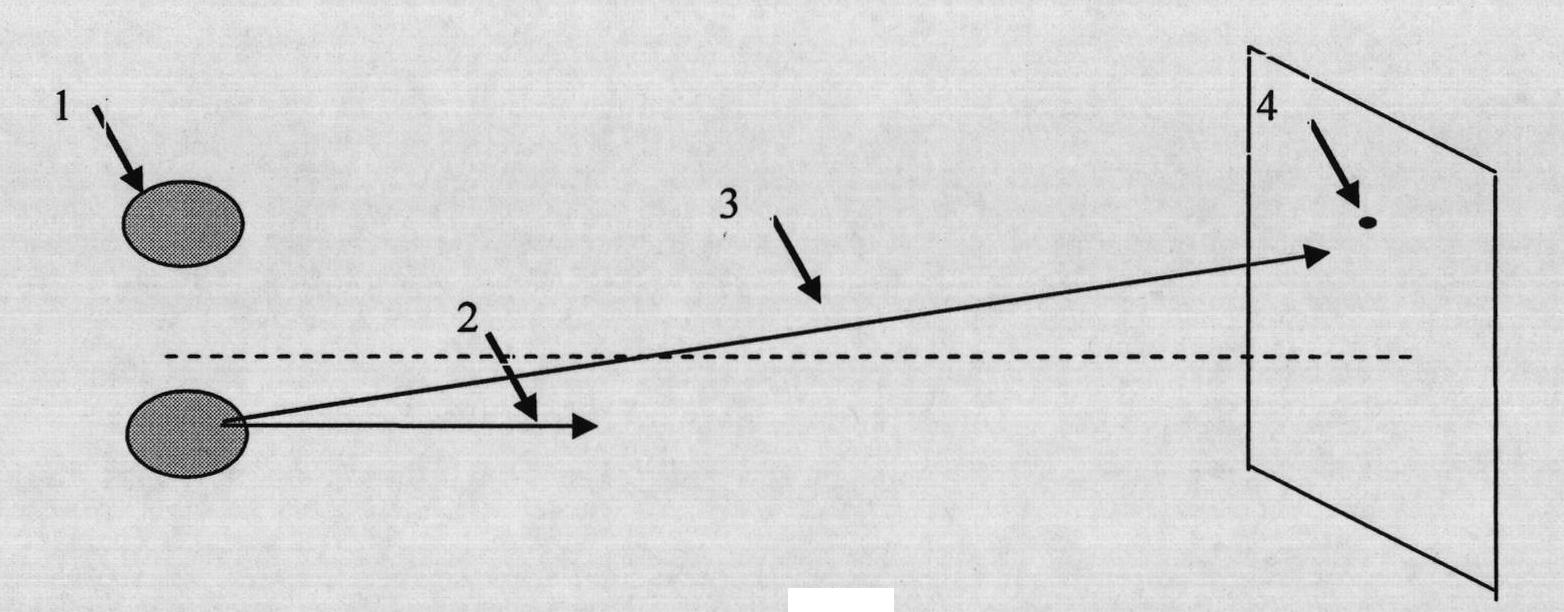

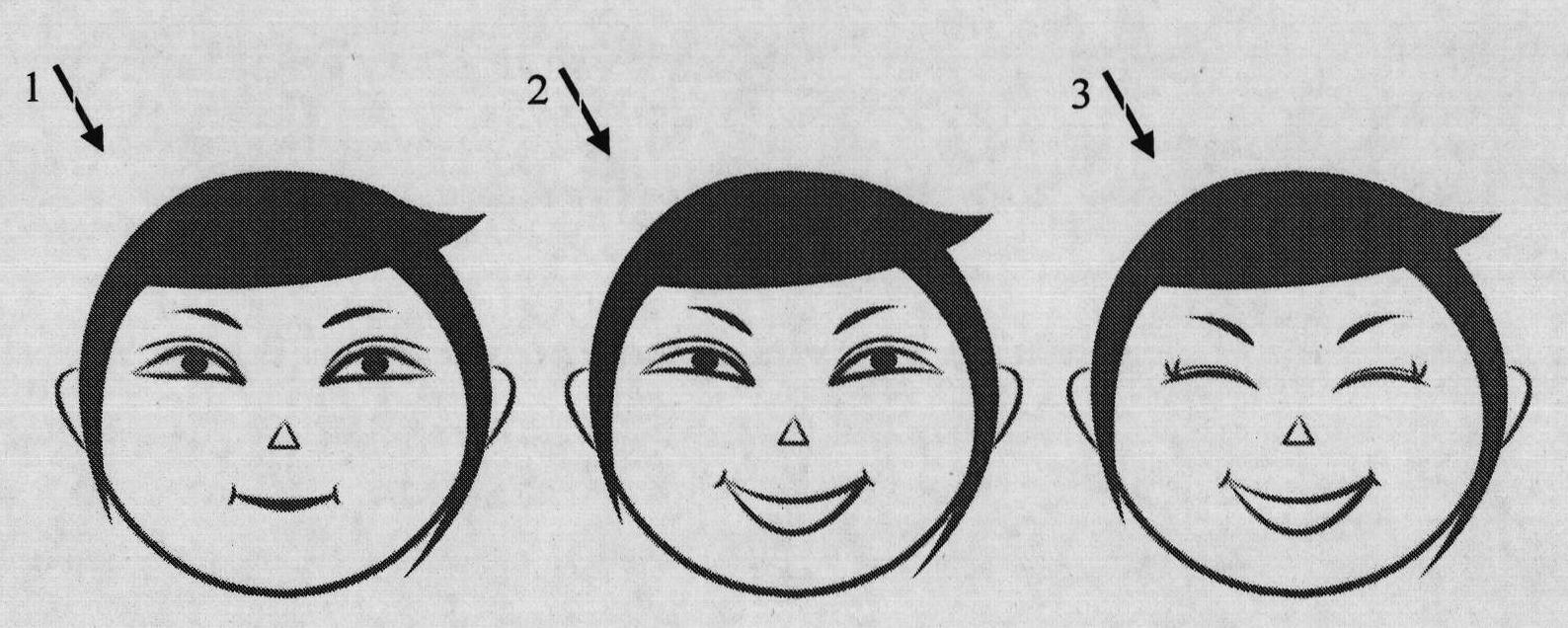

[0025] Input a single front face photo, get the reconstruction result after face detection, face key point positioning, face geometric reconstruction, model expansion and other steps, after animation data production, animation data mapping, interpolation based on spherical parameterization, eyes Action processing and other steps to obtain personalized facial animation effects, the specific implementation process is as follows:

[0026] 1. Establishment of deformation model.

[0027] Use a 3D scanner to collect real 3D face data, perform regularization processing, and perform principal component analysis on the regularized model shape to obtain ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com