Patents

Literature

1406 results about "Feature point matching" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Point feature matching. In image processing, point feature matching is an effective method to detect a specified target in a cluttered scene. This method detects single objects rather than multiple objects.

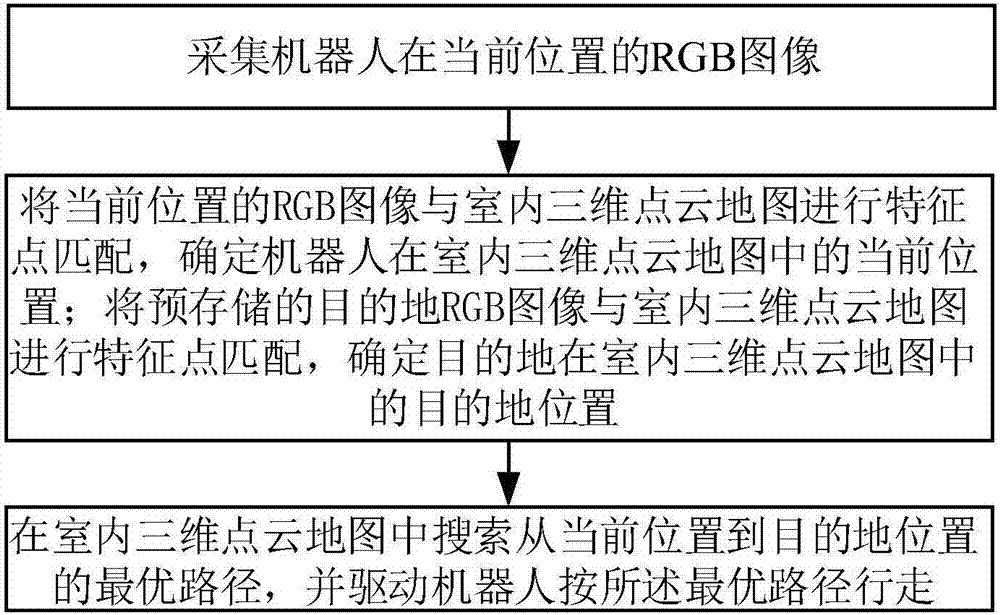

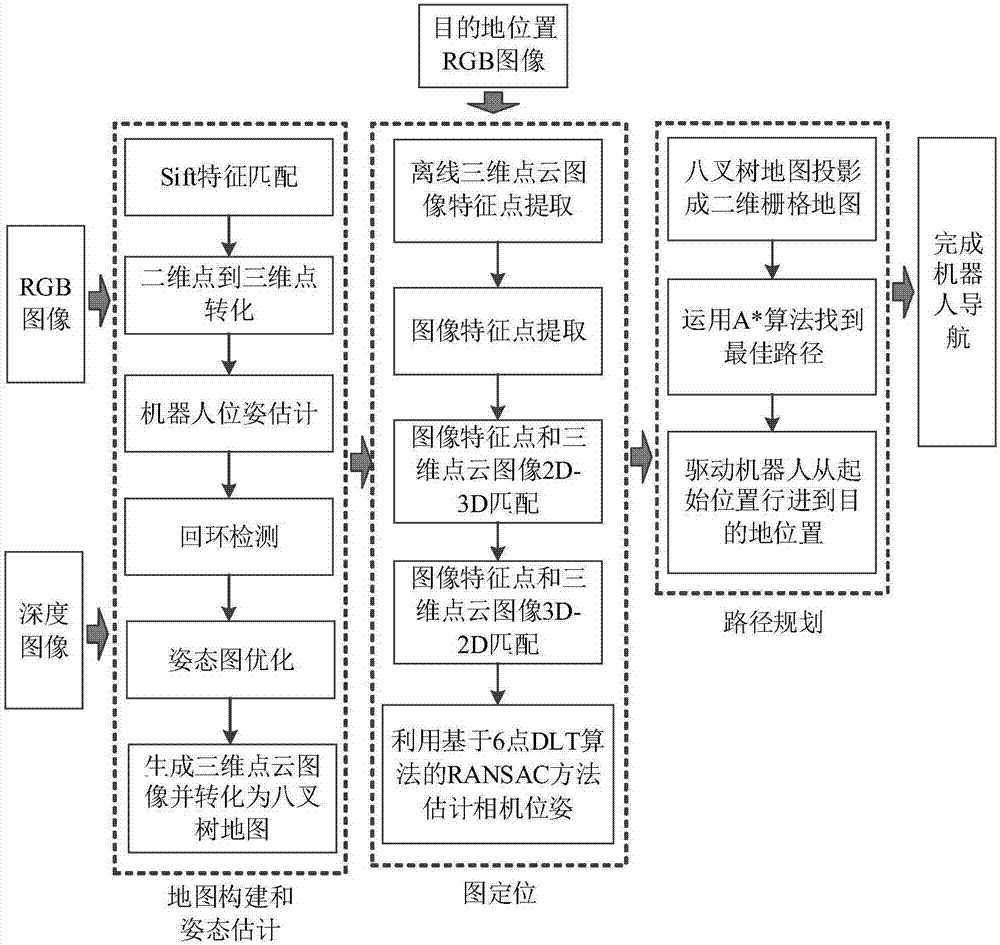

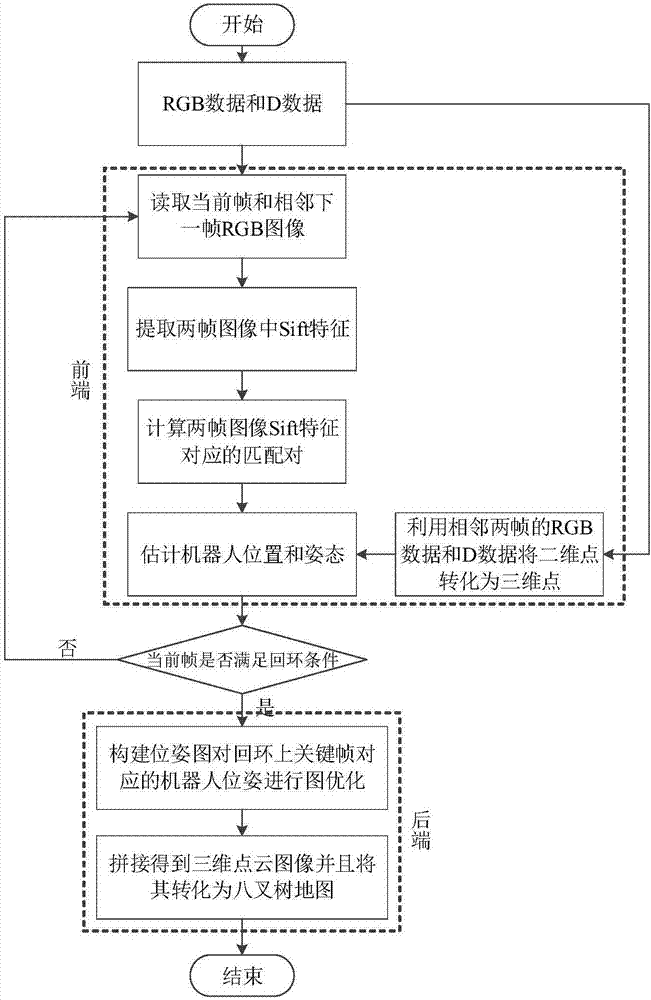

Autonomous location and navigation method and autonomous location and navigation system of robot

ActiveCN106940186AAutomate processingFair priceNavigational calculation instrumentsPoint cloudRgb image

The invention discloses an autonomous location and navigation method and an autonomous location and navigation system of a robot. The method comprises the following steps: acquiring the RGB image of the robot in the current position; carrying out characteristic point matching on the RGB image of the current position and an indoor three-dimensional point cloud map, and determining the current position of the robot in the indoor 3D point cloud map; carrying out characteristic point matching on a pre-stored RGB image of a destination and the indoor three-dimensional point cloud map, and determining the position of the destination in the indoor 3D point cloud map; and searching an optimal path from the current position to the destination position in the indoor 3D point cloud map, and driving the robot to run according to the optimal path. The method and the system have the advantages of completion of autonomous location and navigation through using a visual sensor, simple device structure, low cost, simplicity in operation, and high path planning real-time property, and can be used in the fields of unmanned driving and indoor location and navigation.

Owner:HUAZHONG UNIV OF SCI & TECH

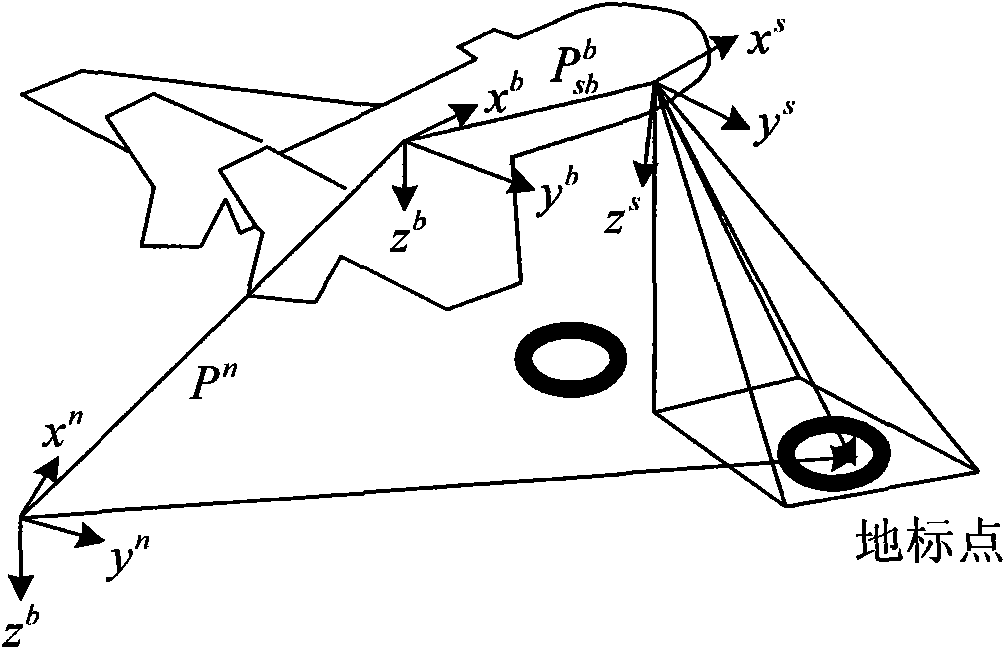

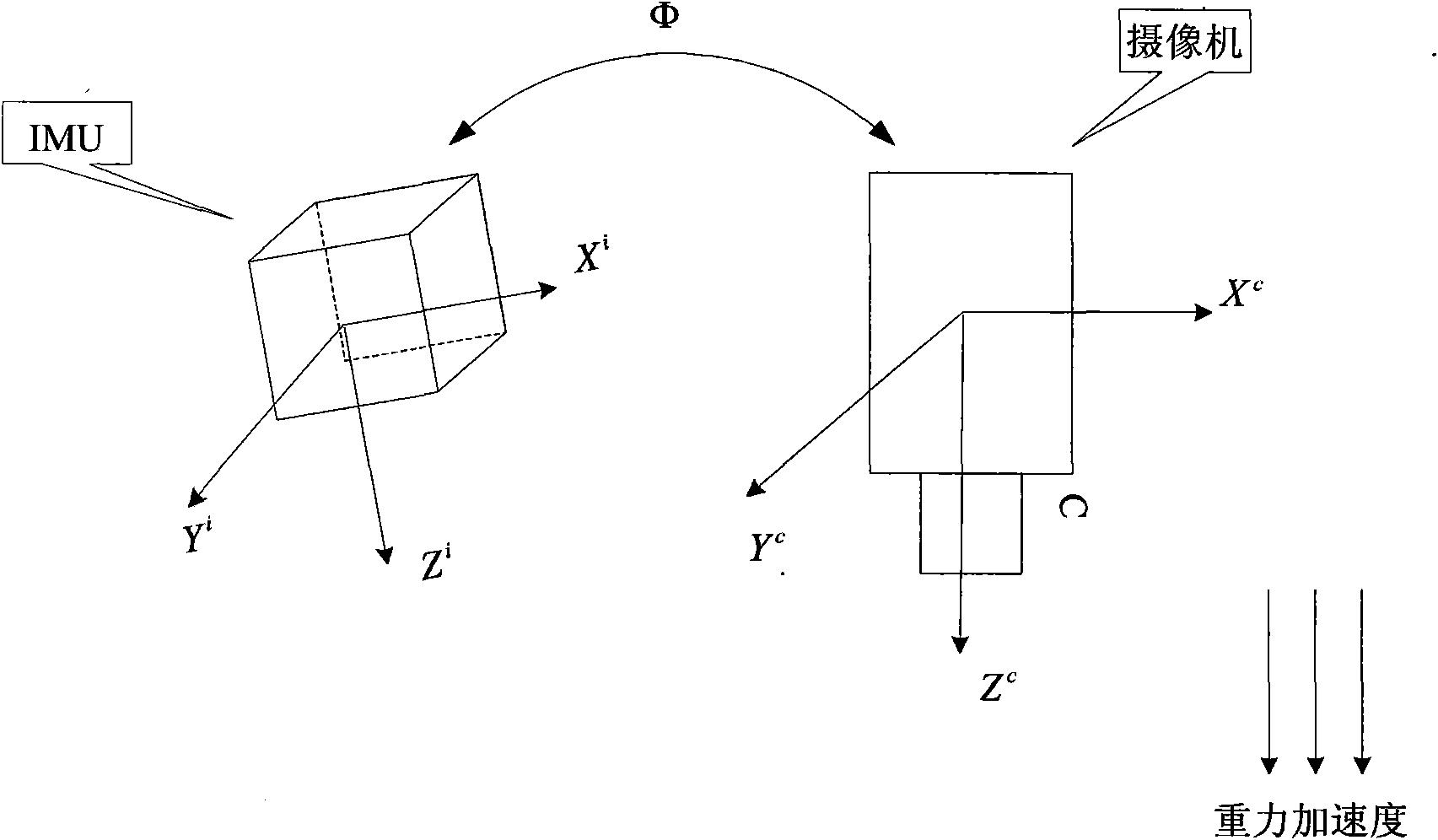

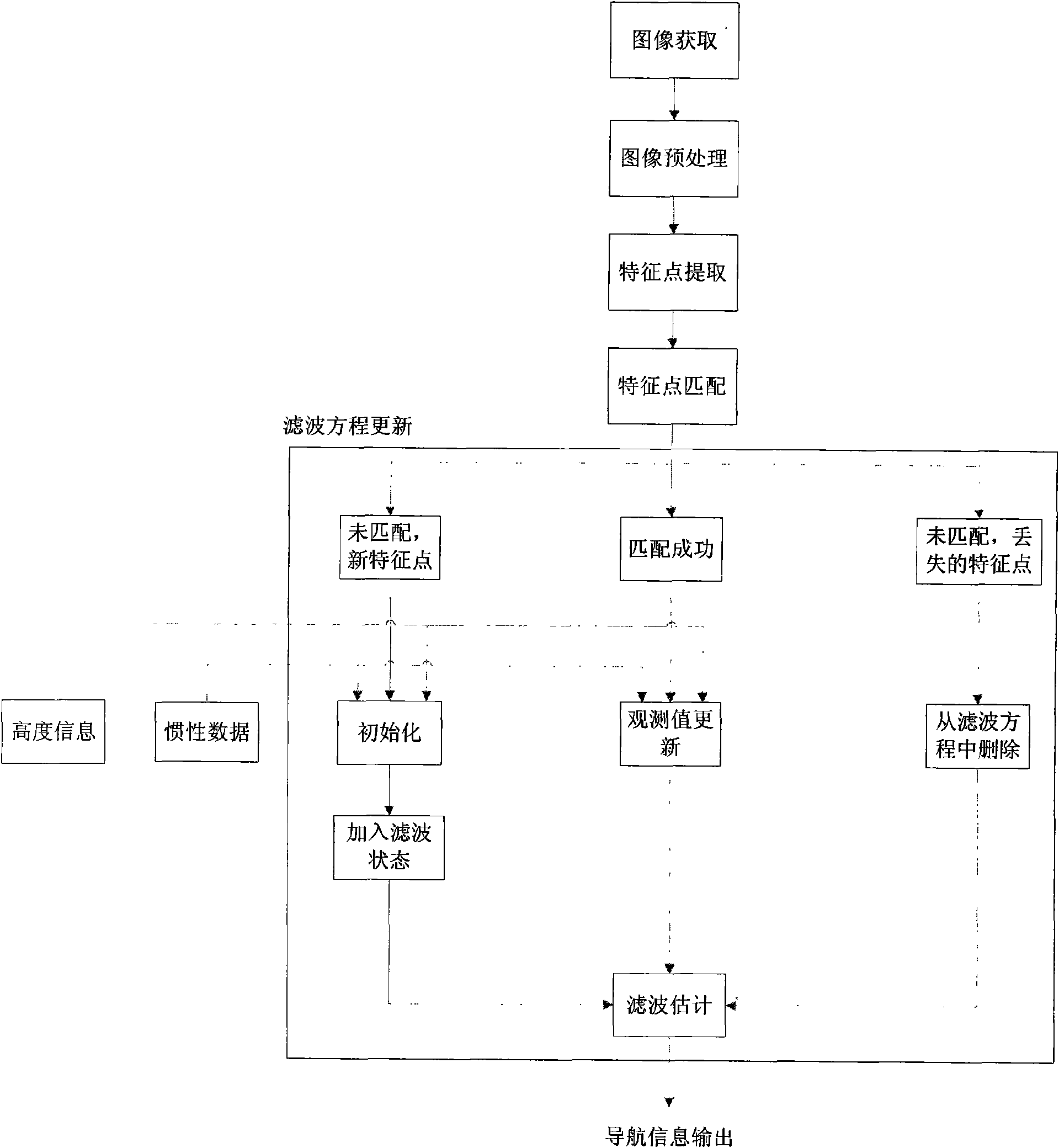

Unmanned aerial vehicle vision/inertia integrated navigation method in unknown environment

InactiveCN101598556AWide adaptabilityImprove concealmentInstruments for comonautical navigationImaging processingCanyon

The invention relates to an unmanned aerial vehicle vision / inertia integrated navigation method in the unknown environment. The method relates to image processing, inertial navigation calculation and filtering estimation, so that the steps of defining coordinate system, aligning the coordinate system, setting up filter equation and the like are firstly carried out before the main navigation method is implemented. The navigation process of the method comprises five steps: acquiring and pretreating image; extracting feature points by a SIFT method; matching the feature points; updating the filter equation; and carrying out the filtering estimation on the updated filter equation. The method only needs the current ground image instead of specific external information for matching, so as to be used in any environment (including underwater, shelter, canyon, underground and the like) in theory; furthermore, the method has the advantages of good sheltered property and high accuracy.

Owner:BEIHANG UNIV

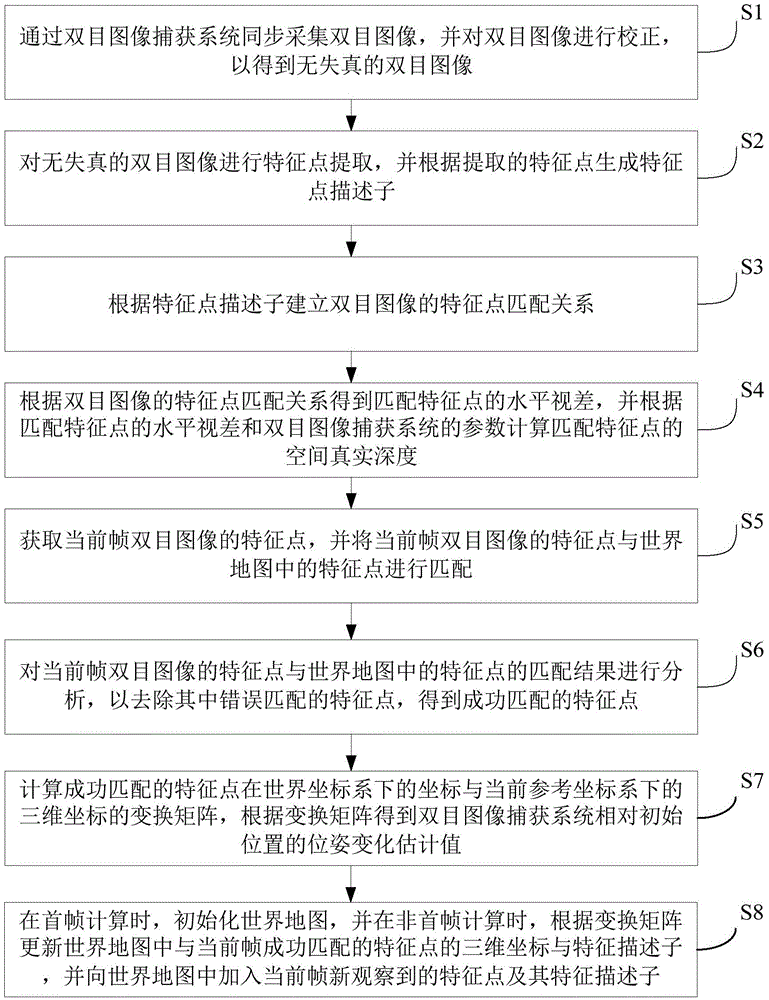

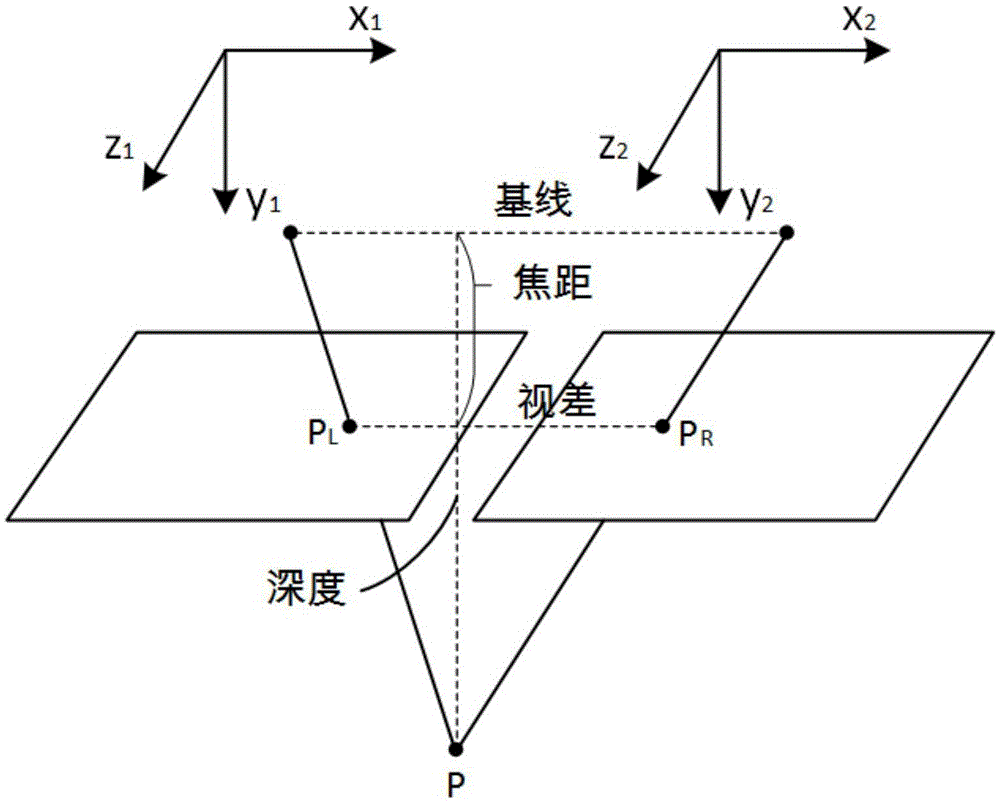

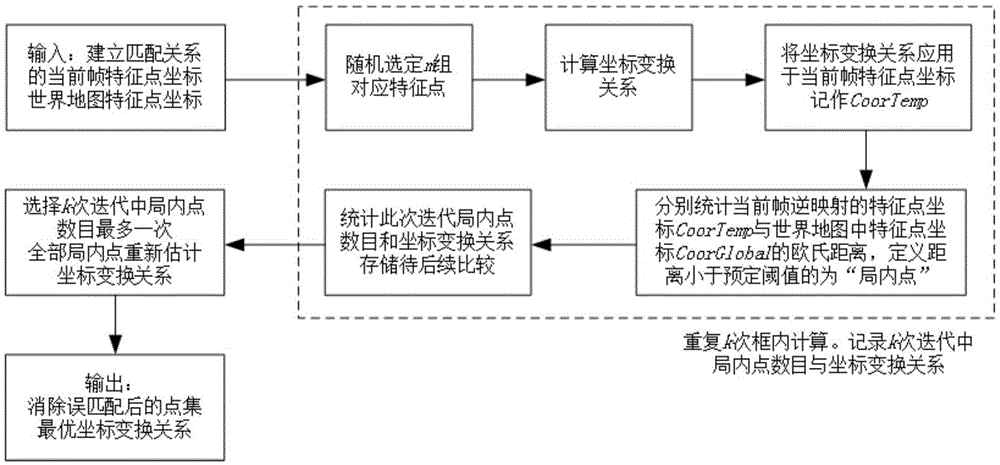

Visual ranging-based simultaneous localization and map construction method

ActiveCN105469405AReduce computational complexityEliminate accumulationImage enhancementImage analysisSimultaneous localization and mappingComputation complexity

The invention provides a visual ranging-based simultaneous localization and map construction method. The method includes the following steps that: a binocular image is acquired and corrected, so that a distortion-free binocular image can be obtained; feature extraction is performed on the distortion-free binocular image, so that feature point descriptors can be generated; feature point matching relations of the binocular image are established; the horizontal parallax of matching feature points is obtained according to the matching relations, and based on the parameters of a binocular image capture system, real space depth is calculated; the matching results of the feature points of a current frame and feature points in a world map are calculated; feature points which are wrongly matched with each other are removed, so that feature points which are successfully matched with each other can be obtained; a transform matrix of the coordinates of the feature points which are successfully matched with each other under a world coordinate system and the three-dimension coordinates of the feature points which are successfully matched with each other under a current reference coordinate system is calculated, and a pose change estimated value of the binocular image capture system relative to an initial position is obtained according to the transform matrix; and the world map is established and updated. The visual ranging-based simultaneous localization and map construction method of the invention has low computational complexity, centimeter-level positioning accuracy and unbiased characteristics of position estimation.

Owner:北京超星未来科技有限公司

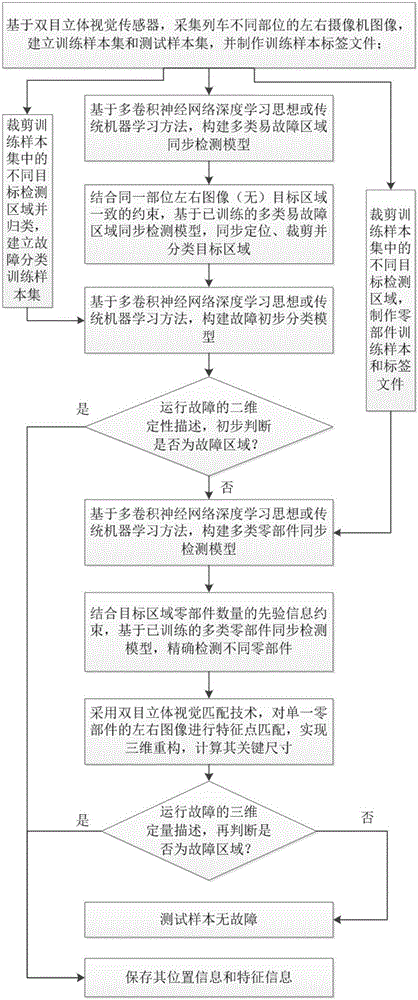

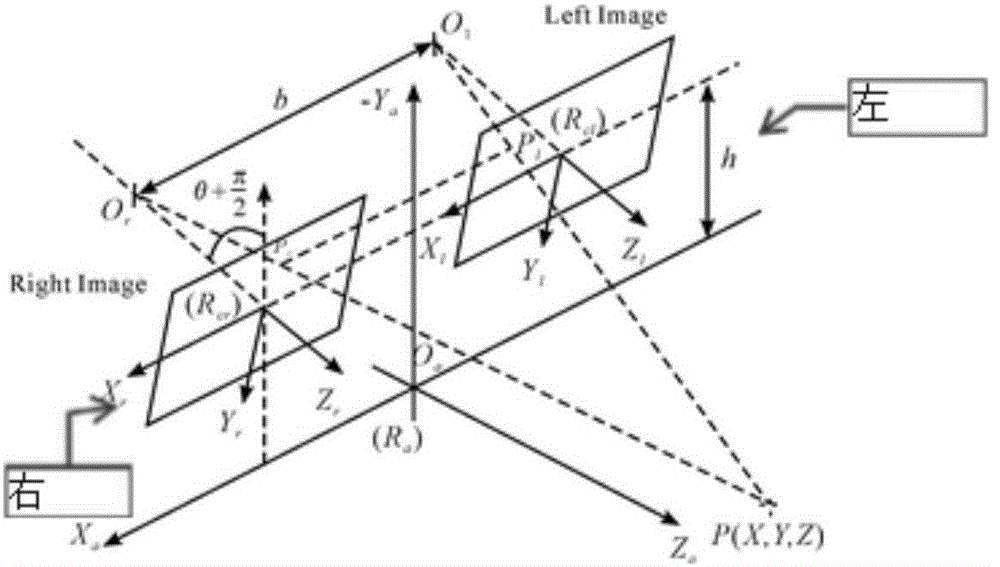

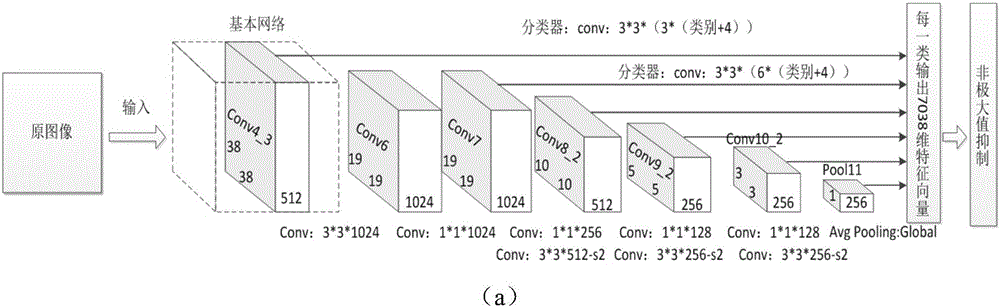

Train operation fault automatic detection system and method based on binocular stereoscopic vision

ActiveCN106600581AReduce maintenance costsRealize the second judgment of faultImage analysisMaterial analysis by optical meansCamera imageStudy methods

The invention discloses a train operation fault automatic detection system and method based on binocular stereoscopic vision, and the method comprises the steps: collecting left and right camera images of different parts of a train based on a binocular stereoscopic vision sensor; achieving the synchronous precise positioning of various types of target regions where faults are liable to happen based on the deep learning theory of a multi-layer convolution neural network or a conventional machine learning method through combining with the left and right image consistency fault (no-fault) constraint of the same part; carrying out the preliminary fault classification and recognition of a positioning region; achieving the synchronous precise positioning of multiple parts in a non-fault region through combining with the priori information of the number of parts in the target regions; carrying out the feature point matching of the left and right images of the same part through employing the technology of binocular stereoscopic vision, achieving the three-dimensional reconstruction, calculating a key size, and carrying out the quantitative description of fine faults and gradually changing hidden faults, such as loosening or playing. The method achieves the synchronous precise detection of the deformation, displacement and falling faults of all big parts of the train, or carries out the three-dimensional quantitative description of the fine and gradually changing hidden troubles, and is more complete, timely and accurate.

Owner:BEIHANG UNIV

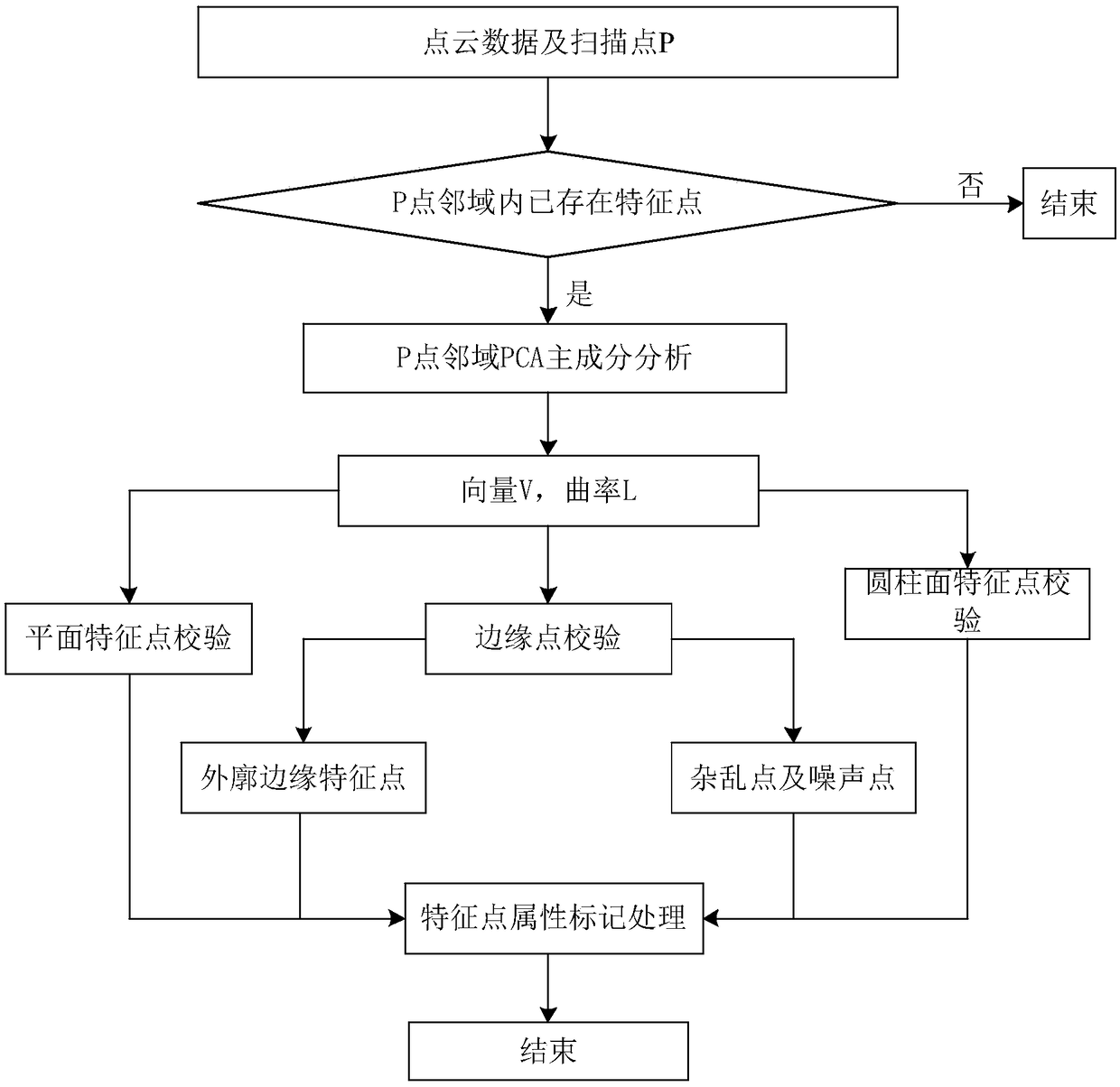

Remote obstacle detection method based on laser radar multi-frame point cloud fusion

ActiveCN110221603ASolve the problem of inability to effectively detect long-distance obstaclesPrecision FusionElectromagnetic wave reradiationPosition/course control in two dimensionsPoint cloudMultiple frame

The invention discloses a remote obstacle detection method based on laser radar multi-frame point cloud fusion. A local coordinate system and a world coordinate system are established, an extraction feature point of each laser point is calculated on an annular scanning line of the laser radar according to the original point cloud data under the local coordinate system, and the global pose of the current position relative to the initial position and the de-distortion point cloud in the world coordinate system are obtained through inter-frame feature point matching and map feature point matching; the de-distortion point clouds of the current frame and the previous frame are fused to obtain more compact de-distortion point cloud data, which is unified to the local coordinate system, then projection is performed on two-dimensional grids, and an obstacle is screened according to the height change features of each two-dimensional grid. According to the method in the invention, the problem that the detection rate of the remote barrier caused by sparse laser point clouds is low is solved, the remote barriers can be effectively detected, the error detection rate and the leak detection rateare low, and the system cost can be greatly reduced.

Owner:ZHEJIANG UNIV

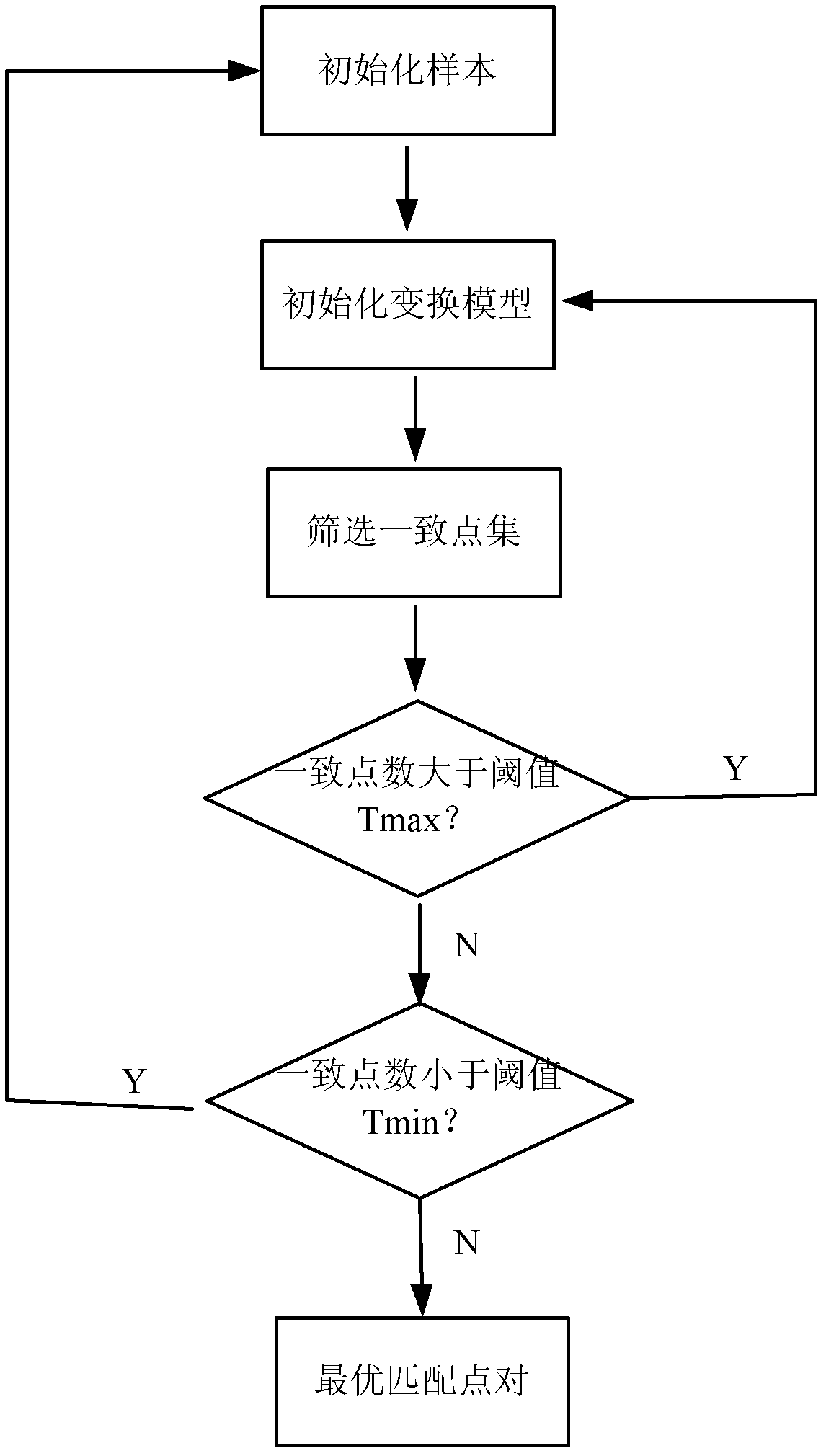

Remote sensing image registration method of multi-source sensor

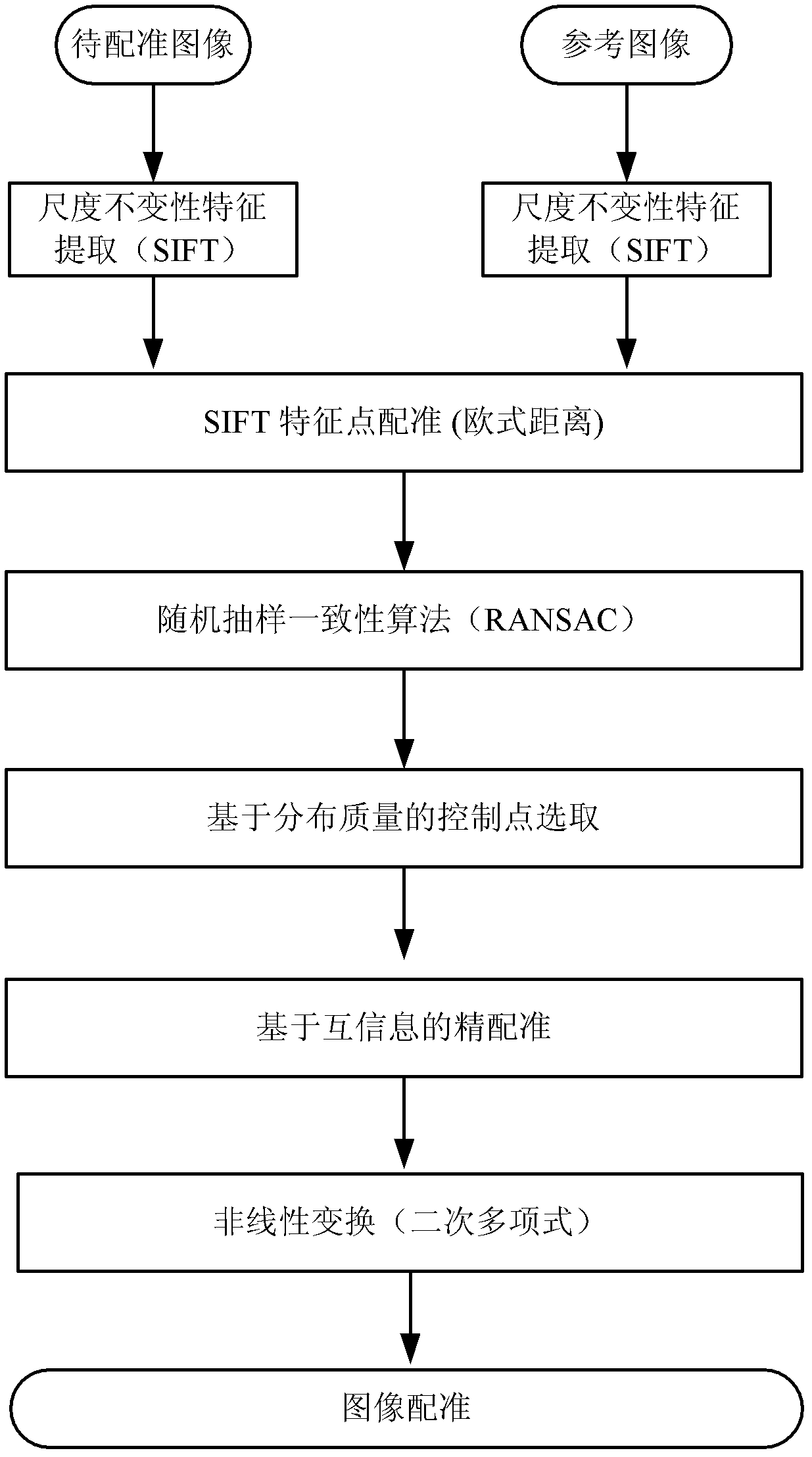

ActiveCN103020945AQuick registrationPrecise registrationImage analysisWeight coefficientMutual information

The invention provides a remote sensing image registration method of a multi-source sensor, relating to an image processing technology. The remote sensing image registration method comprises the following steps of: respectively carrying out scale-invariant feature transform (SIFT) on a reference image and a registration image, extracting feature points, calculating the nearest Euclidean distances and the nearer Euclidean distances of the feature points in the image to be registered and the reference image, and screening an optimal matching point pair according to a ratio; rejecting error registration points through a random consistency sampling algorithm, and screening an original registration point pair; calculating distribution quality parameters of feature point pairs and selecting effective control point parts with uniform distribution according to a feature point weight coefficient; searching an optimal registration point in control points of the image to be registered according to a mutual information assimilation judging criteria, thus obtaining an optimal registration point pair of the control points; and acquiring a geometric deformation parameter of the image to be registered by polynomial parameter transformation, thus realizing the accurate registration of the image to be registered and the reference image. The remote sensing image registration method provided by the invention has the advantages of high calculation speed and high registration precision, and can meet the registration requirements of a multi-sensor, multi-temporal and multi-view remote sensing image.

Owner:济钢防务技术有限公司

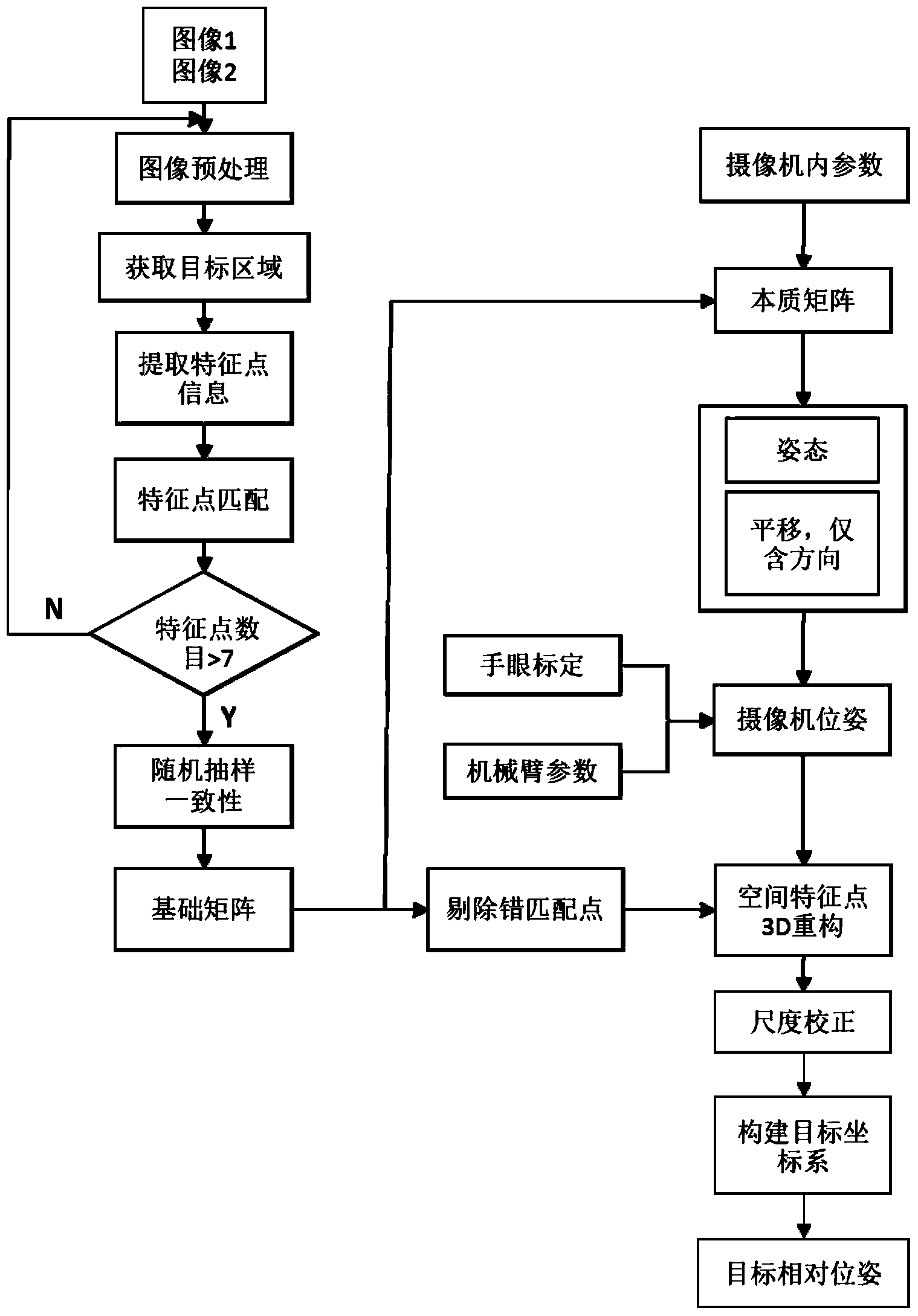

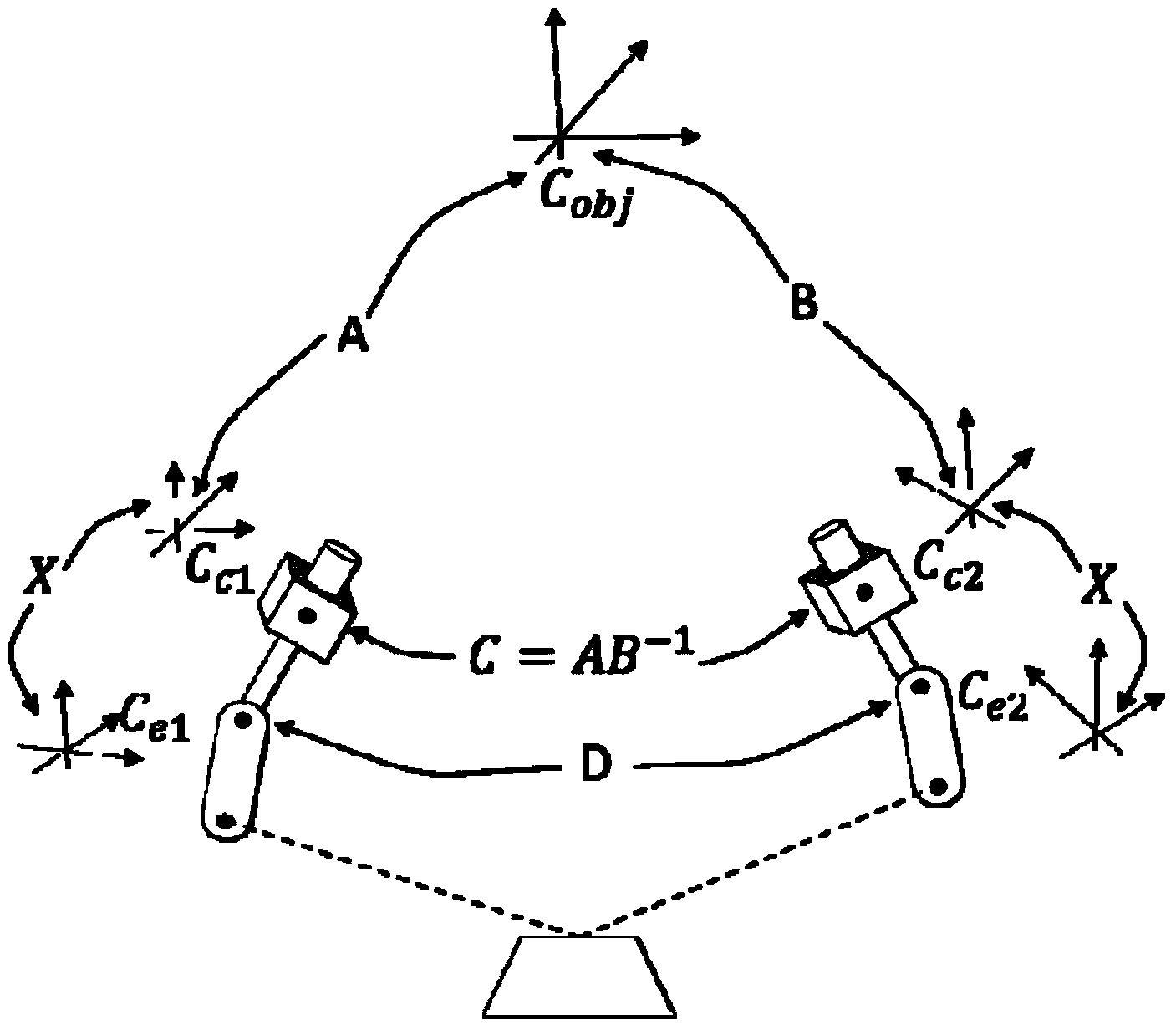

Dynamic target position and attitude measurement method based on monocular vision at tail end of mechanical arm

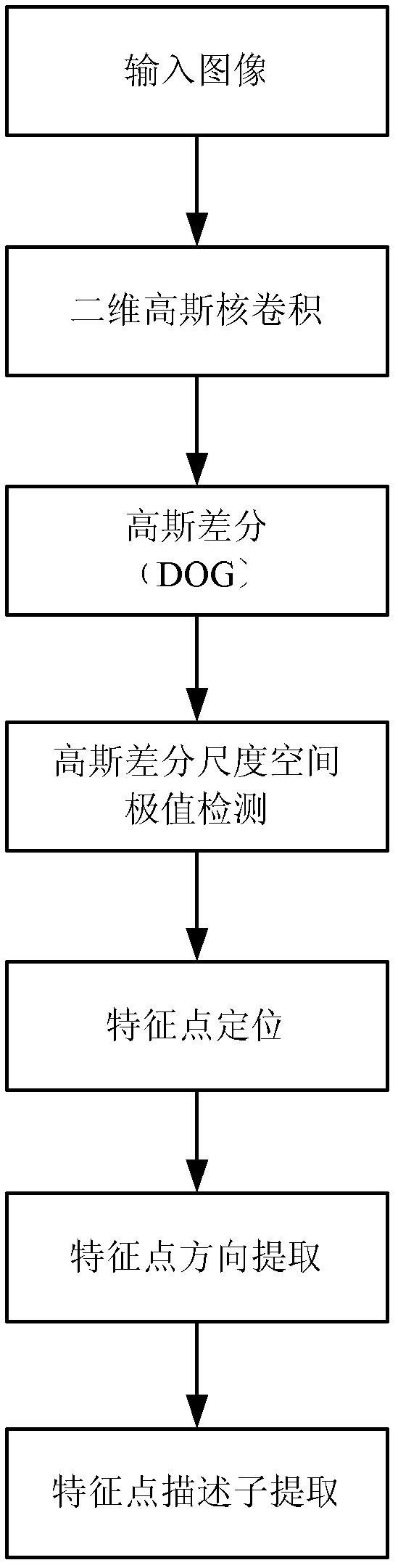

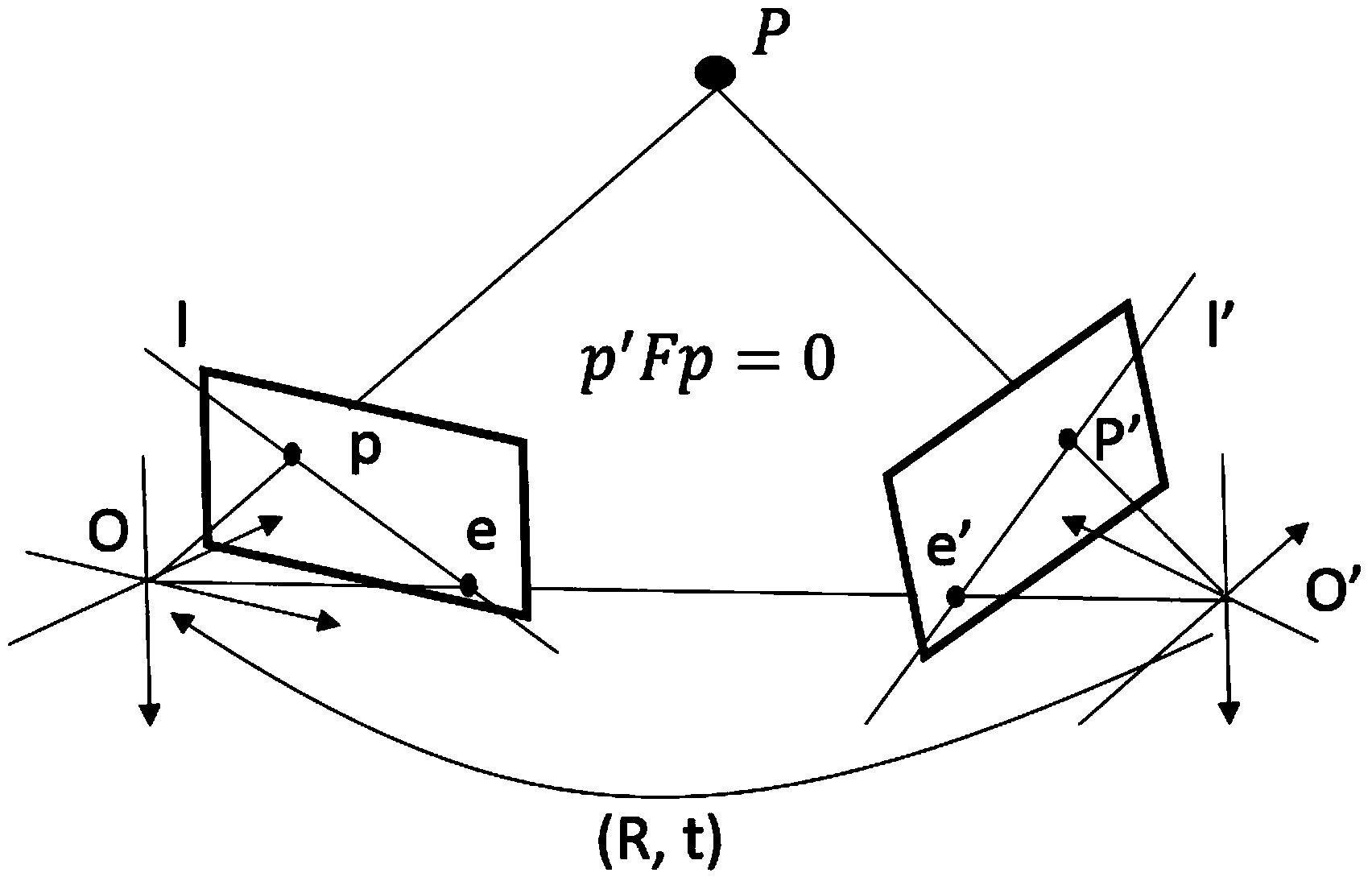

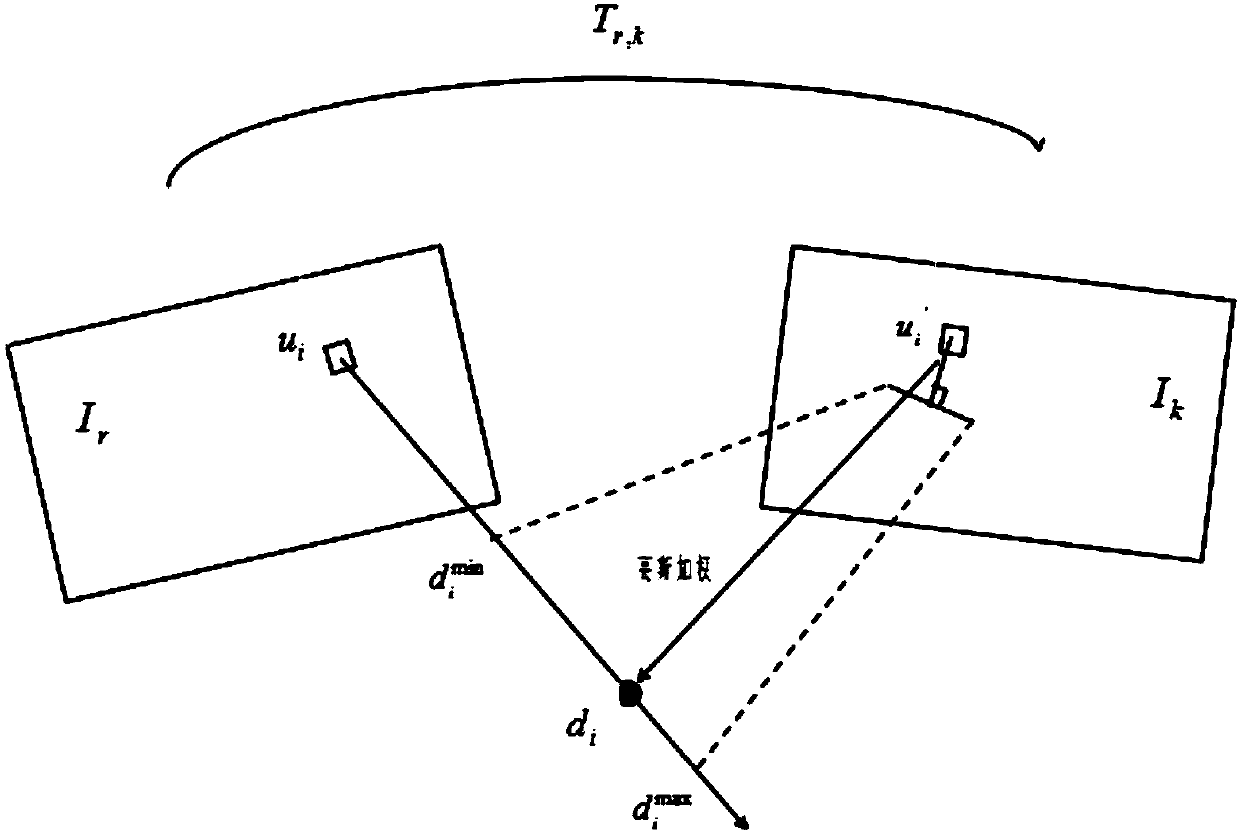

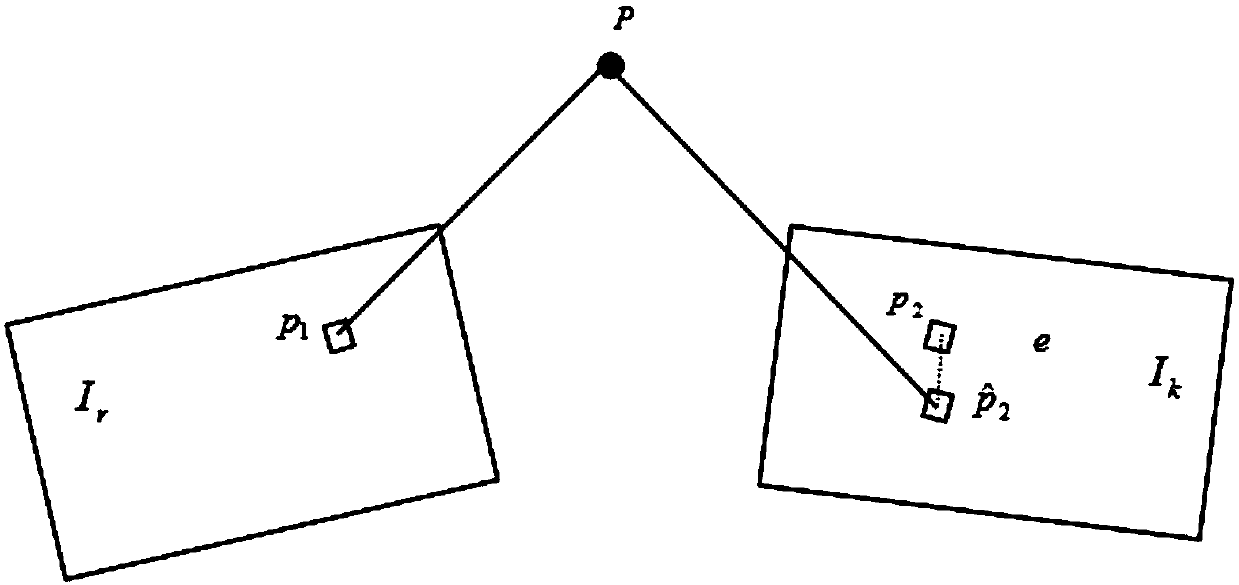

ActiveCN103759716ASimplified Calculation Process MethodOvercome deficienciesPicture interpretationEssential matrixFeature point matching

The invention relates to a dynamic target position and attitude measurement method based on monocular vision at the tail end of a mechanical arm and belongs to the field of vision measurement. The method comprises the following steps: firstly calibrating with a video camera and calibrating with hands and eyes; then shooting two pictures with the video camera, extracting spatial feature points in target areas in the pictures by utilizing a scale-invariant feature extraction method and matching the feature points; resolving a fundamental matrix between the two pictures by utilizing an epipolar geometry constraint method to obtain an essential matrix, and further resolving a rotation transformation matrix and a displacement transformation matrix of the video camera; then performing three-dimensional reconstruction and scale correction on the feature points; and finally constructing a target coordinate system by utilizing the feature points after reconstruction so as to obtain the position and the attitude of a target relative to the video camera. According to the method provided by the invention, the monocular vision is adopted, the calculation process is simplified, the calibration with the hands and the eyes is used, and the elimination of error solutions in the measurement process of the position and the attitude of the video camera can be simplified. The method is suitable for measuring the relative positions and attitudes of stationery targets and low-dynamic targets.

Owner:TSINGHUA UNIV

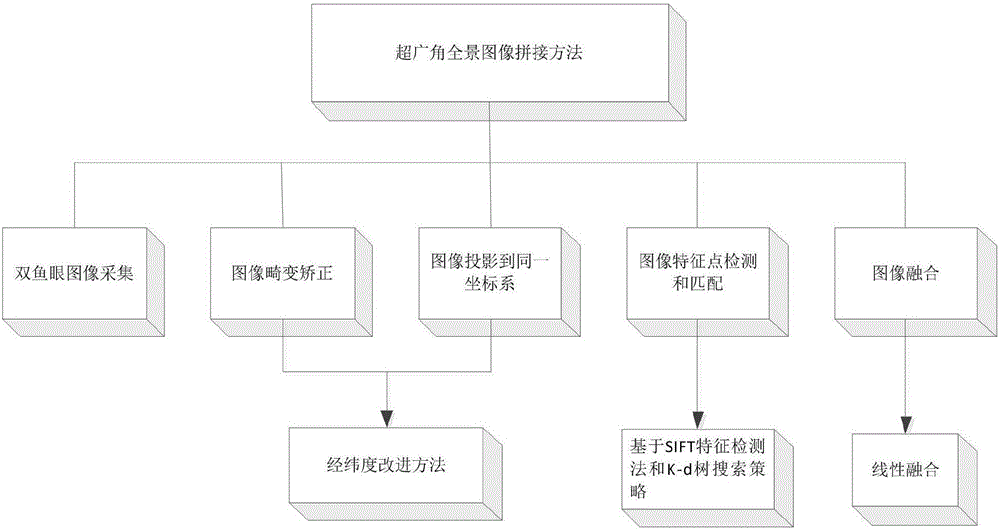

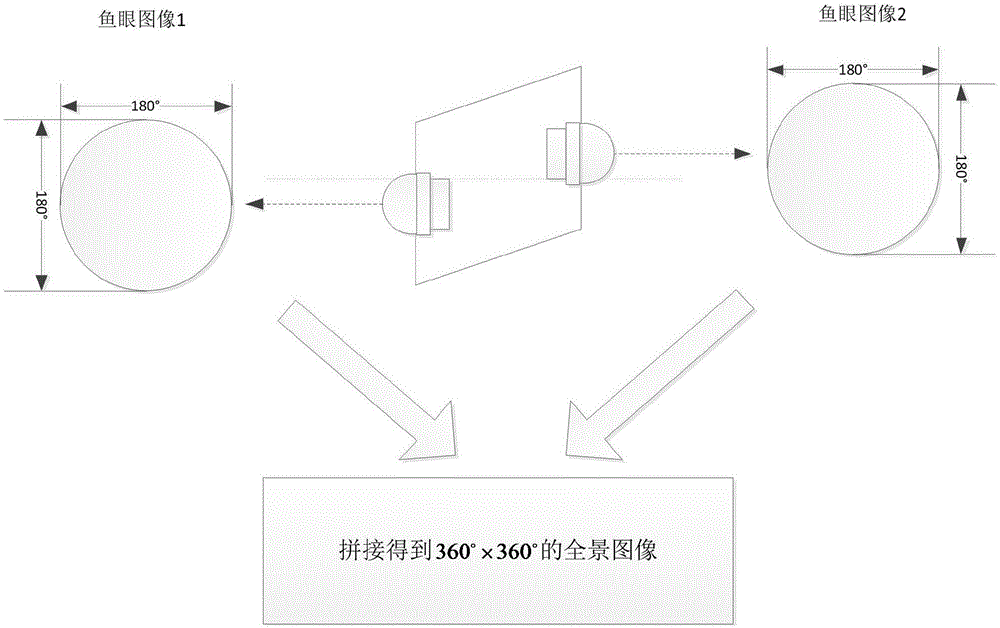

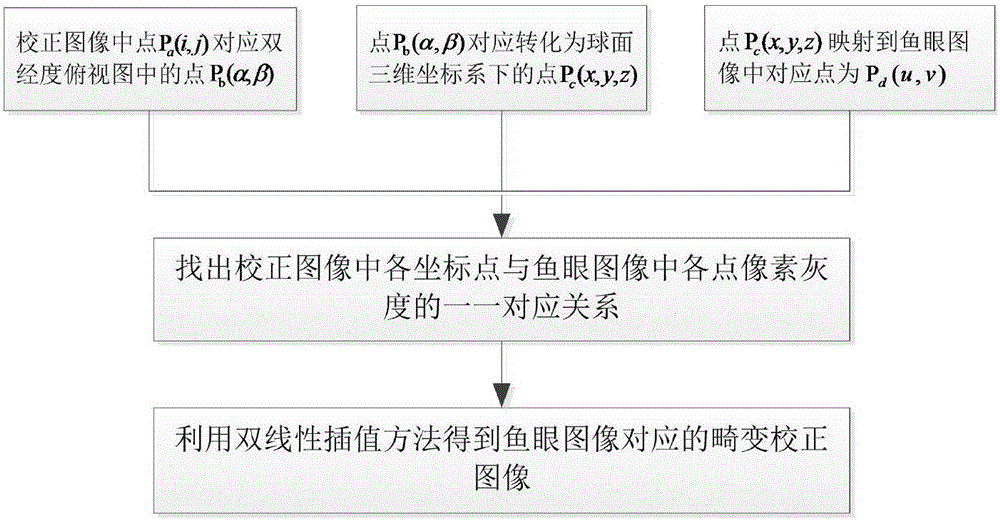

Binocular camera-based panoramic image splicing method

InactiveCN106683045AImprove operational efficiencyAchieve a natural transition effectImage enhancementImage analysisNear neighborLongitude

The invention provides a binocular camera-based panoramic image splicing method. According to the method, a binocular camera is arranged at a certain point of view in the space, the binocular camera completes photographing for once and obtains two fisheye images; a traditional algorithm is improved according to the defect of insufficient distortion correction capacity of a latitude-longitude correction method in a horizontal direction; corrected images are projected into the same coordinate system through using a spherical surface orthographic projection method, so that the fast correction of the fisheye images can be realized; feature points in an overlapping area of the two projected images are extracted based on an SIFT feature point detection method; the search strategy of a K-D tree is adopted to search Euclidean nearest neighbor distances of the feature points, so that feature point matching can be performed; an RANSAC (random sample consensus) algorithm is used to perform de-noising on the feature points and eliminate mismatching points, so that image splicing can be completed; and a linear fusion method is adopted to fuse spliced images, and therefore, color and scene change bluntness in an image transition area can be avoided.

Owner:深圳市优象计算技术有限公司

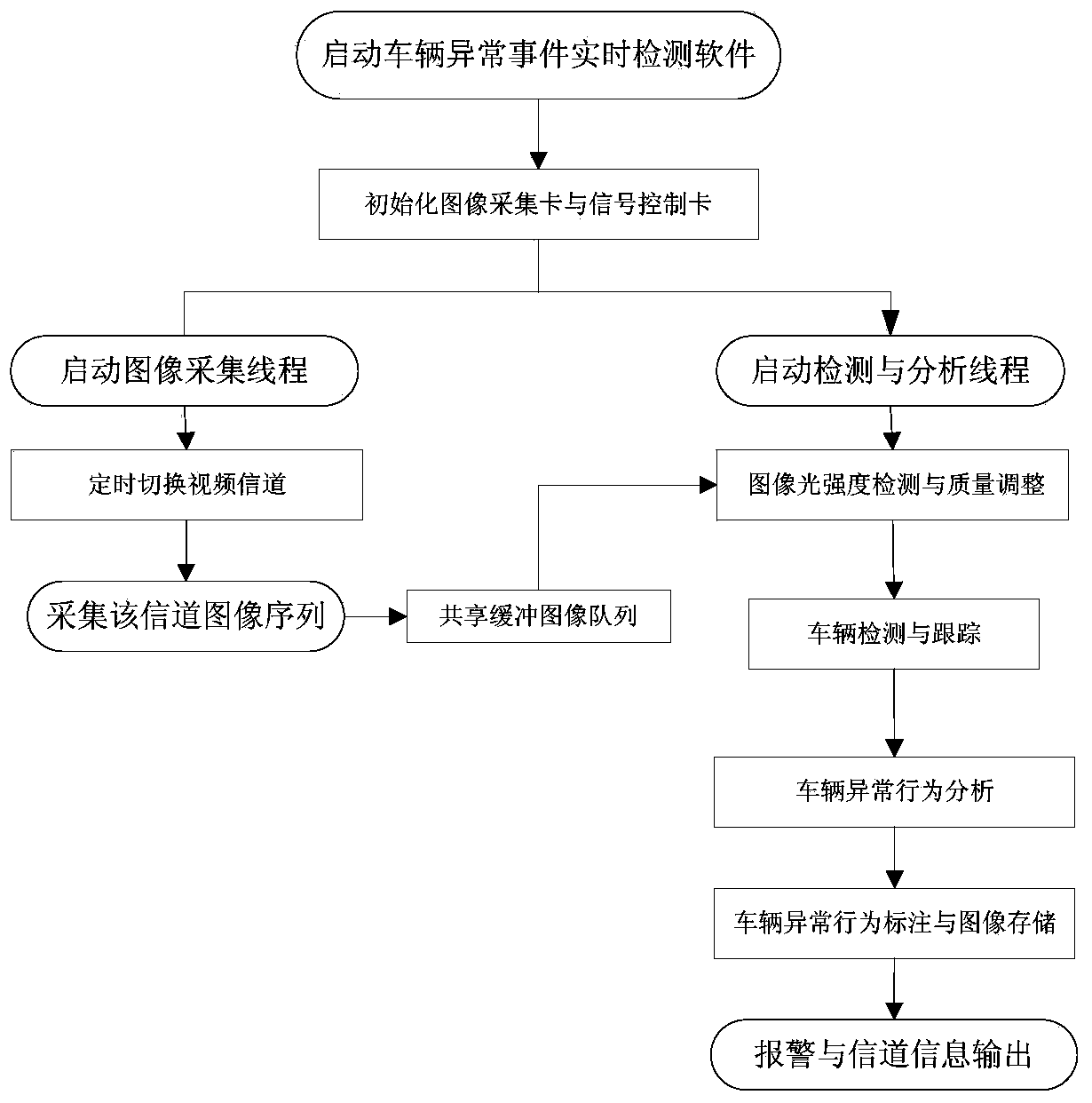

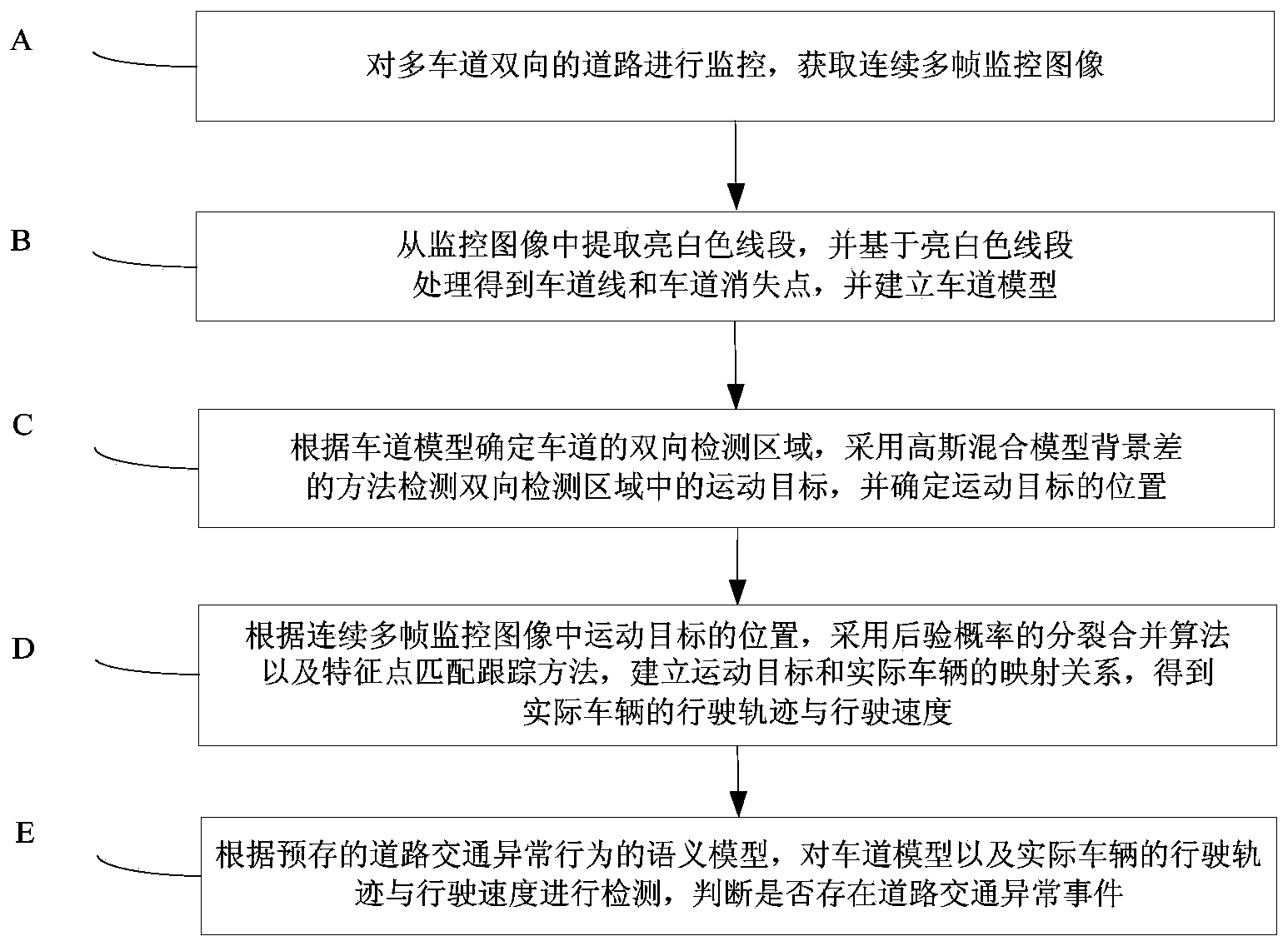

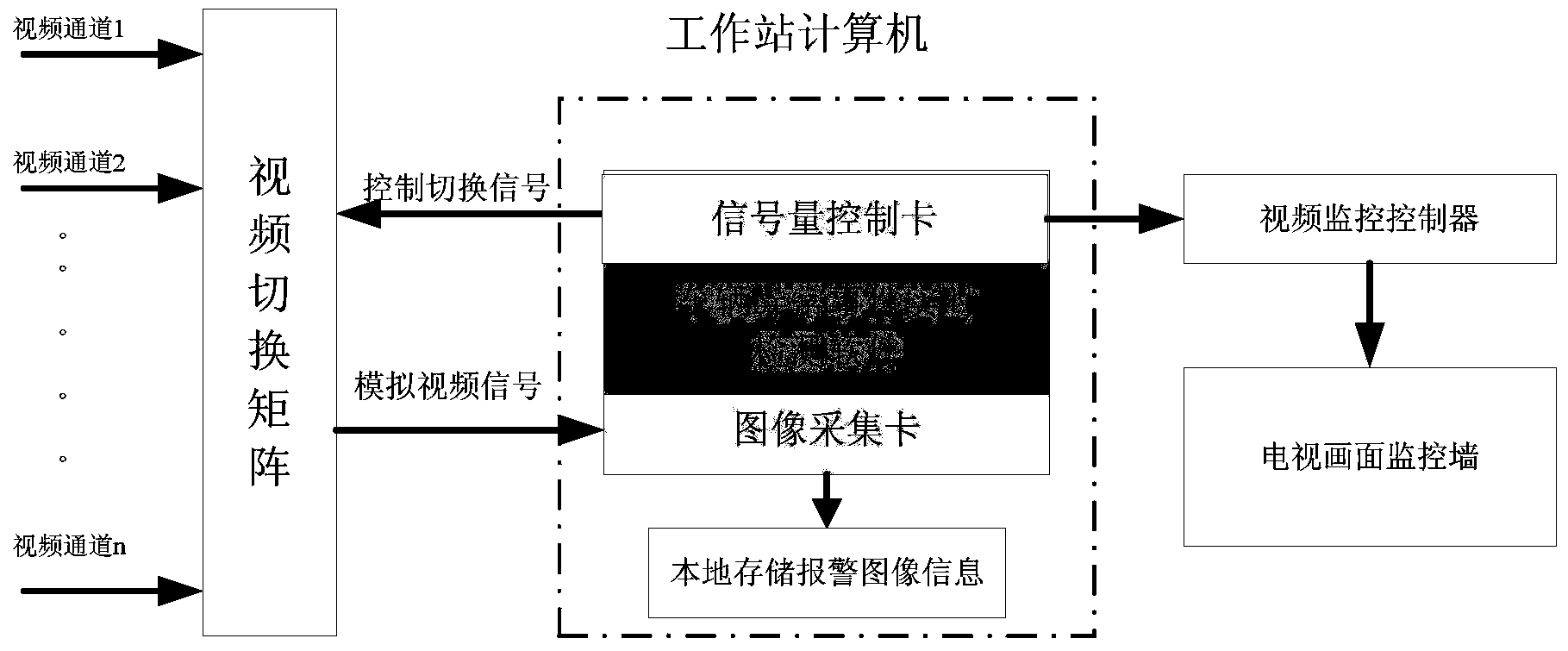

Method and device for detecting road traffic abnormal events in real time

InactiveCN103971521AImprove work efficiencyReduce work intensityDetection of traffic movementClosed circuit television systemsMultiple frameMerge algorithm

The invention provides a method and device for detecting road traffic abnormal events in real time. The method includes the steps of monitoring a road, obtaining a plurality of frames of continuous monitor images, extracting bright white segments from the monitor images, obtaining lane lines and lane end points through processing, building a lane model, determining a bidirectional detection area of a lane according to the lane model, detecting a moving object in the bidirectional detection area according to a Gaussian mixture model background subtraction method, determining the position of the moving object, building the mapping relation between the moving target and an actual vehicle according to the position of the moving target in the multiple frames of continuous monitor images by the adoption of a posterior probability splitting and merging algorithm and a feature point matching and tracking method, obtaining the running track and running speed of the actual vehicle, detecting the lane model and the running track and running speed of the actual vehicle according to a prestored road traffic abnormal behavior semantic model, and judging whether the road traffic abnormal events exist or not. The method has the advantages of being intelligent, high in accuracy and the like.

Owner:TSINGHUA UNIV +1

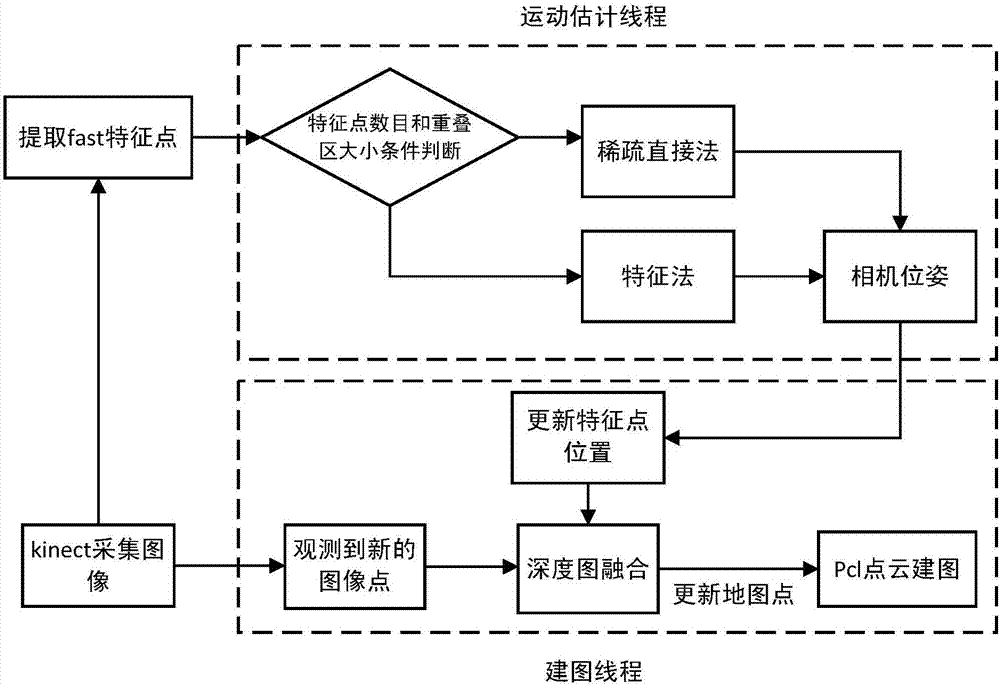

Depth camera-based visual mileometer design method

ActiveCN107025668AEffective trackingEffective estimateImage enhancementImage analysisColor imageFrame based

The invention discloses a depth camera-based visual mileometer design method. The method comprises the following steps of acquiring the color image information and the depth image information in the environment by a depth camera; extracting feature points in an initial key frame and in all the rest image frames; tracking the position of each feature point in the current frame based on the optical flow method so as to find out feature point pairs; according to the number of actual feature points and the region size of the overlapped regions of feature points in two successive frames, selectively adopting the sparse direct method or the feature point method to figure out relative positions and postures between two frames; based on the depth information of a depth image, figuring out the 3D point coordinates of the feature points of the key frame in a world coordinate system based on the combination of relative positions and postures between two frames; conducting the point cloud splicing on the key frame during another process, and constructing a map. The method combines the sparse direct method and the feature point method, so that the real-time performance and the robustness of the visual mileometer are improved.

Owner:SOUTH CHINA UNIV OF TECH

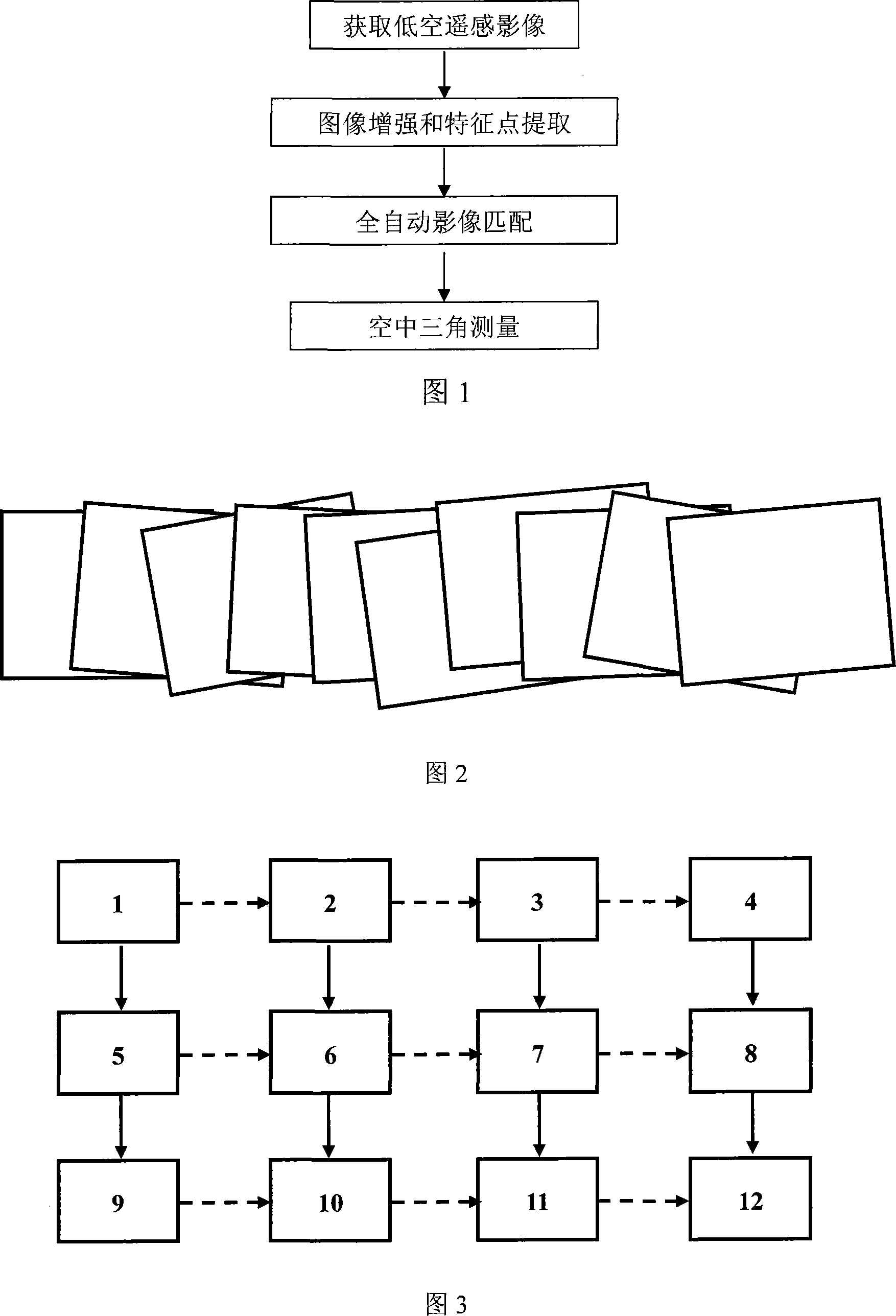

Quick low altitude remote sensing image automatic matching and airborne triangulation method

InactiveCN101126639AGuaranteed reliabilityReduce in quantityPicture interpretationElectromagnetic wave reradiationFeature point matchingLow altitude

The utility model relates to a method of rapid automatic matching for the low altitude remote sensing image and the aerial triangulation, which is characterized in that: capture serial images with the low altitude remote platform; extract the characteristic point from the image with the feature extraction technology; save automatically all extracted characteristic points of the images; match automatically characteristic points with same name of the adjacent images and transmit automatically the matched characteristic points with same names to all superimposed image to obtain a large quantity of characteristic points with three degree or more and same names; the semi-automatic measurement control point and the checkpoint of the image coordinate can combine with other observation values of non-photogrammetry to carry out the high precision aerial triangulation and the precision evaluation of the balancing results. The utility model has the advantages that the stable and reliable matching results and higher precision of the aerial triangulation can be obtained even the low altitude remote sensing images have large rotation deviation angle and the applicative demand of the large scale survey and the high precision three-dimensional reconstruction can be met.

Owner:WUHAN UNIV

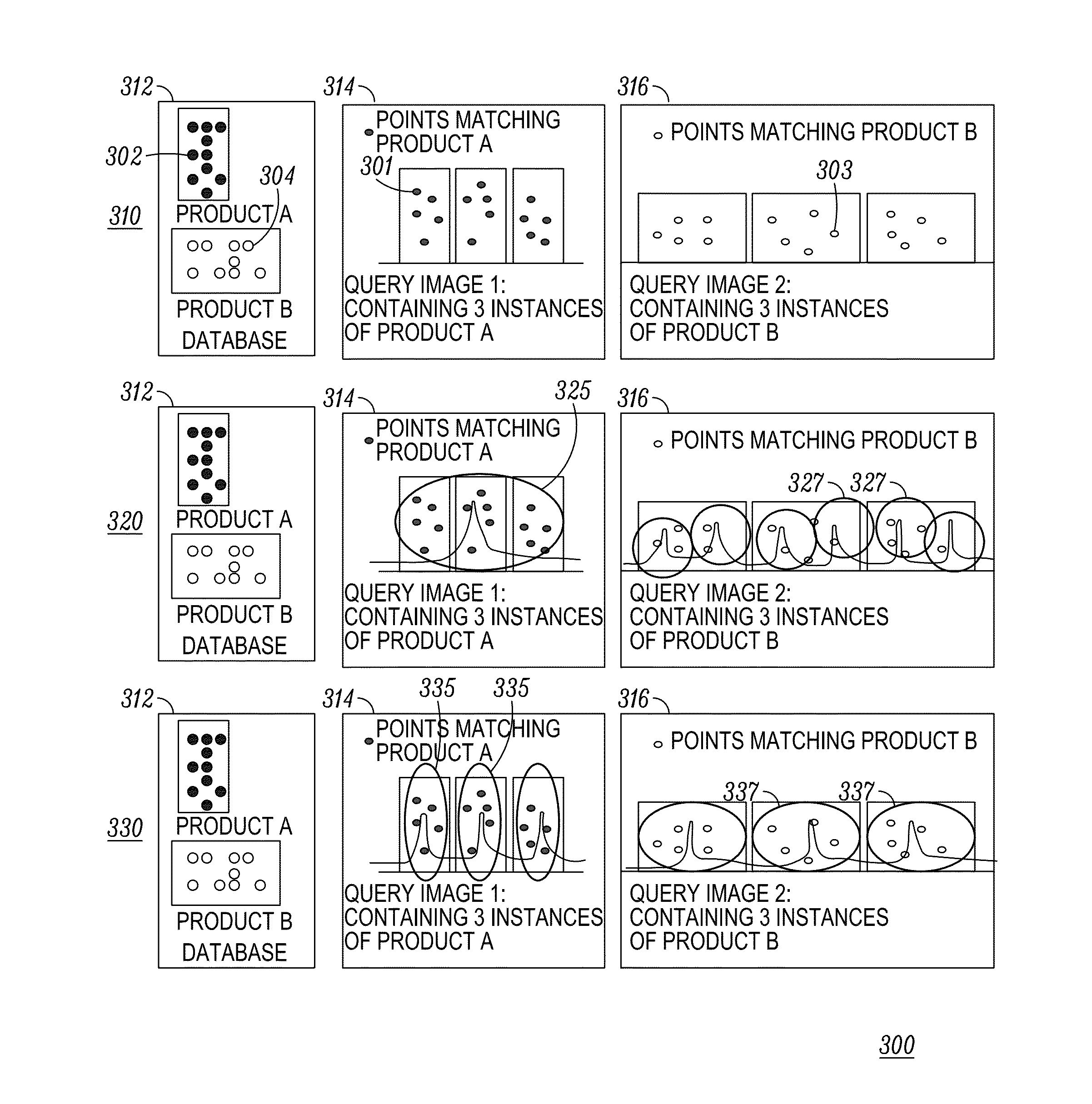

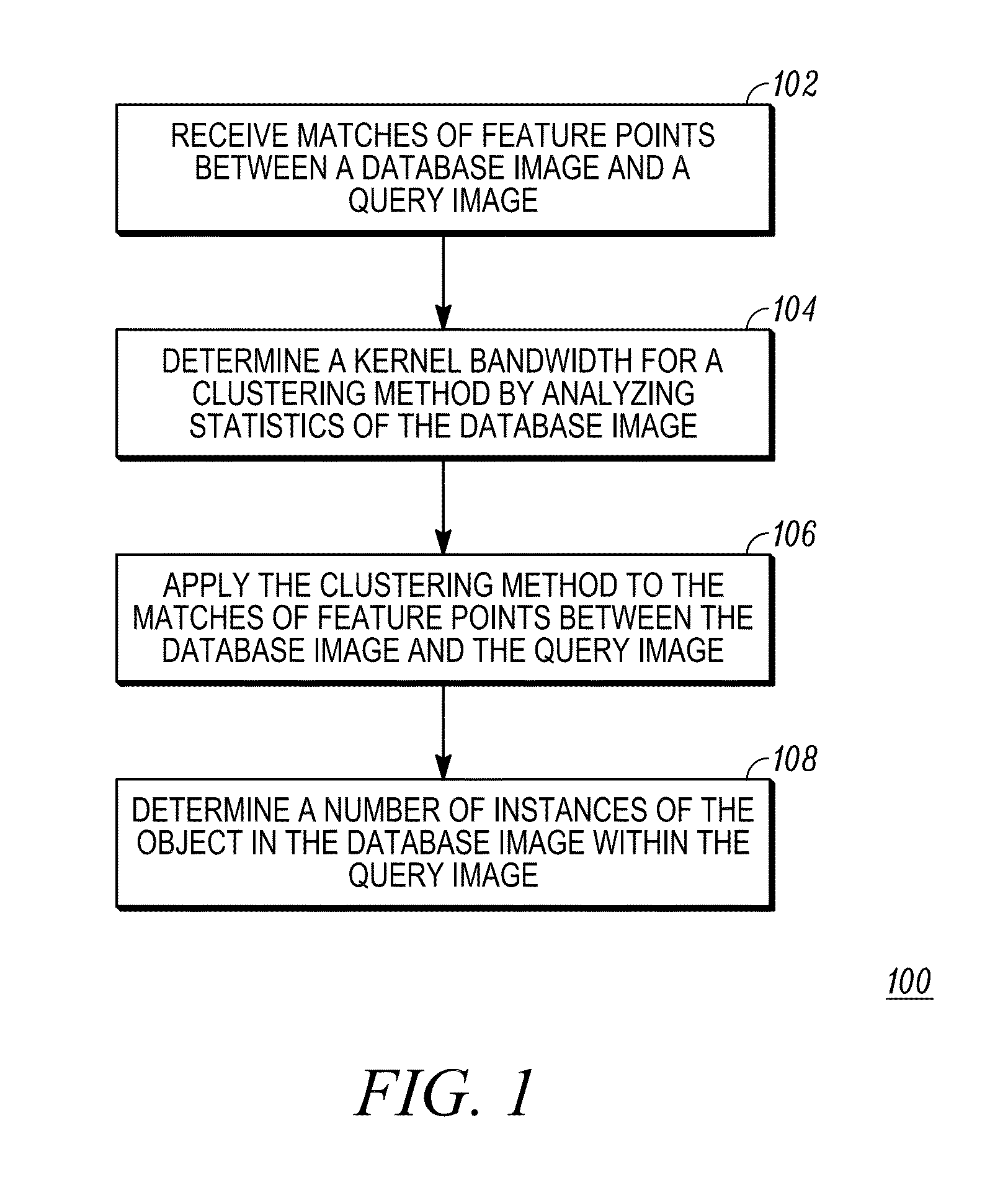

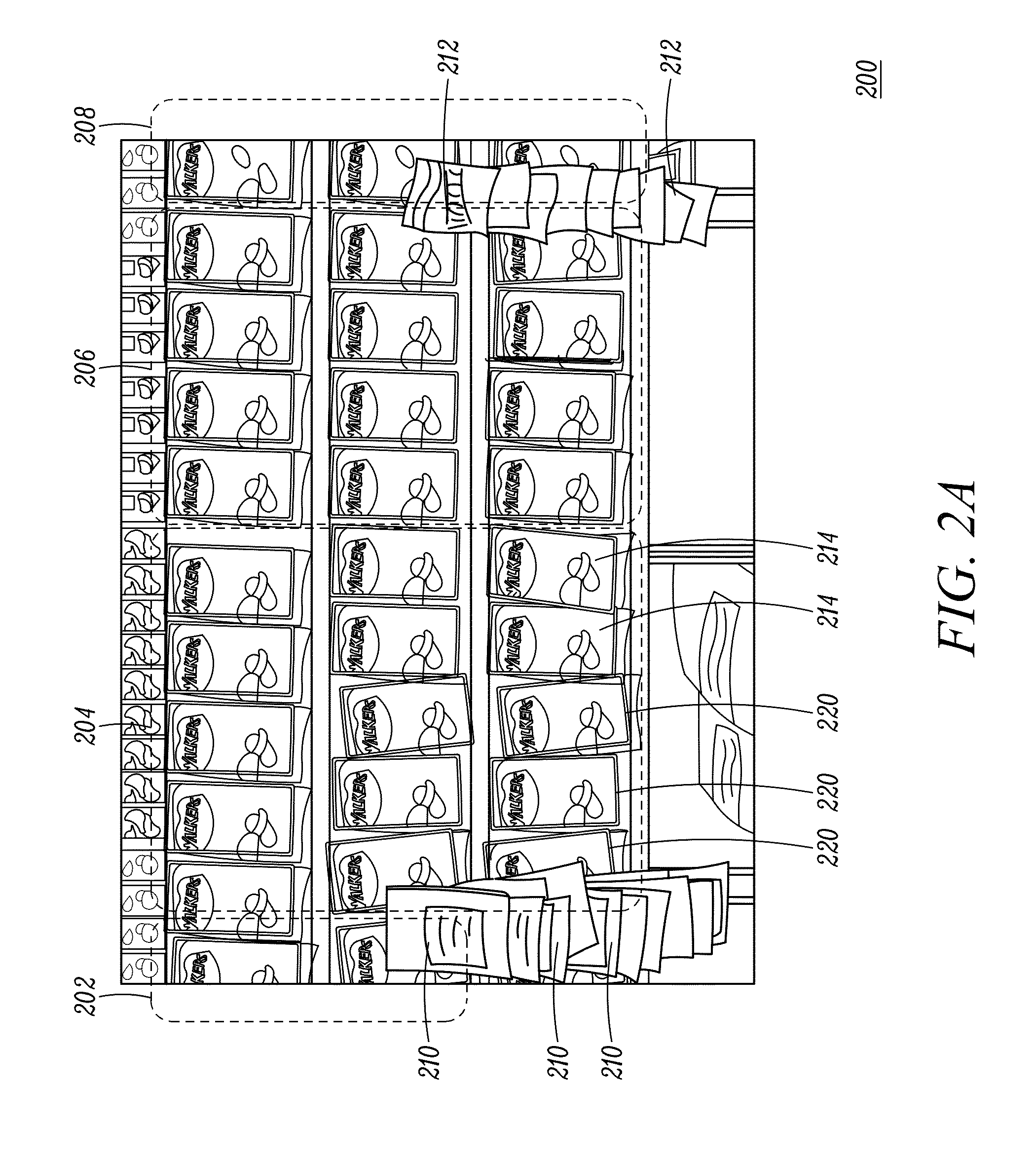

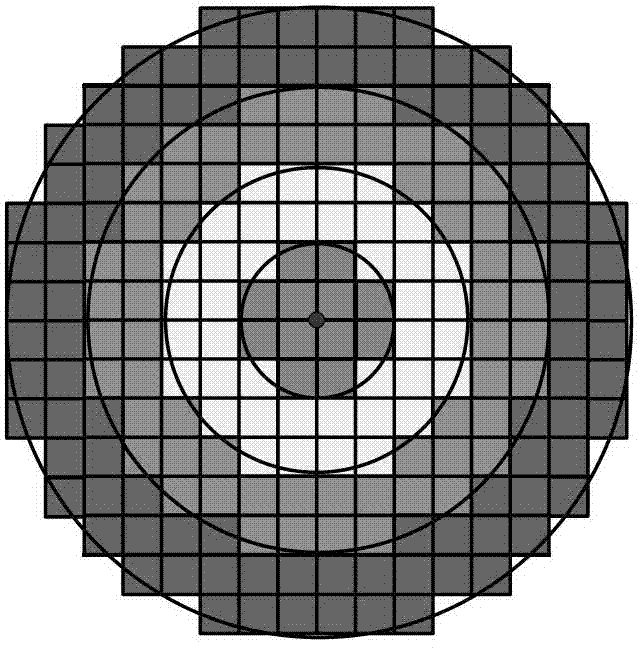

Method for detecting a plurality of instances of an object

ActiveUS20140369607A1Digital data information retrievalCharacter and pattern recognitionSingle imageFeature point matching

An improved object recognition method is provided that enables the recognition of many objects in a single image. Multiple instances of an object in an image can now be detected with high accuracy. The method receives a plurality of matches of feature points between a database image and a query image and determines a kernel bandwidth based on statistics of the database image. The kernel bandwidth is used in clustering the matches. The clustered matches are then analyzed to determine the number of instances of the object within each cluster. A recursive geometric fitting can be applied to each cluster to further improve accuracy.

Owner:SYMBOL TECH LLC

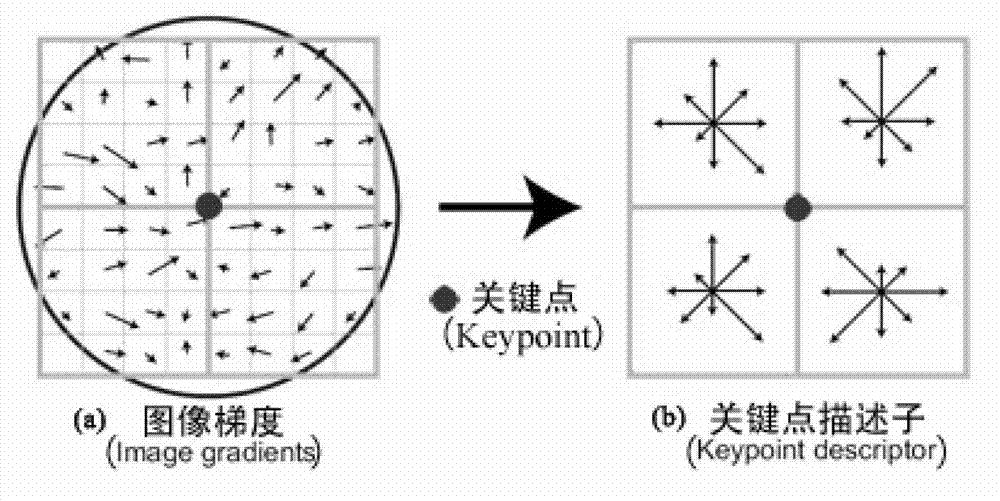

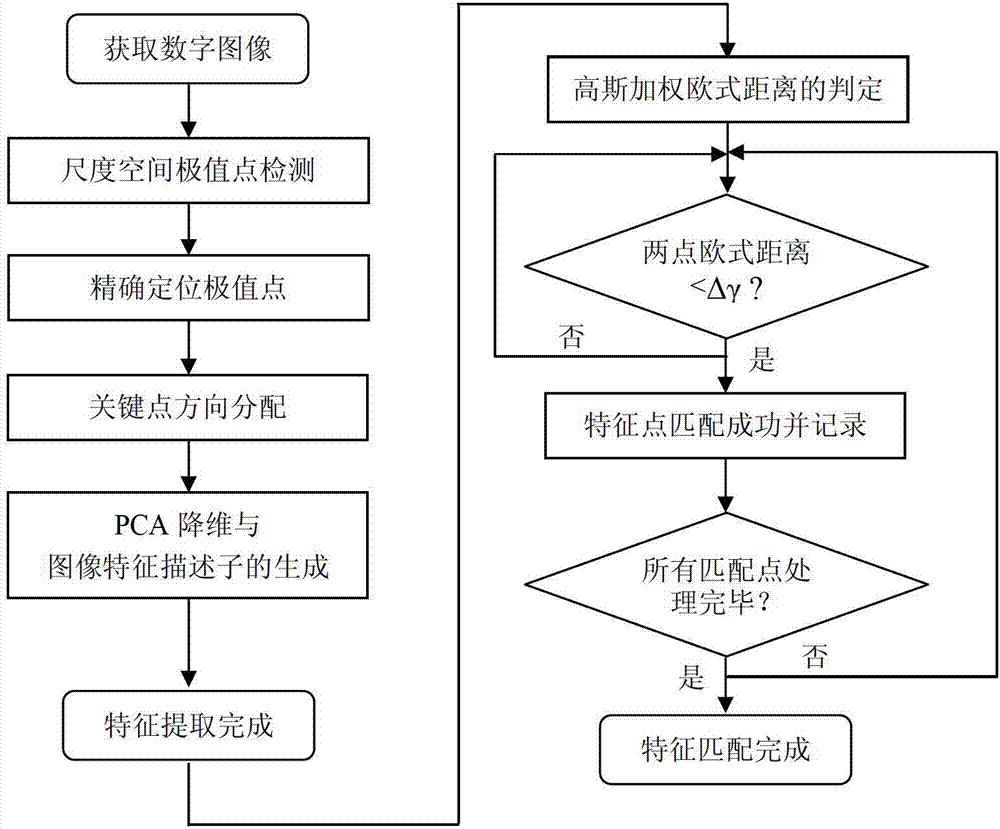

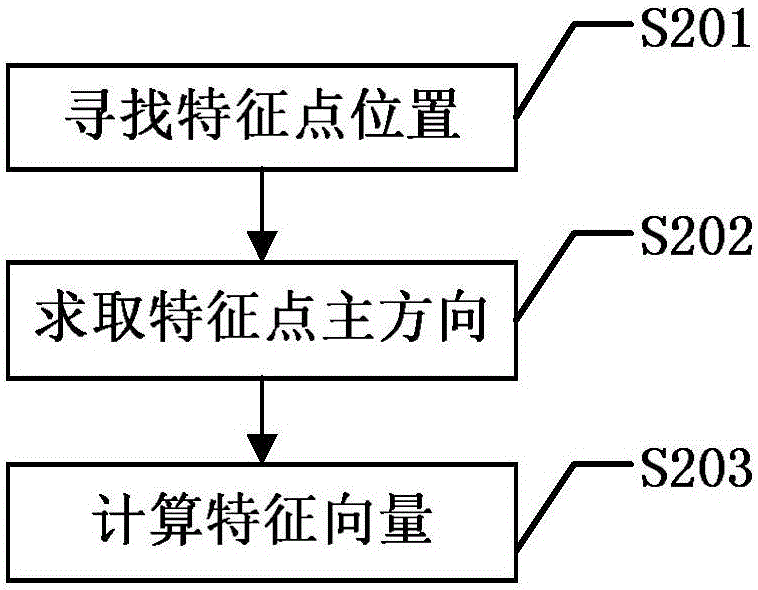

Feature extraction and matching method and device for digital image based on PCA (principal component analysis)

ActiveCN103077512AHigh precisionImprove matching speedImage analysisDigital videoPrincipal component analysis

The invention provides a feature extraction and matching method and device for a digital image based on PCA (principal component analysis), belonging to the technical field of image analysis. The method comprises the following steps of: 1) detecting scale space extreme points; 2) locating the extreme points; 3) distributing directions of the extreme points; 4) reducing dimension of PCA and generating image feature descriptors; and 5) judging similarity measurement and feature matching. The device mainly comprises a numerical value preprocessing module, a feature point extraction module and a feature point matching module. Compared with the existing SIFI (Scale Invariant Feature Transform) feature extraction and matching algorithm, the feature extraction and matching method has higher accuracy and matching speed. The method and device provided by the invention can be directly applied to such machine vision fields as digital image retrieval based on contents, digital video retrieval based on contents, digital image fusion and super-resolution image reconstruction.

Owner:BEIJING UNIV OF TECH

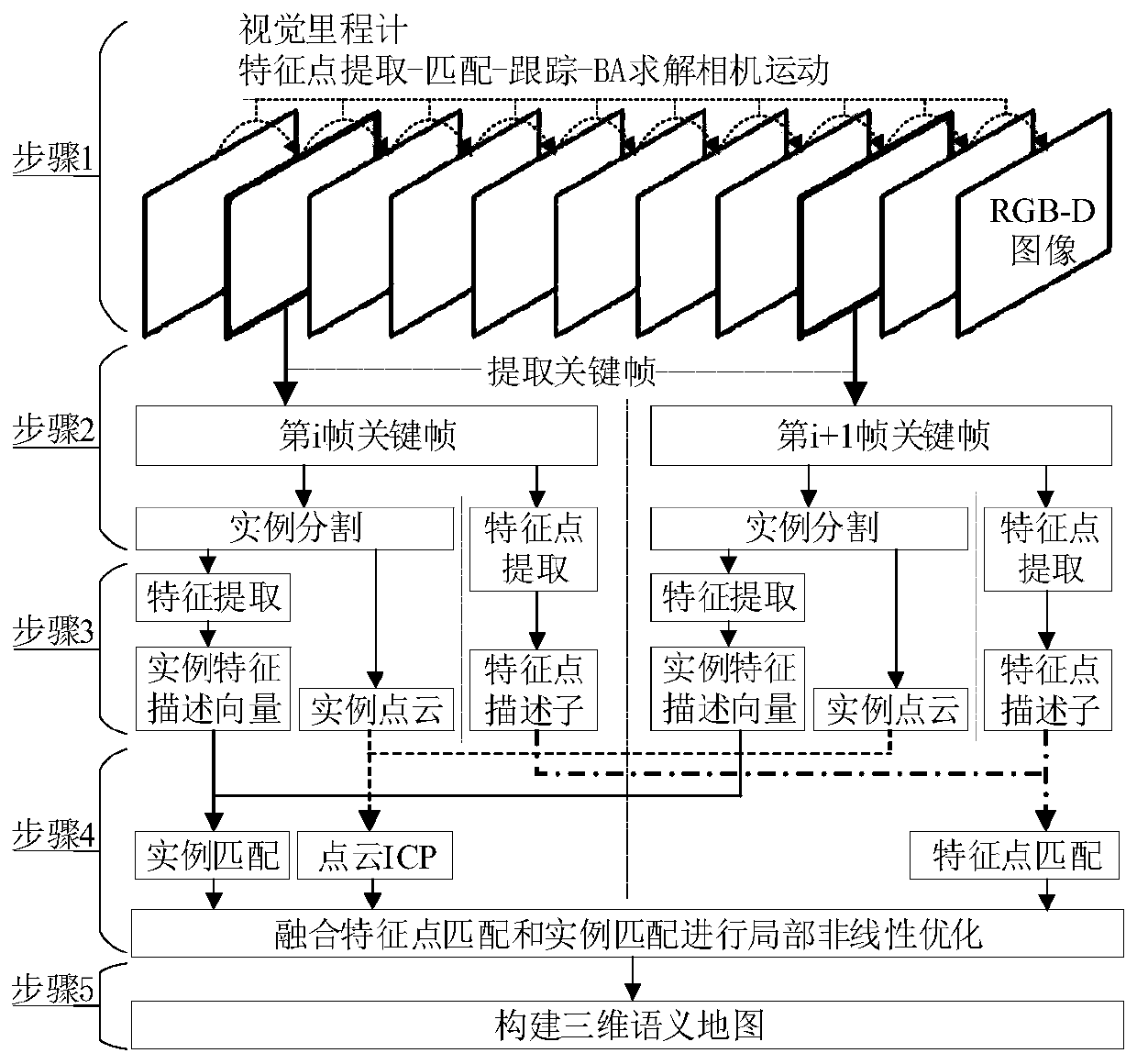

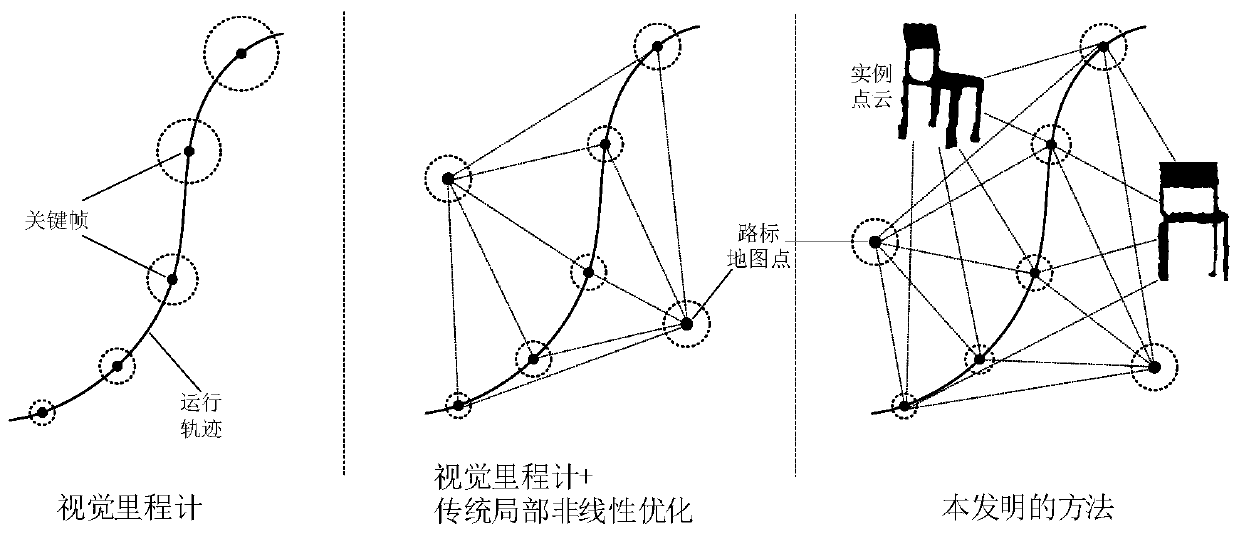

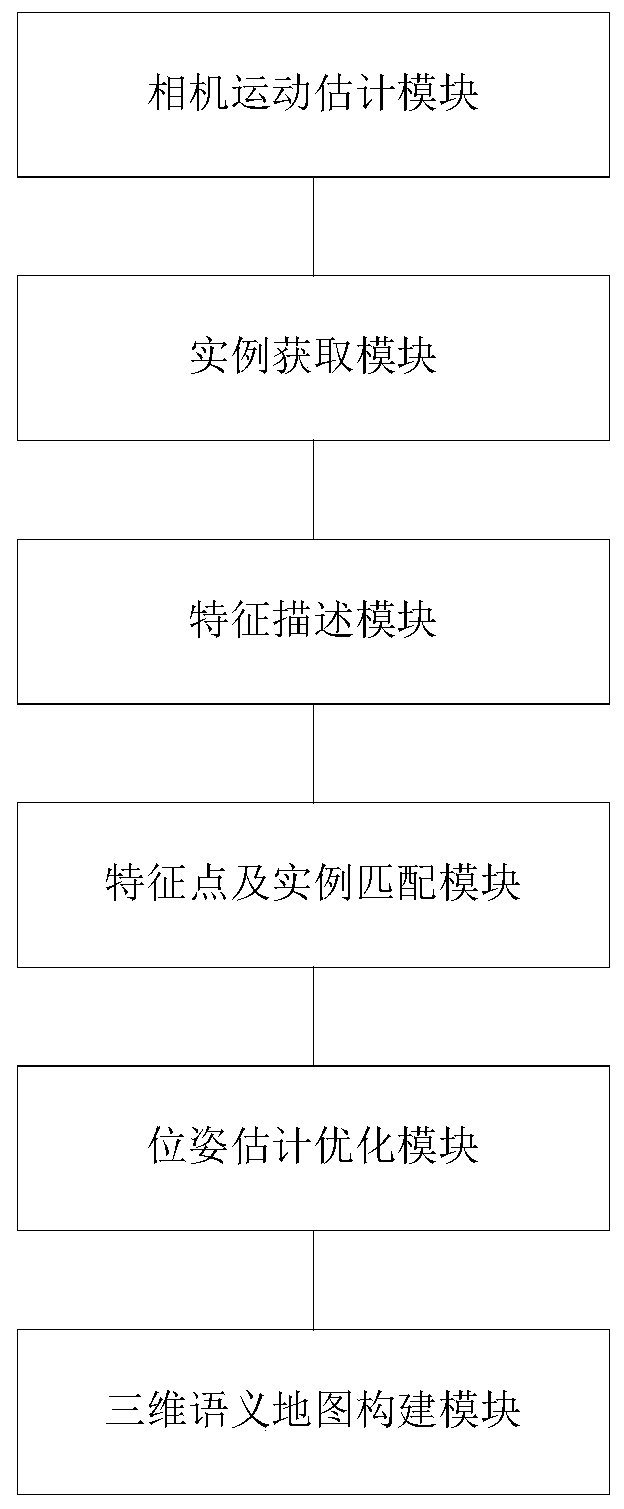

Robot semantic SLAM method based on object instance matching, processor and robot

InactiveCN109816686AGood estimateHigh positioning accuracyImage analysisNeural architecturesPoint cloudFeature description

The invention provides a robot semantic SLAM method based on object instance matching, a processor and a robot. The robot semantic SLAM method comprises the steps that acquring an image sequence shotin the operation process of a robot, and conducting feature point extraction, matching and tracking on each frame of image to estimate camera motion; extracting a key frame, performing instance segmentation on the key frame, and obtaining all object instances in each frame of key frame; carrying out feature point extraction on the key frame and calculating feature point descriptors, carrying outfeature extraction and coding on all object instances in the key frame to calculate feature description vectors of the instances, and obtaining instance three-dimensional point clouds at the same time; carrying out feature point matching and instance matching on the feature points and the object instances between the adjacent key frames; and performing local nonlinear optimization on the pose estimation result of the SLAM by fusing the feature point matching and the instance matching to obtain a key frame carrying object instance semantic annotation information, and mapping the key frame intothe instance three-dimensional point cloud to construct a three-dimensional semantic map.

Owner:SHANDONG UNIV

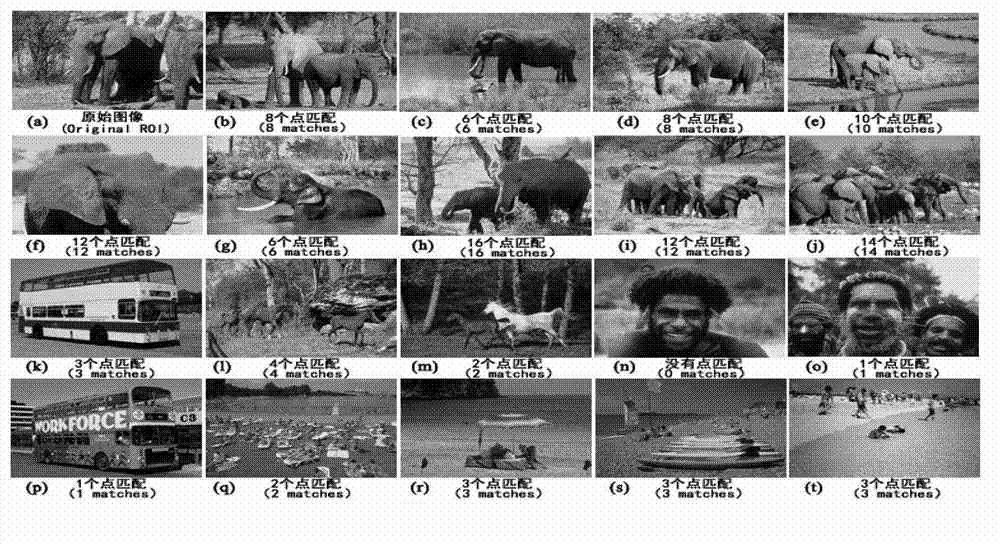

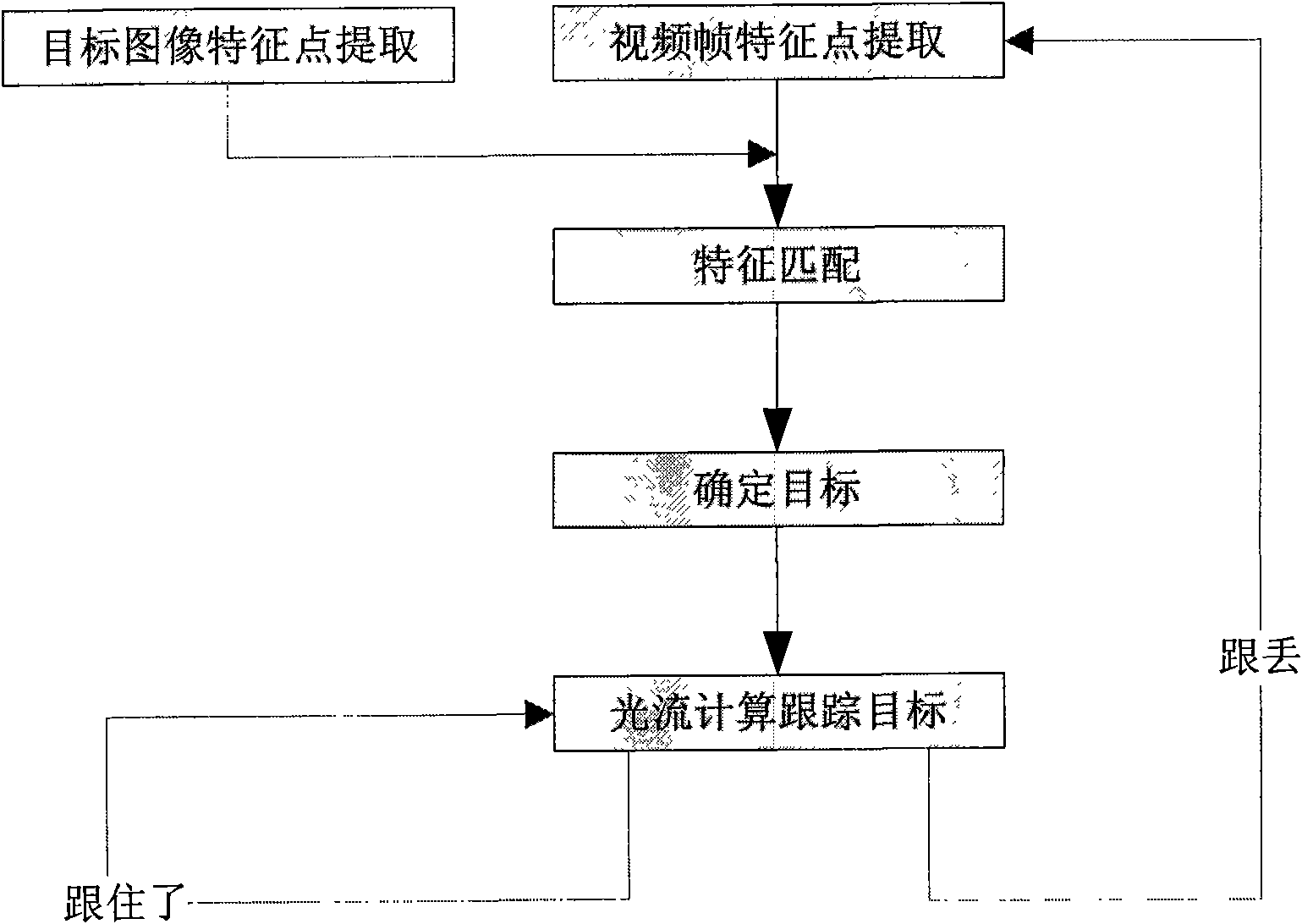

Target automatically recognizing and tracking method based on affine invariant point and optical flow calculation

InactiveCN101770568AImprove matching accuracyExact matchImage analysisCharacter and pattern recognitionVideo monitoringGoal recognition

The invention discloses a target automatically recognizing and tracking method based on affine invariant points and optical flow calculation, which comprises the following steps: firstly, carrying out image pretreatment on a target image and video frames and extracting affine invariant feature points; then, carrying out feature point matching, eliminating mismatching points; determining the target recognition success when the feature point matching pairs reach certain number and affine conversion matrixes can be generated; then, utilizing the affine invariant points collected in the former step for feature optical flow calculation to realize the real-time target tracking; and immediately returning to the first step for carrying out the target recognition again if the tracking of middle targets fails. The feature point operator used by the invention belongs to an image local feature description operator which is based on the metric space and maintains the unchanged image zooming and rotation or even affine conversion. In addition, the adopted optical flow calculation method has the advantages of small calculation amount and high accuracy, and can realize the real-time tracking. The invention is widely applied to the fields of video monitoring, image searching, computer aided driving systems, robots and the like.

Owner:NANJING UNIV OF SCI & TECH

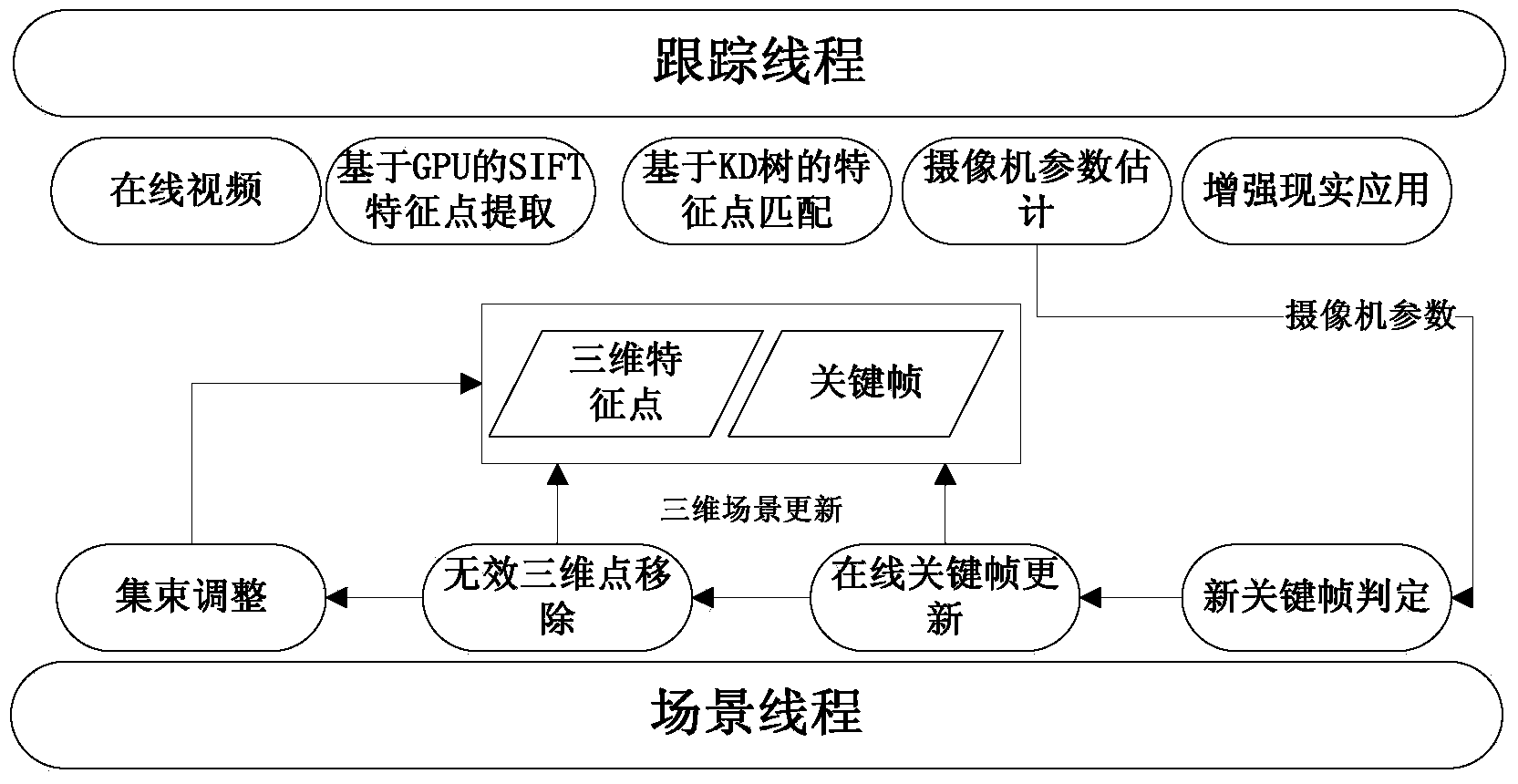

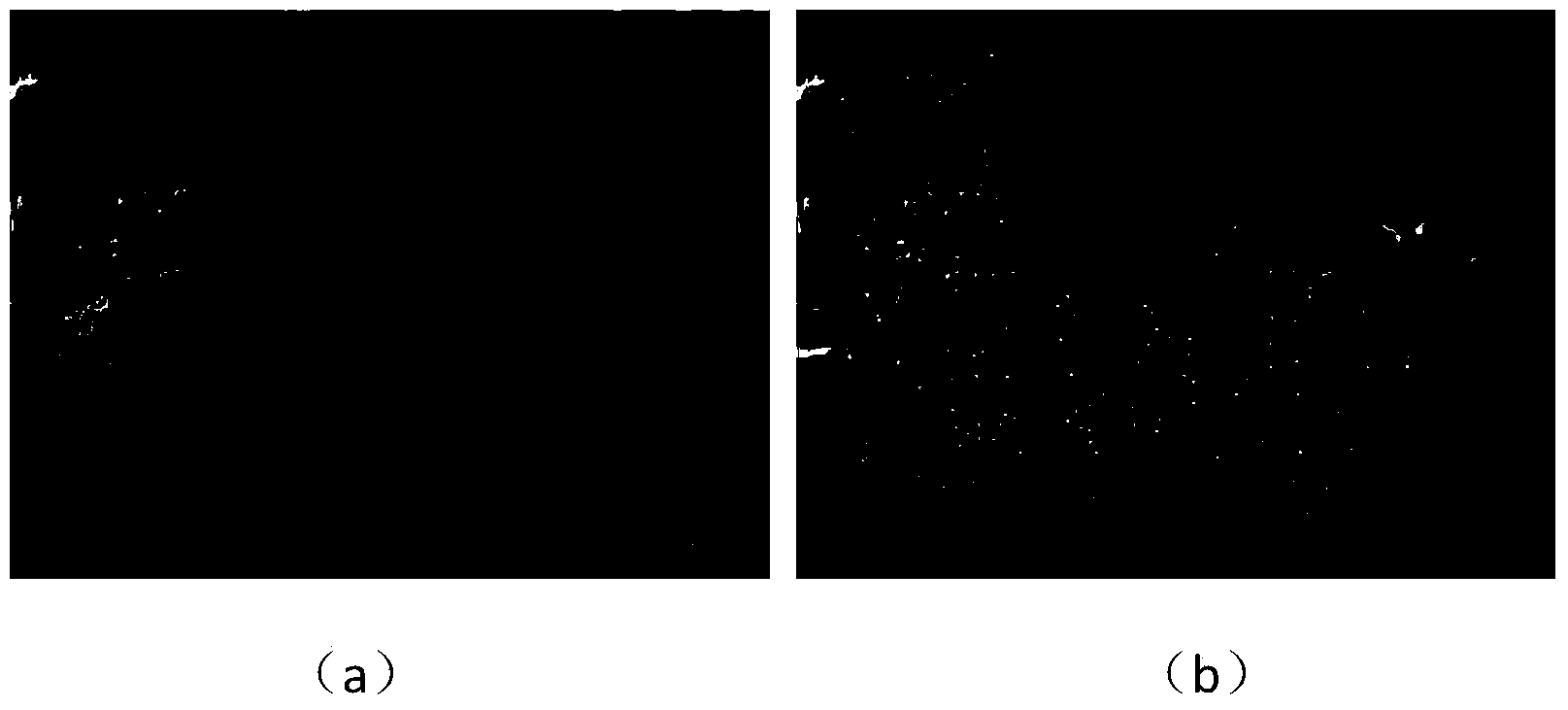

Real-time camera tracking method for dynamically-changed scene

ActiveCN103646391AGuaranteed accuracyAccurate trackingTelevision system detailsImage analysisPoint cloudMotion parameter

The invention discloses a real-time camera tracking method for a dynamically-changed scene. According to the method, the camera pose can be tracked and solved stably in a scene changed dynamically and continually; at first, feature matching and camera parameter estimation are performed, and then, scene update is performed, and finally, when the method is actually applied, foreground and background mutil-thread coordinated operation is implemented, the foreground thread is used to perform feature point matching and camera motion parameter estimation on each frame, and the background thread is used to perform KD tree, key frame and three-dimensional point cloud maintenance and update continually, and optimize key frame camera motion parameters and three-dimensional point positions in a combined manner. When the scene is changed dynamically, the method of the invention still can be used to perform camera tracking on a real-time basis, the method of the invention is significantly better than existing camera tracking methods in tracking accuracy, stability, operating efficiency, etc.

Owner:ZHEJIANG SENSETIME TECH DEV CO LTD

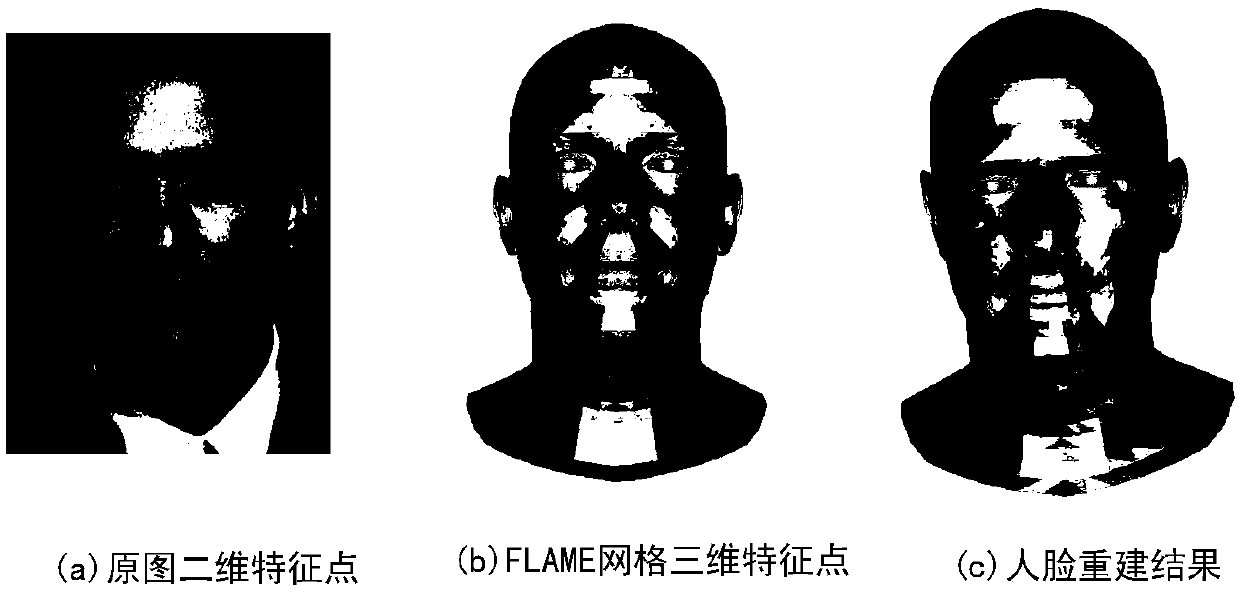

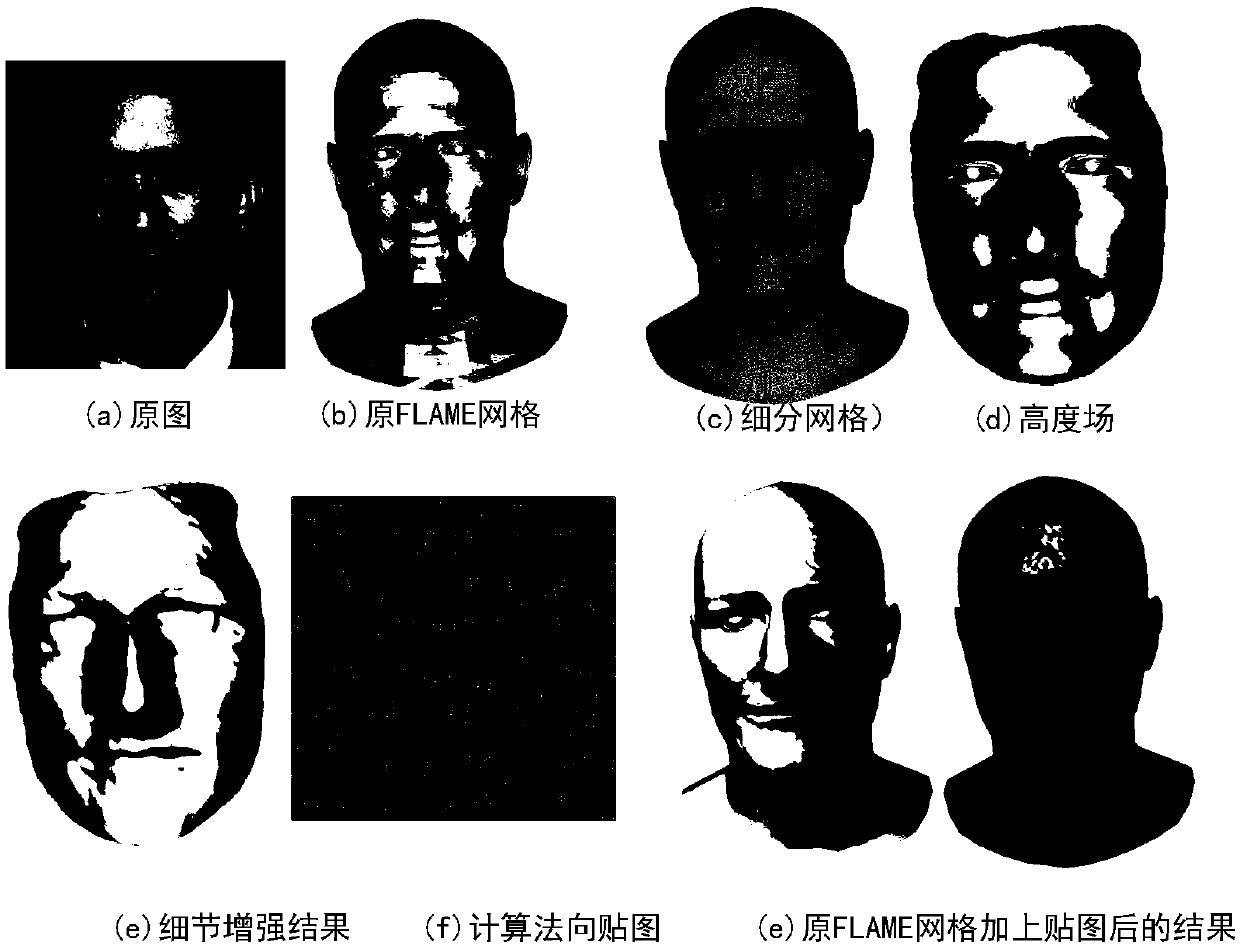

Three-dimensional human face reconstruction method based on single picture

ActiveCN108765550AGood reconstruction accuracyRebuild completeAnimation3D-image renderingImage extractionAnimation

The invention discloses a three-dimensional human face reconstruction method based on a single picture. The method comprises the steps of 1) performing human face reconstruction (face reconstruction)based on a FLAME model; 2) performing FLAME grid face detail enhancement (detail enhancement); and 3) performing FLAME grid chartlet complementation (chartlet complementation). According to the main processes, an input human face image is given; based on an algorithm, firstly, by using human face feature points extracted from the image and in combination with three-dimensional feature points of aFLAME grid, feature point matching energy is established, and the shape of a human face is obtained; secondly, grid vertexes of a human face height field are guided to move by means of high-frequencyinformation of a human face area in the image, and fine face details are reconstructed; and finally, a human face chartlet is complemented through a built FLAME albedo parameterization model, and illumination information is removed. The method solves the problem of three-dimensional human face reconstruction for the single picture, and can be applied to the human face reconstruction and the humanface animation production.

Owner:SOUTH CHINA UNIV OF TECH

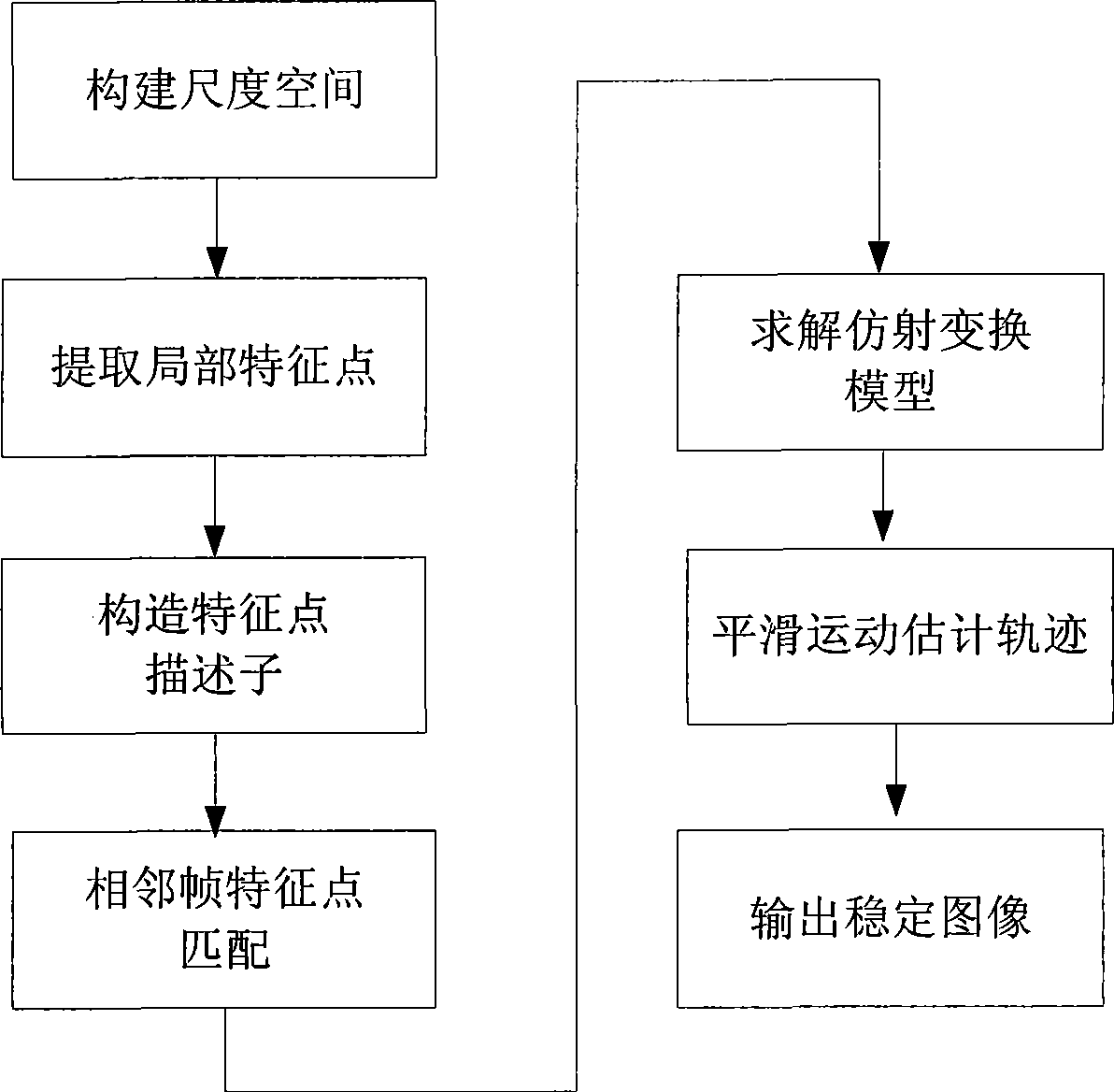

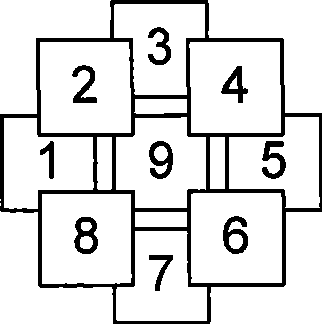

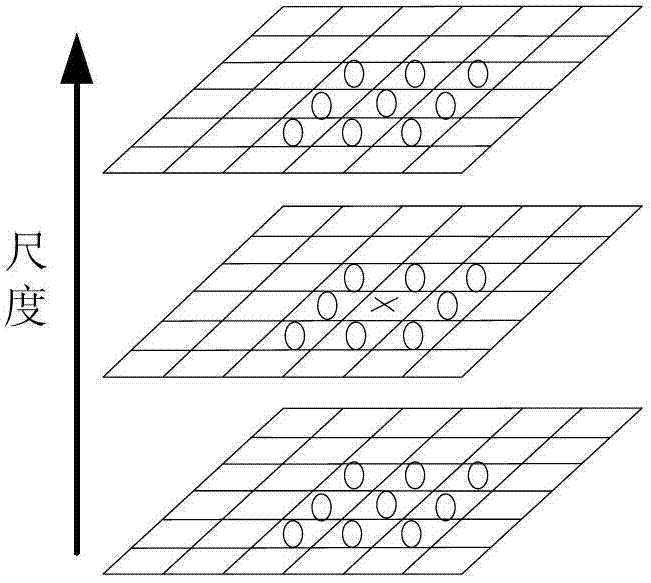

Real-time athletic estimating method based on multiple dimensioned unchanged characteristic

InactiveCN101521740AImprove estimation accuracyImprove matching accuracyTelevision system detailsImage analysisElectrical polarityReal Time Kinematic

The invention relates to a real-time athletic estimating method based on a multiple dimensioned unchanged characteristic, which comprises the steps: (1) a gauss scale space is constructed and a local characteristic point is extracted; (2) a characteristic descriptor of the polar distribution of a rectangular window is constructed; (3) the characteristic point is used for matching and establishing an interframe motion model; and (4) the offset of a current frame output position which corresponds to a window center is calculated. The athletic estimating method has a size, visual angle and rotation adaptive characteristic, can accurately match images with complicated athletic relation, such as translation, rotation, dimension, a certain visual angle change, and the like and has higher real-time performance. The estimating method has better robustness for common phenomena, such as mistiness, noise, and the like in a video, has higher estimated accuracy for arbitrary ruleless complicated athletic parameters and is combined with a motion compensating method based on motion state identification, thus, the image stabilizing requirement of a video image sequence which can be arbitrarily and randomly shot under complex environment can be realized, and the purposes of real-time output and video stabilization can be achieved.

Owner:BEIHANG UNIV

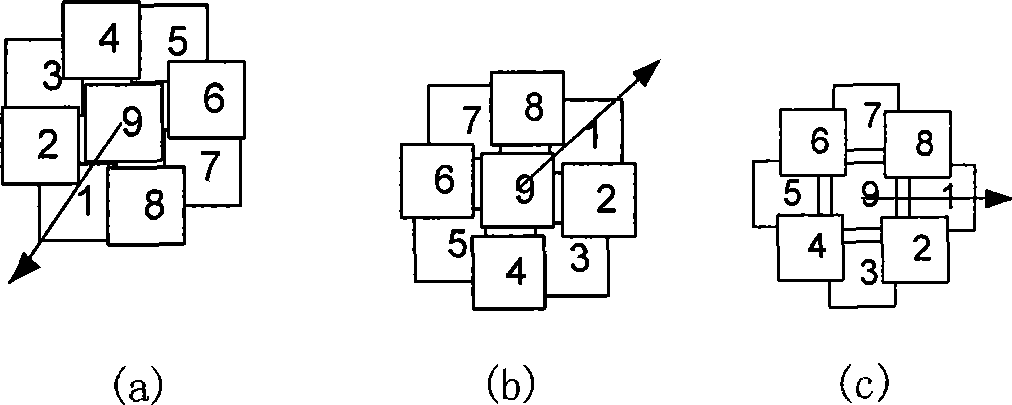

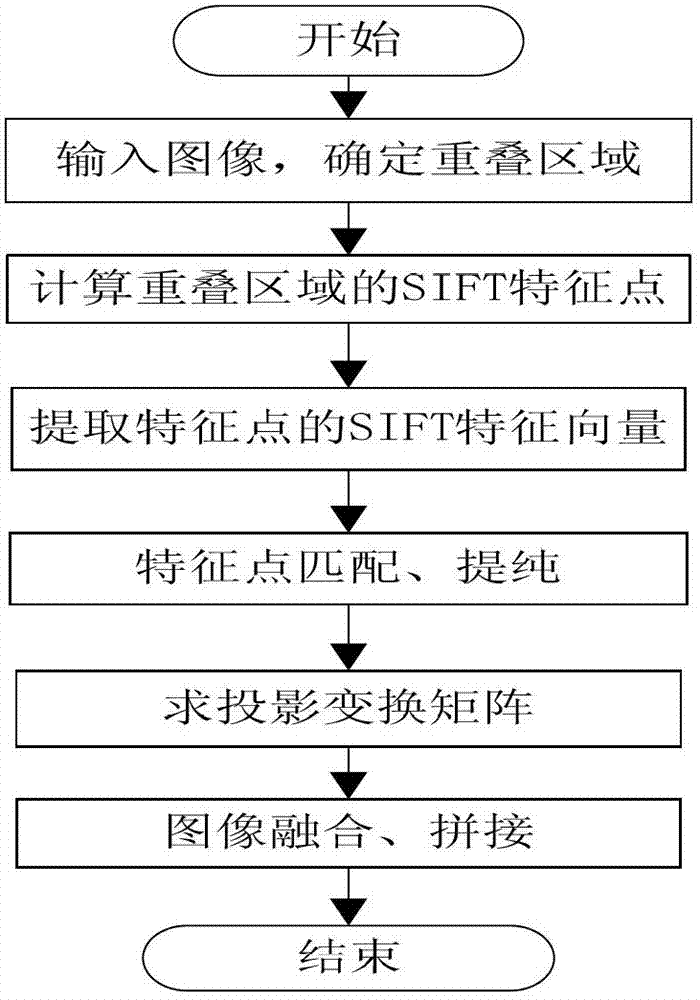

Image stitching method based on overlapping region scale-invariant feather transform (SIFT) feature points

InactiveCN102968777ASmall amount of calculationReduce in quantityImage enhancementGeometric image transformationFeature vectorScale-invariant feature transform

The invention discloses an image stitching method based on overlapping region scale-invariant feather transform (SIFT) feature points and belongs to the technical field of image processing. Aiming at the problems that the algorithm computation is large and subsequent matching error and computing redundancy are easily caused due to the non-overlapping region features because of extraction of the features of the whole image in the conventional image stitching algorithm based on features, the invention provides an image stitching method based on the overlapping region SIFT feature points. According to the method, only the feature points in the image overlapping region are extracted, the number of the feature points is reduced, and the algorithm computation is greatly reduced; and moreover, the feature points are represented by employing an improved SIFT feature vector extraction method, the computation during feature point matching is further reduced, and the mismatching rate is reduced. The invention also discloses an image stitching method with optical imaging difference, wherein the image stitching method comprises the following steps of: converting two images with optical imaging difference to be stitched to a cylindrical coordinate space by utilizing projection transformation, and stitching the images by using the image stitching method based on the overlapping region SIFT feature points.

Owner:HOHAI UNIV

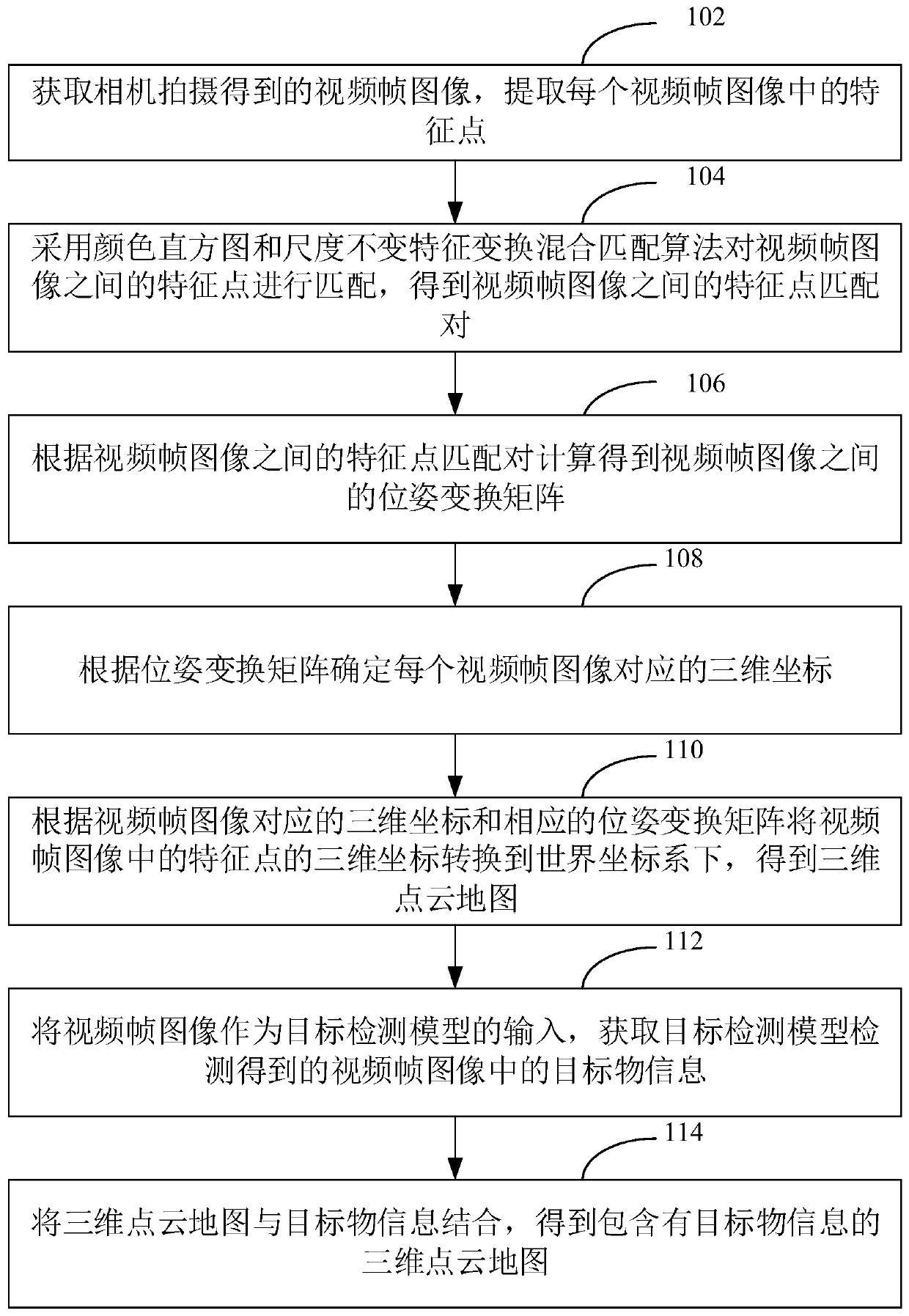

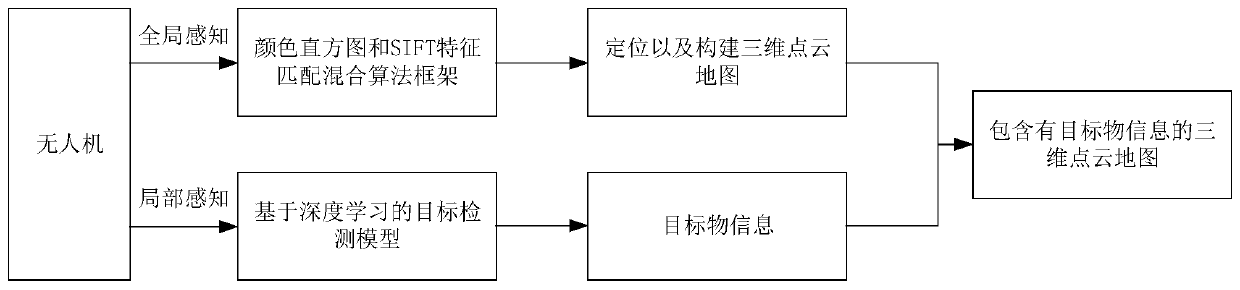

Unmanned aerial vehicle three-dimensional map construction method and device, computer equipment and storage medium

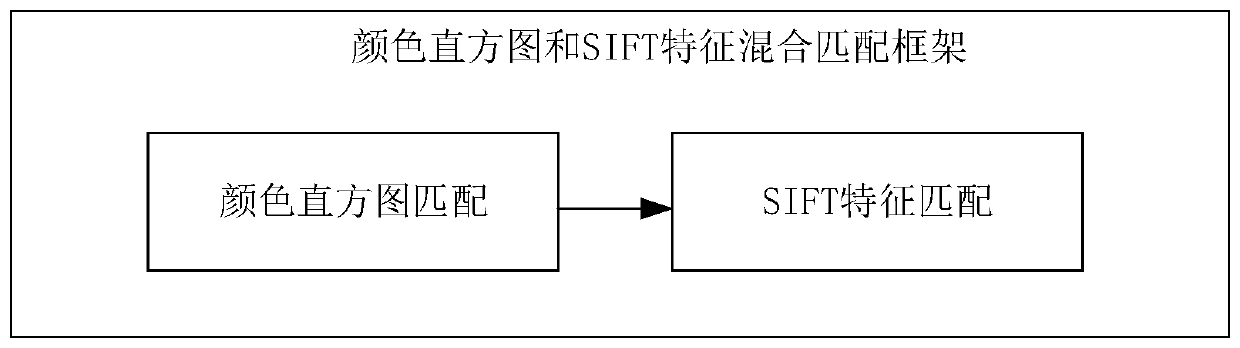

The invention relates to an unmanned aerial vehicle three-dimensional map construction method. The method comprises the following steps of obtaining a video frame image shot by a camera, extracting feature points in each video frame image; matching the feature points by adopting a color histogram and scale invariant feature transformation hybrid matching algorithm to obtain feature point matchingpairs; calculating according to the feature point matching pairs to obtain a pose transformation matrix; determining a three-dimensional coordinate corresponding to each video frame image according tothe pose transformation matrix, and converting the three-dimensional coordinates of the feature points in the video frame image into a world coordinate system to obtain a three-dimensional point cloud map, taking the video frame image as the input of a target detection model to obtain target object information, and combining the three-dimensional point cloud map with the target object informationto obtain the three-dimensional point cloud map containing the target object information. According to the method, the real-time performance and accuracy of three-dimensional point cloud map construction are improved, and rich information is contained. In addition, the invention further provides an unmanned aerial vehicle three-dimensional map construction device, computer equipment and a storagemedium.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

Method for tracking gestures and actions of human face

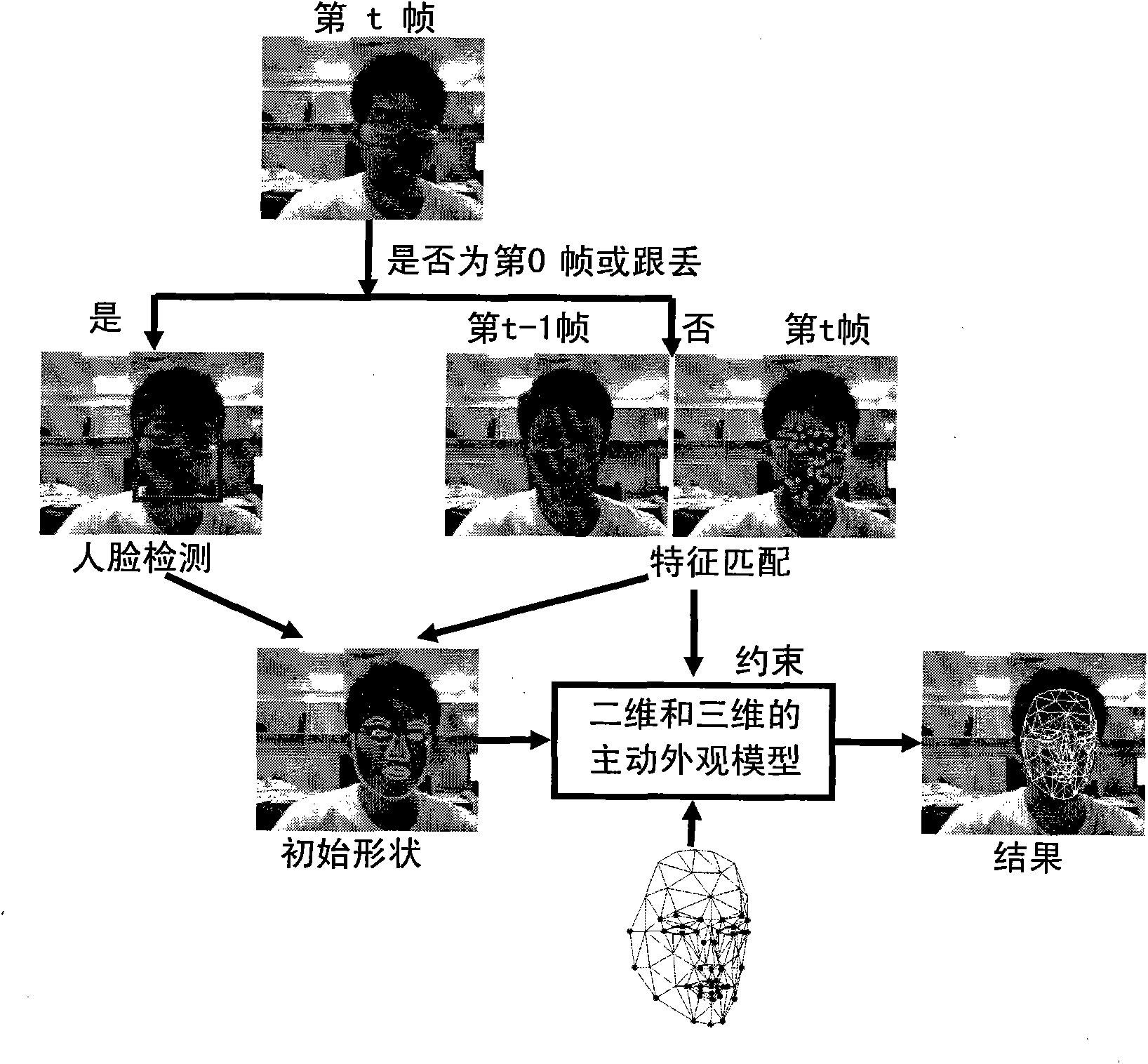

InactiveCN102402691ANo human intervention requiredFast trackingImage analysisCharacter and pattern recognitionFace detectionFeature point matching

The invention discloses a method for tracking gestures and actions of a human face, which comprises steps as follows: a step S1 includes that frame-by-frame images are extracted from a video streaming, human face detection is carried out for a first frame of image of an input video or when tracking is failed, and a human face surrounding frame is obtained, a step S2 includes that after convergent iteration of a previous frame of image, more remarkable feature points of textural features of a human face area of the previous frame of image match with corresponding feather points found in a current frame of image during normal tracking, and matching results of the feather points are obtained, a step S3 includes that the shape of an active appearance model is initialized according to the human face surrounding frame or the feature point matching results, and an initial value of the shape of a human face in the current frame of image is obtained, and a step S4 includes that the active appearance model is fit by a reversal synthesis algorithm, so that human face three-dimensional gestures and face action parameters are obtained. By the aid of the method, online tracking can be completed full-automatically in real time under the condition of common illumination.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

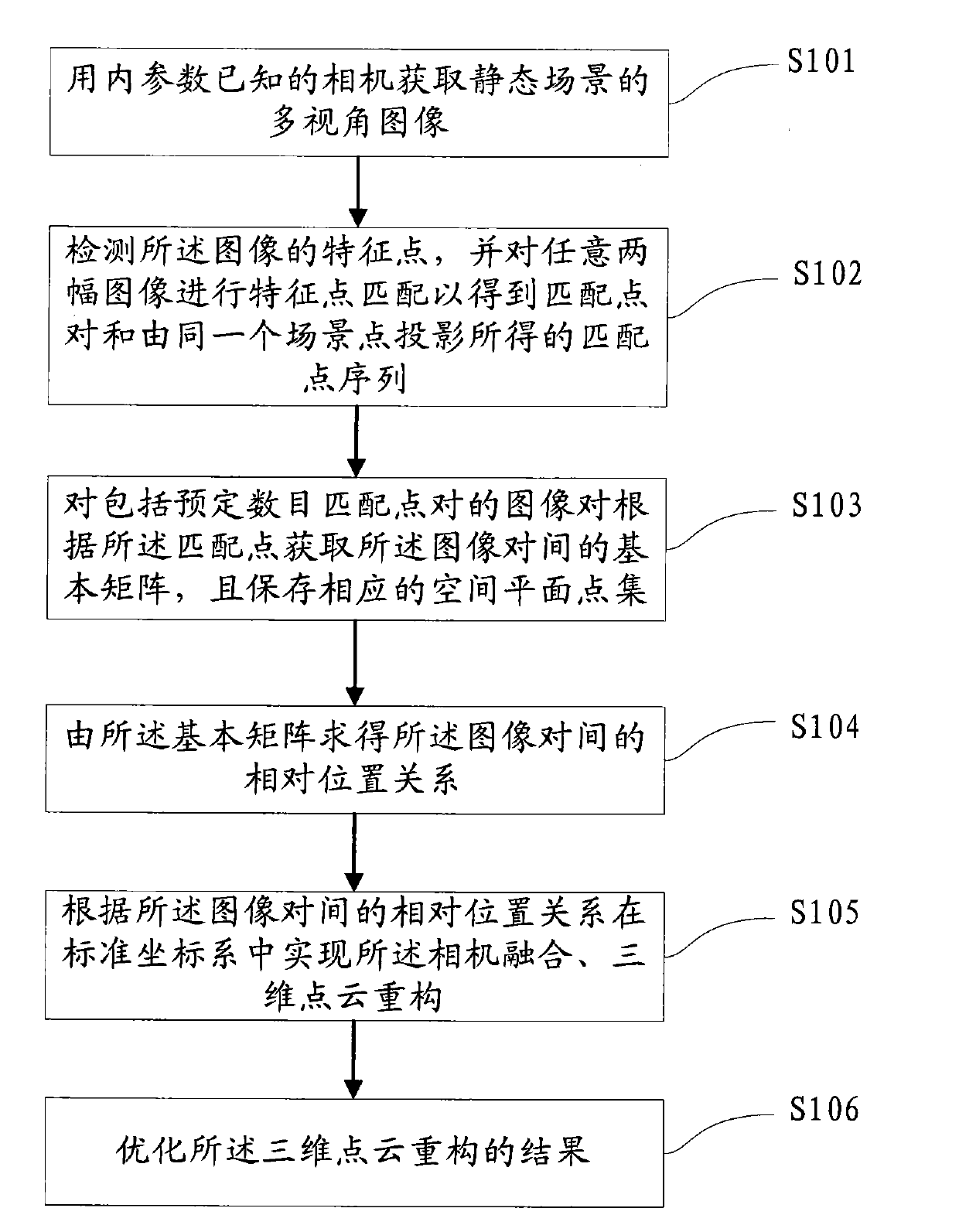

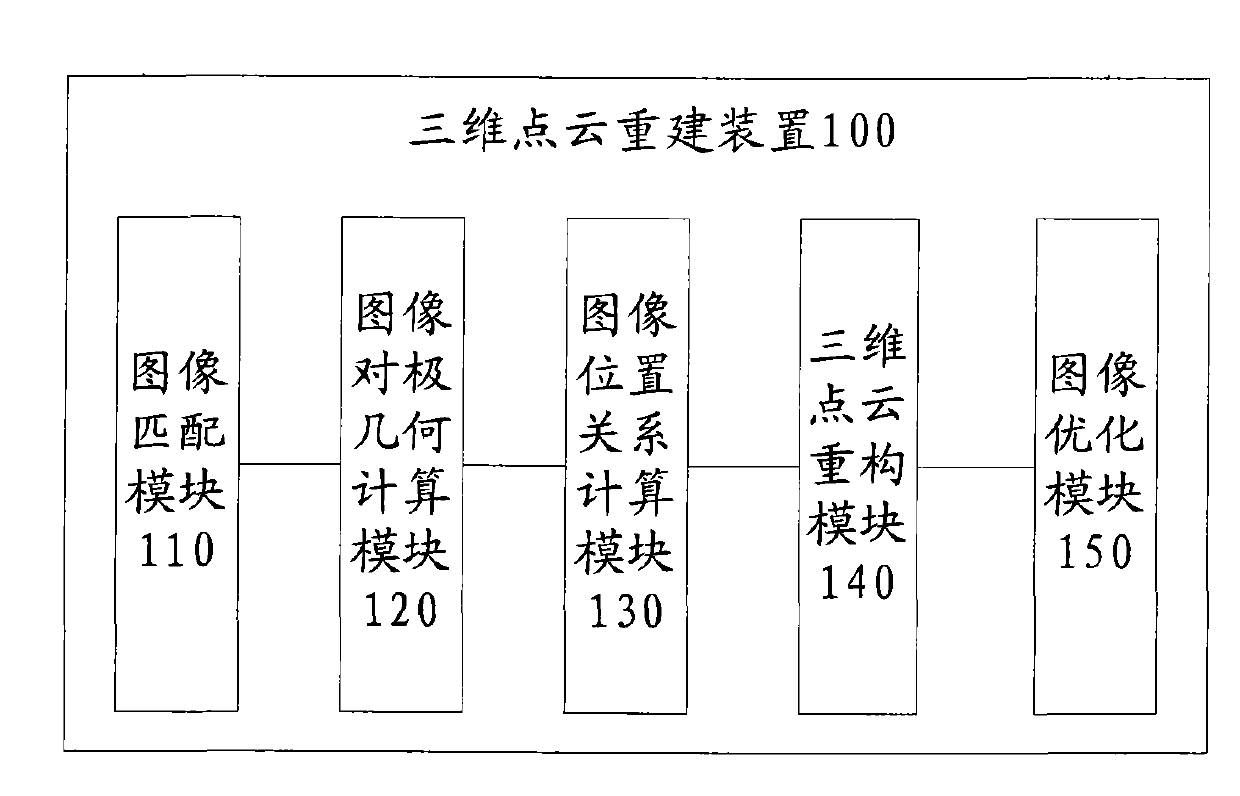

Reconstruction method and system for processing three-dimensional point cloud containing main plane scene

The invention proposes a reconstruction method and system for processing three-dimensional point cloud containing a main plane scene. The method comprises the following steps of obtaining a multi-angle image of a static scene by using a camera with known internal parameters; detecting characteristic points of the image, and matching characteristic points of any two images to obtain a matched point pairs and obtaining a matched point sequence by projecting the same scene point; for image pairs containing the preset number of matched point pairs, obtaining a basic array between the image pairs according to the matched points, and storing corresponding space plane point sets; determining the corresponding position relationship between the image pairs according to the basic array; realizing camera fusion and three-dimensional point reconstruction in a standard coordinate frame according to the corresponding position relationship between the image pairs; and optimizing the reconstruction result of the three-dimensional point cloud. The reconstruction method for processing three-dimensional point cloud containing main plane scene of the invention can overcome defects of the existing reconstruction method for processing three-dimensional point cloud and can realize the three-dimensional reconstruction not depending on the scene.

Owner:TSINGHUA UNIV

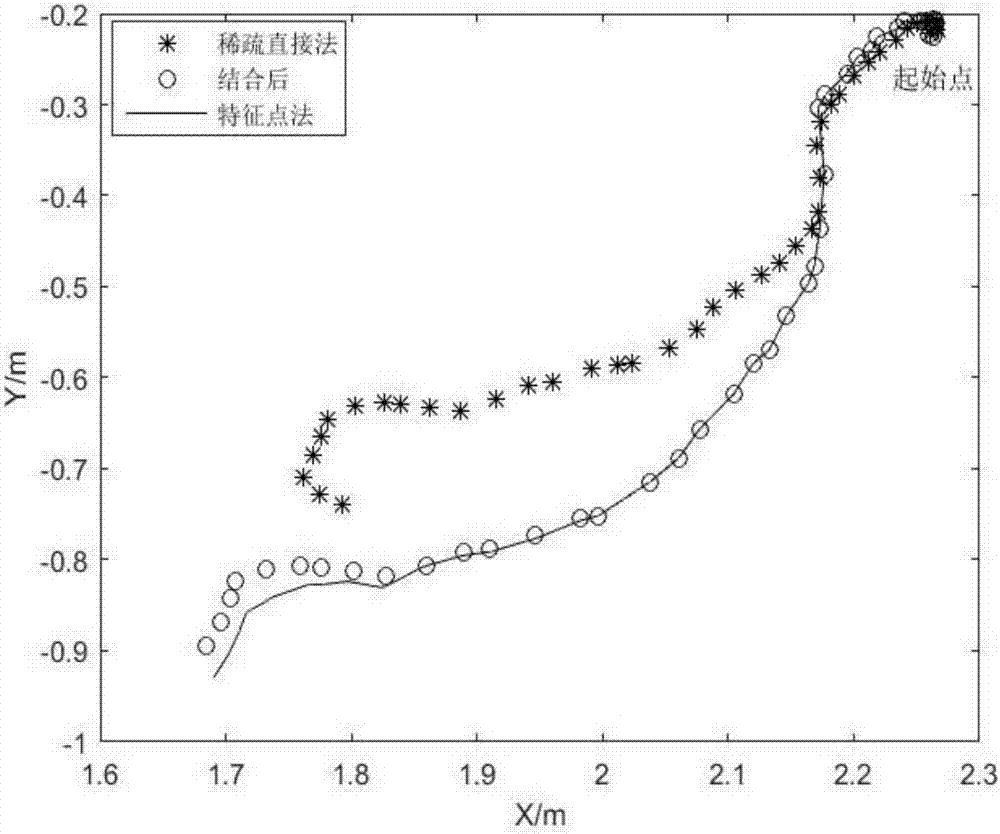

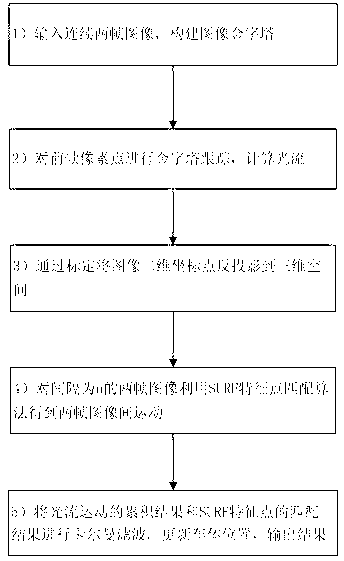

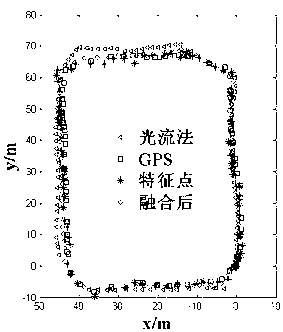

Method for designing monocular vision odometer with light stream method and feature point matching method integrated

InactiveCN103325108AProcessing time advantageReal-time positioningImage analysisAutonomous Navigation SystemRoad surface

The invention discloses a method for designing a monocular vision odometer with a light stream method and a feature point matching method integrated. Accurate real-time positioning is of great significance to an autonomous navigation system. Positioning based on the SURF feature point matching method has the advantages of being robust for illumination variations and high in positioning accuracy, and the defects of the SURF feature point matching method are that the processing speed is low and real-time positioning can not be achieved. The light steam tracking method has good real-time performance, and the defect of the light steam tracking method is that positioning accuracy is poor. The method integrates the advantages of the two methods, and the monocular vision odometer integrated with the light stream method and the feature point matching method is designed. Experimental results show that the algorithm after integration can provide accurate real-time positioning output and has robustness under the condition that illumination variations and road surface textures are few.

Owner:ZHEJIANG UNIV

Uncalibrated multi-viewpoint image correction method for parallel camera array

InactiveCN102065313AFreely adjust horizontal parallaxIncrease the use range of multi-look correctionImage analysisSteroscopic systemsParallaxScale-invariant feature transform

The invention relates to an uncalibrated multi-viewpoint image correction method for parallel camera array. The method comprises the steps of: at first, extracting a set of characteristic points in viewpoint images and determining matching point pairs of every two adjacent images; then introducing RANSAC (Random Sample Consensus) algorithm to enhance the matching precision of SIFT (Scale Invariant Feature Transform) characteristic points, and providing a blocking characteristic extraction method to take the fined positional information of the characteristic points as the input in the subsequent correction processes so as to calculate a correction matrix of uncalibrated stereoscopic image pairs; then projecting a plurality of non-coplanar correction planes onto the same common correction plane and calculating the horizontal distance between the adjacent viewpoints on the common correction plane; and finally, adjusting the positions of the viewpoints horizontally until parallaxes are uniform, namely completing the correction. The composite stereoscopic image after the multi-viewpoint uncalibrated correction of the invention has quite strong sense of width and breadth, prominently enhanced stereoscopic effect compared with the image before the correction, and can be applied to front-end signal processing of a great many of 3DTV application devices.

Owner:SHANGHAI UNIV

Real-time three-dimensional scene reconstruction method for UAV based on EG-SLAM

ActiveCN108648270ARequirements for Reducing Repetition RatesImprove realismImage enhancementImage analysisPoint cloudTexture rendering

The present invention provides a real-time three-dimensional scene reconstruction method for a UAV (unmanned aerial vehicle) based on the EG-SLAM. The method is characterized in that: visual information is acquired by using an unmanned aerial camera to reconstruct a large-scale three-dimensional scene with texture details. Compared with multiple existing methods, by using the method provided by the present invention, images are collected to directly run on the CPU, and positioning and reconstructing a three-dimensional map can be quickly implemented in real time; rather than using the conventional PNP method to solve the pose of the UAV, the EG-SLAM method of the present invention is used to solve the pose of the UAV, namely, the feature point matching relationship between two frames is used to directly solve the pose, so that the requirement for the repetition rate of the collected images is reduced; and in addition, the large amount of obtained environmental information can make theUAV to have a more sophisticated and meticulous perception of the environment structure, texture rendering is performed on the large-scale three-dimensional point cloud map generated in real time, reconstruction of a large-scale three-dimensional map is realized, and a more intuitive and realistic three-dimensional scene is obtained.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Method for identifying forgery seal based on feature line randomly generated by matching feature points

InactiveCN101894260AIncrease the difficultyImprove recognition accuracyCharacter and pattern recognitionPattern recognitionImage extraction

The invention provides a method for identifying a forgery seal based on a feature line randomly generated by matching feature points. The method comprises the following steps: a step of off-line stage and a step of online identification stage to acquire an image of a seal affixed document containing a seal stamp to be verified by an imaging device; a step of preprocessing the image of the seal stamp to be verified, and a step of extracting an effective stamp image of the seal to be verified; a step of extracting a feature point of the seal image to be verified; a step of extracting the feather point of the image of the seal stamp to be verified, and a step of constructing a database containing location information and descriptor information of each feature point; a step of matching the features point of the seal to be verified with that of a reference seal; a step of randomly generating image feature lines of an identifiable seal to be verified and the reference seal; and a step of evaluating truth or forgery of the seal to be verified. The stamps affixed with the same seal under different situations have the two characteristics of accordant number and distribution of the feature points and accordant image information. The method has the characteristics of simpleness, high efficiency, high identification accuracy and the like, and contributes to seal forgery prevention.

Owner:BEIJING UNIV OF CHEM TECH

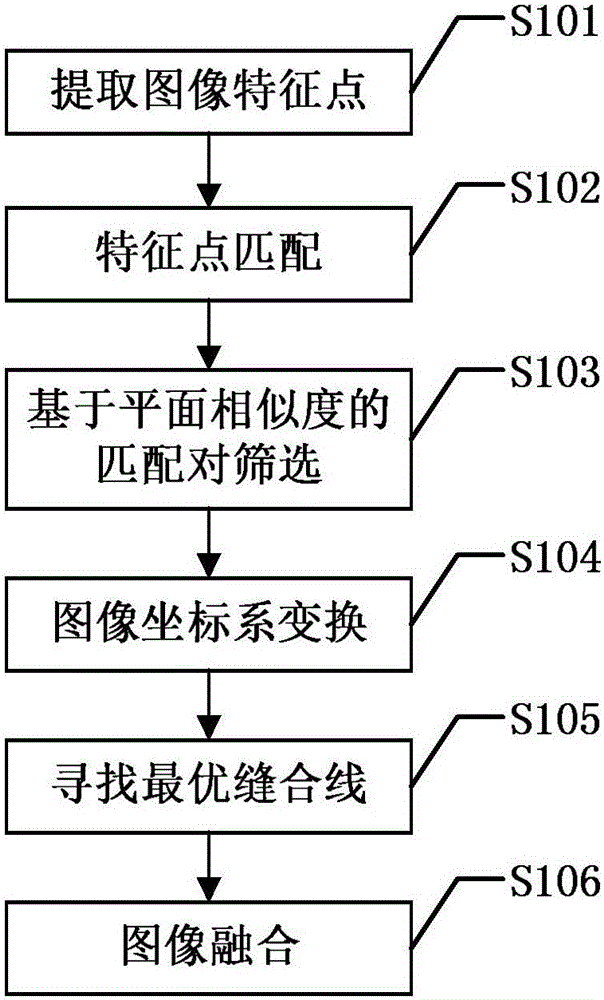

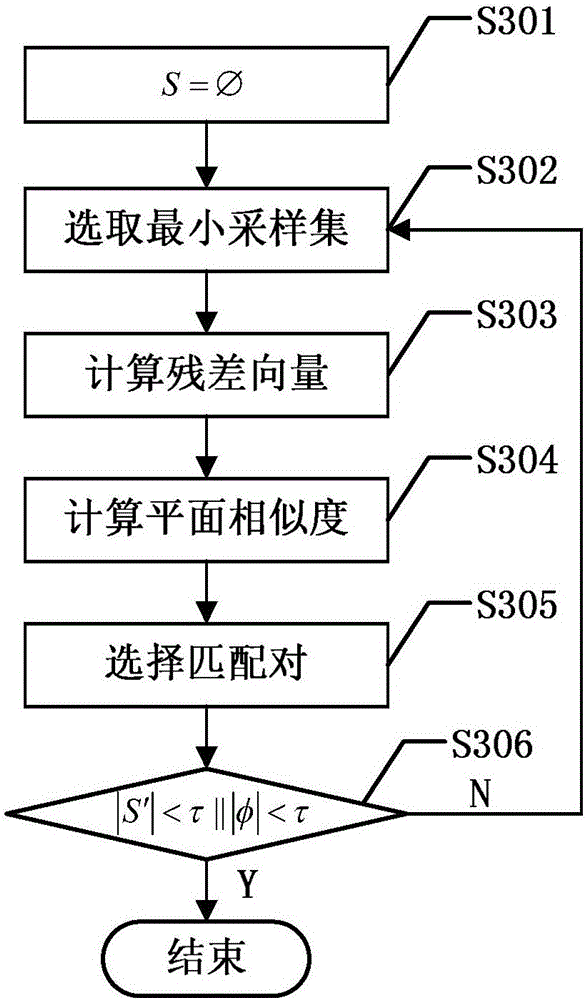

Image stitching method based on characteristic point plane similarity

InactiveCN105957007ATransform accuratelyImprove accuracyGeometric image transformationDetails involving image mosaicingScreening methodEuclidean vector

The invention discloses an image stitching method based on characteristic point plane similarity, comprising first, respectively extracting characteristic points of two images to be stitched; matching the characteristic points to obtain characteristic point matching pairs; screening the characteristic point matching pairs based on plane similarity, the screening method including first randomly selecting a smallest sampling set; calculating the homography matrix of the smallest sampling set; calculating the residual error between each matching pair and a corresponding homography matrix to form a residual vector; and calculating the plane similarity between each two matching pairs according to a residual vector, and furthermore screening the matching pairs; calculating a transformational matrix according to screened matching sets, and transforming two images to be stitched into a same coordinate system; searching for an optimal stitching line; and fusing images according to the optimal stitching line to obtain an image stitching result. The method employs characteristic point matching pairs which are screened based on plane similarity to perform registering and stitching, and can improve image stitching accuracy and robustness.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

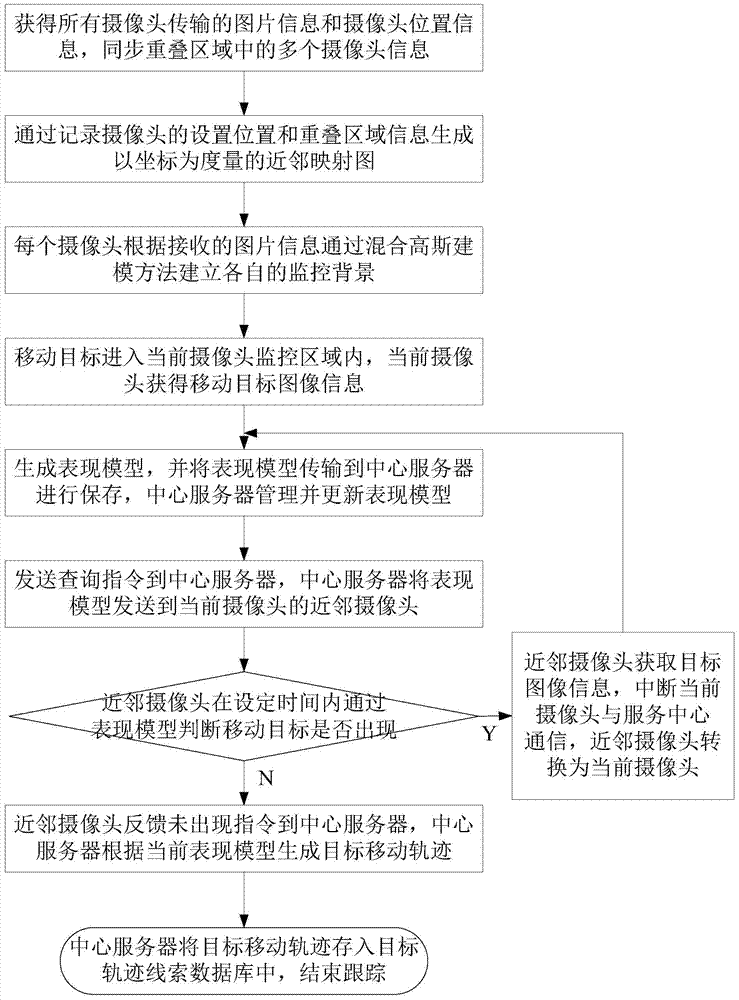

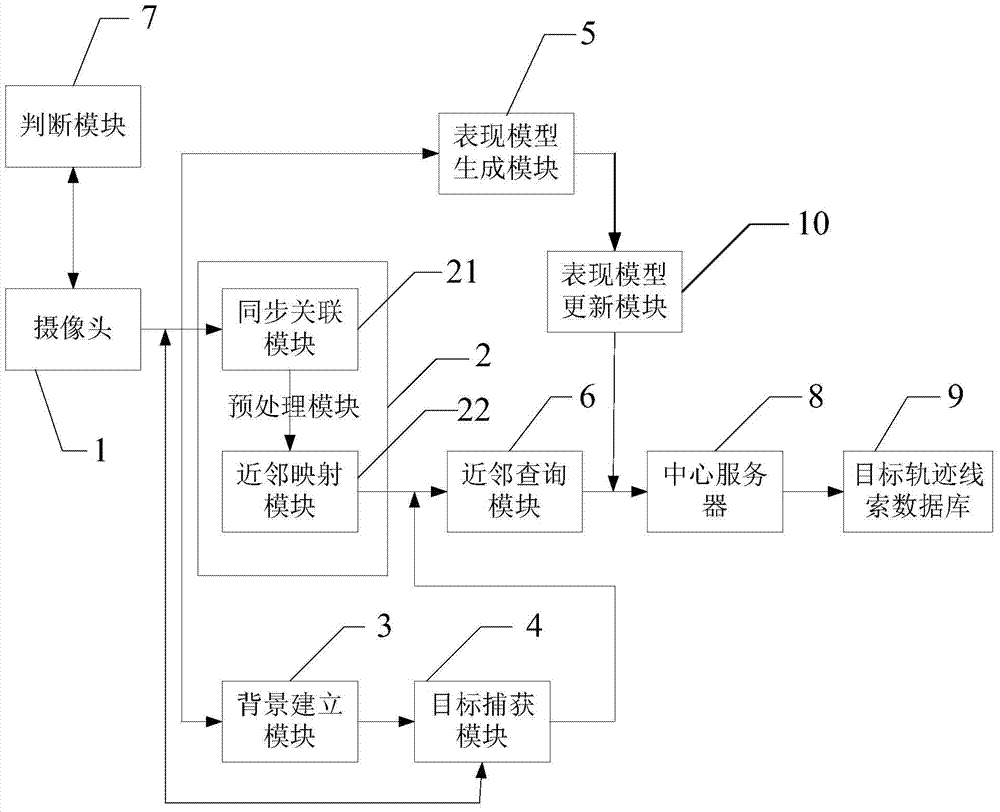

Online target tracking method and system based on multiple cameras

ActiveCN104123732AAchieve synchronization effectEfficient synchronizationTelevision system detailsImage analysisEngineeringFeature point matching

The invention relates to an online target tracking method and an online target tracking system based on multiple cameras, solves a synergy problem and a timeliness problem among the multiple cameras by combining a preset calibration synchronization scheme with a self-learning tracking method, and provides the corresponding method. The preset calibration synchronization scheme uses a target projection matrix calculation method matched with feature points, and synchronizes shared information of the multiple cameras of an overlapping region. The self-learning tracking method records a presentation model of a monitored target, performs detection tracking by synchronizing a center server to the neighbor camera, and thereby achieves conductive information synchronization effects.

Owner:INST OF INFORMATION ENG CHINESE ACAD OF SCI

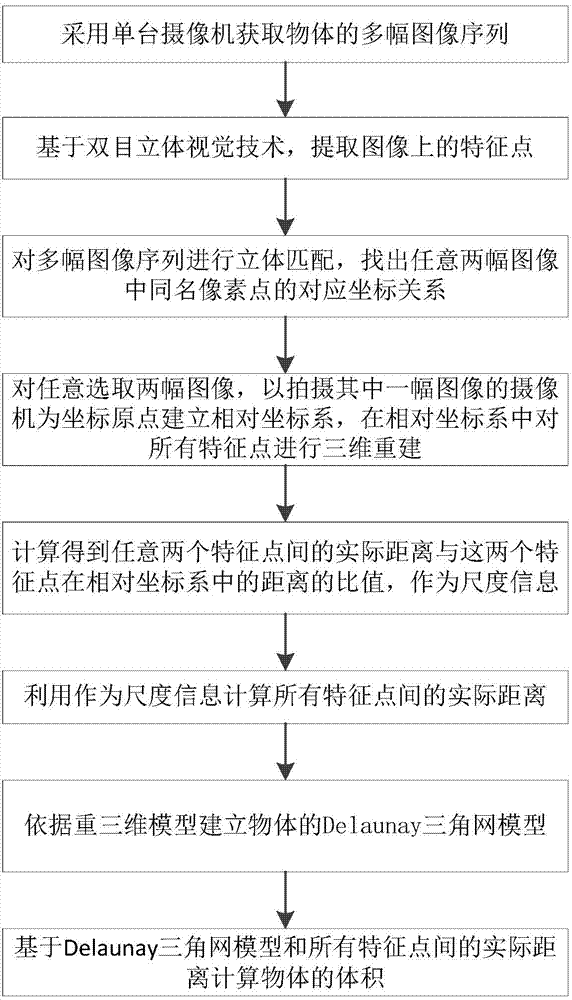

Rapid volume measurement method based on spatial invariant feature

ActiveCN104778720AQuick measurementTo achieve the purpose of photogrammetryImage analysisPoint cloudVolume computation

The invention provides a rapid volume measurement method based on a spatial invariant feature. The method comprises the steps that feature point extracting and matching and three-dimensional reconstruction are performed on acquired images; the distance proportion of the actual distances among feature points to the real world in a relative coordinate system is added in three-dimensional point cloud to serve as scale information; a Delaunay triangular mesh is built by utilizing a three-dimensional coordinate of the feature points, boundary edge detection elements are added, and an optimal datum plane is fit; the triangular mesh is projected to the datum plane according to the discrete integral thought to determine the volume of an irregular object surrounded by the triangular mesh. The measurement of the actual distances among all the feature points of an object can be completed by utilizing a camera and a ruler, and the trouble of field measurement can be omitted; meanwhile, a data structure used for detecting the boundary edge is added when the Delaunay triangular mesh is built, the peripheral datum plane constituting a boundary curve surface can be effectively obtained, and the method can be further used in volume calculation.

Owner:SOUTHEAST UNIV

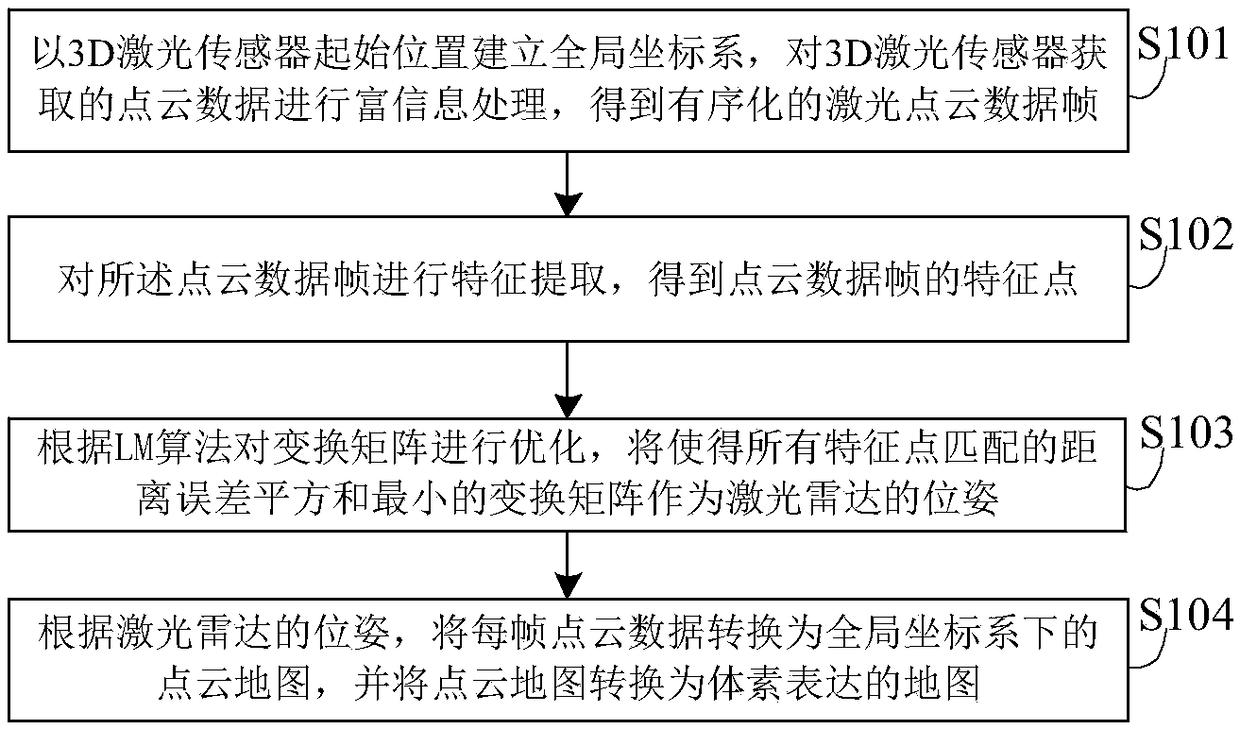

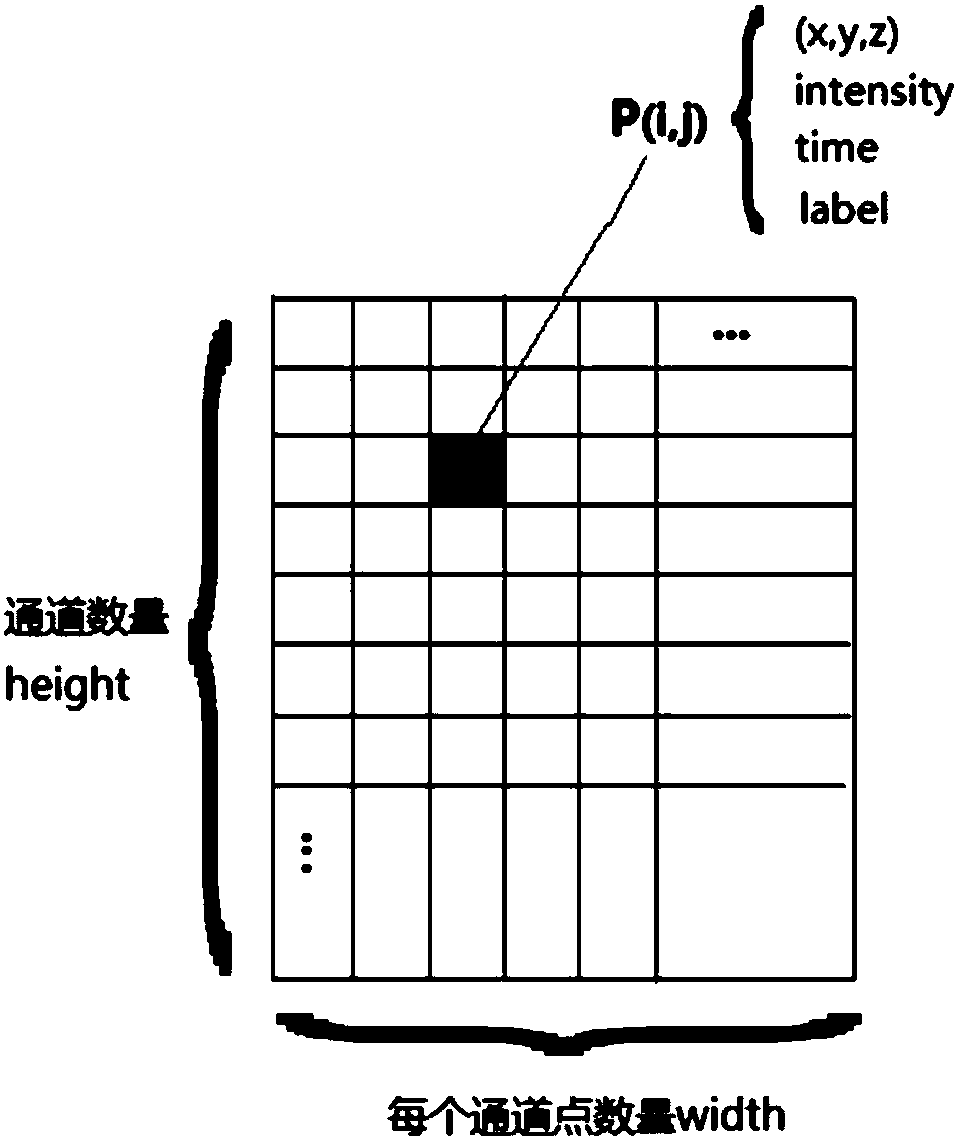

Method for creating 3D map based on 3D laser

ActiveCN108320329AHigh precisionAvoid the problem of inconvenient filtering and denoisingImage enhancementDetails involving processing stepsInformation processingVoxel

The invention discloses a method for creating a 3D map based on a 3D laser, and belongs to the technical field of data processing. The method comprises the steps of performing rich information processing on point cloud data obtained by a 3D laser sensor to obtain an ordered laser point cloud data frame; performing feature extraction on the point cloud data frame to obtain feature points of the point cloud data frame; optimizing a transformation matrix according to an LM algorithm, and enabling the transformation matrix capable of enabling the sum of squares of distance errors of the matching of all feature points to be the minimum to serve the pose of radar; transforming each frame of the point cloud data into a point cloud map under a global coordinate system according to the pose of theradar, and transforming the point cloud map into a map expressed by voxels. According to the invention, rich information processing is performed on the original laser data, thereby providing a data basis for the creation of the 3D map. The method avoids a problem that it is inconvenient to perform filtering and denoising by adopting a point cloud map through adopting an expression mode of the voxel map, and the definition of the 3D map is improved.

Owner:维坤智能科技(上海)有限公司 +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com