Real-time three-dimensional scene reconstruction method for UAV based on EG-SLAM

A real-time 3D and scene reconstruction technology, applied in the fields of drone autonomous flight, computer vision, and map construction, can solve the problems of 3D point clouds that do not contain texture information, waste of transmission bandwidth and computing resources, and only geometric structures

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0086] Embodiments of the present invention are described in detail below, and the embodiments are exemplary and intended to explain the present invention, but should not be construed as limiting the present invention.

[0087] The main device of the drone of the present invention is a microcomputer and an airborne industrial camera. During the flight of the UAV, the image information is collected by the camera, and the data is transmitted to the embedded computer. The embedded computer processes the collected images, completes real-time positioning and point cloud map construction, and combines GPS reconstruction provided by the flight controller. 3D map scene.

[0088] The following are the specific implementation steps:

[0089] Step 1: Acquire and process images:

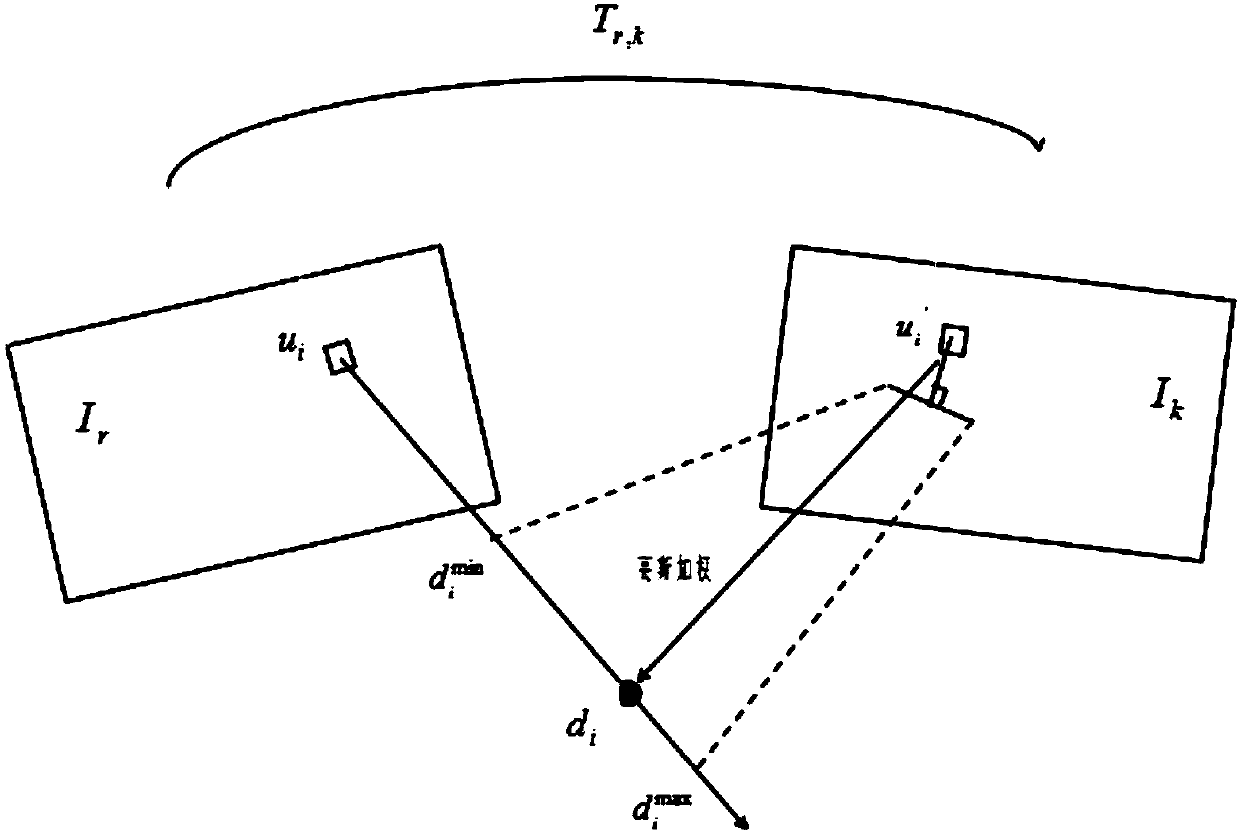

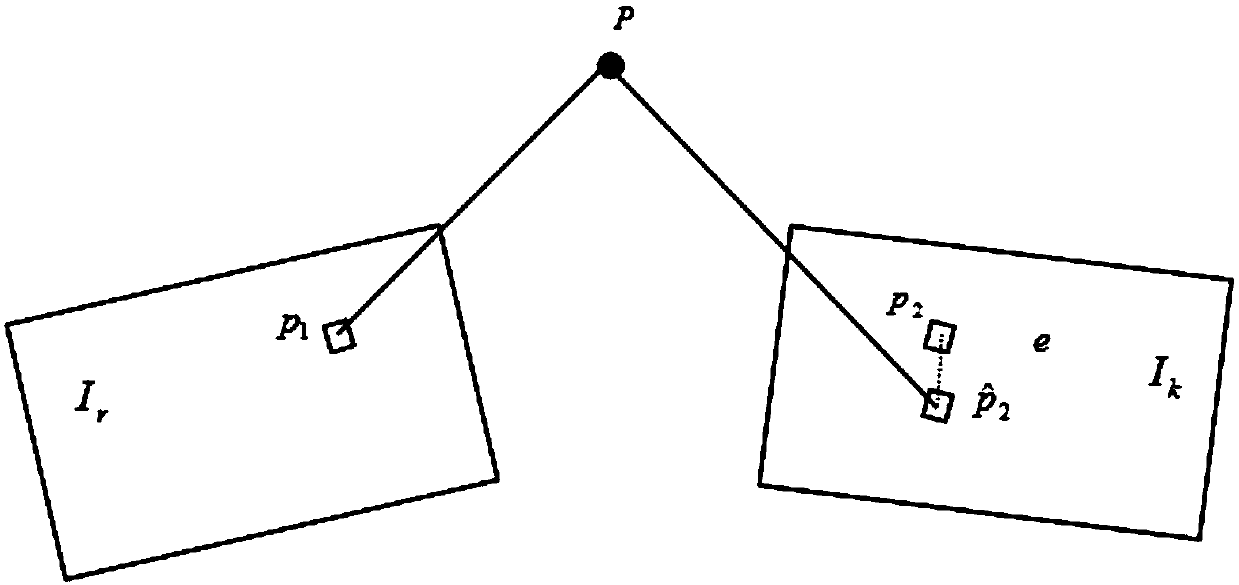

[0090] The drone's on-board camera collects a series of images, and transmits the images to the computing unit in real time, ensuring the rapidity of image transmission. Use the camera calibration data obtain...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com