Patents

Literature

64 results about "Spatial calibration" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The process of spatial calibration involves calibrating a single image against known values, then applying that calibration to your uncalibrated image. This assumes, of course, that both images are at the same magnification.

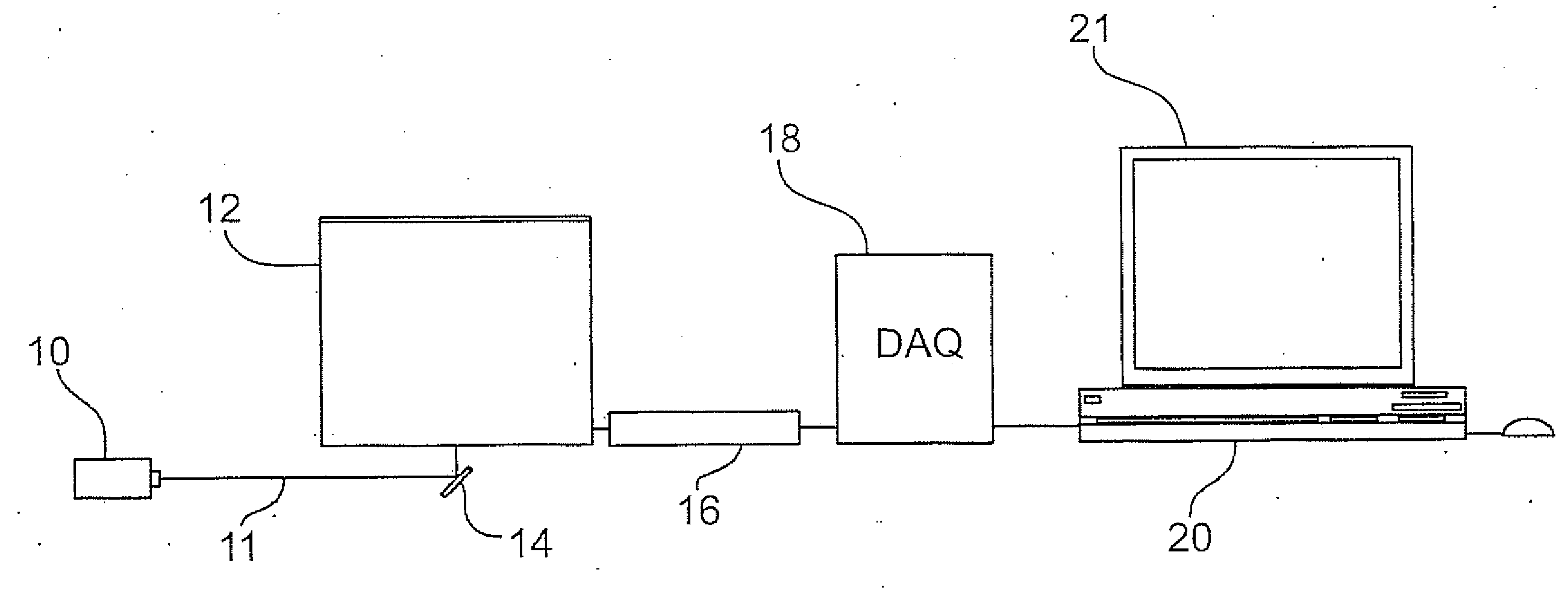

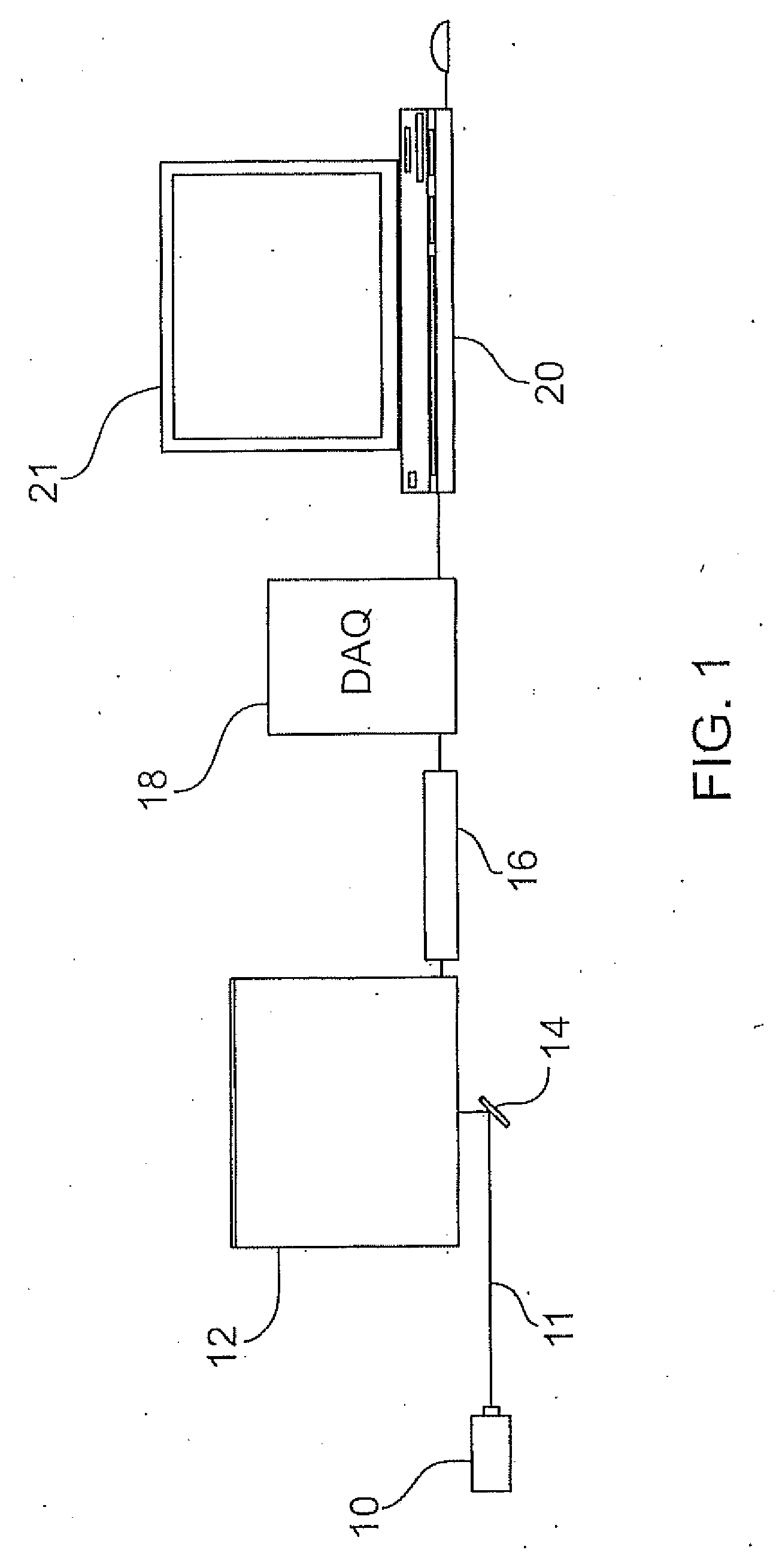

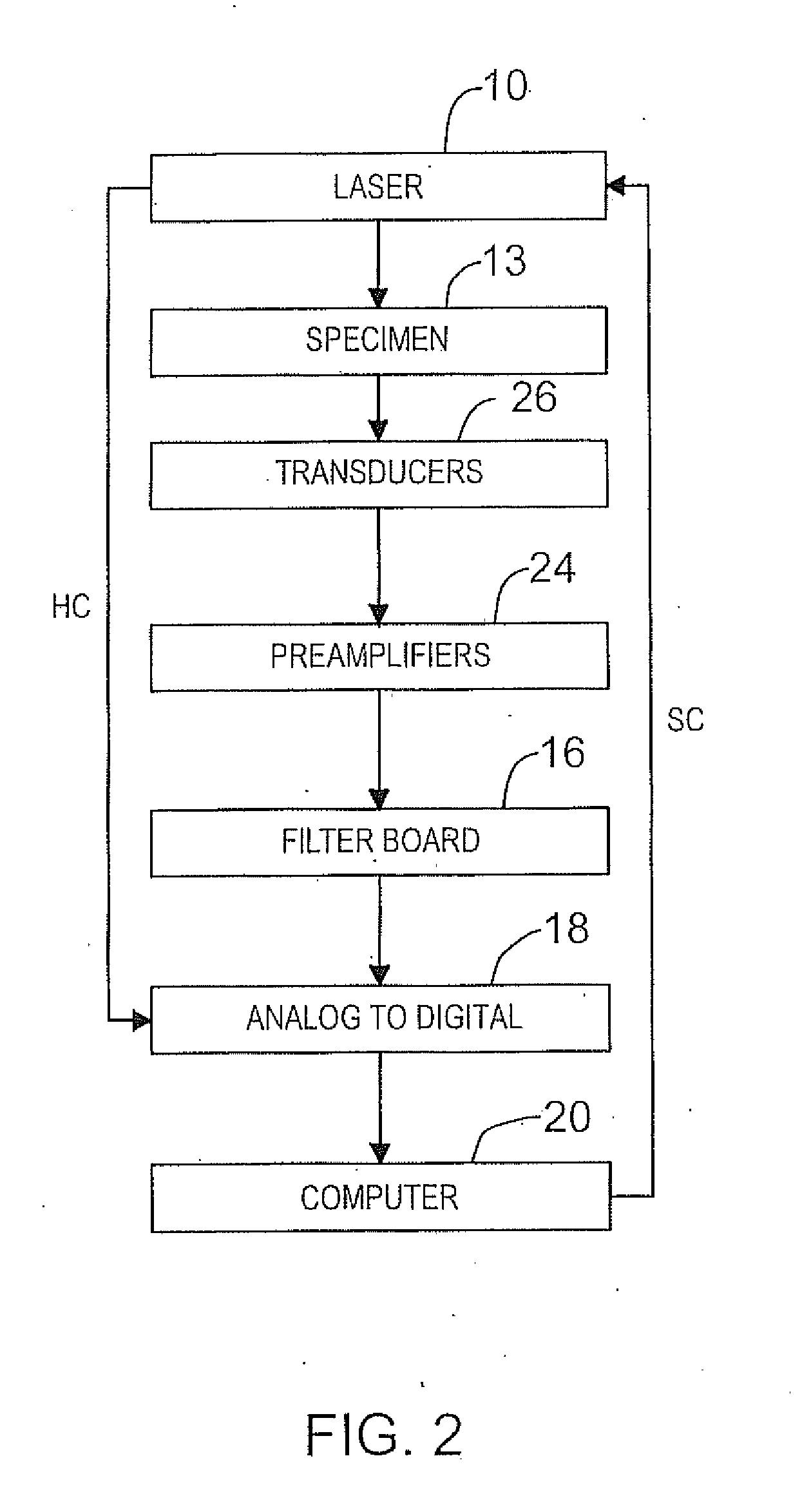

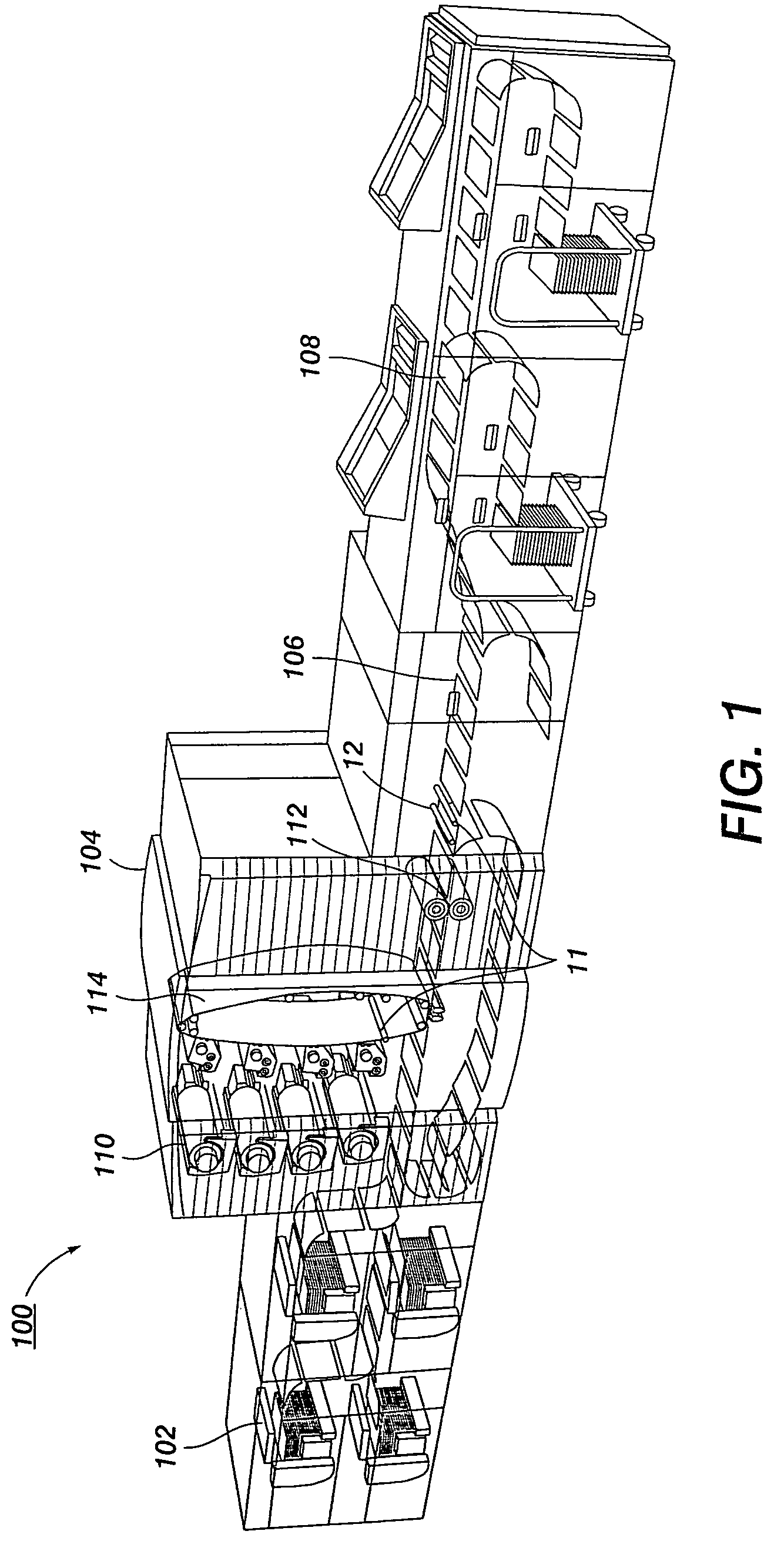

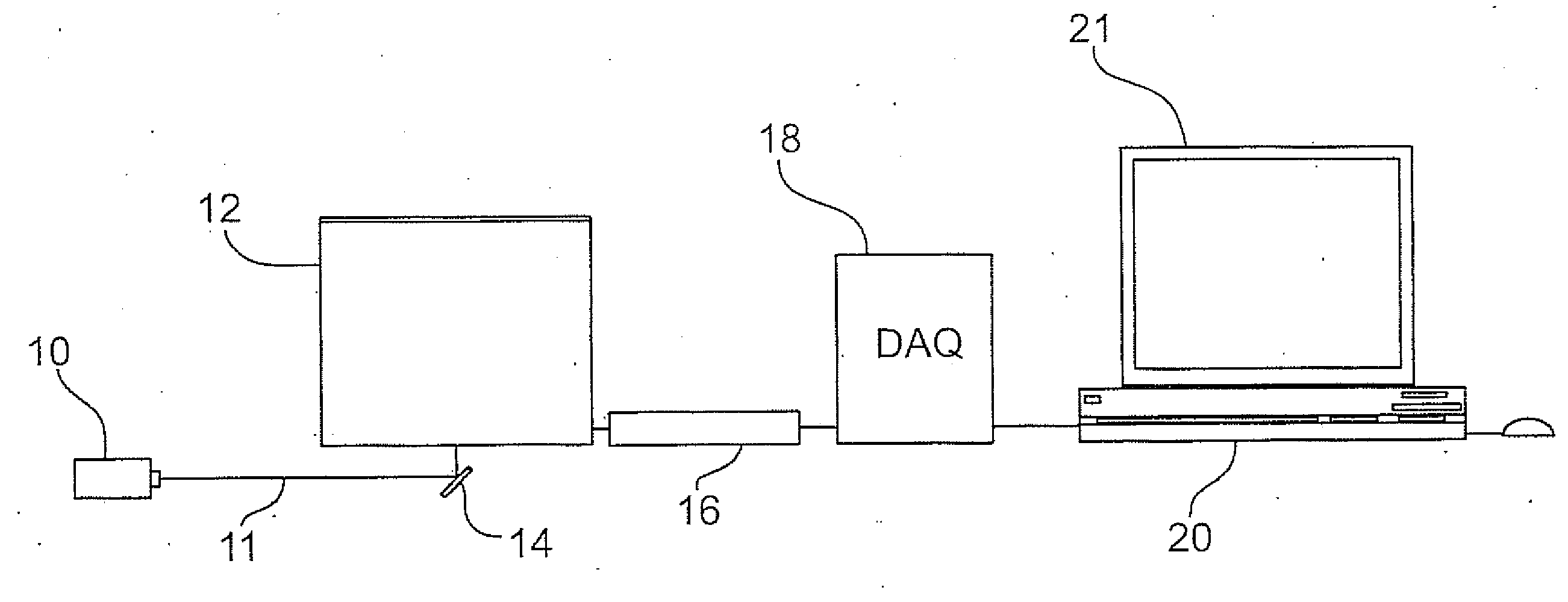

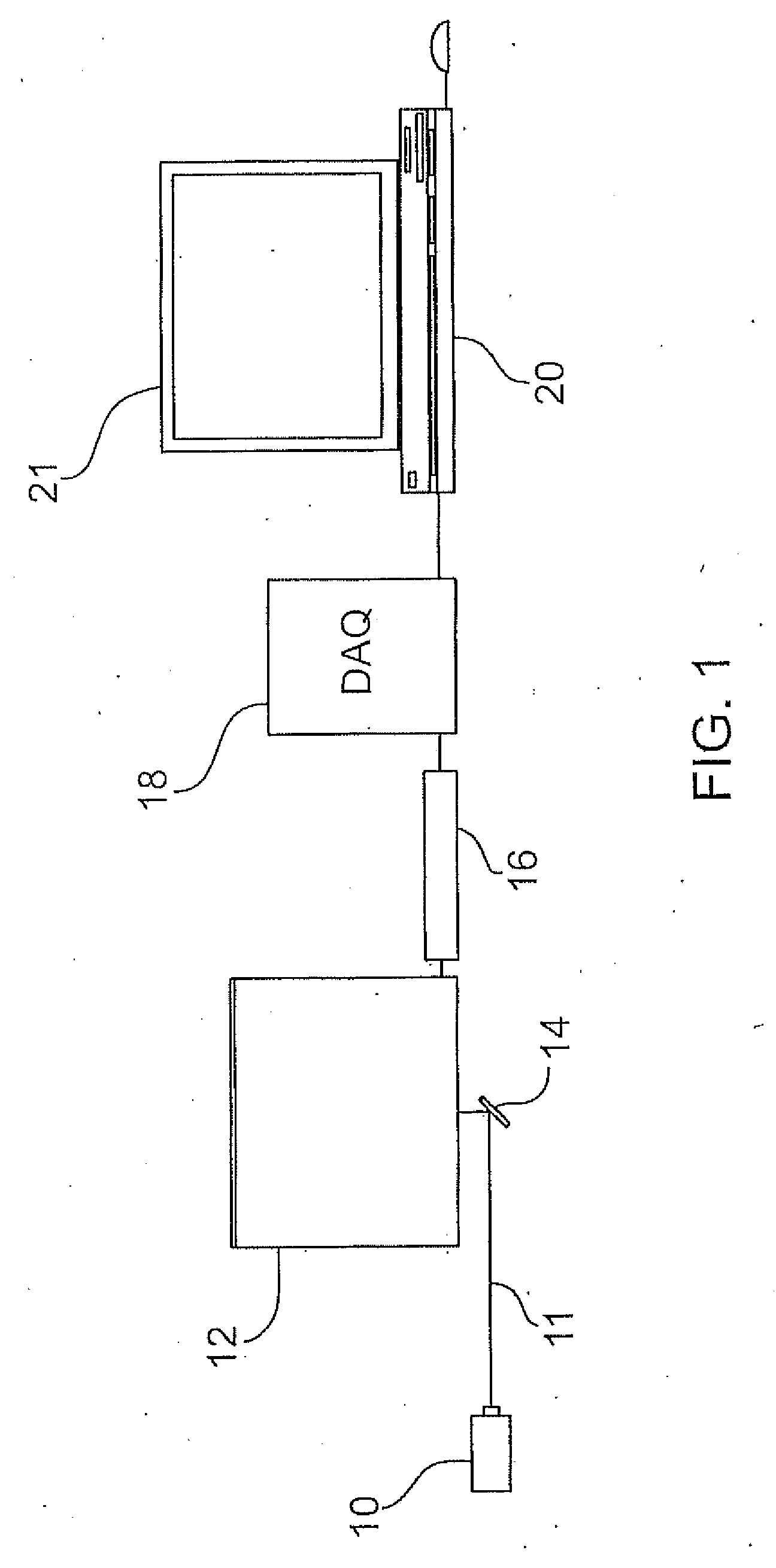

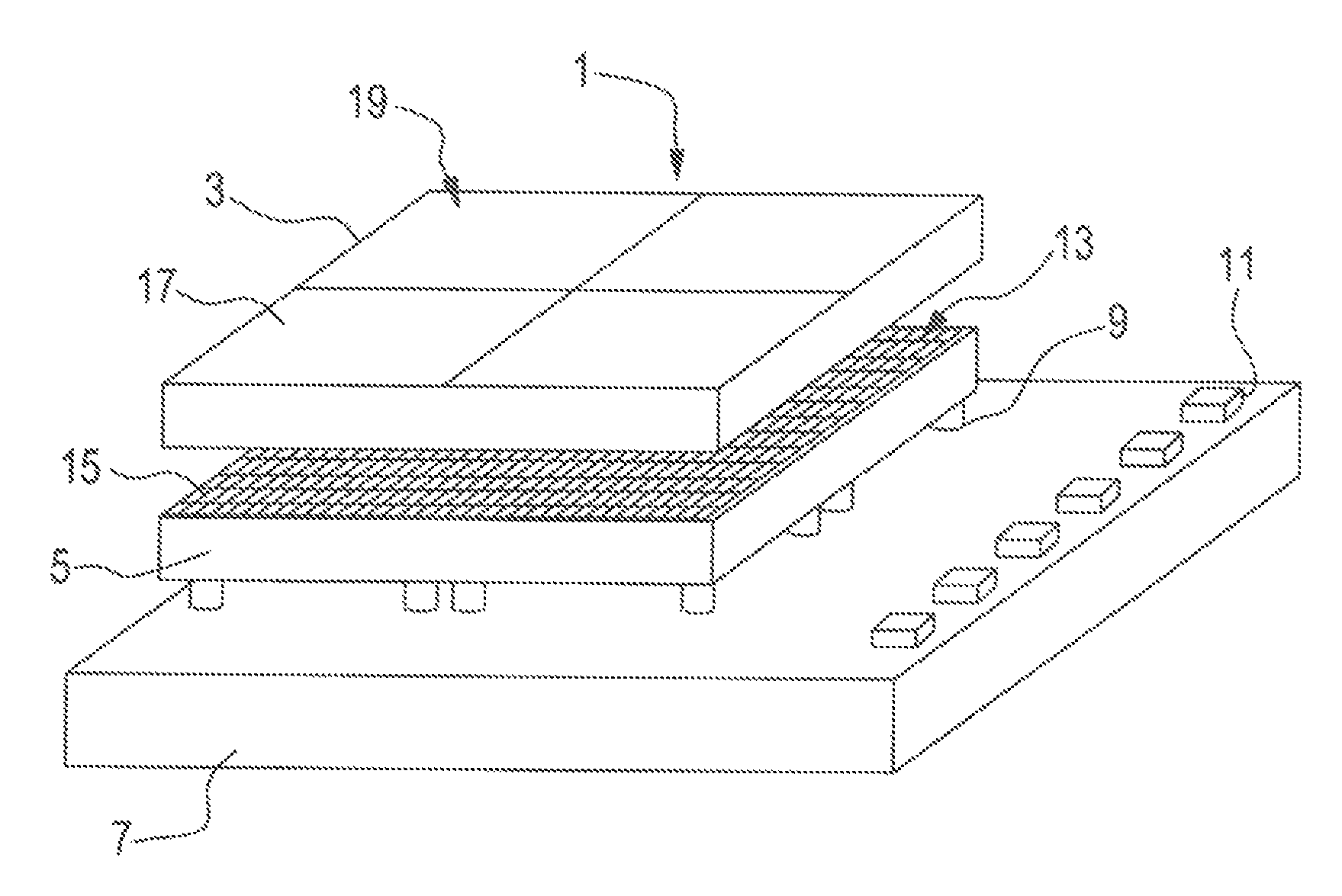

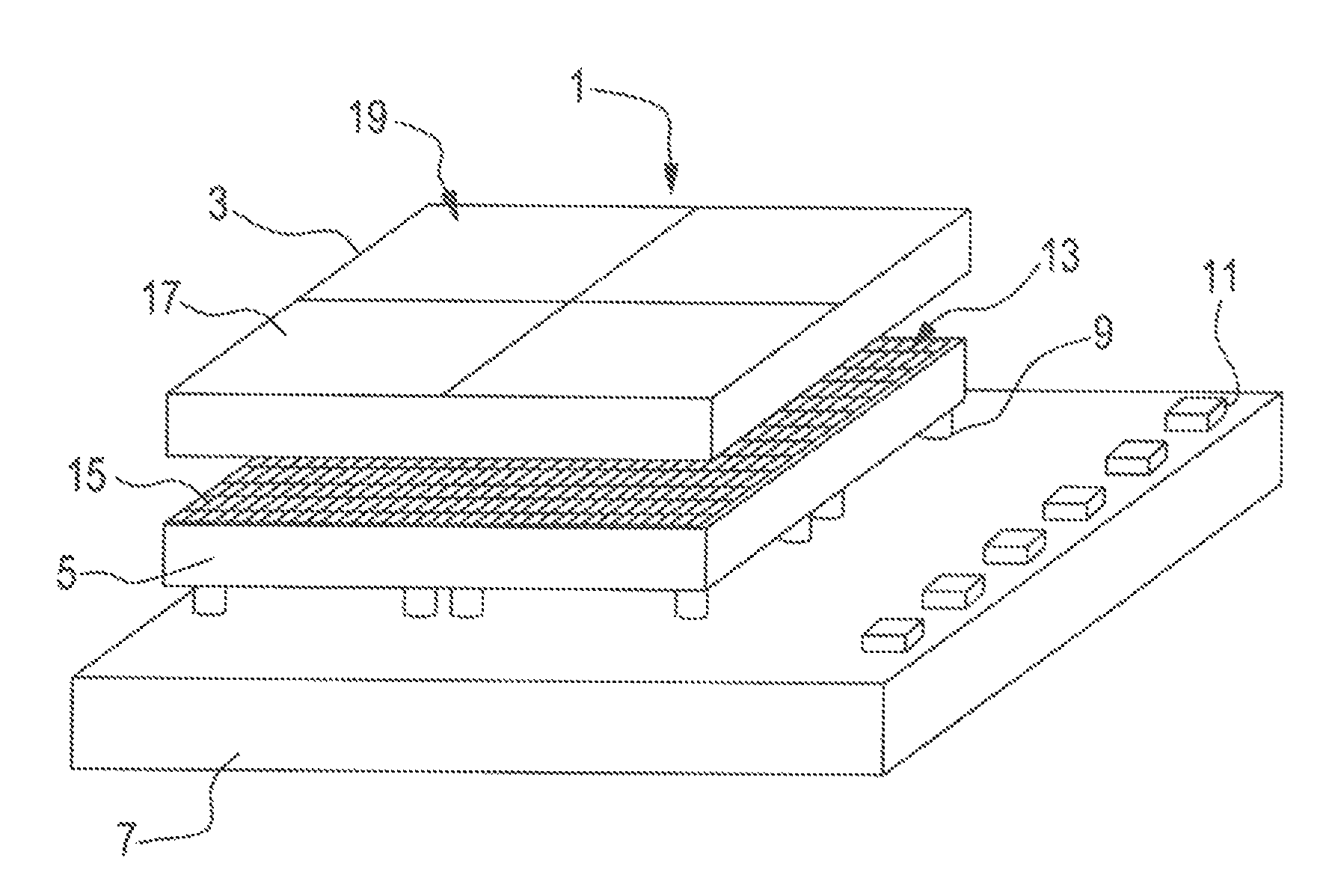

Three-dimensional photoacoustic imager and methods for calibrating an imager

InactiveUS20100249570A1Illuminating subjectUltrasonic/sonic/infrasonic diagnosticsMaterial analysis using sonic/ultrasonic/infrasonic wavesData acquisitionReconstruction algorithm

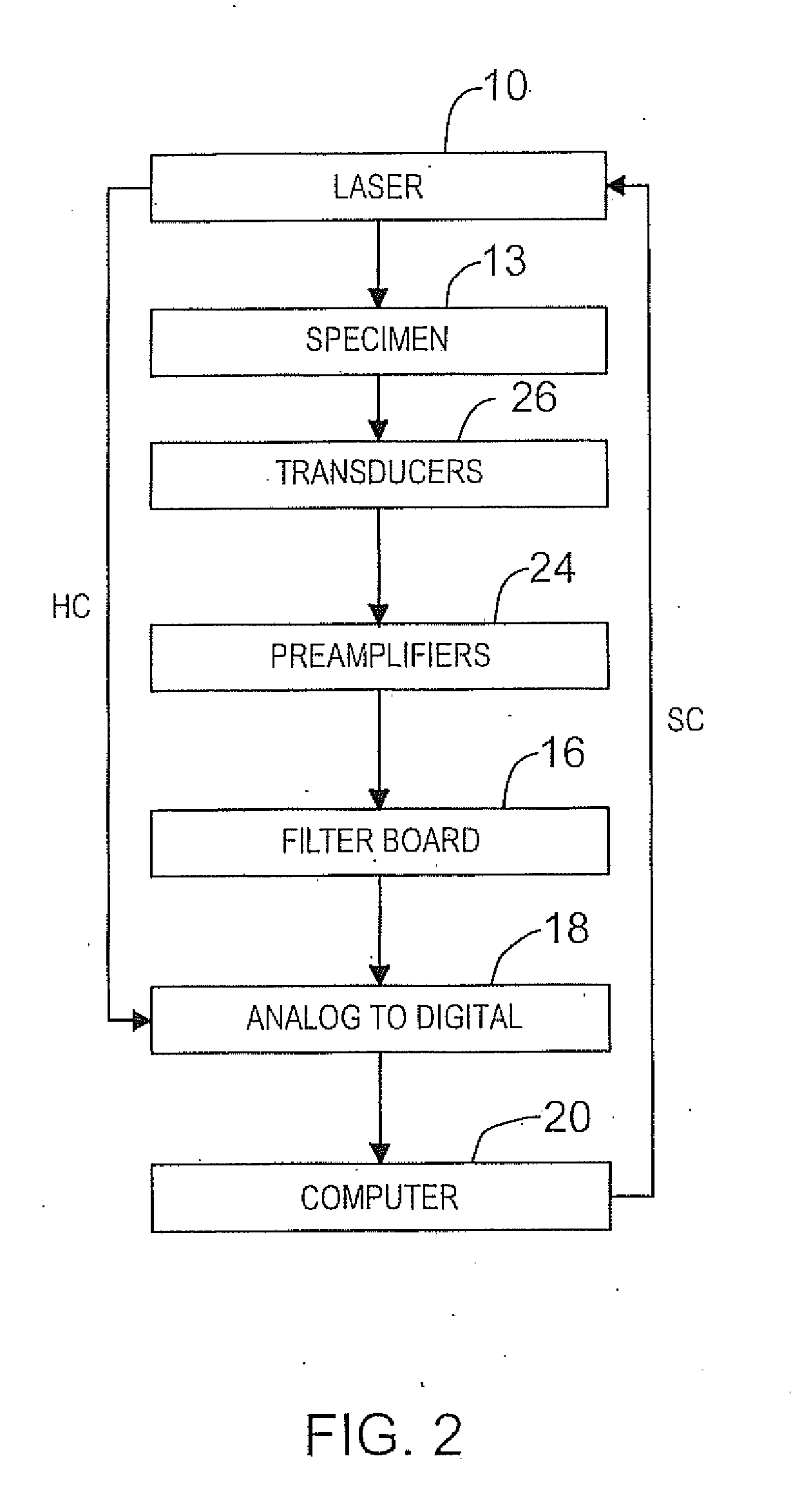

A photoacoustic imaging apparatus is provided for medical or other imaging applications and also a method for calibrating this apparatus. The apparatus employs a sparse array of transducer elements and a reconstruction algorithm. Spatial calibration maps of the sparse array are used to optimize the reconstruction algorithm. The apparatus includes a laser producing a pulsed laser beam to illuminate a subject for imaging and generate photoacoustic waves. The transducers are fixedly mounted on a holder so as to form the sparse array. A photoacoustic (PA) waves are received by each transducer. The resultant analog signals from each transducer are amplified, filtered, and converted to digital signals in parallel by a data acquisition system which is operatively connected to a computer. The computer receives the digital signals and processes the digital signals by the algorithm based on iterative forward projection and back-projection in order to provide the image.

Owner:MULTI MAGNETICS

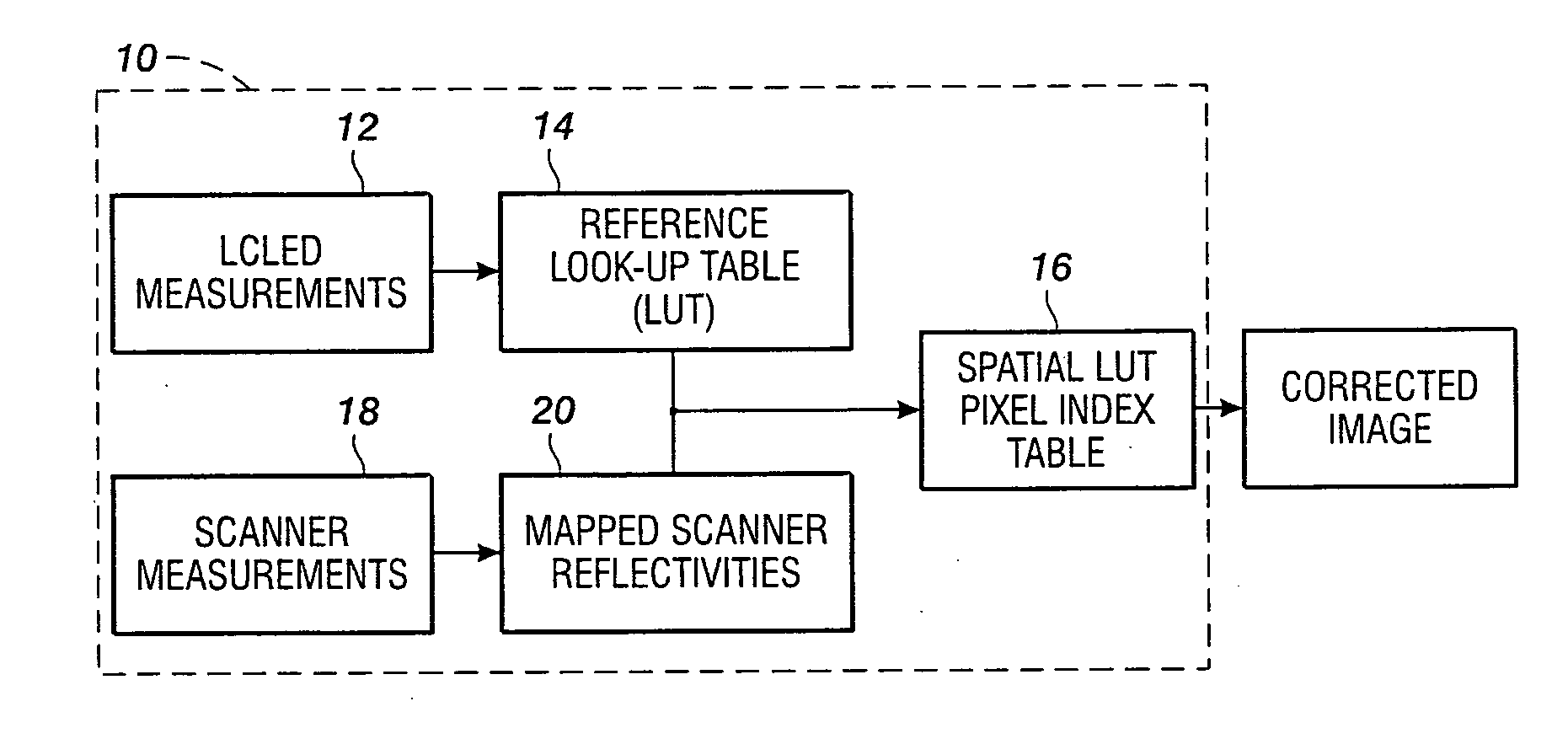

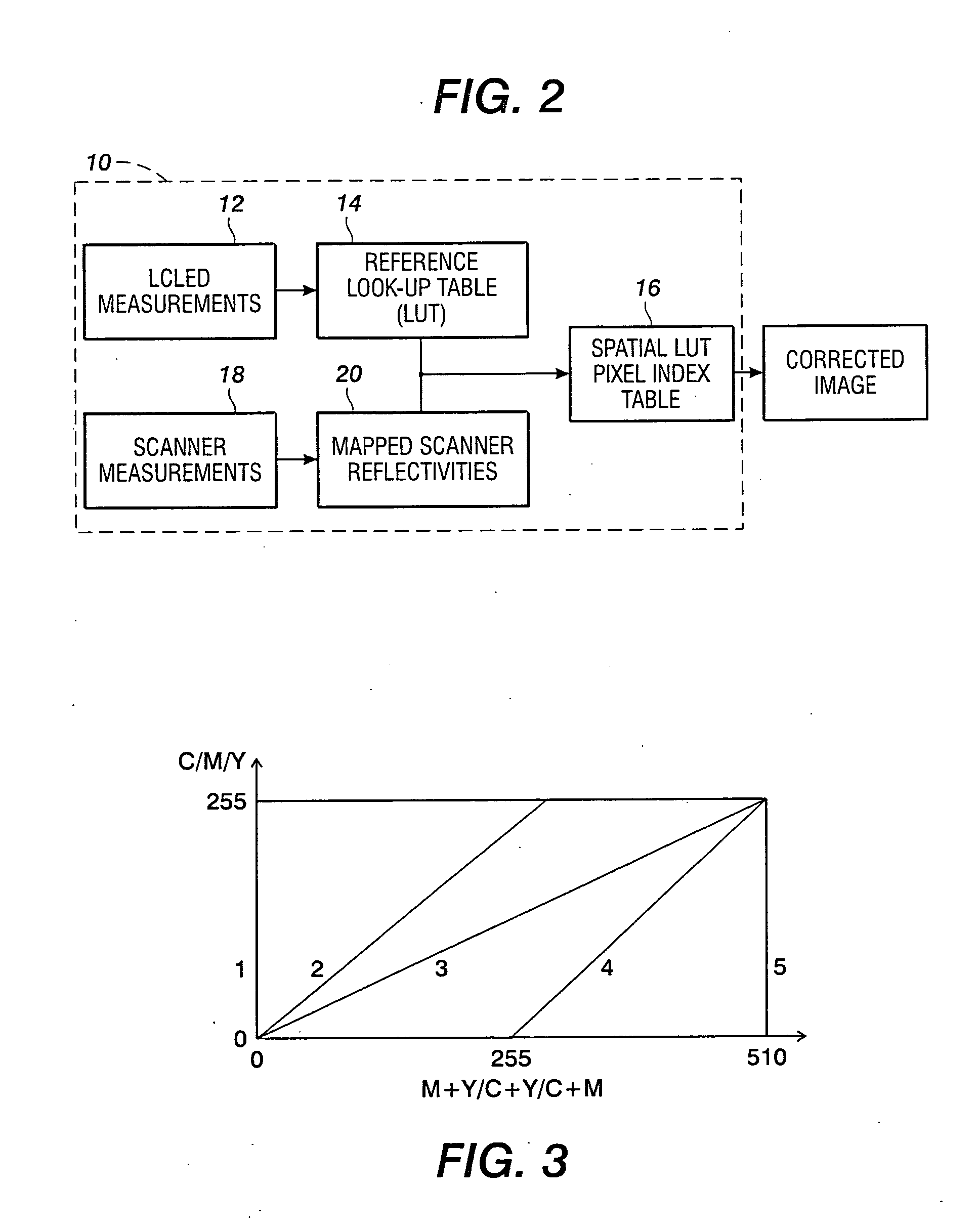

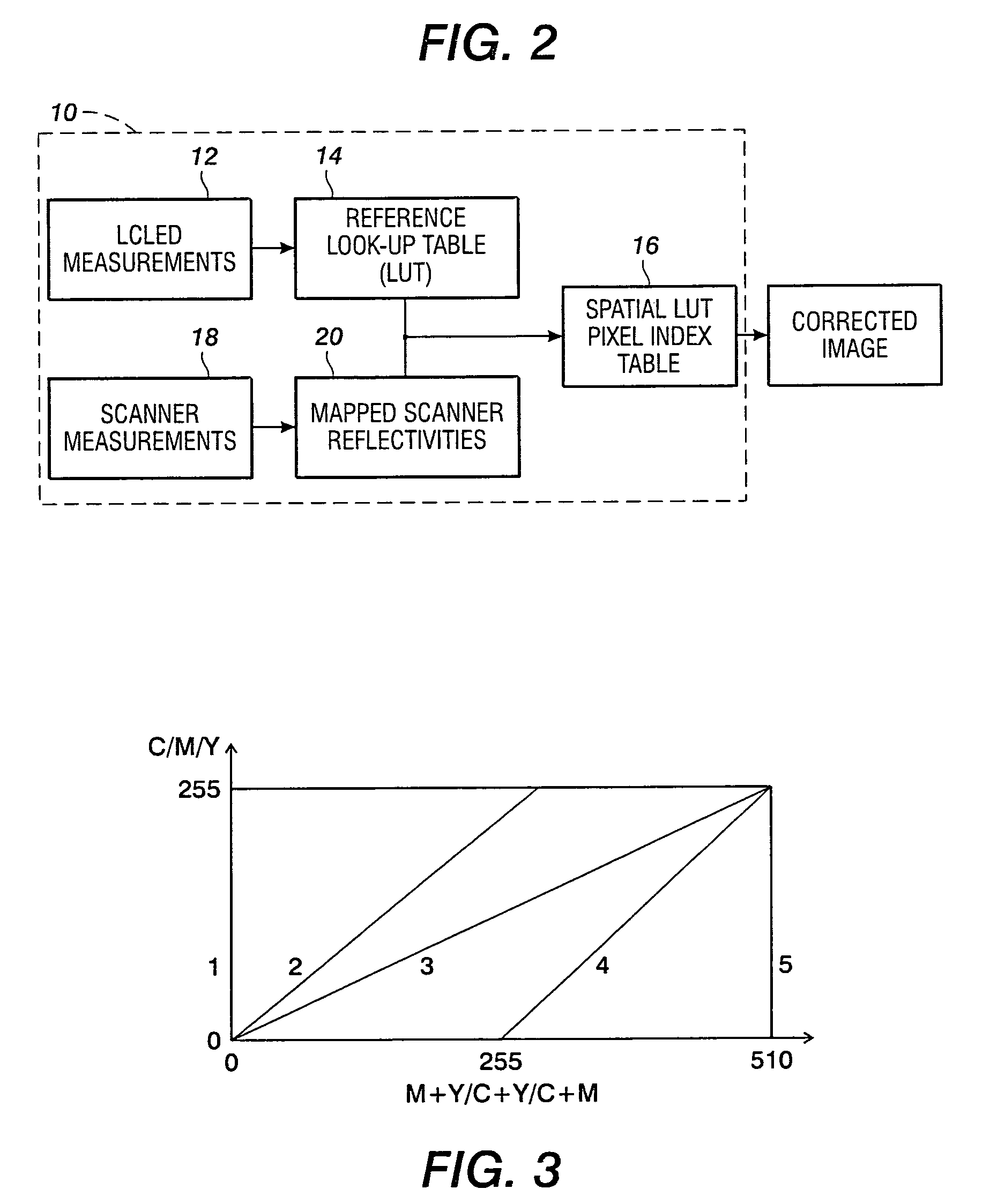

Method for spatial color calibration using hybrid sensing systems

InactiveUS20080037069A1Minimize spatial uniformity errorSpace minimizationDigitally marking record carriersDigital computer detailsOutput deviceComputer vision

A method and system for color calibration or color output device spectrophotoically measures at test target including a preselected test color value. A multi-dimensional LUT of the device is generated representative of the color information including the at least one preselected color. Producing a second image width device including the at least one preselected color located at a plurality of spatial locations in the second image. A second sensor measures the second image and a plurality of spatial locations having the preselected color for generating reflectance information for the preselected color at the plurality of spatial locations. An error is determined between the measured color of the one preselected color and the reflectance information at the other pixel locations. A multi-dimensional LUT is adjusted to minimize spatial uniformity errors at the other pixel locations, thus calibrating device color output spatially.

Owner:XEROX CORP

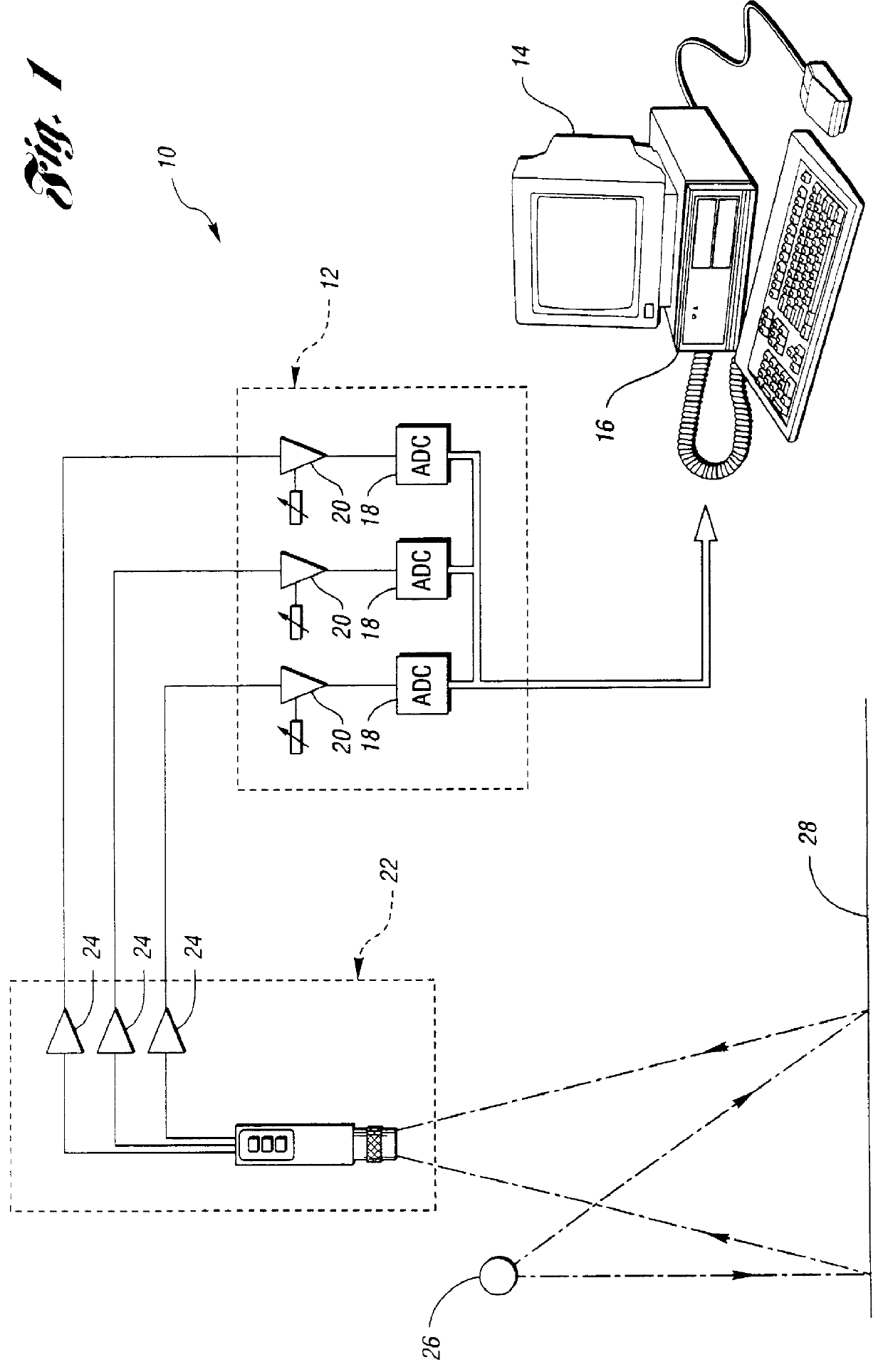

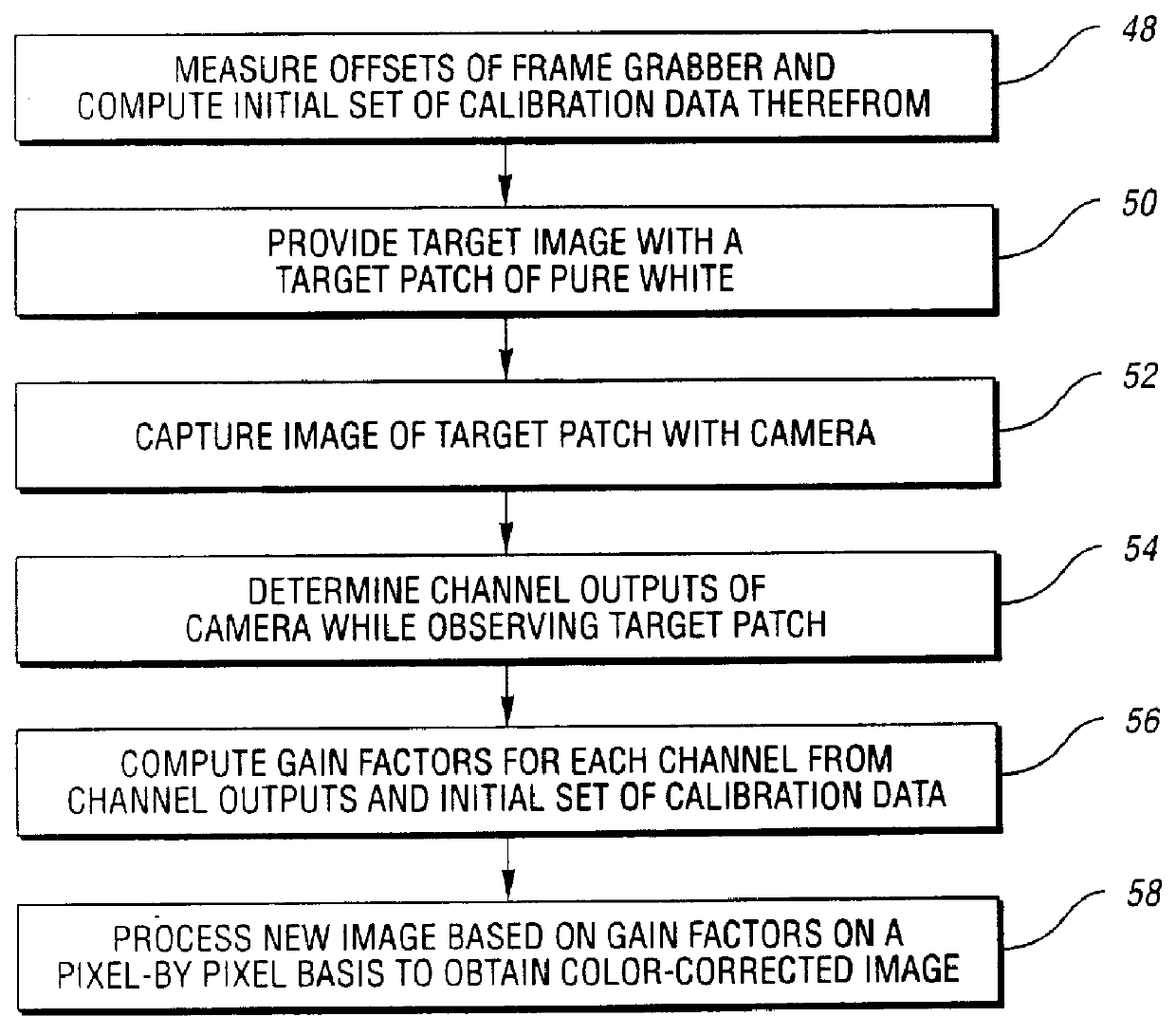

Method and system for automatically calibrating a color-based machine vision system

InactiveUS6016161AUneven illumination levelEffective imagingColor signal processing circuitsPicture signal generatorsImaging conditionColor correction

A calibration algorithm is provided for calibrating a machine vision system including a color camera, a framegrabber and an illumination source wherein subsequently acquired images are processed on a pixel-by-pixel basis. After framegrabber offsets are measured to obtain initial or offset calibration data, the camera is pointed at a target monotone patch of known reflectance such as pure white and the camera outputs for red, green and blue are determined while observing the monotone patch to obtain spatial calibration data. The gain for each of the output channels of the color camera is automatically determined from the initial calibration data. Applying the three gain factors to a subsequent image on the pixel-by-pixel basis allows one to end up with a color-corrected image without incurring any time performance penalty. At any time during an inspection process, a small area of white reflectance can be observed. The resulting dynamic white calibration data is used to correct for any medium to long term temporal variation in imaging conditions.

Owner:INTEGRAL VISION +1

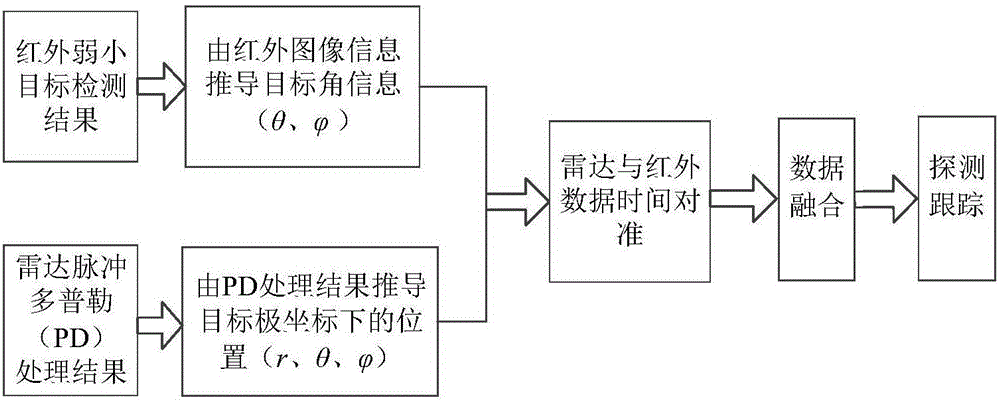

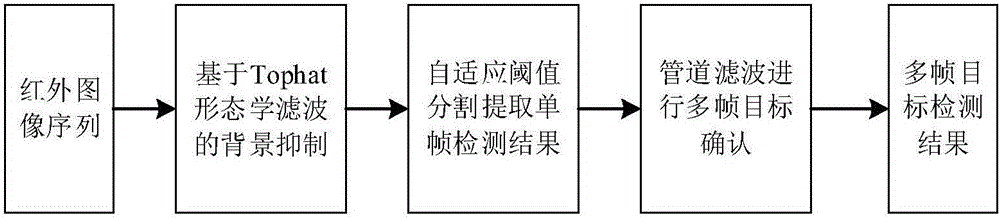

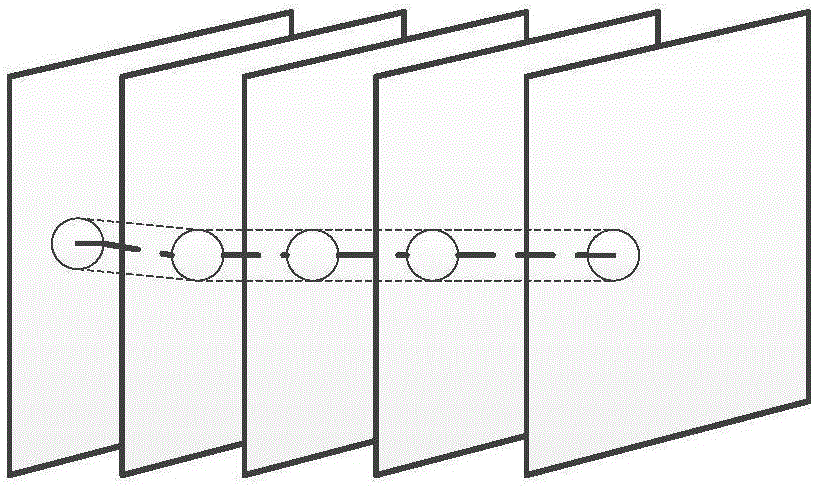

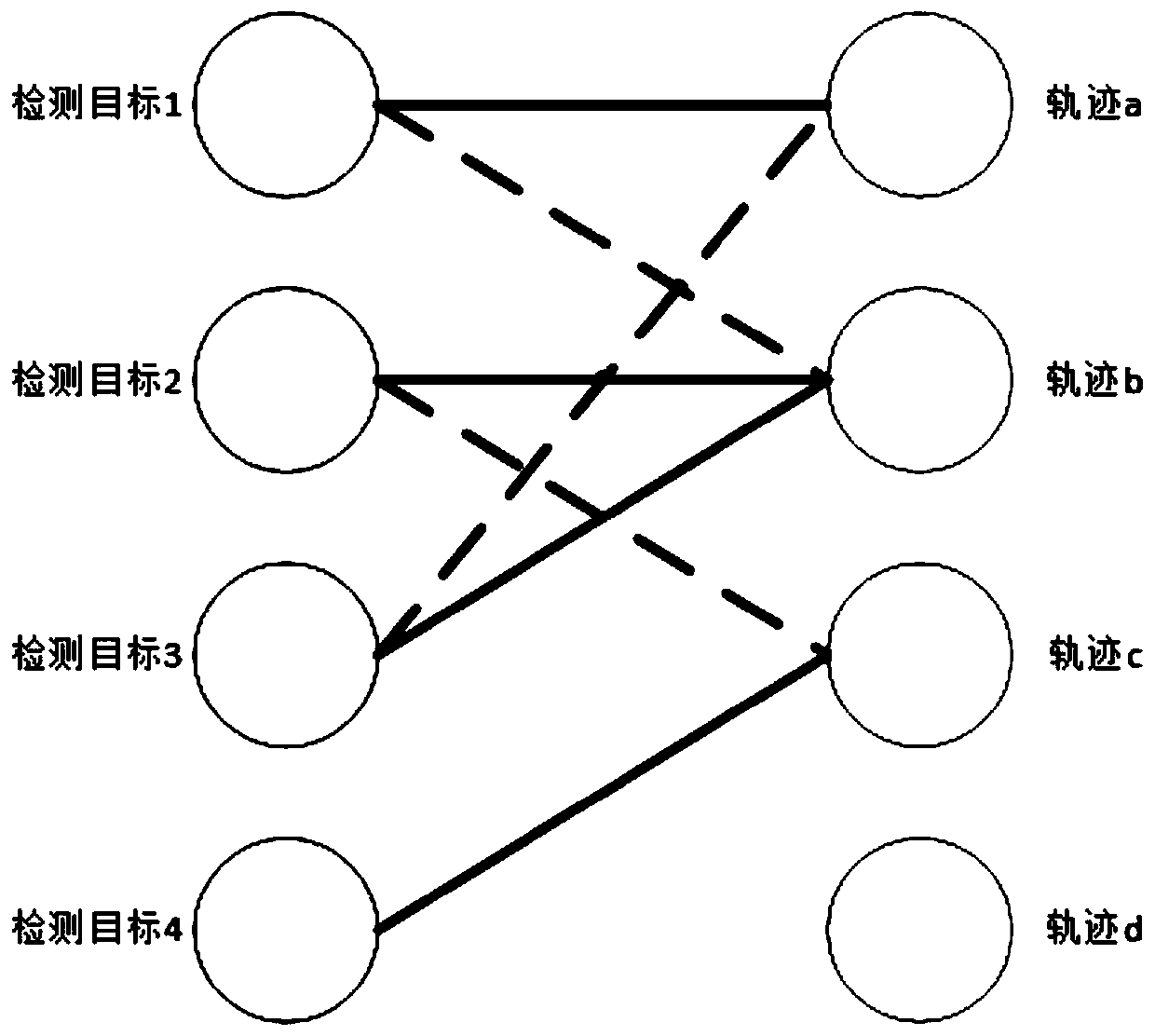

In-orbit moving target detecting method with space-based radar and infrared data fusion

InactiveCN106204629AAvoid moving out of the pipelineImprove performanceImage enhancementImage analysisRadar detectionTrack algorithm

The invention discloses an in-orbit moving target detecting method with space-based radar and infrared data fusion. The method includes the steps that when an infrared detecting system is used for detection, background suppression is carried out on each frame in an infrared image sequence by adopting a filtering method based on the morphology, self-adaptive threshold segmentation is carried out on images obtained after background suppression, a single frame detection result is extracted, a multi-frame target is determined through a tracking algorithm, and whether the detection result is a real target or not is judged to obtain the detection result of the multi-frame target; during radar detection, pulse doppler (PD) is adopted for processing. After time calibration and space calibration are carried out on the obtained infrared and radar target information, data fusion is carried out on the information by adopting a measurement fusion method, and track prediction is carried out on fused data to obtain the estimated position of the target.

Owner:XIDIAN UNIV

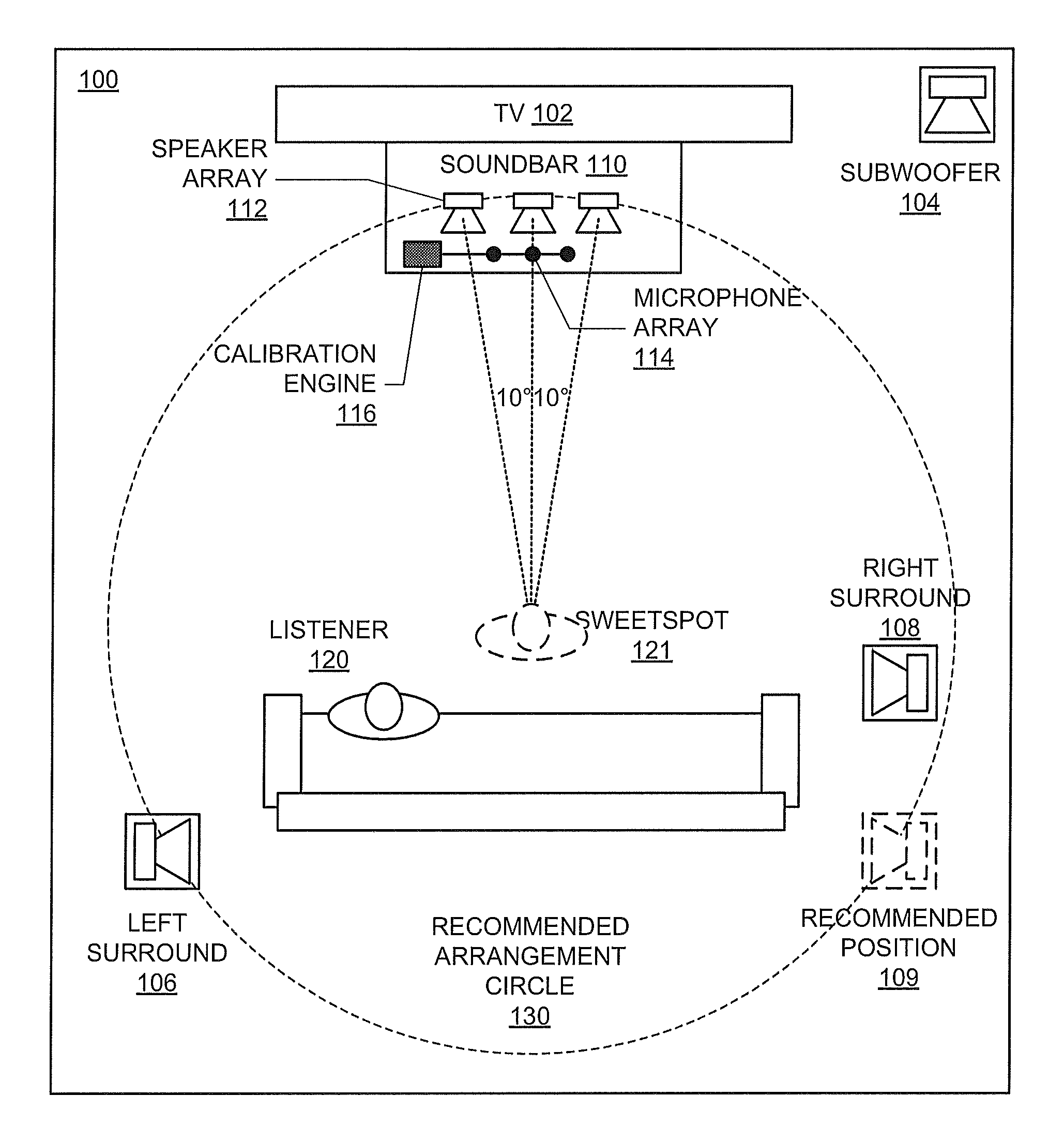

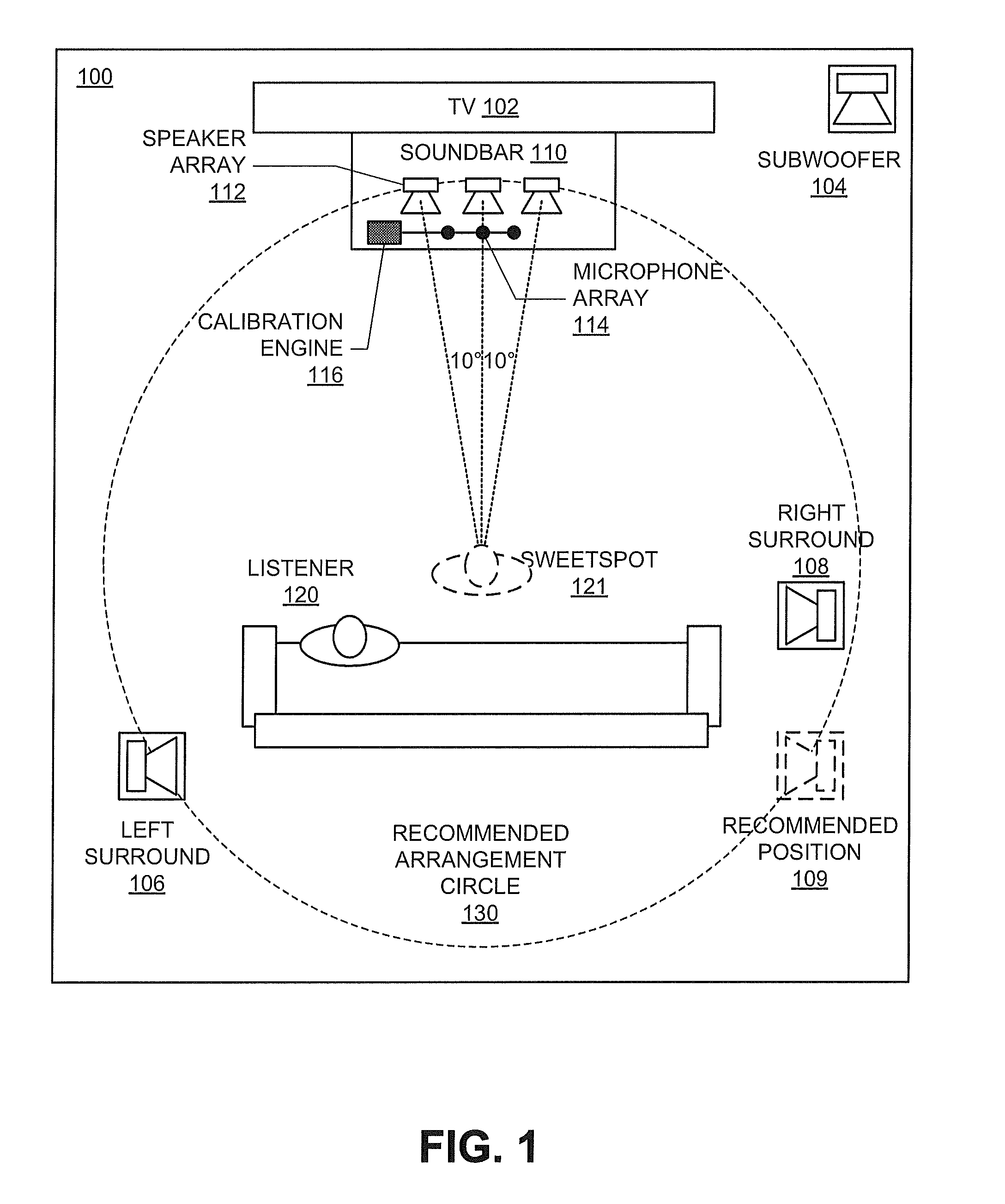

Spatial calibration of surround sound systems including listener position estimation

A method for calibrating a surround sound system is disclosed. The method utilizes a microphone array integrated in a front center loudspeaker of the surround sound system or a soundbar facing a listener. Positions of each loudspeaker relative to the microphone array can be estimated by playing a test signal at each loudspeaker and measuring the test signal received at the microphone array. The listener's position can also be estimated by receiving the listener's voice or other sound cues made by the listener using the microphone array. Once the positions of the loudspeakers and the listener's position are estimated, spatial calibrations can be performed for each loudspeaker in the surround sound system so that listening experience is optimized.

Owner:DTS

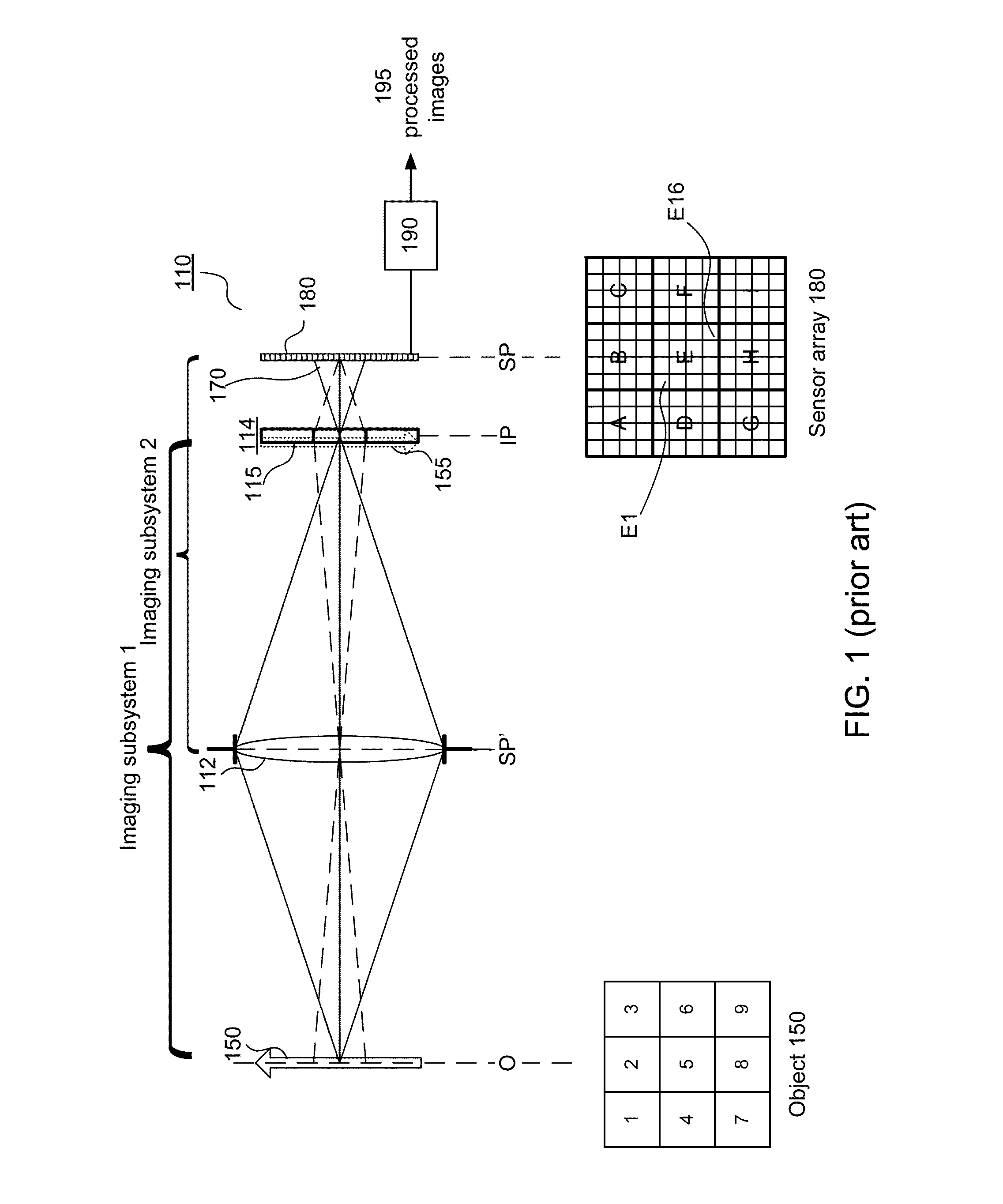

Object Space Calibration of Plenoptic Imaging Systems

InactiveUS20160205394A1Speed up the processOvercome limitationsImage enhancementImage analysisSpatial calibrationImage capture

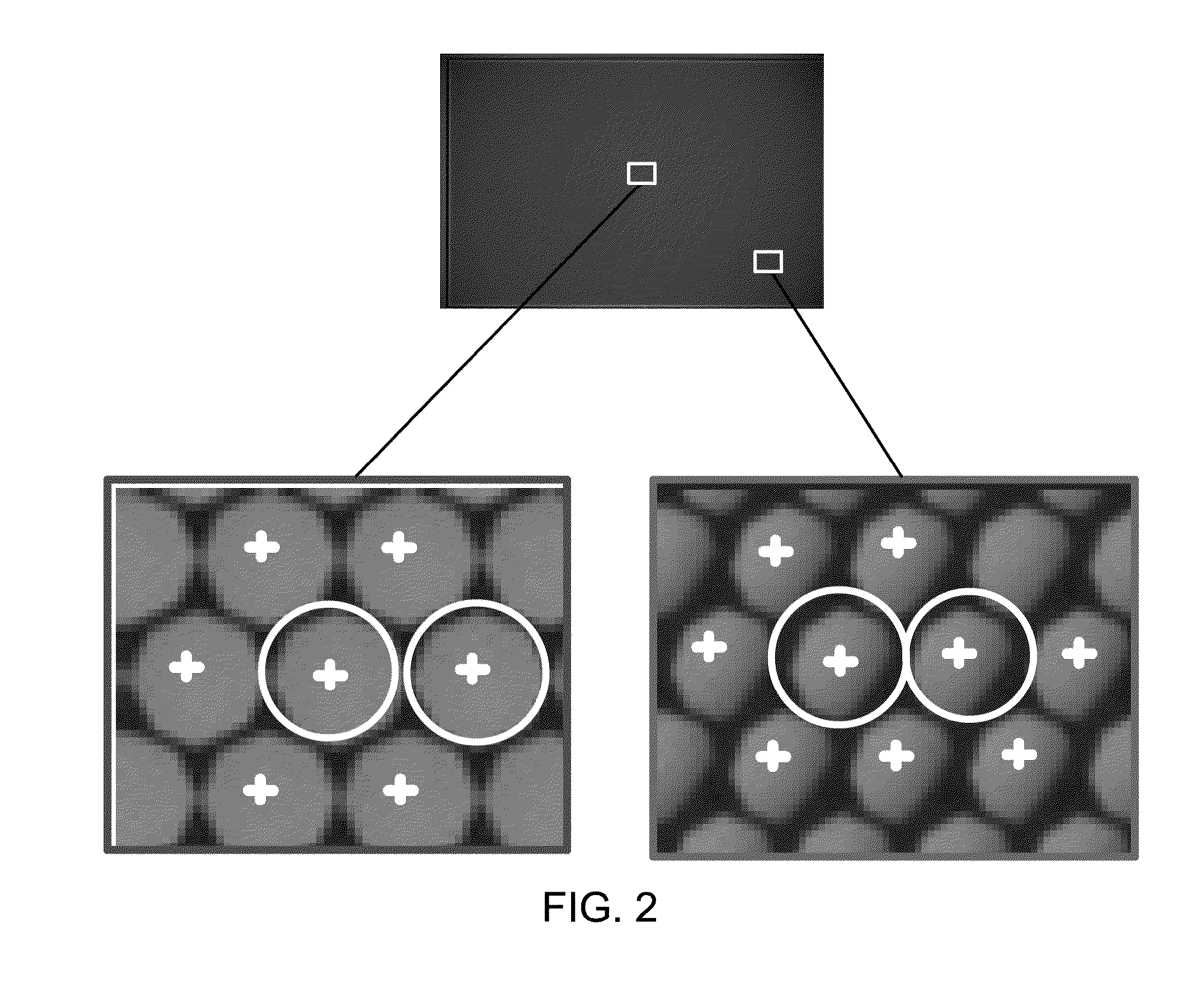

A collimated object is adjustable to produce collimated light propagating along different propagation directions. The plenoptic imaging system under calibration captures plenoptic images of the object adjusted to different propagation directions. The captured plenoptic images includes superpixels, each of which includes subpixels. Each subpixel captures light from a corresponding light field viewing direction. Based on the captured plenoptic images, a calibration module calculates which propagation directions map to which subpixels. The mapping defines the light field viewing directions for the subpixels. This can be used to improve processing of plenoptic images captured by the plenoptic imaging system.

Owner:RICOH KK

Method and system to calibrate a camera system using phase demodulation sensing

InactiveUS7433029B1Simple technologyQuality improvementAngle measurementUsing optical meansWavefrontContinuous wave

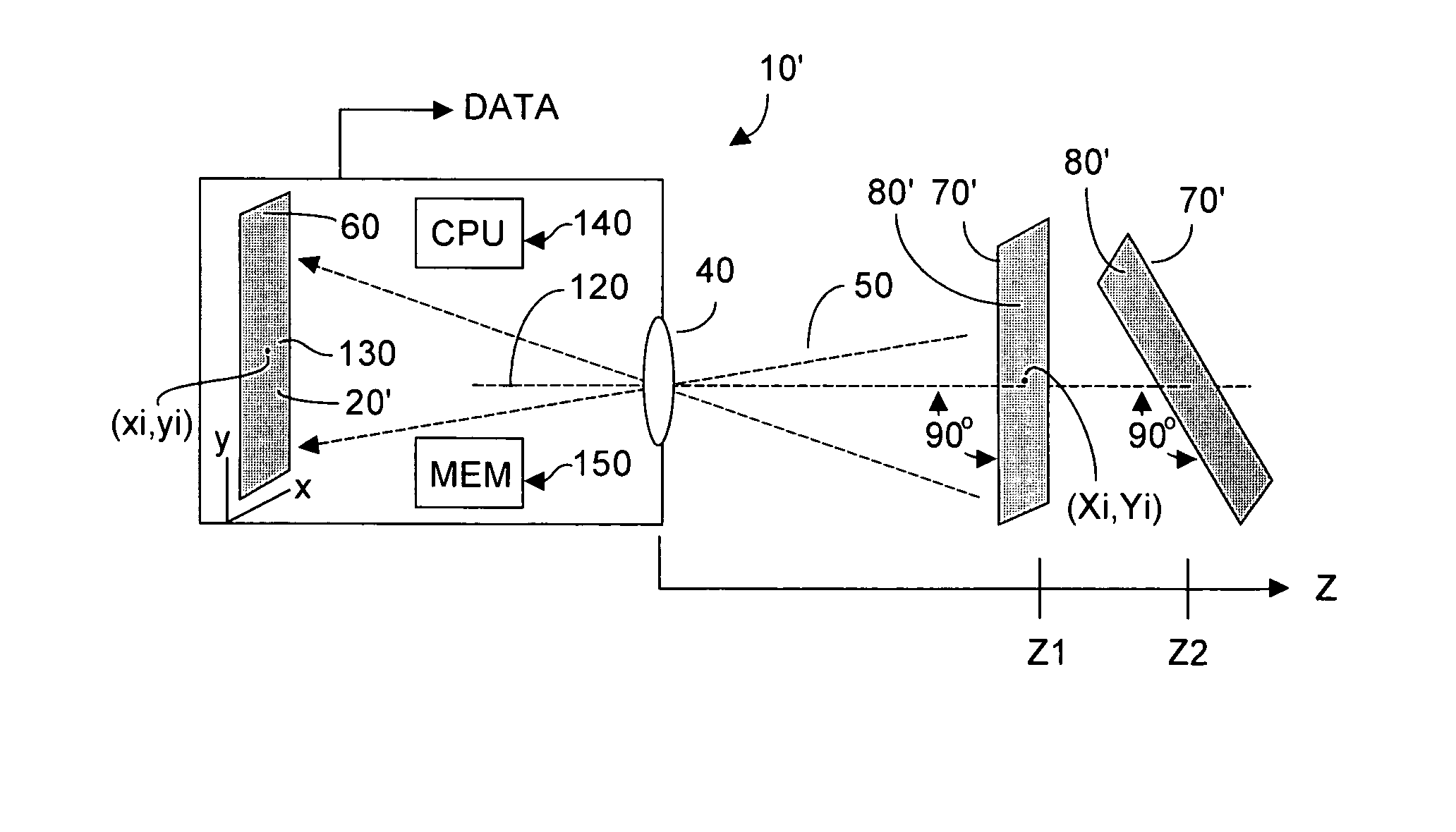

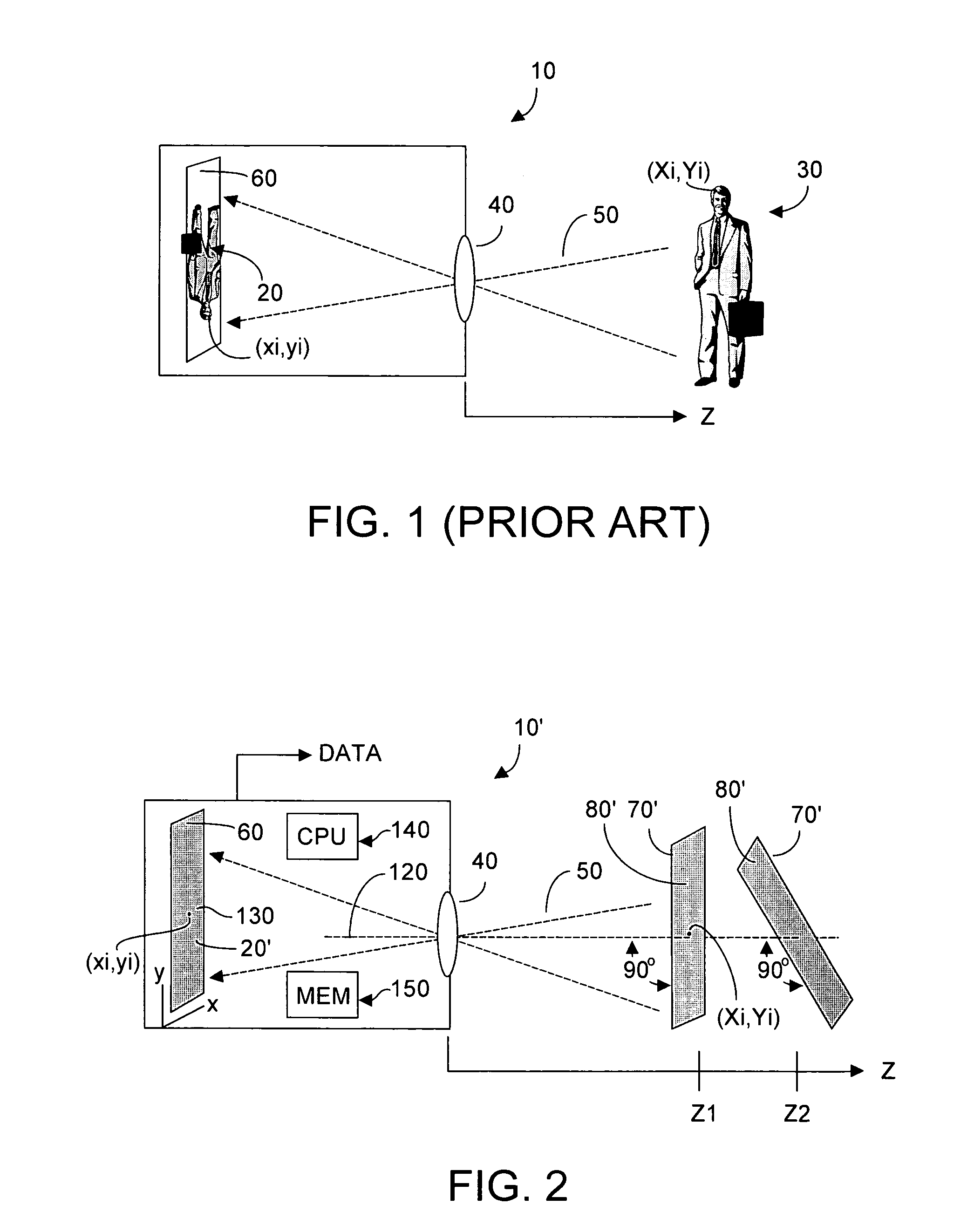

A preferably parametrically defined calibration target pattern definable in real-world coordinates (Xi,Yi) is used to spatially calibrate a camera system acquiring images in pixel coordinates (xi,yi). The calibration target pattern is used to produce a unique 1:1 mapping enabling the camera system to accurately identify each point in the calibration target pattern point mapped to each pixel in the acquired image. Preferably the calibration target pattern is pre-distorted and includes at least two sinusoids that create a pattern of distorted wavefronts that when imaged by the camera system will appear substantially linearized. Since wavefront locations on the calibration target pattern were known, a mapping between those wavefronts and the substantially linearized wavefront pattern on the camera system captured image can be carried out. Demodulation of the captured image enables recovery of the continuous wave functions therein, using spectral analysis and iteration.

Owner:MICROSOFT TECH LICENSING LLC

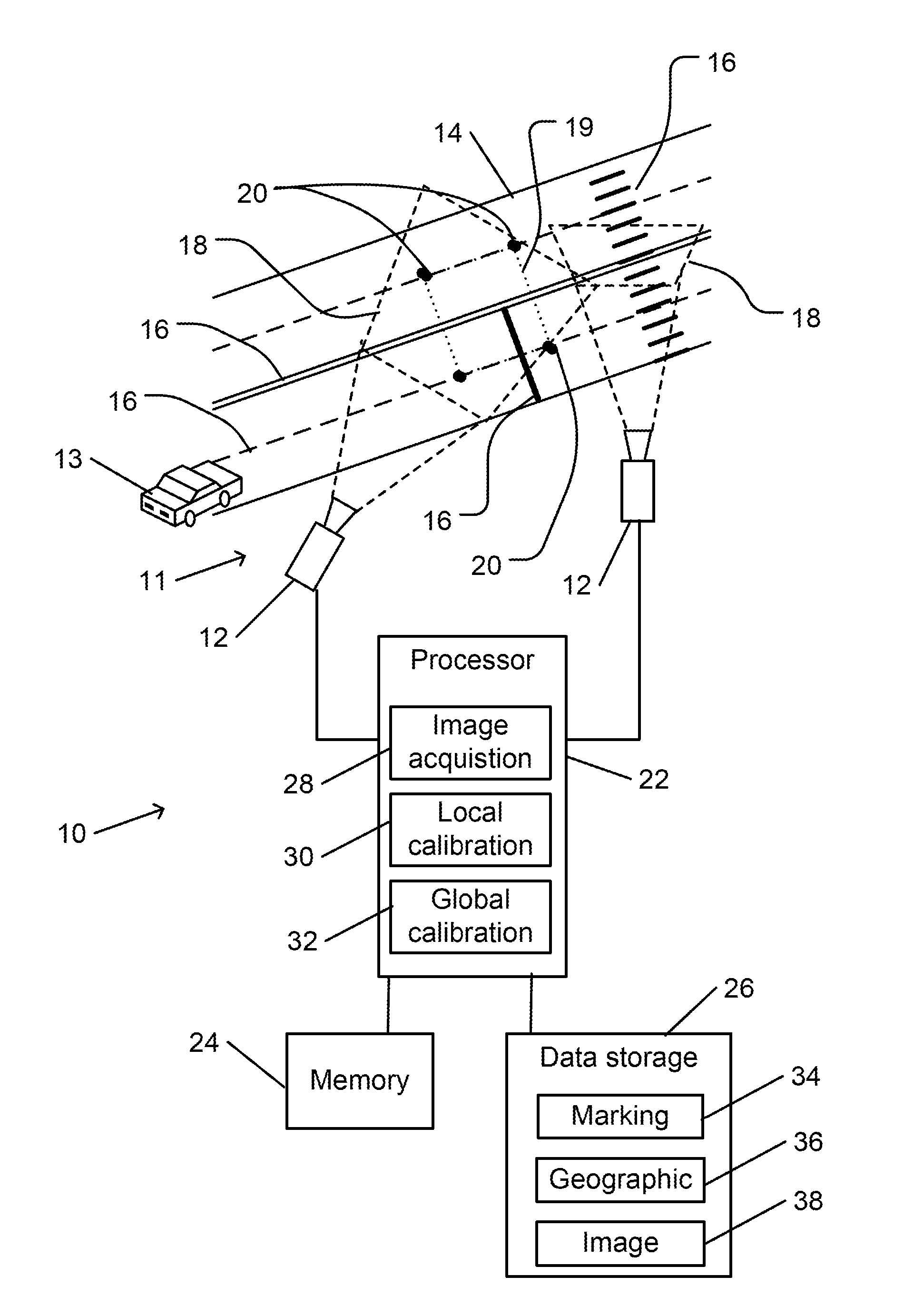

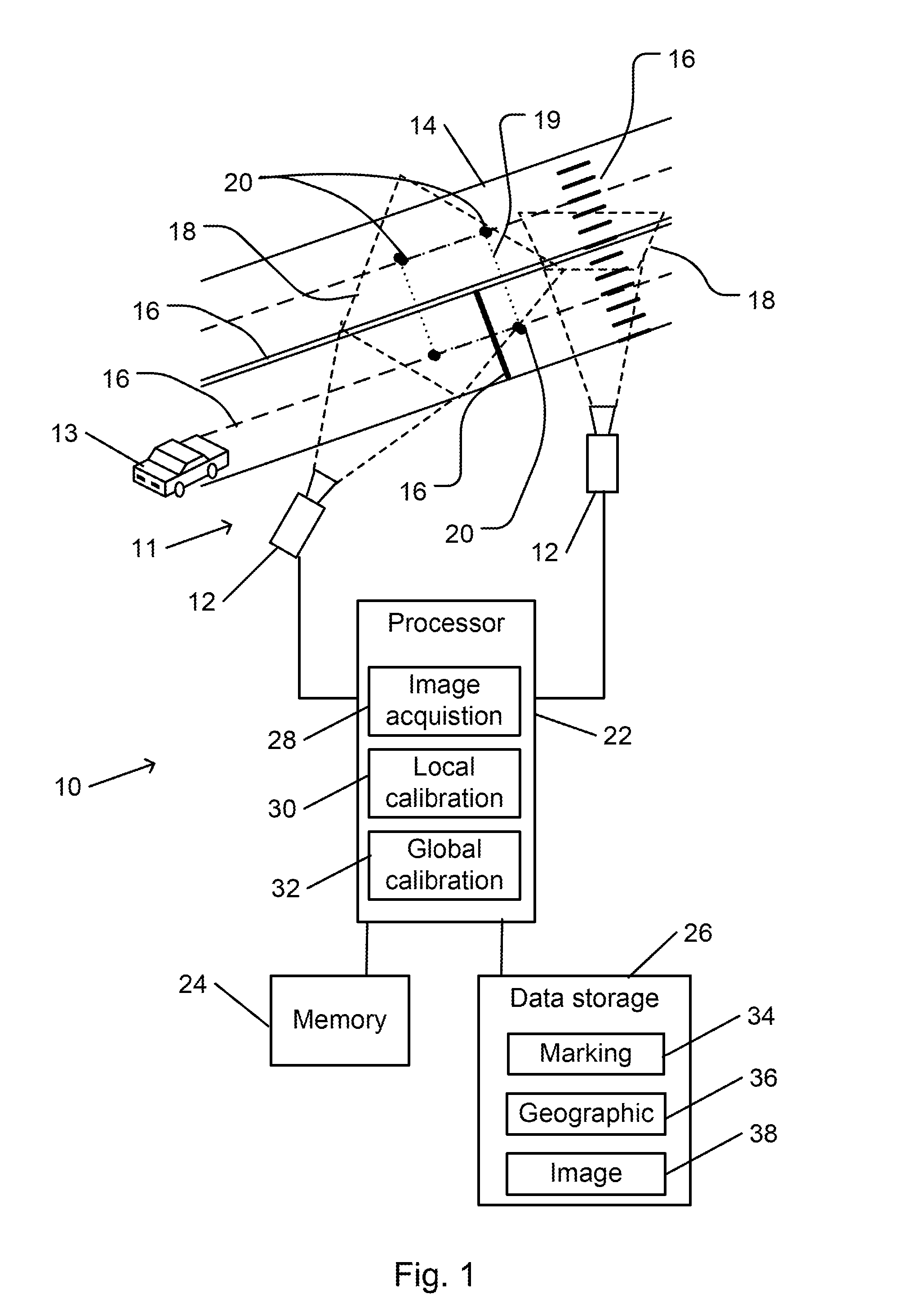

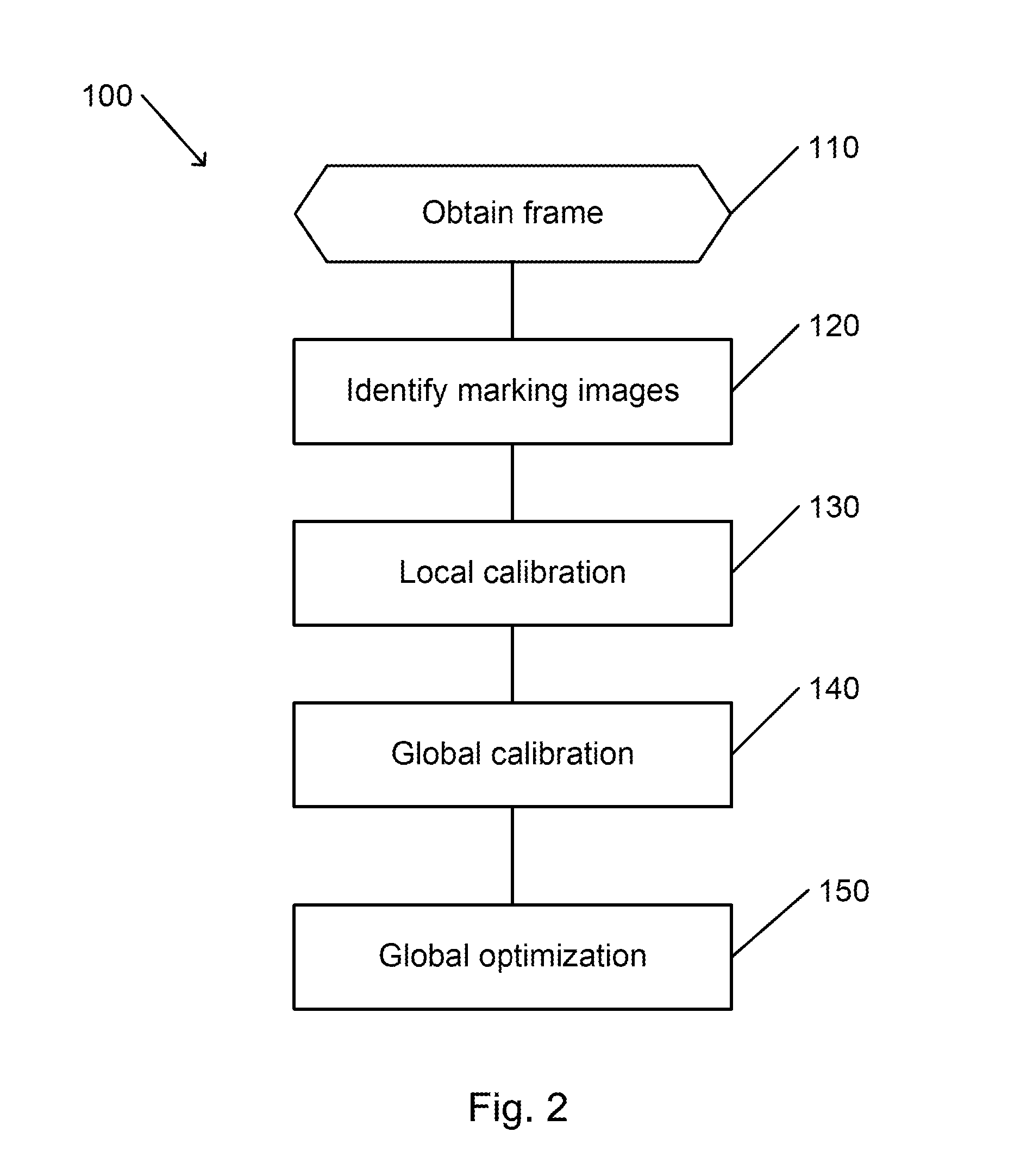

Automatic spatial calibration of camera network

ActiveUS20160012589A1Minimizes valueTelevision system detailsImage enhancementComputer graphics (images)Radiology

A method for automatic spatial calibration of a network of cameras along a road includes, processing a frame that is obtained from each camera of the network to automatically identify an image of a pattern of road markings that have a known spatial relationship to one another. The identified images are used to calculate a position of each camera relative to the pattern of road markings that is imaged by that camera. Geographical information is applied to calculate an absolute position of a field of view of each camera. A global optimization is applied to adjust the absolute position of the field of view of each camera of the camera network relative to an absolute position of the fields of view of other cameras of the camera network

Owner:AGT INTERNATIONAL INC

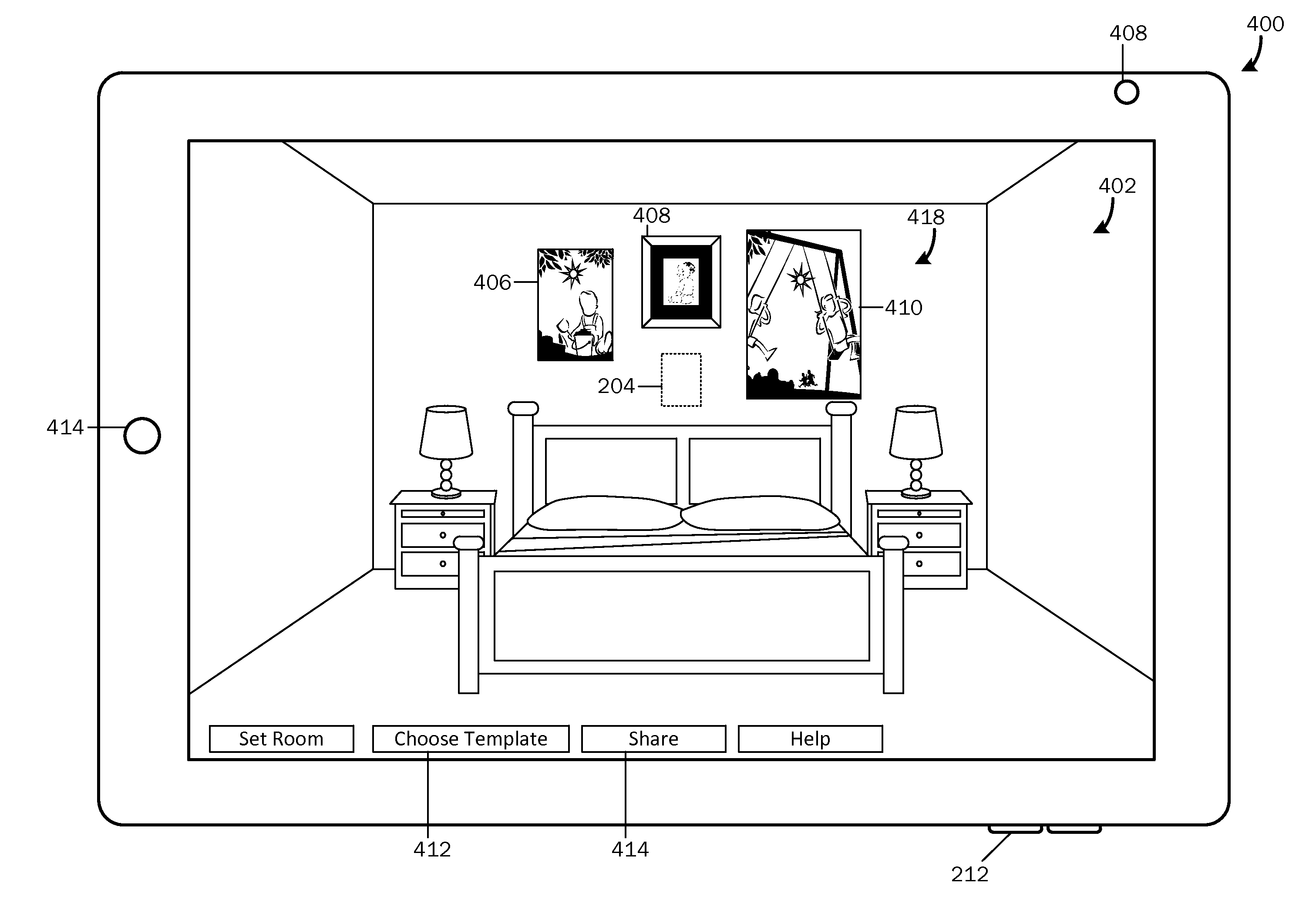

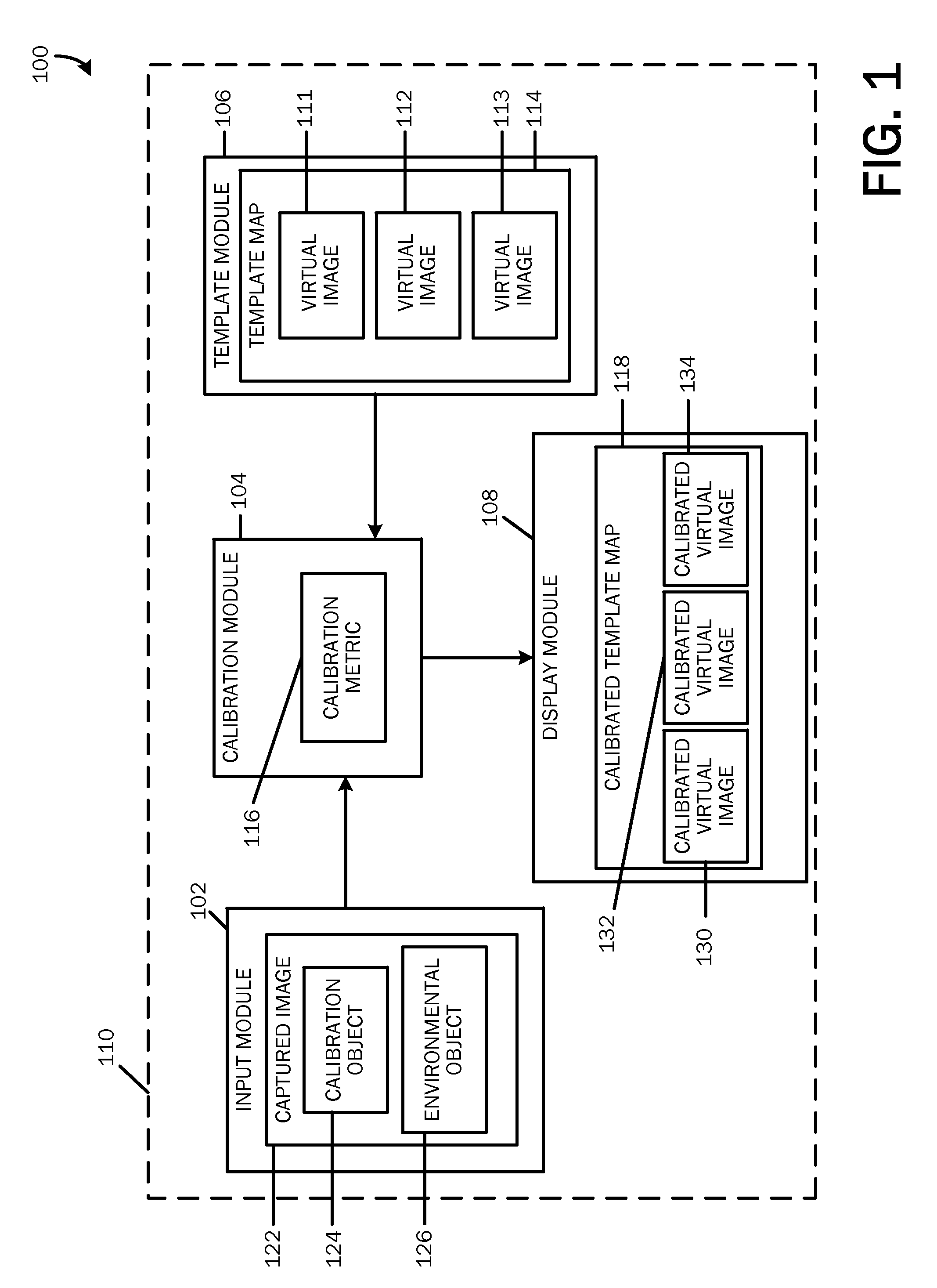

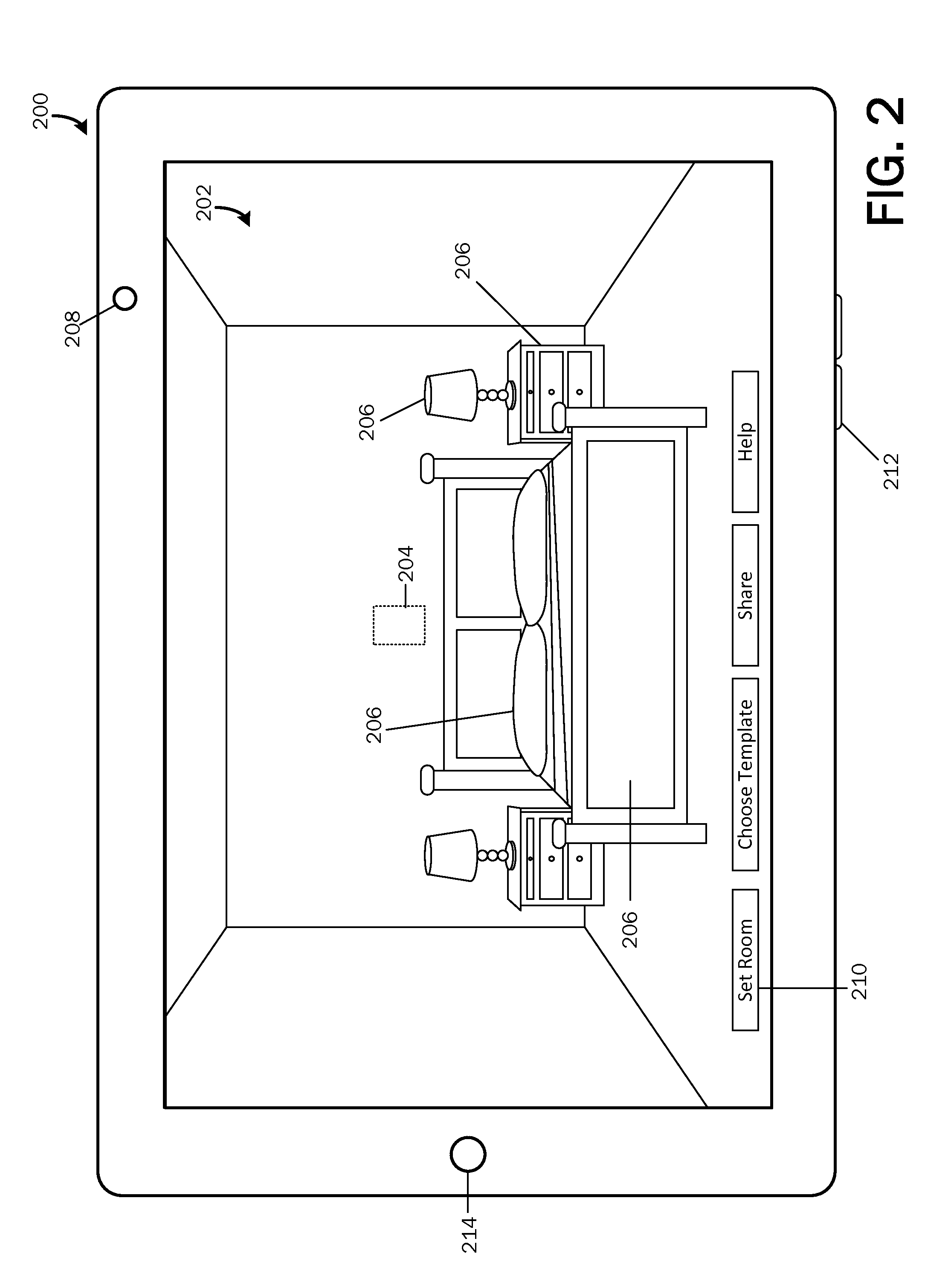

Spatial Calibration System for Augmented Reality Display

InactiveUS20140063063A1Cathode-ray tube indicatorsEditing/combining figures or textDesign spaceSpatial calibration

Systems and methods are described to allow a user of a computing device to augment a captured image of a design space, such as a photograph or a video of an interior room, with an image of a design element, such as a photograph. The disclosure provides systems and methods that enable users of computing devices to capture images from the design space, calibrate the size of a virtual image of a design element to the captured image of the design space, overlay the calibrated virtual image onto the captured image, and adjust the virtual image.

Owner:SIMPLY GALLERIES LLC

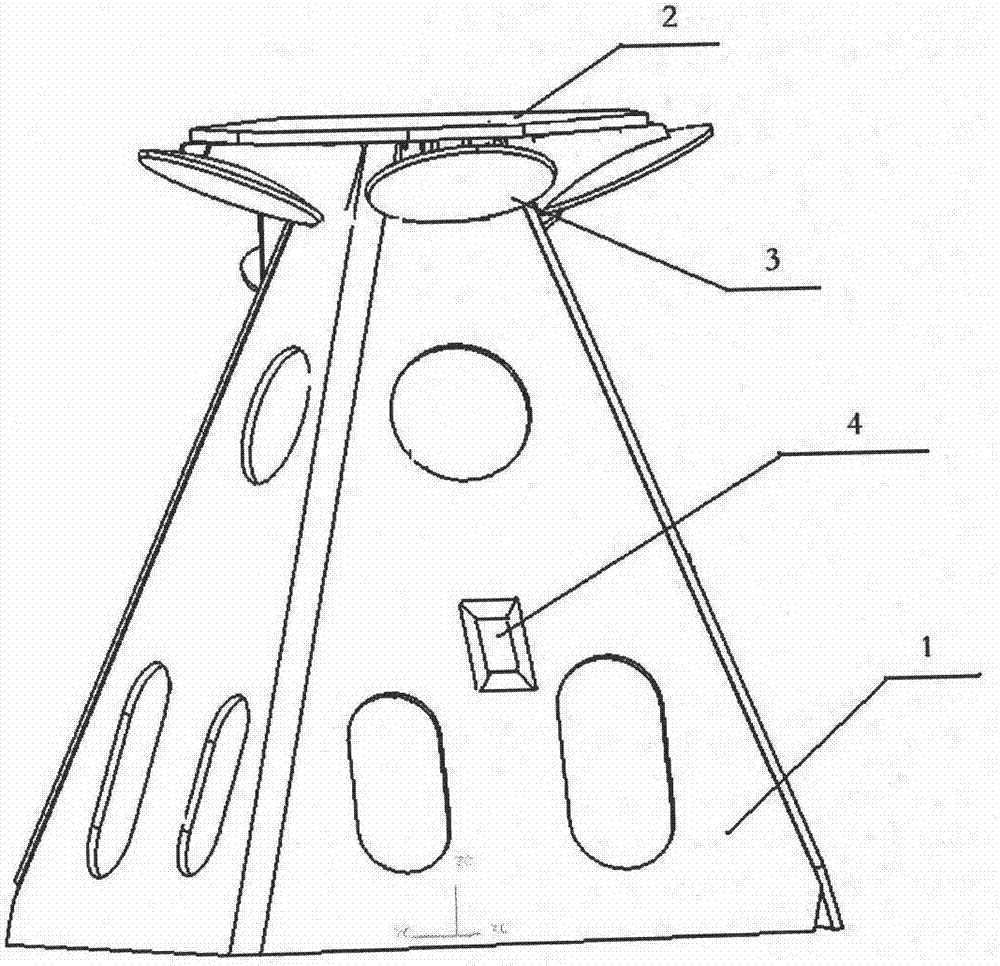

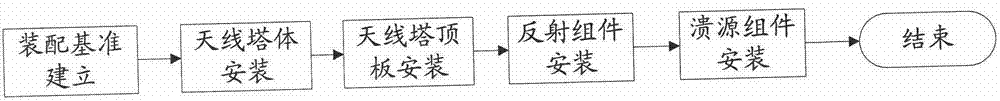

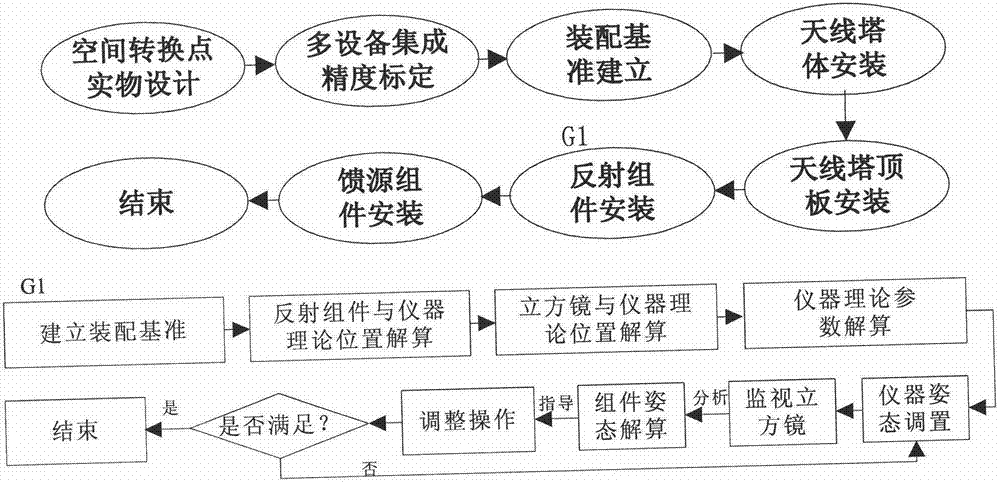

Antenna assembly detection method

ActiveCN102735210ARealize real-time monitoringAvoid iterative solution processAngle measurementSurveying instrumentsTheodoliteRadar systems

The invention relates to an antenna assembly detection method. An antenna tower is a basic structure of the antenna. A set of sub-reflector assembly is respectively arranged on each side of the antenna tower. A feed assembly is respectively arranged below each sub-reflector assembly. The above-mentioned parts are assembled on an assembling platform. Detection systems employed by the detection method are a laser tracker system, a theodolite system and a laser radar system. A space calibration point is arranged, and a spatial calibration process is implemented, such that a coordinate transformation relationship among the three detection systems is determined. One detection system is used for measuring the assembling platform, and for determining the transformation relationship between the assembling platform and an assembling reference coordinate system. When the parts are installed, the parts are installed according to the installation requirements and measuring characteristics. Detection means with lower implementation intensity and higher sampling efficiency is adopted.

Owner:BEIJING SATELLITE MFG FACTORY

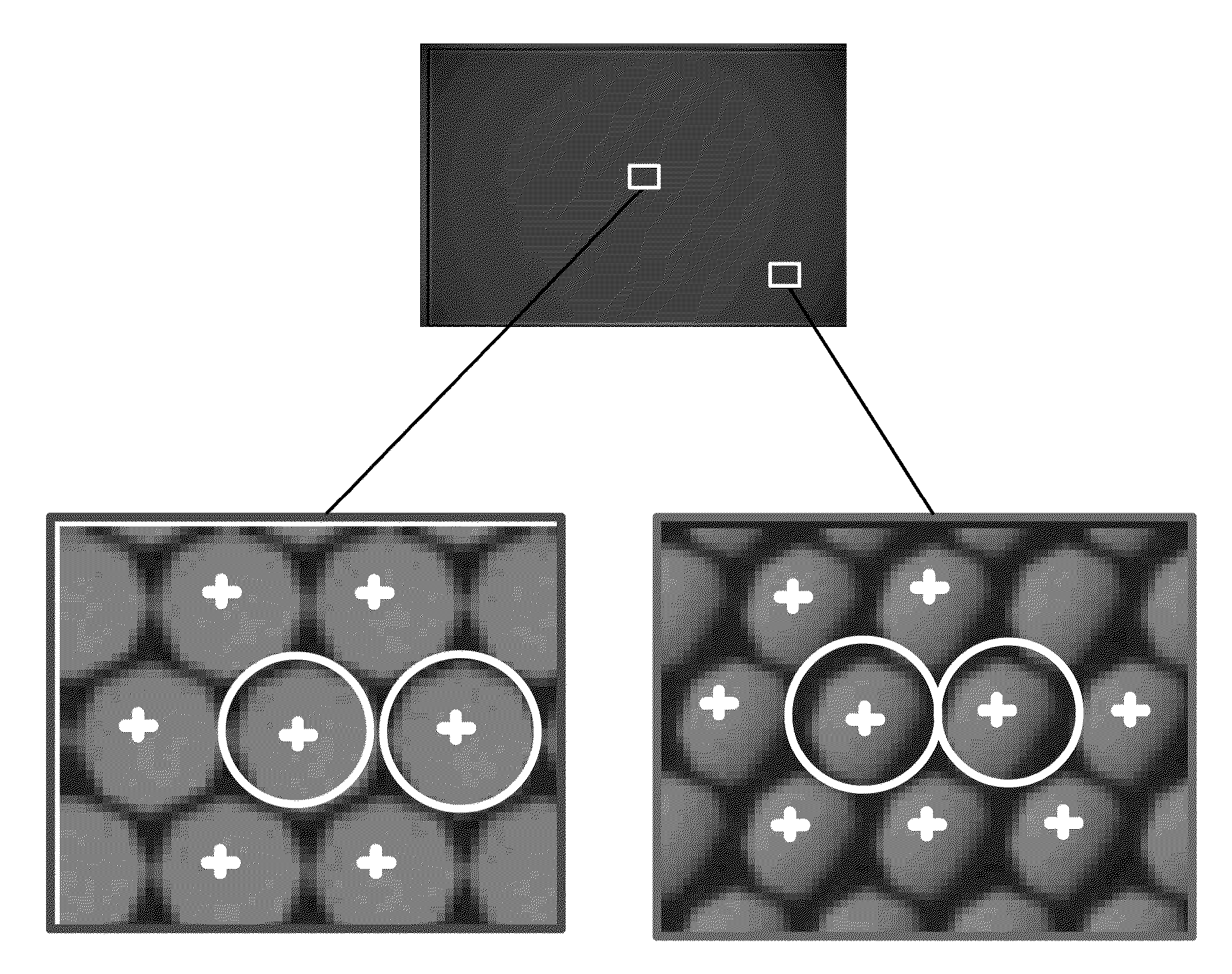

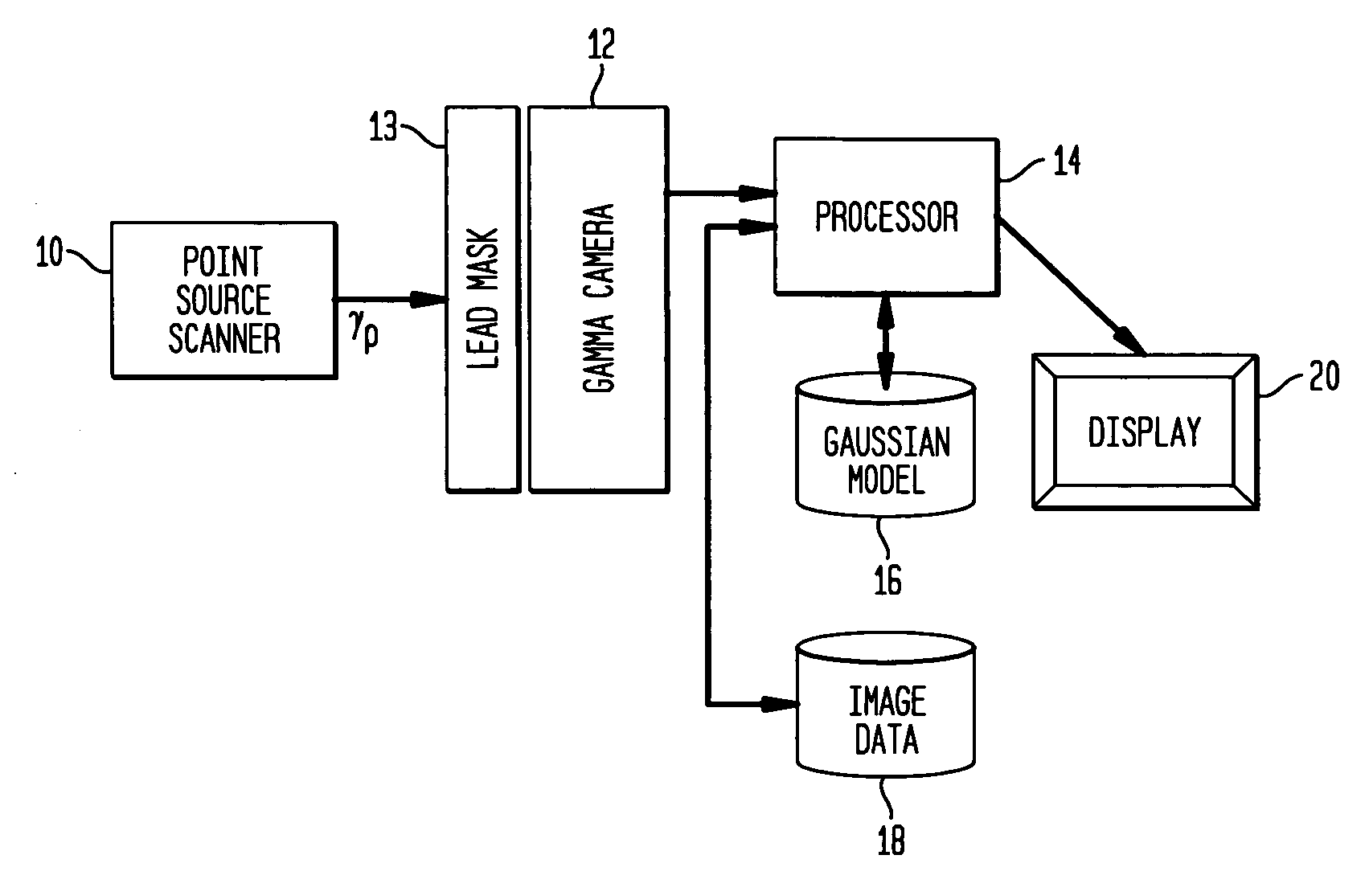

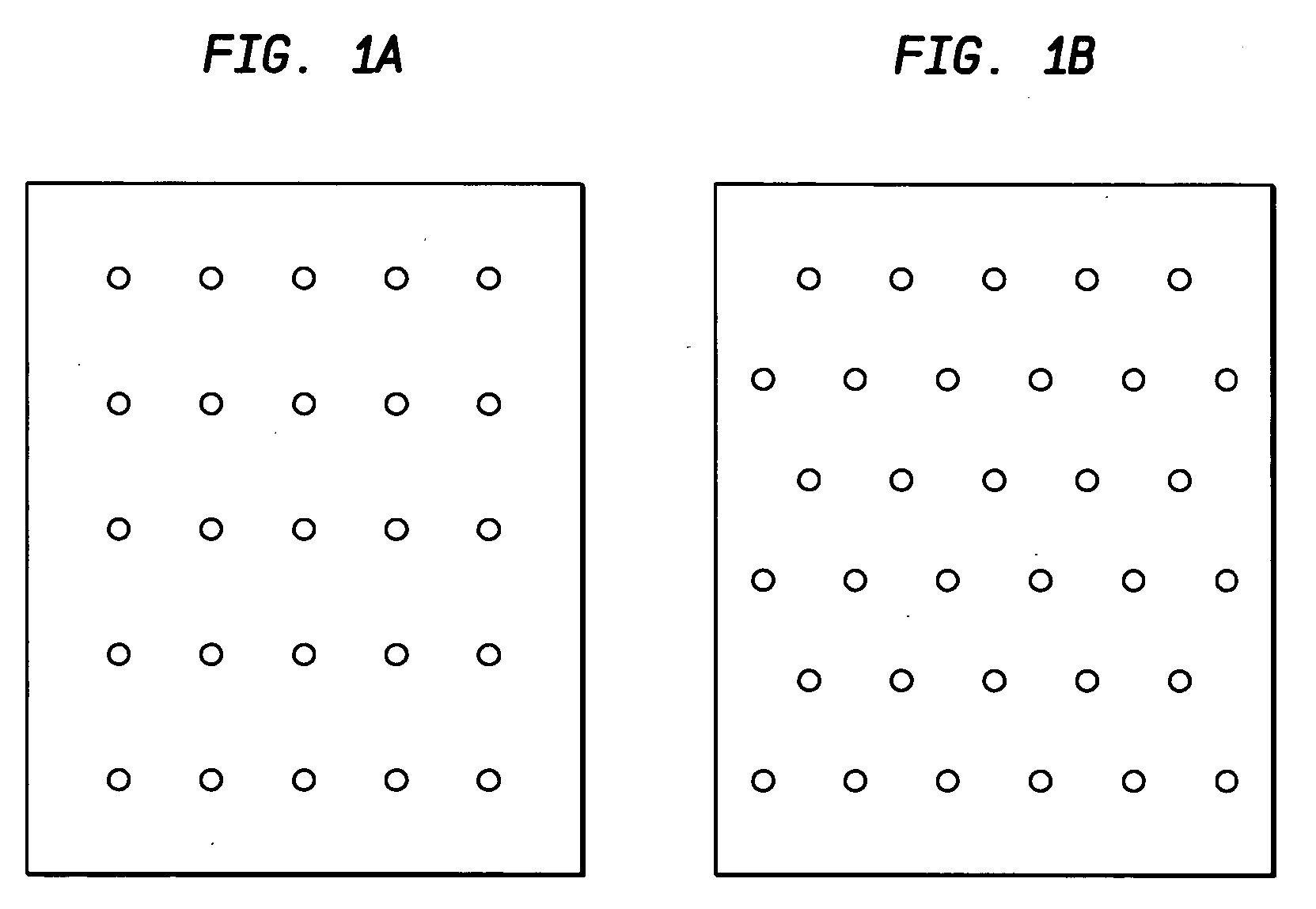

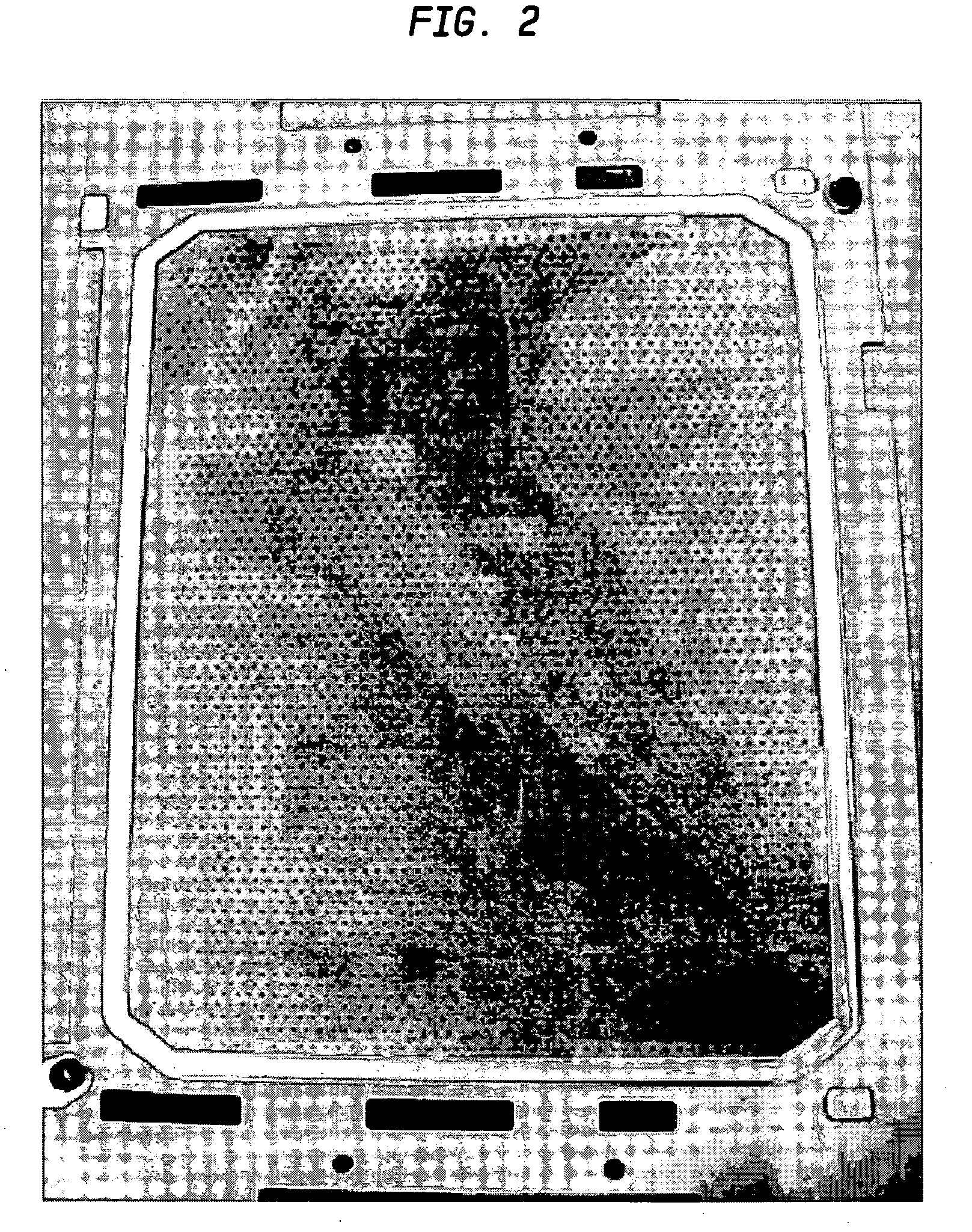

Peak detection calibration for gamma camera using non-uniform pinhole aperture grid mask

ActiveUS20060011847A1Material analysis by optical meansCalibration apparatusGaussian functionSpatial calibration

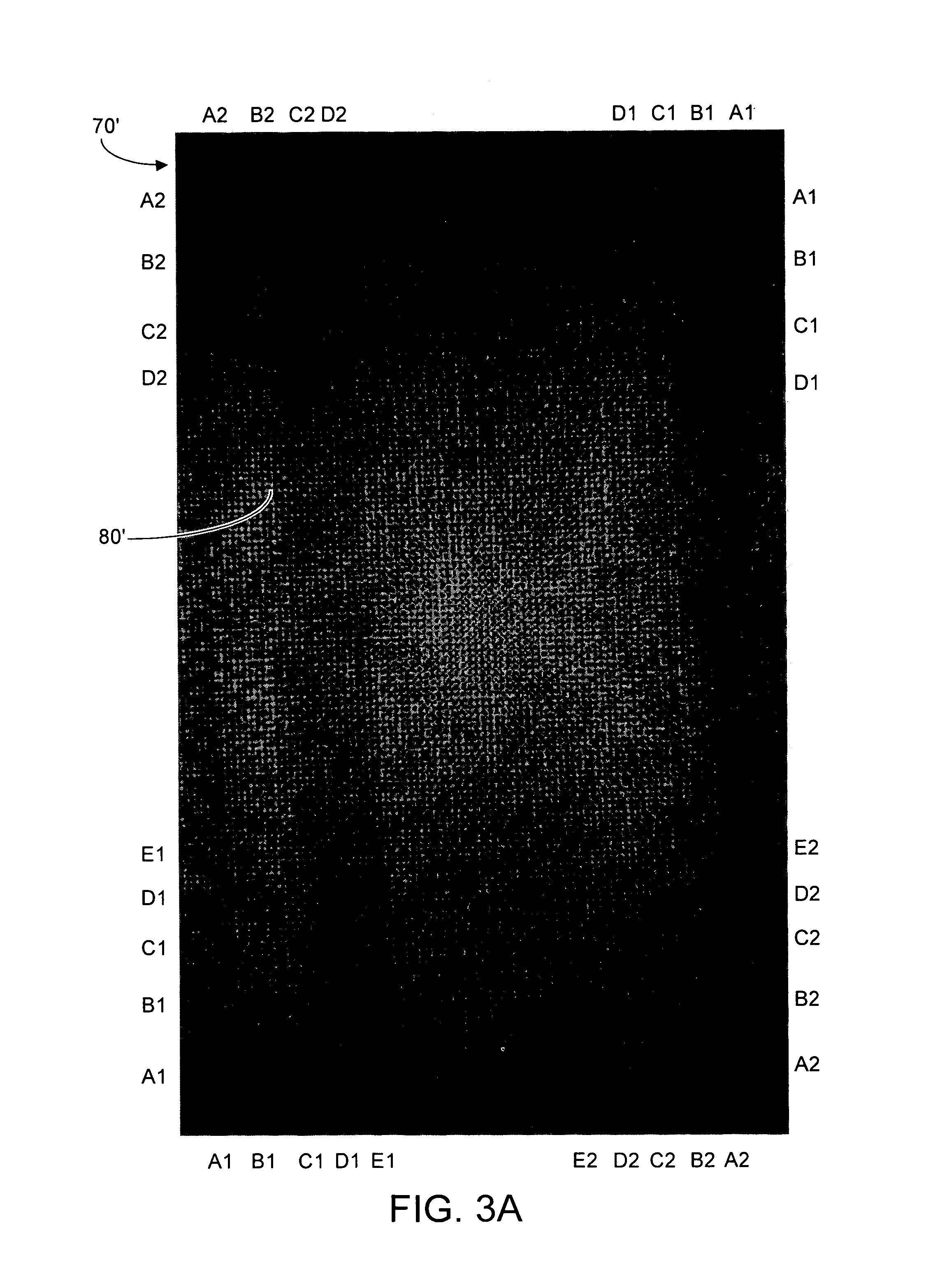

Point source responses of pinhole apertures in a non-uniform grid mask used to spatially calibrate a gamma camera can be modeled as a two-dimensional Gaussian function with a set of seven parameters. The Gaussian parameters can be measured using a surface-fitting algorithm that seeks minimum error in the least squares sense. The process is repeated for data from each pinhole location and the data are added together to generate a complete model of the flood image from the mask, which then can be used in a peak detection process for clinical images.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

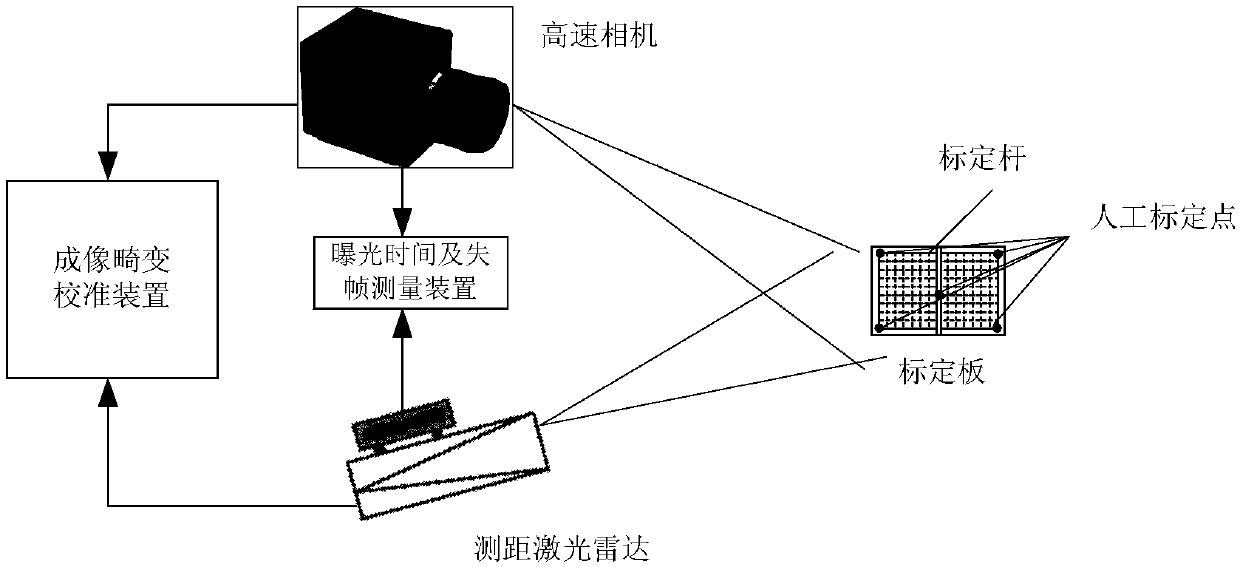

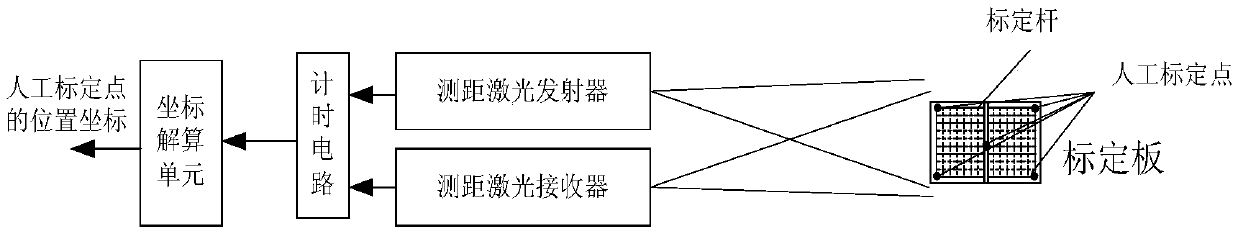

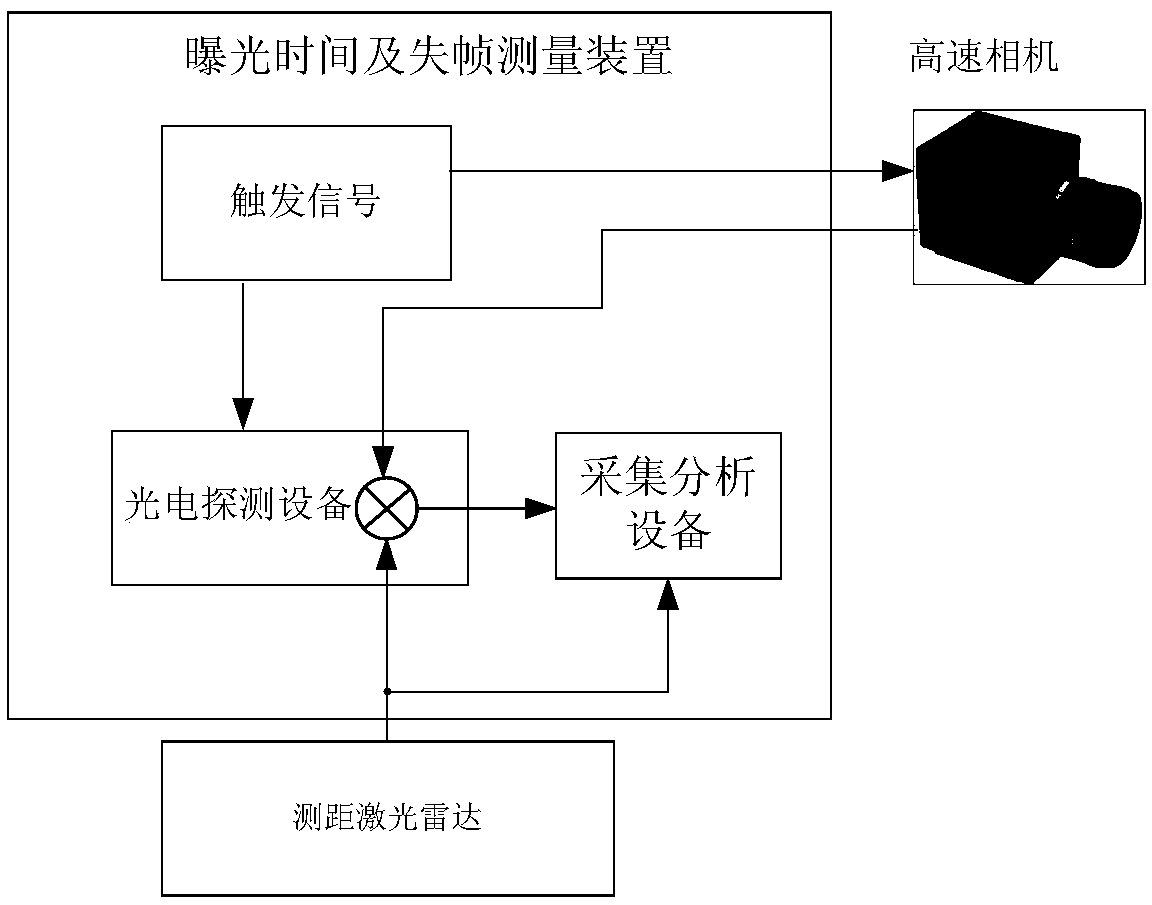

High-speed camera calibration system and method

ActiveCN108964777AAchieving Spatial CalibrationOptimizing the Distortion MatrixTelevision system detailsColor television detailsPicosecond laserMeasurement device

The invention relates to a high-speed camera calibration system and method. The high-speed camera calibration system comprises a calibration board, a laser ranging radar, a high-speed camera, an exposure time and dropped frame measurement device and an imaging distortion calibration device. According to the system and method, a high-precision picosecond laser ranging technology is adopted to be combined with a camera parameter calibration algorithm, the camera focus and the distortion coefficient are determined, a distortion matrix is optimized, a precise camera image-to-spatial position three-dimensional reduction algorithm is formed, and spatial calibration on camera imaging distortion is achieved; the adopted measurement reference clock frequency is 4-5 orders of magnitude higher than the measured high-speed camera frequency; acquisition and analysis equipment with the sampling rate of 25 GHz is adopted to acquire the exposure time of the high-speed camera, the high-precision exposure time and dropped frame rate are obtained through measurement, and a data basis is provided for test parameters acquired for calibrating the high-speed camera.

Owner:南京恒泰利信电子科技有限公司

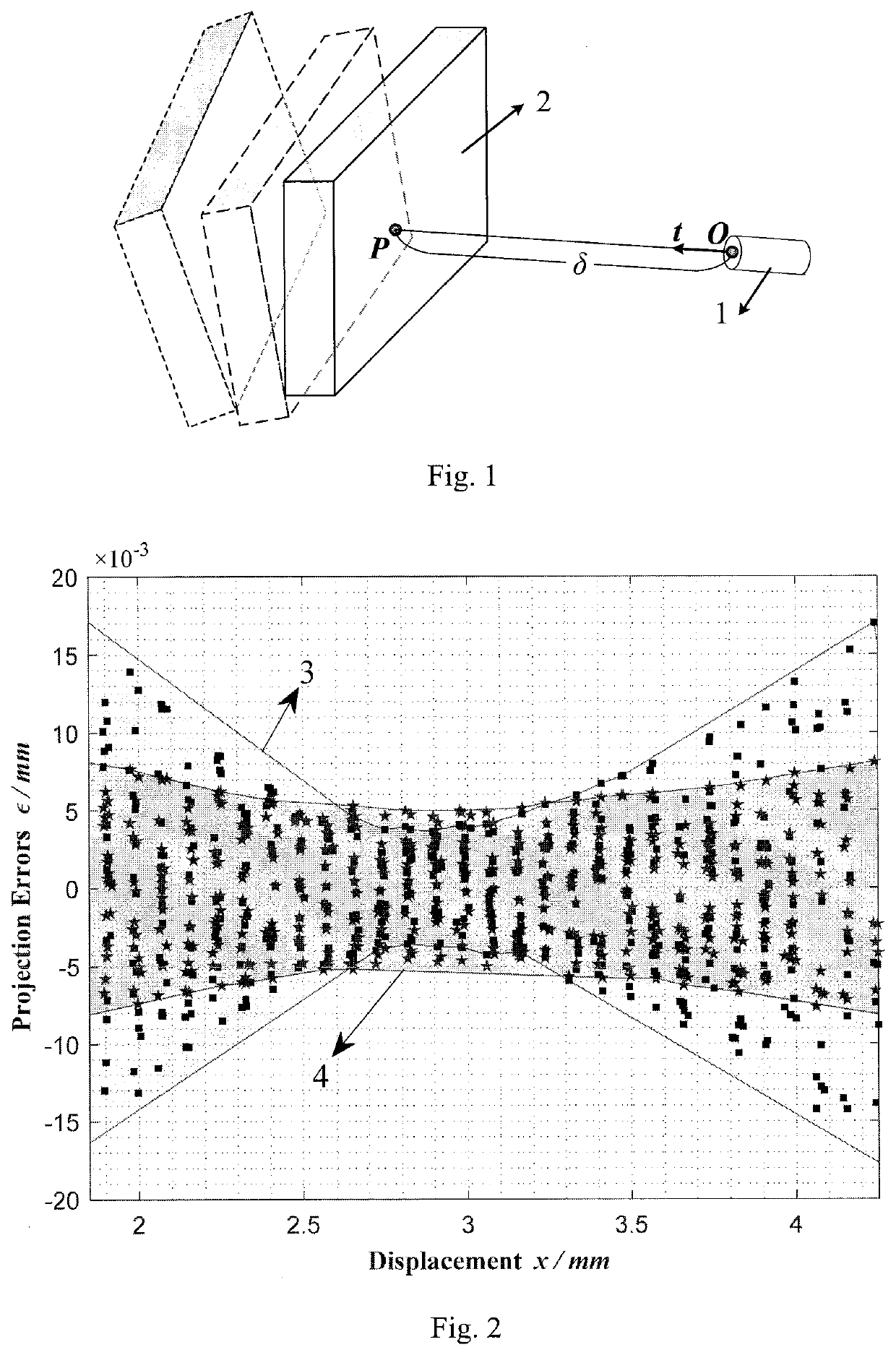

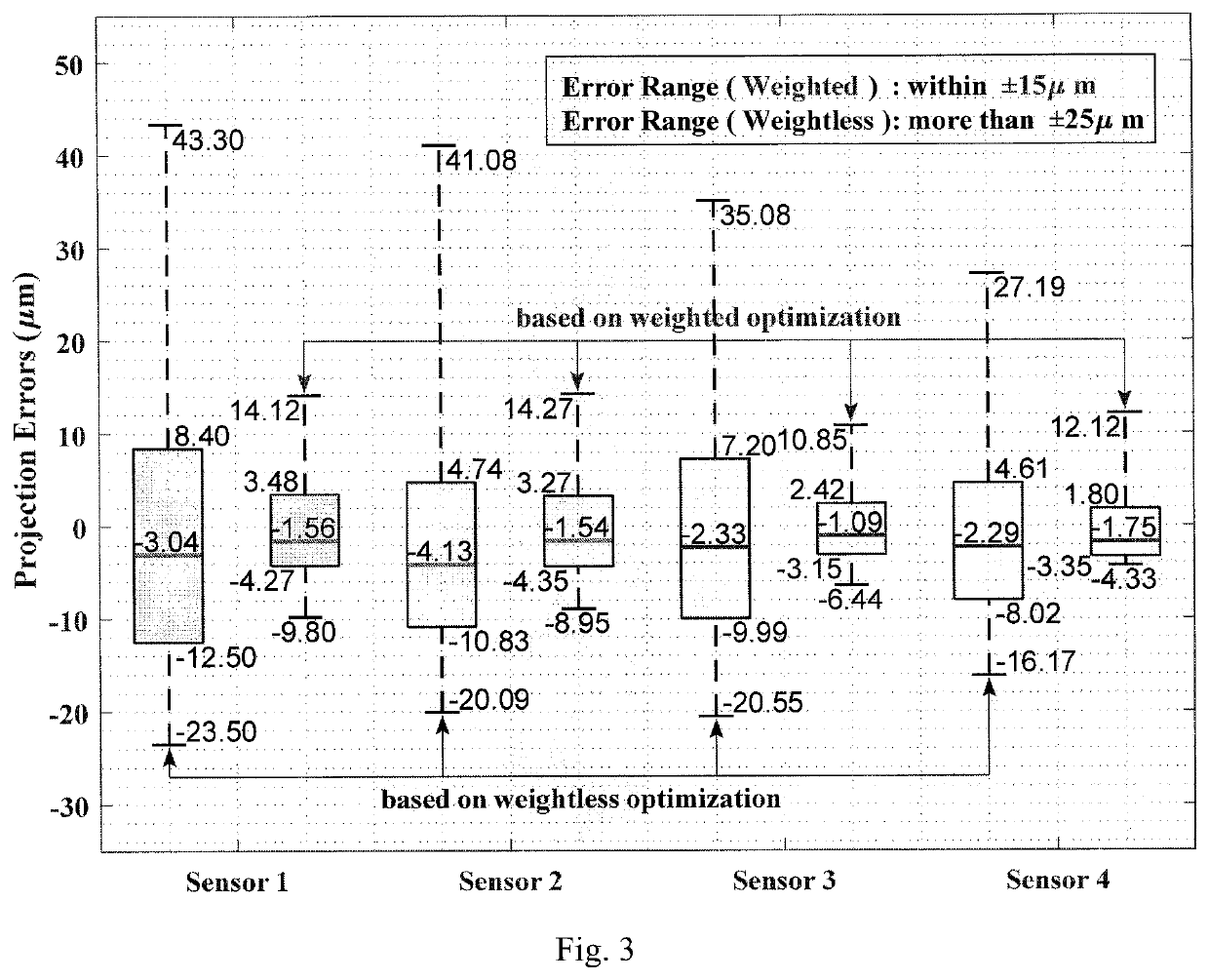

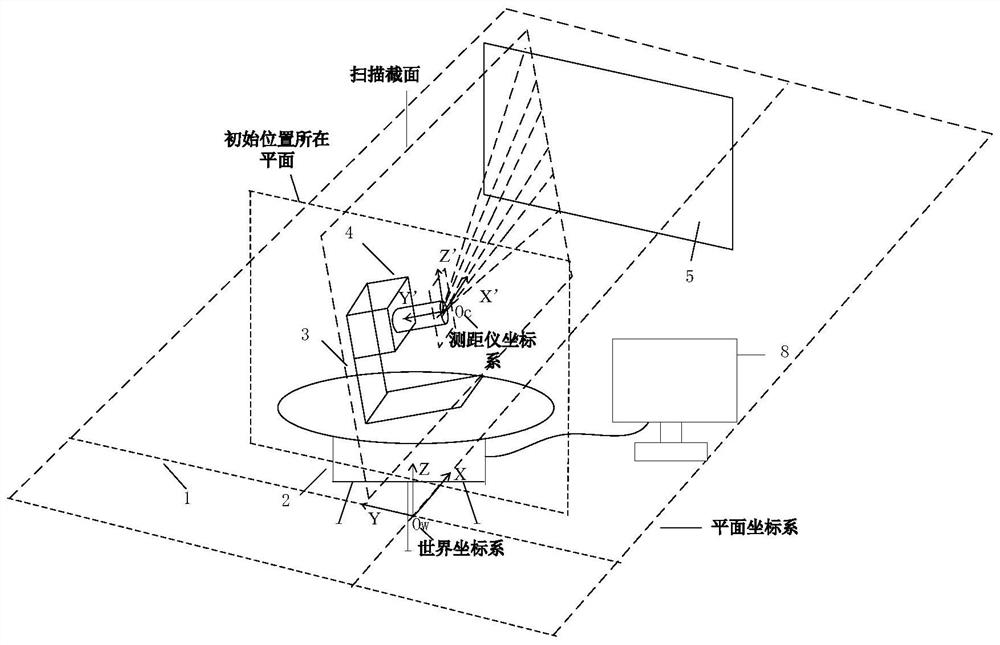

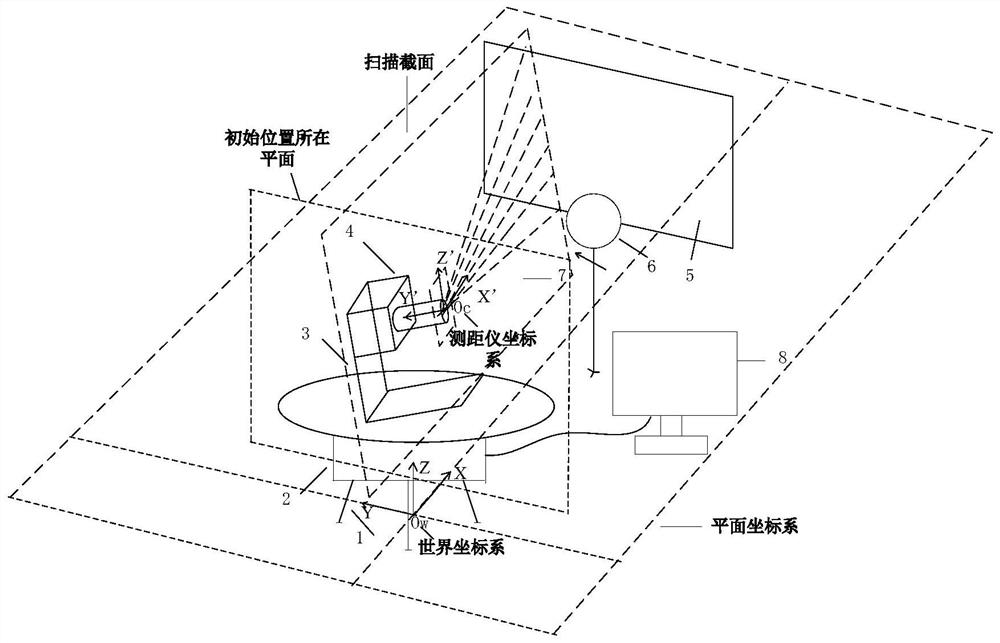

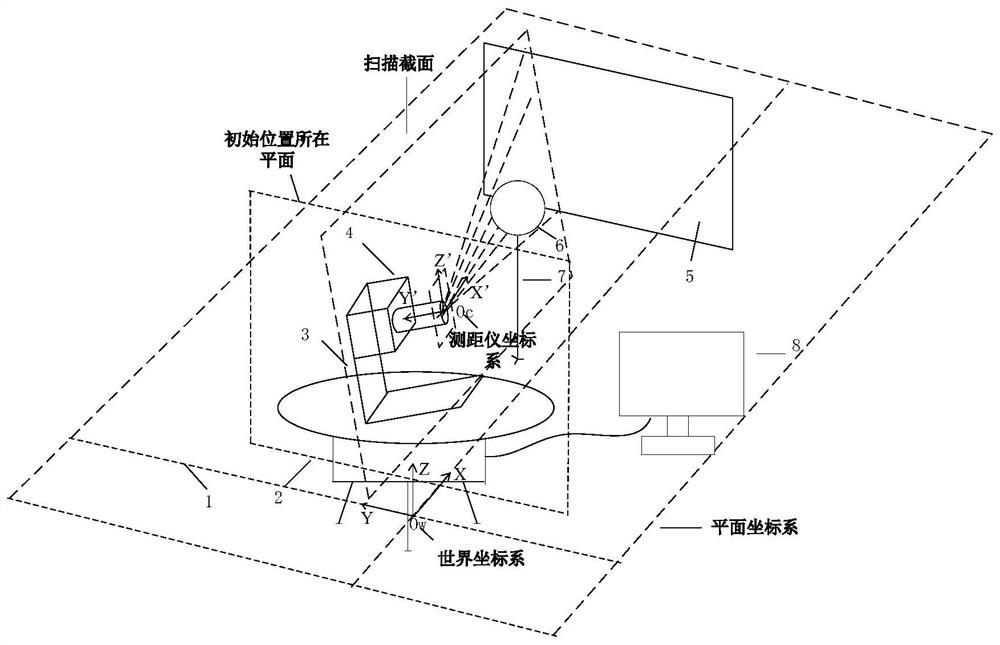

3D measurement model and spatial calibration method based on 1D displacement sensor

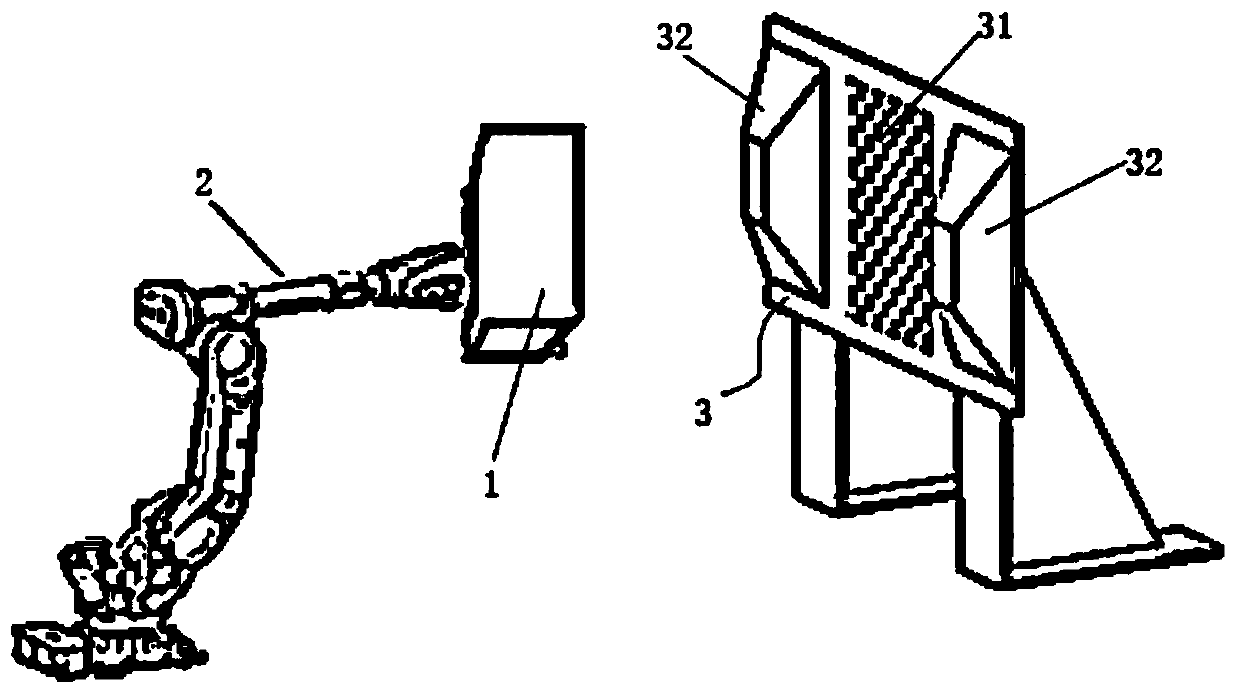

ActiveUS20210095959A1High precisionUniversal applicabilityProgramme-controlled manipulatorUsing electrical meansAlgorithmComputational physics

A 3D measurement model and the spatial calibration method based on a 1D displacement sensor are proposed. A 3D measurement system based on a fixed 1D displacement sensor is established; then a spatial measurement model based on the 1D displacement sensor is established; and then based on the high precision pose data of the measurement plane and sensor measurement data, spatial calibration constraint equation are established; a weighted iterative algorithms is employed to calculate the extrinsic parameters of the 1D sensor that meet the precision requirements, then the calibration process is completed. A high precision 3D measurement model is established; a 3D measurement model based on a 1D displacement sensor is established, and the calibration method of the measurement model is proposed, which will improve the precision of the 3D measurement model and solve the problem of inaccurate spatial measurement caused by the errors of the sensor extrinsic parameters.

Owner:DALIAN UNIV OF TECH

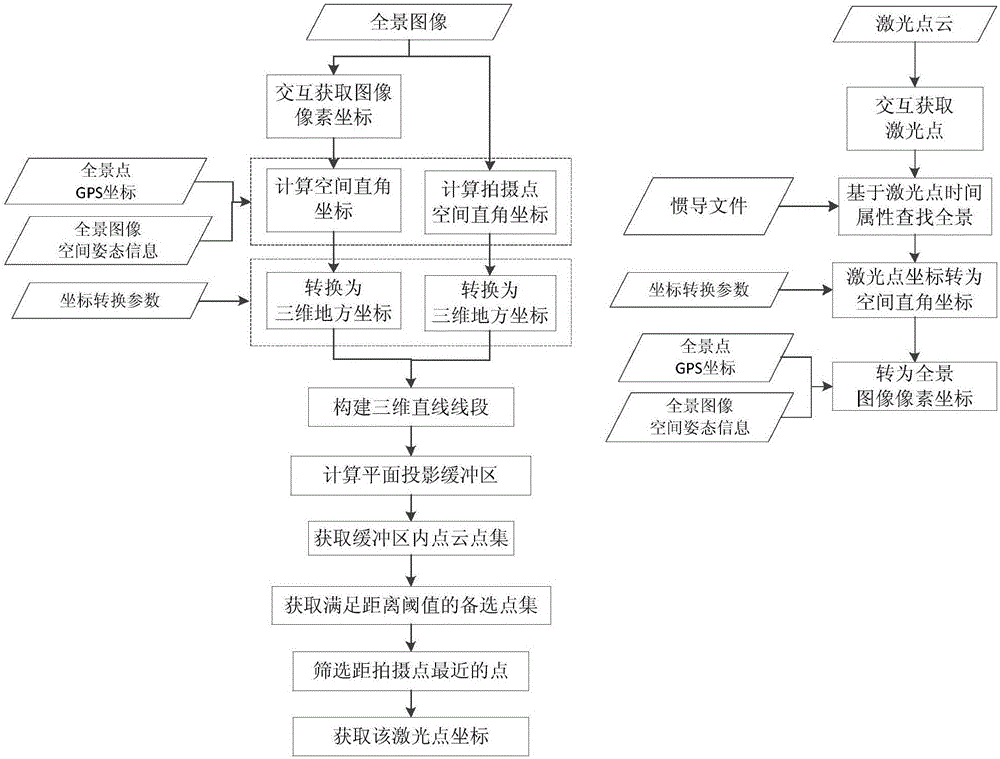

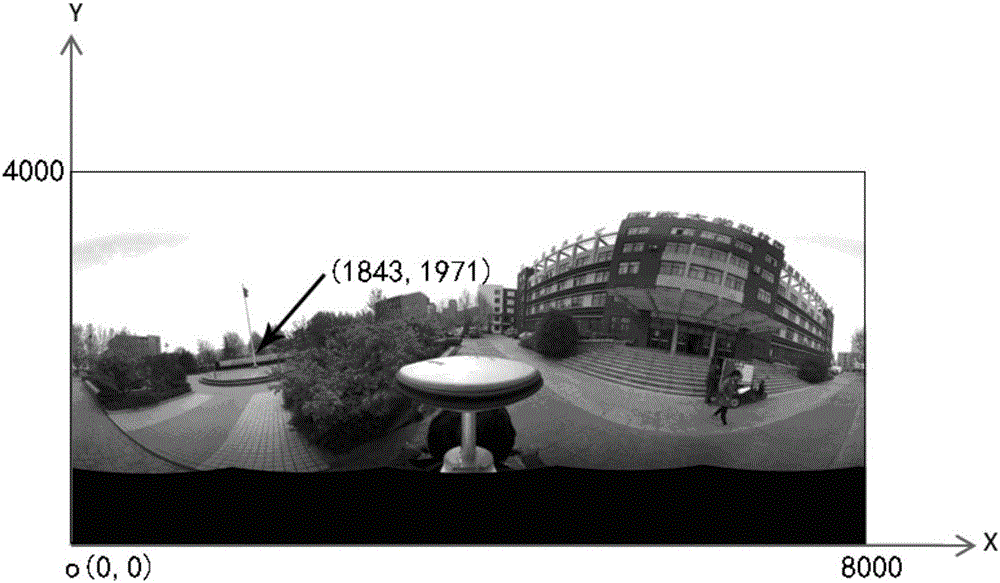

Interactive plotting method based on laser-point cloud and panorama image

The invention discloses an interactive plotting method based on laser-point cloud and a panorama image. The method includes the steps of forward interactive plotting or / and reverse interactive plotting, wherein in the forward interactive plotting step, pixel coordinates of the panorama image are positioned to laser-point coordinates; in the reverse interactive plotting step, the laser-point coordinates are positioned to the pixel coordinates of the panorama image. During forward interaction, a plotting object is determined in the panorama image, and the characteristics of being visual, clear and definite are achieved. During reverse interaction, a specific laser point is obtained through interaction by means of the buffering method, coordinate conversion parameters are converted into spatial rectangular coordinates, the pixel coordinates of the panorama image are calculated by means of spatial calibration parameters, panorama point GPS coordinates and spatial posture information, and accordingly a direction corresponding to the laser point is displayed in the panorama image.

Owner:QINGDAO XIUSHAN MOBILE SURVEYING CO LTD

Planar and spatial feature-based laser radar and camera automatic joint calibration method

PendingCN109828262ACalibration is fast and reliableThe operation process is fully automatedImage analysisElectromagnetic wave reradiationRadarDevices fixation

The invention provides a planar and spatial feature-based laser radar and camera automatic joint calibration method. According to the method, a laser radar and a camera are separately adopted to position planar and spatial features designed on a calibration object, so that the laser radar and the camera can be fast and reliably calibrated. The laser radar and the camera are mounted on a device tobe calibrated, and then, the device to be calibrated is fixed at the output end of an six-axis mechanical arm; in an initial state, a calibration plate is arranged just in front of the laser radar andthe camera, and two kinds of geometric features, namely, planar calibration features and spatial calibration features are designed on the calibration plate; and the camera extracts calibration pointsfrom the planar calibration features so as to use the calibration points, and the laser radar extracts calibration points from the spatial calibration features so as to use the calibration points; and the output end of the six-axis mechanical arm is driven to drive the device to be calibrated to move according to a set trajectory; the camera obtains a corresponding calibration plate image; and the internal and external parameters of the camera are calibrated by the Zhang Zhengyou calibration method.

Owner:TZTEK TECH

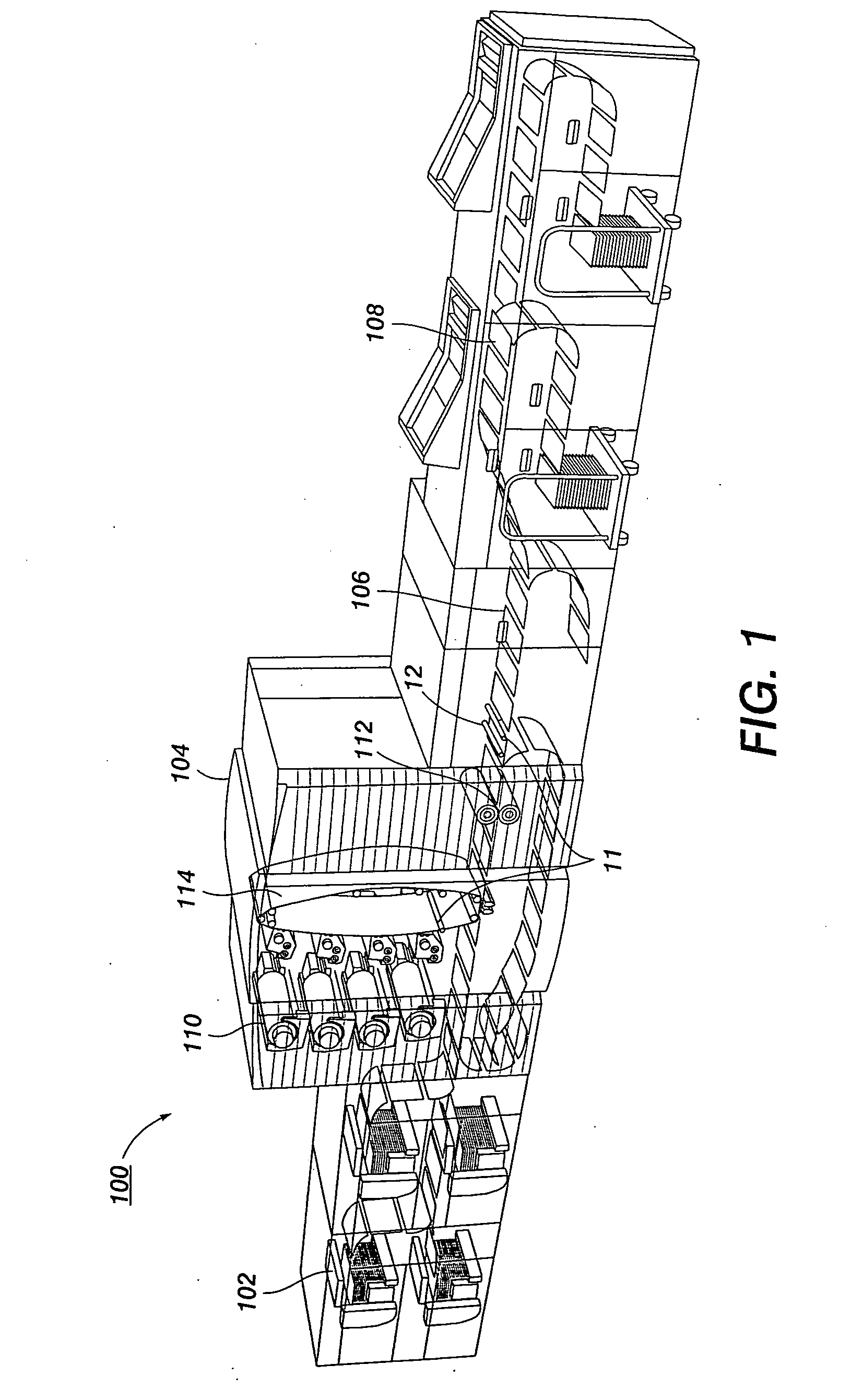

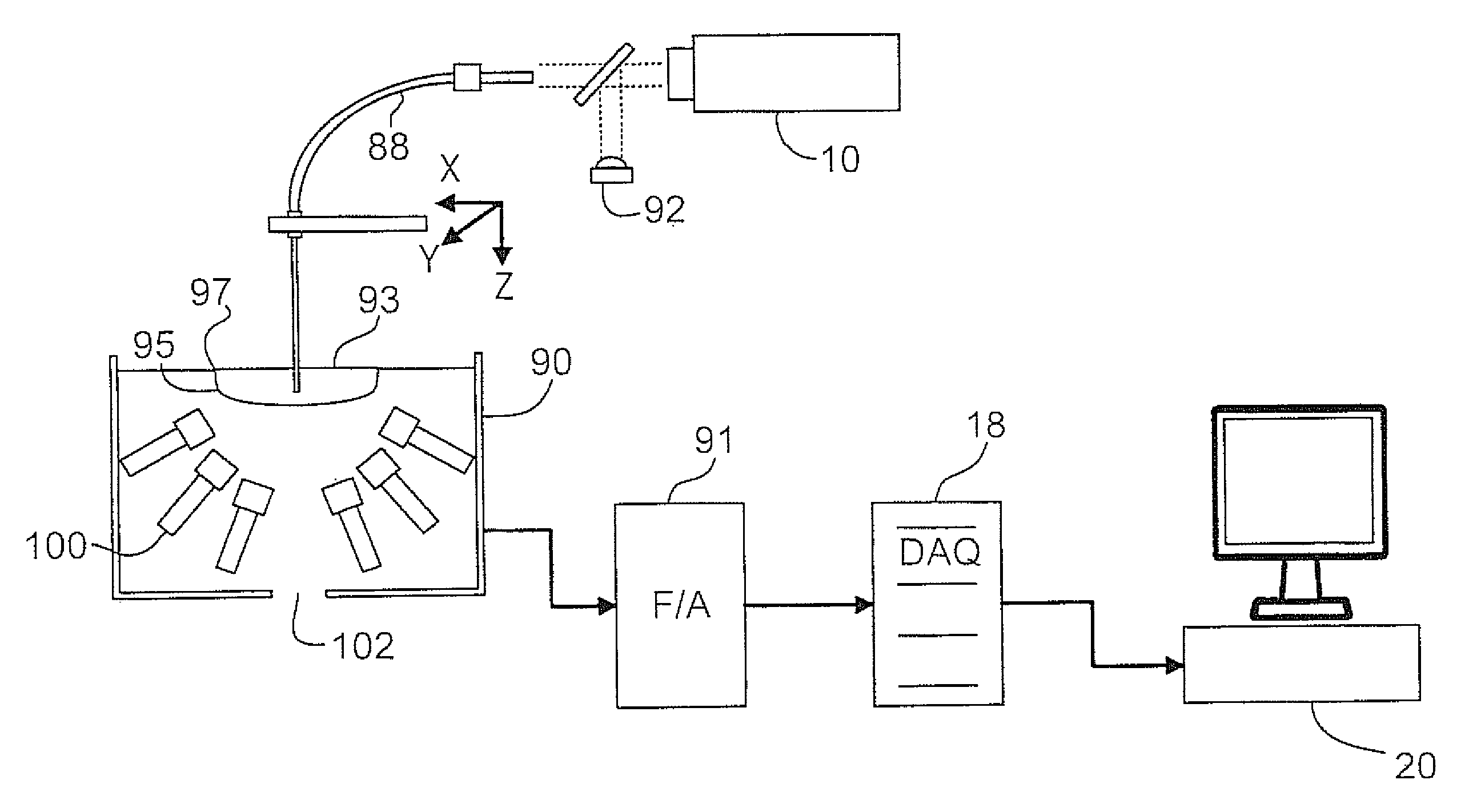

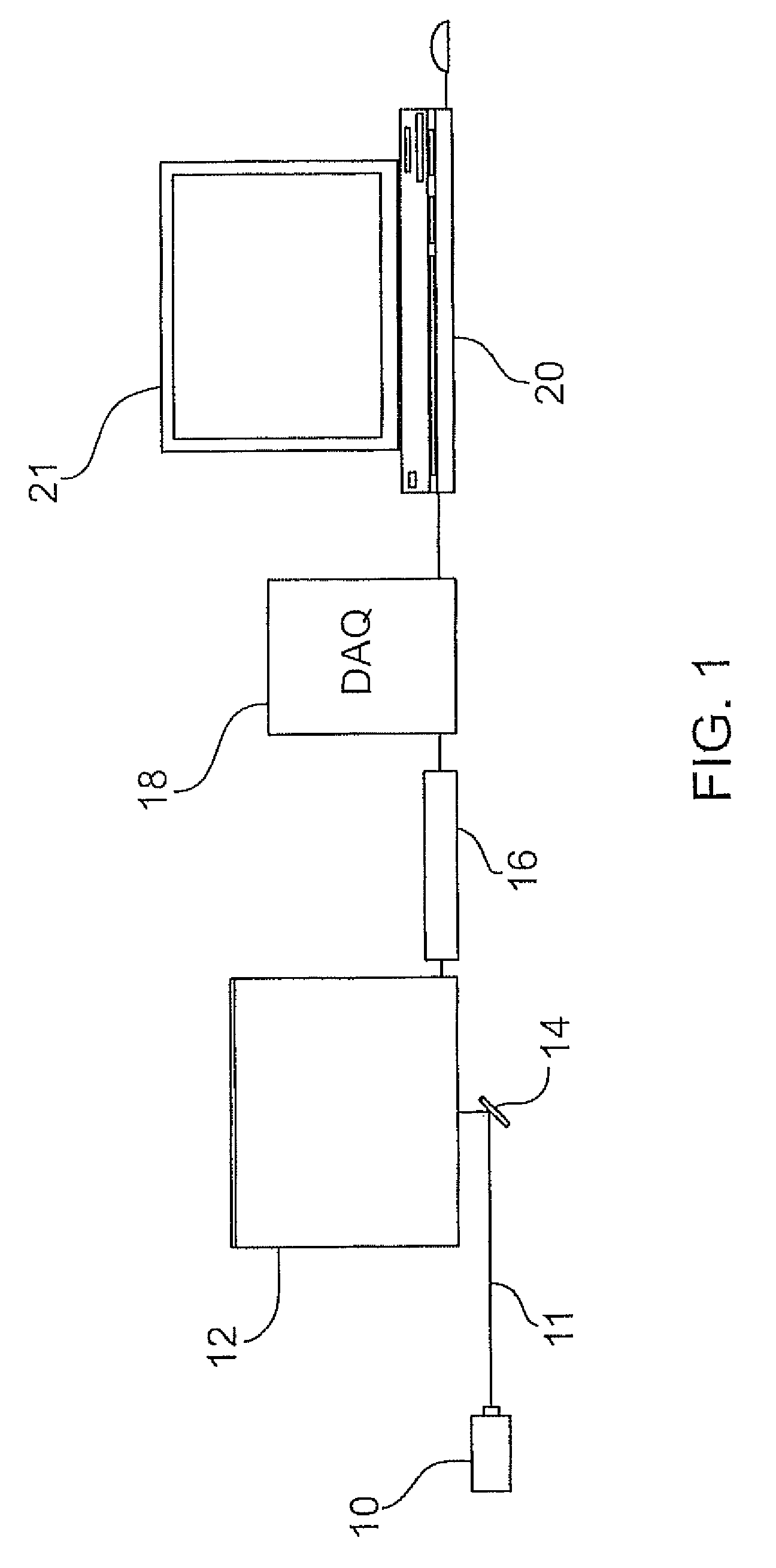

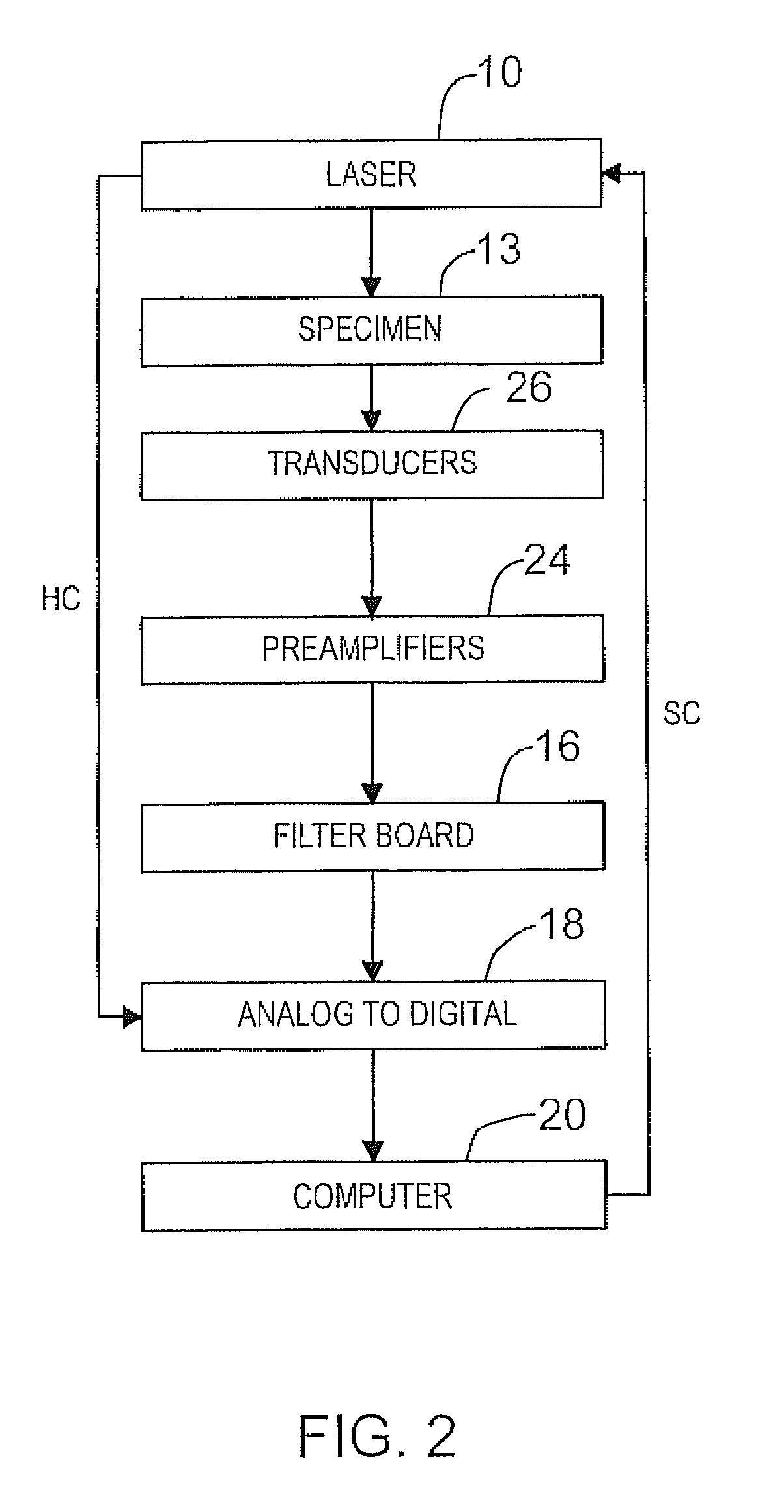

Three-dimensional staring spare array photoacoustic imager and methods for calibrating an imager

InactiveUS9128032B2Illuminating subjectAnalysing solids using sonic/ultrasonic/infrasonic wavesDiagnostics using lightData acquisitionLight beam

A photoacoustic imaging apparatus is provided for medical or other imaging applications and also a method for calibrating this apparatus. The apparatus employs a sparse array of transducer elements and a reconstruction algorithm. Spatial calibration maps of the sparse array are used to optimize the reconstruction algorithm. The apparatus includes a laser producing a pulsed laser beam to illuminate a subject for imaging and generate photoacoustic waves. The transducers are fixedly mounted on a holder so as to form the sparse array. A photoacoustic (PA) waves are received by each transducer. The resultant analog signals from each transducer are amplified, filtered, and converted to digital signals in parallel by a data acquisition system which is operatively connected to a computer. The computer receives the digital signals and processes the digital signals by the algorithm based on iterative forward projection and back-projection in order to provide the image.

Owner:MULTI MAGNETICS

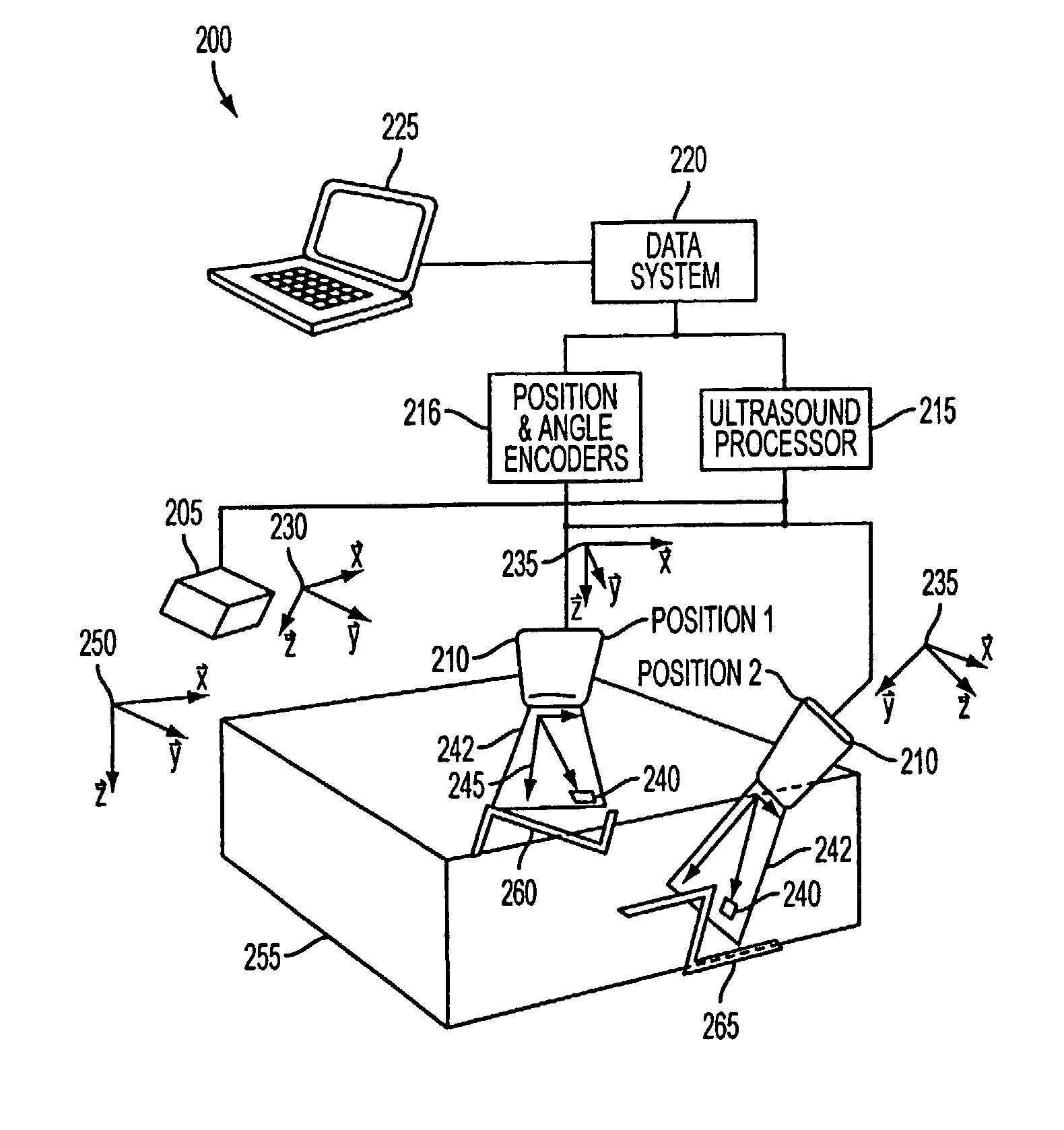

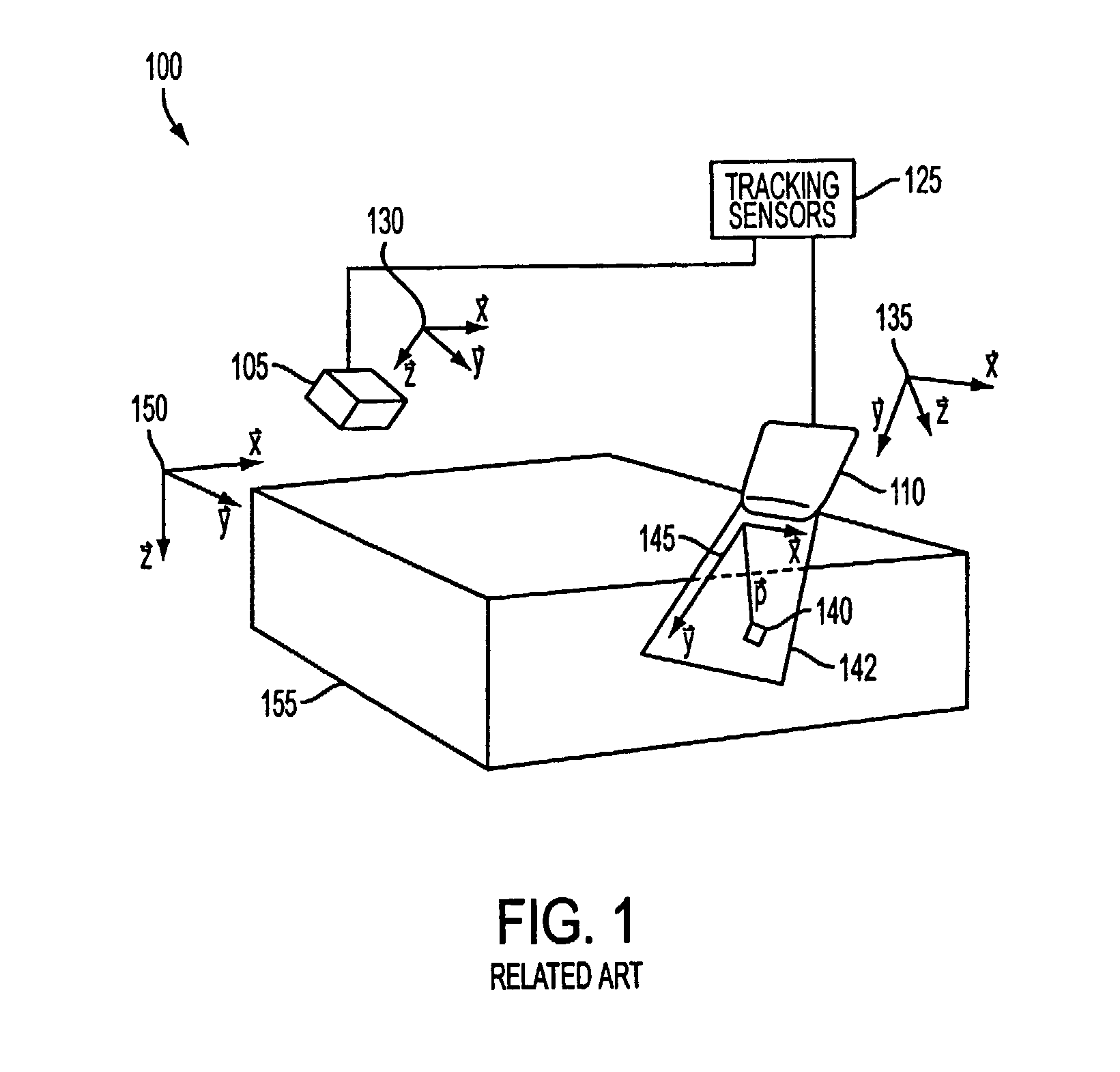

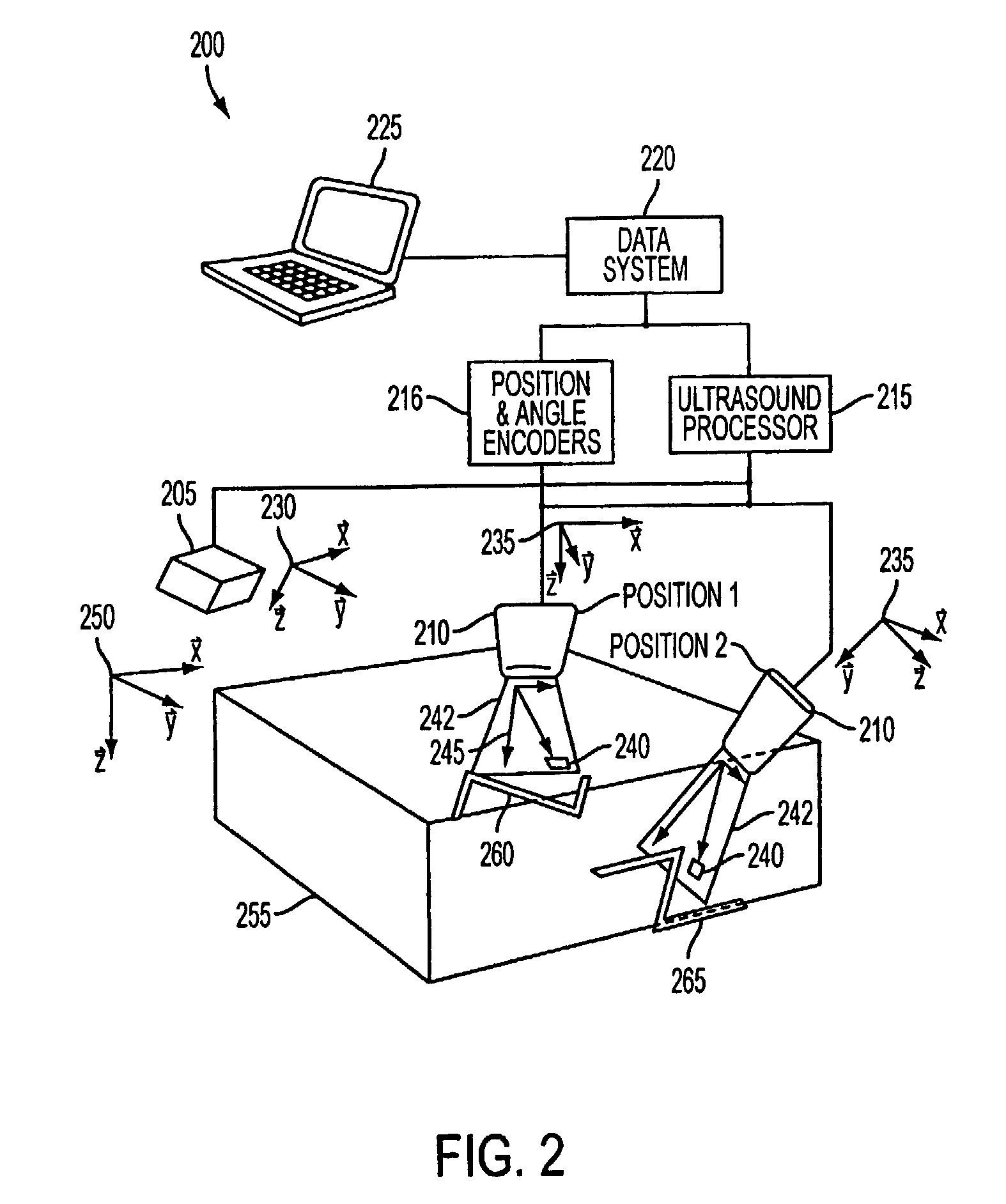

Ultrasound calibration and real-time quality assurance based on closed form formulation

ActiveUS7867167B2Slow changeEfficient and robust spatialUltrasonic/sonic/infrasonic diagnosticsWave based measurement systemsSonificationClosed form formulation

Disclosed is a system and method for intra-operatively spatially calibrating an ultrasound probe. The method includes determining the relative changes in ultrasound images of a phantom, or high-contrast feature points within a target volume, for three different ultrasound positions. Spatially calibrating the ultrasound probe includes measuring the change in position and orientation of the probe and computing a calibration matrix based on the measured changes in probe position and orientation and the estimated changes in position and orientation of the phantom.

Owner:THE JOHN HOPKINS UNIV SCHOOL OF MEDICINE

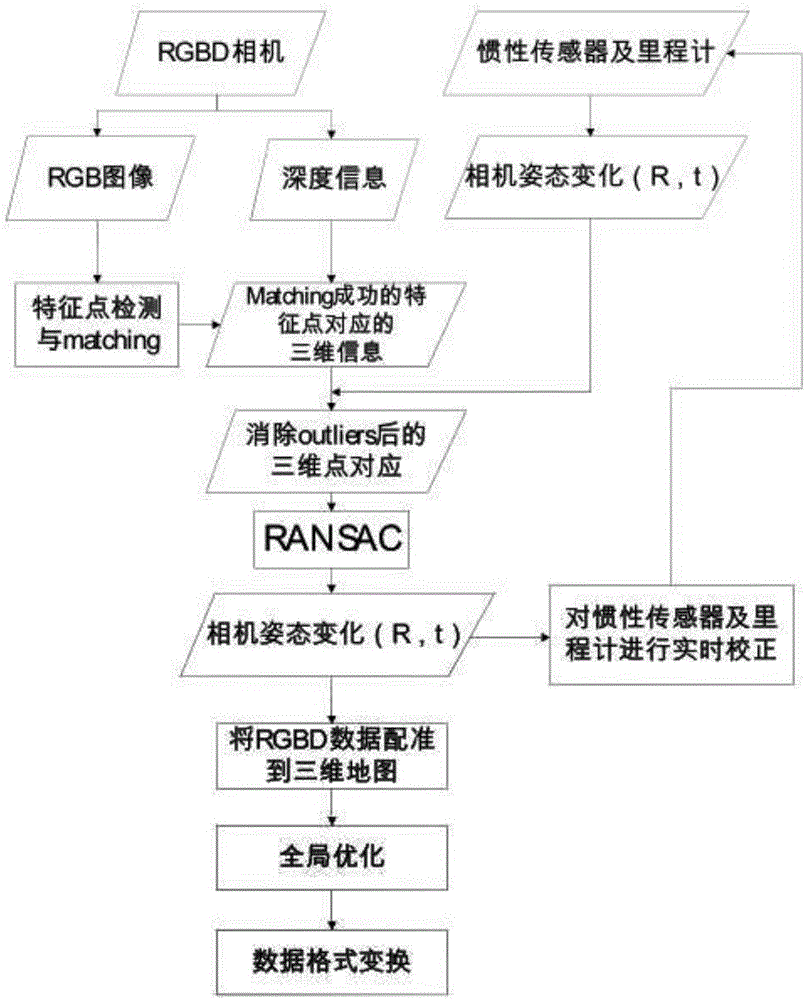

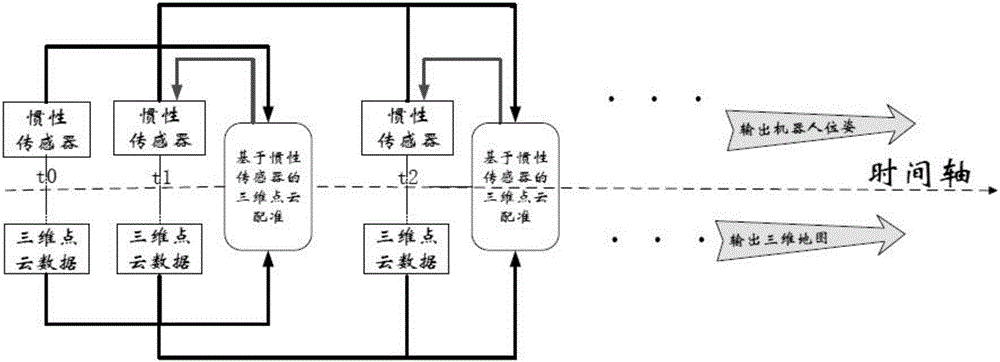

Scene three-dimensional data registration method and navigation system error correction method

InactiveCN106705965AComplementary characteristics are goodSolve the problem of corruptionNavigation by speed/acceleration measurementsNavigation systemVision sensor

The invention discloses a scene three-dimensional data registration method. The method comprises the following steps: an inertial sensor and a visual sensor perform spatial calibration and time synchronization; the inertial sensor outputs pose information at two adjacent moments, and then transmits the pose information to the visual sensor, and the visual sensor performs data registration on two adjacent frames of data according to the pose information given by the inertial sensor; the pose variation of the visual sensor is worked out through the data registration of the visual sensor; the inertial sensor is subjected to real-time correction through the pose variation of the visual sensor; fifthly, data is registered to a three-dimensional map after data registration performed through the visual sensor. The scene three-dimensional data registration method and the navigation system error correction method perform registration on the inertial sensor through the visual sensor, solve vagueness during motion estimation performed by a single visual sensor or inertial sensor, improve the motion object detection performance, reduce the disadvantage that the error of the inertial sensor is accumulated with passage of time, and improve the precision of the inertial sensor.

Owner:苏州中德睿博智能科技有限公司

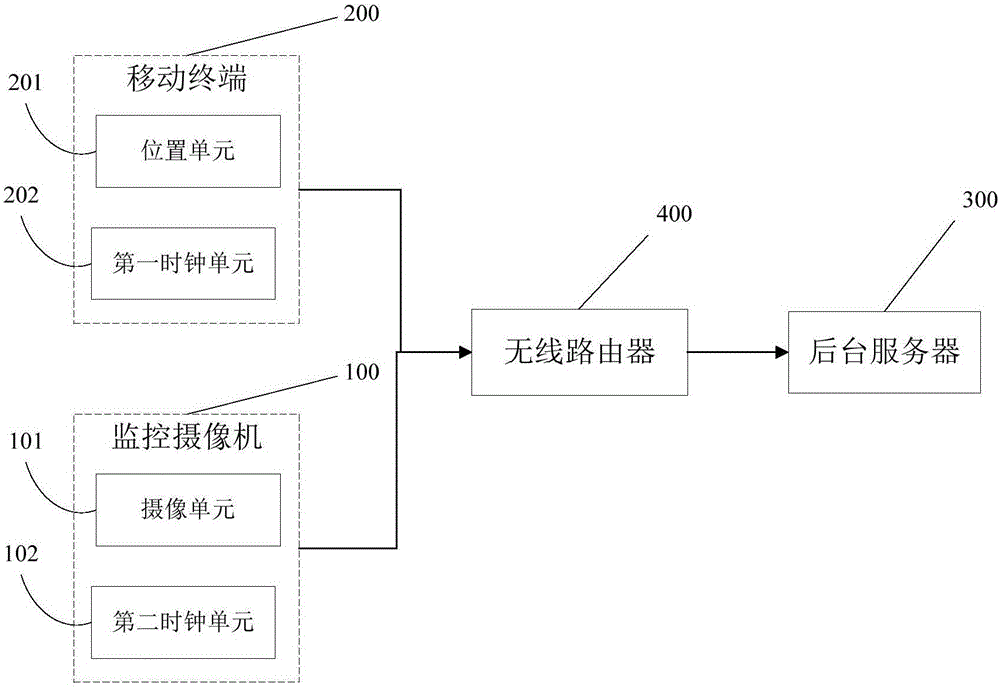

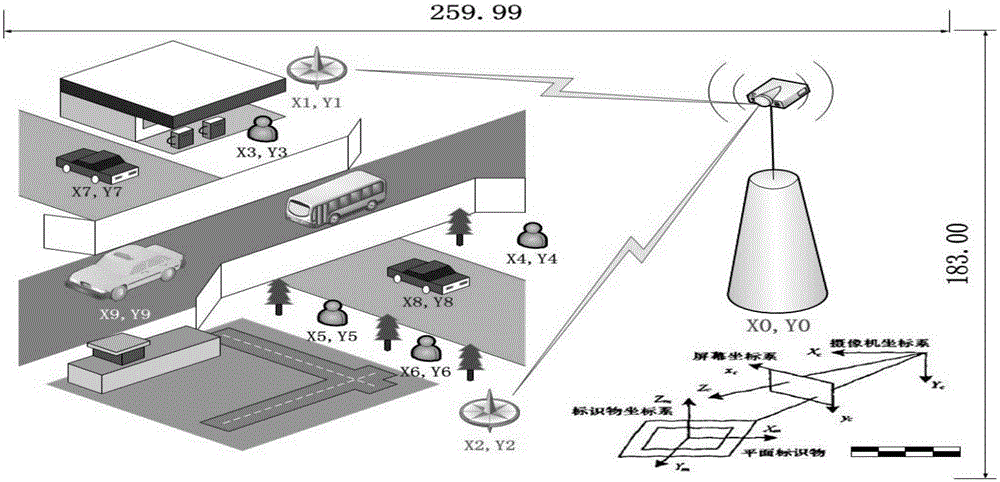

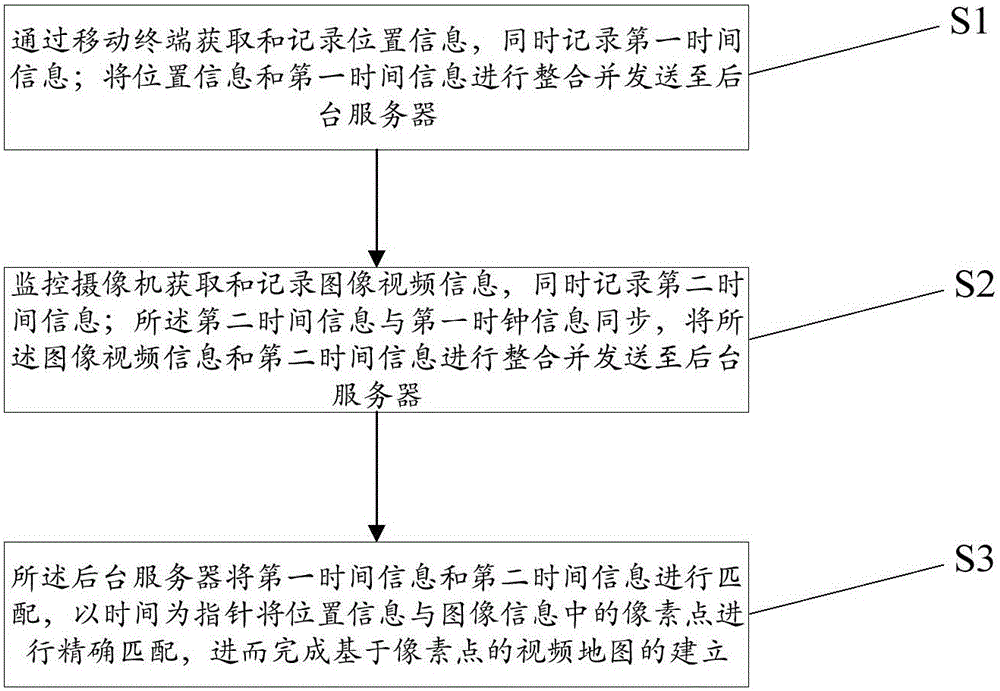

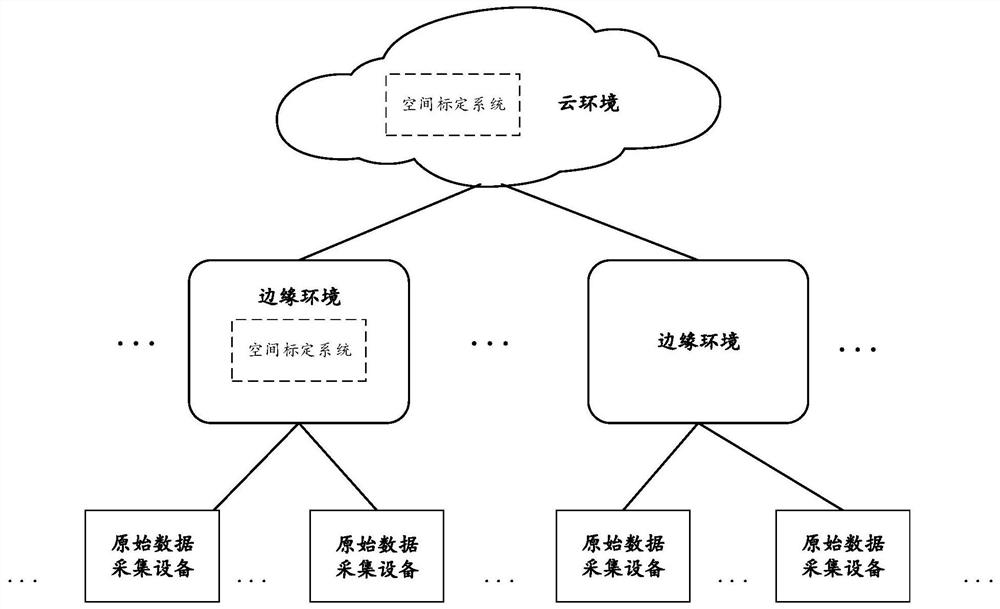

Positioning system and method

ActiveCN106027960ARealize spatial positioningImprove efficiencyTelevision system detailsNetwork topologiesVideo monitoringSpatial positioning

The invention relates to a spatial information technology, in particular to a video positioning system and method. The method comprises the following steps: establishing a mapping relationship between target pixels and spatial coordinates of the target pixels through an interactive operation and data transmission between a mobile terminal and a monitoring camera; performing processing through spatial calibration software to automatically finish spatial coordinate calibration of a video monitoring range; and building a video map in order that target pixel points on a monitoring video matrix have corresponding spatial coordinate values, thereby realizing spatial positioning of any dynamic / static target pixel within the monitoring range. Meanwhile, space-time coordinate calibration is performed semi-automatically through WIFI (Wireless Fidelity) functions of a mobile terminal positioning device and a wireless router, so that conventional manual processing is replaced; the working efficiency is increased; and the cost is reduced.

Owner:SHENZHEN INST OF ADVANCED TECH

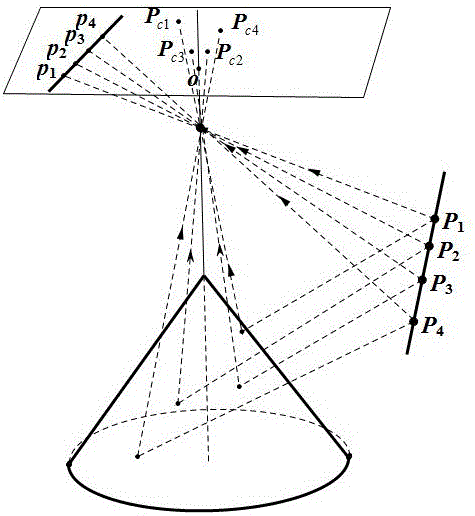

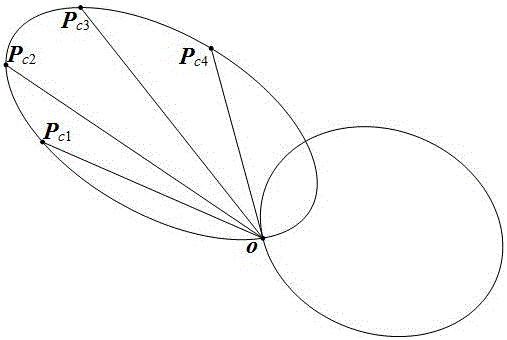

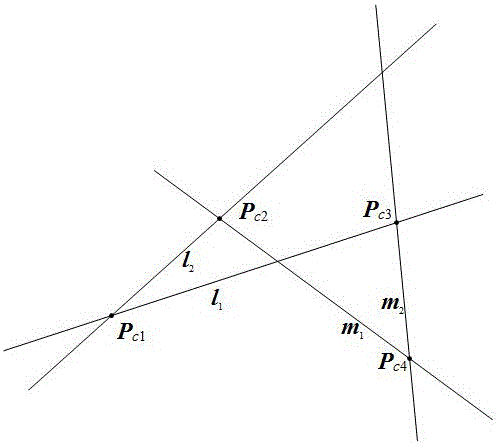

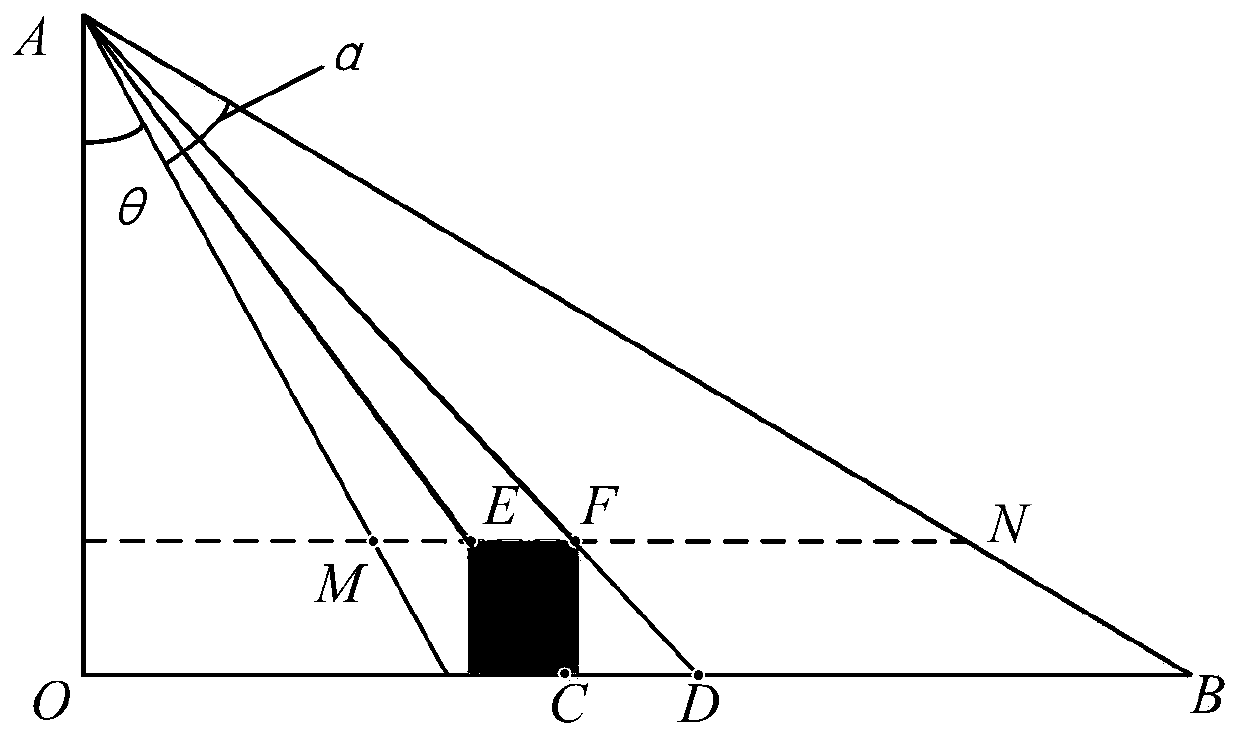

Method for calibrating conic refraction and reflection camera of non-center axial symmetrical system

The invention relates to a method for calibrating a conic refraction and reflection camera of a non-center axial symmetrical system. The method includes: a calibration block image is shot by employing the conic refraction and reflection camera, principal point locus curves are constrained through image points of the refraction and reflection image, and the coordinate of a principal point is determined by multiple loci; the polar line of the principal point is solved by employing a contour circle formed by a certain image point, wherein the intersection point of the polar line and a tangent passing the image point and the intersection point of the principal point and a connecting line of the image points are a group of vanishing points in the orthogonal direction; and two groups of vanishing points in the orthogonal direction are calculated by employing two image points so that the scale factor and the distortion factor of the conic mirror refraction and reflection camera are solved. By employing the method, the cross ratio is determined by directly employing the distances between the points on lines of the spatial calibration block, and point selection on the lines of the spatial calibration block is easy, convenient and accurate so that the accuracy of calibration results is improved.

Owner:YUNNAN UNIV

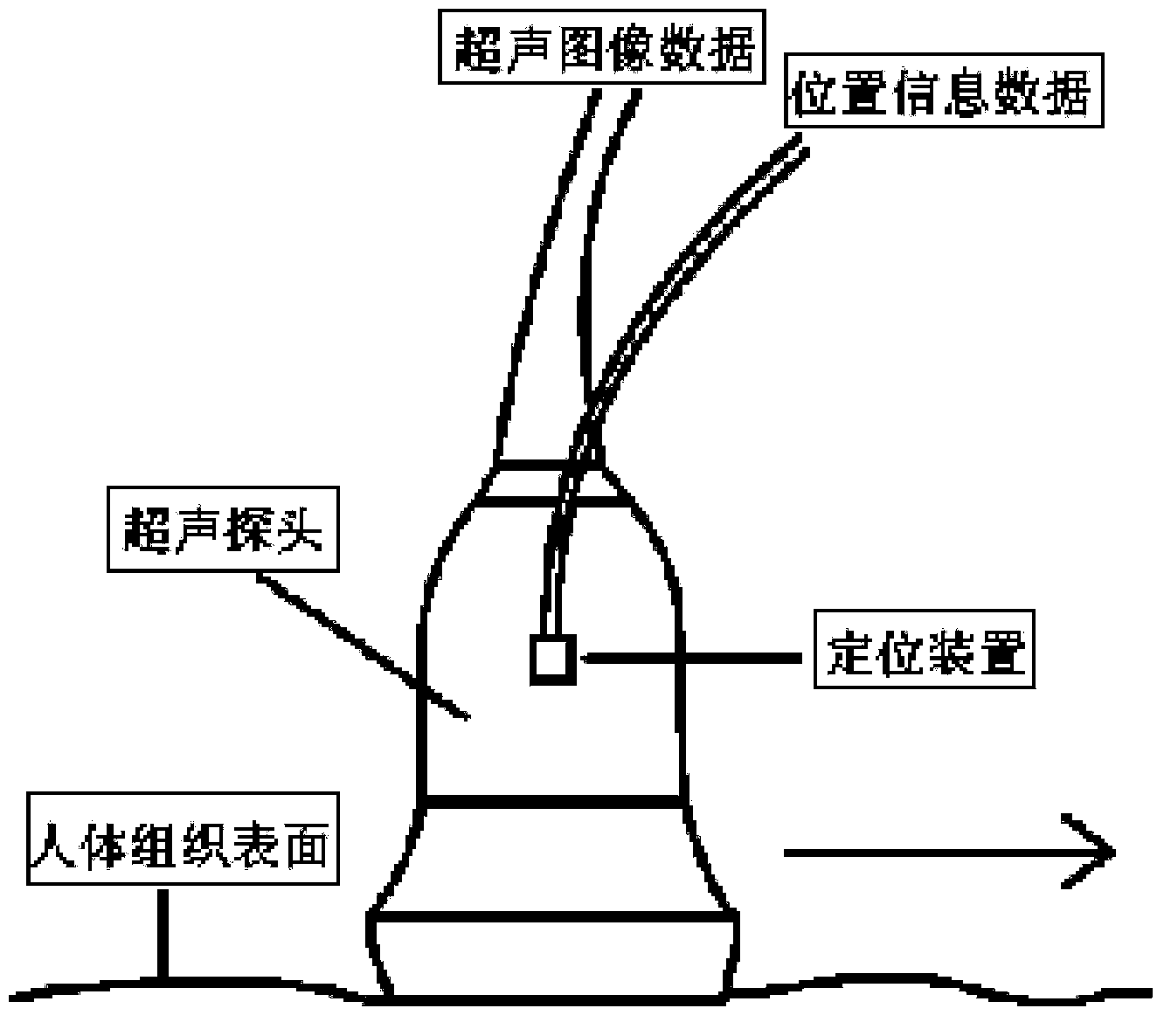

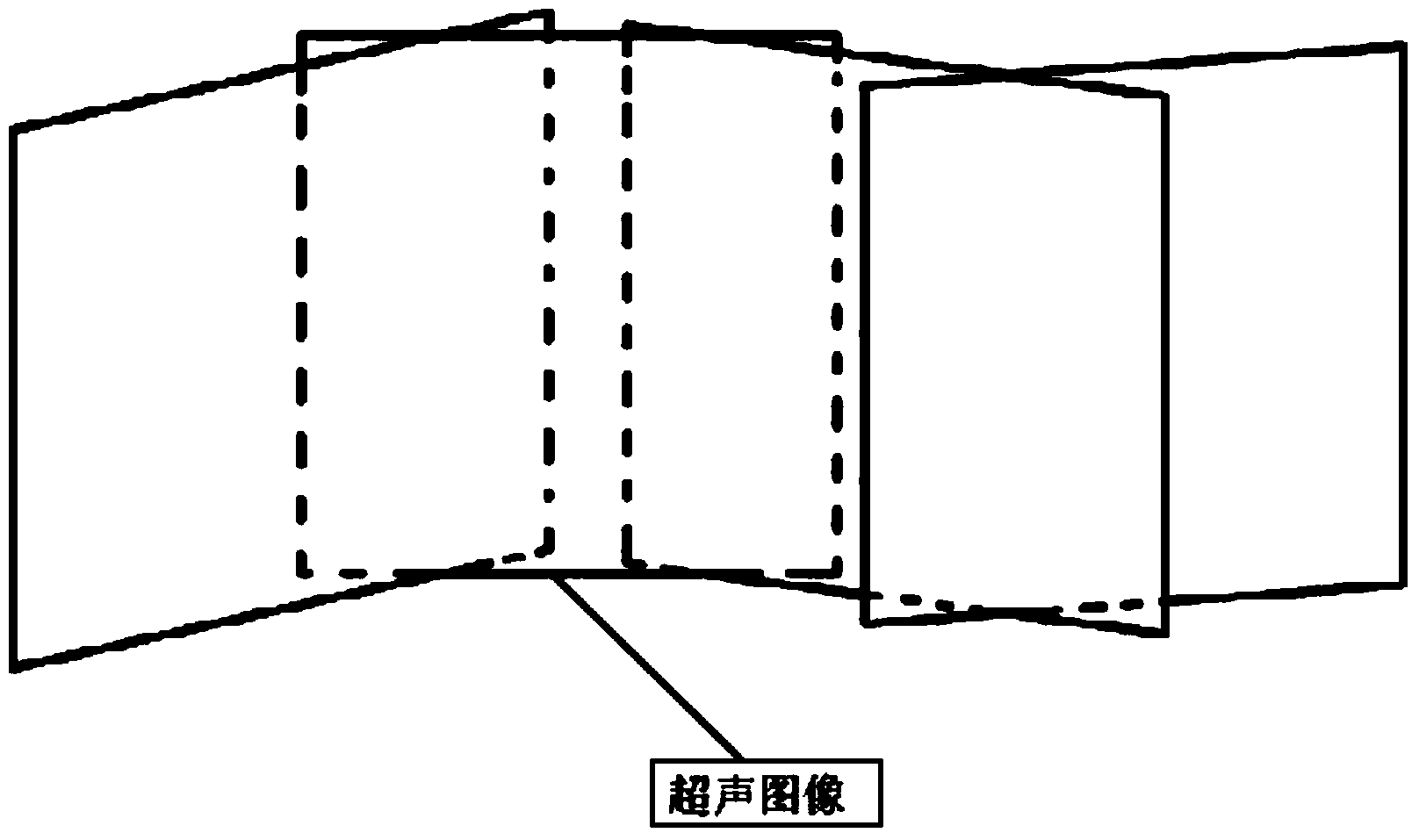

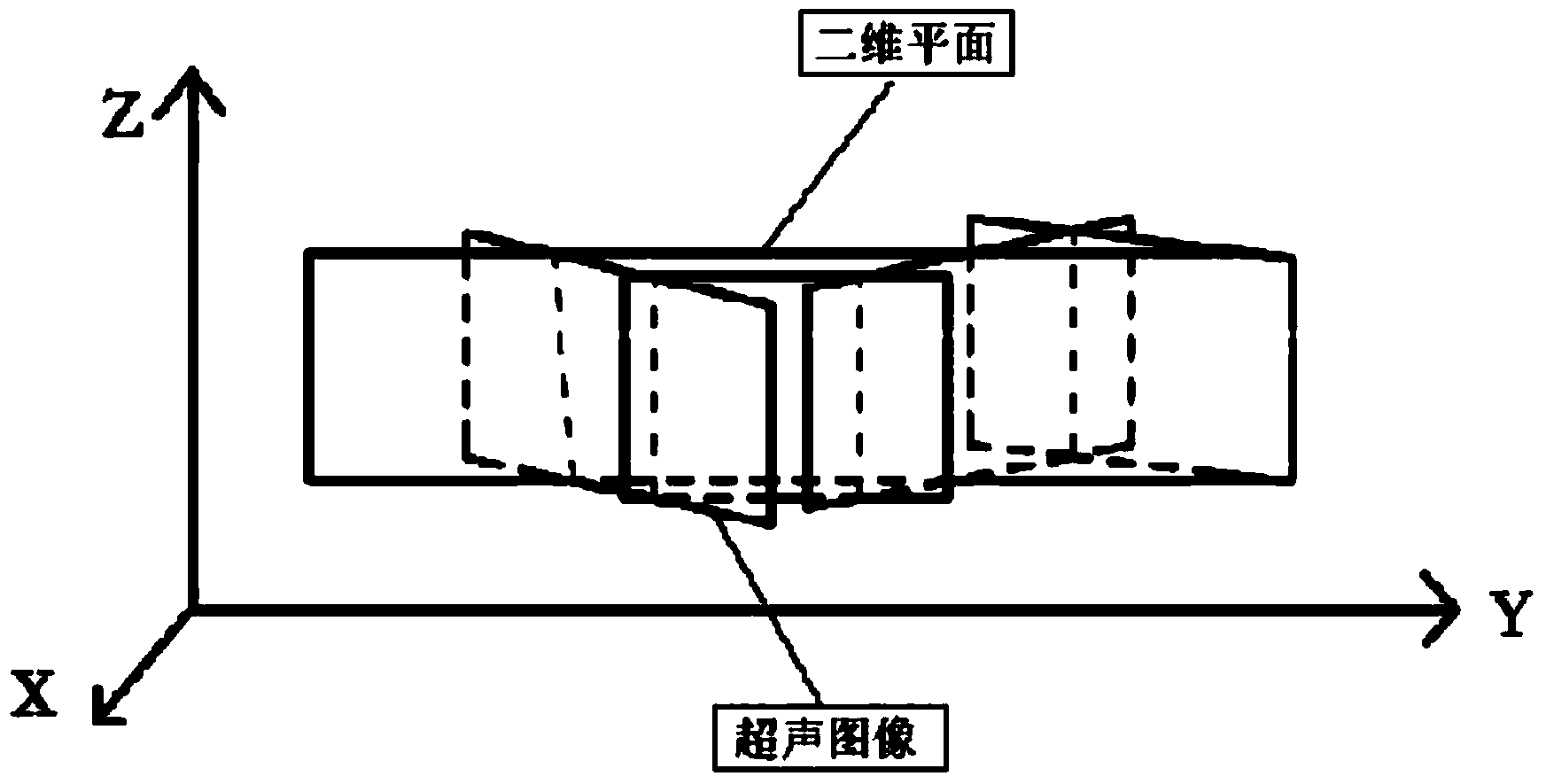

Position information based ultrasonic wide view imaging method

InactiveCN103750859ACorrespondence is accurateSmall amount of calculationUltrasonic/sonic/infrasonic diagnosticsImage analysisSonificationDirection information

The invention discloses a position information based ultrasonic wide view imaging method. The method includes the following steps that (1) when acquiring images, an ultrasonic probe moves along a straight line or an approximate straight line, a system acquires an ultrasonic two-dimensional image sequence in the scanning process, and position information of the images is obtained through a positioning device; (2) time is set for the system, each frame image acquired is corresponding to the position information thereof; (3) spatial calibration is performed to enable each frame image to be transformed into a coordinate system of the positioning device, position and direction information acquired by the positioning device in the moving process of the ultrasonic probe is recorded in a world coordinate system, and coordinates of each frame image in the world coordinate system are obtained by final transformation for the convenience of display; (4) each frame image is mapped onto a preset two-dimensional plane according to the spatial position information thereof in the world coordinate system to form a wide view image. By the method, calculated amount of wide view imaging can be reduced, and real-time performance can be improved.

Owner:SOUTH CHINA UNIV OF TECH

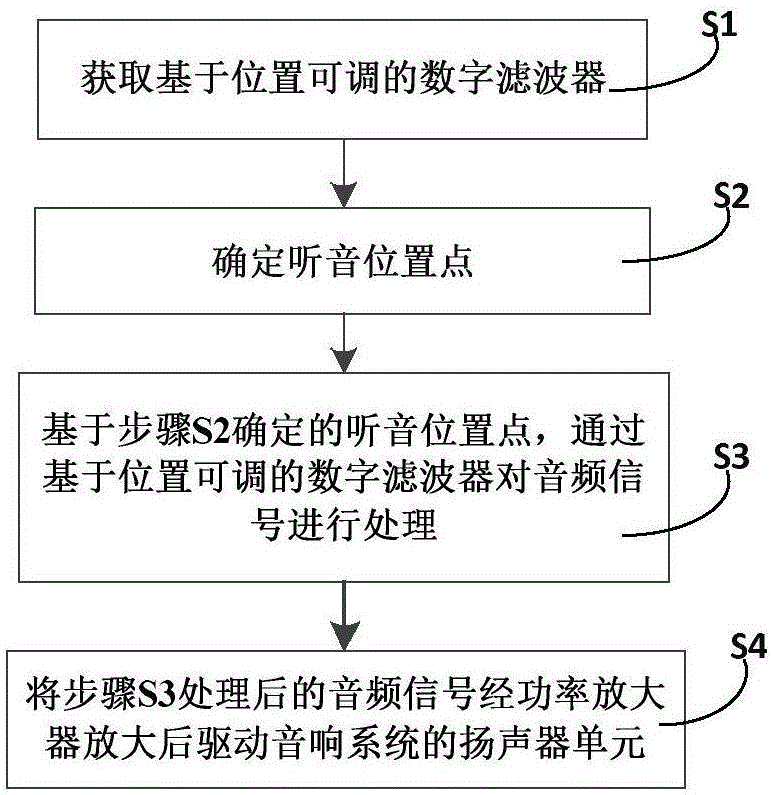

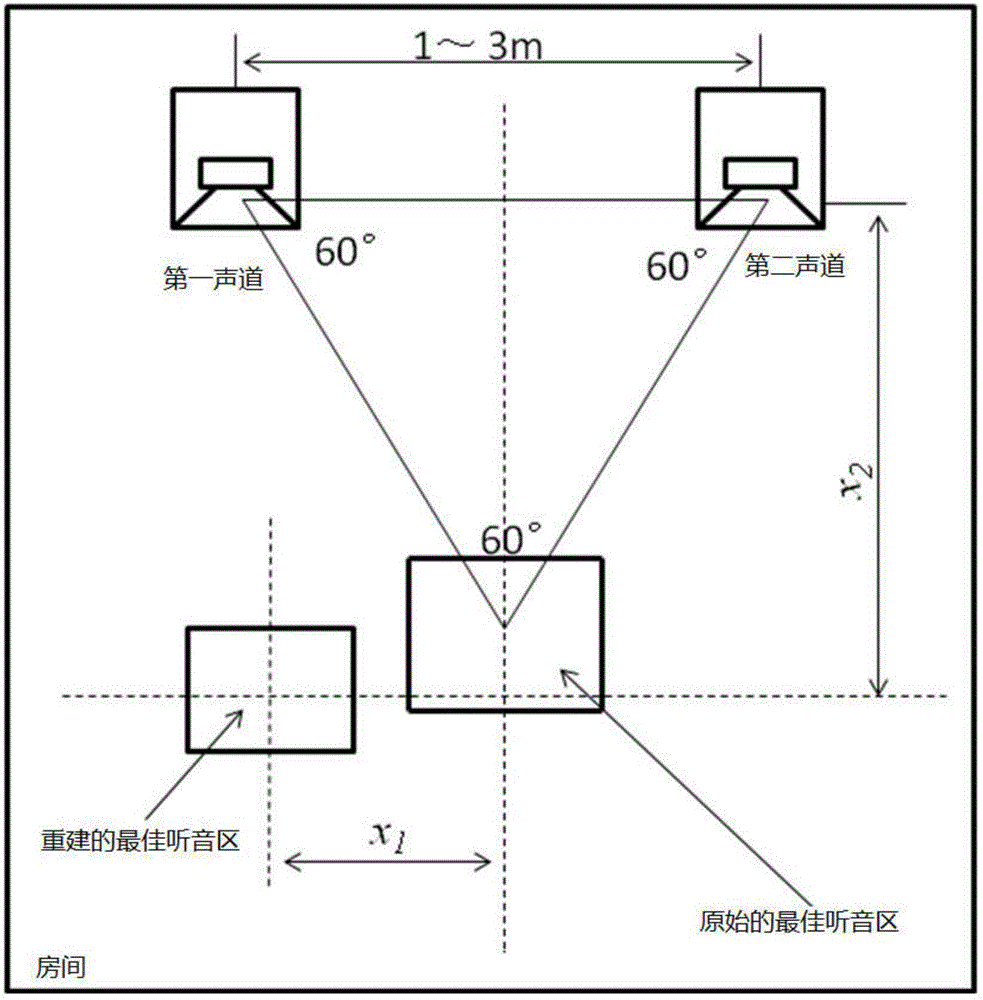

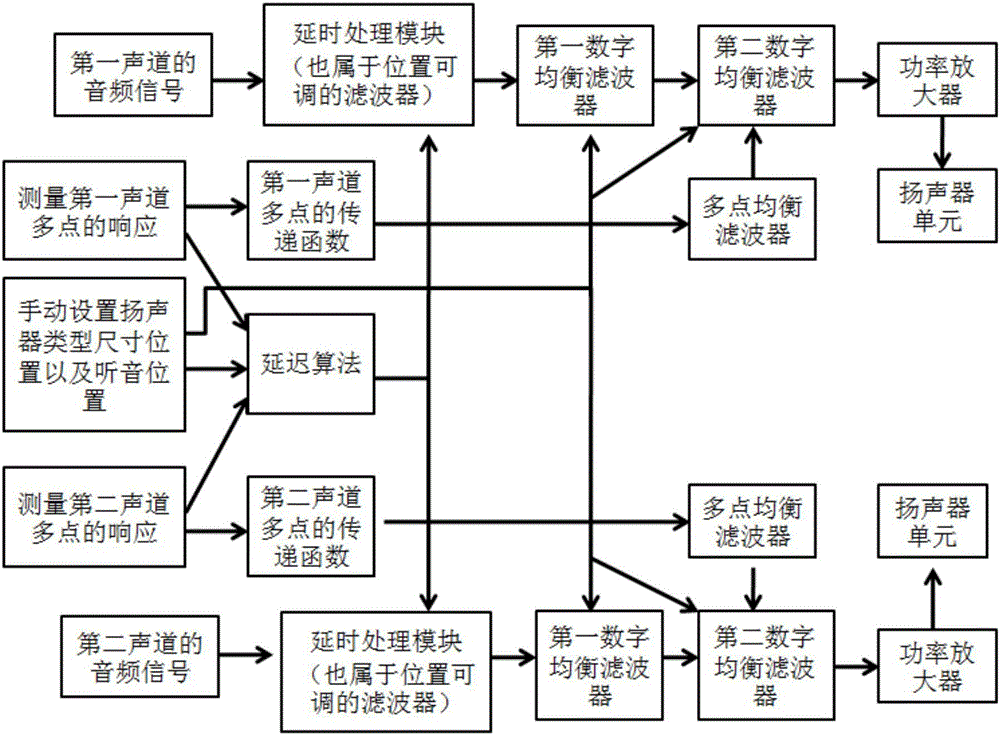

Spatial calibration method of stereo system and mobile terminal device thereof

ActiveCN106535076AImprove sound realismAchieve calibrationElectrical apparatusSpatial calibrationLoudspeaker

The invention provides a spatial calibration method of a stereo system and a mobile terminal device thereof. The spatial calibration method comprises the following steps: step S1, obtaining a digital filter based on an adjustable position; step S2, determining a listening position point; step S3, processing an audio signal through the digital filter based on the adjustable position based on the listening position point determined in step S2; and step S4, amplifying the audio signal processed in step S3, and driving a loudspeaker unit of the stereo system. According to the spatial calibration method provided by the invention, balance processing and delay processing are performed on the audio signal based on the listening position point selected by a user, the delay processing and the balance processing are performed on the sound radiation characteristics, the sound directivity and the spatial structure acoustic characteristics of the stereo system for different listening position points in a listening room so as to realize targeted calibration and optimization, and the validity of voice at different listening position points is maximally improved.

Owner:SHENZHEN IMKS TECH

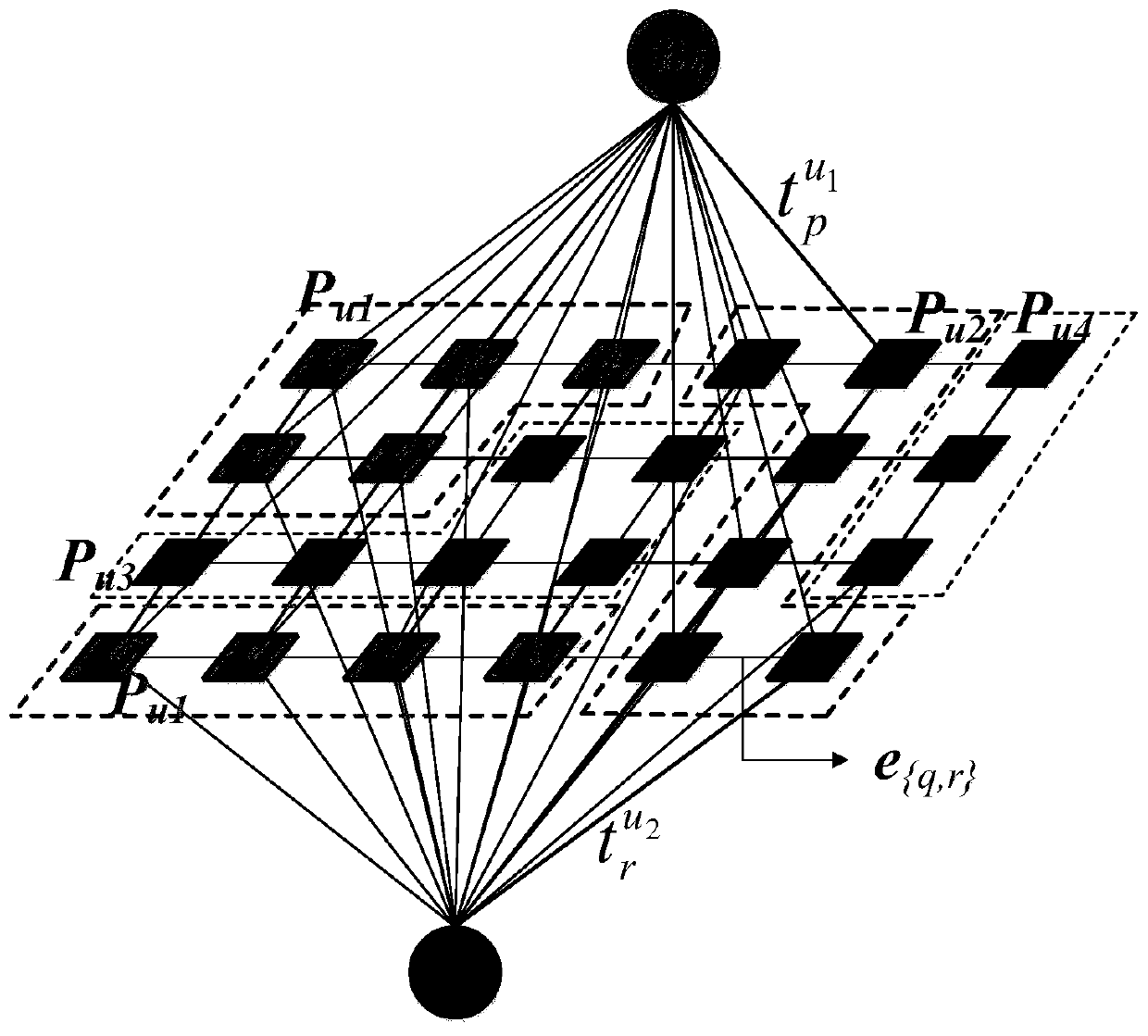

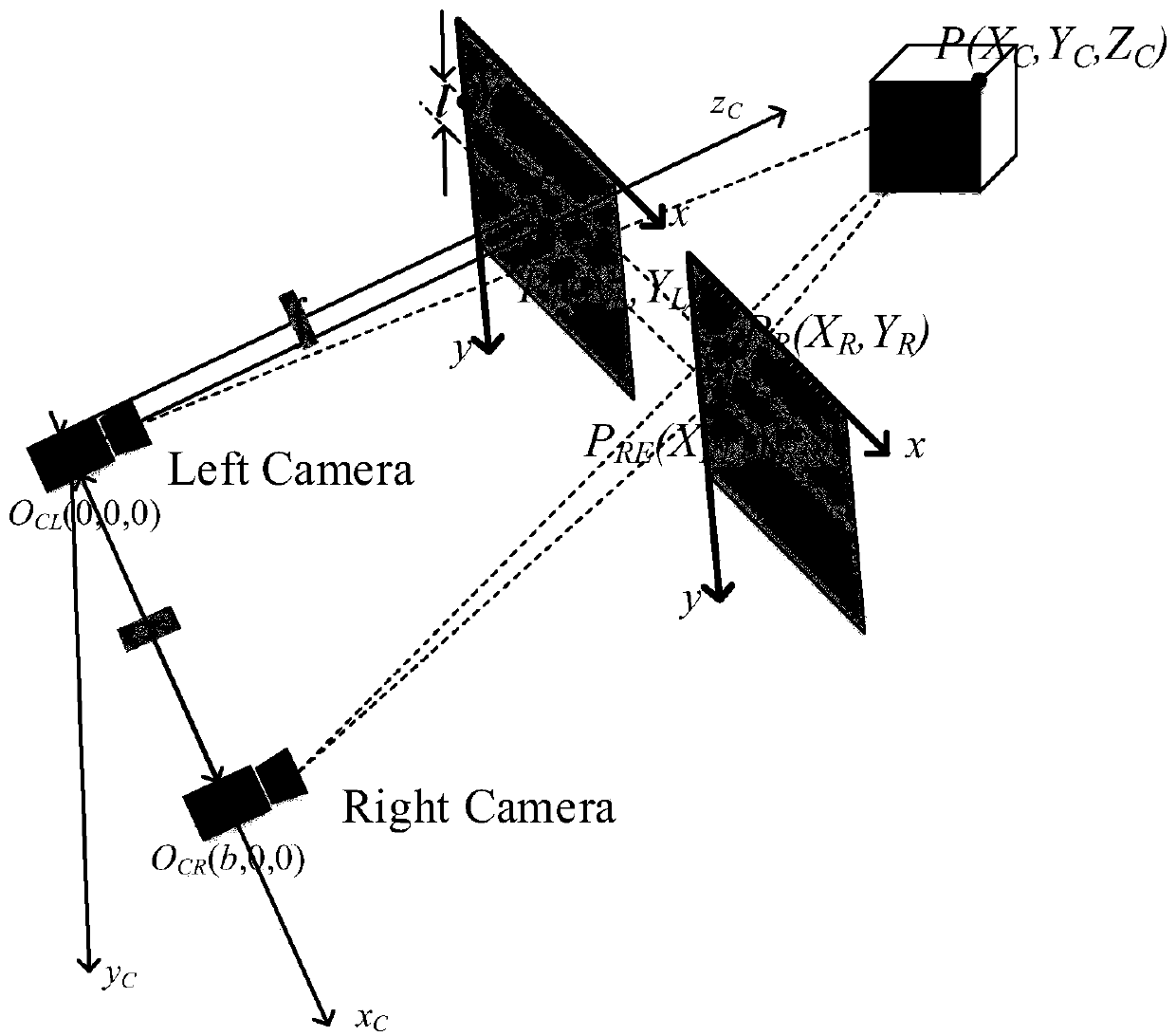

Depth imaging and information acquisition method based on binocular vision

ActiveCN110288659AAchieving Depth ImagingEasy accessImage enhancementImage analysisDepth imagingSpatial calibration

The invention provides a depth imaging and information acquisition method based on binocular vision. The method is characterized by carryin gout the spatial calibration on an image shot by a binocular camera through the distortion correction and the polar line correction, constructing a two-dimensional parallax label set based on the calibrated image pairs; selecting different label combinations as the source confluence points each time to construct a network graph and design the edge weights; iteratively solving a minimum cut set of the network by adopting a maximum flow algorithm; completing the two-dimensional parallax label distribution of the pixel points according to a designed label updating strategy. When the iteration is finished, a dense disparity map can be obtained, the depth information of a scene is obtained through calculation by combining a triangular distance measurement principle, a calculation result is further optimized by collecting the depth information of the key points, and by combining the GPS data, the height and angle information of camera erection, the position and the physical scale information of any target in the scene can be obtained.

Owner:魏运 +1

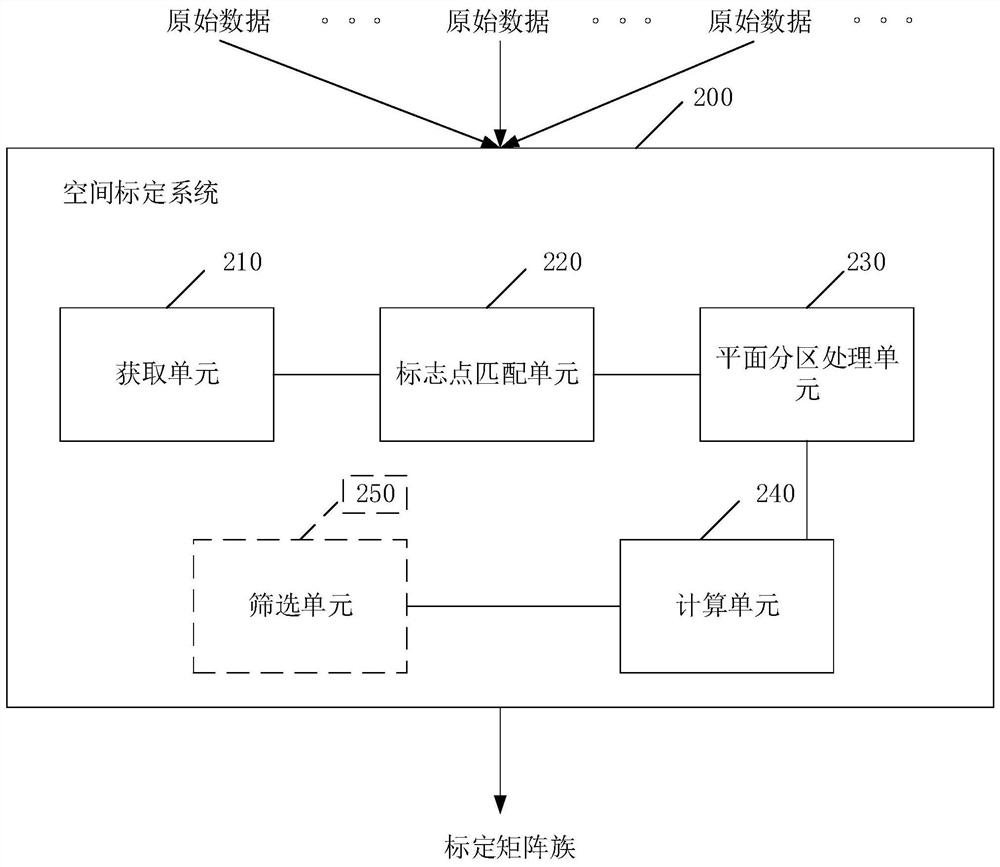

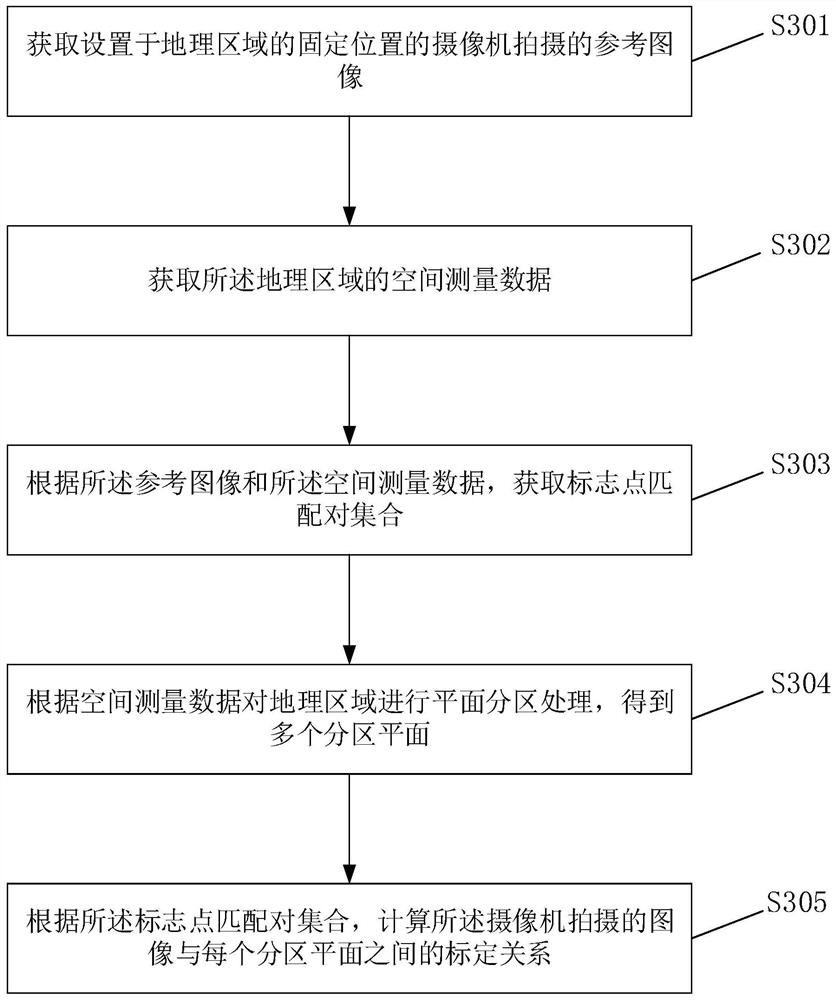

Spatial calibration method and system

The invention provides a space calibration method and system. The method comprises the following steps: collecting a reference image shot by a camera and spatial measurement data of a geographic area; carrying out plane partitioning processing on the geographic area according to the spatial measurement data to obtain a plurality of partitioning planes; according to the reference image and the spatial measurement data, a mark point matching pair set is obtained, and each mark point matching pair in the mark point matching pair set comprises geographic coordinates of a mark point in the geographic area and pixel coordinates of the mark point in the image; According to the mark point matching pair set, calculating a calibration relationship between an image shot by a camera and each partition plane, and obtaining a calibration matrix family between the image shot by the camera and the geographic area. The method can improve the space calibration precision and expand the application scene.

Owner:HUAWEI TECH CO LTD

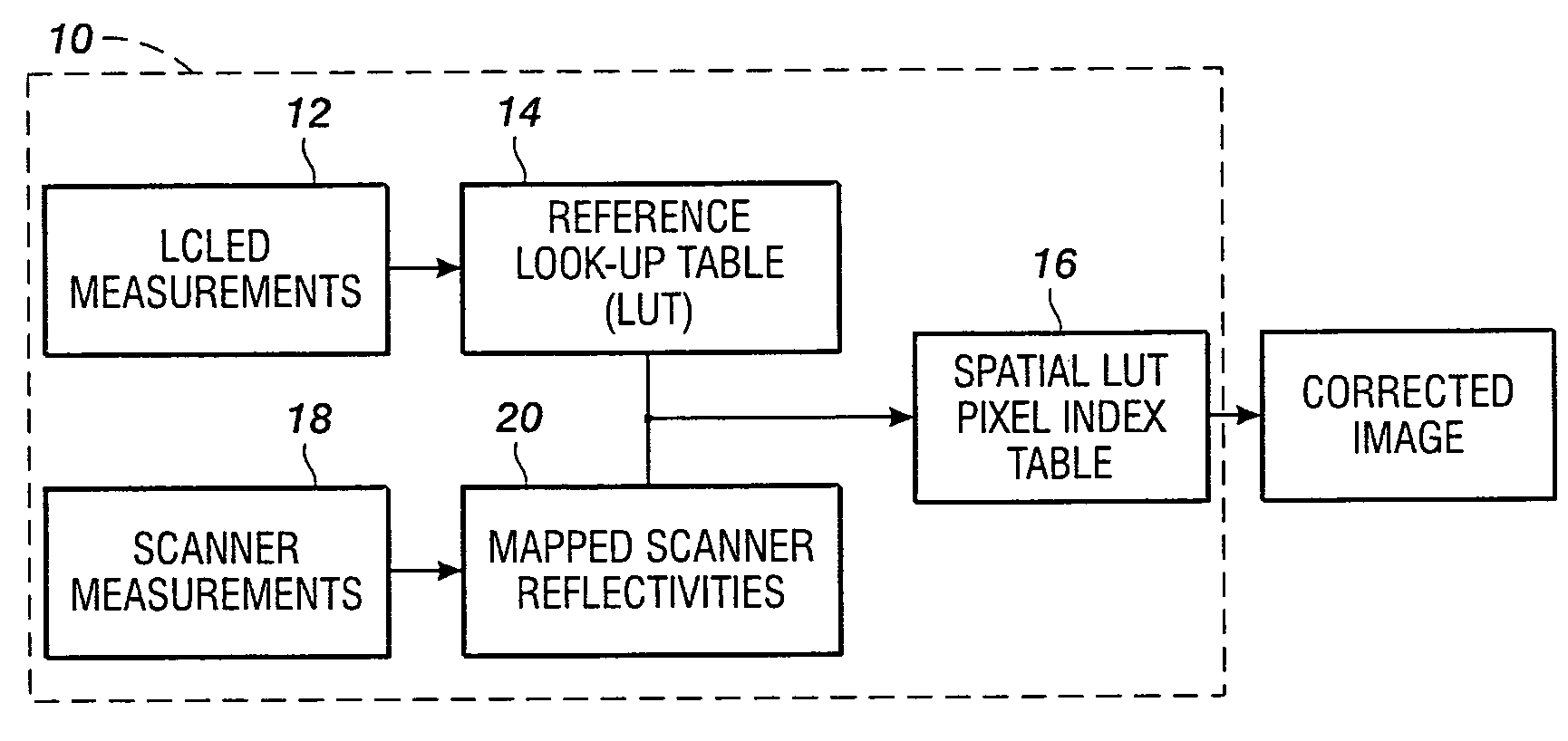

Method for spatial color calibration using hybrid sensing systems

InactiveUS7633647B2Space minimizationUniform colorDigitally marking record carriersDigital computer detailsOutput deviceComputer vision

A method and system for color calibration or color output device spectrophotoically measures at test target including a preselected test color value. A multi-dimensional LUT of the device is generated representative of the color information including the at least one preselected color. Producing a second image width device including the at least one preselected color located at a plurality of spatial locations in the second image. A second sensor measures the second image and a plurality of spatial locations having the preselected color for generating reflectance information for the preselected color at the plurality of spatial locations. An error is determined between the measured color of the one preselected color and the reflectance information at the other pixel locations. A multi-dimensional LUT is adjusted to minimize spatial uniformity errors at the other pixel locations, thus calibrating device color output spatially.

Owner:XEROX CORP

Three-dimensional photoacoustic imager and methods for calibrating an imager

InactiveUS20130338474A9Illuminating subjectUltrasonic/sonic/infrasonic diagnosticsMaterial analysis using sonic/ultrasonic/infrasonic wavesData acquisitionReconstruction algorithm

A photoacoustic imaging apparatus is provided for medical or other imaging applications and also a method for calibrating this apparatus. The apparatus employs a sparse array of transducer elements and a reconstruction algorithm. Spatial calibration maps of the sparse array are used to optimize the reconstruction algorithm. The apparatus includes a laser producing a pulsed laser beam to illuminate a subject for imaging and generate photoacoustic waves. The transducers are fixedly mounted on a holder so as to form the sparse array. A photoacoustic (PA) waves are received by each transducer. The resultant analog signals from each transducer are amplified, filtered, and converted to digital signals in parallel by a data acquisition system which is operatively connected to a computer. The computer receives the digital signals and processes the digital signals by the algorithm based on iterative forward projection and back-projection in order to provide the image.

Owner:MULTI MAGNETICS

Large-object three-dimensional measurement LED label calibration method based on tracker

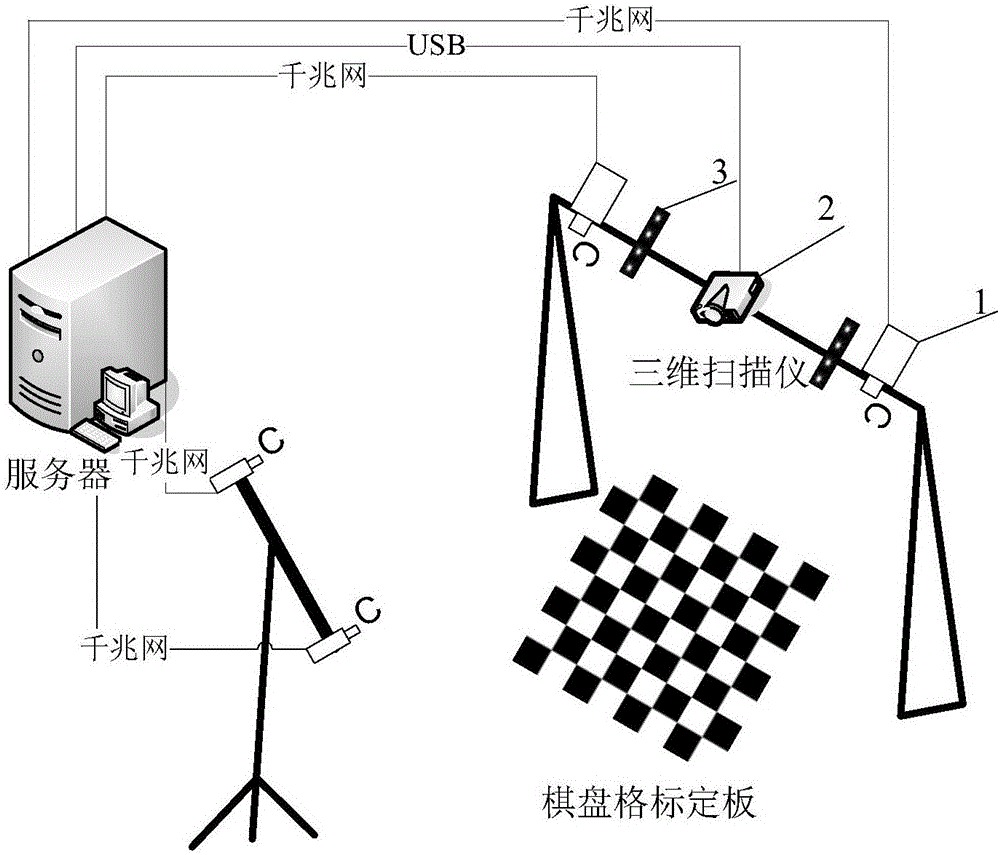

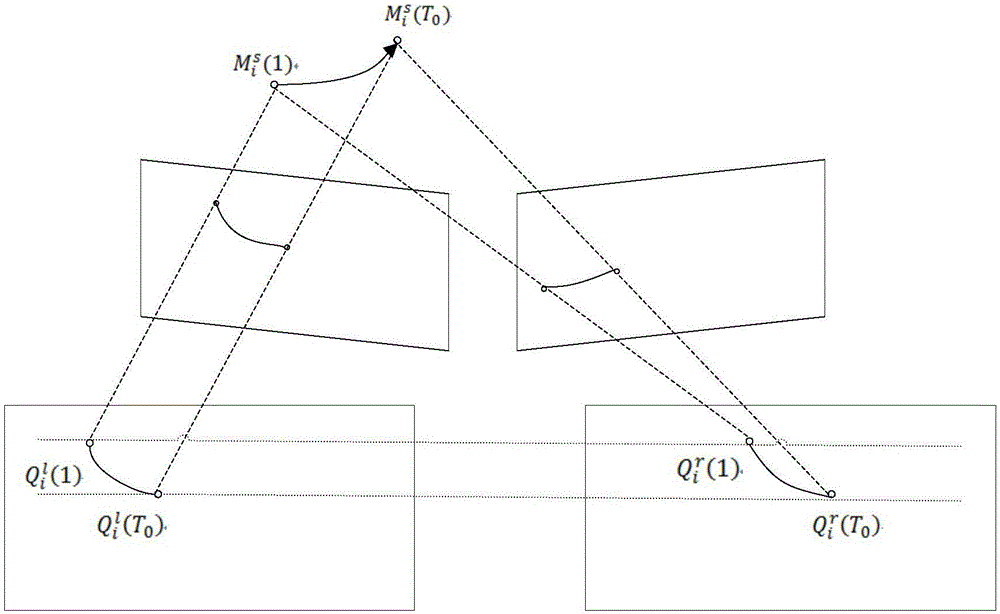

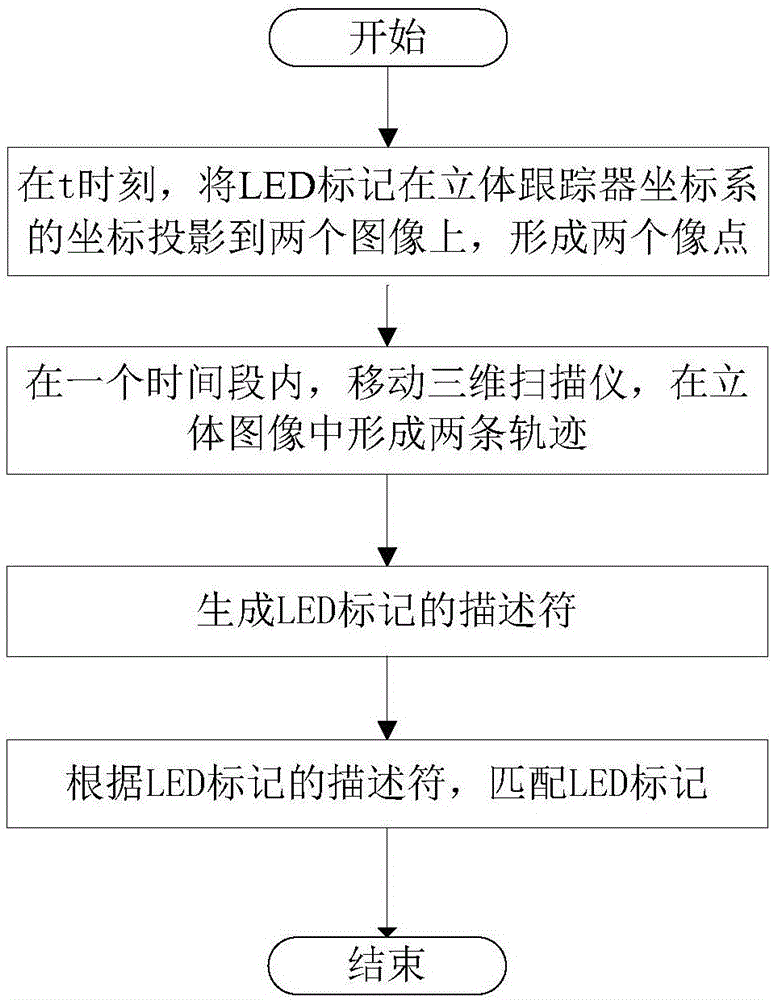

ActiveCN106643504AReduces the possibility of false matchesImprove stabilityUsing optical meansThree dimensional measurementMatching methods

The invention discloses a large-object three-dimensional measurement LED label calibration method based on a tracker. The method includes the following steps of (1) matching the LED labels by means of a trajectory-based LED label matching method: a stereoscopic tracker observes the motion trajectory of the LED labels in the view field of the stereoscopic tracker; matching the trajectories of the LED labels observed in the two cameras of the stereoscopic tracker; and measuring the three-dimensional coordinates of the LED labels in the stereoscopic tracker coordinate system according to the matching result; and (2) calibrating the LED labels with an LED label calibration method based on a checkerboard: the three-dimensional coordinates of the LED labels in the stereoscopic tracker coordinate system are converted into the coordinate system of a three-dimensional scanner to realize the calibration of the LED labels. According to the method, the LED label matching accuracy is high, the point characteristics of the spatial calibration objects can be accurately found, the calibration precision and robustness are high, and the calibration of the LED labels in the three-dimensional measurement can be conveniently and accurately carried out.

Owner:JIANGSU UNIV OF SCI & TECH

Spatial calibration method for rotary three-dimensional measurement system of two-dimensional laser range finder

InactiveCN113009459AImprove reconstruction accuracyEasy to operateWave based measurement systemsLaser rangingPoint cloud

The invention discloses a spatial calibration method for the pose of a rotary three-dimensional measurement system of a two-dimensional laser range finder. The objective of the invention is to solve the problem that three-dimensional point cloud images acquired and spliced by a range finder are easy to generate errors and distortion under the condition that pose deviation exists between a holder rotation center (an original point of a world coordinate system) and a measurement reference point (a laser emission point) of the laser range finder. The invention provides a spatial calibration method for the pose of a rotary three-dimensional measurement system of a two-dimensional laser range finder, which can calibrate the deviation angle of the two-dimensional laser range finder and the offsets on three coordinate axes under the condition that a special calibration reference object is not needed. The method is simple in step, calibration cost and time can be effectively reduced, calibration precision of a measurement system is improved, and errors and distortion of the spliced three-dimensional point cloud images are inhibited.

Owner:SHANGHAI MARITIME UNIVERSITY

Dual color/dual function focal plane

ActiveUS8153978B1Accurate trackingEliminate needRadiation pyrometryDirection controllersWavelengthSpatial calibration

A single focal plane integrated circuit hybrid replaces multiple focal plane circuits and associated off-focal plane signal processing electronics. A dual function, dual color focal plane PSD sensor chip assembly includes a PSD array, a traditional pixelized camera array, a signal processing chip, and flip-chip interconnects and wirebond pads to support electronics on the signal processing chip. The camera array is made of a material sensitive to wavelengths longer than the PSD array material is sensitive to. The PSD array is disposed in the same substrate as the camera array. The PSD array tracks object locations and directs the camera array to window and zoom while capturing images. Inherent registration of PSD cells to the pixelized camera array makes responsivity map testing and spatial calibration unnecessary. Reduction in power dissipation is achieved by powering on the camera array only when the PSD detects a change in scene.

Owner:OCEANIT LAB

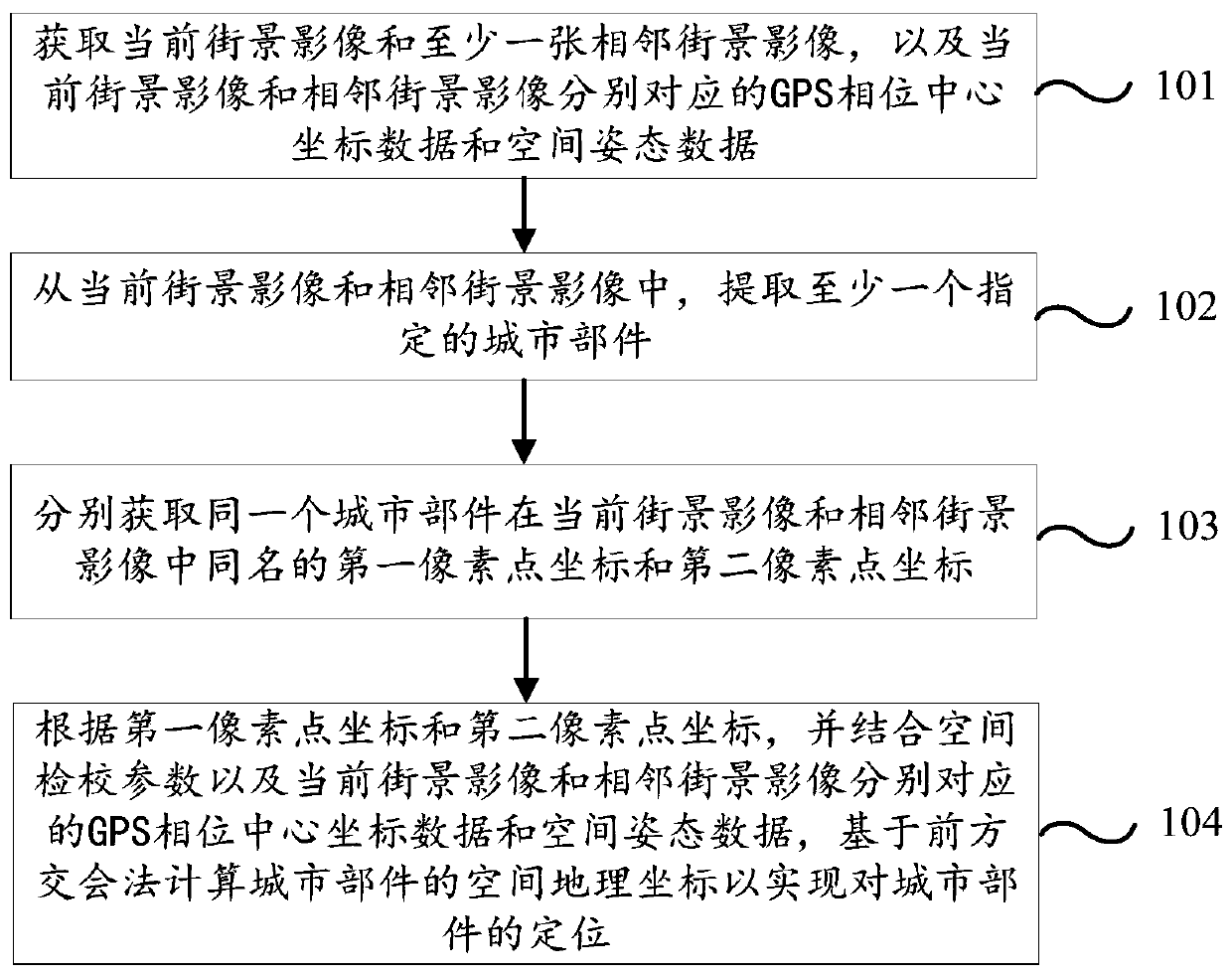

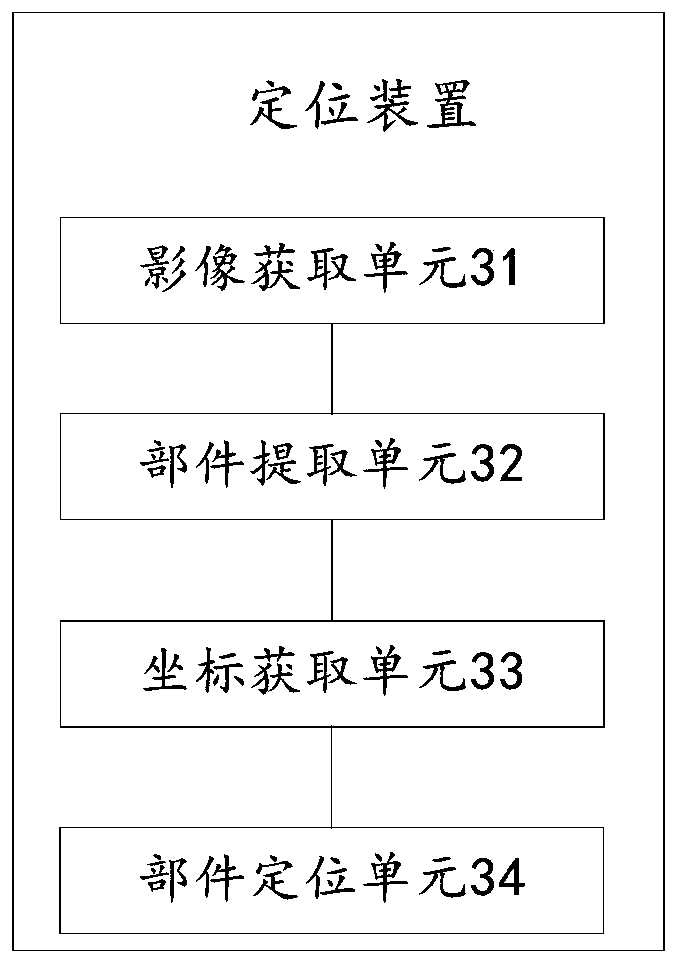

City component positioning method and device and vehicle-mounted mobile measurement system

InactiveCN110763203ARapid positioningImprove update efficiencyImage enhancementImage analysisData ingestionIn vehicle

The invention provides a city component positioning method and device and a vehicle-mounted mobile measurement device. The method comprises the steps of obtaining an image of the current street view,images of adjacent street views and corresponding GPS phase center coordinate data and space posture data; extracting at least one appointed city component; respectively obtaining a first pixel pointcoordinate and a second pixel point coordinate, of the same name, of the same city component; and calculating spatial geographic coordinates of the city components on the basis of a forward intersection method by combining a spatial calibration parameter and the corresponding GPS phase center coordinate data and space posture data according to the first pixel point coordinate and the second pixelpoint coordinate. The method is capable of carrying out automatic recognition and rapid positioning of the city components, so that the automatic updating of the appointed city components is realizedand the fussy artificial recognition and edition work is avoided, thereby greatly improving the updating efficiency, in related thematic maps, of the city components, and providing reliable guaranteefor the thematic application of the departments such as traffic, firefighting, public security and the like.

Owner:XIAN AEROSPACE TIANHUI DATA TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com