Patents

Literature

207 results about "Camera tracking" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

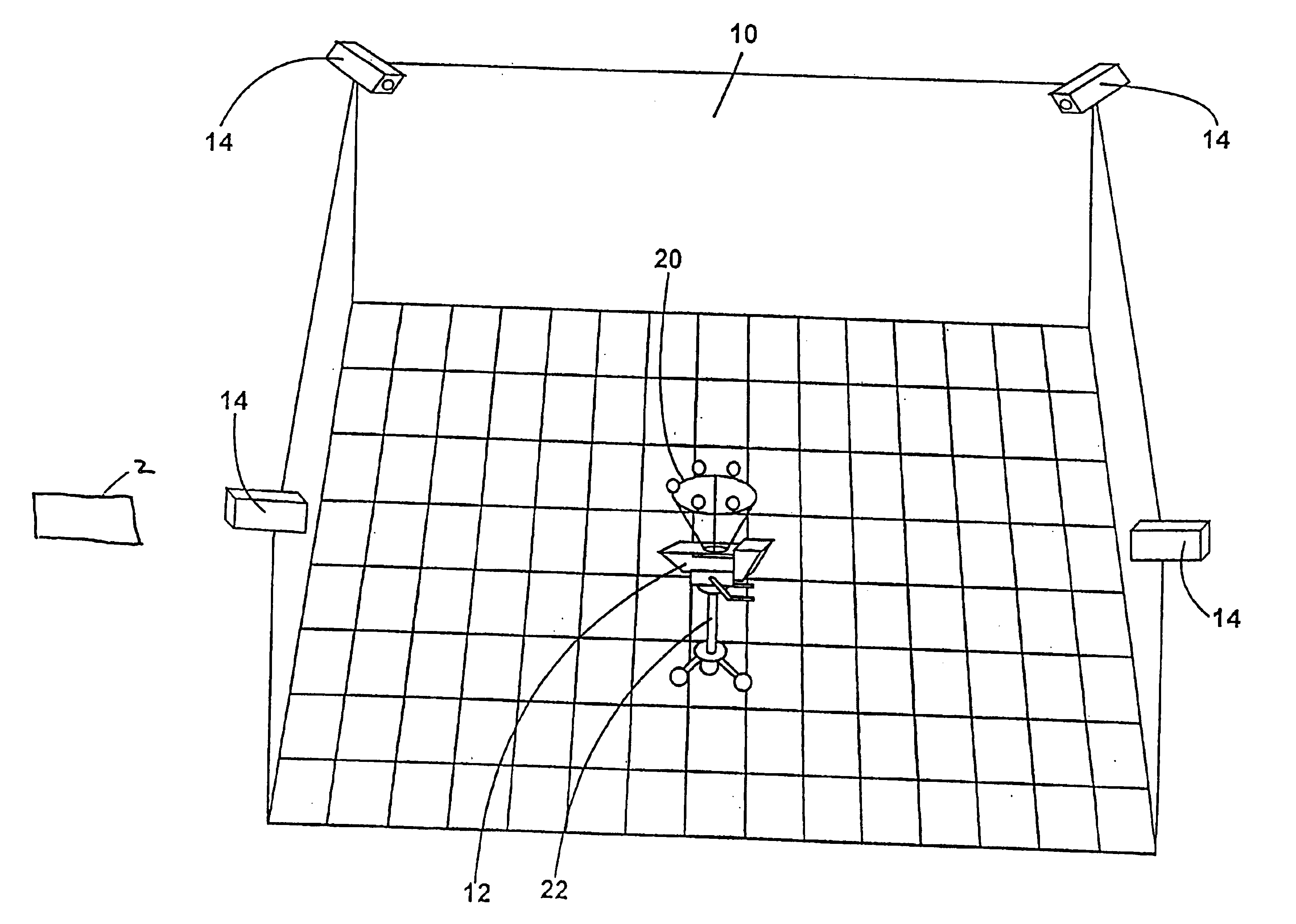

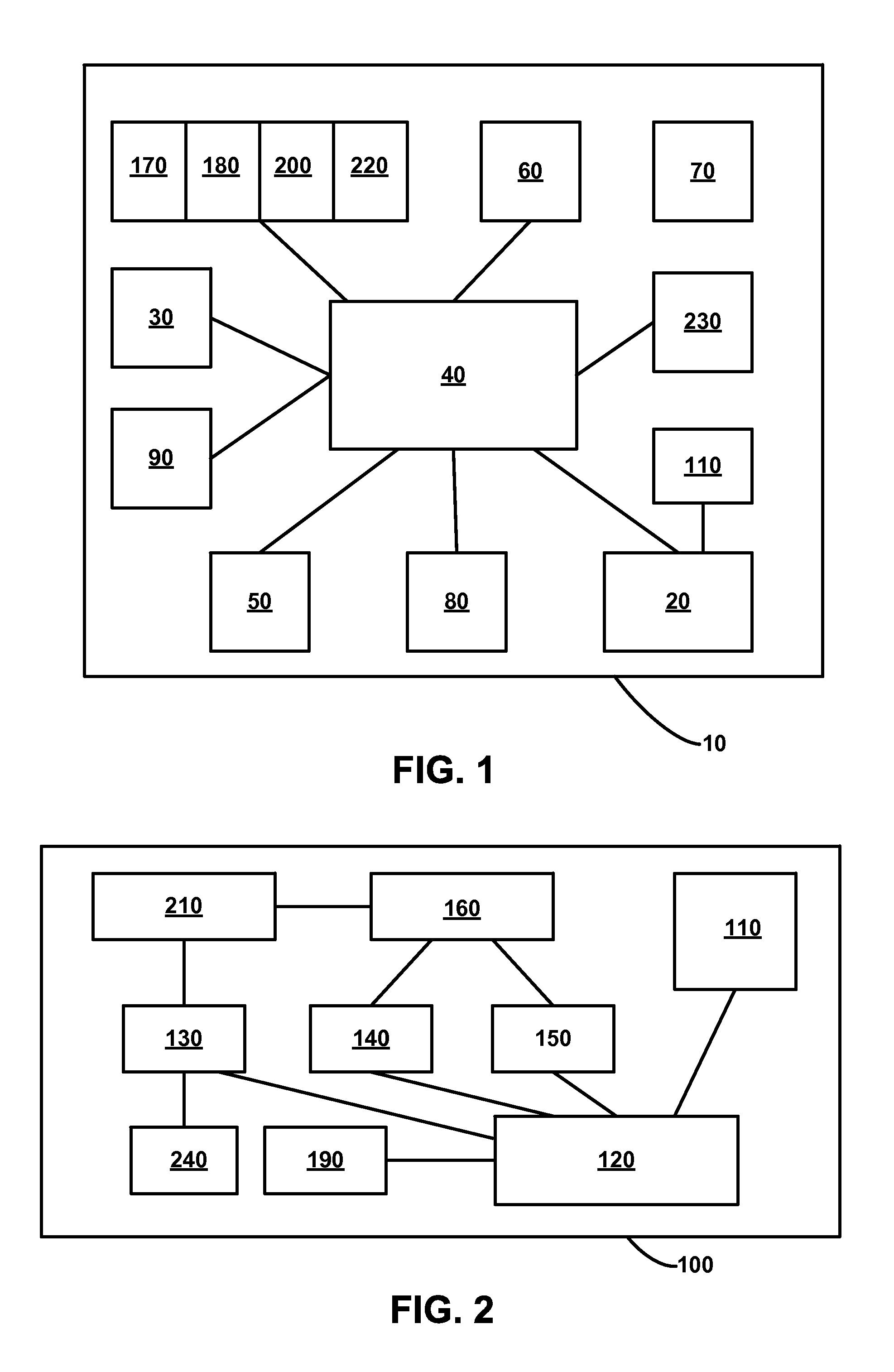

Multiple camera control system

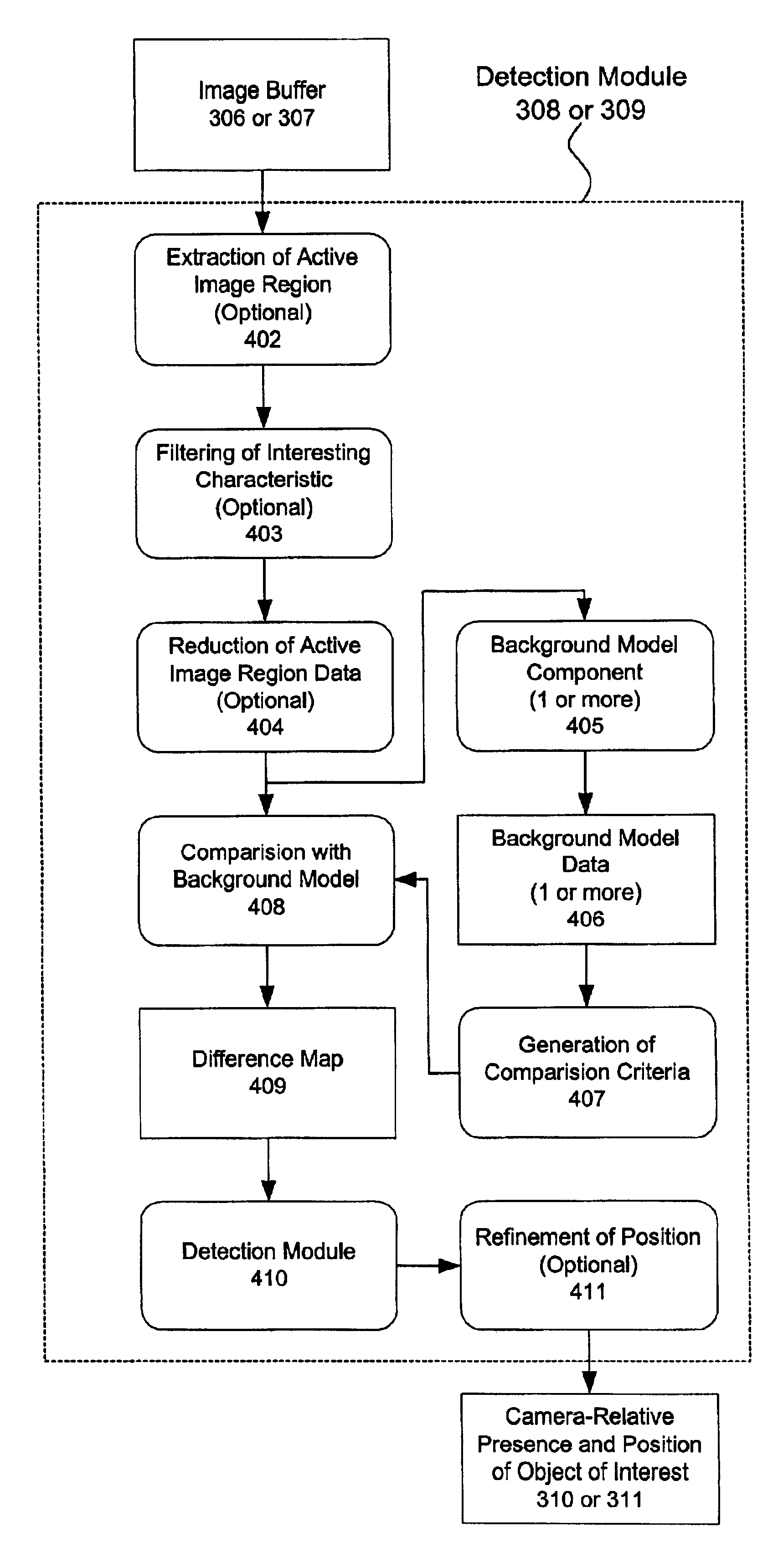

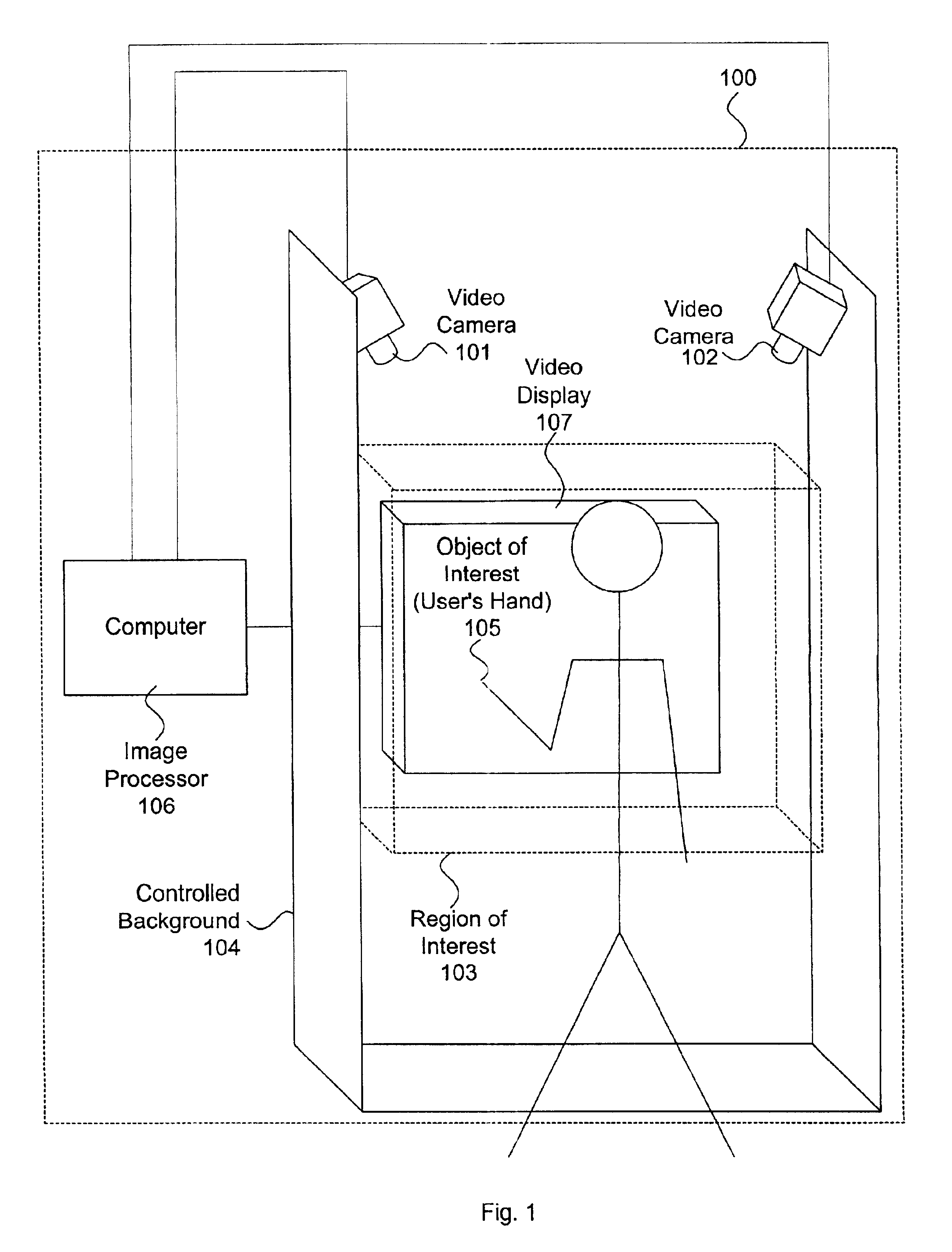

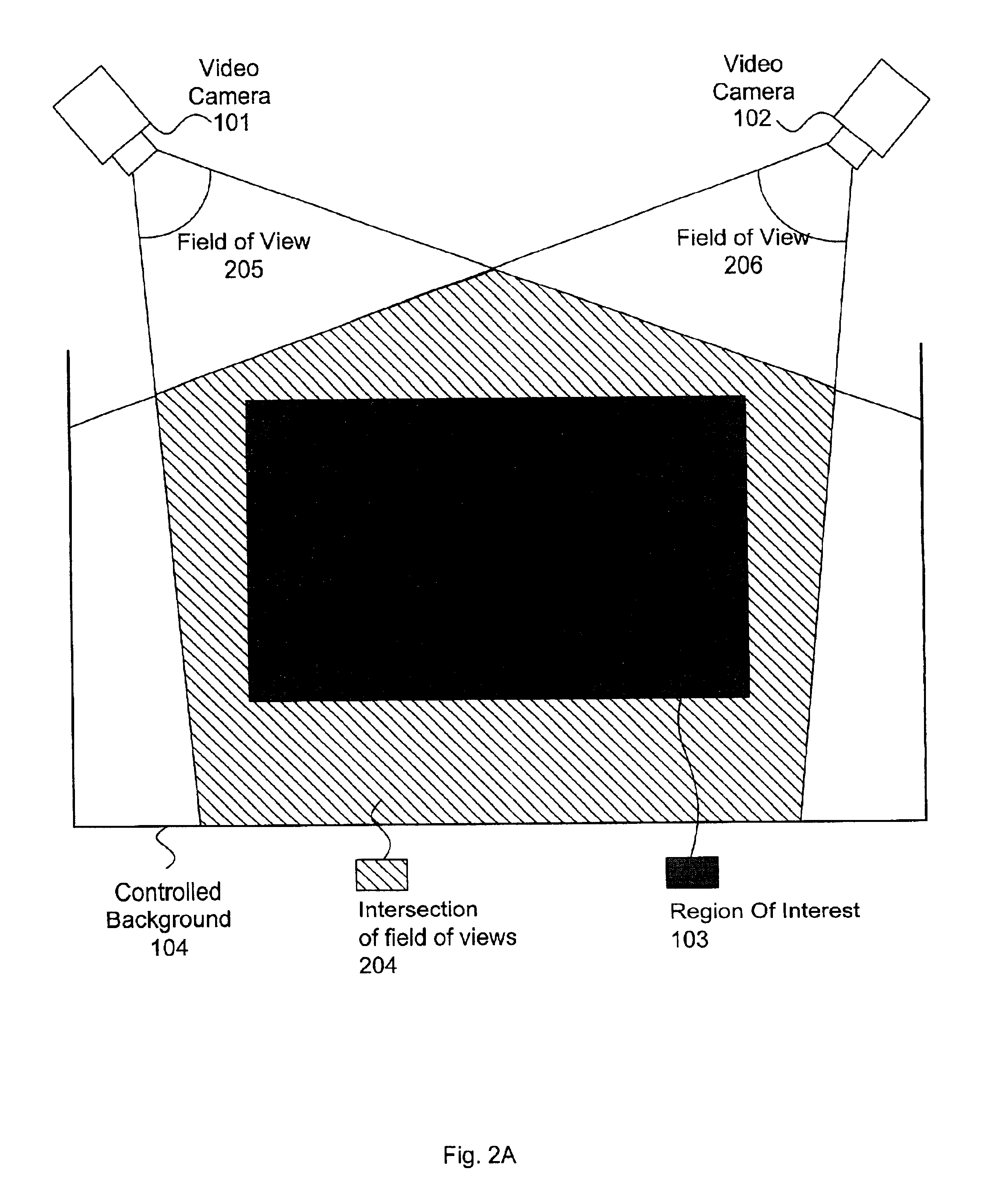

A multiple camera tracking system for interfacing with an application program running on a computer is provided. The tracking system includes two or more video cameras arranged to provide different viewpoints of a region of interest, and are operable to produce a series of video images. A processor is operable to receive the series of video images and detect objects appearing in the region of interest. The processor executes a process to generate a background data set from the video images, generate an image data set for each received video image, compare each image data set to the background data set to produce a difference map for each image data set, detect a relative position of an object of interest within each difference map, and produce an absolute position of the object of interest from the relative positions of the object of interest and map the absolute position to a position indicator associated with the application program.

Owner:QUALCOMM INC

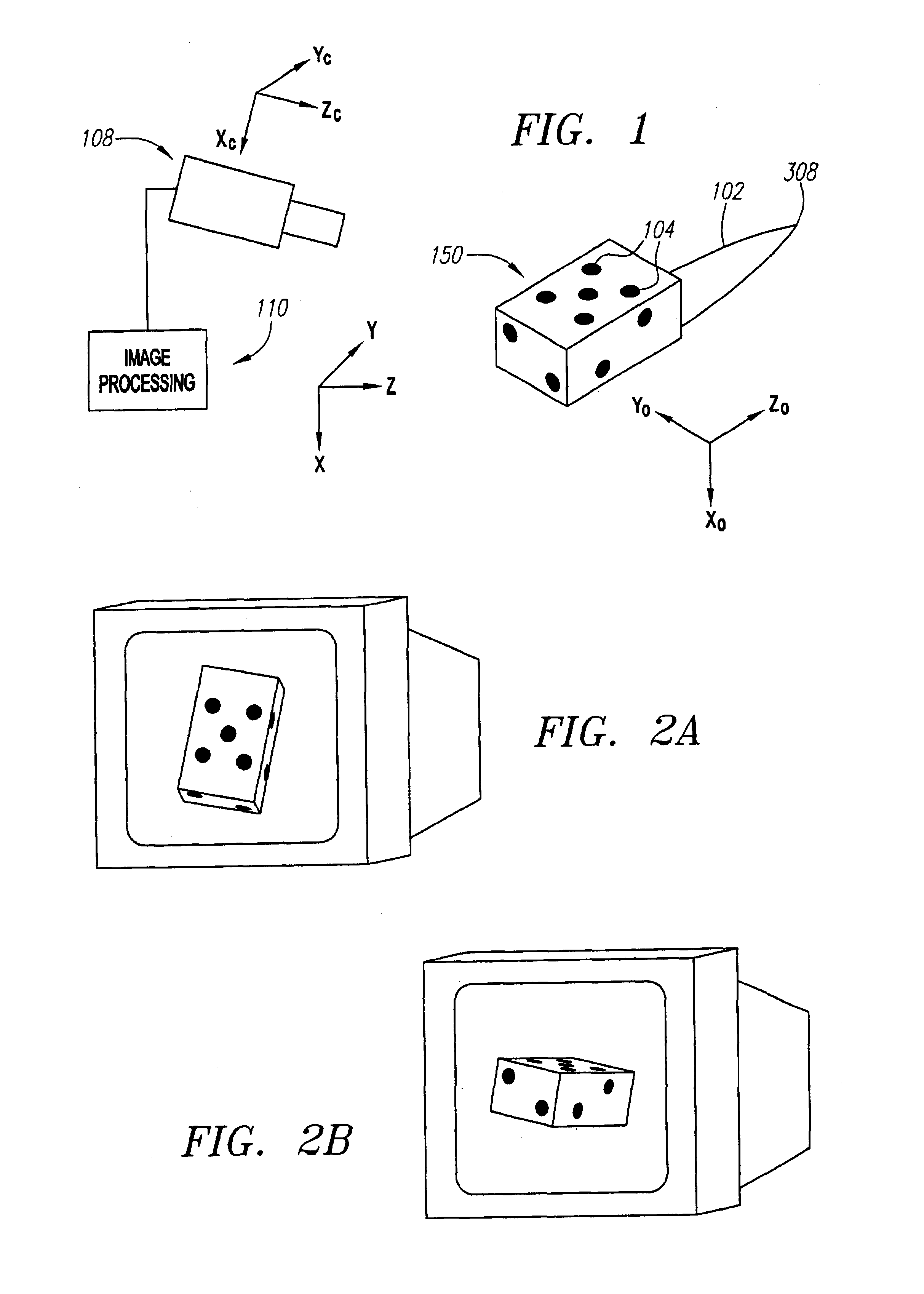

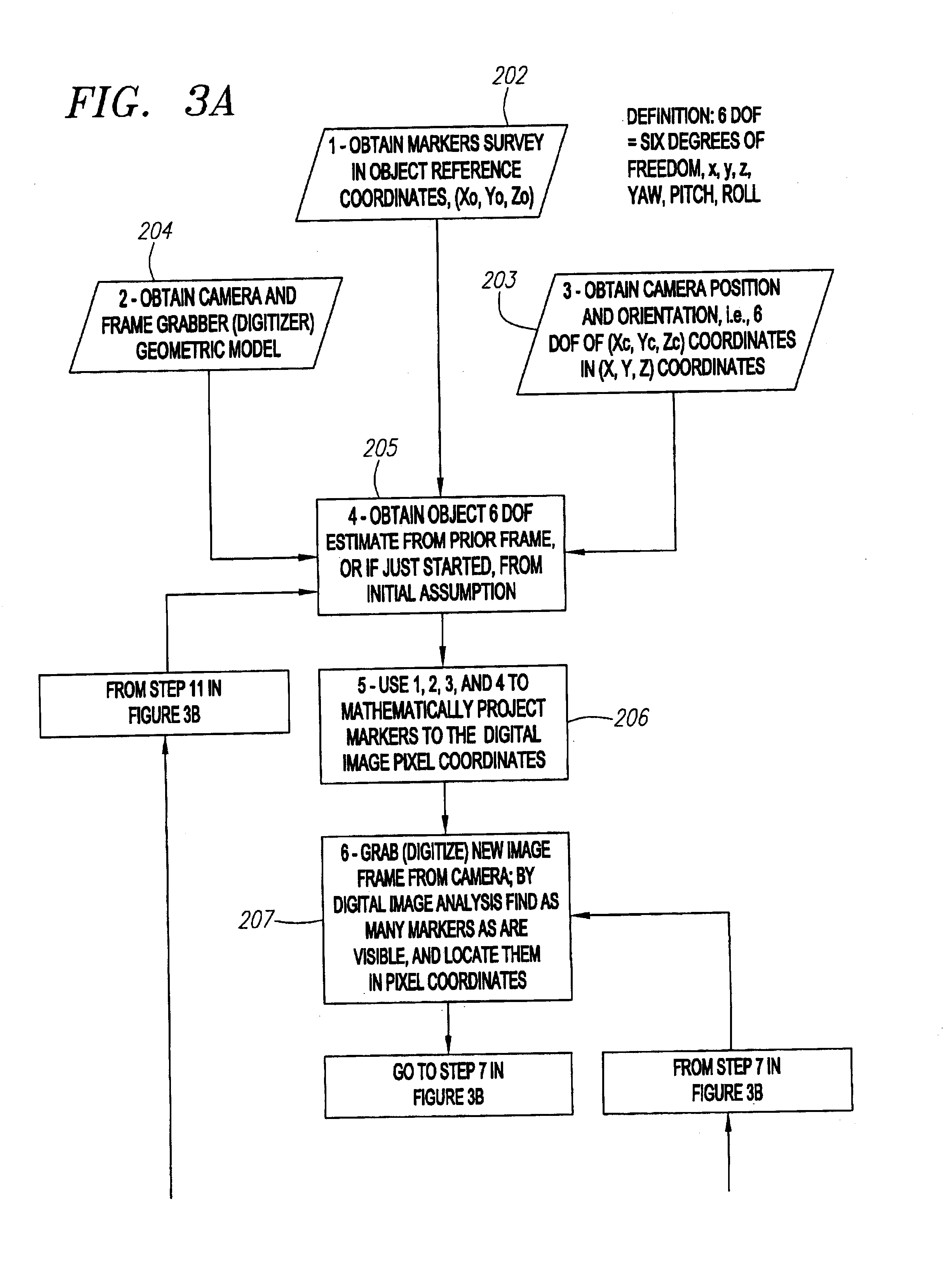

Single-camera tracking of an object

InactiveUS6973202B2Ultrasonic/sonic/infrasonic diagnosticsImage enhancementOptical trackingVideo camera

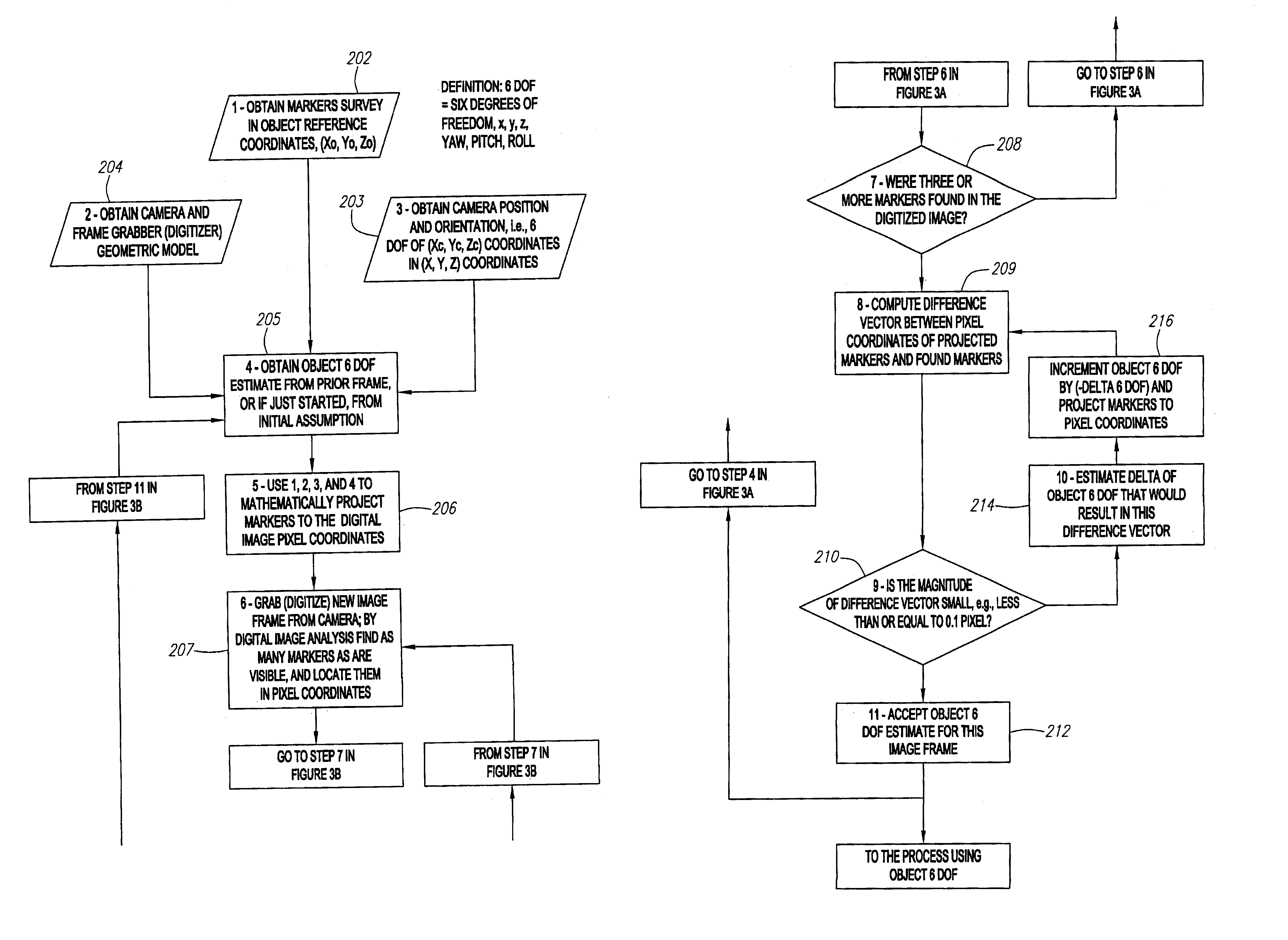

A method and system for determining the position and orientation of an object is disclosed. A set of markers attached or associated with the object is optically tracked and geometric translation is performed to use the coordinates of the set of markers to determine the location and orientation of their associated object.

Owner:VARIAN MEDICAL SYSTEMS

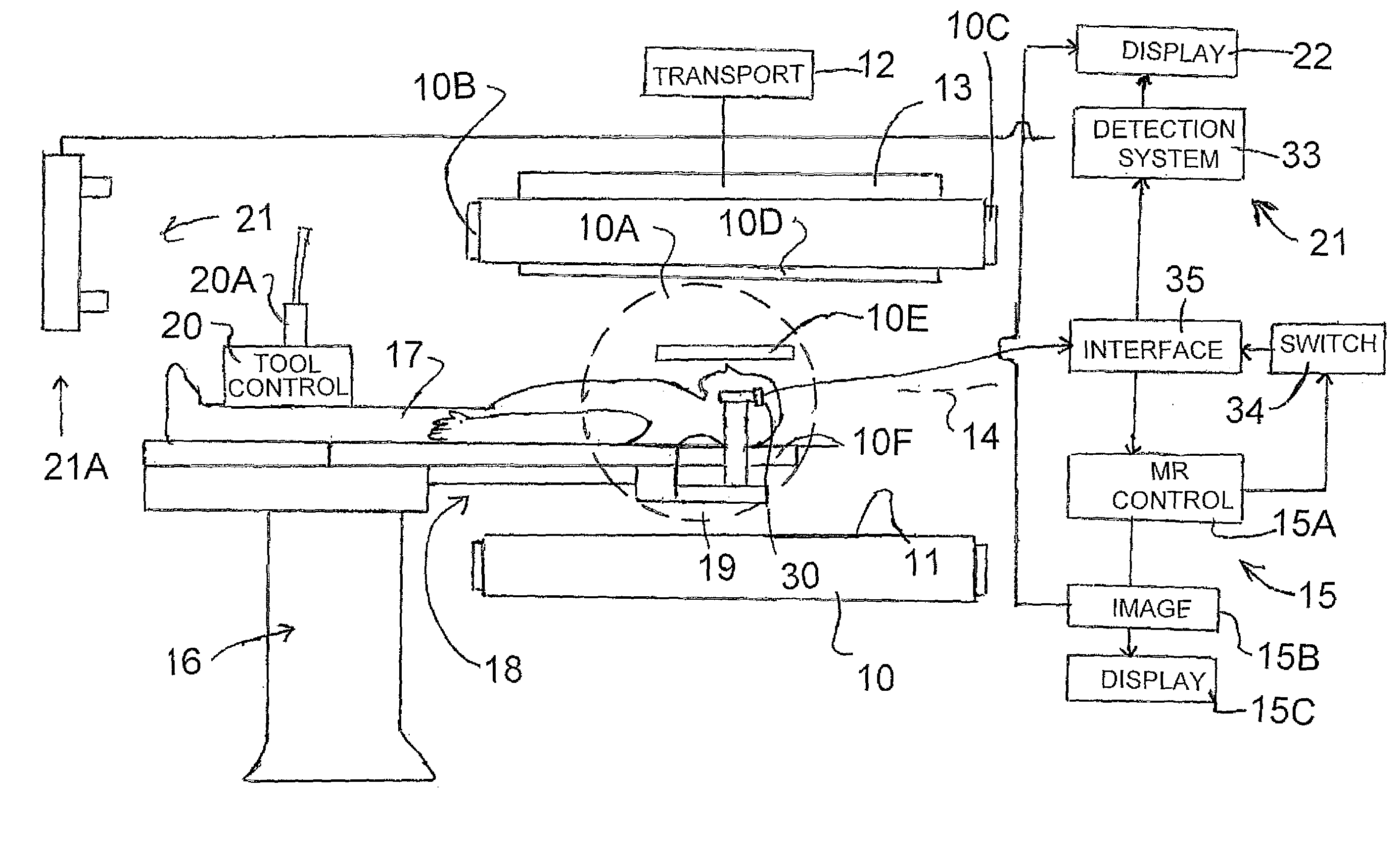

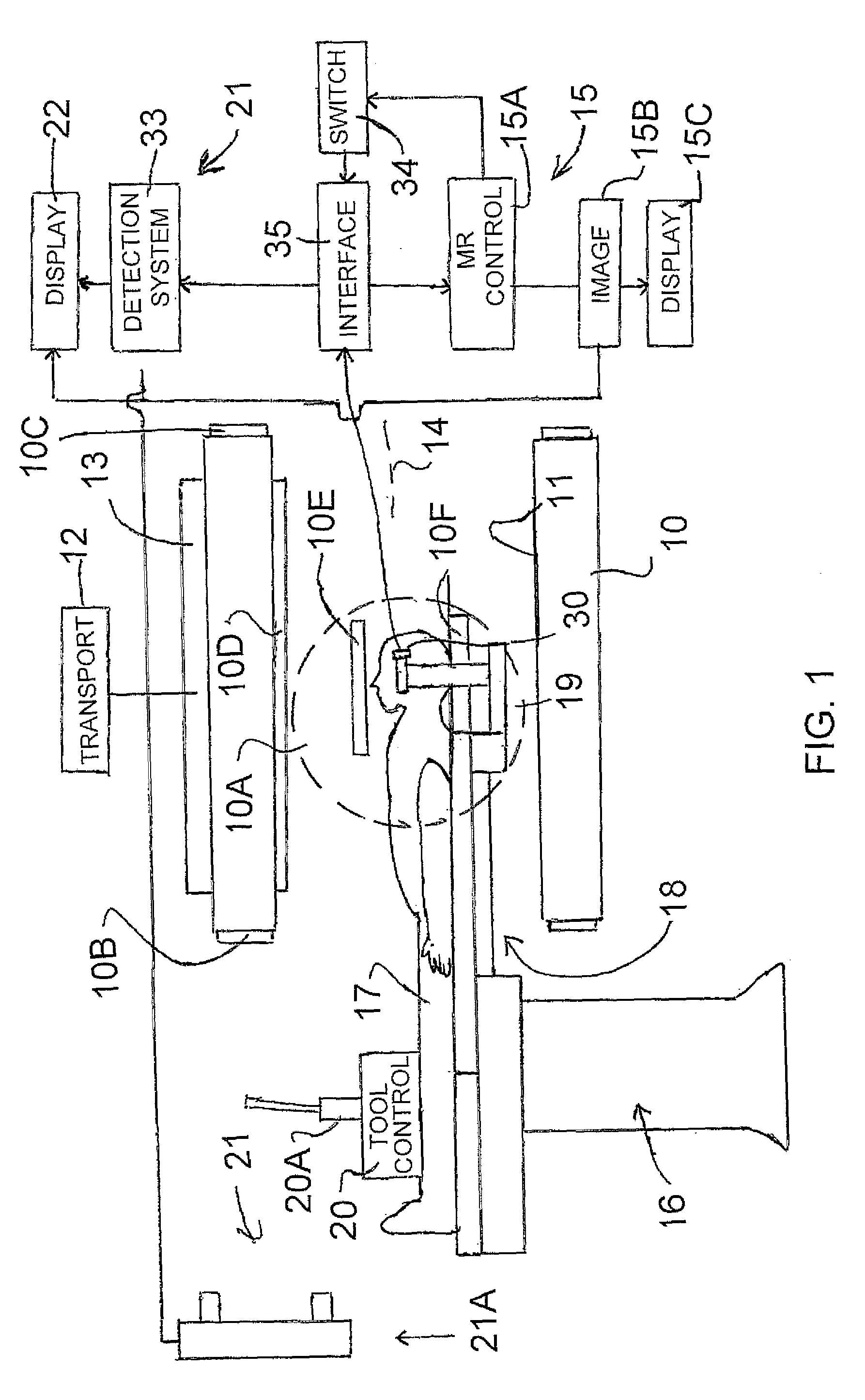

Automatic registration of images for image guided surgery

InactiveUS20110098553A1Robust recognitionSurgical navigation systemsDiagnostic recording/measuringImage guidanceComputer science

Automatic registration of an MR image is carried out in an image guidance system by placing MR visible markers at known positions relative to markers visible in a camera tracking system. The markers are carried on a common fixture which is attached to a head clamp together with a reference marker which is used when the markers are covered or removed. The tracking system includes a camera with a detection array for detecting visible light a processor arranged to analyze the output from the array. Each object to be detected carries a single marker with a pattern of contrasted areas of light and dark intersecting at a specific single feature point thereon with an array around the specific location to allow the processor is able to detect an angle of rotation of the pattern and to distinguish each marker from the other markers.

Owner:IMRIS

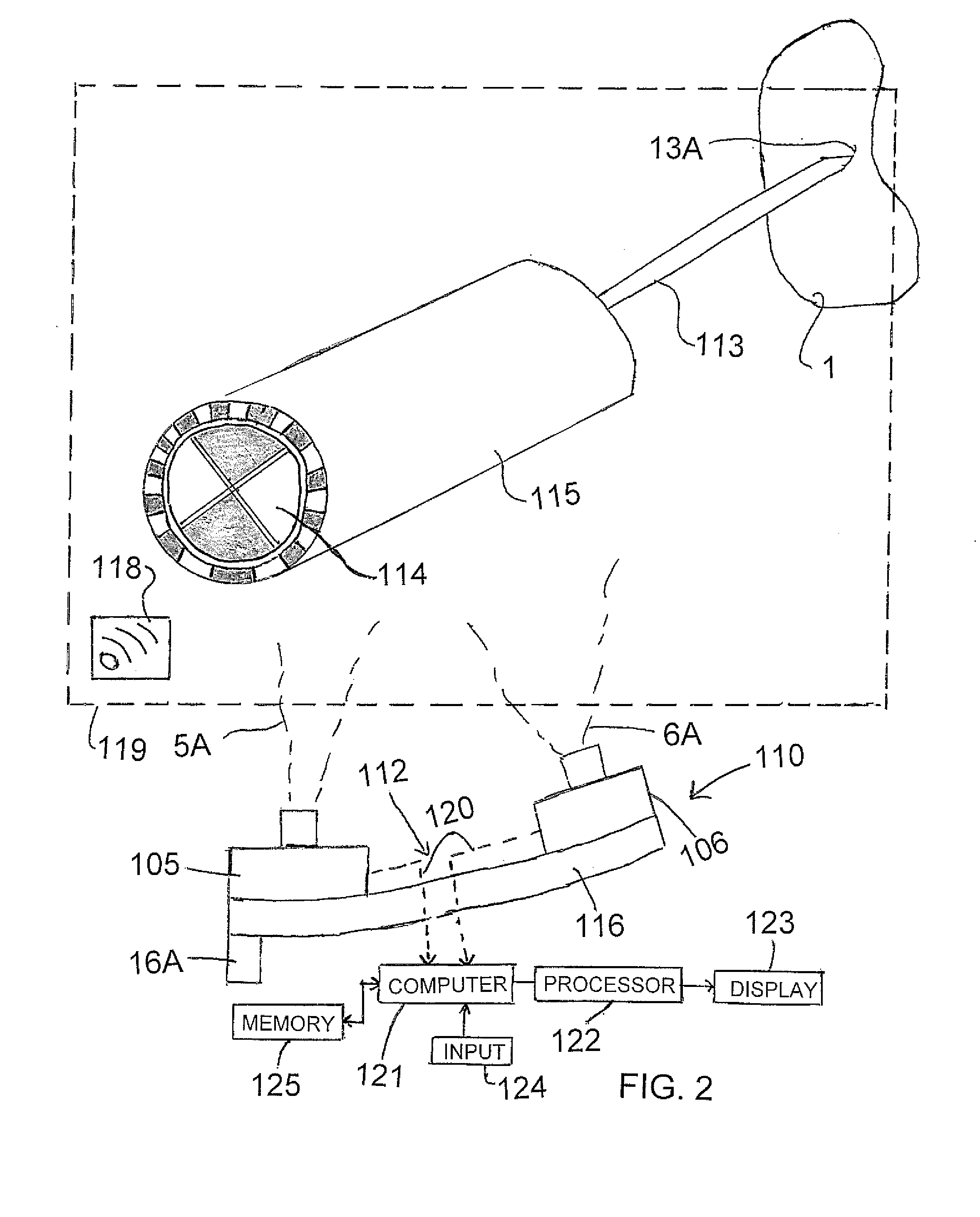

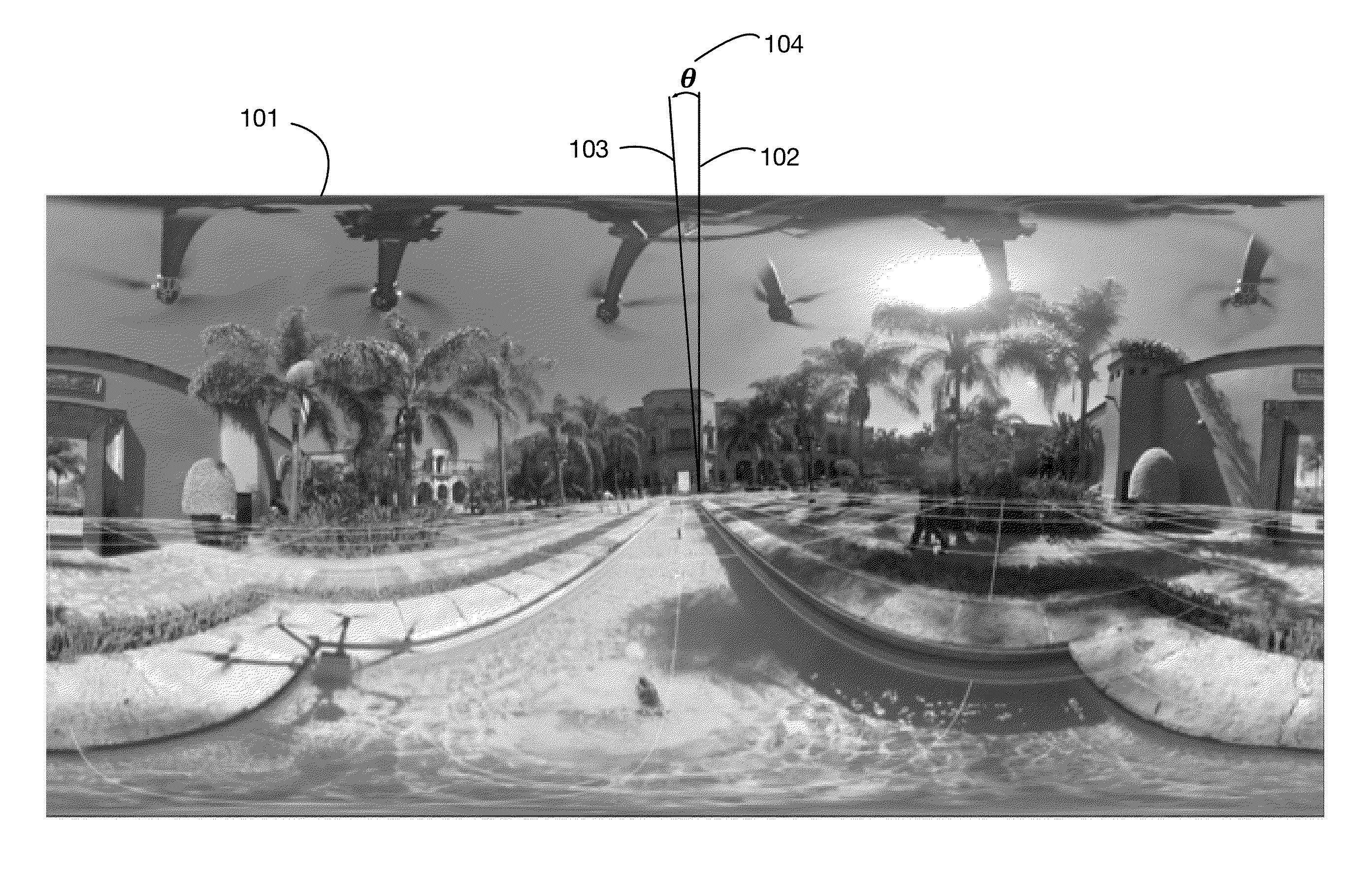

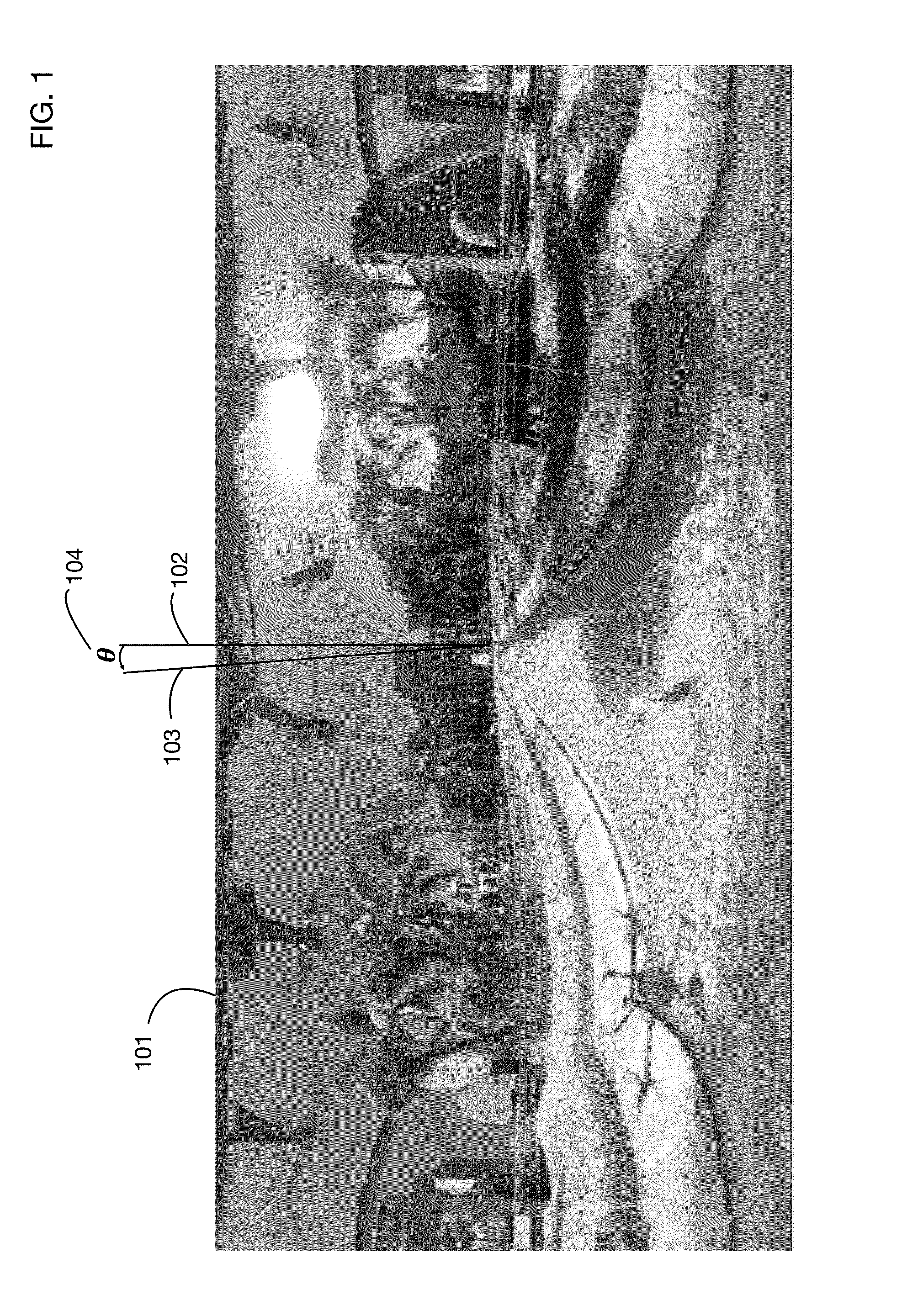

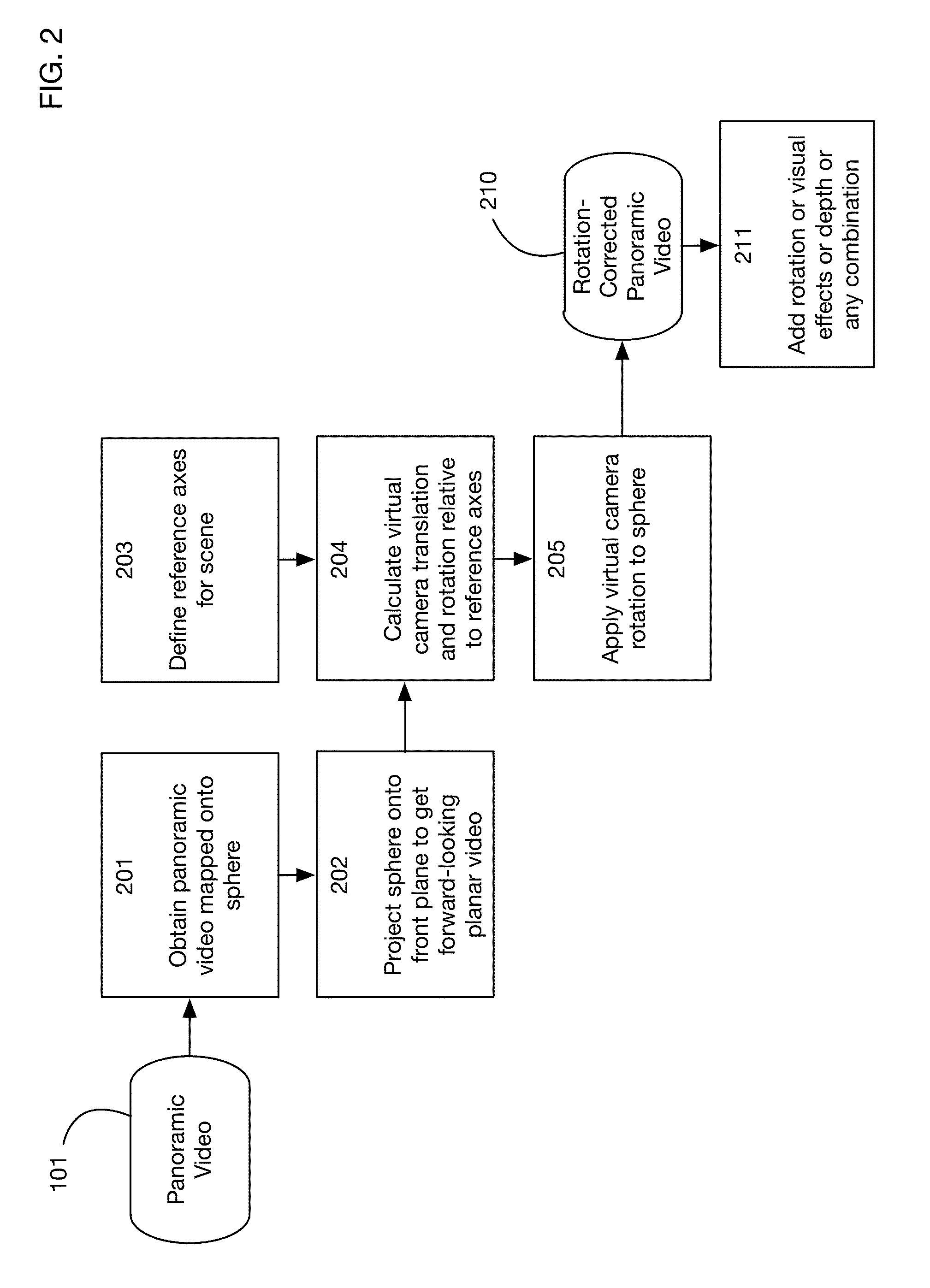

System and method for removing camera rotation from a panoramic video

InactiveUS9277122B1Eliminate relative rotationEliminate the effects ofImage enhancementTelevision system detailsPanoramaForward looking

Removes undesirable camera rotation from a panoramic video. Video frames are mapped to a sphere and then projected to a forward-looking planar video. Camera tracking or other techniques are used to derive camera position and orientation in each frame. From the camera orientation, a correcting rotation is derived and is applied to the sphere onto which the panoramic video was mapped. Re-projecting video from the rotation-corrected sphere reduces or eliminates camera rotation artifacts from the panoramic video. Rotation may be re-introduced and other visual effects may be added to the stabilized video. May be utilized in 2D virtual reality and augmented reality displays. Depth may be accepted by the system for objects in the video and utilized to create 3D stereoscopic virtual reality and augmented reality displays.

Owner:LEGEND FILMS INC

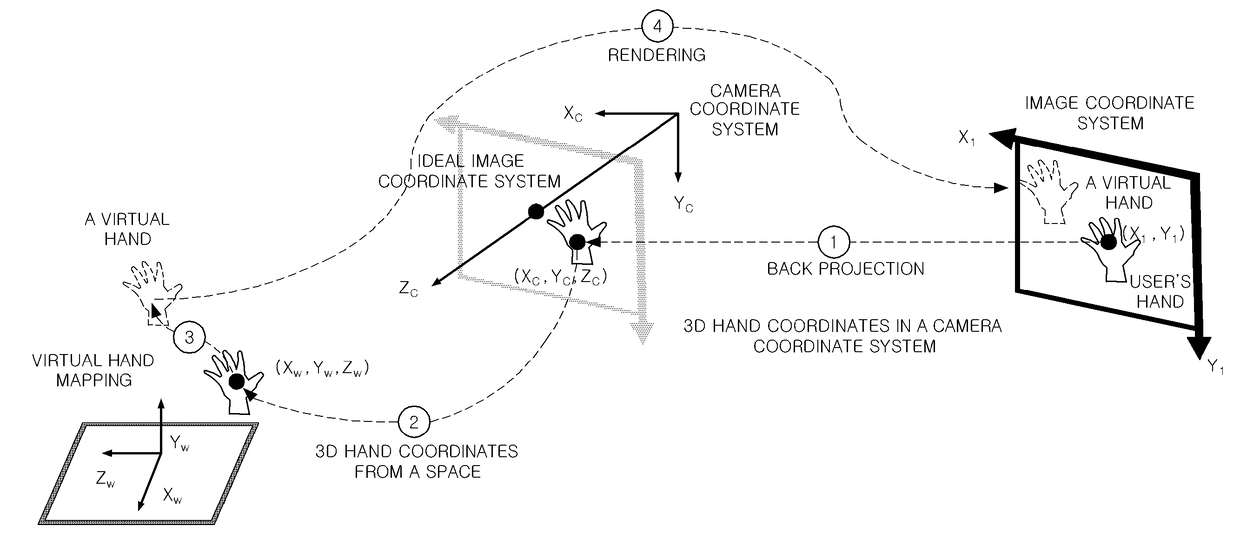

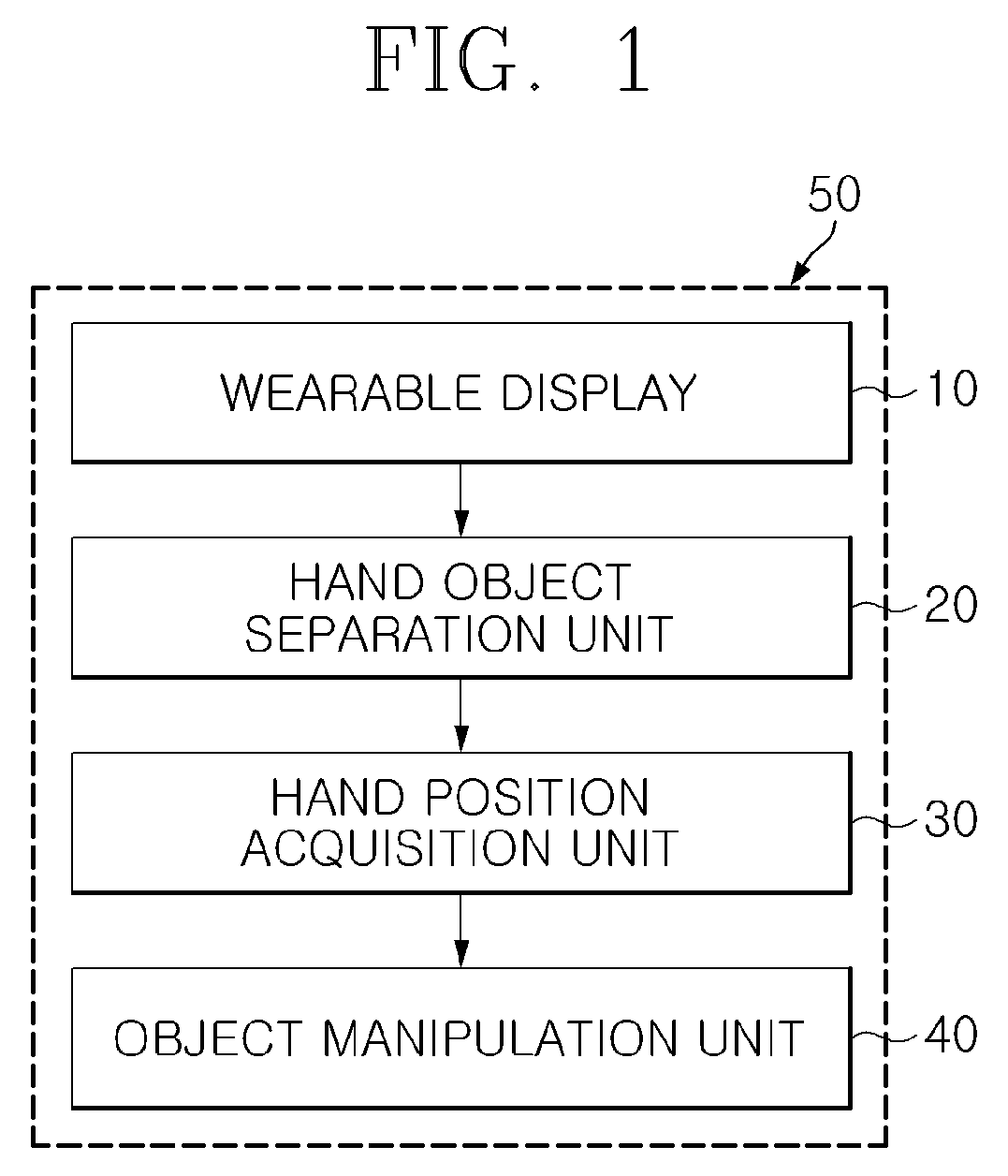

Apparatus and method for estimating hand position utilizing head mounted color depth camera, and bare hand interaction system using same

InactiveUS20170140552A1Increase distanceRecognition distanceInput/output for user-computer interactionImage enhancementInteraction systemsColor depth

The present invention relates to a technology that allows a user to manipulate a virtual three-dimensional (3D) object with his or her bare hand in a wearable augmented reality (AR) environment, and more particularly, to a technology that is capable of detecting 3D positions of a pair of cameras mounted on a wearable display and a 3D position of a user's hand in a space by using distance input data of an RGB-Depth (RGB-D) camera, without separate hand and camera tracking devices installed in the space (environment) and enabling a user's bare hand interaction based on the detected 3D positions.

Owner:KOREA ADVANCED INST OF SCI & TECH

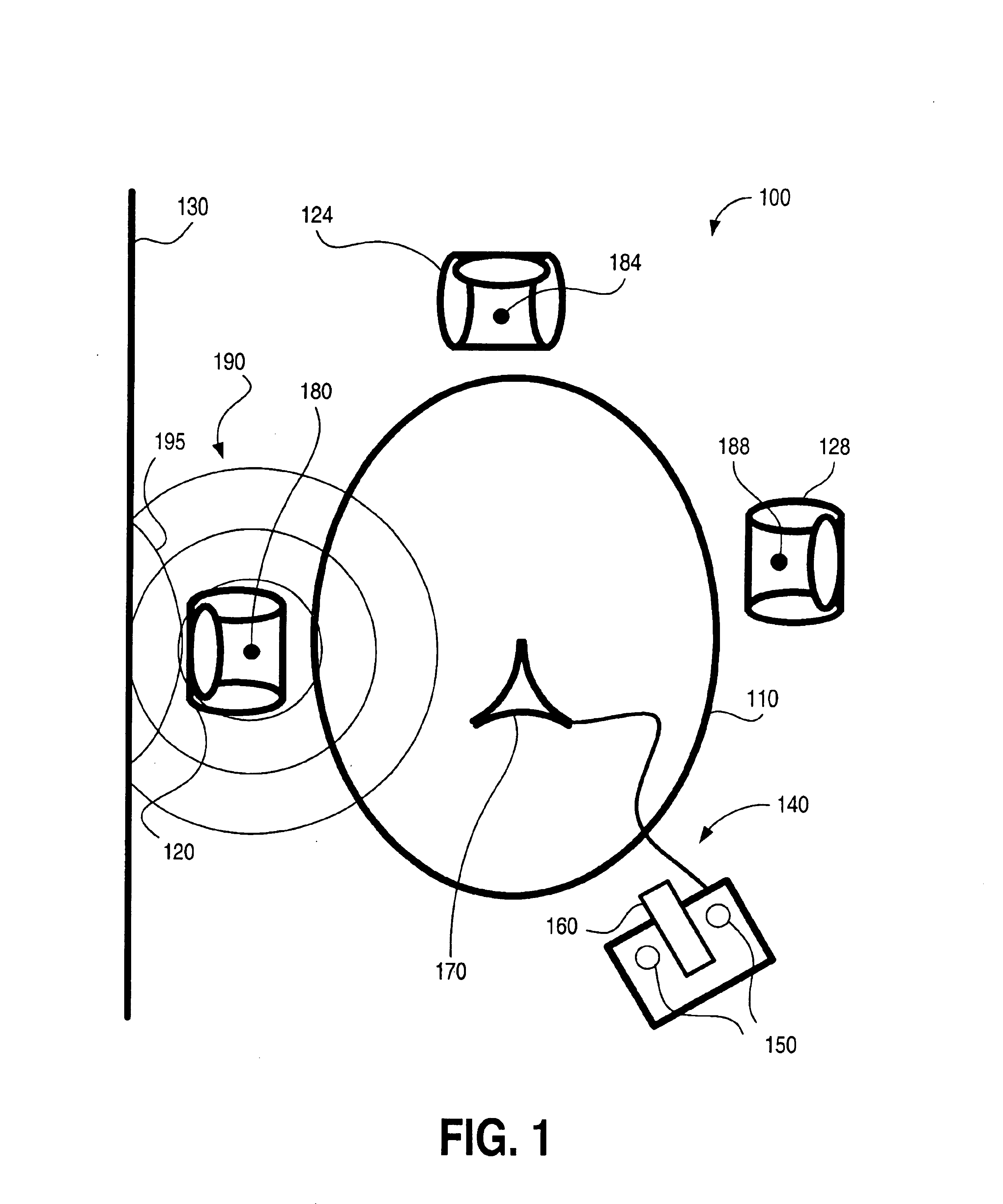

Automatic camera tracking using beamforming

ActiveUS6980485B2Maximize the sum amplitude of the signals receivedTwo-way working systemsDirection/deviation determination systemsEngineeringMicrophone array

An acoustic tracking system that uses an array of microphones to determine the location of an acoustical source. Several points in space are determined to be potential locations of an acoustical source. Beamforming parameters for the array of microphones are determined at each potential location. The beamforming parameters are applied to the sound received by the microphone array for all of the potential locations and data is gathered for each beam. The most likely location is then determined by comparing the data from each beam. Once the location is determined, a camera is directed toward this source.

Owner:HEWLETT PACKARD DEV CO LP

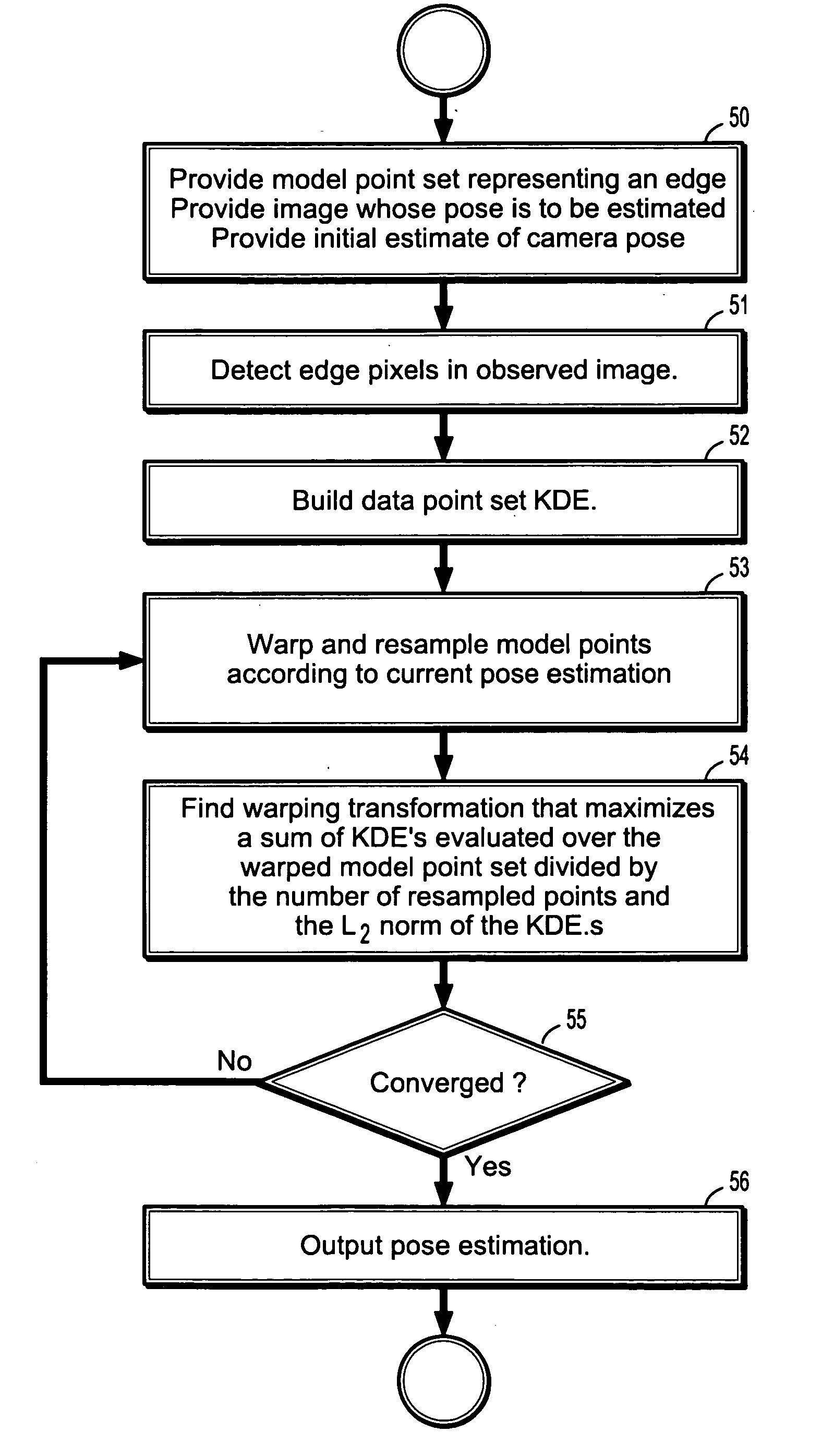

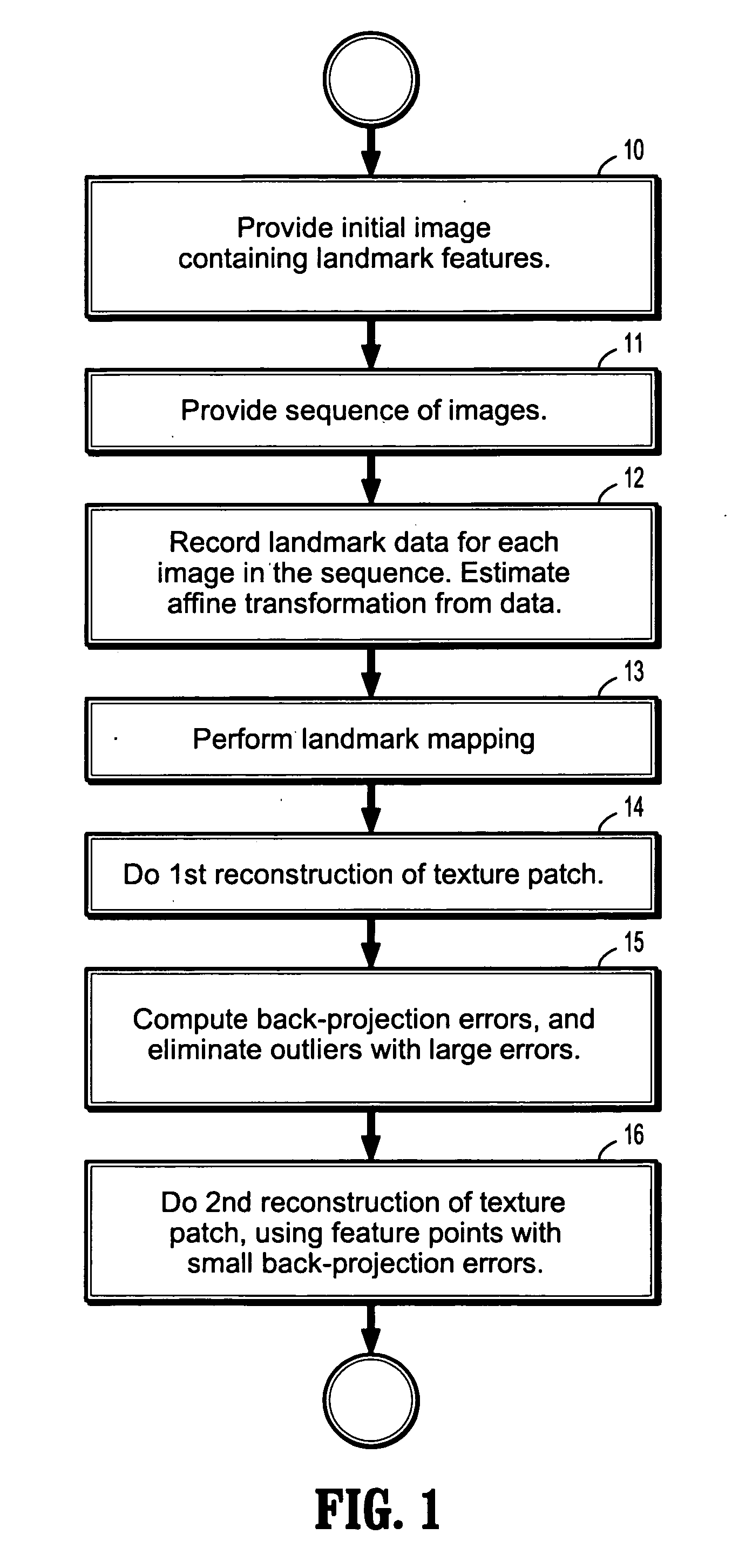

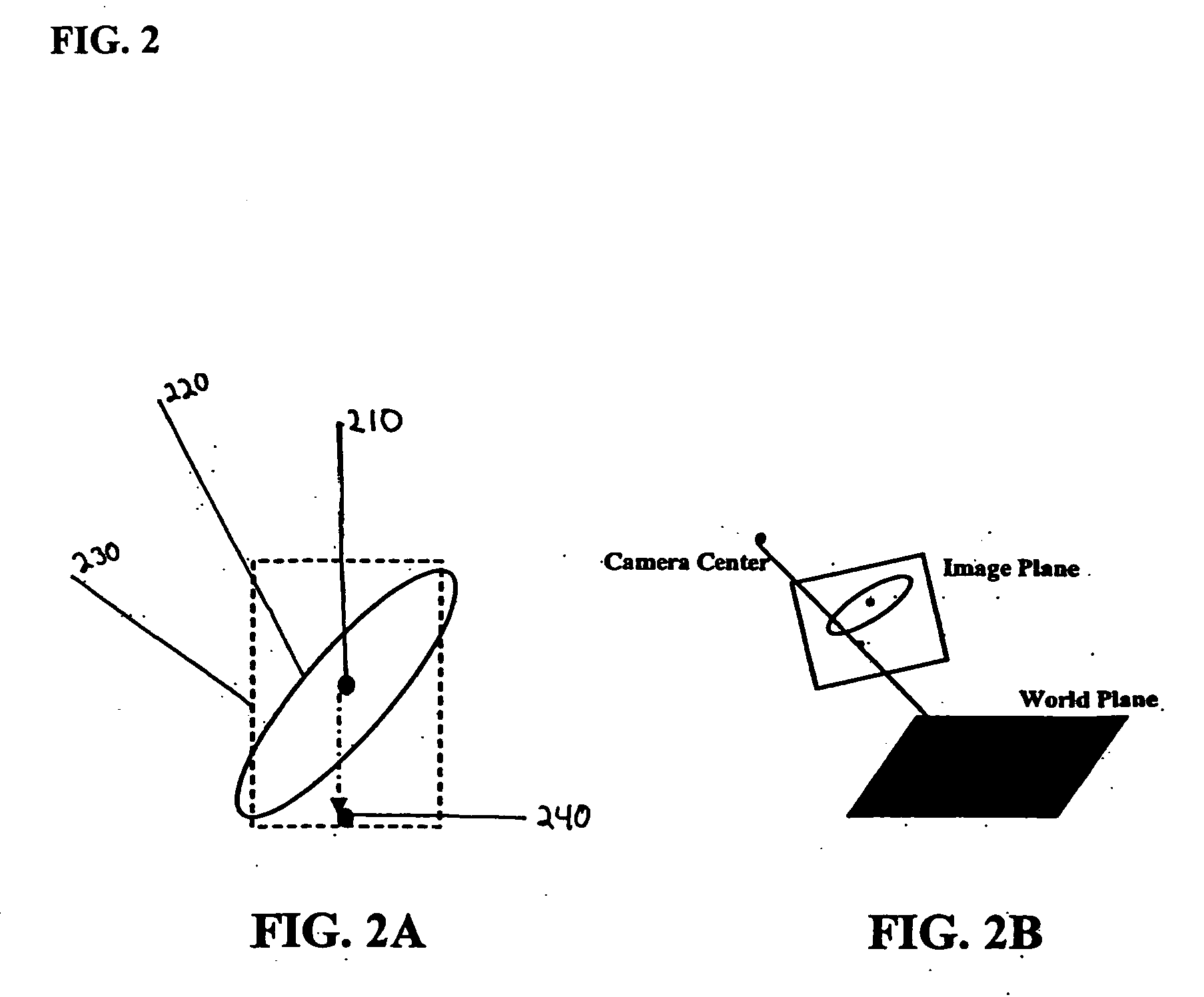

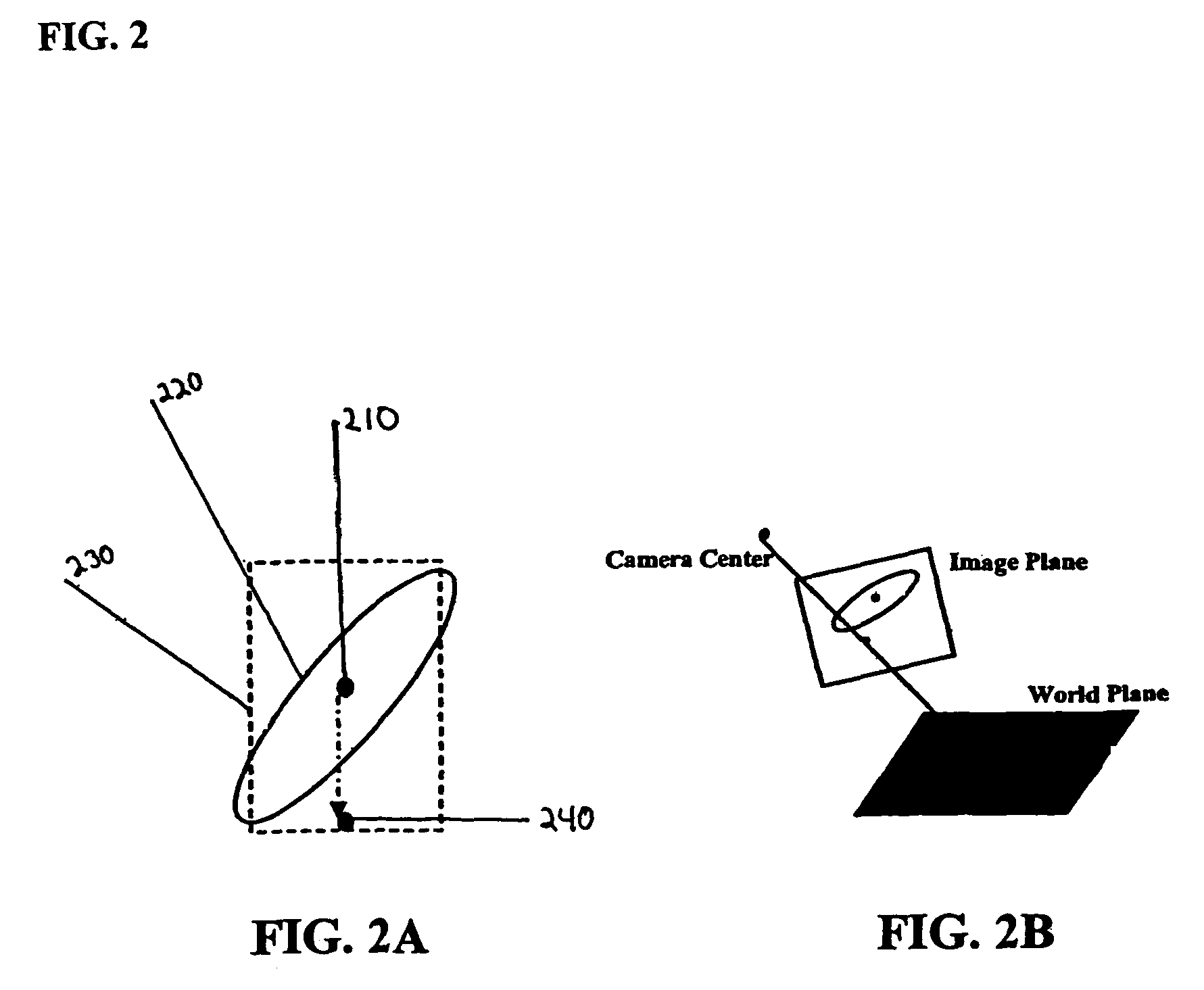

System and method for camera tracking and pose estimation

InactiveUS20060188131A1Promote reconstructionAvoid driftingImage analysisCharacter and pattern recognitionTriangulationBack projection

A method of tracking a pose of a moving camera includes receiving a first image from a camera, receiving a sequence of digitized images from said camera, recording, for each of said sequence of digitized images, the pose and 2D correspondences of landmarks, reconstructing a location and appearance of a 2-dimensional texture patch from 2D correspondences of the landmarks by triangulation and optimization, computing back-projection errors by comparing said reconstructed texture patch with said first received image; and reconstructing said location and appearance of said 2-dimensional texture patch from the 2D correspondences of the landmarks of said sequence of digitized images by triangulation and optimization after eliminating those landmarks with large back-projection errors.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

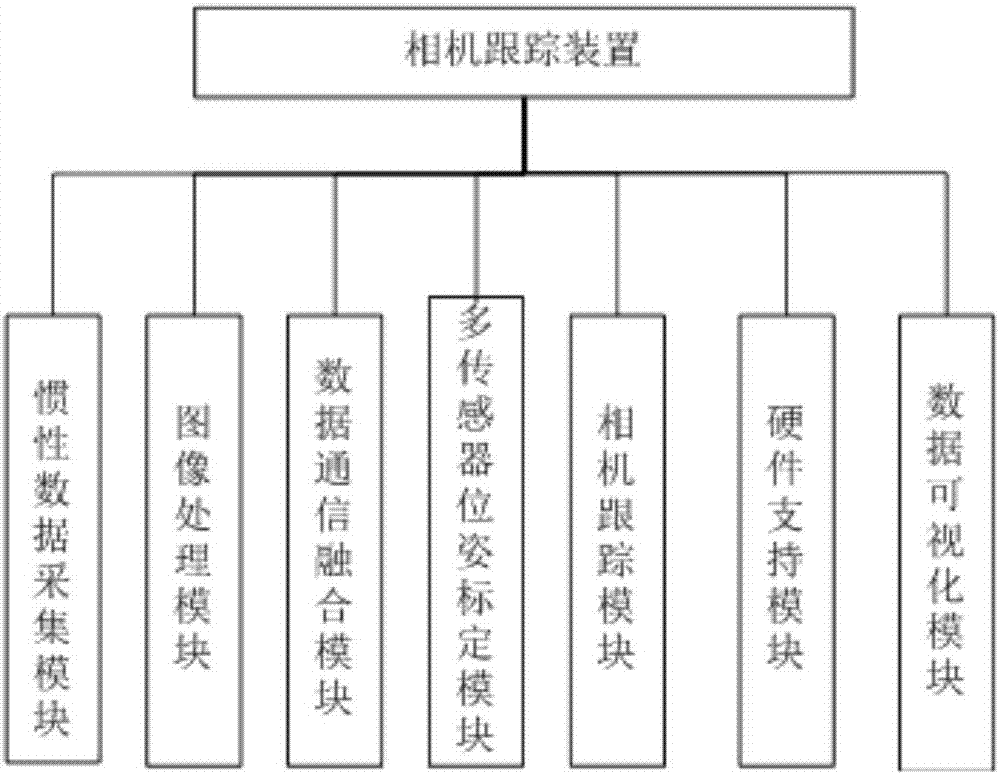

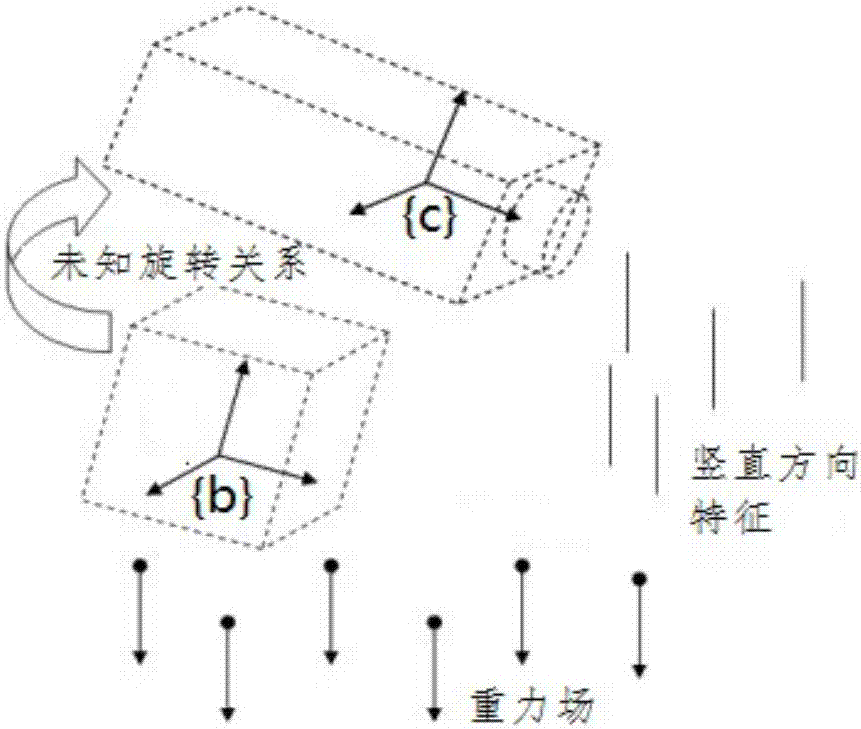

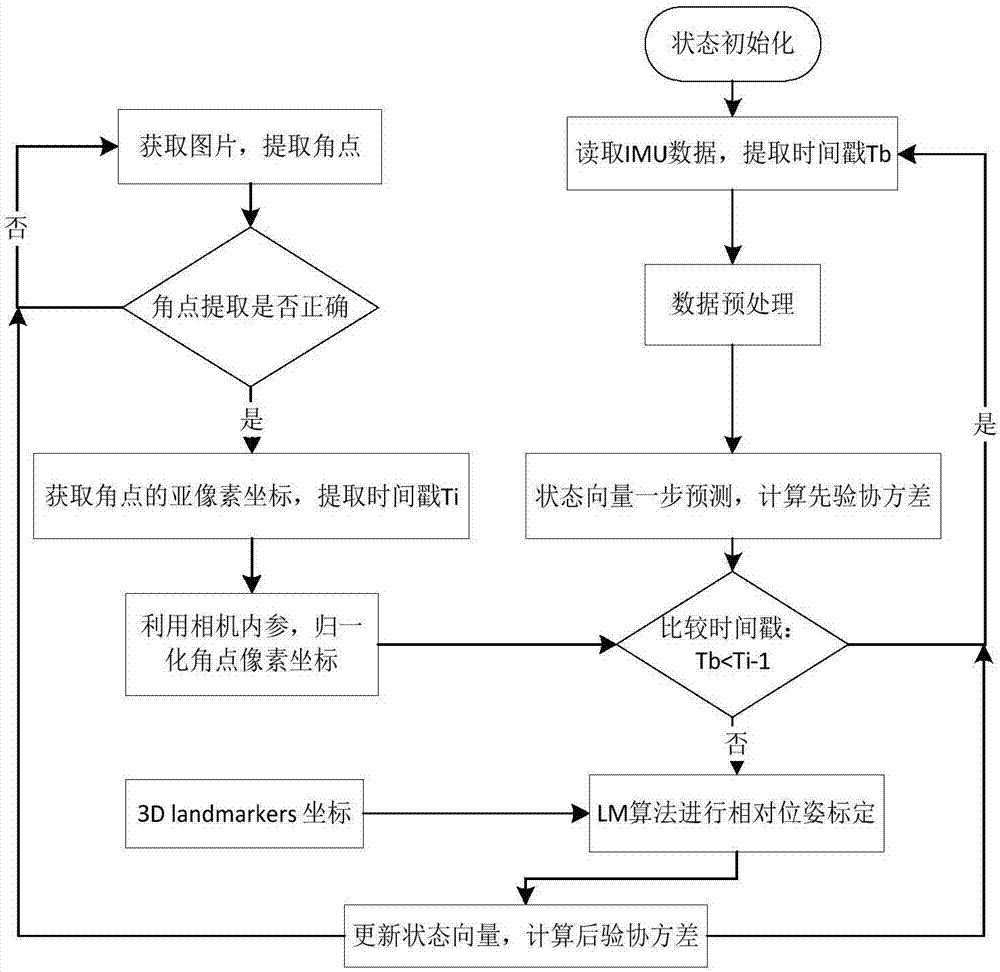

Indoor positioning method and device based on inertial data and visual features

ActiveCN107255476AImprove tracking accuracyImprove robustnessNavigational calculation instrumentsNavigation by speed/acceleration measurementsData modelingVisual perception

The invention discloses an indoor positioning technology based on inertial data and visual features and discloses a corresponding implementation device to implement the steps. The indoor positioning technology specifically comprises: (1) multi-sensor data processing: a camera calibration and image feature extraction method; an IMU data modeling and filtering method; (2) multi-sensor coordinate system calibration: a system modeling, relative attitude calibration and relative position and attitude joint calibration method; (3) an indoor positioning and tracking technology fusing the inertial data and the visual features. Compared with an existing traditional single camera tracking technology, the indoor positioning technology disclosed by the invention has the advantages that single camera tracking is a simple assumption based on constant-speed motion; a better prediction can be provided by using the inertial data of an IMU, so that a search area is smaller during feature matching, the matching speed is higher, the tracking results are more accurate, and the camera tracking robustness in the image degradation and un-textured areas is greatly improved.

Owner:青岛海通胜行智能科技有限公司

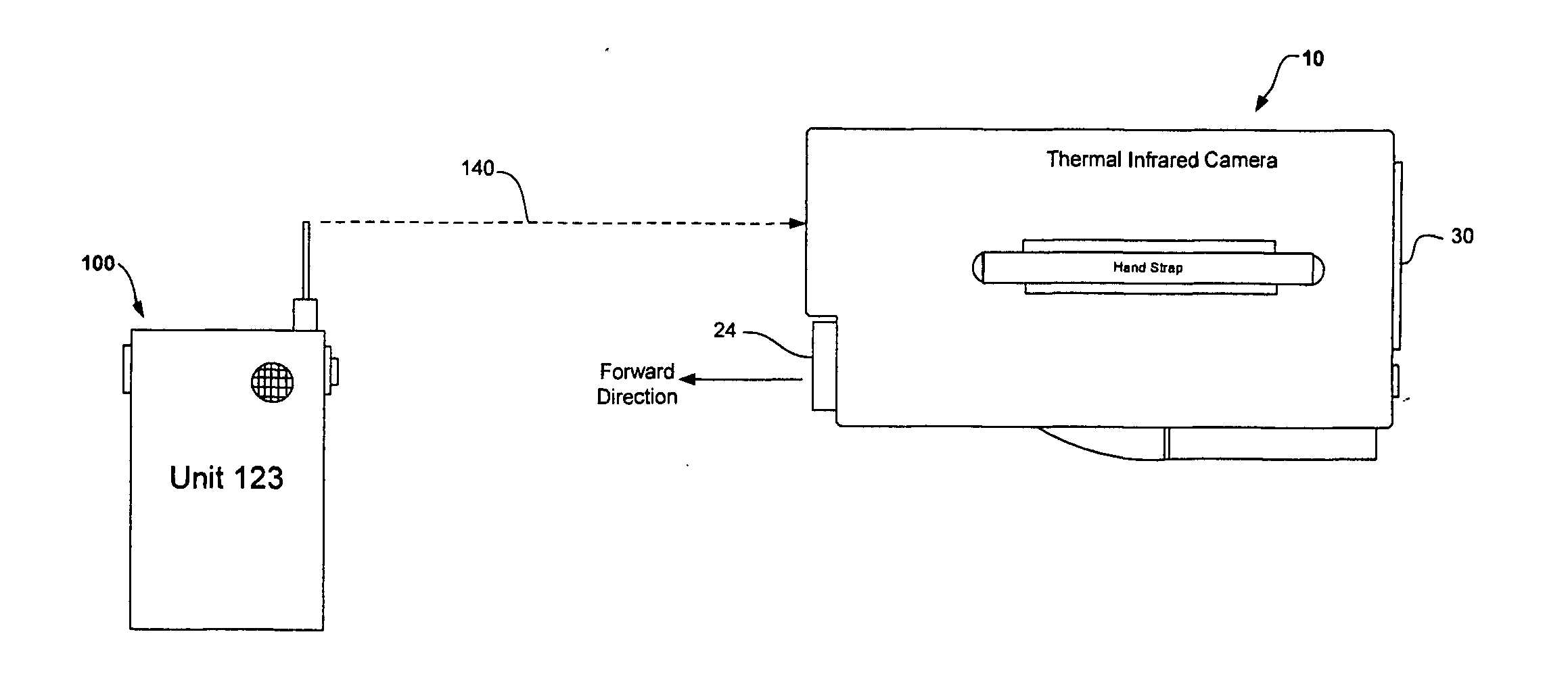

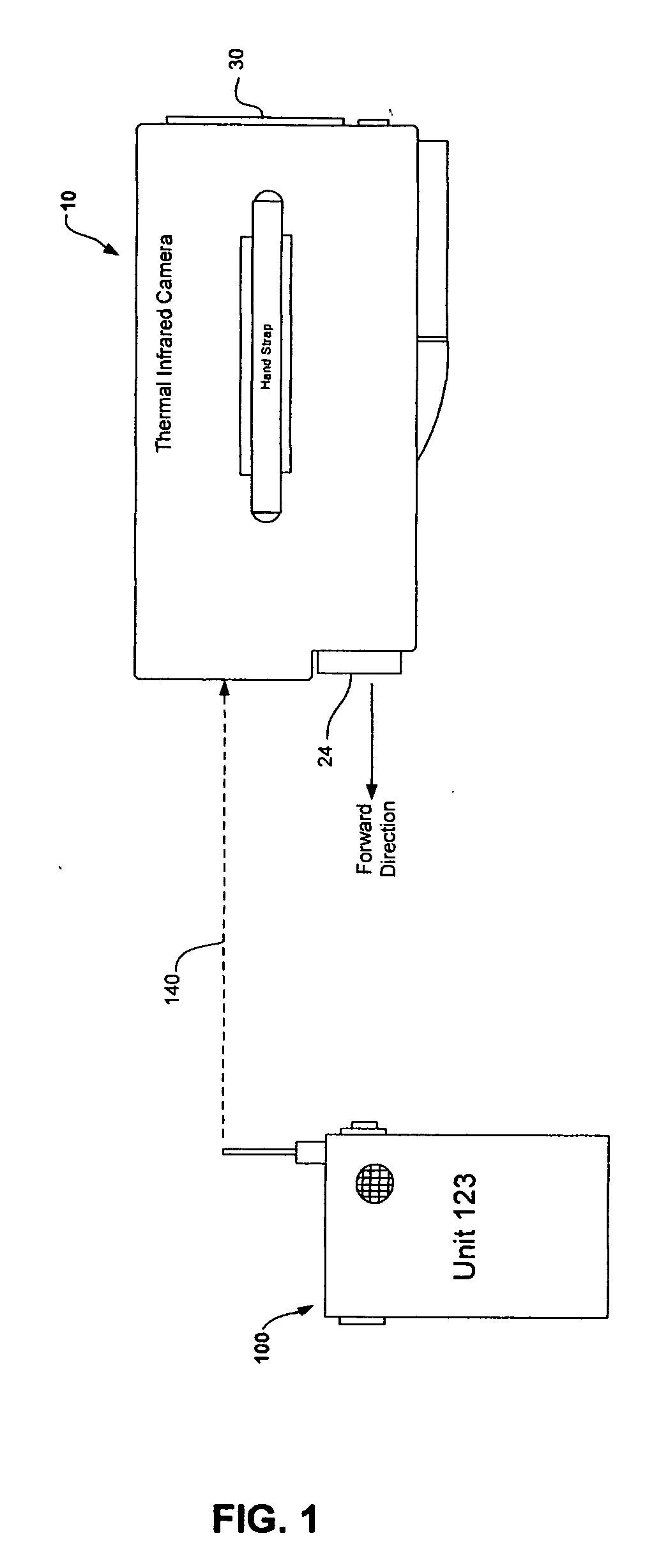

Thermal infrared camera tracking system utilizing receive signal strength

InactiveUS20060216011A1Reduce the risk of injuryLess timeTelevision system detailsAlarmsTransceiverDisplay device

A thermal infrared camera tracking system utilizing receive signal strength is provided for firefighters and emergency service first responders, the system can include a plurality of portable units which can be individually tracked and located using information simultaneously displayed with the thermal infrared video image on the video display of the thermal infrared camera. The thermal infrared camera encompasses a RF transceiver for receiving wireless RF signals transmitted by one or more portable unit(s). The RF signal transmission of a portable unit is displayed as a unique identification (ID) name and when displayed on the video display is an indication an emergency condition. The user of the thermal infrared camera selects one identification (ID) name (if more than one identification (ID) name is displayed) and views visual indicators on the video display being indicators of the strength of the RF signal transmitted by the portable unit to track and locate the selected portable unit. The user of the thermal infrared camera upon selecting a identification (ID) name, views the visual indicators indicating a RSSI value to determine a direction to and distance from the selected portable unit.

Owner:GODEHN KATAREYA

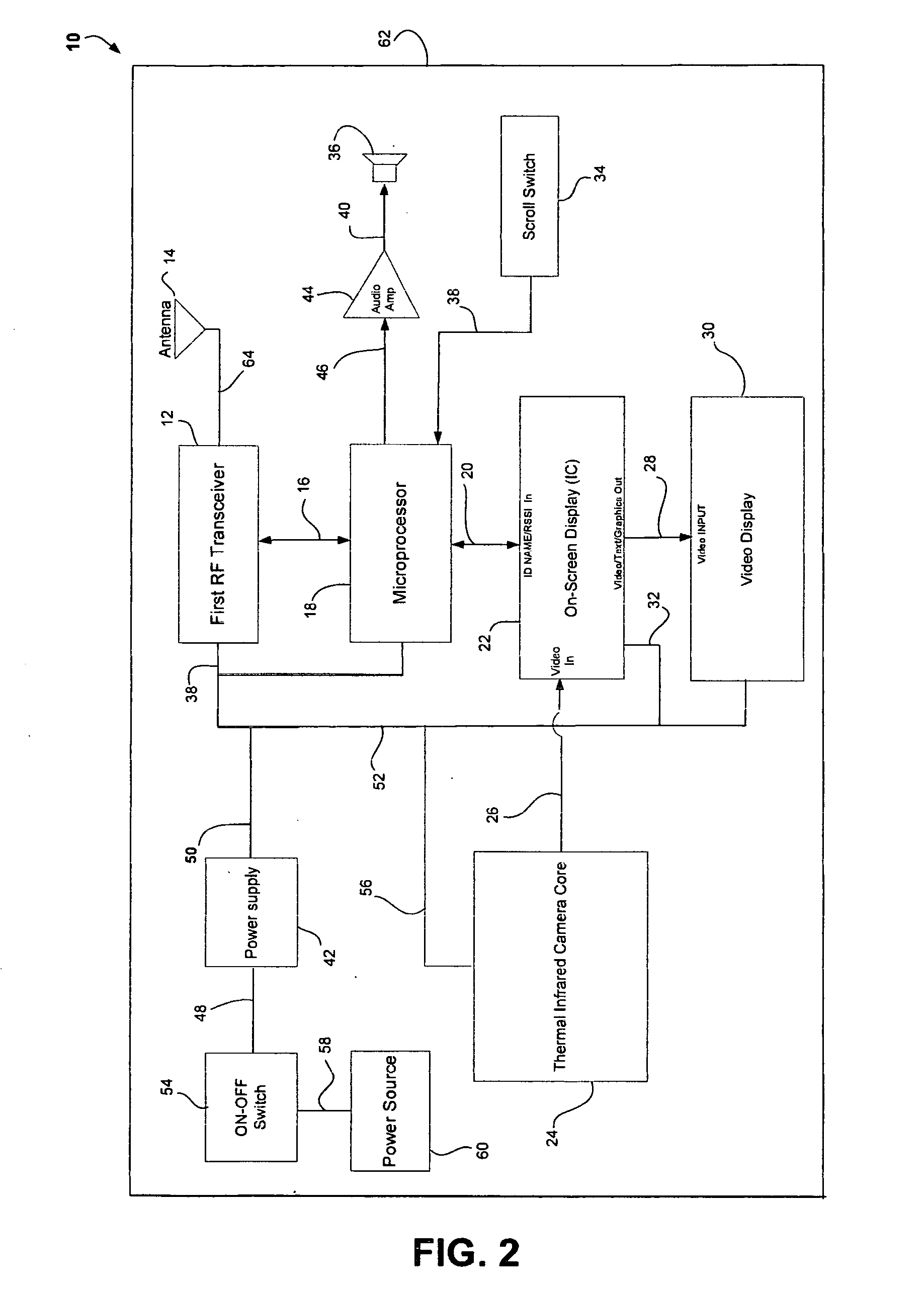

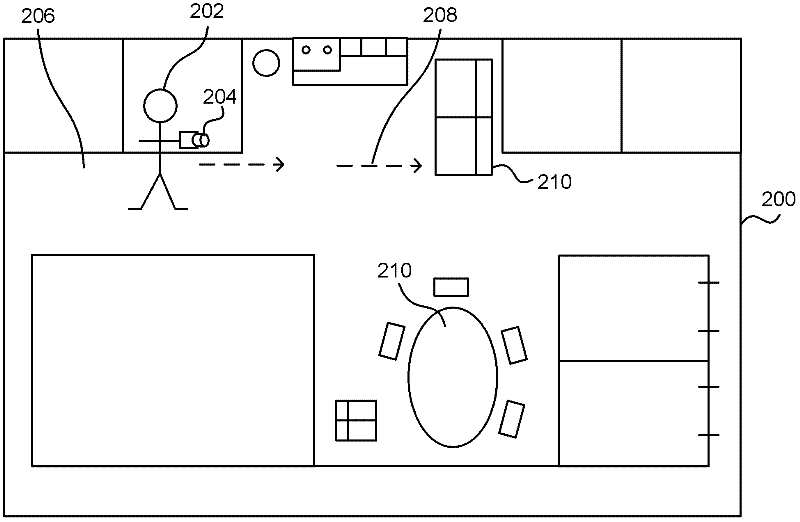

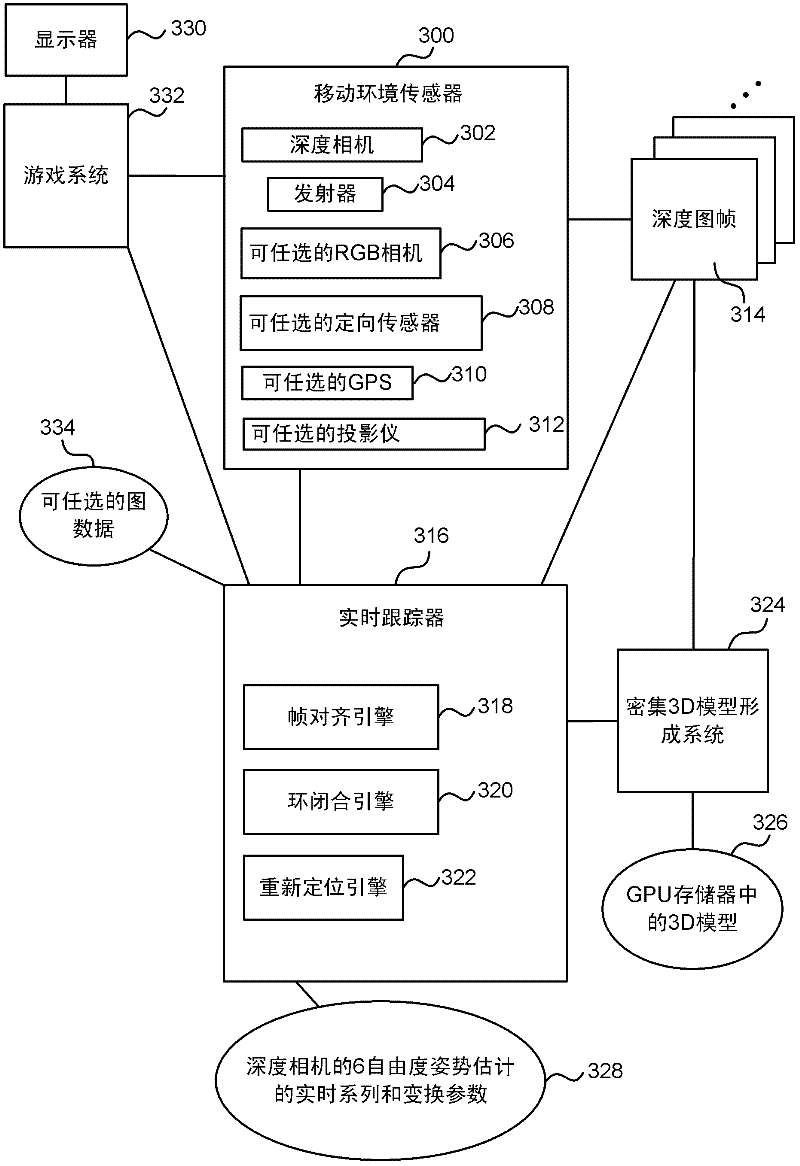

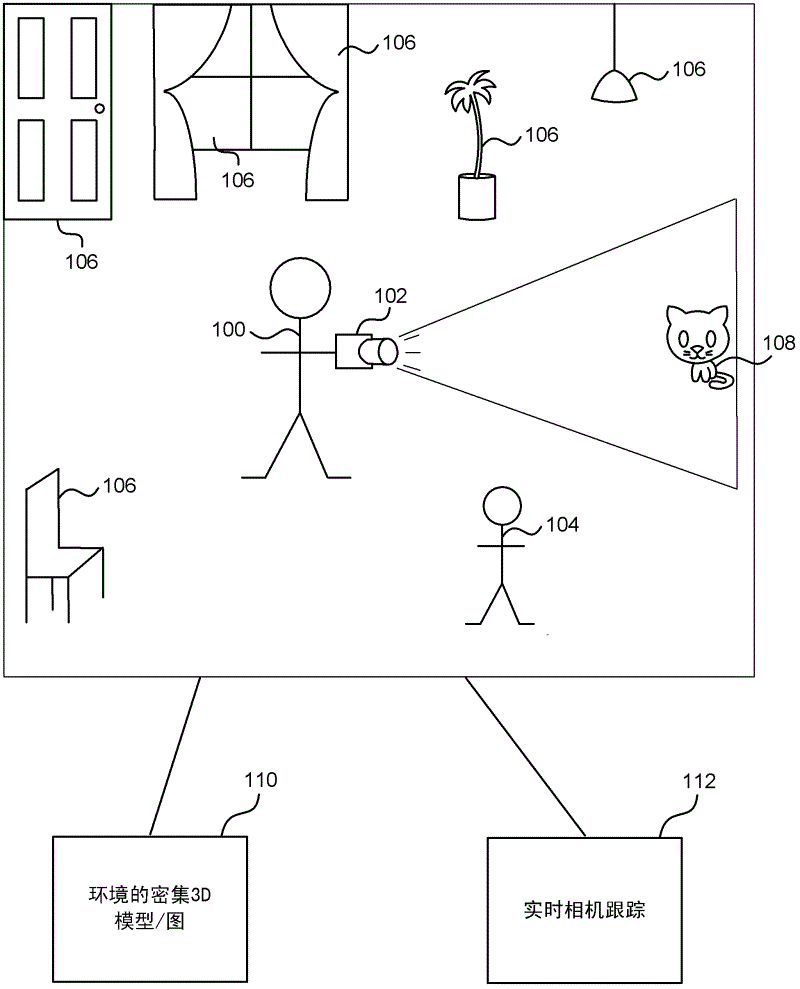

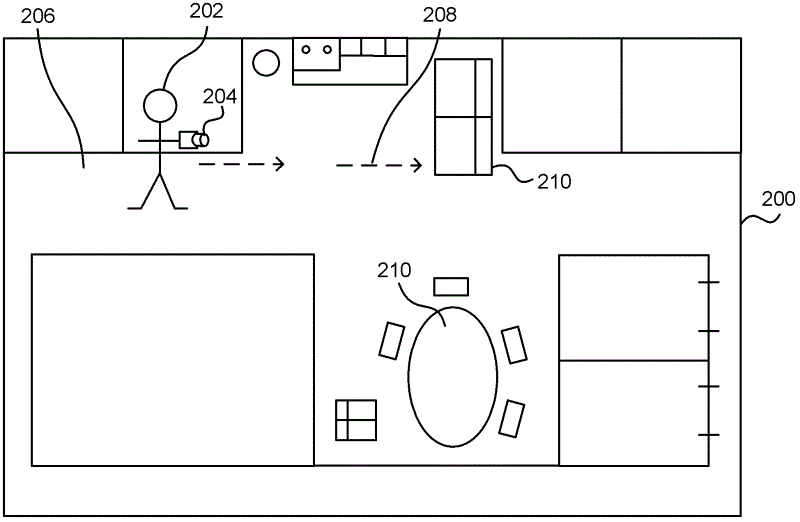

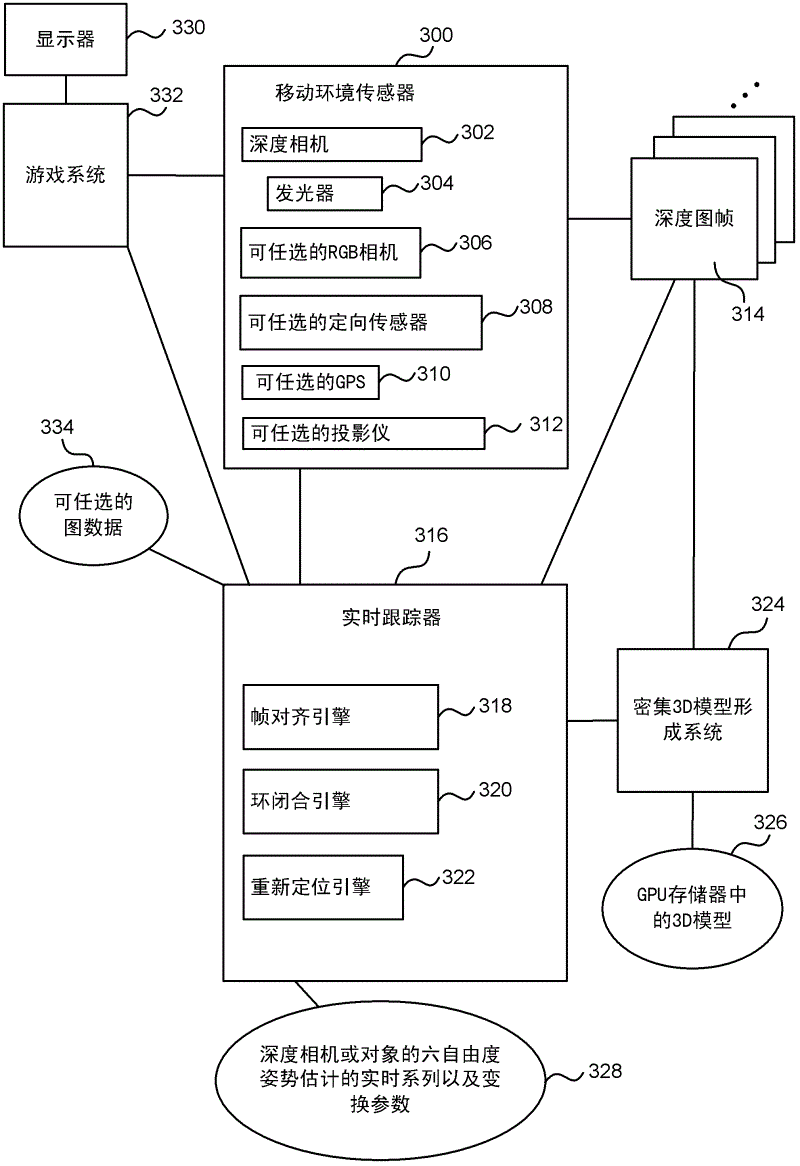

Mobile camera localization using depth maps

Mobile camera localization using depth maps is described for robotics, immersive gaming, augmented reality and other applications. In an embodiment a mobile depth camera is tracked in an environment at the same time as a 3D model of the environment is formed using the sensed depth data. In an embodiment, when camera tracking fails, this is detected and the camera is relocalized either by using previously gathered keyframes or in other ways. In an embodiment, loop closures are detected in which the mobile camera revisits a location, by comparing features of a current depth map with the 3D model in real time. In embodiments the detected loop closures are used to improve the consistency and accuracy of the 3D model of the environment.

Owner:MICROSOFT TECH LICENSING LLC

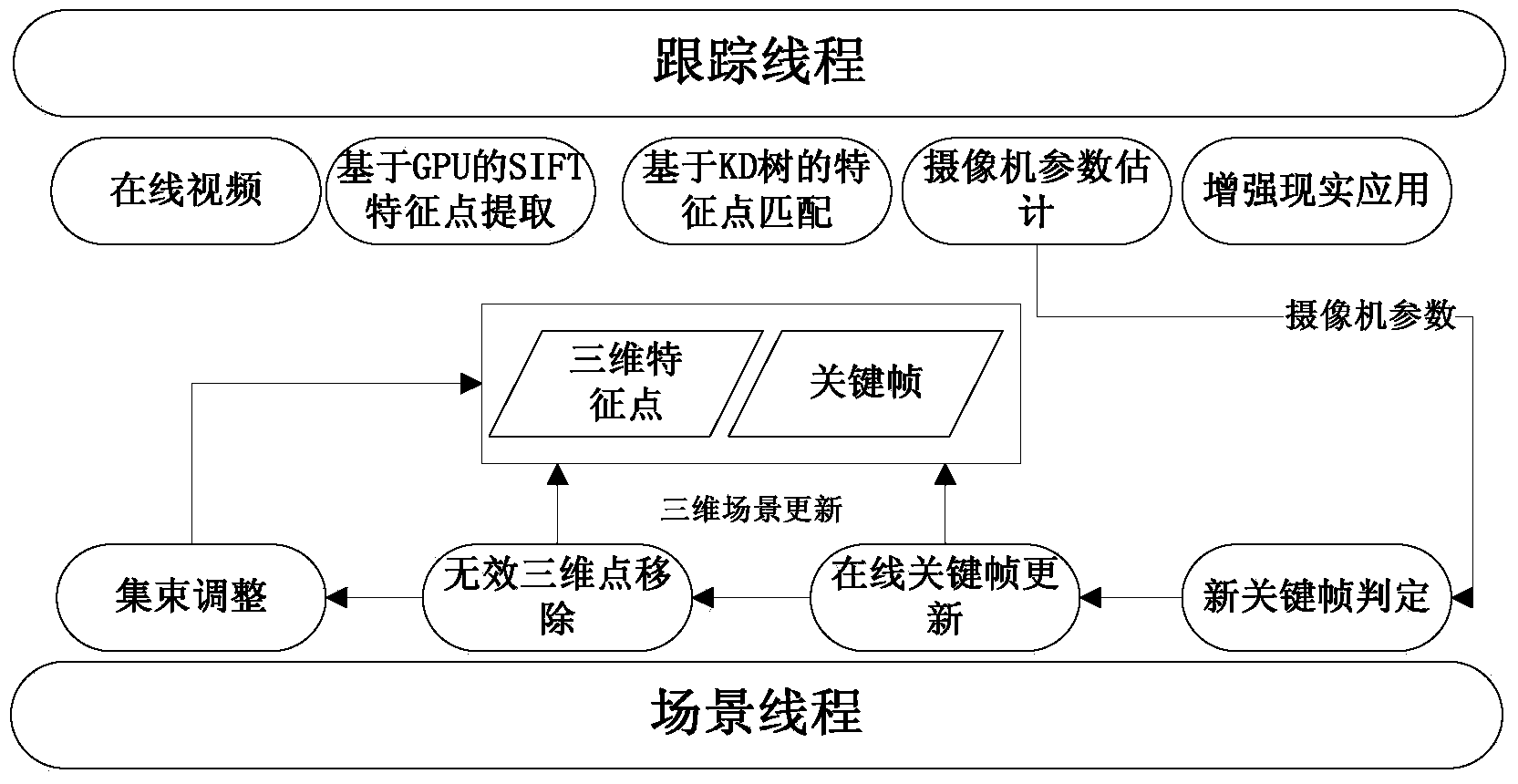

Real-time camera tracking method for dynamically-changed scene

ActiveCN103646391AGuaranteed accuracyAccurate trackingTelevision system detailsImage analysisPoint cloudMotion parameter

The invention discloses a real-time camera tracking method for a dynamically-changed scene. According to the method, the camera pose can be tracked and solved stably in a scene changed dynamically and continually; at first, feature matching and camera parameter estimation are performed, and then, scene update is performed, and finally, when the method is actually applied, foreground and background mutil-thread coordinated operation is implemented, the foreground thread is used to perform feature point matching and camera motion parameter estimation on each frame, and the background thread is used to perform KD tree, key frame and three-dimensional point cloud maintenance and update continually, and optimize key frame camera motion parameters and three-dimensional point positions in a combined manner. When the scene is changed dynamically, the method of the invention still can be used to perform camera tracking on a real-time basis, the method of the invention is significantly better than existing camera tracking methods in tracking accuracy, stability, operating efficiency, etc.

Owner:ZHEJIANG SENSETIME TECH DEV CO LTD

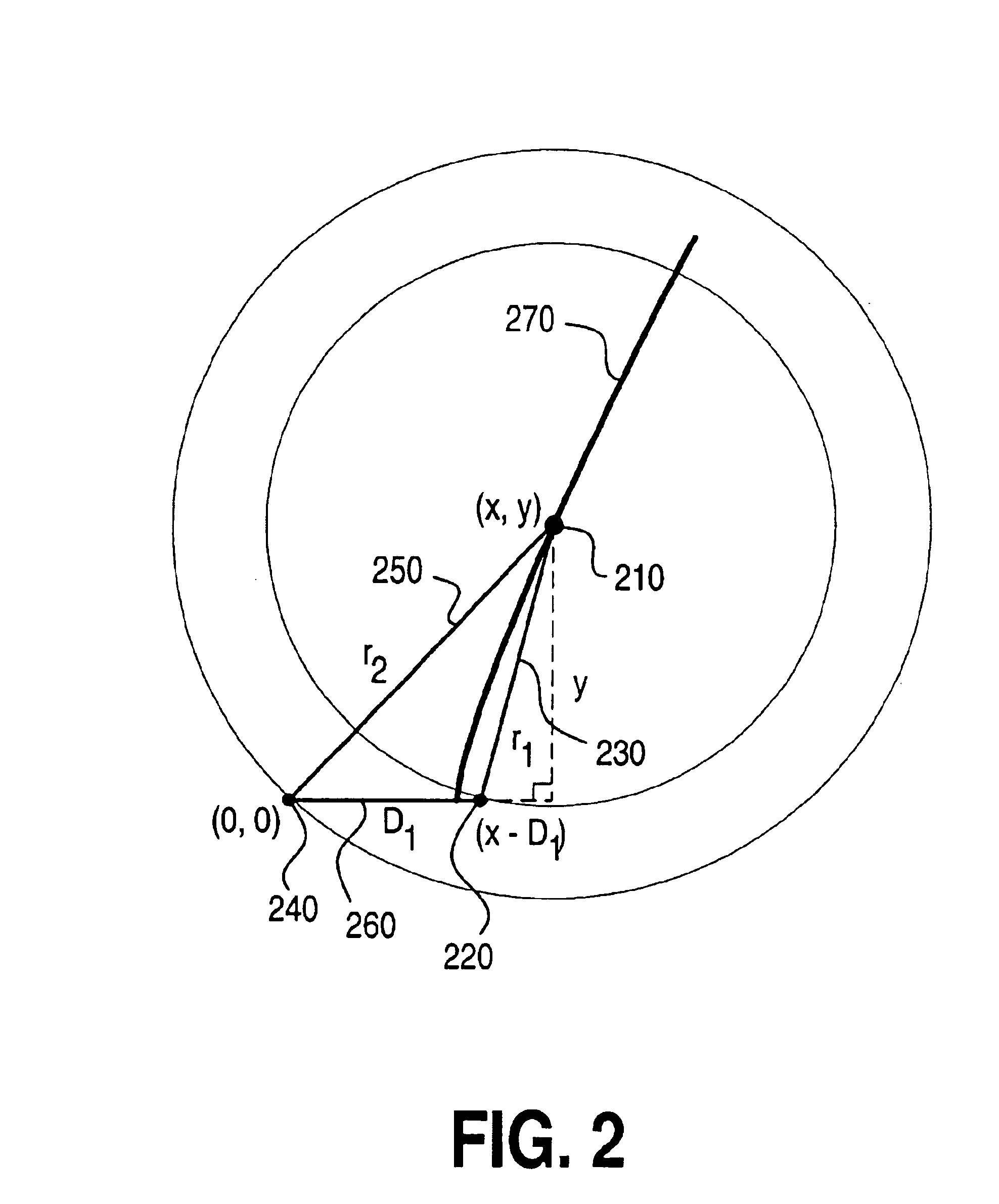

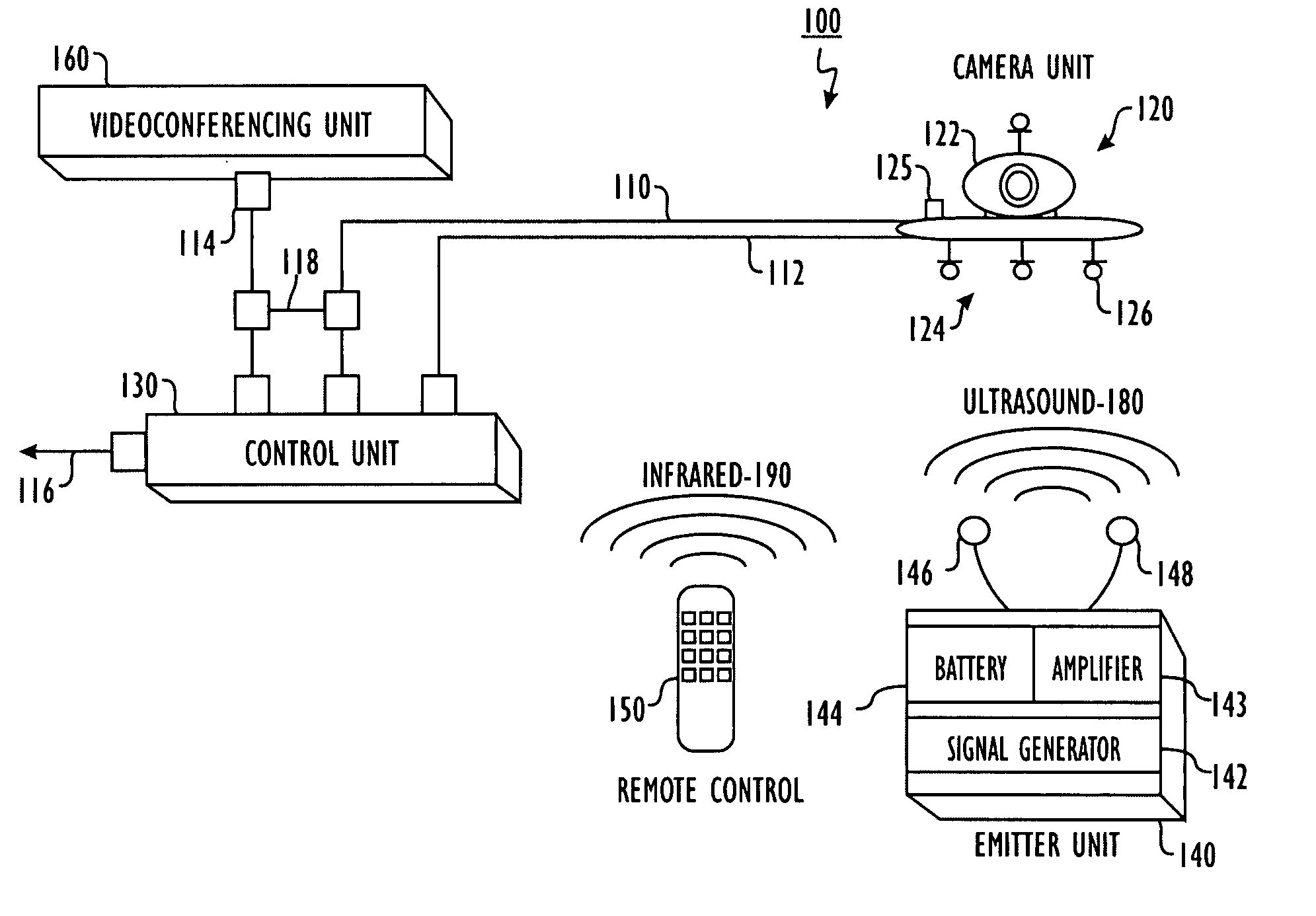

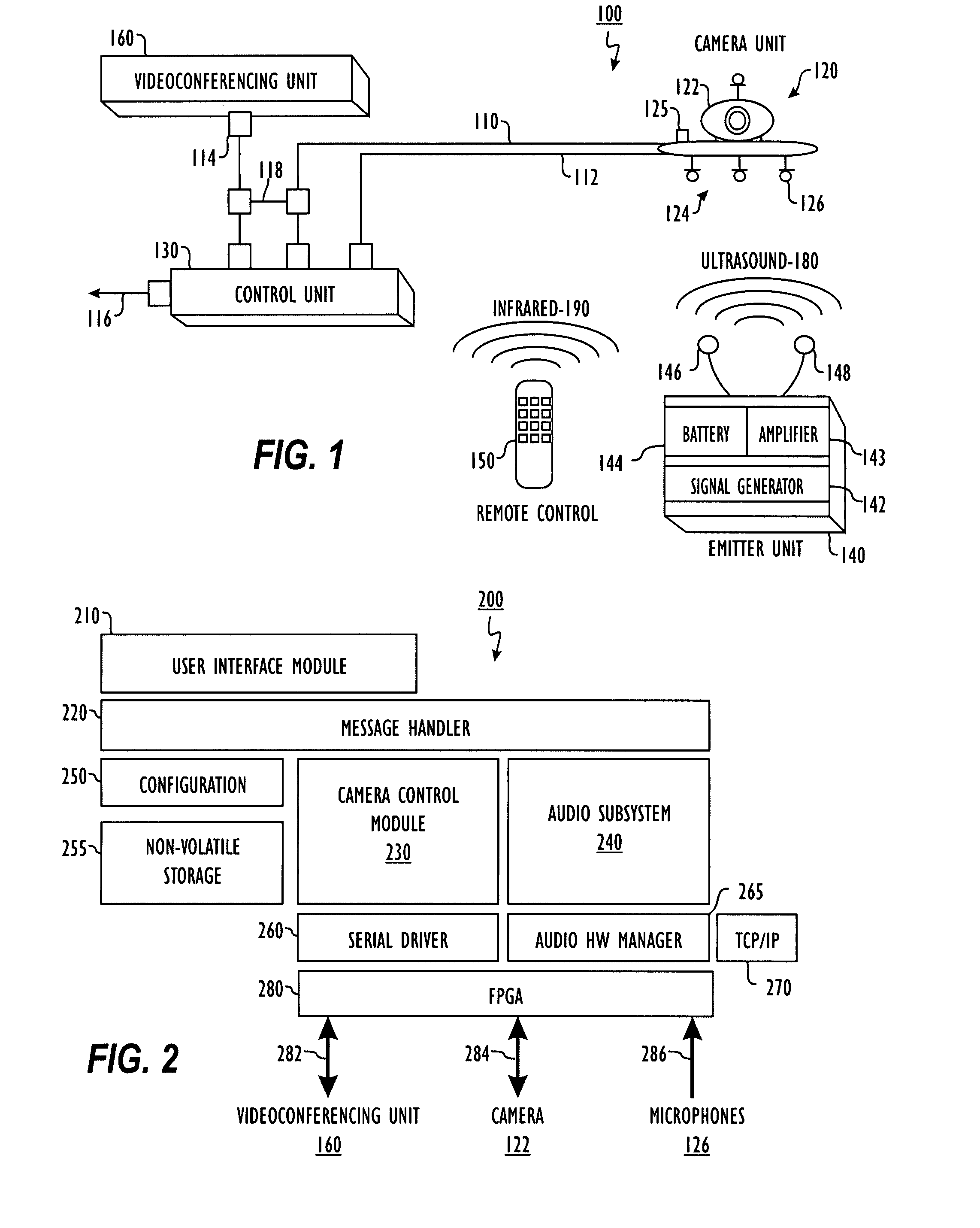

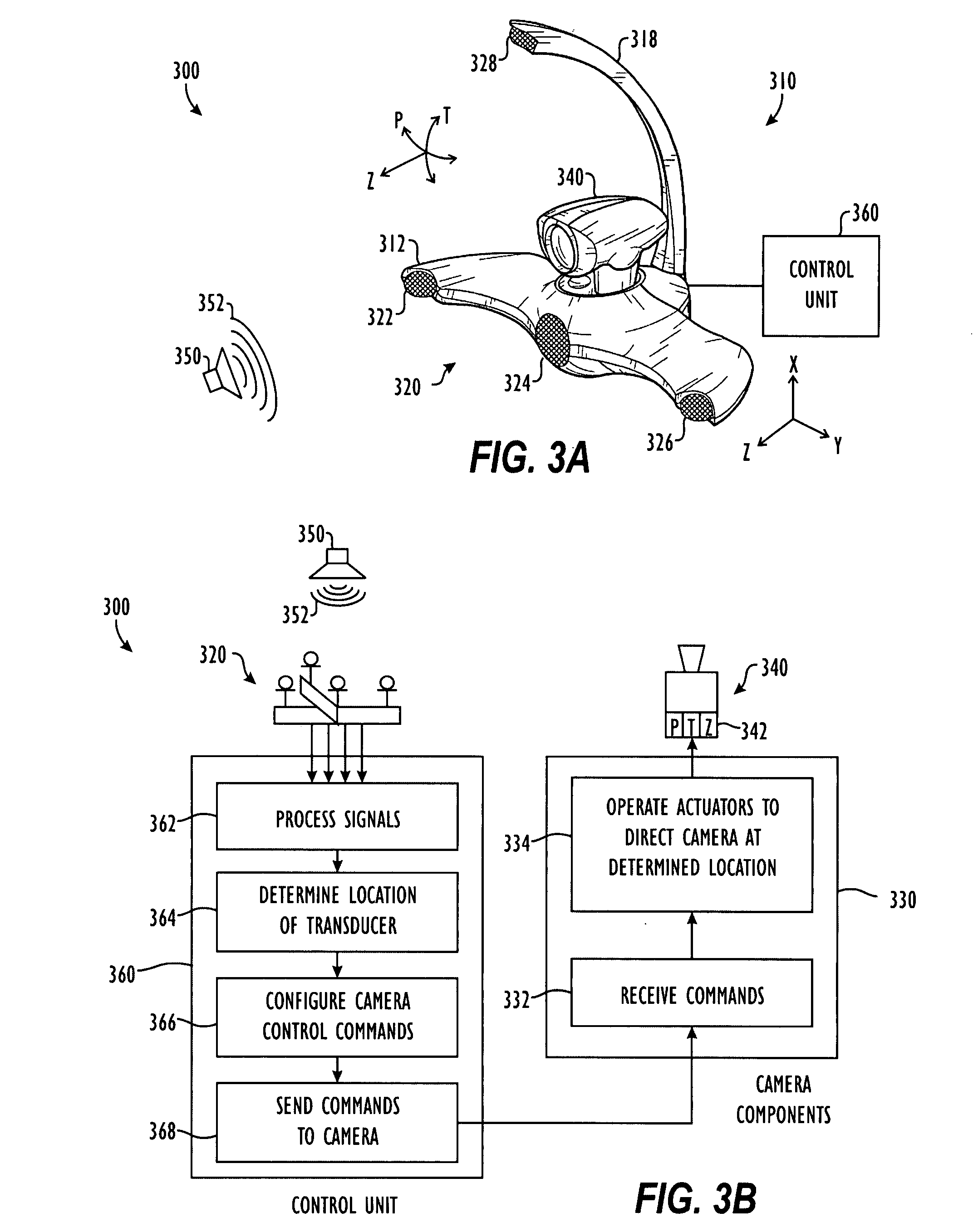

Ultrasonic camera tracking system and associated methods

ActiveUS20080095401A1Television conference systemsPosition fixationUltrasonic sensorFrequency spectrum

A camera tracking system includes a controllable camera, an array of microphones, and a controller. The microphones are positioned adjacent the controllable camera and are at least responsive to ultrasound emitted from a source. The microphones may additionally be capable of responding to sound in the audible spectrum. The controller receives ultrasound signals communicated from the microphones in response to ultrasound emitted from the source and processes the ultrasound signals to determine an at least approximate location of the source. Then, the controller sends one or more command signals to the controllable camera to direct at least approximately at the determined location of the source. The camera tracking system tracks the source as it moves and continues to emit ultrasound. The source can be an emitter pack having one or more ultrasonic transducers that produce tones that sweep form about 24-kHz to about 40-kHz.

Owner:HEWLETT PACKARD DEV CO LP

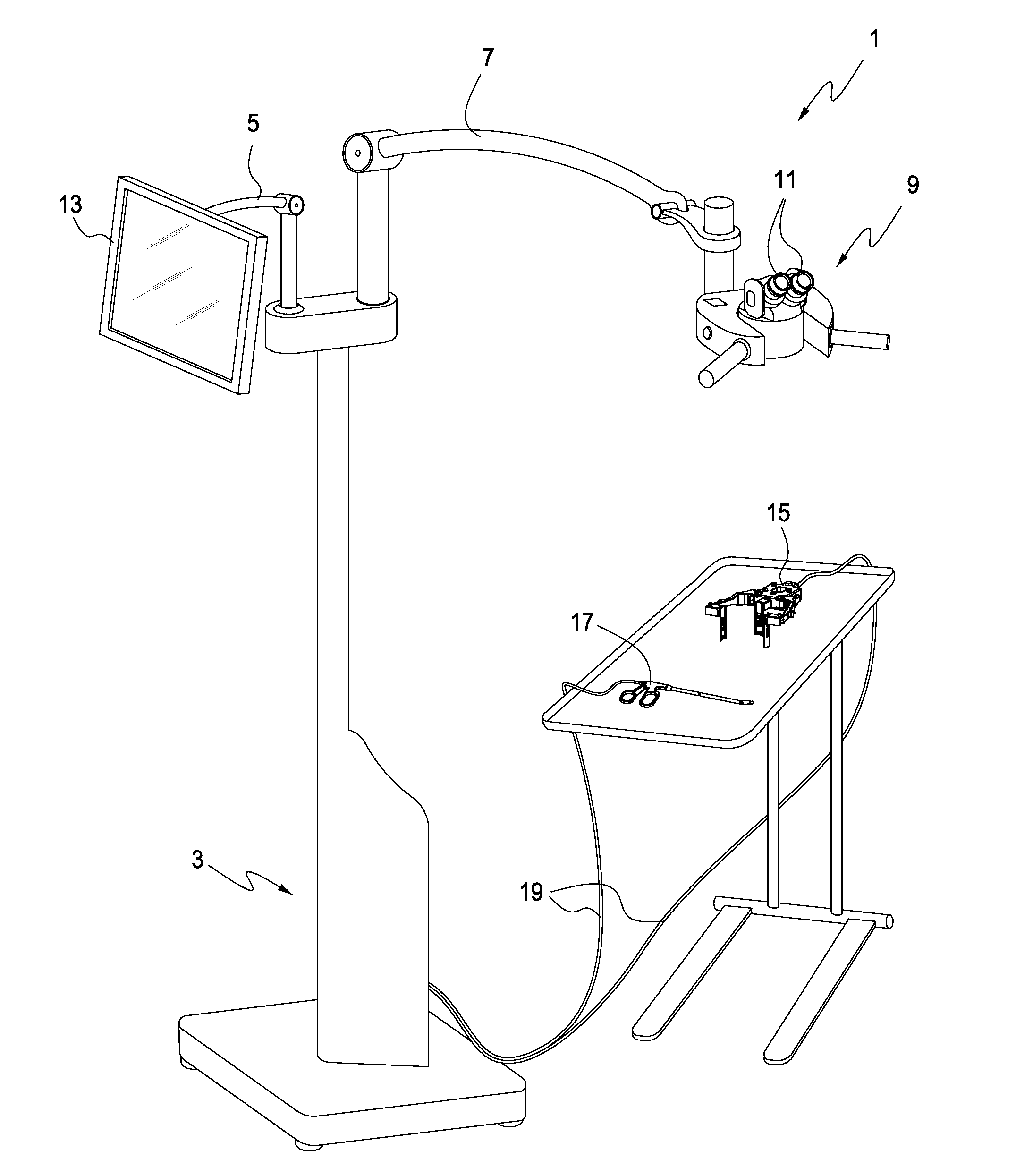

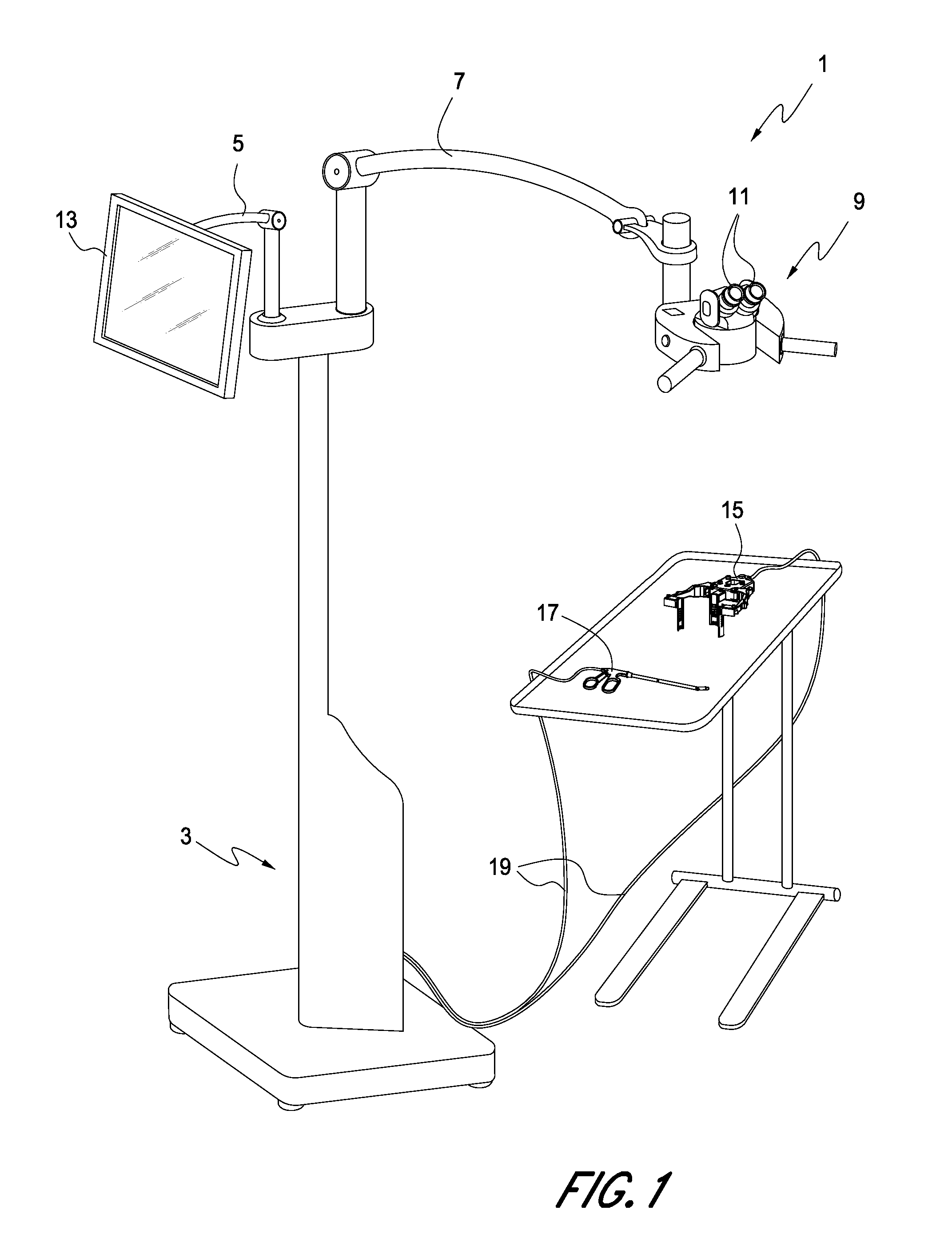

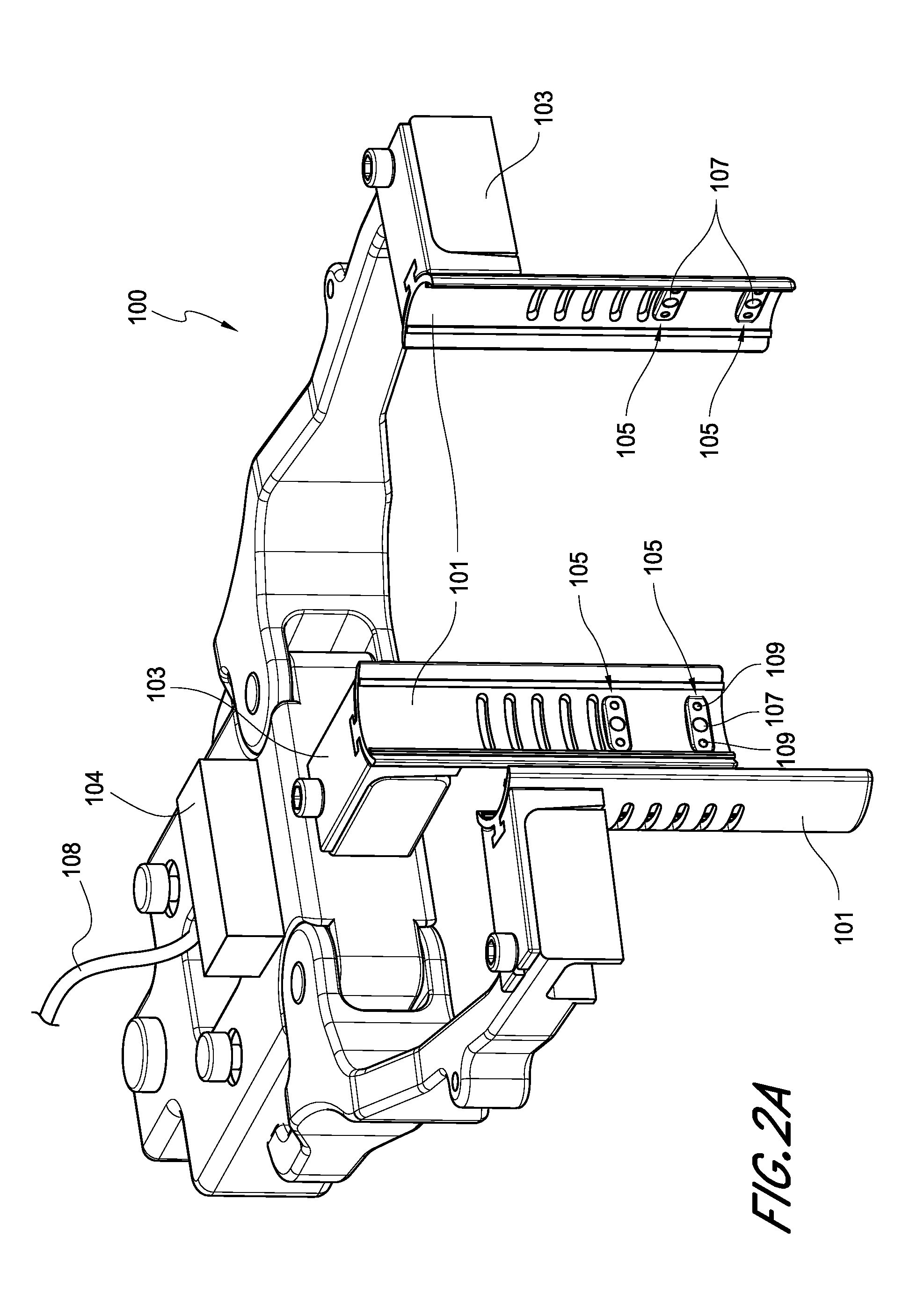

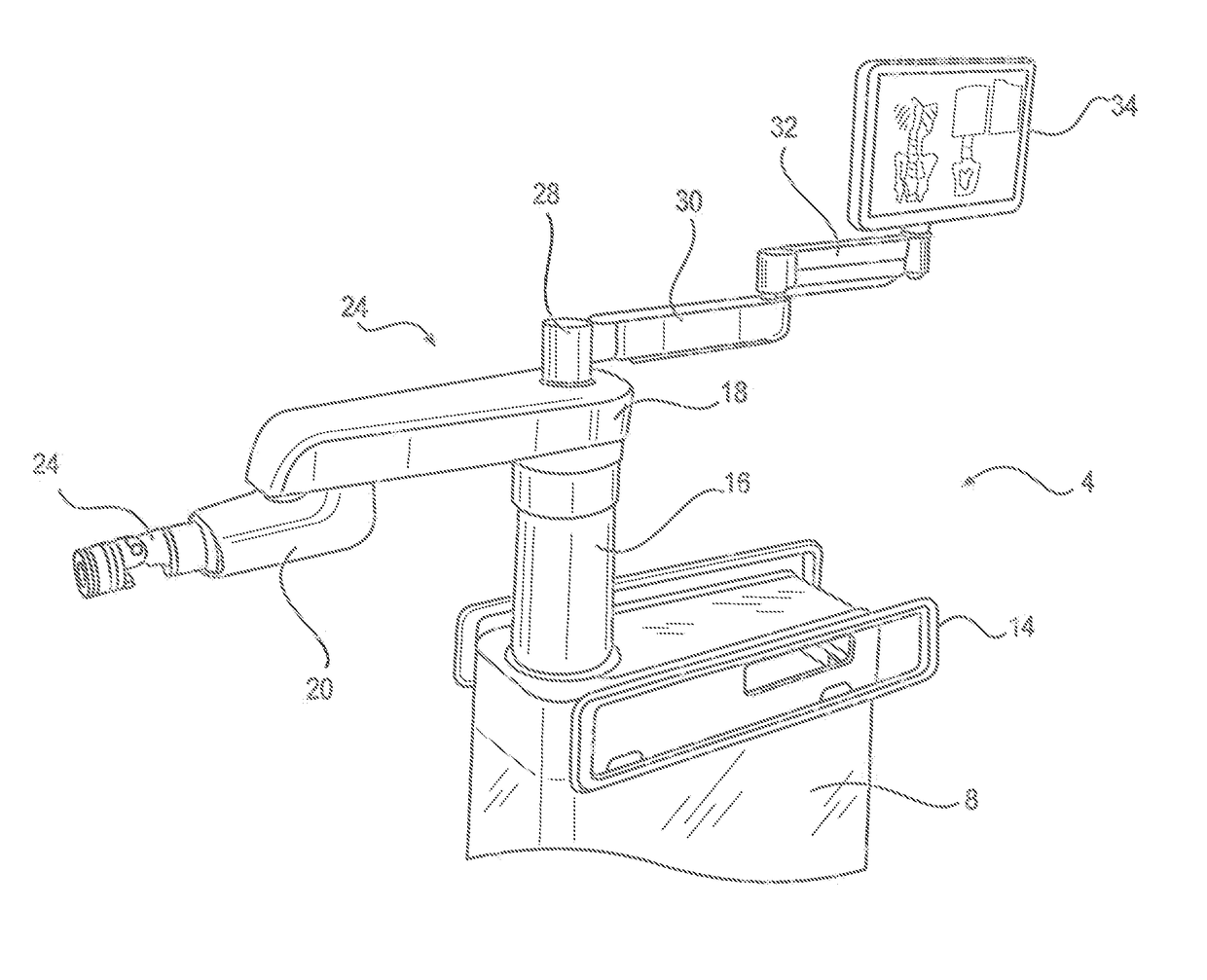

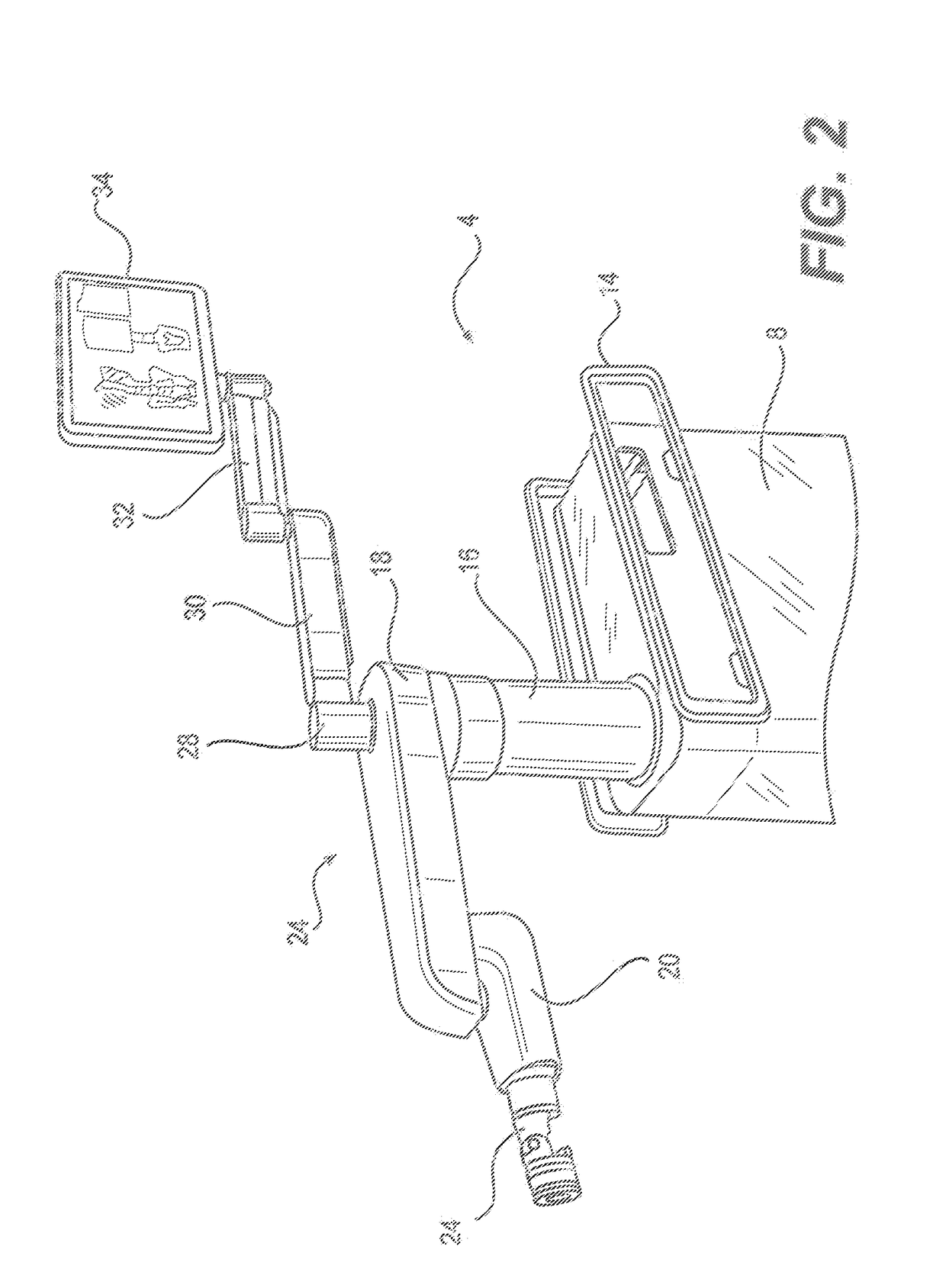

Surgical visualization system with camera tracking

ActiveUS20140005486A1Different stiffnessMedical imagingSurgical furnitureElectromagnetic interferenceEngineering

A surgical device includes a plurality of cameras integrated therein. The view of each of the plurality of cameras can be integrated together to provide a composite image. A surgical tool that includes an integrated camera may be used in conjunction with the surgical device. The image produced by the camera integrated with the surgical tool may be associated with the composite image generated by the plurality of cameras integrated in the surgical device. The position and orientation of the cameras and / or the surgical tool can be tracked, and the surgical tool can be rendered as transparent on the composite image. A surgical device may be powered by a hydraulic system, thereby reducing electromagnetic interference with tracking devices.

Owner:CAMPLEX

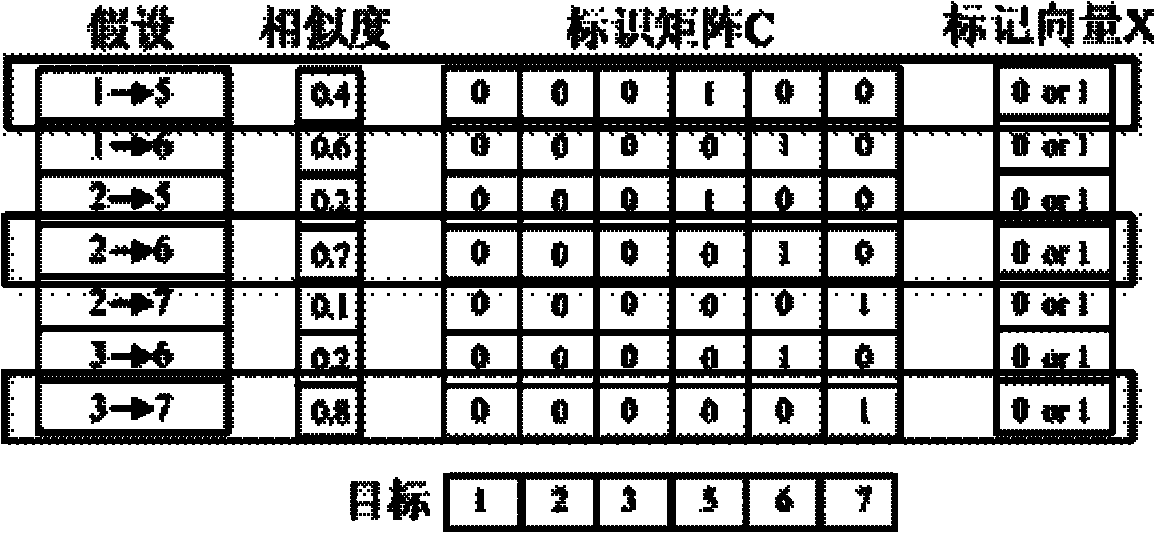

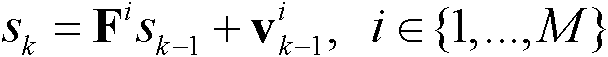

Cross-camera tracking method for multiple moving targets

ActiveCN102156863AComplete descriptionImage analysisCharacter and pattern recognitionHuman bodyFilter model

The invention relates to image process, motion analysis and the like. In order to implement cross-camera tracking of moving targets, the invention uses the technical scheme that the cross-camera tracking method for the multiple moving targets comprises the following steps of: 1, motion filtering for a single camera, specifically, decomposing complex motions of human body into a finite number of combinations of relatively simple motion filtering models, wherein each single motion filtering model is represented by a combination of a linear motion state transition model and a gaussian noise, and the motion filtering method comprises the two following core parts: a motion model: an observation model; zk = Hsk + wk2, cross-camera oriented motion association: (1) creation of a panorama; (2) target similarity measurement; (3) motion trail association; and transforming the problem of cross-camera association of multiple motion trails into the problem of matching of bipartite graphs, and solving the problem through the integer programming under the following constraint conditions. The cross-camera tracking method for the multiple moving targets is mainly used in the image processing, the motion analysis and the like.

Owner:ZHEJIANG E VISION ELECTRONICS TECH

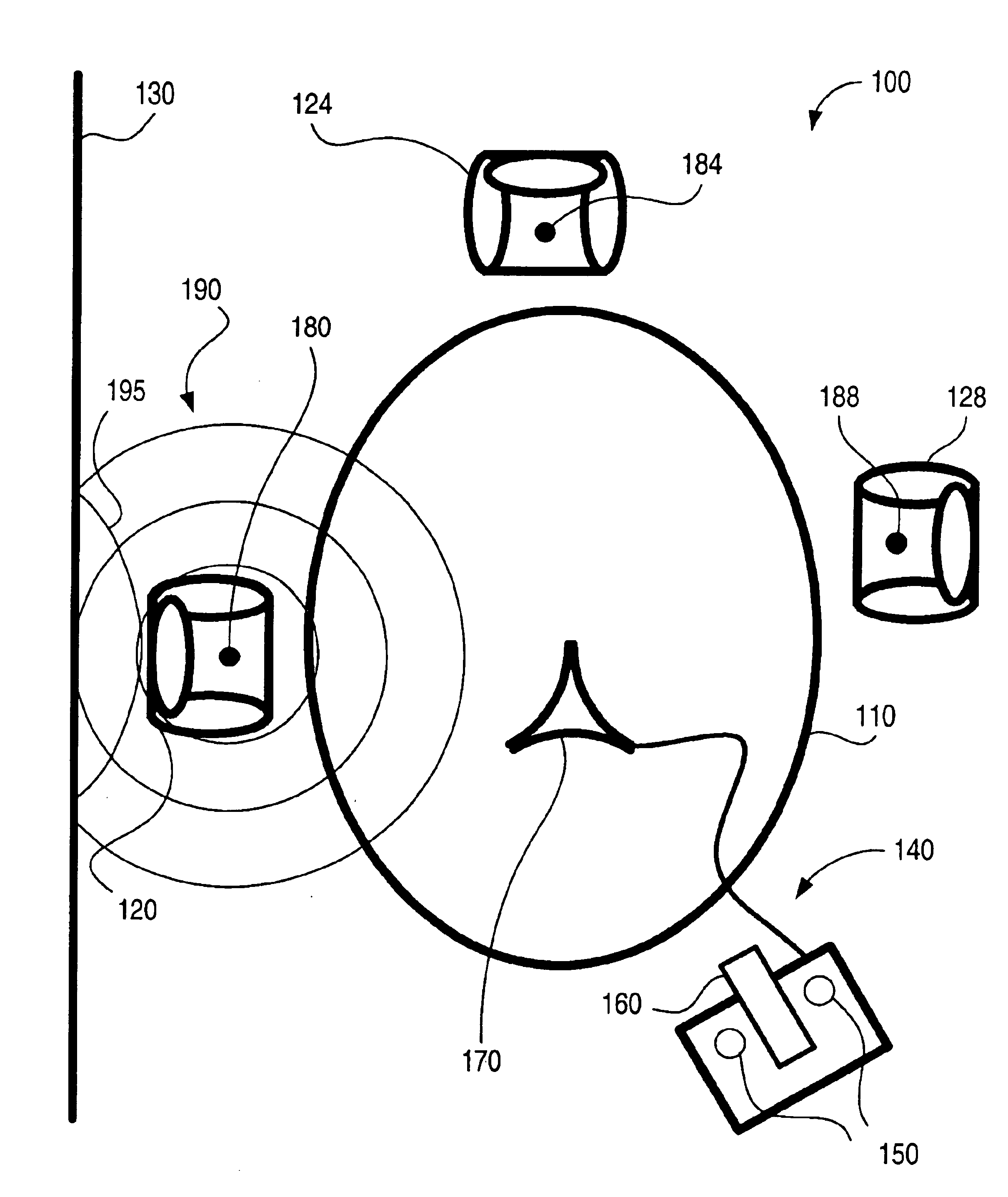

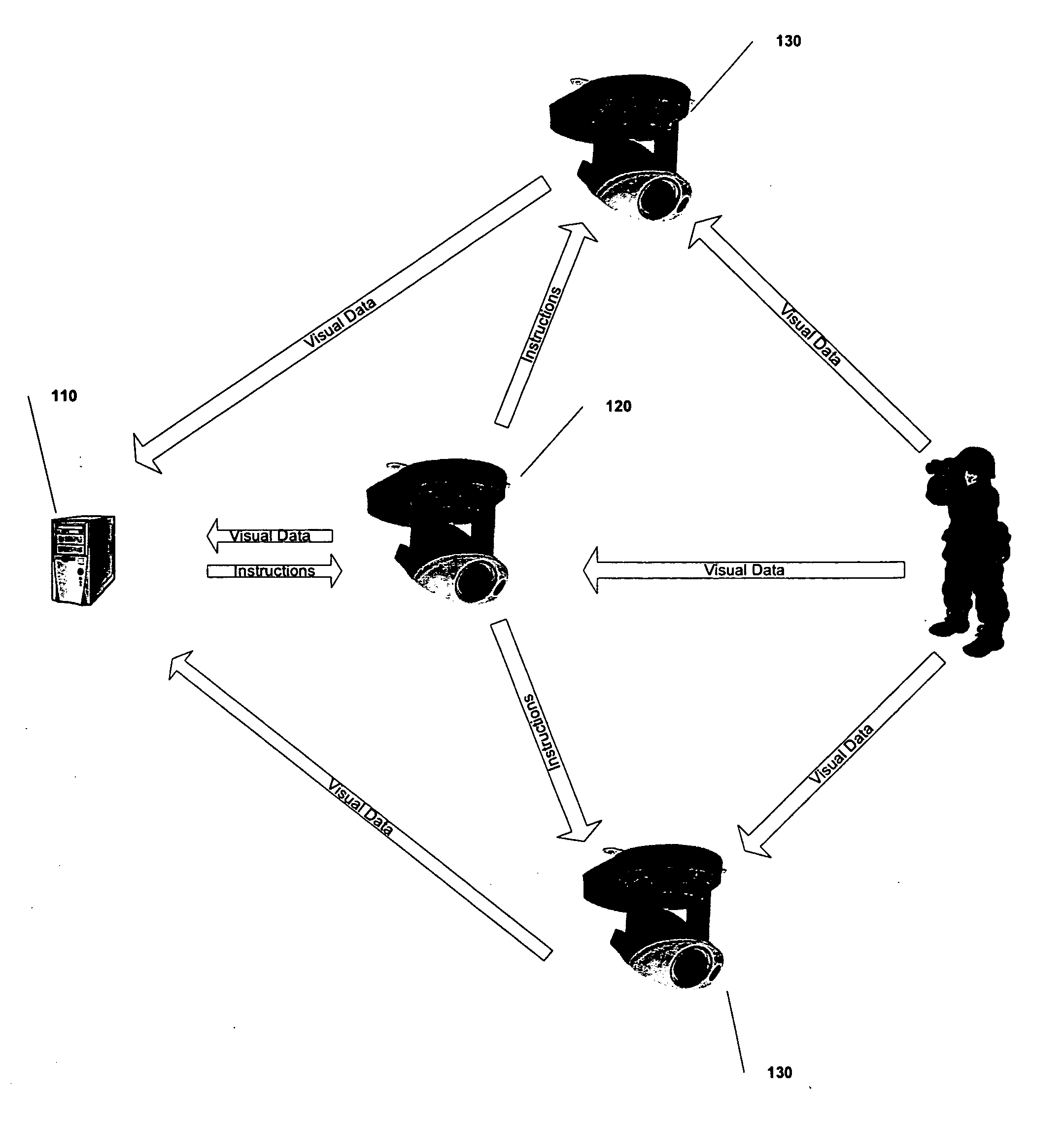

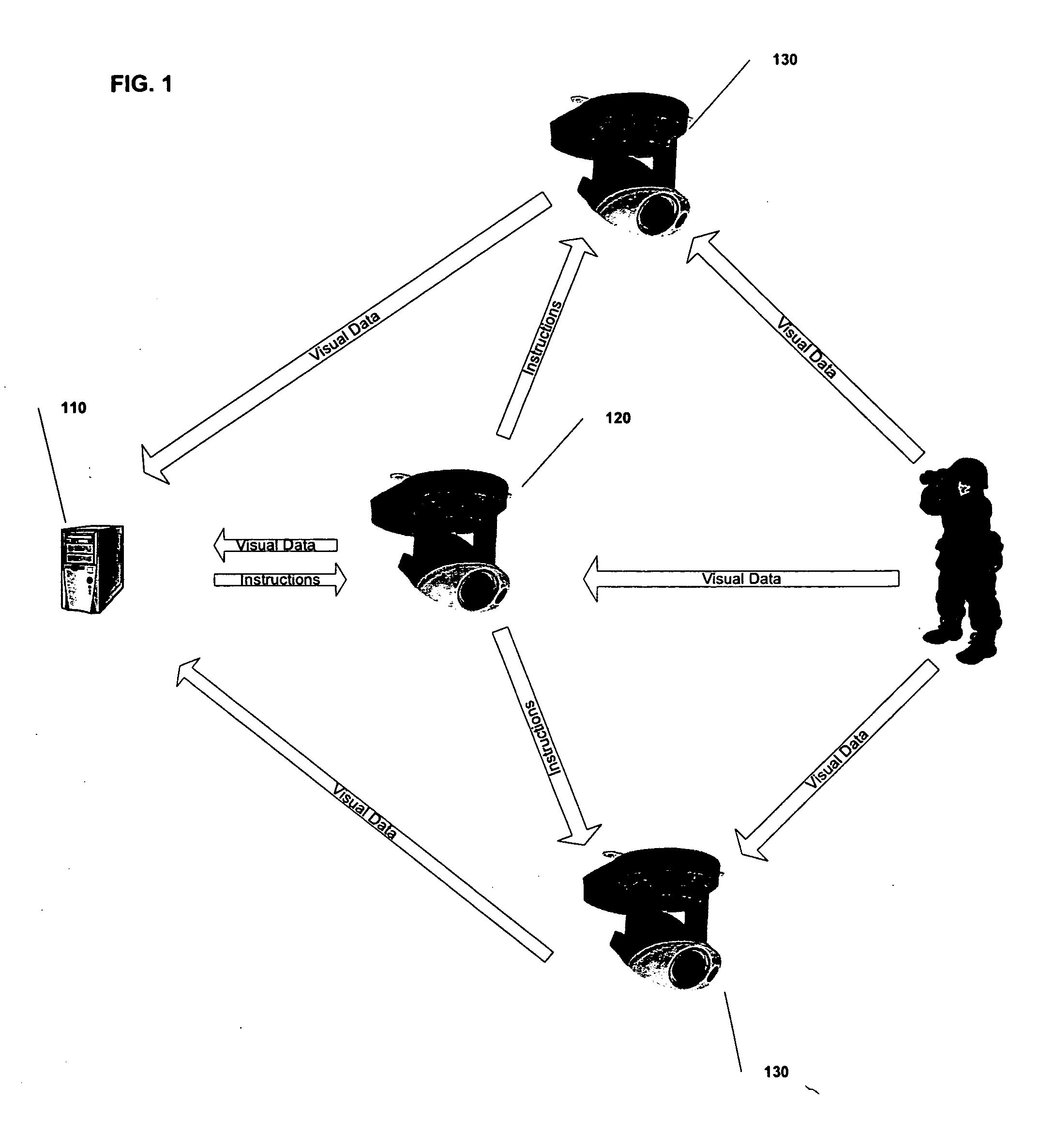

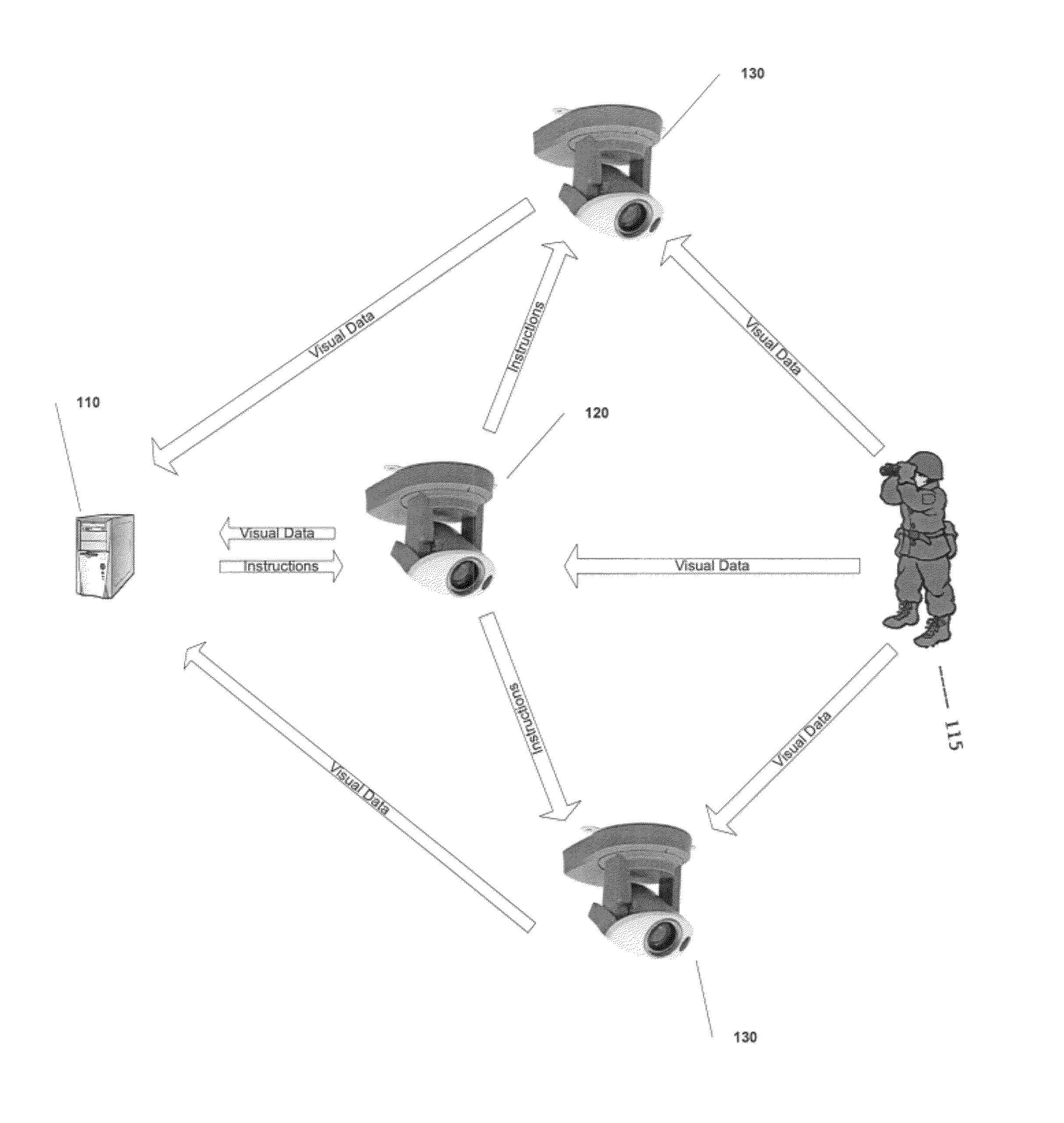

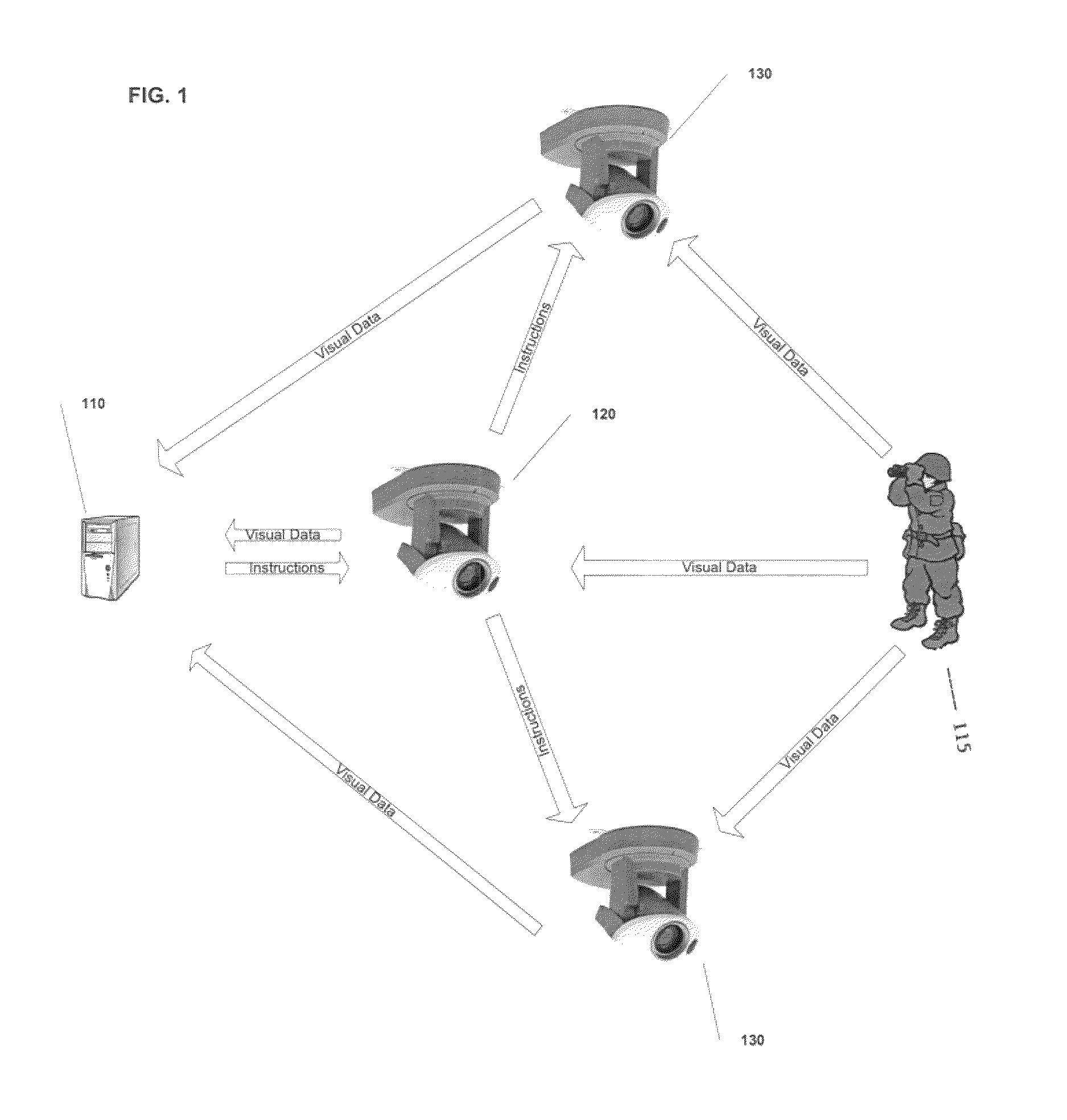

Method and apparatus for performing coordinated multi-PTZ camera tracking

ActiveUS20070064107A1Color television detailsClosed circuit television systemsControl signalComputer science

A system for tracking at least one object, comprising: a plurality of communicatively connected visual sensing units configured to capture visual data related to the at least one object; and a manager component communicatively connected to the plurality of visual sensing units, where the manager component is configured to assign one visual sensing unit to act as a visual sensing unit in a master mode and at least one visual sensing unit to act as a visual sensing unit in a slave mode, transmit at least one control signal to the plurality of visual sensing units, and receive the visual data from the plurality of visual sensing units.

Owner:SRI INTERNATIONAL

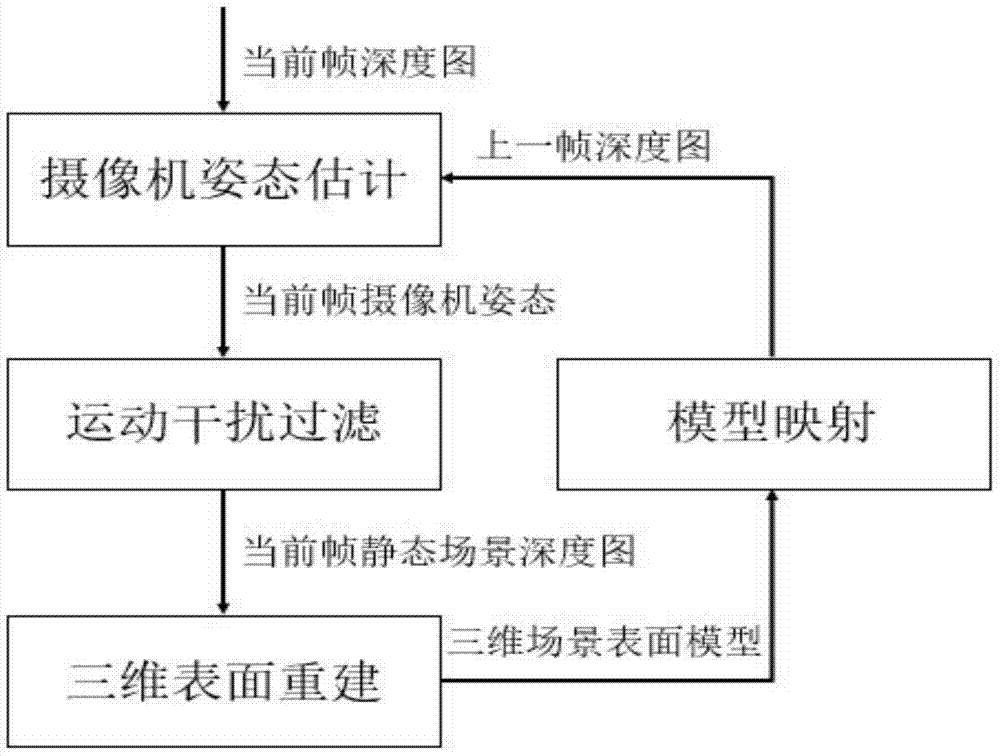

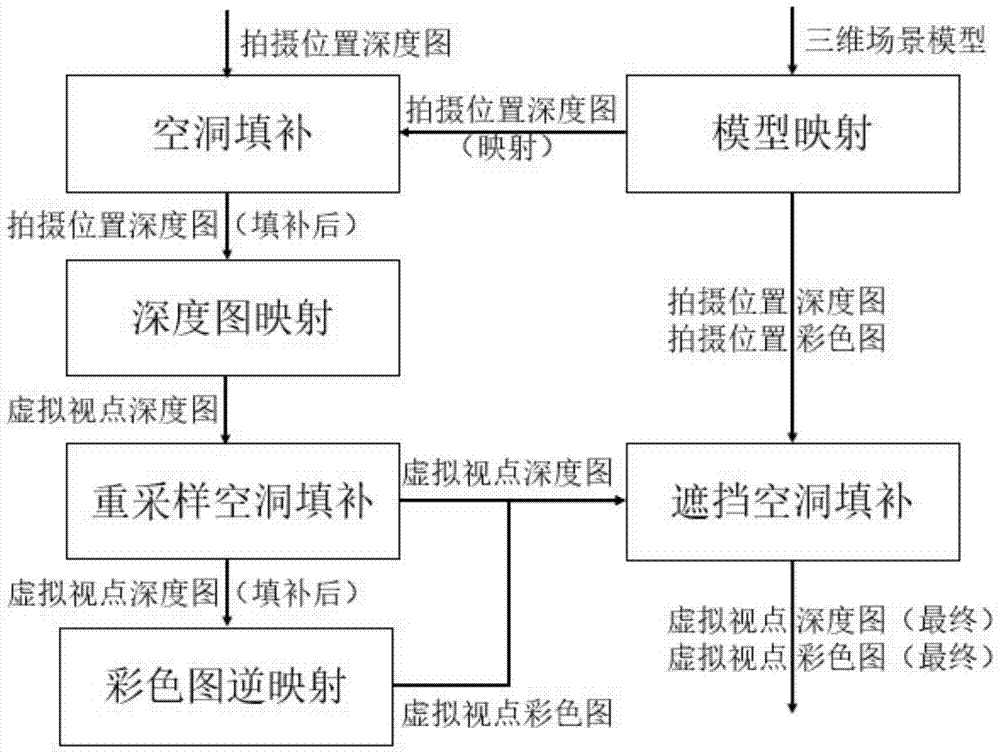

Method for generating virtual-real fusion image for stereo display

ActiveCN104504671AImprove tracking accuracyImprove registration accuracyImage enhancementImage analysisViewpointsCollision detection

The invention discloses a method for generating a virtual-real fusion image for stereo display. The method comprises the following steps: (1) utilizing a monocular RGB-D camera to acquire a depth map and a color map in real scene; (2) rebuilding a three-dimensional scene surface model and calculating a camera parameter; (3) mapping, thereby acquiring the depth map and the color map in a virtual viewpoint position; (4) finishing the three-dimensional registration of a virtual object, rendering for acquiring the depth map and the color map of the virtual object, and performing virtual-real fusion, thereby acquiring a virtual-real fusion content for stereo display. According to the method provided by the invention, the monocular RGB-D camera is used for shooting, the three-dimensional scene surface model is rebuilt frame by frame and the model is simultaneously used for tracking the camera and mapping the virtual viewpoint, so that higher camera tracking precision and virtual object registration precision can be acquired, the cavities appearing in the virtual viewpoint drawing technology based on the image can be effectively handled, the shielding judgment and collision detection for the virtual-real scene can be realized and a stereo display device can be utilized to acquire a vivid stereo display effect.

Owner:ZHEJIANG UNIV

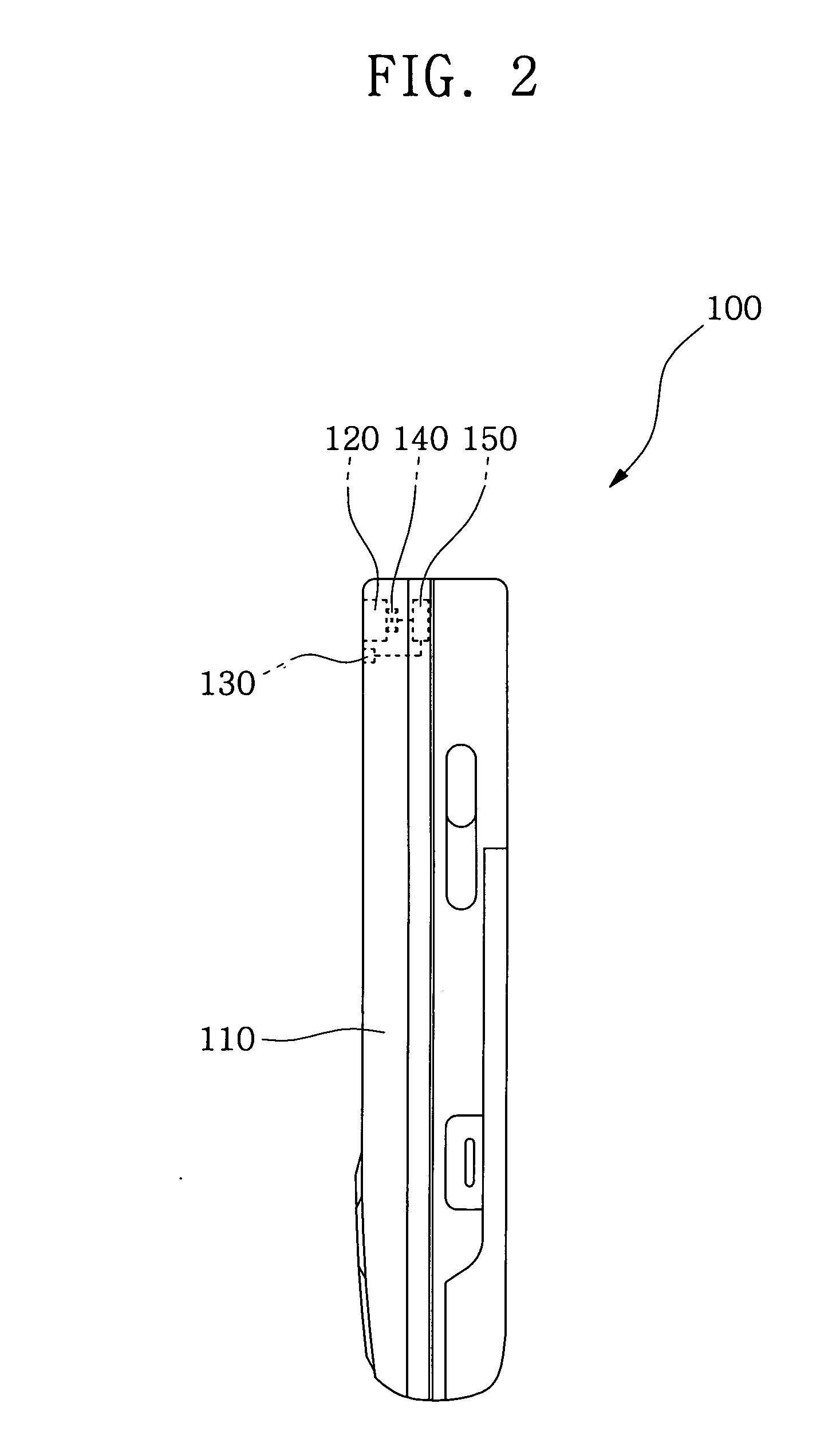

Video communication device and camera tracking method thereof

ActiveUS20090096858A1Wide angular fieldQuality improvementTelevision system detailsColor television detailsColor sensorCommunication device

A video communication device includes a video communication device body; a camera fitted to the video communication device body to record images of an object; at least one detection unit fitted to the video communication device body to detect the object according to a predetermined condition; and a camera rotation angle adjuster fitted to the video communication device body to adjust a rotation angle of the camera according to detection data from the detection unit so that the camera automatically tracks a position of the object when the object moves. The moving object can be located through a detection unit, such as a voice sensor, a codec, a color sensor and an IR sensor, and be automatically tracked by suitable adjustment of the rotation angle of a camera. A wide angular field is ensured and video telephony quality is improved.

Owner:SAMSUNG ELECTRONICS CO LTD

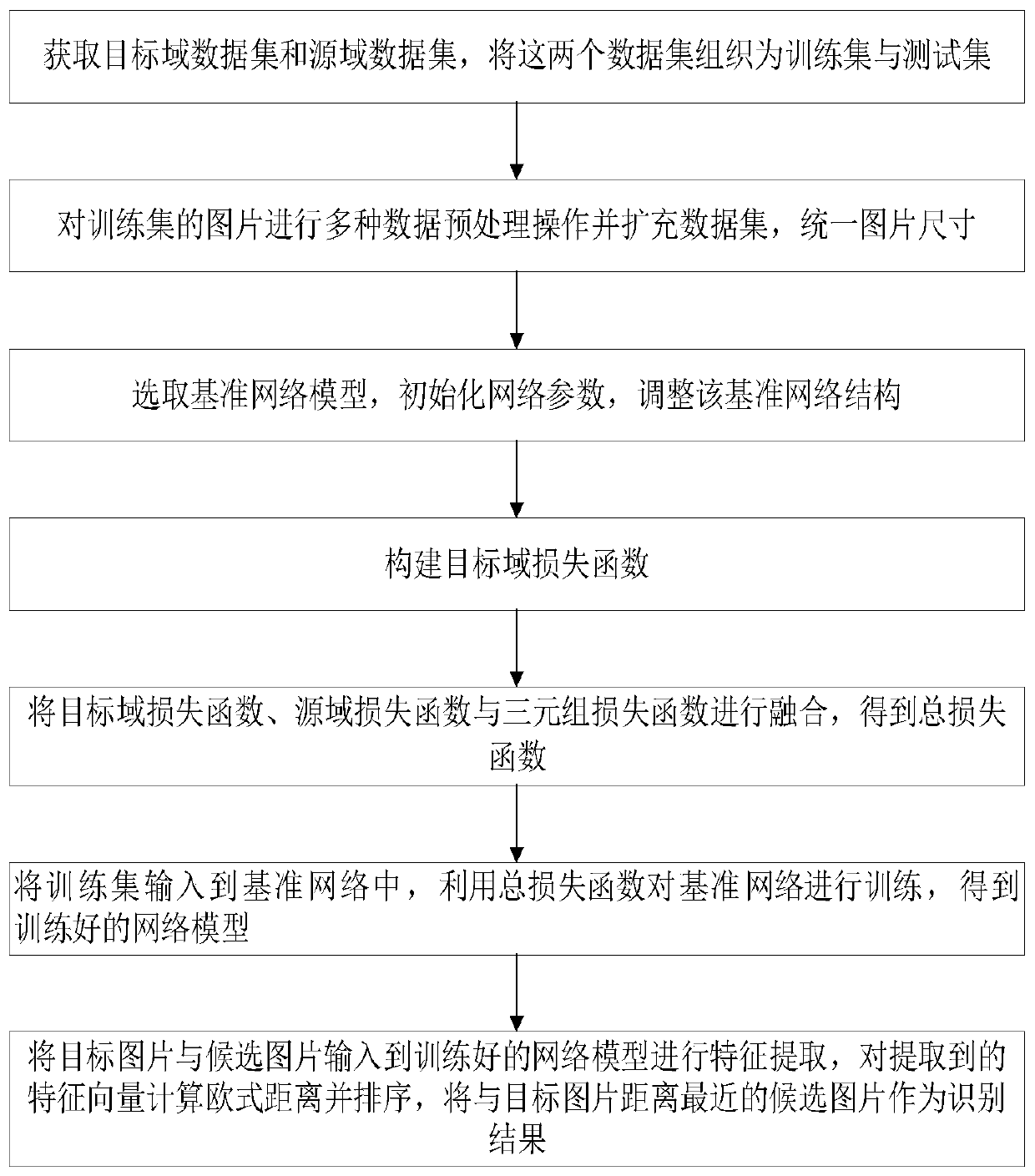

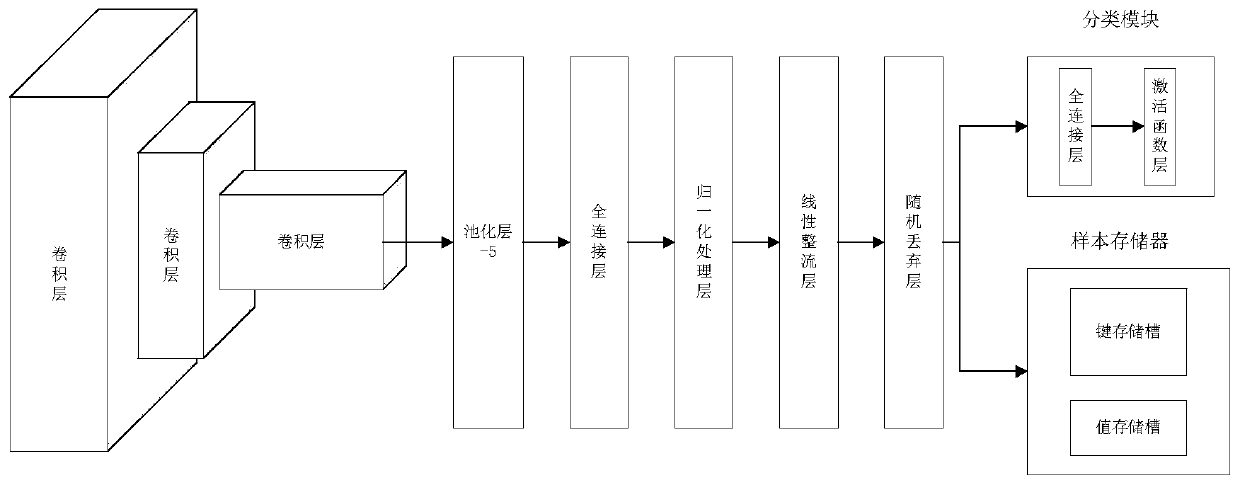

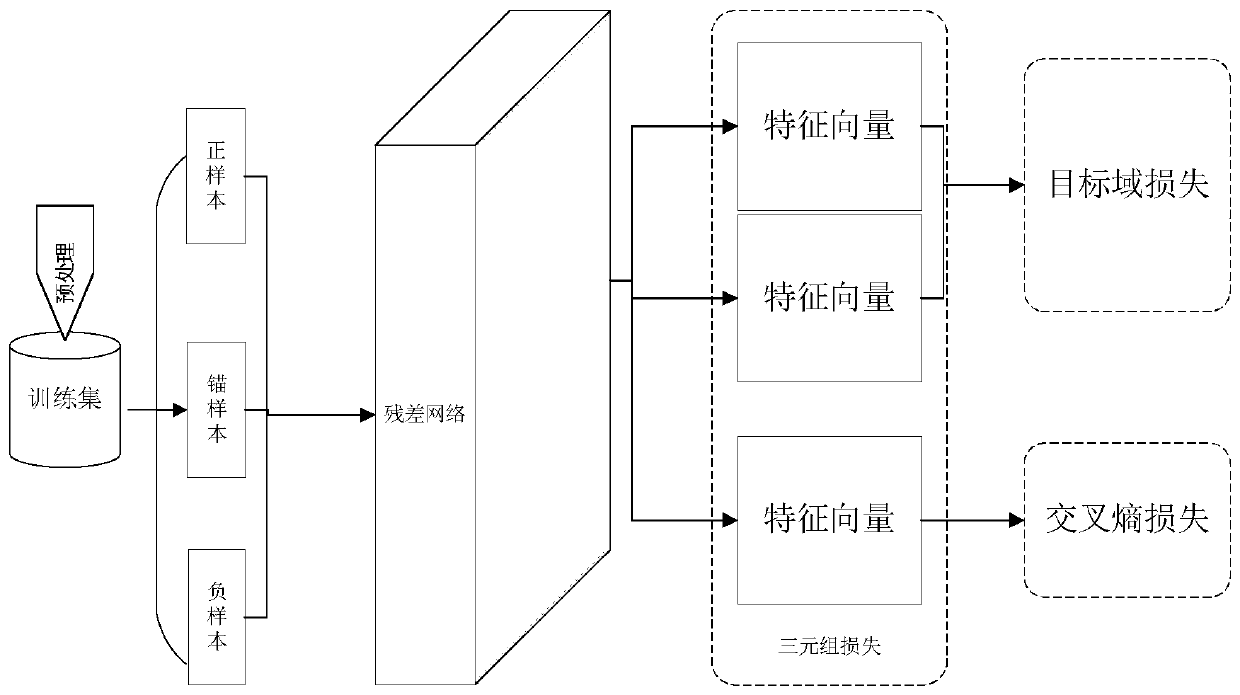

Cross-domain pedestrian re-identification method based on unsupervised joint multi-loss model

ActiveCN111126360AImprove generalization abilityPrevent overfittingInternal combustion piston enginesBiometric pattern recognitionData setNetwork structure

The invention discloses a cross-domain pedestrian re-identification method based on an unsupervised joint multi-loss model. The method mainly solves the problem of low identification rate of an existing unsupervised cross-domain pedestrian re-identification method. The method comprises the following steps: 1) obtaining a data set, and dividing the data set into a training set and a test set; 2) carrying out a plurality of preprocessings and extensions on the training set; 3) selecting a residual network as a reference network model, initializing network parameters, and adjusting a network structure; 4) constructing a target domain loss function; 5) fusing the target domain loss function with the source domain loss function and the triple loss function to obtain a total loss function; 6) training the residual network by using the total loss function to obtain a trained network model; and 7) inputting the test set into the trained network model, and outputting an identification result. The identification rate of unsupervised cross-domain pedestrian re-identification is improved, the occurrence of an over-fitting situation is effectively avoided, and the method can be used for intelligent security of suspected target search and pedestrian cross-camera tracking.

Owner:XIDIAN UNIV

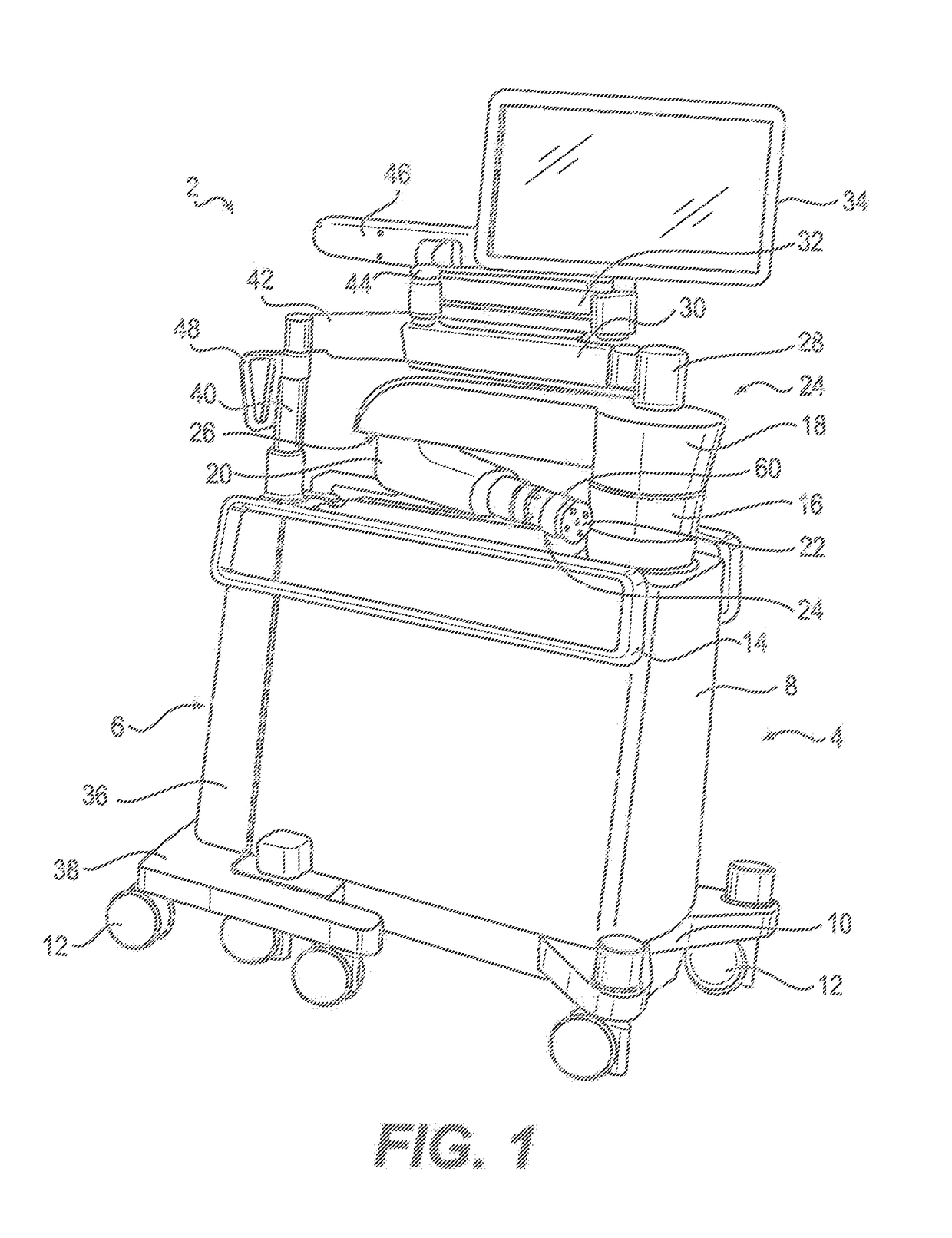

Surgical robotic systems and methods thereof

ActiveUS20170071685A1Surgical navigation systemsSurgical systems user interfaceSupporting systemEngineering

An automated medical system and method for using the automated medical system. The automated medical system may comprise a robot support system. The robot support system may comprise a robot body. The robot support system may further comprise a selective compliance articulated robot arm coupled to the robot body and operable to position a tool at a selected position in a surgical procedure. The robot support system may further comprise an activation assembly operable to transmit a move signal to the selective compliance articulated robot arm allowing an operator to move the selective compliance articulated robot arm. The automated medical system may further comprise a camera tracking system and an automated imaging system.

Owner:GLOBUS MEDICAL INC

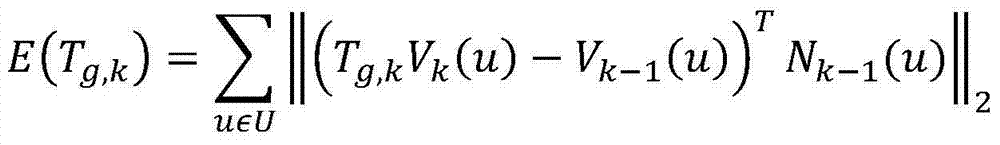

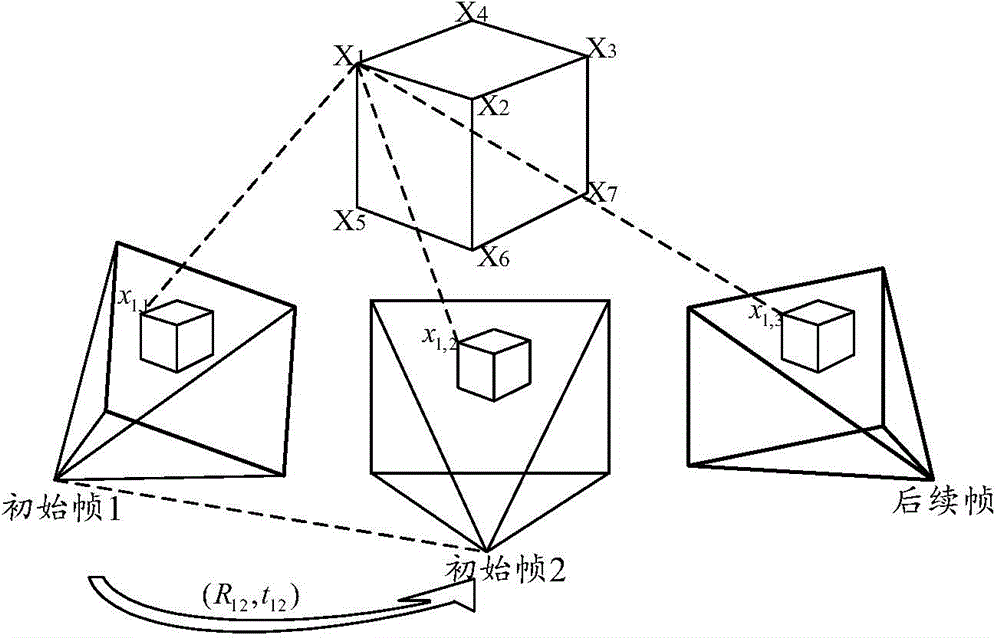

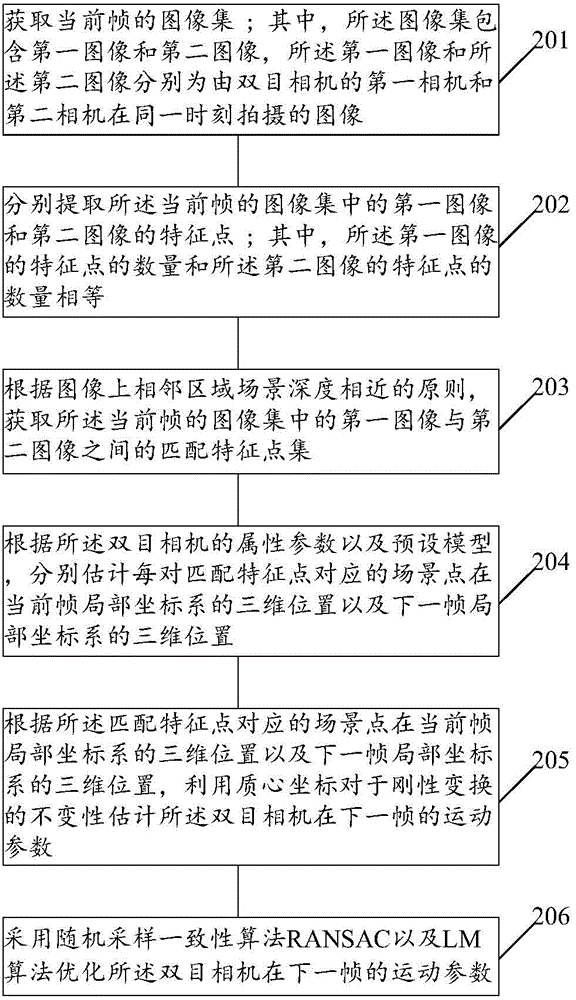

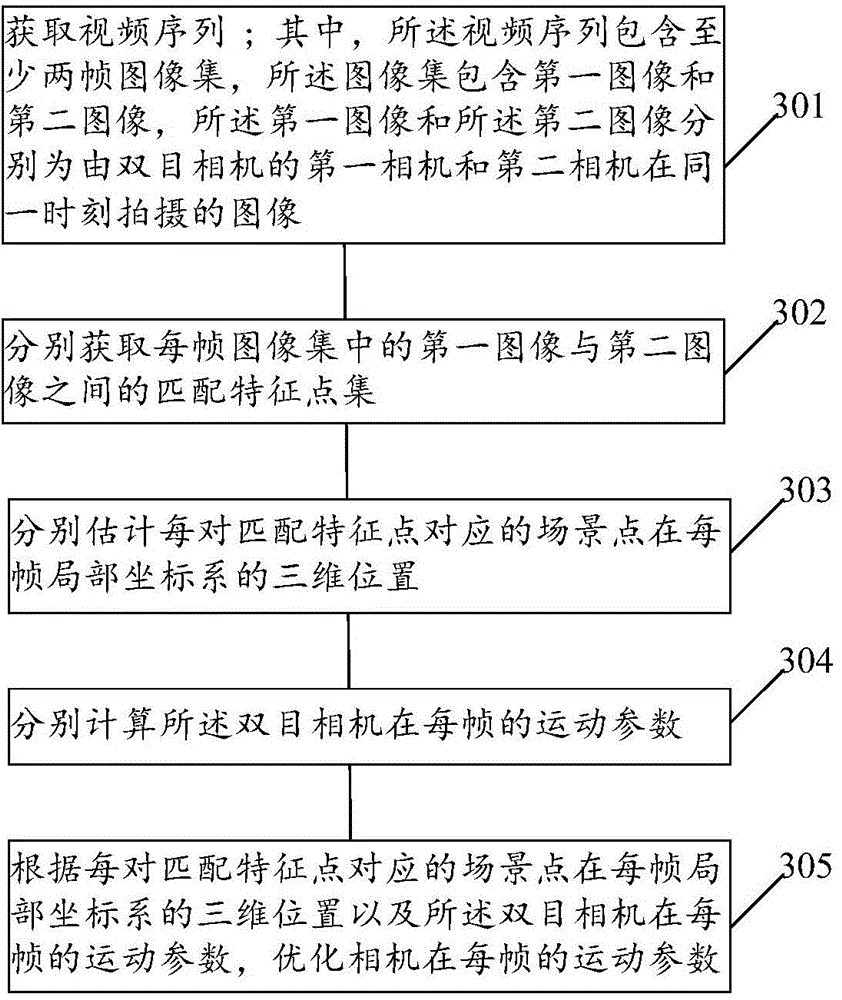

Camera tracking method and device

InactiveCN104915965AAvoid the defect of low tracking accuracyImprove tracking accuracyImage enhancementImage analysisMotion parameterRigid transformation

Provided are a camera tracking method and device, which use a binocular video image to perform camera tracking, thereby improving the tracking accuracy. The camera tracking method provided in the embodiments of the present invention comprises: acquiring an image set of a current frame; respectively extracting feature points of each image in the image set of the current frame; according to a principle that depths of scene in adjacent regions on an image are similar, acquiring a matched feature point set of the image set of the current frame; according to an attribute parameter and a pre-set model of a binocular camera, respectively estimating three-dimensional positions of scene points corresponding to each pair of matched feature points in a local coordinate system of the current frame and a local coordinate system of the next frame; and according to the three-dimensional positions of the scene points corresponding to the matched feature points in the local coordinate system of the current frame and the local coordinate system of the next frame, estimating a motion parameter of the binocular camera in the next frame using the invariance of a barycentric coordinate with respect to rigid transformation, and optimizing the motion parameter of the binocular camera in the next frame.

Owner:HUAWEI TECH CO LTD

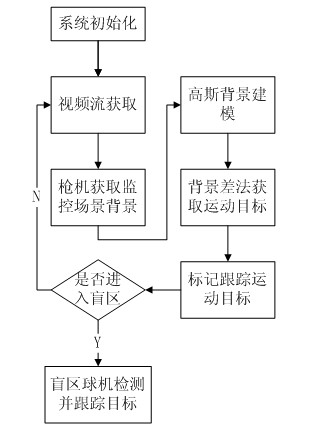

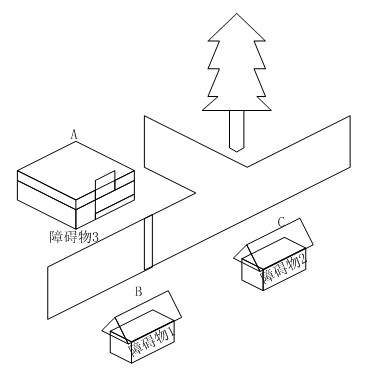

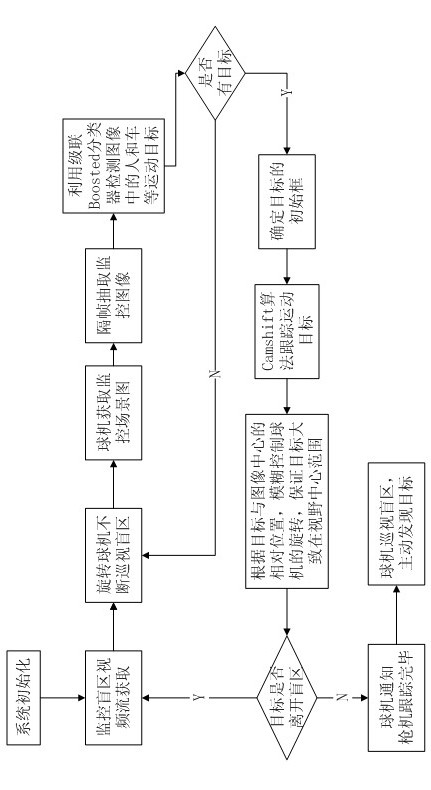

Non-blind-area multi-target cooperative tracking method and system

InactiveCN102447835ARealize monitoringEnsure safetyTelevision system detailsImage analysisBackground imageField of view

The invention relates to a non-blind-area multi-target cooperative tracking method, which comprises the following steps of: manually designating a monitoring area or a blind area in a gun camera monitoring background, and arranging a dome in a corresponding blind area; obtaining an image sequence of a gun camera monitoring scene, and carrying out gauss background modeling to the monitoring image sequence to obtain a background image; detecting a moving target in a monitoring image to obtain the moving target; carrying out gun camera tracking and marking the detected moving target; and continuously detecting whether the moving target exists in the blind area by the gun camera, after determining the moving target, tracking the moving target, transmitting the target position information back to a gun camera, and controlling the movement of the dome according to the movement of the moving target so as to ensure that the moving target is generally within view central range of the dome. The invention also discloses a non-blind-area multi-target cooperative tracking system. According to the invention, as the dome and the gun camera cooperate for the interaction of movement target information, non-blind-area display is carried out in a unitive monitoring picture, the monitoring at all corners can be realized, and further, the safety of the monitoring area is guarded.

Owner:合肥博微安全电子科技有限公司

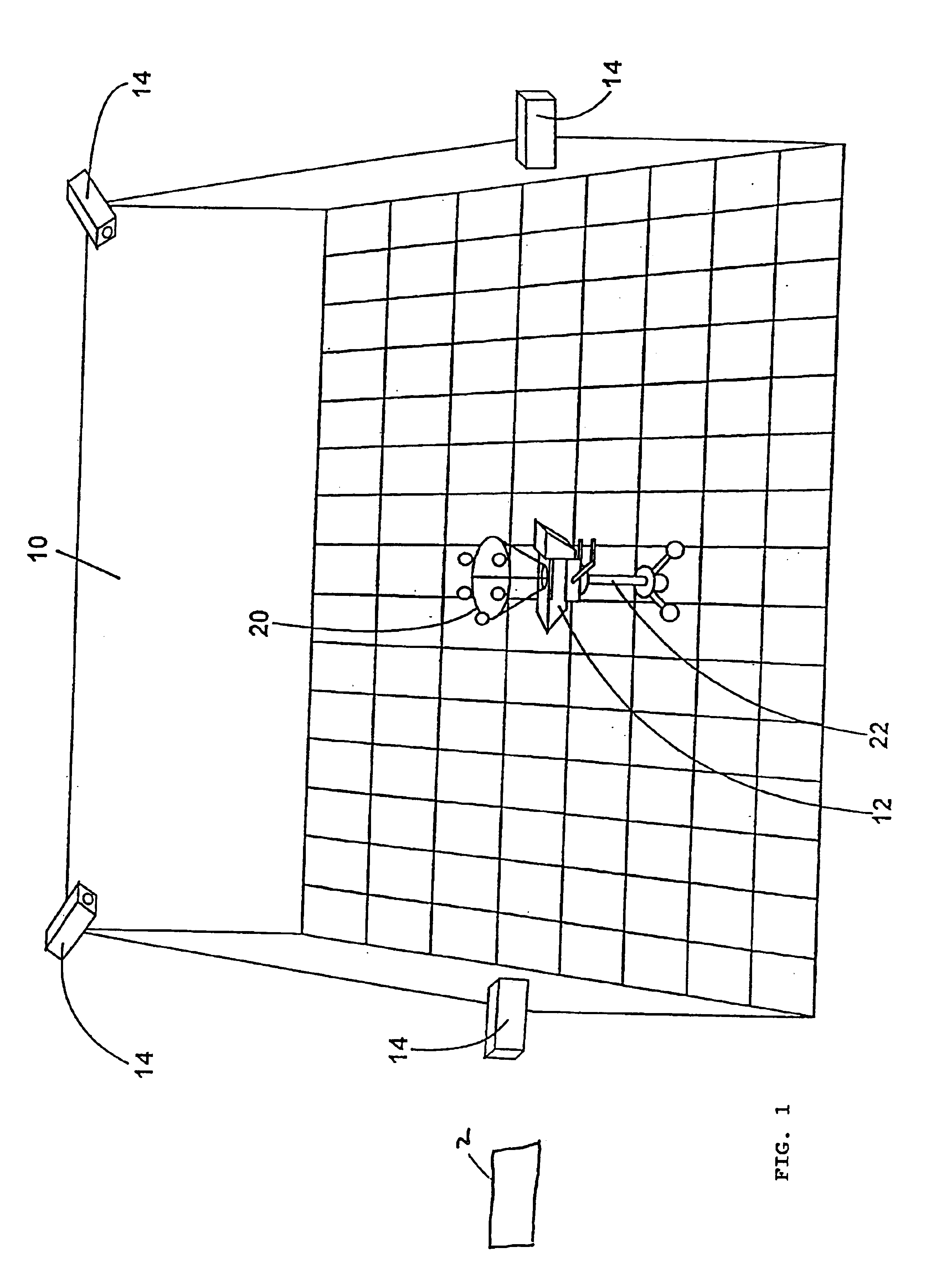

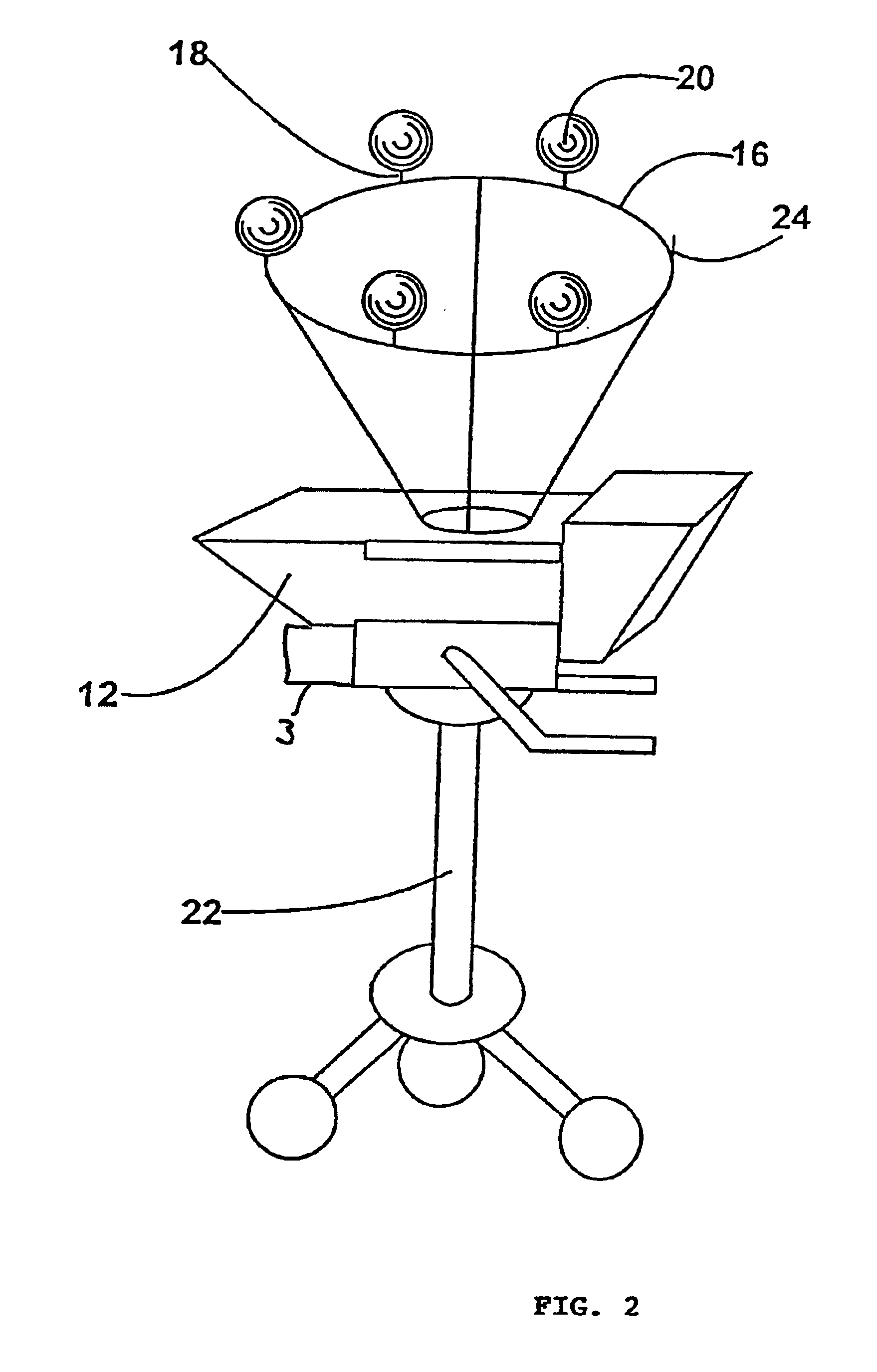

Camera tracking system for a virtual television or video studio

InactiveUS6856935B1Quickly and reliably determines the position and orientationTelevision system detailsBeacon systems using radio wavesElectromagnetic radiationComputer science

A camera tracking system for a virtual television or video studio is provided. The camera tracking system is used to determine the position and / or orientation of recording cameras and comprises emitter devices for emitting electromagnetic radiation. The emitter devices can be mechanically coupled to the recording camera. The camera tracking system further comprises detecting devices for detecting the position of the emitter devices on the basis of the electromagnetic radiation emitted by the emitter devices, each detecting device being able to detect several emitter devices. A computer unit evaluates the electromagnetic radiation detected by the detecting devices and emitted by the emitter device, and determines the position and / or orientation of the emitter devices in relation to the detecting devices.

Owner:GMD-FORSCHUNGSZENTRUM INFORMATIONSTECHNIK

Method and apparatus for performing coordinated multi-PTZ camera tracking

A system for tracking at least one object is disclosed. The system includes a plurality of communicatively connected visual sensing units configured to capture visual data related to the at least one object The system also includes a manager component communicatively connected to the plurality of visual sensing units. The manager component is configured to assign one visual sensing unit to act as a visual sensing unit in a master mode and at least one visual sensing unit to act as a visual sensing unit in a slave mode. The manager component is further configured to transmit at least one control signal to the plurality of visual sensing units, and receive the visual data from the plurality of visual sensing units.

Owner:SRI INTERNATIONAL

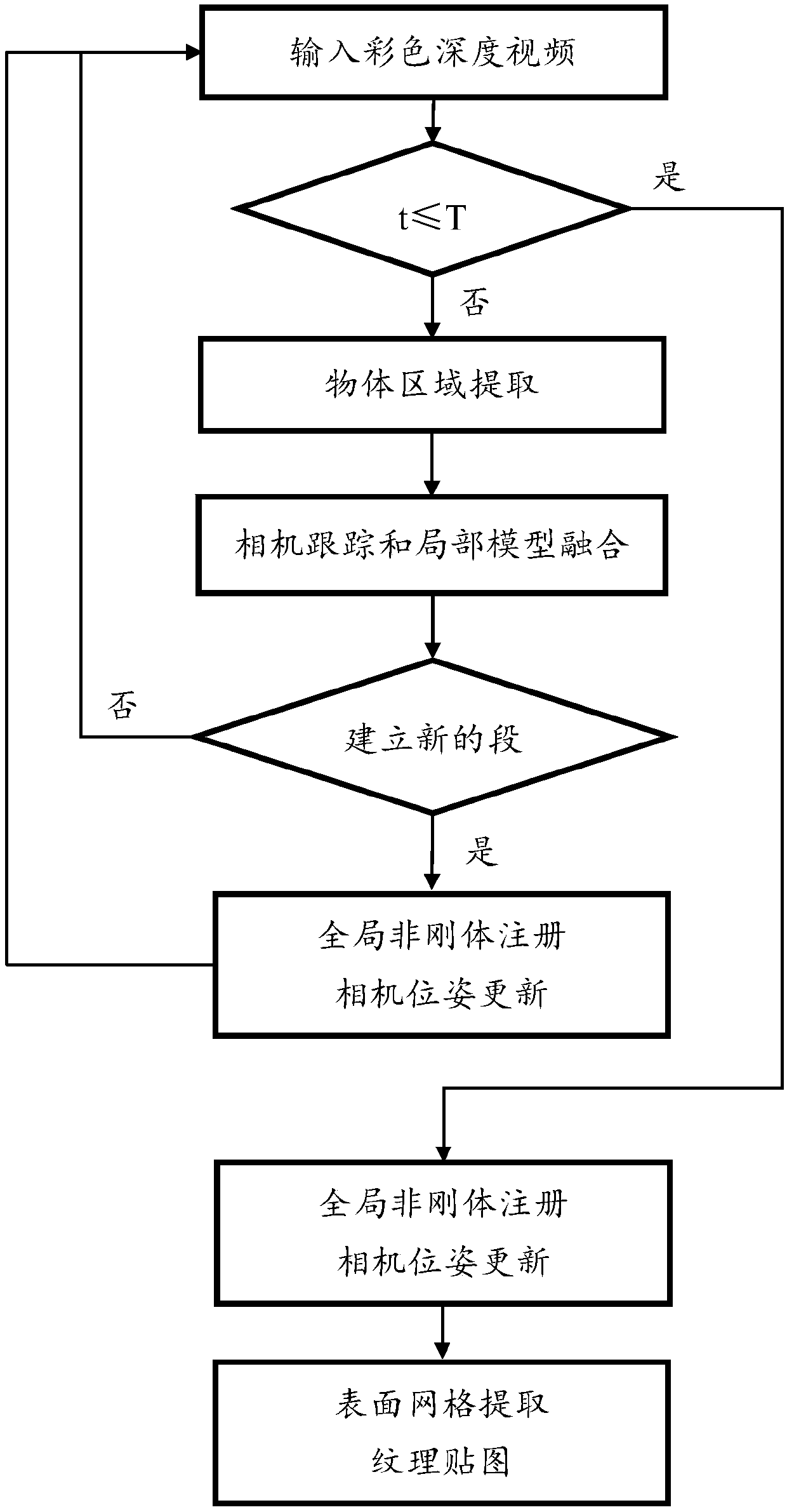

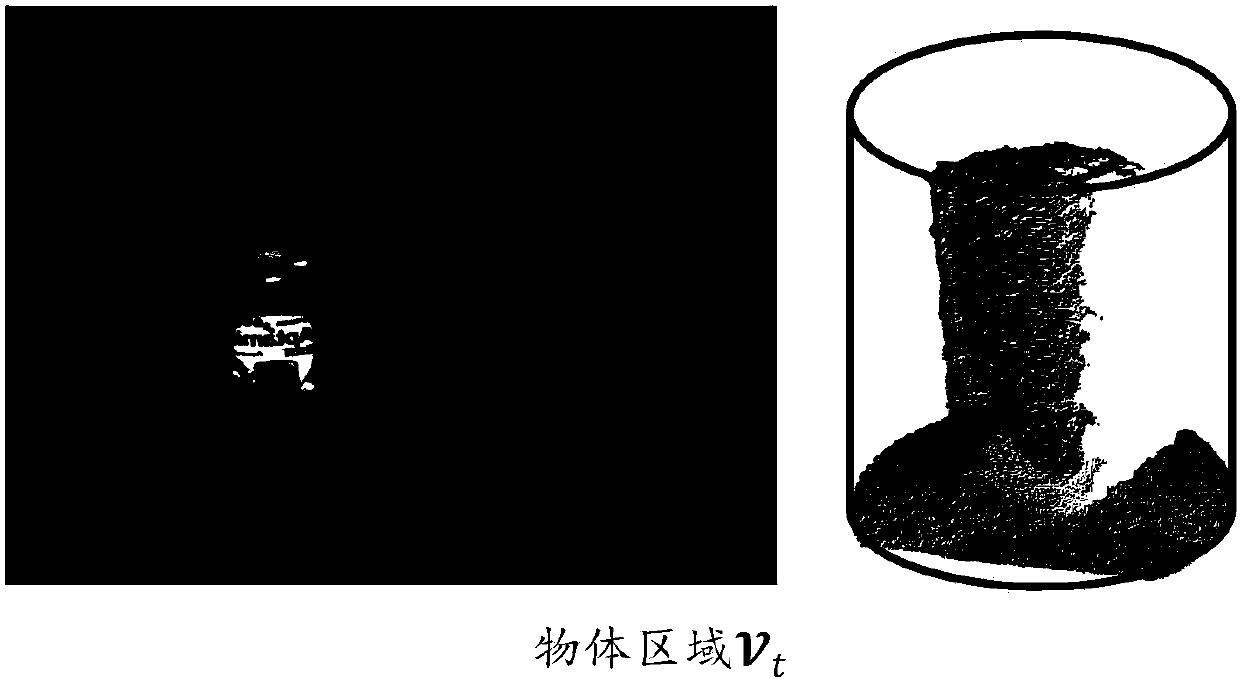

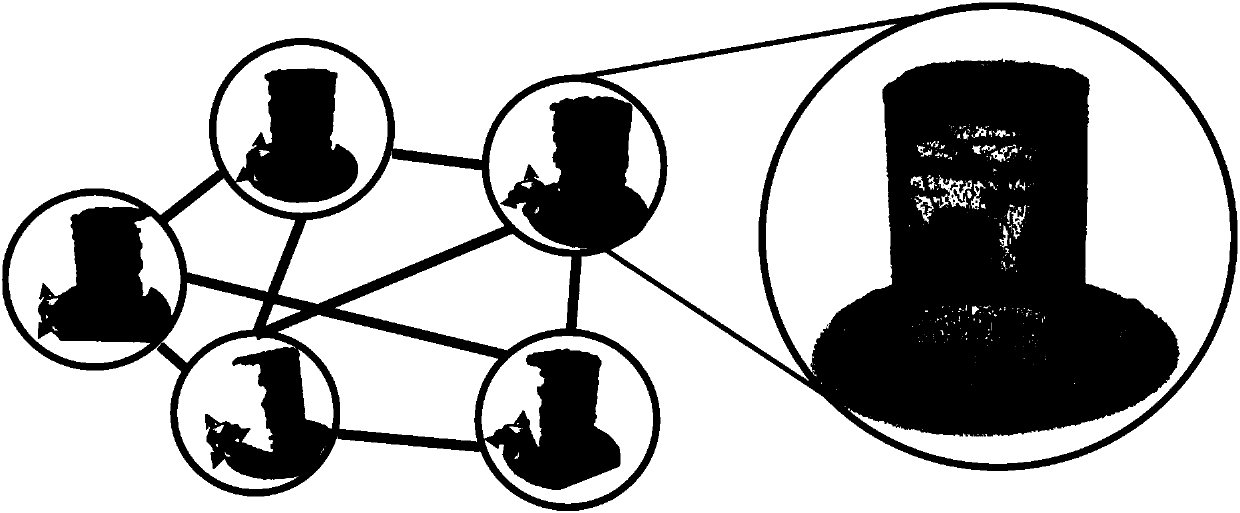

Three-dimensional reconstruction method of single object, based on color depth camera

ActiveCN107845134AShorten the trackImage enhancementImage analysisReconstruction methodDimensional modeling

The invention discloses a three-dimensional reconstruction method of single object, based on a color depth camera. The three-dimensional reconstruction method of single object, based on a color depthcamera includes the three steps: 1) during the scanning process, extracting the scanned object area; 2) according to the color depth data, performing camera tracking and local fusion of the depth data, and performing global non rigid body registration on the locally fused data, and gradually constructing an overall three-dimensional model and the accurate key frame camera position; and 3) performing grid extraction on the fusion model, according to the obtained key frame camera position and the key frame color image, calculating the texture map of the three-dimensional grid model. The method framework of the three-dimensional reconstruction method of single object, based on the color depth camera can guarantee that even if the proportion of the object in the image is relatively smaller, geometrical reconstruction and texture mapping with high quality can still be performed when single object is reconstructed. The three-dimensional reconstruction method of single object, based on a color depth camera has the advantages of being clear, being high in speed, being robust in the result, and being able to be applied to the virtual reality scene construction field and the like.

Owner:ZHEJIANG UNIV

Real-time camera tracking using depth maps

Real-time camera tracking using depth maps is described. In an embodiment depth map frames are captured by a mobile depth camera at over 20 frames per second and used to dynamically update in real-time a set of registration parameters which specify how the mobile depth camera has moved. In examples the real-time camera tracking output is used for computer game applications and robotics. In an example, an iterative closest point process is used with projective data association and a point-to-plane error metric in order to compute the updated registration parameters. In an example, a graphics processing unit (GPU) implementation is used to optimize the error metric in real-time. In some embodiments, a dense 3D model of the mobile camera environment is used.

Owner:MICROSOFT TECH LICENSING LLC

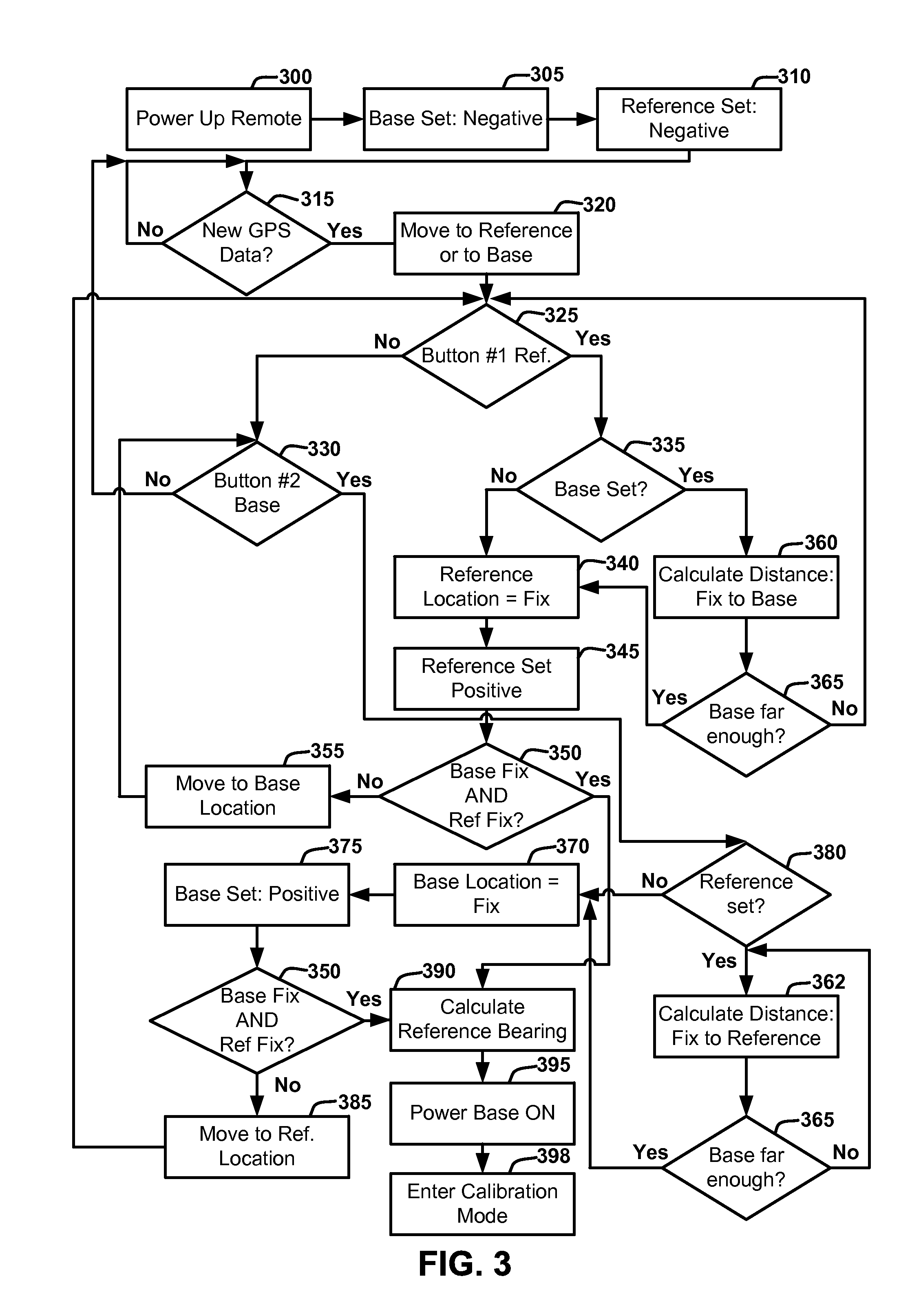

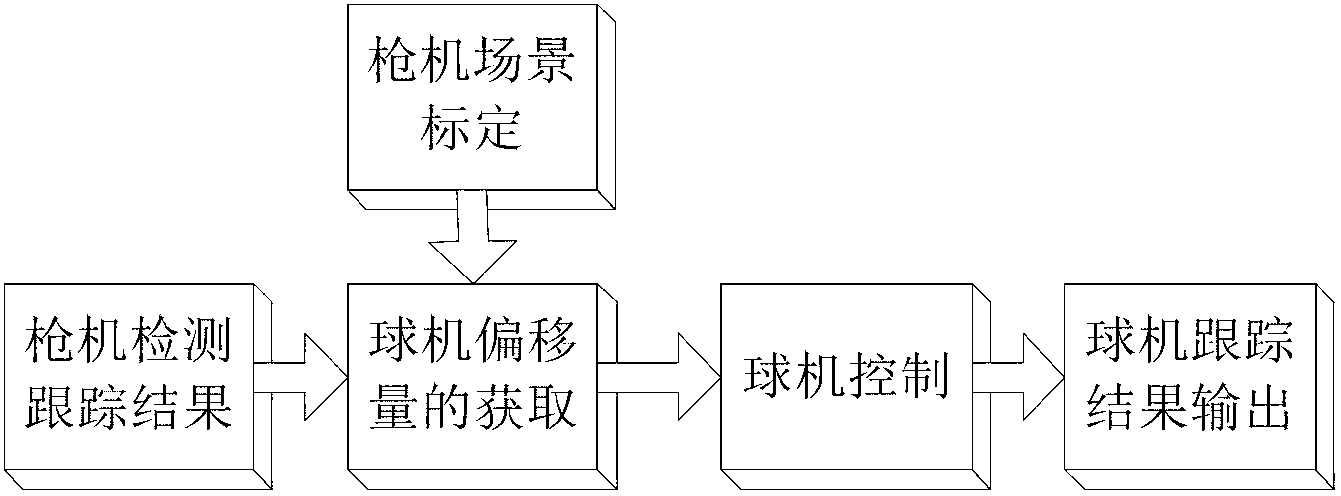

Remotely controlled automatic camera tracking system

A remotely controlled automatic camera tracking system for self-photography is disclosed. The systems hereof combine the functions of remote camera operation, including closed loop automatic zoom control, and camera tracking by employing a system architecture that eliminates the requirement to have microprocessors coupled to the tracking station for the calculation of range and bearing. The system enables range and bearing information to be viewed by the operator, if desired, and conserves radio power.

Owner:H4 ENG

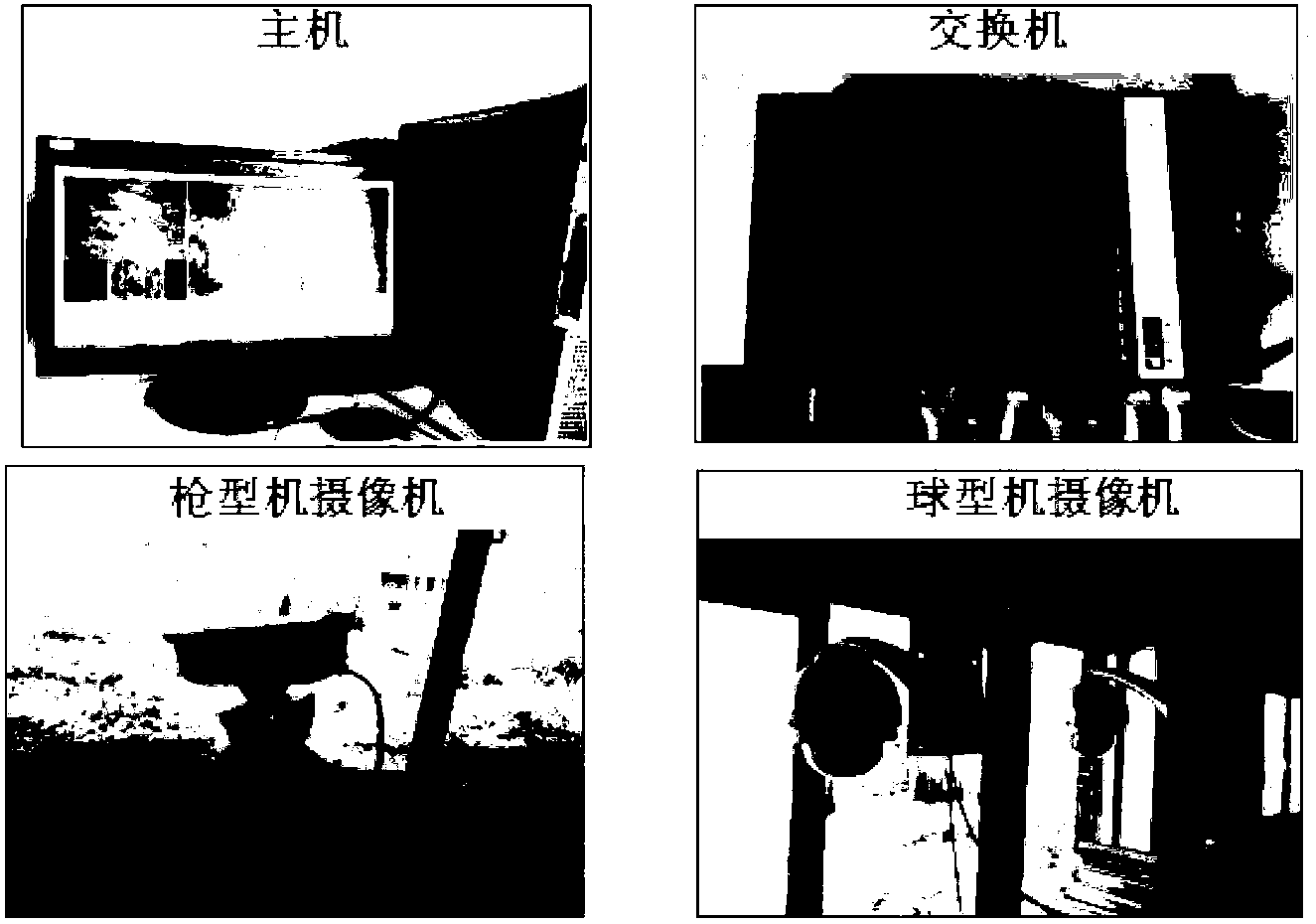

Single-gun-camera-multi-dome-camera linkage method based on grid combination interpolation

InactiveCN103079059AOvercome continuityOvercome the shortcomings of the dome screen shakingClosed circuit television systemsMesh gridCharacteristic point

The invention provides a single- gun-camera-multi-dome-camera linkage method based on grid combination interpolation, which adopts a gun-camera-dome-camera linkage algorithm of the grid combination interpolation. The method comprises the following steps of step a, calibrating a gun camera scene: uniformly dividing a gun camera picture into m rows and n columns of grids and selecting four characteristic points in the grids to be calibrated; step b, acquiring a detection tracking result of a gun camera to be used as input; step c, acquiring deviation of a dome camera: judging a grid in which a target stays, selecting three adjacent characteristic points in the grid to be interpolated, and solving a deviation quantity of a steering target of the dome camera; and step d, controlling the dome camera: utilizing a three-dimensional positioning function in the control of a dome camera holder to control a steering target position of the dome camera so as to track a target. Due to the adoption of the method, the weaknesses that the dome camera tracking picture is discontinuous and the dome camera picture is fluttered can be overcome.

Owner:UNIV OF SCI & TECH OF CHINA

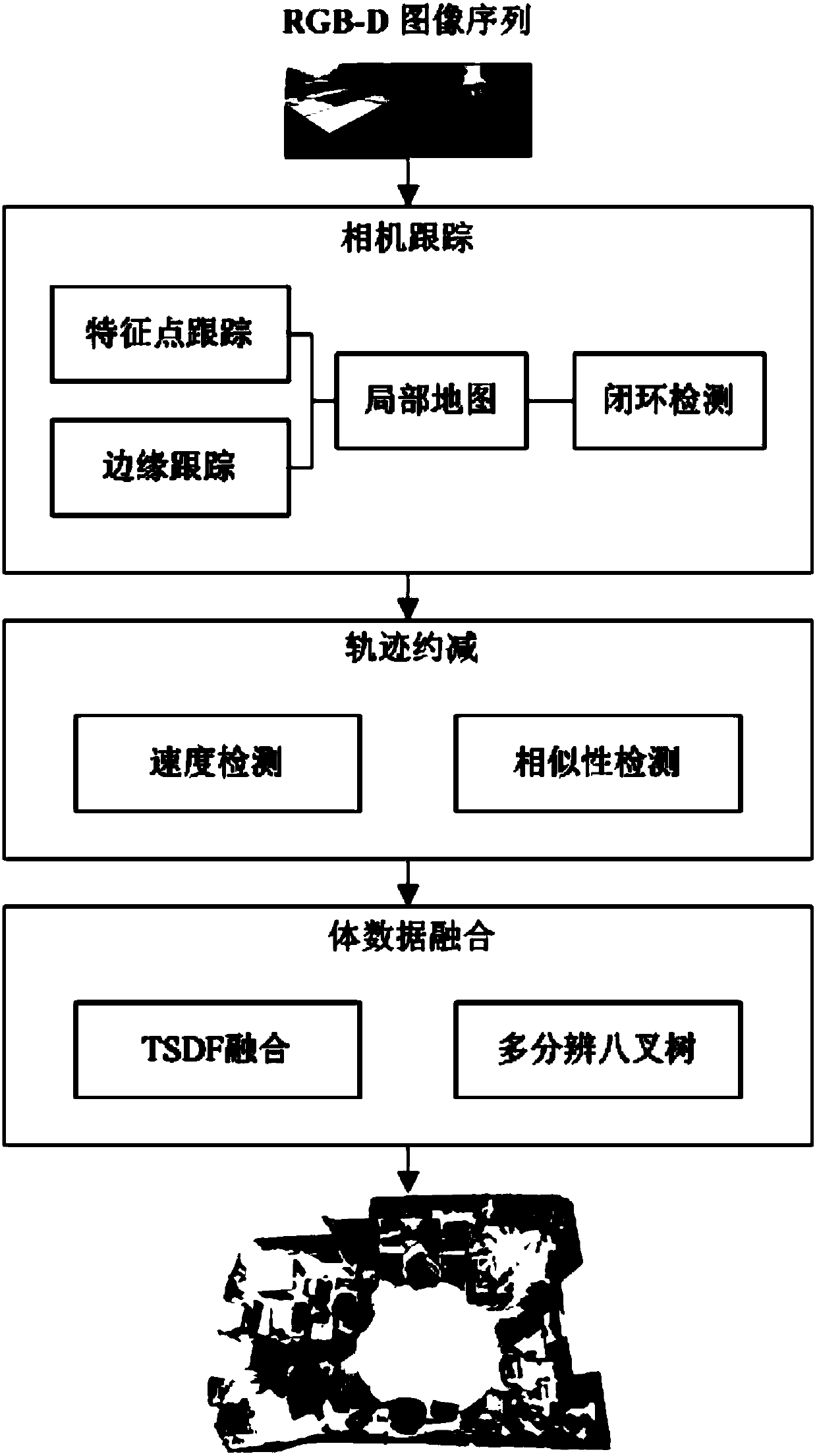

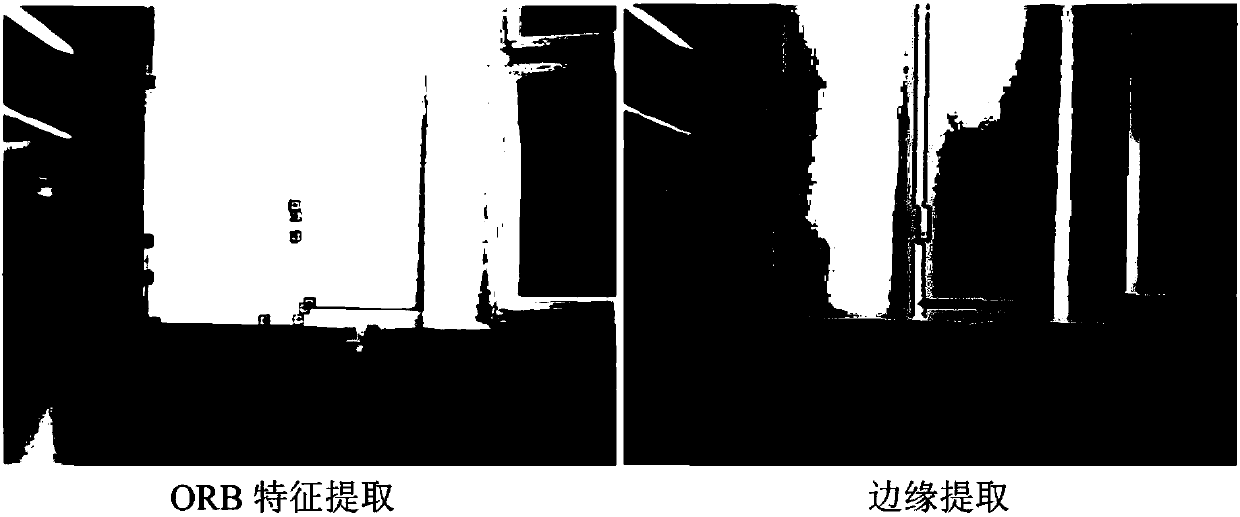

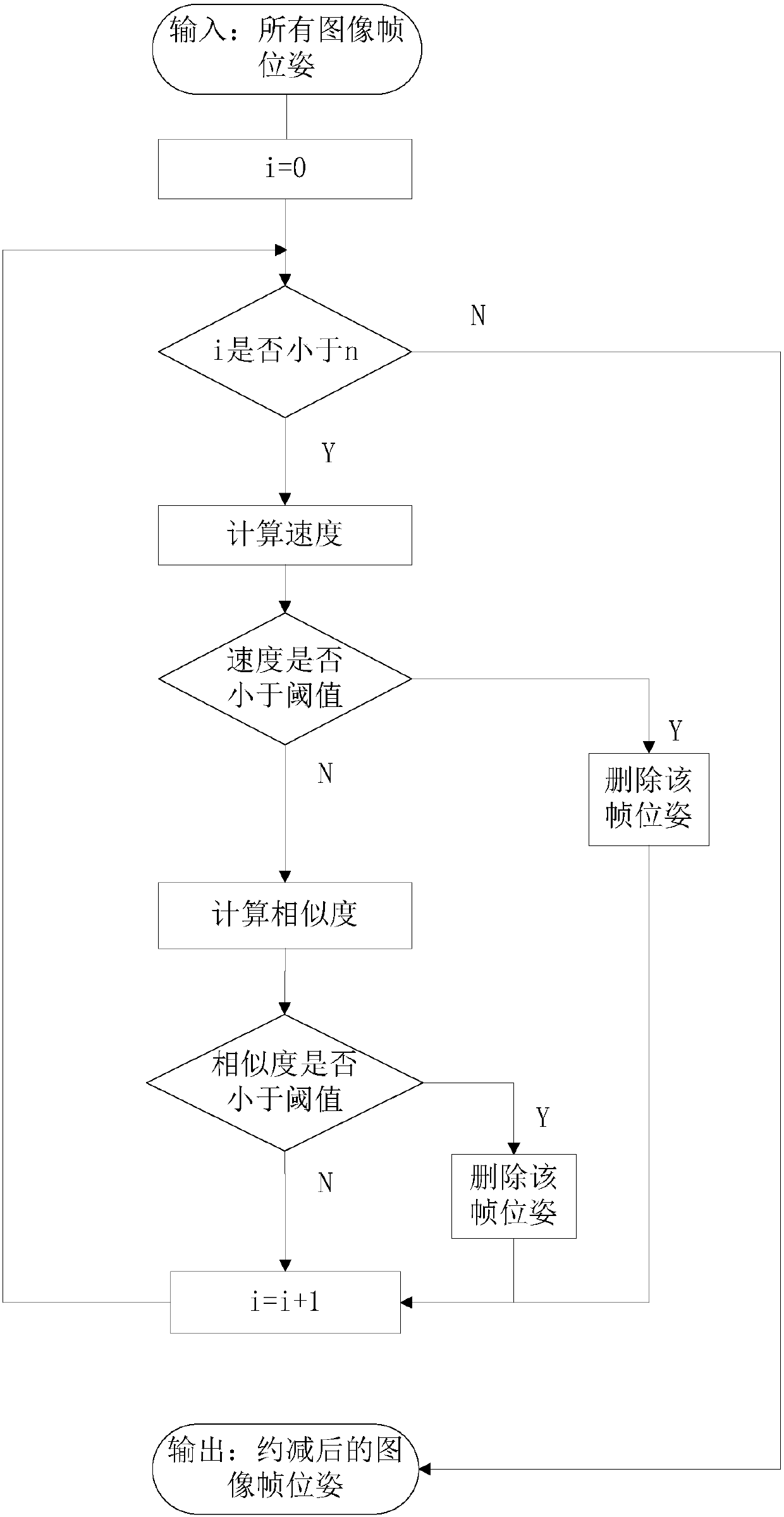

Quick and robust RGB-D indoor three-dimensional scene reconstruction method

ActiveCN108564616AShorten speedReduce model accuracyImage enhancementImage analysisVoxelReconstruction method

The invention relates to the field of three-dimensional reconstruction, in particular to a quick and robust RGB-D indoor three-dimensional scene reconstruction method, and aims to solve the problem that the indoor three-dimensional scene reconstruction efficiency cannot meet the demands. The method comprises the steps of scanning an indoor scene in real time by adopting an RGB-D camera; performingcamera real-time tracking based on a point line fusion camera tracking algorithm; performing reduction on camera tracks by detecting a camera state; and performing multi-scale voxel data fusion on anRGB-D image by utilizing reduced camera track information to generate a complete three-dimensional scene model. The complete indoor scene model can be obtained efficiently and accurately. A system has good robustness and expansibility.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

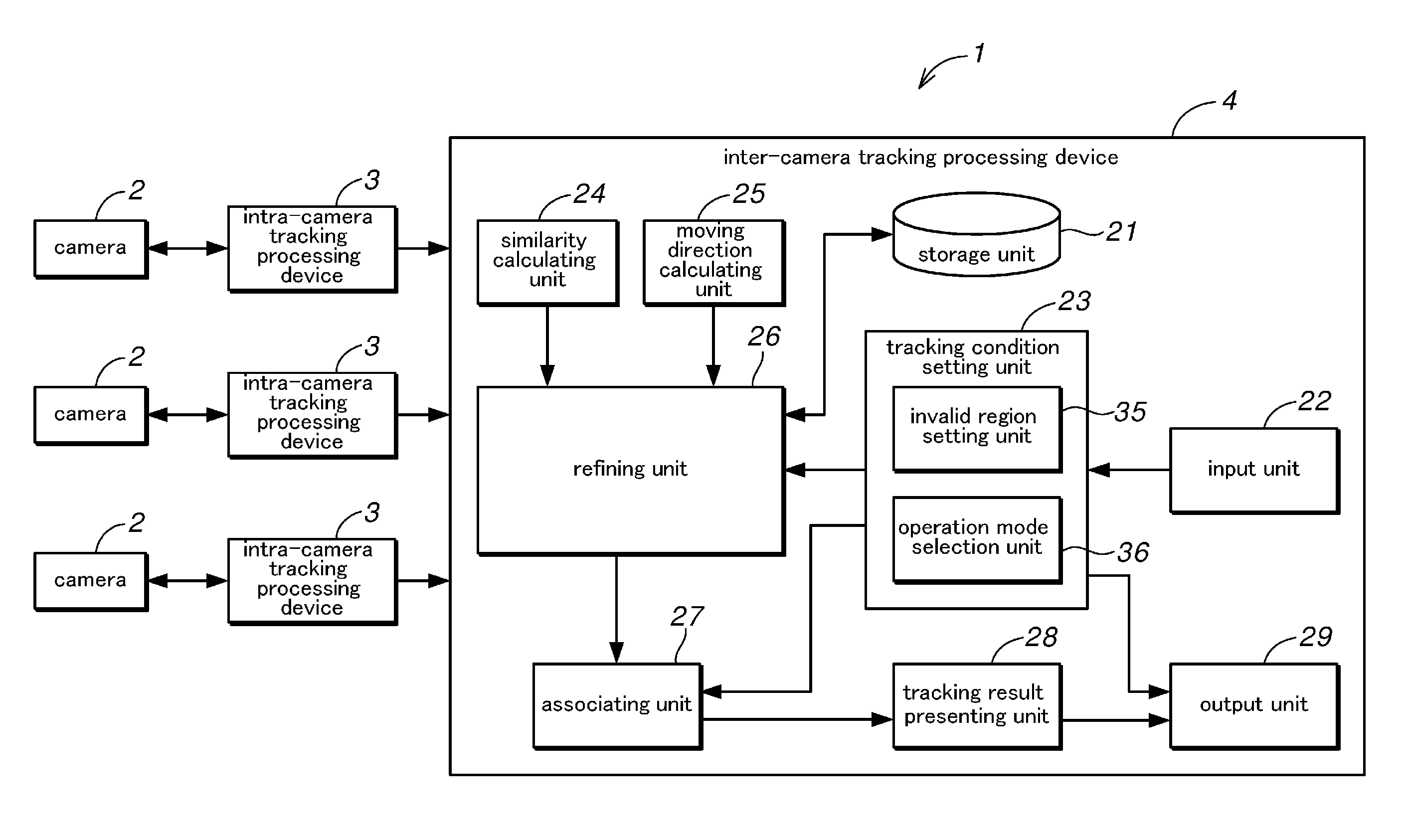

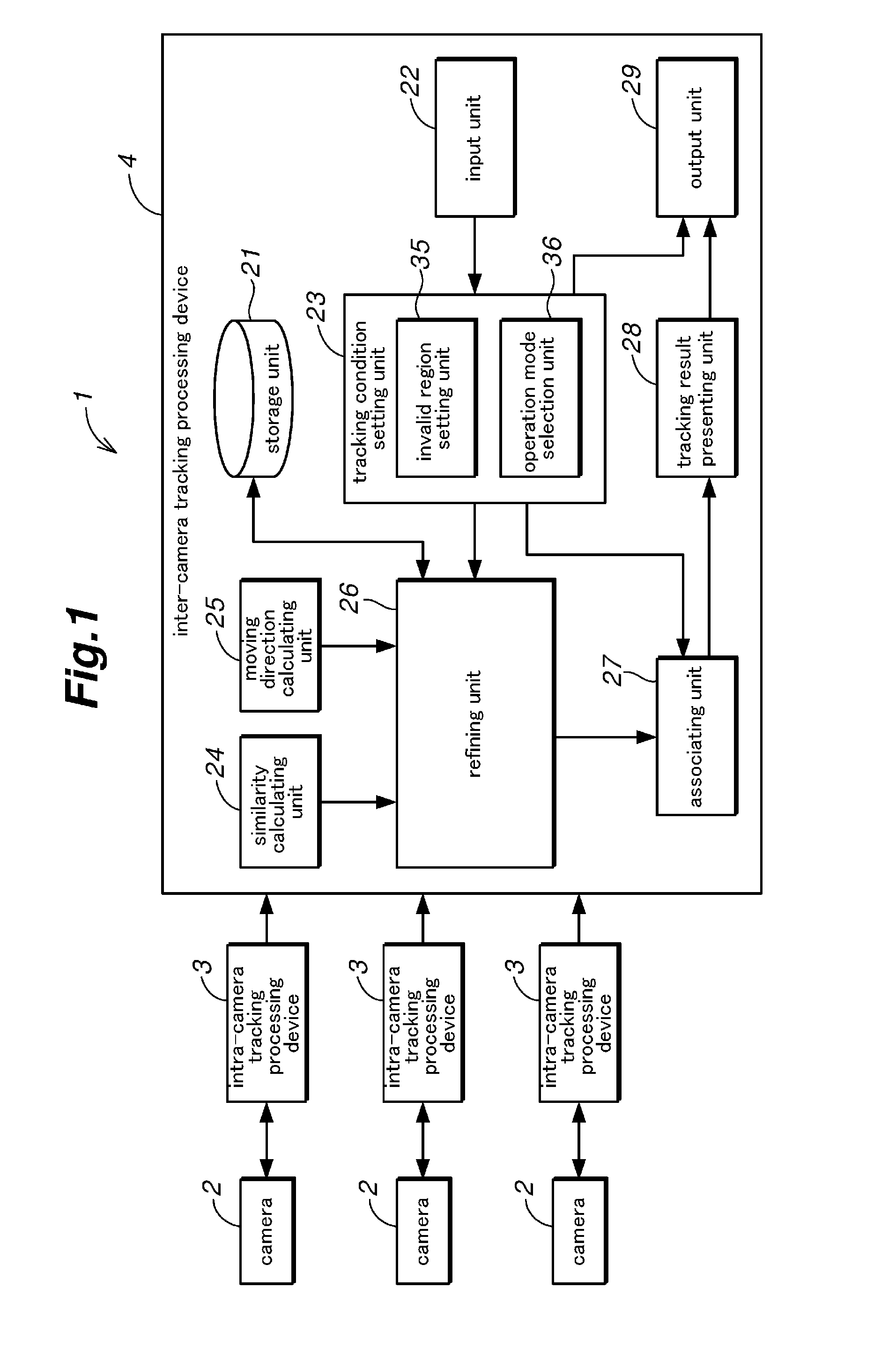

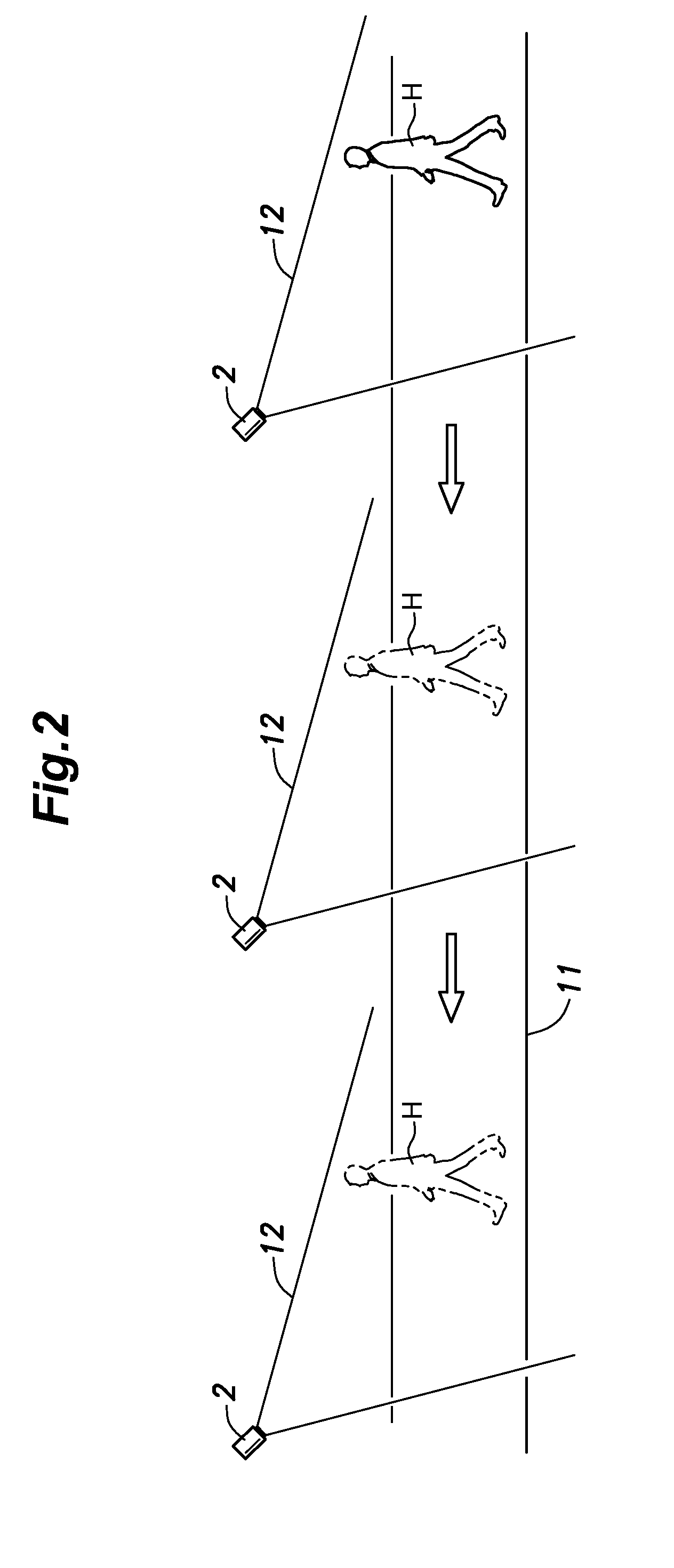

Tracking processing device and tracking processing system provided with same, and tracking processing method

To improve, when performing tracking of moving objects by using captured images taken by multiple cameras, the accuracy of the tracking process across the cameras, a tracking processing device includes: a storage unit that stores, for each camera, a plurality of pieces of intra-camera tracking information including image information of persons obtained from the captured images; a refining unit that performs refining of the plurality of pieces of intra-camera tracking information and thereby extracts pieces of inter-camera tracking information to be used in a process of tracking the persons across the multiple cameras; and an associating unit that, on the basis of the pieces of inter-camera tracking information, associates the persons in the captured images across the multiple cameras.

Owner:PANASONIC INTELLECTUAL PROPERTY MANAGEMENT CO LTD

Overlapped domain dual-camera target tracking system and method

InactiveCN103997624ASolve the problem of easy to loseImprove anti-interference abilityImage analysisCharacter and pattern recognitionAlgorithmObject tracking algorithm

The invention discloses an overlapped domain dual-camera target tracking system and method. According to the system, an HSV-based space background weighted Meanshift algorithm is connected with an improved visual field boundary target. According to the target tracking algorithm, a relatively-independent tracking task is executed on a video sequence collected by each camera within the visual field range of the camera through a front-end single-camera tracking subsystem, relevant information of the tacked target is obtained, data communication between the cameras is achieved by utilizing a target connection algorithm, and then high-class video processing processes like behavior judgment are carried out.

Owner:JIANGSU UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com