Patents

Literature

601 results about "Color map" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

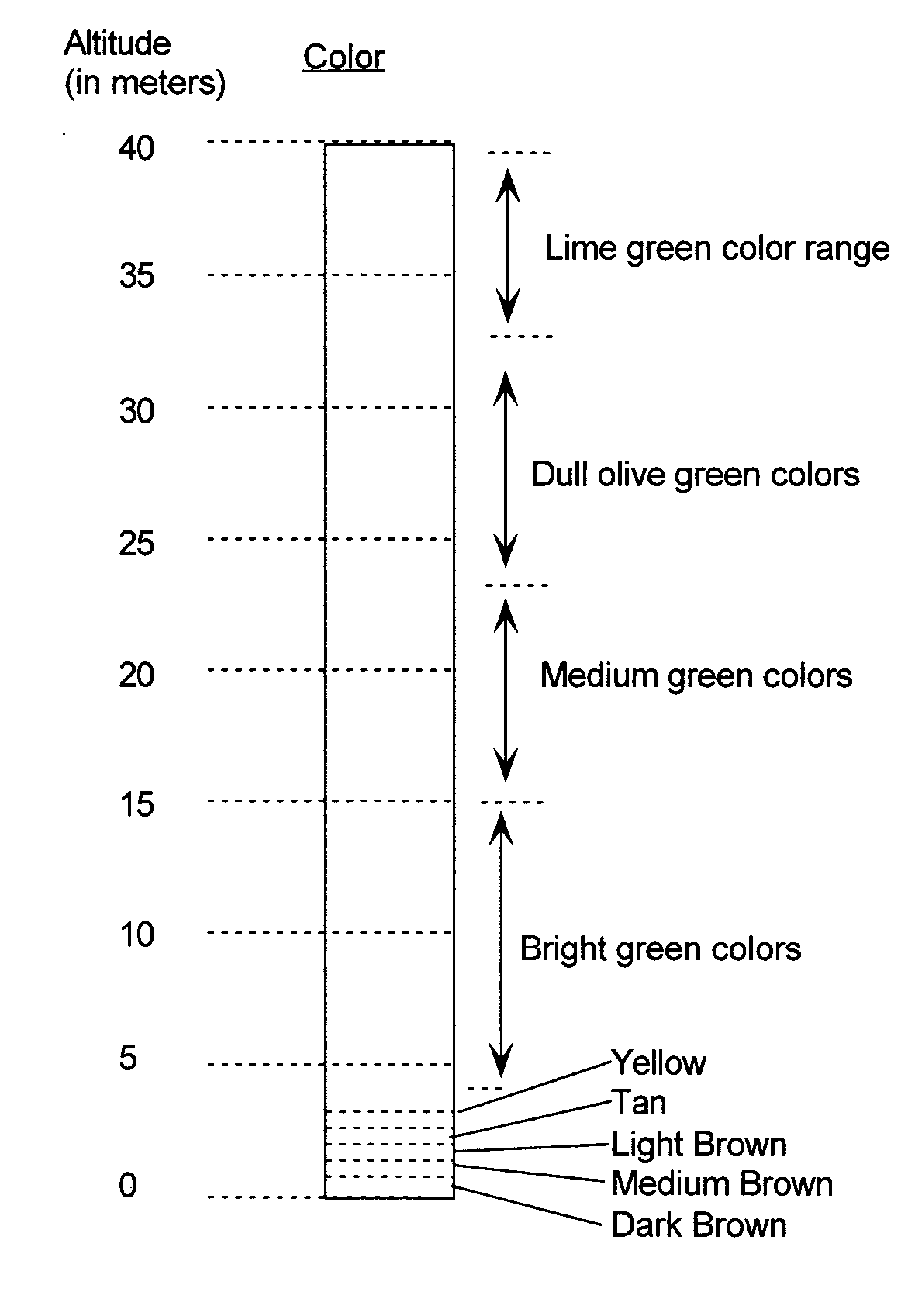

System and Method for Displaying Data Having Spatial Coordinates

InactiveUS20130300740A1Character and pattern recognitionInput/output processes for data processingMaterial classificationHeight map

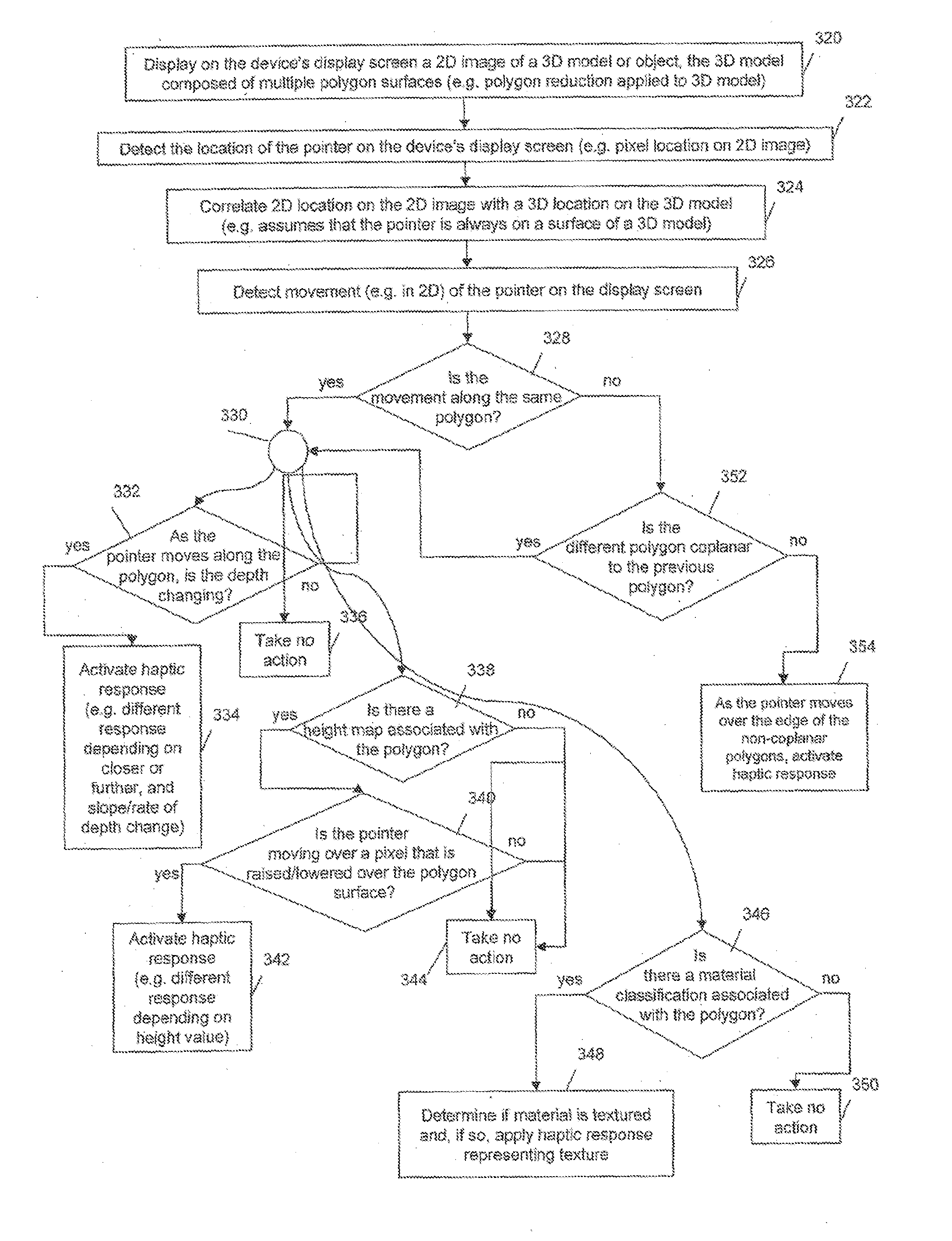

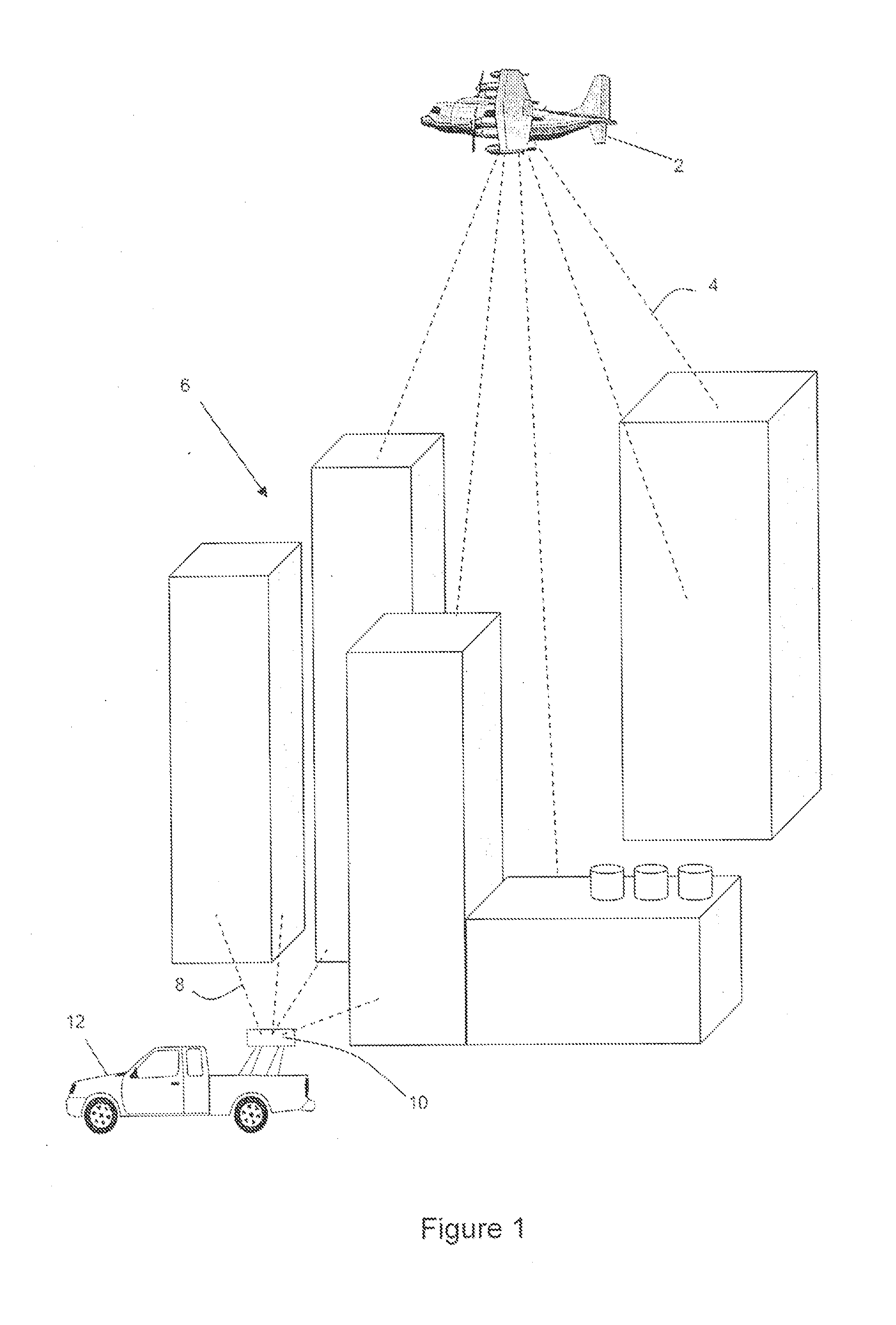

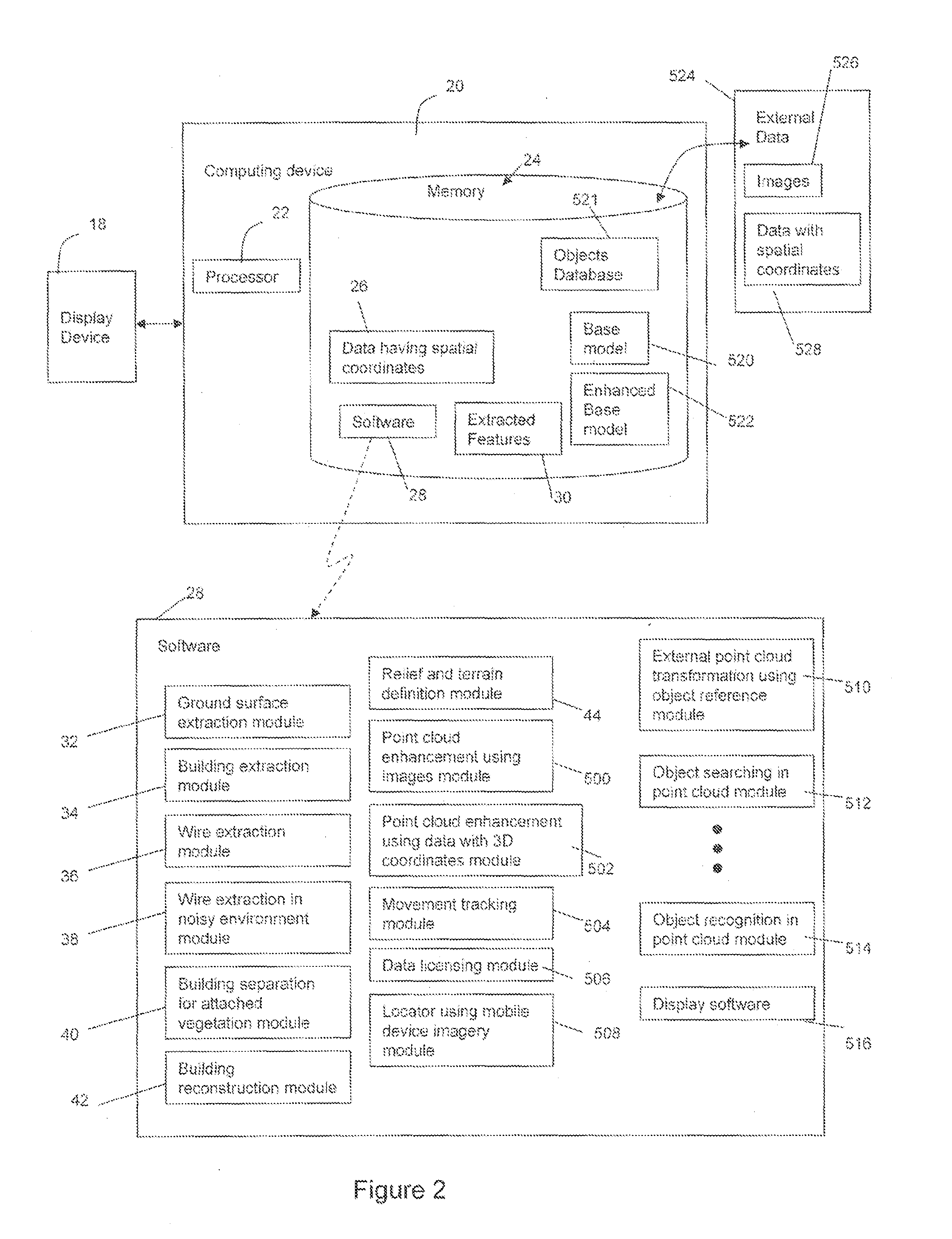

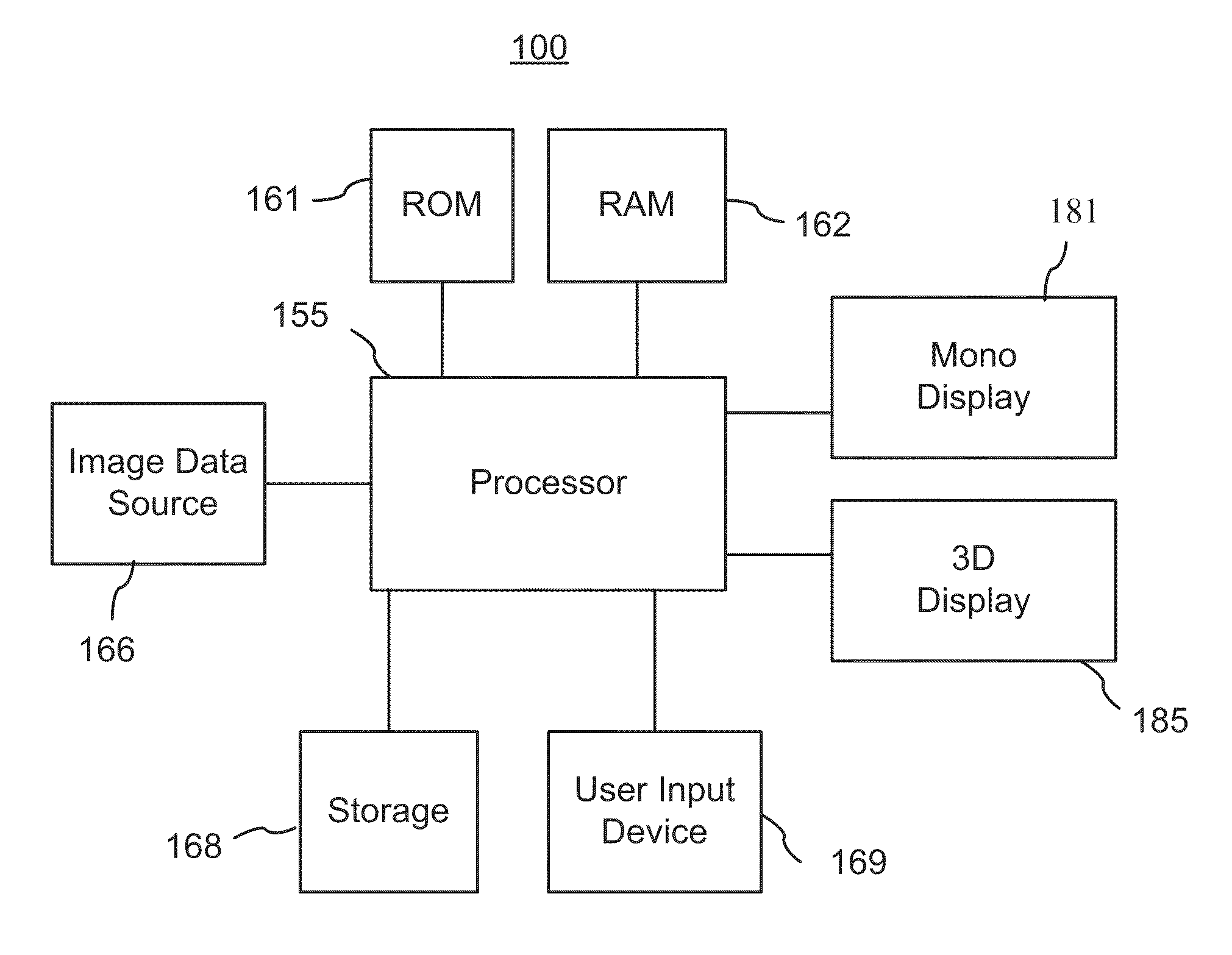

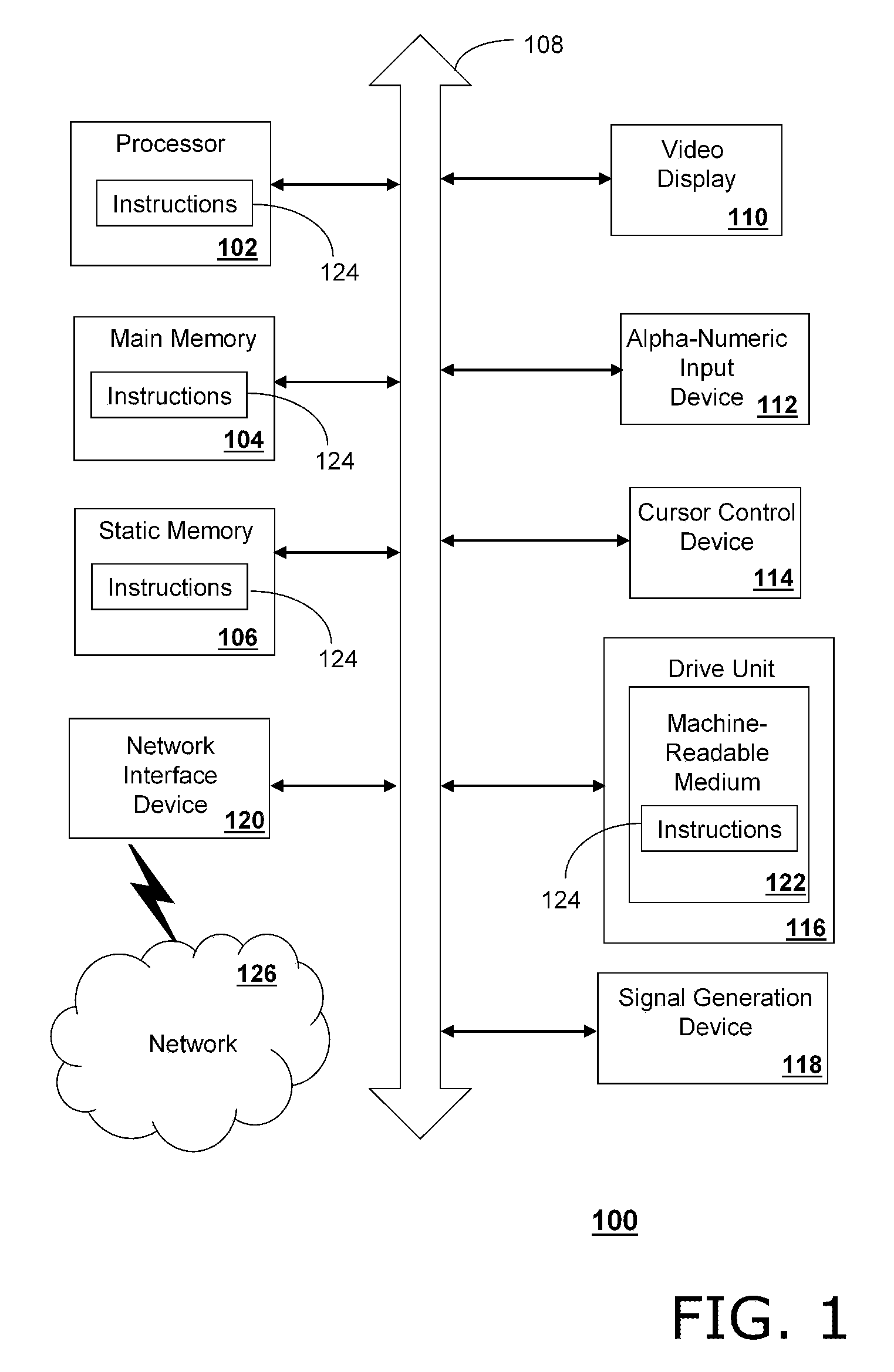

Systems and methods are provided for displaying data, such as 3D models, having spatial coordinates. In one aspect, a height map and color map are generated from the data. In another aspect, material classification is applied to surfaces within a 3D model. Based on the 3D model, the height map, the color map, and the material classification, haptic responses are generated on a haptic device. In another aspect, a 3D user interface (UI) data model comprising model definitions is derived from the 3D models. The 3D model is updated with video data. In another aspect, user controls are provided to navigate a point of view through the 3D model to determine which portions of the 3D model are displayed.

Owner:ALT SOFTWARE US

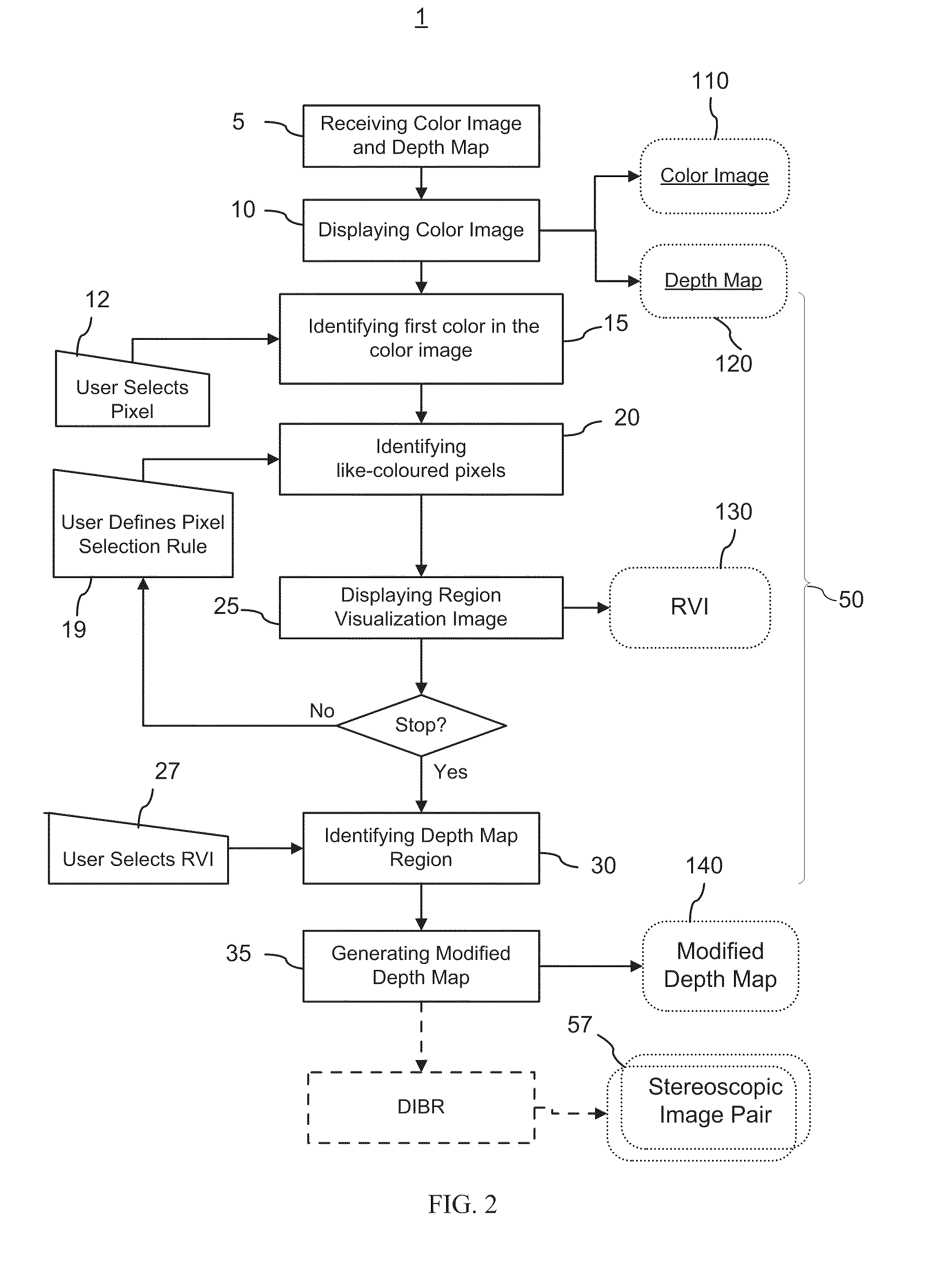

Method and graphical user interface for modifying depth maps

Owner:HER MAJESTY THE QUEEN & RIGHT OF CANADA REPRESENTED BY THE MIN OF IND THROUGH THE COMM RES CENT

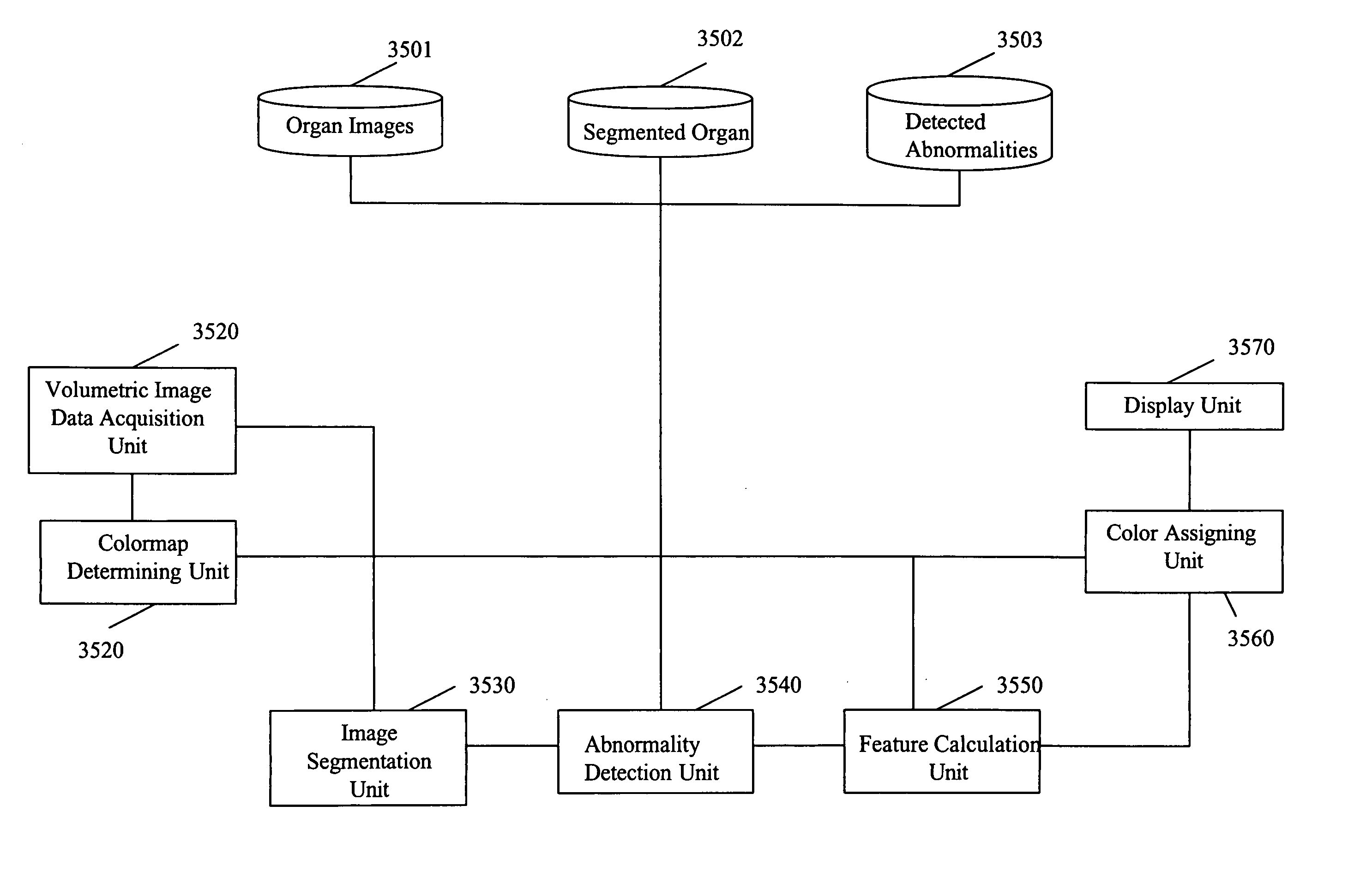

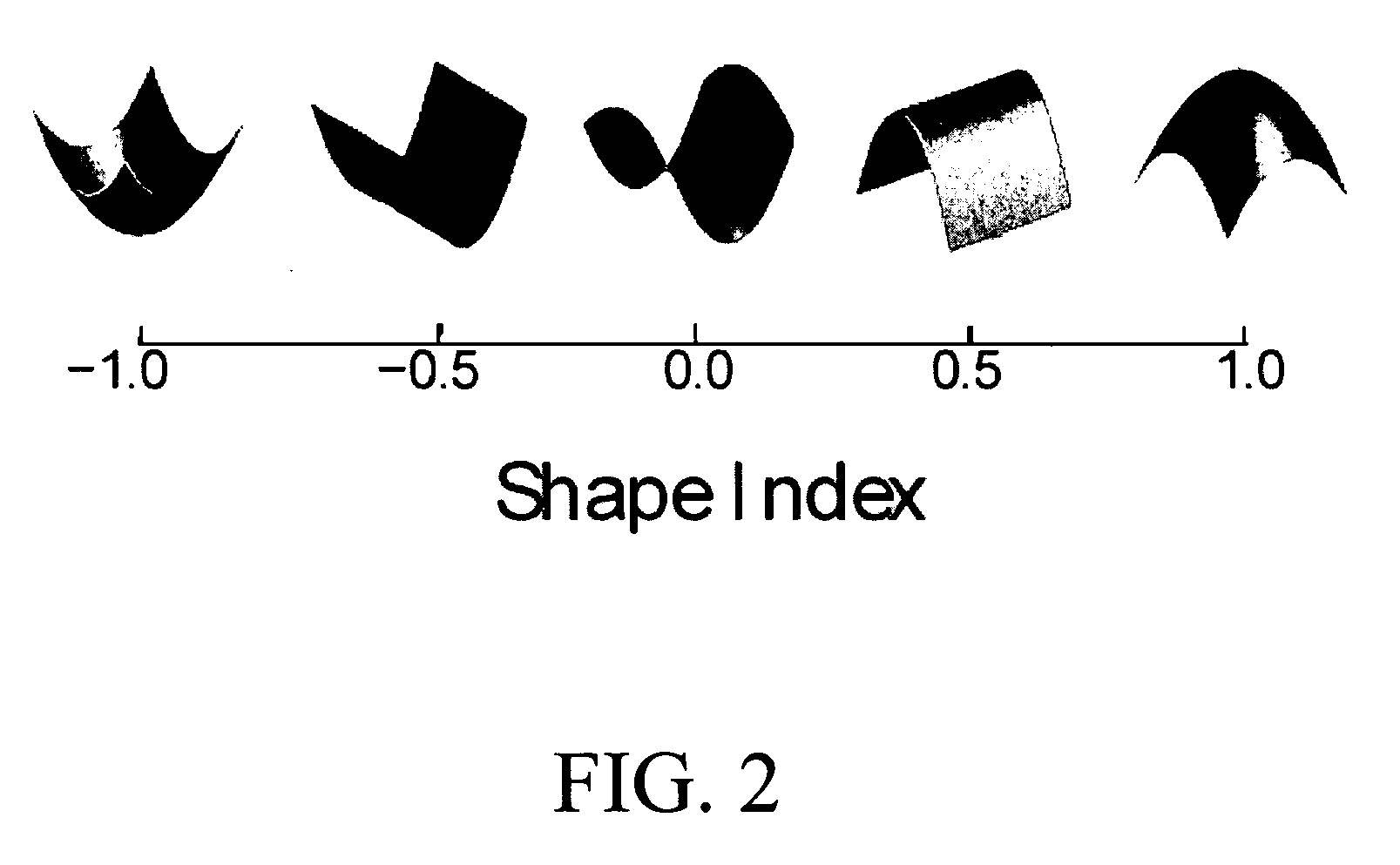

Method for virtual endoscopic visualization of the colon by shape-scale signatures, centerlining, and computerized detection of masses

InactiveUS20050152588A1Enhancing endoscopic visualizationEasy detectionImage enhancementImage analysisPattern recognitionData set

A visualization method and system for virtual endoscopic examination of CT colonographic data by use of shape-scale analysis. The method provides each colonic structure of interest with a unique color, thereby facilitating rapid diagnosis of the colon. Two shape features, called the local shape index and curvedness, are used for defining the shape-scale spectrum. The shape index and curvedness values within CT colonographic data are mapped to the shape-scale spectrum in which specific types of colonic structures are represented by unique characteristic signatures in the spectrum. The characteristic signatures of specific types of lesions can be determined by use of computer-simulated lesions or by use of clinical data sets subjected to a computerized detection scheme. The signatures are used for defining a 2-D color map by assignment of a unique color to each signature region.

Owner:UNIVERSITY OF CHICAGO

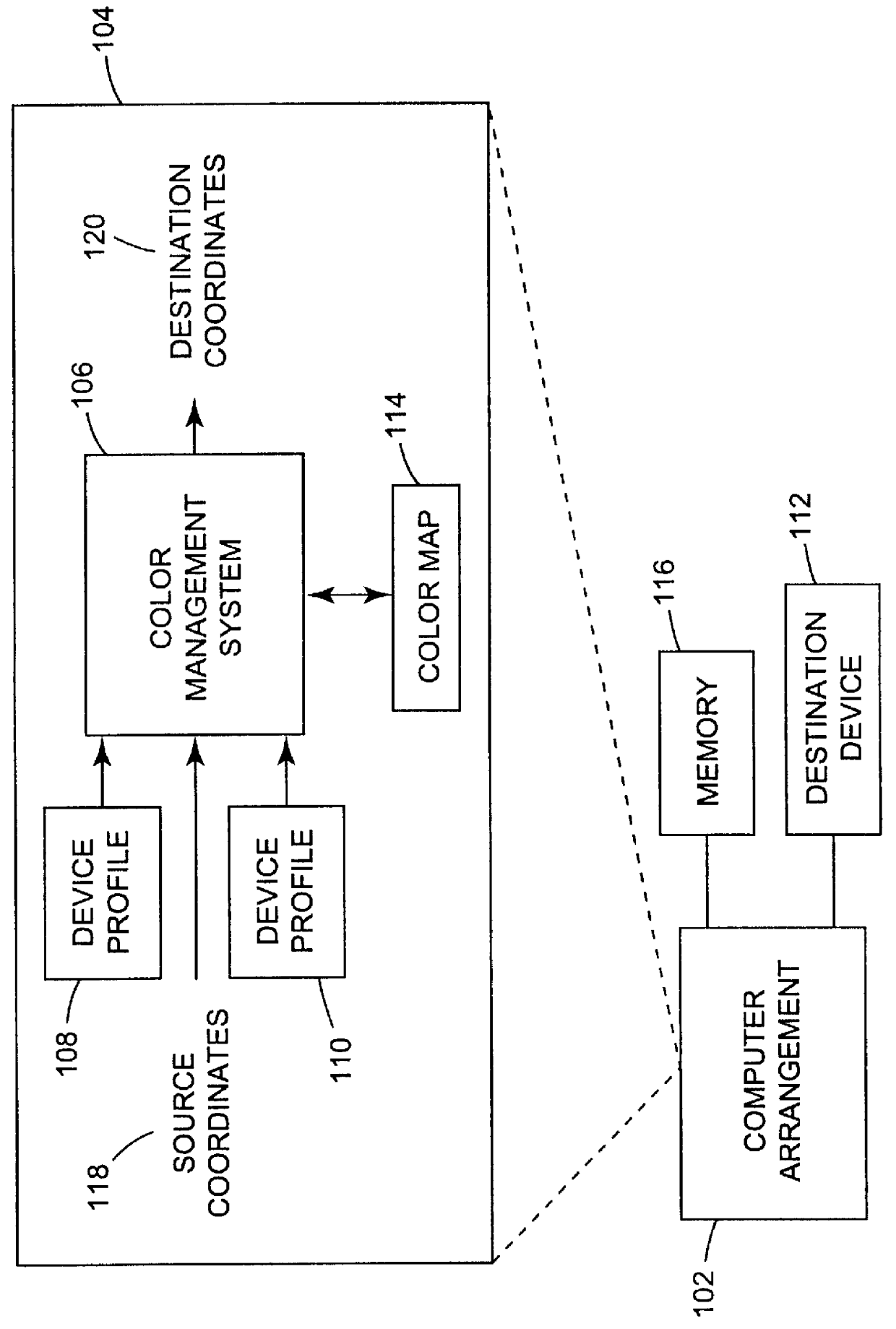

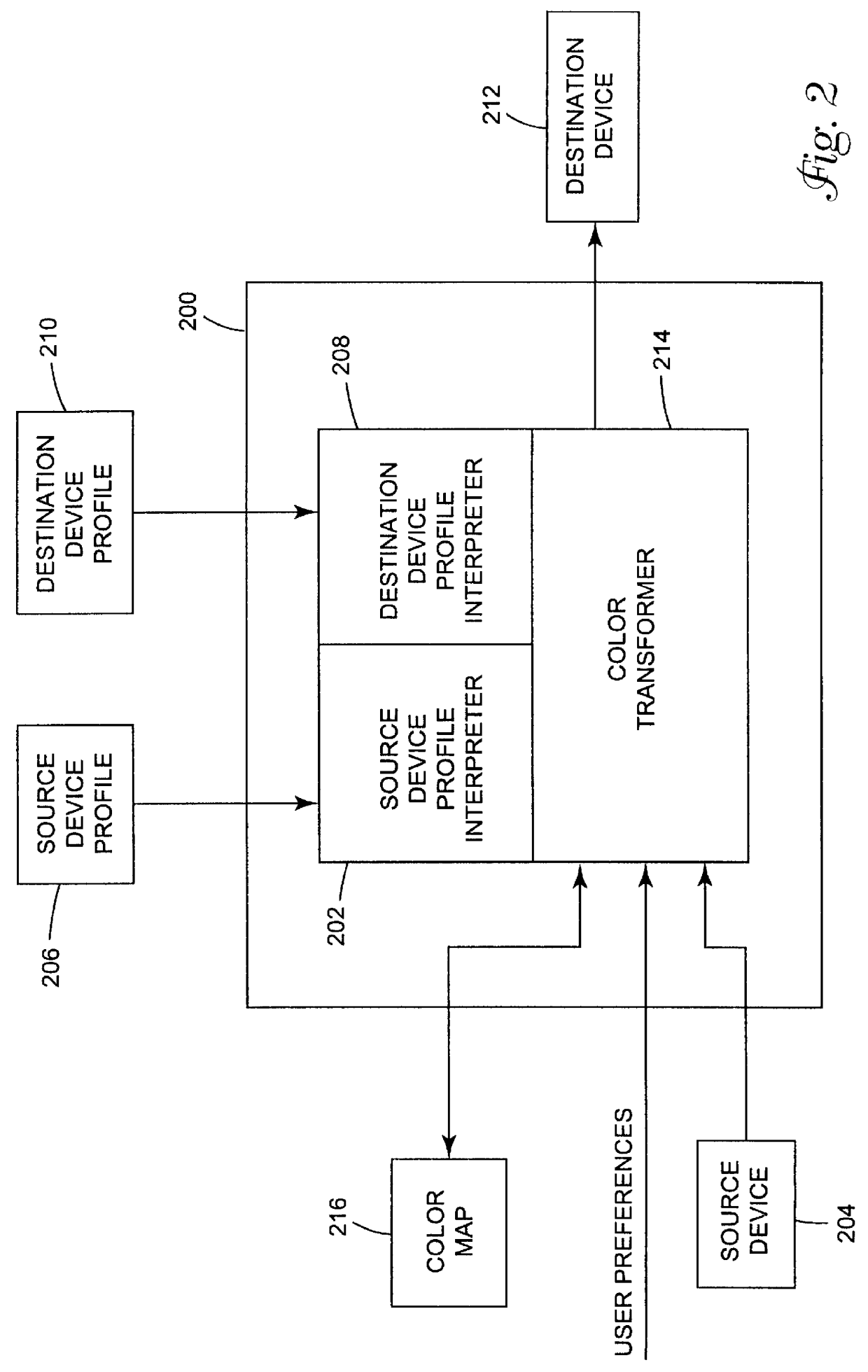

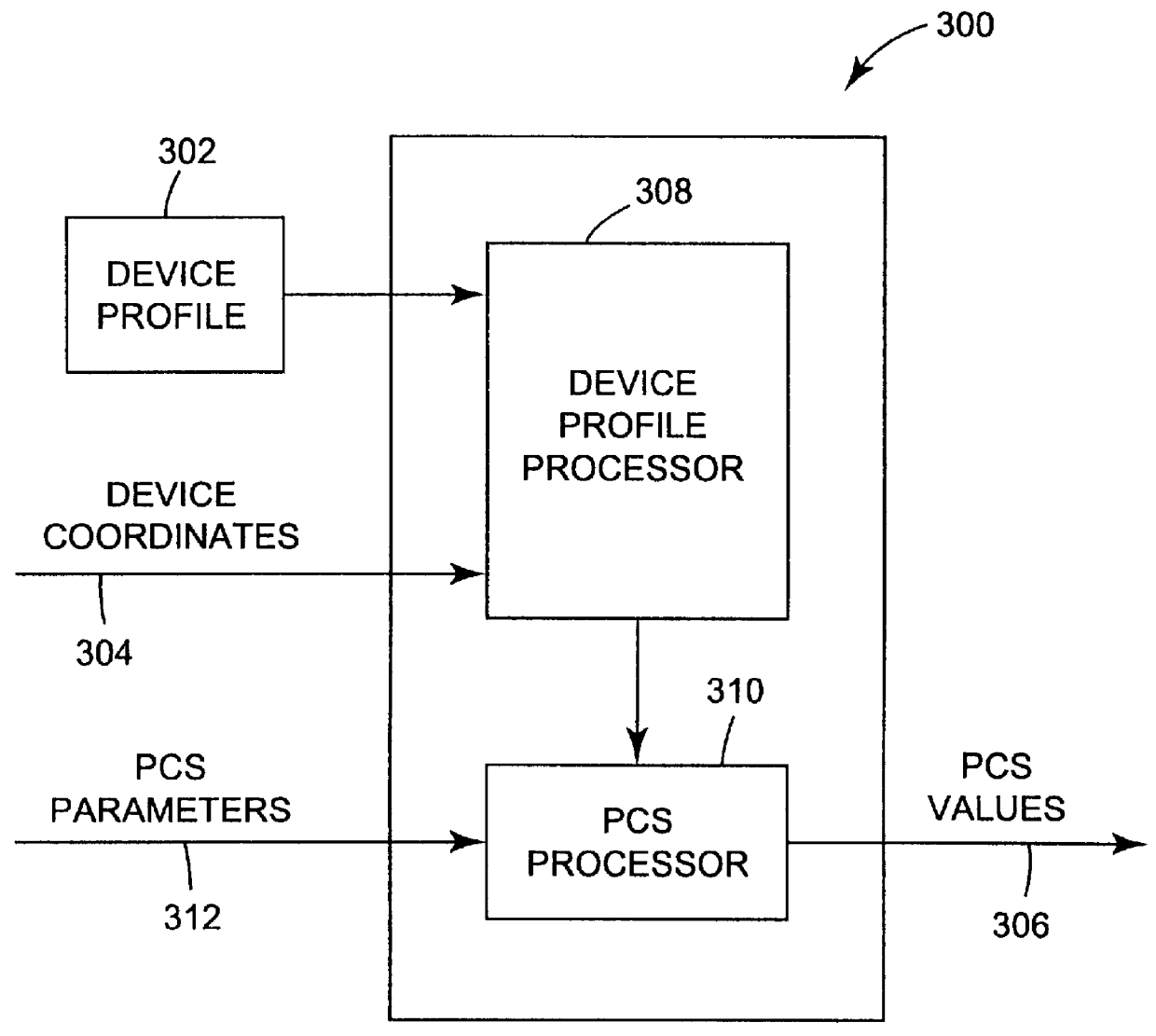

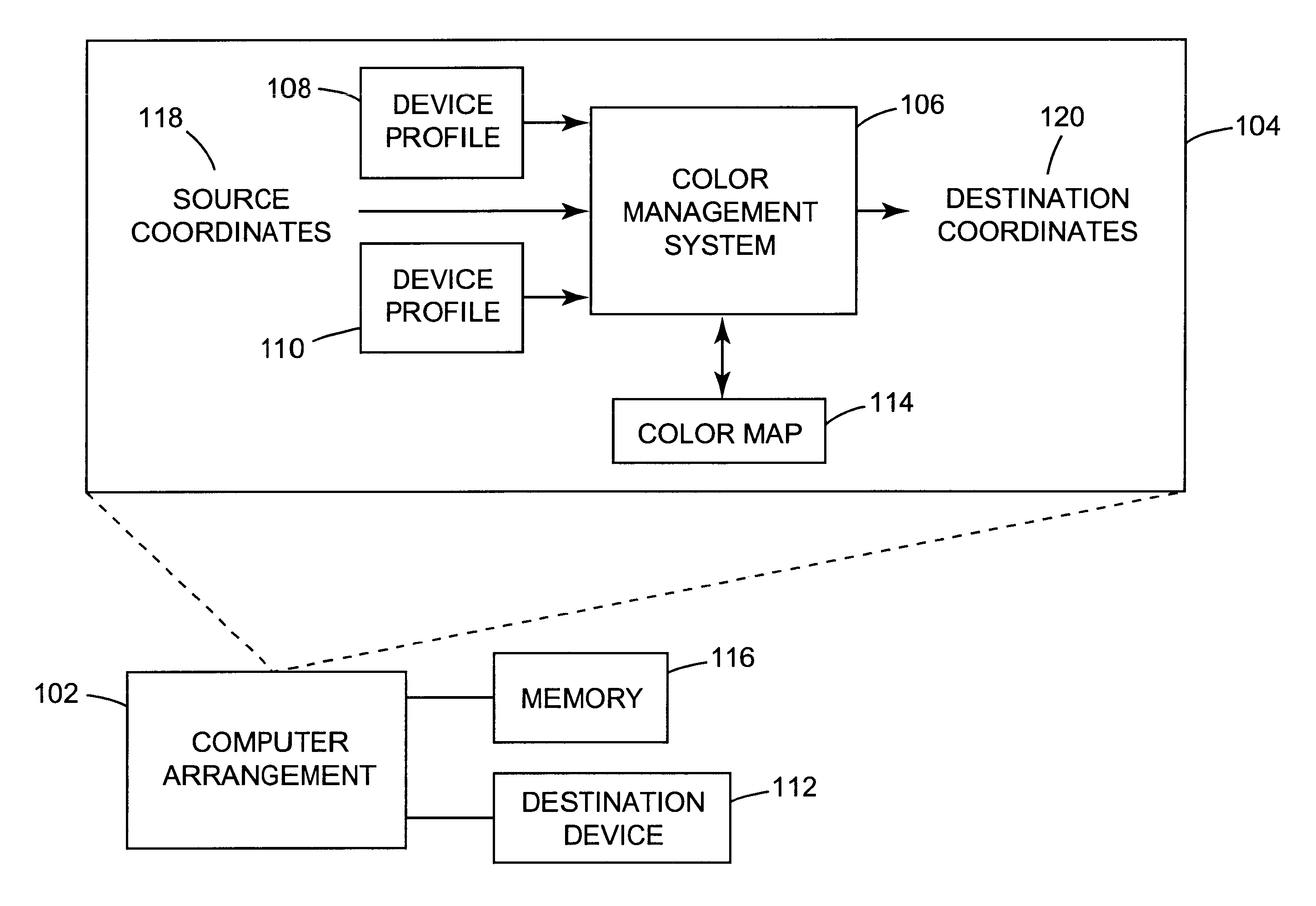

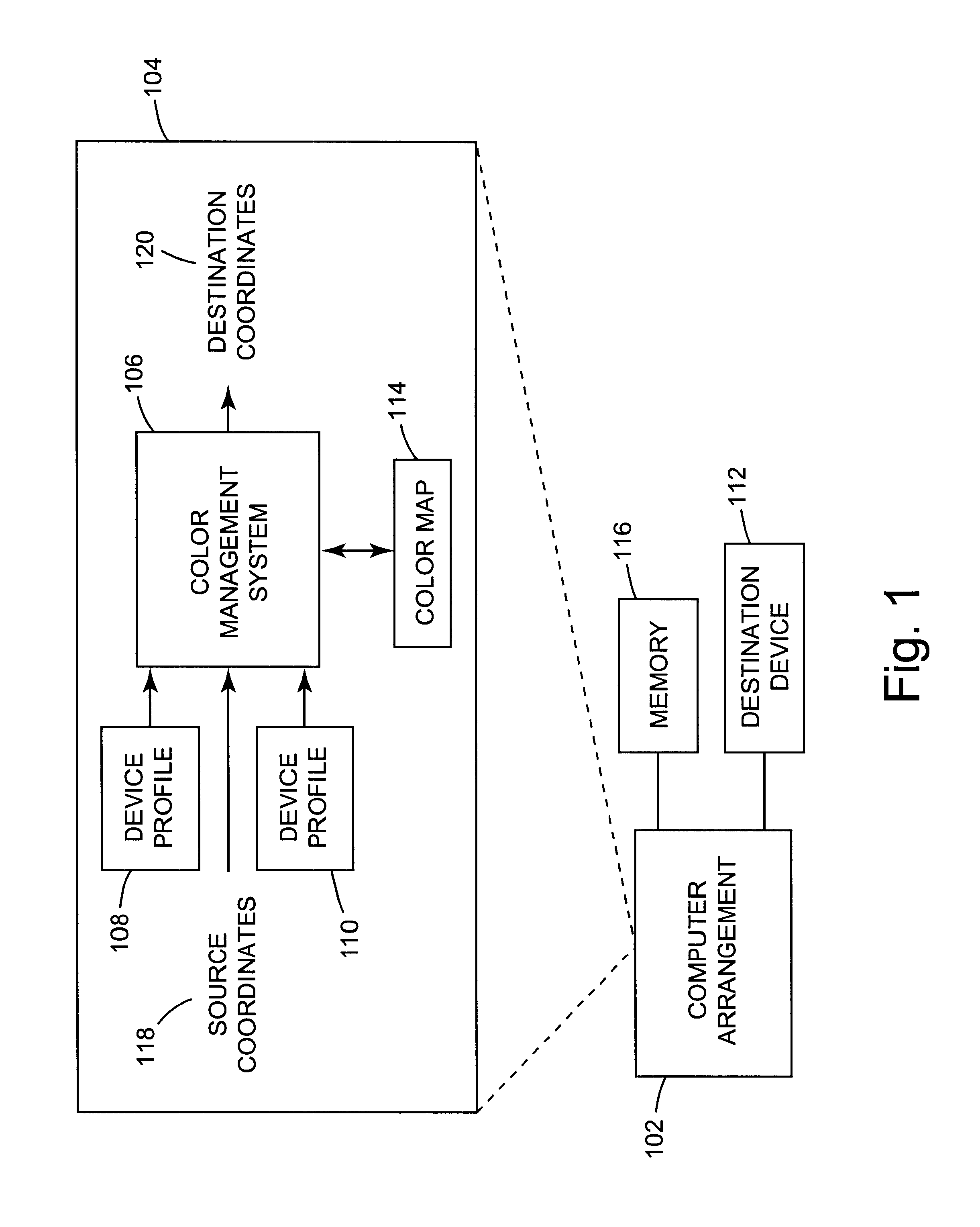

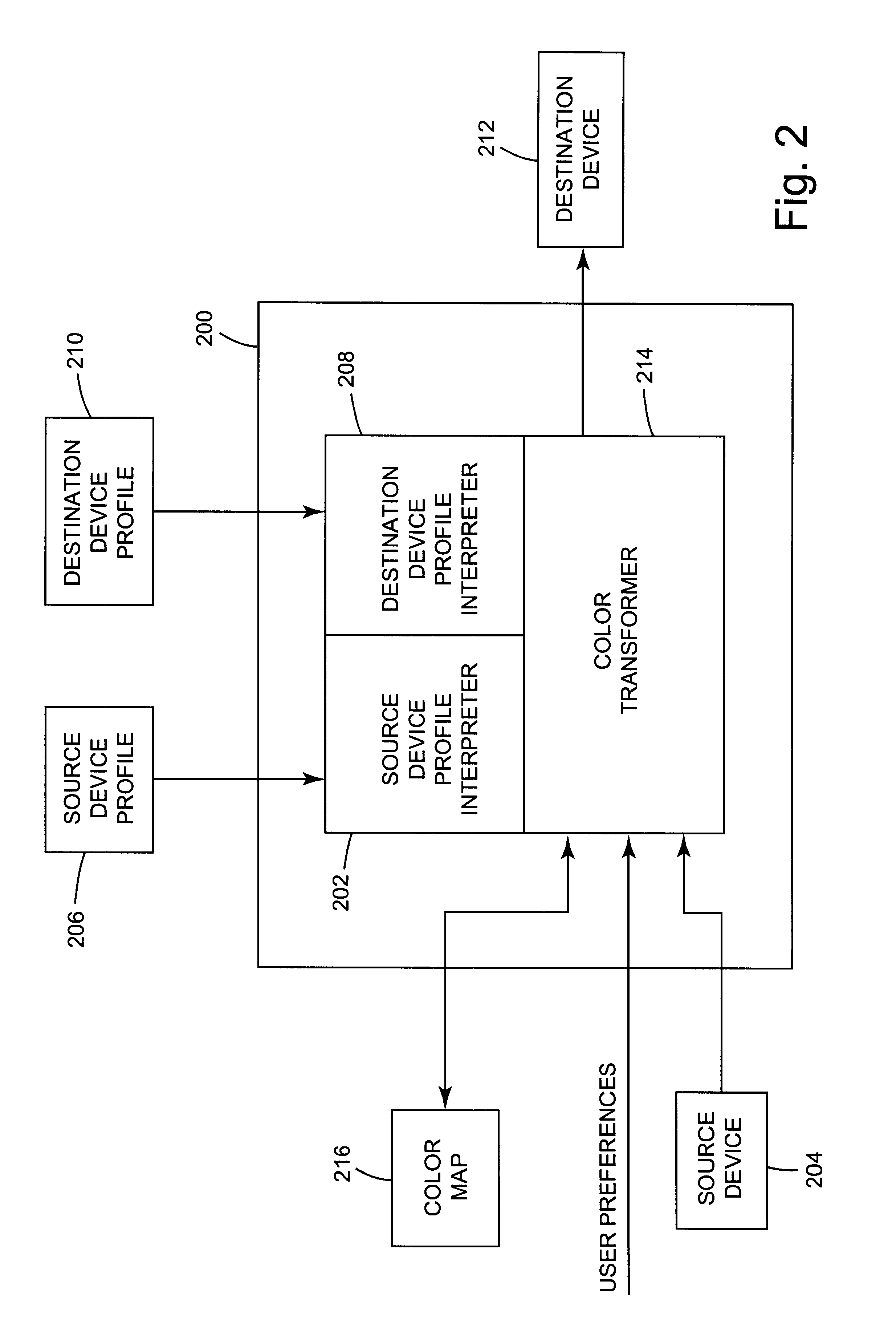

Arrangement for mapping colors between imaging systems and method therefor

A color mapping method is used in transforming colors between color imaging systems. The method includes using forward transformation profiles that characterize the color imaging systems to generate respective sets of device-independent color values for the color imaging systems. Color conversions are calculated by recursively reducing differences between the respective sets of device-independent color values. Based on these color conversions, a color map is constructed that describes a relationship between the color imaging systems.

Owner:KODAK POLYCHROME GRAPHICS

Arrangement for mapping colors between imaging systems and method therefor

InactiveUS6362808B1Character and pattern recognitionCathode-ray tube indicatorsColor transformationColor mapping

A color mapping method is used in transforming colors between color imaging systems. The method includes using forward transformation profiles that characterize the color imaging systems to generate respective sets of device-independent color values for the color imaging systems. Color conversions are calculated by reducing differences between the respective sets of device-independent color values. Based on these color conversions, a color map is constructed that describes a relationship between the color imaging systems.

Owner:KODAK POLYCHROME GRAPHICS

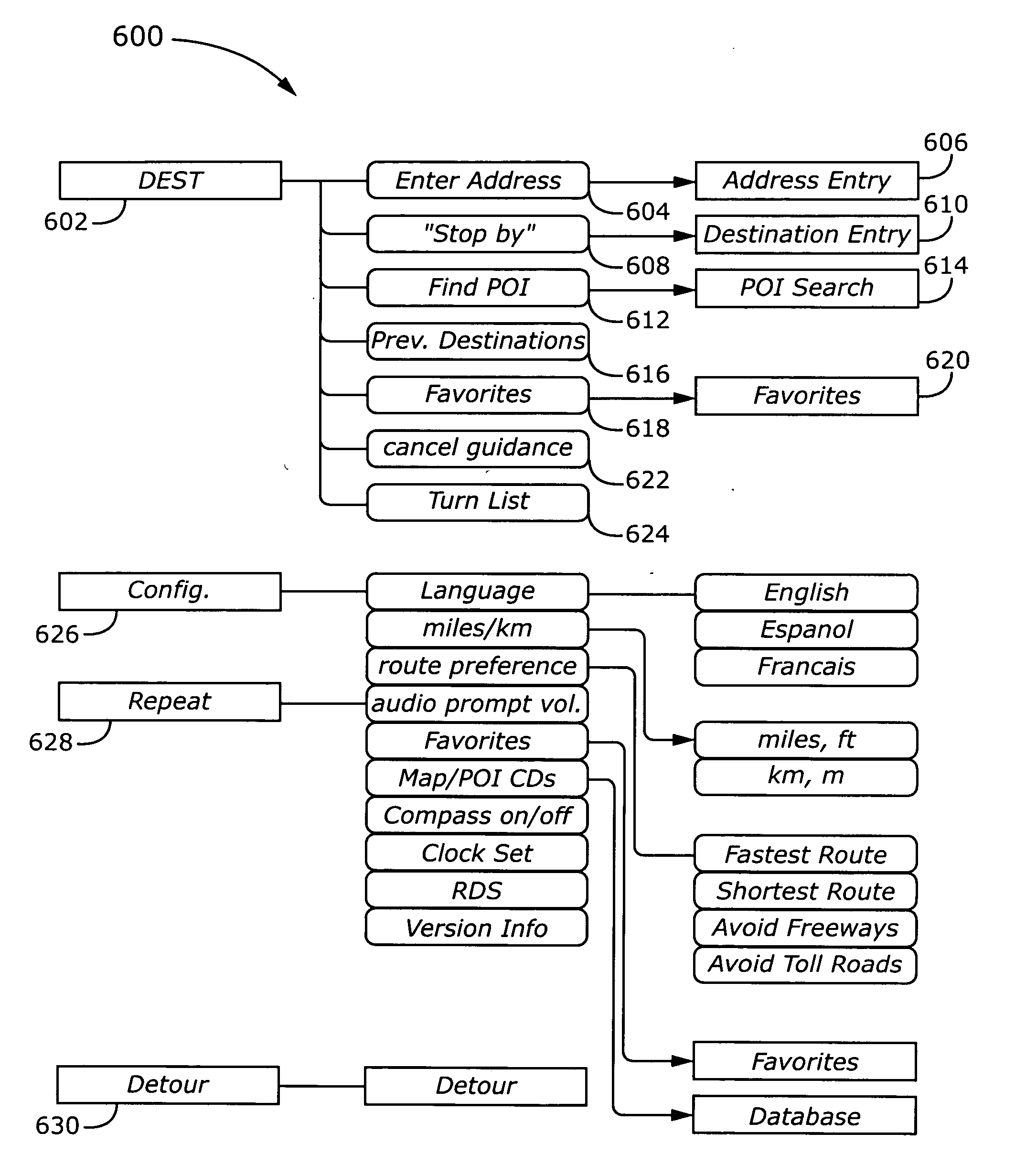

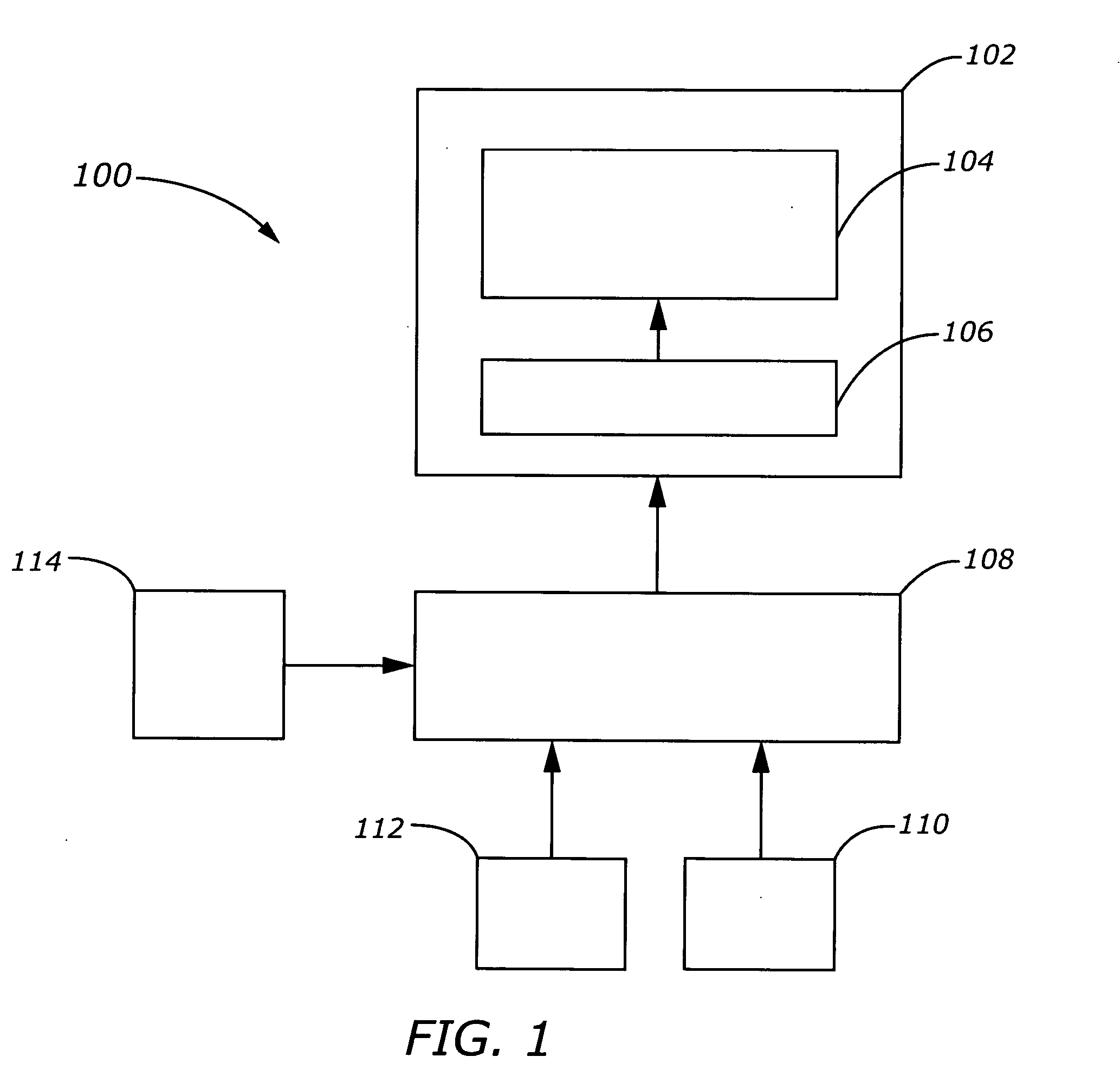

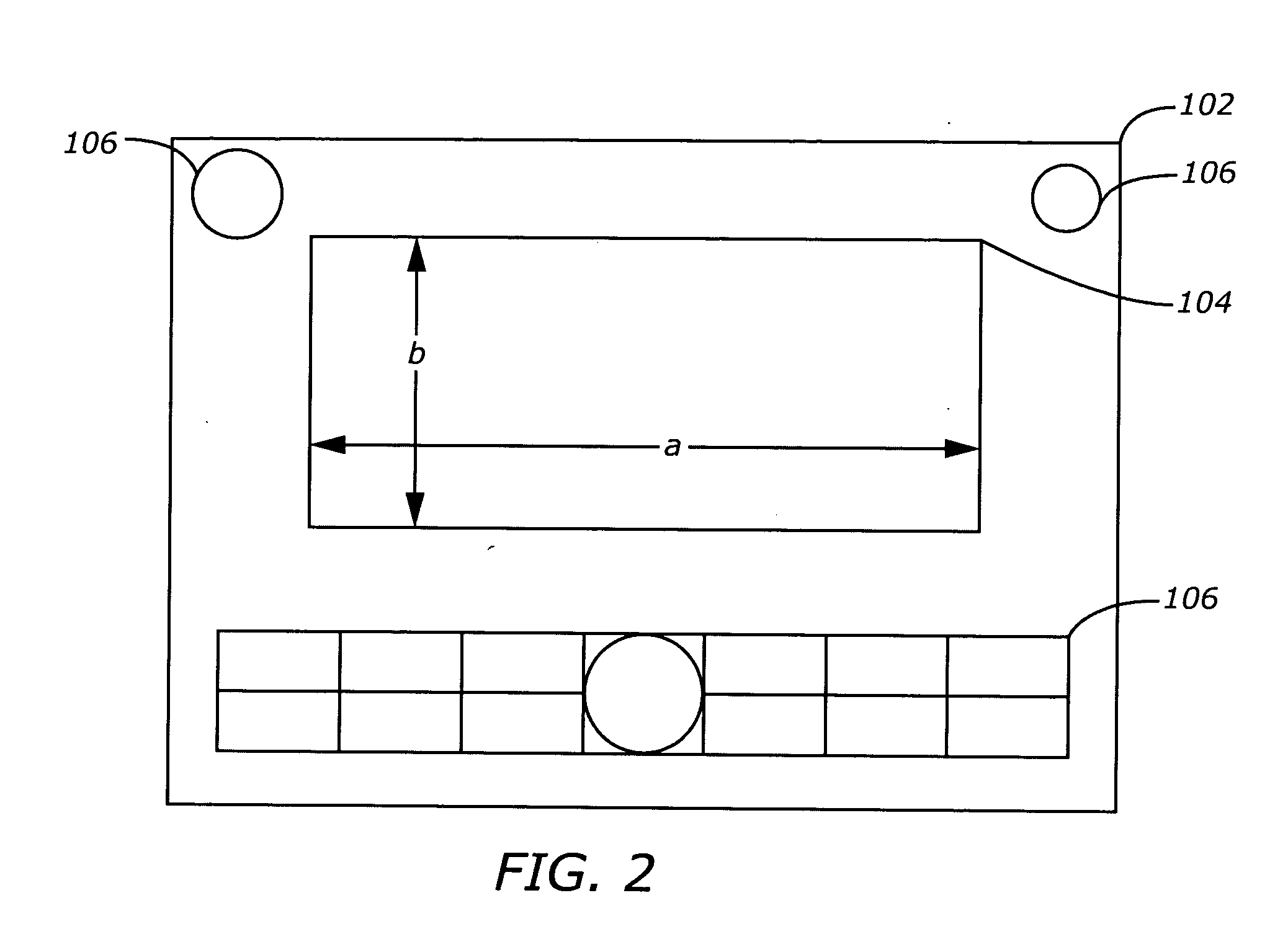

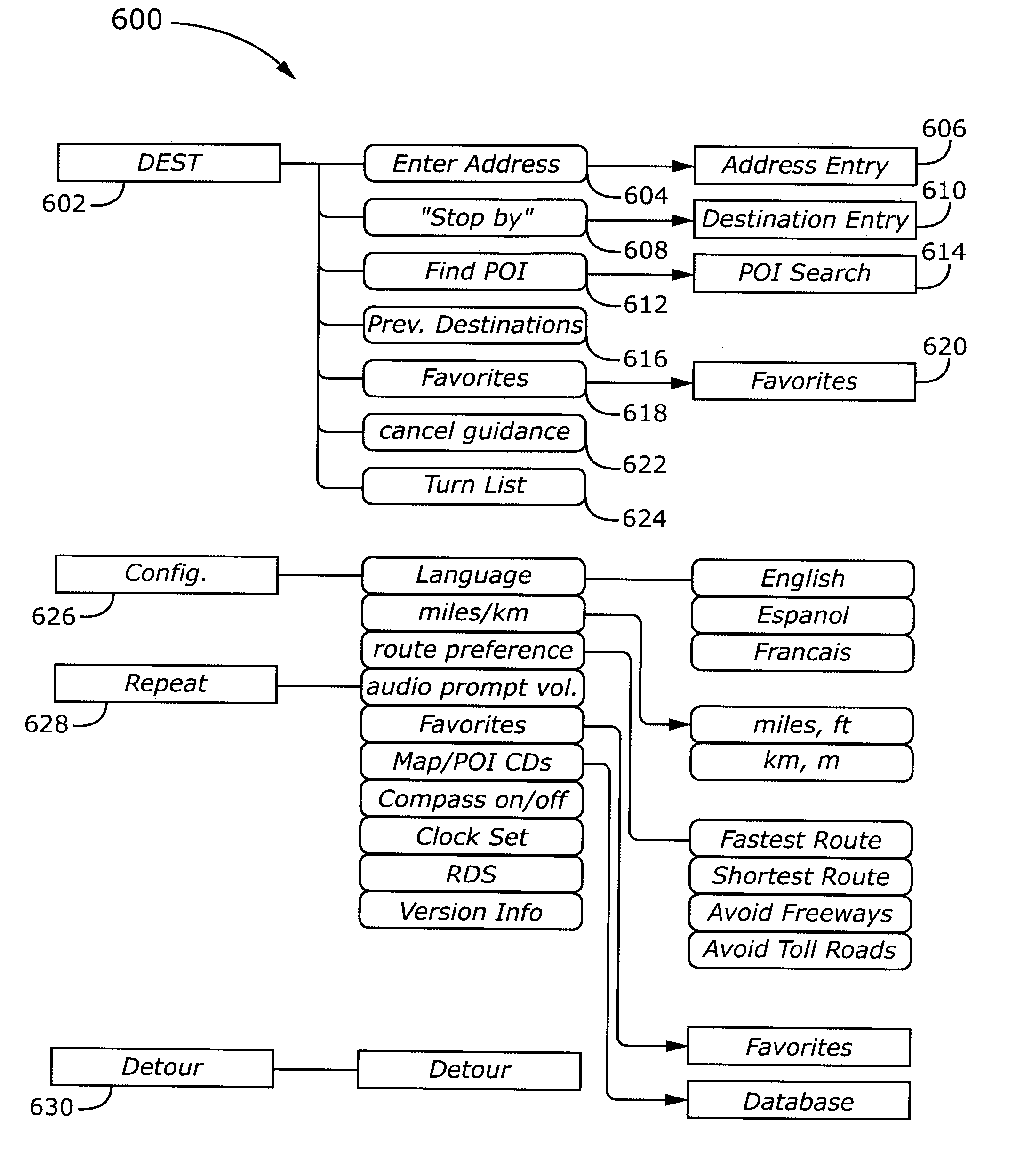

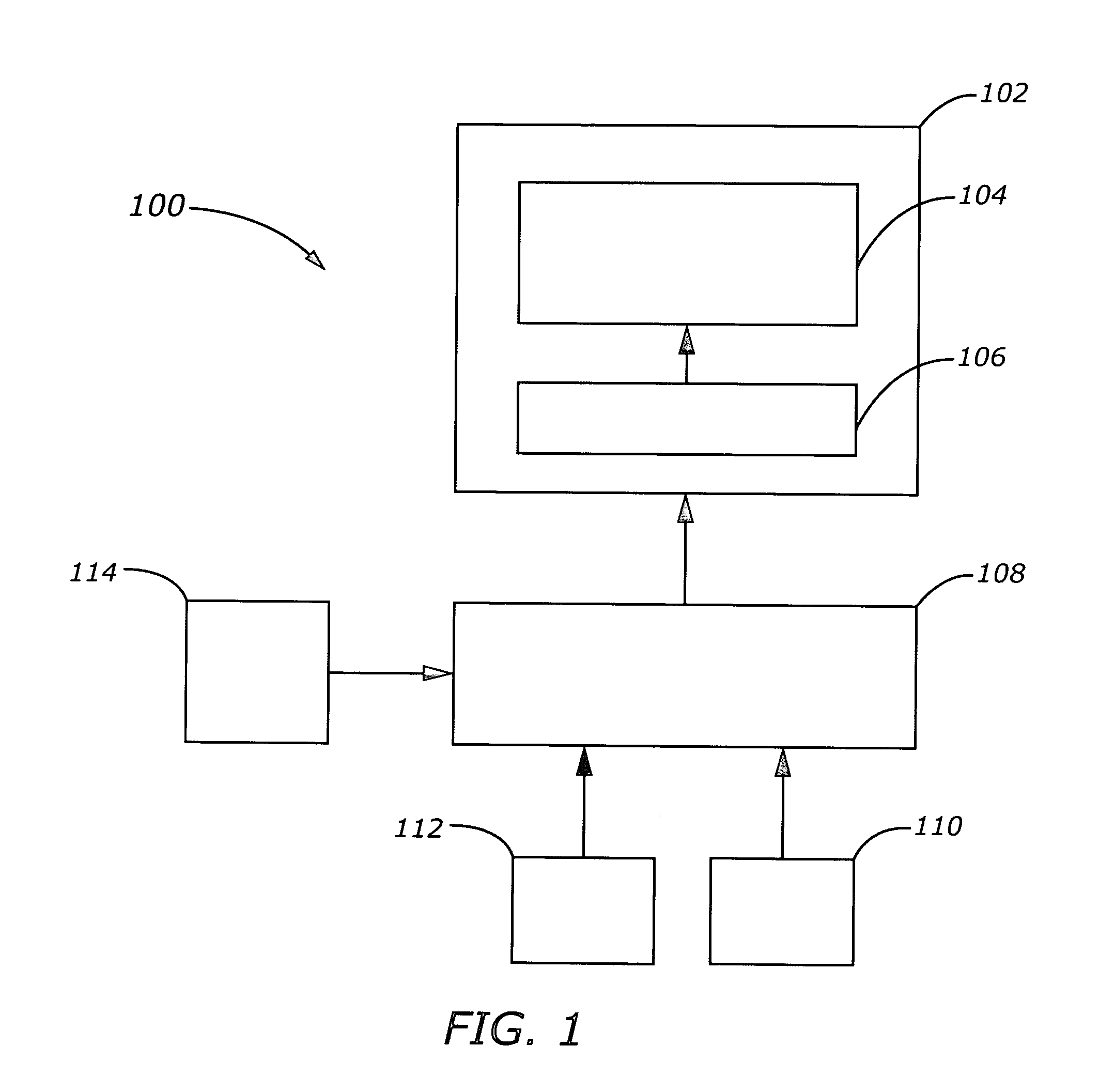

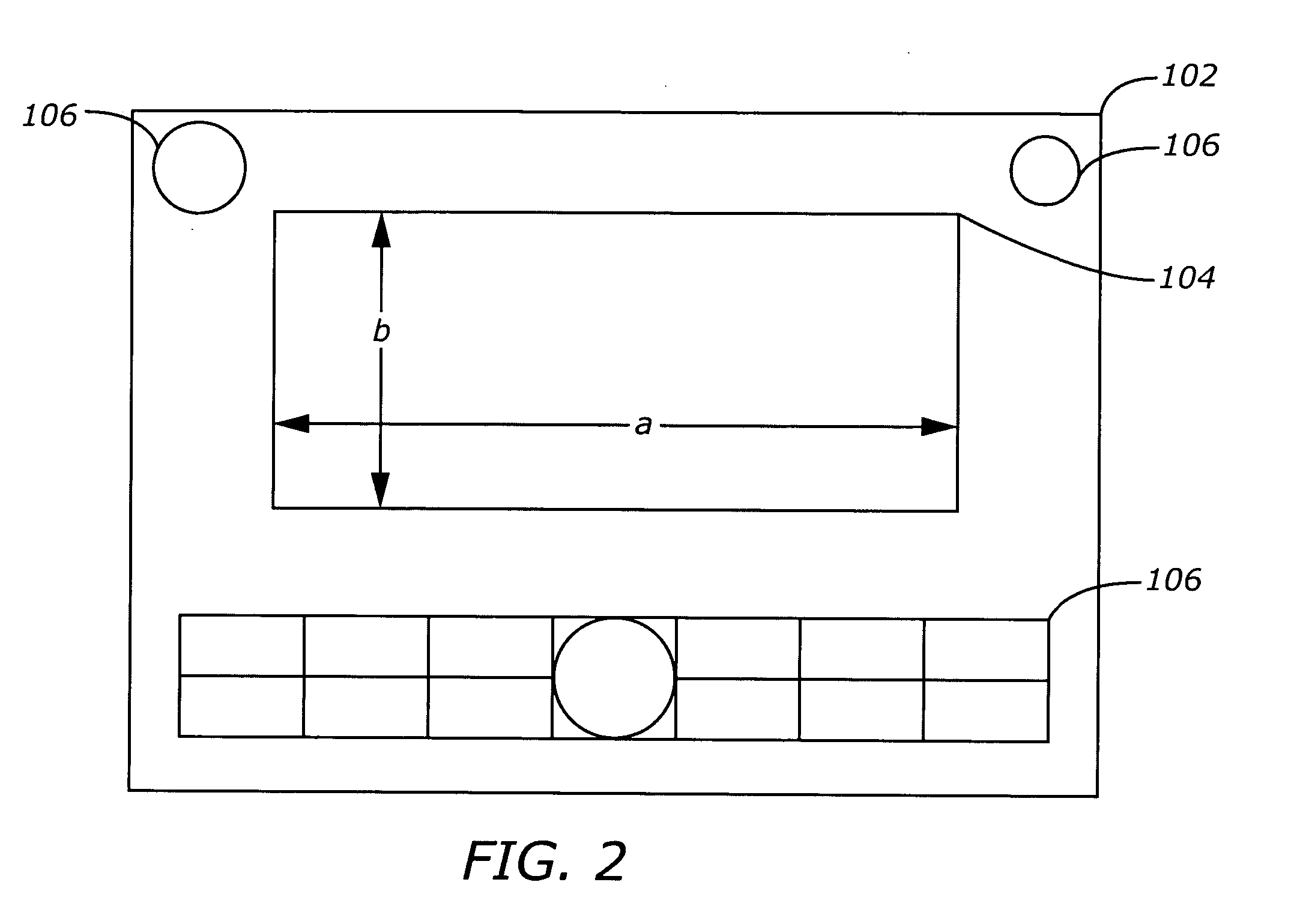

Turn-by-turn navigation system with special routing features

InactiveUS20050273251A1Minimum visual distractionEffective and economicalInstruments for road network navigationRoad vehicles traffic controlTurn-by-turn navigationNavigation system

Methods and apparatus are provided for generating route instructions on a turn-by-turn navigation system in a vehicle. Turn instructions can include a turn icon as well as visual and audio prompts. The turn-by-turn navigation system includes special routing features, such as “Where am I”, “Verification”, “Stop by”, pre-set destinations, and time-restricted road routing. The turn-by-turn navigation system can generally be produced more economically than a typical premium system having a full complement of costly features, such as a color map display. Moreover, the turn-by-turn navigation system can be configured with only those features deemed most useful to a broad-based market segment, in order to further reduce manufacturing costs.

Owner:GENERAL MOTORS COMPANY

Turn-by-turn navigation system with enhanced turn icon

ActiveUS20050273252A1Minimum visual distractionEffective and economicalInstruments for road network navigationDigital data processing detailsGraphicsTurn-by-turn navigation

Methods and apparatus are provided for generating turn instructions on a turn-by-turn navigation system. The turn instructions can include a turn icon as well as visual and audio prompts. A countdown bar is typically embedded within the turn icon to provide upcoming turn information in intuitive graphic form, in order to reduce driver distraction. The turn-by-turn navigation system can generally be produced more economically than a typical premium system having a full complement of costly features, such as a color map display. Moreover, the turn-by-turn navigation system can be configured with only those features deemed most useful to a broad-based market segment, in order to further reduce manufacturing costs.

Owner:GM GLOBAL TECH OPERATIONS LLC

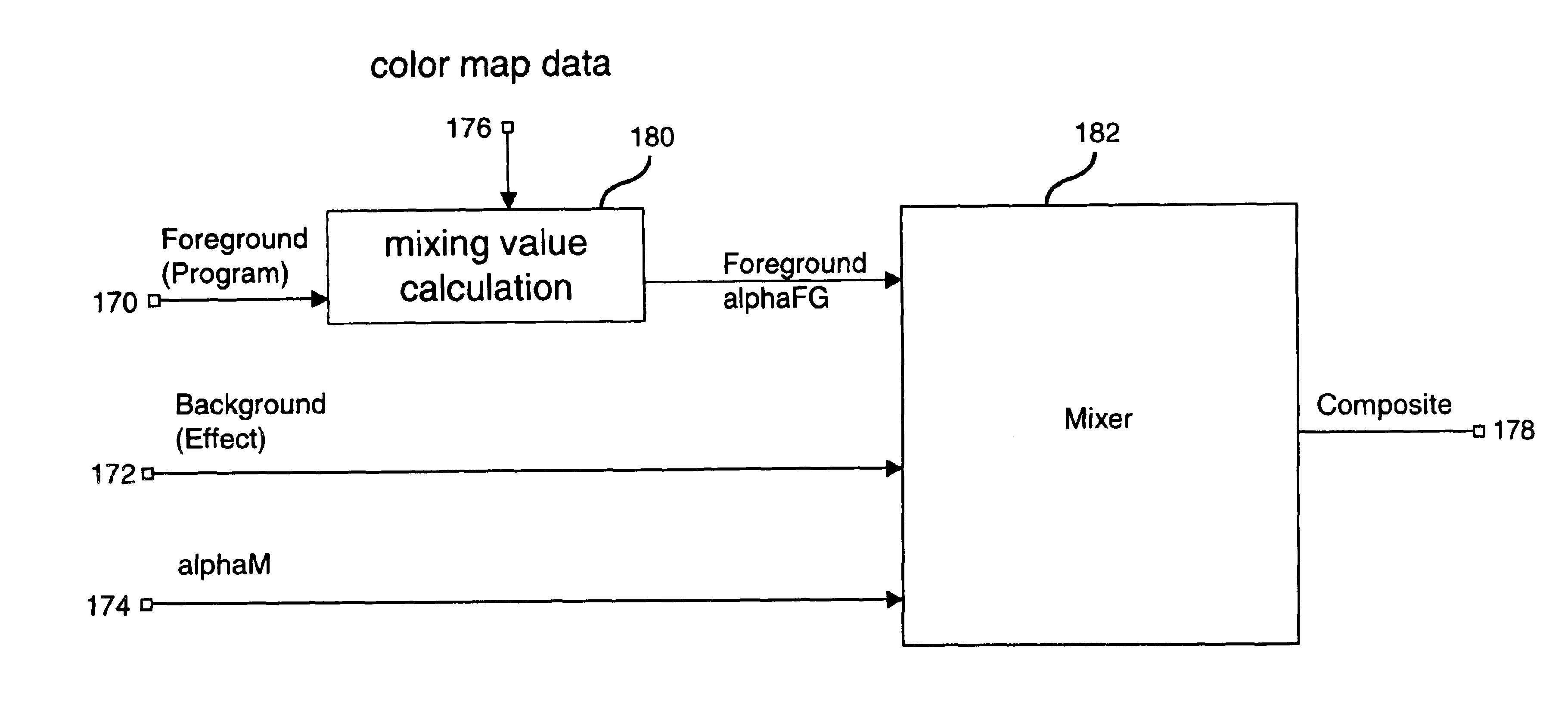

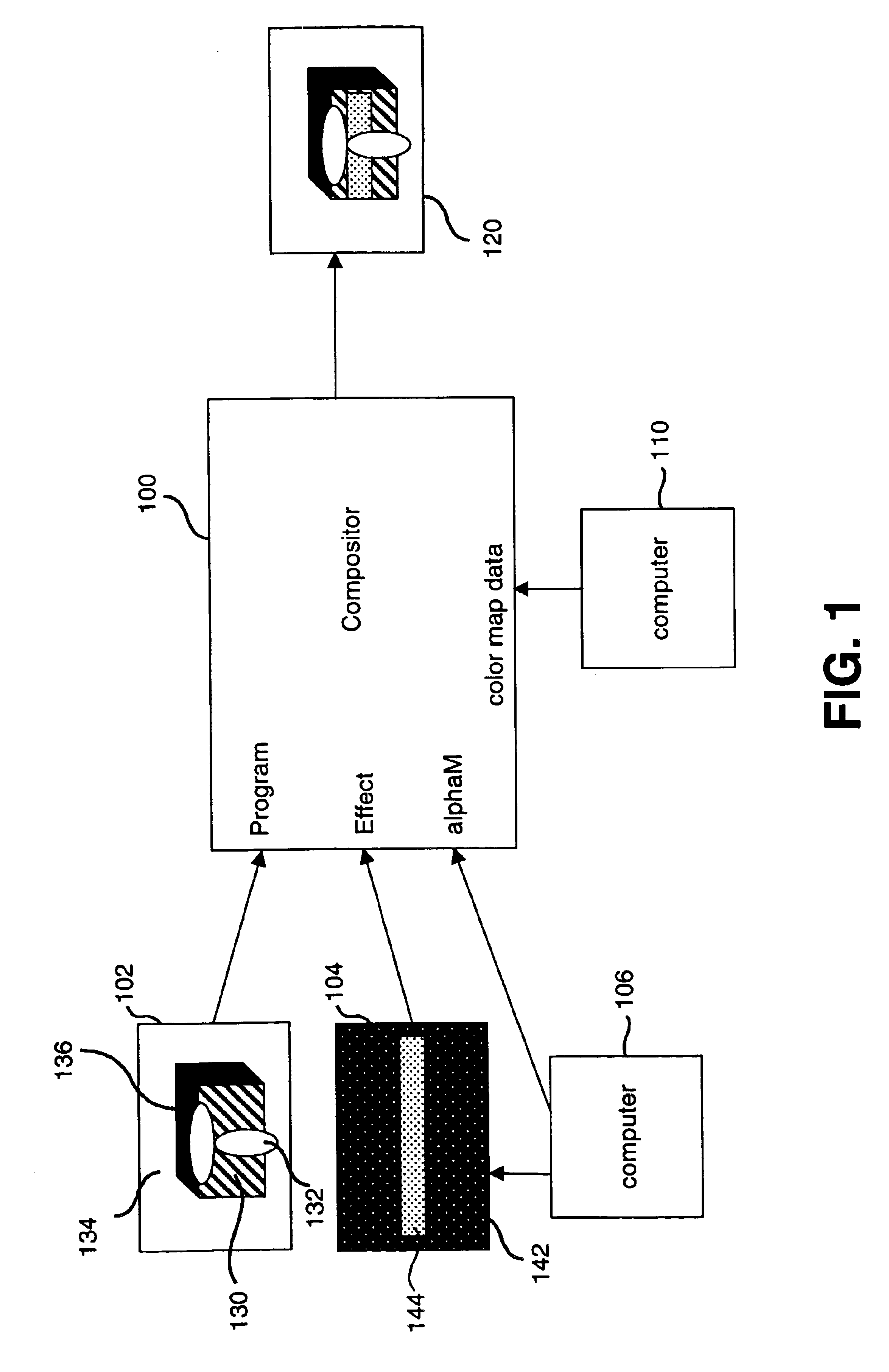

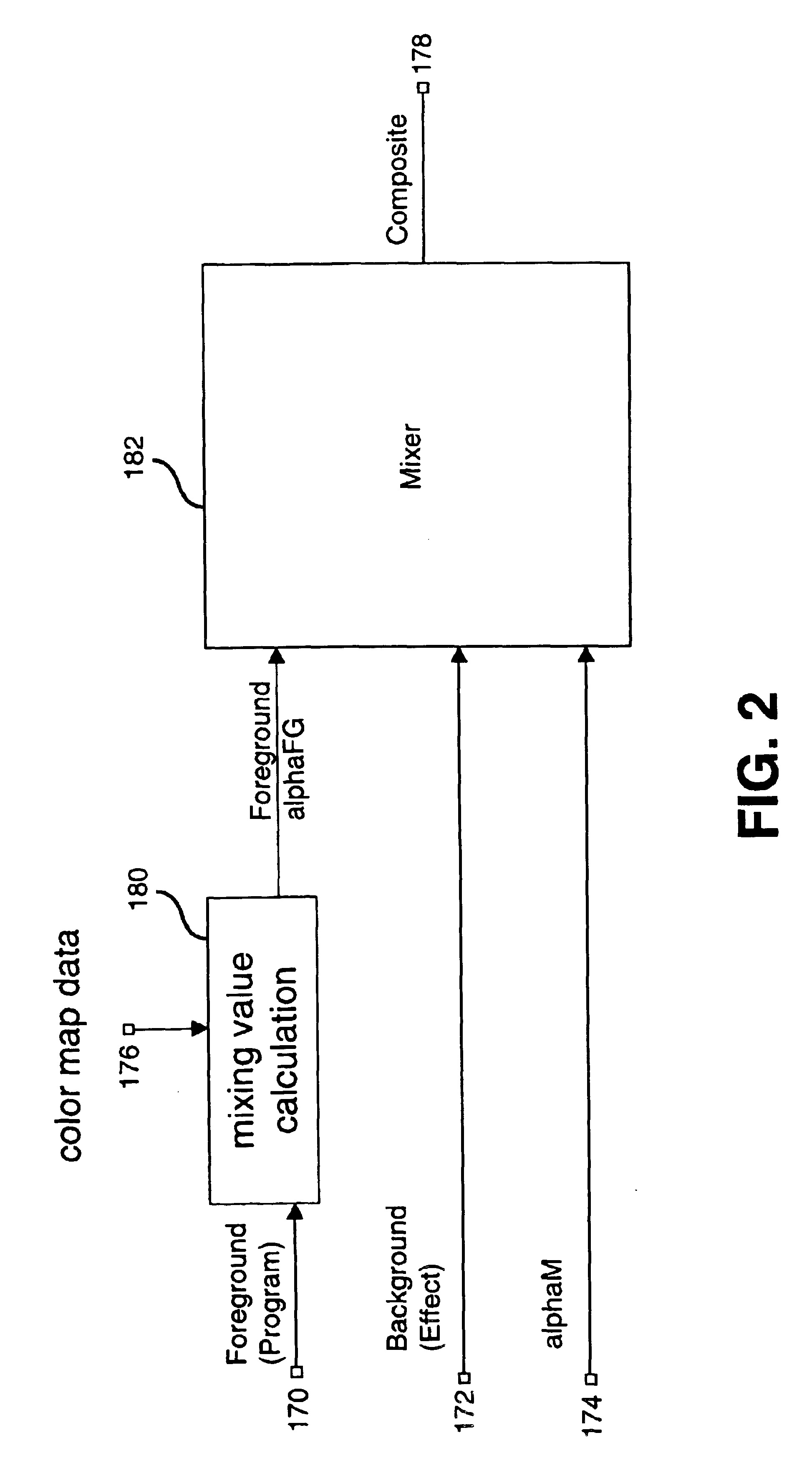

Video compositor

A system is disclosed for blending two image that makes use of a color map which indicates colors in a foreground can be mixed with the background and how much of each source to mix. One embodiment of the invention restricts the use of the color map to only pixels in the foreground that correspond to a graphic (or effect) in the background. Another embodiment makes use of a gray scale matte which stores blending values for each pixel in the foreground.

Owner:SPORTSMEDIA TECH CORP

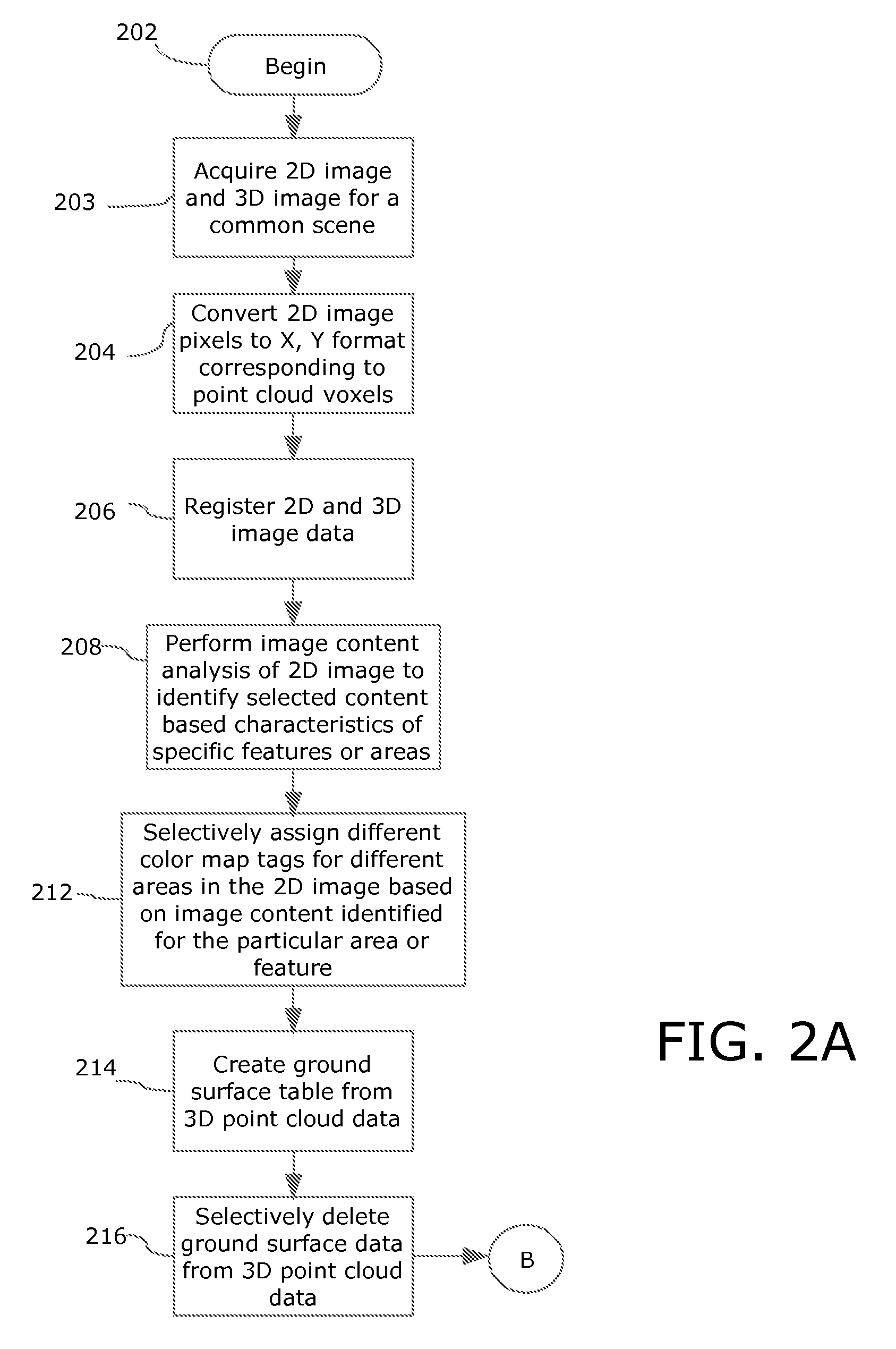

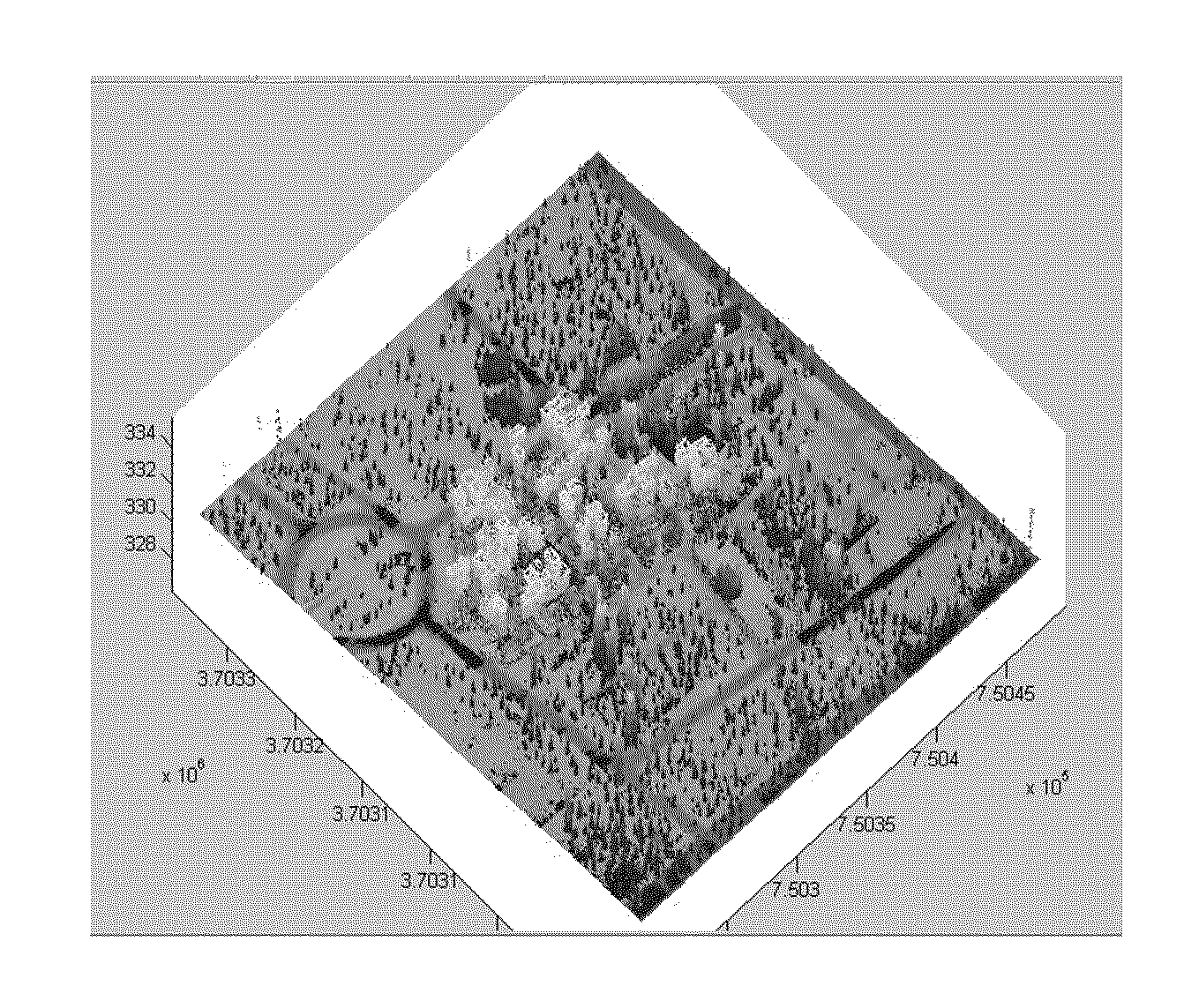

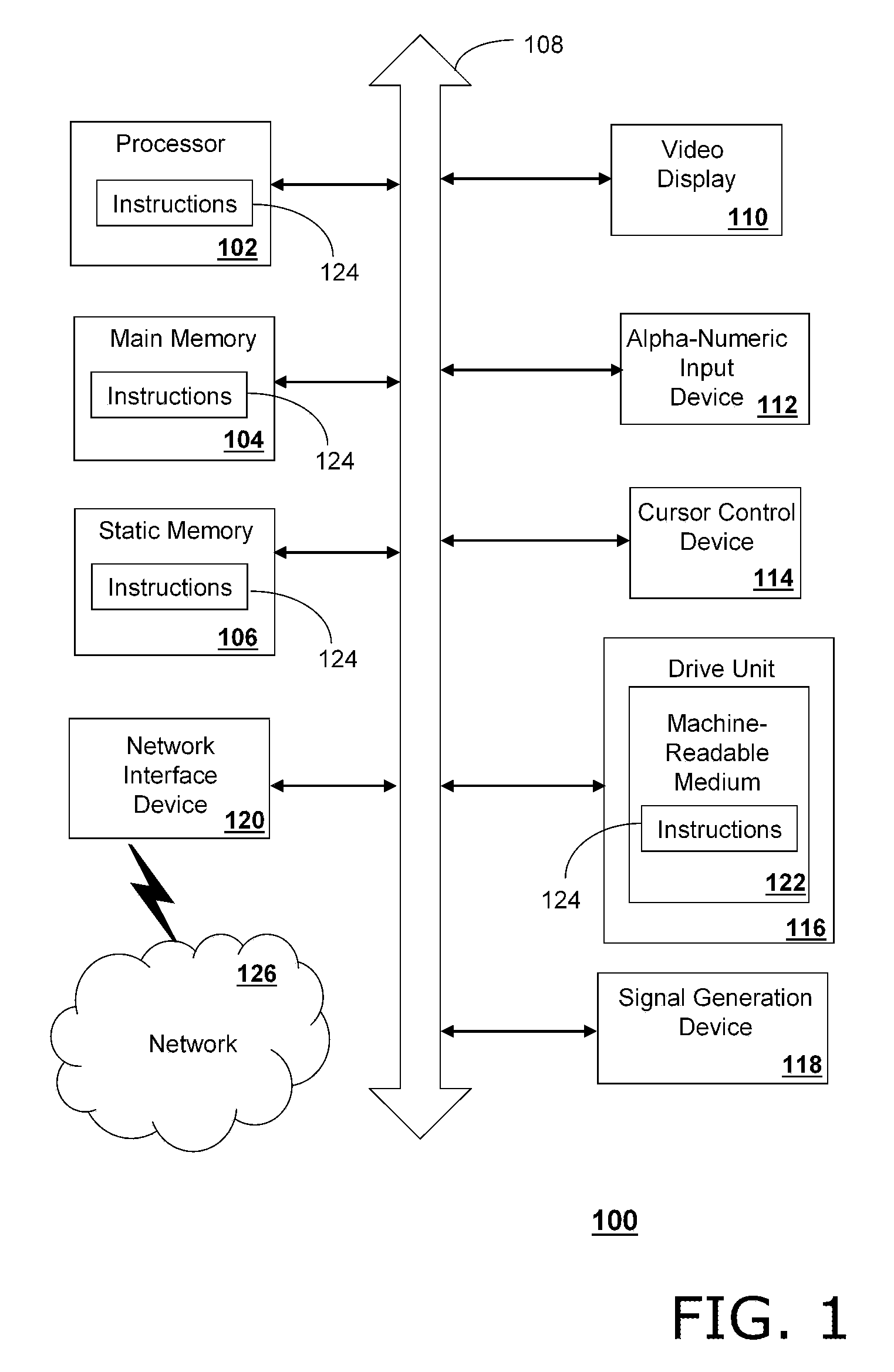

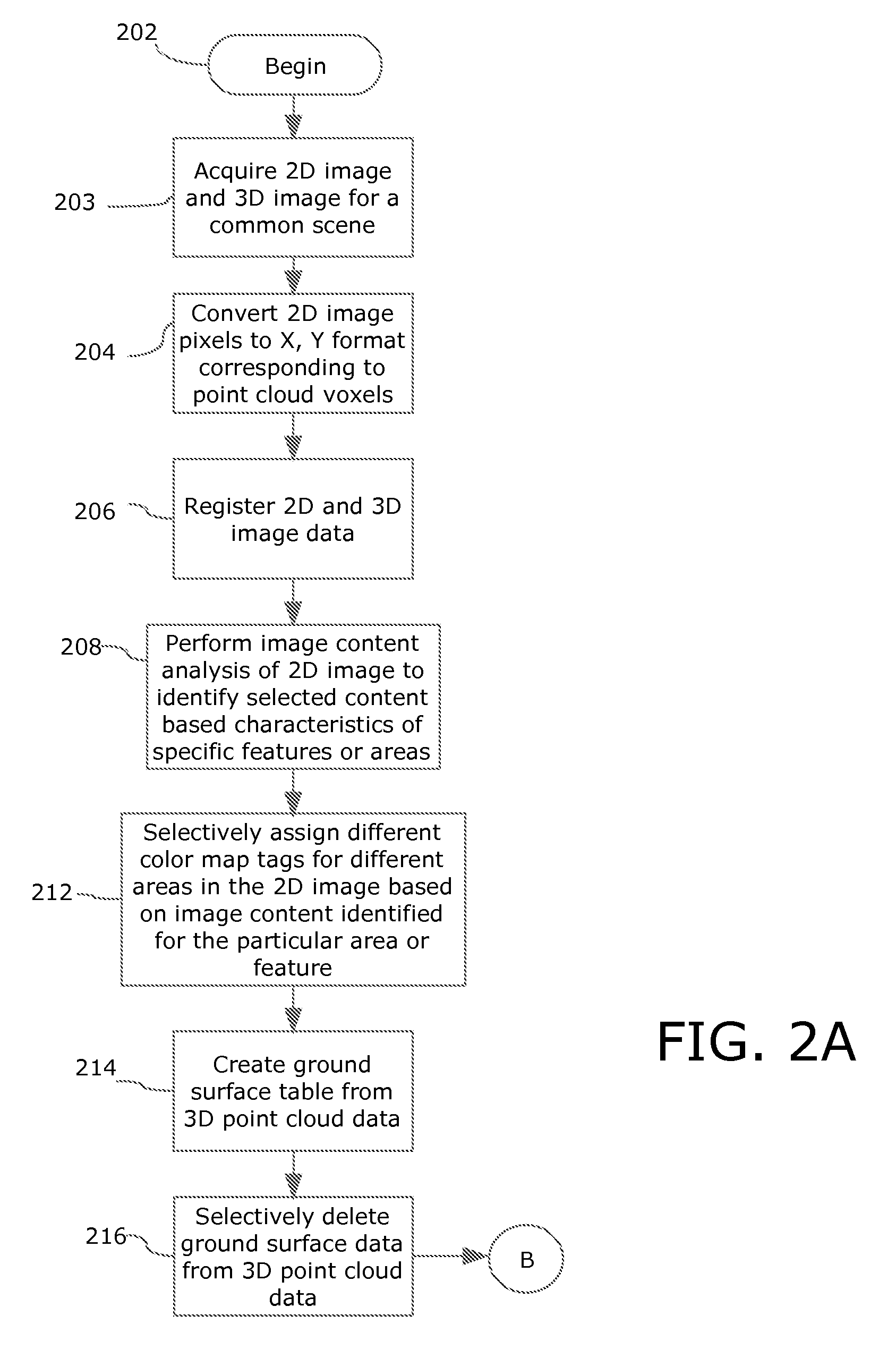

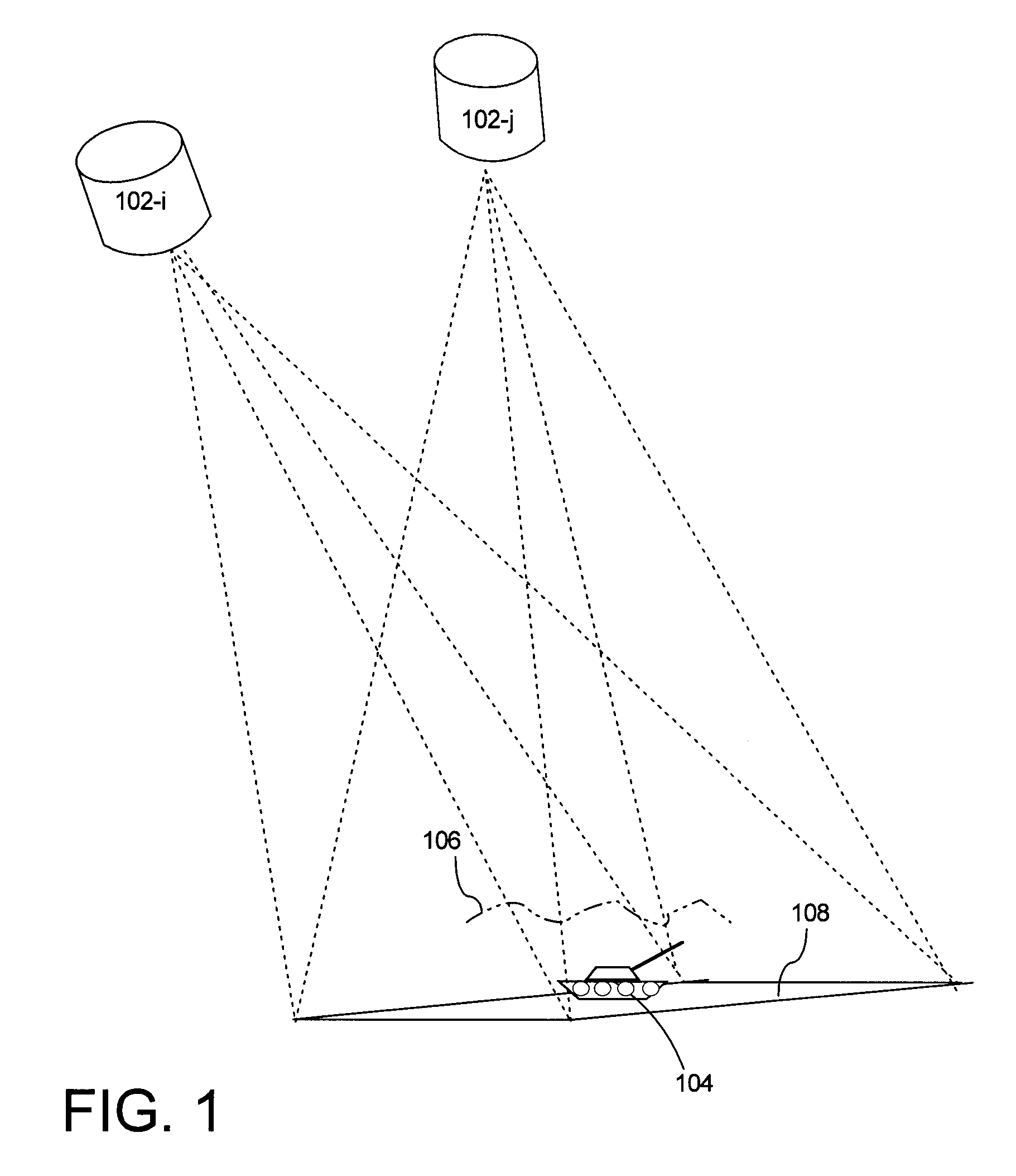

Fusion of a 2d electro-optical image and 3D point cloud data for scene interpretation and registration performance assessment

Method and system for combining a 2D image with a 3D point cloud for improved visualization of a common scene as well as interpretation of the success of the registration process. The resulting fused data contains the combined information from the original 3D point cloud and the information from the 2D image. The original 3D point cloud data is color coded in accordance with a color map tagging process. By fusing data from different sensors, the resulting scene has several useful attributes relating to battle space awareness, target identification, change detection within a rendered scene, and determination of registration success.

Owner:HARRIS CORP

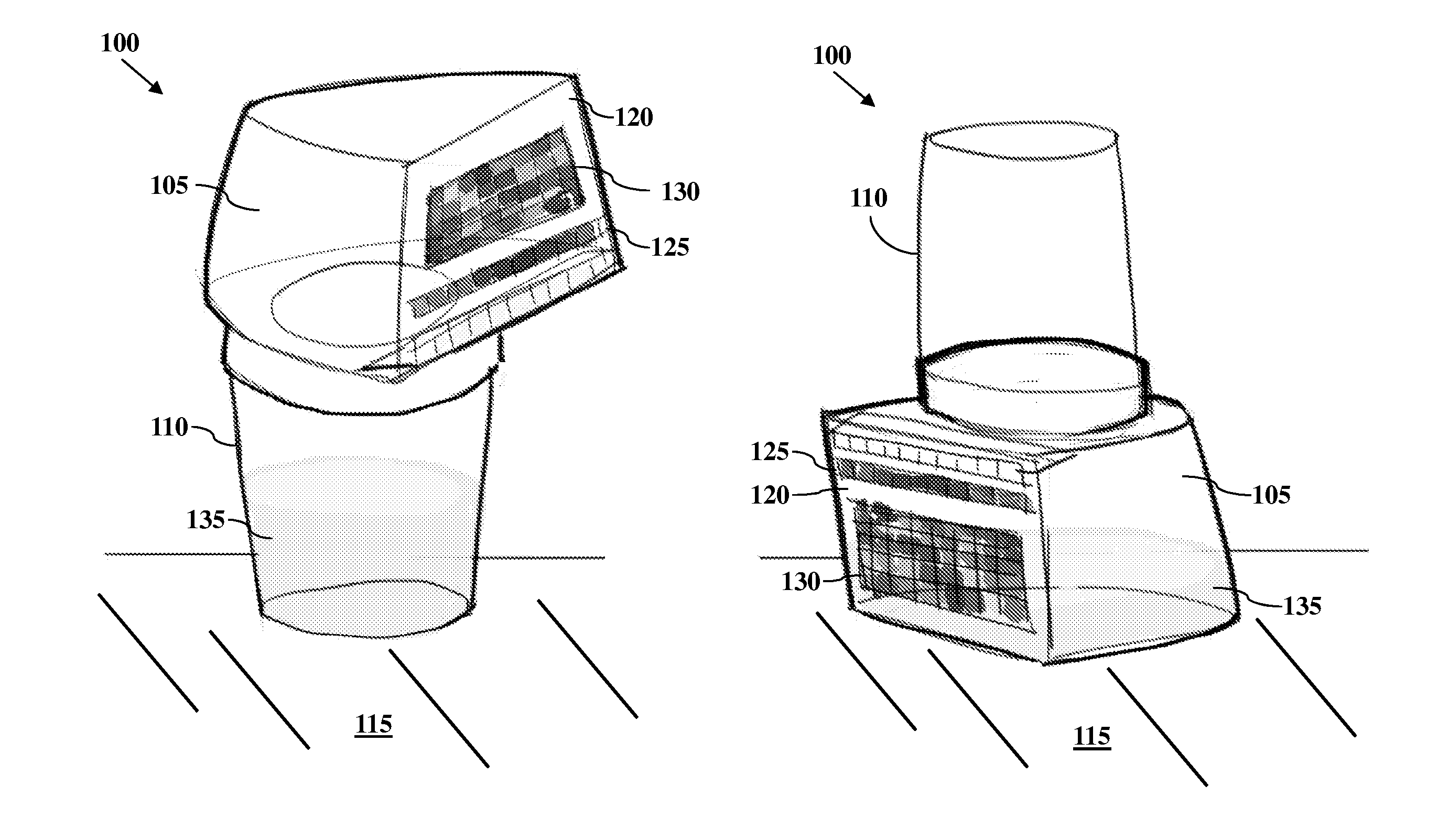

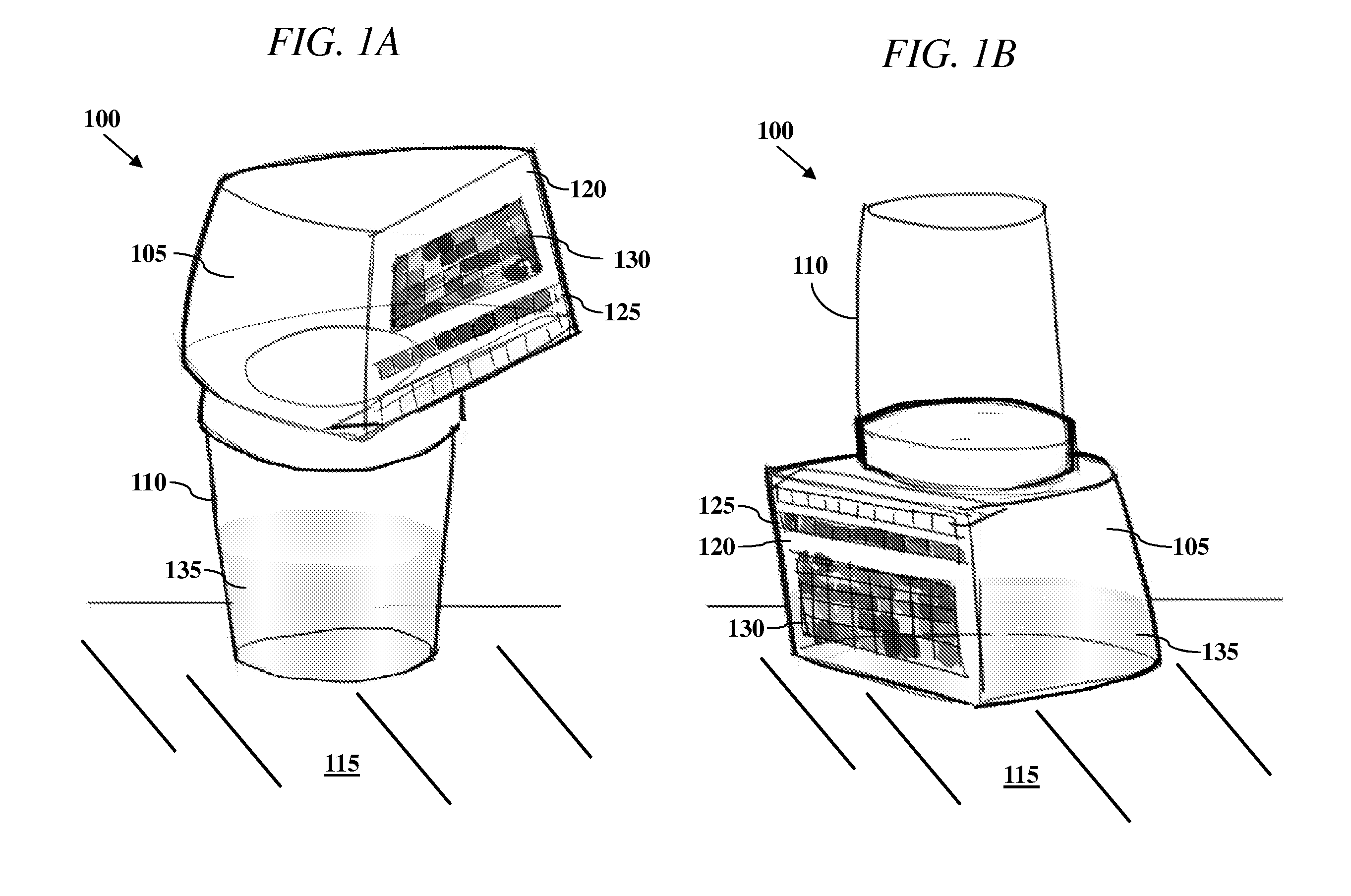

All-In-One Specimen Cup With Optically Readable Results

A biological material test strip and adjacently-located reference color chart are affixed to a lid portion of an all-in-one specimen cup to perform color-based reaction testing of collected biological specimens in an uncalibrated environment. After specimen collection, the lid portion is secured to a container portion of the specimen cup. The cup may then be rotated into an upside down position causing the specimen, under the force of gravity, to pass from the container portion and into a volume of the lid portion, such that the test strip is exposed to the specimen as it is received into the volume of the lid portion. An image of the exposed test strip and adjacently-located reference color chart may then be captured and processed to identify any color matches between the individual test pads on the test strip and the corresponding sequences of reference color blocks on the reference chart.

Owner:SCANWELL HEALTH INC

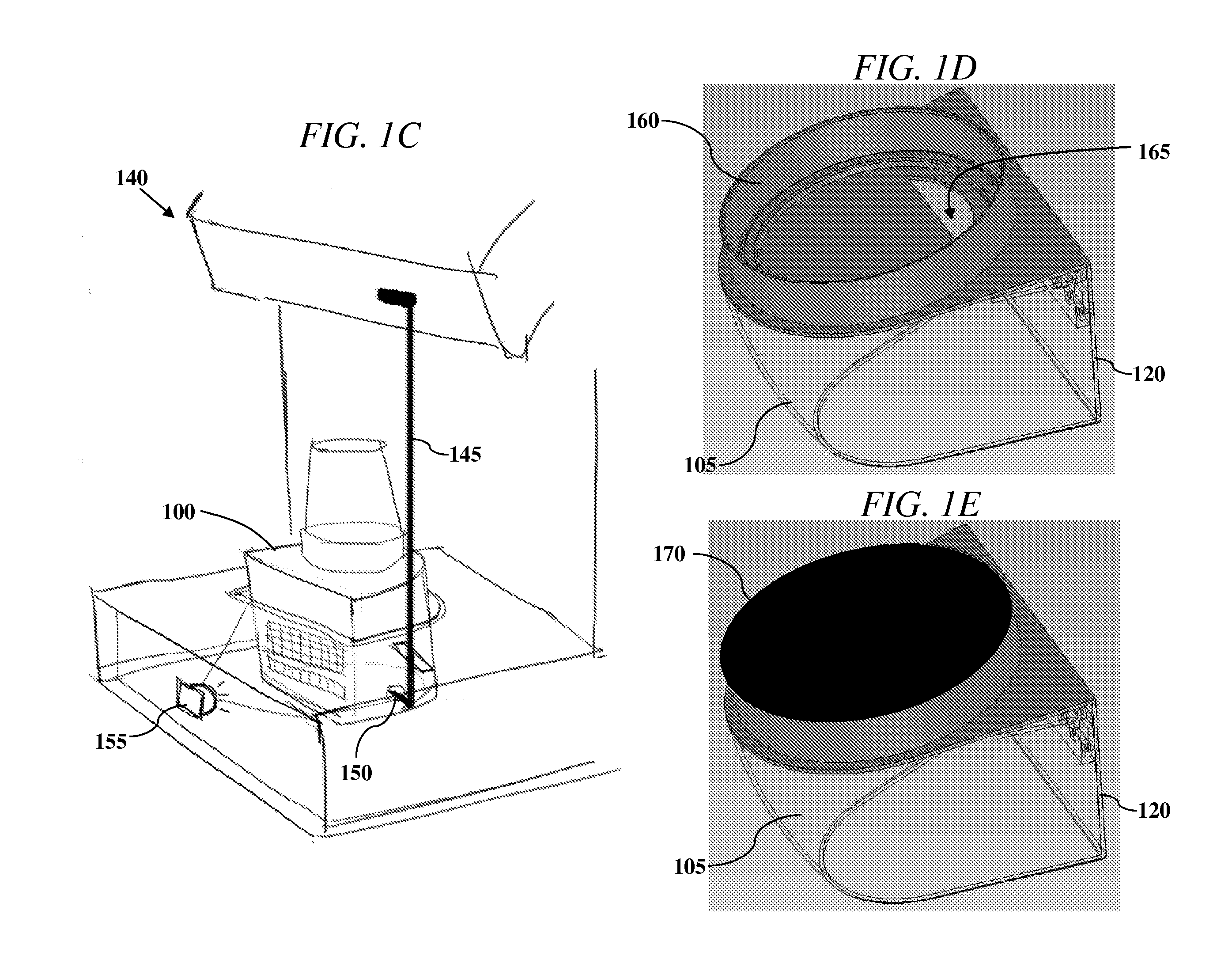

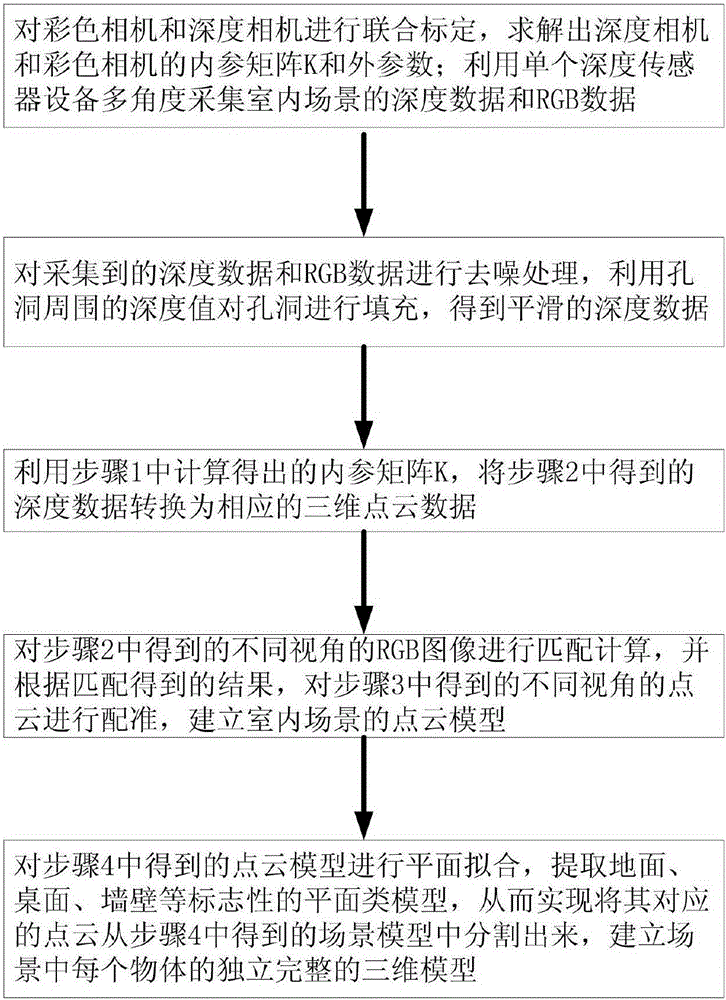

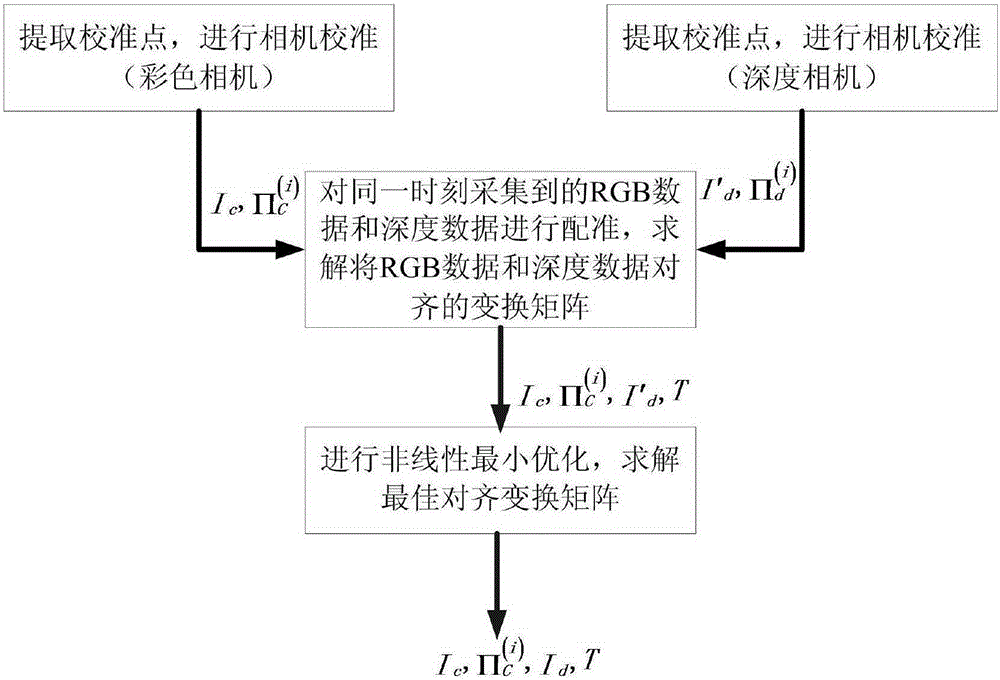

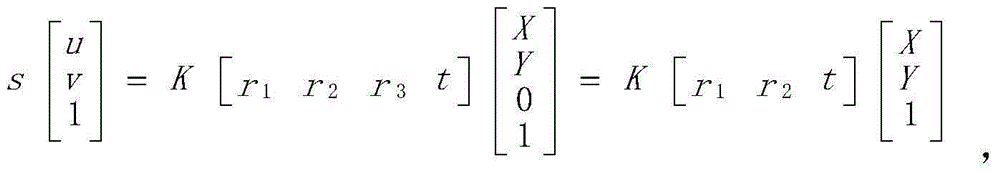

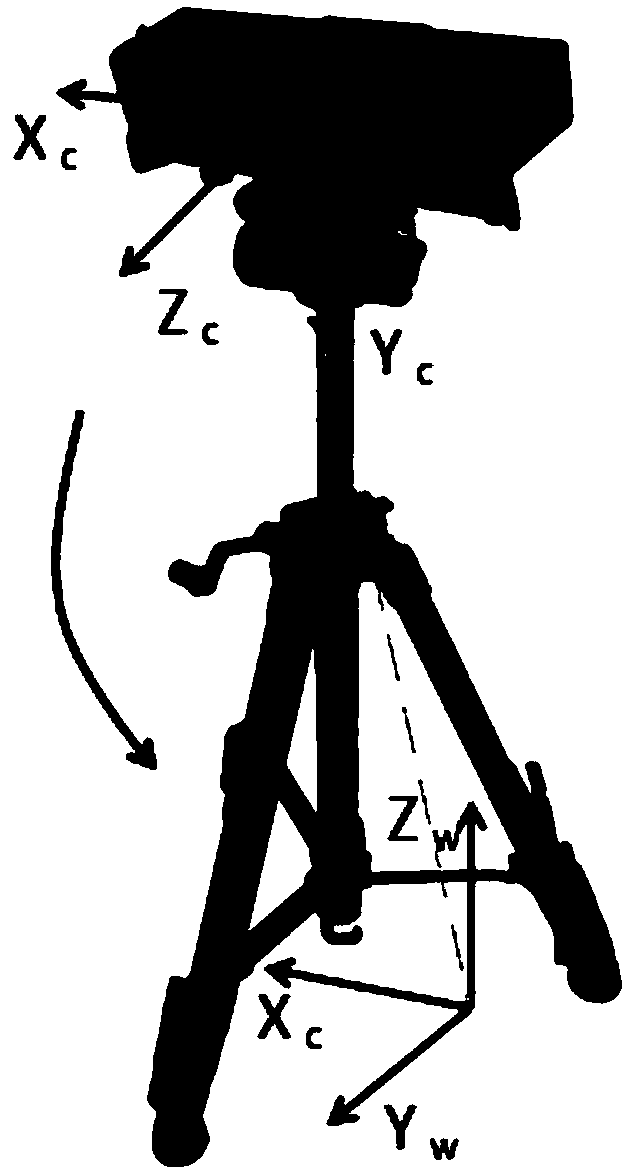

Indoor scene three-dimensional reconstruction method based on single depth vision sensor

InactiveCN105205858ASimplify the scanning processShort scan timeImage analysis3D modellingViewpointsData information

The invention relates to an indoor scene three-dimensional reconstruction method based on a single depth vision sensor. The method is technically characterized by including the following steps of firstly, continuously scanning a whole indoor scene through the single depth vision sensor; secondly, conducting preprocessing including denoising, hole repairing and the like on collected depth data to obtain smooth depth data; thirdly, calculating point cloud data corresponding to the current depth frame according to the depth data collected in the second step; fourthly, conducting registration on point cloud obtained through different viewpoint depth frames to obtain complete point cloud of the indoor scene; fifthly, conducting plane fitting, achieving segmentation of the special point cloud, and establishing an independent and complete three-dimensional model of each object in the indoor scene. Scanning devices used by the method are simple; scanned data information is comprehensive, and the point cloud registration accuracy calculation efficiency is effectively improved; finally, a complete and high-quality three-dimensional model set with a geographic structure and a color map can be established for the indoor scene.

Owner:TIANJIN UNIVERSITY OF TECHNOLOGY

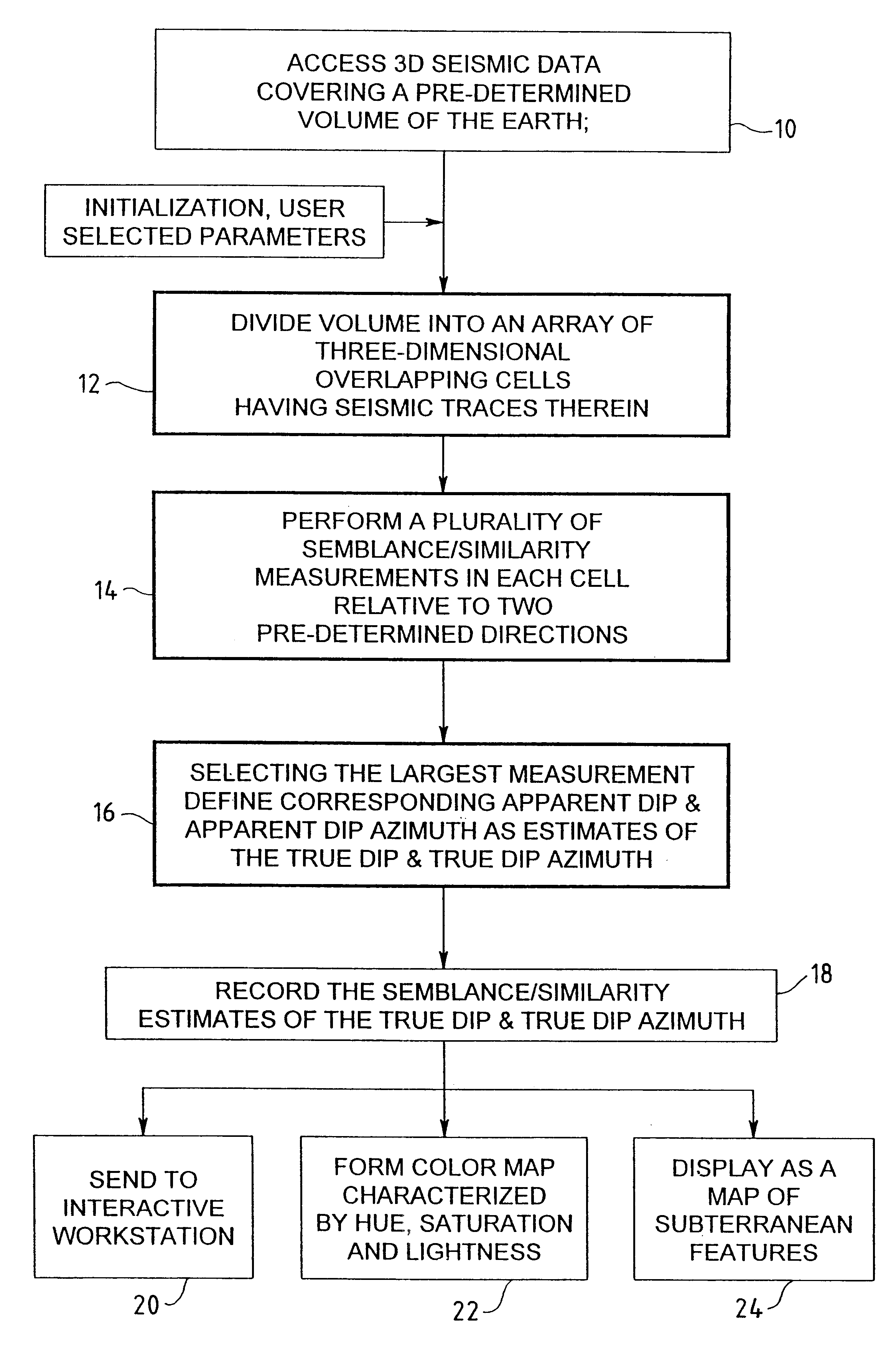

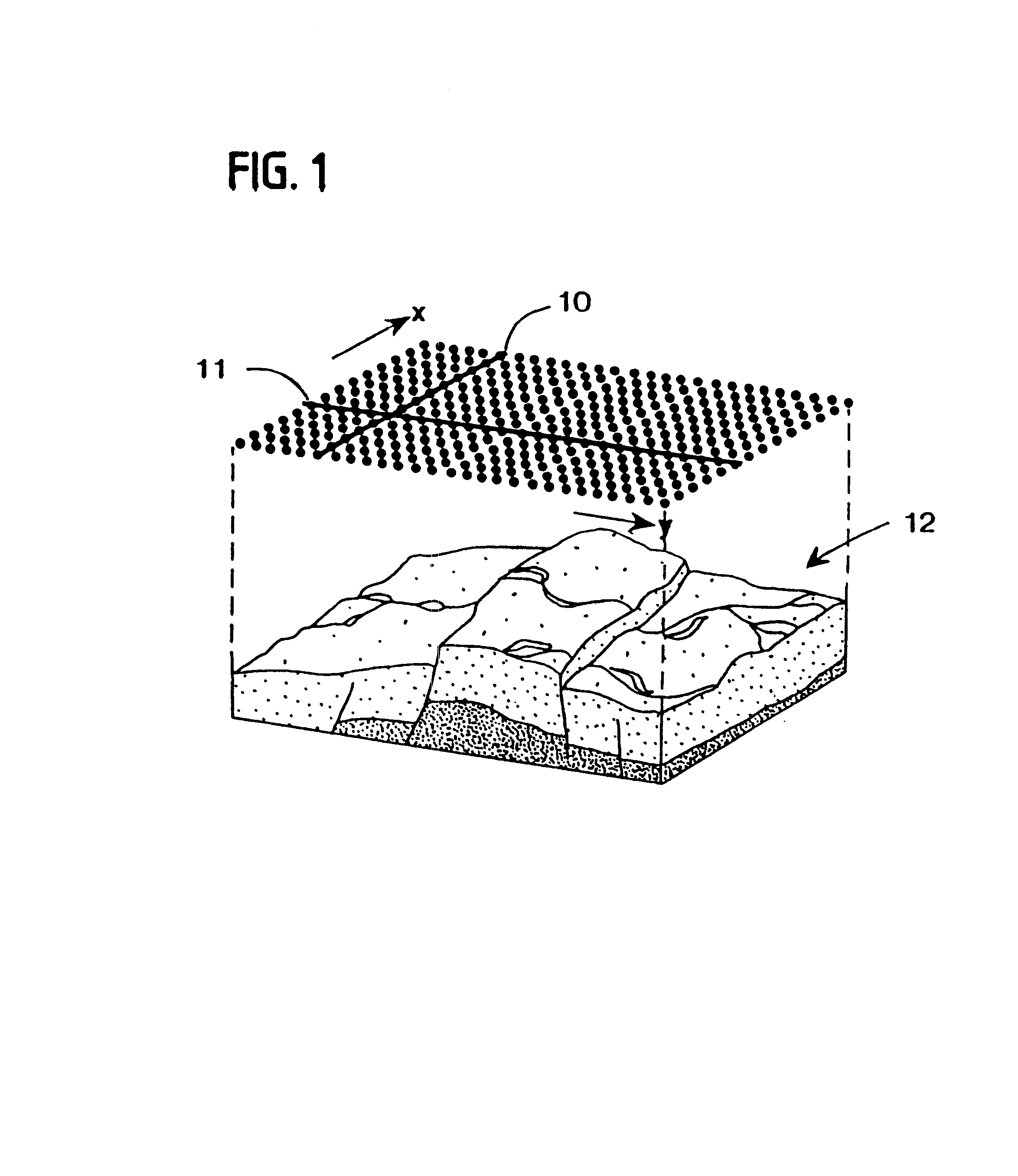

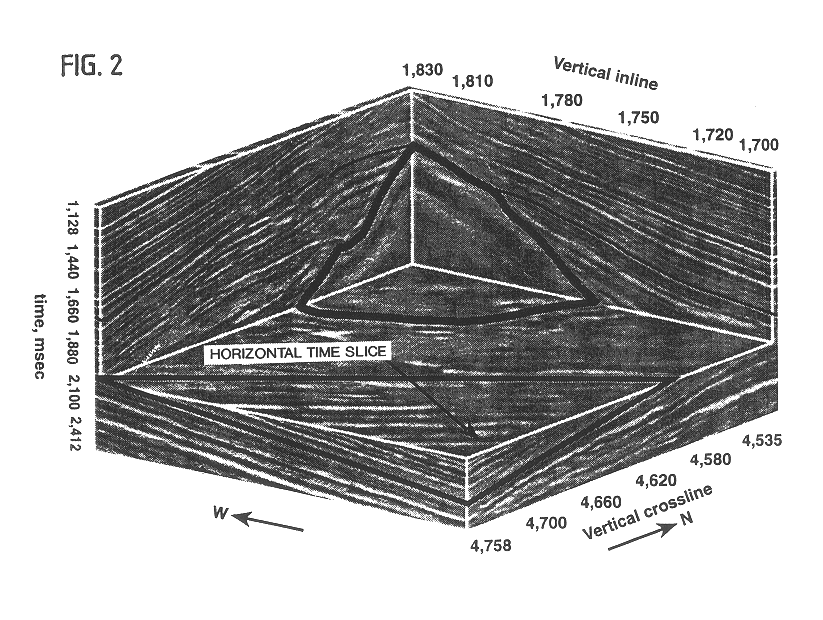

Method and apparatus for seismic signal processing and exploration

InactiveUSRE38229E1Quickly seeSeismic signal processingSpecial data processing applicationsHydrocotyle bowlesioidesSeismic trace

A method, a map and an article of manufacture for the exploration of hydrocarbons. In one embodiment of the invention, the method comprises the steps of: accessing 3D seismic data; dividing the data into an array of relatively small three-dimensional cells; determining in each cell the semblance / similarity, the dip and dip azimuth of the seismic traces contained therein; and displaying dip, dip azimuth and the semblance / similarity of each cell in the form a two-dimensional map. In one embodiment, semblance / similarity is a function of time, the number of seismic traces within the cell, and the apparent dip and apparent dip azimuth of the traces within the cell; the semblance / similarity of a cell is determined by making a plurality of measurements of the semblance / similarity of the traces within the cell and selecting the largest of the measurements. In addition, the apparent dip and apparent dip azimuth, corresponding to the largest measurement of semblance / similarity in the cell, are deemed to be estimates of the true dip and true dip azimuth of the traces therein. A color map, characterized by hue, saturation and lightness, is used to depict semblance / similarity, true dip azimuth and true dip of each cell; true dip azimuth is mapped onto the hue scale, true dip is mapped onto the saturation scale, and the largest measurement of semblance / similarity is mapped onto the lightness scale of the color map.

Owner:CORE LAB GLOBAL

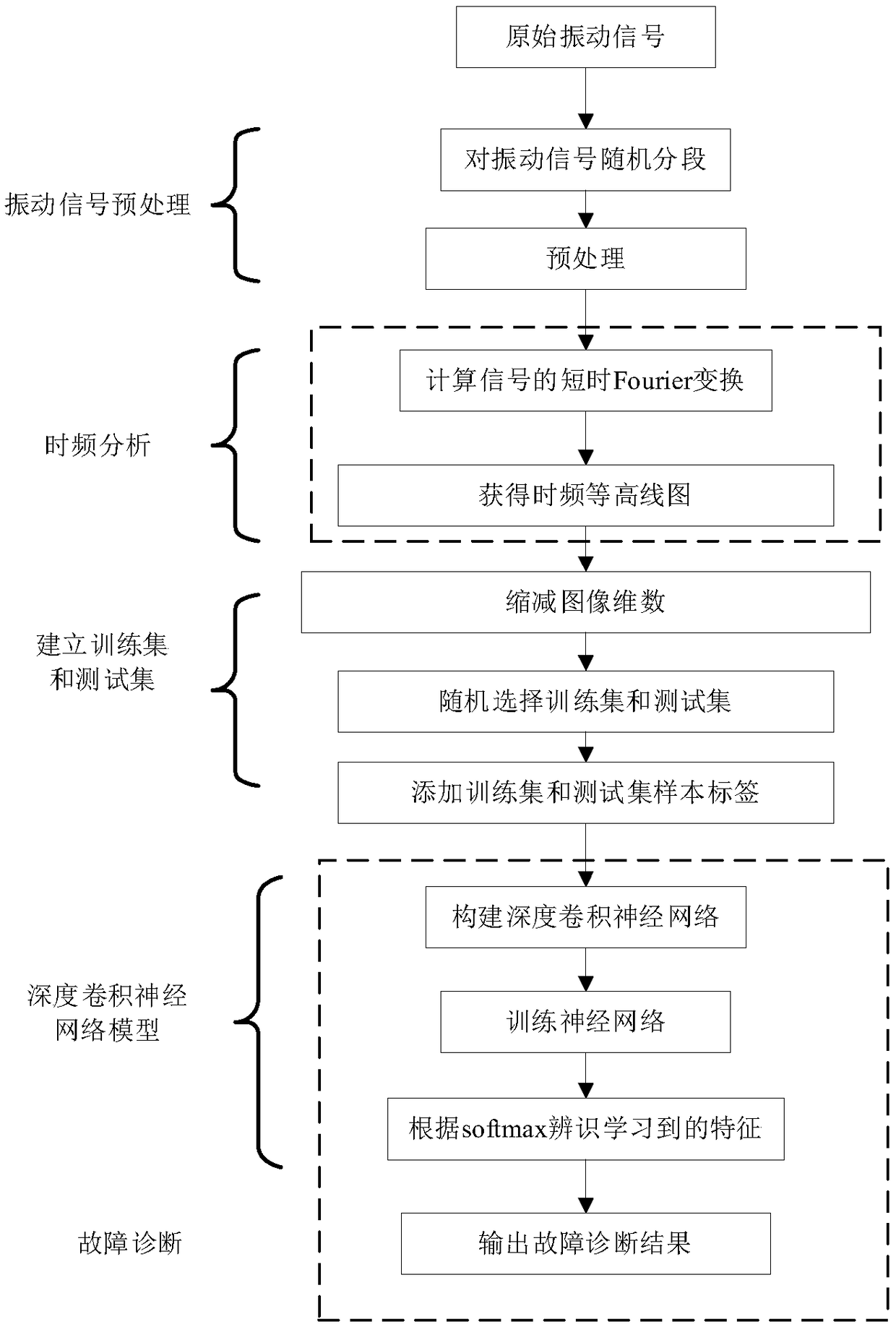

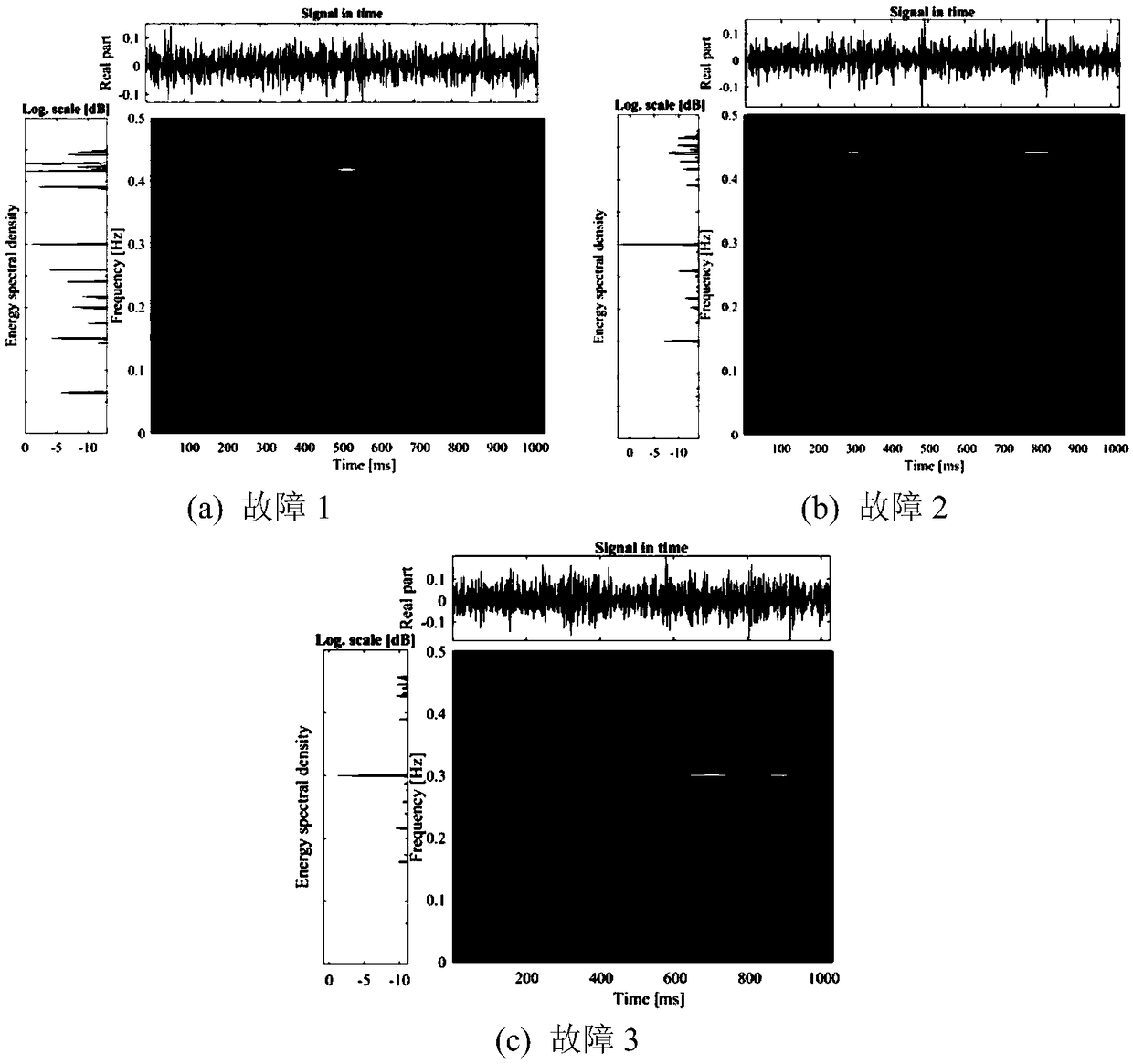

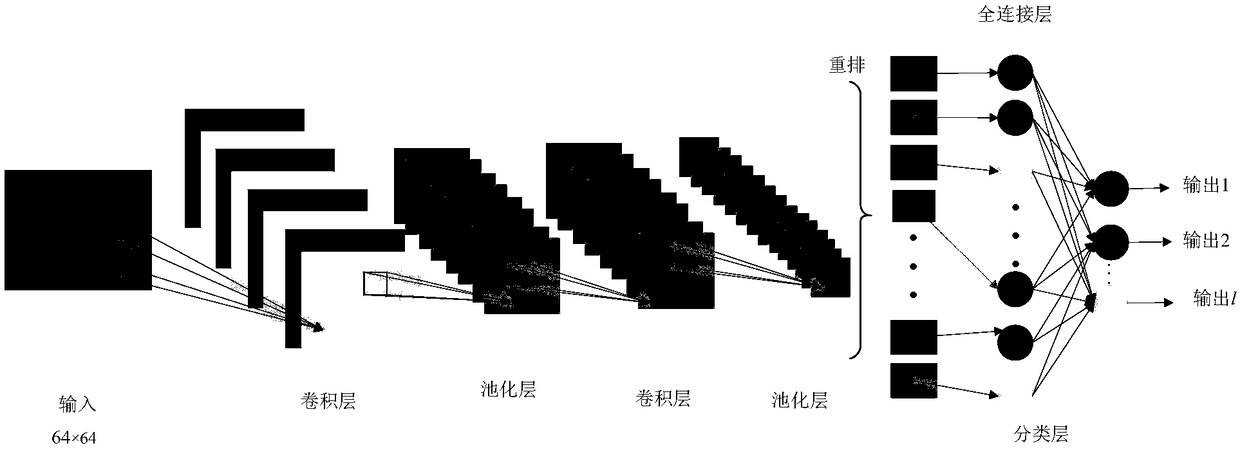

Method for intelligently diagnosing rotating machine fault feature based on deep CNN model

ActiveCN108830127AAccurately reflect the characteristicsAvoid featuresCharacter and pattern recognitionNeural architecturesAlgorithmNetwork model

The invention discloses a method for intelligently diagnosing a rotating machine fault feature based on a deep CNN model. The method comprises: (1) acquiring rotating machine fault vibration signal data, segmenting the data and performing de-trend item preprocessing; (2) performing short-time Fourier time-frequency transform analysis on the signal data to obtain the time-frequency representation of each vibration signal, and displaying the time-frequency representation with a pseudo-color map; (3) reducing the image resolution by an interpolation method and superimposing respective images to form a training sample and a test sample as inputs of the CNN; (4) constructing the deep CNN model including an input layer, two convolution layers, two pooling layers, a fully connected layer, and a softmax classification layer and an output layer; and (5) introducing the training sample into the model for training, obtaining a convolution feature, a pooling feature and a neural network structuralparameter, and diagnosing unknown fault signals according to the constructed deep CNN. The method has better accuracy and stability than an existing time-domain or frequency-domain method.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

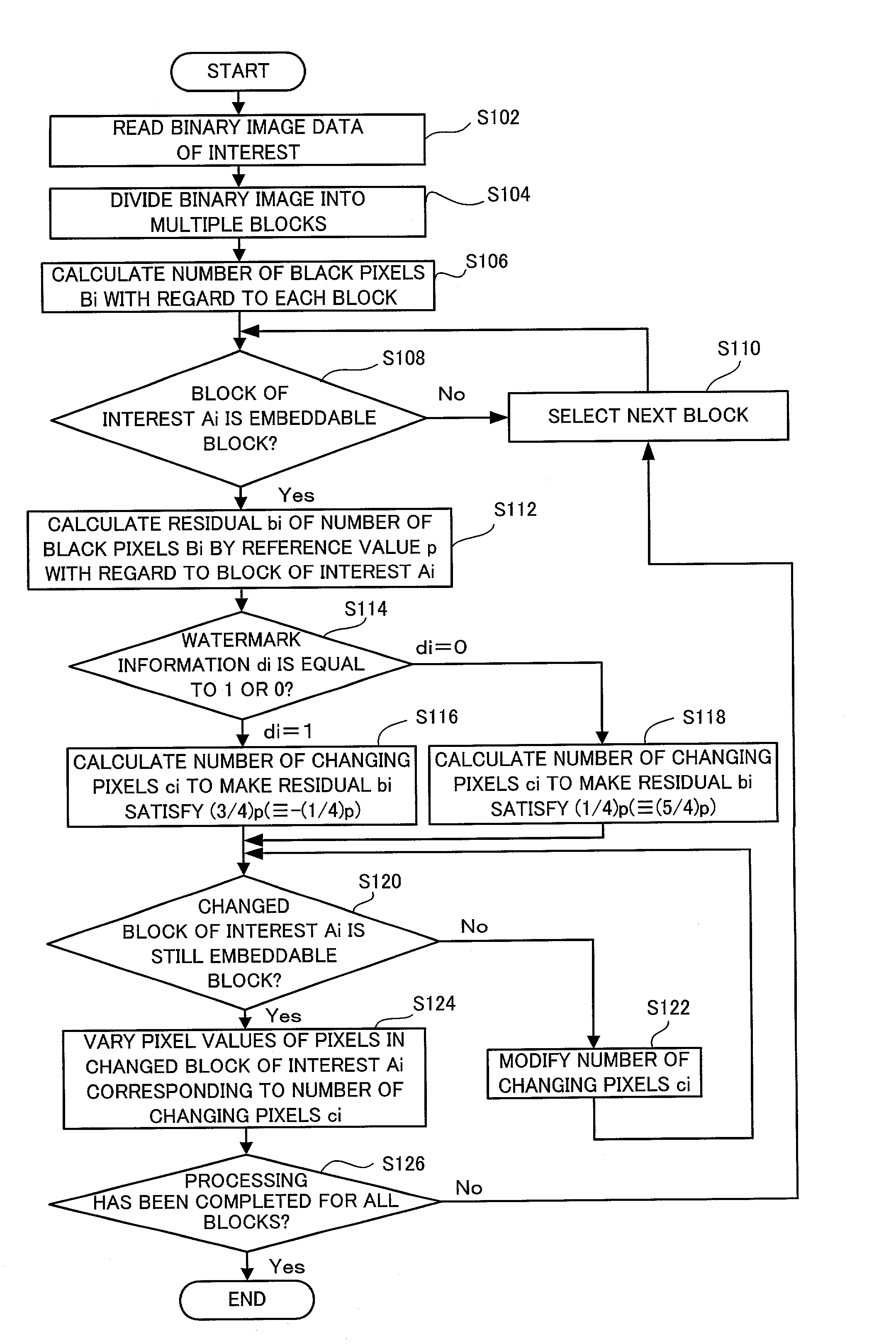

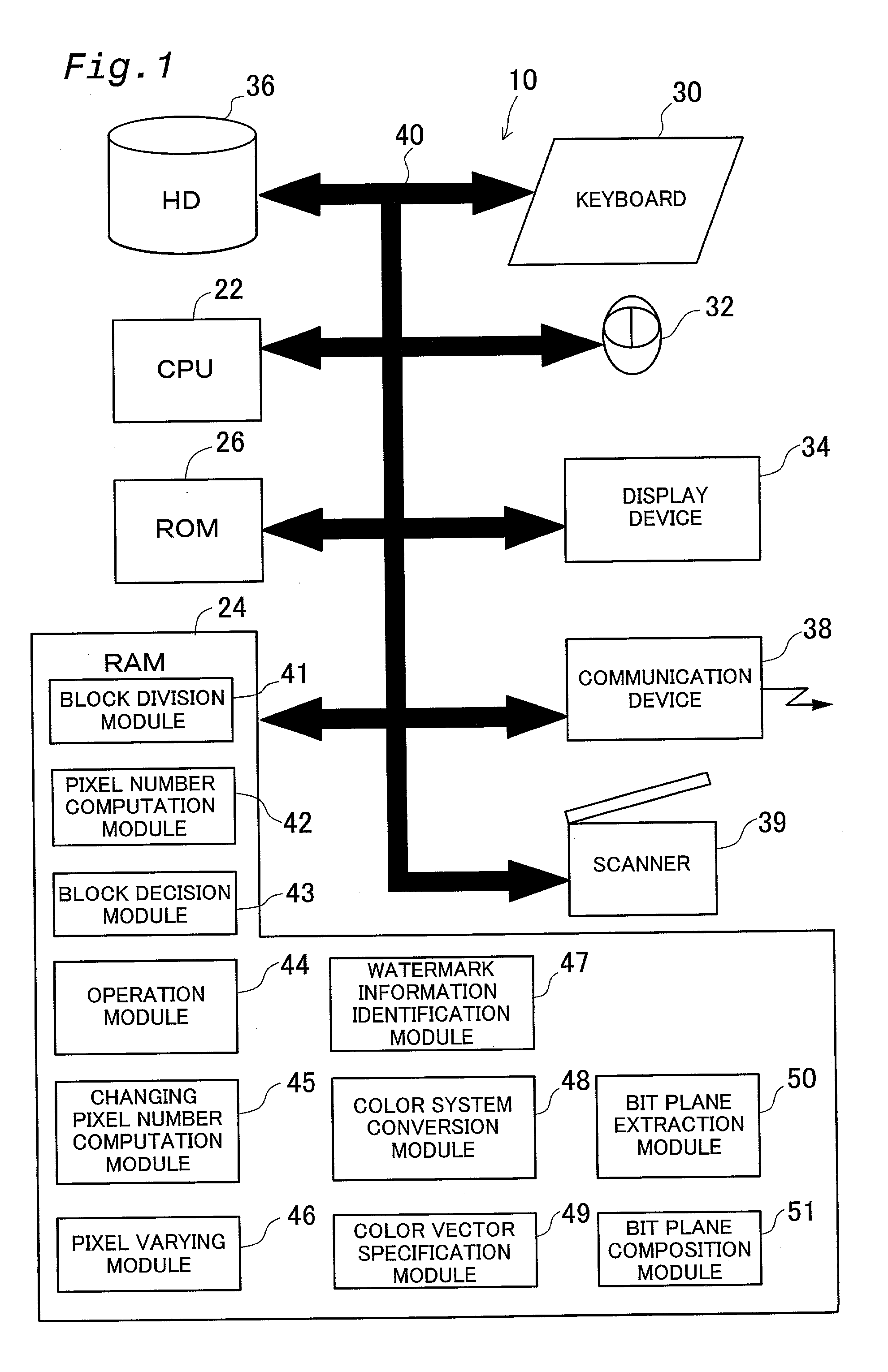

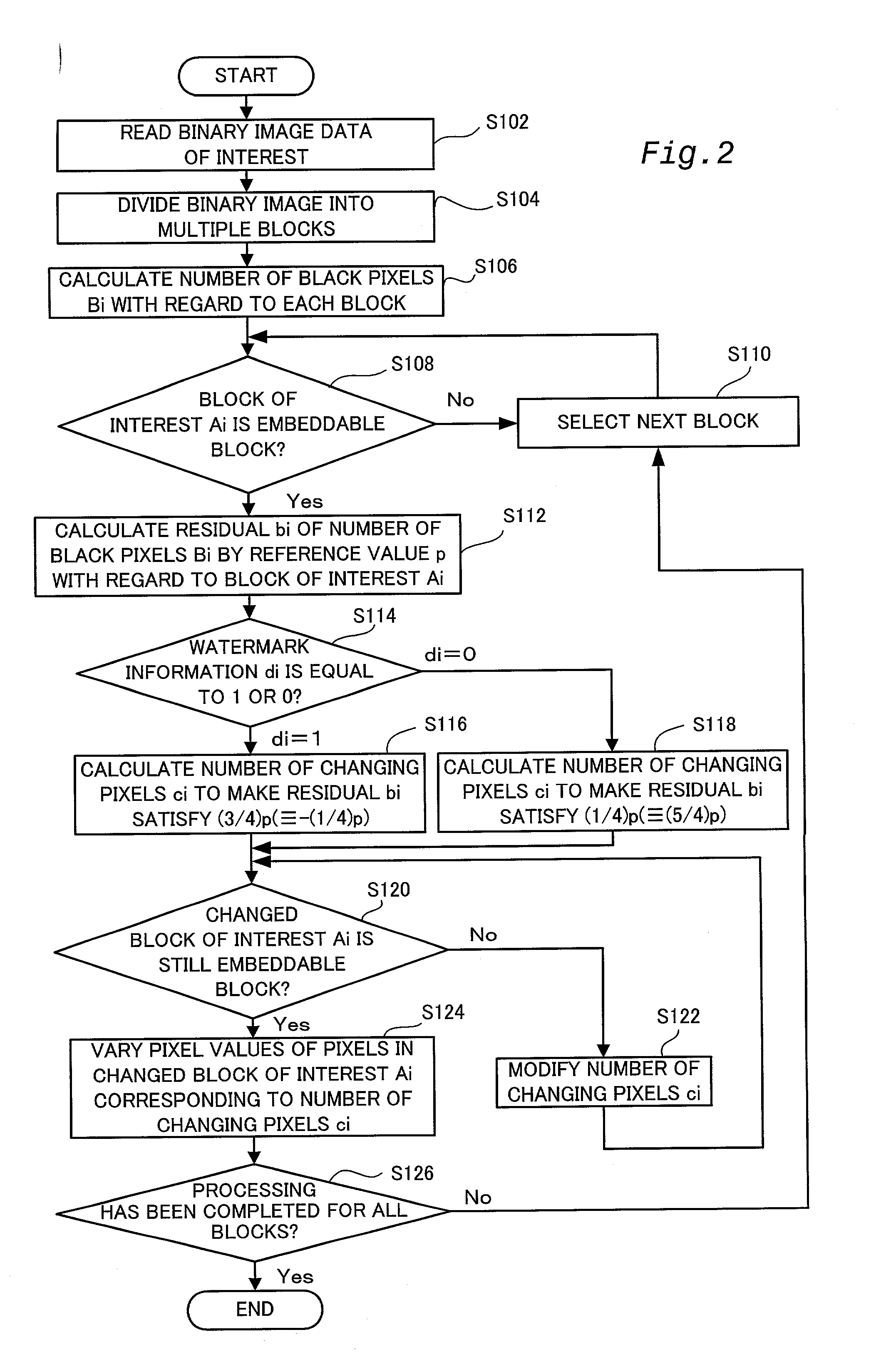

Method of embedding digital watermark, method of extracting embedded digital watermark and apparatuses for the same

InactiveUS20030076979A1Easy to processIncrease resistanceCharacter and pattern recognitionImage data processing detailsColor imageColor transformation

A color conversion module 42 carries out color conversion of original color image data Grgb from the RGB color system into the CMYK color system to obtain color-converted original color image data Gcmyk (step S104). A DCT module 44 applies DCT (discrete cosine transform) over the whole color-converted original color image data Gcmyk to generate DCT coefficients Dcmyk (step S106). An embedding module 46 embeds the watermark information s into the components C, M, Y, and K of the DCT coefficients Dcmyk (step S108). An IDCT module 48 applies IDCT (inverse discrete cosine transform) onto DCT coefficients D'cmyk with the watermark information s embedded therein to generate embedding-processed color image data G'cmyk (step S110). The color conversion module 42 carries out color conversion of the embedding-processed color image data G'cmyk from the CMYK color system into the RGB color system to obtain embedding-processed color image data G'rgb (step S112). This arrangement does not require any correction of the position or the shape of image blocks in the process of extracting the embedded watermark information.

Owner:KOWA CO LTD

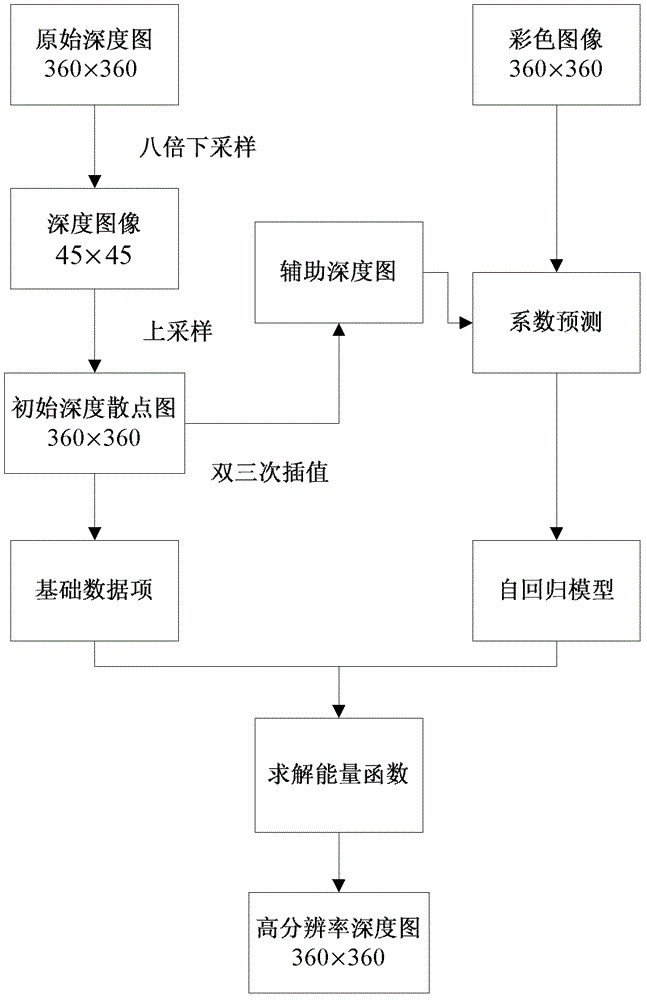

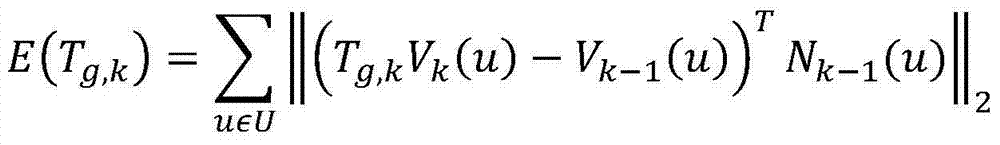

Super-resolution reconstruction method for depth map by adopting autoregressive model

ActiveCN102722863AAvoid changeImplementing the super-resolution processImage enhancementData setImaging processing

The invention belongs to the field of computer vision. In order to provide a simple and practical super-resolution method, the technical scheme adopted by the invention is that a super-resolution reconstruction method for a depth map by adopting an autoregressive model comprises the following steps of: 1) taking the depth map and a color map which are provided by a Middlebury data set and has the same size as experimental data, performing down-sampling on a test depth map according to a super-resolution proportion, performing zero-fill up-sampling on the obtained input low-resolution depth map to the original resolution, and obtaining an initial depth scatter diagram; 2) constructing autoregressive model items of an energy function; 3) constructing basic data items and a final solving equation of the energy function; and 4) solving the equation b utilizing a linear function optimization method. The super-resolution reconstruction method is mainly used for image processing.

Owner:TIANJIN UNIV

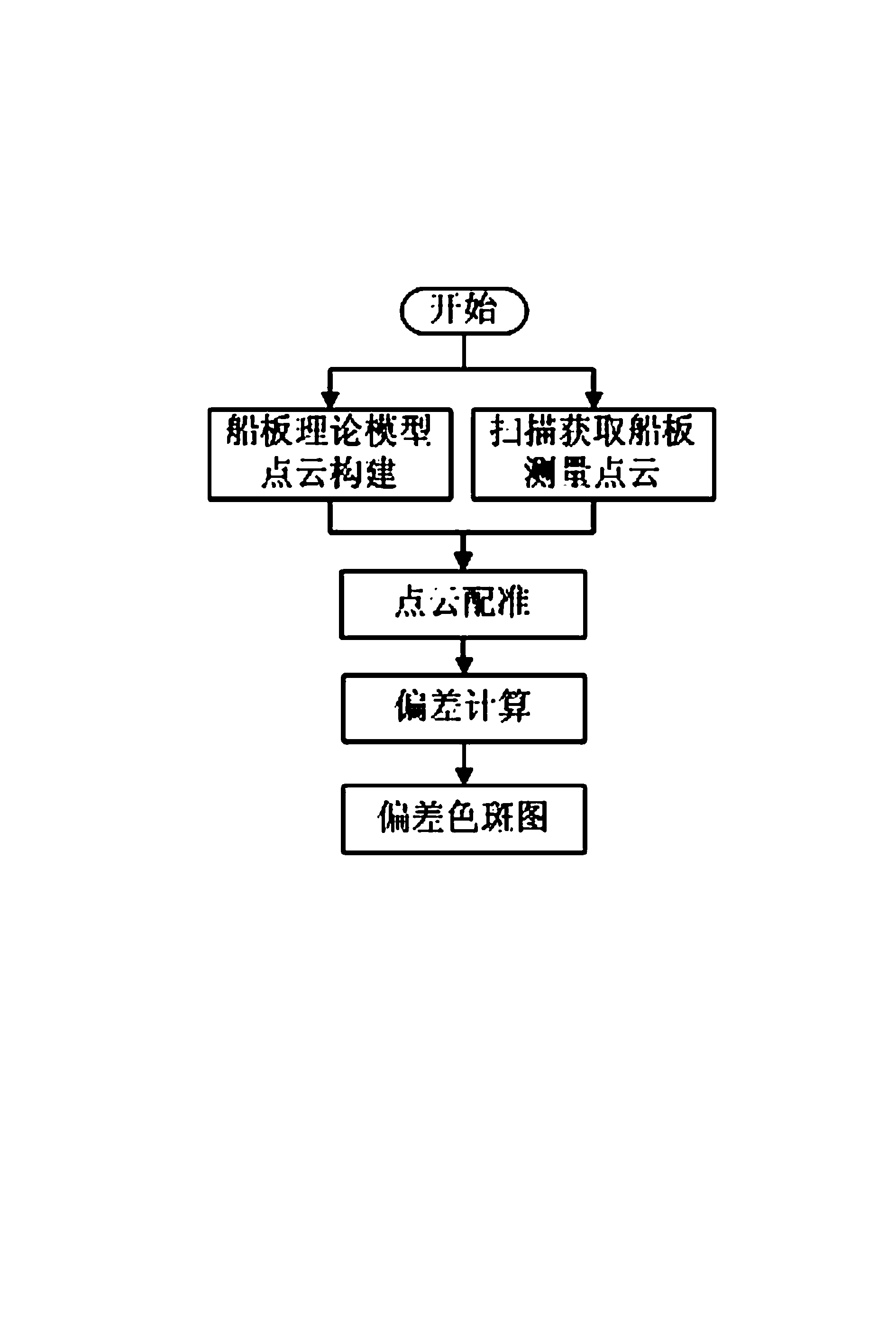

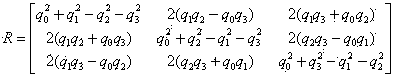

On-line detection method for line heating forming

The invention discloses an on-line detection method for line heat forming of a ship outer plate, and belongs to the field of shipbuilding technologies and optical measurement. The method comprises the following steps: A, establishing a ship theoretical model point cloud; B, scanning and obtaining a ship measurement point cloud; C, performing coordinate system registration for ship theoretical model point cloud and the ship measurement point cloud; D, calculating the distance between every point in the ship measurement point cloud and a theoretical curve surface so as to present the deviation between the measurement point cloud and the theoretical model point cloud; E, expressing the deviation value by a color map. Compared with a traditional sample case / sample plate detection method, the method is high in detection efficiency and precision, and the detection result can be quantized and is good in readability.

Owner:SHANGHAI SHIPBUILDING TECH RES INST +1

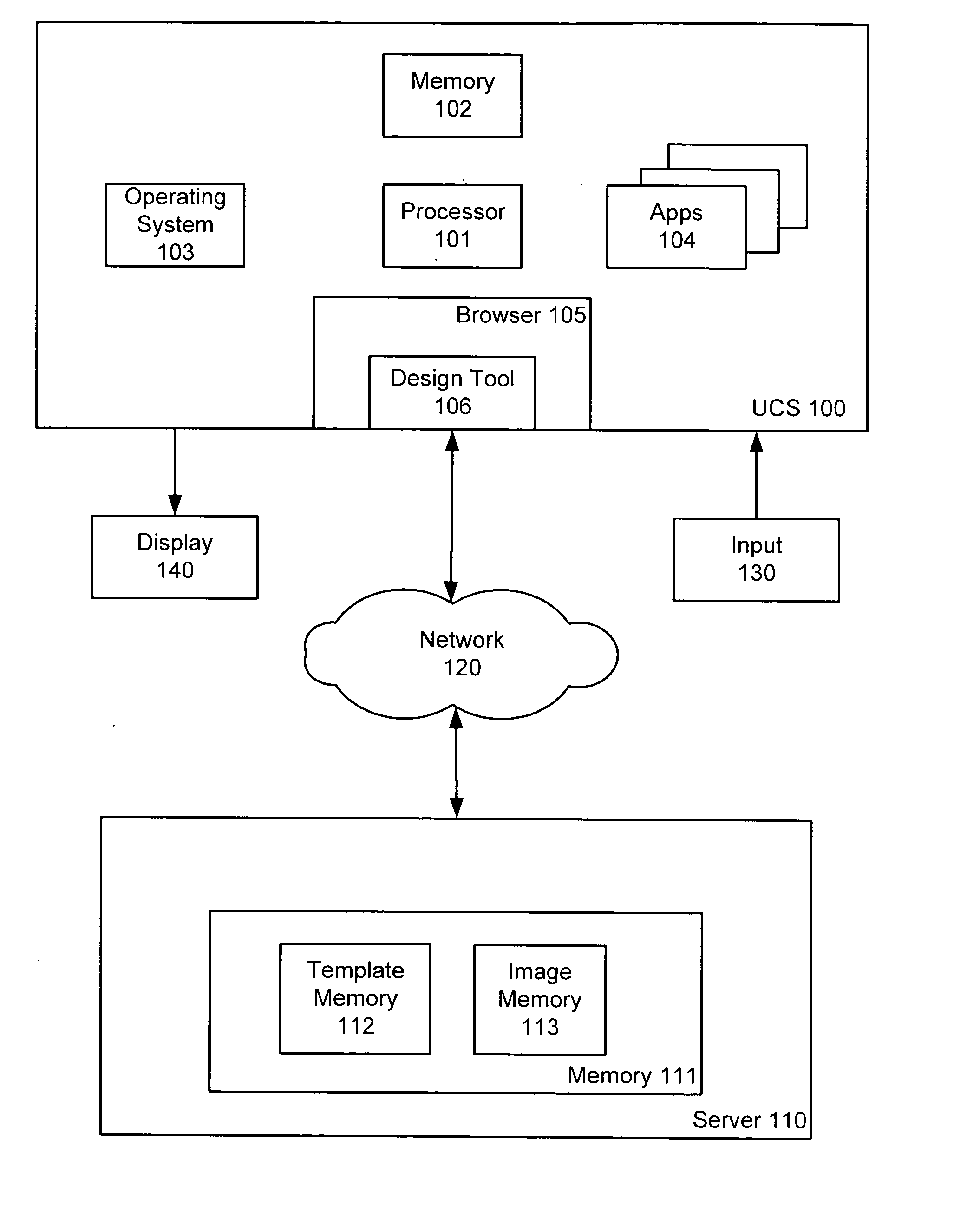

System and method for custom color design

InactiveUS20050122543A1Easy to modifyTexturing/coloringDigital computer detailsColor imageElectronic document

Electronic document design systems and methods allowing a user to generate many different color image versions from a grayscale-based image. Grayscale images are stored as patterns and applied as pattern fill of markup language shapes. When a grayscale image is applied as pattern fill, the original black and white color components of the grayscale image are replaced with the two colors specified by the pattern fill element and a color version of the image based on the two colors is displayed to the user as the content of the shape. By changing the colors associated with the pattern fill element, many different color variations of the image can be produced and displayed for user review. One or more color selection tools are provided to allow the user to select different pairs of colors to be used to generate color image versions.

Owner:VISTAPRINT SCHWEIZ

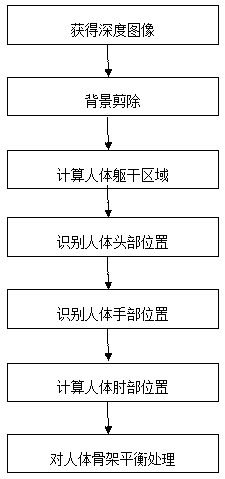

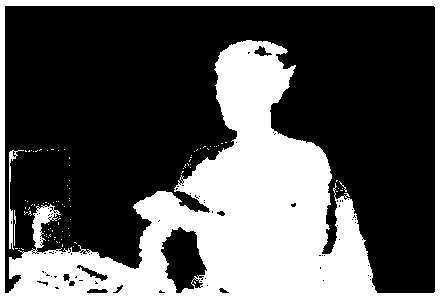

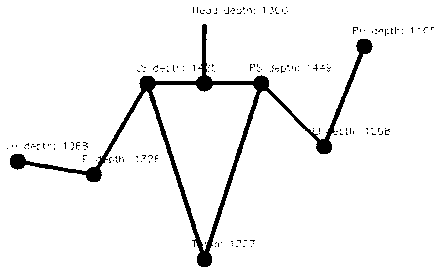

Method for recovering real-time three-dimensional body posture based on multimodal fusion

InactiveCN102800126AThe motion capture process is easyImprove stability3D-image rendering3D modellingColor imageTime domain

The invention relates to a method for recovering a real-time three-dimensional body posture based on multimodal fusion. The method can be used for recovering three-dimensional framework information of a human body by utilizing multiple technologies of depth map analysis, color identification, face detection and the like to obtain coordinates of main joint points of the human body in a real world. According to the method, on the basis of scene depth images and scene color images synchronously acquired at different moments, position information of the head of the human body can be acquired by a face detection method; position information of the four-limb end points with color marks of the human body are acquired by a color identification method; position information of the elbows and the knees of the human body is figured out by virtue of the position information of the four-limb end points and a mapping relation between the color maps and the depth maps; and an acquired framework is subjected to smooth processing by time domain information to reconstruct movement information of the human body in real time. Compared with the conventional technology for recovering the three-dimensional body posture by near-infrared equipment, the method provided by the invention can improve the recovery stability, and allows a human body movement capture process to be more convenient.

Owner:ZHEJIANG UNIV

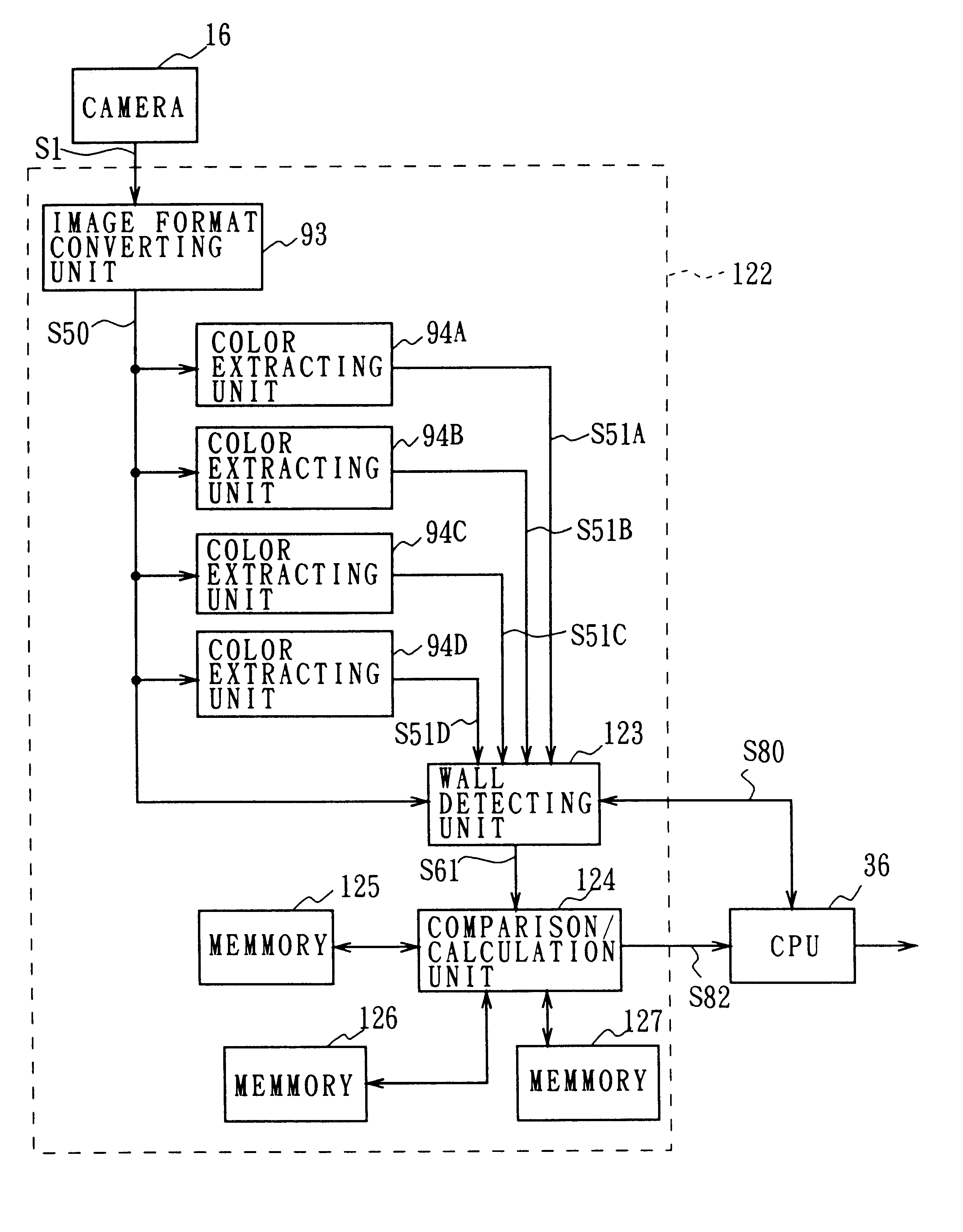

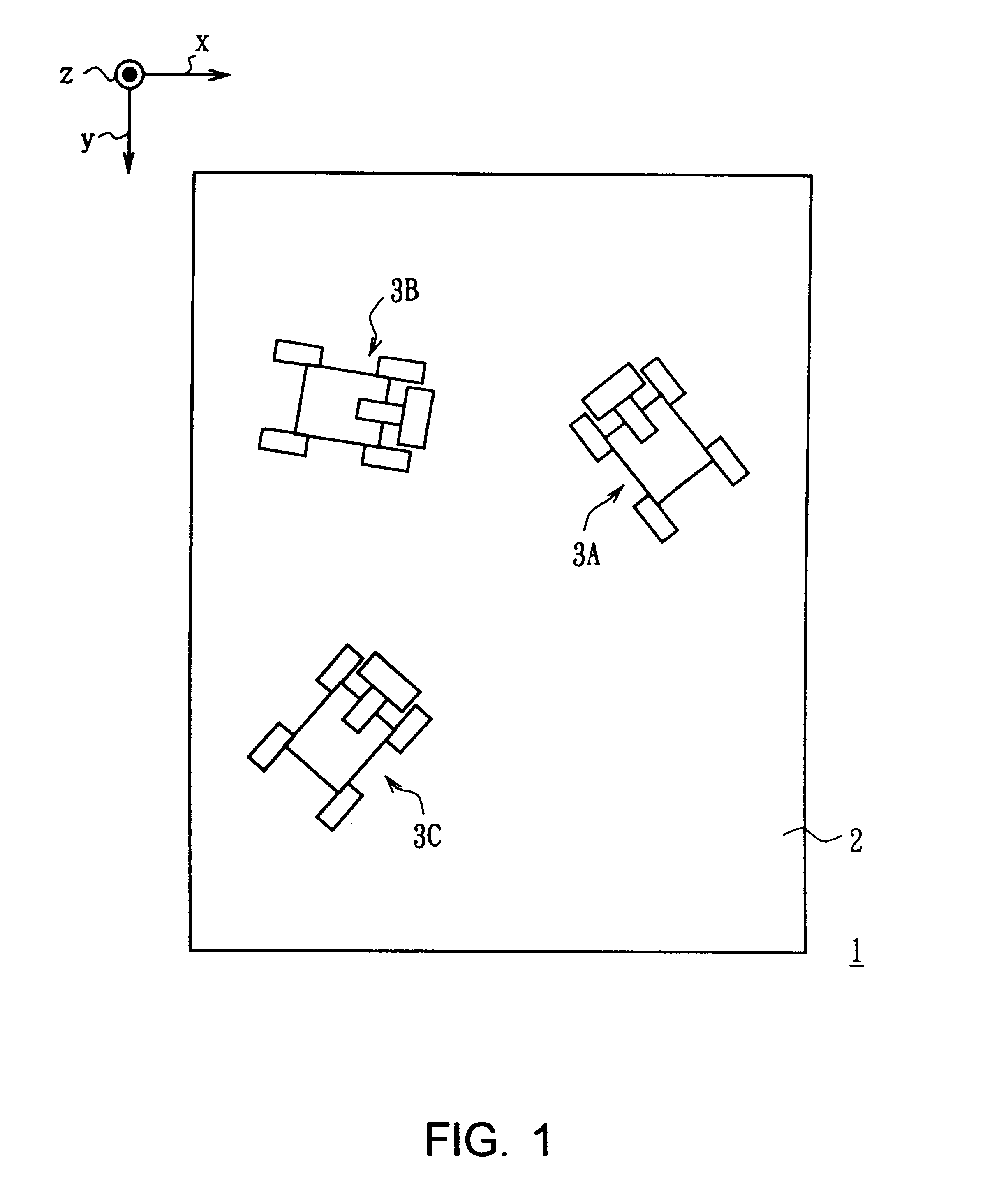

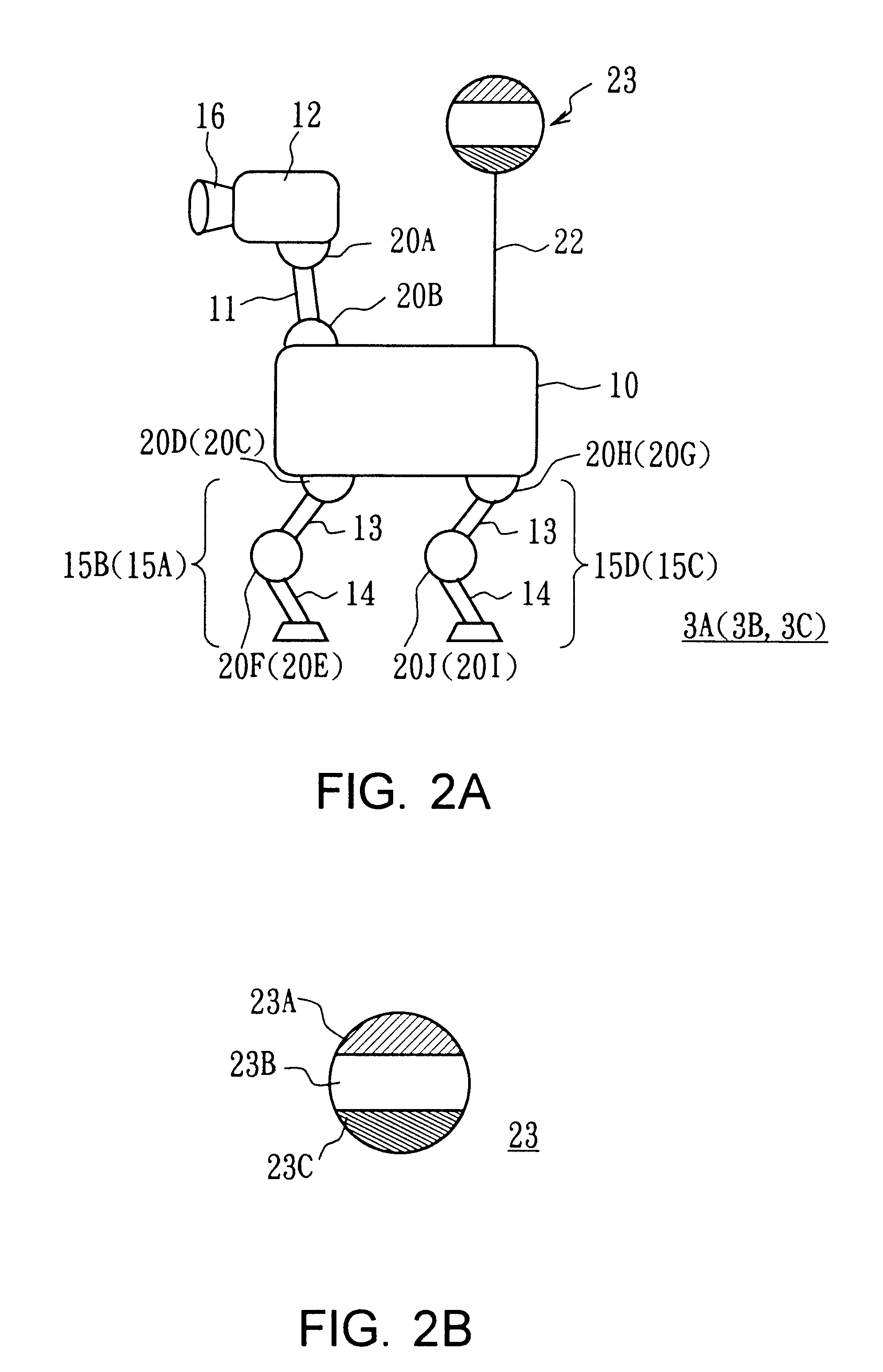

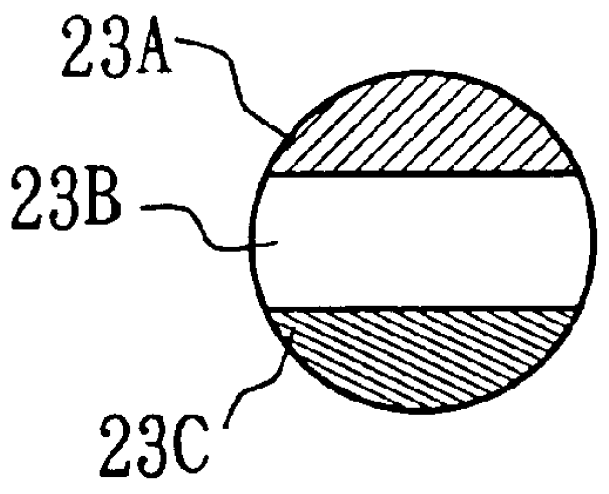

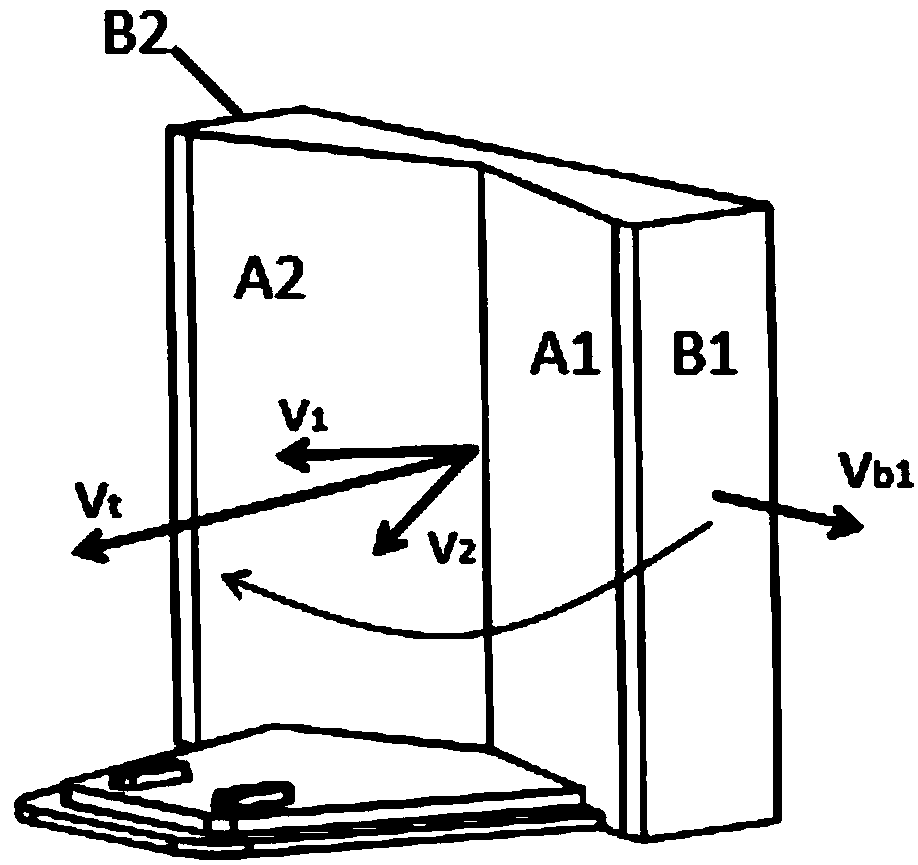

Identifying apparatus and method, position detecting apparatus and method, robot apparatus and color extracting apparatus

InactiveUS6453055B1Readily and reliably identifyLong lasting colorCharacter and pattern recognitionVehicle position/course/altitude controlLocation detectionPattern recognition

An identifying apparatus and method and a robot apparatus capable of reliably identifying other moving objects or other objects, a position detecting apparatus and method and a robot apparatus capable of accurately detecting the position of a moving object or itself within a region, and a color extracting apparatus capable of accurately extracting a desired color are difficult to be realized. Objects are provided with identifiers having different color patterns such that the color patterns are detected and identified through image processing. Also, the objects of interest are given color patterns different from each other, such that the position of the object can be detected by identifying the color pattern through image processing. Further, a plurality of wall surfaces having different colors are provided along the periphery of the region, such that the position of an object is detected on the basis of the colors of the wall surfaces through image processing. Further, a luminance level and color difference levels are sequentially detected for each of pixels to extract a color by determining whether or not the color difference levels are within a predetermined range.

Owner:SONY CORP

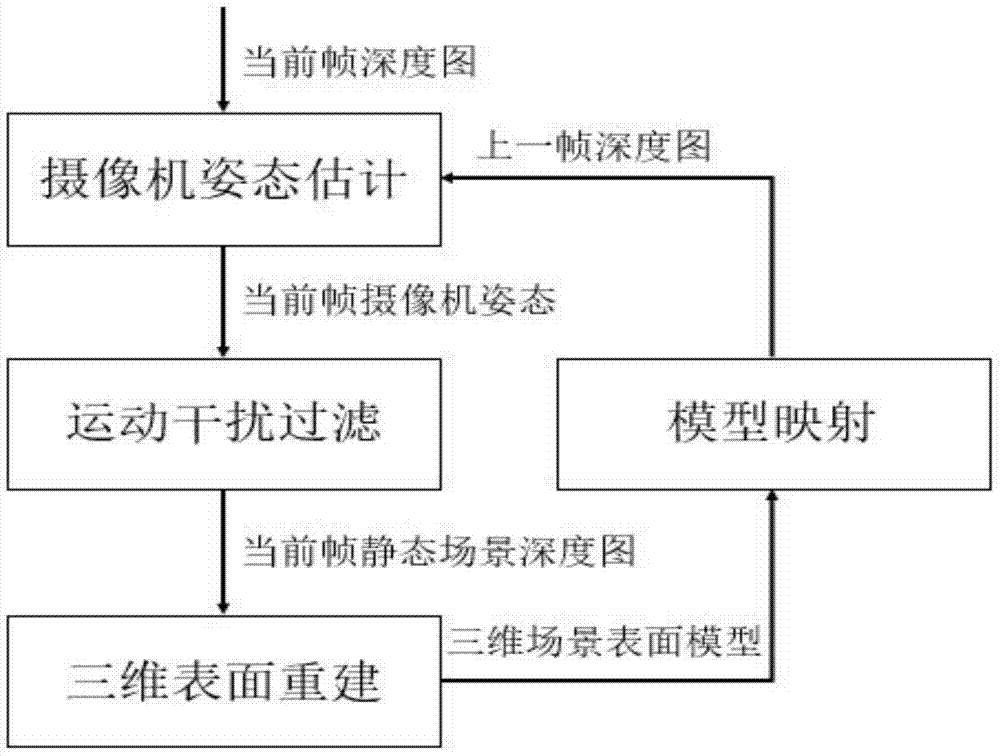

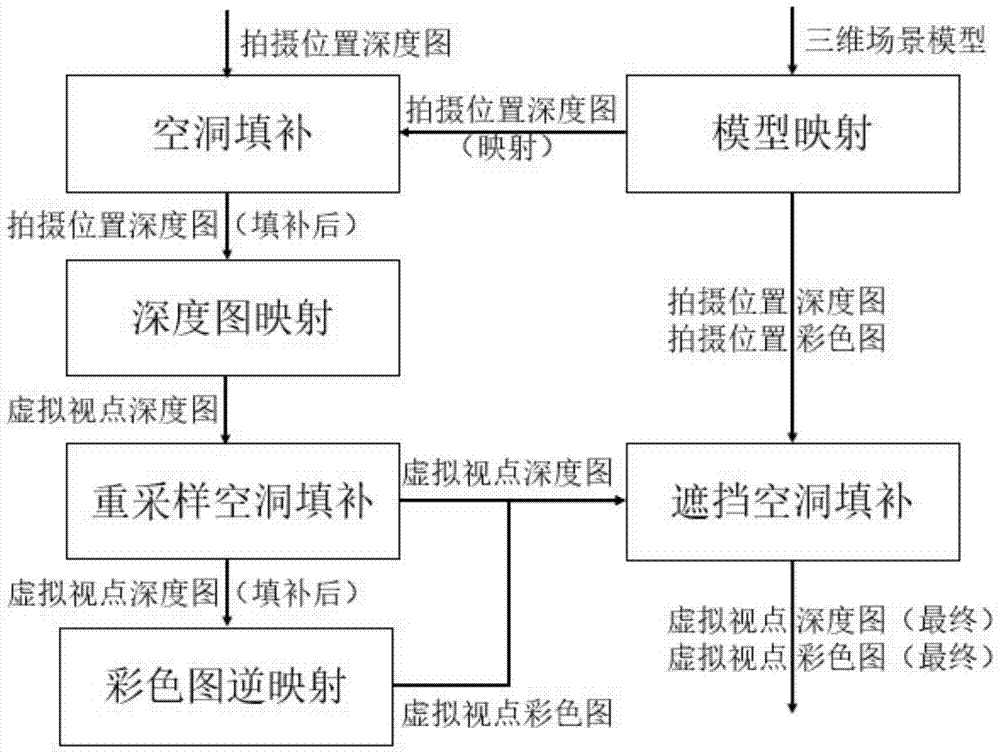

Method for generating virtual-real fusion image for stereo display

ActiveCN104504671AImprove tracking accuracyImprove registration accuracyImage enhancementImage analysisViewpointsCollision detection

The invention discloses a method for generating a virtual-real fusion image for stereo display. The method comprises the following steps: (1) utilizing a monocular RGB-D camera to acquire a depth map and a color map in real scene; (2) rebuilding a three-dimensional scene surface model and calculating a camera parameter; (3) mapping, thereby acquiring the depth map and the color map in a virtual viewpoint position; (4) finishing the three-dimensional registration of a virtual object, rendering for acquiring the depth map and the color map of the virtual object, and performing virtual-real fusion, thereby acquiring a virtual-real fusion content for stereo display. According to the method provided by the invention, the monocular RGB-D camera is used for shooting, the three-dimensional scene surface model is rebuilt frame by frame and the model is simultaneously used for tracking the camera and mapping the virtual viewpoint, so that higher camera tracking precision and virtual object registration precision can be acquired, the cavities appearing in the virtual viewpoint drawing technology based on the image can be effectively handled, the shielding judgment and collision detection for the virtual-real scene can be realized and a stereo display device can be utilized to acquire a vivid stereo display effect.

Owner:ZHEJIANG UNIV

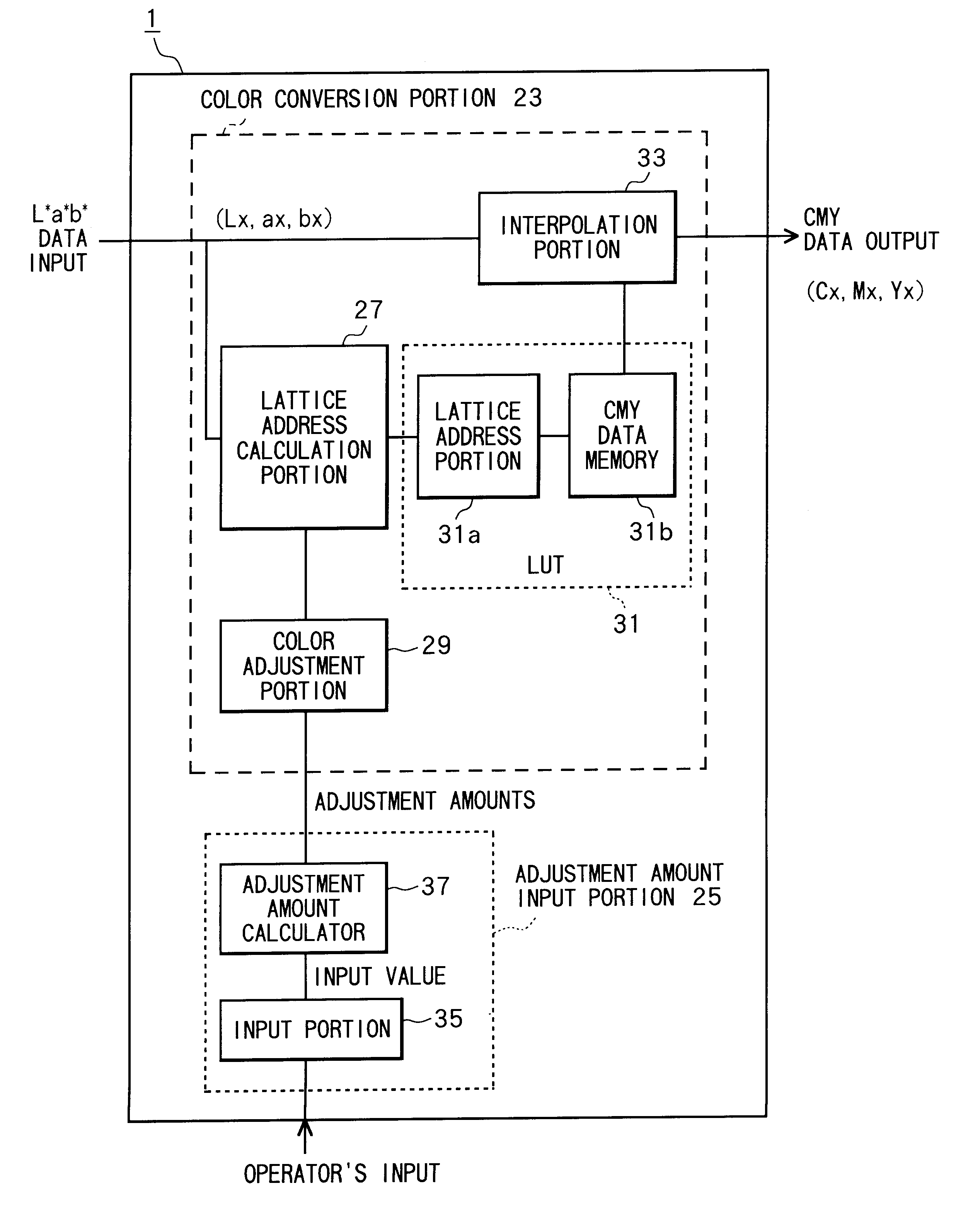

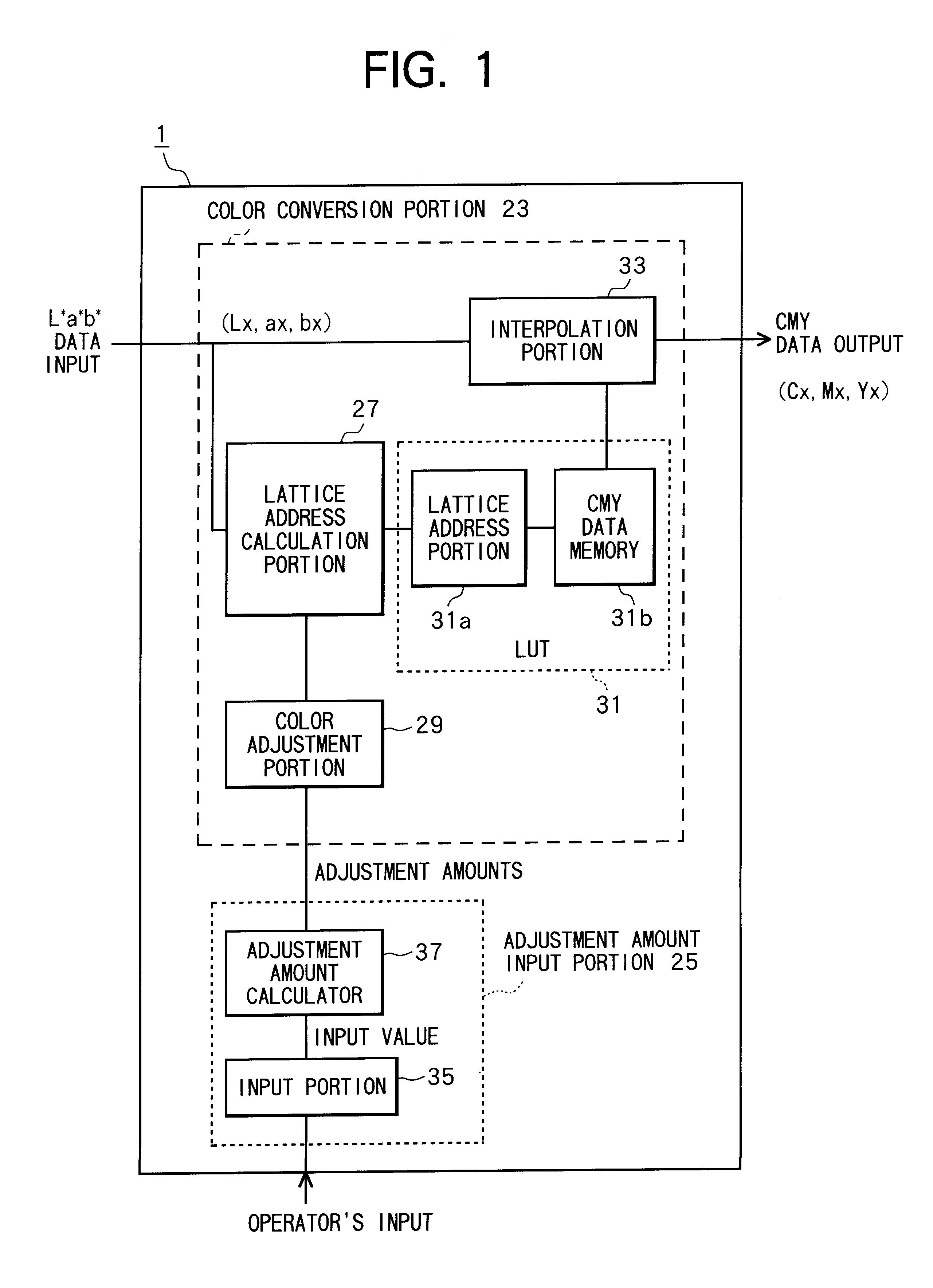

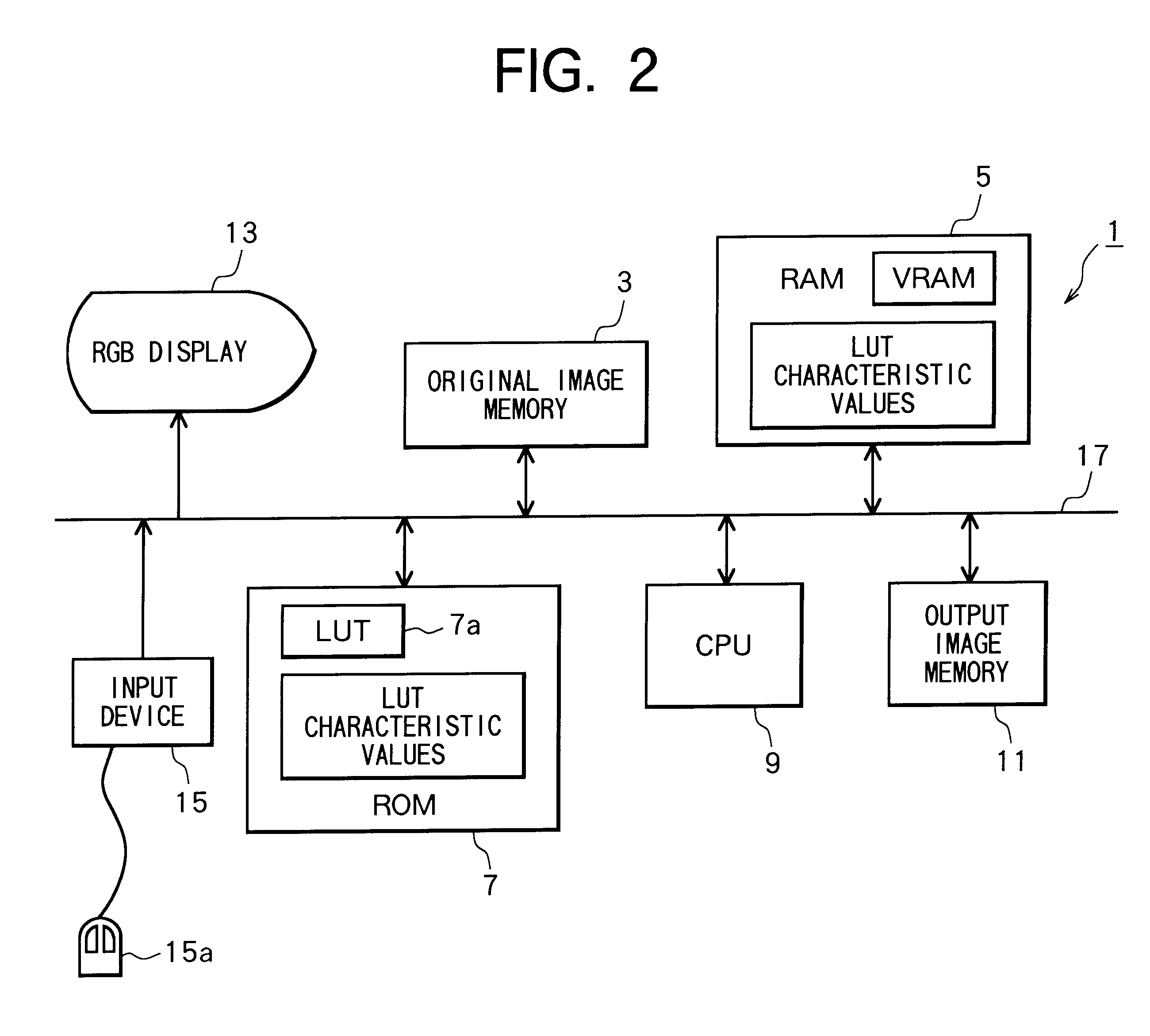

Color adjustment and conversion method

InactiveUS6172681B1Cathode-ray tube indicatorsFilling planer surface with attributesPattern recognitionData set

The user determines his / her desired color state in accordance with the color maps prepared in accordance with the color system corresponding to the human visual sense. The LUT characteristic values Lmin, amin, bmin, astep, and bstep are adjusted into adjusted values L'min, a'min, b'min, a'step, and b'step based on the user's designated color adjustment amounts DELTAL, DELTAC, DELTARG, and DELTAYB. Then, based on the adjusted LUT characteristic values and the inputted Lab color data (Lx, ax, bx), discrimination number sets (Lgrid, agrid, bgrid) are determined for eight lattice points surrounding the inputted color data. With using CMY data sets (Ci, Mi, Yi) for the eight lattice points, an interpolation calculation is achieved to calculate a CMY control data set (Cx, Mx, Yx) for the inputted color data set (Lx, ax, bx).

Owner:BROTHER KOGYO KK

Fusion of a 2D electro-optical image and 3D point cloud data for scene interpretation and registration performance assessment

Owner:HARRIS CORP

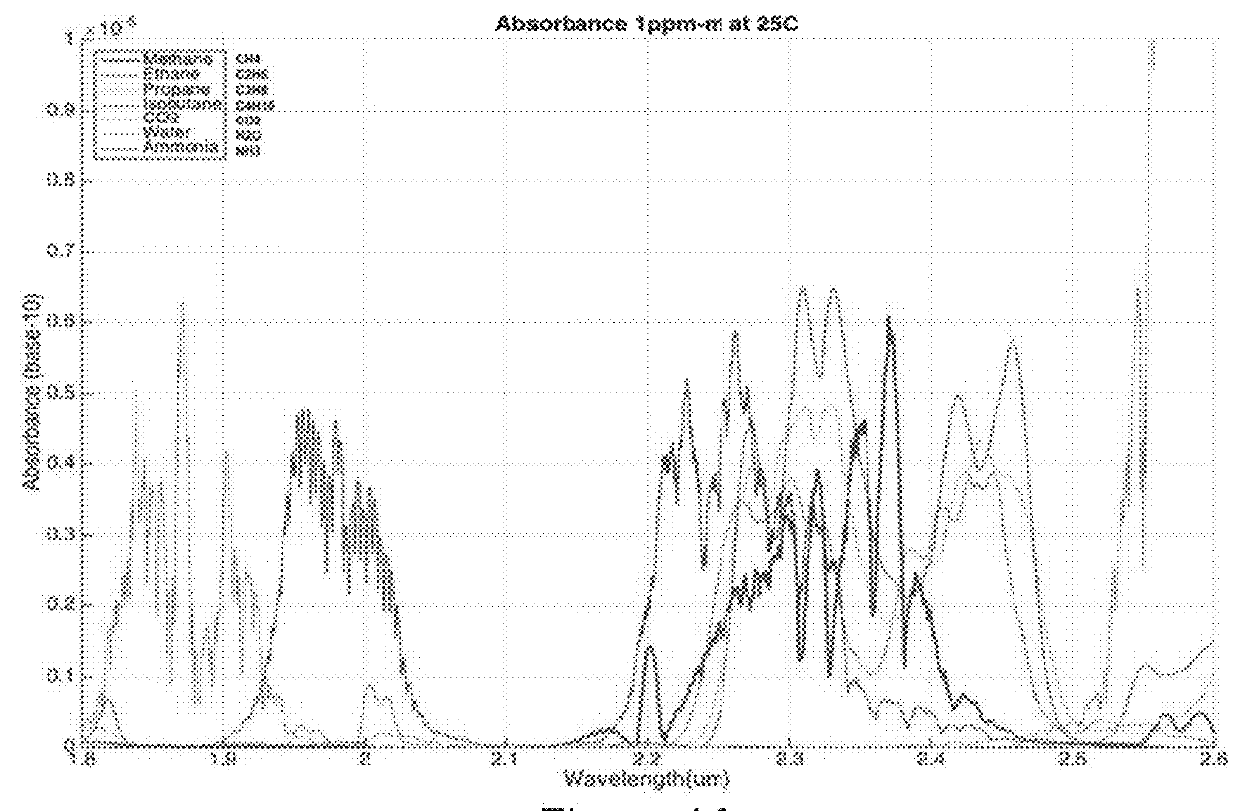

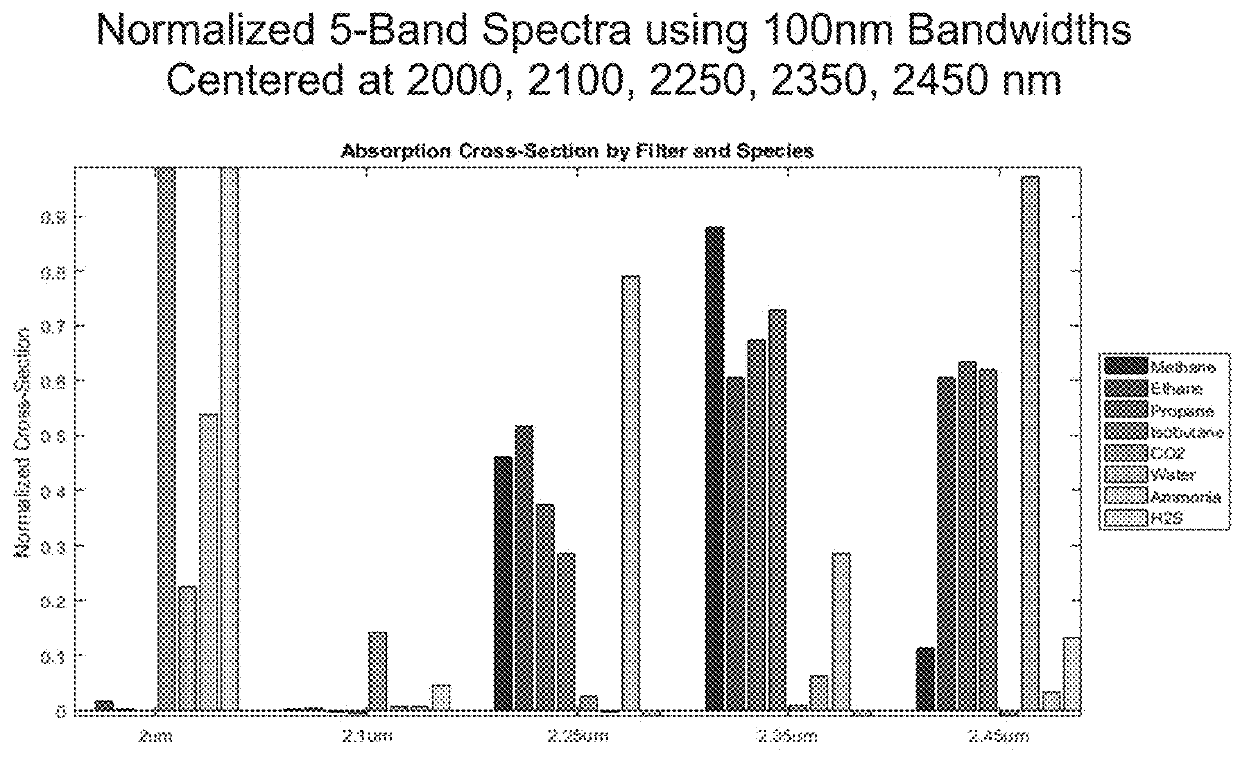

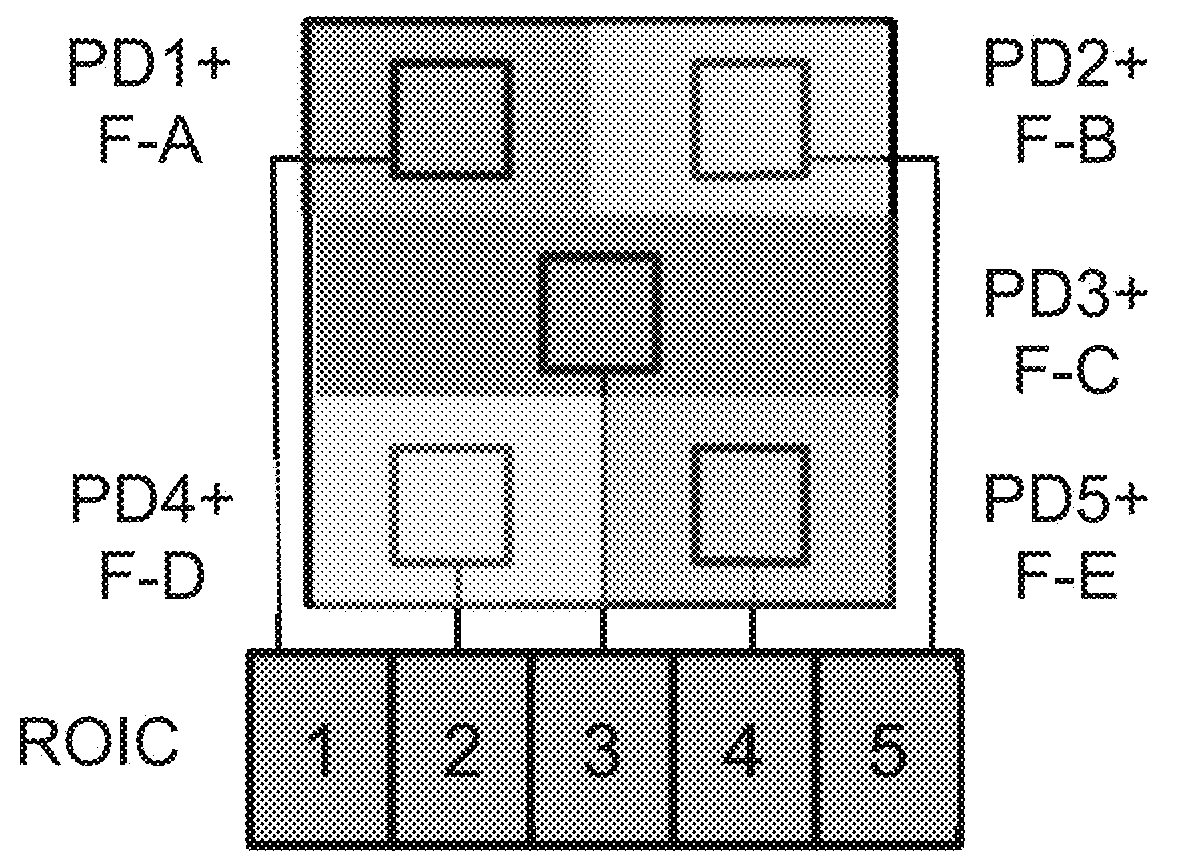

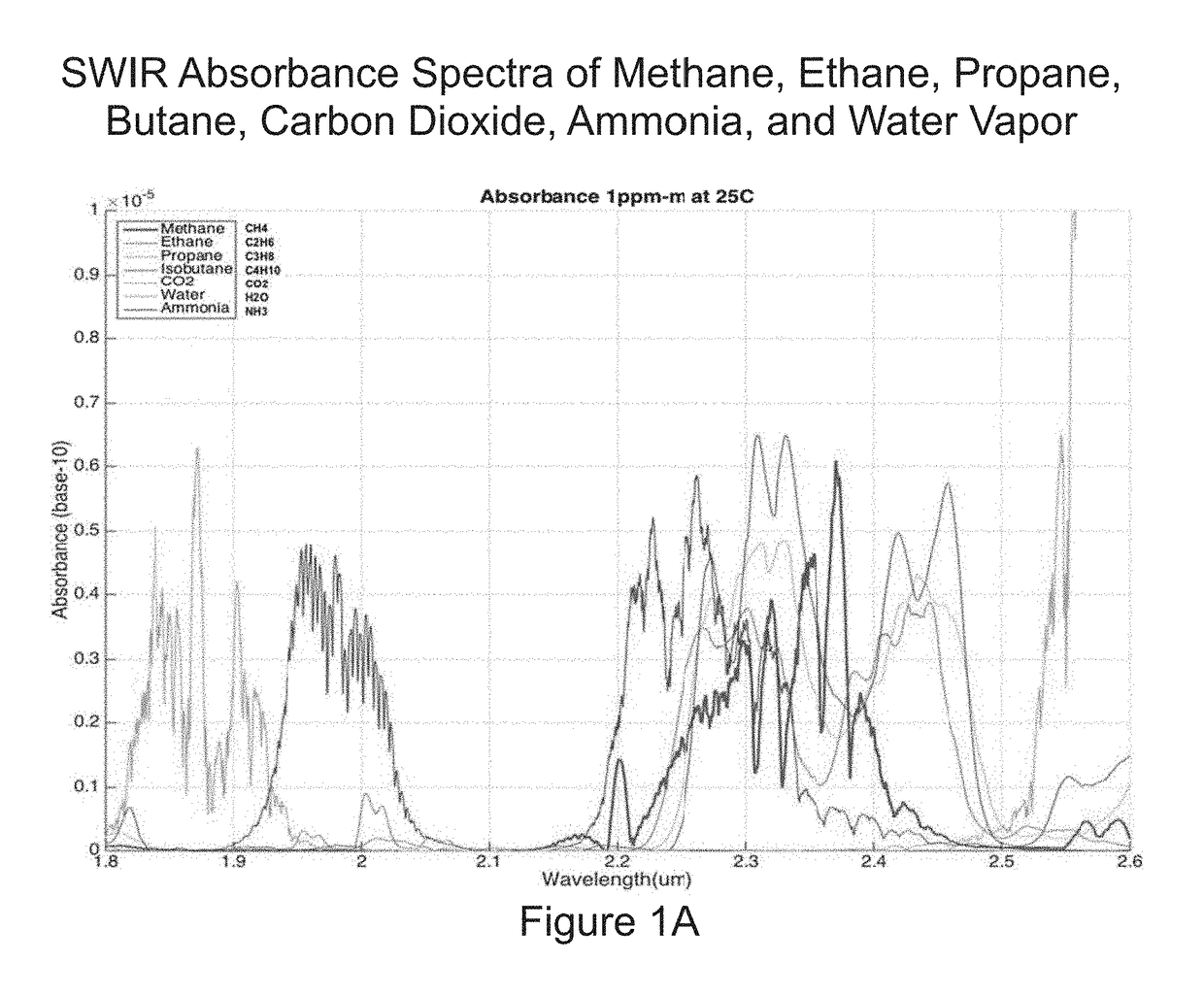

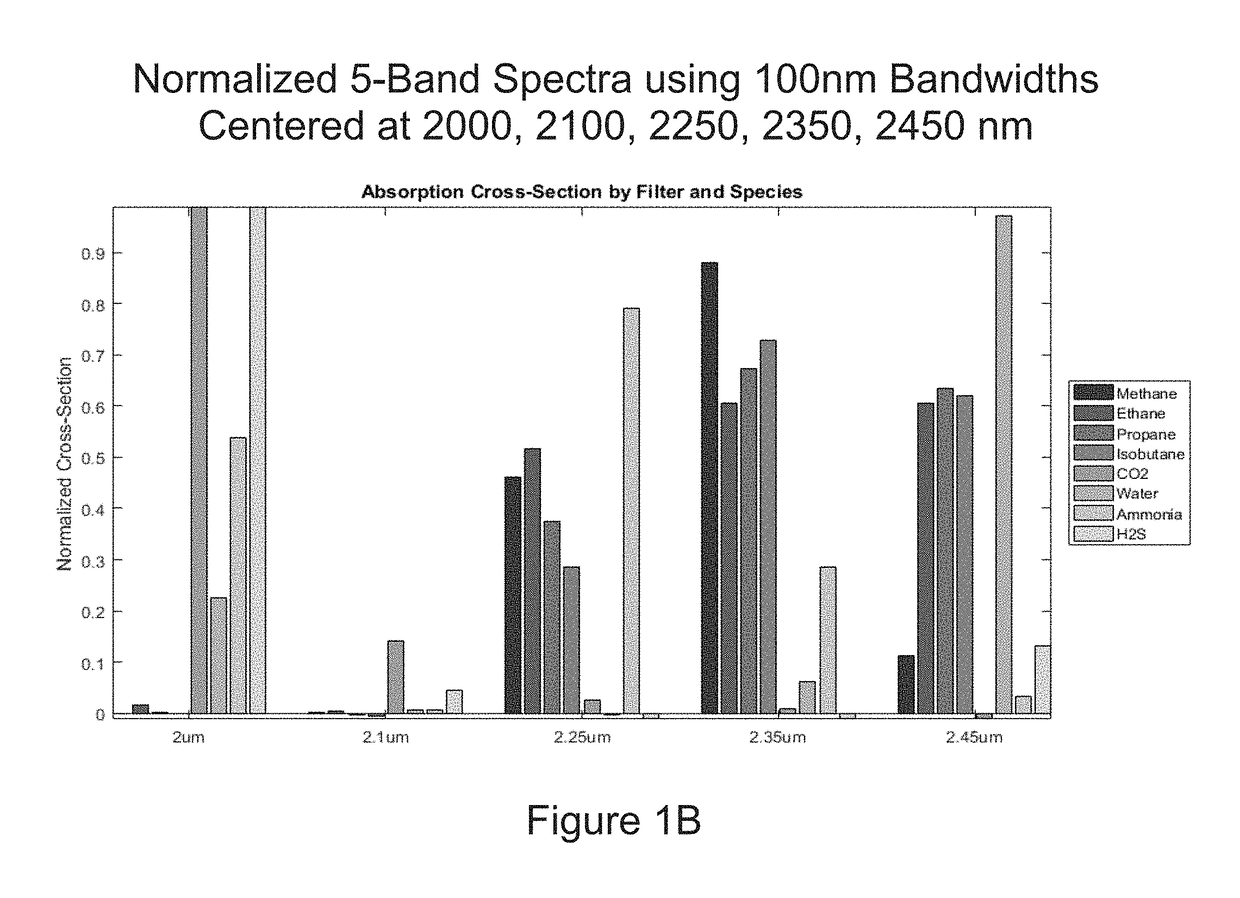

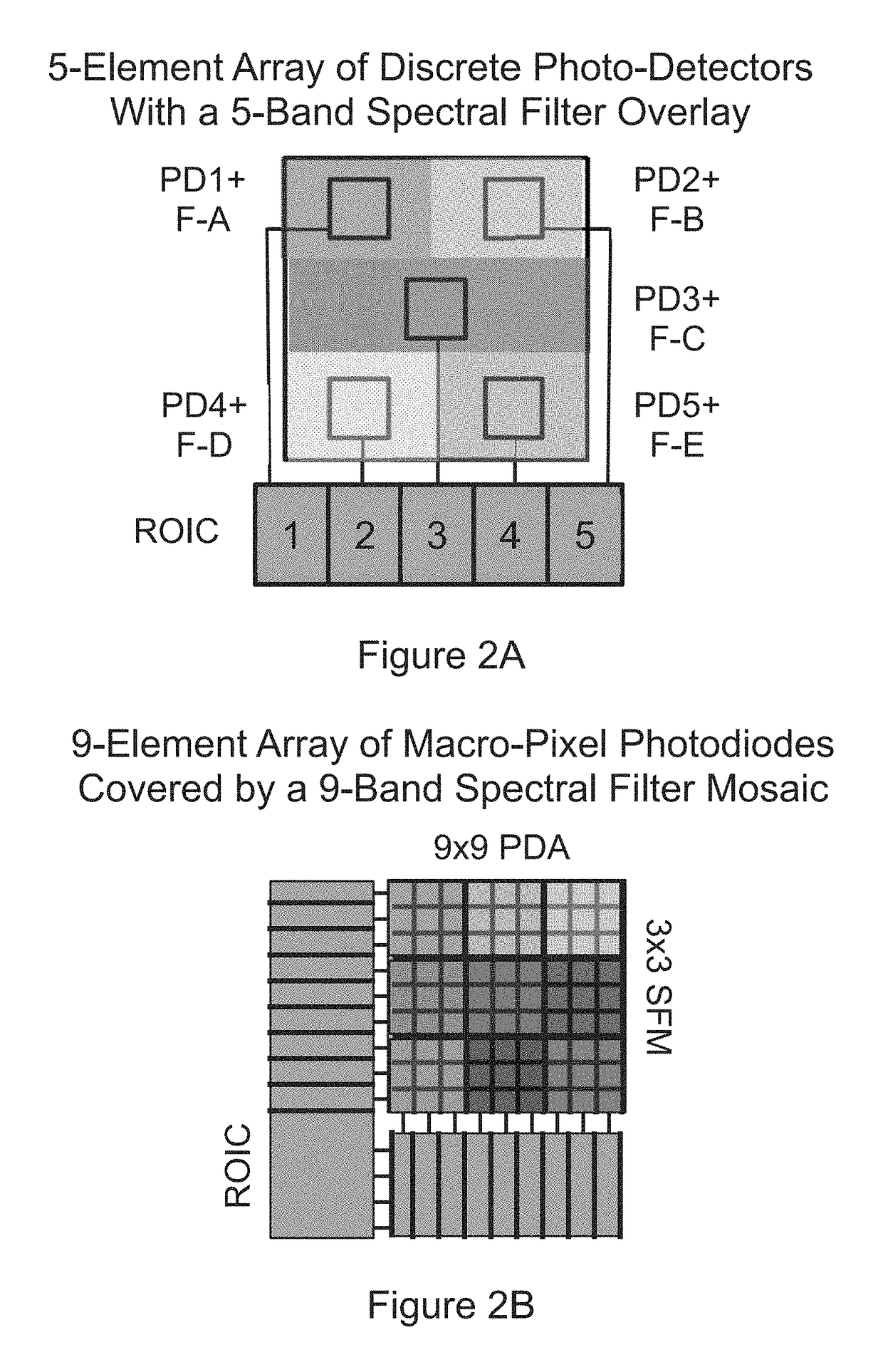

Scanning ir sensor for gas safety and emissions monitoring

ActiveUS20180266944A1More reliableLess expensiveMass flow measurement devicesRaman/scattering spectroscopyConfocalOptical depth

Apparatus and methods for rapidly detecting, localizing, imaging, and quantifying leaks of natural gas and other hydrocarbon and greenhouse gases. Scanning sensors, scan patterns, and data processing algorithms enable monitoring a site to rapidly detect, localize, image, and quantify amounts and rates of hydrocarbon leaks. Multispectral short-wave infrared detectors sense non-thermal infrared radiation from natural solar or artificial illumination sources by differential absorption spectroscopy. A multispectral sensor is scanned to envelop an area of interest, detect the presence and location of a leak, and raster scan the area around the leak to create an image of the leak. The resulting absorption image related to differential spectral optical depth is color mapped to render the degree of gas absorption across the scene. Analysis of this optical depth image, with factors including known inline pressures and / or surface wind speed measurements, enable estimation of the leak rate, i.e., emission mass flux of gas.

Owner:MULTISENSOR SCI INC

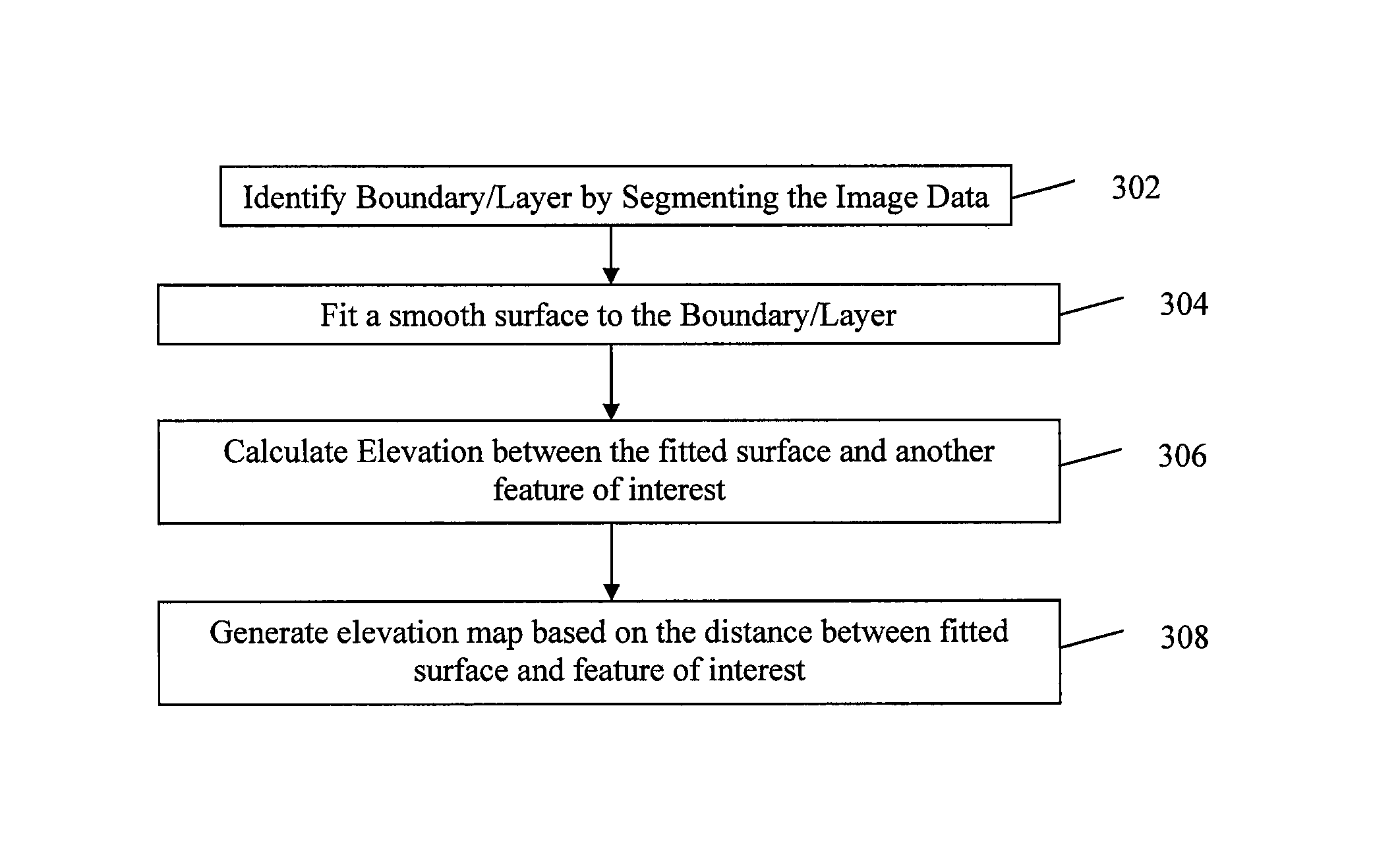

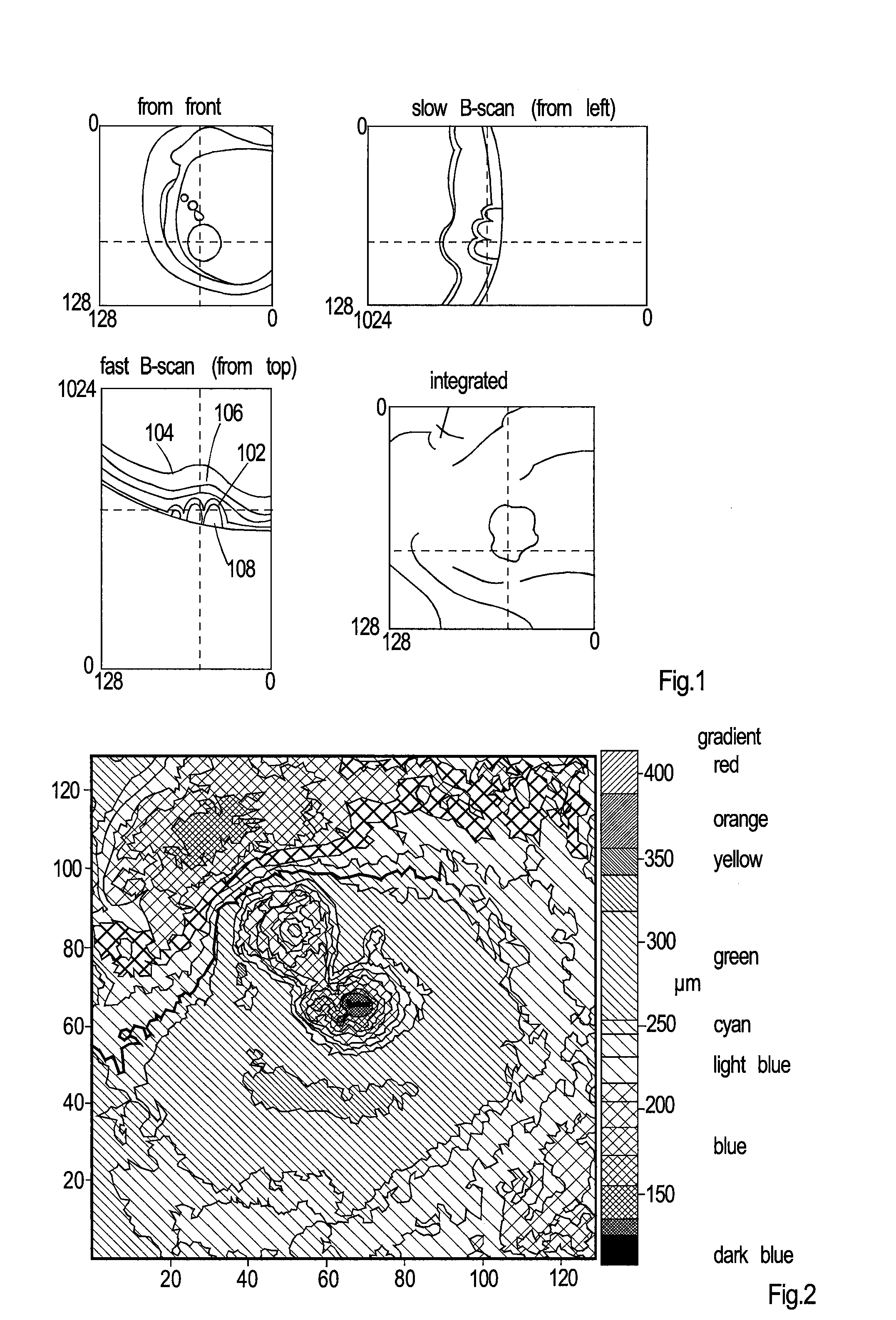

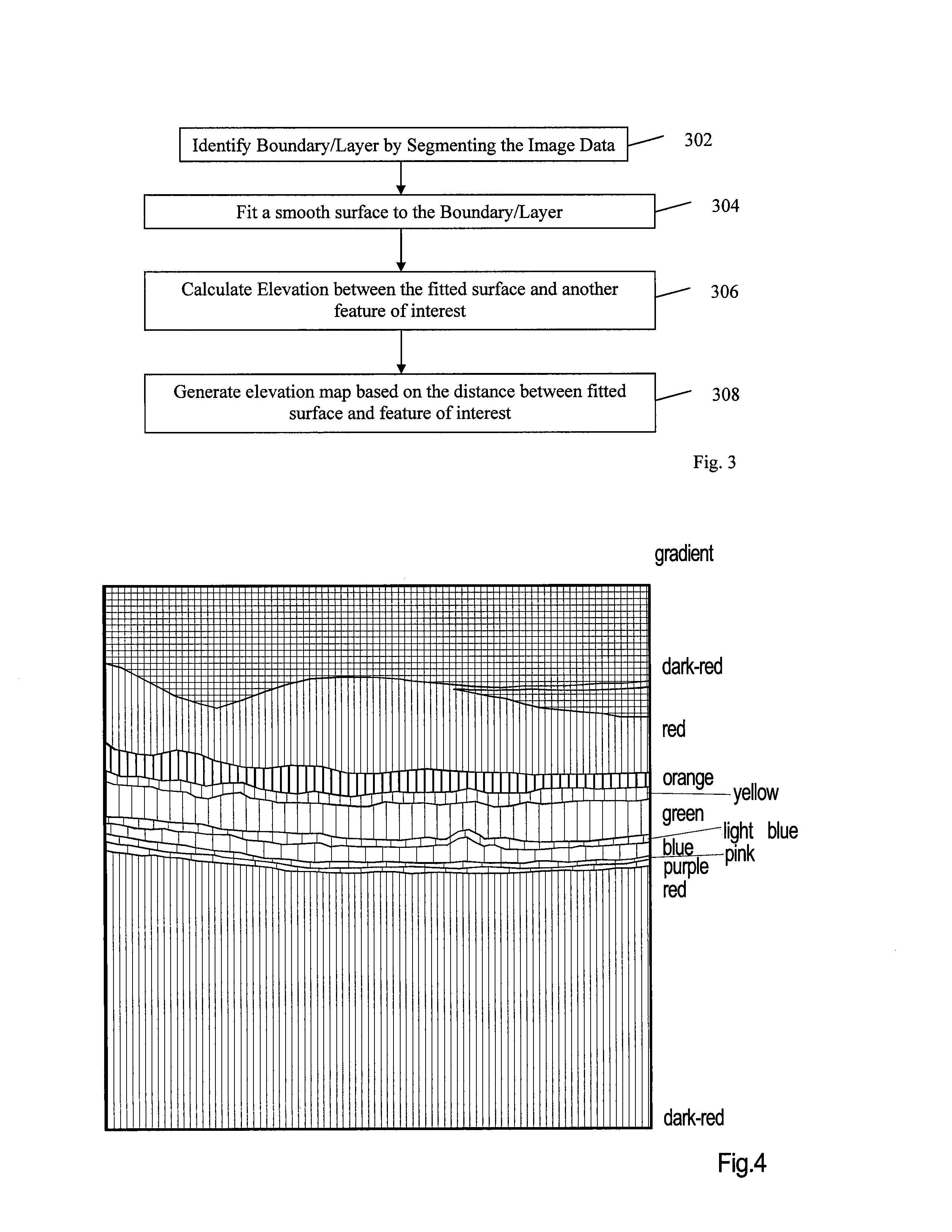

Method of bioimage data processing for revealing more meaningful anatomic features of diseased tissues

The present invention discloses a method for generating elevation maps or images of a tissue layer / boundary with respect to a fitted reference surface, comprising the steps of finding and segmenting a desired tissue layer / boundary; fitting a smooth reference surface to the segmented tissue layer / boundary; calculating elevations of the same or other tissue layer / boundary relative to the fitted reference surface; and generating maps of elevation relative to the fitted surface. The elevation can be displayed in various ways including three-dimensional surface renderings, topographical contour maps, contour maps, en-face color maps, and en-face grayscale maps. The elevation can also be combined and simultaneously displayed with another tissue layer / boundary dependent set of image data to provide additional information for diagnostics.

Owner:CARL ZEISS MEDITEC INC

Method for visualization of point cloud data

InactiveUS20090231327A1Improve visualizationImprove interpretationTexturing/coloring3D-image renderingPoint cloudPeak value

Owner:HARRIS CORP

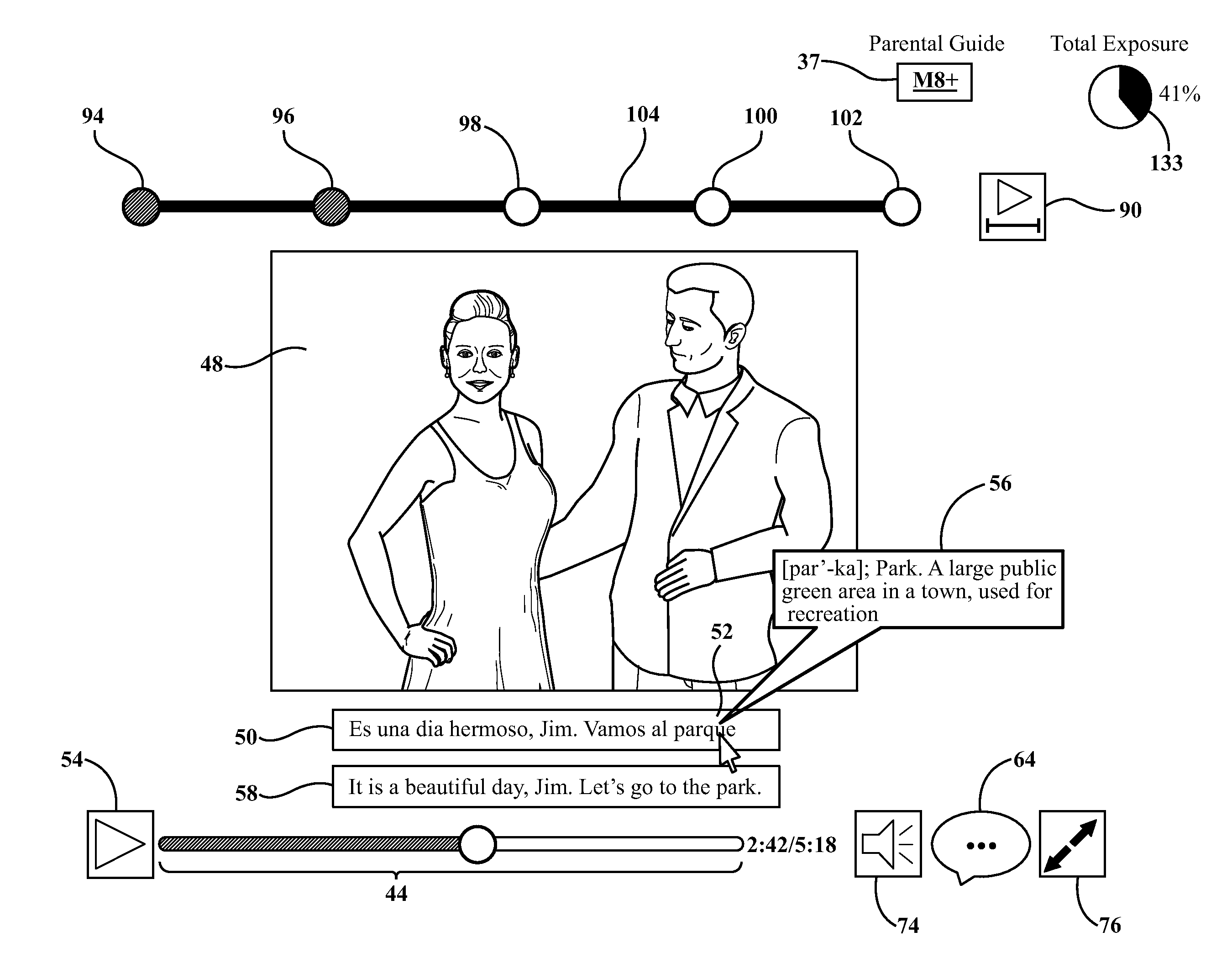

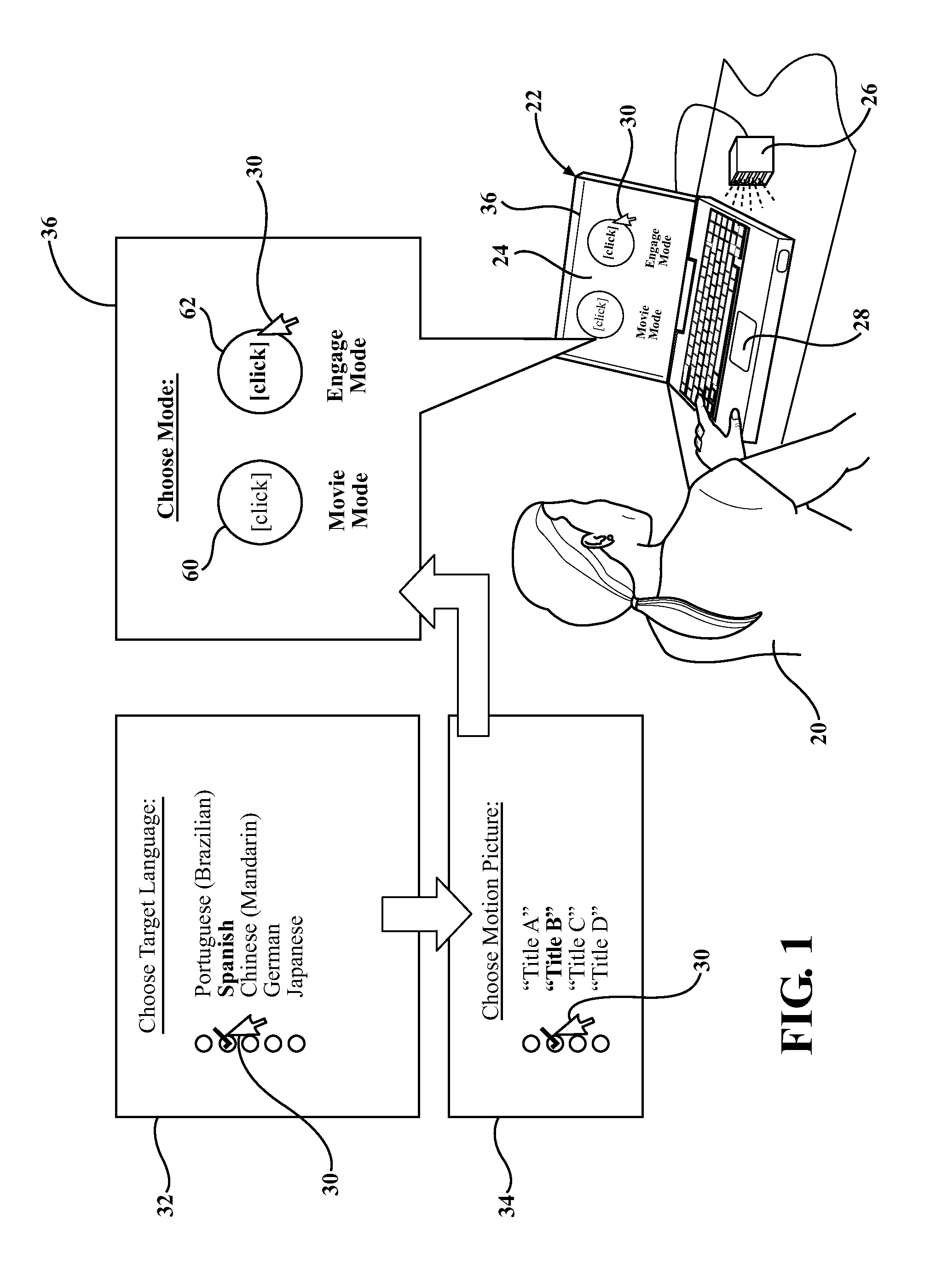

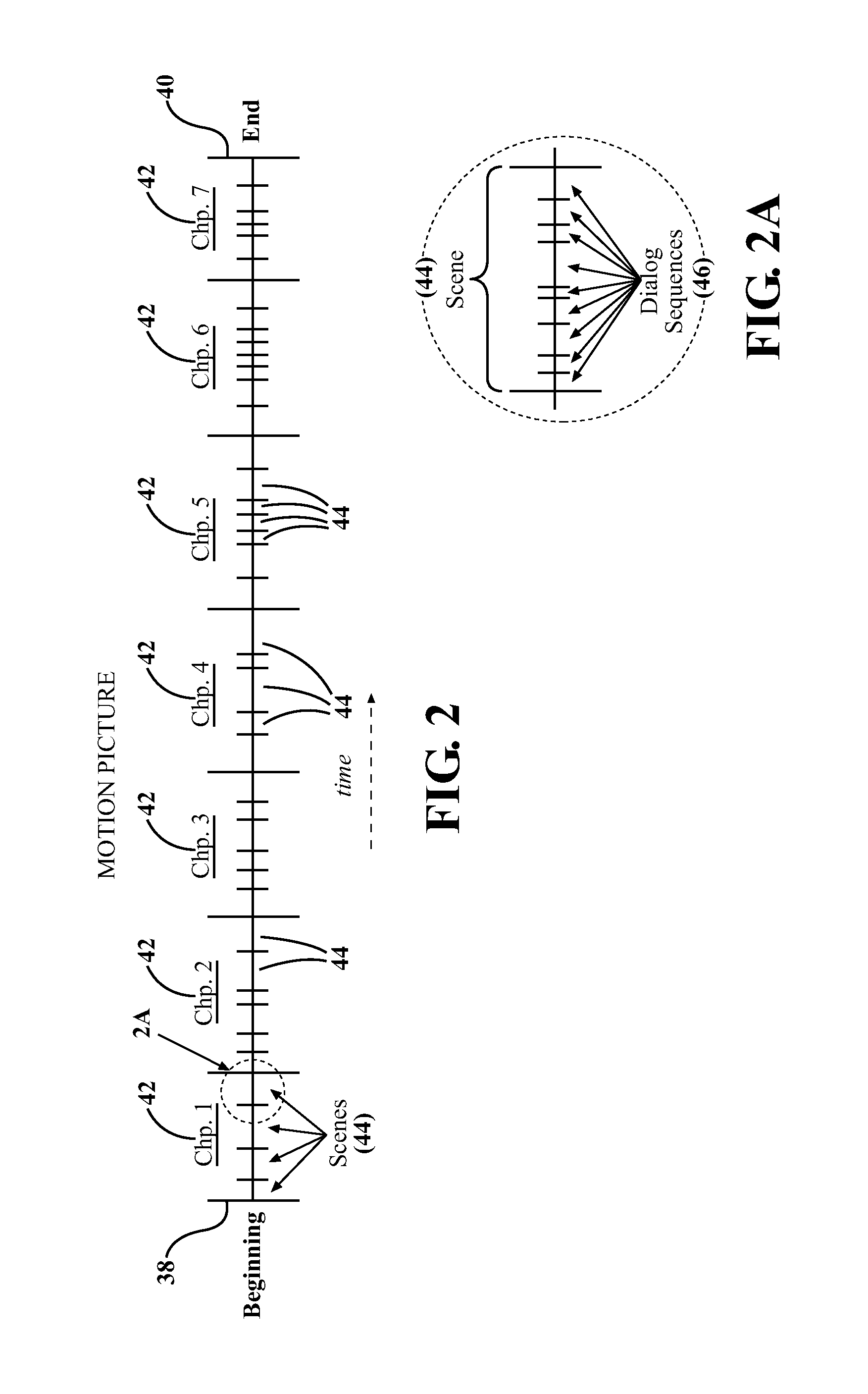

System and method for language learning through film

ActiveUS20160133154A1Improve easeImprove effectivenessElectrical appliancesTeaching apparatusAnimationTheoretical computer science

A computer-assisted system and method for foreign language instruction. A motion picture having audio dialog in a target language is stored, in a computer-readable form, with chapter divisions, scene subdivisions and dialog sequence sub-subdivisions. In an Engage Mode, the motion picture is played on a display screen sequence-by-sequence, scene-by-scene, and chapter-by-chapter for a student listening to the audio dialog on a speaker. Interlinear target and source language subtitles are provided with interactive capabilities accessed through cursor movement or other means. The interlinear subtitles may be semantically color-mapped. After selecting a scene to view, the student is progressed through a series of modules that break-down and dissect each dialog sequence of the scene. The student studies each dialog sequence before moving to the next scene. Likewise, all scenes in a chapter are studied before moving to the next chapter and ultimately completing the motion picture.

Owner:MANGO IP HLDG

Identifying apparatus and method, position detecting apparatus and method, robot apparatus and color extracting apparatus

InactiveUS6088469AReadily and reliably identifyLong lasting colorCharacter and pattern recognitionVehicle position/course/altitude controlLocation detectionPattern recognition

An identifying apparatus and method and a robot apparatus capable of reliably identifying other moving objects or other objects, a position detecting apparatus and method and a robot apparatus capable of accurately detecting the position of a moving object or itself within a region, and a color extracting apparatus capable of accurately extracting a desired color are difficult to be realized. Objects are provided with identifiers having different color patterns such that the color patterns are detected and identified through image processing. Also, the objects of interest are given color patterns different from each other, such that the position of the object can be detected by identifying the color pattern through image processing. Further, a plurality of wall surfaces having different colors are provided along the periphery of the region, such that the position of an object is detected on the basis of the colors of the wall surfaces through image processing. Further, a luminance level and color difference levels are sequentially detected for each of pixels to extract a color by determining whether or not the color difference levels are within a predetermined range.

Owner:SONY CORP

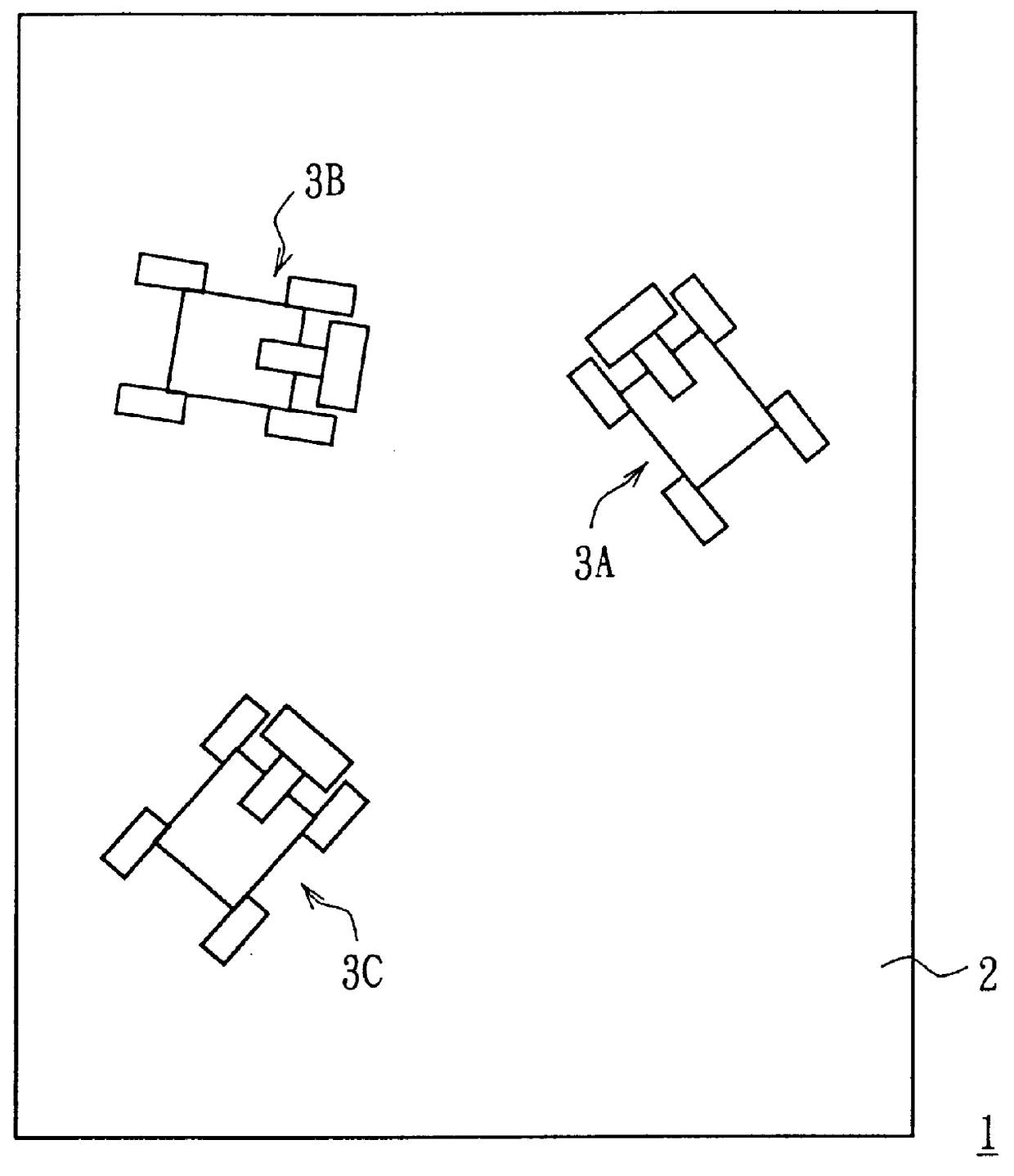

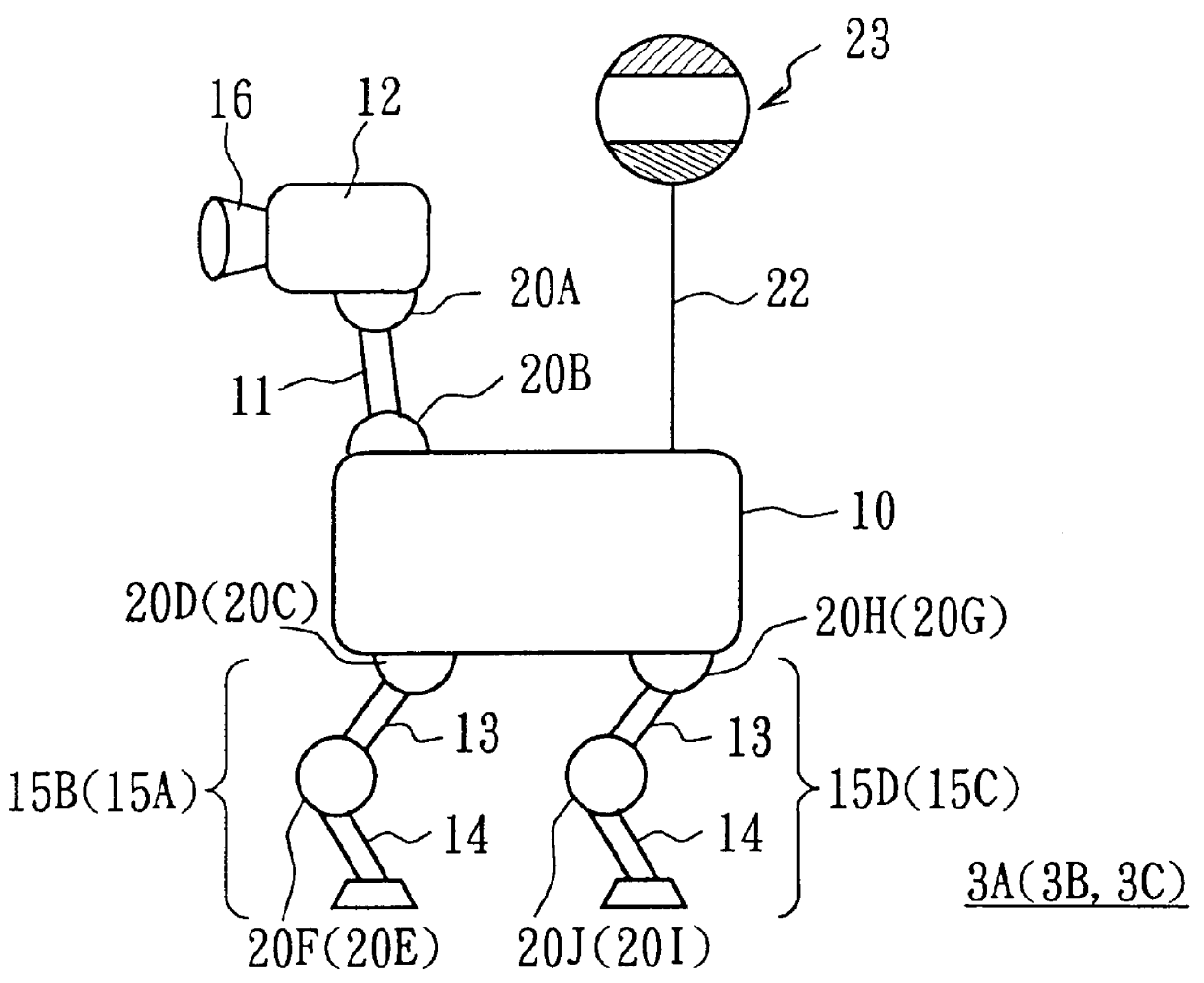

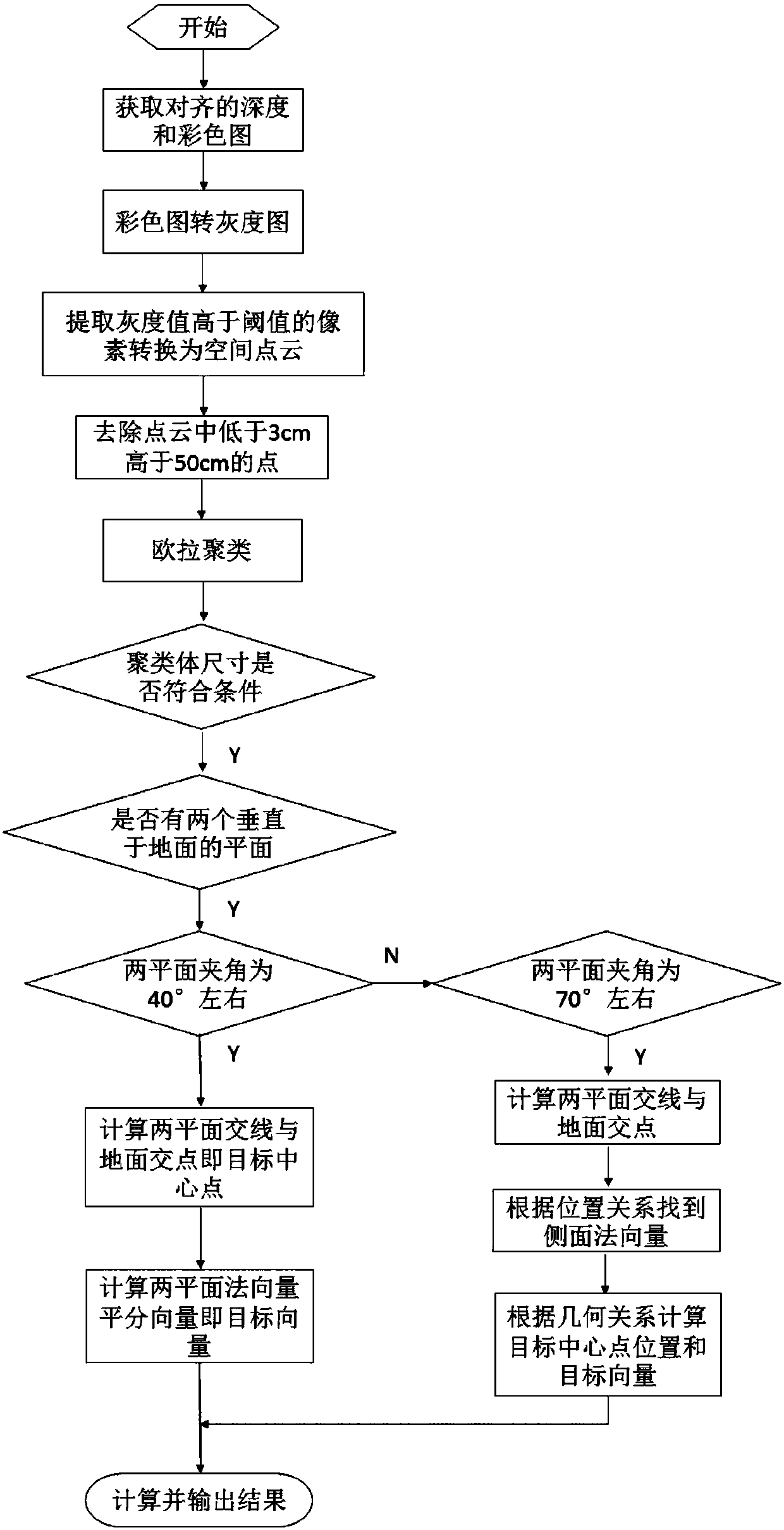

Method and system for dynamic identification and location of charging pile based on Kinect

ActiveCN107590836ASmall amount of calculationAccurate identificationImage analysisCharacter and pattern recognitionPattern recognitionPoint cloud

The present invention provides a method and system for dynamic identification and location of a charging pile based on Kinect. The method comprises: the step 1: calculating a conversion matrix from acamera coordinate system to a setting world coordinate system according to three-dimensional point cloud data obtained by a Kinect sensor; the step 2: performing one-to-one alignment of pixels in a color map and a depth map; the step 3: removing invalid pixel points in an image obtained in the step 2, converting residual pixel points to three-dimensional spatial points and eliminating points higher than 50cm or lower than 3cm; the step 4: performing downsampling of point cloud obtained in the step 3 to reduce computing amount of follow-up processing, and performing radius filtering to remove outliers; the step 5: performing Euclidean cluster of the point cloud obtained in the step 4 to obtain one or more than one cluster objects; the step 6: processing the one or more than one cluster objects obtained in the step 5, and screening cluster objects having two feature planes; the step 7: processing a cluster body screened in the step 6, and calculating whether a geometrical relationship between two feature planes accords with a three-dimensional shape of a charging pile or not; and the step 8: performing calculation in geometry according to relative position of the two feature planes determined in the step 7, determining a position and an angle of deflection of the charging relative to an original point of the world coordinate system, and realizing location of the charging pile. The method and system for dynamic identification and location of charging pile based on the Kinect have the advantages of accurate recognition, strong robustness, stable dynamic tracking, less susceptible to light interference and the like, and the positioning of the target is small in calculation amount and accurate in calculation result, etc.

Owner:斯坦德机器人昆山有限公司

Scanning IR sensor for gas safety and emissions monitoring

ActiveUS10190976B2More reliableLess expensiveMass flow measurement devicesRaman/scattering spectroscopyGratingSpectroscopy

Apparatus and methods for rapidly detecting, localizing, imaging, and quantifying leaks of natural gas and other hydrocarbon and greenhouse gases. Scanning sensors, scan patterns, and data processing algorithms enable monitoring a site to rapidly detect, localize, image, and quantify amounts and rates of hydrocarbon leaks. Multispectral short-wave infrared detectors sense non-thermal infrared radiation from natural solar or artificial illumination sources by differential absorption spectroscopy. A multispectral sensor is scanned to envelop an area of interest, detect the presence and location of a leak, and raster scan the area around the leak to create an image of the leak. The resulting absorption image related to differential spectral optical depth is color mapped to render the degree of gas absorption across the scene. Analysis of this optical depth image, with factors including known inline pressures and / or surface wind speed measurements, enable estimation of the leak rate, i.e., emission mass flux of gas.

Owner:MULTISENSOR SCI INC

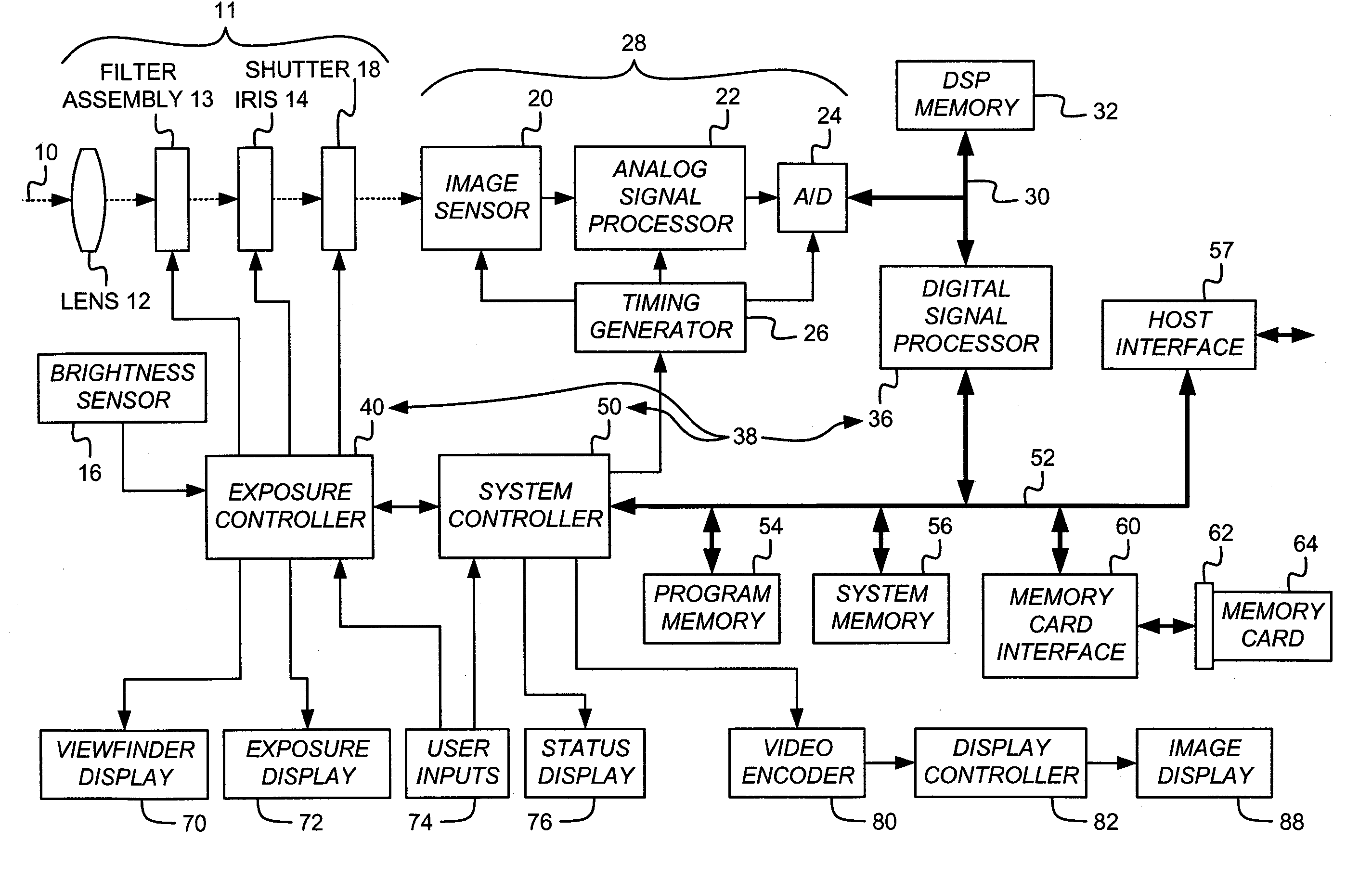

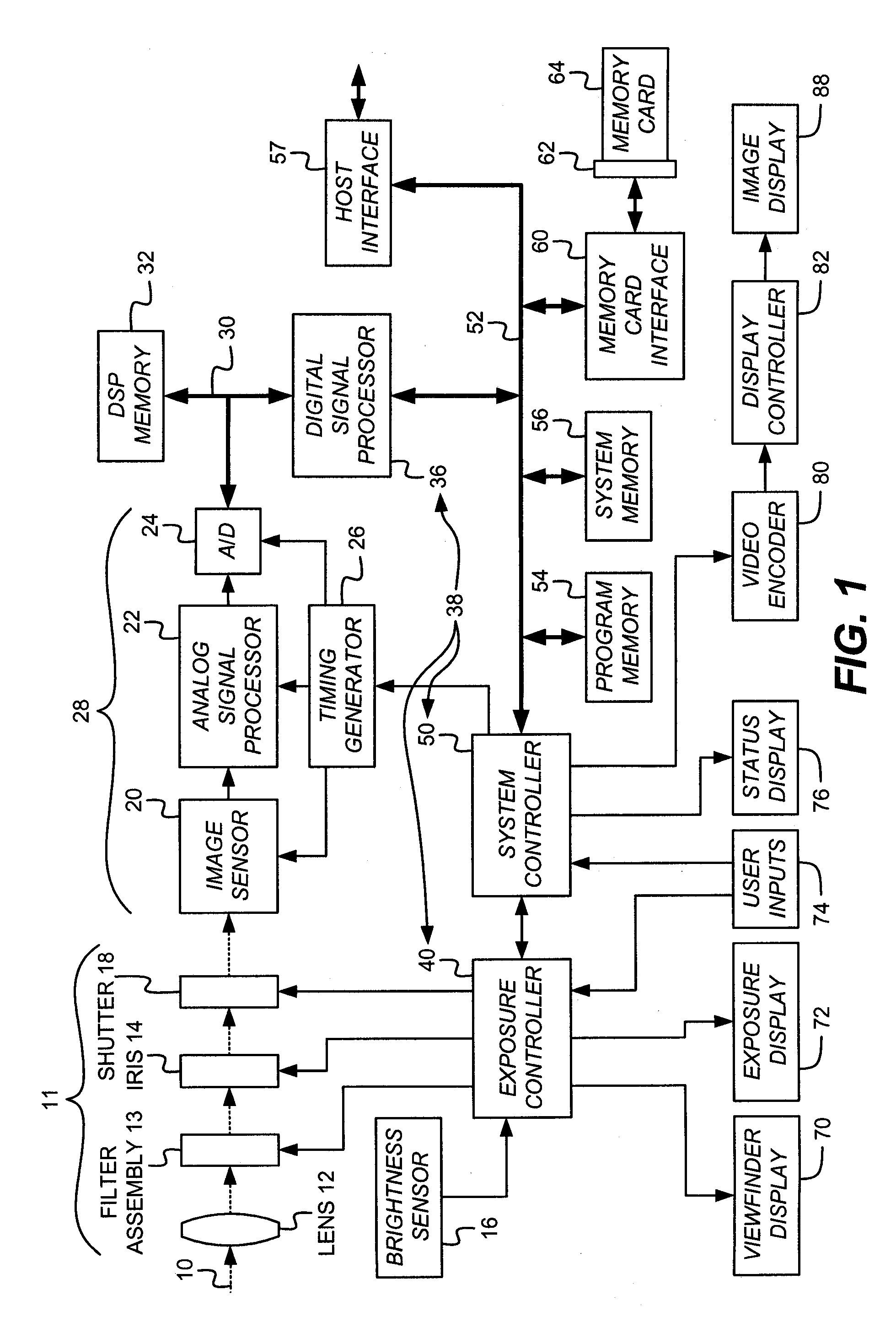

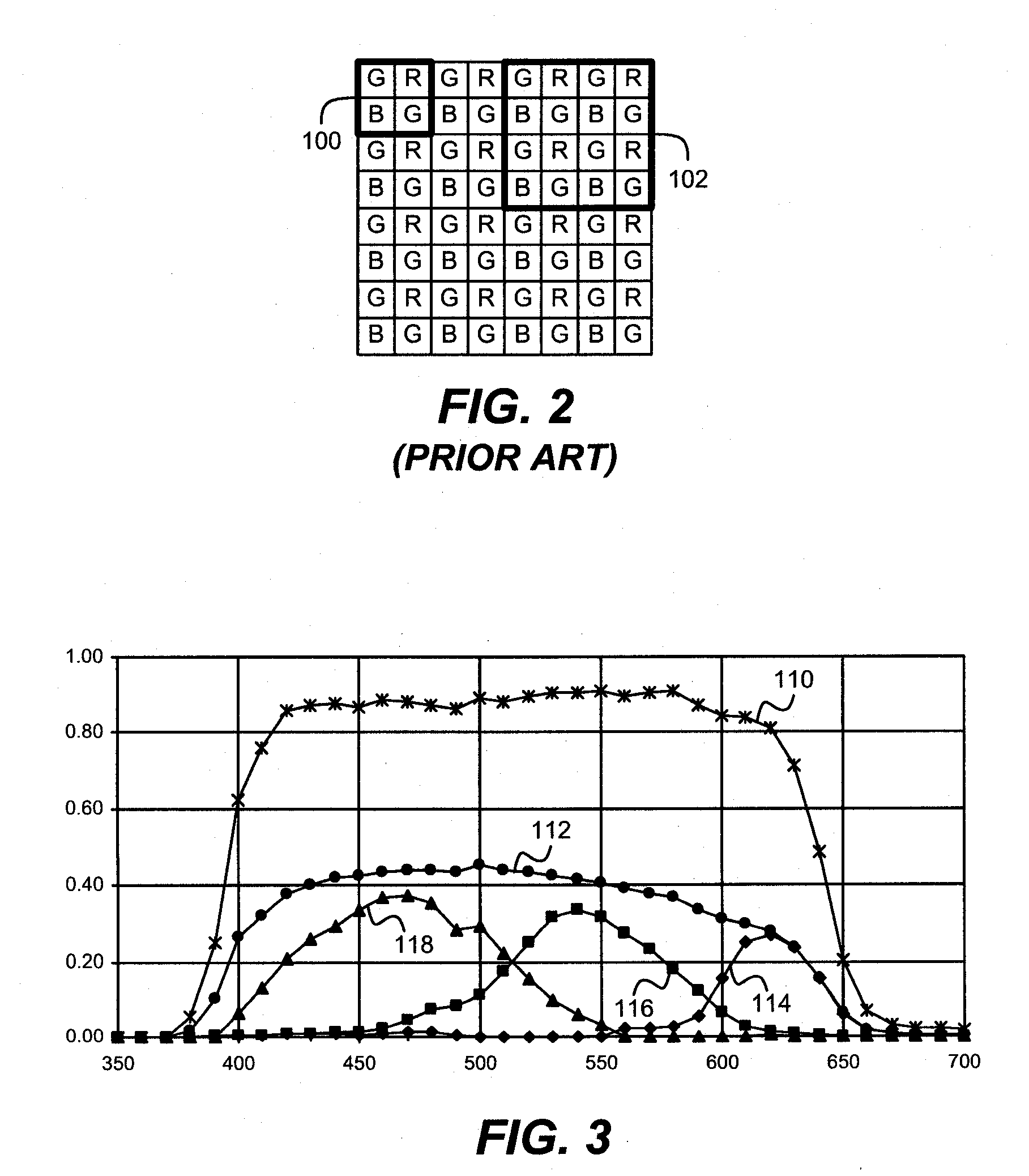

Pattern conversion for interpolation

InactiveUS20090087087A1Improve representationRepresents original subjectTelevision system detailsTelevision system scanning detailsDigital imageComputer vision

A method of processing a digital image having a predetermined color pattern, includes converting the predetermined color pattern of the digital image into a converted digital image having a different desired color pattern; and using algorithms adapted for use with the desired color pattern for processing the converted digital image.

Owner:MONUMENT PEAK VENTURES LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com