Indoor scene three-dimensional reconstruction method based on single depth vision sensor

A depth sensor and indoor scene technology, applied in the field of 3D reconstruction of indoor scenes based on a single depth vision sensor, can solve problems affecting the reconstruction effect of 3D models of indoor scenes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0129] Embodiments of the present invention are described in further detail below:

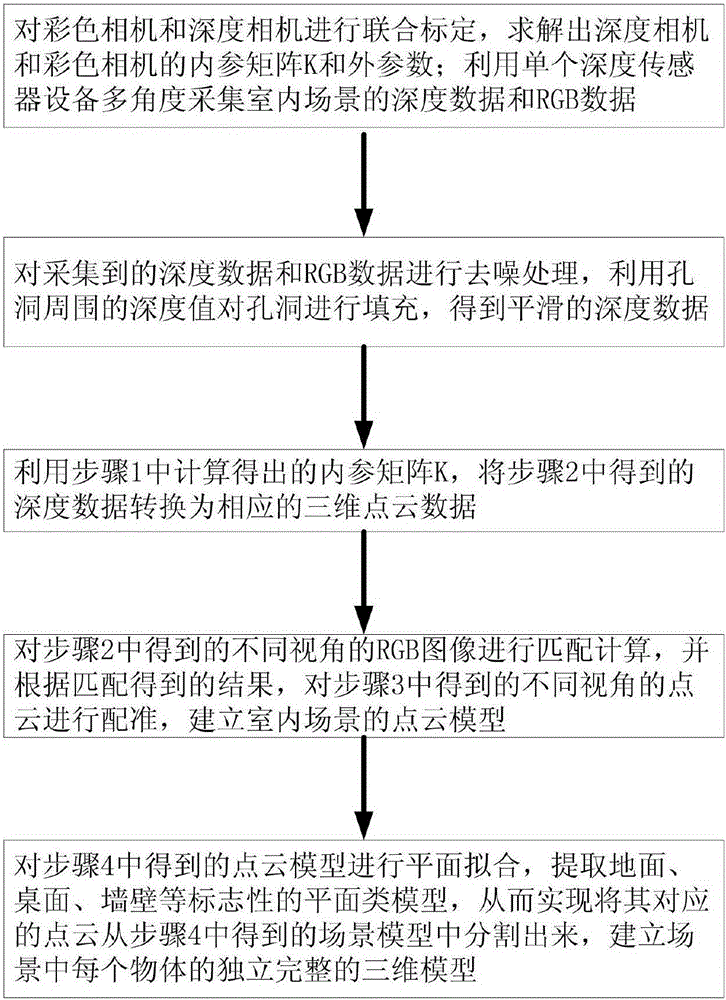

[0130] A method for 3D reconstruction of indoor scenes based on a single depth vision sensor, such as figure 1 shown, including the following steps:

[0131] Step 1. Jointly calibrate the color camera and the depth camera, solve the internal parameter matrix K, internal parameters, and external parameters of the depth and color cameras, and calibrate the depth data; use a single depth sensor device to collect depth data and RGB data of indoor scenes ;

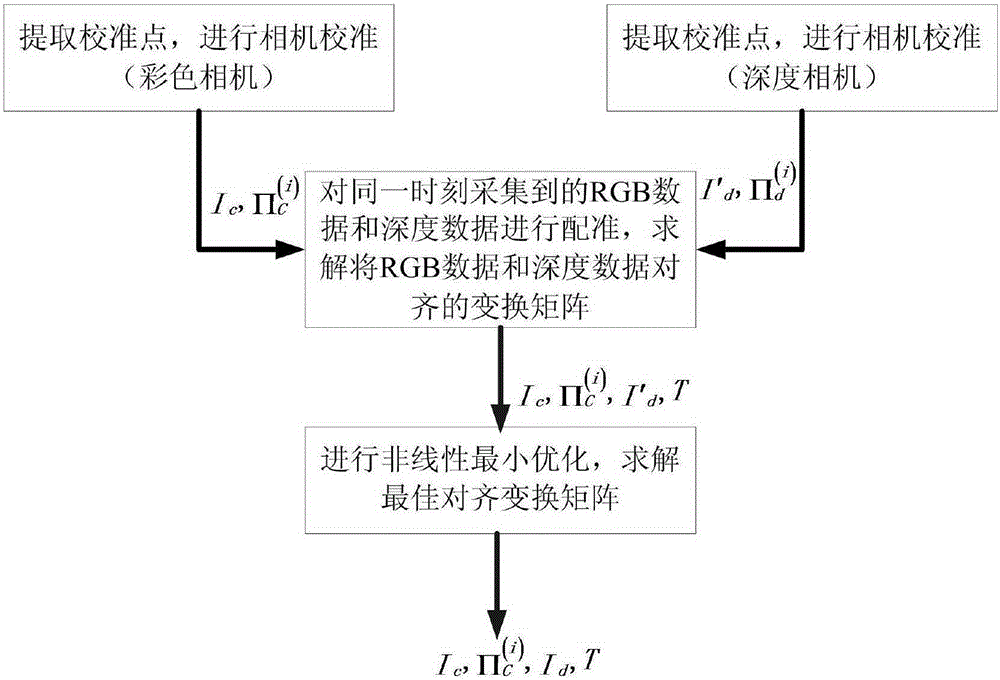

[0132] Such as figure 2 As shown, this step 1 includes the following specific steps:

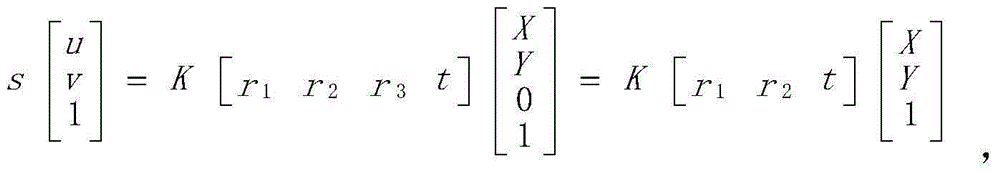

[0133] 1.1. Extract the upper corner points of the calibration chessboard image taken by the color camera and depth camera as calibration points, perform camera calibration, and solve the internal parameter matrix K and external parameters of the depth camera and color camera;

[0134] (1) Extract the upper corner points of the calibration chessboard ima...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com