Patents

Literature

80 results about "Fusion image" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

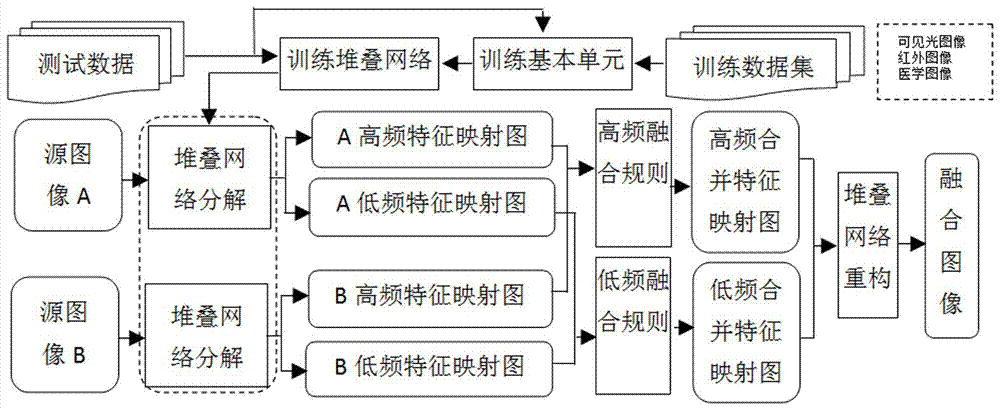

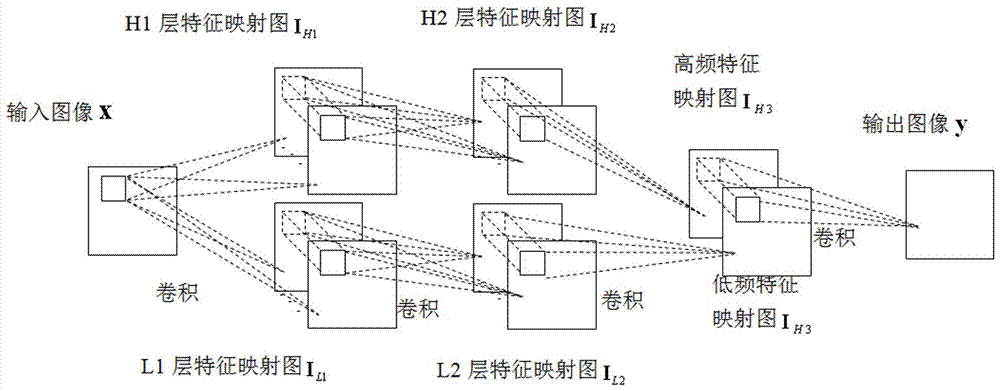

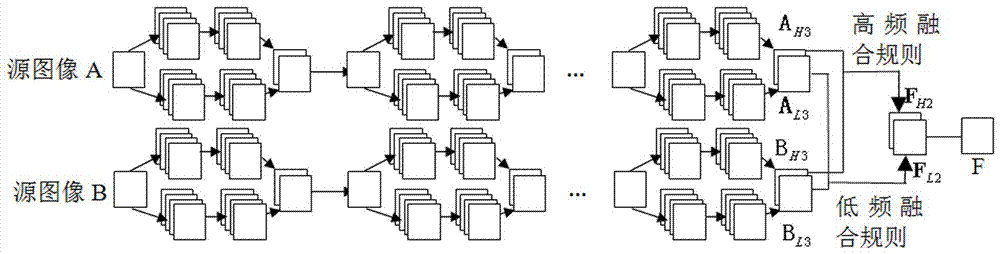

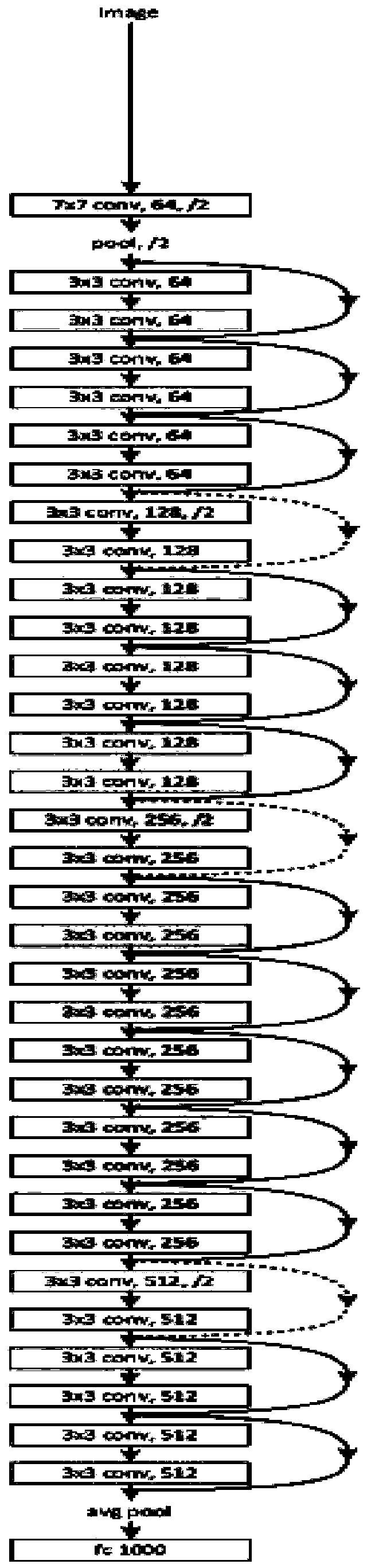

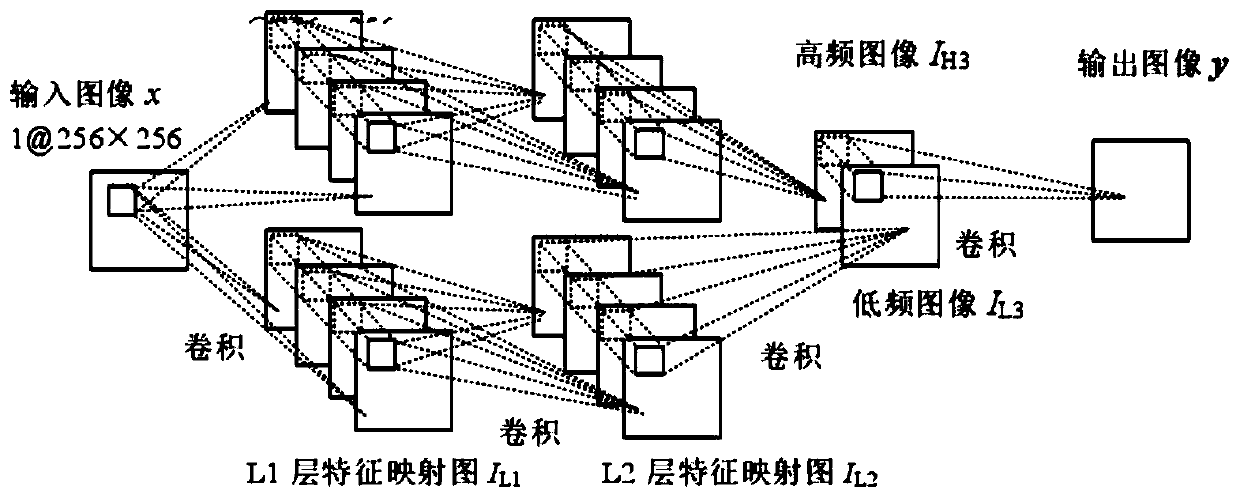

Image fusion method based on depth learning

The present invention relates to an image fusion method, especially to an image fusion method based on depth learning. The method comprises: employing a convolution layer to construct basic units based on an automatic encoder; stacking up a plurality of basic units for training to obtain a depth stack neural network, and employing an end-to-end mode to regulate the stack network; employing the stack network to decompose input images, obtaining high-frequency and low-frequency feature mapping pictures of each input image, and employing local variance maximum and region matching degree to merge the high-frequency and low-frequency feature mapping pictures; and putting a high-frequency fusion feature mapping picture and a low-frequency fusion feature mapping picture back to the last layer of the network, and obtaining a final fusion image. The image fusion method based on depth learning can perform adaptive decomposition and reconstruction of images, one high-frequency feature mapping picture and one low-frequency mapping picture are only needed when fusion, the number of the types of filters do not need artificial definition, the number of the layers of decomposition and the number of filtering directions of the images do not need selection, and the dependence of the fusion algorithm on the prior knowledge can be greatly improved.

Owner:ZHONGBEI UNIV

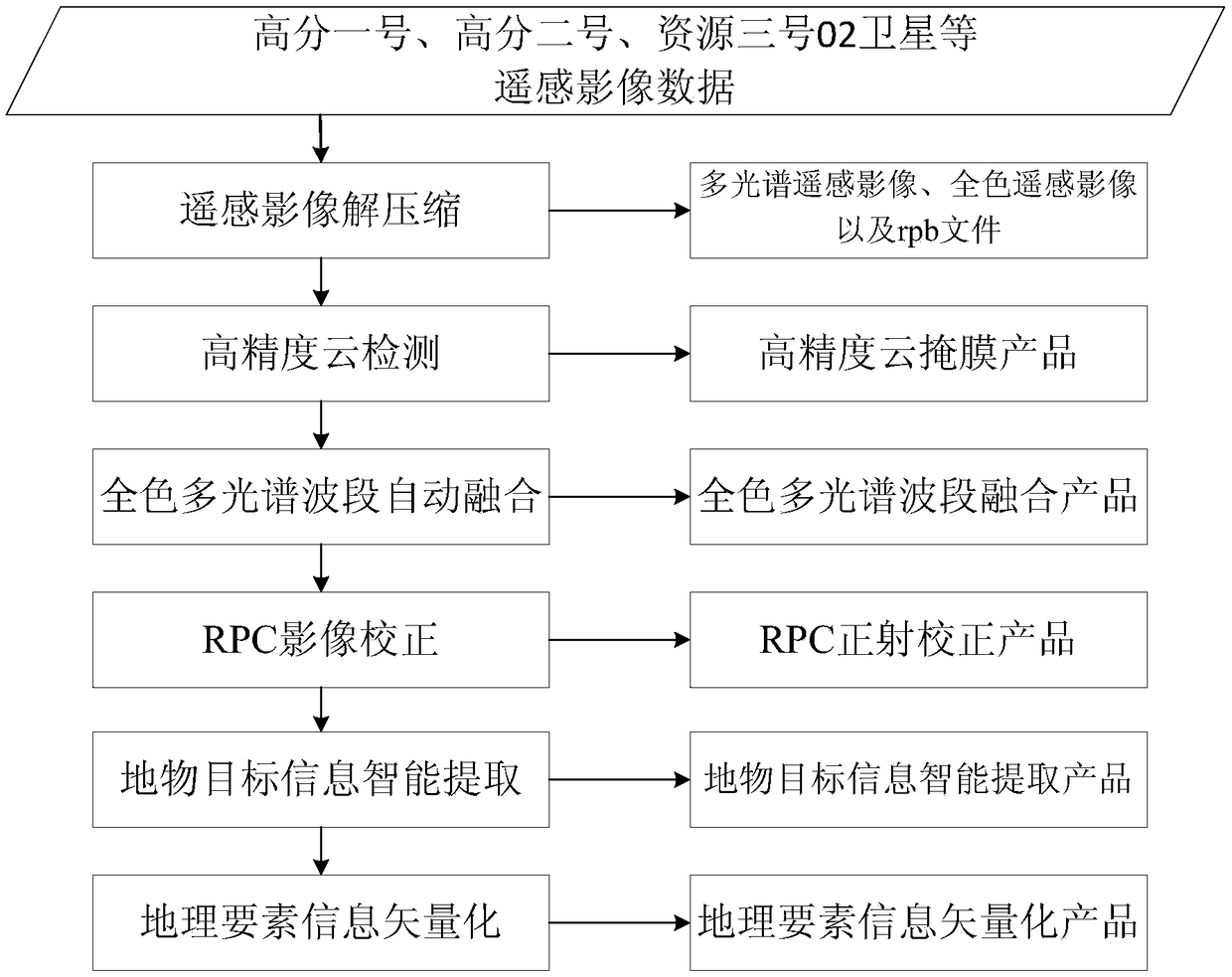

An intelligent information extraction method and system based on remote sensing image

InactiveCN109215038AReduce manual operationsImprove processing efficiencyImage enhancementImage analysisObjective informationImage correction

The invention relates to an intelligent information extraction method and system based on remote sensing image. Firstly, the remote sensing big data GPU computing cluster based on Hadoop is constructed, then the decompression of remote sensing image is designed, high-precision cloud detection, panchromatic multispectral image automatic fusion, RPC image correction, intelligent information extraction method and process for intelligent extraction of ground object information and vectorization of geographic element information, in which the intelligent information extraction platform adopts Caffedeep learning framework, a high-precision cloud mask product of remote sensing image can be obtained through the above process, panchromatic multi-spectral band fusion products, RPC ortho correctionproducts, intelligent extraction products of ground object information and geographic element information vectorization products realize the goal of automatically converting 1A-level original remote sensing images into 2A-level products, which greatly shortens the time from satellite standard data products to information products.

Owner:CHINA CENT FOR RESOURCES SATELLITE DATA & APPL

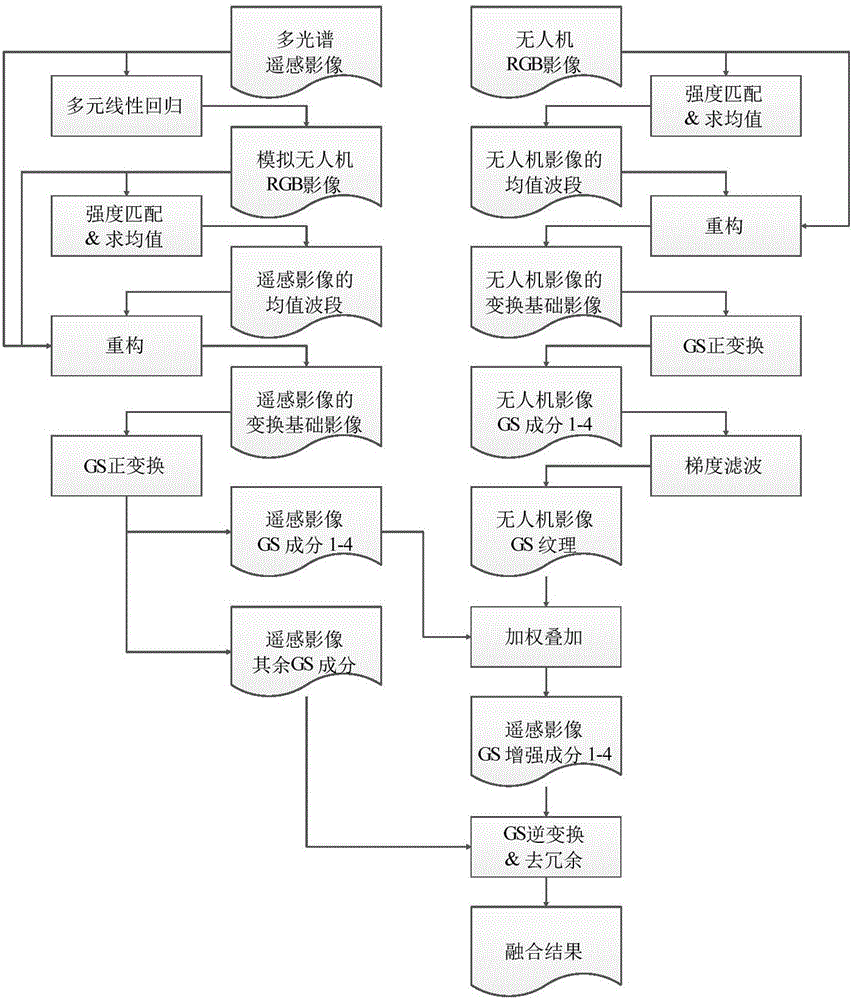

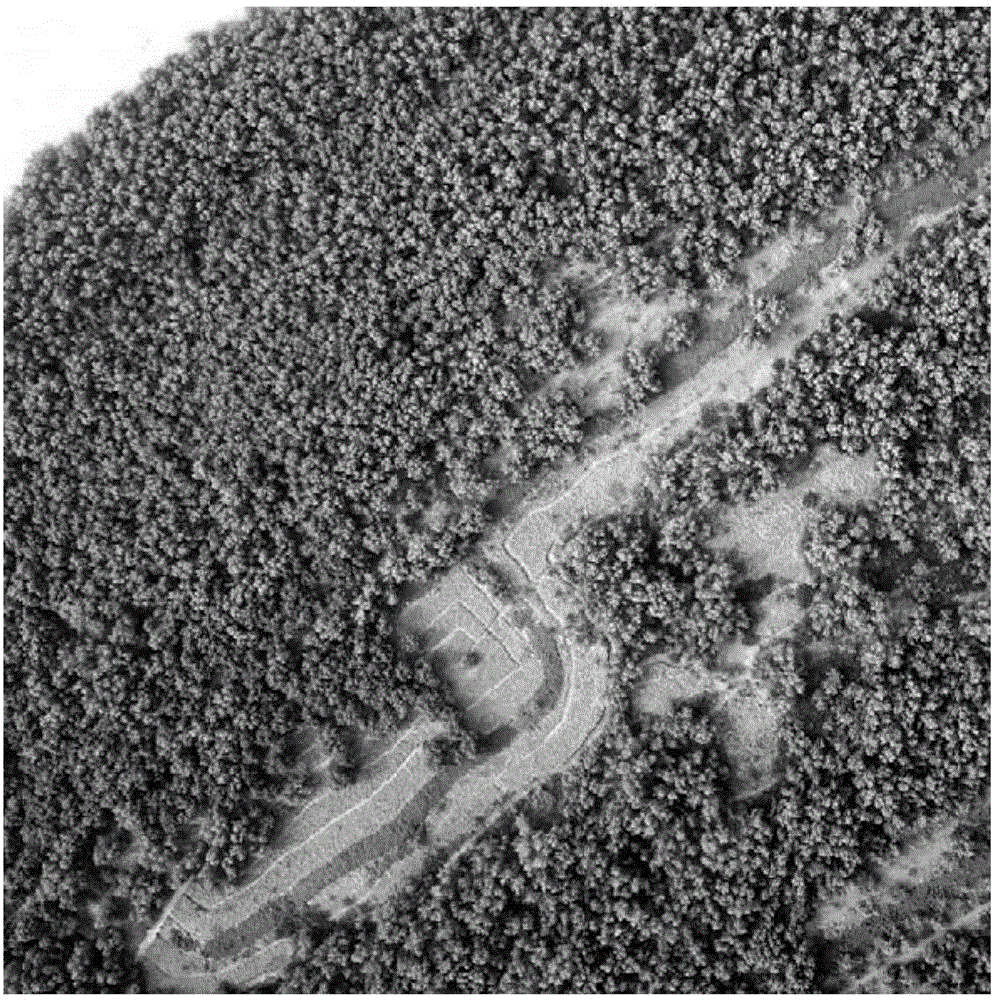

Method for fusing unmanned aerial vehicle image and multispectral image based on Gram-Schmidt

ActiveCN106384332AConducive to definitionConducive to inversionGeometric image transformationImage resolutionHigh spatial resolution

The invention discloses a method for fusing unmanned aerial vehicle image and multispectral image based on Gram-Schmidt transformation, and method comprises the steps: two sets of individual multiband images including multispectral low spatial resolution remote-sensing images and tri-band high spatial resolution unmanned aerial vehicle visible light images with the same pixel sizes can be acquired through image preprocessing; multiple linear regression, reconstruction and Gram-Schmidt transformation are performed on the remote-sensing images, remote-sensing image GS constituent can be obtained, the same reconstruction and Gram-Schmidt transformation are performed on the unmanned aerial vehicle images, and unmanned aerial vehicle GS constituent can be obtained; gradient filtering is performed on the unmanned aerial vehicle GS constituent, texture information is obtained and is added to 1-4 GS constituent of the remote-sensing images with a certain weight; Gram-Schmidt inverse transformation is performed on an enhanced result, redundant information can be removed, and final fused images can be obtained. According to the invention, limitation of fusing single band panchromatic data and multispectral images in a fusing method in the prior art can be extended, diversity of fusing data can be added, and a fusing method having spectrum retention and information quality which are considered at the same time can be realized.

Owner:SUN YAT SEN UNIV

Fusion detection method of infrared image and visible light image based on visual attention model

ActiveCN108090888ANot easy to cause visual fatigueSolve the problem of weakeningImage enhancementImage analysisColor mappingVision based

The invention provides a fusion detection method of infrared image and visible light image based on a visual attention model. The fusion detection method comprises the steps that pretreatment is conducted respectively on the collected infrared image and visible light image; based on the visual attention model of human eyes, an interested object can be extracted from the infrared image and the visible light image after pretreatment; with the visible light image after pretreatment being the background, the corresponding infrared image after pretreatment and the visible light image being the background undergo gray-scale image fusion, obtaining a gray-scale fusion image; the gray-scale fusion image undergoes pseudo color mapping marking of the interested object, obtaining and outputting the target pseudo color fusion image. The invention is advantageous in that the problem that the interested object in the prior art is weakened in the fusion image can be resolved, the accuracy and reliability of detection and identification of the interested object can be greatly improved; the difficulty of object identification can be reduced.

Owner:BEIJING INST OF ENVIRONMENTAL FEATURES

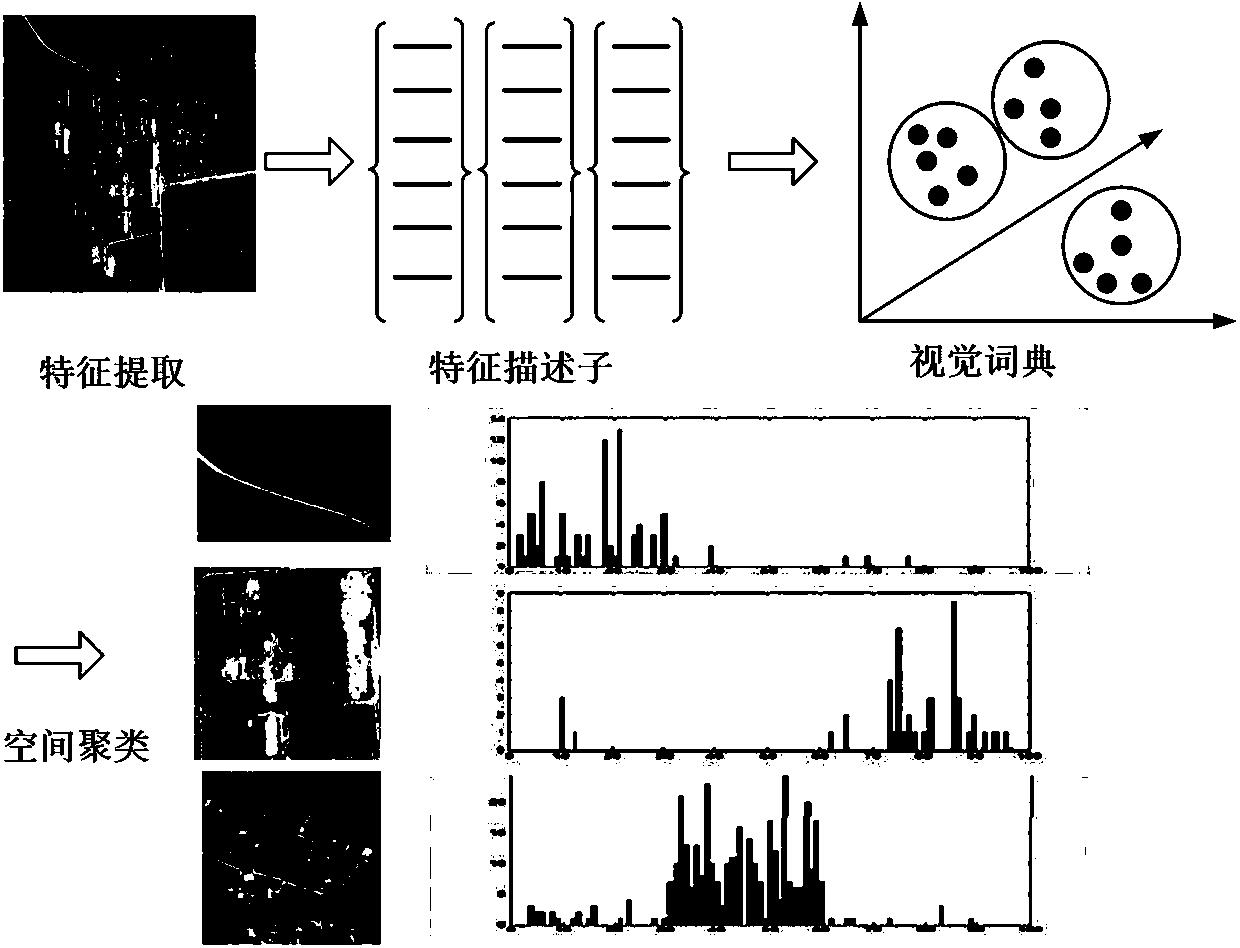

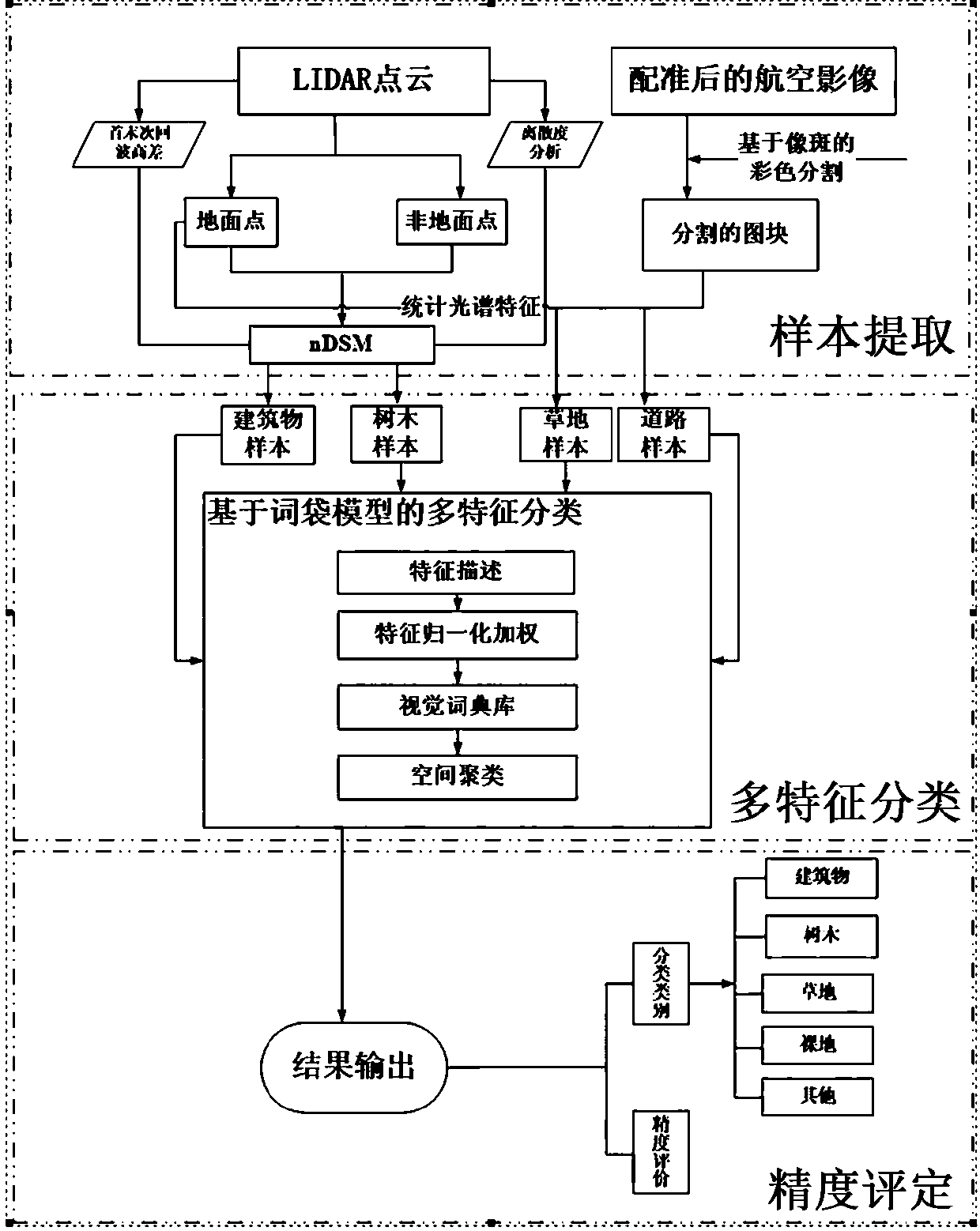

Laser-point cloud and image fusion data classification method based on multi-characteristic

InactiveCN108241871AExtract completeImprove classification accuracyScene recognitionAviationPoint cloud

A laser-point cloud and image fusion data classification method based on multi-characteristic comprises the following steps: 1, data preprocessing: preprocessing aviation image and unmanned plane laser-point cloud data; 2, sample extraction: fully utilizing geometry characteristics of the point cloud data and spectrum characteristics of the aviation images so as to carry out sample extraction of various types; 3, fusion data classification based on multi-characteristic: using a vector description model to classify the sample data; 4, precision evaluation: evaluating the precision of the classified data. The method is complete in surface object extraction and high in classification precision. The method starts from the angle of the fusion image spectrum information, carries out data fusionaccording to application purposes and surface object classification demands, sets corresponding classification rules for classification of main surface objects, and builds a corresponding relation between classification types and classification characteristics, thus extracting complete surface object areas, and reducing misclassification phenomenon.

Owner:NORTH CHINA UNIV OF WATER RESOURCES & ELECTRIC POWER

Projection system and lightness adjusting method thereof

ActiveCN104601915AUniform brightnessConvenient projectionTelevision system detailsPicture reproducers using projection devicesPattern recognitionComputer graphics (images)

Provided is a projection system and a lightness adjusting method thereof. The projection system comprises a plurality of projection devices which project a plurality of images on a screen. The images form a connex set area and have a superposed area. The lightness adjusting method comprises obtaining the images on the screen to obtain image information, selecting a target area from the image information so as to obtain a fused image, corresponding to the images, in the target area, executing a genetic algorithm according to lightness information in the target area to obtain an optimal parameter set and enabling the projection devices to carry out projecting according to the optimal parameter set. The target area is smaller than the connex set area and includes at least one portion of the superposed area. According to the invention, the plurality of projection devices in the projection system can be automatically adjusted to enables the projected images have relatively uniform lightness in the superposed area.

Owner:VIA TECH INC

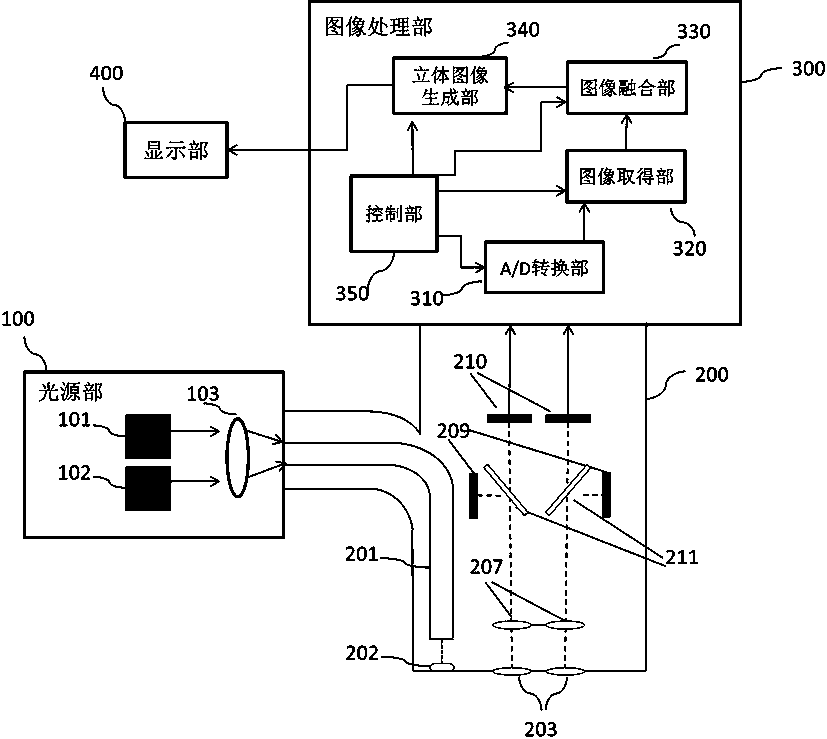

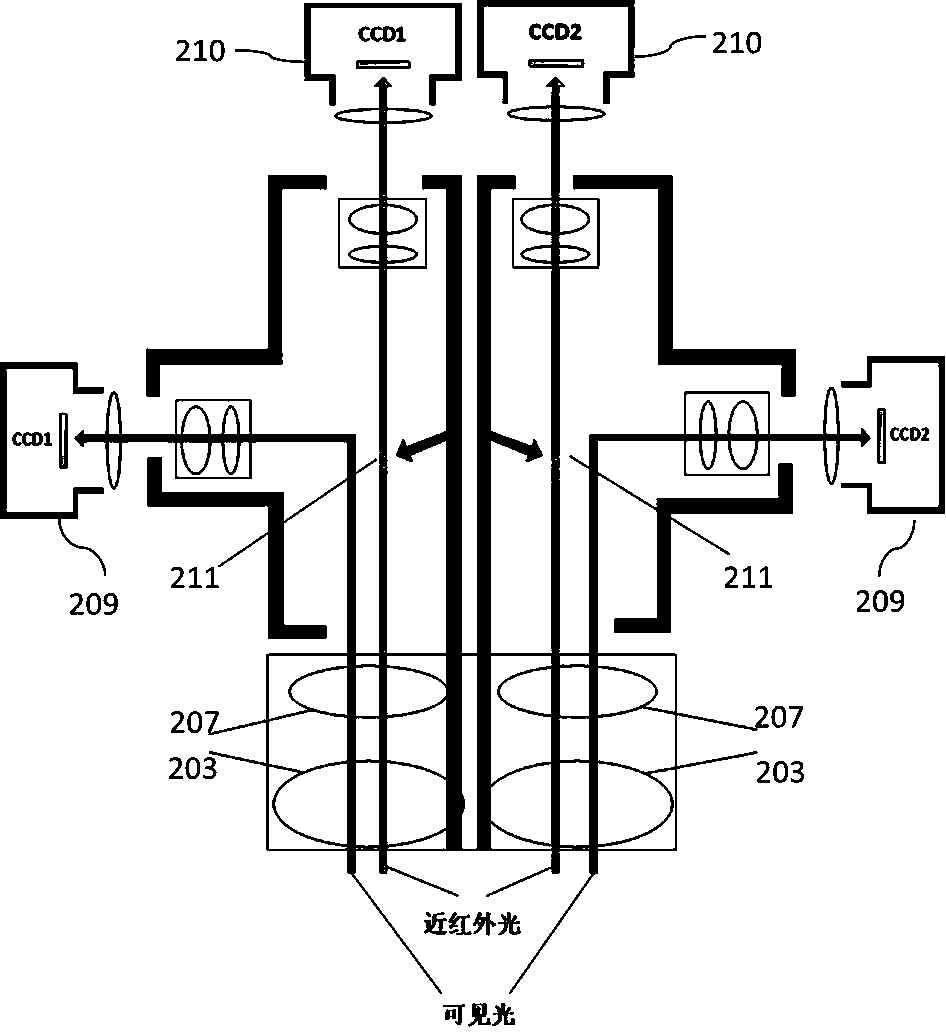

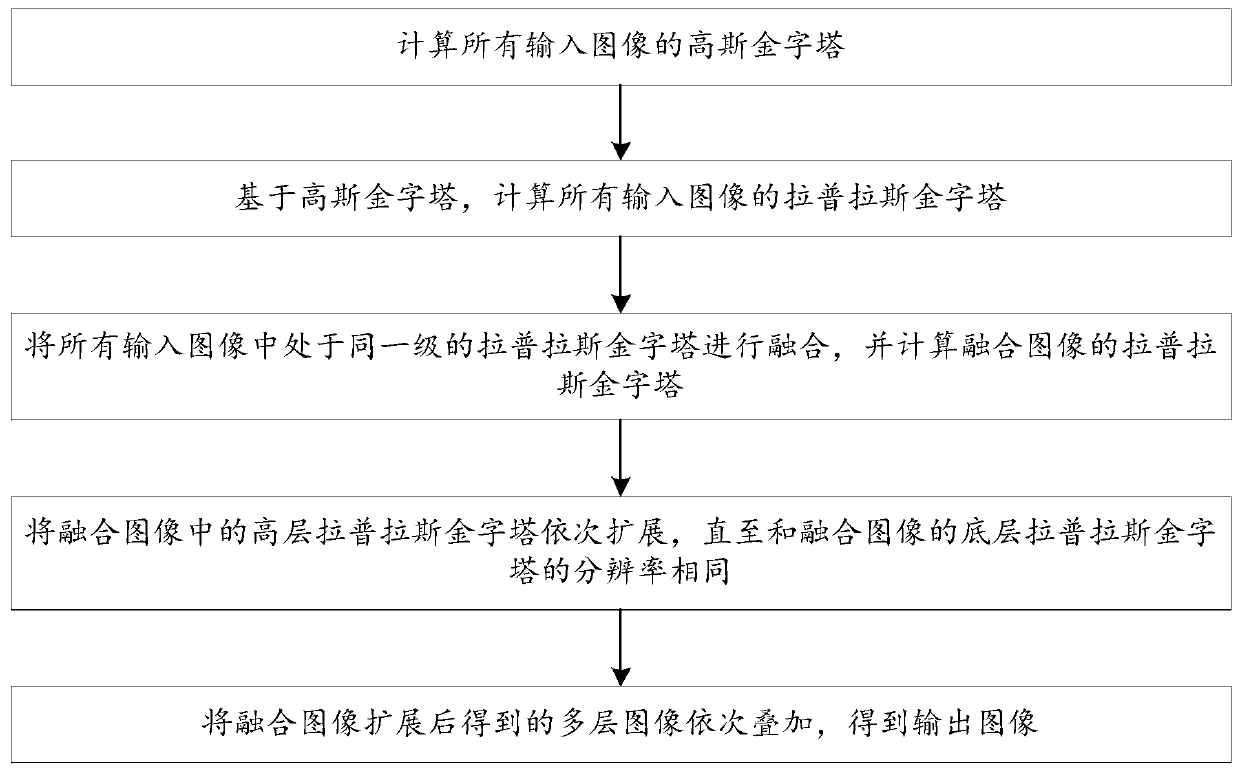

Multispectral stereoscopic vision endoscope device and image fusion method

ActiveCN108040243AImprove visibilityFit closelyTelevision system detailsColor television detailsParallaxImaging processing

The invention discloses a multispectral stereoscopic vision endoscope device and an image fusion method, and belongs to the field of medical devices. The multispectral stereoscopic vision endoscope device comprises a light source part, a camera part, an image processing part and a display part. The image fusion method comprises the steps that 1, images are acquired by an endoscope image acquisition unit; 2, Gaussian pyramids and Laplacian pyramids are built separately; 3, all layers except the top layers of the Laplacian pyramids of the near infrared image and the green image are processed andfused; 4, near infrared pyramid top-layer images are subjected to target area segmentation; 5, top-layer green images and the top-layer near infrared images are processed and fused; 6, bottom-layer green images are reconstructed according to the Laplacian pyramids obtained after fusion; 7, the reconstructed green images are combined with other channels to obtain final fusion images; and 8, the two fusion images are processed through an anaglyph shifting method to generate a stereoscopic image pair. According to the method, real three-dimensional structure information is obtained.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

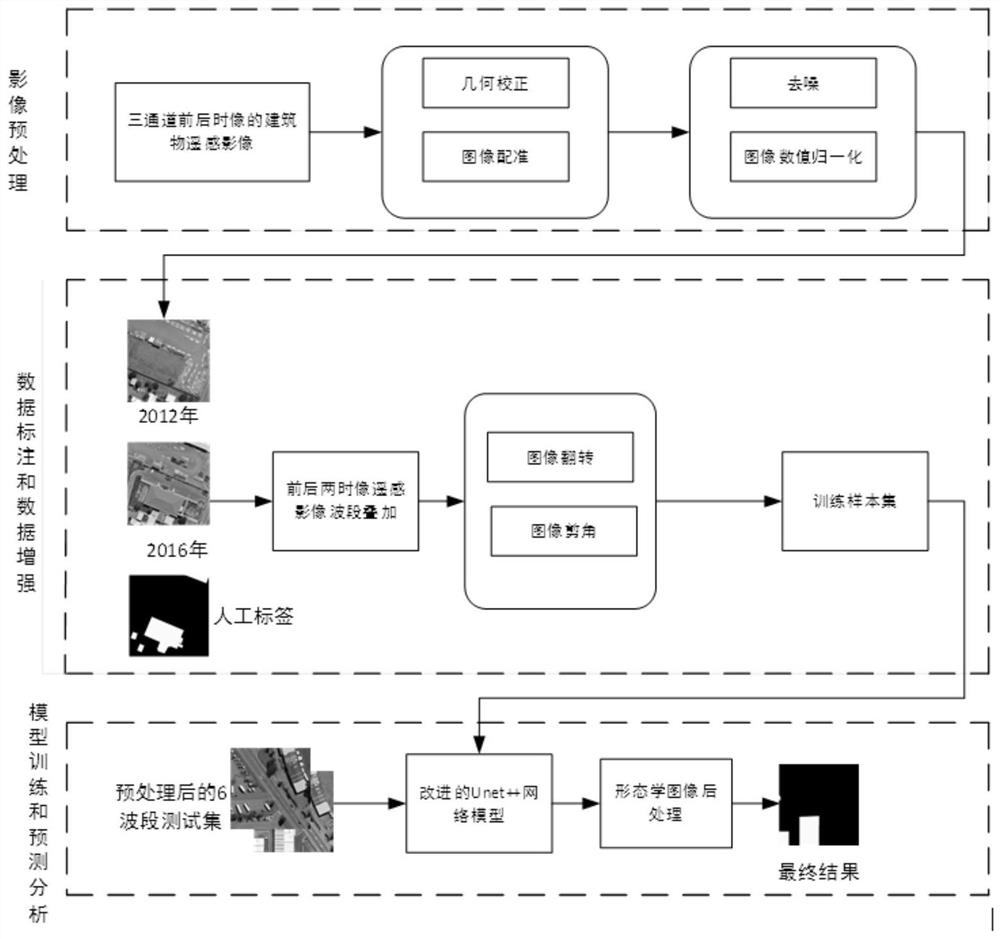

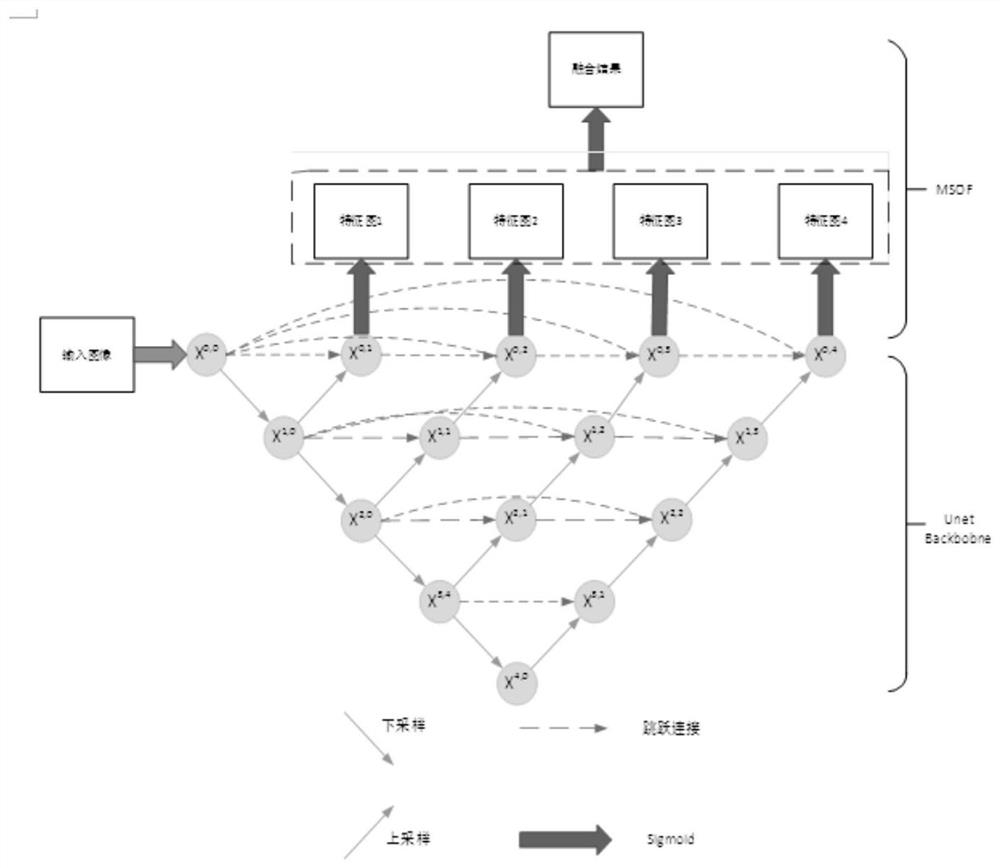

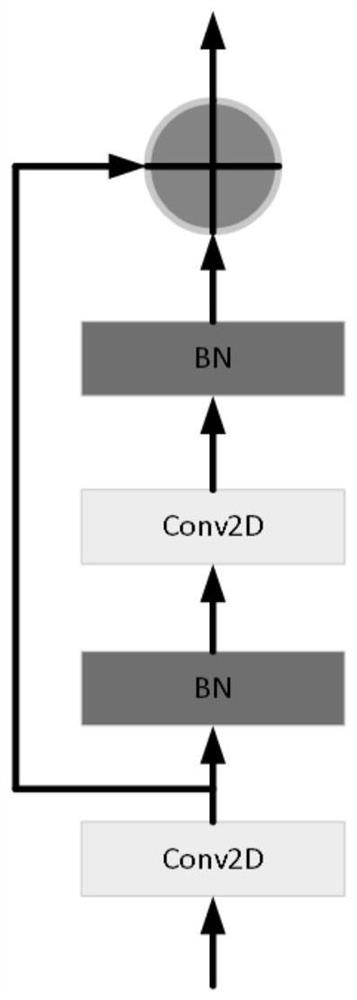

Urban illegal building detection method based on high-resolution remote sensing image

PendingCN112287832AQuick checkCharacter and pattern recognitionNeural architecturesInformation supportFeature extraction

The invention relates to the technical field of urban construction, in particular to an urban illegal building detection method based on a high-resolution remote sensing image, and aims to extract building change characteristics of front and rear time phases of the remote sensing image, obtain a building change result binary image, compare the building change result binary image with a governmentplanning map and detect illegal buildings. Convenient information support is provided for urban planning management. The method comprises the following steps: (1) performing geometric correction, image registration, denoising and normalization preprocessing on high-resolution remote sensing images of front and rear time phases to obtain high-resolution remote sensing image data with relatively consistent distribution; (2) cutting the preprocessed front and back time phase high-resolution remote sensing images by adopting the same size; and (3) carrying out waveband superposition on the segmented front and back time phase high-resolution remote sensing images by using a GDAL library to obtain a six-channel remote sensing building fusion image.

Owner:NANJING KEBO SPACE INFORMATION TECH

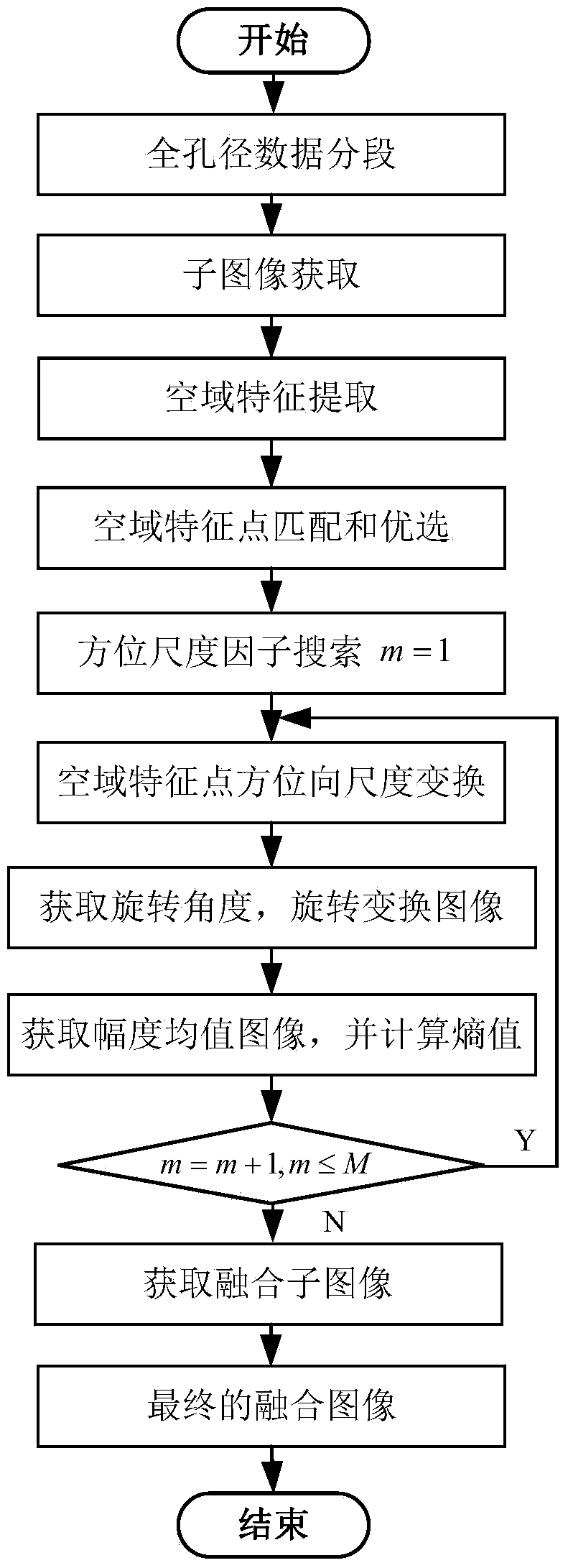

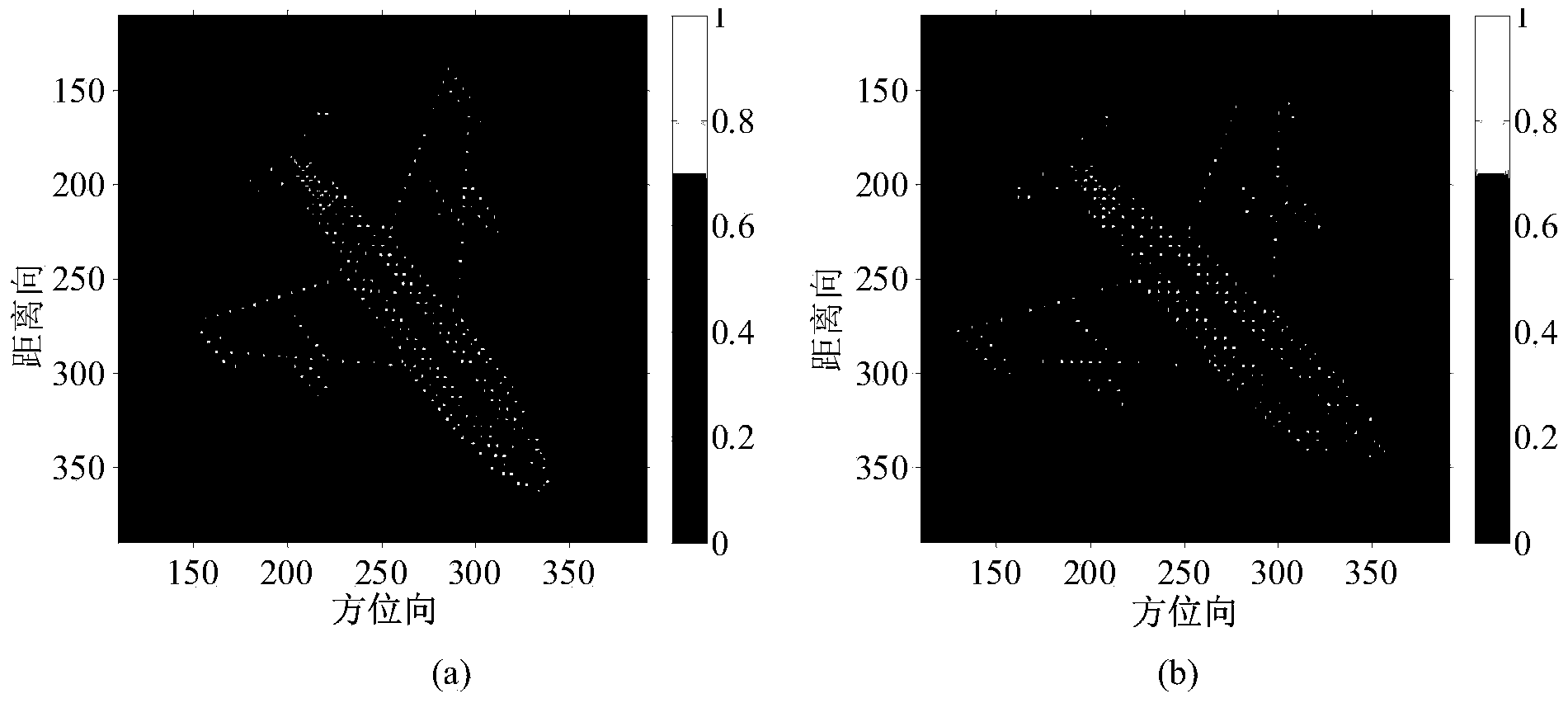

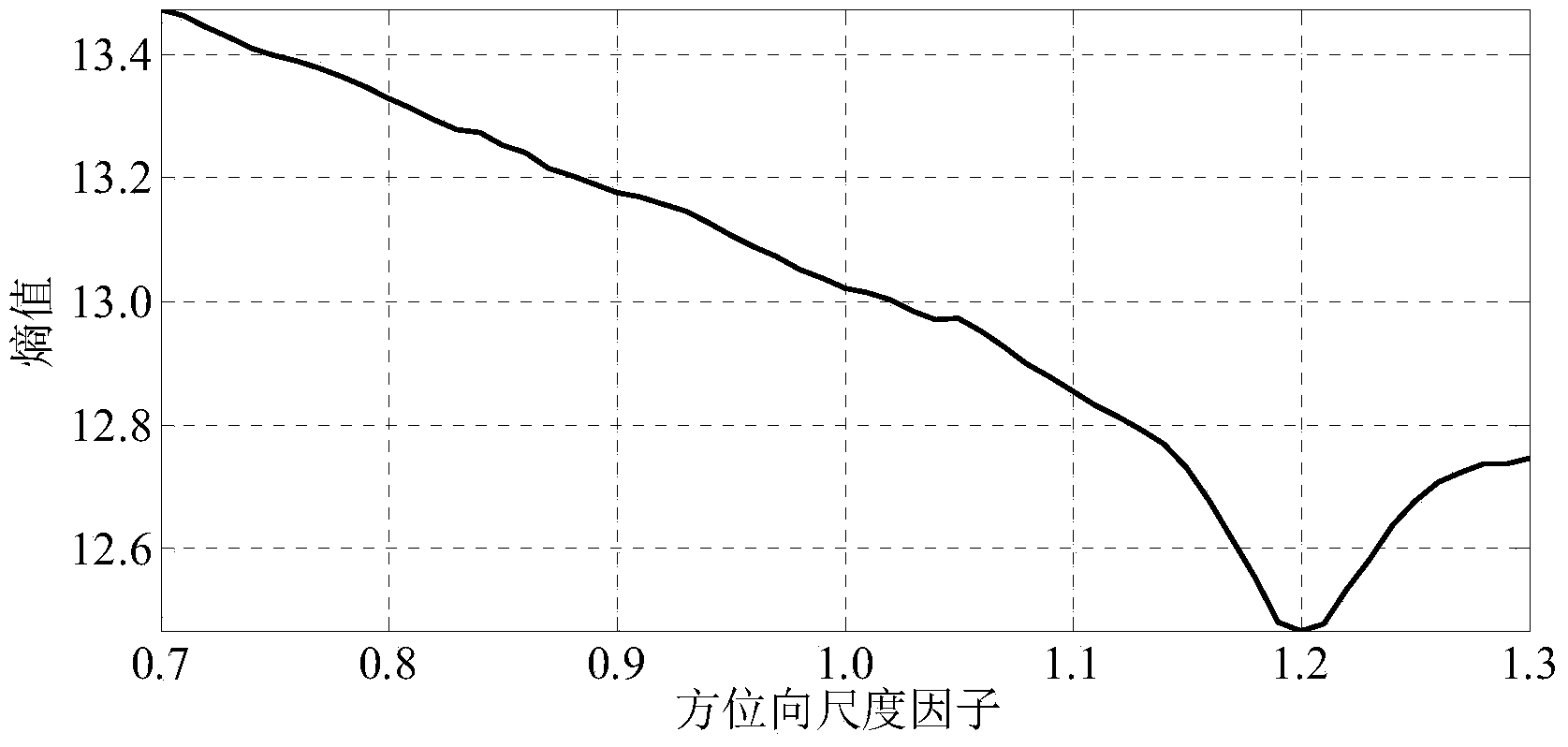

ISAR image fusion method based on target characteristics

InactiveCN104240212AEasy to keepEstimates are accurate and stableImage enhancementGeometric image transformationMatrix decompositionScale variation

The invention discloses an ISAR image fusion method based on target characteristics, and relates to fusion of ISAR images. The method includes the steps that firstly, full-aperture data are segmented, and then sub-images are acquired; space characteristic points and space characteristic descriptor vectors of the sub-images are extracted, and then the space characteristic points are matched; then, the optimized space characteristic points are selected; azimuth scale variation and de-centering are conducted according to an azimuth scale factor search method; the rotation angles between the sub-images are calculated, the transformed images are rotated, and the entropy of average range images is acquired; the fusion sub-images are determined through the minimal entropy; finally, base vectors of the fusion images are acquired according to a non-negative matrix decomposition method, and then the final fusion images are determined. Complete information of targets in the fusion images is reserved, and the method can be used for fusion and real-time processing of different sub-images.

Owner:XIDIAN UNIV

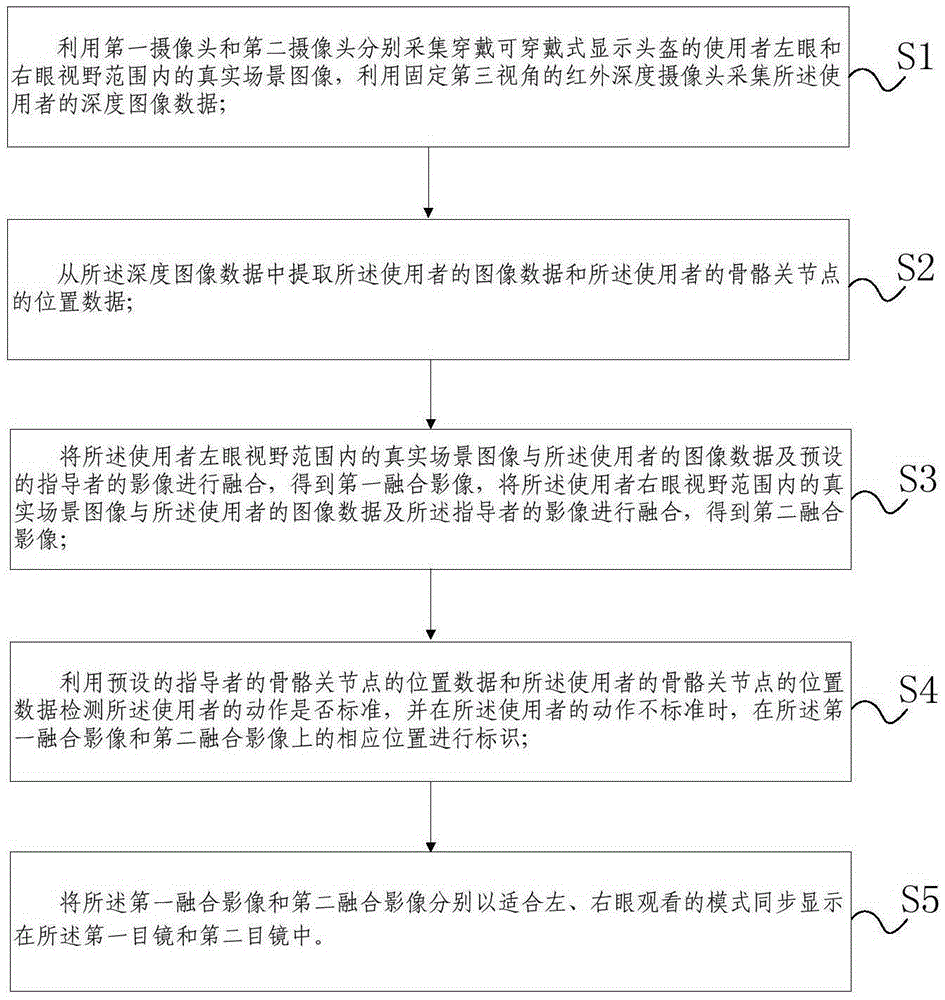

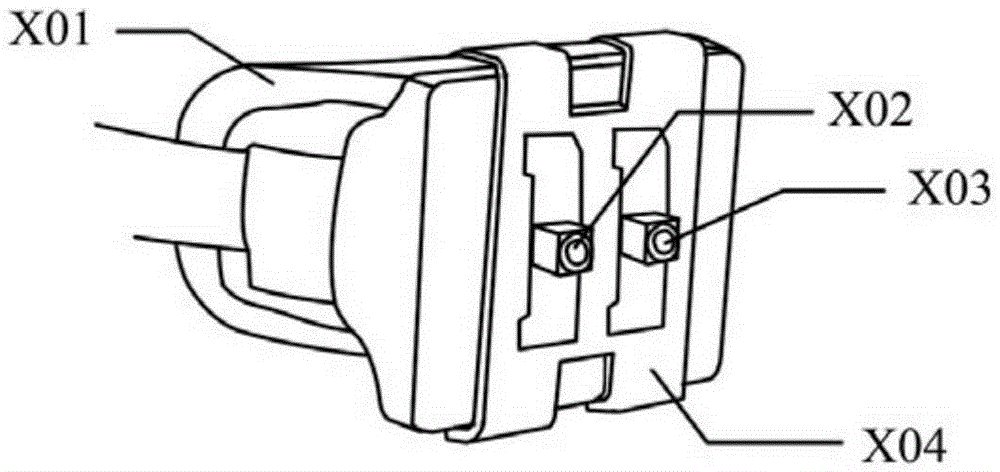

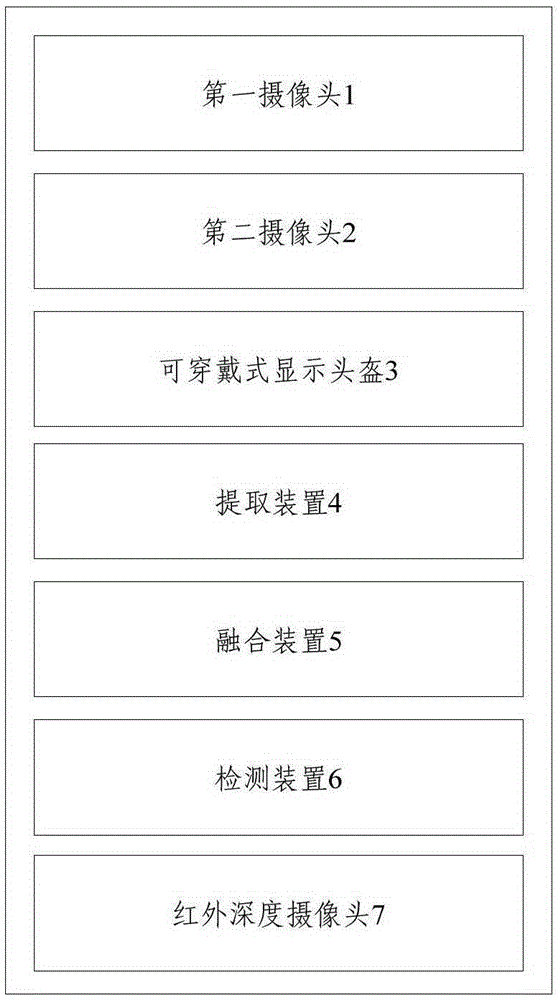

Stage performance assisted training method and system based on augmented reality technology

ActiveCN105404395AReal-time correctionInput/output for user-computer interactionCharacter and pattern recognitionEyepieceField of view

The present invention discloses a stage performance assisted training method and system based on augmented reality technology, which are able to detect the standard of an action of a user. The method comprises: respectively acquiring real scene images within view field ranges of a left eye and a right eye of a user wearing a wearable display helmet by using a first camera and a second camera, and acquiring depth image data of the user by using an infrared depth camera for fixing a third view angle; extracting the image data of the user and position data of skeleton joint points of the user; fusing the real scene image with the image data and a preset image of an operator to obtain a fusion image; by utilizing preset position data of skeleton joint points of the operator and the position data of the skeleton joint points of the user, detecting whether the action of the user is standard, and when the action of the user is nonstandard, carrying out marking on a corresponding position of the fusion image; and synchronously displaying the fusion image in a first eyepiece and a second eyepiece of the wearable display helmet in modes which are respectively suitable for left and right eyes to view.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

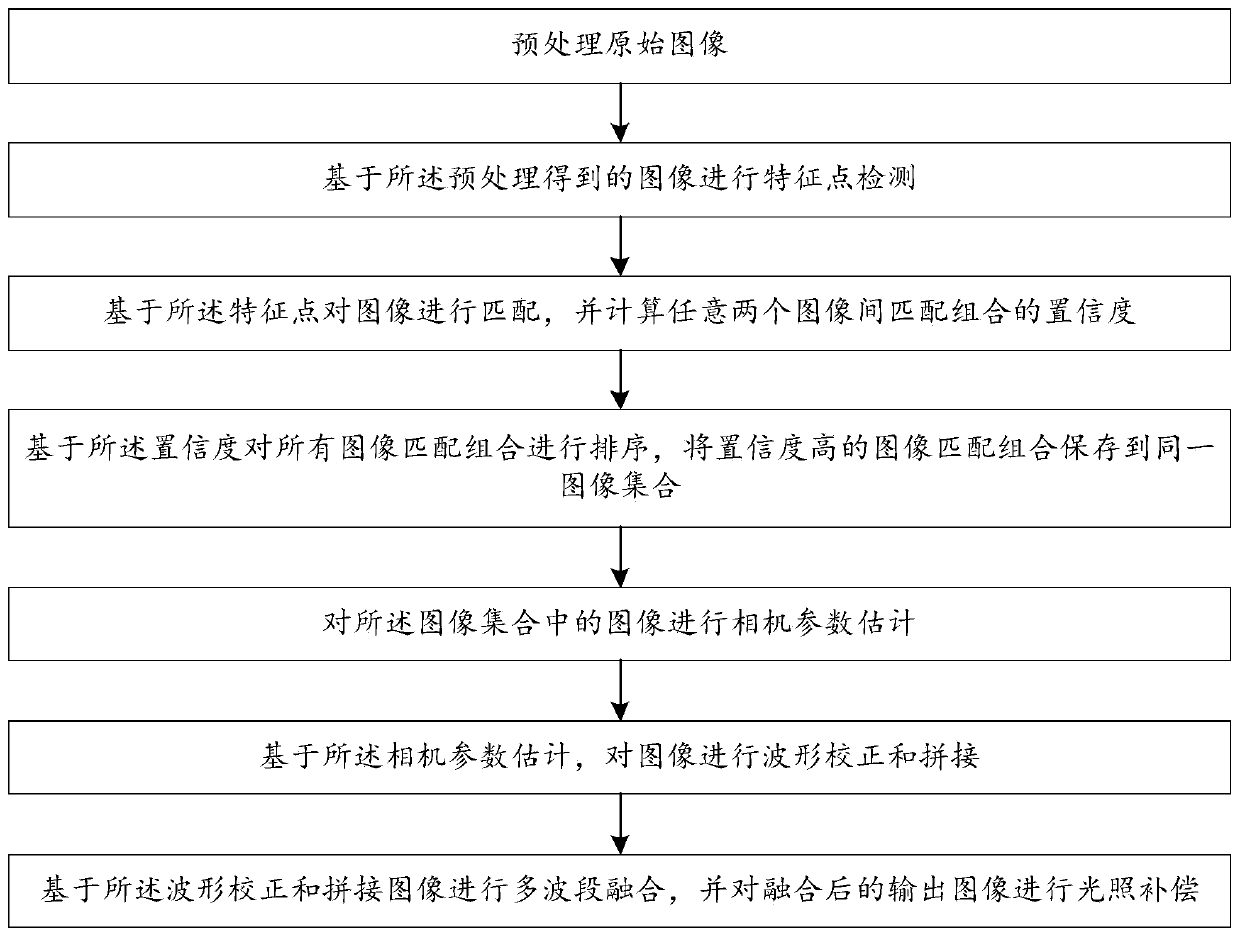

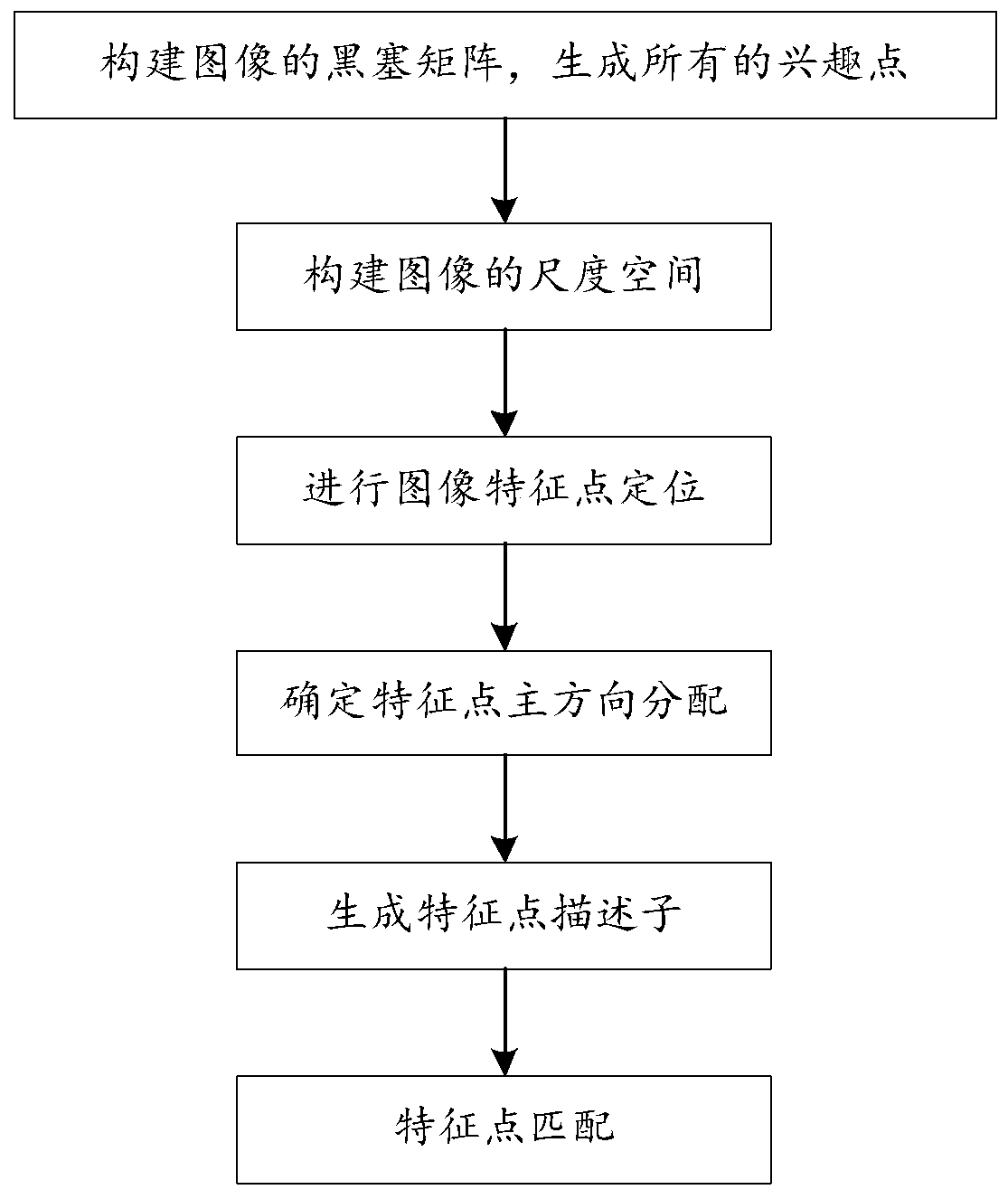

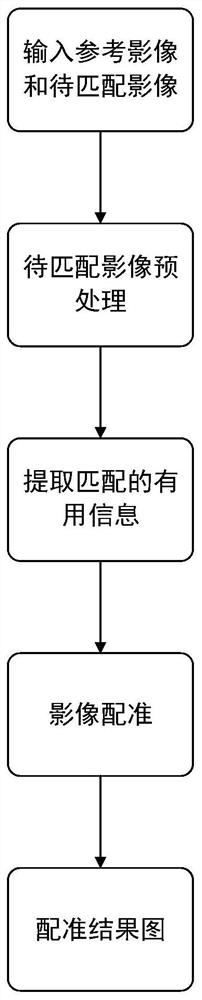

Image fusion method

ActiveCN109858527AImprove robustnessGood precisionCharacter and pattern recognitionMulti bandImage matching

The invention provides an image fusion method. The method comprises the steps of preprocessing an original image; performing feature point detection based on the preprocessed image; matching the images based on the feature points, and calculating the confidence coefficient of the matching combination between any two images; sorting all the image matching combinations based on the confidence coefficient, and storing the image matching combinations with high confidence coefficient in the same image set; performing camera parameter estimation on images in the image set; based on the camera parameter estimation, performing waveform correction and splicing on the images; and performing multi-band fusion based on the waveform correction and the spliced image, and performing illumination compensation on the fused output image. The fusion image obtained through the image fusion method is higher in robustness, better in precision and higher in calculation speed.

Owner:CRSC RESEARCH & DESIGN INSTITUTE GROUP CO LTD

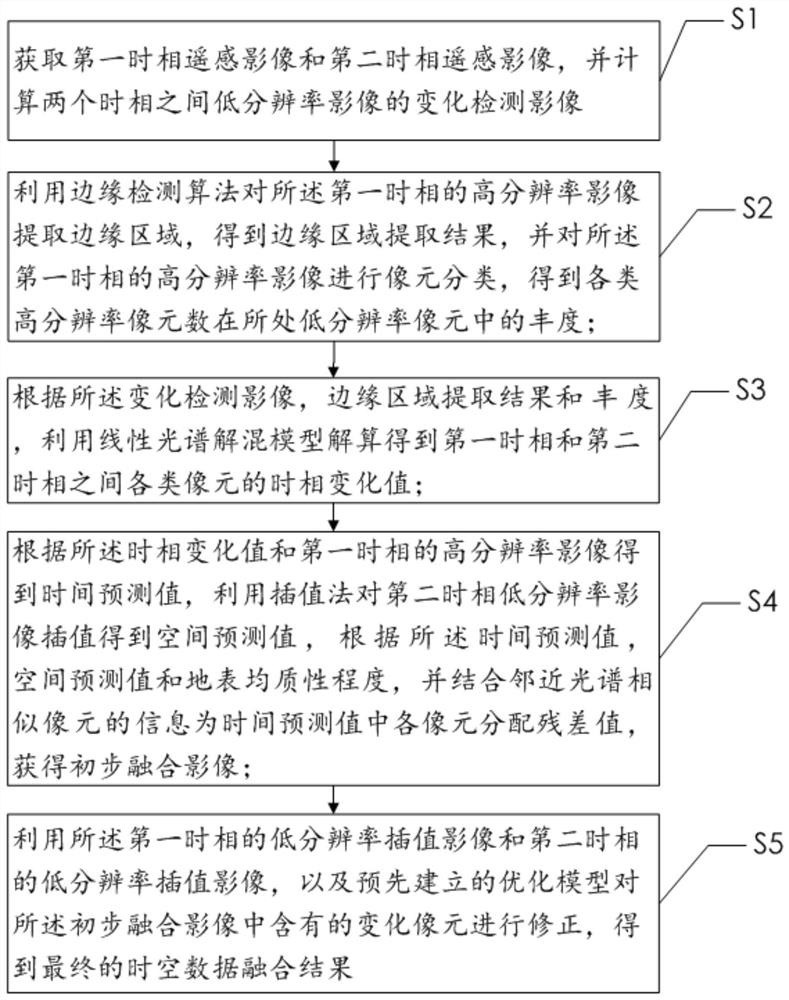

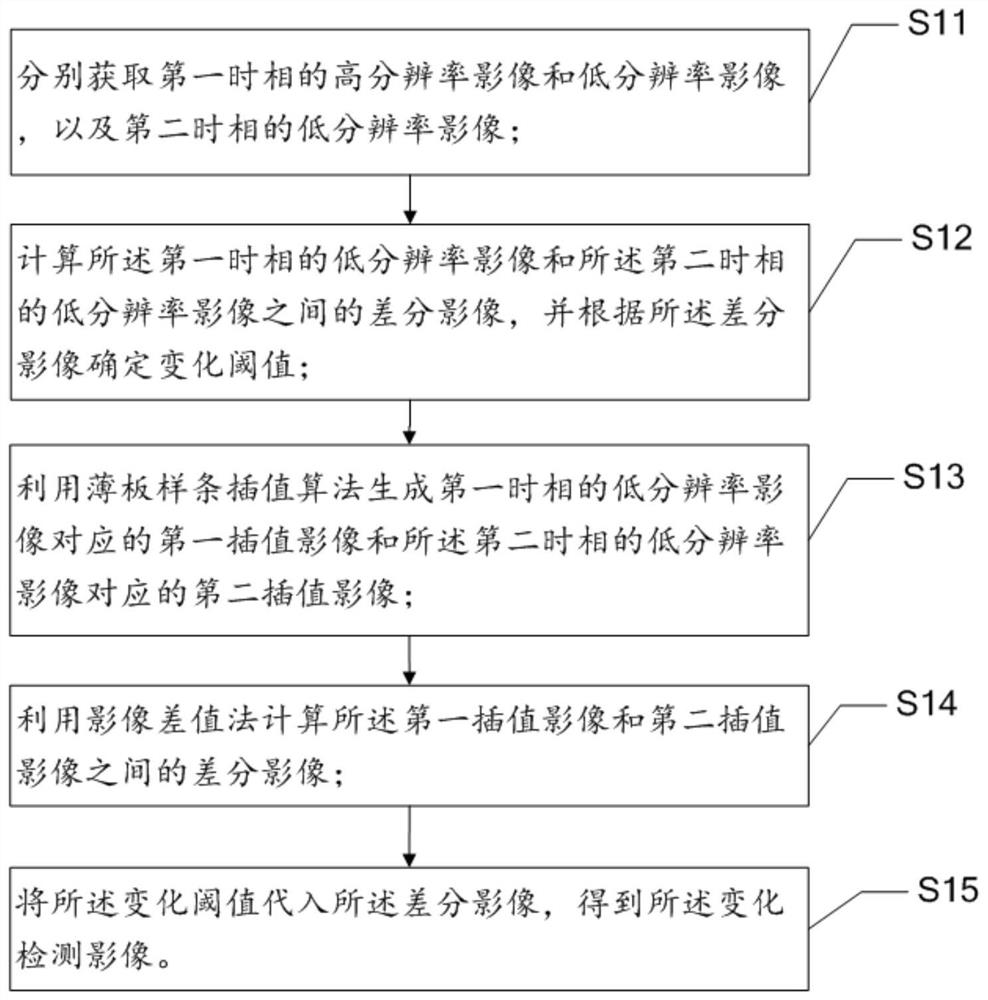

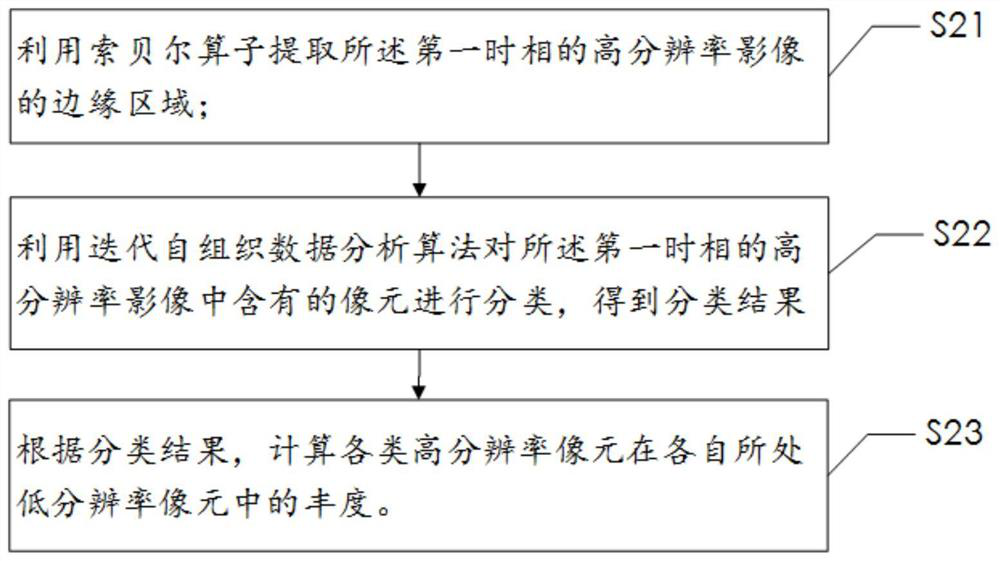

Space-time fusion method, system and device for remote sensing image data

ActiveCN112017135AMulti-space detailsImprove abilitiesImage enhancementImage analysisSpatial predictionData space

The invention provides a remote sensing image data space-time fusion method, system and device. The method includes obtaining a change detection imagethrough calculation of two time-phase low-resolution remote sensing images; extracting an edge region of the high-resolution image of the first time phase, and calculating abundance corresponding to the number of various high-resolution pixels; calculating time-phase change values of various pixels according to the extraction result and abundance of the edge region; calculating a time prediction value and a space prediction value; according to the earth surface homogeneity degree, the time prediction value and the space prediction value, combining neighborhood information to distribute a residual value so as to acquire a preliminary fusion image; and utilizing the established optimization model to correct the change pixels contained in the preliminary fusion image to obtain a spatio-temporal data fusion result. According to the method provided by the embodiment of the invention, the applicability of different change detection algorithms in different scenes is comprehensively considered, the overall spectral precision of fusion is improved, more spatial detail information is reserved, and a better spatio-temporal data fusion result can be obtained.

Owner:THE HONG KONG POLYTECHNIC UNIV SHENZHEN RES INST

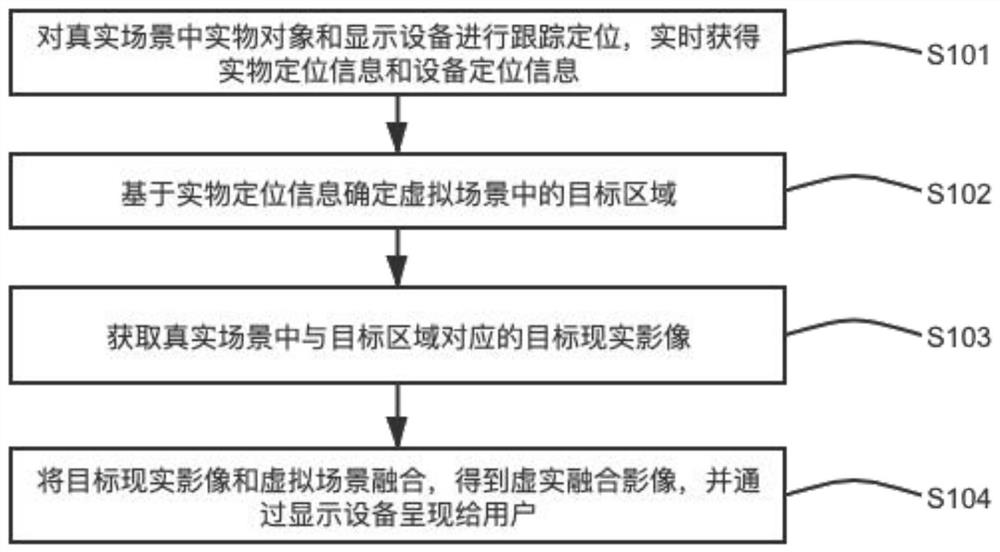

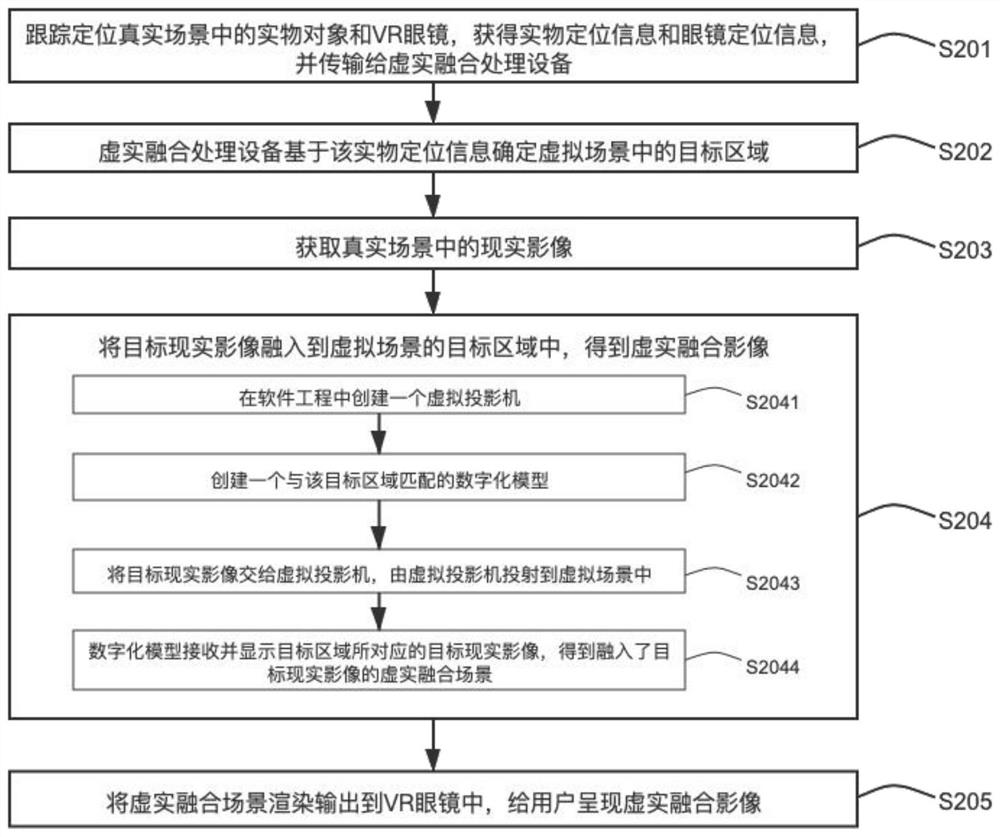

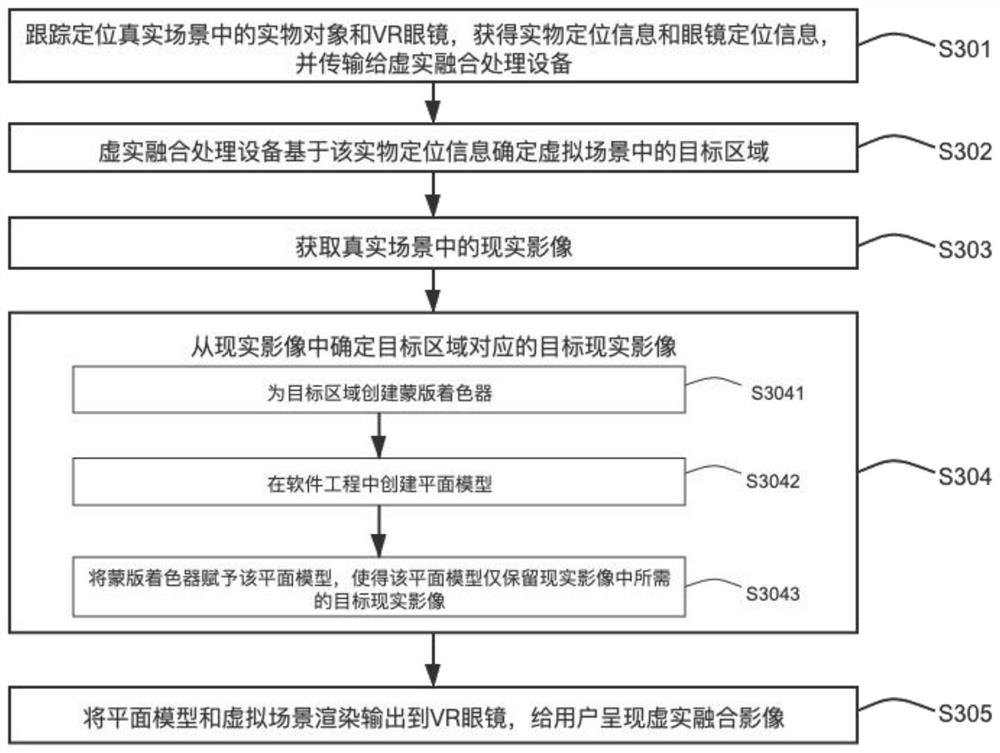

Virtual-real fusion implementation method and system and electronic equipment

PendingCN112346572AInput/output for user-computer interactionCosmonautic condition simulationsHuman bodyComputer graphics (images)

The invention discloses a virtual-real fusion implementation method and system and electronic equipment. According to a virtual-real fusion implementation method, a virtual three-dimensional scene isaccurately copied and constructed according to a real scene through a digital twinning technology, the position and motion of a human body / object in the real scene are positioned, tracked and capturedin real time based on a motion capture technology, an obtained real image is fused into the virtual scene to obtain a virtual-real fusion image which is presented to a user. By applying the virtual-real fusion implementation method provided by the invention, a user can synchronously experience a real interactive operation feeling while obtaining a good visual angle immersion feeling.

Owner:NANJING MENGYU THREE DIMENSIONAL TECH CO LTD

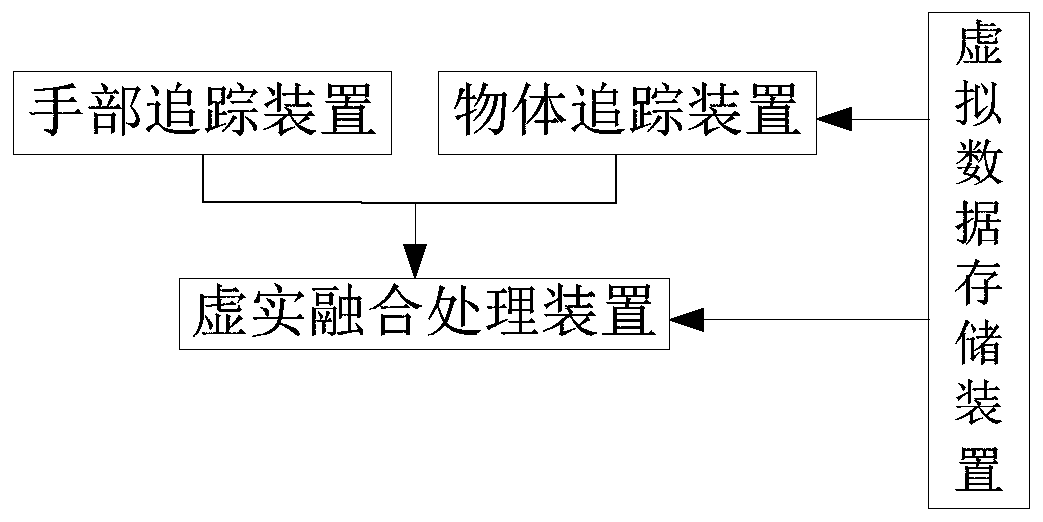

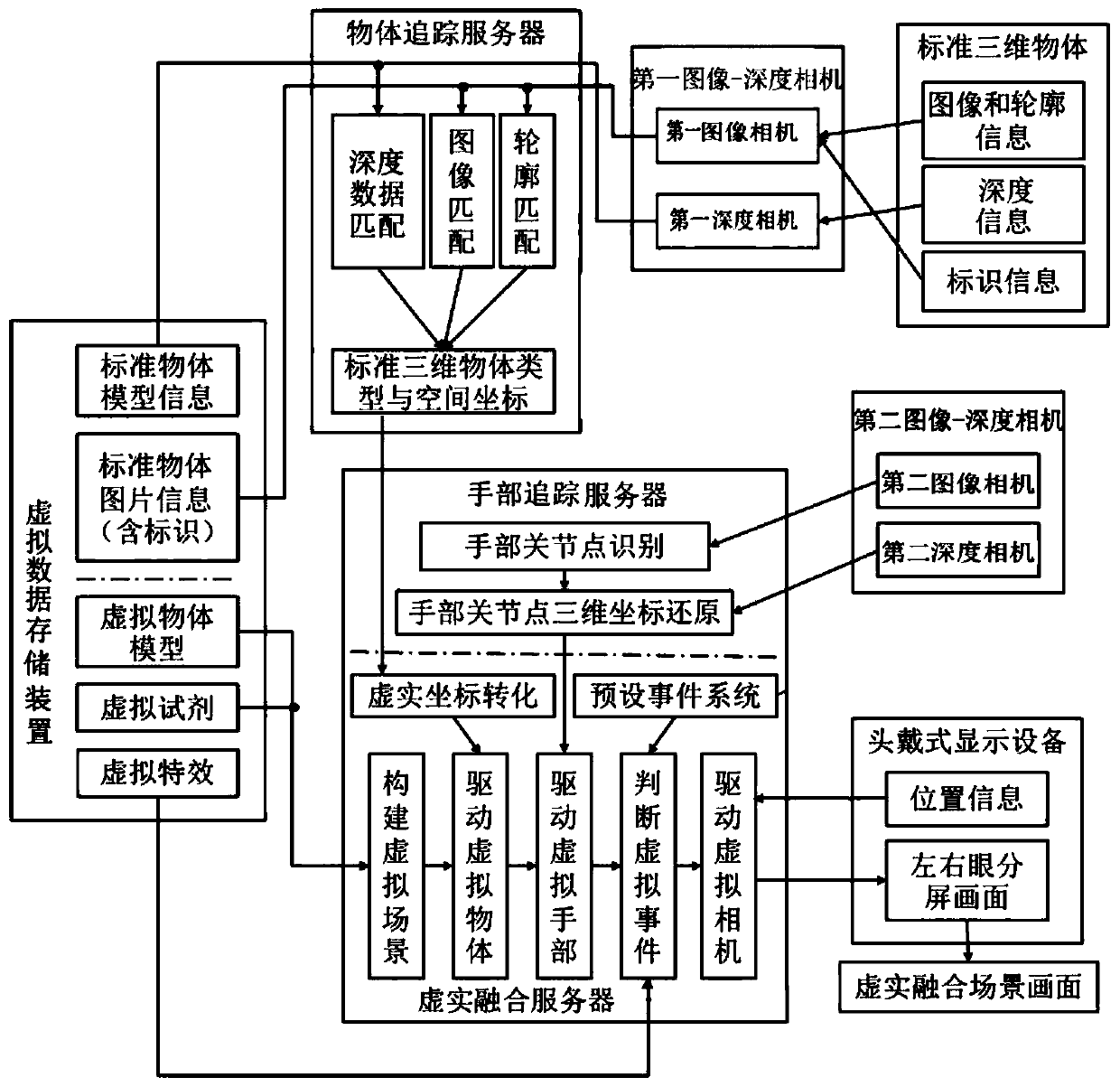

Three-dimensional virtual-real fusion experiment system

InactiveCN110928414ACultivate practical abilityCultivate interest in learningInput/output for user-computer interactionCosmonautic condition simulationsComputer graphics (images)Three-dimensional space

The invention discloses a three-dimensional virtual-real fusion experiment system, and the system comprises: an object tracking device which is used for recognizing the type of a real experiment object and positioning the three-dimensional space coordinates of the real experiment object in real time, and obtaining the type of the real experiment object and the corresponding real-time three-dimensional space coordinates; a hand tracking device which is used for identifying the joint points of the operating hand and positioning the three-dimensional space coordinates of the joint points of the operating hand in real time to obtain the real-time three-dimensional space coordinates of the joint points of the operating hand; and a virtual-real fusion processing device which is used for generating a corresponding virtual-real fusion image according to the type of the real experimental object, the corresponding real-time three-dimensional space coordinates of the real experimental object andthe real-time three-dimensional space coordinates of the operating hand joint points. According to the invention, the advantages of the virtual-real fusion technology are fully utilized, the virtual object and the real experimental object are fused, the real experimental object can be tracked and cultivated in real time while a user naturally moves the real experimental object, the experimental operation ability and learning interest of the user are cultivated, and the experimental risk is reduced.

Owner:SHANGHAI JIAO TONG UNIV

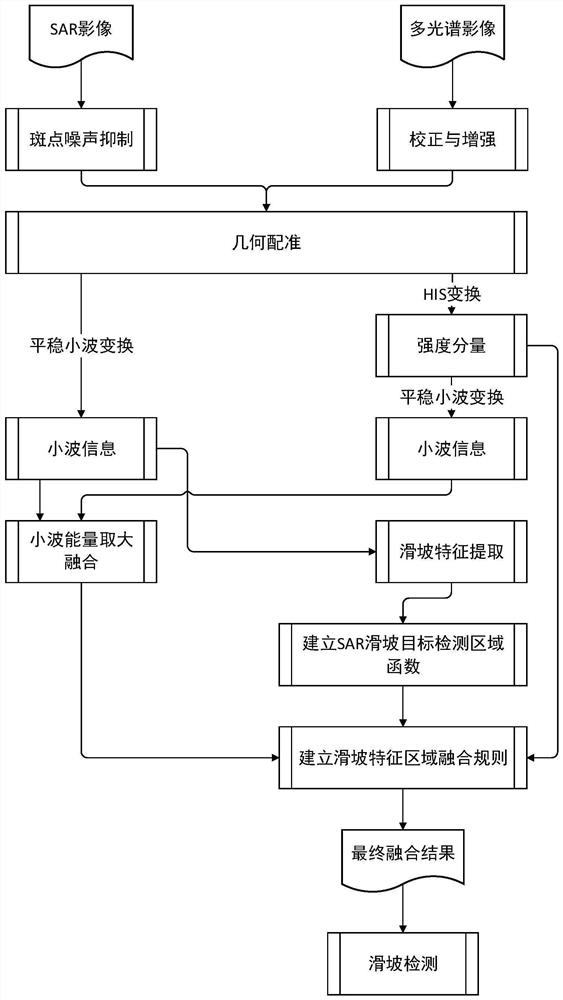

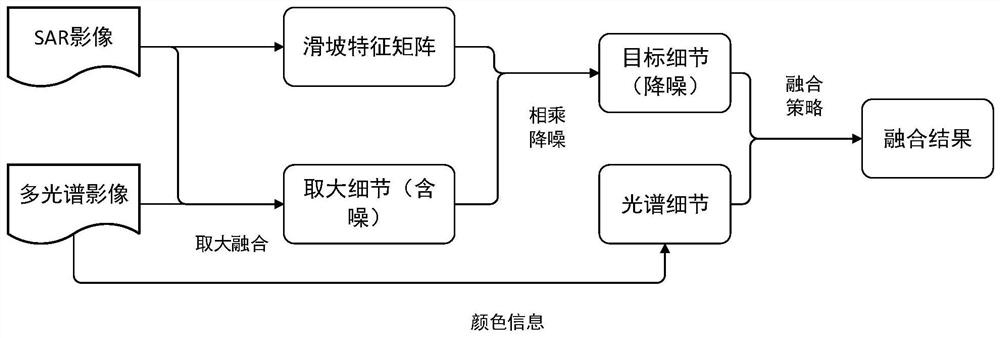

SAR and optical image fusion method and system for landslide detection

PendingCN112307901AAccurate judgmentAccurate analysisScene recognitionNoise removalLandslide detection

The invention discloses an SAR and optical image fusion method for landslide detection. The method provided by the invention comprises the following steps: step 1, preprocessing an SAR and an opticalimage; step 2, performing HIS conversion on the optical image to obtain three components I, H and S; step 3, performing stationary wavelet transform and high-frequency component energy maximization fusion on I components of the SAR image and the optical image; step 4, performing saliency detection on the low-frequency and high-frequency components of the SAR image and the gray scale information ofthe image, establishing an SAR salient target detection area guidance function, and partitioning the SAR image; step 5, establishing a salient region fusion rule, and realizing image fusion accordingto a regional fusion strategy; and step 6, identifying and extracting landslide disaster information based on the fused image. The method has good adaptability to SAR and optical image fusion for landslide detection, adopts related processing measures in structure maintenance, noise removal and spectrum reservation, and obtains an excellent effect.

Owner:ELECTRIC POWER RES INST OF STATE GRID ZHEJIANG ELECTRIC POWER COMAPNY +3

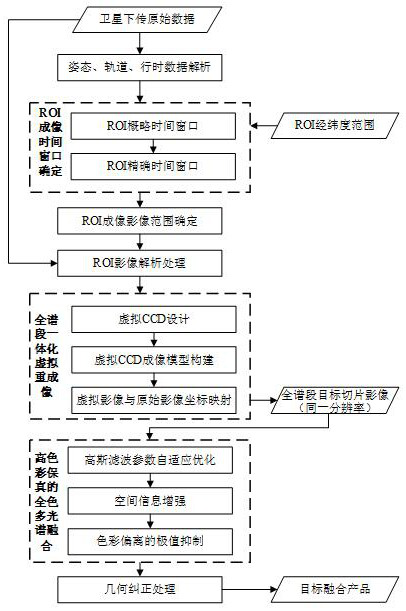

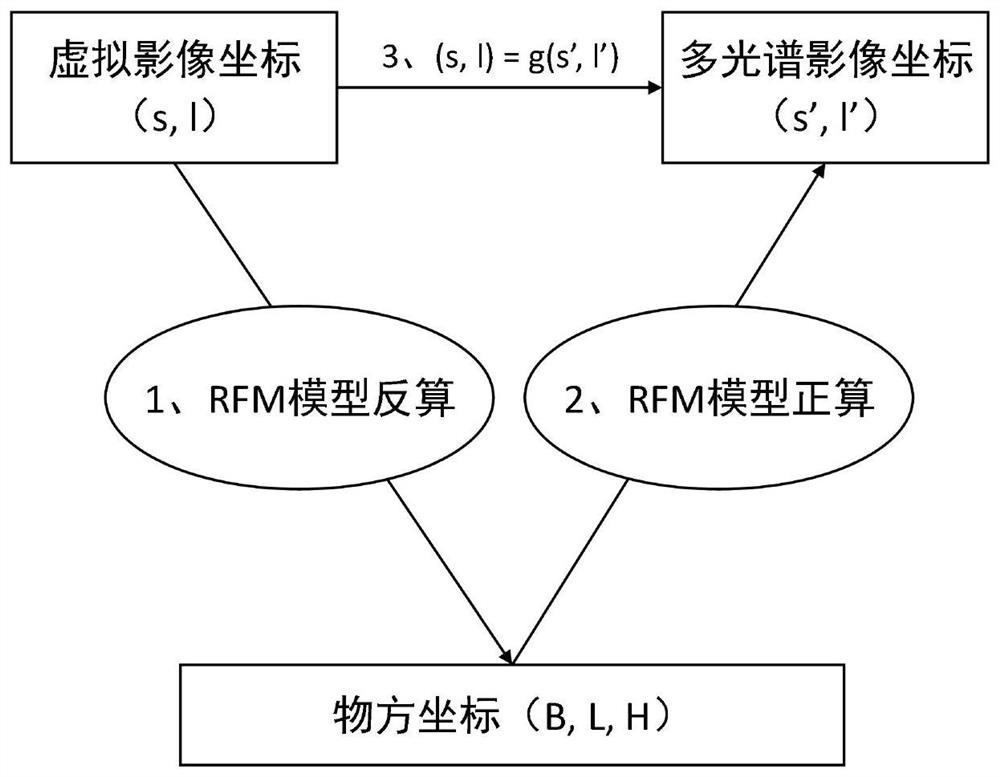

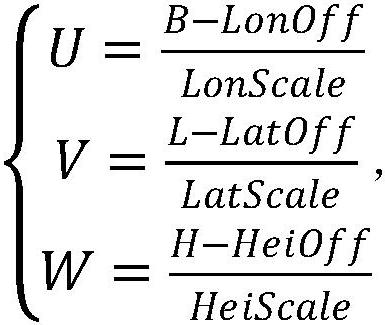

Optical satellite rapid fusion product manufacturing method based on target area

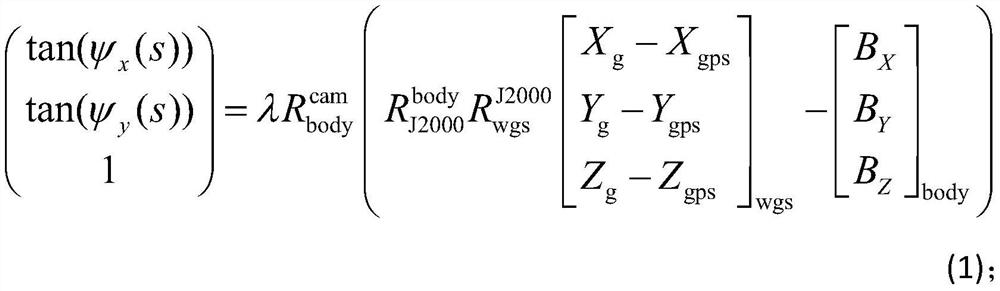

ActiveCN112598608ASolve the problem that coordinate inversion is difficult to realizeAvoid processing powerImage enhancementImage analysisOriginal dataEngineering

The invention discloses an optical satellite rapid fusion product manufacturing method based on a target area. The method comprises the following steps: firstly, determining an imaging time window ofa target region of interest (ROI) by adopting a dynamic search mode; projecting longitude and latitude coordinates of four corners of the ROI reversely to each CCD image, so that an ROI imaging imagerange is determined; then, carrying out image analysis processing on an original data range corresponding to the ROI, carrying out full-spectrum integrated virtual reimaging processing on ROI originalfull-color and multispectral images, and outputting a fusion-oriented full-color multispectral same-resolution image product; carrying out fusion processing on the ROI full-color multispectrum by adopting a full-color multispectrum fusion technology with high color fidelity; and finally, performing indirect geometric correction processing on the ROI fusion image to generate a target fusion product with geographic coordinates. The invention has the advantages of being small in data size, small in calculation amount and rapid in task response, and the information acquisition efficiency for theemergency task is improved.

Owner:HUBEI UNIV OF TECH

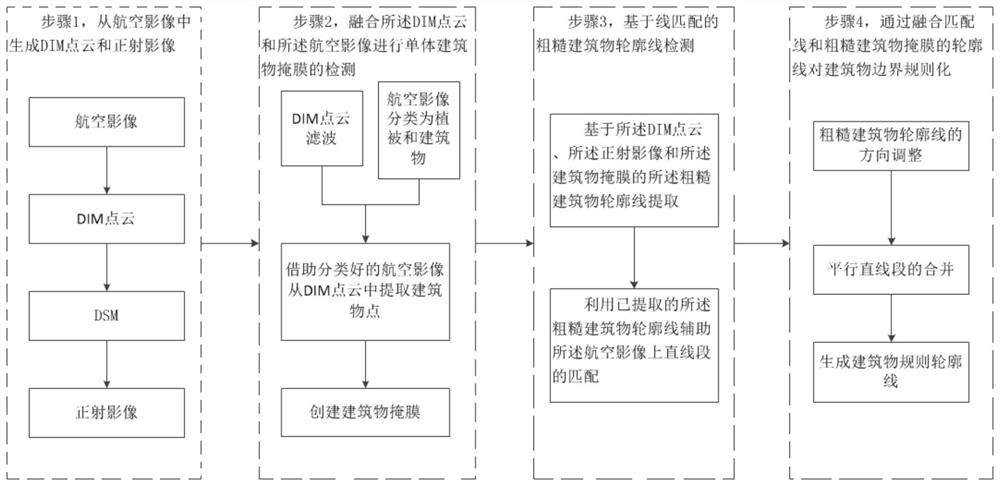

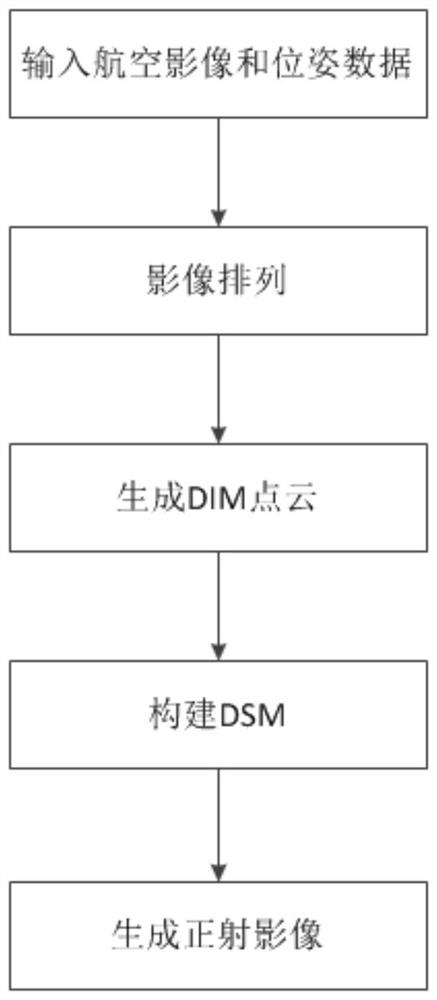

Building contour extraction method fusing image features and dense matching point cloud features

ActiveCN111652241AHigh precisionImprove detection accuracyCharacter and pattern recognitionPoint cloudComputer graphics (images)

The invention provides a building contour extraction method fusing image features and dense matching point cloud features. The building contour extraction method comprises the following steps: step 1,generating a three-dimensional dense image matching (DIM) point cloud and an orthoimage from an aerial image; step 2, fusing the DIM point cloud and the aerial image to detect a mask of a single building; 3, carrying out rough building contour line detection based on line matching; and 4, regularizing the boundary of the building by fusing the matching line and the contour line of the rough building mask. According to the invention, the ground object classification precision can be improved; the detection precision can be improved; a regularized building contour line can be generated.

Owner:CHINESE ACAD OF SURVEYING & MAPPING

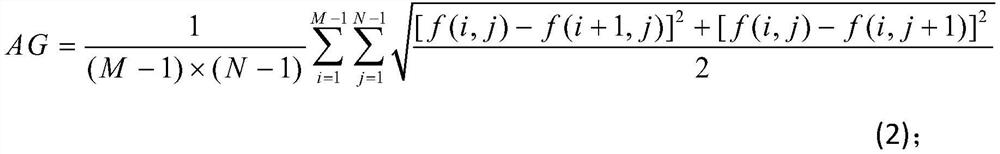

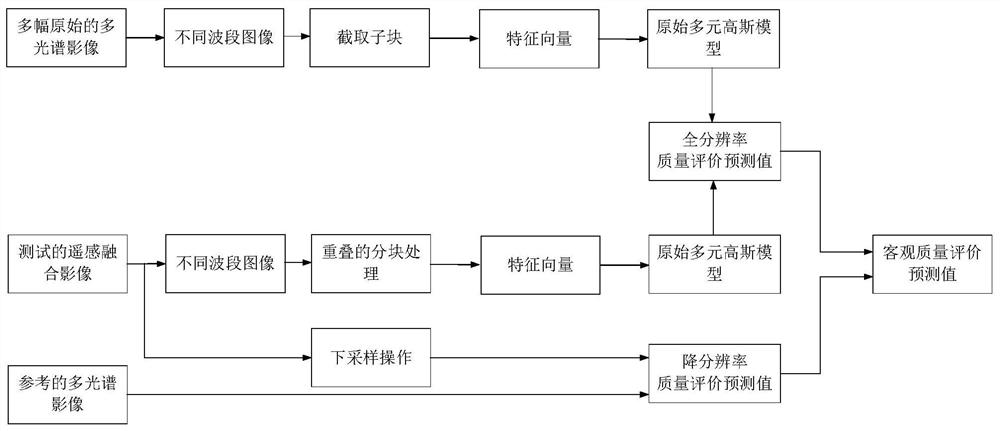

Remote sensing image fusion quality evaluation method

PendingCN111681207AReflect quality changesImprove relevanceImage enhancementImage analysisRemote sensing image fusionHistogram

The invention discloses a remote sensing image fusion quality evaluation method, which comprises the steps of extracting LBP feature statistical histograms, edge histograms and spectral features of sub-blocks in different waveband images as feature vectors, and constructing an original multivariate Gaussian model in a training stage; in the test stage, constructing original multivariate Gaussian models in the test stage, and calculating a full-resolution quality evaluation prediction value according to the two original multivariate Gaussian models constructed during training and testing; calculating spectral similarity and spatial similarity between all waveband images of the multispectral image referred by the test remote sensing fusion image and downsampling waveband images obtained after downsampling operation is carried out on all waveband images of the test remote sensing fusion image, and obtaining a resolution reduction quality evaluation prediction value; and obtaining an objective quality evaluation prediction value. Due to the fact that the obtained feature vector information can well reflect the quality change condition of the remote sensing fusion image, the correlationbetween an objective evaluation result and human eye subjective perception is effectively improved.

Owner:NINGBO UNIV

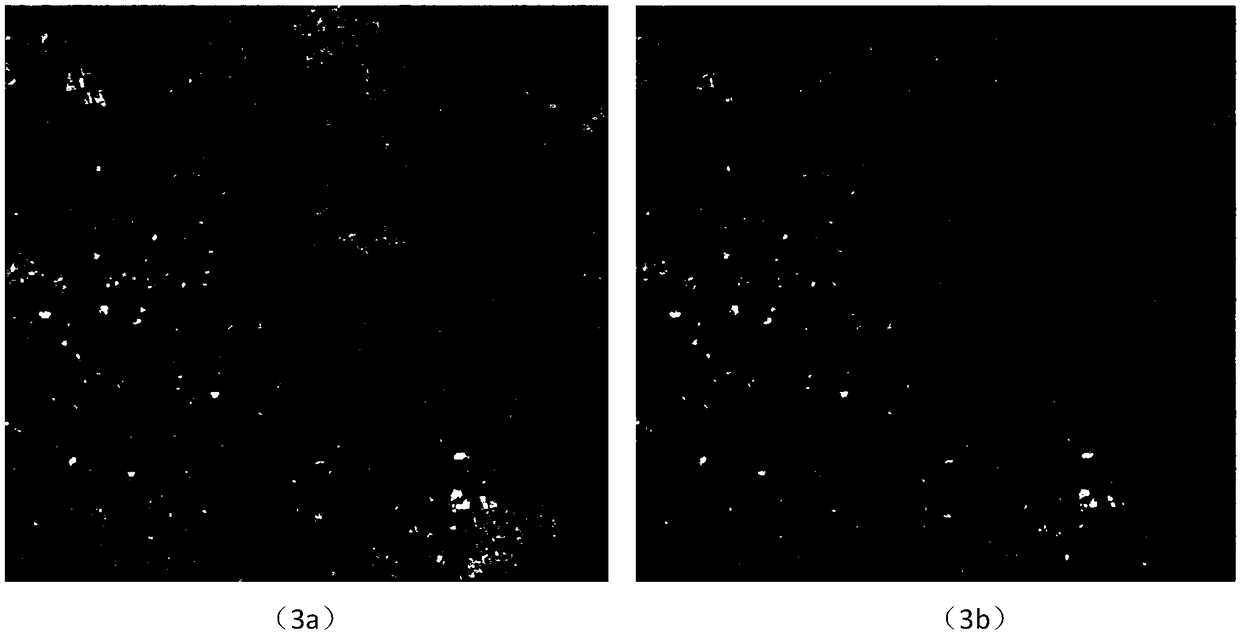

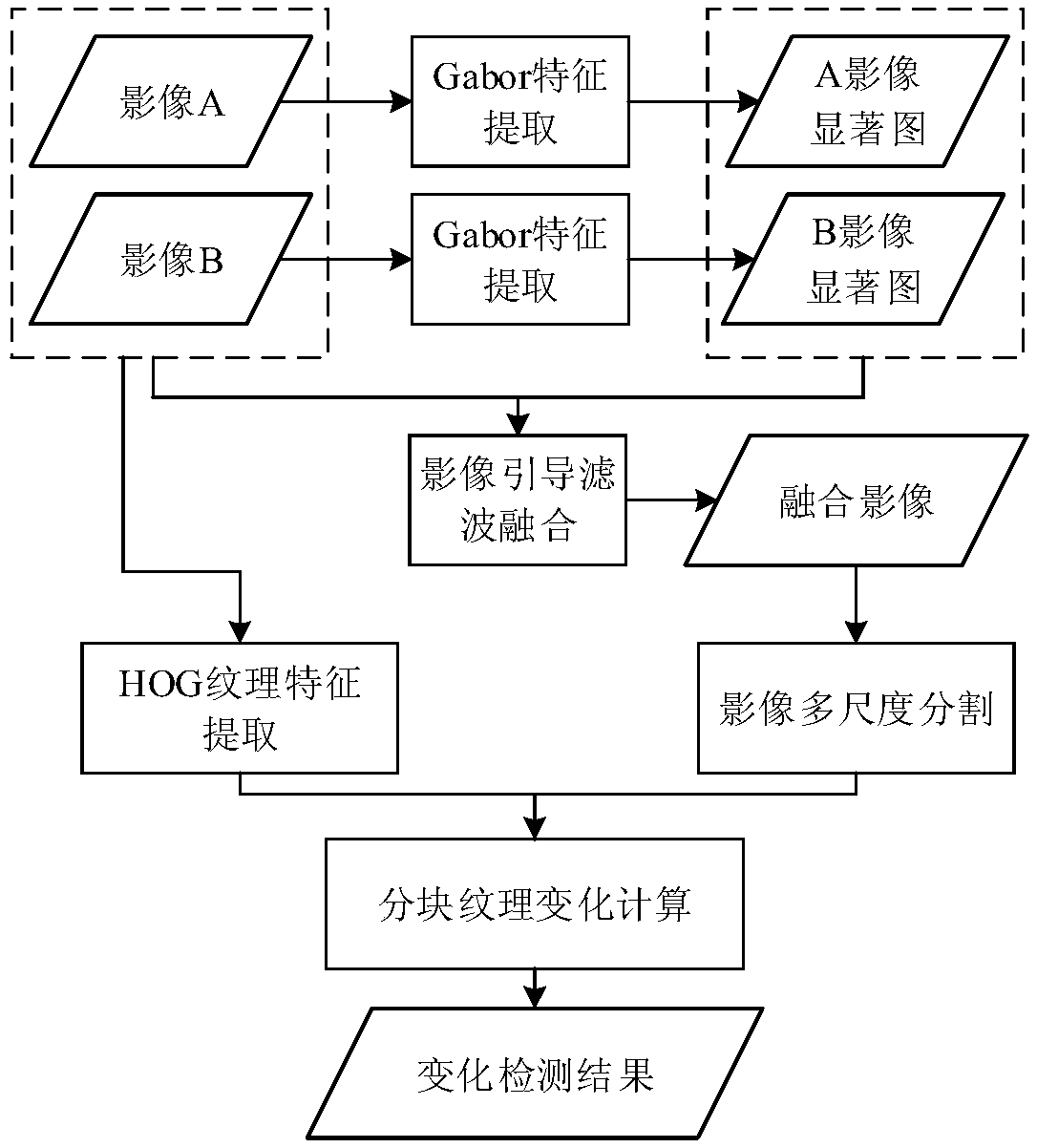

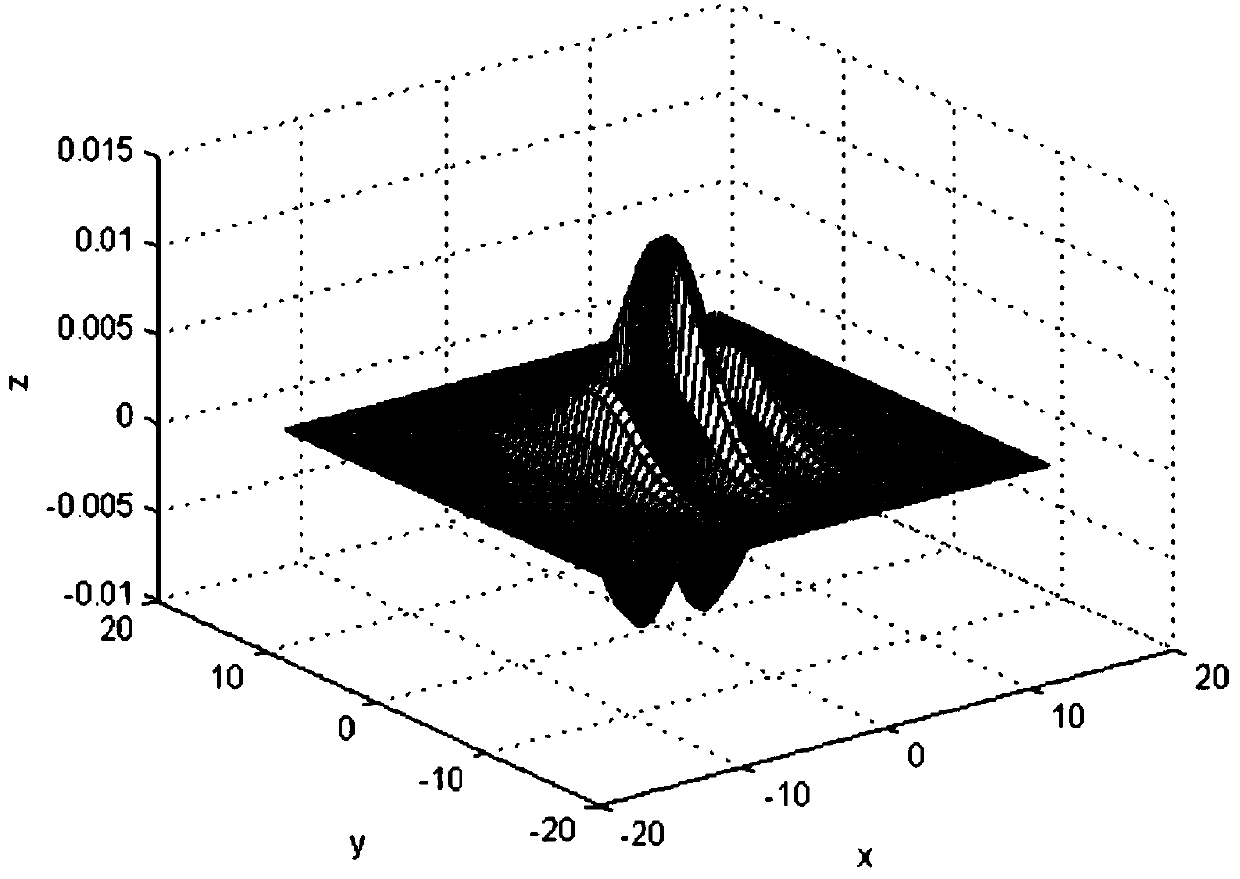

A method of target change detection

ActiveCN109063564AStrong scene adaptabilityStrong anti-interference abilityScene recognitionMean-shiftSatellite imagery

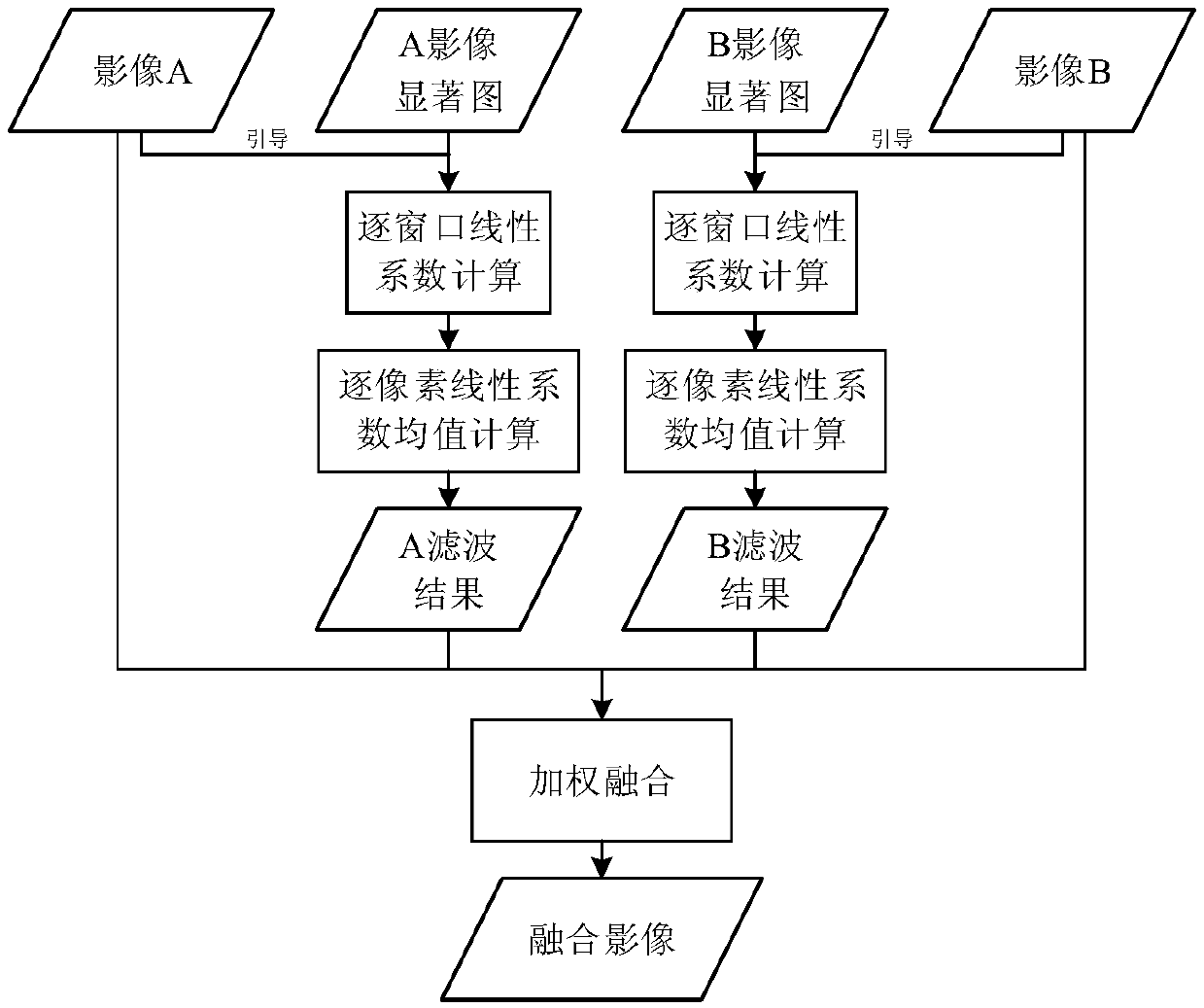

A method for detect a change of an object Firstly, the salient region of the image is extracted by Gabor texture feature, then the fusion image with salient object is obtained by guided filter fusion,and the fusion image is segmented by means of means shift, and then the HOG texture feature is used to compute the variance of the segmented texture, and the final change detection results are compared with each other. The technical proposal of the invention is based on the high-resolution remote sensing satellite image of the image guiding filter fusion and the texture characteristic analysis for the military target change detection technology, which can ensure the change detection precision and improve the change detection efficiency through the image fusion, and truly realizes the automatic, fast and accurate change detection of the military target.

Owner:BEIJING AEROSPACE AUTOMATIC CONTROL RES INST +1

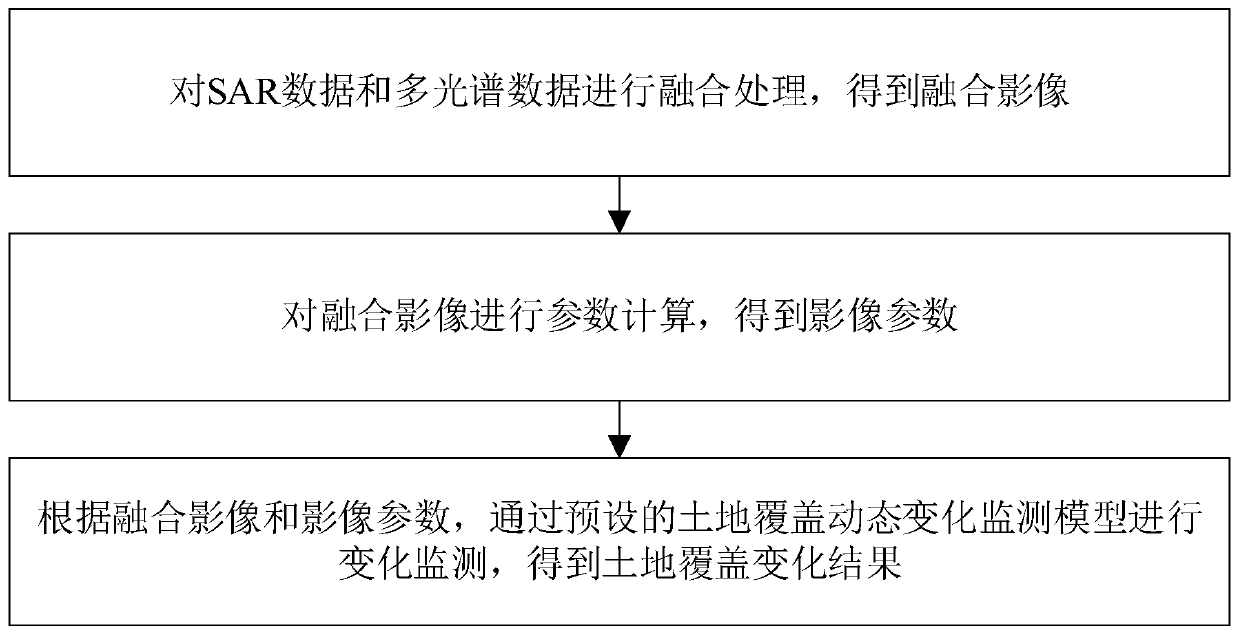

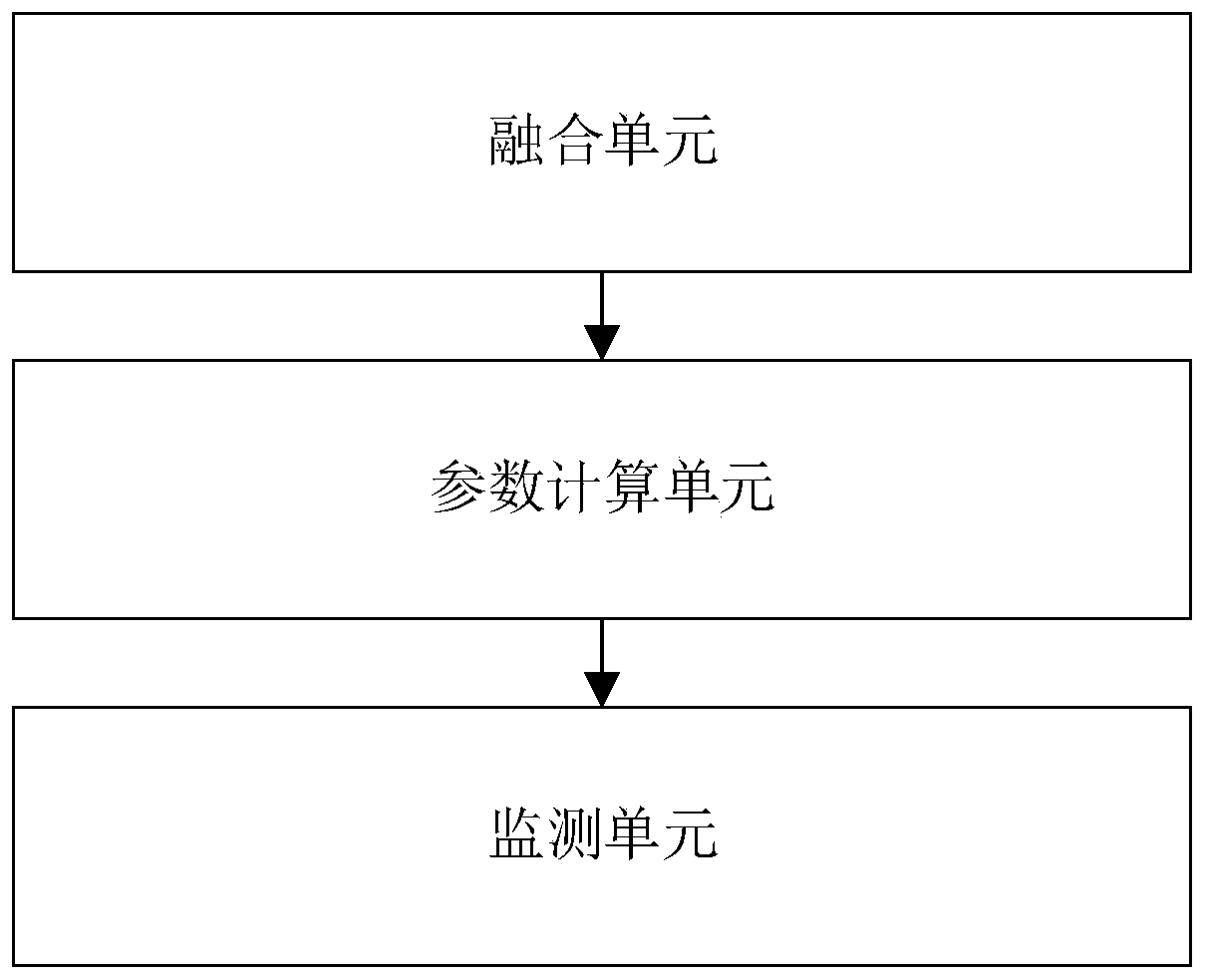

Land cover change monitoring method, system and device and storage medium

ActiveCN110930375ADetailed analysisHigh resolutionImage enhancementImage analysisLand coverImage resolution

The invention discloses a land cover change monitoring method, system and device and a storage medium, and the method comprises the steps: fusing SAR data and multispectral data to acquire obtaining afusion image; performing parameter calculation on a fused image to obtain image parameters; and performing change monitoring through a preset land cover dynamic change monitoring model according to the fused image and the image parameters to obtain a land cover change result. The SAR data and the multispectral data are fused, the fused image machine is subjected to change monitoring through the land cover dynamic change monitoring model, all-weather characteristics and texture characteristics can be obviously combined, spectral characteristics can also be combined, the analysis effect is finer, and the monitoring resolution and the monitoring efficiency are greatly improved. The invention can be widely applied to the field of land monitoring.

Owner:广东国地规划科技股份有限公司 +1

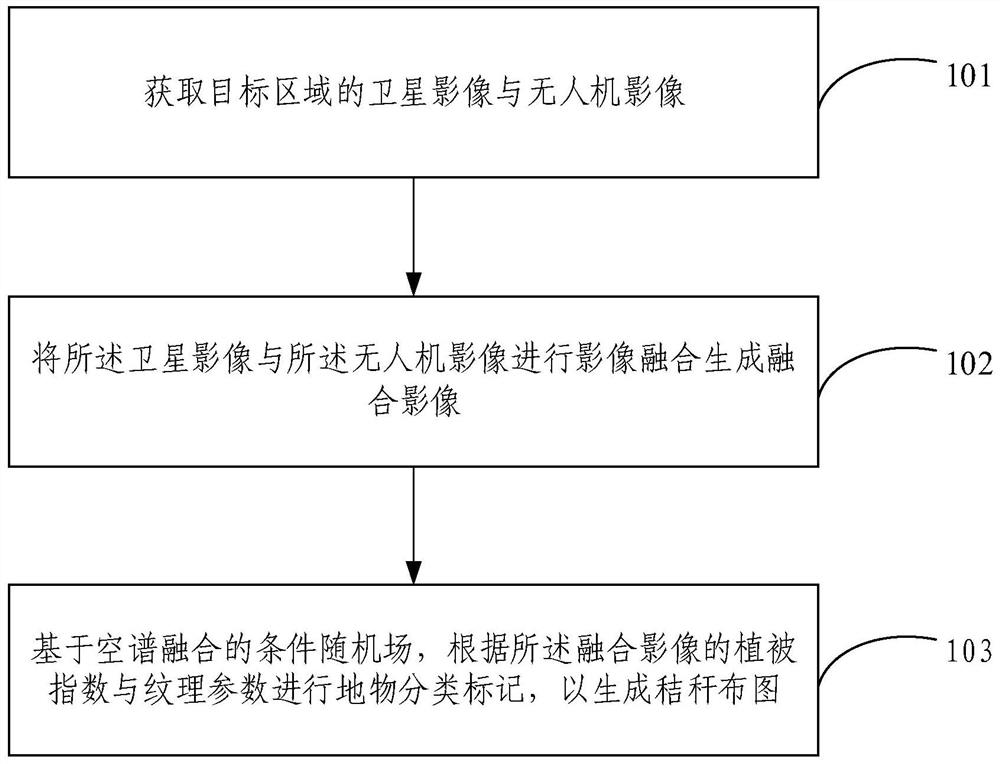

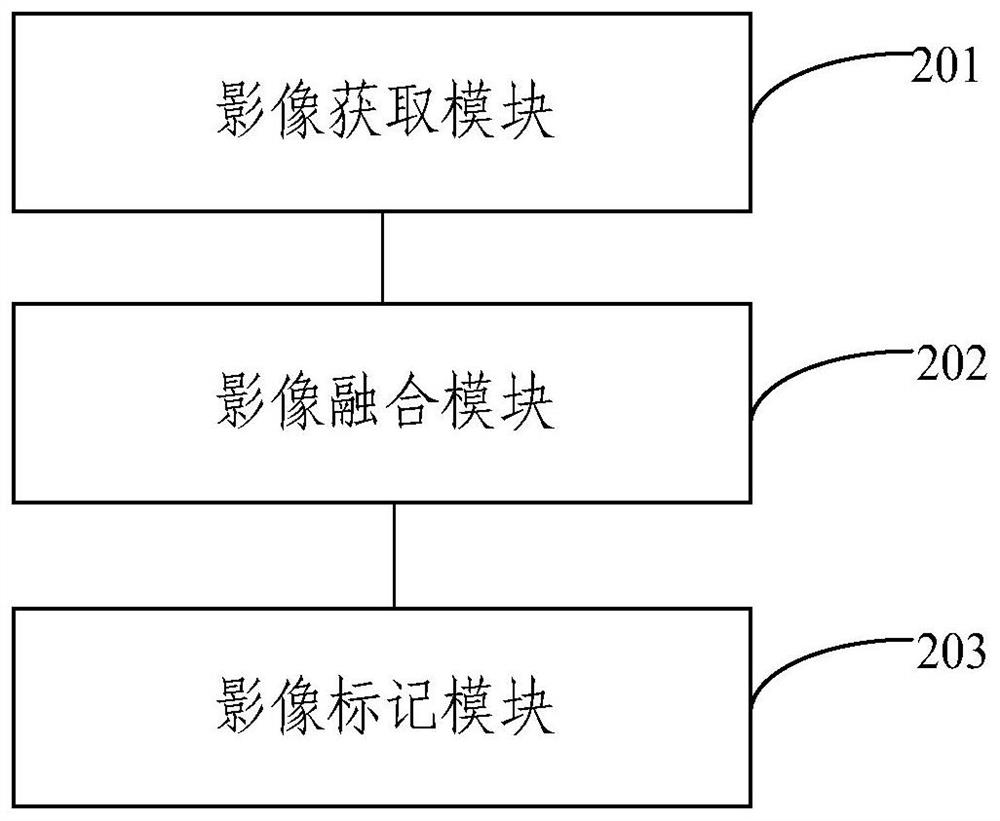

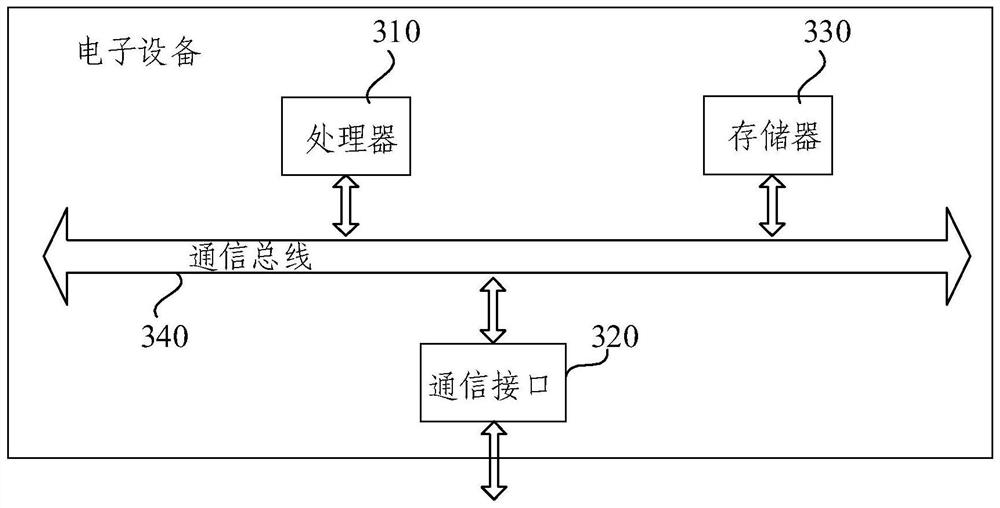

Field site straw extraction method and device based on aerospace remote sensing data fusion

The invention provides a field site straw extraction method and device based on aerospace remote sensing data fusion. The method comprises the following steps: acquiring a satellite image and an unmanned aerial vehicle image of a target area; performing image fusion on the satellite image and the unmanned aerial vehicle image to generate a fused image; and based on a conditional random field of spatial-spectral fusion, performing ground feature classification marking according to the vegetation index and the texture parameter calculated by the fused image so as to generate a straw distribution map. According to the method, the advantage of high spatial resolution of an unmanned aerial vehicle image and the advantage of rich spectral information such as short-wave infrared of a satellite image are integrated, and the unmanned aerial vehicle image and the satellite image are fused to generate a fused image with the spectral information such as centimeter-level high spatial resolution and short-wave infrared; and according to the spatial-spectral fusion conditional random field, ground feature classification is carried out by calculating the vegetation index and the texture feature of the fusion image, and rapid and high-precision extraction of the ground straw is realized.

Owner:北京市农林科学院信息技术研究中心

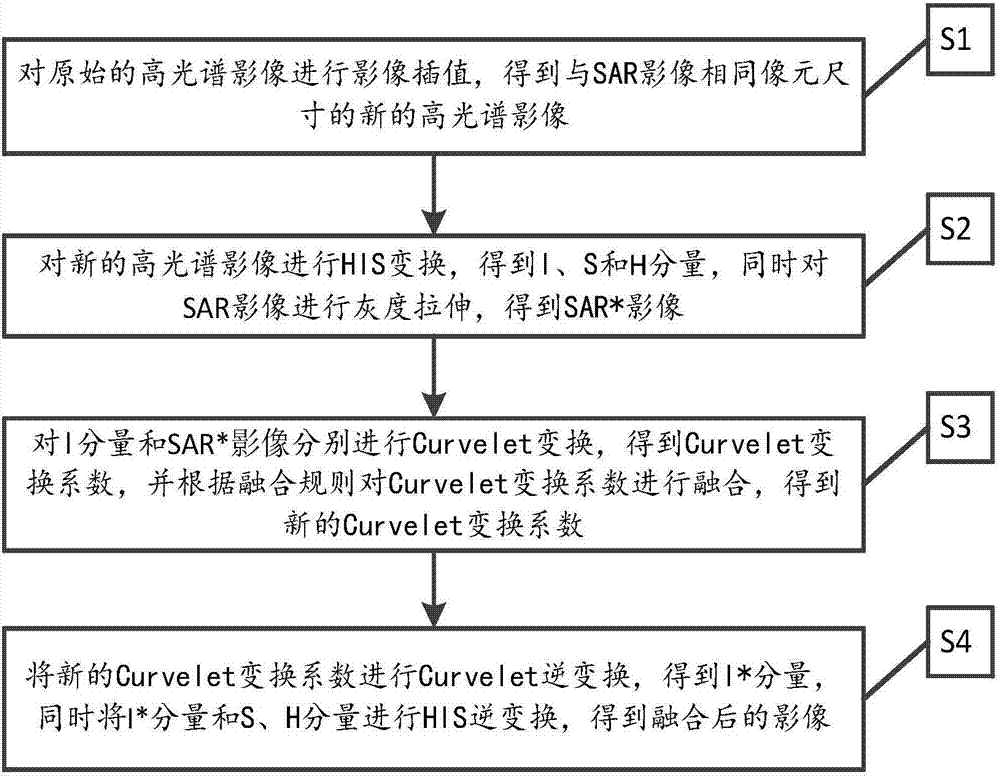

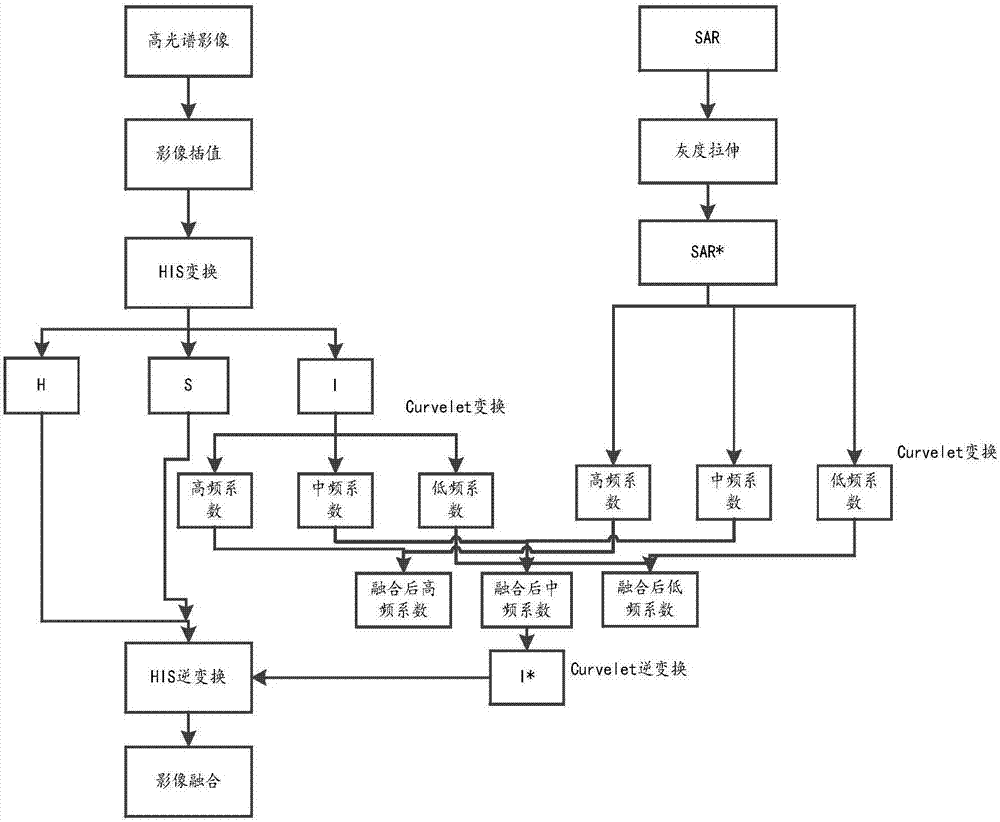

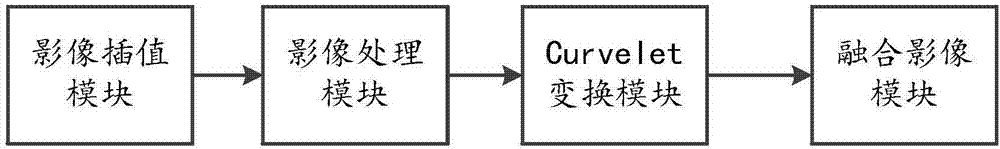

Method and system for image fusion based on Curvelet transformation

InactiveCN107154020AImprove spectral distortion problemImprove spatial resolutionImage enhancementImage analysisImaging processingImage resolution

The invention relates to a method and a system for image fusion based on Curvelet transformation. The method comprises the following steps: interpolating an original hyper-spectral image to get a new hyper-spectral image; carrying out HIS transformation to get components I, S and H, and stretching the gray of an SAR image to get an SAR* image; carrying out Curvelet transformation, and carrying out fusion according to fusion rules to get a new Curvelet transformation coefficient; and finally, carrying out inverse Curvelet transformation to get a component I*, and carrying out inverse HIS transformation on the component I* and the components S and H to get a fused image. The invention further relates to a system which comprises an image interpolation module, an image processing module, a Curvelet transformation module, and an image fusion module. The spectral distortion of fused images is improved, and the spatial resolution is increased greatly.

Owner:TECH & ENG CENT FOR SPACE UTILIZATION CHINESE ACAD OF SCI

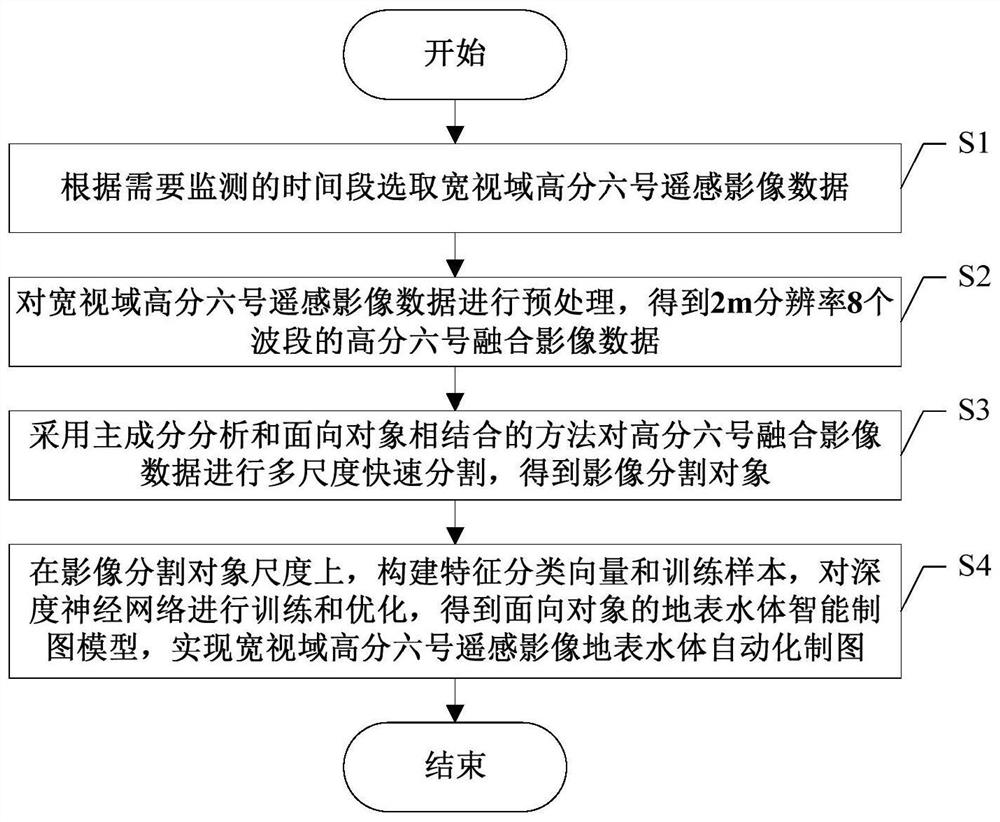

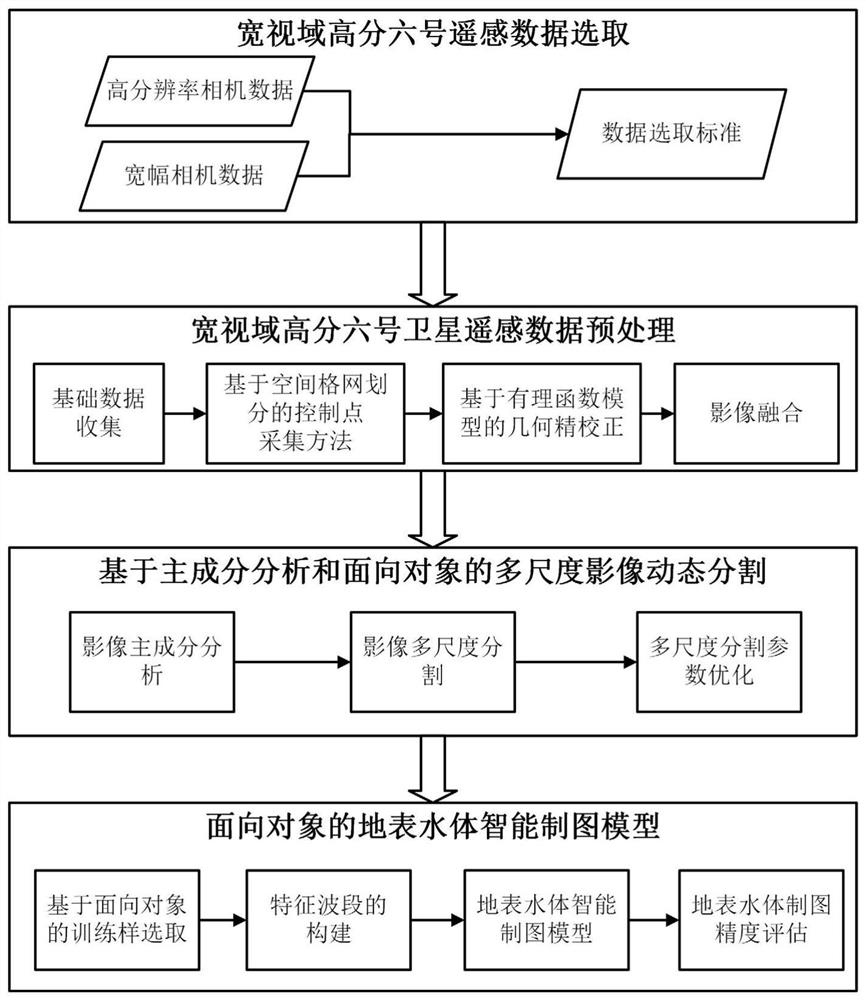

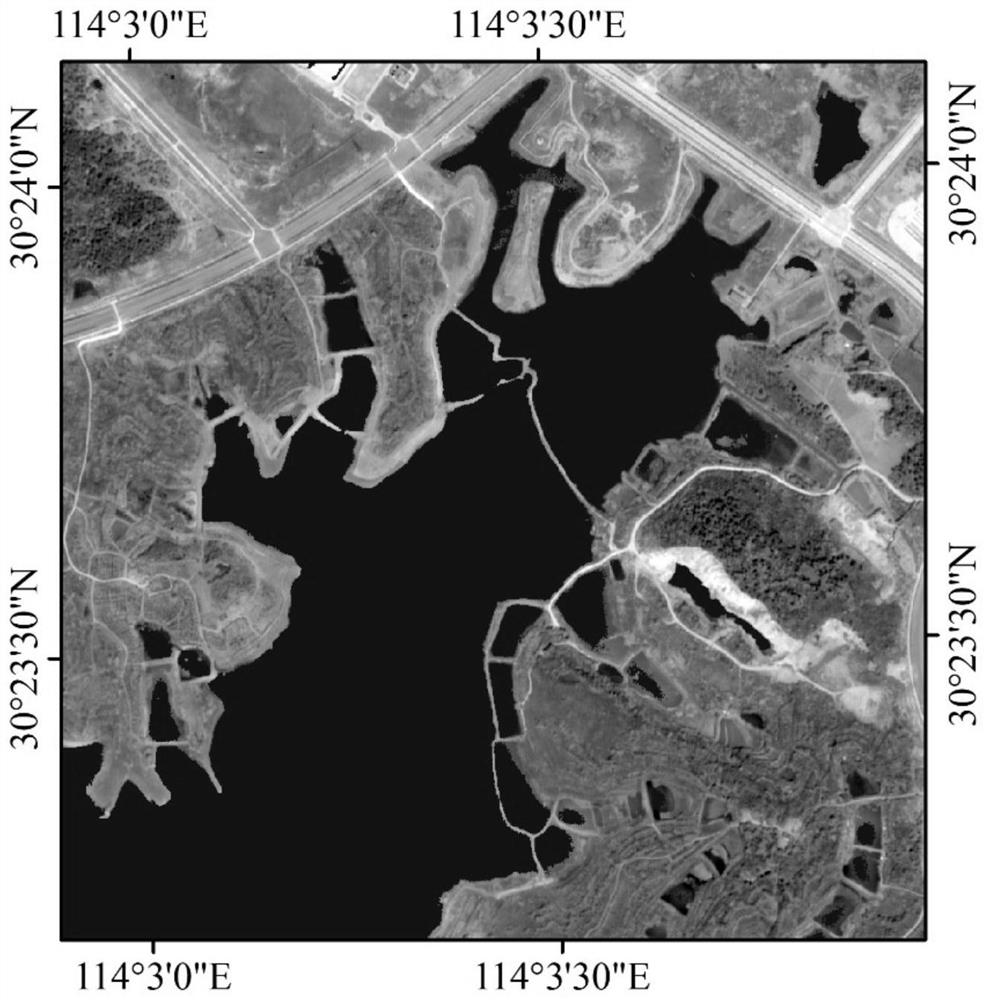

Intelligent drawing method for surface water body based on wide-view-field high-resolution No.6 satellite image

ActiveCN112949414AImprove calibration efficiencyImprove calibration accuracyScene recognitionMaps/plans/chartsPrincipal component analysisGrid based

Owner:CHINA INST OF WATER RESOURCES & HYDROPOWER RES

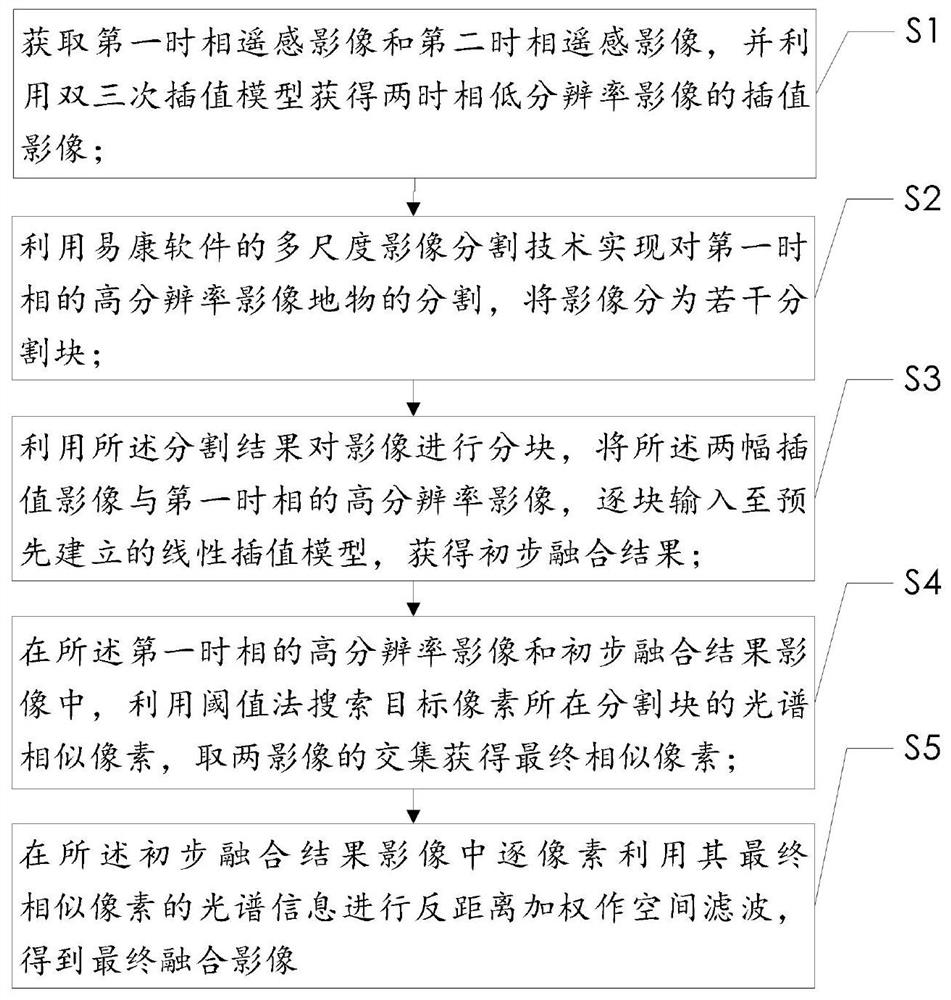

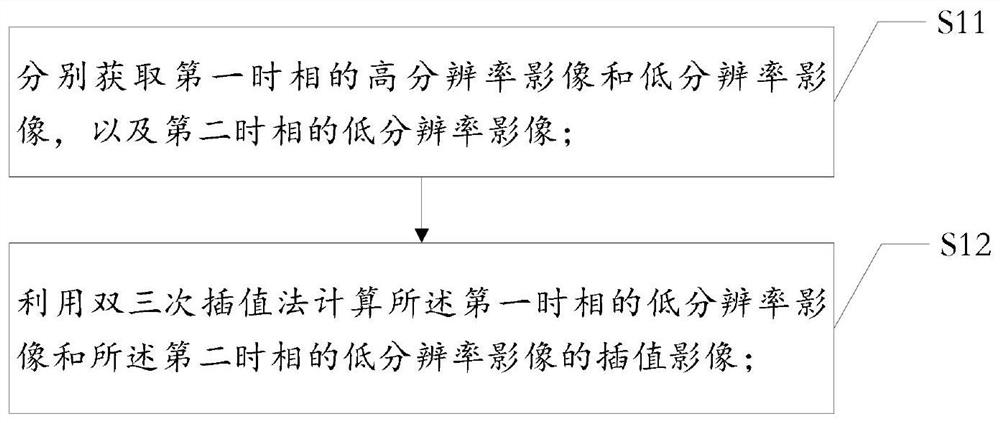

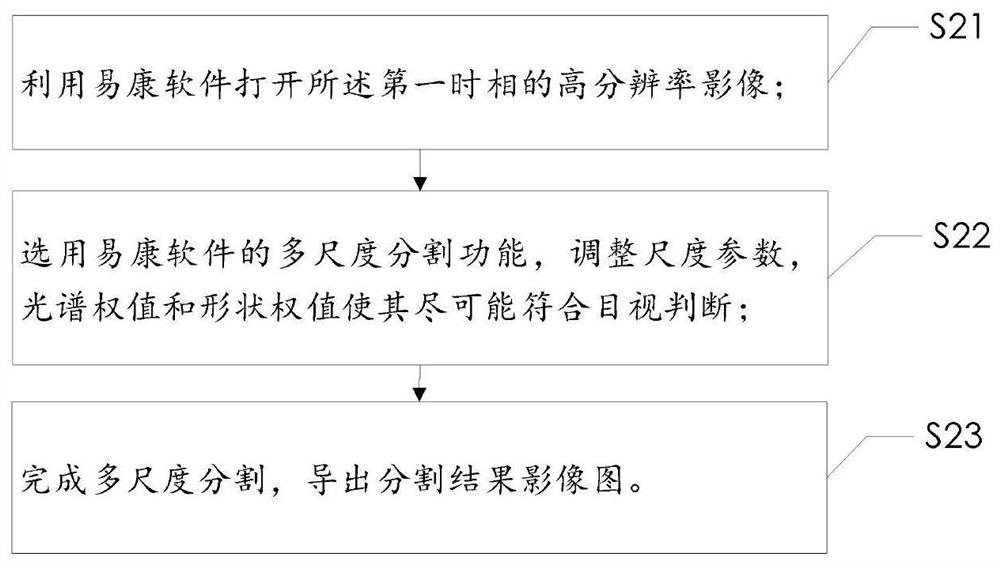

Object-oriented remote sensing image data space-time fusion method, system and device

PendingCN112508832AAchieve integrationEfficient captureImage enhancementImage analysisPattern recognitionImage resolution

The invention discloses an object-oriented remote sensing image data space-time fusion method, a system and a device, which are suitable for being used in the technical field of remote sensing. The method comprises the following steps: firstly, acquiring a high-resolution image and a low-resolution image of a first time phase and a low-resolution image of a second time phase; downscaling the two time-phase low-spatial-resolution images to the same resolution as the first time-phase high-resolution image by using a bicubic interpolation model to obtain an interpolation image; segmenting the high-resolution image ground object of the first time phase by using image segmentation; in each segmentation block, inputting the interpolation image and a high-resolution image of a first time phase into a pre-established linear interpolation model to obtain a preliminary fusion result; in each segmentation block, searching spectral similar pixels of a target pixel pixel pixel by pixel, and takingan intersection of the two images as a final spectral similar pixel; and performing spatial filtering through inverse distance weighting in combination with spectral similar pixel information, so thata final fused image can be obtained. The steps are simple, and the obtained spatio-temporal data fusion result is better.

Owner:CHINA UNIV OF MINING & TECH

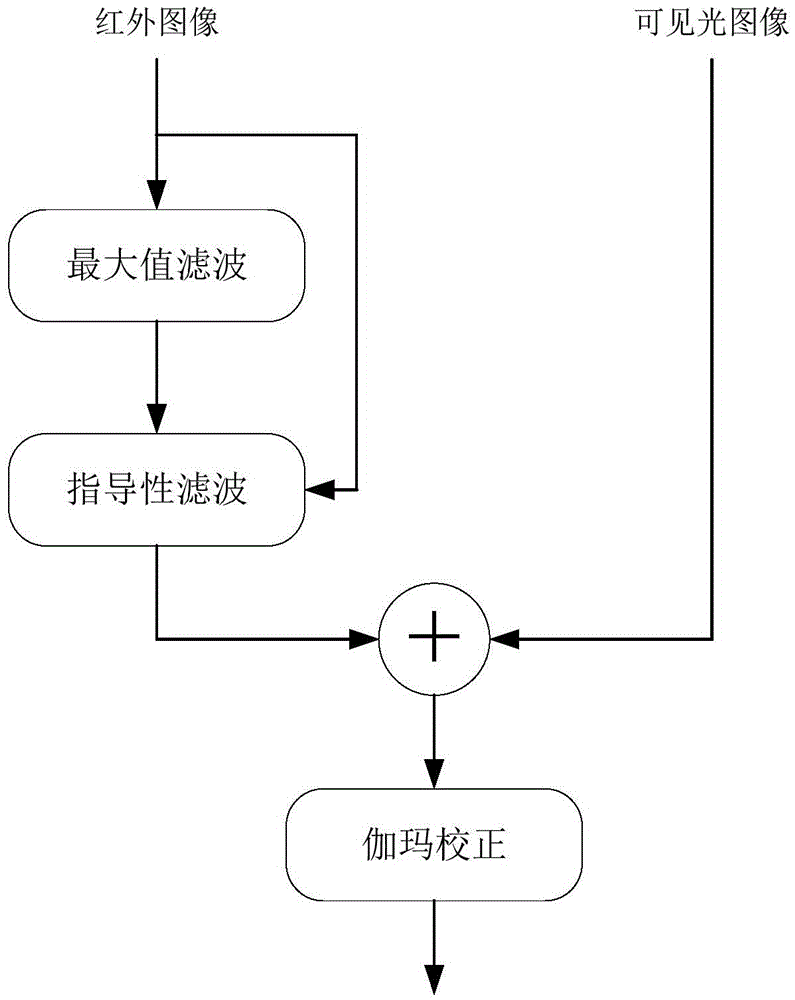

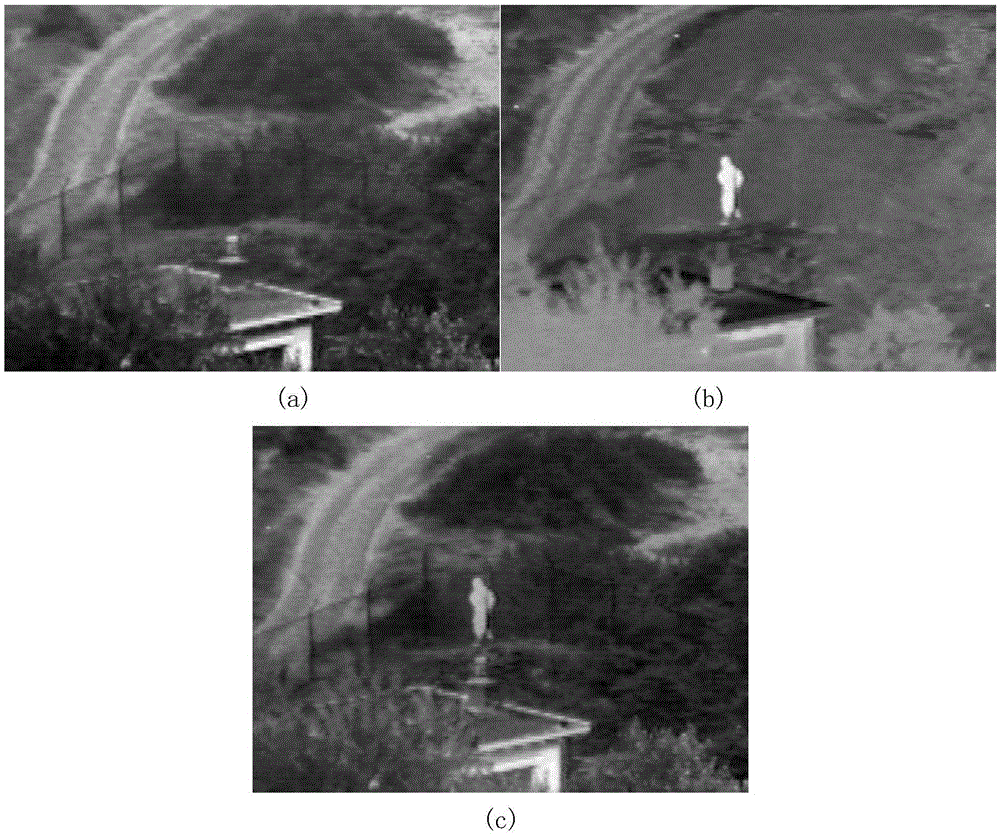

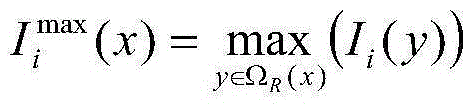

Image fusion method based on guidance filtering

ActiveCN105528772ARich in detailsReduce computational complexityImage enhancementImage analysisGray levelFilter algorithm

The present invention relates to an image fusion method based on guidance filtering. The method comprises the steps of (1) carrying out maximum value filtering on an original infrared image I to obtain an image I<max>, (2) using a guidance filtering algorithm to carry out further filtering processing on the I<max> and output an image I<GF>, (3) directly overlaying the image I<GF> to an original visible light image I<v> and obtaining an image I<f>, (4) carrying out gamma correction on the image I<f> and obtaining a final fusion image. The method is based on pixel fusion, the gray level of only a part of the region is raised, a fusion effect 'unnatural' phenomenon is avoided, in addition the algorithm calculation complexity is low, and the real-time processing is easy.

Owner:LUOYANG INST OF ELECTRO OPTICAL EQUIP OF AVIC

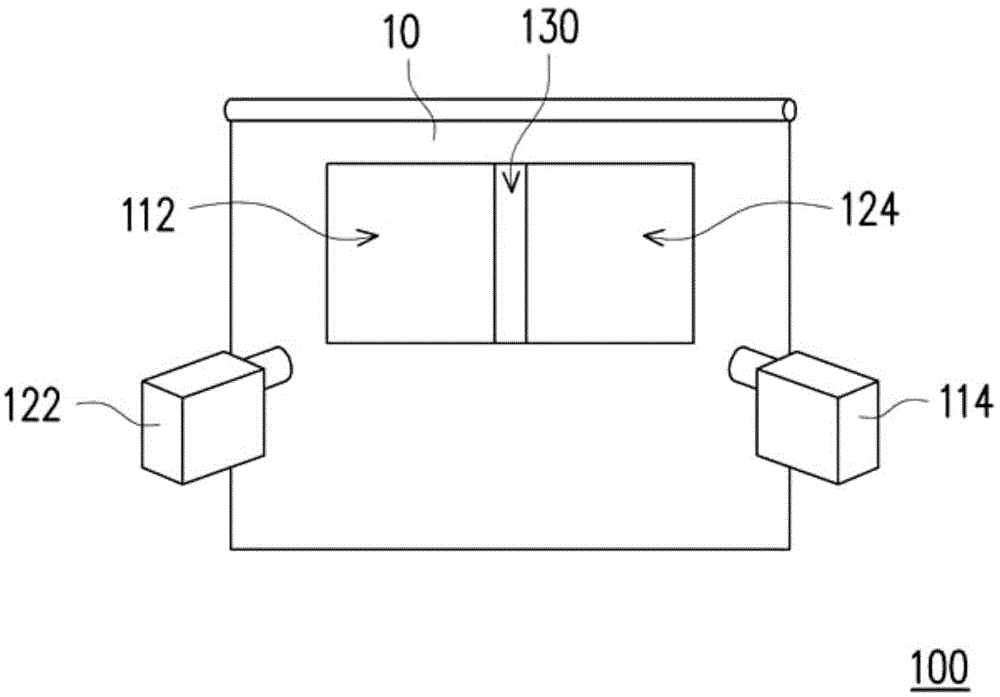

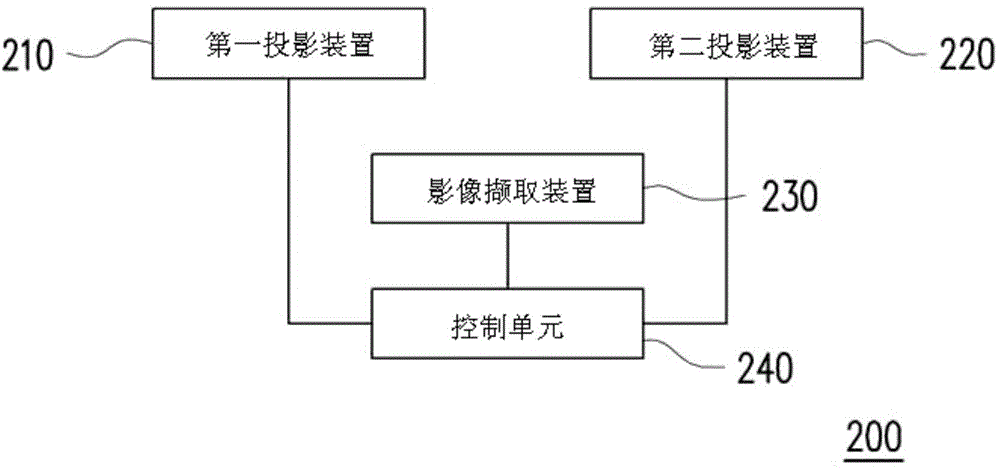

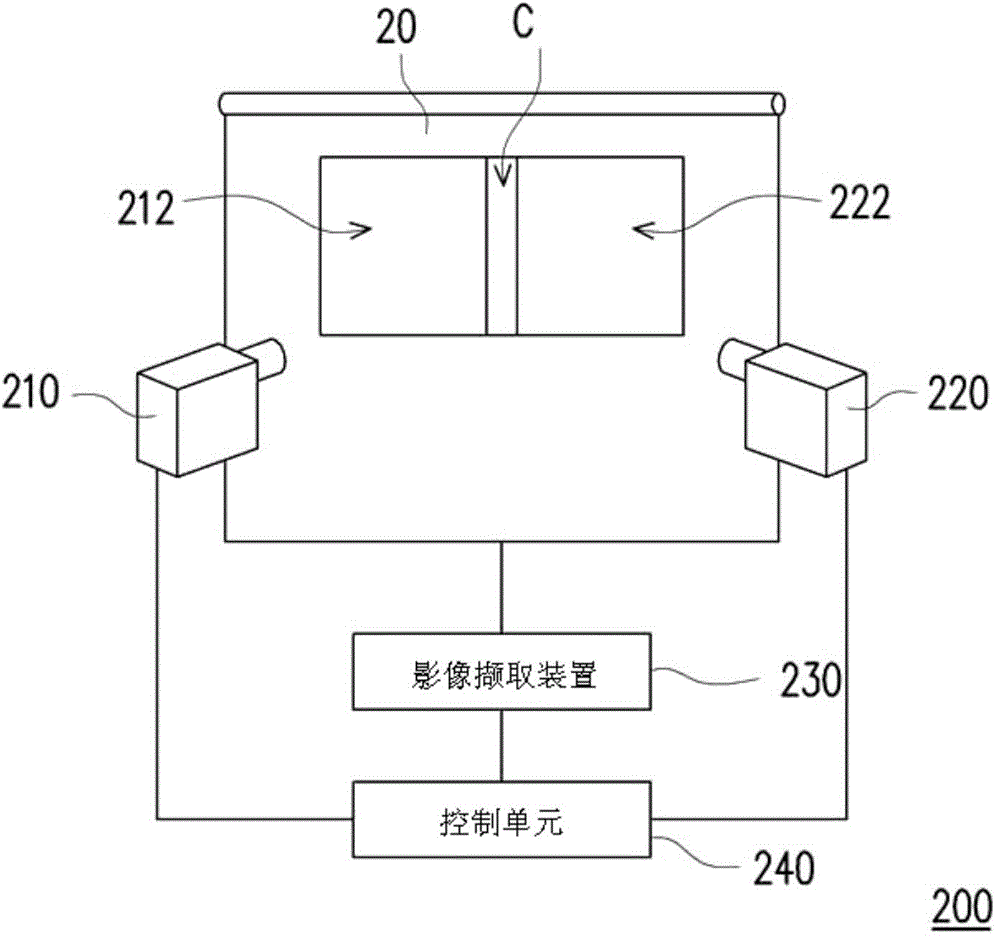

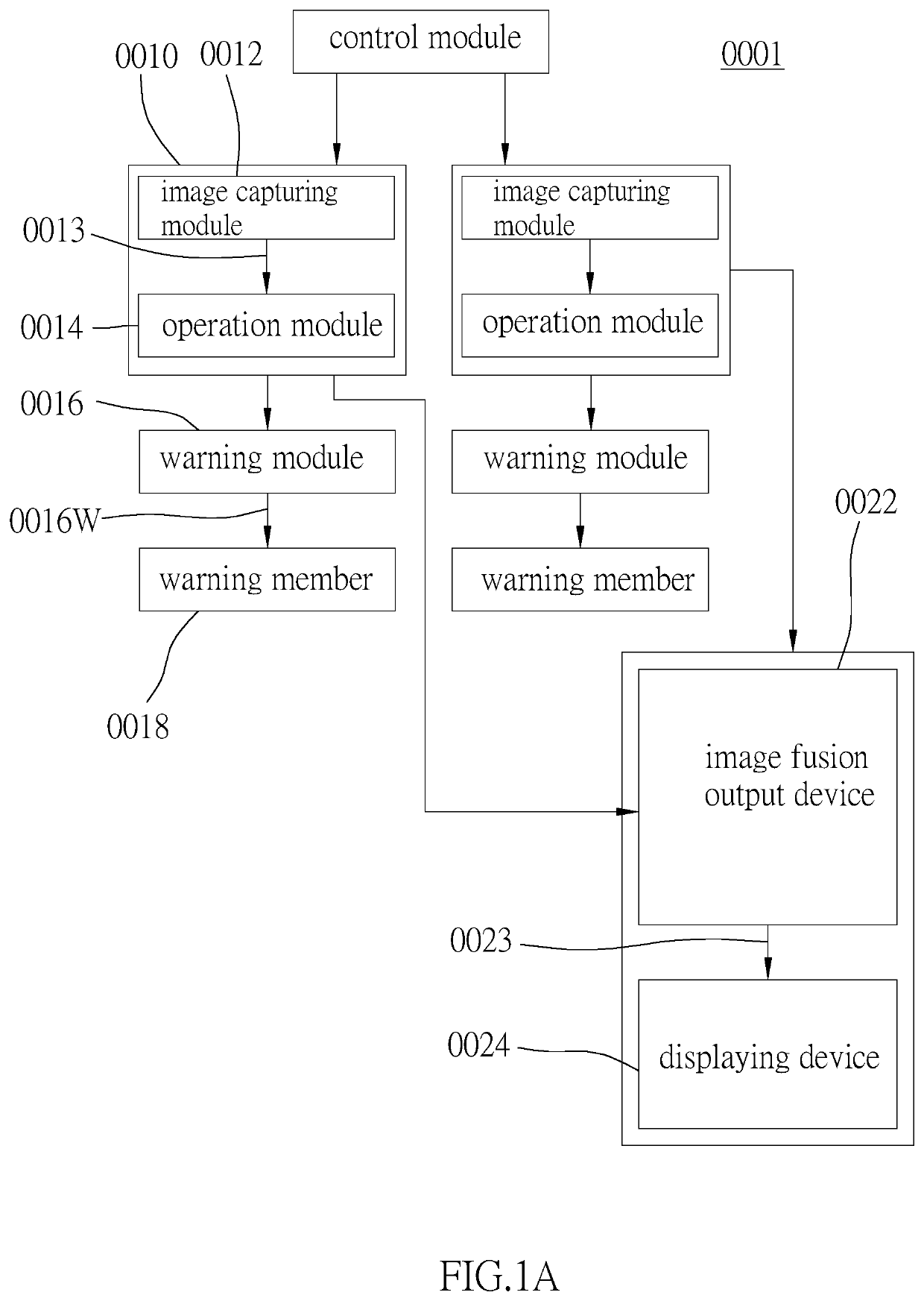

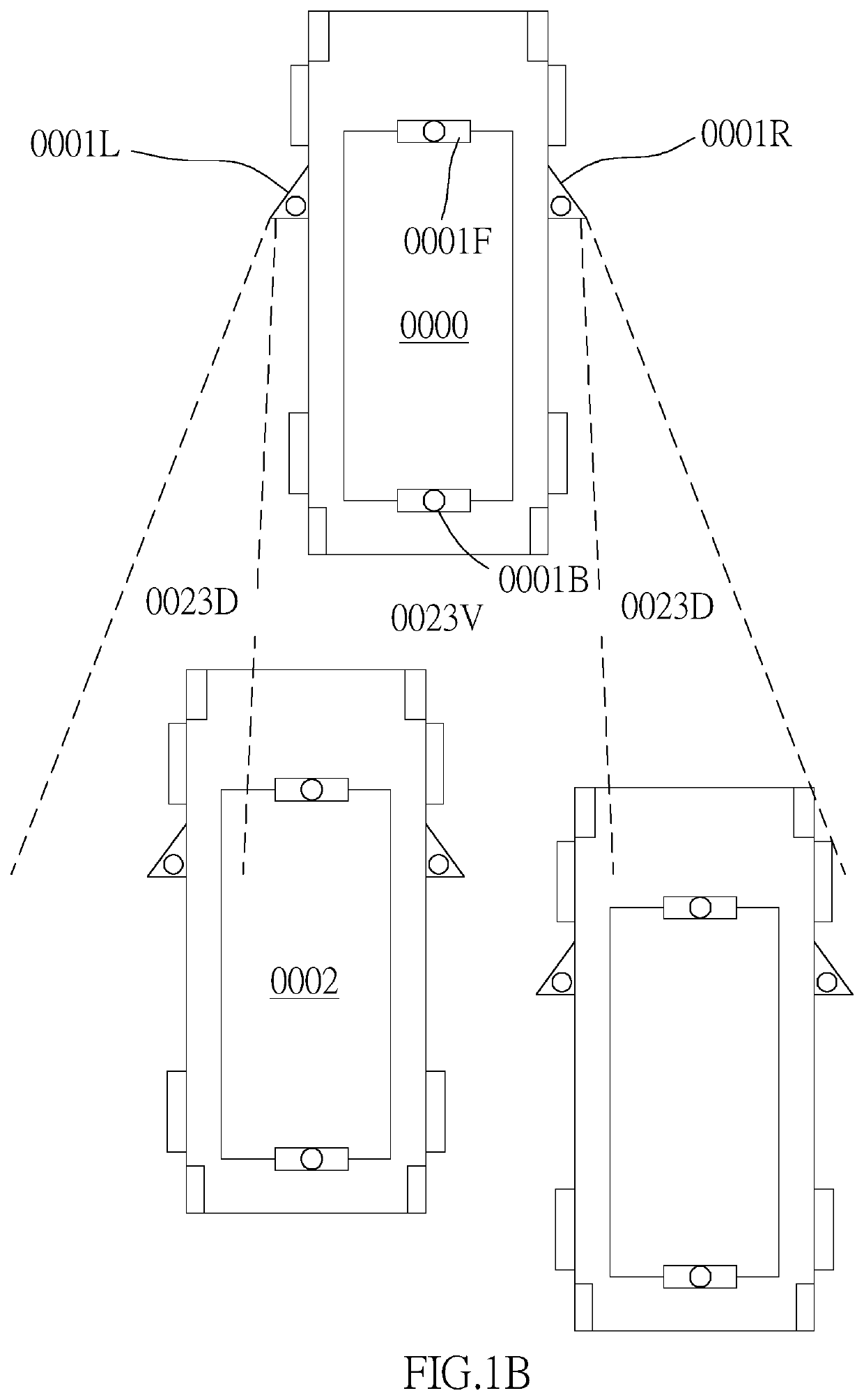

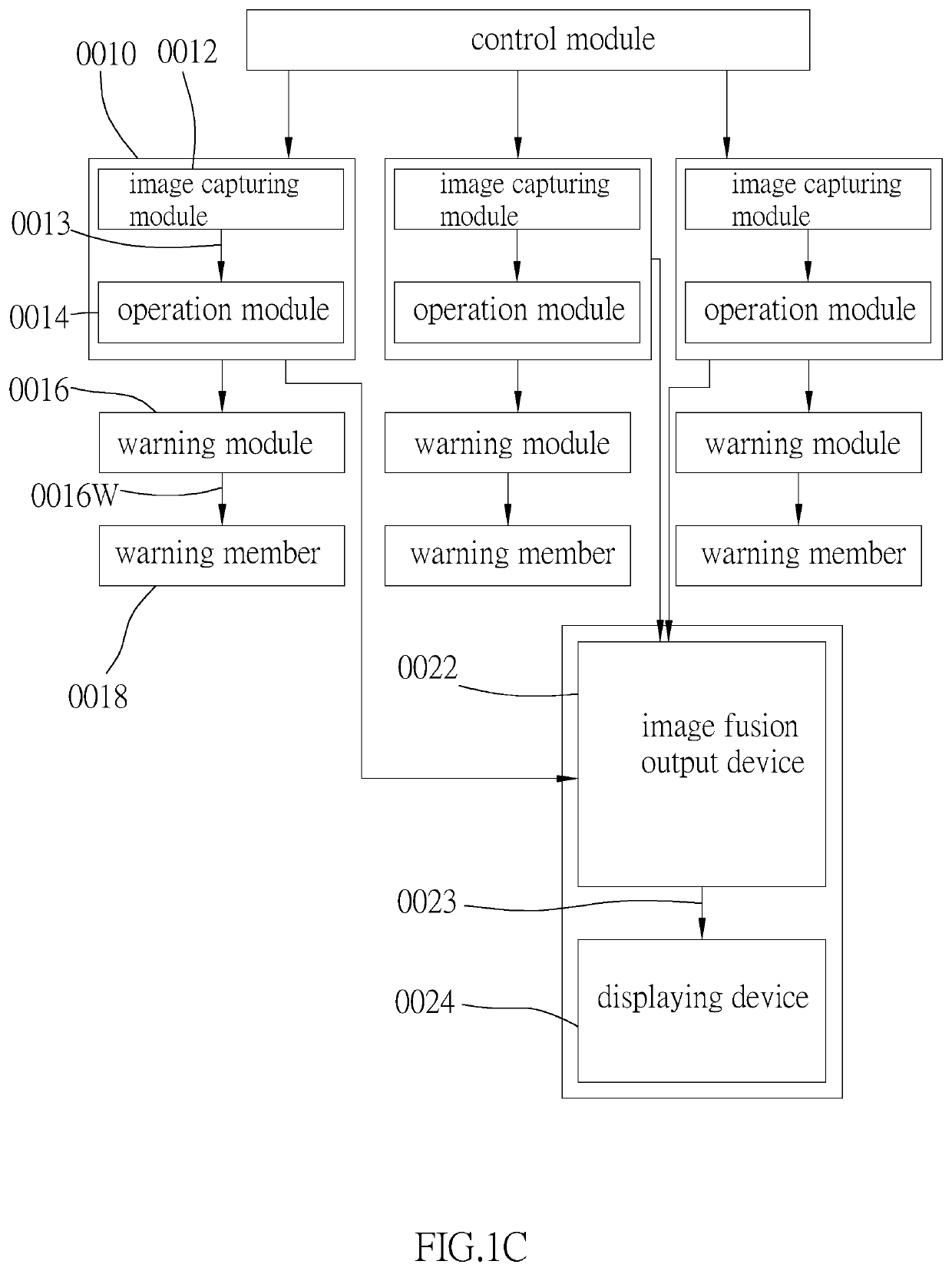

Movable carrier auxiliary system and vehicle auxiliary system

InactiveUS20200218945A1Small sizeImprove imaging pixelTelevision system detailsOptical viewingDisplay deviceEngineering

A movable carrier auxiliary system includes at least two optical image capturing systems respectively disposed on a left portion and a right portion of a movable carrier, at least one image fusion output device, and at least one displaying device. Each optical image capturing system includes an image capturing module and an operation module. The image capturing module captures and produces an environmental image surrounding the movable carrier. The operation module electrically connected to the image capturing module detects at least one moving object in the environmental image to generate a detecting signal and at least one tracking mark. The image fusion output device is disposed inside of the movable carrier and is electrically connected to the optical image capturing systems, thereby to receive the environmental image to generate a fusion image. The displaying device is electrically connected to the image fusion output device to display the fusion image and the at least one tracking mark.

Owner:ABILITY OPTO ELECTRONICS TECH

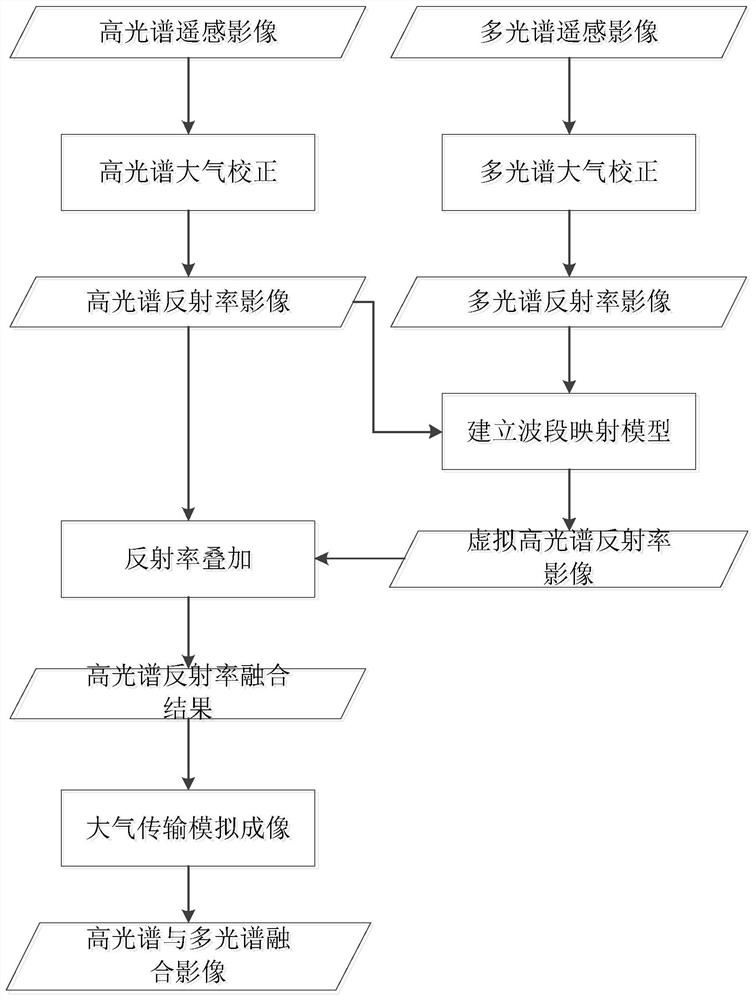

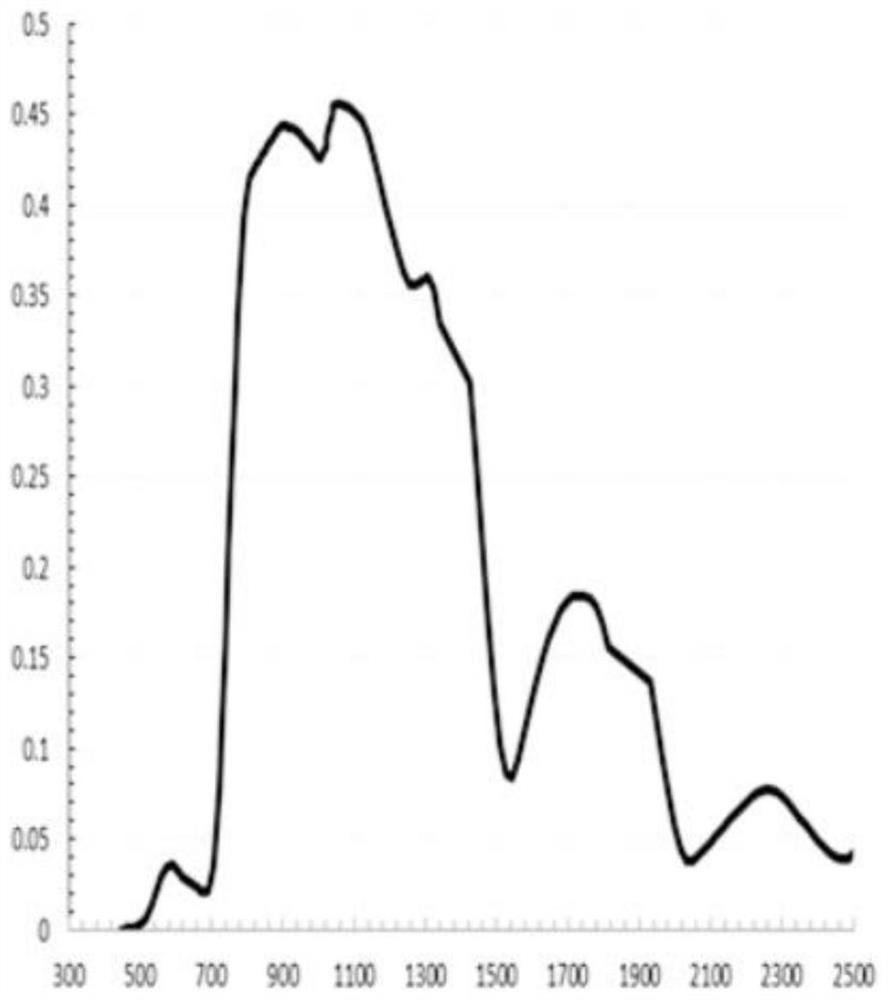

Hyperspectral and multispectral remote sensing information fusion method and system

PendingCN113222836AEasy to handleMaintain application performanceImage enhancementImage analysisAtmospheric correctionComputer science

The invention relates to a hyperspectral and multispectral remote sensing information fusion method and system. The method comprises the following steps: performing hyperspectral atmospheric correction according to pre-acquired hyperspectral remote sensing image data; carrying out multispectral atmospheric correction according to multispectral remote sensing image data acquired in advance; establishing a wave band mapping model based on a hyperspectral reflectivity image result and a multispectral reflectivity image result of the earth surface generated after hyperspectral atmospheric correction and multispectral atmospheric correction; carrying out weighted calculation of the spectral reflectivity value on the virtual hyperspectral reflectivity image result by taking the original hyperspectral reflectivity image value as a reference, and generating a hyperspectral reflectivity fusion result; based on the hyperspectral reflectivity fusion result, adopting an atmospheric radiation transmission model for simulation, achieving atmospheric radiation transmission conversion of a reflection fusion image, and generating a fusion image result of a hyperspectral remote sensing image and a multispectral remote sensing image.

Owner:自然资源部国土卫星遥感应用中心

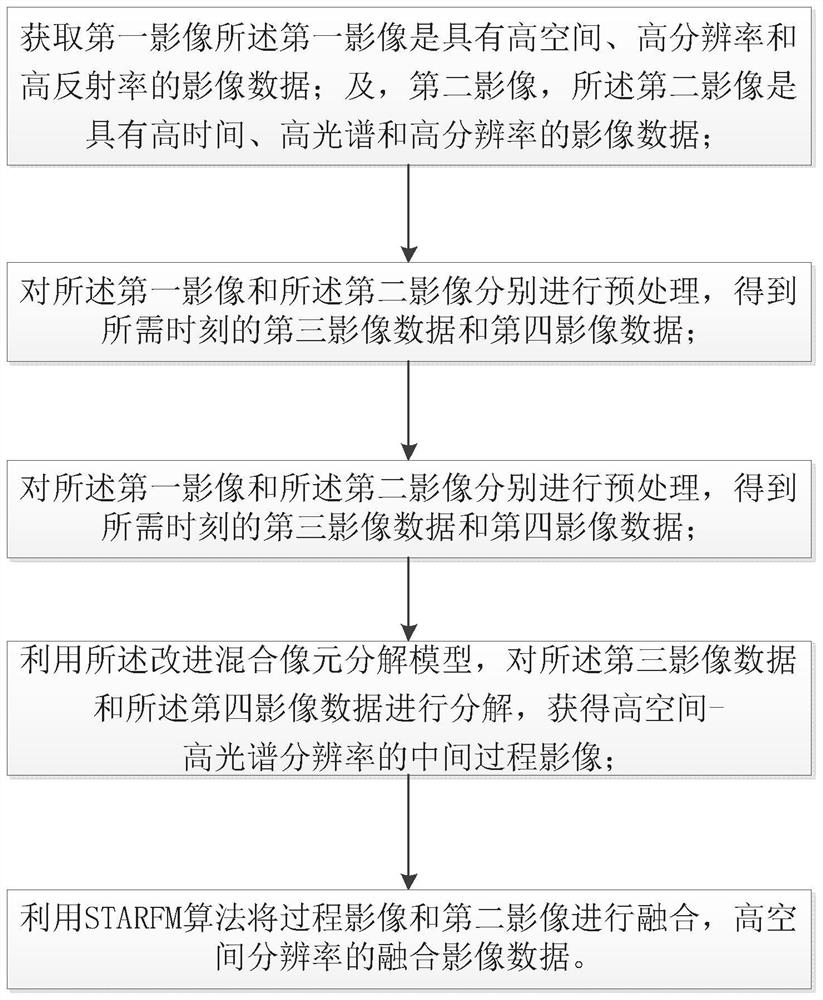

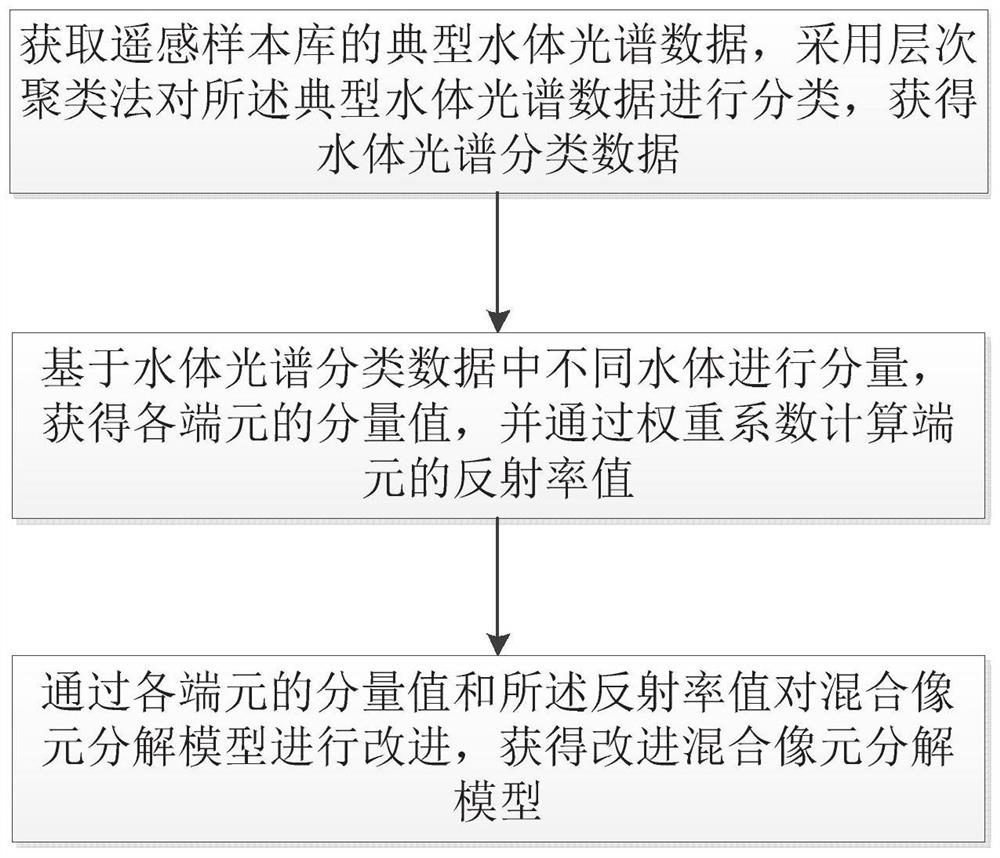

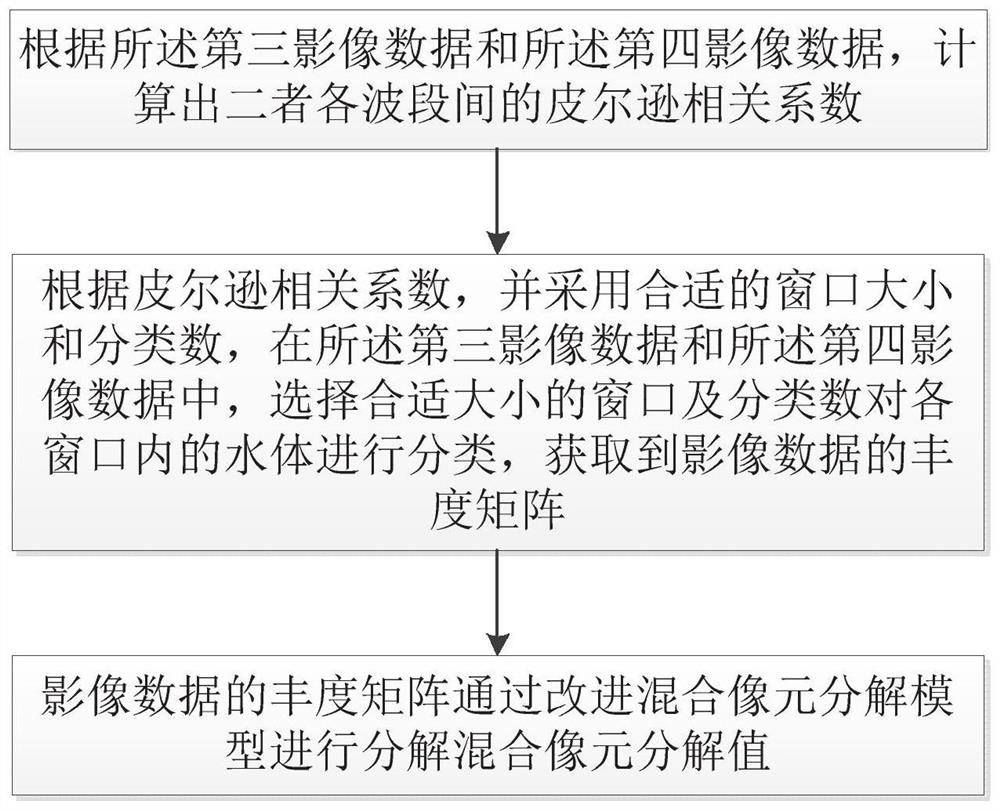

Fusion method and fusion device based on spectral information image and medium

The invention relates to the technical field of image fusion, in particular to a fusion method and device based on a spectral information image and a medium. The fusion method comprises the steps of obtaining a first image and a second image; preprocessing the first image and the second image to obtain third image data and fourth image data at the required moment; improving the mixed pixel decomposition model to obtain an improved mixed pixel decomposition model; decomposing image data by using the improved mixed pixel decomposition model to obtain an intermediate process image with high space-high spectral resolution; and fusing the images to obtain fused image data with high spatial resolution. According to the embodiment of the invention, through fusion of ocean and land satellite image data, the time, space and spectrum advantages of different remote sensing data are effectively utilized, and the remote sensing data which has high spatial resolution and high time resolution and also keeps the ocean image spectrum band is generated, so that the requirement of short-term dynamic monitoring of the water quality of an inland water body is met.

Owner:深圳市规划国土房产信息中心

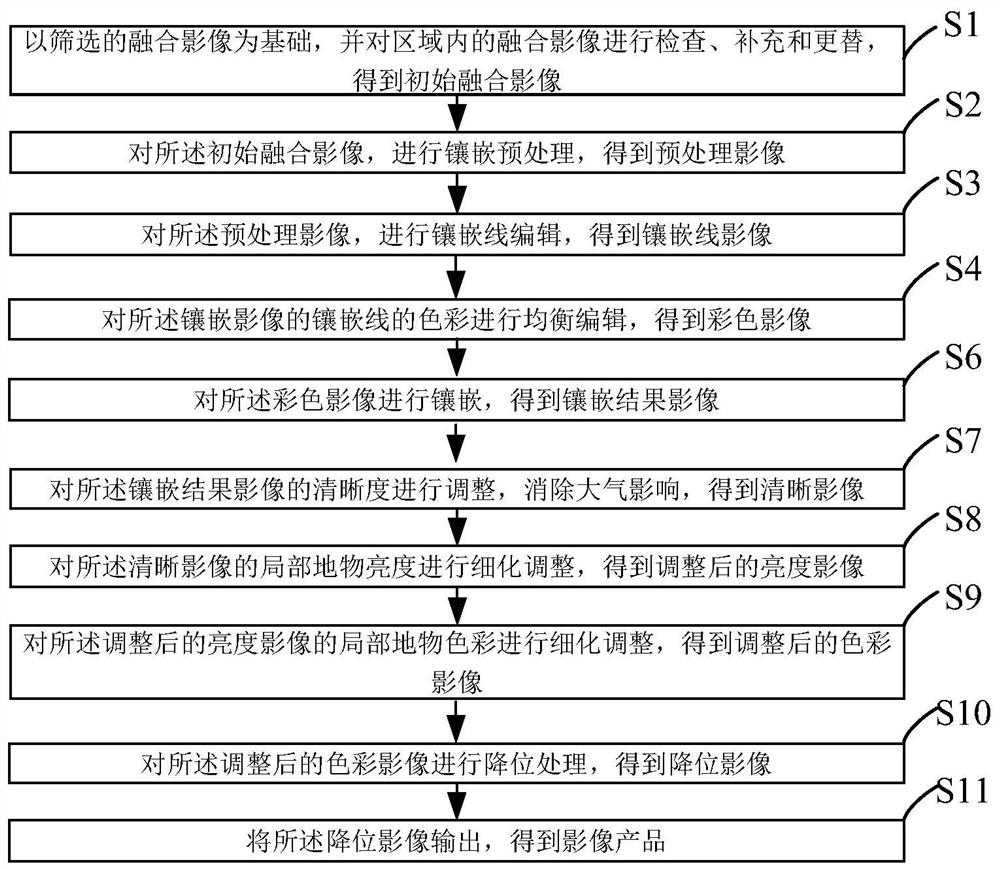

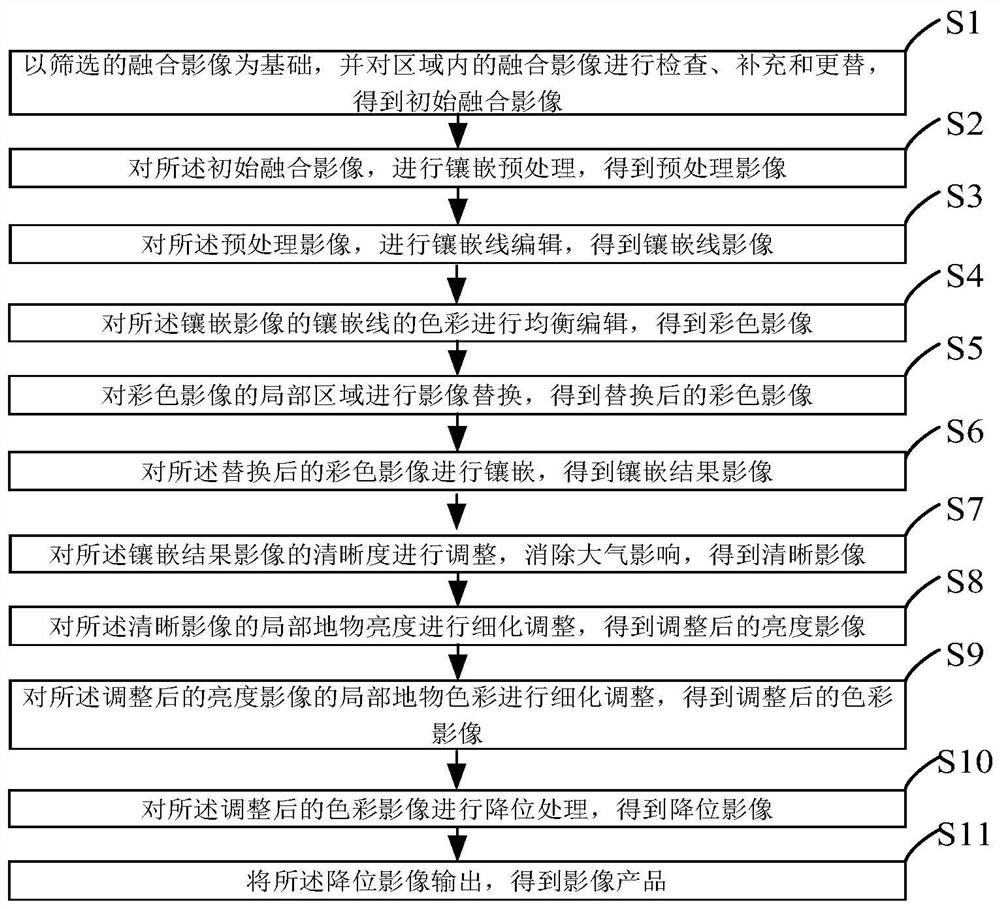

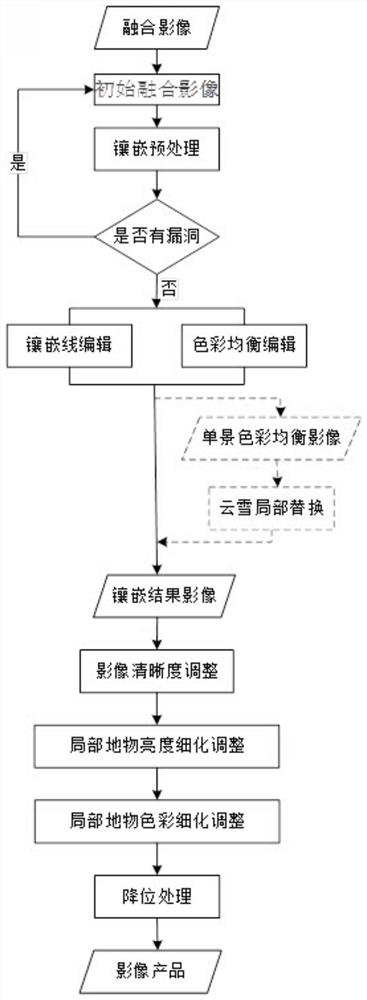

Large-area high-fidelity satellite remote sensing image uniform-color mosaic processing method and device

ActiveCN113920030ASolve color uniformitySolve processingImage enhancementImage analysisSatellite dataImaging condition

The invention provides a large-area high-fidelity satellite remote sensing image uniform-color mosaic processing method and device, and the method comprises the following steps: screening a fusion image, and carrying out inspection, supplement and replacement of the fusion image; carrying out mosaic preprocessing on the initial fusion image, carrying out mosaic line editing on the preprocessed image, carrying out balanced editing on the color of a mosaic line, carrying out mosaic, adjusting the definition of the image, eliminating the atmospheric influence, carrying out refined adjustment on the local surface feature brightness and color of the image, and carrying out bit reduction processing on the image; and outputting to obtain an image product. The method solves the problems that in the prior art, due to the difference of satellite image imaging conditions, time and atmospheric environments, the color difference between images imaged at different time in the same area is large, and due to the difference between different sensors, the color uniformizing and embedding work of multiple satellite data sources becomes very complex, and the data quality is poor. And there is no effective fixed solution method and process.

Owner:自然资源部国土卫星遥感应用中心

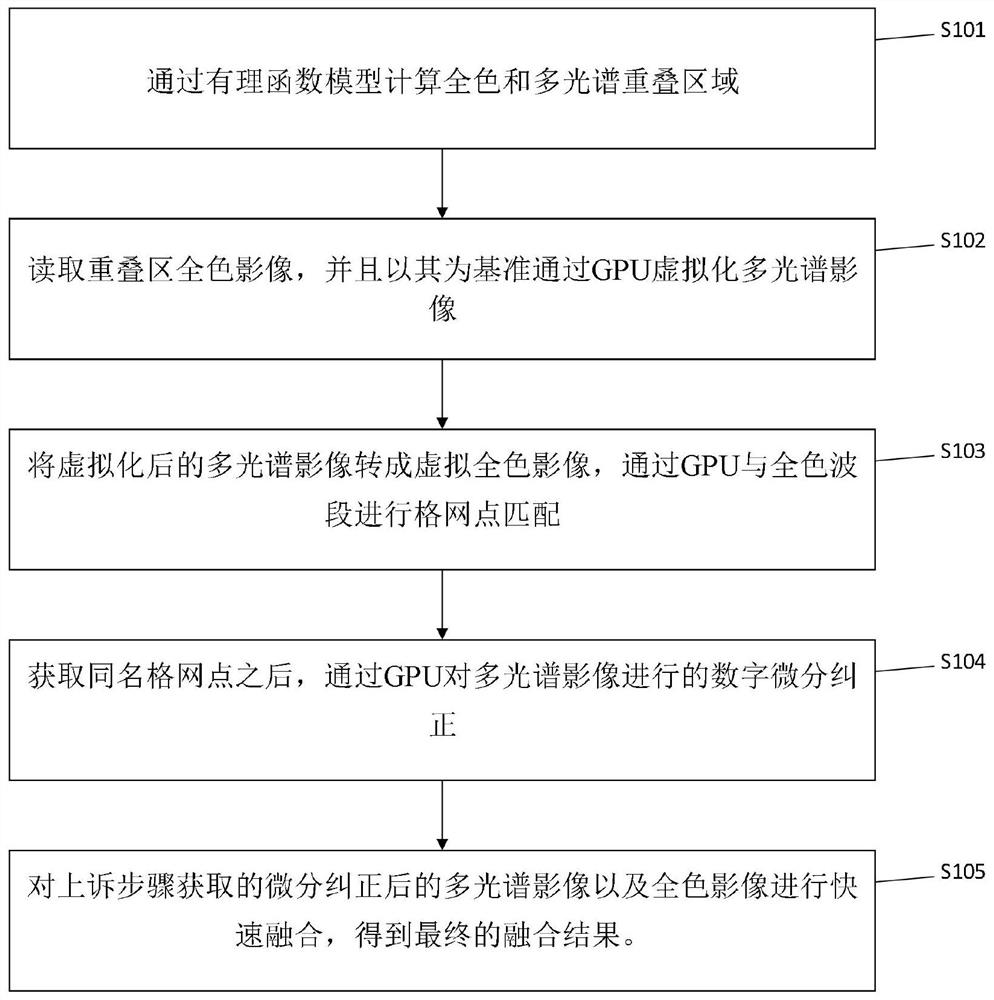

Panchromatic and multispectral image real-time fusion method based on cooperative processing of CPU and GPU

ActiveCN113570536ALimit geometric deviationGood registration effectImage enhancementImage analysisPattern recognitionTerm memory

The invention discloses a panchromatic and multispectral image real-time fusion method based on cooperative processing of CPU and GPU and GPU cooperative processing, which is used for quickly fusing full-color and multispectral data to generate a fused image with high spatial resolution and high spectral resolution. All steps of panchromatic and multispectral fusion are completed in a memory, the steps with the large calculation amount are mapped to a GPU, fusion efficiency can be greatly improved while fusion precision is guaranteed, the strict geometric corresponding relation between panchromatic and multispectral is considered through RFM, and the method has the advantages of being high in robustness and high in robustness. Geometric deviation possibly existing between panchromatic multispectrums is limited to the maximum extent through differential correction, so that the registration effect of panchromatic and multispectrums can be optimal, and finally a fusion image with the optimal effect is obtained through a multi-scale SFIM fusion method and a panchromatic spectral decomposition image fusion method. The invention is suitable for fast fusion processing requirements of large-data-volume sensor data.

Owner:中国人民解放军61646部队

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com