Patents

Literature

212 results about "Remote sensing image fusion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

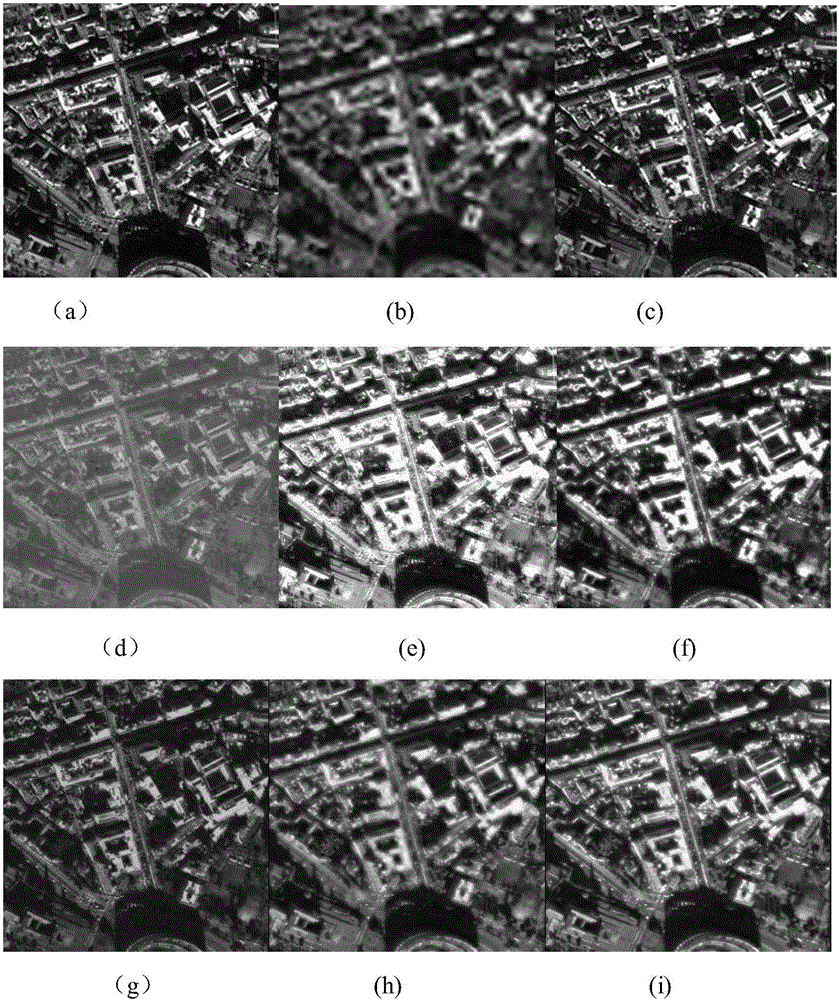

Remote sensing image fusion method based on sparse representation

ActiveCN103208102AEasy to handleEasy to identifyImage enhancementRemote sensing image fusionImage resolution

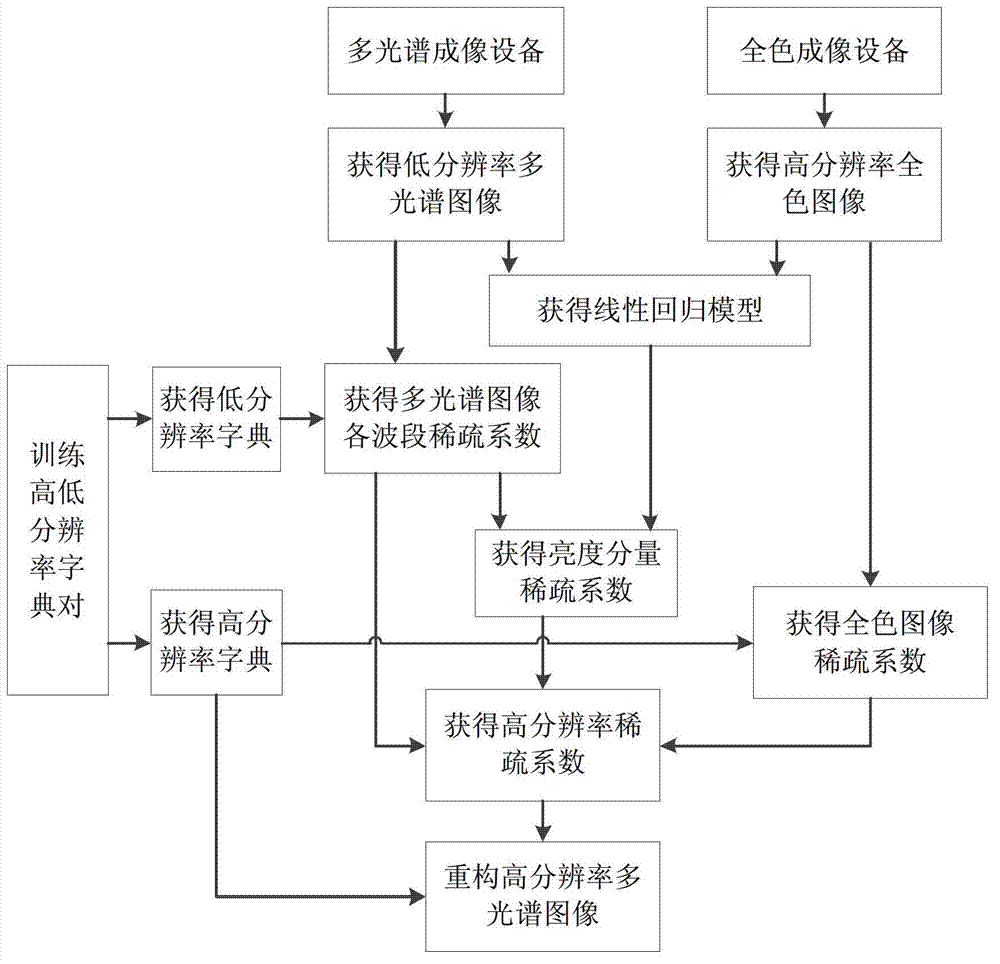

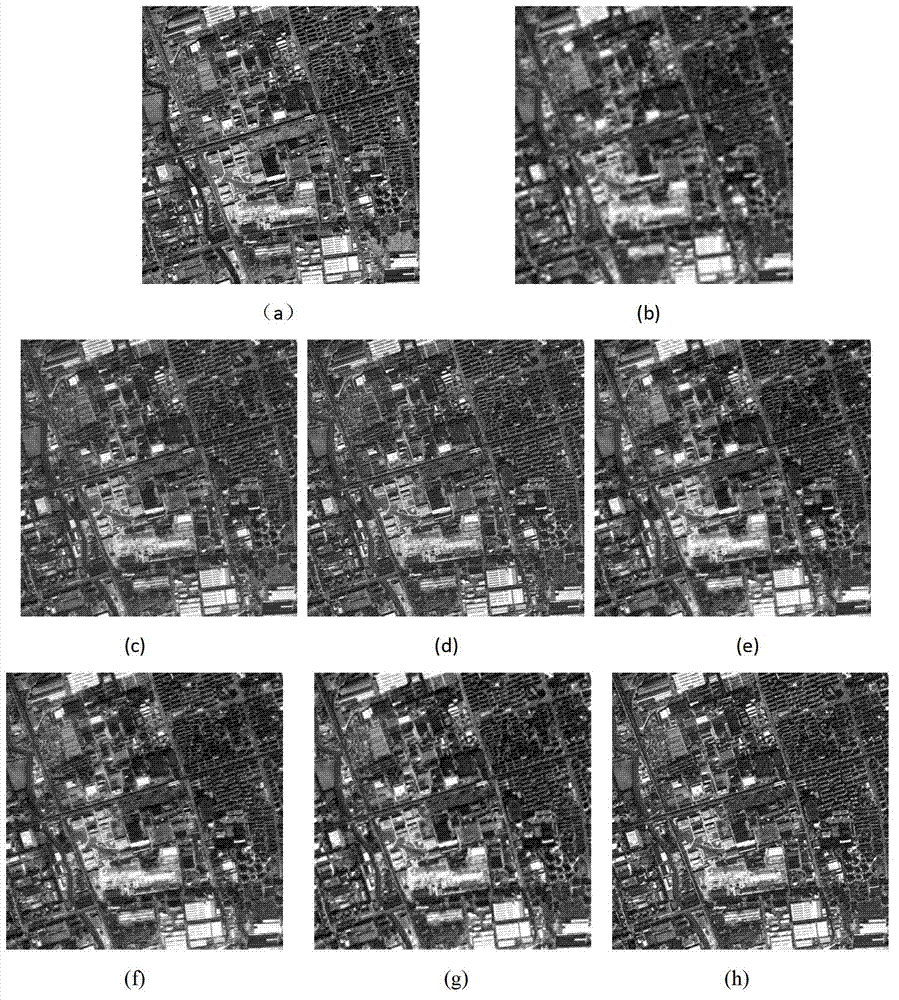

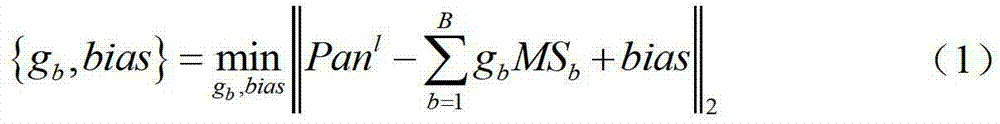

The invention discloses a remote sensing image fusion method based on sparse representation. The method comprises the following steps of: firstly, establishing a linear regression model between a multispectral image and a brightness component thereof; secondly, performing sparse representation on a panchromatic image and the multispectral image by using high and low resolution dictionaries respectively, and acquiring sparse representation coefficients of the brightness component of the multispectral image according to the linear regression model; thirdly, extracting detail components according to the sparse representation coefficients of the panchromatic image and the brightness component, and implanting the detail components to the sparse representation coefficients of each band of the multispectral image under a general component replacement fusion framework; and finally, performing image restoration to obtain a multispectral image with high spatial resolution. According to the method, the sparse representation technology is introduced into the field of remote sensing image fusion, so that the defect that high spatial resolution and spectral information cannot be simultaneously preserved in the prior art is overcome; and the fusion result of the method is superior to that of the conventional remote sensing image fusion method on the aspects of spectral preservation and spatial resolution improvement.

Owner:SHANGHAI JIAO TONG UNIV

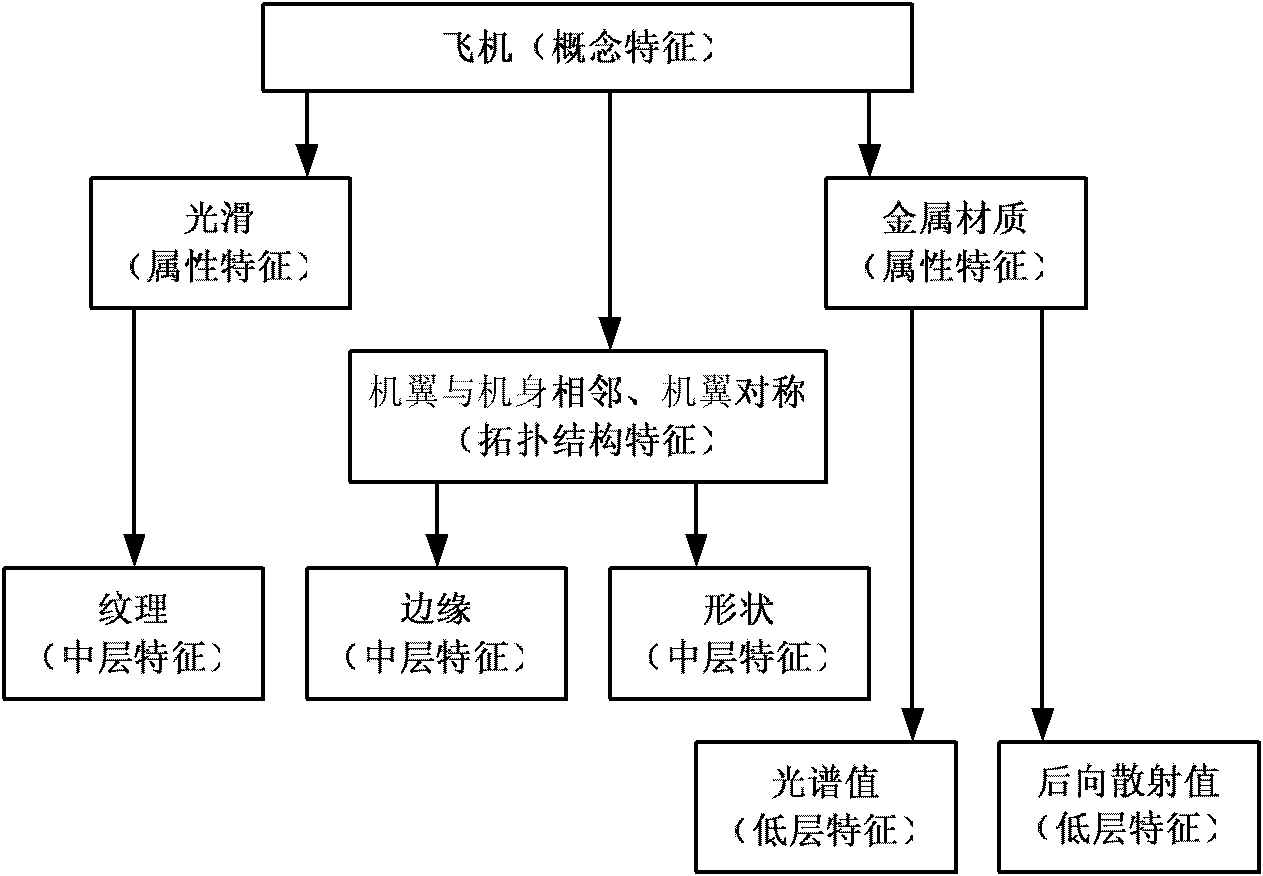

Method of identifying large and medium-sized objects based on multi-source remote sensing image fusion

ActiveCN102663394AImprove efficiencyImprove accuracyImage analysisCharacter and pattern recognitionRemote sensing image fusionObject based

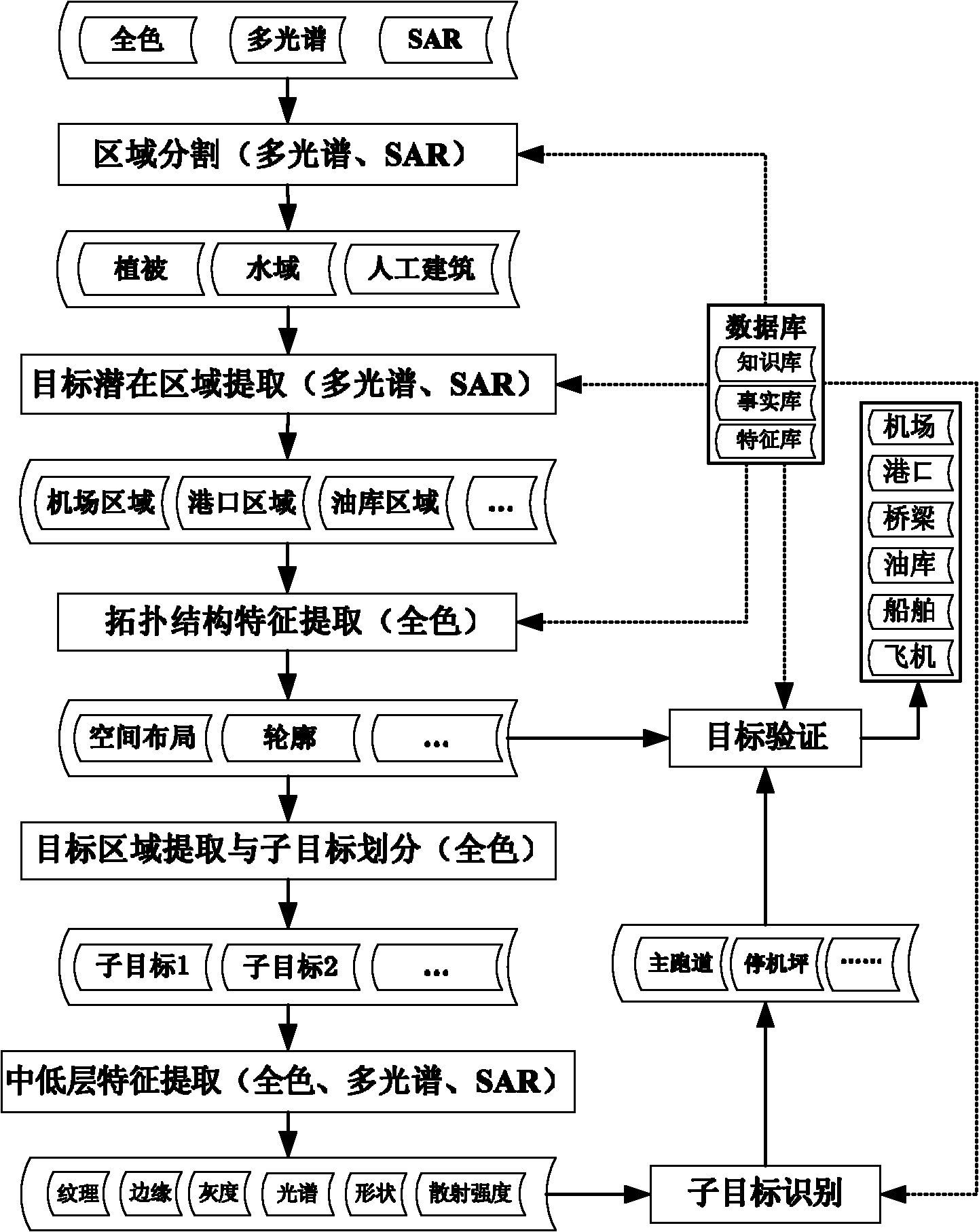

The invention provides a method of identifying large and medium-sized objects based on multi-source remote sensing image fusion. The method comprises that multispectral and SAR images are segmented into image areas according to spectrum distribution between different objects and differences of electromagnetic radiation, and a potential area of an object is extracted according to experience and knowledge of object distribution, so that characteristic extraction and object identification are more targeted while identification efficiency and identification accuracy of the system are improved; that based on the above, object areas are determined by extracting object contour, spatial layout and the like, and sub-object areas are divided according to spatial layout relation of the object contour and sub-object spatial layout; and that sub-object characteristics are extracted, and with the guidance of object characteristic set and object prior knowledge, sub-object identification and object verification are finally realizedby execution of identification rules and matching of characteristics.

Owner:BEIHANG UNIV

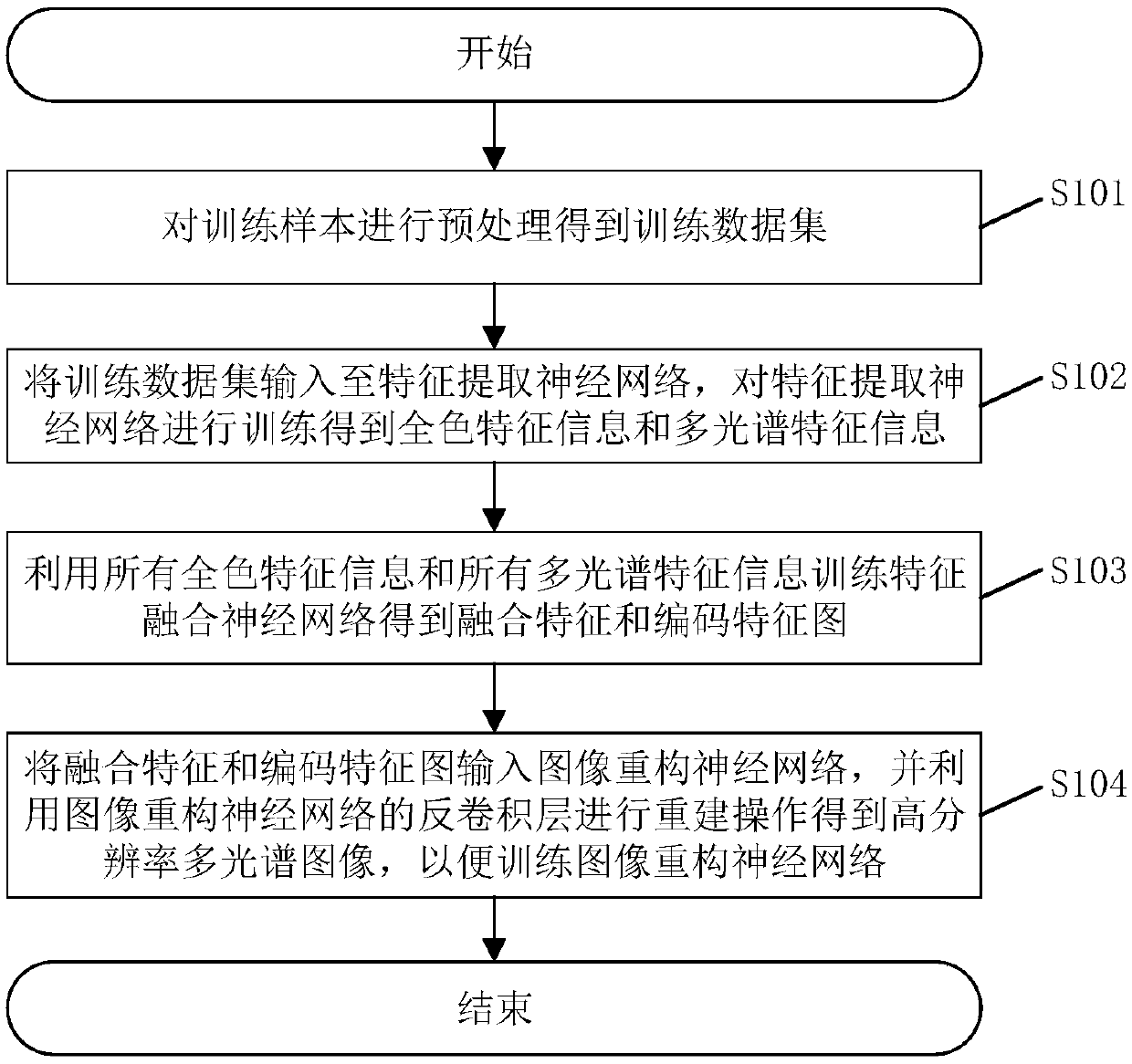

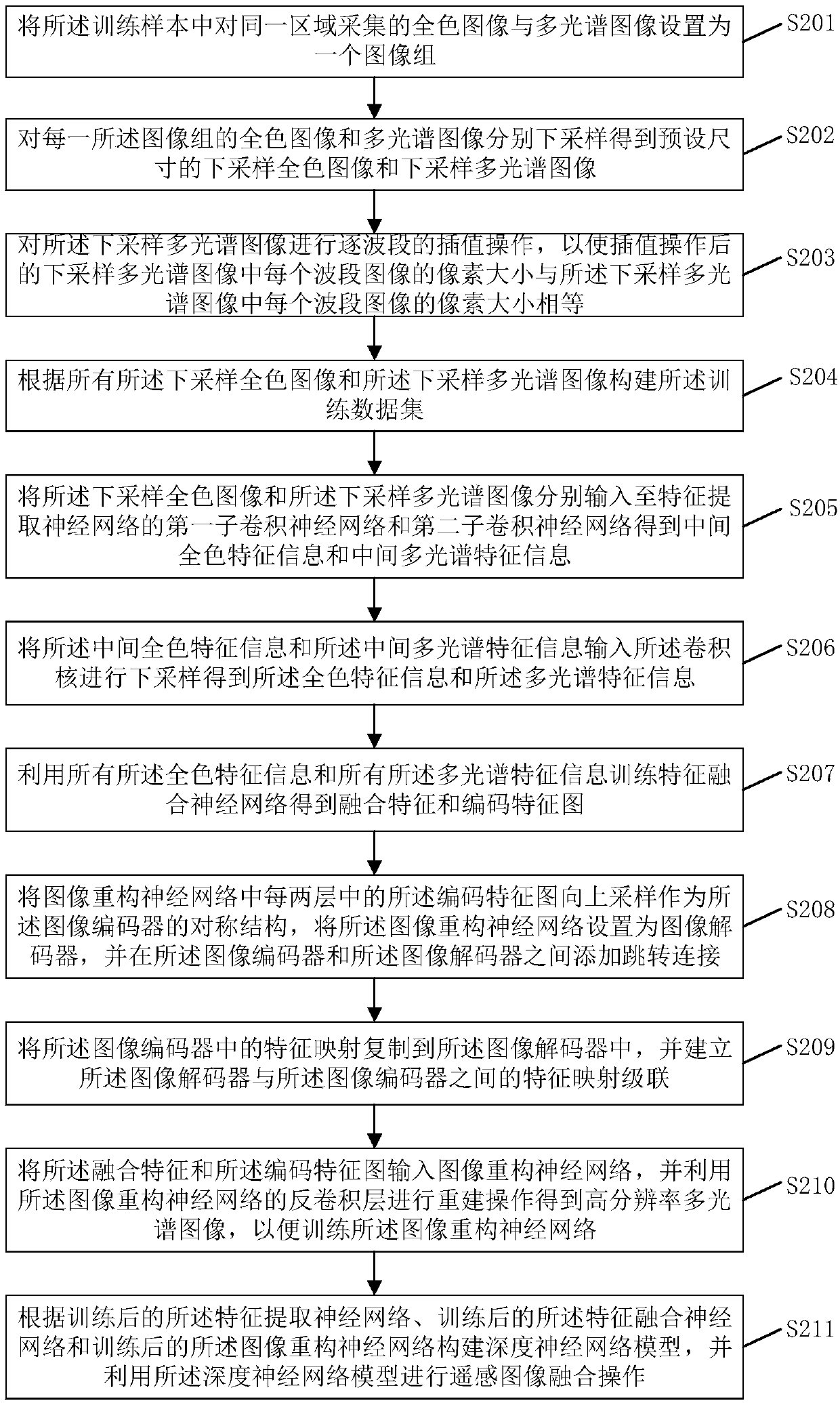

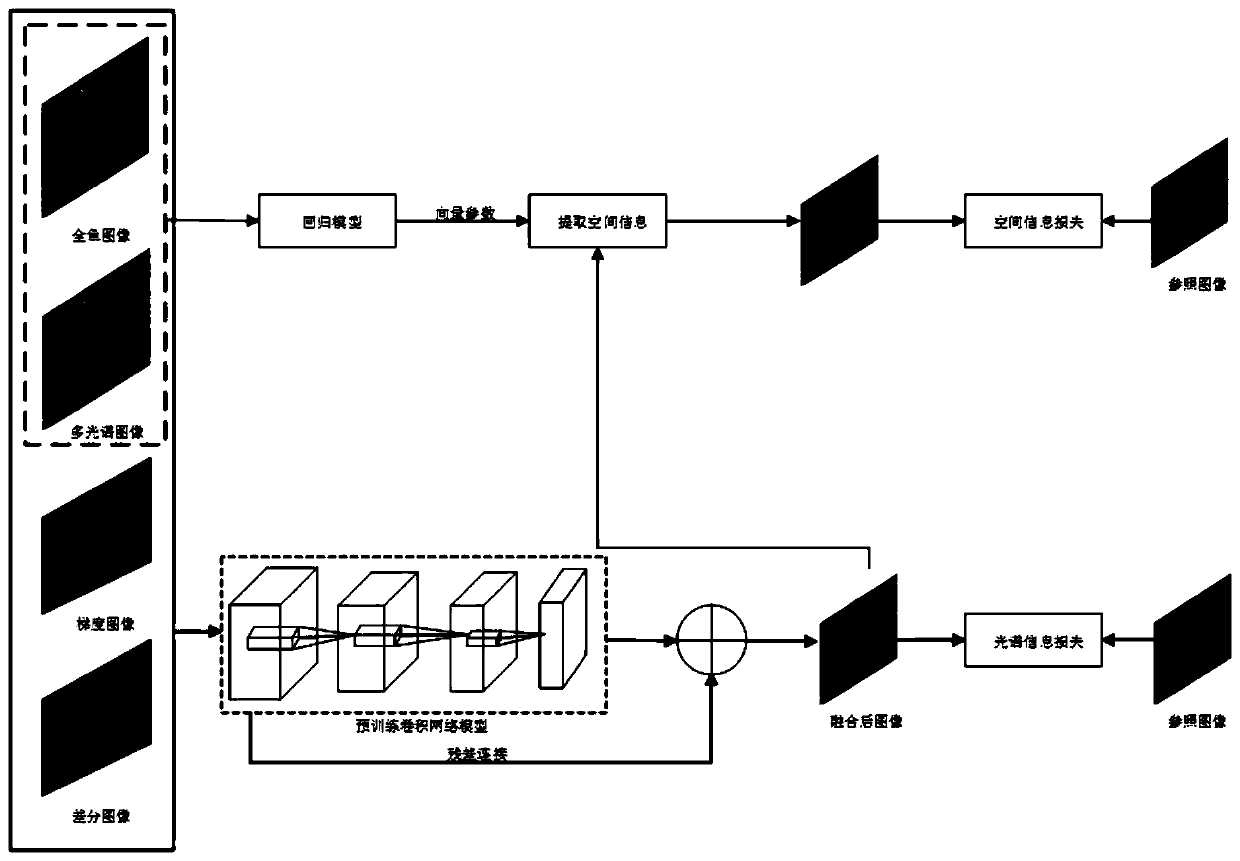

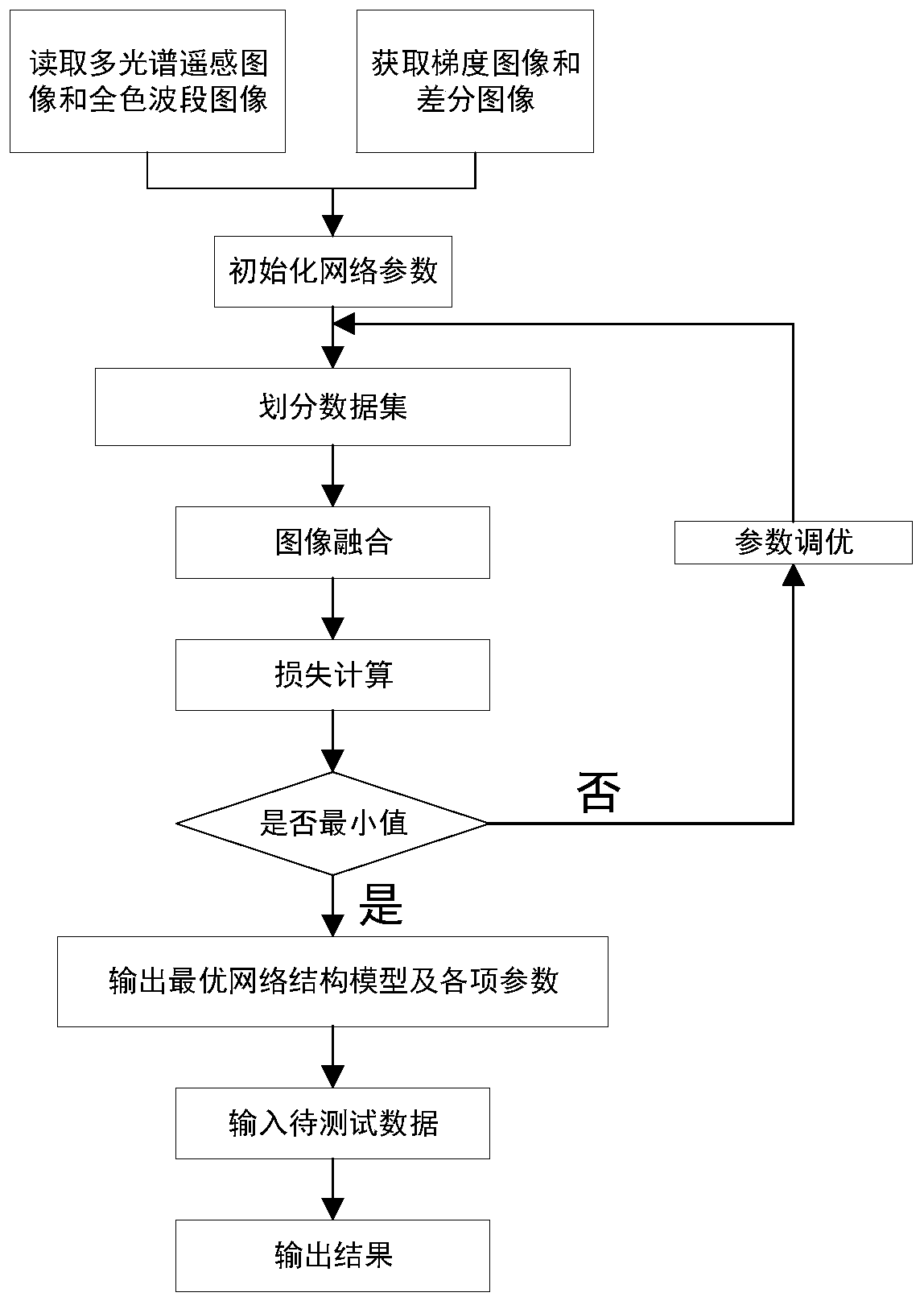

Remote sensing image fusion method, system and related components

InactiveCN108960345AReduce lossesQuality improvementCharacter and pattern recognitionNeural architecturesRemote sensing image fusionData set

The invention discloses a remote sensing image fusion method. The fusion method comprises the following steps: preprocessing a training sample to obtain a training data set; inputting the training data set into a feature extraction neural network to obtain the full-color feature information and the multi-spectral feature information; training the feature fusion neural network to obtain fusion features and coding feature maps by means of all panchromatic feature information and all multi-spectral feature information training features; inputting the fusion features and the coding feature maps into an image reconstruction neural network, and performing reconstructing operation by utilizing the inverse convolution layer of the image reconstruction neural network to obtain a high-resolution multi-spectral image, so that the image reconstruction neural network is trained; and constructing a depth neural network model, carrying out remote sensing image fusion operation by means of the depth neural network model. According to the method, the quality of the fused panchromatic image and the multi-spectral image can be improved. The invention further discloses a remote sensing image fusion system, a computer readable storage medium and electronic equipment, having the above beneficial effects.

Owner:GUANGDONG UNIV OF TECH

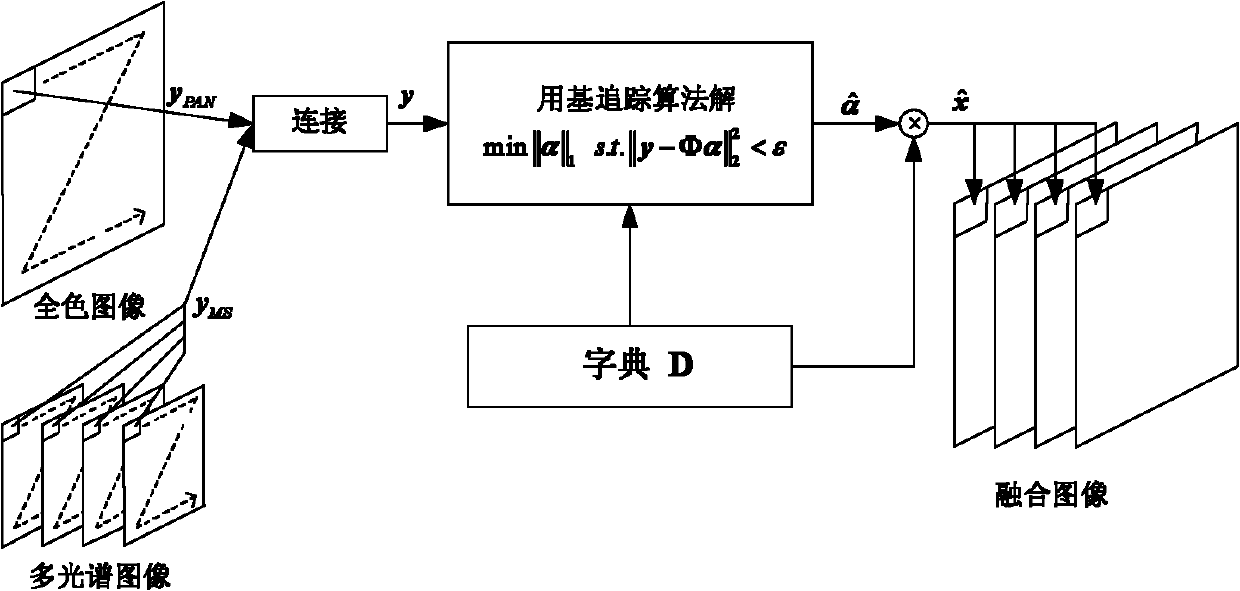

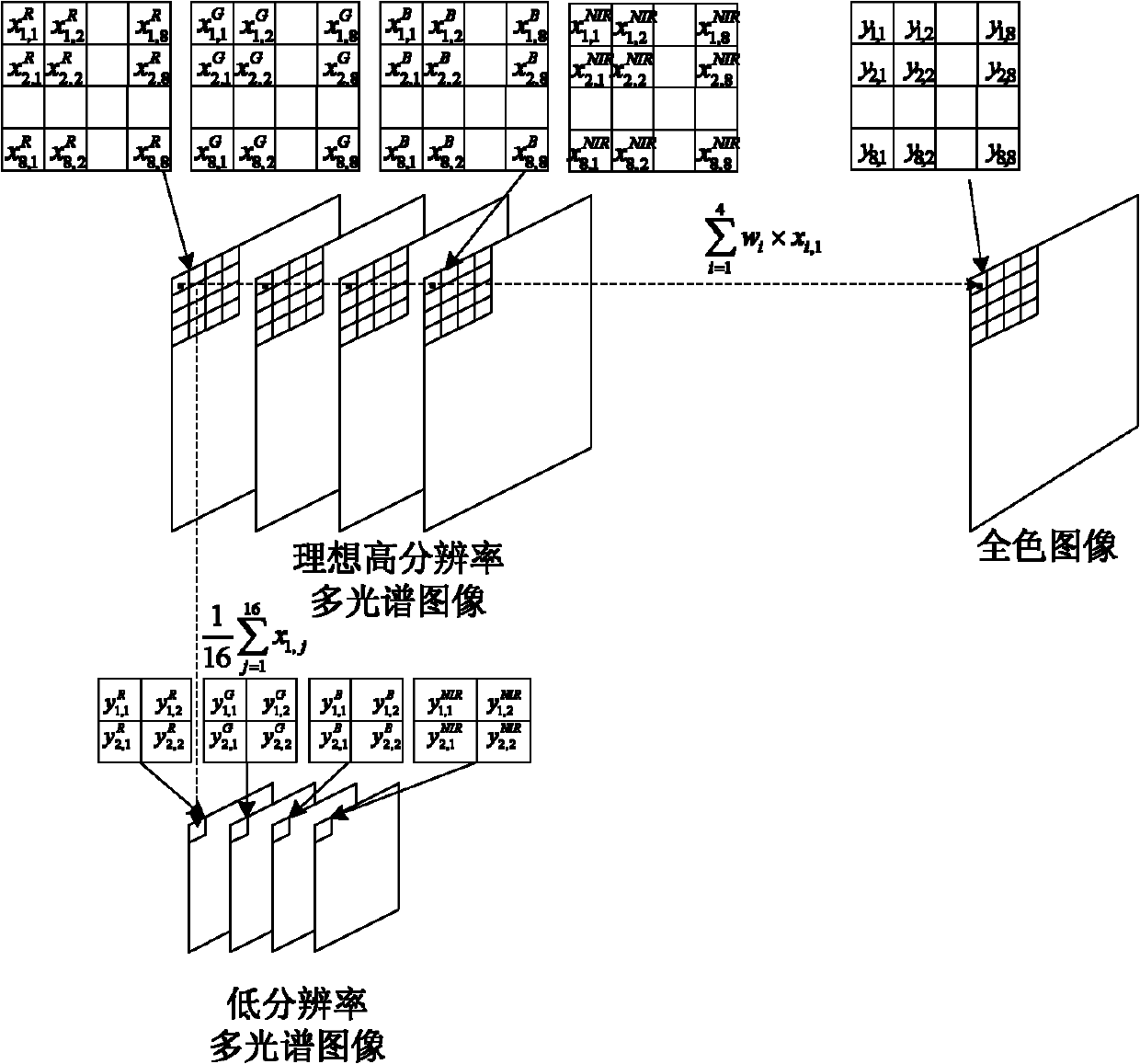

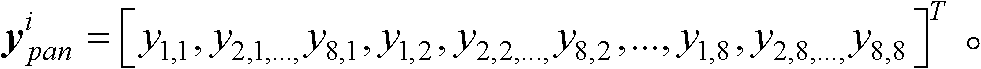

Compressive sensing theory-based satellite remote sensing image fusion method

InactiveCN101996396AConform to visual characteristicsAvoid complexityImage enhancementColor imageRemote sensing image fusion

The invention discloses a compressive sensing theory-based satellite remote sensing image fusion method. The method comprises the following steps of: vectoring a full-color image with high spatial resolution and a multi-spectral image with low spatial resolution; constructing a sparsely represented over-complete atom library of an image block with high spatial resolution; establishing a model from the multi-spectral image with high spatial resolution to the full-color image with high spatial resolution and the multi-spectral image with low spatial resolution according to an imaging principle of each land observation satellite; solving a compressive sensing problem of sparse signal recovery by using a base tracking algorithm to obtain sparse representation of the multi-spectral color image with high spatial resolution in an over-complete dictionary; and multiplying the sparse representation by the preset over-complete dictionary to obtain the vector representation of the multi-spectral color image block with high spatial resolution and converting the vector representation into the image block to obtain a fusion result. By introducing the compressive sensing theory into the image fusion technology, the image quality after fusion can be obviously improved, and ideal fusion effect is achieved.

Owner:HUNAN UNIV

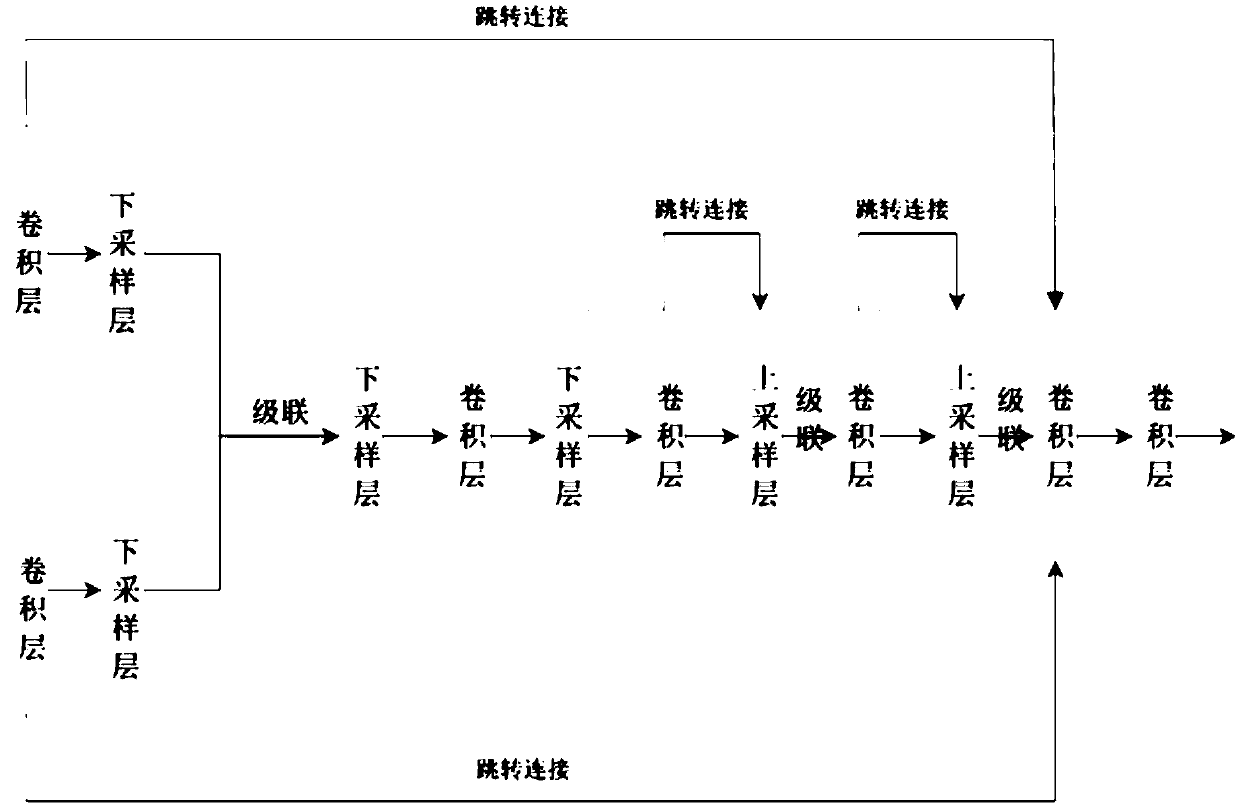

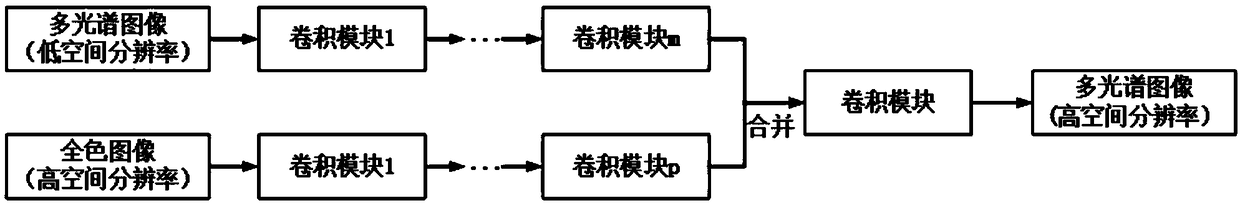

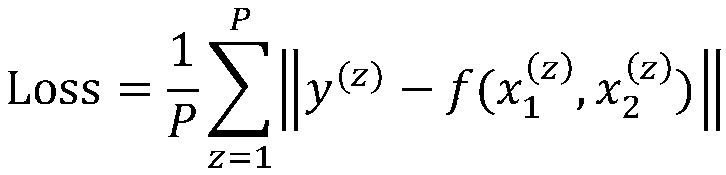

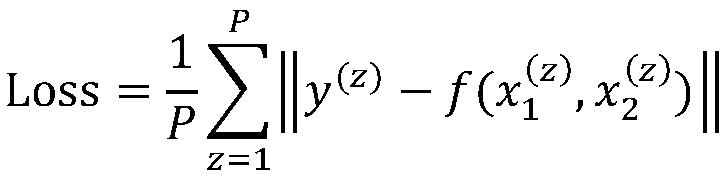

Remote sensing image fusion method and system based on dual-branch depth learning network

InactiveCN109146831AFully extractedEasy to integrateImage enhancementImage analysisStochastic gradient descentRemote sensing image fusion

The invention provides a remote sensing image fusion method and a remote sensing image fusion system based on a dual-branch depth learning network. The method and the system comprise the following steps that panchromatic images and multi-spectral images as sample data are respectively downsampled by corresponding multiples to obtain training samples; the dual-branch convolution neural network is constructed and trained by using stochastic gradient descent algorithm to obtain the trained dual-branch convolution neural network; the panchromatic image and multispectral image to be fused are inputted into the trained two-branch convolution neural network, and the fused multispectral image with high spatial resolution is obtained. Aiming at the fusion of panchromatic and multi-spectral images in remote sensing images, the invention utilizes the deep-level depth convolution network to more fully extract the characteristics of the images, integrates the complementary information between the two images, and generates multi-spectral images with high spatial resolution.

Owner:WUHAN UNIV

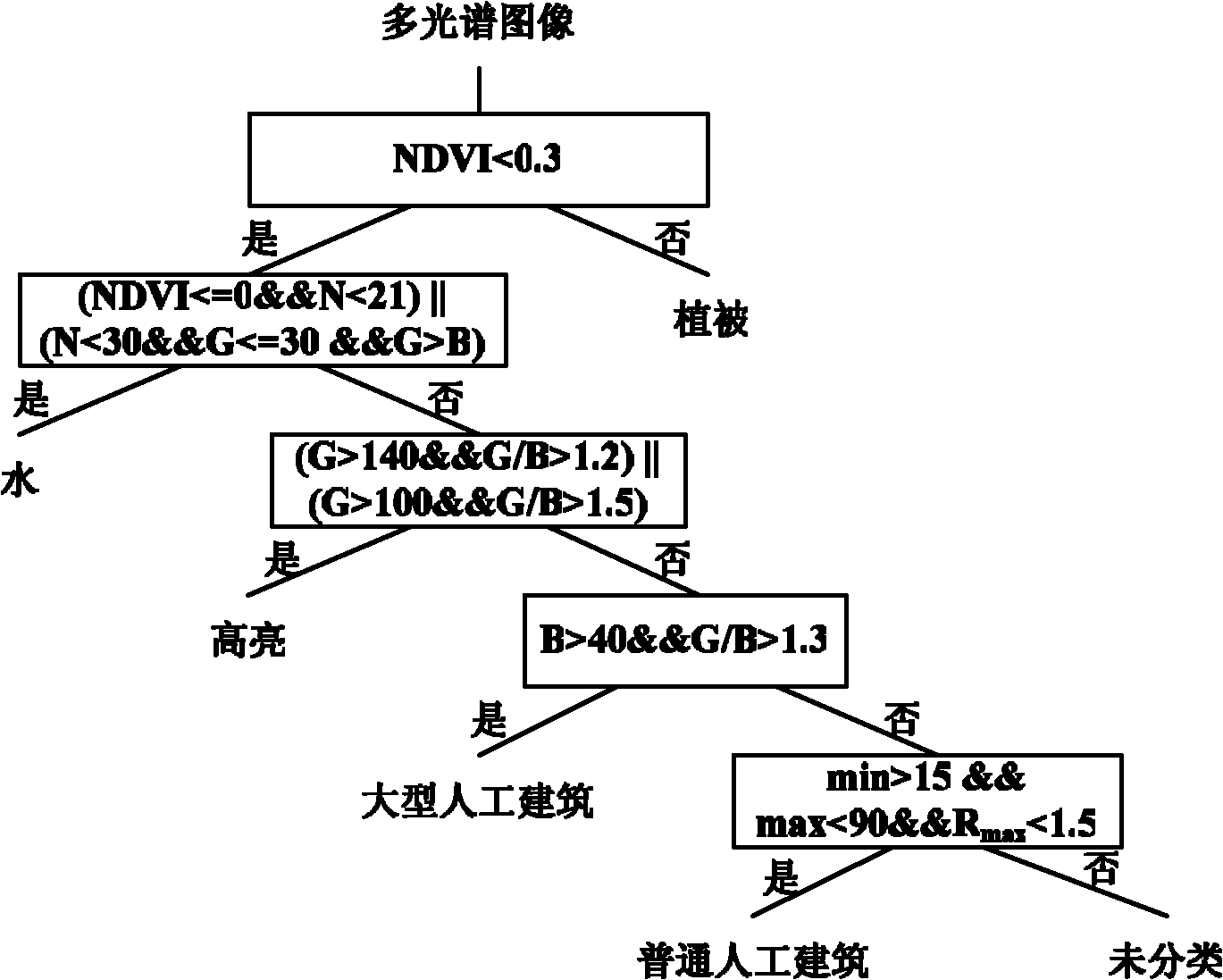

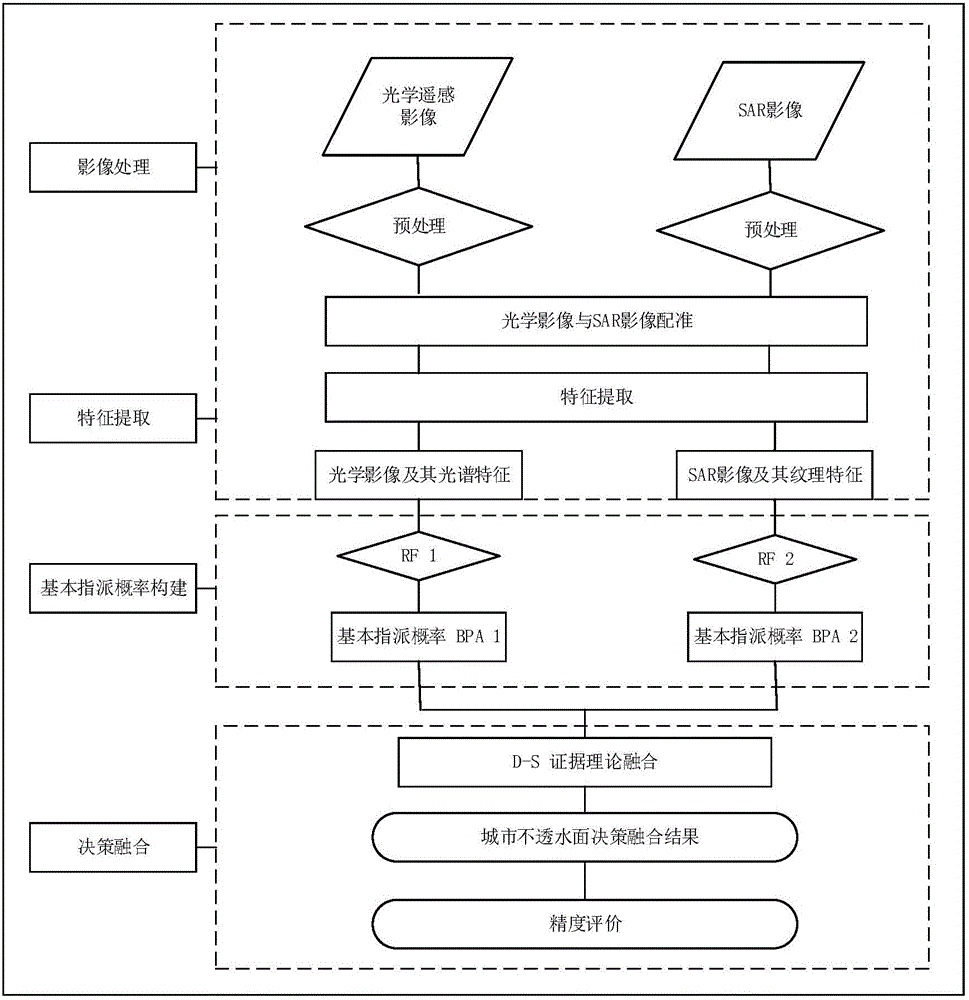

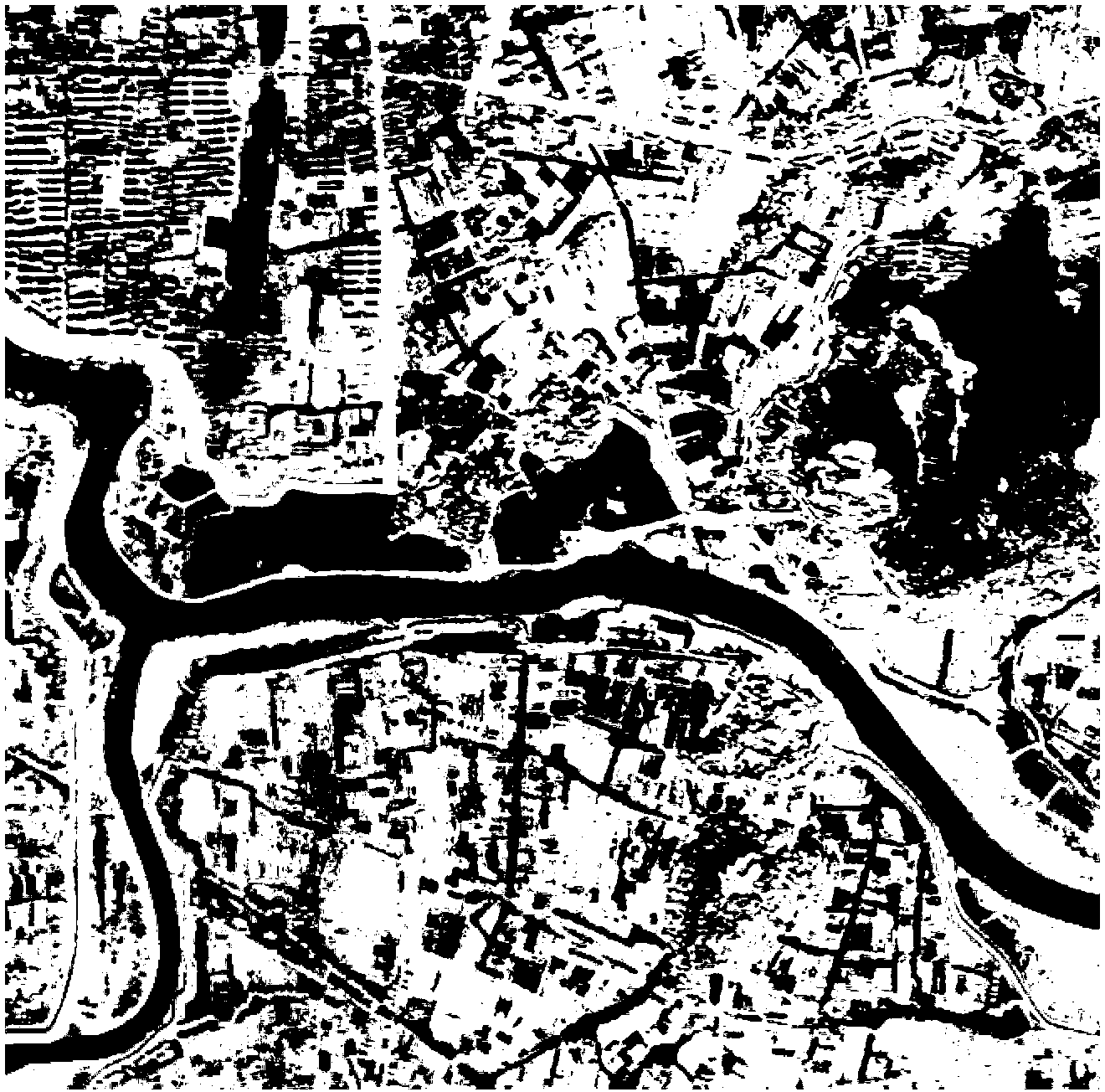

City impervious surface extraction method based on fusion of SAR image and optical remote sensing image

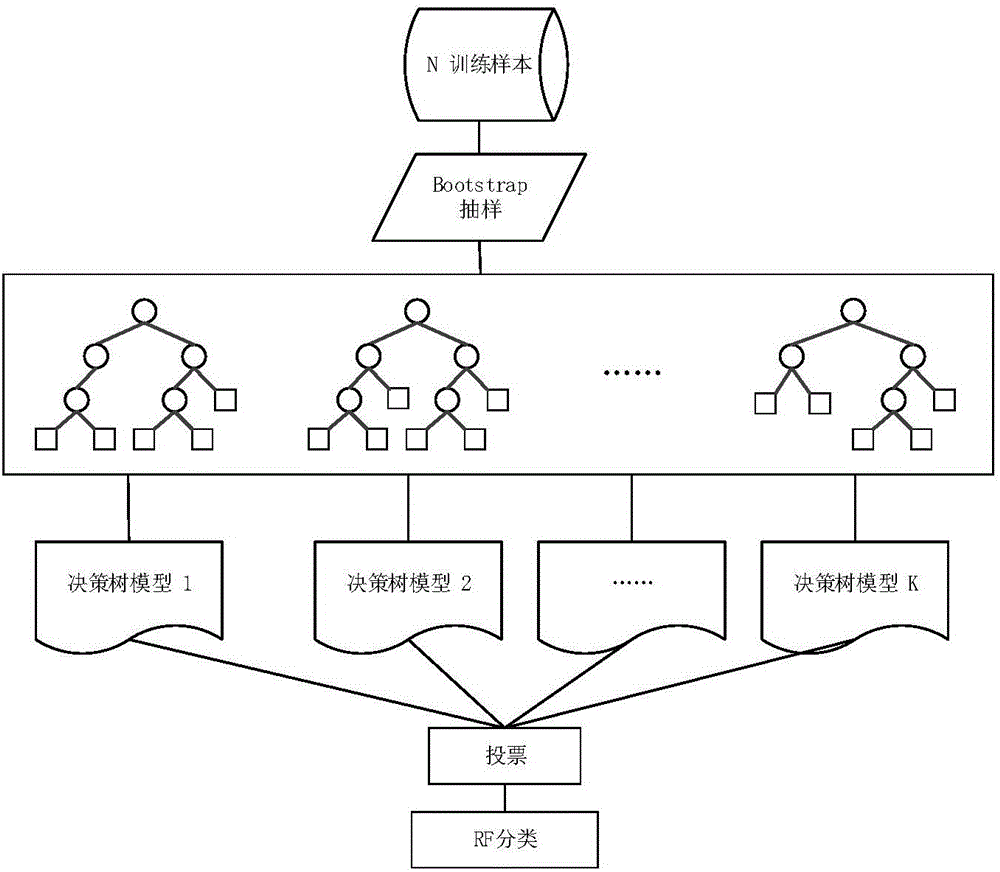

InactiveCN105930772AHigh precisionCharacter and pattern recognitionRemote sensing image fusionData source

Provided is a city impervious surface extraction method based on fusion of an SAR image and an optical remote sensing image. The method comprises that a general sample set formed by samples of a research area is selected in advance, and a classifier training set, a classifier test set and a precision verification set of impervious surface extraction results are generated from the general sample set in a random sampling method; the optical remote sensing image is configured with the SAR image of the research area, and features are extracted from the optical remote sensing image and the SAR image; training is carried out, the city impervious surface is extracted preliminarily on the basis of a random forest classifier, and optimal remote sensing image data, SAR image data and an impervious surface RF preliminary extraction result are obtained; decision level fusion is carried out by utilizing a D-S evidence theory synthesis rule, and a final impervious surface extraction result of the research area is obtained; and the precision of each extraction result is verified via the precision verification set. Advantages of the optical remote sensing image and SAR image data sources are utilized fully, the SAR image and optical remote sensing image fusion method based on the RF and D-S evidence theory is provided, and the impervious surface of higher precision in the city is obtained.

Owner:WUHAN UNIV

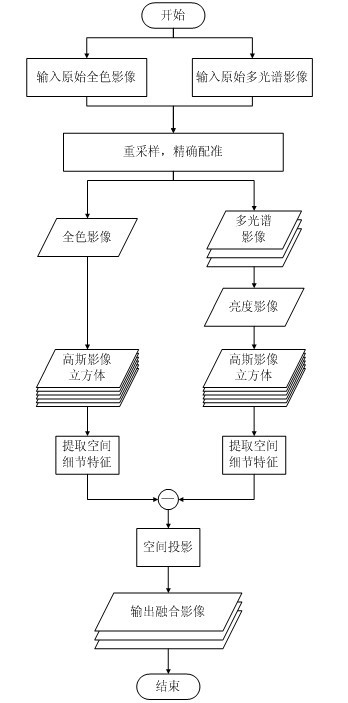

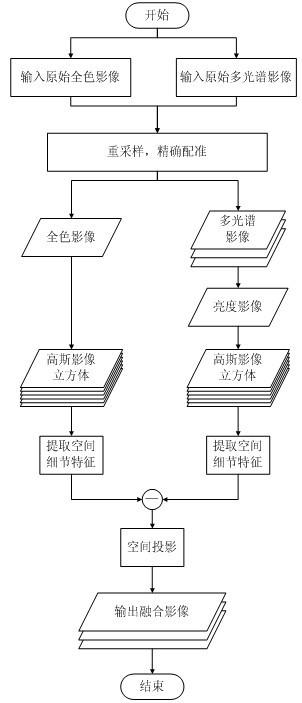

Multi-scale spatial projecting and remote sensing image fusing method

InactiveCN101916436ADescribe spatial propertiesPreserve spectral featuresImage enhancementRemote sensing image fusionImage resolution

The invention relates to a multi-scale spatial projecting and remote sensing image fusing method. The method comprises the following steps of: calculating the brightness image of a primary low-spatial resolution multispectral image by using the primary low-spatial resolution multispectral image, respectively generating the Gaussian image cube of the brightness image and a primary high-spatial resolution panchromatic image through improved Gaussian scale-space theory, extracting spatial detail characteristics of the brightness image and the panchromatic image through the difference between layers of the Gaussian image cube, and projecting the spatial detail characteristics onto the primary multispectral image according to a weighted fusion scheme to obtain a fused multispectral image with high spatial resolution and high spectral resolution. By the method, the spectral information of the primary multispectral image is well kept when the spatial detail performance ability of the fused image is improved; the physical significance is clear; the realization structure is simple; and the fusion effect is good.

Owner:WUHAN UNIV

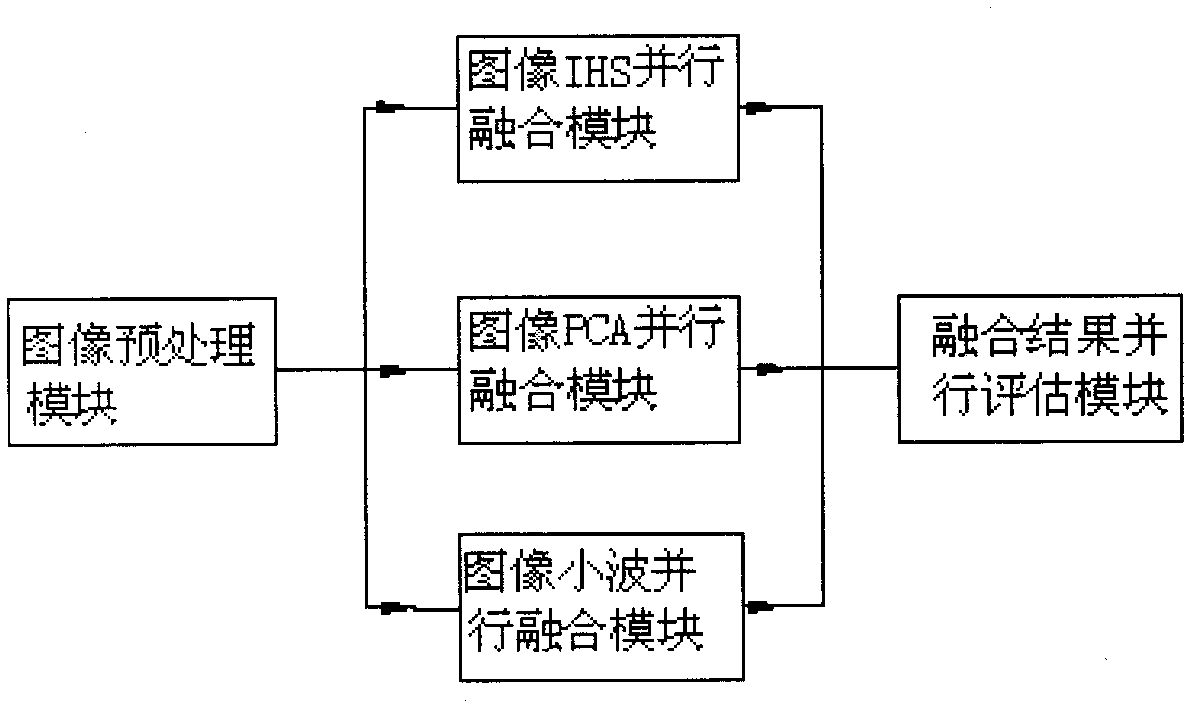

Fast fusion system and fast fusion method for images

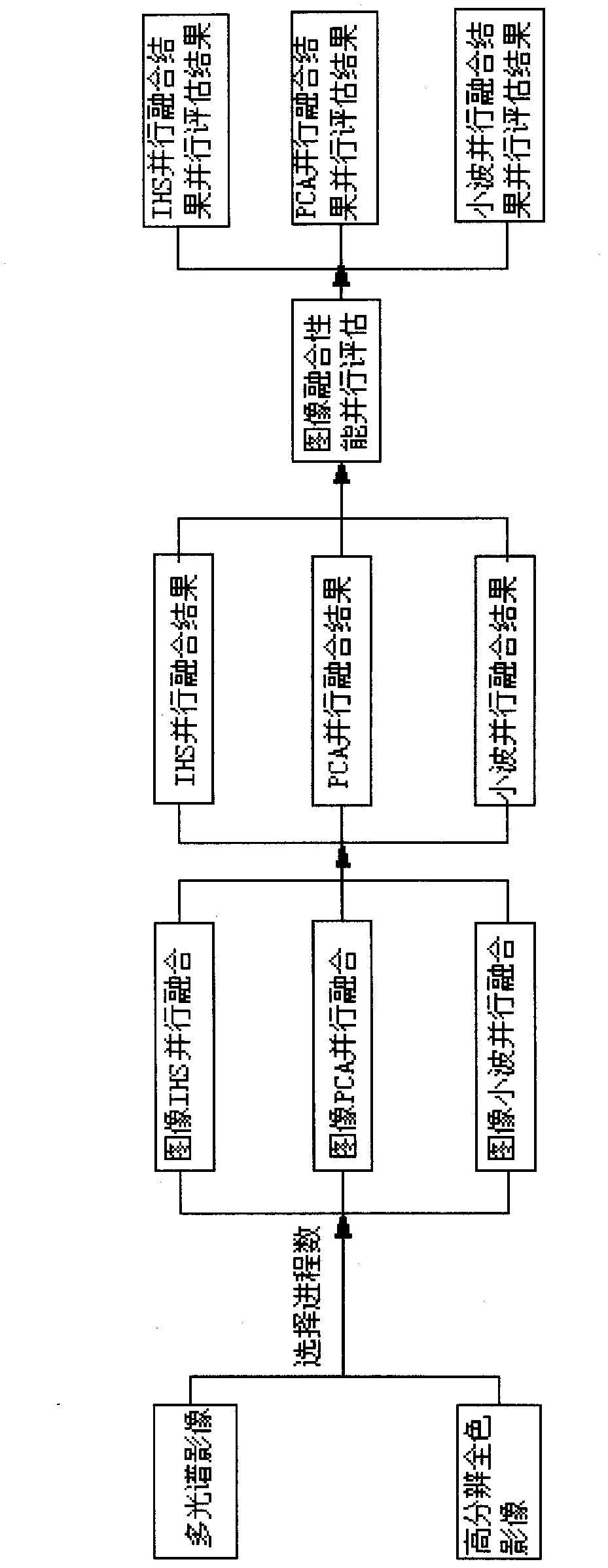

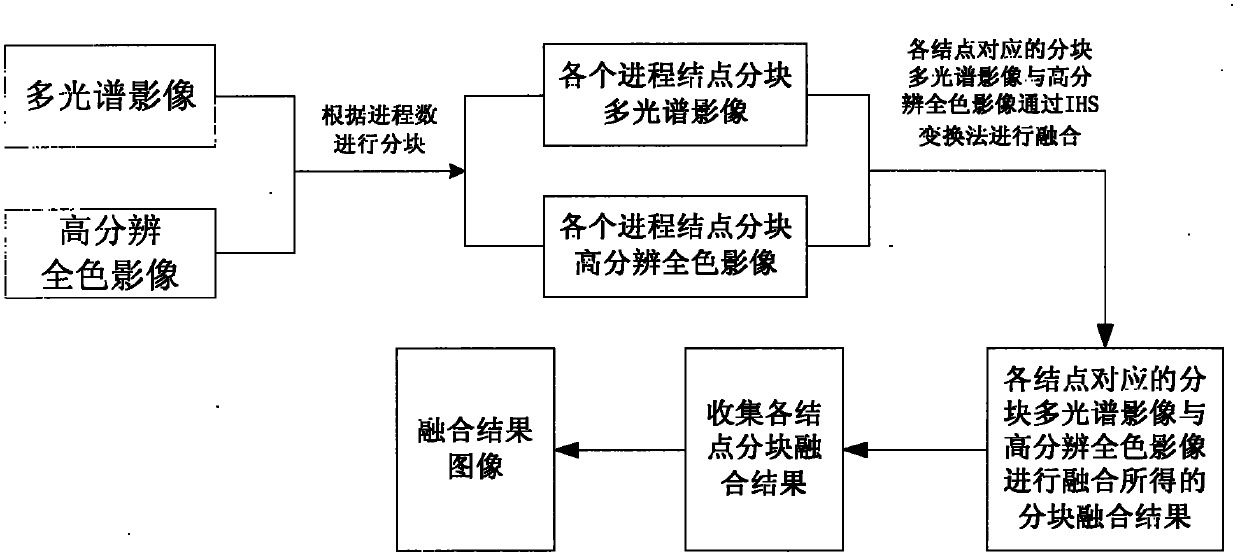

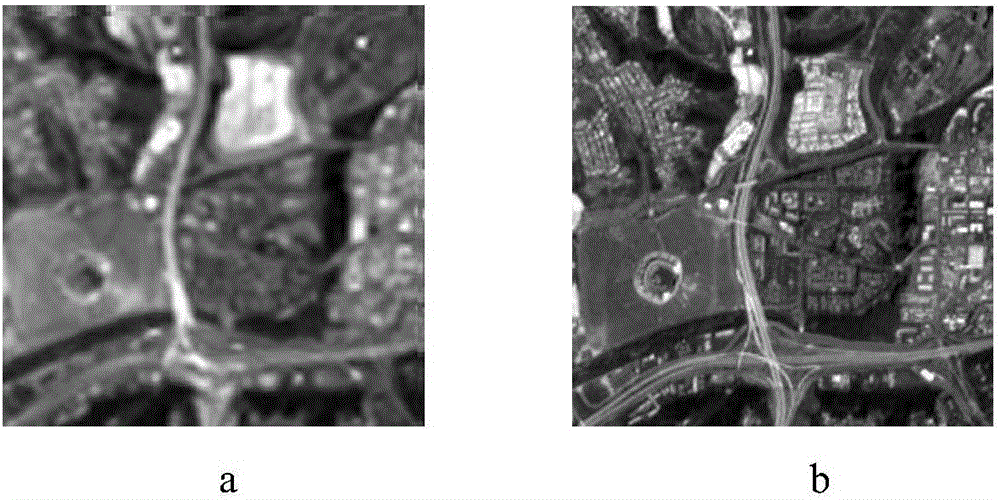

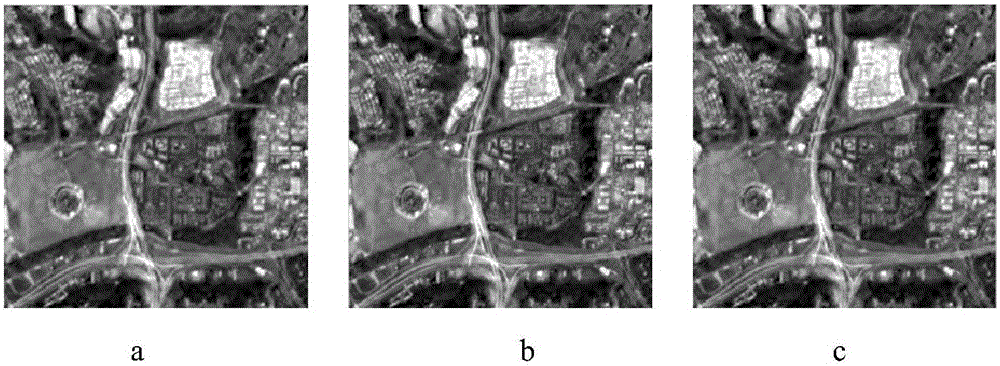

InactiveCN102521815ASolve the problem of slow fusionHigh resolutionImage enhancementRemote sensing image fusionPrincipal component analysis

The invention provides a fast fusion system and an implementing method for remote sensing images. The fusion system comprises an image preprocessing module, an image IHS (Intensity, Hue, Saturation) parallel fusion module, an image PCA (Principal Component Analysis) parallel fusion module, an image wavelet parallel fusion module and an image fusion effect parallel evaluation module. Both the image preprocessing module and the image fusion effect parallel evaluation module are connected with the image IHS parallel fusion module, the image PCA parallel fusion module and the image wavelet parallel fusion module. According to the fusion method, parallel fusion and evaluation are adopted. The invention provides the simple, convenient and efficient fast fusion implementing method for the remote sensing images, provides the efficient fast fusion method and implementing system for the remote sensing images for the remote sensing application in the fields of disaster prevention and relief, military and the like, and solves the problem of low fusion speed of the remote sensing images with large data volumes.

Owner:薛笑荣 +2

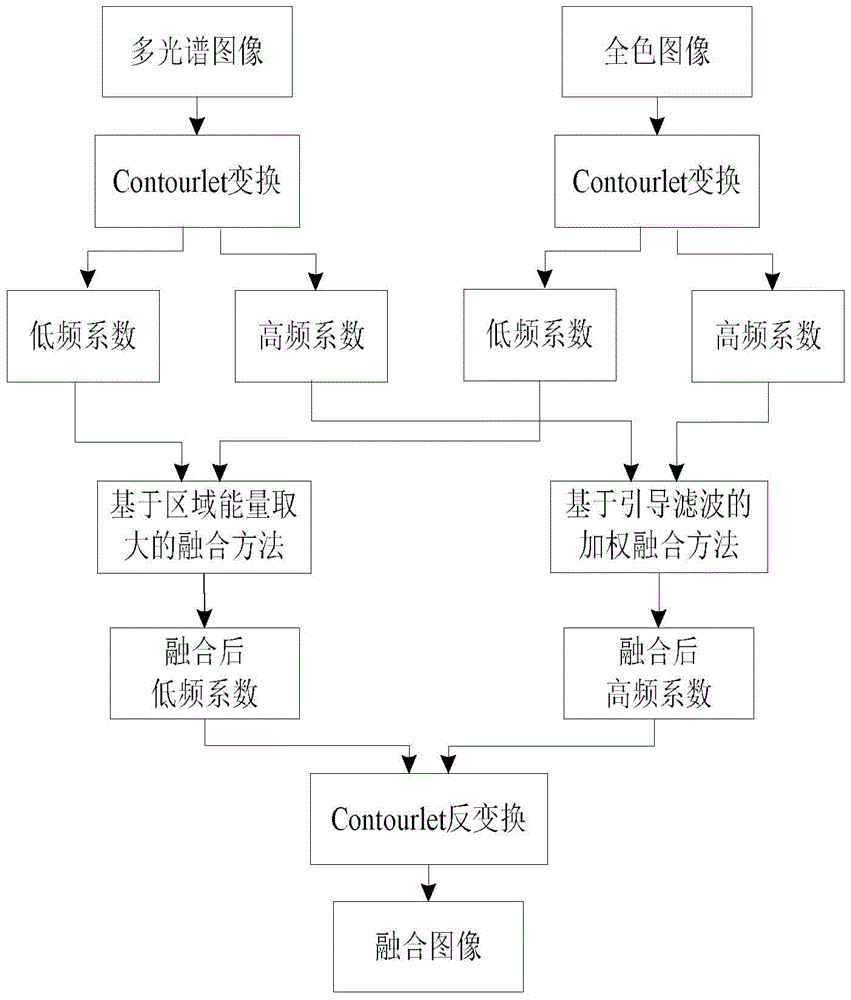

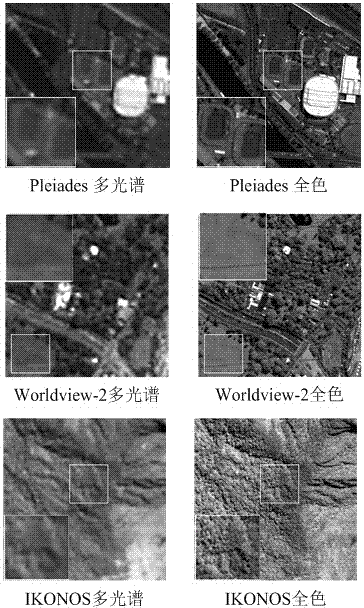

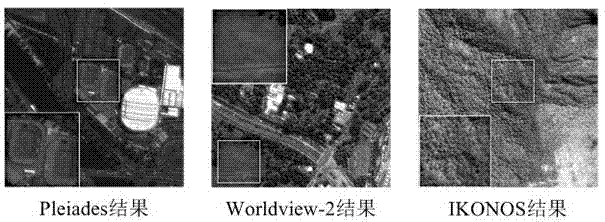

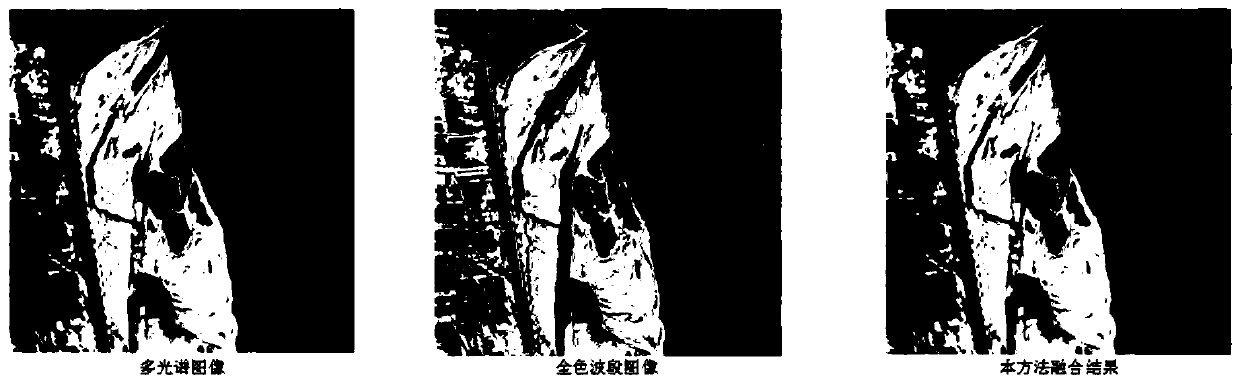

Remote sensing image fusion method based on contourlet transform and guided filter

ActiveCN105761214ASolve image contrast reductionSolve the characteristicsImage enhancementRemote sensing image fusionContourlet

The invention discloses a remote sensing image fusion method based on contourlet transform and guided filter, mainly to solve problems of image contrast reduction and unclear image edge characteristic expression caused by the existing image fusion method. The method particularly comprises steps: the same target is photographed to obtain a to-be-fused multispectral image and a to-be-fused panchromatic image for contourlet transform, and corresponding high-frequency coefficients and low-frequency coefficients are obtained; a weighted fusion method based on guided filter is applied to the high-frequency coefficients of the two source images for fusion, and high-frequency coefficients of the fused image are obtained; a region energy maximum method is applied to the low-frequency coefficients of the two source images for fusion, and low-frequency coefficients of the fused image are obtained; contourlet inverse transform is applied to the high-frequency coefficients and the low-frequency coefficients after fusion, and a fused image of the target is obtained. The method of the invention combines the contourlet transform and the guided filter, the fusion effects are obvious, the image evaluation parameters are high, and the method can be applied to aspects of image analysis and processing, surveying and mapping, geology and the like.

Owner:XIDIAN UNIV

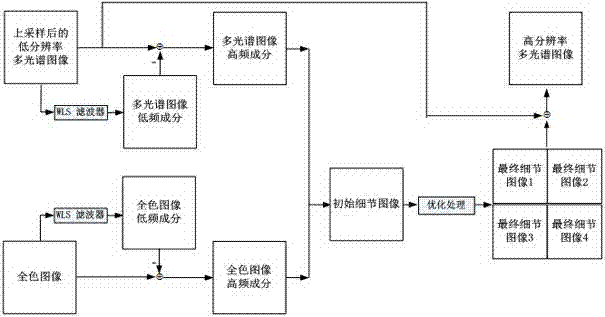

Adaptive remote sensing image panchromatic sharpening method

InactiveCN104851077AAvoid partial artifactsGeometric image transformationRemote sensing image fusionImage resolution

The invention discloses an adaptive remote sensing image panchromatic sharpening method, and belongs to the technical field of remote sensing image fusion. The spectral distortion of fusion results is reduced, and the sharpening effect of fusion results is improved. The method comprises the following steps: performing interpolation amplification on a low-resolution multispectral image to make the multispectral image and a corresponding panchromatic image have the same resolution; filtering the panchromatic image and the multispectral image of the same resolution to obtain low-frequency components; performing difference operation on the panchromatic image and the multispectral image of the same resolution and the low-frequency components to obtain corresponding high-frequency components; estimating missing initial detail images of the multispectral image on the basis of the high-frequency components of the two images; building an optimization function for the initial detail images, and optimizing the initial detail images in a rapid descent method to obtain final detail images suitable for different channels of the multispectral image; and injecting the final detail images into the corresponding channels of the multispectral image to obtain a high-resolution multispectral image. The adaptive remote sensing image panchromatic sharpening method of the invention is used for remote sensing image panchromatic sharpening.

Owner:SICHUAN UNIV

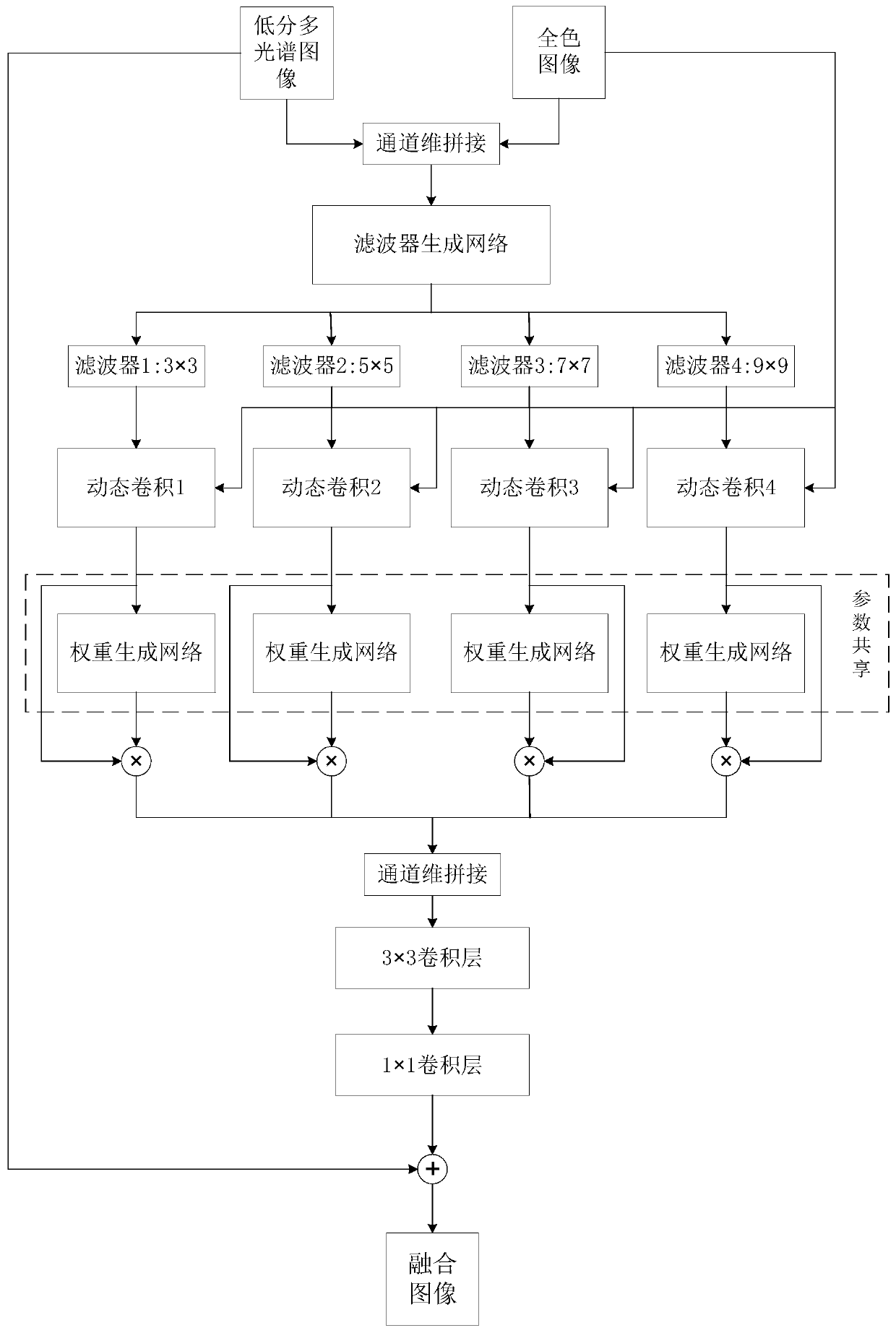

Remote sensing image fusion method and system based on multi-scale dynamic convolutional neural network

ActiveCN111080567AImprove adaptabilitySpeed up circulationImage enhancementImage analysisRemote sensing image fusionAdaptive filter

The invention discloses a remote sensing image fusion method and system based on a multi-scale dynamic convolution neural network, and the method comprises the steps: firstly enabling a high-resolution panchromatic image and a low-resolution multispectral image to dynamically generate a multi-scale filter through a multi-scale filter generation network, and then carrying out the multi-scale dynamic convolution of the filter and the panchromatic image; and properly weighting detail features obtained by dynamic convolution by using a weight generation network, enabling the weighted multi-scale detail features to pass through two convolution layers to obtain a final detail image, and adding the detail image and the low-resolution multispectral image to obtain a fused image. According to the invention, multi-scale local adaptive dynamic convolution is adopted, a local adaptive filter can be dynamically generated at each pixel position according to each input image, the adaptability of thenetwork is enhanced, the generalization ability of the network is improved, a good fusion effect is obtained, and the method and system can be used for target detection, target recognition and the like.

Owner:CHANGSHA UNIVERSITY OF SCIENCE AND TECHNOLOGY

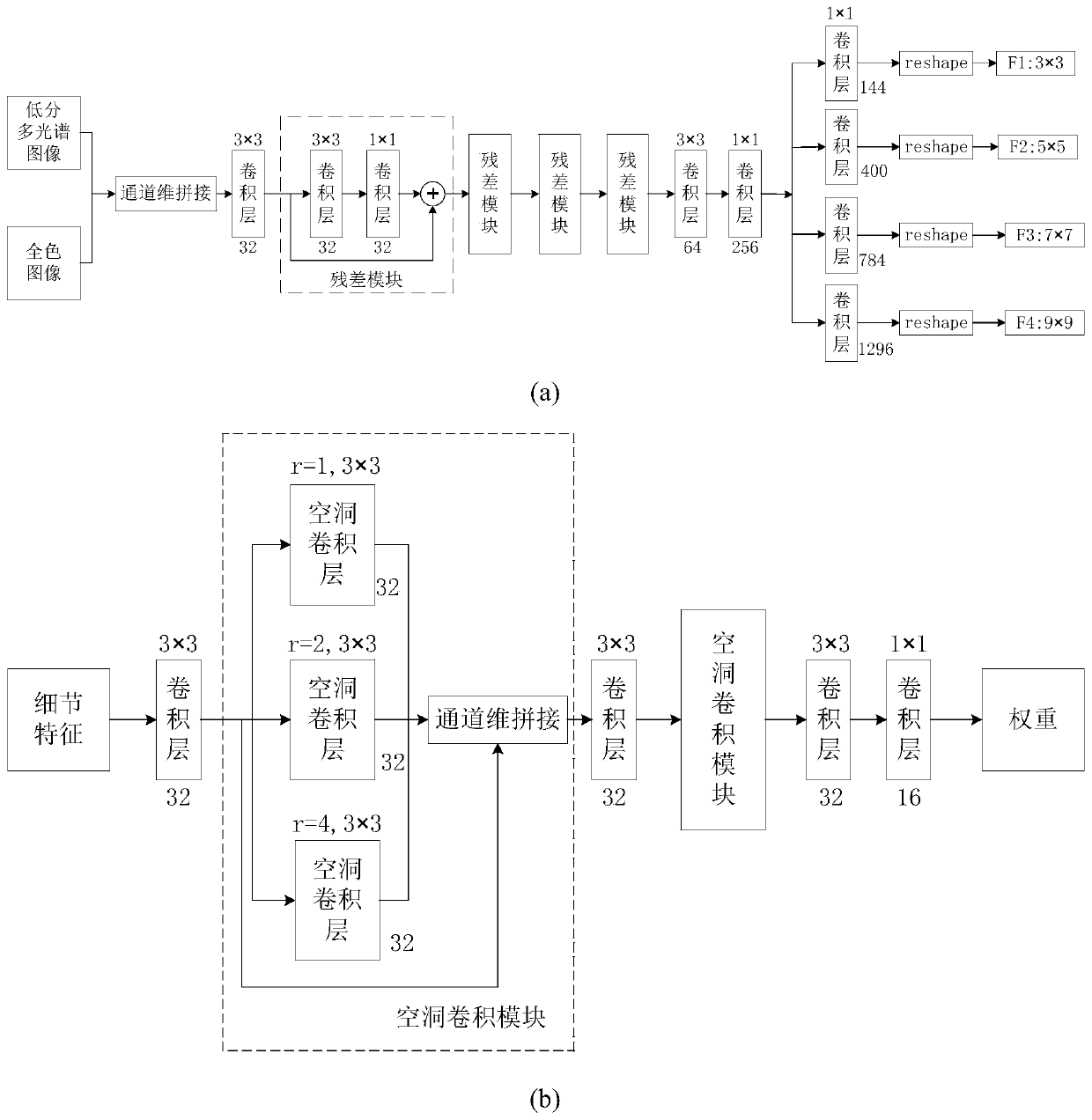

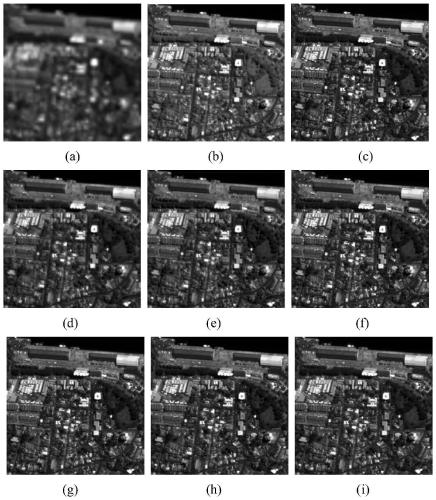

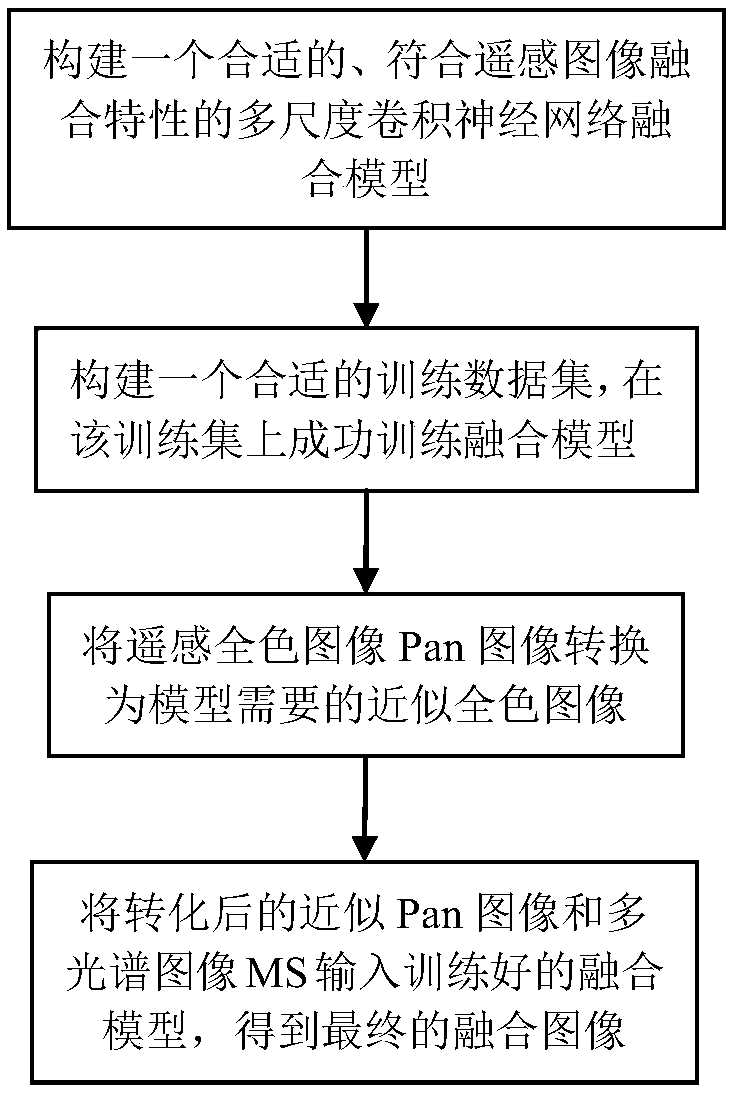

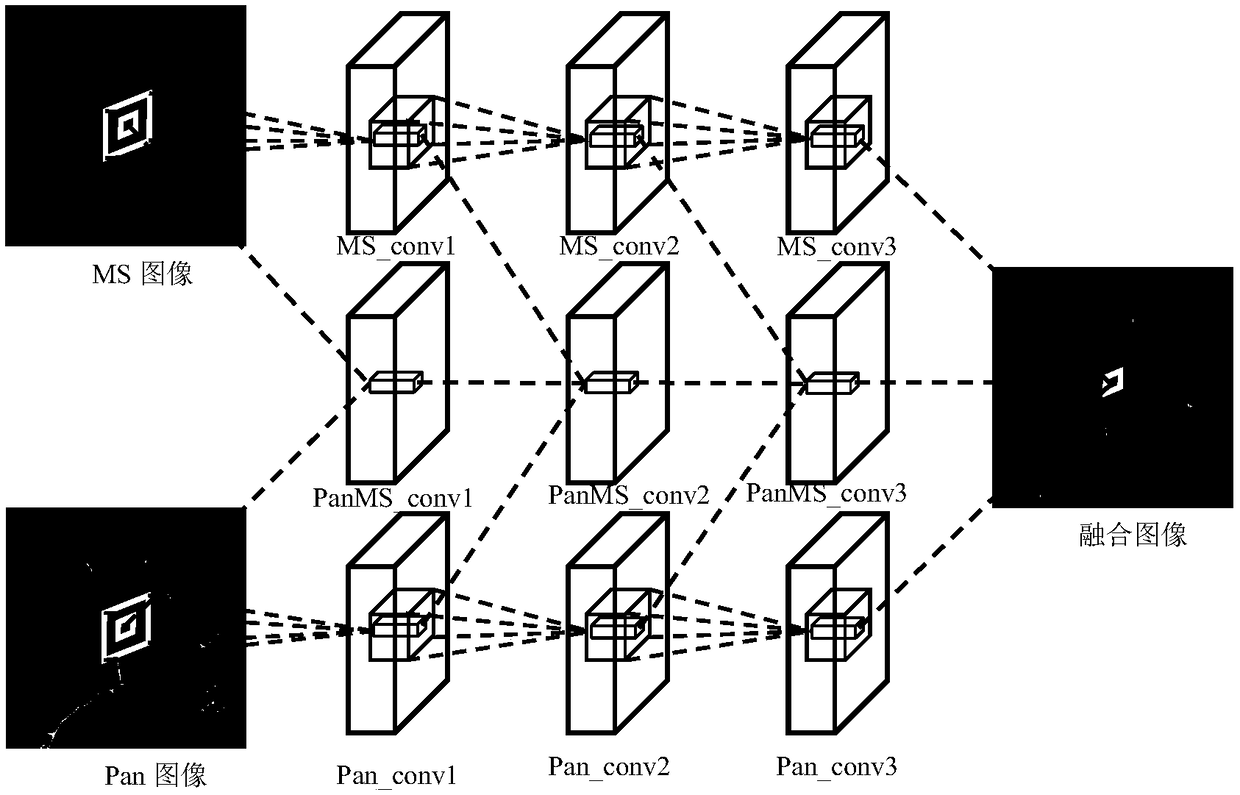

Multi-scale remote sensing image fusion method based on convolution neural network

ActiveCN109272010AImprove robustnessEasy to integrateCharacter and pattern recognitionData setRemote sensing image fusion

The invention provides a multi-scale remote sensing image fusion method based on a convolution neural network. The method comprises the following steps: firstly, a multi-scale convolution neural network fusion model conforming to the fusion characteristics of remote sensing images is constructed by utilizing the properties of the convolution neural network, wherein an image to be fused is input, and a fused image is putput; Secondly, a suitable training dataset is constructed and the fusion model is successfully trained on the dataset, thirdly, the panchromatic image Pan image is converted tothe image to be fused which is needed by the model, Fourthly, the converted approximate Pan image and multi-spectral image MS are input into the trained fusion model, and the final fusion image is obtained. The method of the invention learns an adaptive multi-scale fusion function from a large amount of data, which is not designed artificially and is more reasonable by statistical learning. The experimental results show that the multi-scale fusion method based on convolution neural network can process the remote sensing images of different satellites and different bands.

Owner:JILIN UNIV

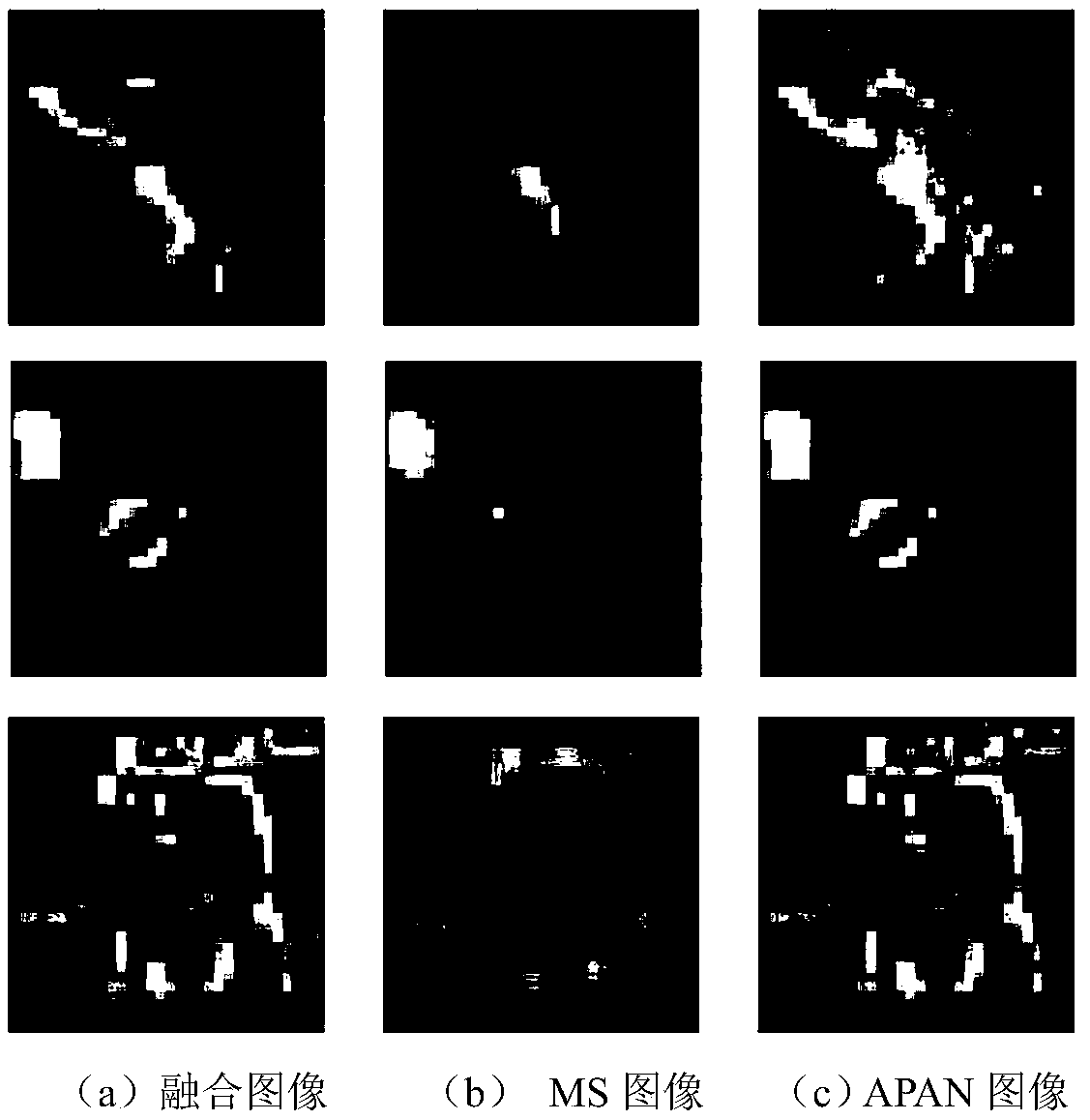

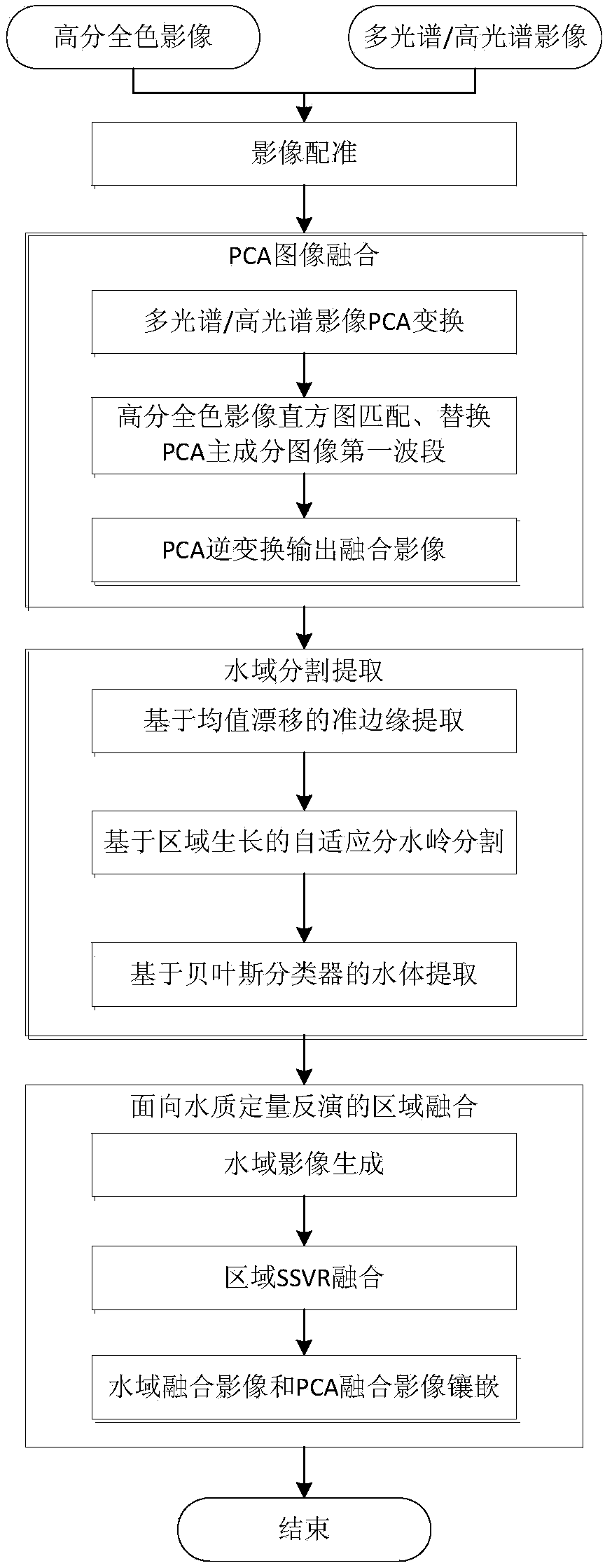

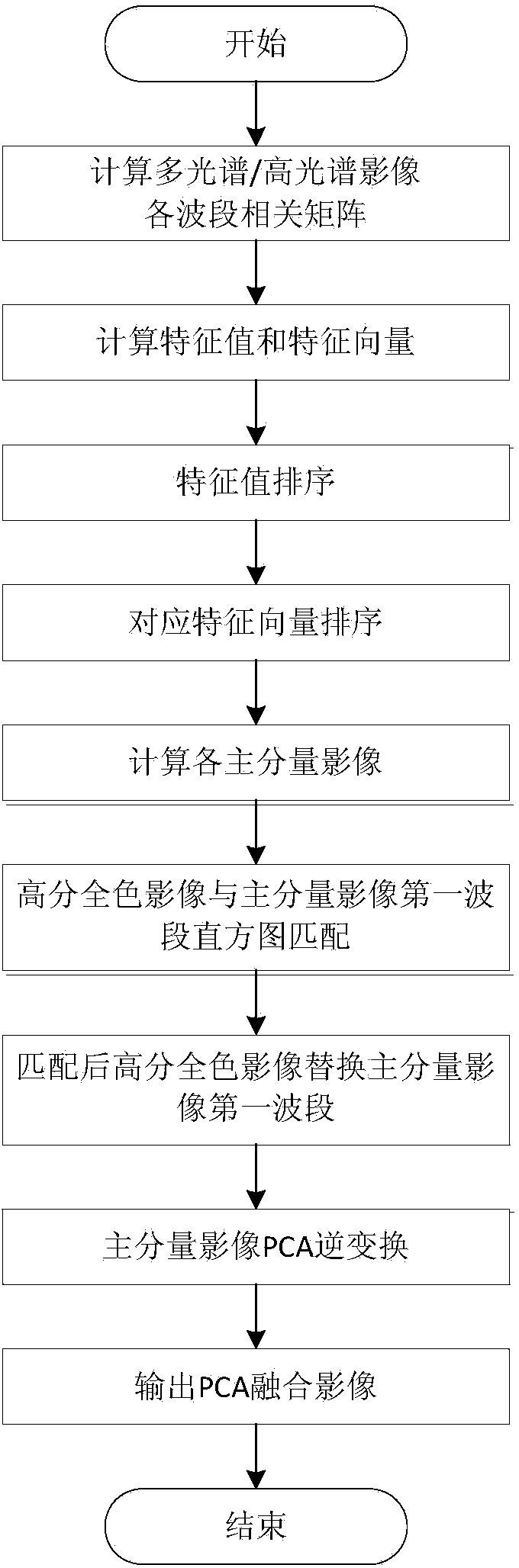

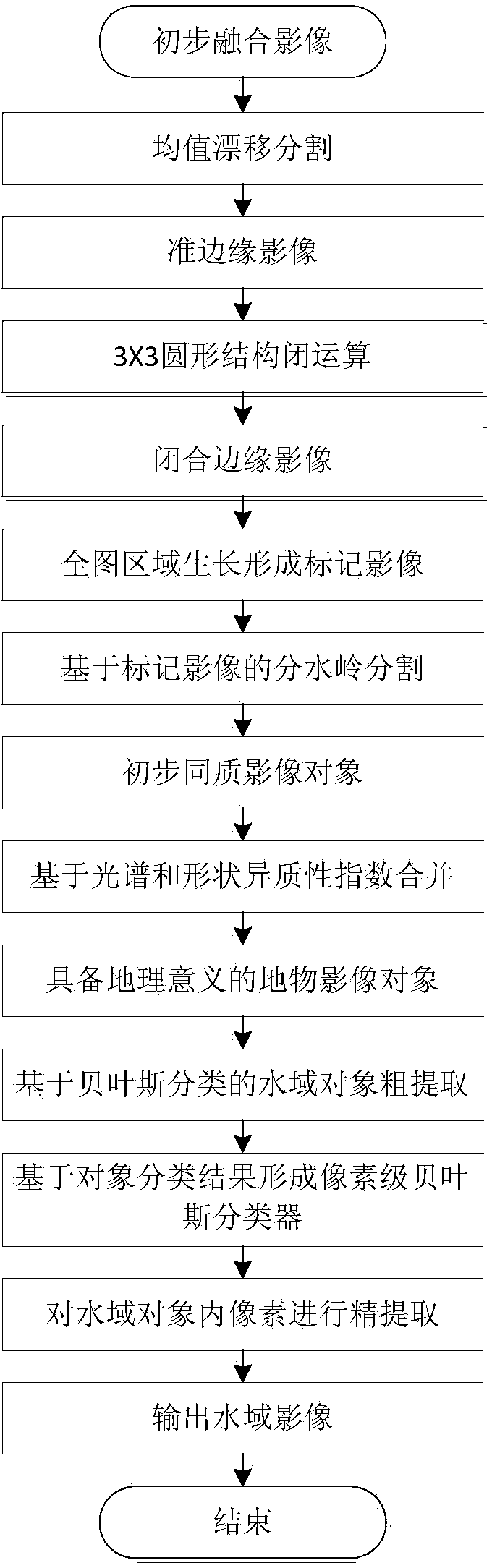

Remote sensing image fusion method oriented to water quality quantitative remote sensing application

ActiveCN103679675AGuaranteed spectral characteristicsPrecise extraction of edge contoursImage enhancementImage analysisRemote sensing image fusionWater quality

Disclosed is a remote sensing image fusion method oriented to water quality quantitative remote sensing application. By maintaining water area extraction accuracy and water area spectral characteristics furthest and combining PCA fusion and SSVR fusion, a two-level fusion method aiming at water quality quantitative remote sensing application is designed, a decision-level object-oriented surface feature classification and interpretation method is introduced in the process of fusion, and processing results have the characteristic that accurate water body object pixel profile and water area spectral characteristics are maintained. A lot of experiment results show that by the remote sensing image fusion method, water area interpretation accuracy reaches 90%, and water area quantitative inversion results are similar to inversion results acquired by directly utilizing hyperspectral or multispectral images.

Owner:SPACE STAR TECH CO LTD

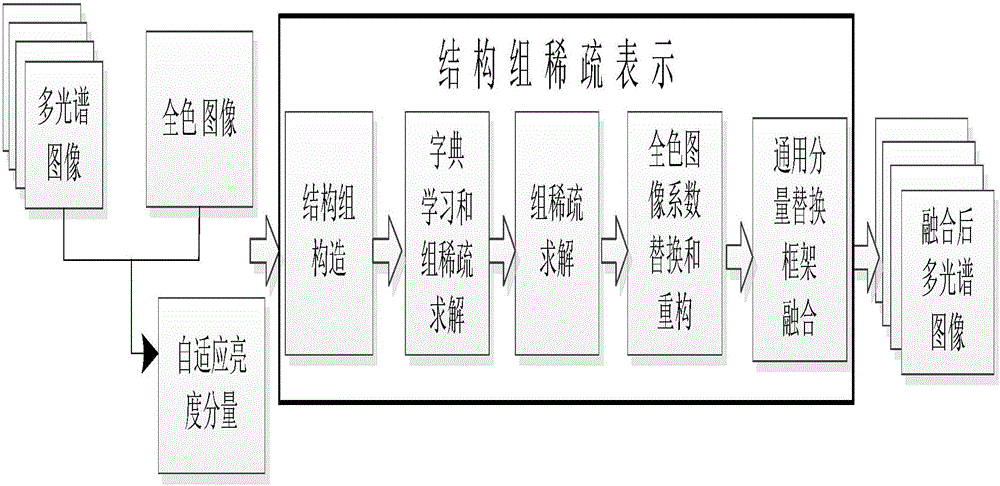

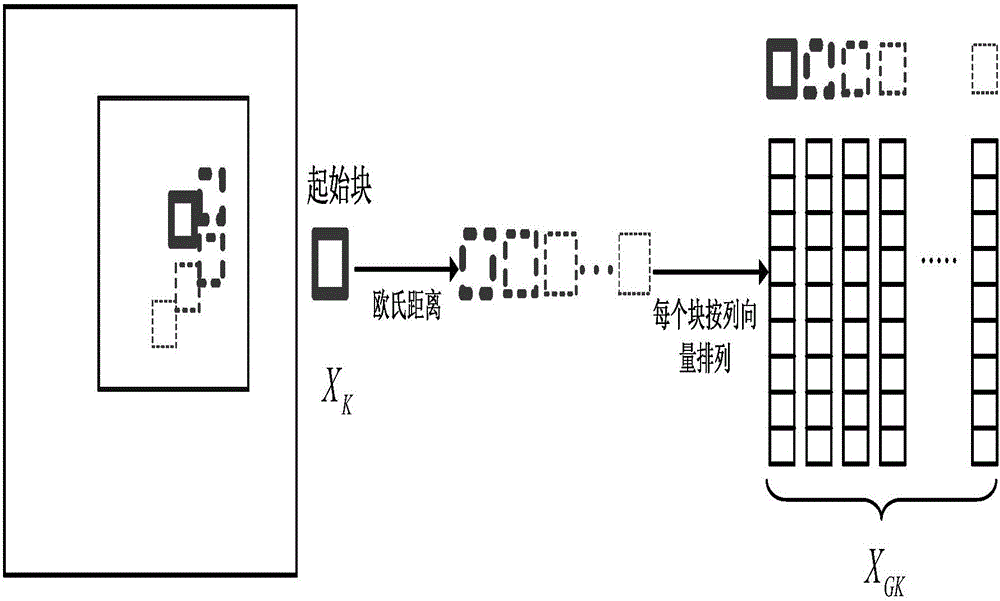

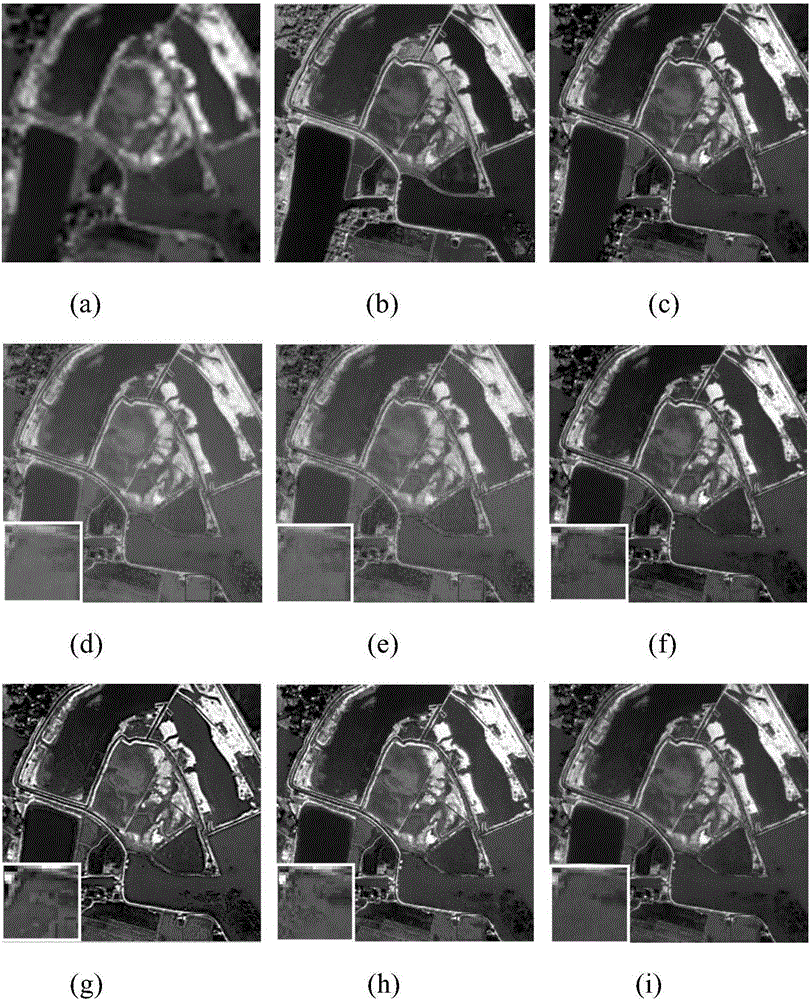

Structure sparse representation-based remote sensing image fusion method

InactiveCN105761234AImprove spatial resolutionHigh spectral informationImage enhancementImage analysisPattern recognitionRemote sensing image fusion

The invention discloses a structure sparse representation-based remote sensing image fusion method. An adaptive weight coefficient calculation model is used for solving a luminance component of a multi-spectral image, similar image blocks are combined into a structure group, a structure group sparse model is used for solving structure group dictionaries and group sparse coefficients for the luminance component and a panchromatic image, an absolute value maximum rule is applied to partial replacement of the sparse coefficients of the panchromatic image, new sparse coefficients are generated, the group dictionary and the new sparse coefficients of the panchromatic image are used for reconstructing a high-spatial resolution luminance image, and finally, a universal component replacement model is used for fusion to acquire a high-resolution multi-spectral image. The method of the invention introduces the structure group sparse representation in the remote sensing image fusion method, overcomes the limitation that the typical sparse representation fusion method only considers a single image block, and compared with the typical sparse representation method, the method of the invention has excellent spectral preservation and spatial resolution improvement performance, and greatly shortens the dictionary training time during the remote sensing image fusion process.

Owner:SOUTH CHINA AGRI UNIV

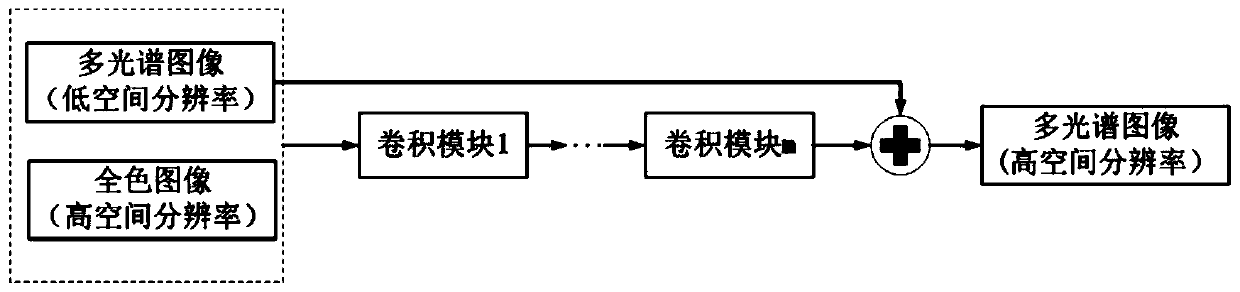

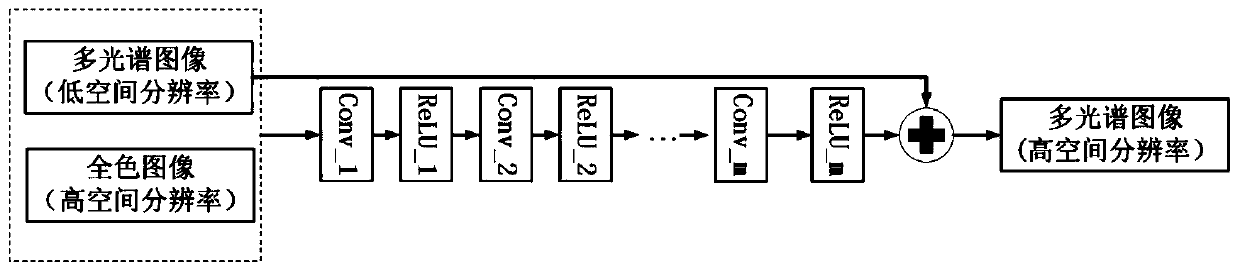

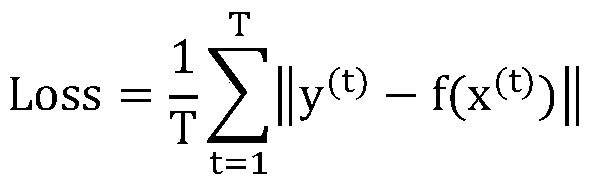

A remote sensing image fusion method and system based on a deep residual neural network

InactiveCN109767412ASuppress spectral distortionRealize automatic learningImage enhancementPattern recognitionStochastic gradient descent

The invention discloses a remote sensing image fusion method and system based on a deep residual neural network, and the method comprises the steps of carrying out the downsampling of corresponding multiples on a panchromatic image and a multispectral image, and obtaining a training sample; constructing a deep residual neural network, wherein the network is of a deep convolutional structure, and inputting a panchromatic image and a multispectral image into the deep residual neural network to sequentially pass through outputs obtained by a plurality of convolution modules and adding with the input multispectral image to form a residual structure; using a training sample and a random gradient descent algorithm for training a deep residual neural network, downsampling a multispectral image and a panchromatic image to be fused respectively by corresponding multiples, then inputting the multispectral image and the panchromatic image into the trained deep residual neural network, and obtaining a fused multispectral image with high spatial resolution. According to the invention, the respective key information of the two images can be integrated, and the spatial resolution of the multispectral image is improved.

Owner:ZHUHAI DAHENGQIN TECH DEV CO LTD

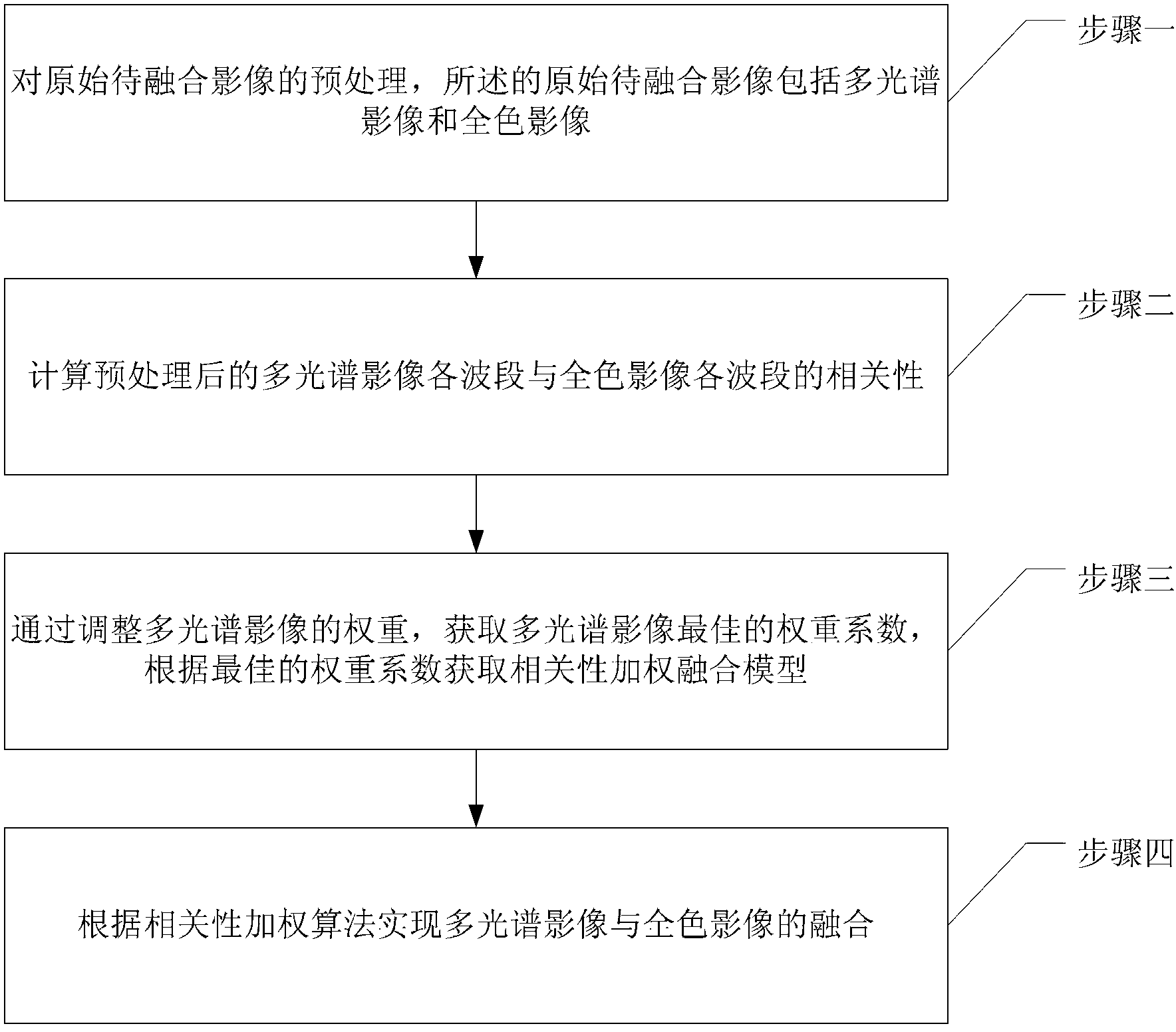

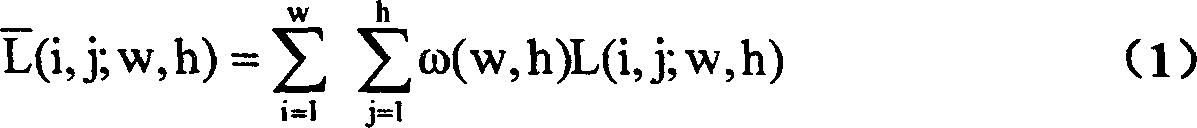

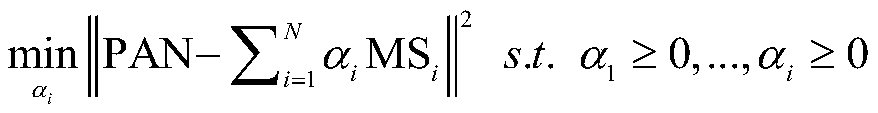

Correlation weighted remote-sensing image fusion method and fusion effect evaluation method thereof

InactiveCN103065293APreserve spectral informationReduce the differenceImage enhancementImage analysisRemote sensing image fusionImaging processing

The invention discloses a correlation weighted remote-sensing image fusion method and a fusion effect evaluation method of the remote-sensing image fusion method, and relates to the technical field of remote-sensing image process. The correlation weighted remote-sensing image fusion method comprises a first step of preprocessing an original to-be-fused image, a second step of calculating the correlation between each wave section of a processed multispectral image and each wave section of a panchromatic image, a third step of adjusting weight of the multispectral image to obtain a best weight coefficient of the multispectral image, and acquiring a correlation weighted fusion model according to the best weight coefficient, and a fourth step of achieving fusion of the multispectral image and the panchromatic image according to a weighting algorithm. The fusion effect evaluation method of the correlation weighted remote-sensing image fusion method comprises a first step of acquiring a fusion image according to the correlation weighted remote-sensing image fusion method, a second step of evaluating the fusion image through a mathematical statistics quantitative method which evaluates the to-be-fused image and the fusion image according to chosen fusion effect evaluation indexes, and the fusion effect evaluation indexes respectively are variance, information entropy and torsion resistance.

Owner:NORTHEAST INST OF GEOGRAPHY & AGRIECOLOGY C A S

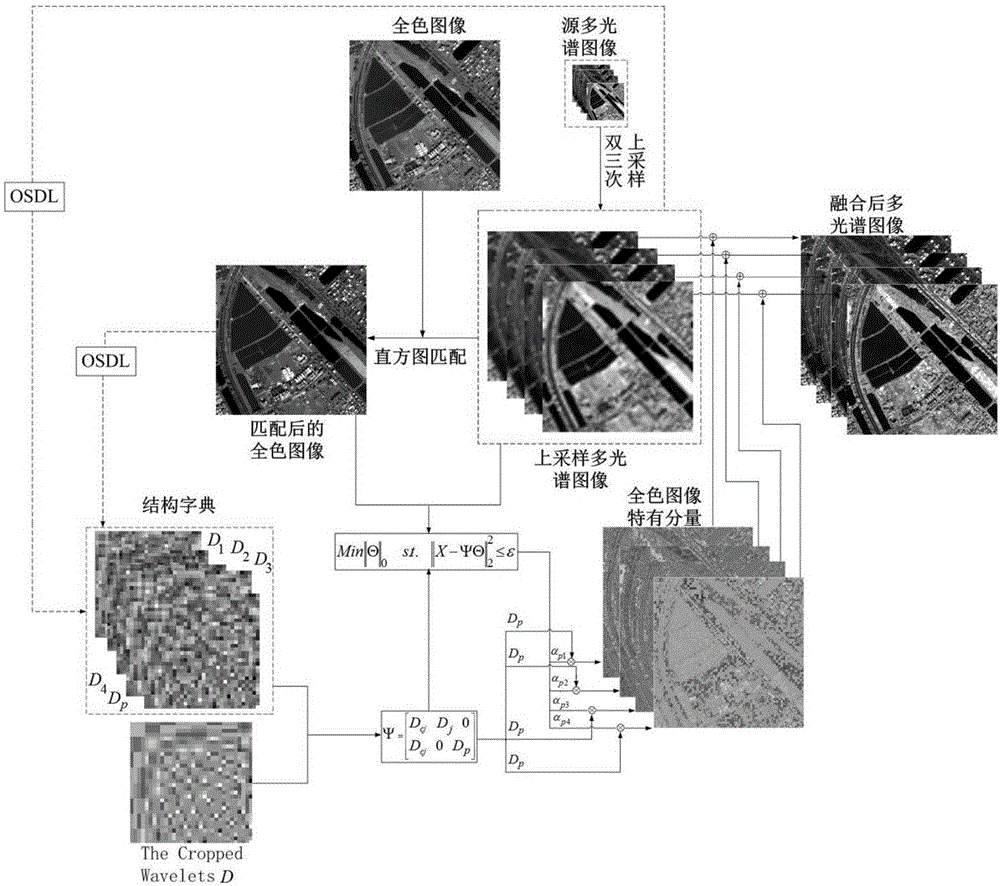

Remote sensing image fusion method based on joint sparse and structural dictionary

ActiveCN106251320AReduce workloadHigh precisionImage enhancementImage analysisRemote sensing image fusionMultispectral image

The invention discloses a remote sensing image fusion method based on a joint sparse and structural dictionary. The method comprises the steps of firstly, respectively obtaining a corresponding structural dictionary from a full-color image and a multi-spectral image through the online sparse dictionary algorithm; secondly, obtaining the specific components of the full-color image different from the multi-spectral image based on joint sparse representation; finally, injecting the specific components of the full-color image into the multi-spectral image by adopting an ARIS fusion framework so as to obtain a high-resolution multi-spectral image. According to the technical scheme of the invention, the correlation between the sparse characteristic of the dictionary and atoms is effectively utilized, and the complexity of the dictionary training is further reduced. The self-adaptability of the structural dictionary is improved, so that the reconstruction of the specific components is more accurate, and the quality of image fusion is improved. Meanwhile, the detail information and the low-frequency information of the full-color image are fully considered, so that the fusion is more comprehensive and more effective.

Owner:易迅通科技有限公司

Remote-sensing image mixing method based on local statistical property and colour space transformation

InactiveCN1489111AAccurate descriptionEasy to interpretImage enhancementRemote sensing image fusionImage resolution

The invented method processes remote sensing image through following steps. Based on IHS transform of multiple spectrum images, using statistic characteristics of remote sensing image, lowpass filtering is carried out for I-component of multiple spectrum images. Meanwhile, highpass filtering is carried out for panchromatic remote sensing image with high spatial resolution. Through histogram matching in local window, gray scale mapping is carried out one pixel by one pixel for value of pixel in panchromatic image with high resolution according to position of pixel. Thus, syncretic I-component is obtained. Then, IHS inverse transform is carried out so as to obtain syncretic result. The invention possesses advantages of retaining spectrum information of multiple spectrum images, raising spatial resolution, accurate decrypting features of ground object so that the invention is more suitable to each application and easy of interpretation.

Owner:SHANGHAI JIAO TONG UNIV

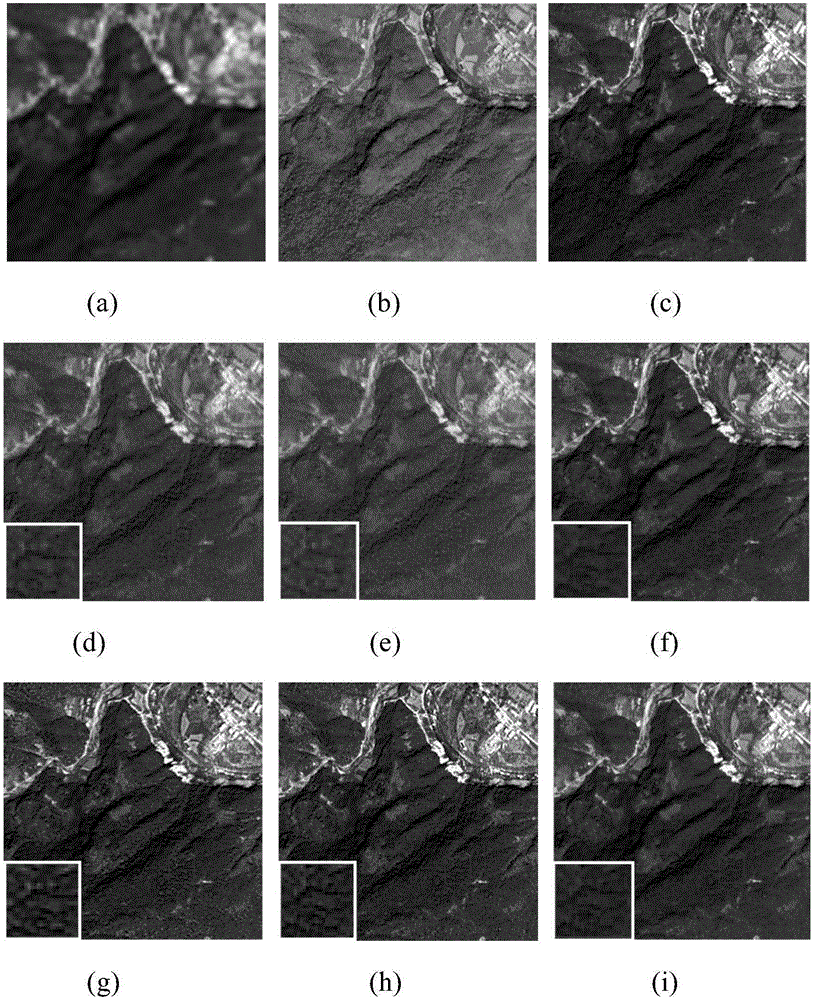

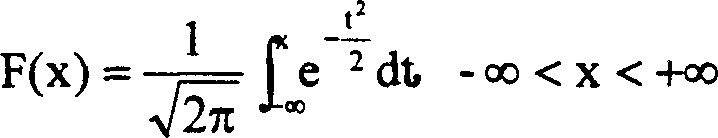

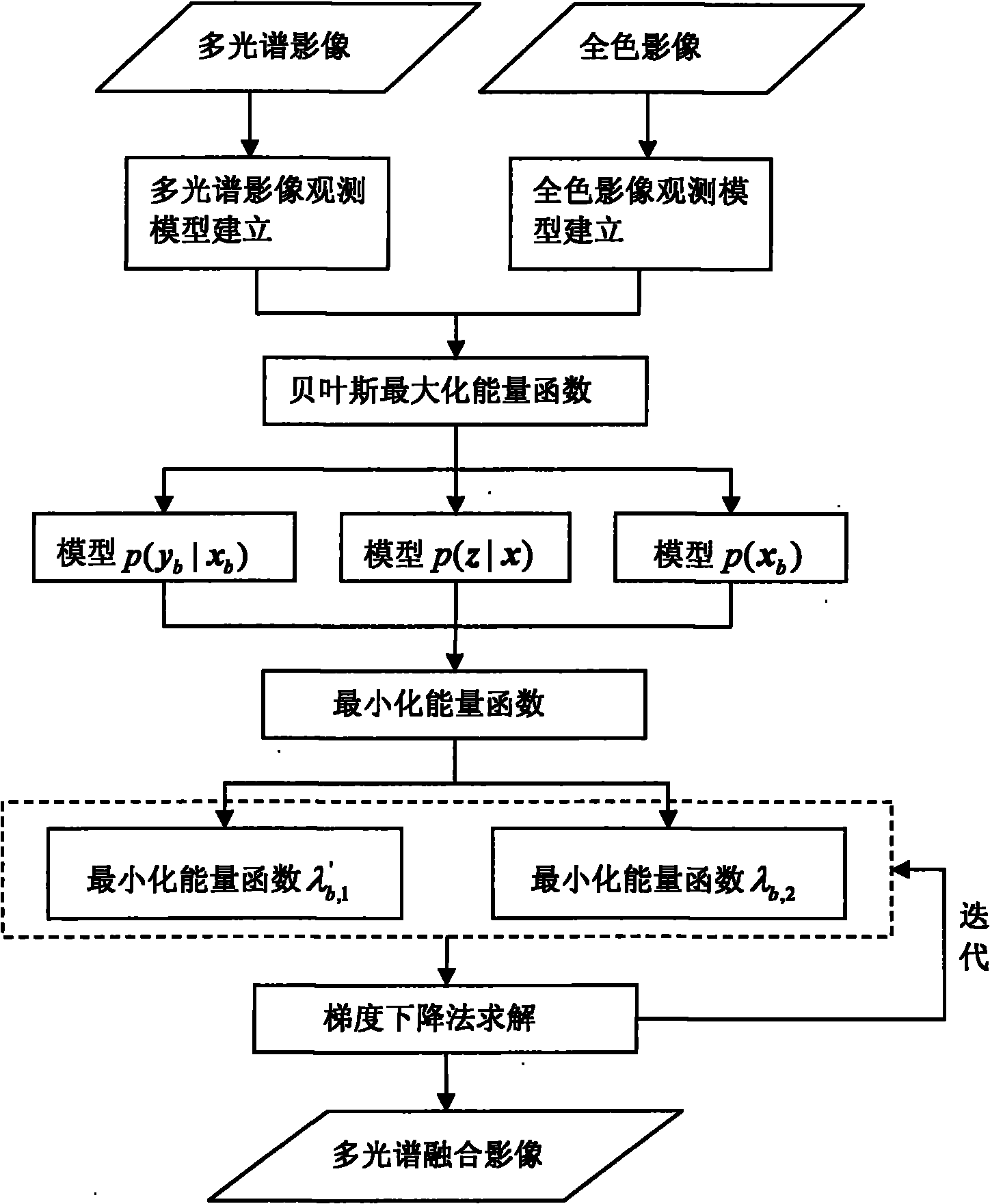

Adaptive variation remotely sensed image fusion method

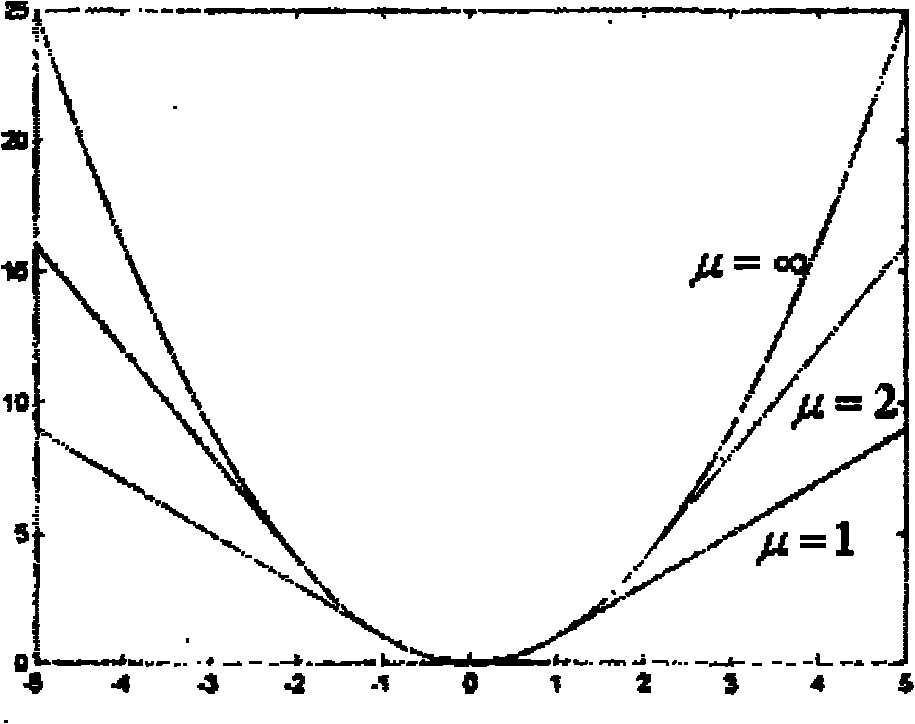

ActiveCN101894365AHigh degree of integrationStrong adaptive processing abilityImage enhancementRemote sensing image fusionImaging data

The invention provides an adaptive variation remotely sensed image fusion method. In the method, multi-spectrum image and full-color image observation models are respectively established by analyzing the image degrading process, an inverse problem corresponding to the image fusion is described by utilizing a maximum posteriori estimation theoretical frame, and a variation image fusion model consisting of a multi-spectrum image data consistency constraint, a full-color image data consistency constraint and an image transcendental constraint is established; and in the resolving process, iterative solving is carried out by a gradient degressive algorithm, regularization parameters are adaptively solved by establishing a proper function, and an adjustable parameter is reserved simultaneously so as to meet the requirements of different users. The method can improve the original multi-spectrum image space resolution, effectively maintain the conventional spectrum information, and automatically select the proper regularization parameters adaptively according to different image data, so the method has the characteristics of high fidelity, high adaptation degree and the like.

Owner:WUHAN UNIV

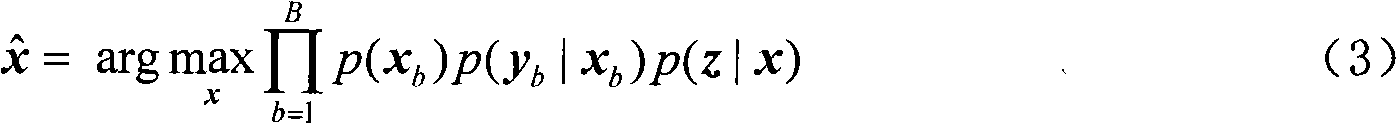

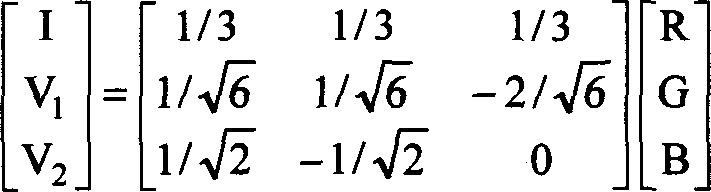

Method used for gully erosion extraction based on landform and remote sensing image fusion technology

InactiveCN103940407ANo lossReduce lossesWave based measurement systemsPicture interpretationRemote sensing image fusionColor intensity

The invention provides a method used for gully erosion extraction based on landform and remote sensing image fusion technology. The method is based on conversion between remote sensing RGB (red, green, and blue) color space and HIS (hue, intensity, and saturation) color space; linear standard surface roughness omega<-><'> is taken as weight of sun northwest shaded relief model (SRM); 1-omega<-><'> is taken as color intensity component weight; novel color intensity component I' is obtained via summation; conversion from HIS color space to RGB color space is performed based on the novel color intensity image I', and fusion of landform information and remote sensing image is realized, so that gullies are represented by recesses, and peaks are represented by convexes in remote sensing images; and interpretation on gully shoulder lines is carried out base on fused remote sensing images, and via combination with gully shoulder line gradient threshold data which is obtained via calculation based on DEM (digital elevation model). Compared with traditional remote sensing image-based gully interpretation method, the method is capable of providing remote sensing two-dimensional images with landform information according with human visual habits, gully bottom remote sensing image characteristics are clear, and gully shoulder line interpretation accuracy is high.

Owner:LUDONG UNIVERSITY

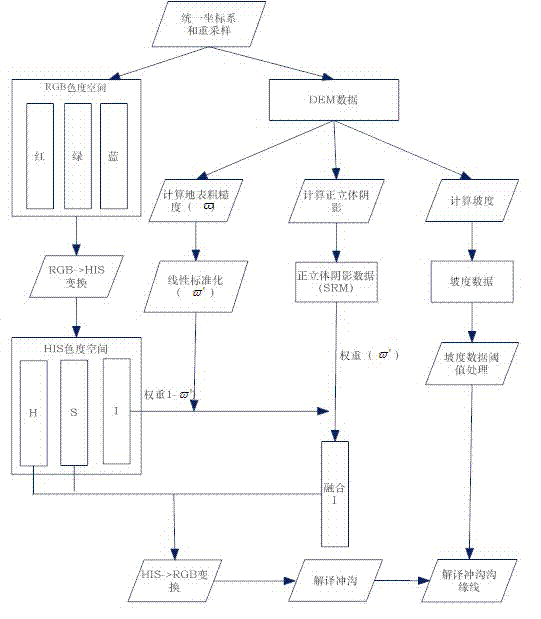

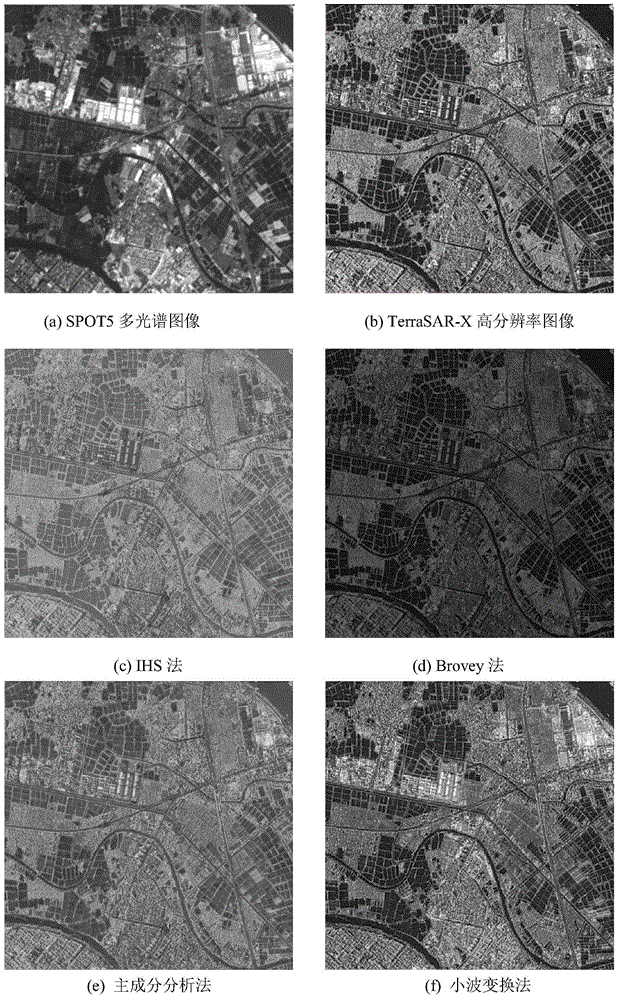

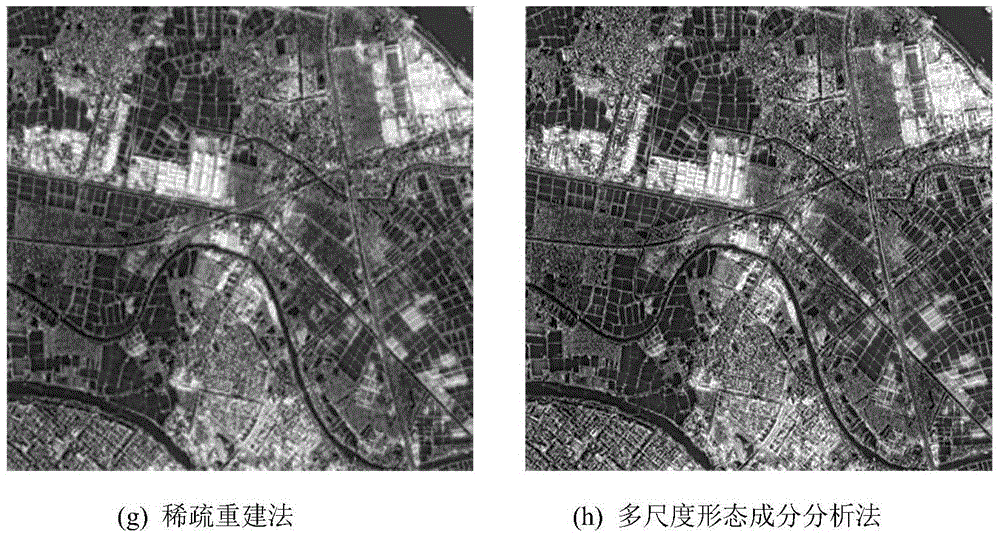

Remote sensing image fusion method based on multi-dimensional morphologic element analysis

The invention discloses a remote sensing image fusion method based on multi-dimensional morphologic element analysis, and belongs to the field of crossing signal processing and remote sensing image processing. The method is that the morphologic element analysis is respectively carried out for a high-resolution remote sensing image and a multi-spectrum remote sensing image under different dimension; the iterative shrinkage method is carried out to perform sparse decomposition; a target image to be fused is divided into texture components and cartoon components based on a plurality of dimensions; the cartoon component and the noise component in the high-solution image, and the texture component and the noise components in the multi-spectrum image are removed; the effective dimension texture component in the high-solution image and the cartoon component in the multi-spectrum image are remained and subjected to spare reconstruction, so as to obtain the fusion image. With the adoption of the method, the high-resolution remote sensing image and the multi-spectrum remote sensing image are effectively fused; the space resolution is improved and the spectrum distortion is reduced by being compared with the existing fusion method; in addition, the rate is greatly increased by being compared with the existing sparse reconstruction method.

Owner:YANTAI UNIV

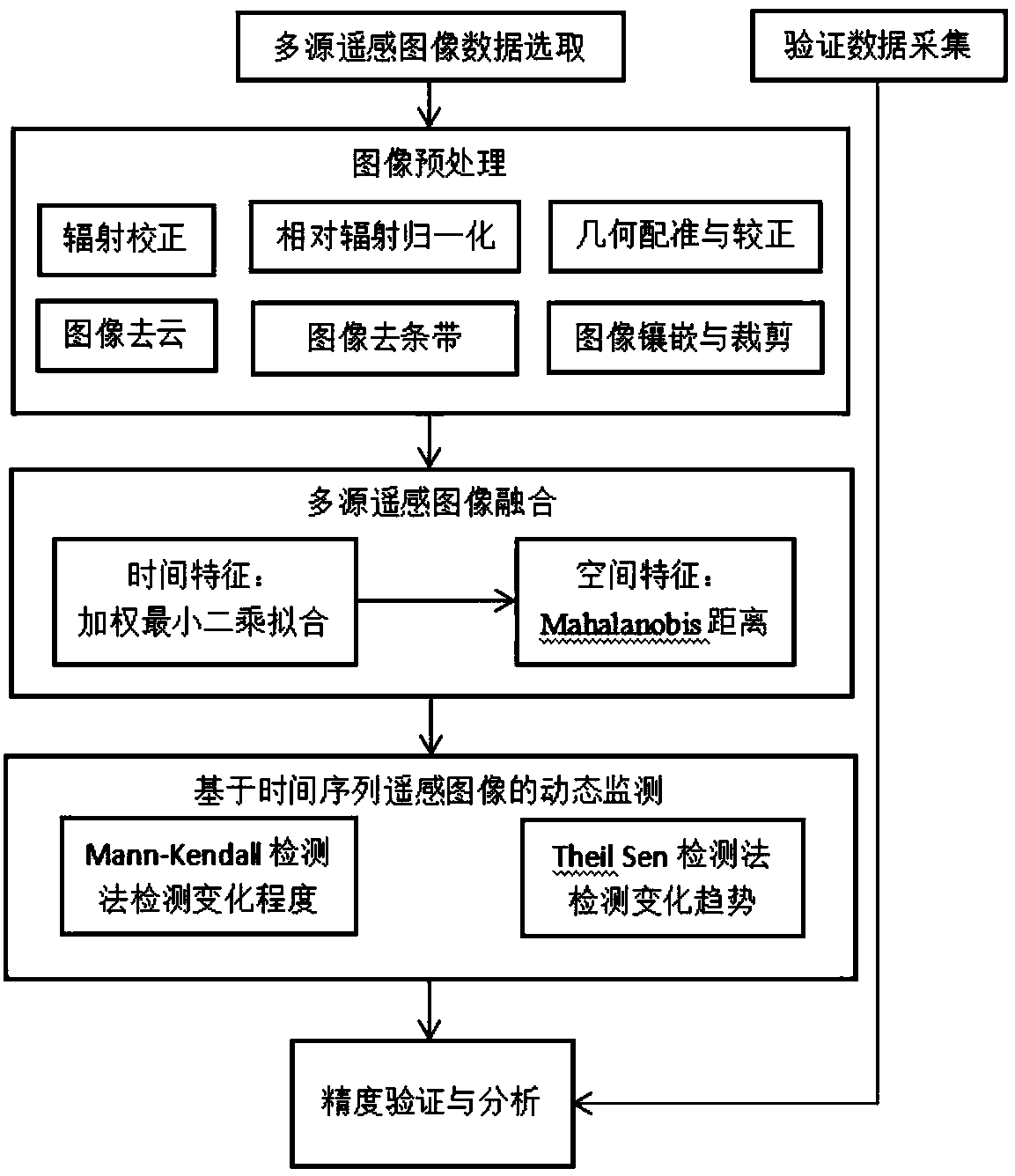

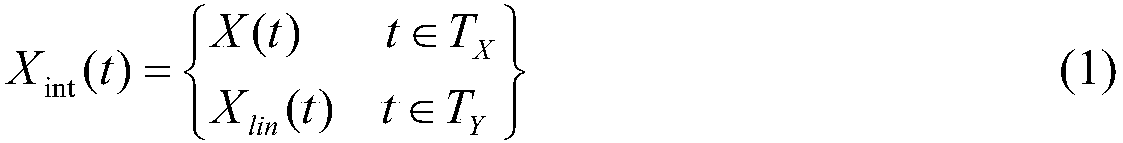

Multisource remote sensing sequence image-based dynamic monitoring method

InactiveCN107704807AImproving Image Registration AccuracyHigh precisionScene recognitionRemote sensing image fusionDynamic monitoring

The invention relates to a multisource remote sensing sequence image-based dynamic monitoring method, and aims at effectively utilizing space information of data to improve fusion methods, reduce feature differences among multisource remote sensing images and improve the dynamic monitoring effect. The method comprises the following steps of: firstly constructing multisource remote sensing image sequences, and preprocessing sequence remote sensing images by adoption of a proper image preprocessing method so as to ensure that the remote sensing image sequences have the features such as consistent image radiation characteristic, relatively high image registration precision and the like; fusing the remote sensing image sequences on the basis of space-time distribution-based enhanced multisource remote sensing image fusion method-eMulTiFuse, so as to ensure that time sequences have more similar space features; and finally dynamically monitoring time sequence remote sensing images by adoption of a Mann-Kendall trend detection method on the basis of pixel features, so as to obtain a monitoring result. The method has relatively high correctness.

Owner:BEIHANG UNIV +1

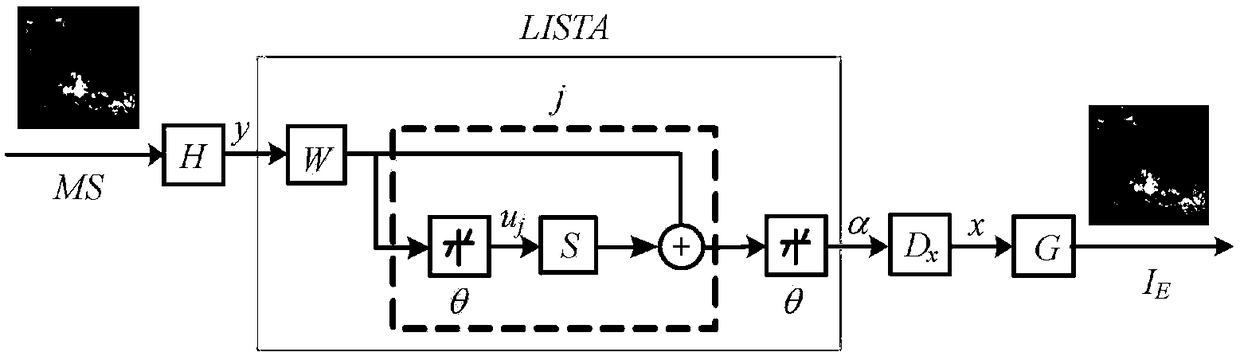

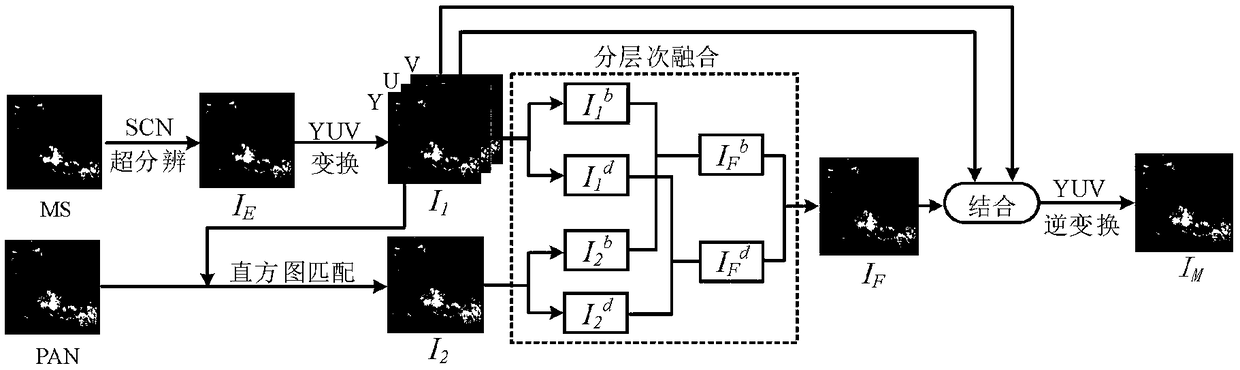

A hierarchical remote sensing image fusion method using layer-by-layer iterative super-resolution is presented

PendingCN109509160ASpatial detail enhancementSmall distortionImage enhancementImage analysisImaging processingRemote sensing image fusion

The invention belongs to the technical field of image processing, in particular to a hierarchical remote sensing image fusion method utilizing layer-by-layer iterative super-resolution. The method comprises the following steps: A1, a layer-by-layer iterative depth neural network is used for super-resolution processing of a low-resolution multi-spectral image to obtain a reconstructed multi-spectral image; A2, luminance components of the panchromatic image and the reconstruct multi-spectral image are hierarchically fused to obtain a high-resolution multi-spectral image. This method can preservethe spectral information sufficiently and enhance the spatial detail information of the image to the maximum extent.

Owner:CHANGSHA UNIVERSITY OF SCIENCE AND TECHNOLOGY

Remote-senstive image interfusion method based on image local spectrum characteristic

InactiveCN1581230AHigh-resolutionEasy to keepImage enhancementRemote sensing image fusionHistogram matching

The present invention relates to a remote-sensing image fusion method based on image local spectral characteristics. Said method combines local correlation moment and local variance of remote-sensing image to make fusion, on the basis of making multispectral image undergo the process of IHS transformation makes I component obtained after transformation and high-spectral image undergo the process of histogram matching treatment, then makes the matched I component and high-spectral image respectively undergo the process of vavelet transformation. For low-frequency component obtained after wavelet decomposition said method adopts the fusion criterion based on local normalized correlation moment to make fusion, and for high-frequency component it adopts the fusion criterion based on variance to make fusion, then reconstructes all the wavelet components after fusion to obtain new I componnet, finally, uses new I component and original components of H and S to make IHS inverse transformation so as to obtain the fusion result.

Owner:SHANGHAI JIAO TONG UNIV

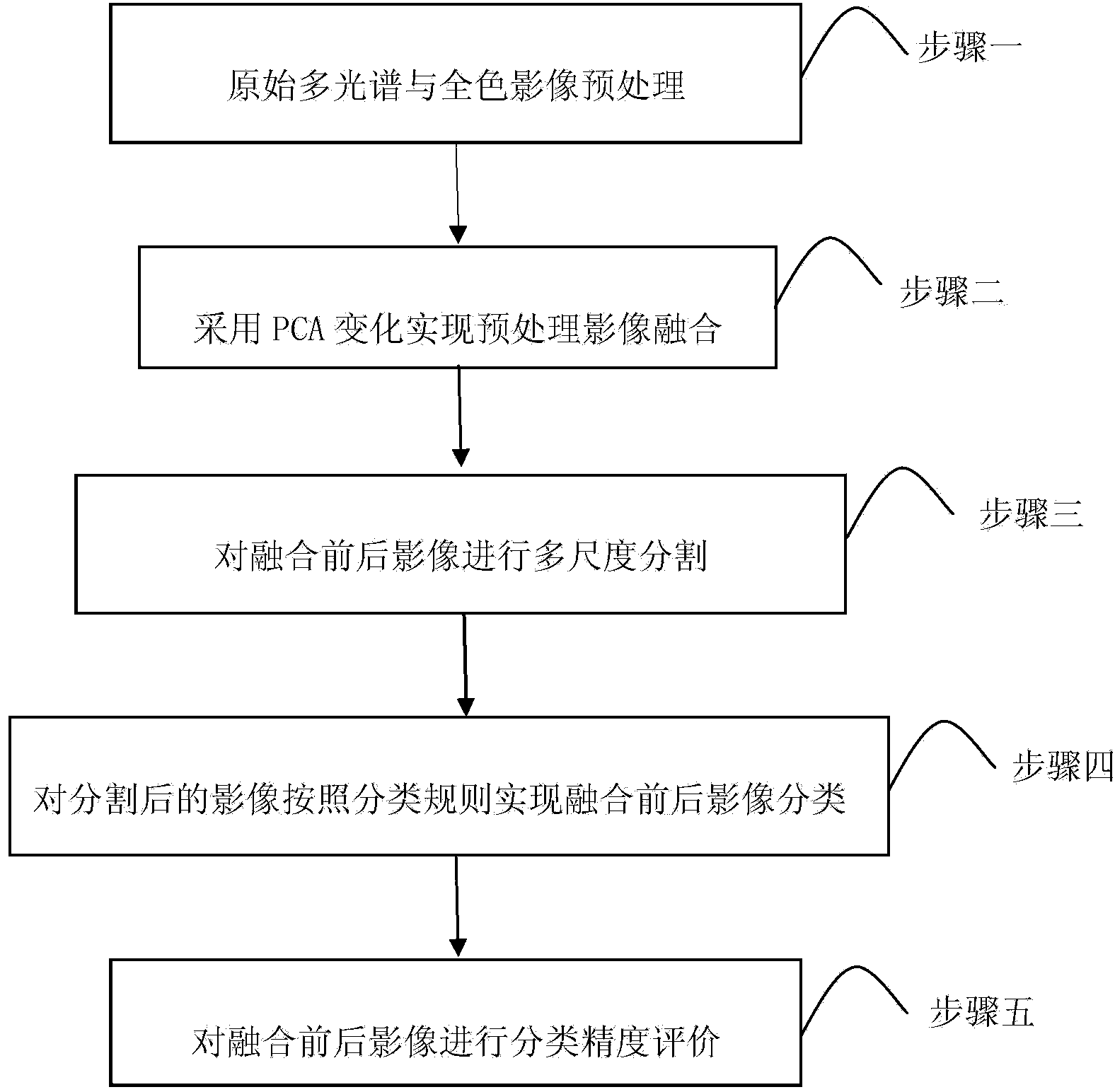

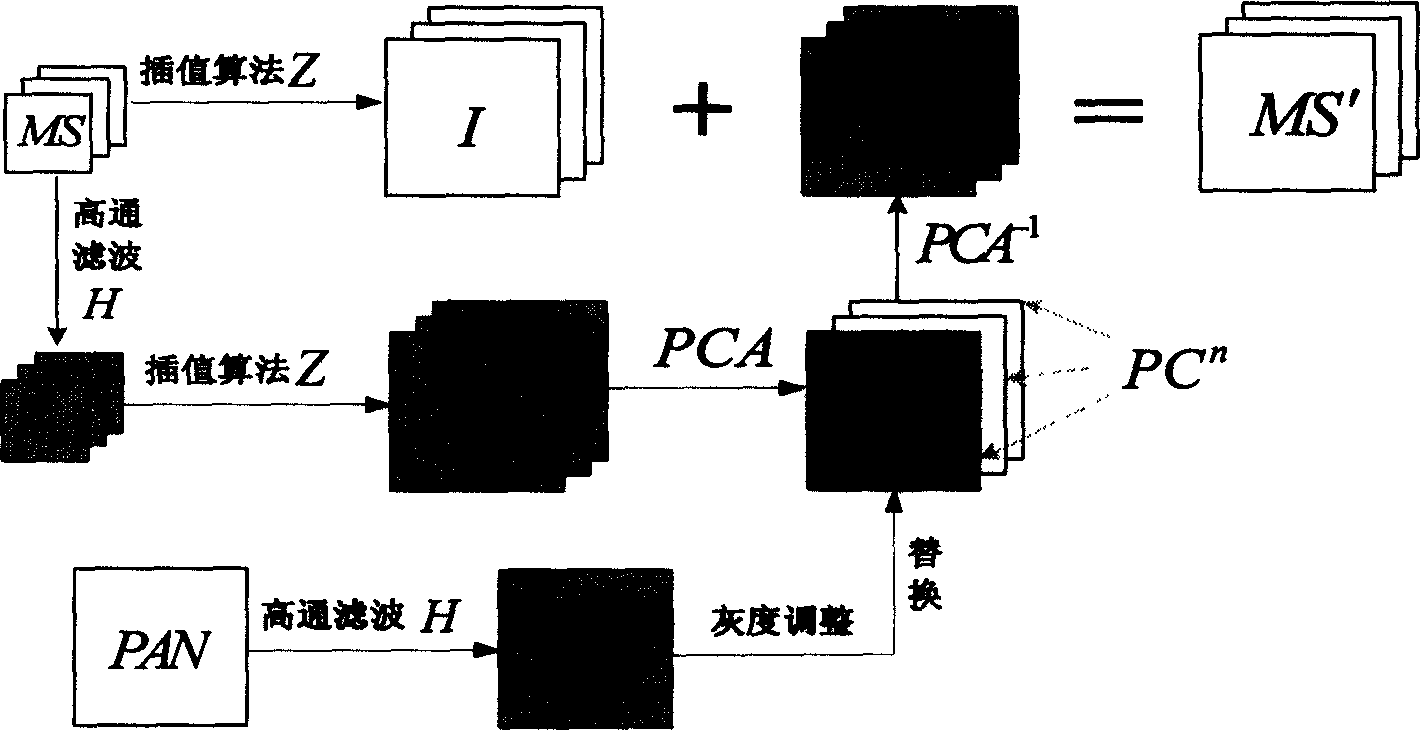

Method for evaluating remote-sensing image fusion effect

InactiveCN103383775AImprove classification accuracyEvaluation results are objectiveImage enhancementImage analysisRemote sensing image fusionImaging quality

The invention relates to remote-sensing image effect evaluation, in particular to a method for evaluating a remote-sensing image fusion effect. The method for evaluating the remote-sensing image fusion effect solves the problems that the man-made interference factor of a main visual effect of an existing method for evaluating the remote-sensing image fusion effect is large, errors can occur easily, a uniform standard does not exist in index selection in an objective mathematical statistic analysis, and image quality is hard to evaluate comprehensively. The method includes the steps that first, an original image to be fused is preprocessed; second, fusion processing is conducted on a preprocessed multispectral image and a panchromatic image; third, object-oriented segmentation is conducted on the images before and after fusion; fourth, a classification rule is used for classifying the segmented remote-sensing image; fifth, the classification result is precisely evaluated from the three aspects of production precision, user precision and a Kappa coefficient, and contrastive analysis is carried out on the classification accuracy of the images before and after fusion so that the fusion effect can be evaluated. The method for evaluating the remote-sensing image fusion effect can be applied to the technical field of remote-sensing image processing.

Owner:NORTHEAST INST OF GEOGRAPHY & AGRIECOLOGY C A S

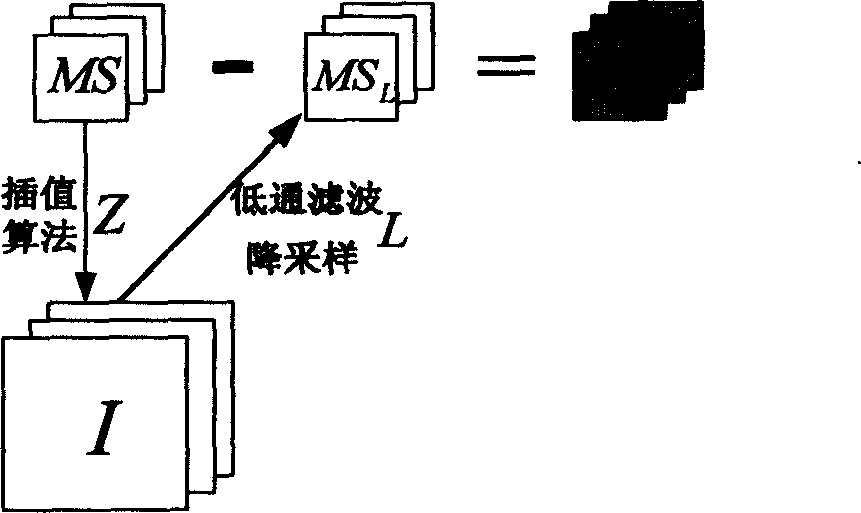

Remote sensitive image fusing method based on residual error

InactiveCN1588447ASolve space problemsSolve the contradiction of keeping spectral informationImage enhancementColor imageRemote sensing image fusion

The invention relates to a remote sensing image fusion method based on residual error. The method acquires residual error image of multi-spectrum image and residual error image of whole-color image with residual error extracting algorithm; then the residual error images of the multi-spectrum image is interpolated with the same size to the residual error image of the whole-color image, carries on image fusion to the residual error image of the multi-spectrum image and the residual error image of the whole-color image after being interpolated, restores the high resolution residual error of the multi-spectrum image; finally the high resolution image estimation of the multi-spectrum image can be acquired through adding the interpolation image of the spectrum image and the residual error image of multi-spectrum image. The invention can enhances the space detail representing ability of the fused image, at the same time, it reserves the spectrum information of original multi-spectrum image, its structure is simple, and the effect is excellent.

Owner:FUDAN UNIV

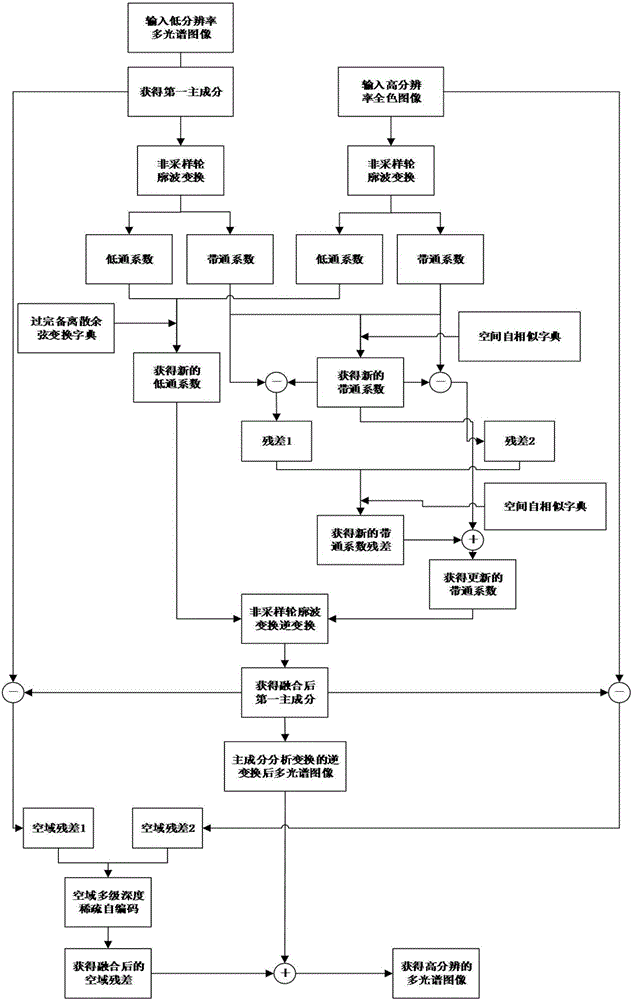

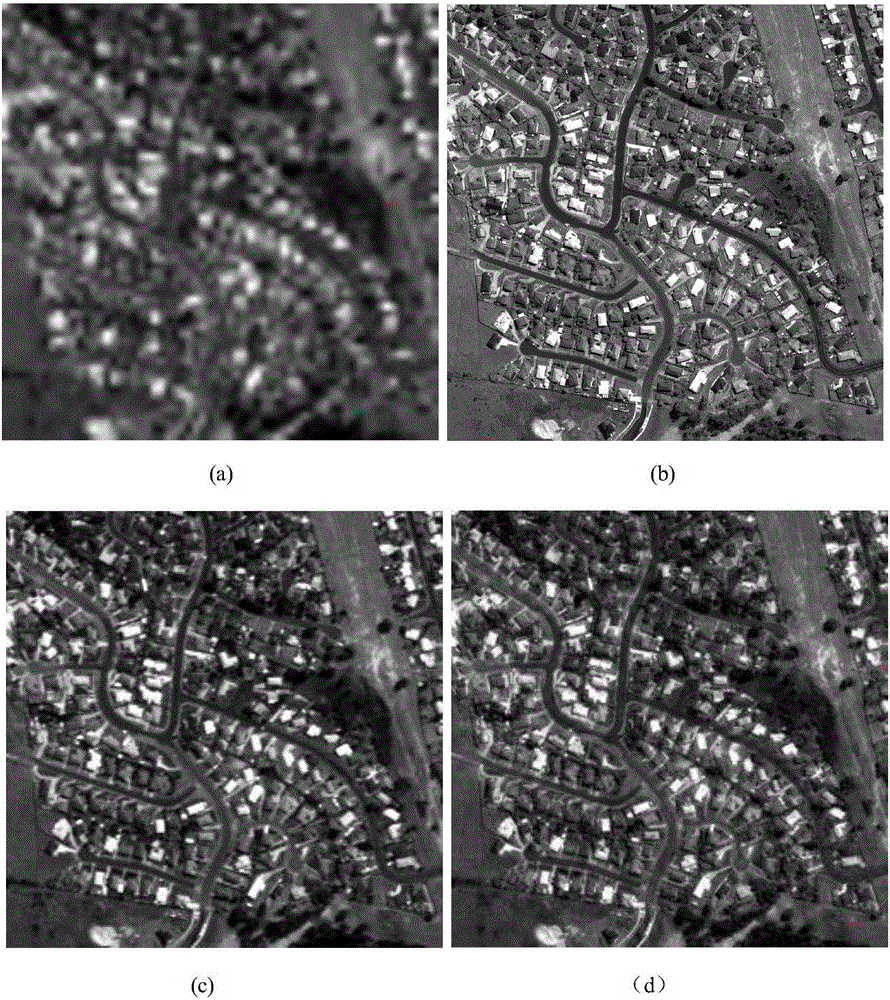

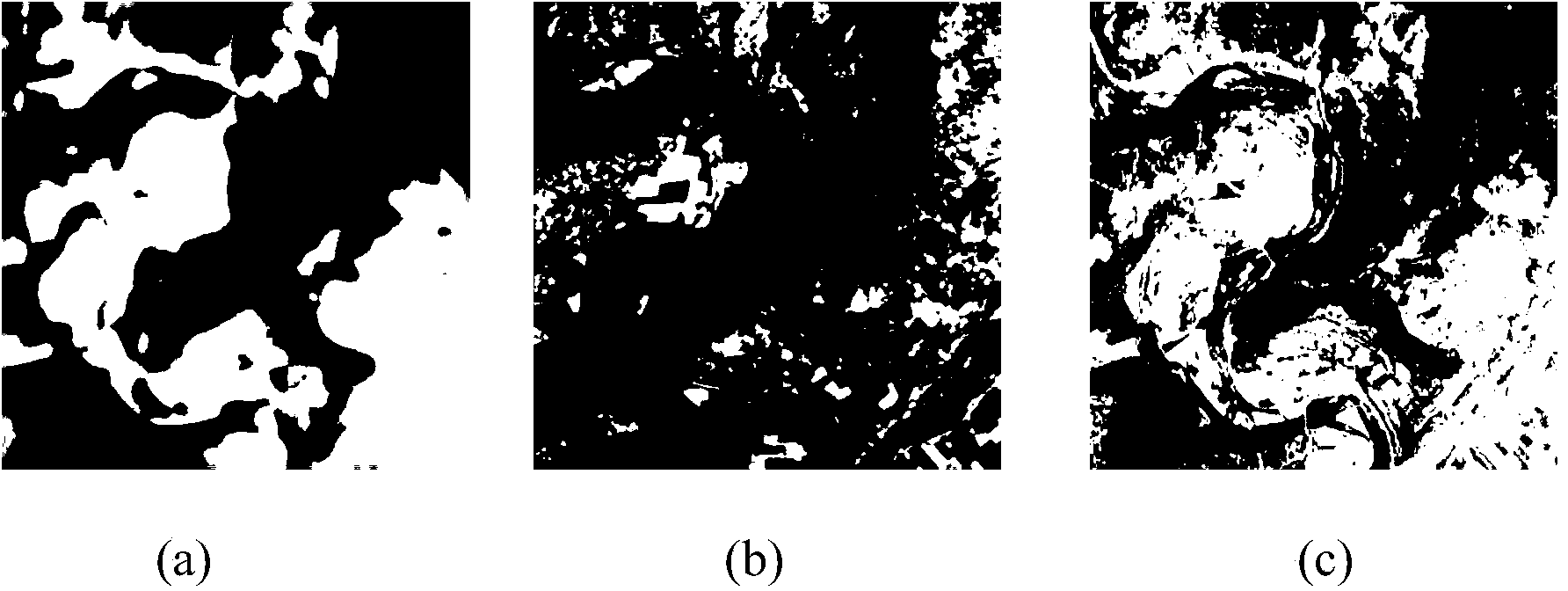

Multi-scale geometric remote sensing image fusion method based on deep sparse self-coding

ActiveCN106204450AIntegrity guaranteedKeep Spectral InformationImage enhancementImage analysisRemote sensing image fusionImage resolution

The invention discloses multi-scale geometric remote sensing image fusion based on deep sparse self-coding. The method comprises the following steps of: 1) inputting a low-resolution multispectral image M and high-resolution full-color image P, extracting first principal component C1 of the M; 2) obtaining the low-pass coefficient LM and the band-pass coefficient HM of the C1, and the low-pass coefficient LP and the band-pass coefficient HP of the P; 3) fusing the LM and LP to obtain a low-pass coefficient LN; 4) constructing a space self-similar dictionary DS and fusing the HM and HP to obtain the band-pass coefficient HN under the dictionary DS; 5) updating the HN to obtain the band-pass coefficient HNS; and 6) performing inverse transformation on the LN and the HNS to obtain a fused first principal component C2, updating the C2 to obtain a updated first principal component CS; and 7) performing inverse transformation on the CS to obtain the high-resolution multispectral image. The method reduces the injection of mismatched details, improves the spatial distortions of the fused multispectral images, and can be used for target recognition, terrain classification and remote sensing monitoring.

Owner:XIANYANG NORMAL UNIV

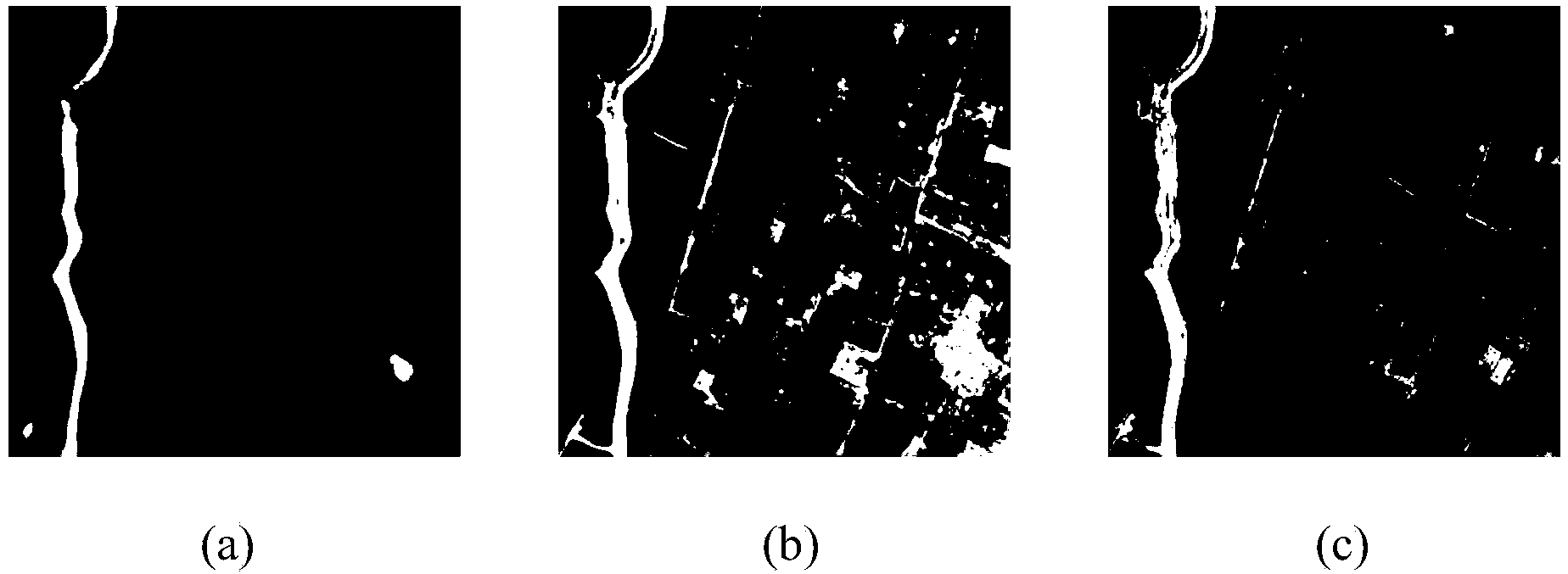

A remote sensing image fusion method based on difference image sparse representation

ActiveCN103617597AEfficient use ofReduce spectral distortionImage enhancementCharacter and pattern recognitionData setRemote sensing image fusion

The invention discloses a remote sensing image fusion method based on difference image sparse representation and mainly resolves a problem of spectrum distortion in a conventional remote sensing image fusion method. The implementation steps of the method comprise: inputting an image set and performing block acquisition in order to acquire an image block data set; constructing a high-low resolution image training set according to the image block data set; training the high-low resolution image training set with a semi-symmetric dictionary training method in order to obtain a training dictionary; inputting a low-resolution multispectral image to be fused and a high-resolution full-color image and calculating a low-resolution difference image to be fused; and performing super-resolution processing on the low-resolution difference image to be fused with a semi-symmetric dictionary image super-resolution method in order to obtain a high-resolution difference image and performing inverse transformation on the high-resolution difference image in order to obtain a high-resolution multispectral image. Compared with a classic remote sensing image fusion method, the remote sensing image fusion method based on difference image sparse representation reduces spectrum distortion because of the use of a fusion model based on difference images and can be used in object identification.

Owner:XIDIAN UNIV

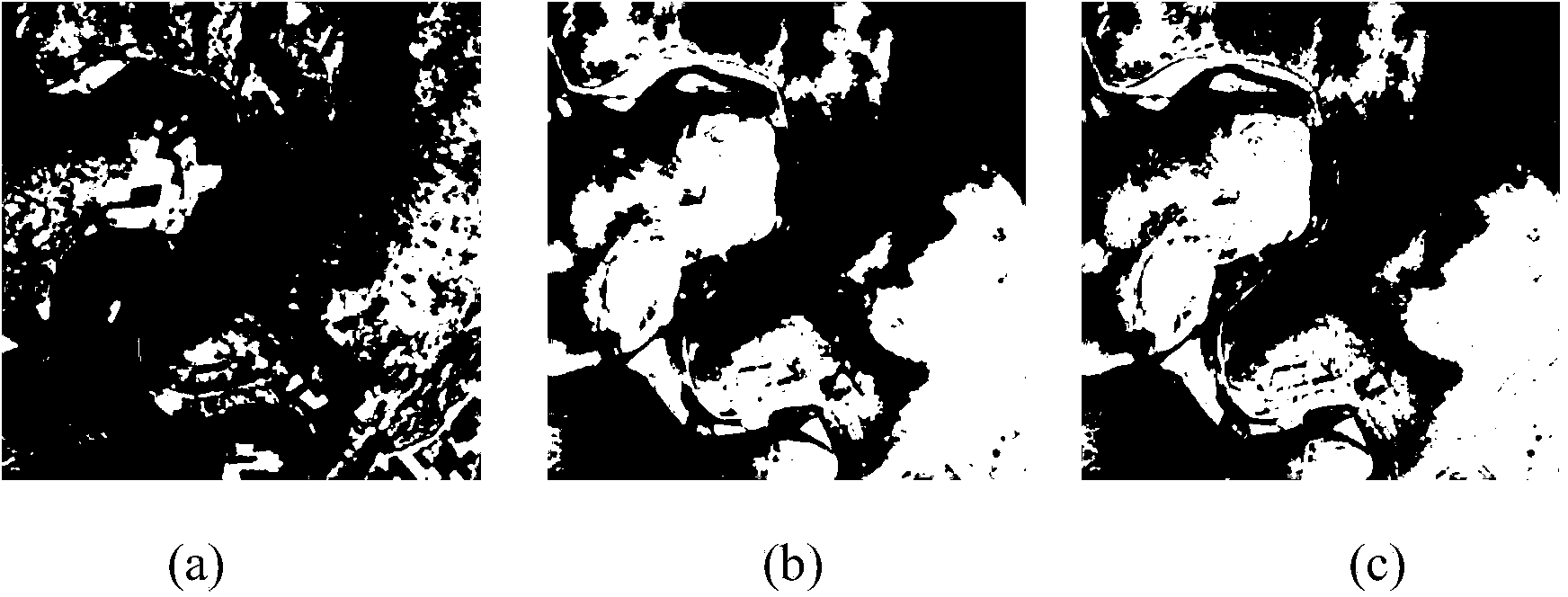

Multispectral remote sensing image fusion method and device based on residual learning

ActiveCN110415199AImprove performanceReduce processing stepsImage enhancementImage analysisMean squareRemote sensing image fusion

The invention discloses a multispectral remote sensing image fusion method and a multispectral remote sensing image fusion device based on residual learning. The method comprises the following steps:(1) acquiring a plurality of original multispectral remote sensing images IMS and corresponding original panchromatic band remote sensing images IPAN; (2) calculating to obtain an interpolation imageIMSI of the IMS, a gradient image GPAN of the IPAN and a differential image DPAN; (3) constructing a convolutional neural network fusion model which comprises a feature extraction layer, a nonlinear mapping layer, a residual image reconstruction layer and an output layer which are connected in sequence, taking I=[IMSI,IPAN,GPAN,DPAN] as input for training, and taking a loss function adopted duringtraining as a mean square error function for introducing residual learning; and (4) processing the multispectral remote sensing image I'MSRI to be fused and the corresponding original panchromatic band remote sensing image I'PAN to obtain corresponding data [I'MSI, I'PAN, G'PAN, D'PAN], inputting the data into the trained convolutional neural network fusion model, and outputting an image after fusion. The method is high in fusion speed, and the spectrum and space quality of the fused image is higher.

Owner:HOHAI UNIV

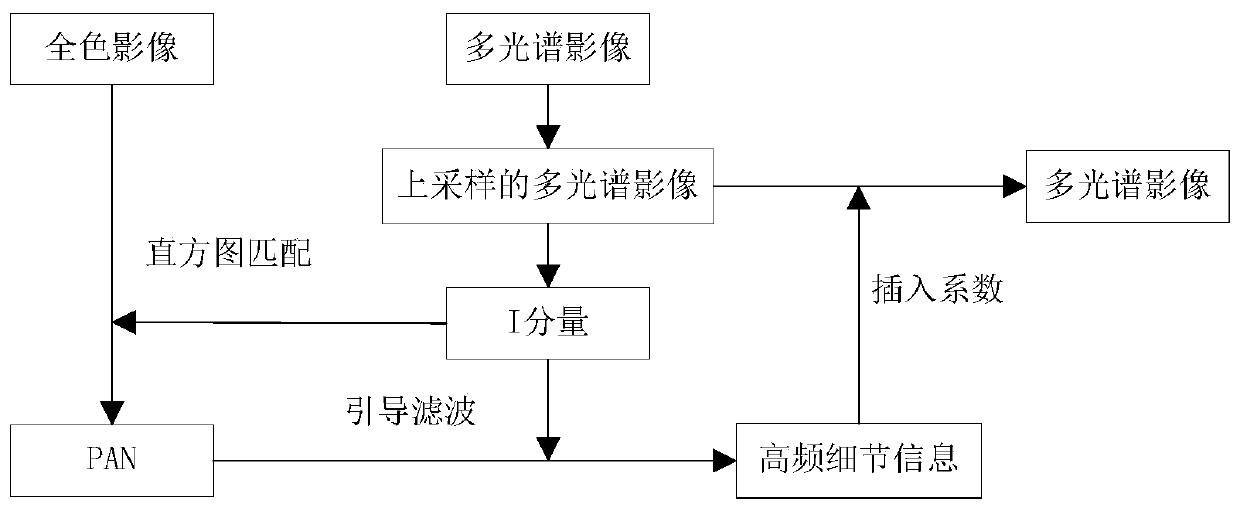

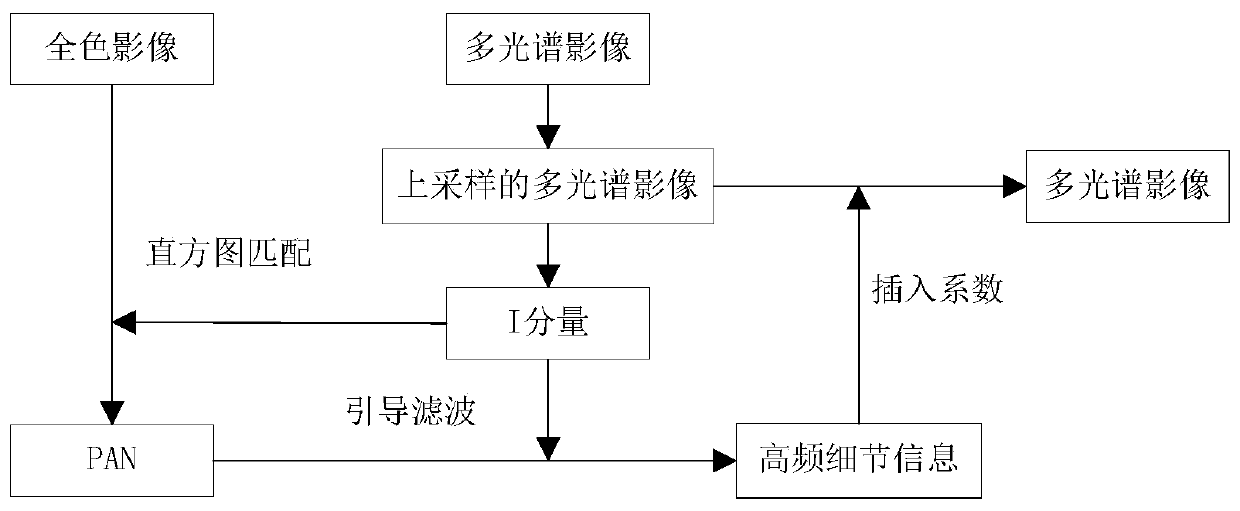

Remote sensing image fusion method combining guided filtering and IHS transformation

PendingCN109993717AReduce redundancyAvoid over-insertionImage enhancementImage analysisImage resolutionRemote sensing image fusion

The invention provides a remote sensing image fusion method combining guided filtering and IHS transformation. The method comprises steps of carrying out image fusion by adopting a detail injection model; performing multi-scale guided filtering on the preprocessed image to obtain detail information; designing a corresponding insertion coefficient; and finally, inserting detail information into theup-sampled multispectral image to obtain a fused image, wherein the fused image retains the spectral resolution of the original multispectral image, has higher spatial resolution, and is an effectivefusion method suitable for fusion of the high-resolution remote sensing multispectral image and the panchromatic image.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com