Method for evaluating remote-sensing image fusion effect

A remote sensing image and evaluation method technology, which is applied in image enhancement, image analysis, image data processing, etc., can solve problems such as error-prone, difficult image quality, and no uniform standard for index selection, and achieve objective evaluation results and classification accuracy Improved effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

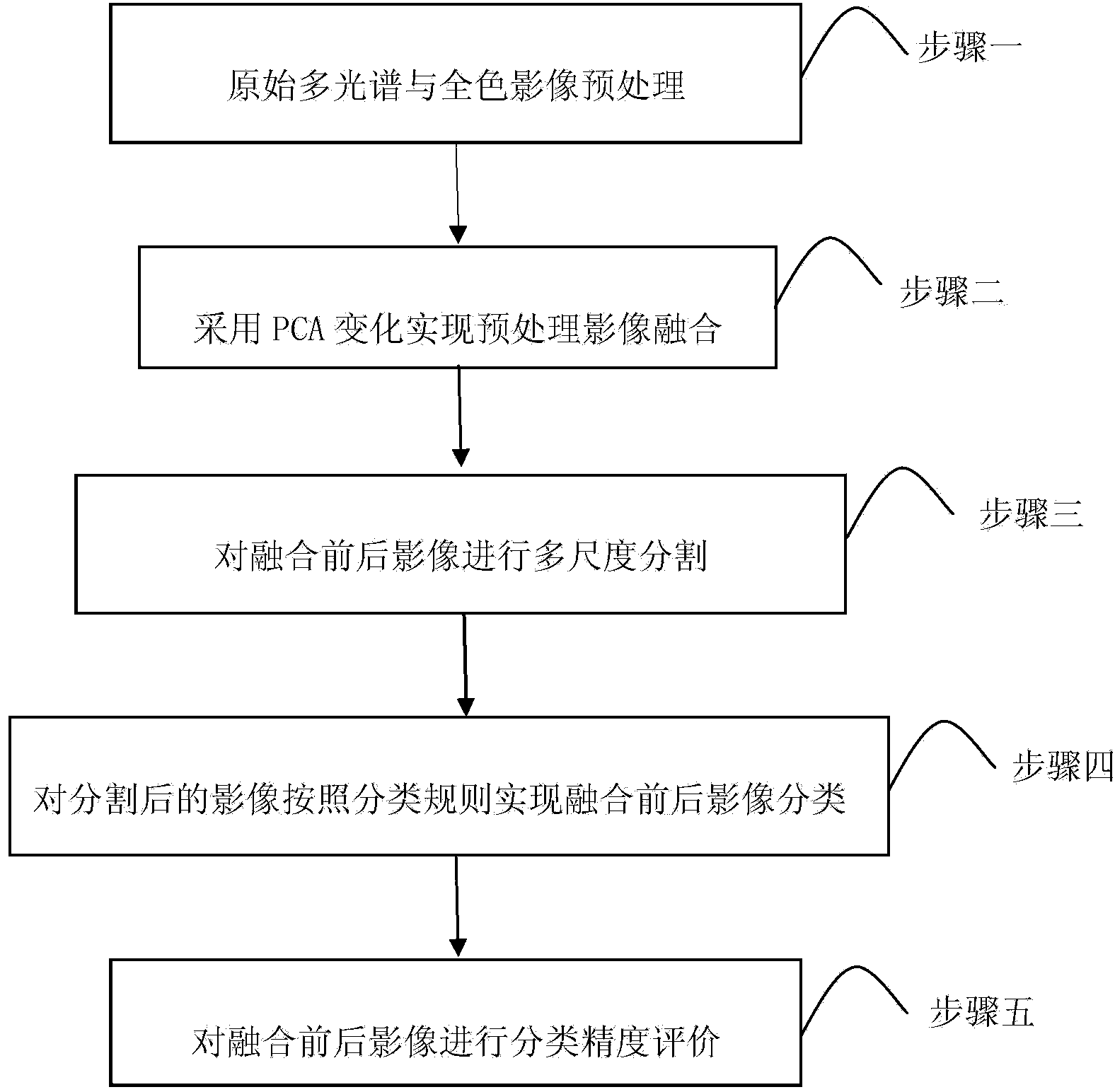

[0020] Specific implementation mode 1: The remote sensing image fusion effect evaluation method in this implementation mode mainly includes the following steps:

[0021] Step 1: Preprocessing of the original image to be fused;

[0022] Step 2: The preprocessed multispectral image is fused with the panchromatic image to obtain a fused result image;

[0023] Step 3: Carry out object-oriented segmentation on the images before and after fusion, and obtain the remote sensing images after segmentation;

[0024] Step 4: Using classification rules to classify the segmented remote sensing images to obtain classification results of images before and after fusion;

[0025] Step 5: Evaluate the accuracy of the classification results from the perspectives of production accuracy, user accuracy, and Kappa coefficient, and compare and analyze the classification accuracy of images before and after fusion to evaluate the fusion effect.

[0026] The effect of this implementation mode:

[0027...

specific Embodiment approach 2

[0029] Specific embodiment two: the difference between this embodiment and specific embodiment one is: the preprocessing of the original image to be fused described in step one includes the following steps:

[0030] The quadratic polynomial method is used to geometrically register the original multispectral image to maintain geometric consistency with the panchromatic image;

[0031] The nearest neighbor interpolation method is used to resample the multispectral image, so that the pixel size of the image to be fused is consistent;

[0032] On the basis of the above processing, the image of the test area was cut out to obtain the original multispectral and panchromatic images to be fused in the same area. Other steps and parameters are the same as those in Embodiment 1.

specific Embodiment approach 3

[0033] Embodiment 3: The difference between this embodiment and Embodiment 1 or 2 is that in step 2, the preprocessed multispectral image and the panchromatic image are fused, and in the resulting fused image, the multispectral The principle of image and panchromatic image fusion is: use a certain fusion method to fuse the preprocessed multispectral image and panchromatic image. Other steps and parameters are the same as those in Embodiment 1 or Embodiment 2.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com