Method for generating virtual-real fusion image for stereo display

A virtual-real fusion and image generation technology, applied in image enhancement, image analysis, image data processing, etc., can solve the problem of insufficient tracking accuracy, achieve realistic three-dimensional display effect, and realize the effect of occlusion judgment and collision detection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] In order to describe the present invention more specifically, the technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

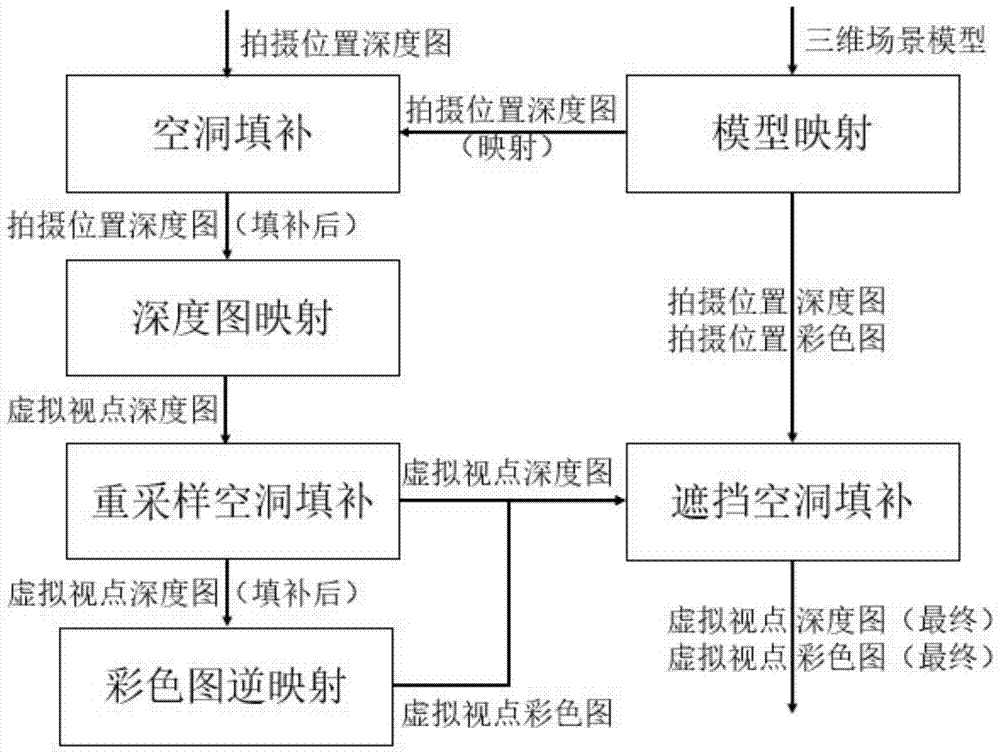

[0034] The method for generating a virtual-real fusion image for stereoscopic display of the present invention comprises the following steps:

[0035] (1) Using a monocular RGB-D camera to obtain scene depth information and color information.

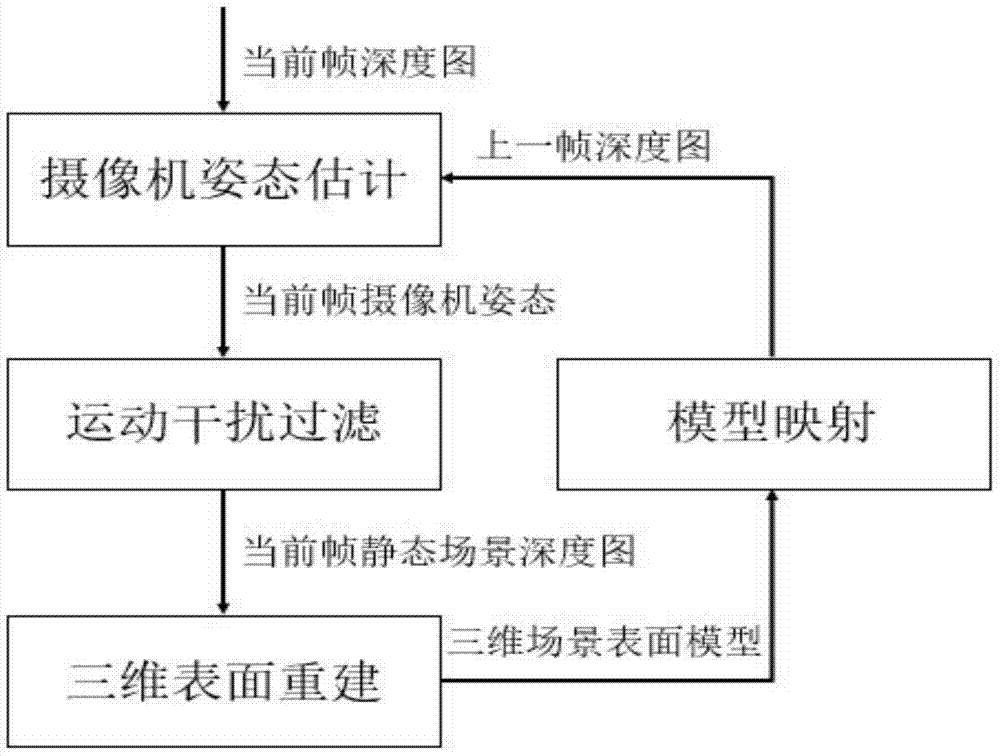

[0036] (2) Use the camera tracking module to determine the camera parameters of each frame according to the 3D scene reconstruction model, and at the same time integrate the scene depth information and color information into the 3D scene reconstruction model frame by frame.

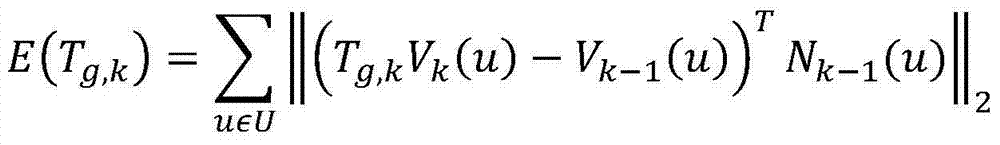

[0037] 2.1 Use the Raycast algorithm to extract the depth map of the previous frame from the 3D scene reconstruction model according to the saved camera pose of the previous frame;

[0038] 2.2 Preprocess the depth map of the current frame. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com