Patents

Literature

205 results about "Hand region" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Human-machine interaction method and device based on sight tracing and gesture discriminating

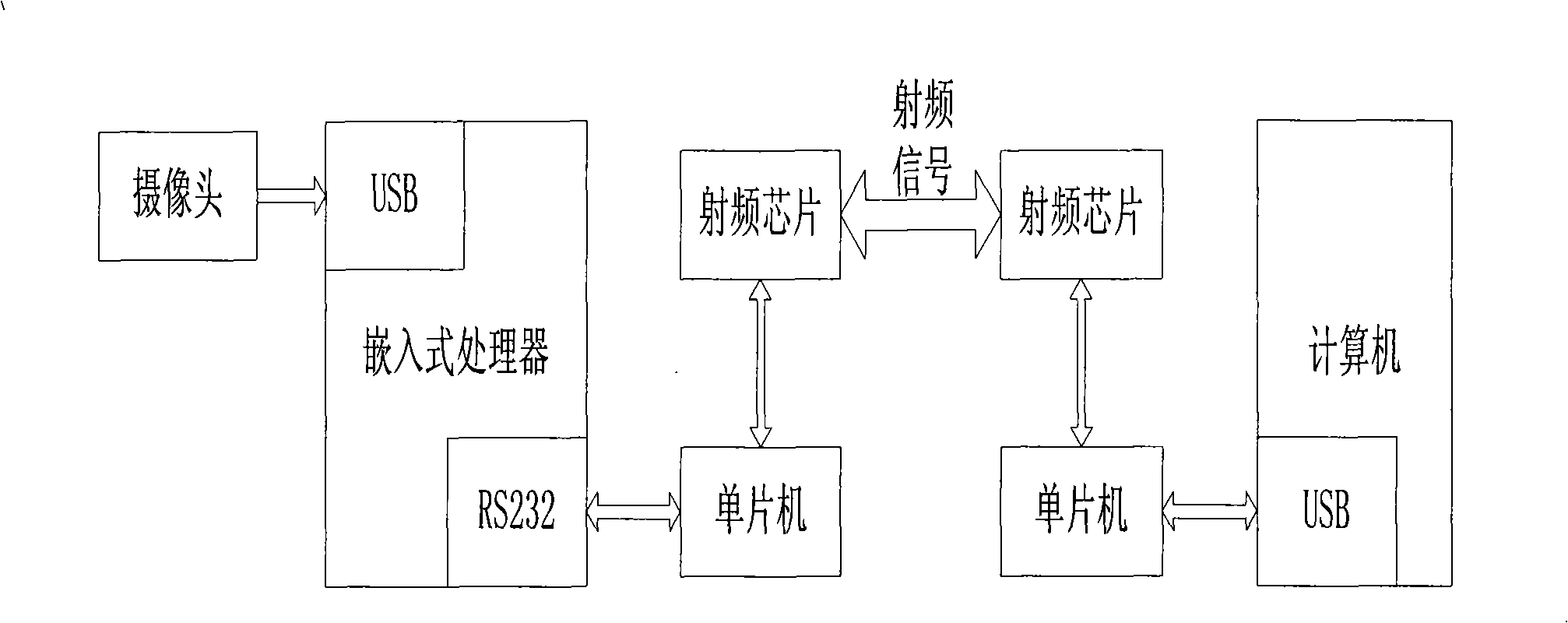

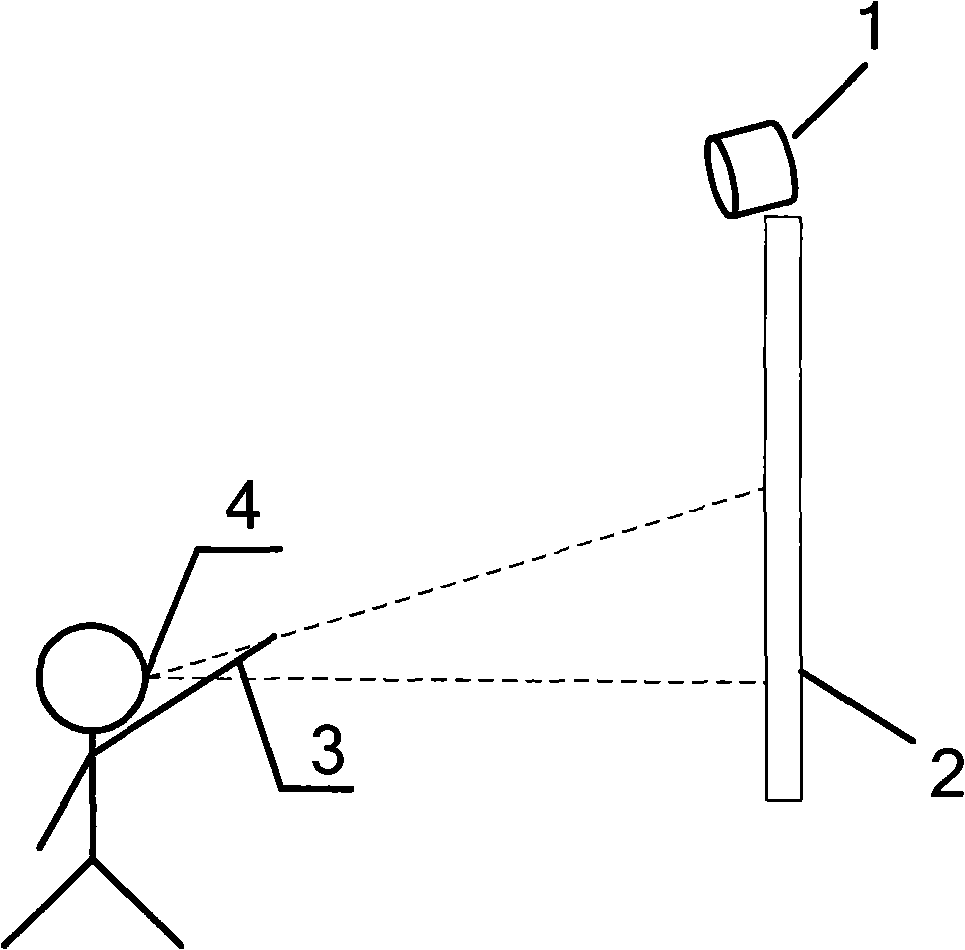

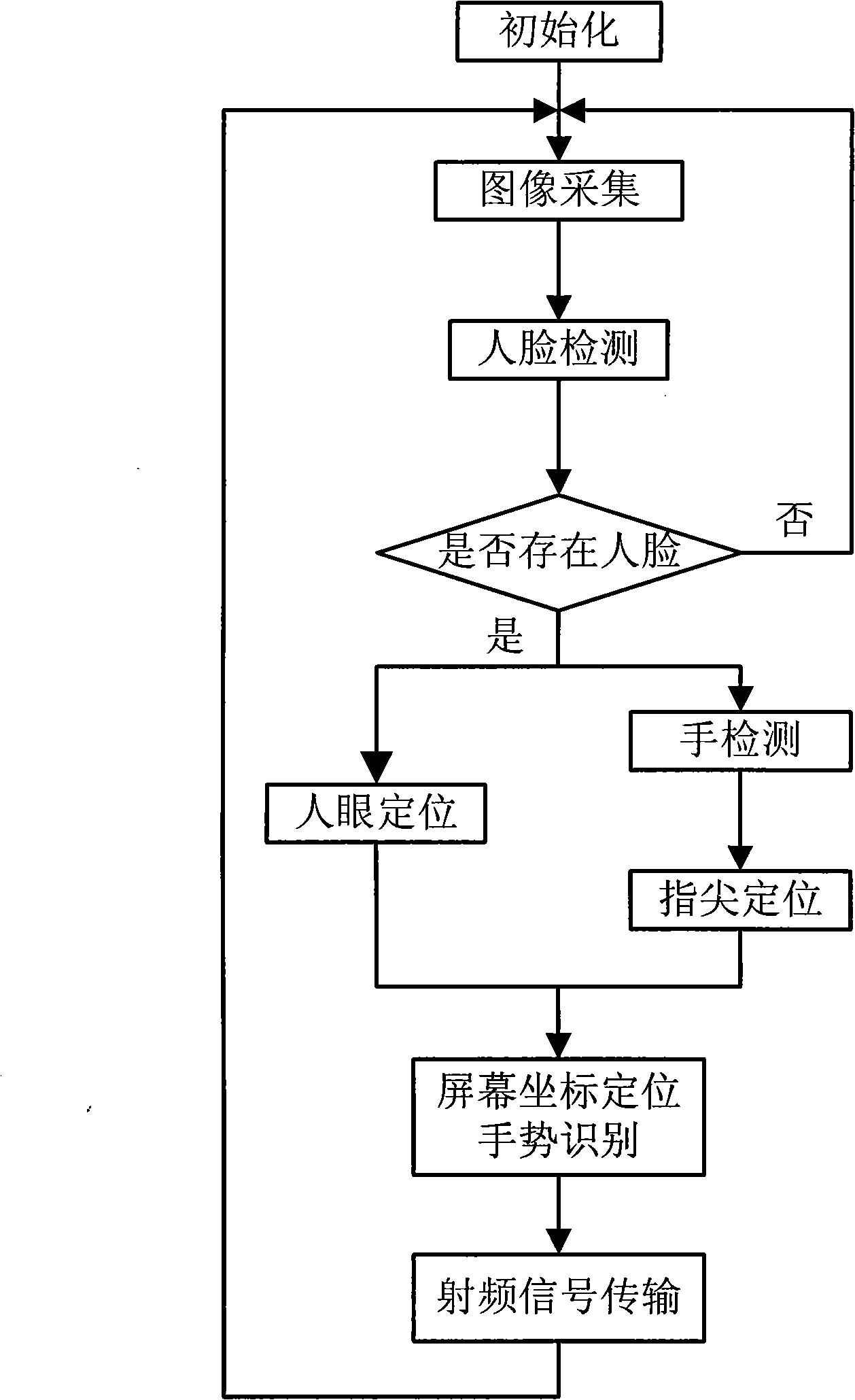

InactiveCN101344816AEasy to controlSolve bugs that limit users' freedom of useInput/output for user-computer interactionCharacter and pattern recognitionImaging processingWireless transmission

The invention discloses a human-computer interaction method and a device based on vision follow-up and gesture identification. The method comprises the following steps of: facial area detection, hand area detection, eye location, fingertip location, screen location and gesture identification. A straight line is determined between an eye and a fingertip; the position where the straight line intersects with the screen is transformed into the logic coordinate of the mouse on the screen, and simultaneously the clicking operation of the mouse is simulated by judging the pressing action of the finger. The device comprises an image collection module, an image processing module and a wireless transmission module. First, the image of a user is collected at real time by a camera and then analyzed and processed by using an image processing algorithm to transform positions the user points to the screen and gesture changes into logic coordinates and control orders of the computer on the screen; and then the processing results are transmitted to the computer through the wireless transmission module. The invention provides a natural, intuitive and simple human-computer interaction method, which can realize remote operation of computers.

Owner:SOUTH CHINA UNIV OF TECH

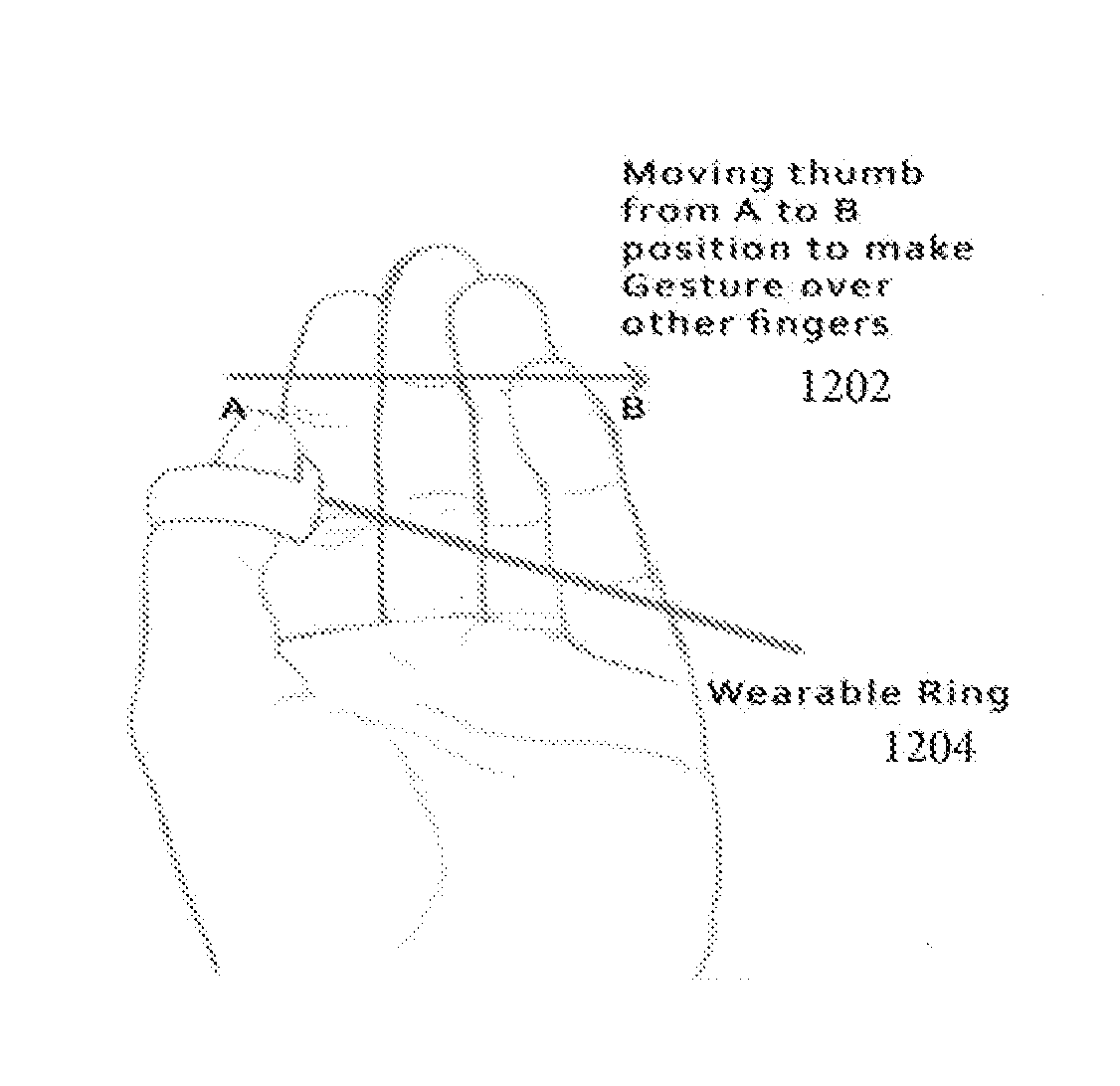

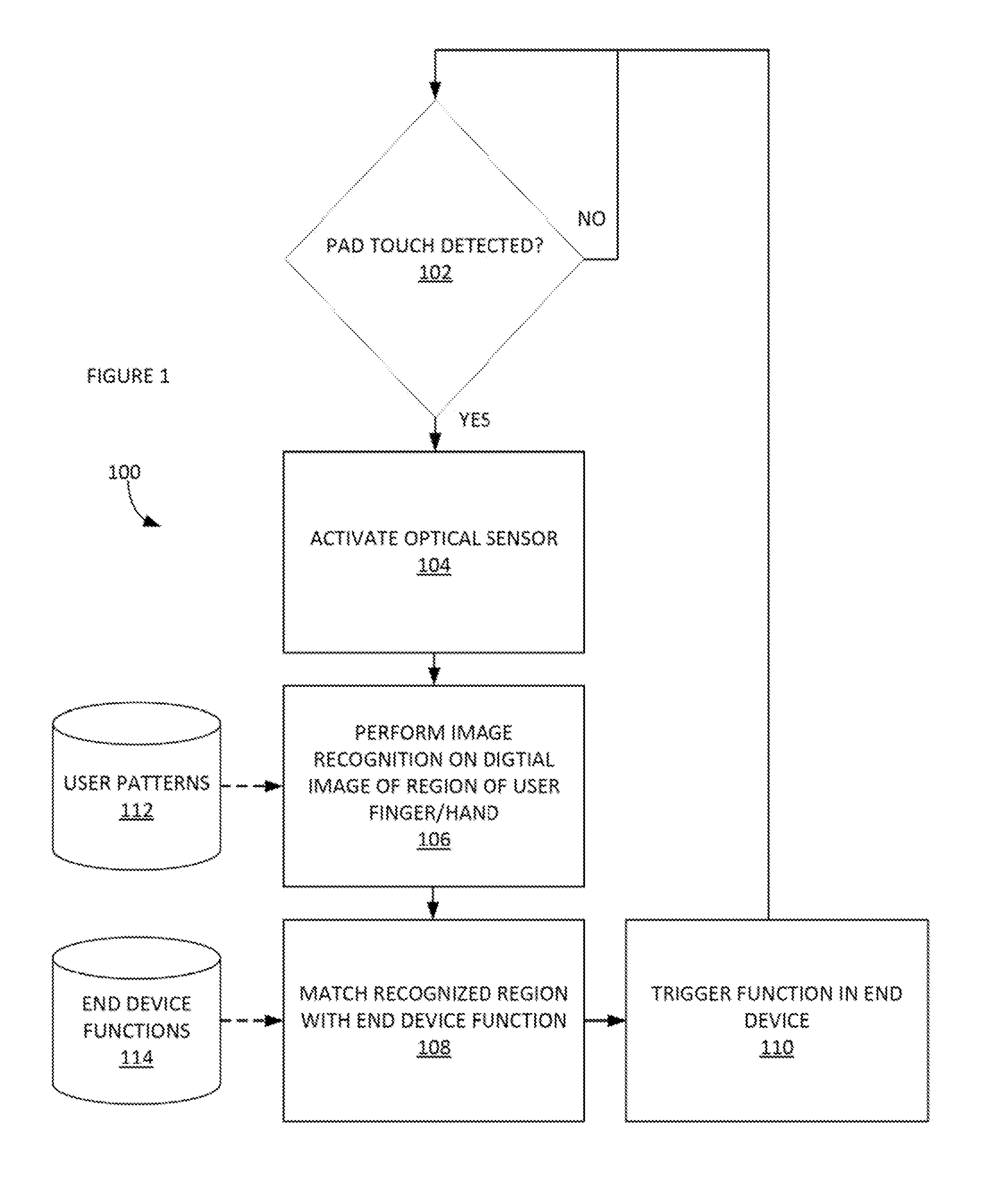

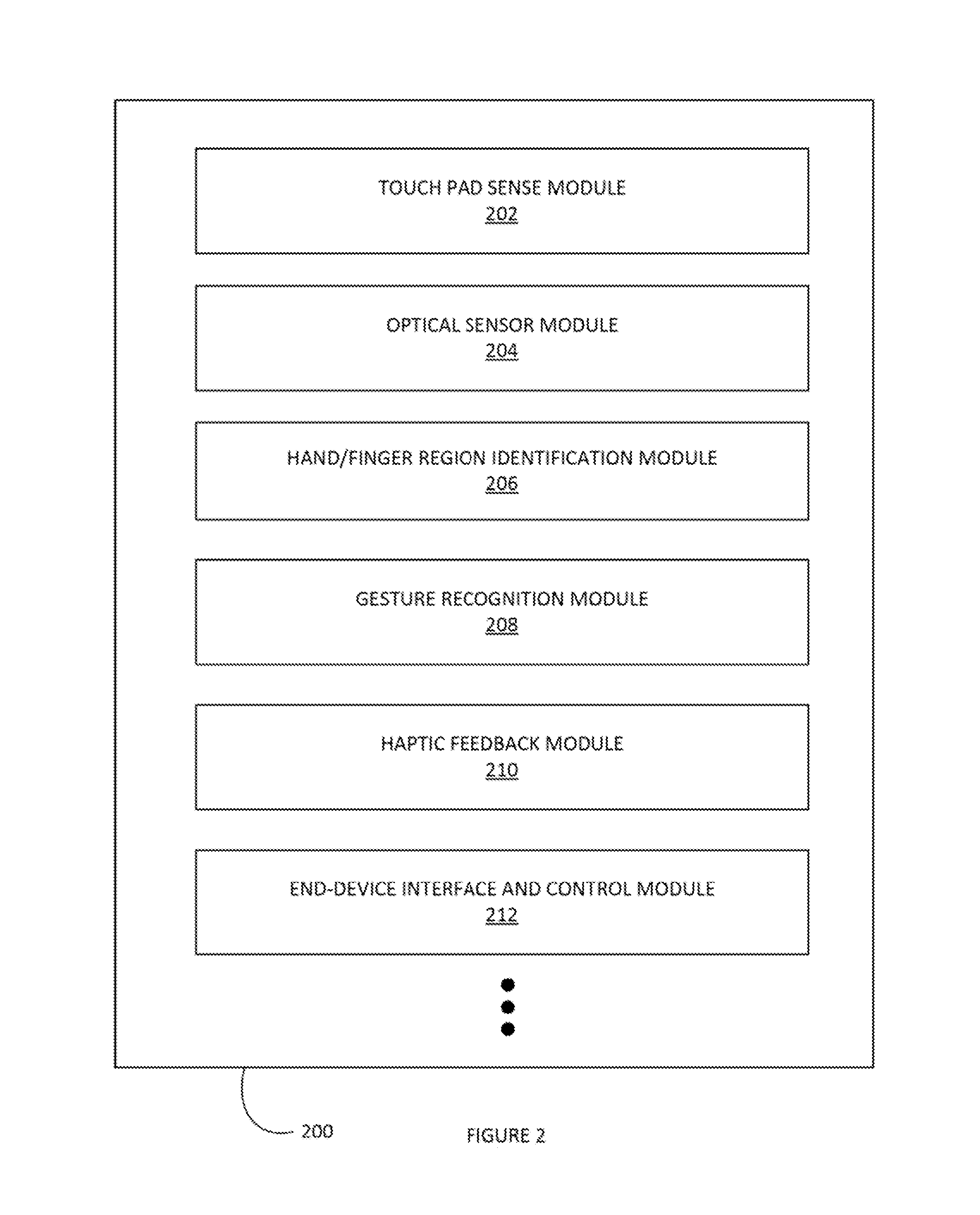

Method and system of a wearable ring device for management of another computing device

InactiveUS20150062086A1Input/output for user-computer interactionGraph readingRing deviceTerminal equipment

In one exemplary aspect, a method of a wearable ring device senses a touch event with a touch sensor in a wearable ring device. An optical sensor in the wearable ring device is activated. A digital image of a user hand region is obtained with the optical sensor. A list of end device functions is obtained. Each element of the list of end device functions is associated with a separate user hand region. The digital image of the user hand region obtained with the optical sensor is matched with an end device function. The end device function matched with the digital image of the user hand region obtained with the optical sensor is trigger.

Owner:FIN ROBOTICS

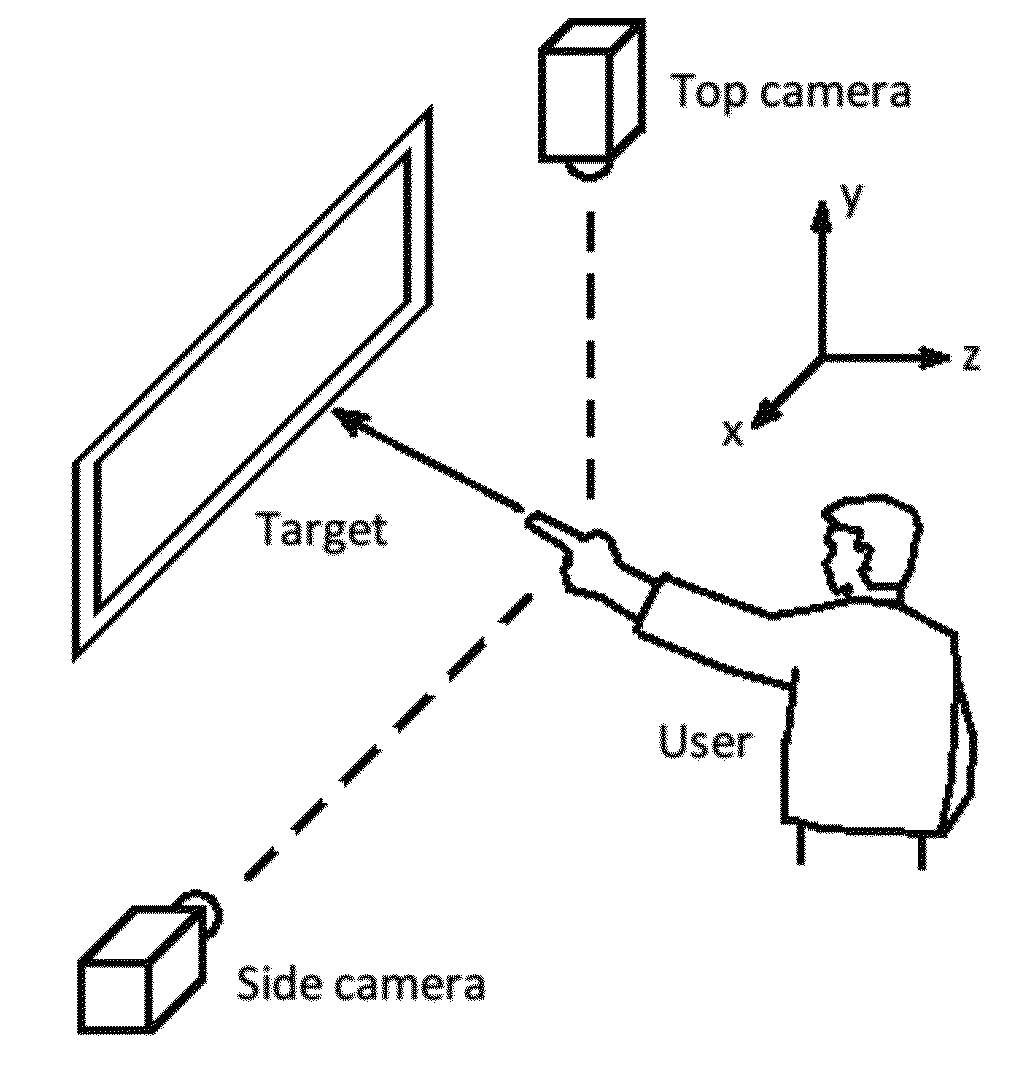

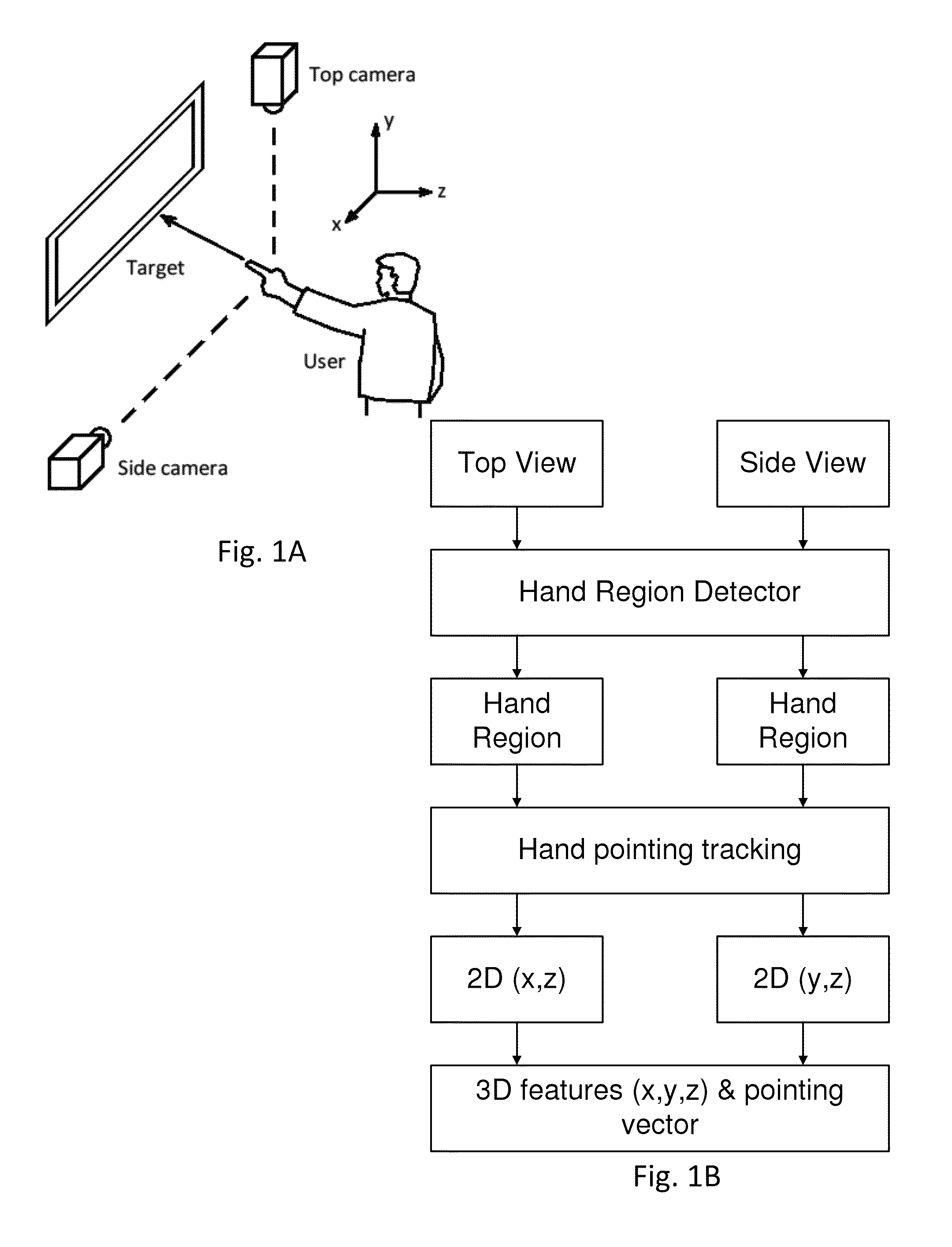

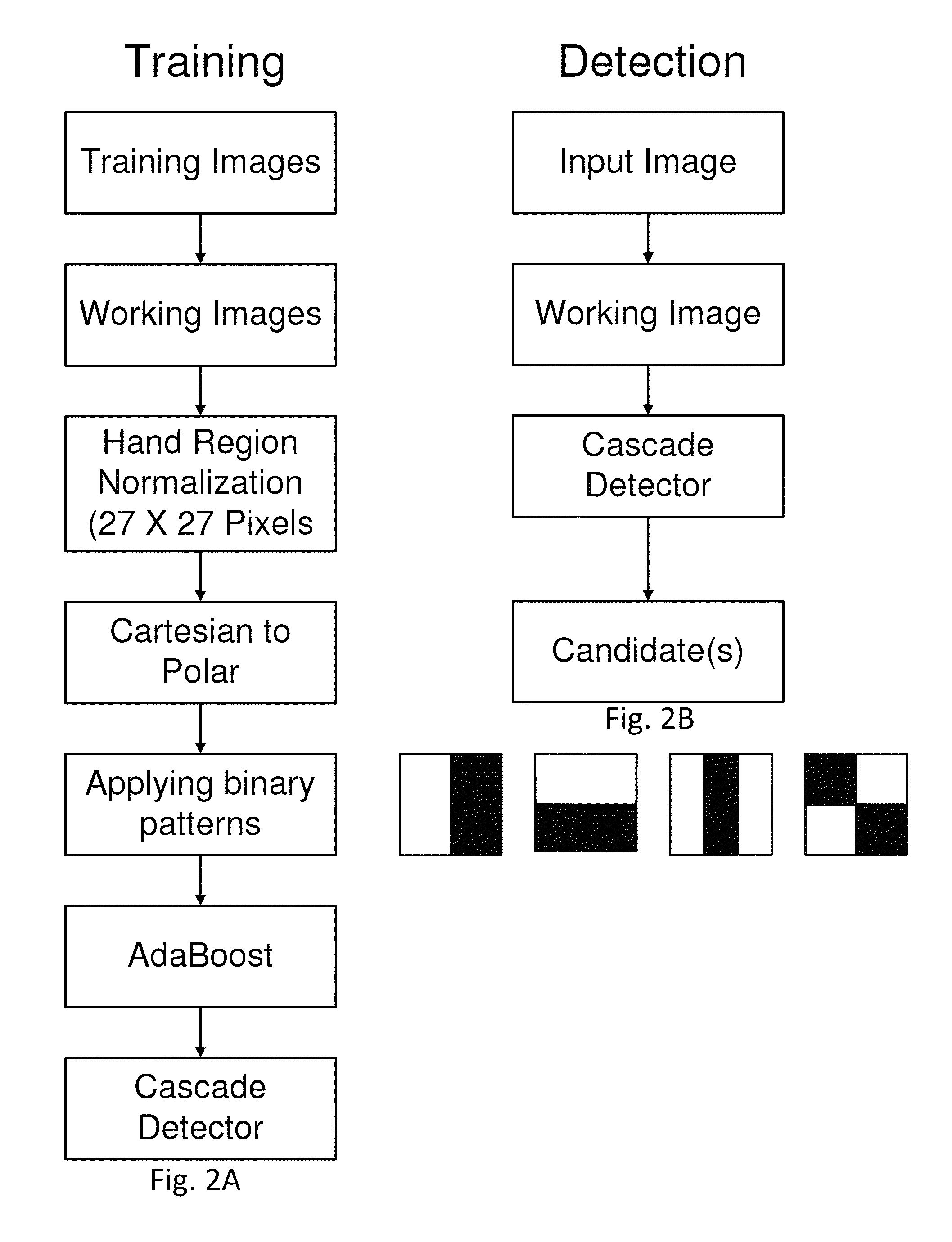

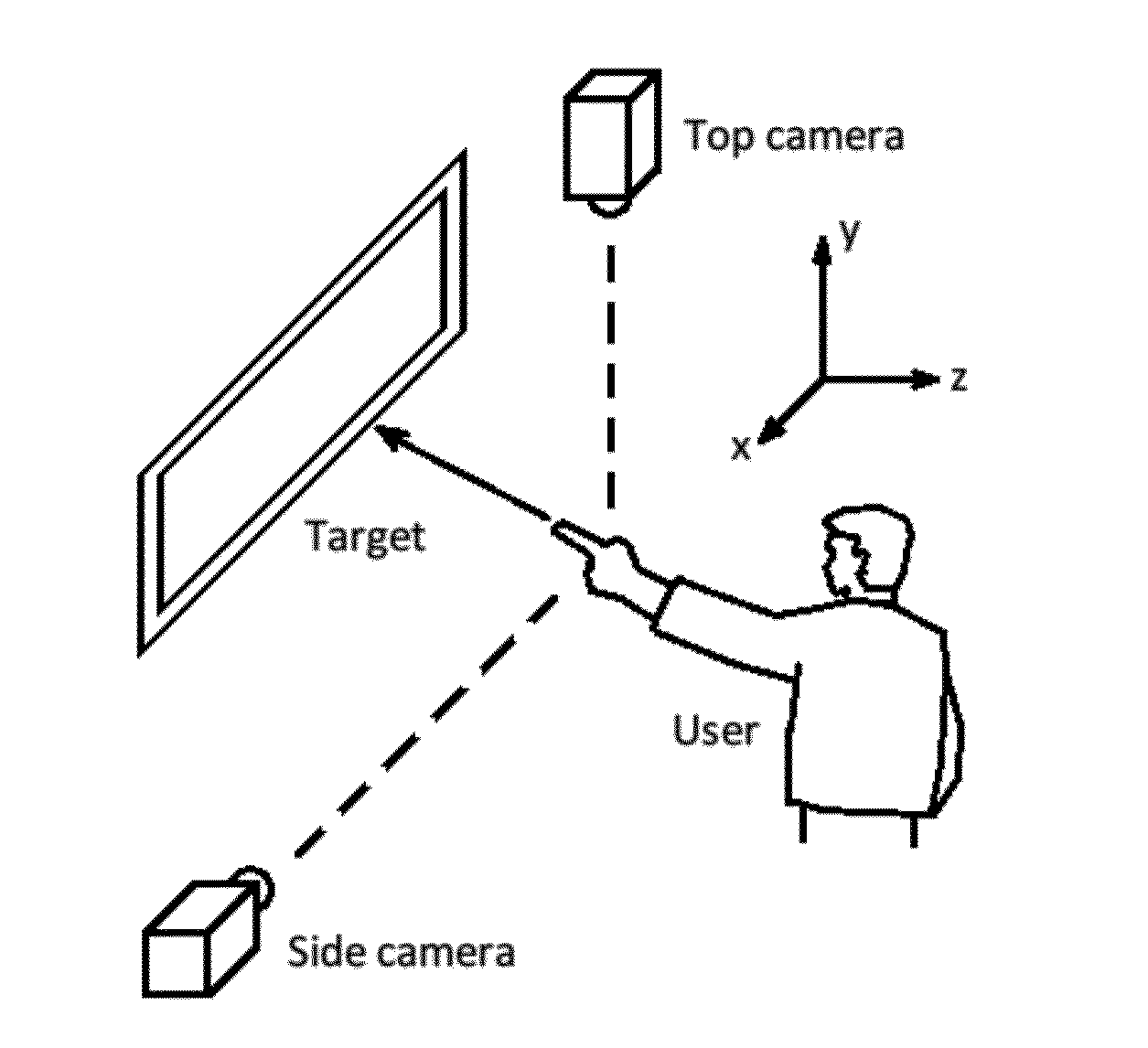

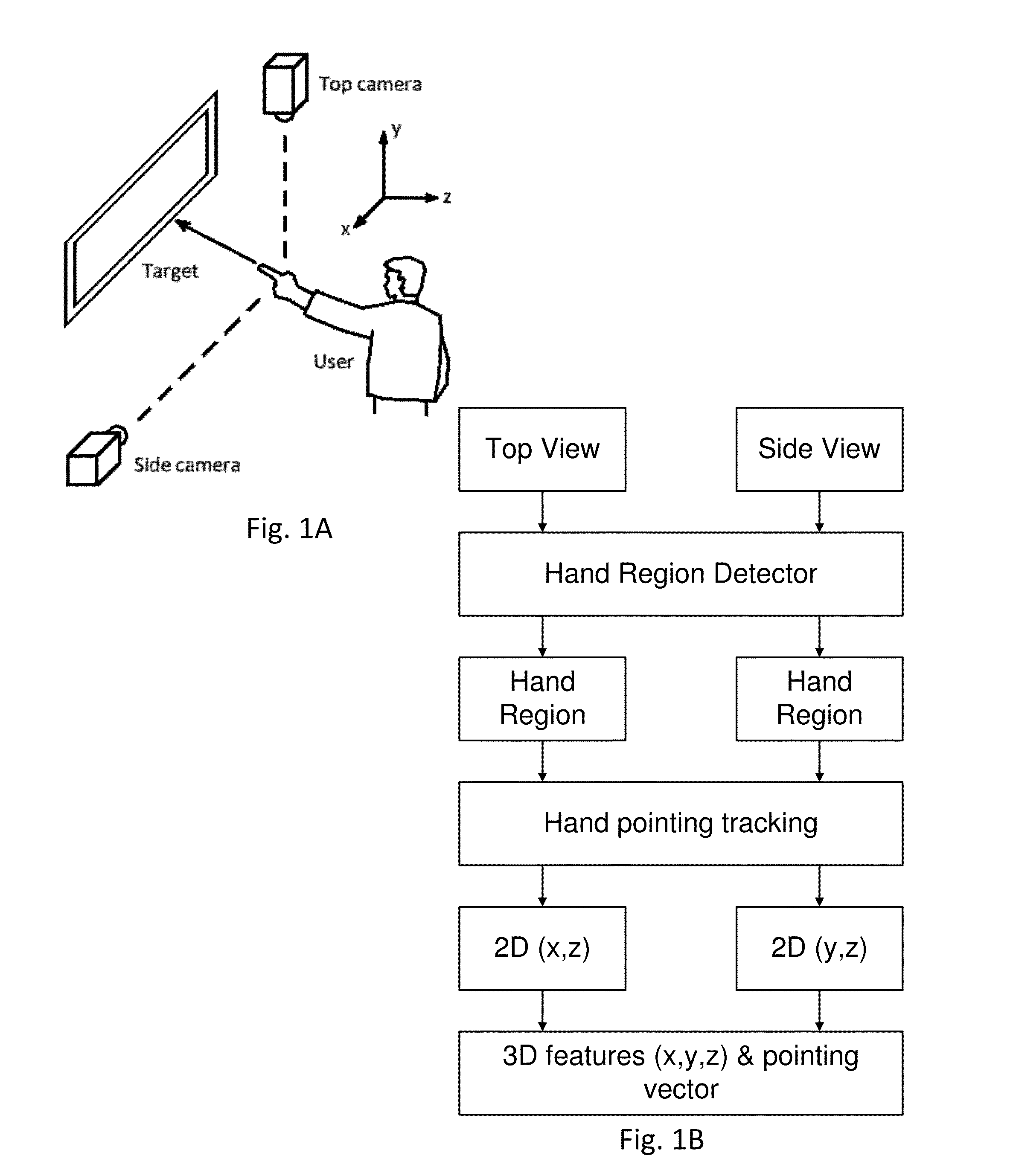

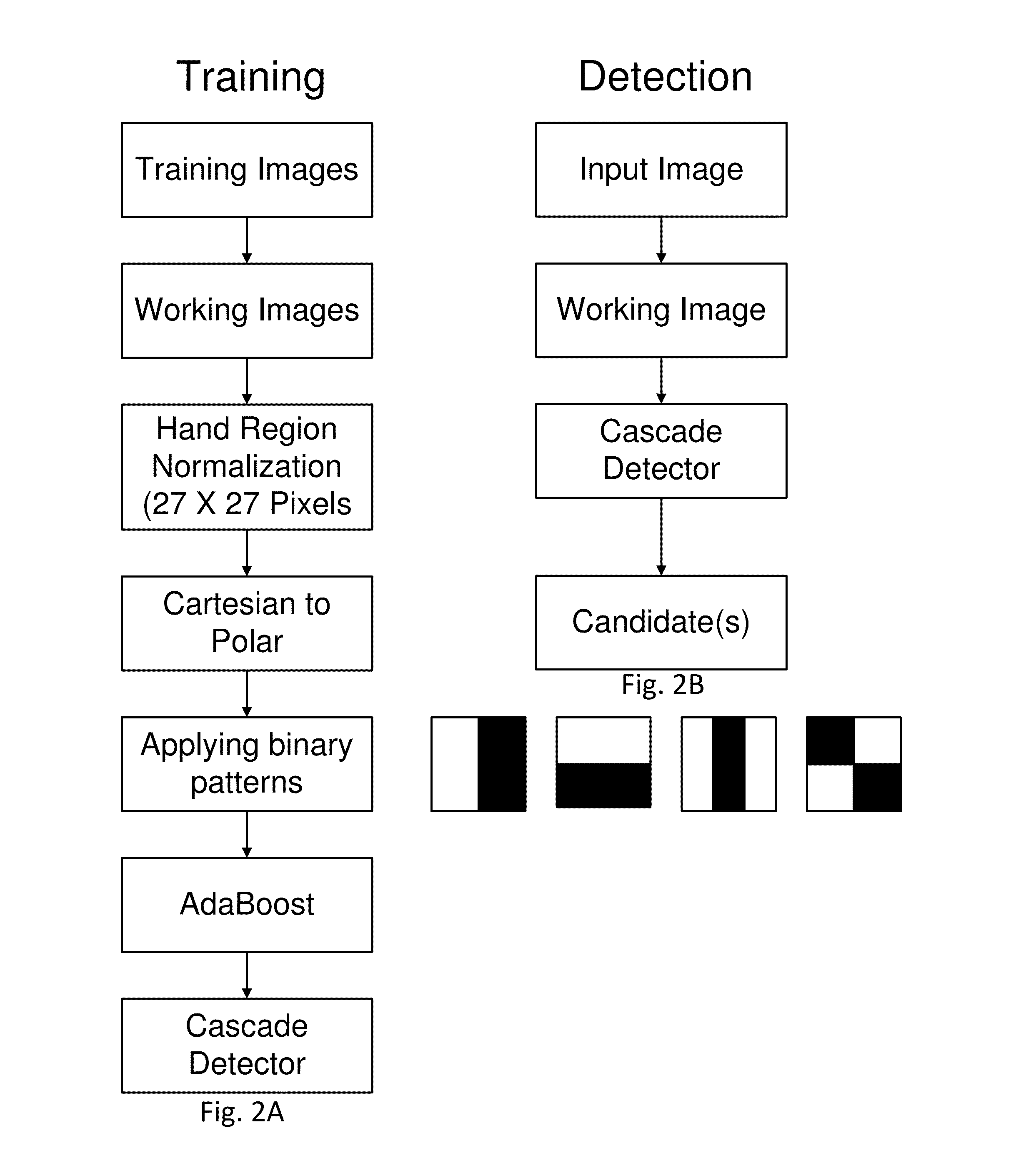

Hand pointing estimation for human computer interaction

ActiveUS8971572B1Simple classificationHigh degree of robustnessImage analysisCharacter and pattern recognitionHuman interactionHuman–robot interaction

Hand pointing has been an intuitive gesture for human interaction with computers. A hand pointing estimation system is provided, based on two regular cameras, which includes hand region detection, hand finger estimation, two views' feature detection, and 3D pointing direction estimation. The technique may employ a polar coordinate system to represent the hand region, and tests show a good result in terms of the robustness to hand orientation variation. To estimate the pointing direction, Active Appearance Models are employed to detect and track, e.g., 14 feature points along the hand contour from a top view and a side view. Combining two views of the hand features, the 3D pointing direction is estimated.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

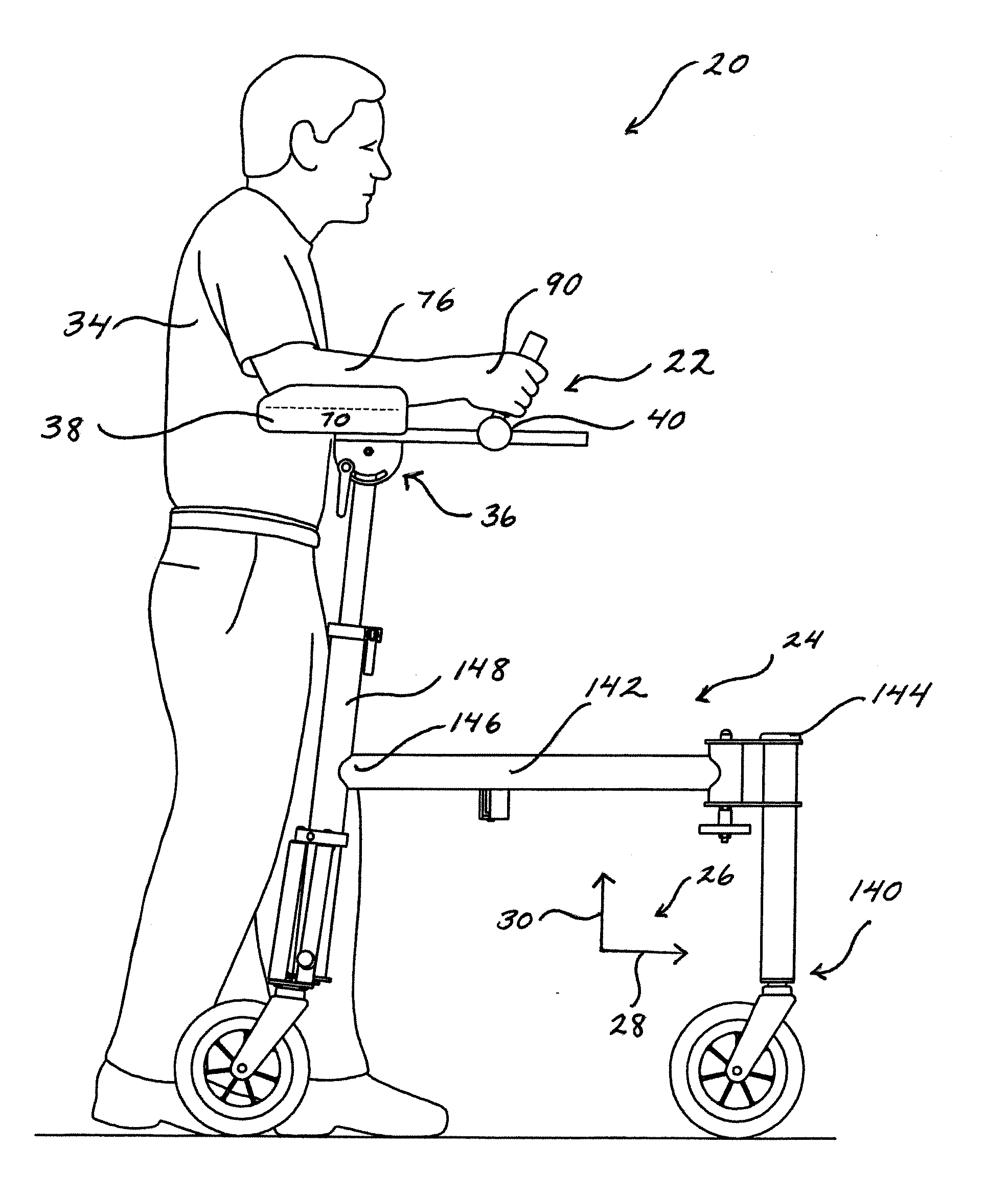

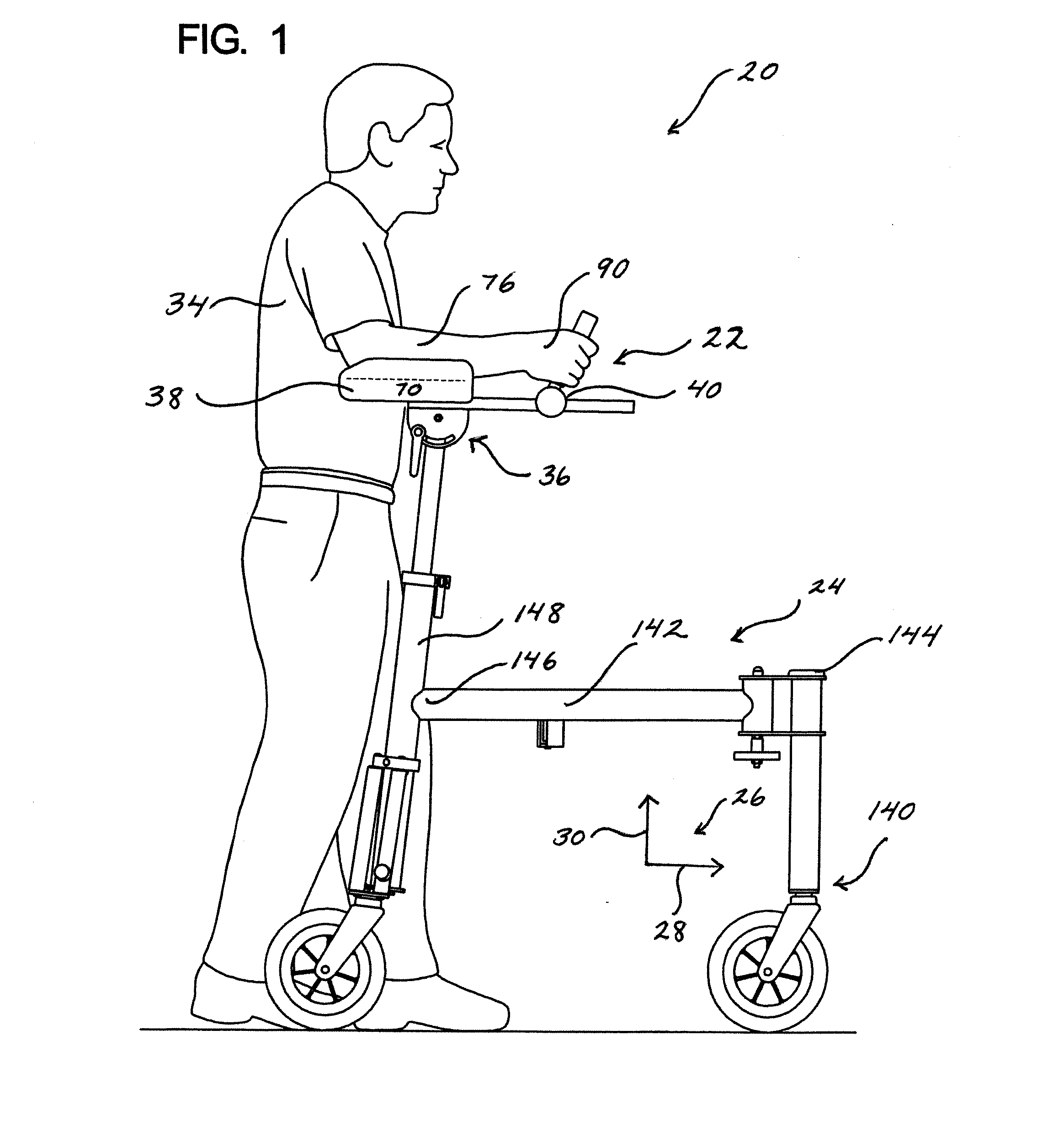

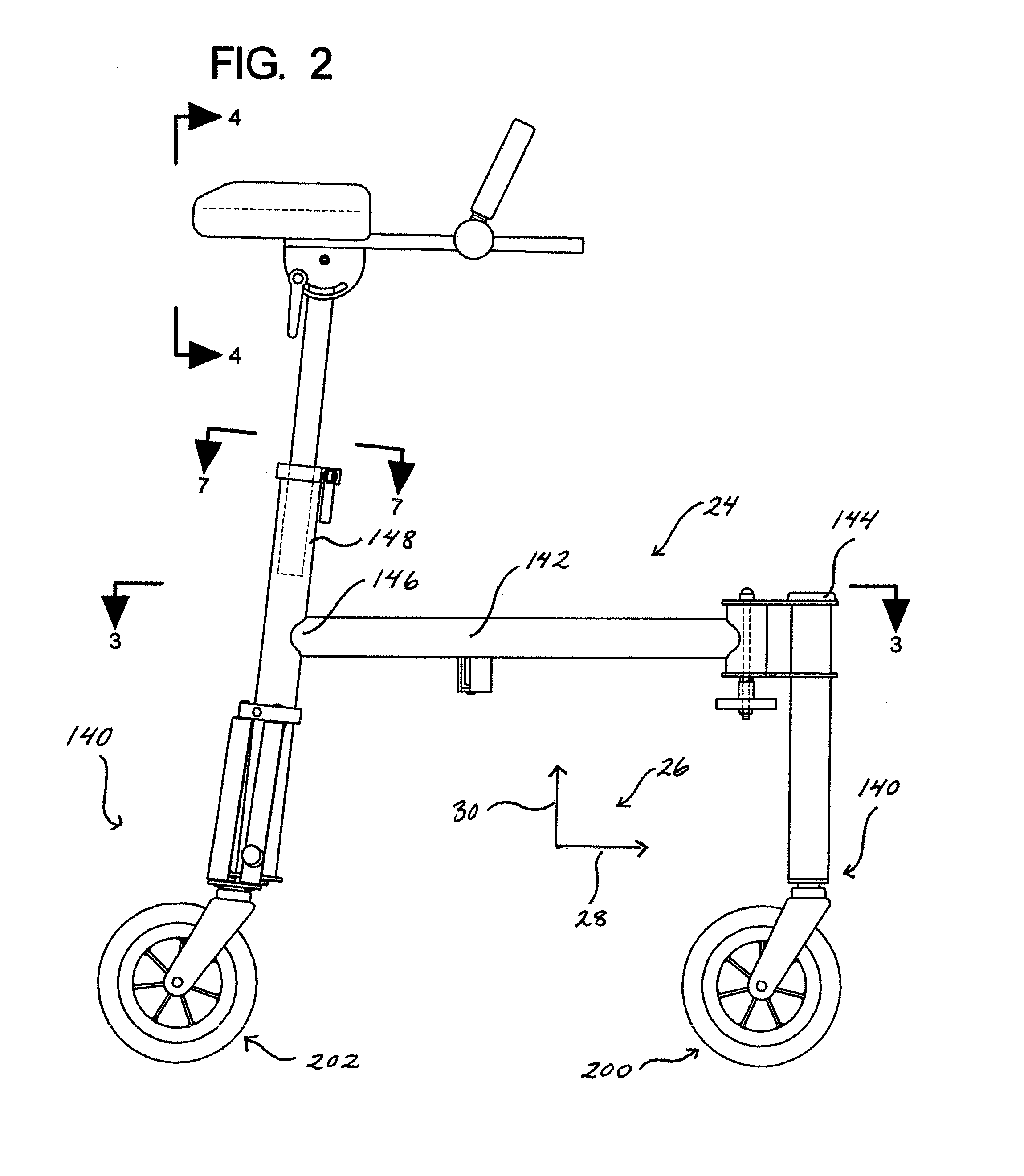

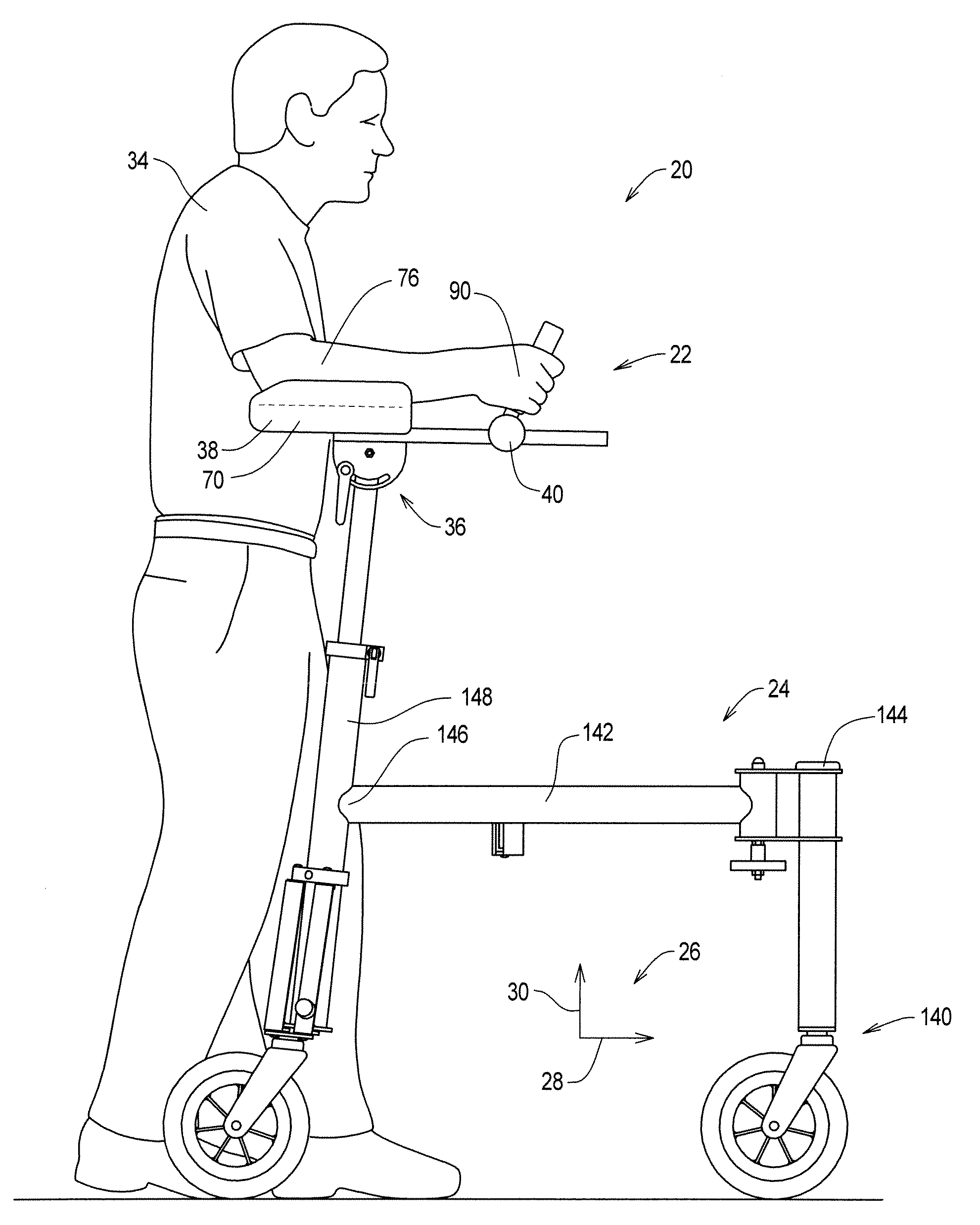

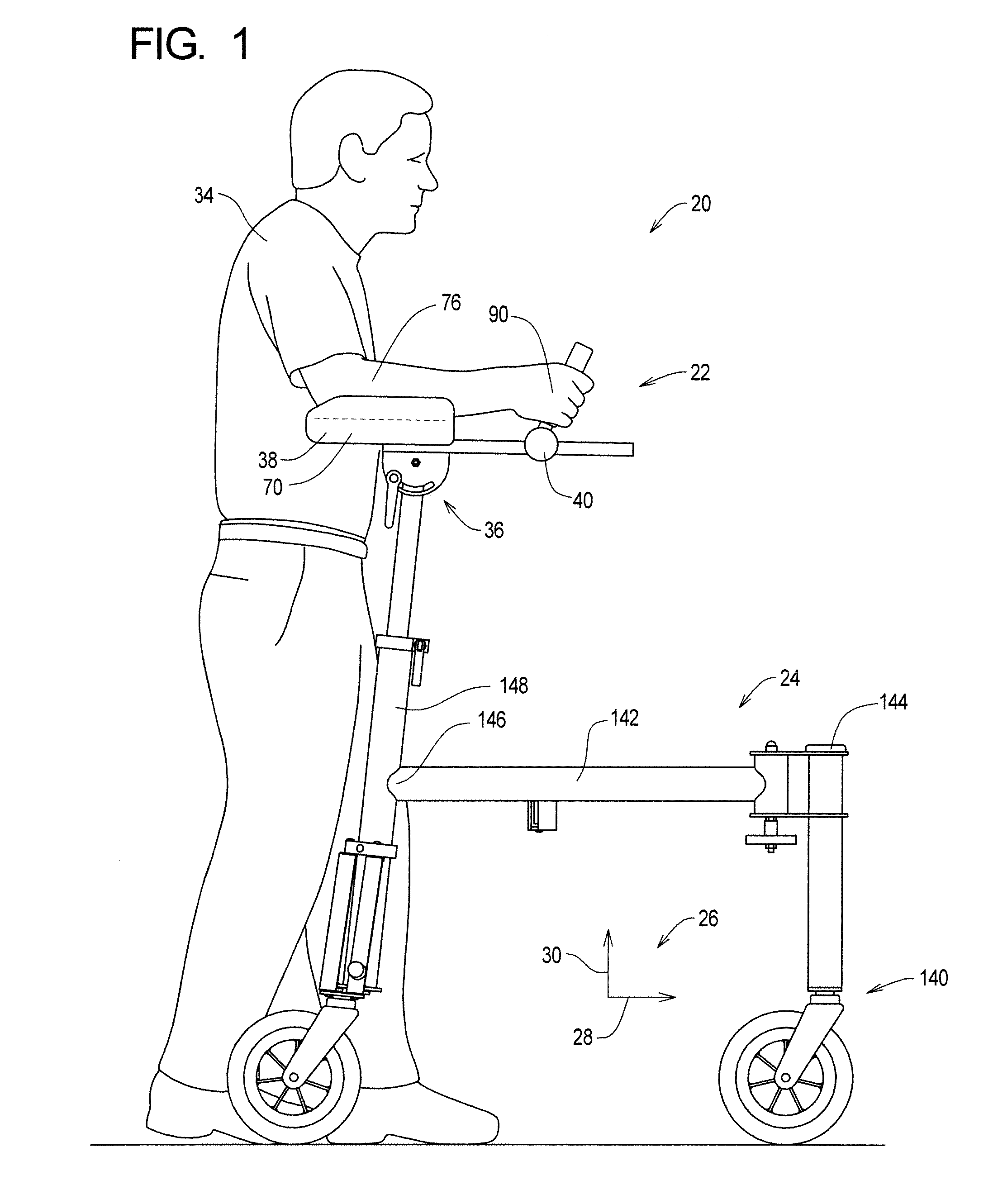

Bipedal motion assisting method and apparatus

A mobile support system having a central region that allows the legs to move in an unobstructed manner and providing an upper body support assembly where the weight is distributed between the elbow region and hand region of the individual for a desirable weight distribution for assisted bipedal motion such as walking or running.

Owner:GRAHAM GARY

Bipedal motion assisting method and apparatus

A mobile support system having a central region that allows the legs to move in an unobstructed manner and providing an upper body support assembly where the weight is distributed between the elbow region and hand region of the individual for a desirable weight distribution for assisted bipedal motion such as walking or running.

Owner:GRAHAM GARY

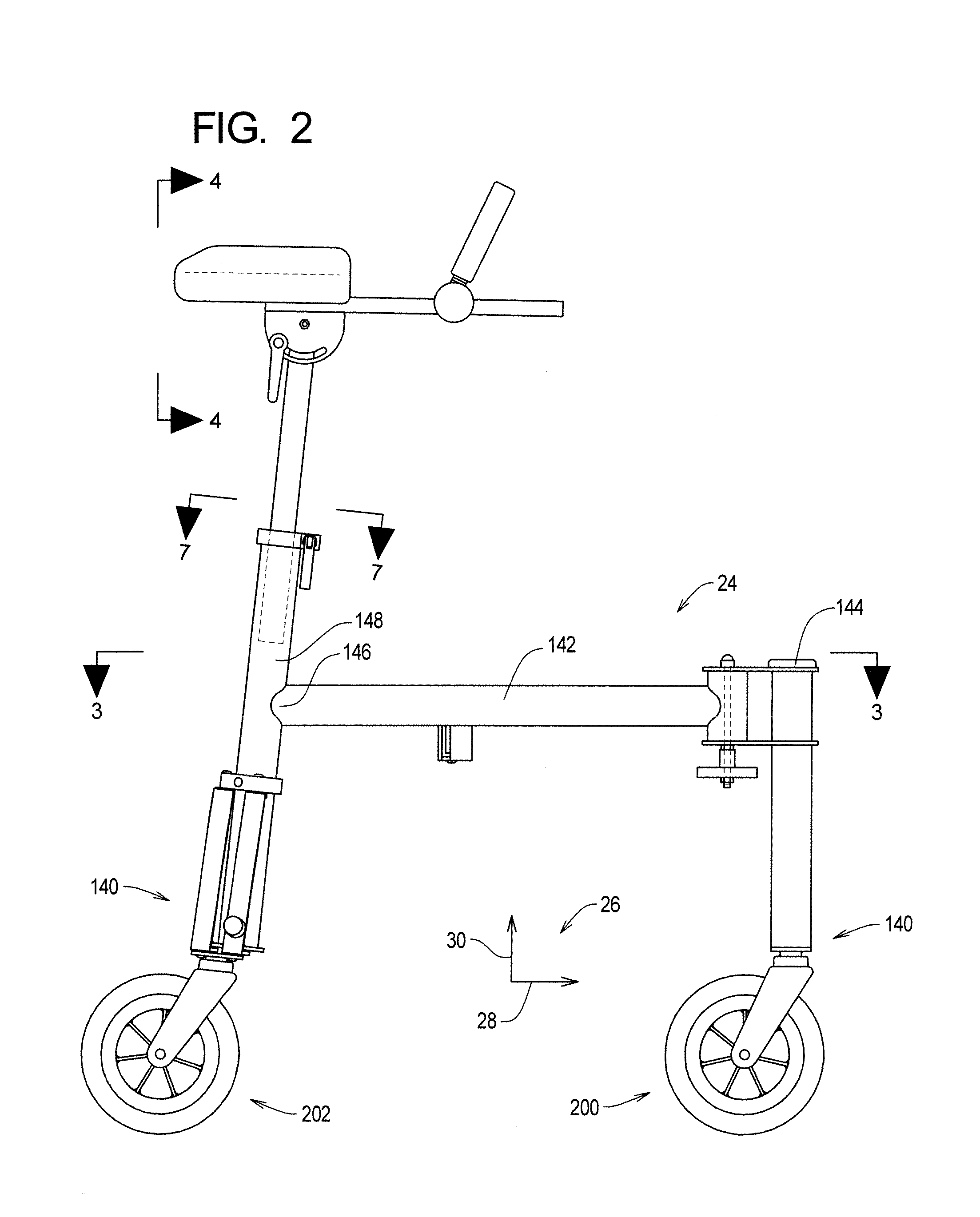

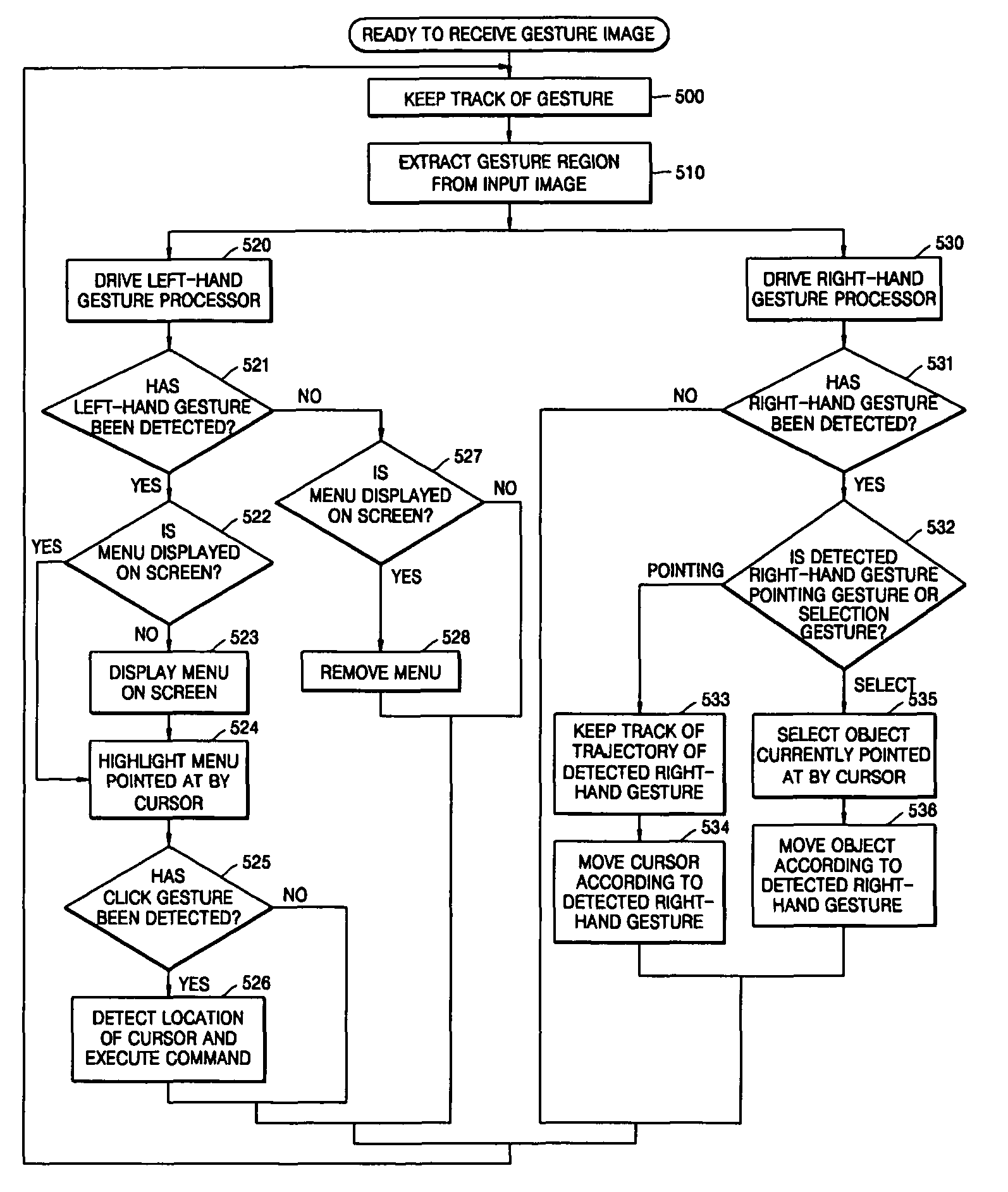

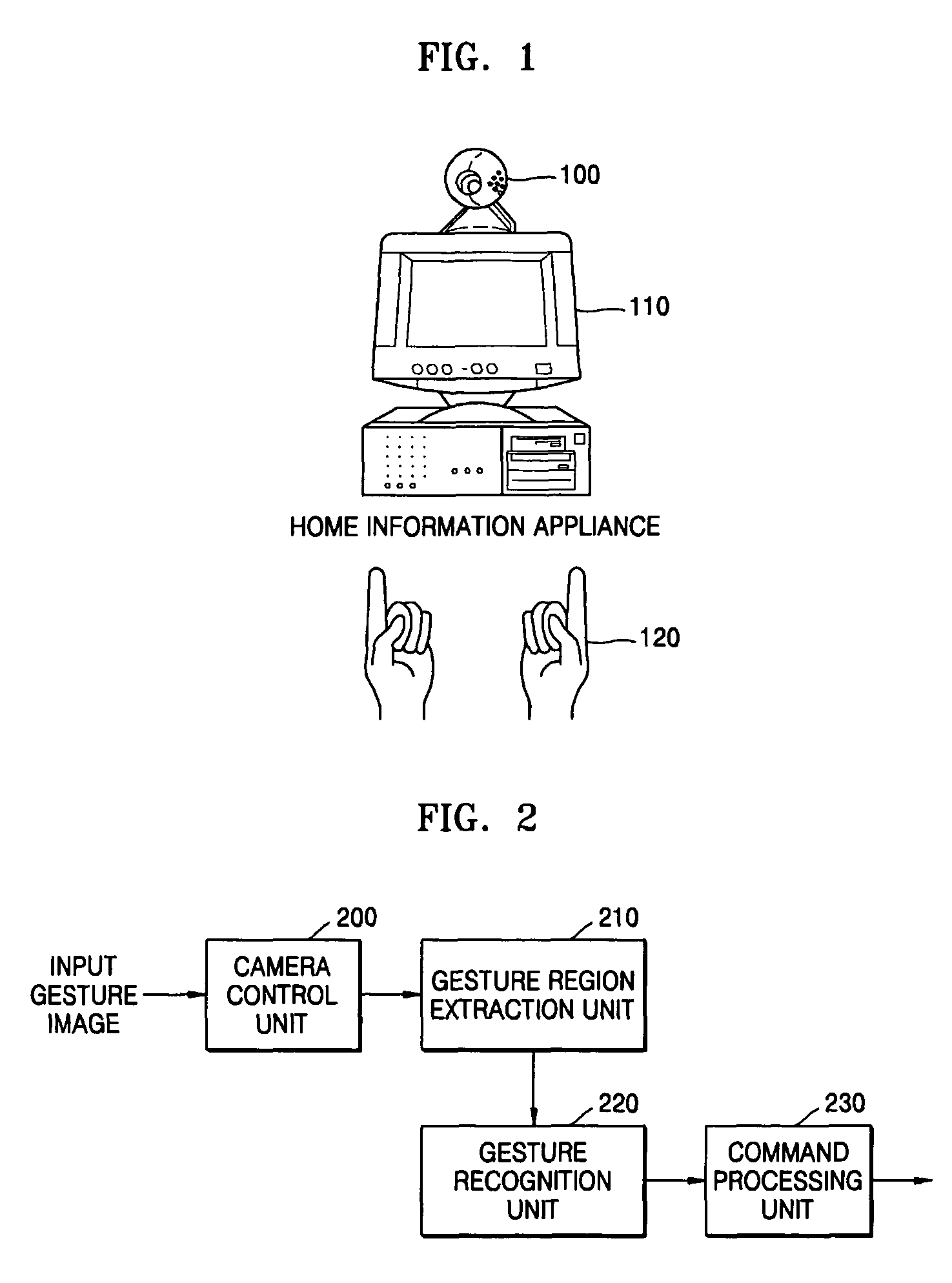

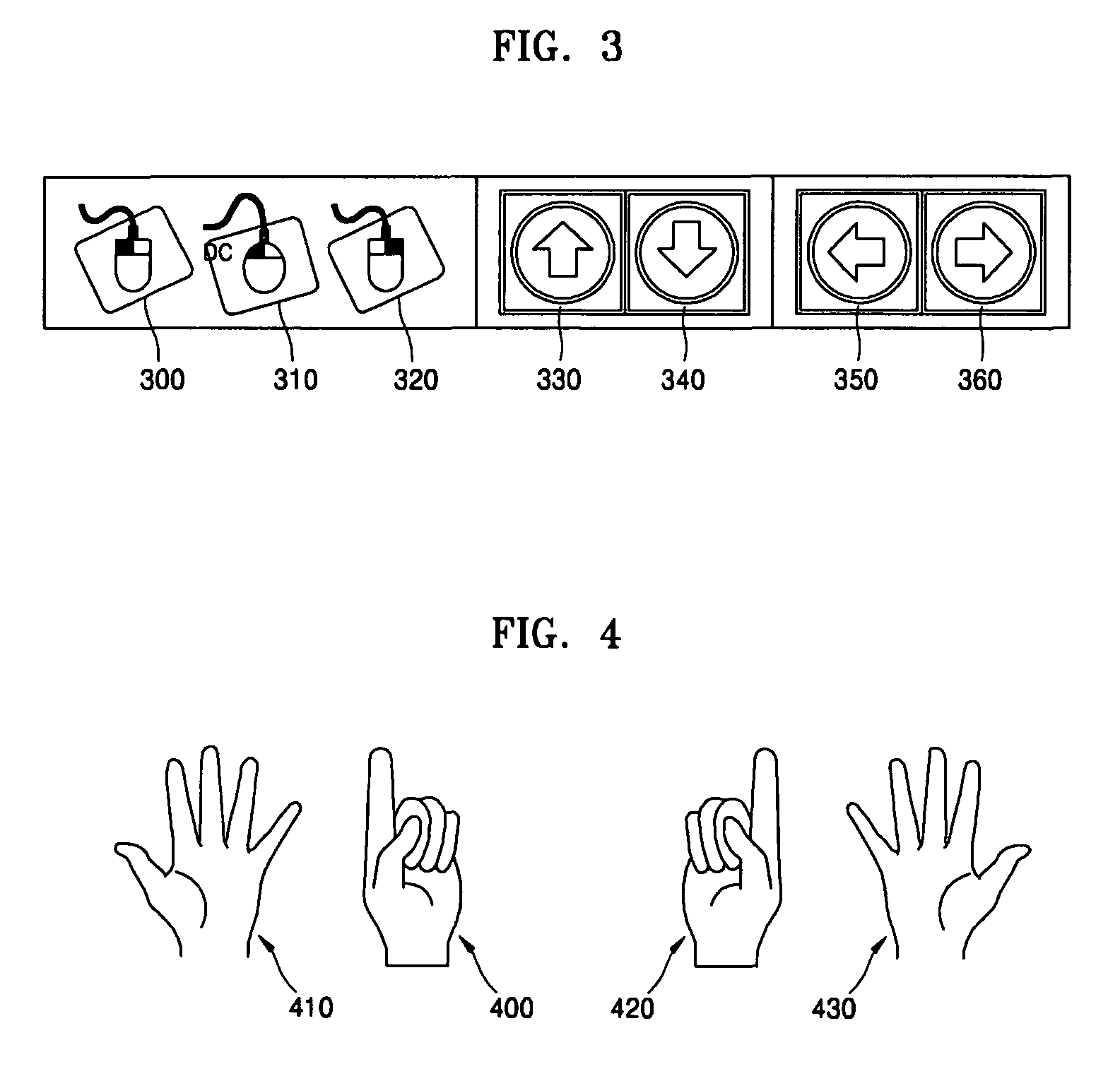

Virtual mouse driving apparatus and method using two-handed gestures

InactiveUS7849421B2Input/output for user-computer interactionCathode-ray tube indicatorsVirtual mouseHand region

A virtual mouse driving apparatus and method for processing a variety of gesture commands as equivalent mouse commands based on two-handed gesture information obtained by a video camera are provided. The virtual mouse driving method includes: keeping track of an input gesture input with the video camera; removing a background portion of the input gesture image and extracting a left-hand region and a right-hand region from the input gesture image whose background portion has been removed; recognizing a left-hand gesture and a right-hand gesture from the extracted left-hand region and the extracted right-hand region, respectively, and recognizing gesture commands corresponding to the recognition results; and executing the recognized gesture commands.

Owner:ELECTRONICS & TELECOMM RES INST

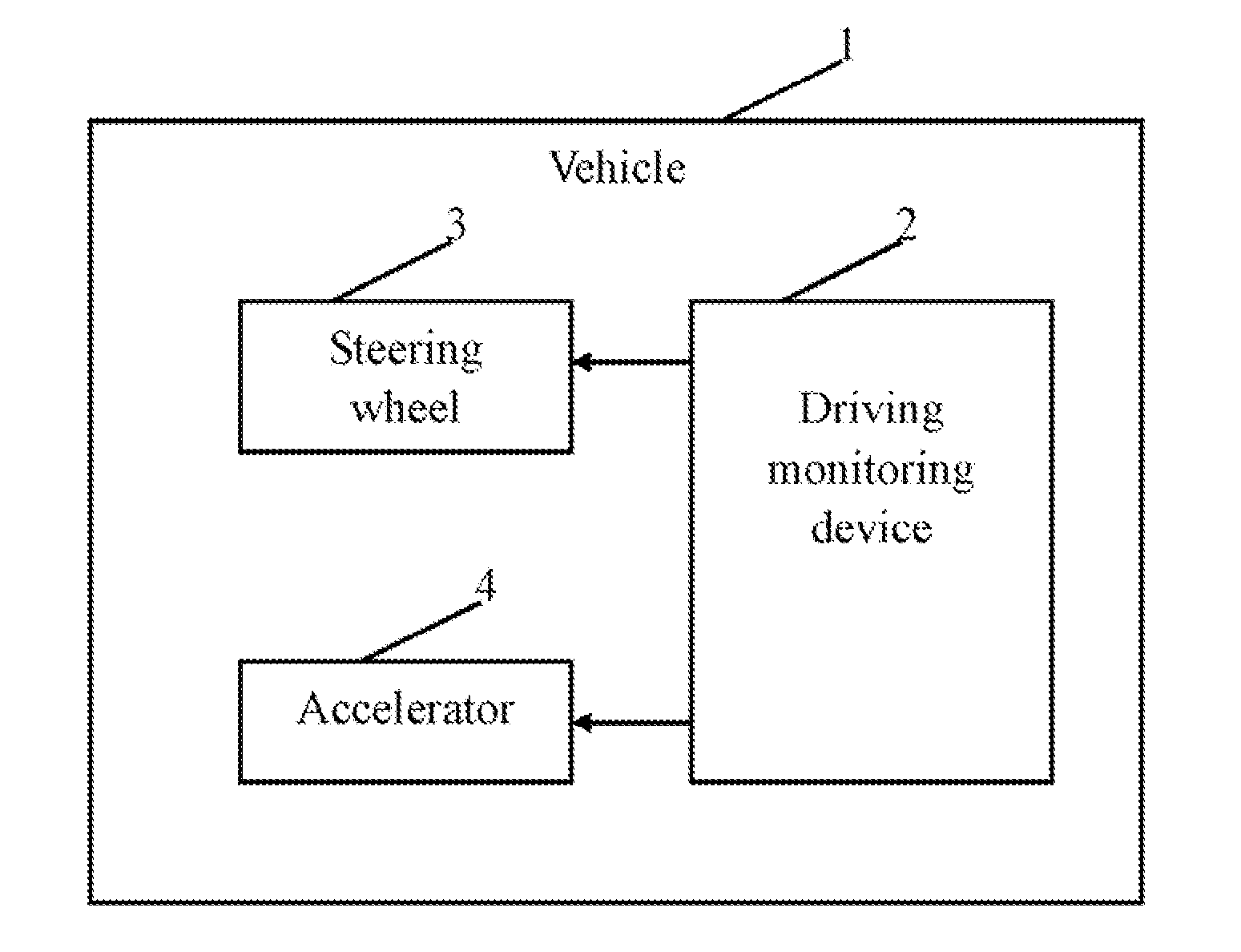

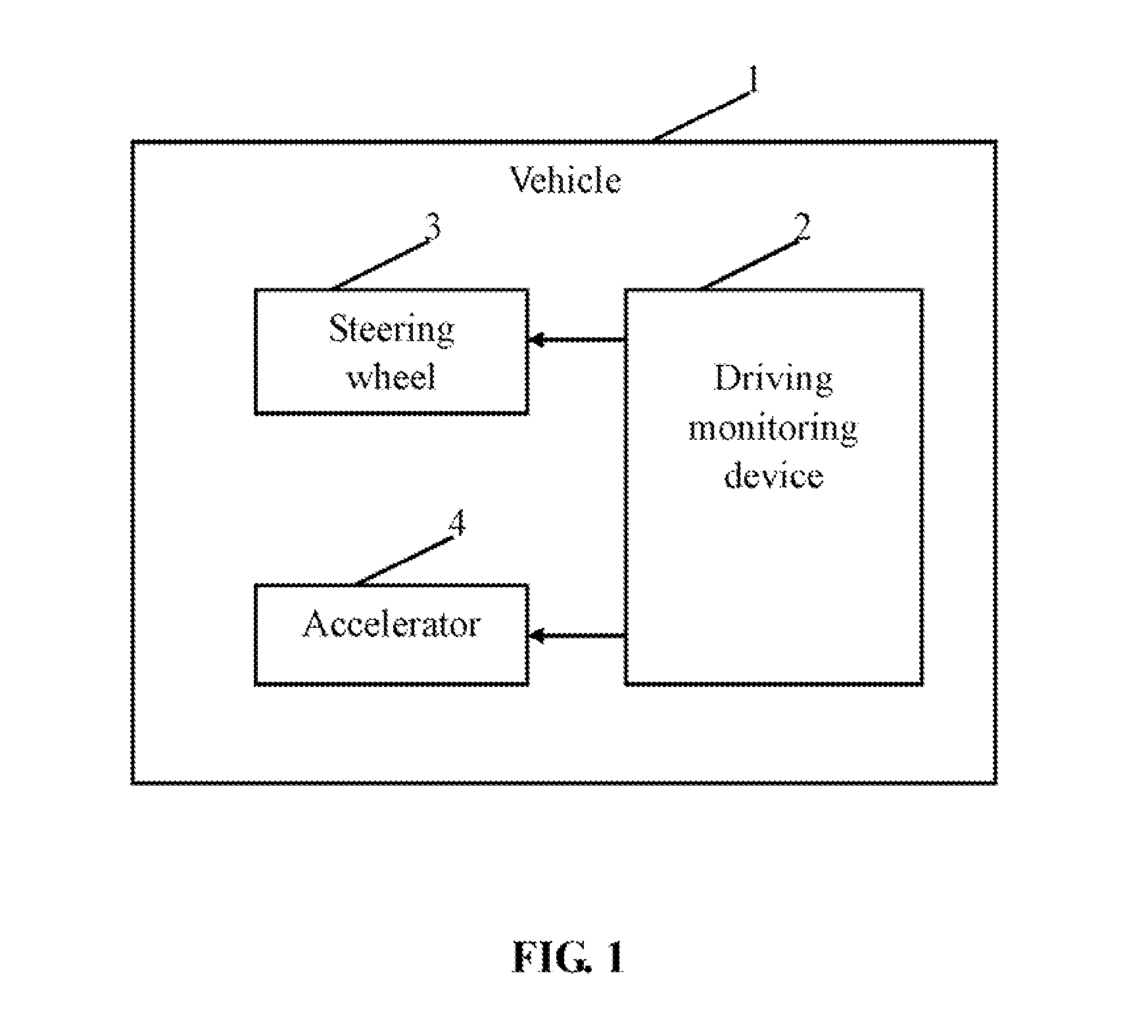

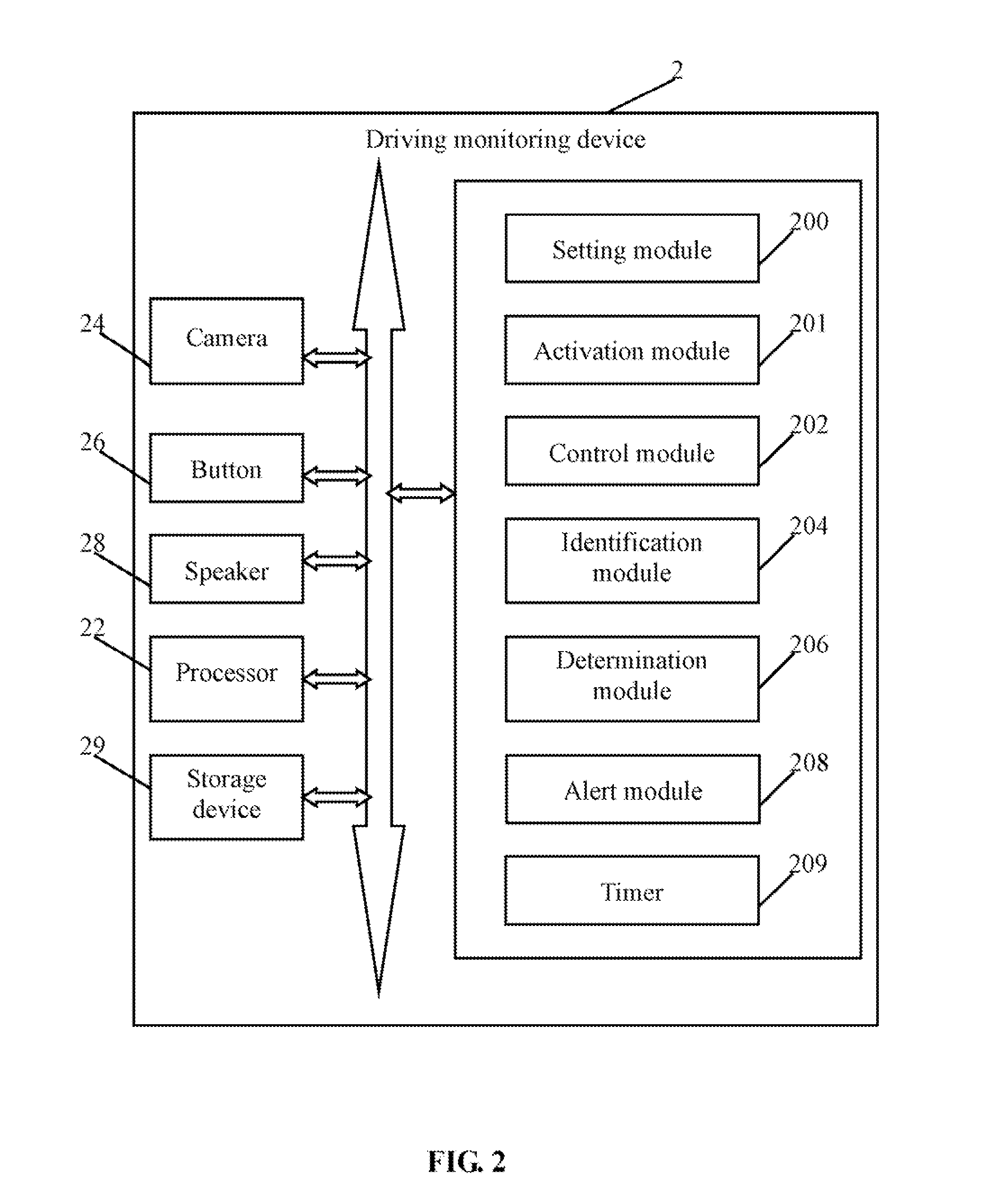

Driving monitoring device and method utilizing the same

InactiveUS20110121960A1Reduce traffic accidentsRoad vehicles traffic controlInstrument arrangements/adaptationsSteering wheelEngineering

A driving monitoring device and method includes controlling a camera to capture a face image of a driver, identifying the face image to determine if the driver is awake, and controlling a camera to capture a first image of the steering wheel. The driving monitoring device and method further includes identifying a steering wheel region and two hand regions, confirming one or more sub regions of the steering wheel region corresponding to the two hand regions, determining that the driver drives the vehicle inappropriately if the confirmed sub regions do not match standard positions, and outputting alert signals to prompt the driver.

Owner:HON HAI PRECISION IND CO LTD

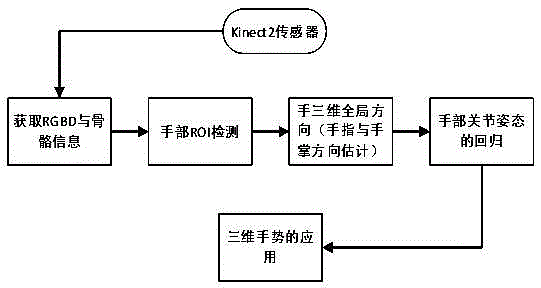

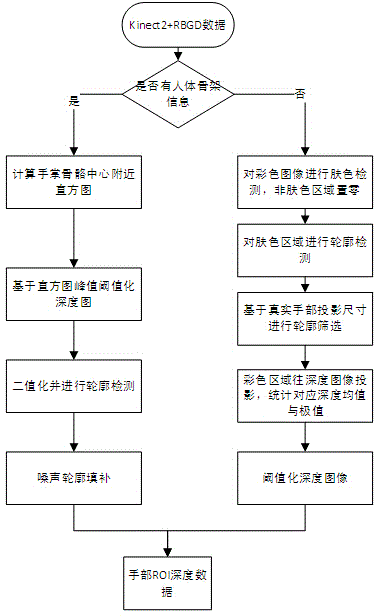

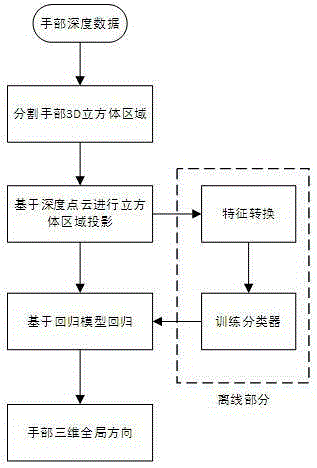

Three-dimensional gesture estimation method and three-dimensional gesture estimation system based on depth data

ActiveCN105389539AGood practical valueThe estimate is reasonableCharacter and pattern recognitionFeature extractionHand parts

The invention discloses a three-dimensional gesture estimation method and a three-dimensional gesture estimation system based on depth data. The three-dimensional gesture estimation method comprises the following steps of S1, performing hand region of interest (ROI) detection on photographed data, and acquiring hand depth data, wherein the S1 comprises the processes of (1), when bone point information can be obtained, performing hand ROI detection through single bone point of a palm; (2) when the bone point information cannot be obtained, performing hand ROI detection in a manner based on skin color; S2, performing preliminary estimation in a hand three-dimensional global direction, wherein the S2 comprises the processes of S21, performing characteristic extracting; S22, realizing regression in the hand global direction according to a classifier R1; and S3, performing joint gesture estimation on the three-dimensional gesture, wherein the S3 comprises the processes of S31, realizing gesture estimation according to a classifier R2; and S32, performing gesture correction. According to the three-dimensional gesture estimation method and the three-dimensional gesture estimation system, firstly cooperation of two manners is utilized for dividing hand ROI data; afterwards estimation in the hand global direction is finished according to a regression algorithm based on hand ROI data dividing; and finally three-dimensional gesture estimation is realized by means of the regression algorithm through utilizing the data as an aid. The three-dimensional gesture estimation method and the three-dimensional gesture estimation system have advantages of simple algorithm and high practical value.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

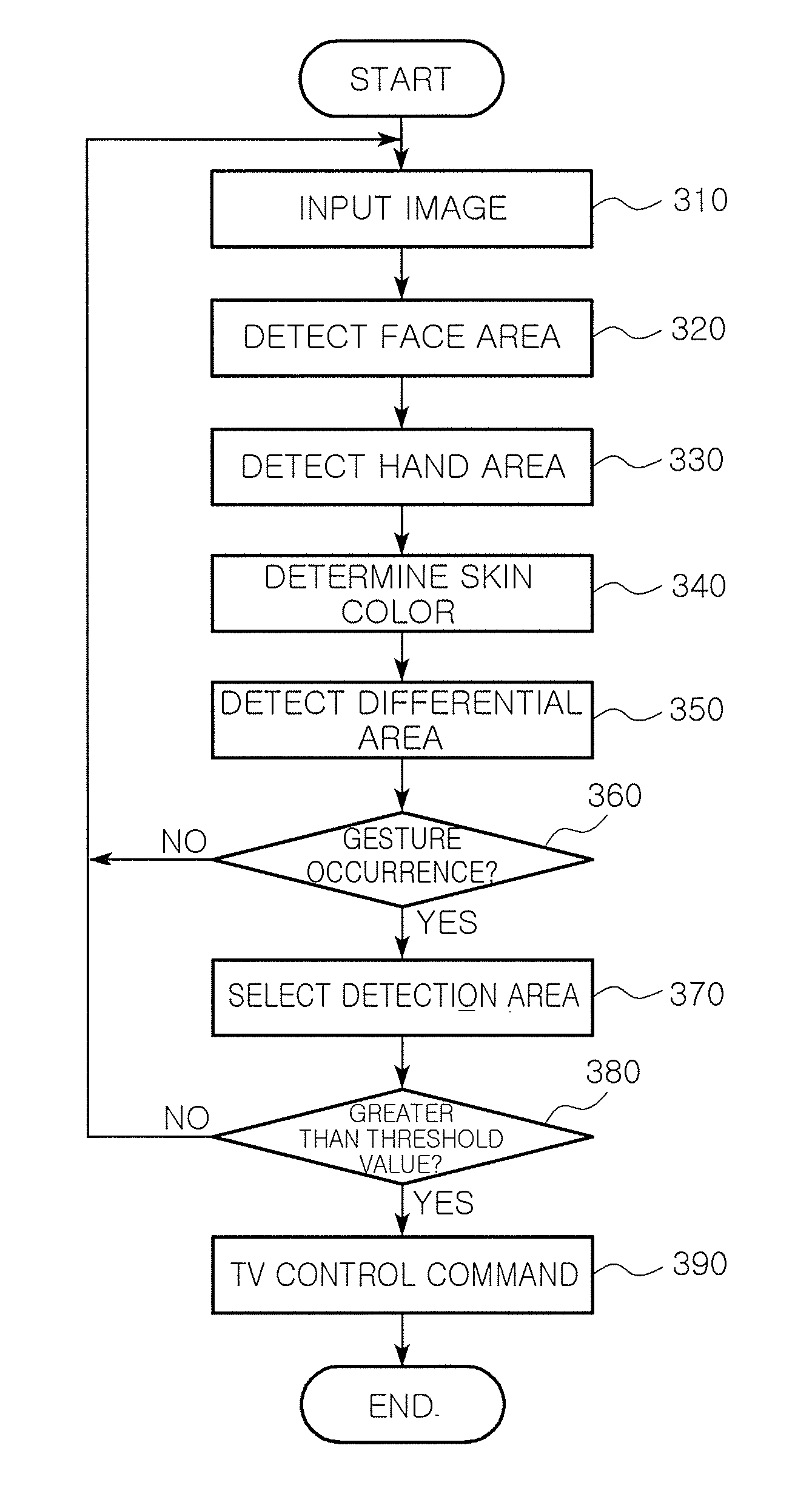

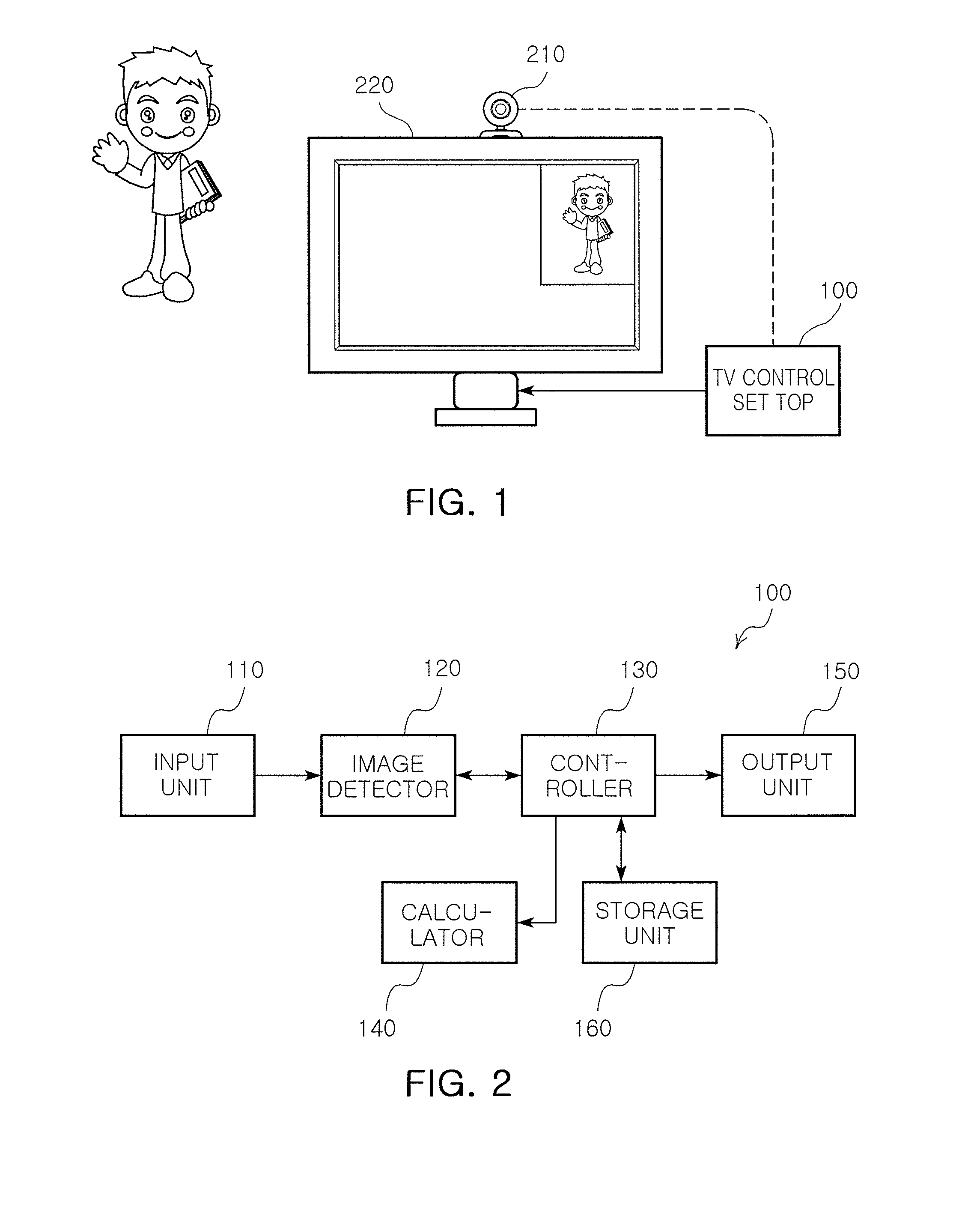

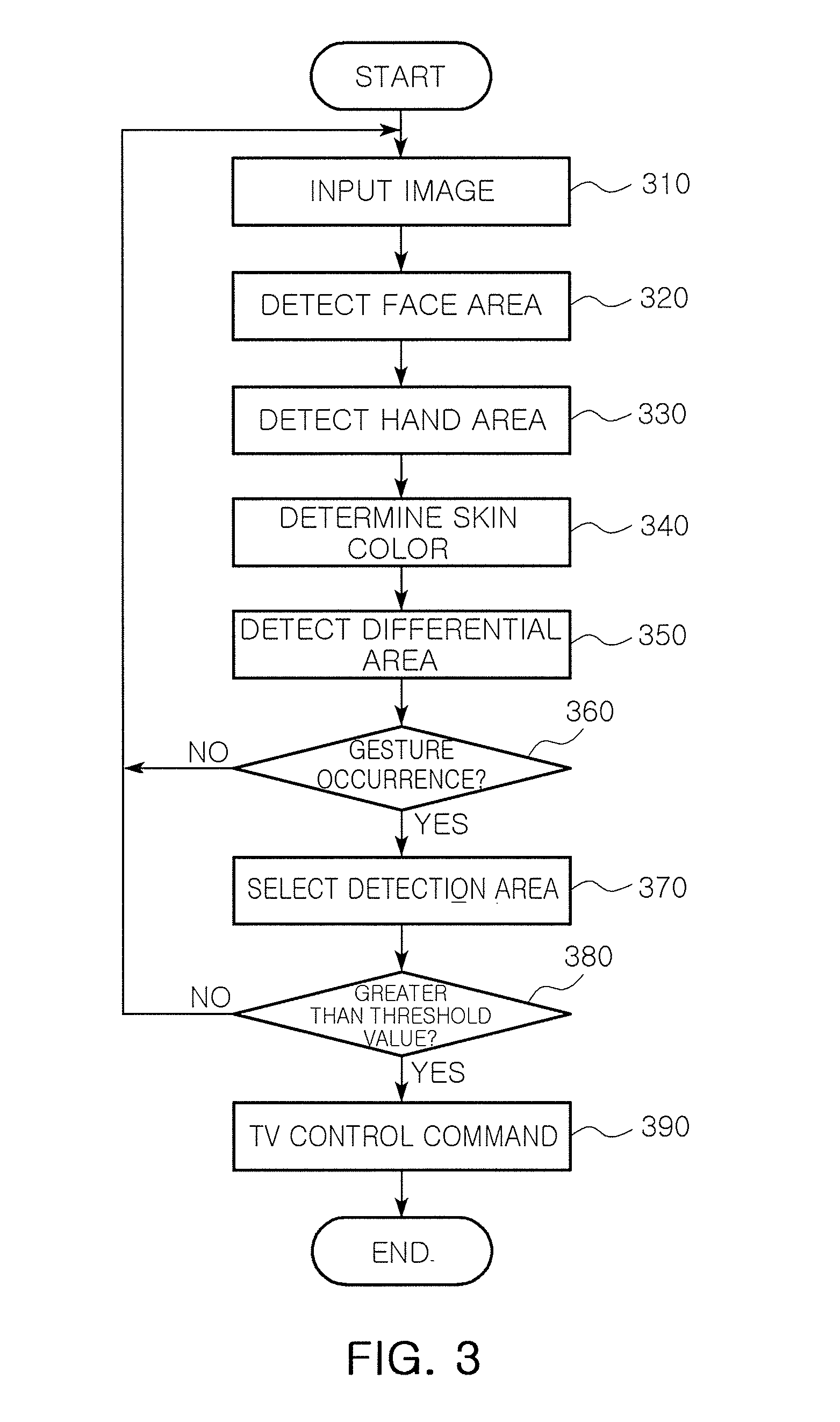

Method and apparatus for recognizing gesture in image processing system

ActiveUS8014567B2High resolutionCharacter and pattern recognitionCathode-ray tube indicatorsImaging processingSkin color

A method and apparatus for recognizing a gesture in an image processing system. In the apparatus, an input unit receives an image obtained by capturing a gesture of a user using a camera. A detector detects a face area in the input image, and detects a hand area in gesture search areas. The gesture search areas being set by dividing the image into predetermined areas with reference to a predetermined location of the detected face area. A controller sets the gesture search areas, determines whether a gesture occurs in the detected hand area, and selects a detection area with respect to the gesture to generate a control command for controlling an image device. A calculator calculates skin-color information and differential-area information for checking a gesture in the detected hand area. Accordingly, a hand area can be accurately detected, and a gesture can be separated from peripheral movement information, so that mal-functioning caused by gesture recognition can be reduced.

Owner:INTELLECTUAL DISCOVERY CO LTD

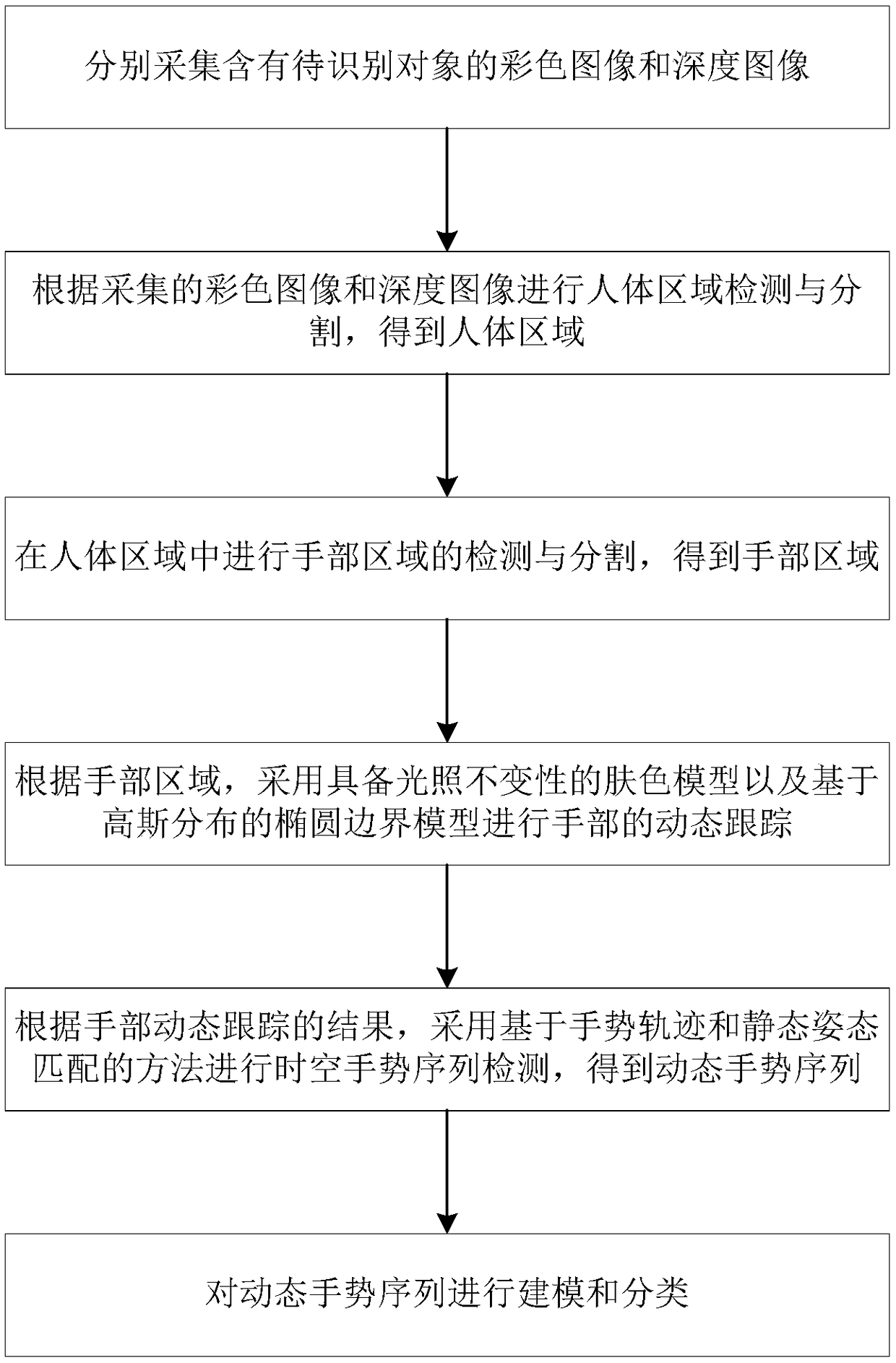

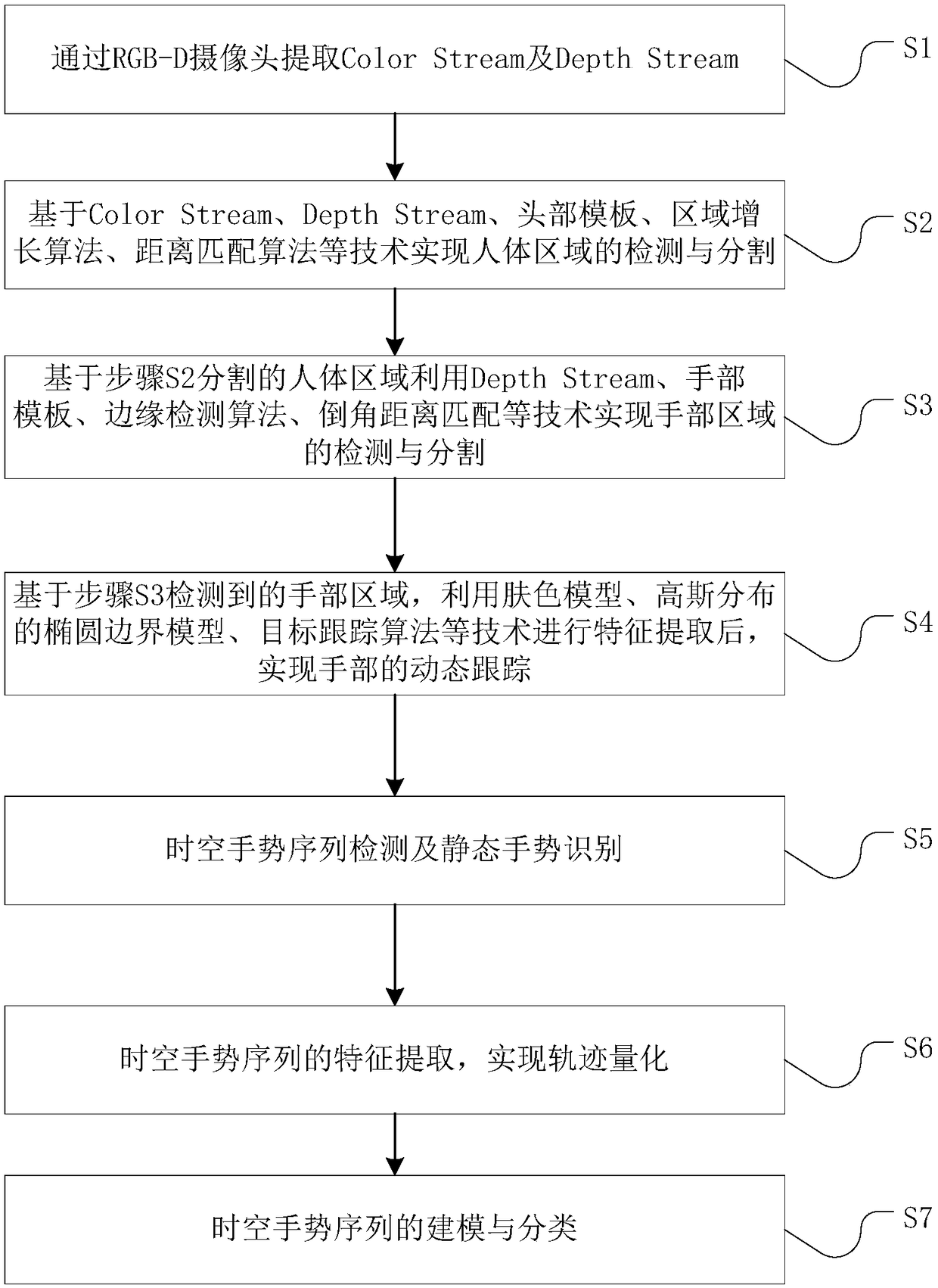

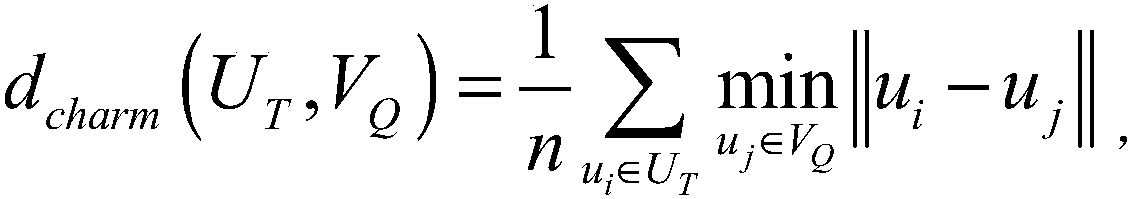

Dynamic gesture sequence real-time recognition method, system and device

InactiveCN108256421AImprove robustnessEasy to identifyImage enhancementImage analysisHuman bodyColor image

The invention discloses a dynamic gesture sequence real-time recognition method, a dynamic gesture sequence real-time recognition system and a dynamic gesture sequence real-time recognition device. The dynamic gesture sequence real-time recognition method comprises the steps of: separately acquiring a color image and a depth image containing an object to be recognized; performing human body regiondetection and segmentation according to the acquired color image and depth image, so as to obtain a human body region; detecting and segmenting hand regions in the human body region so as to obtain the hand regions; adopting a skin color mode with illumination invariance and an elliptical boundary model based on Gaussian distribution for tracking hands dynamically according to the hand regions; adopting a method based on gesture trajectory and static attitude matching for performing space-time gesture sequence detection according to dynamic tracking results of the hands, so as to obtain a dynamic gesture sequence; and modeling and classifying the dynamic gesture sequence. The dynamic gesture sequence real-time recognition method, the dynamic gesture sequence real-time recognition system and the dynamic gesture sequence real-time recognition device improve the robustness of gesture recognition through utilizing the depth information and adopting the skin color mode with illumination invariance and the elliptical boundary model based on Gaussian distribution, have good recognition effect, and can be widely applied in the fields of artificial intelligence and computer vision.

Owner:盈盛资讯科技有限公司

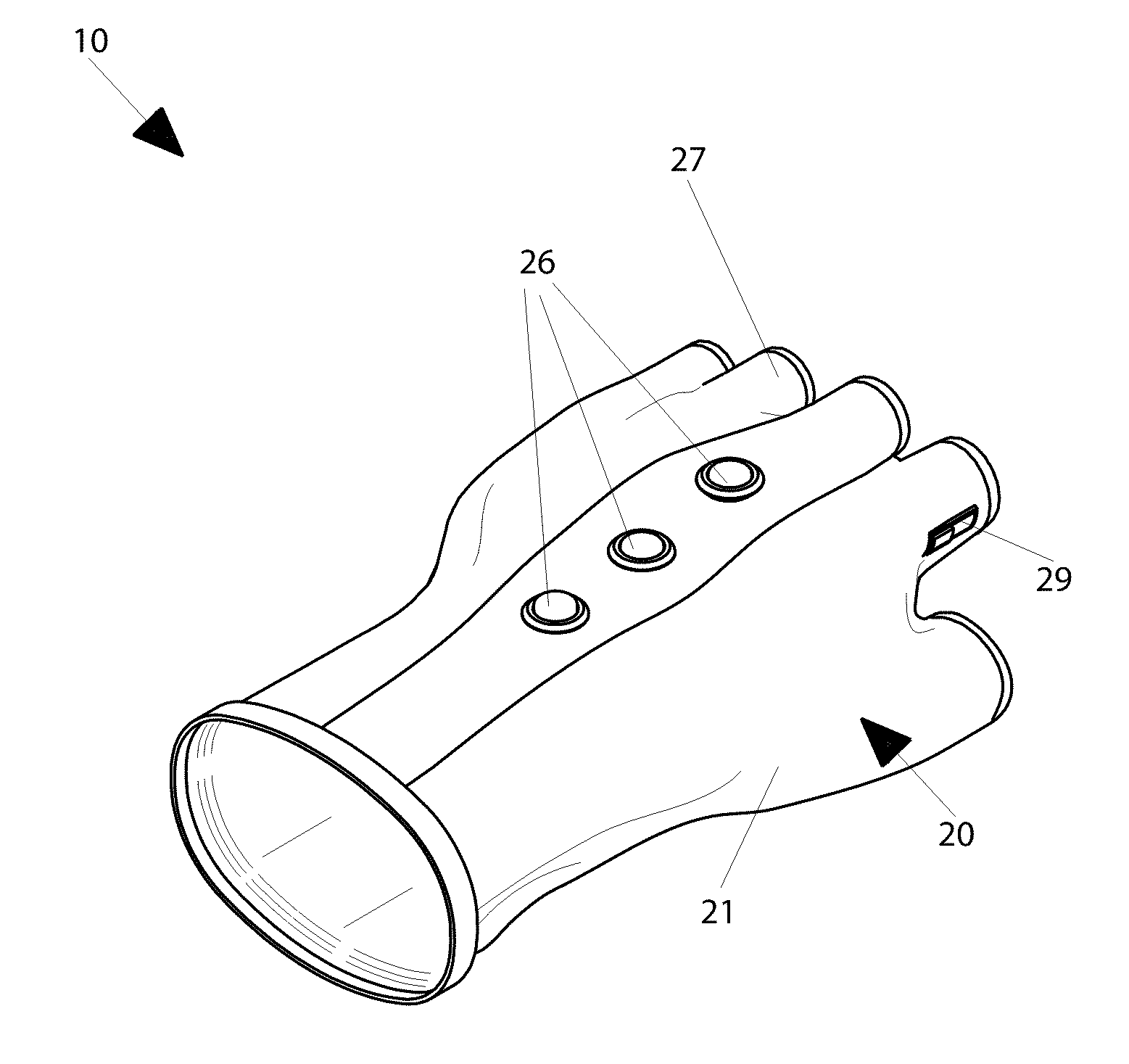

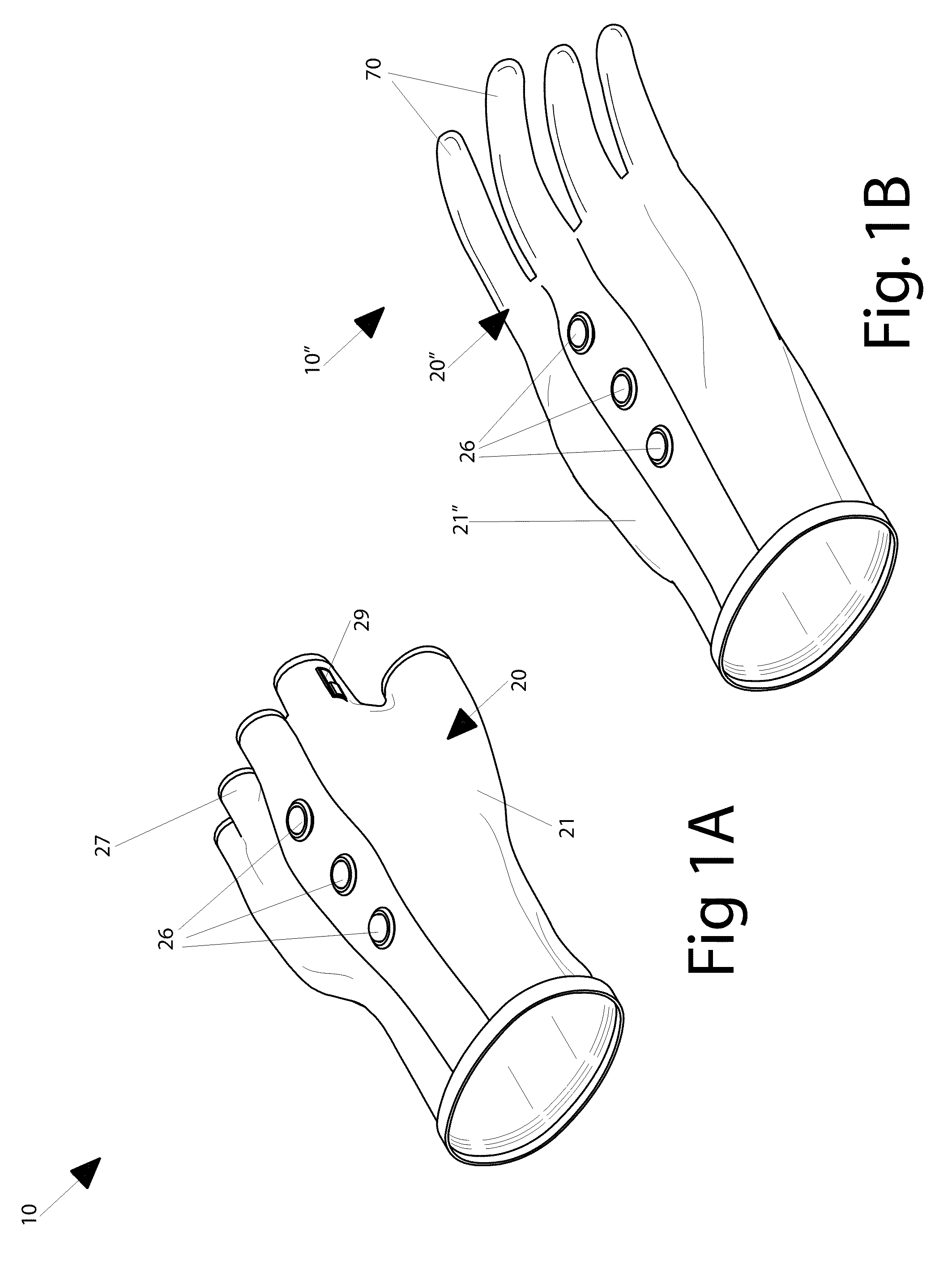

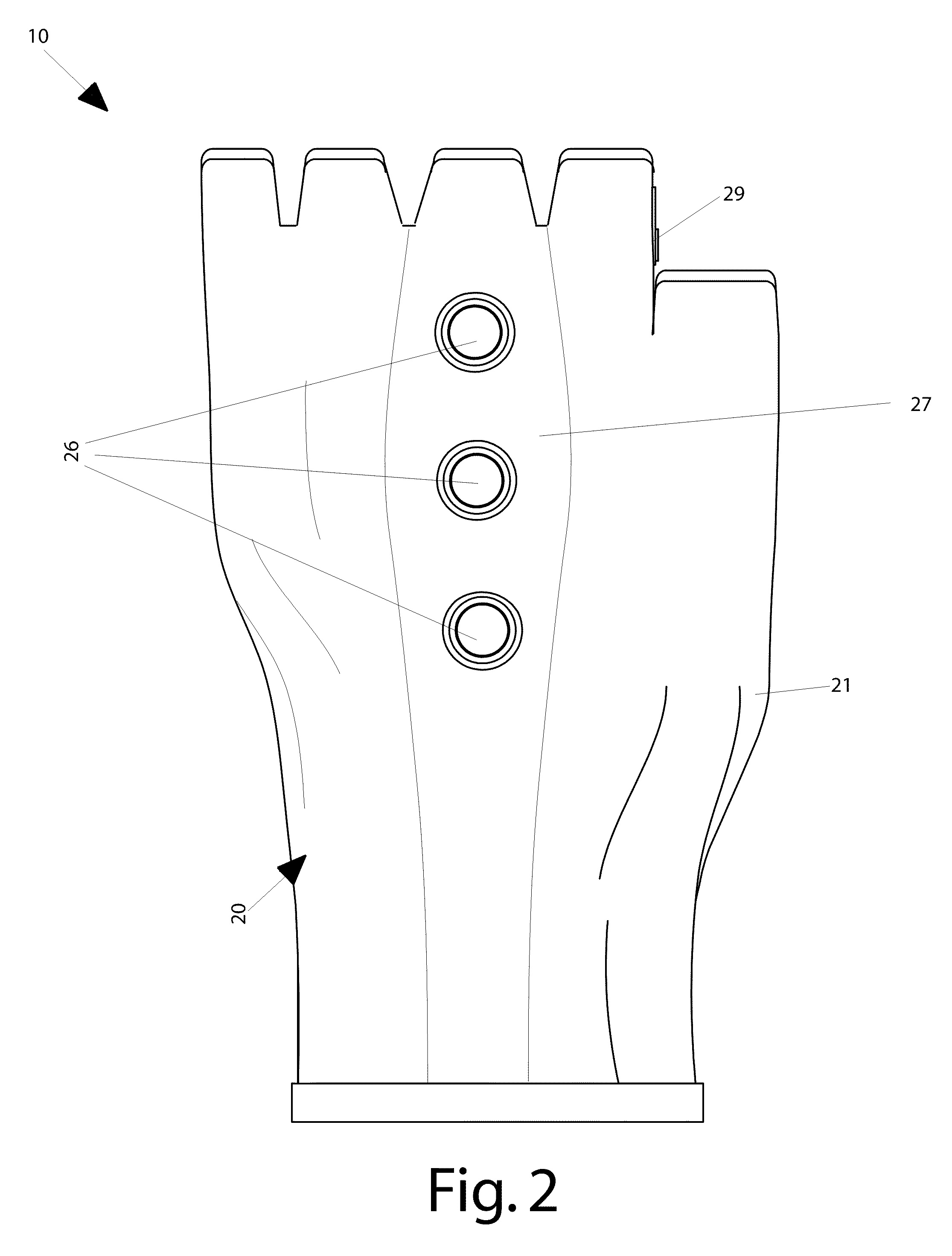

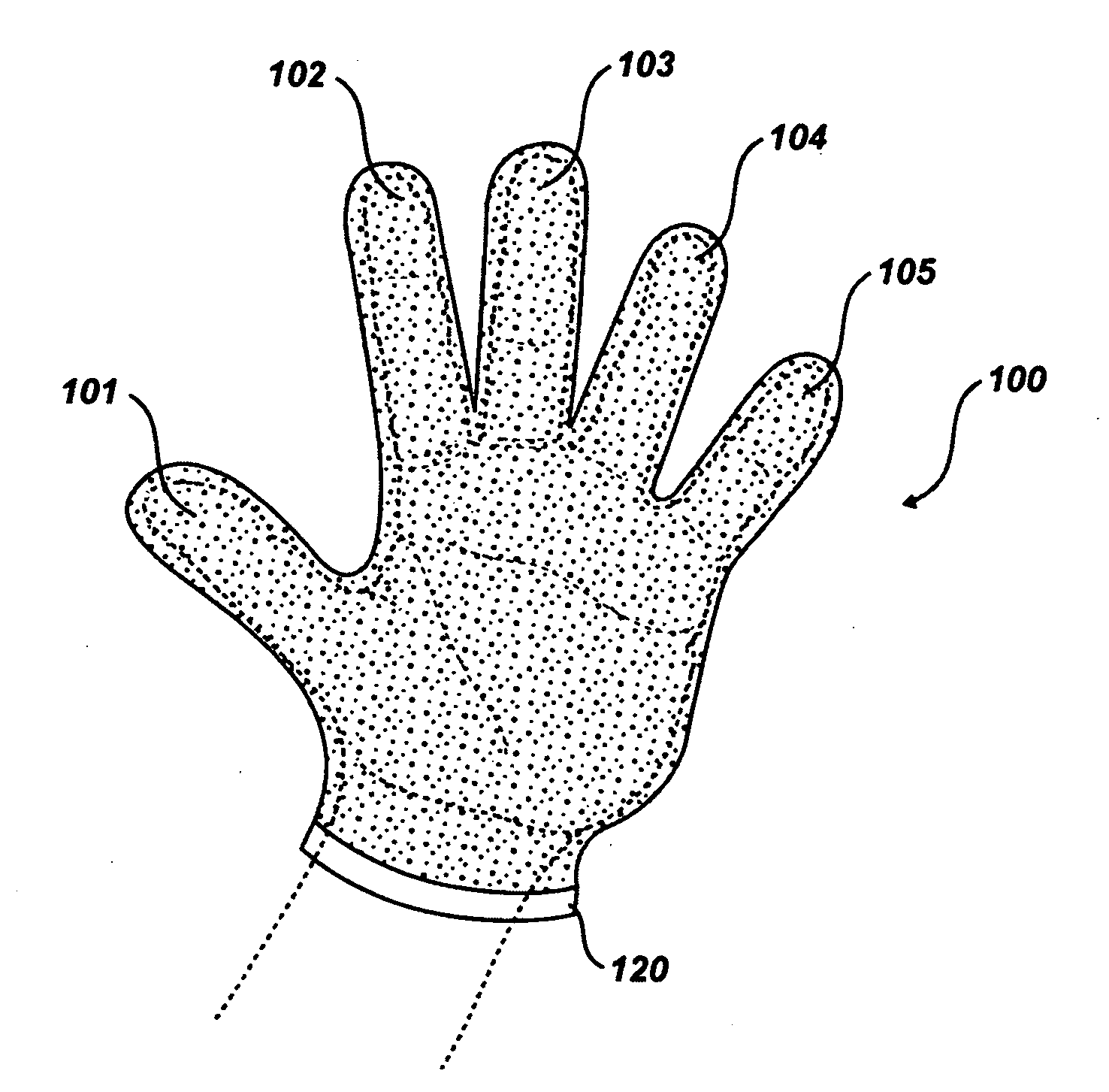

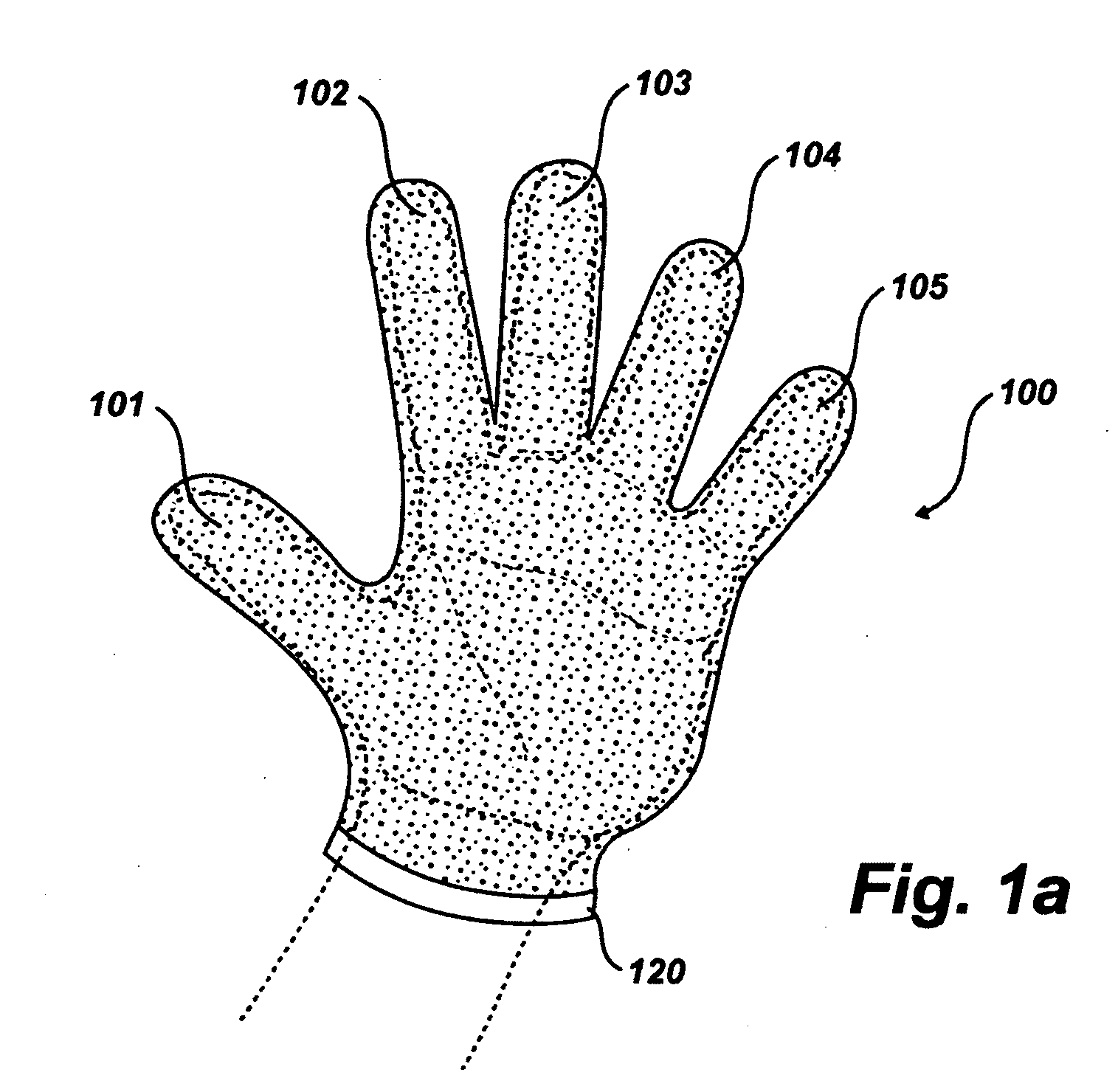

Illuminable hand-signaling glove and associated method

InactiveUS7959314B1Conveniently pivotedLighting support devicesPoint-like light sourceBiomedical engineeringUser interface

An illuminable hand-signaling glove preferably includes a body adapted to be positioned at a hand region of the user. Such a body may include a glove. The body may further include a wrist band and a plurality of finger bands connected thereto. The device may further include a plurality of light-emitting sources displayed on an exterior surface of the body, and a mechanism may be included for selectively toggling independent ones of the light-emitting sources between illuminated and non-illuminated modes by selectively pivoting one metacarpal of the user hand region along a plurality of positions defined within the body while maintaining remaining metacarpals of the user hand region at a static position. Such a selectively toggling mechanism preferably includes a manually actuated user interface attached to the exterior surface of the body.

Owner:RODRIGUEZ WILLIAM

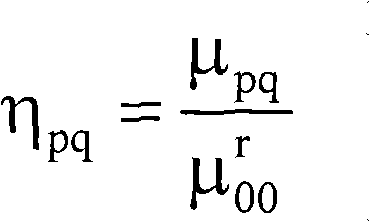

Static gesture identification method based on vision

InactiveCN101661556AImprove recognition rateEasy to identifyCharacter and pattern recognitionHuman bodyFeature vector

The invention provides a static gesture identification method based on vision, comprising the following steps of S1, gesture image pretreatment: separating a hand region from an environment accordingto the complexional characteristic of a human body and obtaining a gesture profile through image filtering and image morphological operation; S2, gesture characteristic parameter extraction: extracting an Hu invariable moment characteristic, a gesture region characteristic and a Fourier description subparameter so as to form a characteristic vector; and S3, gesture identification, using a multi-layer sensor classifier having self-organizing and self-studying abilities, capable of effectively resisting noise and treating incomplete mode, and having mode generalization ability. The static gesture identification method based on vision in the invention firstly carries out pretreatment and binarizes the original gesture image according to the complexional characteristic of the human body. The extracted gesture characteristic parameters are in three groups, namely the Hu invariable moment characteristic, the gesture region characteristic and the Fourier description subparameter, which form the characteristic vector together. The characteristic has better recognition rate.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

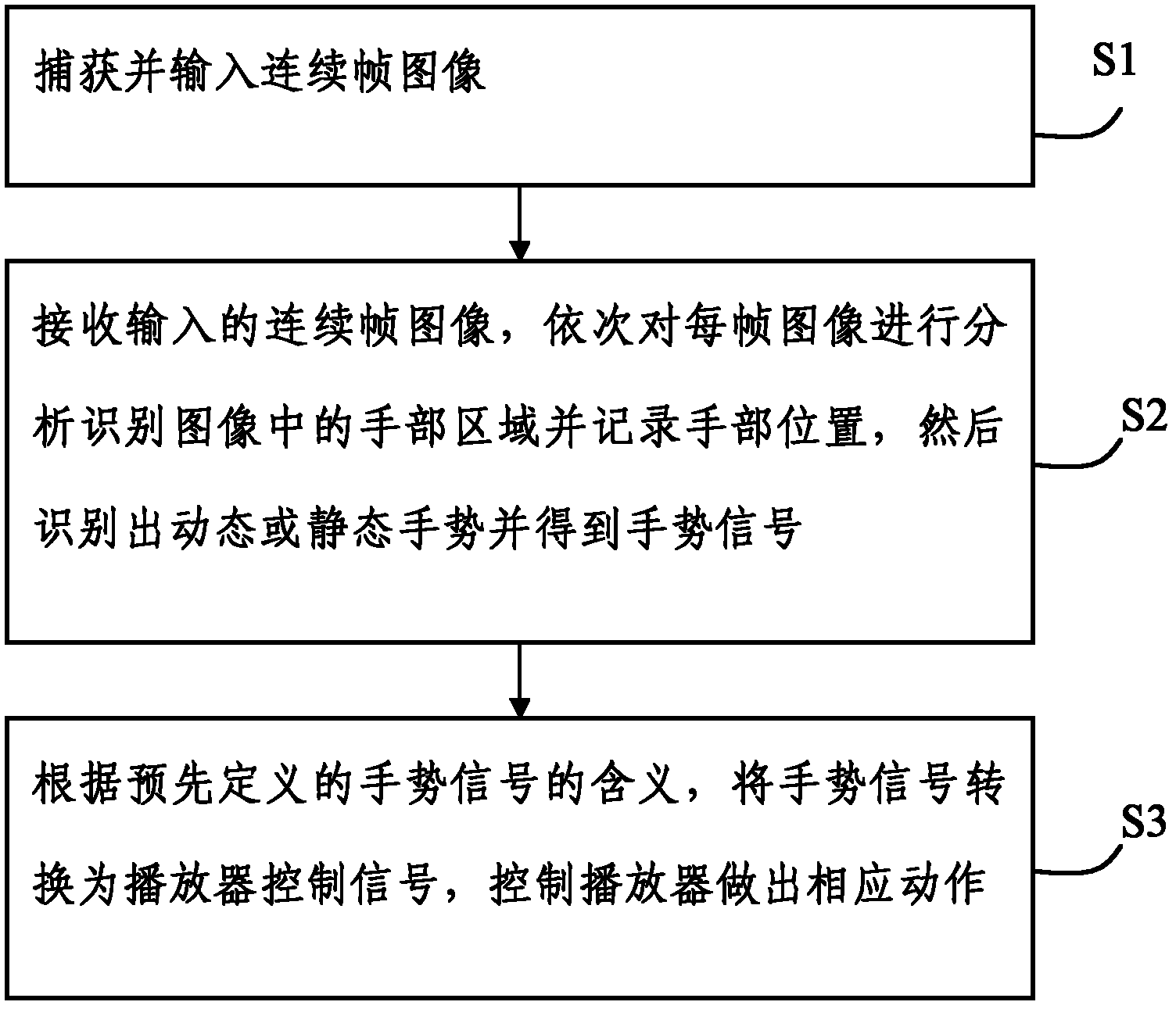

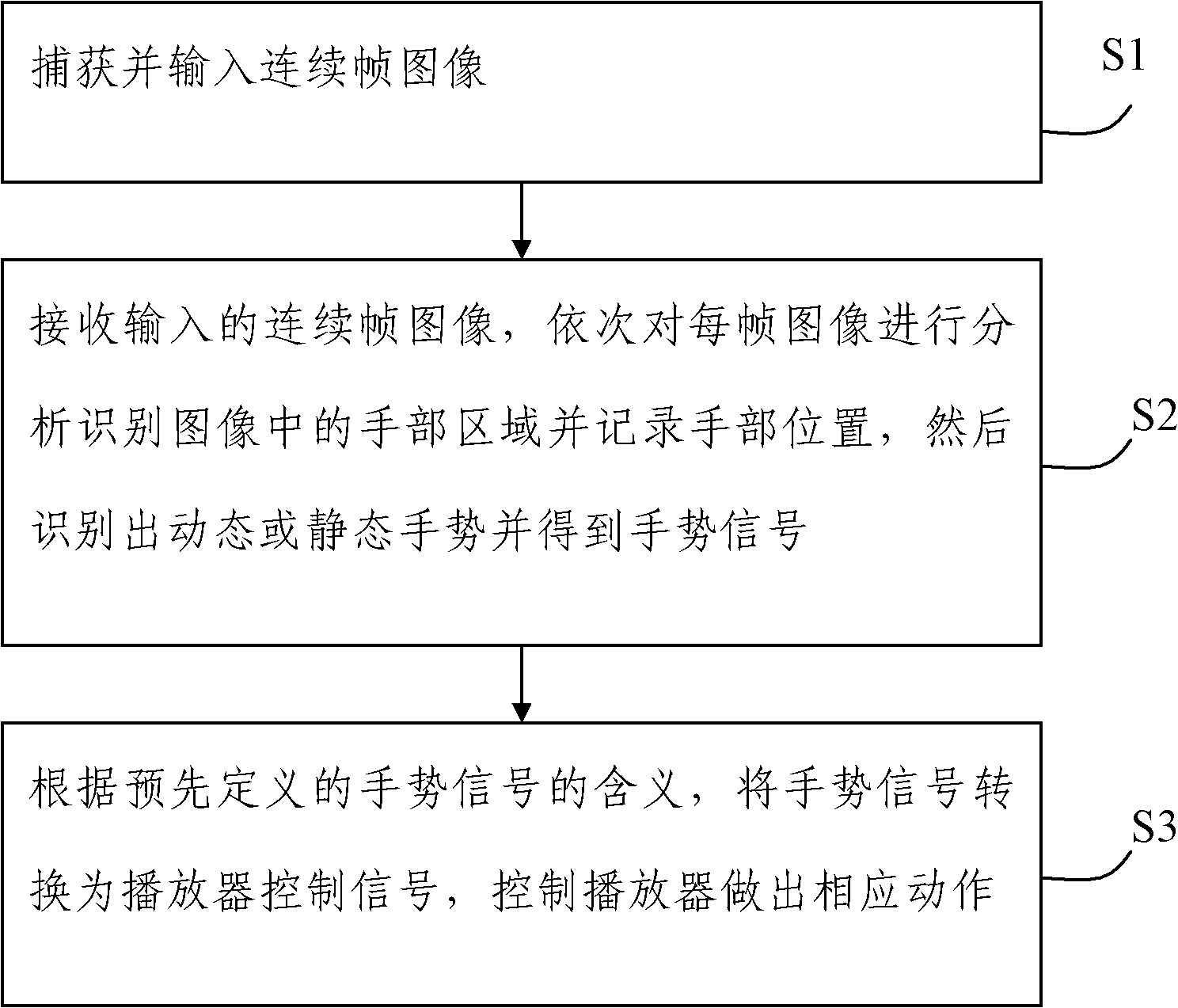

Method and device for controlling video playing by using gestures

InactiveCN102609093AIntuitive playback controlsPlay Control NaturallyInput/output for user-computer interactionGraph readingControl signalHuman–computer interaction

The invention discloses a method for controlling video playing by using gestures. The method comprises: S1, capturing and inputting a sequential frame image; S2, receiving the inputted sequential frame image, analyzing each frame of the image to recognize the hand region in the image, recording the hand position, recognizing a dynamic or static gesture and obtaining a gesture signal; and S3, converting the gesture signal to a player control signal according to the meaning of a predetermined gesture signal, and controlling a player to execute corresponding action. The invention also discloses a device for controlling video playing by using gestures. The device for realizing the above method comprises a video acquisition module, a gesture recognition module and a player module for executing corresponding action according to the received player control signal. The device provided by the invention is low in cost, and can realize more visualized, natural and humanized video playing control.

Owner:CHINA AGRI UNIV

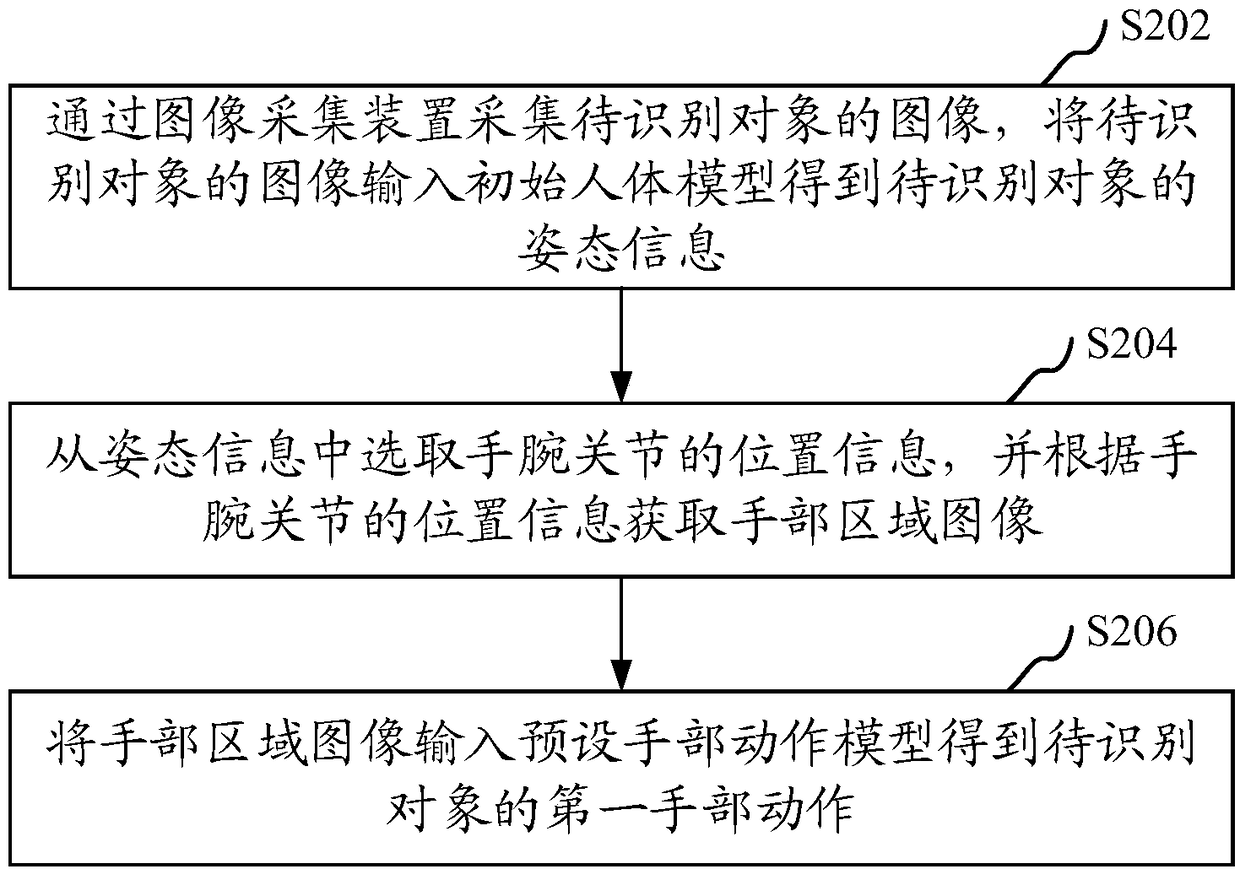

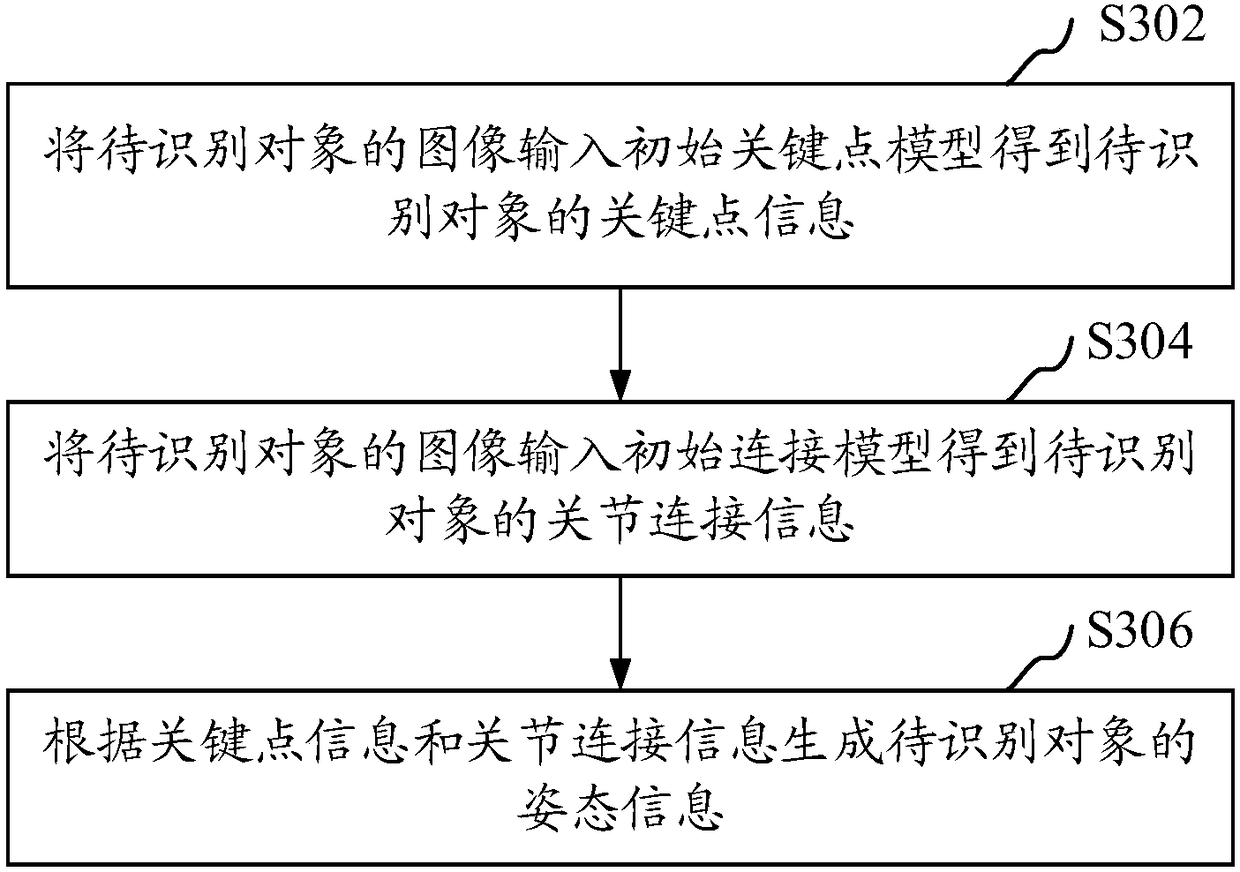

Hand action identification method and apparatus, computer device and readable storage medium

InactiveCN108399367AImprove accuracyInput/output for user-computer interactionCharacter and pattern recognitionPattern recognitionHand parts

The invention relates to a hand action identification method and apparatus, a computer device and a readable storage medium. The hand action identification method comprises the following steps of collecting an image of a to-be-identified object through an image collection apparatus, and inputting the image of the to-be-identified object to an initial human body model to obtain pose information ofthe to-be-identified object; selecting position information of wrist joints from the pose information, and according to the position information of the wrist joints, obtaining a hand region image; andinputting the hand region image to a preset hand action model to obtain a first hand action of the to-be-identified object. According to the method, an overall pose of an identified person is identified firstly and then a hand is located; and when multiple to-be-identified objects exist in an identification region and are mutually shielded, the hand positions can be judged according to the posesof the to-be-identified objects, so that the hand action identification accuracy is improved.

Owner:深圳市阿西莫夫科技有限公司

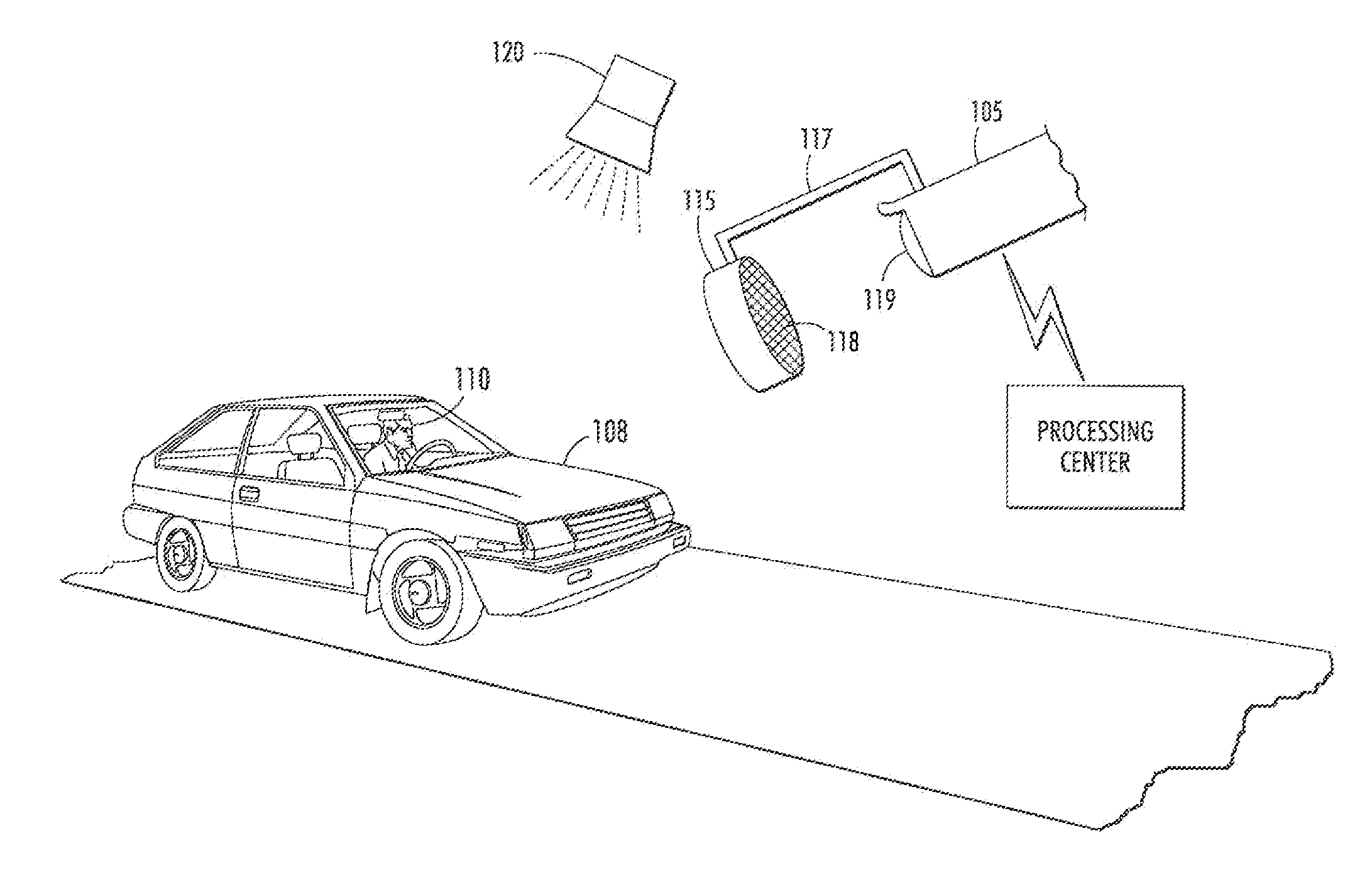

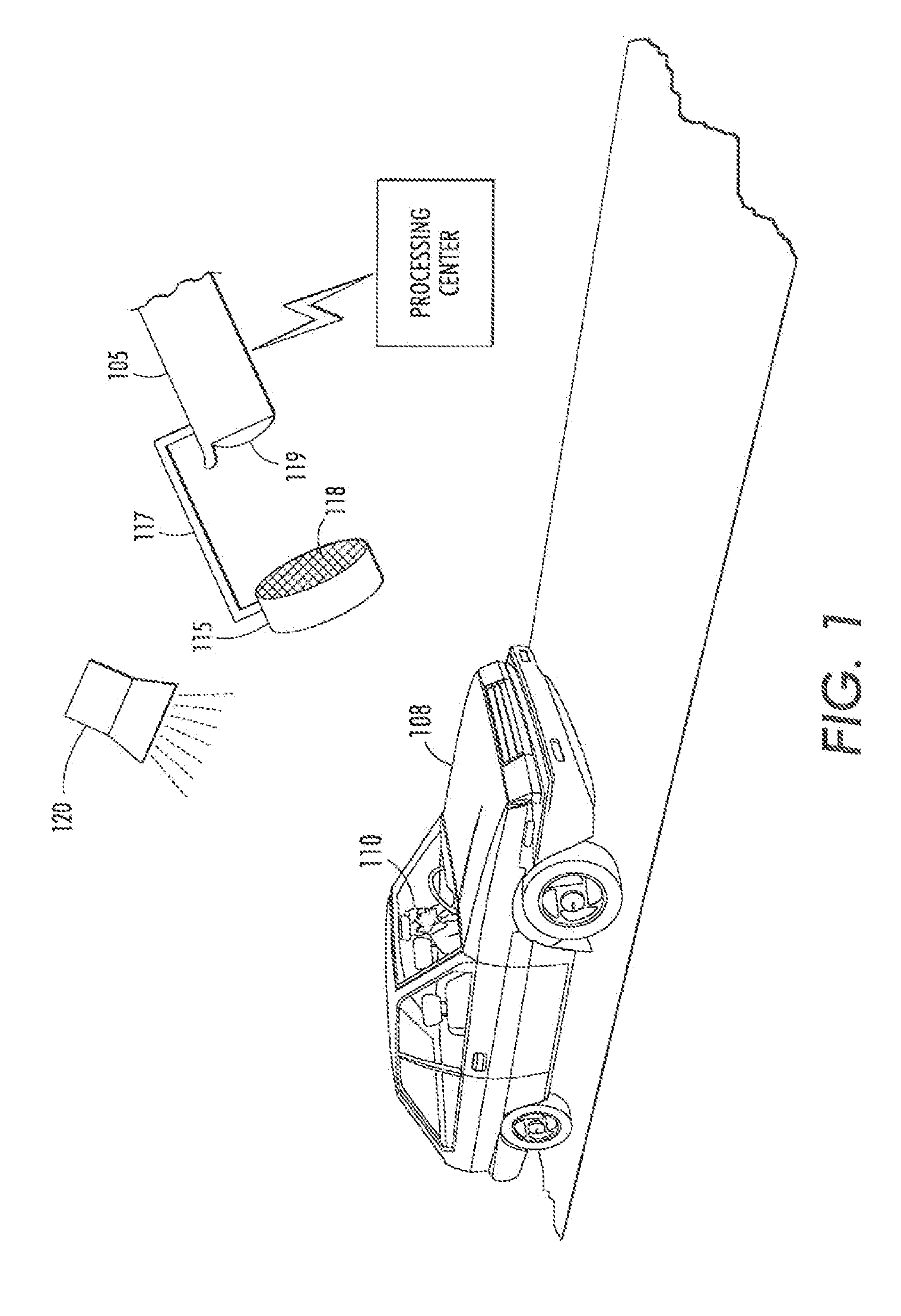

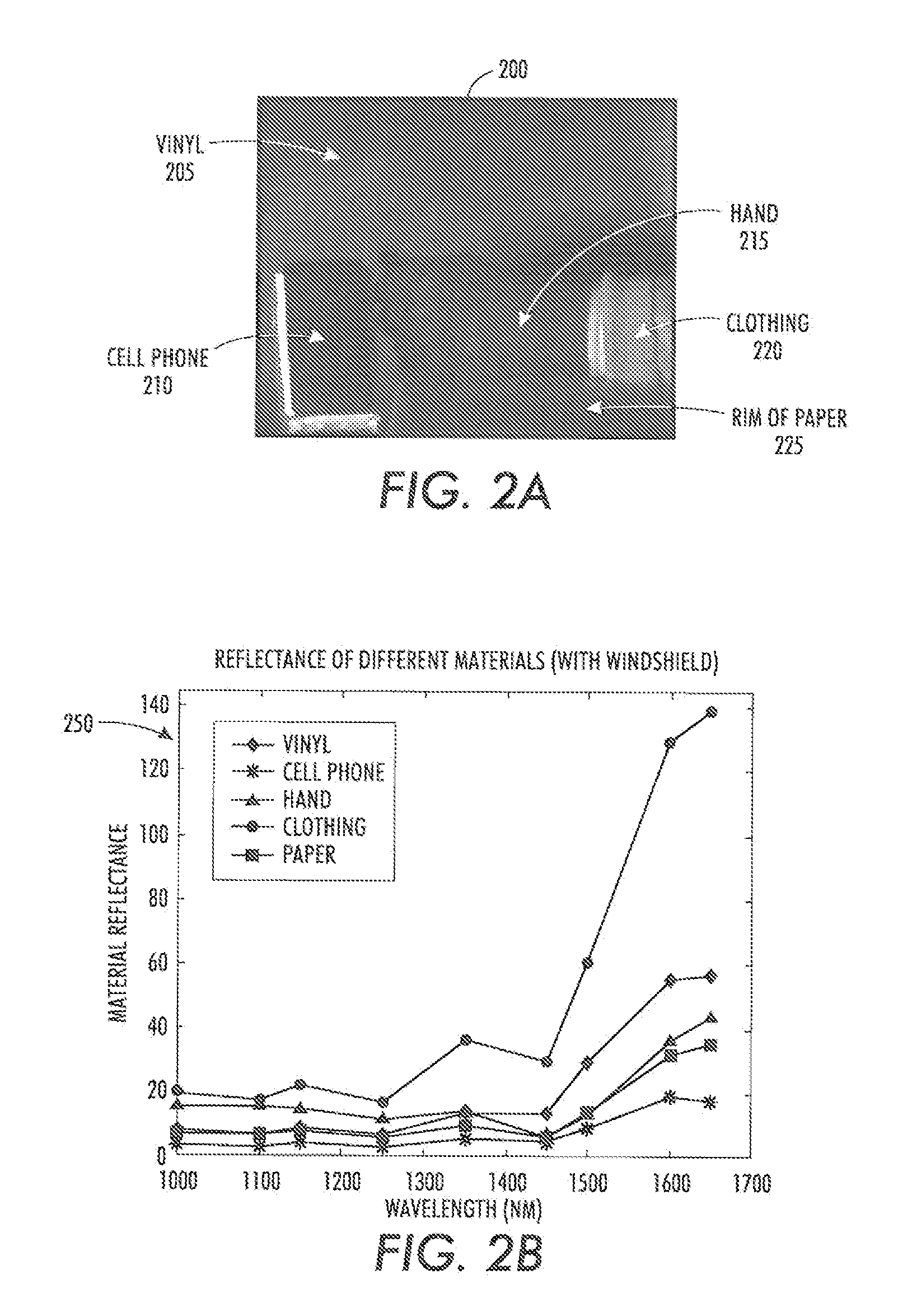

Systems and methods for detecting cell phone usage by a vehicle operator

An embodiment generally relates to systems and methods for determining cell phone usage automatically by individuals operating vehicles. A processing module can process multi-spectral images or videos of individuals and detect different regions in the image such as face regions, hand regions, and cell phone regions. Further, the processing module can analyze the regions based on locations and numbers of skin pixels and cell phone pixels to determine if the individual is holding his or her cell phone near his or her face. Based on the analysis, it can be determined whether the individual is operating the cell phone. Further, the analysis can yield a confidence level associated with the cell phone usage.

Owner:CONDUENT BUSINESS SERVICES LLC

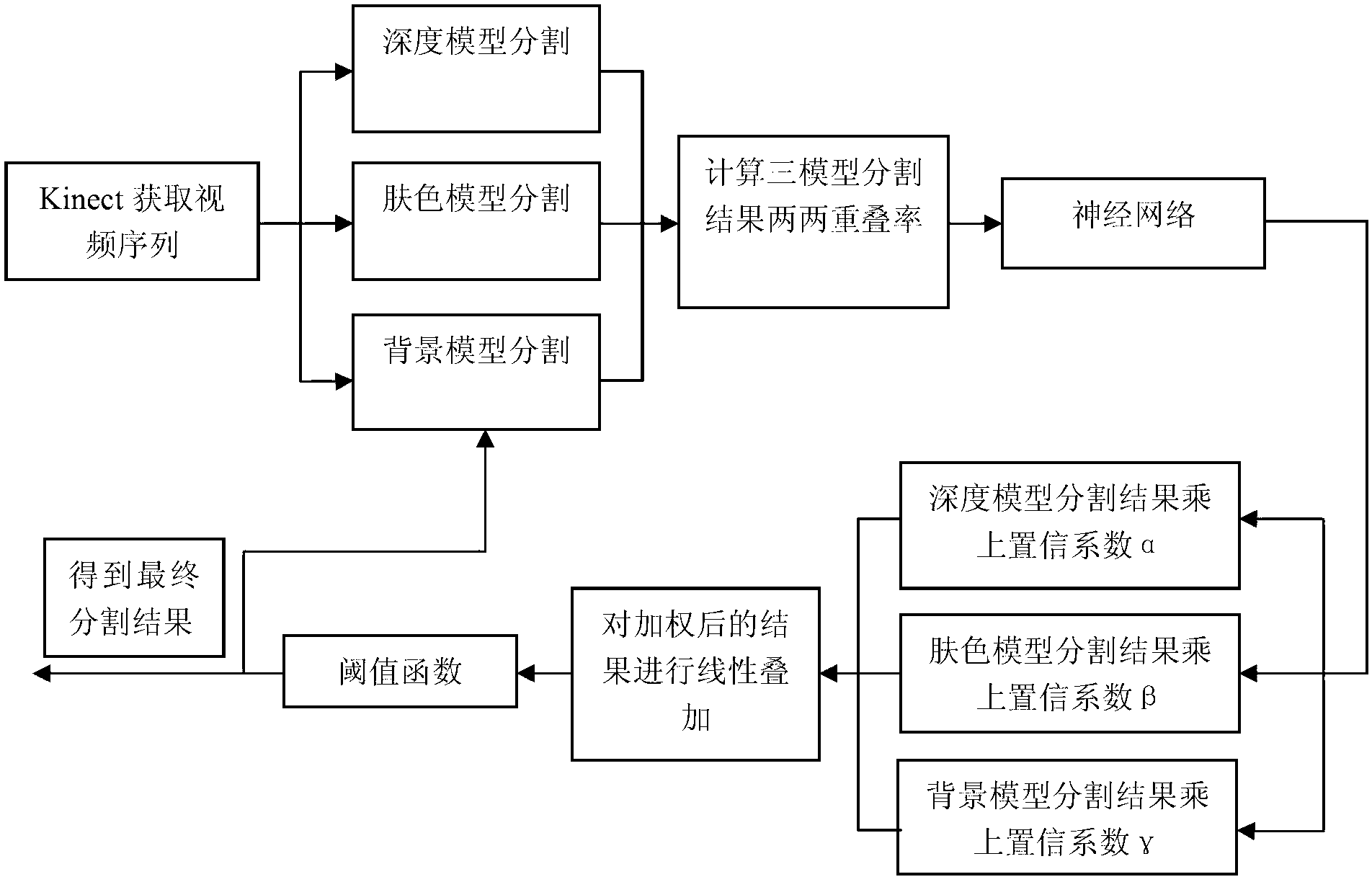

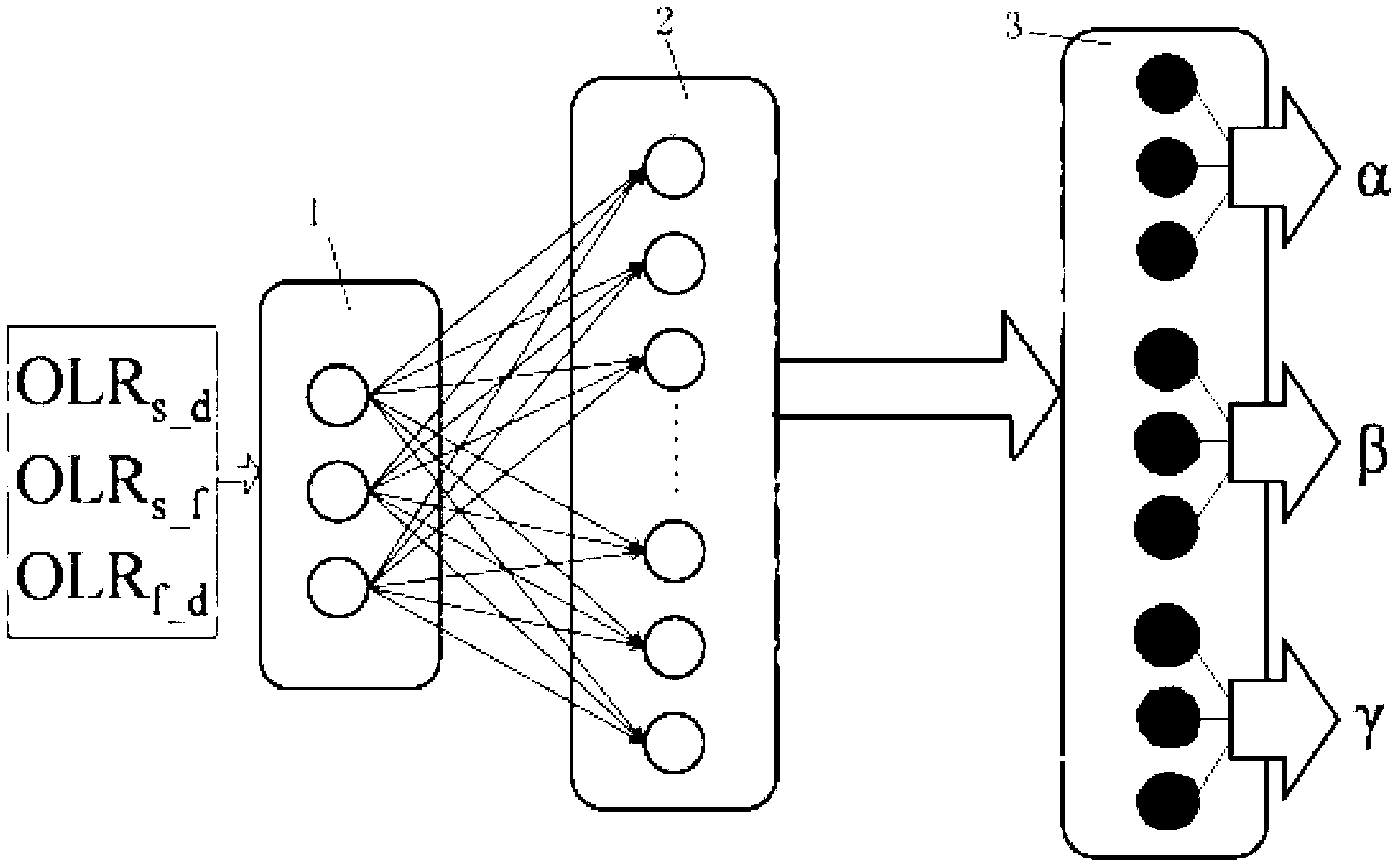

Multi-model fusion video hand division method based on Kinect

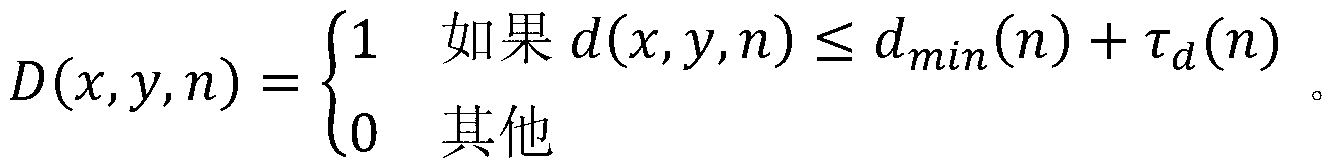

The invention provides a multi-model fusion video hand division method based on Kinect, which comprises the following steps of (1) capturing video information, (2) dividing images in a video respectively to obtain division results, namely a depth model, a skin color model and a background model, (3) calculating an overlapping rate of every two division results as a characteristic of judging division effects of the results and inputting the three overlapping rates into a neural network, (4) allowing the neural network to output three coefficients (namely confidence coefficients) showing respective reliability of the three models, and weighting the three division results with the confidence coefficients, (5) conducting linear superposition on the weighted division results of the three models, (6) outputting a final binary image of a superposed result through a threshold function and finally dividing an obtained video hand region, and (7) updating the background model, wherein the division results are expressed as binary images. The method has the advantages of low cost, good flexibility and the like.

Owner:SOUTH CHINA UNIV OF TECH

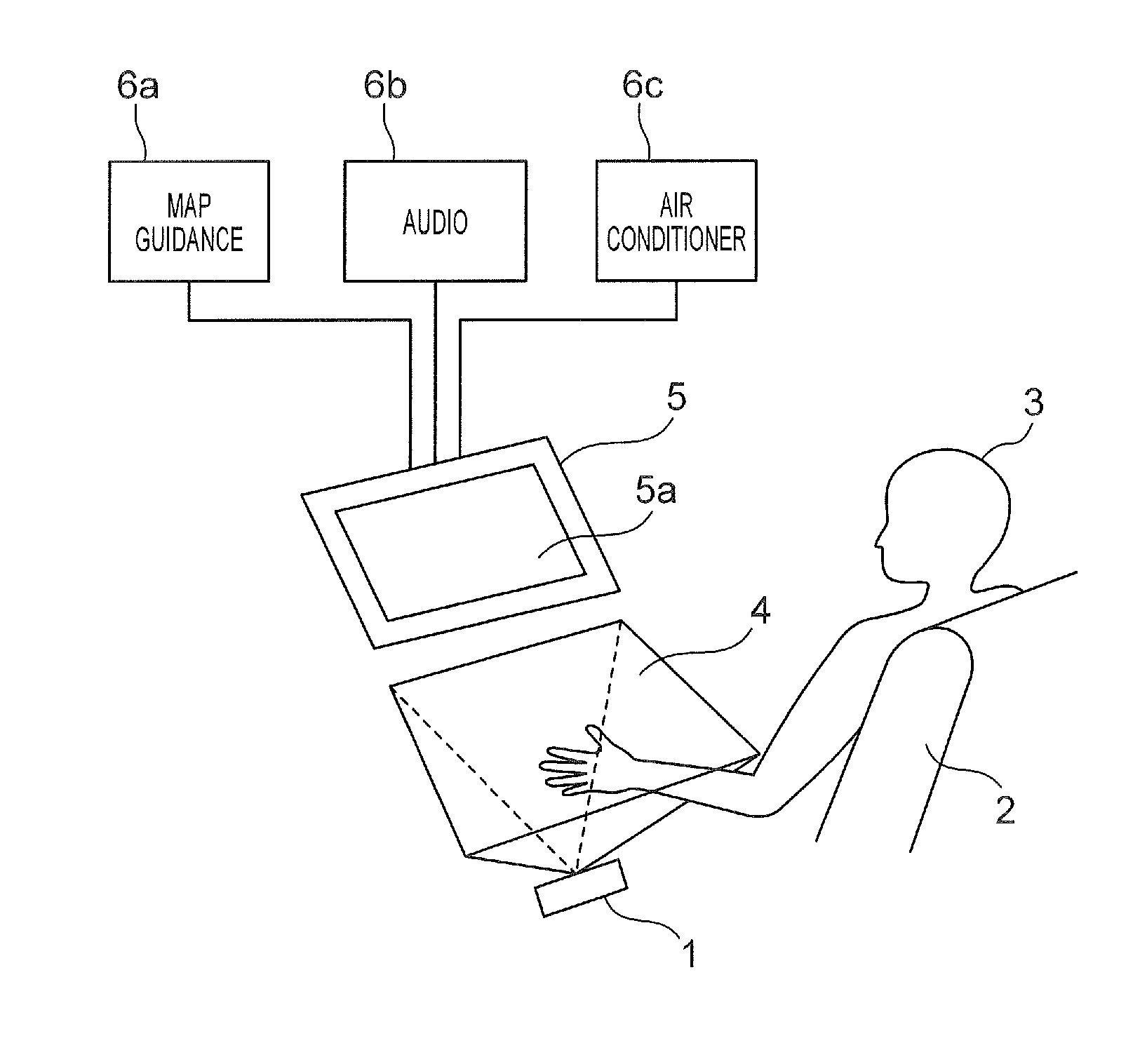

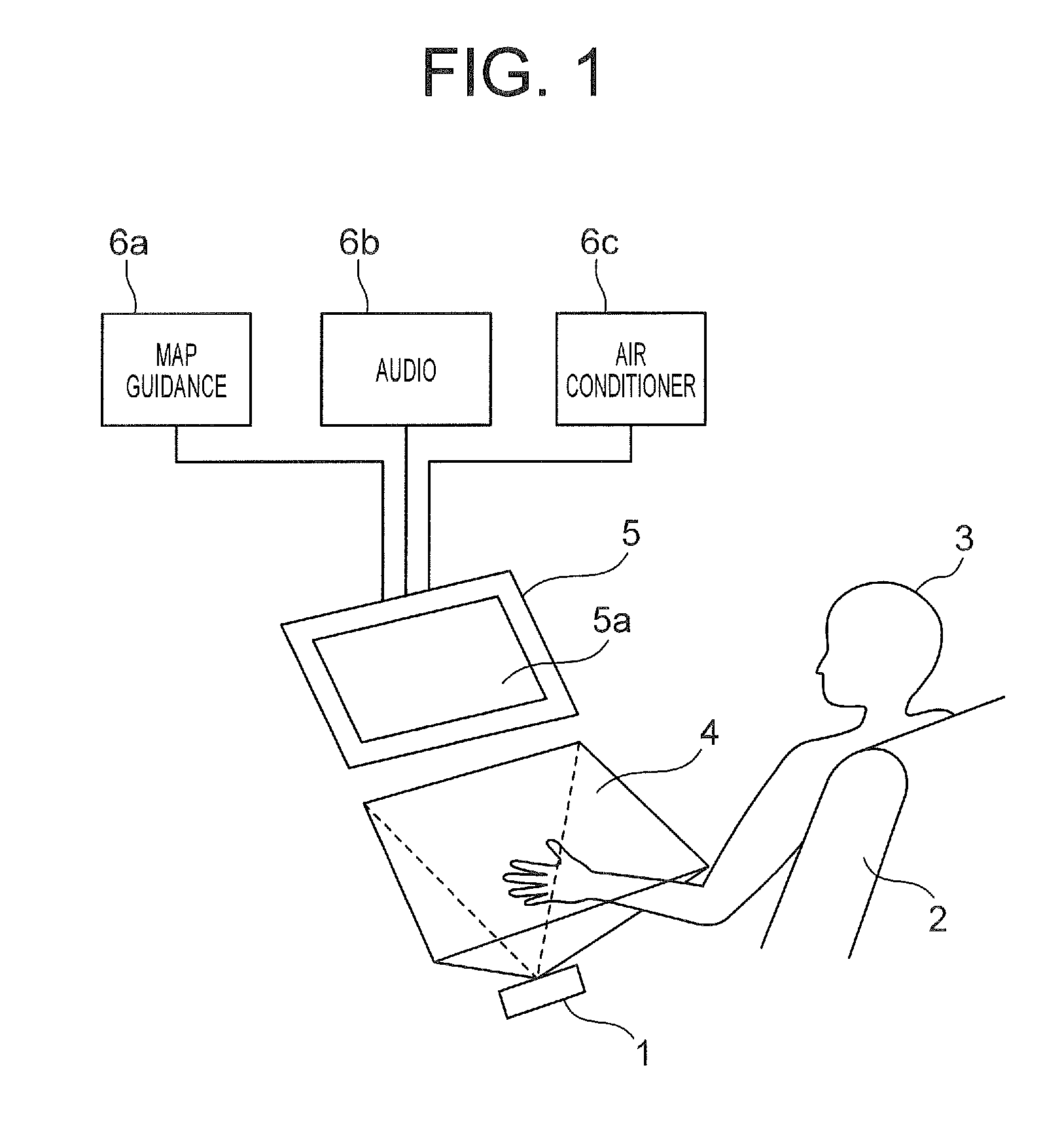

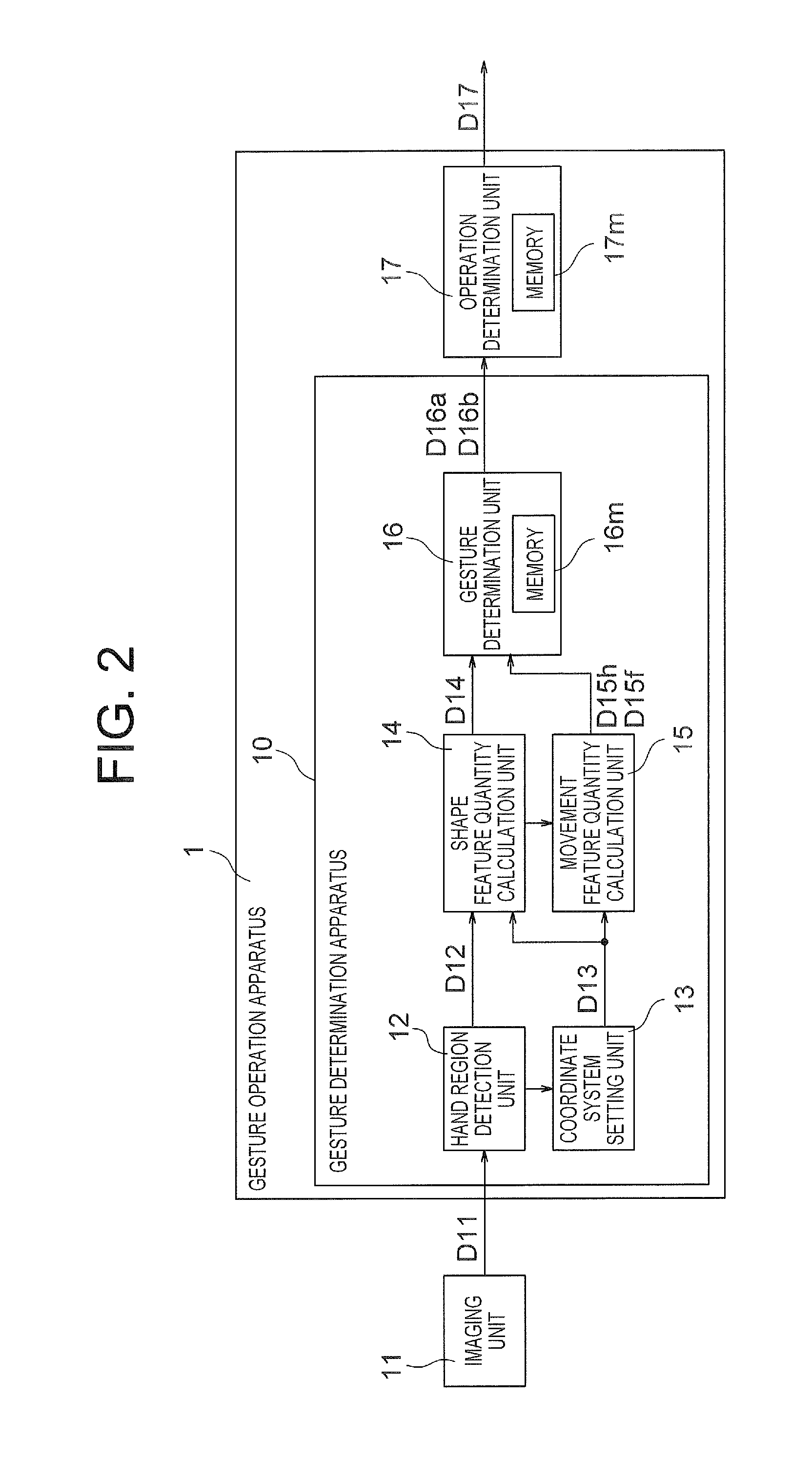

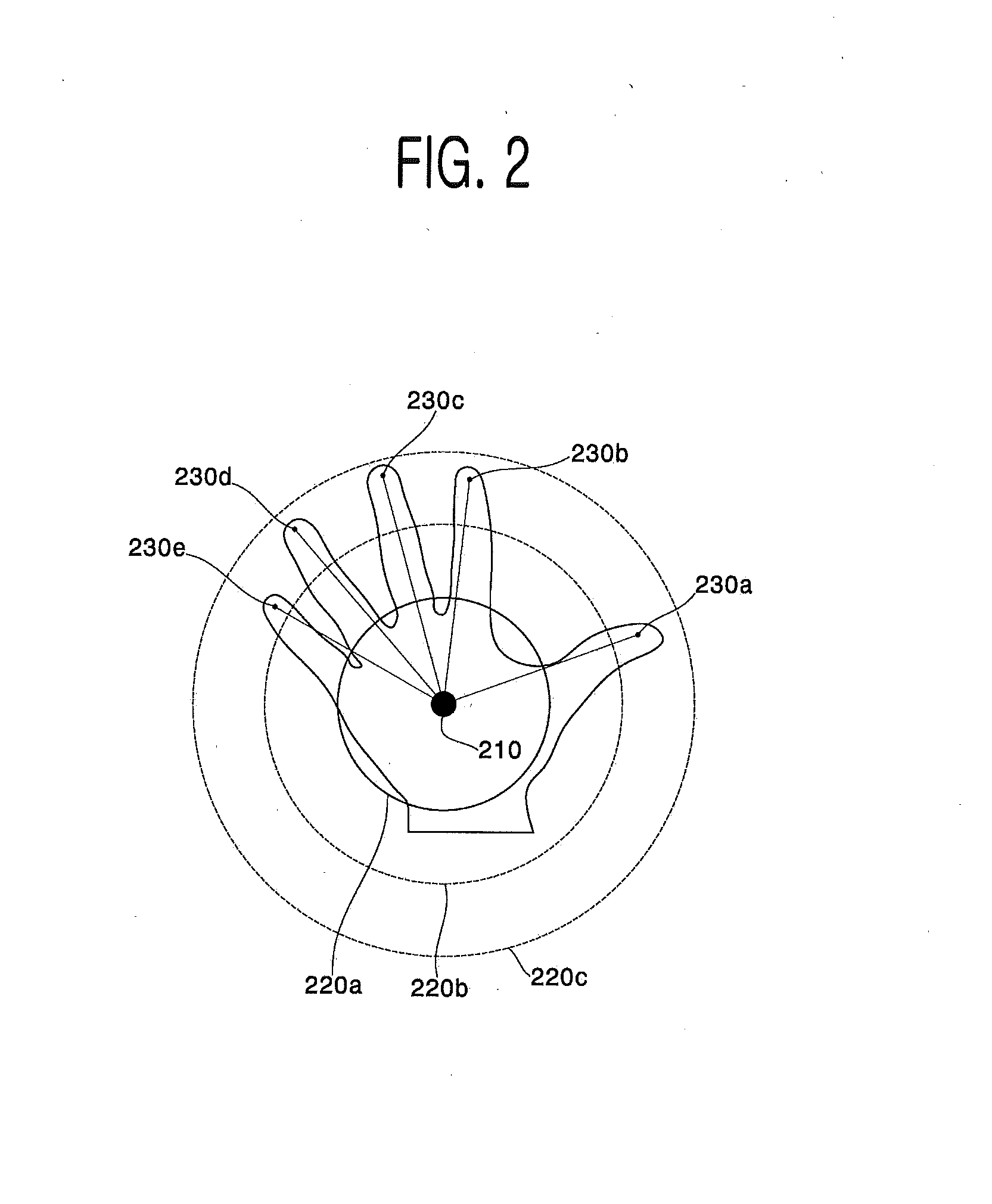

Gesture determination apparatus and method, gesture operation apparatus, program, and recording medium

InactiveUS20160132124A1Input/output for user-computer interactionImage analysisComputer scienceWrist

A hand region (Rh) of an operator is detected from a captured image, and positions of a palm center (Po) and a wrist center (Wo) are determined, and origin coordinates (Cho) and a direction of an coordinate axis (Chu) of a hand coordinate system are calculated. A shape of the hand in the hand region (Rh) is detected using the hand coordinate system, and a shape feature quantity (D14) of the hand is calculated. Further, a movement feature quantity (D15f) of a finger is calculated based on the shape feature quantity (D14) of the hand. Gesture determination is made based on the calculated feature quantities. Since the gesture determination is made taking into consideration the differences in the angle of the hand placed in the operation region, and the direction in which the hand is moved, misrecognition of the operation can be reduced.

Owner:MITSUBISHI ELECTRIC CORP

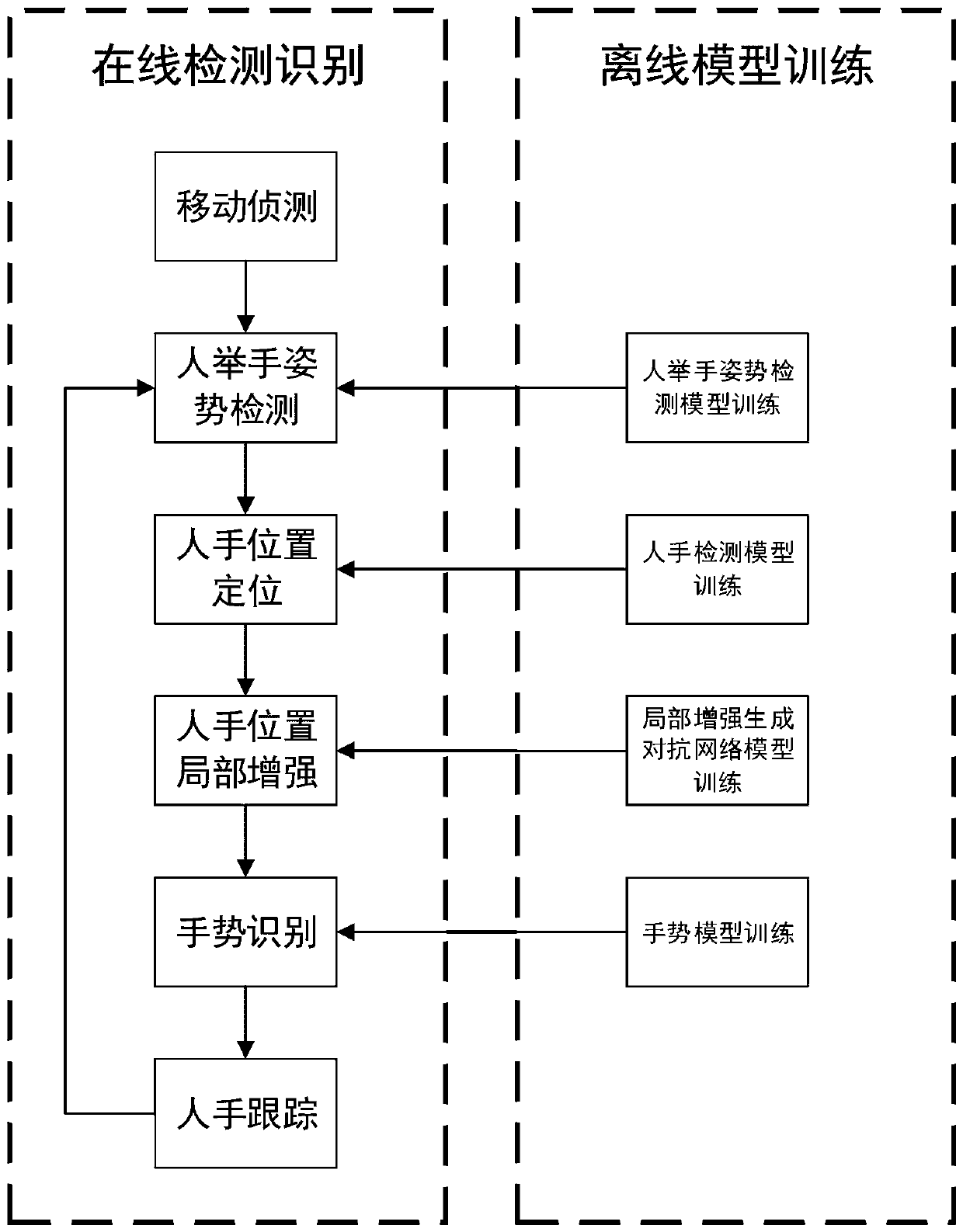

Local image enhancement-based home appliance gesture quick detection identification method

ActiveCN108038452AImprove experienceCharacter and pattern recognitionNeural architecturesRecognition algorithmHome appliance

The invention relates to a local image enhancement-based home appliance gesture quick detection identification method. The method comprises the steps of extracting a motion region in an image sequenceby adopting a mobile detection method; in the motion region, detecting a hand raising posture of a person by adopting a detection algorithm, and locating a hand region; performing local enhancement on the hand region; and identifying specific gestures by utilizing an identification algorithm. According to the home appliance gesture quick detection identification method, the hand region is subjected to image enhancement, so that the region is clearer, remote home appliance gesture control of multiple complex light rays can be adapted, the problems of false identification and missing identification caused by unclear gestures are effectively avoided, and the user experience is improved.

Owner:RECONOVA TECH CO LTD

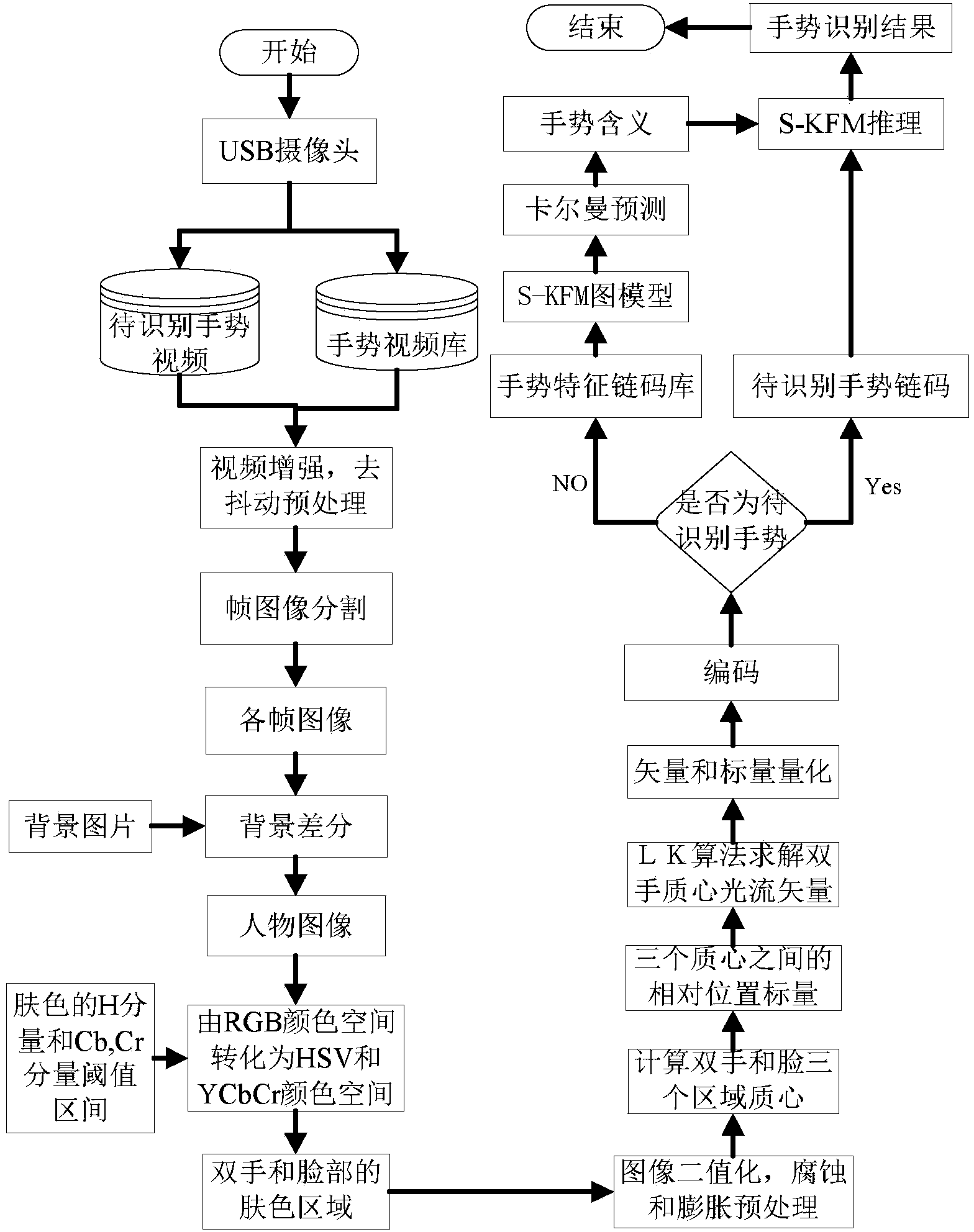

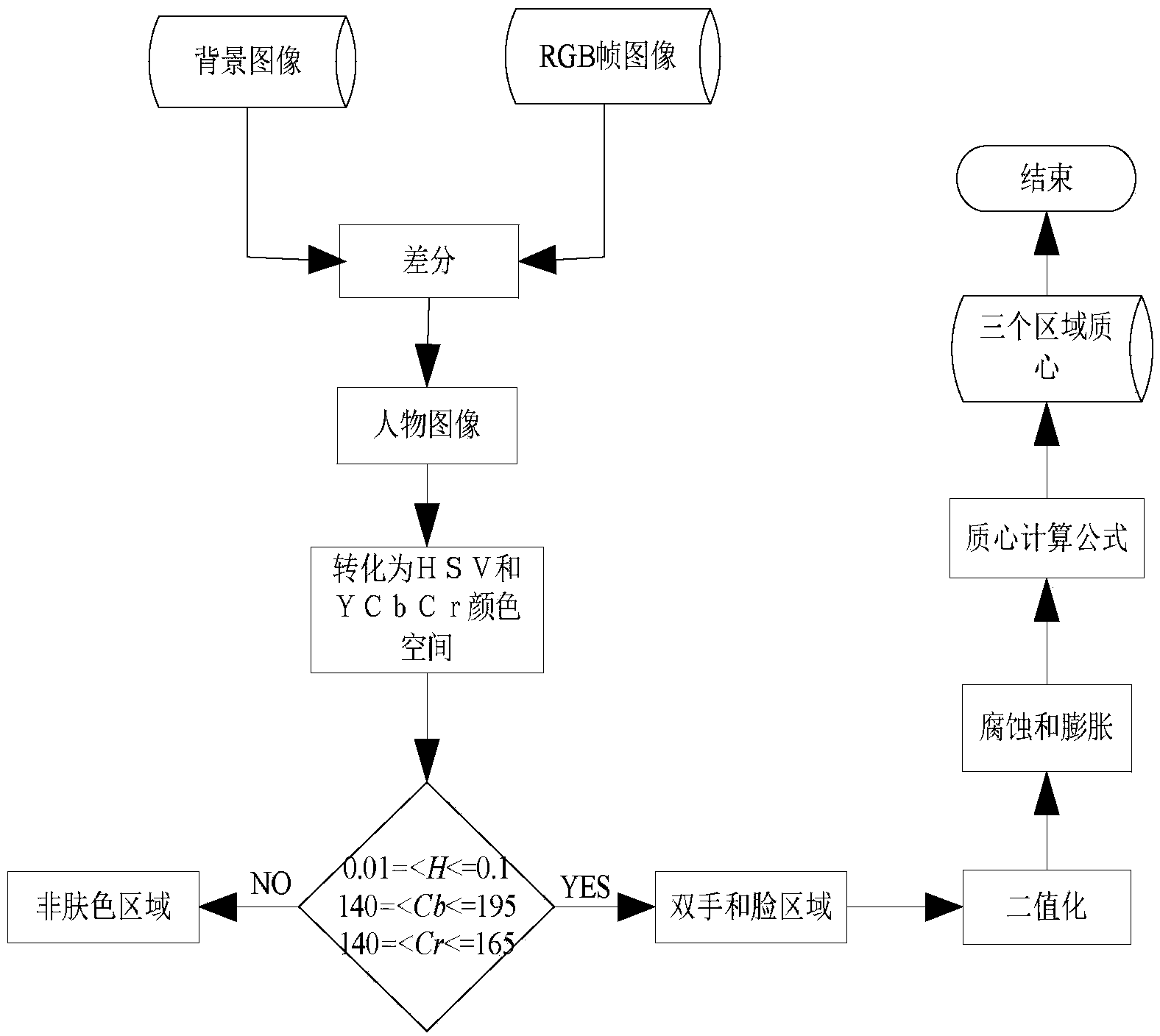

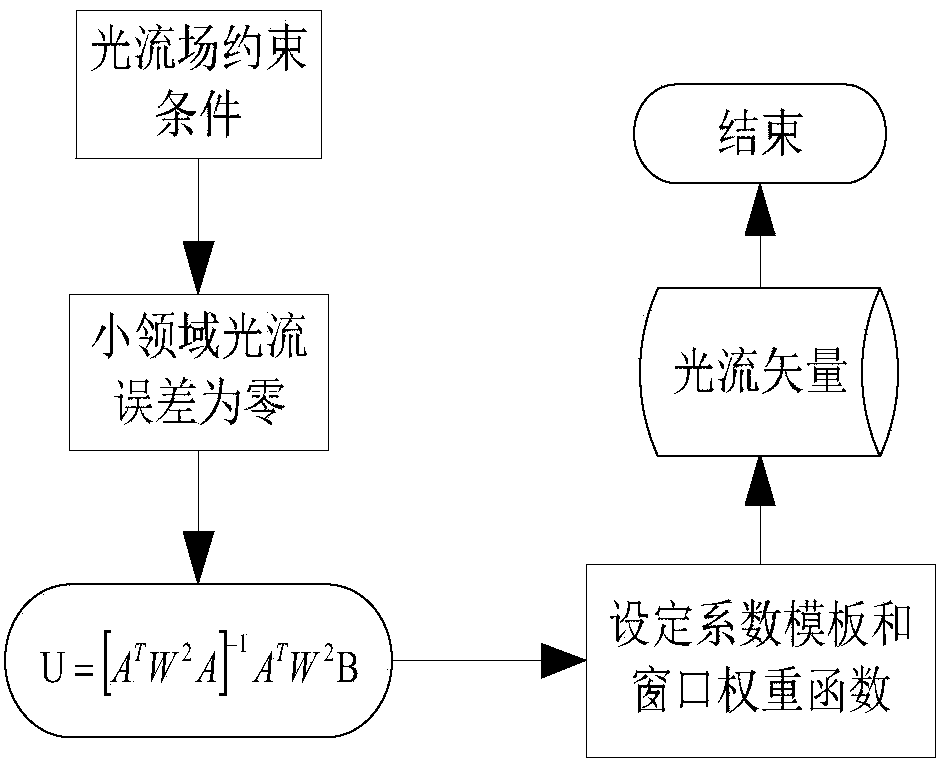

Hand gesture recognition method based on switching Kalman filtering model

InactiveCN104050488AThe recognition result is accurateReduce recognition errorsImage enhancementCharacter and pattern recognitionPattern recognitionSkin color

The invention discloses a hand gesture recognition method based on a switching Kalman filtering model. The hand gesture recognition method based on a switching Kalman filtering model comprises the steps that a hand gesture video database is established, and the hand gesture video database is pre-processed; image backgrounds of video frames are removed, and two hand regions and a face region are separated out based on a skin color model; morphological operation is conducted on the three areas, mass centers are calculated respectively, and the position vectors of the face and the two hands and the position vector between the two hands are obtained; an optical flow field is calculated, and the optical flow vectors of the mass centers of the two hands are obtained; a coding rule is defined, the two optical flow vectors and the three position vectors of each frame of image are coded, so that a hand gesture characteristic chain code library is obtained; an S-KFM graph model is established, wherein a characteristic chain code sequence serves as an observation signal of the S-KFM graph model, and a hand gesture posture meaning sequence serves as an output signal of the S-KFM graph model; optimal parameters are obtained by conducting learning with the characteristic chain code library as a training sample of the S-KFM; relevant steps are executed again for a hand gesture video to be recognized, so that a corresponding characteristic chain code is obtained, reasoning is conducted with the corresponding characteristic chain code serving as input of the S-KFM, and finally a hand gesture recognition result is obtained.

Owner:XIAN TECHNOLOGICAL UNIV

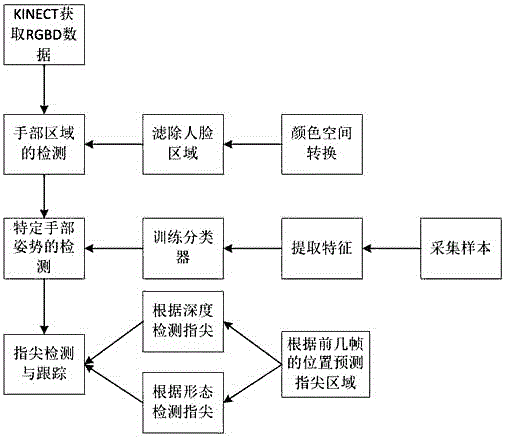

Multi-posture fingertip tracking method for natural man-machine interaction

ActiveCN105739702AInput/output for user-computer interactionCharacter and pattern recognitionFace detectionSkin complexion

The present invention discloses a multi-posture fingertip tracking method for natural man-machine interaction. The method comprises the following steps: S1: acquiring RGBD data by adopting Kinect2, including depth information and color information; S2: detecting a hand region: by means of conversion of a color space, converting a color into a space that does not obviously react to brightness so as to detect a complexion region, then detecting a human face by means of a human face detection algorithm, so as to exclude the human face region and obtain the hand region, and calculating a central point of a hand; S3: by means of the depth information and in combination with an HOG feature and an SVM classifier, identifying and detecting a specific gesture; and S4: by means of positions of preceding frames of fingertips and in combination with an identified region of the hand, performing prediction on a current tracking window, and then detecting and tracking a fingertip by means of two modes, i.e. a depth-based fingertip detection mode and a form-based fingertip detection mode. The method disclosed by the present invention is mainly used for performing detection and tracking on a single fingertip under motion and various postures, and relatively high precision and real-time property need to be ensured.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

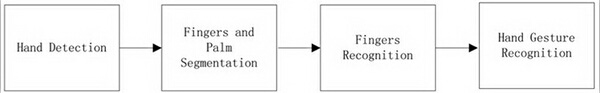

Real-time gesture recognition method based on finger division

ActiveCN104063059AInvariant to rotationScalableInput/output for user-computer interactionCharacter and pattern recognitionPattern recognitionInteraction field

The invention discloses a real-time gesture recognition method based on finger division and relates to a novel gesture recognition technology. The gesture recognition technology is one of hot topics in the human-computer interaction field. The real-time gesture recognition method based on finger division comprises the steps of extracting the whole hand region through a background subtraction method, and dividing a palm part and a finger part on the extracted hand region; judging finger types according to positions, angles and other information of fingers; performing gesture recognition on the whole gesture through a rule classifier. Related gesture recognition tests performed through a large number of experimental images prove that the real-time gesture recognition method based on finger division is high in speed and efficiency, and real-time effect can be achieved.

Owner:EAST CHINA UNIV OF SCI & TECH

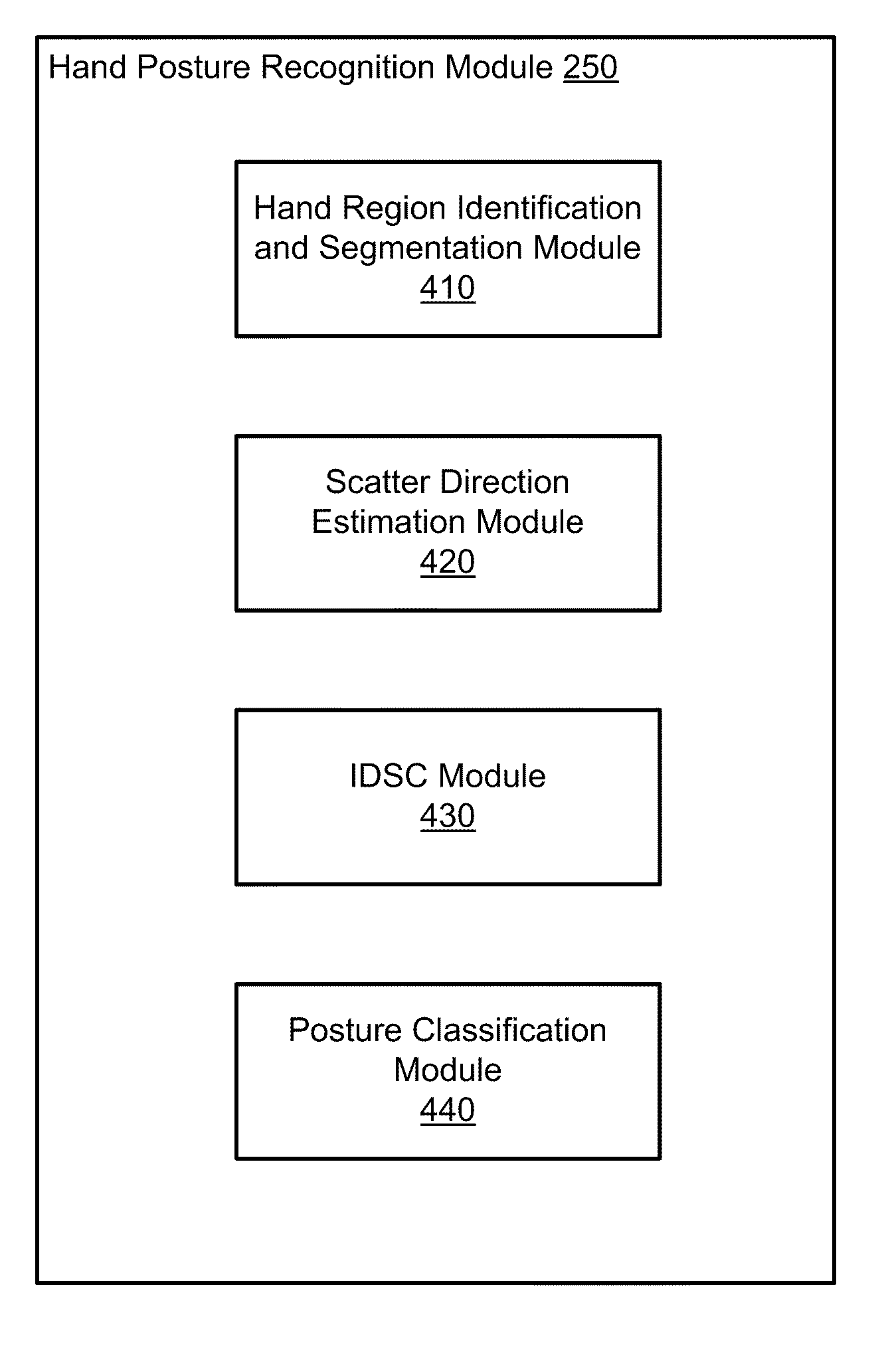

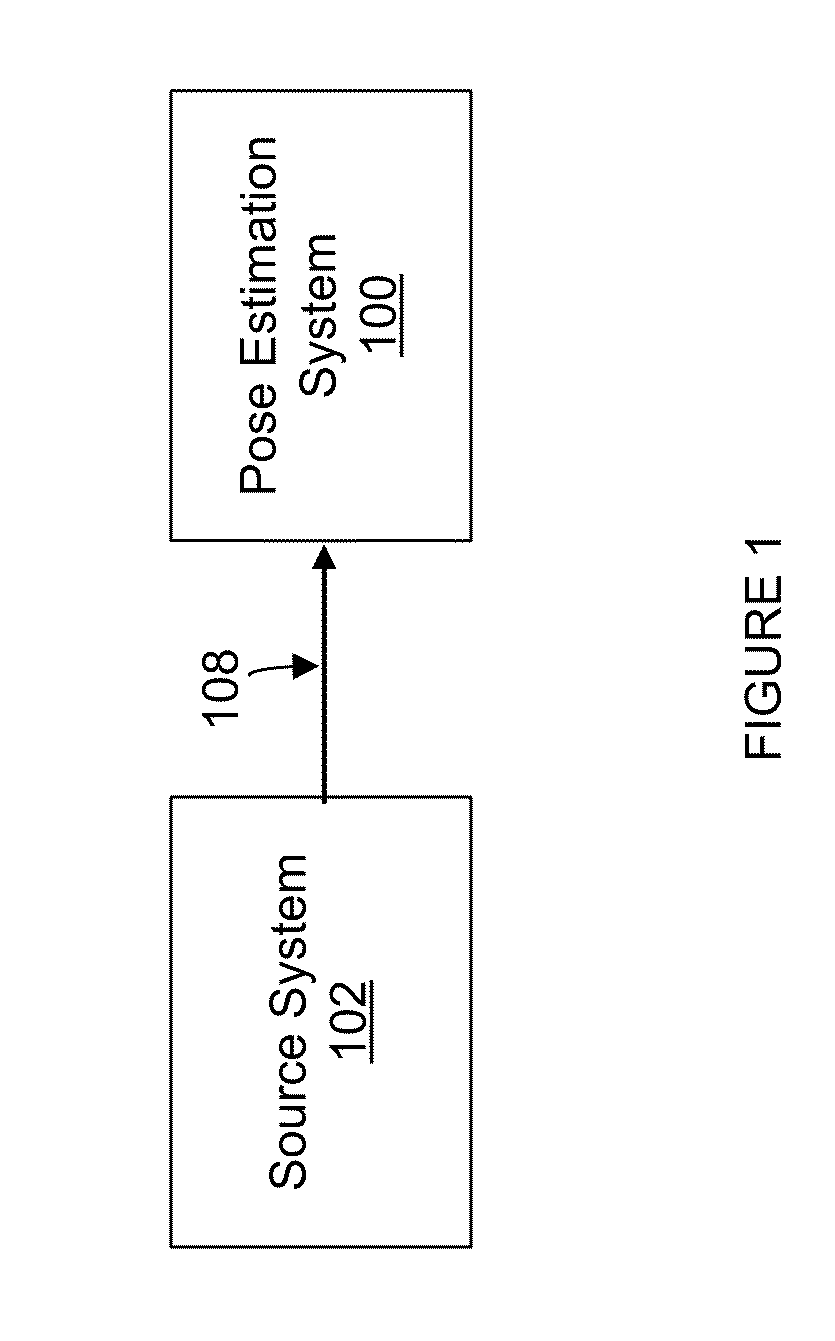

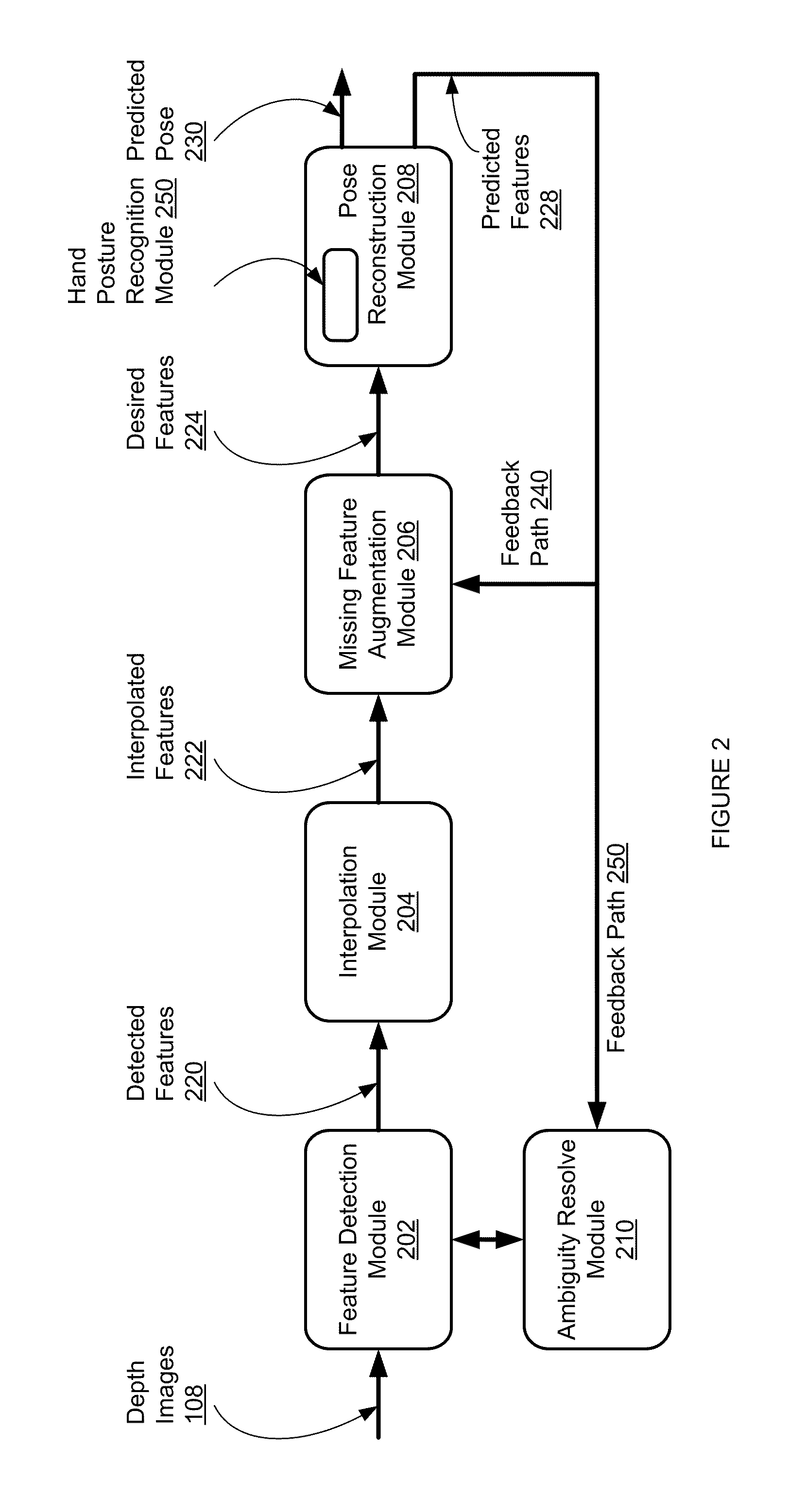

Capturing and recognizing hand postures using inner distance shape contexts

InactiveUS8428311B2Character and pattern recognitionInput/output processes for data processingHand partsSvm classifier

A system, method, and computer program product for recognizing hand postures are described. According to one aspect, a set of training images is provided with labels identifying hand states captured in the training images. Inner Distance Shape Context (IDSC) descriptors are determined for the hand regions in the training images, and fed into a Support Vector Machine (SVM) classifier to train it to classify hand shapes into posture classes. An IDSC descriptor is determined for a hand region in a testing image, and classified by the SVM classifier into one of the posture classes the SVM classifier was trained for. The hand posture captured in the testing image is recognized based on the classification.

Owner:HONDA MOTOR CO LTD

Hand pointing estimation for human computer interaction

ActiveUS20150177846A1High degree of robustnessMore robustInput/output for user-computer interactionImage analysisHuman interactionHand parts

Hand pointing has been an intuitive gesture for human interaction with computers. A hand pointing estimation system is provided, based on two regular cameras, which includes hand region detection, hand finger estimation, two views' feature detection, and 3D pointing direction estimation. The technique may employ a polar coordinate system to represent the hand region, and tests show a good result in terms of the robustness to hand orientation variation. To estimate the pointing direction, Active Appearance Models are employed to detect and track, e.g., 14 feature points along the hand contour from a top view and a side view. Combining two views of the hand features, the 3D pointing direction is estimated.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

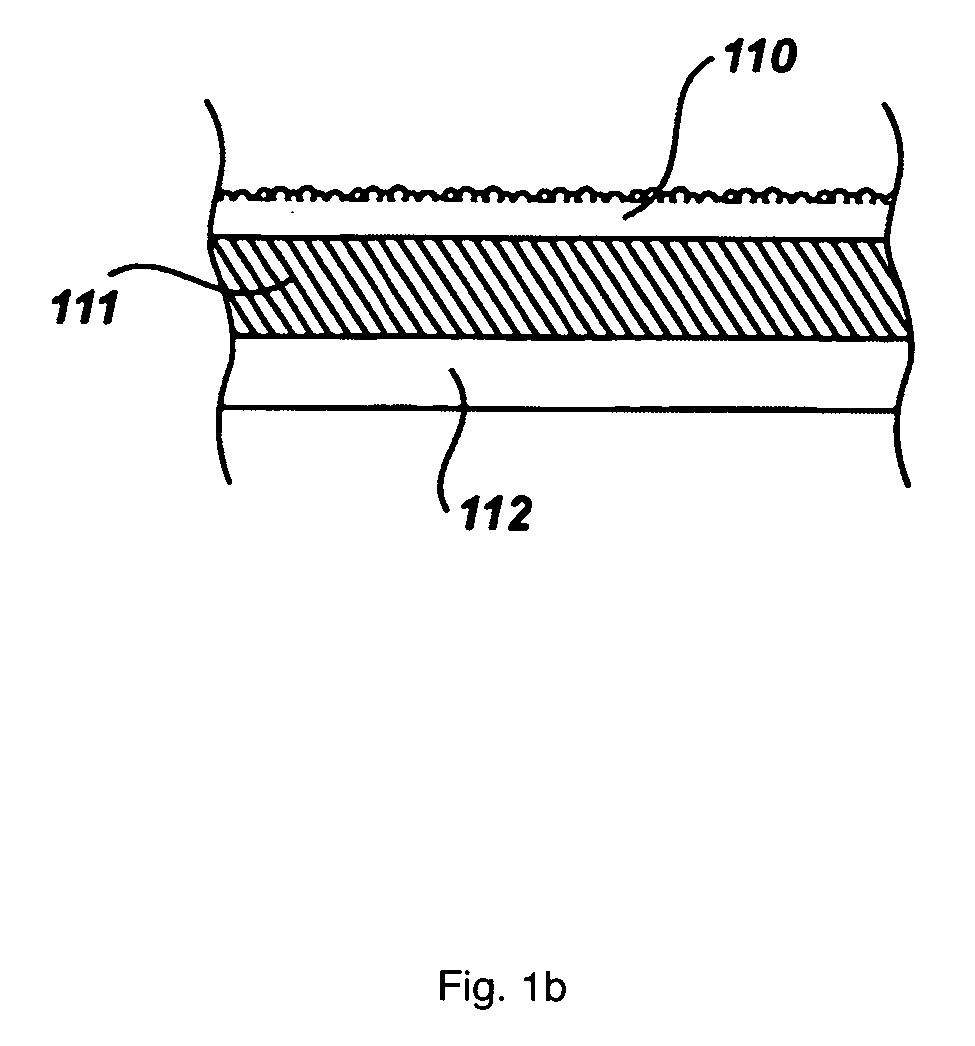

Disposable and ambidextrous glove sander

A glove sander comprising: an ambidextrous glove, an inner liner, and a sand layer, the ambidextrous glove having a thumb compartment, an index finger compartment, a middle finger compartment, a ring finger compartment, a pinky compartment and a hand region generally housing a user's hand extending to a user's wrist region, the inner liner comprised of a fabric which is affixed to the ambidextrous glove, the inner liner being housed within the ambidextrous glove and approximating the general inner space of the ambidextrous glove; the sand layer affixed to the outer surface of the ambidextrous glove, the sand layer extending from the wrist region to the finger and thumb compartments on both sides of the ambidextrous glove and in between the finger and thumb compartments.

Owner:COX STEPHEN

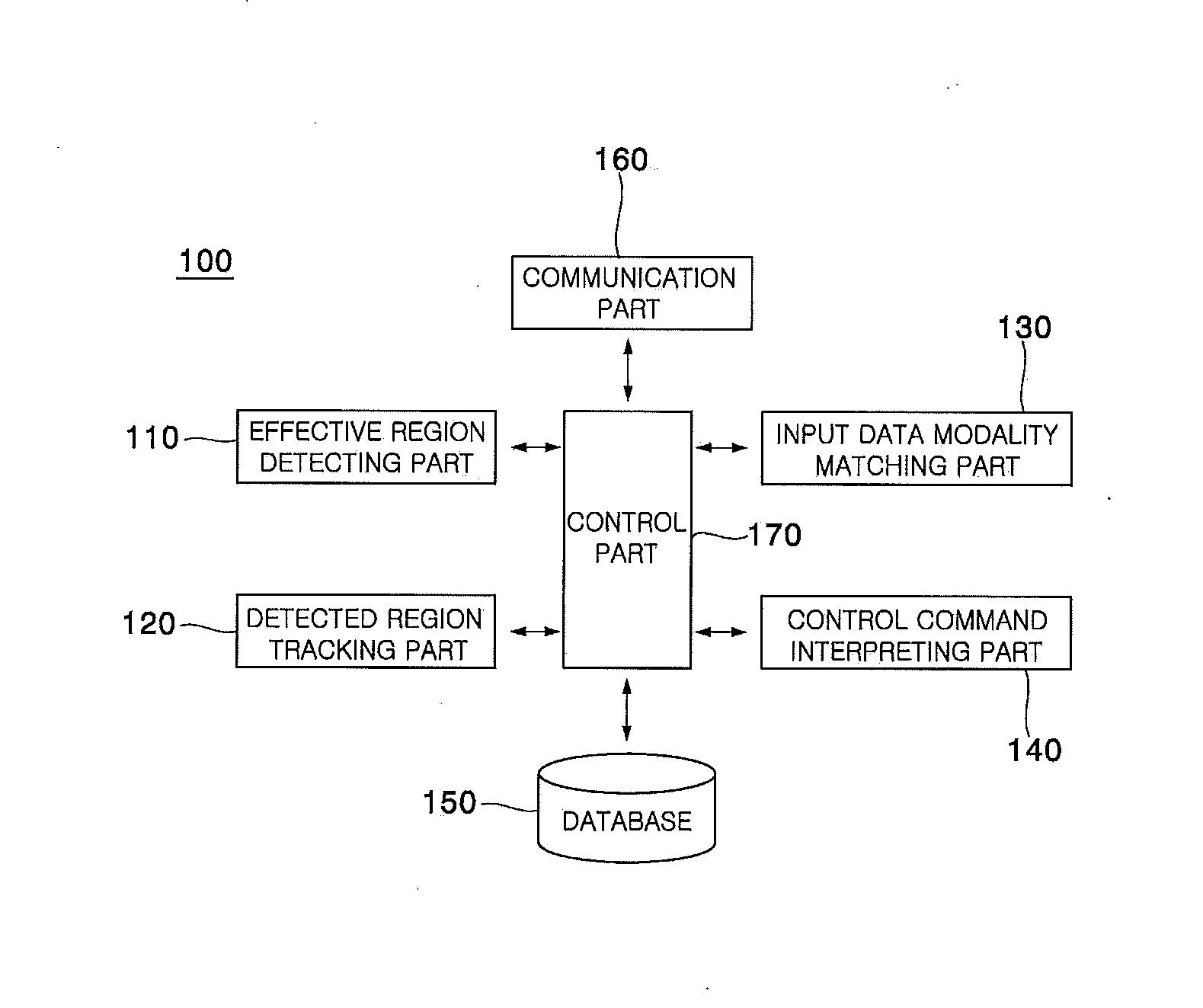

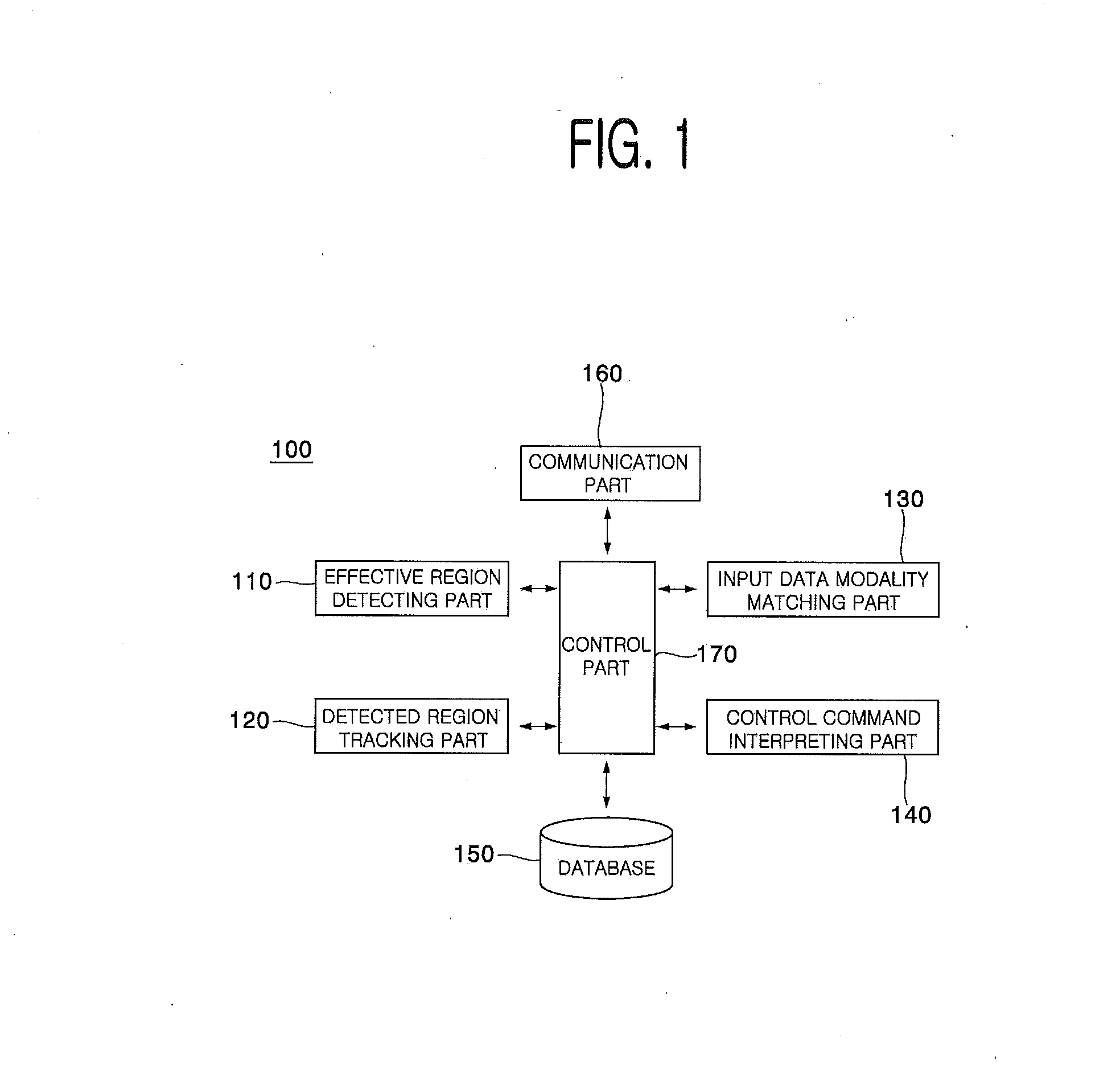

Method and terminal device for controlling content by sensing head gesture and hand gesture, and computer-readable recording medium

InactiveUS20140168074A1Input/output for user-computer interactionImage analysisTerminal equipmentVisual perception

The present invention relates to a method, a terminal, and a computer-readable medium for controlling content by detecting head and hand gestures. The method for controlling a content by detecting hand gestures and head gestures, includes steps of: (a) detecting a head region and a hand region of a user by analyzing an input image with an object detection technology; (b) tracking the gestures in the head region and the hand region of the user by using a computer vision technology; and (c) allowing the content to be controlled by referring to gestures-combining information including data on the tracked gestures in the head region and the hand region.

Owner:MEDIA INTERACTIVE +1

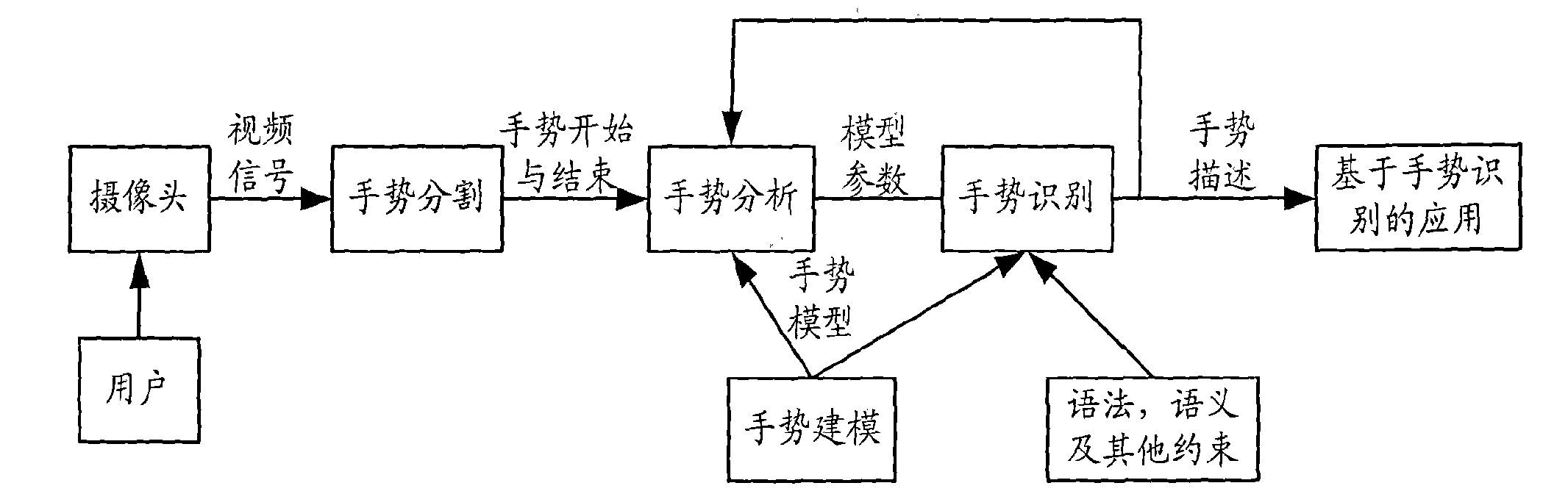

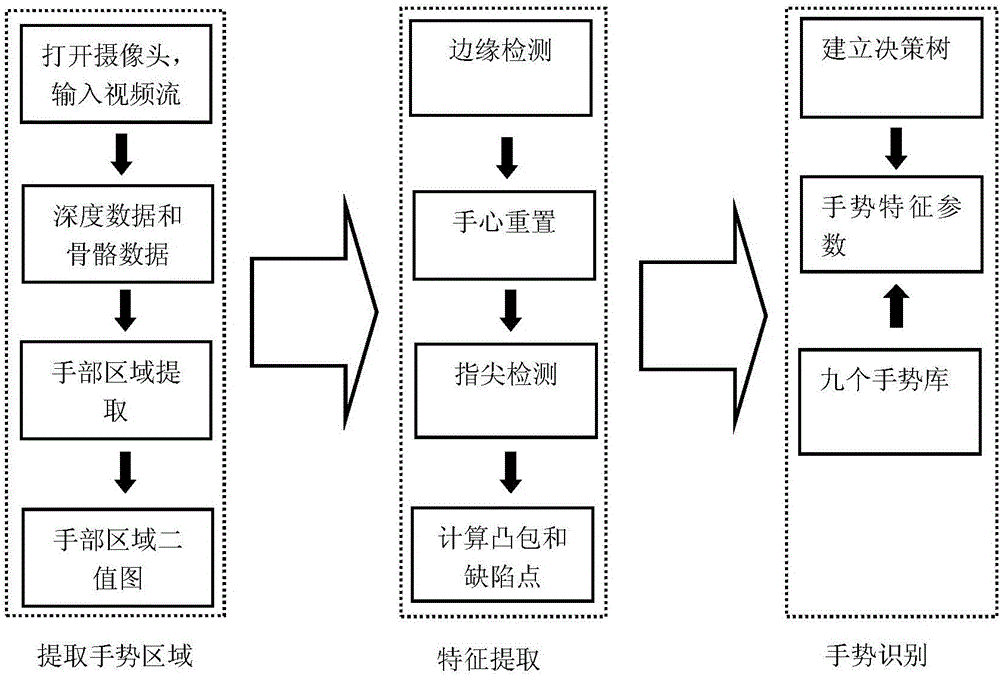

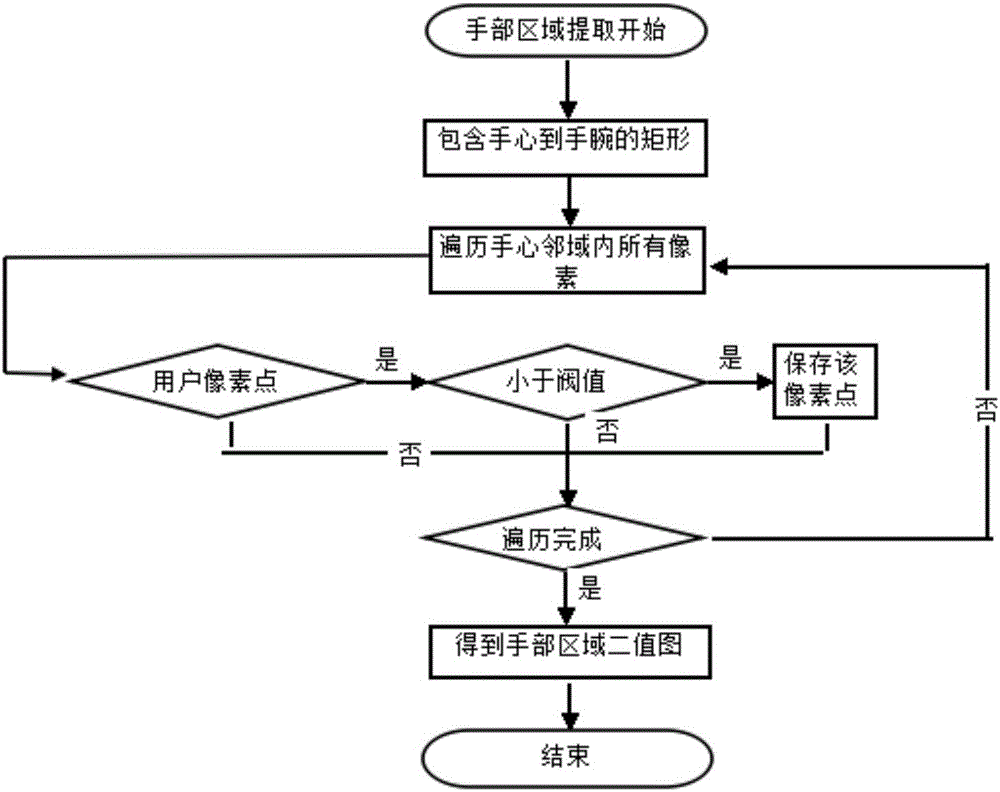

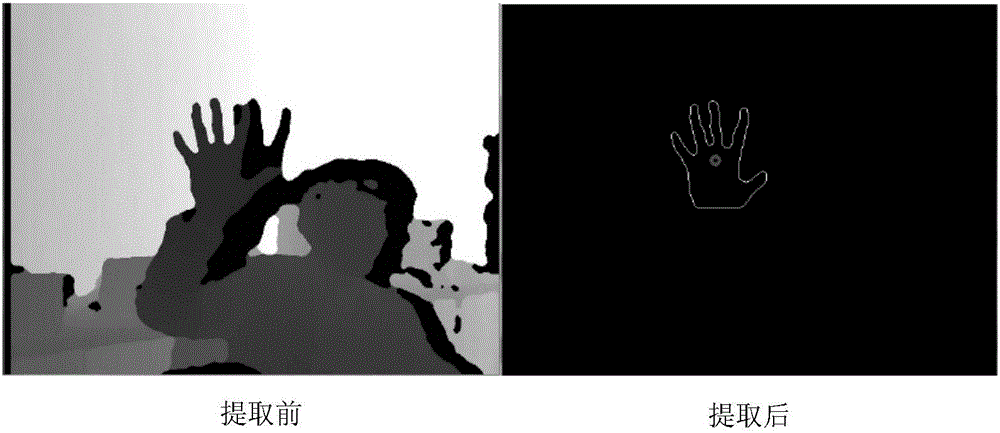

Gesture recognition method based on vision

InactiveCN106326860AEfficient identificationCharacter and pattern recognitionVision basedVisual perception

The invention discloses a gesture recognition method based on vision, comprising the following steps: step 1, capturing a video stream through a Kinect sensor, and getting depth image data and bone data; step 2, preprocessing the obtained depth image data through use of a median filtering method; step 3, extracting a hand region according to the depth image data and the bone data to get a hand region binary map; step 4, binarizing the edge image of the hand region binary map through edge detection, and extracting palm contour information; step 5, resetting the center of palm according to the hand region binary map; step 6, calculating out convex hull vertices and a circum circle according to the palm contour information; and step 7, performing matching to recognize gestures using a classification decision tree and a variety of gestures in a gesture library according to the center-of-palm resetting and the convex hull vertices and the circum circle calculated.

Owner:BRIGHTVISION TECH

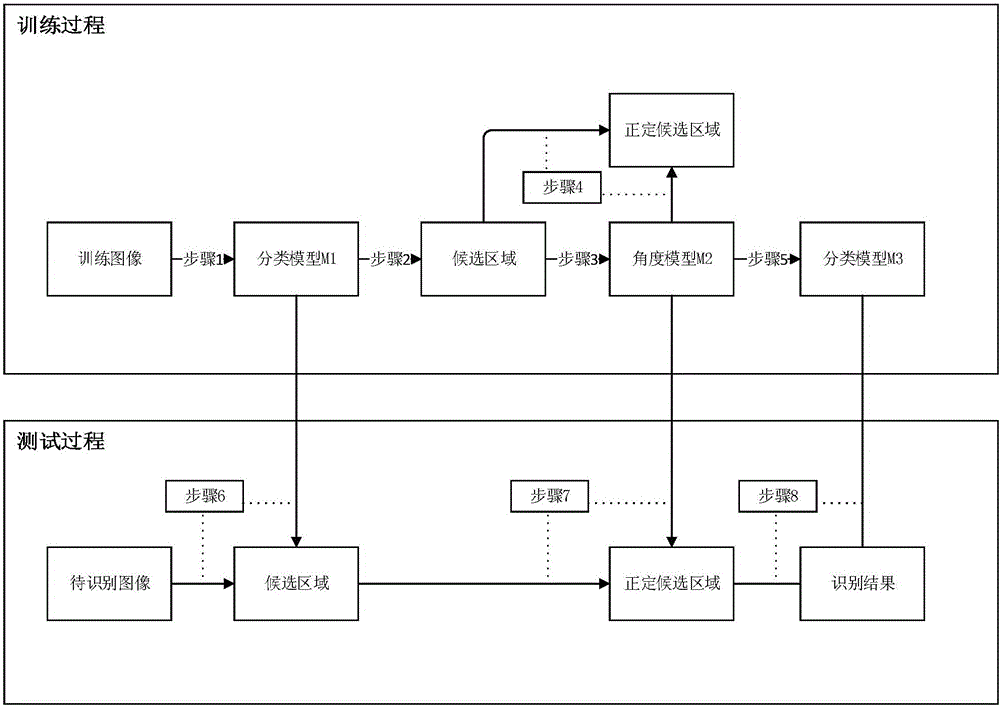

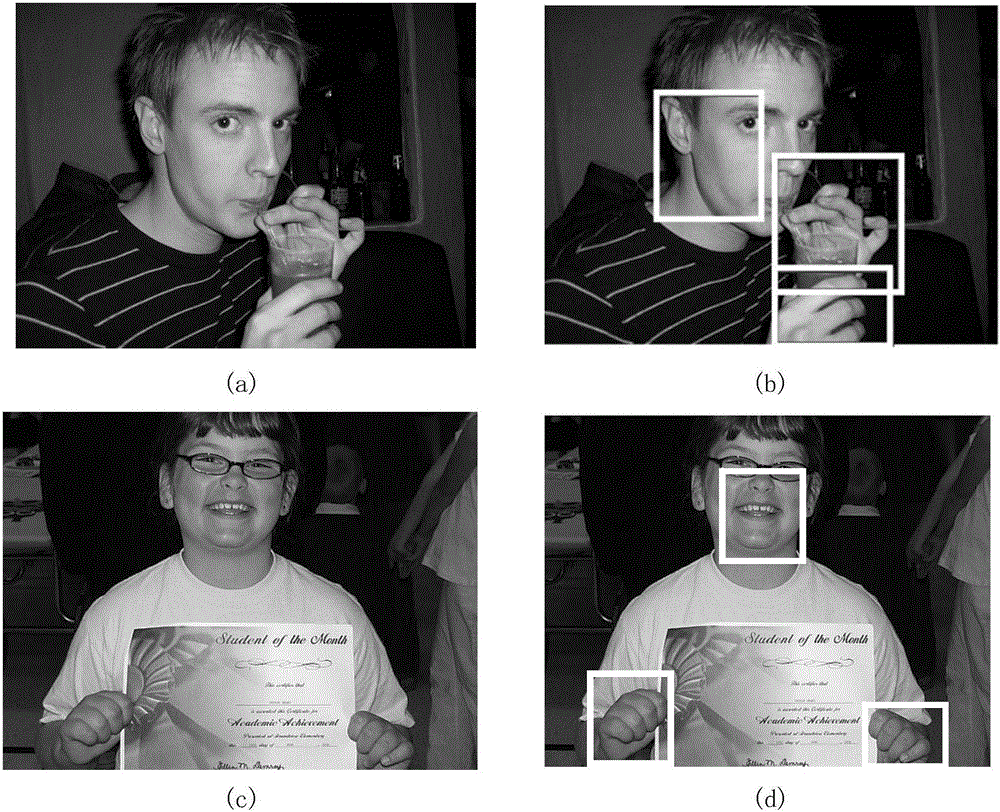

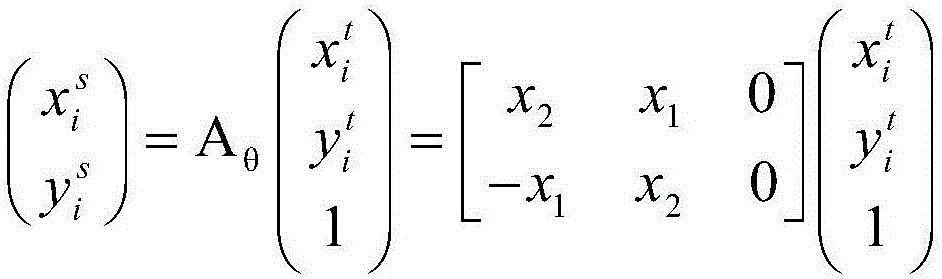

Convolutional neural network-based human hand image region detection method

ActiveCN106127108AInput/output for user-computer interactionCharacter and pattern recognitionBase codeFeature extraction

The invention discloses a convolutional neural network-based human hand image region detection method. The method comprises the following steps of: carrying out feature extraction on an image by utilizing a convolutional neural network, and training a weak classifier; for the image, angles of which are marked, segmenting the image on the basis of the classifier to obtain a plurality of candidate regions; modeling each candidate region by utilizing the convolutional neural network so as to obtain an angle estimation model, and carrying out angle marking to rotate the candidate area to a positive definite attitude; modeling each candidate region again by utilizing the convolutional neural network so as to obtain a classification model; for a test image, firstly segmenting the test image by using the weak classifier so as to obtain candidate regions, and for each candidate region, estimating angle of the candidate region through the angle estimation model and rotating the candidate region to a positive definite attitude; and inputting the candidate region under the positive definite attitude into the classification model to obtain a position and an angle of a human hand in the image. According to the method, the classification precision is improved by adopting convolutional neural network-based coding classification, and by utilizing the angle model, the method has rotation variance and very high human hand region detection precision.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

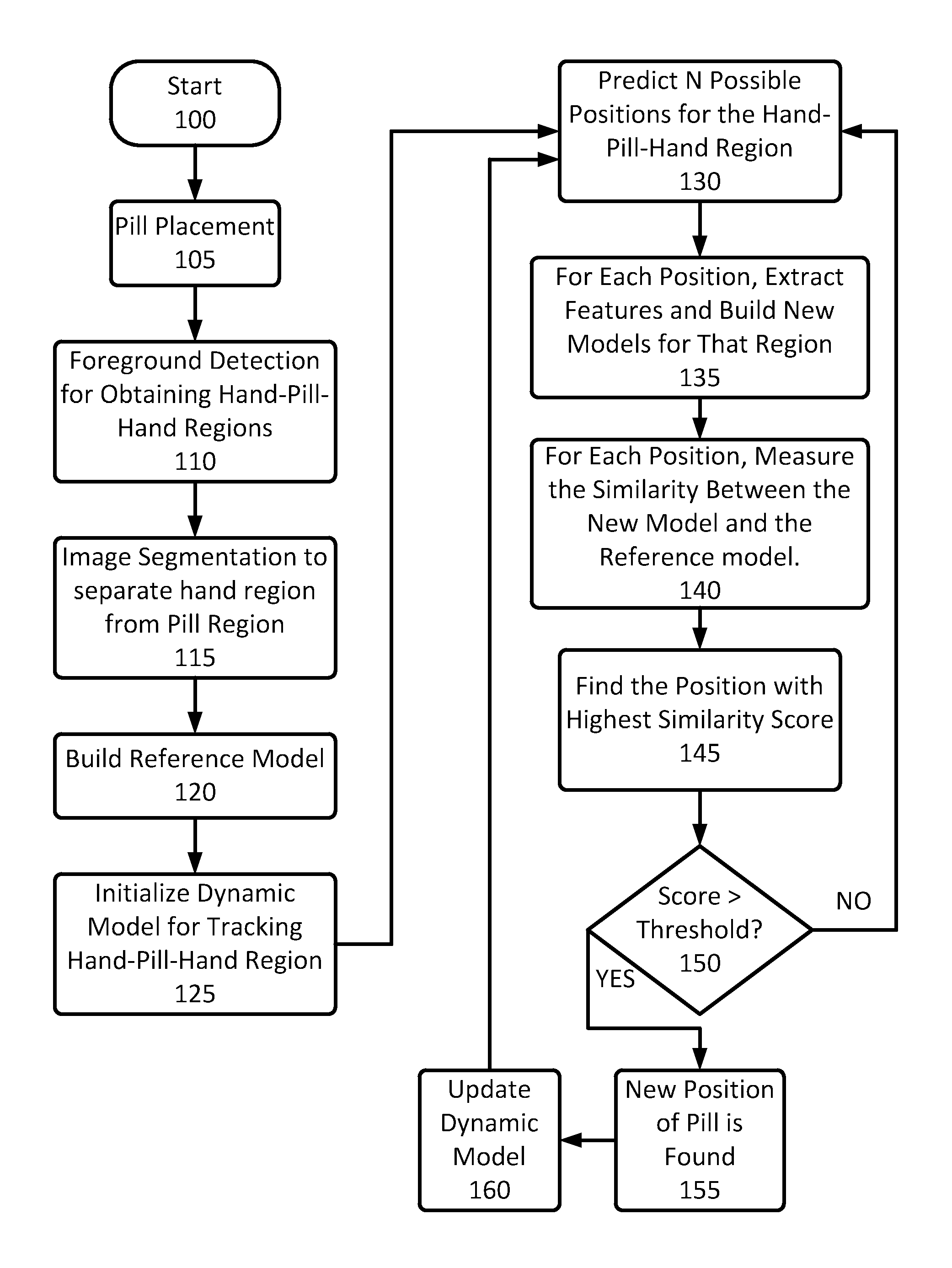

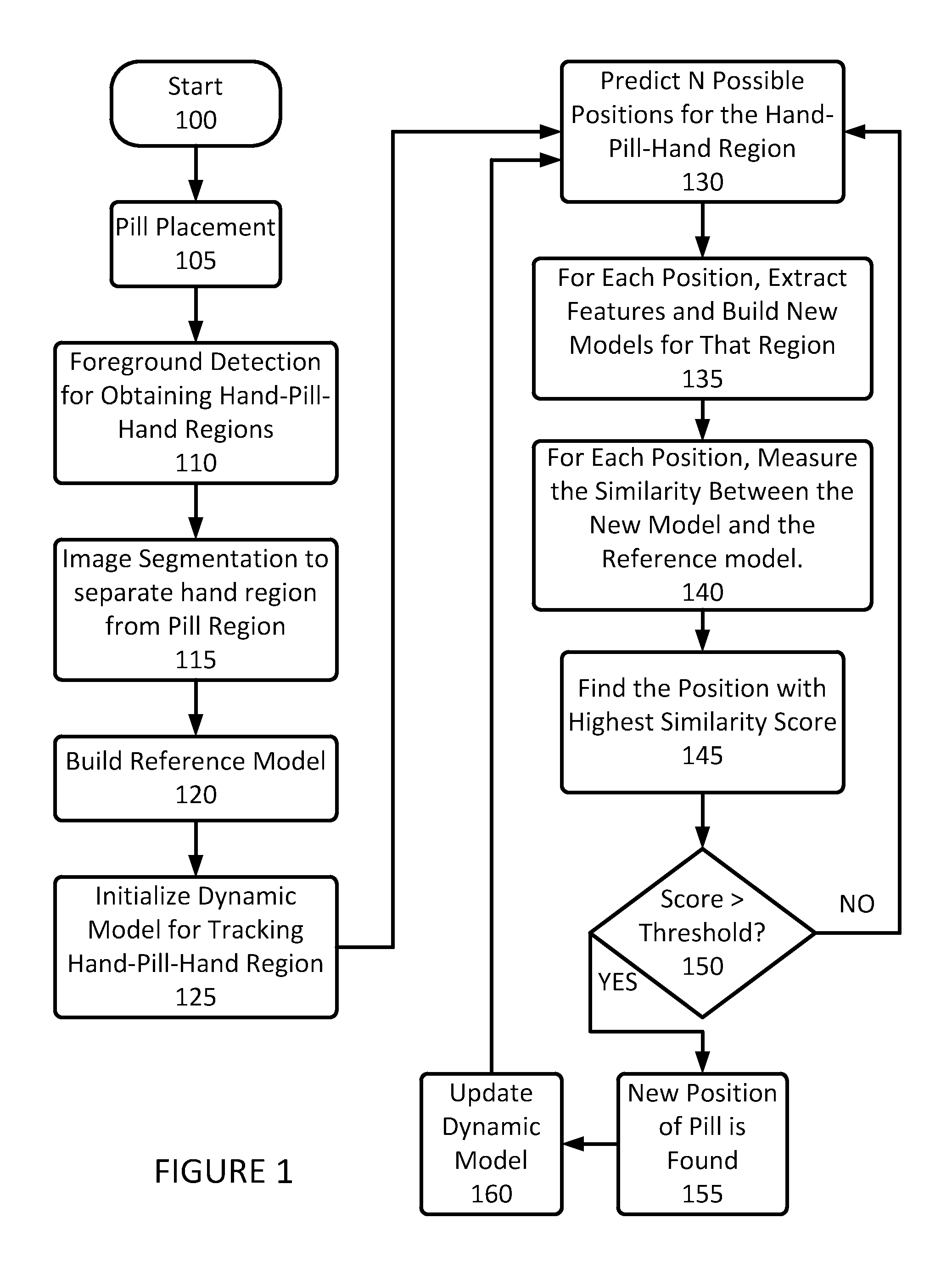

Method and Apparatus for Pattern Tracking

A method and apparatus for pattern tracking The method includes the steps of performing a foreground detection process to determine a hand-pill-hand region, performing image segmentation to separate the determined hand portion of the hand-pill-hand region from the pill portion thereof, building three reference models, one for each hand region and one for the pill region, initializing a dynamic model for tracking the hand-pill-hand region, determining N possible next positions for the hand-pill-hand region, for each such determined position, determining various features, building a new model for that region in accordance with the determined position, for each position, comparing the new model and a reference model, determining a position whose new model generates a highest similarity score, determining whether that similarity score is greater than a predetermined threshold, and wherein if it is determined that the similarity score is greater than the predetermined threshold, the object is tracked.

Owner:AIC INNOVATIONS GRP

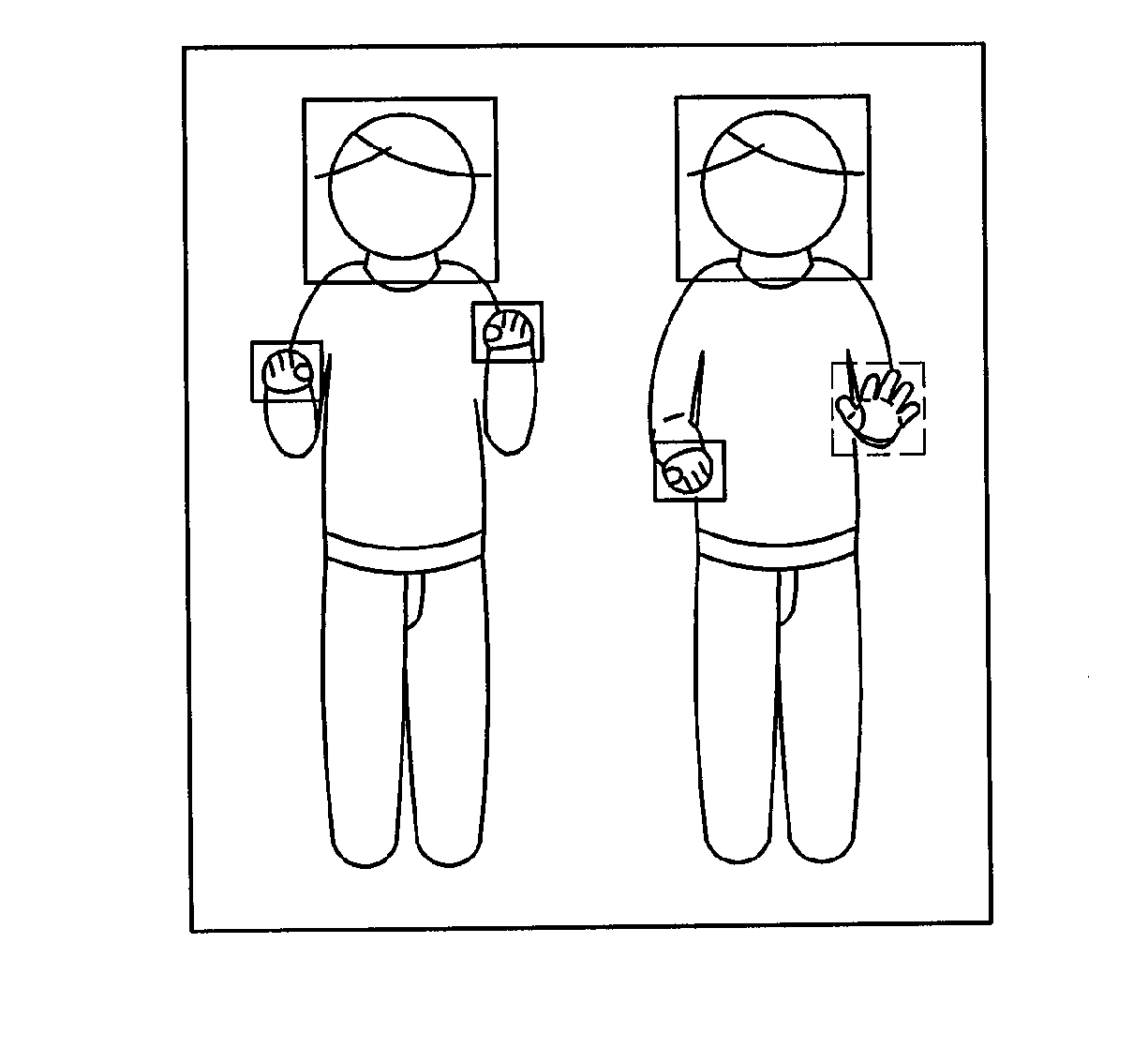

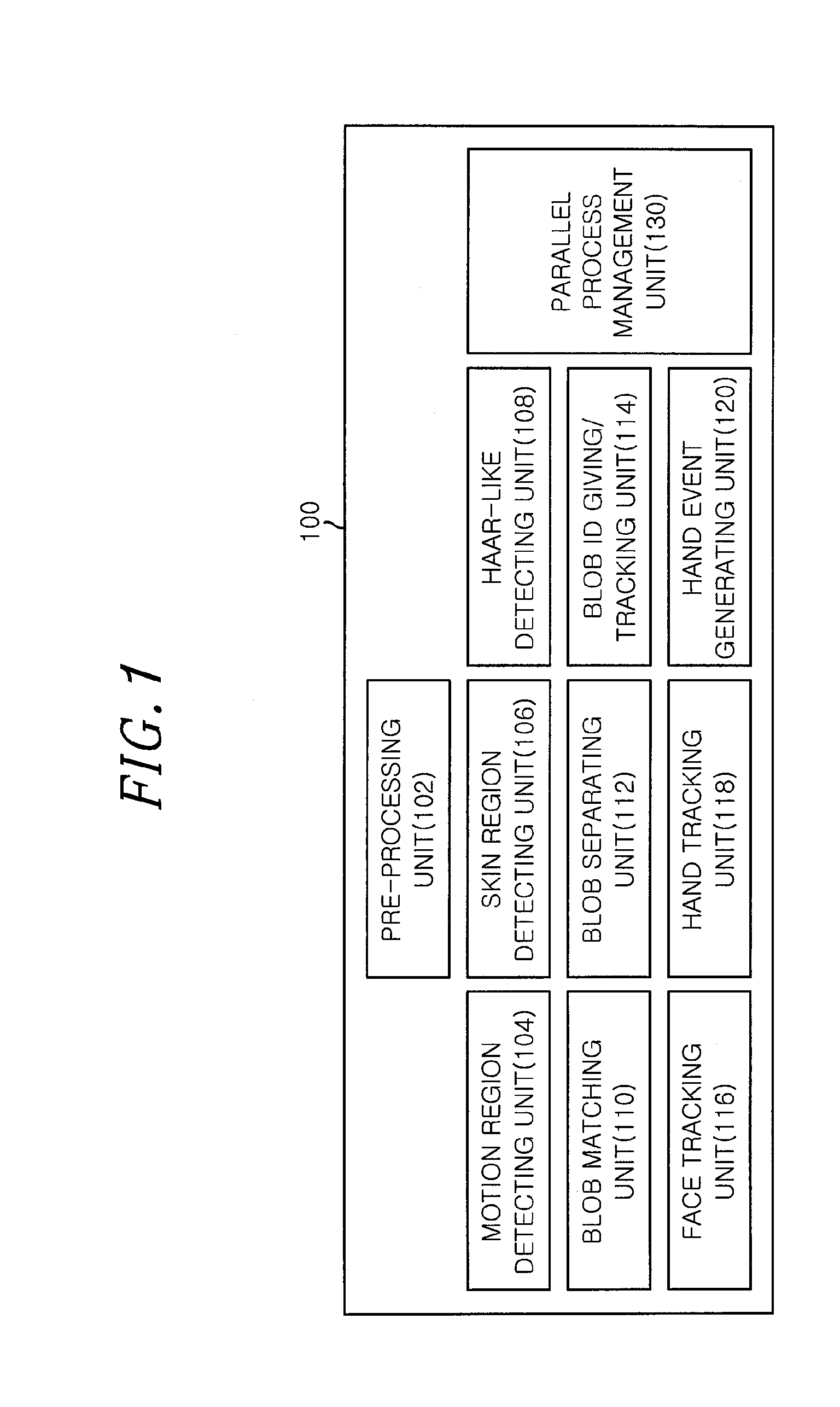

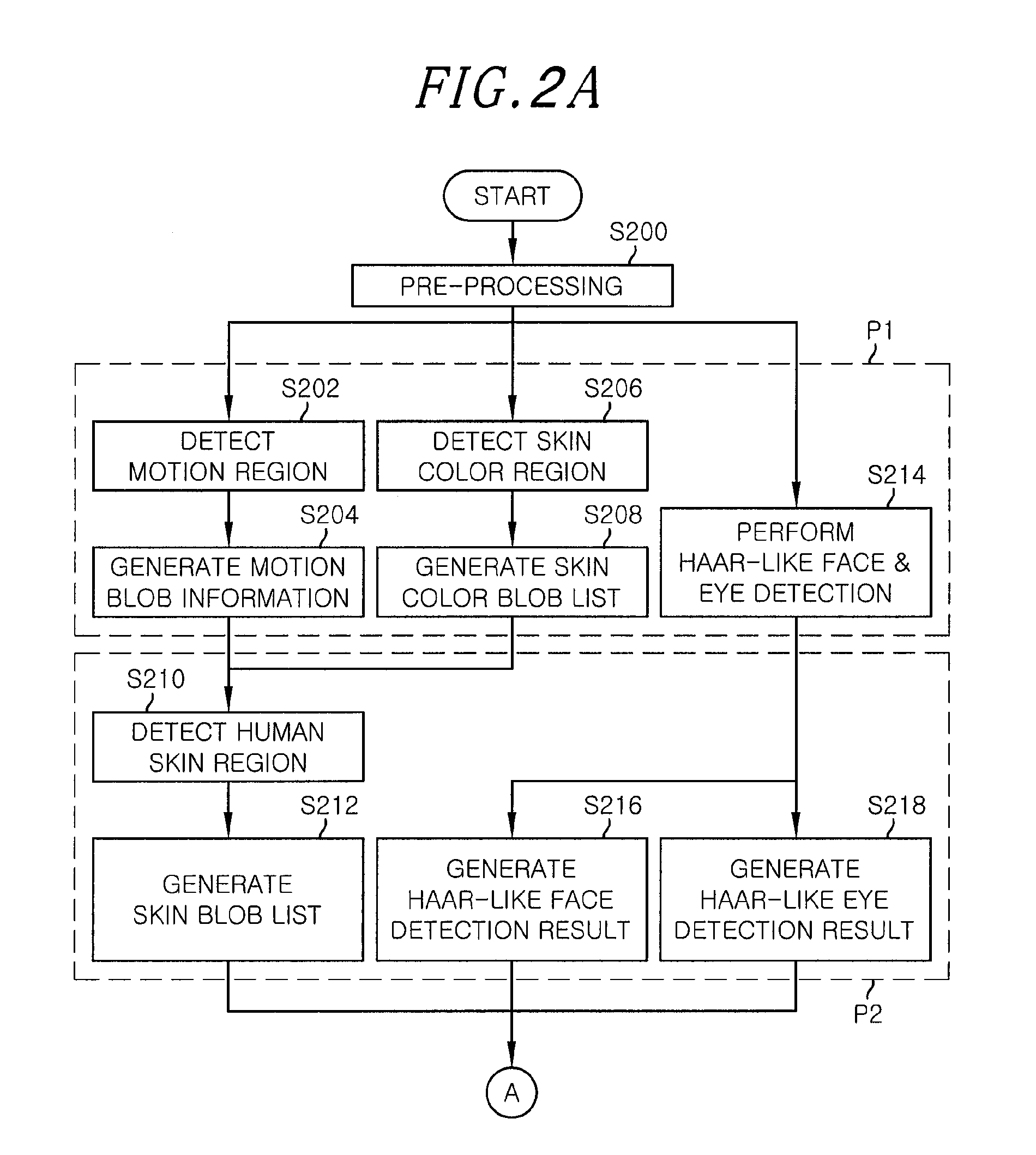

Apparatus and method for recognizing multi-user interactions

InactiveUS20120163661A1Efficient identificationCharacter and pattern recognitionSkin colorContrast ratio

An apparatus for recognizing multi-user interactions includes: a pre-processing unit for receiving a single visible light image to perform pre-processing; a motion region detecting unit for detecting a motion region from the image to generate motion blob information; a skin region detecting unit for extracting information on a skin color region from the image to generate a skin blob list; a Haar-like detecting unit for performing Haar-like face and eye detection by using only contrast information from the image; a face tracking unit for recognizing a face of a user from the image by using the skin blob list and results of the Haar-like face and eye detection; and a hand tracking unit for recognizing a hand region of the user from the image.

Owner:ELECTRONICS & TELECOMM RES INST

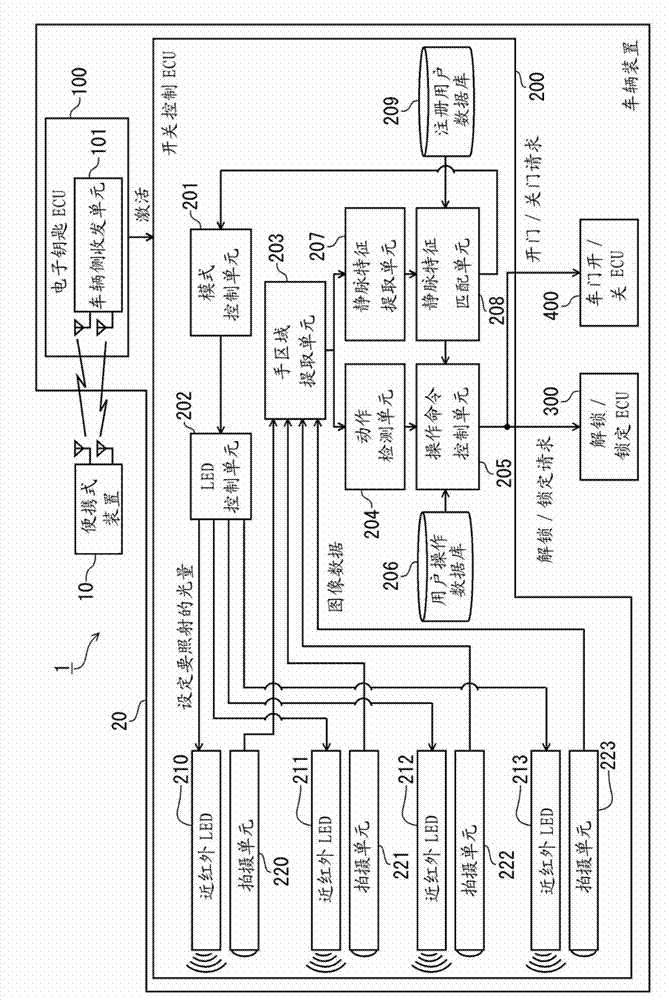

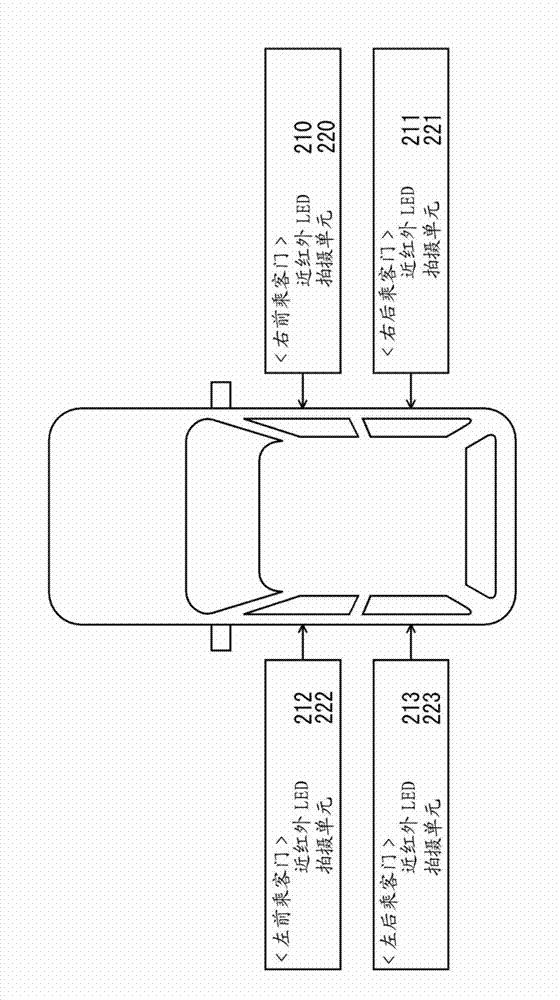

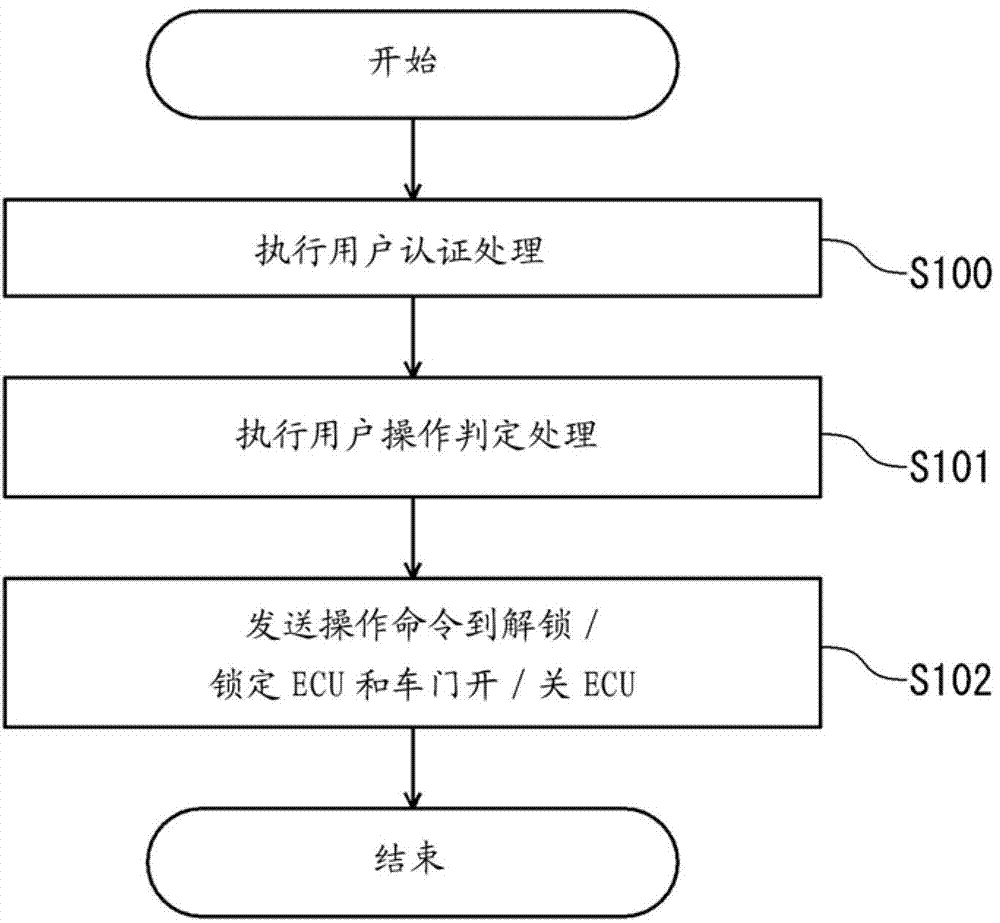

Apparatus for controlling vehicle opening/closing element

A system for controlling a vehicle opening / closing element according to the present invention comprises an irradiation unit for irradiating near-infrared light to the periphery of an opening / closing element, an image capture unit for capturing an image formed by the irradiated near-infrared light, a hand region extraction unit for extracting a user hand region on the basis of the luminance of the image captured by the image capture unit, an action detection unit for detecting movement of the user's hand from the extracted hand region, and a controller for determining whether the detected action matches a predetermined action that is set in advance and issuing an instruction to operate the opening / closing element on the basis of the determined action.

Owner:PANASONIC AUTOMOTIVE SYST CO LTD YOKOHAMA SHI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com