Patents

Literature

62 results about "Fingertip detection" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

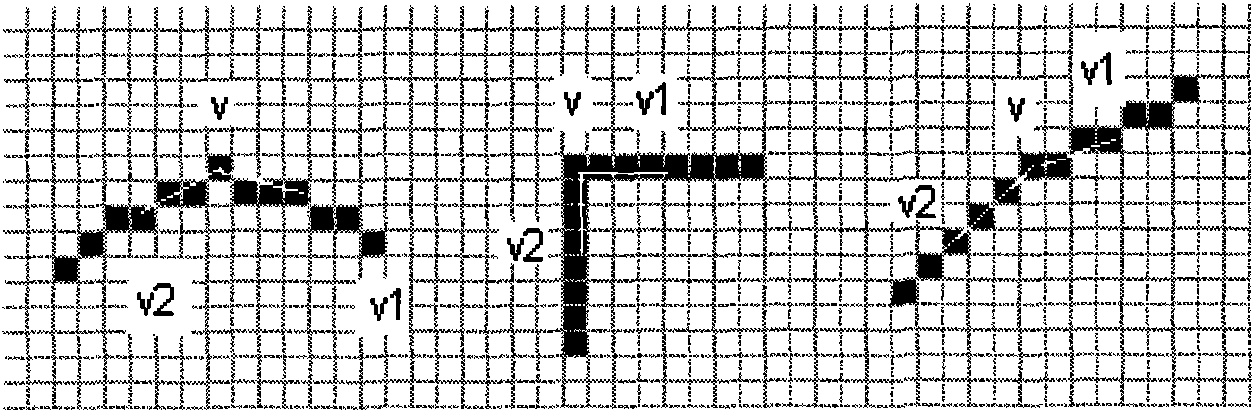

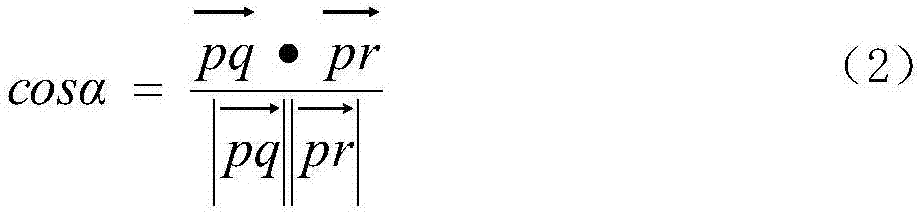

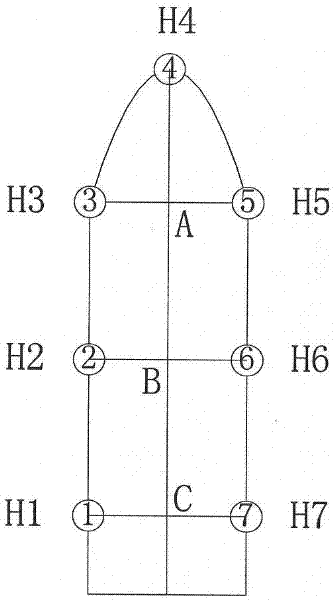

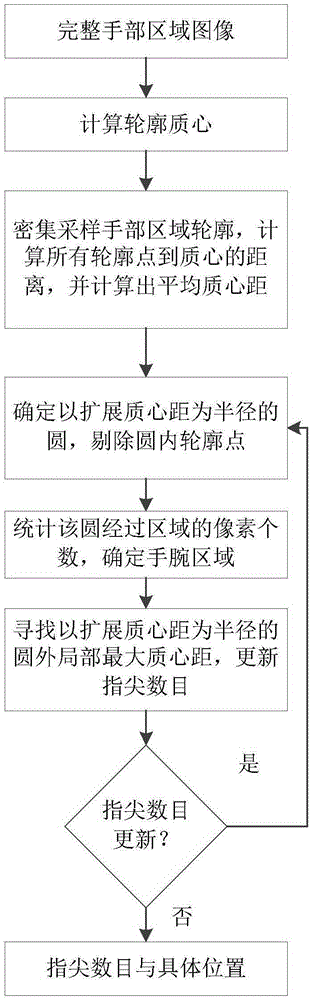

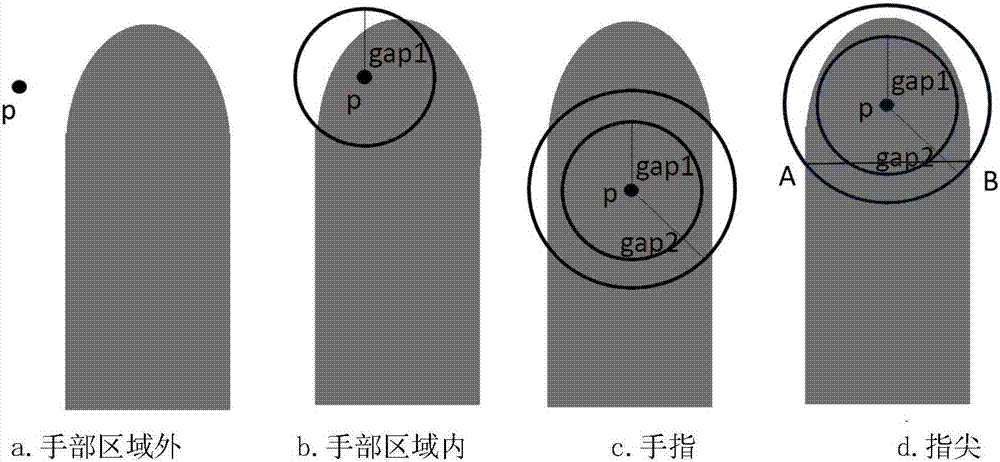

The fingertip detection approach consists of two stages. First, based on the grid sampling and the analysis of sampled hand contour, the fingertip was detected roughly. Then, the location of fingertip was localized precisely based on circle feature matching.

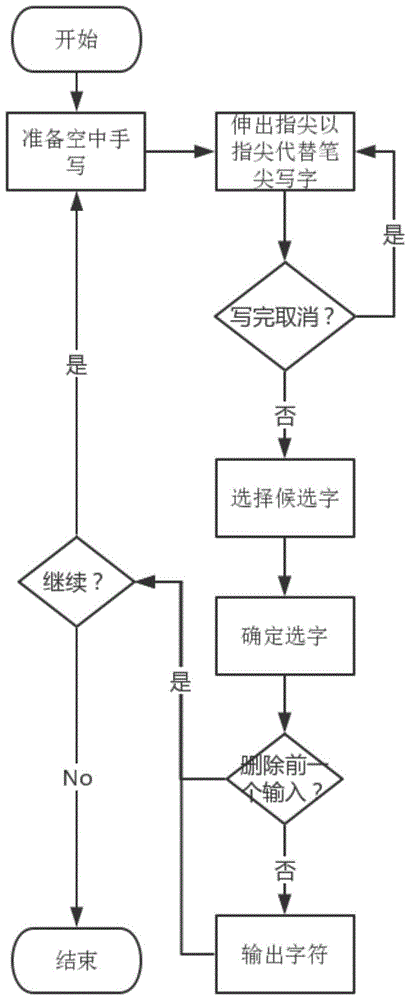

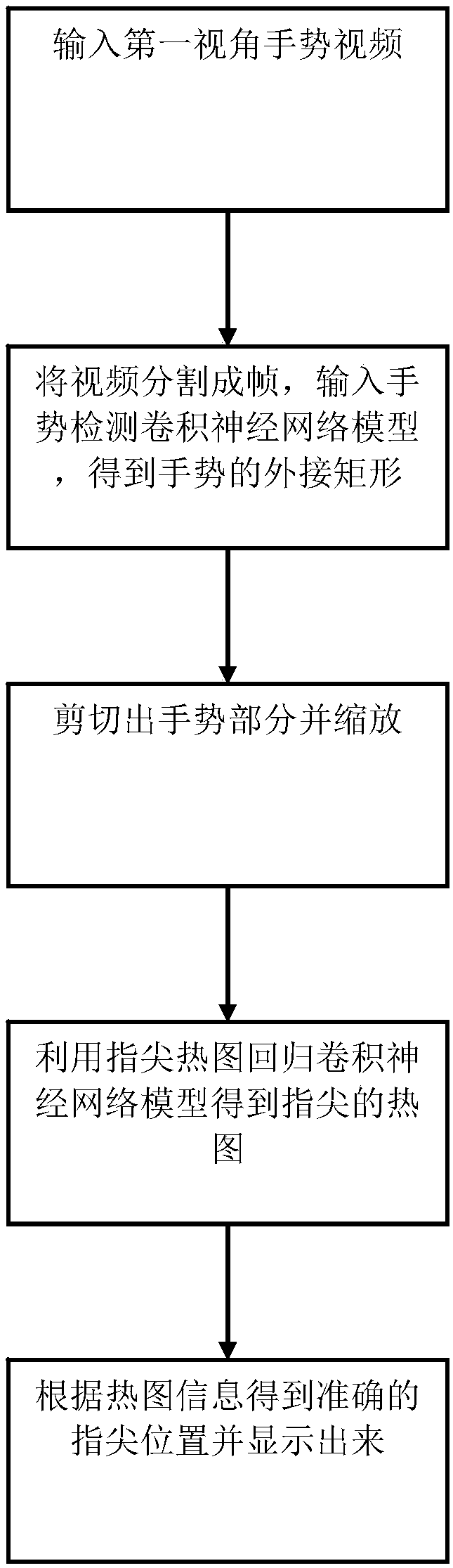

Egocentric vision in-the-air hand-writing and in-the-air interaction method based on cascade convolution nerve network

ActiveCN105718878AForecast stabilityReduce time performance consumptionCharacter and pattern recognitionNeural learning methodsFingertip detectionNerve network

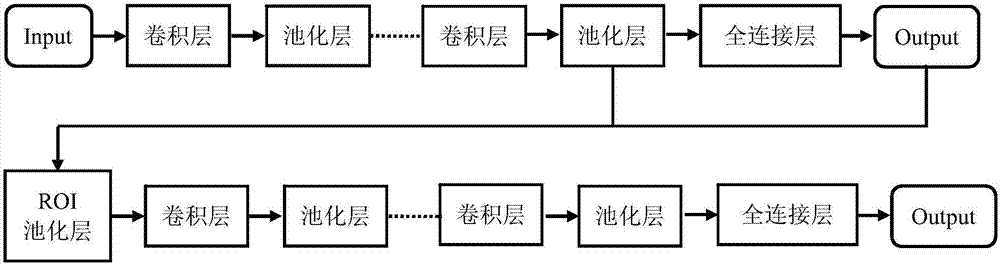

The invention discloses an egocentric vision in-the-air hand-writing and in-the-air interaction method based on a cascade convolution nerve network. The method comprises steps of S1: obtaining training data; S2: designing a depth convolution nerve network used for hand detection; S3: designing a depth convolution nerve network used for gesture classification and finger tip detection; S4: cascading a first-level network and a second-level network, cutting a region of interest out of a foreground bounding rectangle output by the first-level network so as to obtain a foreground region including a hand, and then using the foreground region as the input of the second-level convolution network for finger tip detection and gesture identification; S5: judging the gesture identification, if it is a single-finger gesture, outputting the finger tip thereof and then carrying out timing sequence smoothing and interpolation between points; and S6: using continuous multi-frame finger tip sampling coordinates to carry out character identification. The invention provides an integral in-the-air hand-writing and in-the-air interaction algorithm, so accurate and robust finger tip detection and gesture classification are achieved, thereby achieving the egocentric vision in-the-air hand-writing and in-the-air interaction.

Owner:SOUTH CHINA UNIV OF TECH

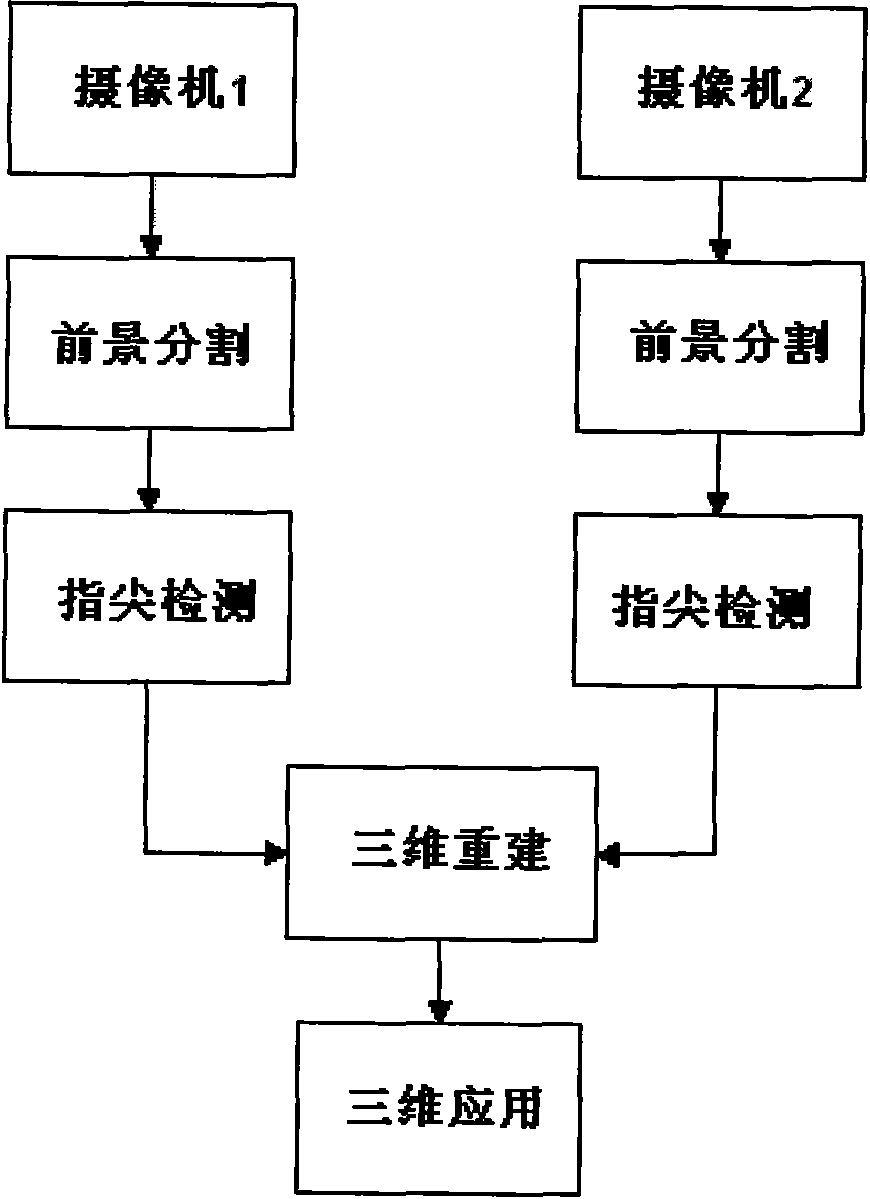

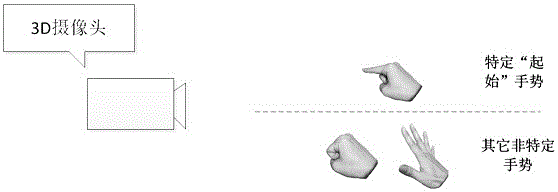

Natural interactive method based on three-dimensional gestures

ActiveCN101807114AMeet the needs of interactionInput/output for user-computer interactionCharacter and pattern recognitionFingertip detectionVisual technology

The invention discloses a natural interactive method based on three-dimensional gestures, the method utilizes a computer vision technology to obtain local features of a hand by foreground segmentation and fingertip detection, and the local features include fingertip position, palm contour, palm center position and the like. By adopting the stereoscopic vision technology, the hand features such as the fingertip position, the palm center position and the like are reconstructed in the three-dimensional space. The finger tip position, the palm center position and the like in the three-dimensional space are parameterized, and a three-dimensional interactive model based on points, lines and planes is defined, thus realizing various three-dimensional gestures in the three-dimensional space, such as fingertip clicking, fingertip squeezing, palm overturning, fingertip directing and the like. The method needs only two ordinary network cameras to meet the demands of real-time man-machine interaction.

Owner:ZHEJIANG UNIV

Regional convolutional neural network-based method for gesture identification and interaction under egocentric vision

ActiveCN107168527ARecognition speed is fastImprove recognition accuracyInput/output for user-computer interactionCharacter and pattern recognitionFingertip detectionNerve network

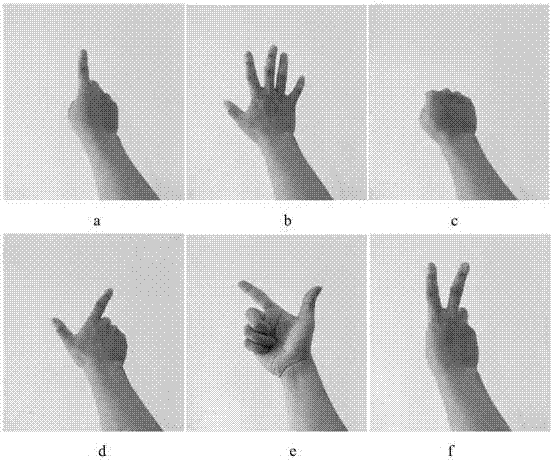

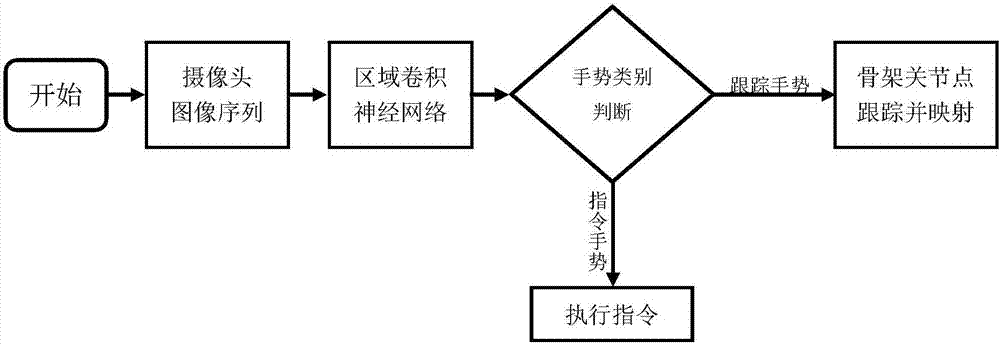

The invention discloses a regional convolutional neural network-based method for gesture identification and interaction under egocentric vision. The method comprises the following steps of S1, obtaining training data; S2, designing a regional neural network which is used for gesture classification and fingertip detection while being used for hand detection, and ensuring that an input of the neural network is a three-channel RGB image and outputs of the neural network are top left corner coordinates and top right corner coordinates of an external connection matrix of a gesture region, gesture types and gesture skeleton key points; and S3, judging the gesture types, and outputting corresponding interactive results according to different interactive demands. The invention provides a complete method for gesture identification and interaction under egocentric vision. Through single model training and partial network sharing, the identification speed and accuracy of the gesture identification under the egocentric vision are increased and improved.

Owner:SOUTH CHINA UNIV OF TECH

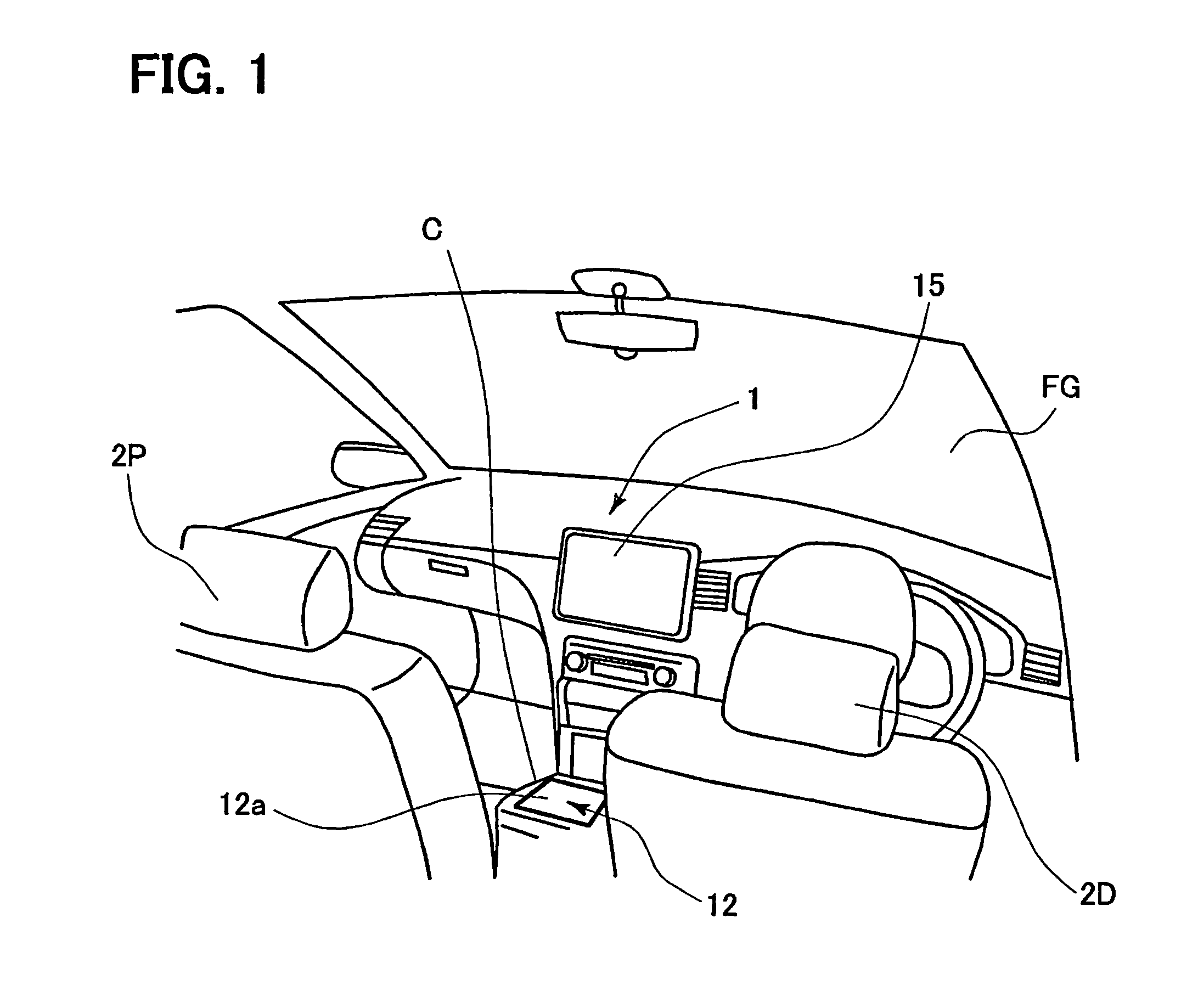

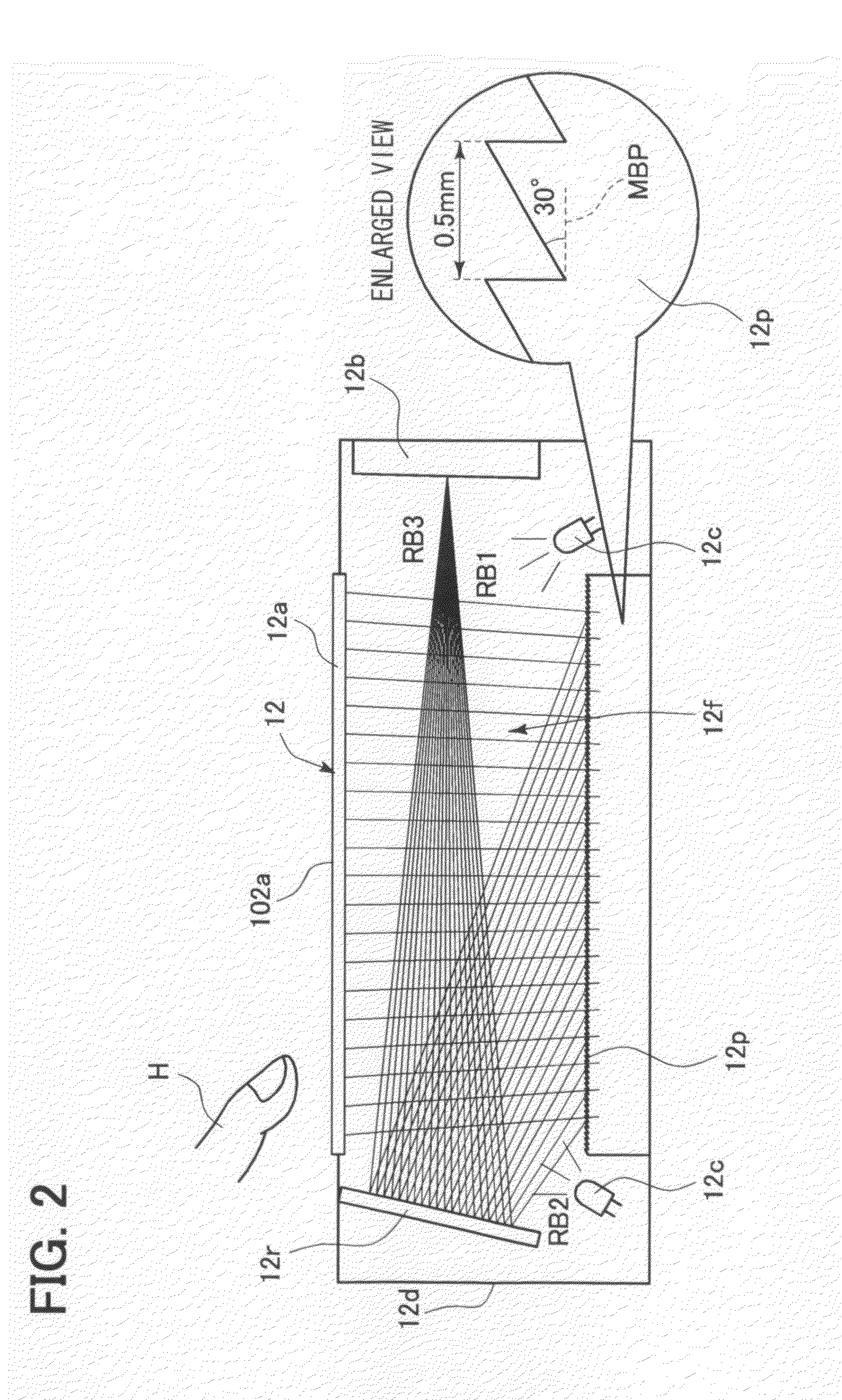

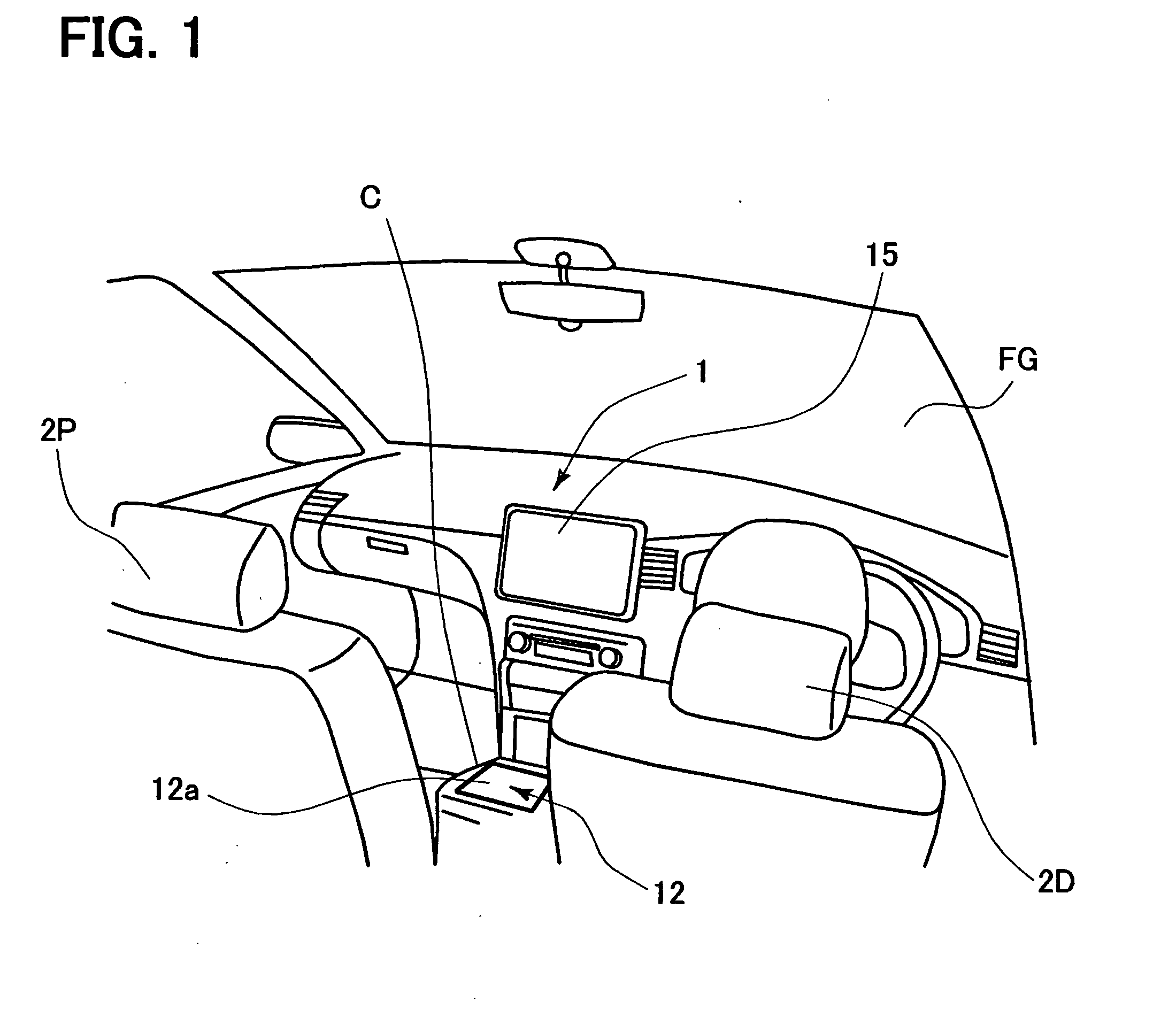

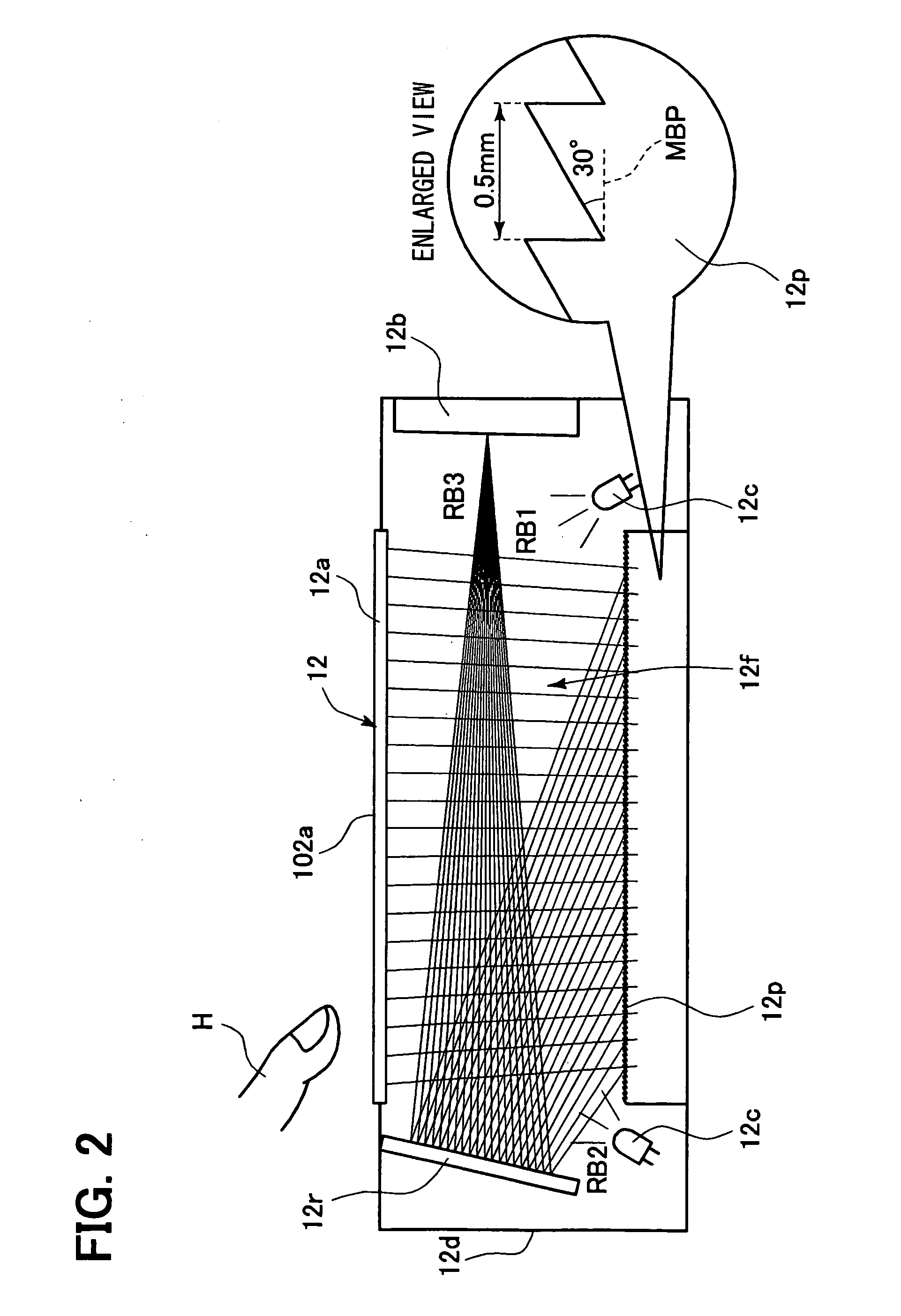

Operation apparatus for in-vehicle electronic device and method for controlling the same

InactiveUS8593417B2Easy to operateInstrument arrangements/adaptationsCharacter and pattern recognitionFingertip detectionIn vehicle

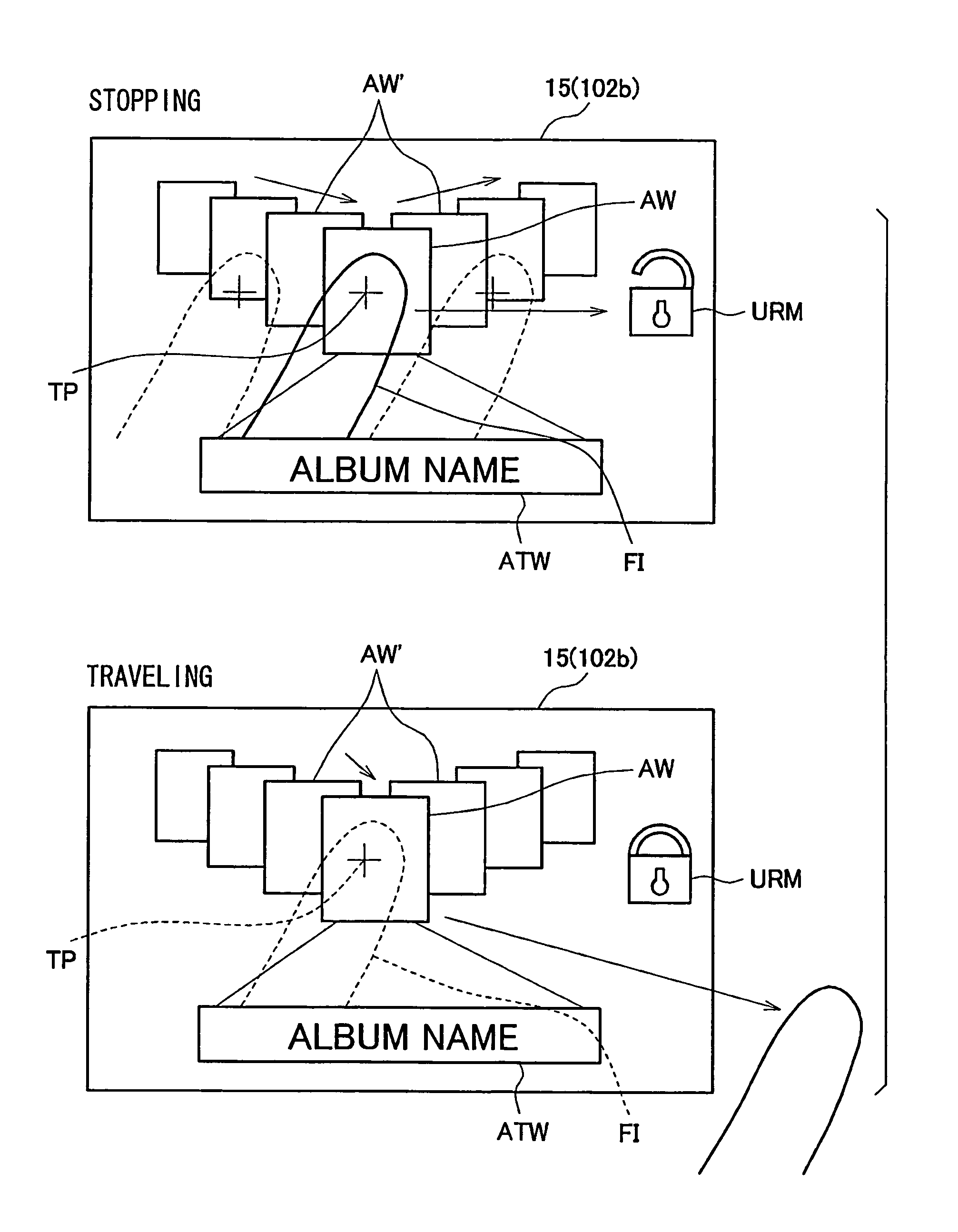

An operation surface detects a touch point specified by an operator. An imaging area obtains a hand image of the operator. A fingertip detection unit detects a fingertip of the hand image. A display device includes a display screen having coordinates uniquely corresponding to the operation surface and the imaging area. The display device indicates the fingertip and an operation panel specific to an in-vehicle electronic device to be operated. An interface engine having a prescribed interface relationship between an input, which is specified by at least one of the touch point and the fingertip, and an output to be outputted to the in-vehicle electronic device according to a combination of the input and the interface relationship. An alteration unit alters the interface relationship according to a detected traveling state of the vehicle.

Owner:DENSO CORP

Finger gesture recognition method based on field depth image

ActiveCN103984928AImprove robustnessOvercoming the problem of misidentificationCharacter and pattern recognitionFingertip detectionPattern recognition

The invention discloses a finger gesture recognition method based on a field depth image. The finger gesture recognition method comprises the steps that a field depth camera is started, and field depth video data are obtained; a man hand length is deduced, and a palm position and hand length data are determined; a hand spherical zone image is subjected to segmenting and cutting, and preprocessing is carried out; and fingertip recognition is carried out, and gesture recognition is carried out according to geometrical relationship. According to the method, a hand zone is obtained by cutting quickly based on the features of the field depth image, analyzing and processing are only carried out on a target zone, operation complexity is lowered, adaptability on dynamic field changing is good, a contour maximum concave point scanning algorithm is used for fingertip recognition, the robustness of fingertip recognition is improved, after a fingertip is accurately recognized, fingers are recognized according to the direction vectors of the fingers and the geometrical relationship of the fingers, and accordingly recognition of various gestures is provided. The method is simple, flexible and easy to achieve.

Owner:GUILIN UNIV OF ELECTRONIC TECH

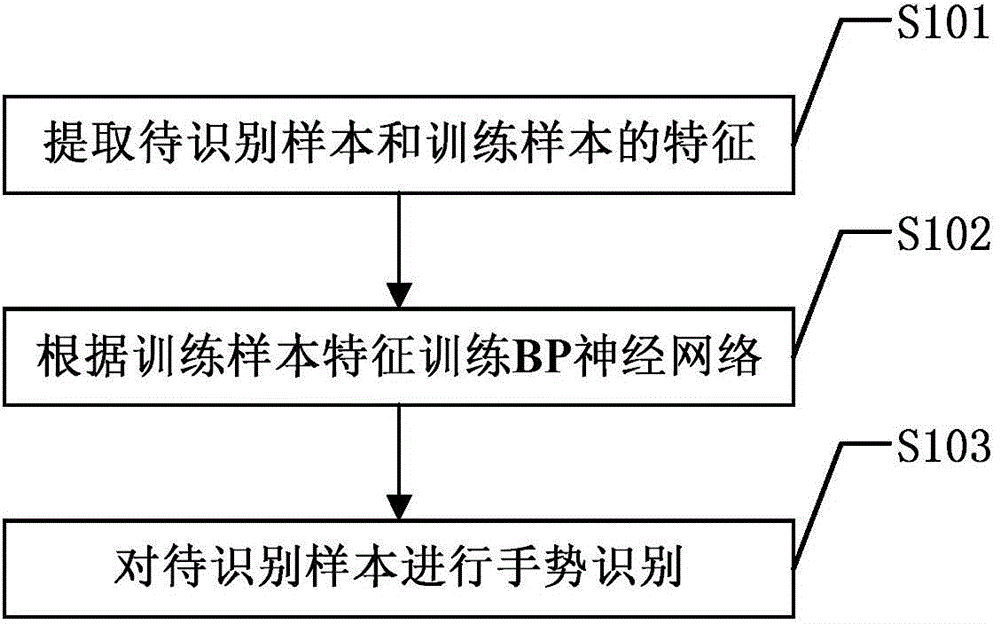

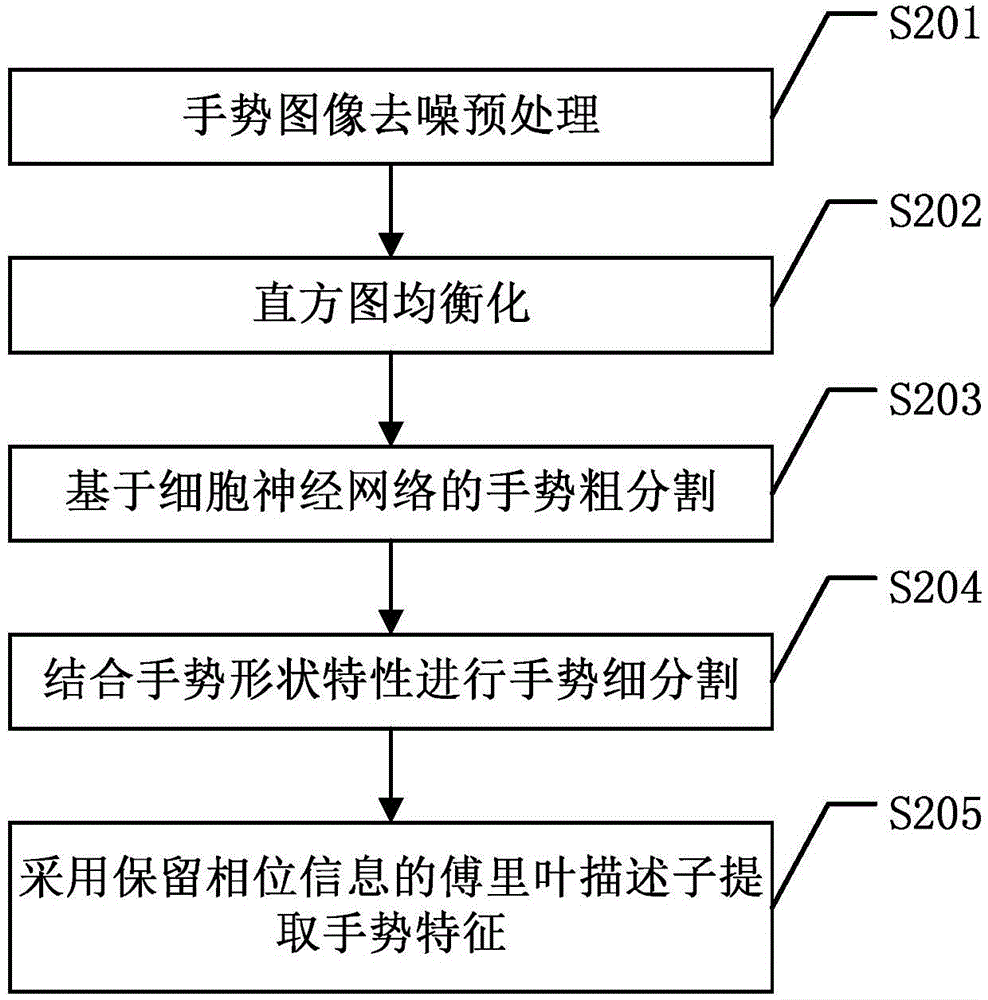

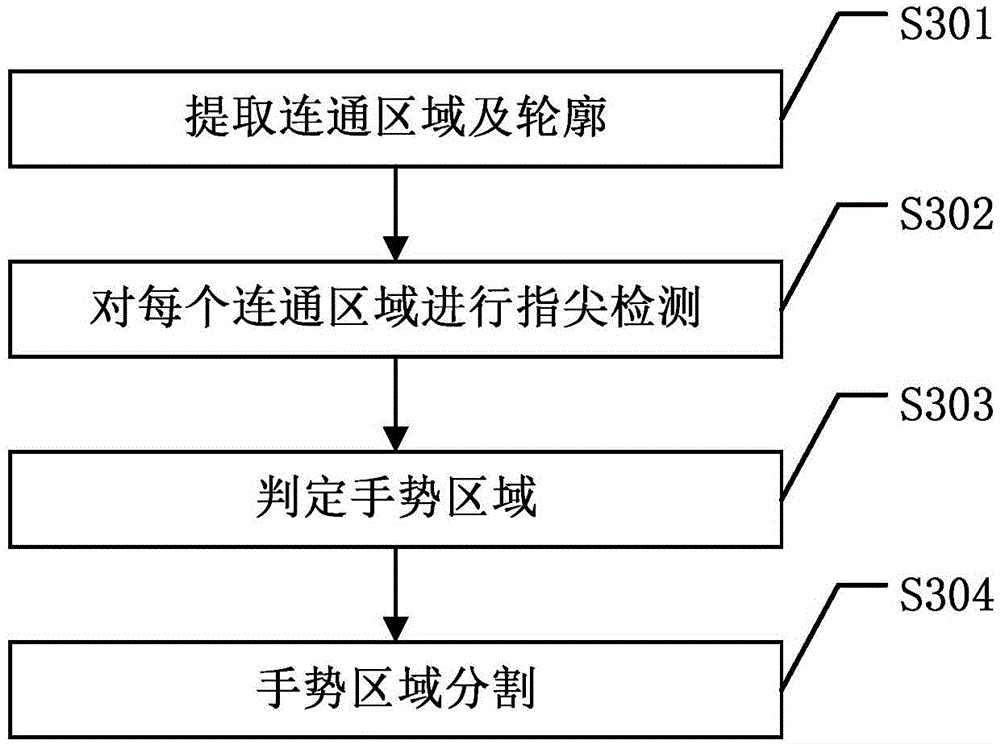

Hybrid neural network-based gesture recognition method

InactiveCN104834922AImprove denoising effectImprove accuracyCharacter and pattern recognitionNeural learning methodsFingertip detectionPattern recognition

The invention discloses a hybrid neural network-based gesture recognition method. For a gesture image to be recognized and a gesture image training sample, first a pulse coupling neural network is used to detect to obtain noise points, then a composite denoising algorithm is used to process the noise points, then a cell neural network is used to extract edge points in the gesture image, connected regions are obtained according to the extracted edge points, curvature is used to perform fingertip detection on each connected region to obtain undetermined fingertip points, interference of a face part is eliminated to obtain a gesture region, then the gesture region is partitioned according to gesture shape features, Fourier descriptors which keep phase information are obtained according to contour points of the partitioned gesture region, and first multiple Fourier descriptors are selected as gesture features; and a BP neural network is trained according to gesture features of the gesture image training sample, and the gesture features of the gesture image to be recognized are input to the BP neural network for recognition. The hybrid neural network-based gesture recognition method provided by the invention improves the accuracy rate of gesture recognition through utilization of various neural networks.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Operation apparatus for in-vehicle electronic device and method for controlling the same

InactiveUS20100277438A1Easy to operateInstrument arrangements/adaptationsCharacter and pattern recognitionFingertip detectionIn vehicle

An operation surface detects a touch point specified by an operator. An imaging area obtains a hand image of the operator. A fingertip detection unit detects a fingertip of the hand image. A display device includes a display screen having coordinates uniquely corresponding to the operation surface and the imaging area. The display device indicates the fingertip and an operation panel specific to an in-vehicle electronic device to be operated. An interface engine having a prescribed interface relationship between an input, which is specified by at least one of the touch point and the fingertip, and an output to be outputted to the in-vehicle electronic device according to a combination of the input and the interface relationship. An alteration unit alters the interface relationship according to a detected traveling state of the vehicle.

Owner:DENSO CORP

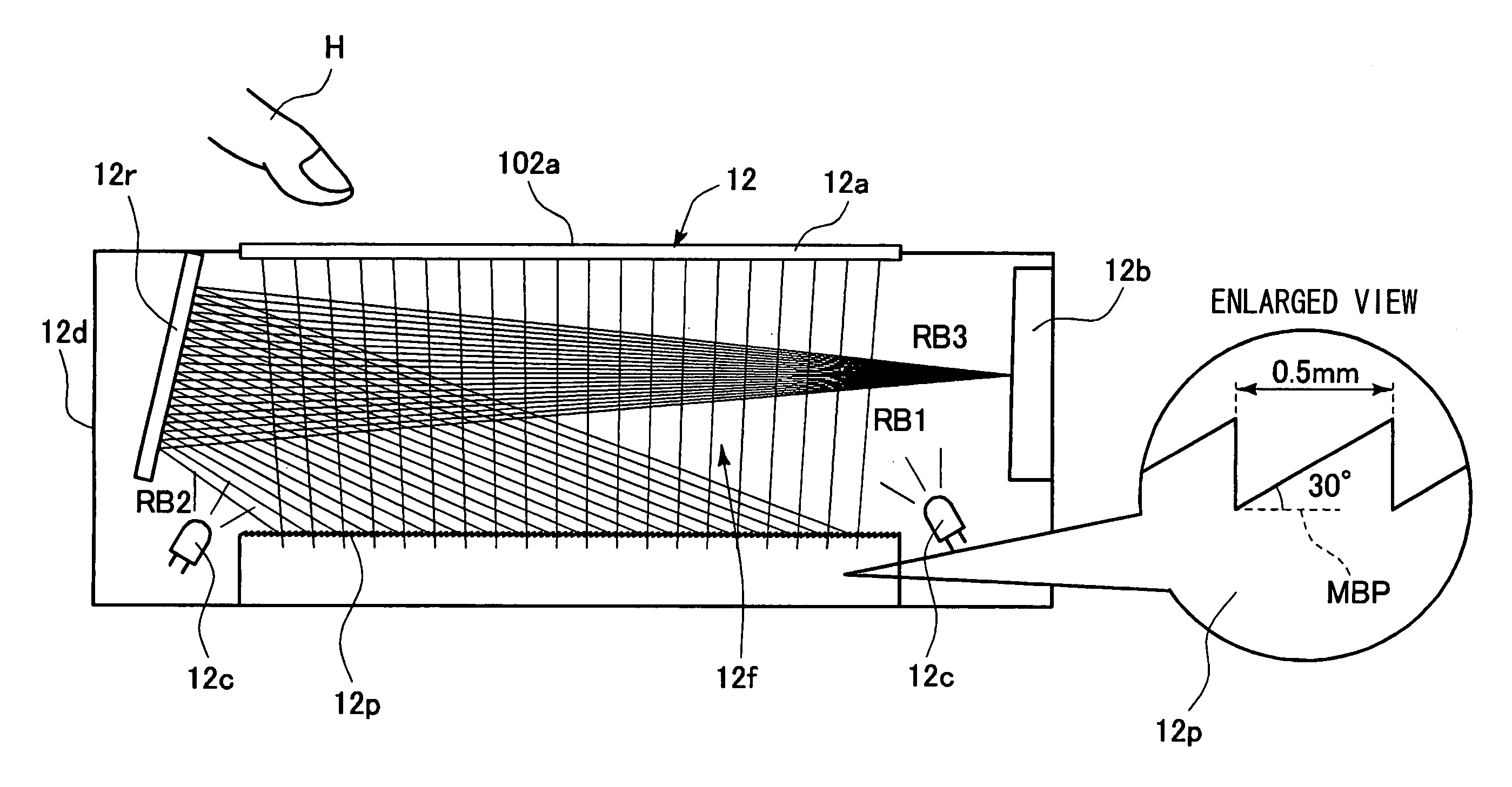

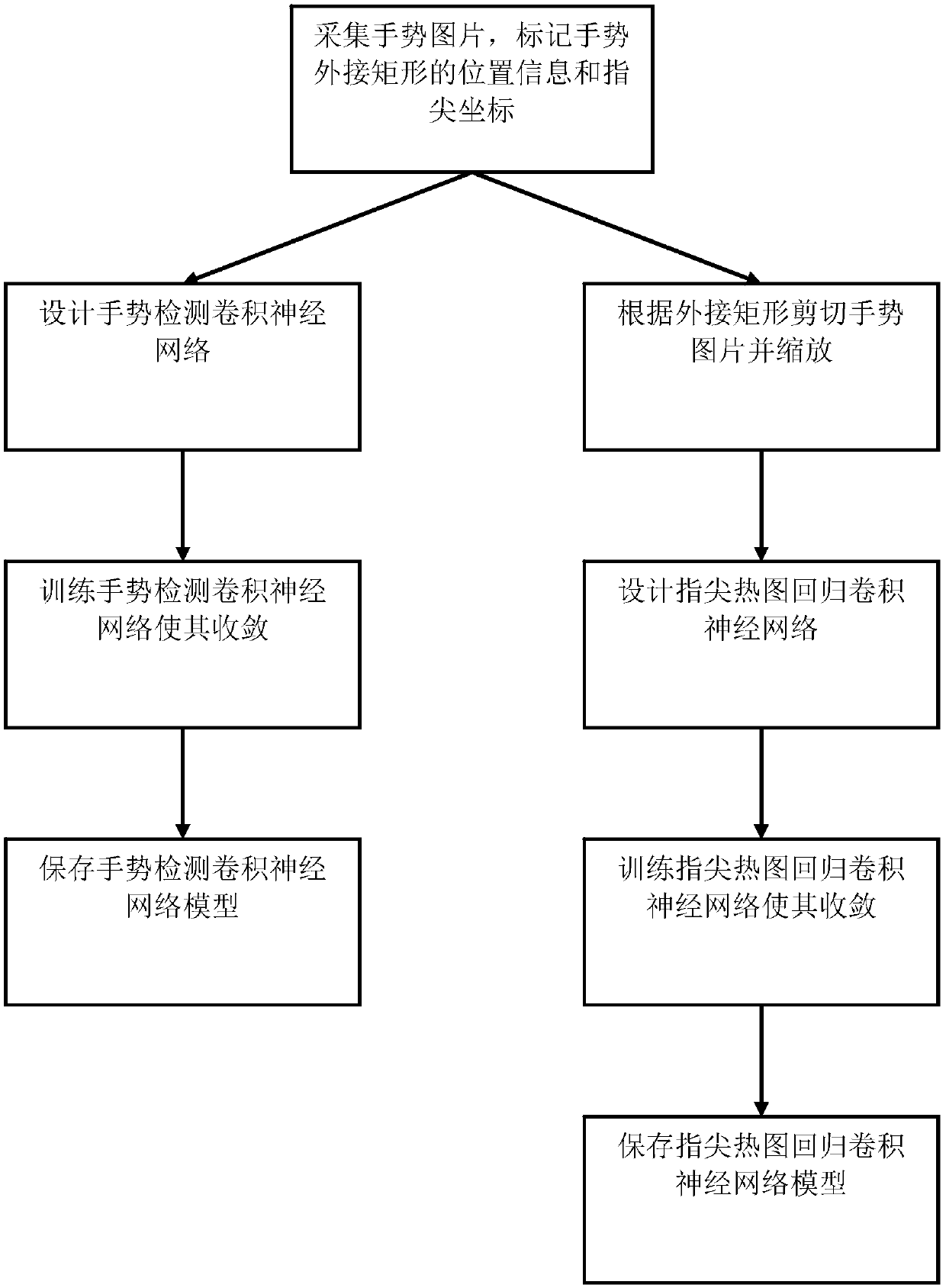

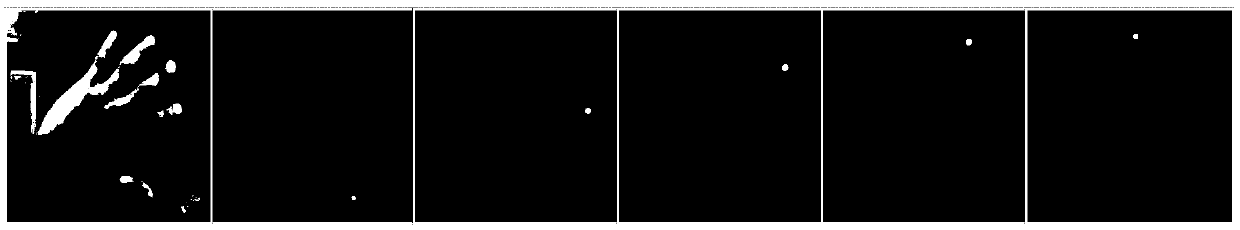

First view fingertip detection method based on convolutional neural network and heat map

ActiveCN107563494AProcessing speedThe impact of reducing the accuracy of gesture detectionInput/output for user-computer interactionCharacter and pattern recognitionFingertip detectionThematic map

Owner:SOUTH CHINA UNIV OF TECH

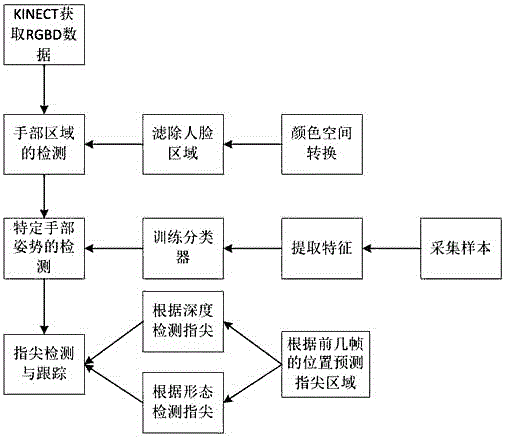

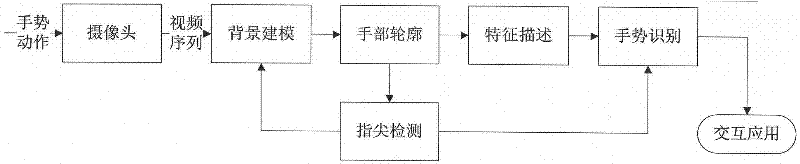

Multi-posture fingertip tracking method for natural man-machine interaction

ActiveCN105739702AInput/output for user-computer interactionCharacter and pattern recognitionFace detectionSkin complexion

The present invention discloses a multi-posture fingertip tracking method for natural man-machine interaction. The method comprises the following steps: S1: acquiring RGBD data by adopting Kinect2, including depth information and color information; S2: detecting a hand region: by means of conversion of a color space, converting a color into a space that does not obviously react to brightness so as to detect a complexion region, then detecting a human face by means of a human face detection algorithm, so as to exclude the human face region and obtain the hand region, and calculating a central point of a hand; S3: by means of the depth information and in combination with an HOG feature and an SVM classifier, identifying and detecting a specific gesture; and S4: by means of positions of preceding frames of fingertips and in combination with an identified region of the hand, performing prediction on a current tracking window, and then detecting and tracking a fingertip by means of two modes, i.e. a depth-based fingertip detection mode and a form-based fingertip detection mode. The method disclosed by the present invention is mainly used for performing detection and tracking on a single fingertip under motion and various postures, and relatively high precision and real-time property need to be ensured.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

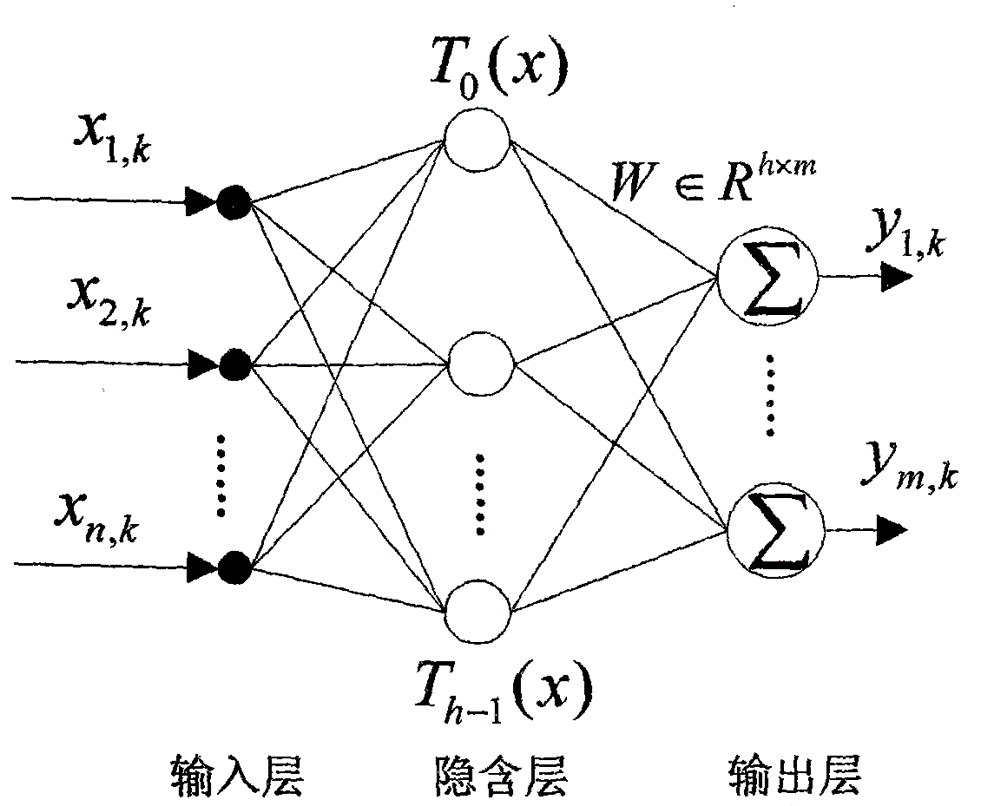

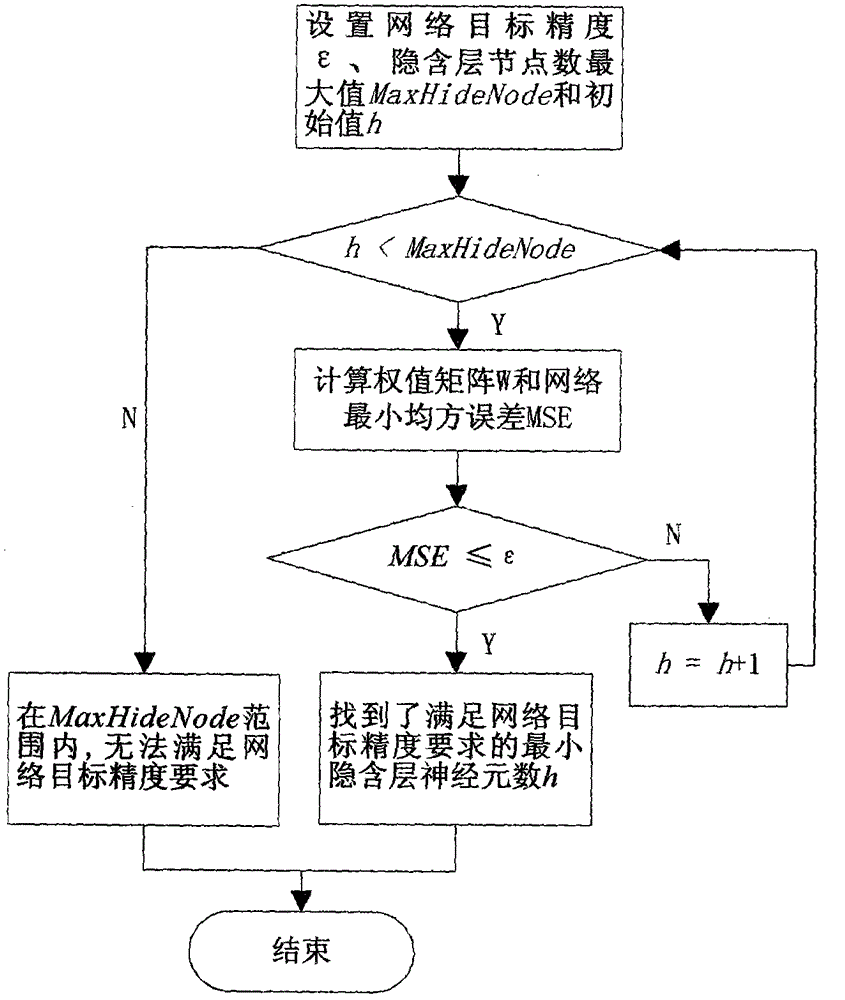

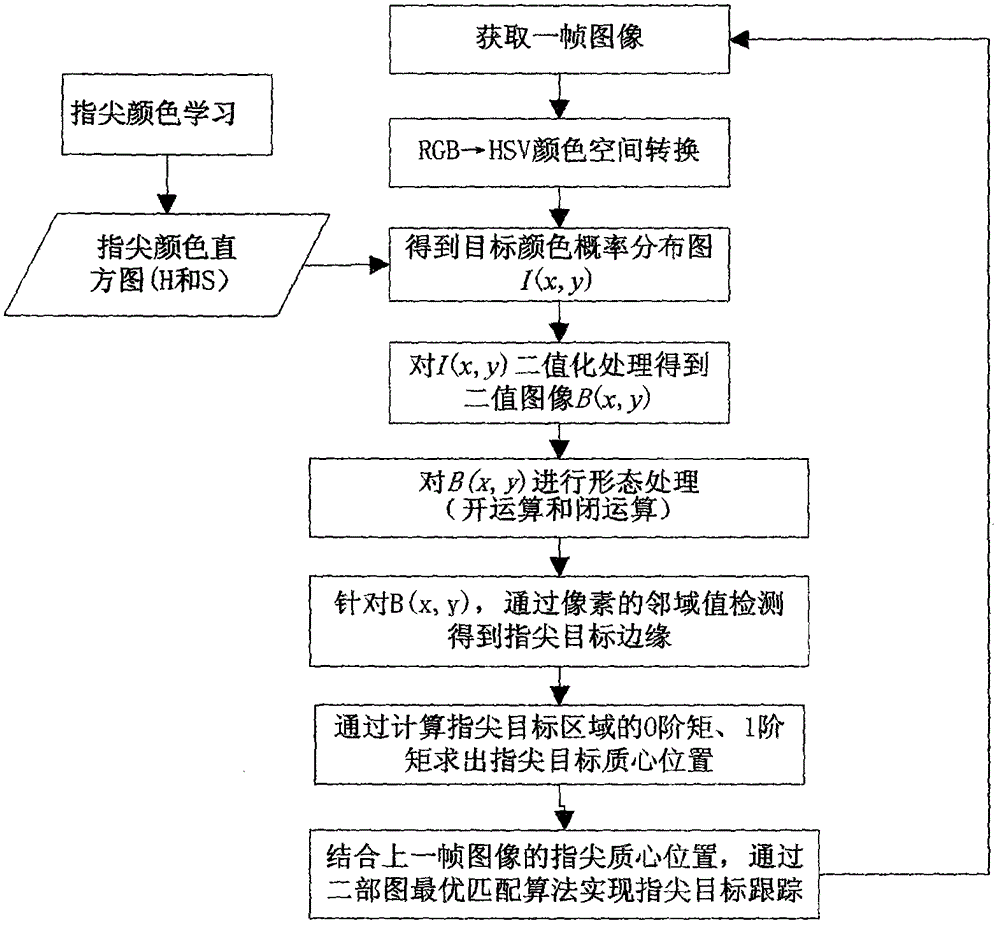

Dynamic gesture learning and identifying method based on Chebyshev neural network

InactiveCN104573621ALearning Speed BlocksImprove recognition accuracyBiological neural network modelsCharacter and pattern recognitionMulti inputHidden layer

The invention discloses a dynamic gesture learning and identifying method based on a Chebyshev neural network. Chebyshev orthogonal polynomials serve as hidden-layer neuron excitation functions for constructing a multi-input multi-output three-layer feedforward neural network, and a weights direct determination method and a hidden-layer node number adaptive determination algorithm are given; a fingertip detection algorithm based on a color histogram and a fingertip tracking algorithm based on bigraph optimal matching are given for obtaining a dynamic gesture track in real time; an MIMO-CNN (multi-input multi-output Chebyshev neural network) is subjected to input output structure design and network weights learning training according to the dynamic gesture identifying requirements, and a dynamic gesture is identified by the trained MIMO-CNN. A test result shows that the MIMO-CNN can increase the network training speed and improve the network training precision, so that the dynamic gesture learning speed is increased and the dynamic gesture identifying accuracy is improved; moreover, relatively good robustness and generalization ability in the aspect of dynamic gesture identification are achieved.

Owner:李文生 +2

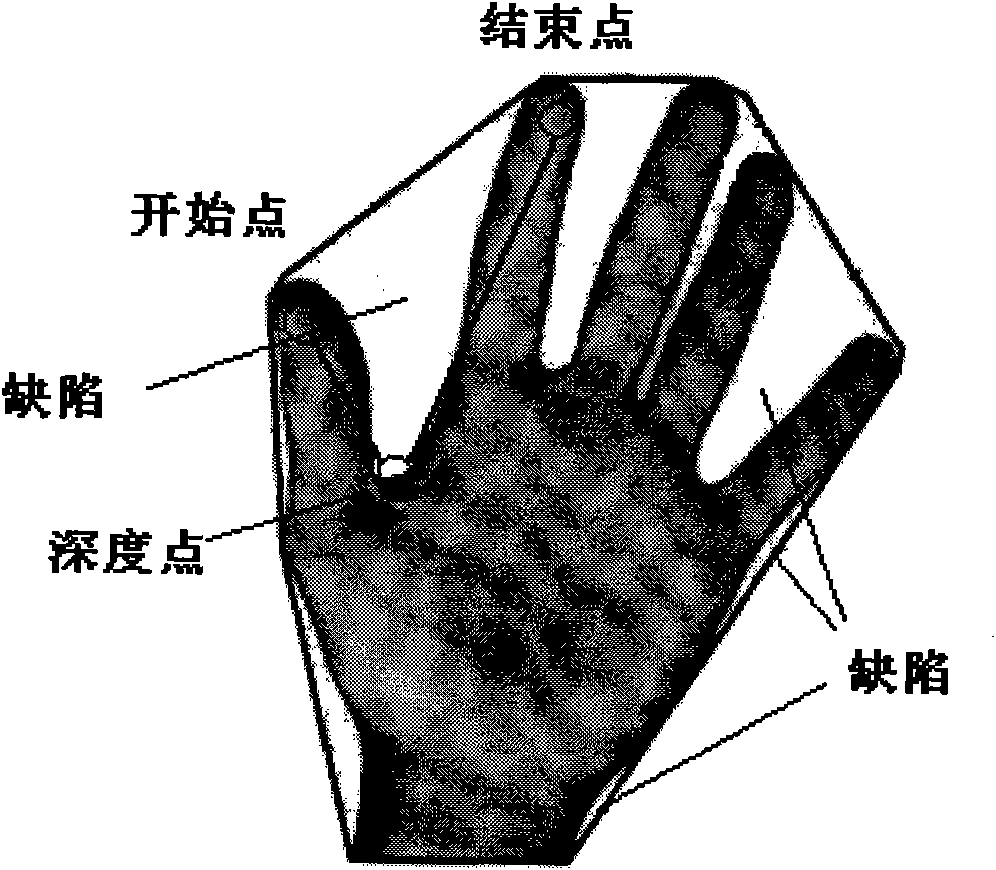

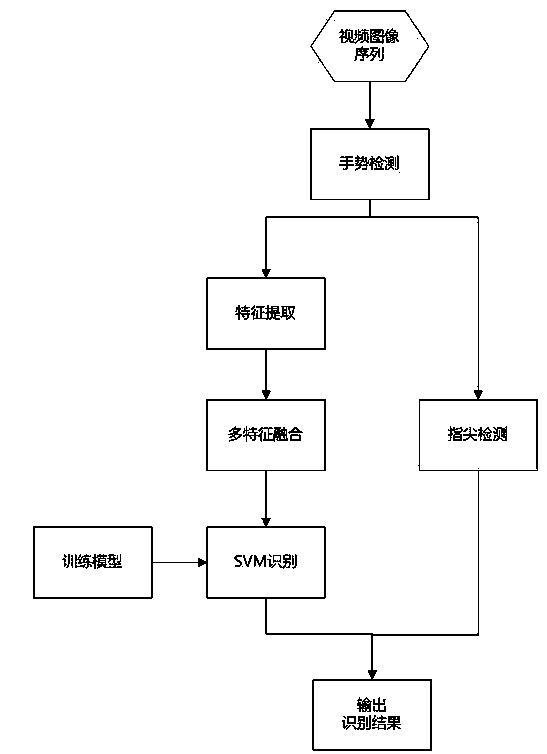

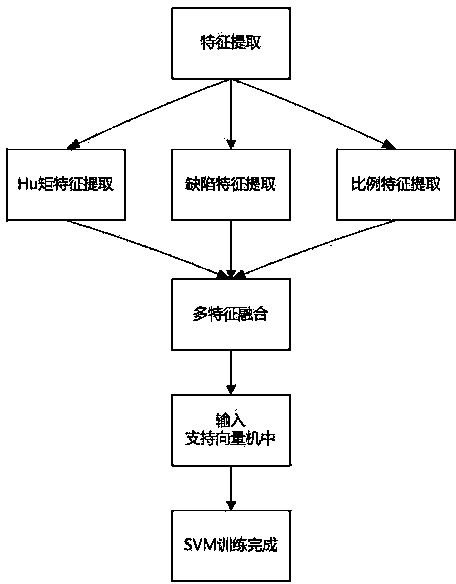

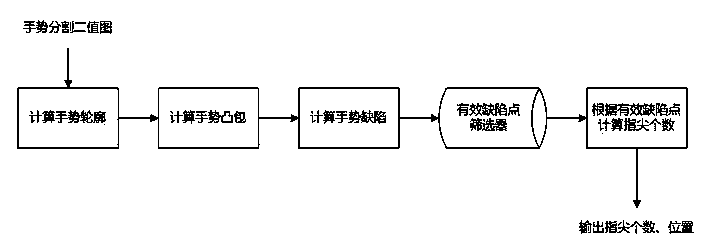

Hand gesture recognition method based on multi-feature fusion and fingertip detecting

ActiveCN104299004AThe amount of feature calculation is smallCorrect mistakesCharacter and pattern recognitionFingertip detectionSupport vector machine

The invention discloses a hand gesture recognition method based on multi-feature fusion and fingertip detecting. The method comprises a training process and a recognition process. In the training process, for a complex hand gesture, reasonable hand gesture features are selected, a multi-feature fusion feature extracting algorithm is used, the hand gesture is subjected to support vector machine training, and a training model is formed. In the recognition process, for an input video image sequence, hand gesture detecting is carried out first, then multi-feature extracting and fusion are carried out, and multiple features are input into the support vector machine to obtain a recognition results. Meanwhile, the hand gesture is subjected to fingertip detecting based on defects, through a defect screener, the positions of fingertips of fingers are located, then two-time recognition and detecting results are subjected to synthesized, and the final hand gesture recognition results are obtained. The problem that in a complex scene, the hand gesture recognition rate is not high can be effectively solved, the requirement of real-time performance is met, and the method can be well used in human-machine interaction.

Owner:ZHEJIANG UNIV

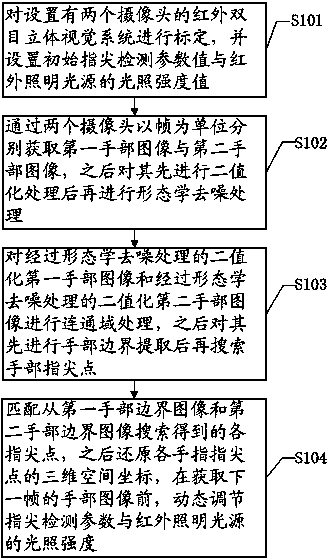

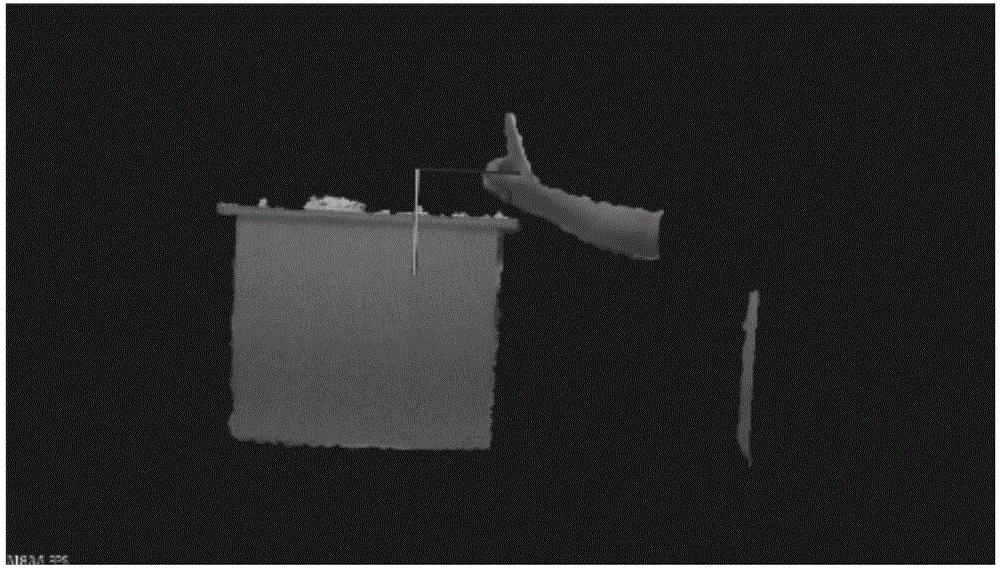

Method and system for detecting fingertip space position based on binocular stereoscopic vision

ActiveCN103714345AImprove detection rateExpand the usable rangeCharacter and pattern recognitionFingertip detectionVisual perception

The invention provides a method and system for detecting the fingertip space position based on binocular stereoscopic vision. After denoising is carried out on hand images acquired through two cameras respectively with a frame as a unit in a binarization processing mode, image connected domain calculation, boundary extraction, fingertip point search, fingertip match and space coordinates reset treatment are carried out on the hand images, and detecting parameters and illumination intensity of an infrared lighting source are dynamically adjusted before the next frame of each hand image is acquired. Due to the fact that the infrared binocular vision system is adopted, and the illumination intensity and the fingertip detecting parameters are dynamically adjusted according to the distance between the hands of a user and the cameras, the detecting rate of the fingertip is effectively improved, and the usable range of the device is wider.

Owner:TCL CORPORATION

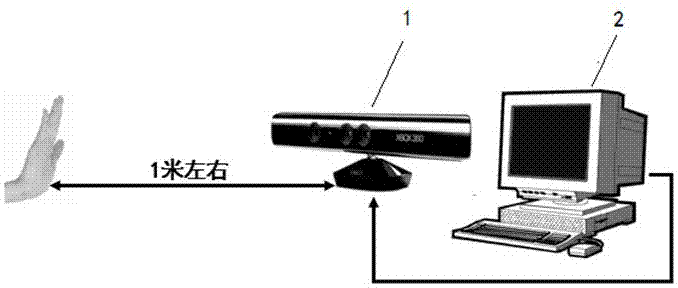

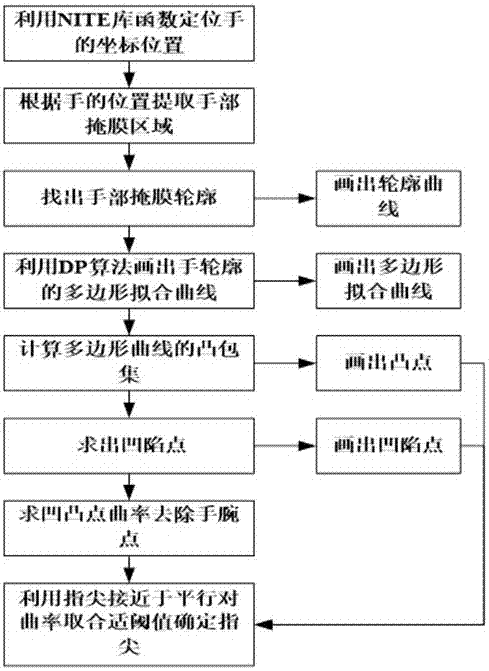

Fingertip detection method based on Kinect depth information

ActiveCN107885327AEasy extractionAccurate acquisitionInput/output for user-computer interactionCharacter and pattern recognitionFingertip detectionComputer vision

The invention relates to a fingertip detection method based on Kinect depth information. A device Kinect is connected to a computer through a cable. The method is characterized by comprising the firststep of extracting a hand and acquiring a palm coordinate; the second step of performing fingertip positioning including image preprocessing and hand contour; performing joint bilateral filtering onan extracted hand area; utilizing a Douglas-Poke algorithm to approximate a specified point set, finding out a polygon fitted curve of the hand contour and drawing out a fitted curve of the hand; thethird step of utilizing a convexHull () function to search the steps, and analyzing and obtaining a convex hull point of the hand; the fourth step of obtaining the curvature at the convex hull point,and according to the difference between the curvature of the wrist and the curvature of the fingertip, setting an appropriate threshold value to remove the convex hull point at the wrist. The method can complete gesture recognition tasks in real time and accurately, improve the real-time property and accuracy of Kinect gesture recognition, and improve natural gesture interaction experience.

Owner:CHANGCHUN UNIV OF SCI & TECH

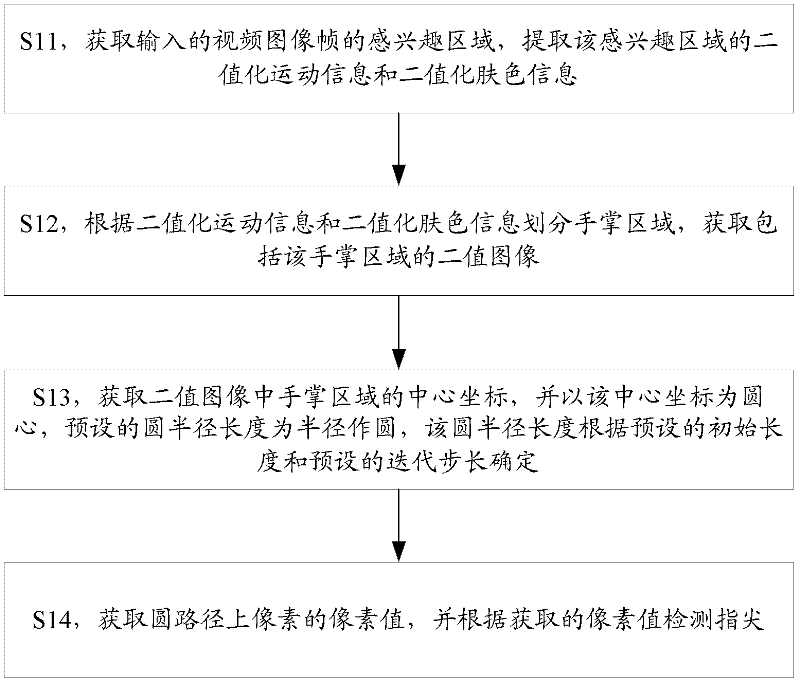

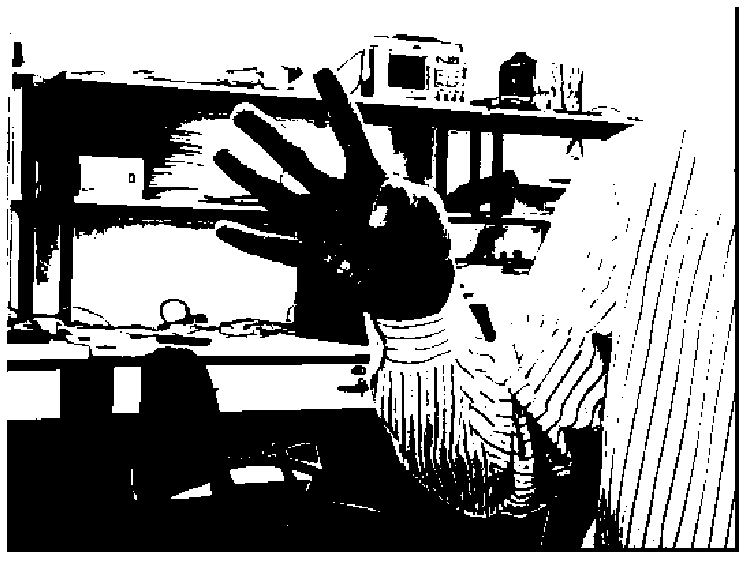

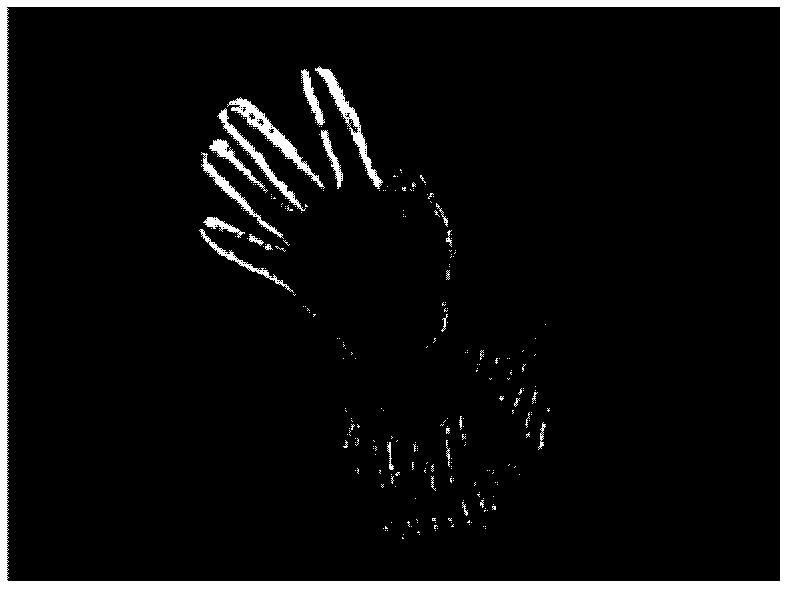

Human-computer interaction fingertip detection method, device and television

ActiveCN102521567AImprove accuracyEffectively eliminate interferenceTelevision system detailsCharacter and pattern recognitionFingertip detectionSkin color

The invention is applicable to human-computer interaction and provides a human-computer interaction fingertip detection method, a device and a television. The method comprises the following steps of: acquiring an area of interest from inputted video image frames, and extracting binary motion information and binary skin-color information from the area of interest; dividing a palm region according to the binary motion information and the binary skin-color information, and acquiring a binary image containing the palm region; acquiring the central coordinate of the palm region in the binary image, and drawing a circle by taking the central coordinate as the center of the circle and the length of a preset radius of the circle as a radius, wherein the length of the preset radius of the circle is determined according to a preset initial length and a preset iteration step size; and acquiring the pixel values of pixels on a circular path, and carrying out fingertip detection according to the acquired pixel values. With the adoption of the embodiment of the invention, the accuracy in the detection of fingertips can be improved, and the scope of application can be enlarged.

Owner:TCL CORPORATION

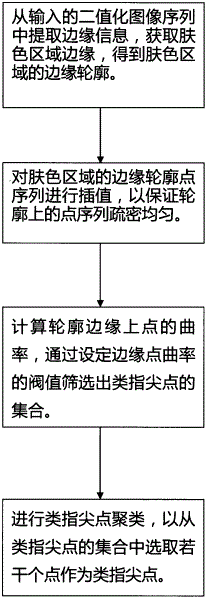

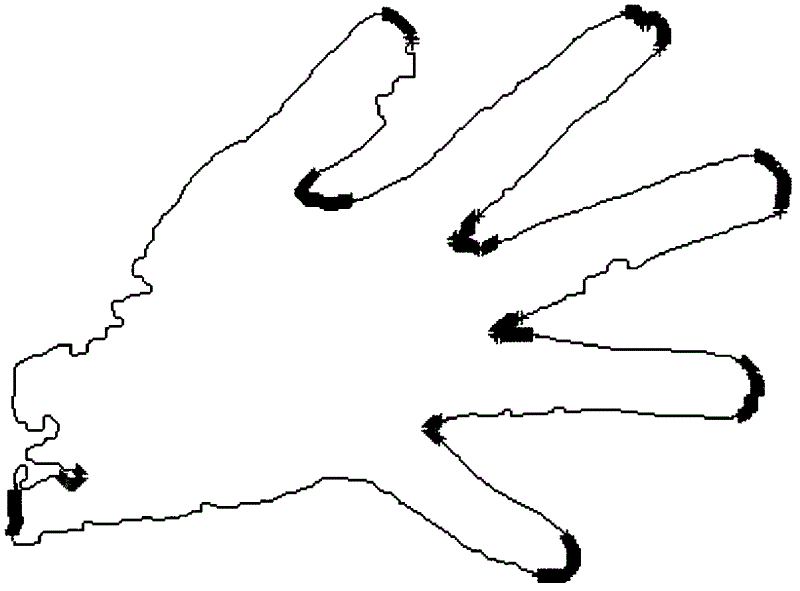

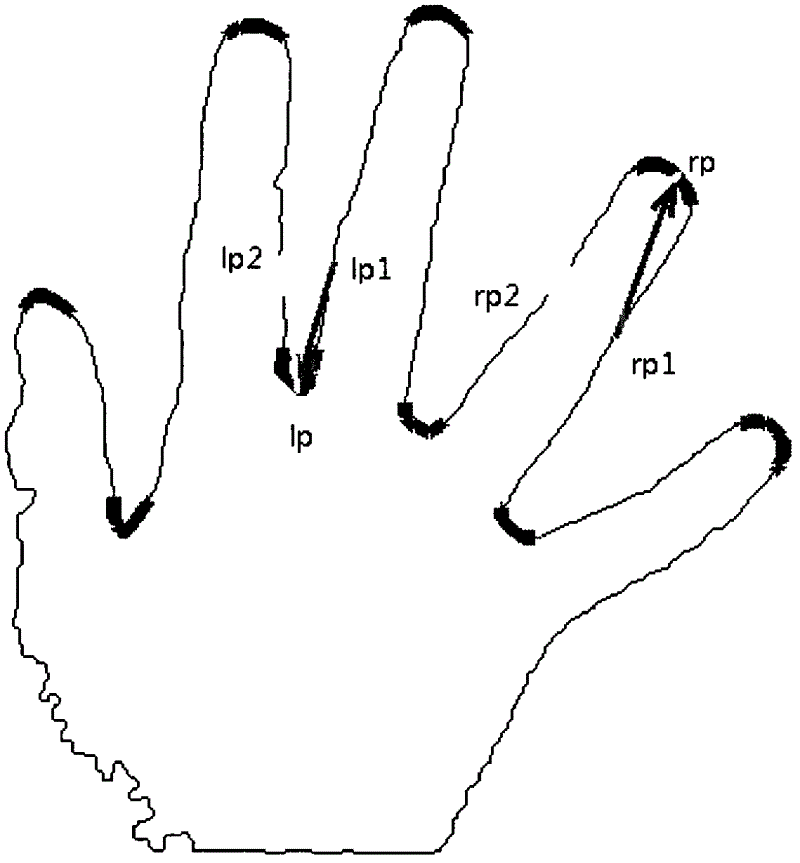

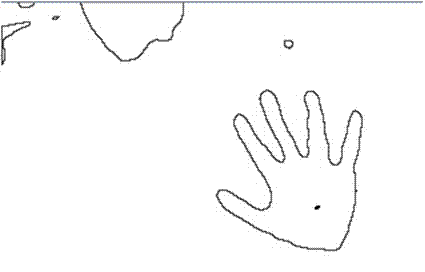

Fingertip detection method

InactiveCN102622601AImprove efficiencyMeet real-time requirementsCharacter and pattern recognitionFingertip detectionPattern recognition

The invention discloses a fingertip detection method, which comprises the following steps of: 11, extracting edge information from an input binary image sequence to obtain an edge outline of a skin color region; 12, interpolating a point sequence of the edge outline of the skin color region to ensure that the density of the point sequence on the outline is uniform; 13, calculating curvatures of points on the edge of the outline, and screening a set of similar fingertip points by setting the curvatures of the edge points; and 14, clustering the similar fingertip points, and selecting a plurality of points from the set of the similar fingertip points as the similar fingertip points. A basic condition is supplied to detection of the similar fingertip points based on the curvatures through operation of edge detection, tracking, edge point interpolation and the like; groove points among fingers and arm points are filtered, so that the similar fingertip points are determined to be fingertip points; most operation is based on the similar fingertip points, so that the whole efficiency is relatively high; and requirement for the real-time performance of a system can be met, and the performance of the system is improved.

Owner:李博男

Multifunctional method for identifying hand gestures

InactiveCN102194097AImprove recognition rateEasy to identifyInput/output for user-computer interactionCharacter and pattern recognitionFingertip detectionHand parts

The invention provides a vision-based multifunctional method for identifying hand gestures, which comprises the following steps of: firstly, extracting a hand part region from the background by background subtraction; secondly, carrying out fingertip detection on the extracted hand part region to determine the number of single fingertips contained in the hand gestures; meanwhile, acquiring the outline of the hand part region and describing the outline of the hand part region through improved shape context descriptors; afterwards, introducing a directed acyclic support vector fleet to classify the extracted improved shape context descriptors; after classifying, comparing the number of fingers of a hand form, to which the improved shape context descriptors belong, with the detection result of the fingertip, if the number is matched with the result, outputting the final judgment result; and otherwise, refusing the judgment. The vision-based multifunctional method can be better applied to man-machine interaction, mobile equipment and sing language input.

Owner:范为

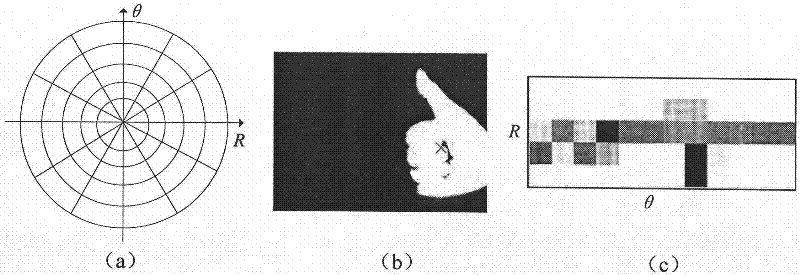

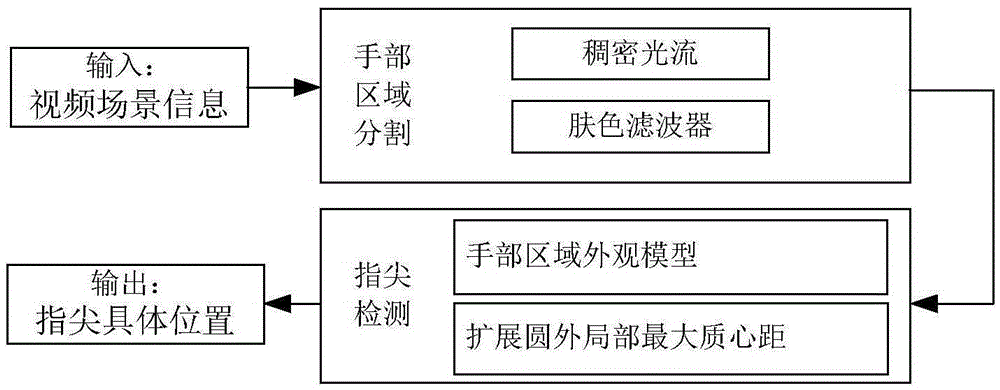

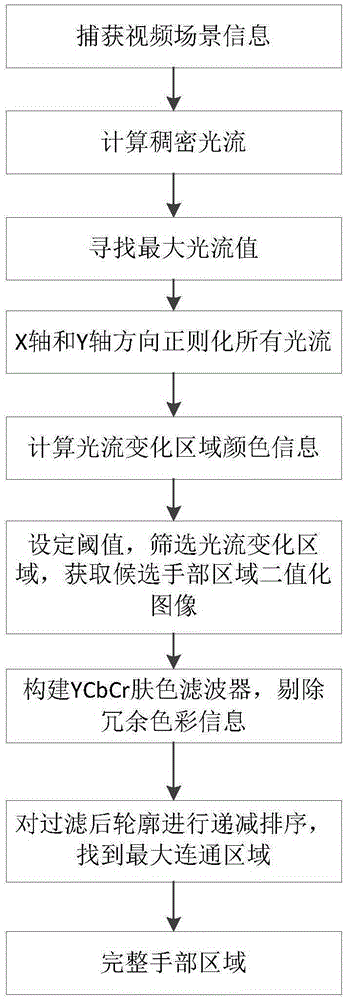

Fingertip detection method in complex environment

ActiveCN105335711AFreedom of movementAvoid interferenceCharacter and pattern recognitionFingertip detectionPattern recognition

The invention provides a fingertip detection method in a complex environment. The fingertip detection method comprises the steps of: step 1, calculating dense light stream information corresponding to scene information, and reconstructing a skin color filter to obtain a hand region; step 2, and constructing models of the hand region in various gestures by adopting equal-area blocks, calculating a mass center of the hand region, calculating distances from all contour sampling points to the mass center and an average mass center distance, determining an extended mass center distance according to detected number of fingertips, drawing a circle by taking the mass center as a circle center and the extended mass center distance as the radius, removing contour points inside the circle and a wrist region with maximum number of continuous pixels on the circle, searching contour points with partial maximum mass center distance outside the circle, and marking the contour points as fingertips, and comparing the detected number of fingertips in this round with the detected number of fingertips in the last round to judge whether to continue the fingertip detection. The fingertip detection method is high in robustness, and can detect the fingertips correctly when the hand of a person moves in front of a camera freely in the complex environment, thereby increasing the accuracy and effectiveness of fingertip detection.

Owner:SOUTH CHINA UNIV OF TECH

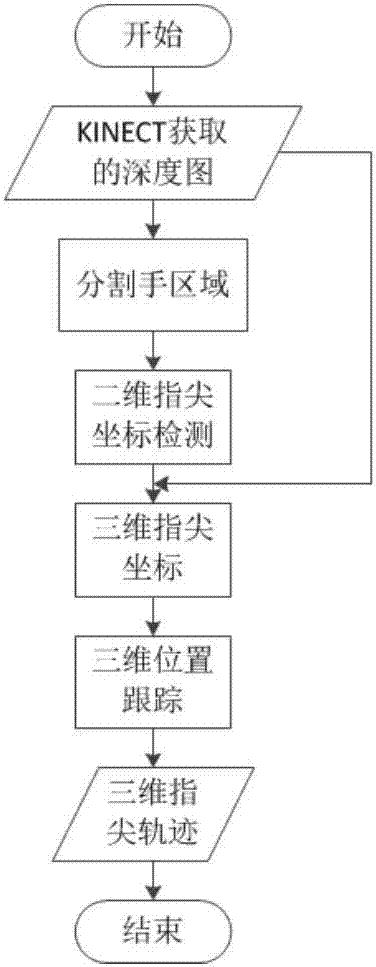

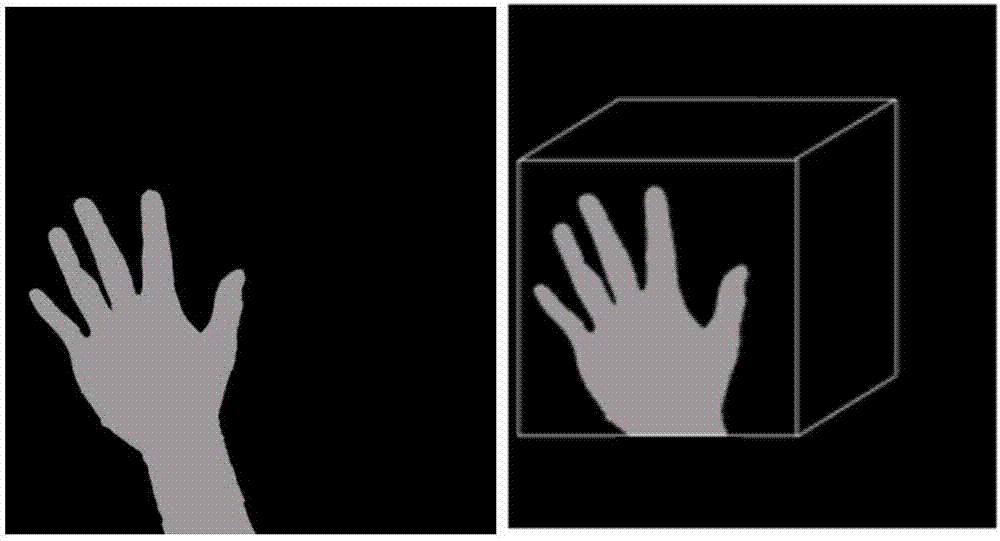

KINECT-based multi-finger real-time tracking method

InactiveCN107256083AFast and accurate real-time trackingImprove experienceInput/output for user-computer interactionImage enhancementPixel classificationFingertip detection

The invention discloses a KINECT-based multi-finger real-time tracking method. The method comprises the following steps of: 1, carrying out hand area segmentation; 2, carrying out fingertip detection on the basis of pixel classification; 3, obtaining a three-dimensional position of a fingertip; and 4, tracking a three-dimensional fingertip trajectory. The KINECT-based multi-finger real-time tracking method is capable of rapidly and correctly carrying out finger tracking in real time, and is high in system stability, high in user experience degree and high in reaction speed.

Owner:HOHAI UNIV CHANGZHOU

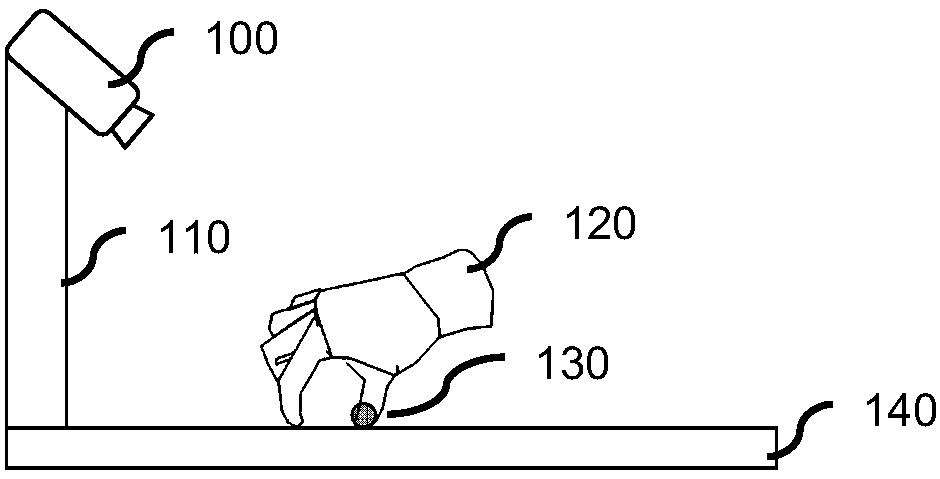

Enhanced assembly teaching system based on fingertip characteristics and control method thereof

ActiveCN110147162ASimple featuresImprove usabilityInput/output for user-computer interactionImage enhancementFingertip detectionEdge extraction

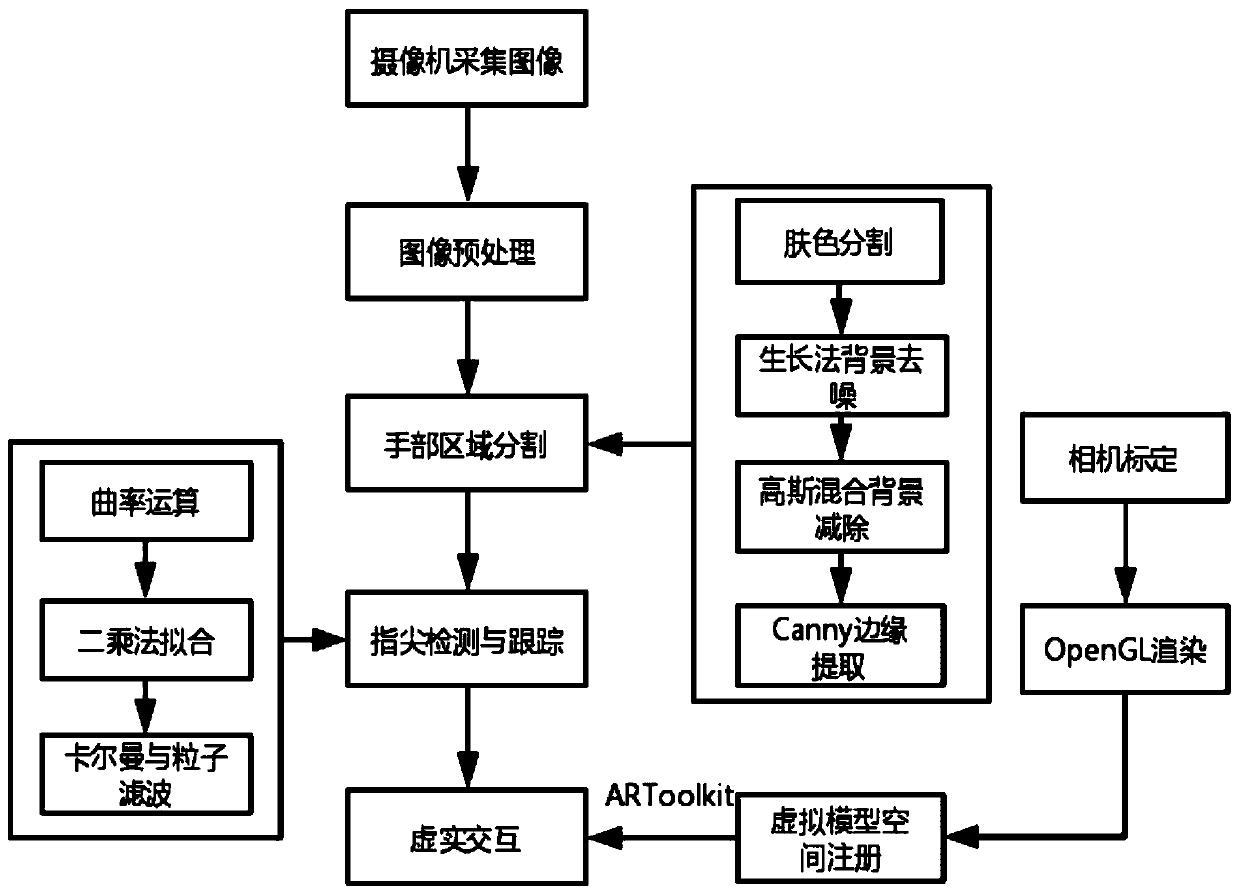

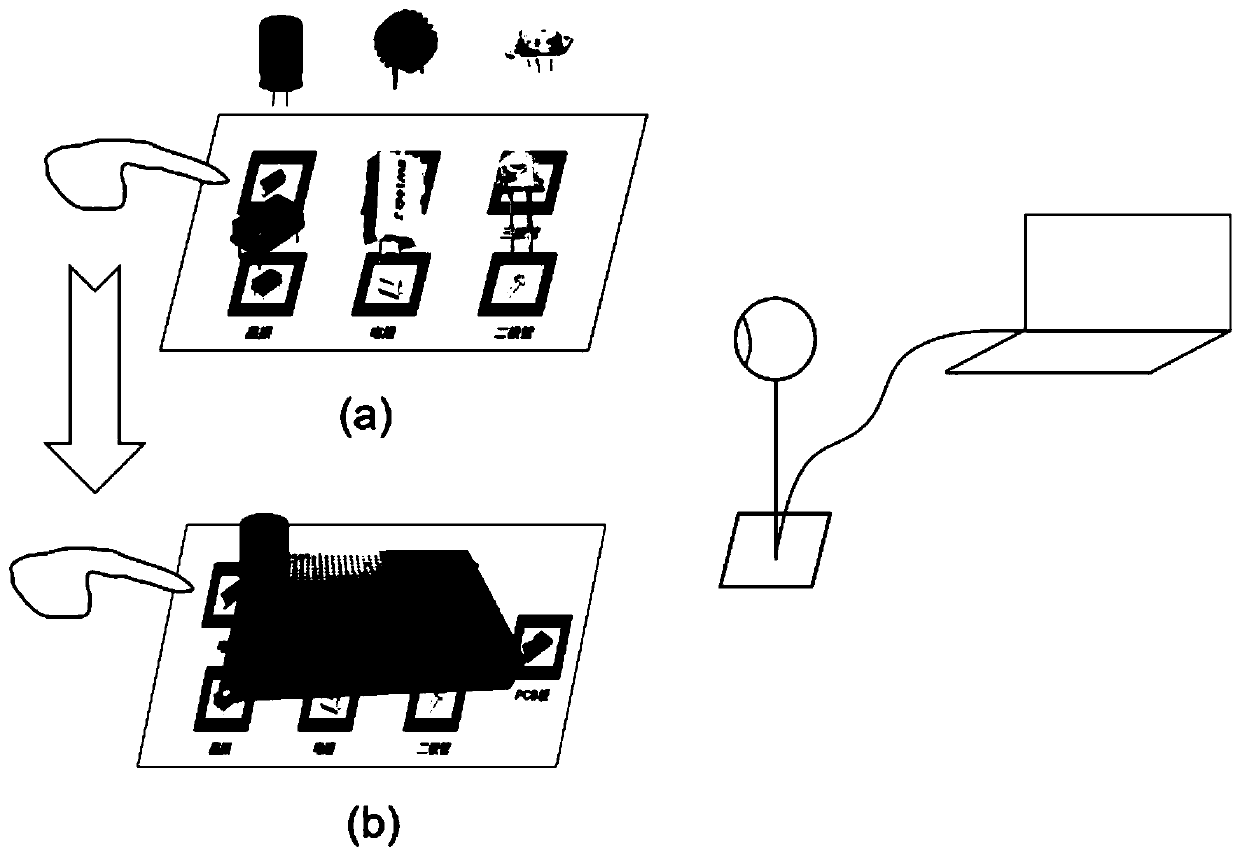

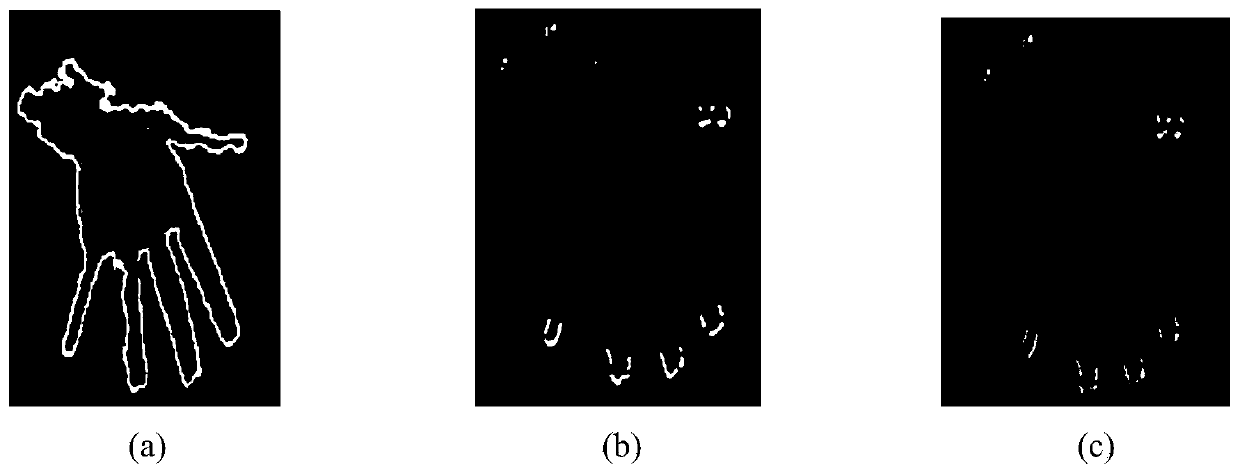

The invention provides an enhanced assembly teaching system based on fingertip characteristics and a control method thereof. The enhanced assembly teaching system comprises an image acquisition module, an image preprocessing module, a hand area segmentation module, a fingertip detection and tracking module and a virtual component model space registration module. The method includes: collecting images of the finger and the interaction plane; preprocessing the acquired image; carrying out segmentation and edge extraction on the hand area; carrying out finger tip detection based on curvature operation and least square fitting, and tracking the finger tip through a method based on combination of Kalman filtering and particle filtering; performing calibration, computer rendering and virtual component model space registration on the image acquisition equipment; and enabling the fingertips to interact with the virtual component to complete plug-in mounting. According to the method, fingertipsare used as new computer input to complete interaction with the virtual object, inconvenience brought by an materialized handheld identifier is abandoned, and when motion is nonlinear, Kalman filtering and particle filtering are combined to improve the positioning accuracy and real-time performance of the target object.

Owner:JIANGSU UNIV

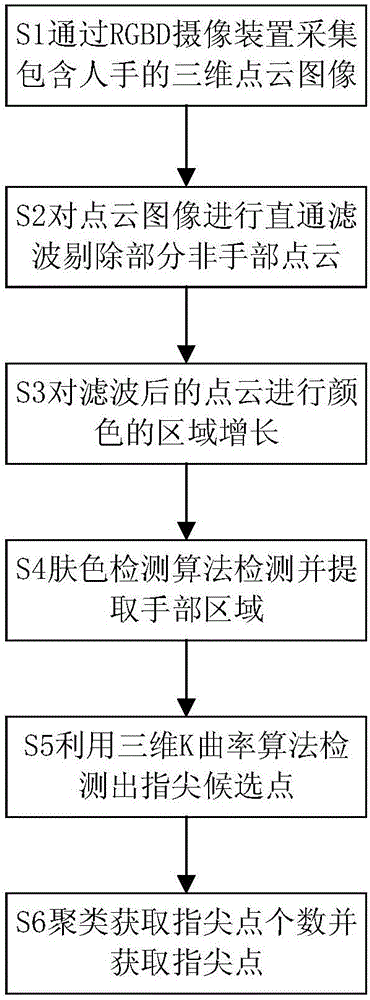

Fingertip detection method based on three-dimensional K curvature

ActiveCN106650628AEasy to detectReduce distance errorCharacter and pattern recognitionFingertip detectionPoint cloud

The invention discloses a fingertip detection method based on three-dimensional K curvature. The method comprises two steps; in the first step, a hand region is extracted based on point cloud color region growing, wherein first, point cloud data acquired by an RGB-D sensor is filtered, then color region growing partitioning is performed on the filtered point cloud data, and finally a skin color detection algorithm is adopted to acquire the point cloud data in the hand region; in the second step, fingertip points are extracted based on a three-dimensional K curvature algorithm, wherein hand point cloud is filtered to remove some spatial dispersion points after the hand region is acquired, then the thought of the K curvature algorithm is utilized to process the point cloud data, fingertip candidate points are determined and clustered, and the fingertip points are obtained. Through the method, the fingertip points can be well detected at different positions, and under different backgrounds and different light environments under a plurality of common gestures such as gestures of representing the numbers 1, 2, 3, 4 and 5. According to the method, the distance error between the obtained fingertip points and actual fingertip points is only about 5mm, and therefore good precision and robustness are achieved.

Owner:NANJING UNIV OF POSTS & TELECOMM

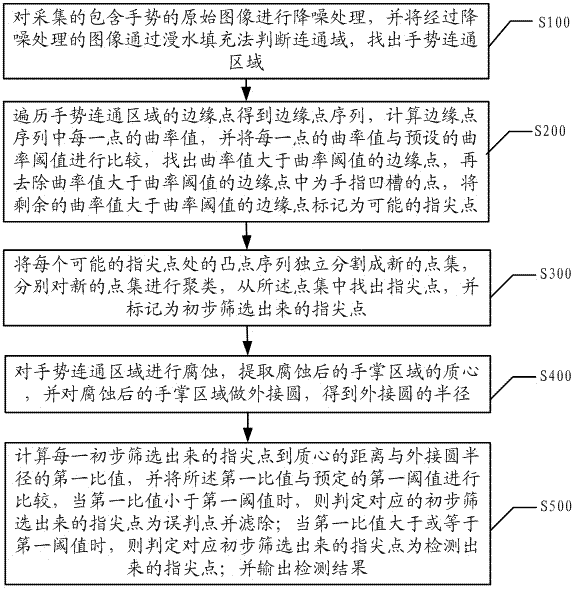

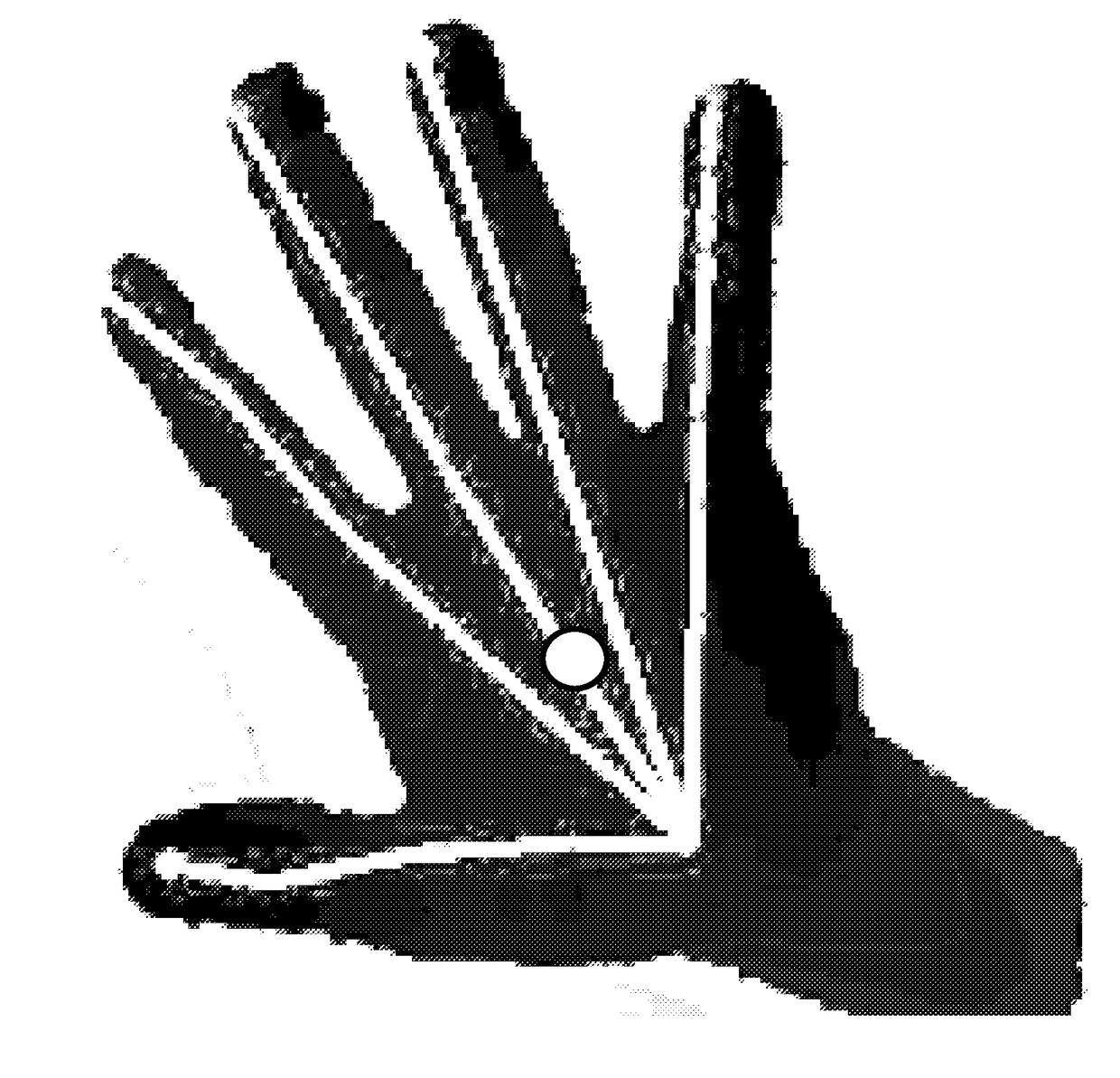

Fingertip detection method and device based on palm ranging

InactiveCN103544469AAvoid misjudgmentReduce false detection rateCharacter and pattern recognitionFingertip detectionMinutiae

The invention discloses a fingertip detection method and device based on palm ranging. The method includes marking initially screened fingertip points after cluster, corroding the gesture communicating area, extracting the centroid of the palm area after corrosion, making a circumcircle on the palm area after corrosion, and acquiring the radius of the circumcircle; calculating a first ratio of distance between an initially-screened fingertip point to the centroid and the radius of the circumcircle, and comparing the first ratio with a preset first threshold; when the first ratio is smaller than the first threshold, determining the corresponding initially-screened fingertip point to be a false minutiae point and filtering; when the first ratio is larger than or equal to the first threshold, determining the corresponding initially-screened fingertip point to be a detected fingertip point; and outputting detection results. Thus, detection accuracy of real-time fingertip detection is improved, and fingertip false detection rate is decreased.

Owner:TCL CORPORATION

Method for detecting static gesture fingertip

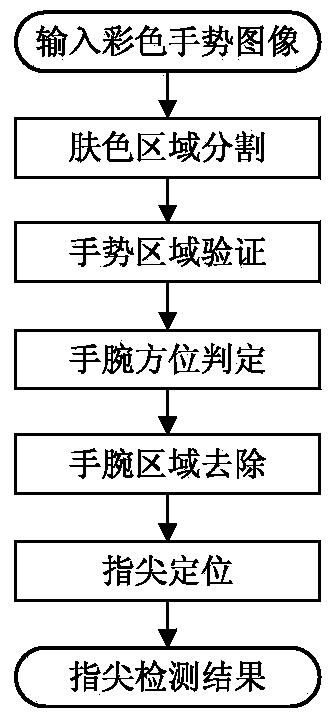

InactiveCN103426000AProof of validityInput/output for user-computer interactionCharacter and pattern recognitionColor imageFingertip detection

The invention belongs to the field of the specific object detection in the computer vision field, and relates to a method for detecting a static gesture fingertip. The method comprises the steps of conducting segmentation on the gesture area, segmenting the gesture area from an input color image, investigating coordinates of points in the gesture area, determining the direction of the wrist in the gesture area, computing the center of gravity of the gesture area, searching for the minimum radius, conducting processing according to the different directions of the wrist and different situations, obtaining a binary image of a hand area, extracting the outer contour of the binary image of the HAND area, computing the distance between the outer contour and the center of gravity of the gesture, conducting smooth processing, detecting maximum value points, obtaining a maximum value point set, and obtaining a fingertip point set. According to the method, the fingertip area in the gesture image can be rapidly and accurately detected and positioned.

Owner:TIANJIN UNIV

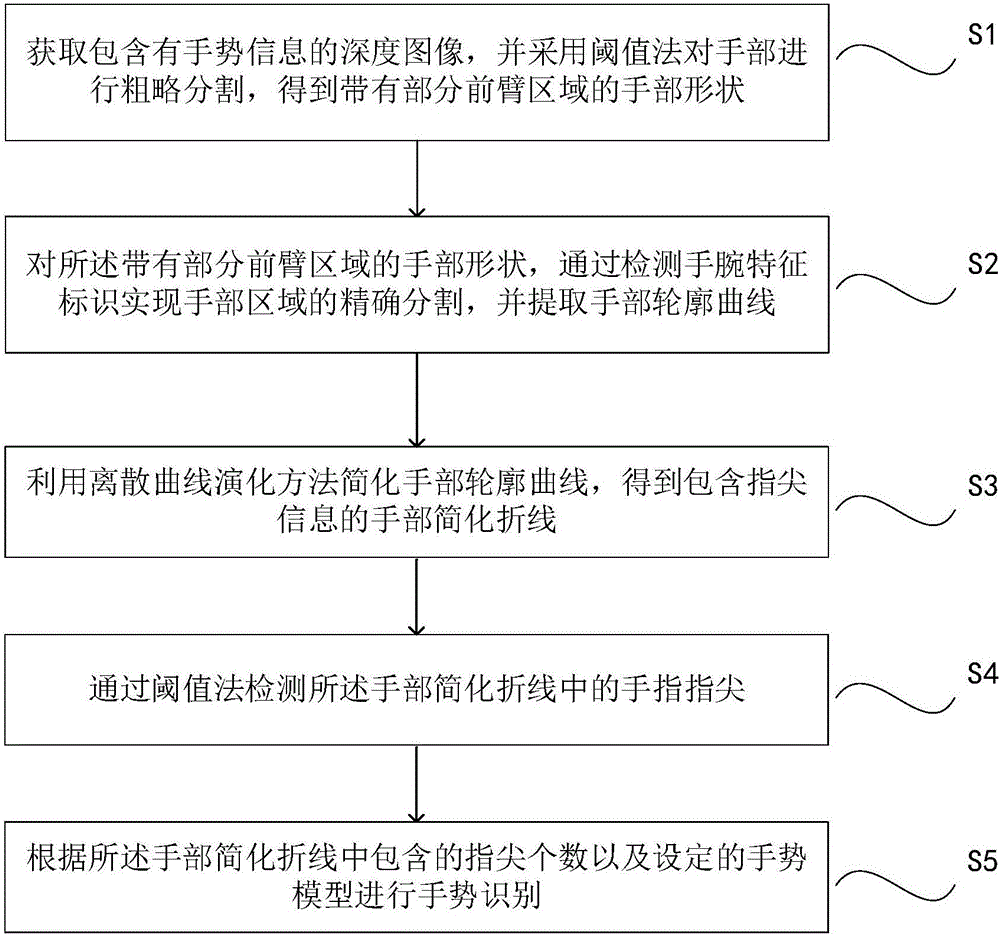

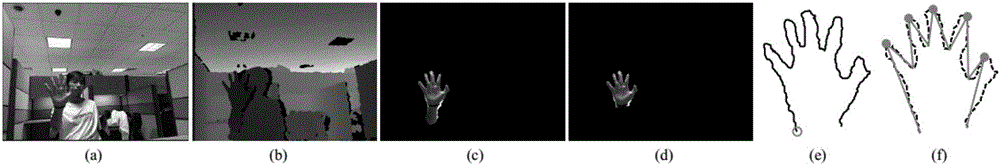

Finger tip detection and gesture identification method and system based on depth information

InactiveCN106529480AAccurate segmentationAvoid interferenceCharacter and pattern recognitionPattern recognitionFingertip detection

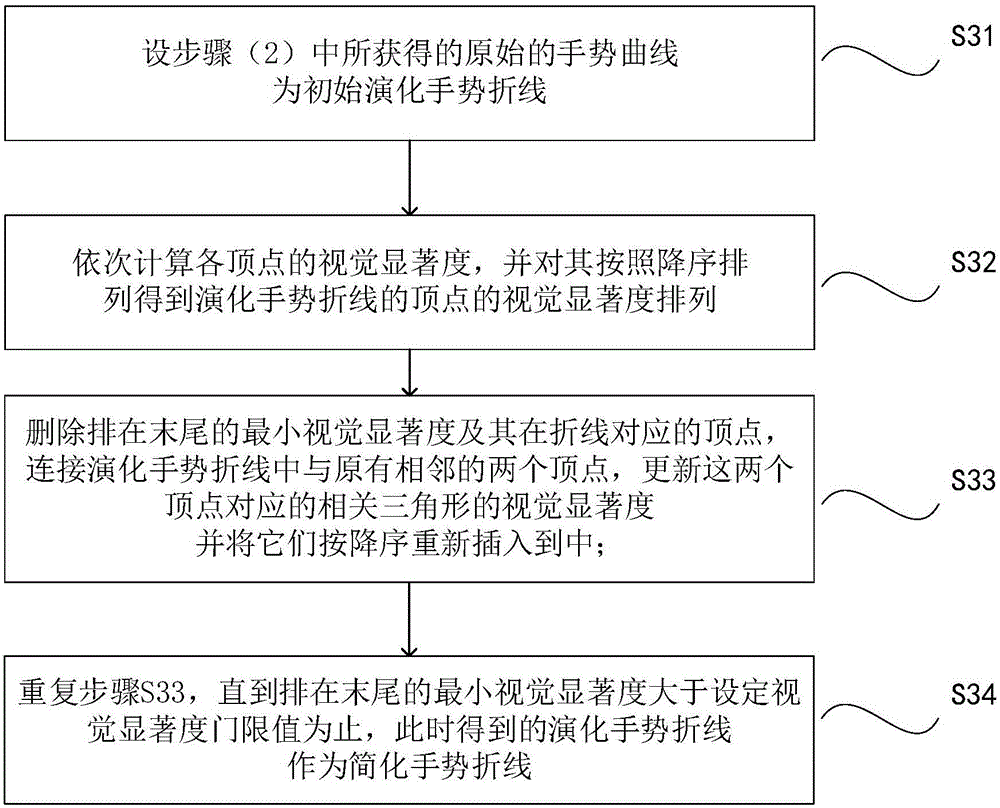

The invention discloses a finger tip detection and gesture identification method based on depth information. The method comprises the following steps: obtaining a depth image comprising gesture information, performing coarse segmentation on a hand portion by use of a threshold method to obtain a hand shape with a part of a forearm area; realizing accurate segmentation of a hand area through detecting a wrist feature identification, and extracting a hand contour curve; obtaining a hand simplified broken line by simplifying the hand contour curve by use of a discrete curve evolution method; detecting finger tips on the hand simplified broken line by use of the threshold method; and identifying a gesture according to the quantity of the finger tips included in the hand simplified broken line and a set gesture model. The method can accurately segment the hand area, and at the same time, detects the finger tips and identifies the gesture. The invention further provides a corresponding finger tip detection and gesture identification system based on depth information.

Owner:JIANGHAN UNIVERSITY

Gesture three-dimensional interactive projection technology

InactiveCN108932060ARealize screen interactionImplement tactile sensingInput/output for user-computer interactionGraph readingLine sensorSonification

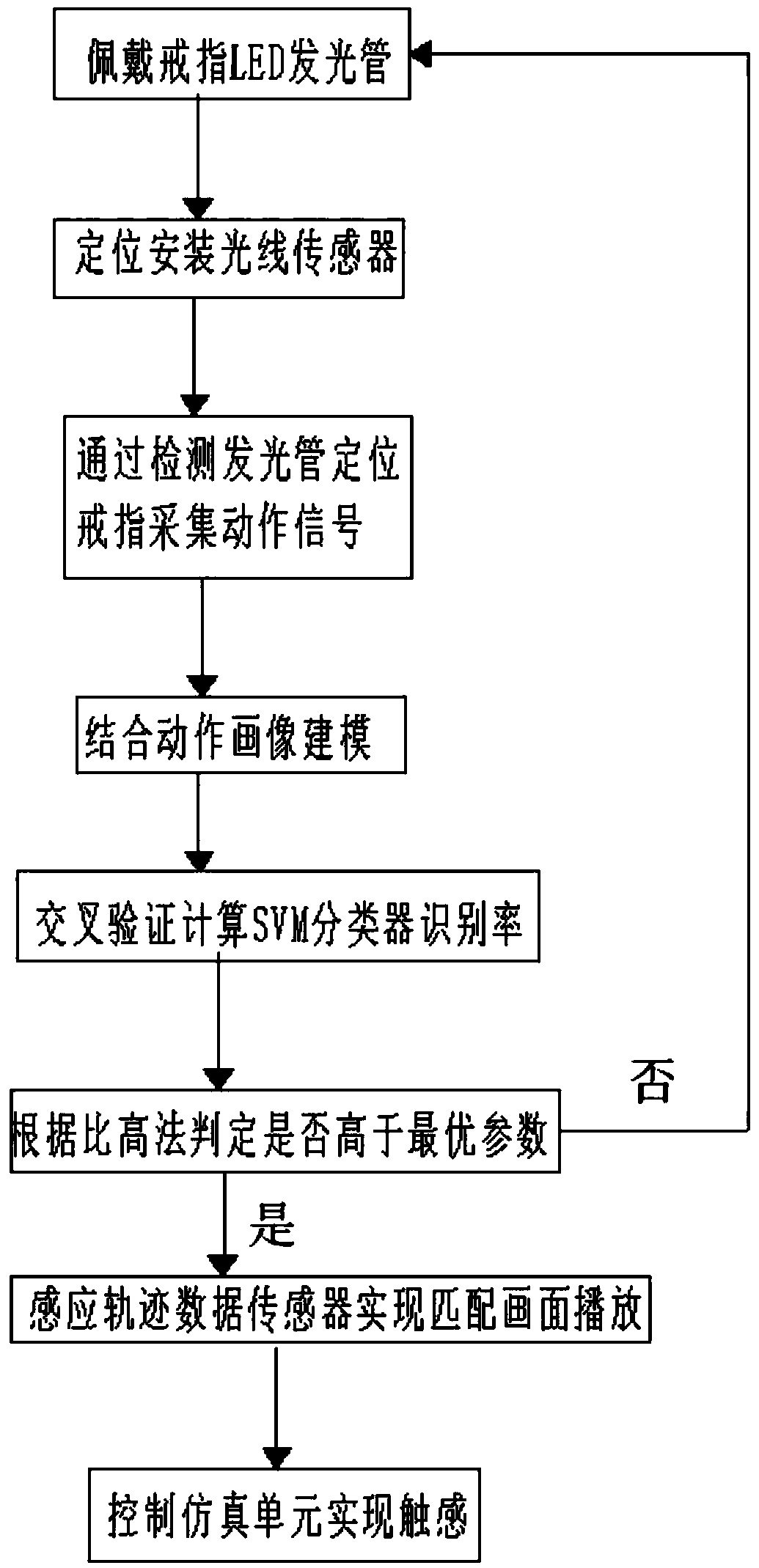

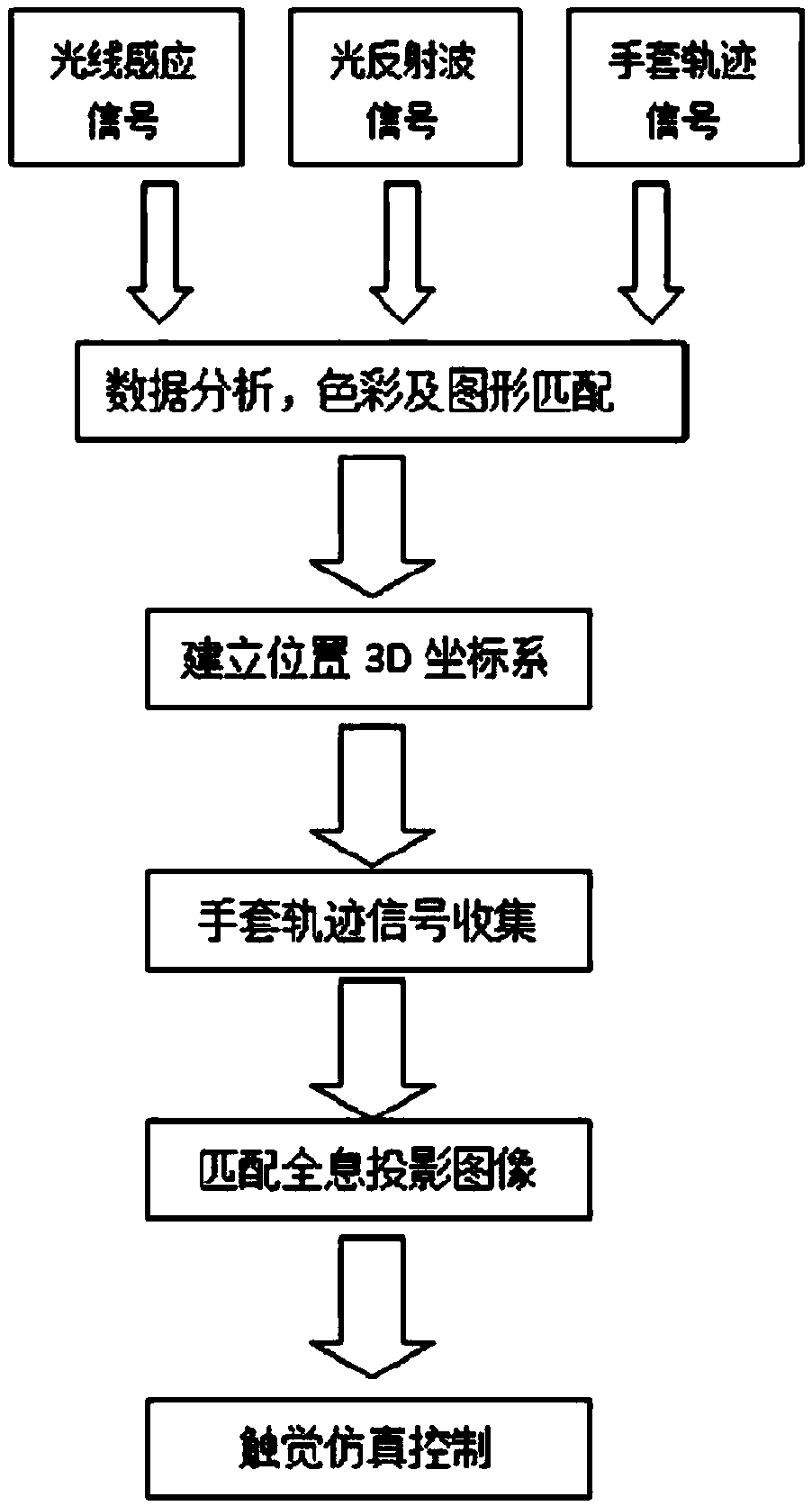

The invention relates to a gesture three-dimensional interactive projection technology. One or more fingers of a user wear ring LED luminous tubes. Through a plurality of mark points, the technology simplifies processing, tracking, identifying, and positioning, and a system detects the luminous tubes instead of fingertip detection. Through a light-spot, special position device in a screen is pointed to. Through a six-axis acceleration sensor, an infrared signal generator, screen ultrasonic and infrared positioning grids, a dual-depth of field camera above the screen above are used for signal acquisition. Beneficial of the technology are that through signal processing of hand trajectory actions and correction of light-induced data, interaction with a holographic projection picture is realized, through a touch simulation unit, touch induction is realized, interaction true feeling is enhanced, and users can get tactile feedback of holographic images at the same time through a light sensor and a trajectory data sensor in a light-sensing data glove, and through continuously increasing sampling quantity and optimizing parameters, human gesture interactive projection is realized, so as to realize self-adaptation of individual crowd.

Owner:SHENZHEN VVETIME TECH CO LTD

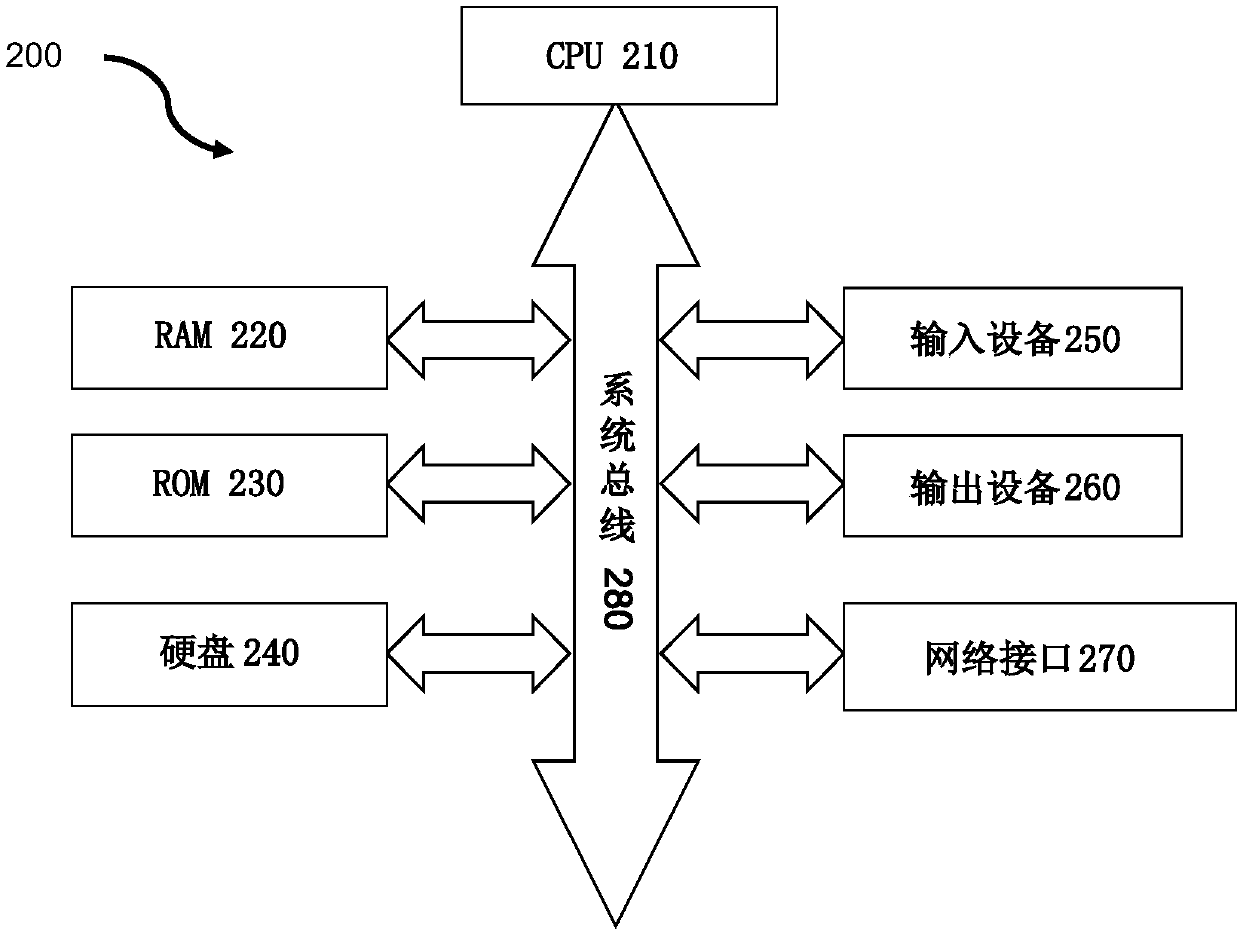

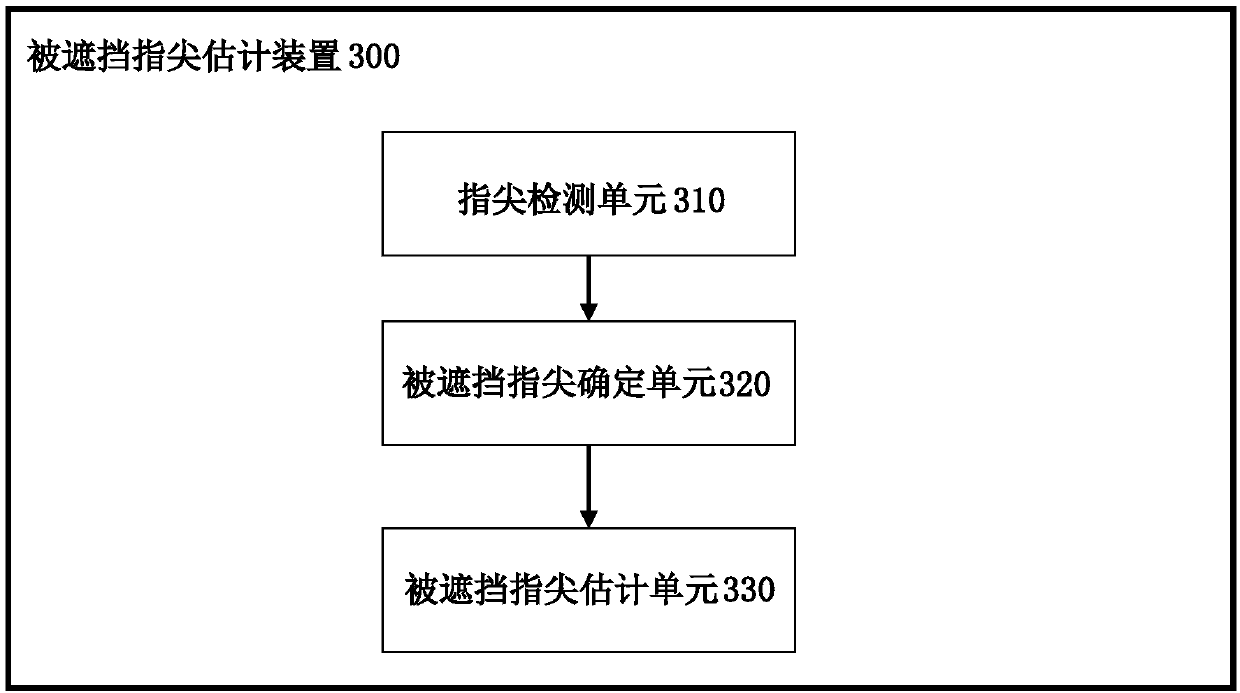

Method and device for estimating shielded fingertip, posture recognition system and storage medium

InactiveCN110377187ACharacter and pattern recognitionInput/output processes for data processingFingertip detectionText detection

The invention discloses a method and device for estimating a shielded fingertip, a posture recognition system and a storage medium. The method comprises: a fingertip detection step for detecting a fingertip from a current frame of a video image; a shielded fingertip determination step for determining a shielded fingertip and a visible fingertip from the detected fingertip; and a shielded fingertipestimation step for estimating a first position of the shielded fingertip according to the visible fingertip and a posture center determined by the visible fingertip. The accuracy of text detection is improved.

Owner:CANON KK

Fingertip identification for gesture control

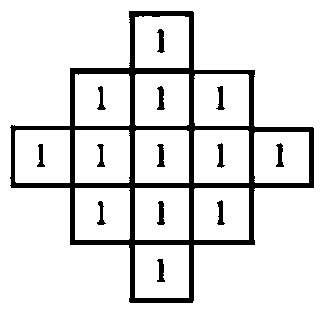

ActiveUS20180260601A1Easy to getAccurate detectionInput/output for user-computer interactionTelevision system detailsFingertip detectionComputerized system

A computer implemented method of fingertip centroid identification in real-time, implemented on a computer system comprising a processor, memory, and a camera system. The processor receives image data from the camera system; runs a first kernel comprising a set of concentric closed shapes over image data to identify an occupancy pattern in which internal closed shapes are at least nearly fully occupied, and in which a subsequent closed shape has at least a relatively low occupancy level, so as to identify one or more fingertips in the image data; for each identified fingertip, runs a second kernel over the identified one or more fingertips to establish a best fit closed shape which covers each identified fingertip; calculates a centroid for each best fit closed shape which corresponds to an identified fingertip; and stores in the memory the calculated centroids for the identified one or more fingertips.

Owner:UMAJIN

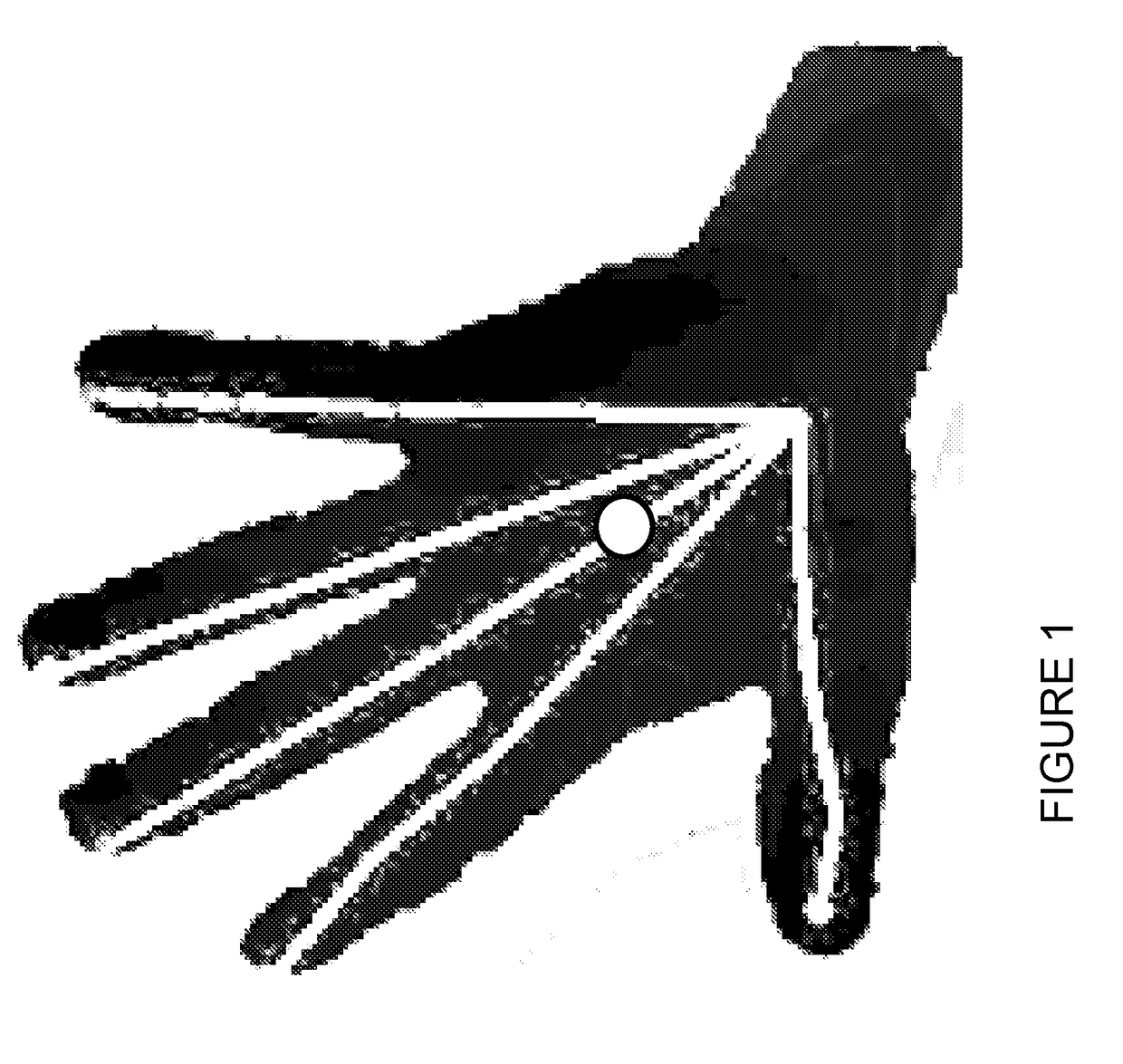

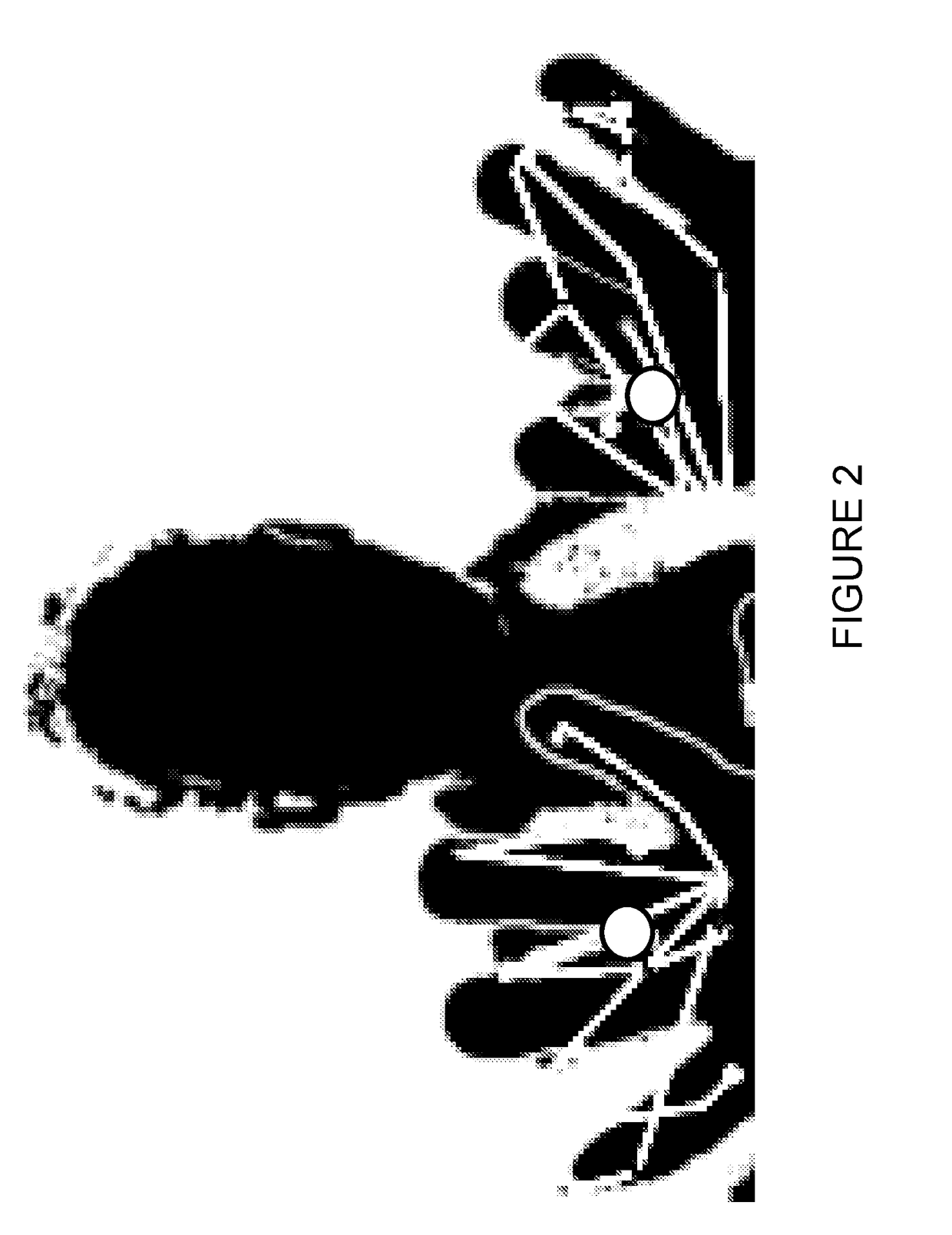

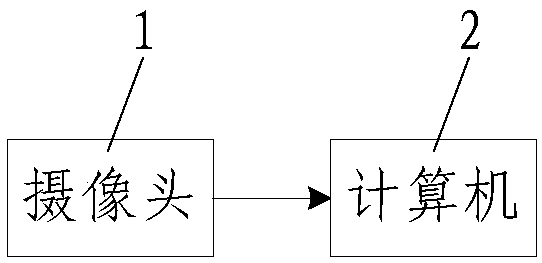

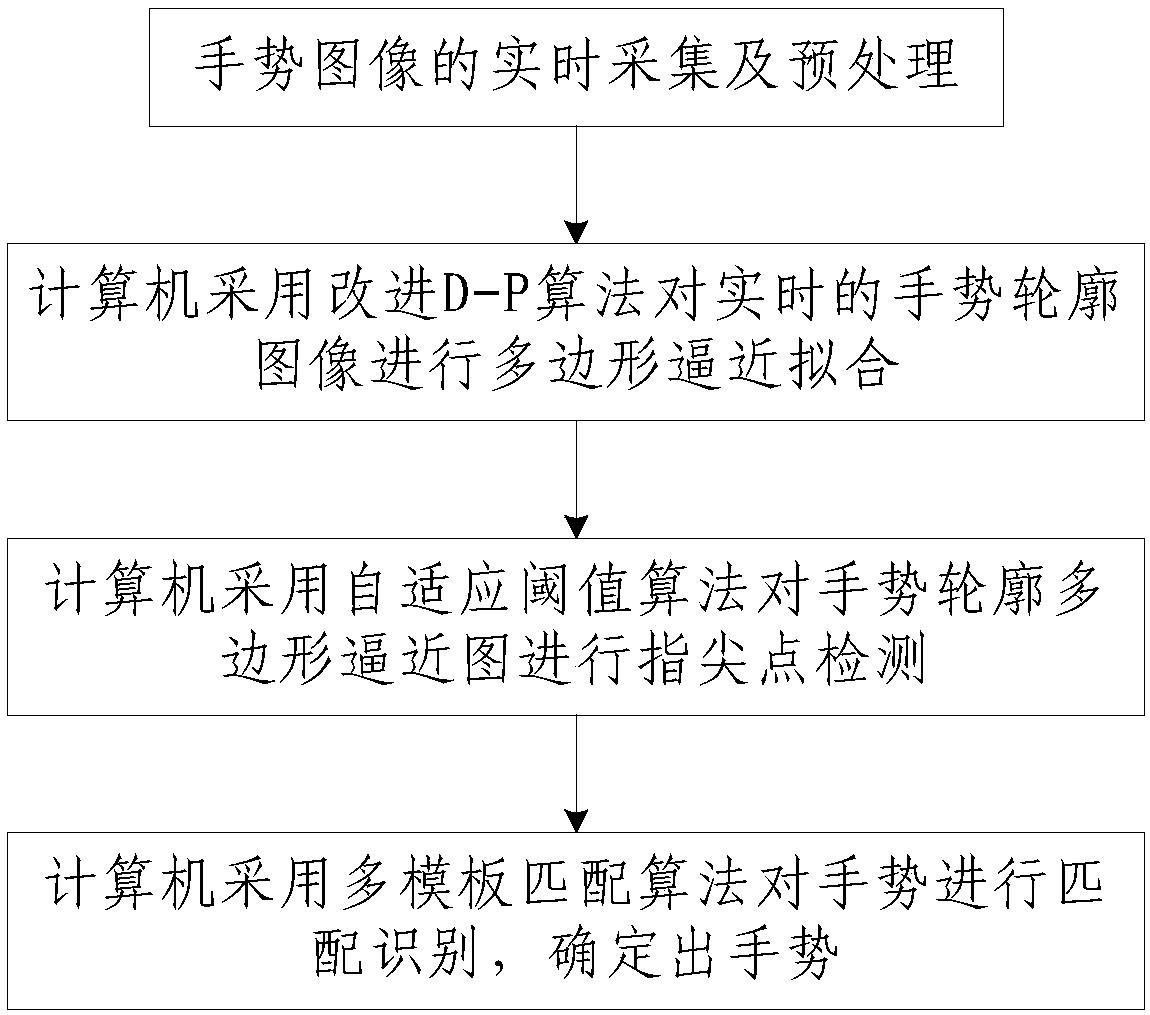

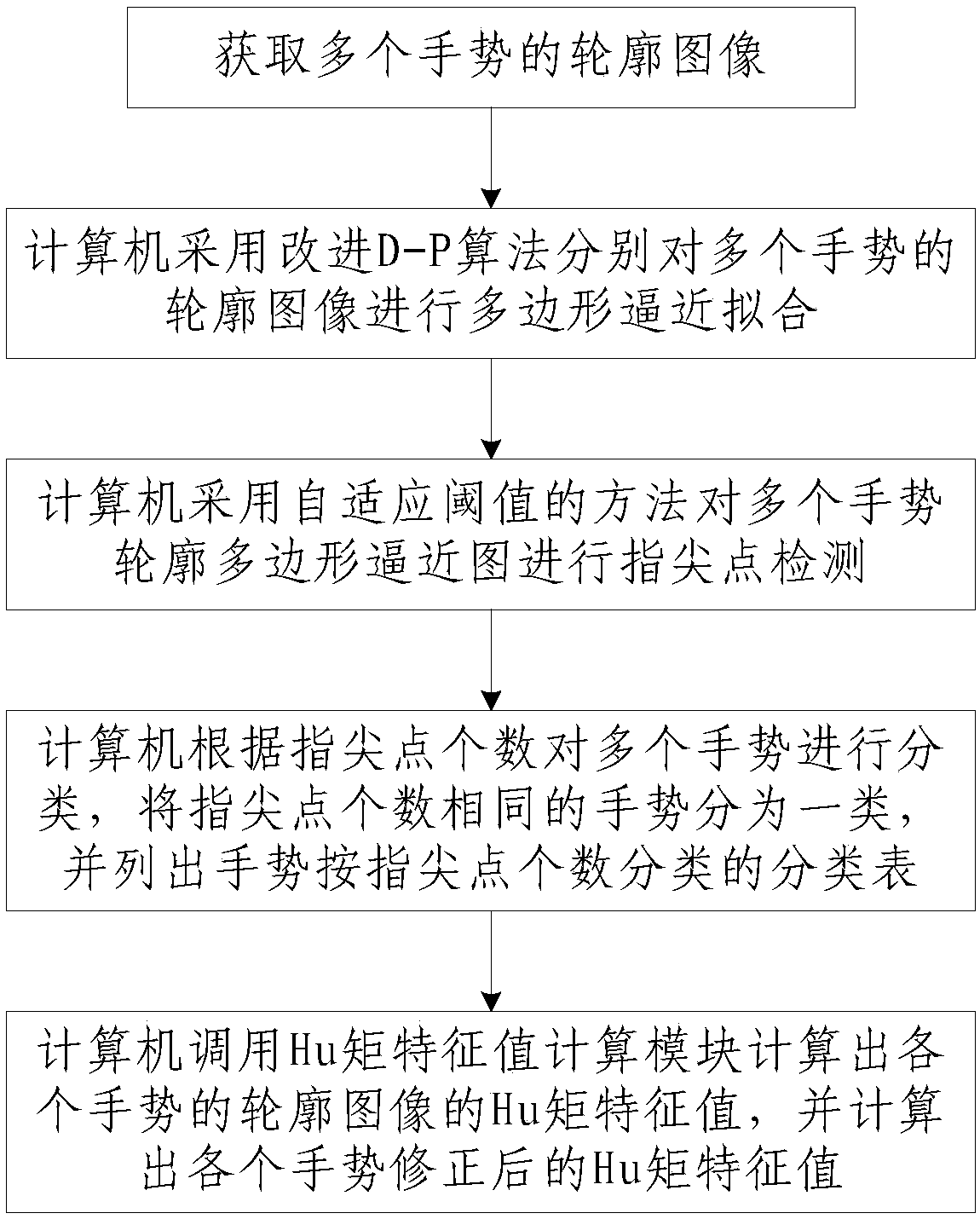

Gesture recognition method based on improved D-P algorithm and multi-template matching

InactiveCN107220634AAccurate identificationNovel and reasonable designCharacter and pattern recognitionFingertip detectionTemplate matching

The invention discloses a gesture recognition method based on an improved D-P algorithm and multi-template matching. The gesture recognition method comprises the following steps that step one, real-time acquisition and preprocessing of a gesture image are performed; step two, polygonal approximation fitting is performed on the real-time gesture contour image obtained in the step one by a computer through the improved D-P algorithm; step three, fingertip detection is performed on the gesture contour polygonal approximation image obtained in the step two by the computer through an adaptive threshold algorithm; and step four, matching recognition is performed on the gesture by the computer through a multi-template matching algorithm so as to determine the gesture. The gesture recognition method is novel and reasonable in design, high in gesture recognition rate, great in real-time performance, high in gesture recognition accuracy, high in practicality, great in use effect and convenient for popularization and application.

Owner:XIAN UNIV OF SCI & TECH

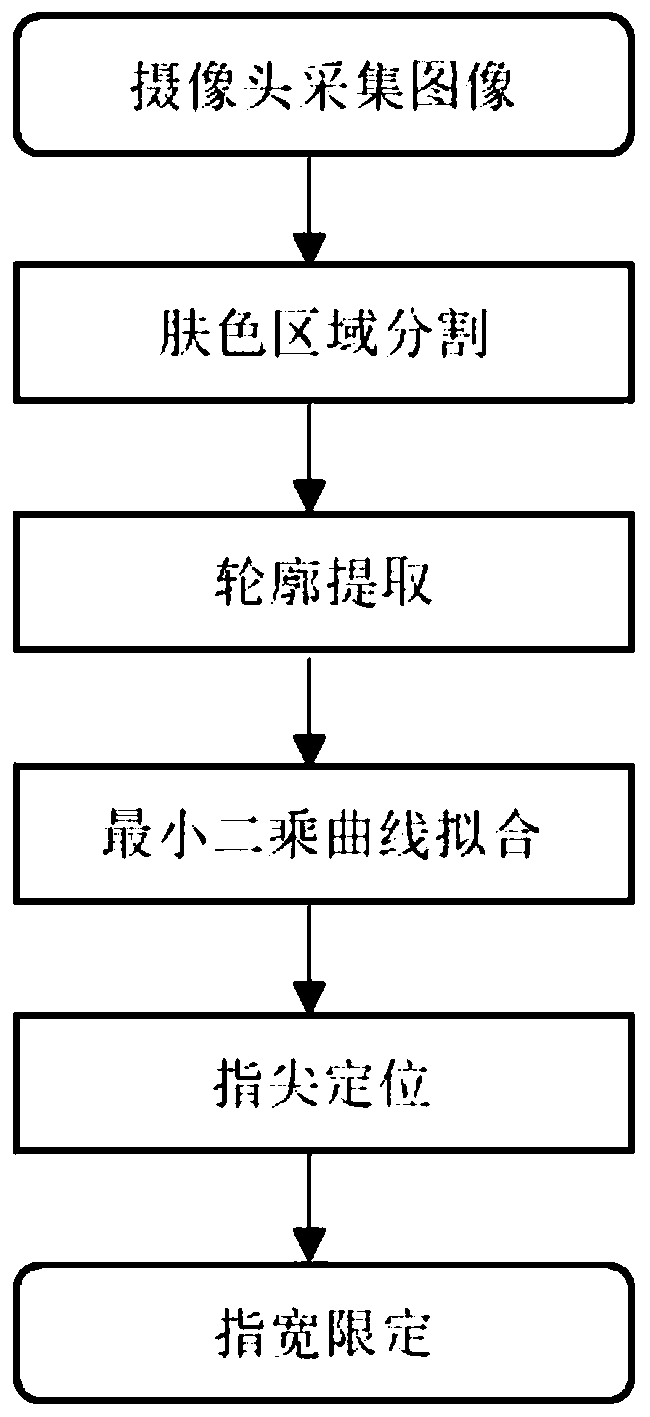

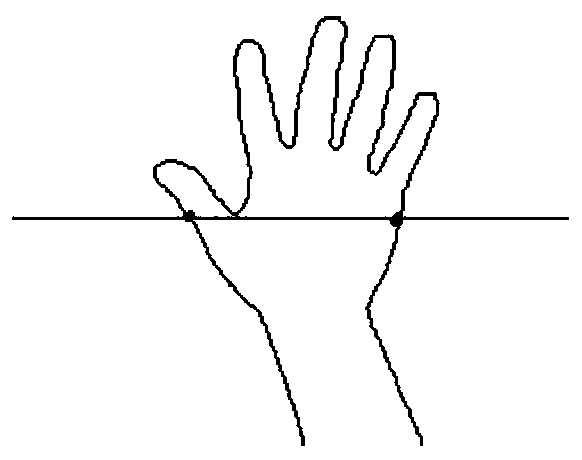

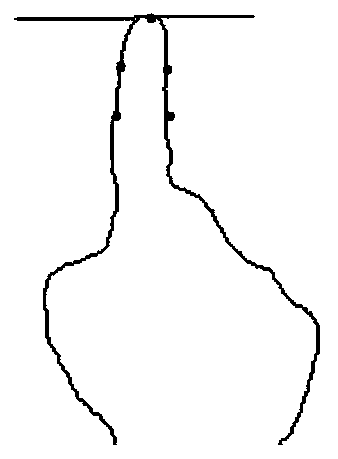

A fingertip detection method based on least square curve fitting

ActiveCN109740497AAccurate and effective positioningImprove convenienceCharacter and pattern recognitionFingertip detectionHand parts

The invention discloses a fingertip detection method based on least square curve fitting. The method comprises the following steps: firstly, carrying out skin color model segmentation in an RGB colorspace to obtain a hand region, then obtaining a hand peripheral contour by adopting a contour extraction method, fitting a parabola on a section of curve in an adjacent range at each point of the contour by using a least square method, and accumulating distances from points at a certain interval distance to tangent lines where the points are located as the curvature; and setting a threshold value,judging the point of which the curvature is greater than the threshold value as a similar fingertip point, and finally limiting the finger width to eliminate non-fingertip points. According to the method, the quadratic polynomial curve is used for fitting the upper end part of the finger, the fingertip point is detected by setting the threshold value and the finger width limit, the fingertip positioning accuracy is high, the detection result is not influenced by the change of the distance between the hand and the camera, and the method still has a good detection effect and relatively high robustness when the form of the finger is changed by a certain amplitude.

Owner:HOHAI UNIV

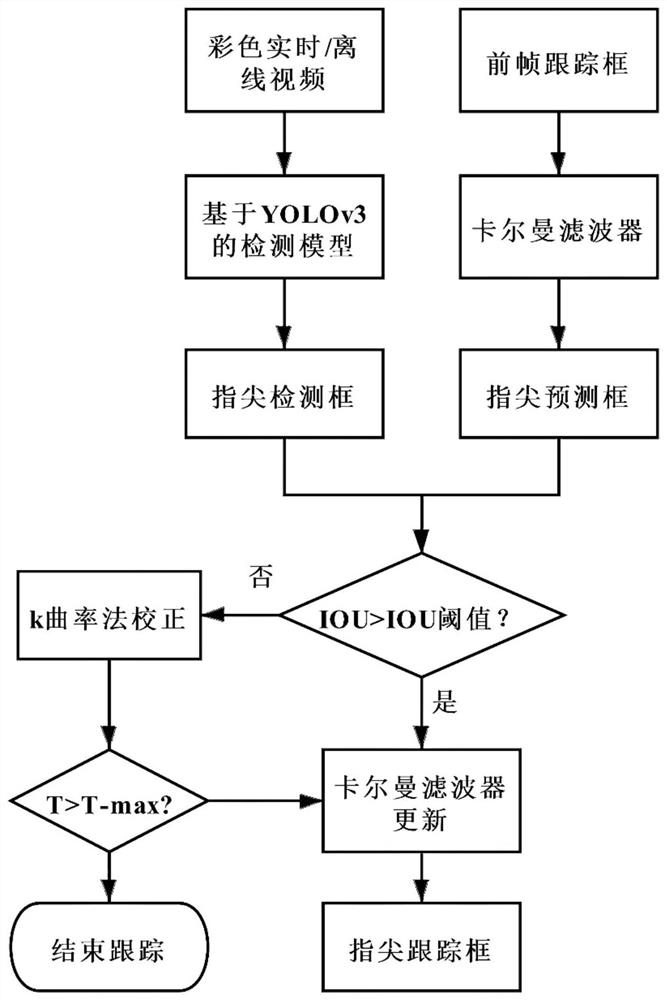

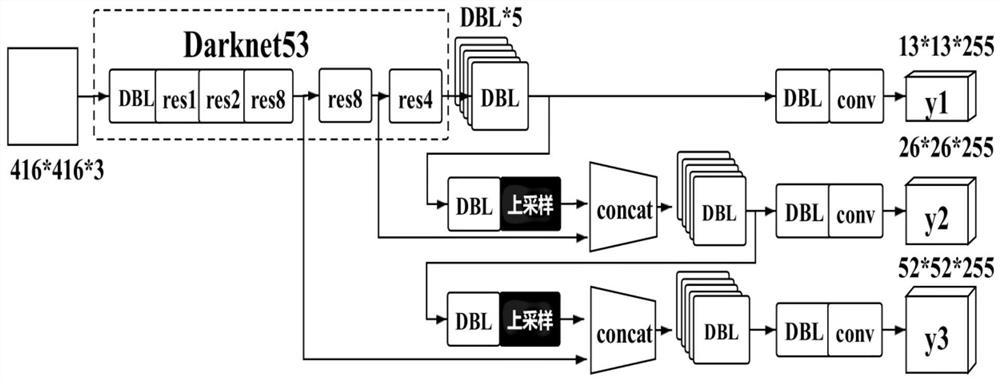

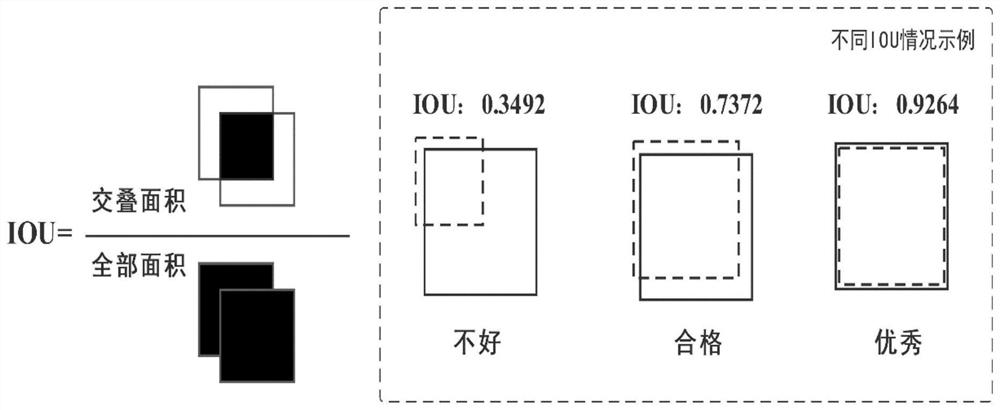

Fingertip tracking method based on deep learning and K-curvature method

ActiveCN113608663AImprove real-time performanceImprove accuracyCharacter and pattern recognitionNeural architecturesKaiman filterFingertip detection

The invention discloses a fingertip tracking method based on deep learning and a K-curvature method, and the method comprises the steps: training a preprocessed data set through a YOLOv3 network model, and obtaining a fingertip detection model; acquiring a video stream by using a camera, inputting the video stream into a detection model, detecting detection frame information, and initializing a Kalman filter; then obtaining a prediction frame by using a Kalman filter, calculating IOU of the detection frame and the prediction frame of the frame, setting an IOU threshold value, judging whether the IOU is greater than the IOU threshold value or not, and if the IOU is greater than the IOU threshold value, updating the Kalman filter to obtain a fingertip tracking frame; otherwise, correcting the fingertip position by using a K-curvature method, and updating the Kalman filter; and finally, setting a time threshold T-max, and stopping tracking if tracking information is not detected in a time threshold frame. The influence of a complex environment on the detection accuracy is weakened, the detection speed is increased, and the accuracy and robustness are improved.

Owner:HARBIN ENG UNIV

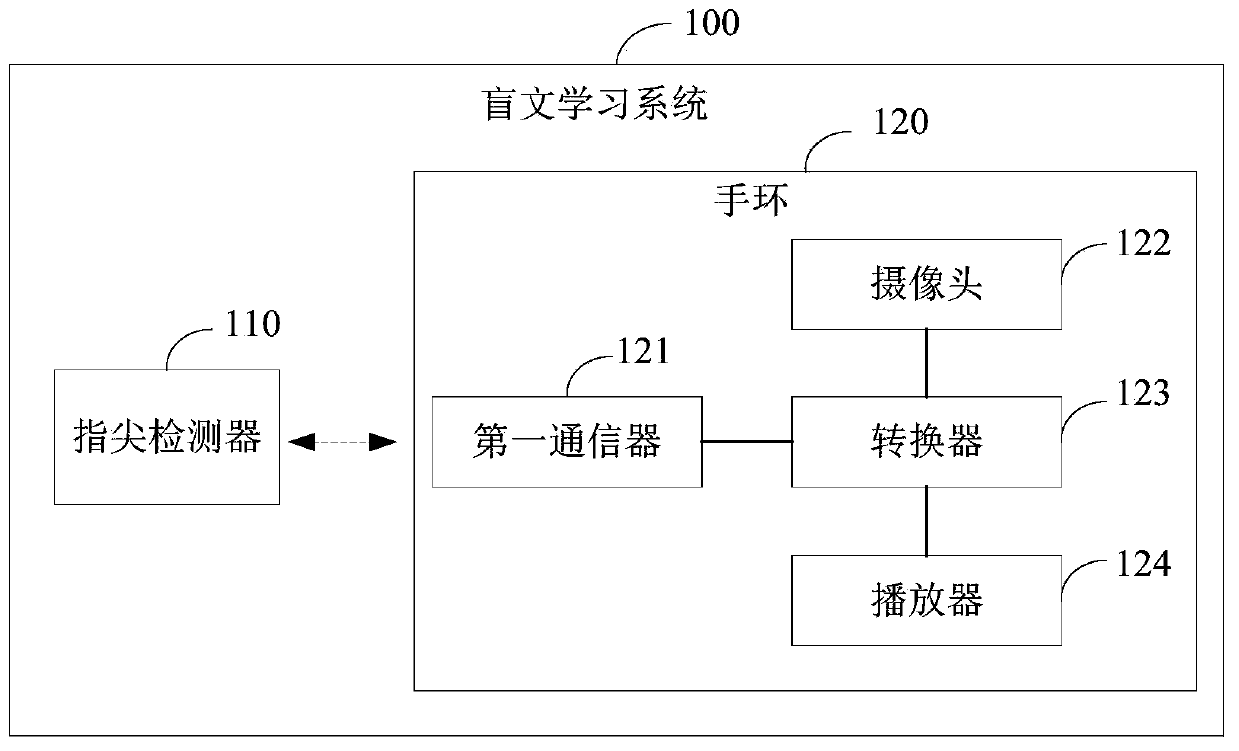

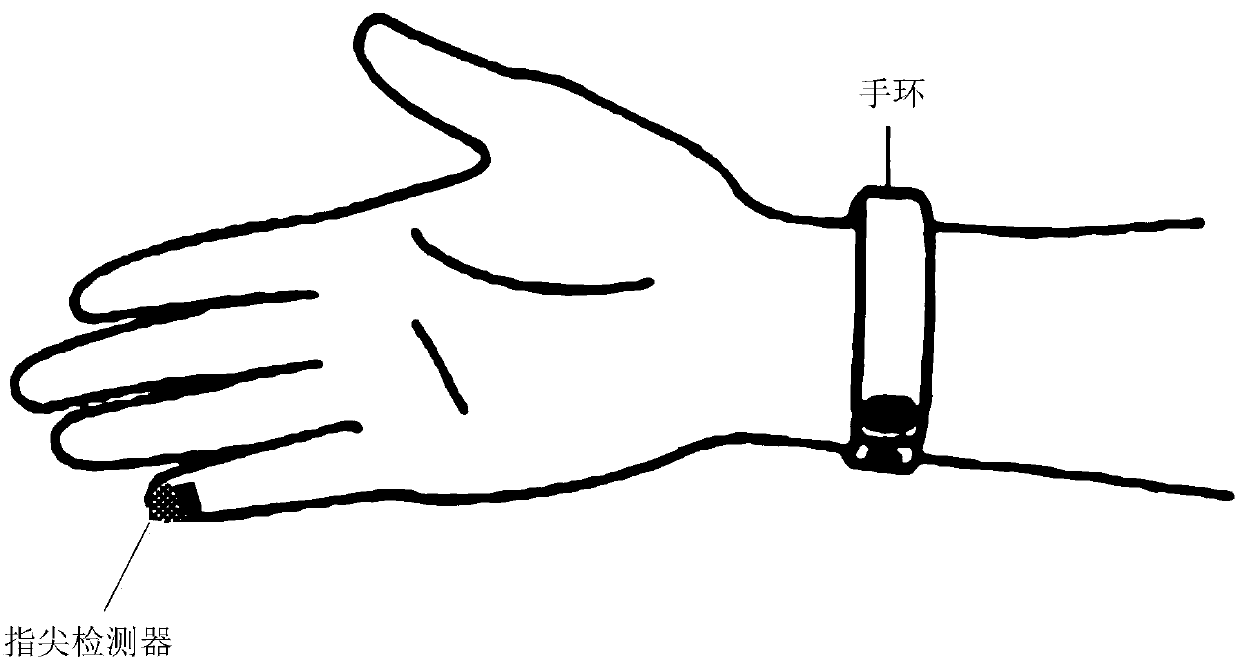

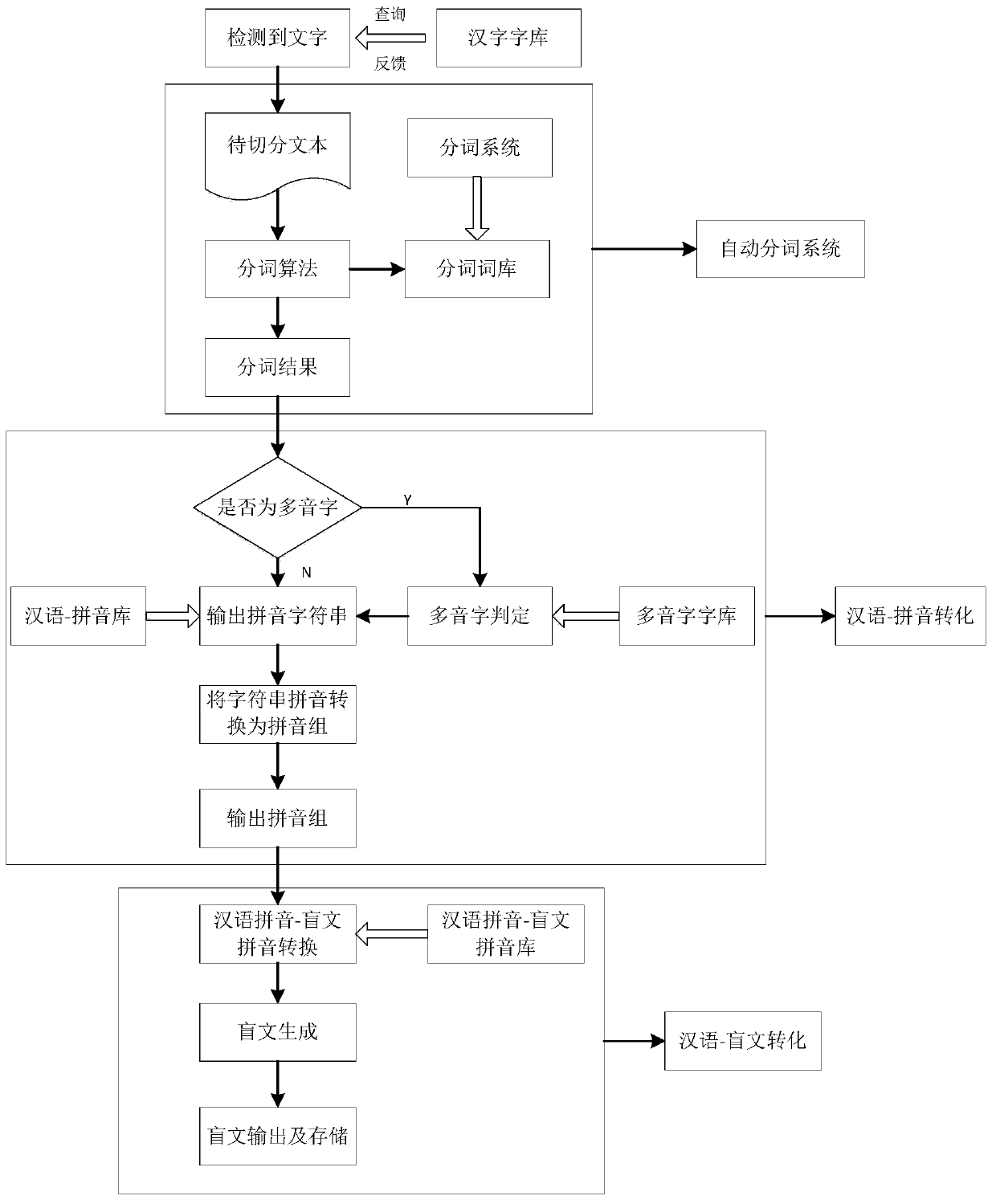

Braille learning system, fingertip sensor and forming method of fingertip sensor

ActiveCN110728886AImplementing Haptic FeedbackTeaching apparatusFingertip detectionComputer graphics (images)

The application discloses a Braille learning system, a fingertip sensor and a forming method of the fingertip sensor. The system comprises a fingertip detector and a wristband; the wristband comprisesa first communicator, a camera and a converter; the fingertip detector can detect Braille and send the detected Braille to the wristband; the converter in the wristband can carry out conversion on the detected Braille to generate identification characters; a player plays the identification characters; the camera in the wristband can acquire target characters; the converter carries out conversionon the target characters to generate simulated Braille and sends the simulated Braille to the fingertip detector; and the fingertip detector receives the simulated Braille sent by the wristband, generates a Braille pulse signal according to the simulated Braille and stimulates a finger of a user to imitate the simulated Braille. The Braille learning system is low in price and convenient to carry;the user not only can read Braille, but also can read characters; a reading range of the blind is enlarged; convenience is brought to the blind; the Braille learning system has a tactile feedback function; and by the tactile feedback function, the blind senses Braille.

Owner:BOE TECH GRP CO LTD +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com