Patents

Literature

151 results about "Gesture classification" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The actual gesture classification is based on the low level characteristics of individual angular data stream. Whenever new low-level characteristics are detected, the gesture classifier is activated. By using the set of current angular characteristics, a gesture label is determined based on built- in gesture model.

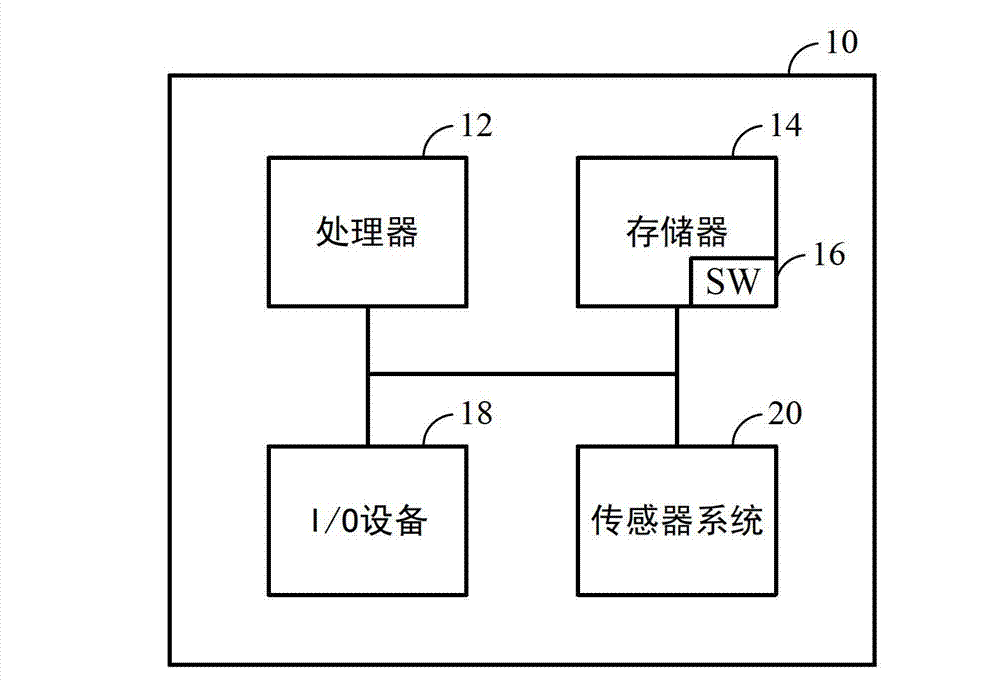

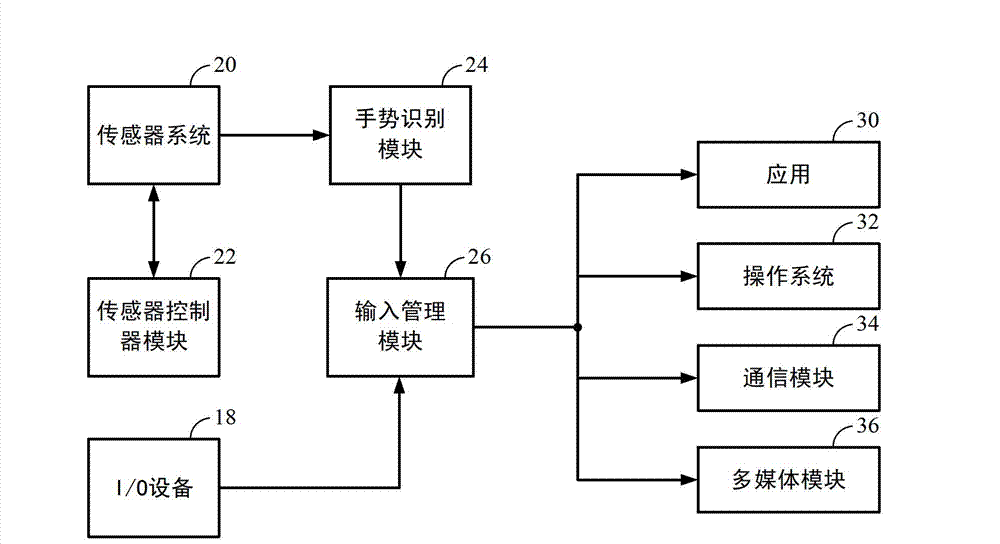

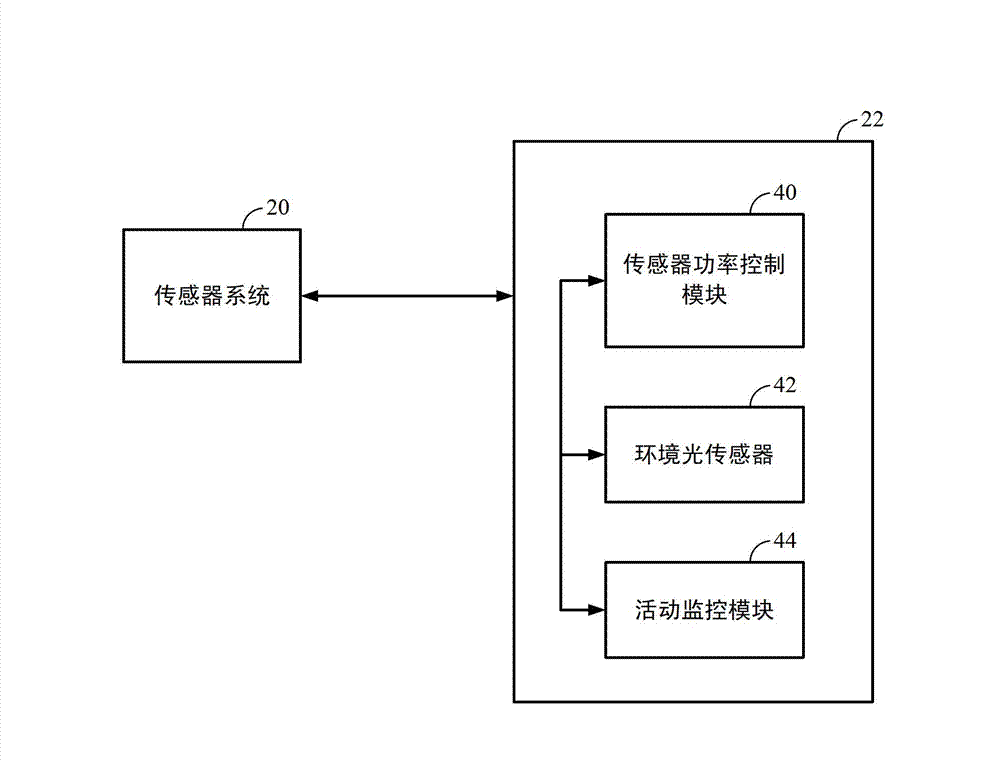

Methods and apparatus for contactless gesture recognition

InactiveUS20110310005A1Reduce wearImprove aestheticsInput/output for user-computer interactionEnergy efficient ICTProximity sensorHuman–computer interaction

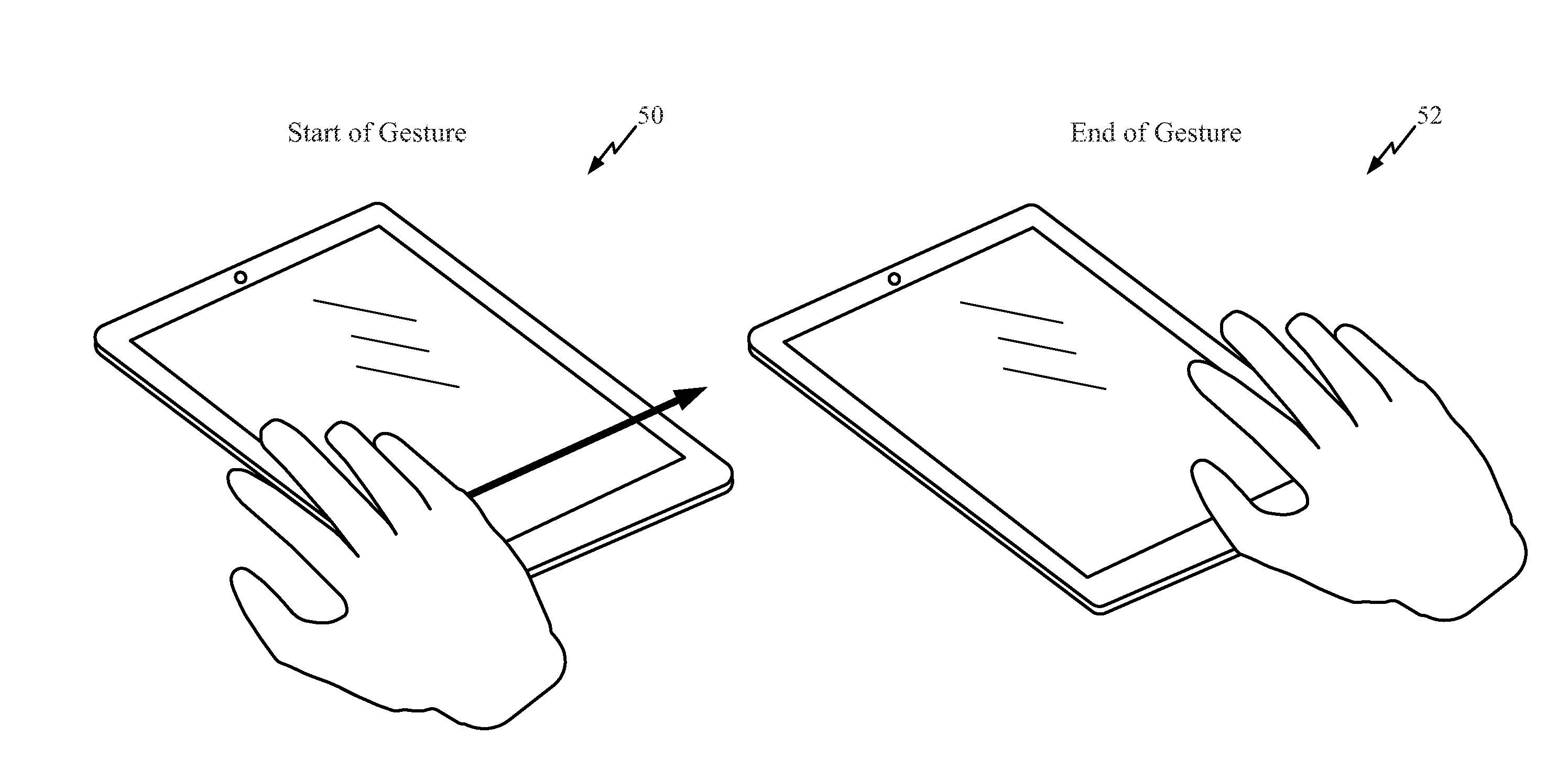

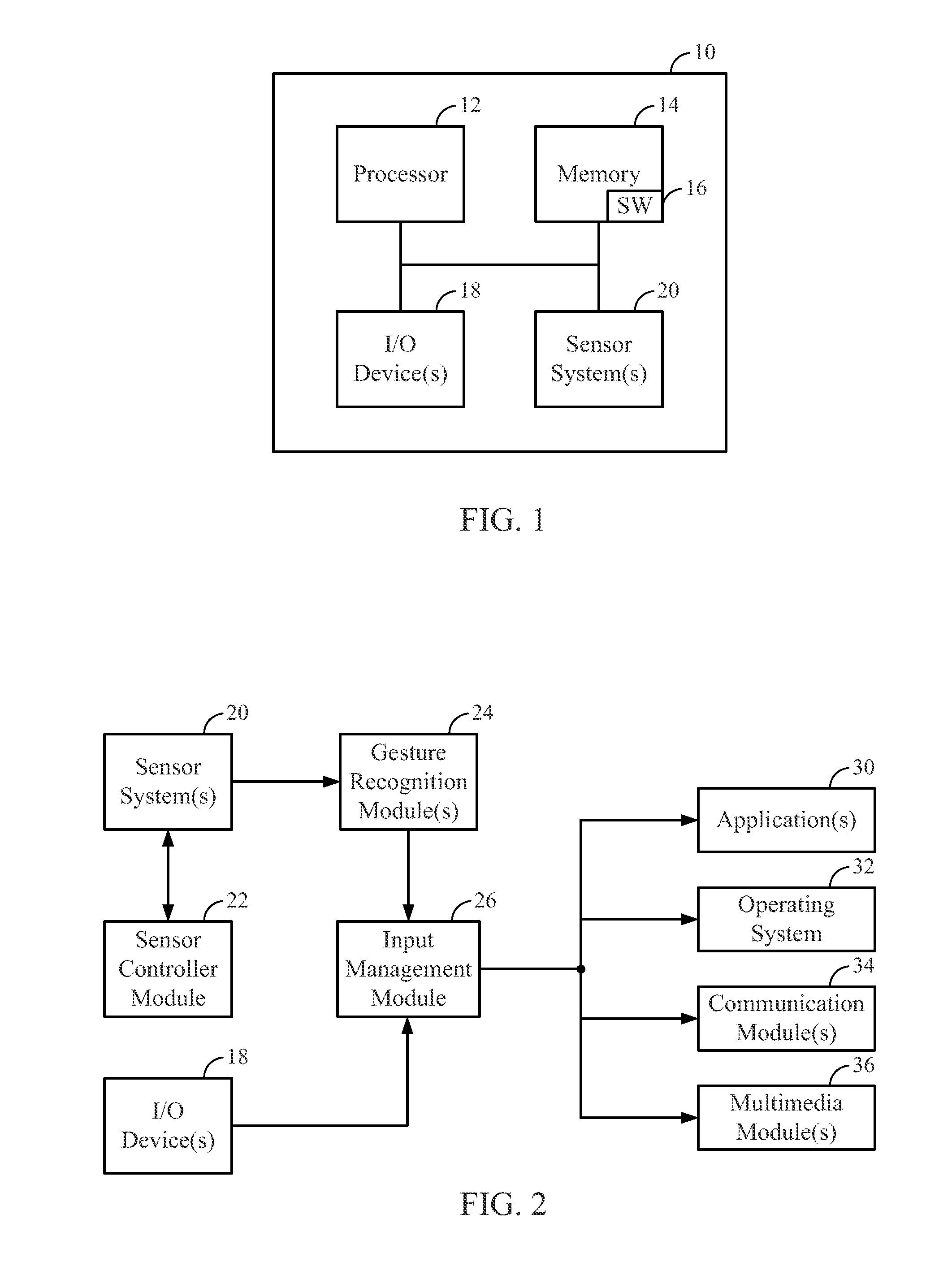

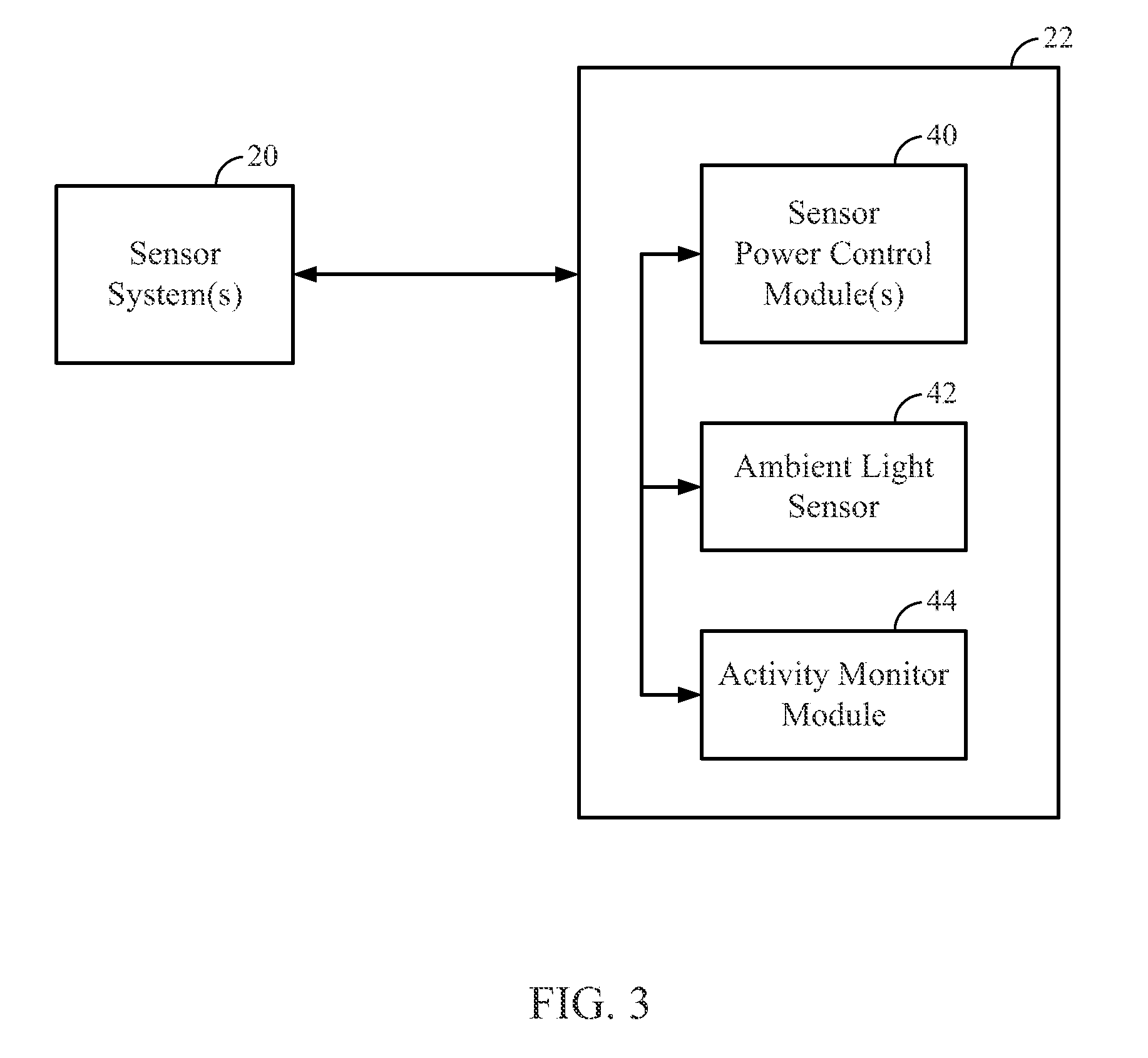

Systems and methods are described for performing contactless gesture recognition for a computing device, such as a mobile computing device. An example technique for managing a gesture-based input mechanism for a computing device described herein includes identifying parameters of the computing device relating to accuracy of gesture classification performed by the gesture-based input mechanism and managing a power consumption level of at least an infrared (IR) light emitting diode (LED) or an IR proximity sensor of the gesture-based input mechanism based on the parameters of the computing device.

Owner:QUALCOMM INC

Apparatus, method and computer program for recognizing a gesture in a picture, and apparatus, method and computer program for controlling a device

ActiveUS20110234840A1Improve processing efficiencyReduces expenditure involvedTelevision system detailsCharacter and pattern recognitionHough transformTransformer

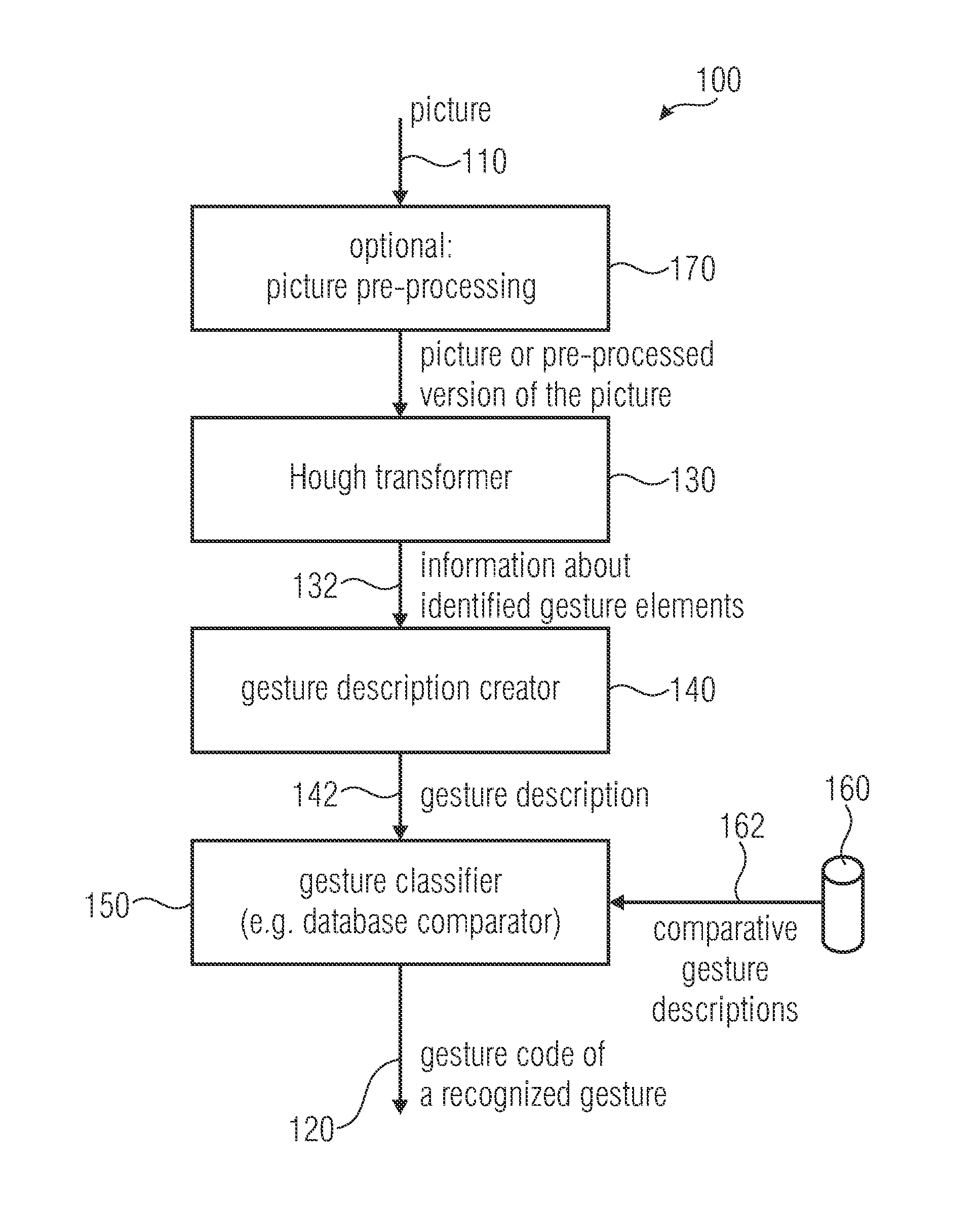

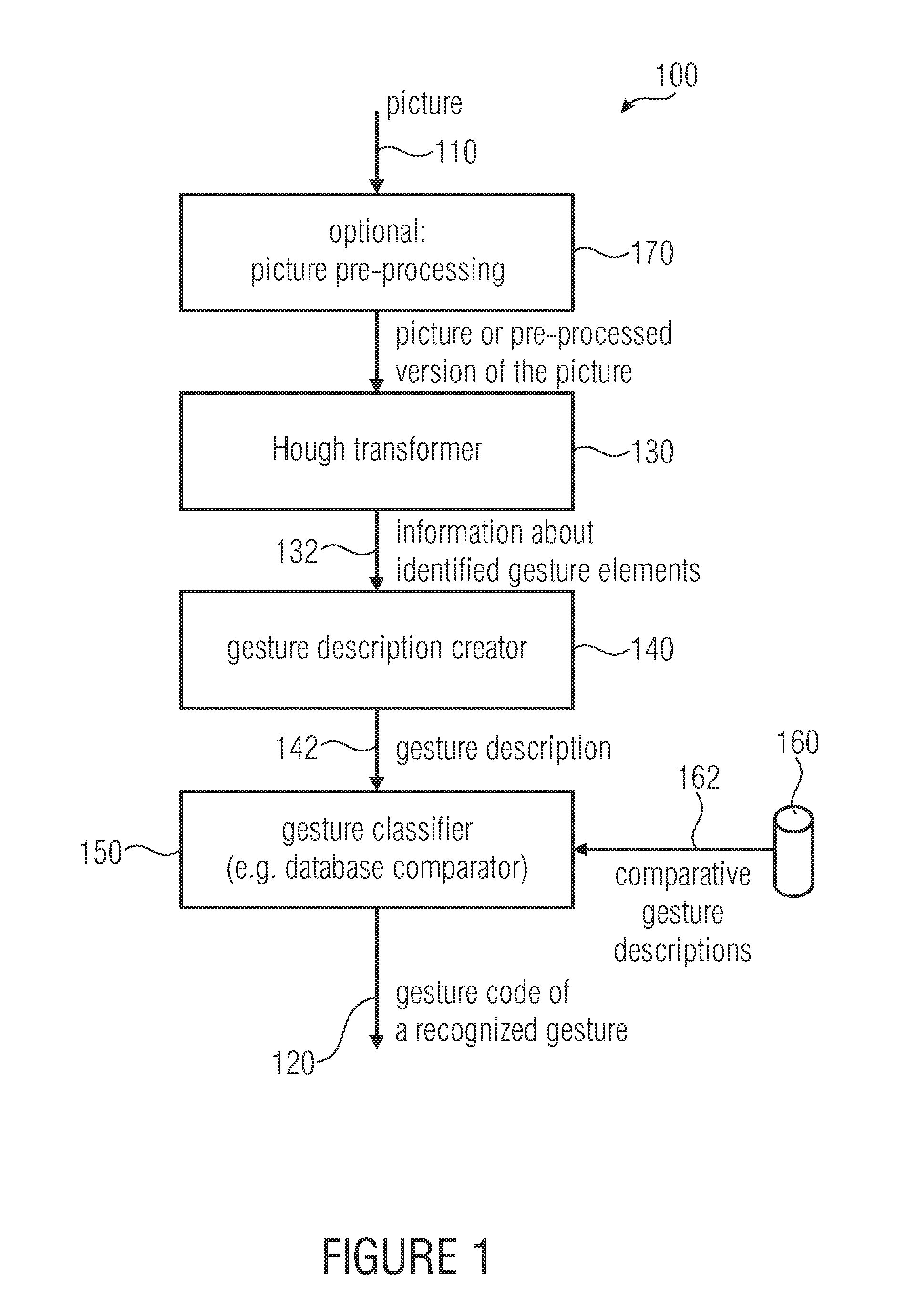

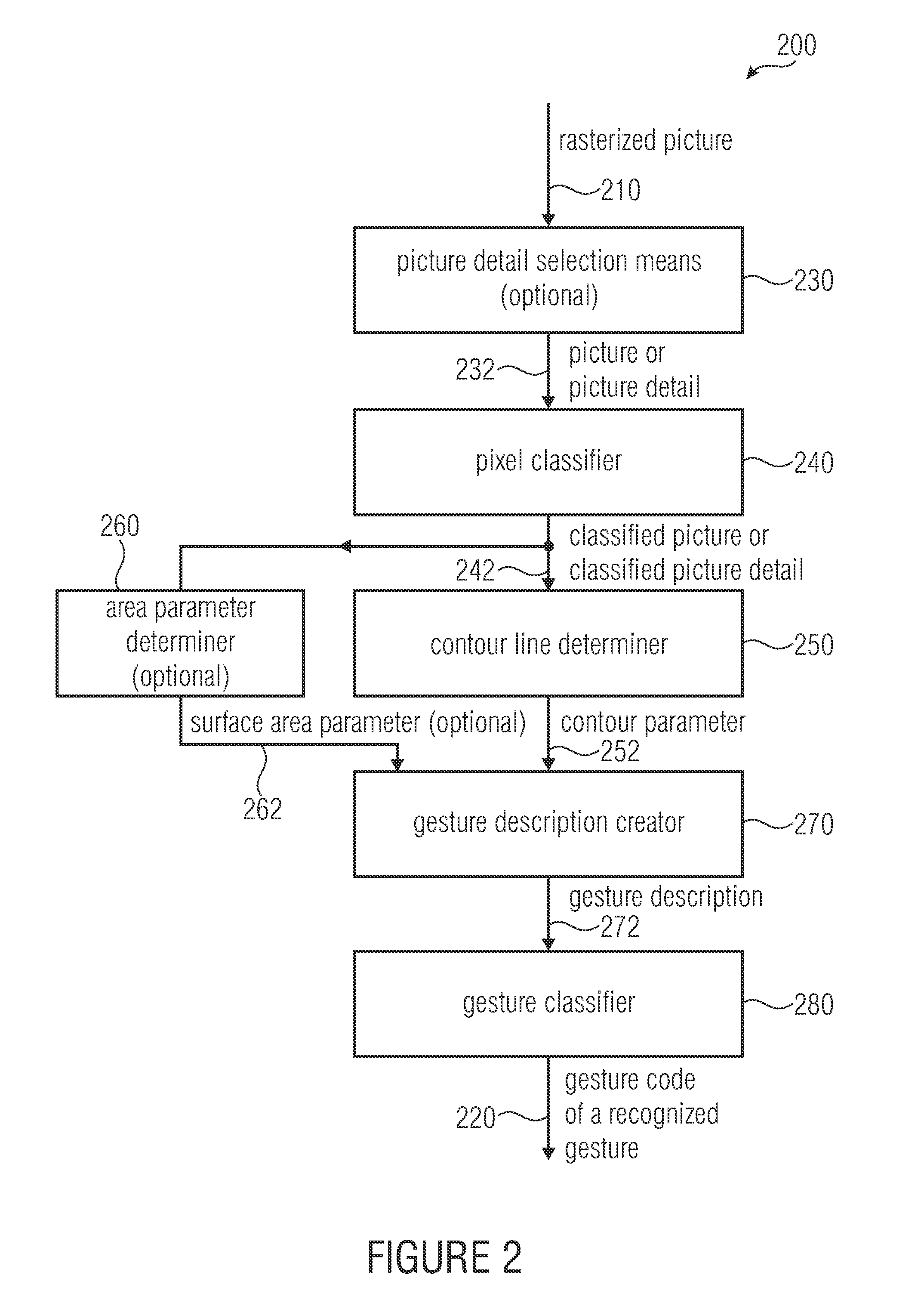

An apparatus for recognizing gestures in a picture includes a Hough transformer configured to identify elements in the picture or in a pre-processed version of the picture as identified gesture elements and to obtain information about the identified gesture elements.The apparatus further includes a gesture description creator configured to obtain a gesture description while using the information about the identified gesture elements.Moreover, the apparatus includes a gesture classifier configured to compare the gesture description to a plurality of comparative gesture descriptions having gesture codes associated with them. The gesture classifier is configured to provide, as the result of the comparison, a gesture code of a recognized gesture.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

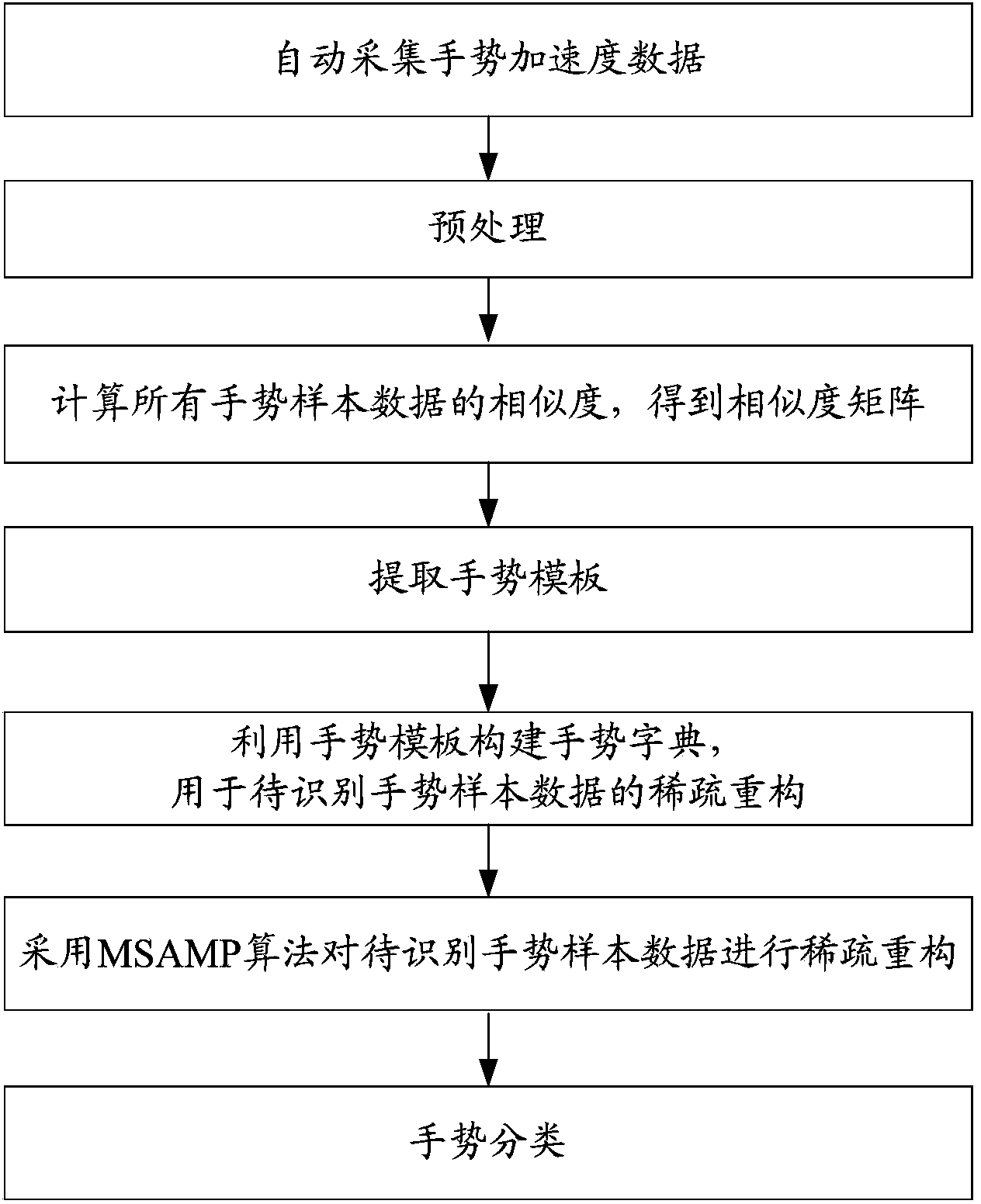

Gesture recognition method based on acceleration sensor

InactiveCN103984416ASmooth waveformWaveform Regularization and UnificationInput/output for user-computer interactionCharacter and pattern recognitionAdaptive matchingCompressed sensing

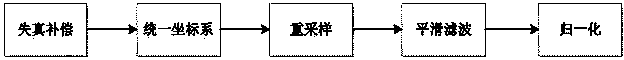

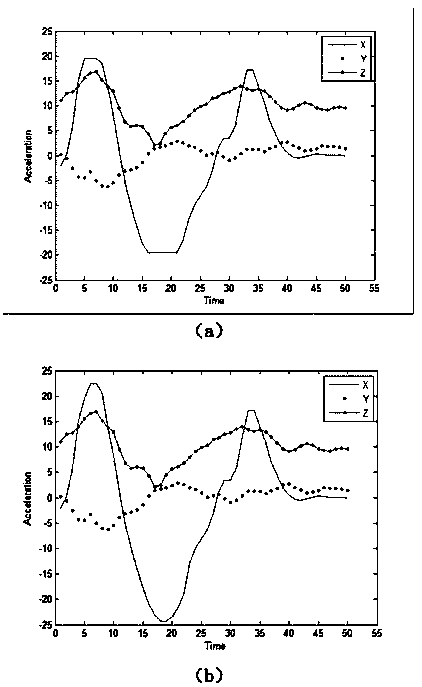

The invention discloses a gesture recognition method based on an acceleration sensor. The gesture recognition method based on an acceleration sensor comprises the following steps: automatically collecting gesture acceleration data, preprocessing, calculating the similarity of all gesture sample data so as to obtain a similarity matrix, extracting a gesture template, constructing a gesture dictionary by utilizing the gesture template, and carrying out sparse reconstruction and gesture classification on the gesture sample data to be recognized by adopting an MSAMP (modified sparsity algorithm adaptive matching pursuit) algorithm. According to the invention, the compressed sensing technique and a traditional DTW (dynamic time warping) algorithm are combined, and the adaptability of the gesture recognition to different gesture habits is improved, and by adopting multiple preprocessing methods, the practicability of the gesture recognition method is improved. Additionally, the invention also discloses an automatic collecting algorithm of the gesture acceleration data; the additional operation of traditional gesture collection is eliminated; the user experience is improved; according to the invention, a special sensor is not required, the gesture recognition method based on the acceleration sensor can be used for terminals carried with the acceleration sensor; the hardware adaptability is favorable, and the practicability of the recognition method is enhanced. The coordinate system is uniform, and can be adaptive to different multiple gesture habits.

Owner:BEIJING UNIV OF POSTS & TELECOMM

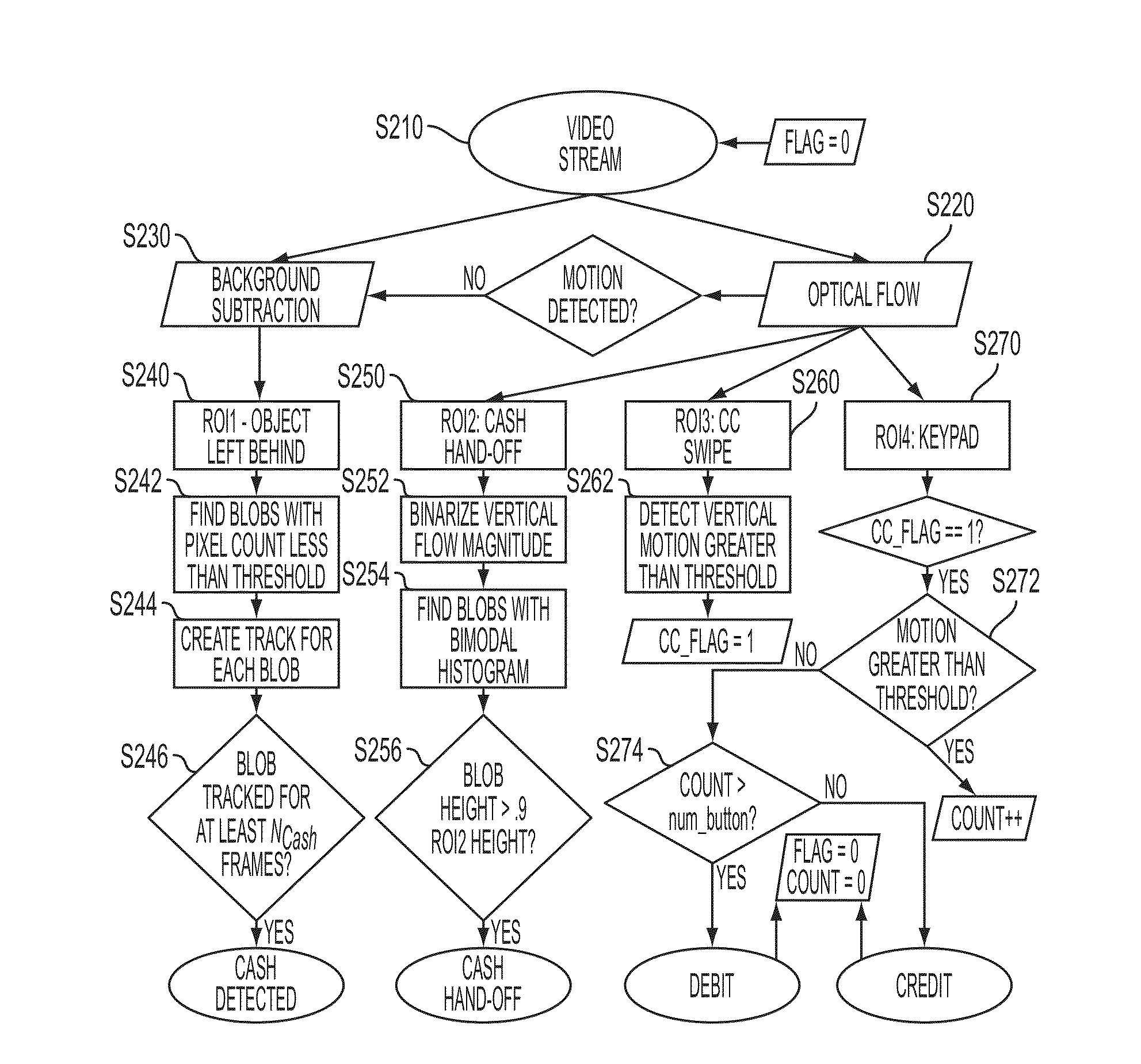

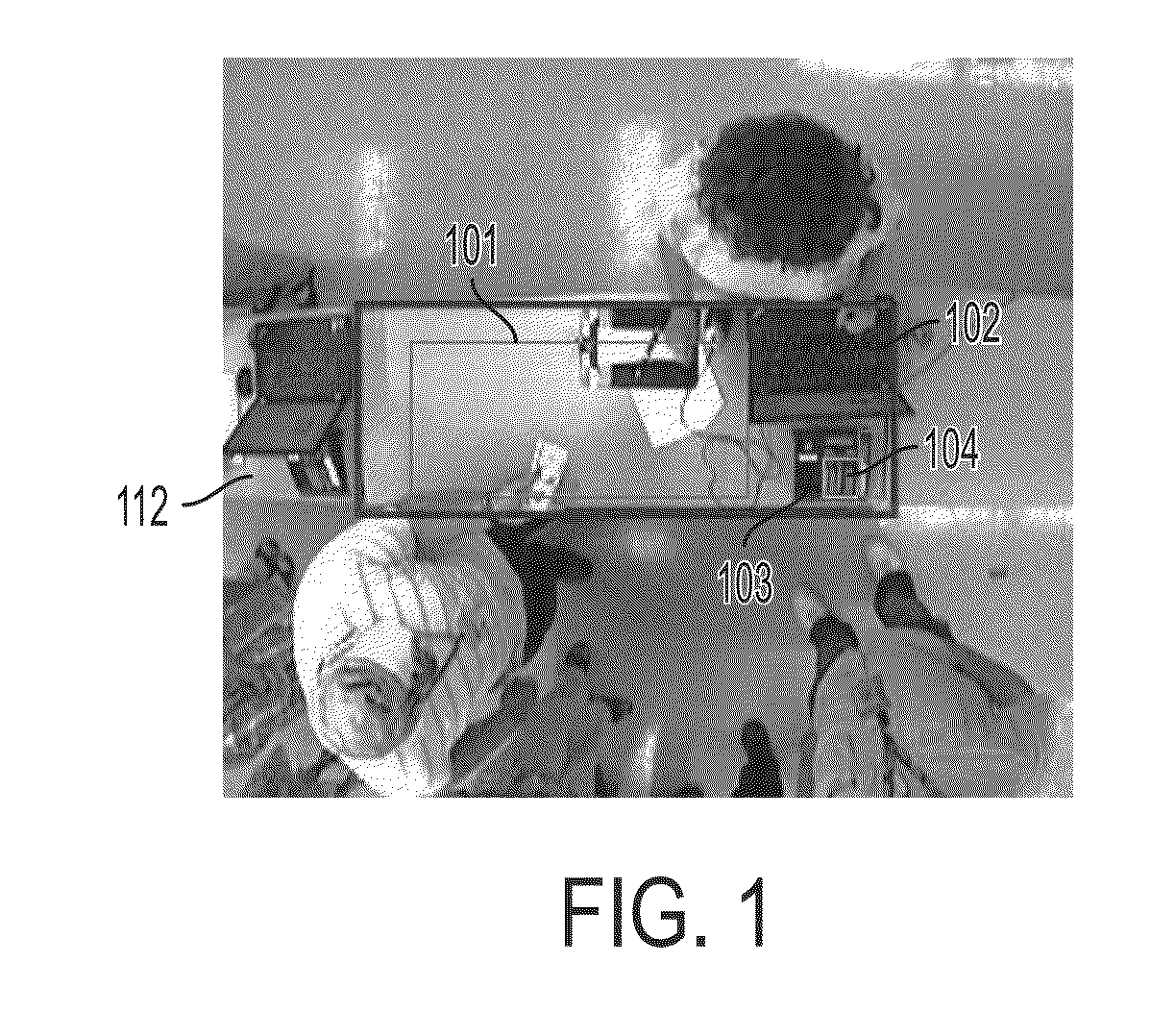

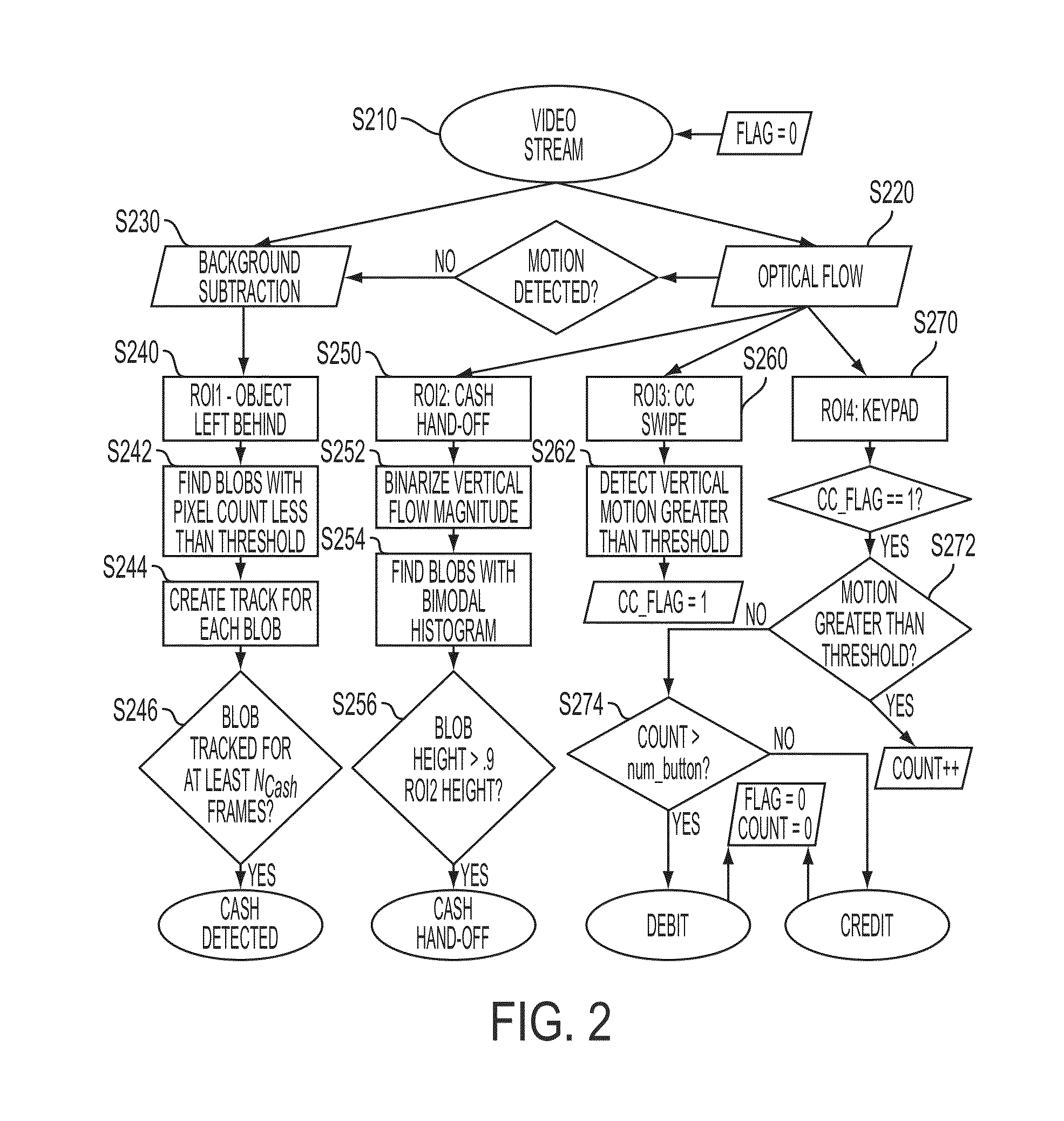

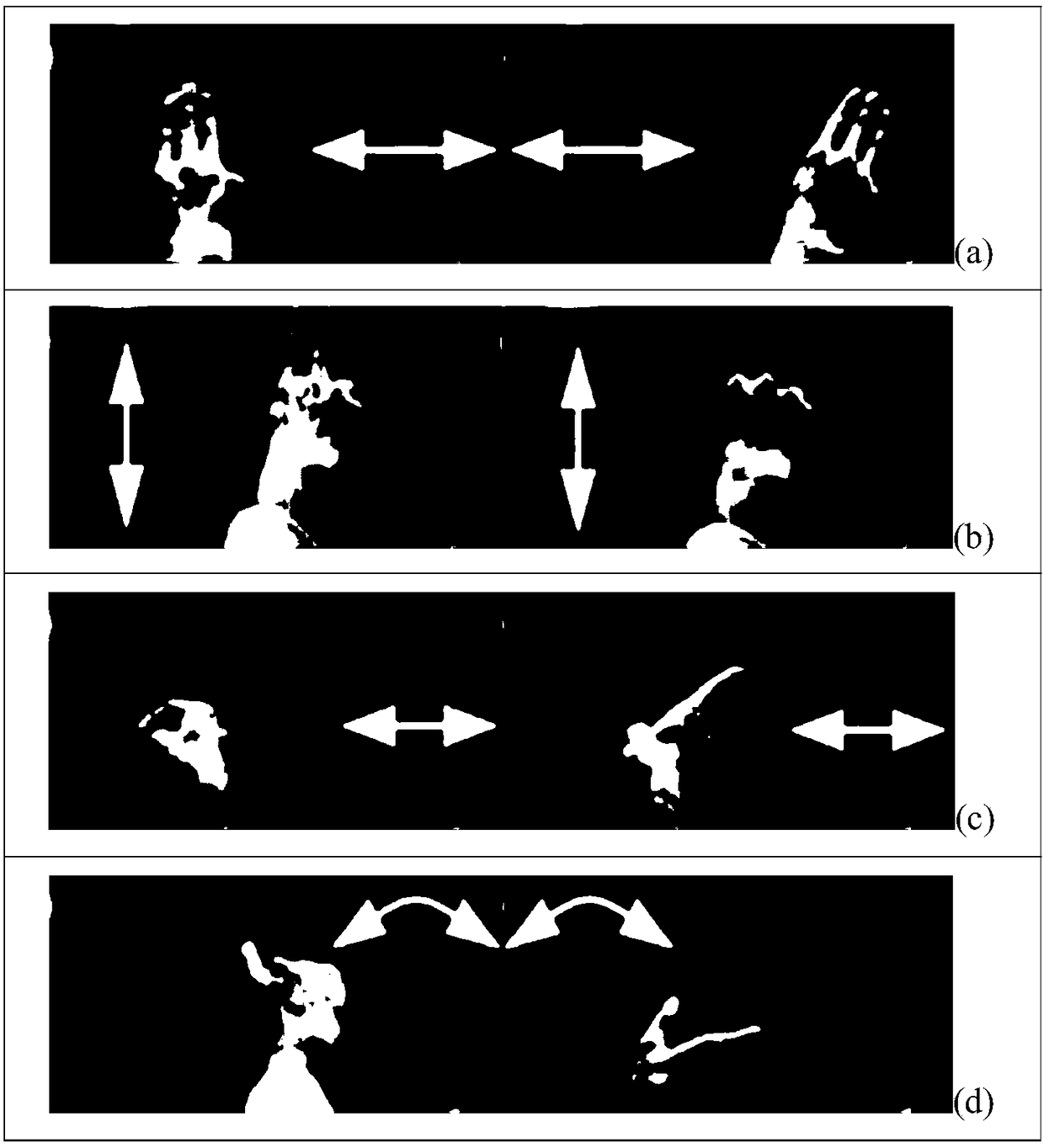

Heuristic-based approach for automatic payment gesture classification and detection

A system and method for automatic classification and detection of a payment gesture are disclosed. The method includes obtaining a video stream from a camera placed above at least one region of interest, the region of interest classifying the payment gesture. A background image is generated from the obtained video stream. Motion is estimated in at least two consecutive frames from the video stream. A representation is created from the background image and the estimated motion occurring within the at least one region of interest. The payment gesture is detected based on the representation.

Owner:CONDUENT BUSINESS SERVICES LLC

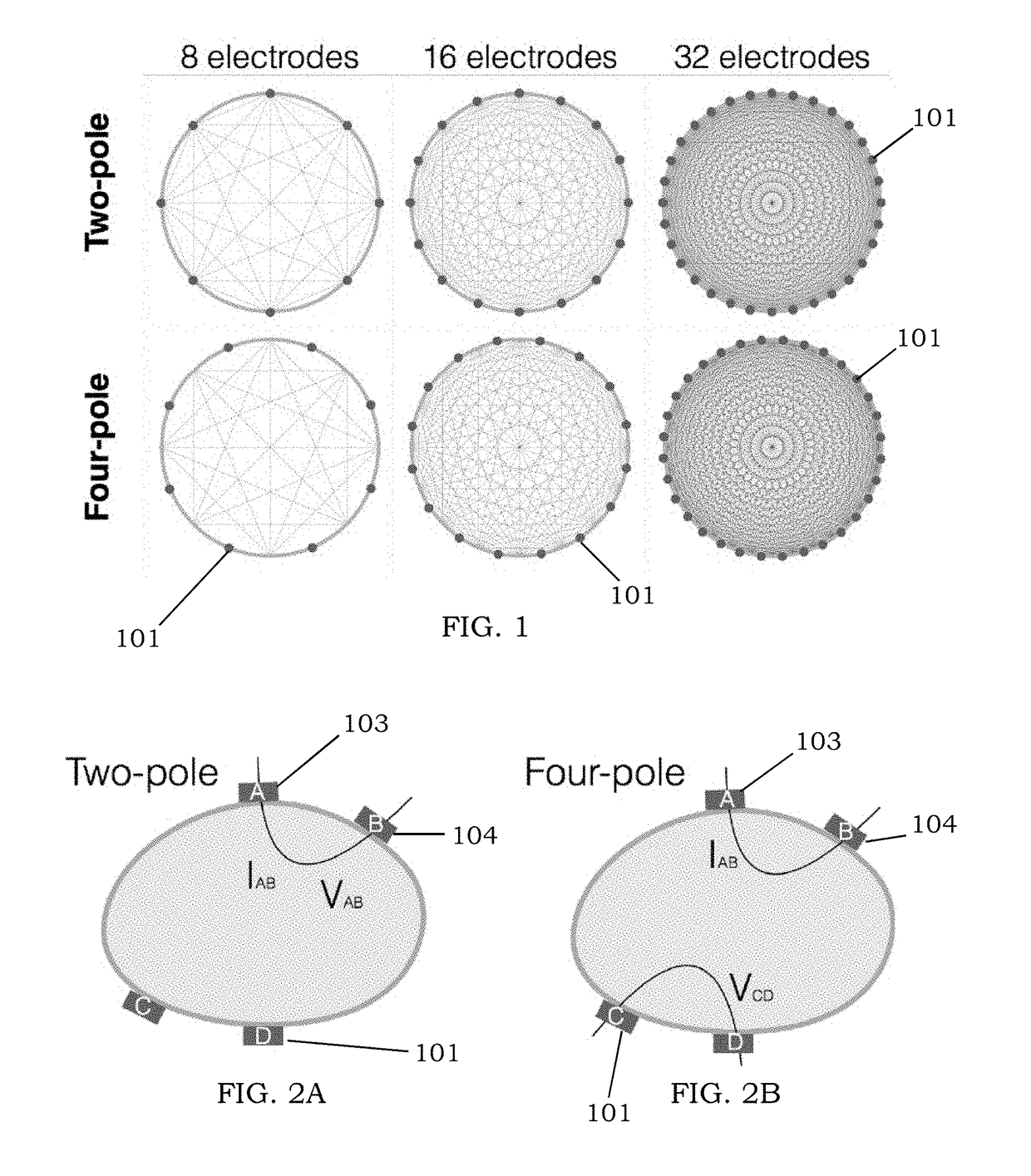

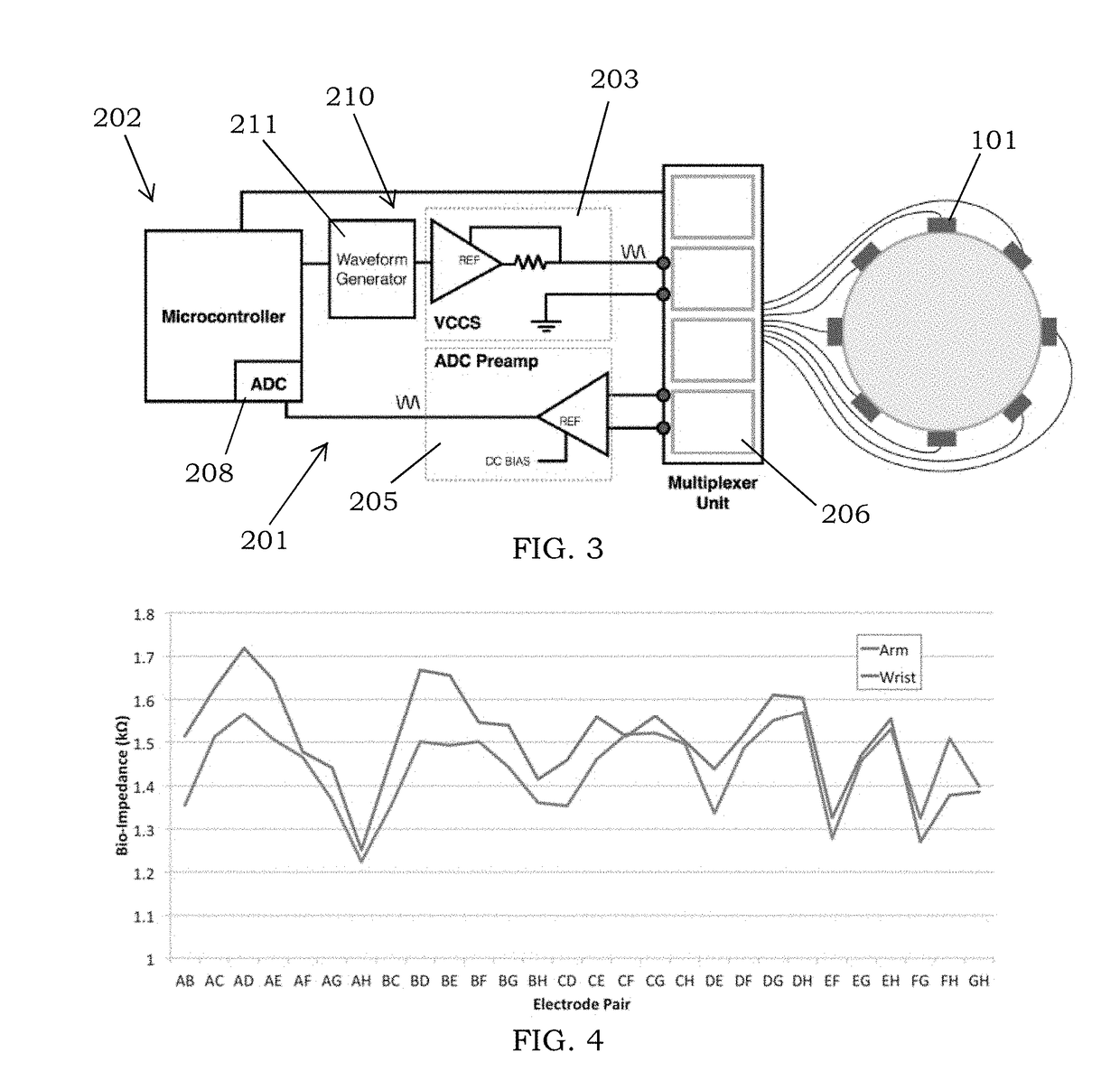

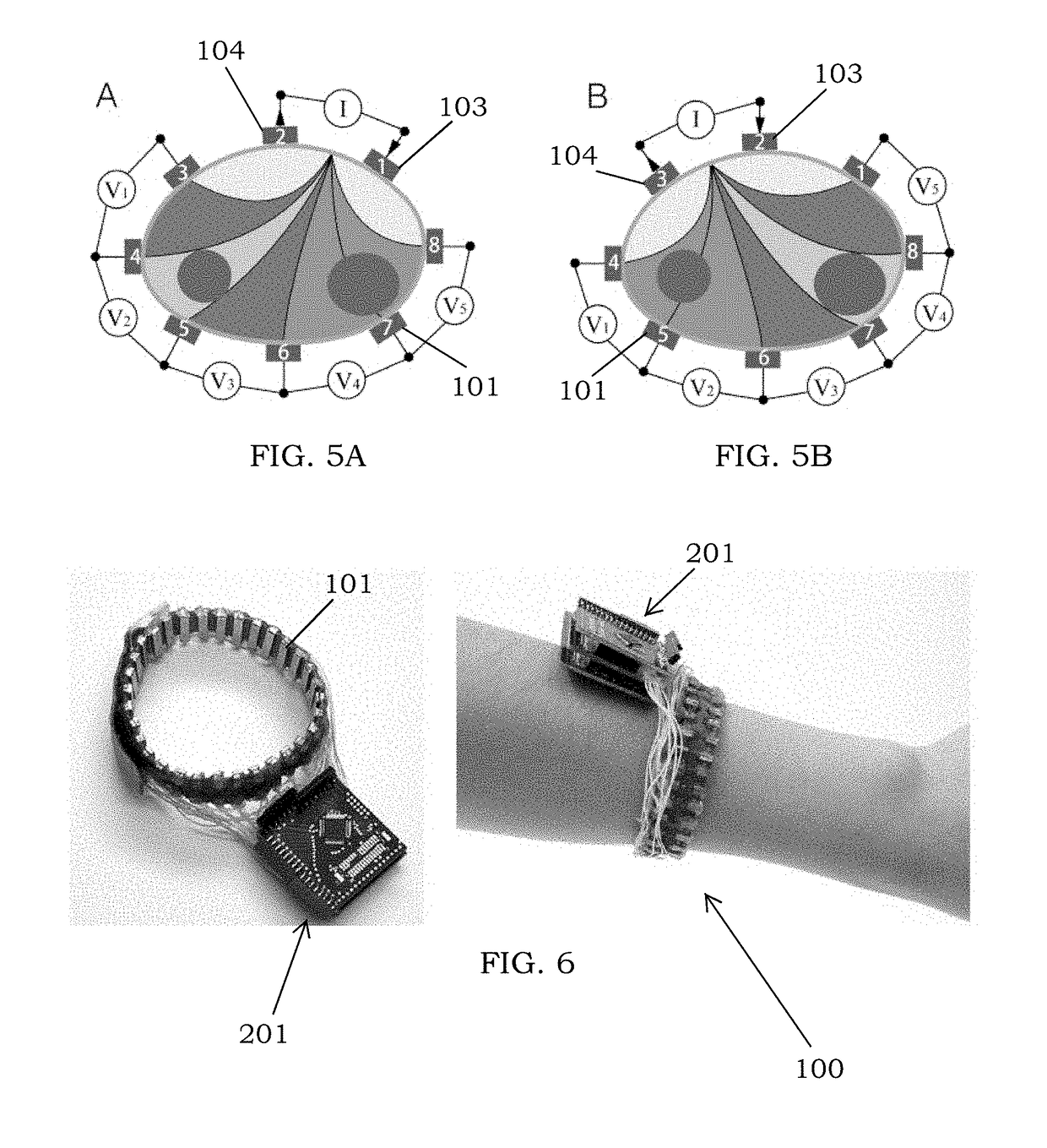

System for Wearable, Low-Cost Electrical Impedance Tomography for Non-Invasive Gesture Recognition

ActiveUS20180360379A1Different profileInput/output for user-computer interactionMedical automated diagnosisElectrical resistance and conductanceImpedance distribution

The disclosure describes a wearable, low-cost and low-power Electrical Impedance Tomography system for gesture recognition. The system measures cross-sectional bio-impedance using electrodes on wearers' skin. Using all-pairs measurements, the interior impedance distribution is recovered, which is then fed to a hand gesture classifier. This system also solves the problem of poor accuracy of gesture recognition often observed with other gesture recognition approaches.

Owner:CARNEGIE MELLON UNIV

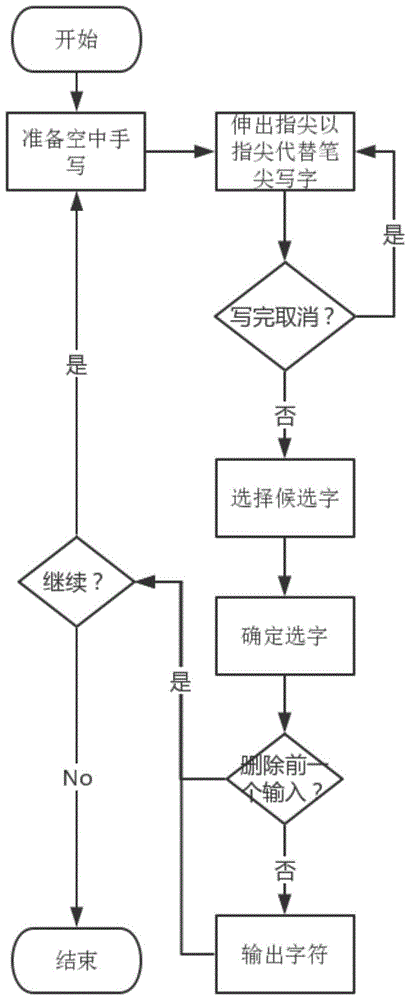

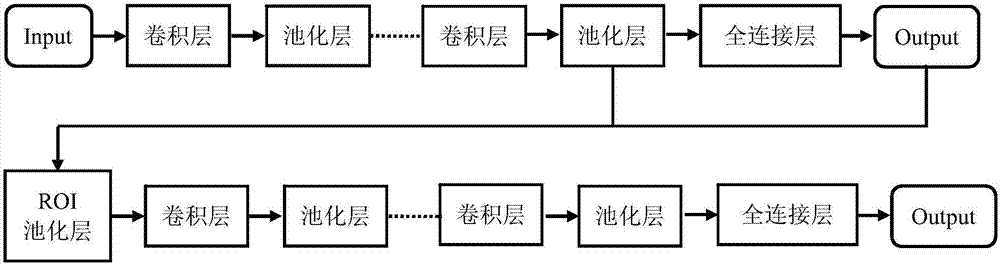

Egocentric vision in-the-air hand-writing and in-the-air interaction method based on cascade convolution nerve network

ActiveCN105718878AForecast stabilityReduce time performance consumptionCharacter and pattern recognitionNeural learning methodsFingertip detectionNerve network

The invention discloses an egocentric vision in-the-air hand-writing and in-the-air interaction method based on a cascade convolution nerve network. The method comprises steps of S1: obtaining training data; S2: designing a depth convolution nerve network used for hand detection; S3: designing a depth convolution nerve network used for gesture classification and finger tip detection; S4: cascading a first-level network and a second-level network, cutting a region of interest out of a foreground bounding rectangle output by the first-level network so as to obtain a foreground region including a hand, and then using the foreground region as the input of the second-level convolution network for finger tip detection and gesture identification; S5: judging the gesture identification, if it is a single-finger gesture, outputting the finger tip thereof and then carrying out timing sequence smoothing and interpolation between points; and S6: using continuous multi-frame finger tip sampling coordinates to carry out character identification. The invention provides an integral in-the-air hand-writing and in-the-air interaction algorithm, so accurate and robust finger tip detection and gesture classification are achieved, thereby achieving the egocentric vision in-the-air hand-writing and in-the-air interaction.

Owner:SOUTH CHINA UNIV OF TECH

Method and system for gesture classification

InactiveUS7970176B2Prevent overfittingCharacter and pattern recognitionVideo gamesHuman–computer interactionGesture classification

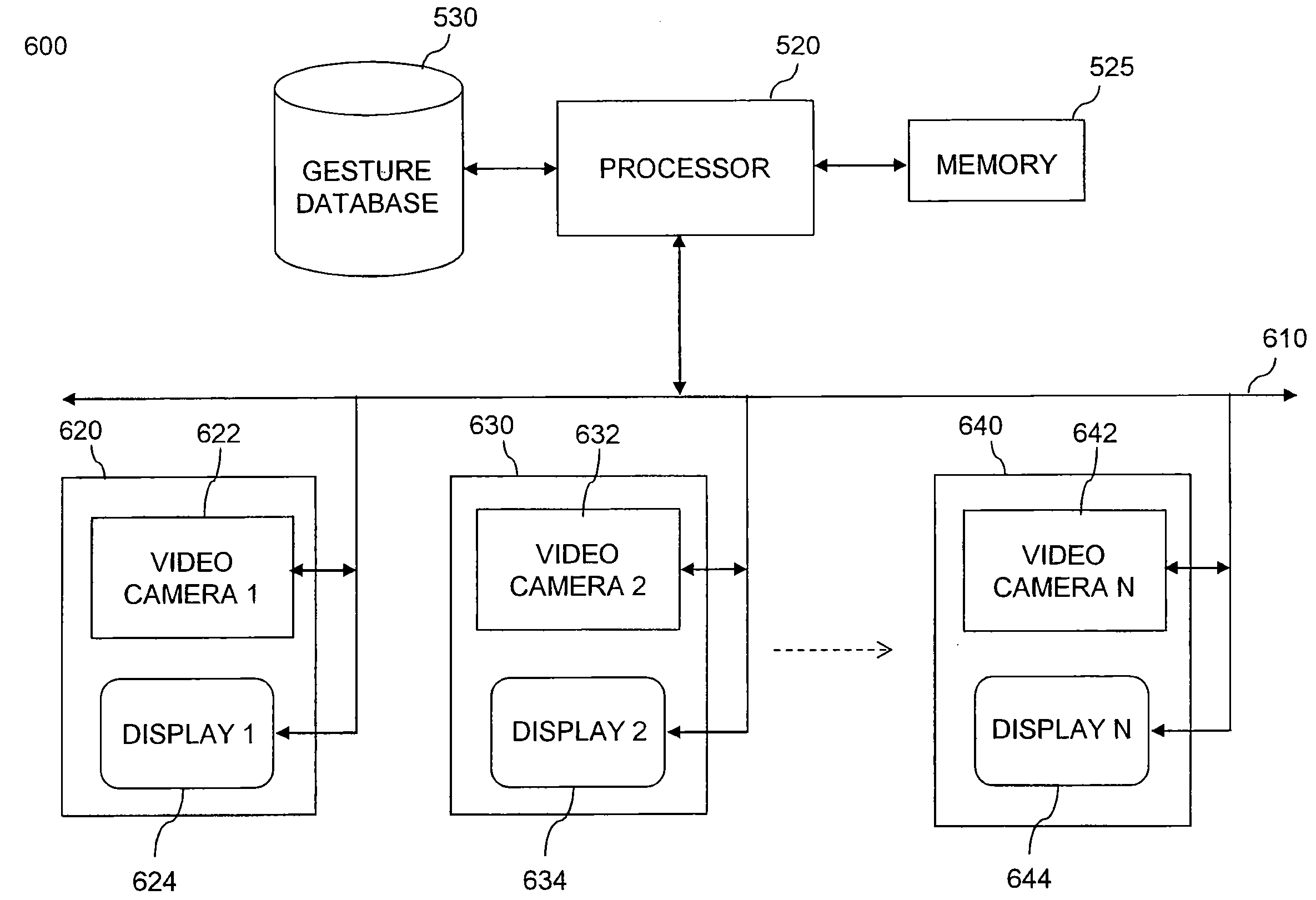

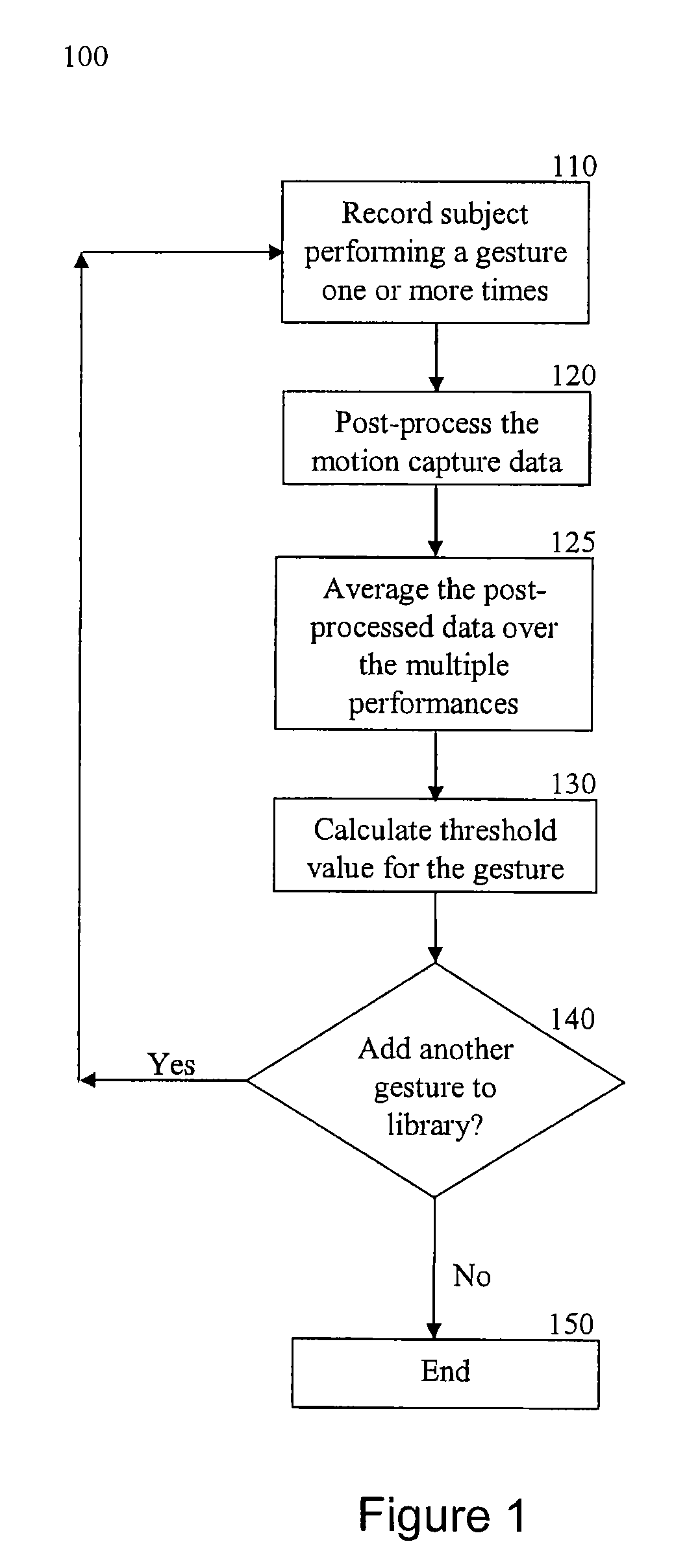

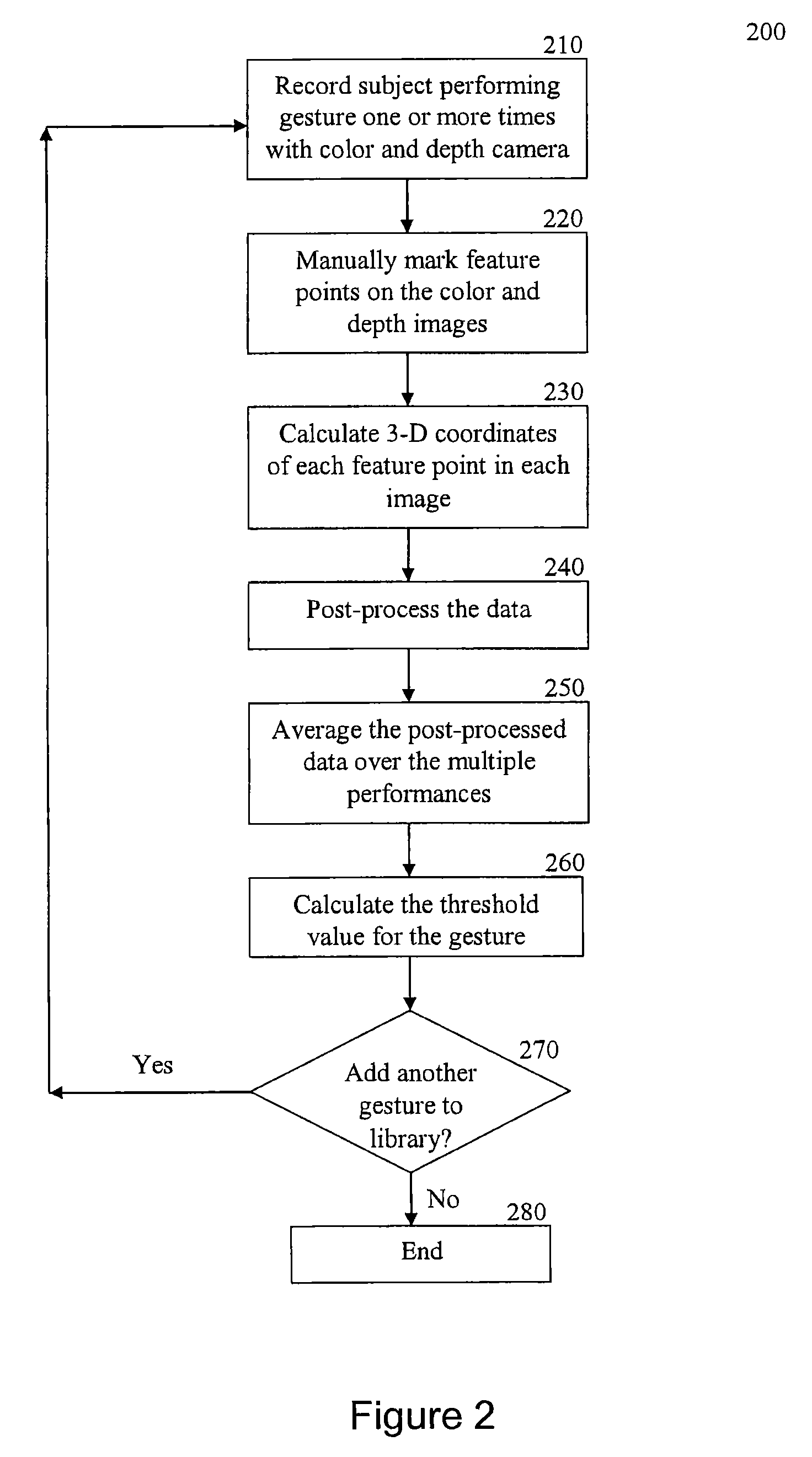

The present invention is a method of identifying a user's gestures for use in an interactive game application. Videocamera images of the user are obtained, and feature point locations of a user's body are identified in the images. A similarity measure is used to compare the feature point locations in the images with a library of gestures. The gesture in the library corresponding to the largest calculated similarity measure which is greater than a threshold value of the gesture is identified as the user's gesture. The identified gesture may be integrated into the user's movements within a virtual gaming environment, and visual feedback is provided to the user.

Owner:TAHOE RES LTD

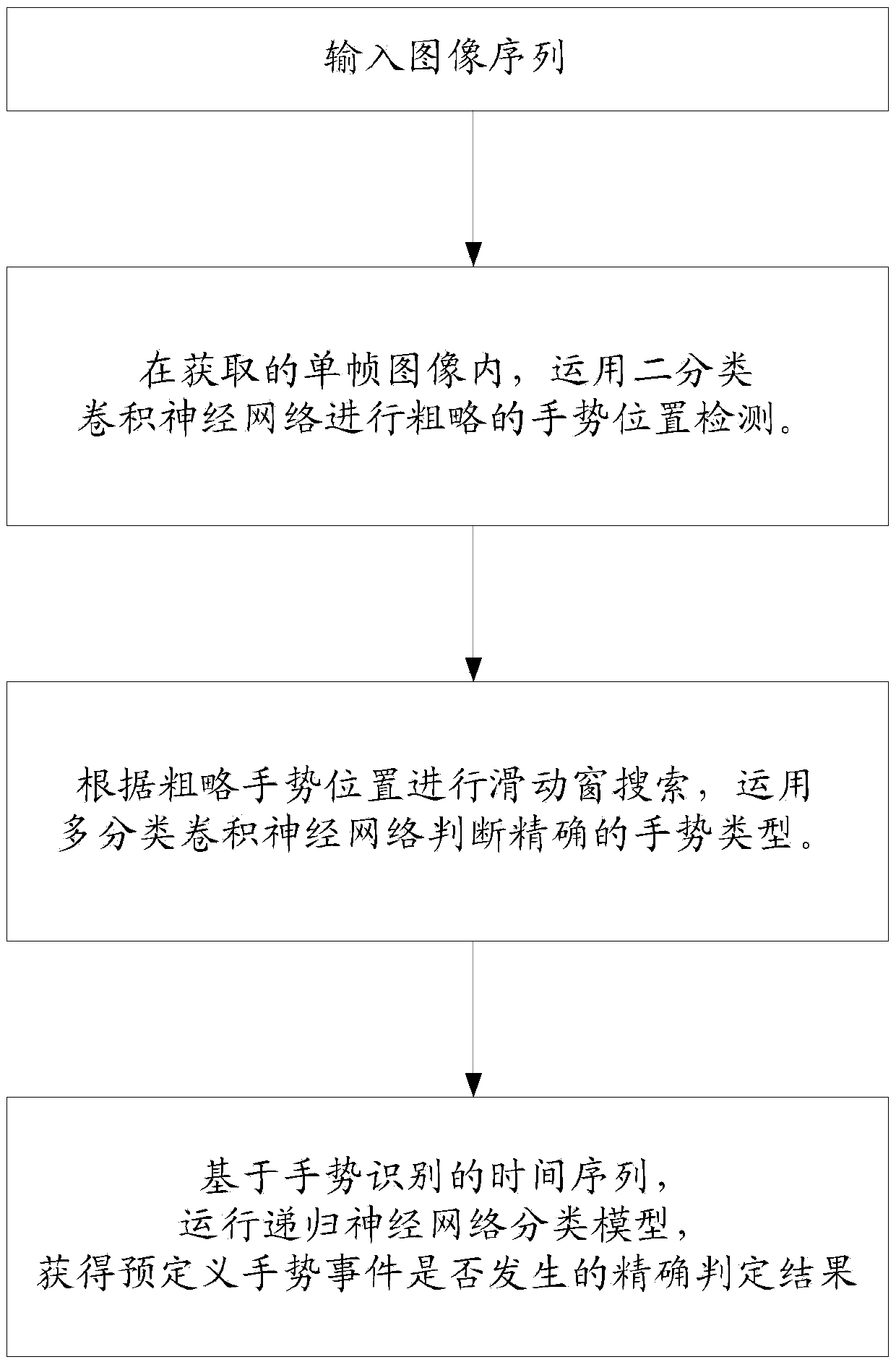

Method and device of hand gesture recognition and detection on the basis of deep neural network

ActiveCN105373785AInput/output for user-computer interactionGenetic modelsPattern recognitionGesture classification

Owner:BEIJING HORIZON ROBOTICS TECH RES & DEV CO LTD

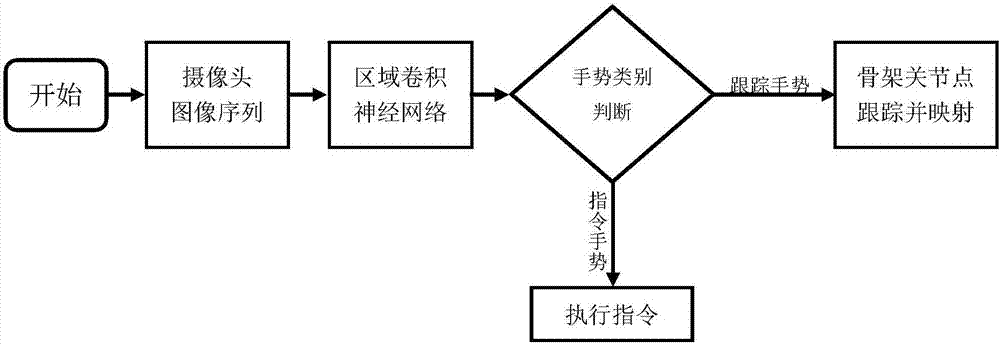

Regional convolutional neural network-based method for gesture identification and interaction under egocentric vision

ActiveCN107168527ARecognition speed is fastImprove recognition accuracyInput/output for user-computer interactionCharacter and pattern recognitionFingertip detectionNerve network

The invention discloses a regional convolutional neural network-based method for gesture identification and interaction under egocentric vision. The method comprises the following steps of S1, obtaining training data; S2, designing a regional neural network which is used for gesture classification and fingertip detection while being used for hand detection, and ensuring that an input of the neural network is a three-channel RGB image and outputs of the neural network are top left corner coordinates and top right corner coordinates of an external connection matrix of a gesture region, gesture types and gesture skeleton key points; and S3, judging the gesture types, and outputting corresponding interactive results according to different interactive demands. The invention provides a complete method for gesture identification and interaction under egocentric vision. Through single model training and partial network sharing, the identification speed and accuracy of the gesture identification under the egocentric vision are increased and improved.

Owner:SOUTH CHINA UNIV OF TECH

Methods and apparatus for contactless gesture recognition and power reduction

ActiveCN102971701AReduce contact frequencyReduce generationInput/output for user-computer interactionEnergy efficient ICTProximity sensorHuman–computer interaction

Owner:QUALCOMM INC

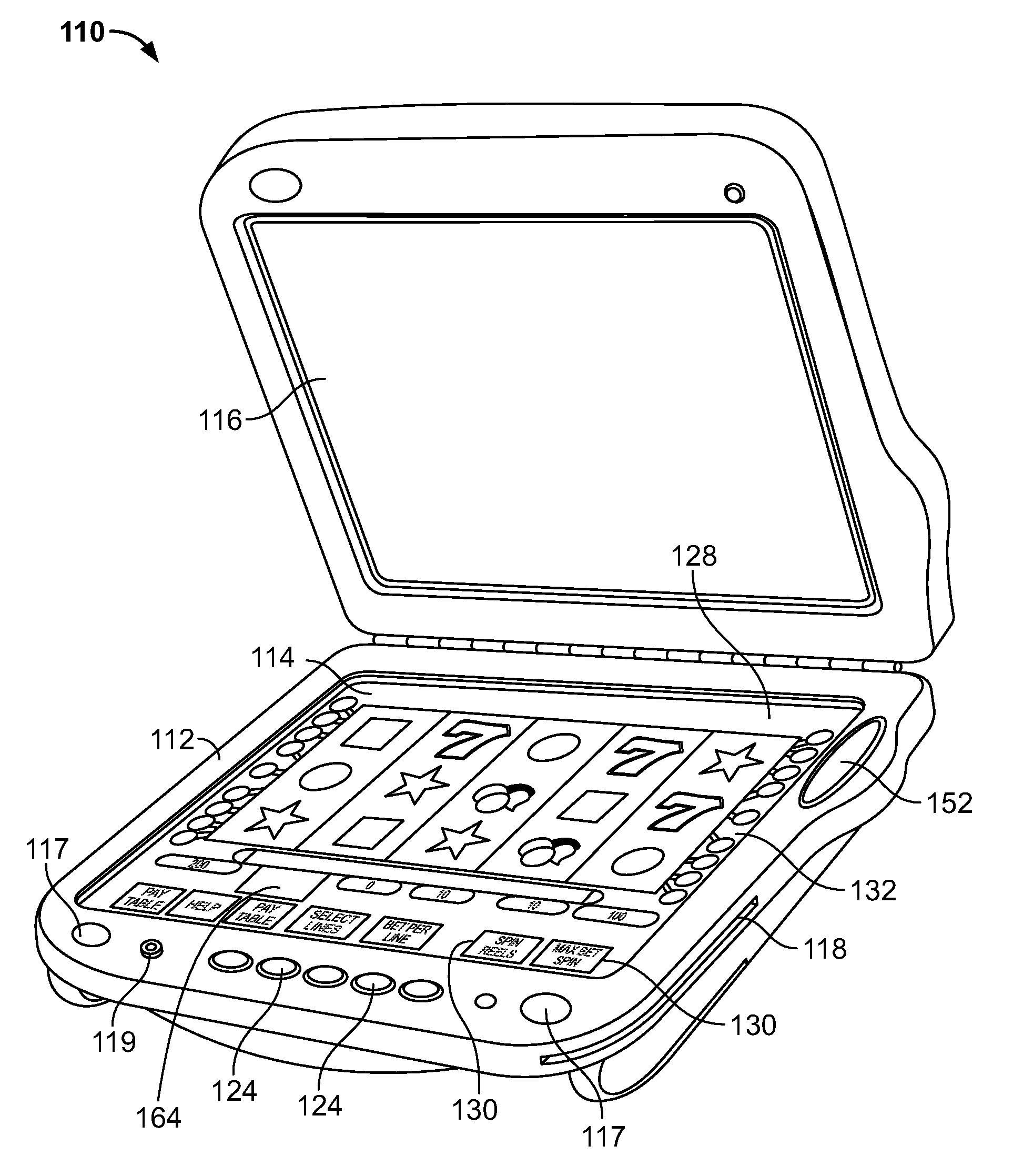

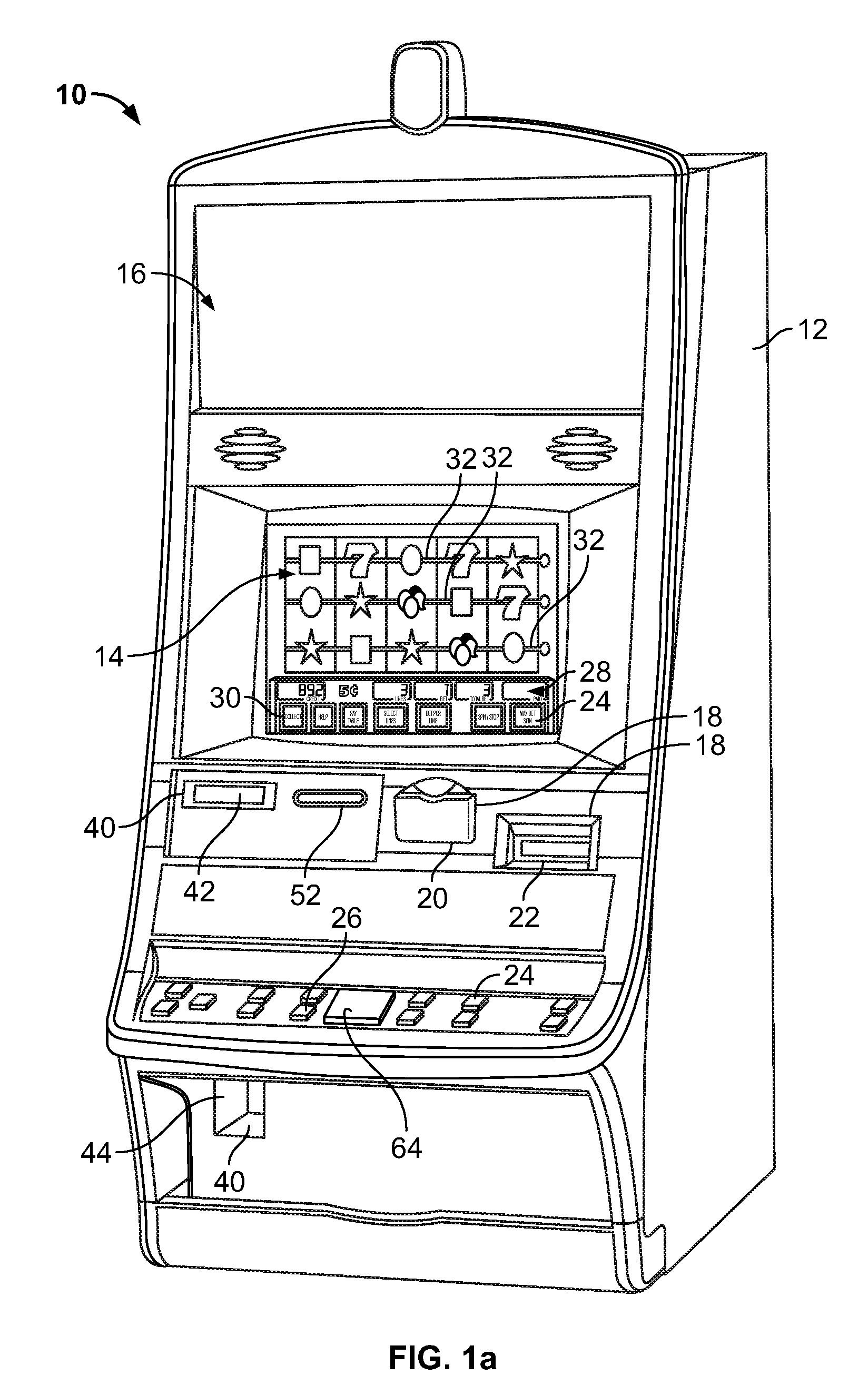

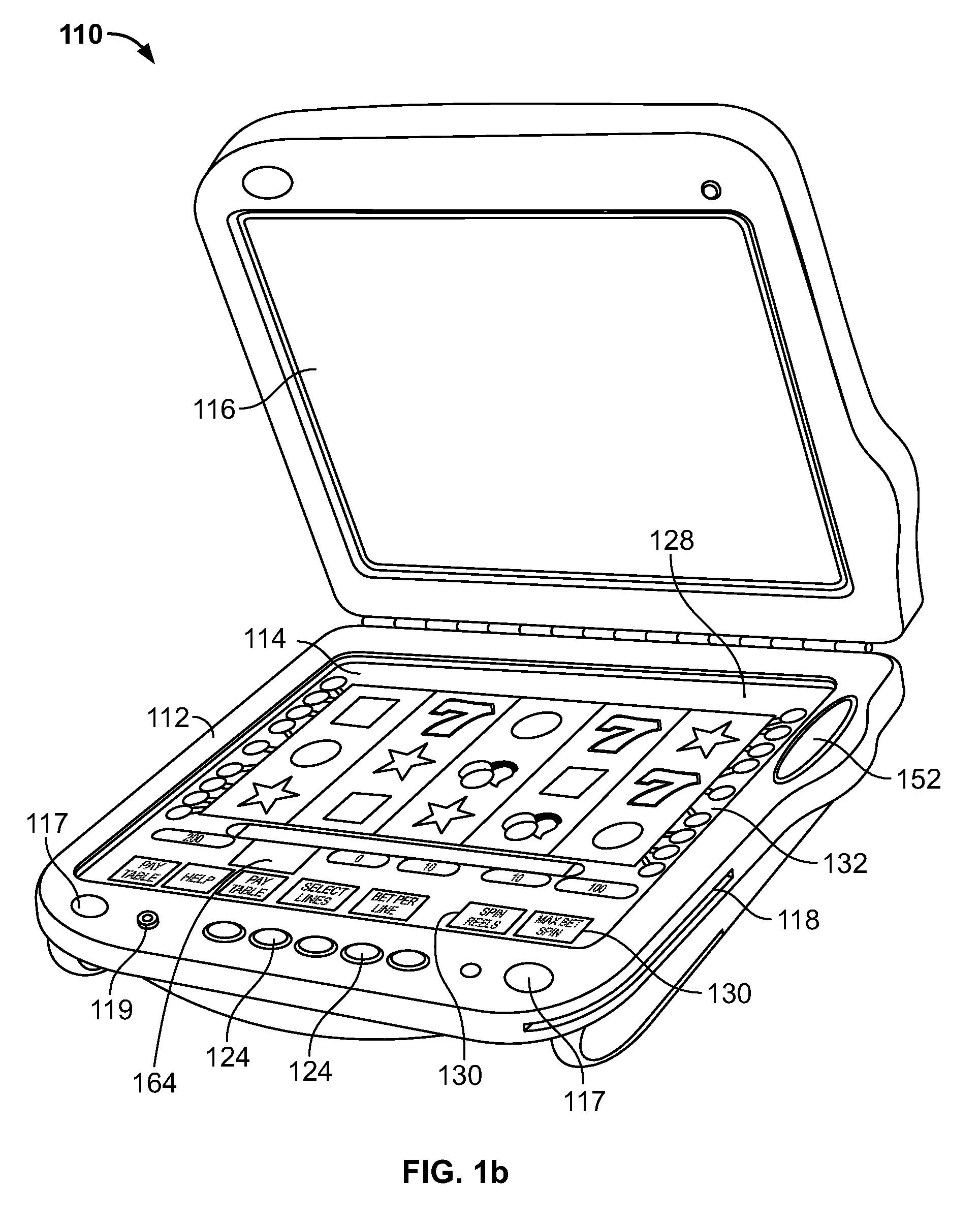

Gaming machine having multi-touch sensing device

InactiveUS20090325691A1Chemical analysis using catalysisCathode-ray tube indicatorsSensor arrayDisplay device

A gaming system and method for conducting a wagering game includes a primary display that displays a randomly selected outcome. The gaming system includes a multi-touch input system having a multi-touch sensing device, a memory, and a local controller. The multi-touch sensing device includes an array of input sensors that detect a multi-point gesture. Each sensor detects a touch input made by a player of the wagering game. The memory includes gesture classification codes each representing a distinct combination of characteristics relating to the gesture. The local controller receives data indicative of at least two of the characteristics related to the multi-point gesture and determines whether the data corresponds to any of the gesture classification codes. A main controller is coupled to the local controller to receive the gesture classification code responsive to the local controller determining that the data corresponds to a gesture classification code.

Owner:BALLY GAMING INC

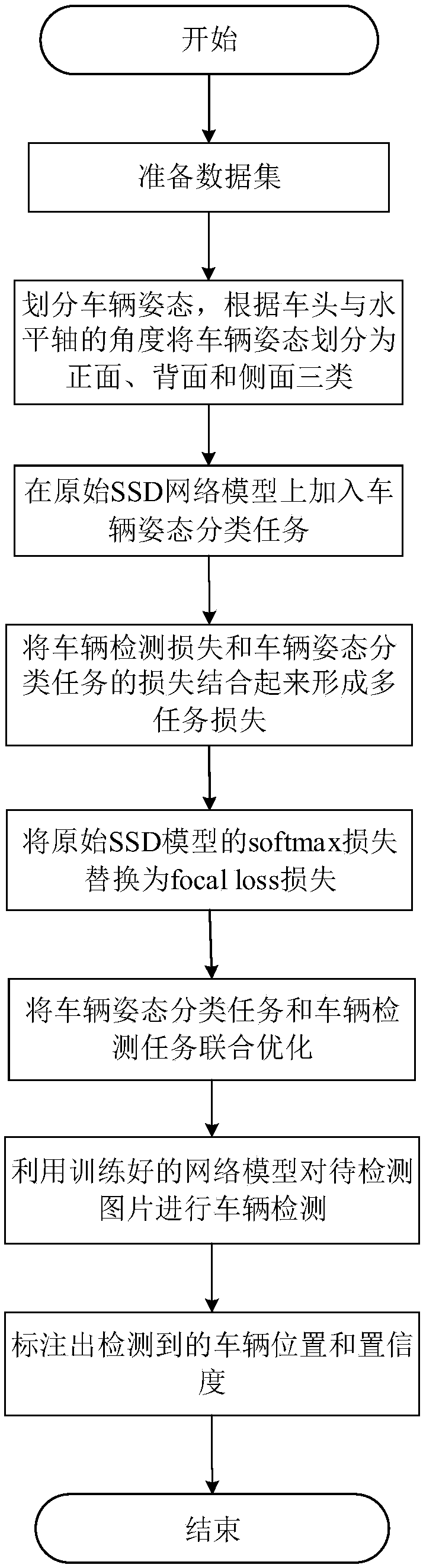

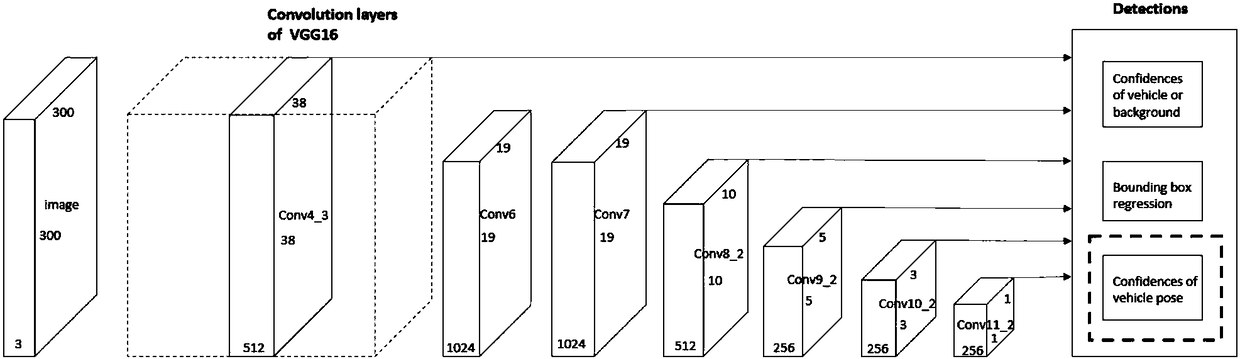

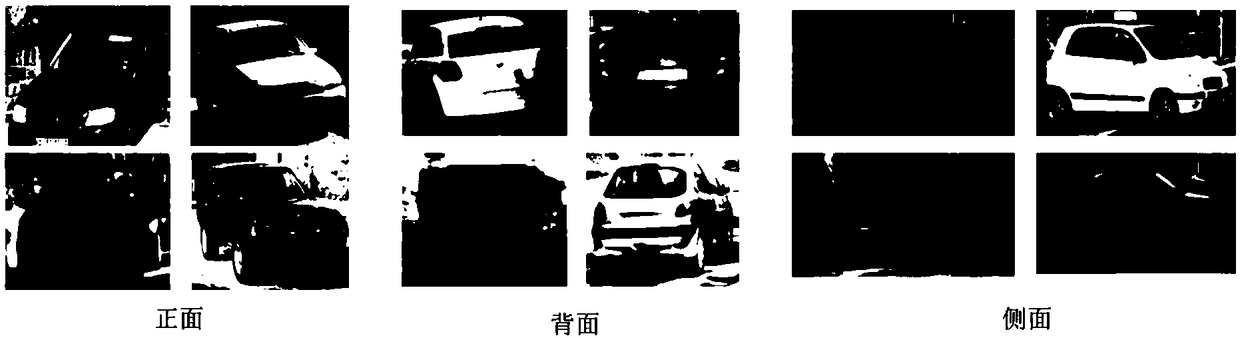

Vehicle detection method and system based on SSD (Single Shot MultiBox Detector) and vehicle gesture classification

InactiveCN108596053AImprove detection accuracyHigh precisionRoad vehicles traffic controlCharacter and pattern recognitionHorizontal axisVehicle detection

The invention discloses a vehicle detection method and system based on a SSD (Single Shot MultiBox Detector) and vehicle gesture classification. The method comprises the following steps that: according to an angle between a vehicle head and a horizontal axis, dividing a vehicle gesture; adding a vehicle gesture classification task into an original SSD network model; combining a vehicle detection loss with a vehicle gesture classification task loss to form a multi-task loss; replacing the softmax loss of the original SSD model with a flocal loss; carrying out joint optimization on the vehicle gesture classification task and the vehicle detection task; training to obtain a detection model; and utilizing the detection model to carry out vehicle detection on a picture to be detected to realizemultiscale and multi-angle vehicle detection. By use of the method, a deep learning target detection SSD is used for vehicle detection, the vehicle gesture classification is used for assisting the task and the vehicle detection task in carrying out joint training, and the flocal loss is added to solve the problem of unbalanced vehicle samples so as to improve the accuracy and the stability of thesystem.

Owner:HUAZHONG UNIV OF SCI & TECH

3d convolutional neural network based gesture recognition method

ActiveCN108197580AImprove robustnessGuaranteed accuracyCharacter and pattern recognitionNeural architecturesVideo imageDeconvolution

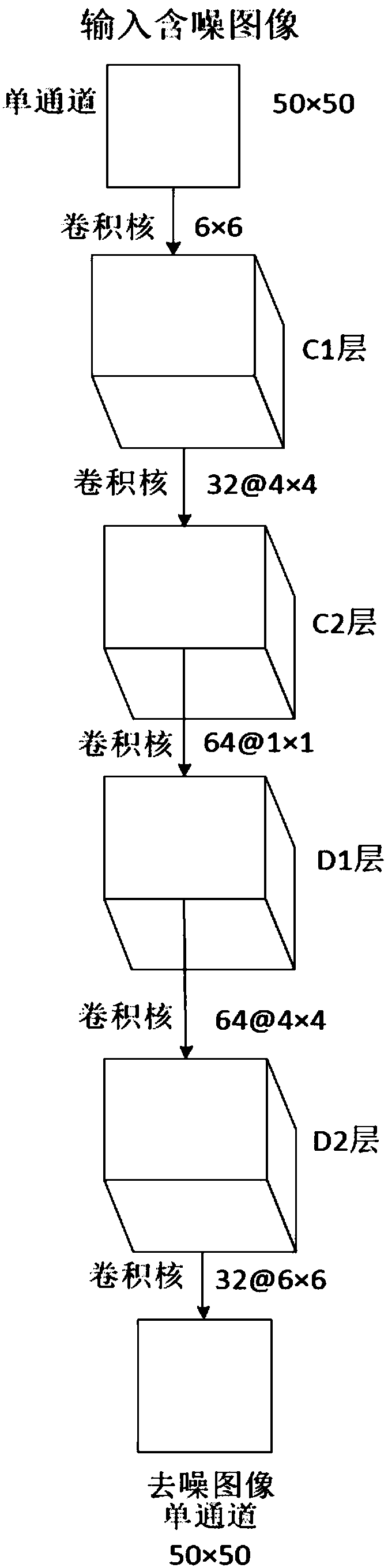

The invention belongs to gesture recognition methods and relates to a 3d convolutional neural network based gesture recognition method. A series of preprocessing is carried out aiming at common videosand depth videos shot by a depth camera. On the basis of application of a common processing method for preprocessing, a convolutional neural sub-network and a deconvolution neural sub-network combined denoising method is adopted aiming at the problem of noise points of video images, and a 3d convolutional neural network is adopted for processing aimed at a temporal-spatial relationship in the videos. By the 3d convolutional neural network based gesture recognition method, the gesture classification rate is substantially increased, and recognition basis reliability and result reasonability areimproved.

Owner:JILIN UNIV

System and method for hand gesture recognition for remote control of an internet protocol TV

ActiveUS8582037B2Television system detailsImage enhancementInternet protocol suitePattern recognition

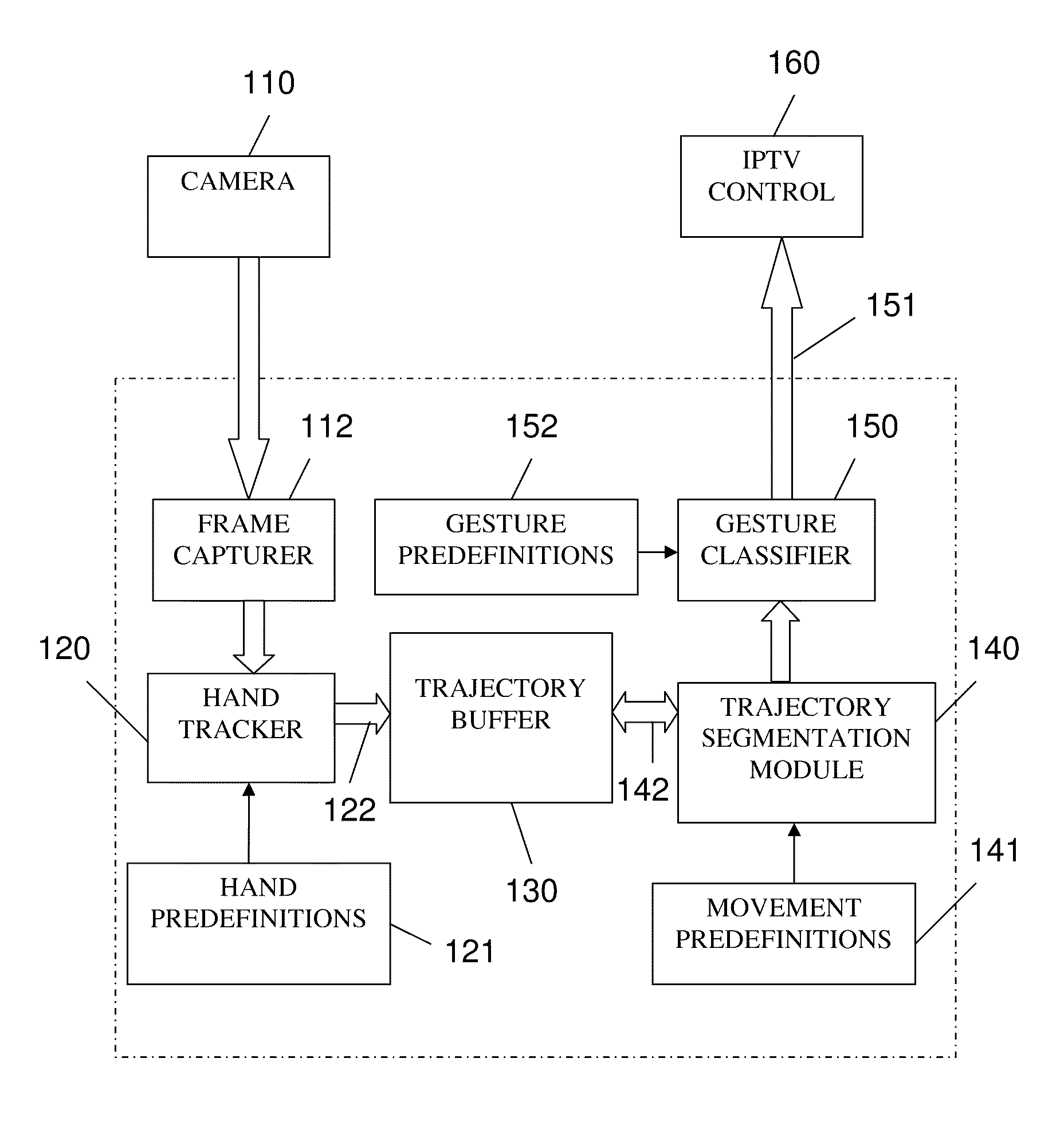

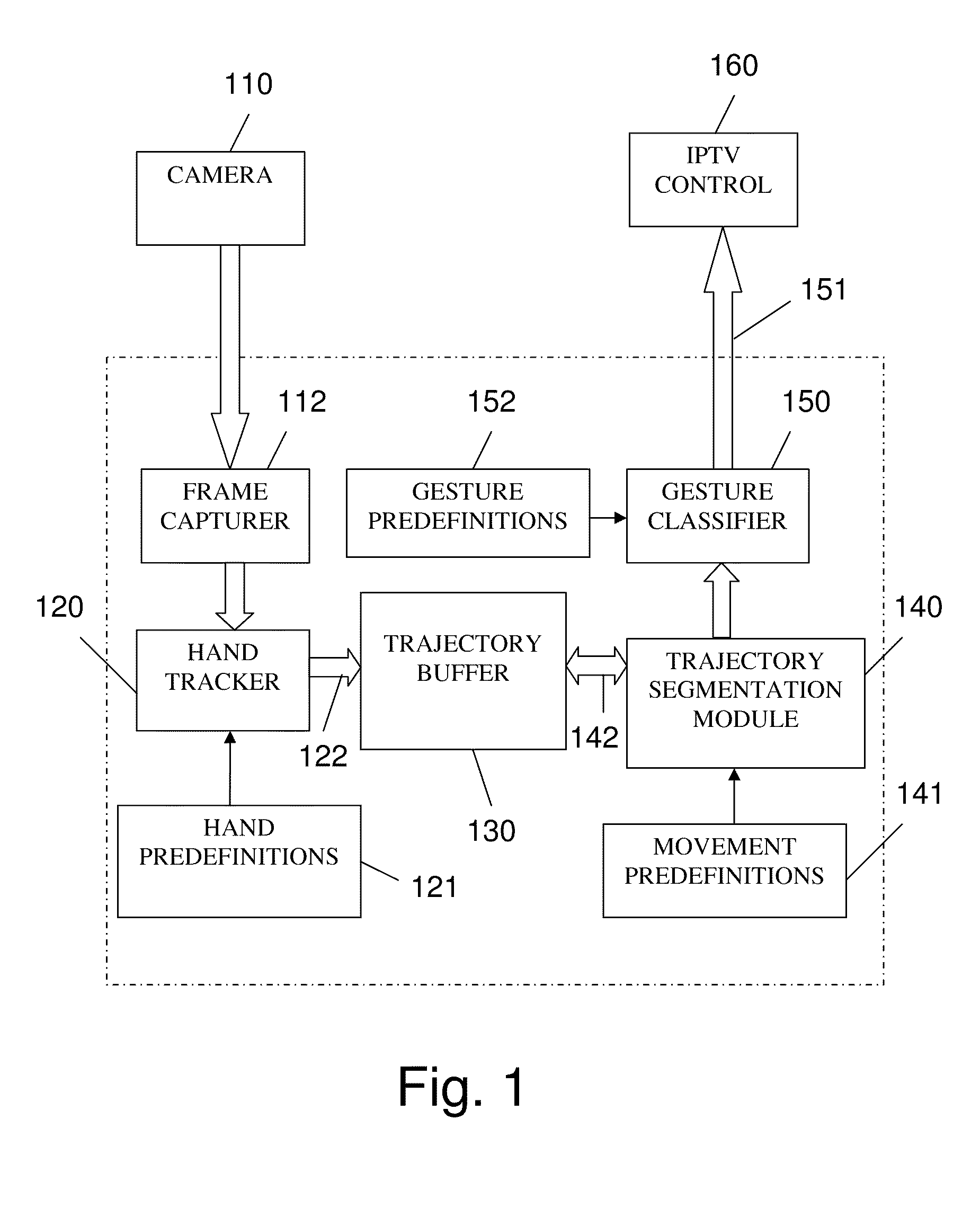

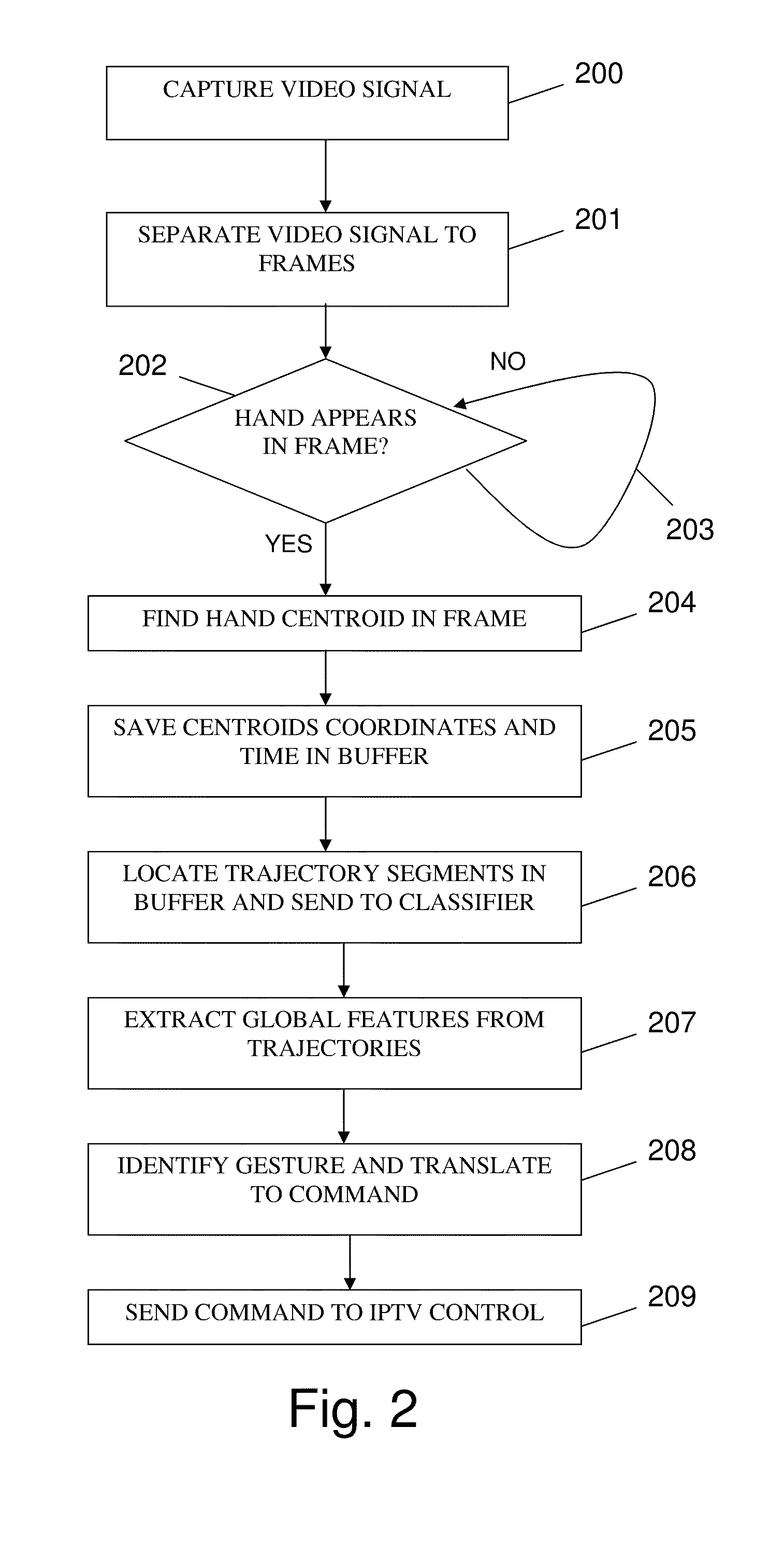

Gesture recognition system for remote controlling a TV is disclosed. The system comprises: (a) a webcam for capturing a video sequence of a user which is composed of video frames (b) a hand tracker for receiving the video sequence, and when a hand is determined within a frame of the video sequence, the hand tracker calculates the hand centroid; (c) a trajectory buffer for receiving plurality of the hand centroids as determined from plurality of frames respectively; (d) trajectory segmentation module for continuously inspecting the buffer for possible inclusion of relevant trajectories that relate to a hand gesture pattern, and whenever a relevant trajectory is detected, it extracts a respective trajectory segment, and conveys the segment into a gesture classifier; and (e) the gesture classifier for verifying whether the segment relates to a specific gesture command, and when affirmative, the gesture classifier transfers the respective command to the TV.

Owner:DEUTSCHE TELEKOM AG

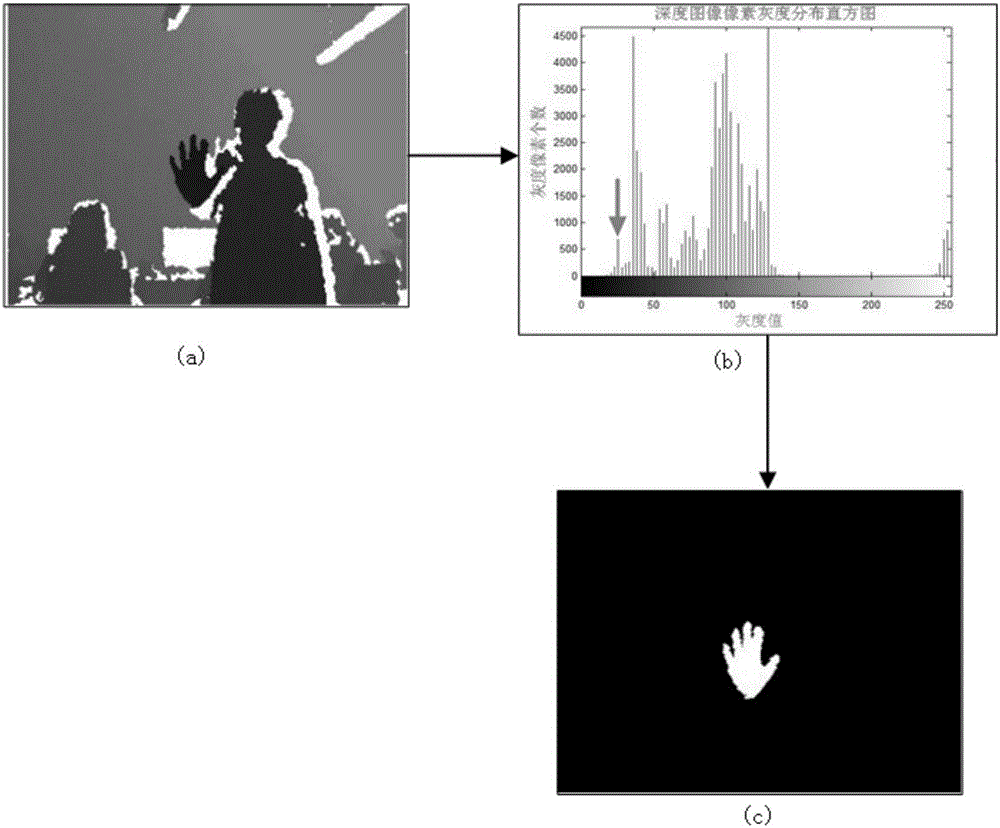

Gesture recognition method based on depth sensor

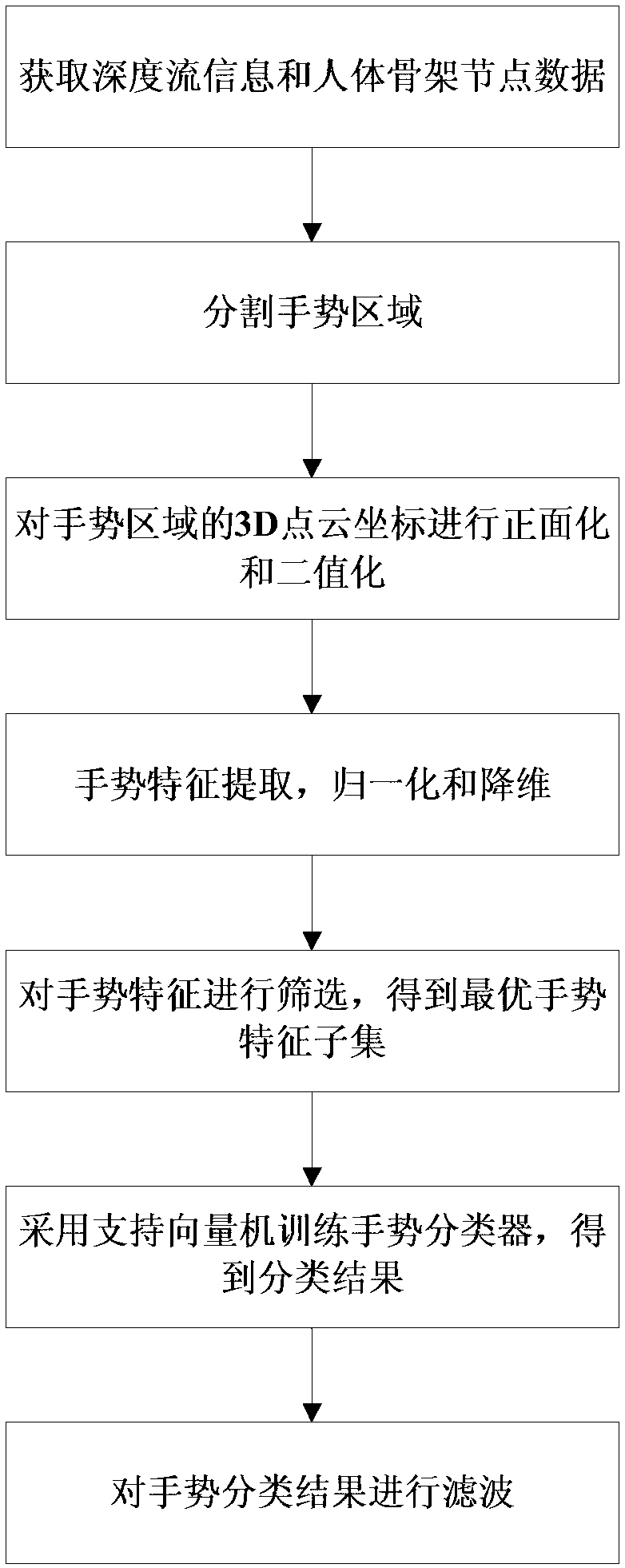

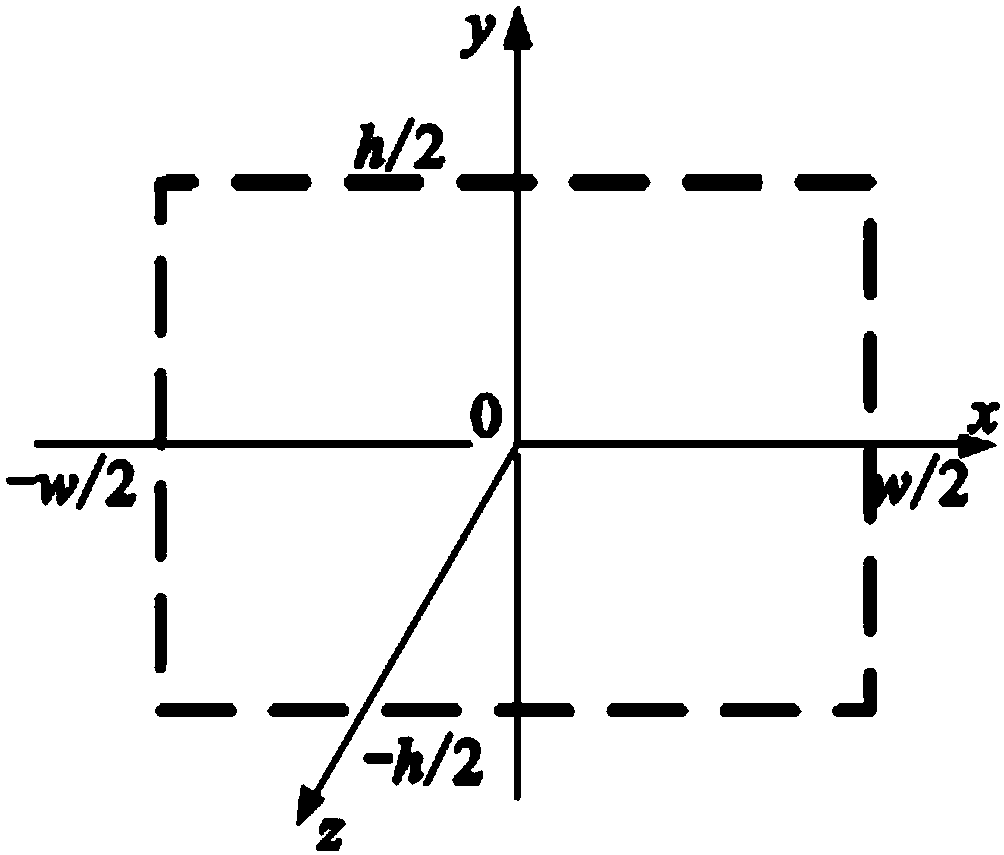

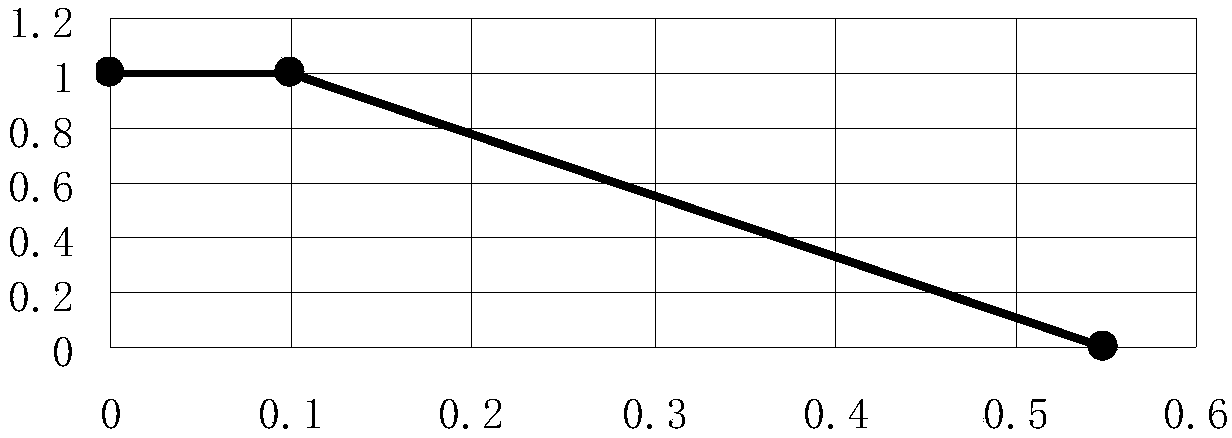

ActiveCN107742102AImprove algorithm efficiencyImprove recognition rateCharacter and pattern recognitionSupport vector machinePoint cloud

The invention discloses a gesture recognition method based on a depth sensor. The method comprises the steps of sequentially obtaining depth flow information and human skeleton node data; cutting a gesture region; performing positive side and binaryzation are performed on 3D point cloud coordinates of the gesture region; gesture feature extraction, normalization and dimension reduction treatment are performed; gesture features are screened to obtain an optimal gesture feature subset; a support vector machine gesture training classifier is adopted, and a classification result is obtained; filtering is performed on the gesture classification result. The defect in the prior art is overcome, and the gesture recognition precision, stability and efficiency are improved.

Owner:BEIJING HUAJIE IMI TECH CO LTD

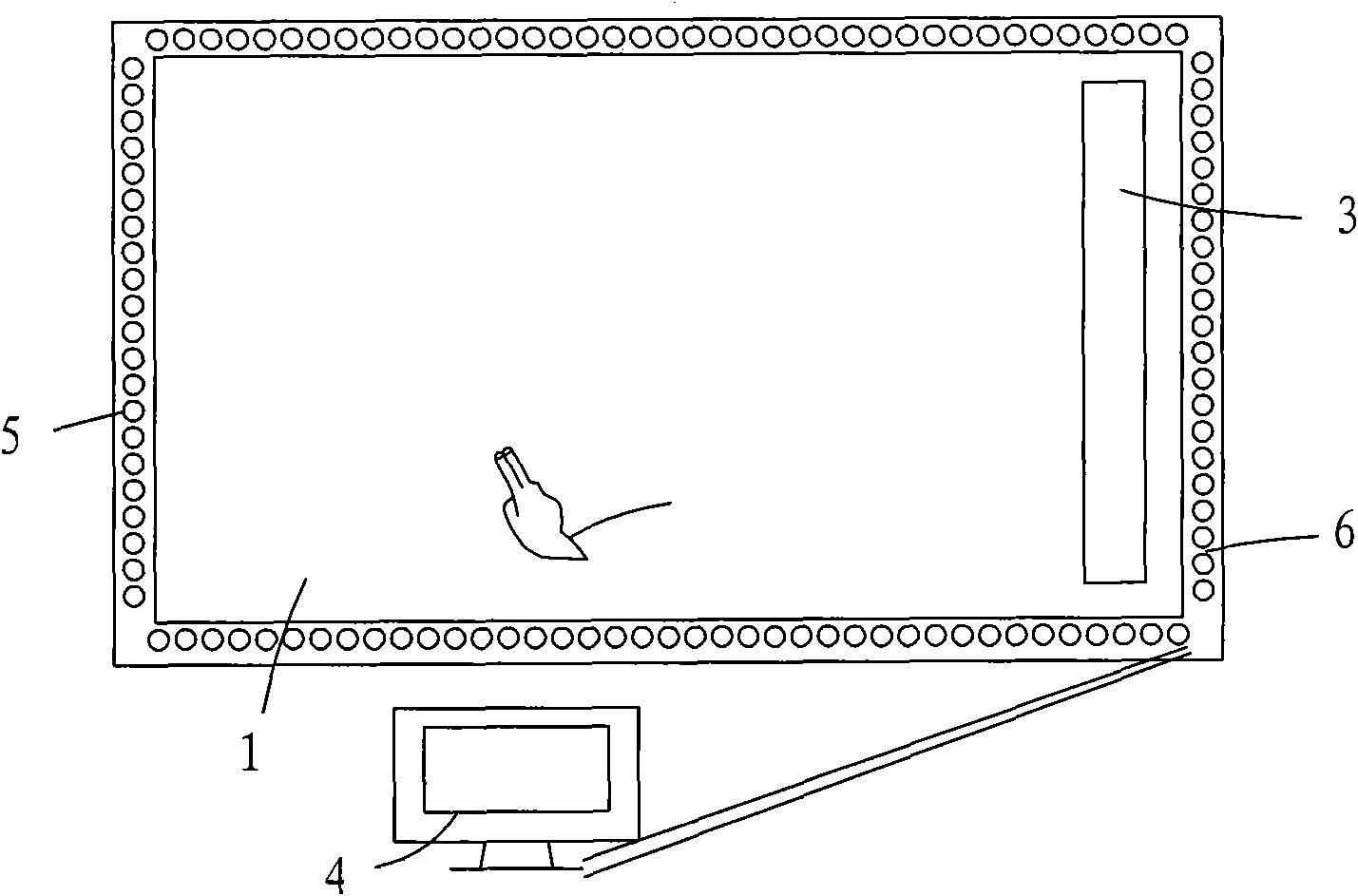

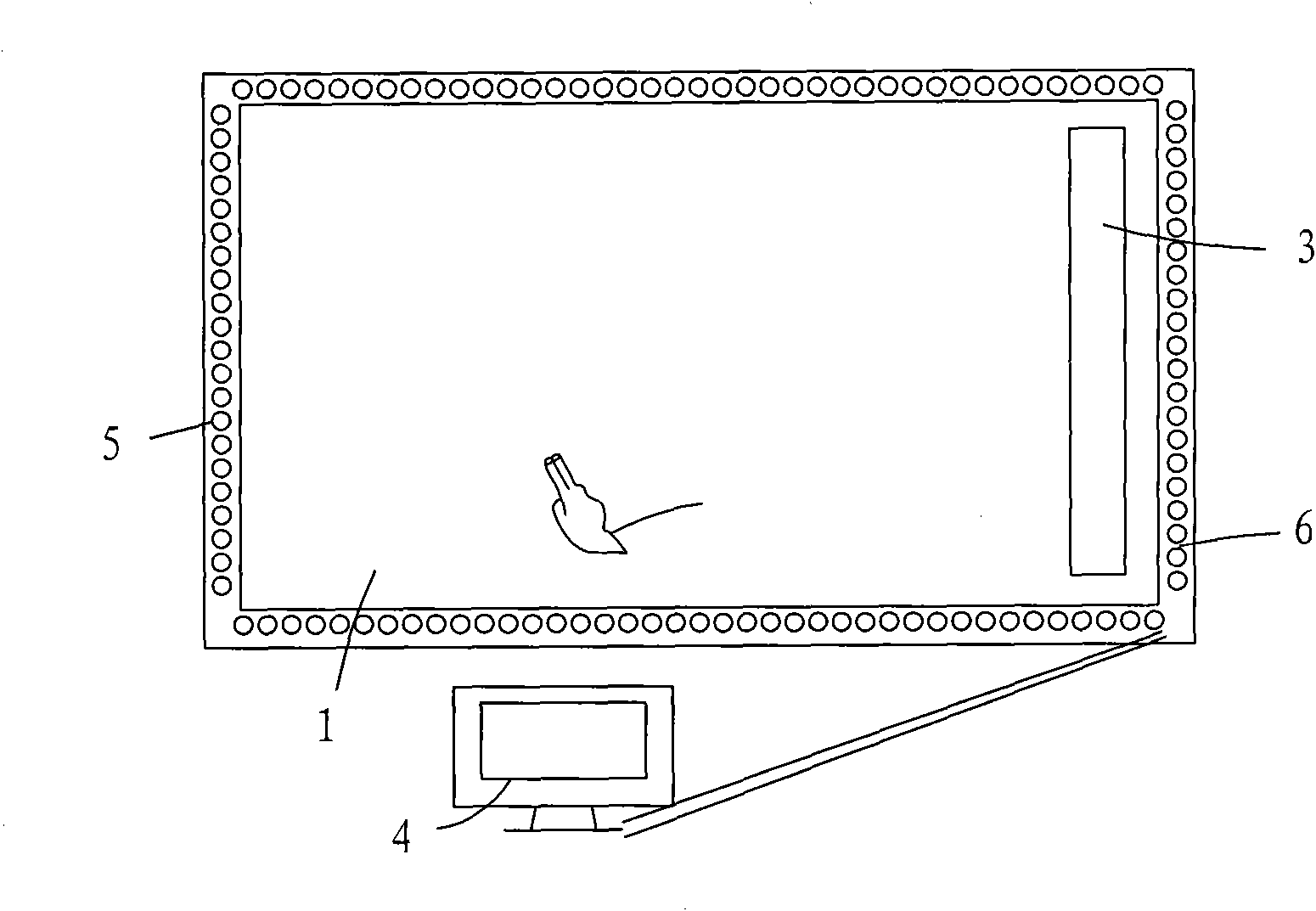

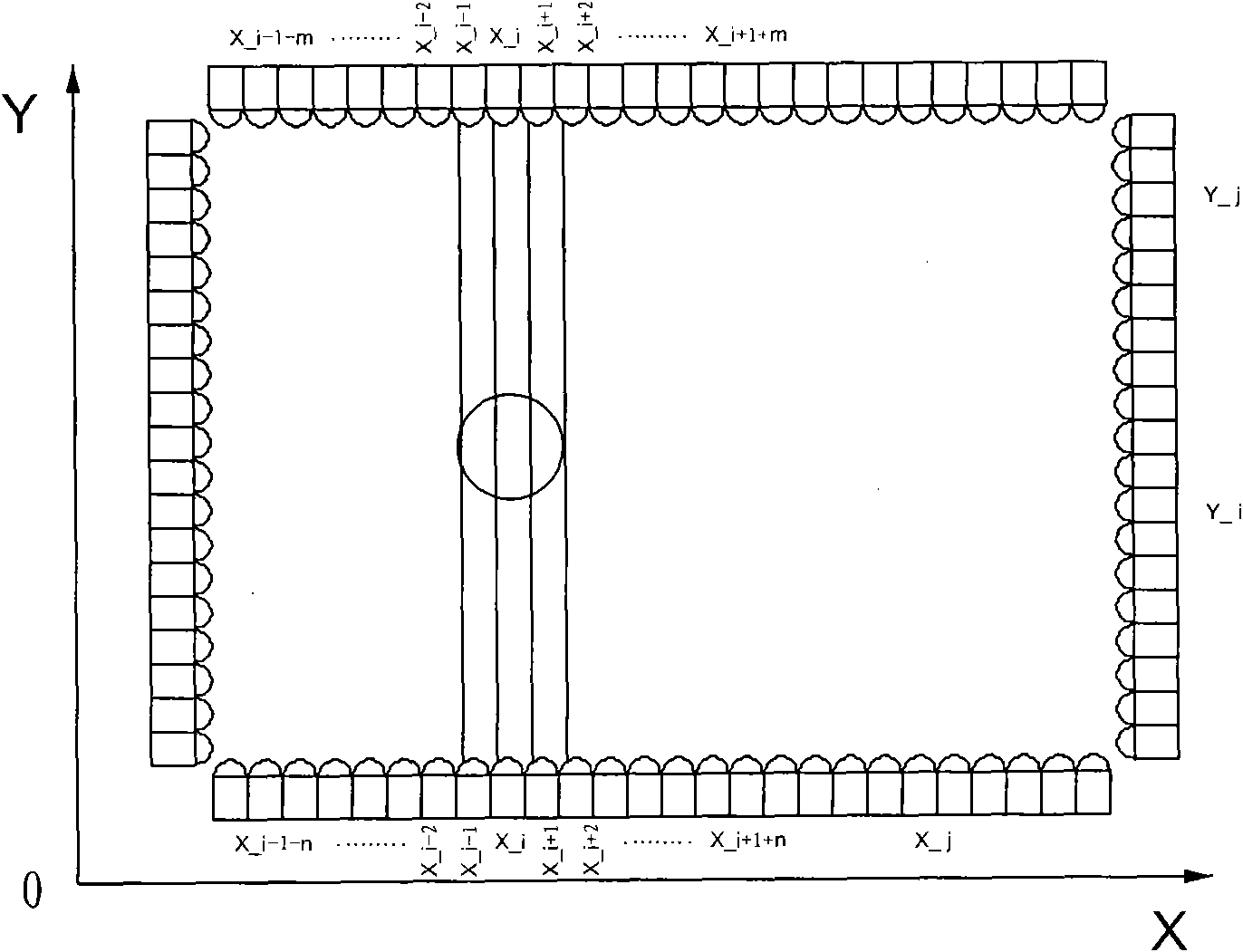

Method for recognizing gesture on infrared induction type electronic whiteboard

InactiveCN101813995AHigh recognition rate and accuracyInput/output processes for data processingWhiteboardPattern recognition

The invention provides a method for recognizing the gesture on an infrared induction type electronic whiteboard. The method comprises the following steps: classifying the first gesture recognition according to the number of touching objects; classifying the second gesture recognition according to the area of the touching objects, and further distinguishing the specific types of the gesture; and distinguishing the exact gesture according to the determined physical coordinates, and then, performing the functions of the gesture on the infrared induction type electronic whiteboard by whiteboard software. The gesture recognition mode of the invention is capable of recognizing most of the gestures on the infrared whiteboard so far, and the invention is characterized by the gesture recognition of the right key of a mouse; and the invention has the advantages of high recognition rate, accurate recognition and little error recognition.

Owner:RETURNSTAR INTERACTIVE TECH GRP +1

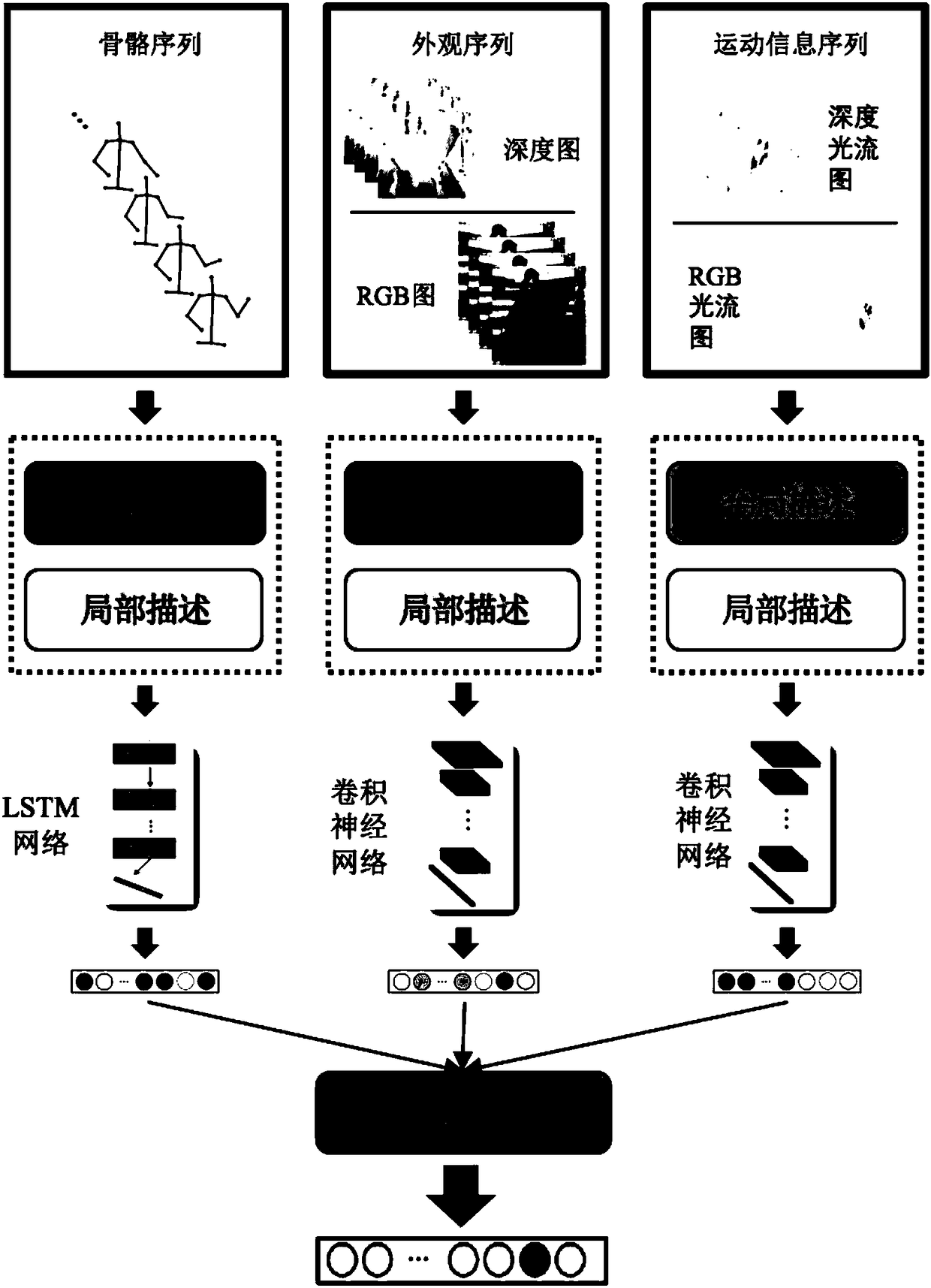

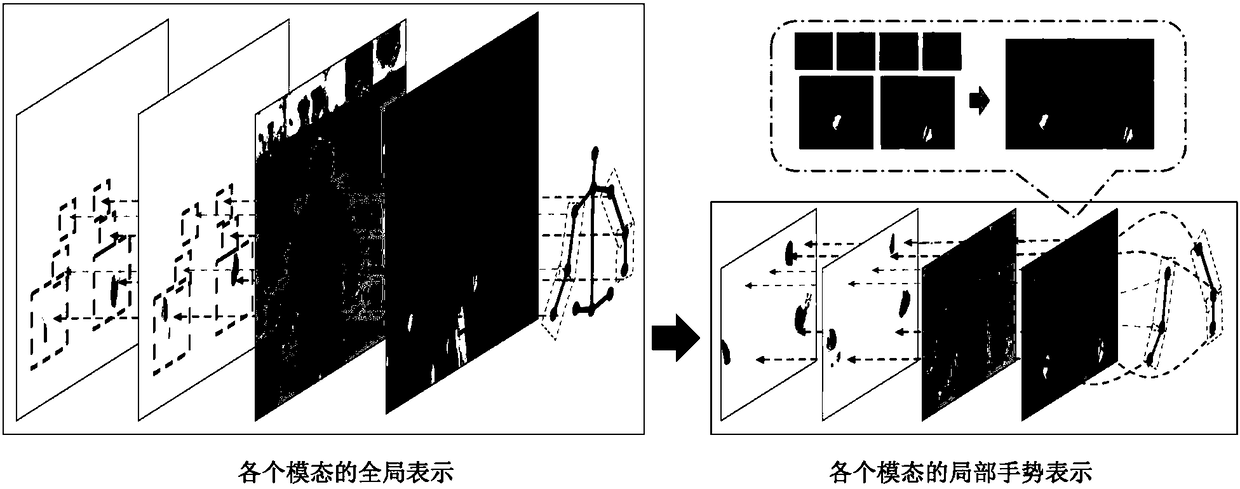

Global-local RGB-D multimode-based gesture recognition method

ActiveCN108388882AGesture recognition performance improvementWide application backgroundCharacter and pattern recognitionRgb imageUser input

The invention discloses a global-local RGB-D multimode-based gesture recognition method. An inputted gesture video is expressed mainly through data modes comprising a bone position, an RGB image, a depth image and an optical flow image; after the multimode gesture data expression is obtained, a method of a convolution neural network and a recurrent neural network is used to carrying out feature expression on gesture data in different modes, and the features obtained in different modes are used for gesture classification; and finally, different classes of gesture scores obtained in different modes are fused to obtain a multimode-based gesture classification result finally. The method can be applied to a client or a cloud for recognizing a gesture video inputted by the user, and through gesture input, a computer or mobile phone software or hardware makes a corresponding response.

Owner:SUN YAT SEN UNIV

A monocular static gesture recognition method based on multi-feature fusion

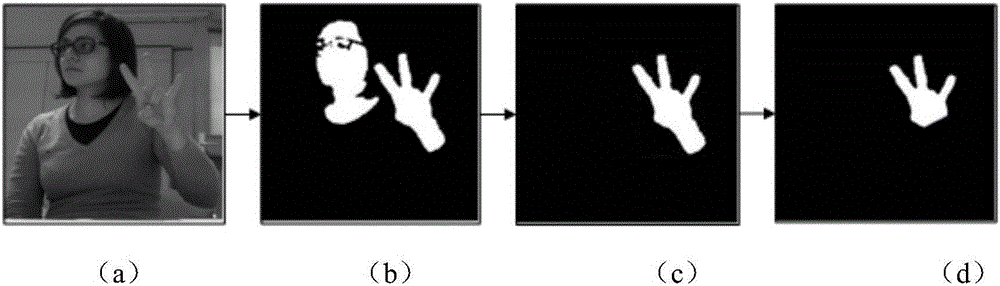

InactiveCN109190496AAccurate separationImprove robustnessImage analysisCharacter and pattern recognitionRgb imageSkin color

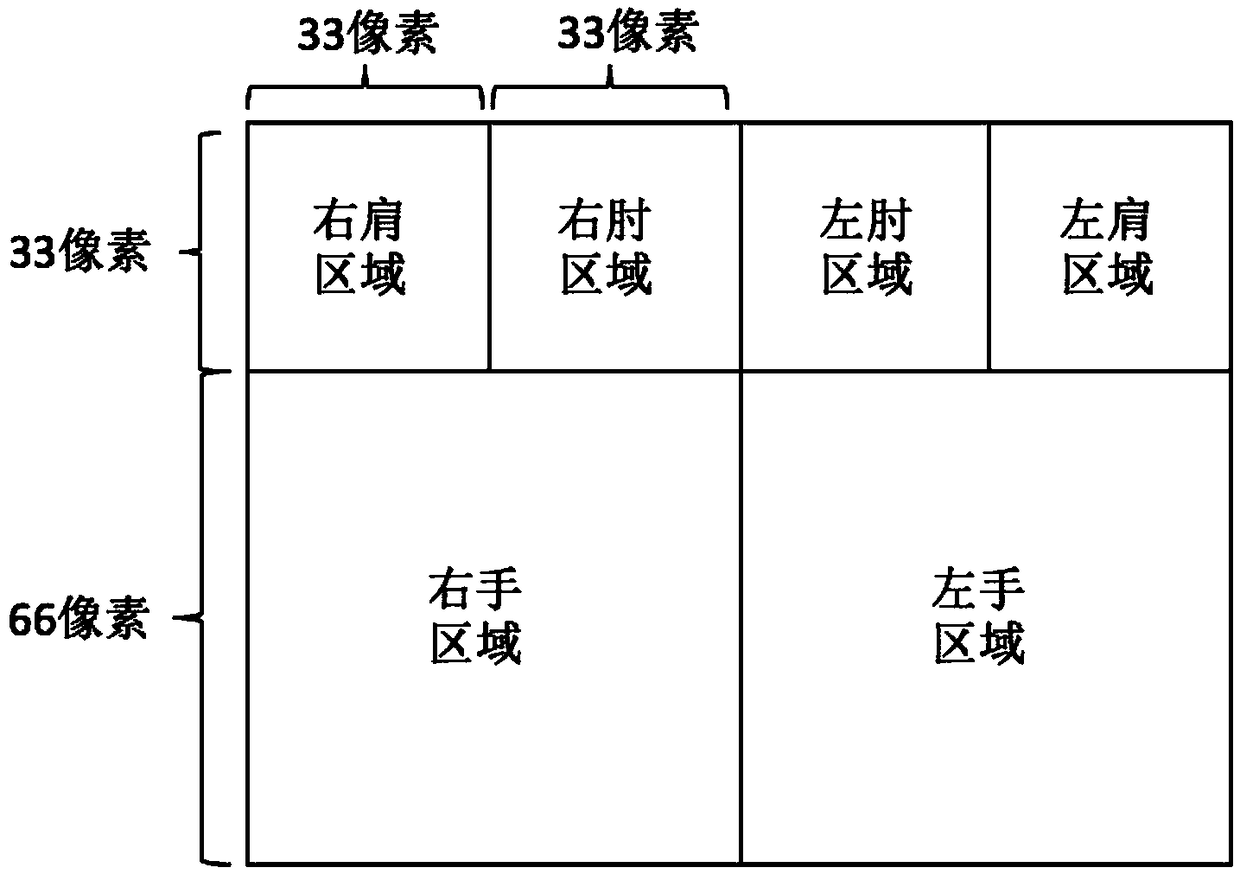

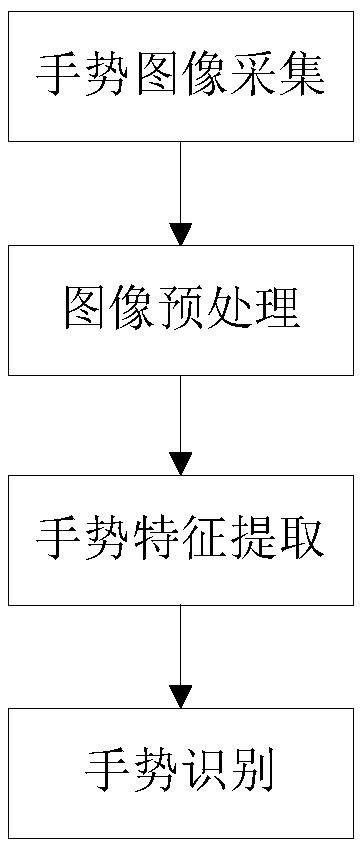

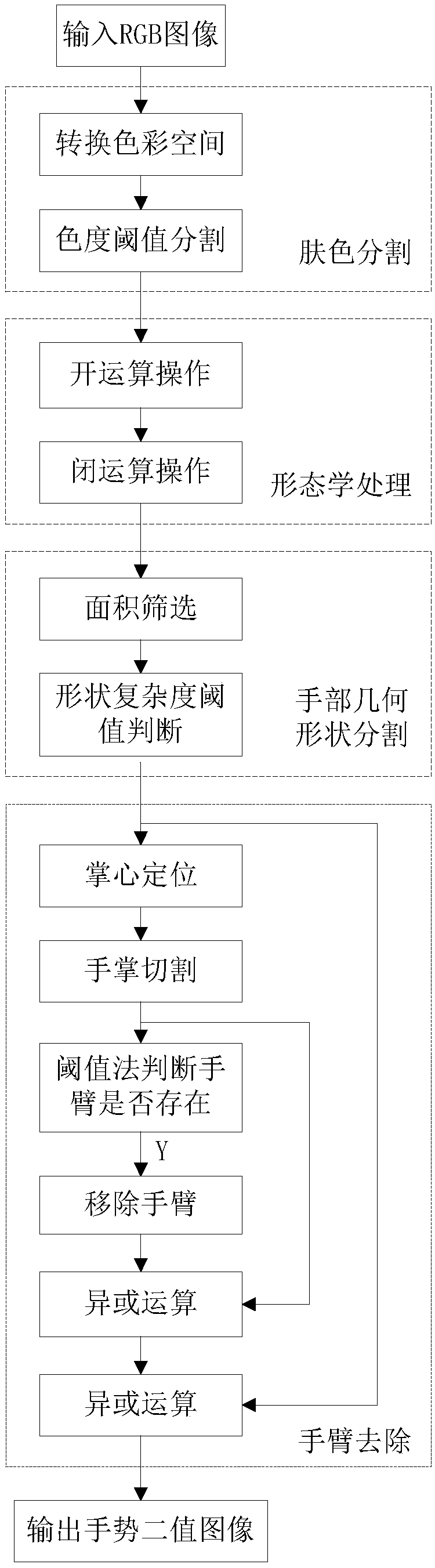

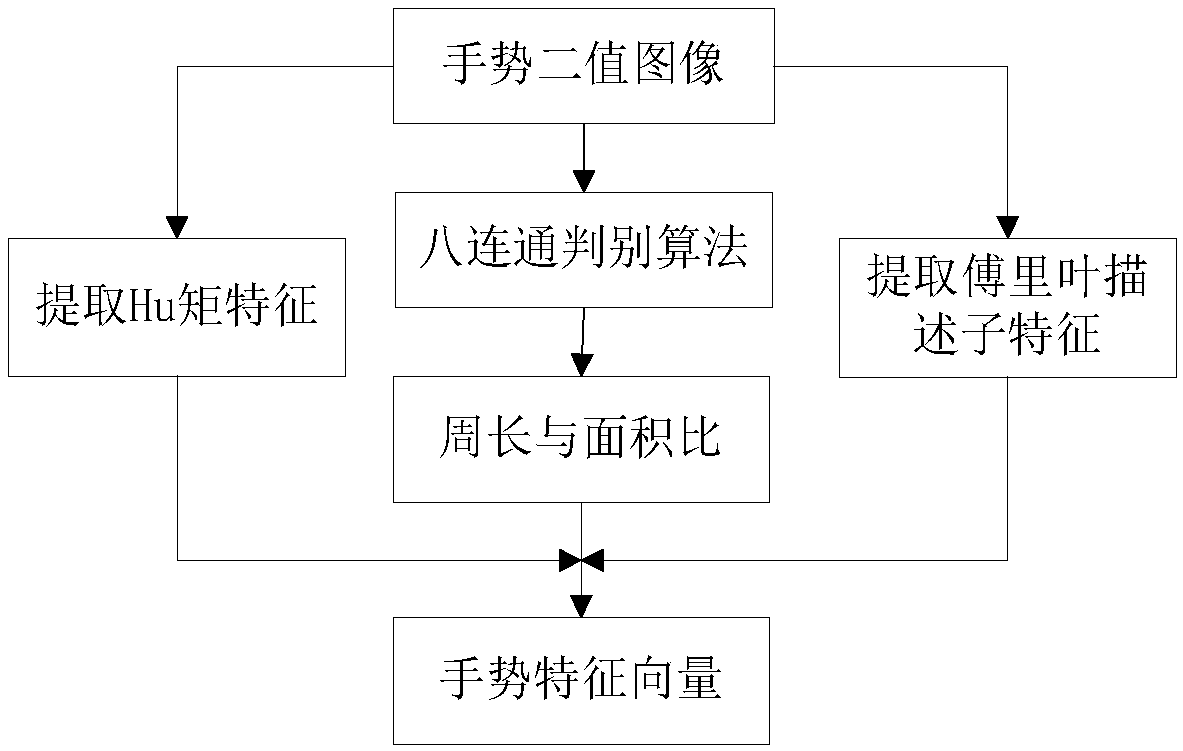

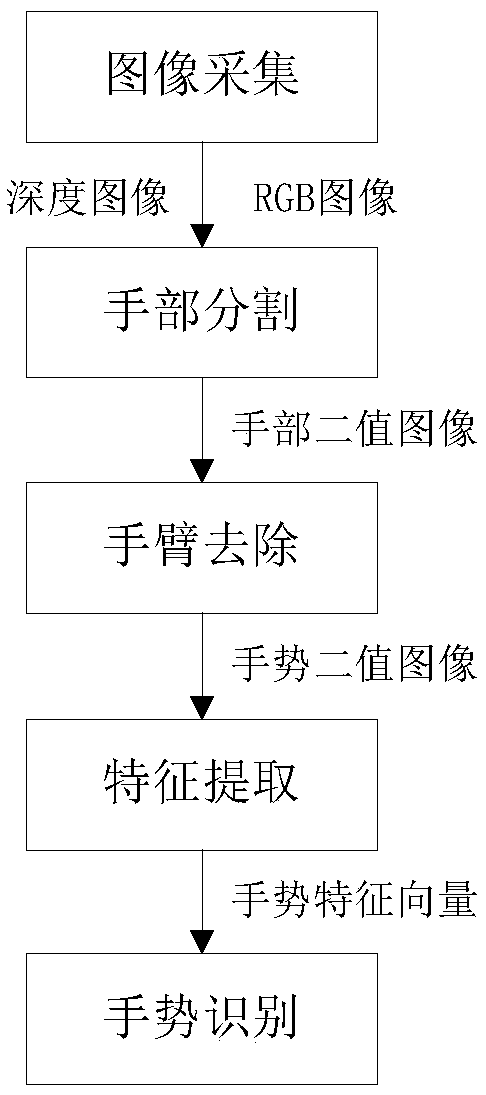

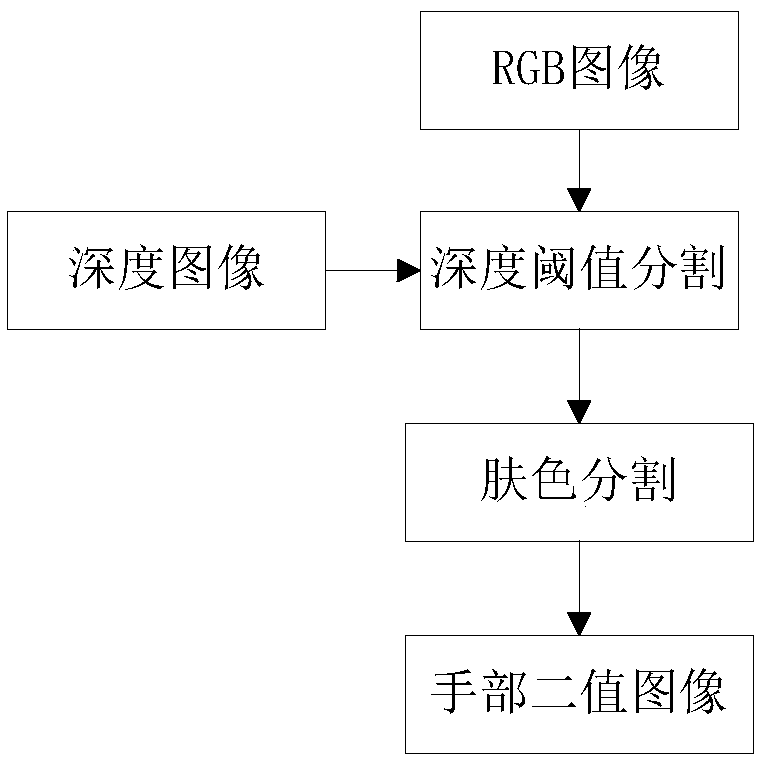

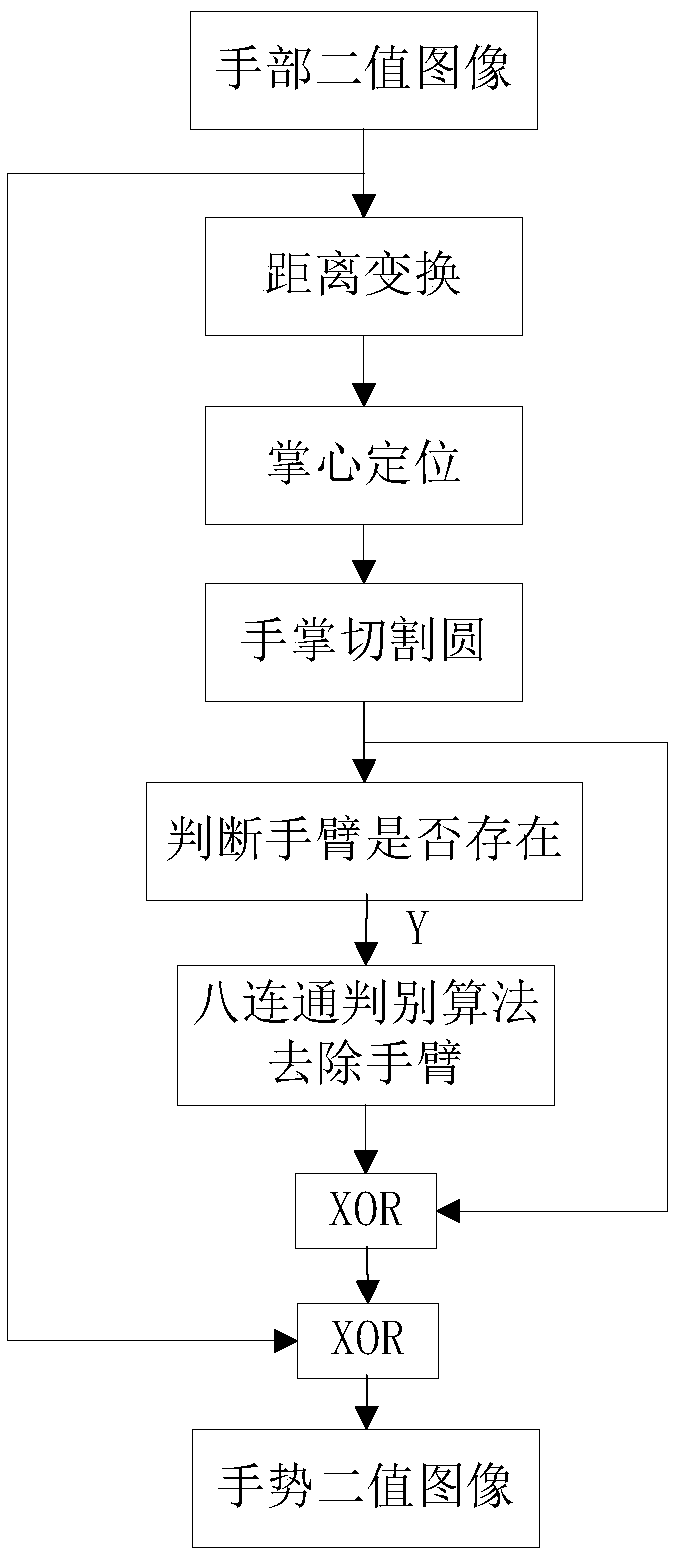

The invention discloses a monocular static gesture recognition method based on multi-feature fusion. The method comprises the following steps: gesture image collection: collecting an RGB image containing gesture by a monocular camera; image preprocessing: using human skin color information for skin color segmentation, using morphological processing and combining with the geometric characteristicsof the hand, separating the hand from the complex background, and locating the palm center and removing the arm region of the hand through the distance transformation operation to obtain the gesture binary image; gesture feature extraction: calculating the ratio of perimeter to area, Hu moment and Fourier descriptor feature of gesture and forming gesture feature vector; gesture recognition: usingthe input gesture feature vector to train the BP neural network to achieve static gesture classification. The invention combines the skin color information and the geometrical characteristics of the hand, and realizes accurate gesture segmentation under monocular vision by using morphological processing and distance transformation operation. By combining various gesture features and training BP neural network, a gesture classifier with strong robustness and high accuracy is obtained.

Owner:SOUTH CHINA UNIV OF TECH

Gesture identification method based on recursive model

ActiveCN106845384AOvercome the unequal length problemImprove robustnessCharacter and pattern recognitionRecursive modelSequence point

The invention discloses a gesture identification method based on a recursive model. The method comprises the basic steps of: 1, carrying out preprocessing on static and dynamic gesture images; 2, extracting static and dynamic gesture space sequences; 3, according to the gesture space sequences, constructing a gesture recursive model; and 4, by the gesture recursive model, carrying out gesture classification. According to the gesture identification method disclosed by the invention, in a form of converting the gesture space sequences into the recursive model, problems caused by different lengths of acquired gesture space sequences and incomparability of data values of sequence points are effectively solved, and robustness of a gesture identification algorithm is improved.

Owner:NORTHWEST UNIV

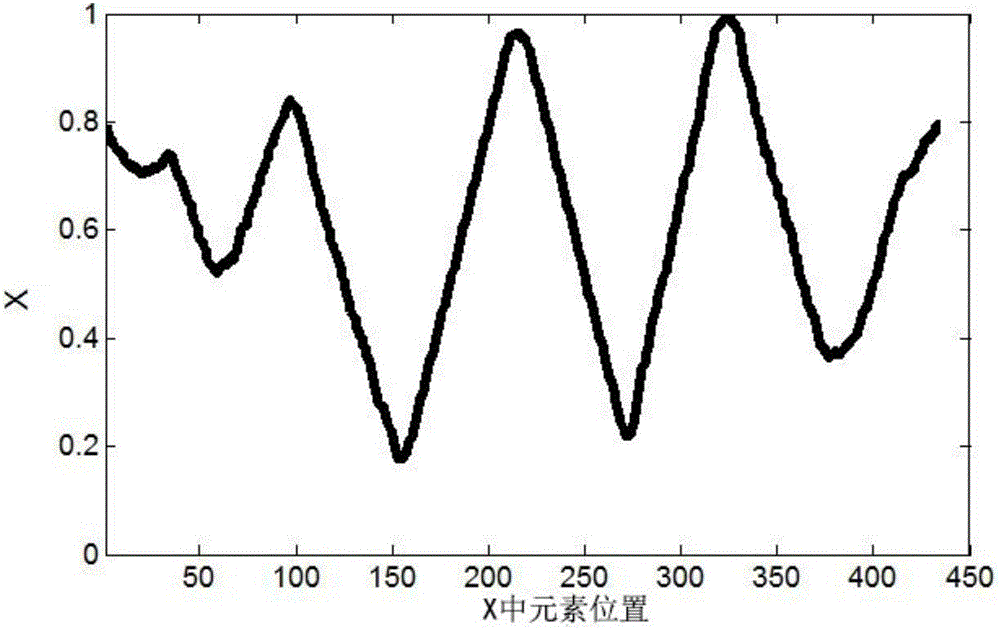

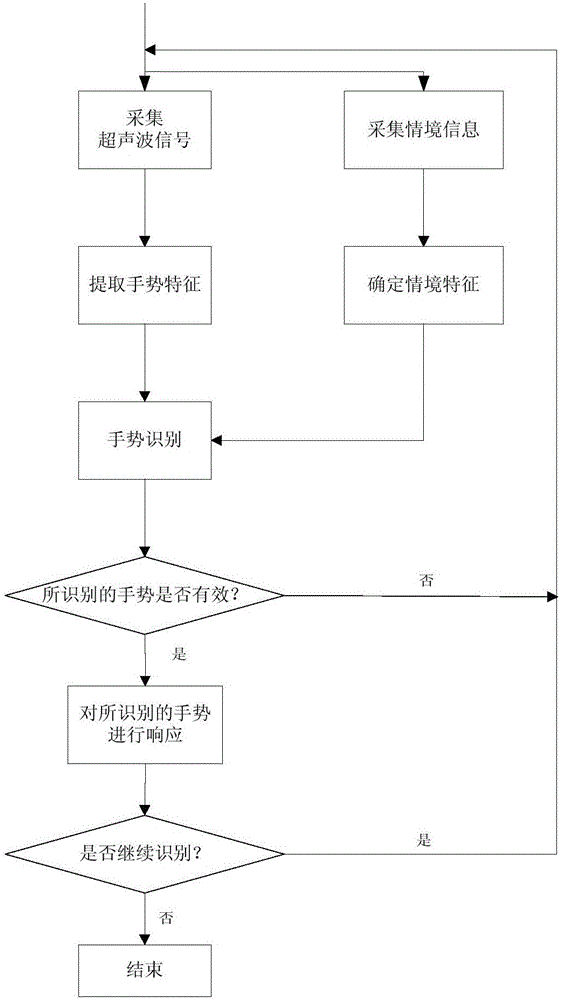

Ultrasonic gesture recognition method and system

ActiveCN106203380AImprove interactive experienceReduce invalid or even erroneous responsesCharacter and pattern recognitionHuman–robot interactionHuman–computer interaction

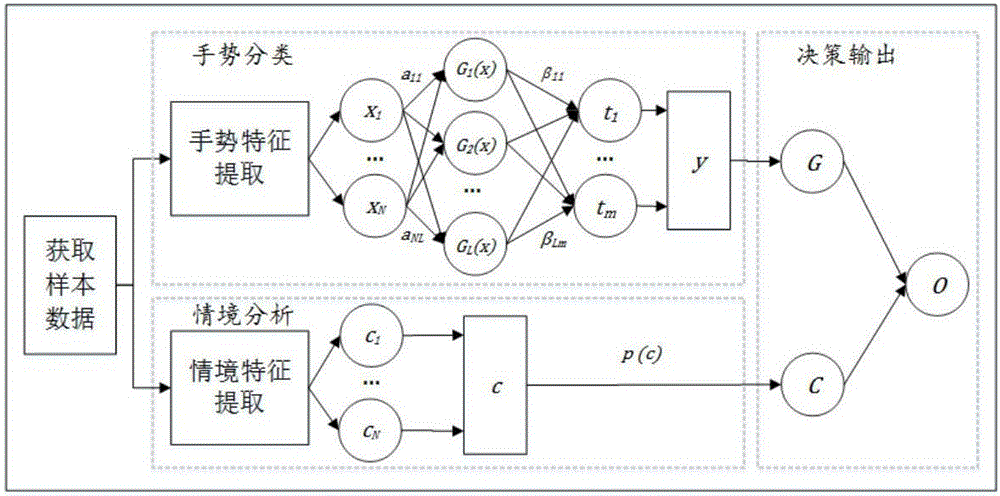

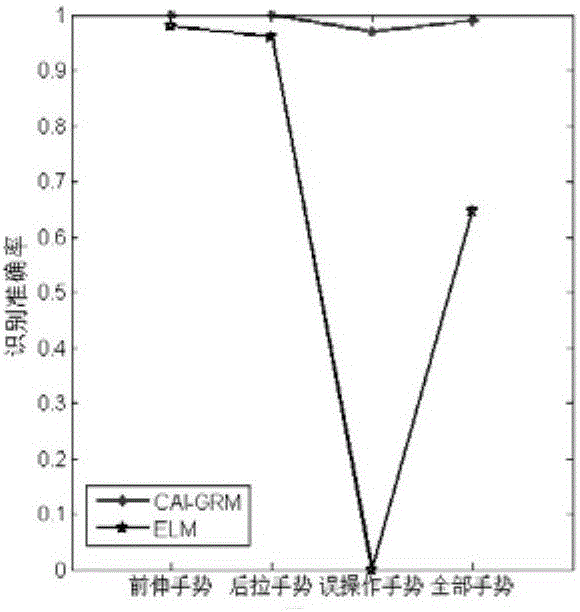

The invention provides an ultrasonic gesture recognition method. The method includes the steps that ultrasonic signals and situation information related to a current situation are collected at the same time, gesture features are obtained from the collected ultrasonic signals, and the probability that the gesture features belong to various preset gestures is obtained by means of a gesture classification model trained in advance; the probability that the gestures happen in the current situation is determined on the basis of the collected situation information; according to the two probabilities, the probability that the gesture features belong to various preset gestures in the current situation is calculated, and the gesture corresponding to the largest probability is recognized as the gesture corresponding to the collected ultrasonic signals. By means of the method, gesture signals and the situation information are fused, by means of the situation information, misoperation gestures of users are filtered, wrong gestures are corrected and recognized, invalid even wrong responses are reduced, accordingly accuracy and robustness of gesture recognition are improved, and man-machine interaction experiences are improved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

A static gesture recognition method combining depth information and skin color information

InactiveCN109214297AAccurate separationImprove recognition accuracyInput/output for user-computer interactionImage enhancementFeature vectorSupport vector machine

The invention discloses a static gesture recognition method which combines depth information and skin color information. The method comprises the following steps: acquiring RGB image and depth image by kinect; using the depth threshold and human skin color information to get the binary image of the hand. Distance transform operation combined with palm cutting circle and threshold method is used tojudge whether there is arm region in hand image. The arm region is removed by exclusive OR operation between images to obtain binary gesture image. Calculating the number of Fourier descriptors and fingertips to form the eigenvector of the gesture; Support vector machine is used for gesture classification to achieve the purpose of gesture recognition. The invention realizes hand partial cutting by combining depth information and skin color information, and overcomes the influence of skin color region in complex background. By removing the arm region, the disturbance of the arm to the classification accuracy of the system can be overcome. The number of fingertips and Fourier descriptor features are computed and input to support vector machine for gesture recognition.

Owner:SOUTH CHINA UNIV OF TECH

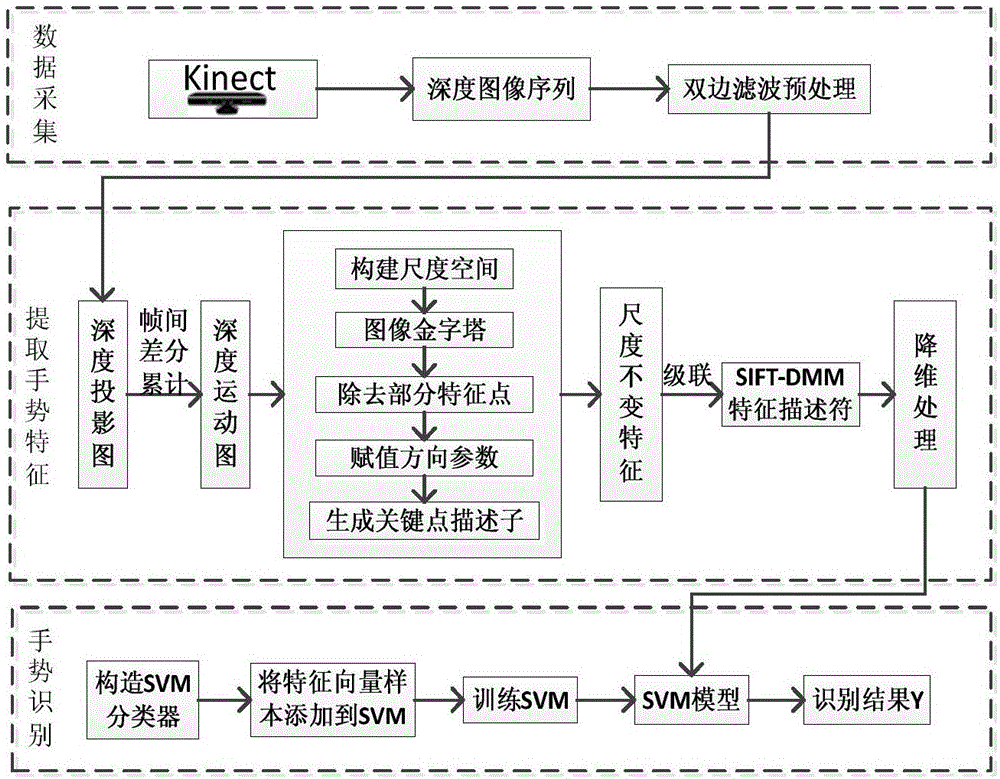

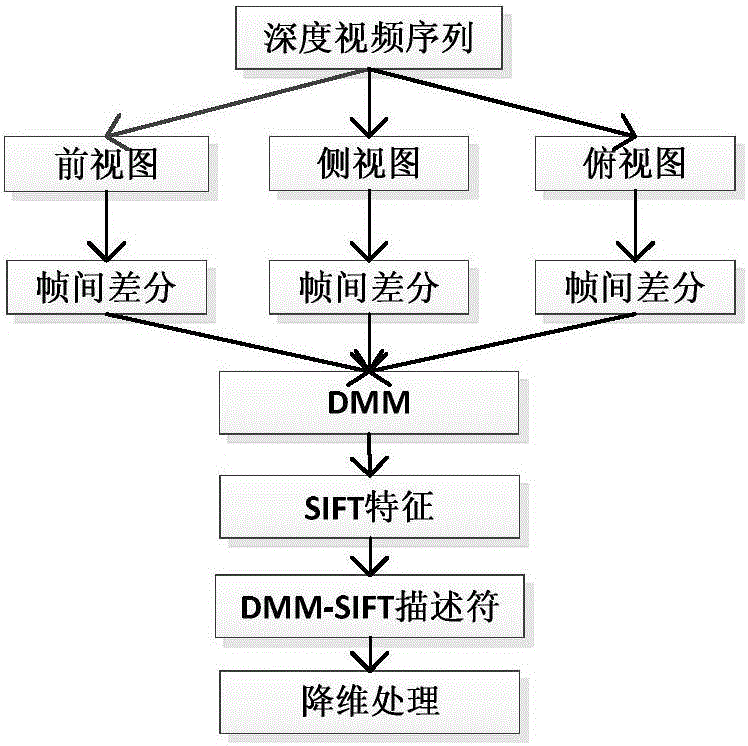

Depth motion map-scale invariant feature transform-based gesture recognition method

InactiveCN106815578AEfficient identificationImprove robustnessCharacter and pattern recognitionReduction treatmentSomatosensory system

The invention relates to a depth motion map-scale invariant feature transform-based gesture recognition method. The method mainly comprises the following three parts: in the motion data acquisition aspect, an original depth image provided by the Kinect somatosensory technology is adopted as the input variable of a gesture recognition system. In the human body gesture feature construction aspect, a depth motion map-scale invariant feature transform-based extraction method is adopted, and data obtained after feature extraction are subjected to dimension-reduction treatment through the supervised locally linear embedding (SLLE) method. In this way, a gesture motion characteristic quantity is represented. In the gesture classifier recognition aspect, a support vector machine based on a discriminant is adopted to realize the sample training and modeling process of the characteristic quantities of a depth image sequence. Meanwhile, an unknown gesture is classified and predicted. The method of the invention can be adapted to different lighting environments, and is stronger in robustness. The method can also efficiently recognize gesture sequences in real time. Therefore, the method can be applied to the real-time gesture recognition field of man-machine interaction.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Gesture recognition-based mechanical arm pose control system

ActiveCN107943283AImprove recognition accuracyControl the robotic arm accuratelyInput/output for user-computer interactionProgramme-controlled manipulatorFiltrationControl system

The invention discloses a gesture recognition-based mechanical arm pose control system. The system comprises a smart wristband module, a remote client module, a Bluetooth communication module, a dataprocessing module, a simulation module and a mechanical arm execution module which are connected successively, wherein the smart wristband is wirelessly connected with a PC through Bluetooth and is used for transmitting an electromyographic signal acquired by the smart wristband module to the remote client module; the remote client module is used for forwarding the signal to the data processing module after receiving the signal; the data processing module is used for carrying out filtration and noise reduction on the signal, classifying gestures, and solving joint angles by utilizing positiveand athwart kinematics after noise reduction; two smart wristbands are used for obtaining joint angles of arms of an operator; the remote client is further used for transferring signals of the joint angles and an operation instruction signal to the smart wristband module; a gesture action signal is transmitted to a simulation mechanical arm in the simulation module; the simulation mechanical arm transmits the signal to a working mechanical arm; and the working mechanical arm executes a command according to the signal.

Owner:ZHEJIANG UNIV OF TECH

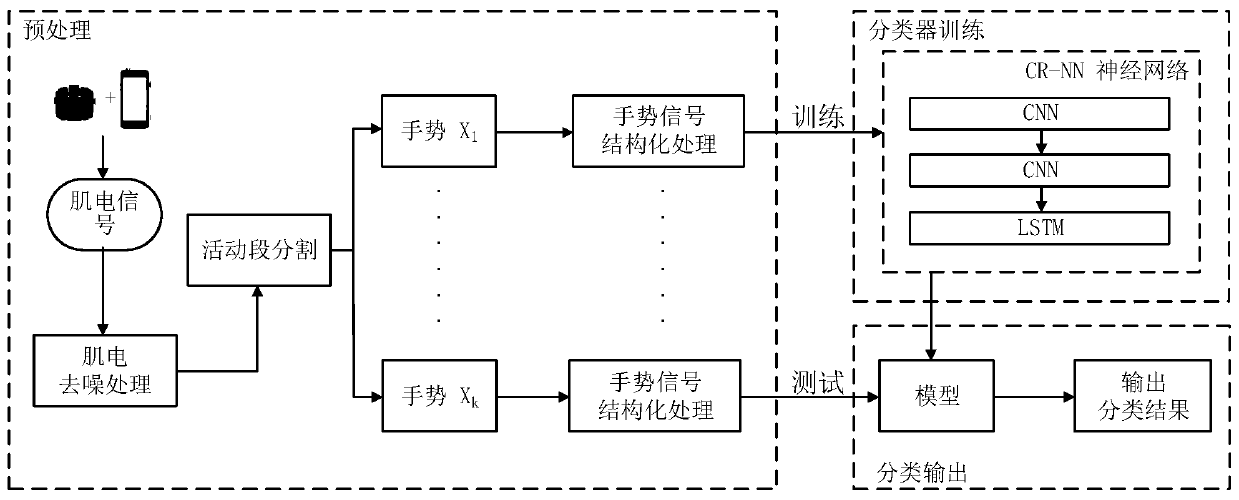

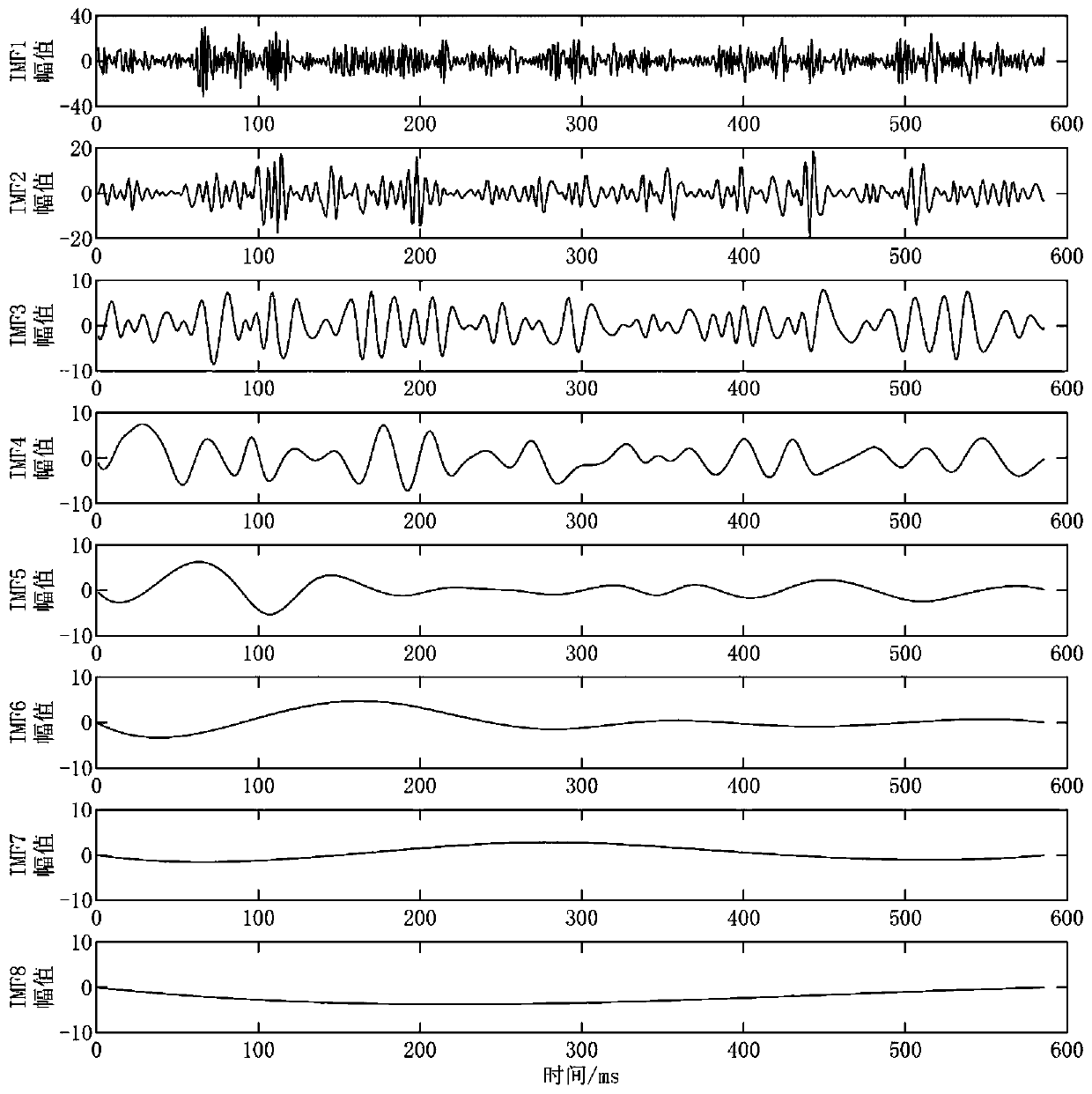

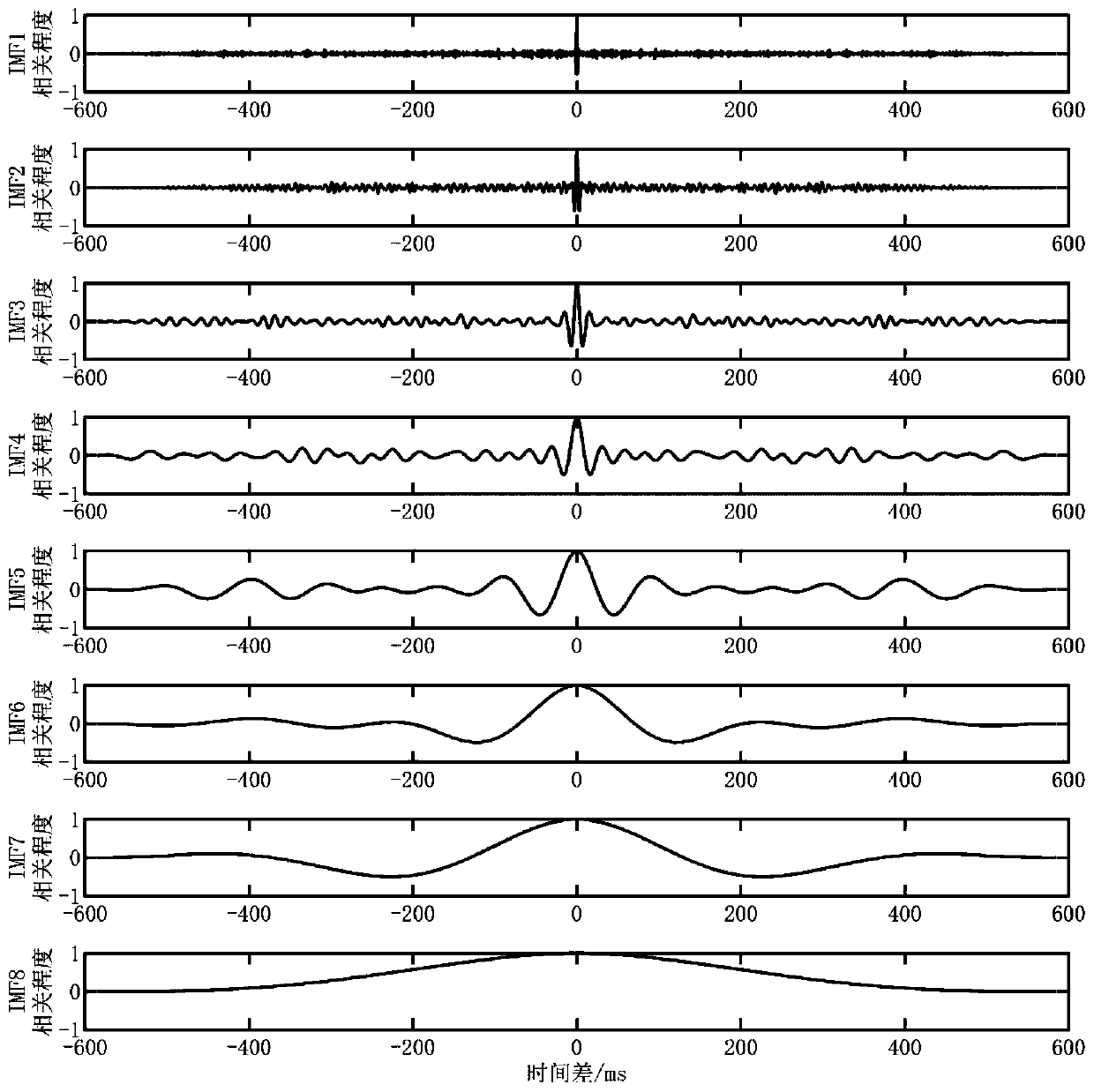

Gesture recognition method based on multichannel electromyographic signal correlation

InactiveCN110399846AEasy to operateImprove environmental adaptabilityInput/output for user-computer interactionCharacter and pattern recognitionPattern recognitionTime correlation

The invention provides a gesture recognition method based on multichannel electromyographic signal correlation. The gesture recognition method comprises the following steps: firstly, de-noising electromyographic signals acquired by each channel; detecting a movable section according to the signal amplitude intensity; then, performing structured processing on the active section signal; processing the signal into a format with time correlation by superposing a plurality of continuous time windows; and finally, realizing a hybrid neural network CRNet based on the CNN + RNN neural network, and establishing a classifier for gesture recognition, wherein the input of the classifier is a signal subjected to structured processing, and the output of the classifier is a gesture classification probability, and the trained classifier is utilized to perform gesture recognition. For the gesture recognition method, only a plurality of myoelectricity sensors are used for collecting original signals while extra complex equipment is not needed, so that operation is convenient, and environmental adaptability is good. According to the gesture recognition method, the noise in the signal can be effectively removed, and the used classifier reduces the computing resources and improves the recognition efficiency, and the gesture recognition method is more suitable for engineering application.

Owner:BEIHANG UNIV +1

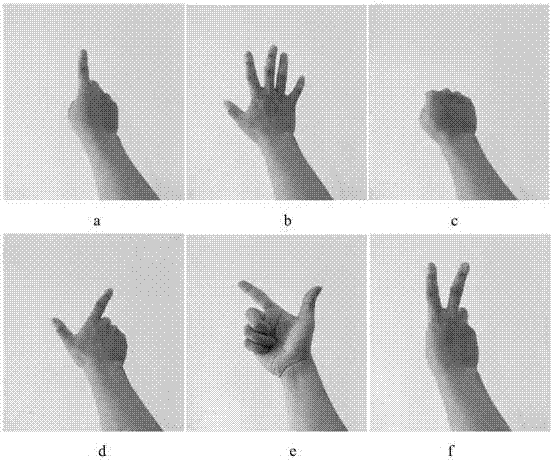

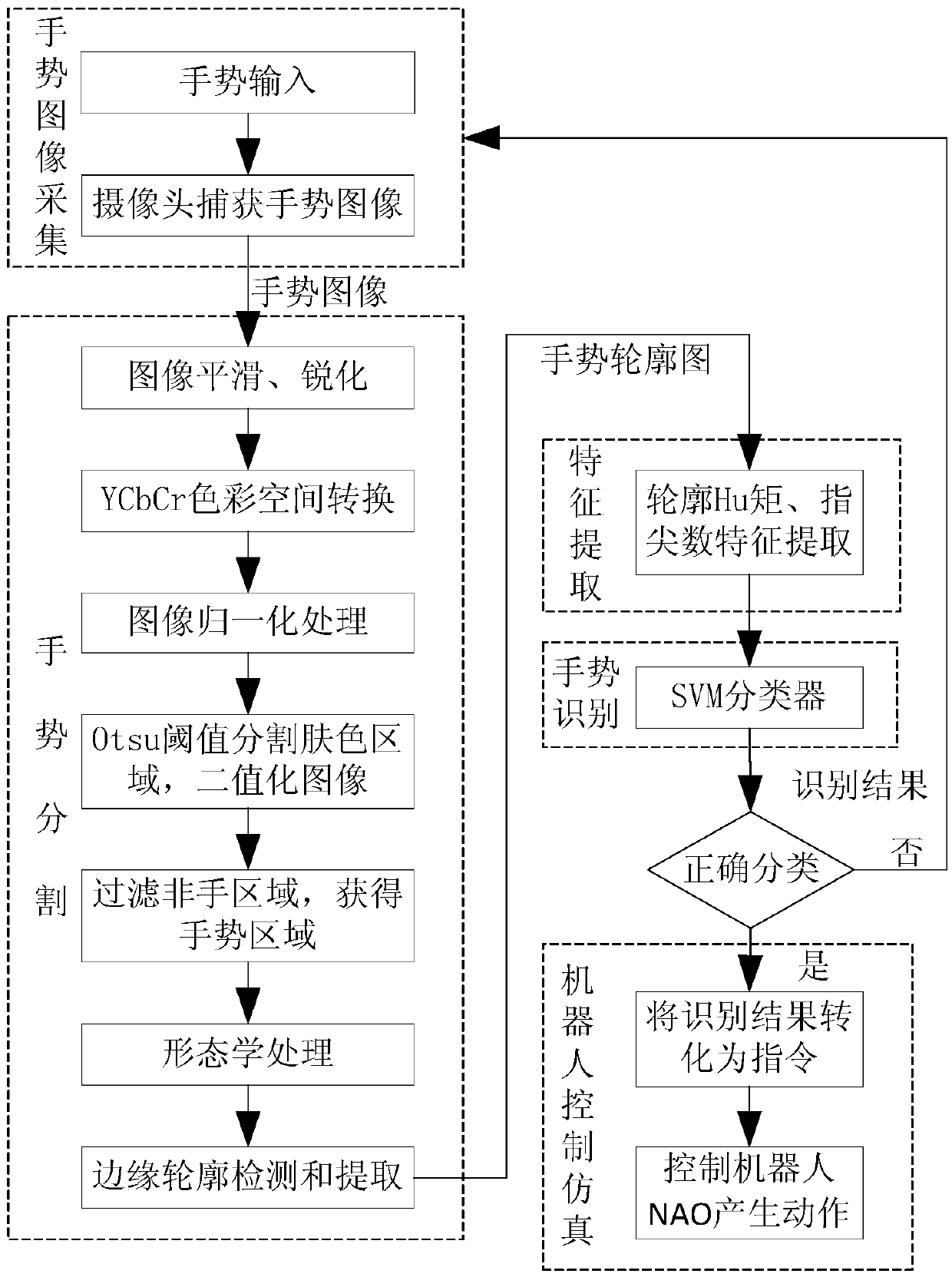

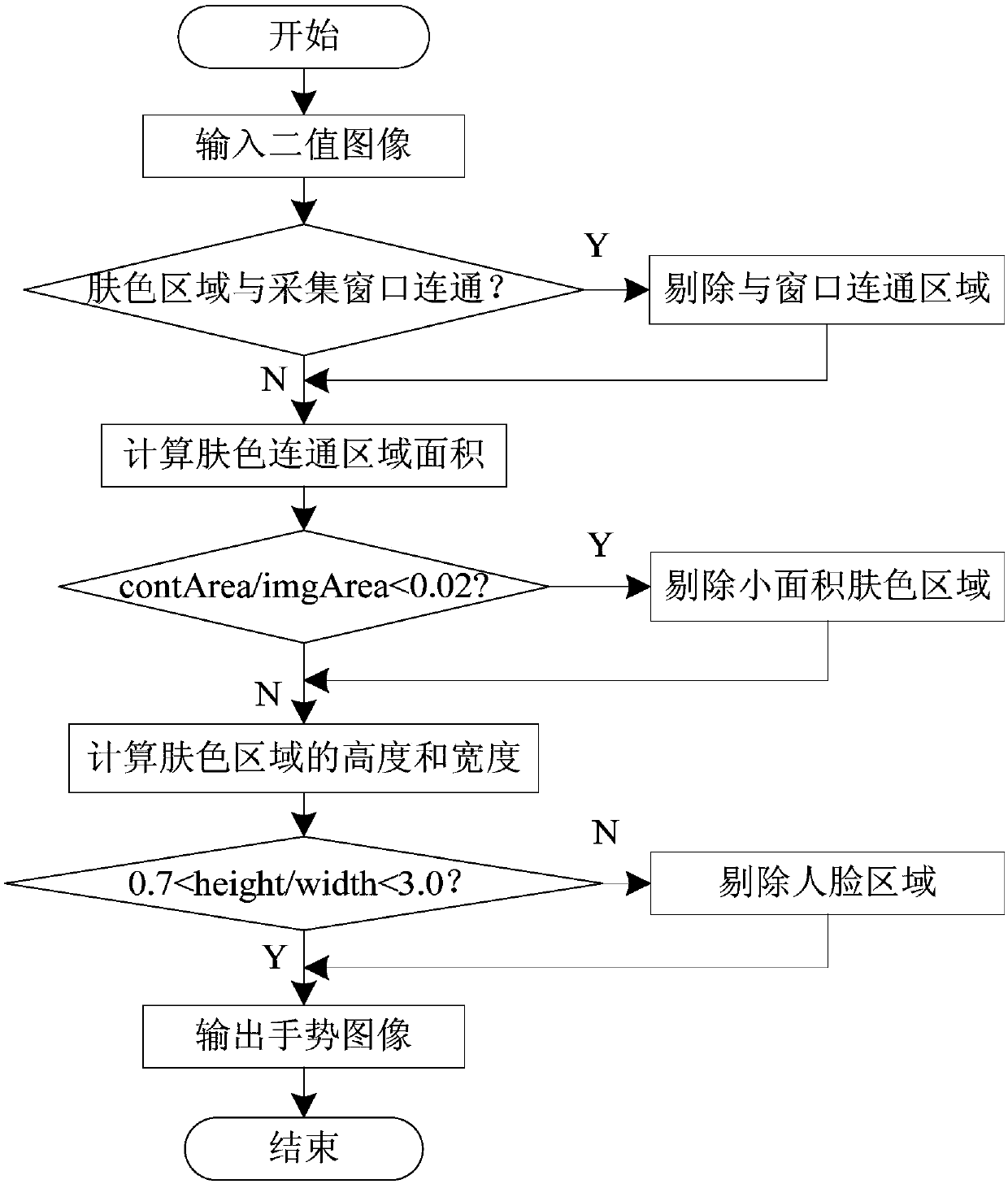

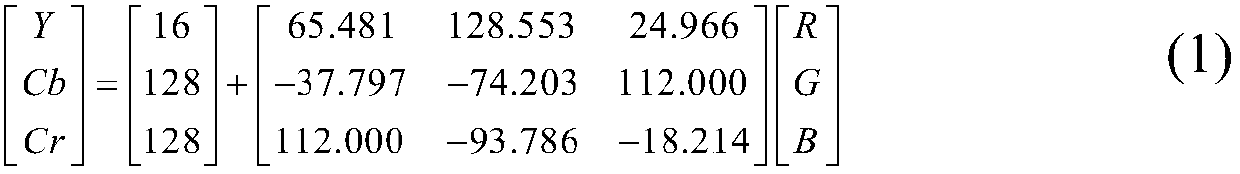

Gesture recognition method based on fused skin color region segmentation and machine learning algorithm and application thereof

InactiveCN108846359AGuaranteed feasibilityFlexible interactionImage analysisCharacter and pattern recognitionFeature vectorSkin color

The invention discloses a gesture recognition method based on fused skin color region segmentation and a machine learning algorithm and an application thereof. The method comprises the following steps: after capturing and pre-processing a gesture image, using an Otsu adaptive threshold algorithm to segment a skin color region under an YCbCr skin color space; after segmentation, segmenting a gesture by setting a gesture region decision condition, and extracting an Hu moment character and the fingertip number as feature vectors on a gesture contour; and using an SVM classifier to classify and recognize six kinds of commonly used static gestures. According to the gesture recognition method based on the fused skin color region segmentation and the machine learning algorithm provided by the invention, the gesture can be accurately located and segmented through a skin color setting gesture decision condition; and the extracted gesture contour Hu moment character and the fingertip number provide more accurate feature vectors for gesture classification, and classification and recognition are carried out on the gesture by utilizing the mature SVM classifier, thus the gesture recognition rate is guaranteed.

Owner:新疆大学科学技术学院

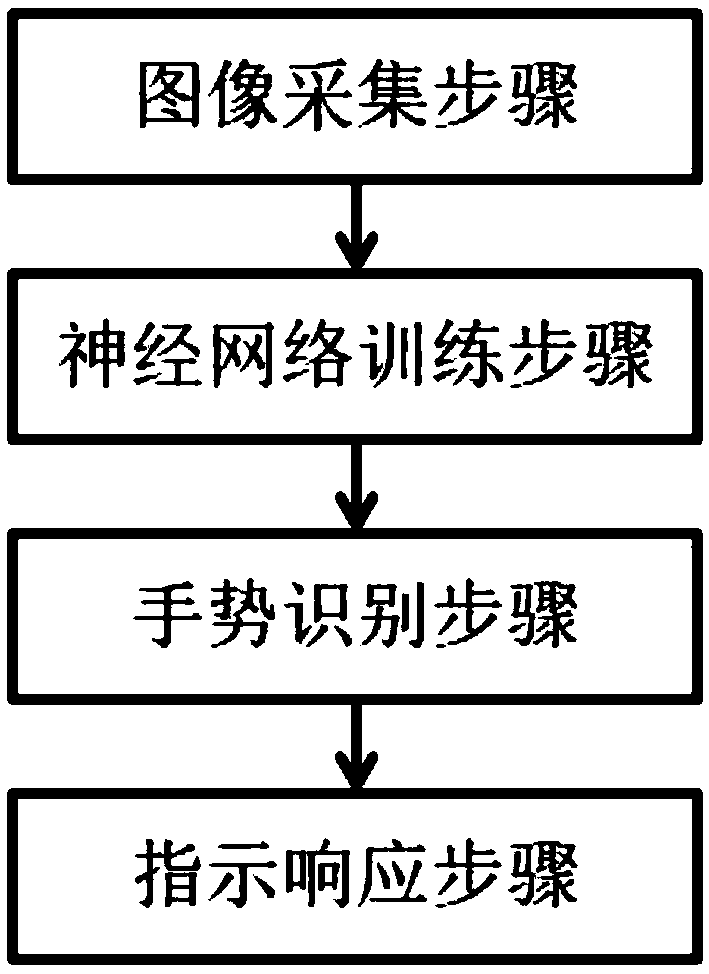

Gesture recognition method based on deep learning

PendingCN111104820AEasy to controlImprove reliabilityCharacter and pattern recognitionPattern recognitionData set

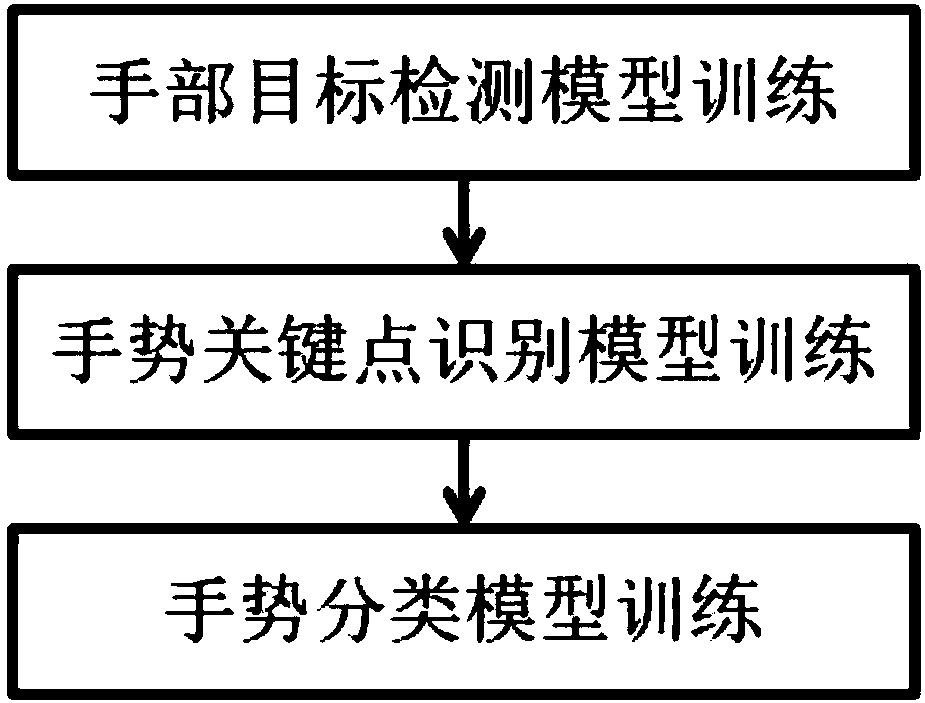

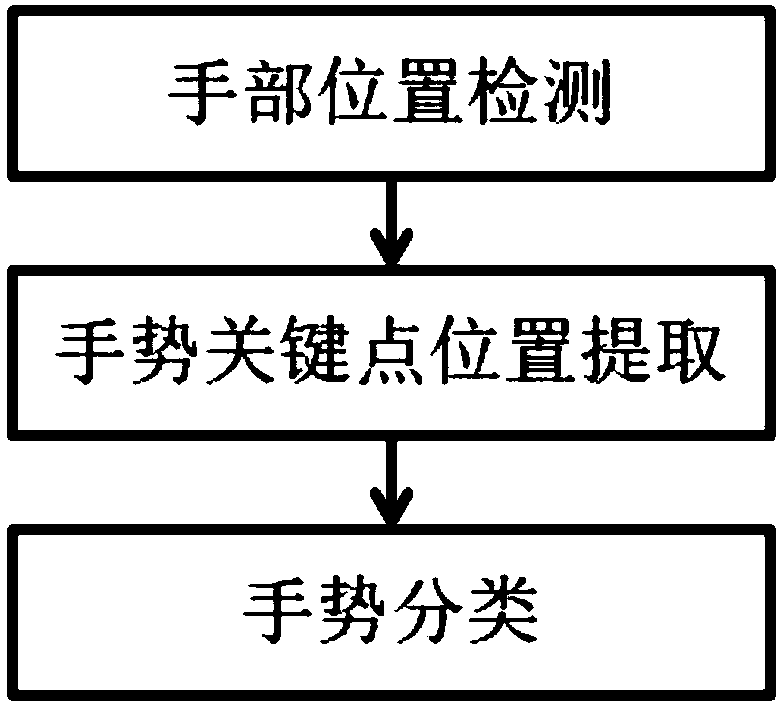

The invention provides a gesture recognition method based on deep learning, and the method comprises an image collection step: collecting a video image, carrying out the video decoding of the video image, and obtaining input video data; a neural network training step: training a neural network model to perform hand position detection, gesture key point position extraction and gesture classification based on gesture key points; a gesture recognition step: carrying out hand position detection and gesture key point position extraction on the input video data by utilizing a neural network model, and carrying out gesture classification on gesture key points; an indication response step: obtaining a gesture operation instruction, and making a real-time response by the system according to the gesture operation instruction; in the neural network training step, at least three neural network models are adopted, and a data set in an online open database and / or a self-sampled and calibrated data set are / is used as input samples to train the neural network models.

Owner:CSR ZHUZHOU ELECTRIC LOCOMOTIVE RES INST

Mechanical arm pose control method based on gesture recognition

ActiveCN107856014ALarge working spaceThe calculation result is accurateProgramme-controlled manipulatorAfter treatmentBluetooth

The invention discloses a mechanical arm pose control method based on gesture recognition. The post of an arm can be obtained through an intelligent wrist belt, and the angle of each joint angle on aworking mechanical arm is solved based on the pose through positive inverse kinematics. The method comprises the specific steps that the wrist belt is wirelessly connected with a PC terminal through Bluetooth, and electromyographic signals collected by an intelligent wrist belt module are transmitted to a remote client; the remote client receives the signals and transmits the signals to a data processing module, the signals are filtered and denoised in the data processing module, and gestures are classified after treatment; after denoising in the data processing module, positive inverse kinematics is used for solving the joint angle; the joint angles of arms of an operator are obtained through two intelligent wrist belts; the signals of the joint angles and operation instruction signals can be transmitted to an intelligent wrist belt module through the remote client; gesture action signals are sent to a simulated mechanical arm in a simulation module; and the simulated arm sends signals to the working mechanical arm, and the mechanical arm executes commands.

Owner:ZHEJIANG UNIV OF TECH

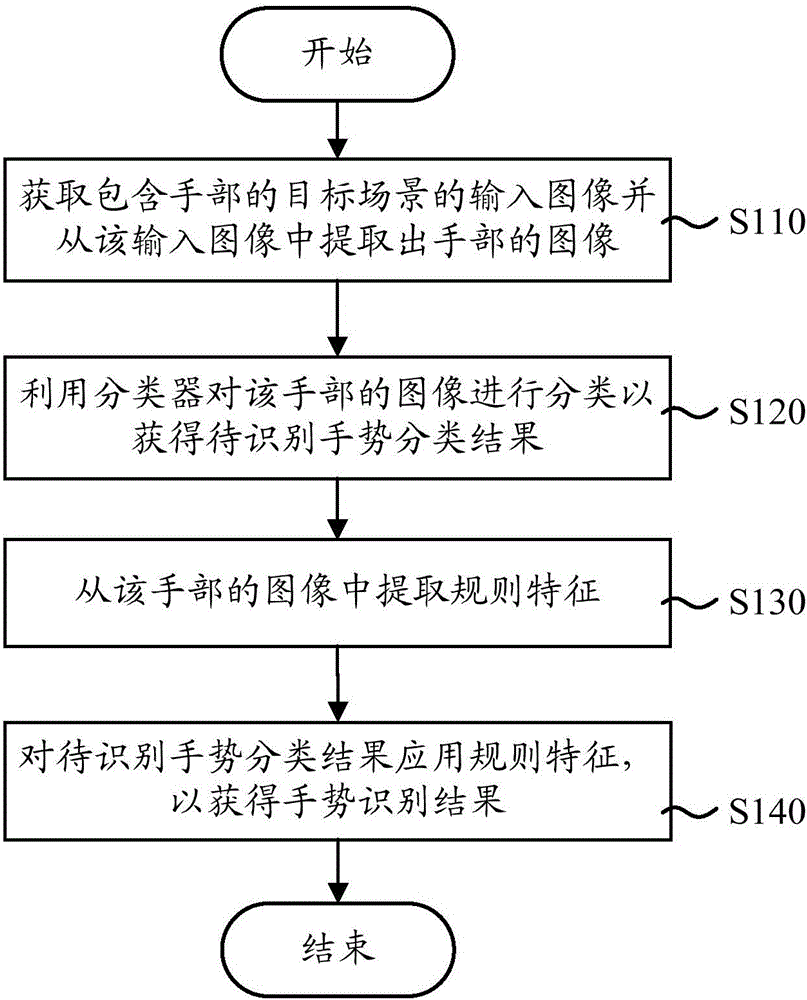

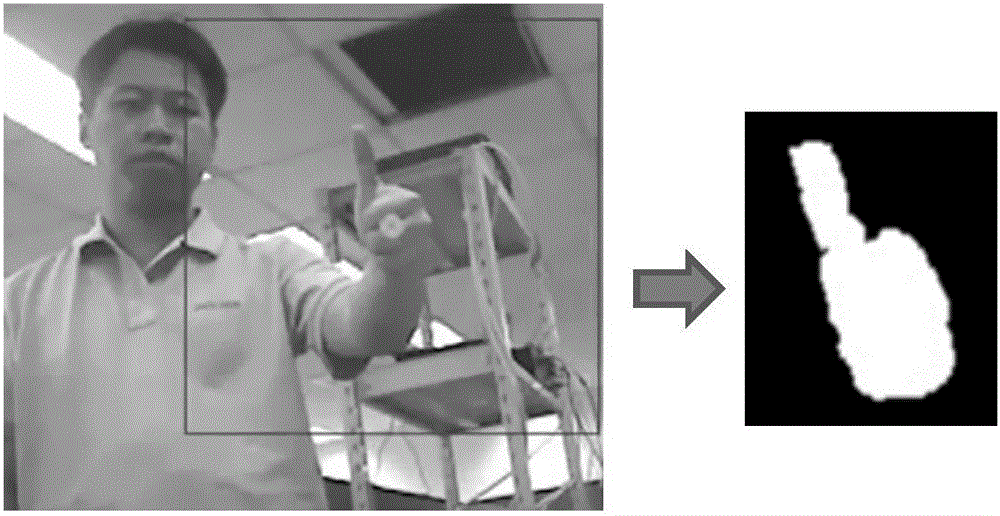

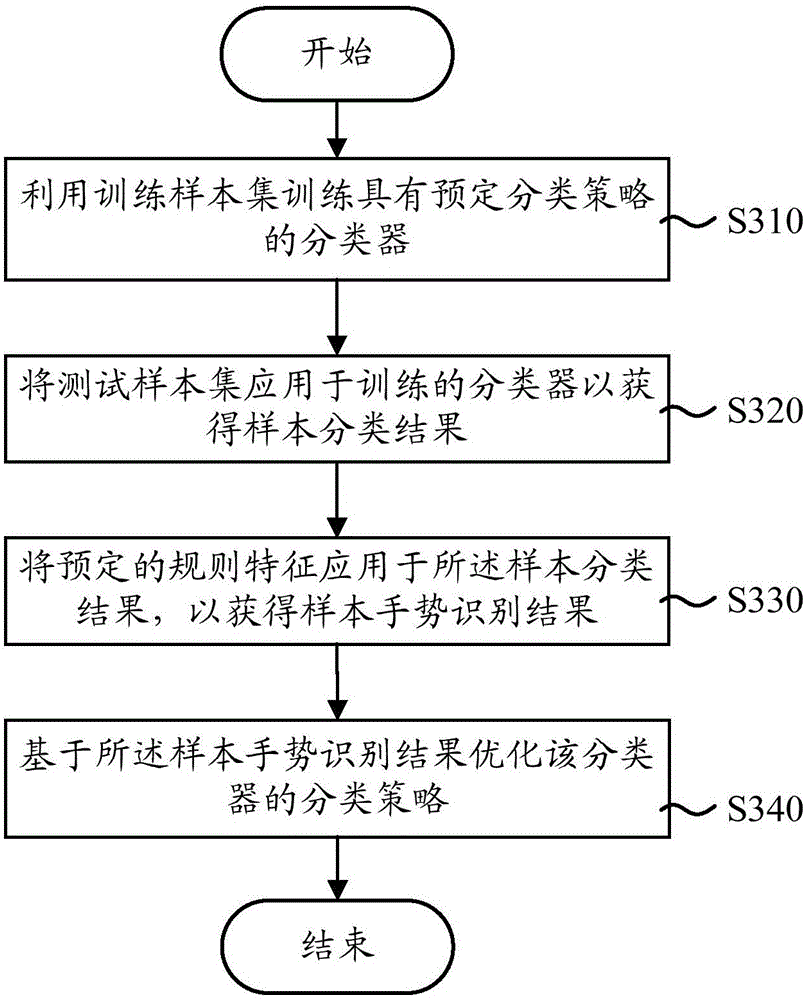

Gesture identification method and apparatus

InactiveCN106372564AImprove gesture recognition accuracyReduce the possibility of misjudgmentCharacter and pattern recognitionPattern recognitionGesture classification

The invention provides a gesture identification method and apparatus. An input image of a target scene comprising a hand portion is obtained, an image of the hand portion is extracted from the input image, a to-be-identified gesture classification result is obtained by classifying the image of the hand portion by use of a classifier, a rule feature is extracted from the image of the hand portion, and a gesture identification result is obtained by applying the rule feature to the to-be-identified gesture classification result. In such a way, weak classifier and strong rule determination technologies are integrated, the classifier is not directly applied to final identification of various gestures, and the various different gestures are further distinguished by applying a strong rule to the to-be-identified gesture classification result of the classifier, such that advantages of the rule feature and a classifier feature complement each other, while the gesture identification accuracy is improved, the possibility of misjudgement is reduced, and robust identification of static gestures is realized.

Owner:RICOH KK

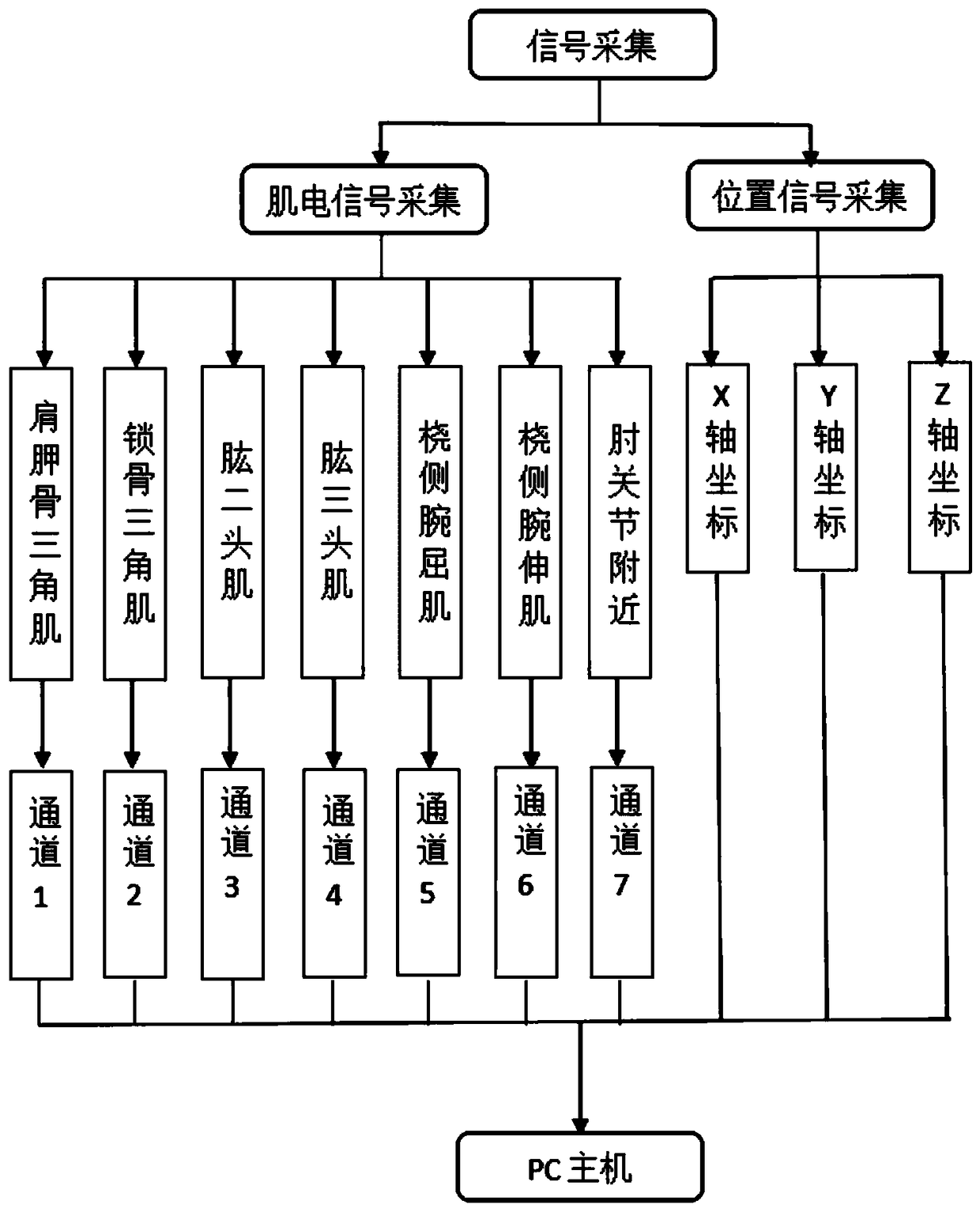

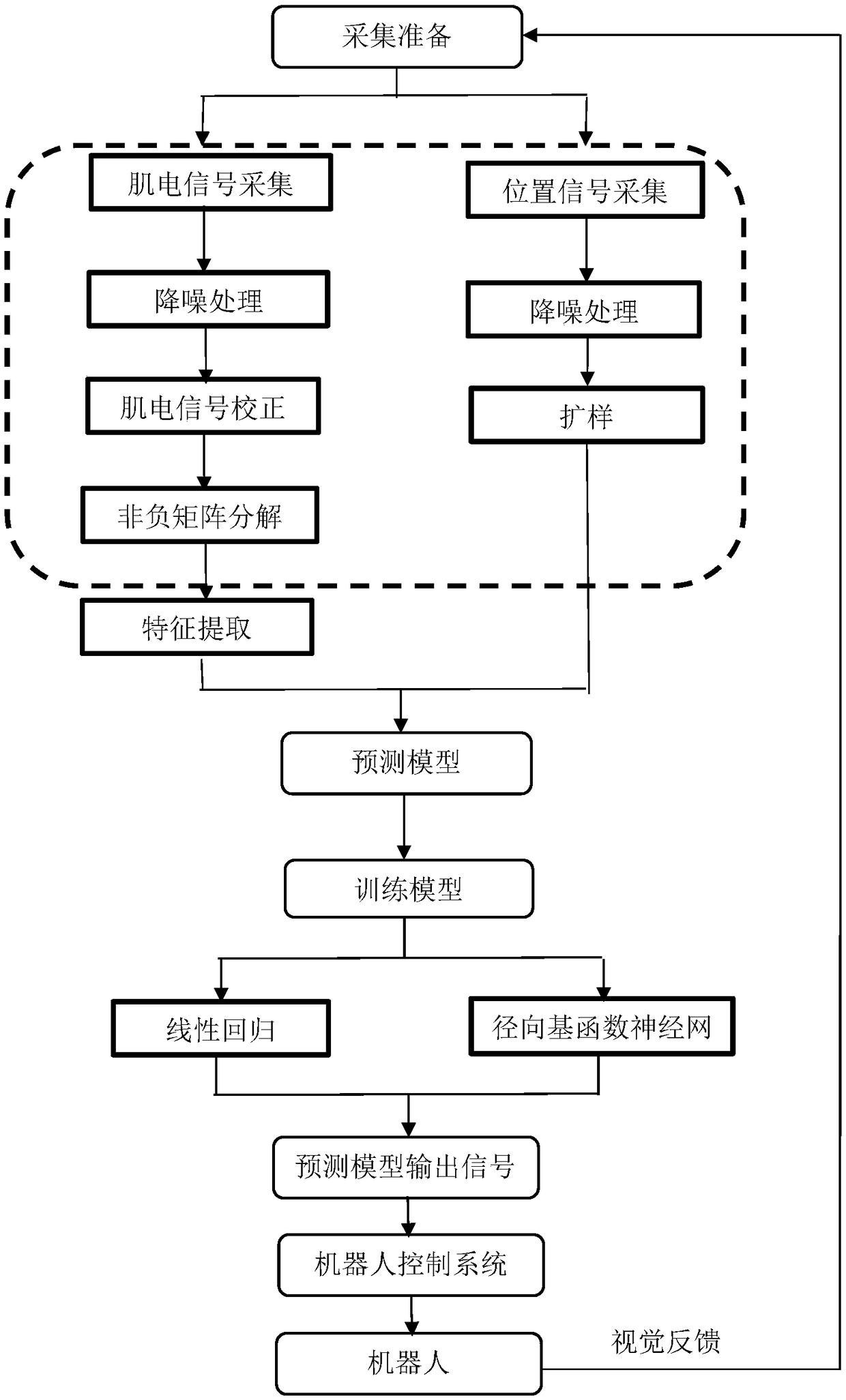

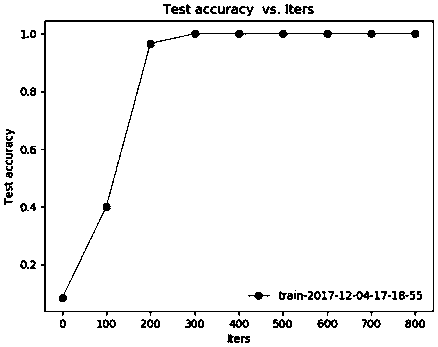

Method for predicting continuous complex motion intention of human body based on electromyographic signal on surface layer

ActiveCN109480838AEffective predictionGuaranteed accuracyProgramme-controlled manipulatorDiagnostic recording/measuringSurface layerSignal on

The invention provides a method for predicting continuous complex motion intention of the human body based on an electromyographic signal on a surface layer. By collecting the electromyographic signalon the surface layer of the upper limb of the human body, signal processing such as noise removal is carried out, the features of the electromyographic signal on the surface layer are extracted, theelectromyographic signal on the surface layer can be utilized to accurately predict three-dimensional coordinates of the upper limbs of the human body, the obtained three-dimensional position coordinates are transmitted to a robot control system, a robot performs action and feeds back to the upper limbs of the human body to achieve man-machine collaborative operation, and the problem that the simple gesture classification of the electromyographic signal on the surface layer is not suitable for continuous complex motion of the robot.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

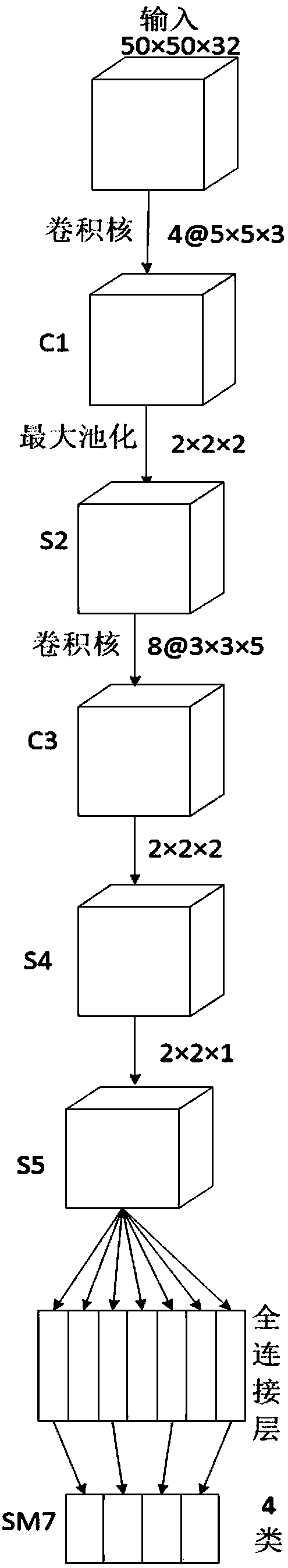

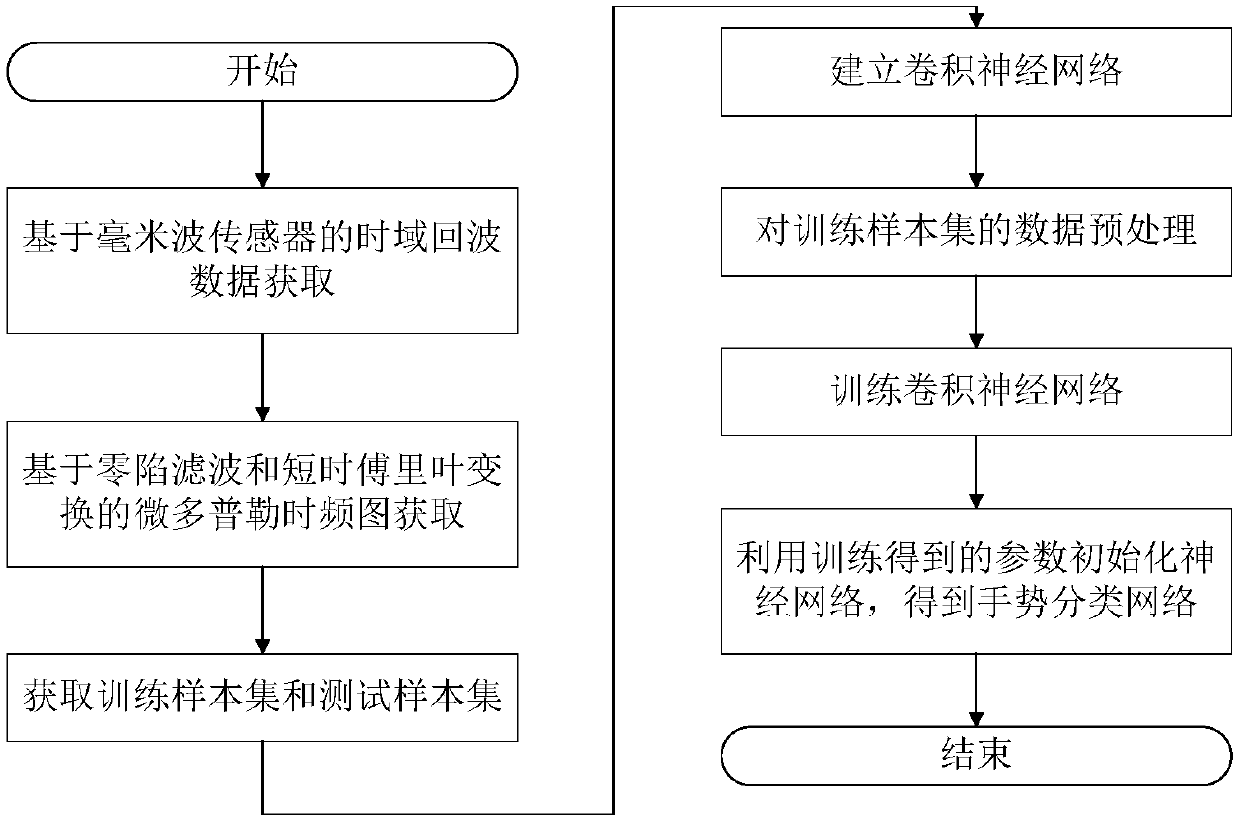

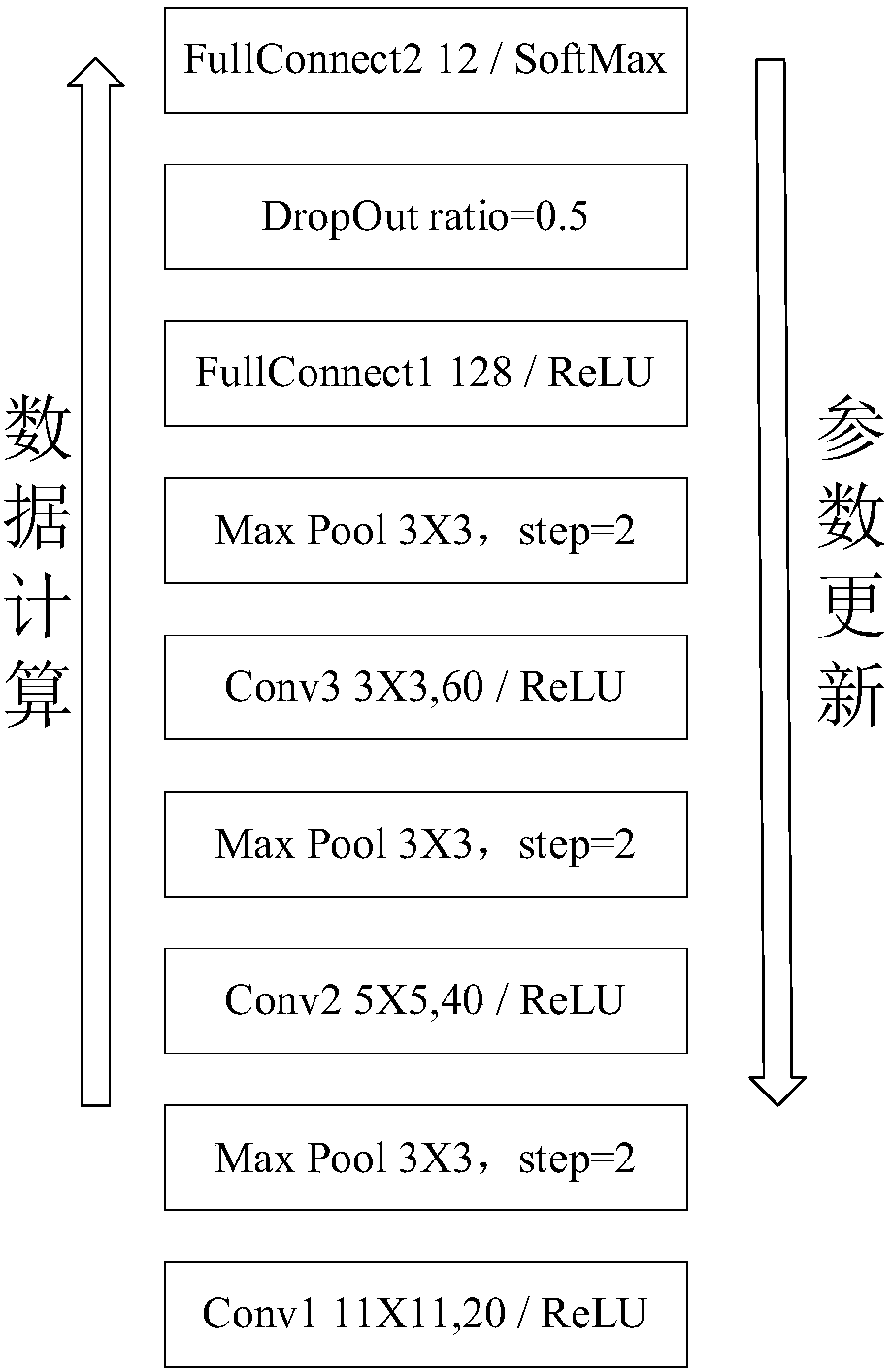

Millimeter wave sensor gesture recognition method based on convolutional neural network

InactiveCN110262653AHigh precisionHigh speedInput/output for user-computer interactionImage enhancementTime domainSupervised learning

The invention discloses a millimeter wave sensor gesture recognition method based on a convolutional neural network, and the method comprises the steps: (1) enabling a millimeter wave sensor to emit frequency modulation continuous wave signals, carrying out the various gestures in front of the sensor, and enabling a receiving channel to obtain the time domain echo signals of the gestures; (2) obtaining a micro Doppler time-frequency diagram; (3) acquiring time-frequency diagram sample sets of different gestures; (4) preprocessing the data in the training sample set, inputting the pictures as training data into the established convolutional neural network, and carrying out supervised learning to obtain parameters of each layer of the convolutional neural network; and (5) initializing the network by using the trained parameters of each layer of the convolutional neural network to obtain an image recognition network with a gesture classification function. According to the gesture recognition method and device based on the convolutional neural network, gesture classification recognition is conducted through the convolutional neural network, manual intervention is avoided, the convolutional neural network can learn deep features of all kinds of actions, the generalization ability and adaptability are high, and the gesture recognition precision and speed are improved.

Owner:SOUTHEAST UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com