A static gesture recognition method combining depth information and skin color information

A gesture recognition and depth information technology, applied in the field of image recognition, can solve the problems of difficulty in meeting the real-time requirements of the gesture recognition system, single gesture features, and taking a long time to improve the recognition accuracy, strong robustness, The effect of removing arm interference

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

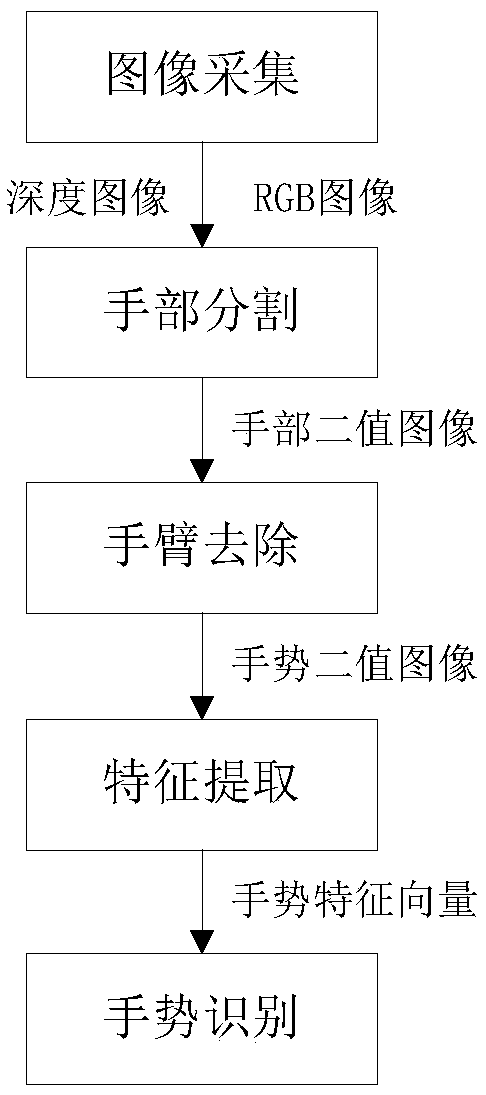

[0054] like figure 1 As shown, a static gesture recognition method combining depth information and skin color information, the steps are: image acquisition step, hand segmentation step, arm removal step, feature extraction step and gesture recognition step.

[0055] Image acquisition steps:

[0056] The RGB image and its corresponding depth image are collected simultaneously by kinect, that is, the depth information corresponding to all pixels in the RGB image is obtained.

[0057] The kinect should be located 200mm to 3500mm in front of the human body, and the hand should be the closest to the kinect.

[0058] Hand segmentation steps:

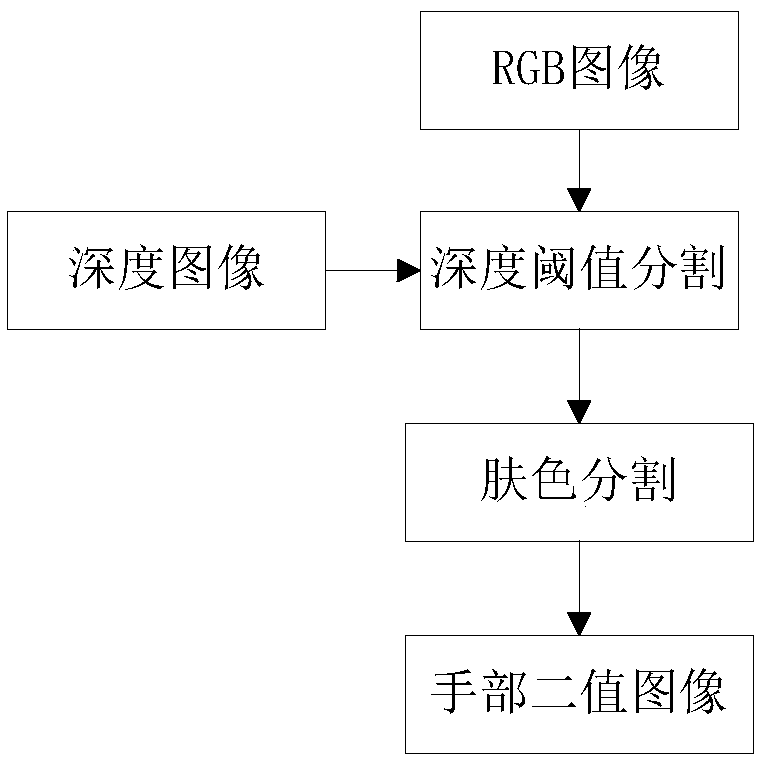

[0059] like figure 2 As shown, the hand segmentation step includes the following steps:

[0060] (1) Combine the depth image and the RGB image, and use the depth threshold segmentation to obtain the RGB image containing the hand. The specific process is as follows:

[0061] Locate the pixel closest to the kinect in the depth image, and c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com