Patents

Literature

73 results about "Gesture segmentation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

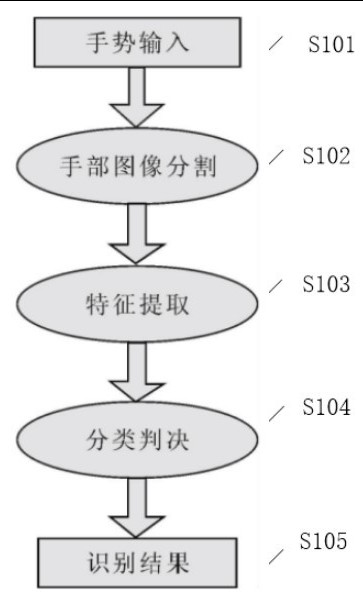

Gesture identification method and system based on visual sense

InactiveCN101853071AImprove usabilityImprove execution efficiencyInput/output for user-computer interactionCharacter and pattern recognitionVision basedUsability

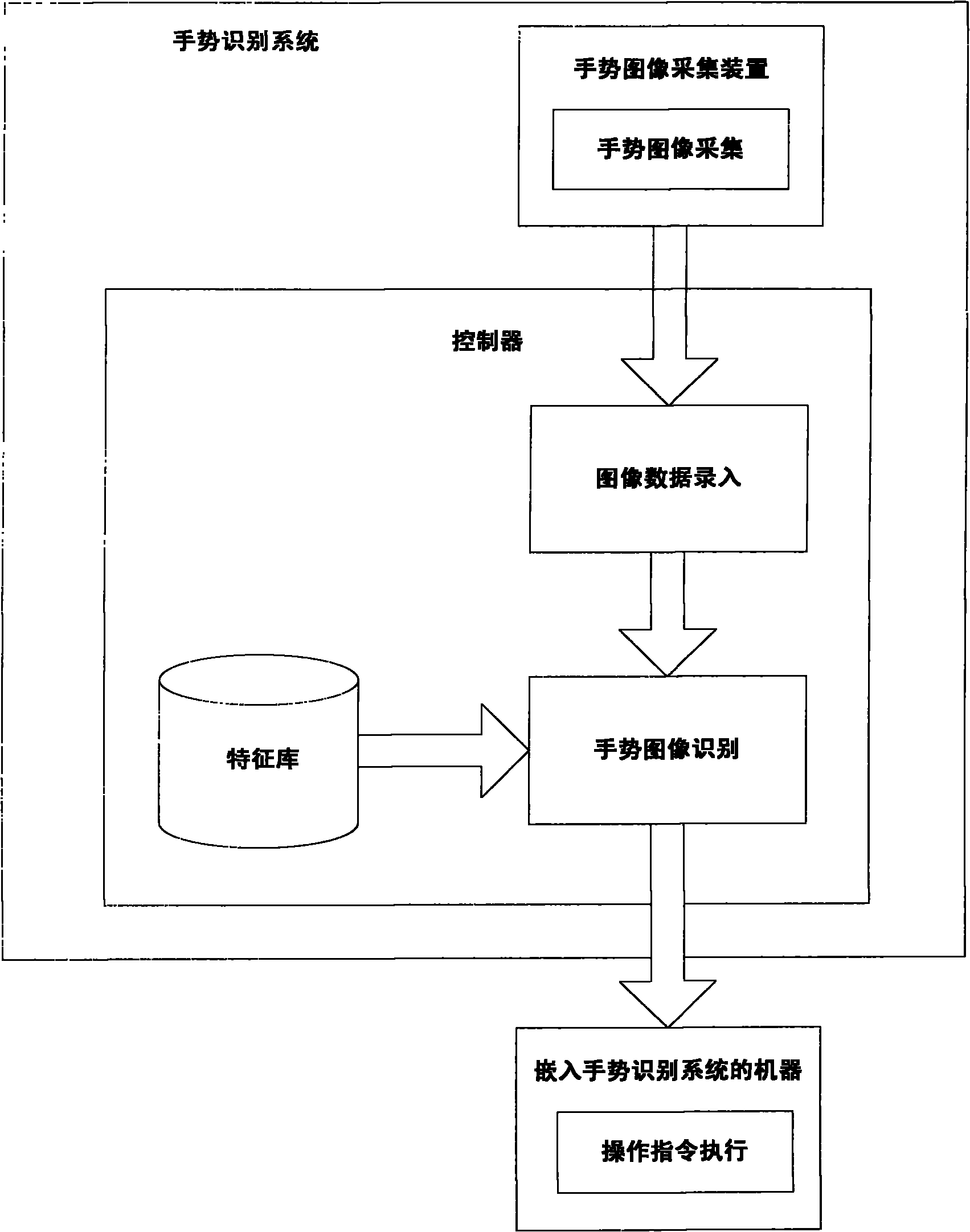

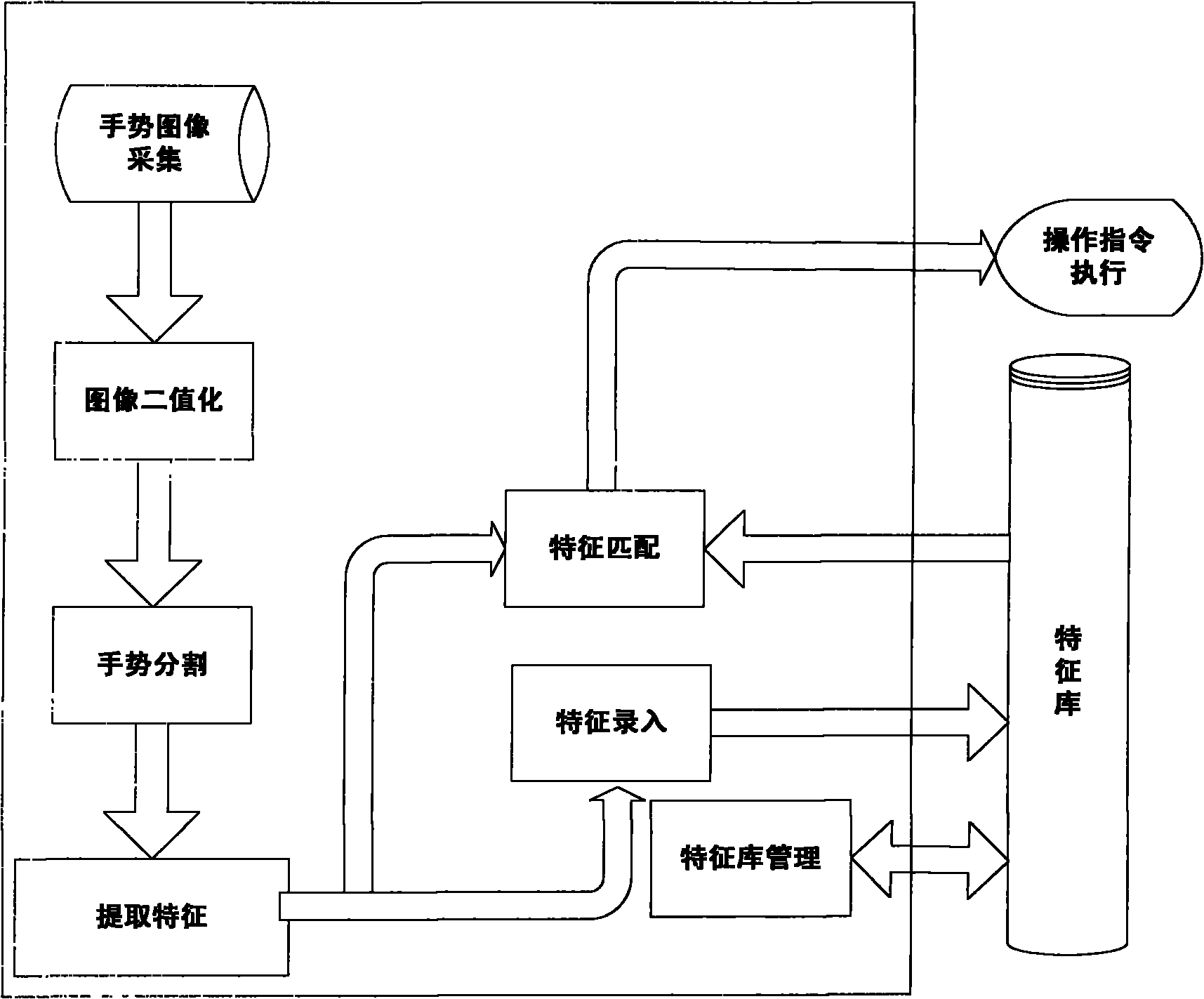

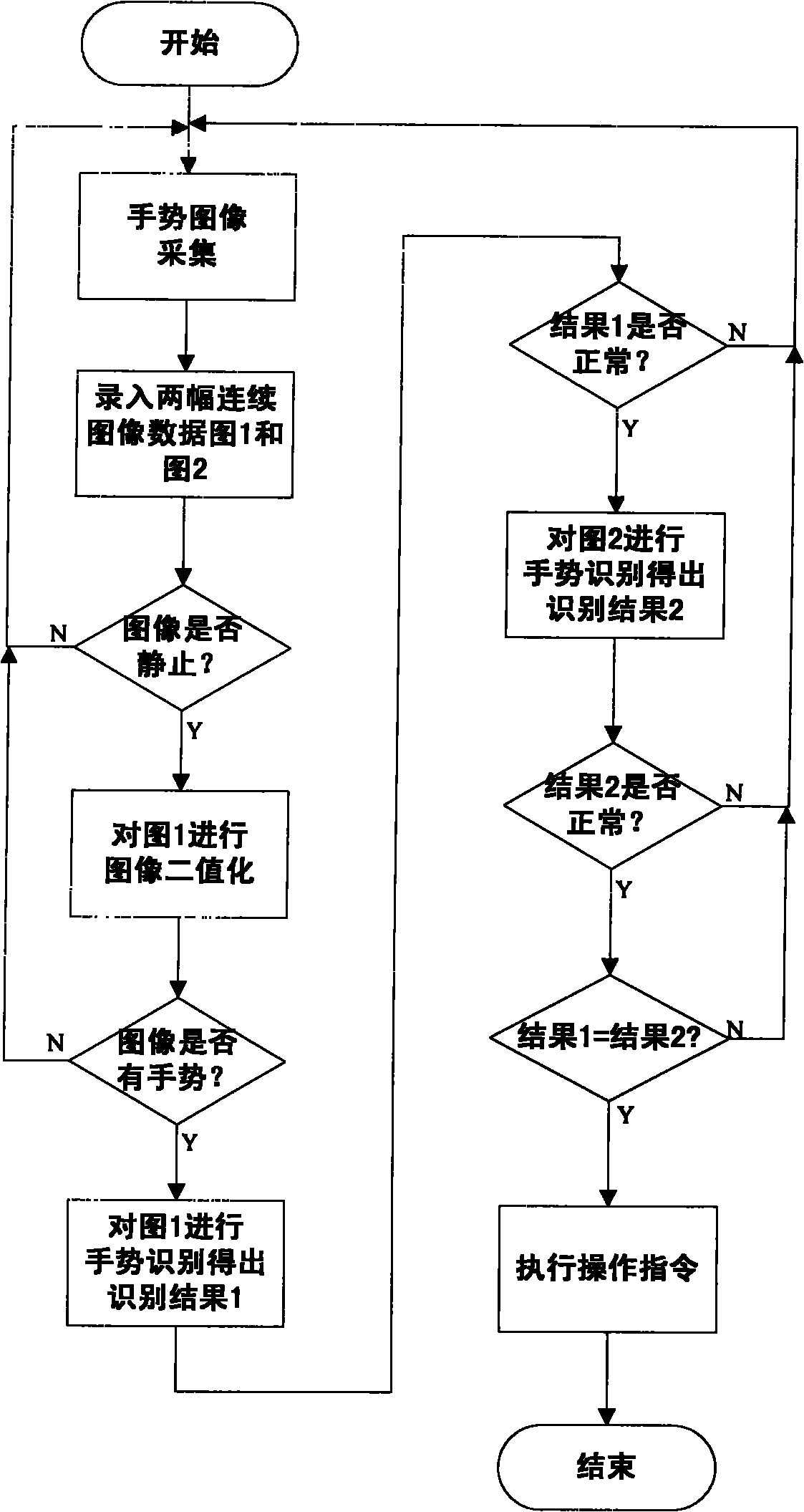

The invention provides gesture identification method and system based on visual sense. The system comprises a gesture image acquisition device and a controller which are mainly used for realizing gesture image acquisition, image data entry, gesture image identification and operation command execution, wherein the gesture image identification comprises image binaryzation, gesture split, feature extraction and feature matching. The invention has real-time performance, obtains identification results by extracting and matching the features of gesture images of a user, and executes corresponding commands according to the identification results. In the invention, hands are used as input devices, only the acquired images need contain complete gestures, and the gestures can be allowed to translate, change in dimension and rotate within a certain angel, thereby greatly improving the use convenience of devices.

Owner:CHONGQING UNIV

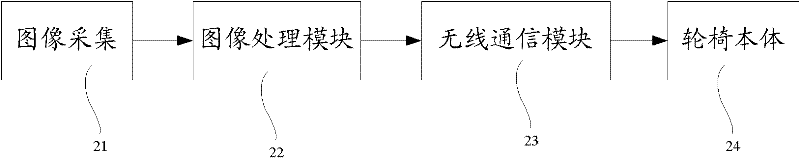

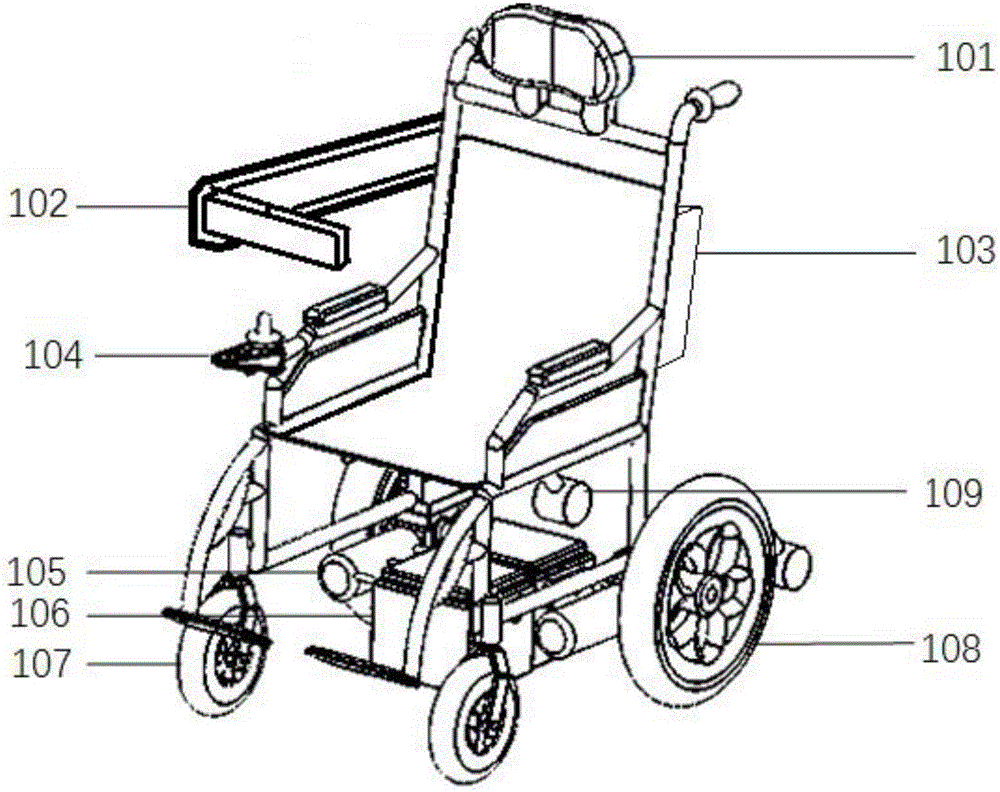

Gesture recognition method and gesture recognition control-based intelligent wheelchair man-machine system

InactiveCN102339379AQuick identificationAccurate identificationWheelchairs/patient conveyanceCharacter and pattern recognitionSupport vector machineLife quality

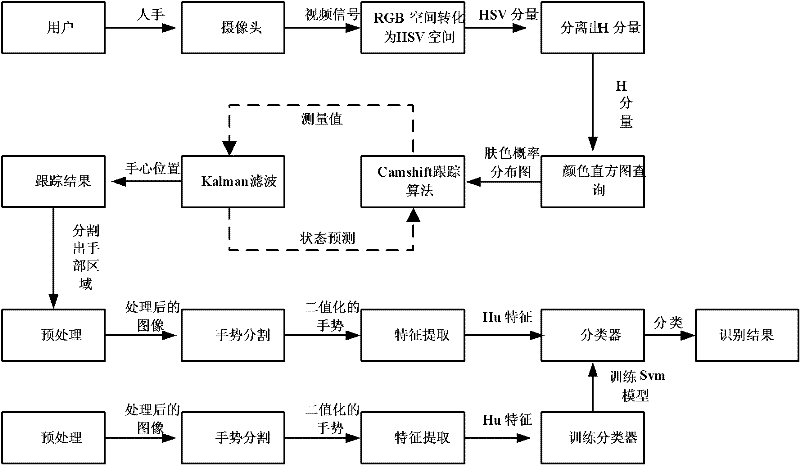

The invention discloses a gesture recognition method and a gesture recognition control-based intelligent wheelchair man-machine system, and relates to the fields of computer vision, man-machine systems and control. The system comprises a video acquisition module, a separator, a query module, a tracking module, a gesture pretreatment module, a characteristic extraction module, a gesture recognition module and a control module. In the method, a hand is tracked by combining a Camshift tracking algorithm with a Kalman filtering algorithm, and a gesture is separated and is recognized by combining Hu moment with a support vector machine (SVM). By the gesture recognition method, the influence of skin color interference, shielding and a peripheral complex environment on gesture segmentation can be eliminated, and the hand is accurately tracked and quickly and accurately recognized; and when the gesture recognition method is used in the gesture recognition control-based intelligent wheelchair man-machine system, the aims of quickly and accurately recognizing a gesture command and safely controlling an intelligent wheelchair can be fulfilled, and the activity range and life quality of old people and disabled people can be improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

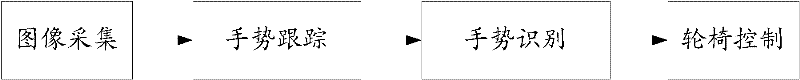

Dynamic gesture identification method in interactive system

ActiveCN102063618AOvercoming complexityOvercoming real-timeInput/output for user-computer interactionCharacter and pattern recognitionInteraction systemsGray level

The invention discloses a dynamic gesture identification method in an interactive system, comprising the following four steps of: acquiring an image, and obtaining a current image frame after denoising; obtaining a moving region of the image by calculating the difference of the current image frame and a reference image frame, and obtaining a binary image by a skin color detection method; carrying out gesture segmentation on the binary image by a gray level projection method to obtain a gesture region and a barycentric position; and obtaining a final identification result by a classification identification method according to the barycentric position and fingertip characteristics. The method can be used for overcoming the problems of high complexity, low real-time quality and low identification rate in traditional algorithms, has the advantages of simplicity in implementation, good real-time quality and high identification rate, and can be applied to the dynamic gesture identification very well. Moreover, the method can tolerate the translation of a definition gesture, the scale and the transformation of a rotation angle, and has a good robustness.

Owner:CHINA KEY SYST & INTEGRATED CIRCUIT

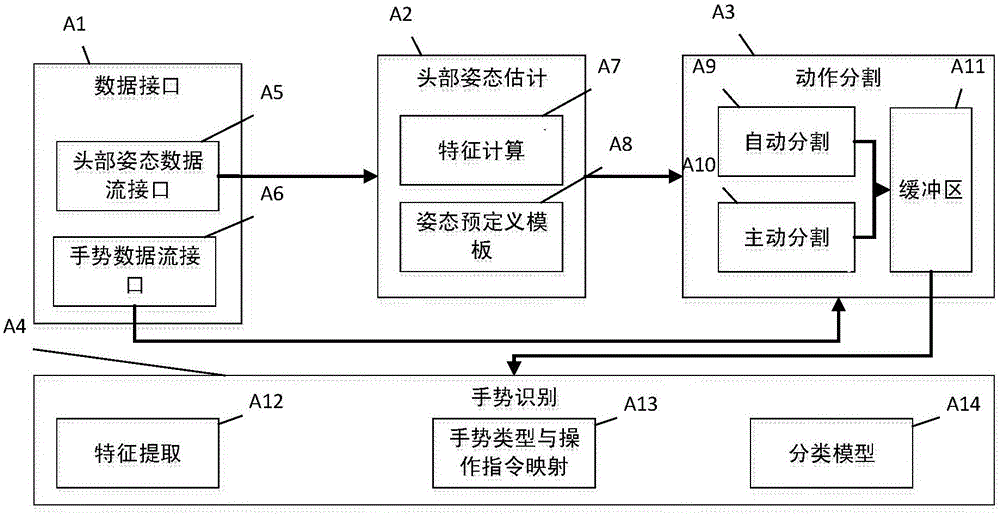

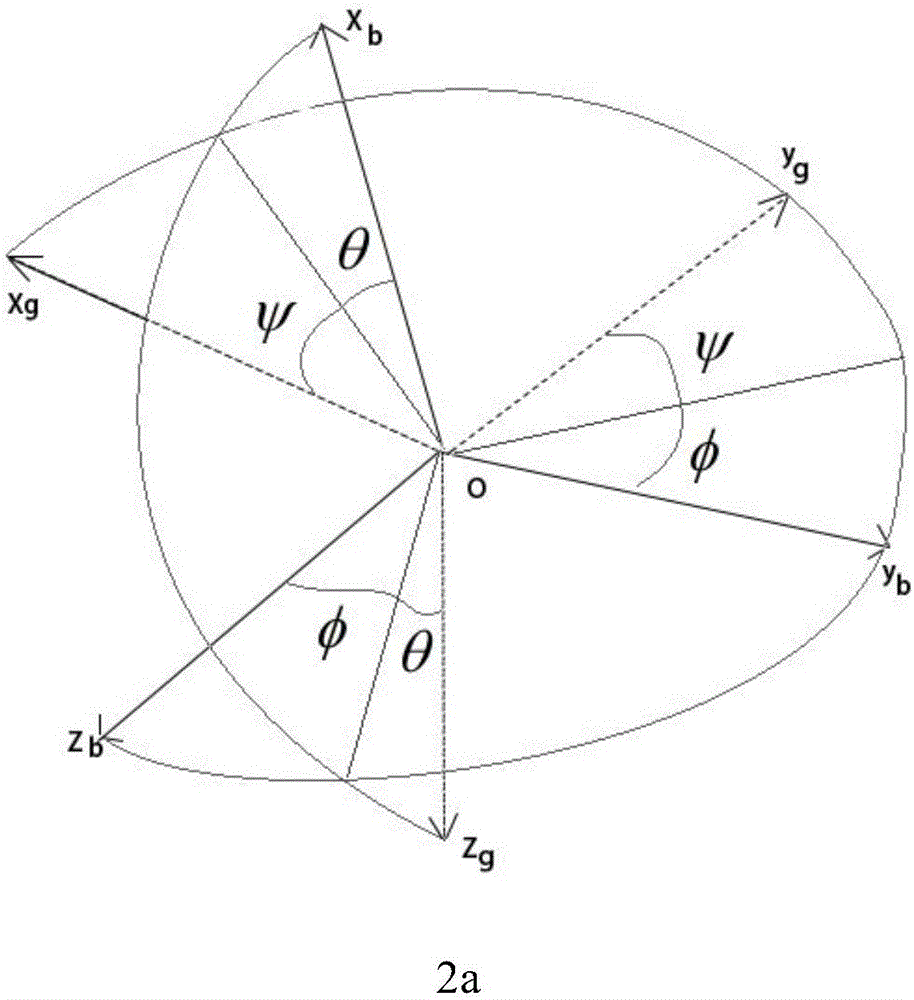

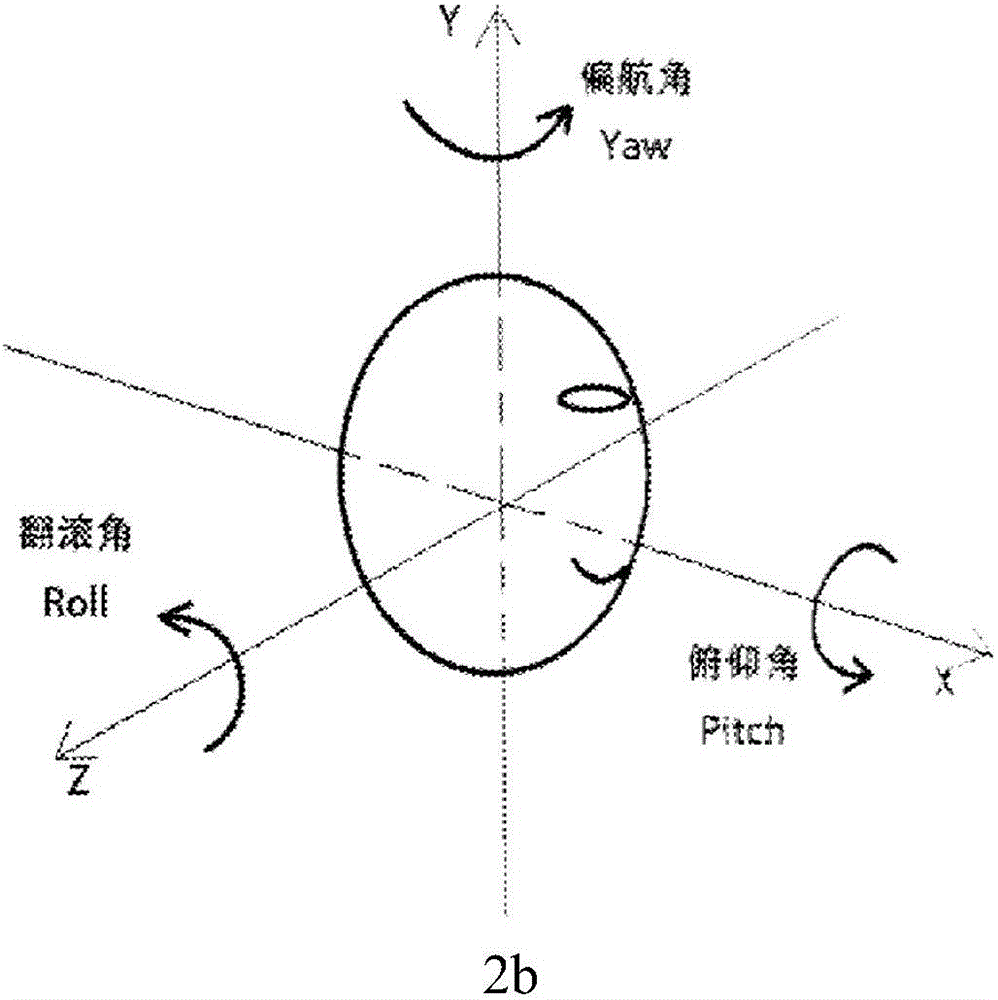

Gesture recognition system and method adopting action segmentation

ActiveCN105809144AHigh precisionImprove efficiencyInput/output for user-computer interactionCharacter and pattern recognitionHead movementsFrame sequence

The invention provides a gesture recognition system and method adopting action segmentation and relates to the field of machine vision and man-machine interaction.The gesture recognition method comprises the following steps that firstly, head movements are detected, and head posture changes are calculated; then, a segmentation signal is sent according to posture estimation information, gesture segmentation beginning and end points are judged, if the signal indicates initiative gesture action segmentation, gesture video frame sequences are captured within a time interval of gesture execution, and preprocessing and characteristic extraction are conducted on gesture frame images; if the signal indicates automatic action segmentation, the video frame sequences are acquired in real time, segmentation points are automatically analyzed by analyzing the movement change rule of adjacent gestures for action segmentation, then vision-unrelated characteristics are extracted from segmented effective element gesture sequences, and a type result is obtained by adopting a gesture recognition algorithm for eliminating spatial and temporal disparities.The gesture recognition method greatly reduces redundant information of continuous gestures and the calculation expenditures of the recognition algorithm and improves the gesture recognition accuracy and real-timeliness.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

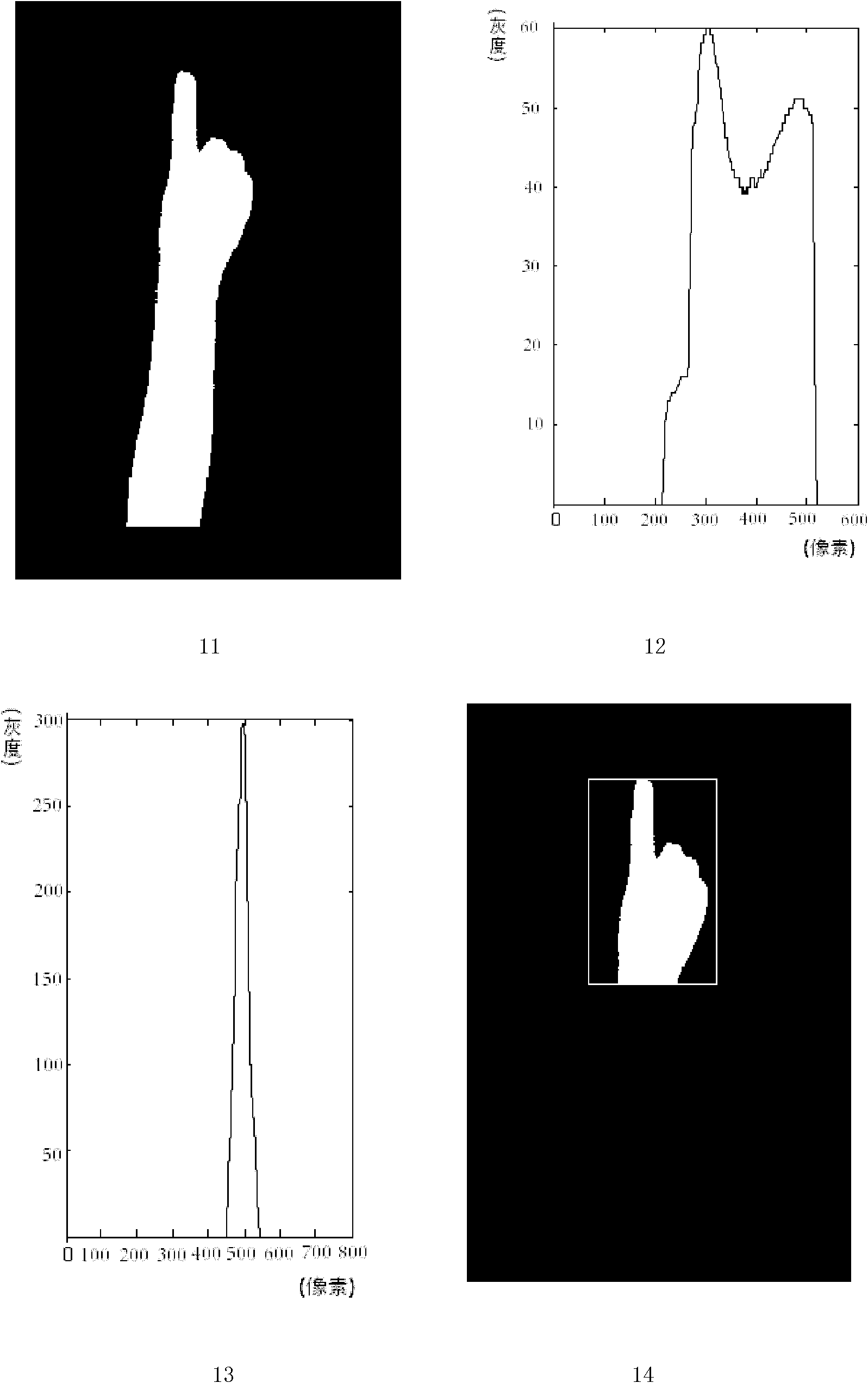

Gesture identification method applied to control of mobile service robot for elder and disabled

ActiveCN105787471ASafe and reliable controlExpand the scope of activitiesInput/output for user-computer interactionImage enhancementLife qualityWheelchair

The invention provides a gesture identification method applied to control of a mobile service robot for the elder and disabled. Static gesture identification is combined with dynamic gesture identification, interference caused by complex background, large-area skin color or shielding during gesture segmentation can be eliminated, a man-machine interaction manner is natural and friendly, gesture instruction can be identified rapidly and accurately and a wheelchair is controlled safely when the method is applied to a mobile service robot system based on gesture identification, the movement range of a user is increased, and life quality of the user is improved effectively.

Owner:NANJING UNIV OF POSTS & TELECOMM

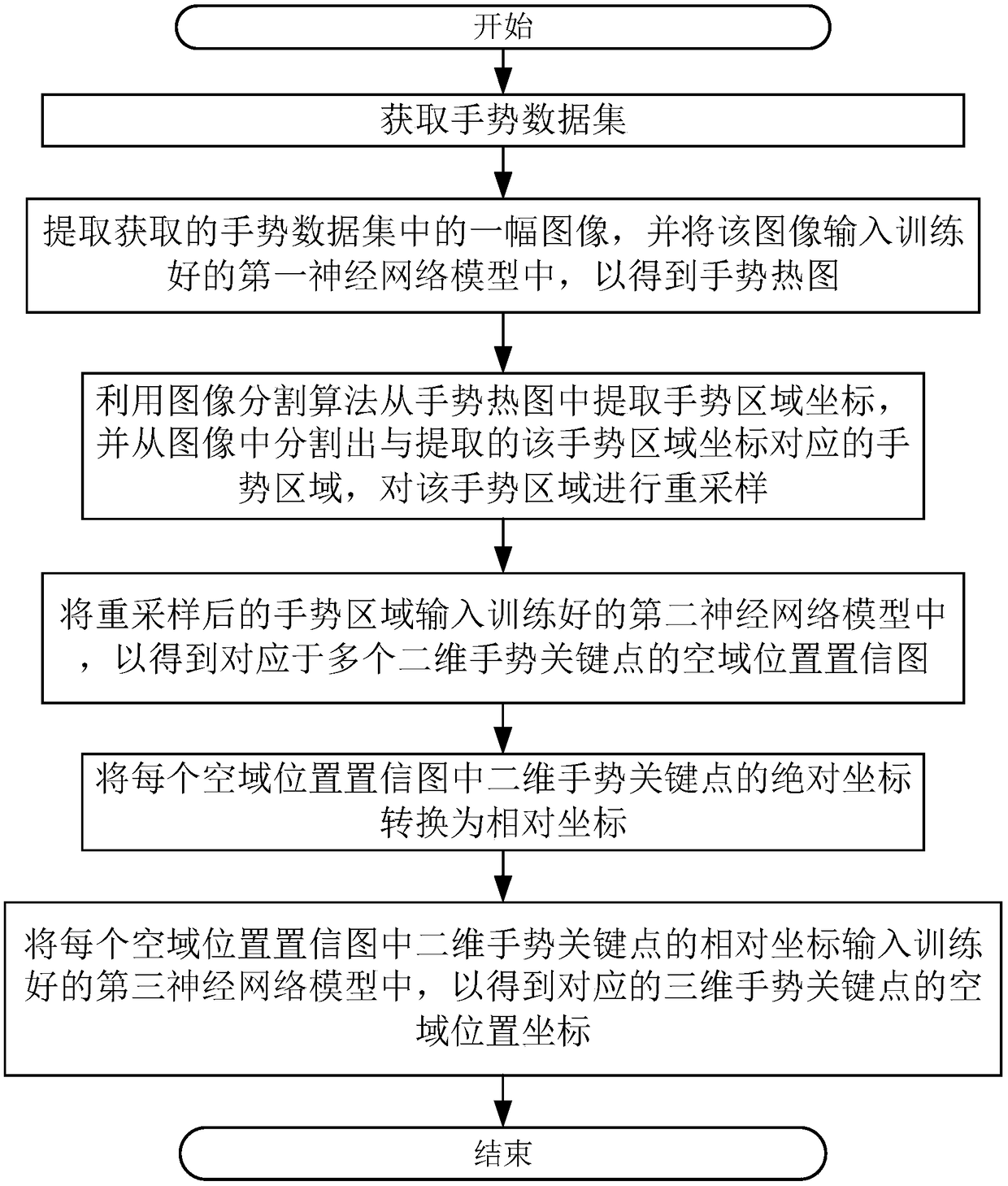

A method and a system for detecting key points of a three-dimensional gesture based on a neural network

ActiveCN109214282AReduce usageReduce hardware costsNeural architecturesThree-dimensional object recognitionData setNerve network

The invention discloses a three-dimensional gesture key point detection method based on a neural network, which comprises the following steps: acquiring a gesture data set including gesture area information and gesture two-dimensional and three-dimensional key point position information; obtaining a gesture data set containing gesture area information and gesture two-dimensional and three-dimensional key point position information. Training a gesture segmentation network which can detect a gesture region in an RGB image by taking an RGB image containing a gesture as an input; the gesture region detected by the gesture segmentation network is truncated, up-sampled or down-sampled. Training a two-dimensional gesture key point detection network, which can detect a plurality of two-dimensionalgesture key points in a gesture region image; the absolute coordinates of the key points of the three-dimensional gesture are converted into relative coordinates; a 2D to 3D gesture key point mappingnetwork is trained. The network can map multiple 2D gesture key points into 3D space to form 3D gesture key points. The invention can quickly and effectively detect three-dimensional gesture key points from RGB images containing gestures.

Owner:SOUTH CENTRAL UNIVERSITY FOR NATIONALITIES

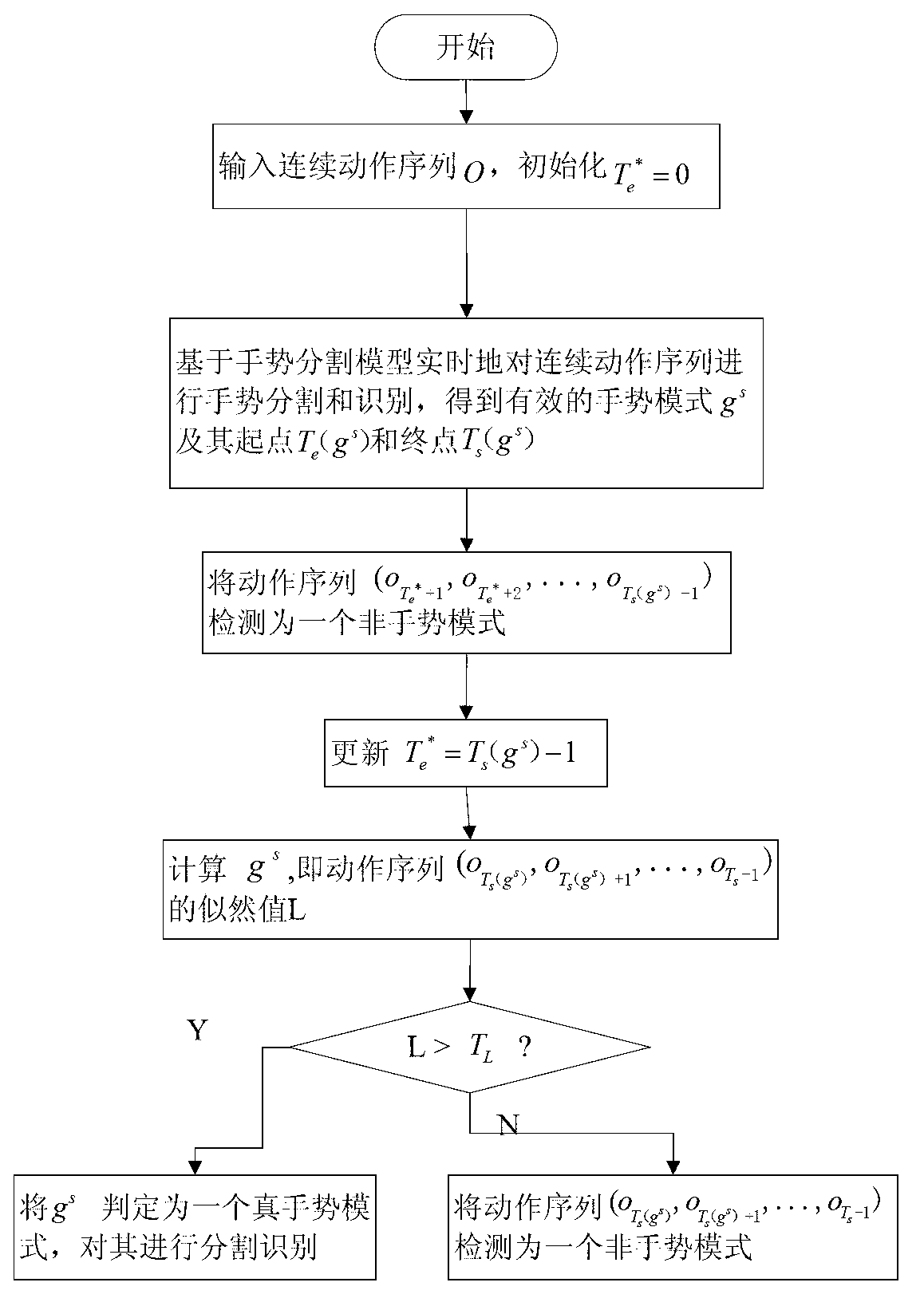

Gesture segmentation recognition method capable of detecting non-gesture modes automatically and gesture segmentation recognition system

ActiveCN102982315AReduce the amount of manual calibrationImprove accuracyCharacter and pattern recognitionThreshold modelRecognition system

The invention discloses a gesture segmentation recognition method capable of detecting non-gesture modes automatically and a gesture segmentation recognition system. The gesture segmentation recognition method includes multiple steps, a first step is that a gesture recognition model is trained based on heterogeneous data acquired by a camera and a sensor, the gesture recognition model is used for constructing a threshold model, and the gesture recognition model and the threshold model constitute a gesture segmentation model; a second step is that the gesture segmentation model is used for automatically detecting non-gesture modes from an input continuous action sequence; a third step is that the non-gesture modes are used for training a non-gesture recognition model; and a fourth step is that the gesture segmentation model is expanded based on the non-gesture recognition model and used for segmentation recognition of the input continuous action sequence. Due to the gesture segmentation recognition method capable of detecting the non-gesture modes automatically, the gesture segmentation recognition system can well represent the non-gesture modes, the probability that the non-gesture modes are misjudged to gesture modes is reduced, and accuracy of a gesture segmentation algorithm is improved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

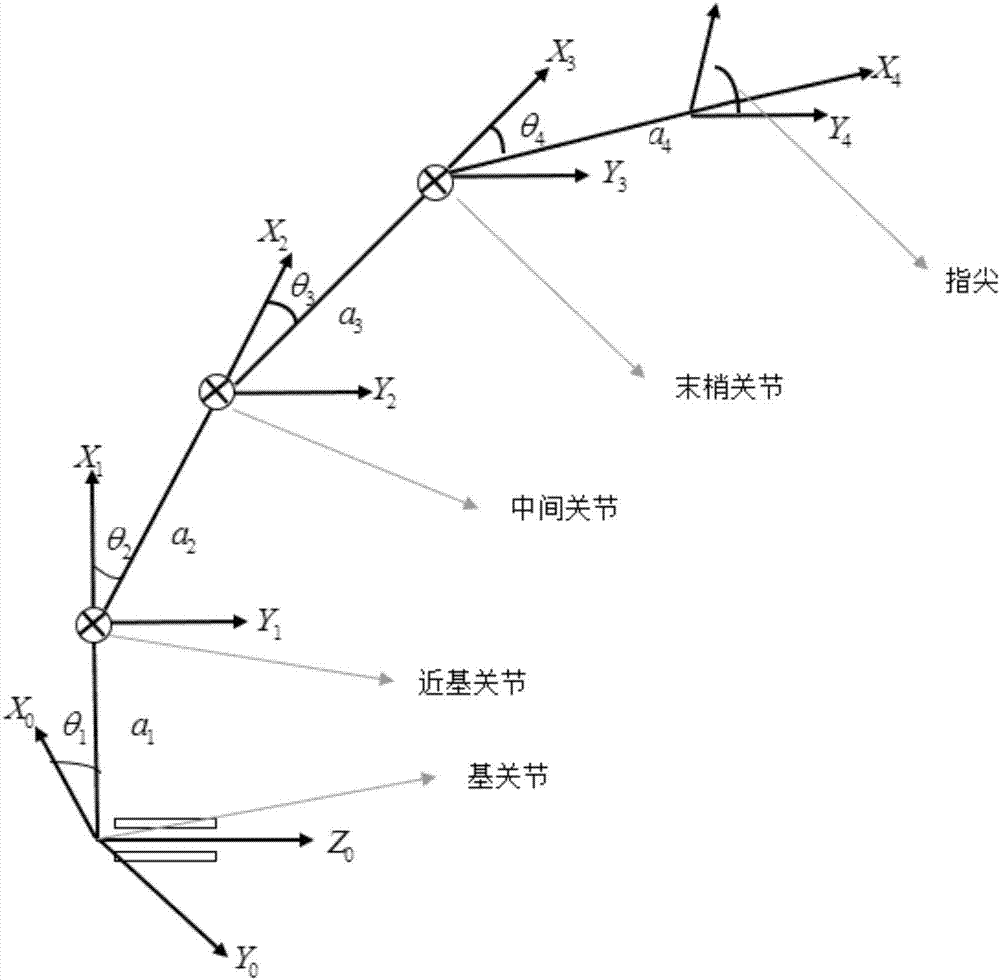

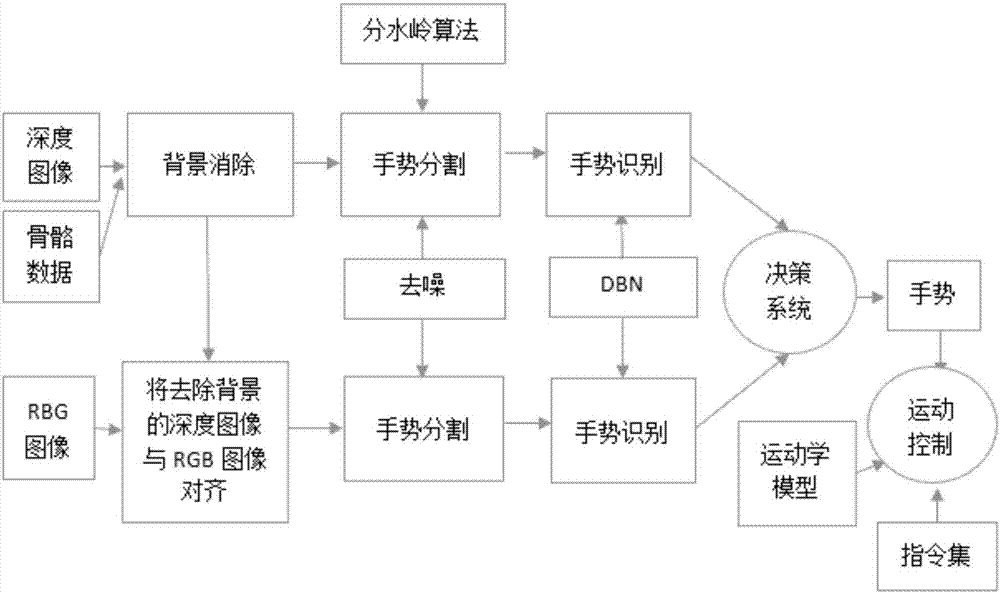

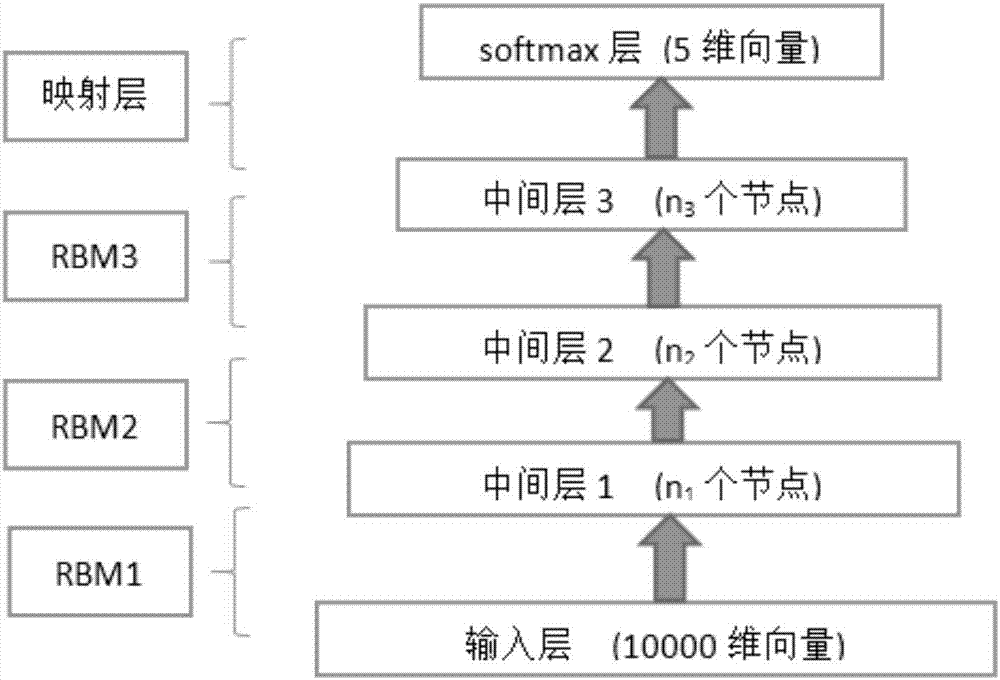

Humanoid mechanical arm control method based on Kinect sensor

ActiveCN106909216AEfficient removalEasy to operate and controlInput/output for user-computer interactionCharacter and pattern recognitionKinematicsRobotic hand

The invention discloses a humanoid mechanical arm control method based on a Kinect sensor. The method comprises the first step of collecting data through the Kinect sensor; the second step of preprocessing the collected data, and then using relevant algorithms to conduct gesture segmentation; the third step of using a DBN neural network to conduct gesture recognition; the fourth step of converting recognized gestures into instructions in a fixed format; the fifth step of using a TCP protocol to conduct telecommunication, and then sending the instructions to a server side; the sixth step of making the server side receive and recognize the instructions, and obtaining a control parameter through kinematics calculation; the seventh step of making the server side control motions of a mechanical arm according to the control parameter. According to the humanoid mechanical arm control method based on the Kinect sensor, the requirements in the aspects of cost and accuracy in actual operation, response speed and the like are considered, the problems that a data glove is high in control cost, and the traditional human-computer interactive modes based on keyboards and the like have certain requirements on specialized knowledge are solved, and the humanoid mechanical arm control method has the advantages that the operation is humanized, the response speed is high, the accuracy is high, and the humanoid mechanical arm control method has very good robustness.

Owner:SOUTH CHINA UNIV OF TECH

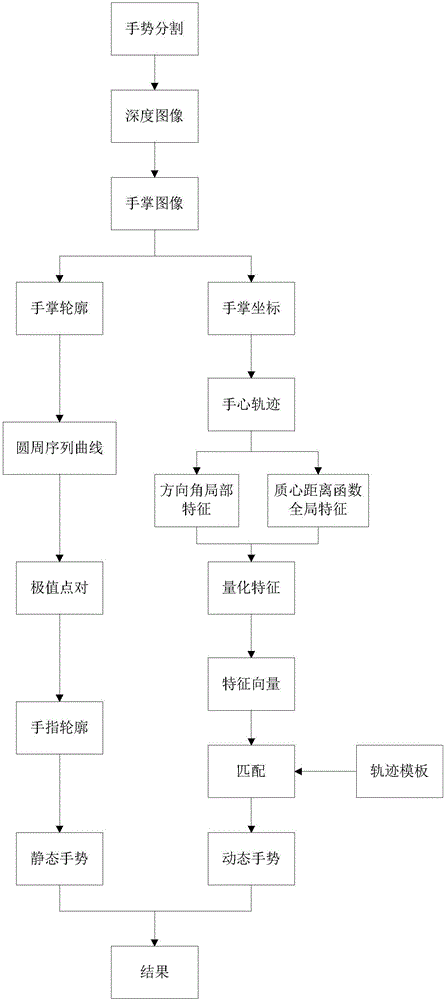

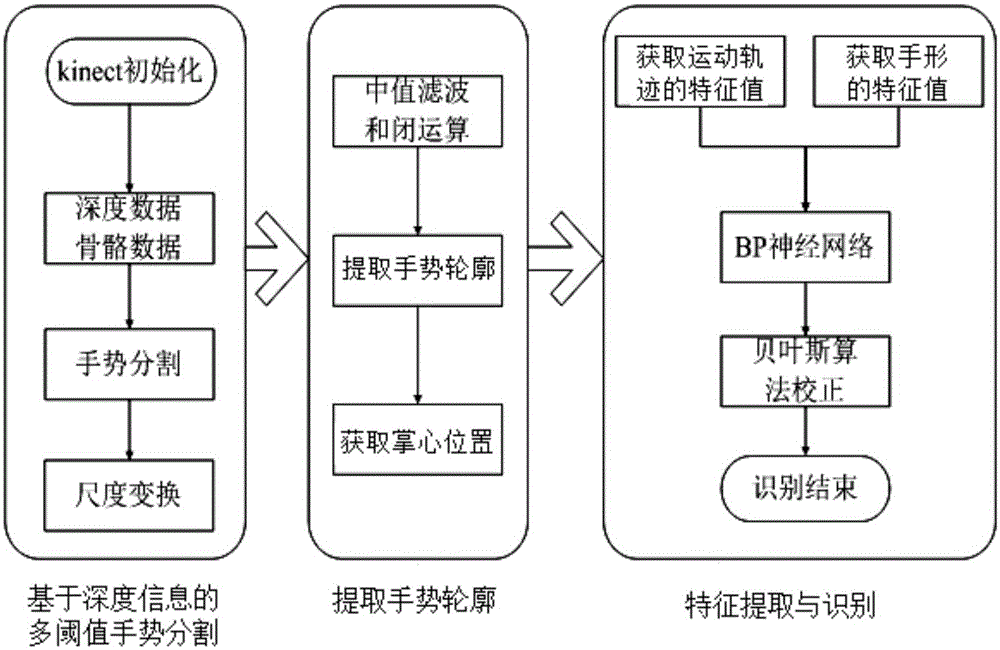

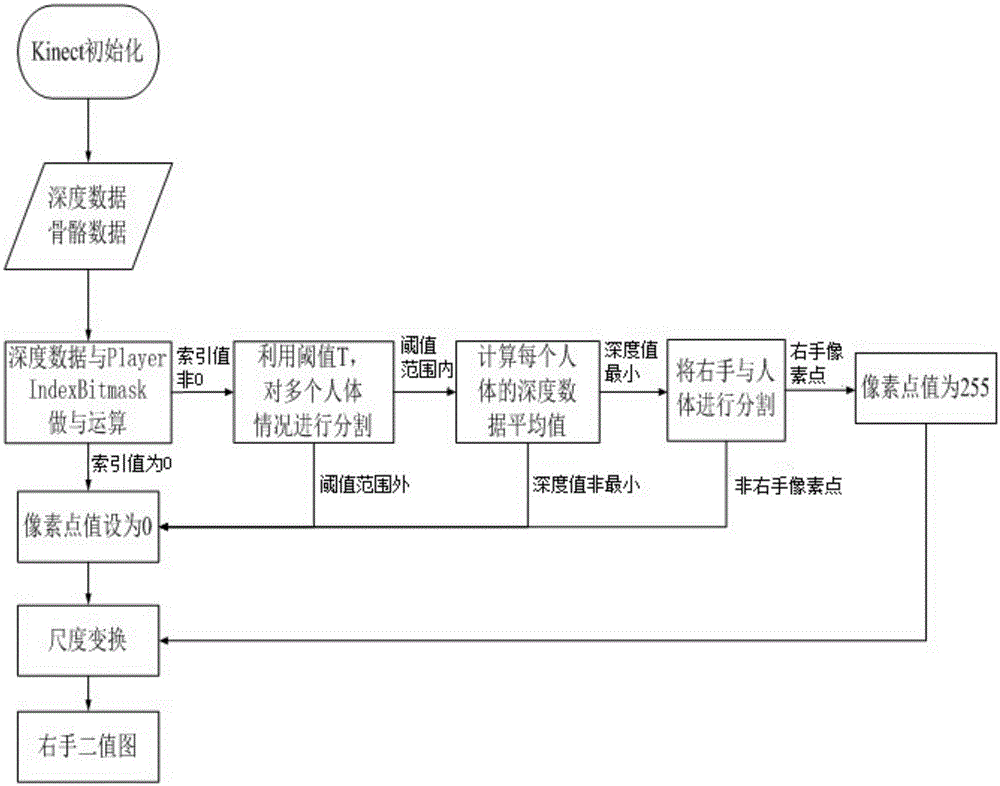

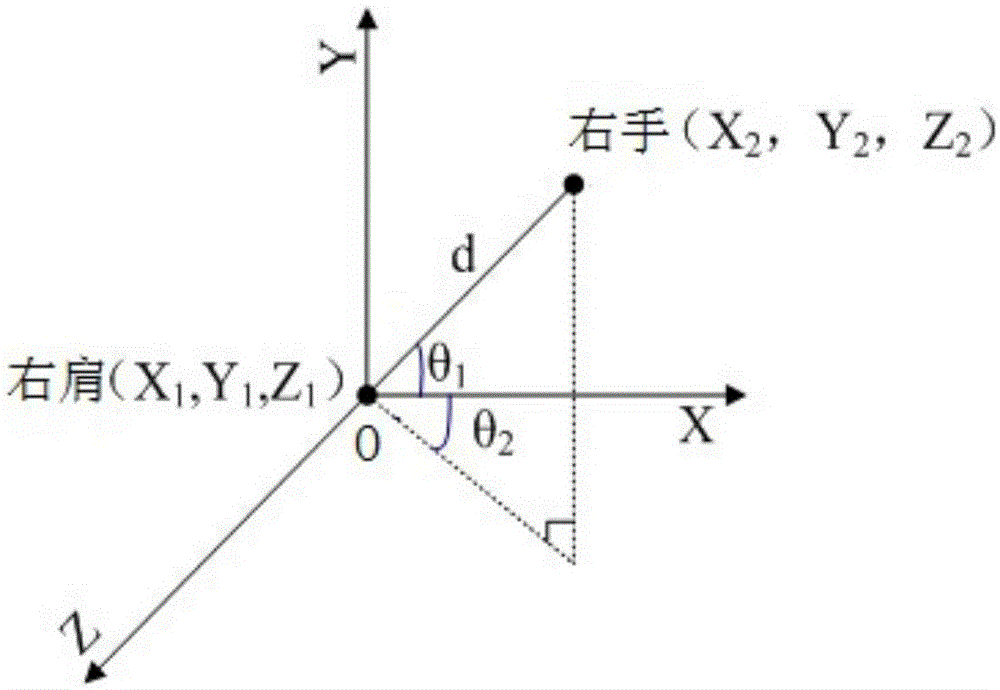

Deep information based sign language recognition method

ActiveCN105005769AImprove naturalnessAvoid interferenceCharacter and pattern recognitionPattern recognitionAngular velocity

The invention discloses a deep information based sign language recognition method. The method comprises steps of: (1) identification of a single gesture: dividing a sign language into a hand shape and a motion track; using deep information based multi-threshold hand gesture segmentation, and obtaining a feature value of the hand shape by using an improved SURF algorithm; obtaining the feature value of the motion track by using angular velocity and distance based motion characteristics, and performing gesture identification by using extracted feature value of the hand shape and the feature value of the motion track as an input of BP neural network; and (2) correction of a gesture sequence: according to the recognized gesture, performing automatic reasoning correction on gestures that have not been correctly recognized or that have polysemy by using a Bayesian algorithm. According to the method provided by the invention, the hand gesture segmentation is performed by using the deep information obtained by a Kinect camera, thereby overcoming the interference caused by illumination in the conventional vision based hand gesture segmentation, and improving naturality of human-computer interaction. The use of improved SURF algorithm reduces the calculation amount and improves the identification speed.

Owner:SHANDONG UNIV

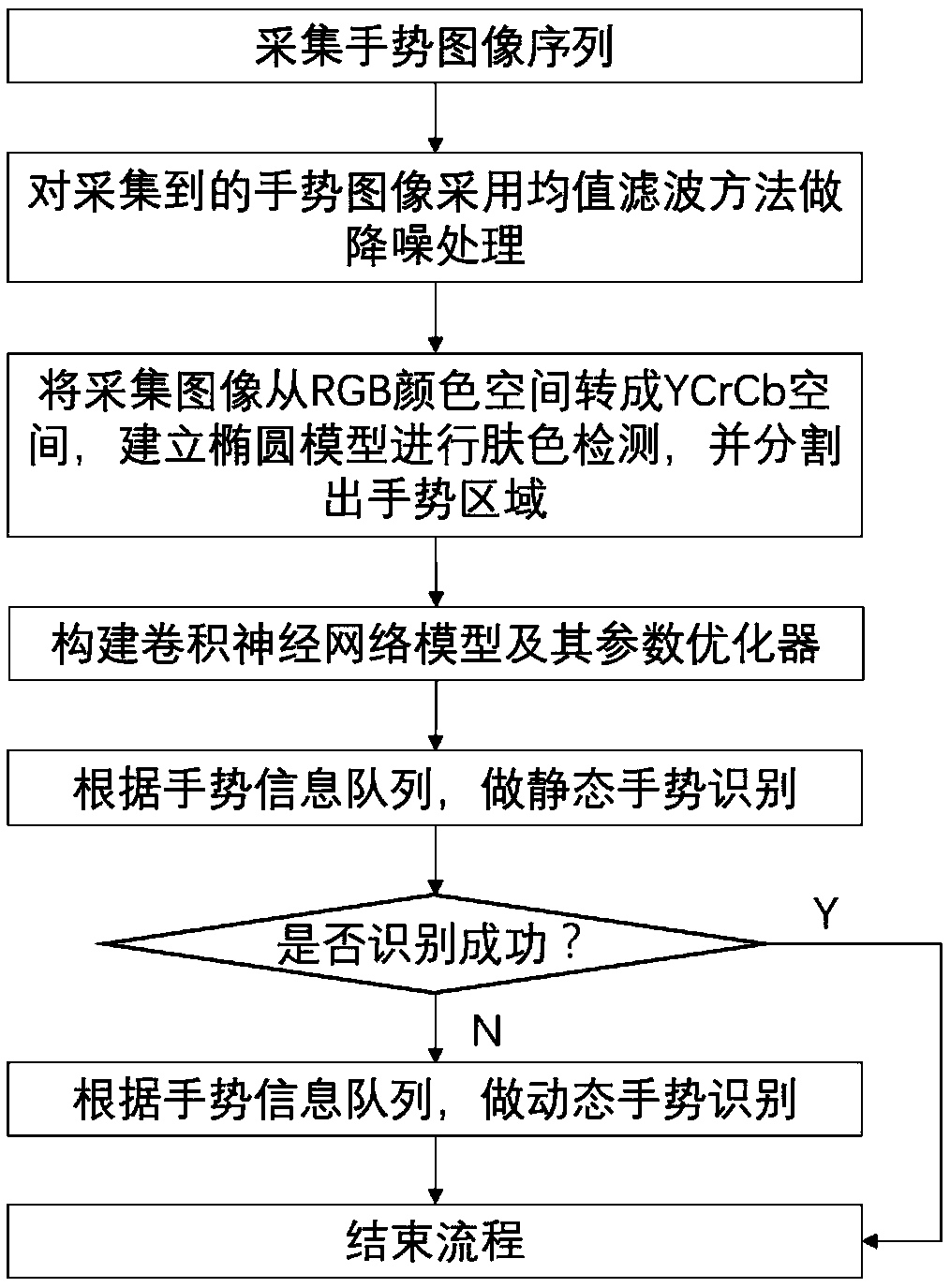

Dynamic and static gesture recognition method and system

ActiveCN109614922AImprove recognition rateEasy to identifyCharacter and pattern recognitionRGB color spaceImage sequence

The invention discloses a dynamic and static gesture recognition method. The method comprises the steps of S1, acquiring gesture images to obtain an image sequence; s2, removing image noise from the acquired gesture image by adopting a mean filtering method; s3, converting the acquired gesture image into a YCrCb space from an RGB color space, establishing an elliptic model, carrying out skin colordetection, segmenting a gesture area, and carrying out binarization processing; s4, constructing a convolutional neural network model and a parameter optimizer thereof, and obtaining a classifier with optimal performance by using the training data; s5, executing gesture static recognition according to the gesture information in the recognition queue; and S6, executing dynamic gesture recognitionaccording to the gesture information in the recognition queue. The gesture data can be collected through a common camera, and the gesture recognition accuracy and stability are improved through gesture segmentation, convolutional neural network classification and motion track constraint.

Owner:NANJING FUJITSU NANDA SOFTWARE TECH

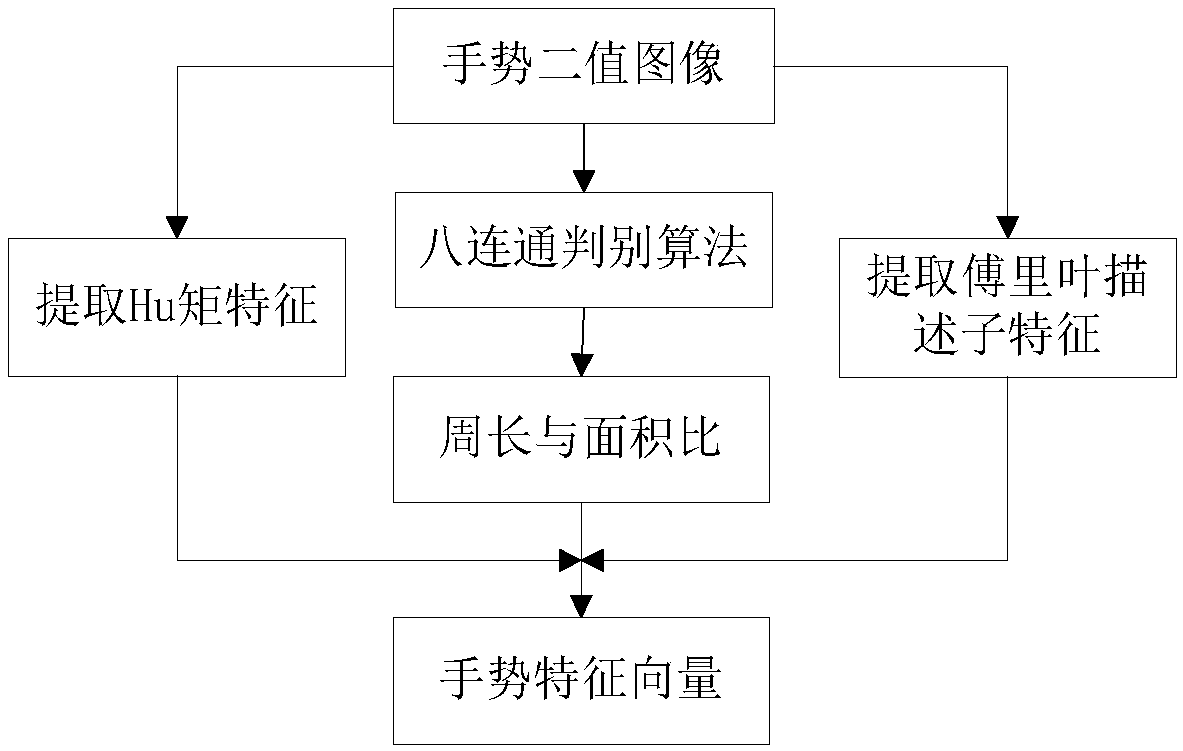

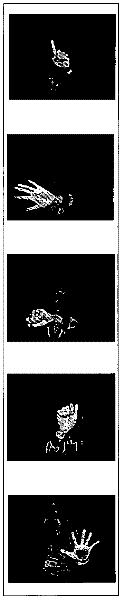

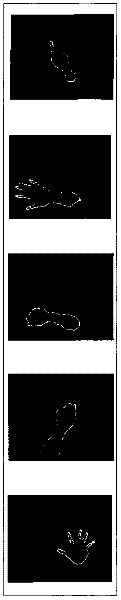

A monocular static gesture recognition method based on multi-feature fusion

InactiveCN109190496AAccurate separationImprove robustnessImage analysisCharacter and pattern recognitionRgb imageSkin color

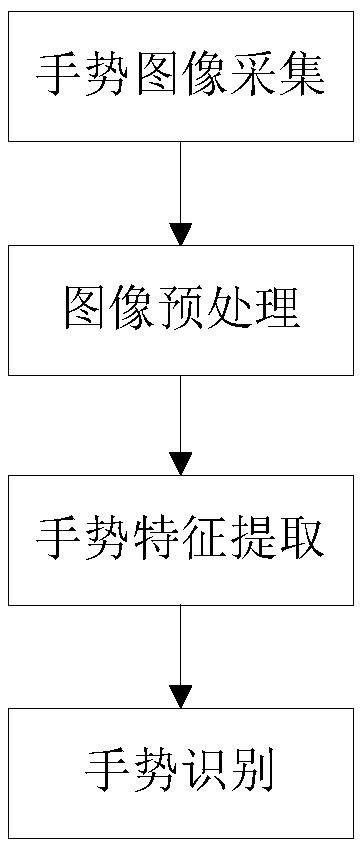

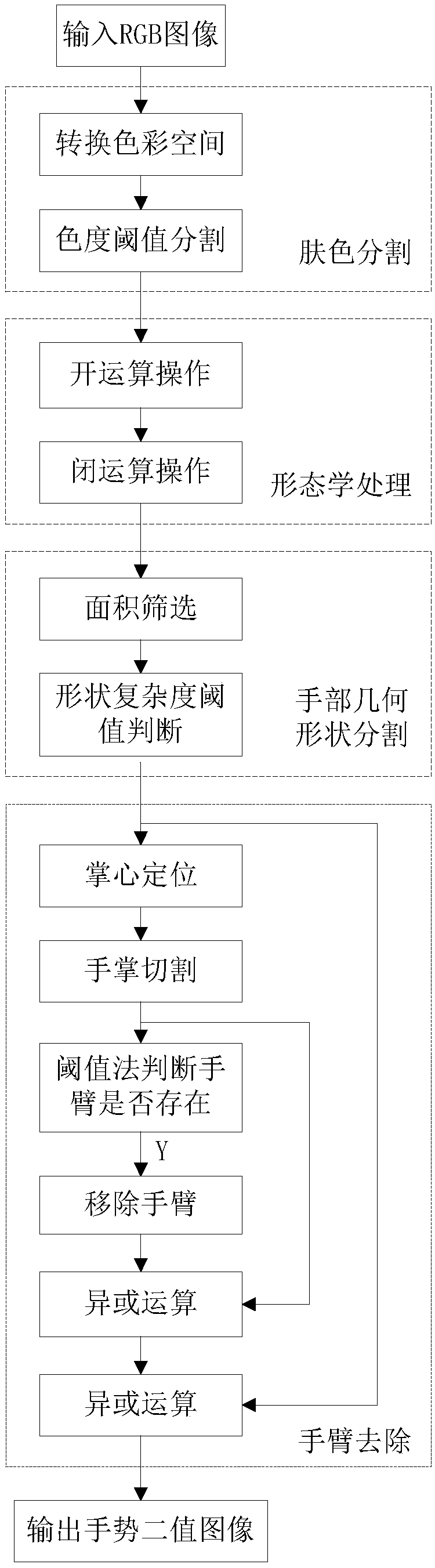

The invention discloses a monocular static gesture recognition method based on multi-feature fusion. The method comprises the following steps: gesture image collection: collecting an RGB image containing gesture by a monocular camera; image preprocessing: using human skin color information for skin color segmentation, using morphological processing and combining with the geometric characteristicsof the hand, separating the hand from the complex background, and locating the palm center and removing the arm region of the hand through the distance transformation operation to obtain the gesture binary image; gesture feature extraction: calculating the ratio of perimeter to area, Hu moment and Fourier descriptor feature of gesture and forming gesture feature vector; gesture recognition: usingthe input gesture feature vector to train the BP neural network to achieve static gesture classification. The invention combines the skin color information and the geometrical characteristics of the hand, and realizes accurate gesture segmentation under monocular vision by using morphological processing and distance transformation operation. By combining various gesture features and training BP neural network, a gesture classifier with strong robustness and high accuracy is obtained.

Owner:SOUTH CHINA UNIV OF TECH

Method, apparatus and computer program product for providing hand segmentation for gesture analysis

A method for providing hand segmentation for gesture analysis may include determining a target region based at least in part on depth range data corresponding to an intensity image. The intensity image may include data descriptive of a hand. The method may further include determining a point of interest of a hand portion of the target region, determining a shape corresponding to a palm region of the hand, and removing a selected portion of the target region to identify a portion of the target region corresponding to the hand. An apparatus and computer program product corresponding to the method are also provided.

Owner:NOKIA TECHNOLOGLES OY

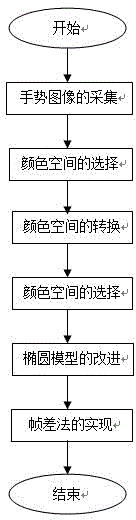

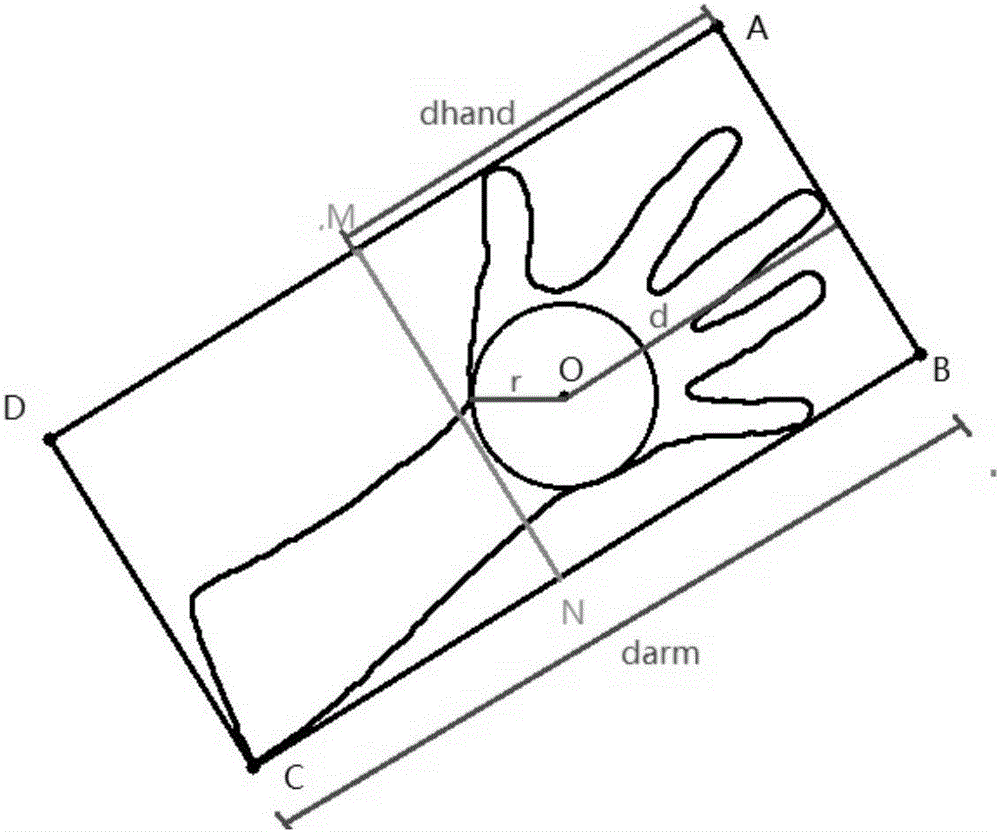

Hand gesture segmentation method based on monocular vision complicated background

InactiveCN104679242ALow costInput/output for user-computer interactionCharacter and pattern recognitionSkin colourOphthalmology

The invention relates to a hand gesture segmentation method based on a monocular vision complicated background and is suitable for hand gesture segmentation in hand gesture recognition in man-machine interaction. The method comprises the following steps of: (1) obtaining a hand gesture image through a monocular camera; (2) selecting a color space; (3) transforming the color space; (4) improving an elliptical model algorithm to extract the skin color information in the hand gesture image; (5) implementing a frame differentiation method to extract a hand gesture area in the skin color information. According to the method, on price, the problem that the cost of Kinect equipment is high is solved through an ordinary RGB camera only; on the aspect of accuracy, the problem that the hand gesture segmentation accuracy based on the monocular camera complicated background is not high is solved, thus a necessary guarantee is provided for man-machine interaction work such as hand gesture recognition, hand gesture control and the like.

Owner:JILIN JIYUAN SPACE TIME CARTOON GAME SCI & TECH GRP CO LTD

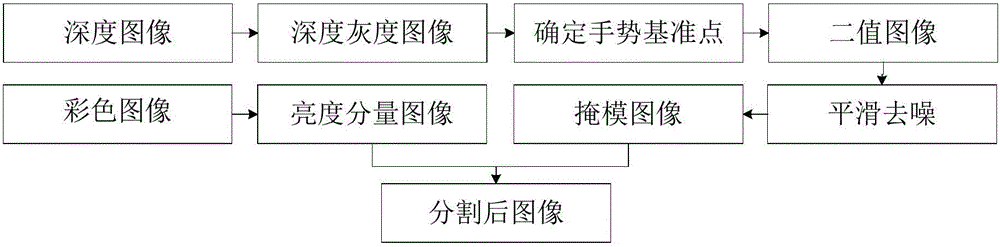

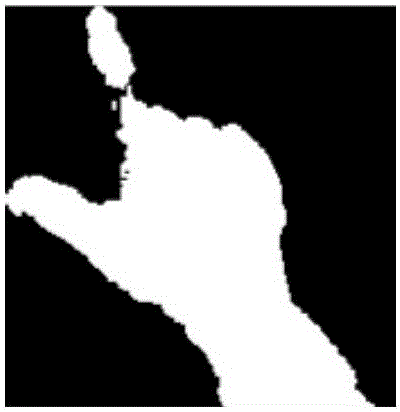

Depth information static gesture segmentation method

ActiveCN105893944ALight evenlyAvoid racial differencesImage enhancementImage analysisContour segmentationImage conversion

A depth information static gesture segmentation method includes the steps of converting a depth image into a depth grey-scale image of a same size, determining the gray scale of a gesture area in the depth grey-scale image, converting the depth grey-scale image into a binary image, conducting smooth processing for the binary image to obtain a mask image, determining a brightness component image, and segmenting the gesture area. The gesture area image segmented is accurate, and no segmentation problem exists. The influence of factors including non-uniform illumination, race difference, other human body parts and similar color background on gesture segmentation is prevented. The method is simple and rapid, and provides technical foundation for man-machine interaction works including gesture identification, control and medical surgeries.

Owner:江苏思远集成电路与智能技术研究院有限公司

Gesture image key frame extraction method based on deep learning

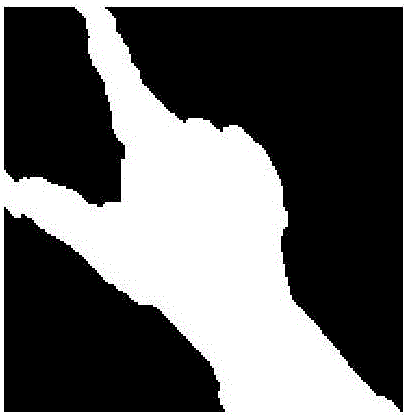

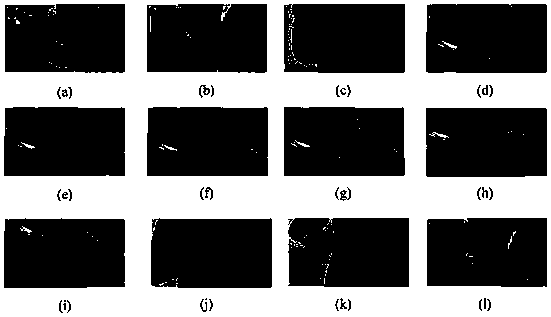

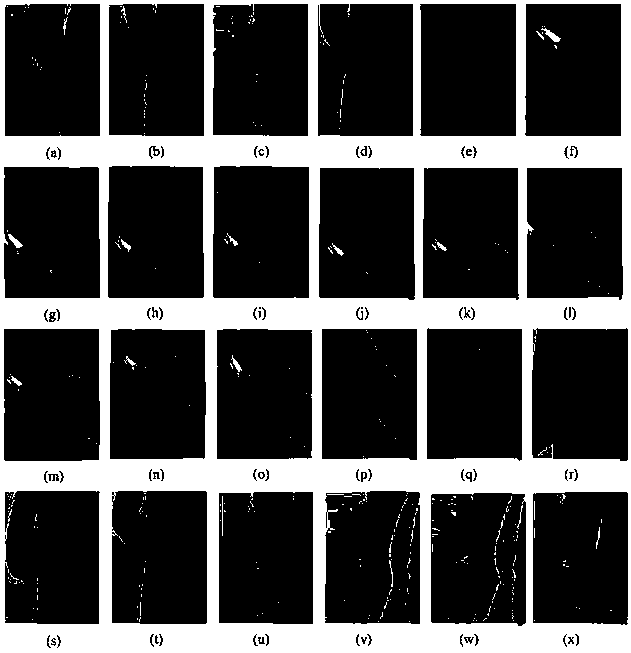

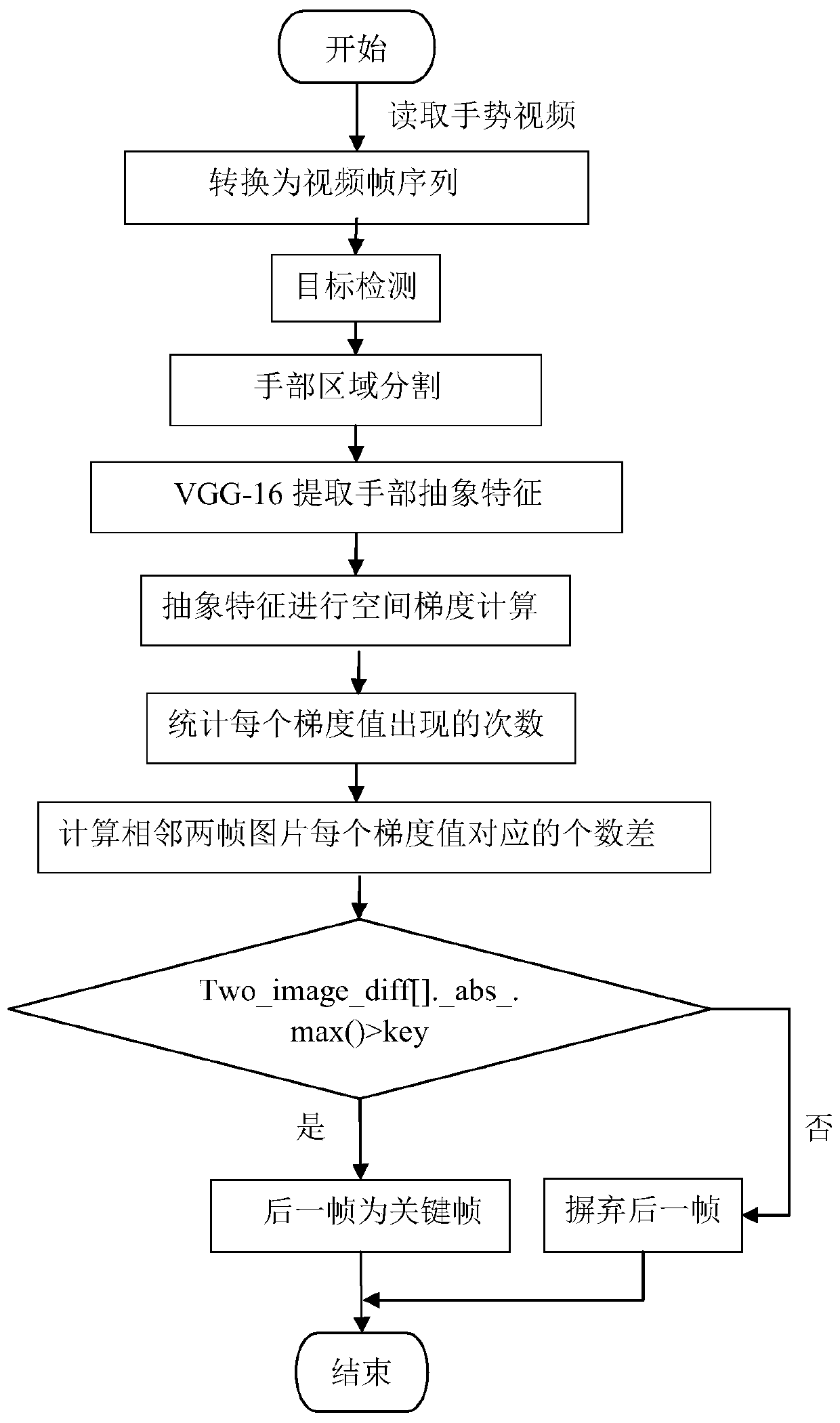

ActiveCN110110646AReduce complexityReduce the amount of parametersCharacter and pattern recognitionNeural architecturesSmall amplitudeComputer vision

The invention discloses a gesture image key frame extraction method based on deep learning. The method comprises the following steps: reading an input gesture video, and converting the input gesture video into a video frame image; detecting a gesture in a video frame image by adopting a Mobilenet-SSD target detection model, and segmenting the detected gesture; and training a gesture segmentation image by adopting a VGG16 training model to obtain a corresponding abstract feature, calculating a spatial gradient, and setting an appropriate threshold value to judge a key frame according to a gradient difference between two adjacent frames of images. The Mobilenet-SSD target detection model is used to detect and segments a hand area, background area noise is removed; hand abstract features areaccurately extracted by utilizing VGG-16, so that the expression capability of the picture is greatly enhanced;, the parameter quantity is reduced, the model complexity is reduced, and the method is suitable for small-amplitude changing video key frame extraction.

Owner:康旭科技有限公司

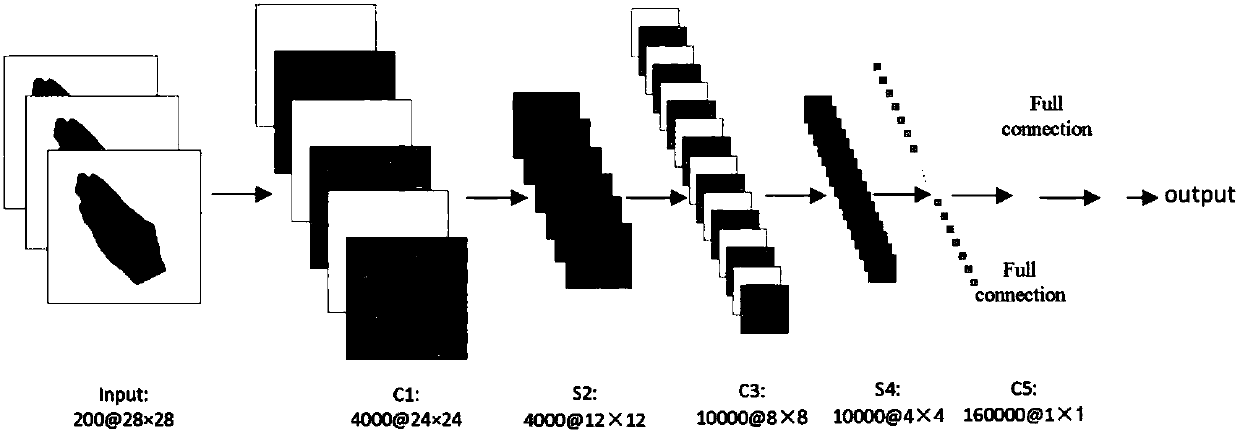

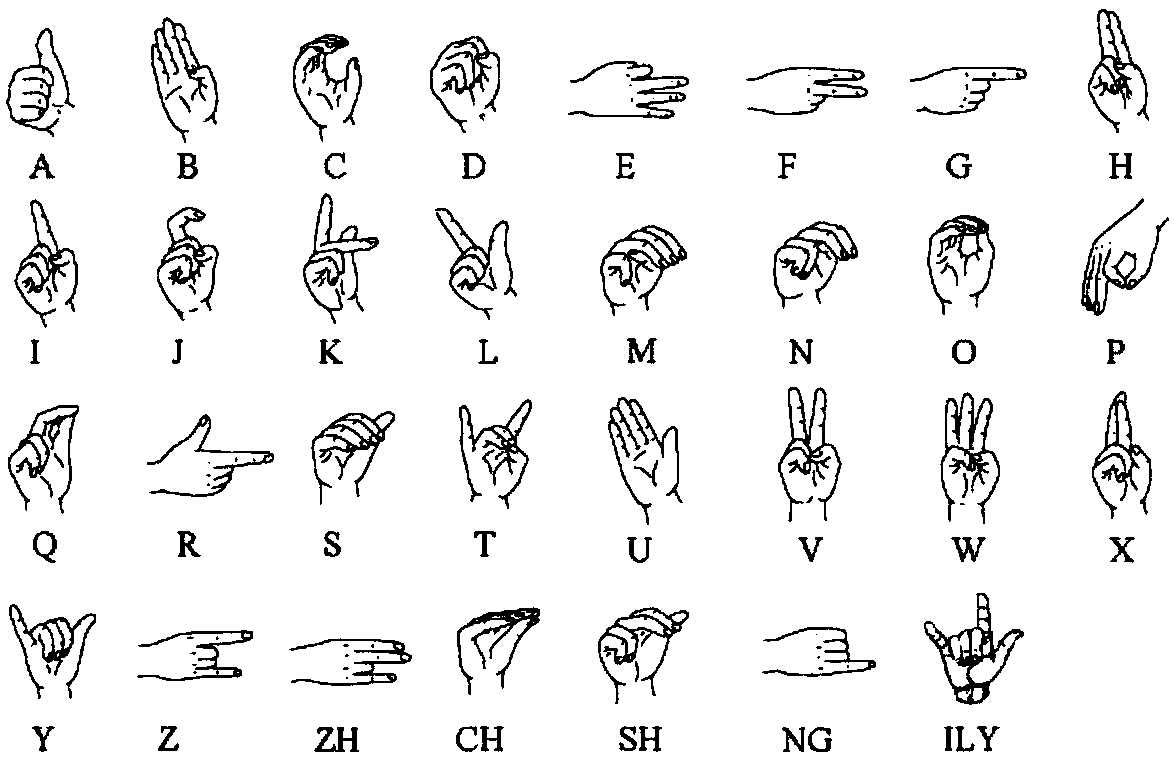

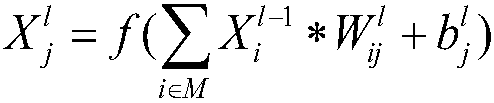

Chinese gesture language recognition method based on convolutional neural network

InactiveCN107742095AImprove recognition accuracyCharacter and pattern recognitionNeural architecturesConvulsionFeature vector

The invention relates to a Chinese gesture language recognition method based on a convolutional neural network. The method comprises the following steps of collecting various gesture pictures of Chinese gesture language, and obtaining multiple gesture samples through gesture segmentation and preprocessing; dividing an obtained gesture sample dataset into a training set, a verifying set and a testing set according to the proportion of 5:1:1; building seven layers of convolutional neural network CNN models, wherein the convolutional neural network CNN models include three convolutional layers, two pooling layers and one fully-connecting layer; using the gesture features of the CNN model training set to set the image quantity of batchsize after batching every time, and selecting the featuresafter each convulsion through maximal pooling; after the last convolution of layers, classifying feature vectors through a Softmax function, classifying the result, comparing labels, and updating theweight of the model; comparing the accuracy of the verification set after each iteration, and comparing the result with the last verification result; if the accuracy is lowered, continuing the iteration, and if the accuracy is not lowered, stopping the iteration.

Owner:TIANJIN UNIV

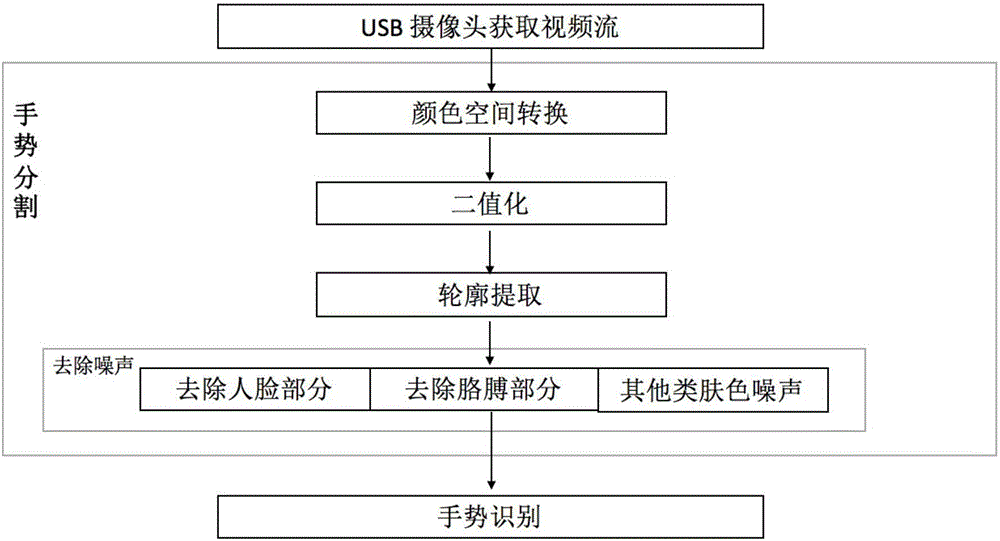

BP neural network-based gesture recognition method

ActiveCN106503619ARemove the influence of gesture recognitionImprove accuracyCharacter and pattern recognitionPattern recognitionImage conversion

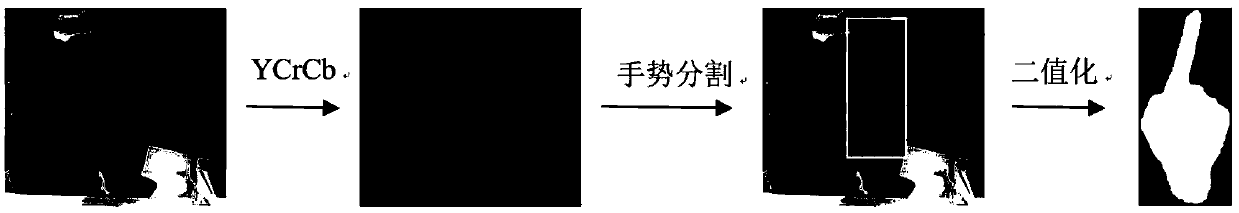

The present invention discloses a BP neural network-based gesture recognition method. The method includes the following steps of: video acquisition: a video containing a hand is acquired through a video acquisition device; gesture segmentation: an RGB type image acquired by the video acquisition device is converted into a YCrCb color space, adaptive threshold processing is performed on a Cr channel, so that the image can be converted into a binarized image, and a gesture contour is obtained, and noises are removed; gesture recognition: the Hu invariant moment of the noise-removed binarized image is determined and is adopted as a gesture feature, and a three-layer BP neural network is constructed, and training is carried out with the value of the Hu invariant moment adopted as the input of the neural network, so that the trained gesture in the video can be recognized. With the method of the invention adopted, a palm part can be effectively segmented, and a specific gesture can be accurately recognized.

Owner:NANJING UNIV OF SCI & TECH

Method for detecting gesture based on RGB-D image

ActiveCN103810480AEffective segmentationAccurate segmentationCharacter and pattern recognitionPattern recognitionAlgorithm robustness

The invention provides a method for detecting a gesture based on an RGB-D image. The method comprises the following steps of: step 1, acquiring the RGB-D image; step 2, segmenting the hands from a background; step 3, identifying the gesture; step 4, finding the optimal segmentation of the gesture. The detecting the gesture based on the RGB-D image provided by the invention is capable of effectively segmenting the areas of human hands, accurate in segmentation, capable of obtaining good gesture segmentation even if in the case that the hands are partially self-shielded or the interference of other people exists in the background, and good in algorithm robustness.

Owner:深圳市微智体技术有限公司

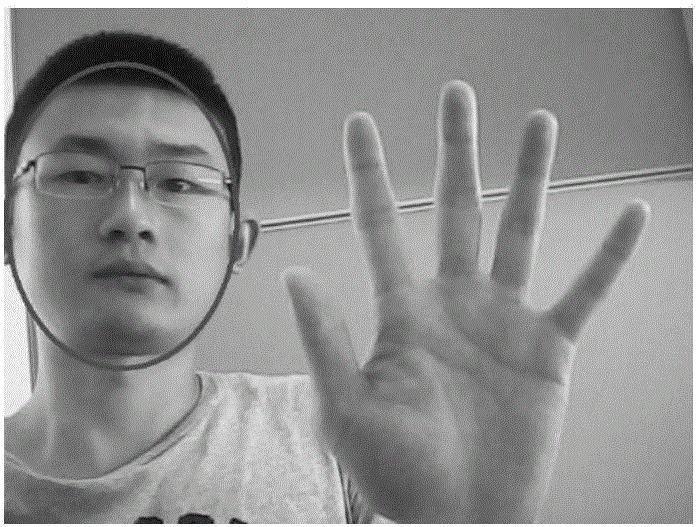

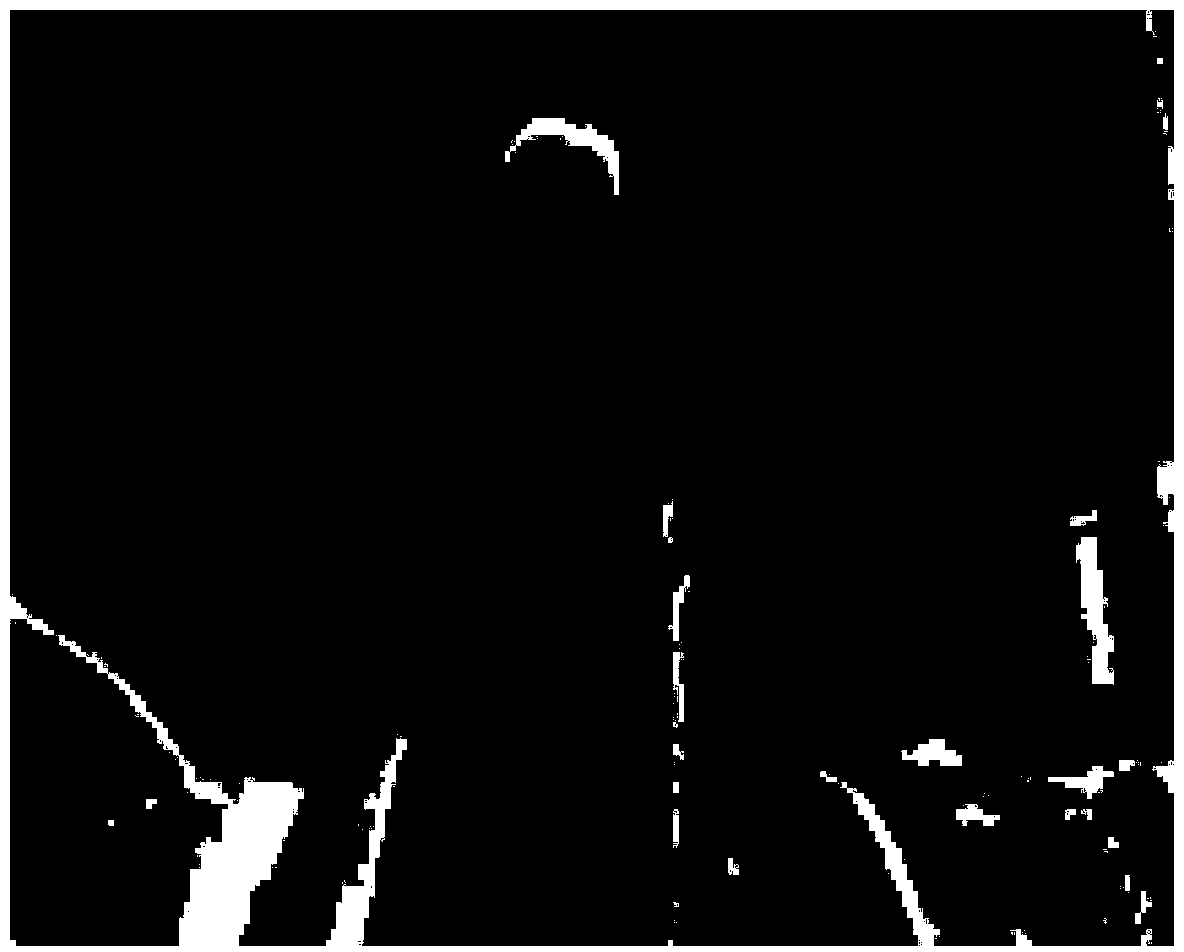

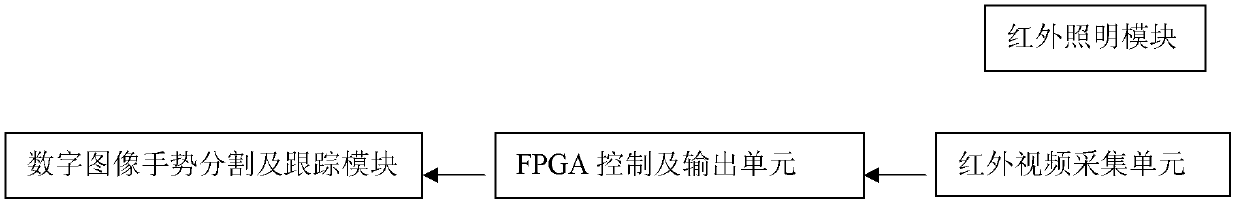

Gesture recognition system based on infrared image and method thereof

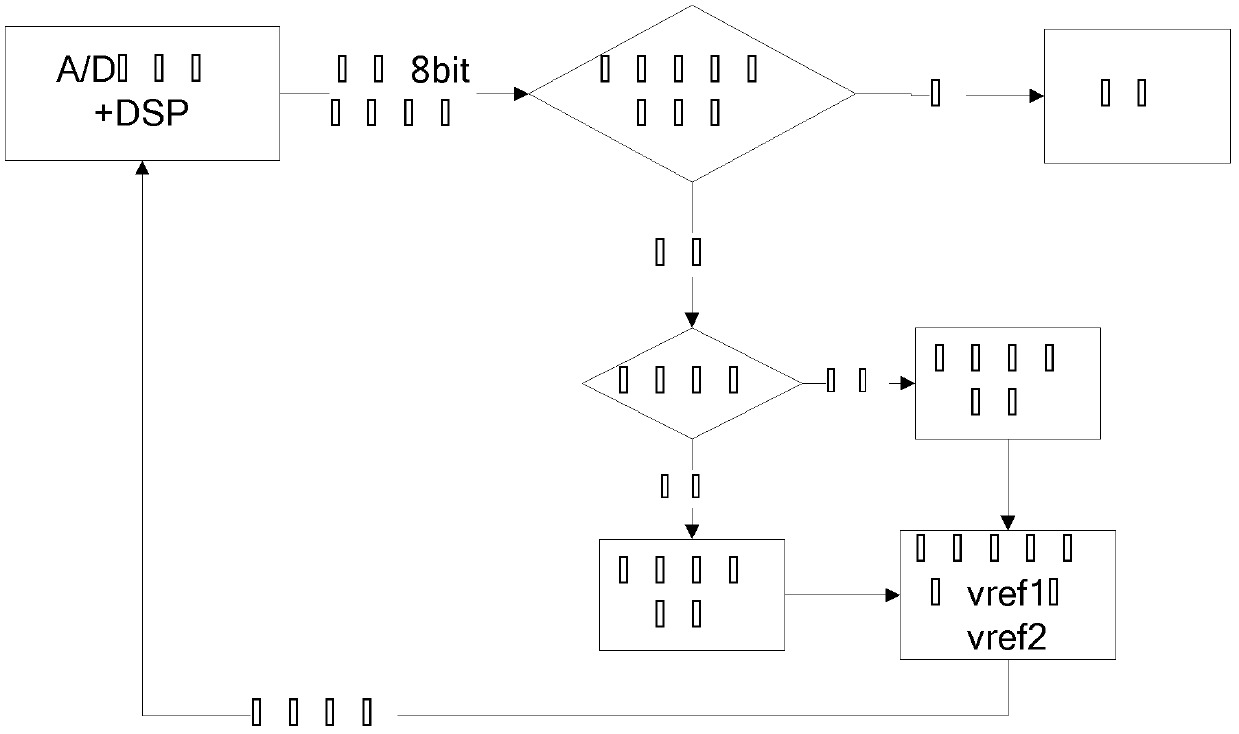

InactiveCN102629314AUniqueness guaranteedGuaranteed stabilityCharacter and pattern recognitionPhotographyDigital videoCamera lens

The invention discloses a gesture recognition system based on an infrared image. The system comprises an infrared illumination module, a front end image processing module and a digital image gesture segmentation and tracking module. An infrared video acquisition unit comprises a CMOS optical sensing chip and a lens assembly. The front end image processing module comprises the infrared video acquisition unit and a FPGA control and output unit. The FPGA control and output unit comprises an image quality assessment module, a reference voltage adjustment module and an output module. The image quality assessment module is used to determine whether a hand area and a hand surrounding area present a same or similar pixel value in a digital image. If the hand area and the hand surrounding area present the same or similar pixel value in the digital image, a reference voltage of the CMOS optical sensing chip is adjusted. If the hand area and the hand surrounding area do not present the same or similar pixel value in the digital image, the digital image is directly output. The invention also discloses a gesture recognition method of the system. In the prior art, under low illumination level or variable illumination environment, recognition is unstable. By using the system and the method of the invention, the above problem can be solved, the quality of infrared digital video data and stability of the system can be increased.

Owner:SOUTH CHINA UNIV OF TECH

Medical model interaction visualization method and system based on gesture recognition

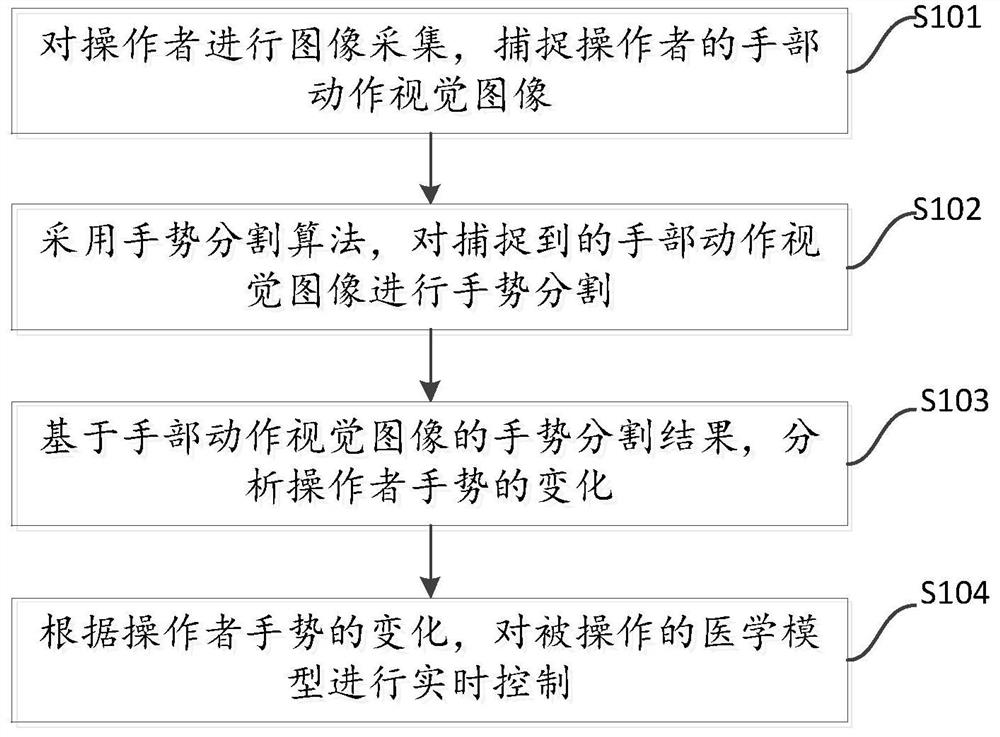

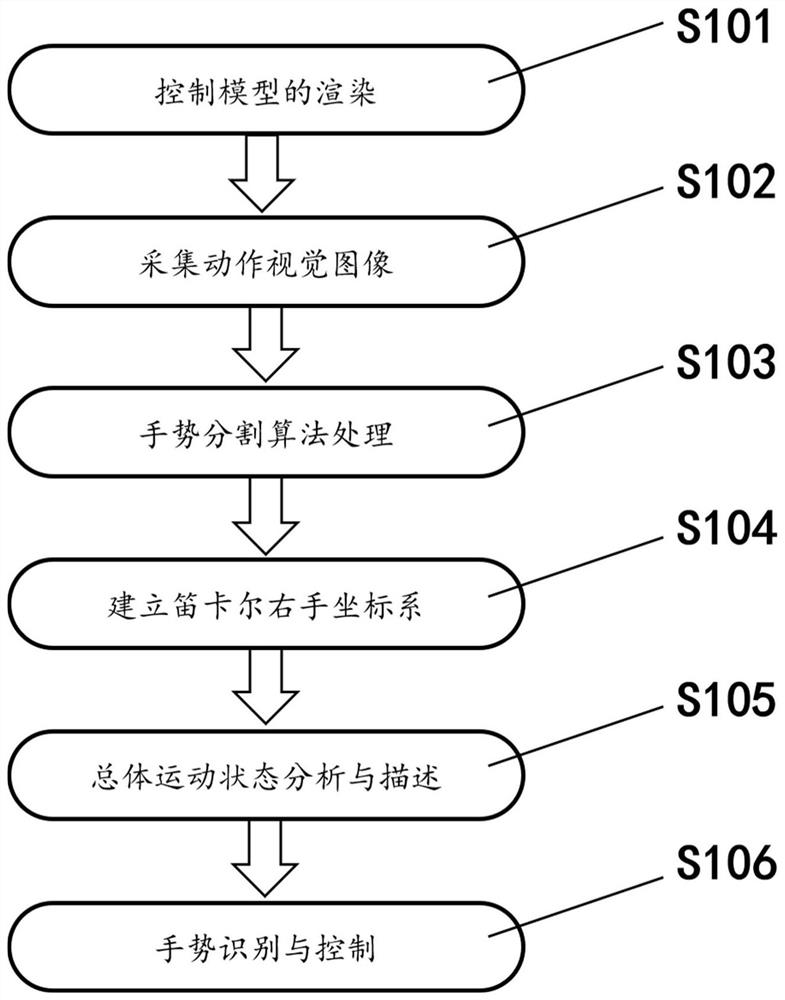

PendingCN111639531ADevelop processingDevelop Human-Computer Interaction TechnologyInput/output for user-computer interactionCharacter and pattern recognitionImaging processingComputer graphics (images)

The invention provides a medical model interaction visualization method and system based on gesture recognition, and the method comprises the steps: collecting images of an operator, and capturing a hand motion visual image of the operator; performing gesture segmentation on the captured hand action visual image by adopting a preset gesture segmentation algorithm; analyzing the change of the gesture of the operator based on the gesture segmentation result of the hand action visual image; and controlling the operated medical model in real time according to the change of the gesture of the operator. Three-dimensional reconstruction and gesture recognition are combined, the image processing technology and the man-machine interaction technology are further developed in the medical field, and the method and system can be used for displaying a medical three-dimensional model in a gesture control mode.

Owner:GENERAL HOSPITAL OF PLA

Millimeter wave radar gesture recognition method and system based on cross-domain enhancement

PendingCN113963441AImprove accuracyResolve inconsistenciesCharacter and pattern recognitionNeural architecturesSequence modelNetwork model

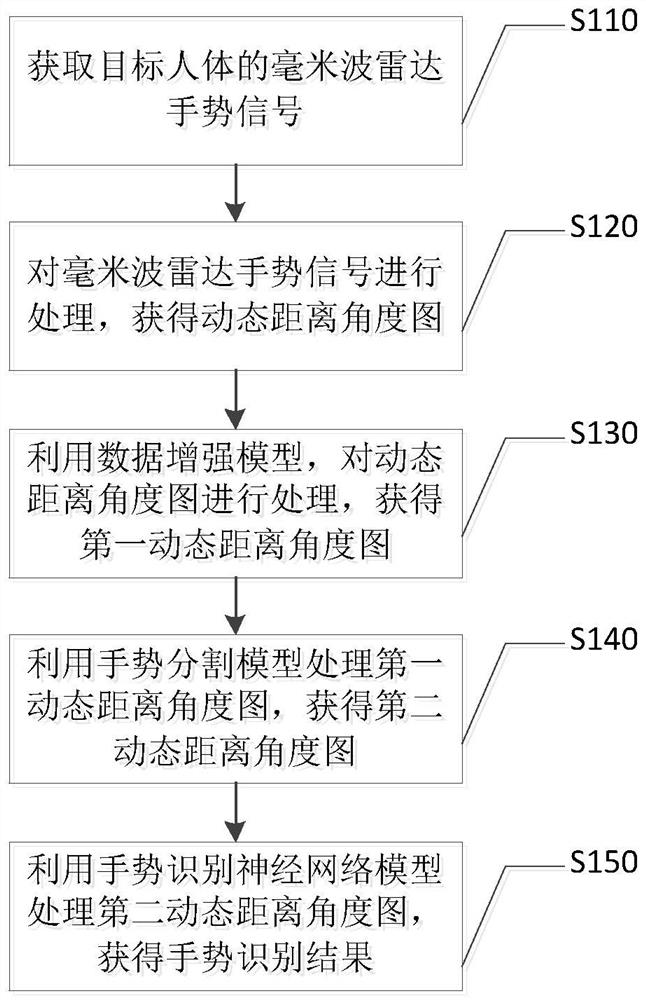

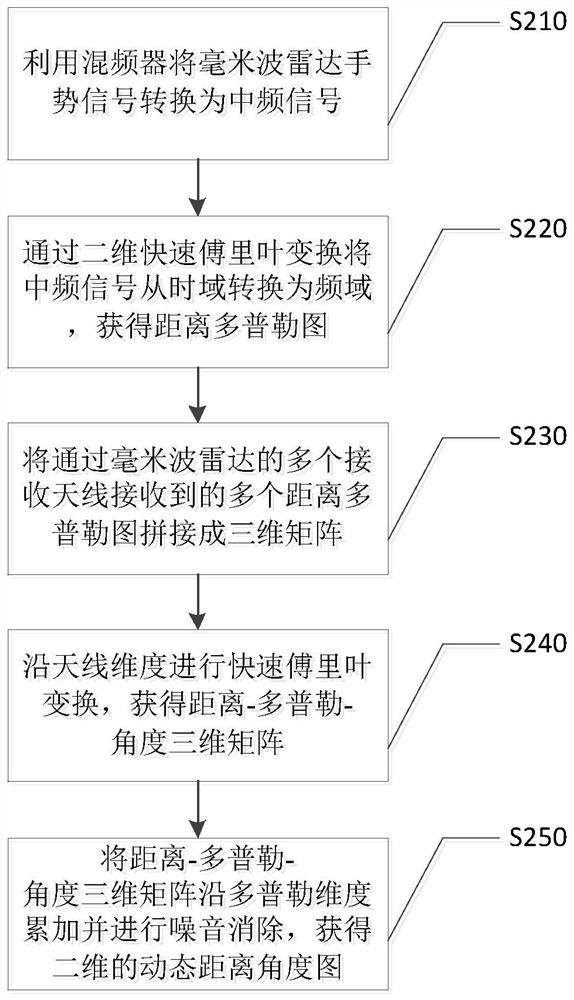

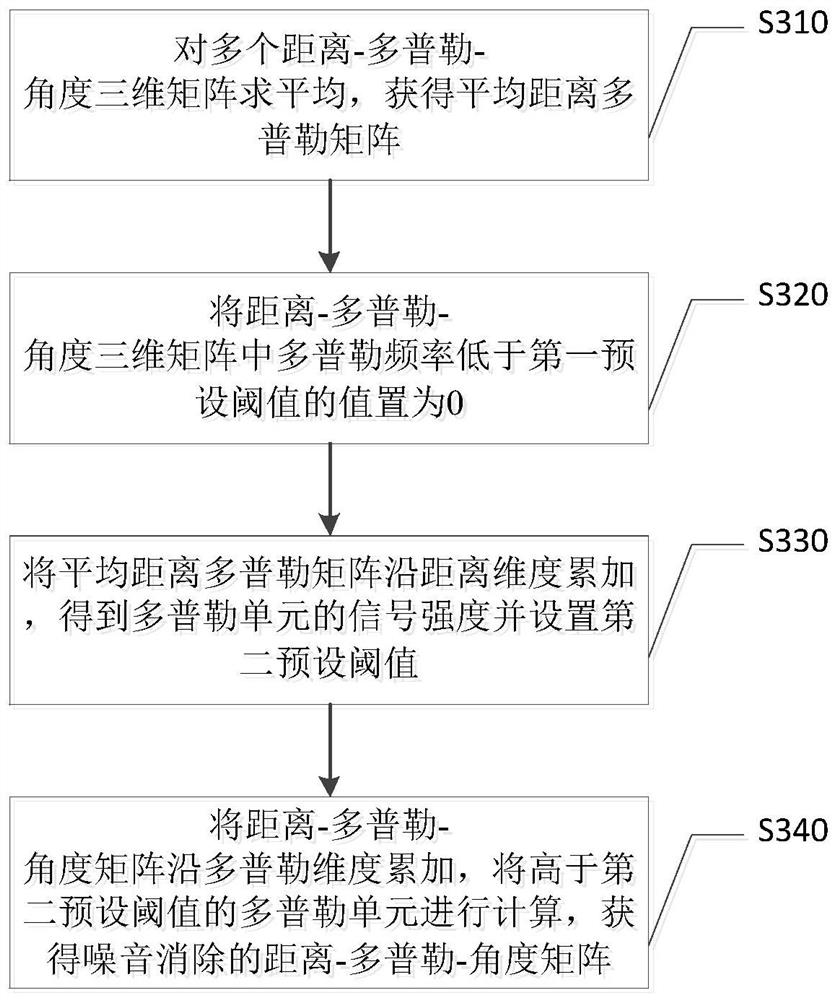

The invention discloses a millimeter wave radar gesture recognition method based on cross-domain enhancement. The method comprises the following steps: acquiring a millimeter wave radar gesture signal of a target human body; processing the millimeter wave radar gesture signal to obtain a dynamic distance angle diagram; using a data enhancement model to process the dynamic distance angle diagram to obtain a first dynamic distance angle diagram, the first dynamic distance angle diagram comprising a plurality of frame matrixes; processing the first dynamic distance angle diagram by using a gesture segmentation model to obtain a second dynamic distance angle diagram which represents a continuous DRAI frame sequence; and using a gesture recognition neural network model to process the second dynamic distance angle diagram to obtain a gesture recognition result, where the gesture recognition neural network model comprises a frame model and a sequence model. The invention further discloses a millimeter wave radar gesture recognition system based on cross-domain enhancement and electronic equipment.

Owner:UNIV OF SCI & TECH OF CHINA

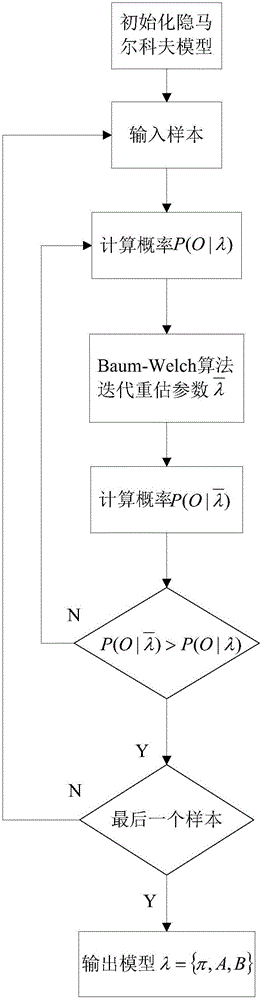

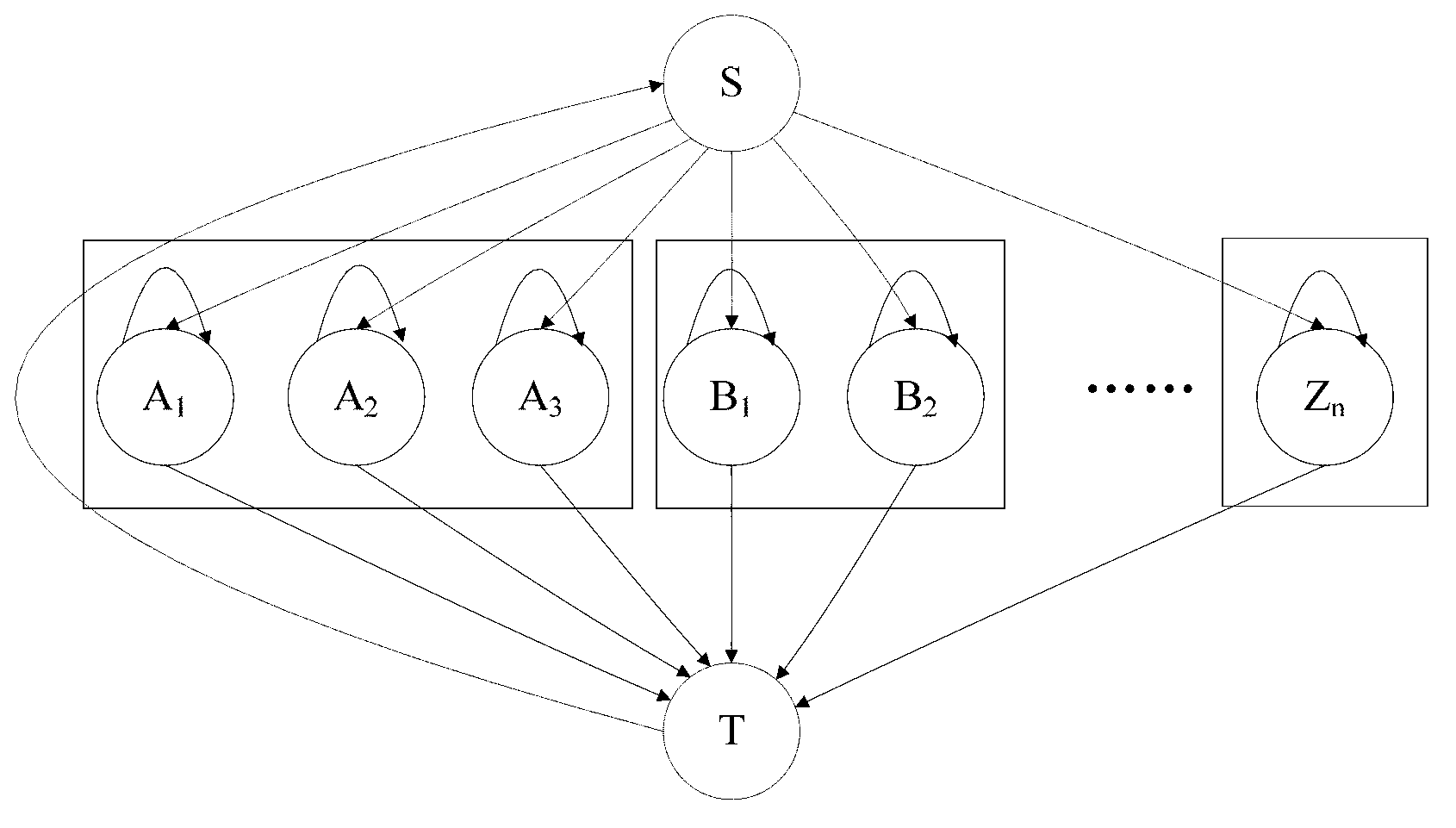

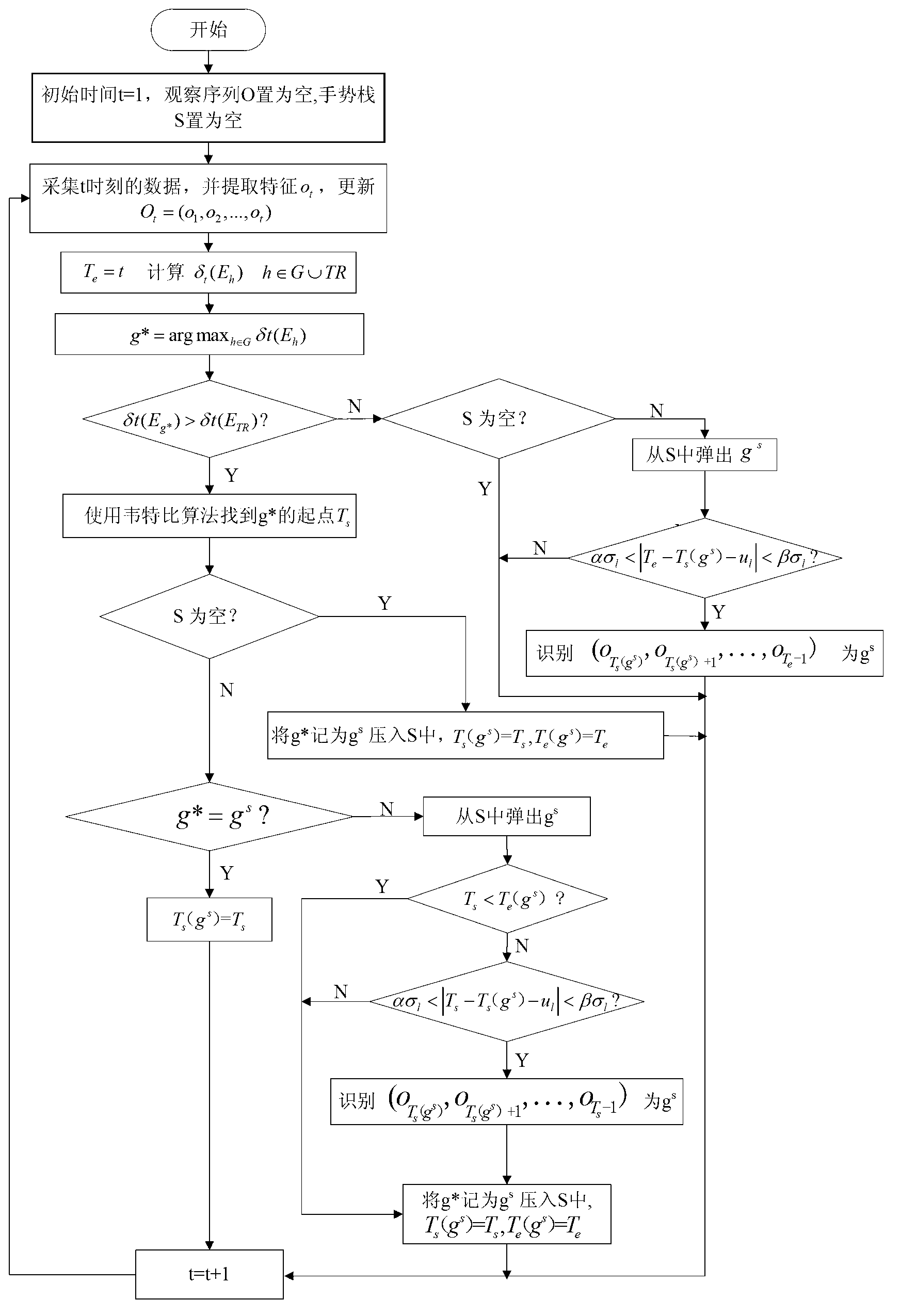

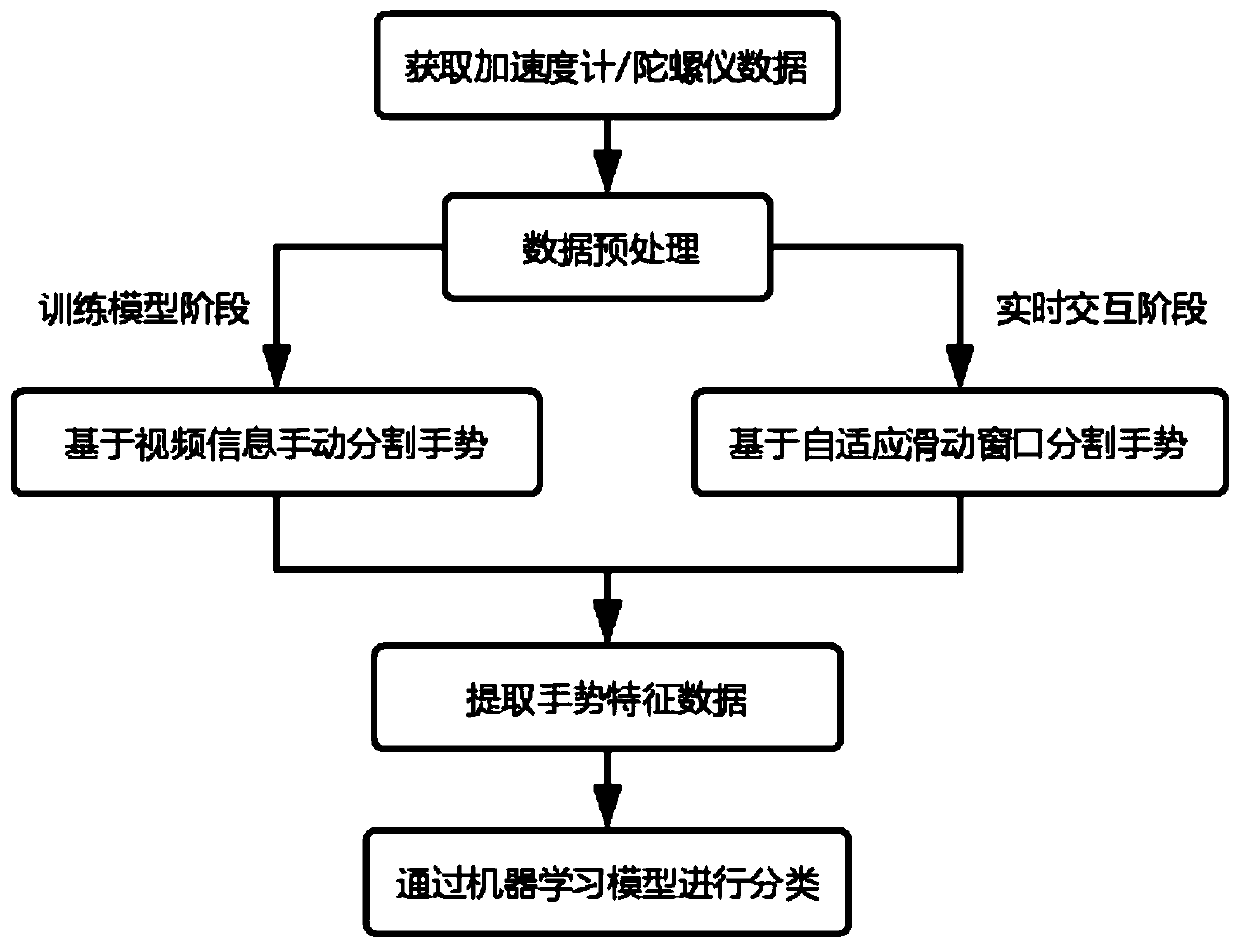

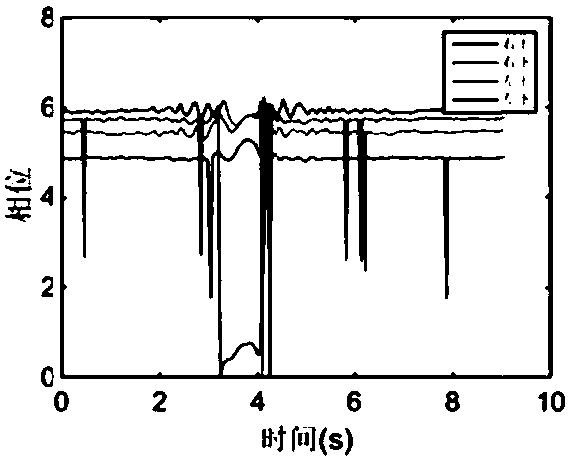

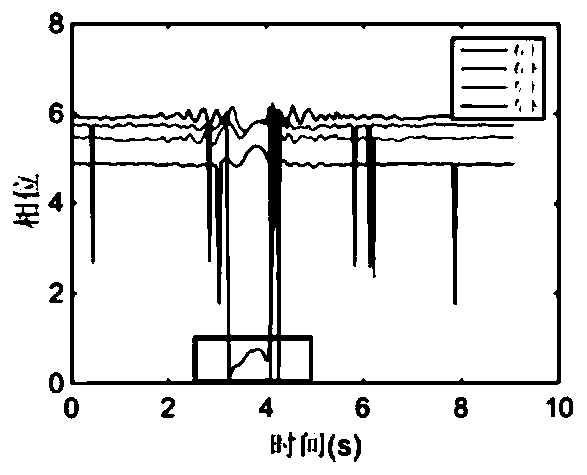

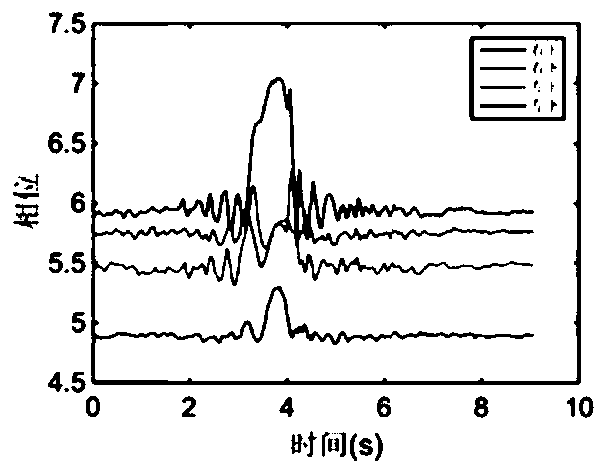

Dynamic gesture segmentation and recognition method based on Hidden Markov Model (HMM)

ActiveCN107346207AEffective segmentationEffective real-time segmentationInput/output for user-computer interactionCharacter and pattern recognitionHide markov modelComputer vision

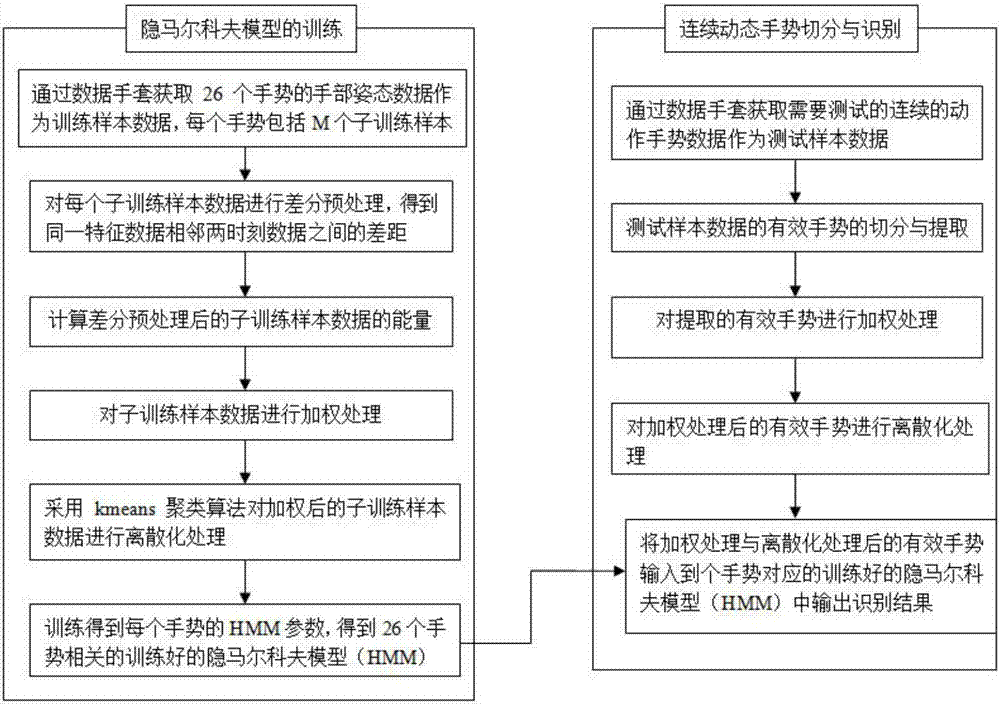

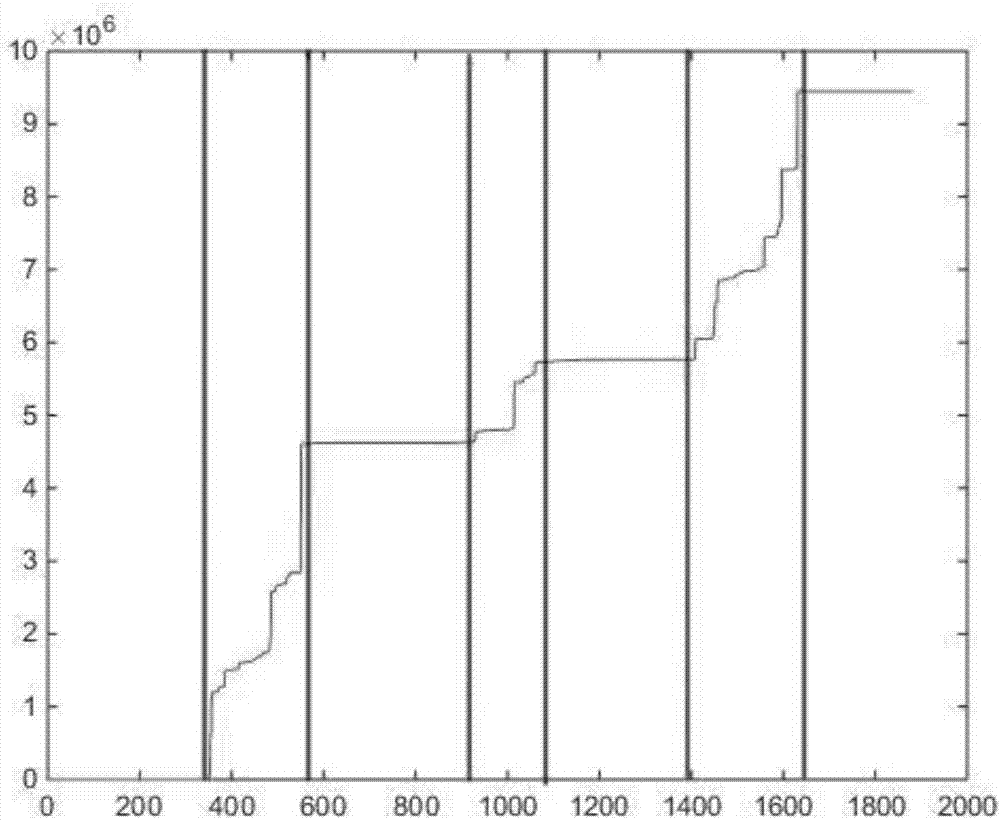

The invention relates to a dynamic gesture segmentation and recognition method based on a Hidden Markov Model (HMM). The method comprises training of the HMM and dynamic gesture segmentation and recognition. According to the method disclosed by the invention, the starting point and ending point of the continuous dynamic gesture can be effectively detected in real time, the real-time property of gesturing to others is further improved, and the normal gesture conversation habit is met, so that the gesture conversation is natural and smooth. Moreover, in combination with weighted processing, the composite gesture sequence is effectively segmented, redundant data is reduced, an effective gesture with great energy in dynamic gestures is further recognized and extracted, the recognition rate of the gesture recognition after gesture segmentation is improved, and further the gesture recognition precision and efficiency are further improved. Moreover, the problem of spatio-temportal difference of continuous dynamic gesture and the problem of gesture segmentation from gesture starting to ending can be effectively solved in a real-time scene.

Owner:GUANGZHOU HUANTEK CO LTD +1

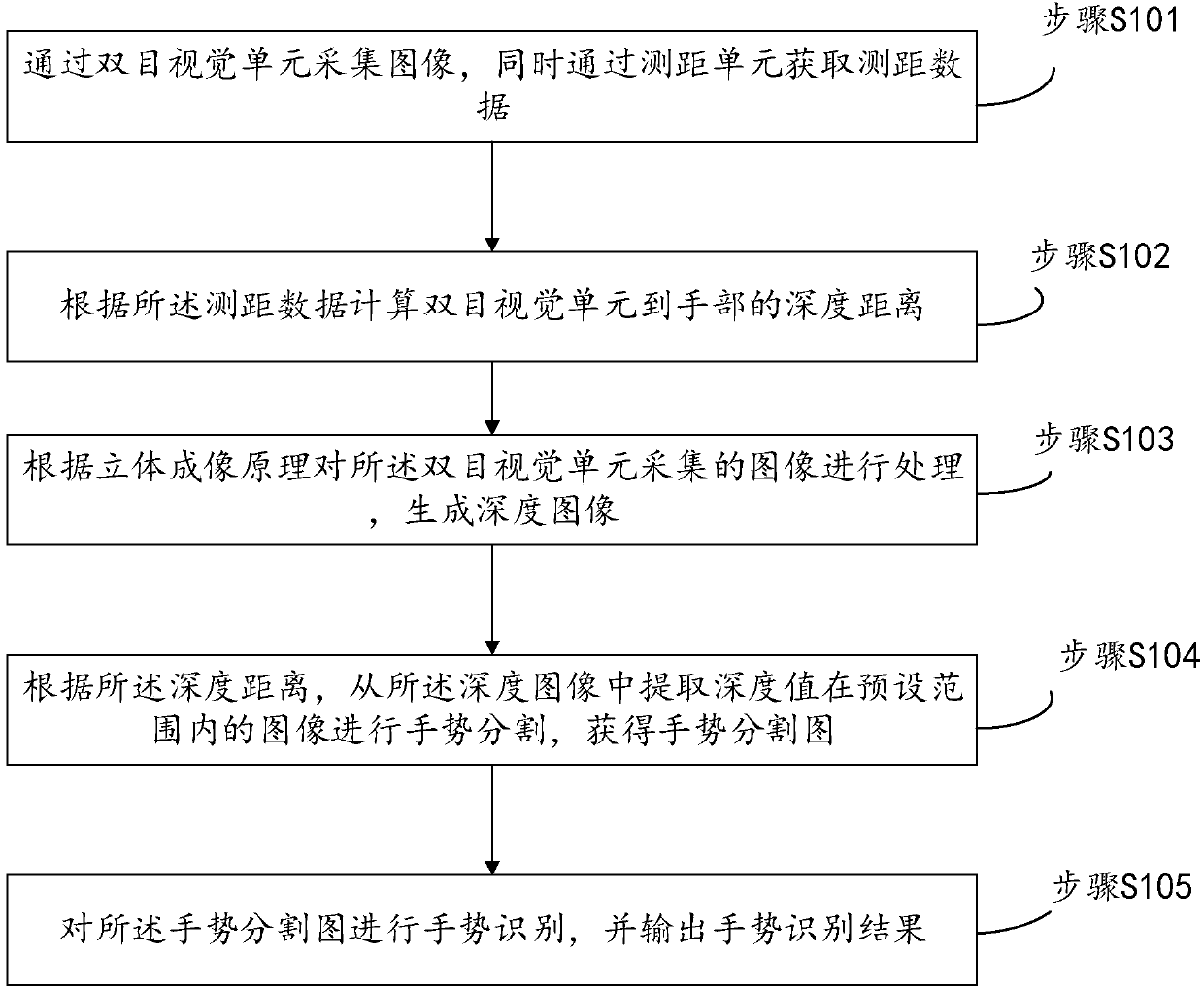

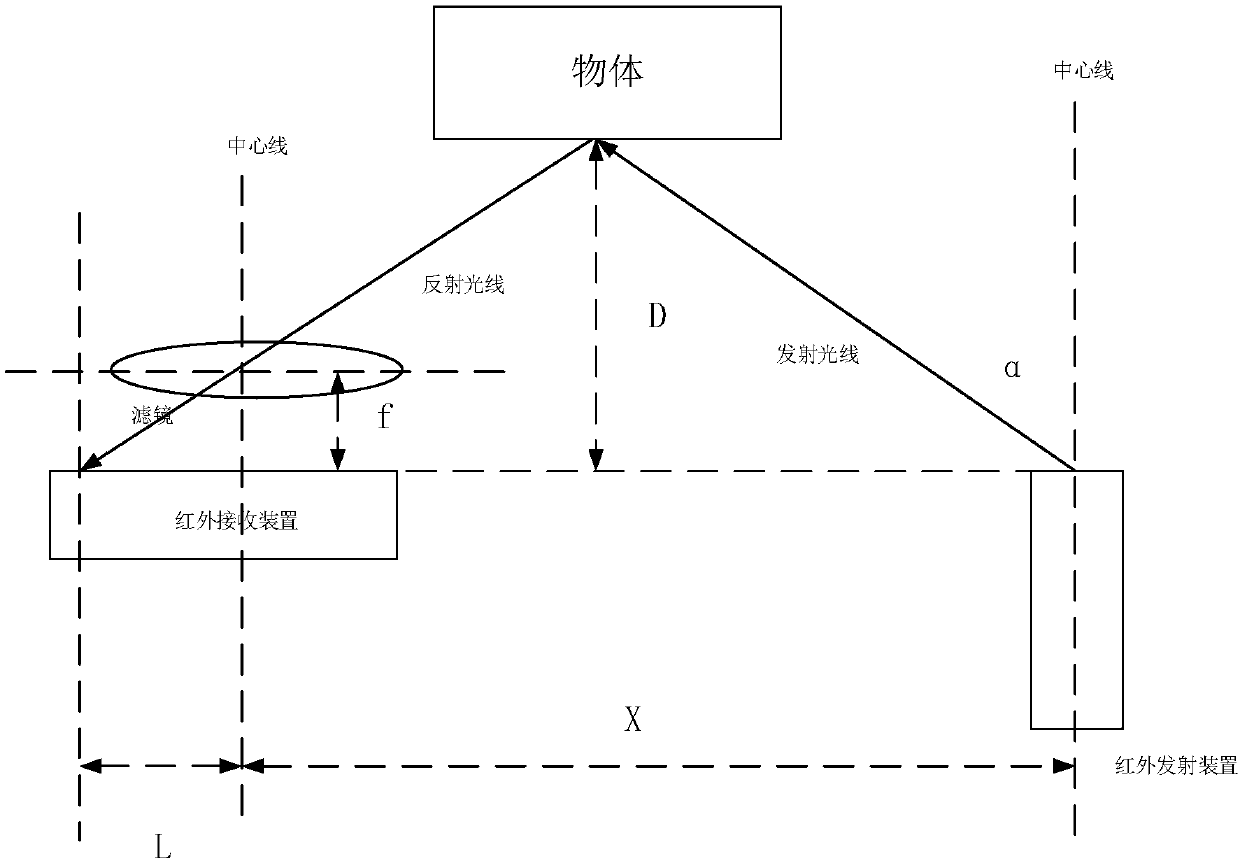

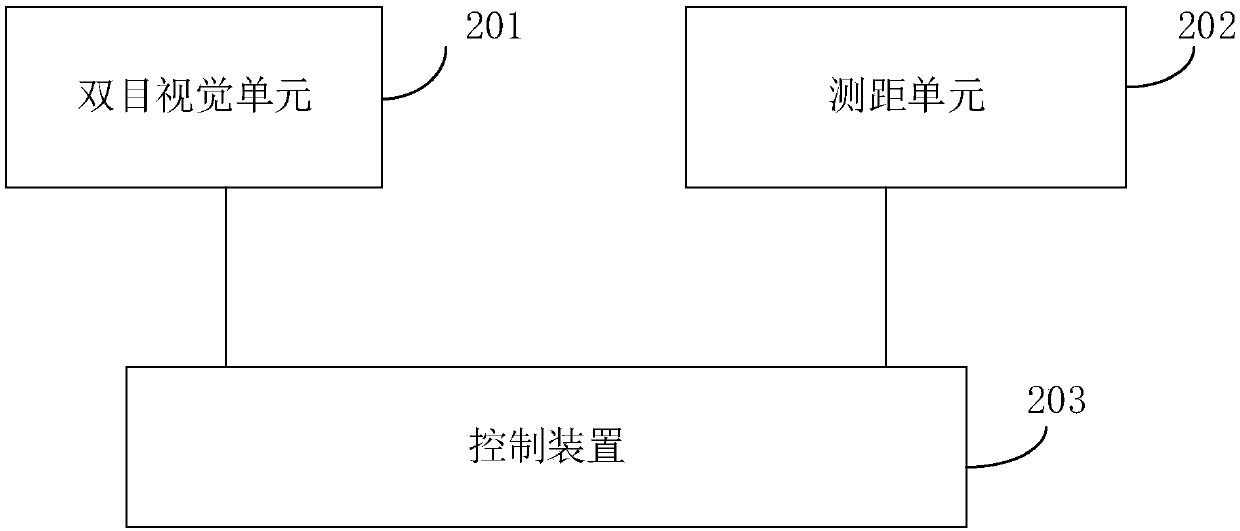

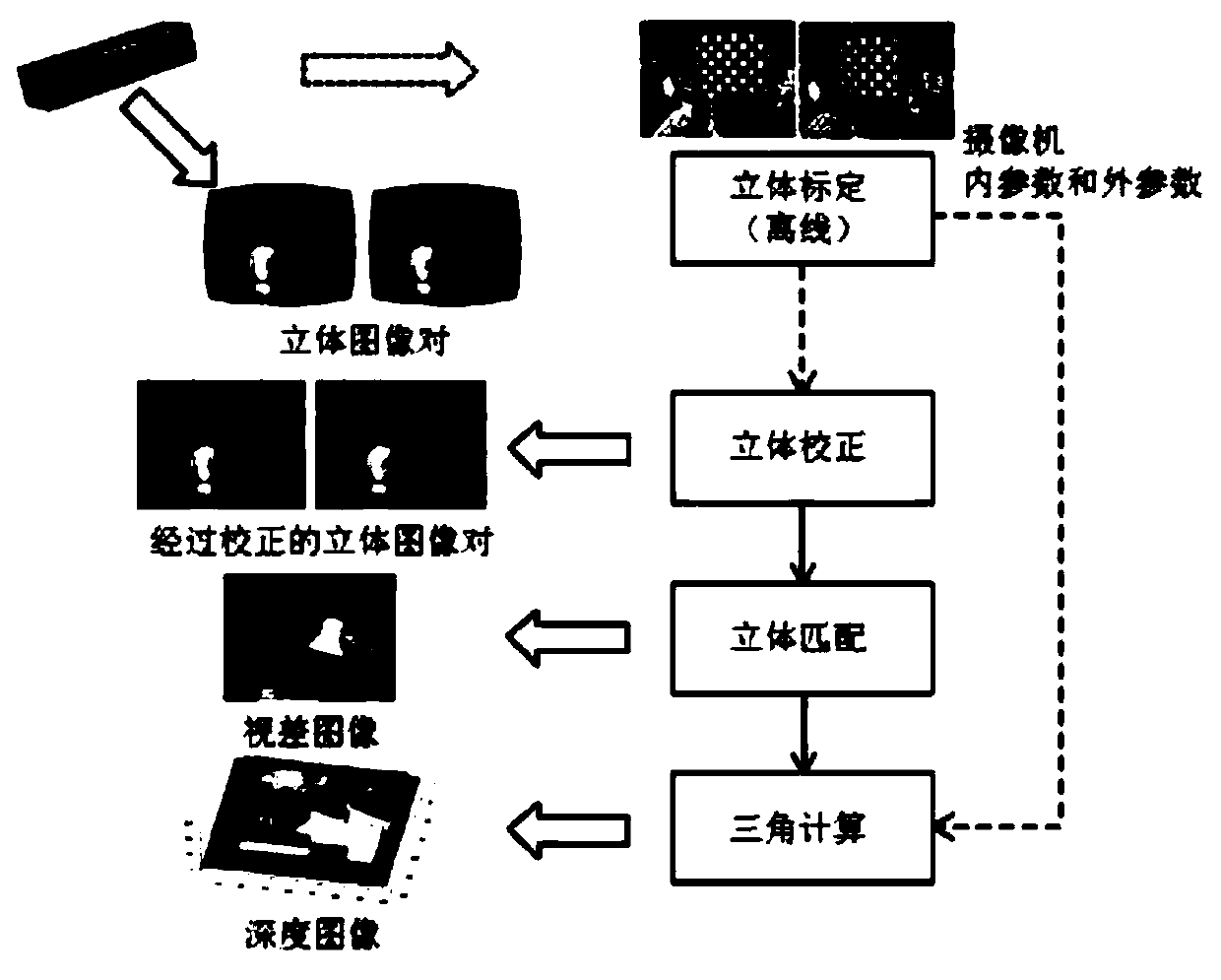

Binocular vision gesture recognition method and device based on range finding assistance

InactiveCN107563333AHigh precisionImprove accuracyImage analysisCharacter and pattern recognitionStereoscopic imagingComputer science

The invention provides a binocular vision gesture recognition method based on range finding assistance. The method comprising steps of: acquiring an image by using a binocular vision unit and obtaining range finding data by using a range finding unit; and calculating a depth distance between the binocular vision unit and a hand according to the range finding data; processing the image acquired bythe binocular vision unit according to a stereoscopic imaging principle to generate a depth image; and extracting an image with a depth value within a preset range from the depth image according to the depth distance and obtaining a gesture segmentation map; and performing gesture recognition on the gesture segmentation map and outputting a gesture recognition result. The method assists the measurement by the range finding unit, segments a hand region according to the obtained range finding data, can improve the accuracy of the gesture segmentation, and thus improves the accuracy of gesture recognition.

Owner:GUANGZHOU UNIVERSITY

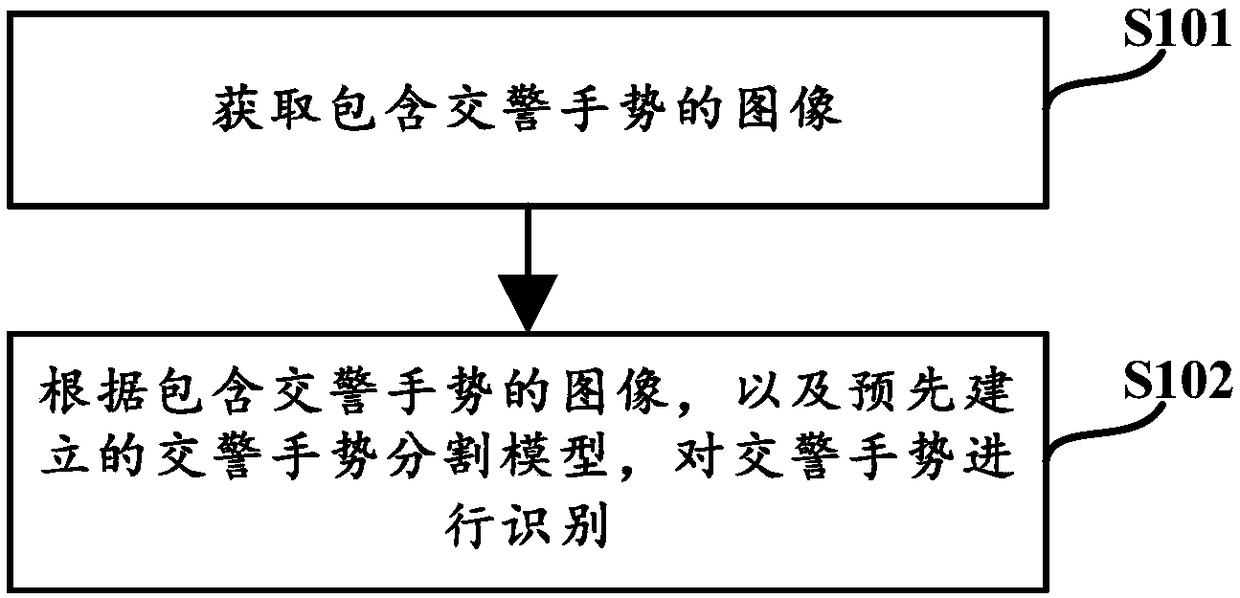

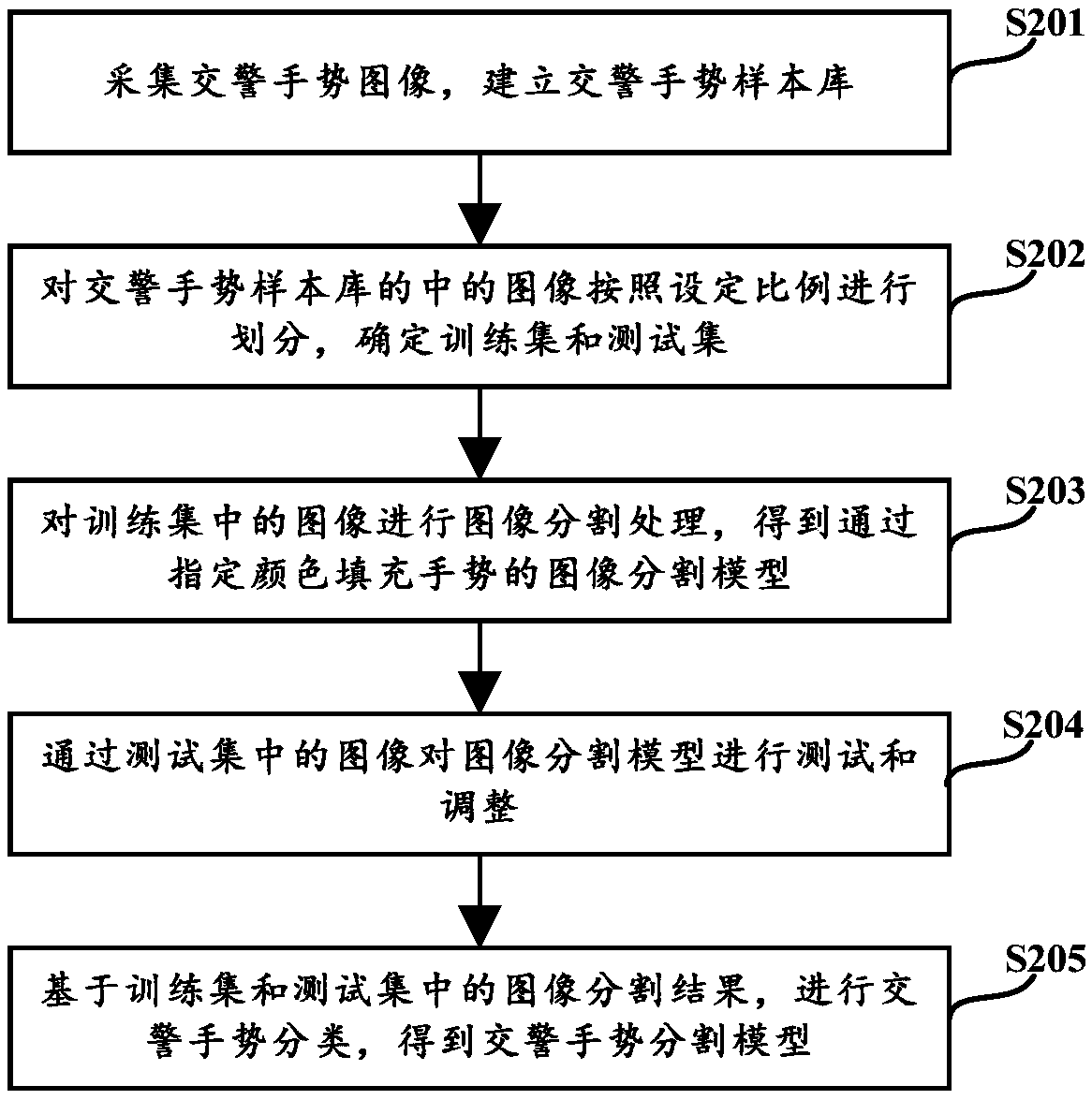

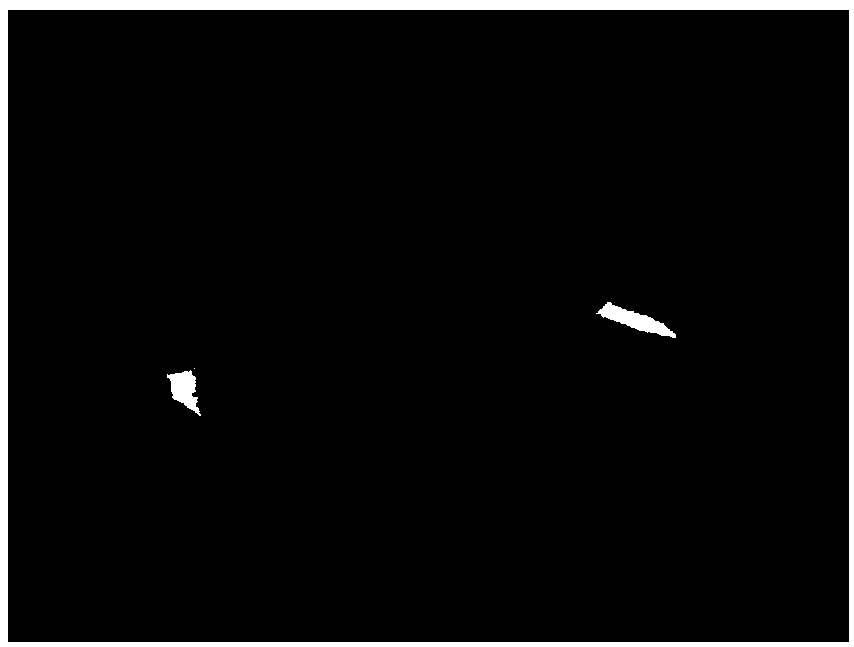

Traffic police gesture recognition method and apparatus

ActiveCN108846387AReduce identification costsEasy to implementCharacter and pattern recognitionComputer visionGesture segmentation

The invention discloses a traffic police gesture recognition method and apparatus, and relates to the field of computers. The method comprises the following steps: processing an image containing a traffic police gesture, and recognizing the traffic police gesture through a pre-established traffic police gesture segmentation model. By adoption of the method, real-time recognition of traffic policegestures can be performed by a traffic police without wearing a sensor device, so that the recognition cost is low, the implementation is relatively convenient, the traffic police gesture segmentation model is established in a traffic policemen gesture segmentation manner, and the traffic police gesture can be recognized by the traffic police gesture segmentation model via a real-time image, so that the recognition accuracy is relatively high.

Owner:BEIHANG UNIV

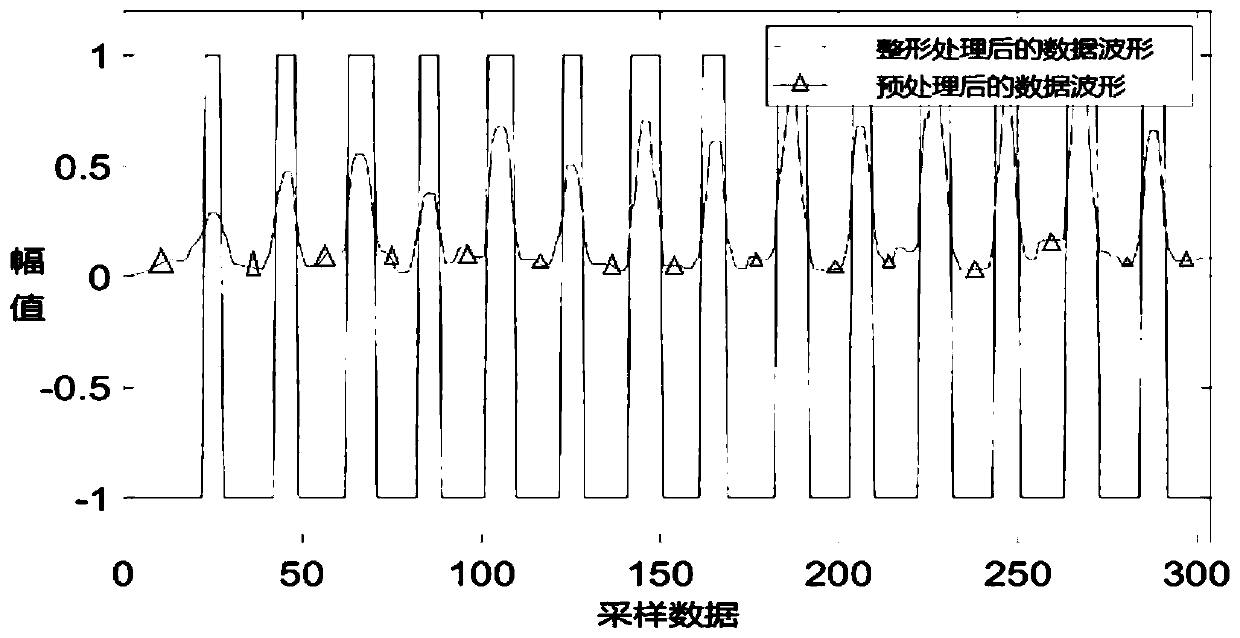

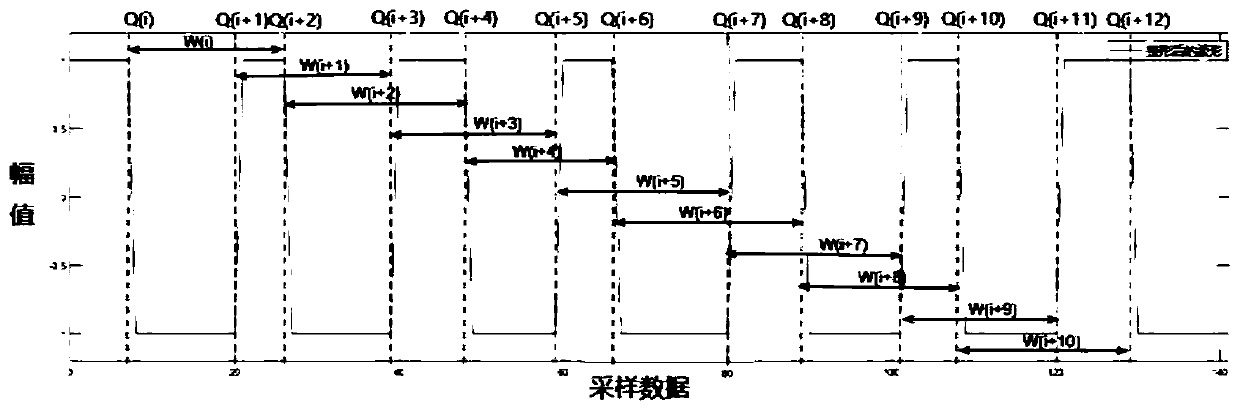

Real-time gesture recognition method and system

ActiveCN110163142AImprove robustnessFast and Accurate SegmentationCharacter and pattern recognitionSelf adaptiveGesture segmentation

The invention discloses a real-time gesture recognition method and system. The method mainly comprises the following steps: 1) establishing a gesture classification model; and 2) collecting motion sensing data of the gesture from the intelligent terminal in real time; 3) enabling the server to preprocess the motion sensing data to obtain resultant acceleration data; 4) performing gesture segmentation on the preprocessed resultant acceleration data, segmenting the resultant acceleration data into gesture segments in real time, and extracting feature data of gestures; and 5) inputting the feature data of the gesture into the gesture classification model, and identifying the gesture in real time to obtain a gesture identification result. The system mainly comprises an intelligent terminal anda server. According to the gesture segmentation method based on the self-adaptive sliding window, continuous gestures can be rapidly and accurately segmented into independent effective gestures, andthe gesture recognition accuracy and speed are improved.

Owner:CHONGQING UNIV

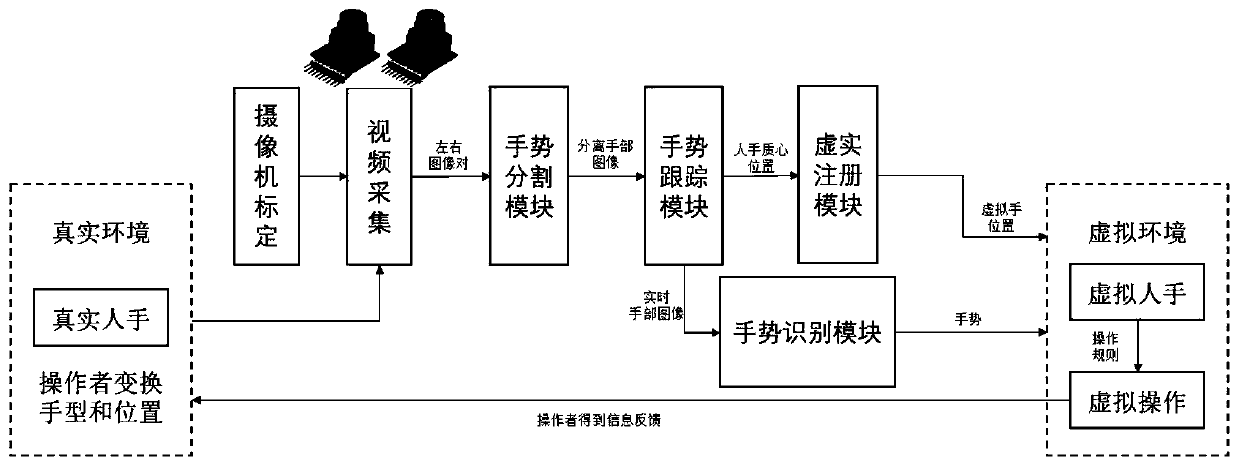

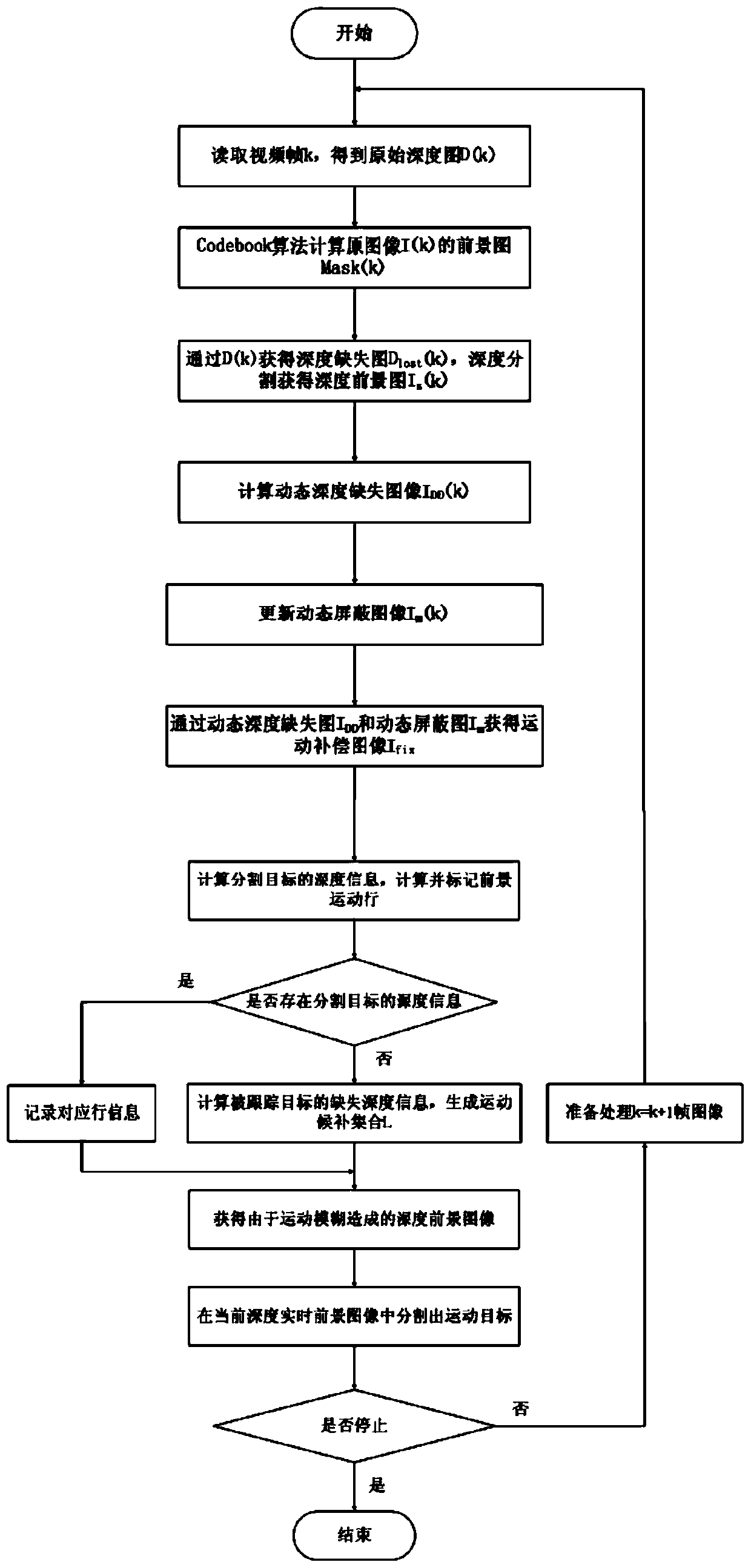

IPT simulation training gesture recognition method based on binocular vision

ActiveCN110688965AView accuratelySmooth human-computer interactionInput/output for user-computer interactionImage analysisSimulation trainingVisual perception

The invention discloses an IPT simulation training gesture recognition method based on binocular vision, and the method comprises the steps: improving an interaction mode according to the characteristics of a VR application, and carrying out the innovative improvement in three aspects: gesture segmentation, gesture tracking and gesture recognition; during gesture segmentation, compensating a target loss phenomenon in a foreground segmentation image caused by motion blur through background modeling, target motion trend estimation and other methods; during gesture tracking, using the combinationof chrominance information and depth information, realizing tracking of a motion gesture during operation of the flight simulator through contour fitting; during gesture recognition, providing and using an extended Haar-like feature and an improved Adaboost method for hand shape classification, and defining a state area. The good dynamic gesture recognition rate is obtained, the use experience during operation is improved, an operator can accurately check the hand action during operation, the operation training efficiency is effectively improved, and the training cost is reduced.

Owner:QINGDAO RES INST OF BEIHANG UNIV +1

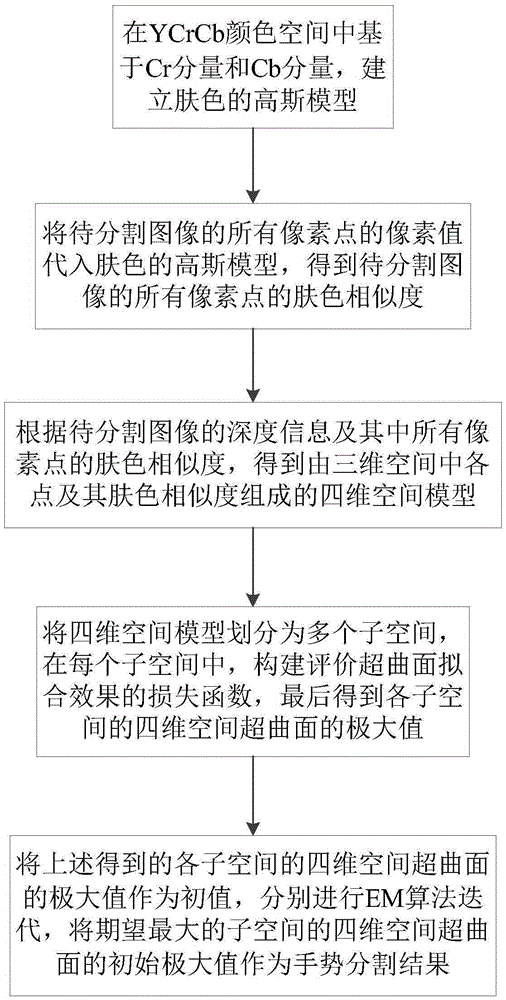

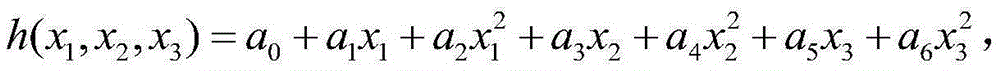

Gesture segmentation method and system based on global expectation-maximization algorithm

ActiveCN105405143AEnhanced basis for judgmentAvoid searchingImage enhancementImage analysisSkin complexionExpectation–maximization algorithm

The present invention discloses a gesture segmentation method and system based on a global expectation-maximization algorithm. The method comprises: establishing a Gaussian model of a complexion; substituting pixel values of all pixel points of a to-be-segmented image into the Gaussian model of the complexion, so as to obtain a complexion similarity degree of all the pixel points of the to-be-segmented image; according to depth information of the to-be-segmented image and the complexion similarity degree of all the pixel points thereof, obtaining a four-dimensional space model consisting of all points in a three-dimensional space and the complexion similarity degree thereof; and dividing the four-dimensional space model into a plurality of sub-spaces, constructing a loss function for evaluating an hypersurface fitting effect in each sub-space, and minimizing the loss function by using a gradient descent method to obtain a four-dimensional hypersurface of the subspace, and finally obtaining a maximum value of the four-dimensional hypersurface of each subspace according to a gradient ascending direction. According to the method provided by the present invention, comparable mathematical description can be generated, the base of two-model fusion is realized, thereby providing a new basis for fusion of different modal data.

Owner:HUAZHONG NORMAL UNIV

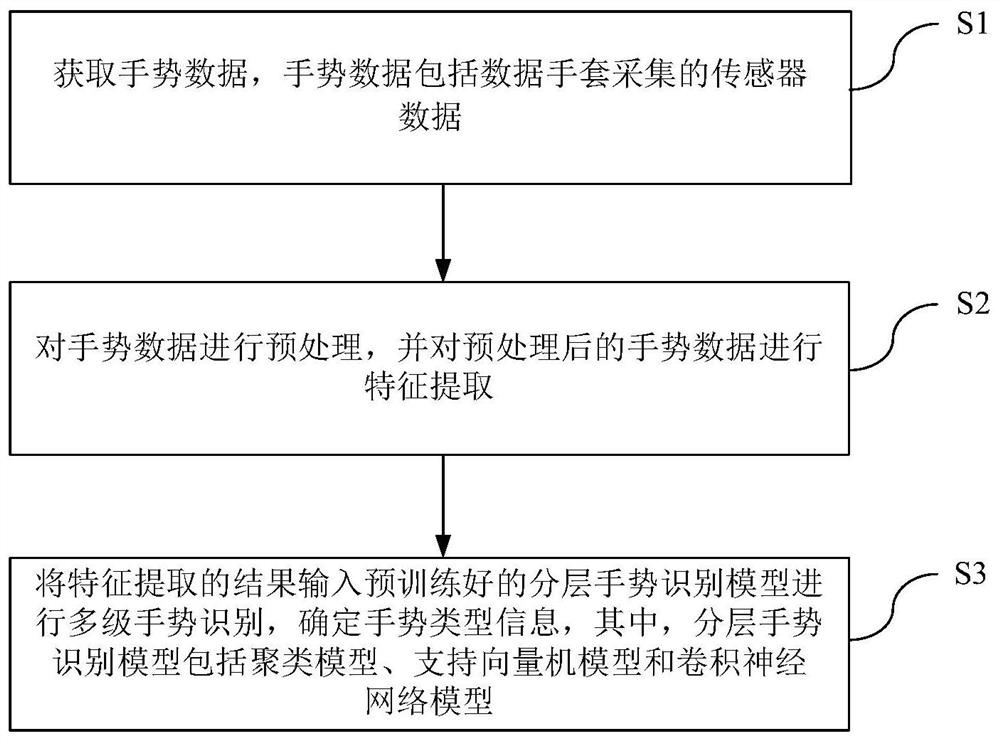

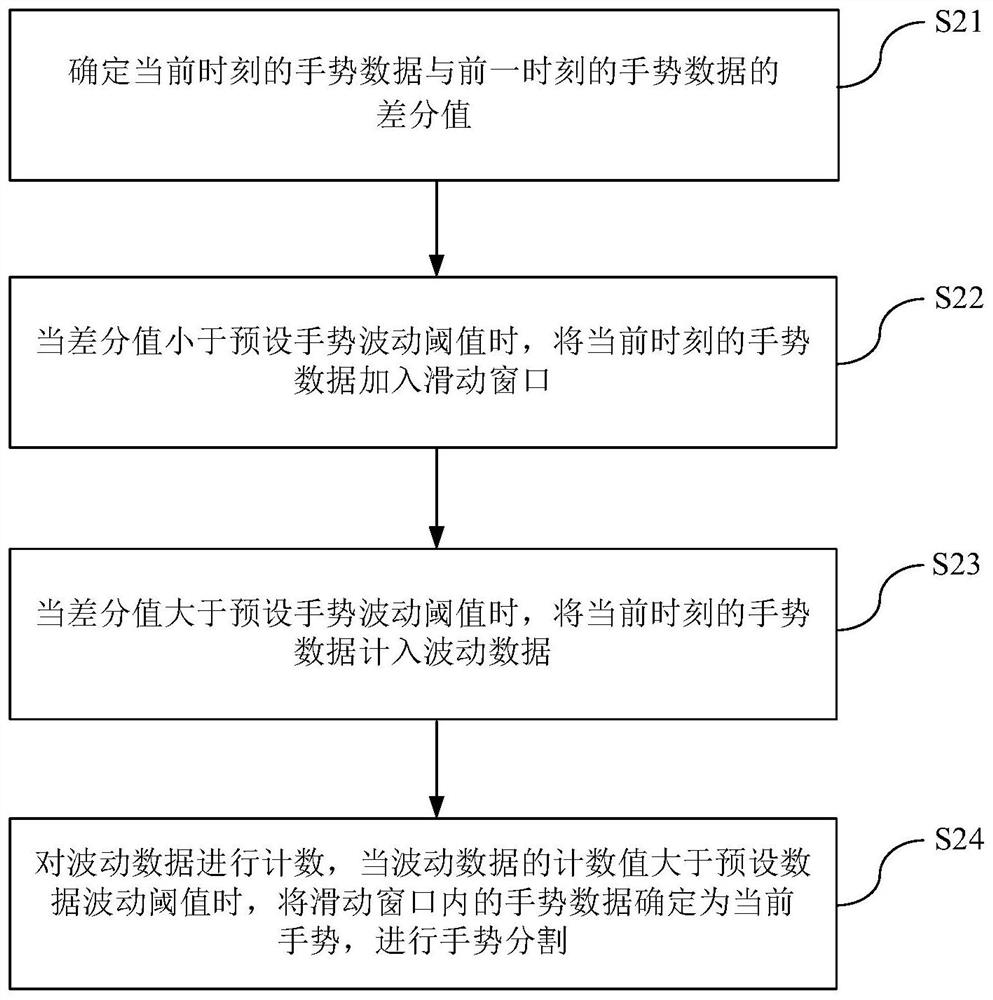

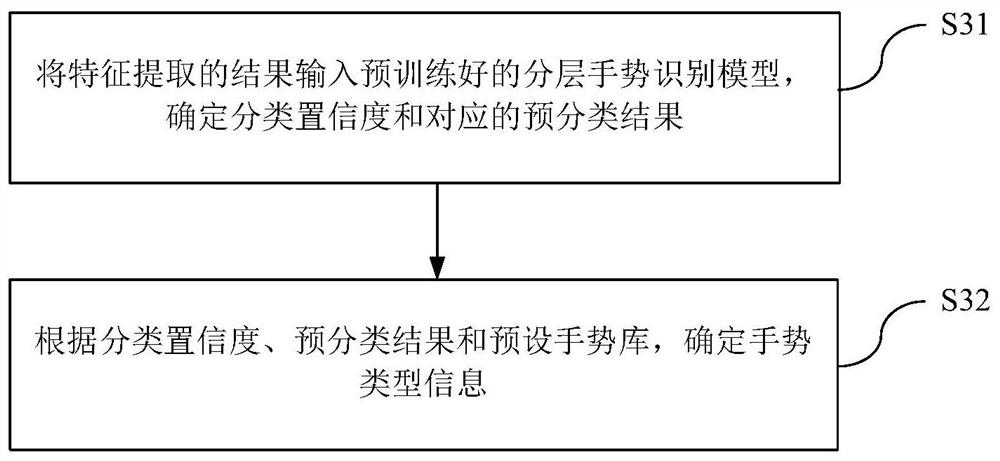

Real-time gesture recognition method and device and human-computer interaction system

ActiveCN112148128AReduce occupancyGuaranteed accuracyInput/output for user-computer interactionCharacter and pattern recognitionData packFeature extraction

The invention provides a real-time gesture recognition method and device, a mobile terminal and a human-computer interaction system, and relates to the technical field of gesture recognition, and themethod comprises the steps: obtaining gesture data which comprises sensor data collected by a data glove; preprocessing the gesture data, and performing feature extraction on the preprocessed gesturedata; and inputting the feature extraction result into a pre-trained hierarchical gesture recognition model for multi-stage gesture recognition, and determining gesture type information. Gesture dataof a user are collected in real time through the data glove, effective gesture segmentation and other preprocessing operations are carried out on the gesture data, feature extraction is carried out byconstructing a valuable feature information set, and extracted feature information is input into the lightweight hierarchical gesture recognition model to carry out gesture recognition; on the basisof ensuring gesture recognition accuracy, less system resources are occupied, and the gesture recognition method and device are suitable for mobile terminal-oriented application scenarios.

Owner:HARBIN INST OF TECH

Real-time accurate non-contact gesture recognition method and system based on RFID system

ActiveCN110298263AOvercome inherent shortcomingsOvercome the priceCharacter and pattern recognitionHigh level techniquesTimestampReader writer

The invention discloses a real-time accurate non-contact gesture recognition method and system based on an RFID system, and the method comprises the steps: a reader-writer collecting a gesture signalfrom a user, and the gesture signal comprising a timestamp and a phase value; preprocessing and gesture segmentation are carried out on the gesture signal; extracting coarse-grained statistical characteristics and fine-grained wavelet transformation characteristics of the signals subjected to gesture segmentation processing to construct a classifier of the system; and inputting the acquired data into the constructed classifier, and predicting the gesture. According to the invention, the identification time is greatly shortened, and the identification precision is ensured.

Owner:CENT SOUTH UNIV

Static gesture recognition method based on Kinect sensor

InactiveCN112836662AHigh precisionImprove efficiencyImage enhancementImage analysisHistogram of oriented gradientsFeature extraction algorithm

The invention discloses a static gesture recognition method based on a Kinect sensor. The static gesture recognition method comprises the steps that the Kinect sensor is adopted to obtain static gesture depth image information; the computer preprocesses the hand depth image by adopting nonlinear depth median filtering; gesture segmentation is performed based on a grey level histogram algorithm to obtain a hand region image; a hand region image with good robustness is obtained by adopting a histogram of oriented gradient (HOG) feature extraction algorithm; a computer is adopted to extract HOG features, the weighted Euclidean distance between the HOG features and the standard template is minimum and does not exceed a set threshold value, gesture category judgment is carried out, and a result is given. The static gesture recognition precision and efficiency are higher.

Owner:SUZHOU YUTA TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com