Deep information based sign language recognition method

A technology of depth information and recognition methods, applied in character and pattern recognition, instruments, computing, etc., can solve problems such as target tracking and segmentation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

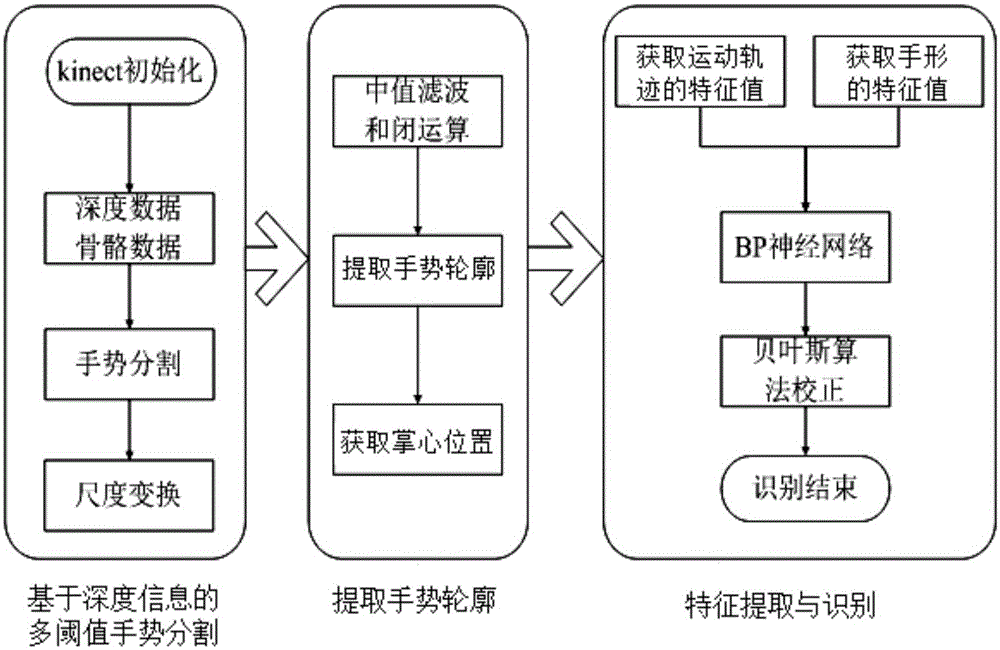

[0066] A sign language recognition method based on depth information, the specific steps include:

[0067] (1) Multi-threshold gesture segmentation based on depth information: use the Kinect camera to obtain user depth data and bone data, perform multi-threshold segmentation on gestures, and obtain the binary image of the right hand after scale transformation; at the same time, extract the right hand and right hand respectively. The bone space coordinates of the four bone points of the index finger, right wrist and right shoulder;

[0068] (2) using a 5×5 window to perform median filtering and morphological closing operation on the binary image of the right hand obtained in step (1), that is, perform smoothing processing, and use the nearest neighbor method to extract the gesture contour;

[0069] (3) Based on the improved SURF algorithm, the eigenvalues of the hand shape are obtained;

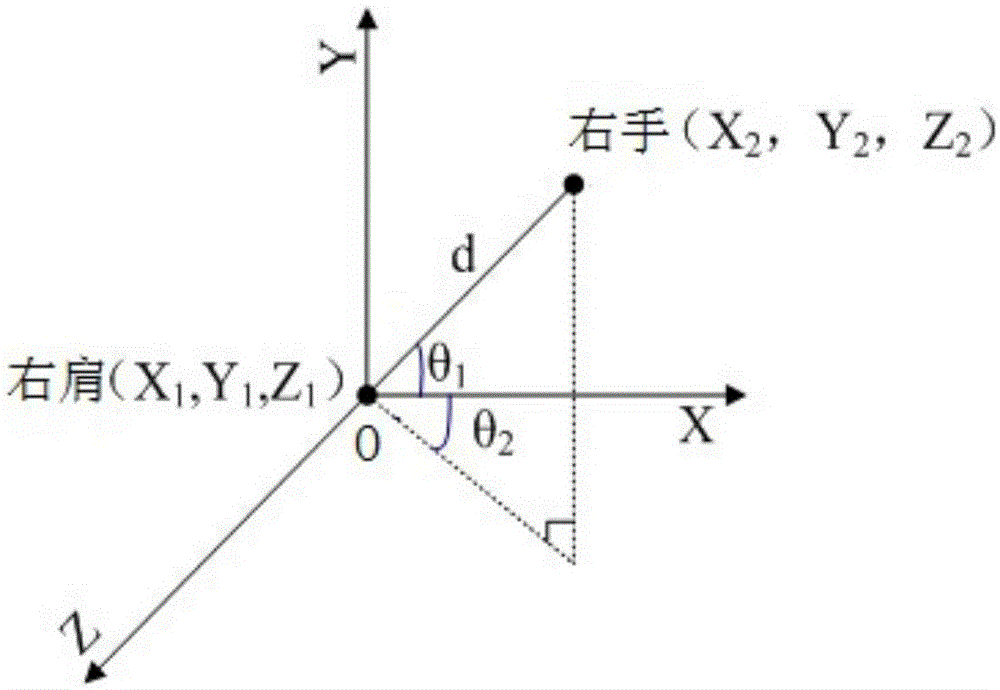

[0070] (4) Motion trajectory feature extraction based on angular velocity and distance:...

Embodiment 2

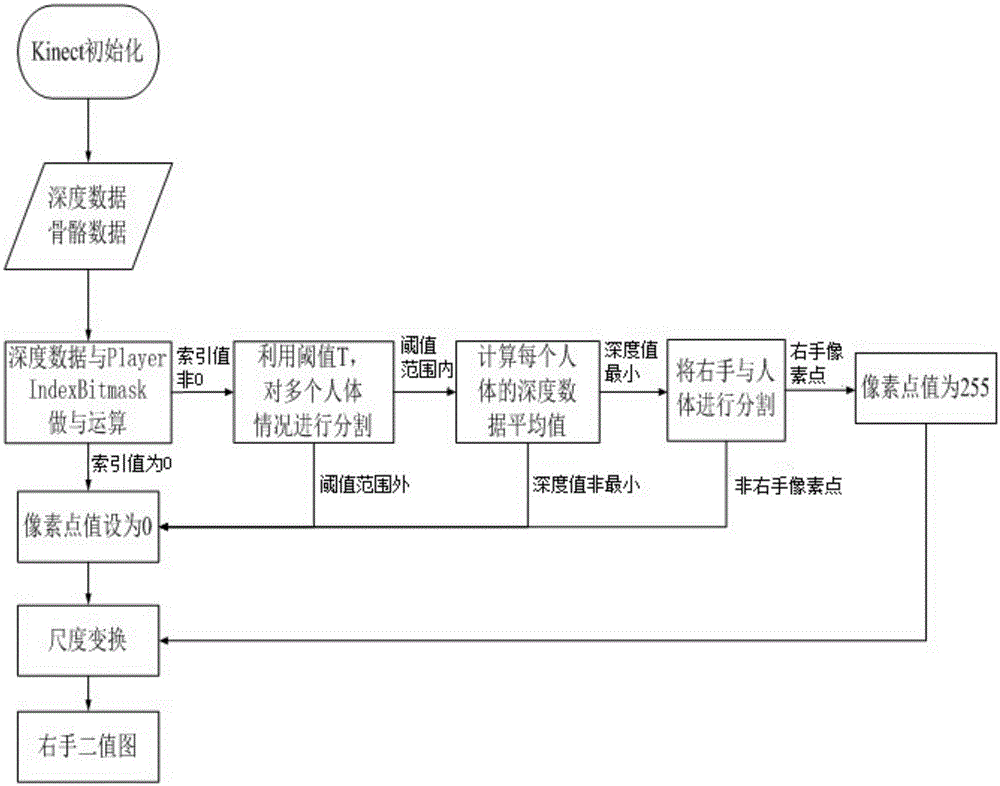

[0075] The depth information-based sign language recognition method according to Embodiment 1 is different in that the depth information-based multi-threshold gesture segmentation includes the following specific steps:

[0076] a. Use the user depth data obtained by the Kinect camera to perform an AND operation with the PlayerIndexBitmask. The default value of the PlayerIndexBitmask is 7 to obtain the user index value. According to the difference of the user index value, the human body and the background are divided;

[0077] b. When there are multiple human bodies within the effective line-of-sight of the Kinect camera, the effective line-of-sight of the Kinect camera is 1.2m-3.5m, select the threshold T to further segment the depth image segmented in step a, and the threshold T is 2.5m- 3.5m;

[0078] c. In the depth image processed in step b, if there are still multiple human bodies within the threshold T, calculate the average value of the depth data of each human body, an...

Embodiment 3

[0087] The method for sign language recognition based on depth information according to Embodiment 1, the difference is that in step a, the user index value consists of 2 bytes and 16 bits, wherein the upper 13 bits represent the user to the Kinect camera The lower 3 bits represent the user index value, convert the binary user index value to decimal, the value is 0-7; the user index value is 0, the pixel is the background, if the user index value is 1 to 7, the pixel point for the human body.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com