Depth information static gesture segmentation method

A depth information and gesture technology, applied in image analysis, image enhancement, instruments, etc., can solve problems such as over-segmentation, complex gestures, difficult gesture segmentation, etc., to achieve the effect of simple method, accurate image of gesture area, and avoiding uneven lighting.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0047] The gesture images in this embodiment come from the American Sign Language dataset (American Sign Language, ASL), which includes 60,000 color images and 60,000 depth images collected by Kinect.

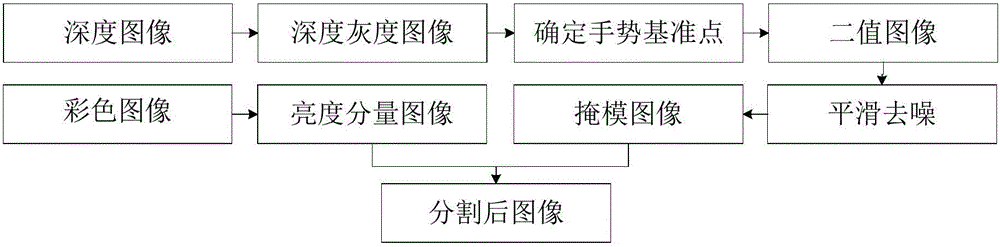

[0048] exist figure 1 Among them, this embodiment selects a depth image with a length of 184 and a width of 178 and the corresponding color image, and the segmentation steps of the depth information static gesture segmentation method are as follows:

[0049] 1. Convert the depth image to an equal-sized depth grayscale image

[0050] Adjust the depth value of each pixel in the depth image to a grayscale value of 0 to 255 to obtain a depth grayscale image. The specific steps are:

[0051] (1) Find the maximum depth value dmax of the pixel from the depth image.

[0052] Take the maximum value of each row in the 1-178 rows of the image matrix, and select a maximum value of 3277 from the 178 maximum values as the dmax value.

[0053] (2) Use formula (1) to convert the depth ima...

Embodiment 2

[0078] The gesture images in this embodiment come from the American Sign Language dataset (American Sign Language, ASL), which includes 60,000 color images and 60,000 depth images collected by Kinect.

[0079] In this embodiment, a depth image with a length of 184 and a width of 178 and the corresponding color image are selected. The segmentation steps of the depth information static gesture segmentation method are as follows:

[0080] 1. Convert the depth image to an equal-sized depth grayscale image

[0081] This step is the same as in Example 1.

[0082] 2. Determine the grayscale of the gesture area in the depth grayscale image

[0083] This step is the same as in Example 1.

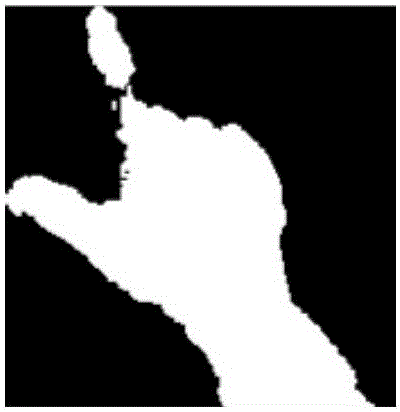

[0084] 3. Convert the depth grayscale image into a binary image

[0085] According to the relationship between the gray value of the pixel at (x, y) in the depth grayscale image, the grayscale d of the gesture area is 54, and the set threshold T is 5, use formula (6) to judge the depth grayscale i...

Embodiment 3

[0096] The gesture images in this embodiment come from the American Sign Language dataset (American Sign Language, ASL), which includes 60,000 color images and 60,000 depth images collected by Kinect.

[0097] In this embodiment, a depth image with a length of 184 and a width of 178 and the corresponding color image are selected. The segmentation steps of the depth information static gesture segmentation method are as follows:

[0098] 1. Convert the depth image to an equal-sized depth grayscale image

[0099] This step is the same as in Example 1.

[0100] 2. Determine the grayscale of the gesture area in the depth grayscale image

[0101] This step is the same as in Example 1.

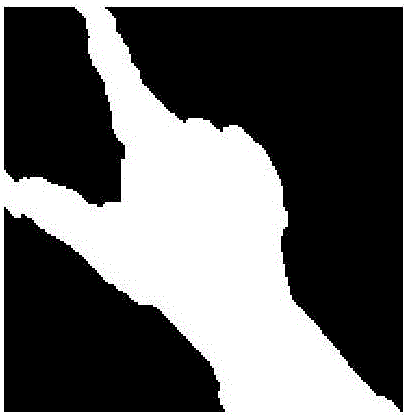

[0102] 3. Convert the depth grayscale image into a binary image

[0103] According to the relationship between the gray value of the pixel at (x, y) in the depth grayscale image, the grayscale d of the gesture area is 54, and the set threshold T is 15, use formula (8) to judge the depth grayscale ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com