Medical model interaction visualization method and system based on gesture recognition

A gesture recognition and gesture technology, applied in the field of computer vision, can solve problems such as insufficient interaction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

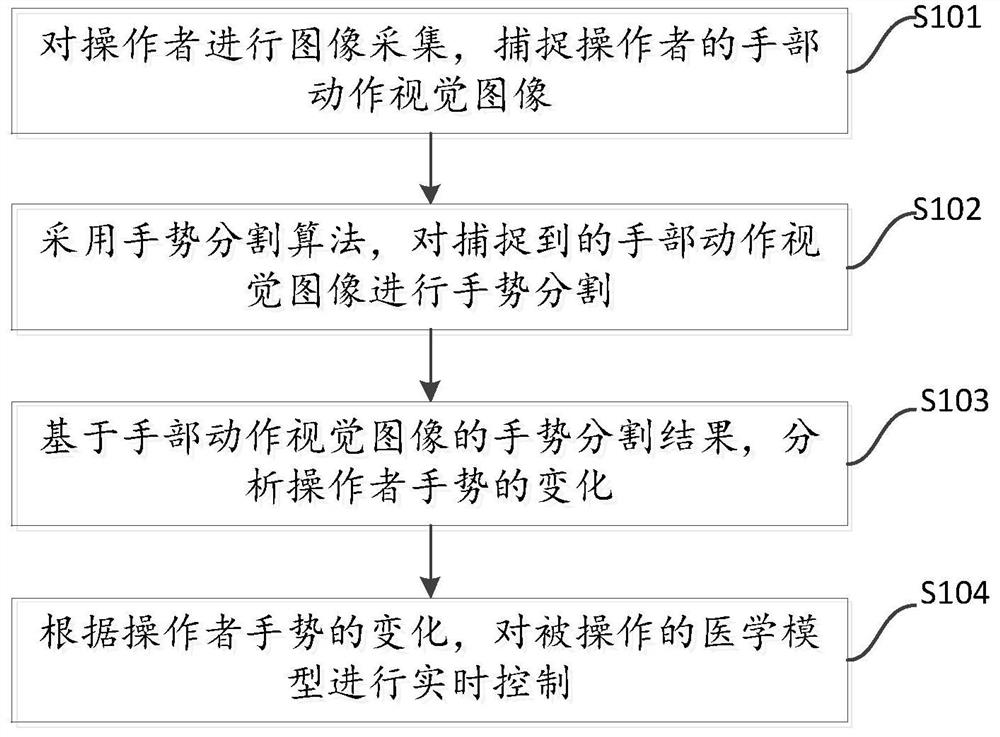

[0046] see figure 1 , the present embodiment provides a method for interactive visualization of medical models based on gesture recognition, the method for interactive visualization of medical models based on gesture recognition includes the following steps:

[0047] S101, collecting images of the operator, and capturing visual images of the operator's hand movements;

[0048] It should be noted that the above steps are to collect images of the operator through the binocular camera, and obtain the calibrated stereo image through stereo calibration, so as to complete the stereo matching and obtain the parallax image. The depth image is acquired through calculation to capture the visual image of the operator's hand movement; wherein, the visual image of the hand movement includes a left visual image and a right visual image.

[0049] S102, using a gesture segmentation algorithm to perform gesture segmentation on the captured hand movement visual image;

[0050] It should be no...

no. 2 example

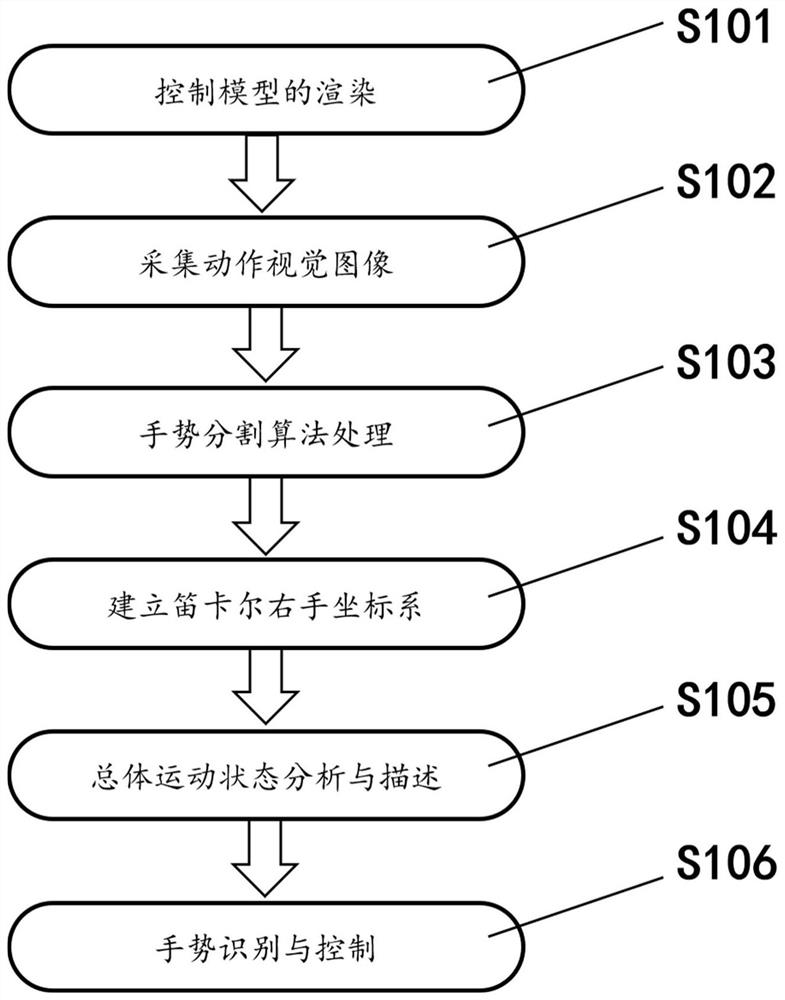

[0058] see figure 2 , the present embodiment provides a method for interactive visualization of medical models based on gesture recognition, the method for interactive visualization of medical models based on gesture recognition includes the following steps:

[0059] S101, realize the rendering of the control model, that is, the two-dimensional image information stored in the computer can be displayed in three-dimensional form through operations such as dragging and moving the mouse, and at the same time, it can also cooperate with the keyboard to complete the selection of a certain area operate. The model rendering function is the main function of this system;

[0060] S102, collect the visual image of the operator's action through the binocular camera, and obtain the calibrated stereo image through stereo calibration, thereby completing the stereo matching, obtaining the parallax image, and then combining the internal parameters and external parameters of the camera, using...

no. 3 example

[0067] This embodiment provides a gesture recognition-based interactive visualization system for medical models, which includes:

[0068] The binocular camera is used to collect images of the operator and capture visual images of their hand movements;

[0069] A gesture segmentation module, configured to use a preset gesture segmentation algorithm to perform gesture segmentation on the hand movement visual image captured by the binocular camera;

[0070] The gesture analysis and tracking module is used to analyze the change of the operator's gesture based on the gesture segmentation result of the hand movement visual image by the gesture segmentation module;

[0071] The gesture recognition and control module is used to control the operated medical model in real time according to the change of the operator's gesture.

[0072] The gesture recognition-based medical model interactive visualization system of this embodiment corresponds to the gesture recognition-based medical mod...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com