Gesture recognition method based on deep learning

A gesture recognition and deep learning technology, applied in the field of human-computer interaction, can solve the problems of many command operations, inconvenient buttons for drivers to use, complex control screen and button settings, etc., and achieve simple recognition process, high reliability and high adaptability sexual effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The detailed features and advantages of the present invention are described in detail below in the specific embodiments, the content of which is sufficient to enable any person skilled in the art to understand the technical content of the present invention and implement it accordingly, and according to the specification, claims and drawings disclosed in this specification , those skilled in the art can easily understand the related objects and advantages of the present invention.

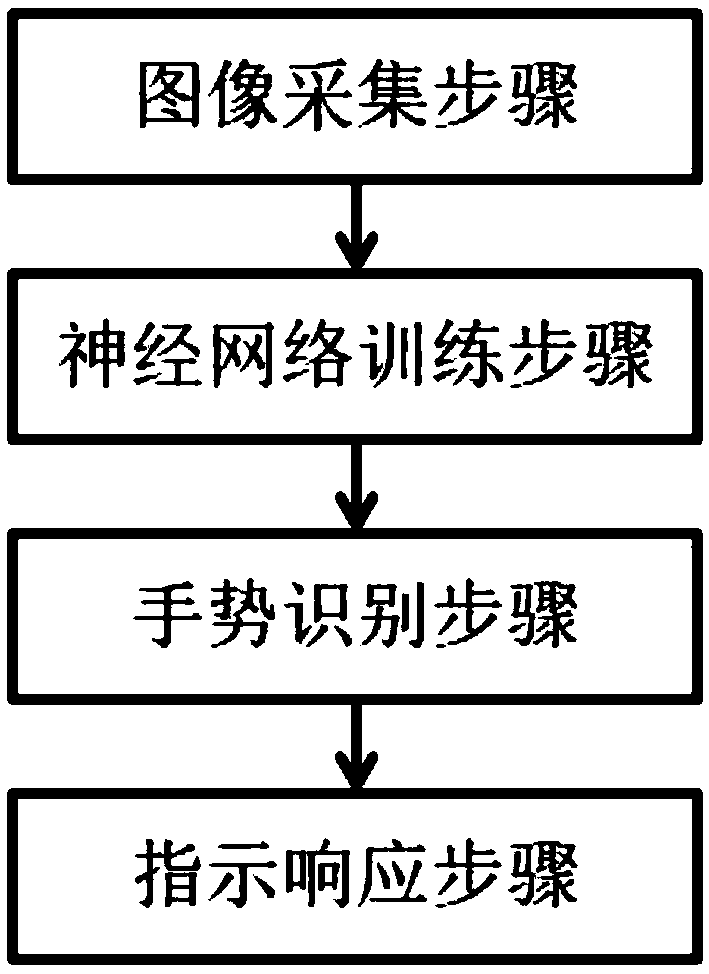

[0031] see figure 1 , as a first aspect of the present invention, the present invention provides a gesture recognition method based on deep learning, including:

[0032] Image collection step: use a camera to collect video images, perform video decoding on the video images, and obtain input video data. The camera can be a network camera, an infrared camera or a 3D camera;

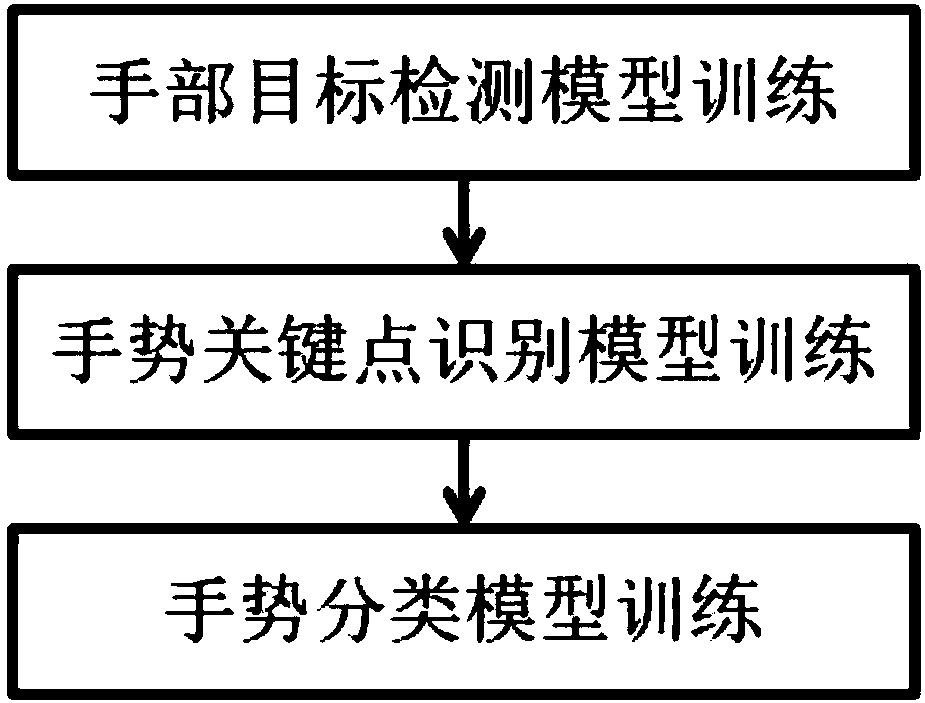

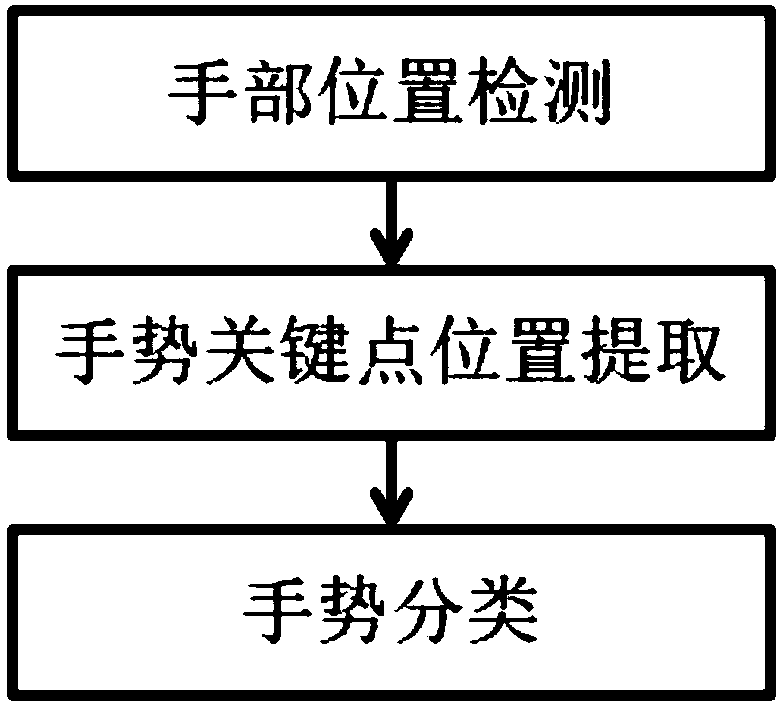

[0033] Neural network training steps: train the neural network model for hand position detection, gesture key point pos...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com