Multi-model fusion video hand division method based on Kinect

A technology that integrates video and multiple models. It is used in character and pattern recognition, instruments, computer parts, etc., and can solve problems such as depth information noise, lack of segmentation effect, and inaccurate edges.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

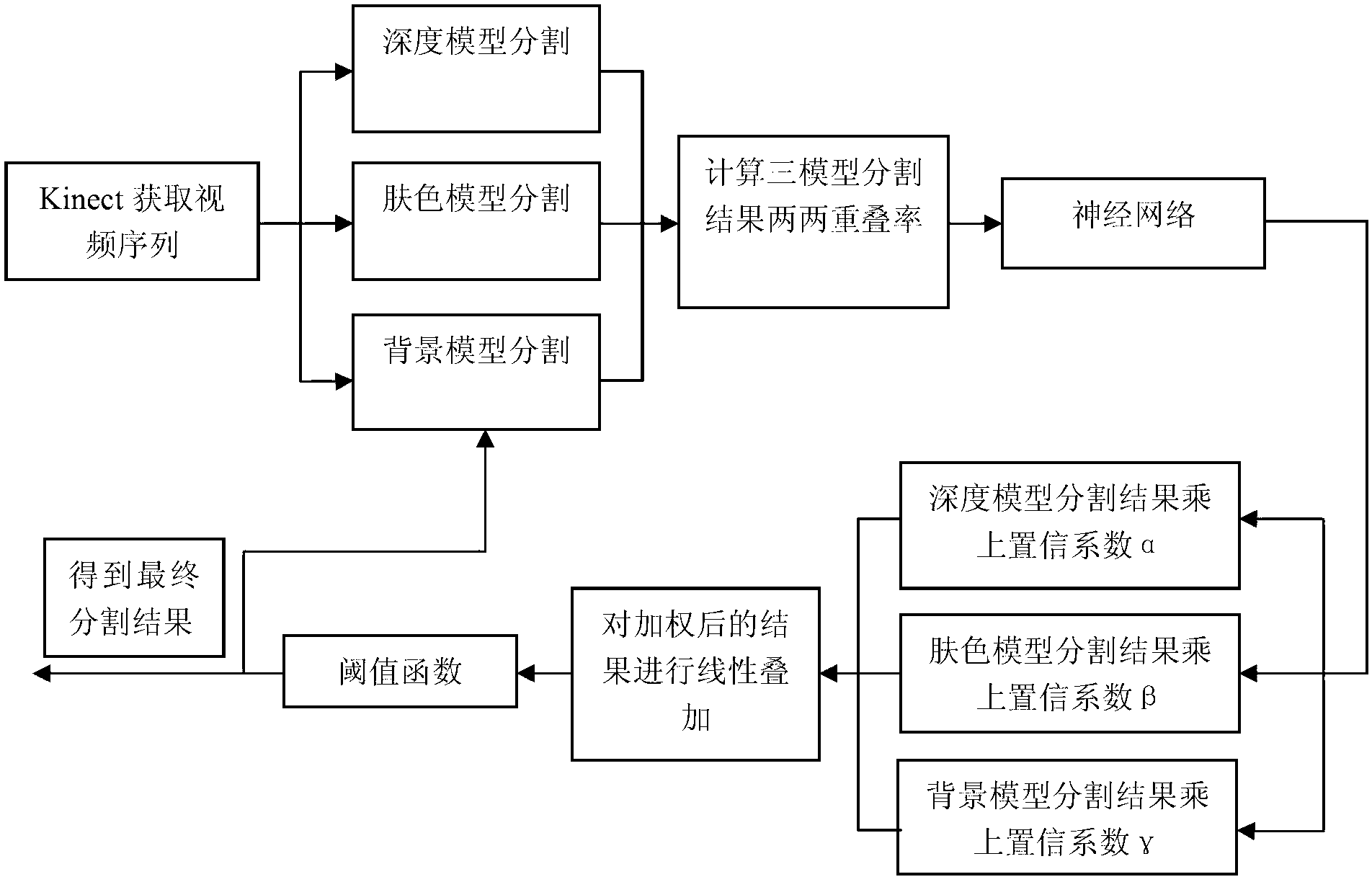

[0061] Such as figure 1 As shown, it is a block diagram of the system structure of the present invention. After the user video is obtained through Kinect, the depth, skin color and background models are used to segment respectively, and the overlapping ratios of the segmentation results of the three models are calculated, and input to a neural network evaluation system . The neural network outputs the "confidence coefficient" of each of the three models. The segmentation results of each model are weighted by the confidence coefficient and then linearly accumulated. Finally, the final segmentation result is obtained through a threshold function, and the non-human hand area is extracted from the segmentation result to update the background model regularly. .

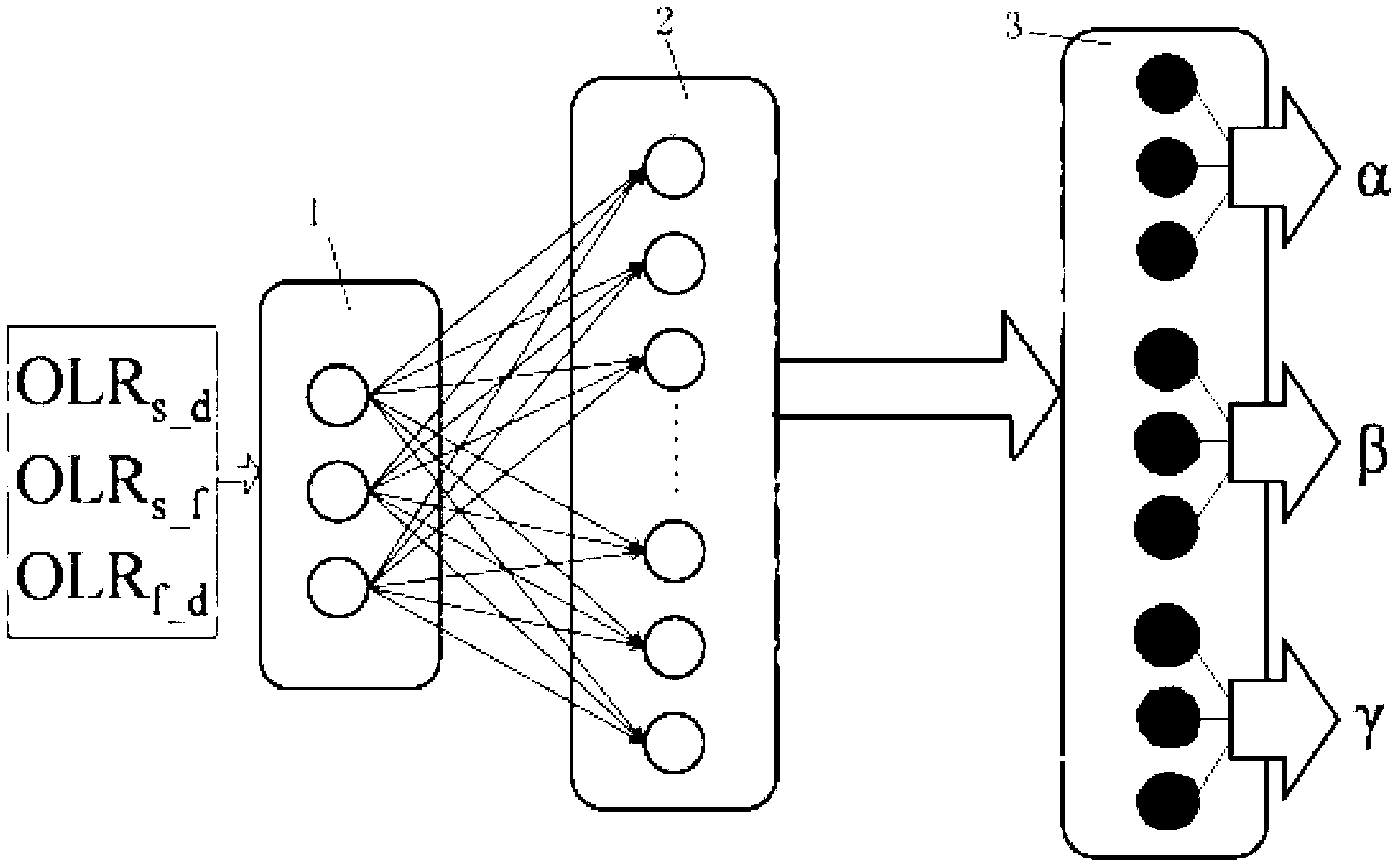

[0062] The structure of the neural network evaluation system is as follows: figure 2 As shown, the input layer 1 accepts the pairwise overlap rate of the three model segmentation results as input, that is, OLR s_d ,OLR...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com