Patents

Literature

119 results about "Electroencephalogram feature" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

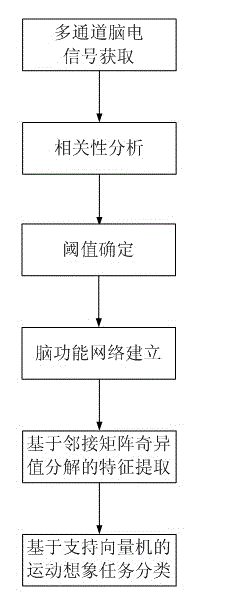

Electroencephalogram feature extracting method based on brain function network adjacent matrix decomposition

InactiveCN102722727AIgnore the relationshipIgnore coordinationCharacter and pattern recognitionMatrix decompositionSingular value decomposition

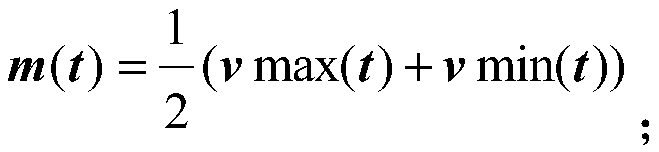

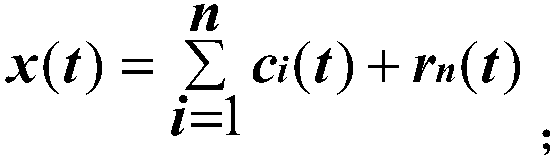

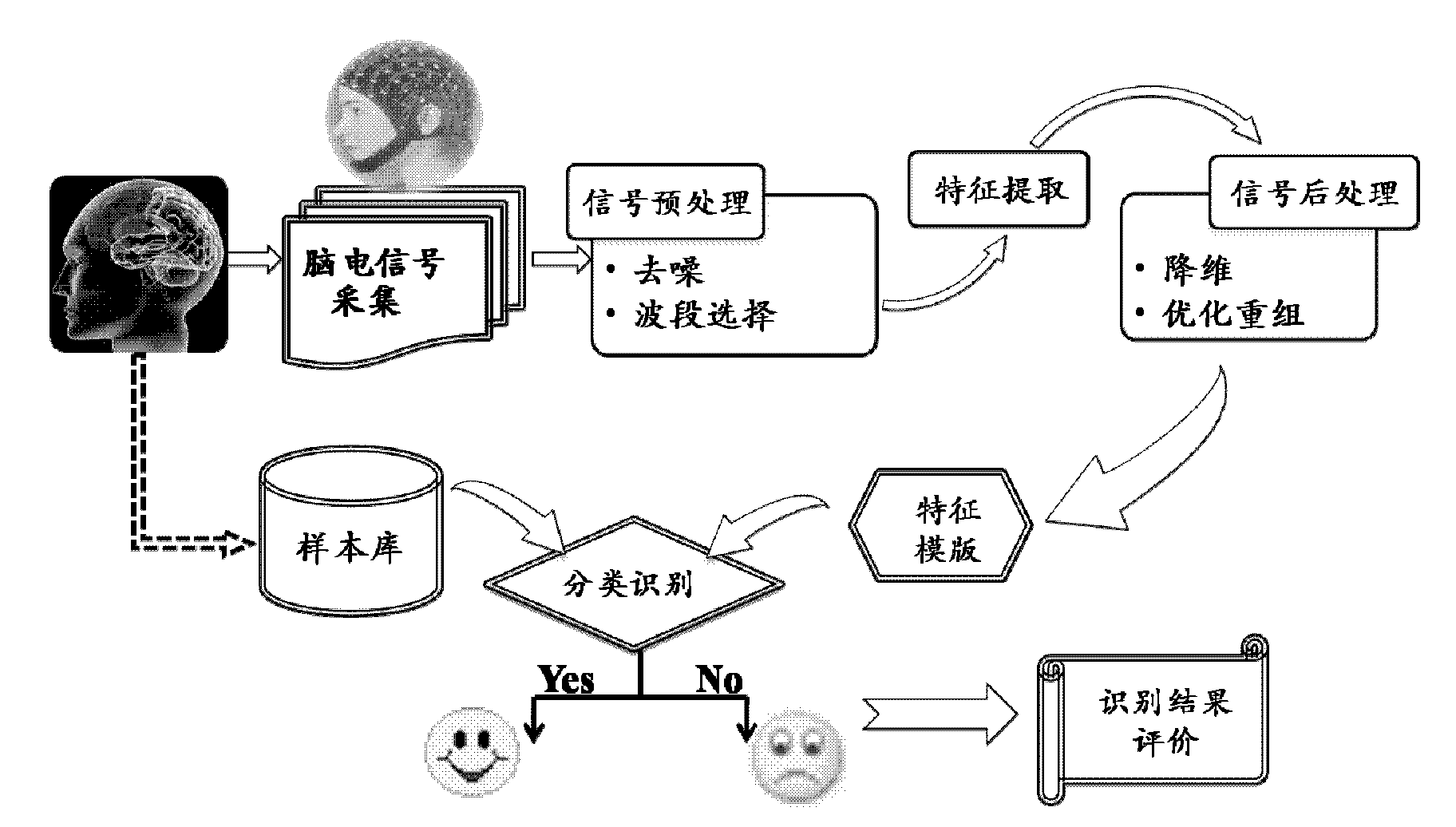

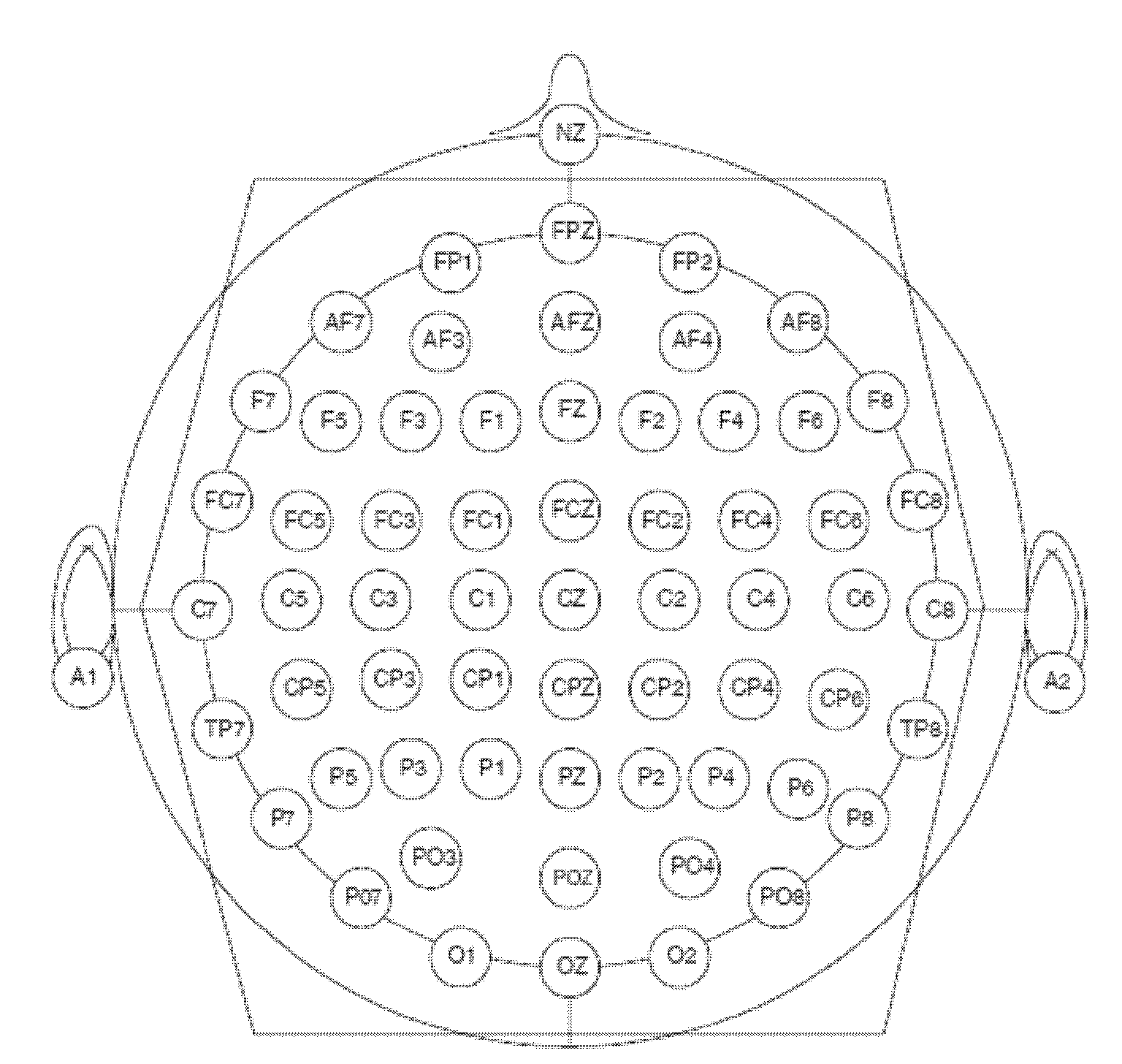

The invention relates to an electroencephalogram feature extracting method based on brain function network adjacent matrix decomposition. The current motion image electroencephalogram signal feature extraction algorithm mostly focuses on partially activating the qualitative and quantitative analysis of brain areas, and ignores the interrelation of the bran areas and the overall coordination. In light of a brain function network, and on the basis of complex brain network theory based on atlas analysis, the method comprises the steps of: firstly, establishing the brain function network through a multi-channel motion image electroencephalogram signal, secondly, carrying out singular value decomposition on the network adjacent matrix, thirdly, identifying a group of feature parameters based on the singular value obtained by the decomposition for showing the feature vector of the electroencephalogram signal, and fourthly, inputting the feature vector into a classifier of a supporting vector machine to complete the classification and identification of various motion image tasks. The method has a wide application prospect in the identification of a motion image task in the field of brain-machine interfaces.

Owner:启东晟涵医疗科技有限公司

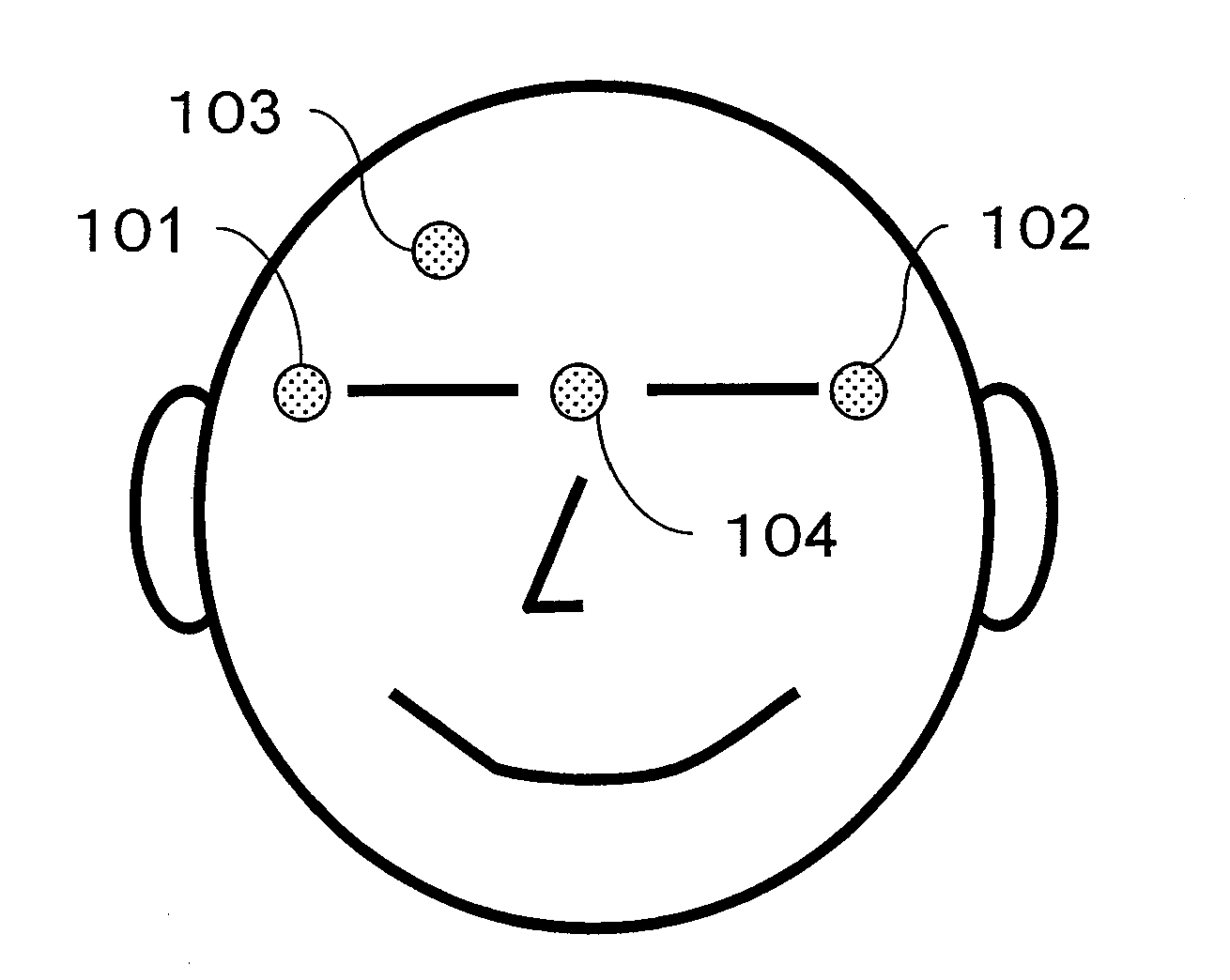

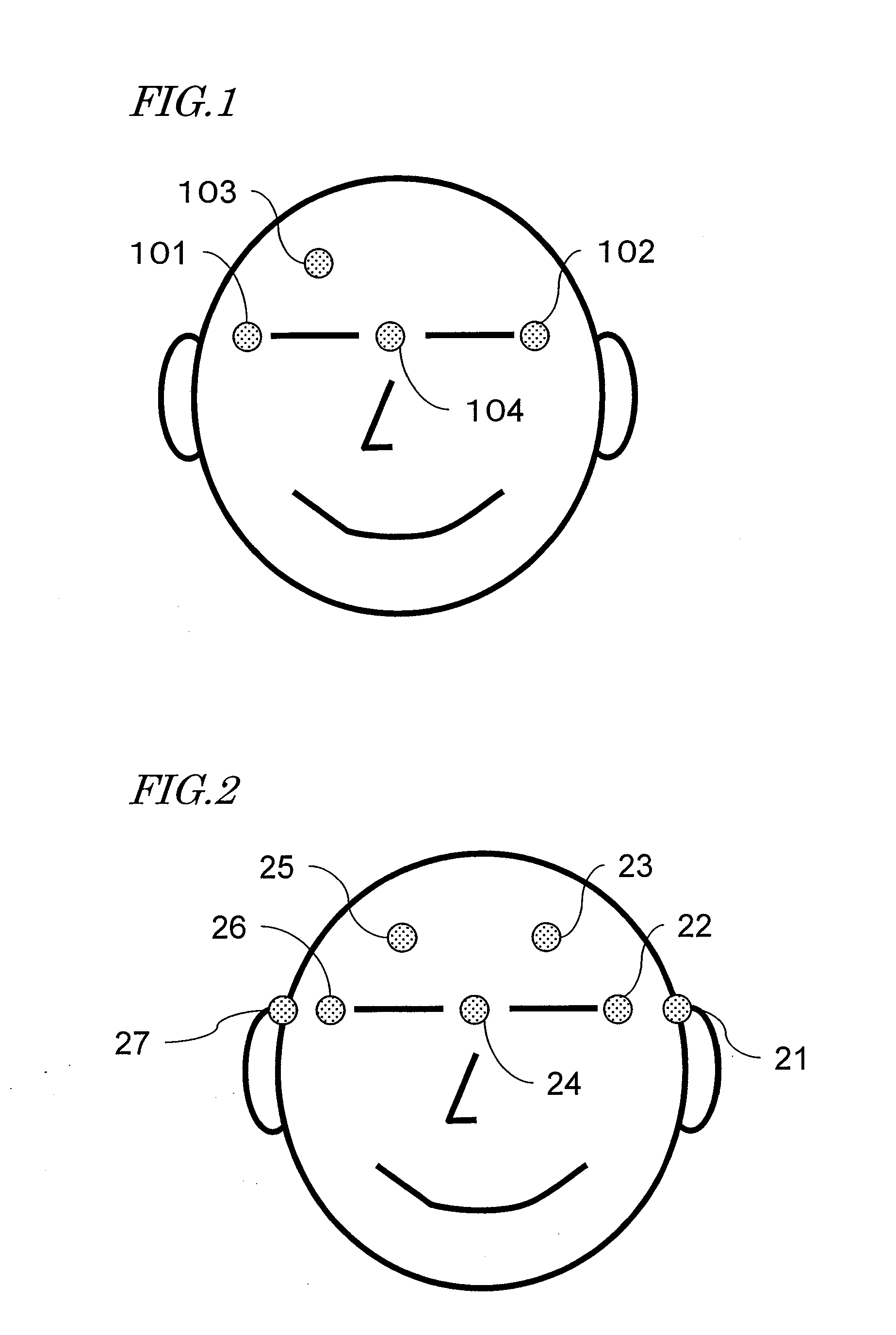

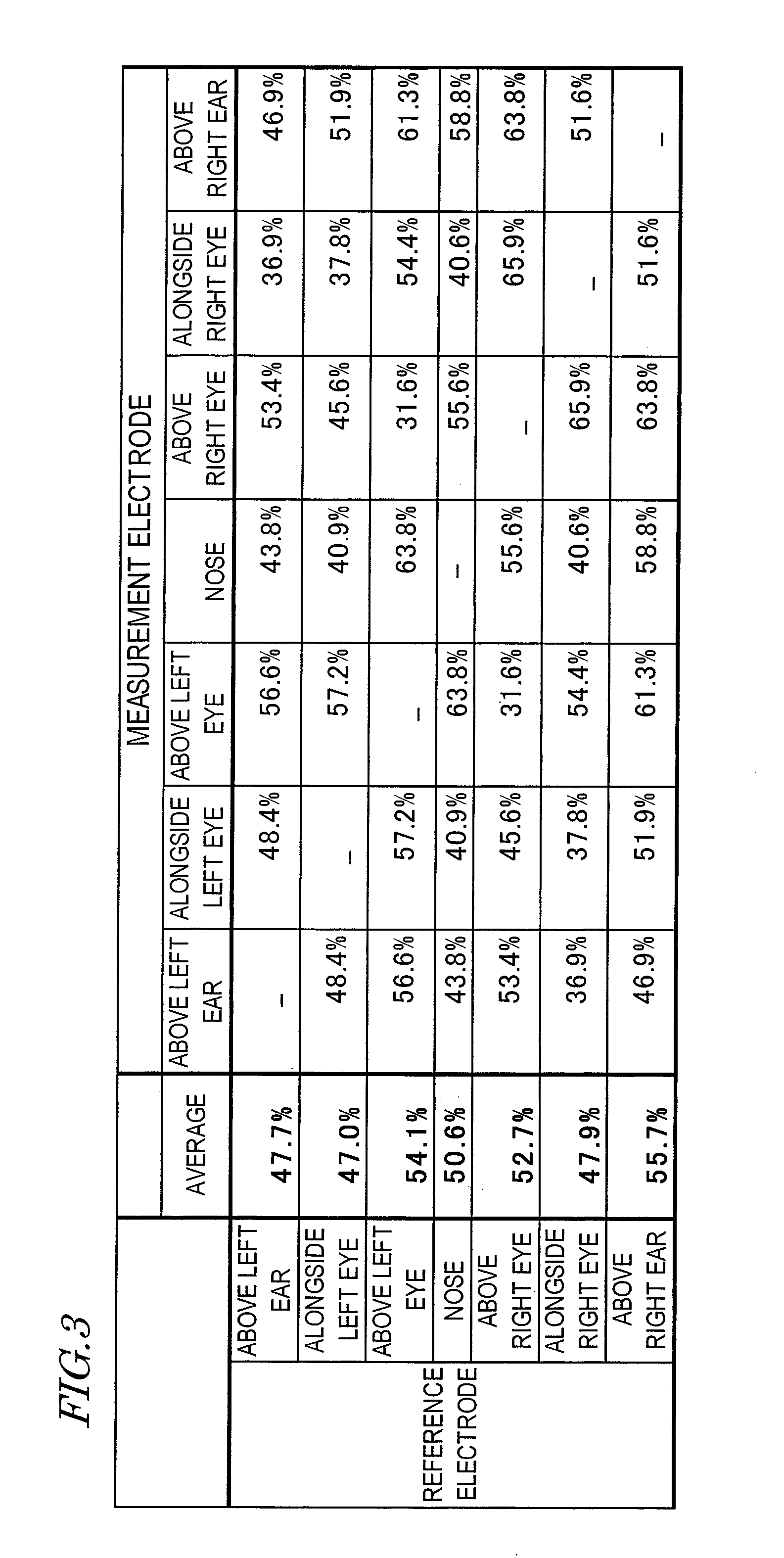

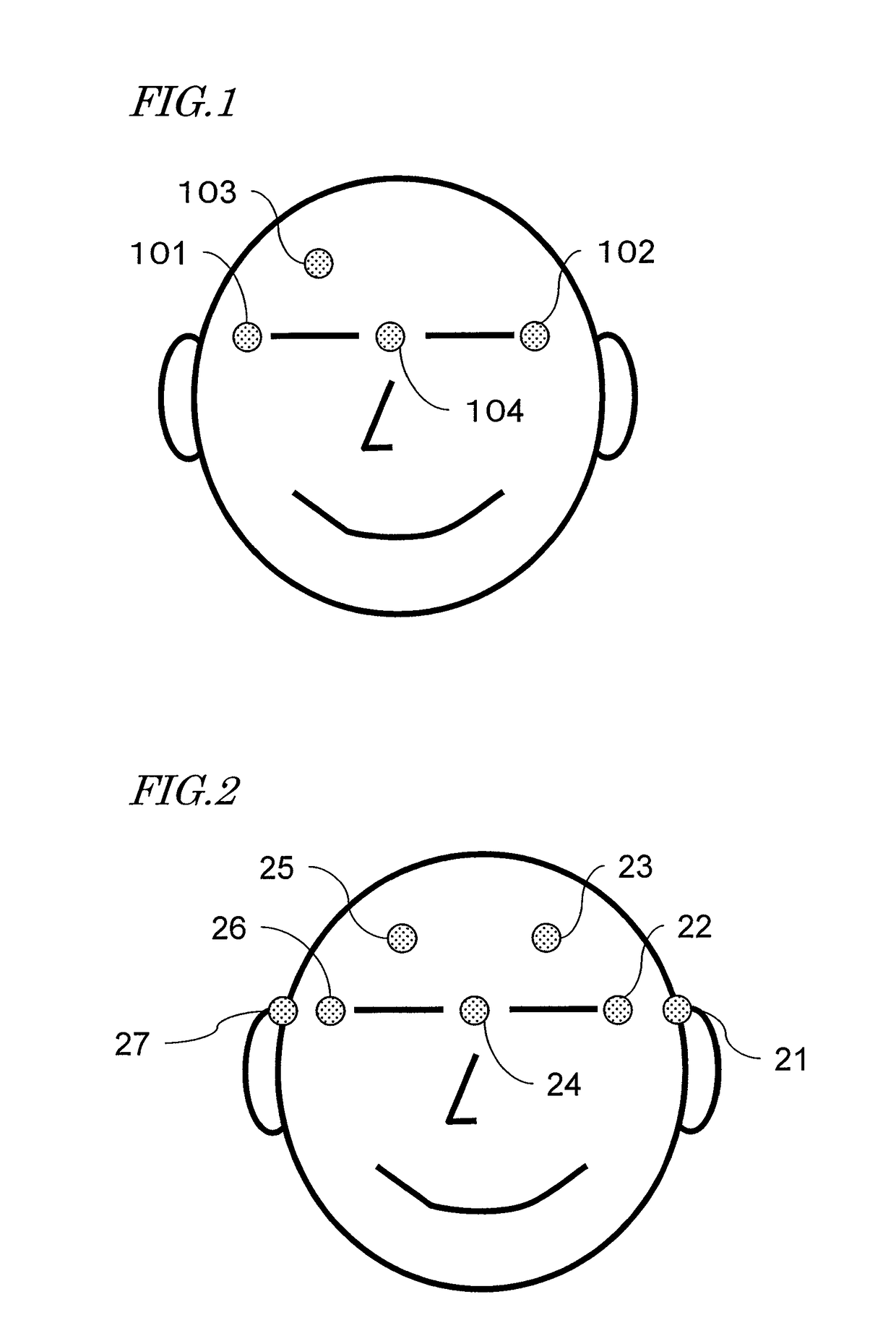

Method for controlling device by using brain wave and brain wave interface system

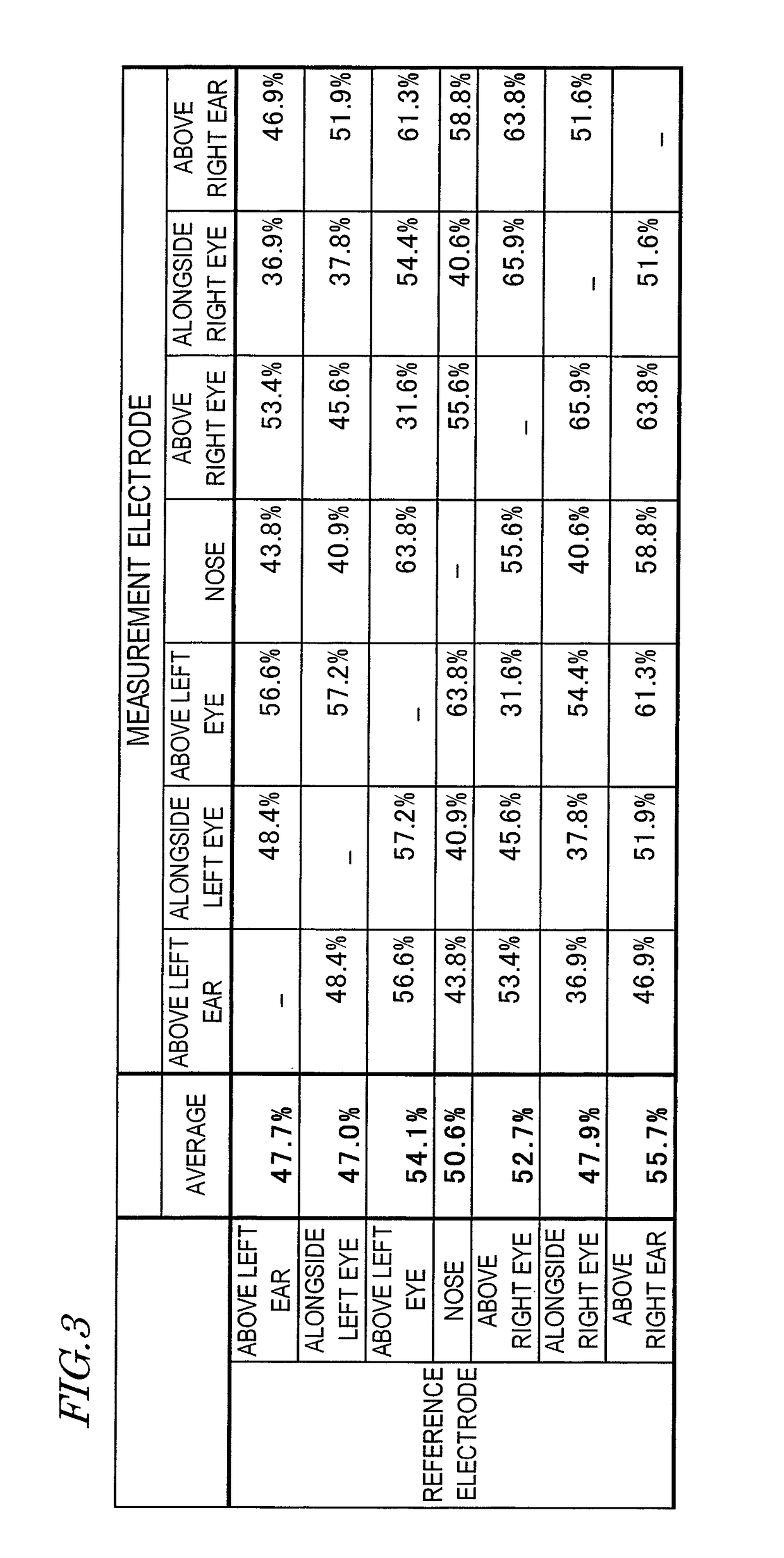

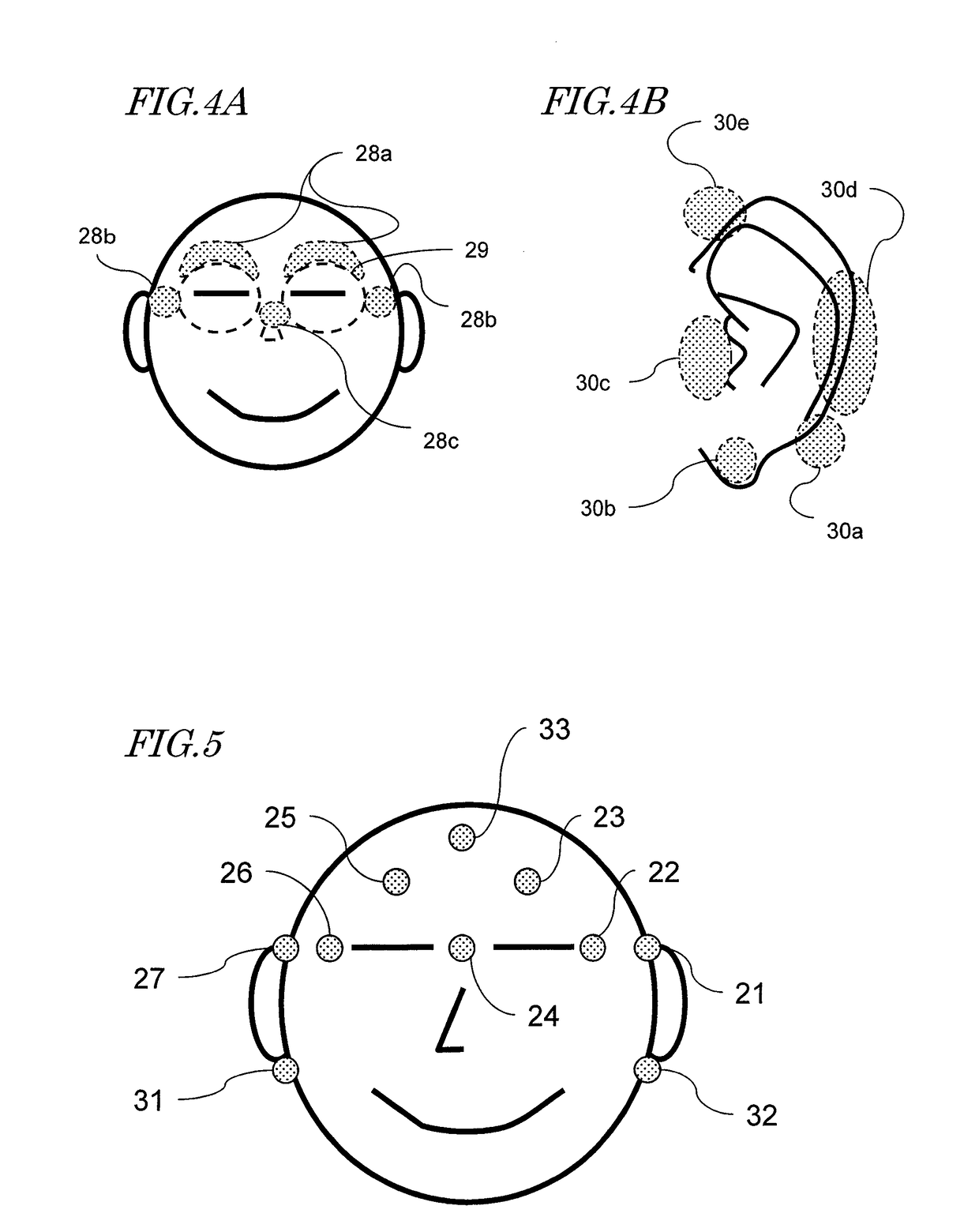

ActiveUS20100191140A1Reduce the burden onUnnecessary wearElectroencephalographySensorsElectroencephalogram featureMedicine

The control method for a device includes steps of: presenting a visual stimulation concerning a manipulation menu for a device; measuring event-related potentials after the visual stimulation is presented, where event-related potentials based on a timing of presenting the visual stimulation as a starting point are measured from a potential difference between each of electrodes and at least one reference electrode respectively worn on a face and in an ear periphery of a user; from each of the measured event-related potentials, extracting electroencephalogram data which is at 5 Hz or less and contains a predetermined time section, and combining the extracted electroencephalogram data into electroencephalogram characteristic data; comparing the electroencephalogram characteristic data against reference data prepared in advance for determining a desire to select an item in the manipulation menu; and, based on a comparison result, executing a manipulation of the device corresponding to the item.

Owner:PANASONIC CORP

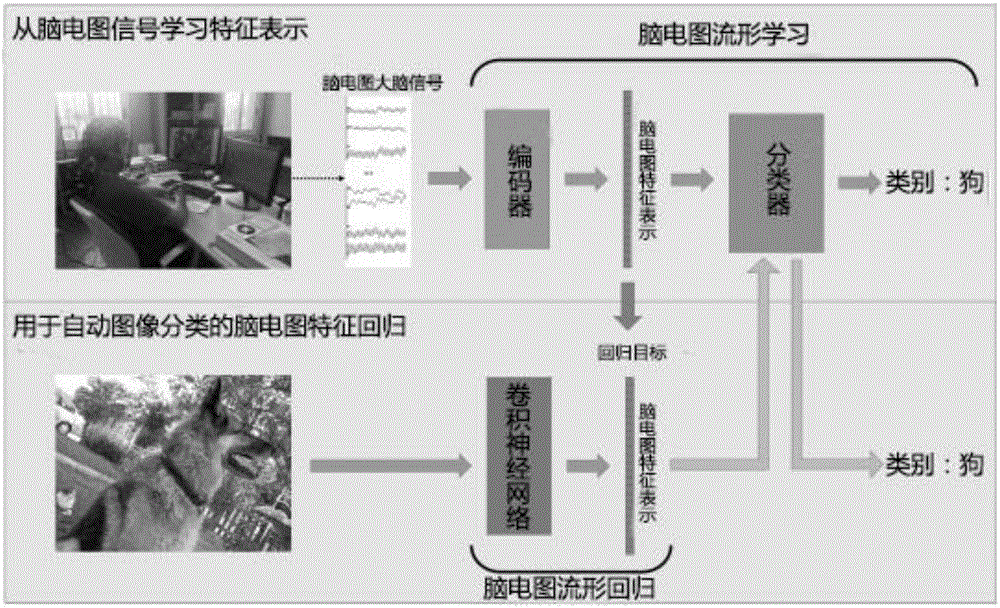

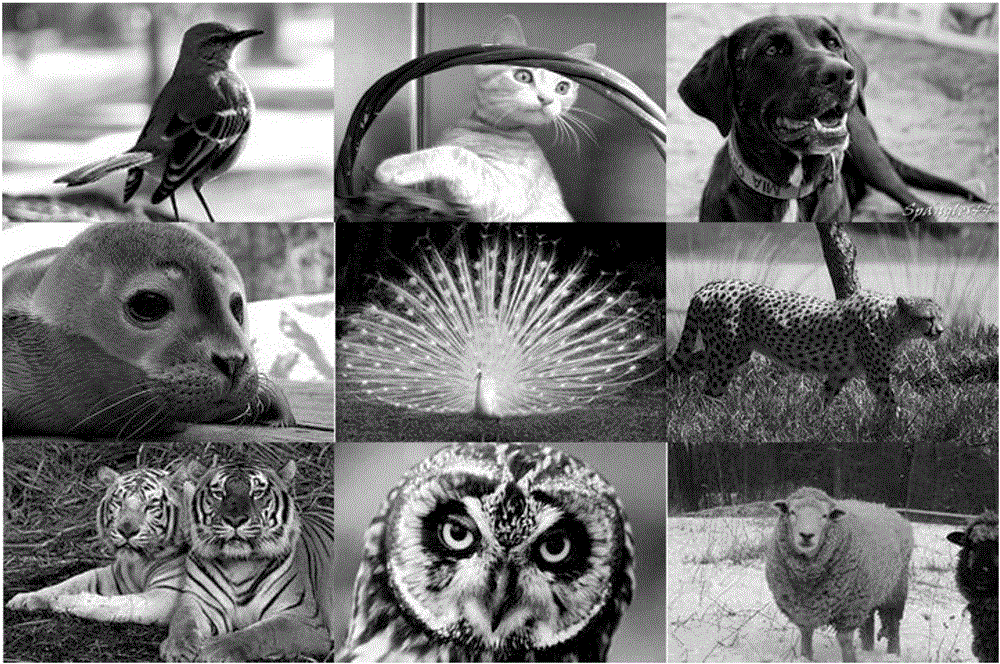

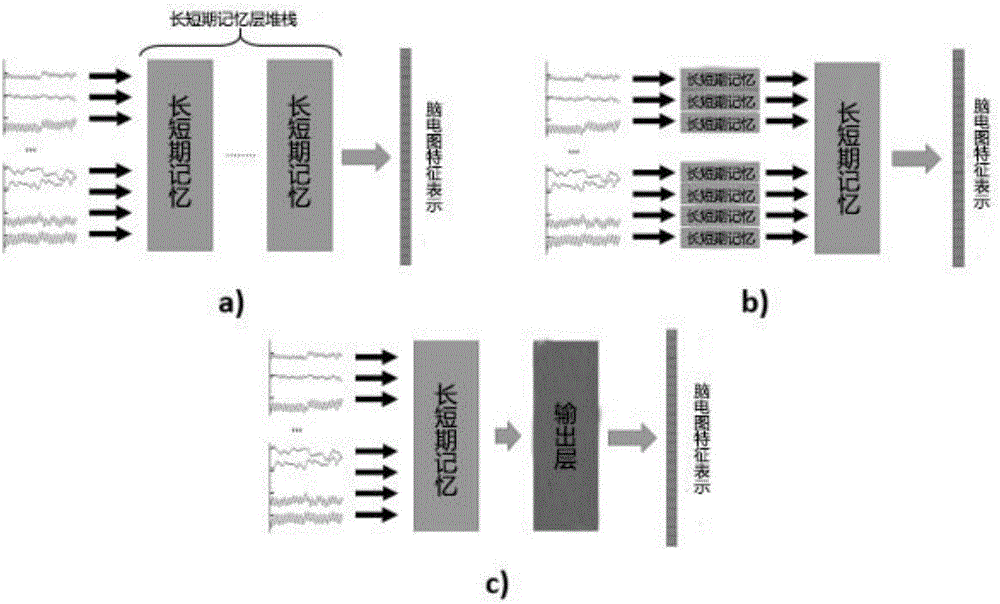

Deep learning vision classifying method based on electroencephalogram data

InactiveCN106691378ADiagnostic recording/measuringSensorsElectroencephalogram featureClassification methods

The invention provides a deep learning vision classifying method based on electroencephalogram data. The deep learning vision classifying method based on electroencephalogram data mainly comprises electroencephalogram data acquisition, electroencephalogram learning, electroencephalogram feature extracting and automatic classifying. The process of the method comprises the following steps: learning a brain activity vision classifying manifold with recognition capability by electroencephalogram data induced by a visual object stimulating factor and a recurrent neural network; then training a regressor based on a convolutional neural network; mapping an image to a learned manifold; and finally, carrying out automated vision classifying task through a computer by features based on human brain to obtain an image classifying result. Compared with a convolutional neural network method, the deep learning vision classifying method based on electroencephalogram data has the advantages that the classifying ability and the generalization ability are competitive; an image sign based on brain in a novel mode is used, and significative insight which is associated with a human vision perceptual system is provided; and images can be effectively projected to a new manifold based on biology, so that the development mode of an object classifier can be changed fundamentally.

Owner:SHENZHEN WEITESHI TECH

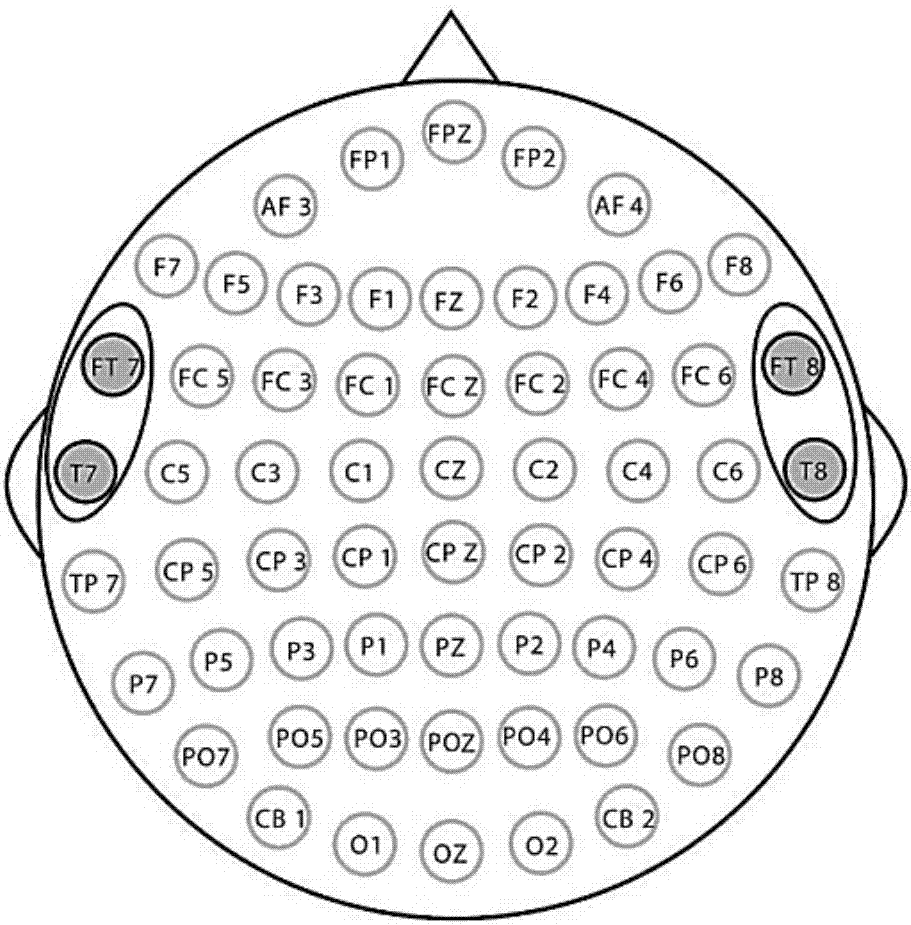

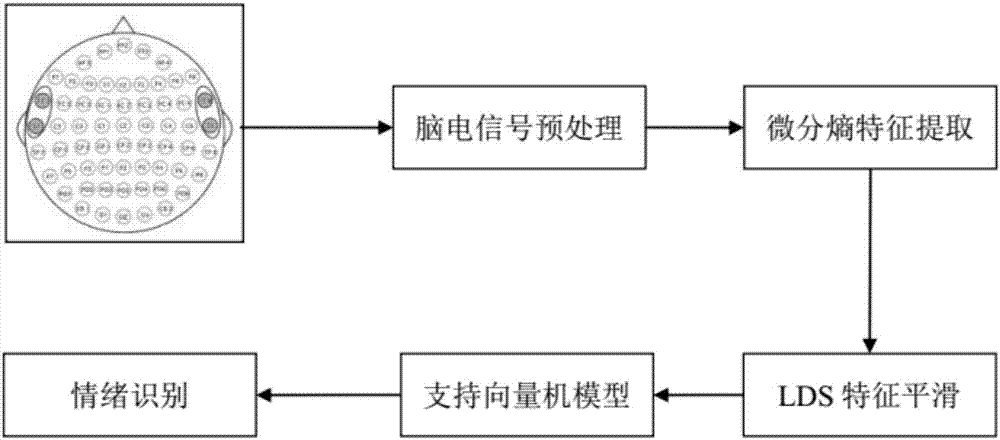

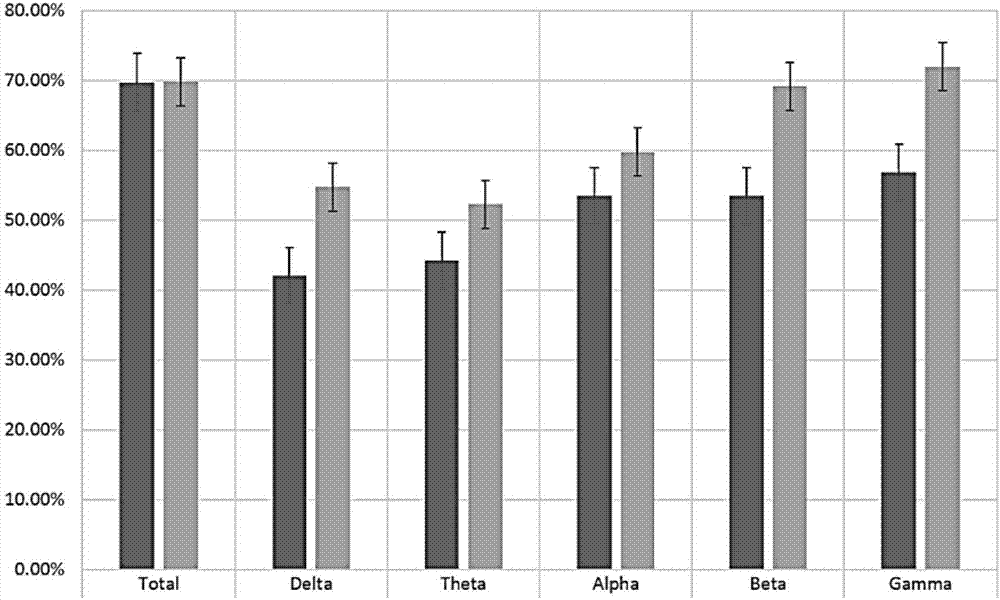

Feature recognition system and method for electroencephalogram signals

ActiveCN107157477AHigh speedReduce dimensionalitySensorsPsychotechnic devicesElectroencephalogram featureMood state

Provided are a feature recognition system and method for electroencephalogram signals. The method comprises the following steps: utilizing four conduction electrodes of a temporal area above ears to collect original brain electrical signals in different emotion states of different people and forming a sample set; obtaining electroencephalogram feature data from the sample set by pre-processing and extracting features; carry out smoothing operation on electroencephalogram feature data to obtain a training sample used for training a support vector machine so that an emotion recognition classifier is obtained. The feature recognition system and method for electroencephalogram signals have the following beneficial effects: under the premise that acquisition cost and complexity of electroencephalogram are greatly reduced, the feature recognition system and method can maintain higher emotion recognition accuracy and provide useful reference on emotion recognition by wearable equipment.

Owner:上海零唯一思科技有限公司

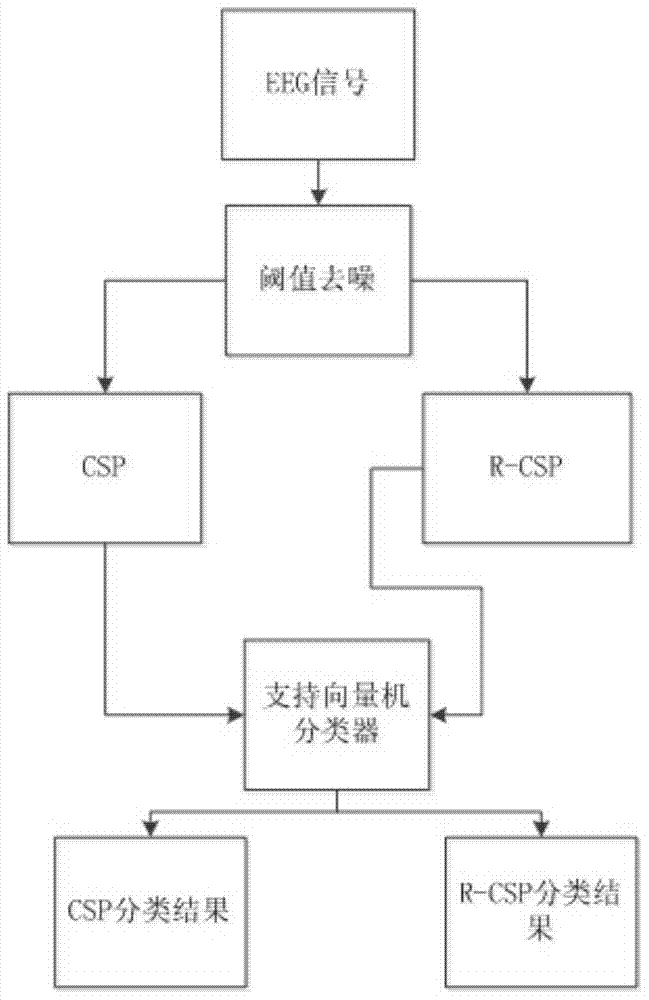

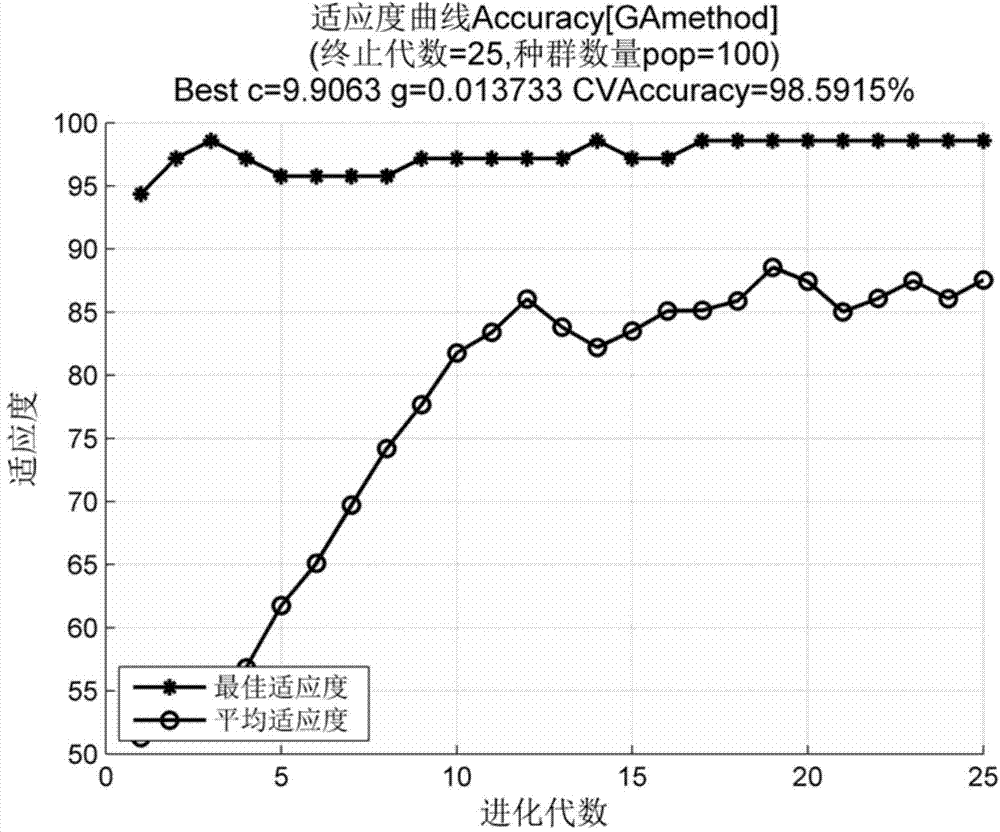

Electroencephalogram feature extraction method based on CSP and R-CSP algorithms

ActiveCN104771163AImprove classification resultsImprove adaptabilityDiagnostic signal processingSensorsElectroencephalogram featureSmall sample

The invention relates to an electroencephalogram feature extraction method based on CSP and R-CSP algorithms. According to the electroencephalogram feature extraction method, when a traditional CSP algorithm is used for extracting small sample electroencephalograms, covariance estimation of the traditional CSP algorithm will generate a larger error; according to the electroencephalogram feature extraction method, the traditional CSP algorithm is improved, and the regularization CSP algorithm R-CSP is put forward. Firstly, a small wave threshold denoising algorithm is used for conducting de-noising processing; secondly, covariance matrixes of five experimenters are solved, one target experimenter is selected, and the rest of the experimenters are auxiliary experimenters, an optimal spatial filter is constructed through selection of regularization parameters, and feature vectors are accordingly extracted; and finally, a genetic algorithm is used for optimizing a support vector machine classifier, and the correct rate of the classification result is further improved. The final classification result shows that the R-CSP algorithm is better in correct rate of the classification result compared with a traditional CSP algorithm.

Owner:西安慧脑智能科技有限公司

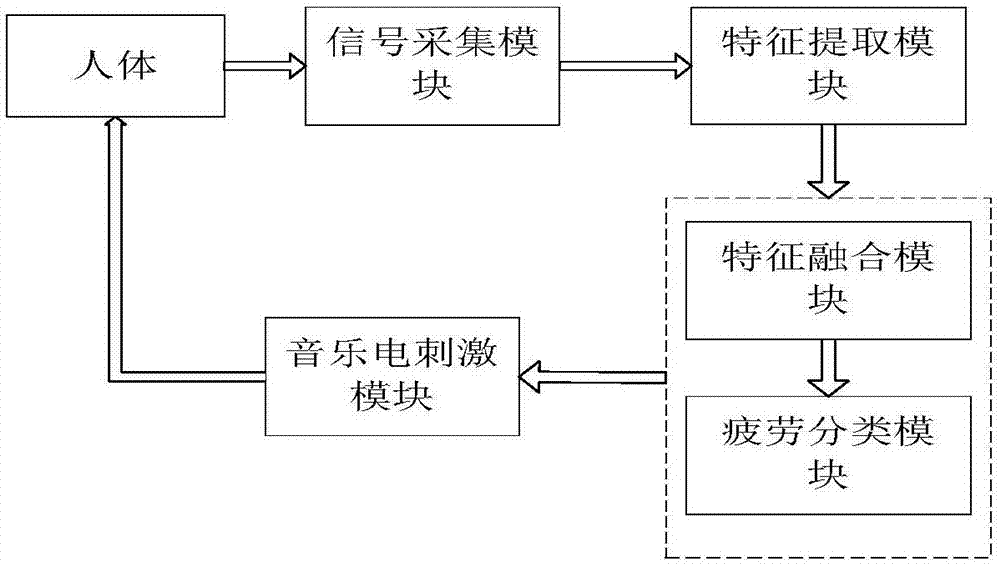

Fatigue detection method based on multi-source information fusion

The invention discloses a fatigue detection method based on multi-source information fusion. Electroencephalogram signals, twinkling information and electrocardiosignals of a testee are synchronously collected by means of an electroencephalogram collecting device and an electrocardiogram collecting device respectively; electroencephalogram signal features including the relative energy of electroencephalogram rhythm waves alpha, beta, theta and delta, electro-oculogram information including twinkling frequency E and twinkling intensity F, and electroencephalogram features including heart rate values HR, LF and HF are extracted; by means of the logistic regression algorithm, the fatigue degrees are primarily divided into three classes, namely, the non-fatigue degree, the mild fatigue degree and the deep fatigue degree, and meanwhile features with large weights are screened according to logistic regression weights for feature fusion; fused feature vectors are classified again by means of the bagging algorithm based on a support vector machine, the processed feature vectors serve as input of the bagging algorithm, and the current fatigue degree of the testee is determined; different fatigue relieving methods are used according to classification results of the fatigue degree of the testee. The method has the advantages of being high in applicability, high in fatigue detection precision, good in improvement effect and the like.

Owner:YANSHAN UNIV

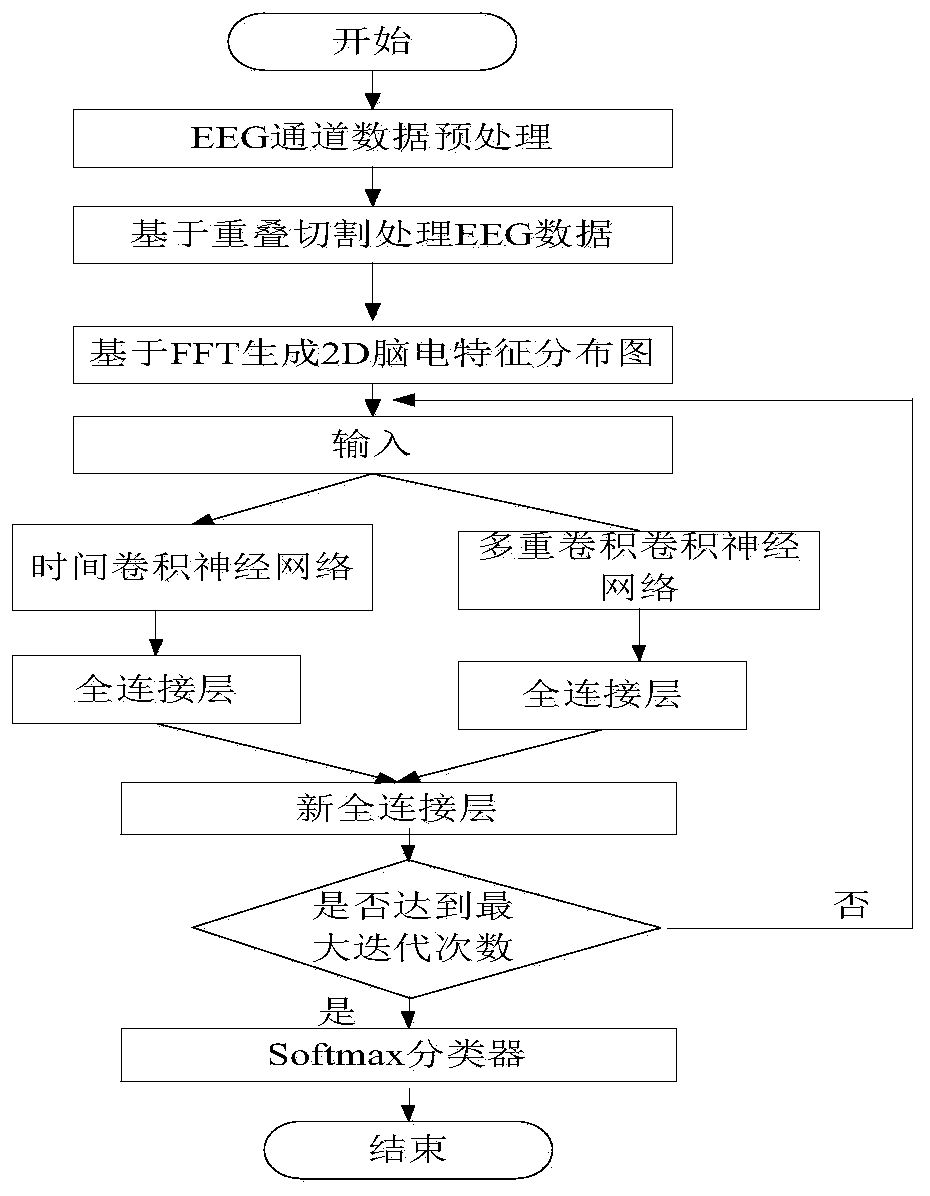

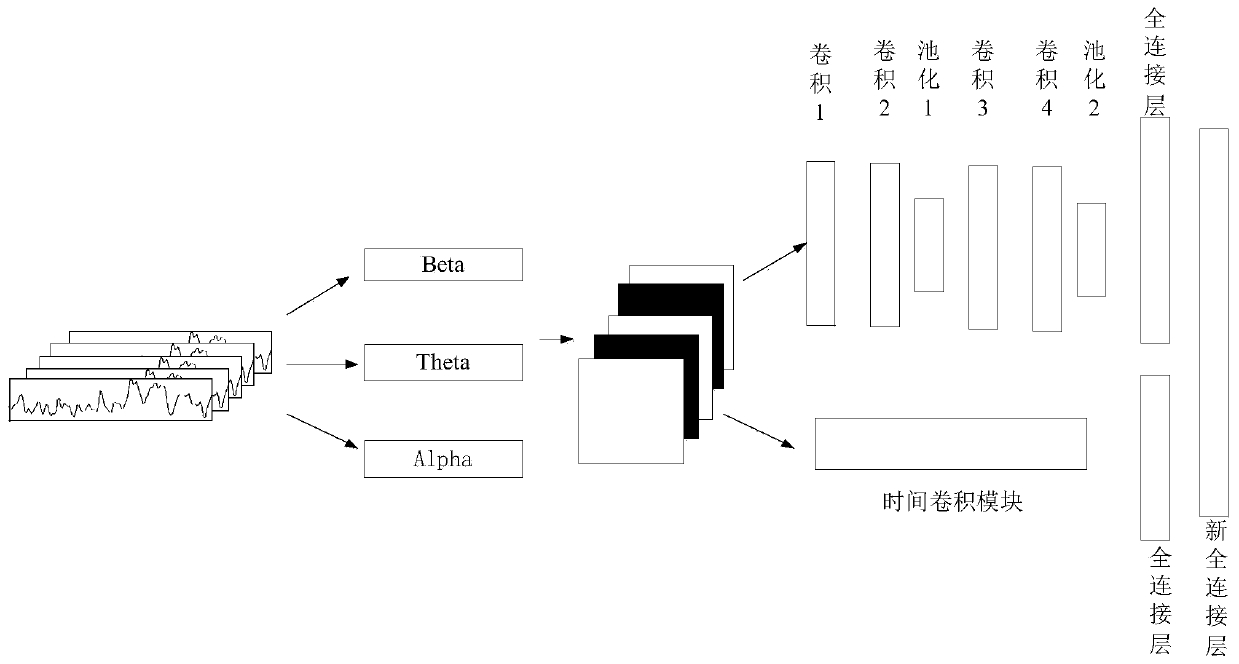

Parallel convolutional neural network motor imagery electroencephalogram classification method based on spatial-temporal feature fusion

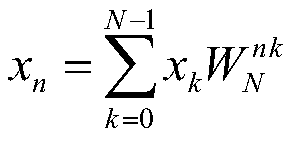

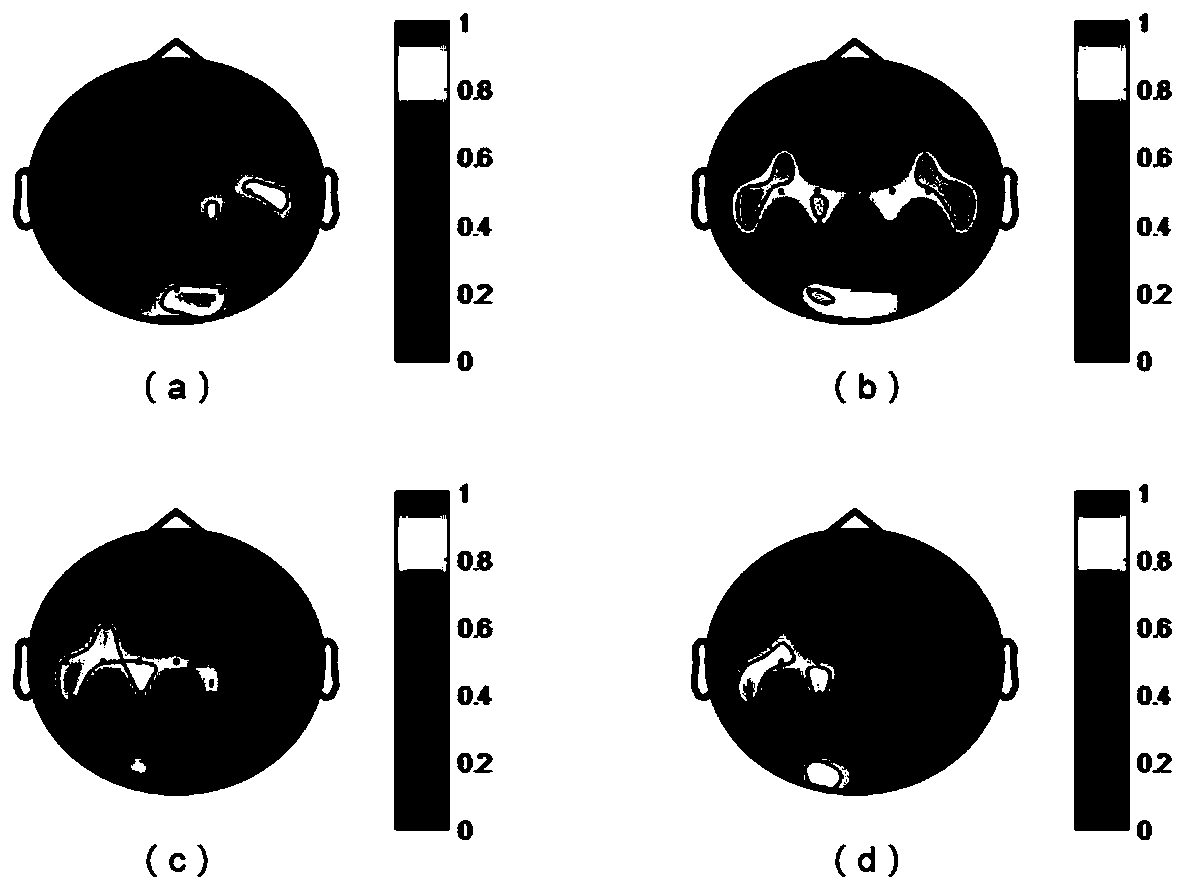

ActiveCN111012336ASpatio-temporal features are fully minedImprove the problem of only visualizing time series channel dataGeometric image transformationCharacter and pattern recognitionFast Fourier transformElectroencephalogram feature

The invention provides a parallel convolutional neural network motor imagery electroencephalogram classification method based on spatial-temporal feature fusion. According to the invention, motion imagery electroencephalogram signals are used as research objects, and a novel deep network model-parallel convolutional neural network method is provided for extracting spatial-temporal features of themotion imagery electroencephalogram signals. Different from a traditional electroencephalogram classification algorithm which often discards electroencephalogram spatial feature information, Theta waves (4-8 Hz), alpha waves (8-12 Hz) and beta waves (12-36 Hz) are extracted through fast Fourier transform to generate a 2D electroencephalogram feature map; training of the electroencephalogram feature map is conducted based on a multi-convolutional neural network so as to extract spatial features; in addition, a time convolution neural network is used for parallel training so as to extract time-order features; and finally, the spatial features and the time-order features are fused and classified based on Softmax. Experimental results show that the parallel convolutional neural network has good recognition precision and is superior to other latest classification algorithms.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

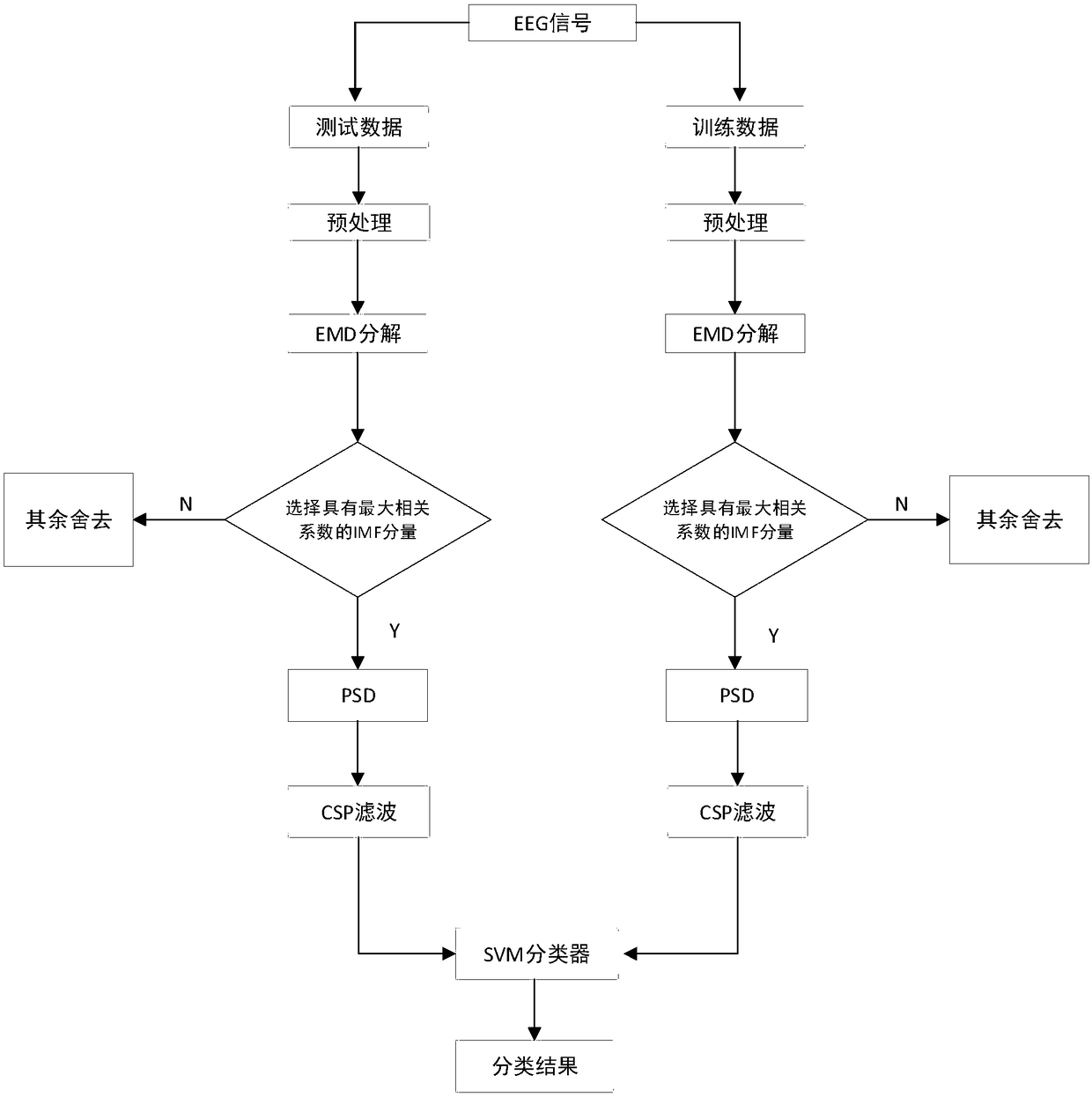

EMD and CSP fusion power spectral density electroencephalogram feature extraction method

InactiveCN108888264ASolve the problem of lack of frequency domain informationSolve the problem of requiring a large number of input channelsDiagnostic recording/measuringSensorsCorrelation coefficientFeature vector

Owner:NANJING UNIV OF POSTS & TELECOMM

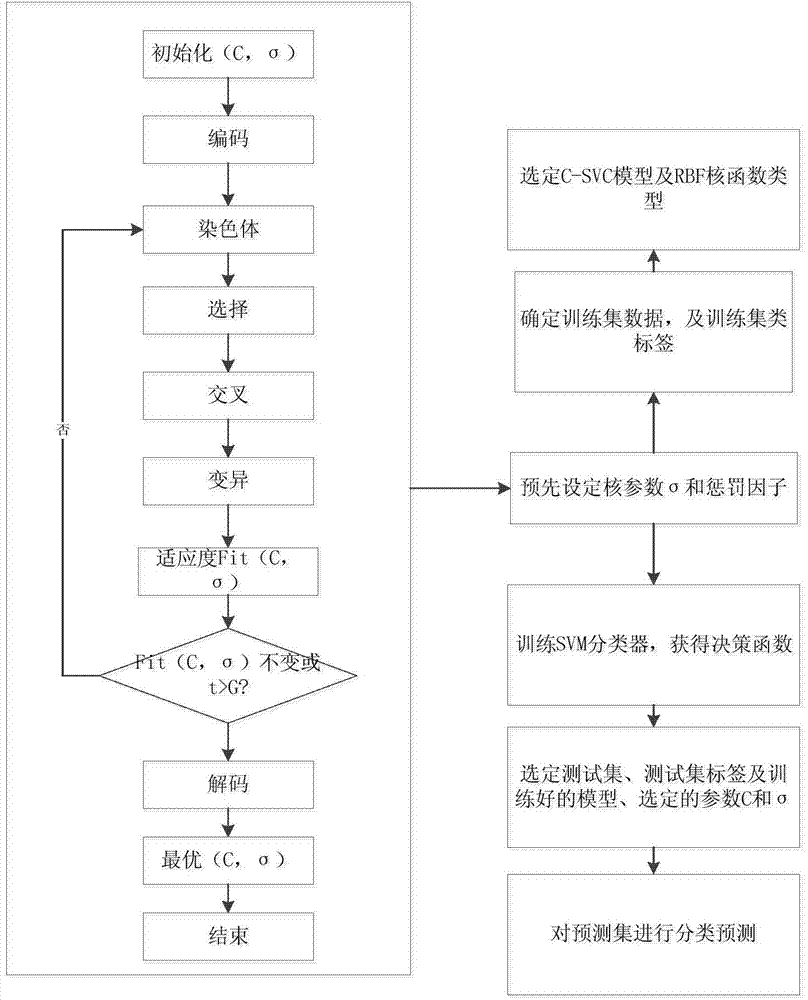

MSVM (multi-class support vector machine) electroencephalogram feature classification based method and intelligent wheelchair system

ActiveCN103473294AWheelchairs/patient conveyanceSpecial data processing applicationsExperimental validationBrain computer interfacing

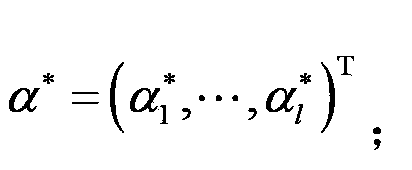

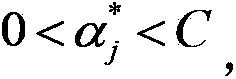

The invention discloses an MSVM (multi-class support vector machine) electroencephalogram feature classification based method, and relates to the fields of feature classification and identification control of brain-computer interface technology. A support vector machine is adopted to perform feature classification on electroencephalograms, and aiming for the problem about parameter selection of an existing support vector machine algorithm, an improved parameter optimization method is provided. In order to achieving the multi-classification purpose, the principle and the structure of a multi-class support vector machine are researched on the basis of binary classification. Through analysis and comparison, the multi-class support vector machine in a binary tree form is selected to perform multi-feature classification, and the improved parameter optimization method is subjected to experimental verification under an offline environment.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

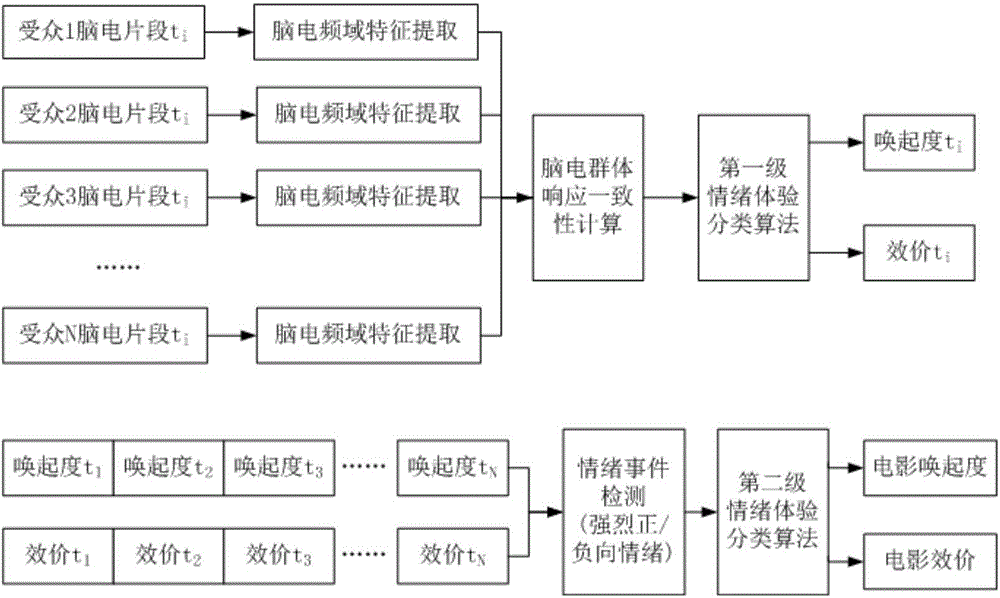

Movie audience experience assessing method based on human-computer interaction

ActiveCN104361356AFine evaluationAccurate evaluationData processing applicationsCharacter and pattern recognitionTime courseElectroencephalogram feature

The invention discloses a movie audience experience assessing method based on a human-computer interaction. The movie audience experience assessing method is characterized by comprising the following steps: taking a certain quantity and length of movie clip samples with different emotional contents and playing the movie clip samples; acquiring electroencephalograms of a certain quantity of audiences watching the different emotional contents of movie clips; performing frequency-domain analysis on the acquired electroencephalograms, extracting different frequency bands of electroencephalogram responses; splitting the electroencephalogram responses into electroencephalogram clips of a certain duration; calculating response consistency of audiences of different electroencephalogram features of each electroencephalogram clip, and using a consistency calculating result as a multielement measure index of the consistency of the audiences; correcting a parameter of a human-computer interactive experience state identifying method according to the multielement measure index; and furthermore, obtaining an emotional experience state assessment of the whole movie by the audiences according to characteristics of a dynamic change time course of experience states identified in the whole movie playing process.

Owner:TSINGHUA UNIV

Multi-mode fusion video emotion identification method based on kernel-based over-limit learning machine

ActiveCN105512609AEasy to operateFast recognitionPhysiological signal biometric patternsLearning machineElectroencephalogram feature

The invention relates to a multi-mode fusion video emotion identification method based on a kernel-based over-limit learning machine. Feature extraction and feature selection are carried out on image information and audio information of a video so as to obtain a video feature; pretreatment, feature extraction, and feature selection are carried out on a collected multi-channel electroencephalogram signal to obtain an electroencephalogram feature; a multi-mode fusion video emotion identification model based on a kernel-based over-limit learning machine is established; the video feature and the electroencephalogram feature are inputted into the multi-mode fusion video emotion identification model based on a kernel-based over-limit learning machine to carry out video emotion identification, thereby obtaining a final classification accuracy rate. According to the invention, with the multi-mode fusion video emotion identification model based on a kernel-based over-limit learning machine, the operation becomes simple and the identification speed becomes fast; and the classification accuracy rate of three kinds of video emotion data is high. The video content description can be realized completely by using the video and electroencephalogram data; and compared with the video emotion identification by using the single mode, the two-mode-data-based video emotion identification enables the classification accuracy rate to be improved.

Owner:BEIJING UNIV OF TECH

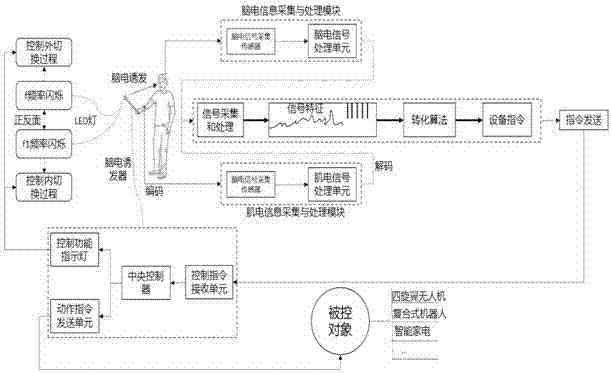

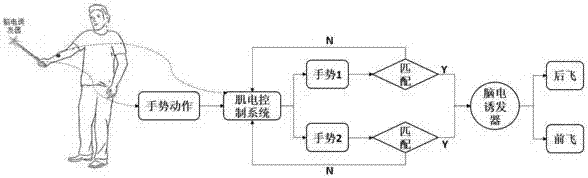

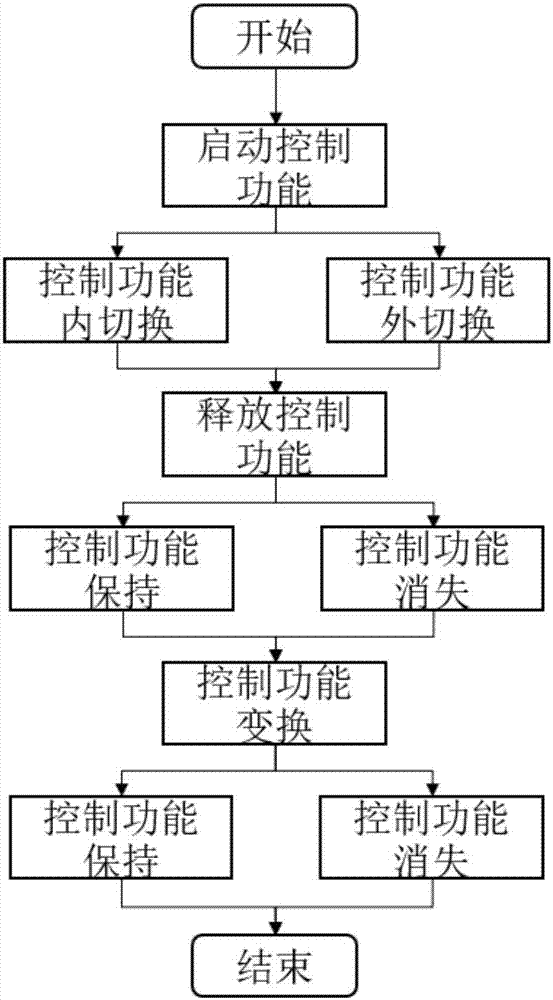

Multi-mode intelligent control system and method based on electroencephalogram and myoelectricity information

ActiveCN107957783AReliable mappingImprove the sense of mystery experienceInput/output for user-computer interactionGraph readingElectroencephalogram featureExecution control

The invention discloses a multi-mode intelligent control system and method based on electroencephalogram and myoelectricity information. The system comprises an electroencephalogram inducer, an electroencephalogram signal collecting and processing module, a myoelectricity signal collecting and processing module and an information communication module, wherein the electroencephalogram inducer is used for inducing various electroencephalogram signals in the human brain, receiving a control instruction sent by the electroencephalogram signal collecting and processing module, executing a control function outer switching process and inner switching process, triggering and switching control functions and performing displaying; the electroencephalogram signal collecting and processing module is used for collecting and analyzing the electroencephalogram signals, encoding the extracted electroencephalogram feature information to generate a corresponding control instruction, and sending the control instruction to the electroencephalogram inducer; the myoelectricity signal collecting and processing module is used for executing control function release mode switching, collecting and analyzingmyoelectricity signals generated by specific instruction control actions to generate a corresponding action control instruction, and sending the action control instruction to a controlled object through the electroencephalogram inducer.

Owner:BEIJING AEROSPACE MEASUREMENT & CONTROL TECH

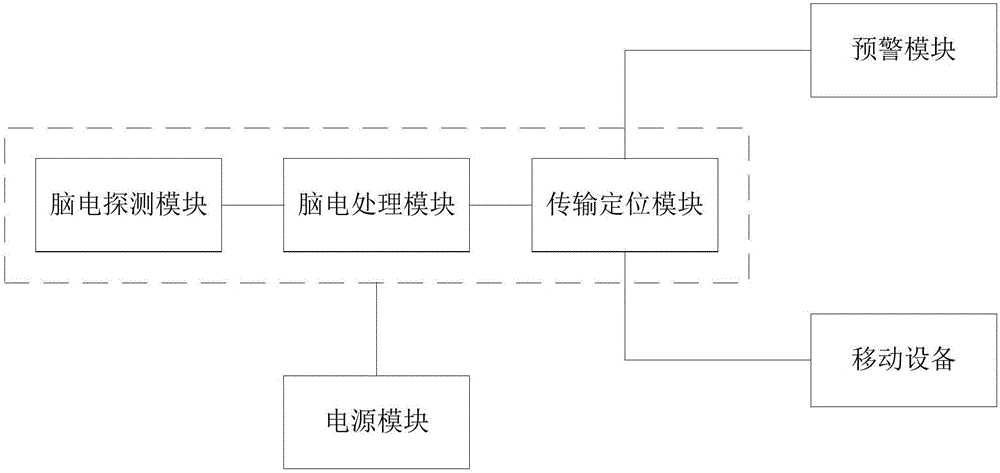

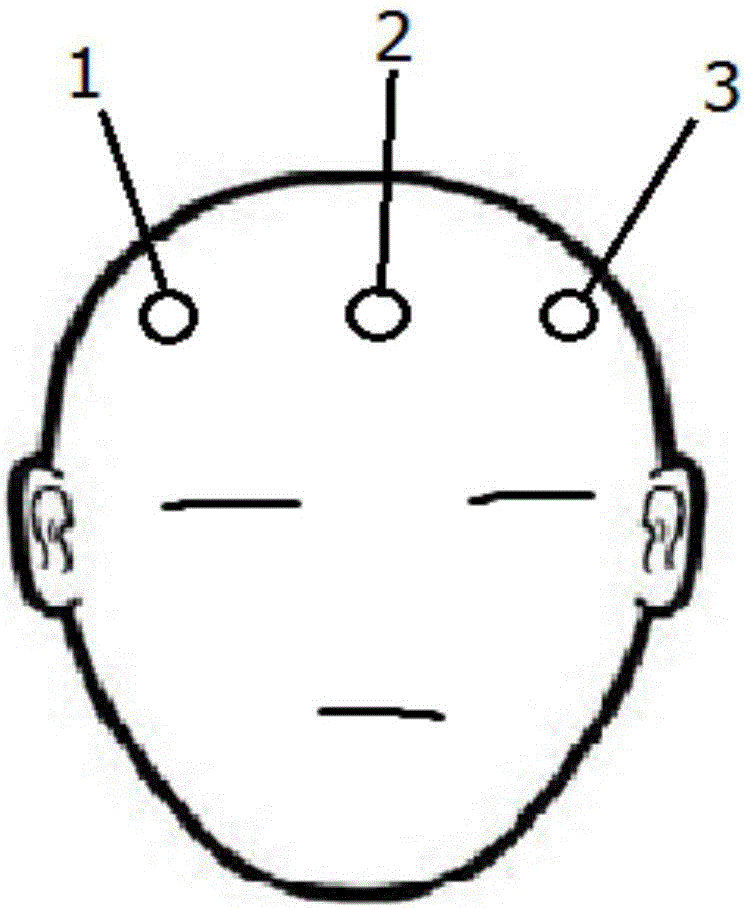

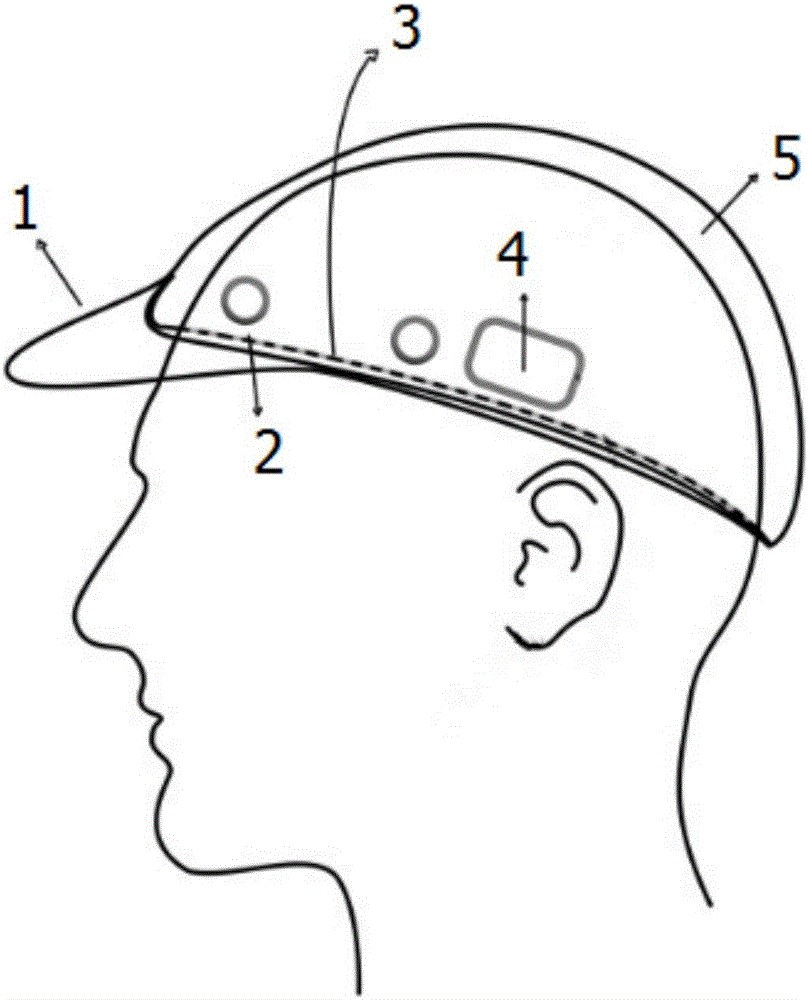

Electroencephalogram-based wearable anti-fatigue intelligent monitoring and pre-warning system for driver

ActiveCN106691443ADoes not affect daily driving operationReduce volumeSensorsTelemetric patient monitoringElectroencephalogram featureTraffic accident

The invention discloses an electroencephalogram-based wearable anti-fatigue intelligent monitoring and pre-warning system for a driver. The electroencephalogram-based wearable anti-fatigue intelligent monitoring and pre-warning system is characterized in that when a driver drives, self-developed wearable electroencephalogram detecting equipment is used to detect the electroencephalogram features of the driver, a specific fatigue algorithm is provided, whether the driver is in a fatigue state or not or has a fatigue trend or not is monitored precisely in real time, timely pre-warning can be performed, and traffic accidents caused by fatigue driving can be prevented effectively. The system has the advantages that the system is high in precision, good in timeliness, capable of effectively preventing the traffic accidents caused by the fatigue driving, high in practicality and promising in prospect; the used wearable cap structure is small in size, easy to wear and free of influence on the daily driving operation of the driver; the used electrode structure does not need to use the earlobe as the reference voltage, the driver does not need to wear an ear clip on the ear, and discomfort is avoided; false alarm and alarm failure are reduced effectively by the specific fatigue algorithm.

Owner:UNIV OF SCI & TECH OF CHINA

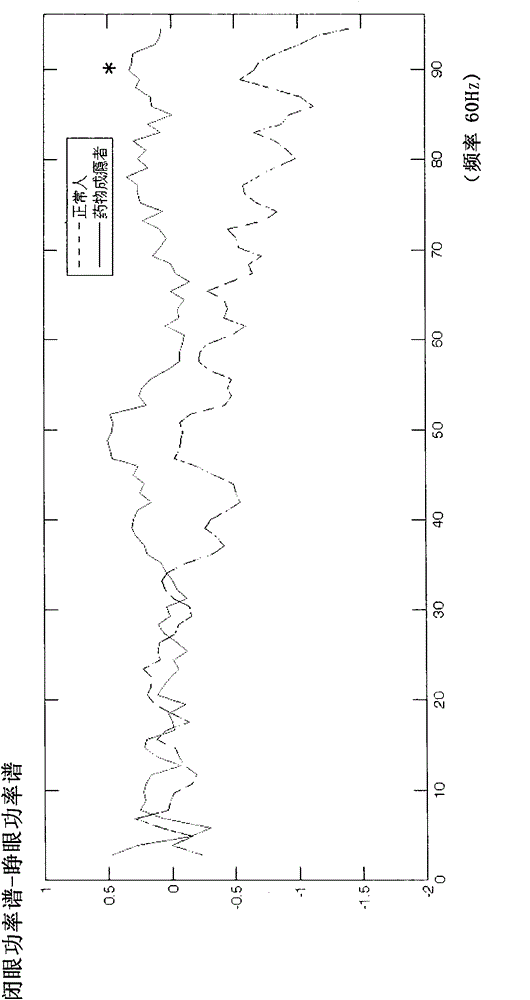

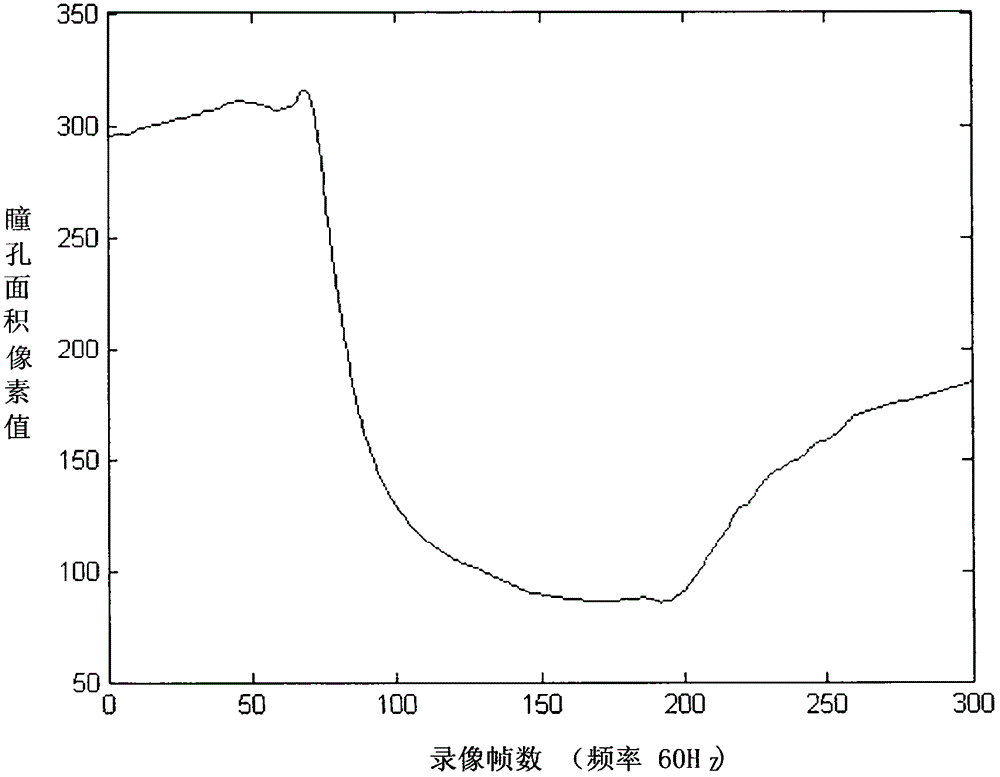

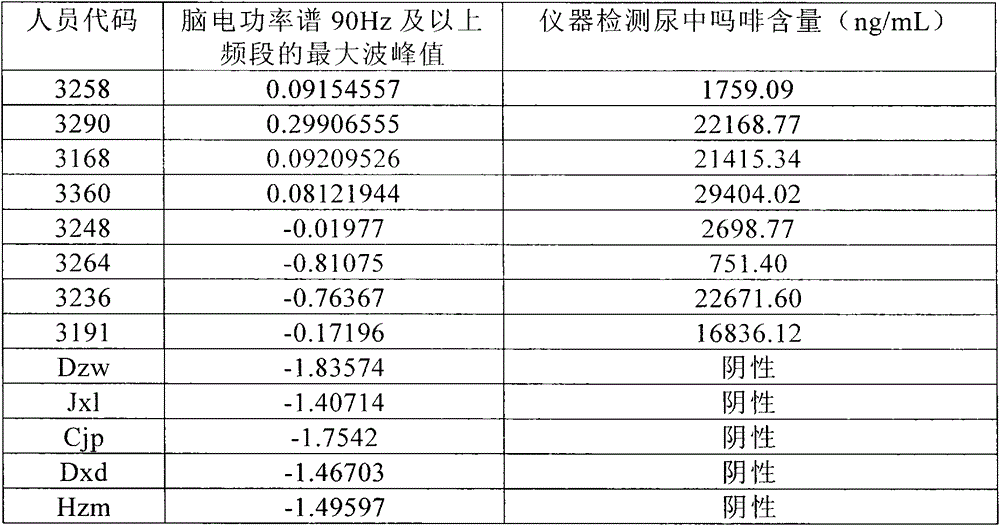

Method for rapidly screening out drug addicts by means of electroencephalogram features and pupil dynamic change features

InactiveCN104586386AImprove simplicityReduce operation timeDiagnostic recording/measuringEye diagnosticsElectroencephalogram featurePupil

Disclosed is a detection method for rapidly screening out drug addicts. The method can rapidly screen out drug addicts independently or jointly according to electroencephalogram features and pupil dynamic change features of people to be detected responding to illumination and light offset. The detection method for rapidly screening out the drug addicts is easy and convenient to operate, the obtained result is rapid and accurate, and the method has the good accuracy and practicability.

Owner:KUNMING INST OF ZOOLOGY CHINESE ACAD OF SCI

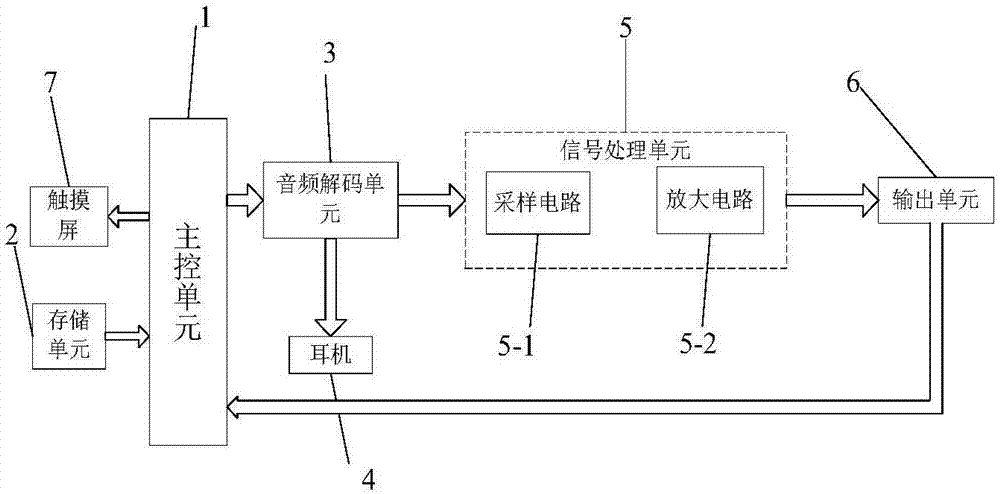

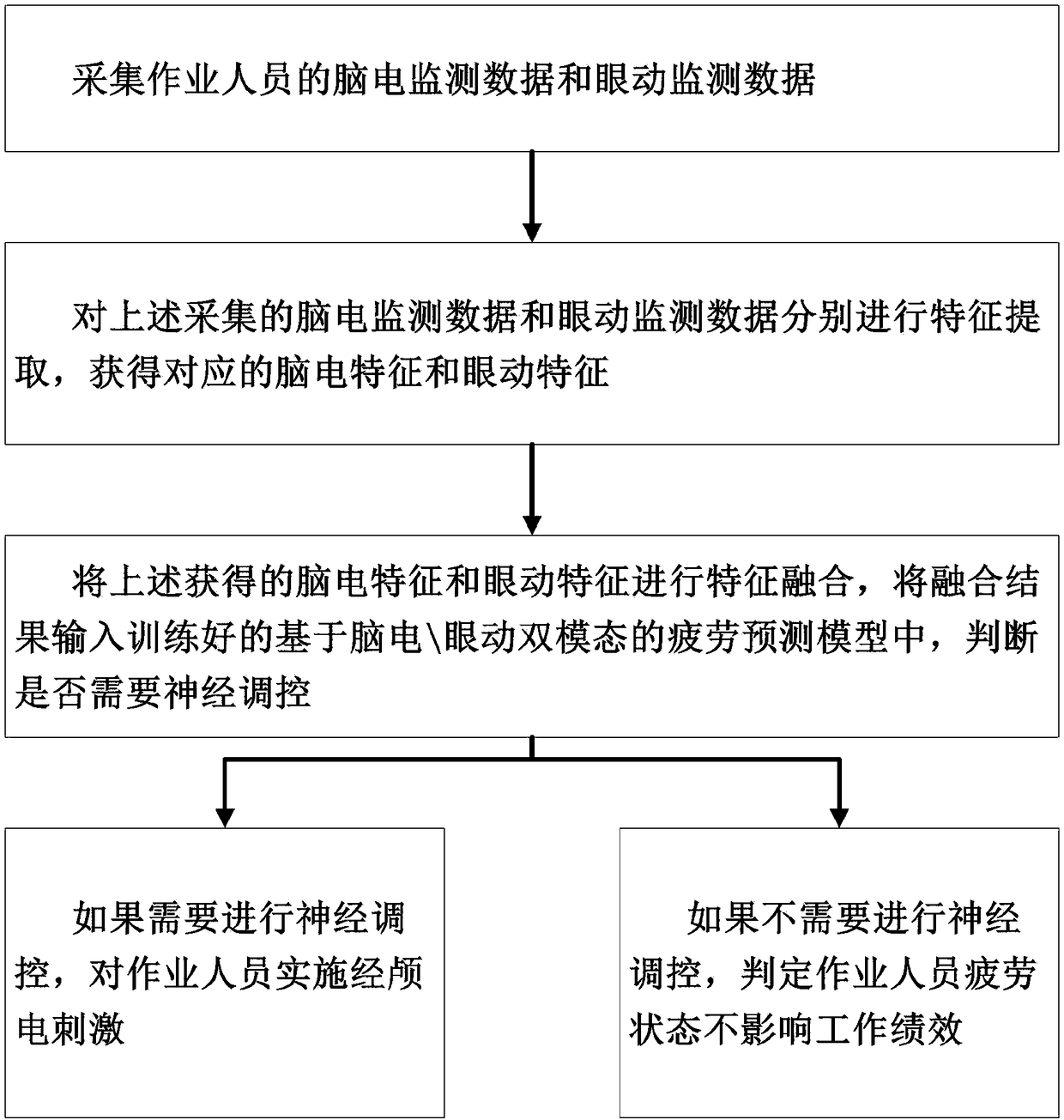

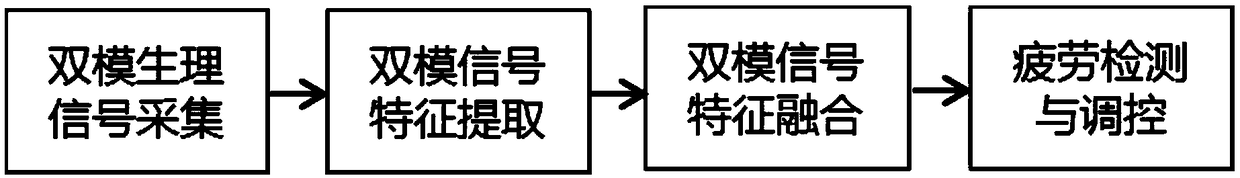

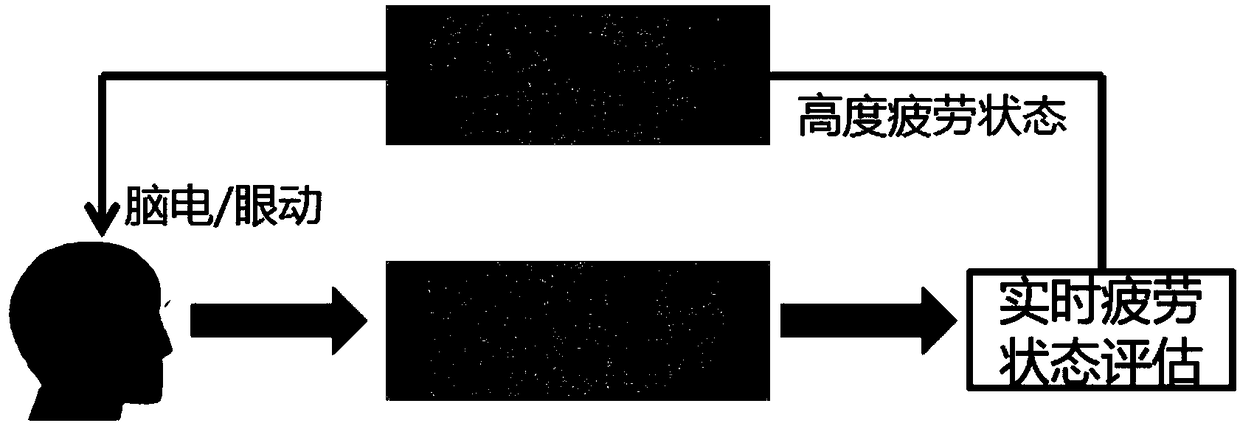

Fatigue detection and regulation method based on electroencephalogram-eye movement bimodal signal

ActiveCN109009173AImprove job performanceImprove physical conditionElectrotherapyDiagnostic recording/measuringElectroencephalogram featurePhysiologic States

The invention relates to a fatigue detection and regulation method based on an electroencephalogram and eye movement bimodal signal, belongs to the technical field of fatigue detection and regulation,and solves the problems in the prior art that the fatigue degree of a high-load operator cannot be continuously monitored and nerve regulation is not timely performed. A fatigue prediction model based on electroencephalogram-eye movement bimodal uses a machine learning method to analyze bimodal fusion features, that is, a fusion result of electroencephalogram features and eye movement features, recognizes the brain fatigue state of the operator, and according to the detection result, judges whether or not neuromodulation is required. If not required, the operator can continue to work and maintain high job performance. If necessary, a transverse brain intervention is applied by using transcranial direct current stimulation. Through bidirectional closed-loop fatigue monitoring and adaptiveregulation, the excitability of a cerebral cortex functional area is adjusted, thereby improving the physiological state of the operator and ensuring the efficiency and stability of the job performance.

Owner:BEIJING MECHANICAL EQUIP INST

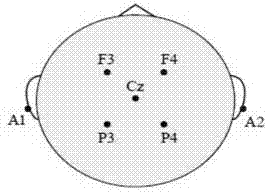

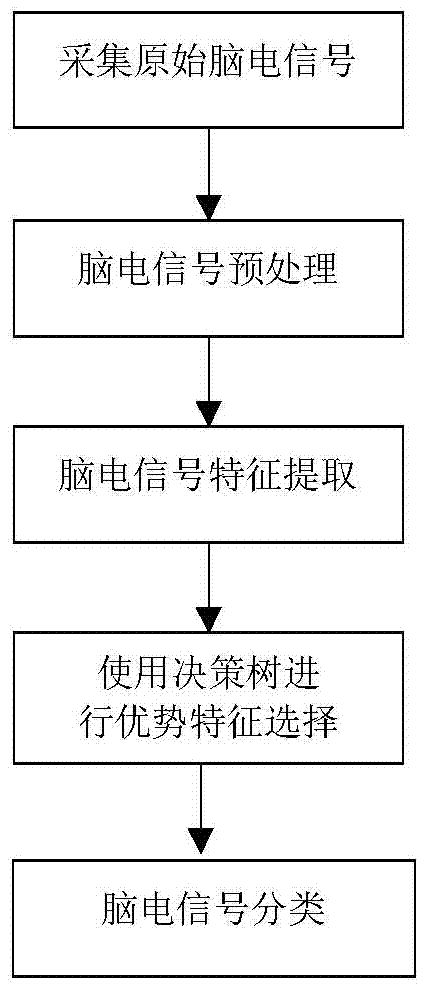

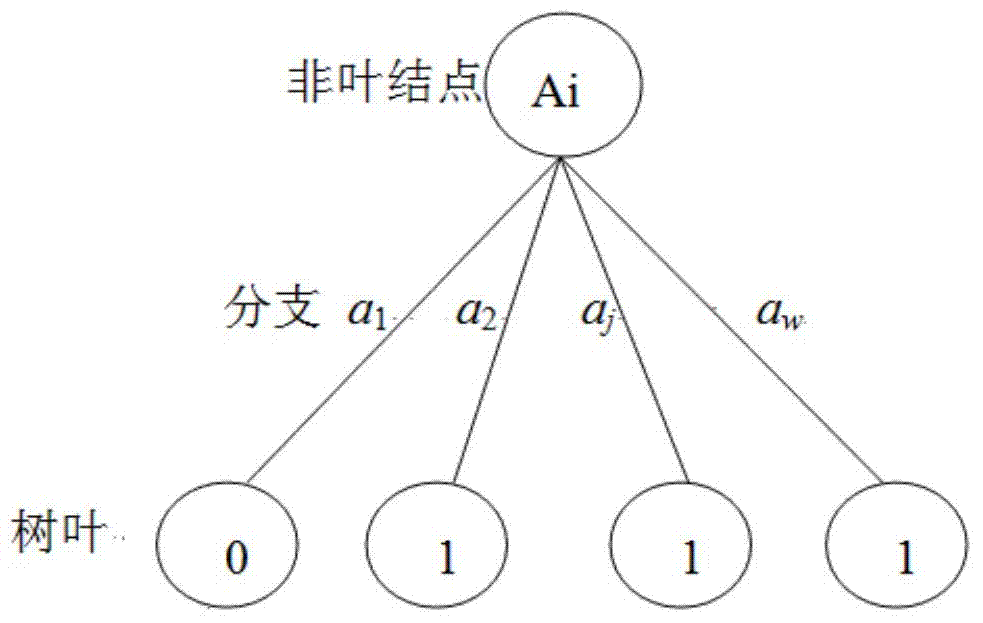

Electroencephalogram feature selection approach based on decision-making tree

ActiveCN103876734AEasy to operateNo human intervention requiredMedical data miningDiagnostic recording/measuringFeature vectorElectroencephalogram feature

The invention relates to an electroencephalogram feature selection approach based on a decision-making tree. Firstly, collected multi-channel electroencephalograms are preprocessed; secondly, feature extraction is conducted on the preprocessed electroencephalograms by means of the principal component analysis method to obtain feature vectors; thirdly, the feature vectors obtained after the step of feature extraction is conducted are input into the decision-making tree, and superior feature selection is conducted; fourthly, superior features selected by the decision-making tree are reassembled; finally, the reassembled superior feature vectors are input into a support vector machine, and electroencephalogram classification is conducted to obtain the classification correct rate. According to the electroencephalogram feature selection approach, the decision-making tree is used for superior feature selection, operation is simple, manual participation is not needed, and time and labor are saved. The decision-making tree is used for superior feature selection, so that influence of subjective factors of people is avoided in a selection process, selection is more objective, and the classification correct rate is higher. Experiments show that the average accuracy is 89.1% by using the approach to conduct electroencephalogram classification and is increased by 0.9% compared with a traditional superior electrode reassembling method.

Owner:BEIJING UNIV OF TECH

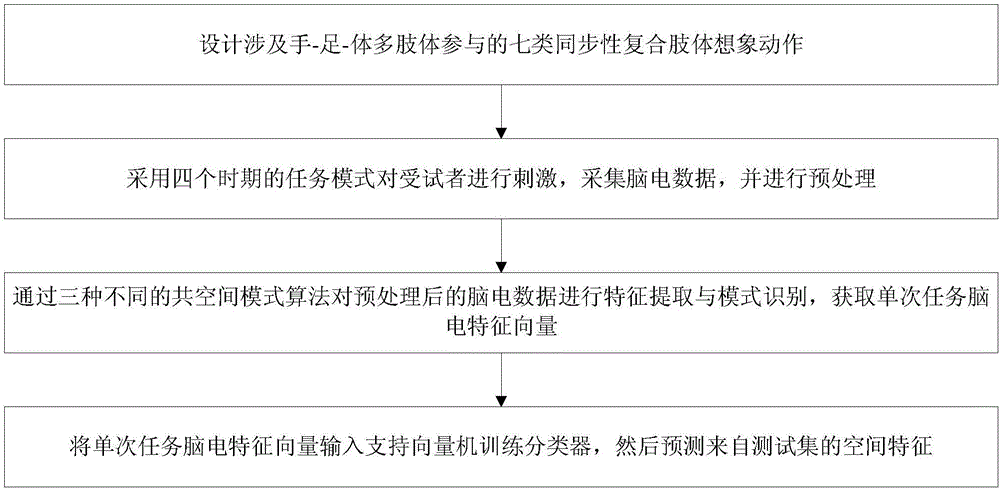

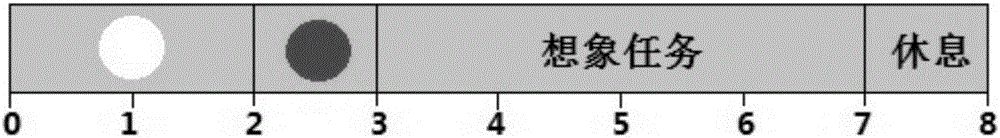

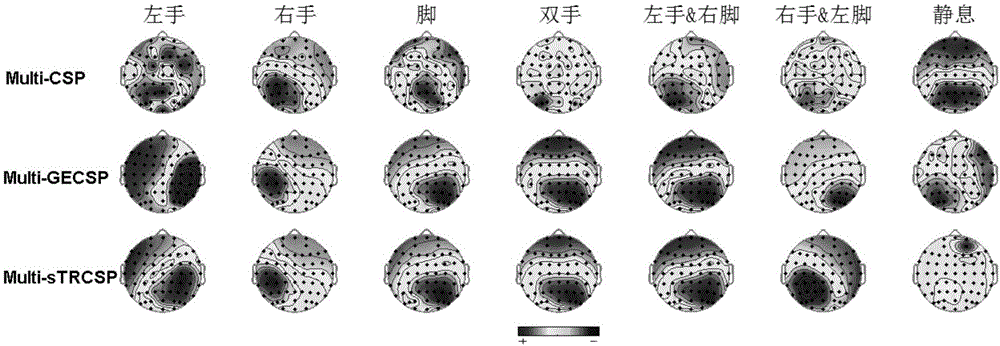

Multi-modal brain-computer interface (BCI) method and system based on synchronic compound limb imaginary movement

InactiveCN106502405AGet rid of the limited varietyGet rid of consistencyInput/output for user-computer interactionGraph readingInformation controlFeature vector

The invention discloses a multi-modal brain-computer interface (BCI) method and system based on synchronic compound limb imaginary movements. The method comprises the following steps: designing seven types of multi-limb participated synchronic compound limb imaginary movements related to hands, feet and a body; irritating a subject by adopting task models of four stages, collecting electroencephalogram data and carrying out preprocessing; carrying out feature extraction and mode recognition on the preprocessed electroencephalogram data by three different cospace mode algorithms, so as to acquire an electroencephalogram feature vector of a single task; inputting the electroencephalogram feature vector of the single task into a training classifier of a support vector machine, and then predicting a spatial feature from a test set. The invention establishes a novel multi-modal BIC pattern based on the synchronic compound limb imaginary movements, the dilemmas that the species of the existing imaginary movement patterns are limited and disaccord with actual movements are got rid of, big instruction set output of information controlled by an MI (Motor imagery)-BCI system is realized, furthermore, a novel pathway is explored and a novel method is provided for promoting the practical application of a brain-computer interface in rehabilitation engineering.

Owner:TIANJIN UNIV

Resting electroencephalogram identification method based on bilinear model

InactiveCN101843491AImproved recognition ratePerson identificationSensorsElectroencephalogram featureLinear model

The invention relates to the technical field of using electroencephalogram for identification. The invention provides a method capable of more comprehensively reflecting and analyzing information of electroencephalogram, extracting effective electroencephalogram feature parameters with obvious individual variation from the information and realizing the aim of identification. Therefore, the technical scheme of the invention is as follows: the resting electroencephalogram identification method based on bilinear model comprises the following steps: using an electrode cap worn on the head of a subject to collect the original resting electroencephalogram signals; processing the original resting electroencephalogram signals; establishing a composite model with of linear and nonlinear components; adopting main components to analyze PCA and perform data dimension reduction; and performing identification based on a support vector machine. The invention is mainly used for identification.

Owner:TIANJIN UNIV

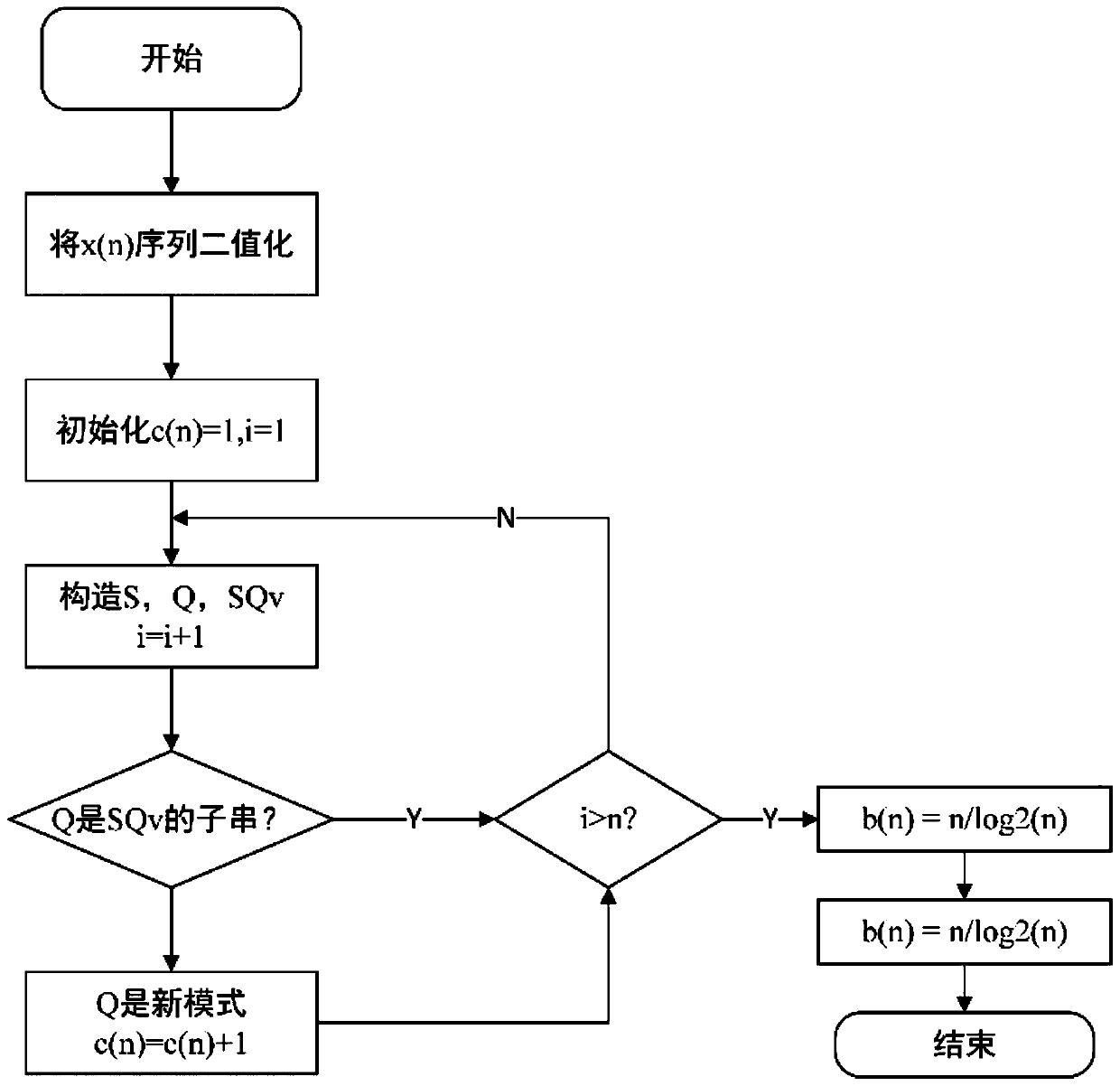

Electroencephalogram feature extraction method based on non-Gaussian time sequence model

ActiveCN103690160AIdentify feature changesDiagnostic recording/measuringSensorsFeature vectorElectroencephalogram feature

The invention discloses an electroencephalogram feature extraction method based on a non-Gaussian time sequence model. The method comprises the following steps: acquiring electroencephalogram data to be processed and two groups of training electroencephalogram data, removing artifacts and partitioning an obtained effective frequency band into a plurality of data segments; extracting the time-frequency feature value, morphological feature value and complexity feature value of each data segment, wherein the feature value of each data segment constructs a feature vector; marking the status value of each feature vector in a first group of training electroencephalogram data, and training a support vector machine by using a marking result; inputting the feature vectors of a second group of training electroencephalogram data into the support vector machine to obtain the status value sequence of the second group of training electroencephalogram data; establishing an observation equation and a status transfer equation, and determining parameters in the equations by using the feature vectors and the status value sequence of the second group of electroencephalogram training data; acquiring the status value of the electroencephalogram data to be processed by using the feature vector of the electroencephalogram data to be processed and the two equations. By adopting the method, different brain statuses can be distinguished accurately.

Owner:浙江浙大西投脑机智能科技有限公司

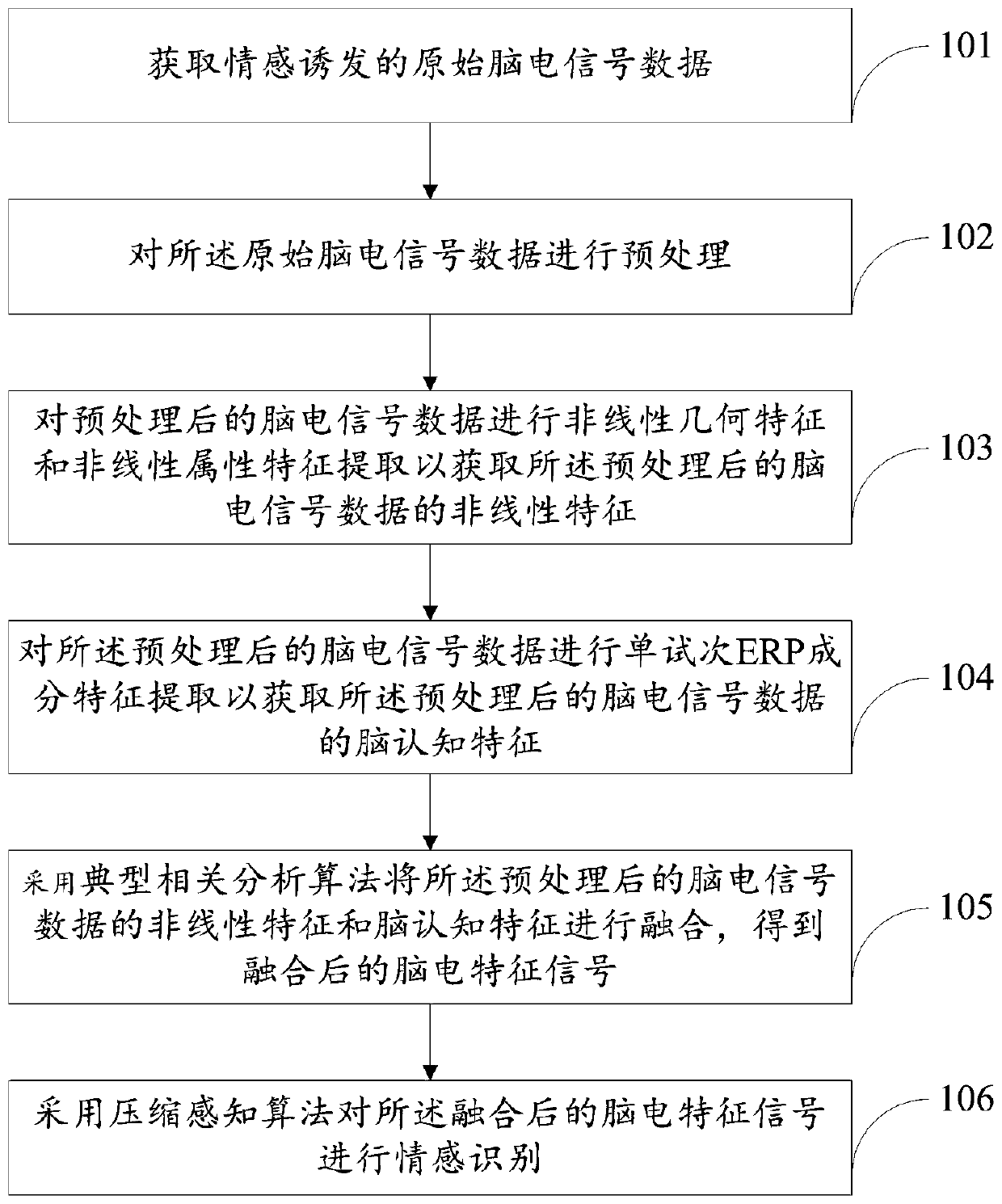

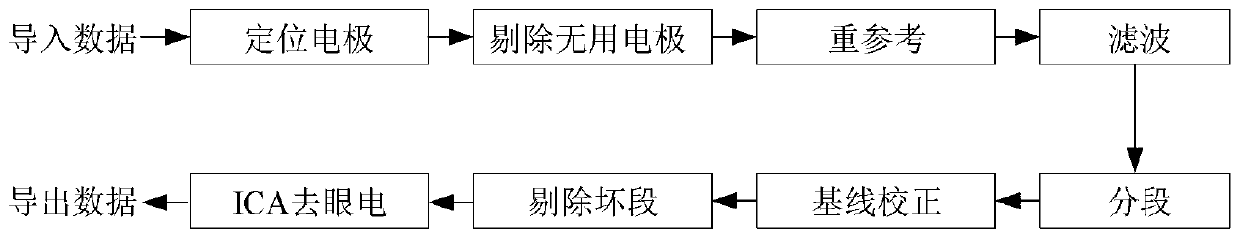

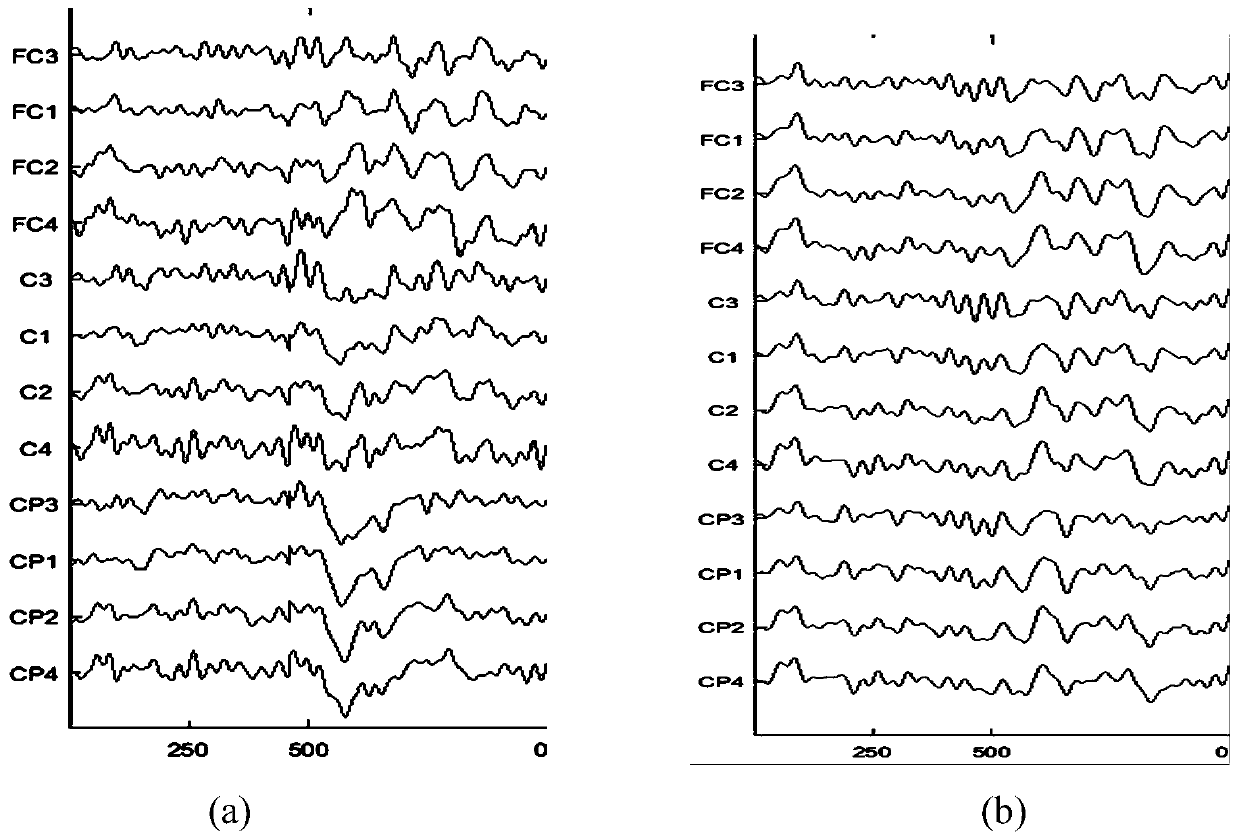

Electroencephalogram signal emotion recognition method and system fusing multiple features

InactiveCN110781945AComplete EEG emotion feature parametersImprove emotion recognitionCharacter and pattern recognitionDiagnostic recording/measuringElectroencephalogram featureCompressed sensing

The invention discloses an electroencephalogram signal emotion recognition method and system fusing multiple features, and relates to the technical field of electroencephalogram signal emotion recognition, and the method mainly comprises the steps: obtaining emotion-induced original electroencephalogram signal data, and preprocessing the emotion-induced original electroencephalogram signal data; carrying out nonlinear feature and brain cognitive feature extraction on the preprocessed electroencephalogram signal data; fusing the nonlinear features and the brain cognitive features of the preprocessed electroencephalogram signal data by adopting a canonical correlation analysis algorithm to obtain fused electroencephalogram feature signals; and performing emotion recognition on the fused electroencephalogram characteristic signals by adopting a compressed sensing algorithm. The most complete characteristic parameters with the most discriminating capability can be constructed, the emotionrecognition effect of the electroencephalogram signals is improved, and meanwhile the defects that a traditional recognition model is high in training complexity and poor in anti-noise performance areovercome.

Owner:TAIYUAN UNIV OF TECH

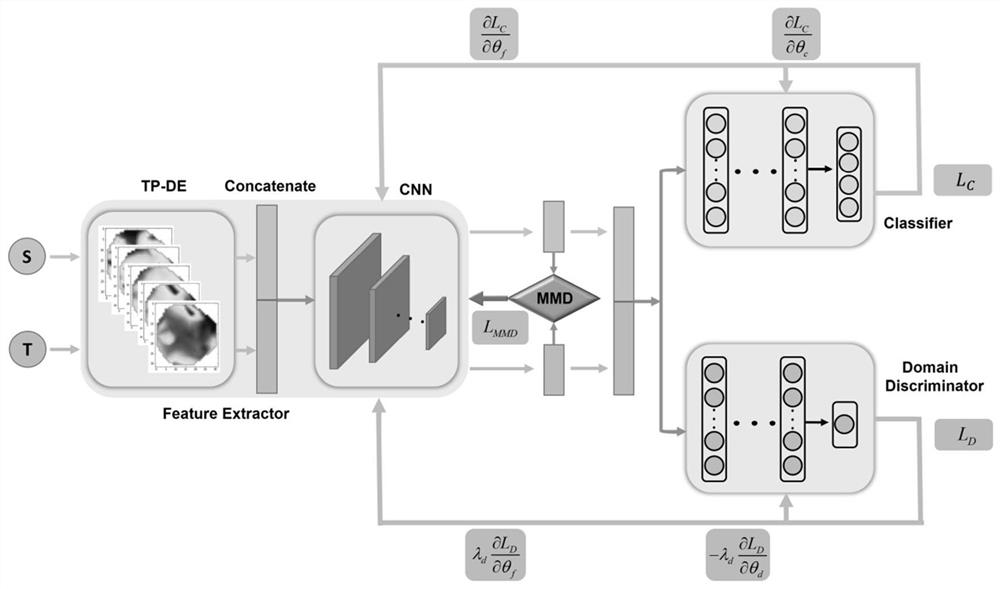

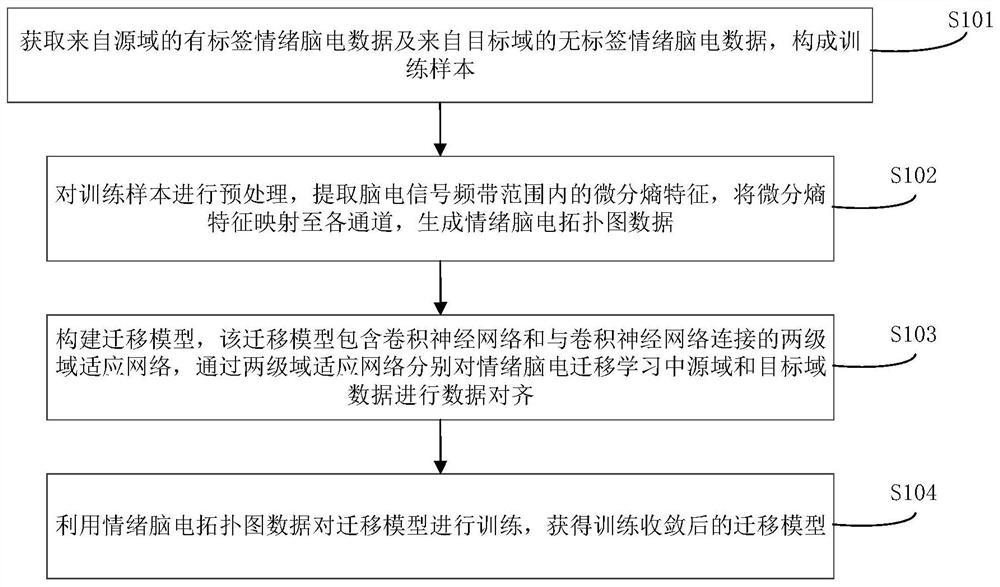

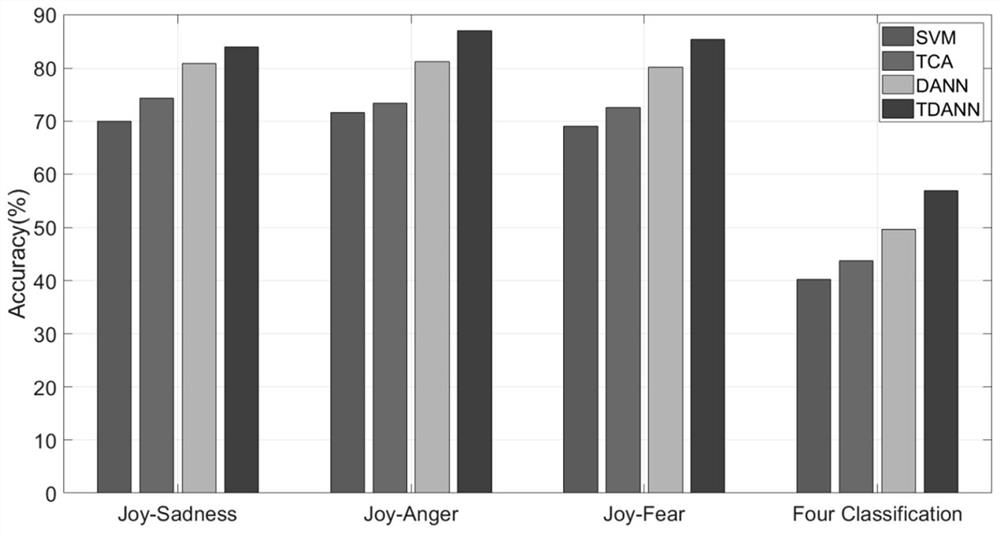

Electroencephalogram emotion migration model training method and system and electroencephalogram emotion recognition method and device

ActiveCN112690793AImprove performanceImprove accuracySensorsPsychotechnic devicesImage extractionElectroencephalogram feature

The invention belongs to the technical field of electroencephalogram recognition, particularly relates to an electroencephalogram emotion migration model training method and system and an electroencephalogram emotion recognition method and device. The problem of electroencephalogram emotion migration is solved, and the difficulty and cost of electroencephalogram emotion recognition training are reduced. An electroencephalogram emotion recognition migration model based on a depth domain adversarial network is built; electroencephalogram features are mapped into an electroencephalogram feature topological graph; deep features are extracted from a feature image by using a deep convolutional neural network; the extracted deep features are input into domain adaptation networks; the two-stage domain adaptation networks are utilized; the first stage performs preliminary confusion on a source domain and a target domain by adopting a maximum mean value difference; and the second-stage domain adaptation network increases the between-class distance, so that the migration model performance and the emotion recognition accuracy are improved. According to the invention, the problem of electroencephalogram emotion migration can be effectively solved, and the application effect of the migration model in emotion recognition is improved. The relatively good application prospects are realized.

Owner:PLA STRATEGIC SUPPORT FORCE INFORMATION ENG UNIV PLA SSF IEU

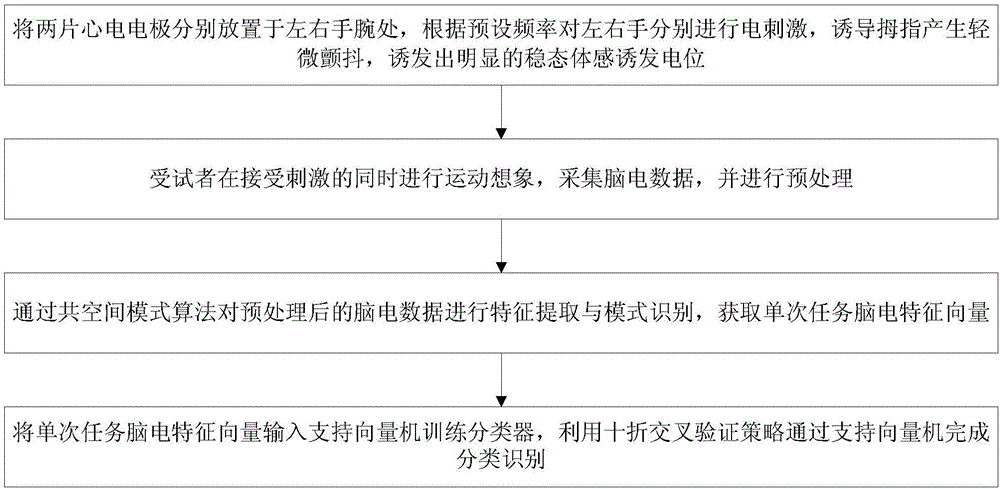

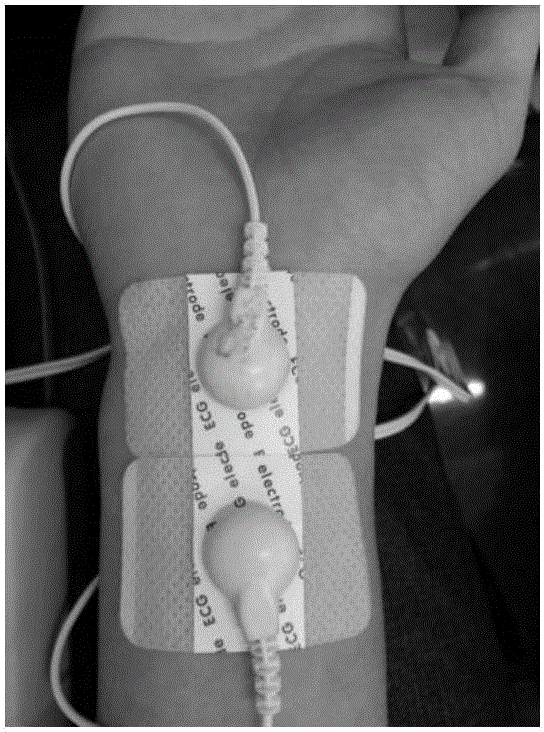

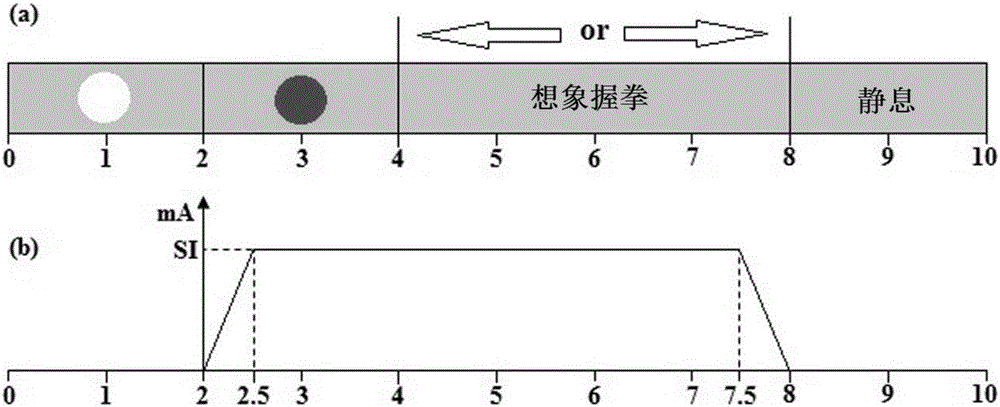

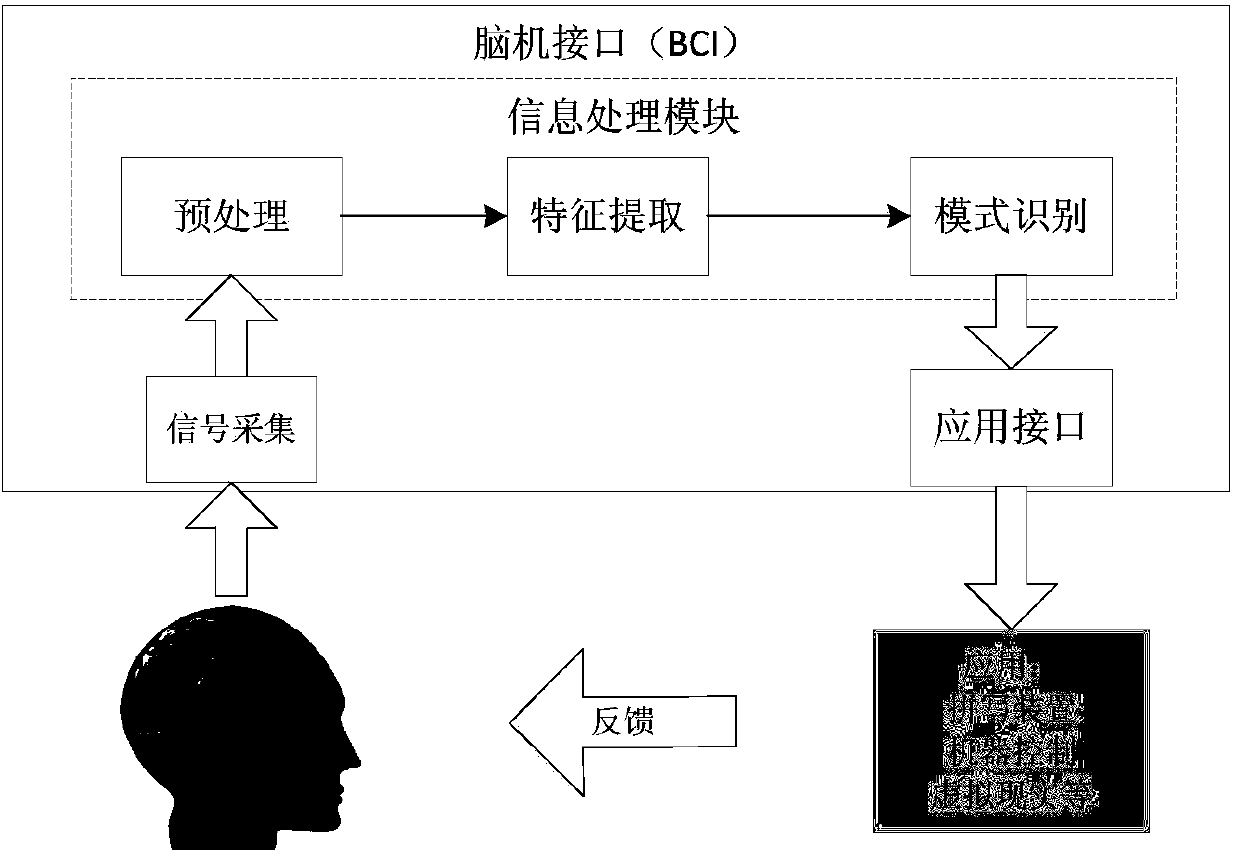

Novel MI-SSSEP mixed brain-computer interface method and system thereof

InactiveCN106362287AImprove performanceEffectively extract fusion featuresElectrotherapyDiagnostic recording/measuringLeft wristBrain computer interfacing

The invention discloses a novel motor imagery (MI)-steady state somatosensory evoked potential (SSSEP) mixed brain-computer interface method. The method comprises: two electrocardioelectrodes are placed at a left wrist and a right wrist, electrical simulation is carried out a left hand and a right hand according to a preset frequency, thumbs are induced to tremble slightly, thereby inducing obvious steady-state somatosensory evoked potentials; a tester is simulated and is processed by motor imagery, electroencephalogram data are collected, and pretreatment is carried out; feature extraction and pattern recognition are carried out on the electroencephalogram data by using a common spatial pattern algorithm and a single-task electroencephalogram feature vector is obtained; the single-task electroencephalogram feature vector is inputted into a support vector machine to train a classifier and classification identification is completed by using the support vector machine based on a ten-fold cross validation strategy, so that six sub frequency bands are built by using 4 Hz as stepping at frequency bands of 8 to 32Hz so as to complete classification identification. With the method, the ERD feature and the SSSEP feature are integrated, thereby realizing performance improvement; and robustness of neural-feedback-based rehabilitation training is enhanced.

Owner:TIANJIN UNIV

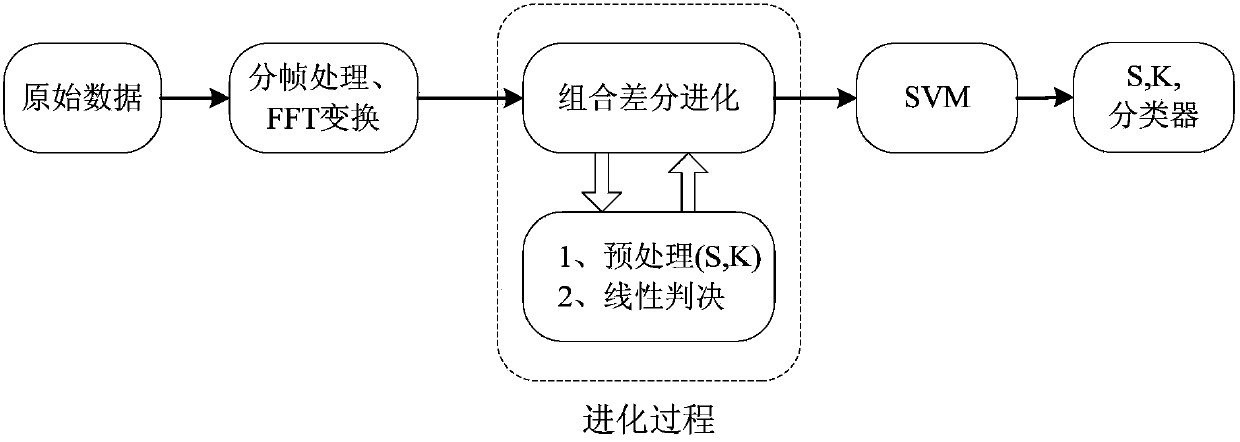

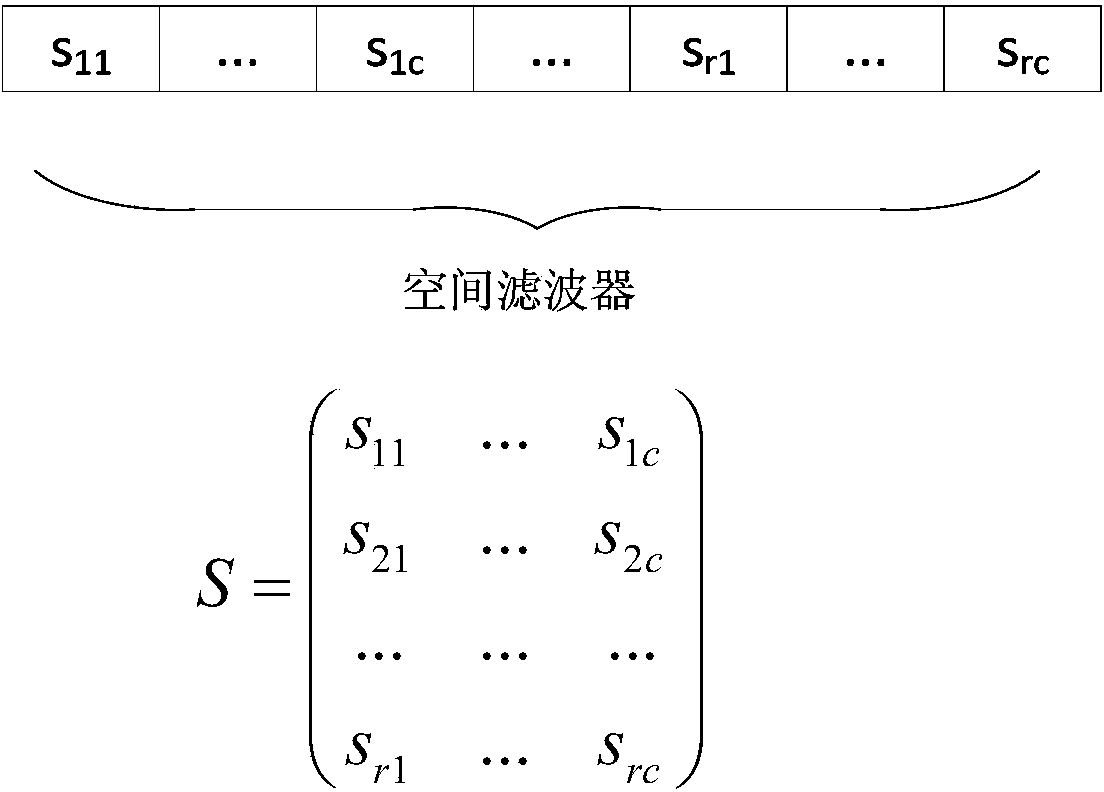

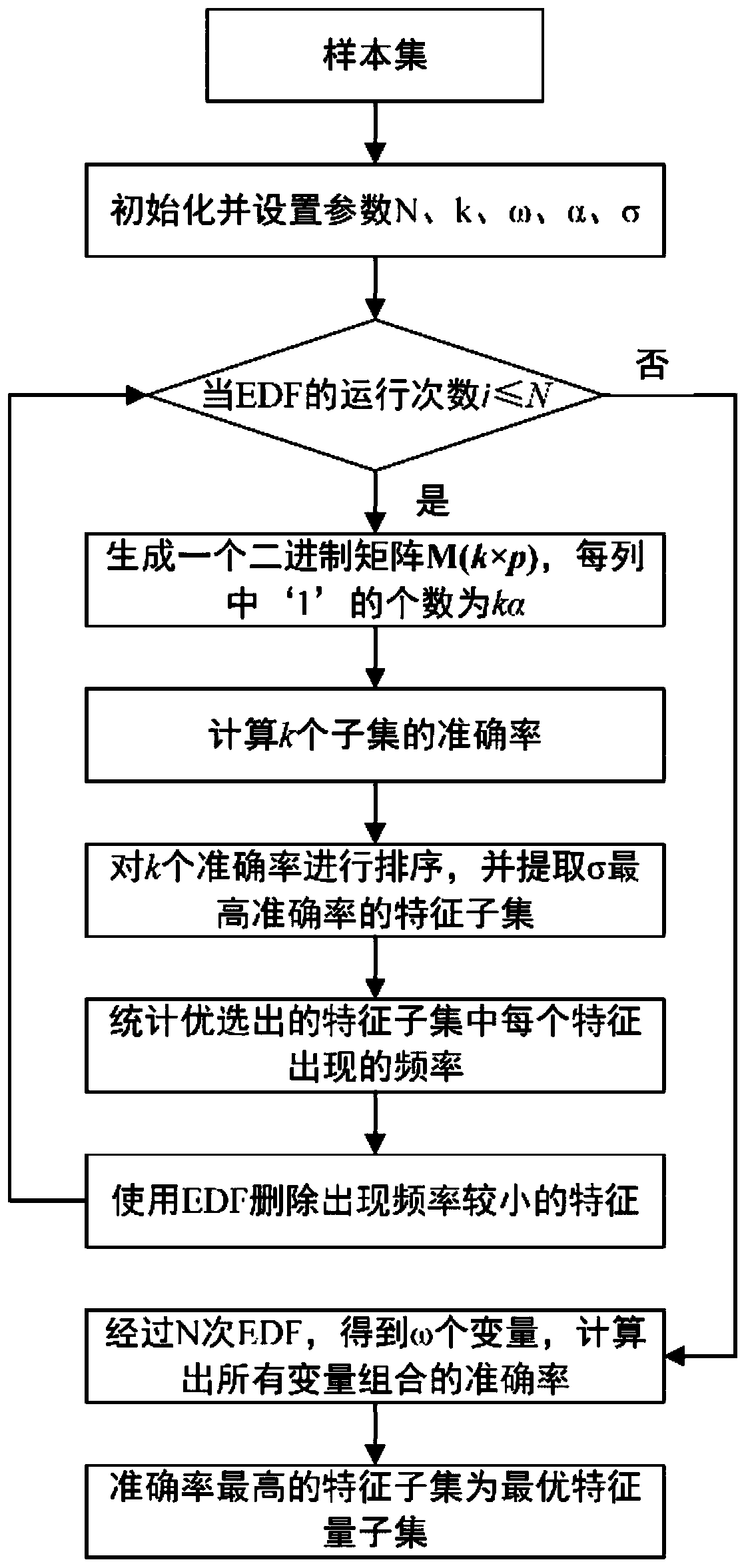

Electroencephalogram feature selecting and classifying method based on combined differential evaluation

InactiveCN103996054AImprove recognition rateRealize automatic identificationInput/output for user-computer interactionCharacter and pattern recognitionFeature vectorElectroencephalogram feature

The invention discloses an electroencephalogram feature selecting and classifying method based on combined differential evaluation. Due to the fact that a combined differential evaluation algorithm has the advantages in the global searching ability and the rapid convergence aspect, the combined differential evaluation algorithm is utilized to rapidly find out the optimal spatial filtering coefficients and feature vectors. Thus, the problem of complex and low-efficiency work of relying on manual work to decide spatial filtering coefficients and feature vectors in the prior art is solved, and a classifier is trained according to the searched optimal spatial filtering coefficients and feature vectors to classify electroencephalogram to improve the recognition rate of electroencephalogram. In addition, the purpose of recognizing electroencephalogram automatically is achieved, the labor intensity is reduced, and the processing efficiency of electroencephalogram is greatly improved.

Owner:CENT SOUTH UNIV

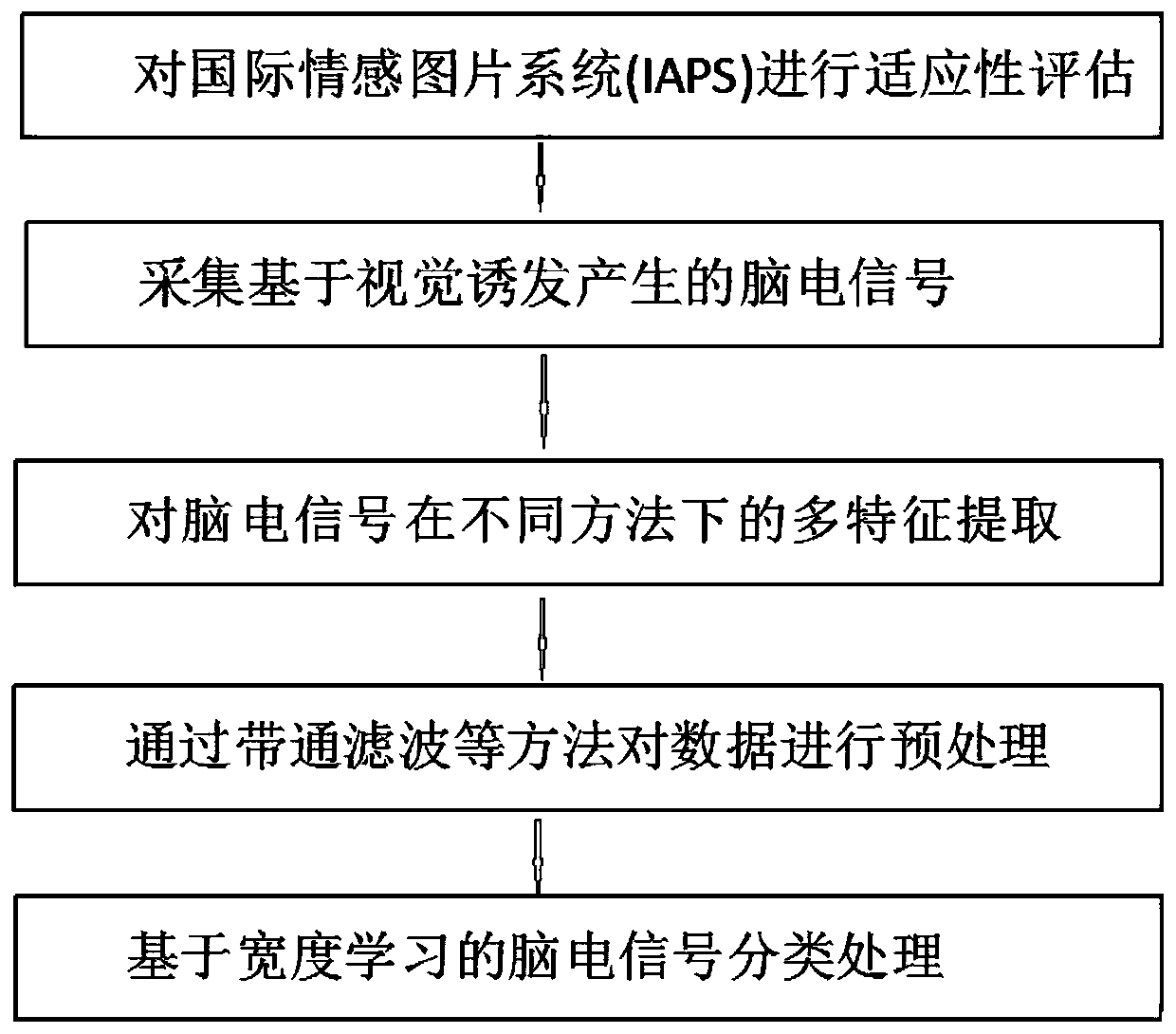

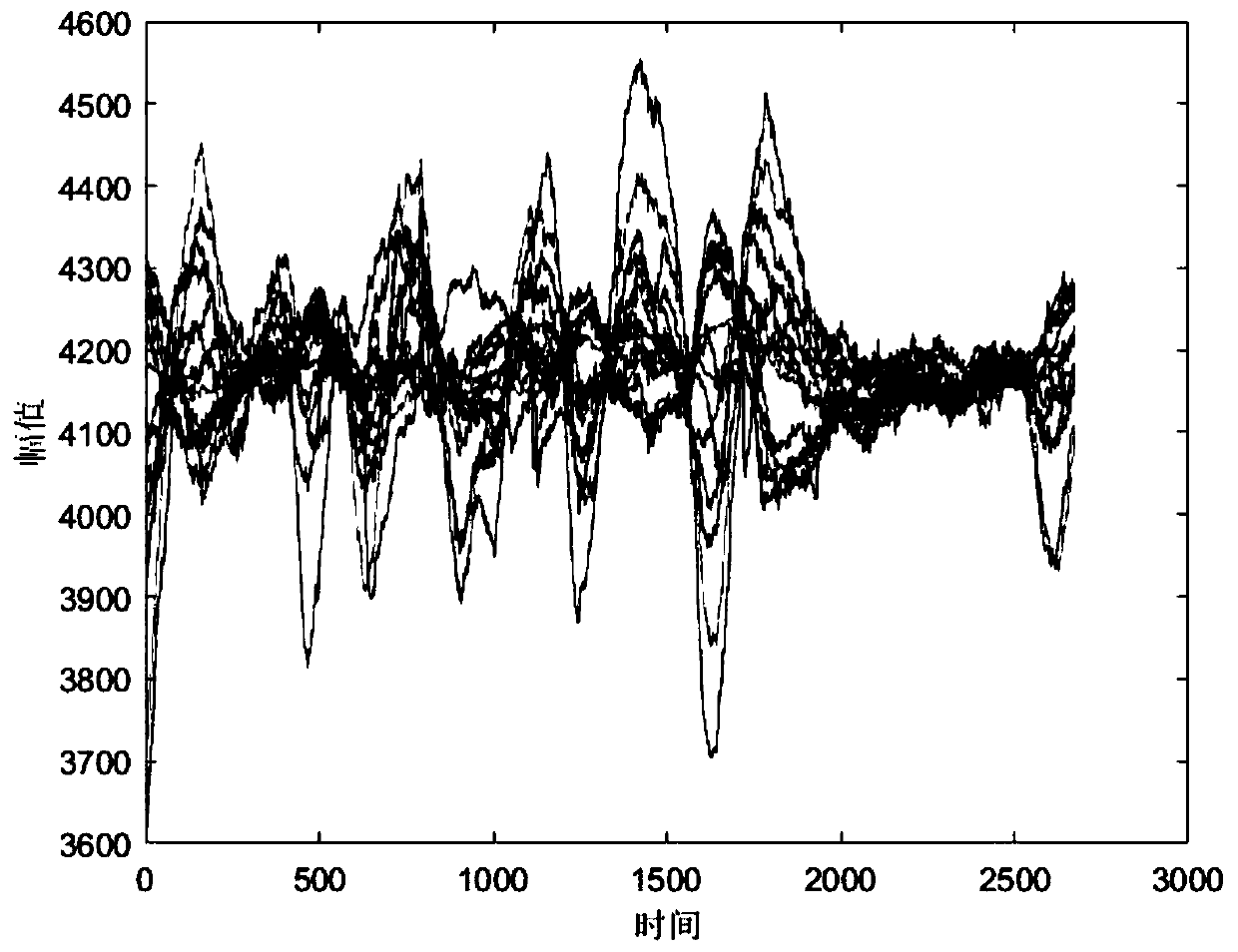

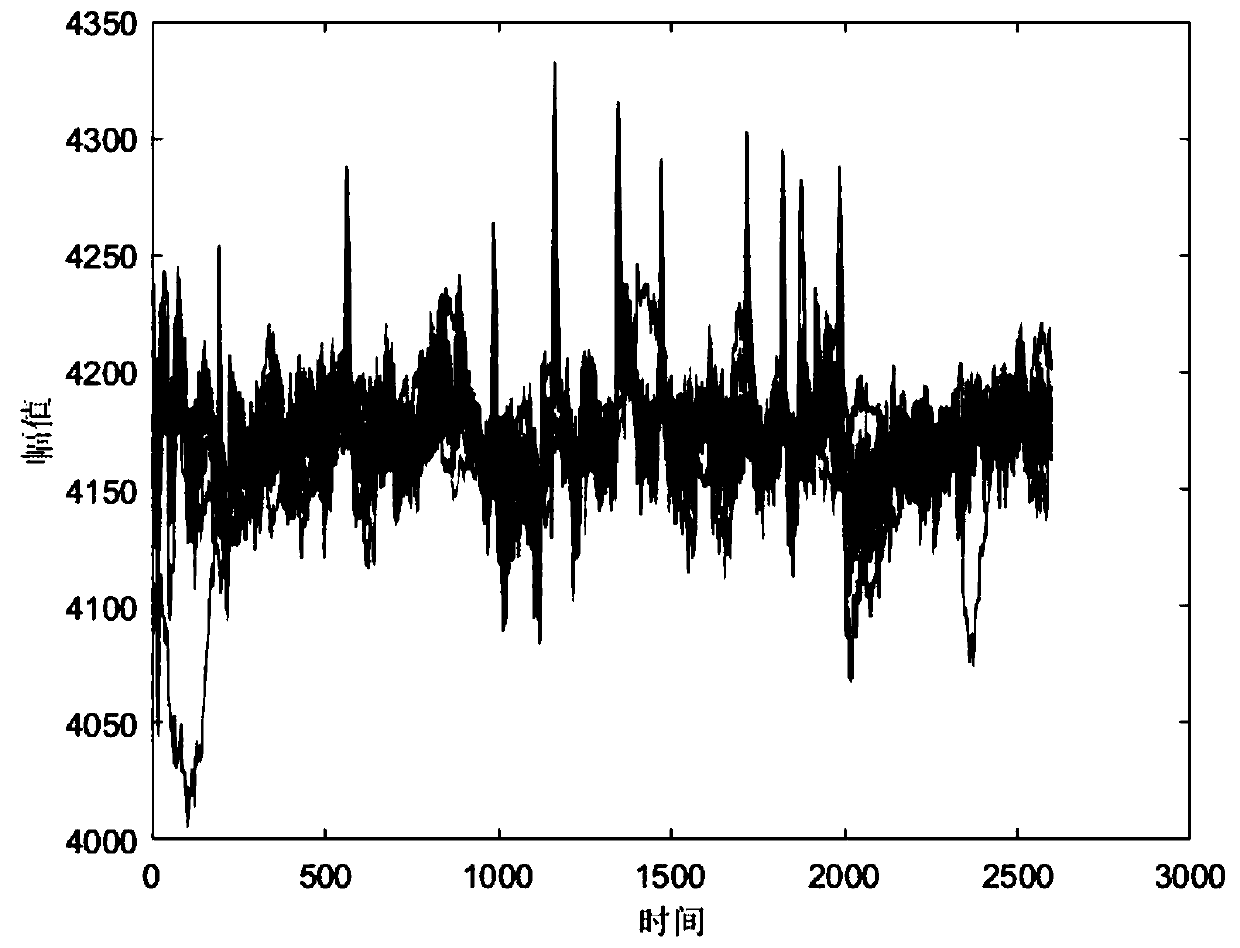

Visual evoked potential affective recognition method based on width learning

InactiveCN110946576AEliminate fluctuationsEliminate differencesSensorsPsychotechnic devicesBandpass filteringVisual evoked potentials

The invention belongs to electroencephalogram feature classification of the field of biometric feature recognition and in particular discloses a visual evoked potential affective recognition method based on width learning. The method comprises the following five steps: performing adaptability evaluation on an international affective picture system (IAPS); acquiring electroencephalogram generated on the basis of visual evocation; preprocessing data by using methods such as band-pass filtering; extracting multiple features of the electroencephalogram in different methods; and performing classification treatment on the electroencephalogram on the basis of width learning. According to the experiment method designed by the invention, adaptability evaluation on the international affective picture system (IAPS) is implemented, so that the experiment accuracy can be improved; as the data are preprocessed by using the methods such as band-pass filtering, fluctuation and differences of the electroencephalogram at different moments can be eliminated; as multiple features are extracted by using a power spectrum density method, the robustness and the efficiency of electroencephalogram and affective classification can be improved; and as affective classification is implemented by using a width learning method, results of affective classification can be prevented from local optimum, and in addition, the cost can be reduced.

Owner:XIAN UNIV OF SCI & TECH

Method for controlling device by using brain wave and brain wave interface system

ActiveUS8473045B2Reduce the burden onUnnecessary wearElectroencephalographySensorsElectroencephalogram featureMedicine

The control method for a device includes steps of: presenting a visual stimulation concerning a manipulation menu for a device; measuring event-related potentials after the visual stimulation is presented, where event-related potentials based on a timing of presenting the visual stimulation as a starting point are measured from a potential difference between each of electrodes and at least one reference electrode respectively worn on a face and in an ear periphery of a user; from each of the measured event-related potentials, extracting electroencephalogram data which is at 5 Hz or less and contains a predetermined time section, and combining the extracted electroencephalogram data into electroencephalogram characteristic data; comparing the electroencephalogram characteristic data against reference data prepared in advance for determining a desire to select an item in the manipulation menu; and, based on a comparison result, executing a manipulation of the device corresponding to the item.

Owner:PANASONIC CORP

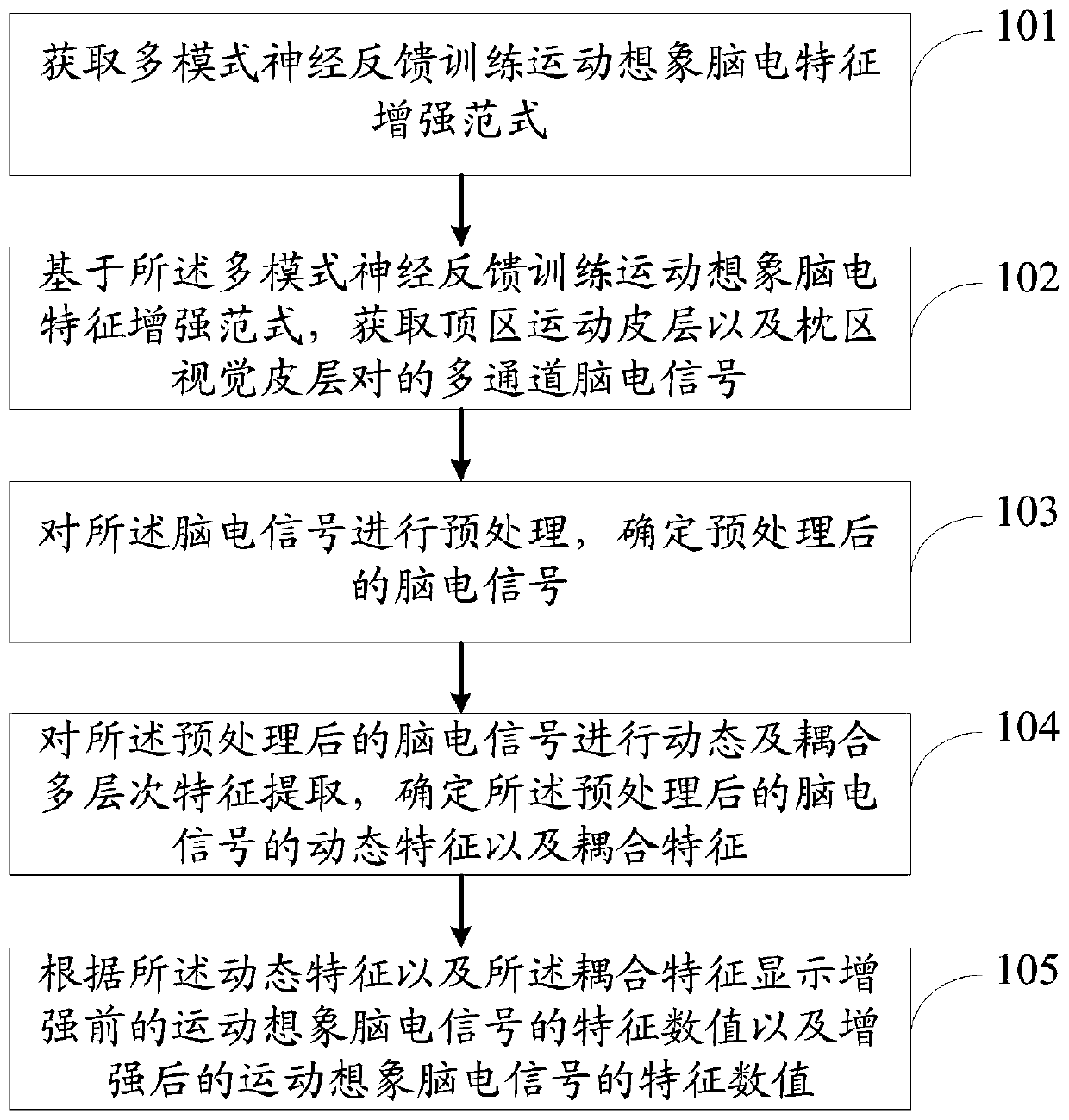

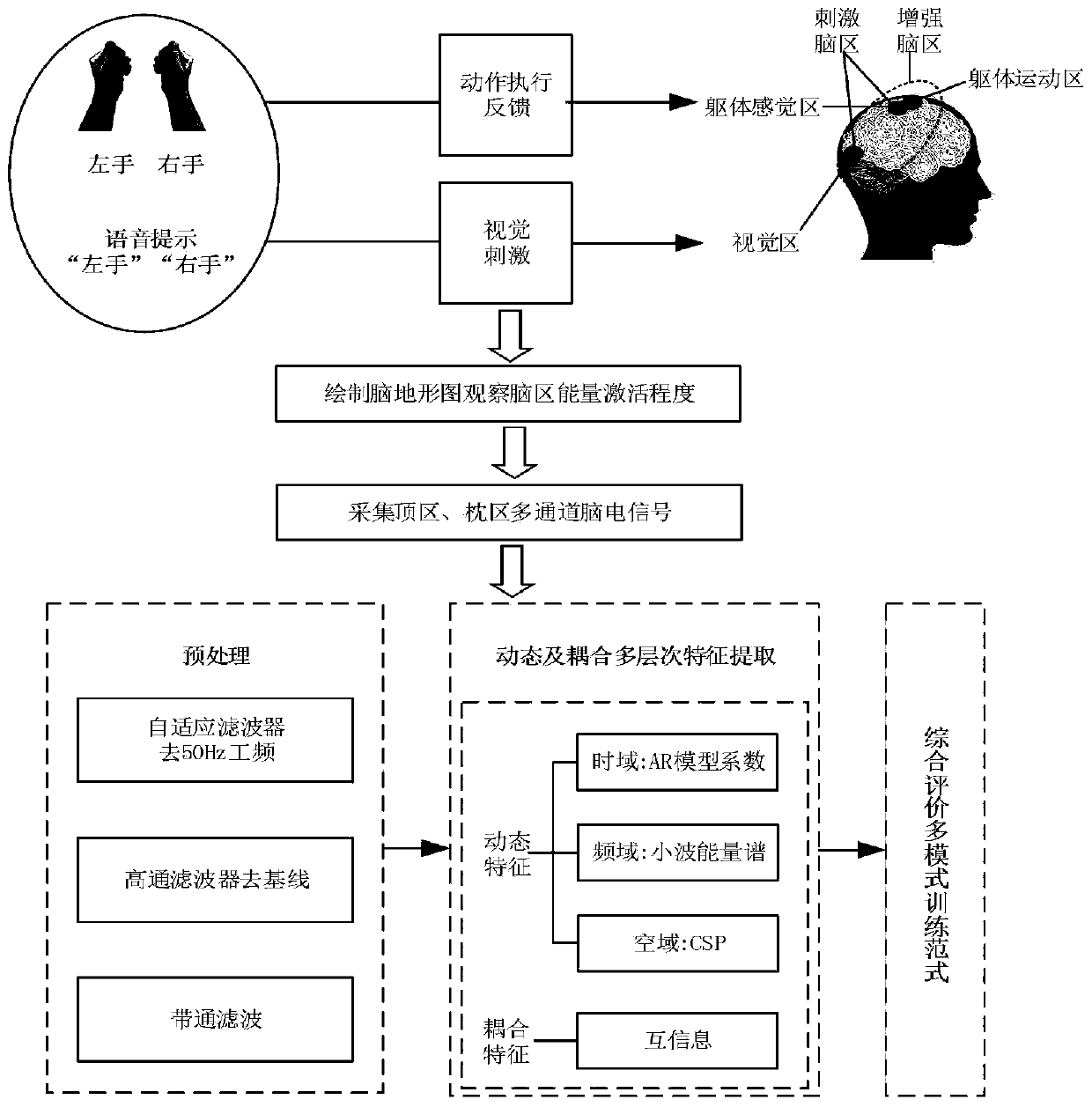

Motor imagery electroencephalogram feature enhancement method and system

InactiveCN111110230AImprove recognition rateComprehensiveDiagnostic recording/measuringSensorsVisual cortexElectroencephalogram feature

The invention relates to a motor imagery electroencephalogram feature enhancement method and system. The enhancement method comprises the following steps: acquiring a multi-mode neural feedback training motor imagery electroencephalogram feature enhancement paradigm; based on the multi-mode neural feedback training motor imagery electroencephalogram feature enhancement normal form, acquiring multi-channel electroencephalogram signals of a pair of a parietal region motor cortex and an occipital region visual cortex; preprocessing the electroencephalogram signals, and determining the preprocessed electroencephalogram signals; conducting dynamic and coupled multi-level feature extraction on the preprocessed electroencephalogram signals, and determining dynamic features and coupling features of the preprocessed electroencephalogram signals; and according to the dynamic features and the coupling features, displaying feature values of the motor imagery electroencephalogram signals before enhancement and feature values of the motor imagery electroencephalogram signals after enhancement. By adopting the enhancement method and system provided by the invention, the recognition rate of effective scalp electroencephalogram signals can be improved.

Owner:YANSHAN UNIV

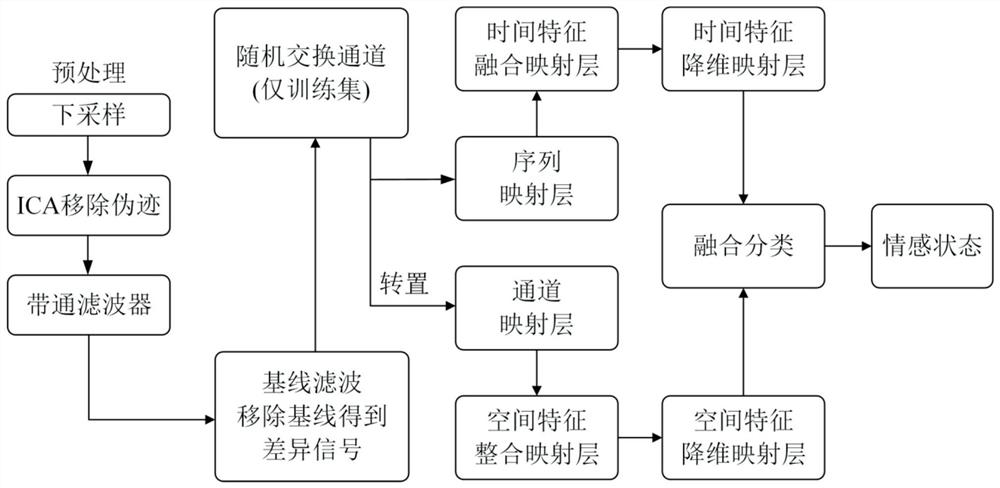

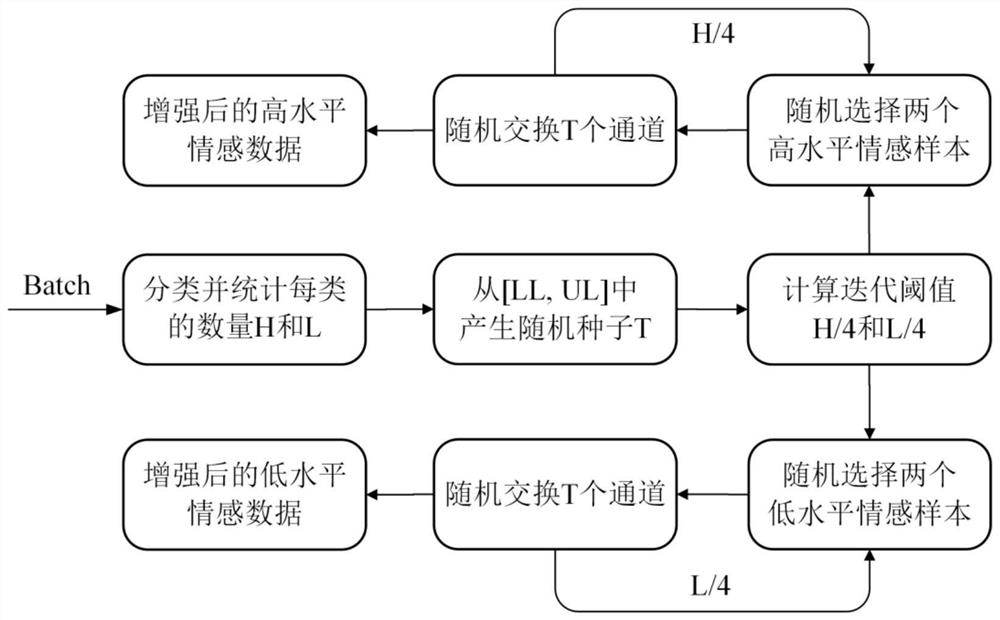

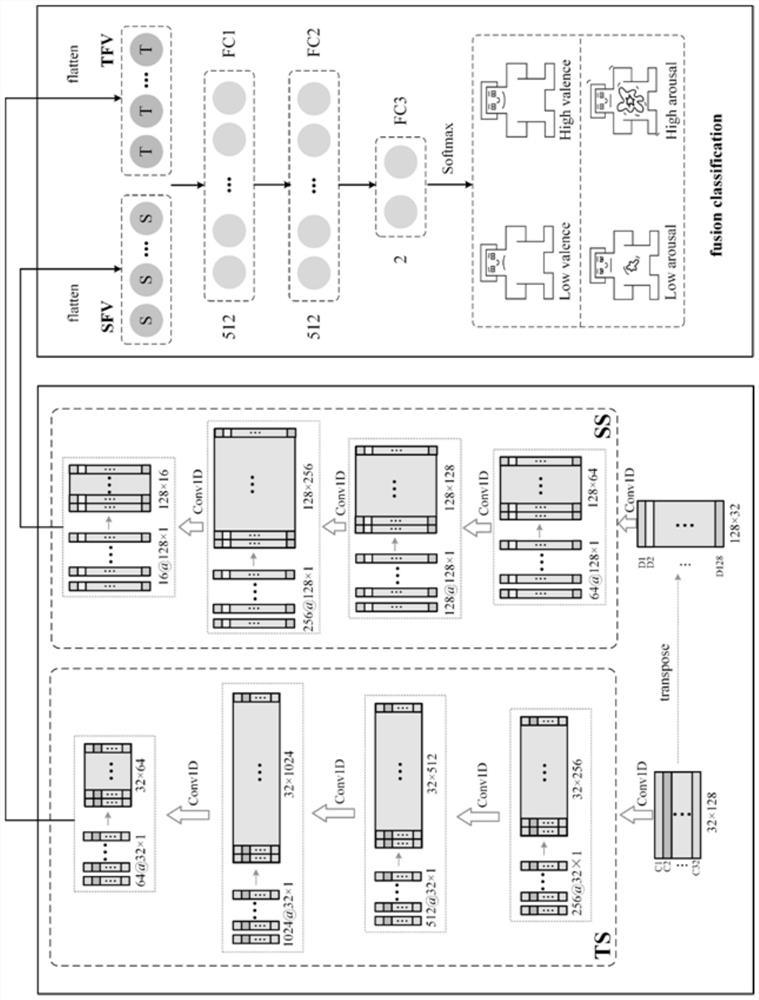

Electroencephalogram emotion recognition method based on parallel sequence channel mapping network

ActiveCN112381008AAccurate identificationGood serviceCharacter and pattern recognitionNeural architecturesEeg dataElectroencephalogram feature

The invention discloses an electroencephalogram emotion recognition method based on a parallel sequence channel mapping network, and the method comprises the following steps: downsampling EEG data ofa subject, removing EOG artifacts and noises, and obtaining a baseline signal and an emotion signal after preprocessing; constructing a baseline filter for screening a stable baseline signal from thebaseline signals, and subtracting the stable baseline signal from the emotion signal to obtain a difference signal as an input sample of the network; randomly selecting samples of the same emotion ineach training batch by adopting an online data enhancement mode, and randomly exchanging data on uncertain number of corresponding channels; constructing an electroencephalogram emotion recognition network composed of a time flow sub-network, a space flow sub-network and a fusion classification block; and extracting human electroencephalogram features according to the electroencephalogram emotionrecognition network, wherein the electroencephalogram features comprise time and space features. The problems of insufficient space-time information and low efficiency in the feature extraction process are effectively solved.

Owner:TIANJIN UNIV

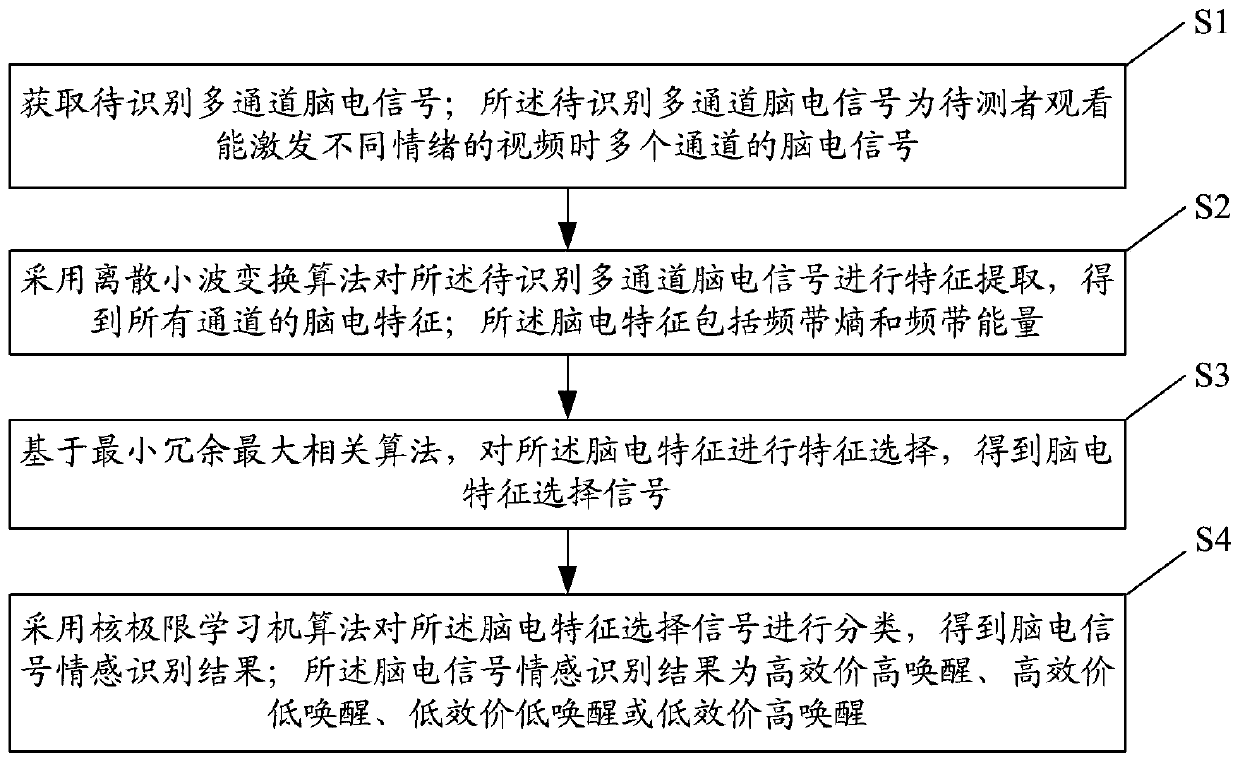

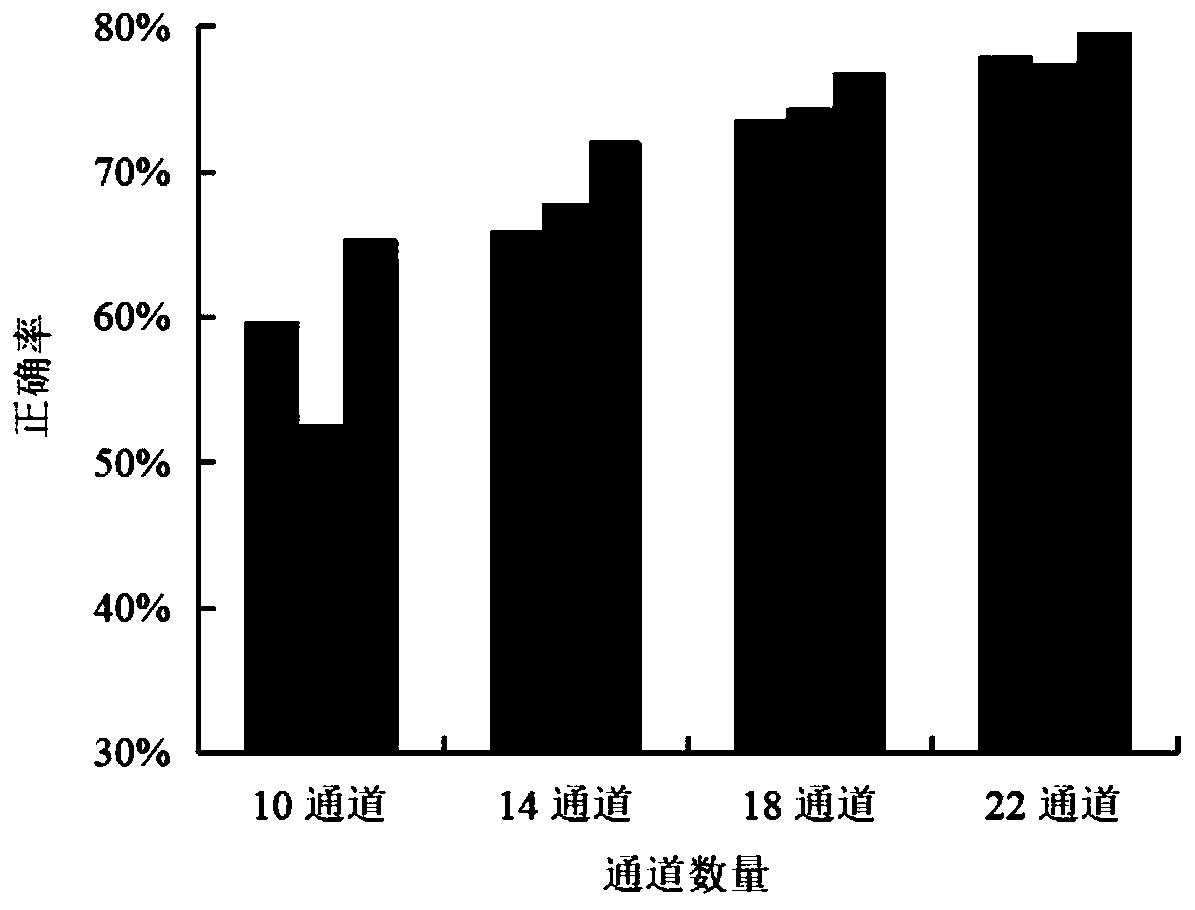

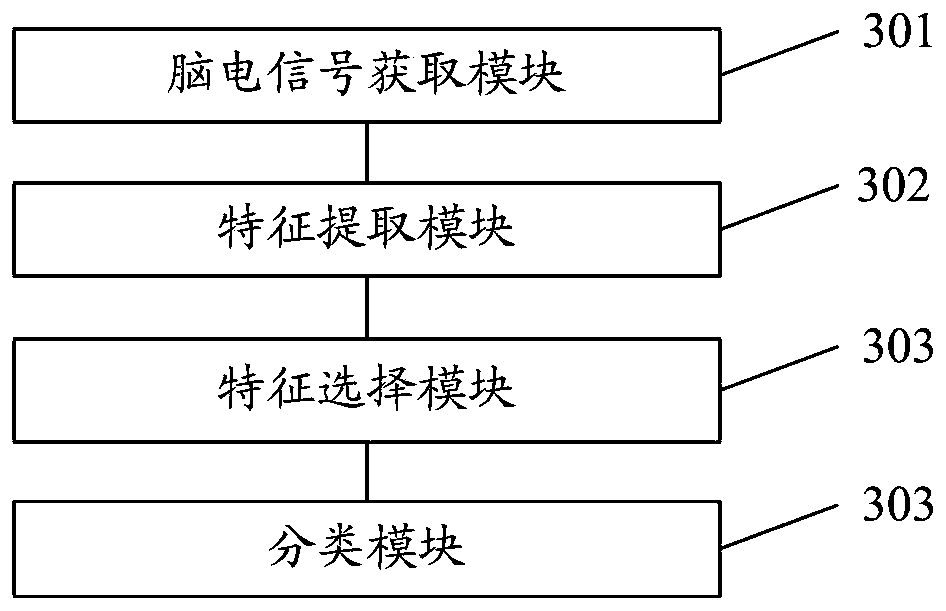

Emotion recognition method and system based on electroencephalogram signals

InactiveCN110881975AImprove recognition accuracyAccurate methodCharacter and pattern recognitionSensorsLearning machineElectroencephalogram feature

The invention discloses an emotion recognition method and system based on electroencephalogram signals. The method comprises the following steps: acquiring to-be-identified multi-channel electroencephalogram signals that are electroencephalogram signals from multiple channels when a to-be-identified person watches videos capable of stimulating different emotions; carrying out feature extraction onthe to-be-identified multi-channel electroencephalogram signals based on a discrete wavelet transform algorithm to obtain electroencephalogram features, including frequency band entropies and frequency band energy, of all channels; according to a minimum redundancy maximum correlation algorithm, performing feature selection on the electroencephalogram features to obtain electroencephalogram feature selection signals; and classifying the electroencephalogram feature selection signals by adopting a kernel extreme learning machine algorithm to obtain an electroencephalogram signal emotion recognition result. The emotion recognition precision can be improved.

Owner:SHANDONG INST OF ADVANCED TECH CHINESE ACAD OF SCI CO LTD

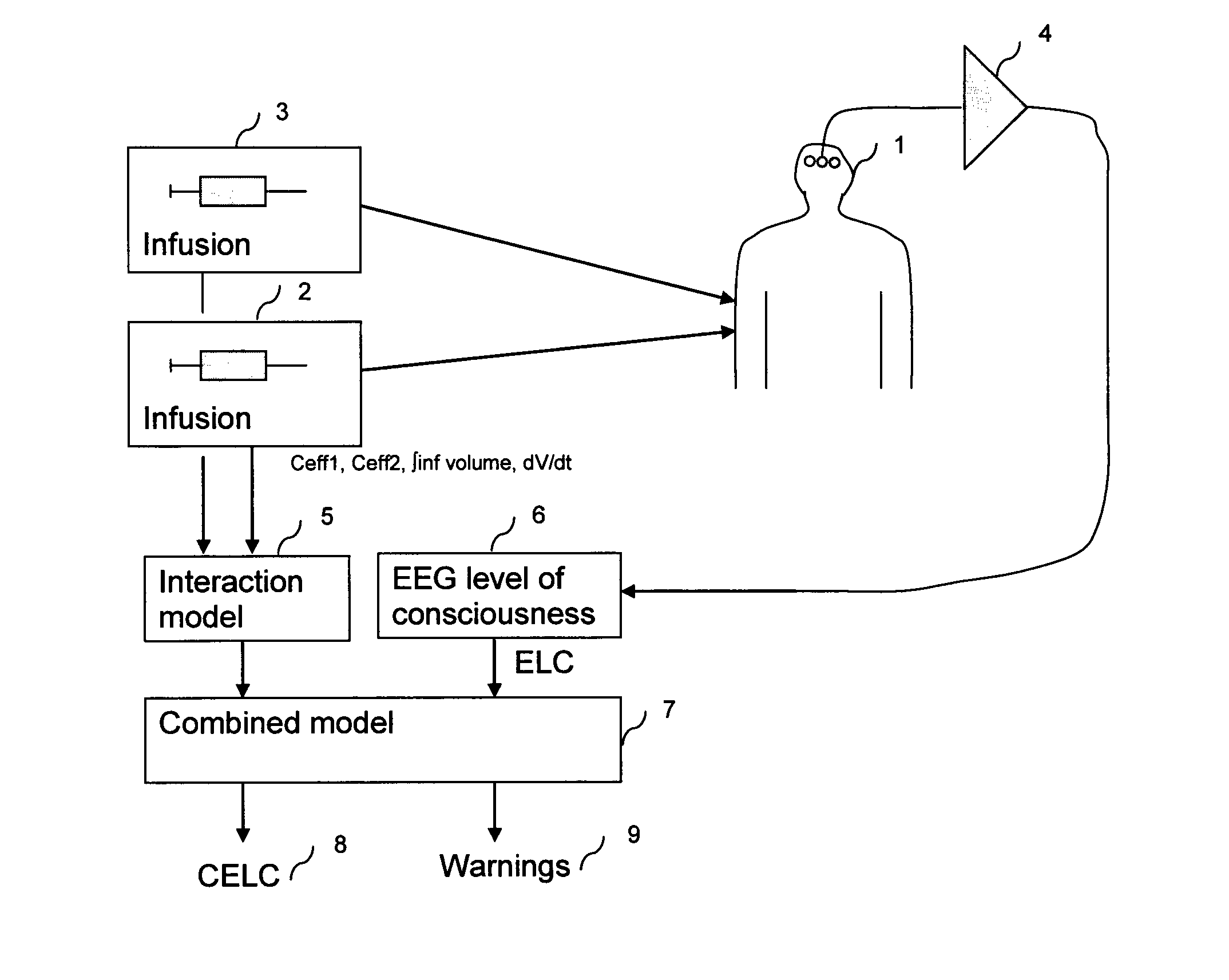

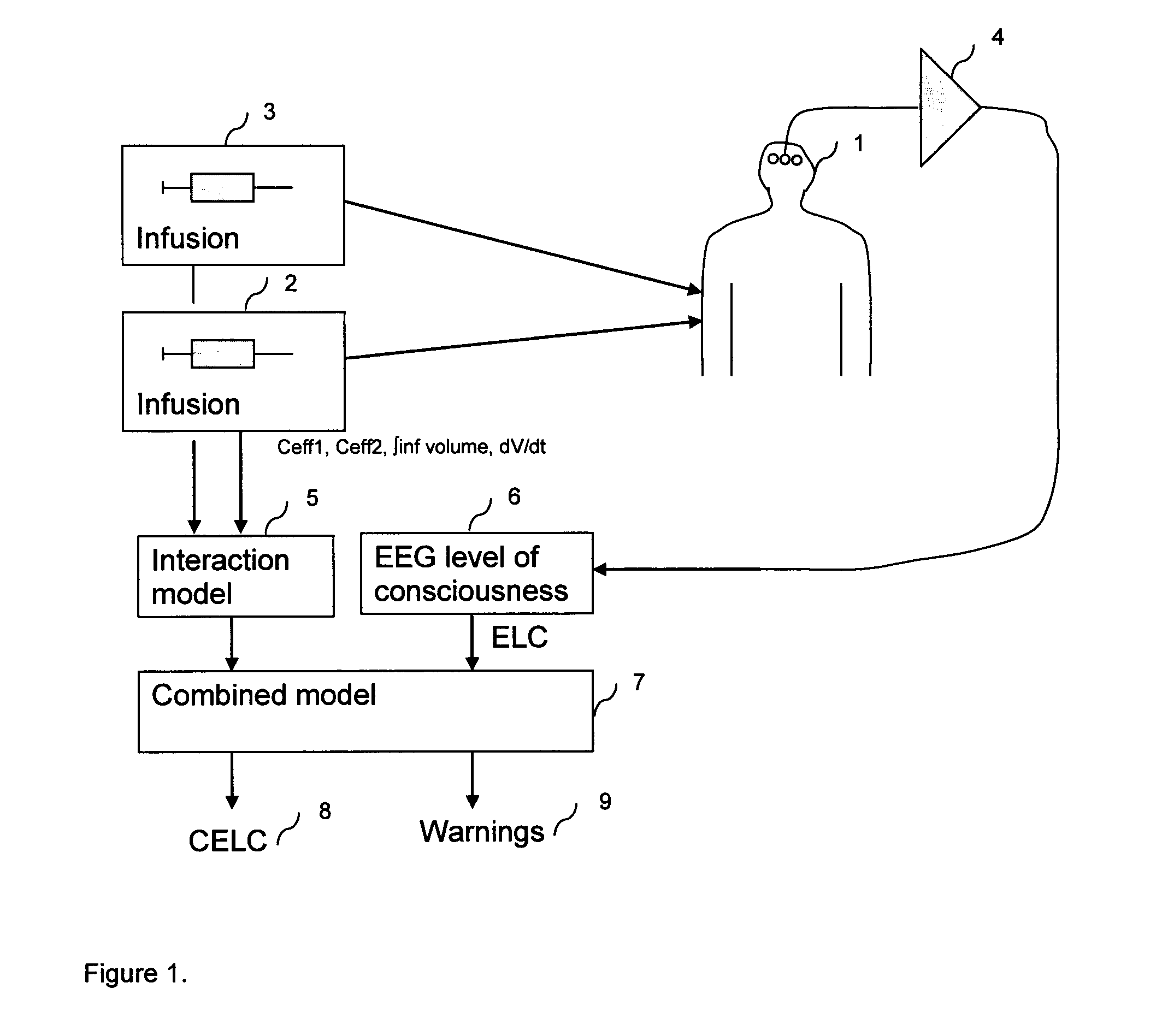

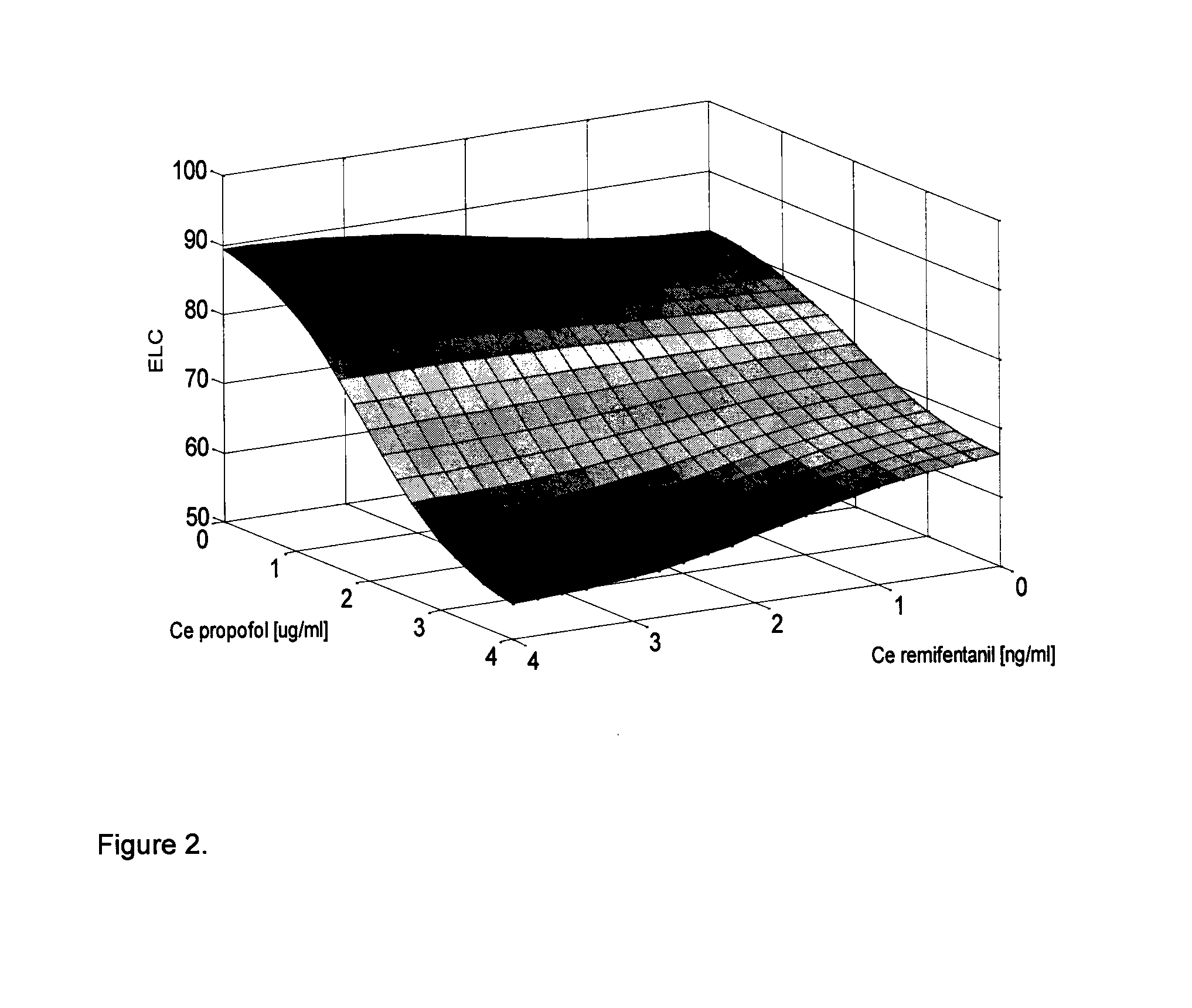

Apparatus for combining drug effect interaction between anaesthetics and analgesics and electroencephalogram features for precise assessment of the level of consciousness during anaesthesia

InactiveUS20130150748A1Accurate descriptionGood compensationElectroencephalographySensorsInstrumentation amplifierDrug interaction

The present invention consists of an apparatus for the on-line identification of drug effect using drug interactions and physiologic signals, in particular the interaction between anaesthetics and analgesics combined with the electroencephalogram for precise assessment of the level of consciousness in awake, sedated and anaesthetised patients. In a preferred embodiment the apparatus comprises: two infusion devices, for example syring pumps, which are connected to the patient (1) adapted to deliver hypnotics (2) and analgesics (3). The infusion data from the pumps are fed into an interaction model (5); an interaction model characterized by a Neural Network which is adapted to estimate the parameters of the model online and in real-time for drug interaction between anaesthetics and an analgesics, an EEG instrumentation amplifier; a processing unit adapted to calculate an EEG index of the level of consciousness (ELC); a fuzzy logic reasoner adapted to merge extracted EEG parameters into an index.

Owner:COVIDIEN AG

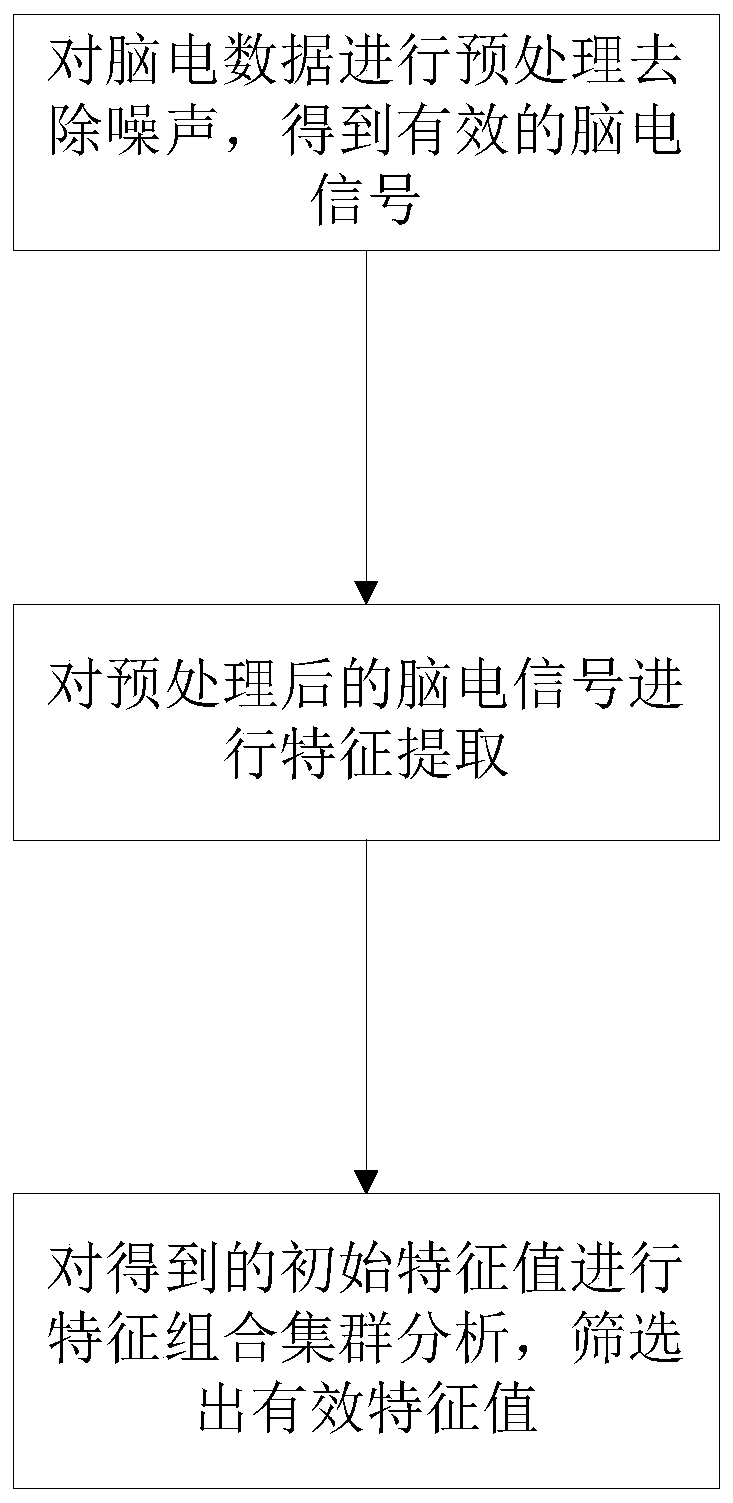

Electroencephalogram feature extraction and selection method

InactiveCN111214226AImprove accuracyDiagnostic recording/measuringSensorsElectroencephalogram featureEeg signal analysis

The invention discloses an electroencephalogram feature extraction and selection method. The method comprises the following steps of preprocessing electroencephalogram data to remove noise to obtain an effective electroencephalogram signal; performing feature extraction on the preprocessed electroencephalogram signal, and extracting an electroencephalogram time-frequency domain information-based feature and an entropy theory and complexity-based feature to obtain an initial feature value of the electroencephalogram signal; performing feature combination cluster analysis on the obtained initialfeature value, and screening out effective feature values. The method provides a powerful technical support for realization and development of an electroencephalogram signal technology so as to improve the accuracy of electroencephalogram signal analysis.

Owner:苏州小蓝医疗科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com