Parallel convolutional neural network motor imagery electroencephalogram classification method based on spatial-temporal feature fusion

A convolutional network and motion imagery technology, applied in biological neural network models, image data processing, graphics and image conversion, etc., to achieve the effect of improving classification performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] The technical solutions in the embodiments of the present invention will be described clearly and in detail below in conjunction with the drawings in the embodiments of the present invention. The described embodiments are only some of the embodiments of the invention.

[0057] The technical scheme that the present invention solves the problems of the technologies described above is:

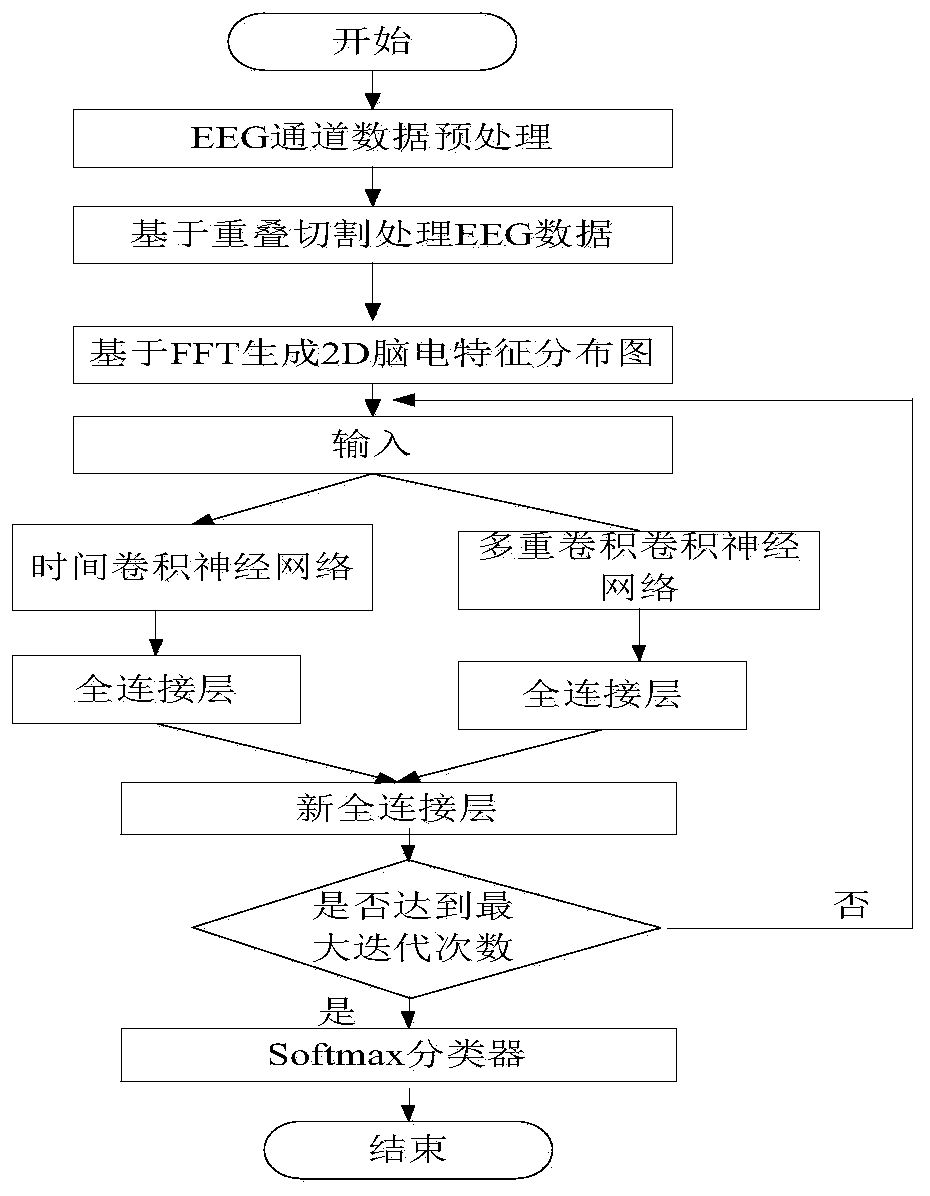

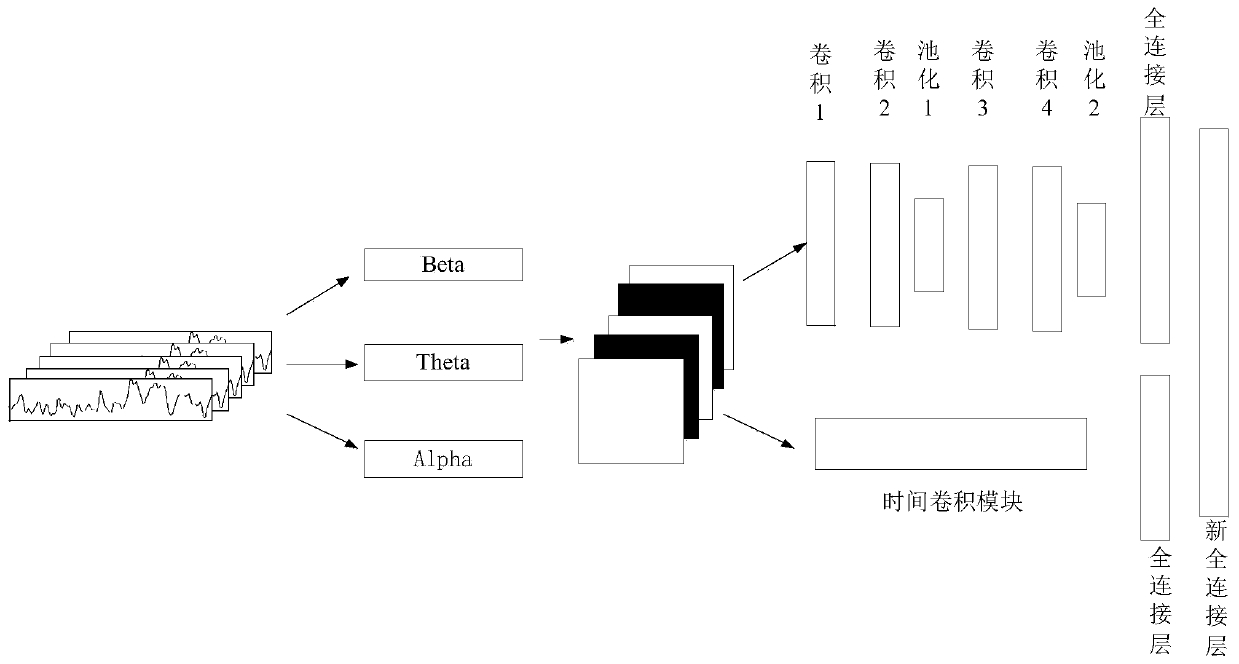

[0058] As shown in the figure, the motor imagery EEG feature extraction and classification method based on spatio-temporal feature fusion provided by the present embodiment includes the following steps:

[0059] Step 1: Preprocess the raw data. Generally, the original EEG channel data obtained from the experiment contains noises such as myoelectricity and oculoelectricity, which are not suitable for direct network training. Therefore, BCI researchers will perform a series of data processing processes to improve the signal-to-noise ratio before feature extraction, such as high-pass filter...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com