Human body action recognition method based on cyclic convolutional neural network

A human action recognition, neural network technology, applied in the fields of image classification, pattern recognition and machine learning, can solve the problem of low accuracy of human action recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0052] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

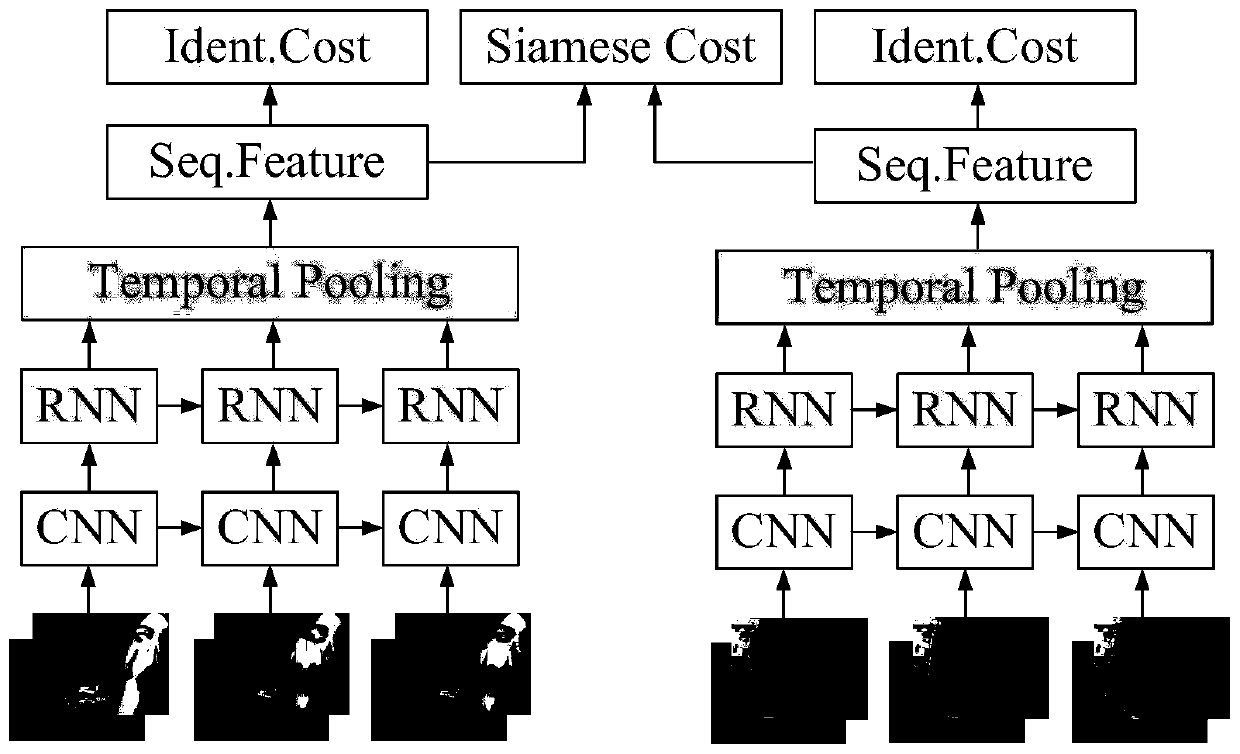

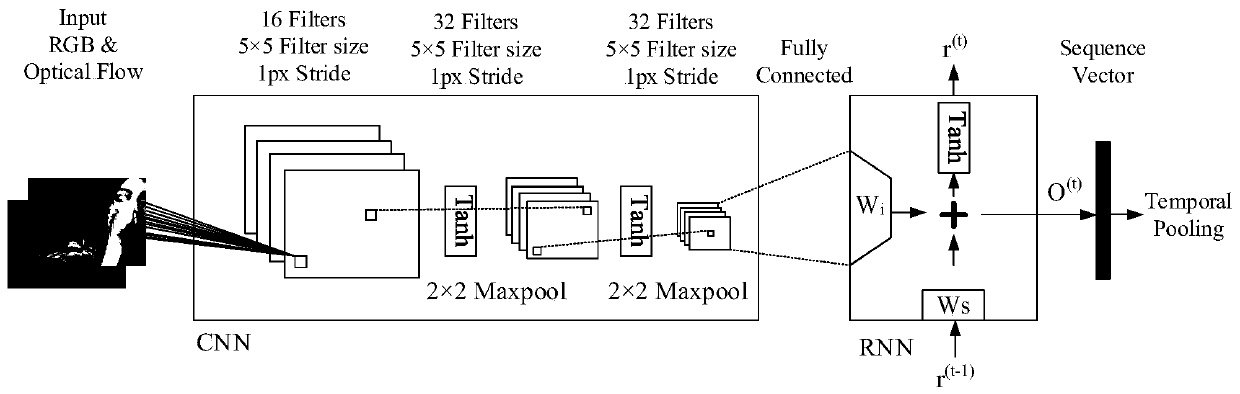

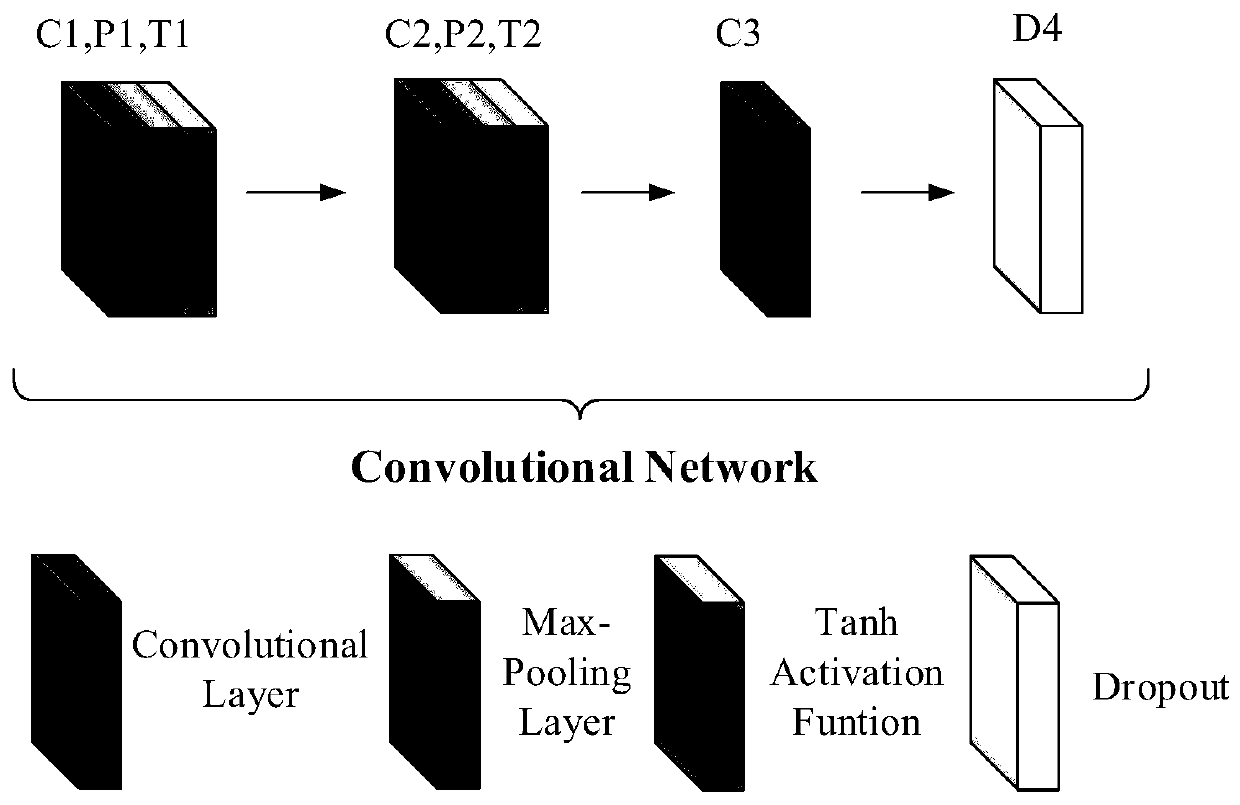

[0053] A human action recognition method based on cyclic convolutional neural network can be widely used in video-based category similarity recognition, including the following steps:

[0054] S1. Construct a data set, that is, randomly select sequence pairs of the same length from public data sets, and each frame in each sequence includes RGB images and optical flow images; the public data sets are UCF101-split1 data set, HMDB51 data set, UCFSPORT data set or UCF11 dataset, where the two action clips in the sequence pair are from the same action category or from different action categories. Specifically: first cut the video sequence in the public data set into fixed-length action segments (segments), obtain multiple sequences, and randomly select a pair of segments, that is, a sequence pair, which can be from the same action category (Positive...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com