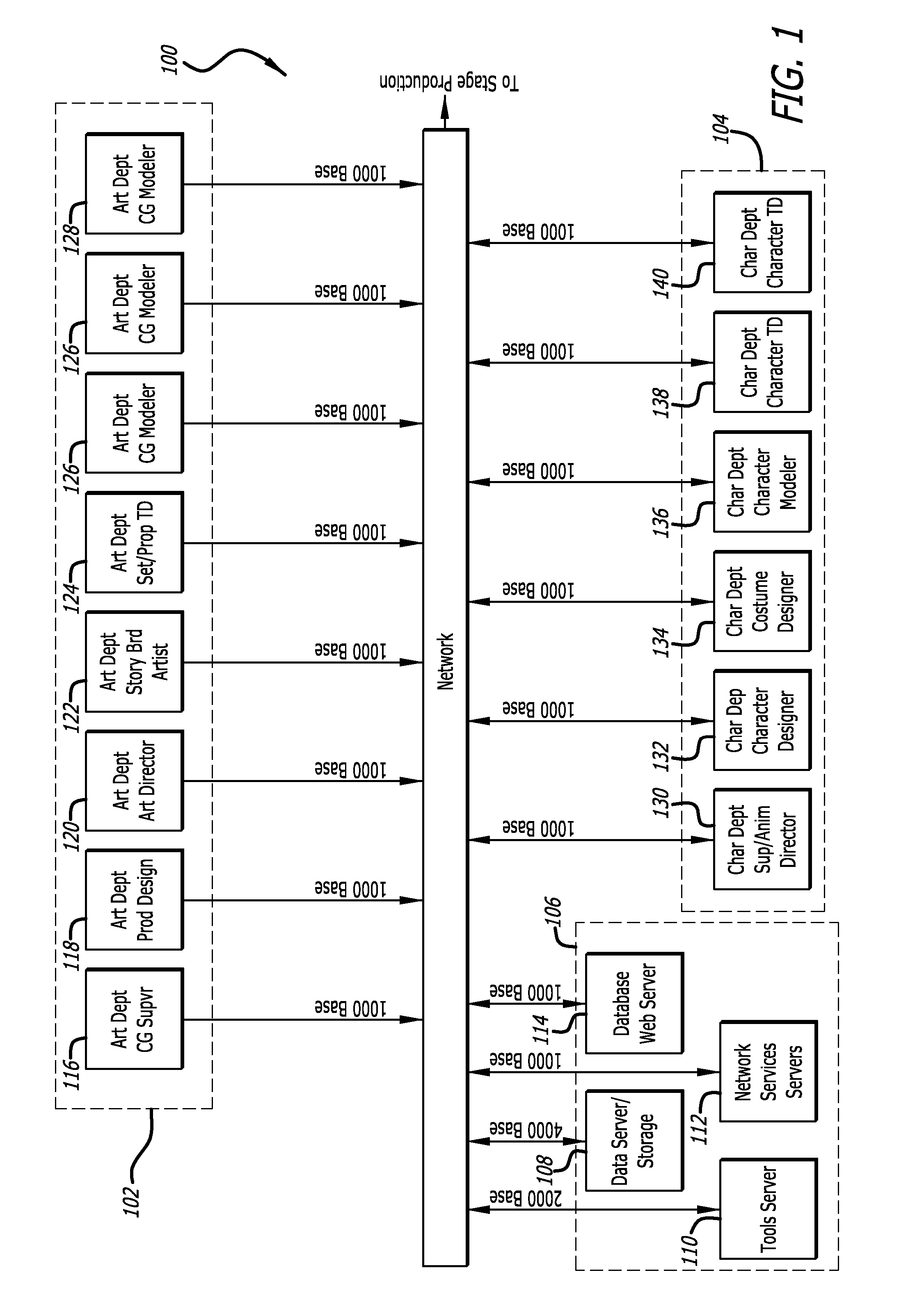

Patents

Literature

110 results about "Facial movement" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Compositions and delivery methods for the treatment of wrinkles, fine lines and hyperhidrosis

The present invention describes compositions and methods for treating, preventing and improving the appearance of skin, particularly, treating, preventing, ameliorating, reducing and / or eliminating fine lines and / or wrinkles of skin, wherein the compositions include limonoid constituents which inhibit acetylcholine release at neuromuscular junctions of skeletal muscle so as to relax the muscles involved with wrinkling, folding and creasing of skin, e.g., facial movement and expression. The limonoids preferably include the plant alkaloids toosendanin and azadirachtin. The compositions, which also are used to treat hyperhidrosis, are preferably applied to the skin, or are delivered by directed means to a site in need thereof.

Owner:AVON PROD INC

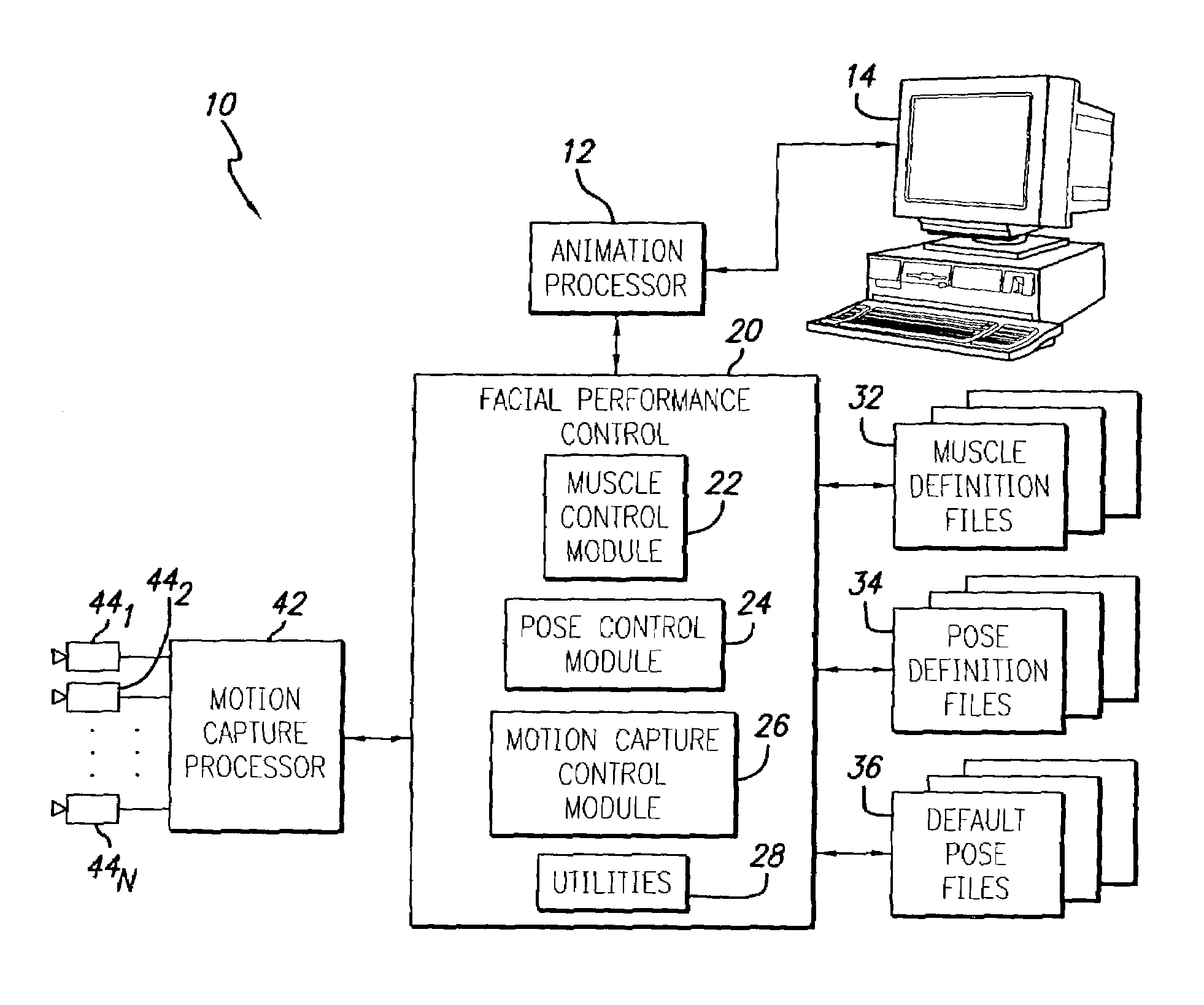

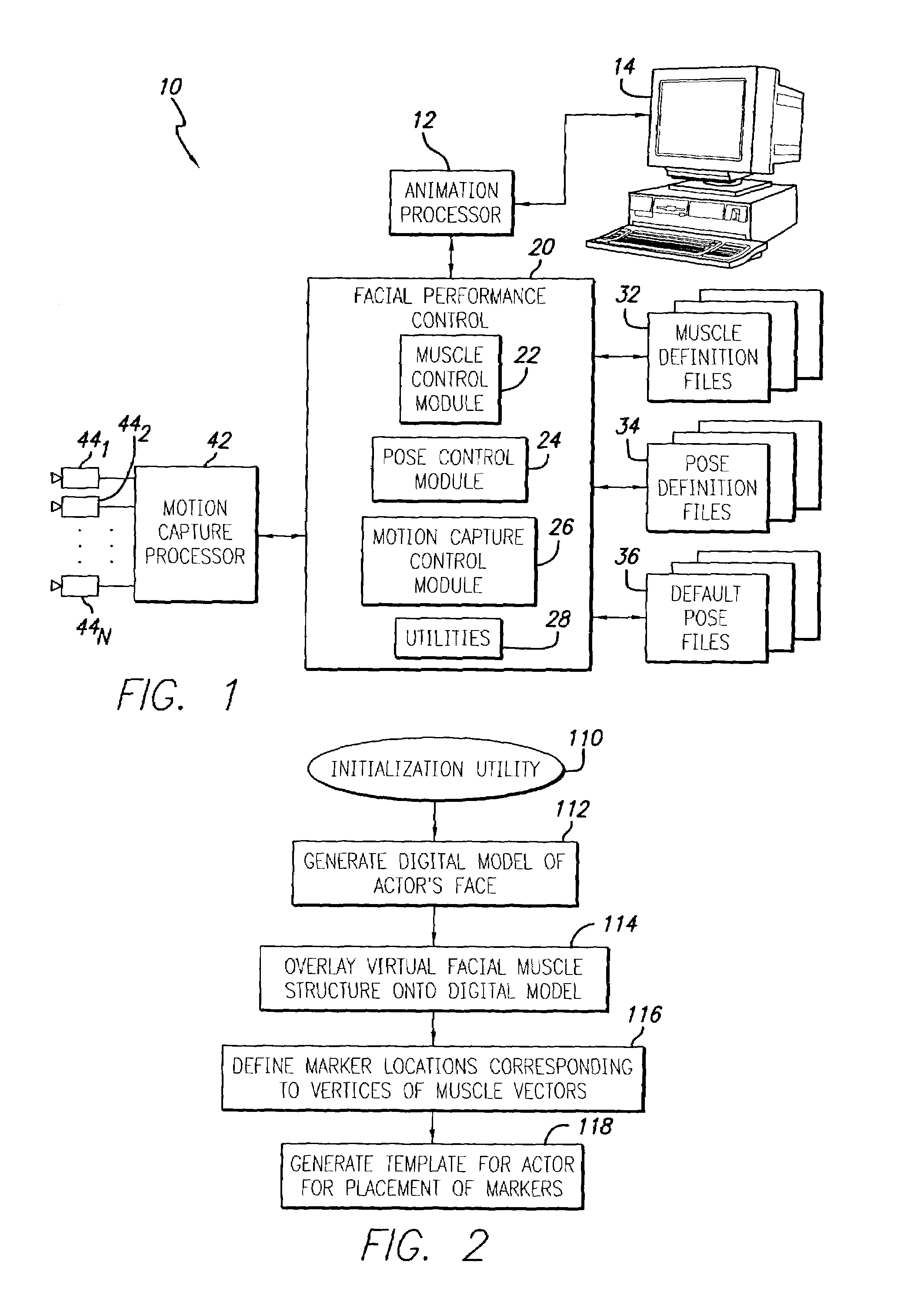

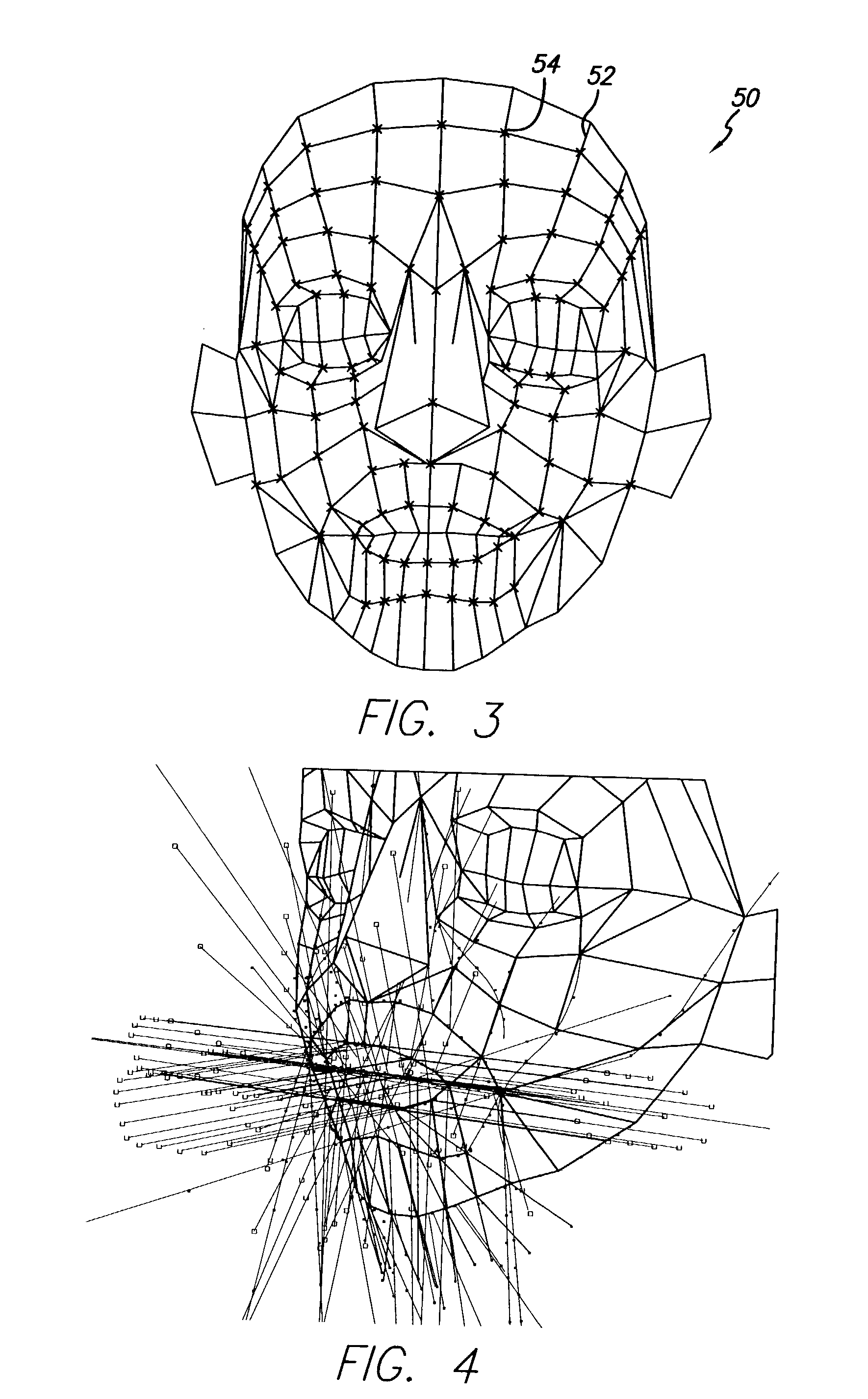

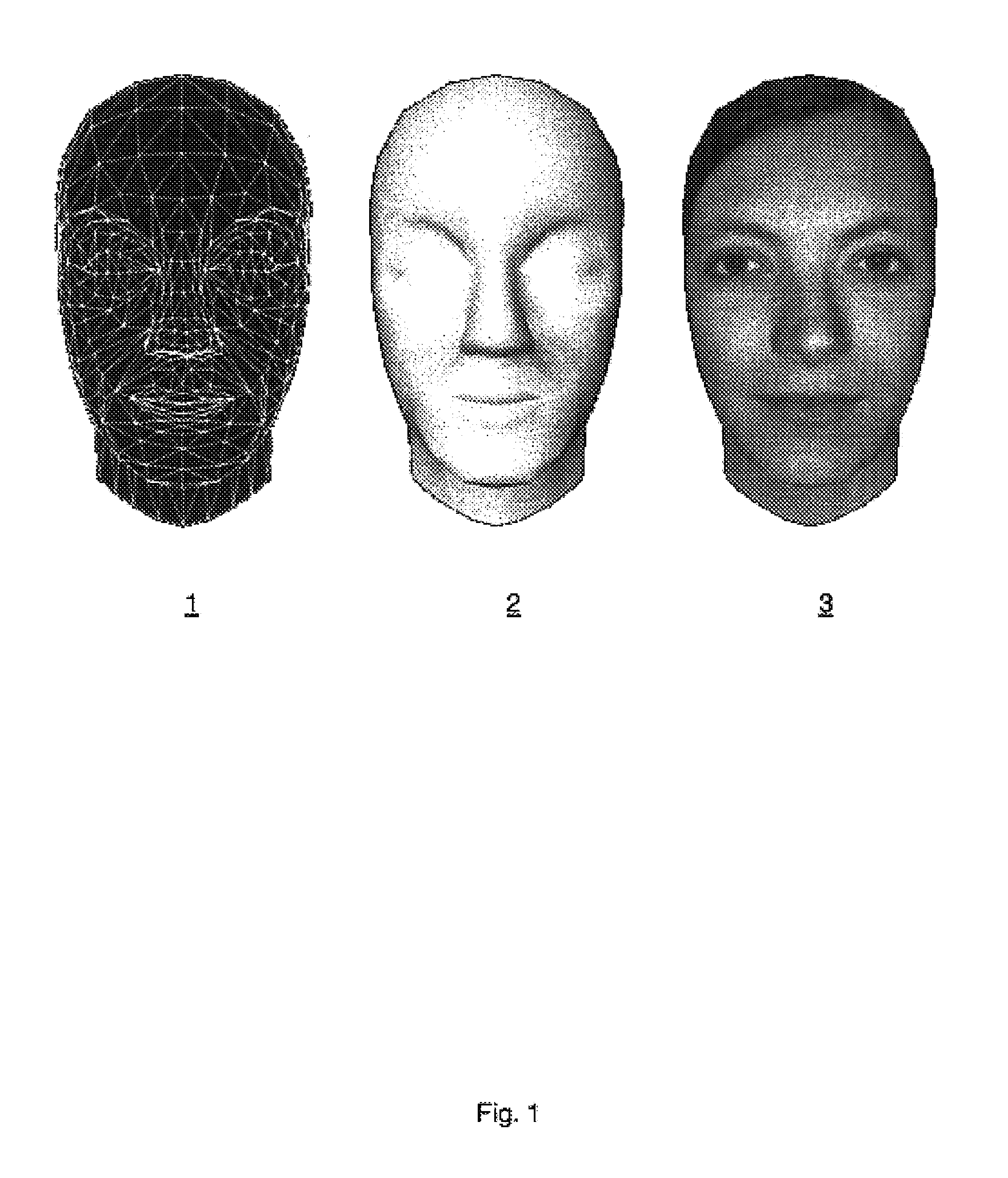

System and method for animating a digital facial model

InactiveUS7068277B2Highly realistic2D-image generationCathode-ray tube indicatorsGraphicsFacial movement

A system and method for animating facial motion comprises an animation processor adapted to generate three-dimensional graphical images and having a user interface and a facial performance processing system operative with the animation processor to generate a three-dimensional digital model of an actor's face and overlay a virtual muscle structure onto the digital model. The virtual muscle structure includes plural muscle vectors that each respectively define a plurality of vertices along a surface of the digital model in a direction corresponding to actual facial muscles. The facial performance processing system is responsive to an input reflecting selective actuation of at least one of the plural muscle vectors to thereby reposition corresponding ones of the plurality of vertices and re-generate the digital model in a manner that simulates facial motion. The muscle vectors further include an origin point defining a rigid connection of the muscle vector with an underlying structure corresponding to actual cranial tissue, an insertion point defining a connection of the muscle vector with an overlying surface corresponding to actual skin, and interconnection points with other ones of the plural muscle vectors.

Owner:SONY CORP +1

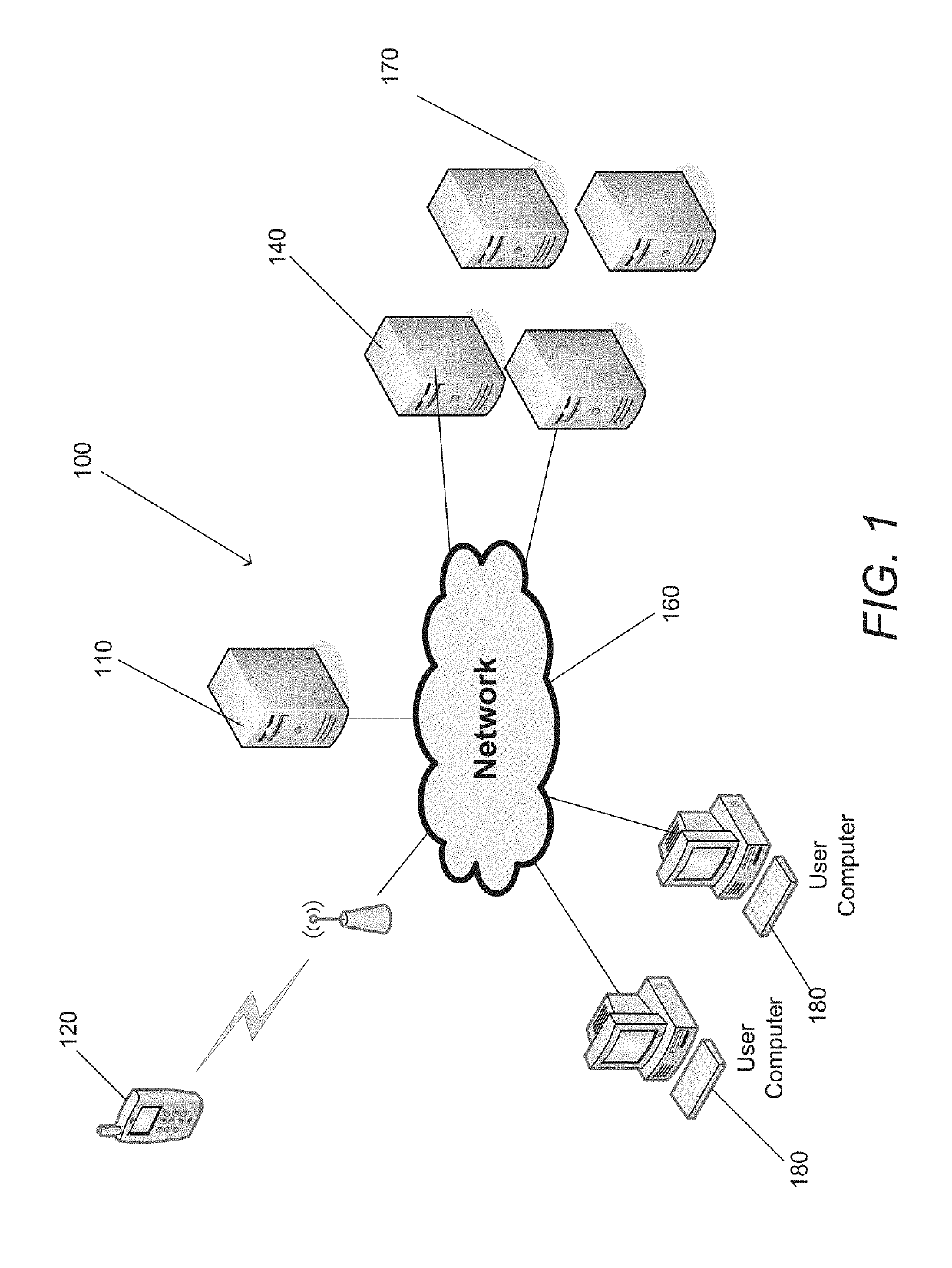

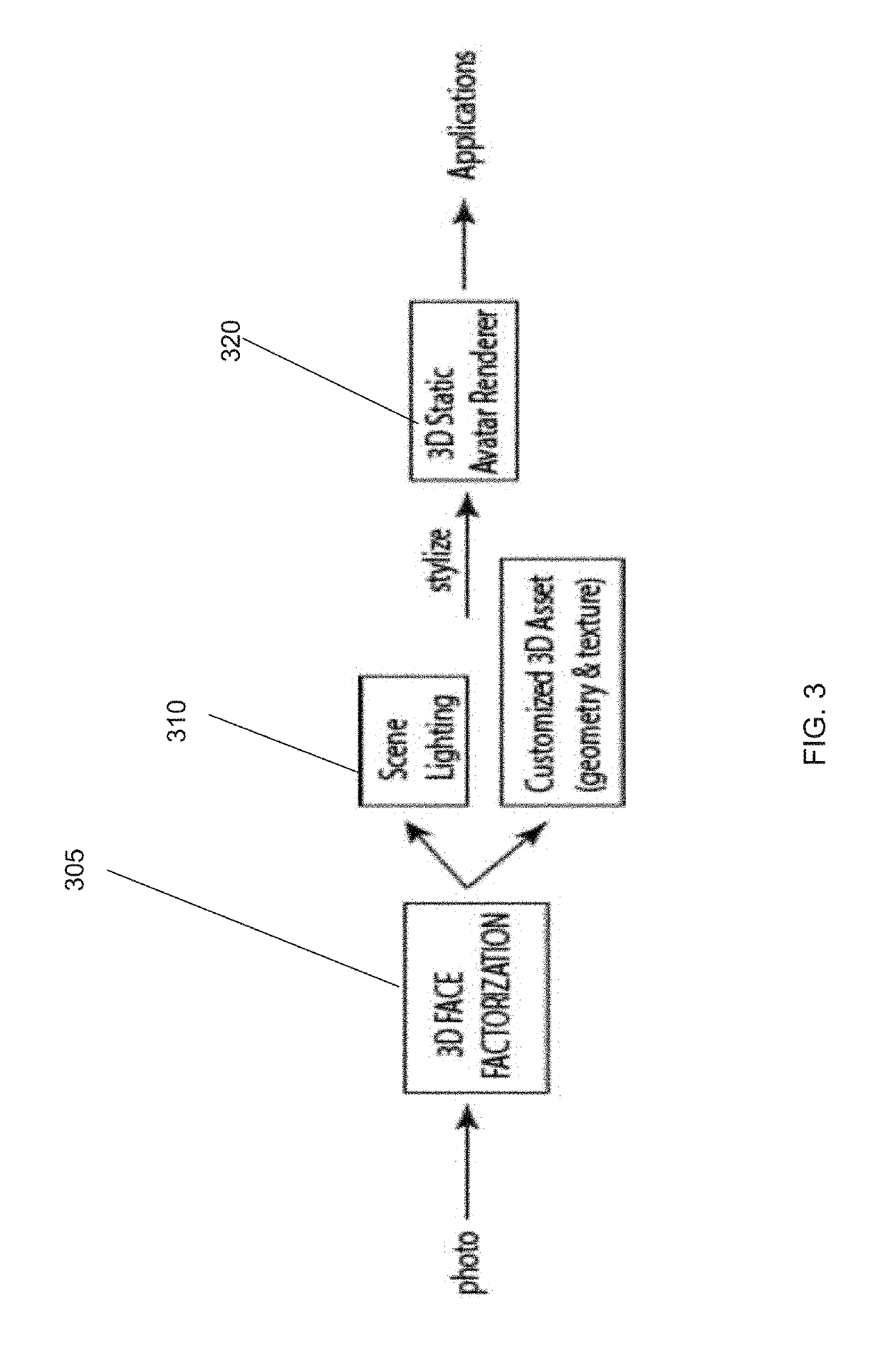

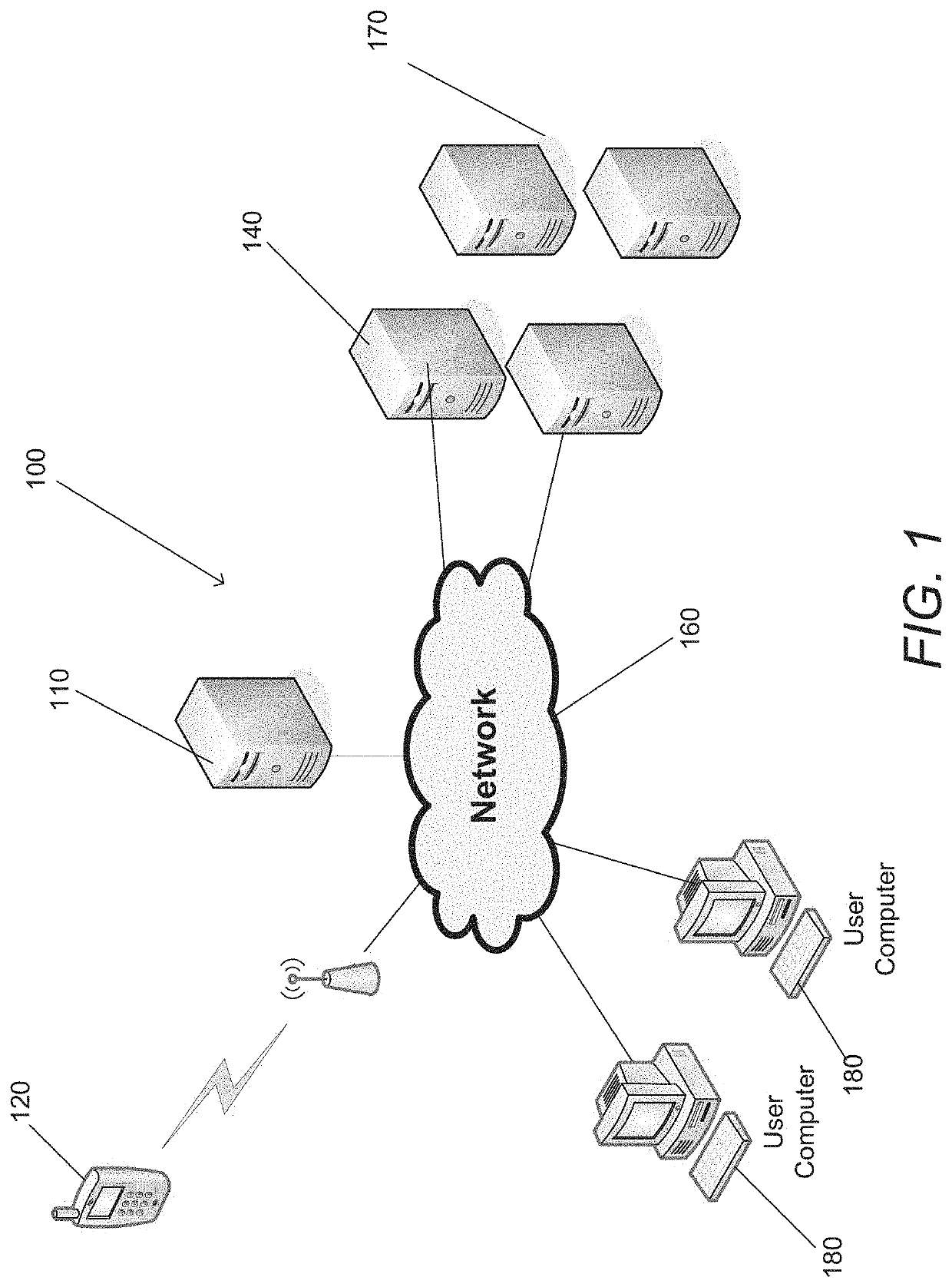

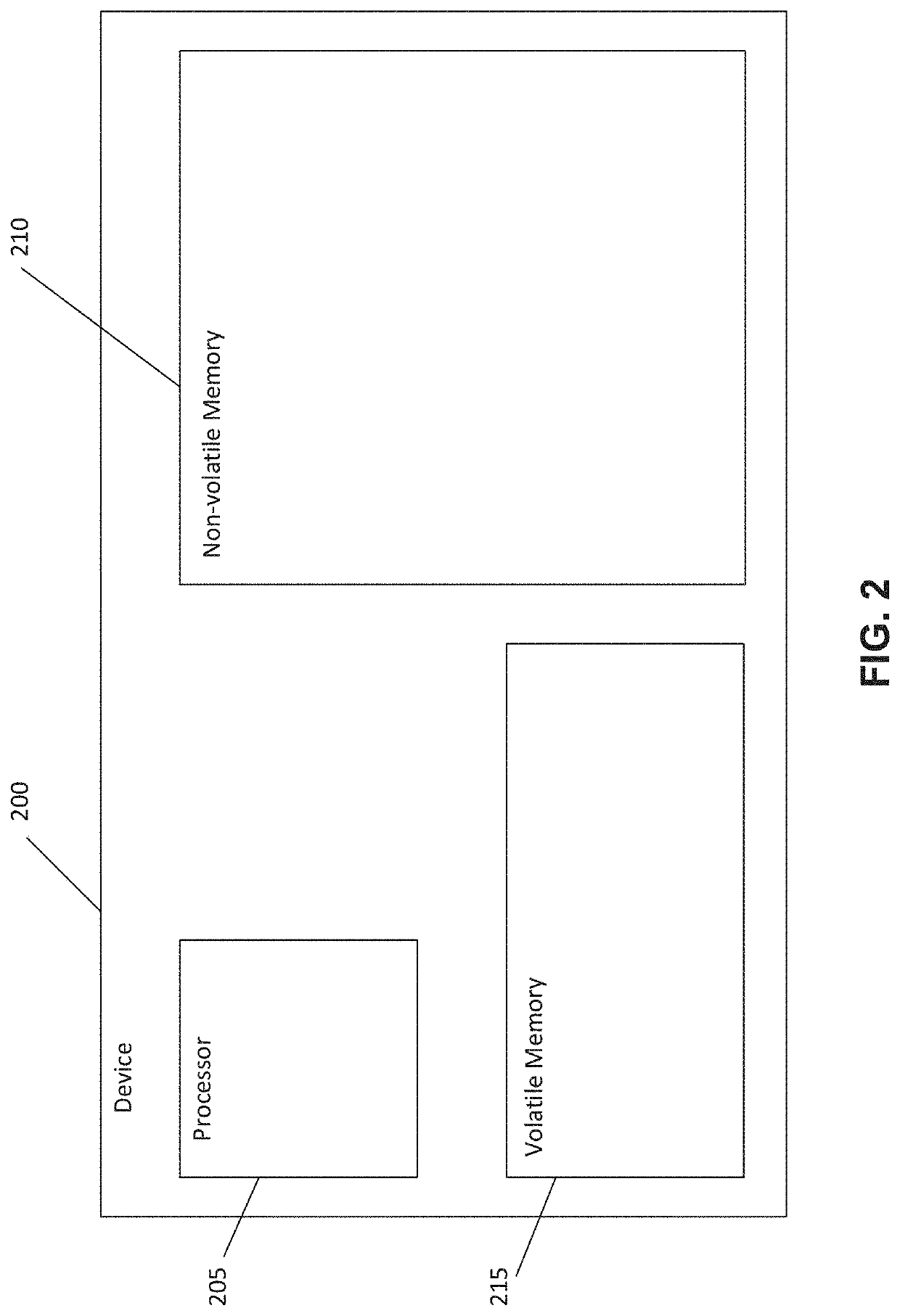

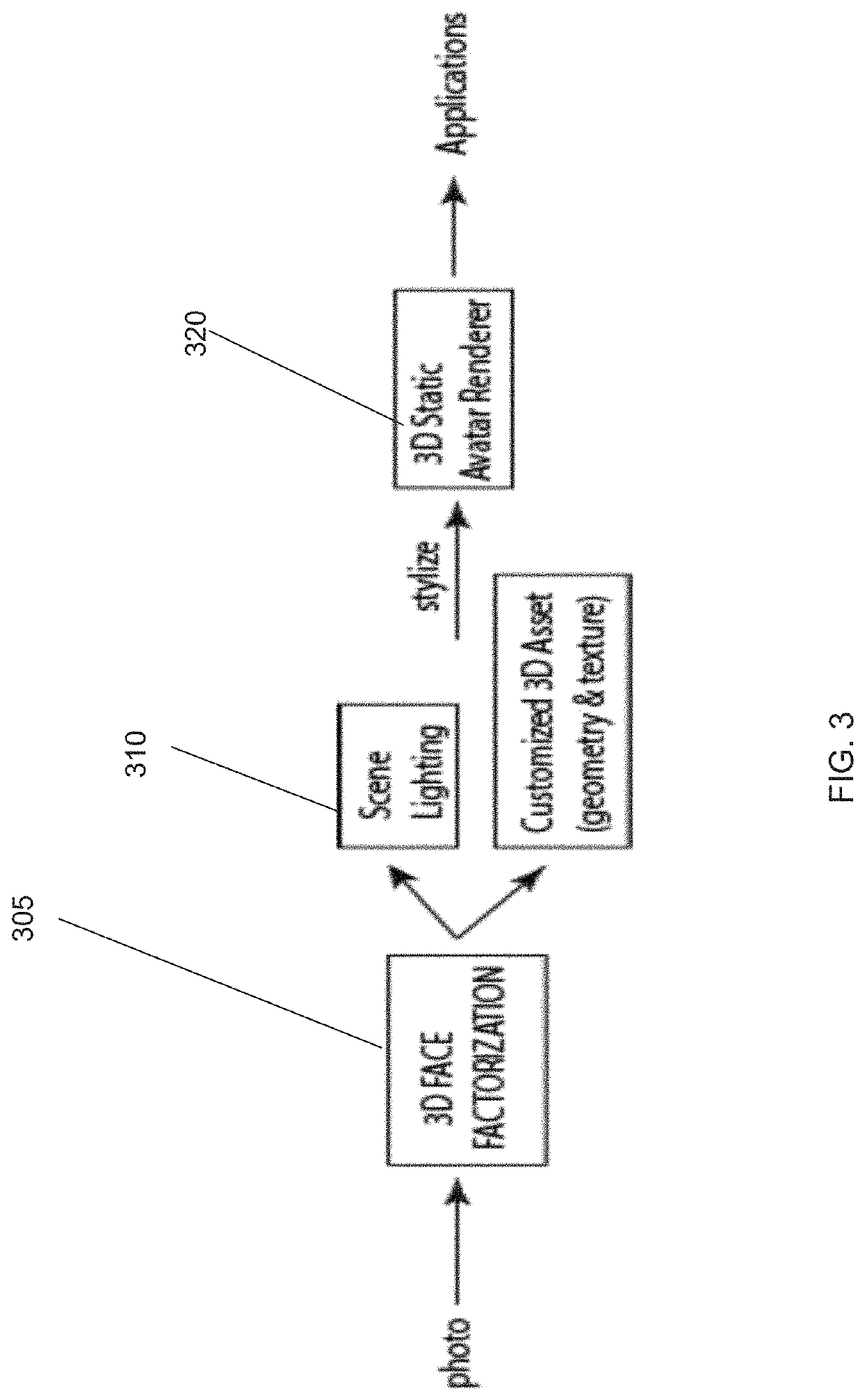

Systems and Methods for Generating Computer Ready Animation Models of a Human Head from Captured Data Images

System and methods for computer animations of 3D models of heads generated from images of faces is disclosed. A 2D captured image that includes an image of a face can be received and used to generate a static 3D model of a head. A rig can be fit to the static 3D model to generate an animation-ready 3D generative model. Sets of rigs can be parameters that each map to particular sounds or particular facial movement observed in a video. These mappings can be used to generate a playlists of sets of rig parameters based upon received audio or video content. The playlist may be played in synchronization with an audio rendition of the audio content. Methods can receive a captured image, identify taxonomy attributes from the captured image, select a template model for the captured image, and perform a shape solve for the selected template model based on the identified taxonomy attributes.

Owner:LOOMAI INC

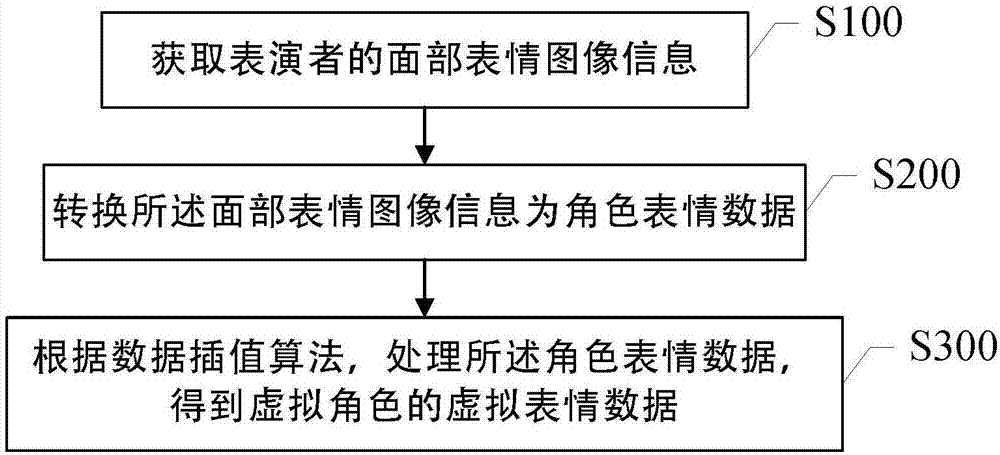

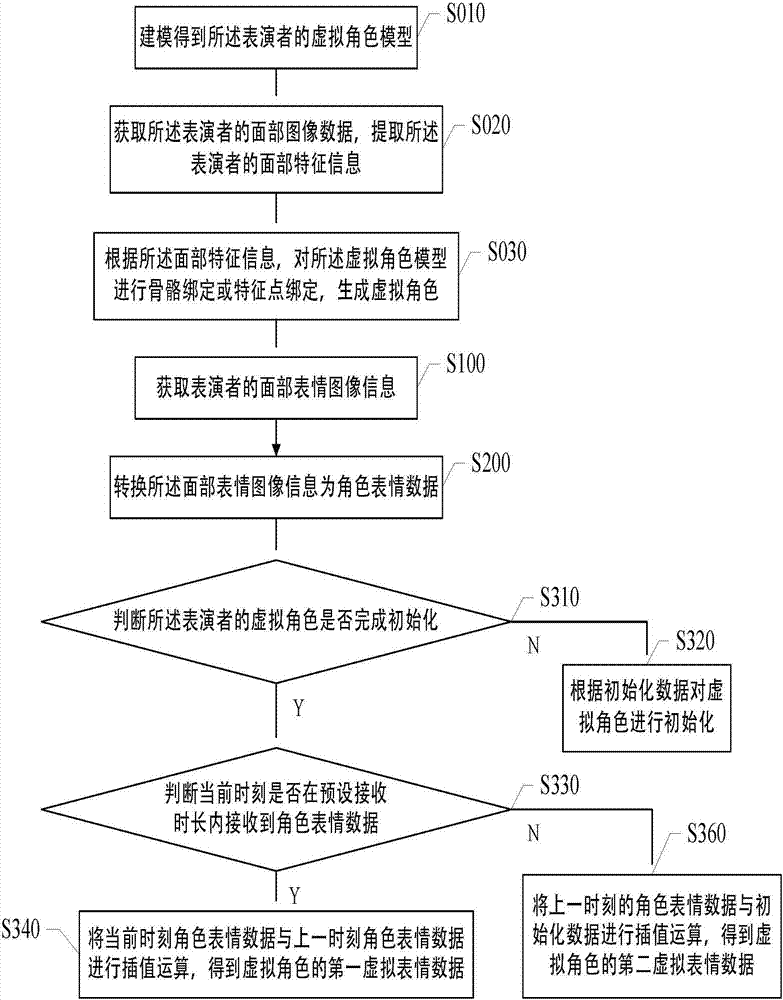

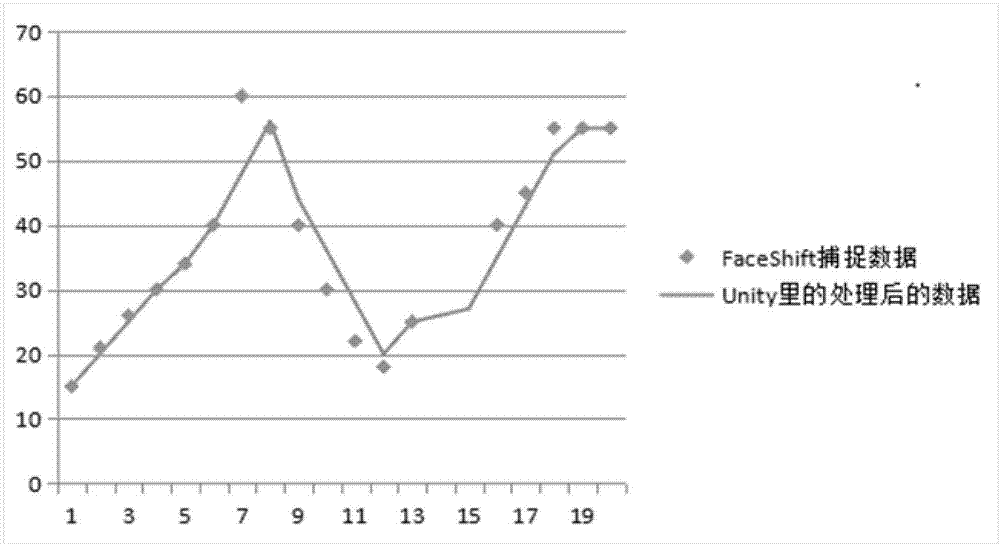

Data processing method and system based on virtual character

The invention discloses a data processing method and a system based on a virtual character. The invention provides a data processing method based on a virtual character, comprising the following steps: S100, acquiring facial expression image information of a performer; S200, converting the facial expression image information into character expression data; and S300, processing the character expression data according to a data interpolation algorithm to get virtual expression data of a virtual character. The facial movements of a human face can be transferred to any virtual character chosen by users and demonstrated. The current facial expression change of a performer can be reflected in real time through the virtual character. A more vivid virtual character can be created, interest is added, and the user experience is enhanced. Moreover, when there is an identification fault, the action of the character can be stably controlled within an interval to make virtual character demonstration more vivid and natural.

Owner:上海微漫网络科技有限公司

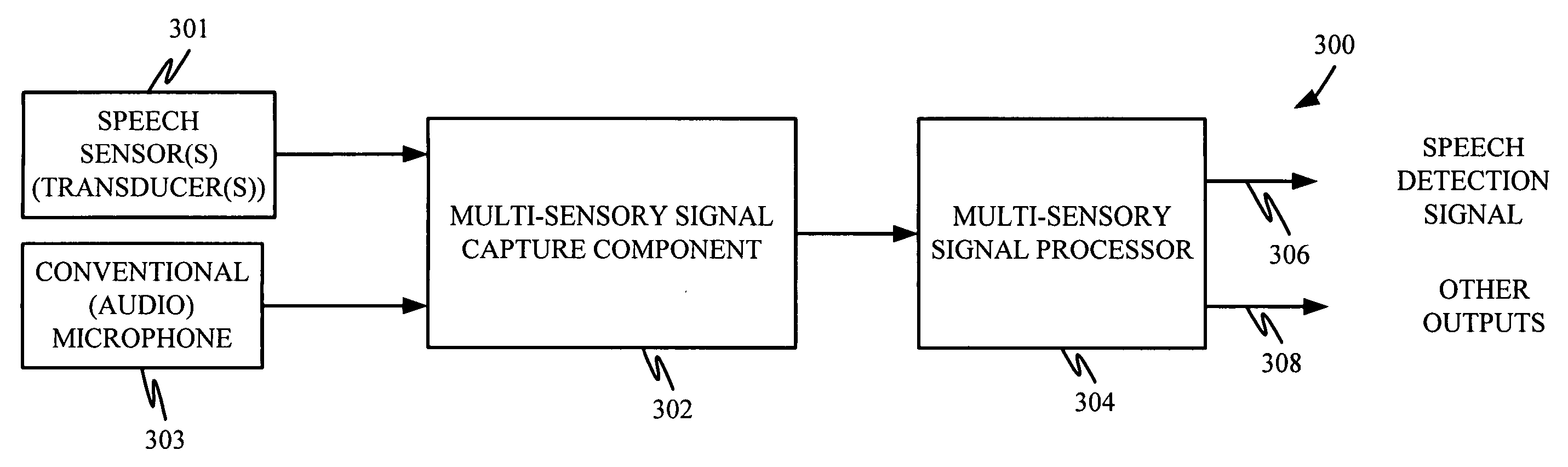

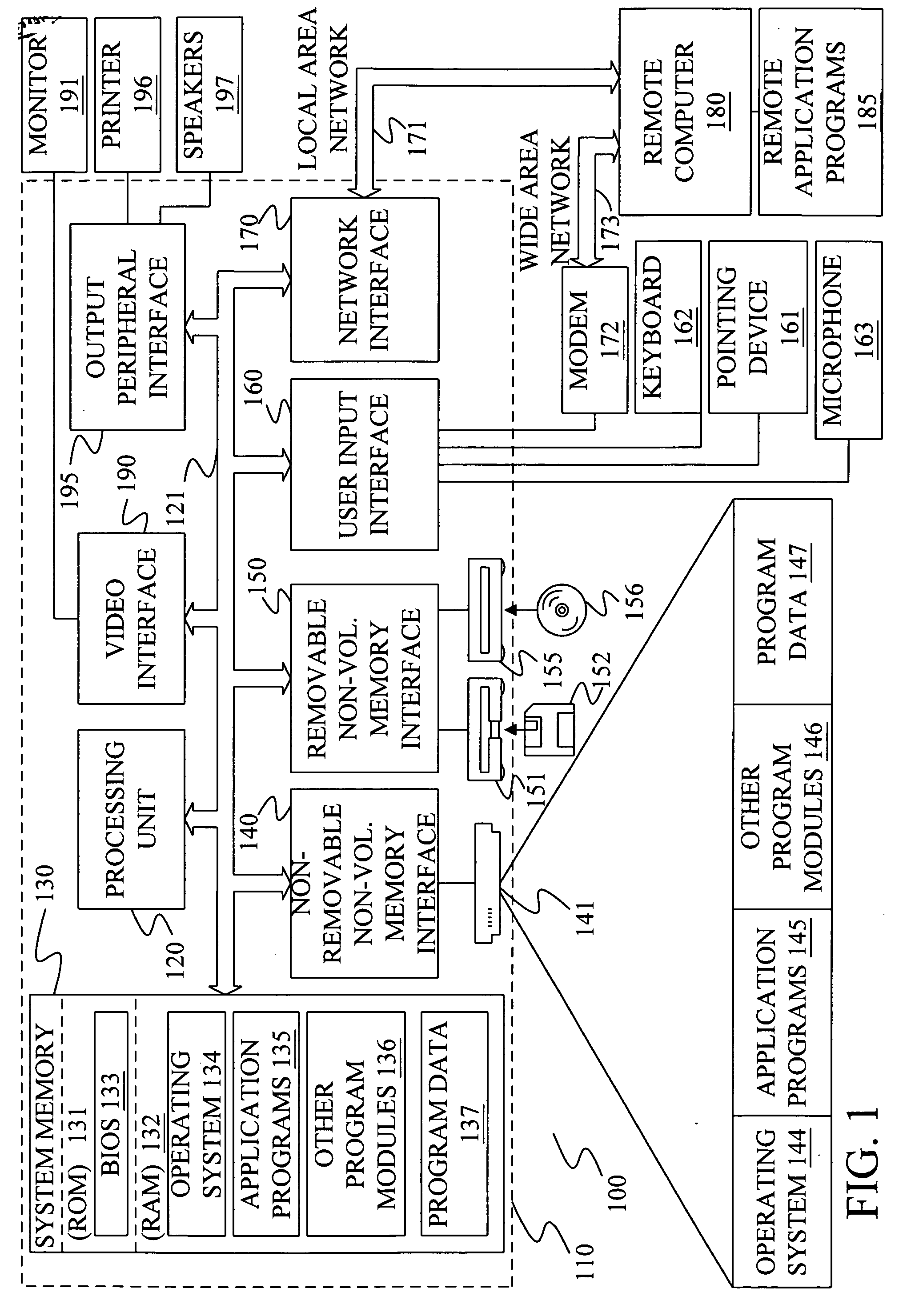

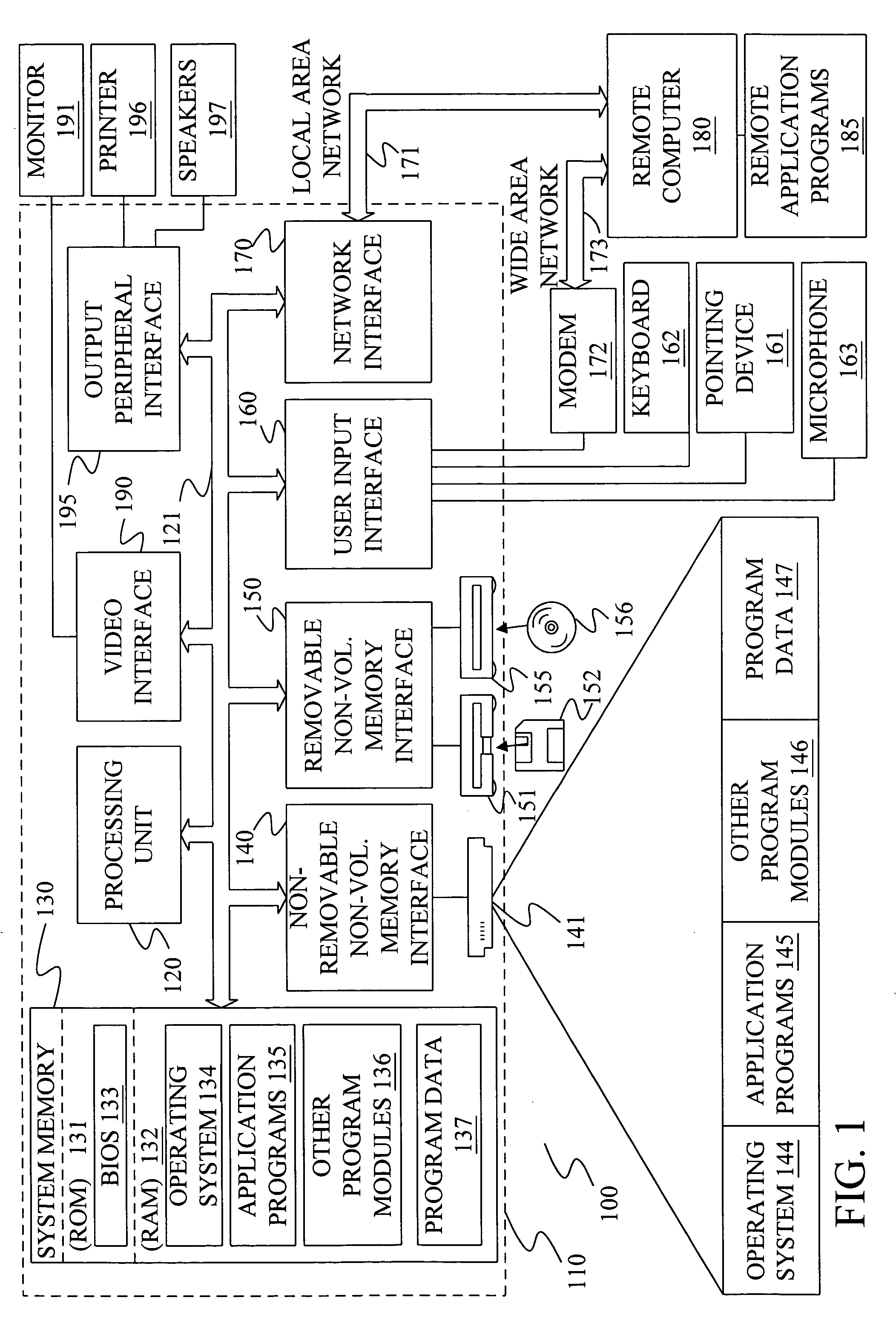

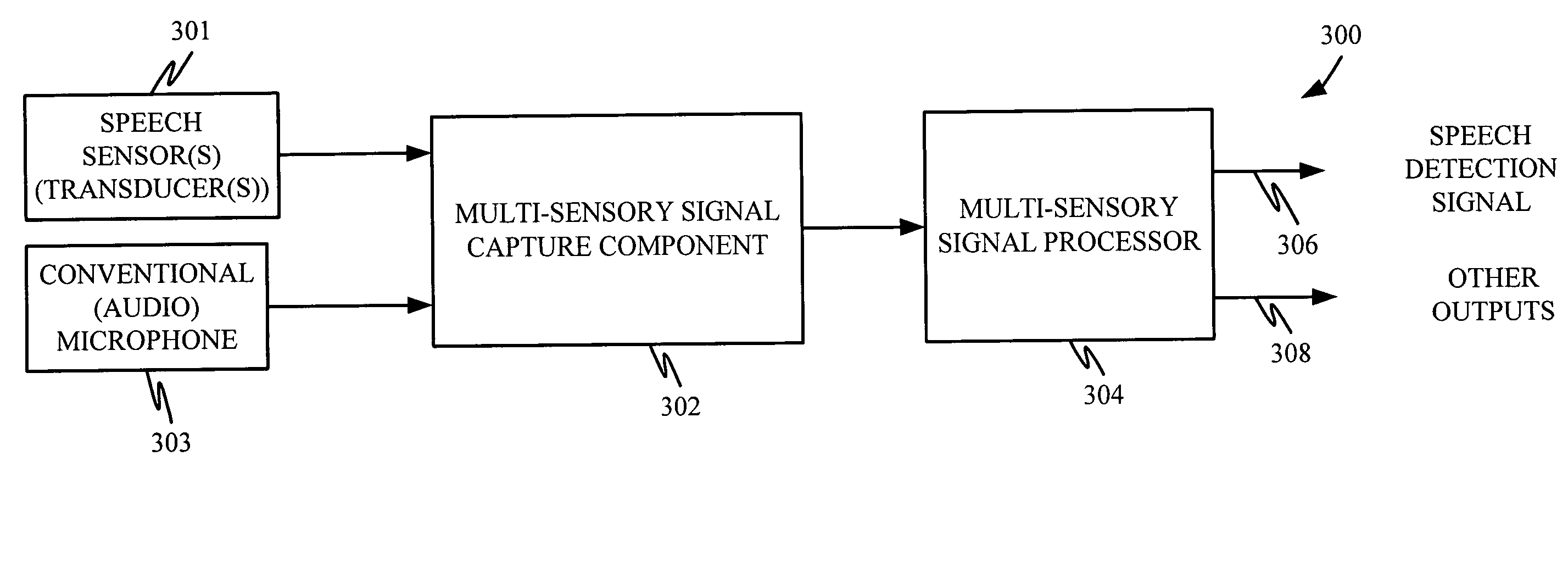

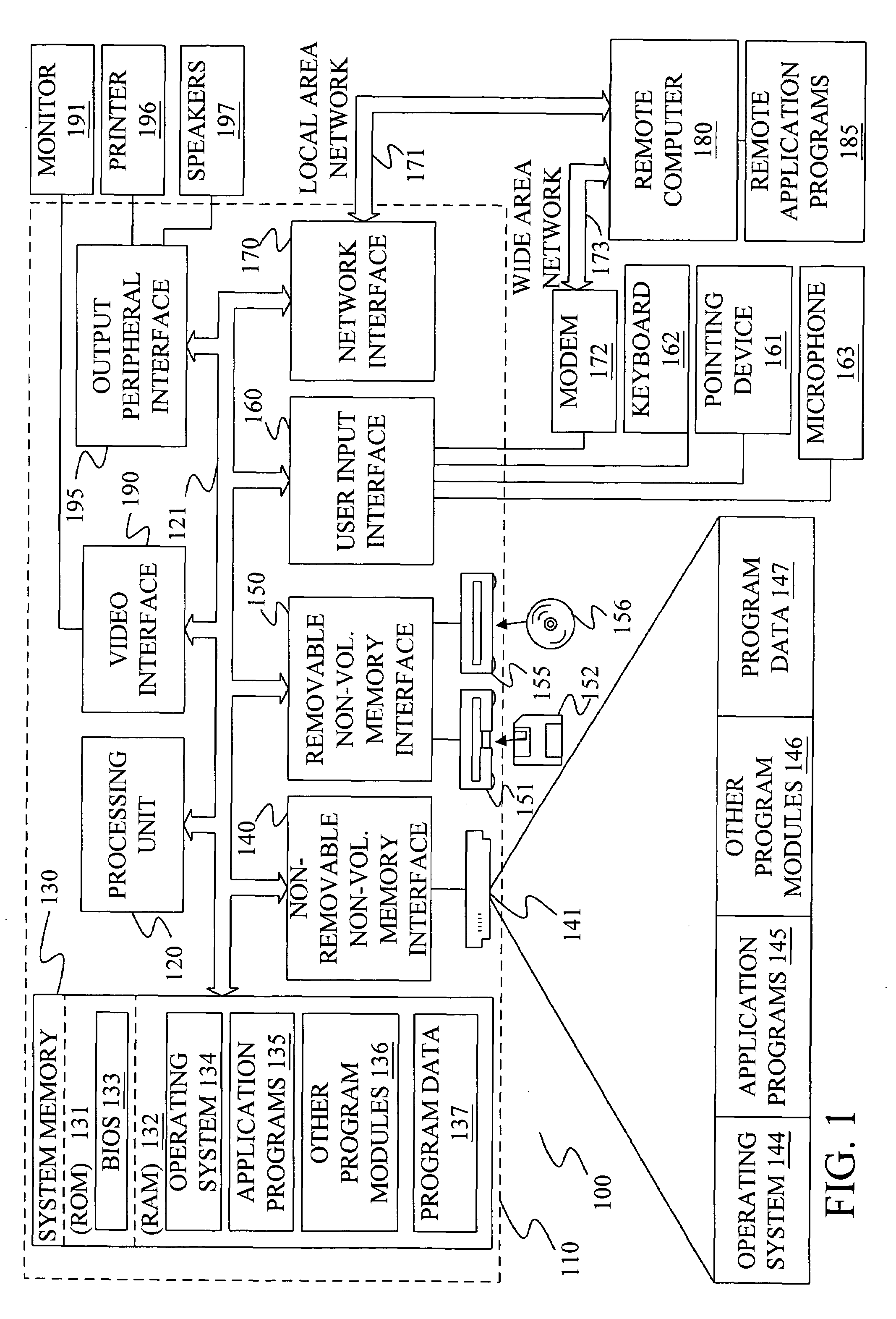

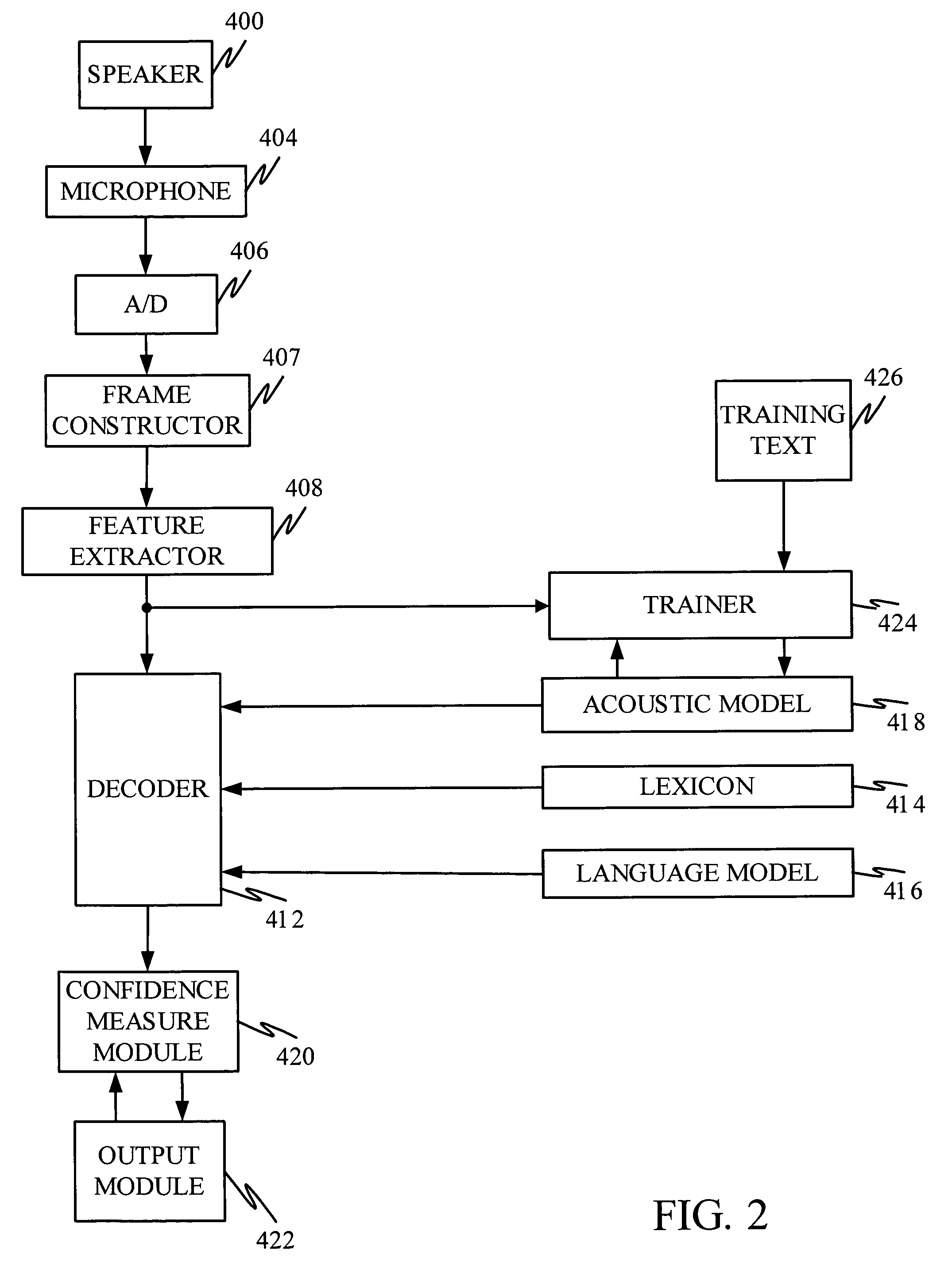

Head mounted multi-sensory audio input system

InactiveUS20050033571A1Headphones for stereophonic communicationBone conduction transducer hearing devicesThroatFacial movement

The present invention combines a conventional audio microphone with an additional speech sensor that provides a speech sensor signal based on an input. The speech sensor signal is generated based on an action undertaken by a speaker during speech, such as facial movement, bone vibration, throat vibration, throat impedance changes, etc. A speech detector component receives an input from the speech sensor and outputs a speech detection signal indicative of whether a user is speaking. The speech detector generates the speech detection signal based on the microphone signal and the speech sensor signal.

Owner:MICROSOFT TECH LICENSING LLC

Systems and methods for generating computer ready animation models of a human head from captured data images

System and methods for computer animations of 3D models of heads generated from images of faces is disclosed. A 2D captured image that includes an image of a face can be received and used to generate a static 3D model of a head. A rig can be fit to the static 3D model to generate an animation-ready 3D generative model. Sets of rigs can be parameters that each map to particular sounds or particular facial movement observed in a video. These mappings can be used to generate a playlists of sets of rig parameters based upon received audio or video content. The playlist may be played in synchronization with an audio rendition of the audio content. Methods can receive a captured image, identify taxonomy attributes from the captured image, select a template model for the captured image, and perform a shape solve for the selected template model based on the identified taxonomy attributes.

Owner:LOOMAI INC

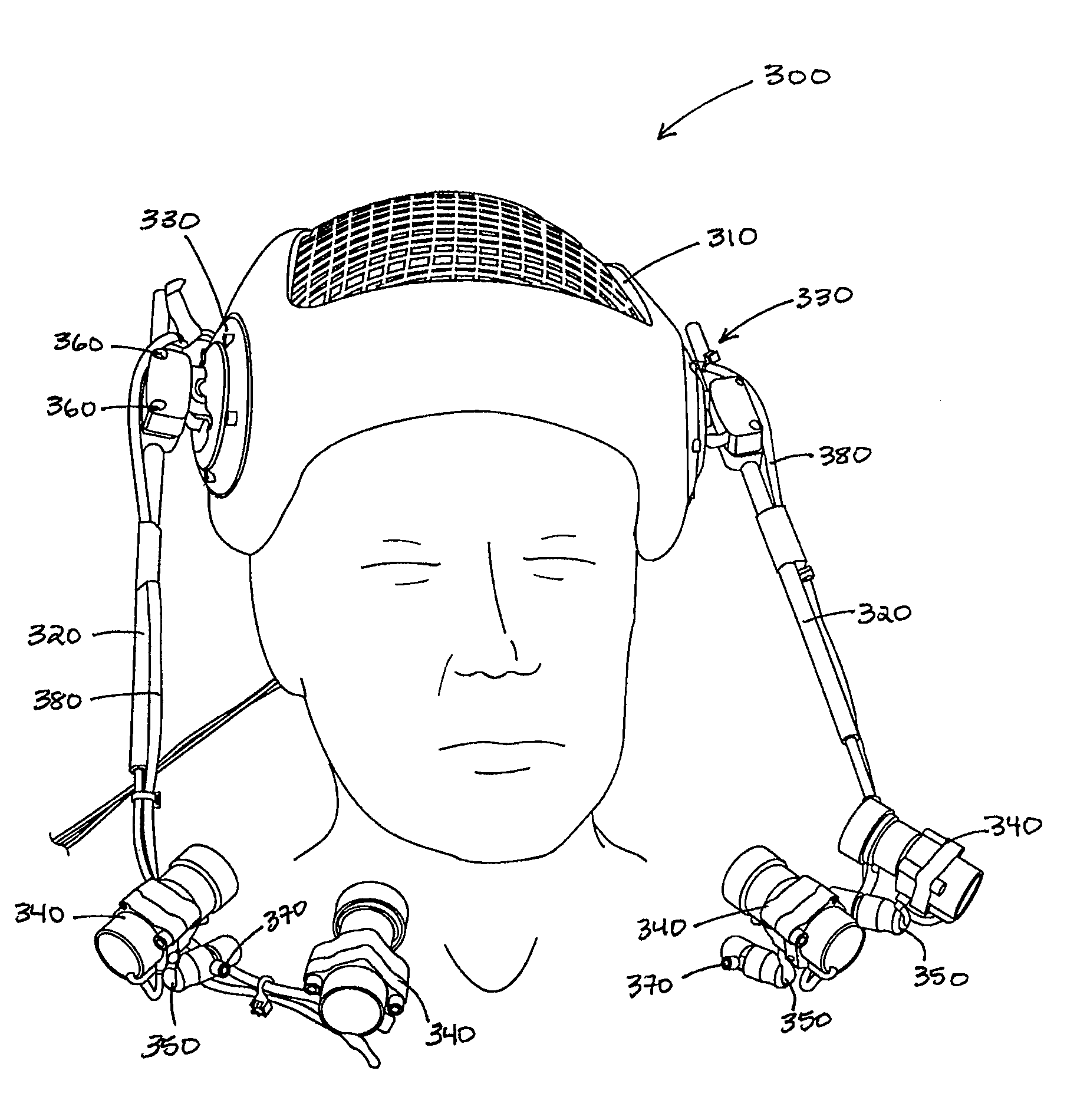

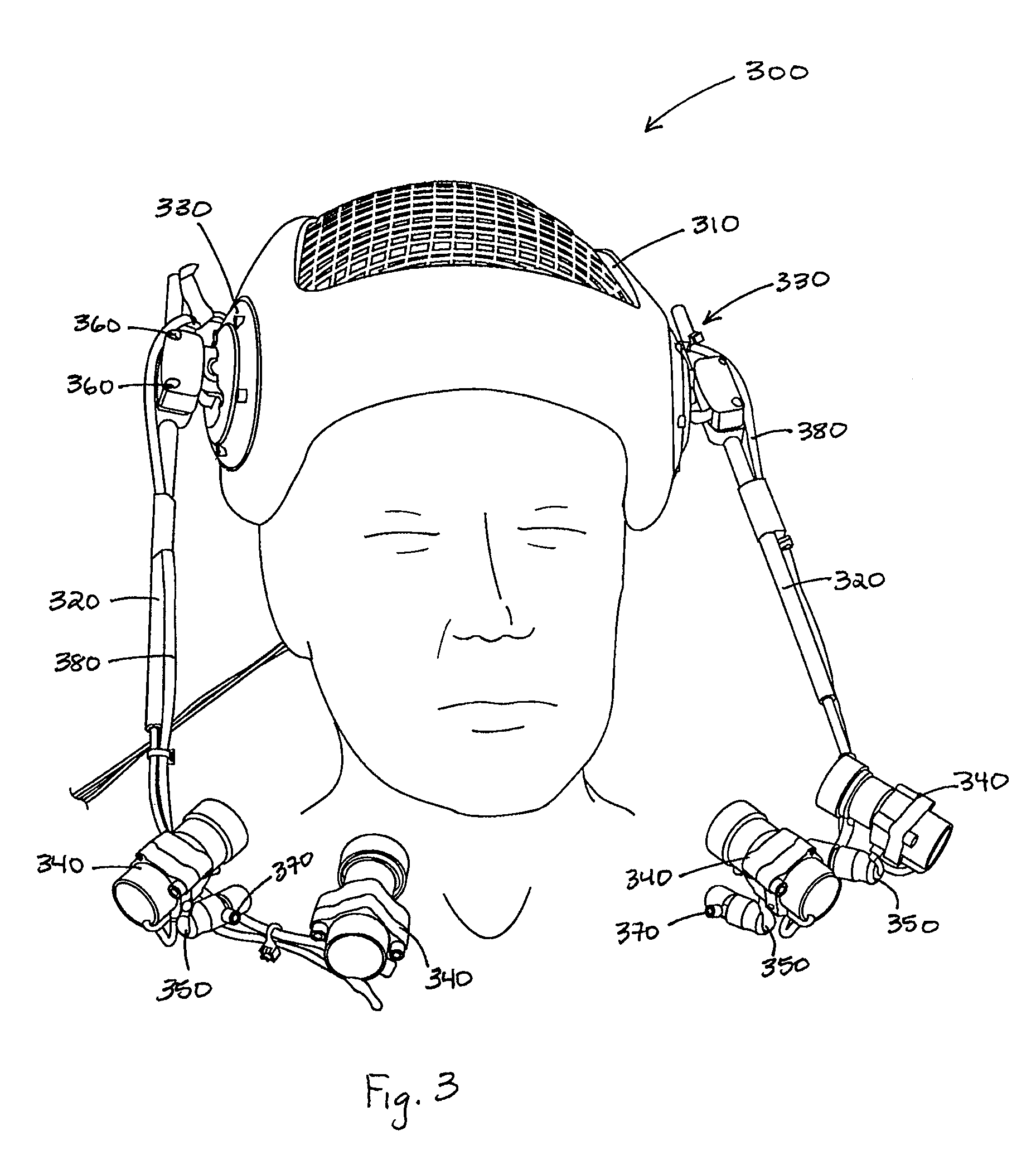

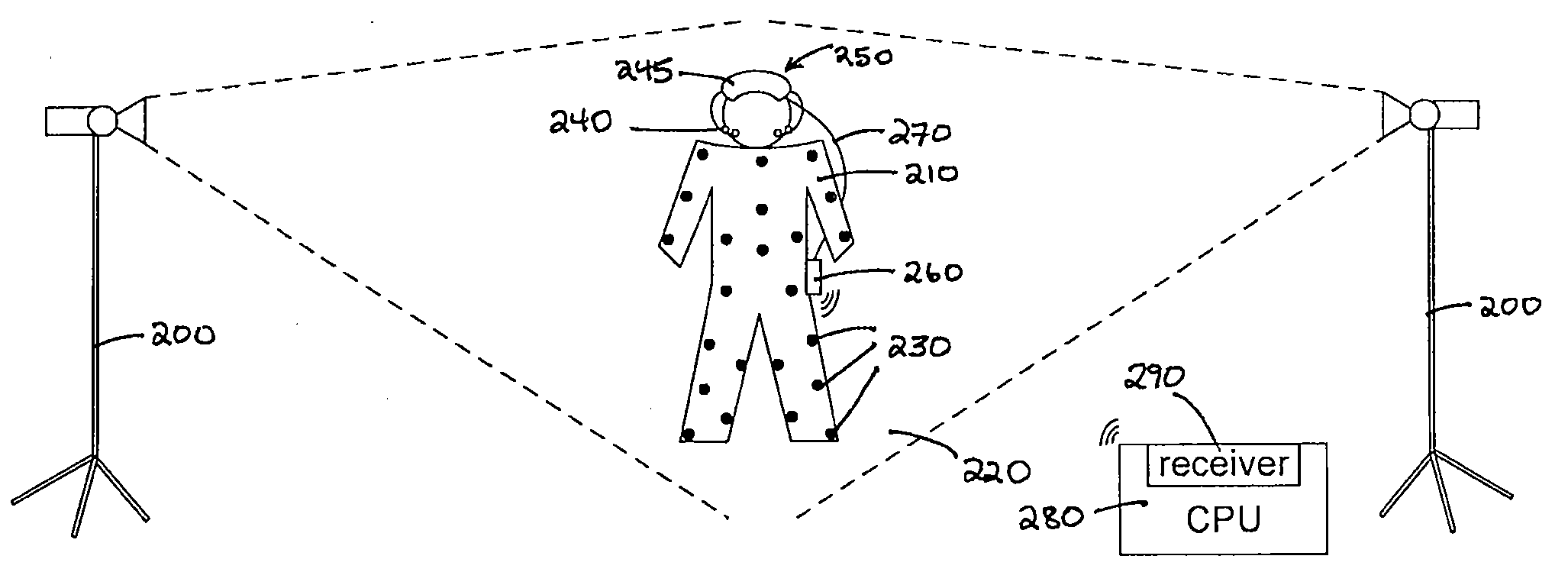

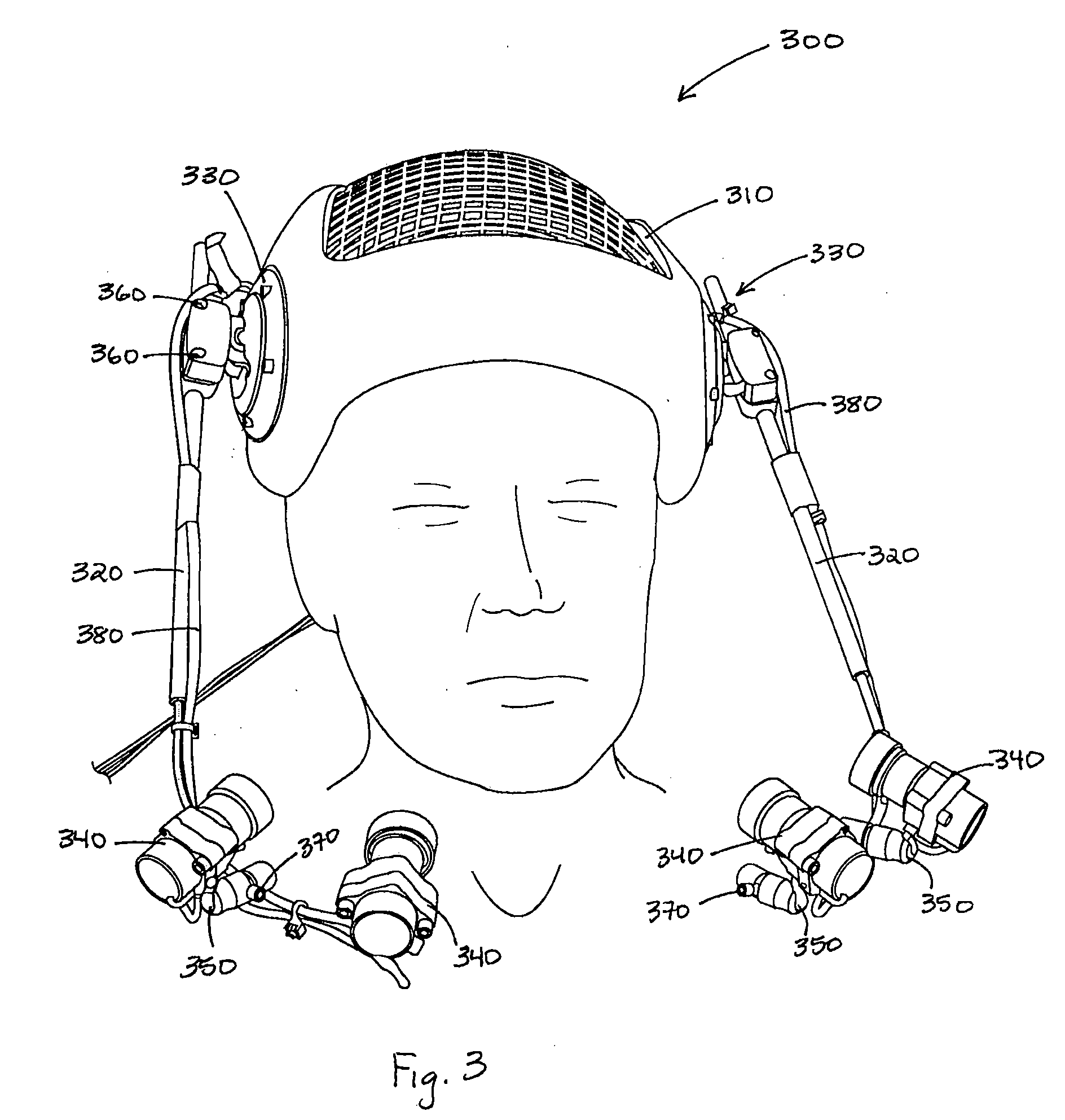

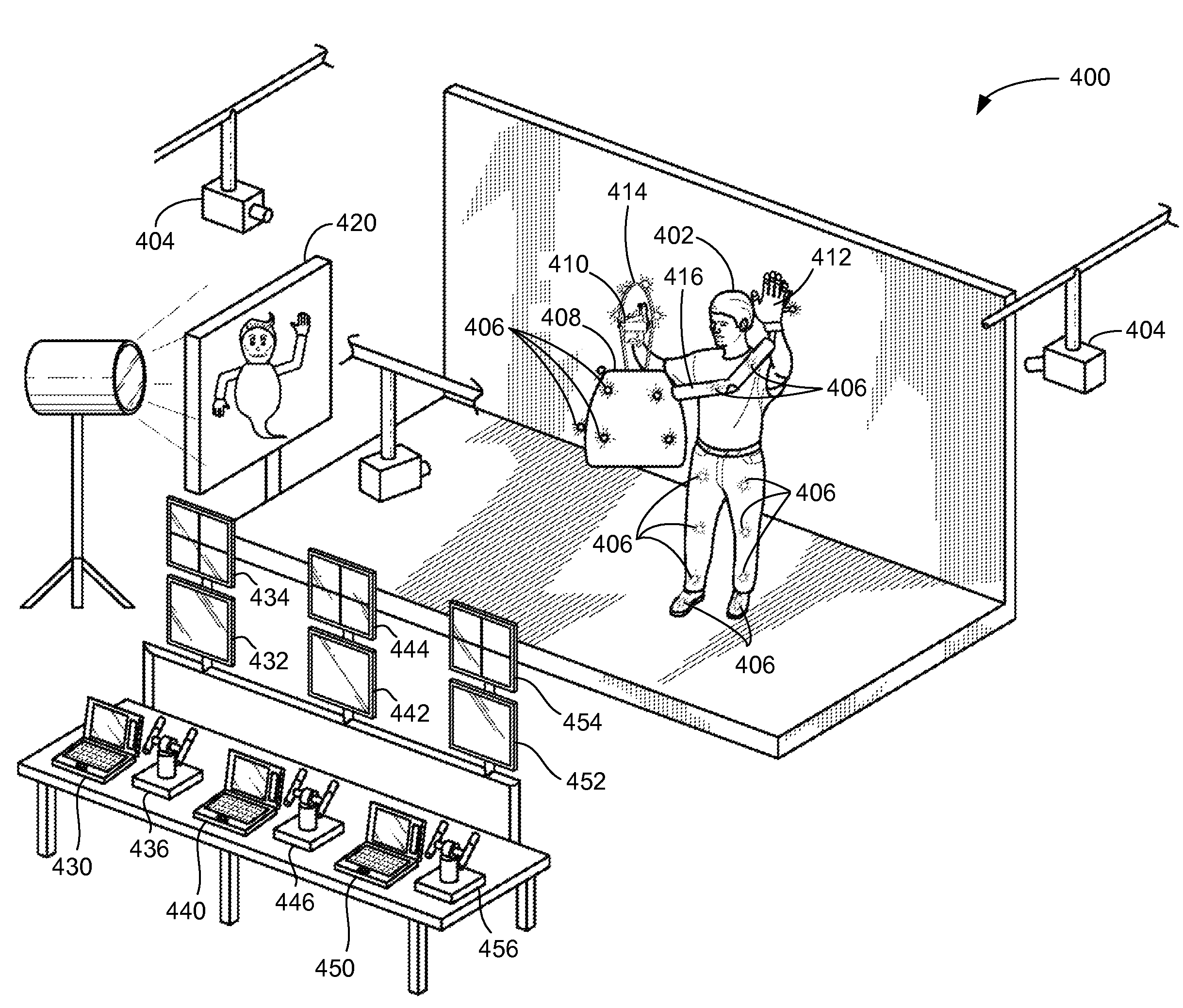

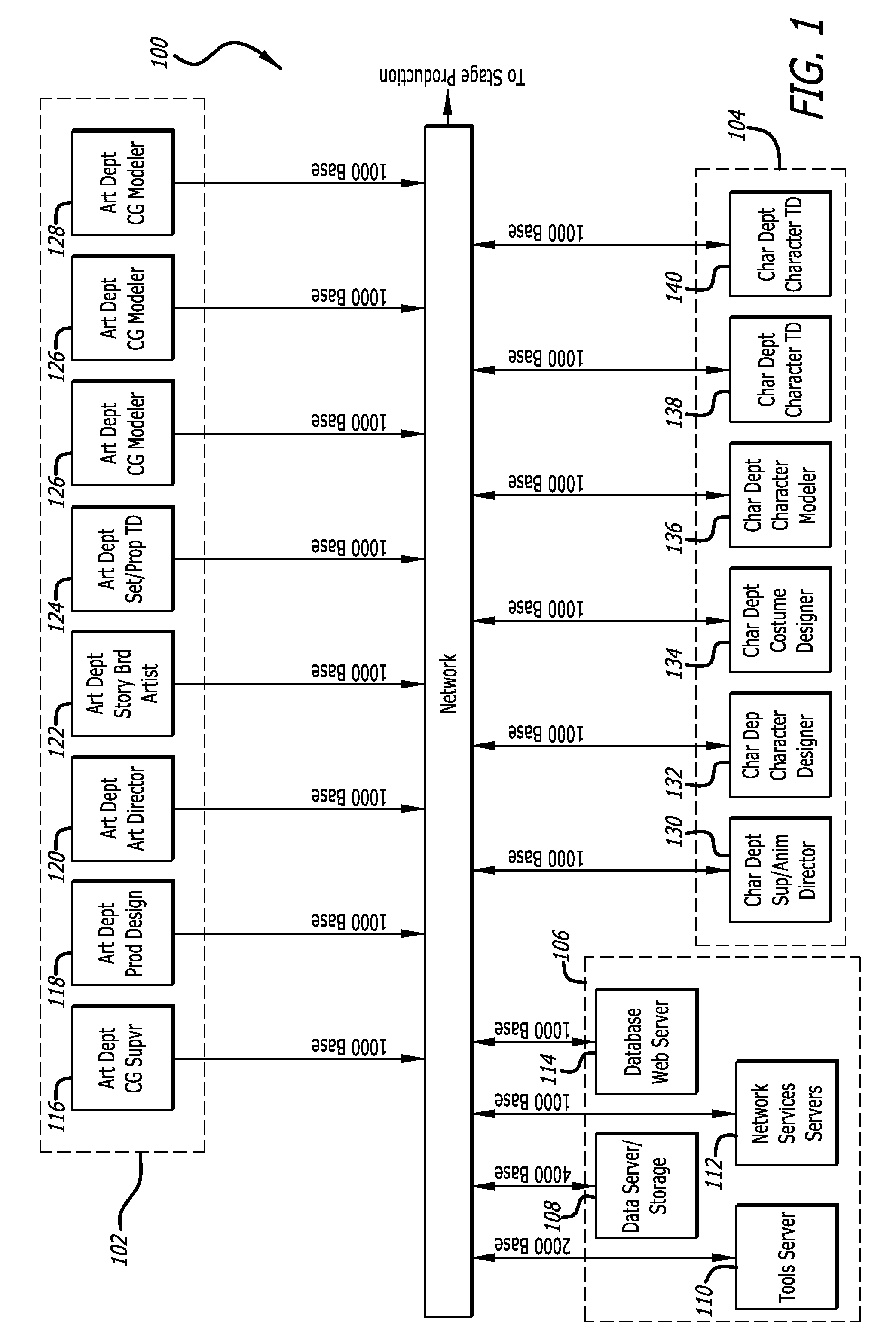

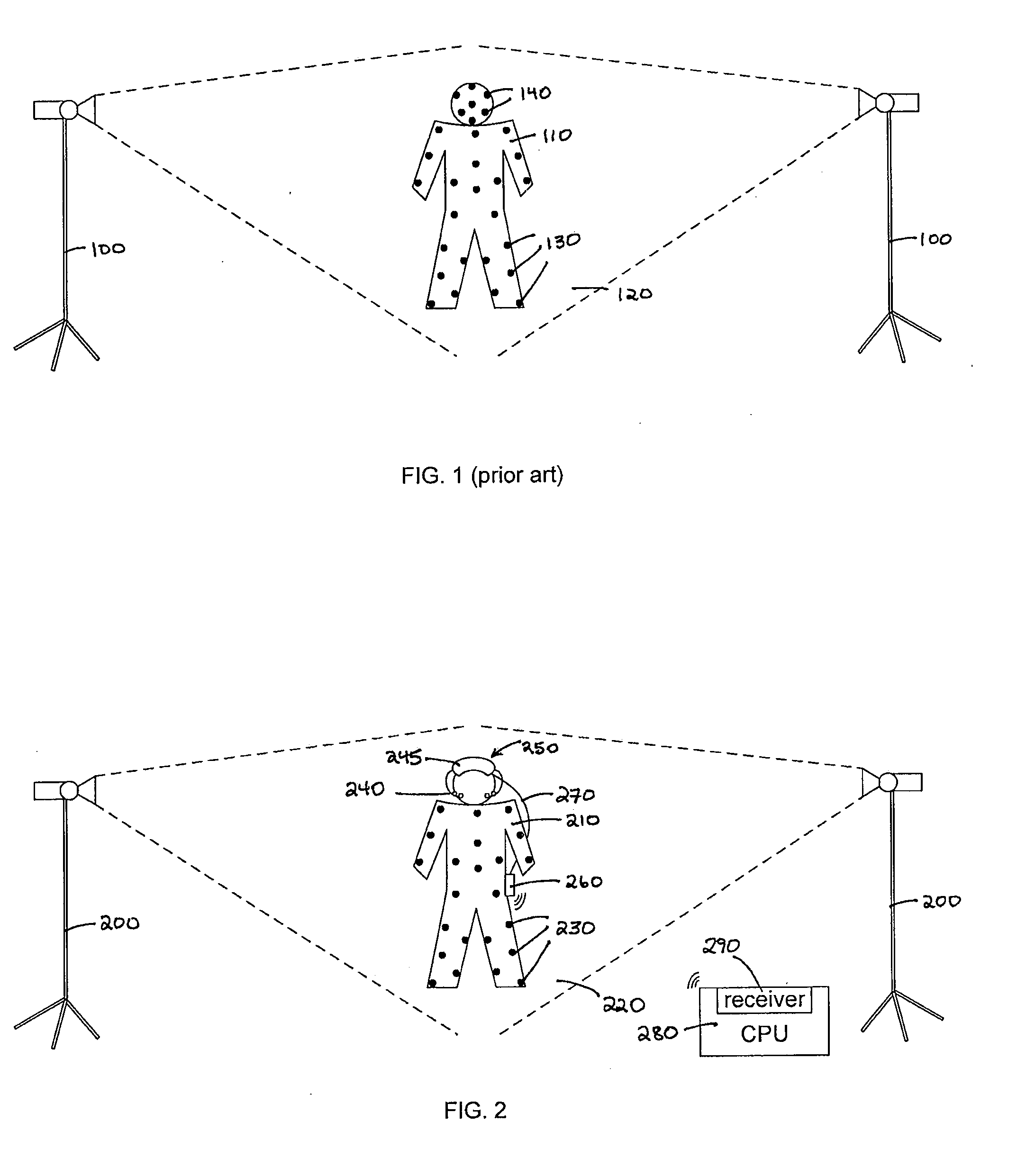

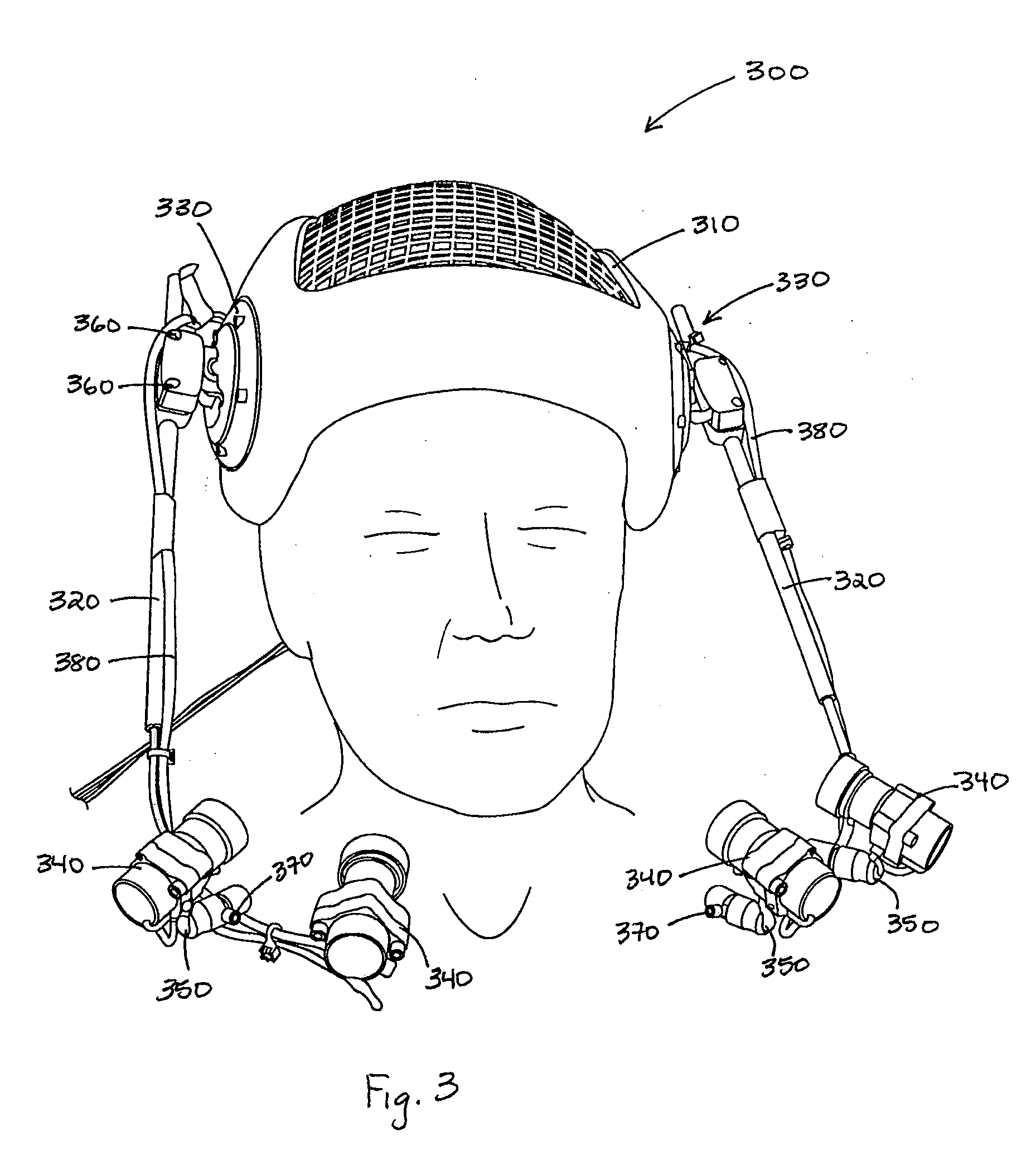

Mounting and bracket for an actor-mounted motion capture camera system

The present invention relates to computer capture of object motion. More specifically, embodiments of the present invention relate to capturing of facial movement or performance of an actor. Embodiments of the present invention provide a mounting bracket for a head-mounted motion capture camera system. In many embodiments, the mounting bracket includes a helmet that is positioned on the actor's head and mounting rods on either side of the helmet that project toward the front of the actor that may be flexibly repositioned. Cameras positioned on the mounting rods capture images of the actor's face.

Owner:TWO PIC MC

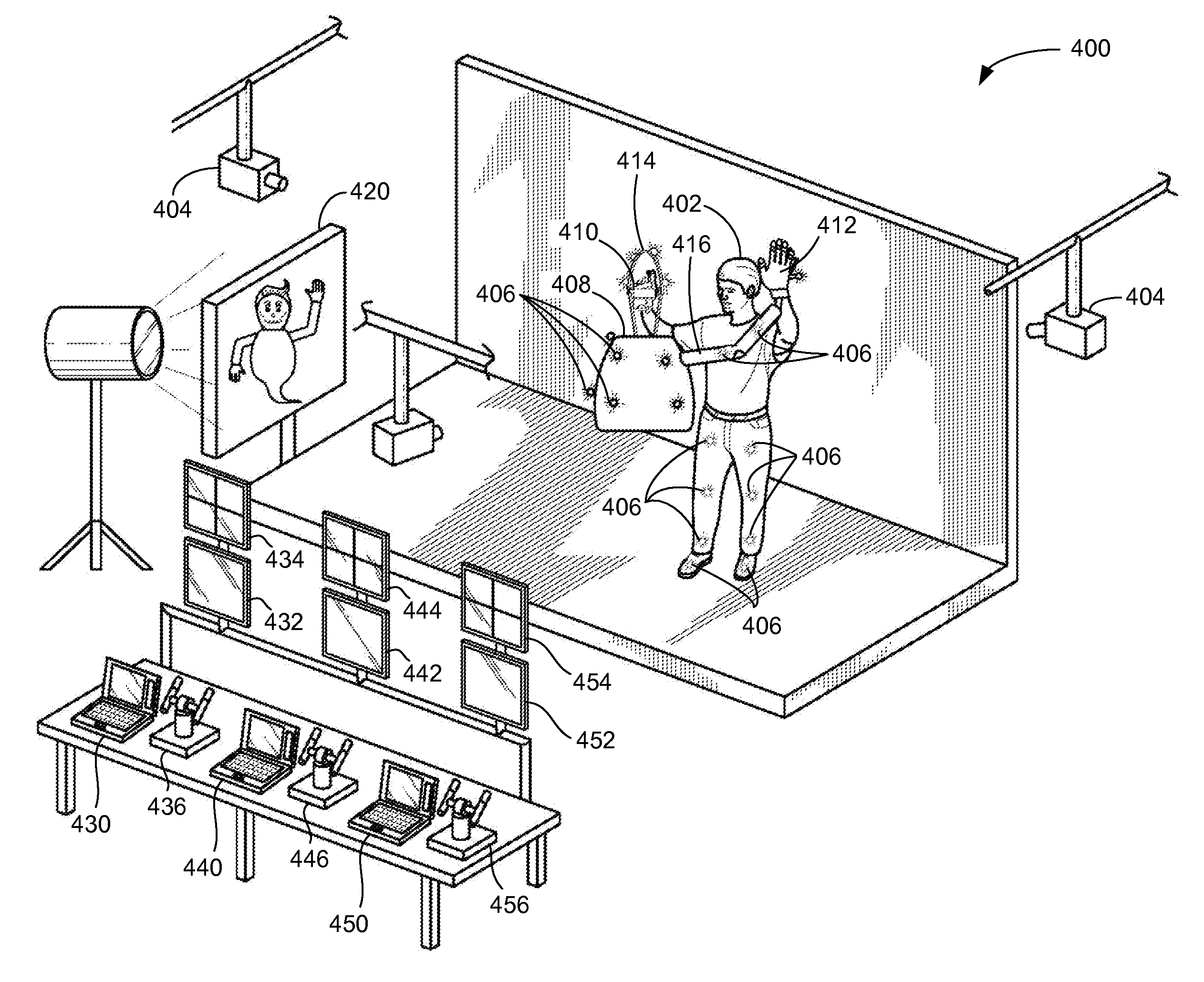

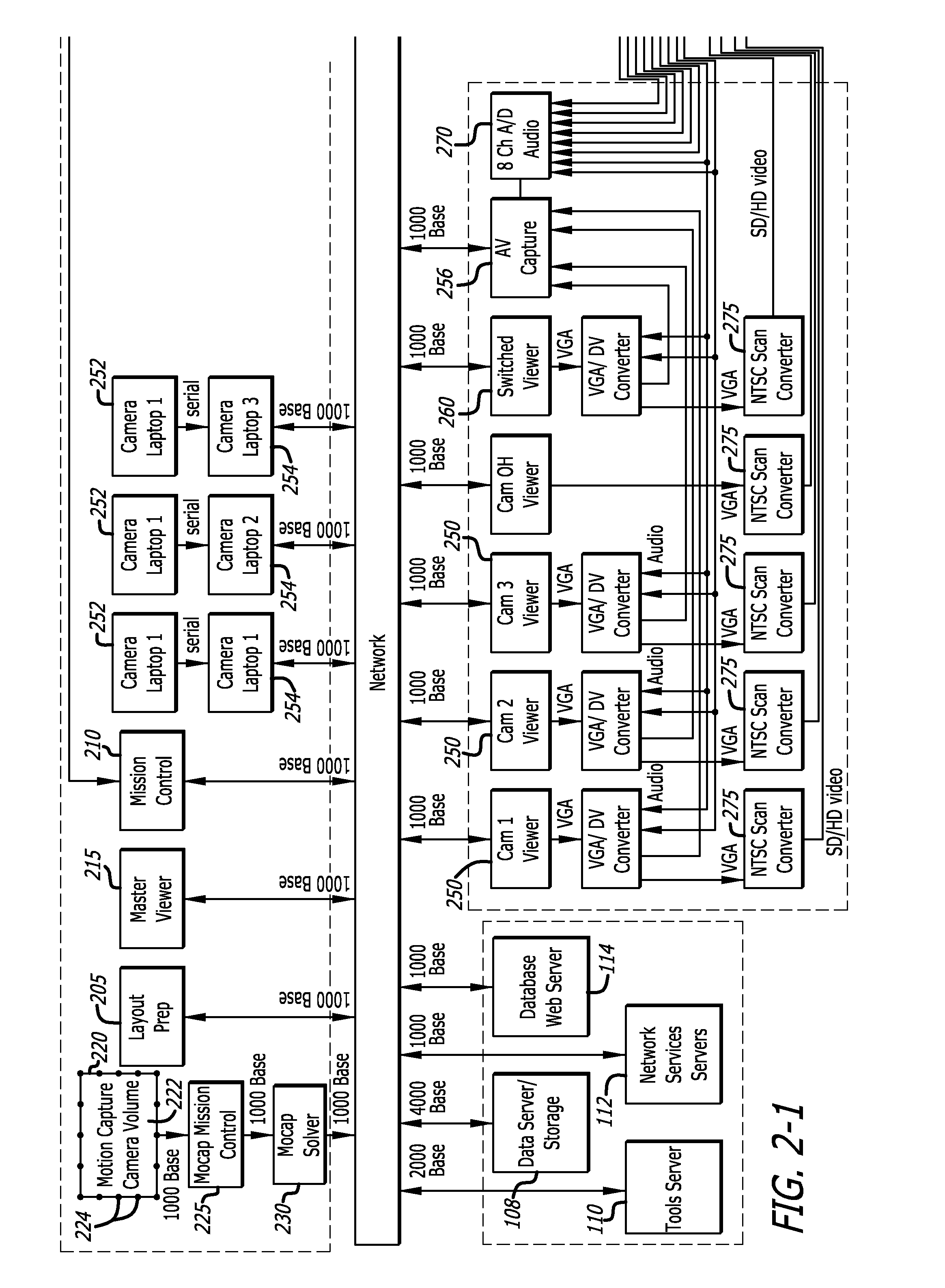

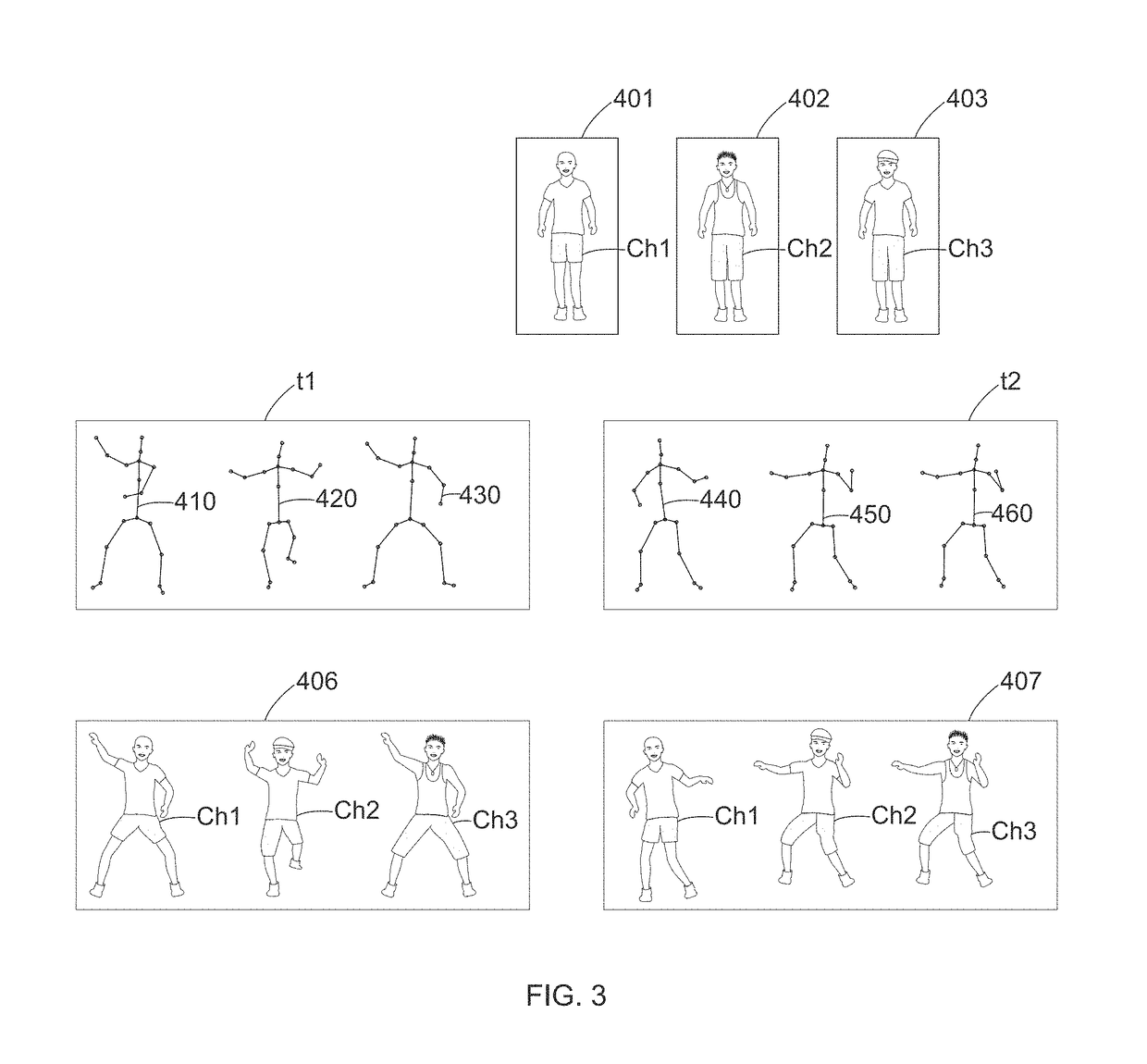

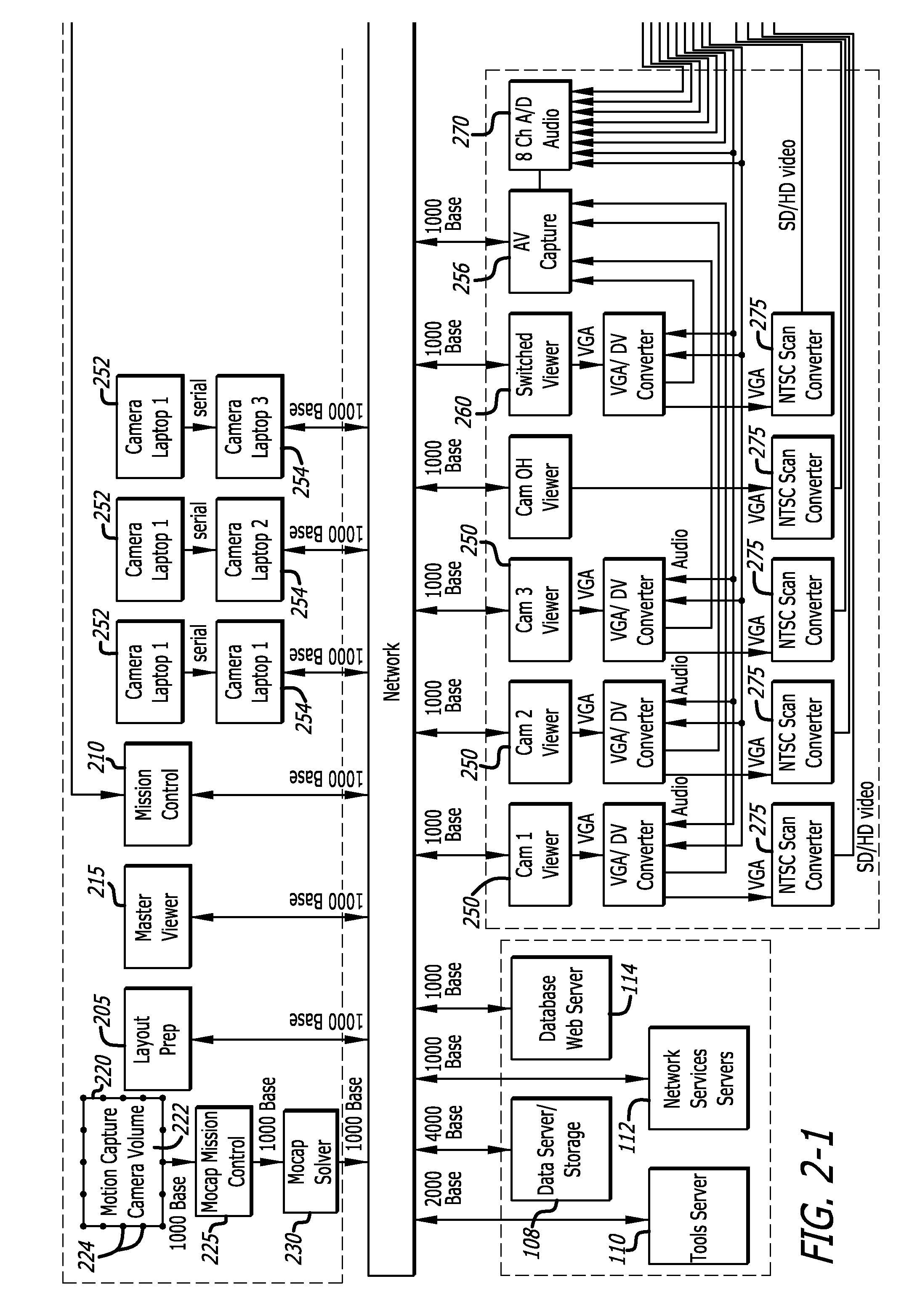

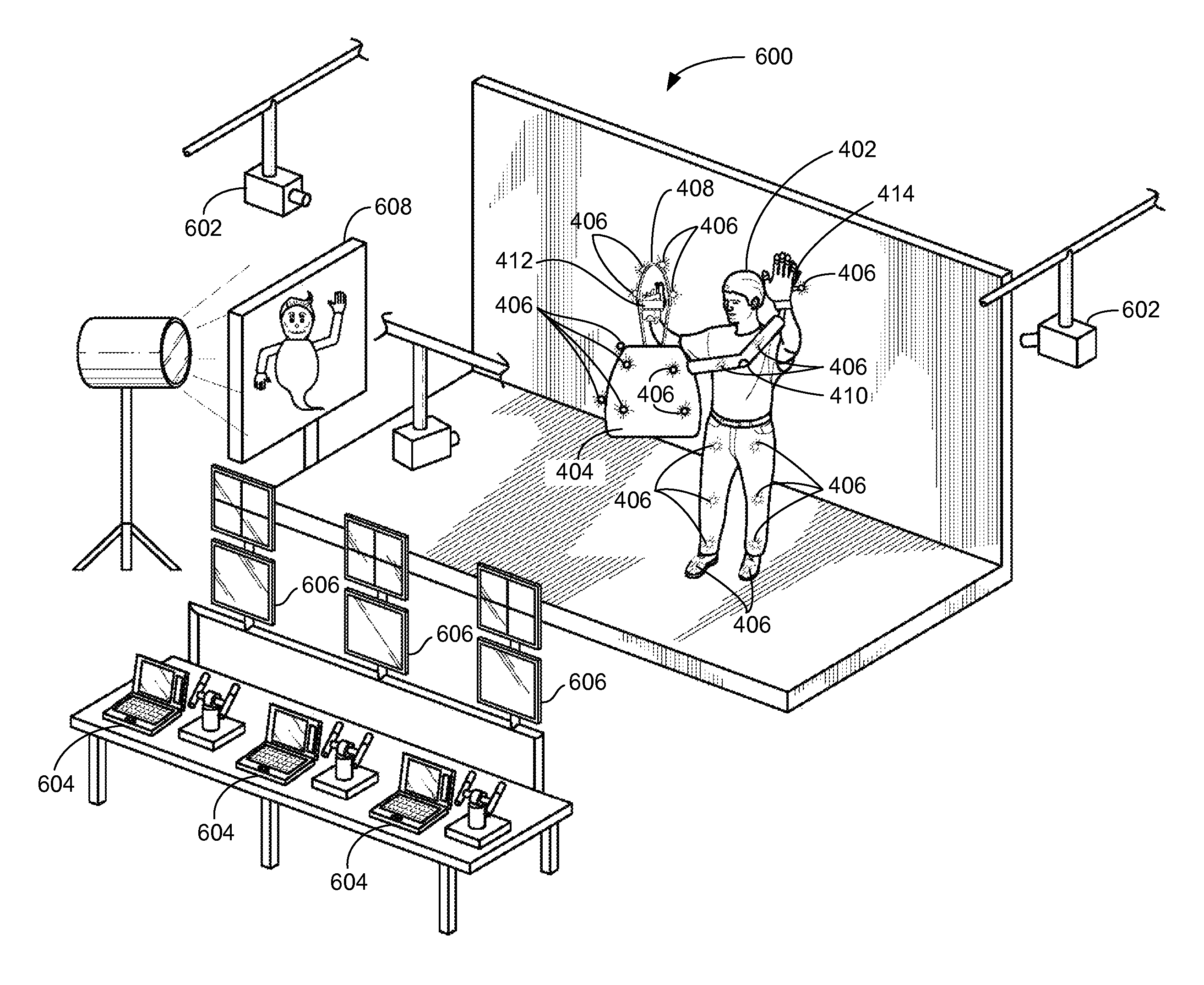

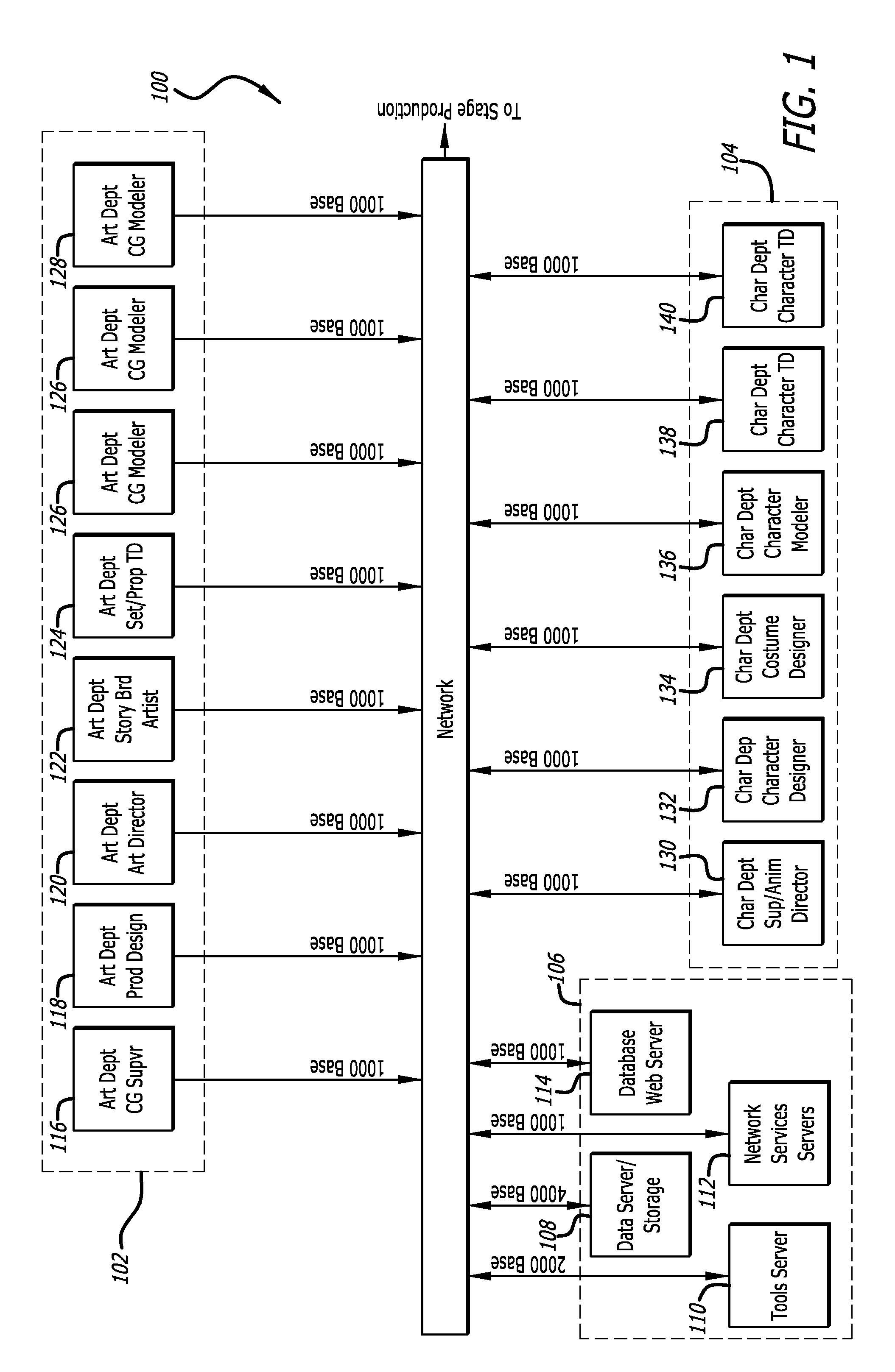

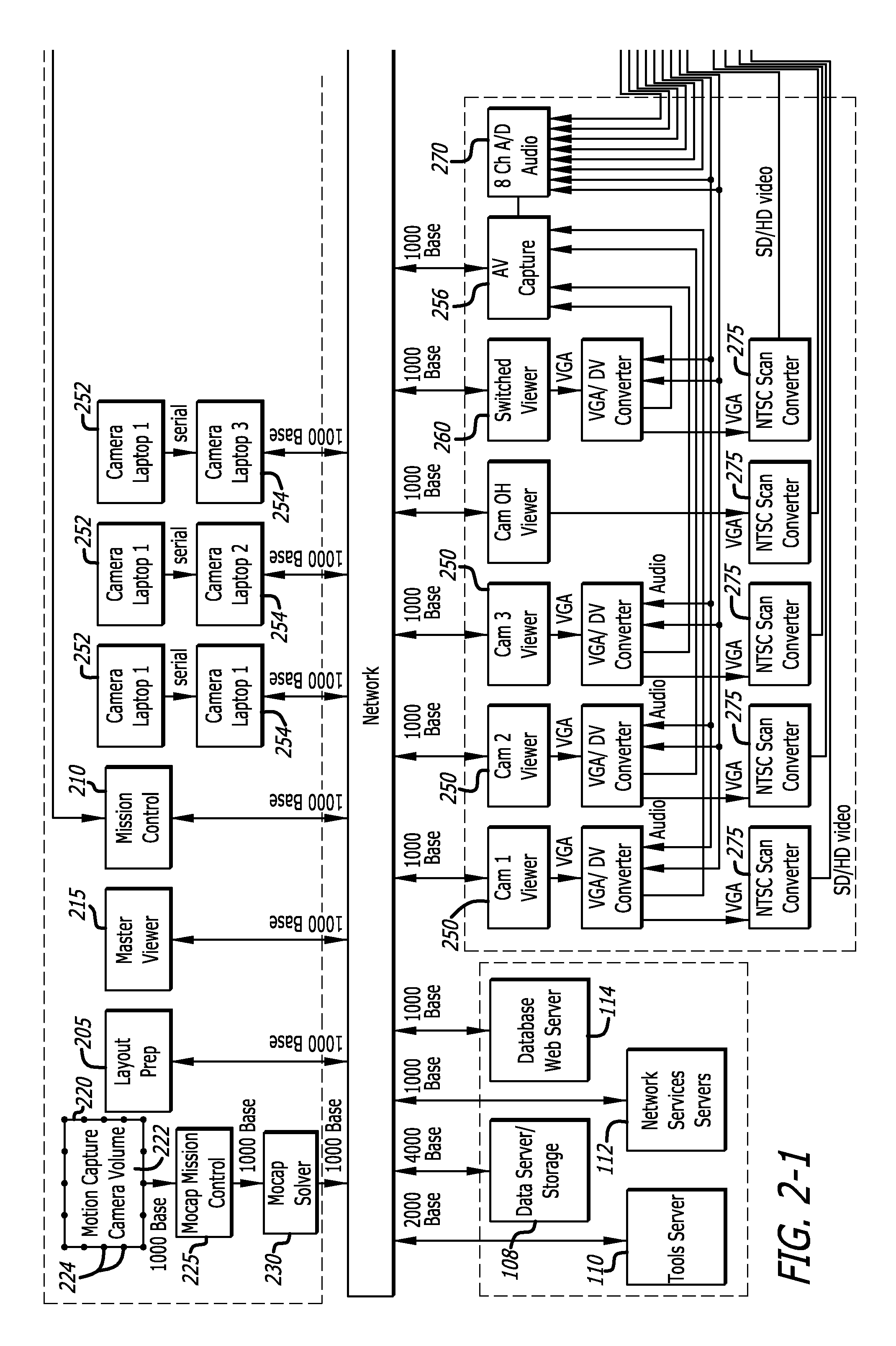

System and method of producing an animated performance utilizing multiple cameras

A real-time method for producing an animated performance is disclosed. The real-time method involves receiving animation data, the animation data used to animate a computer generated character. The animation data may comprise motion capture data, or puppetry data, or a combination thereof. A computer generated animated character is rendered in real-time with receiving the animation data. A body movement of the computer generated character may be based on the motion capture data, and a head and a facial movement are based on the puppetry data. A first view of the computer generated animated character is created from a first reference point. A second view of the computer generated animated character is created from a second reference point that is distinct from the first reference point. One or more of the first and second views of the computer generated animated character are displayed in real-time with receiving the animation data.

Owner:THE JIM HENSON COMPANY

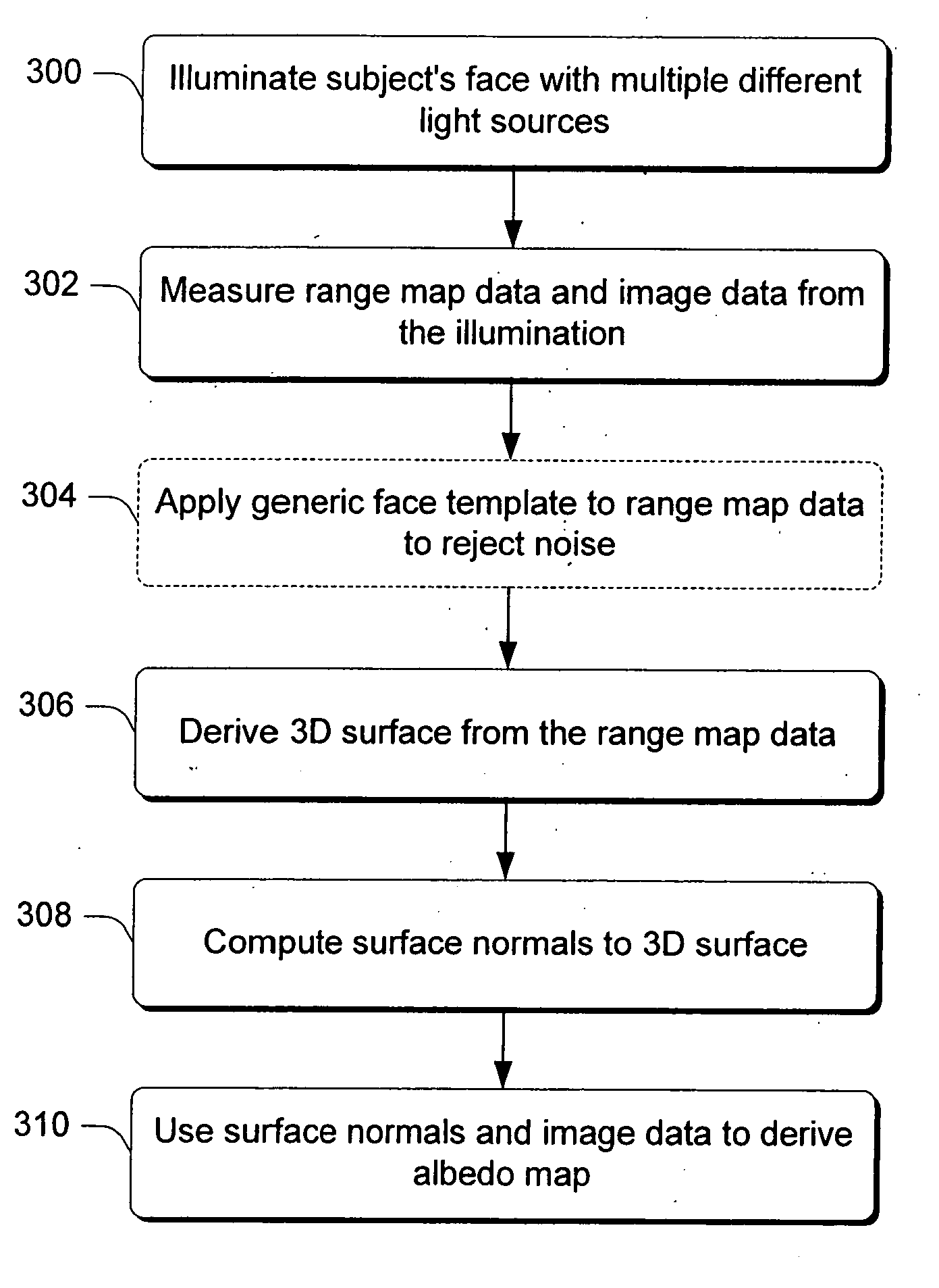

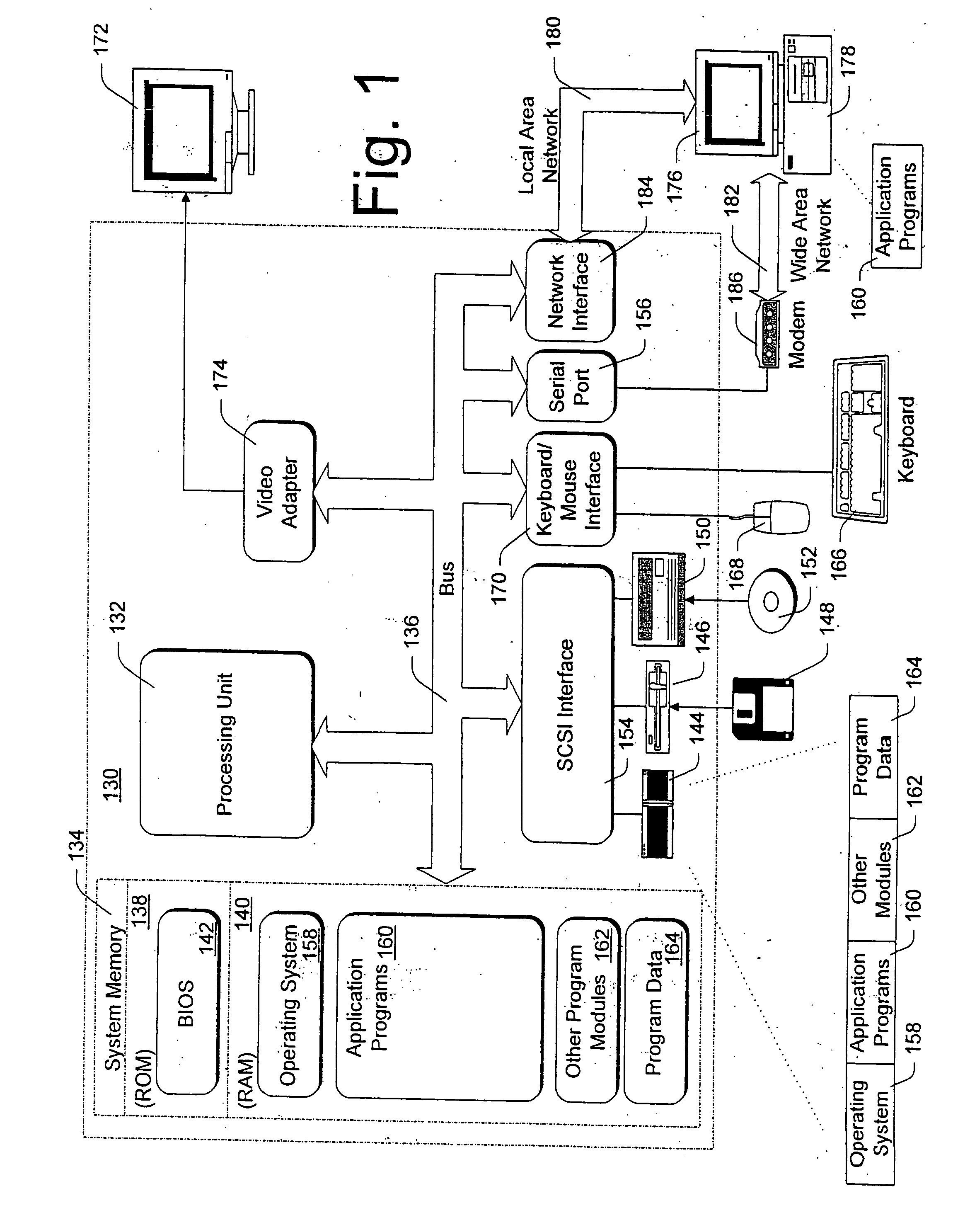

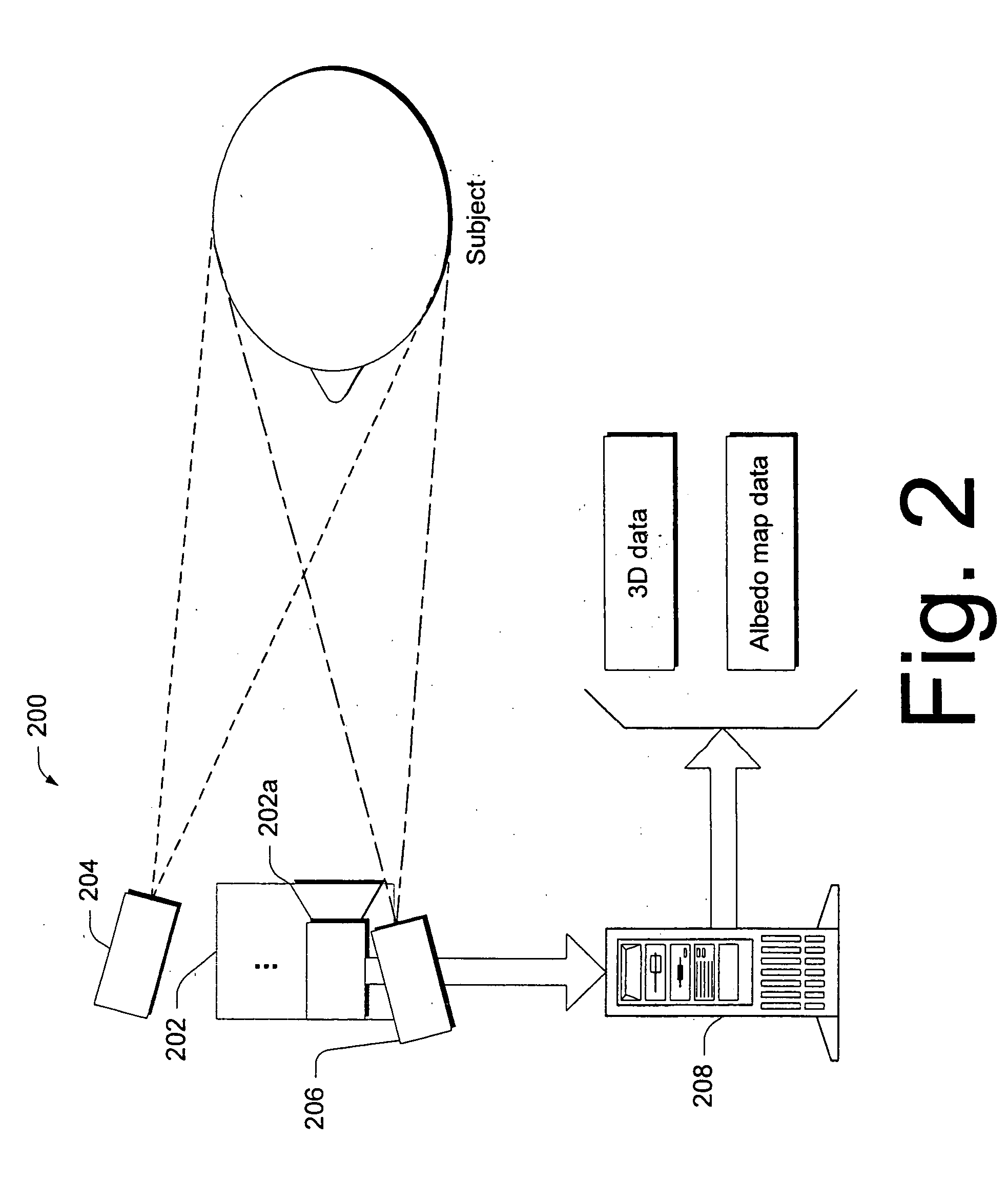

Methods and systems for animating facial features and methods and systems for expression transformation

The illustrated and described embodiments describe techniques for capturing data that describes 3-dimensional (3-D) aspects of a face, transforming facial motion from one individual to another in a realistic manner, and modeling skin reflectance.

Owner:ZHIGU HLDG

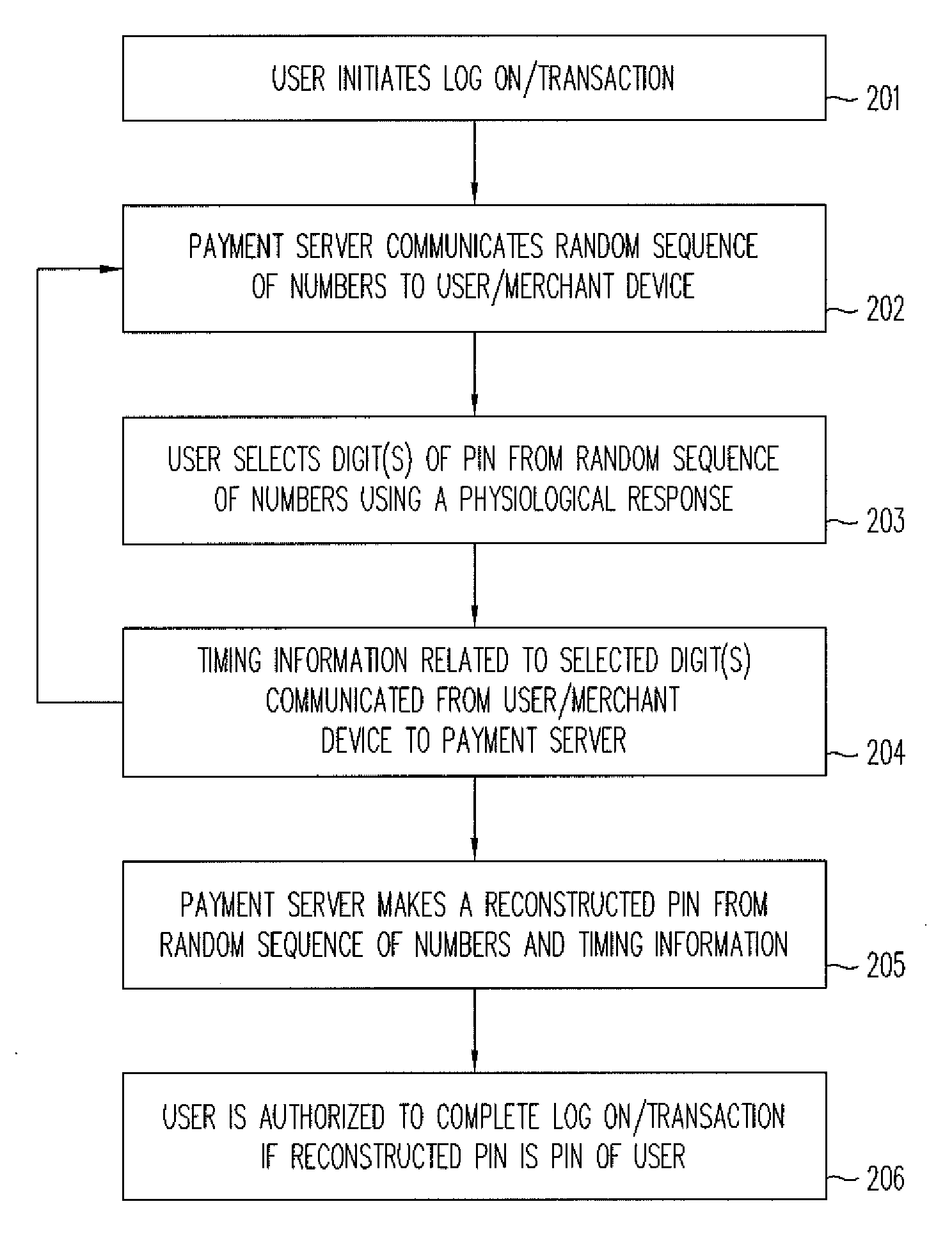

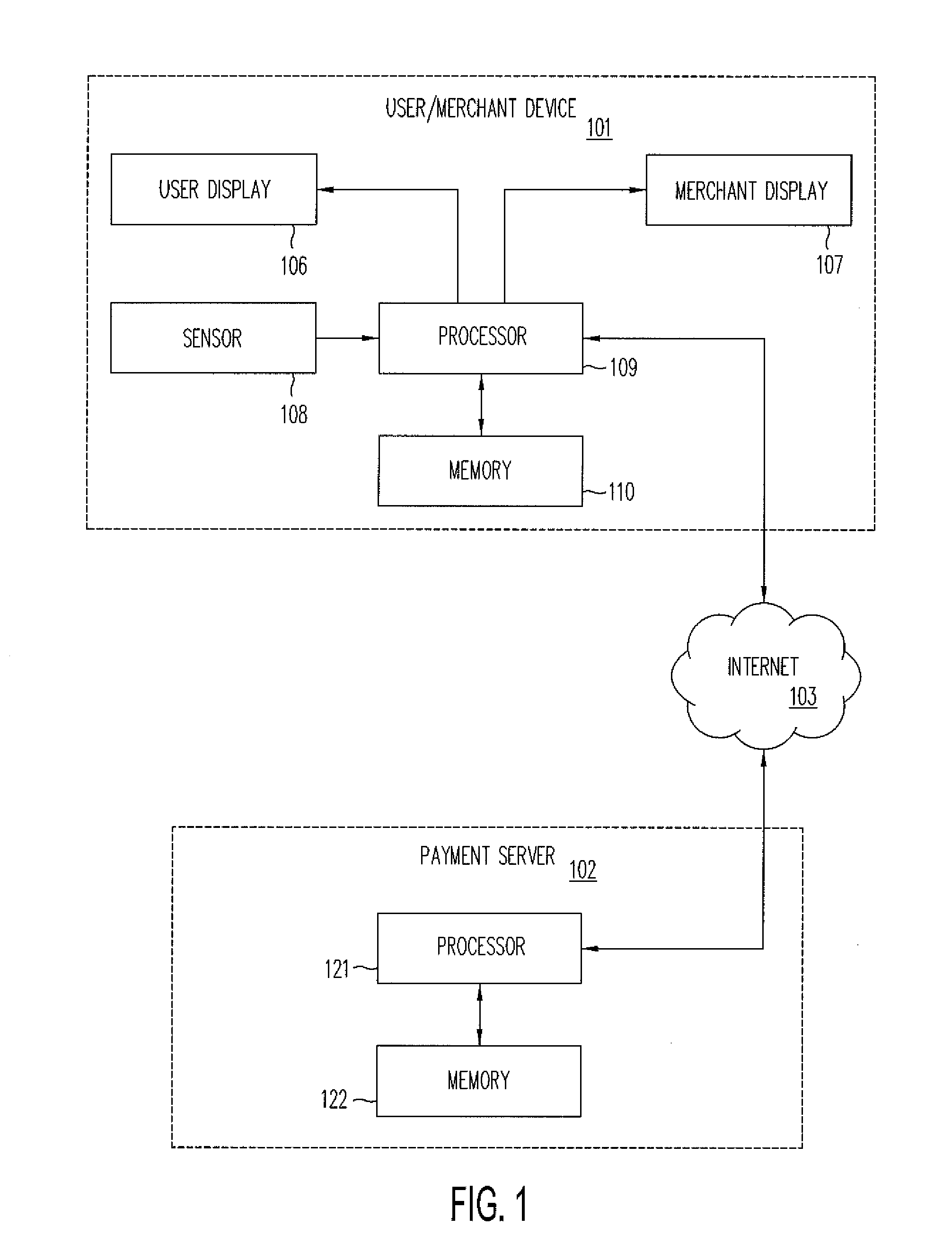

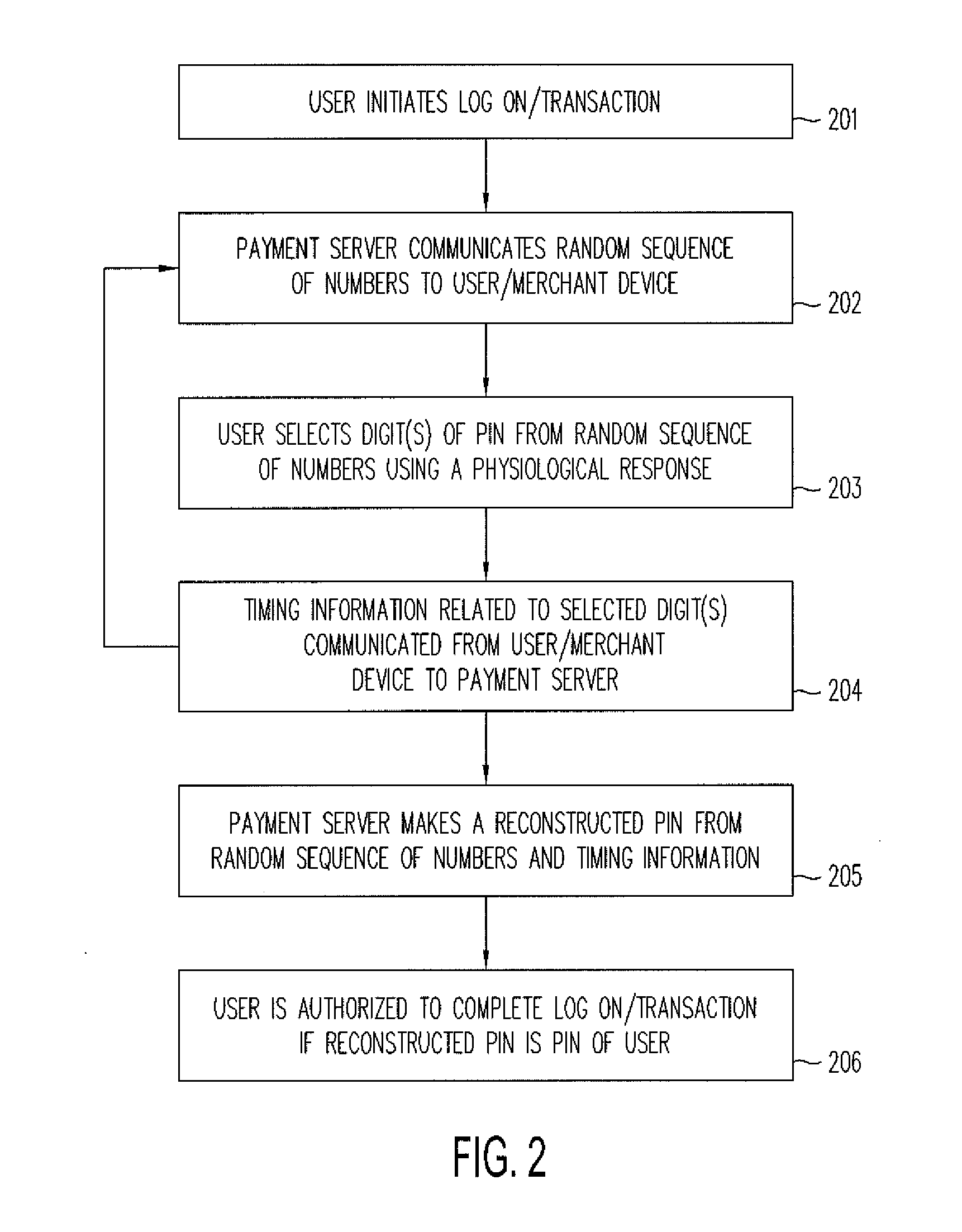

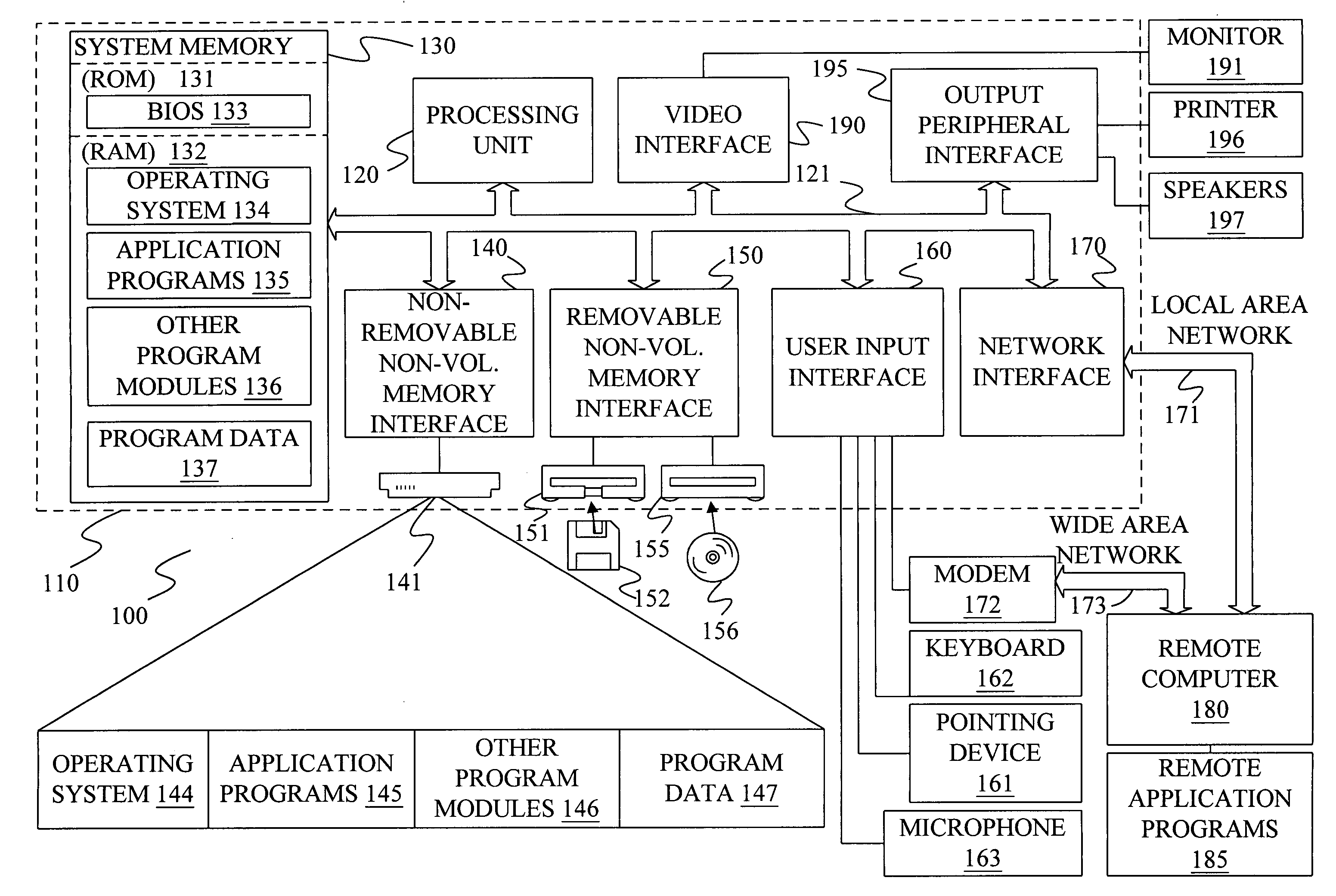

Physiological Response PIN Entry

Methods and systems are provided for facilitating the secure entry of a user's PIN for electronic transactions such as merchant checkout, payment authorization, or access authorization. A physiological response of the user can indicate which one of a random sequence of numbers is a number of the user's PIN. For example, the user can blink, wink, or make a subtle facial movement to provide the indication.

Owner:PAYPAL INC

Multi-sensory speech detection system

The present invention combines a conventional audio microphone with an additional speech sensor that provides a speech sensor signal based on an input. The speech sensor signal is generated based on an action undertaken by a speaker during speech, such as facial movement, bone vibration, throat vibration, throat impedance changes, etc. A speech detector component receives an input from the speech sensor and outputs a speech detection signal indicative of whether a user is speaking. The speech detector generates the speech detection signal based on the microphone signal and the speech sensor signal.

Owner:MICROSOFT TECH LICENSING LLC

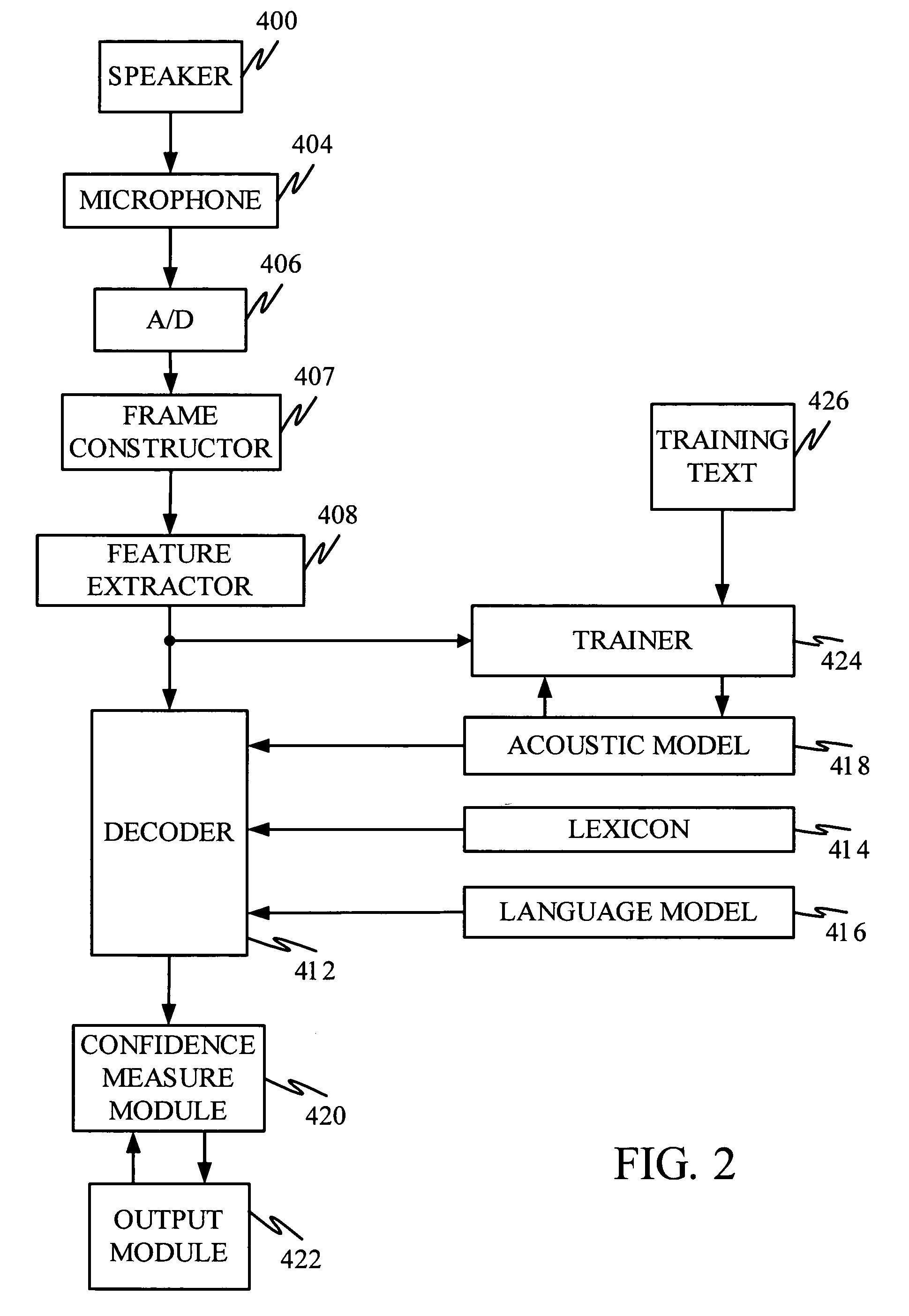

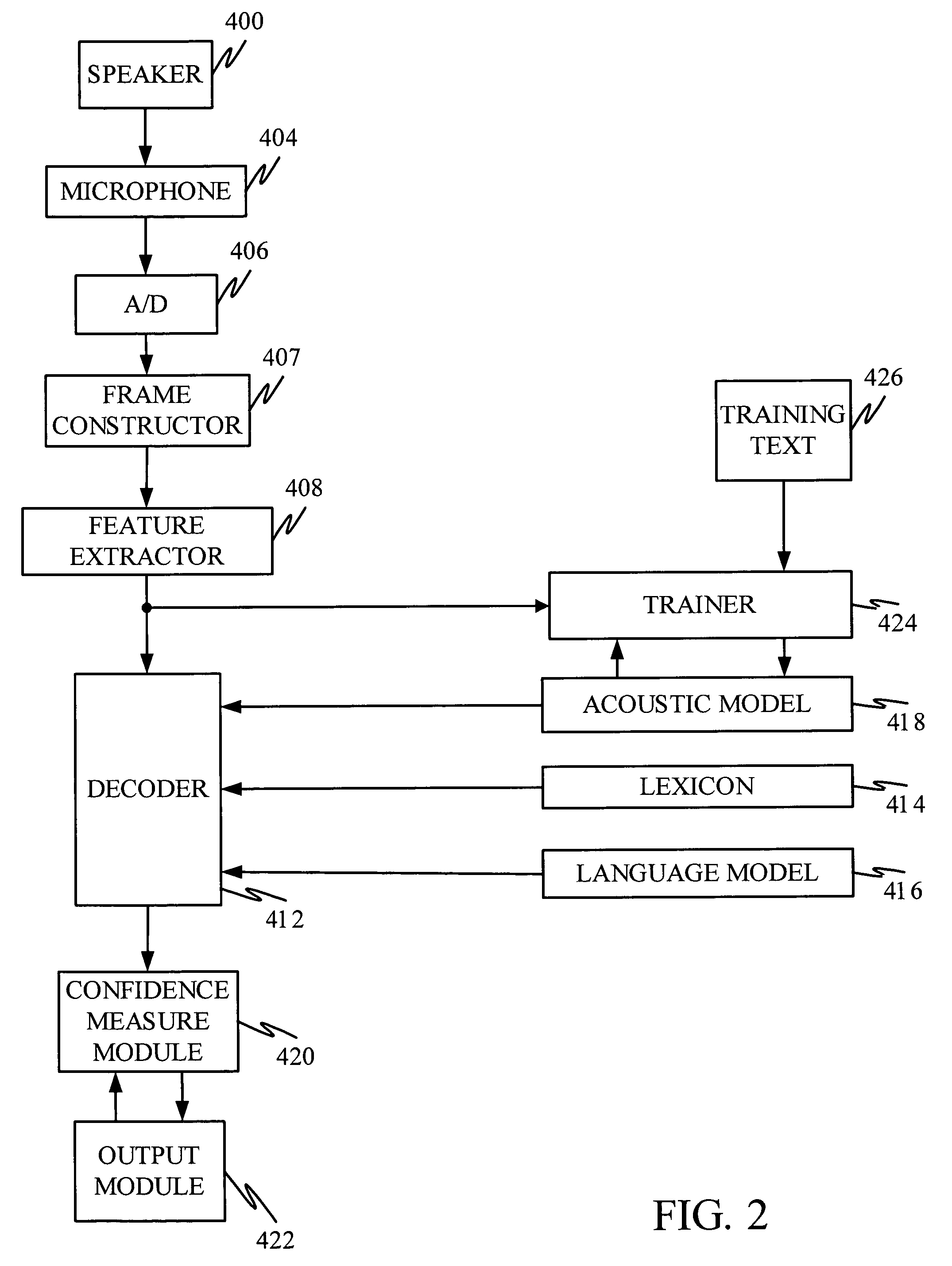

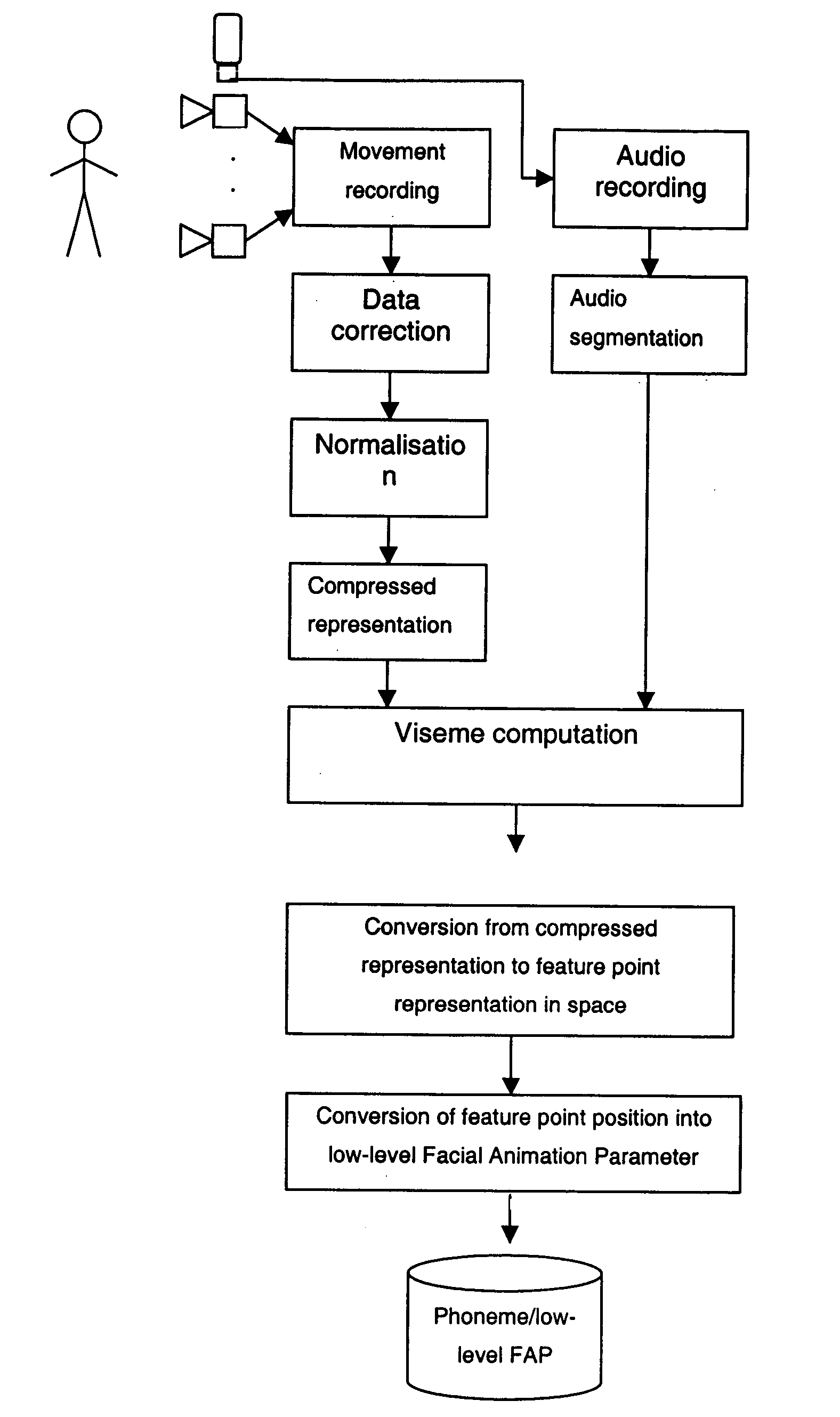

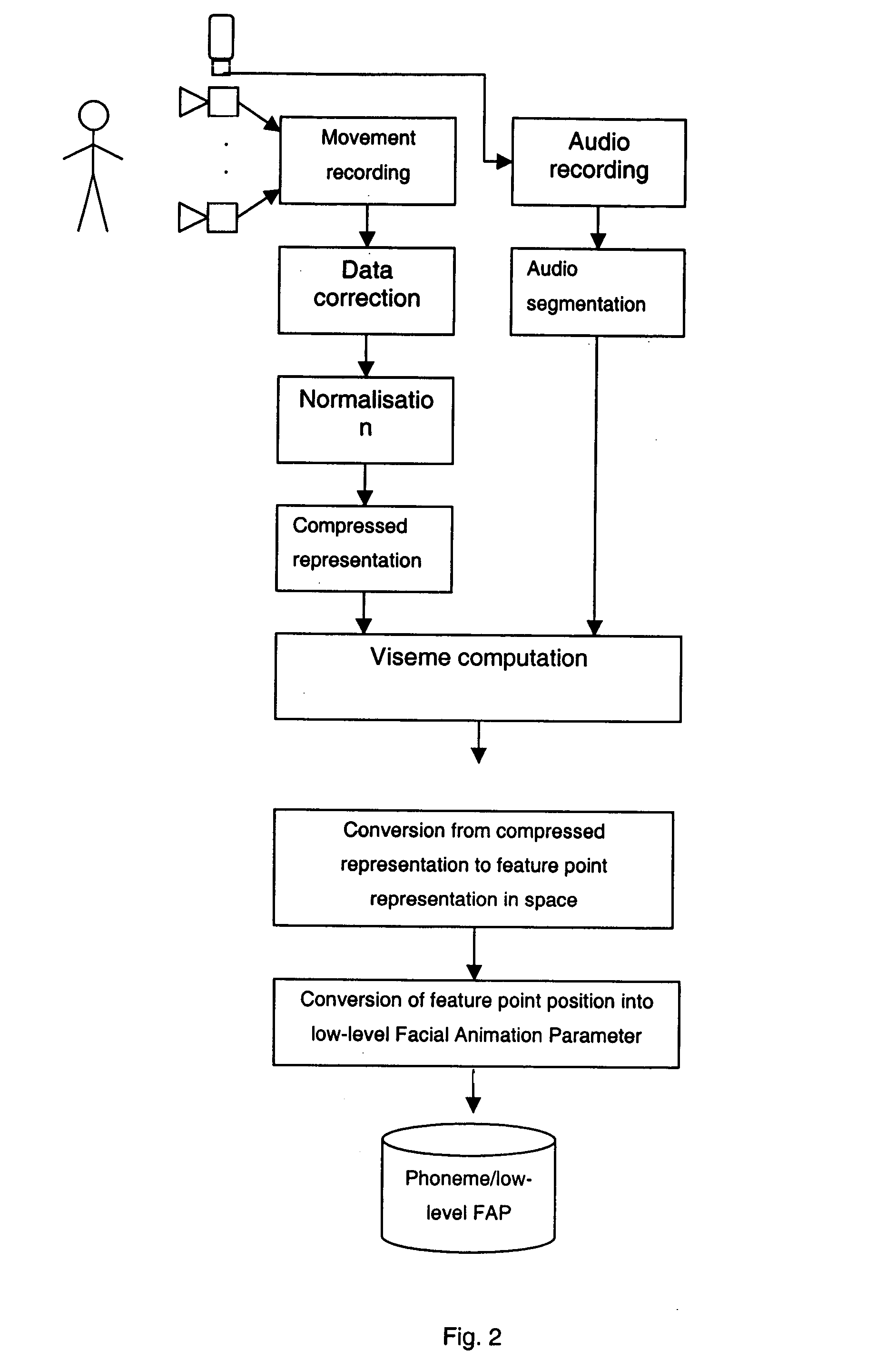

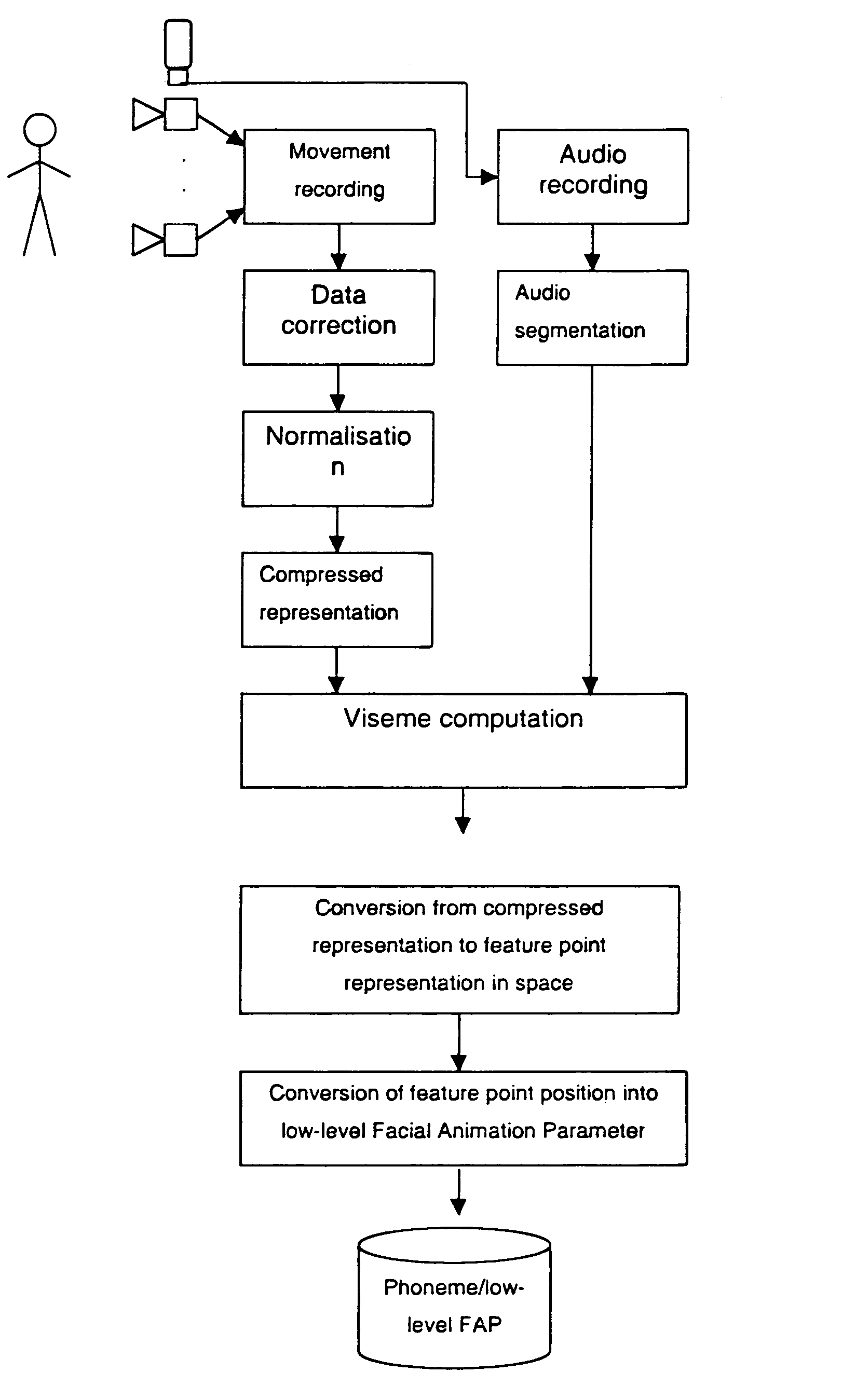

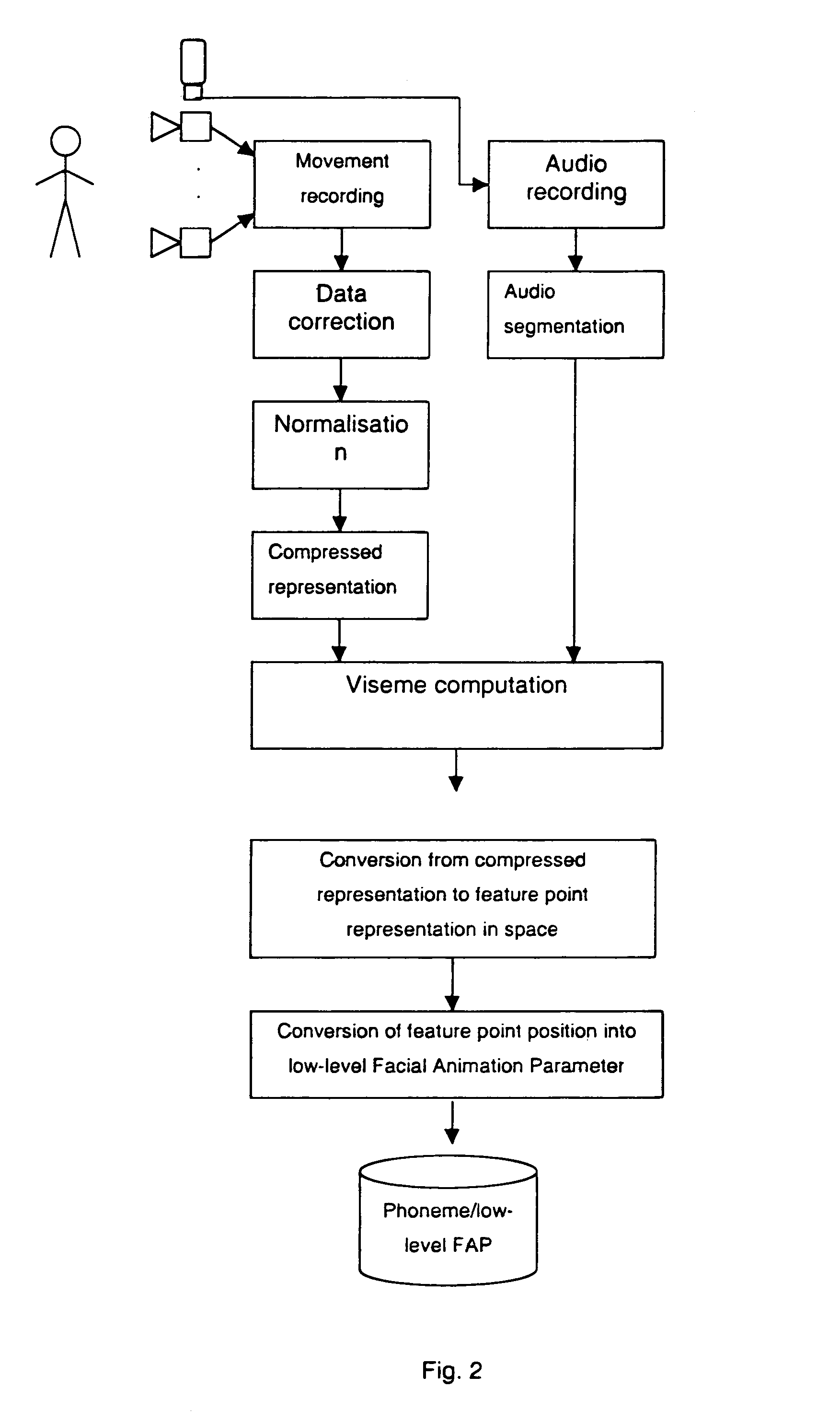

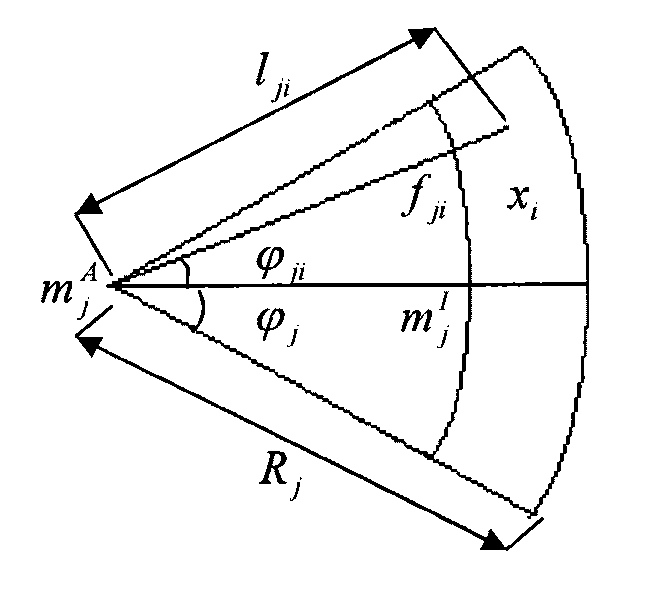

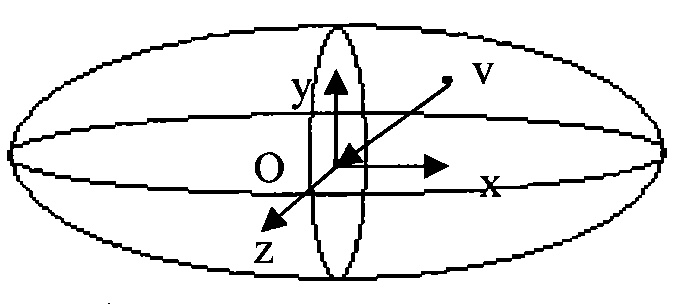

Method of animating a synthesised model of a human face driven by an acoustic signal

The method permits the animation of a synthesised model of a human face in relation to an audio signal. The method is not language dependent and provides a very natural animated synthetic model, being based on the simultaneous analysis of voice and facial movements, tracked on real speakers, and on the extraction of suitable visemes. The subsequent animation consists in transforming the sequence of visemes corresponding to the phonemes of the driving text into the sequence of movements applied to the model of the human face.

Owner:TELECOM ITALIA SPA

Method of animating a synthesized model of a human face driven by an acoustic signal

The method permits the animation of a synthesised model of a human face in relation to an audio signal. The method is not language dependent and provides a very natural animated synthetic model, being based on the simultaneous analysis of voice and facial movements, tracked on real speakers, and on the extraction of suitable visemes.The subsequent animation consists in transforming the sequence of visemes corresponding to the phonemes of the driving text into the sequence of movements applied to the model of the human face.

Owner:TELECOM ITALIA SPA

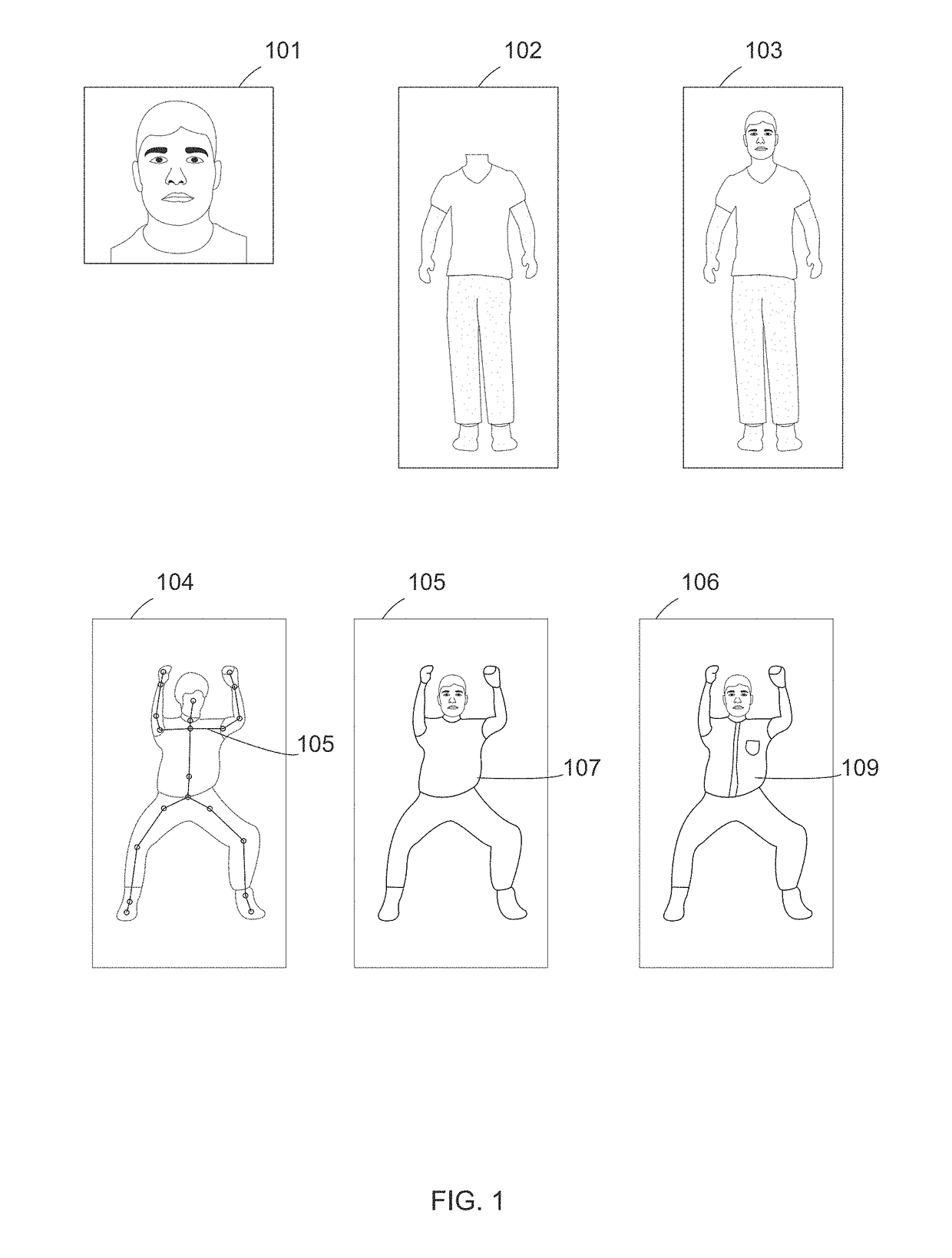

Producing realistic body movement using body Images

ActiveUS20190082211A1Reduce the amount of informationSpecial service provision for substationInput/output for user-computer interactionPattern recognitionHuman body

A method for providing visual sequences using one or more images comprising:receiving one or more person images of showing atleast one face,using a human body information to identify requirement of the other body part / s;receiving atleast one image or photograph of other human body part / s based on identified requirement;processing the image / s of the person with the image / s of other human body part / s using the human body information to generate a body model of the, person, the virtual model comprises face of the person,receiving a message to be enacted by the person, wherein the message comprises atleast a text or a emotional and movement command,processing the message to extract or receive an audio data related to voice of the person, and a facial movement data related to expression to be carried on face of the person,processing the body model, the audio data, and the facial movement data, and generating an animation of the body model of the person enacting the message,Wherein emotional and movement command is a GUI or multimedia based instruction to invoke the generation of facial expression / s and or body part / s movement.

Owner:VATS NITIN

Asynchronous streaming of data for validation

The present invention relates to computer capture of object motion. More specifically, embodiments of the present invention relate to capturing of facial movement or performance of an actor. Embodiments of the present invention provide a head-mounted camera system that allows the movements of an actor's face to be captured separately from, but simultaneously with, the movements of the actor's body. In some embodiments of the present invention, a method of motion capture of an actor's performance is provided. A self-contained system is provided for recording the data, which is free of tethers or other hard-wiring, is remotely operated by a motion-capture team, without any intervention by the actor wearing the device. Embodiments of the present invention also provide a method of validating that usable data is being acquired and recorded by the remote system.

Owner:TWO PIC MC

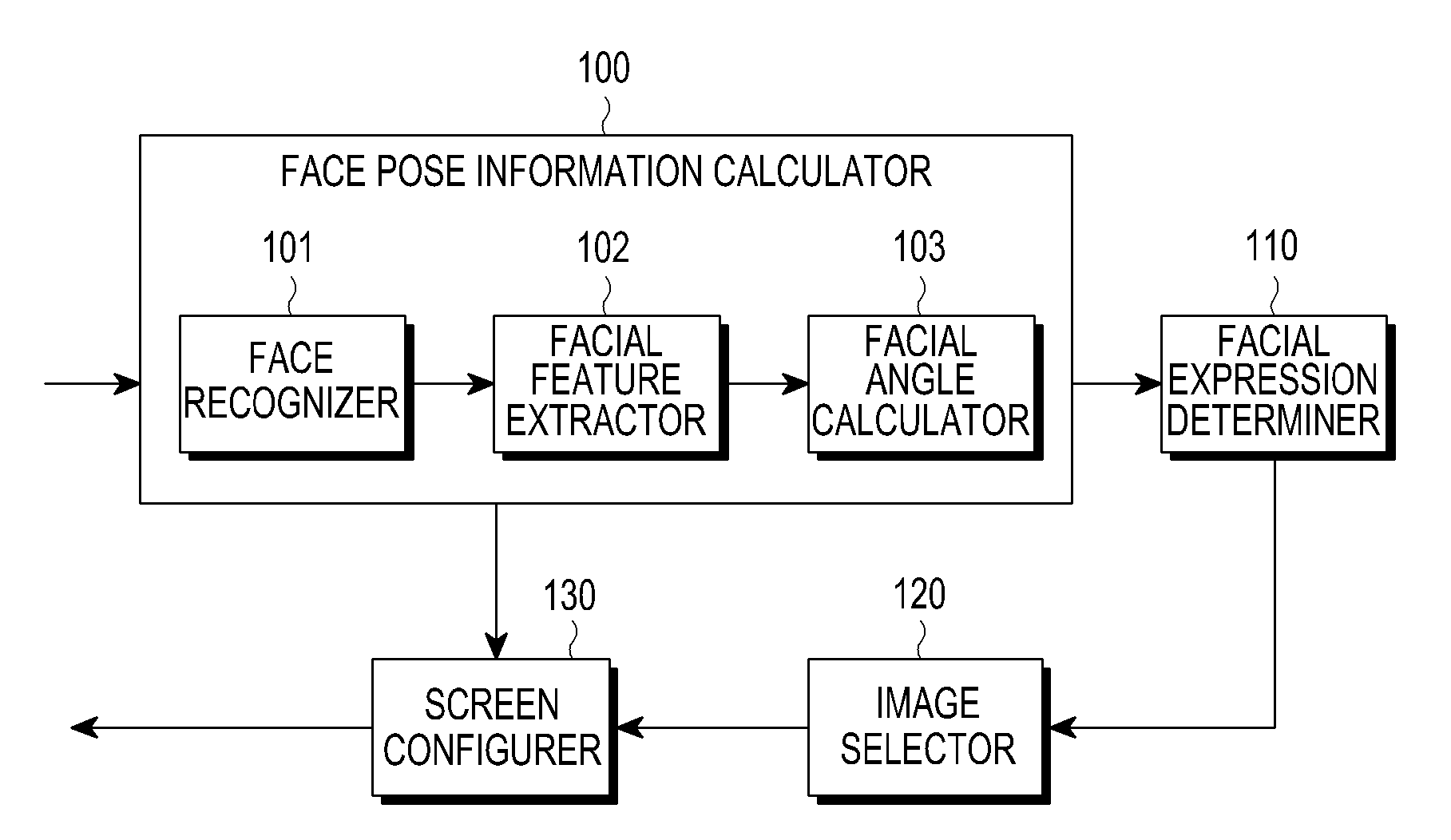

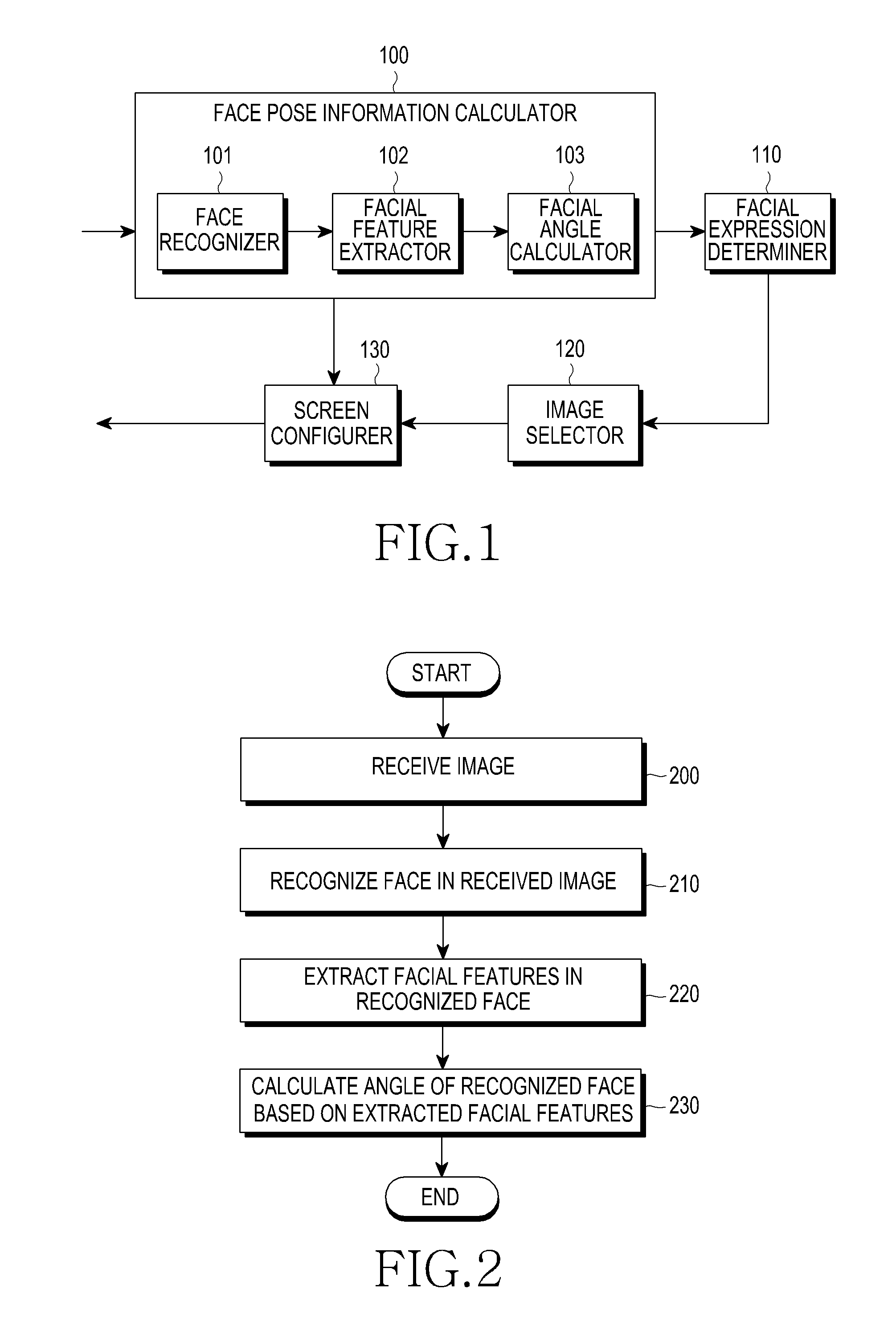

Apparatus and method for configuring screen for video call using facial expression

Apparatus and method for configuring a screen for a video call using a facial expression by recognizing a face from an image, calculating facial expression information for an expression of the recognized face, and determining whether there is a change in expression of the recognized face by comparing the calculated facial expression information with reference expression information preset to determine a change in expression of the face. If there is a change in expression of the recognized face, the apparatus and method selects a video image corresponding to the changed expression in the video call screen, and reconfigures the video call screen using the selected video image, making it possible for a user to conveniently select an image of the interested person without taking extensive action, and preventing a wrong image from being selected due to the unintended user facial movement.

Owner:SAMSUNG ELECTRONICS CO LTD

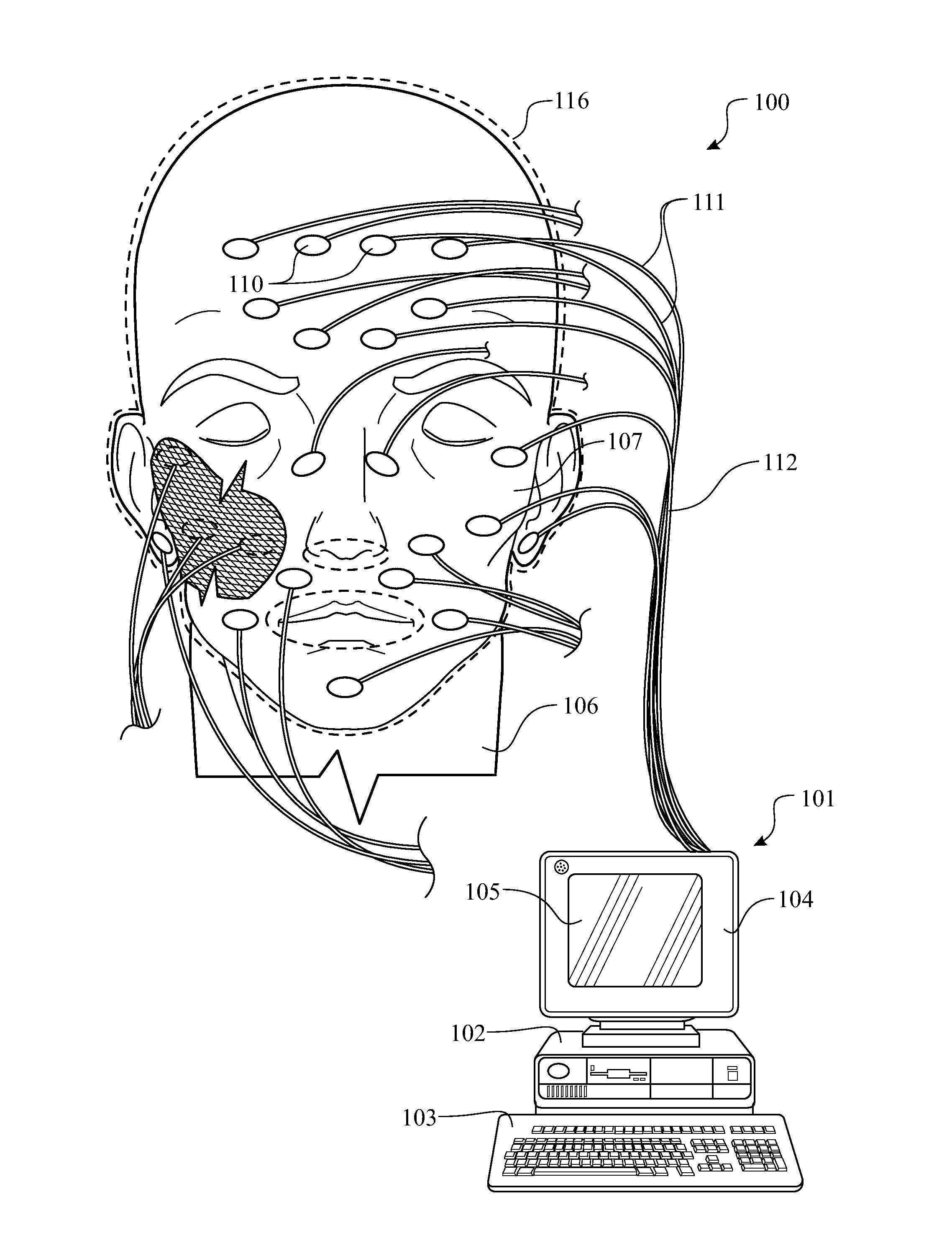

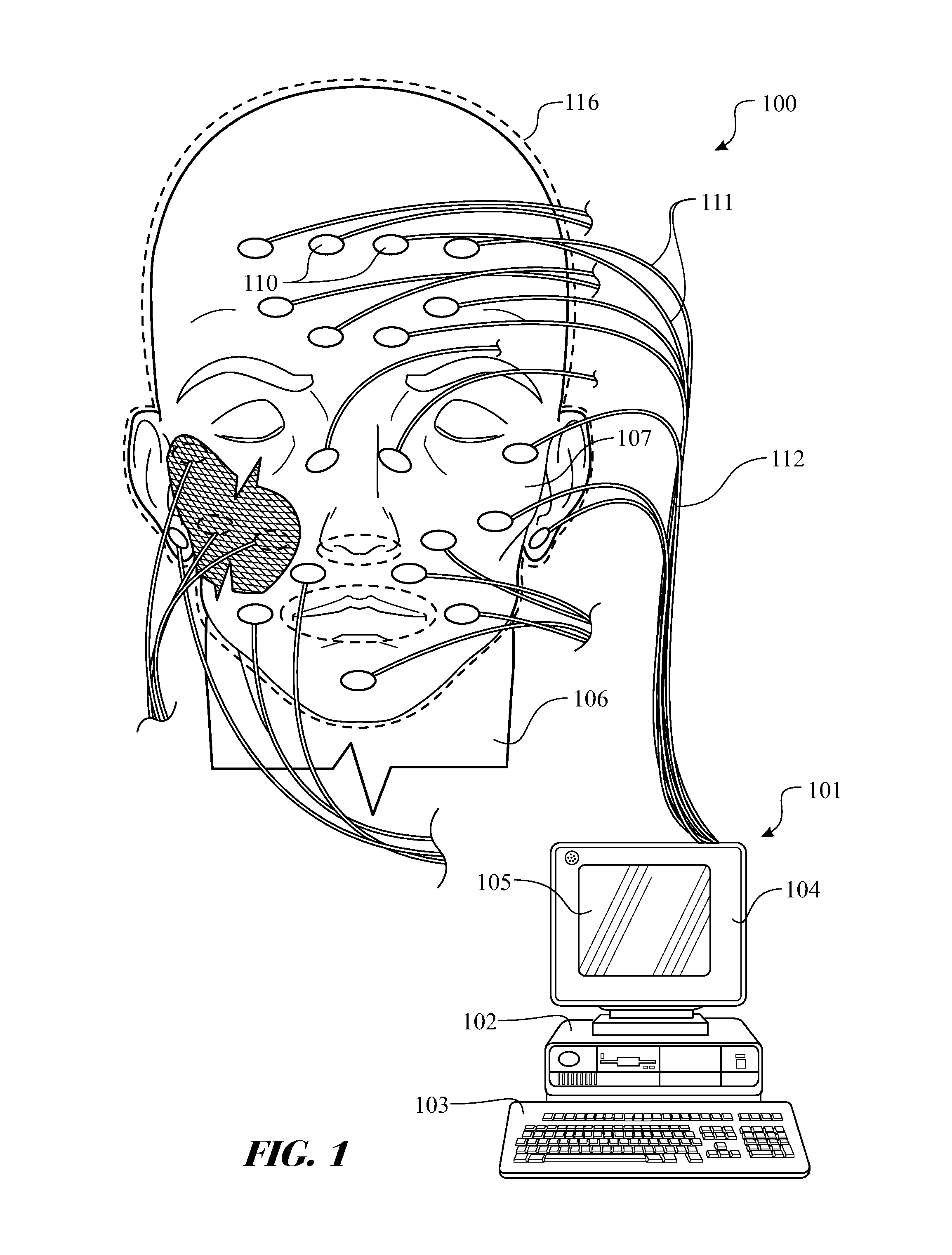

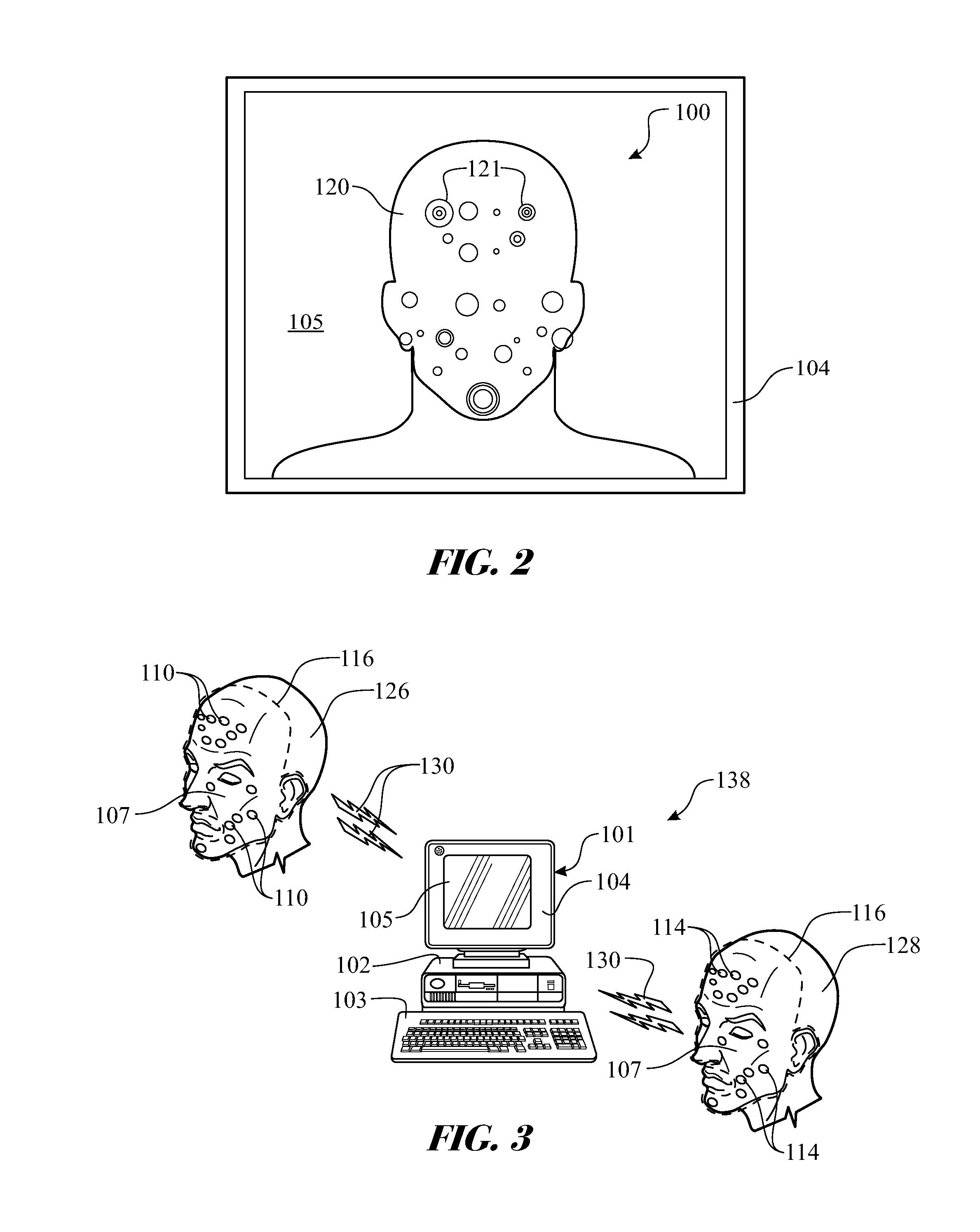

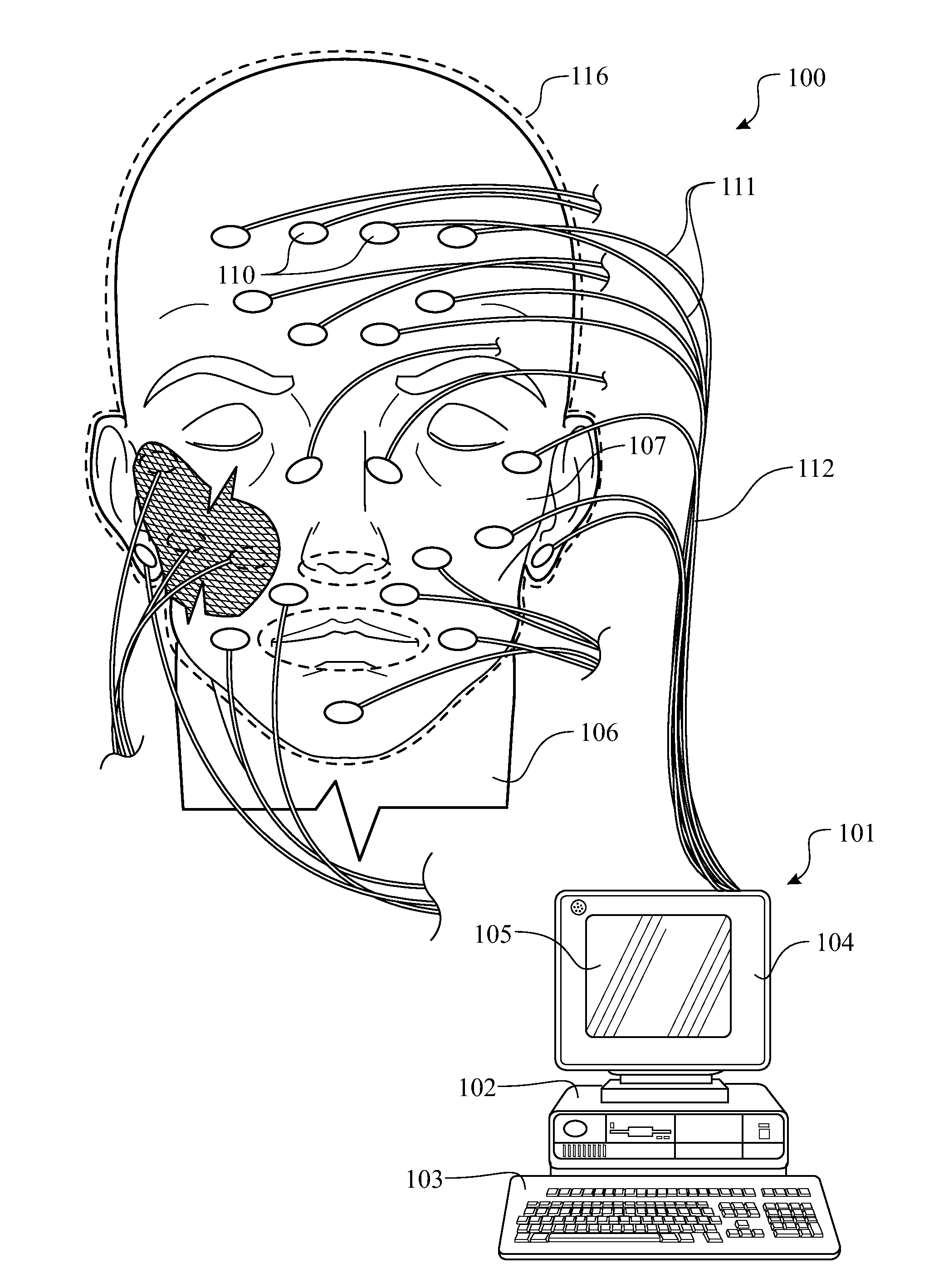

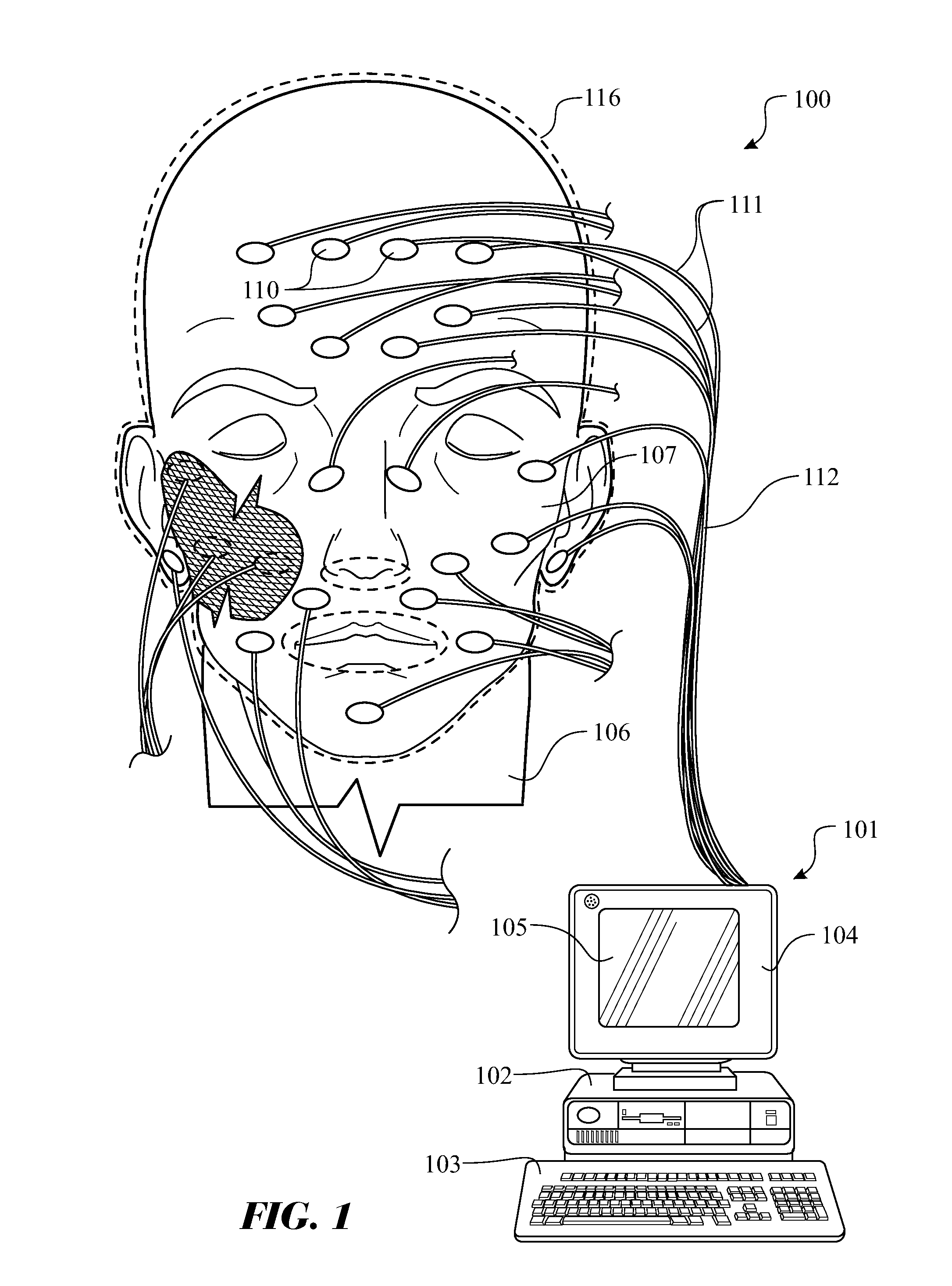

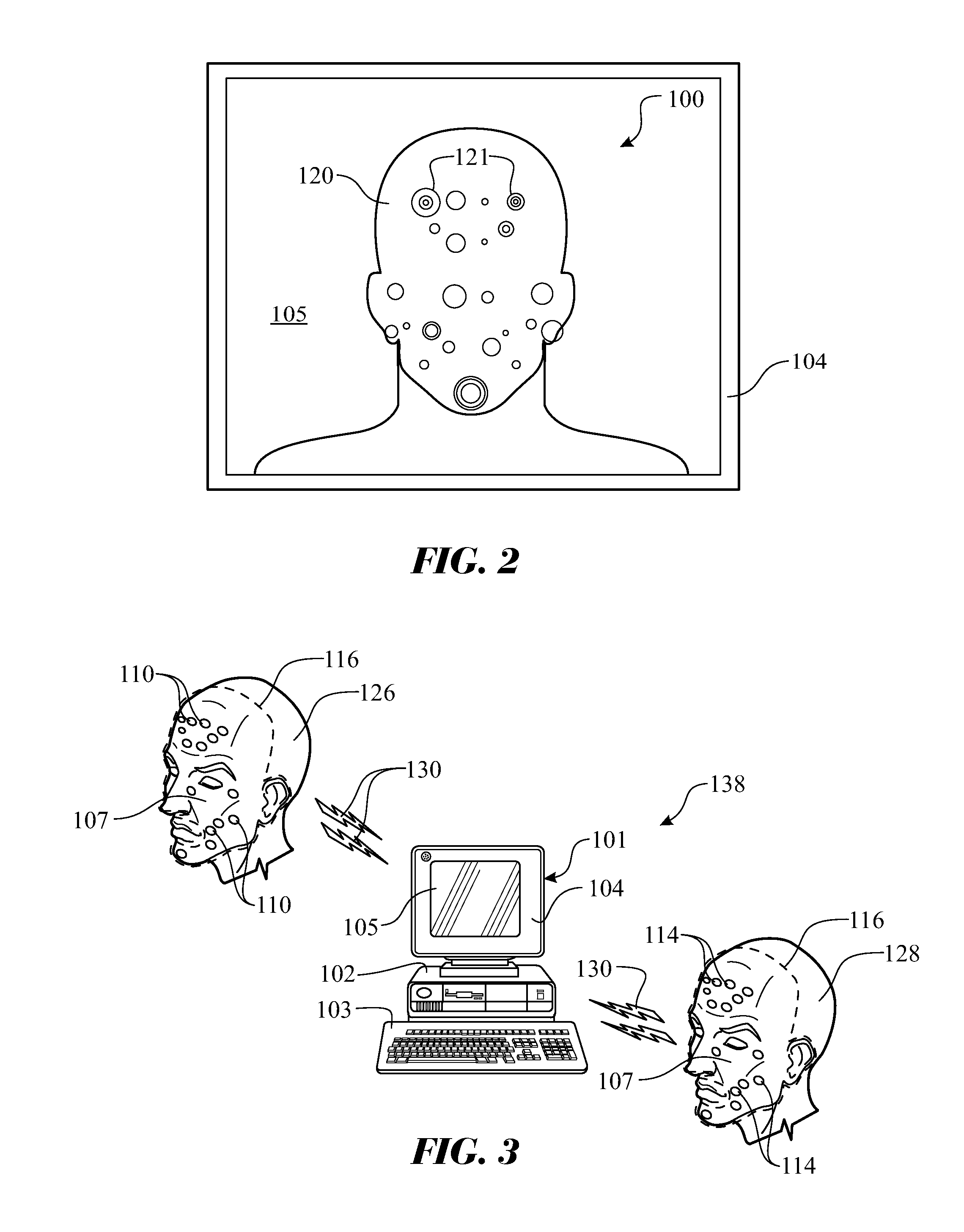

Facial movement measurement and stimulation apparatus and method

ActiveUS8493286B1Easy to shareEasy transferElectrotherapyElectromyographyPhysical medicine and rehabilitationFacial movement

An illustrative embodiment of a facial movement measurement and stimulation apparatus includes at least one facial movement sensor adapted to sense facial movement in a subject and a device interfacing with the facial movement sensor or sensors and adapted to receive at least one signal from the facial movement sensor or sensors and indicate facial movement of the subject. A facial movement measurement and stimulation method is also disclosed.

Owner:AGRAMA MARK T

Multi-sensory speech detection system

The present invention combines a conventional audio microphone with an additional speech sensor that provides a speech sensor signal based on an input. The speech sensor signal is generated based on an action undertaken by a speaker during speech, such as facial movement, bone vibration, throat vibration, throat impedance changes, etc. A speech detector component receives an input from the speech sensor and outputs a speech detection signal indicative of whether a user is speaking. The speech detector generates the speech detection signal based on the microphone signal and the speech sensor signal.

Owner:MICROSOFT TECH LICENSING LLC

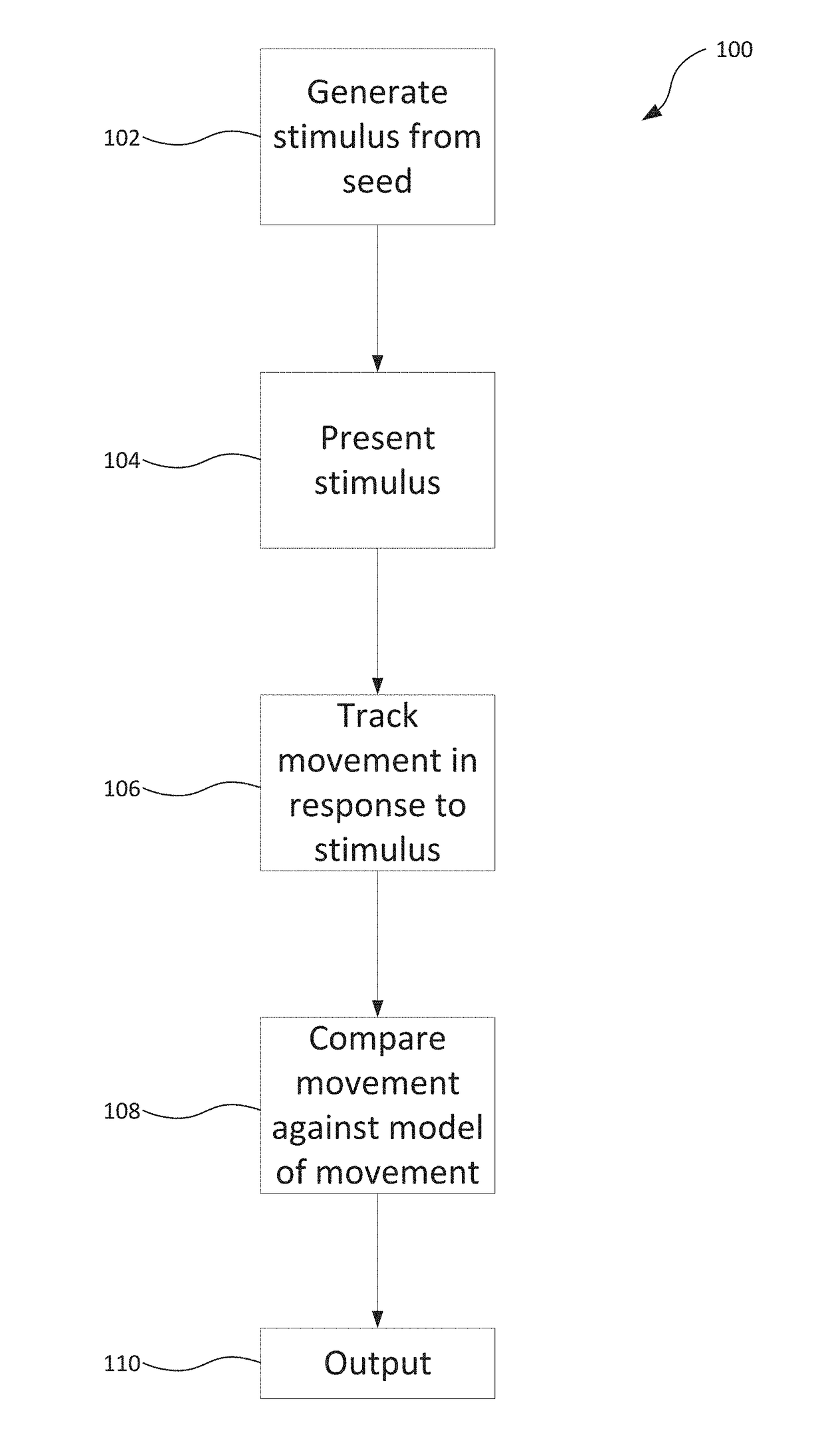

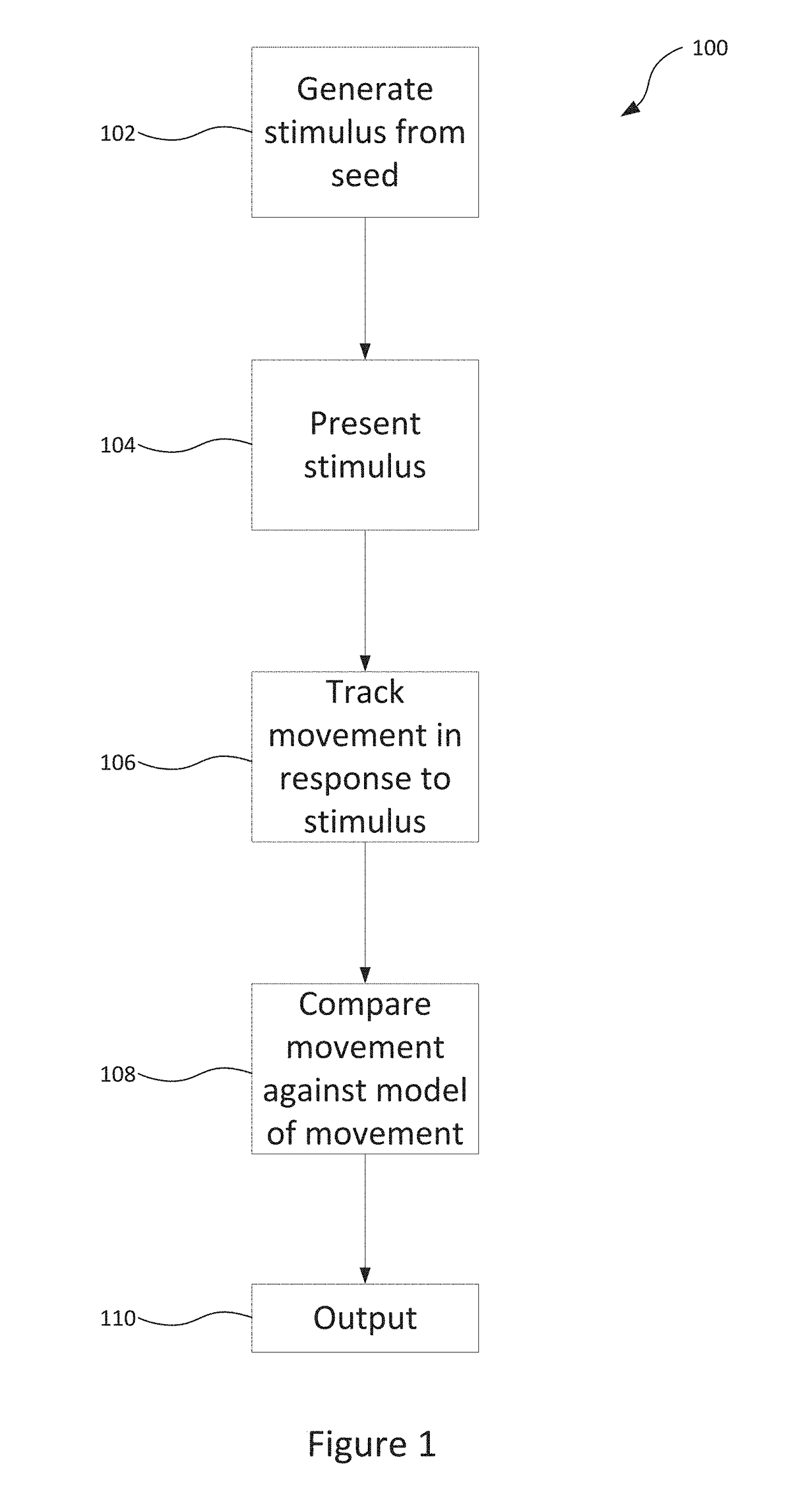

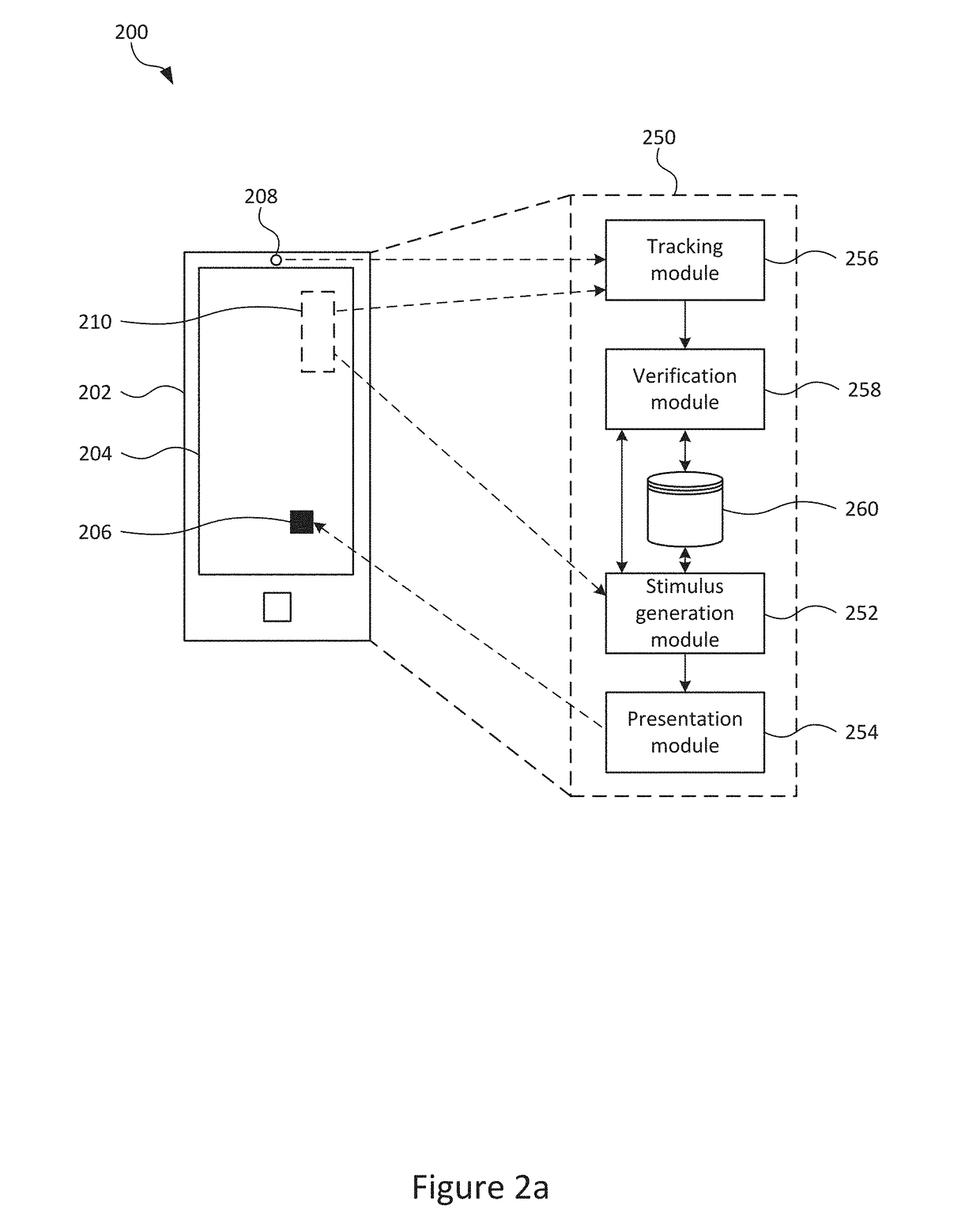

Verification method and system

The present invention relates to a method of verifying that (or determining whether) a live human face is present. More particularly, the present invention relates to a method of verifying that (or determining whether) a live human face is present for use as part of a facial recognition system and / or method. In particular, the invention relates to a method 100 of determining whether a live human face is present, comprising: generating 102 a stimulus 206; predicting, using a model 214, human face movement in response to said generated stimulus; presenting 104 the stimulus to a face of a person; tracking 106 a movement of the face in response to the stimulus using a camera; and determining 108 whether a live human face is present by comparing the movement of the face against said prediction.

Owner:ONFIDO LTD

Control method of three-dimensional facial animation

InactiveCN101739709AHigh degree of simulationImprove the simulation effect3D-image rendering3D modellingState of artFacial movement

The invention discloses a control method of three-dimensional facial animation, which is characterized in that the control method comprises the following steps: obtaining a three-dimensional facial feature network model; dividing the functional zones of the three-dimensional facial feature network model; according to the zone division, arranging a movement control point, and calculating the affect of the control point on the three-dimensional facial feature model movement; by driving the movement of the control point, driving the movement of the three-dimensional facial feature network model; and simulating real facial movement. The method can overcome the defect of the prior art and deforms the three-dimensional facial model according to various expression requirements to ensure that the three-dimensional face shows the expression animation with strong sense of reality.

Owner:SICHUAN UNIV

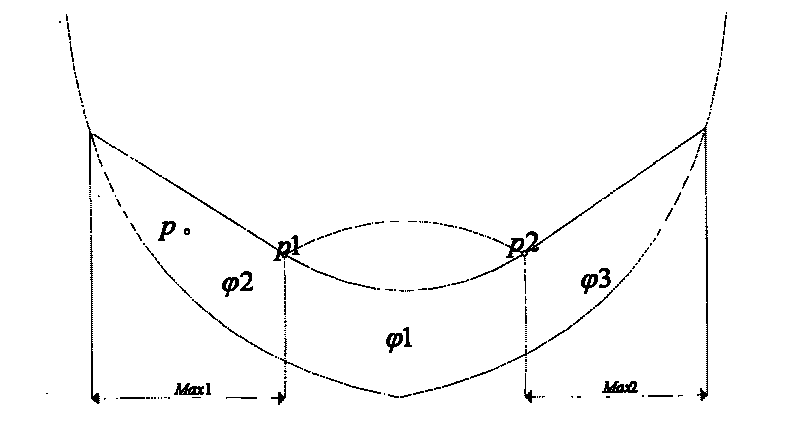

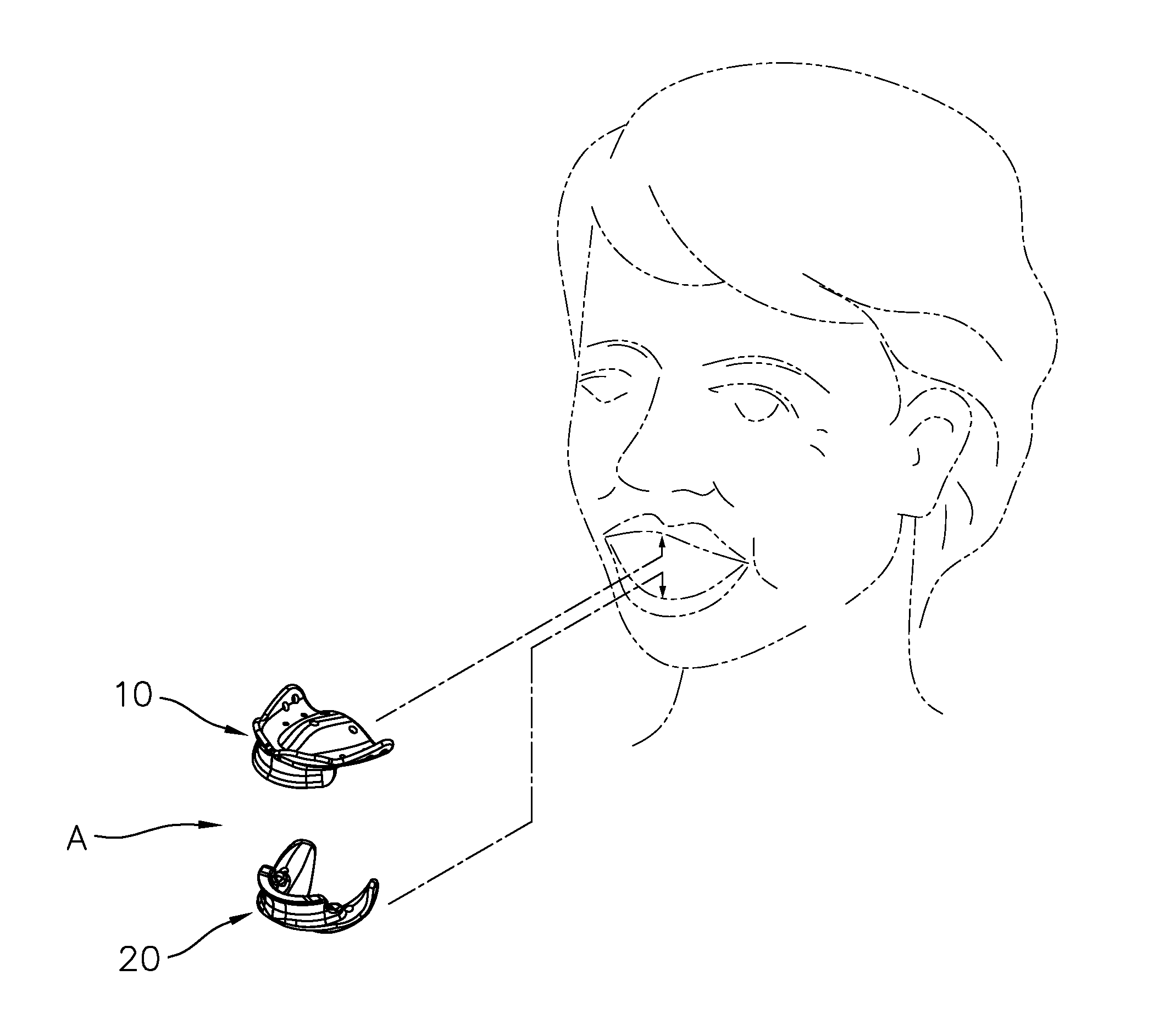

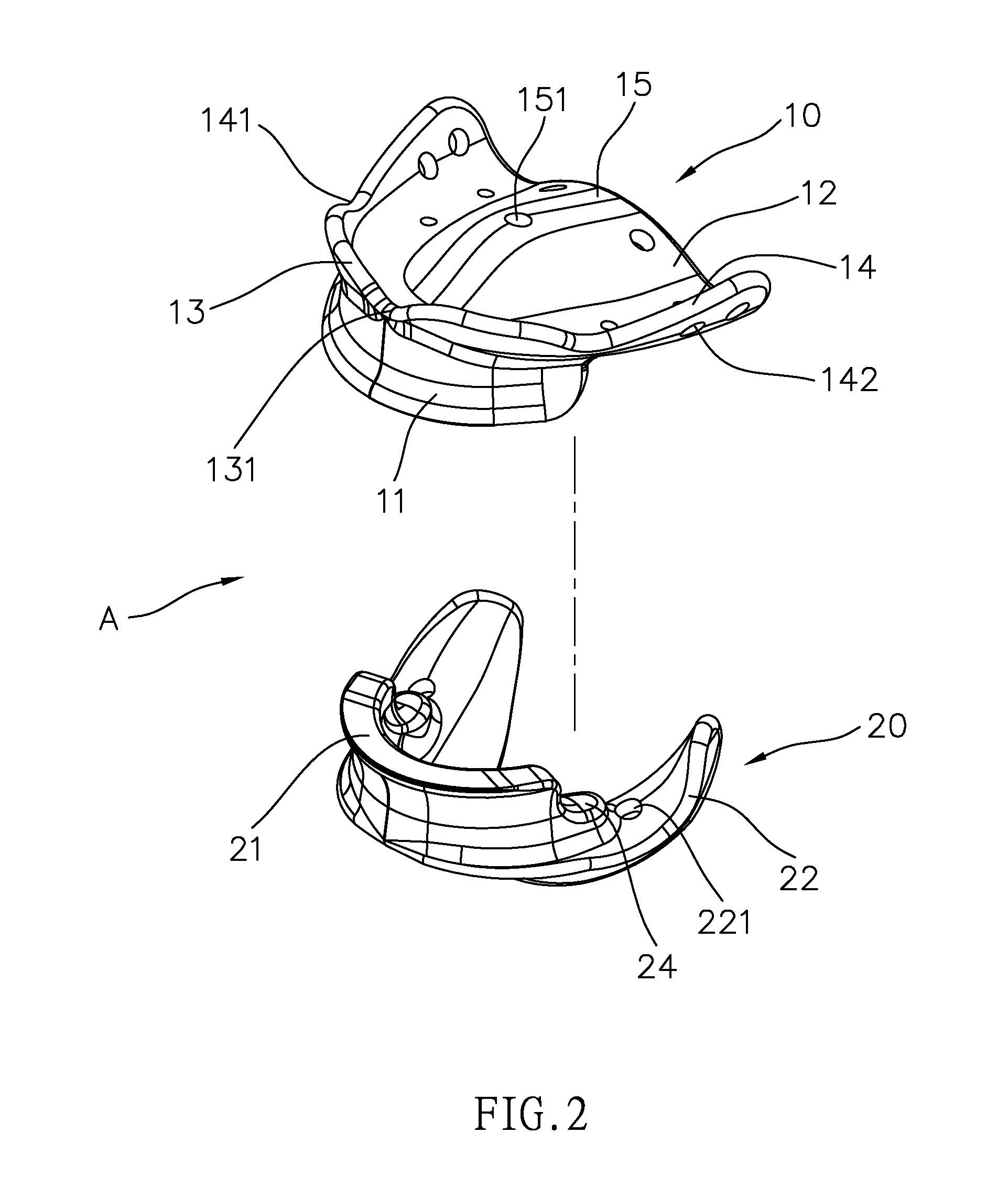

Method and apparatus of full mouth reconstruction

A full mouth reconstruction method and apparatus includes a denture molding structure mounted onto a patient's maxilla and mandible after being filled with an impression material, so that a static molding of the patient's gum can be performed based on the patient's occlusion. Important reference data including the vertical height of occlusion, facial midline, horizontal occlusion line, connecting line between two pupils and nose-ear horizontal line are recorded for facial and oral reconstructions while the patient is biting a first recording device, and the facial movements of the patient's chewing and speaking are recorded quickly while the patient is biting a second recording device. Records of a metal calibration are applied to obtain data of a patient's front-and-rear, left-and-right, and up-and-down maxillary and mandibular movements through facial movements of a patient's chewing and speaking, and these data are provided to a dental mold technician for producing the denture.

Owner:CHIOU WEN HSIN +1

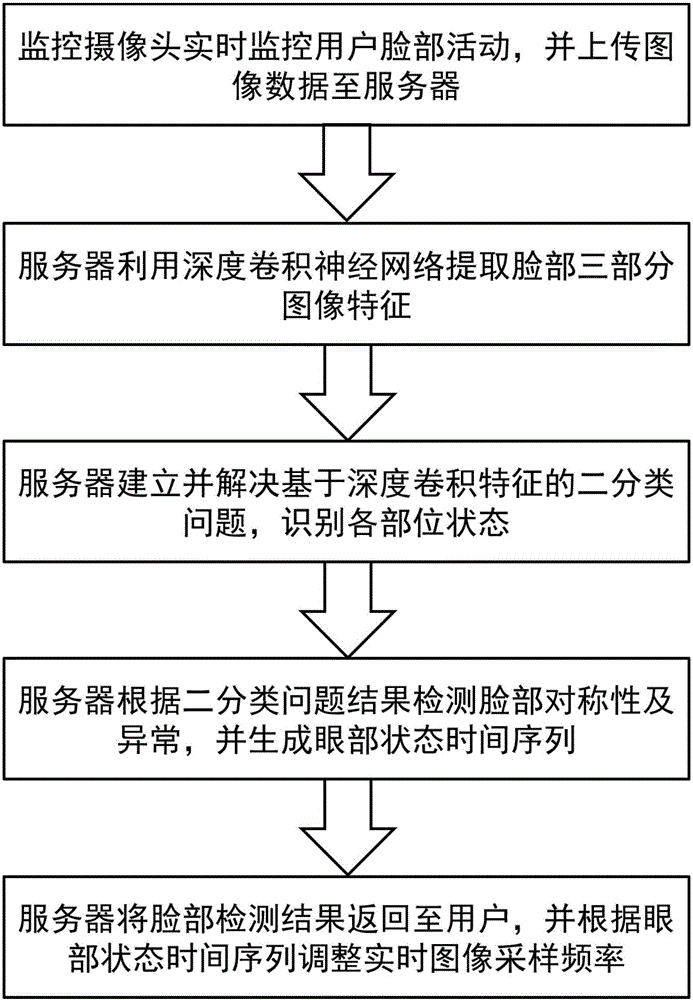

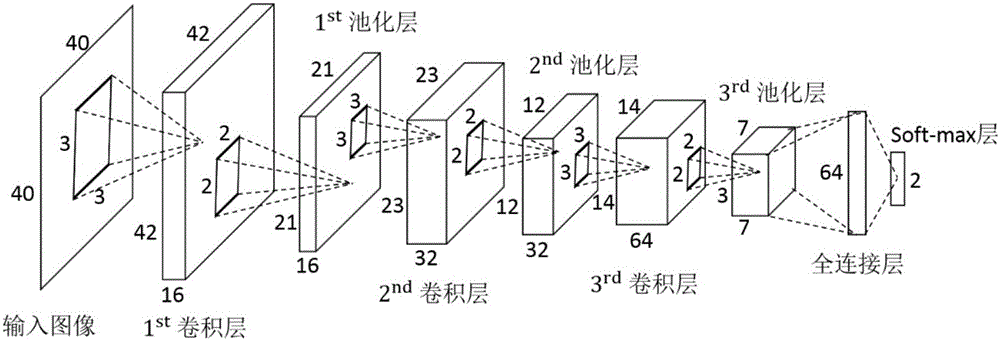

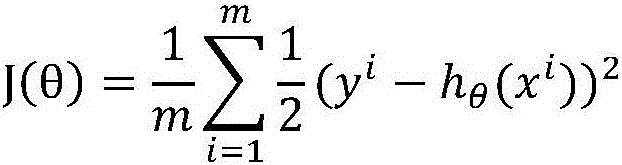

Method for detecting face symmetry and anomaly based on real-time face detection

InactiveCN106250819ARealize real-time detectionImprove stabilityCharacter and pattern recognitionFace detectionEye state

The invention relates to a method for detecting face symmetry and anomaly based on real-time face detection. The method includes a training stage and a testing stage. The training stage includes the following steps that: a deep convolutional neural network model is established; the image data of the main parts of the face of a user are acquired; and an optimal classification strategy is provided according to the image data given by the user. The testing stage includes the following steps that: the facial movement of the user is monitored in real time; the image data of the main parts of the face of the user are acquired; the images of the main parts of the face of the user are processed through the deep convolutional neural network model, and deep convolutional features are extracted; a deep convolutional feature-based dichotomy problem is solved, state identification is carried out on the main parts of the face of the user, and then real-time symmetry detection and anomaly detection are carried out on the main parts; and the eye state time sequence of the user is recorded, and the sampling frequency of a monitoring camera is adjusted in real time. With the method of the invention adopted, real-time face detection on face symmetry and anomaly can be carried out accurately under conditions of different lighting conditions and different users. The method has high stability and high universality.

Owner:SHANGHAI JIAO TONG UNIV

System and method of producing an animated performance utilizing multiple cameras

A real-time method for producing an animated performance is disclosed. The real-time method involves receiving animation data, the animation data used to animate a computer generated character. The animation data may comprise motion capture data, or puppetry data, or a combination thereof. A computer generated animated character is rendered in real-time with receiving the animation data. A body movement of the computer generated character may be based on the motion capture data, and a head and a facial movement are based on the puppetry data. A first view of the computer generated animated character is created from a first reference point. A second view of the computer generated animated character is created from a second reference point that is distinct from the first reference point. One or more of the first and second views of the computer generated animated character are displayed in real-time with receiving the animation data.

Owner:THE JIM HENSON COMPANY

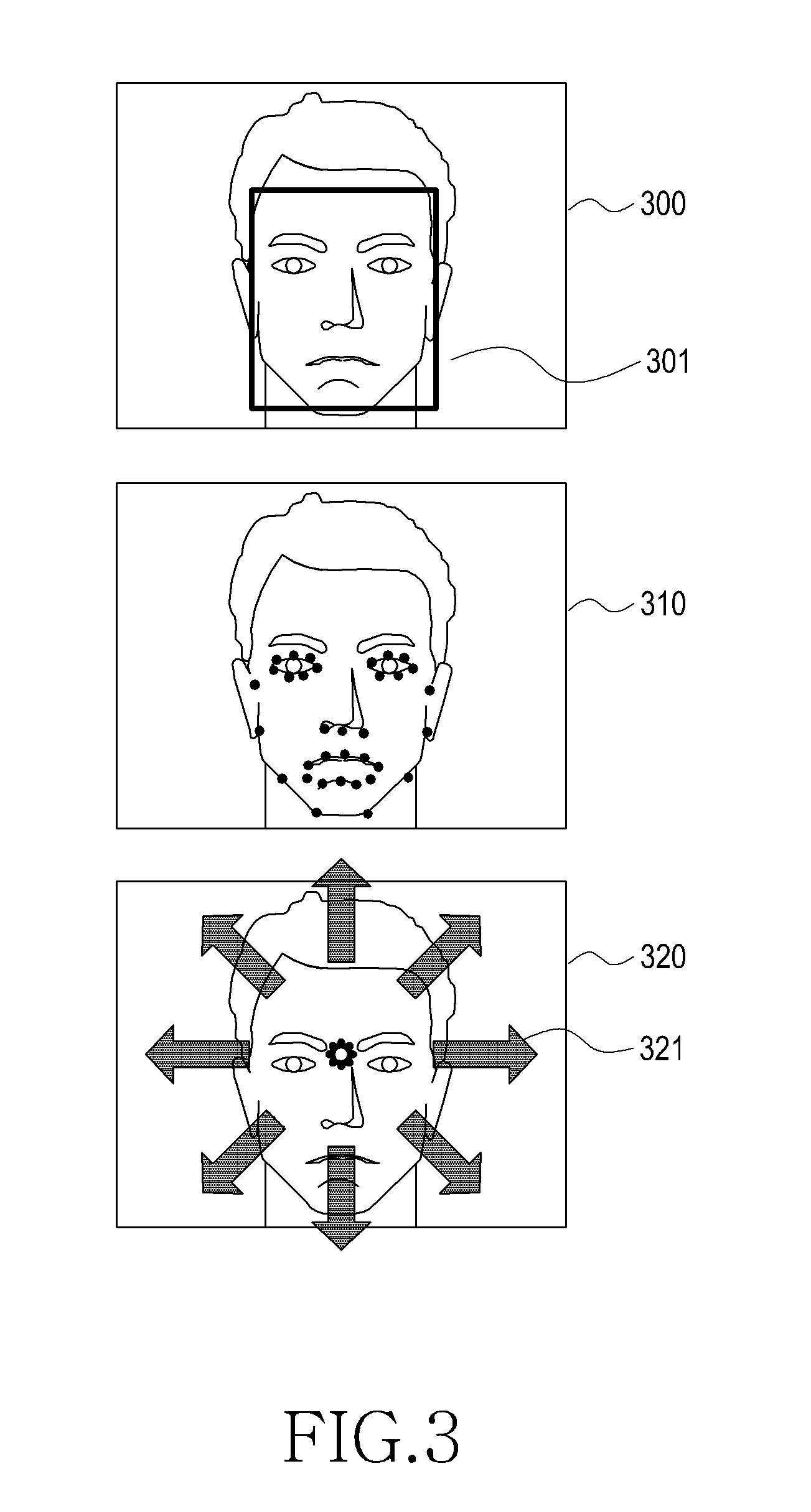

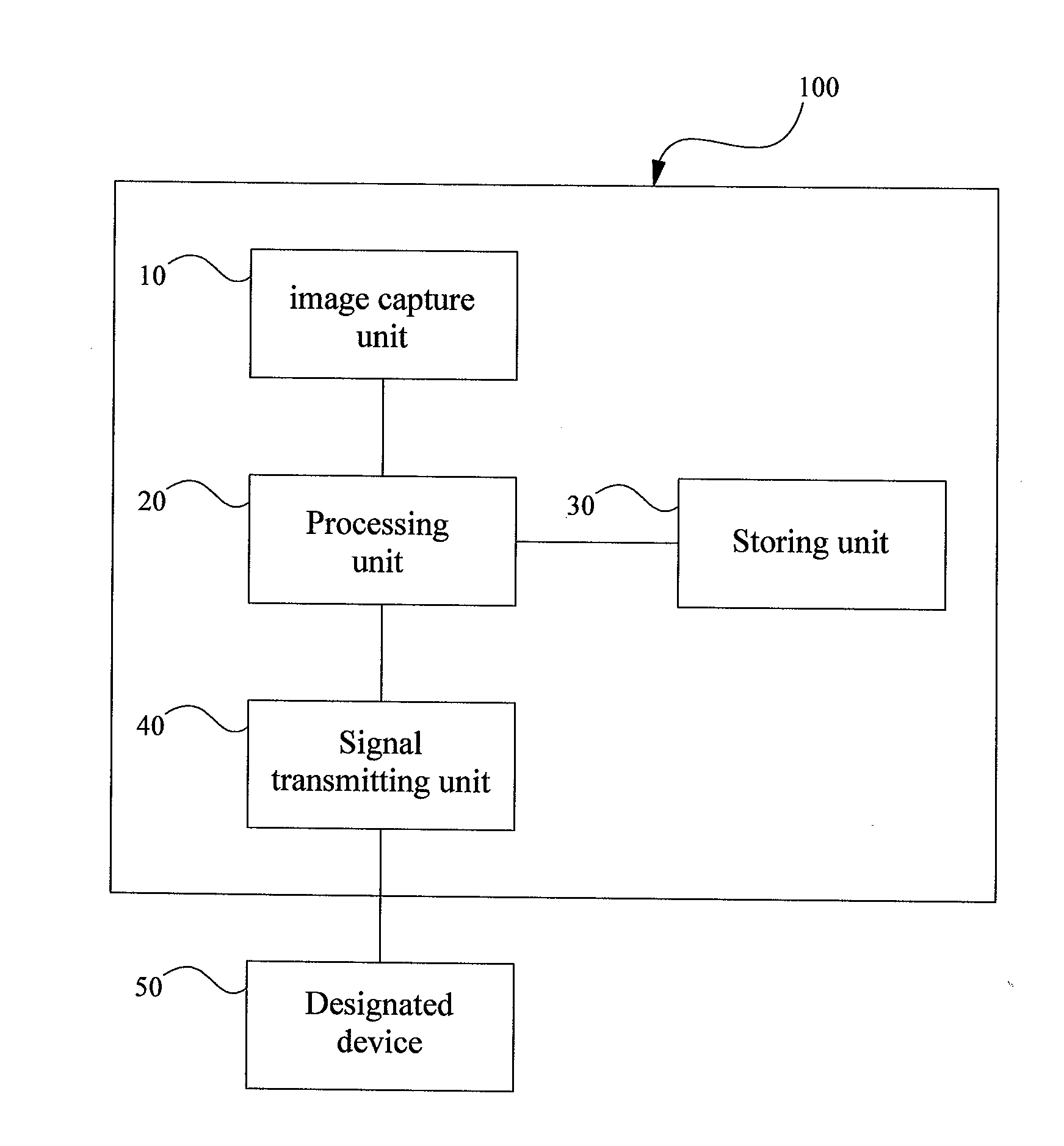

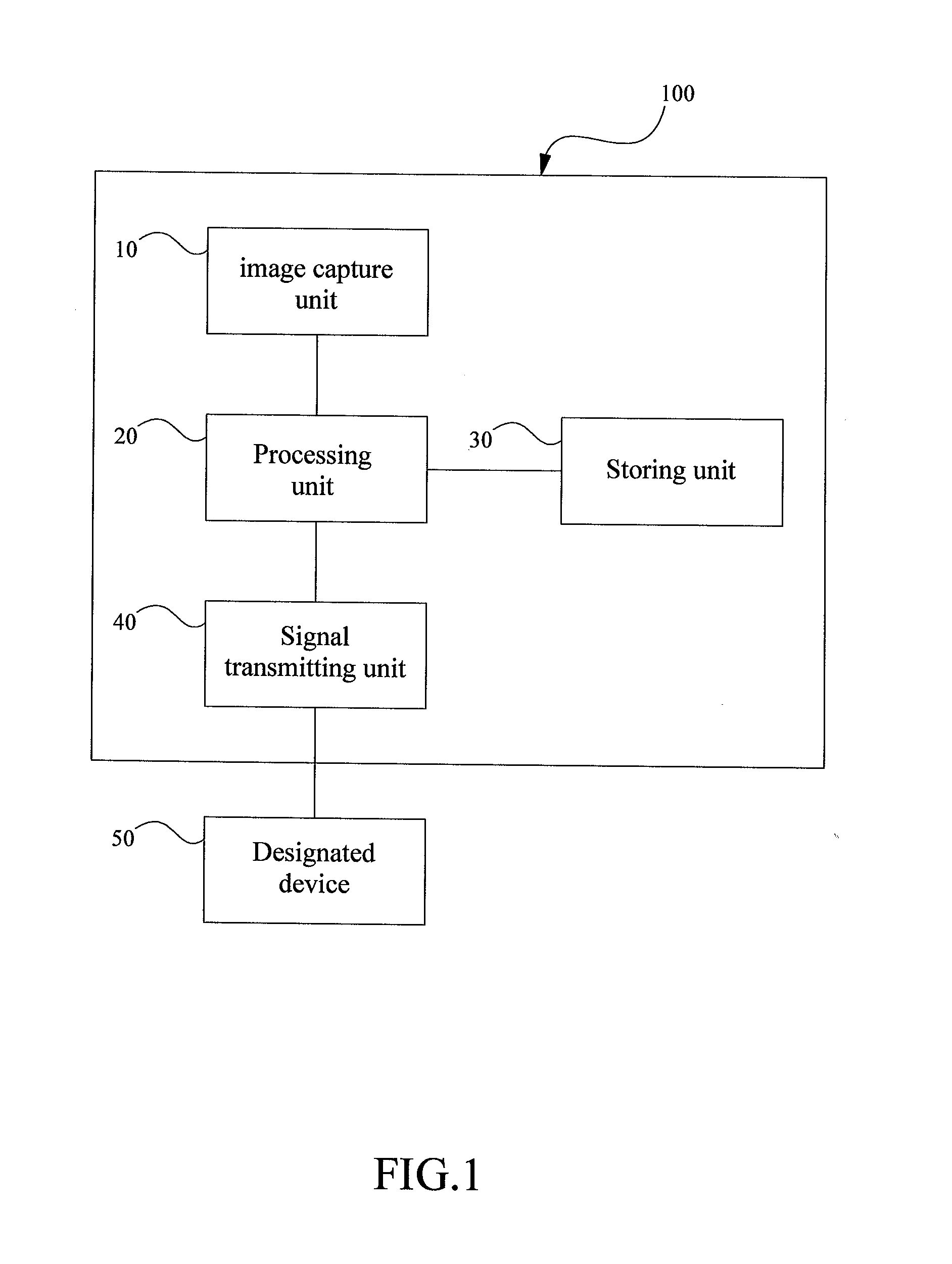

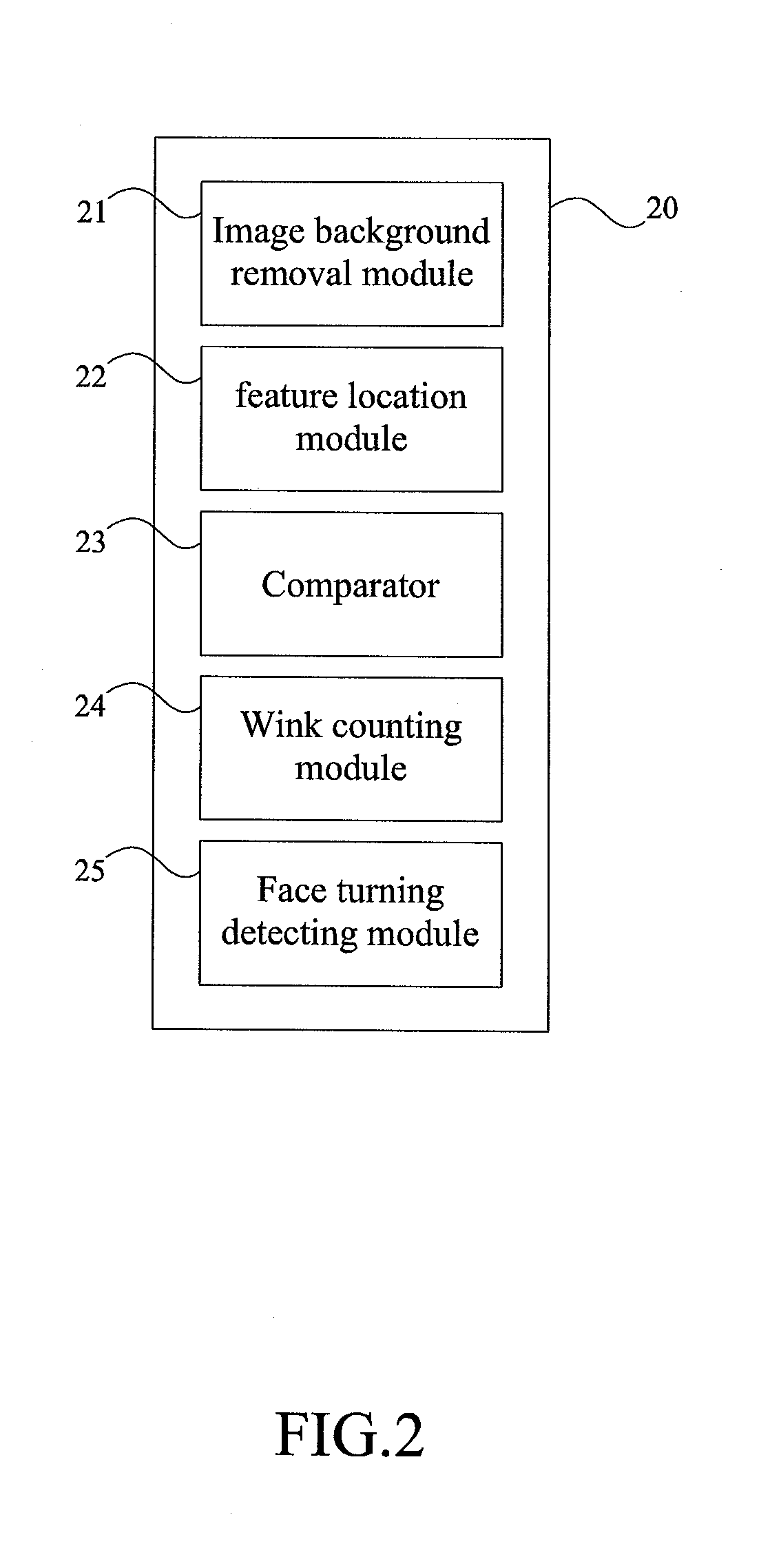

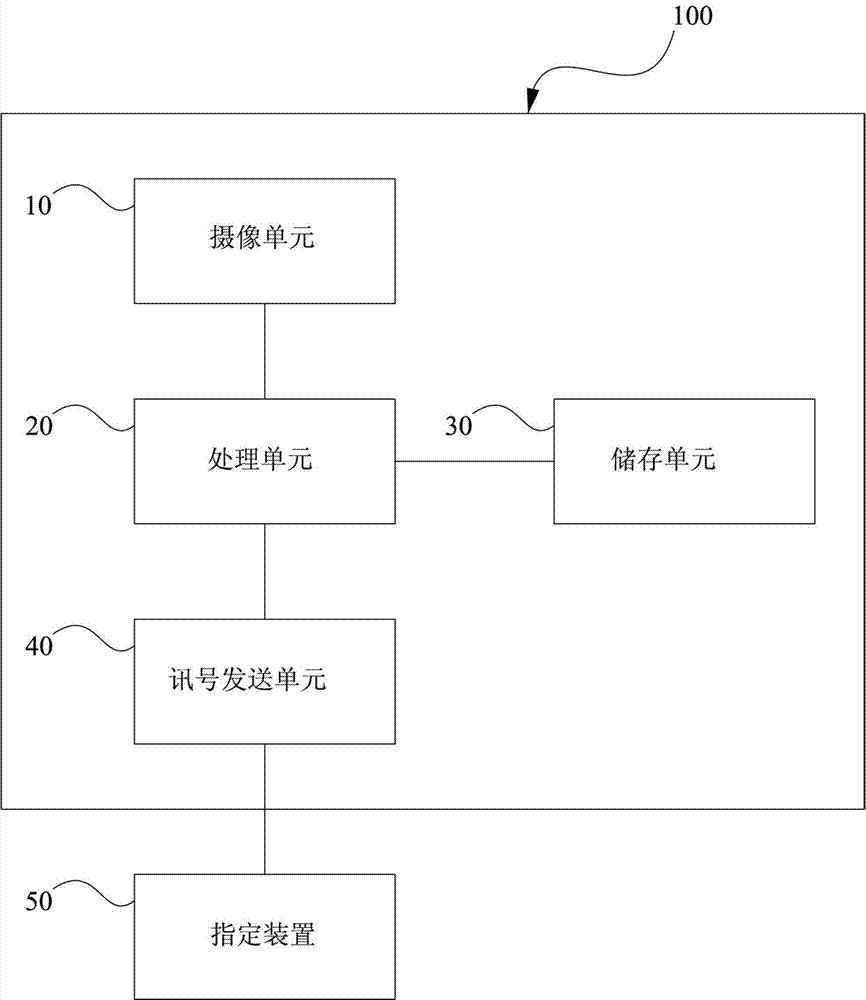

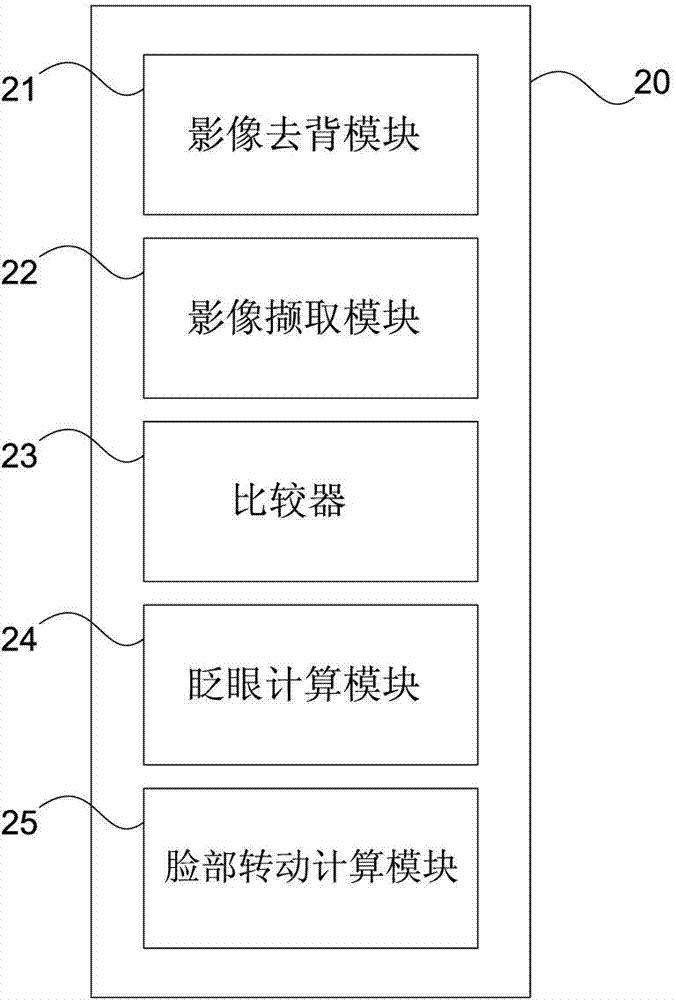

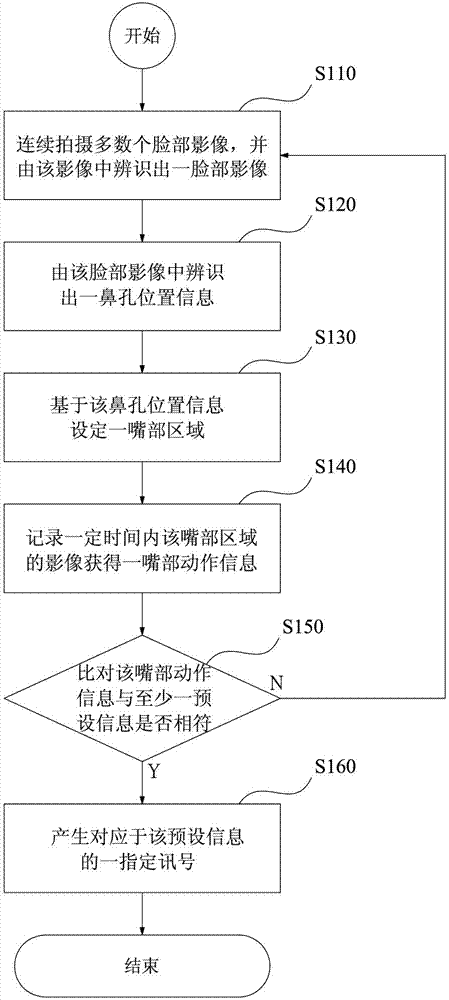

Device, operating method and computer-readable recording medium for generating a signal by detecting facial movement

ActiveUS20140376772A1Avoid distraction of driverReduce accident rateElement comparisonAcquiring/recognising eyesPattern recognitionNostril

A device for generating signal by detecting facial movement and operating method thereof is provided, which includes: an image capture unit, and a processing unit. The image capture unit for obtaining an image series. The processing unit receives the images series from the image capture unit, wherein the processing unit includes an image background removal module, an image extracting module, and a comparator, wherein the image background removing module processes each of the image series respectively to obtain a facial image, wherein the feature location module determines a location of a pair of nostrils in the facial image, defines a mouth searching frame, and acquires a data of mouth movements through the mouth searching frame, wherein the comparator compares the data of mouth movements with predetermined facial information, and generates a designated signal according to the comparison result.

Owner:UTECHZONE CO LTD

Mounting and bracket for an actor-mounted motion capture camera system

The present invention relates to computer capture of object motion. More specifically, embodiments of the present invention relate to capturing of facial movement or performance of an actor. Embodiments of the present invention provide a mounting bracket for a head-mounted motion capture camera system. In many embodiments, the mounting bracket includes a helmet that is positioned on the actor's head and mounting rods on either side of the helmet that project toward the front of the actor that may be flexibly repositioned. Cameras positioned on the mounting rods capture images of the actor's face.

Owner:TWO PIC MC

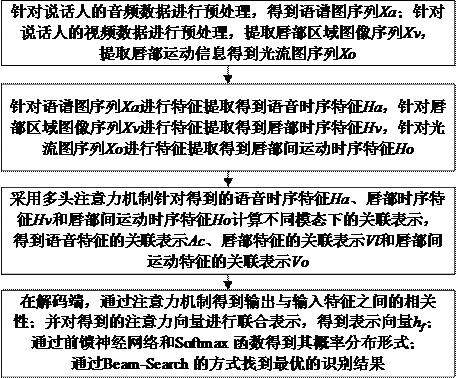

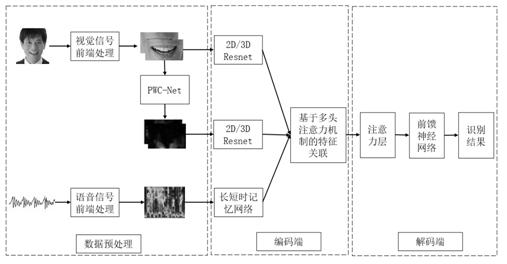

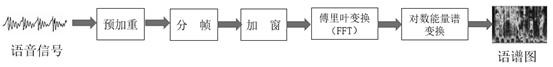

Cross-modal multi-feature fusion audio and video speech recognition method and system

PendingCN112053690AAccurate acquisitionImprove learning effectImage enhancementImage analysisEnvironmental noiseFacial movement

The invention relates to an audio and video speech recognition technology, and provides a cross-modal multi-feature fusion audio and video speech recognition method and system in consideration of thesituation that speech interaction is easily affected by complex environmental noise, and facial motion information is acquired through a video and is relatively stable in an actual robot application environment. According to the method, speech information, visual information and visual motion information are fused through an attention mechanism, and the speech content expressed by a user is acquired more accurately by using the relevance among different modes, so that the speech recognition precision under the condition of complex background noise is improved, the speech recognition performance in human-computer interaction is improved, and the problem of low pure-speech recognition accuracy in a noise environment is effectively solved.

Owner:HUNAN UNIV

Device, operating method and computer-readable recording medium for generating a signal by detecting facial movement

ActiveCN104238732AAchieve the effect of distress signalMaster quicklyInput/output for user-computer interactionElement comparisonPattern recognitionFacial movement

Provided are a device, an operating method and a computer-readable recording medium for generating a signal by detecting facial movement. A device for generating signal by detecting facial movement and operating method thereof is provided, which includes: an image capture unit, and a processing unit. The image capture unit for obtaining an image series. The processing unit receives the images series from the image capture unit, wherein the processing unit includes an image background removal module, an image extracting module, and a comparator, wherein the image background removing module processes each of the image series respectively to obtain a facial image, wherein the feature location module determines a location of a pair of nostrils in the facial image, defines a mouth searching frame, and acquires a data of mouth movements through the mouth searching frame, wherein the comparator compares the data of mouth movements with predetermined facial information, and generates a designated signal according to the comparison result.

Owner:UTECHZONE CO LTD

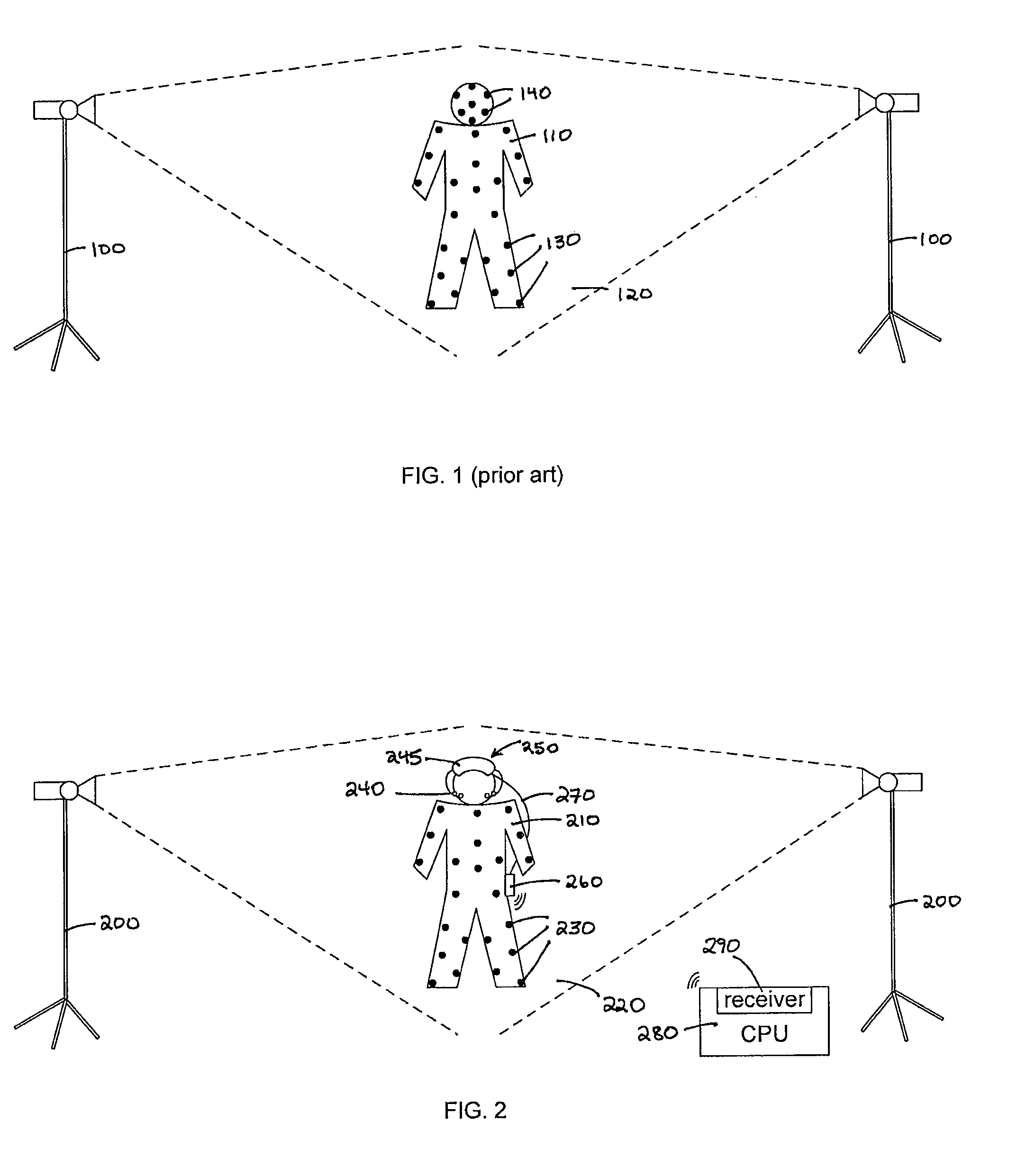

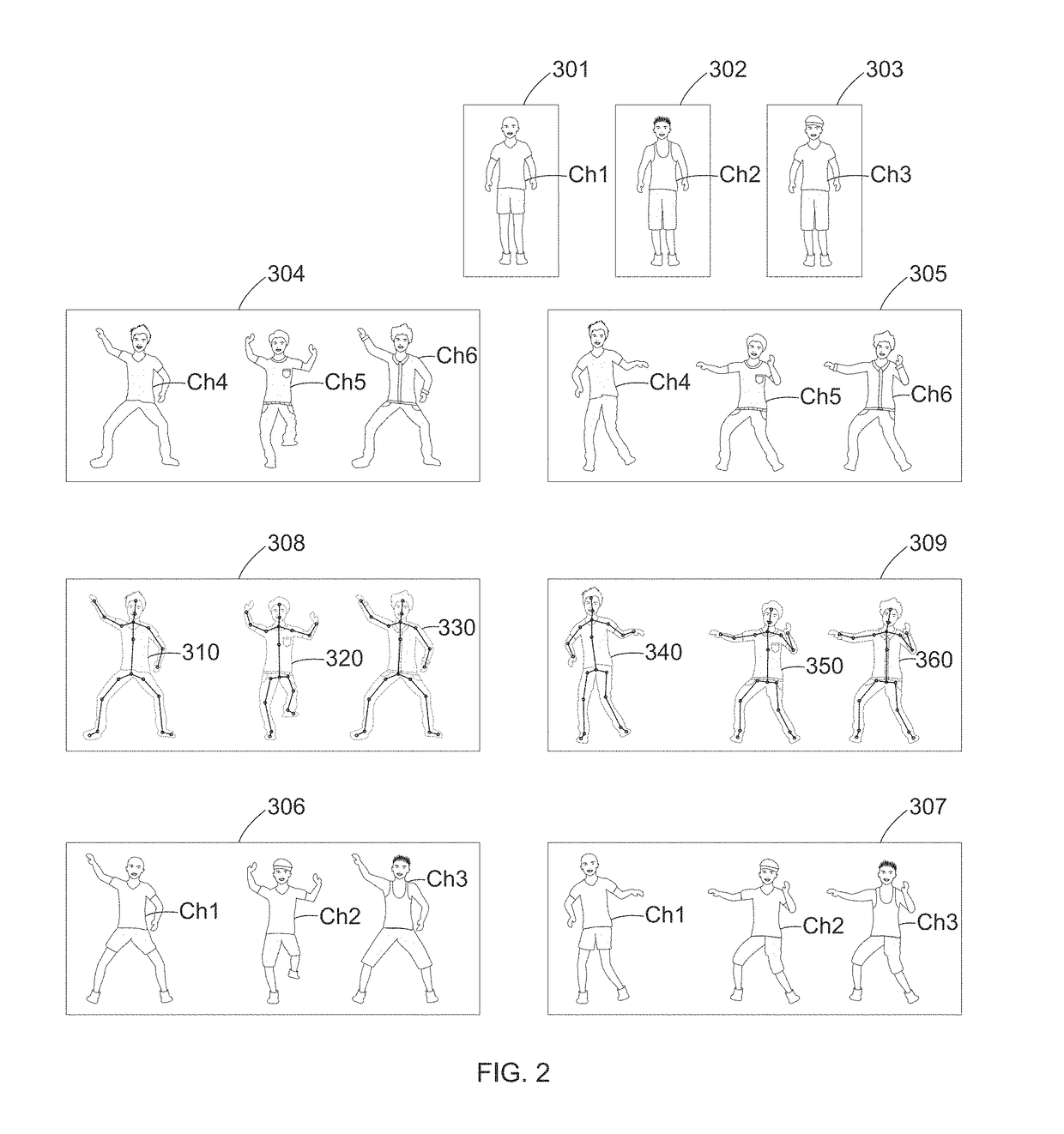

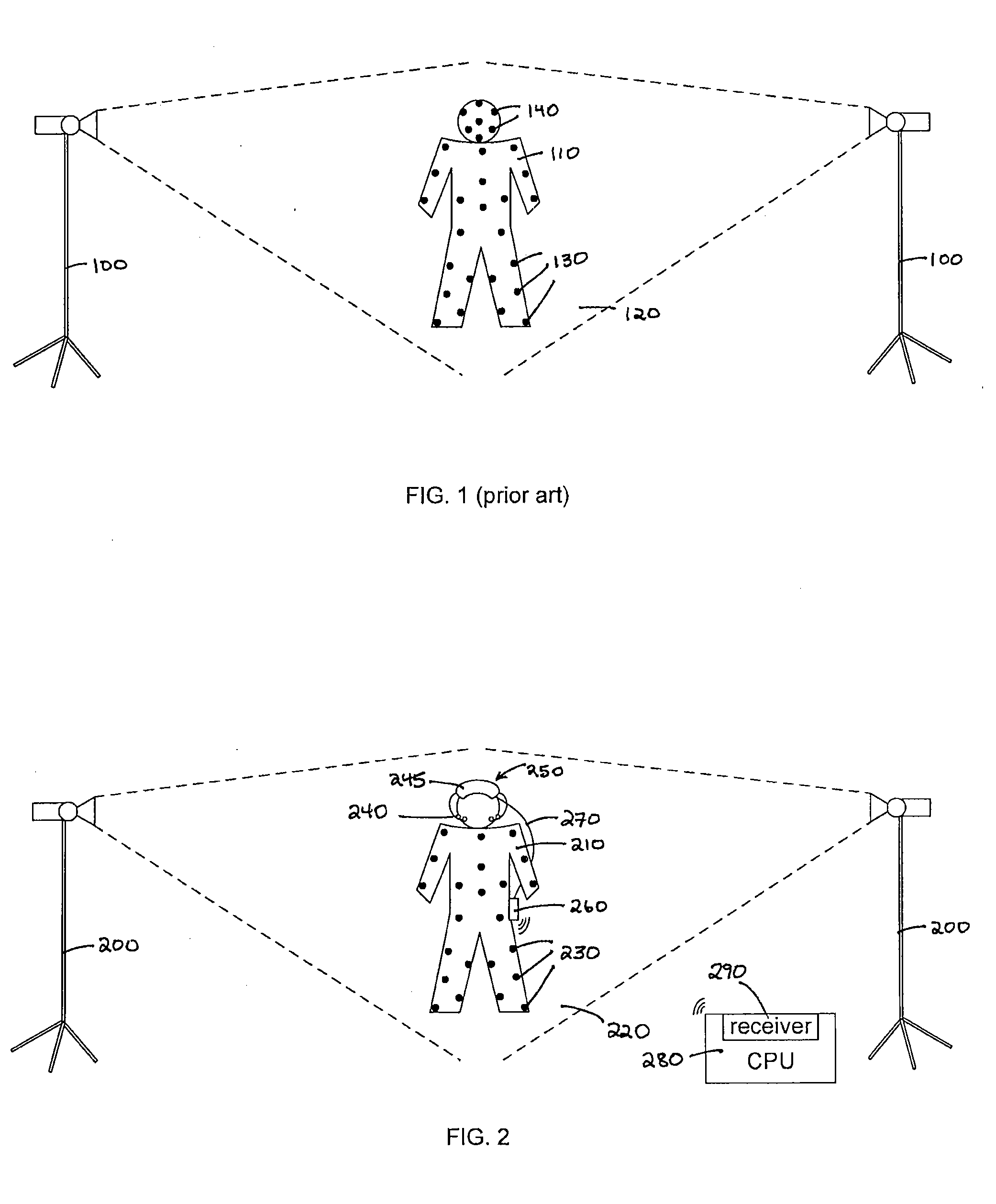

System and method of animating a character through a single person performance

A method of animating a computer generated character in real-time through a single person performance is disclosed. The method provides a mobile input device configured to receive a hand puppetry movement as an input from a performer. Further, the method provides a motion capture device that includes a plurality of markers. The motion capture device is configured to be worn on the body of the performer. Motion capture data is received at a computer. The motion capture data is representative of the positions of the plurality of markers. In addition, input device data is received from the mobile input device at the computer. A computer generated animated character is then generated, the body movements of the character being based on the motion capture data, and head and facial movements being based on the input device data received from the mobile input device.

Owner:THE JIM HENSON COMPANY

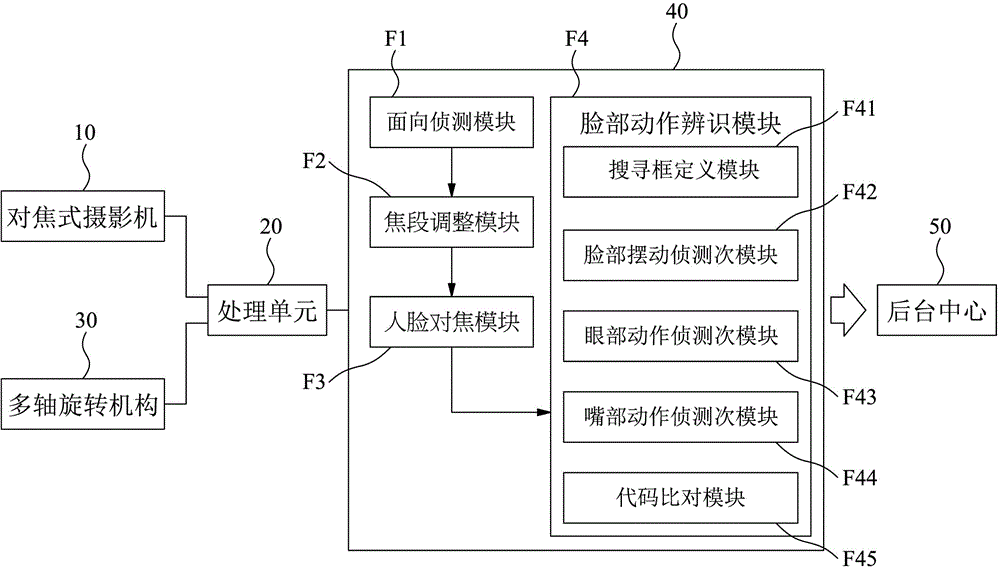

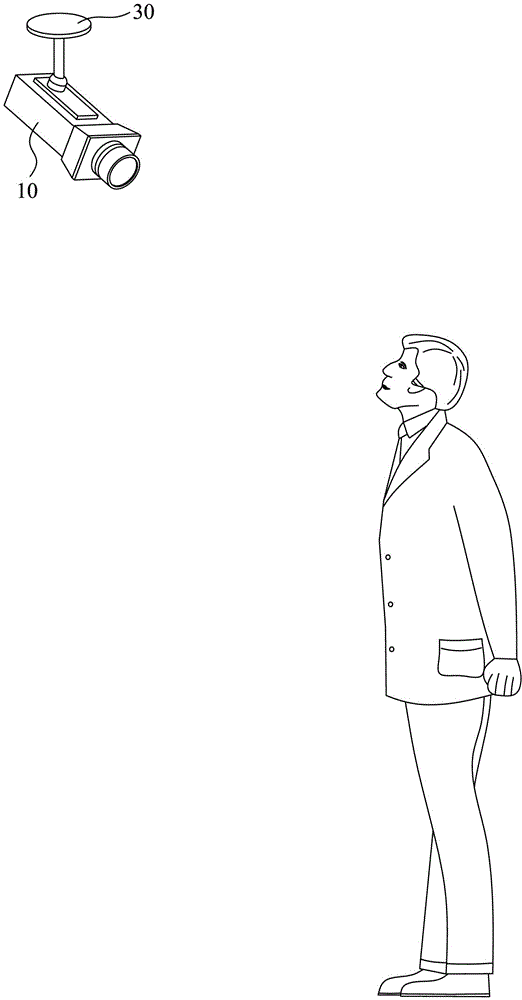

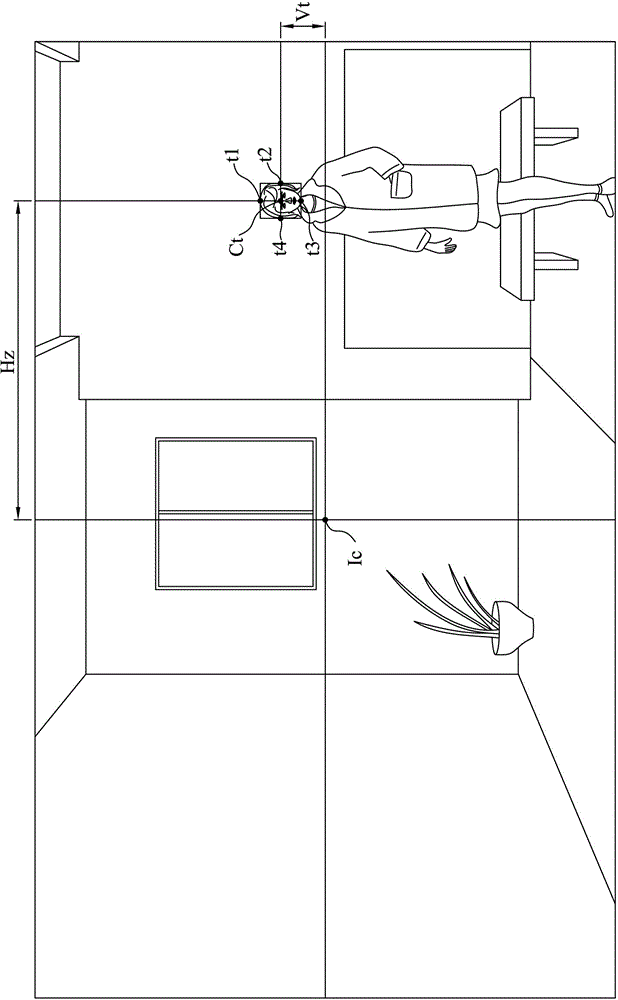

Distant facial monitoring system and method

InactiveCN106034217ASave wiringTransfer unitTelevision system detailsColor television detailsComputer graphics (images)Control signal

A remote face monitoring system and method, which includes a focus camera and a processing unit connected to the focus camera. The focusing camera is used to shoot a preset environment. The processing unit is used to load and execute the following programs: a face detection module detects the face features in the image from the image of the preset environment, and triggers a control command within a preset time; and a face The motion recognition module continuously captures the specific motion information of the face feature when receiving the control instruction, and triggers a distress signal to be sent to the background center when the specific motion information matches a preset information.

Owner:UTECHZONE CO LTD

Facial movement measurement and stimulation apparatus and method

ActiveUS9372533B1Facilitating sharing and transferAccurately reflectInput/output for user-computer interactionElectromyographyFacial movementDisplay device

An illustrative embodiment of a facial movement measurement and stimulation apparatus includes at least one facial movement sensor adapted to sense facial movement in a subject and a wearable computing device interfacing with the facial movement sensor or sensors and adapted to receive at least one signal from the facial movement sensor or sensors and indicate facial movement of the subject on a display of the wearable computing device. A facial movement measurement and stimulation method utilizing the wearable computing device is also disclosed.

Owner:AGRAMA MARK

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com