Patents

Literature

147 results about "Facial motion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

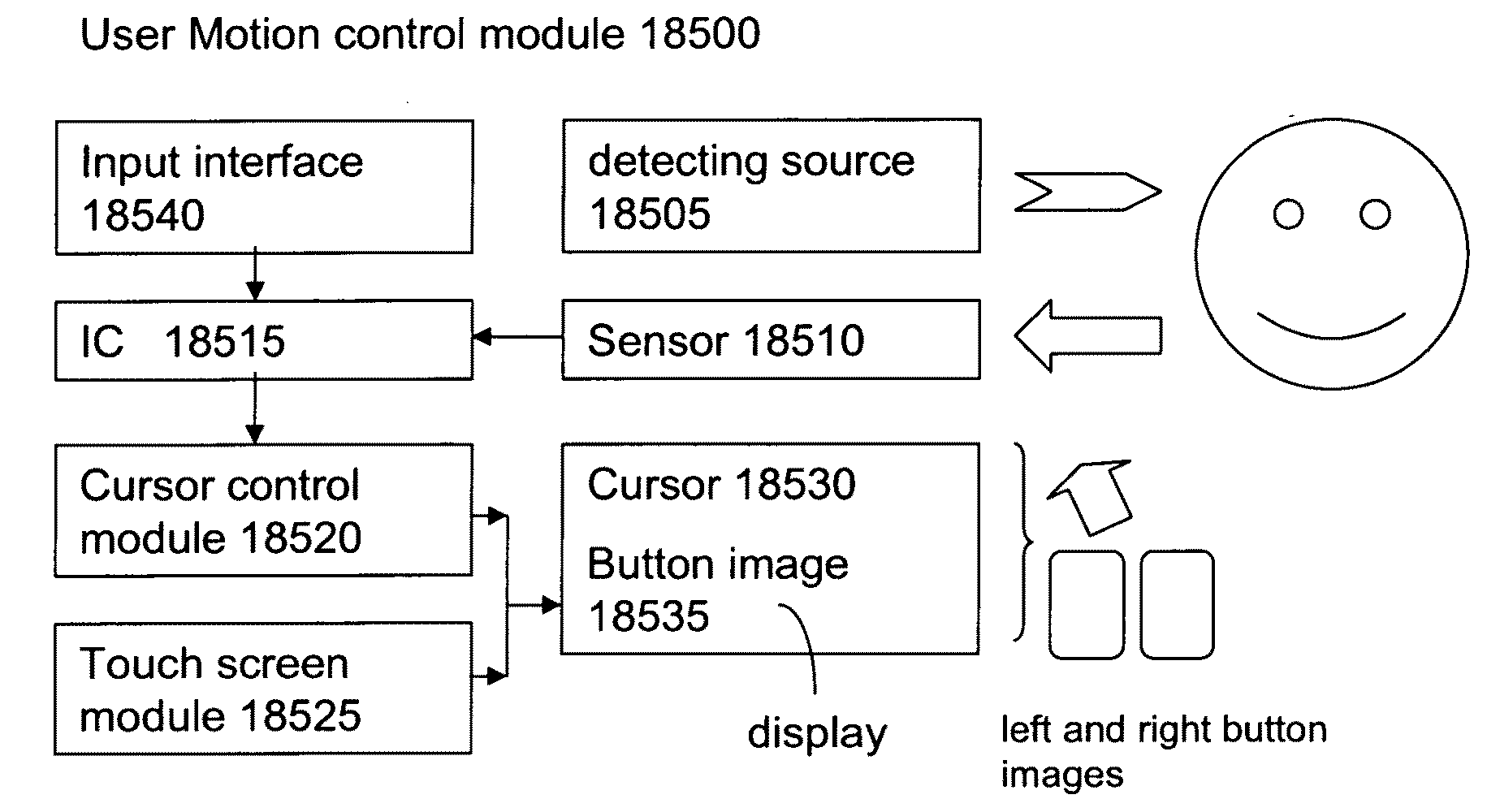

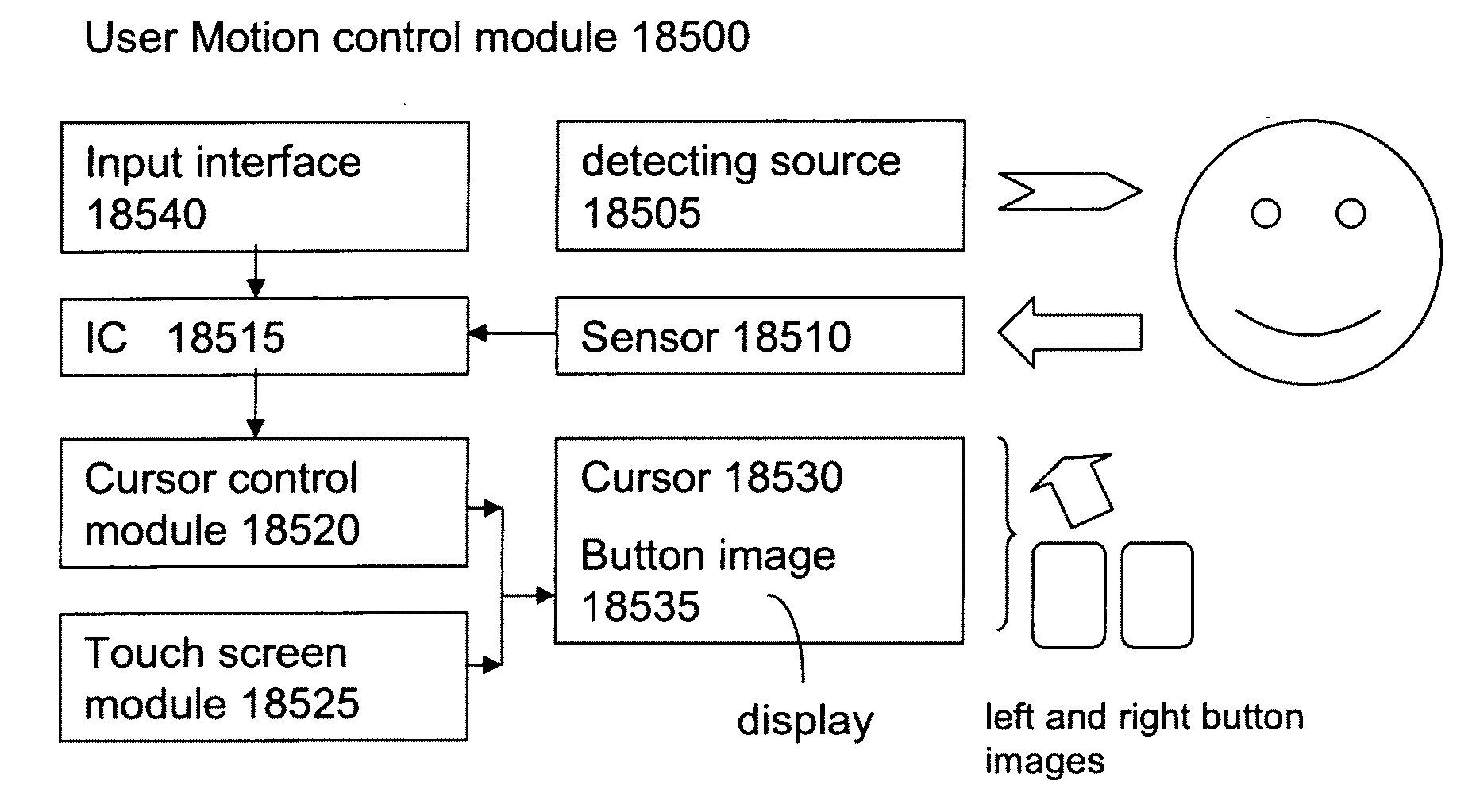

Method of controlling an object by user motion for electronic device

ActiveUS20090295738A1Minimize shielding effectInput/output for user-computer interactionAssess restrictionCMOSControl signal

The present invention provides a method of controlling a virtual object or instruction for a computing device comprising: detecting a user activity by a detecting device; generating a control signal in responsive to the user activity detection; controlling an object displayed on a display in responsive to the control signal to execute the instruction. The user activity is detected by CMOS or CCD. The user activity includes facial motion, eye motion, or finger motion.

Owner:TAIWAN SEMICON MFG CO LTD

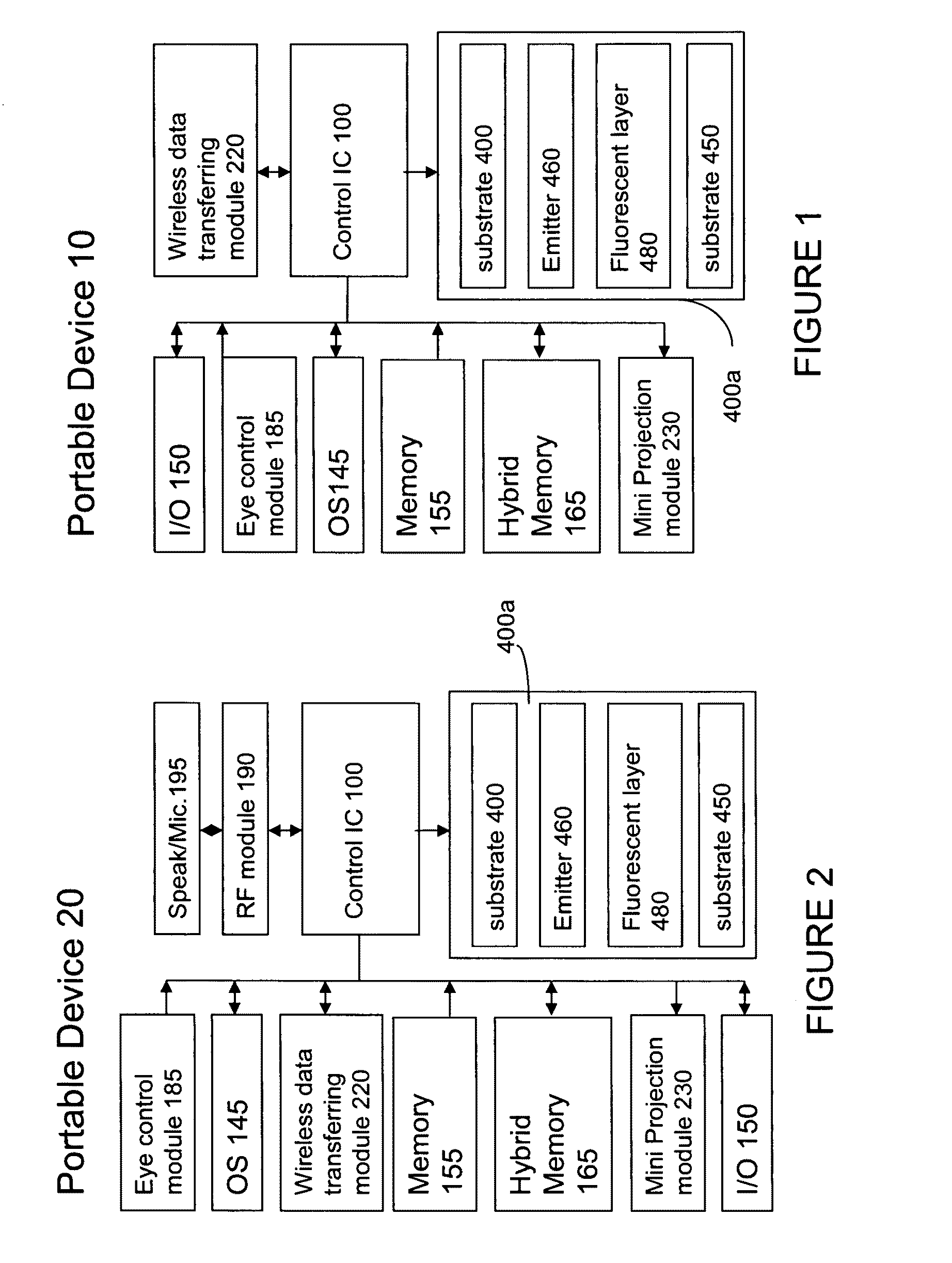

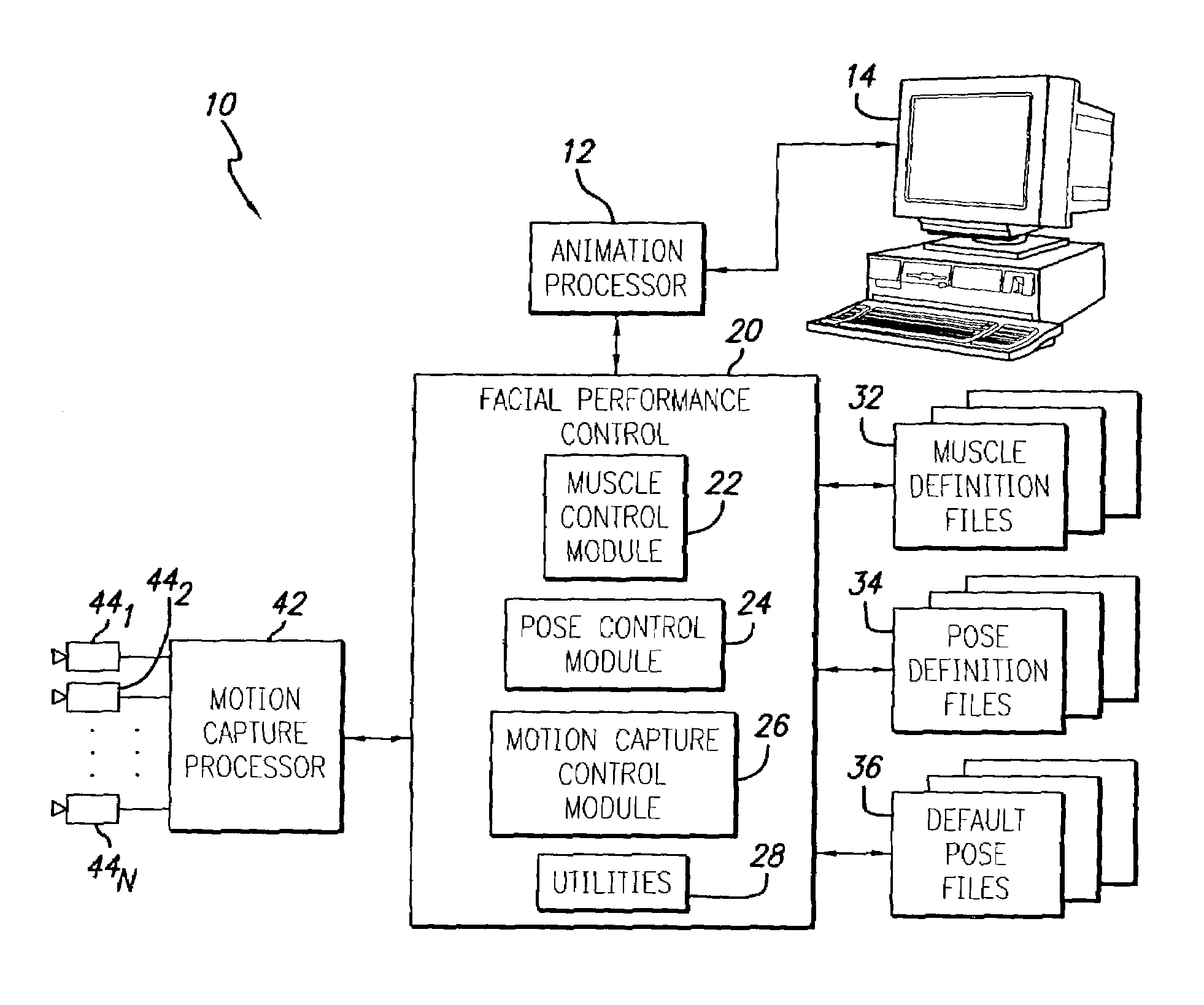

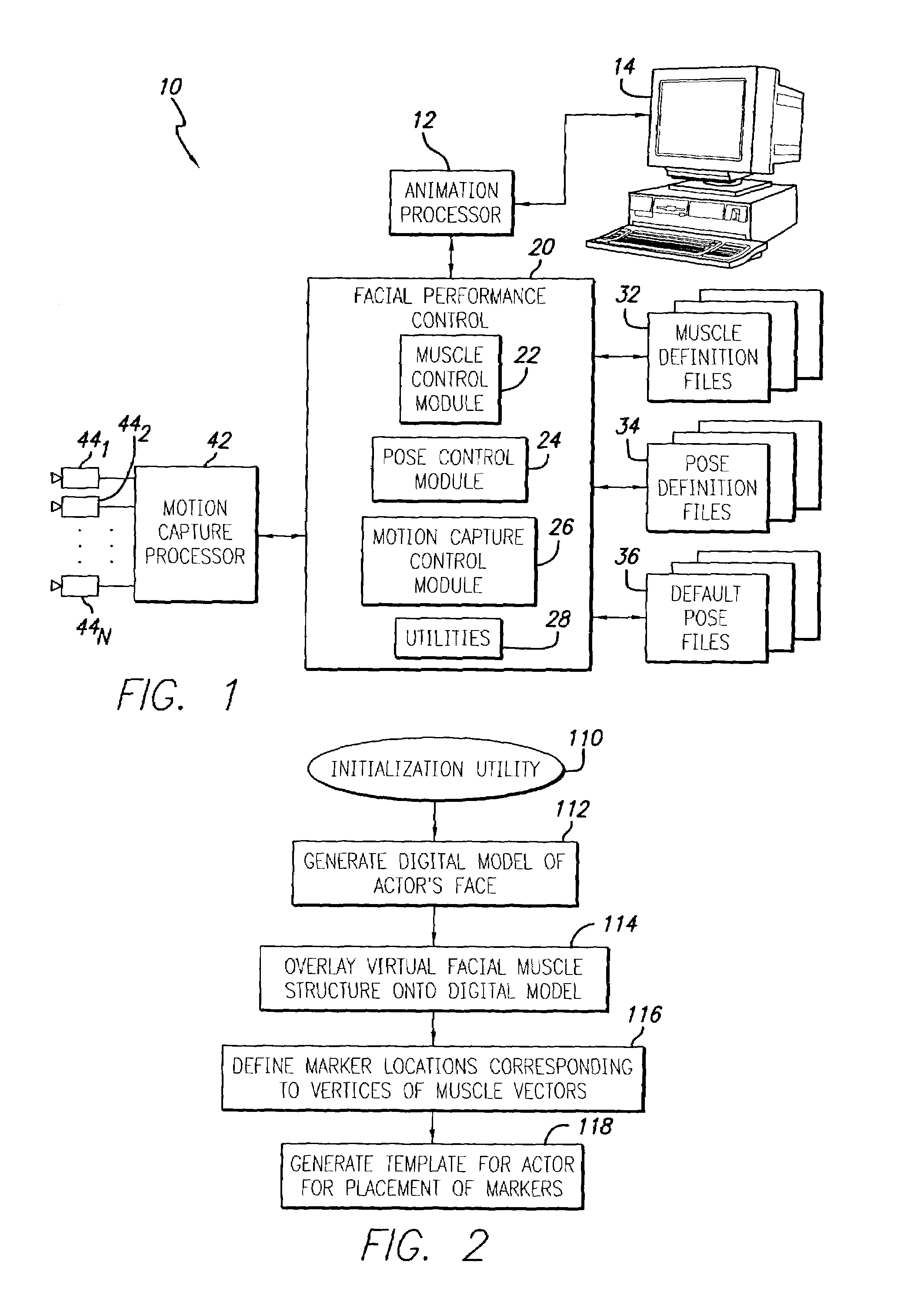

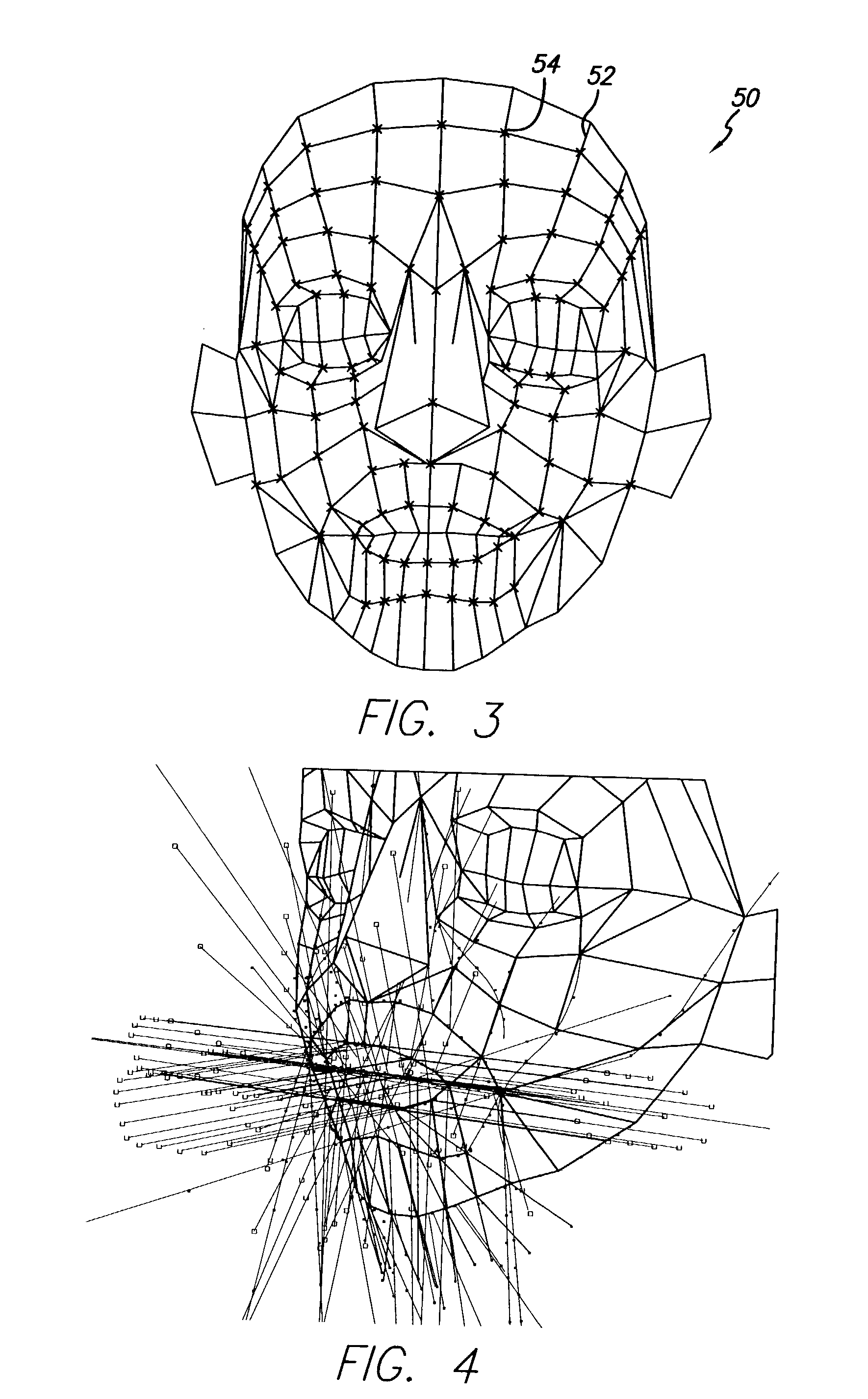

System and method for animating a digital facial model

InactiveUS7068277B2Highly realistic2D-image generationCathode-ray tube indicatorsGraphicsFacial movement

A system and method for animating facial motion comprises an animation processor adapted to generate three-dimensional graphical images and having a user interface and a facial performance processing system operative with the animation processor to generate a three-dimensional digital model of an actor's face and overlay a virtual muscle structure onto the digital model. The virtual muscle structure includes plural muscle vectors that each respectively define a plurality of vertices along a surface of the digital model in a direction corresponding to actual facial muscles. The facial performance processing system is responsive to an input reflecting selective actuation of at least one of the plural muscle vectors to thereby reposition corresponding ones of the plurality of vertices and re-generate the digital model in a manner that simulates facial motion. The muscle vectors further include an origin point defining a rigid connection of the muscle vector with an underlying structure corresponding to actual cranial tissue, an insertion point defining a connection of the muscle vector with an overlying surface corresponding to actual skin, and interconnection points with other ones of the plural muscle vectors.

Owner:SONY CORP +1

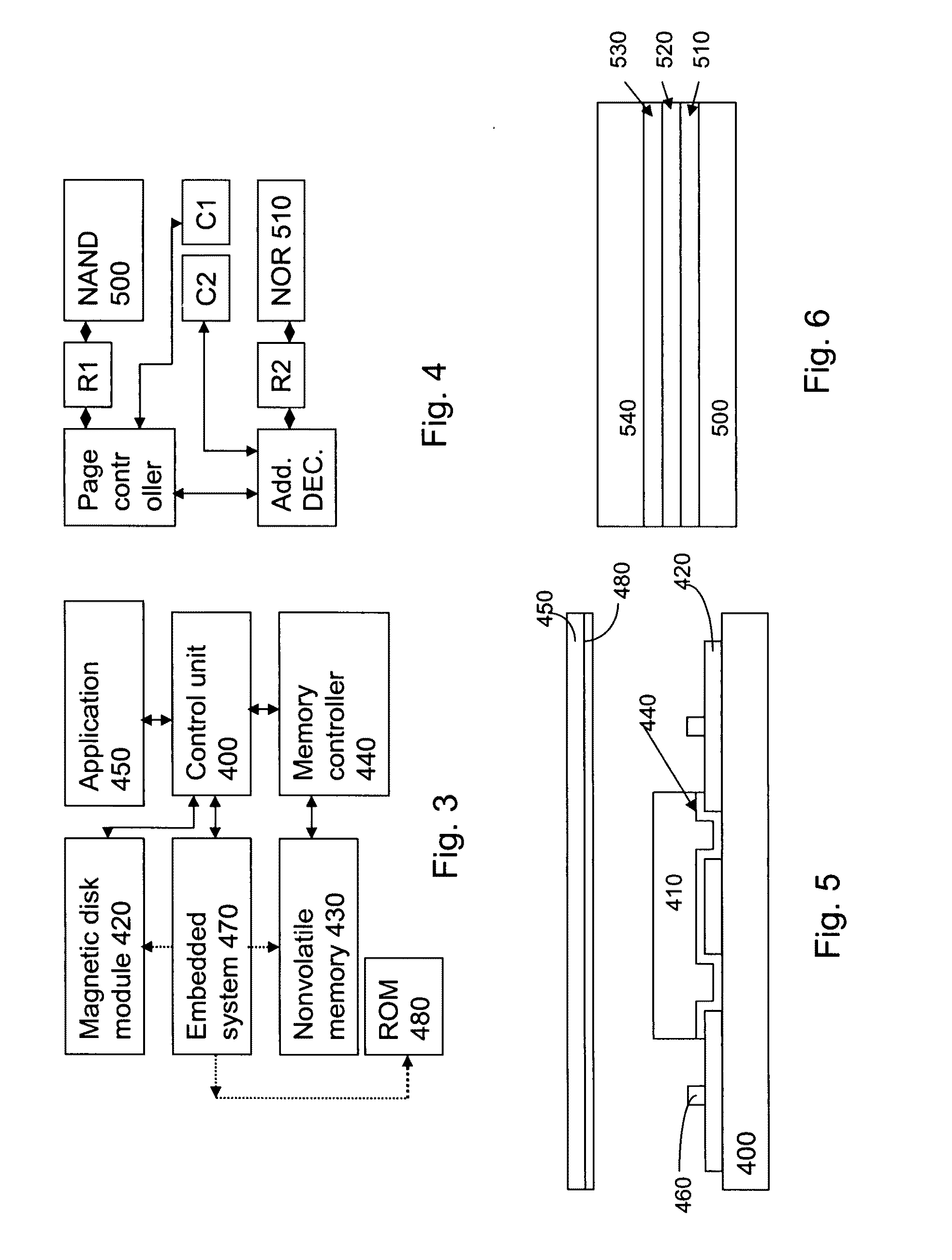

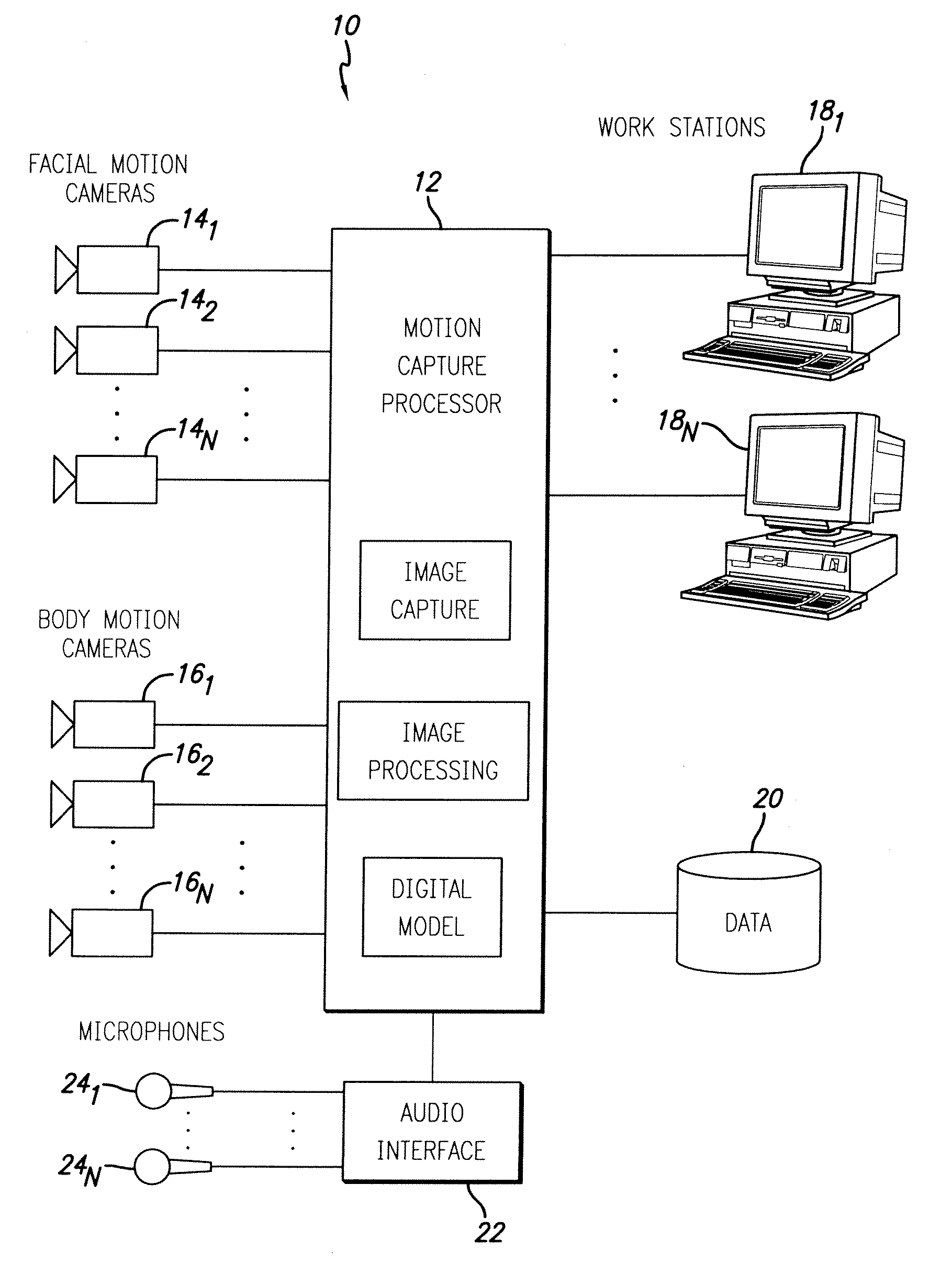

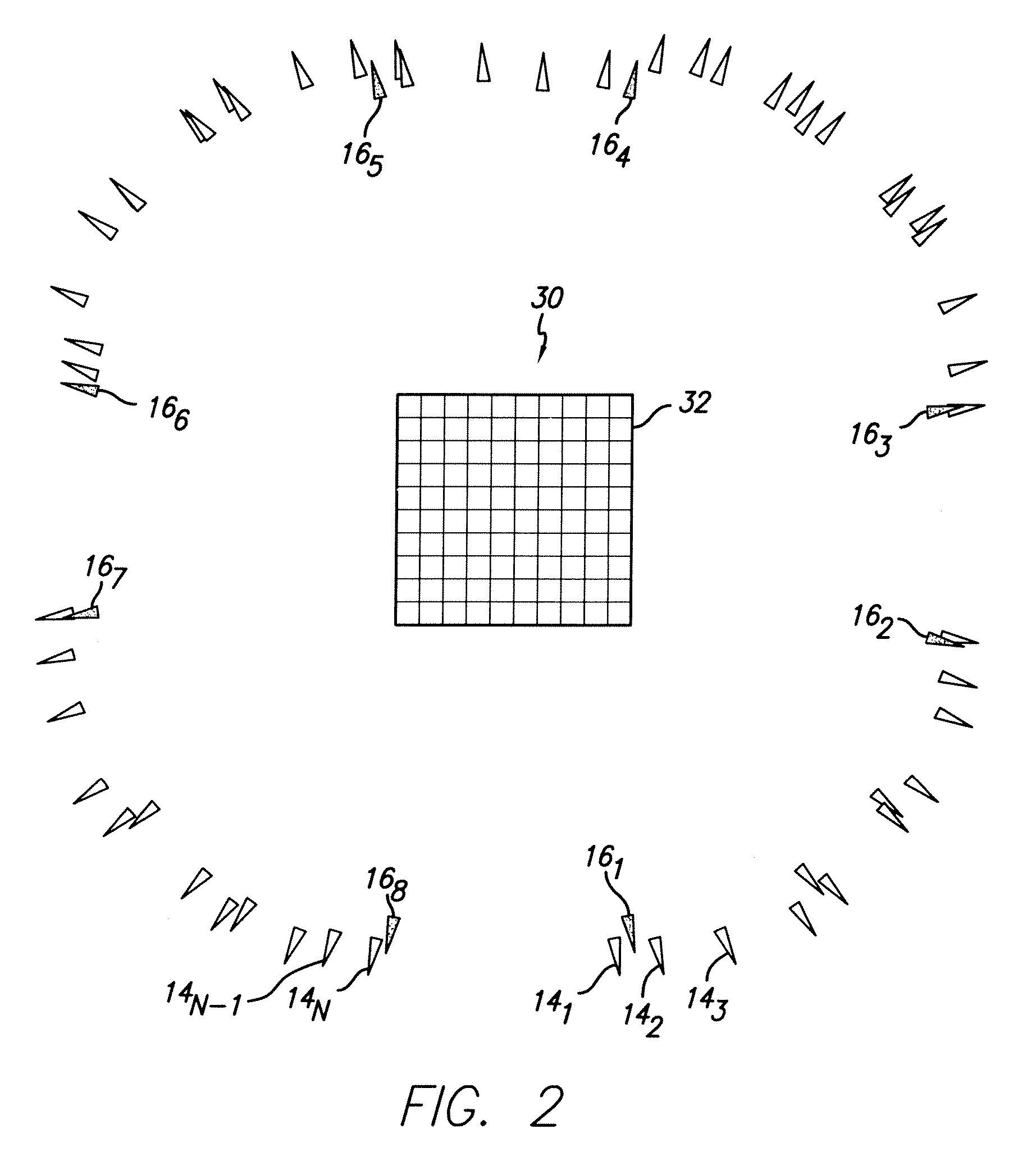

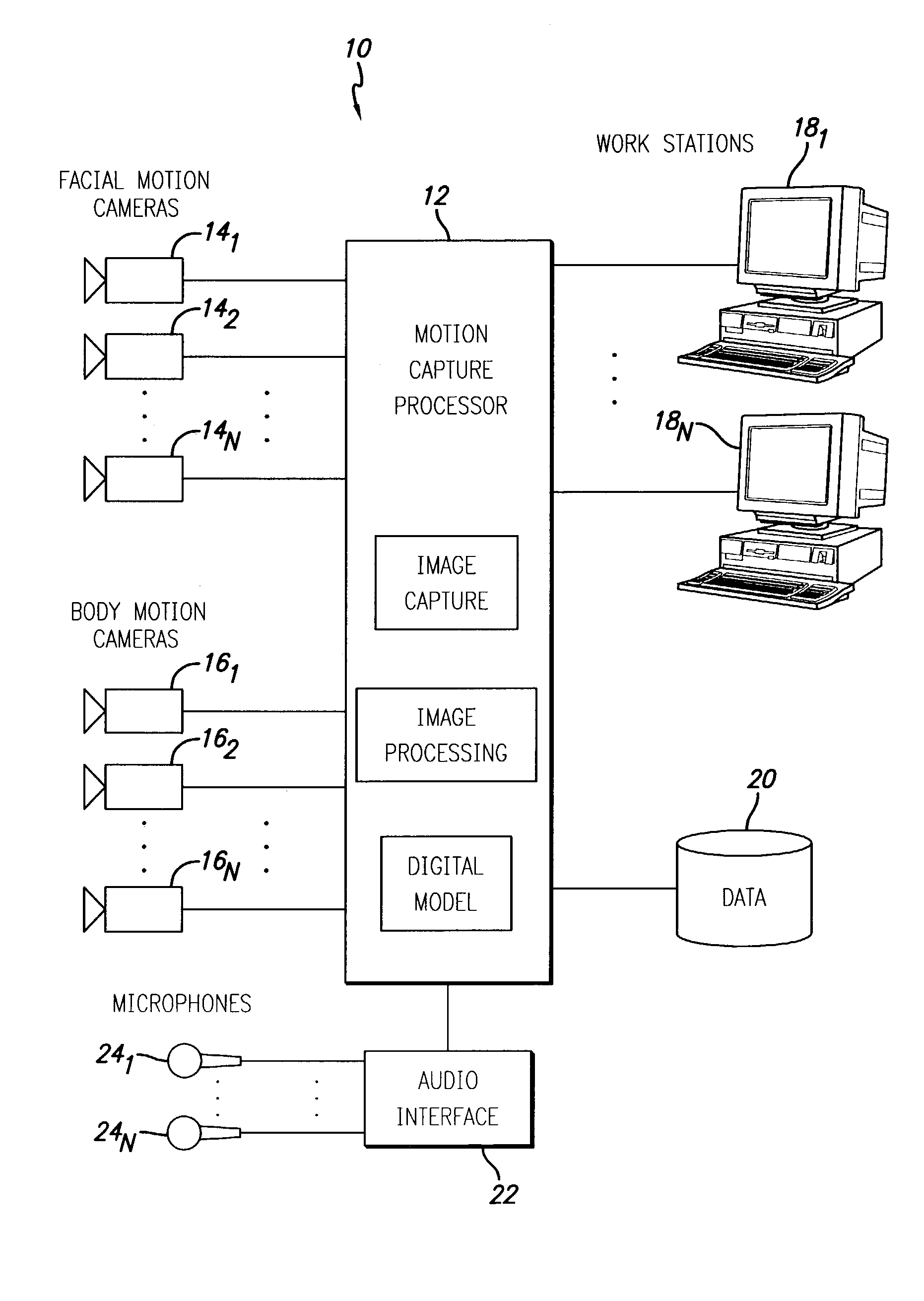

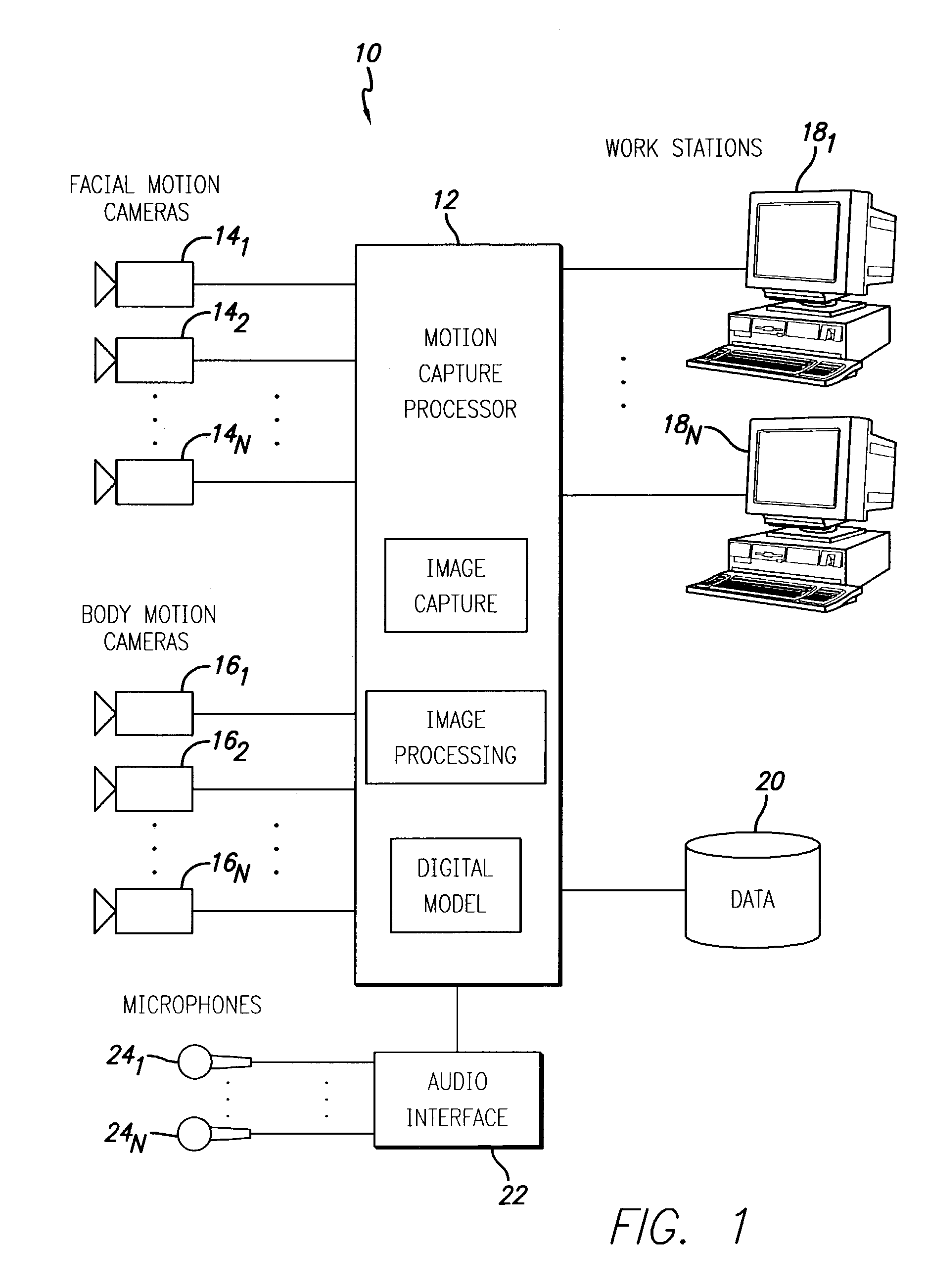

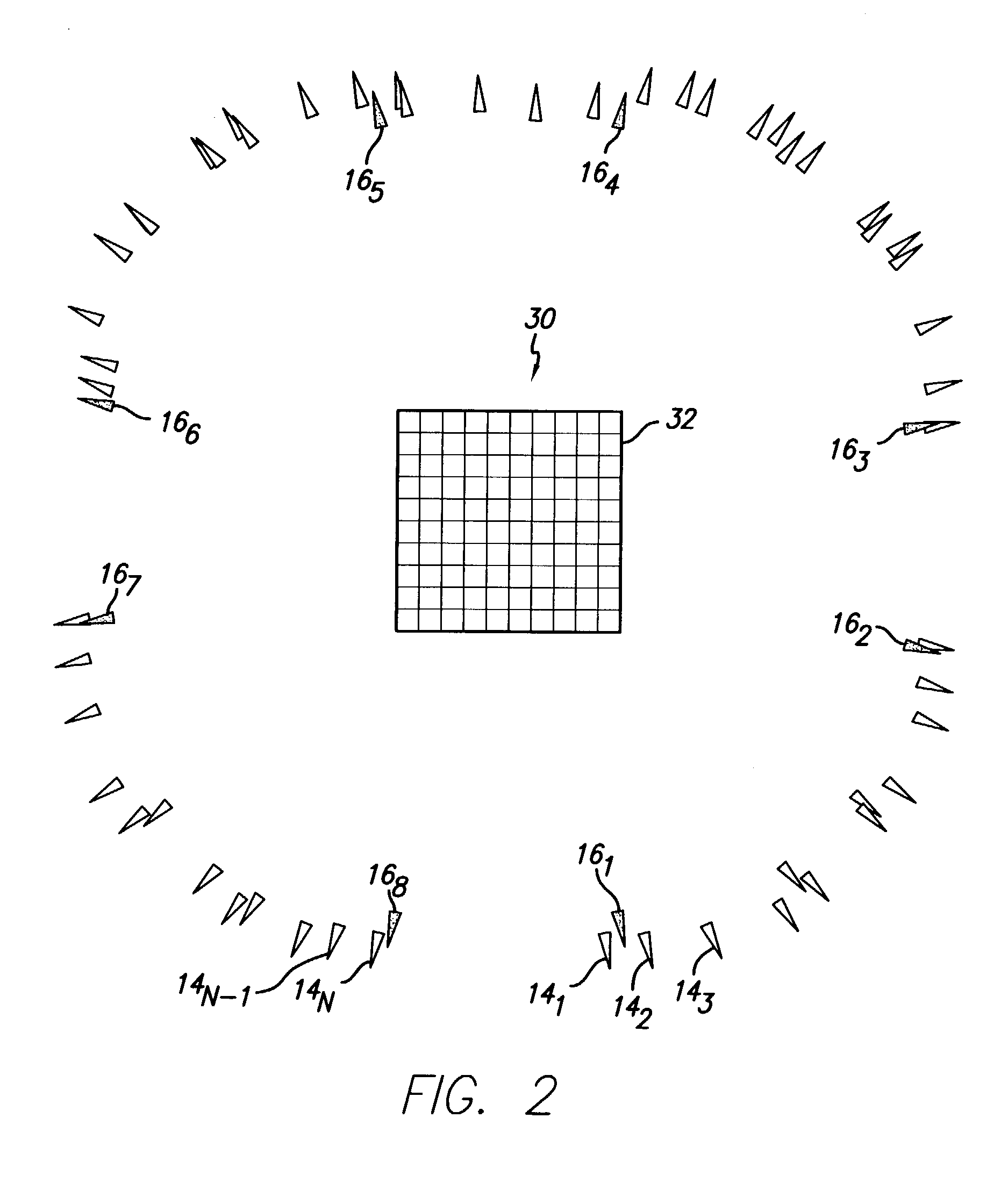

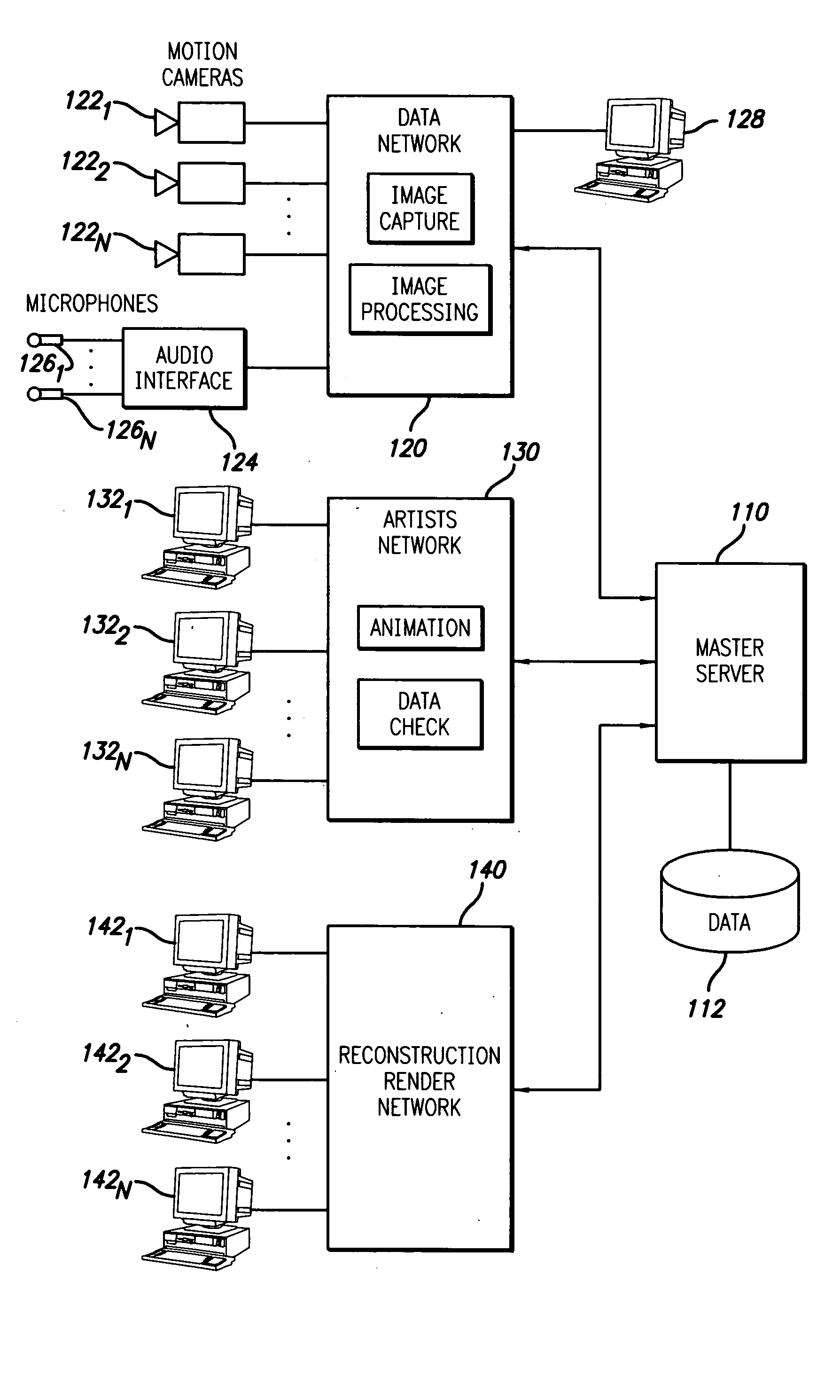

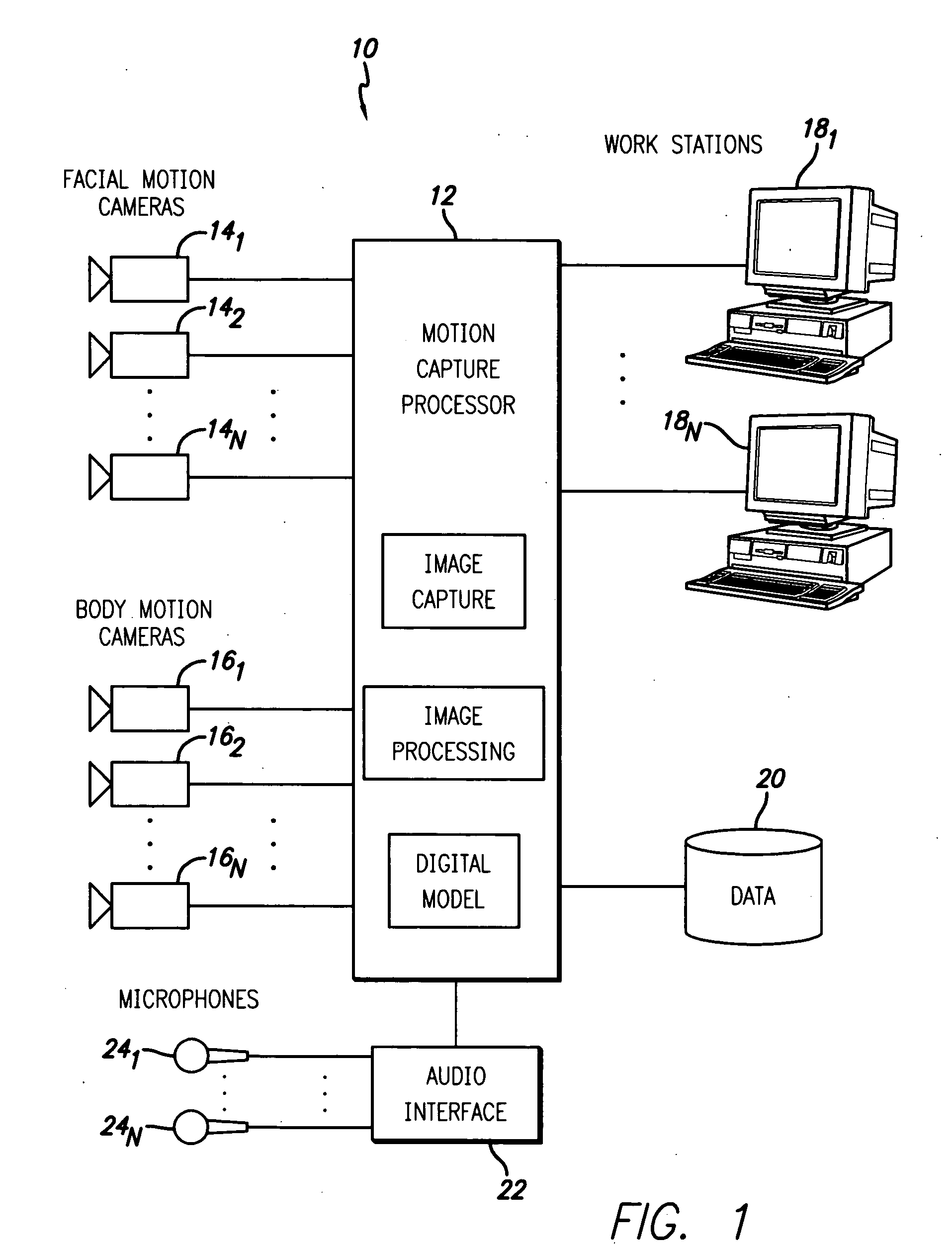

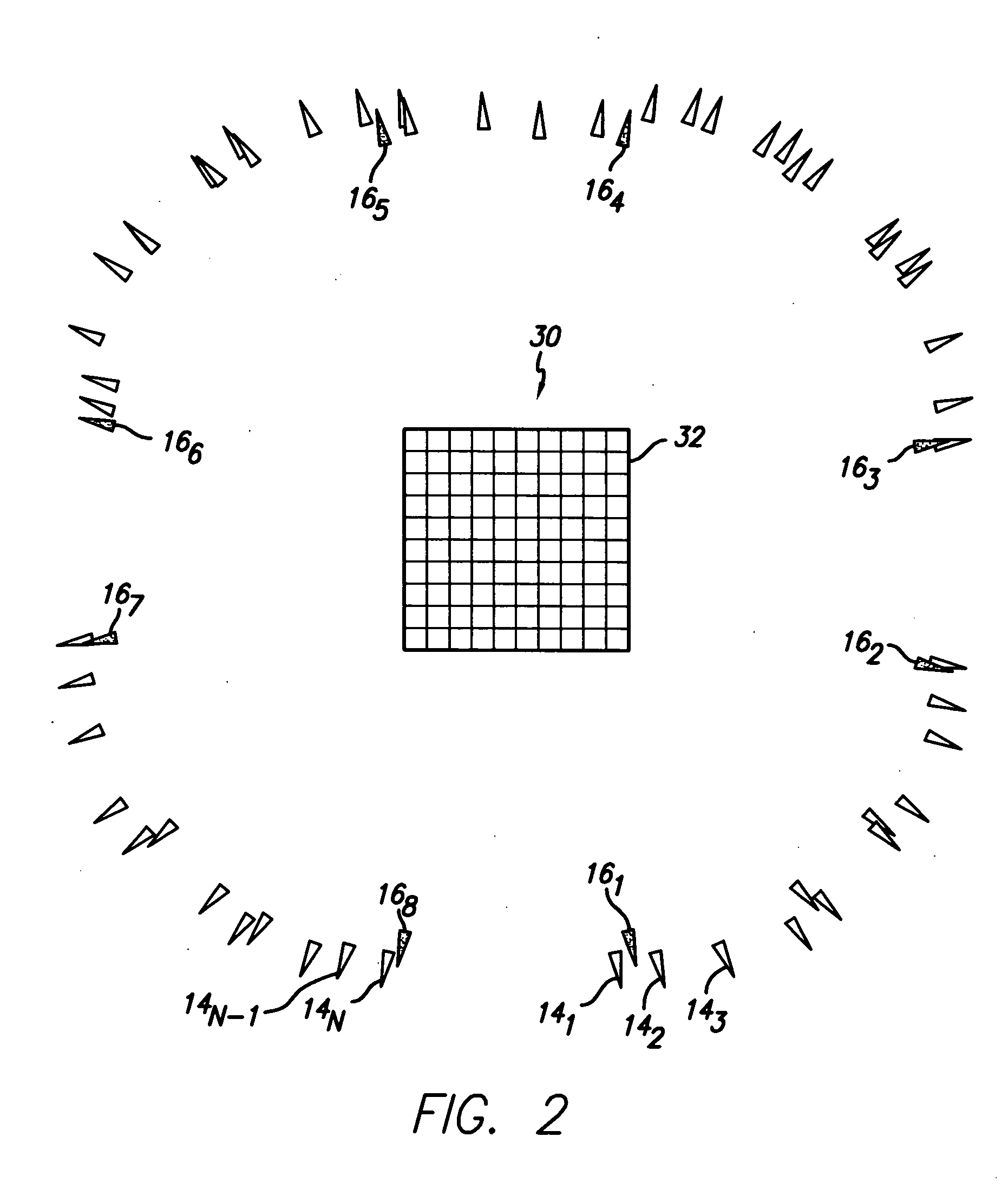

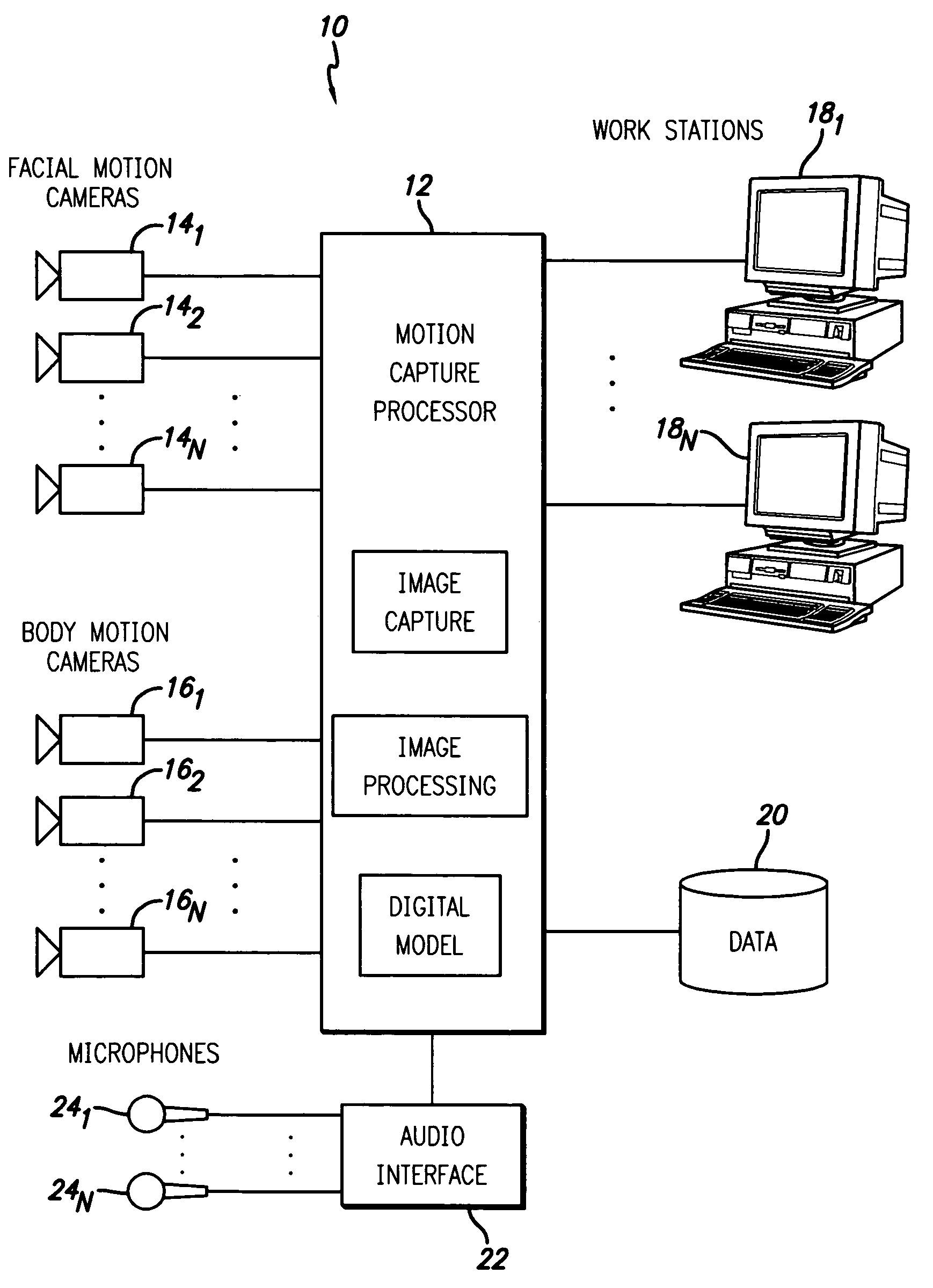

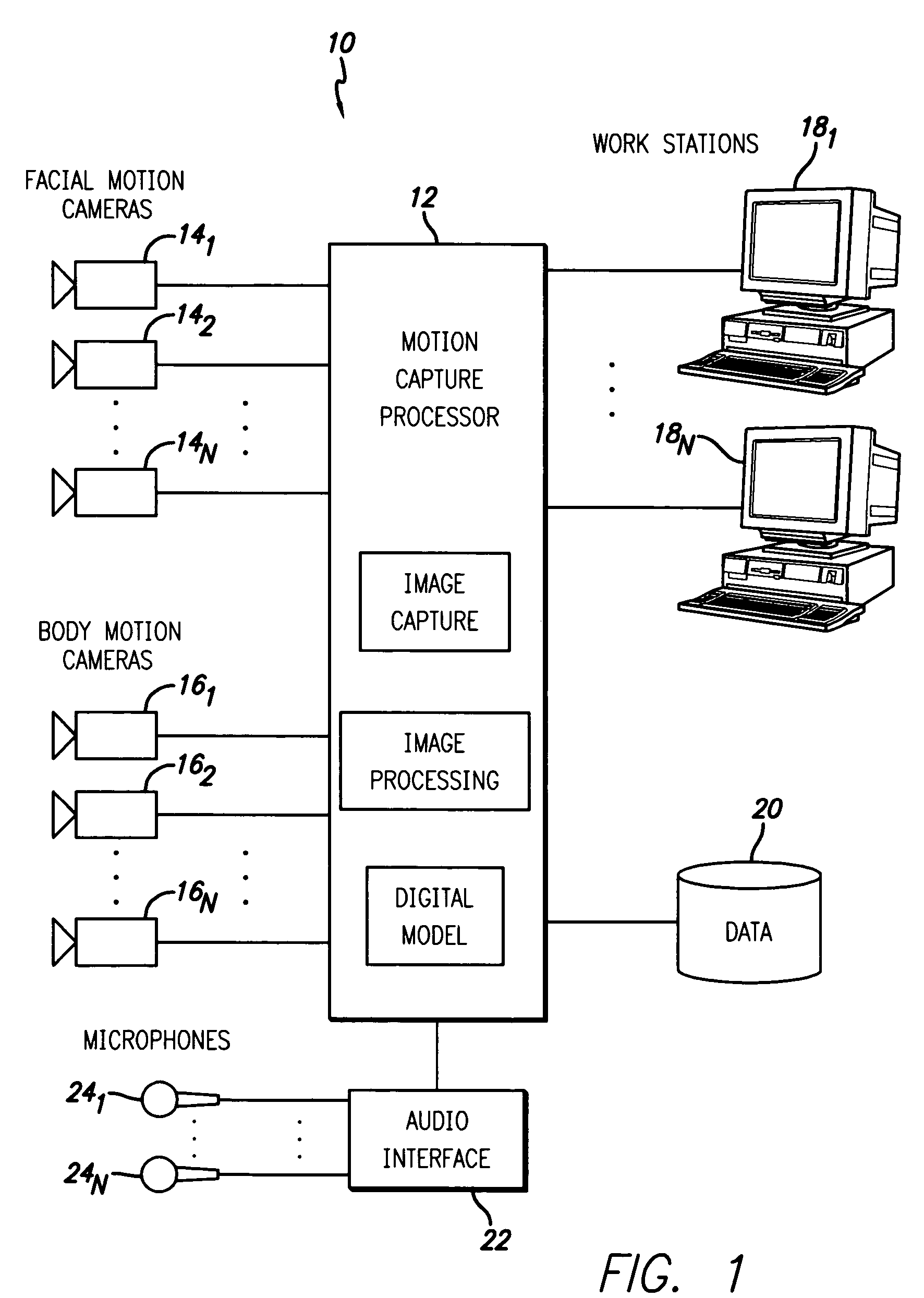

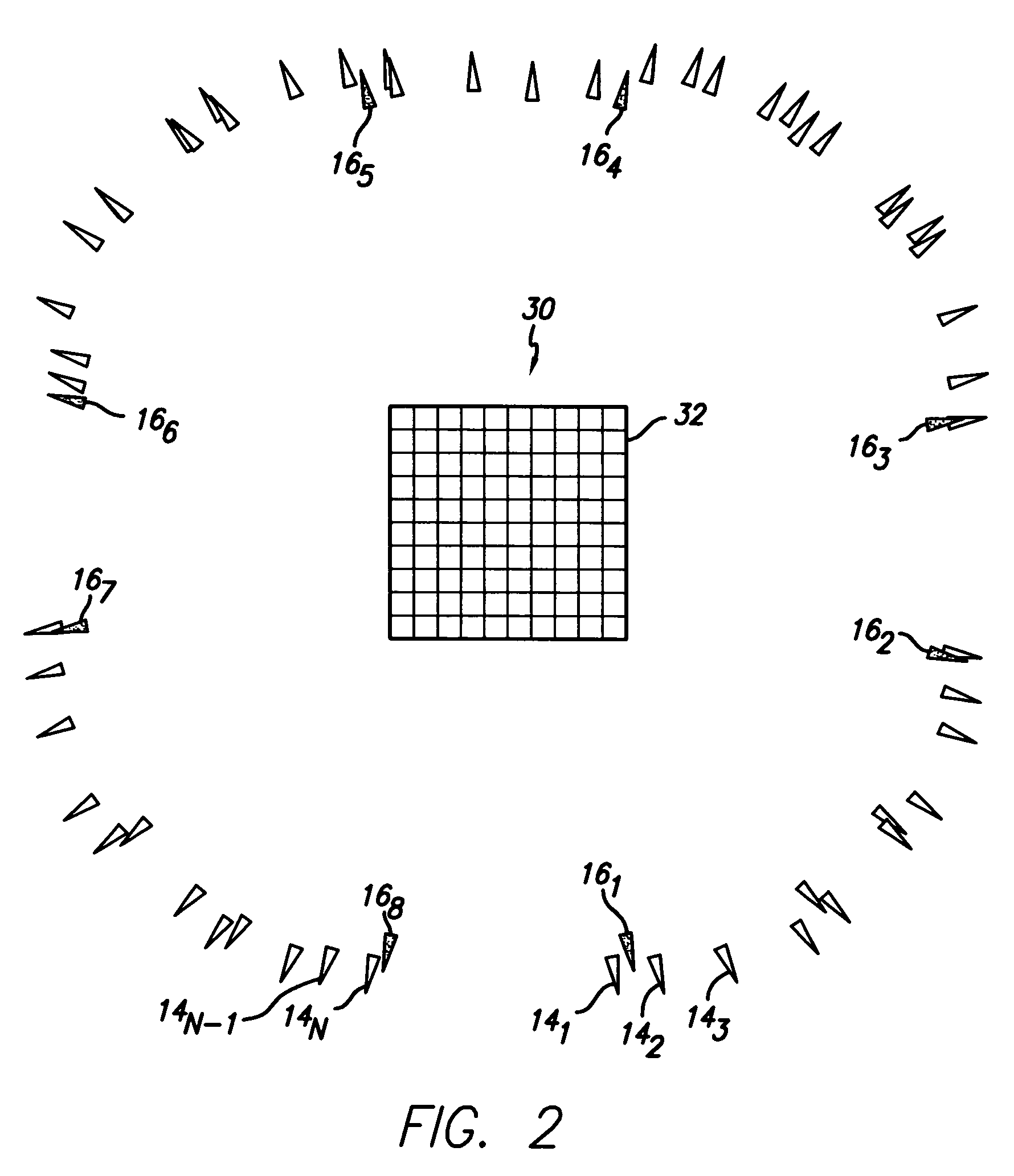

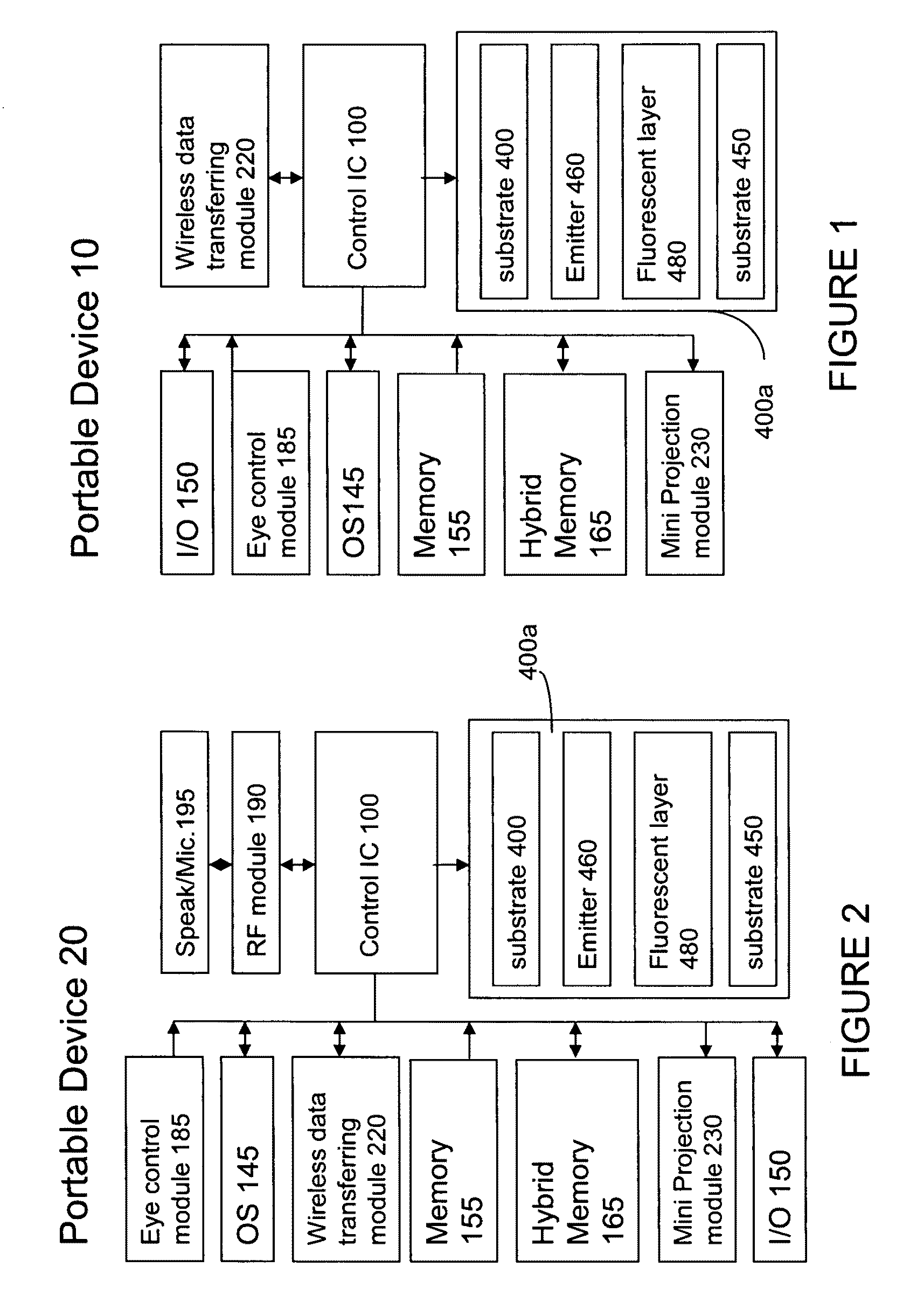

System and method for capturing facial and body motion

InactiveUS7358972B2Image analysisCharacter and pattern recognitionComputer graphics (images)Motion capture

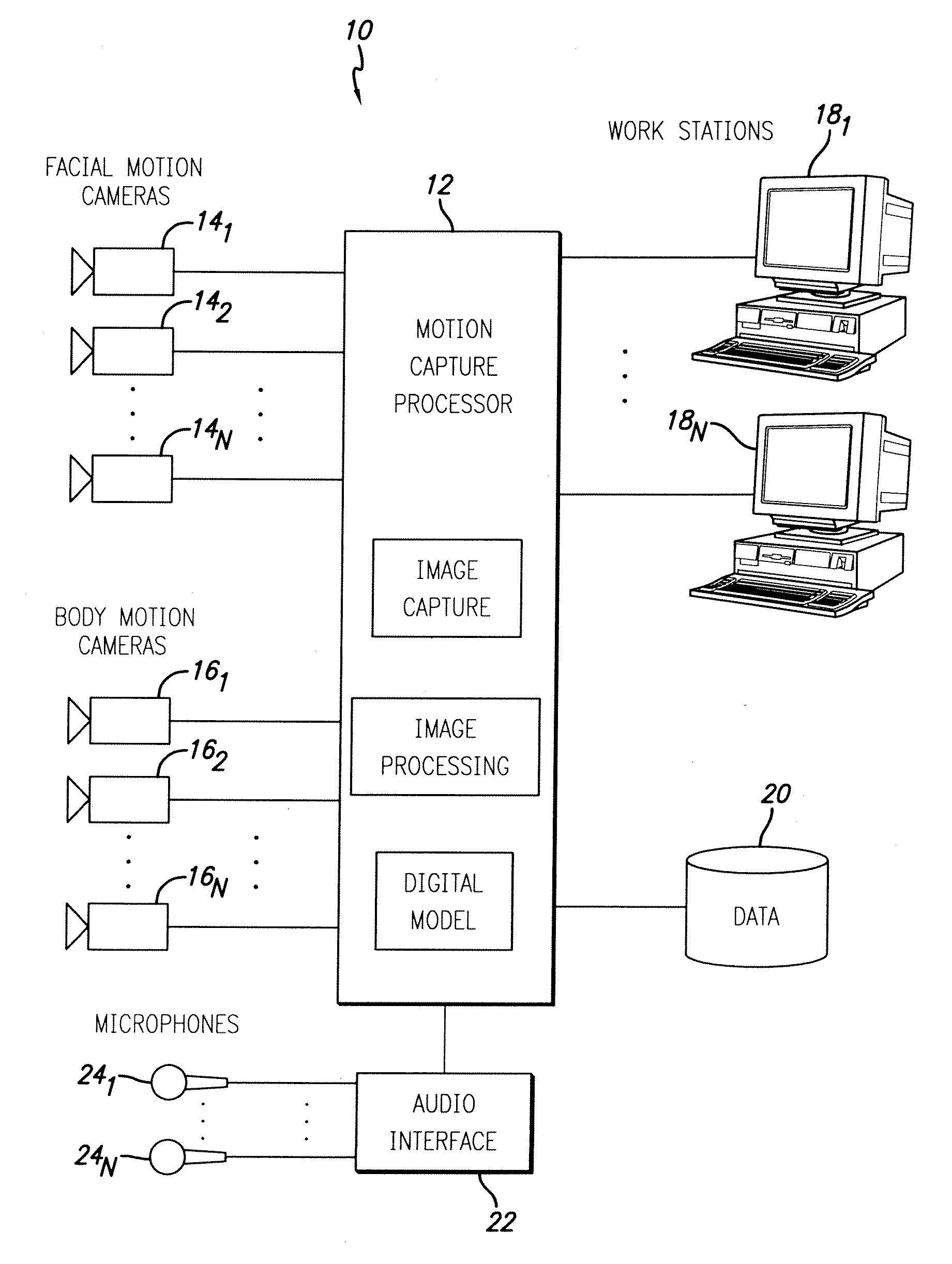

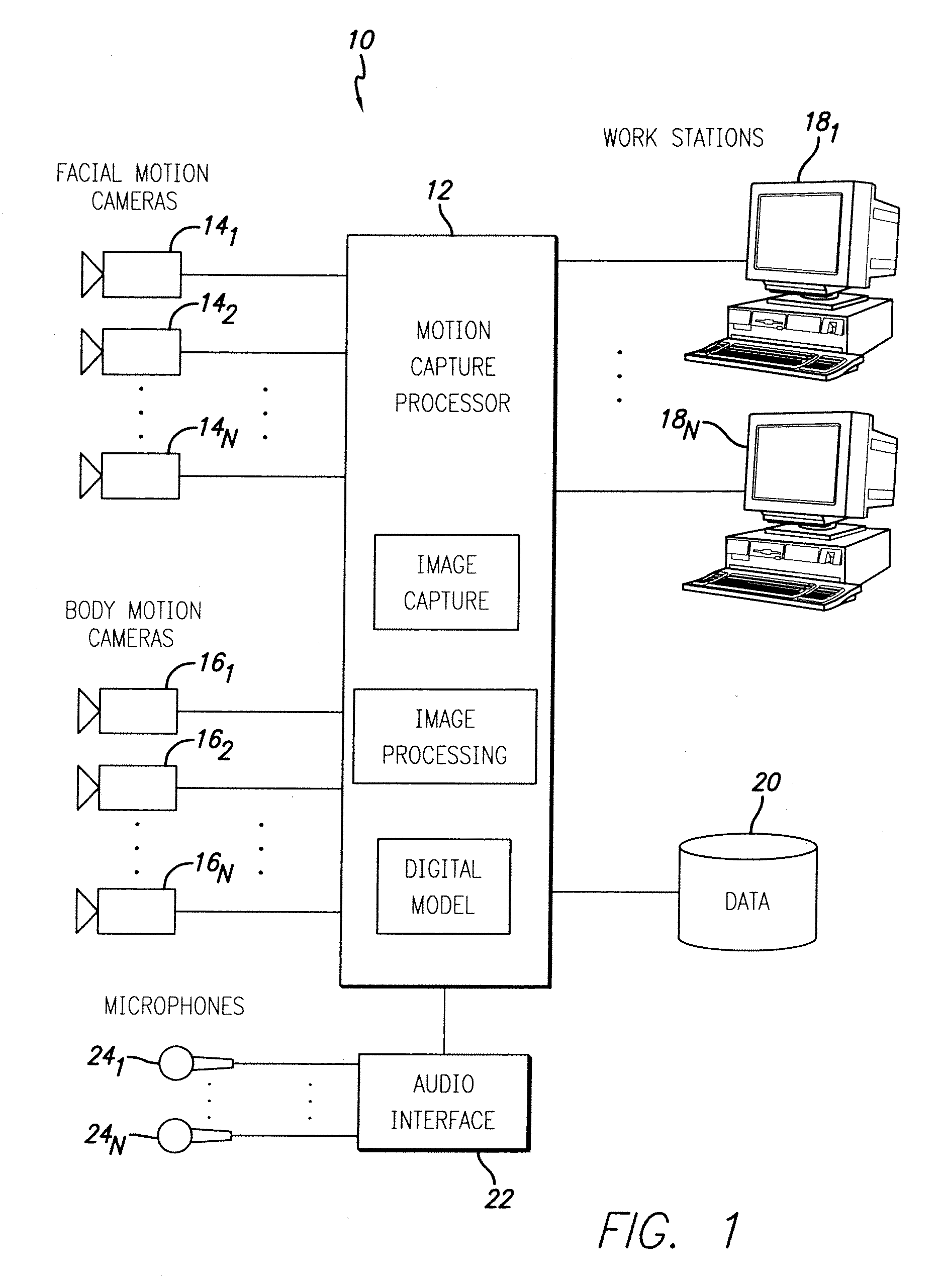

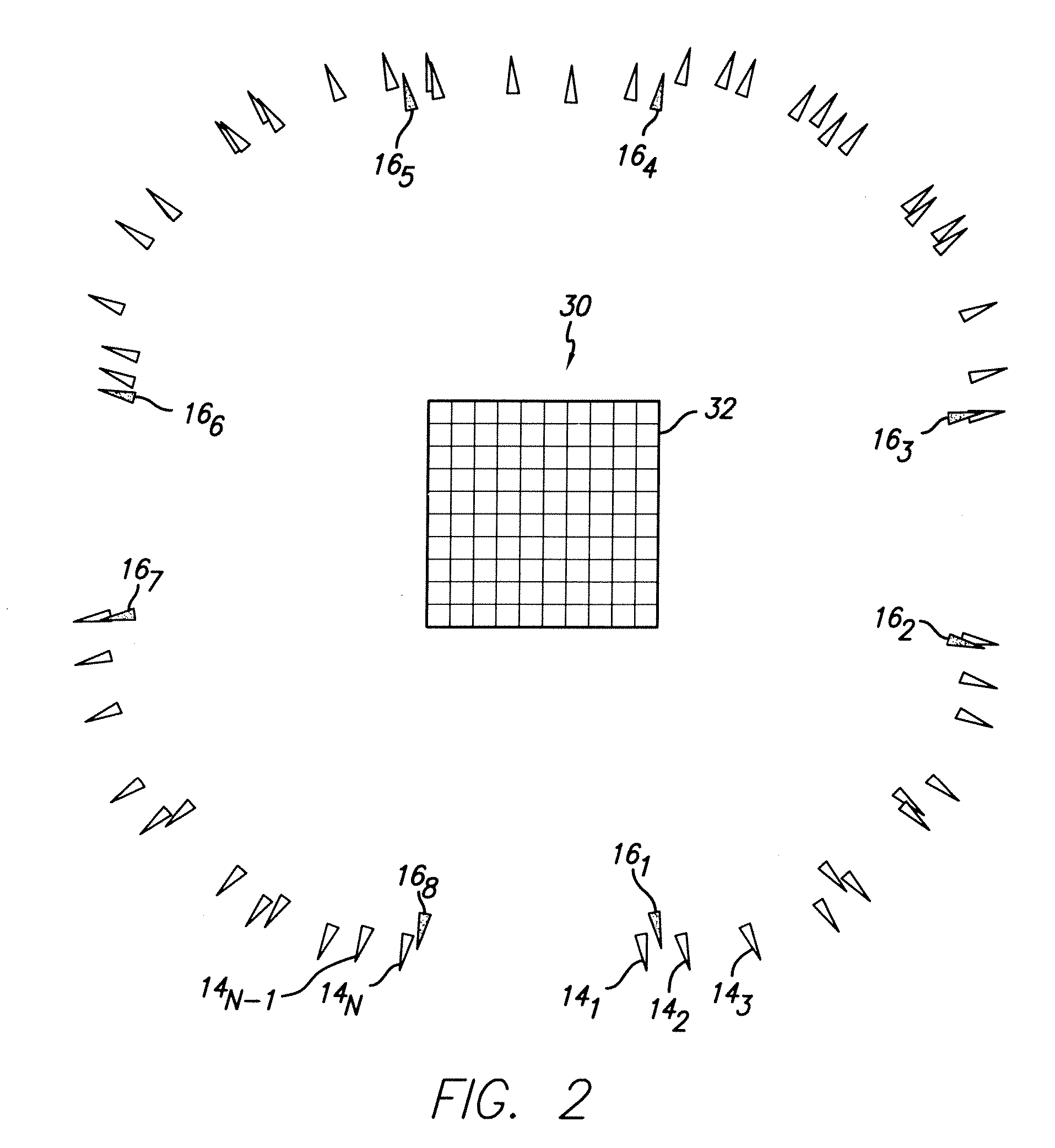

A system and method for capturing motion comprises a motion capture volume adapted to contain at least one actor having body markers defining plural body points and facial markers defining plural facial points. A plurality of body motion cameras and a plurality of facial motion cameras are arranged around a periphery of the motion capture volume. The facial motion cameras each have a respective field of view narrower than a corresponding field of view of the body motion cameras. The facial motion cameras are arranged such that all laterally exposed surfaces of the actor while in motion within the motion capture volume are within the field of view of at least one of the plurality of facial motion cameras at substantially all times. A motion capture processor is coupled to the plurality of facial motion cameras and the plurality of body motion cameras to produce a digital model reflecting combined body and facial motion of the actor. At least one microphone may be oriented to pick up audio from the motion capture volume.

Owner:SONY CORP +1

System and method for capturing facial and body motion

InactiveUS7218320B2Input/output for user-computer interactionImage enhancementComputer graphics (images)Body movement

A system and method for capturing motion comprises a motion capture volume adapted to contain at least one actor having body markers defining plural body points and facial markers defining plural facial points. A plurality of body motion cameras and a plurality of facial motion cameras are arranged around a periphery of the motion capture volume. The facial motion cameras each have a respective field of view narrower than a corresponding field of view of the body motion cameras. The facial motion cameras are arranged such that all laterally exposed surfaces of the actor while in motion within the motion capture volume are within the field of view of at least one of the plurality of facial motion cameras at substantially all times. A motion capture processor is coupled to the plurality of facial motion cameras and the plurality of body motion cameras to produce a digital model reflecting combined body and facial motion of the actor. At least one microphone may be oriented to pick up audio from the motion capture volume.

Owner:SONY CORP +1

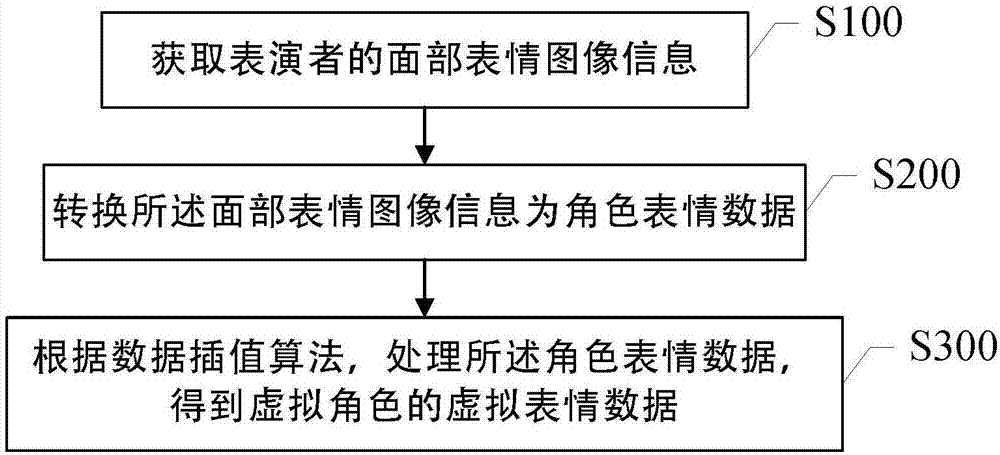

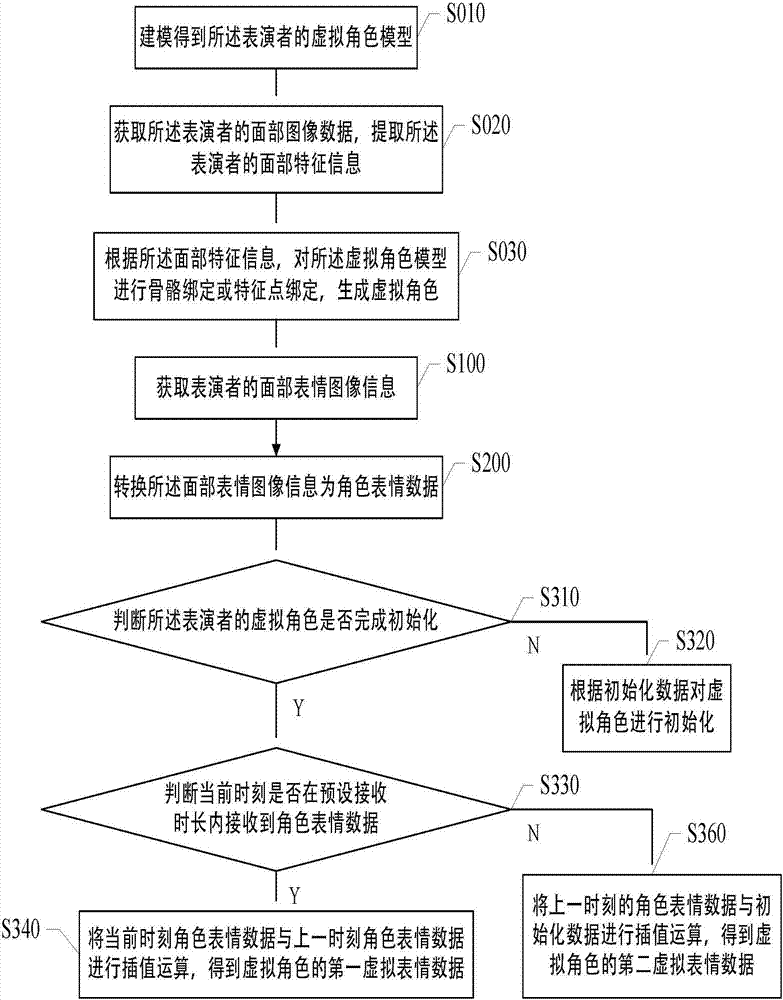

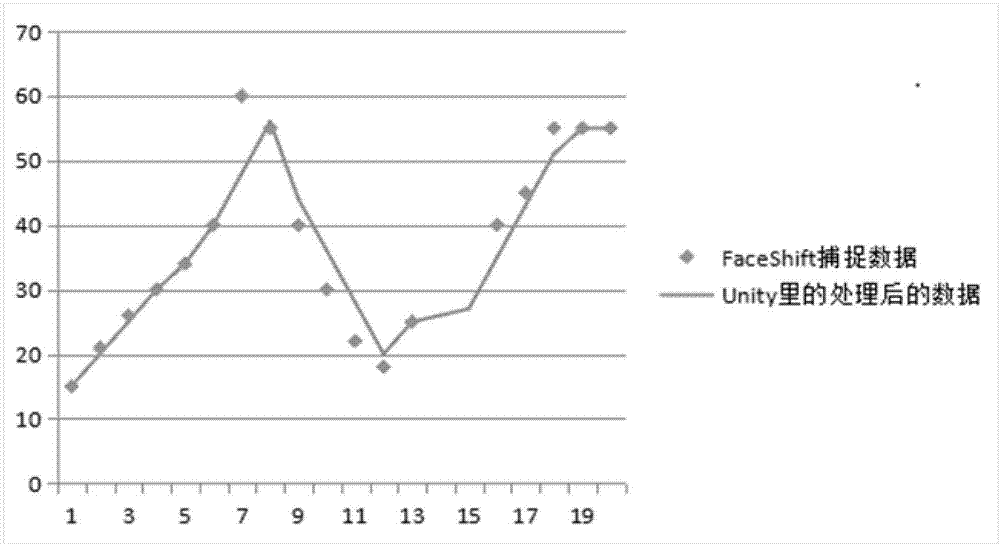

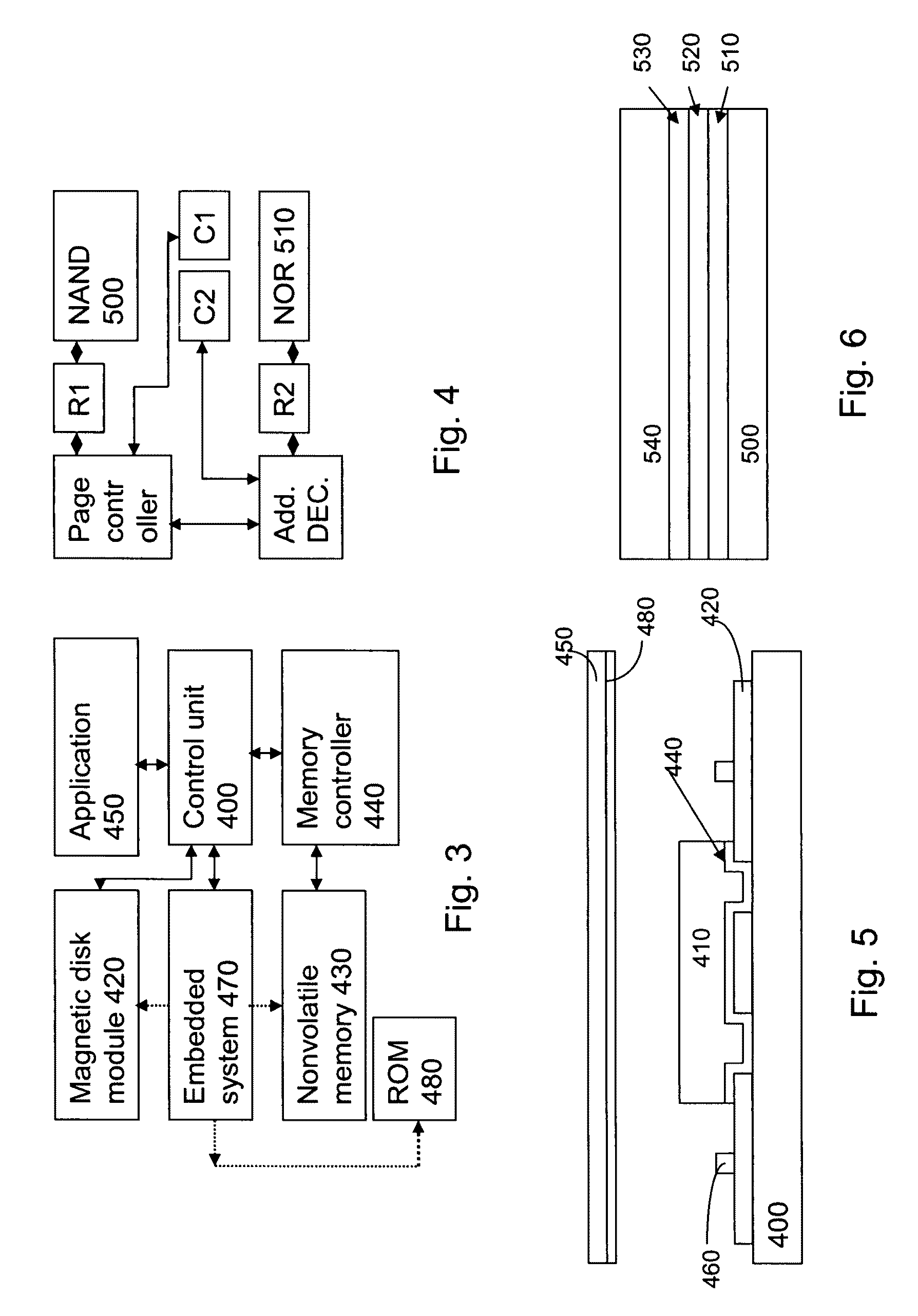

Data processing method and system based on virtual character

The invention discloses a data processing method and a system based on a virtual character. The invention provides a data processing method based on a virtual character, comprising the following steps: S100, acquiring facial expression image information of a performer; S200, converting the facial expression image information into character expression data; and S300, processing the character expression data according to a data interpolation algorithm to get virtual expression data of a virtual character. The facial movements of a human face can be transferred to any virtual character chosen by users and demonstrated. The current facial expression change of a performer can be reflected in real time through the virtual character. A more vivid virtual character can be created, interest is added, and the user experience is enhanced. Moreover, when there is an identification fault, the action of the character can be stably controlled within an interval to make virtual character demonstration more vivid and natural.

Owner:上海微漫网络科技有限公司

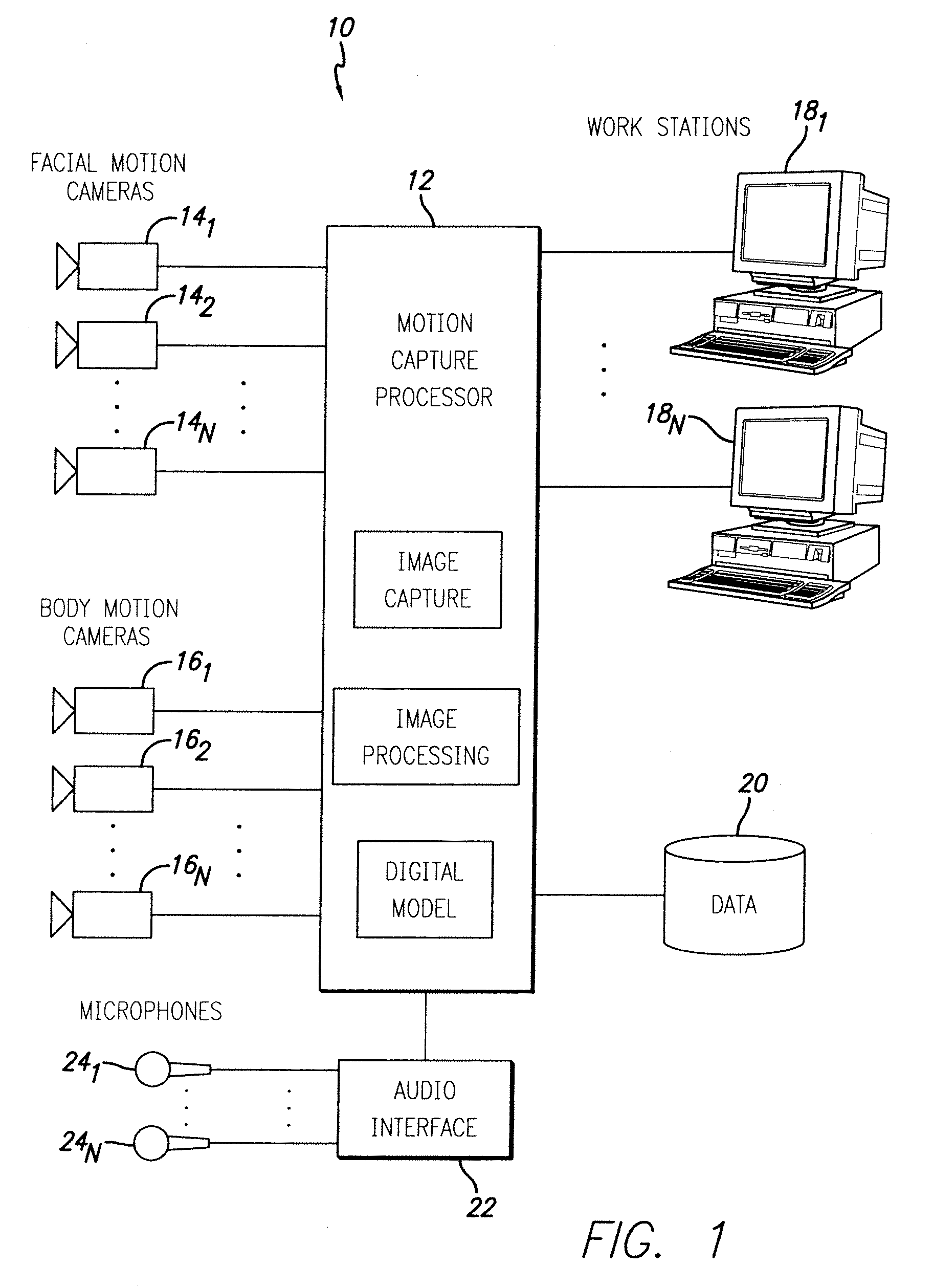

System and method for capturing facial and body motion

InactiveUS20050083333A1Television system detailsImage analysisComputer graphics (images)Motion capture

A system and method for capturing motion comprises a motion capture volume adapted to contain at least one actor having body markers defining plural body points and facial markers defining plural facial points. A plurality of motion cameras are arranged around a periphery of the motion capture volume. The motion cameras are arranged such that all laterally exposed surfaces of the actor while in motion within the motion capture volume are within the field of view of at least one of the plurality of the motion cameras at substantially all times. A motion capture processor is coupled to the plurality of motion cameras to produce a digital model reflecting combined body and facial motion of the actor. At least one microphone may be oriented to pick up audio from the motion capture volume.

Owner:SONY CORP +1

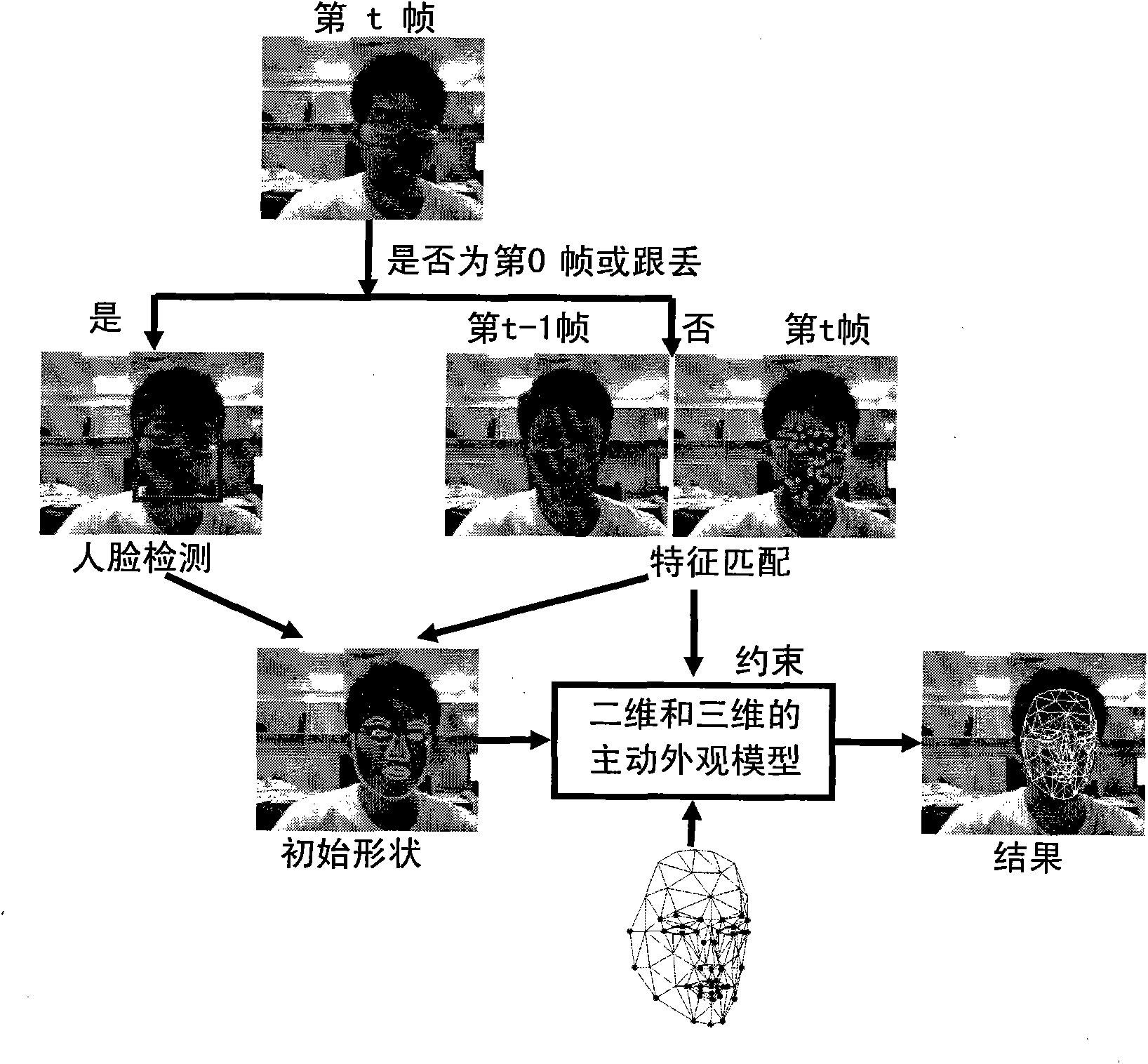

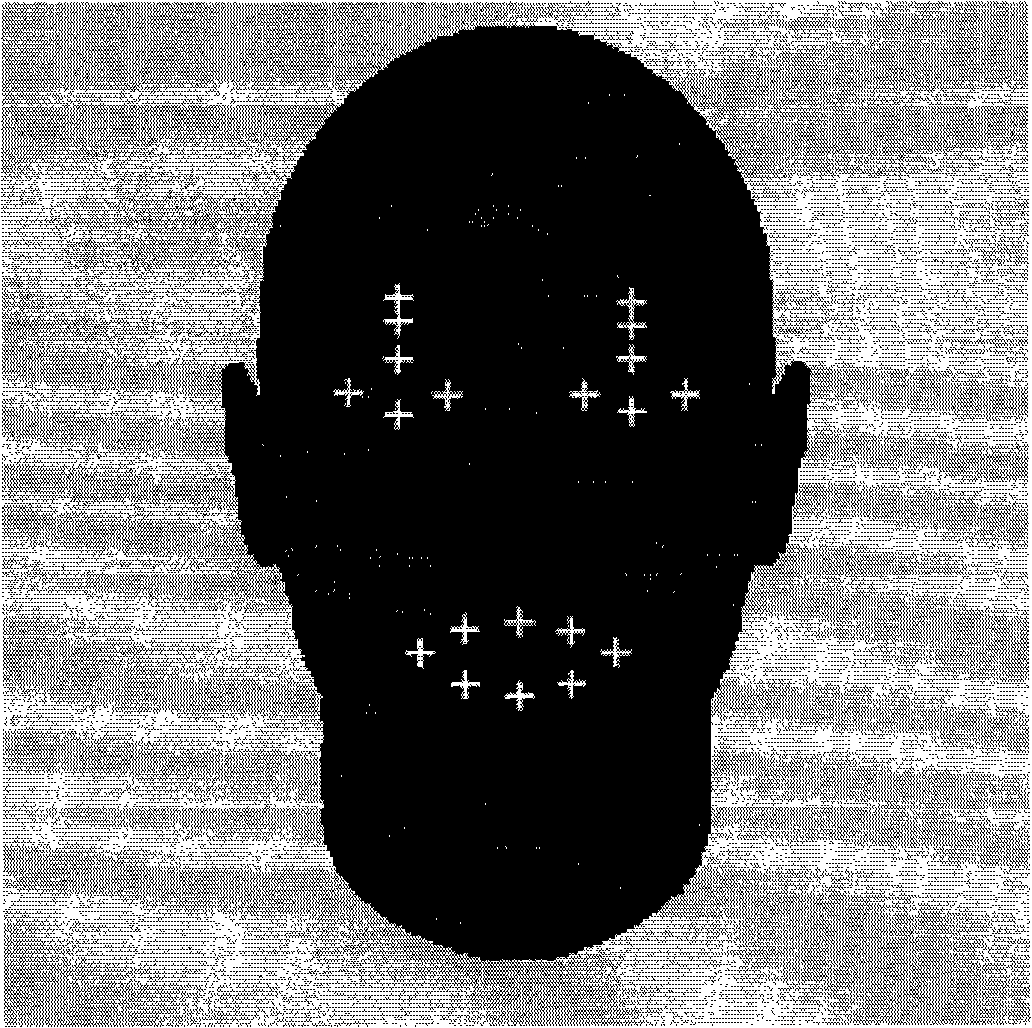

Method for tracking gestures and actions of human face

InactiveCN102402691ANo human intervention requiredFast trackingImage analysisCharacter and pattern recognitionFace detectionFeature point matching

The invention discloses a method for tracking gestures and actions of a human face, which comprises steps as follows: a step S1 includes that frame-by-frame images are extracted from a video streaming, human face detection is carried out for a first frame of image of an input video or when tracking is failed, and a human face surrounding frame is obtained, a step S2 includes that after convergent iteration of a previous frame of image, more remarkable feature points of textural features of a human face area of the previous frame of image match with corresponding feather points found in a current frame of image during normal tracking, and matching results of the feather points are obtained, a step S3 includes that the shape of an active appearance model is initialized according to the human face surrounding frame or the feature point matching results, and an initial value of the shape of a human face in the current frame of image is obtained, and a step S4 includes that the active appearance model is fit by a reversal synthesis algorithm, so that human face three-dimensional gestures and face action parameters are obtained. By the aid of the method, online tracking can be completed full-automatically in real time under the condition of common illumination.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

System and method for capturing facial and body motion

InactiveUS20070058839A1Image analysisCharacter and pattern recognitionComputer graphics (images)Motion capture

A system and method for capturing motion comprises a motion capture volume adapted to contain at least one actor having body markers defining plural body points and facial markers defining plural facial points. A plurality of body motion cameras and a plurality of facial motion cameras are arranged around a periphery of the motion capture volume. The facial motion cameras each have a respective field of view narrower than a corresponding field of view of the body motion cameras. The facial motion cameras are arranged such that all laterally exposed surfaces of the actor while in motion within the motion capture volume are within the field of view of at least one of the plurality of facial motion cameras at substantially all times. A motion capture processor is coupled to the plurality of facial motion cameras and the plurality of body motion cameras to produce a digital model reflecting combined body and facial motion of the actor. At least one microphone may be oriented to pick up audio from the motion capture volume.

Owner:SONY CORP +1

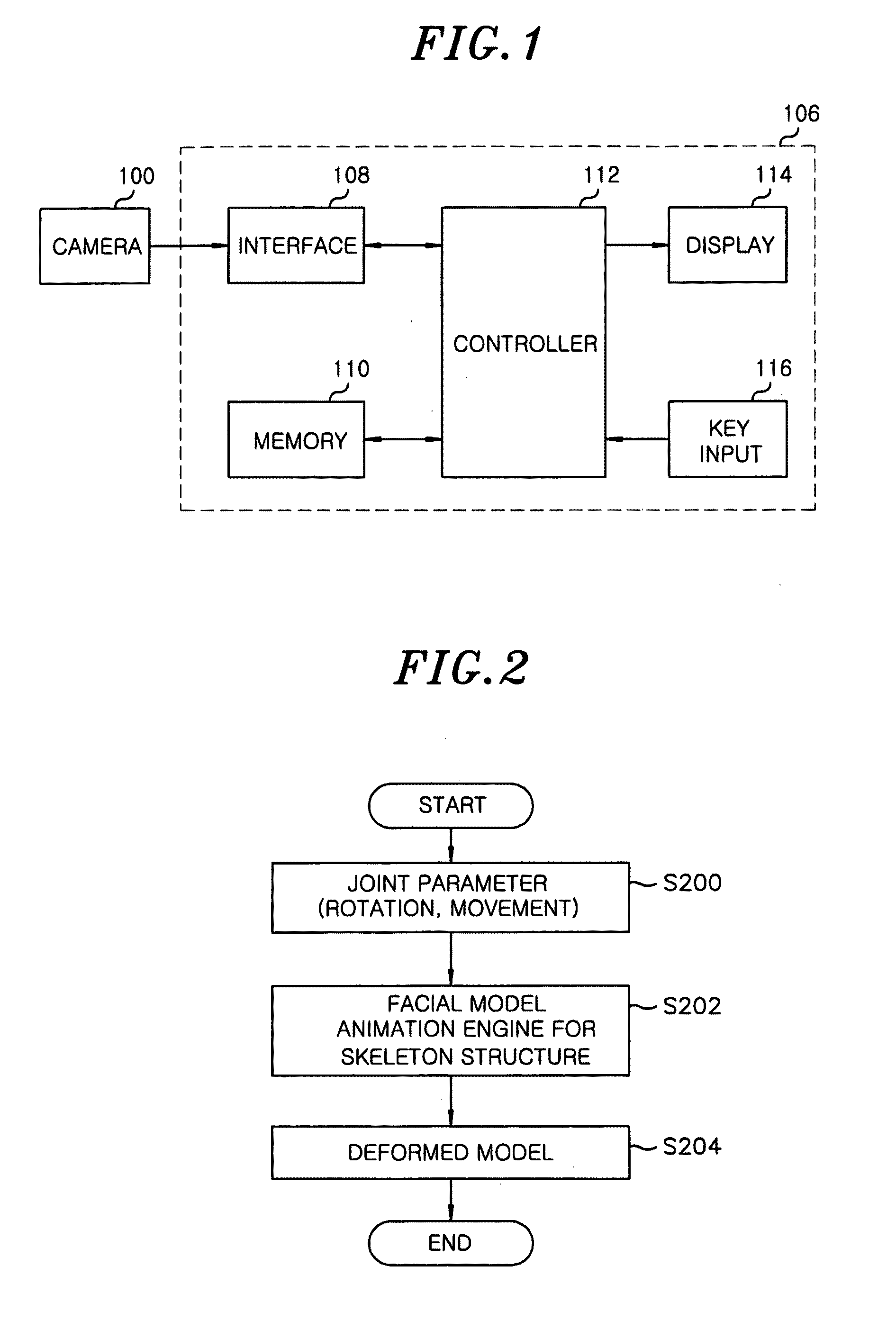

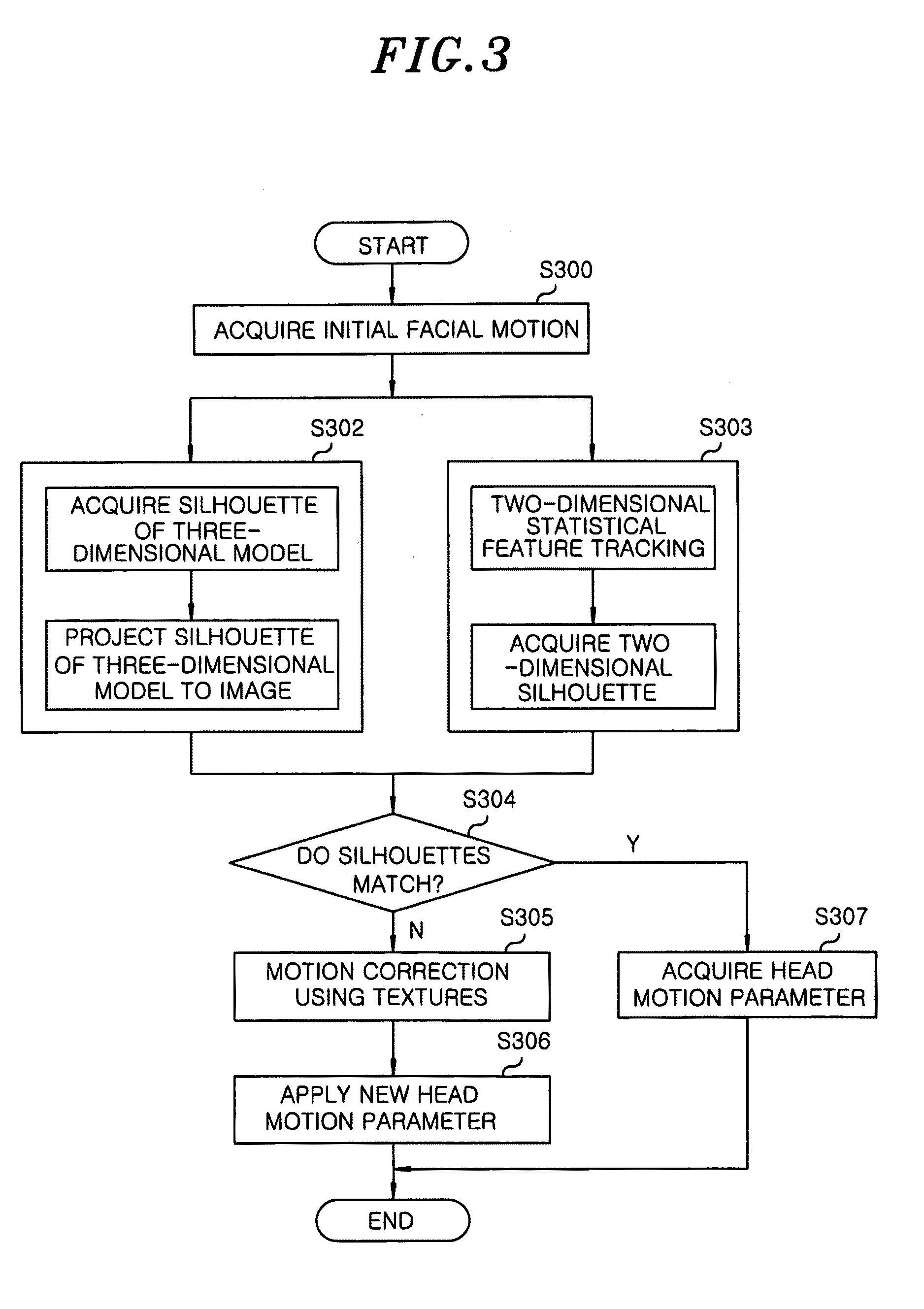

Method for tracking head motion for 3D facial model animation from video stream

InactiveUS20090153569A1Shorten the timeLow costImage enhancementImage analysisHead movementsAnimation

A head motion tracking method for three-dimensional facial model animation, the head motion tracking method includes acquiring initial facial motion to be fit to an image of a three-dimensional model from an image inputted by a video camera; creating a silhouette of the three-dimensional model and projecting the silhouette; matching the silhouette created from the three-dimensional model with a silhouette acquired by a statistical feature point tracking scheme; and obtaining a motion parameter for the image of the three-dimensional model through motion correction using a texture to perform three-dimensional head motion tracking. In accordance with the present invention, natural three-dimensional facial model animation based on a real image acquired with a video camera can be performed automatically, thereby reducing time and cost.

Owner:ELECTRONICS & TELECOMM RES INST

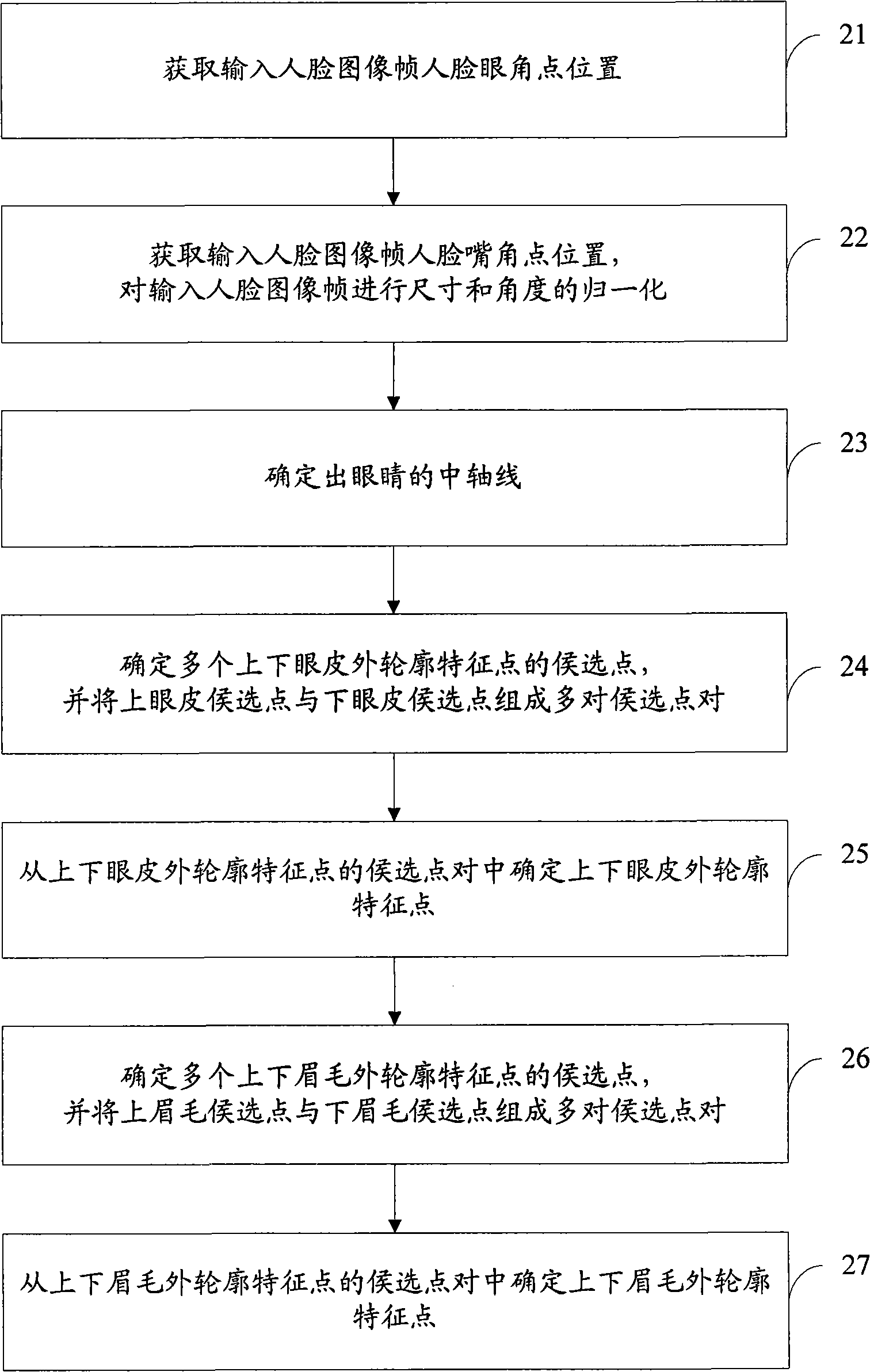

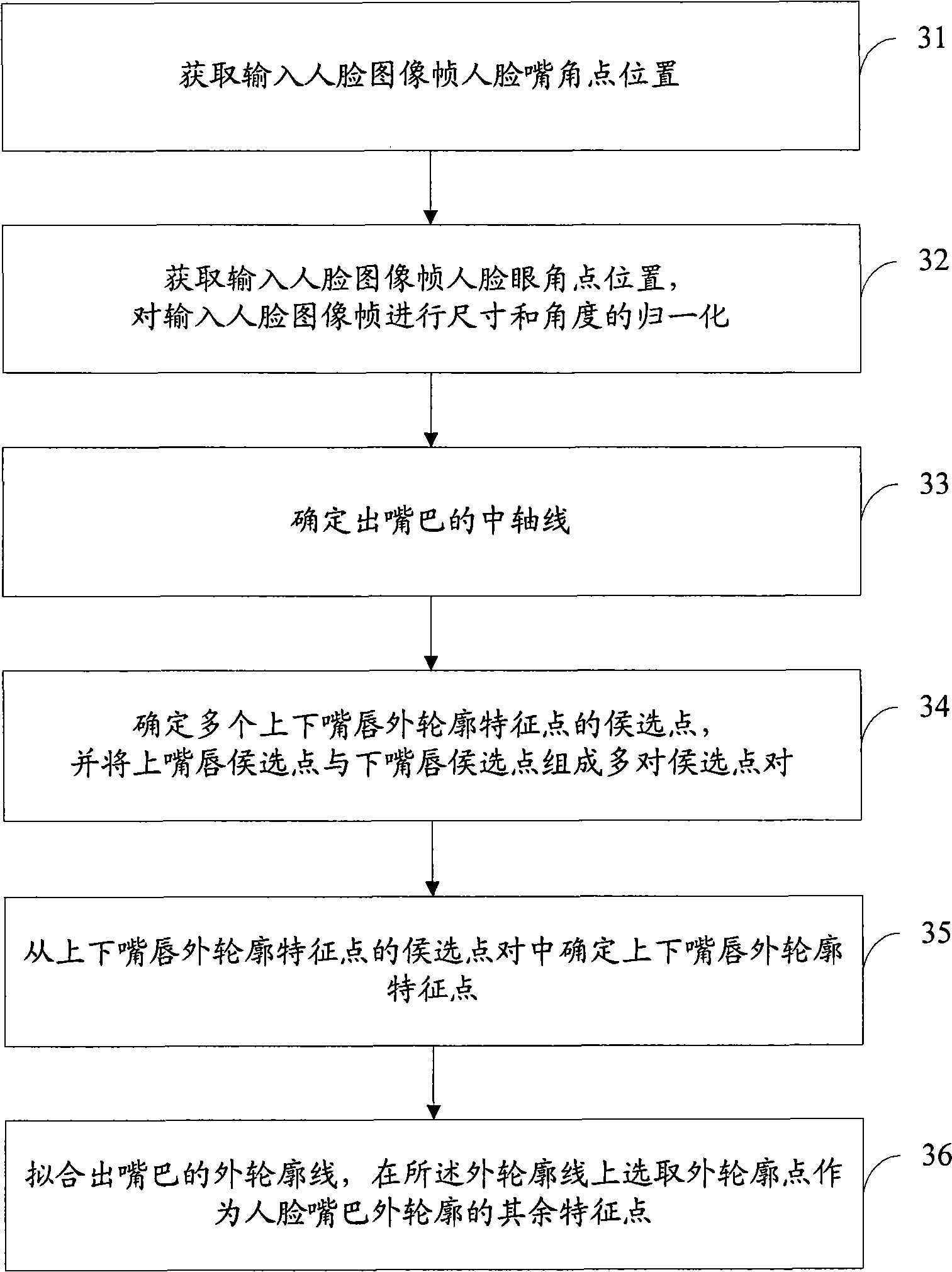

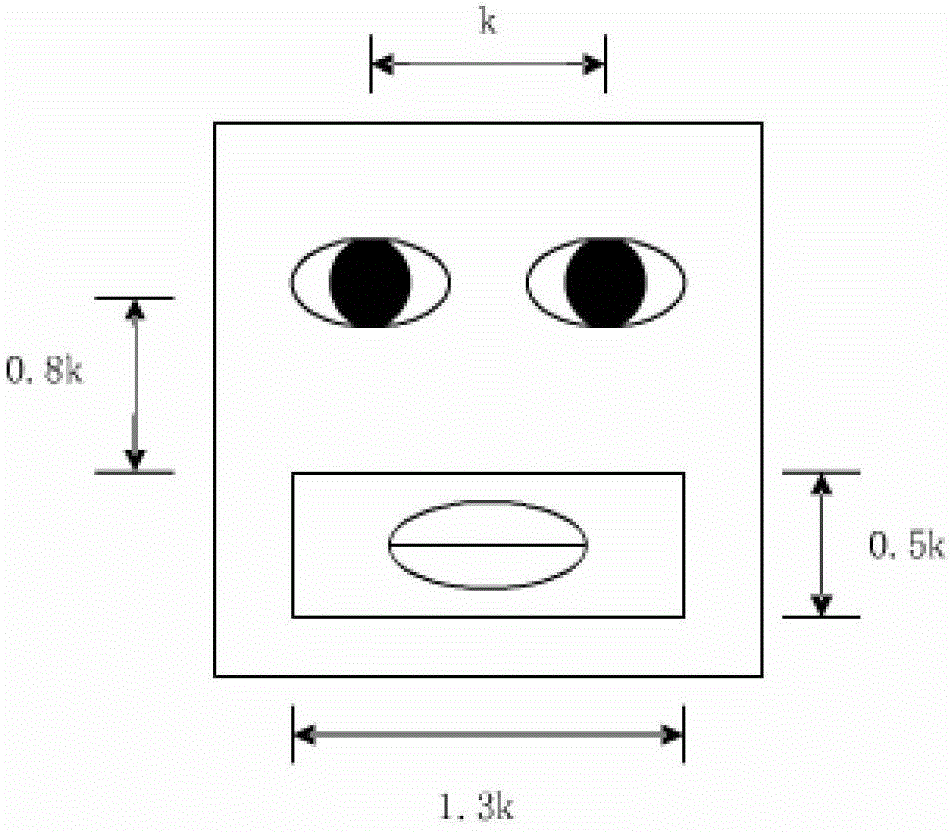

Human face critical organ contour characteristic points positioning and tracking method and device

The invention discloses a method for positioning and tracking outer contour feature points of key face organs and a device thereof, comprising the following steps: first, the positions of canthi points and angulus oris points on the face are obtained; the medial axis of the eyes and the mouth is identified based on the positions; the outer contour feature points of the eyes and eyebrows are identified on the medial axis of the eyes, and the outer contour feature points of the mouth are identified on the medial axis of the mouth. Consistent positioning and tracking of outer contour feature points of key face organs are conducted on such a basis. The technical proposal provided by the embodiment of the invention solves the problem of inaccurate positioning of outer contour feature points of eyes, mouth and eyebrows when people makes various faces; based on the positioning of the feature points, the existing two-dimensional and three-dimensional face models can be real-time driven, and face expressions and motions such as frowning, blinking and mouth opening of people before a camera can be real-time simulated, thus creating various real and vivid face animations.

Owner:BEIJING VIMICRO ARTIFICIAL INTELLIGENCE CHIP TECH CO LTD

System and method for capturing facial and body motion

A system and method for capturing motion comprises a motion capture volume adapted to contain at least one actor having body markers defining plural body points and facial markers defining plural facial points. A plurality of motion cameras are arranged around a periphery of the motion capture volume. The motion cameras are arranged such that all laterally exposed surfaces of the actor while in motion within the motion capture volume are within the field of view of at least one of the plurality of the motion cameras at substantially all times. A motion capture processor is coupled to the plurality of motion cameras to produce a digital model reflecting combined body and facial motion of the actor. At least one microphone may be oriented to pick up audio from the motion capture volume.

Owner:SONY CORP +1

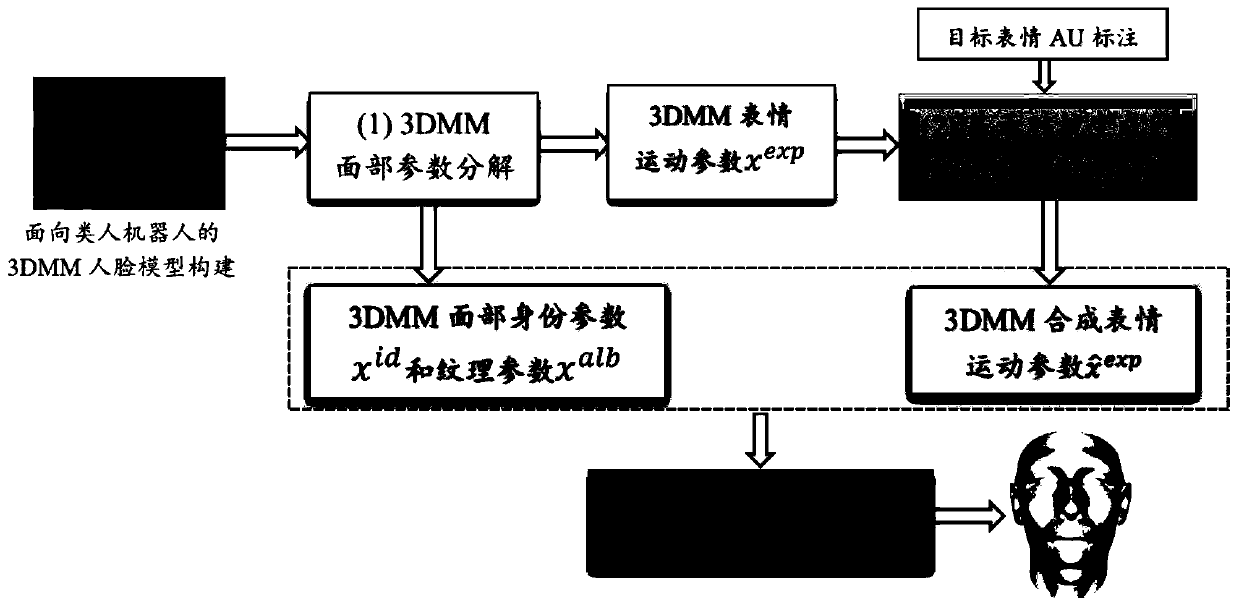

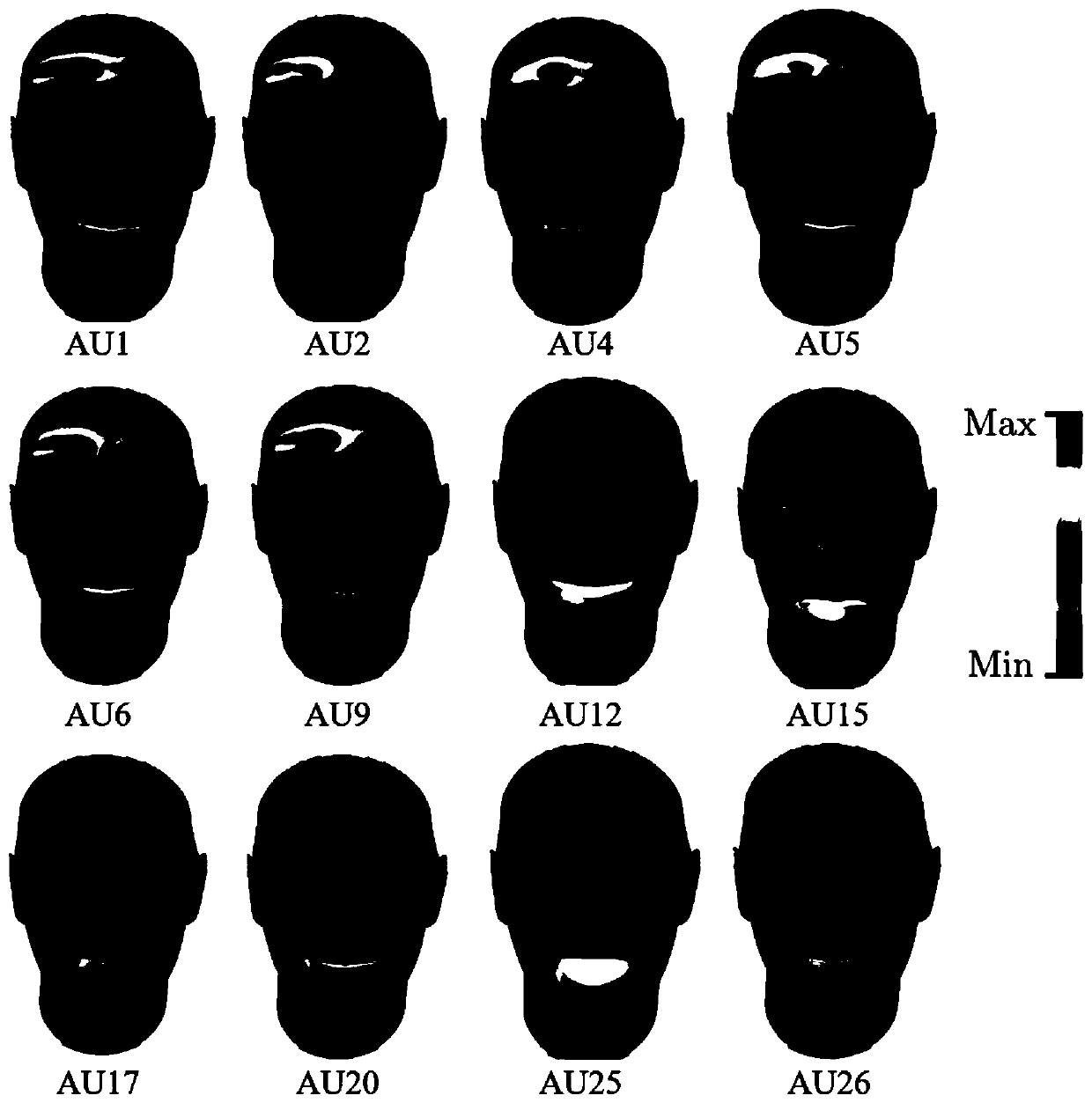

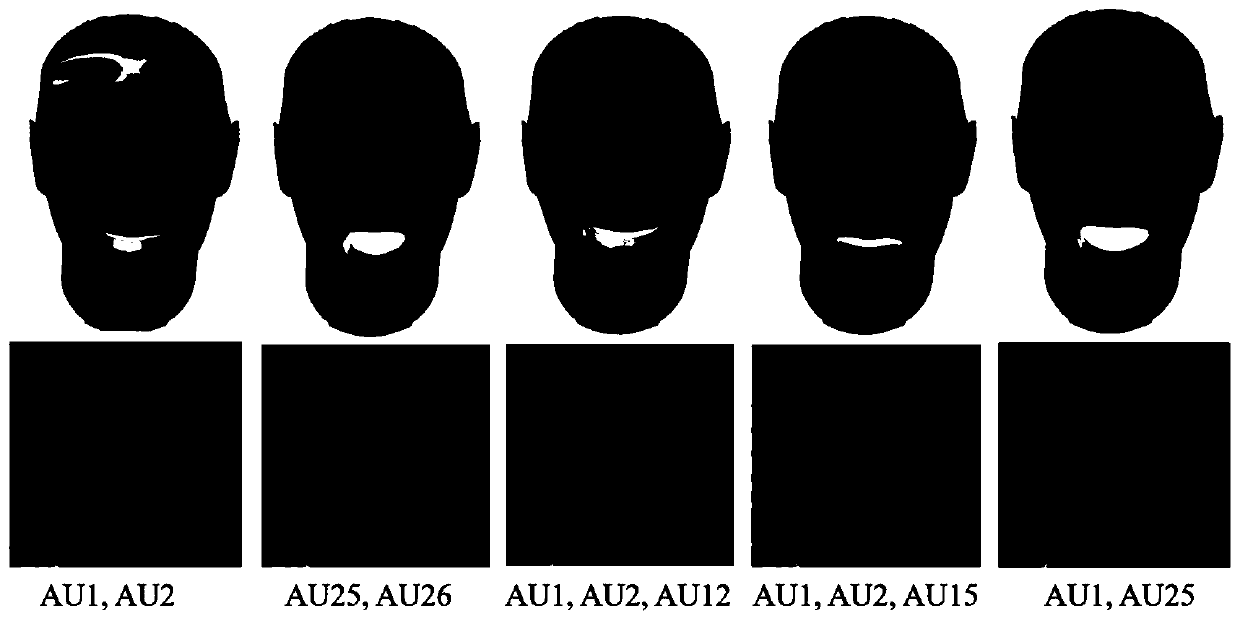

A conditional generative adversarial network three-dimensional facial expression motion unit synthesis method

The invention relates to the field of man-machine emotion interaction, intelligent robots and the like, and aims to provide a corresponding solution way for researching generation and control problemsof natural facial expressions of a humanoid robot by taking a three-dimensional face model oriented to a virtual or entity humanoid robot as a carrier. Therefore, the conditional generative adversarial network three-dimensional facial expression motion unit synthesis method comprises the following steps: (1) establishing effective mapping between facial motion unit labels with different intensities and different combinations and facial expression parameter distribution; (2) carrying out game optimization on the generation result of the expression motion parameter generation model; And (3) applying the generated target expression parameters to the three-dimensional face model facing the humanoid robot to realize generation and control of the three-dimensional face complex expression of thehumanoid robot. The method is mainly applied to intelligent robot design and manufacturing occasions.

Owner:TIANJIN UNIV

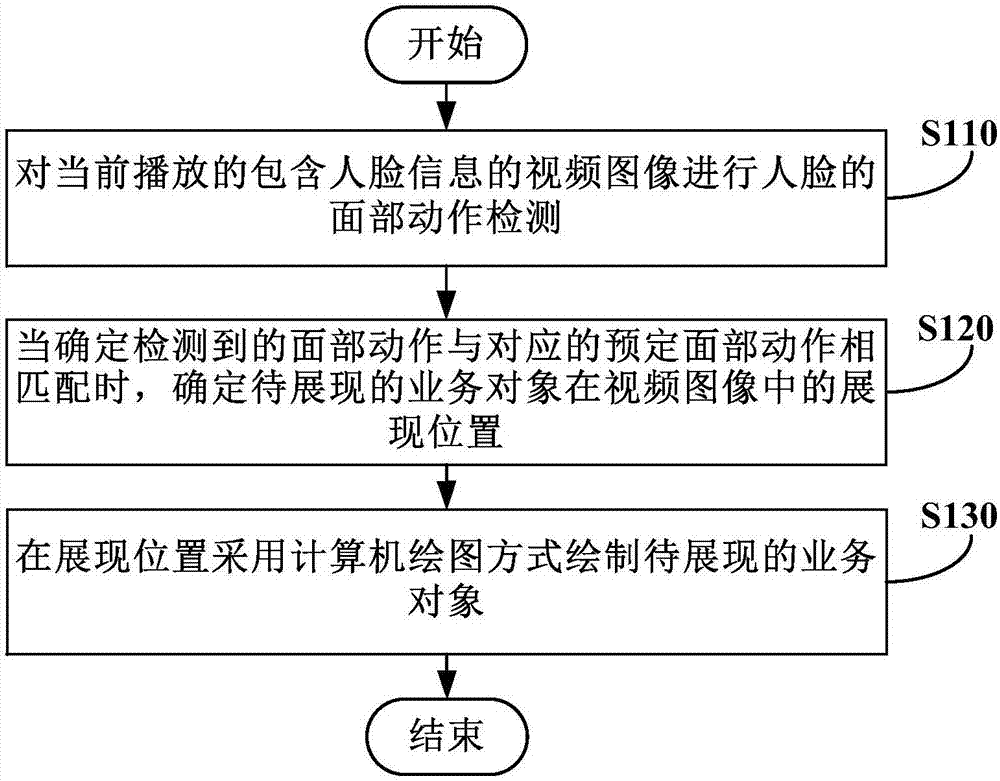

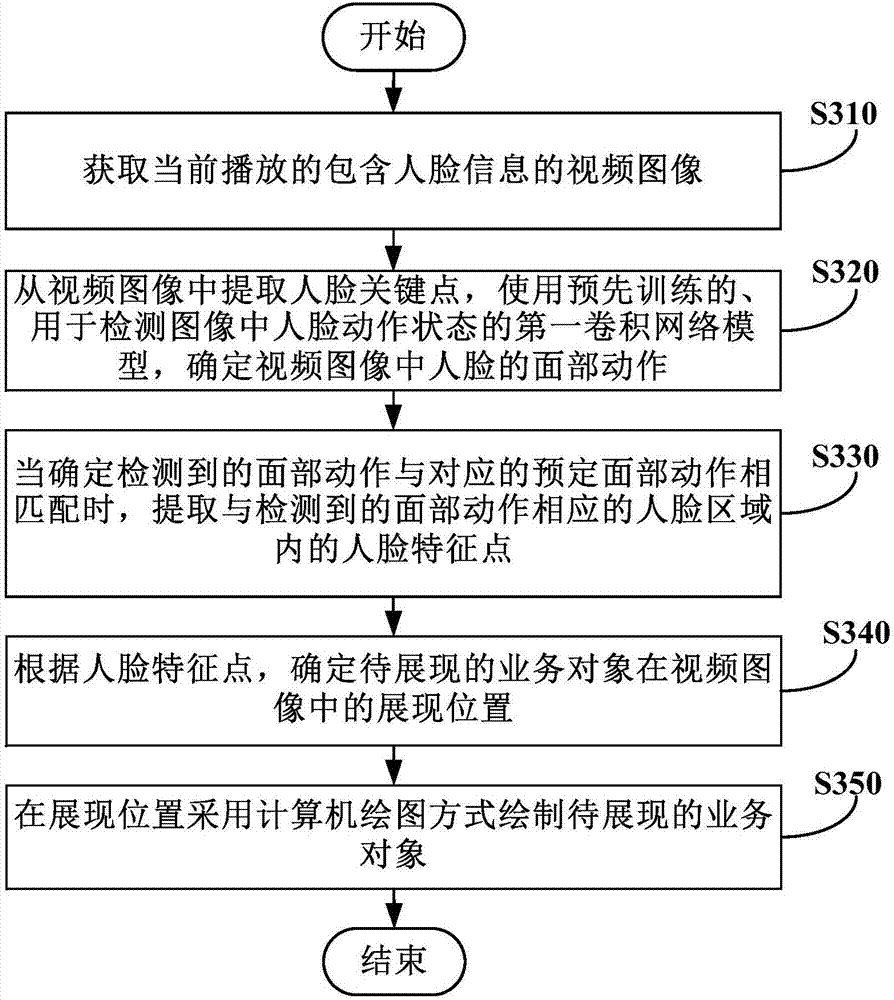

Video image processing method and device, and terminal device

InactiveCN107341435AAdd funReduce disgustCharacter and pattern recognitionExecution for user interfacesComputer graphics (images)Terminal equipment

The embodiment of the invention provides a video image processing method and device, and a terminal device. The video image processing method comprises the following steps: performing human face facial motion detection on a current video image containing the human face information; confirming the display position of a business object to be displayed in the video image when the detected facial motion matches the corresponding predetermined facial motion; and drawing the business object in the display position by means of the computer graphics. By means of the embodiment of the invention, the network resources and / or the client system resources can be saved, the interestingness of the video image is added, and the video can be watched by the user normally without disturbing; therefore, the aversion of the user to the business object displayed in the video image can be reduced, the attention of the audience can be attracted to a certain extent, and the influence of the business object can be improved.

Owner:BEIJING SENSETIME TECH DEV CO LTD

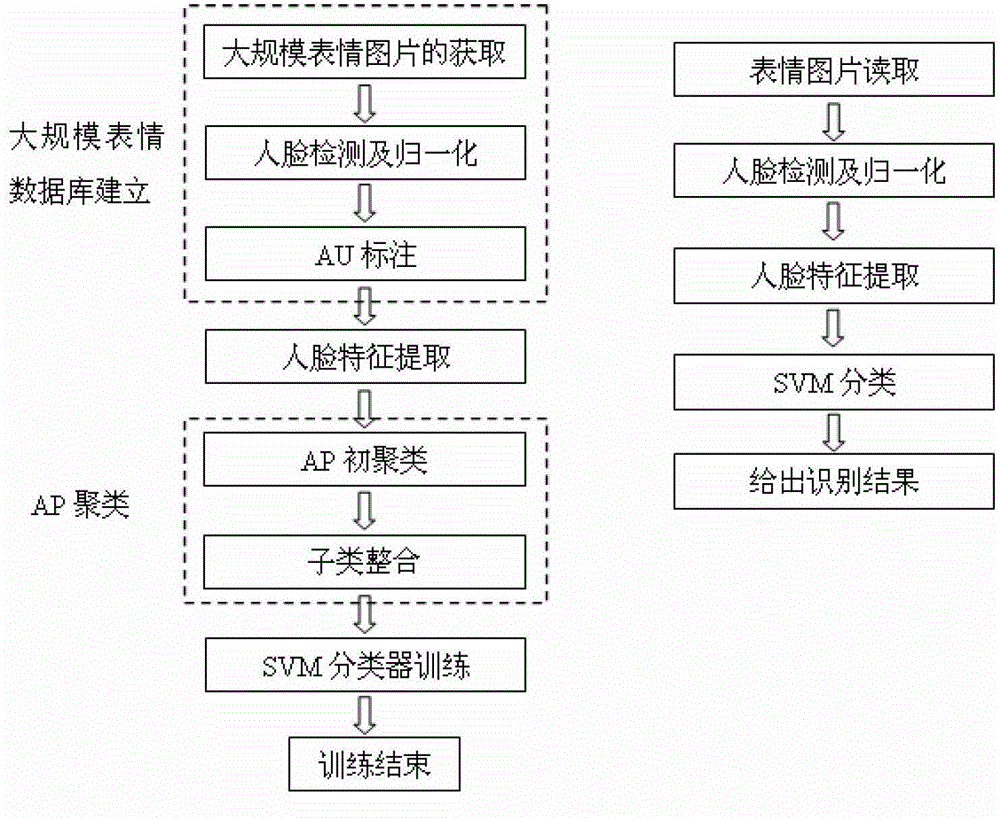

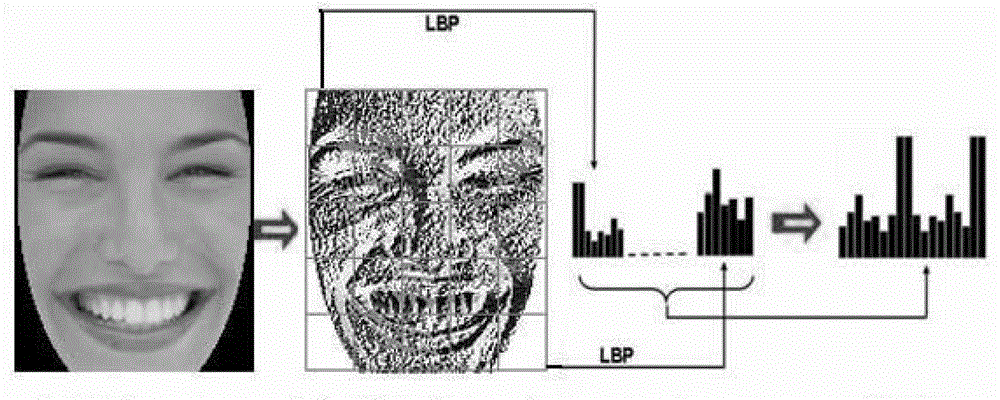

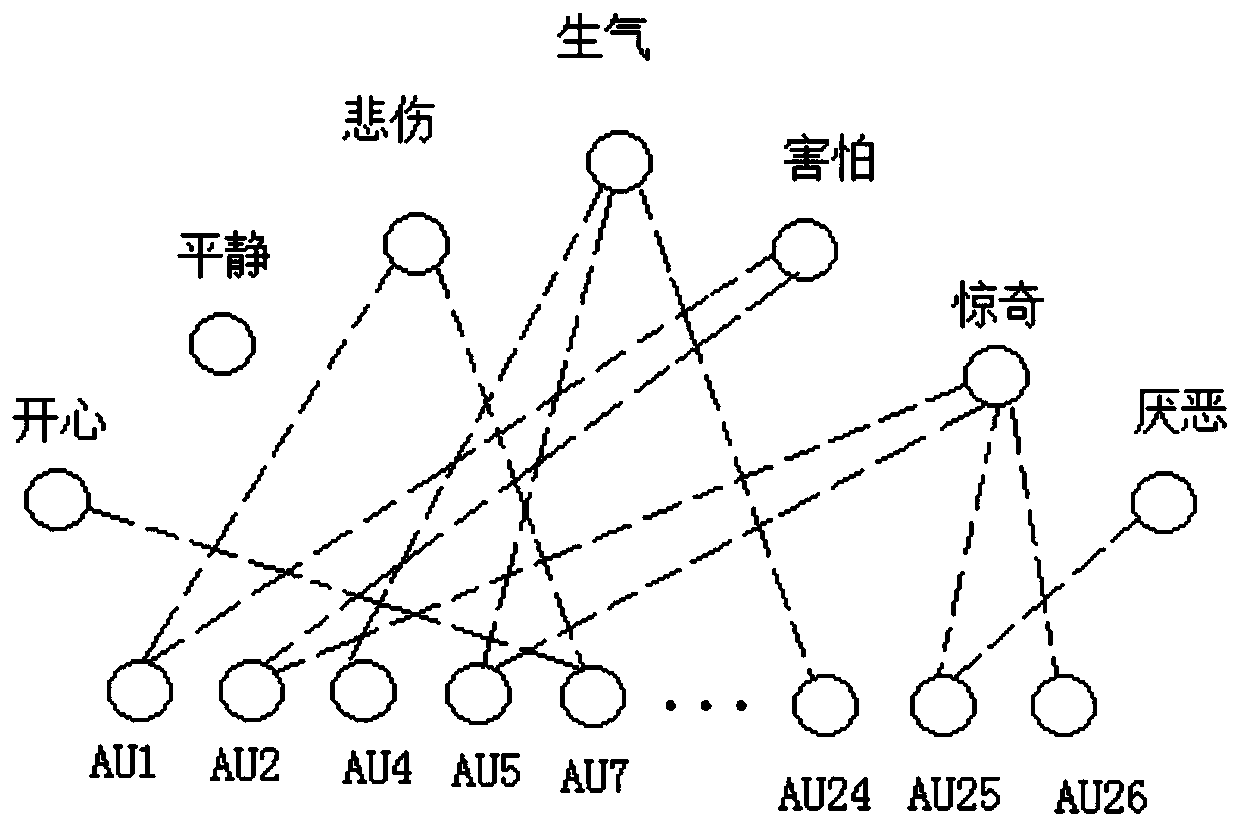

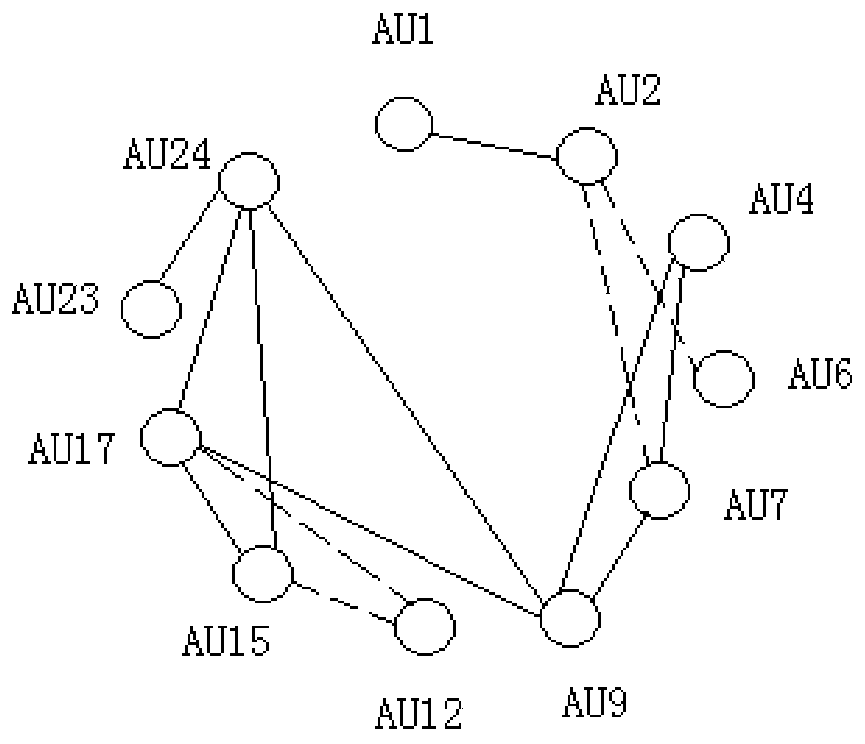

Facial expression recognition method based on facial motion unit combination features

InactiveCN103065122AImprove recognition rateCharacter and pattern recognitionSupport vector machineCluster algorithm

The invention discloses a facial expression recognition method based on facial motion unit combination features. The facial expression recognition method based on the facial motion unit combination features is used for solving the technical problem of low recognition rate of a single facial motion unit with an existing facial expression recognition method based on facial motion units. The technical scheme includes that the facial expression recognition method based on the facial motion unit combination features comprises the steps of building a large-scale facial expression data base, carrying out clustering of each category of facial expressions and corresponding training samples by using the affinity propagation (AP) clustering algorithm, judging arbitrary unit (AU) combinations in each sub-category, determining the number of the sub-categories under the same facial expression by combining main AU combinations, generating the number of the categories of the training sample by combining the sub-categories of all facial expressions, and carrying out classification training by using the support vector machine (SVM) method. According to the facial expression recognition method based on the facial motion unit combination features, the recognition rate of the single facial motion unit is improved, namely that average recognition rate of a single AU unit is improved from 87.5% in prior are to 90.1% and by 2.6%.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

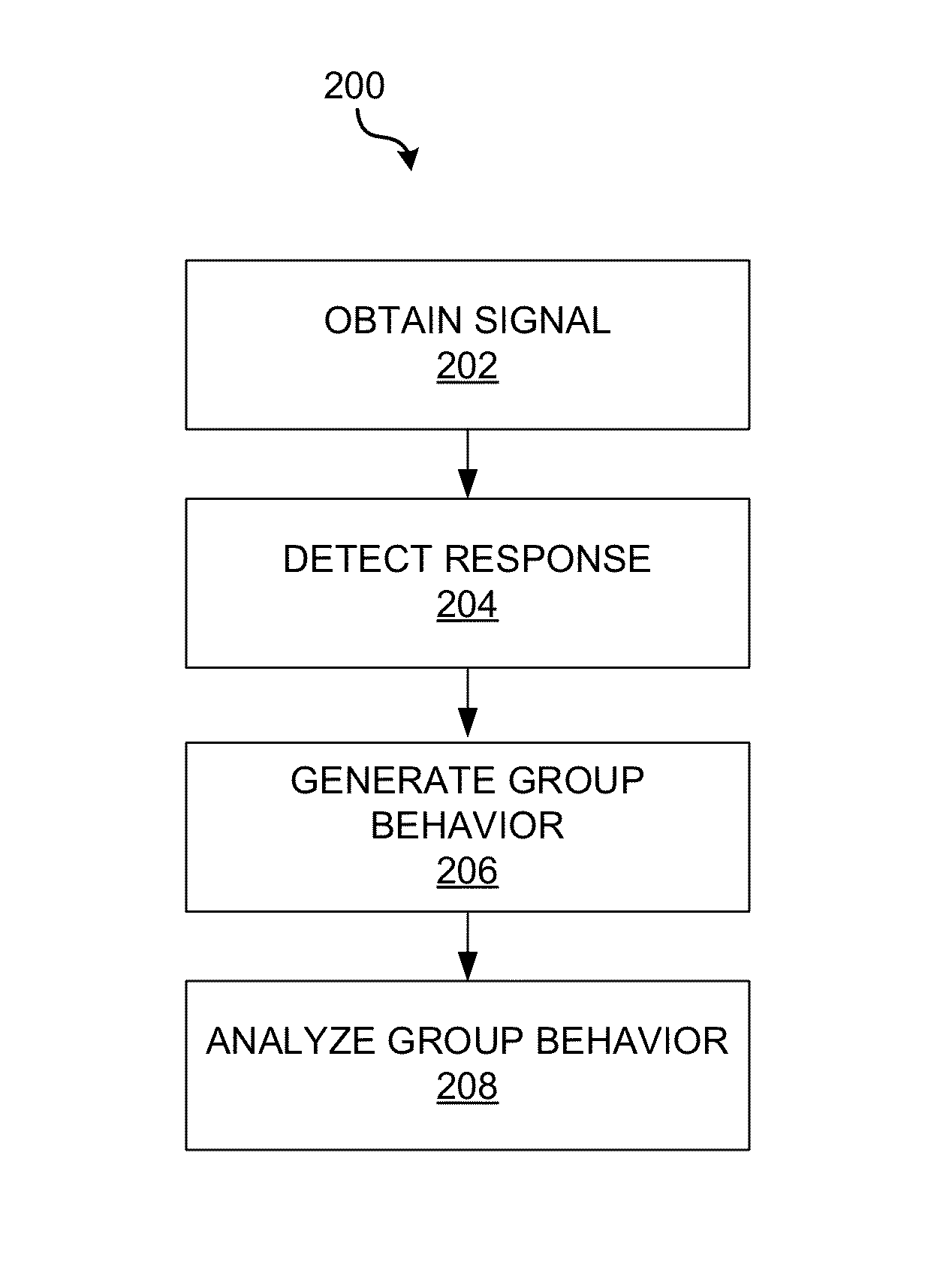

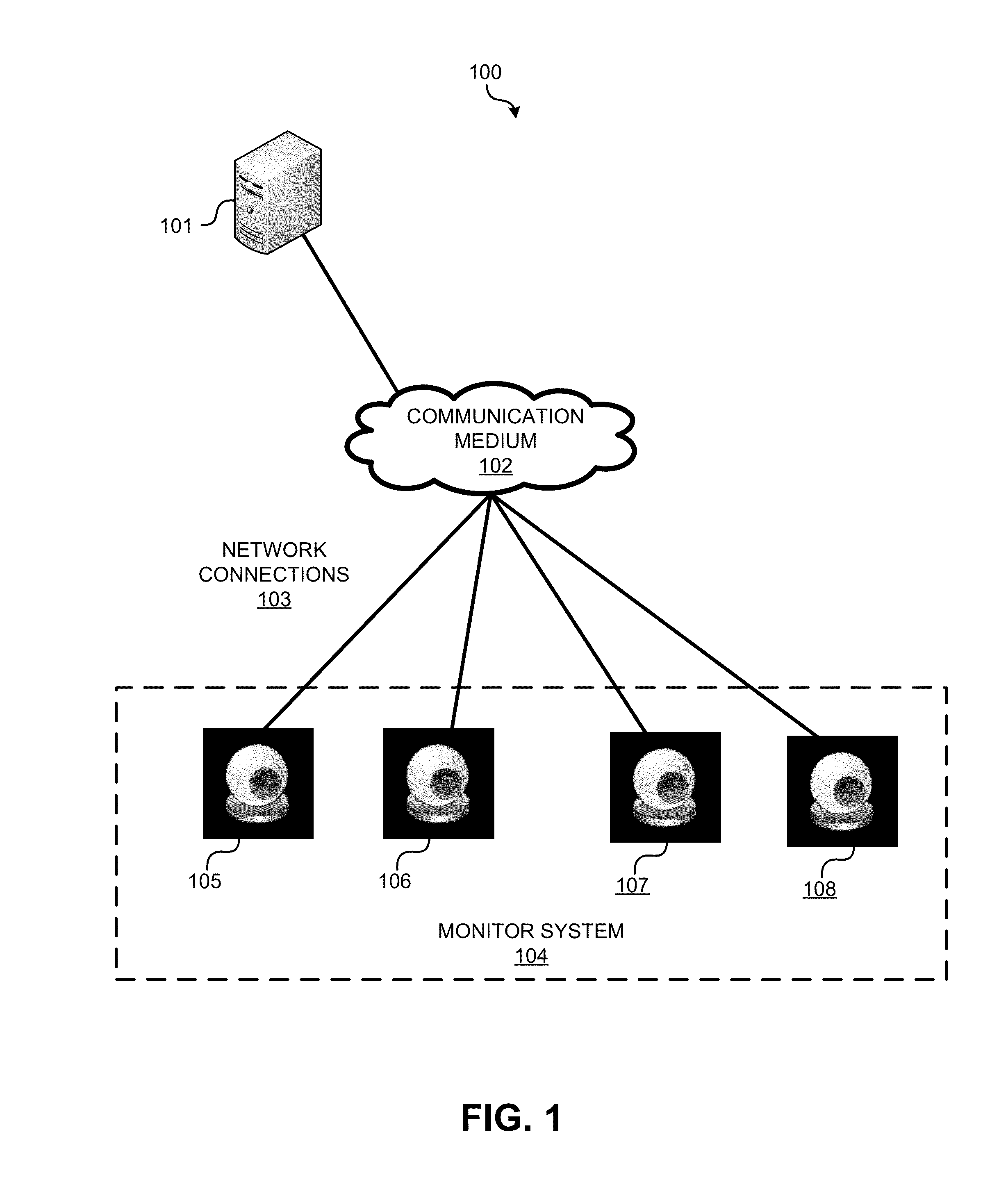

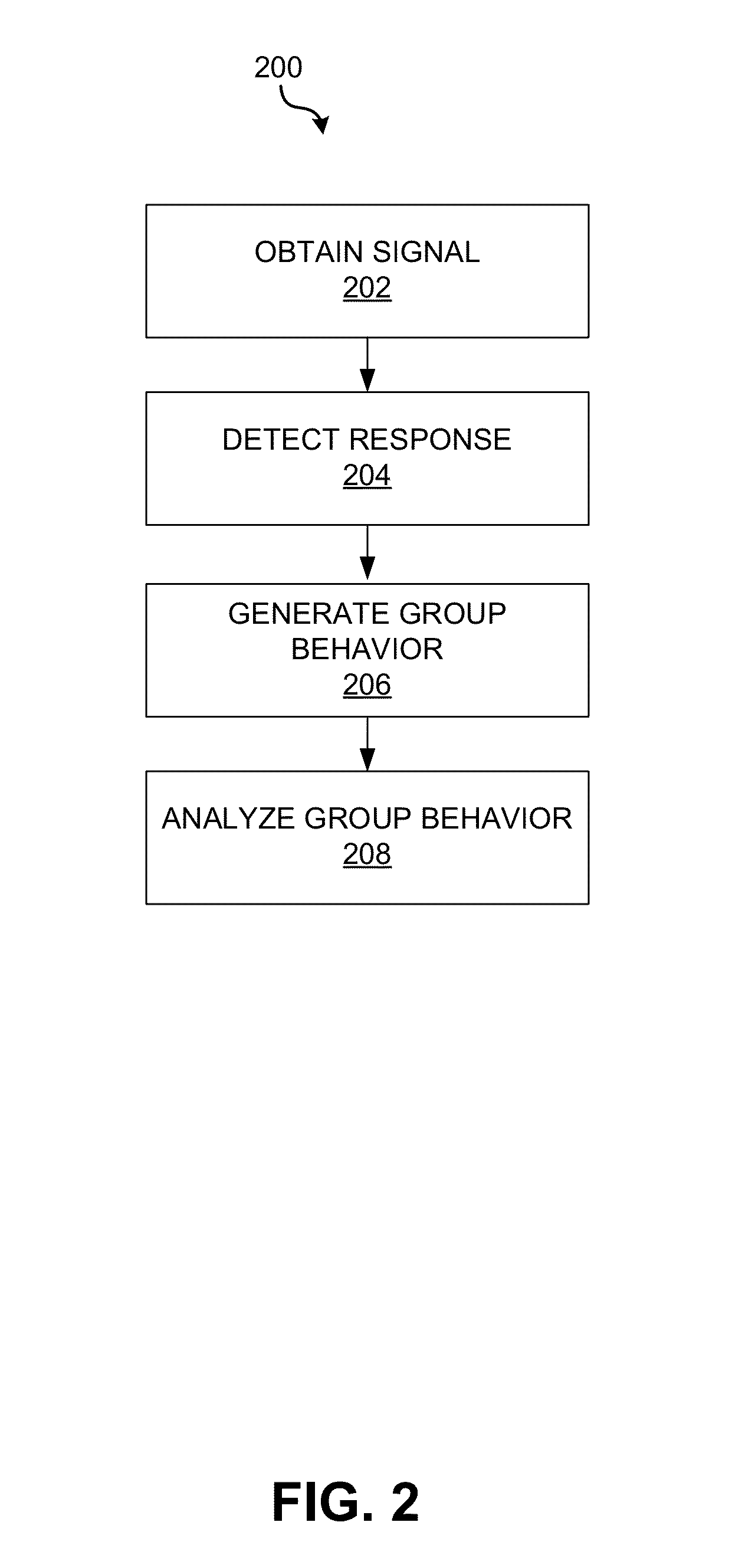

Methods and systems for measuring group behavior

Methods and systems for measuring group behavior are provided. Group behavior of different groups may be measured objectively and automatically in different environments including a dark environment. A uniform visible signal comprising images of members of a group may be obtained. Facial motion and body motions of each member may be detected and analyzed from the signal. Group behavior may be measured by aggregating facial motions and body motions of all members of the group. A facial motion such as a smile may be detected by using the Fourier Lucas-Kanade (FLK) algorithm to register and track faces of each member of a group. A flow-profile for each member of the group is generated. Group behavior may be further analyzed to determine a correlation of the group behavior and the content of the stimulus. A prediction of the general public's response to the stimulus based on the analysis of the group behavior is also provided.

Owner:DISNEY ENTERPRISES INC

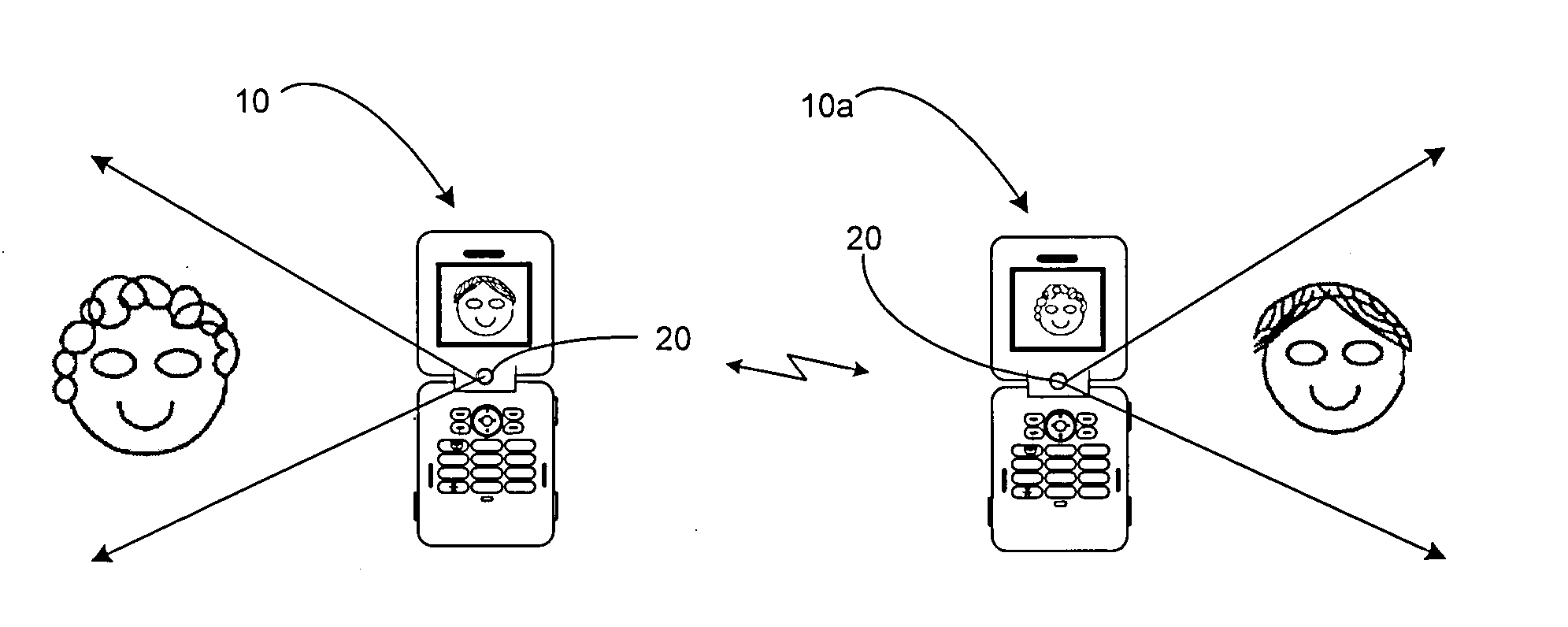

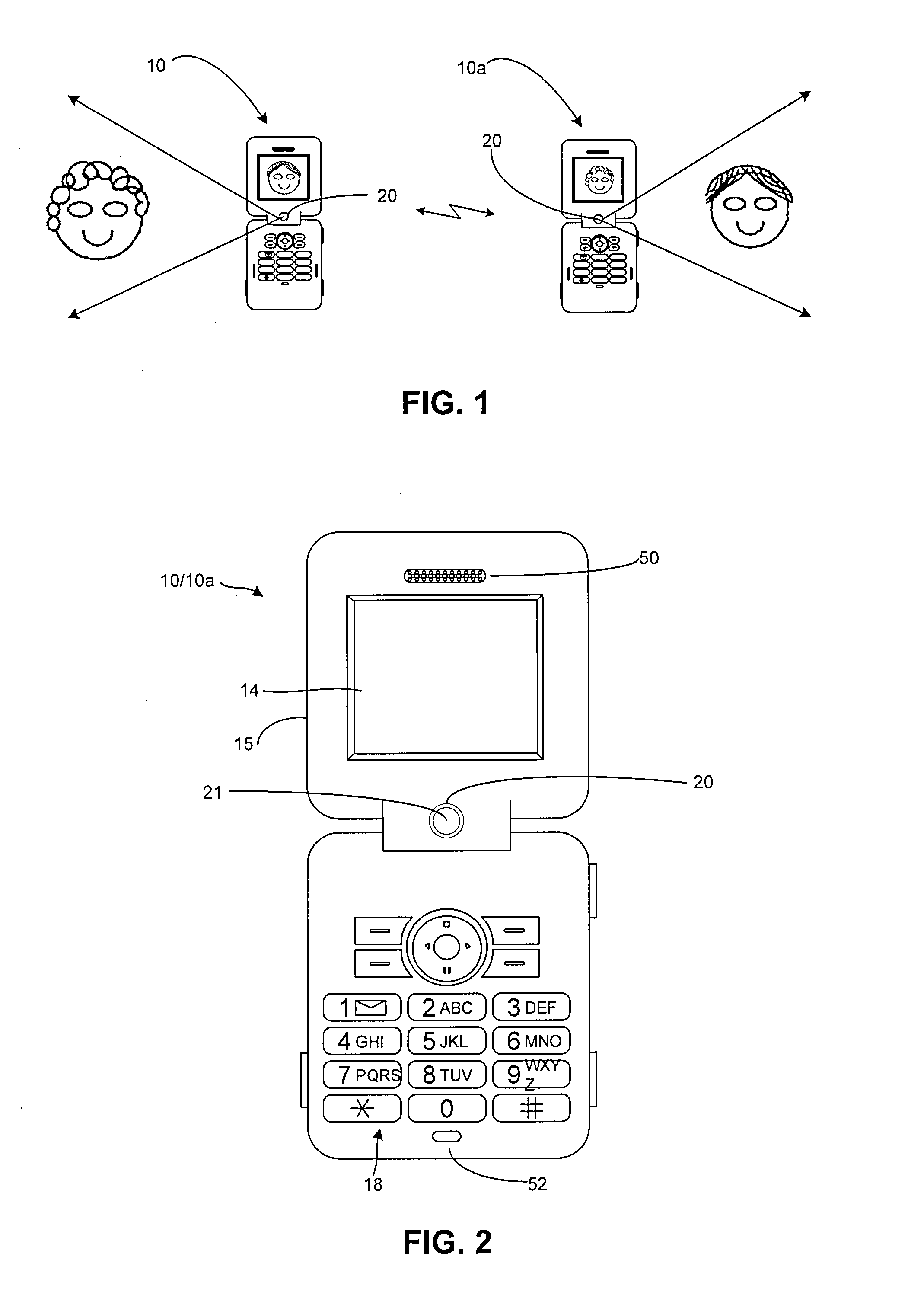

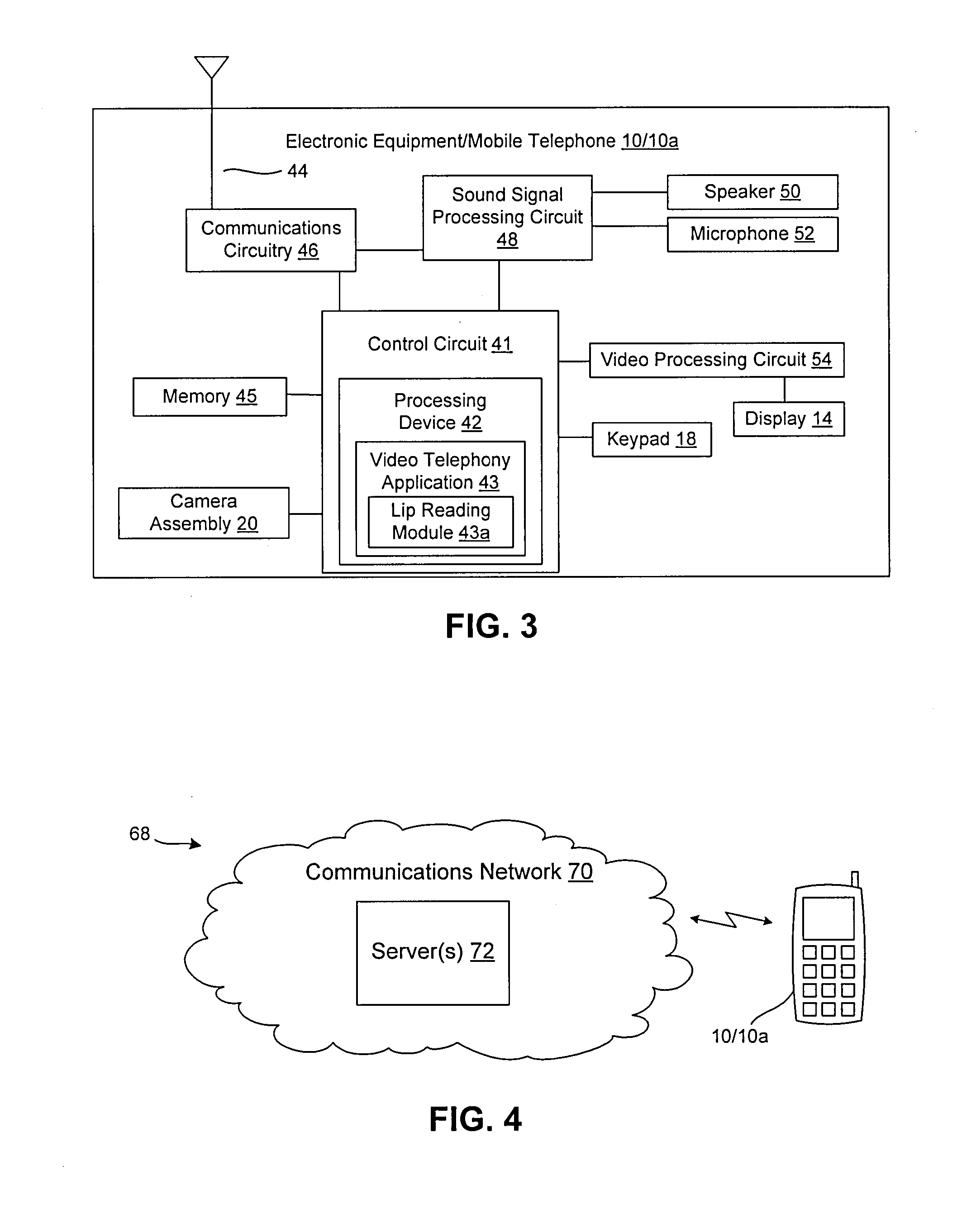

System and method for video telephony by converting facial motion to text

InactiveUS20100079573A1Enhanced video telephonyDevices with voice recognitionAlphabetical characters enteringDisplay deviceLoudspeaker

A video telephony system includes an electronic device having communications circuitry to establish a communication with a second electronic device. The second electronic device may include an image generating device for generating a sequence of images of a user of the second electronic device. The first electronic device may receive the sequence of images as part of the communication. Based on the sequence of images, a lip reading module within the first electronic device analyzes changes in the second user's facial features to generate text corresponding to a communication portion of the second user. The text is then displayed on a display of the first electronic device so that the first user may follow along with the conversation in a text format without the need to employ a speaker telephone function. The sequence of images may be displayed with the text for enhanced video telephony.

Owner:SONY ERICSSON MOBILE COMM AB

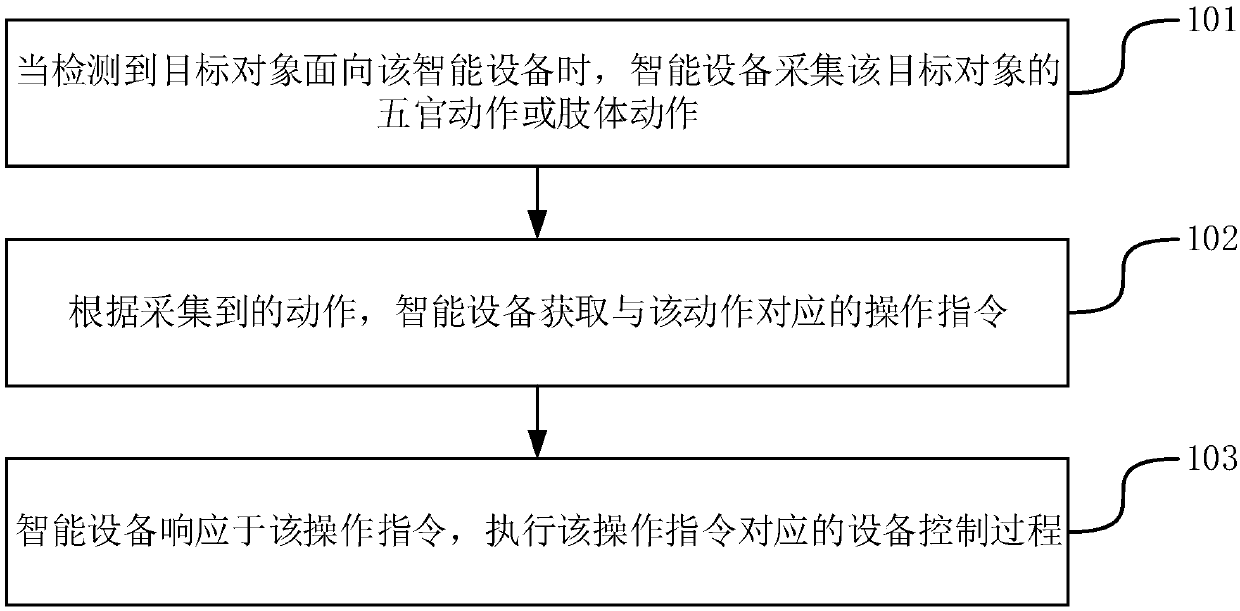

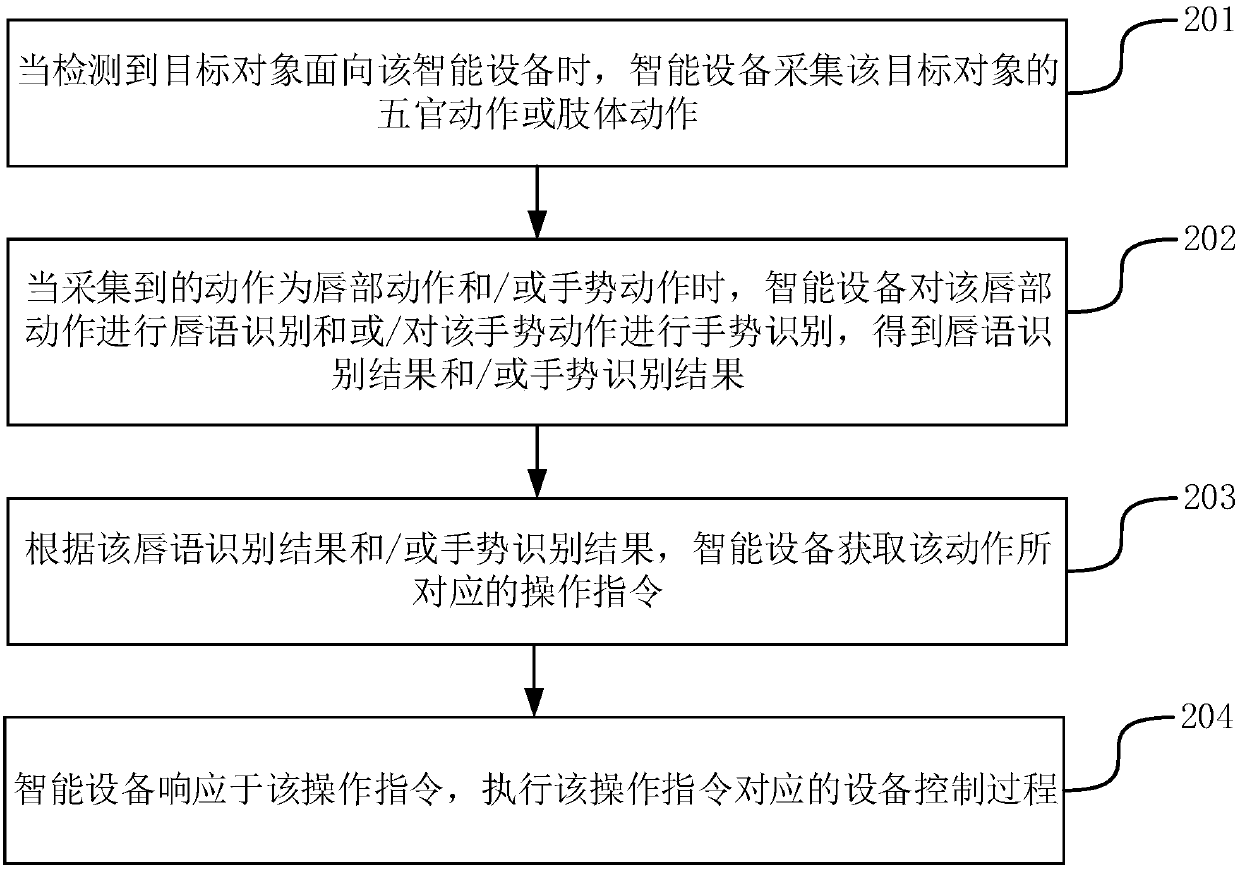

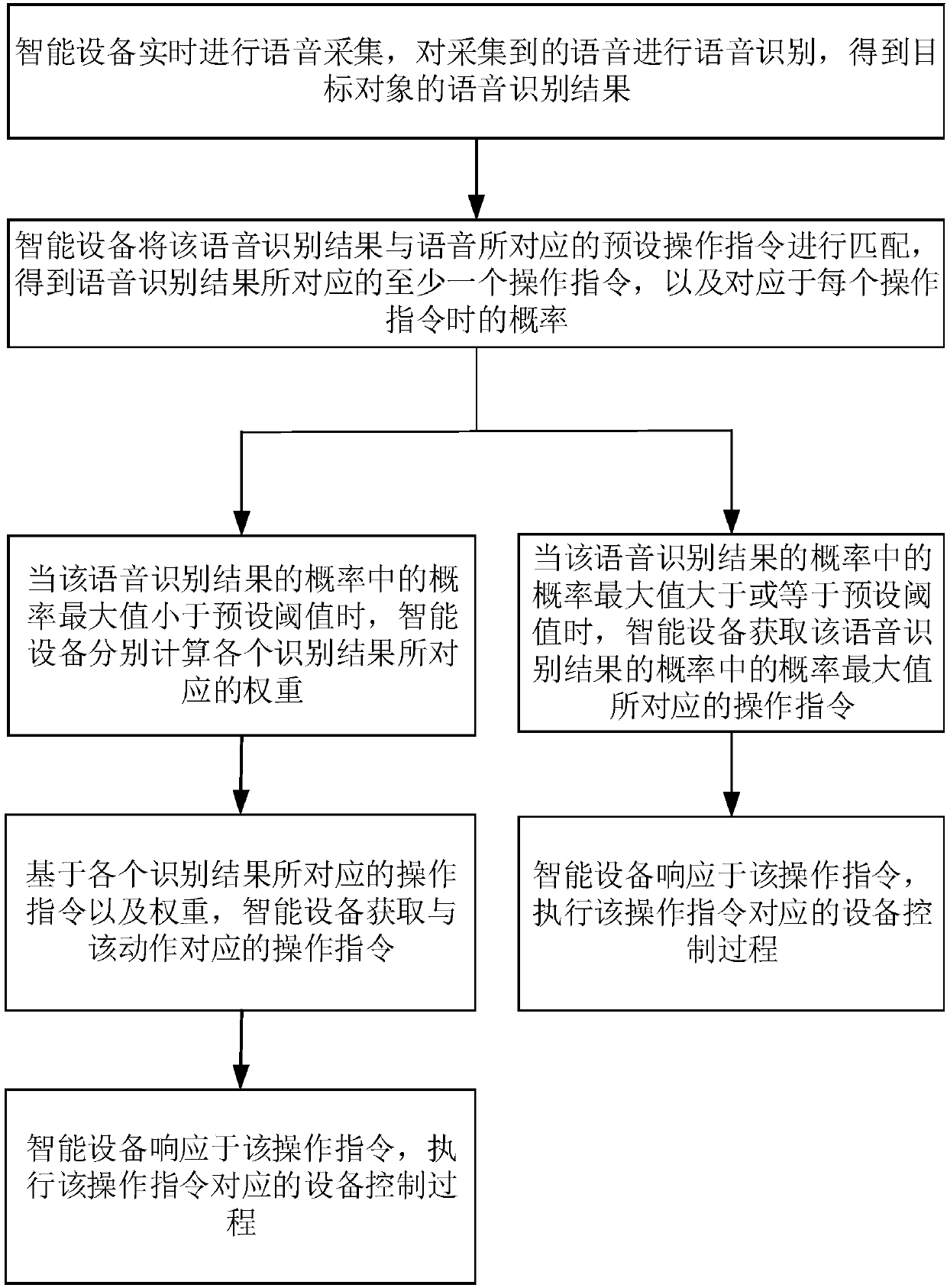

Equipment control method and device, equipment control device, and storage medium

ActiveCN108052079AAccurate receptionIncrease success rateComputer controlTotal factory controlIntelligent equipmentEmbedded system

Owner:BEIJING XIAOMI MOBILE SOFTWARE CO LTD

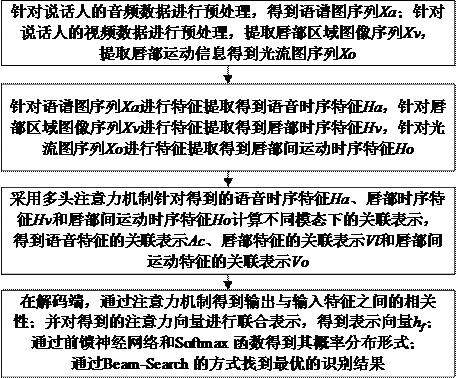

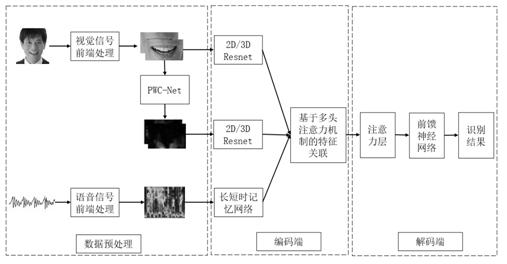

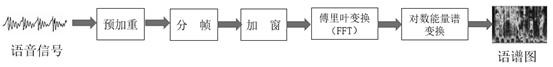

Cross-modal multi-feature fusion audio and video speech recognition method and system

PendingCN112053690AAccurate acquisitionImprove learning effectImage enhancementImage analysisEnvironmental noiseFacial movement

The invention relates to an audio and video speech recognition technology, and provides a cross-modal multi-feature fusion audio and video speech recognition method and system in consideration of thesituation that speech interaction is easily affected by complex environmental noise, and facial motion information is acquired through a video and is relatively stable in an actual robot application environment. According to the method, speech information, visual information and visual motion information are fused through an attention mechanism, and the speech content expressed by a user is acquired more accurately by using the relevance among different modes, so that the speech recognition precision under the condition of complex background noise is improved, the speech recognition performance in human-computer interaction is improved, and the problem of low pure-speech recognition accuracy in a noise environment is effectively solved.

Owner:HUNAN UNIV

Method of controlling virtual object by user's figure or finger motion for electronic device

ActiveUS8203530B2Minimize shielding effectInput/output for user-computer interactionAssess restrictionCMOSControl signal

The present invention provides a method of controlling a virtual object or instruction for a computing device comprising: detecting a user activity by a detecting device; generating a control signal in responsive to the user activity detection; controlling an object displayed on a display in responsive to the control signal to execute the instruction. The user activity is detected by CMOS or CCD. The user activity includes facial motion, eye motion, or finger motion.

Owner:TAIWAN SEMICON MFG CO LTD

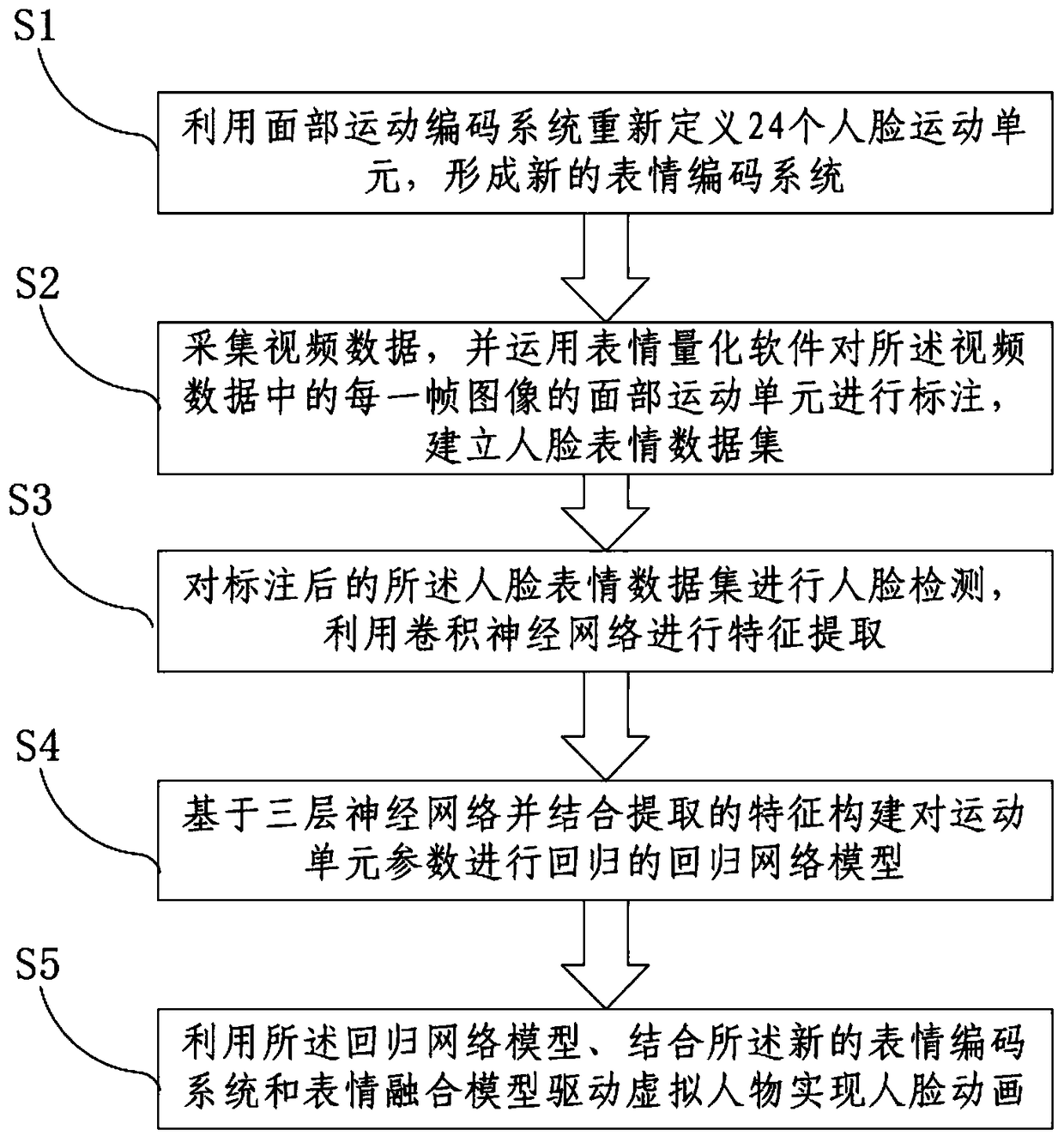

Method for realizing face animation based on motion unit expression mapping

PendingCN109493403AAccurate acquisitionImprove applicabilityAnimationNeural architecturesFace detectionData set

The embodiment of the invention discloses a method for realizing face animation based on motion unit expression mapping, and relates to the technical field of deep learning and face animation, the method for realizing face animation comprises the following steps: redefining 24 face motion units by utilizing a face motion coding system; collecting video data, marking the facial motion unit of eachframe of image in the video data by using expression quantification software, and establishing a facial expression data set; carrying out face detection on the labeled face expression data set, and carrying out feature extraction by utilizing a convolutional neural network; constructing a regression network model for regression of the motion unit parameters based on the three-layer neural networkin combination with the extracted features; and driving the virtual character to realize face animation by using a regression network model and combining the new expression coding system and the expression fusion model. According to the method, the problems that an existing method for realizing the facial animation is not high in applicability and cannot accurately describe facial expression changes by directly utilizing two-dimensional features can be solved.

Owner:北京中科嘉宁科技有限公司

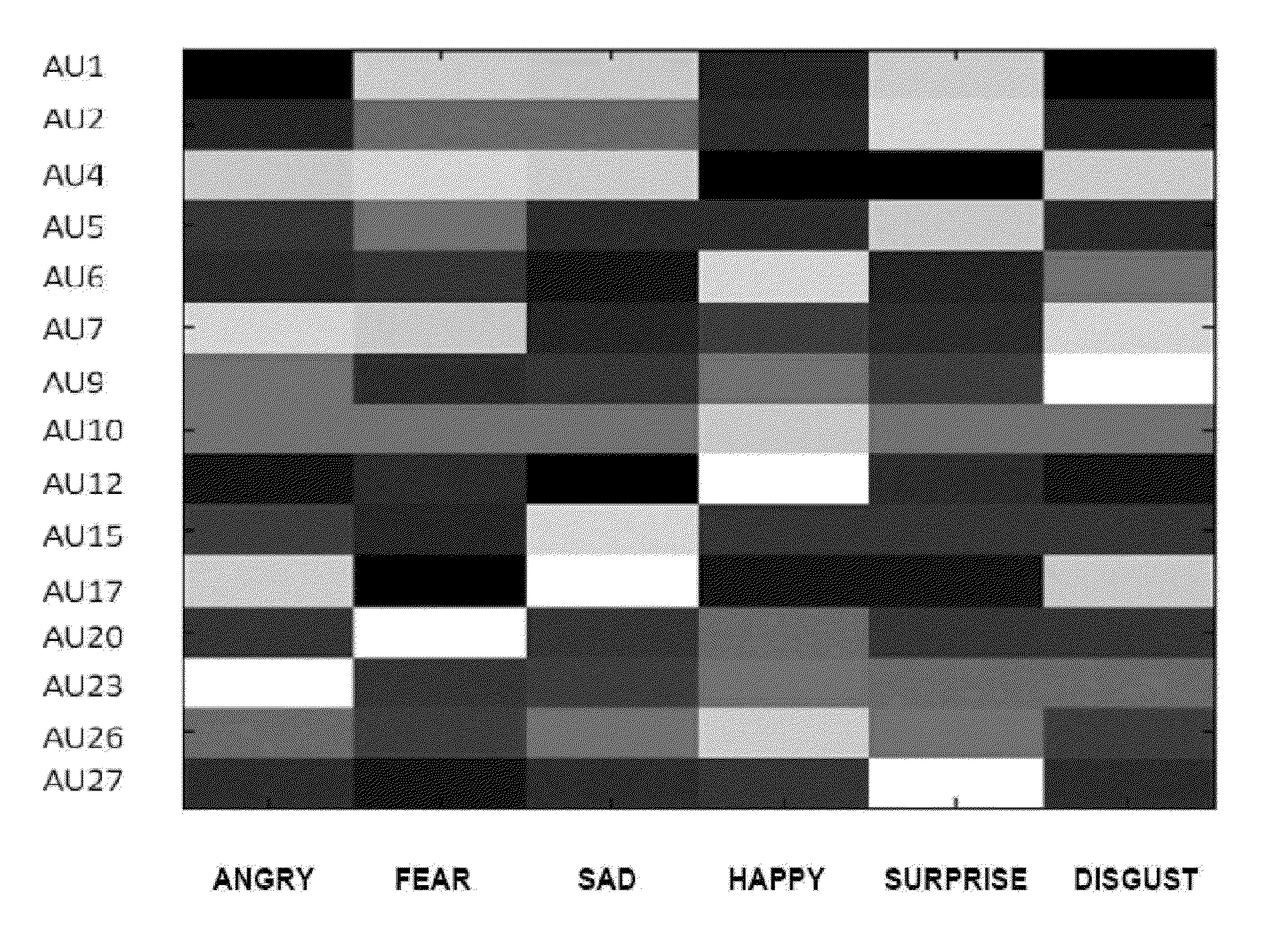

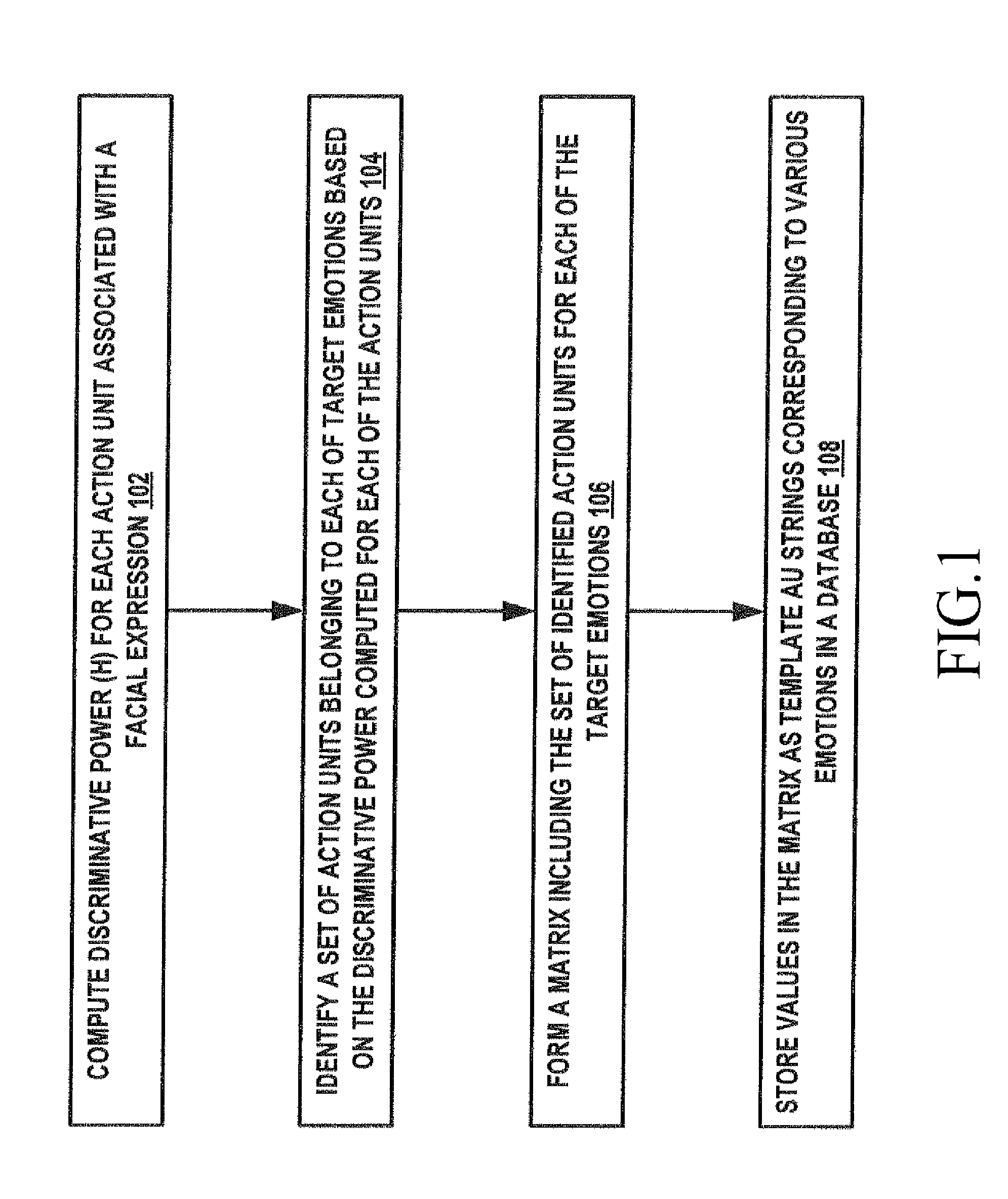

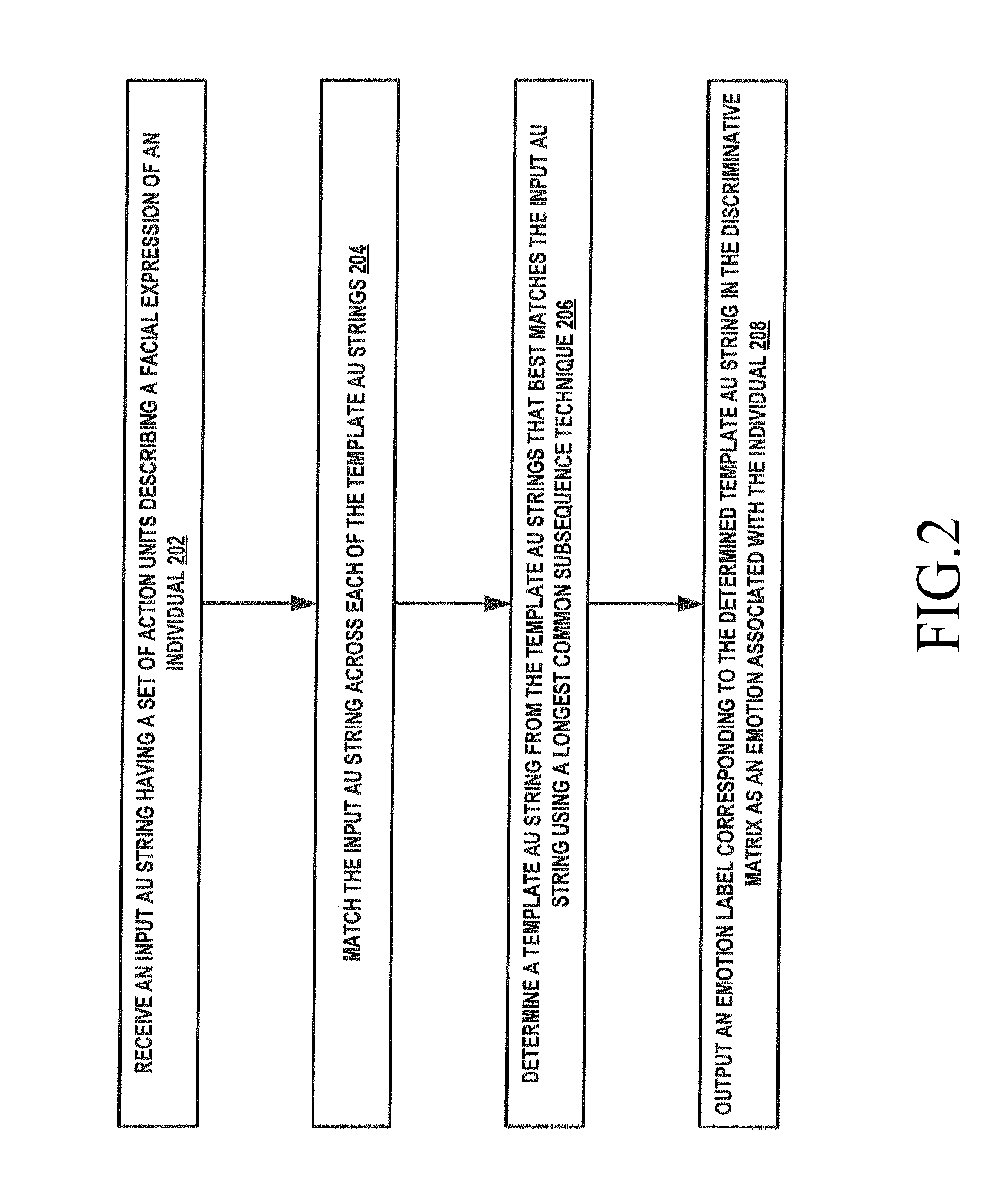

Method and apparatus for recognizing an emotion of an individual based on facial action units

InactiveUS20120101735A1Improve matchImage analysisBiological testingPattern recognitionFacial expression

An apparatus and method are provided for recognizing an emotion of an individual based on Action Units. The method includes receiving an input AU string including one or more AUs that represents a facial expression of an individual from an AU detector; matching the input AU string with each of a plurality of AU strings, wherein each of the plurality of AU strings includes a set of highly discriminative AUs, each representing an emotion; identifying an AU string from the plurality of AU strings that best matches the input AU string; and outputting an emotion label corresponding to the best matching AU string that indicates the emotion of the individual.

Owner:SAMSUNG ELECTRONICS CO LTD

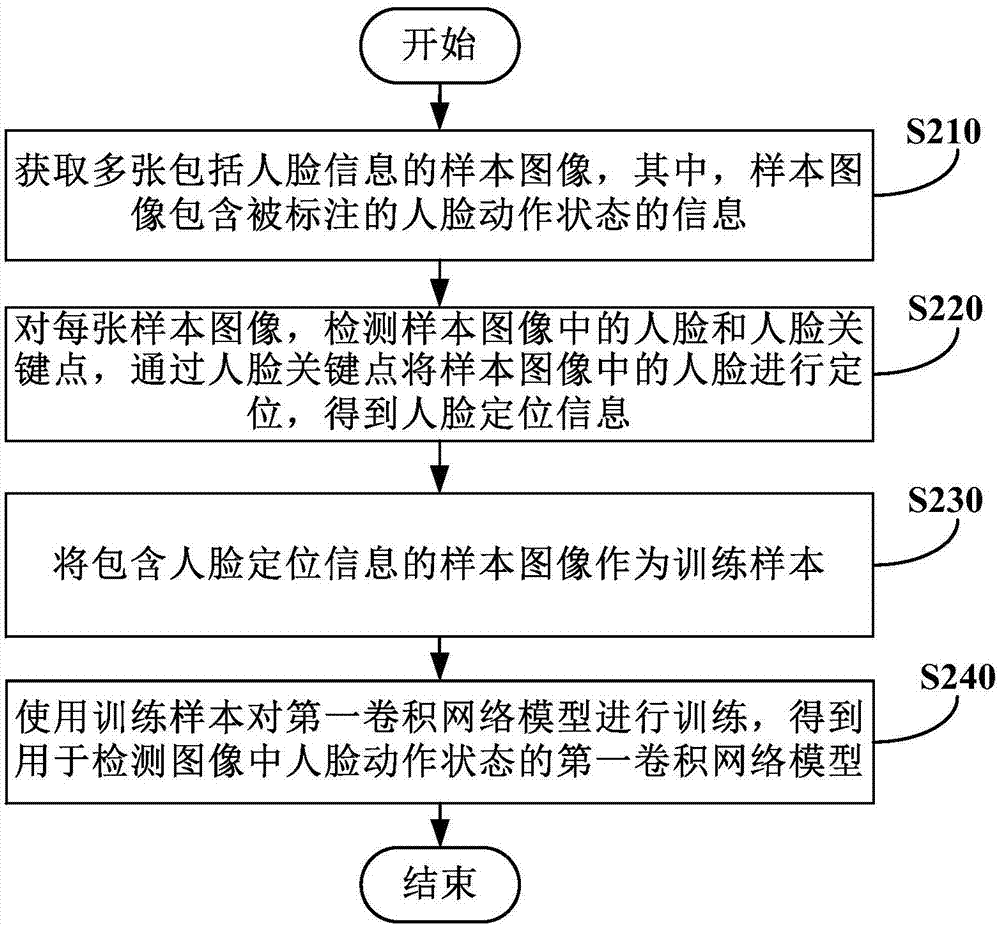

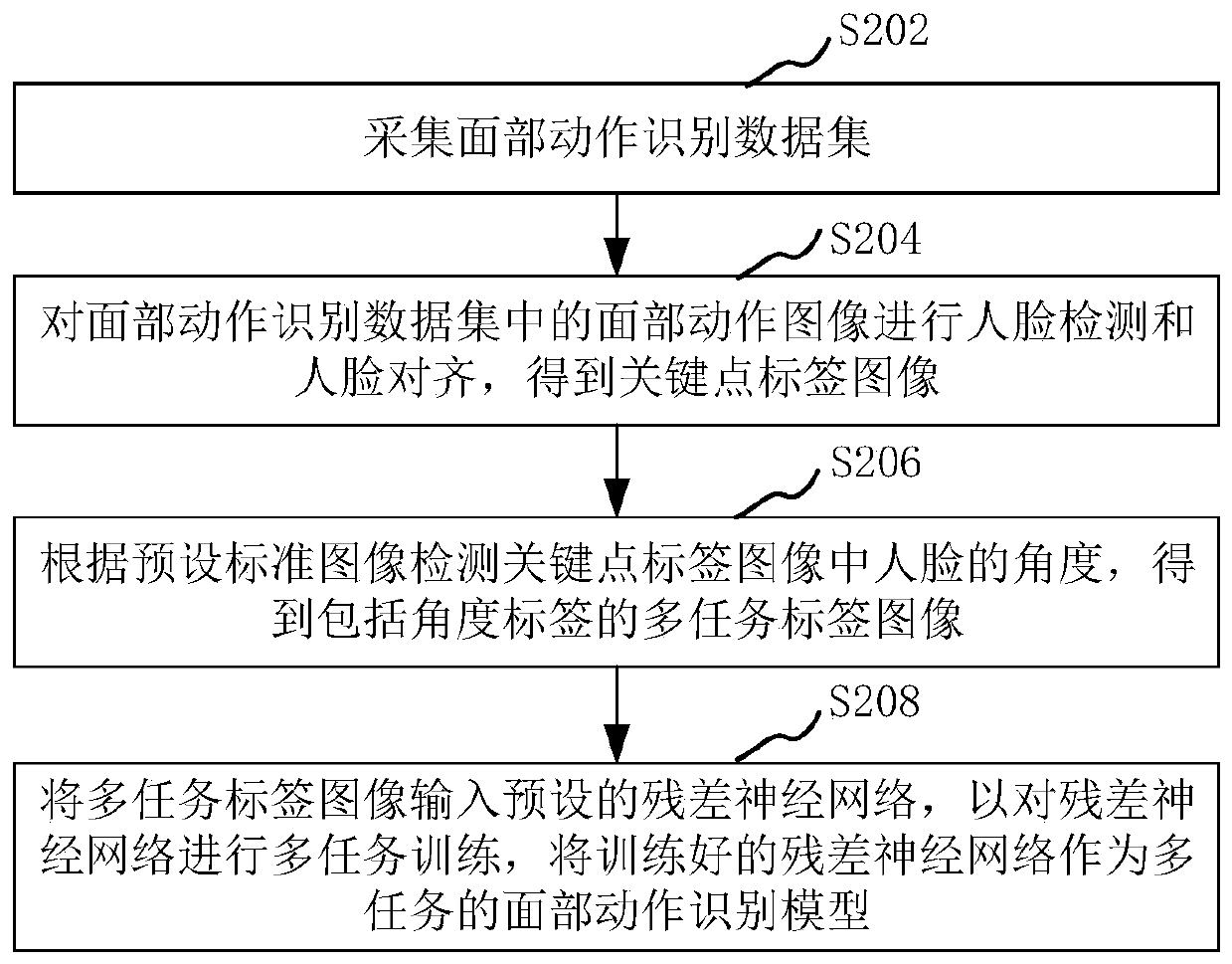

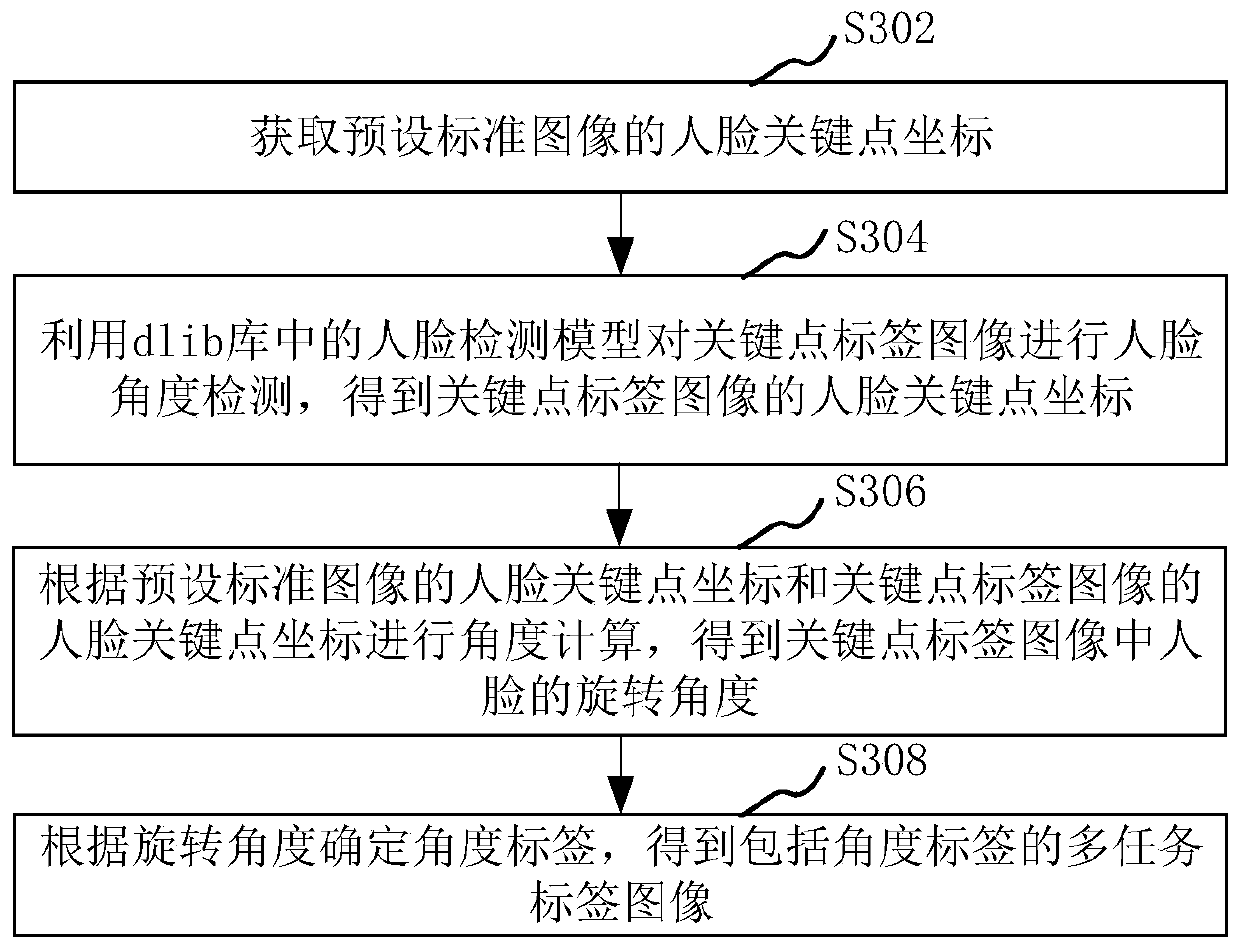

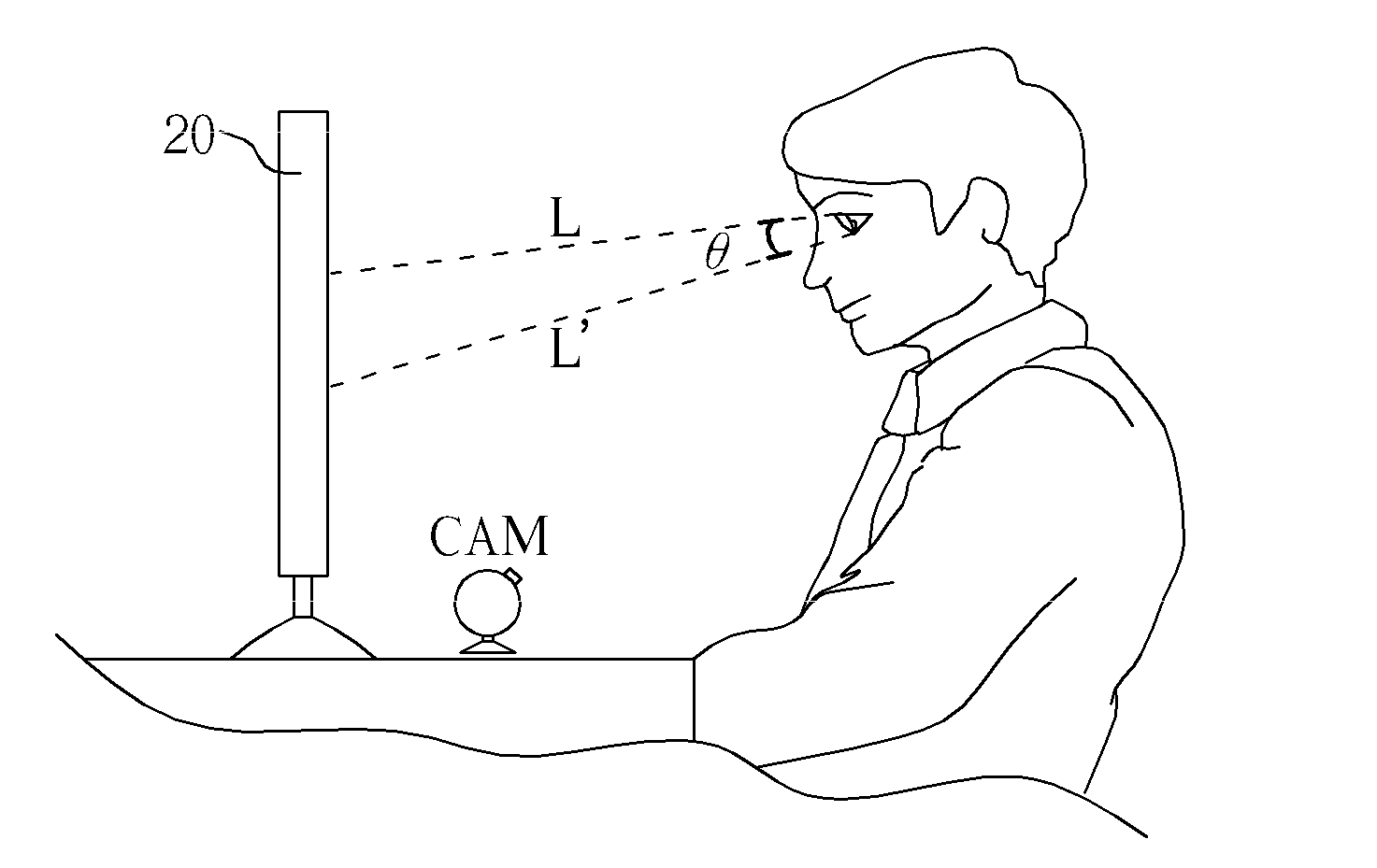

Multi-task facial action recognition model training and multi-task facial action recognition method

ActiveCN110889325AIncrease diversityCharacter and pattern recognitionNeural architecturesFace detectionData set

The invention relates to a multi-task facial action recognition model training method based on a neural network, a multi-task facial action recognition method, computer equipment and a storage medium.The method comprises the following steps: acquiring a facial action recognition data set; performing face detection and face alignment on the face action image in the face action recognition data setto obtain a key point label image; detecting the angle of a human face in the key point label image according to a preset standard image to obtain a multi-task label image comprising an angle label;and inputting the multi-task label image into a preset residual neural network to perform multi-task training on the residual neural network, and taking the trained residual neural network as a multi-task facial action recognition model. By adopting the method, the diversity of facial action recognition can be improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

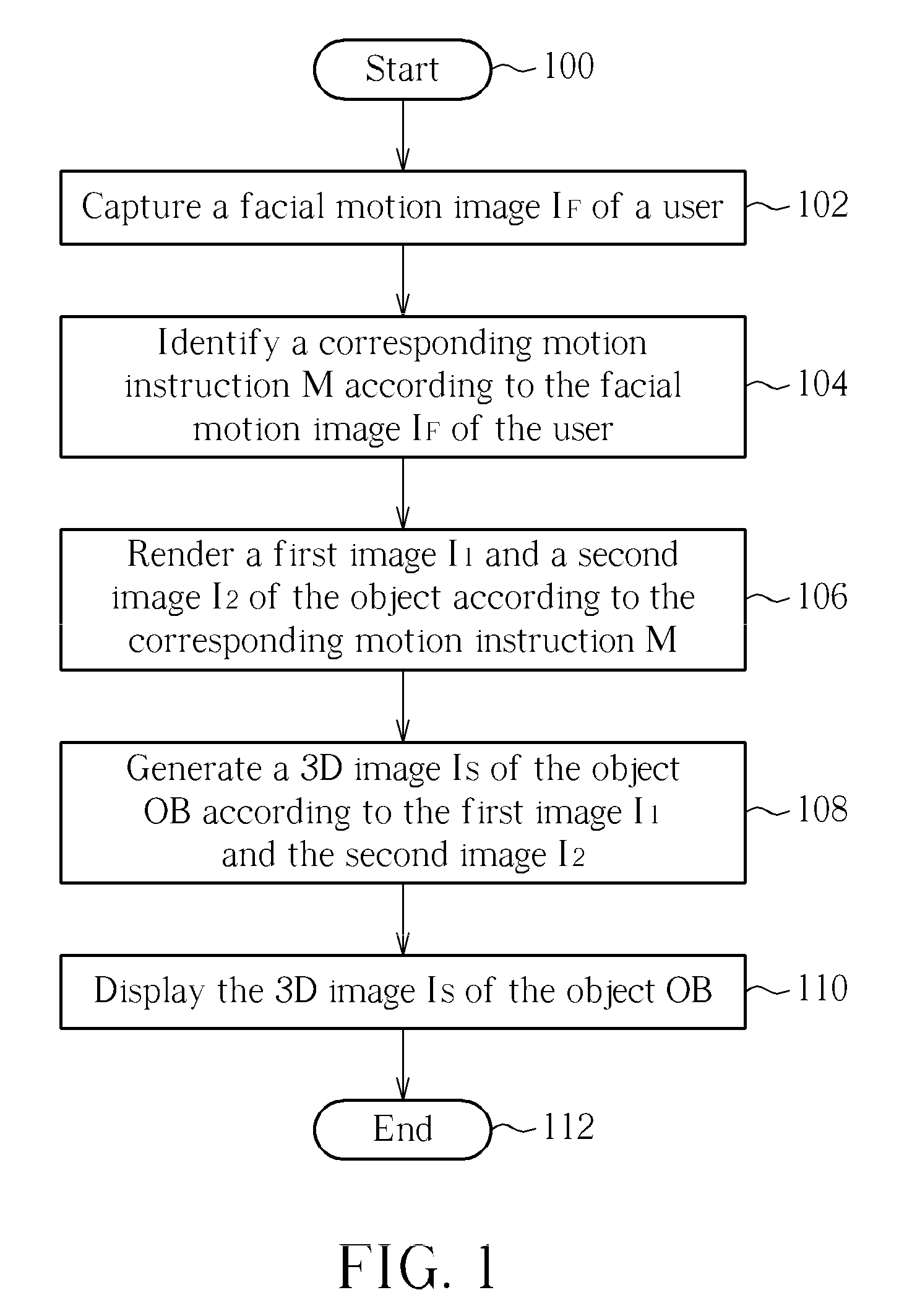

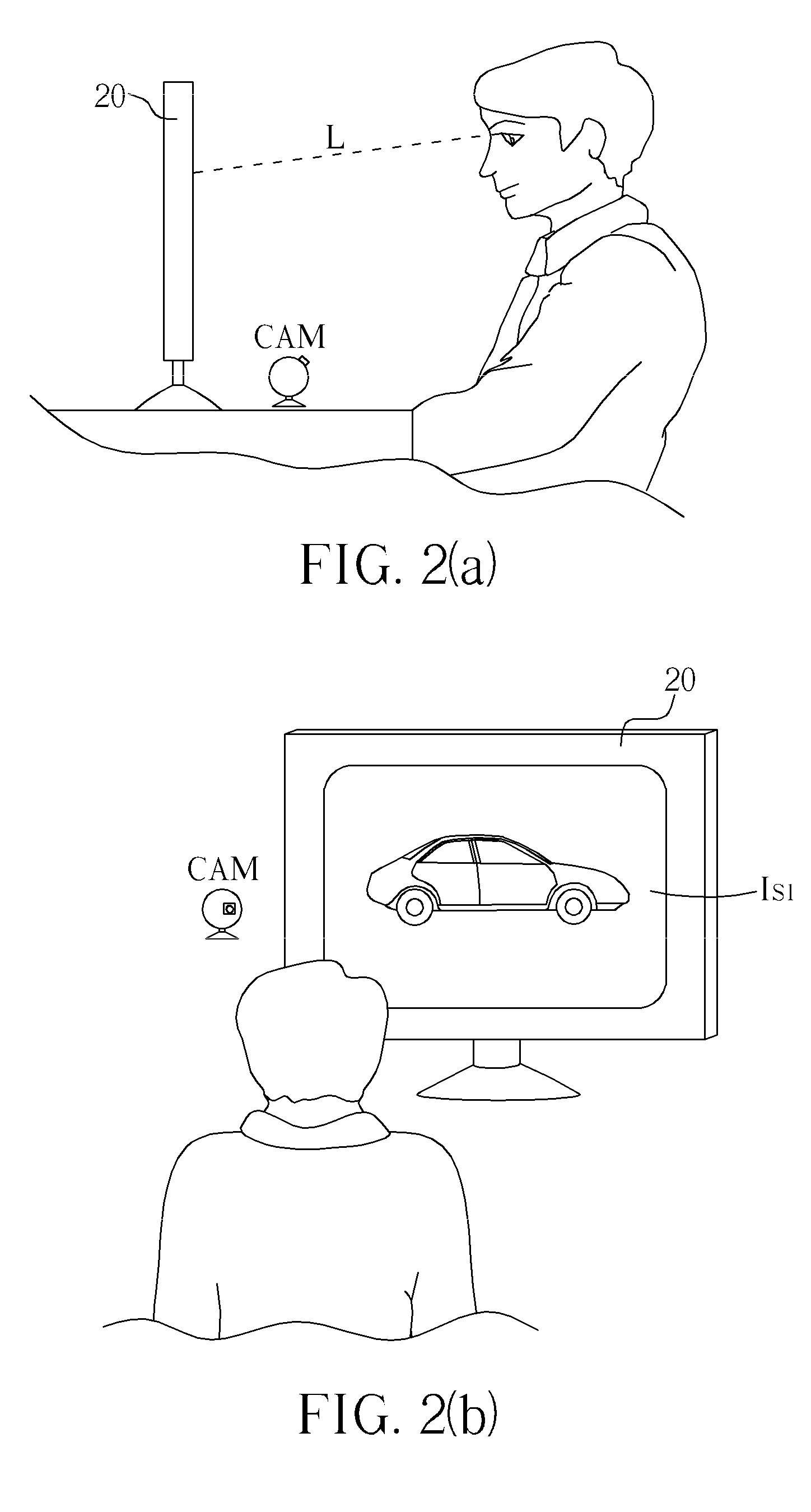

Interactive 3D image Display method and Related 3D Display Apparatus

An interactive 3D image display method for displaying a 3D image of an object, which includes capturing a facial motion image of a user, identifying a corresponding motion instruction according to the facial motion image of the user, rendering a first image and a second image of the object according to the corresponding motion instruction, generating the 3D image of the object according to the first image and the second image, and displaying the 3D image of the object.

Owner:WISTRON CORP

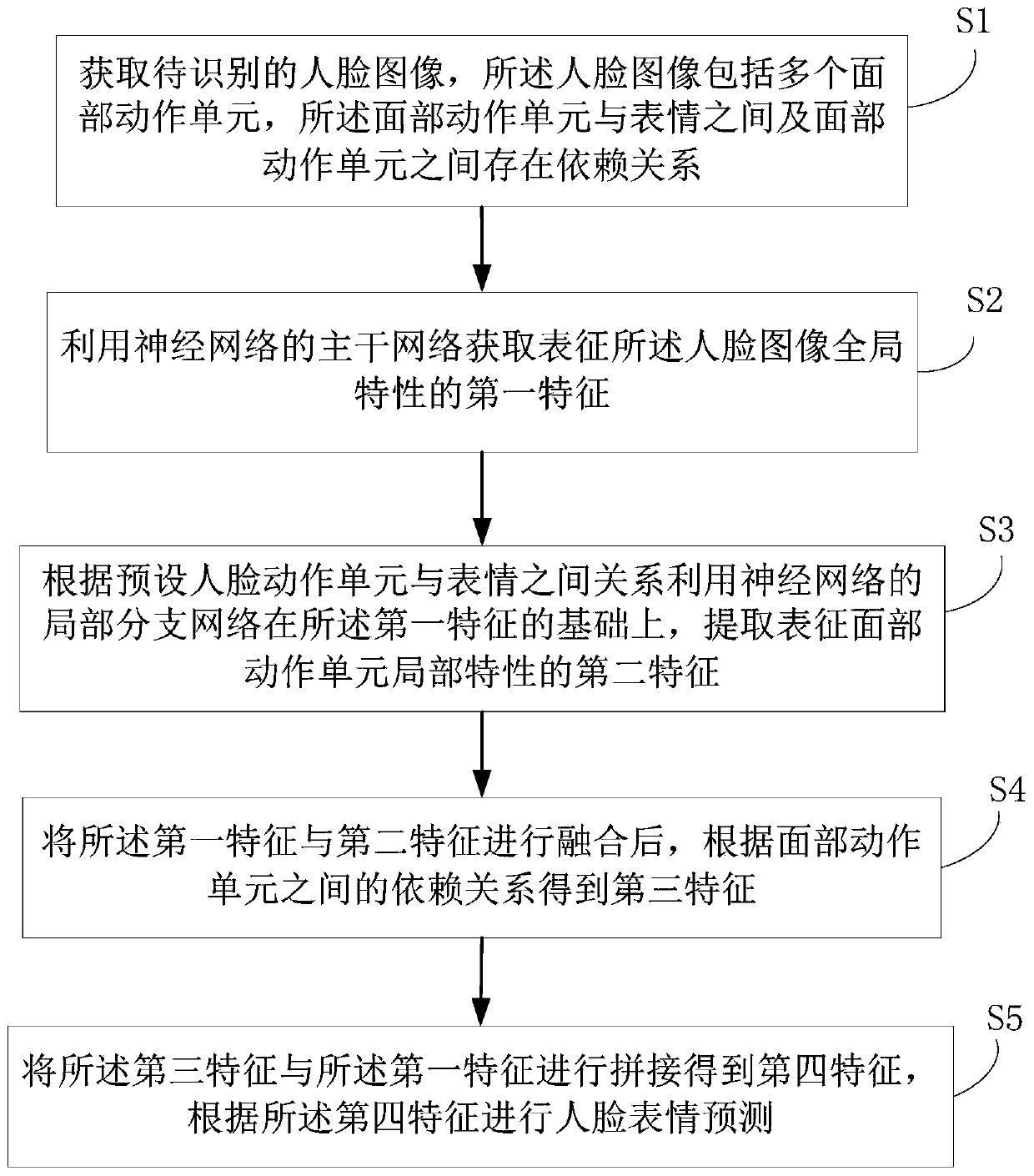

Face recognition method and system

ActiveCN110738102AOvercome the defect of poor facial expression recognition effectAccurate identificationAcquiring/recognising facial featuresFeature extractionMedicine

The invention discloses a face expression recognition method and system, and the method comprises the steps: obtaining a to-be-recognized face image which comprises a plurality of face action units, wherein there are dependence relationships between the face action units and expressions and between the face action units; utilizing a backbone network of the neural network to obtain a first featurerepresenting the global feature of the face image; extracting a second feature representing local features of the facial action unit on the basis of the first feature according to a preset relationship between the facial action unit and the expression; after the first feature and the second feature are fused, a third feature is obtained according to the dependency relationship between the facial action units; and splicing the third feature and the first feature to obtain a fourth feature, and performing facial expression prediction according to the fourth feature. According to the embodiment of the invention, the feature extraction is assisted by introducing the expression-action unit relationship and the action unit relationship and combining the expression and action unit knowledge interaction, so that more accurate recognition of the facial expression is realized.

Owner:暗物智能科技(广州)有限公司

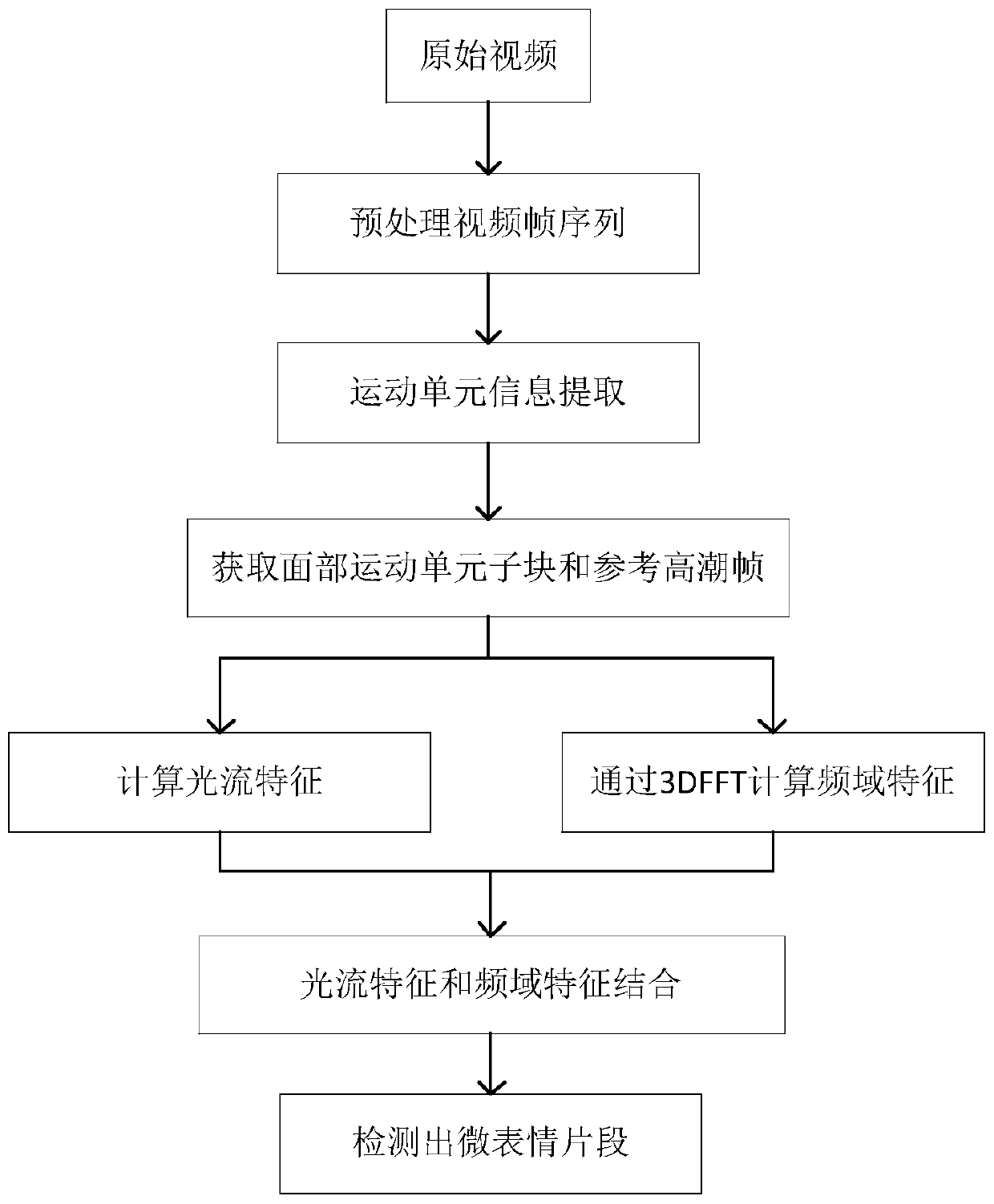

Multi-domain fusion micro-expression detection method based on motion unit

ActiveCN111582212APrecise positioningSmall amount of calculationAcquiring/recognising facial featuresFace detectionFrame sequence

The invention relates to a multi-domain fusion micro-expression detection method based on a motion unit, and the method comprises the steps: (1) carrying out the preprocessing of a micro-expression video: obtaining a video frame sequence, carrying out the face detection and positioning, and carrying out the face alignment; (2) performing motion unit detection on the video frame sequence to obtainmotion unit information of the video frame sequence; (3) according to the motion unit information, finding out a facial motion unit sub-block containing the maximum micro-expression motion unit information amount ME as a micro-expression detection area through a semi-decision algorithm, and meanwhile, extracting a plurality of peak frames of the micro-expression motion unit information amount ME as reference climax frames of micro-expression detection by setting a dynamic threshold value; and (4) realizing micro-expression detection through a multi-domain fusion micro-expression detection method. According to the method, the influence of redundant information on micro-expression detection is reduced, the calculated amount is reduced, and the micro-expression detection has higher comprehensive discrimination capability. The calculation speed is high, and the micro-expression detection precision is high.

Owner:SHANDONG UNIV

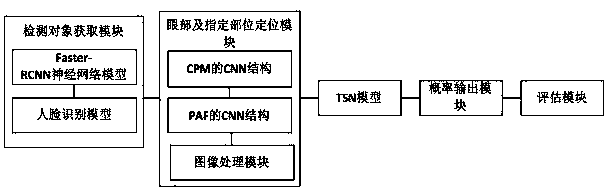

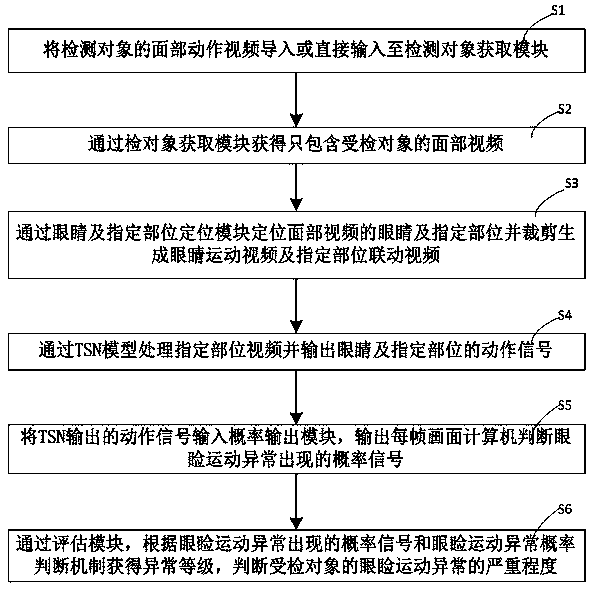

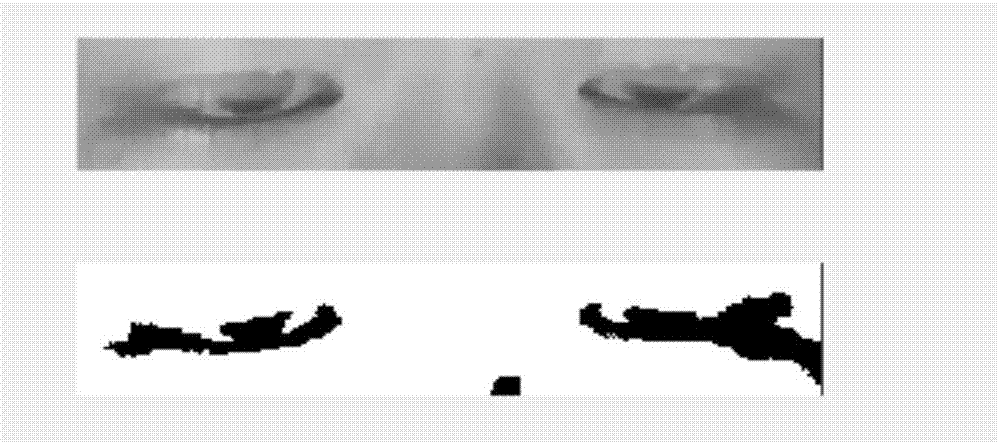

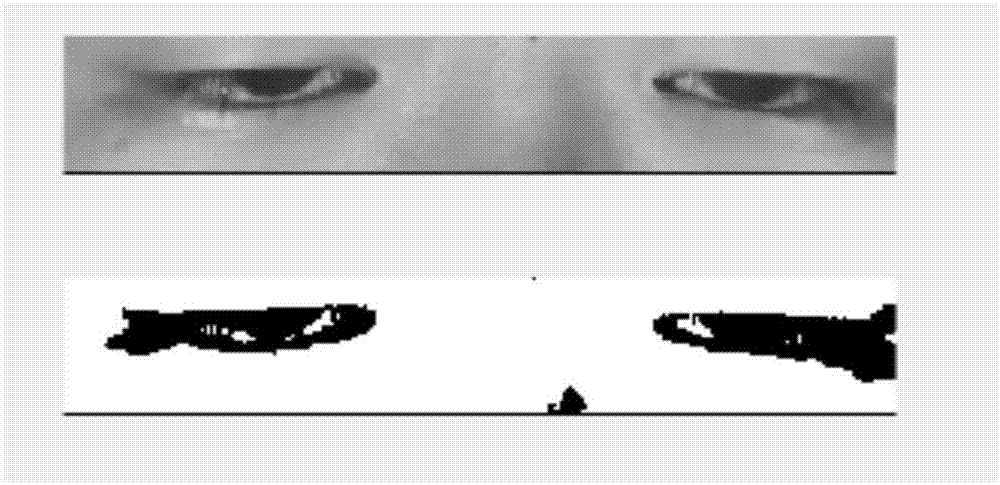

Artificial-intelligence-based eyelid movement function evaluation system

ActiveCN110428908AUniversally acceptableHighly corporatedMedical data miningMedical automated diagnosisPattern recognitionEyelid

The invention discloses an artificial-intelligence-based abnormal eyelid movement evaluation system. The system comprises an examined object obtaining module, an eye and specific part positioning module, a TSN model, a probability output module and an evaluation module; the examined object obtaining module is used for obtaining a facial video which is obtained from a facial movement video of the input examined object and only contains the examined object, the eye and specific part positioning module is used for positioning the eye and specific part of the facial video and obtaining an eye movement video and a specific part linkage video which only contain the examined object, the TSN model is used for processing the eye movement video and the specific part linkage video and outputting movement signals of eyes and specific parts, the probability output module is used for outputting probability signals appearing when a computer judges that eyelid movement is abnormal in each frame, and the evaluation module is used for obtaining the abnormal level according to the probability signals appearing in abnormal eyelid movement and an abnormal eyelid movement probability judging mechanism.Accordingly, universal acceptability, convenience, accuracy, objectivity and repeatability are achieved, and the clinical applicability is high.

Owner:THE PEOPLES HOSPITAL OF GUANGXI ZHUANG AUTONOMOUS REGION

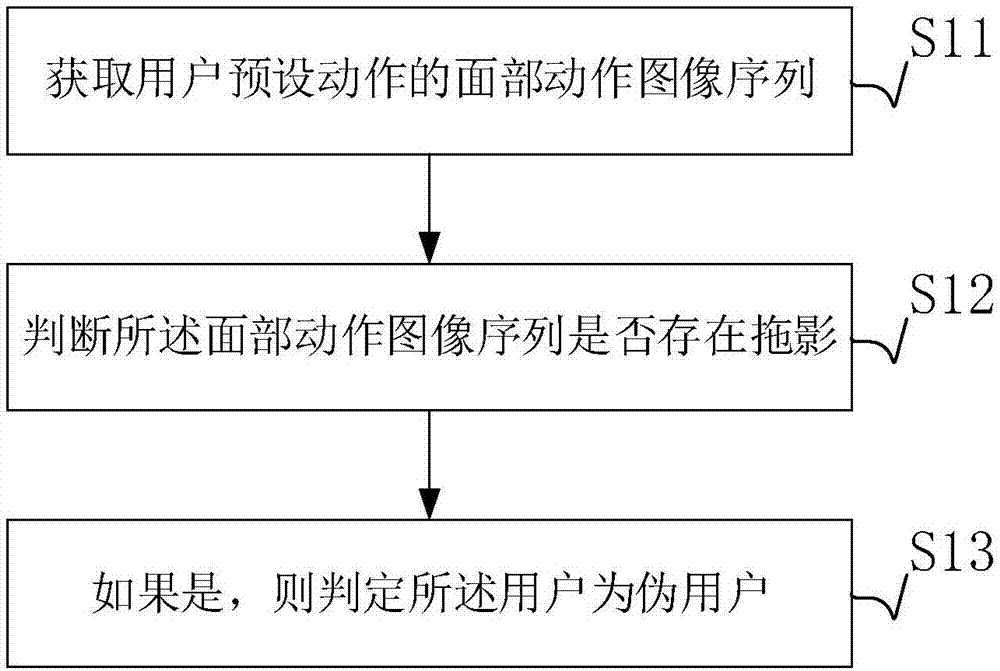

Facial recognition and discrimination method and device and computer device

InactiveCN107992845APrevent counterfeitingRealize the purpose of counterfeitingCharacter and pattern recognitionVideo sequenceFacial recognition system

The invention provides a facial recognition and discrimination method comprising the steps of acquiring a facial motion image sequence of a user preset motion; judging whether a smear occurs in the facial motion image sequence; and if yes, determining that the user is a pseudo user. According to the method, the characteristics that the smear is formed when a display screen plays a video attack areutilized; when a facial recognition device is deceived and a motion is played on the screen, as the display screen is formed by pixel points, the smear is formed during pixel conversion. In the facial recognition and discrimination method, the smear is captured and utilized to be the facial motion of a normal real person, so that the goal of facial recognition discrimination can be achieved. Thereal human face can be recognized during facial recognition, and the faking of the human face by adopting high resolution photos, videos, and realistic face 3D masks or video sequences is avoided.

Owner:GUANGDONG UNIV OF TECH

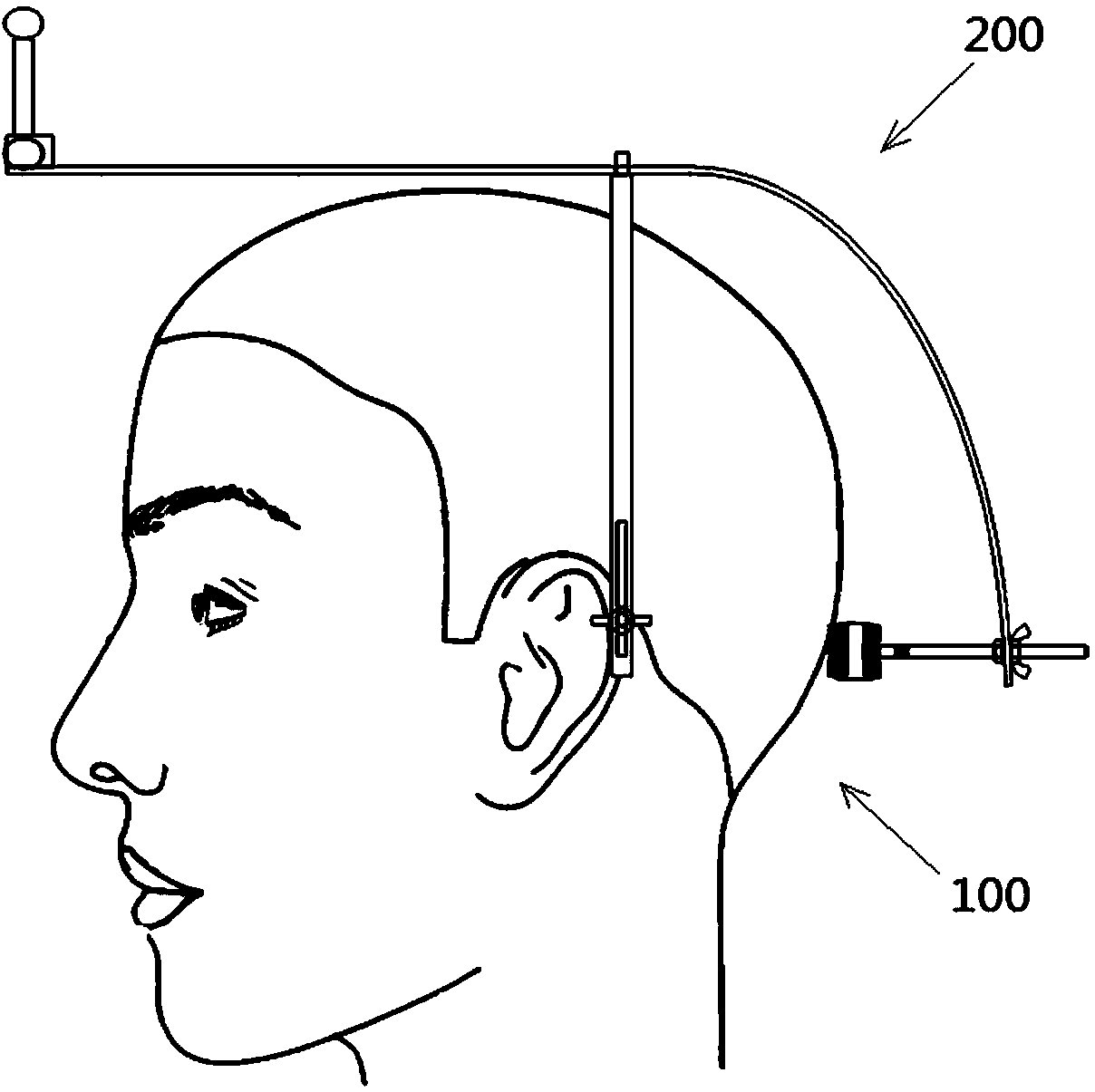

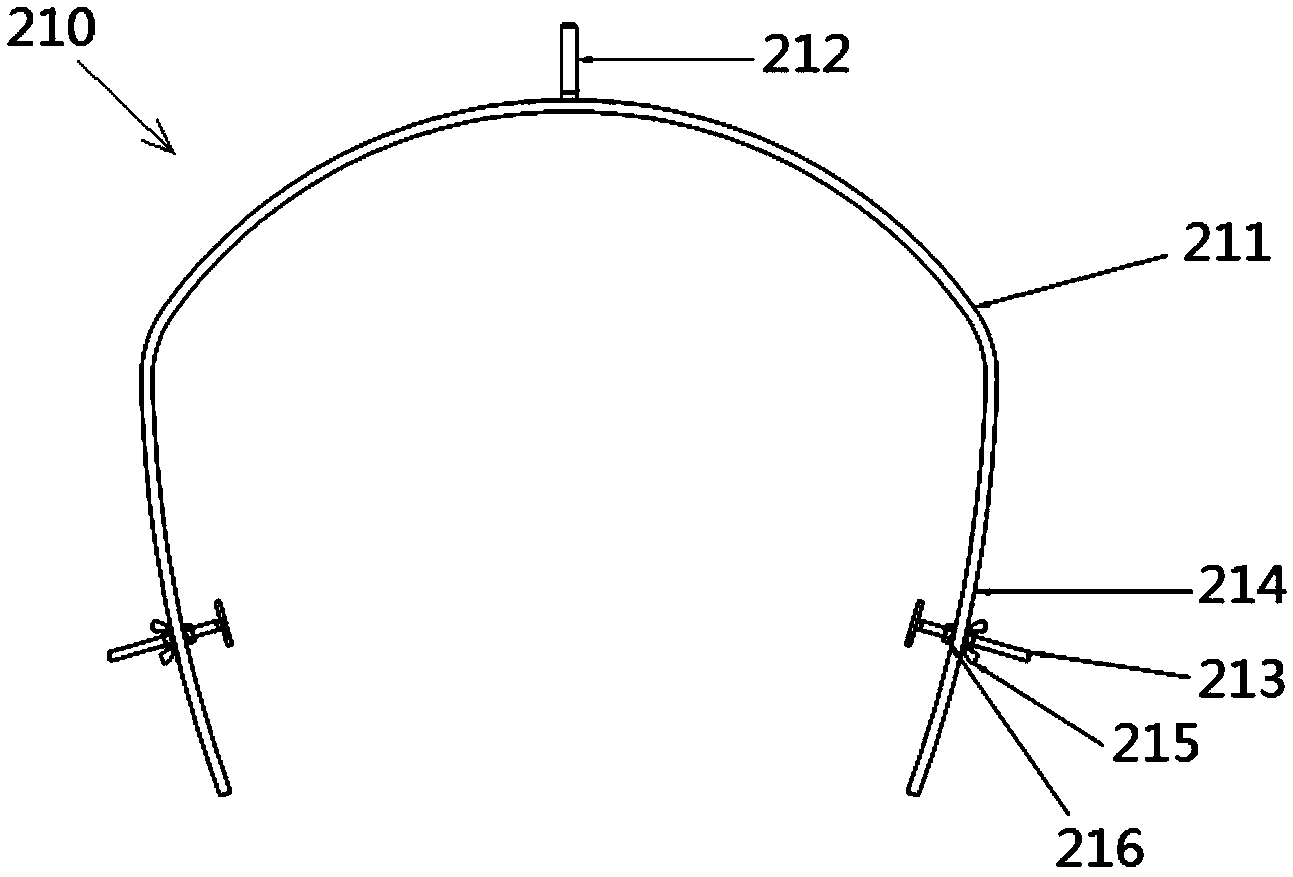

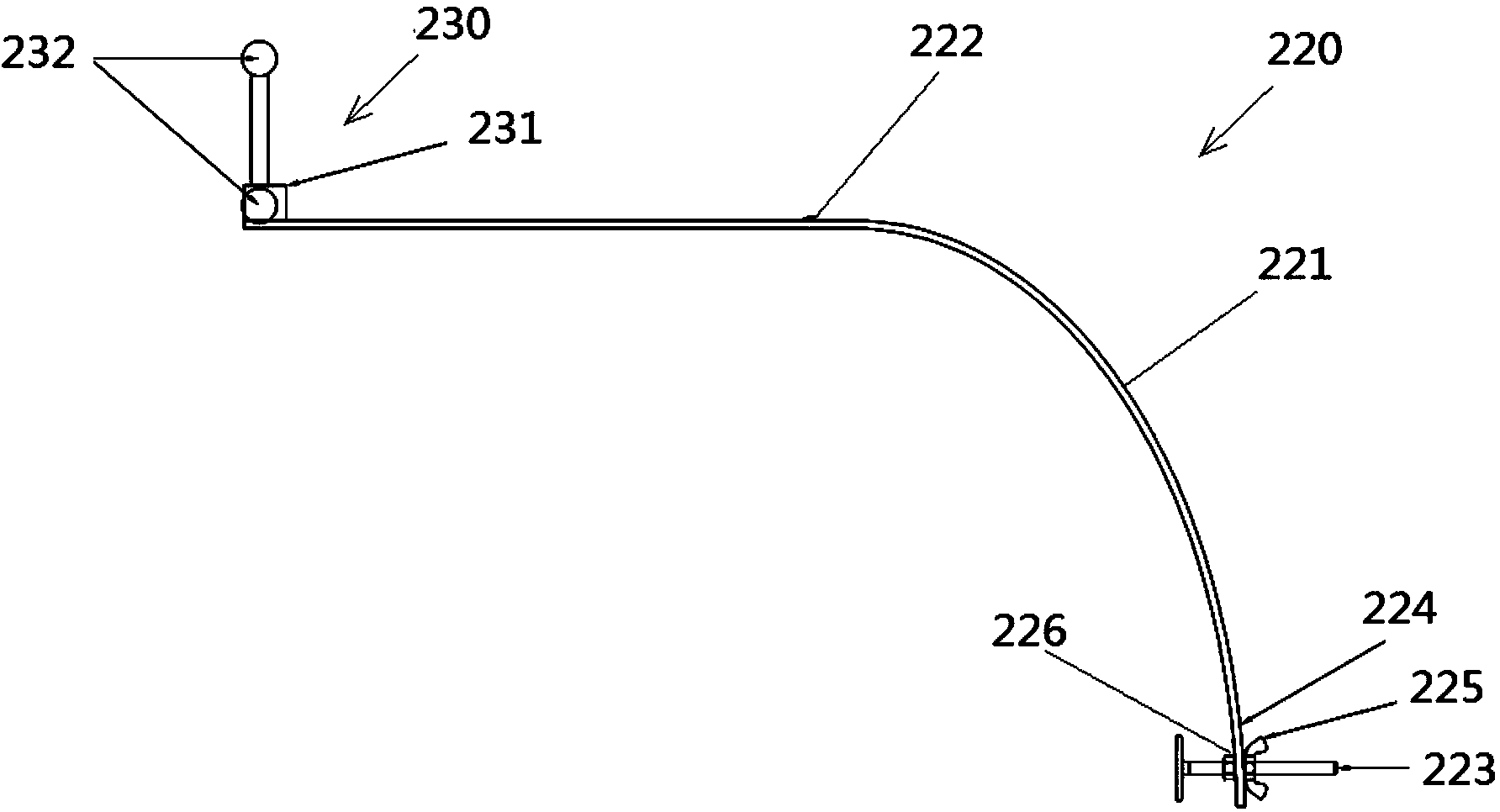

Musculus facialis three-dimensional motion measuring device on basis of motion capture

ActiveCN104107048AQuick measurementEasy to measureDiagnostic recording/measuringSensorsGross motor functionsObservation point

The invention relates to a musculus facialis three-dimensional motion measuring device on the basis of motion capture. The musculus facialis three-dimensional motion measuring device comprises a fixed head support and a motion capture device, and the fixed head support is fixed on a skull through a plurality of bone fulcrums of the skull of a measured user. The motion capture device comprises motion capture cameras arranged in front of the face of the measured user and used for capturing relative relation of various facial motion observation points on the face and the fixed head support, and the motion capture device calculates musculus facialis static parameters and dynamic parameters of facial motion observation points according to the relative relation. The fixed head support can be used as an 'absolute' reference system for reflecting the static conditions of the musculus facialis and the dynamic conditions of the facial observation points, is fixed through the bone fulcrums of the skull and can be stably fixed on the skull to move along with the skull without affection of facial expression. Further, the static parameters of the musculus facialis and the dynamic parameters of the facial motion observation points can be quickly, conveniently and accurately measured, and objective evaluation of the motion function of the musculus facialis is realized.

Owner:PEKING UNION MEDICAL COLLEGE HOSPITAL CHINESE ACAD OF MEDICAL SCI

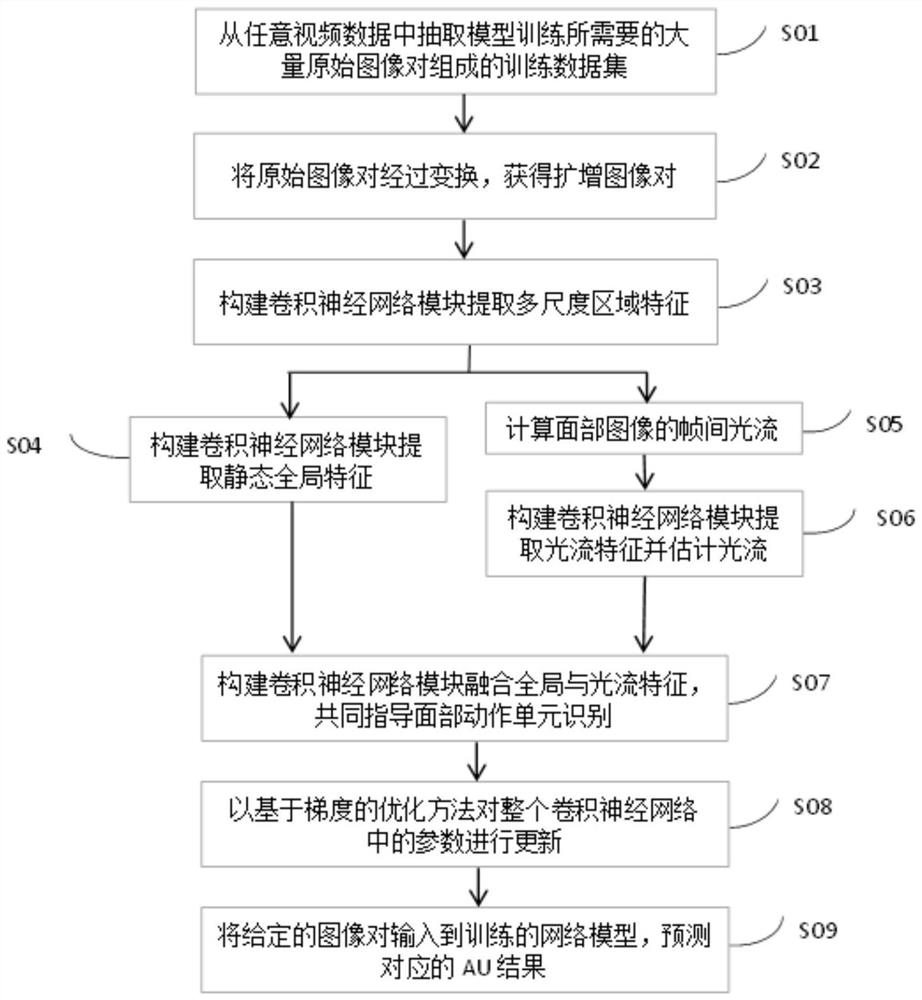

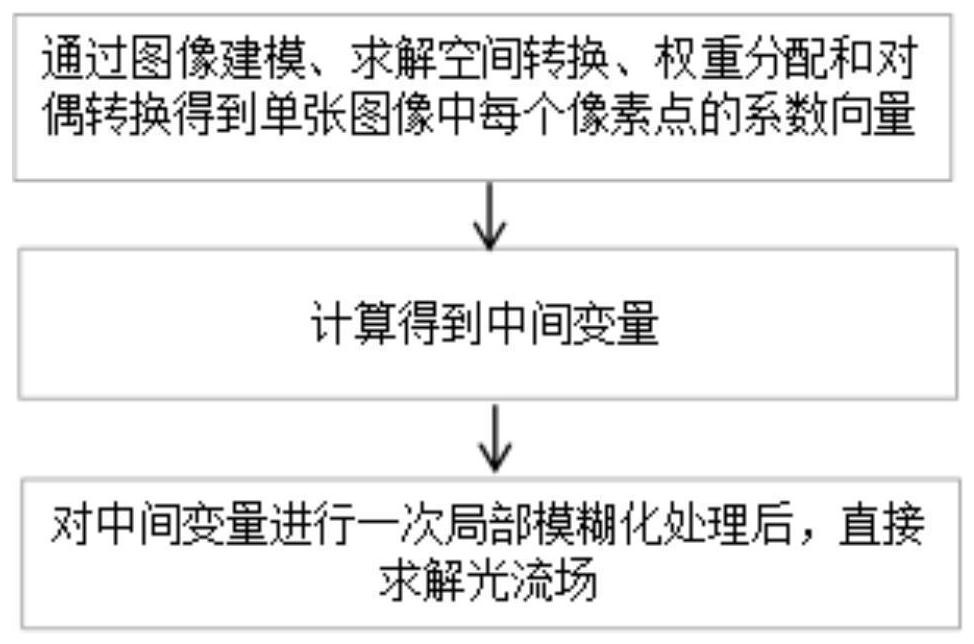

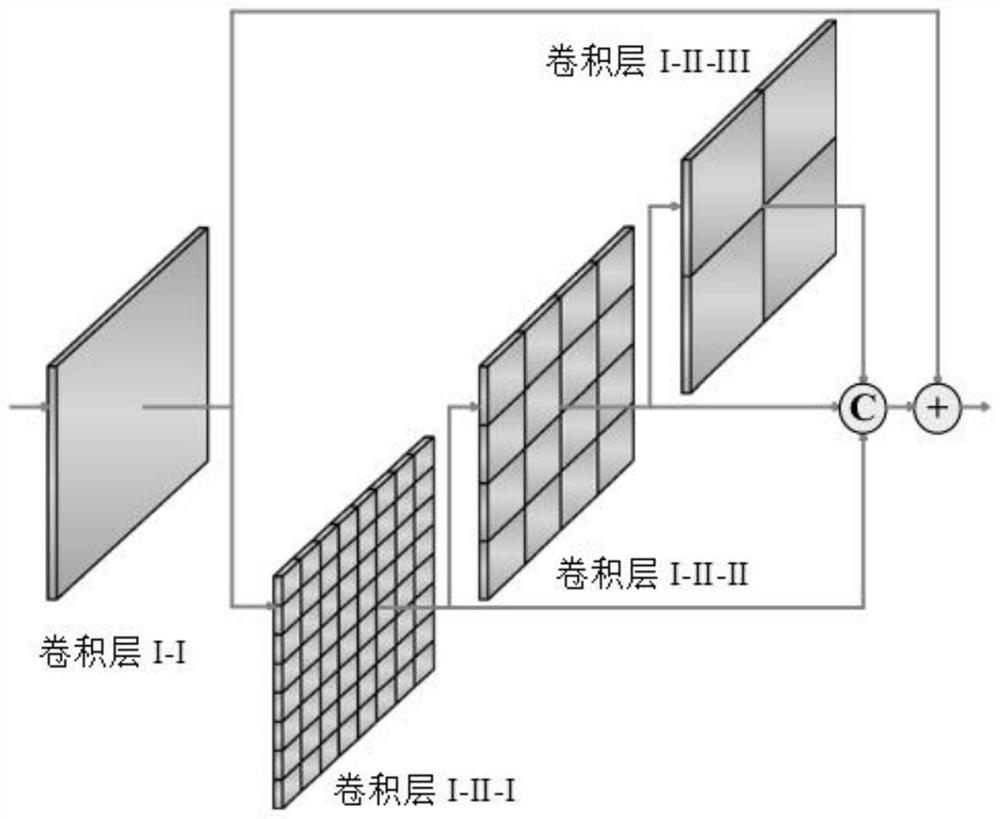

Facial action unit recognition method and device based on joint learning and optical flow estimation

ActiveCN112990077AEnhance expressive abilityImprove recognition accuracyCharacter and pattern recognitionNeural architecturesData setEngineering

The invention discloses a facial action unit recognition method and device based on joint learning and optical flow estimation. The method comprises the following steps: firstly extracting an original image pair needed by model training from video data to form a training data set, then preprocessing the original image pair to obtain an amplified image pair, constructing a convolutional neural network module I to extract multi-scale regional features of the amplified image pair, constructing a convolutional neural network module II to extract static global features of the amplified image pair, constructing a convolutional neural network module III to extract optical flow features of the amplified image pair, and finally, constructing a convolutional neural network module IV, fusing the static global features and the optical flow features, and carrying out facial action unit recognition. An end-to-end deep learning framework is adopted to jointly learn action unit recognition and optical flow estimation, action unit recognition is promoted by utilizing the relevance between tasks, the motion condition of facial muscles in a two-dimensional image can be effectively recognized, and unified facial action unit recognition system construction is achieved.

Owner:CHINA UNIV OF MINING & TECH

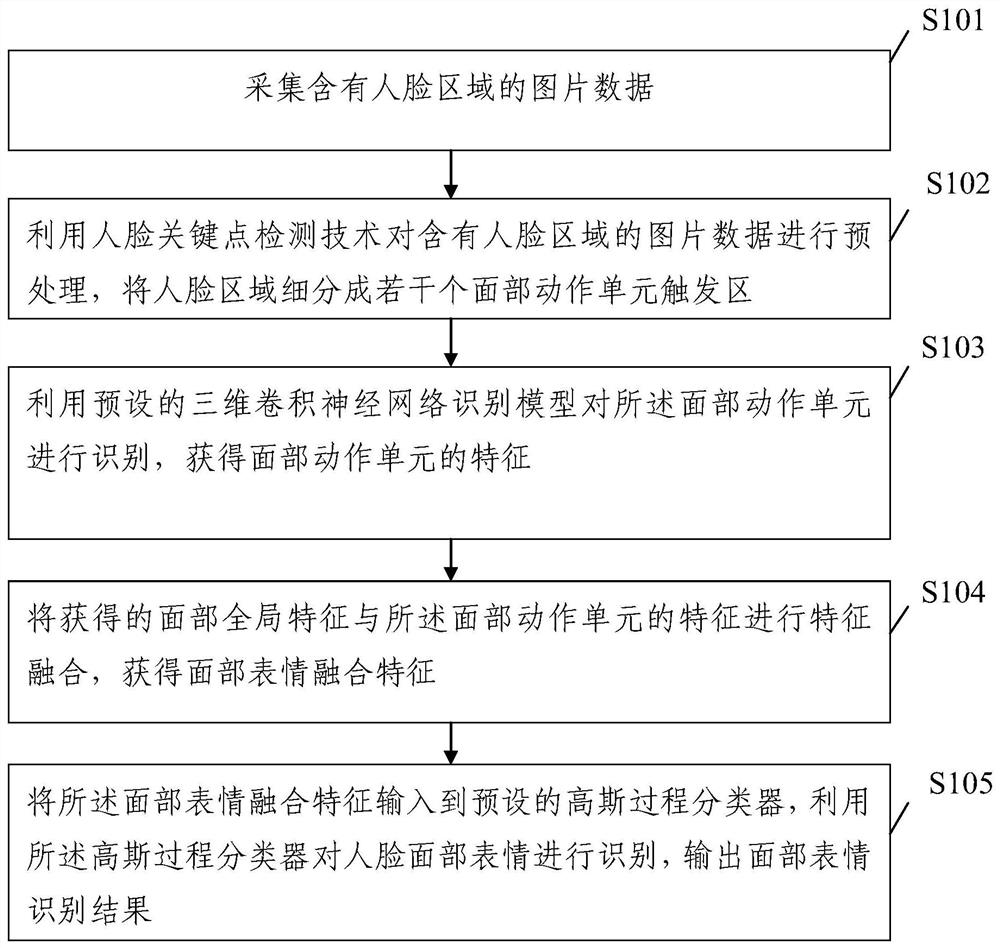

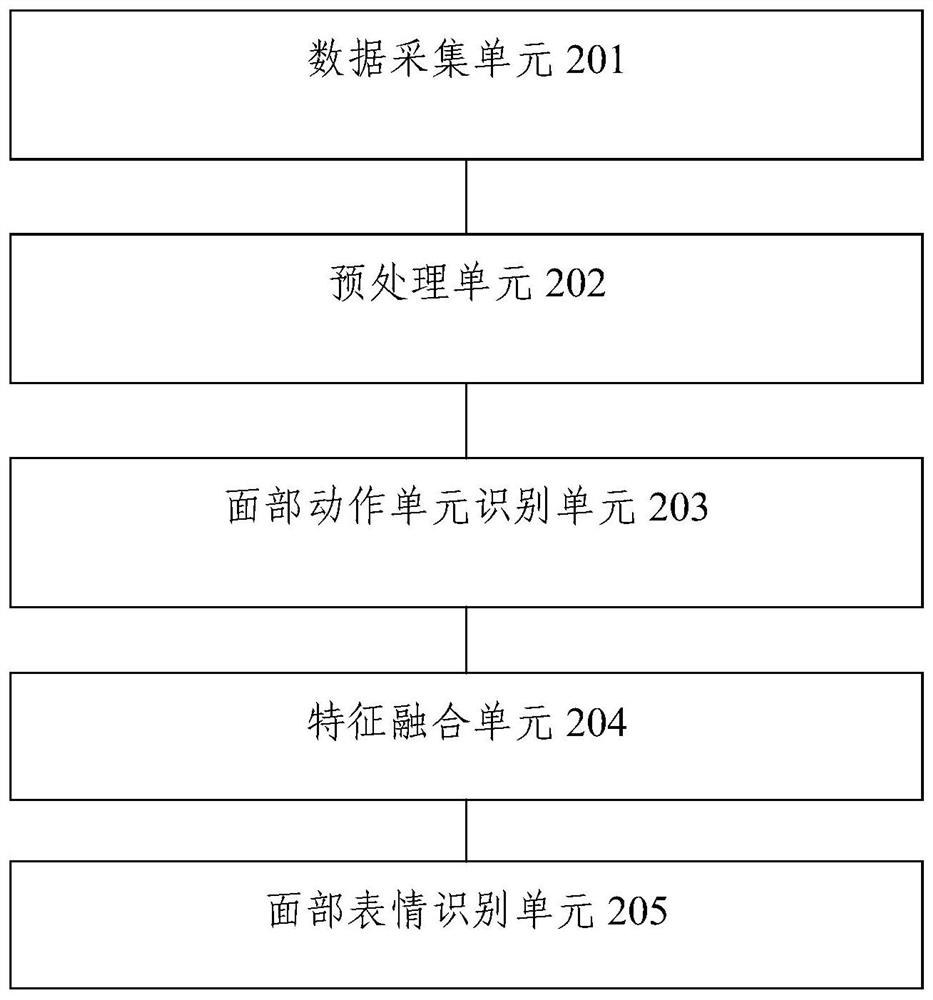

Facial expression recognition method and device based on facial action unit

PendingCN111626113AEasy to identifyImprove robustnessCharacter and pattern recognitionComputer visionFeature fusion

The embodiment of the invention discloses a facial expression recognition method and device based on facial action units, and the method comprises the steps: carrying out the preprocessing of collected image data containing a human face region through employing a human face key point detection technology, and enabling the human face region to be subdivided into a plurality of facial action unit triggering regions; utilizing a preset three-dimensional convolutional neural network recognition model to recognize the facial action unit to obtain features of the facial action unit; performing feature fusion on the obtained facial global features and the features of the facial action unit to obtain facial expression fusion features; and inputting the facial expression fusion features into a preset Gaussian process classifier, recognizing the facial expression of the human face by using the Gaussian process classifier, and outputting a facial expression recognition result. By the adoption ofthe method, expression recognition can be conducted on the basis of the facial action unit, recognition of tiny expressions is better facilitated, and facial expression recognition performance and robustness are improved.

Owner:北京市西城区培智中心学校 +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com