Method for realizing face animation based on motion unit expression mapping

A technology of motor units and facial expressions, applied in the field of deep learning and facial animation, can solve the problems of inability to describe facial expression changes and poor applicability, and achieve good animation effects and strong applicability

Pending Publication Date: 2019-03-19

北京中科嘉宁科技有限公司

View PDF6 Cites 11 Cited by

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

[0004] The purpose of the embodiments of the present invention is to provide a method for realizing facial animation based on motor unit expression mapping, which is used to solve the problem that the existing methods for realizing human facial animation are not applicable and cannot directly use two-dimensional features to accurately describe human facial expressions change problem

Method used

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

View moreImage

Smart Image Click on the blue labels to locate them in the text.

Smart ImageViewing Examples

Examples

Experimental program

Comparison scheme

Effect test

Embodiment 1

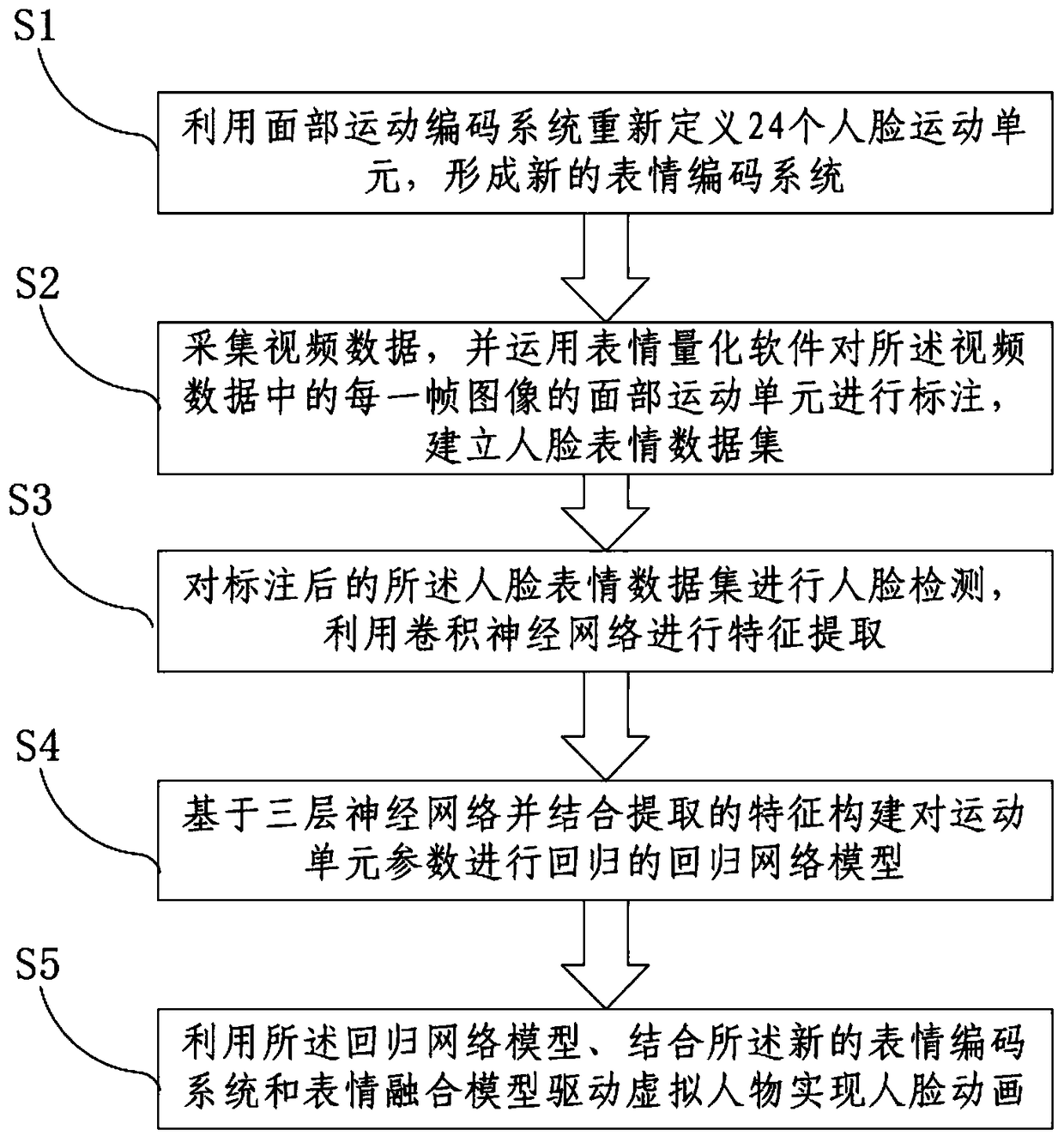

[0021] This embodiment provides a method for realizing facial animation based on motion unit expression mapping, including:

[0022] S1: Use the facial motion coding system to redefine 24 facial motion units to form a new expression coding system;

[0023] S2: collect video data, and use expression quantification software to mark the facial movement unit of each frame of image in the video data, and establish a facial expression data set;

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More PUM

Login to View More

Login to View More Abstract

The embodiment of the invention discloses a method for realizing face animation based on motion unit expression mapping, and relates to the technical field of deep learning and face animation, the method for realizing face animation comprises the following steps: redefining 24 face motion units by utilizing a face motion coding system; collecting video data, marking the facial motion unit of eachframe of image in the video data by using expression quantification software, and establishing a facial expression data set; carrying out face detection on the labeled face expression data set, and carrying out feature extraction by utilizing a convolutional neural network; constructing a regression network model for regression of the motion unit parameters based on the three-layer neural networkin combination with the extracted features; and driving the virtual character to realize face animation by using a regression network model and combining the new expression coding system and the expression fusion model. According to the method, the problems that an existing method for realizing the facial animation is not high in applicability and cannot accurately describe facial expression changes by directly utilizing two-dimensional features can be solved.

Description

technical field [0001] The invention relates to the technical field of deep learning and facial animation technology, in particular to a method for realizing facial animation based on motor unit expression mapping. Background technique [0002] In the fields of computer graphics and computer vision, facial animation techniques aim to capture the facial expressions of a source subject and map them to the face of an avatar. Among them, the most common methods are the face animation method based on the depth camera and the face animation method based on the video image. The face animation method based on the depth camera mainly uses the dynamic expression model to capture the rigid and non-rigid parameters of the face in real time to estimate Produce facial expression data and use facial expression data to make facial animation, but the equipment of the depth camera is expensive, the application scenarios are limited, and the applicability is not strong. [0003] The face anim...

Claims

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More Application Information

Patent Timeline

Login to View More

Login to View More Patent Type & Authority Applications(China)

IPC IPC(8): G06T13/40G06N3/04G06N3/08

CPCG06N3/08G06T13/40G06N3/045

Inventor 吕科闫衍芙薛健

Owner 北京中科嘉宁科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com