Patents

Literature

597 results about "Facial expression recognition" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

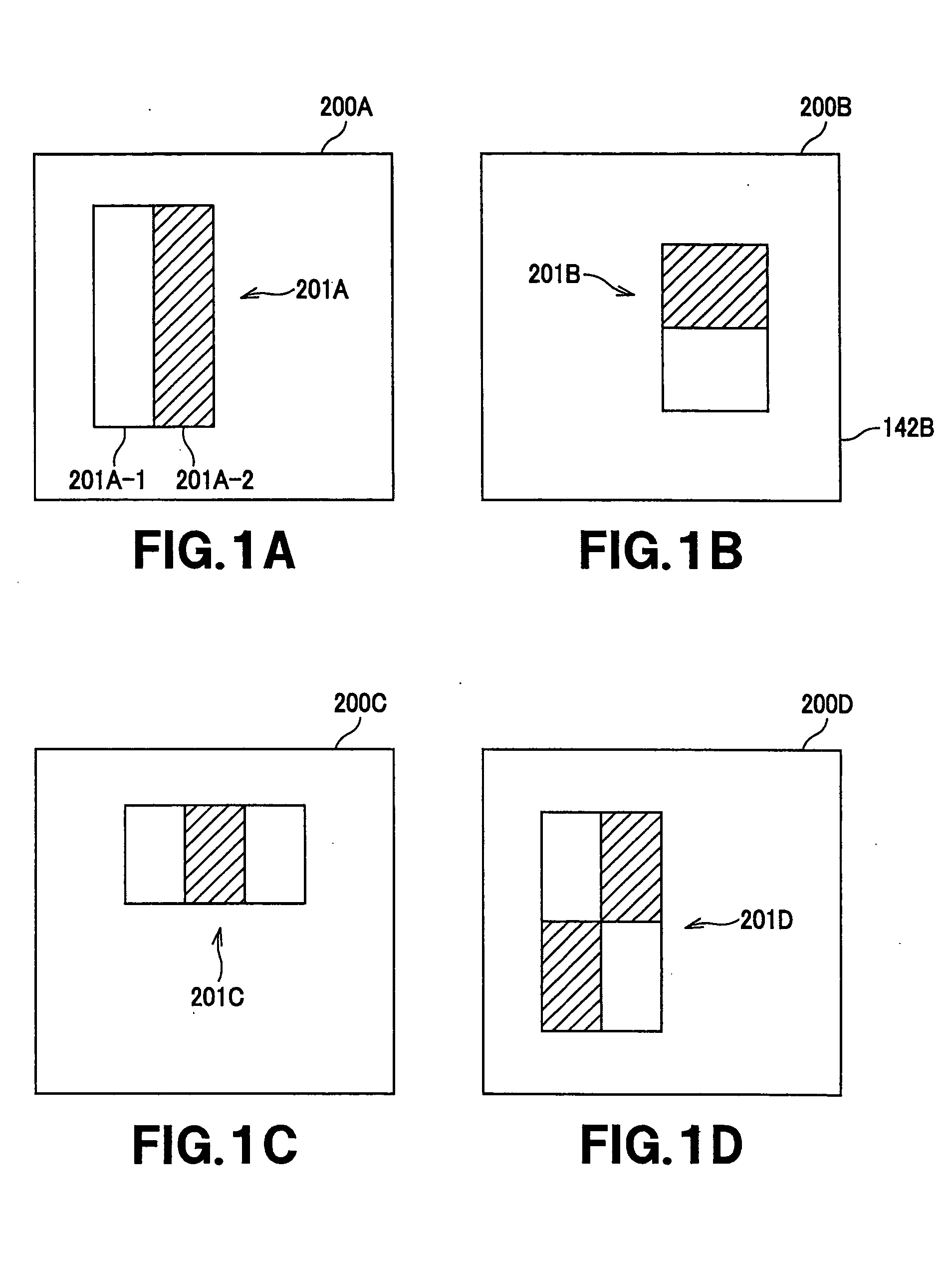

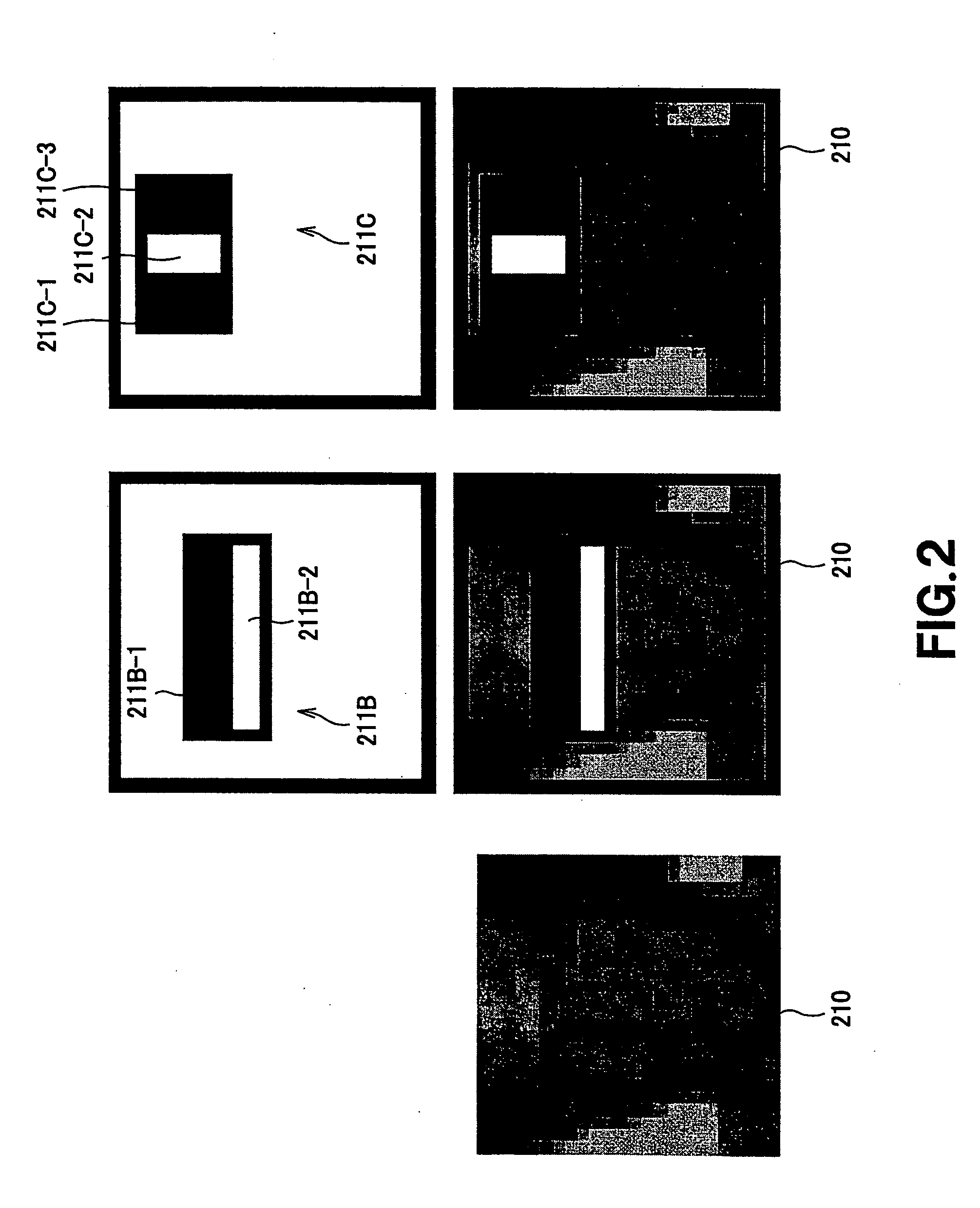

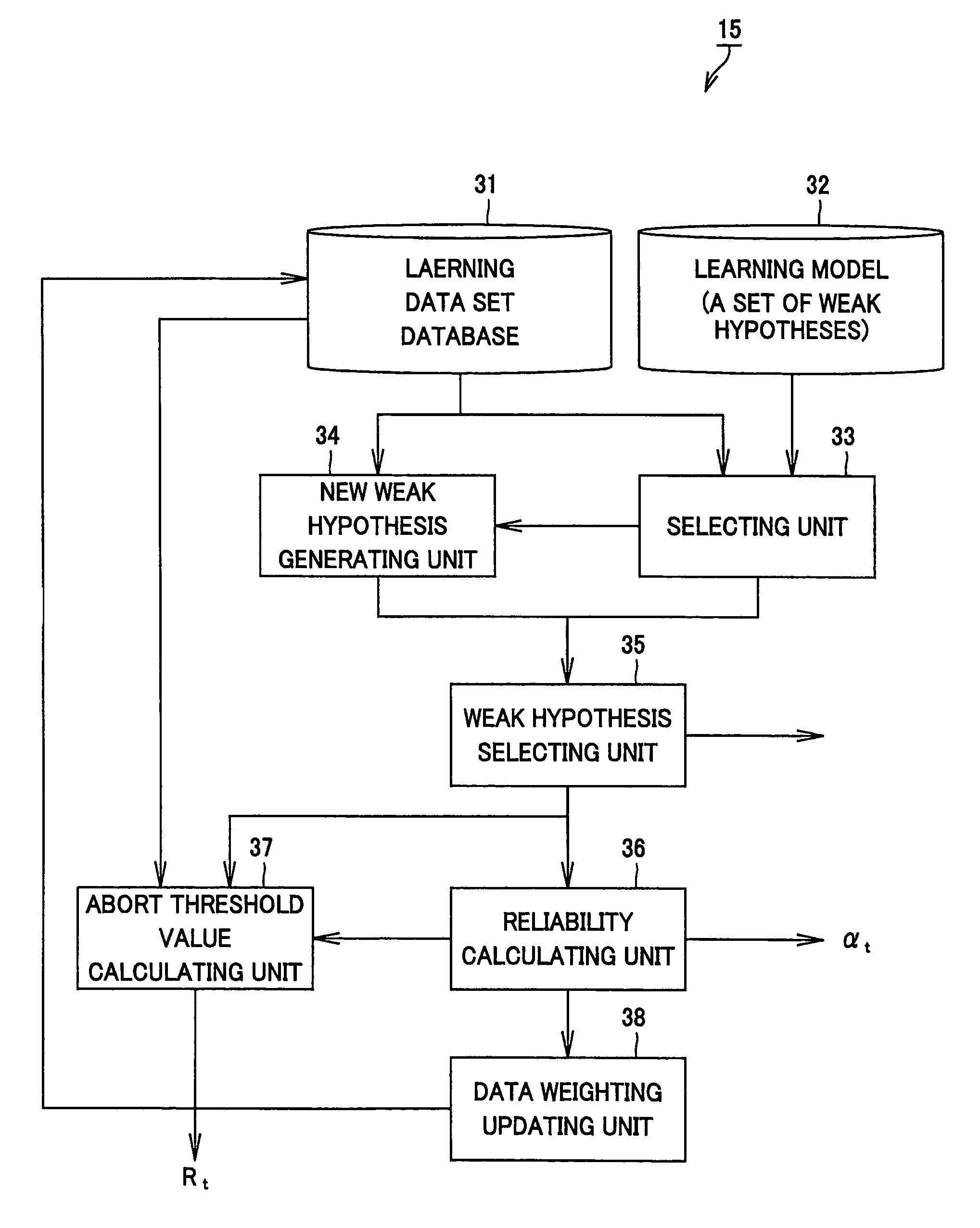

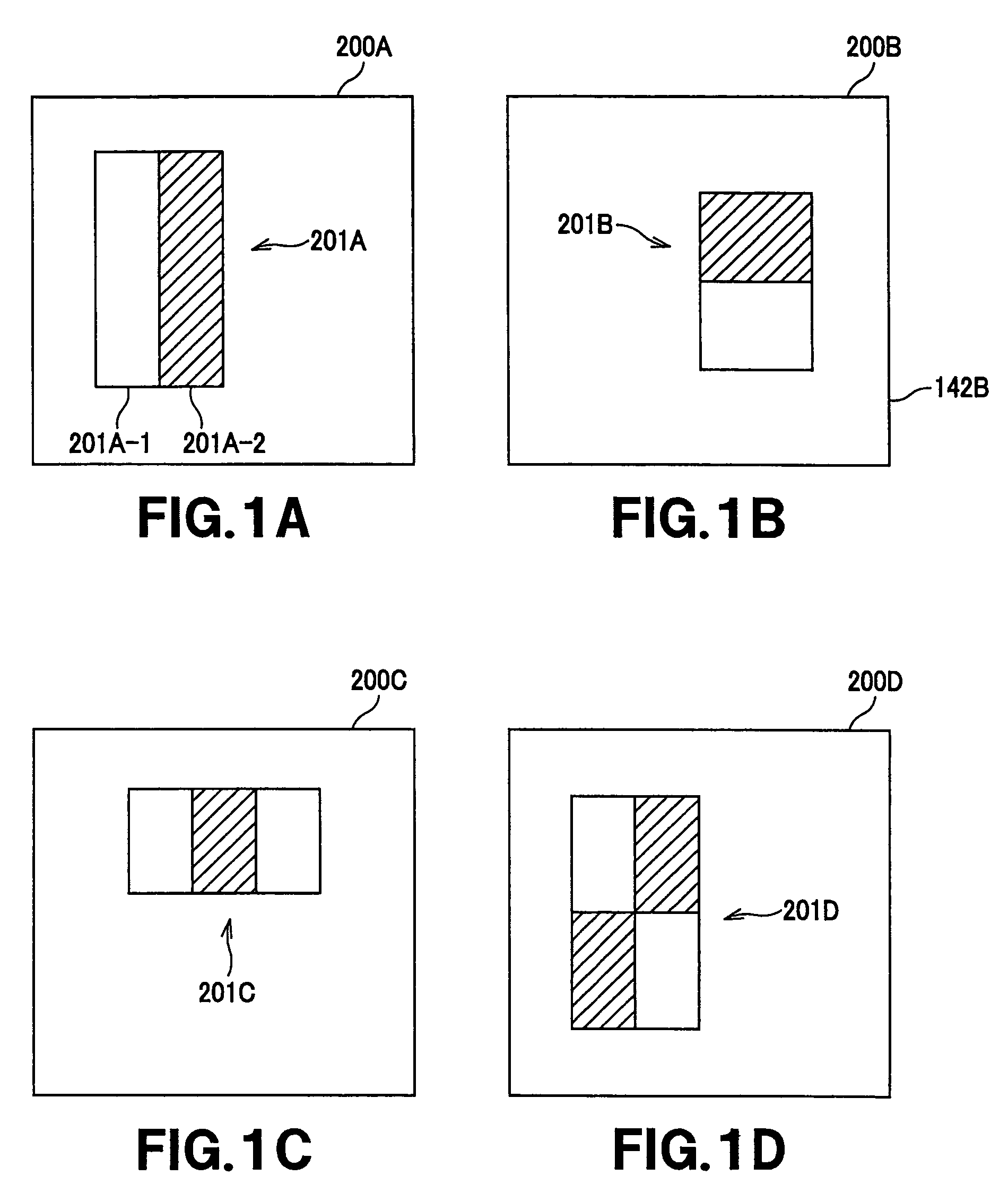

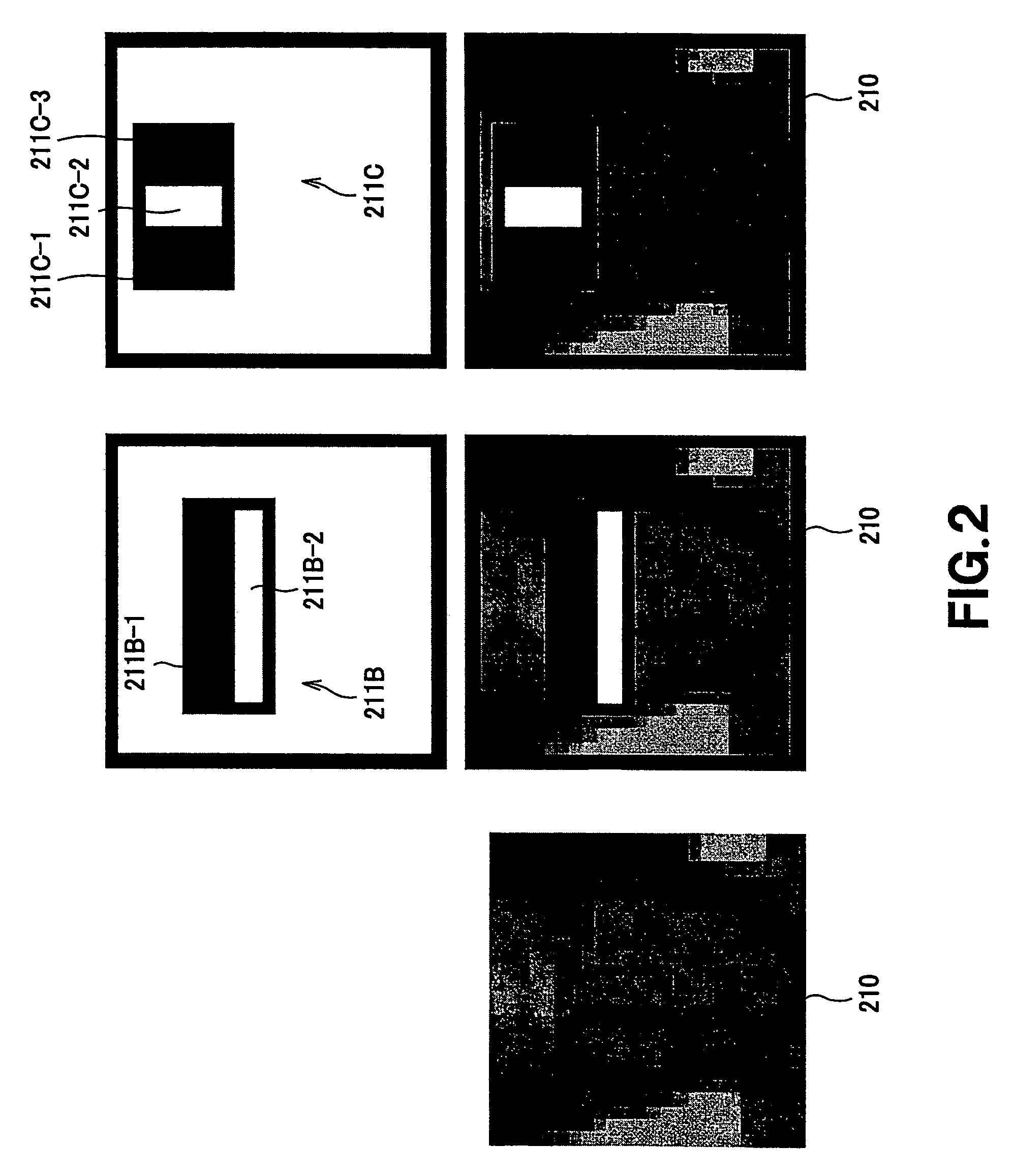

Weak hypothesis generation apparatus and method, learning apparatus and method, detection apparatus and method, facial expression learning apparatus and method, facial expression recognition apparatus and method, and robot apparatus

ActiveUS20050102246A1Lower performance requirementsIncrease speedImage analysisDigital computer detailsFace detectionHypothesis

A facial expression recognition system that uses a face detection apparatus realizing efficient learning and high-speed detection processing based on ensemble learning when detecting an area representing a detection target and that is robust against shifts of face position included in images and capable of highly accurate expression recognition, and a learning method for the system, are provided. When learning data to be used by the face detection apparatus by Adaboost, processing to select high-performance weak hypotheses from all weak hypotheses, then generate new weak hypotheses from these high-performance weak hypotheses on the basis of statistical characteristics, and select one weak hypothesis having the highest discrimination performance from these weak hypotheses, is repeated to sequentially generate a weak hypothesis, and a final hypothesis is thus acquired. In detection, using an abort threshold value that has been learned in advance, whether provided data can be obviously judged as a non-face is determined every time one weak hypothesis outputs the result of discrimination. If it can be judged so, processing is aborted. A predetermined Gabor filter is selected from the detected face image by an Adaboost technique, and a support vector for only a feature quantity extracted by the selected filter is learned, thus performing expression recognition.

Owner:SAN DIEGO UNIV OF CALIFORNIA +1

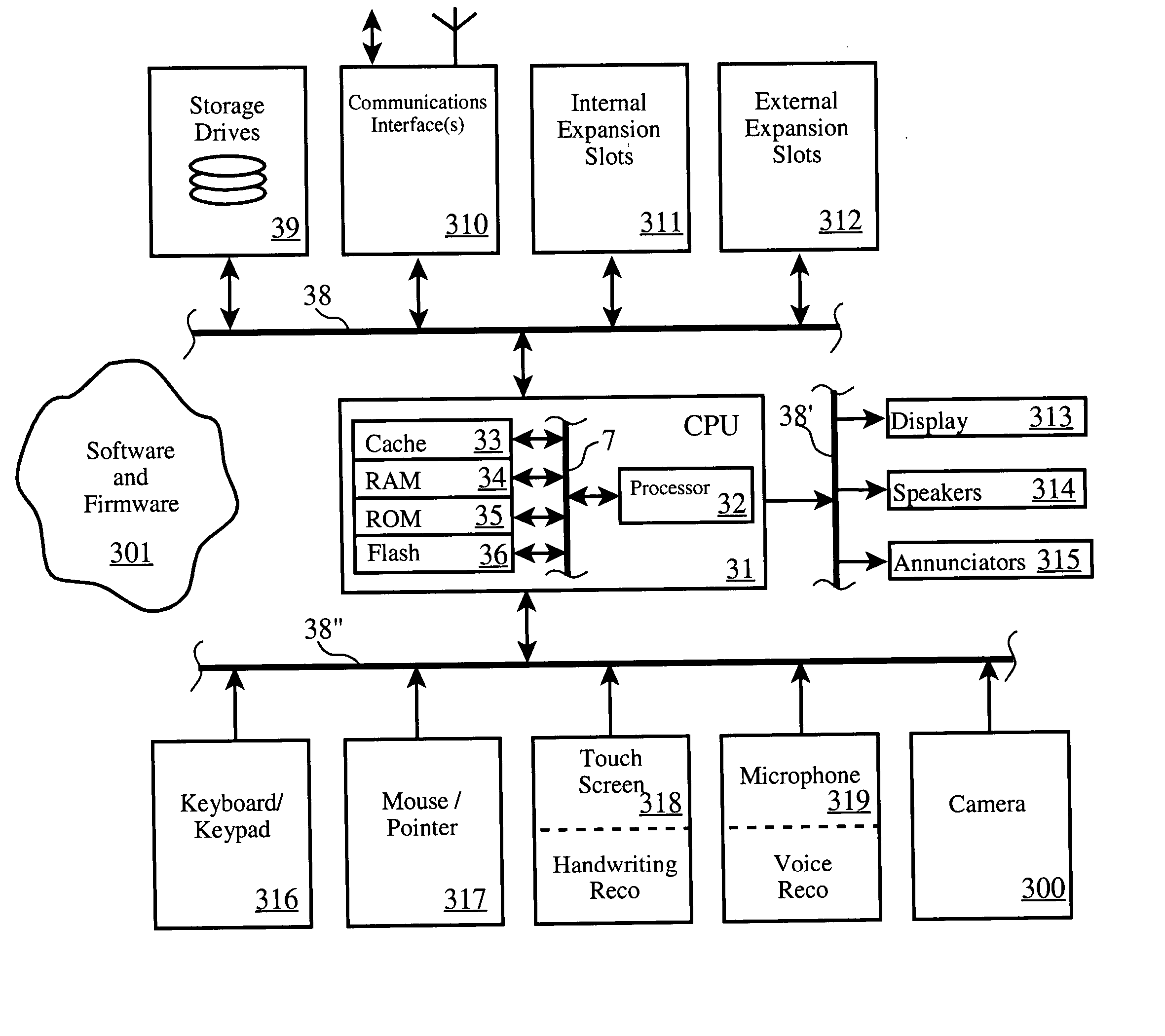

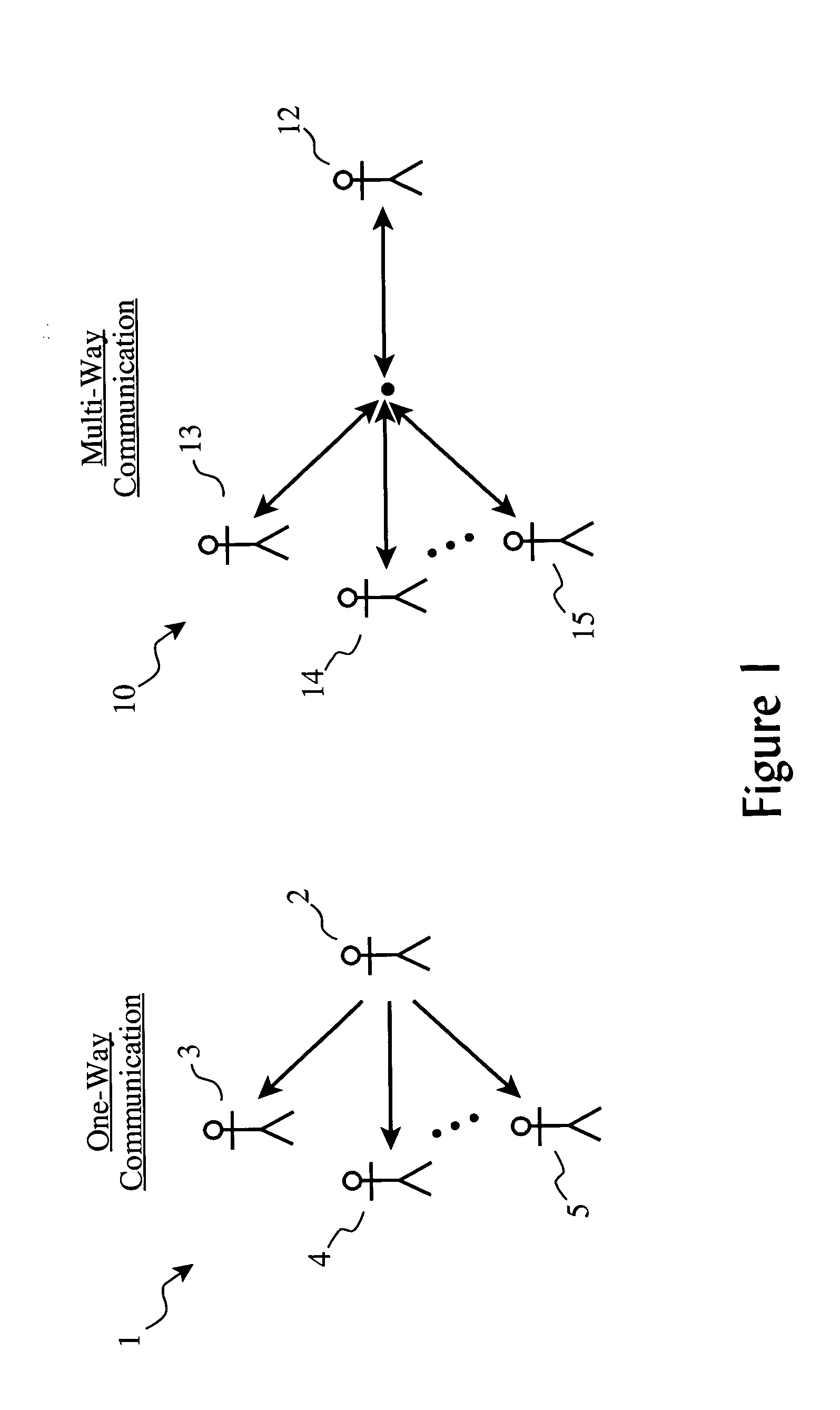

Translating emotion to braille, emoticons and other special symbols

InactiveUS20050069852A1Substation equipmentInput/output processes for data processingGraphicsPattern recognition

A system for incorporating emotional information in a communication stream from a first person to a second person, including an electronic image analyzer for determining an emotional component of a speaker or presenter using subsystems such as facial expression recognition, hand gesture recognition, body movement recognition, voice pitch analysis. A symbol generator generates one or more symbols such as emoticons, graphic symbols, or text modifications (bolding, underlining, etc.) corresponding to the emotional aspects of the presenter or speaker. The emotional symbols are merged with the audio or visual information from the speaker, and is presented to one or more recipients.

Owner:IBM CORP +1

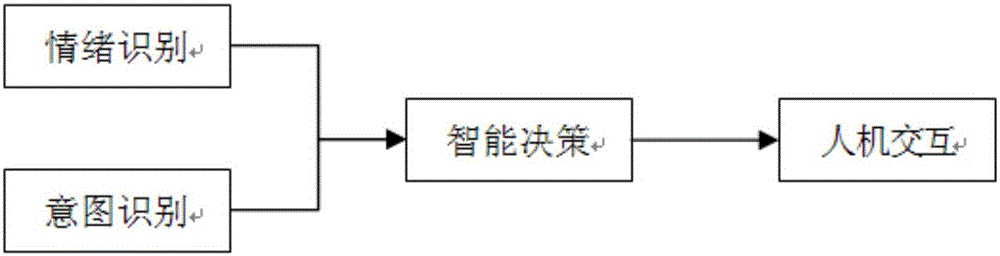

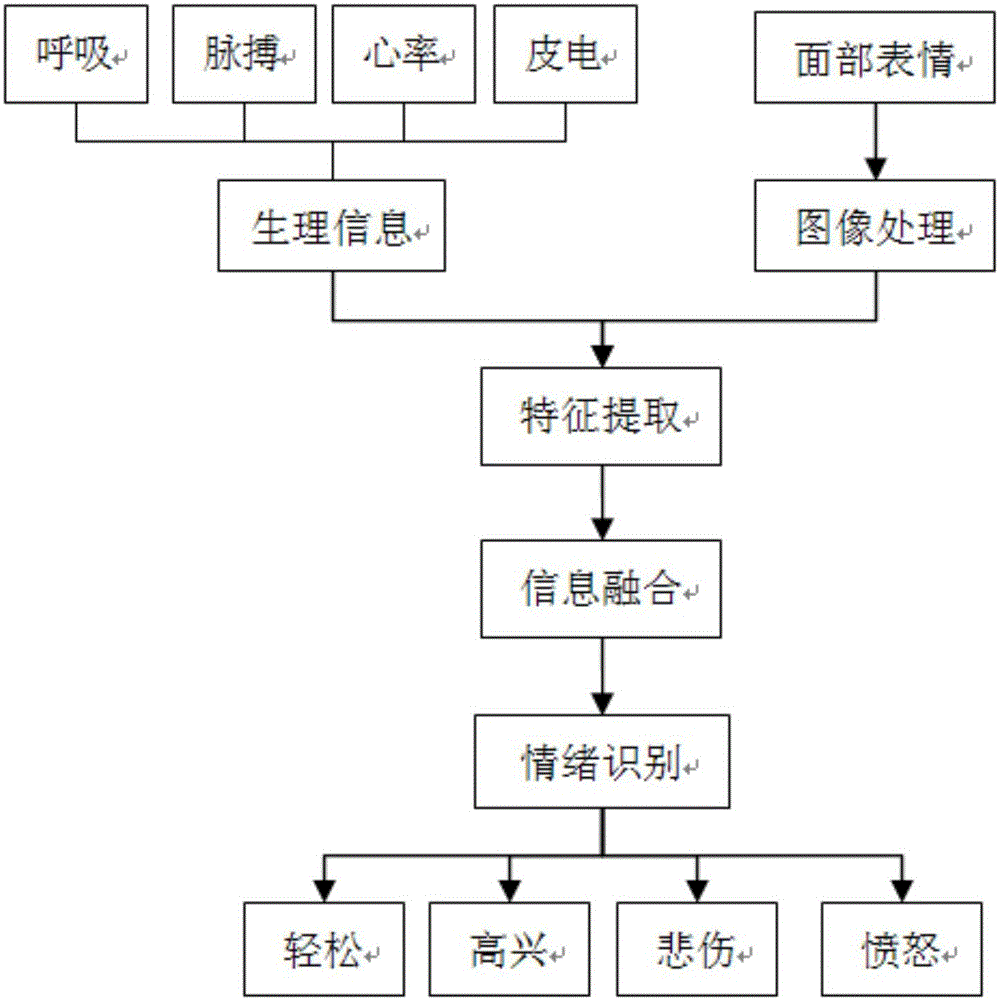

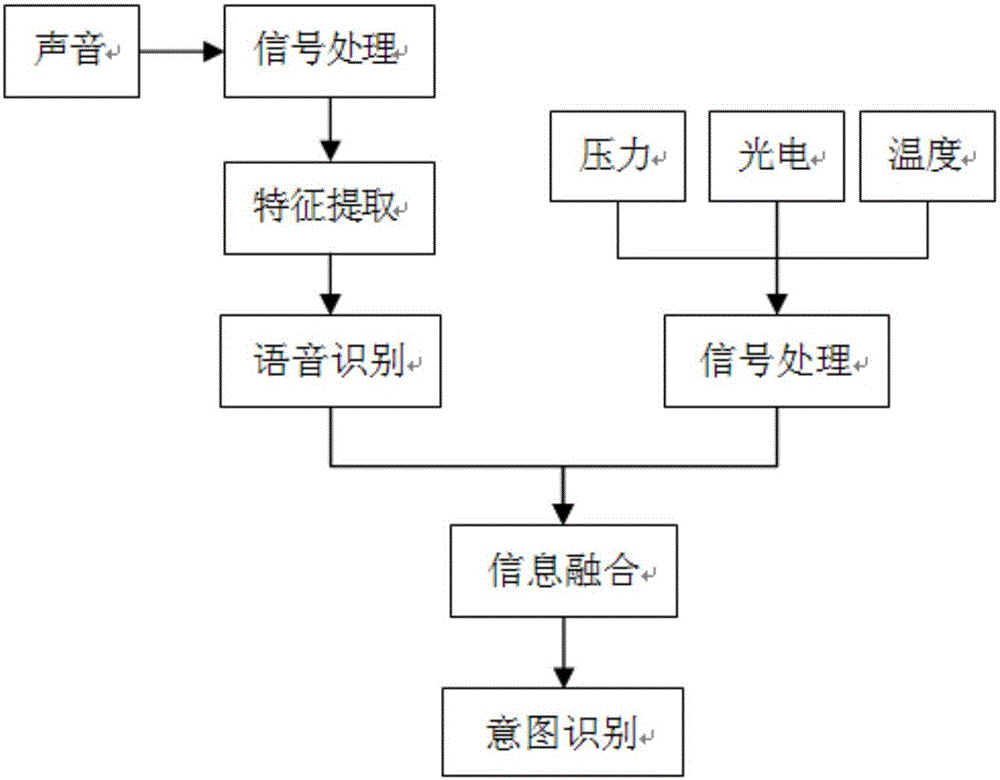

Robot man-machine interaction method based on user mood and intension recognition

The invention relates to a robot man-machine interaction method based on user mood and intension recognition. User mood recognition is carried out by combining human body biological information such as breath, the heart rate and the dermal electricity with facial expression recognition. The user intension is recognized through different kinds of physical sensor information such as pressure, photoelectricity and temperature. Intelligent control and decision making are carried out according to the user mood and intention recognition results. A corresponding execution mechanism of a robot is controlled to complete limb movements and voice interaction. By means of the robot man-machine interaction method based on user mood and intension recognition, the robot can understand the user intension more thoroughly, know the metal changes of a user, meet the functional requirement for emotion care for the user, and better participate in the life of users such as the old people and children.

Owner:国家康复辅具研究中心

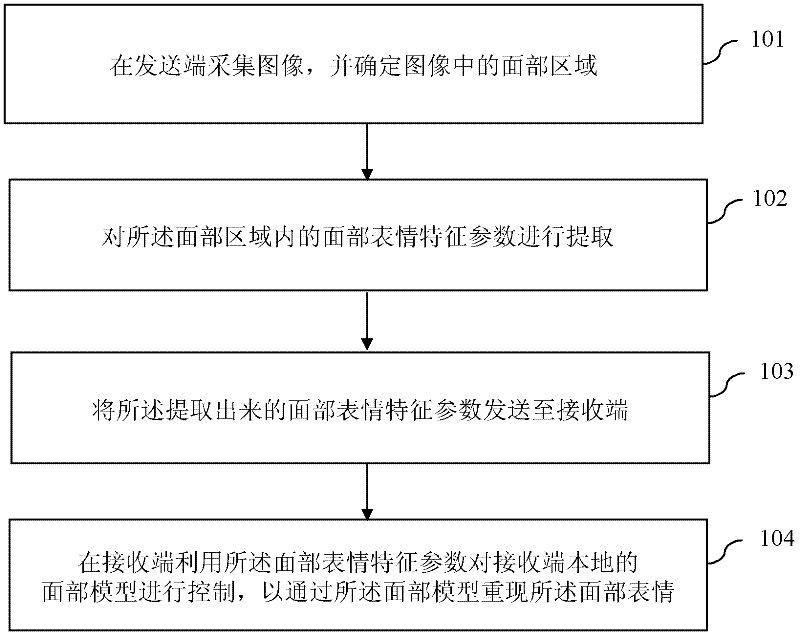

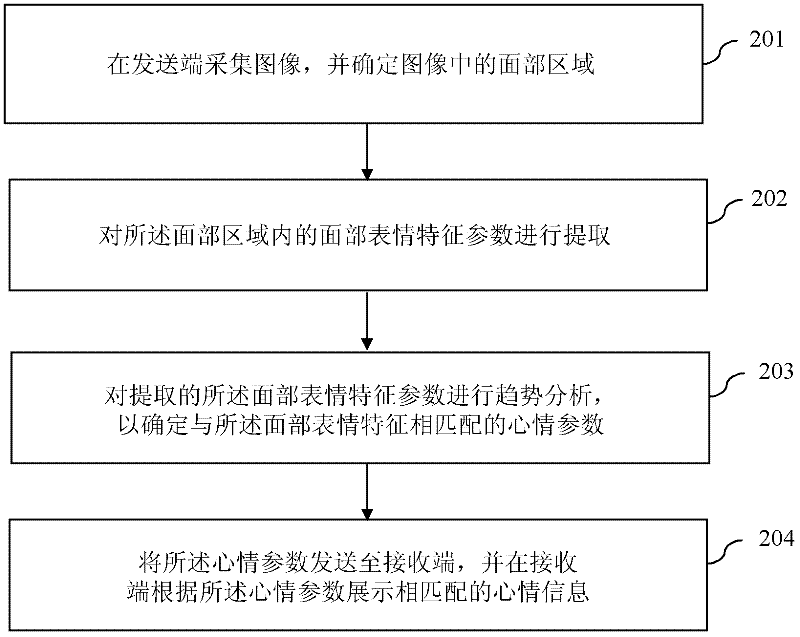

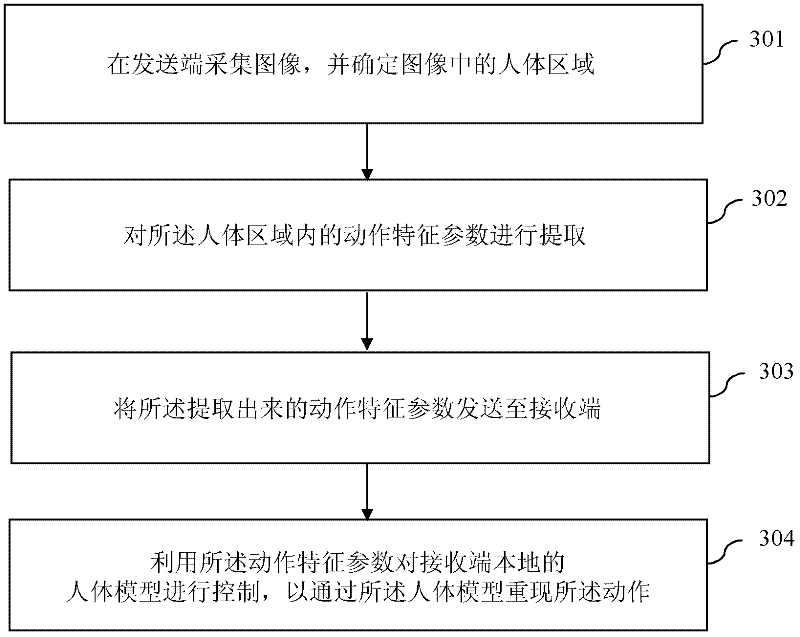

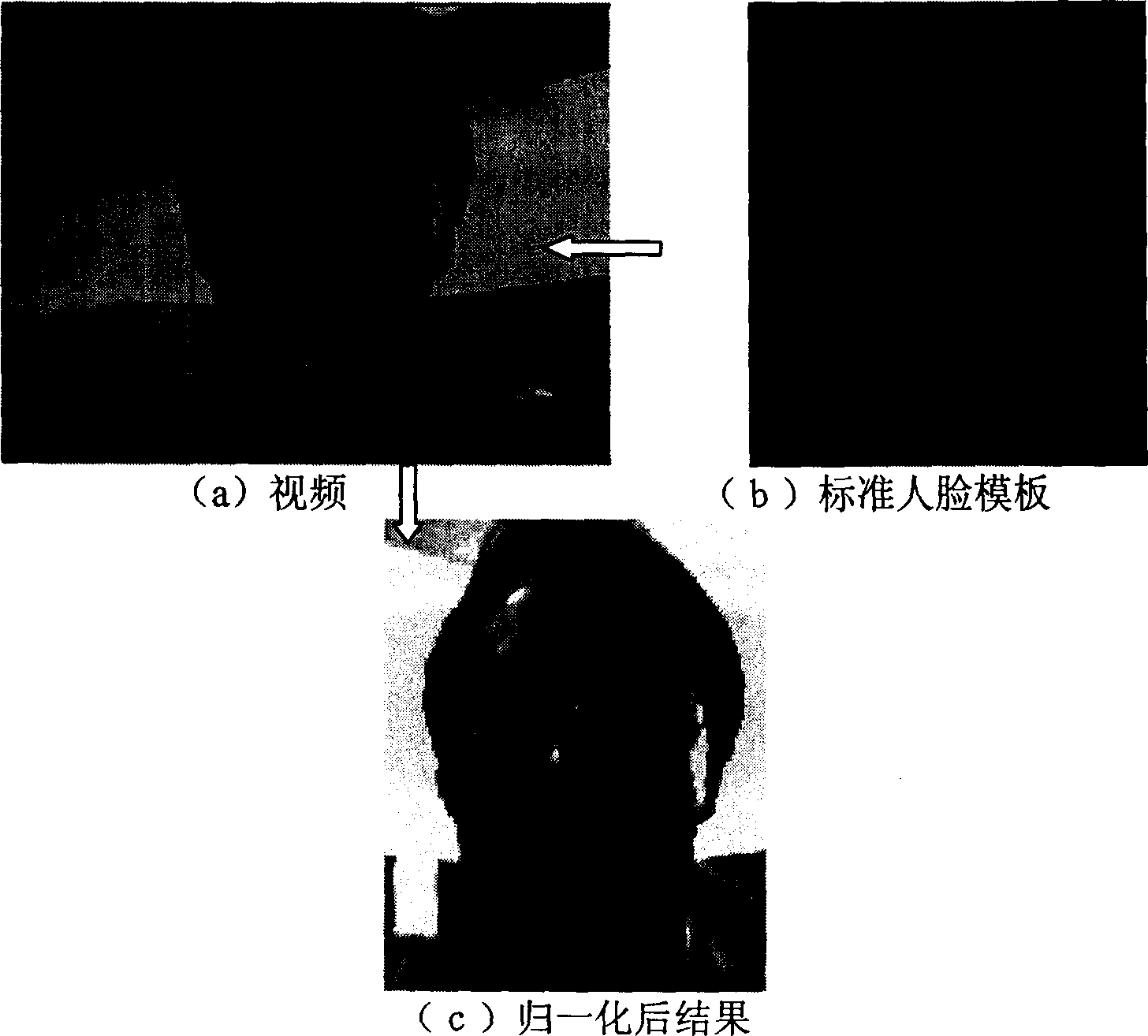

Image communication method and system based on facial expression/action recognition

InactiveCN102271241AReduced transfer rate requirementsTelevision conference systemsCharacter and pattern recognitionFace modelFeature parameter

The invention discloses an image communication method and system based on facial expression / action recognition. In the image communication method based on facial expression recognition, firstly, an image is collected at the sending end, and the facial area in the image is determined; The facial expression feature parameters in the region are extracted; then the extracted facial expression feature parameters are sent to the receiving end; finally, the receiving end uses the facial expression feature parameters to control the local facial model of the receiving end to pass the The facial model reproduces the facial expression. The application of the present invention can not only enhance user experience, but also greatly reduce the requirement on the information transmission rate of the video bearer network, realize more effective video communication, and is especially suitable for wireless communication networks with limited network bandwidth and capacity.

Owner:BEIJING UNIV OF POSTS & TELECOMM

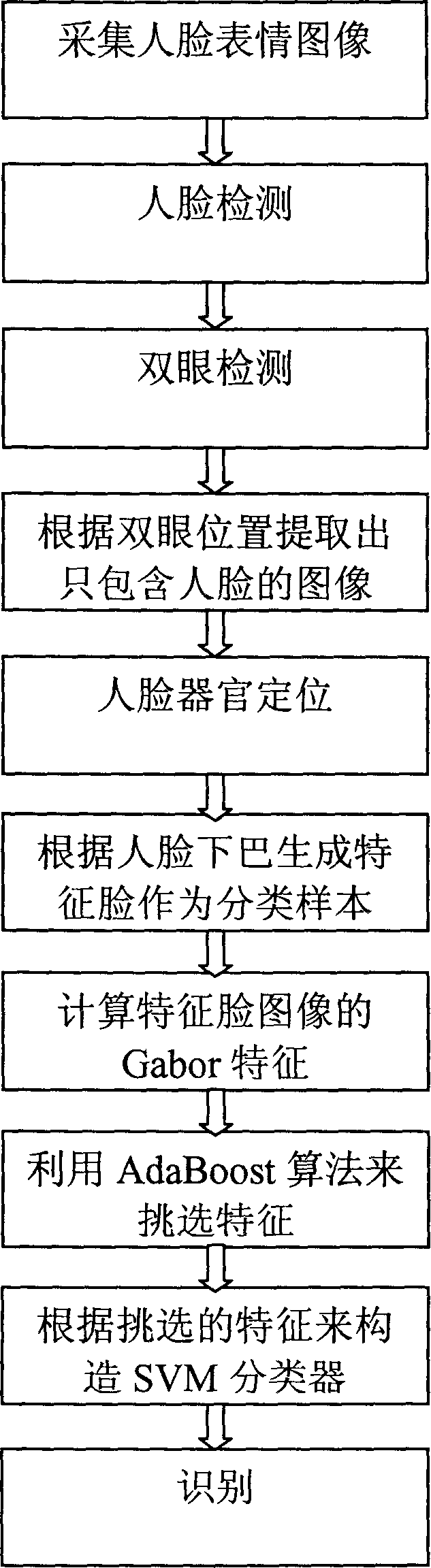

Method and device for distinguishing face expression based on video frequency

ActiveCN1794265AAvoid being affected by factors such as lightEliminate the effects ofCharacter and pattern recognitionChinAdaboost algorithm

This invention provides an identification method and a device for countenance based on the video, which applies the ASM profile pick-up algorithm in the pick-up of character vectors and picks up the man-face image based on the position of the eyes to generate a normalized character face from the chin and picks up the most effective character in the character face to identify the countenance, which can eliminate the influence of illumination to make the right and left gray value almost the same with the variance.

Owner:GUANGDONG VIMICRO

Weak hypothesis generation apparatus and method, learning apparatus and method, detection apparatus and method, facial expression learning apparatus and method, facial expression recognition apparatus and method, and robot apparatus

ActiveUS7379568B2Lower performance requirementsIncrease speedImage analysisDigital computer detailsFace detectionHypothesis

A facial expression recognition system that uses a face detection apparatus realizing efficient learning and high-speed detection processing based on ensemble learning when detecting an area representing a detection target and that is robust against shifts of face position included in images and capable of highly accurate expression recognition, and a learning method for the system, are provided. When learning data to be used by the face detection apparatus by Adaboost, processing to select high-performance weak hypotheses from all weak hypotheses, then generate new weak hypotheses from these high-performance weak hypotheses on the basis of statistical characteristics, and select one weak hypothesis having the highest discrimination performance from these weak hypotheses, is repeated to sequentially generate a weak hypothesis, and a final hypothesis is thus acquired. In detection, using an abort threshold value that has been learned in advance, whether provided data can be obviously judged as a non-face is determined every time one weak hypothesis outputs the result of discrimination. If it can be judged so, processing is aborted. A predetermined Gabor filter is selected from the detected face image by an Adaboost technique, and a support vector for only a feature quantity extracted by the selected filter is learned, thus performing expression recognition.

Owner:SAN DIEGO UNIV OF CALIFORNIA +1

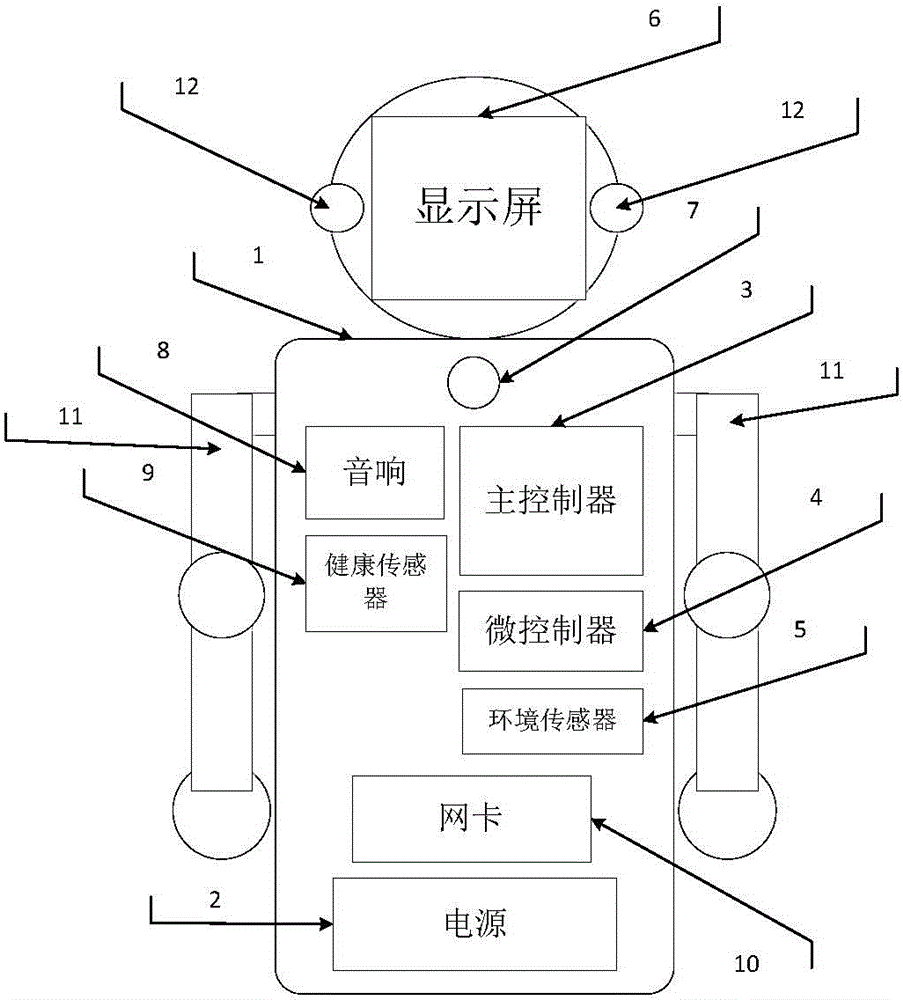

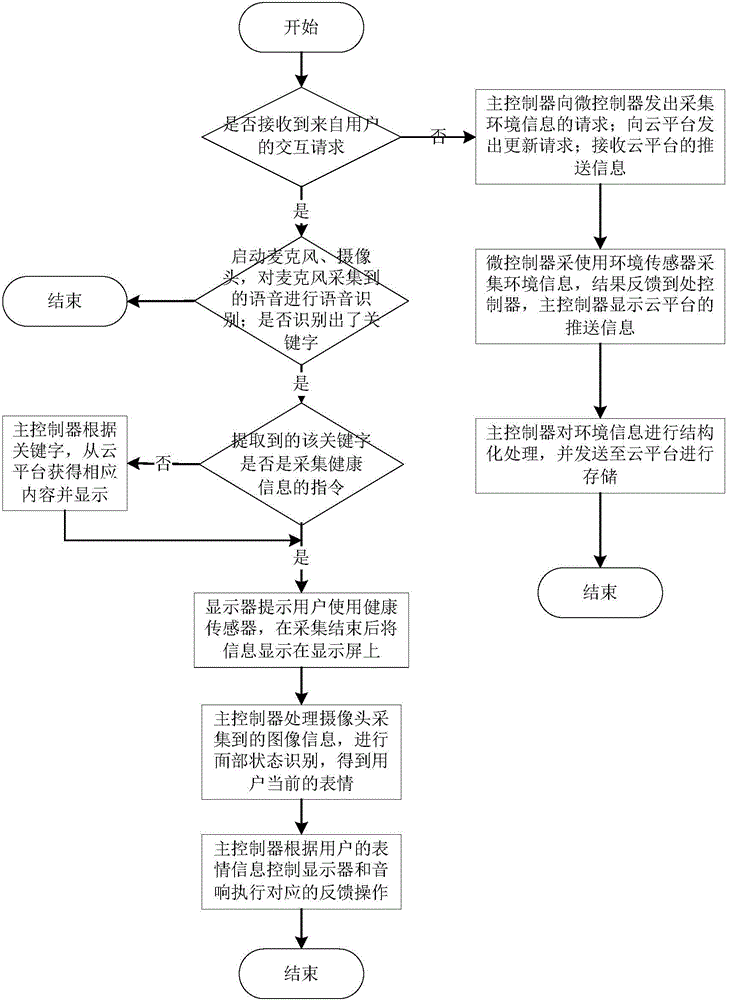

Household information acquisition and user emotion recognition equipment and working method thereof

InactiveCN104102346AVarious functionsImprove scalabilityProgramme controlInput/output for user-computer interactionMicrocontrollerAudio electronics

The invention discloses a household information acquisition and user emotion recognition equipment, which comprises a shell, a power supply, a main controller, a microcontroller, multiple environmental sensors, a screen, a microphone, an audio, multiple health sensors, a pair of robot arms and a pair of cameras, wherein the microphone is arranged on the shell; the power supply, the main controller, the microcontroller, the environmental sensors, the audio and the pair of cameras are arranged symmetrically relative to the screen respectively on the left and right sides; the robot arms are arranged on the two sides of the shell; the main controller is in communication connection with the microcontroller, and is used for controlling the microcontroller to control the movements of the robot arms through motors of the robot arms; the power supply is connected with the main controller and the microcontroller, and is mainly used for providing energy for the main controller and the microcontroller. According to the household information acquisition and user emotion recognition equipment, the intelligent speech recognition technology, the speech synthesis technology and the facial expression recognition technology are integrated, thus the use of the household information acquisition and user emotion recognition equipment is more convenient, and the feedback is more reasonable.

Owner:HUAZHONG UNIV OF SCI & TECH

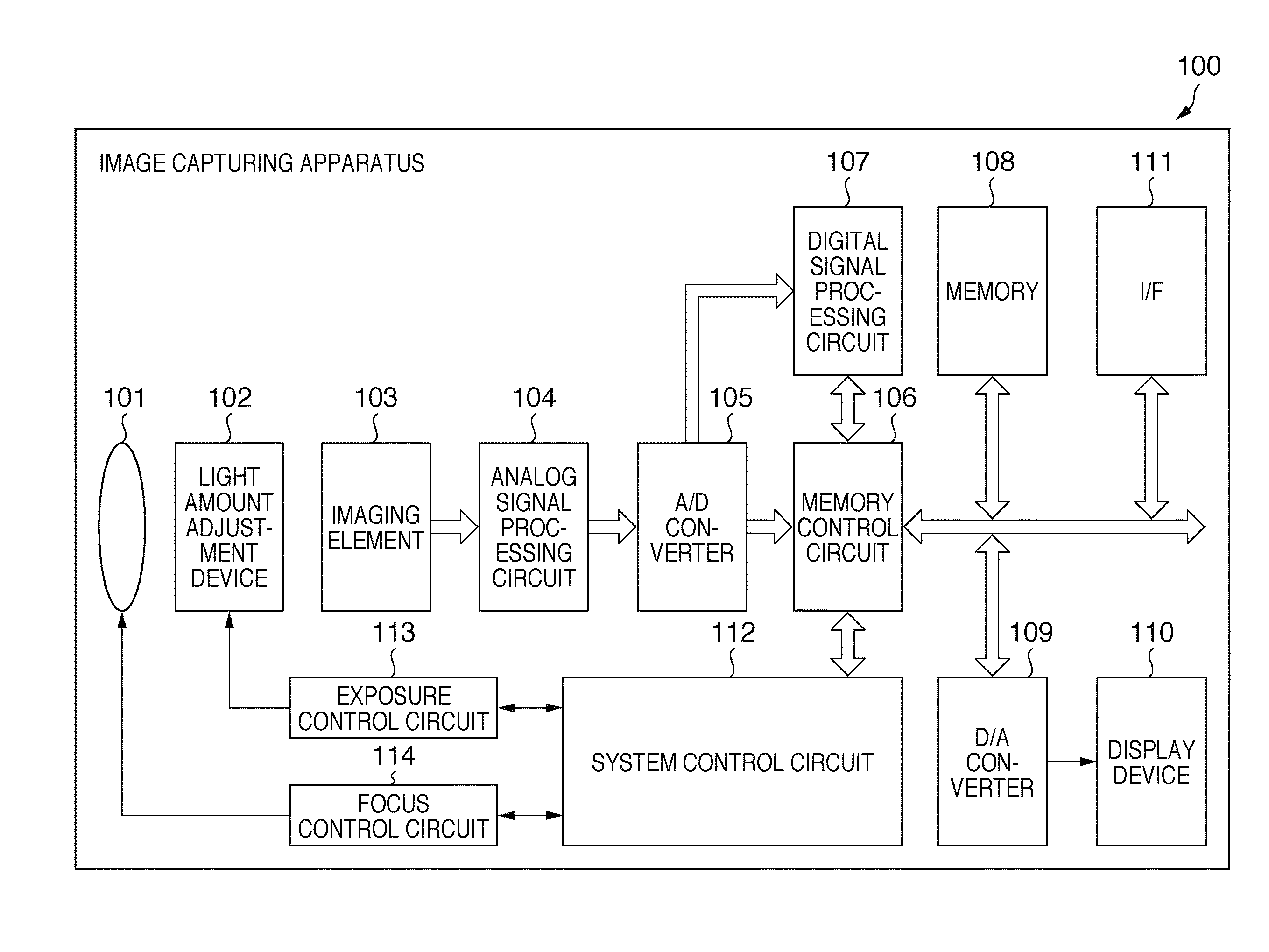

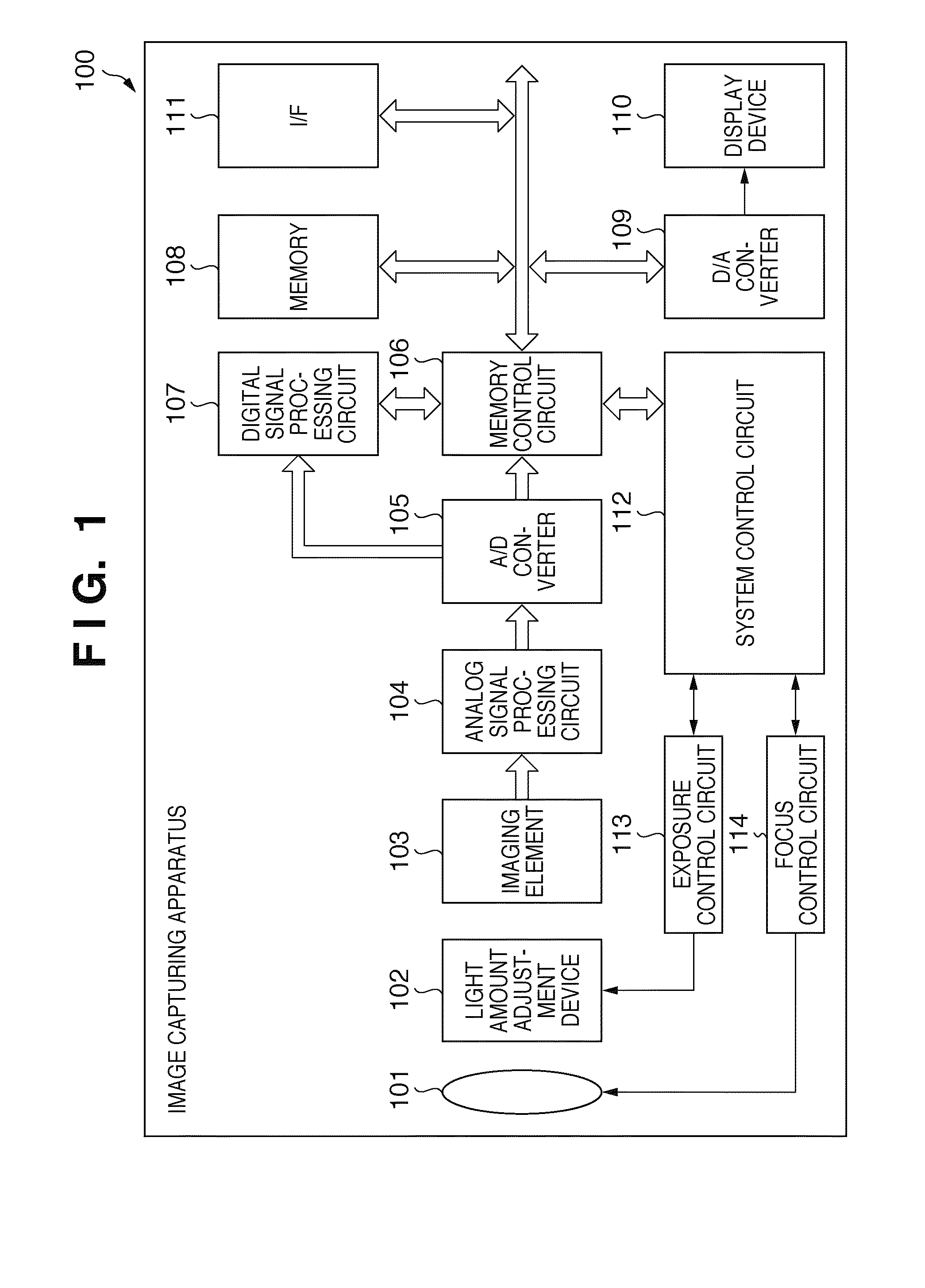

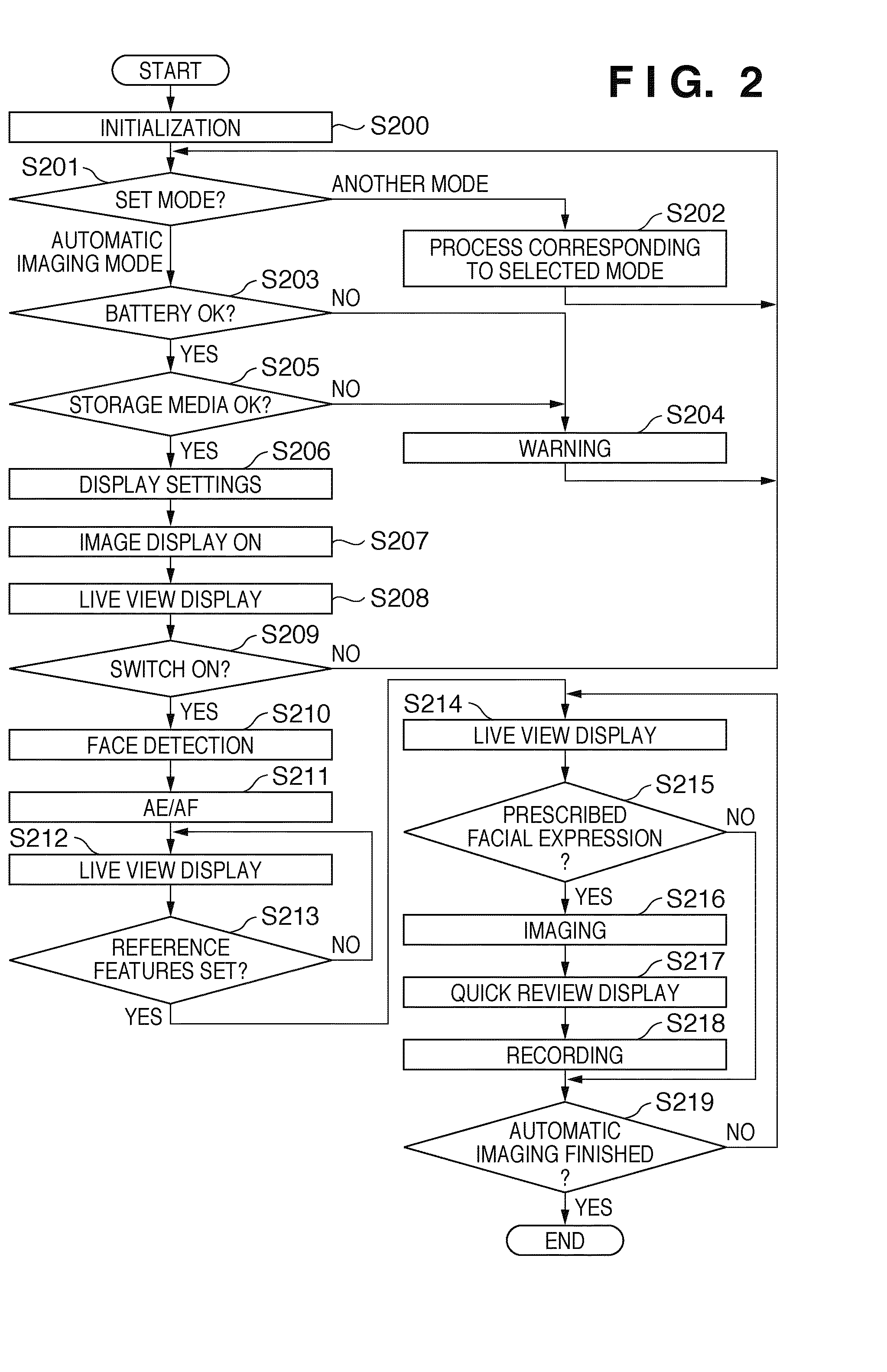

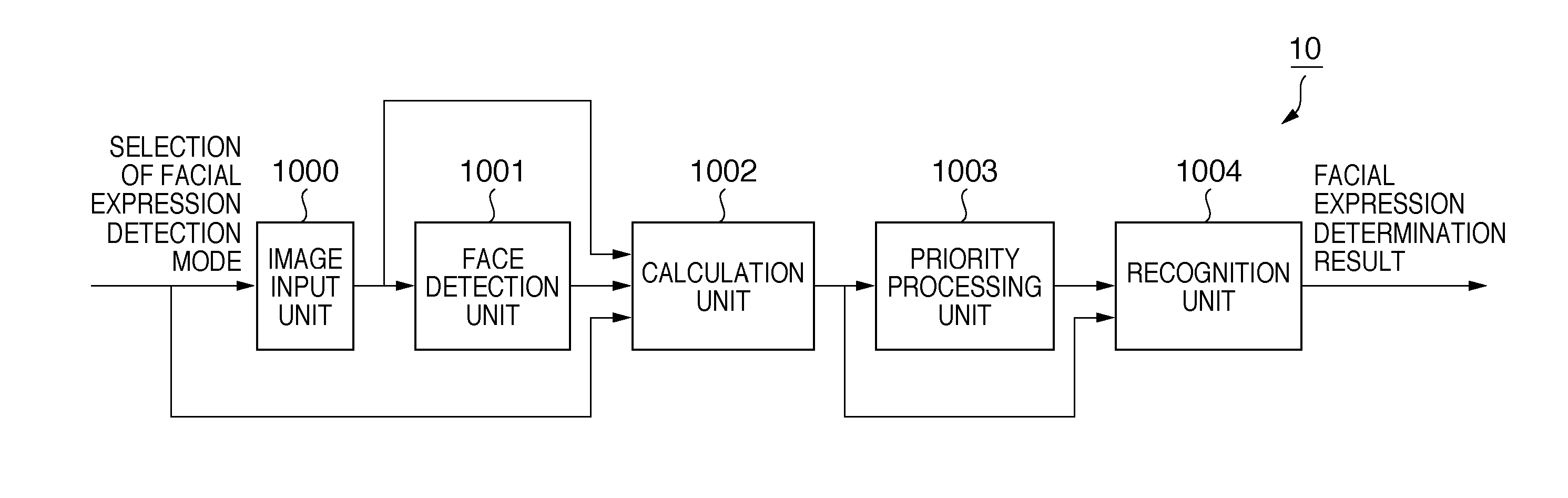

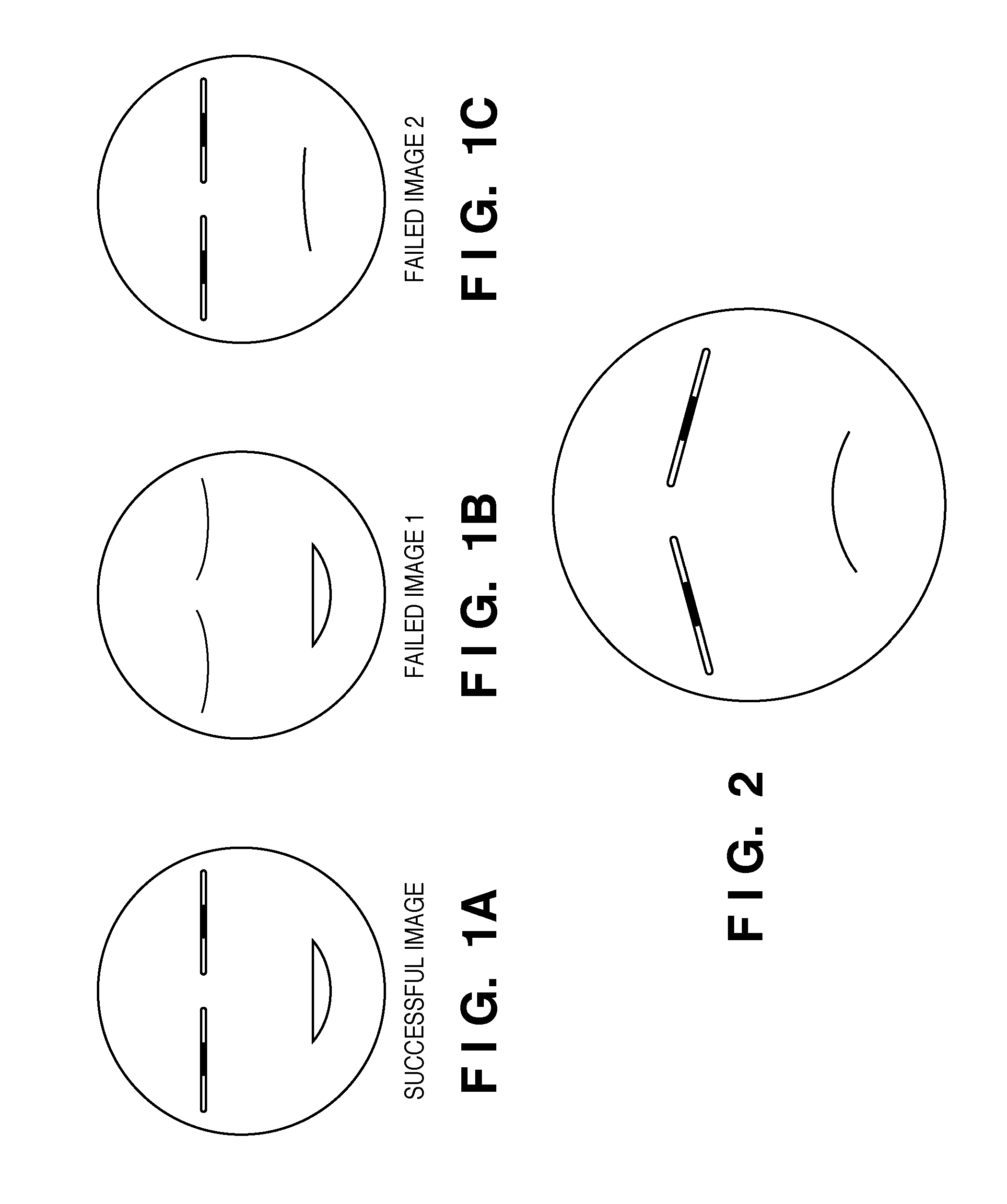

Facial expression recognition apparatus and method, and image capturing apparatus

ActiveUS20100189358A1Convenient registrationTelevision system detailsCharacter and pattern recognitionFace detectionPattern recognition

A facial expression recognition apparatus includes an image input unit configured to sequentially input images, a face detection unit configured to detect faces in images obtained by the image input unit, and a start determination unit configured to determine whether to start facial expression determination based on facial image information detected by the face detection unit. When the start determination unit determines that facial expression determination should be started, an acquisition unit acquires reference feature information based on the facial image information detected by the face detection unit and a facial expression determination unit extracts feature information from the facial image information detected by the face detection unit and determines facial expressions of the detected faces based on the extracted feature information and the reference feature information.

Owner:CANON KK

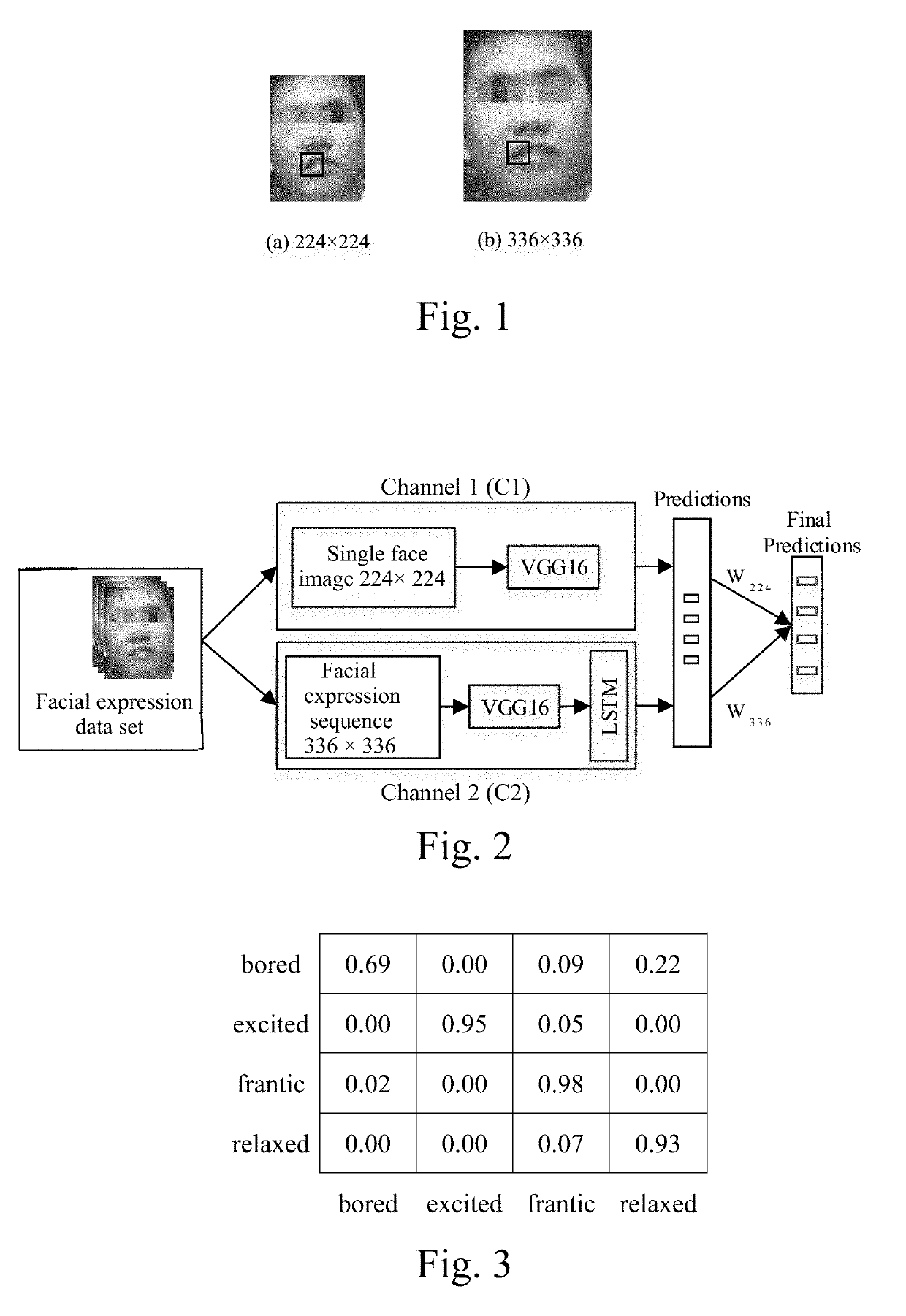

Face emotion recognition method based on dual-stream convolutional neural network

ActiveUS20190311188A1Low accuracyPromote resultsNeural architecturesNeural learning methodsManual extractionChannel network

A face emotion recognition method based on dual-stream convolutional neural network uses a multi-scale face expression recognition network to single frame face images and face sequences to perform learning classification. The method includes constructing a multi-scale face expression recognition network which includes a channel network with a resolution of 224×224 and a channel network with a resolution of 336×336, extracting facial expression characteristics at different resolutions through the recognition network, effectively combining static characteristics of images and dynamic characteristics of expression sequence to perform training and learning, fusing the two channel models, testing and obtaining a classification effect of facial expressions. The present invention fully utilizes the advantages of deep learning, effectively avoids the problems of manual extraction of feature deviations and long time, and makes the method provided by the present invention more adaptable. Moreover, the present invention improves the accuracy and productivity of expression recognition.

Owner:SICHUAN UNIV

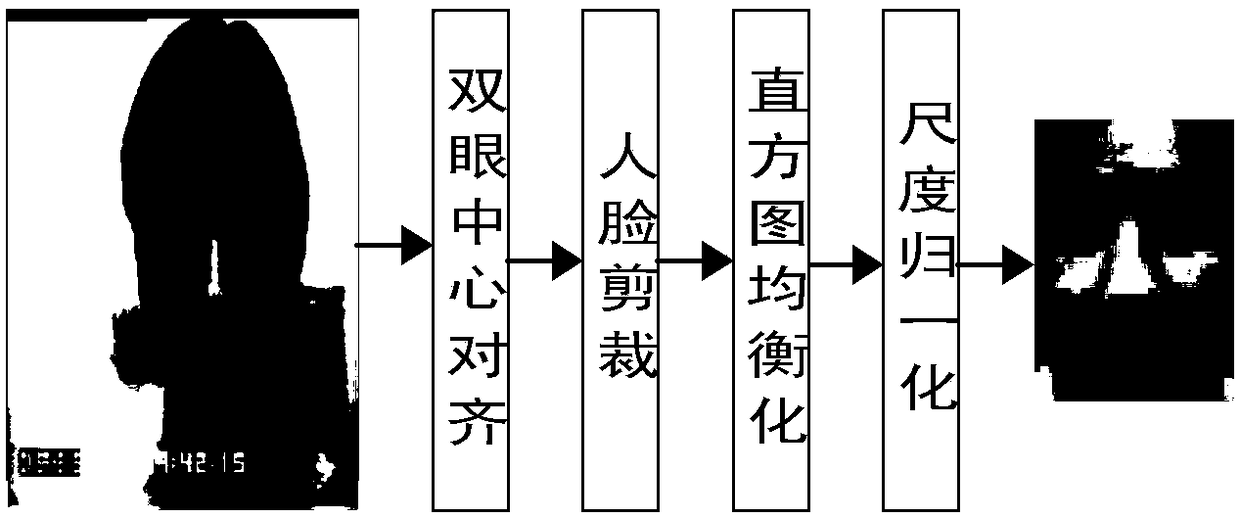

Improved CNN-based facial expression recognition method

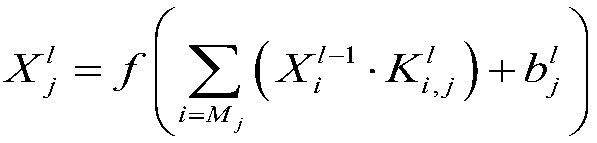

InactiveCN108108677AReduce training parametersSmall amount of calculationImage enhancementImage analysisFace detectionSvm classifier

The invention provides an improved CNN-based facial expression recognition method, and relates to the field of image classification and identification. The improved CNN-based facial expression recognition method comprises the following steps: s1, acquiring a facial expression image from a video stream by using a face detection alignment algorithm JDA algorithm integrating the face detection and alignment functions; s2, correcting the human face posture in a real environment by using the face according to the facial expression image obtained in the step s1, removing the background information irrelevant to the expression information and adopting the scale normalization; s3, training the convolutional neural network model to obtain and store an optimal network parameter before extracting feature of the normalized facial expression image obtained in the step s2; s4 loading a CNN model and the optimal network parameters obtained by s3 for the optimal network parameters obtained in the steps3, and performing feature extraction on the normalized facial expression images obtained in the step s2; s5, classifying and recognizing the facial expression features obtained in the step s4 by using an SVM classifier. The method has high robustness and good generalization performance.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

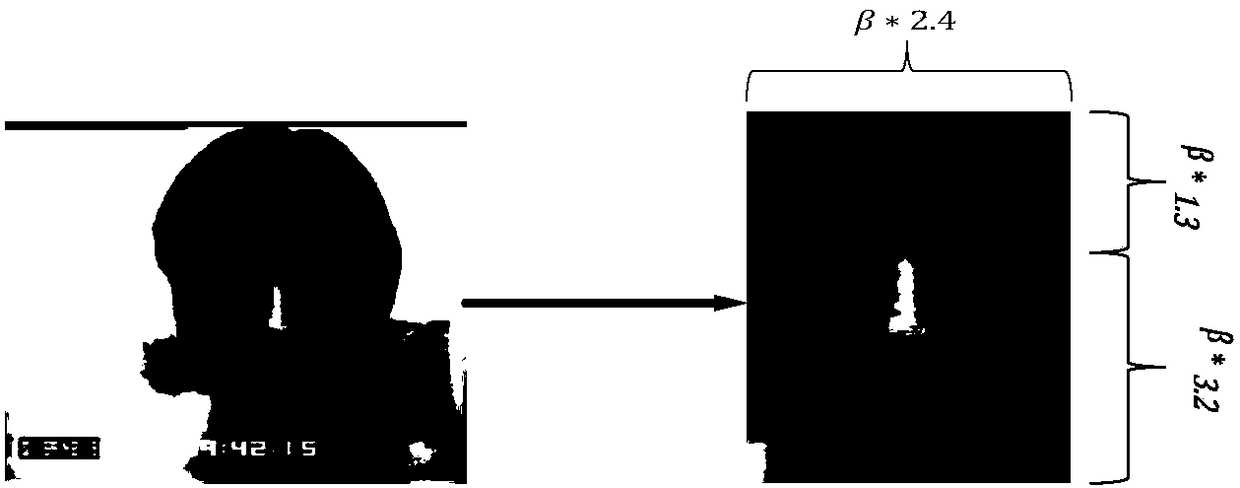

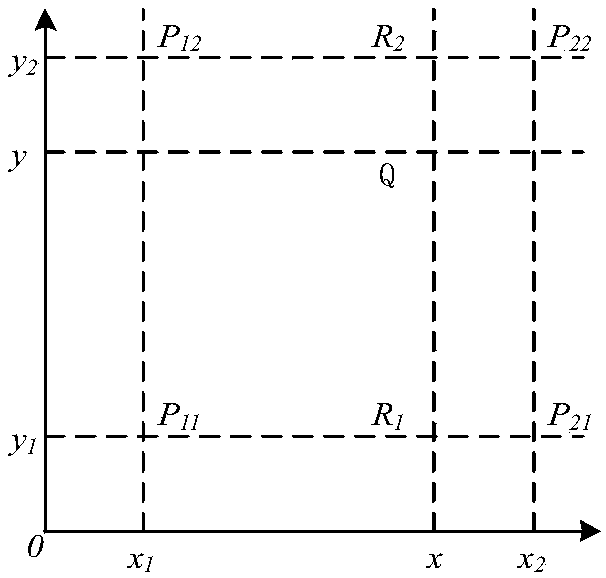

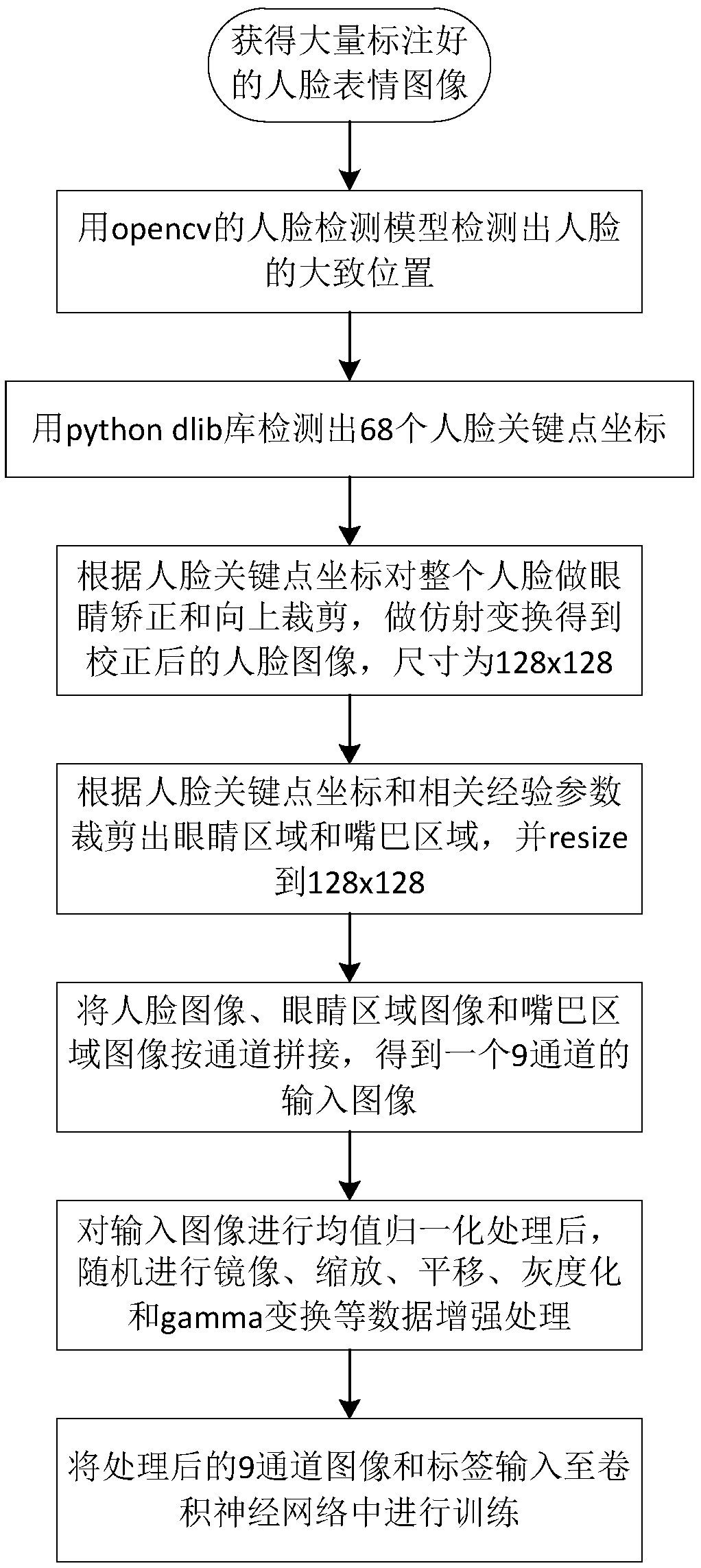

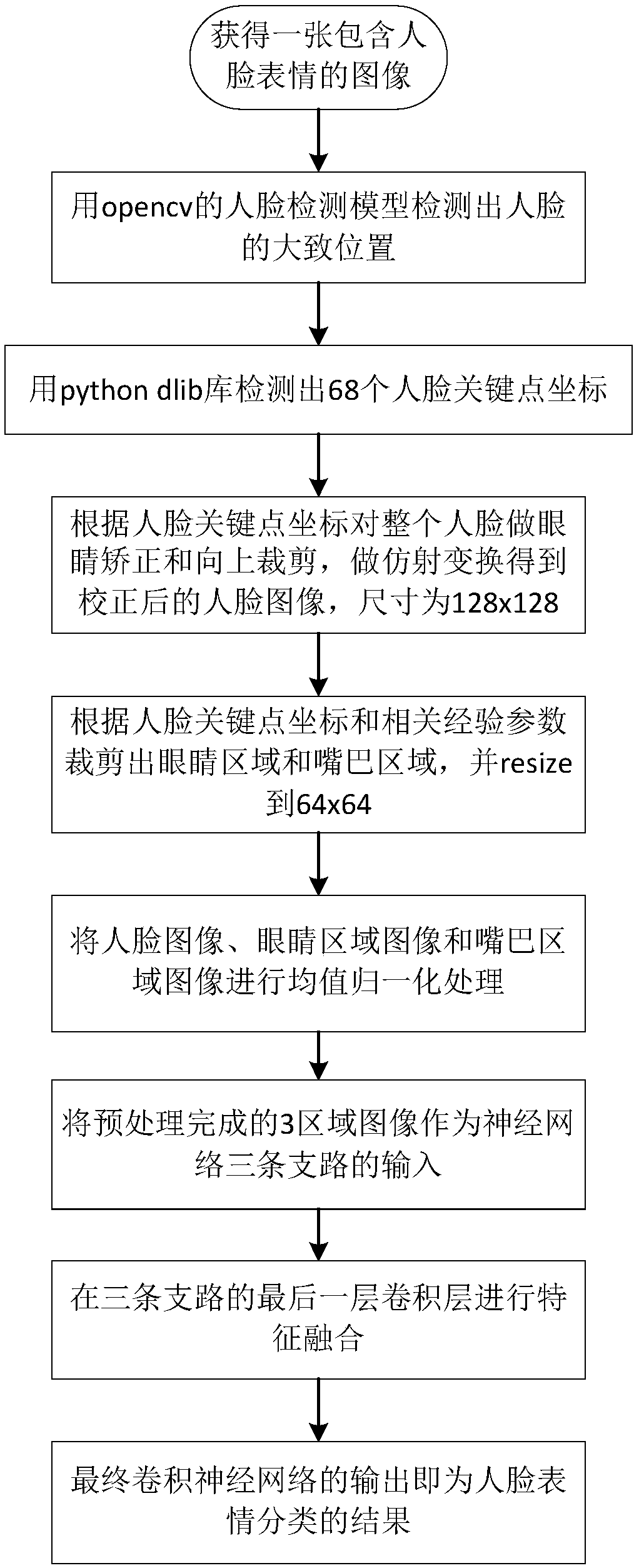

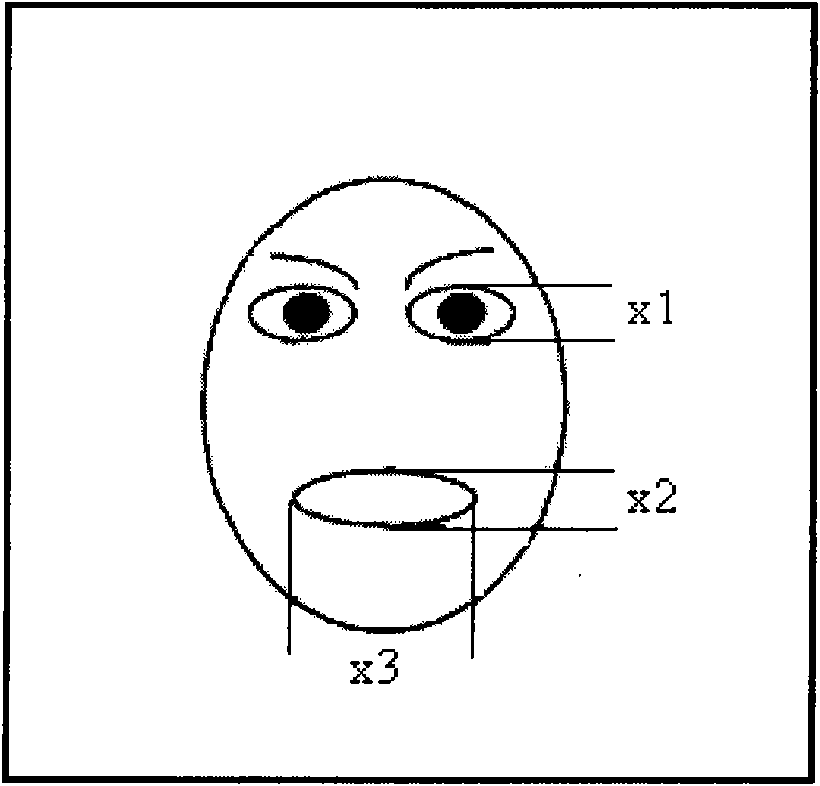

A face multi-area fusion expression recognition method based on depth learning

ActiveCN109344693AImprove robustnessImprove stabilityNeural architecturesAcquiring/recognising facial featuresSemantic featureComputer science

The invention discloses a face multi-area fusion expression recognition method based on depth learning, which comprises the following steps of detecting a face position with a detection model; obtaining the coordinates of the key points by using the key point model; aligning the eyes according to the key points of the eyes, then aligning the face according to the coordinates of the key points of the whole face, and clipping the face region by affine transformation; cutting the eye and mouth areas of the image to a certain proportion; dividing the convolution neural network into one backbone network and two branch networks; carrying out the feature fusion in the last convolution layer, and finally obtaining the expression classification results by the classifier. The method of the inventionutilizes the priori information, besides the whole face, the eyes and mouth regions are also used as the input of the network, and the network can learn the whole semantic features of facial expressions and the local features of facial expressions through model fusion, so that the method simplifies the difficulty of facial expression recognition, reduces the external noise, and has strong robustness, high accuracy, low complexity of the algorithm and so on.

Owner:SOUTH CHINA UNIV OF TECH

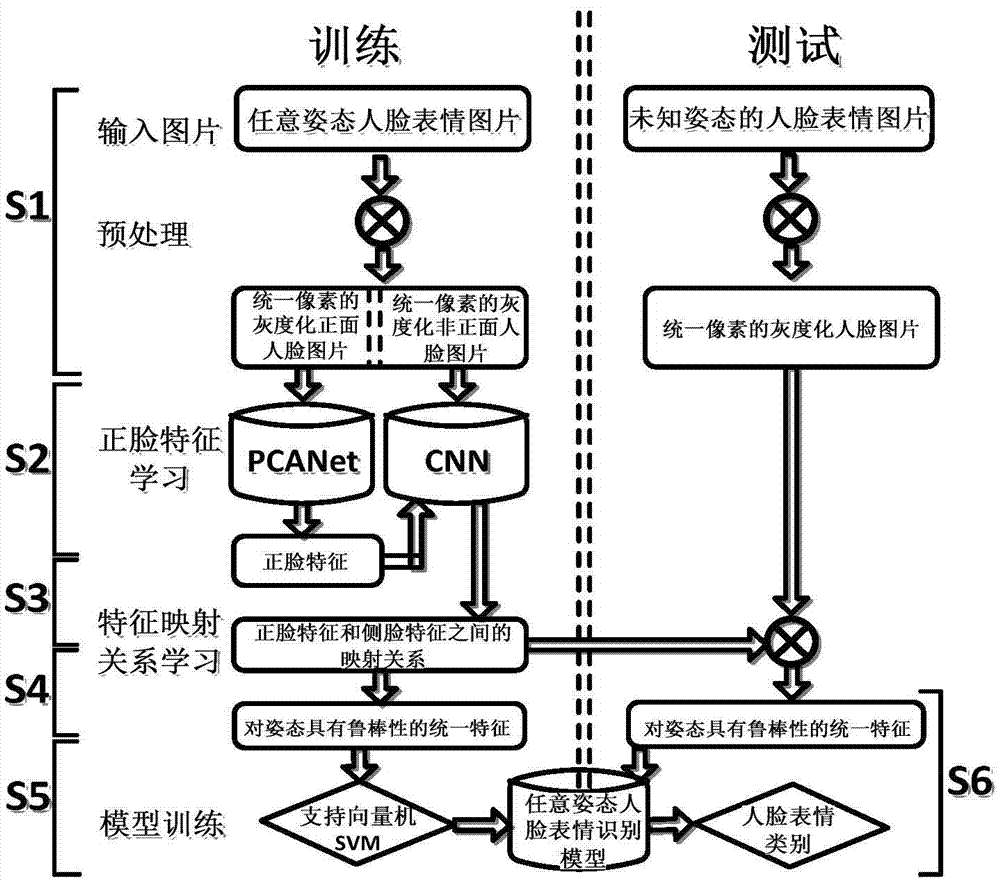

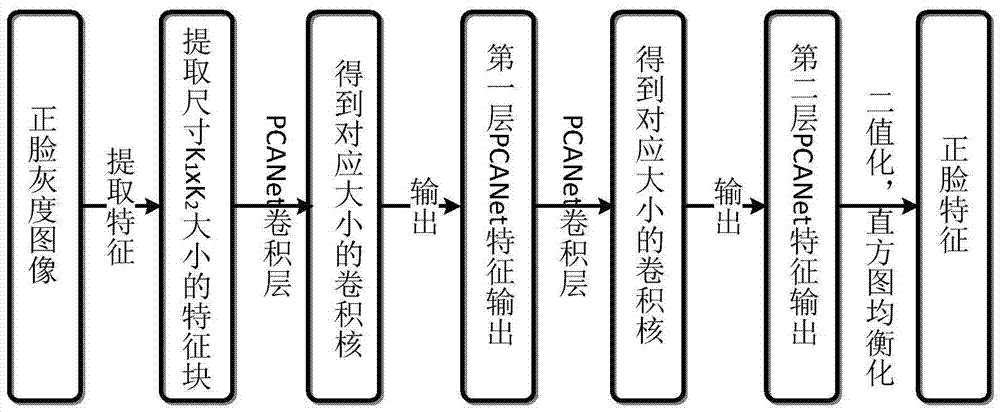

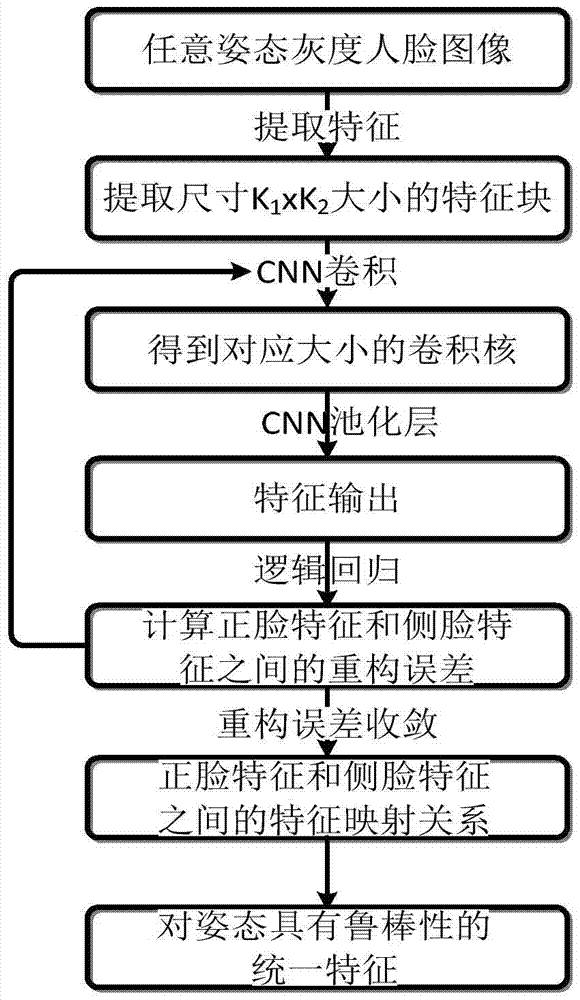

PCANet-CNN-based arbitrary attitude facial expression recognition method

ActiveCN105447473AImprove efficiencyEasy to identifyAcquiring/recognising facial featuresGray levelFacial characteristic

The invention discloses a PCANet-CNN-based arbitrary attitude facial expression recognition method. The method comprises the following steps: firstly pre-processing the original images to obtain gray level facial images with uniform size, wherein the gray level facial images comprise front facial images and side facial images; inputting the front face images into an unsupervised characteristic learning model PCANet and learning to obtain characteristics corresponding to the front facial images; inputting the side facial images into a supervised characteristic learning model CNN, and training by taking the front facial characteristics obtained through the unsupervised characteristic learning as labels so as to obtain a mapping relationship between the front facial characteristics and the side facial characteristics; and obtaining uniform front facial characteristics corresponding to the facial images at arbitrary attitudes through the mapping relationship, and finally sending the uniform front facial characteristics into SVM to train so as to obtain a uniform recognition model in allusion to arbitrary attitudes. According to the method provided by the invention, the problem of low model recognition rate caused by the condition of respectively modeling for each attitude in the traditional multi-attitude facial expression recognition and the factors such as attitude and the like is solved, and the correctness of the multi-attitude facial image expression recognition can be effectively improved.

Owner:JIANGSU UNIV

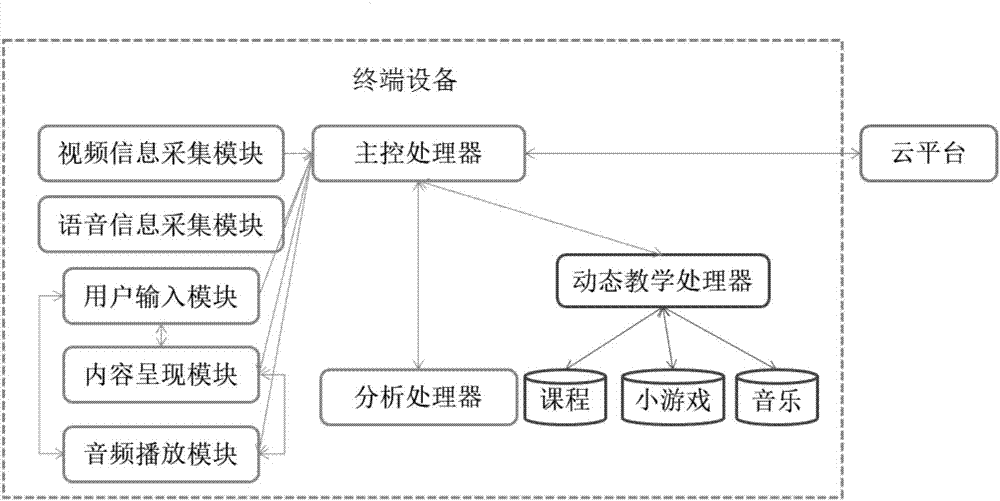

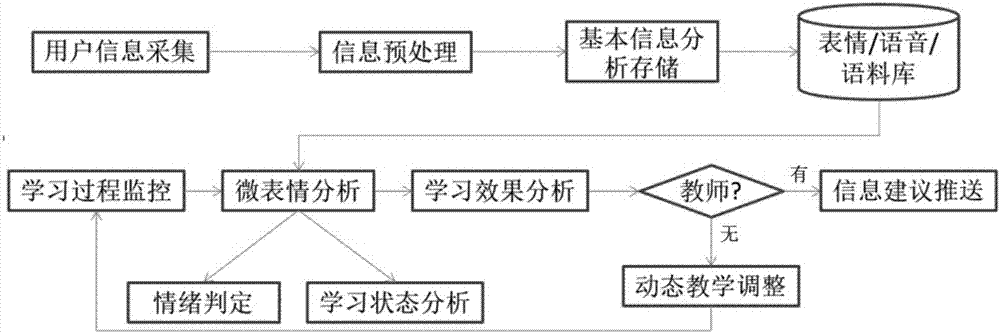

Teaching system based on internet, facial expression recognition and speech recognition and realizing method of teaching system

ActiveCN107203953AMobileEntertainingData processing applicationsSpeech recognitionData informationThe Internet

The invention discloses a teaching system based on an internet, facial expression recognition and speech recognition and a realizing method of the teaching system. The realizing method comprises the following steps: S1, playing contents of teaching courses by a first terminal; S2, acquiring video data information, speech data information of a user and user operation in the playing process; S3, transmitting the information and the user operation to a main control processor; S4, extracting facial features and pronunciation features of the user into an analysis processor by the main control processor; S5, respectively comparing the facial features and the pronunciation features with standard templates by the analysis processor; S6, dynamically adjusting the played contents of the teaching courses or / and teaching procedures by the main control processor according to current operation of the user and feedback of the analysis processor, or transmitting a comparison result to a second terminal by a cloud platform in real time. The teaching system disclosed by the invention is teaching software with the characteristics of mobility, entertainment, sociability and the like and can provide a chance for students to study independently outside class at any time and any place; by means of an online teaching mode for assisting real persons, a traditional mode for teaching Chinese as a foreign language is improved.

Owner:深圳极速汉语网络教育有限公司

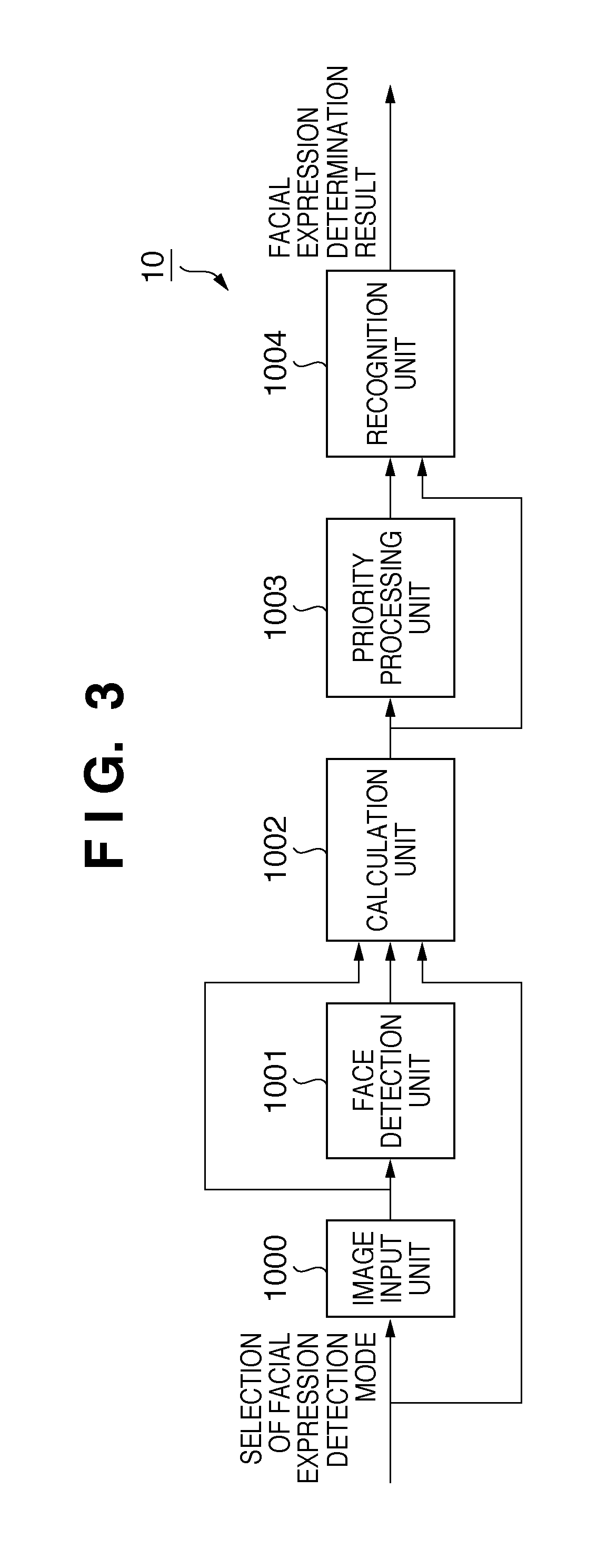

Facial expression recognition apparatus, image sensing apparatus, facial expression recognition method, and computer-readable storage medium

ActiveUS20110032378A1Accurate identificationTelevision system detailsColor television detailsPattern recognitionImage sensing

A facial expression recognition apparatus (10) detects a face image of a person from an input image, calculates a facial expression evaluation value corresponding to each facial expression from the detected face image, updates, based on the face image, the relationship between the calculated facial expression evaluation value and a threshold for determining a facial expression set for the facial expression evaluation value, and determines the facial expression of the face image based on the updated relationship between the facial expression evaluation value and the threshold for determining a facial expression.

Owner:CANON KK

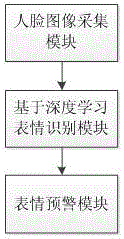

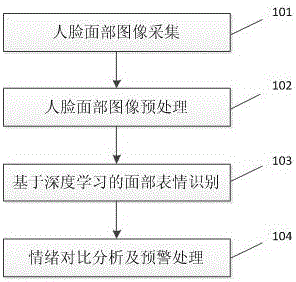

Deep learning-based emotion recognition method and system

InactiveCN106650621AStable working conditionCharacter and pattern recognitionLearning basedPattern recognition

The invention provides a deep learning-based emotion recognition method and system. The system includes a face image acquisition module, a deep learning-based facial expression recognition module and a facial expression early warning module. The facial images of employees are acquired when the employees punch in every time; the emotions of the employees are analyzed through adopting a deep learning algorithm-based facial expression analysis algorithm, and the emotions are compared with historical emotions; and when the emotions are abnormal, the system sends alarm information to relevant personnel. According to the deep learning-based emotion recognition method and system of the present invention, the deep learning algorithm is adopted to perform emotional analysis on the employees, and therefore, deep-level humanistic care can be provided for the employees.

Owner:GUANGDONG POLYTECHNIC NORMAL UNIV

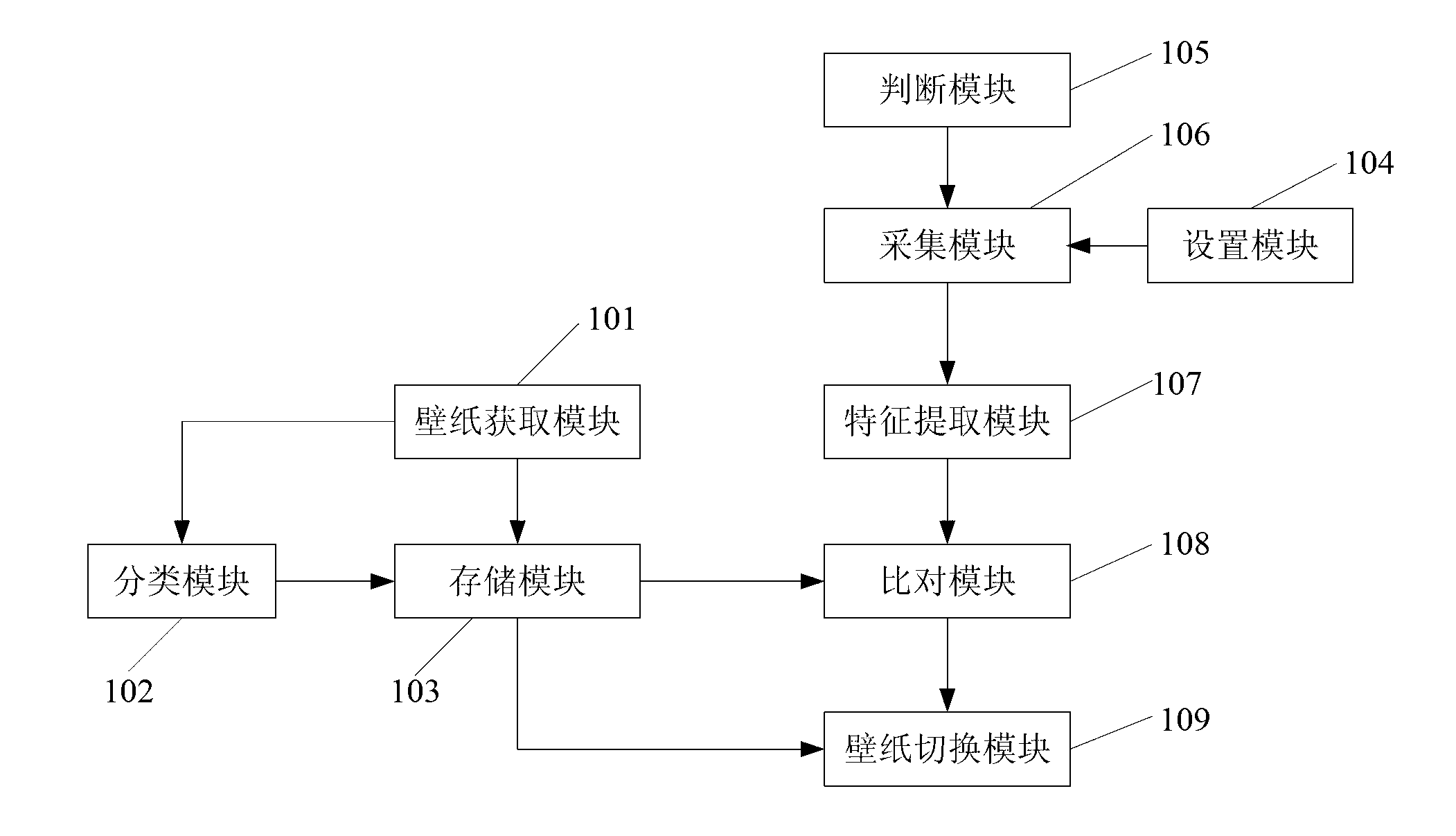

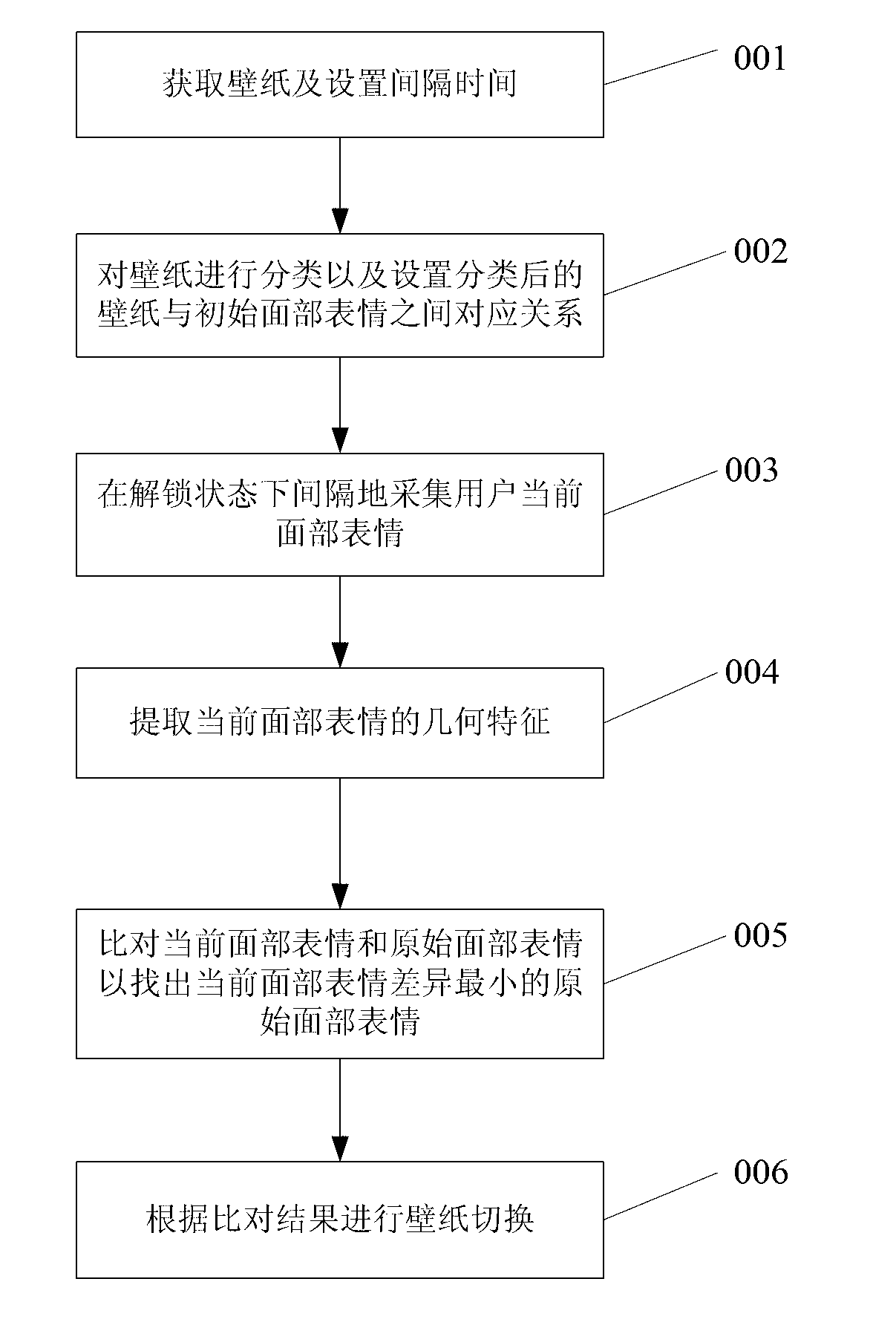

Mobile terminal and method for automatically switching wall paper based on facial expression recognition

InactiveCN103309449AInput/output for user-computer interactionSubstation equipmentSwitched currentComputer module

The invention provides a mobile terminal for automatically switching wall paper based on facial expression recognition. The mobile terminal comprises a storage module, a collection module, a comparison module and a wall paper switching module, wherein the storage module is used for storing the wall paper, an initial facial expression and a corresponding relationship between the wall paper and the initial facial expression after classification; the collection module is used for collecting a current facial expression; the comparison module is connected with the storage module and the collection module and used for comparing the current facial expression with the initial facial expression; and the wall paper switching module is connected with the storage module and the comparison module and used for switching the wall paper according to a comparison result. Compared with the prior art, the mobile terminal can be used for automatically switching current wall paper to the wall paper conforming to current mood of a user by detecting and recognizing the facial expression of the user so as to improve the current mood of the user. The invention provides a method for automatically switching the wall paper based on the facial expression recognition at the same time.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

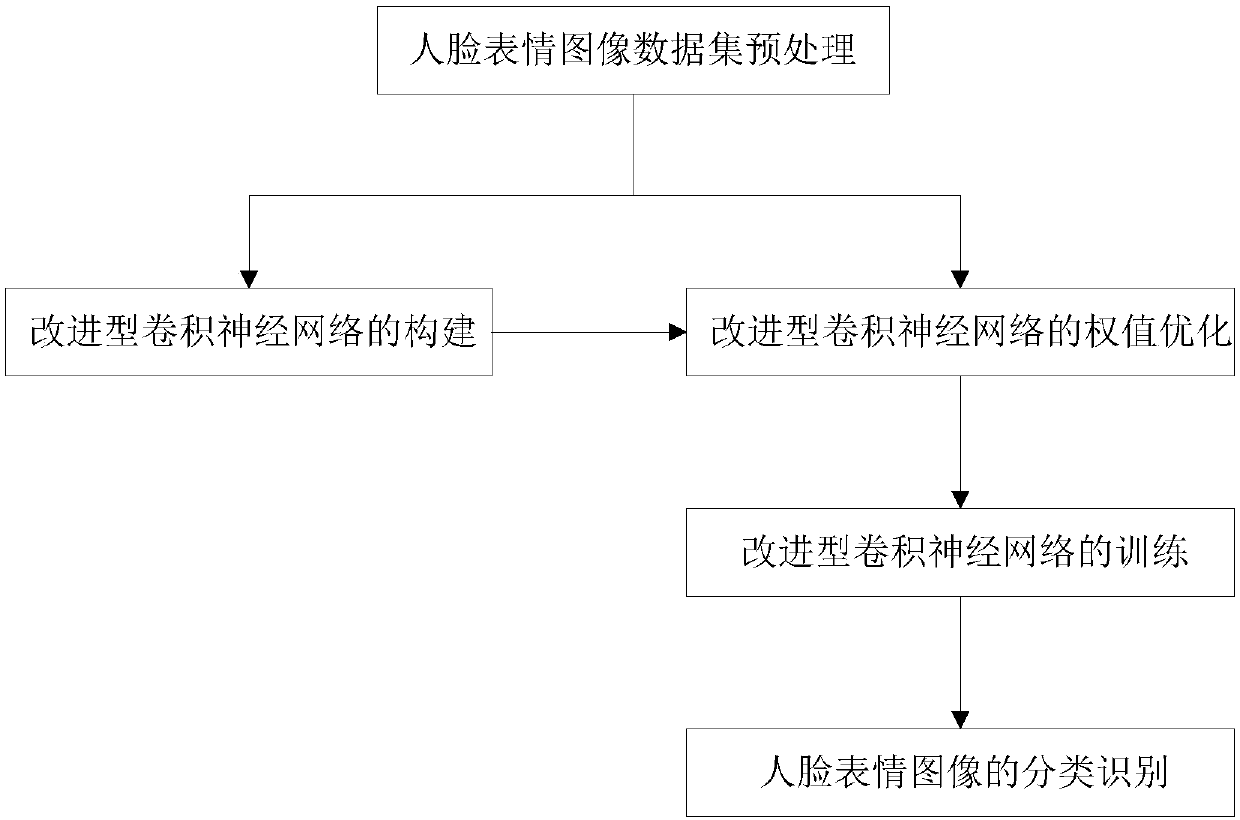

Facial expression recognition method based on convolutional neural network

InactiveCN108304826AEnhancing Non-Linear Expression CapabilitiesHigh precisionNeural architecturesAcquiring/recognising facial featuresData setNerve network

The invention discloses a facial expression recognition method based on a convolutional neural network. The method includes facial expression image data set preprocessing, construction of an improvedconvolutional neural network, weight optimization and training, and classification processing of facial expressions. The method introduces continuous convolution into a conventional convolutional neural network to obtain the improved convolutional neural network, the improved convolutional neural network adopts a small-scale convolution kernel to perform feature extraction, so that extracted facial expression features are more precise, two continuous convolution layers also enhance a nonlinear expression capability of the network, in addition, the convolutional neural network and an SOM neuralnetwork are cascaded to form a pretraining network to perform pre-learning, neurons with an optimal learning result are used for initializing the improved convolutional neural network, and the methodprovided by the invention can effectively improve facial expression image recognition precision.

Owner:HOHAI UNIV

Face recognition method and system based on large-scale face database

ActiveCN104978550AHigh speedImprove performanceCharacter and pattern recognitionFeature vectorFace detection

The invention discloses a face recognition method and system based on a large-scale face database. The recognition method comprises the following steps: obtaining a recognition image; image preprocessing: carrying out illumination compensation, graying, filtering denoising and normalization processing on an image to be detected to obtain a high-quality grey level image; face detection: detecting and positioning a face in a scene image, and separating the face from a background; characteristic extraction and expression: describing and modeling a face mode, and expressing the face by a characteristic vector; face matching: utilizing a similarity between the characteristic vector used for calculating the face image to be detected and a training sample to obtain discrimination information; and outputting a result. The face recognition method and system based on the large-scale face database not only improves the speed and the performance of face recognition under a large-scale database. Meanwhile, the face recognition method and system can be conveniently applied to the recognition field of other modes including facial expression recognition, face tracking and the like.

Owner:SHANGHAI JUNYU DIGITAL TECH

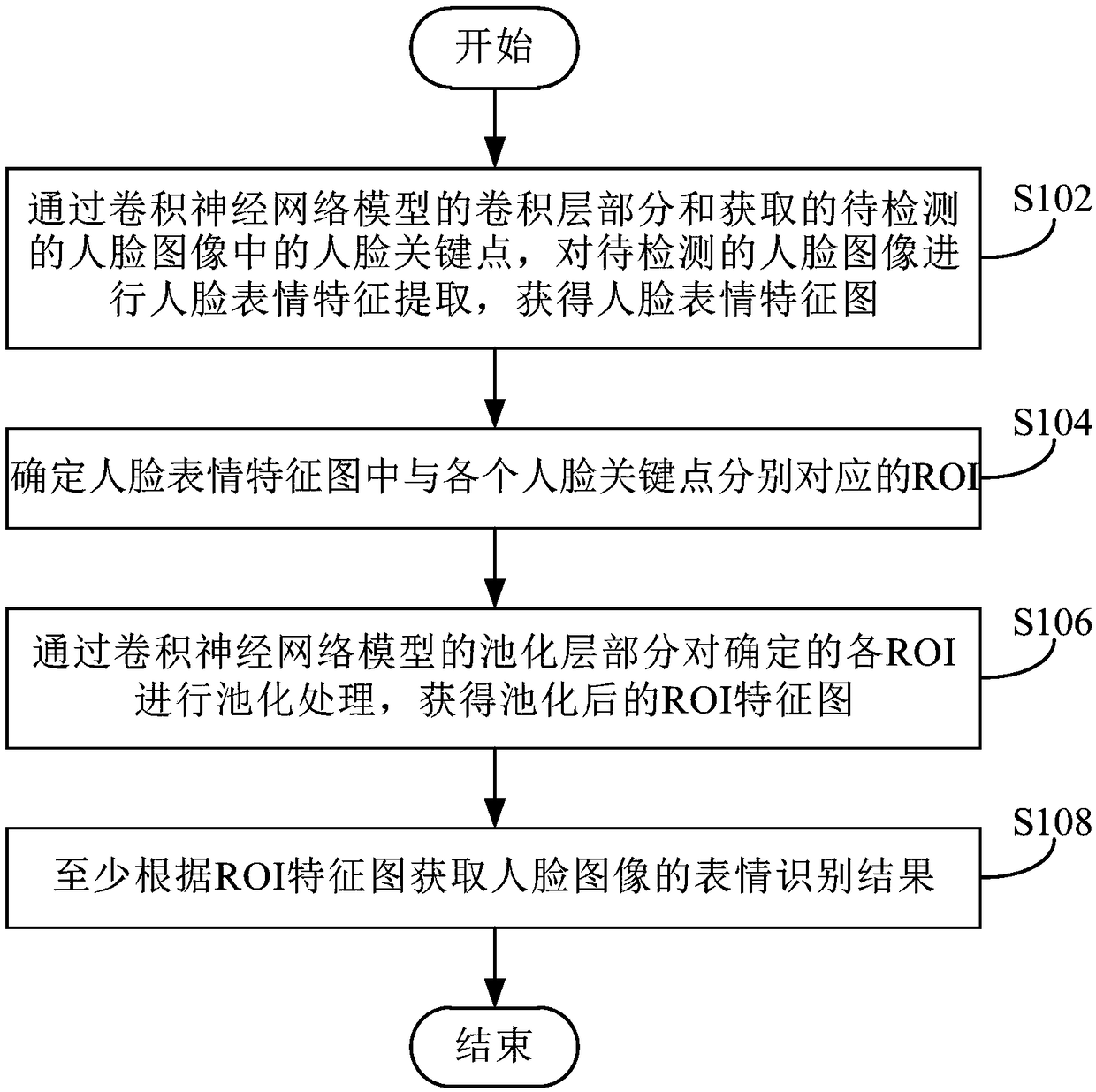

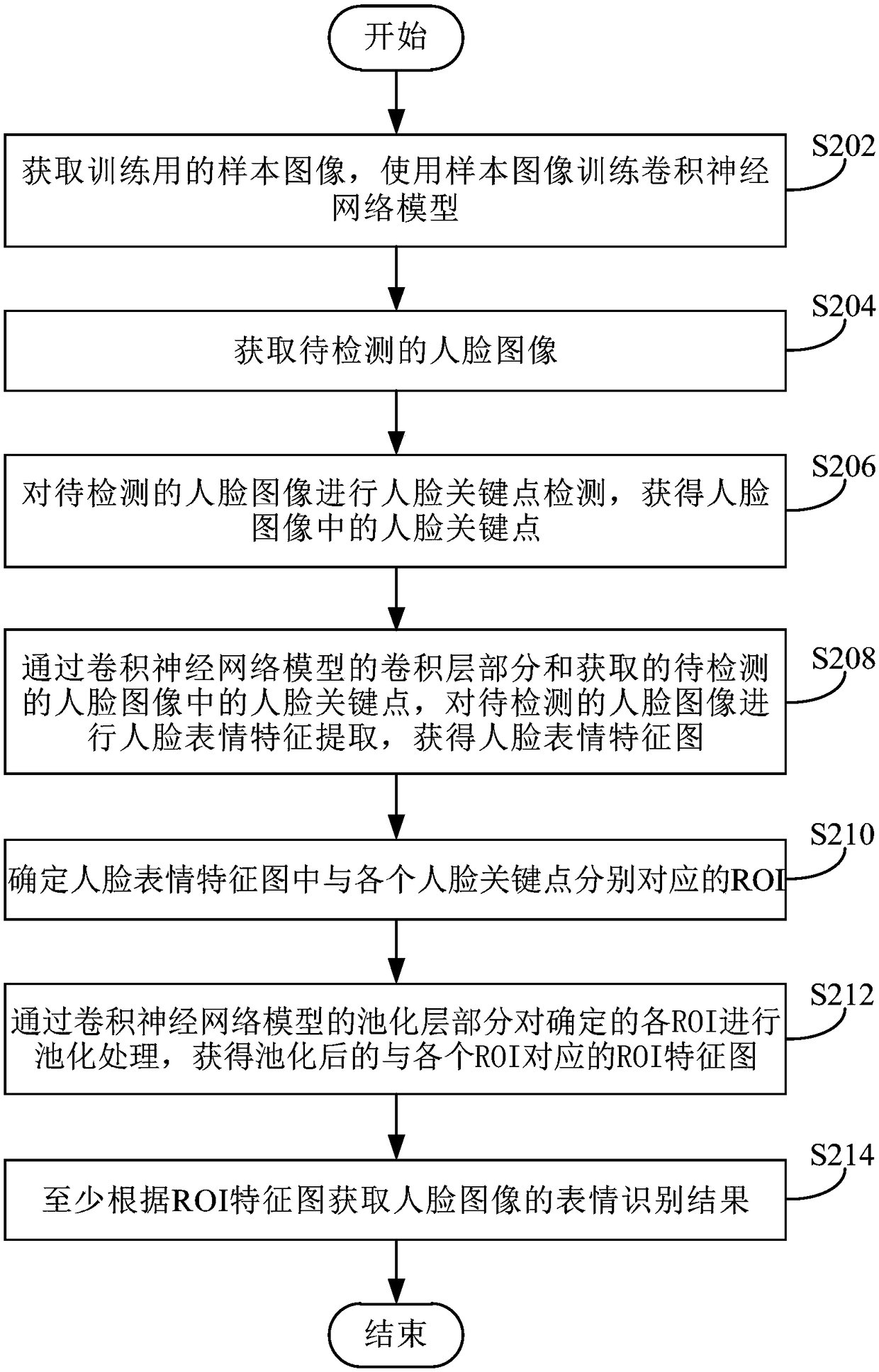

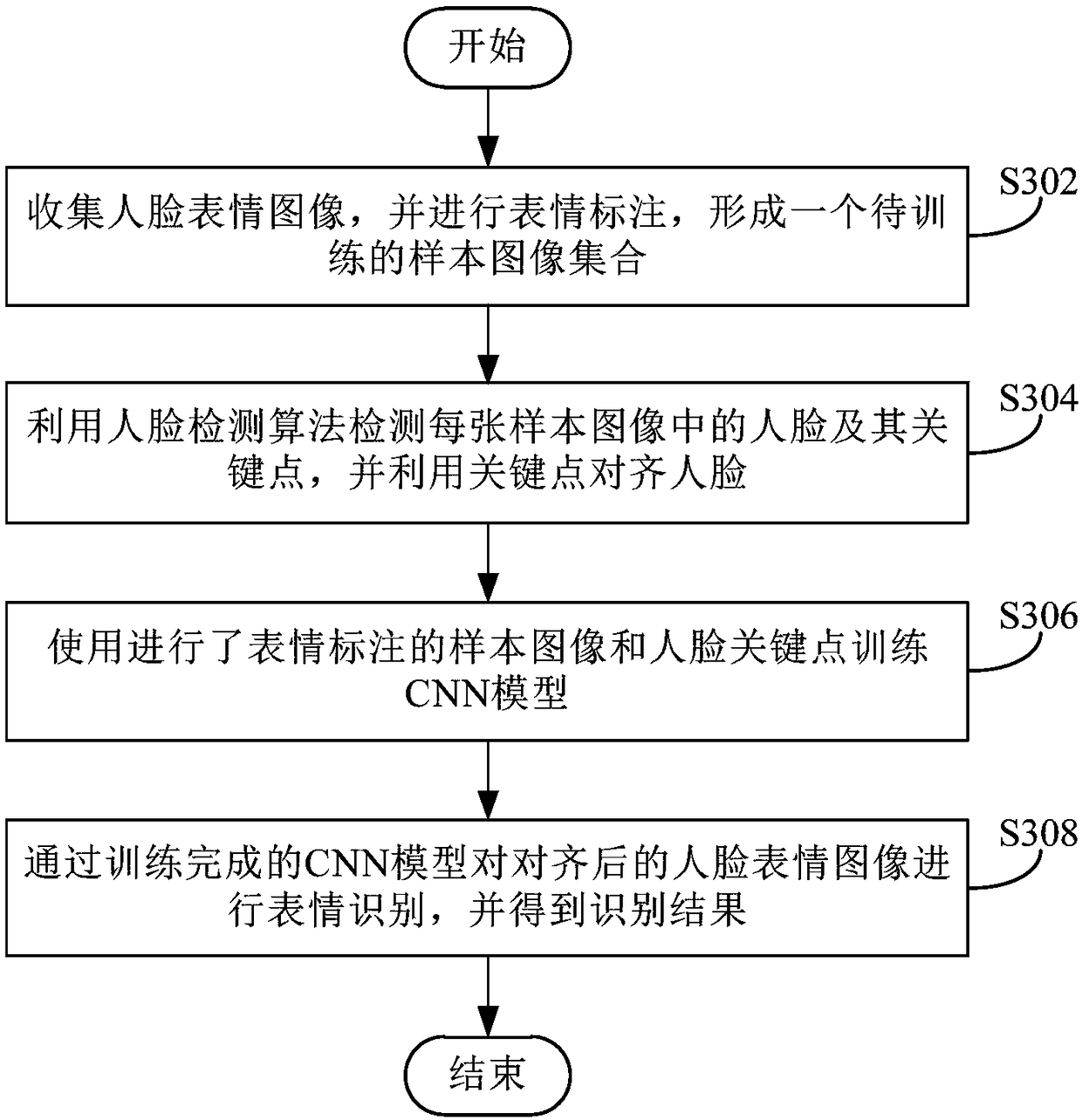

Facial expression recognition method, convolutional neural network model training method, devices and electronic apparatus

InactiveCN108229268AAccurate identificationEfficient captureNeural learning methodsAcquiring/recognising facial featuresMinutiaeRegion of interest

The embodiments of the present invention provide a facial expression recognition method, a convolutional neural network model training method, a facial expression recognition device, a convolutional neural network model training device and an electronic apparatus. The facial expression recognition method includes the following steps that: facial expression features are extracted from a face imageto be detected by means of the convolutional layer portion of a convolutional neural network model and acquired face key points in the face image to be detected, so that a facial expression feature image is obtained; ROI (regions of interest) corresponding to the face key points in the facial expression feature image are determined; pooling processing is performed on the determined ROIs through adopting the pooling layer of the convolutional neural network model, so that a pooled ROI feature image can be obtained; and the facial expression recognition result of the face image is obtained at least according to the ROI feature map. With the facial expression recognition method provided by the embodiments of the present invention adopted, subtle facial expression changes can be effectively captured, and at the same time, differences caused by different facial gestures can be better processed; and the detailed information of the changes of the plurality of regions of a face are fully utilized, so that subtle facial expression changes and faces in different postures can be recognized more accurately.

Owner:SENSETIME GRP LTD

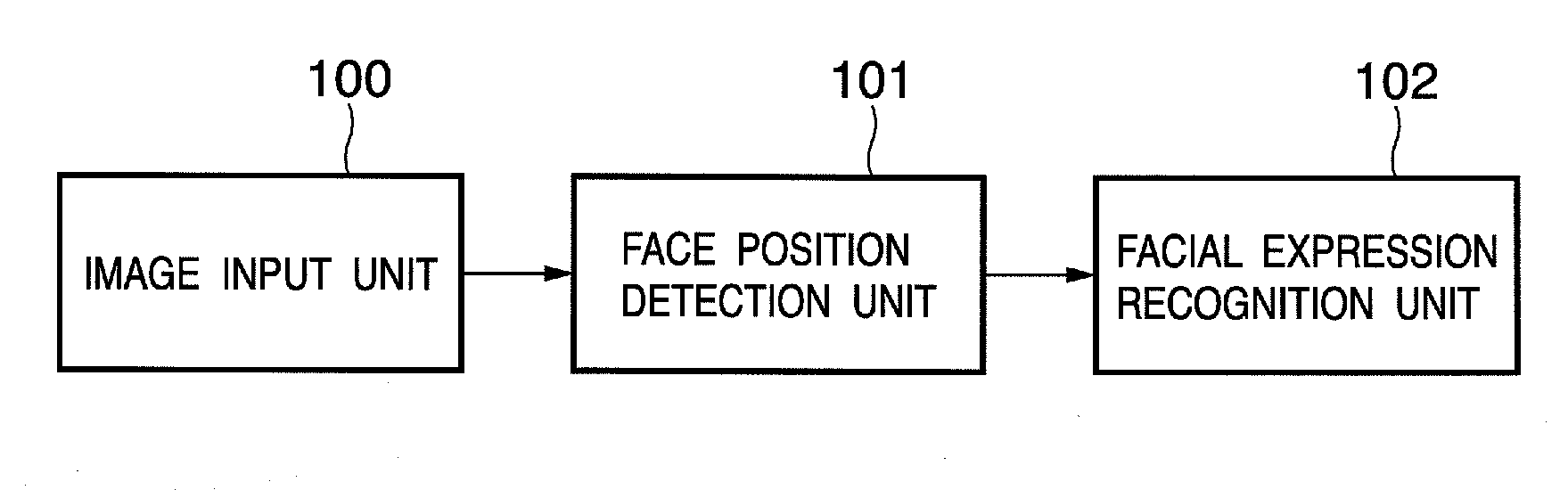

Information processing apparatus and control method therefor

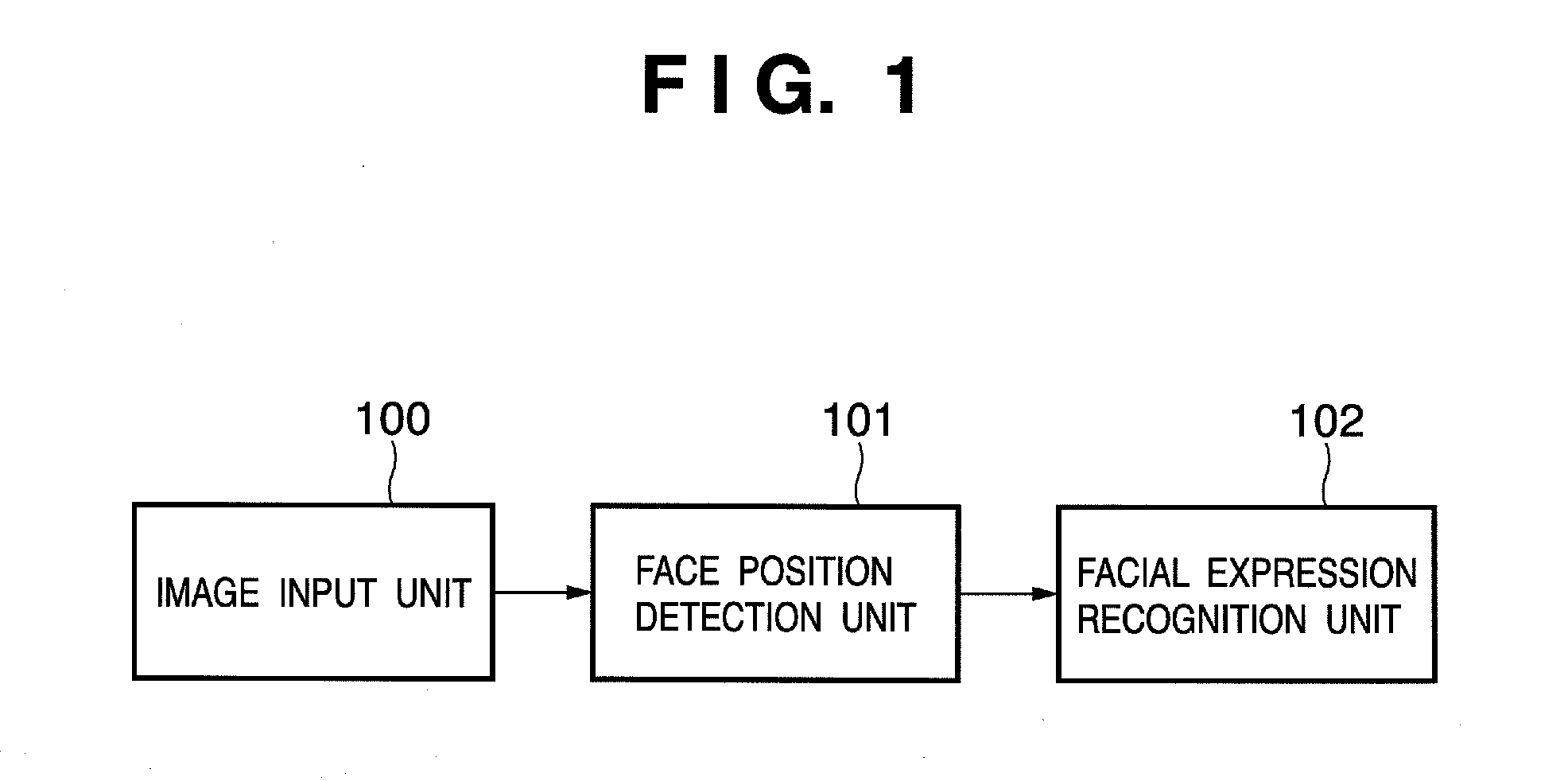

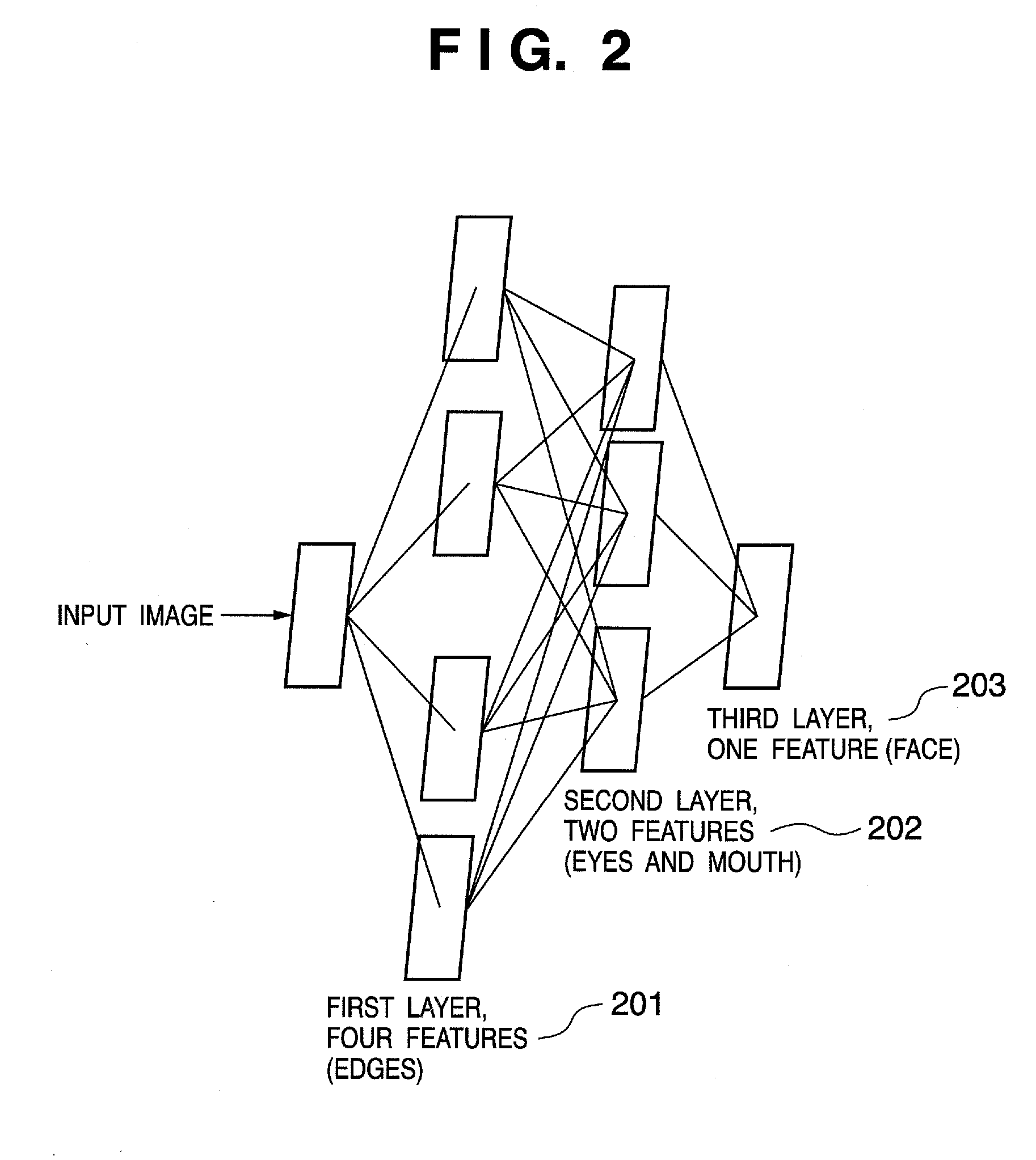

InactiveUS20070122036A1Accurate identificationAcquiring/recognising facial featuresPattern recognitionInformation processing

An information processing apparatus includes an image input unit which inputs image data containing a face, a face position detection unit which detects, from the image data, the position of a specific part of the face, and a facial expression recognition unit which detects a feature point of the face from the image data on the basis of the detected position of the specific part and determines facial expression of the face on the basis of the detected feature point. The feature point is detected at a detection accuracy higher than detection of the position of the specific part. Detection of the position of the specific part is robust to a variation in the detection target.

Owner:CANON KK

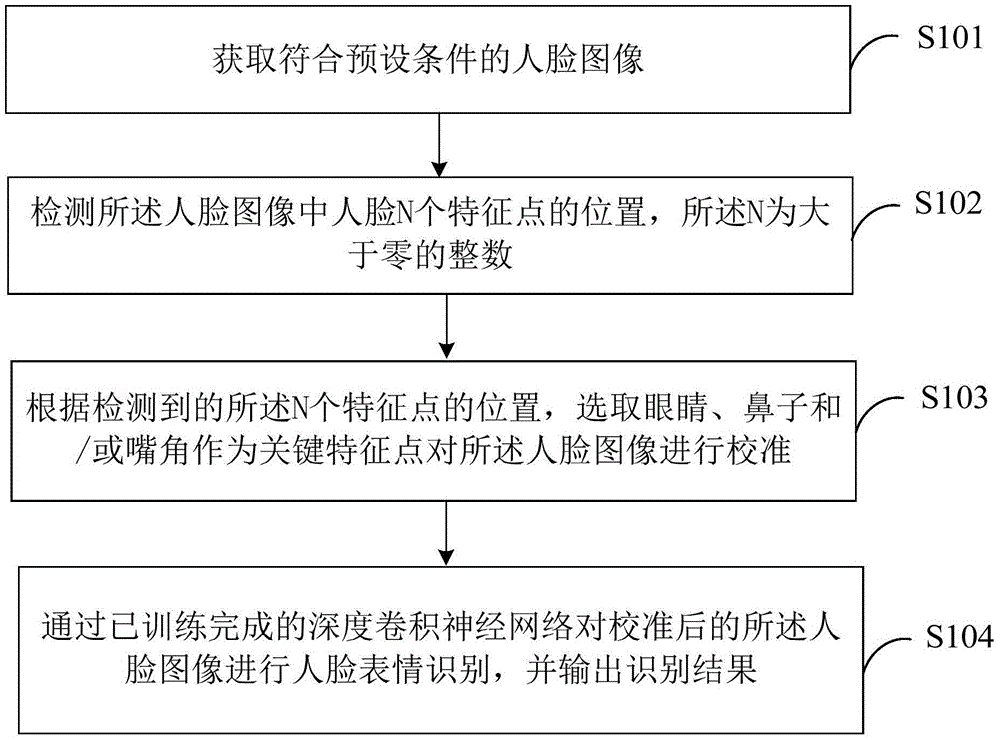

Facial expression recognition method and device

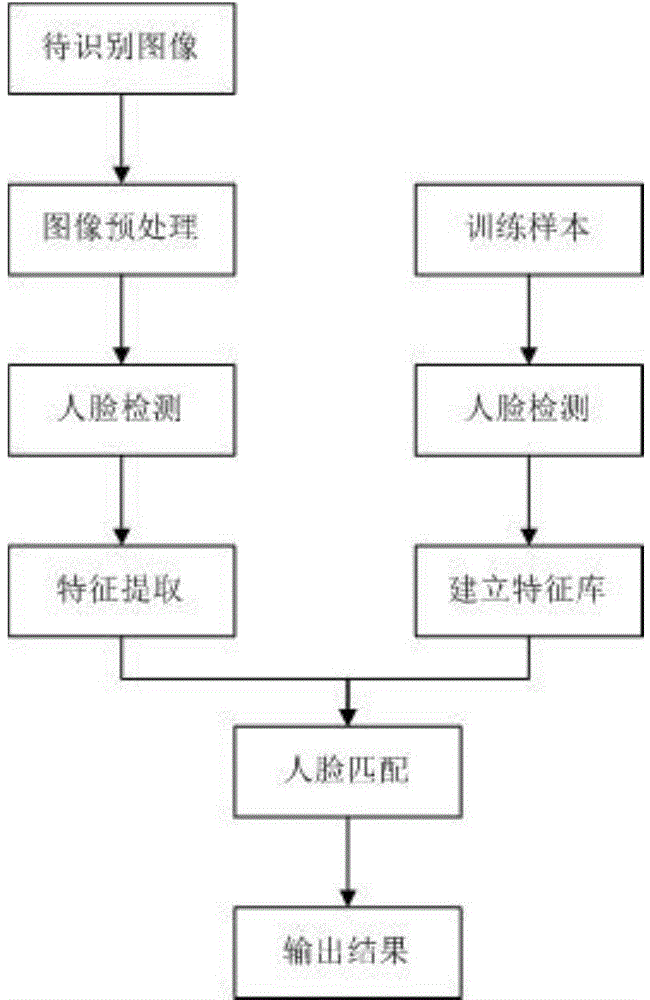

ActiveCN105654049AImprove accuracyHigh expression recognition rateAcquiring/recognising facial featuresImage resolutionNose

The invention belongs to the machine learning technical field and provides a facial expression recognition method and device. The method includes the following steps that: a face image satisfying a preset condition is obtained; the location of N feature points of a face in the face image are detected, wherein N is an integer greater than zero; eyes, a nose and / or the corners of a mouth are selected as key feature points according to the detected locations of the N feature points, so that the face image can be calibrated; facial expression recognition is performed on the calibrated face image through a trained depth convolutional neural network, and a recognition result is outputted. With the method and device of the invention adopted, the accuracy of facial expression recognition can be effectively improved, and even the facial expression recognition rate of faces in images where large-area occlusion exists, low-resolution images and images where side faces exist can be improved.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

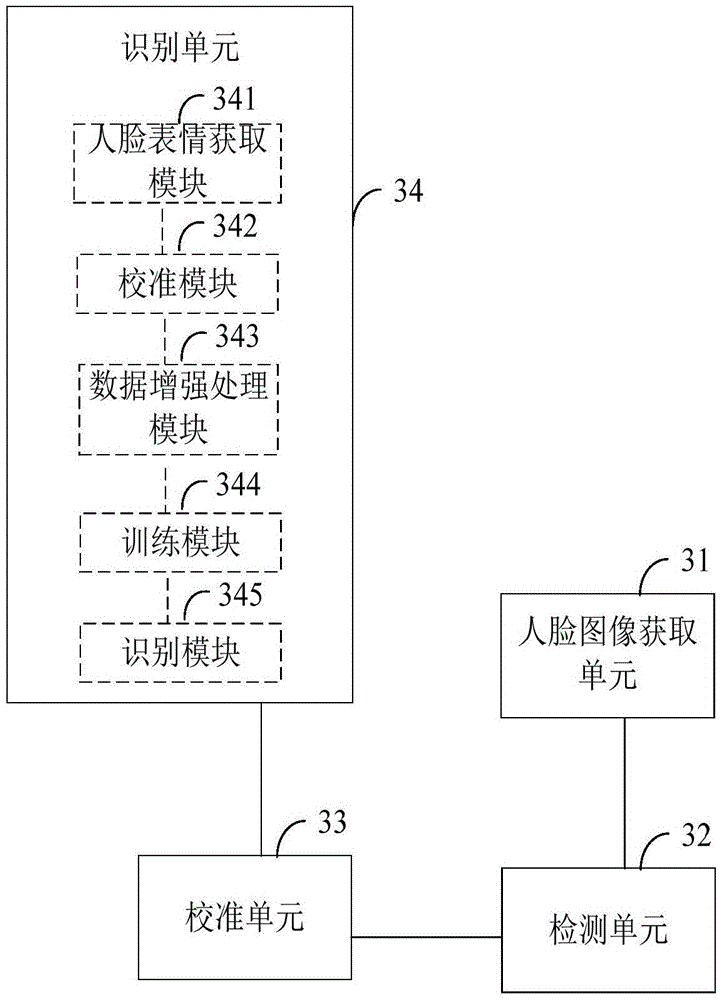

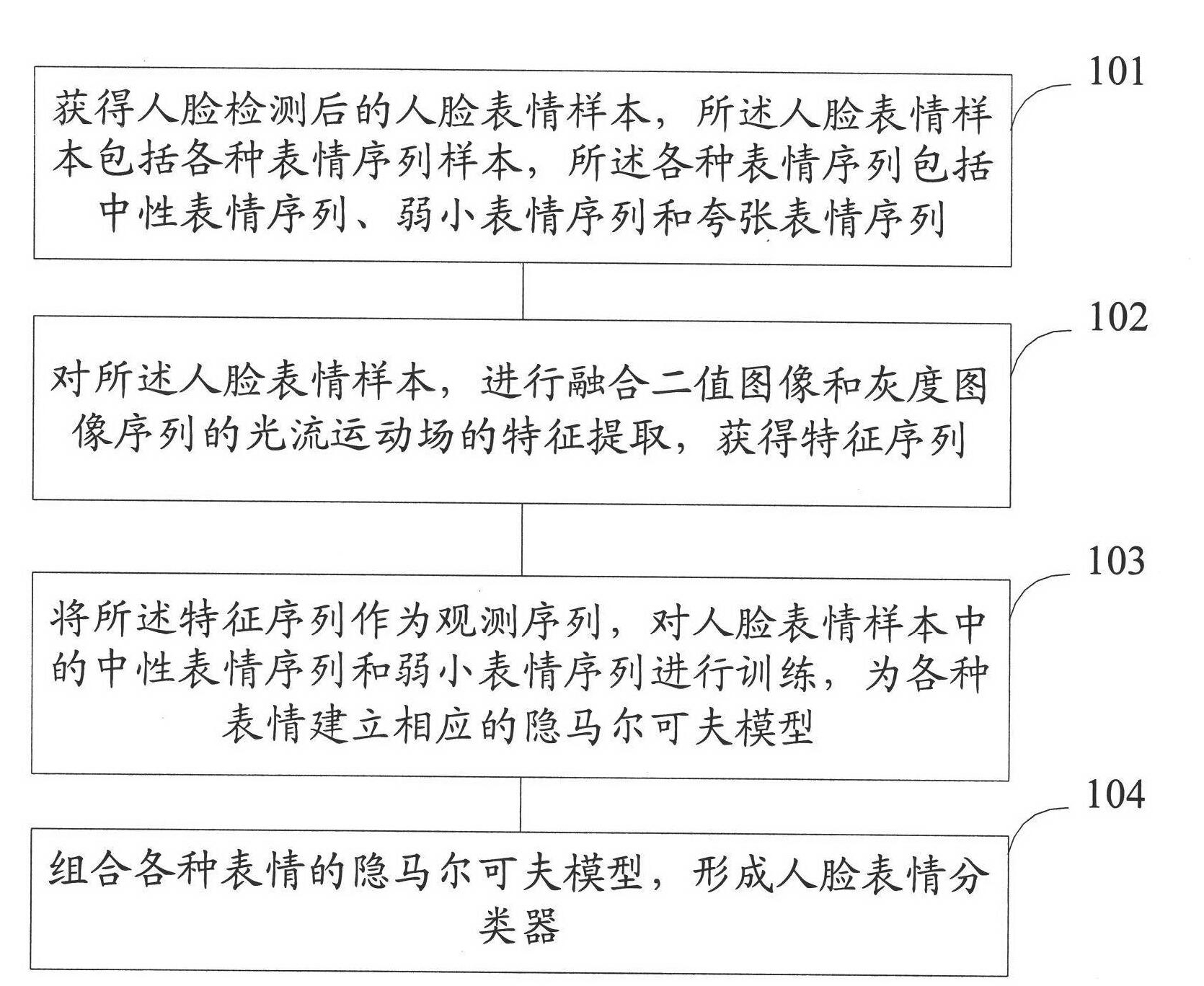

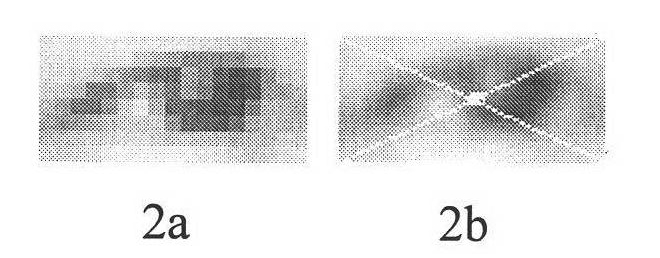

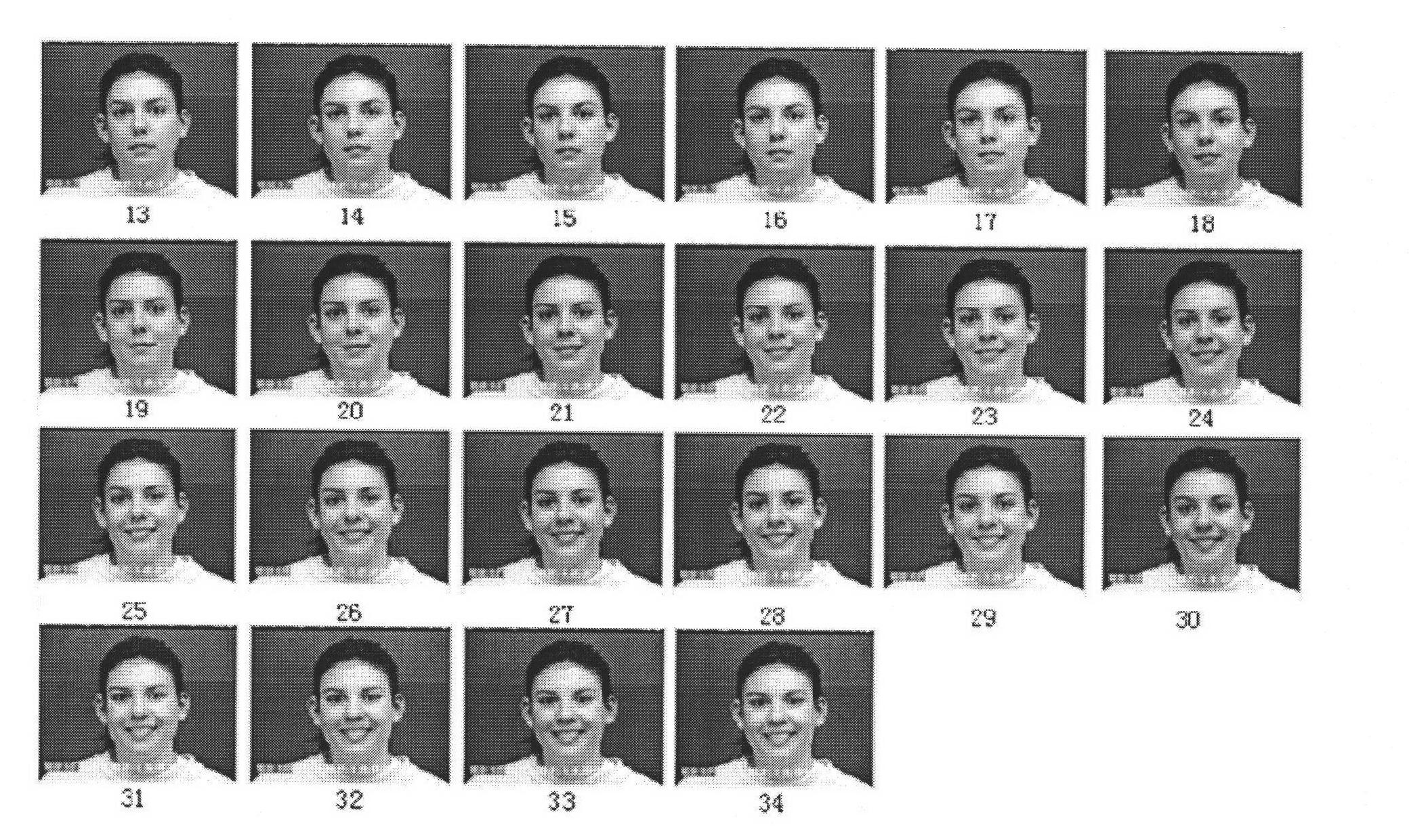

Facial expression recognition method and system, and training method and system of expression classifier

InactiveCN101877056ARealize identificationComplete sequence of continuous expressionsCharacter and pattern recognitionMotion fieldHide markov model

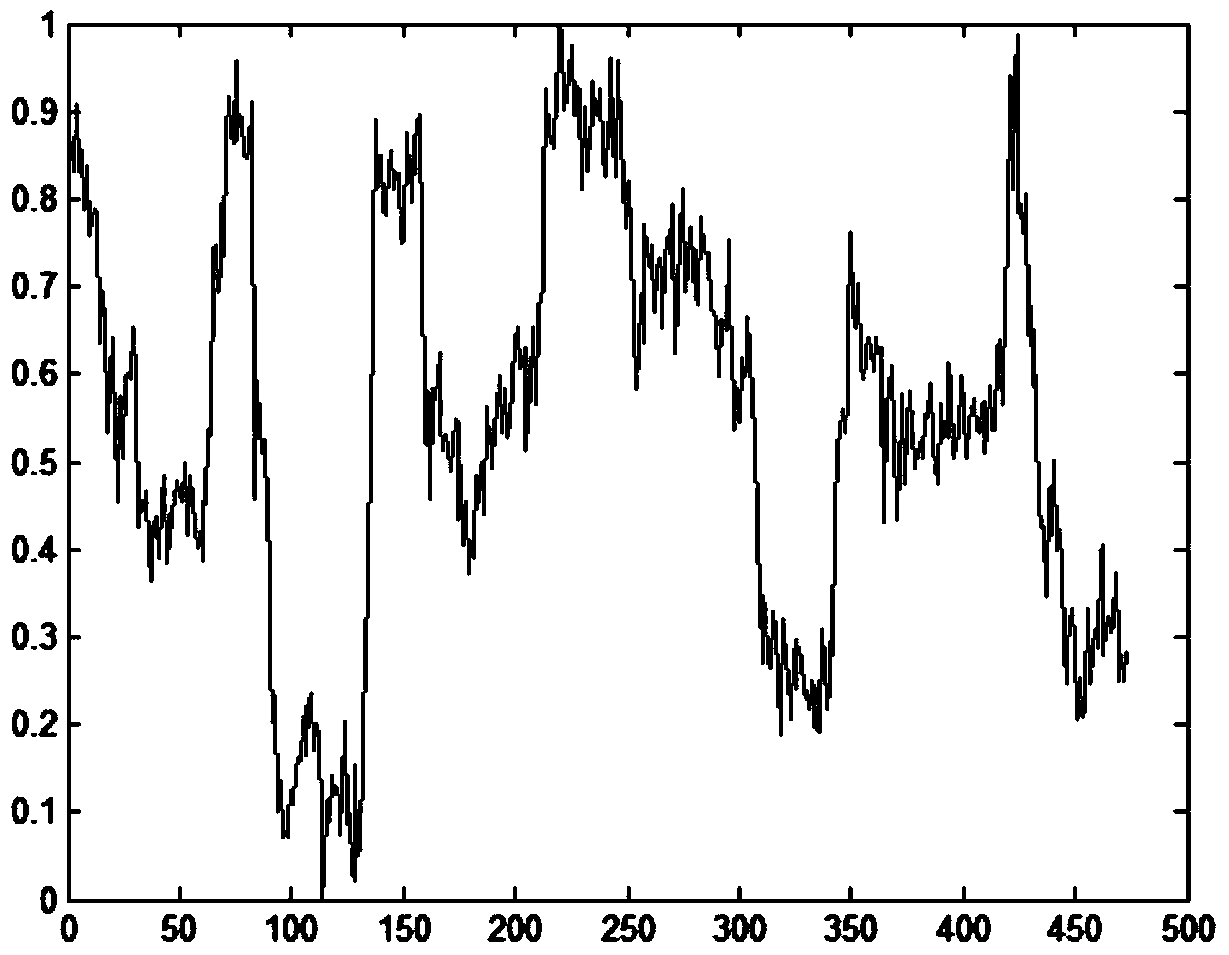

The invention provides a facial expression recognition method and a system, and a training method and a system of a facial expression classifier. The facial expression recognition method specifically comprises the following steps: carrying out feature extraction on an optical flow motion field fusing the sequences of a binary image and a gray scale image and obtaining a feature sequence; and taking the inputted feature sequence as an observation sequence and judging the expression category of the observation sequence according to the facial expression classifier, wherein the facial expression classifier is obtained by combining hidden markov models of a variety of expressions, and the hidden markov models are obtained by training neutral expression sequences and weak expression sequences in facial expression samples. The invention is used for overcoming the shortcomings of discrete results, jumping and unnatural property of the existing expression recognition.

Owner:VIMICRO CORP

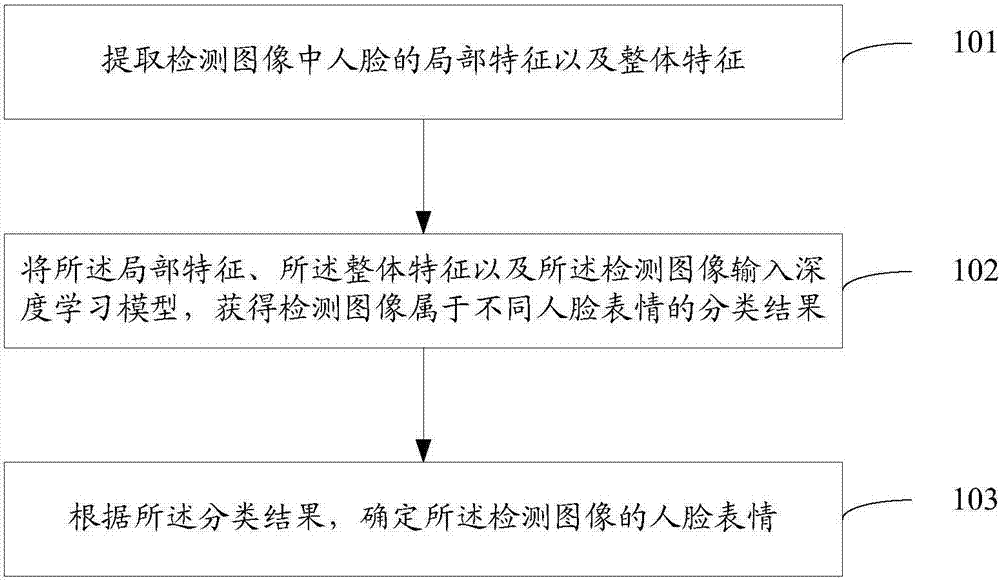

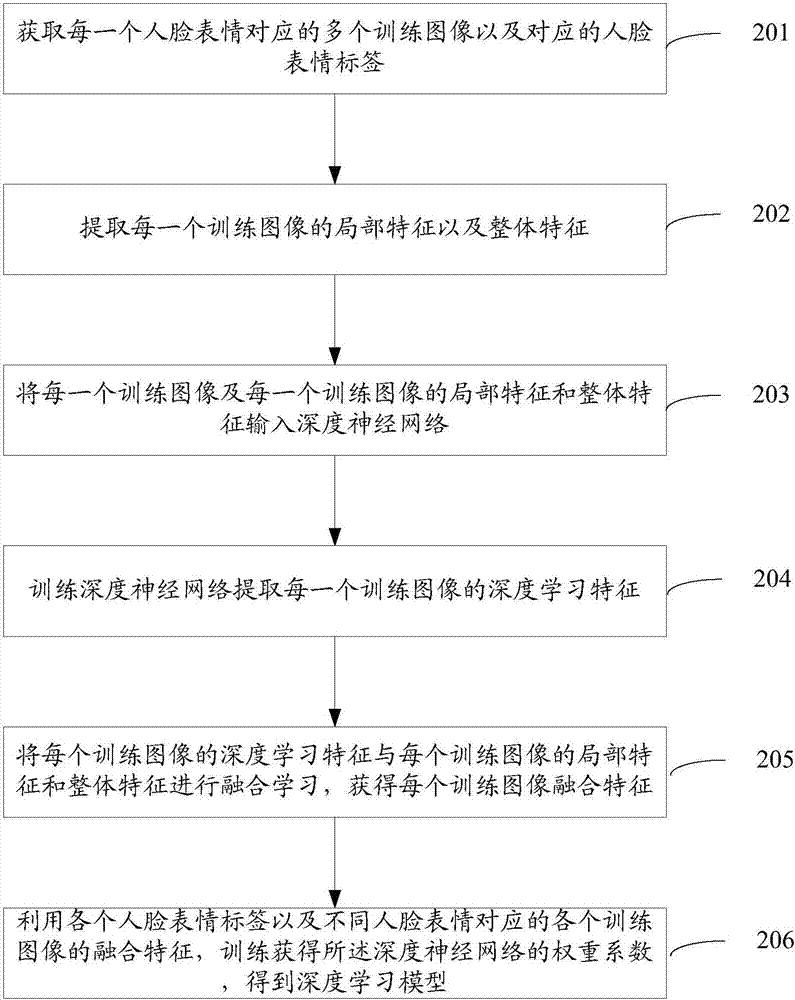

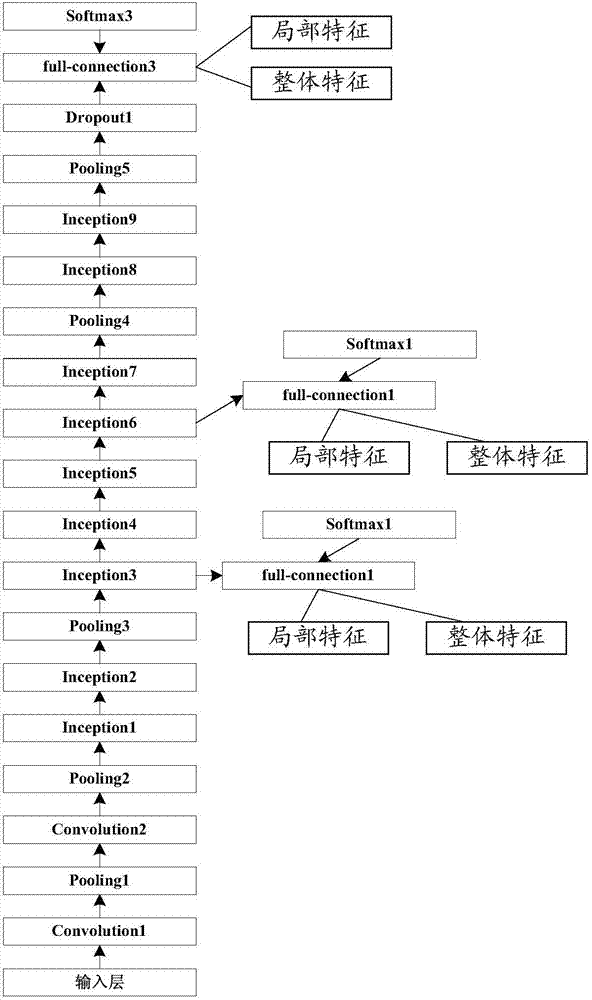

Facial expression recognition method and device

ActiveCN107045618AHigh precisionAcquiring/recognising facial featuresNeural learning methodsComputer scienceFacial expression recognition

The present invention discloses a facial expression recognition method and device. The method comprises the following steps that: the local features and overall features of a face in a detection image are extracted; the local features, overall features and detection image are inputted into a deep learning model, so that the classification results of the detection image belonging to different facial expressions are obtained, wherein the deep learning model is obtained through training a deep neural network in advance according to the training images of different facial expressions and the local features and overall features of the training images; and the facial expression of the detection image is determined according to the classification results. With the facial expression recognition method and device of the invention adopted, the accuracy of facial expression recognition can be improved.

Owner:BEIJING MOSHANGHUA TECH CO LTD

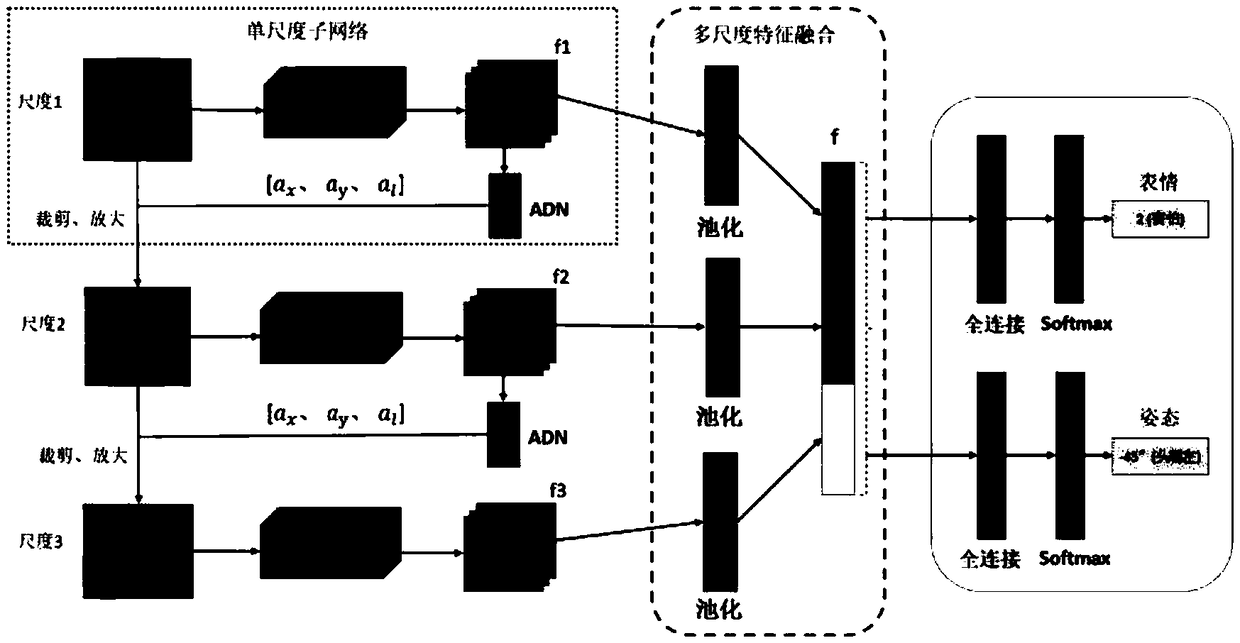

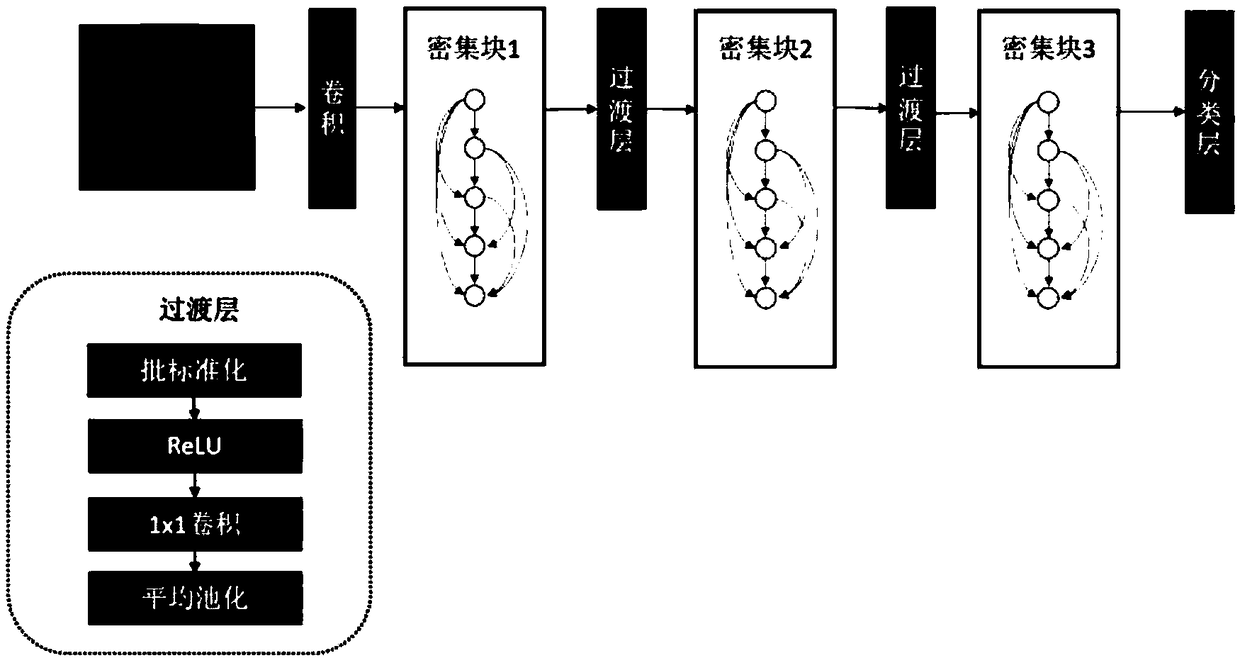

A multi-view facial expression recognition method based on mobile terminal

InactiveCN109409222AImproved expression recognition accuracyImprove recognition accuracyAcquiring/recognising facial featuresPattern recognitionData set

The invention discloses a multi-view facial expression recognition method based on a mobile terminal, which comprises the steps of cutting out a face region from each image, and carrying out data enhancement to obtain a facial expression recognition method used for training data set of AA-MDNet model; the multi-attitude data set is obtained by GAN model extension, and the multi-attitude data set is obtained by GAN model extension. Using ADN multi-scale clipping method to clip; enter the cropped image into AA-MDNet model, The input image extracts features from DenseNet, a densely connected subnetwork, Then, based on the extracted features, an attention adaptive network (ADN) is used to obtain the position parameters of the attention area of the expression and posture, and the image of the area is scaled from the input image according to the position parameters, which is used as the input of the next scale. Learning the multi-scale high-level feature fusion, we can get the high-level features with global and local fusion features. Finally, we can classify the facial posture and expression categories. The invention has very important significance in the fields of human-computer interaction, face recognition, computer vision and the like.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

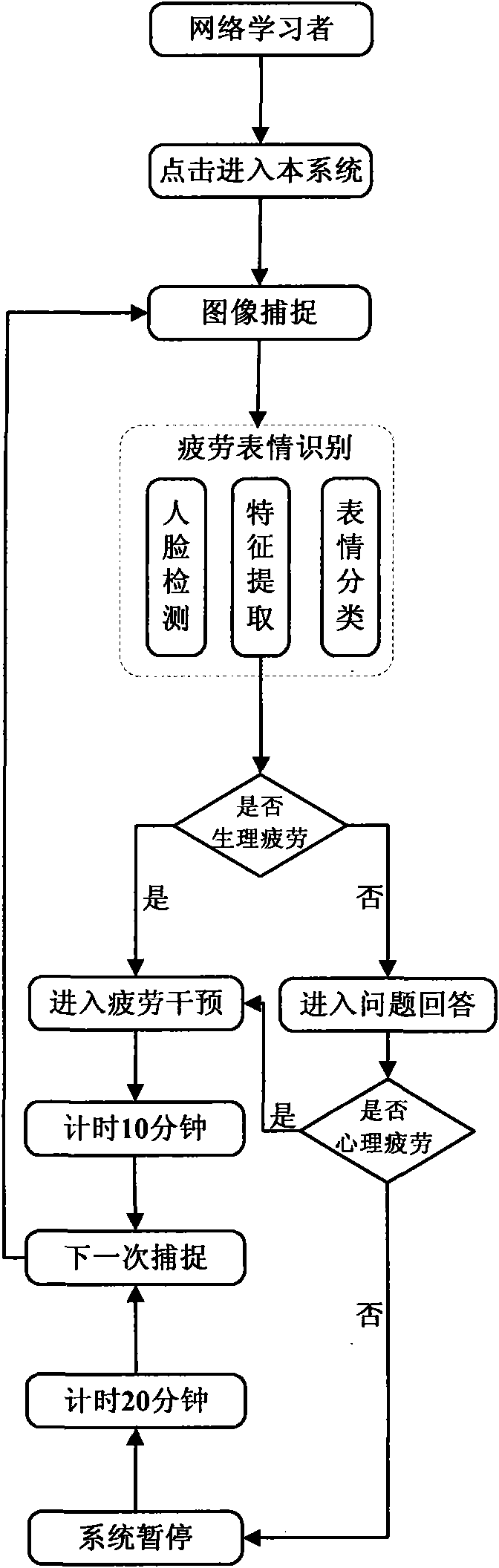

Learning fatigue recognition interference method based on facial expression recognition

InactiveCN101604382AQuick identificationFriendly human-computer interactionCharacter and pattern recognitionElectrical appliancesMental fatigueRegion detection

A learning fatigue recognition interference method based on facial expression recognition comprises the following steps: face region detection; facial feature extraction, comprising the establishment of a face expression facial model; expression classification recognition, namely carrying out classification recognition based on the face expression facial model; carrying out fatigue interference when recognizing fatigue expression or mental fatigue, and returning to continue to catch network learner picture image for next recognition after presetting shorter time; returning to continue to catch network learner picture image for next recognition after suspending presetting longer time in a manner that a system enters suspension when determining the learner is in neutral or absorbed expression or no mental fatigue; returning to continue to catch network learner picture image for next recognition when returning error messages. The technical scheme provided by the invention can rapidly recognize the expression of network learners and realize real-time learning fatigue interference.

Owner:HUAZHONG NORMAL UNIV

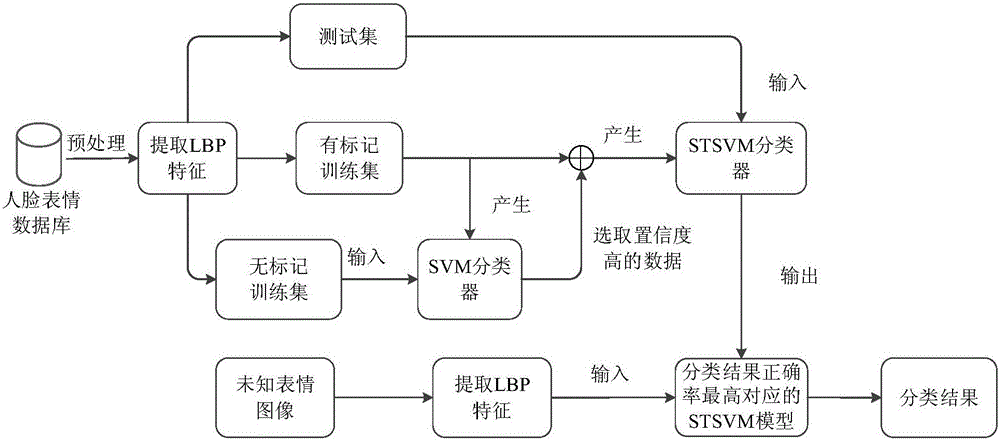

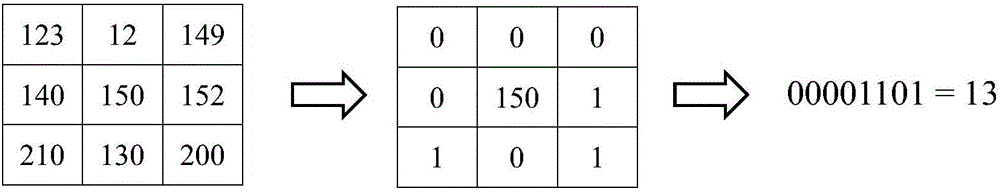

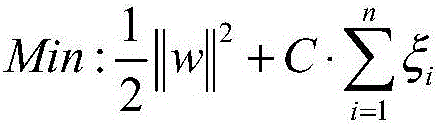

Semi-supervised learning facial expression recognition method based on fuzzy training samples

ActiveCN106096557AImprove recognition accuracyEasy to identifyAcquiring/recognising facial featuresSvm classifierSupervised learning

The invention discloses a semi-supervised learning facial expression recognition method based on fuzzy training samples. First, data in a face database is preprocessed; then, facial expressions are recognized using an improved SVM algorithm; and finally, unknown expression images are recognized. Under the condition that a same number of labeled samples are used, the expression recognition rate is improved by 3-7% by adding a large number of unlabeled samples. Under the condition that a few labeled samples are used, the recognition rate is improved using an STSVM algorithm to a level equivalent to the recognition rate of an SVM classifier obtained by using a large number of labeled samples.

Owner:ZHEJIANG UNIV

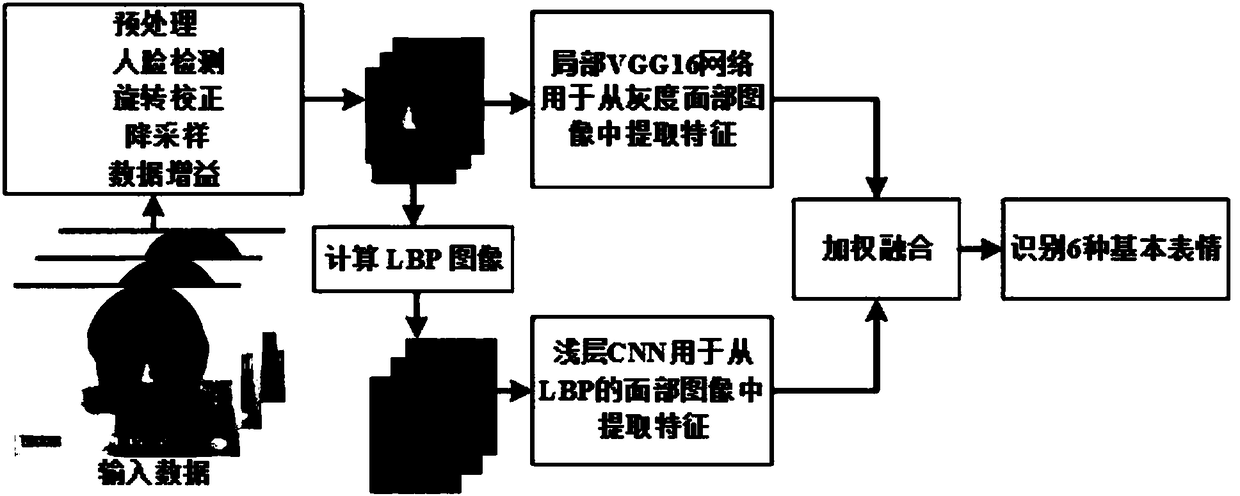

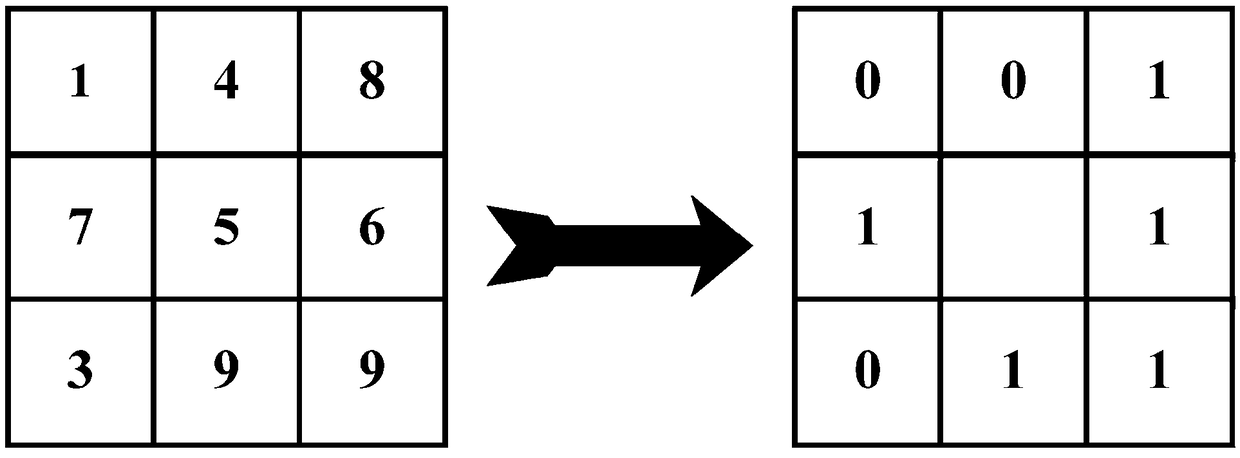

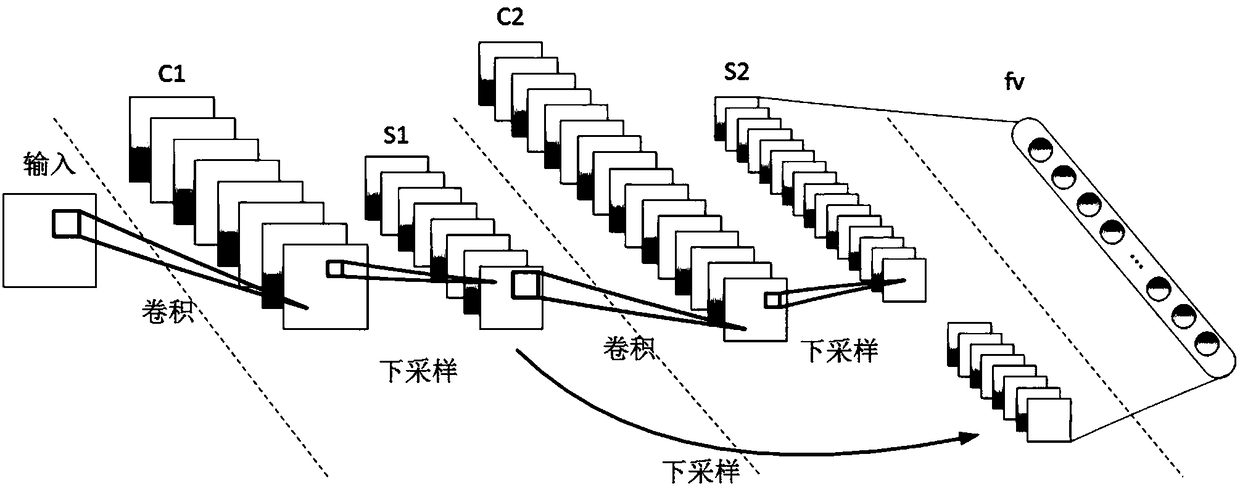

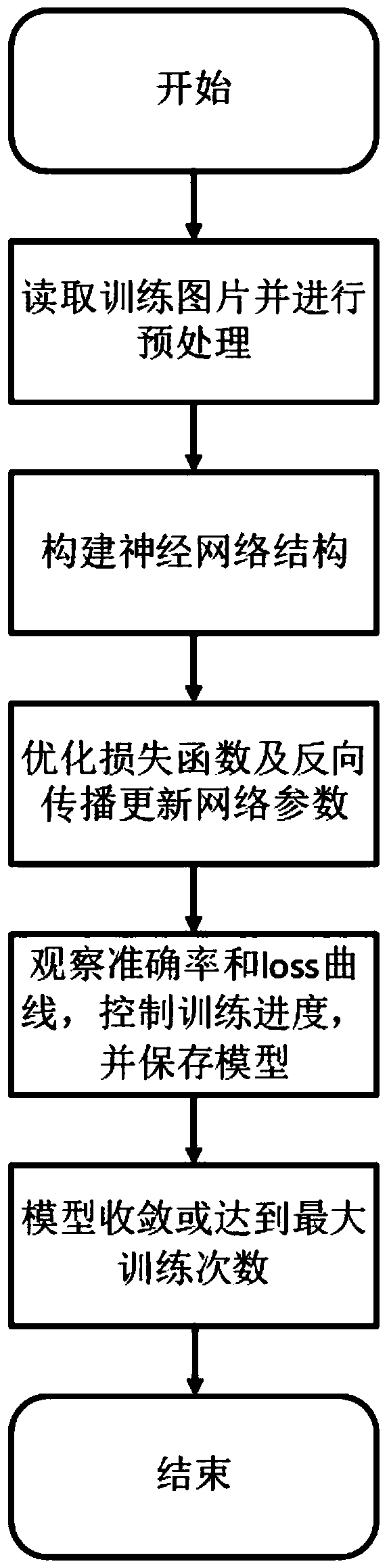

Binary-channel convolutional neural network for facial expression recognition

ActiveCN108491835AReduce background informationEnhance expressive abilityNeural architecturesAcquiring/recognising facial featuresFace detectionComputation complexity

The invention discloses a method for recognizing human facial expression by virtue of a binary-channel convolutional neural network. According to the method, firstly, pretreatments are carried out aiming at different input signals and include human face detection, rotation correction, downsampling and data sample expansion (if RGB images are input, the RGB images are grayed so as to decrease computation complexity), so that the human face detection precision is improved; secondly, LBP images corresponding to sample-expanded gray images are calculated so as to form a binary-channel sample set for subsequent model training and testing; a binary channel feature extraction network (Binary Channel-Feature Extraction Network, BC-FEN) is utilized for effectively extracting overall and local features of a human face image; and finally, a weighted merge classify network (Weighted Merge Classify Network, WMCN) is utilized for finishing the feature fusion and expression classification of the human face image, so that the human face expression recognition precision is improved.

Owner:CHANGZHOU UNIV

A facial expression recognition method based on multi-scale feature extraction and global feature fusion is proposed

InactiveCN109492529AAuthoritativeImprove robustnessNeural architecturesAcquiring/recognising facial featuresData setTest set

The invention discloses a face expression recognition method of multi-scale feature extraction and global feature fusion. A human face expression data set is selected as raw data, and the raw data isdivided into training set data and test set data. A TensorFlow artificial intelligence learning system is used to construct a convolution neural network with multi-scale feature extraction and globalfeature fusion. The convolution neural network reads the training set data, pretreats the training set data and performs model training, then reads the test set data, recognizes the expression types of each expression in the test set data in turn, and calculates the average accuracy rate and average F1 of all expressions after completing the recognition of all expressions. Score indicators, the final completion of the process of facial expression recognition. The invention has the advantages of high recognition speed under the condition of ensuring high recognition accuracy, and can be adaptedto multiple illumination environments with strong robustness, so as to effectively meet the practical application requirements.

Owner:CHINA UNIV OF MINING & TECH +1

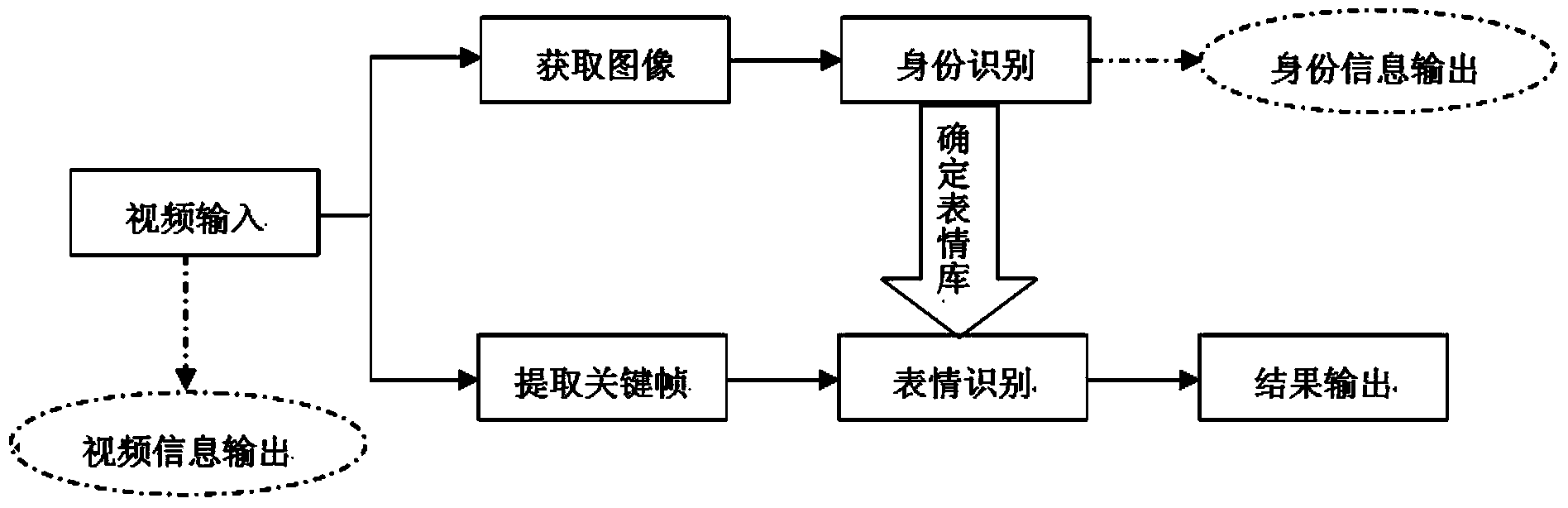

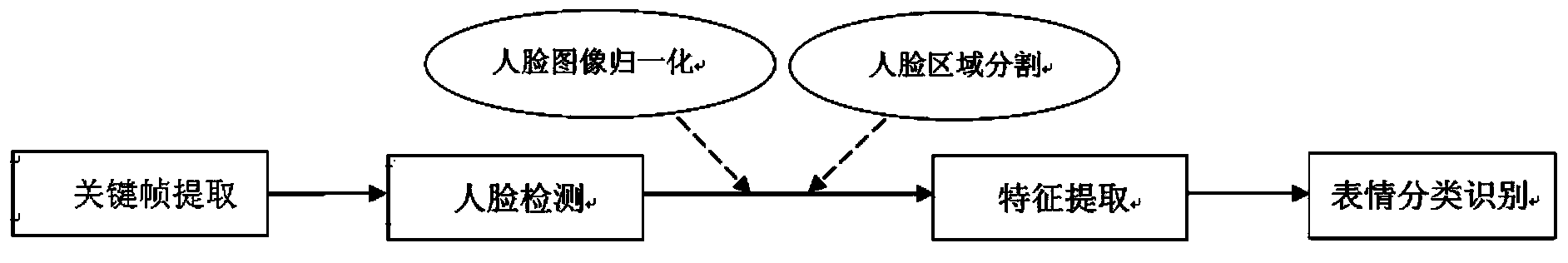

Facial expression recognition method based on video image sequence

InactiveCN103824059AImprove recognition resultsSuppress interferenceCharacter and pattern recognitionComputation complexityExpression Library

The invention discloses a facial expression recognition method based on a video image sequence, and relates to the field of face recognition. The method includes the following steps of (1) identity verification, wherein an image is captured from a video, user information in the video is obtained, then identity verification is carried out by comparing the user information with a facial training sample, and a user expression library is determined; (2) expression recognition, wherein texture feature extraction is carried out on the video, a key frame produced when the degree of a user expression is maximized is obtained, an image of the key frame is compared with the expression training sample in the user expression library determined in the step (1) to achieve the aim of recognizing the expression, and ultimately a statistic result of expression recognition is output. By means of texture characteristics, the key frame obtained in the video is analyzed, the user expression library is built so that the user expression can be recognized, interference can be effectively prohibited, calculation complexity is reduced and the recognition rate is improved.

Owner:SOUTHEAST UNIV

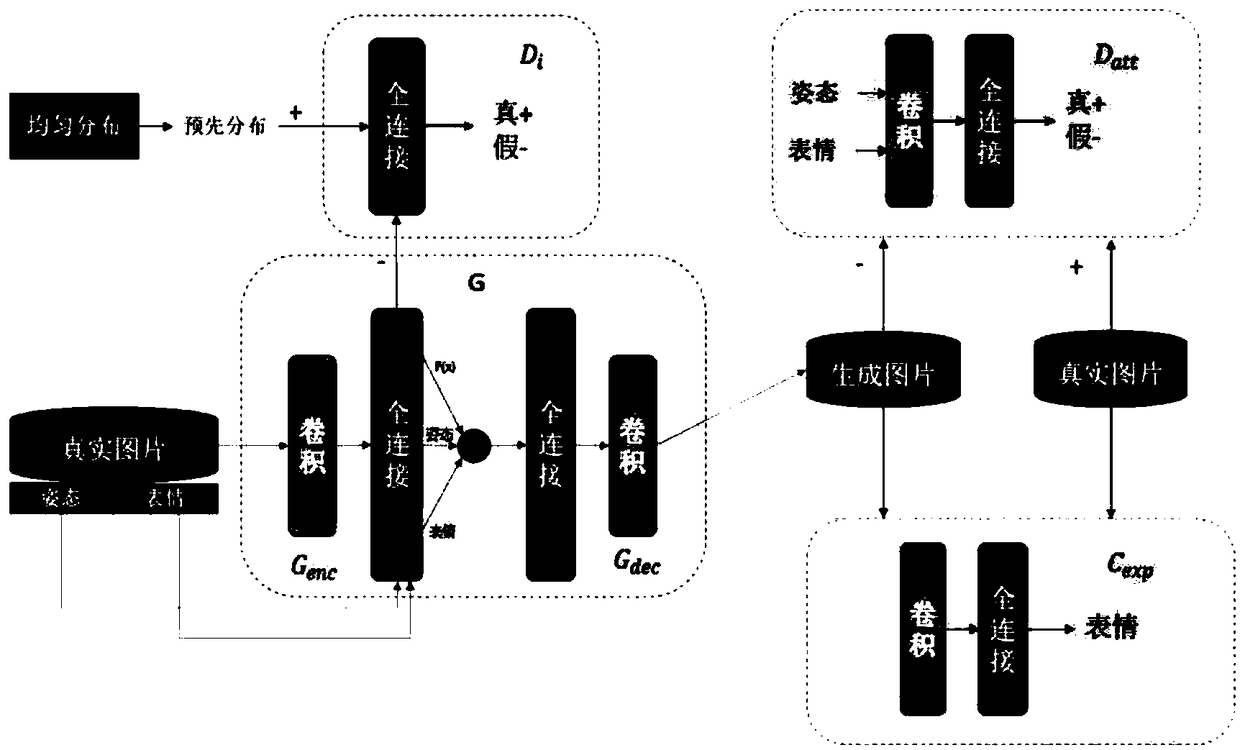

A facial expression recognition method based on generative antagonistic network

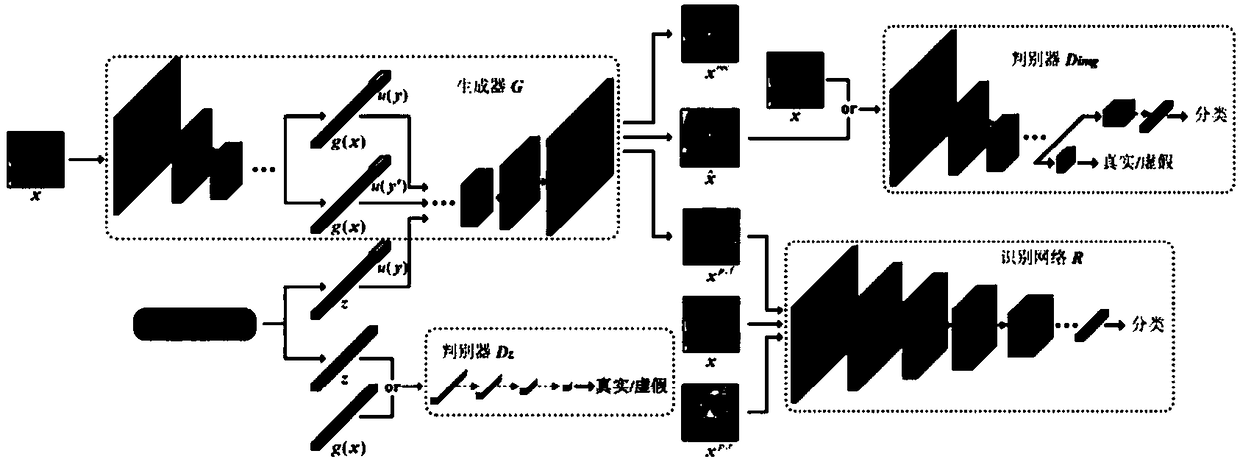

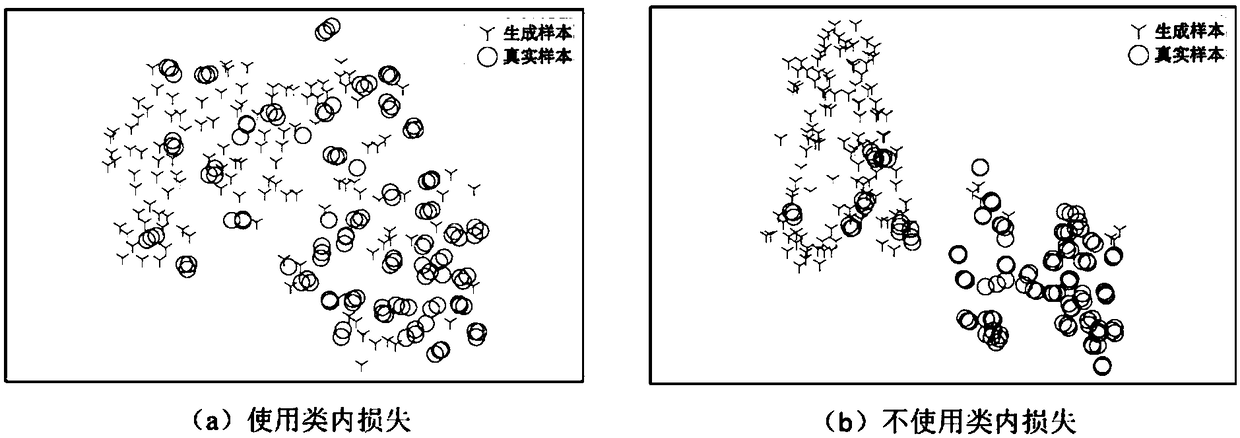

ActiveCN109508669AIncrease diversityEfficient extractionNeural architecturesNeural learning methodsDiscriminatorFeature learning

The invention relates to a facial expression recognition method based on a generative antagonistic network, which relates to computer vision technology. Firstly, a facial expression generation networkbased on generative antagonistic network is designed and pre-trained. The network is composed of a generator and two discriminators, which can generate a face map with random identities of specifiedfacial expressions. Then a facial expression recognition network is designed, which receives the facial expression map from the training set and the random facial expression map generated by the facial expression generation network, and uses an intra-class loss to reduce the facial expression feature differences between the real samples and the generated samples. At the same time, a real sample-oriented gradient updating method is used to promote the feature learning of the generated samples. Finally, according to the trained facial expression recognition network model, the final facial expression recognition results are obtained from the final flexible maximum classification layer of the model.

Owner:XIAMEN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com