Patents

Literature

234results about How to "Improve recognition results" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

License Plate Recognition

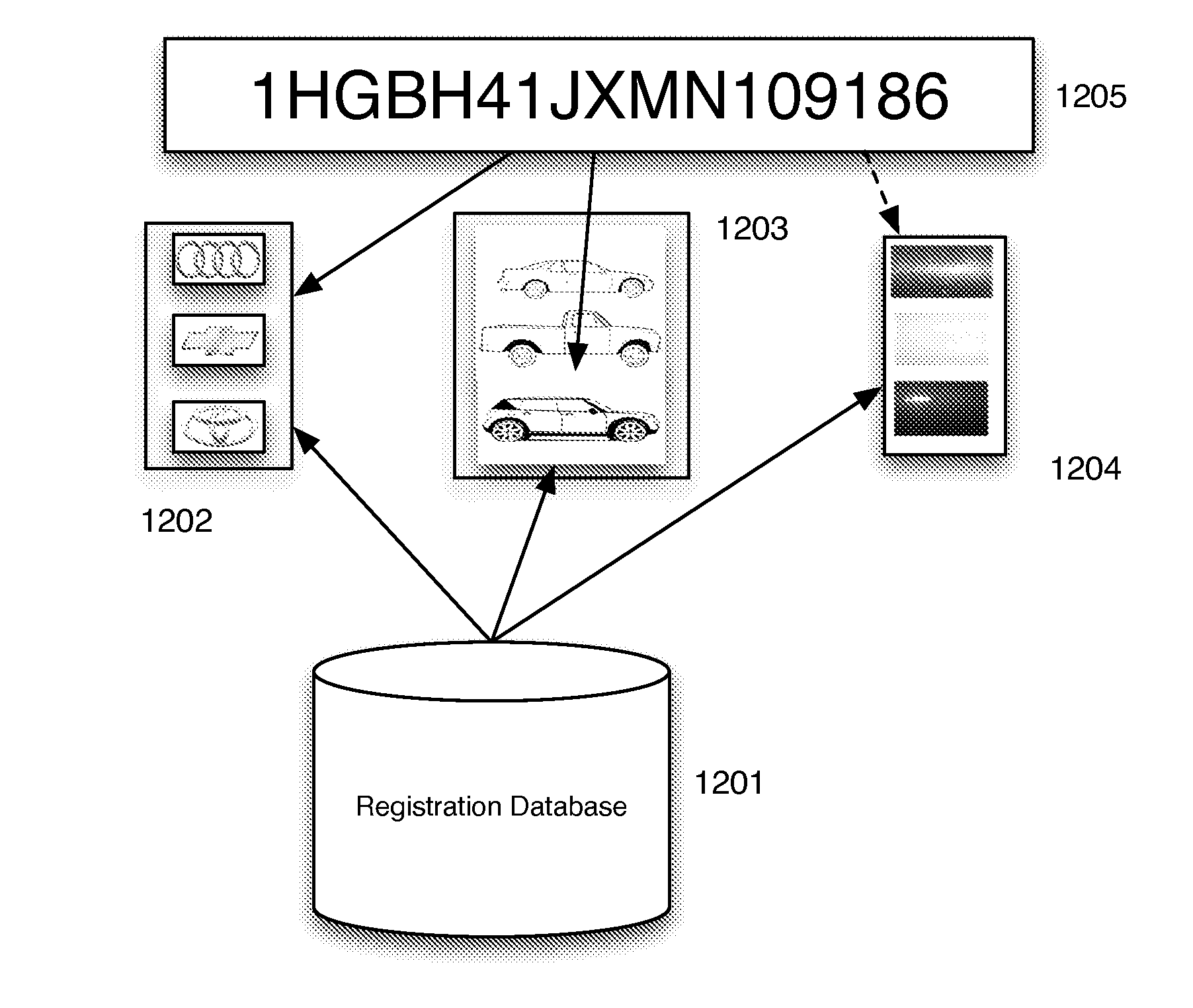

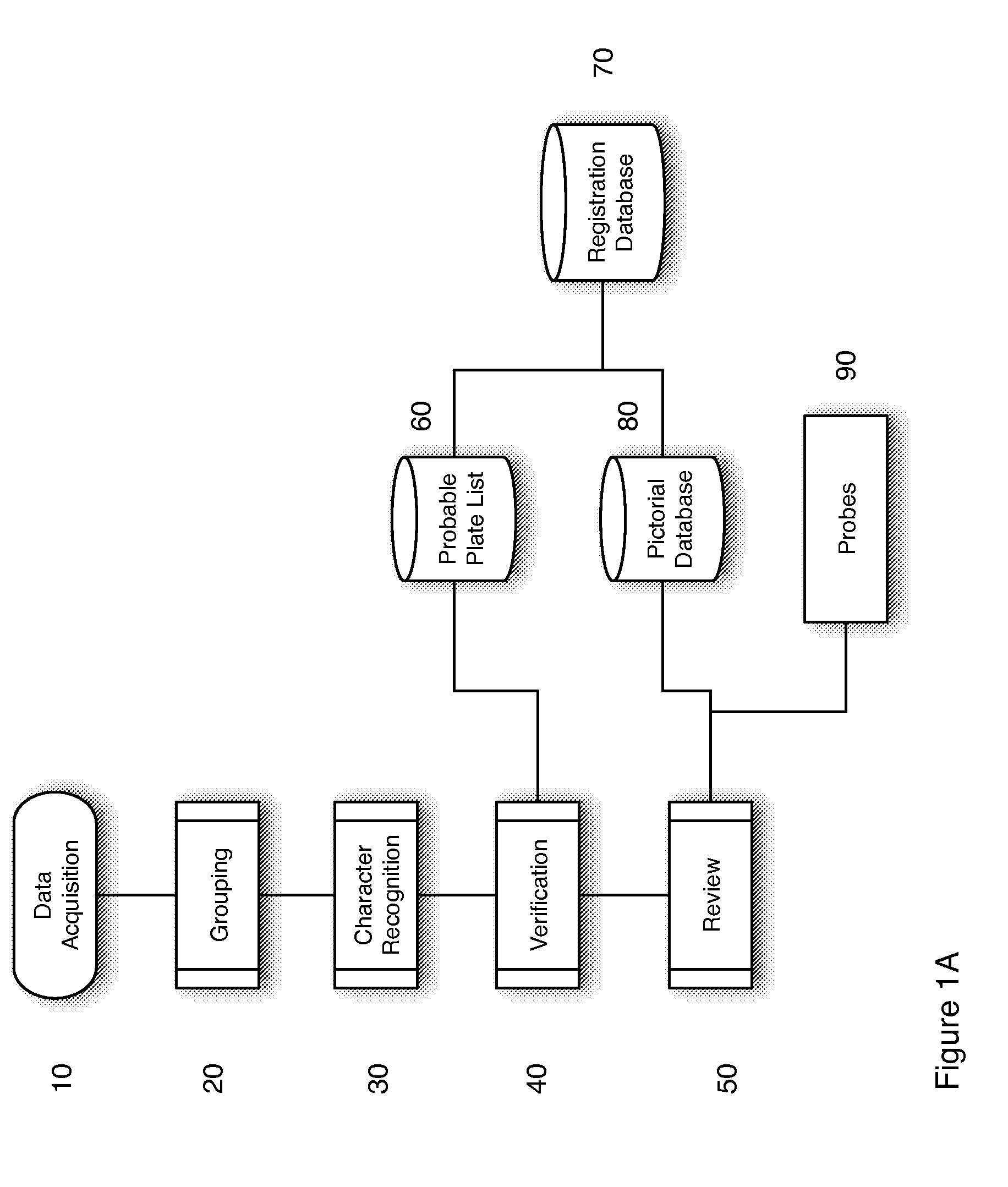

ActiveUS20150049914A1Improve recognition resultsMake up for deficienciesCharacter and pattern recognitionPattern recognitionComputer science

A license plate recognition and image review system and processes are described. The system includes grouping of images that are determined to be of the same vehicle, using an image encoded database such that verification of a license plate read is done through comparison of images of the actual vehicle to images from the encoded database and testing of the accuracy of a manual review process by interspersing previously identified images with real images being reviewed in a batch process.

Owner:ALVES JAMES

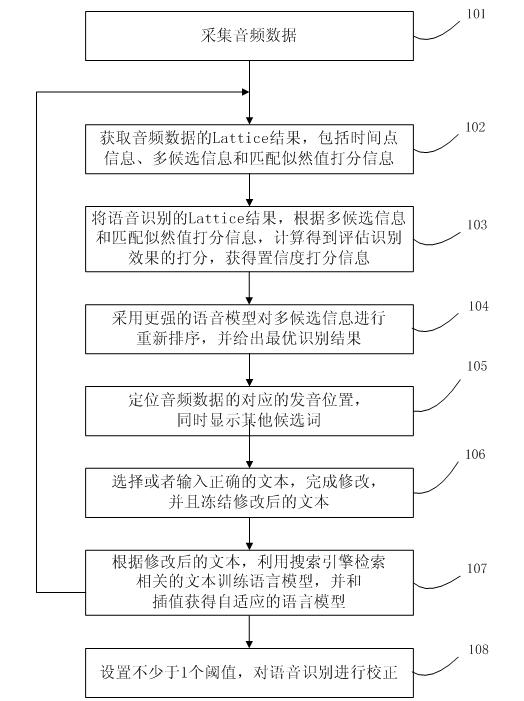

Method for recognizing voice

InactiveCN102122506AImprove recognition resultsImprove adaptabilitySpeech recognitionWorkloadSpeech sound

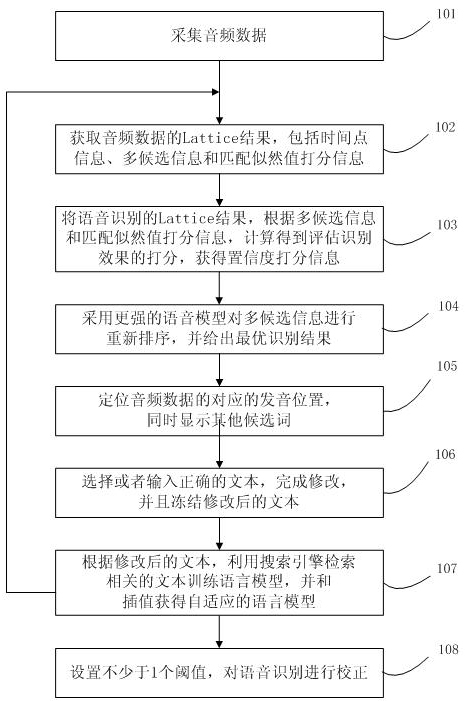

The invention discloses a method for recognizing voice. The method comprises the following steps of: acquiring audio data; acquiring a Lattice result of the audio data, wherein the Lattice result comprises time point information, a plurality of pieces of candidate information and matching likelihood scoring information; acquiring confidence scoring information according to the plurality of piecesof candidate information and the matching likelihood scoring information; rearranging the plurality of pieces of candidate information by using a stronger voice model and providing the optimal recognition result; positioning a voicing position corresponding to the audio data and simultaneously displaying other candidate words; selecting or inputting a correct text to finish amendment and freezingthe amended text; and searching a related text training language model by using a search engine according to the amended text serving as a key word, interpolating to acquire an adaptive language model, and returning and newly recognizing the rest part of audio data by using the adaptive voice model. By using the technical scheme, the voice recognition rate can be improved, and the workload of manual checking can be reduced.

Owner:TVMINING BEIJING MEDIA TECH

Multi-modal emotion recognition method based on attention feature fusion

InactiveCN109614895AMaximize UtilizationFully reflect the degree of influenceCharacter and pattern recognitionNeural architecturesSpeech soundComputer science

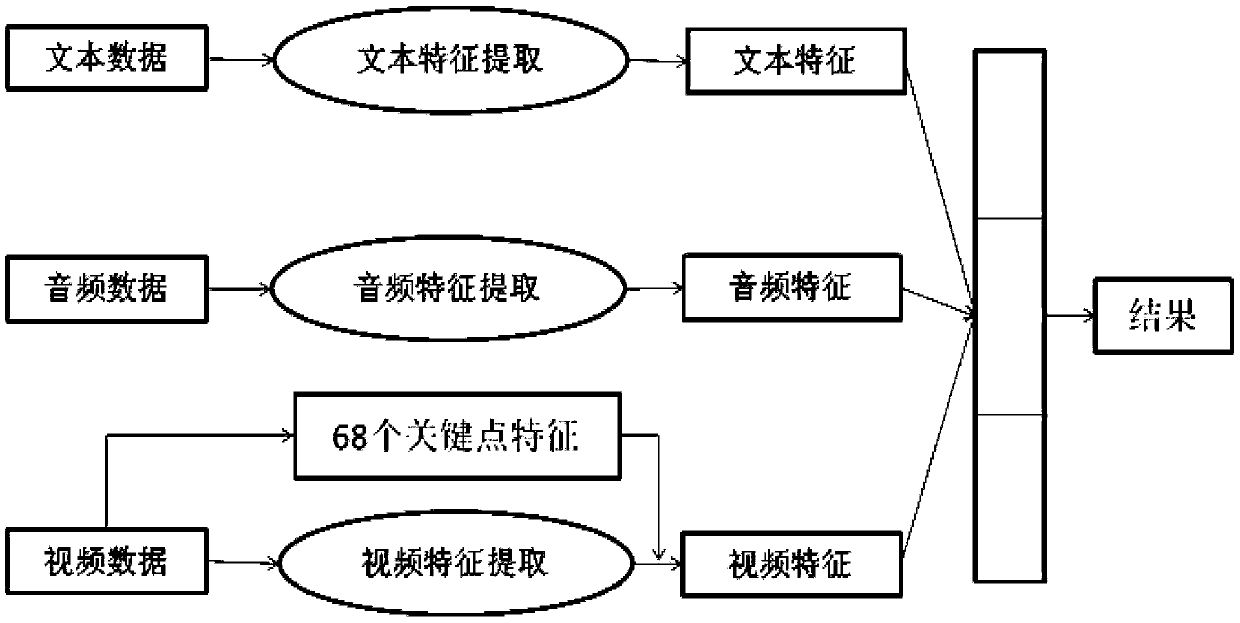

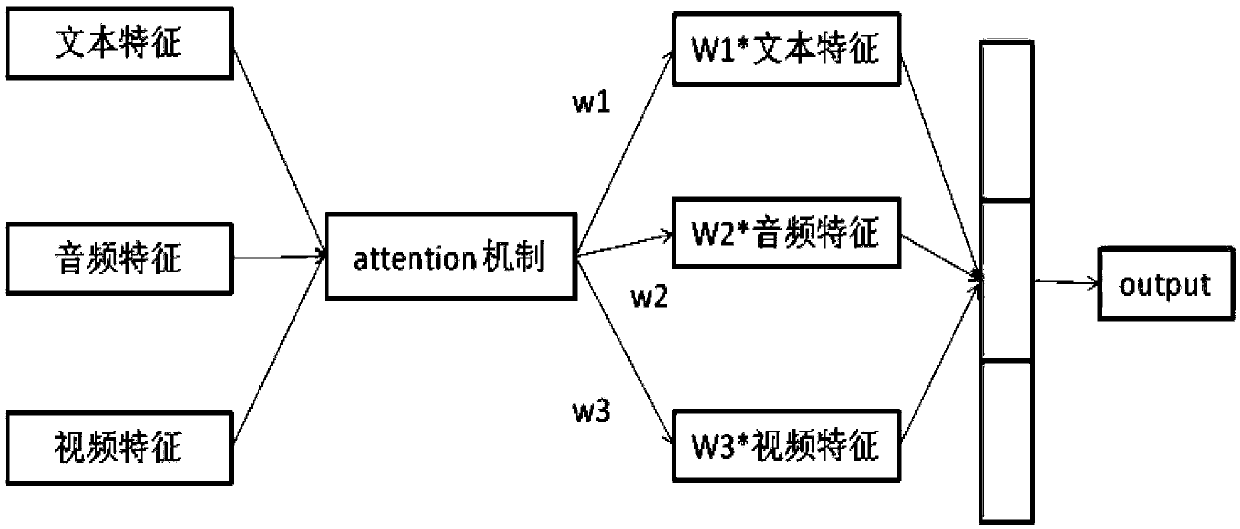

The invention relates to a multi-modal emotion recognition method based on attention feature fusion. According to the multi-modal emotion recognition method based on attention feature fusion, final emotion recognition is carried out by mainly utilizing data of three modes of a text, a voice and a video. The method comprises the following steps: firstly, performing feature extraction on data of three modes; in the text aspect, bidirectional LSTM is used for extracting text features, a convolutional neural network is used for extracting features in a voice mode, and a three-dimensional convolutional neural network model is used for extracting video features in a video mode. Performing feature fusion on the features of the three modes by adopting an attention-based feature layer fusion mode;a traditional feature layer fusion mode is changed, complementary information between different modes is fully utilized, certain weights are given to the features of the different modes, the weights and the network are obtained through training and learning together, and therefore the method better conforms to the whole data distribution of people, and the final recognition effect is well improved.

Owner:SHANDONG UNIV

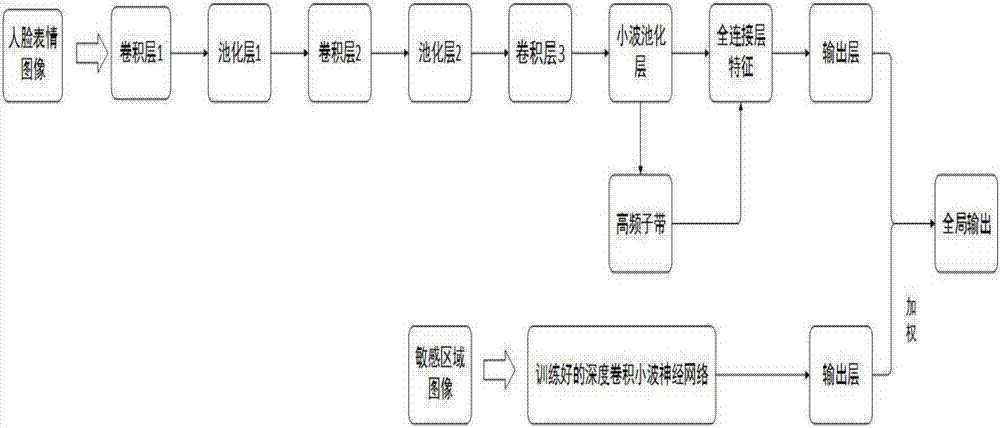

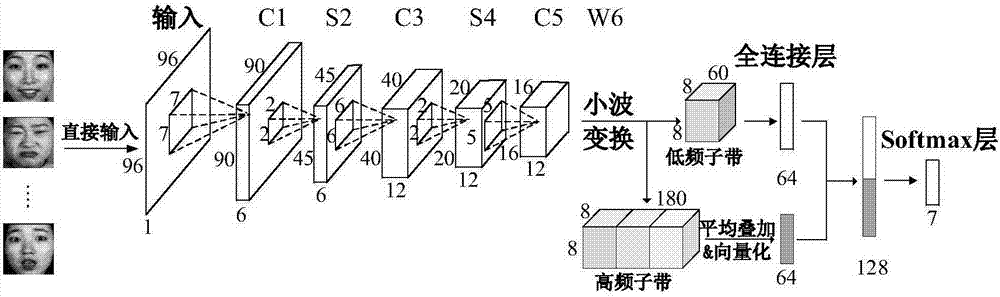

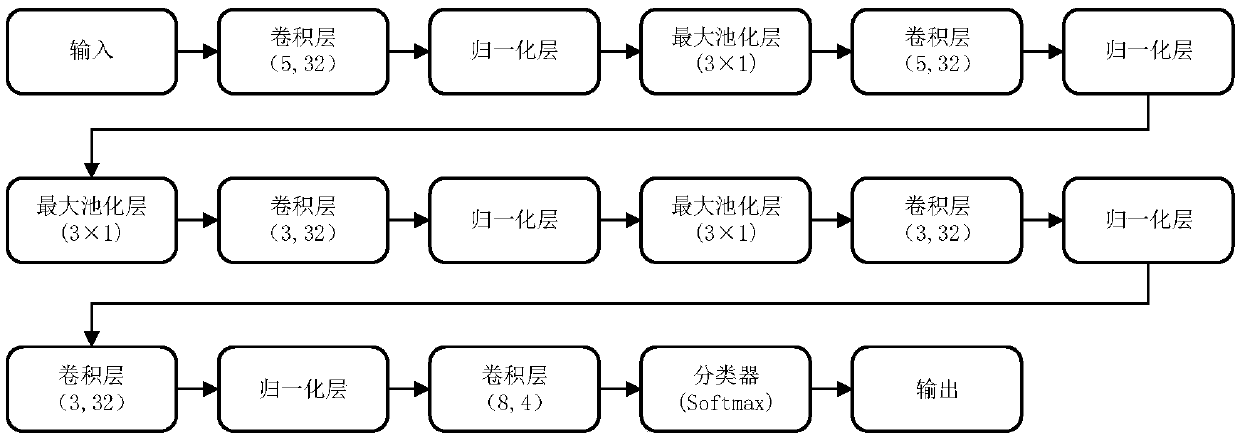

Depth convolution wavelet neural network expression identification method based on auxiliary task

ActiveCN107292256AImprove generalization abilityEfficient complete feature transferNeural architecturesAcquiring/recognising facial featuresFeature selectionExpression Feature

The invention discloses a depth convolution wavelet neural network expression identification method based on auxiliary tasks, and solves problems that an existing feature selection operator cannot efficiently learn expression features and cannot extract more image expression information classification features. The method comprises: establishing a depth convolution wavelet neural network; establishing a face expression set and a corresponding expression sensitive area image set; inputting a face expression image to the network; training the depth convolution wavelet neural network; propagating network errors in a back direction; updating each convolution kernel and bias vector of the network; inputting an expression sensitive area image to the trained network; learning weighting proportion of an auxiliary task; obtaining network global classification labels; and according to the global labels, counting identification accuracy rate. The method gives both considerations on abstractness and detail information of expression images, enhances influence of the expression sensitive area in expression feature learning, obviously improves accuracy rate of expression identification, and can be applied in expression identification of face expression images.

Owner:XIDIAN UNIV

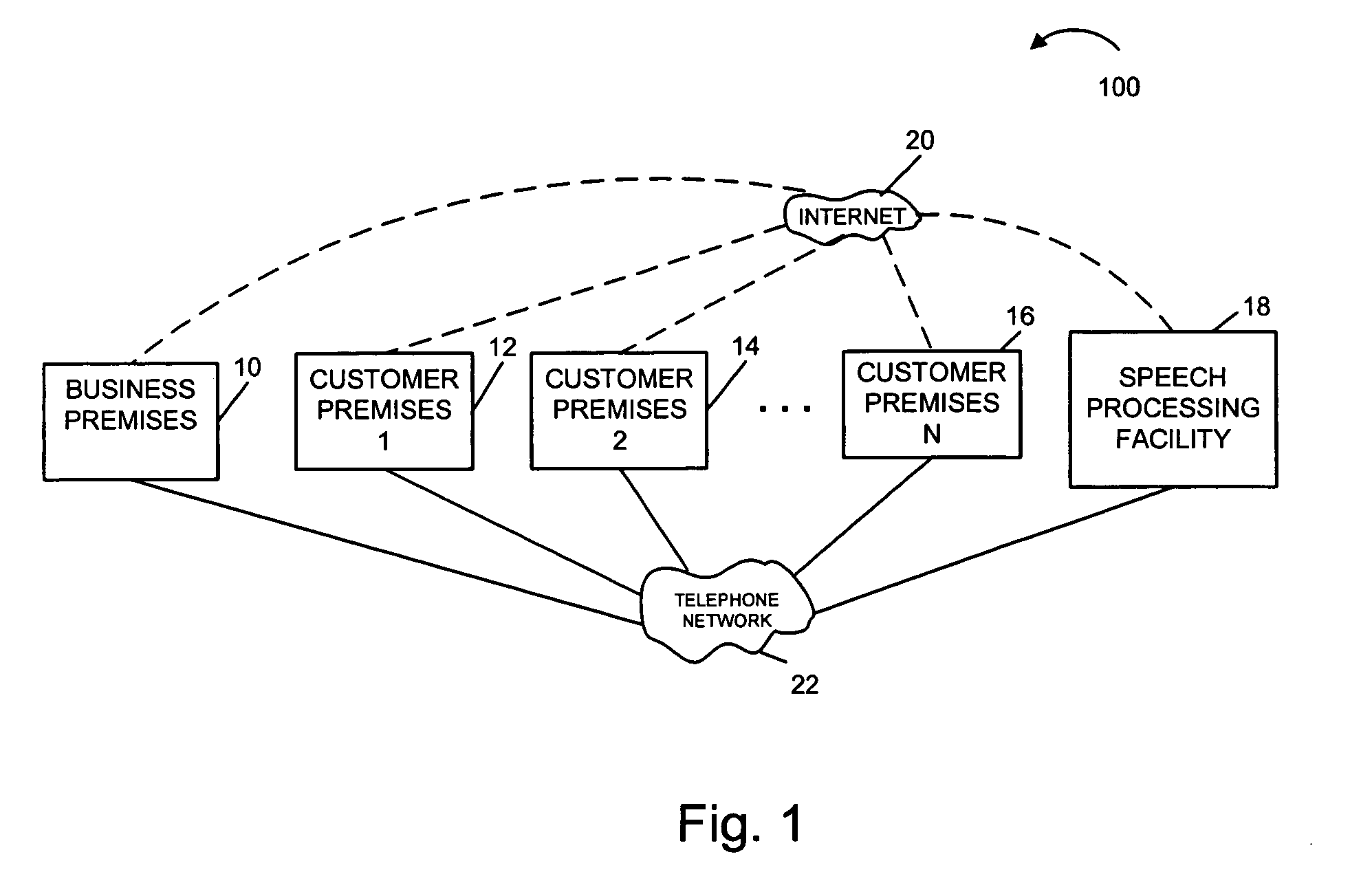

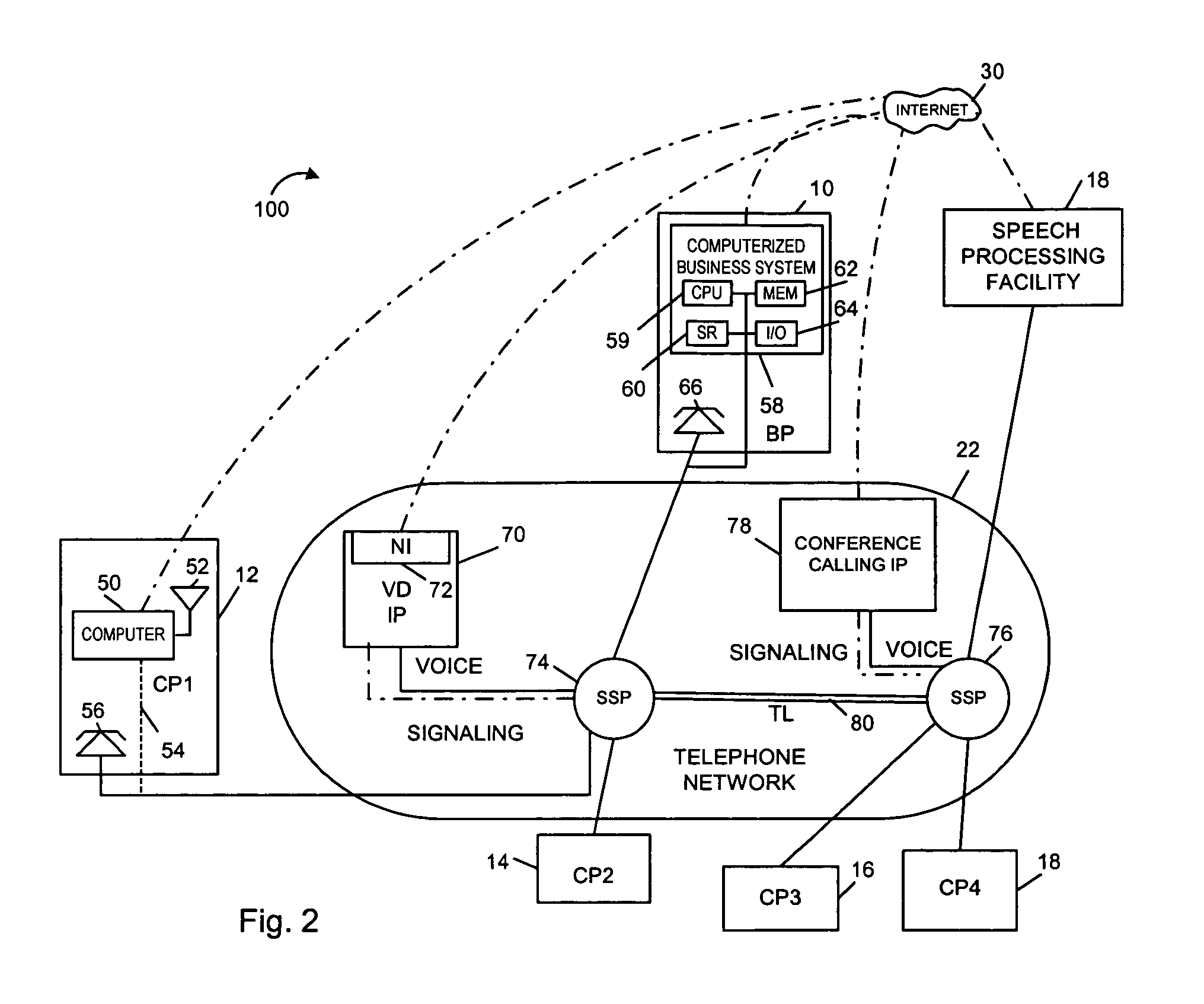

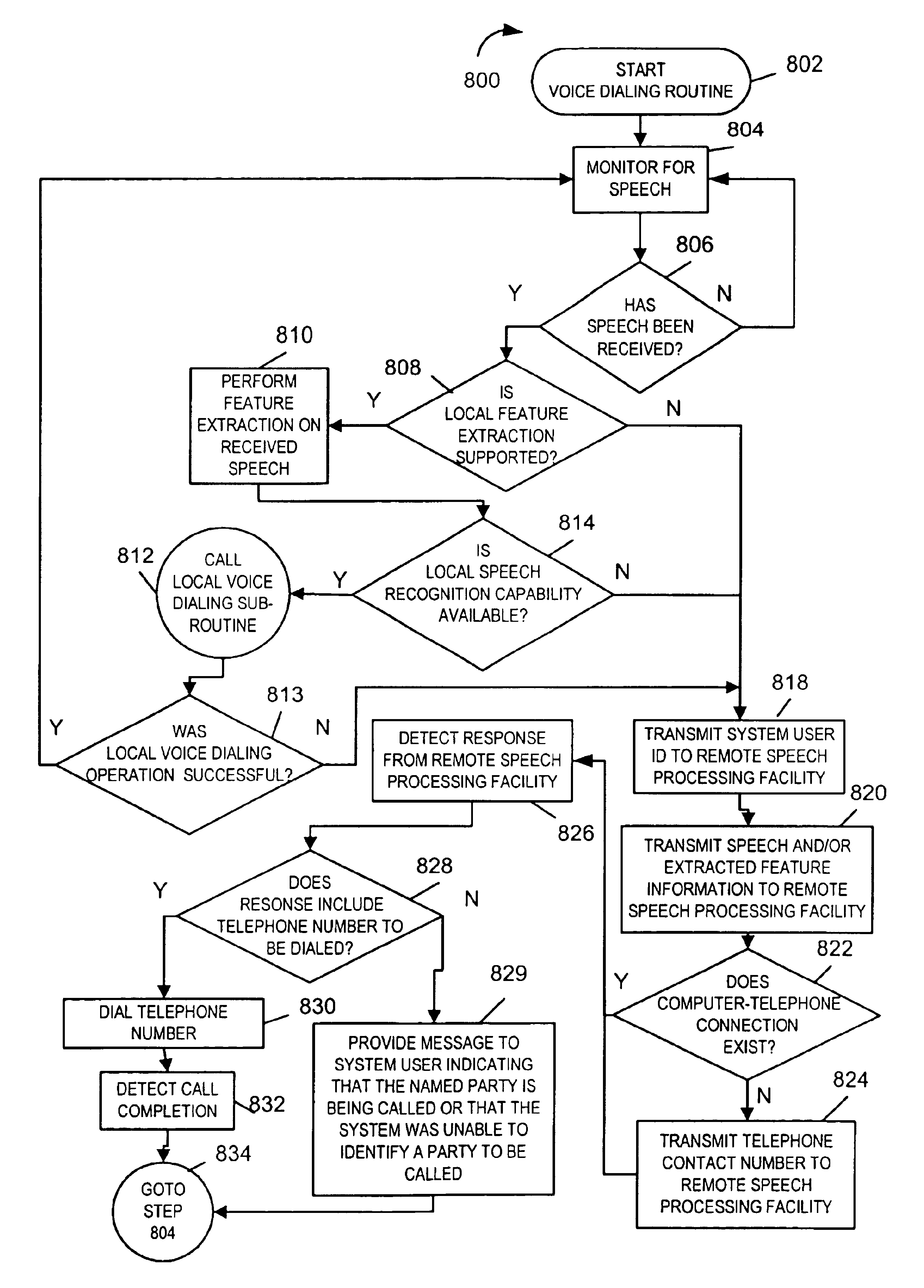

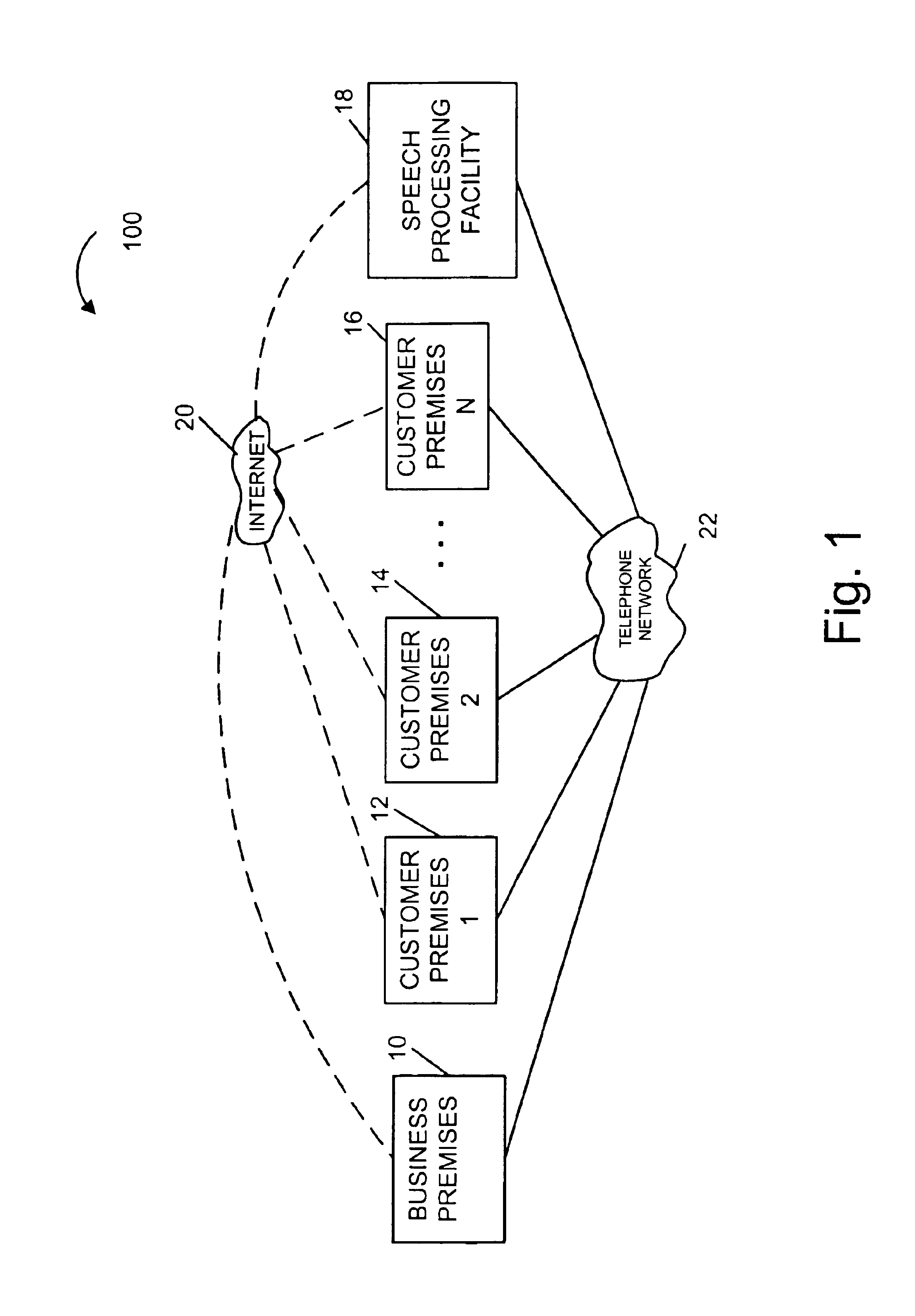

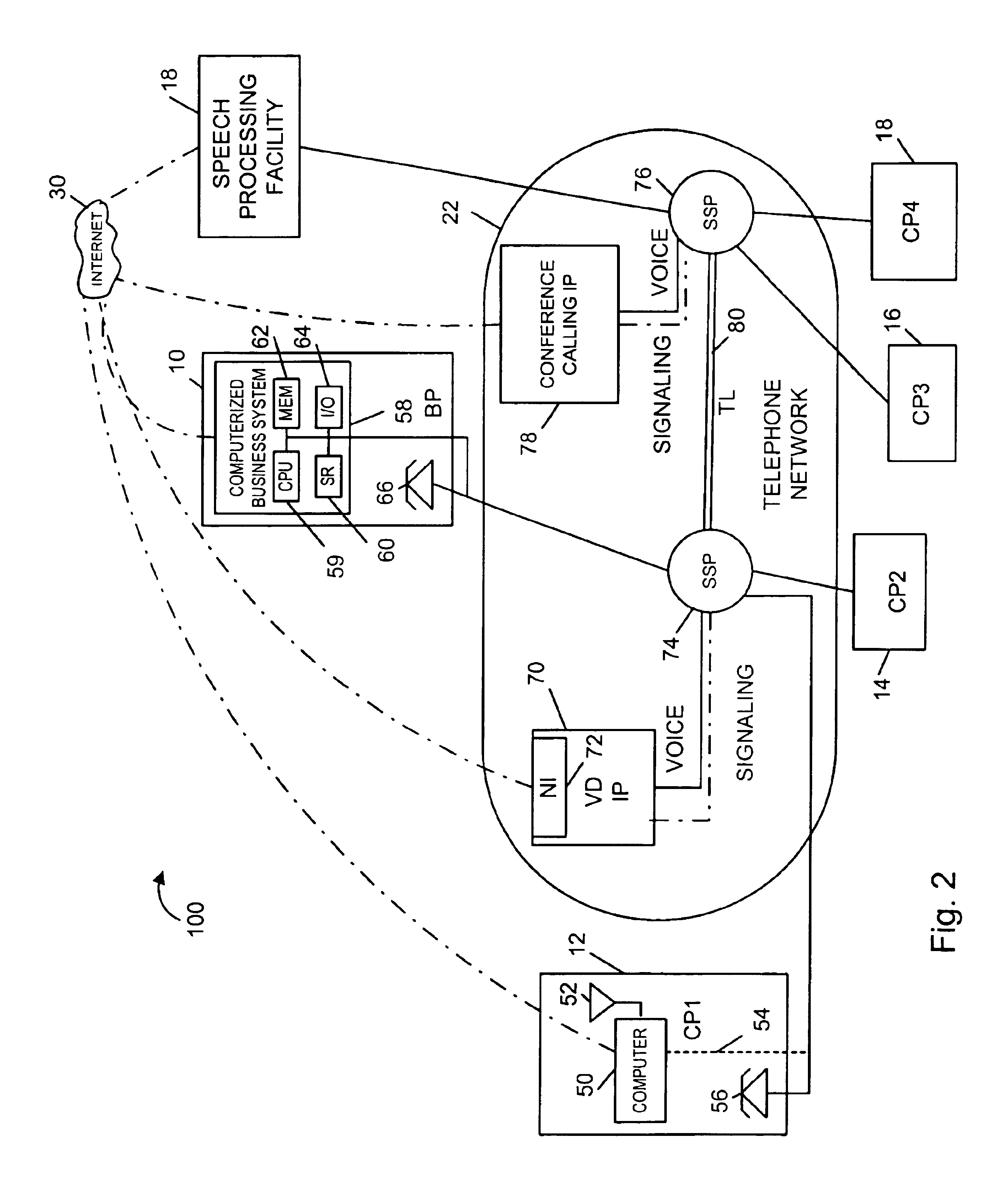

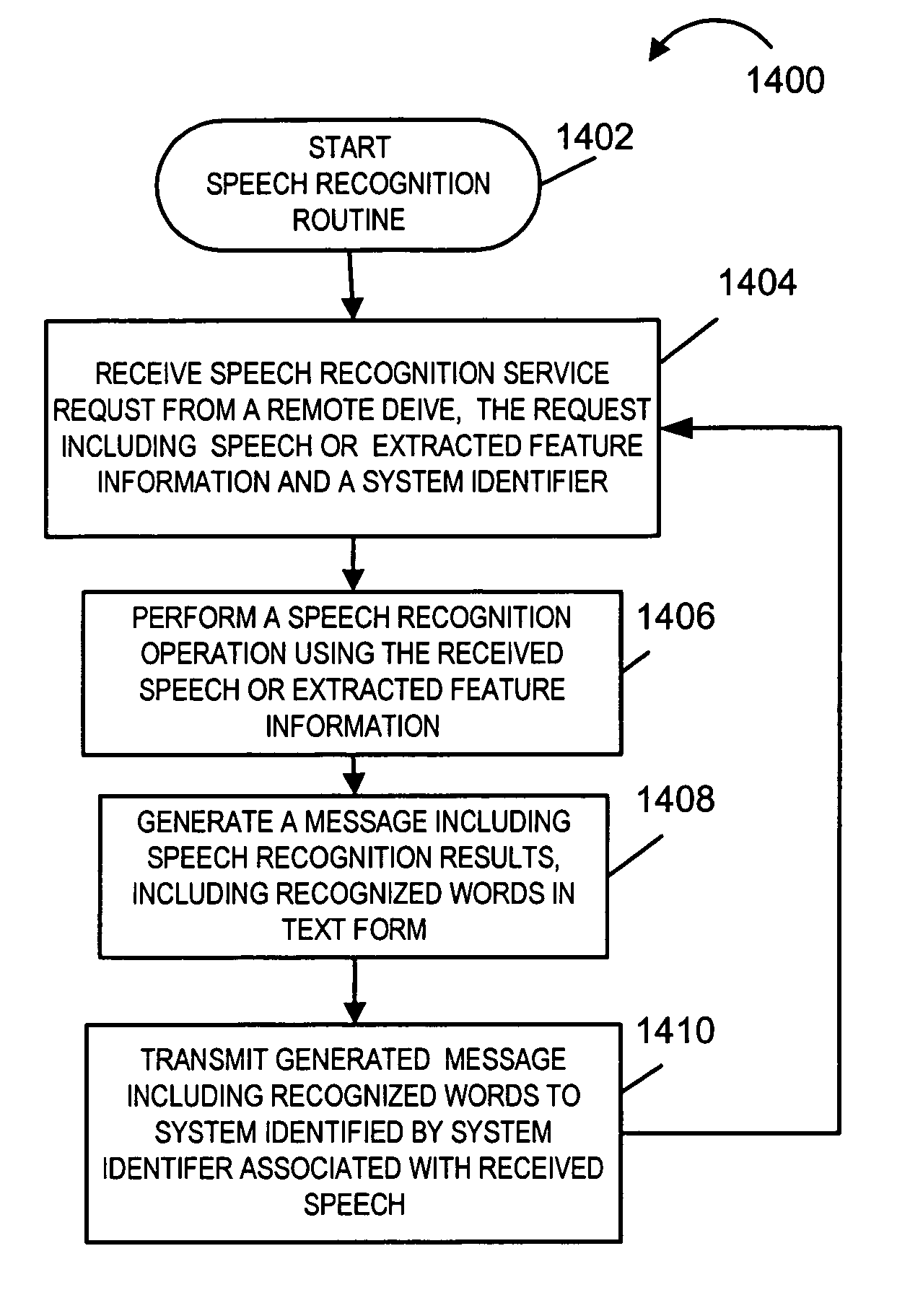

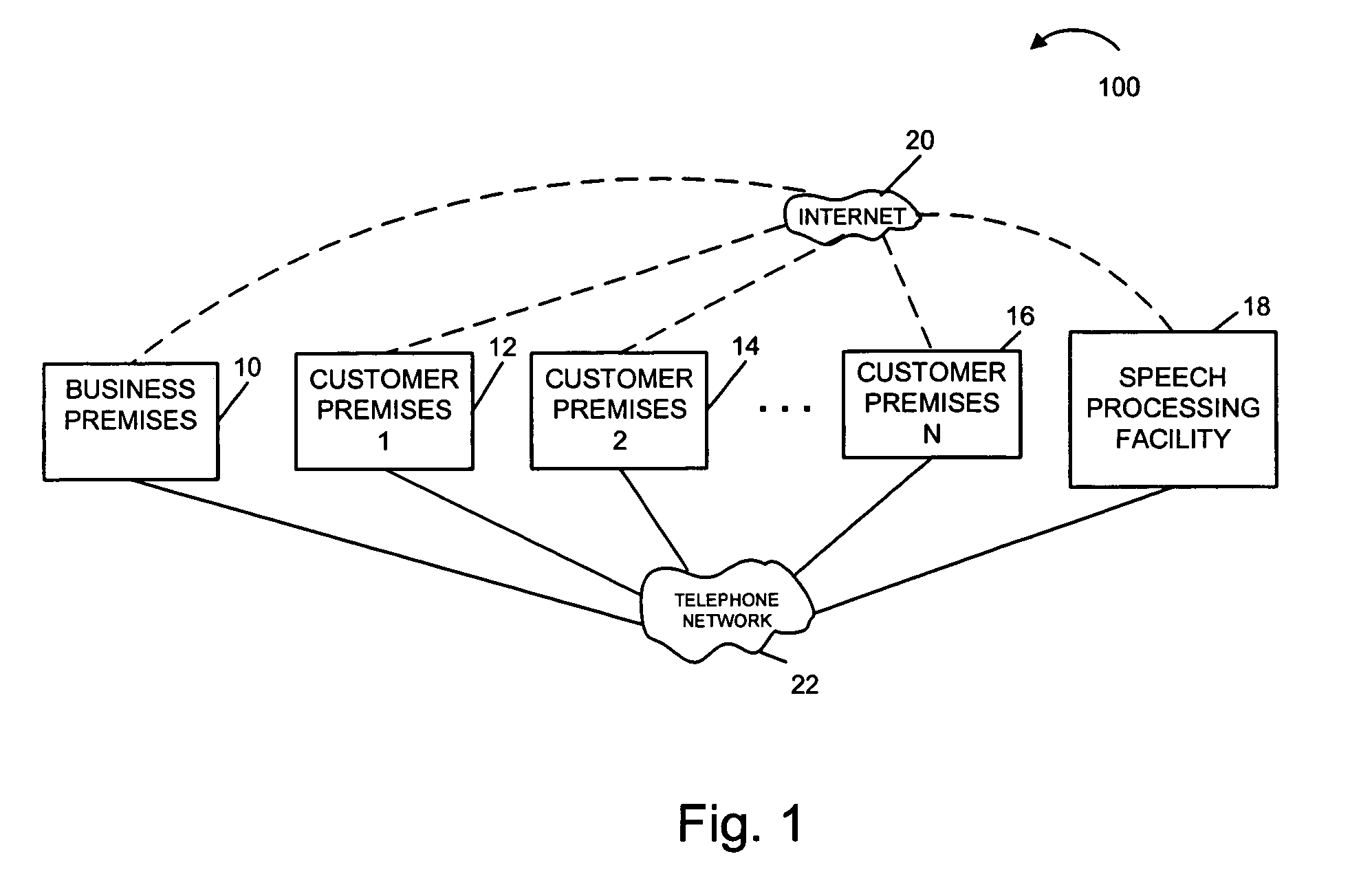

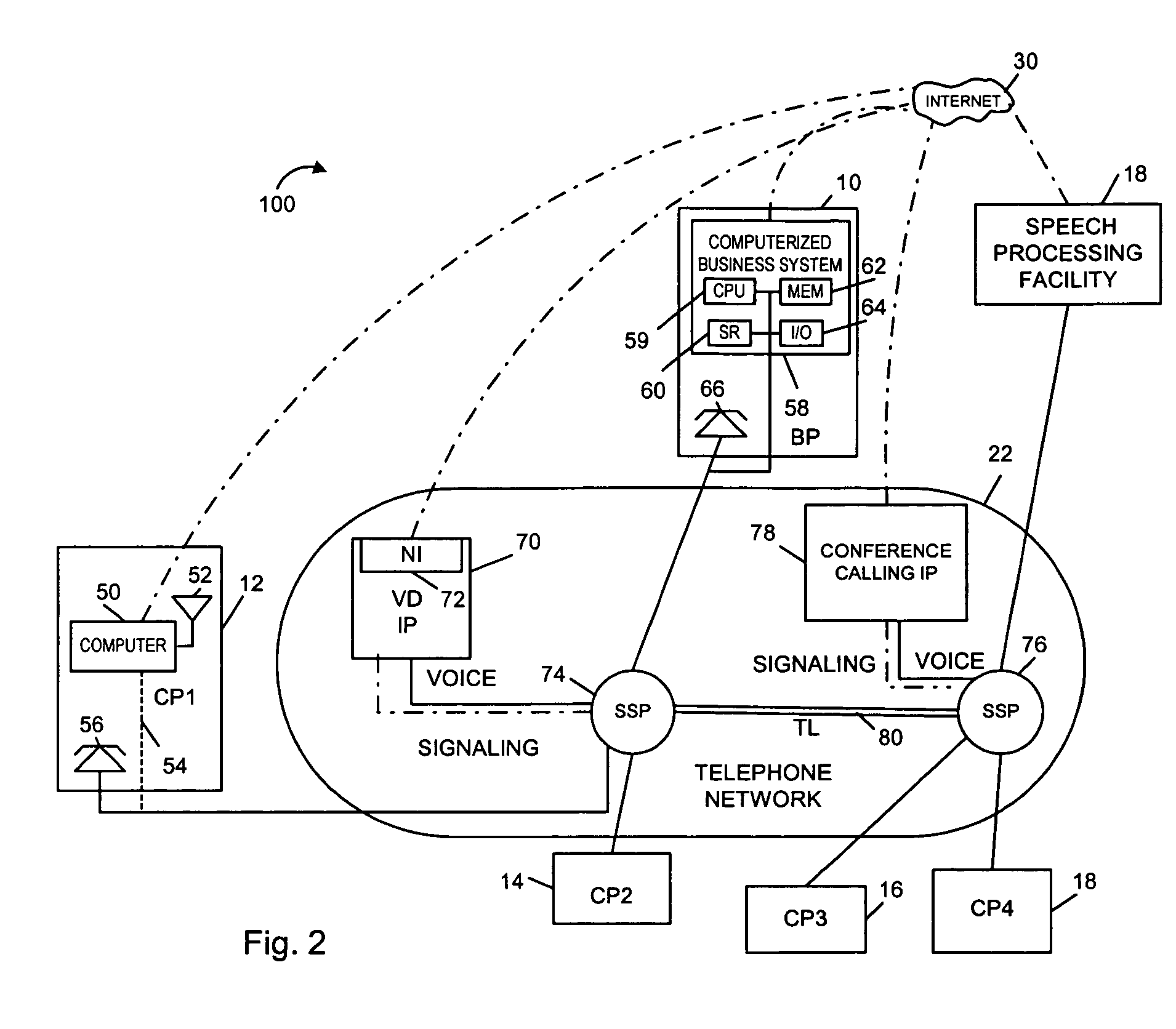

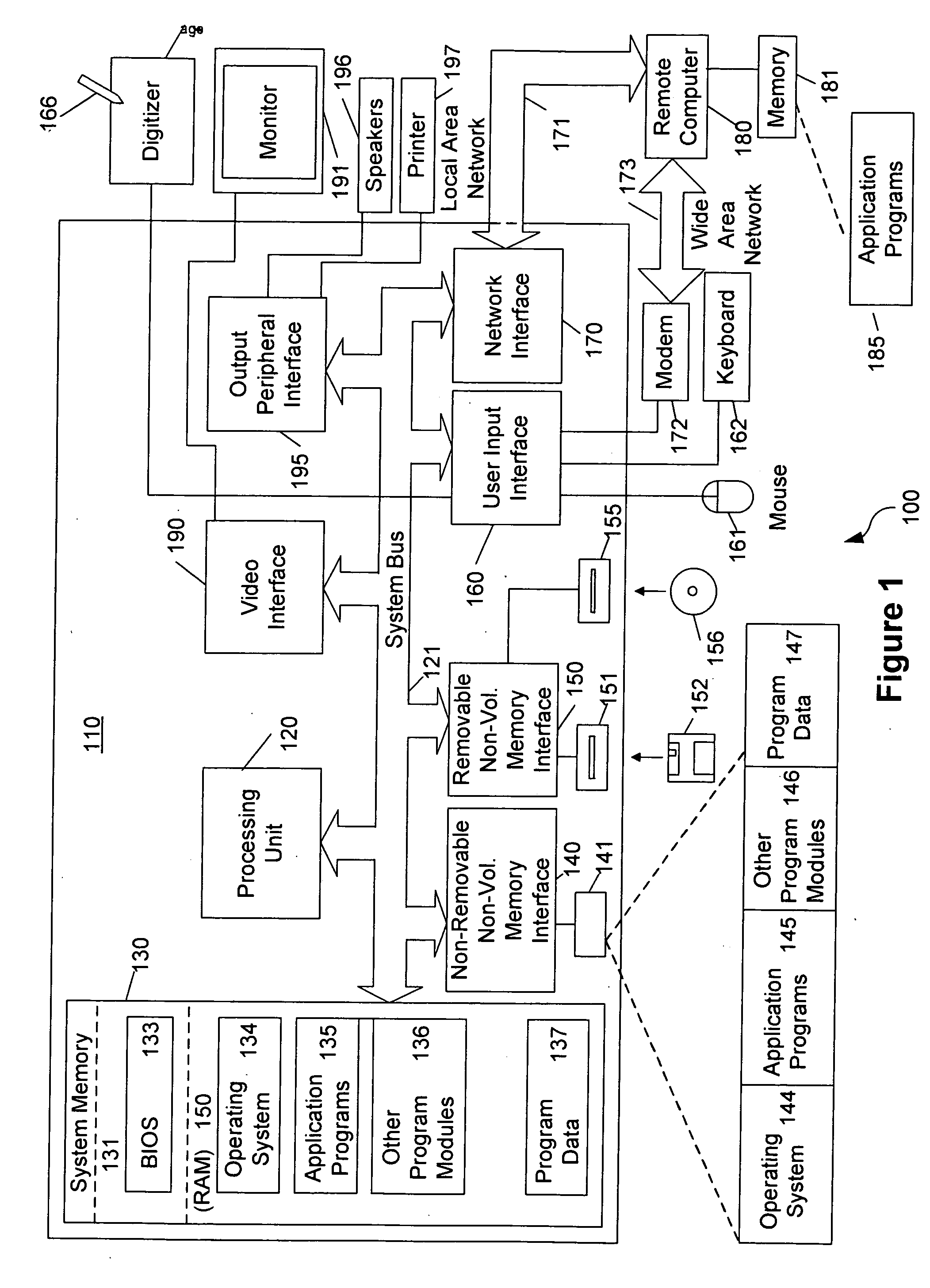

Methods and apparatus for performing speech recognition over a network and using speech recognition results

InactiveUS20050216273A1Improve recognition resultsAutomatic call-answering/message-recording/conversation-recordingAutomatic exchangesThe InternetAudio frequency

Techniques for generating, distributing, and using speech recognition models are described. A shared speech processing facility is used to support speech recognition for a wide variety of devices with limited capabilities including business computer systems, personal data assistants, etc., which are coupled to the speech processing facility via a communications channel, e.g., the Internet. Devices with audio capture capability record and transmit to the speech processing facility, via the Internet, digitized speech and receive speech processing services, e.g., speech recognition model generation and / or speech recognition services, in response. The Internet is used to return speech recognition models and / or information identifying recognized words or phrases. Thus, the speech processing facility can be used to provide speech recognition capabilities to devices without such capabilities and / or to augment a device's speech processing capability. Voice dialing, telephone control and / or other services are provided by the speech processing facility in response to speech recognition results.

Owner:GOOGLE LLC

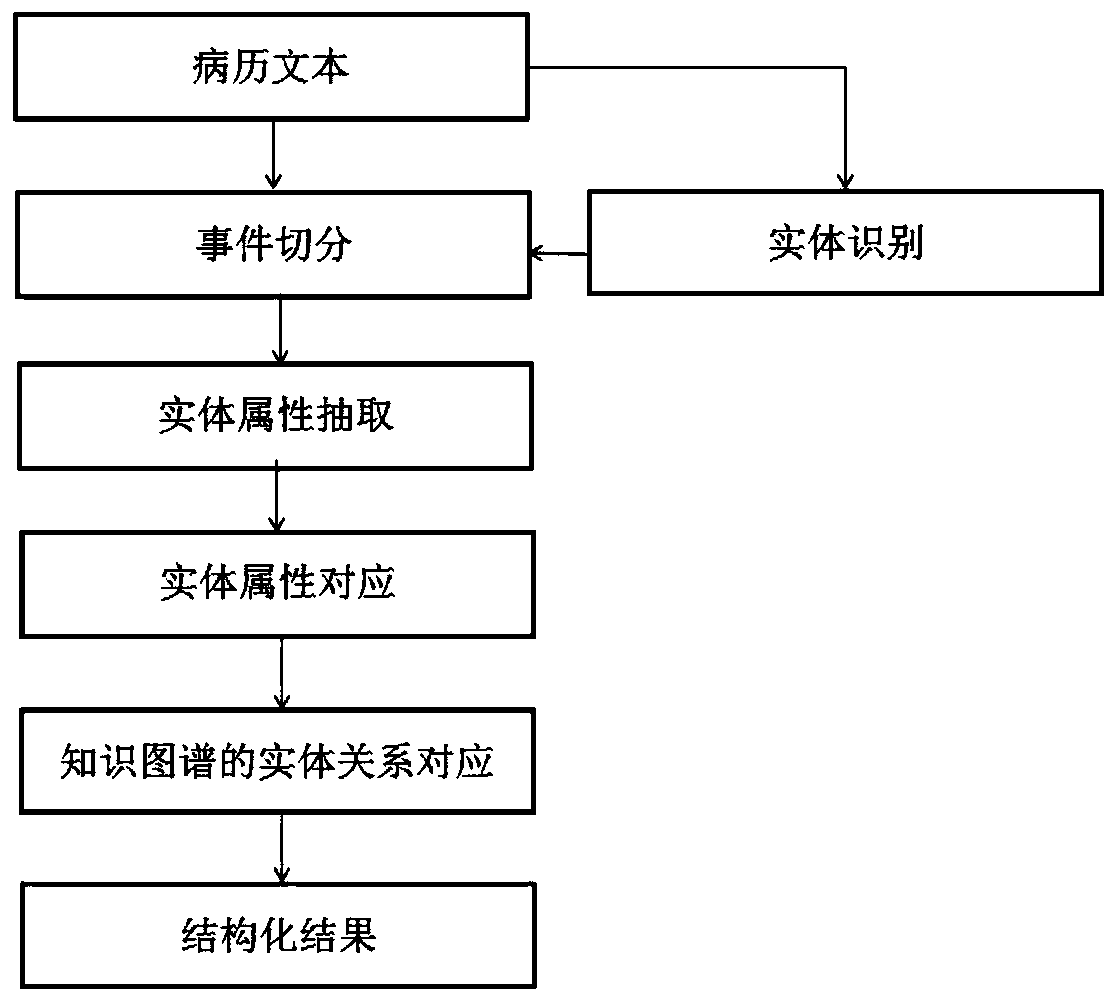

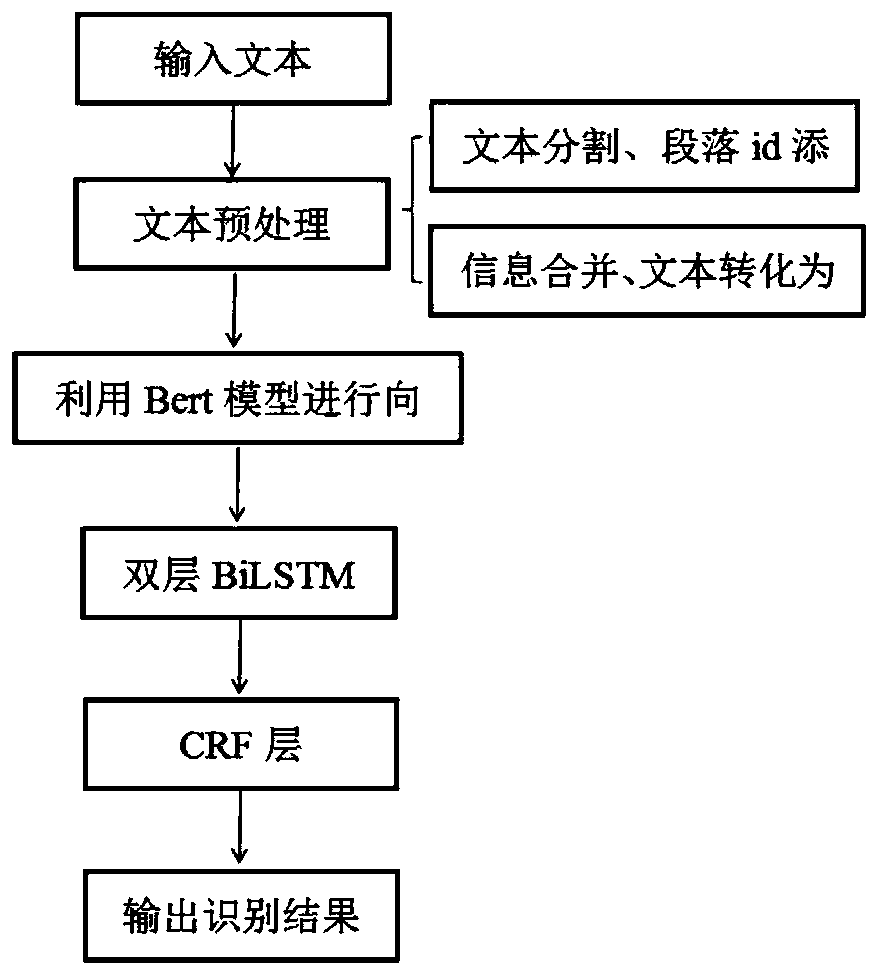

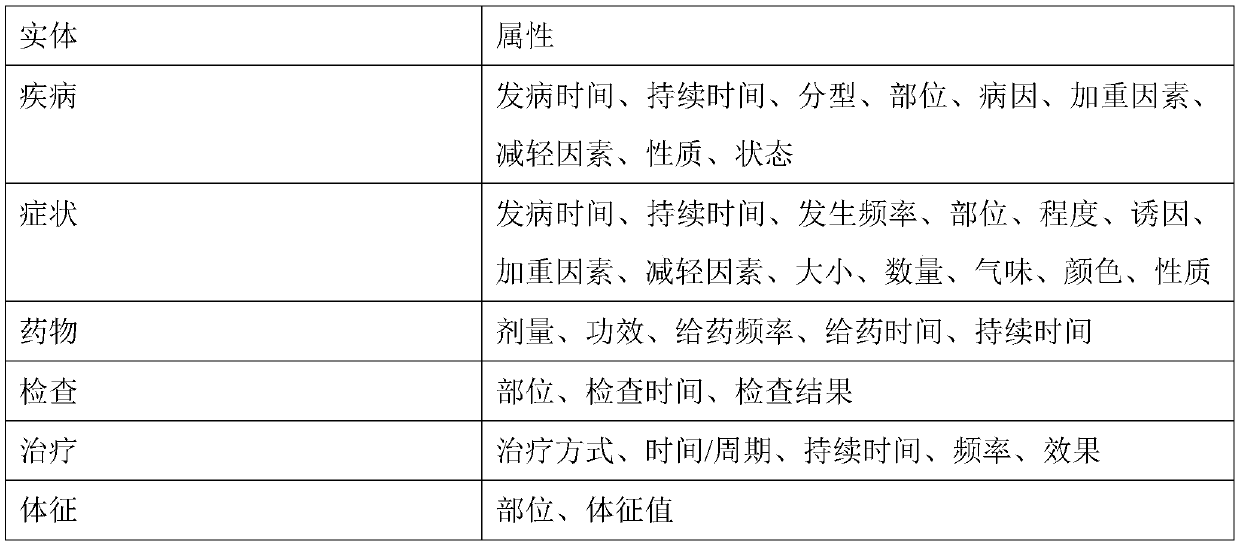

Medical record structured analysis method based on medical field entities

ActiveCN110032648AAvoid ambiguityGood recognition resultSemantic analysisSpecial data processing applicationsStructured analysisKnowledge graph

The invention discloses a medical record structured analysis method based on medical field entities, and the method comprises the steps of 1) building a medical entity and an attribute category tablefor a common medical record text, and carrying out the corresponding relation mapping; 2) identifying the medical entity in the medical record text by adopting a Bert _ BiLSTM _ CRF model; 3) segmenting the medical record text according to semantics to form events; 4) recombining the events; 5) constructing an attribute recognition model, and extracting the attributes in the segmented events; 6) connecting the medical entities of the events in the same sentence by utilizing the knowledge graph to obtain the relationship between the entities, and 7) customizing different attribute recognition models for different types of medical record text segments, and finally forming a final medical record structured analysis text according to the text sequence accumulating structured analysis results.

Owner:微医云(杭州)控股有限公司

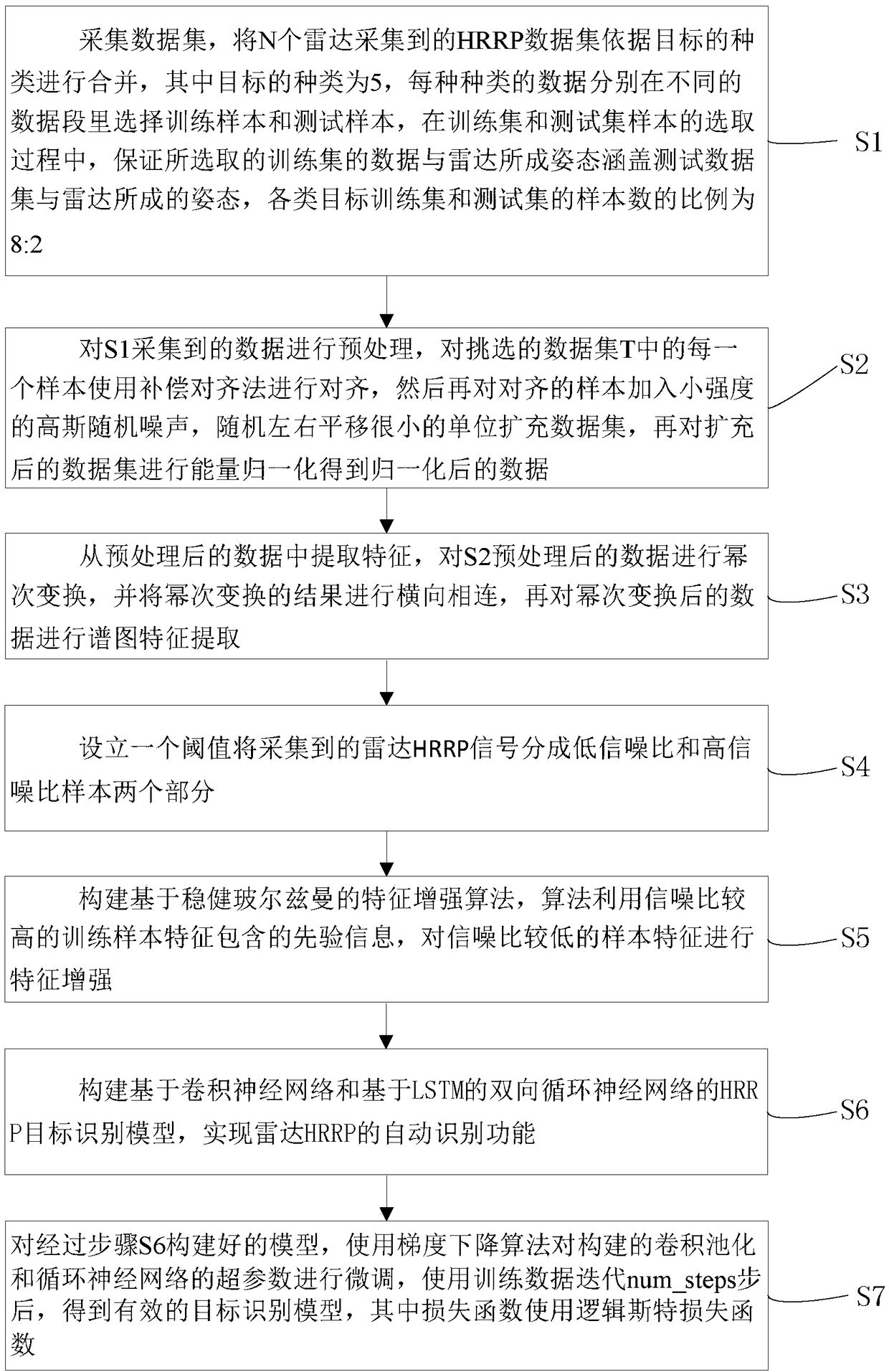

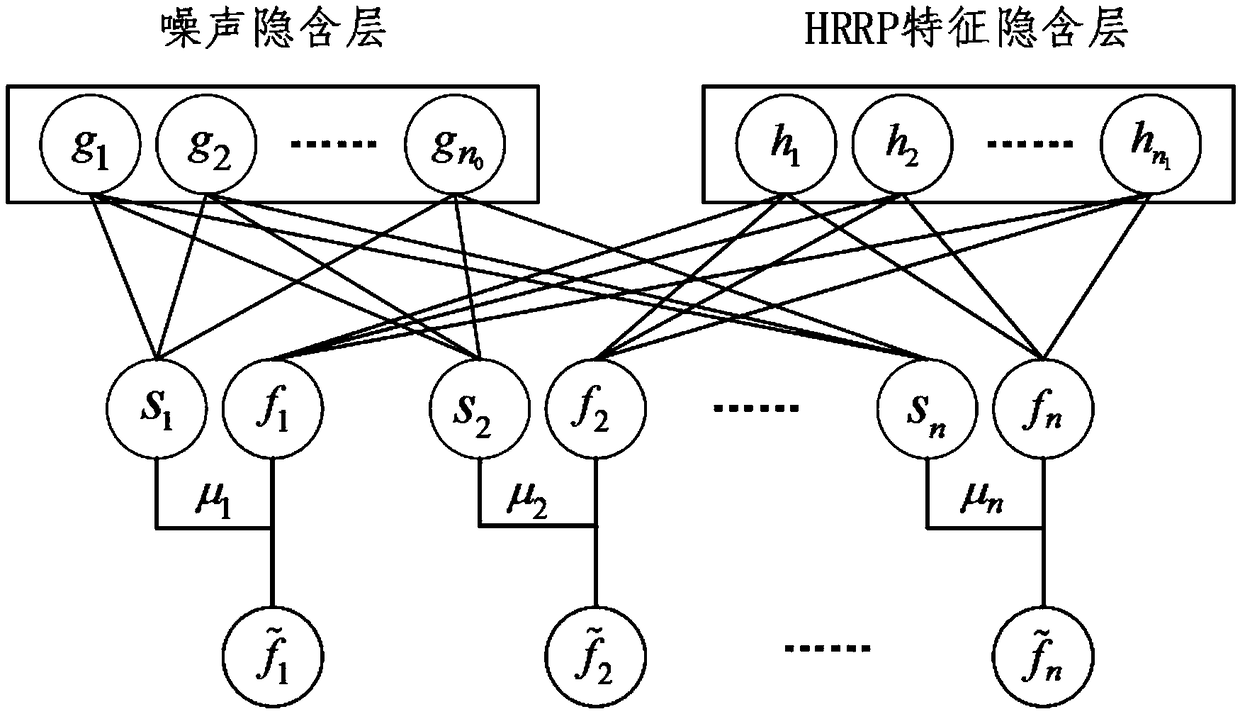

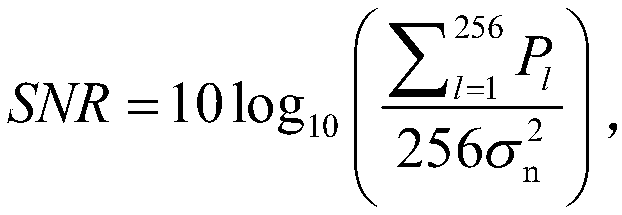

Radar one-dimensional range profile target recognition method based on depth convolution neural network

ActiveCN109086700AEasy to identifyGood noise robustnessWave based measurement systemsScene recognitionSmall sampleData set

The invention discloses a radar one-dimensional range profile target recognition method based on a depth convolution neural network, includes the following steps: a data set is collected, the collected data is preprocessed, features are extracted from the preprocessed data, the HRRP signal is divided into two parts: low SNR and high SNR, A feature enhancement algorithm based on robust Boltzmann isconstructed, and a HRRP target recognition model based on convolution neural network and bidirectional loop neural network based on LSTM is constructed. The parameters of the network model are fine-tuned by using gradient descent algorithm, and an effective target recognition model is obtained. A radar HRRP automatic target recognition technology with small sample robustness and noise robustnessconstructed by the invention has strong engineering practicability, and a radar one-dimensional range profile target recognition model based on a convolution neural network and a cyclic neural networkis proposed from the aspects of feature extraction and the design of a classifier.

Owner:HANGZHOU DIANZI UNIV

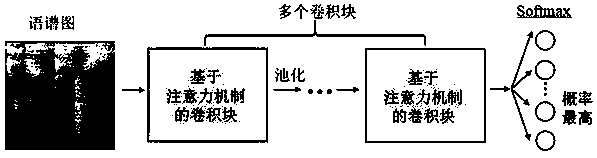

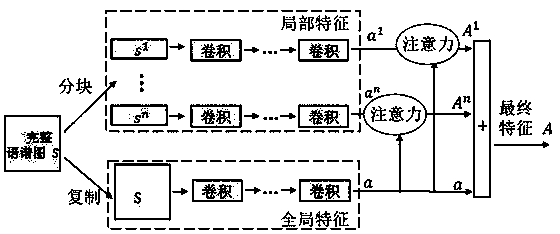

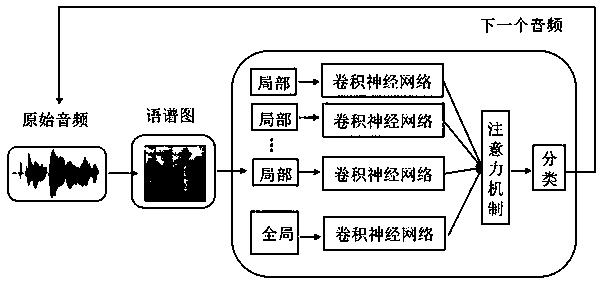

Speech classification method based on deep neural network

ActiveCN108010514AImprove recognition rateImprove recognition resultsSpeech recognitionNeural architecturesHigh probabilityBack propagation algorithm

The invention discloses a speech classification method based on a deep neural network, and aims at solving different speech classification problems through a unified algorithm model. The method of theinvention includes the steps of S1, converting a speech into a corresponding spectrogram; segmenting the complete spectrogram along the frequency domain into blocks to obtain a local frequency domaininformation set; S2, taking the complete and local frequency domain information as inputs of a model respectively, and based on the different inputs, the convolutional neural network being capable ofextracting local and global features; S3, using an attention mechanism to fuse global and local feature expressions to form a final feature expression; S4, using tagged data to train the network by gradient descent and back propagation algorithms; and S5, using the trained parameters for an untagged speech and taking the classification of highest probability that the model outputs as a predictionresult. The method of the invention realizes a unified algorithm model for different speech classification problems, and improves the accuracy on multiple speech classification problems.

Owner:SICHUAN UNIV

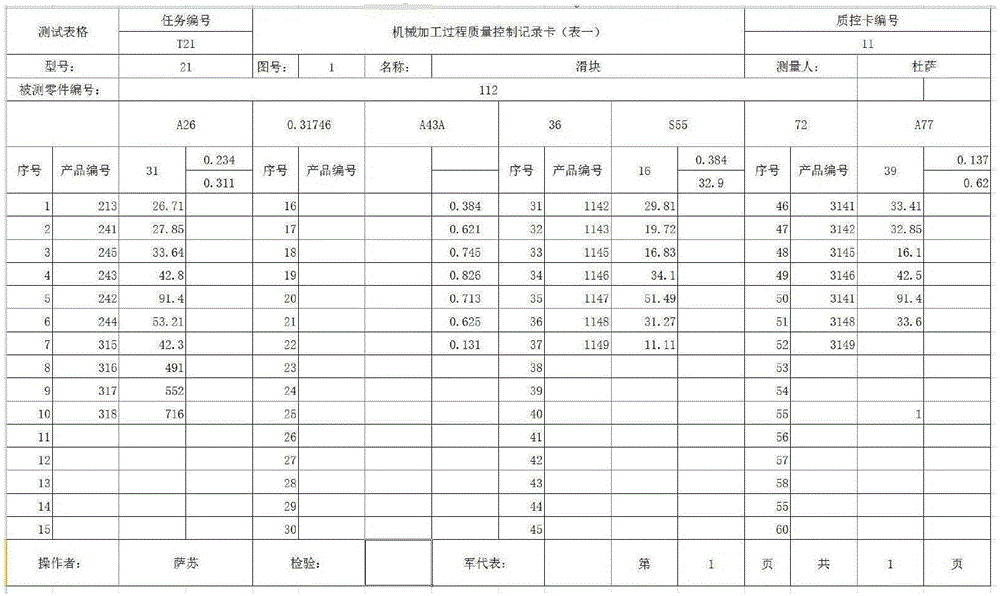

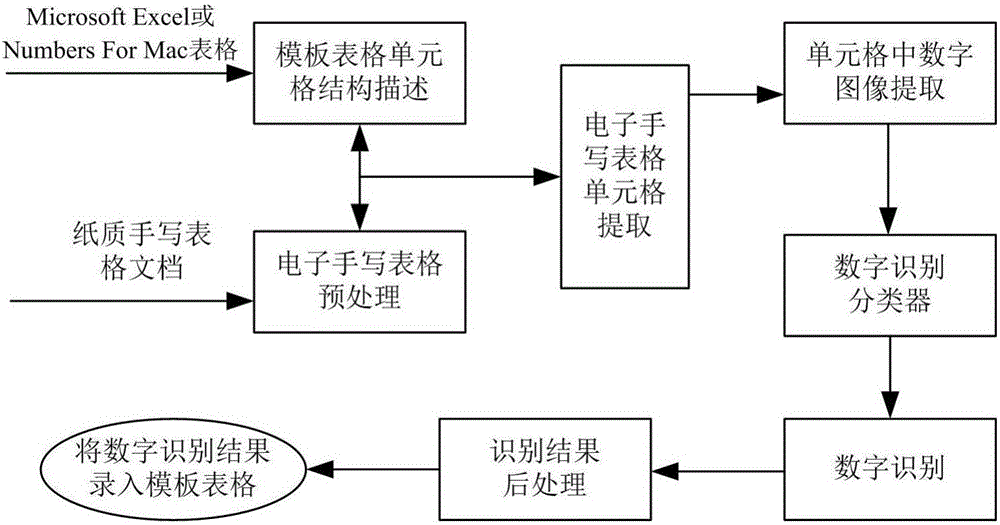

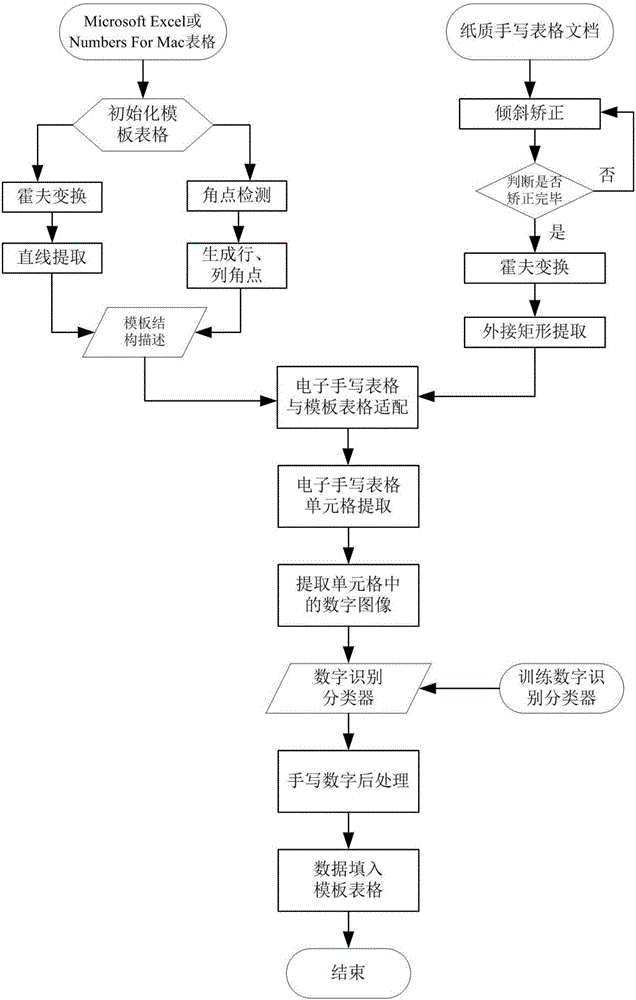

Complex table and method for identifying handwritten numbers in complex table

ActiveCN106407883AImprove recognition rateEasy to identifyCharacter and pattern recognitionData setAngular point

The invention discloses a complex table and a method for identifying handwritten numbers in the complex table. A complex template table is preprocessed by line detection and angular point detection to realize classified row and column ordering and obtain structural description of cells of the template table; after an electronic handwritten table is obtained, inclination of the electronic handwritten table is corrected, and the electronic handwritten table matches the template table to obtain cell position description thereof; each cell is processed by removing the edge and reserving characters in the cell as completely as possible; number images are extracted from the cells, and a trained classifier of a data set is used to identify the number images; and the handwritten characters are post-processed, and an identification result is filled into the template table. The method of the invention is easy to realize, and better in identification effect, and provides a good solution to automatic identification and table filling later.

Owner:BEIJING UNIV OF TECH +1

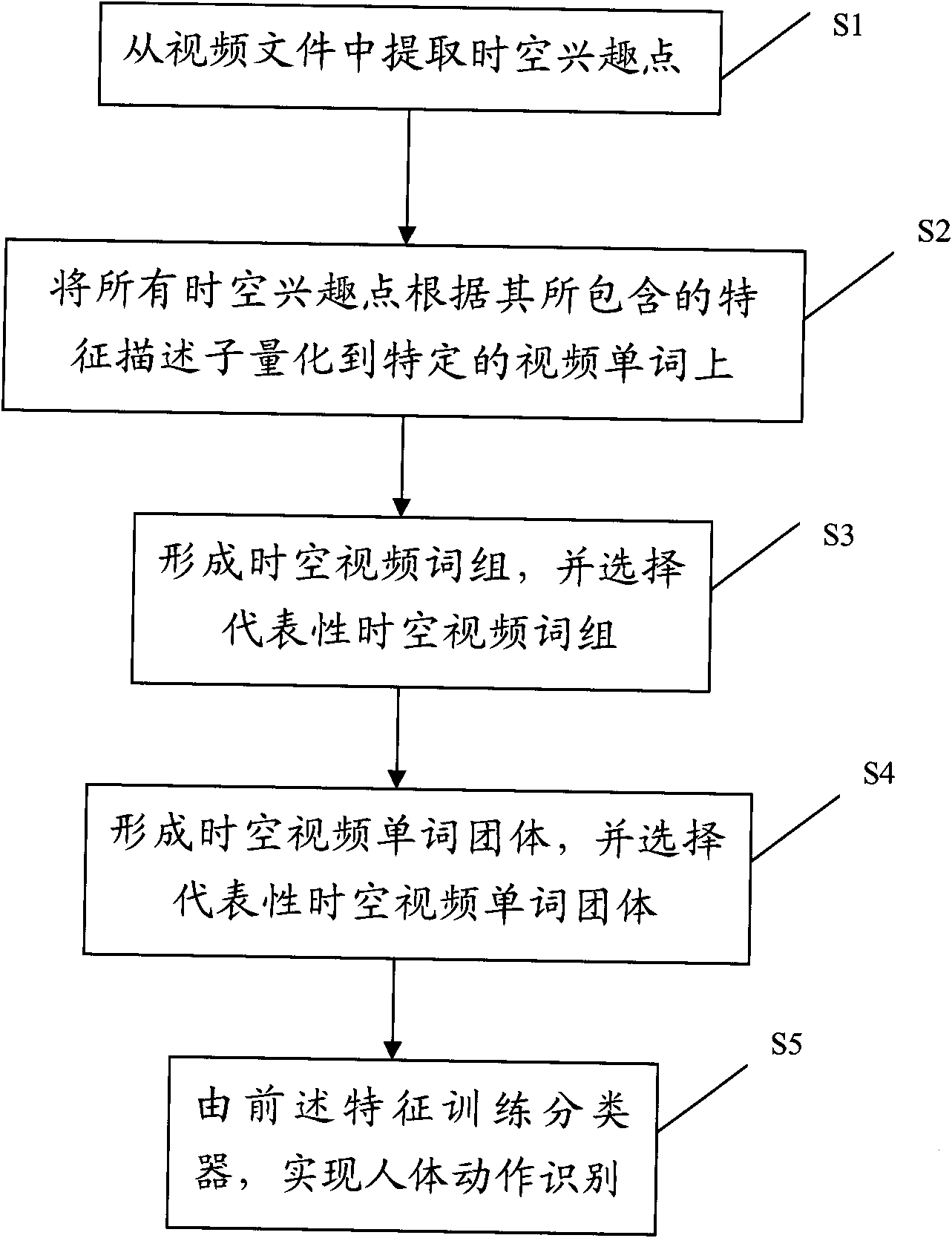

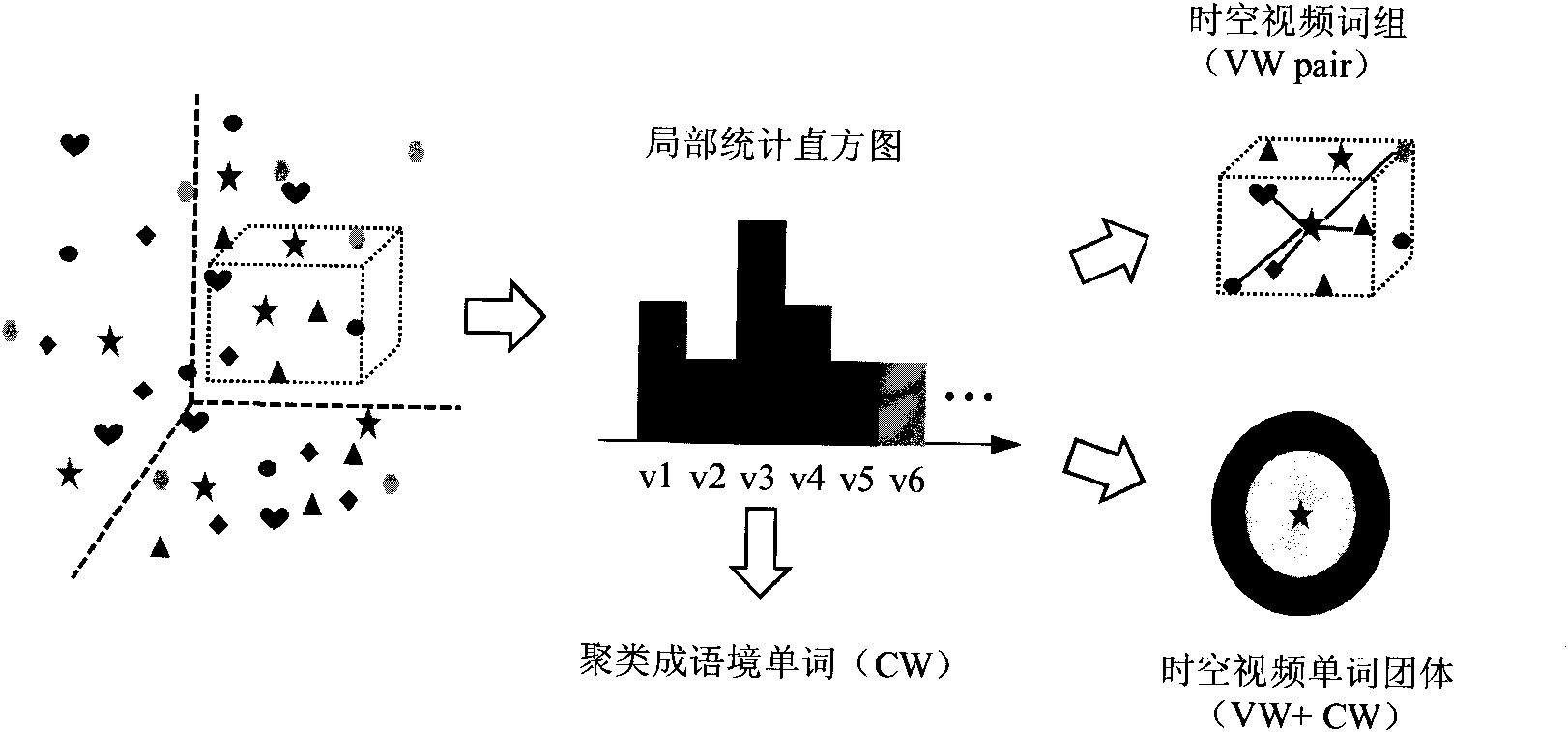

Training method of human action recognition and recognition method

InactiveCN101894276AEfficient integrationCapture essential propertiesCharacter and pattern recognitionWord groupSpacetime

The invention provides a training method of human action recognition, comprising the following steps: extracting space-time interest points from a video file; quantizing all the space-time interest points to corresponding video words according to the feature descriptors contained by the space-time interest points and generating a statistical histogram for the video words; obtaining other video words in the space-time neighborhood of the video words according to the space-time context information in the space-time neighborhood of the video words and forming space-time video phrases by the video words and one of other video words which meets space-time constraint; clustering the space-time contexts in the space-time neighborhood of the video words to obtain context words and forming space-time video word groups by the video words and the context words; selecting the representative space-time video phrase from the space-time video phrases and selecting the representative space-time video word group from the space-time video word groups; and training a classifier by utilizing the result after one or more features in the video words, the representative space-time video phrase and the representative space-time video word group are fused.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

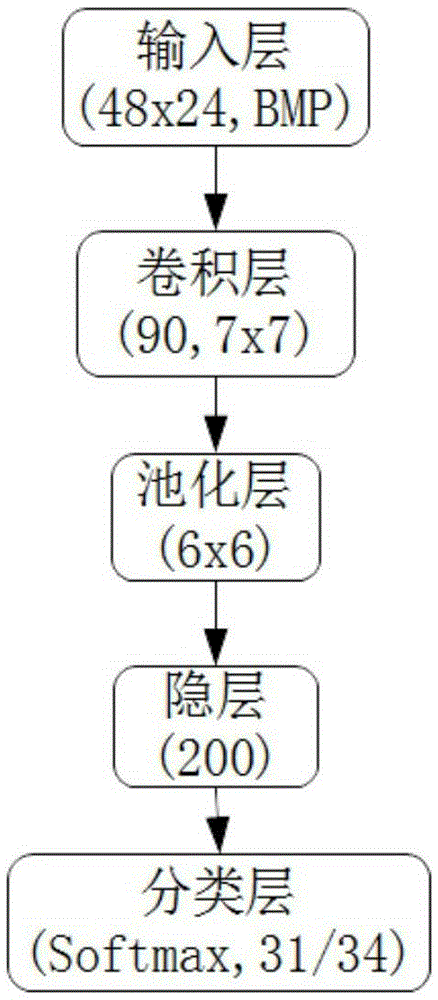

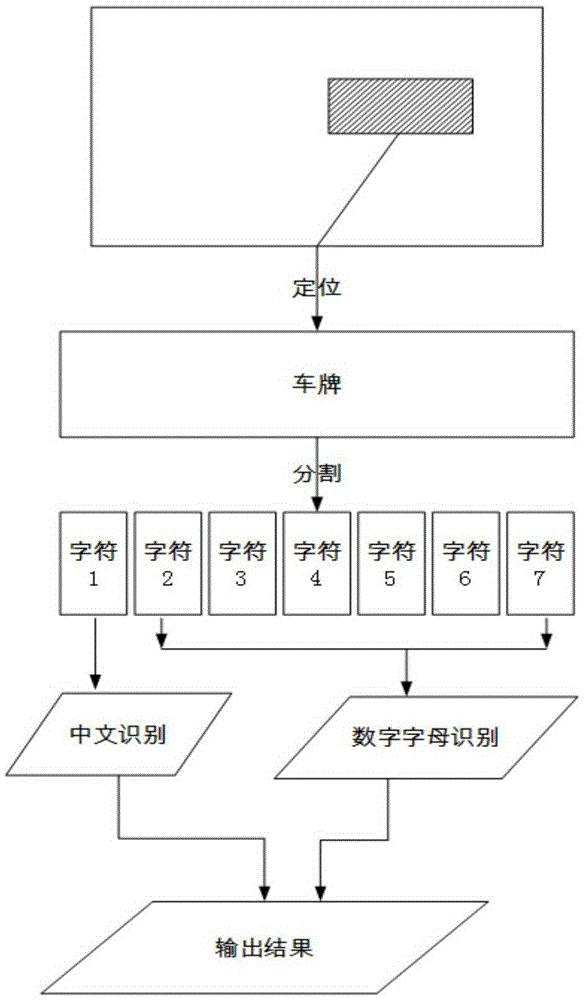

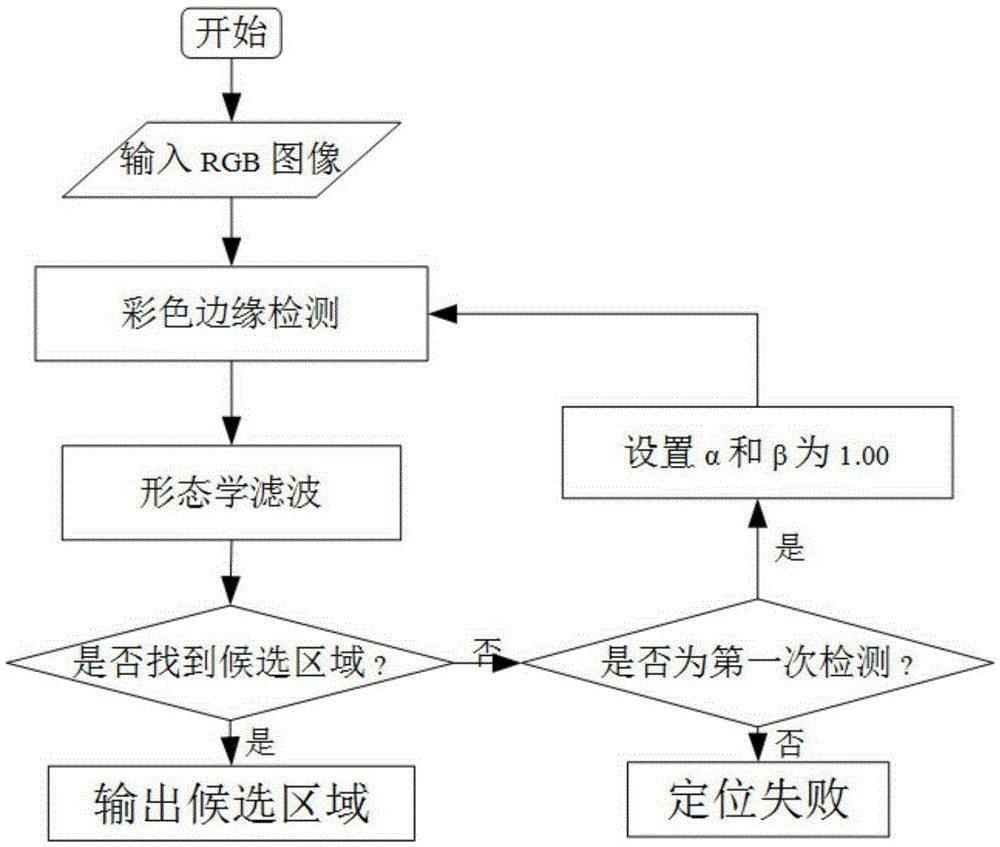

Automatic identification system of number plate on the basis of simplified convolutional neural network

ActiveCN105354572AAchieve positioningSuppress noiseCharacter and pattern recognitionHidden layerNerve network

The invention discloses an automatic identification system of a number plate on the basis of a simplified convolutional neural network. The convolutional neural network comprises an input layer, a convolutional layer, a pooling layer, a hidden layer and a classification output layer and solves the problem of number plate identification under a daily background. The number plate identification comprises the following steps: positioning, segmenting and identifying. The invention puts forward a positioning method which extracts colorful edges by colorful edge information and colorful information. Since parameters in the method are set on the basis of color features, noise in the daily background can be effectively inhibited, and input images of different sizes can be subjected number plate extraction. The automatic identification system omits a front convolutional layer of a traditional depth convolutional neural network and only keeps one layer of convolutional layer and one hidden layer. As the supplementation of a missing convolutional layer and the strengthening of input features, a gray level edge image obtained by a Sobel operator is used as the input of a colorful image, i.e., coarsness features which are artificially extracted replace features extracted by multiple convolutional layers of the traditional convolutional neural network.

Owner:SUZHOU UNIV

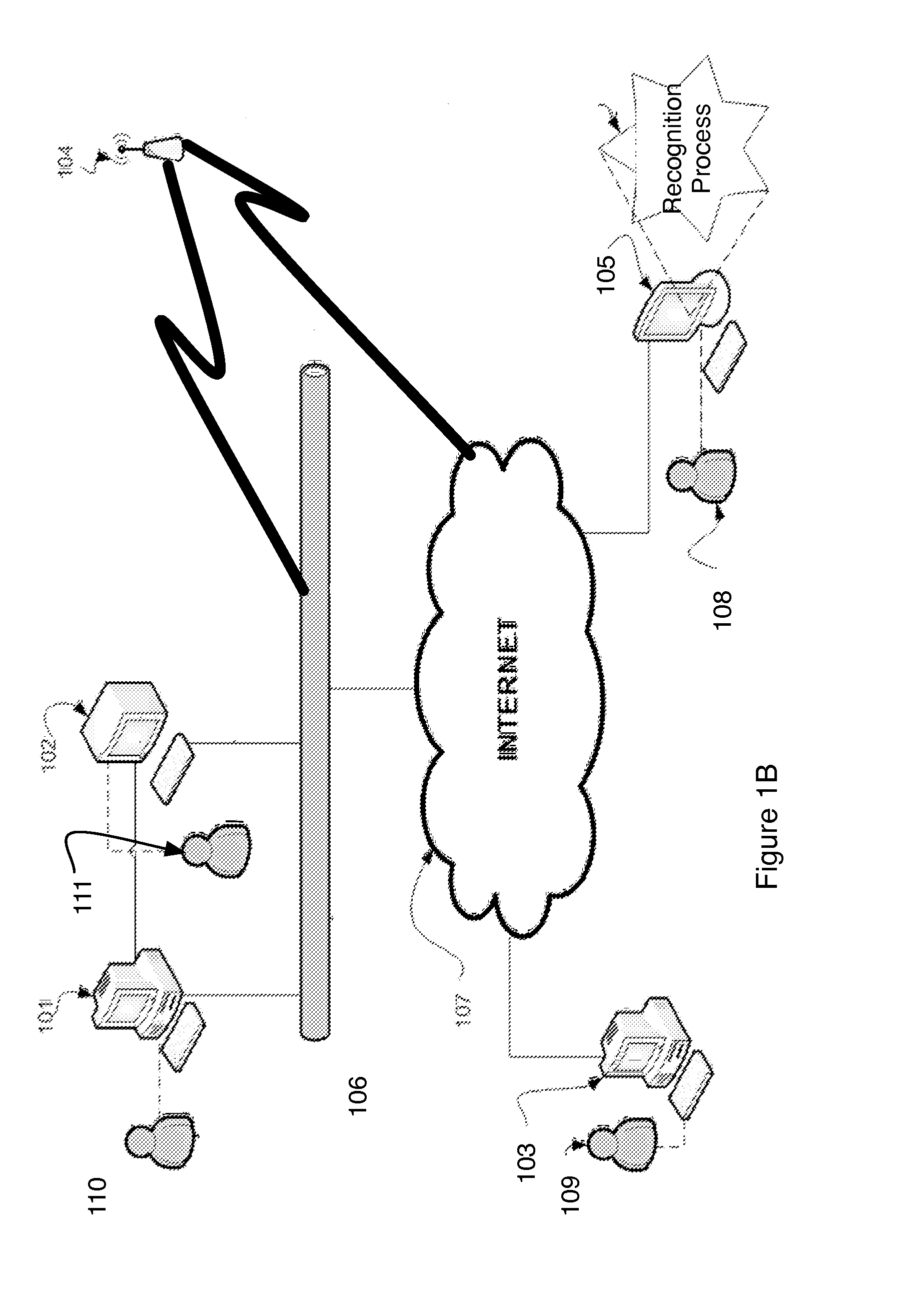

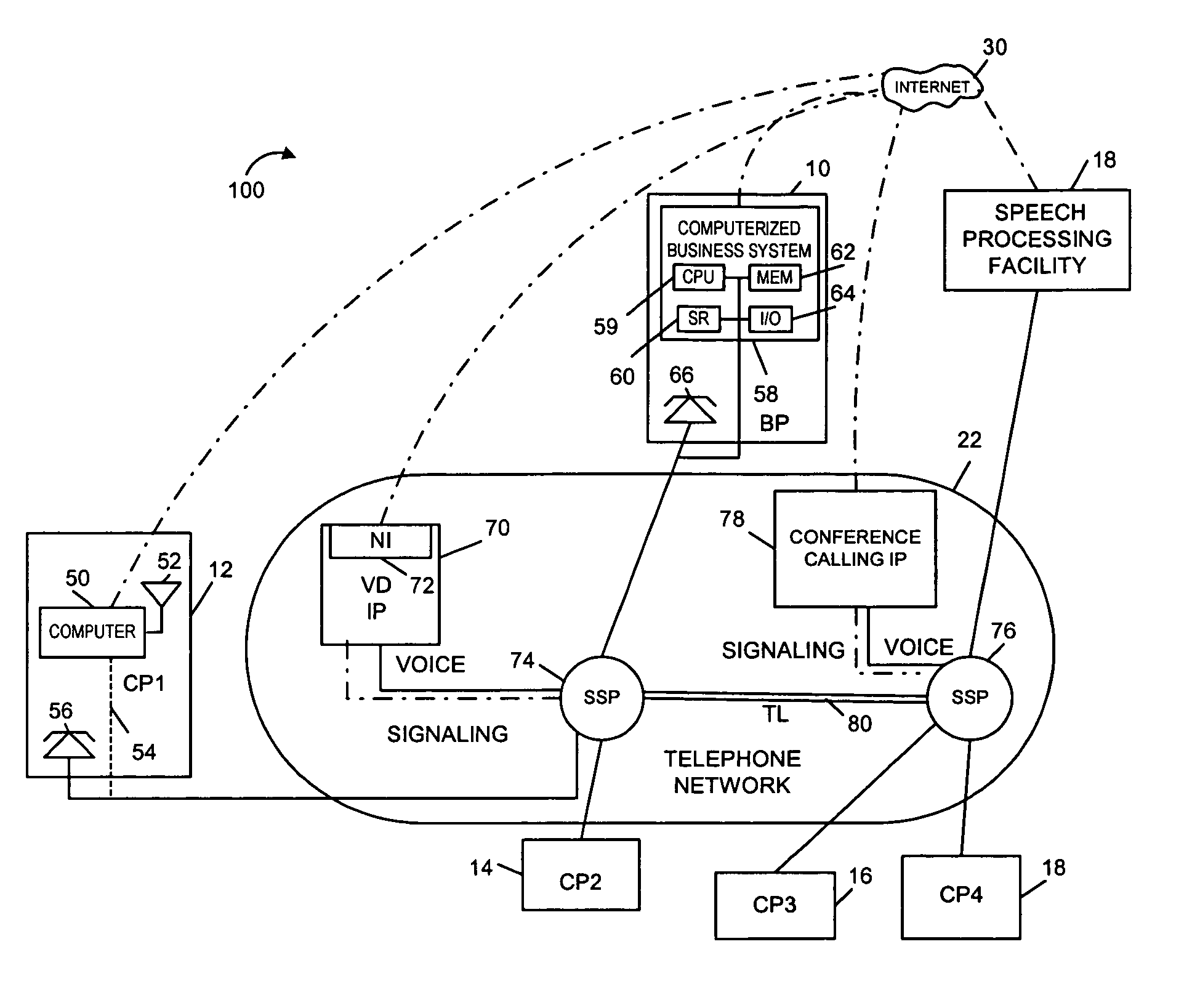

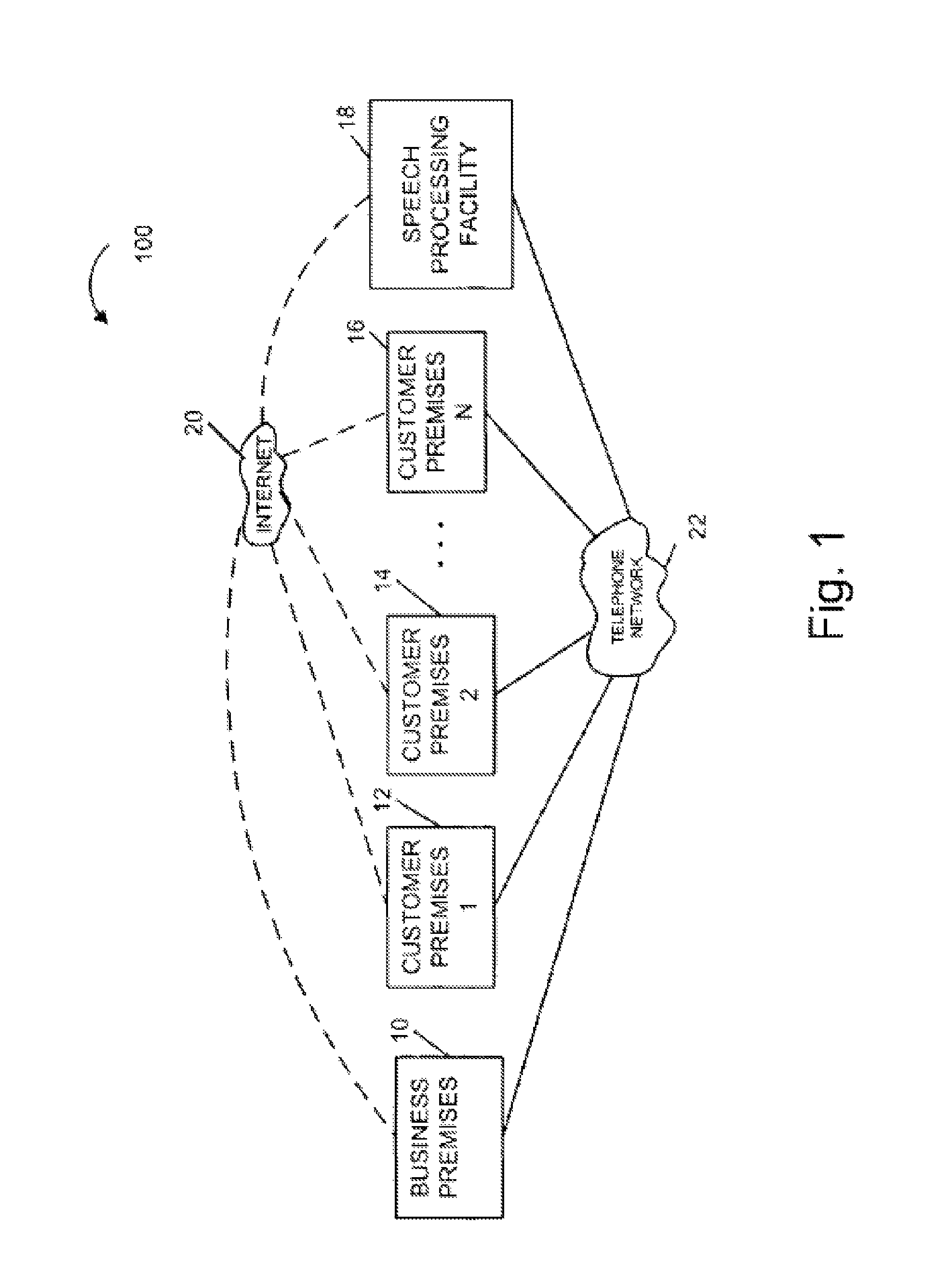

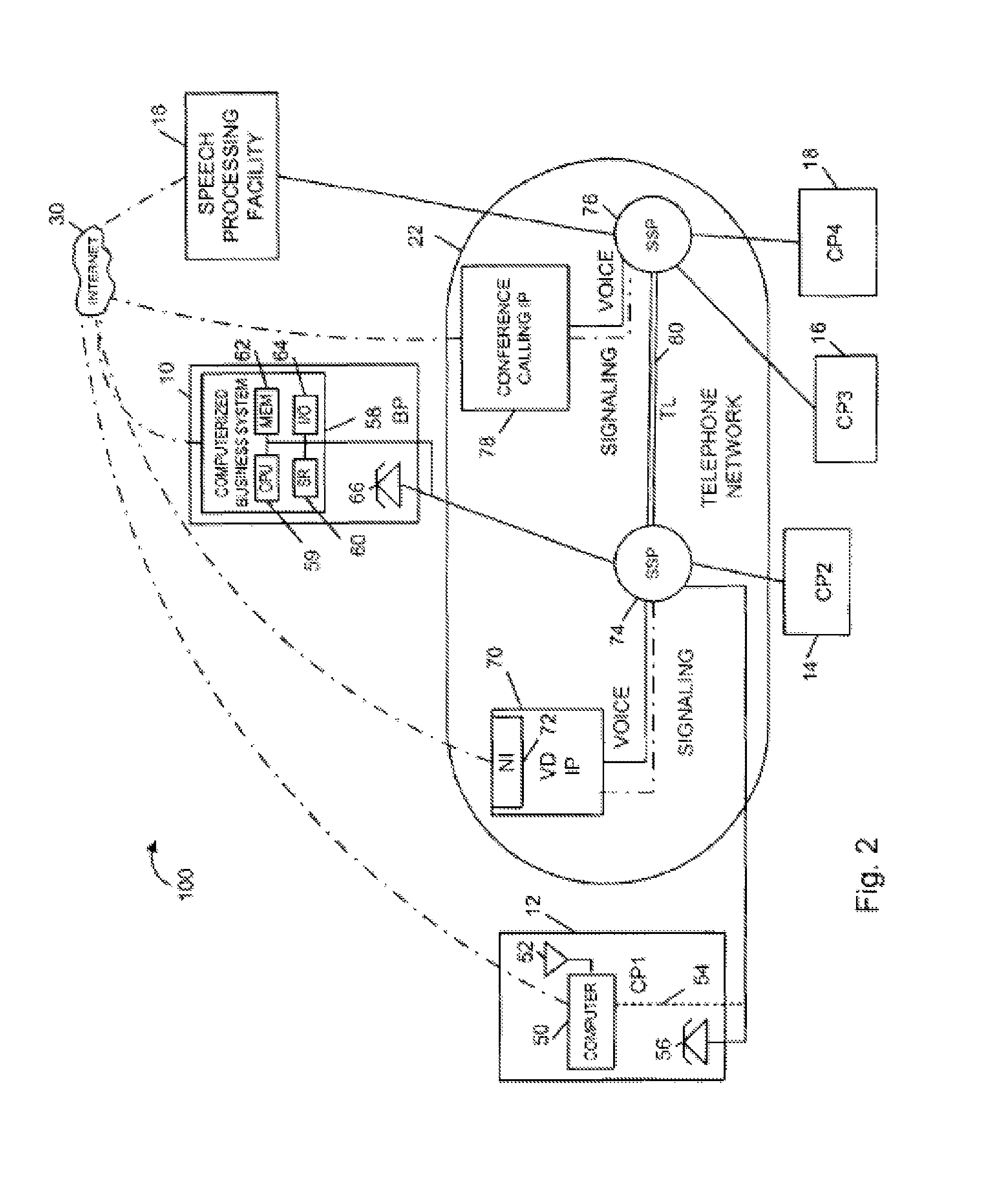

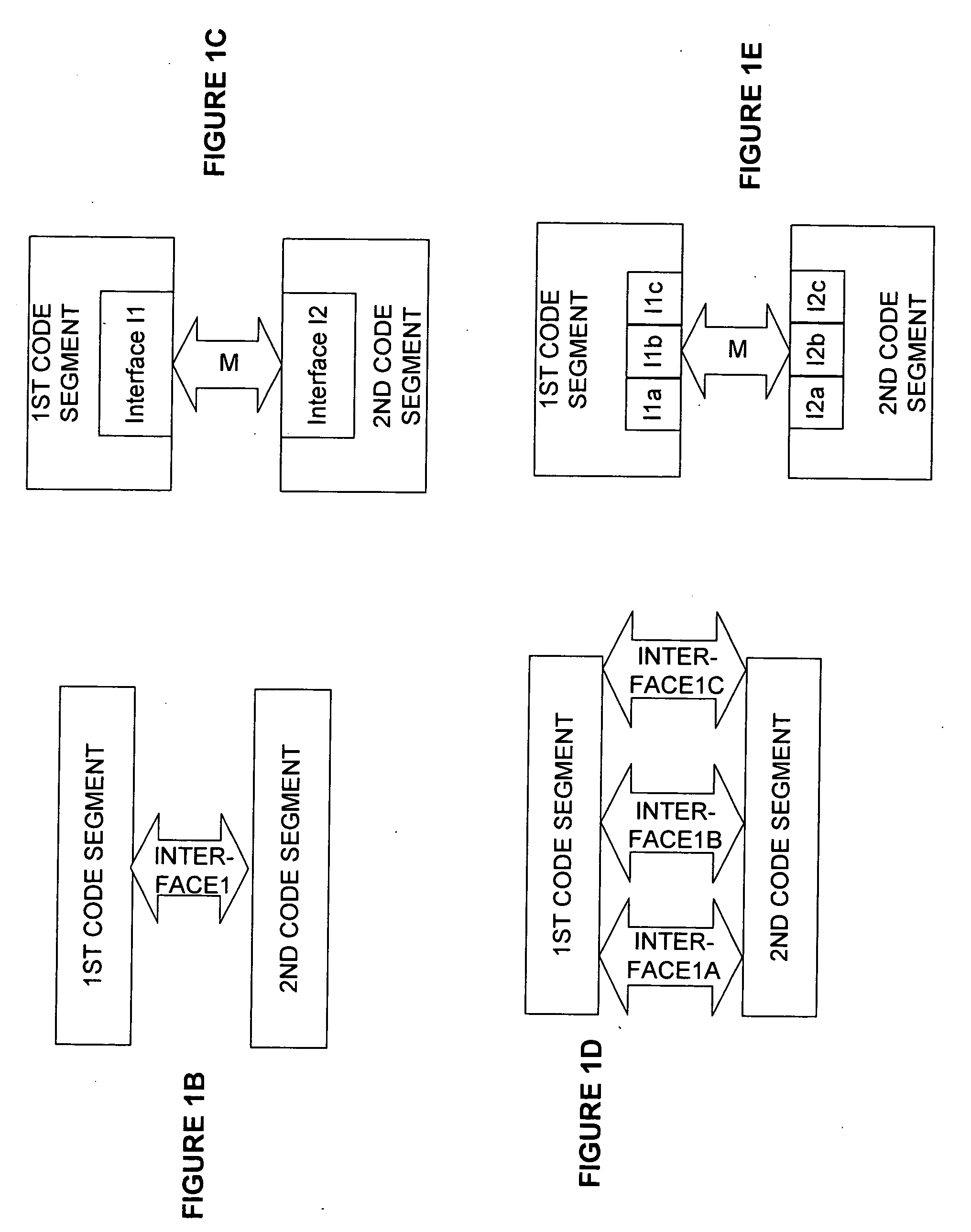

Methods and apparatus for performing speech recognition and using speech recognition results

InactiveUS6915262B2Improve recognition resultsAutomatic exchangesSpeech recognitionThe InternetComputerized system

Techniques for generating, distributing, and using speech recognition models are described. A shared speech processing facility is used to support speech recognition for a wide variety of devices with limited capabilities including business computer systems, personal data assistants, etc., which are coupled to the speech processing facility via a communications channel, e.g., the Internet. Devices with audio capture capability record and transmit to the speech processing facility, via the Internet, digitized speech and receive speech processing services, e.g., speech recognition model generation and / or speech recognition services, in response. The Internet is used to return speech recognition models and / or information identifying recognized words or phrases. Thus, the speech processing facility can be used to provide speech recognition capabilities to devices without such capabilities and / or to augment a device's speech processing capability. Voice dialing, telephone control and / or other services are provided by the speech processing facility in response to speech recognition results.

Owner:GOOGLE LLC

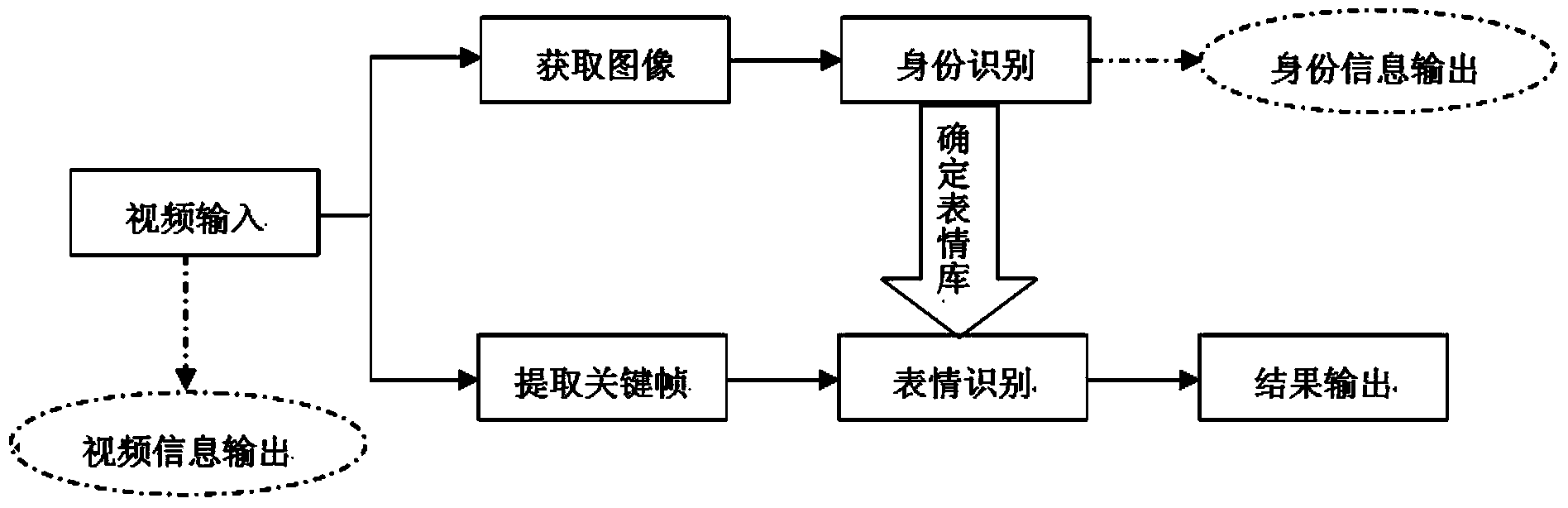

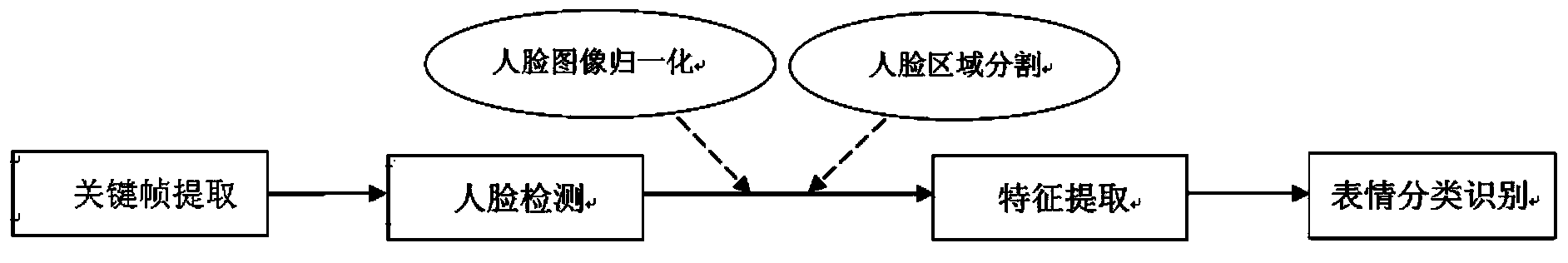

Facial expression recognition method based on video image sequence

InactiveCN103824059AImprove recognition resultsSuppress interferenceCharacter and pattern recognitionComputation complexityExpression Library

The invention discloses a facial expression recognition method based on a video image sequence, and relates to the field of face recognition. The method includes the following steps of (1) identity verification, wherein an image is captured from a video, user information in the video is obtained, then identity verification is carried out by comparing the user information with a facial training sample, and a user expression library is determined; (2) expression recognition, wherein texture feature extraction is carried out on the video, a key frame produced when the degree of a user expression is maximized is obtained, an image of the key frame is compared with the expression training sample in the user expression library determined in the step (1) to achieve the aim of recognizing the expression, and ultimately a statistic result of expression recognition is output. By means of texture characteristics, the key frame obtained in the video is analyzed, the user expression library is built so that the user expression can be recognized, interference can be effectively prohibited, calculation complexity is reduced and the recognition rate is improved.

Owner:SOUTHEAST UNIV

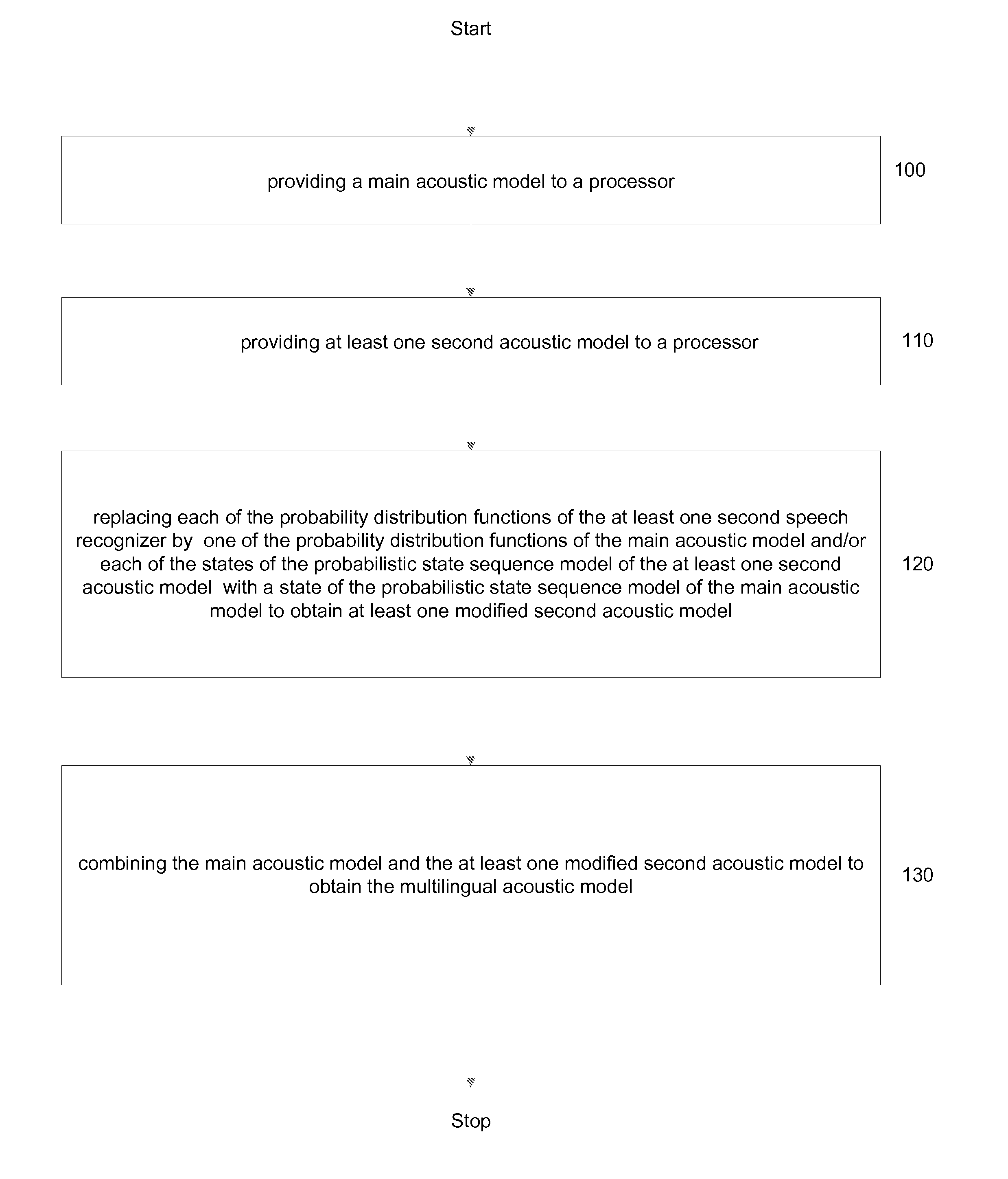

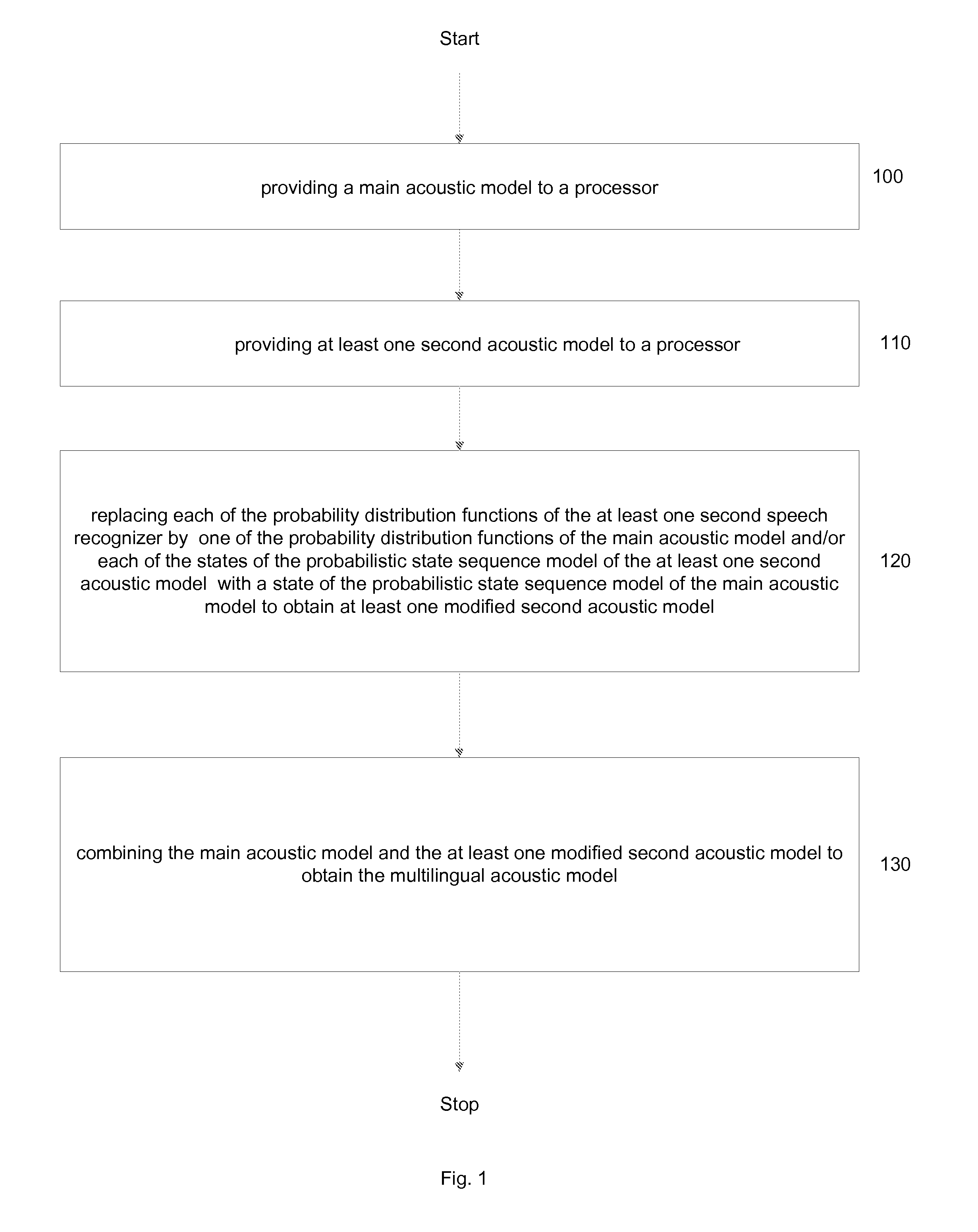

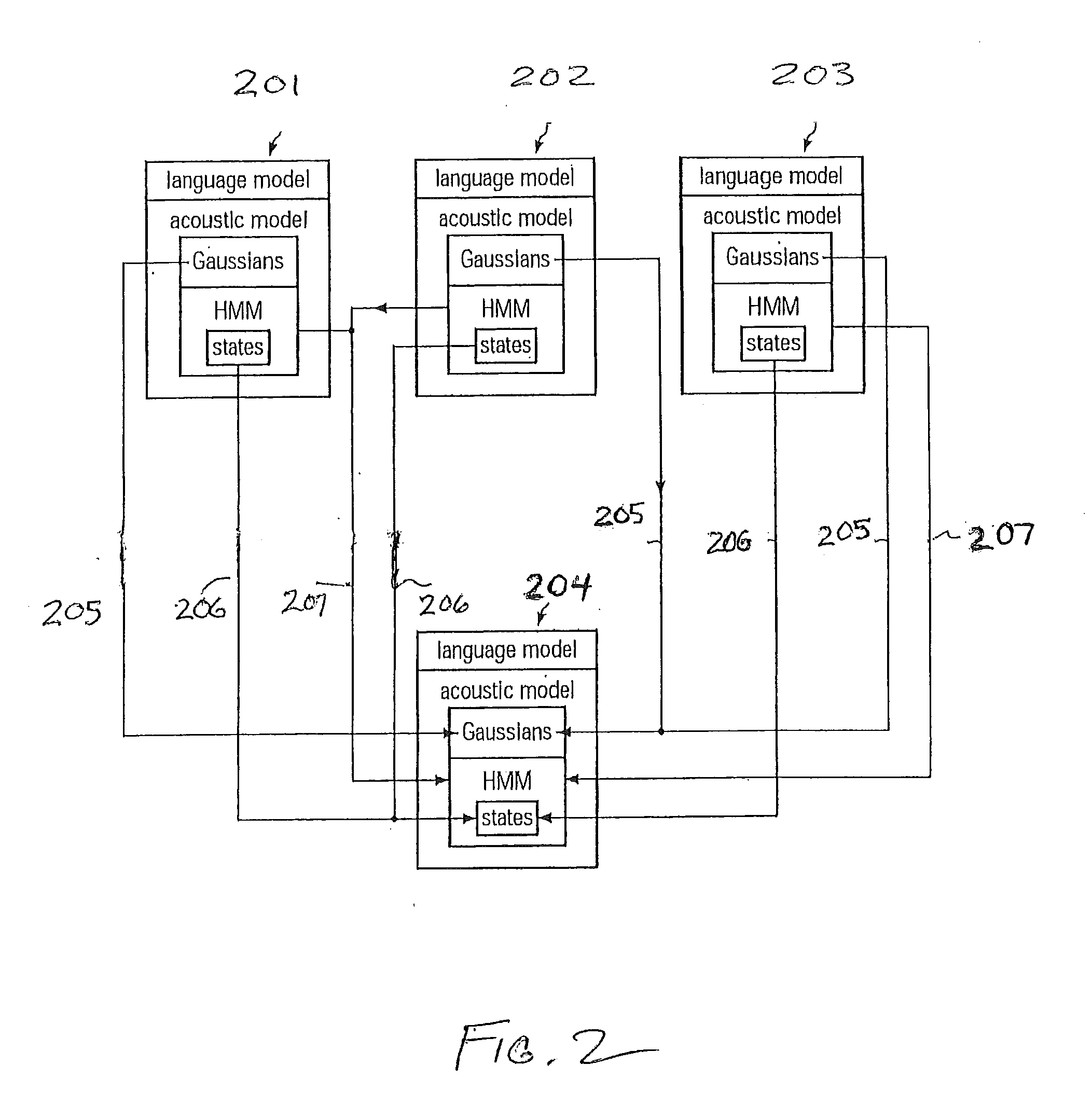

Speech Recognition Based on a Multilingual Acoustic Model

ActiveUS20100131262A1Fast and relatively reliable multilingualConvenience to workNatural language data processingSpeech recognitionSpeech identificationAcoustic model

Embodiments of the invention relate to methods for generating a multilingual acoustic model. A main acoustic model comprising a main acoustic model having probability distribution functions and a probabilistic state sequence model including first states is provided to a processor. At least one second acoustic model including probability distribution functions and a probabilistic state sequence model including states is also provided to the processor. The processor replaces each of the probability distribution functions of the at least one second acoustic model by one of the probability distribution functions and / or each of the states of the probabilistic state sequence model of the at least one second acoustic model with the state of the probabilistic state sequence model of the main acoustic model based on a criteria set to obtain at least one modified second acoustic model. The criteria set may be a distance measurement. The processor then combines the main acoustic model and the at least one modified second acoustic model to obtain the multilingual acoustic model.

Owner:CERENCE OPERATING CO

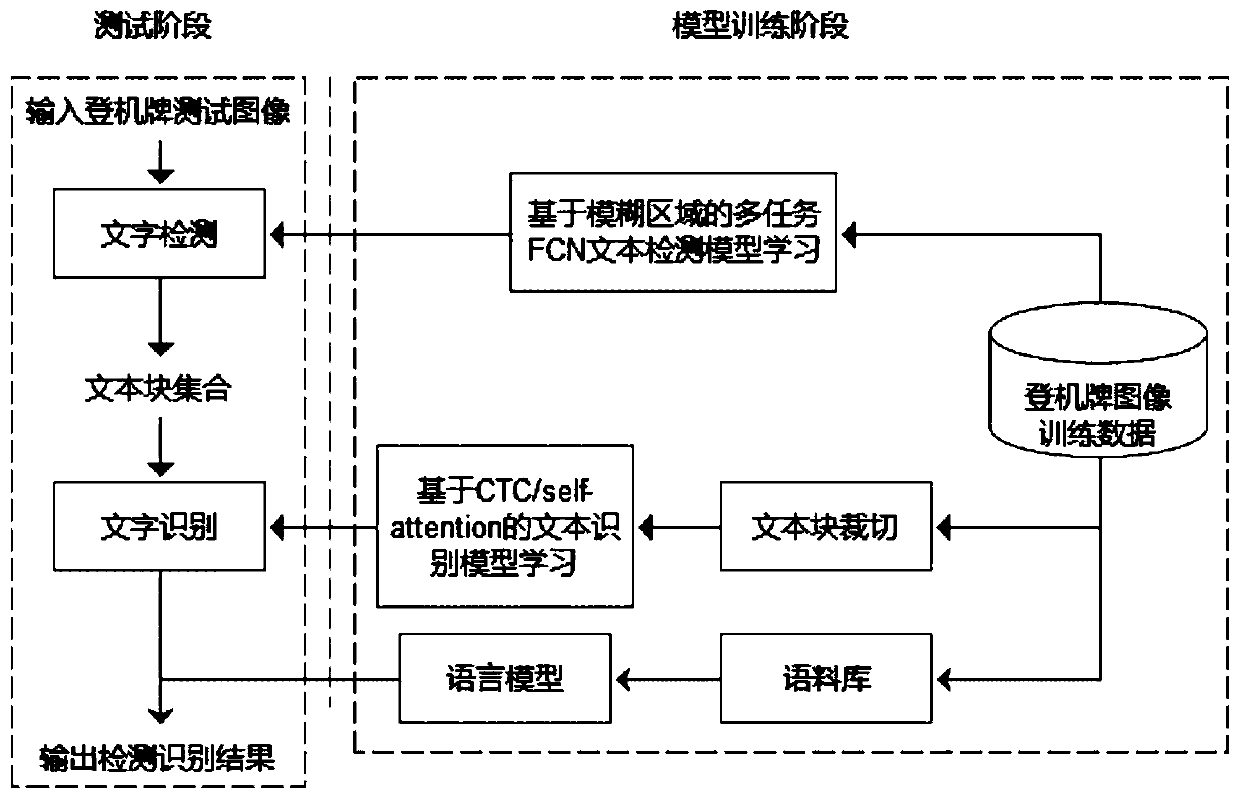

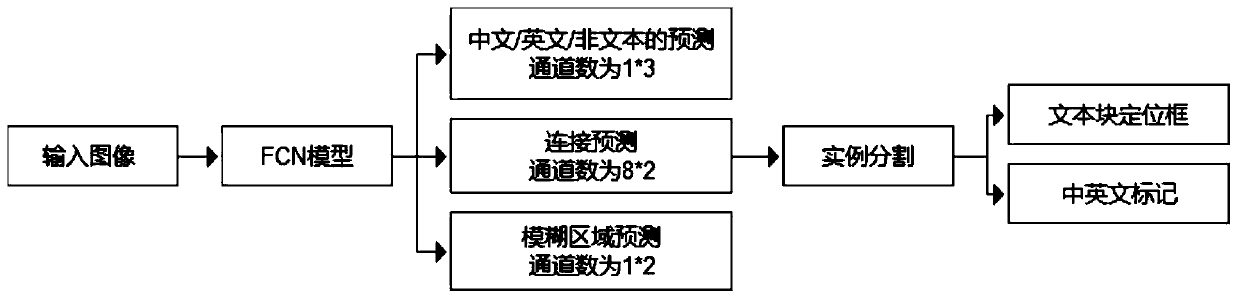

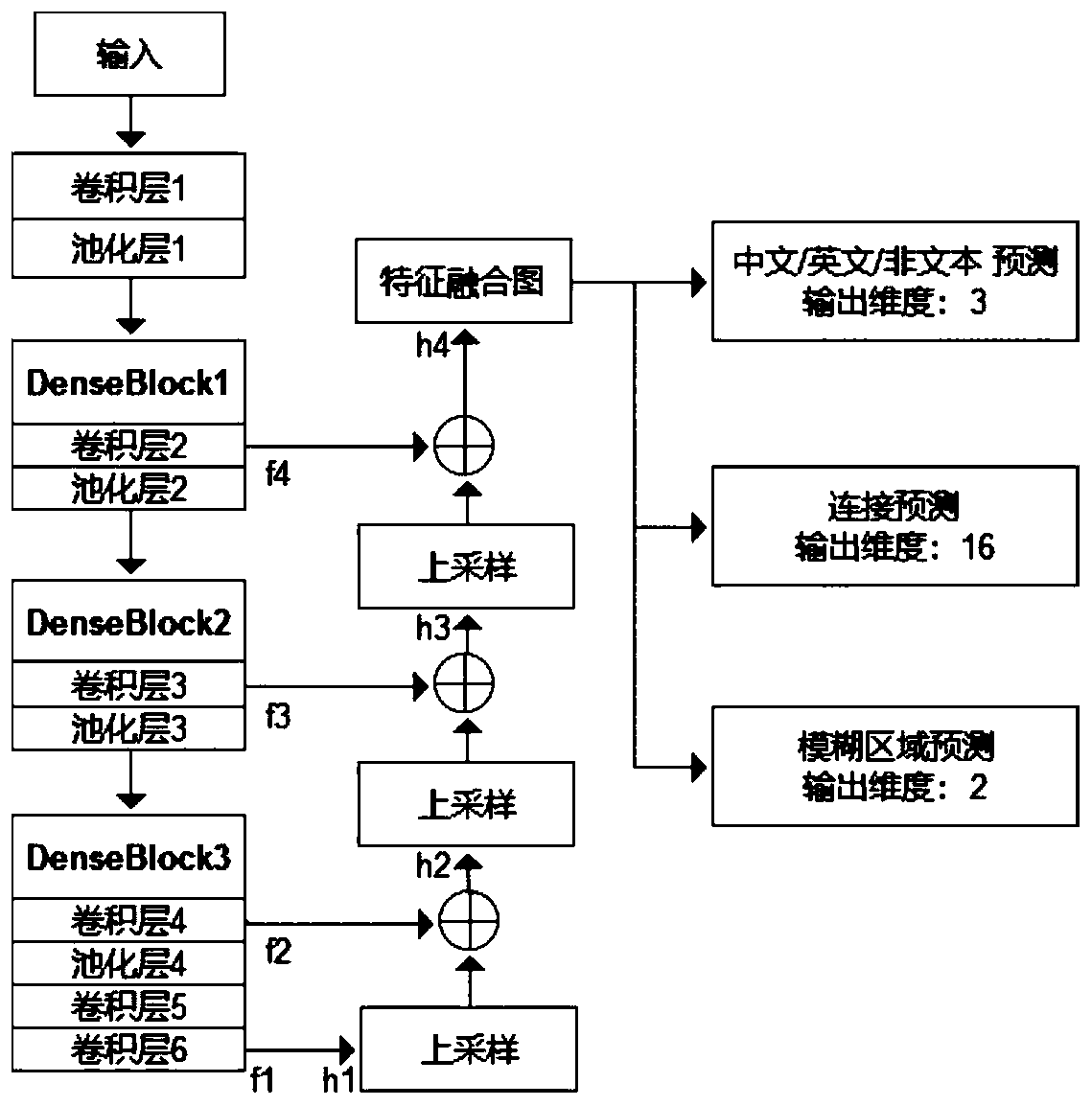

A character detection and recognition method for boarding pass information verification

ActiveCN109902622AOptimize text line recognition resultsComprehensive personal informationCharacter and pattern recognitionNeural architecturesModel learningSelf attention

The invention relates to a character detection and recognition method for boarding pass information verification, and belongs to the field of computer vision. The method comprises the following steps:S1, reading a boarding pass image, and obtaining a boarding pass test image and a training image; S2, positioning each text block through a text line detection method of a multi-task full convolutional neural network model based on a fuzzy region; S3, through text recognition model learning based on a CTC and a self-attention mechanism, realizing recognition of a text line, namely a positioned text block; S4, establishing a boarding pass common text library so as to learn an n-gram language model, and assisting in optimizing a text line recognition result. The boarding pass character information is automatically detected and recognized, Chinese and English mixed text line recognition is achieved, and more comprehensive personal information is obtained.

Owner:CHONGQING INST OF GREEN & INTELLIGENT TECH CHINESE ACADEMY OF SCI

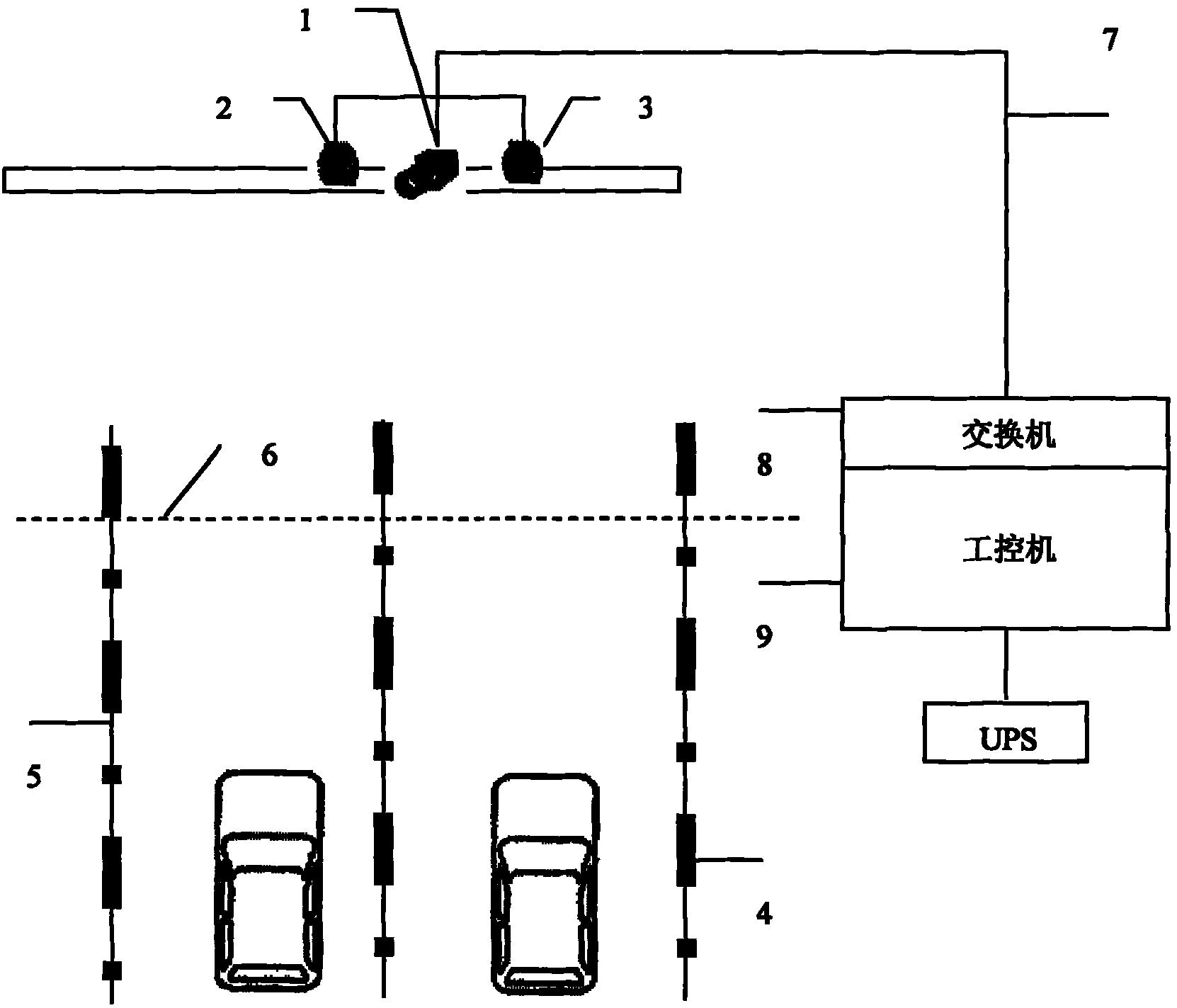

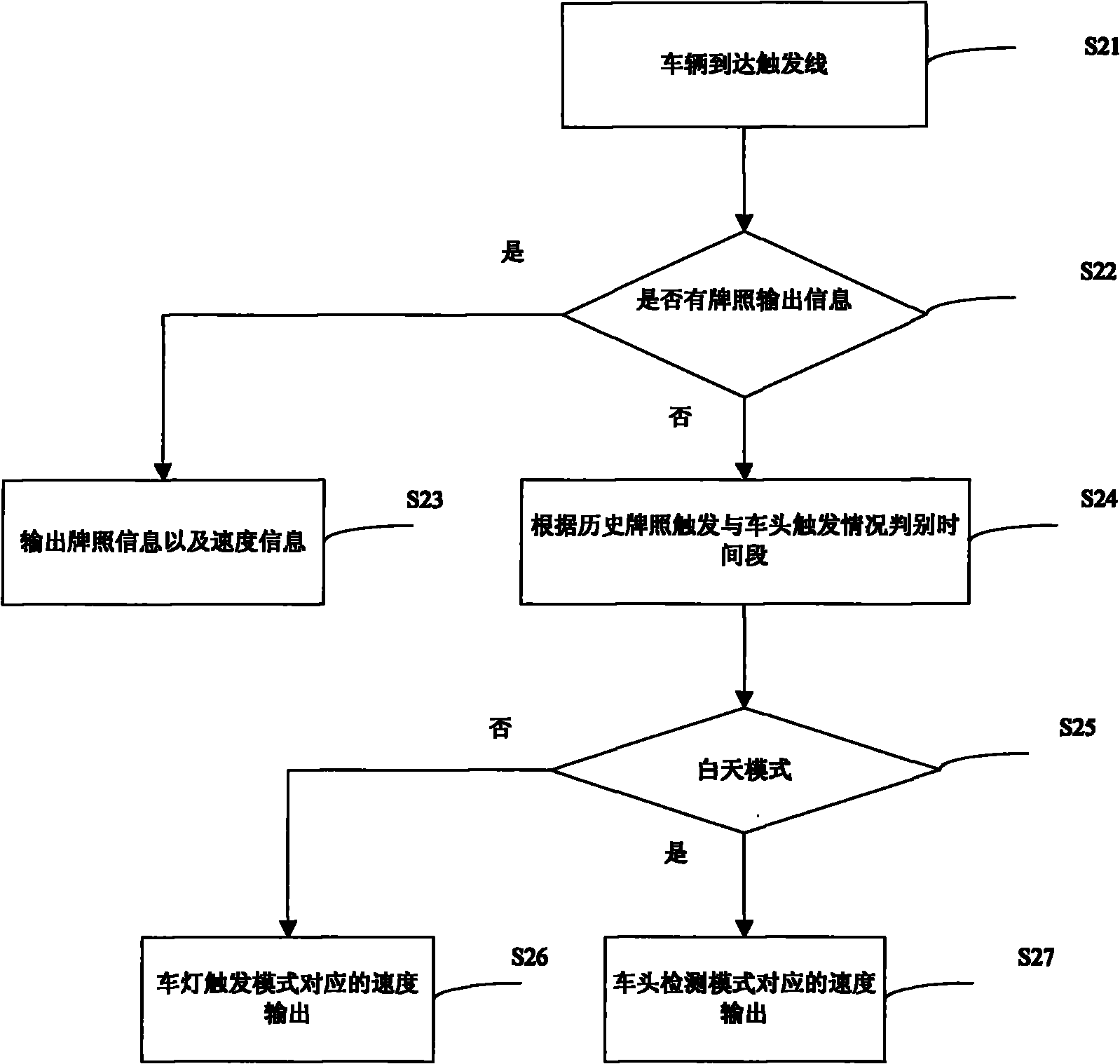

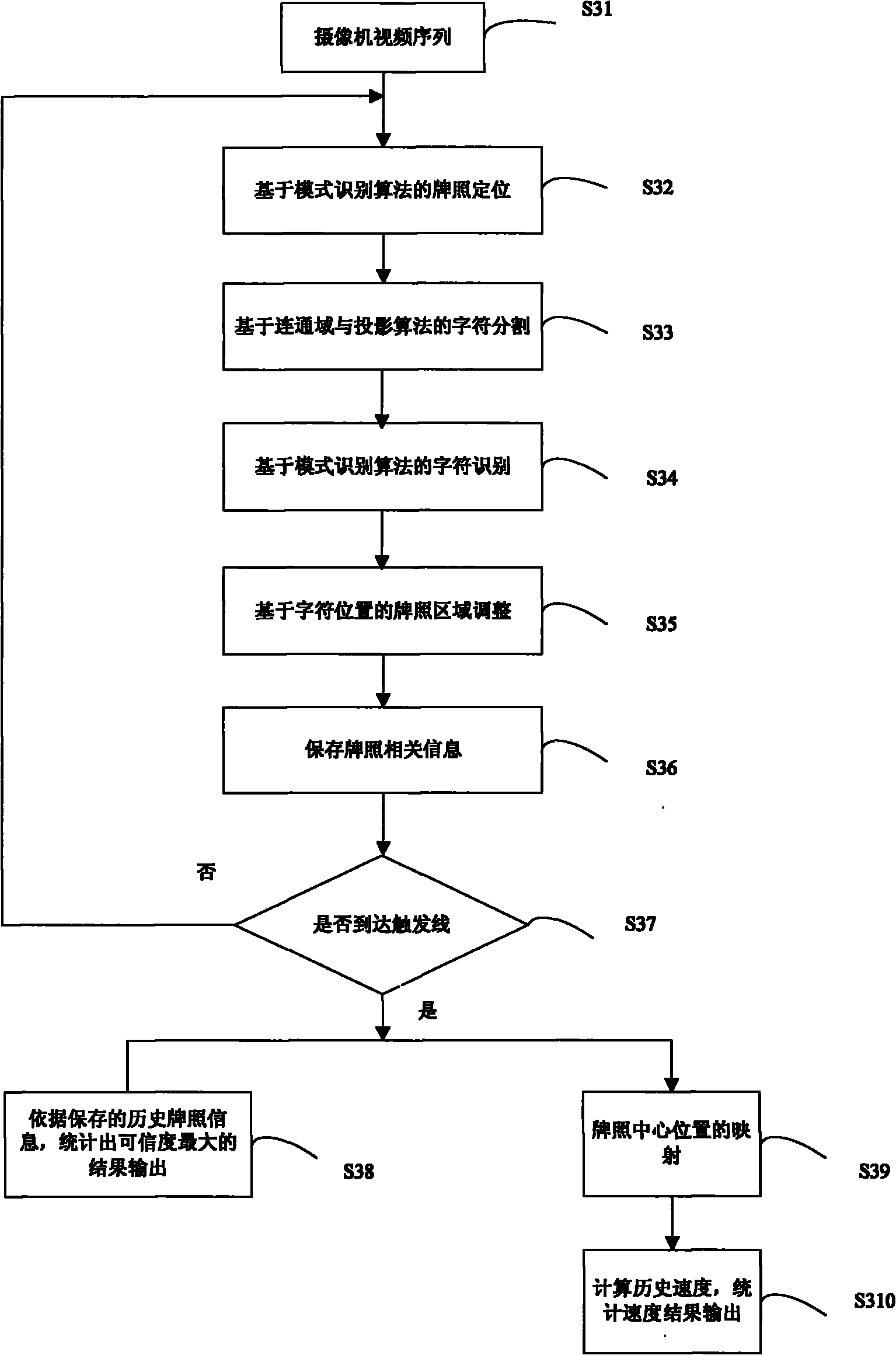

License tag recognizing and vehicle speed measuring method based on videos

ActiveCN102074113ASimple structureEasy interface settingRoad vehicles traffic controlClosed circuit television systemsEngineeringVideo image

The invention discloses a license tag recognizing and vehicle speed measuring method based on videos. With the method, license tags from every frame of a video image are recognized, and the license tag recognizing results are calculated out according to the historical information, a higher recognition rate is realized. Meanwhile, the central position of characters can be positioned and tracked with the method to realize the accurate measurement of the vehicle speed. A vehicle head lower edge line detecting and tracking module positions a vehicle head lower edge line according to prospective motion and prospective edge detecting results, efficiently avoids the interference of shadows, captures the position of the vehicle head lower edge line, and tracks. After a trigger line is reached, the vehicle head lower edge line detecting and tracking module carries out space mapping on the historical tracking position to realize the accurate measurement of the vehicle speed. A vehicle lamp pairdetecting and tracking module eliminates the interference of the circumference and headlamps of the vehicle in a night mode, accurately detects the vehicle lamp pair, and tracks. After the trigger line is reached, the vehicle lamp pair detecting and tracking module carries out space mapping on the historical tracking position to realize the accurate measurement of the vehicle speed.

Owner:ZHEJIANG DAHUA TECH CO LTD

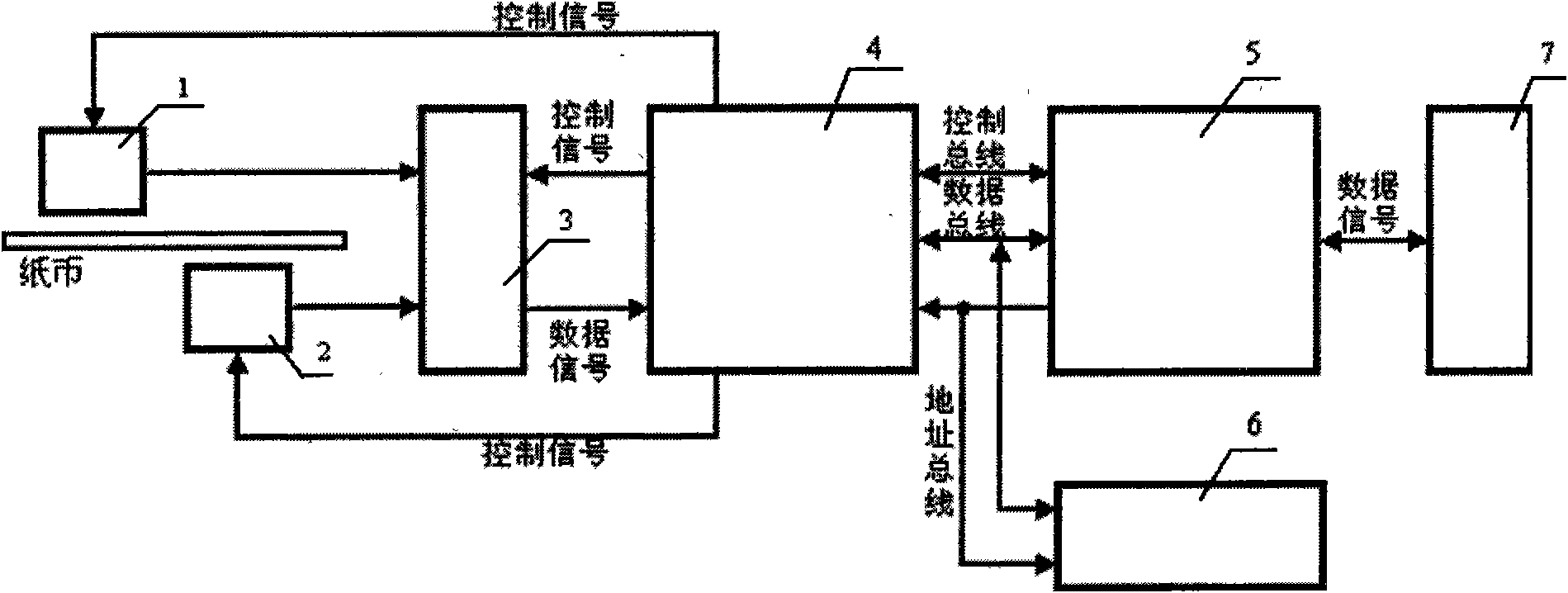

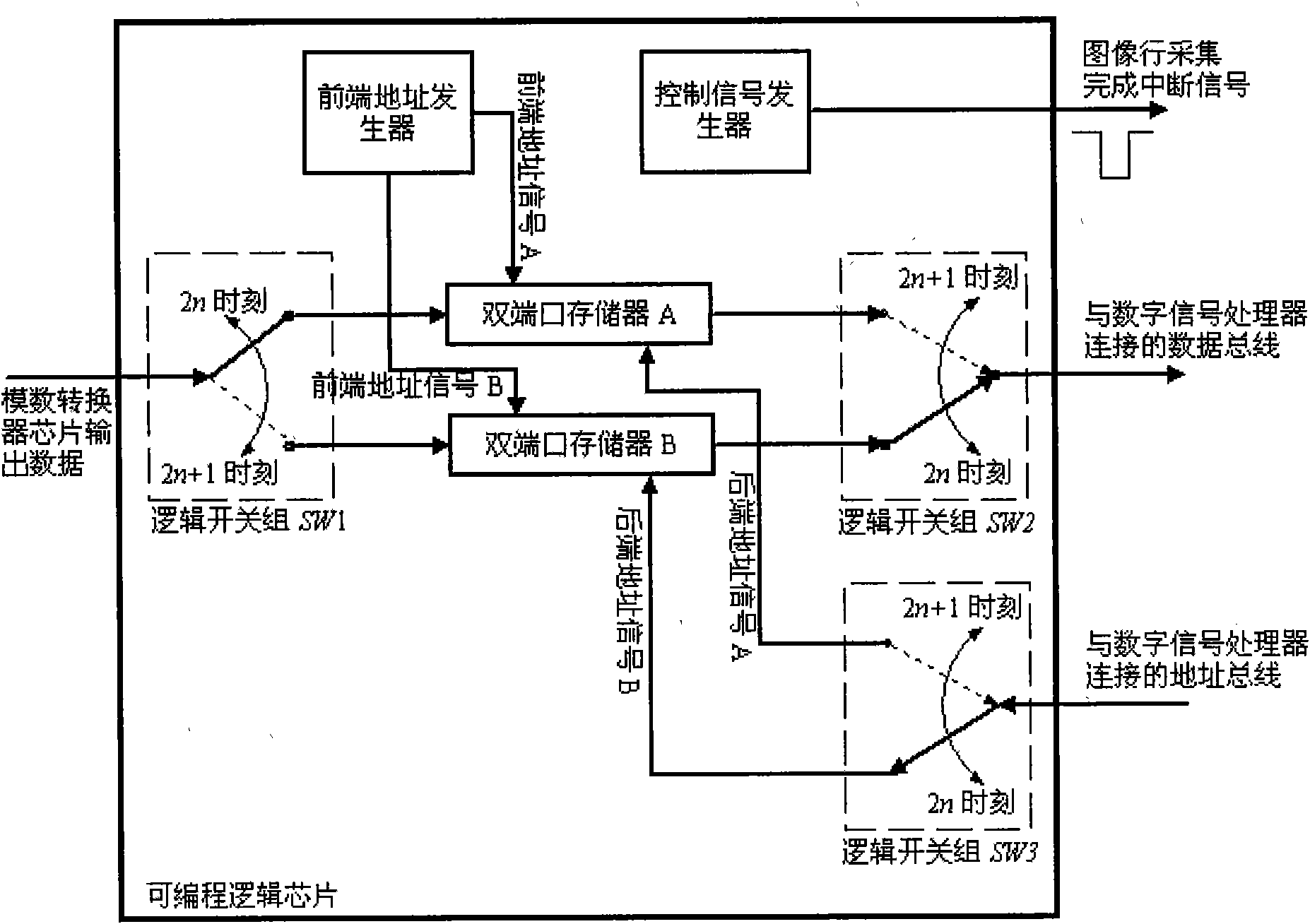

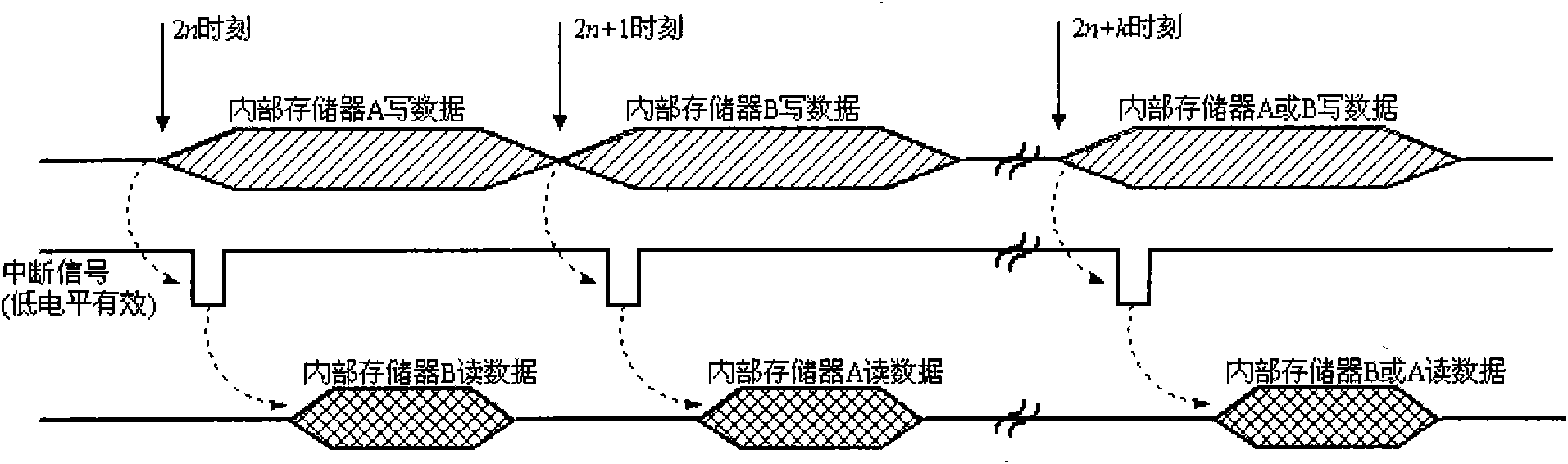

High-speed and high-resolution number collecting device of banknote sorting machine and identification method

The invention provides a high-speed and high-resolution number collecting device of a banknote sorting machine and an identification method. The high-speed and high-resolution number collecting device of the banknote sorting machine comprises image sensors, a multichannel A / D (analog to digital) converter chip, a programmable logic chip, a digital signal processor chip, a dynamic memory chip and a communication structure chip, wherein the image sensors are respectively connected with the multichannel A / D converter chip, the multichannel A / D converter chip is connected with the programmable logic chip, and the programmable logic chip is respectively connected with the digital signal processor chip and the dynamic memory chip. The invention realizes the high-resolution collection to banknote images under the condition of high-speed operation of the banknotes. The contact type image sensors have the advantages of low cost, small volume and low requirements for light source performance. Positioning and cutting thresholds are not needed to be set in the identification method of banknote numbers, so that an accurate positioning and cutting position and ideal identification results can be obtained when the banknotes are depreciated and polluted.

Owner:HARBIN INST OF TECH

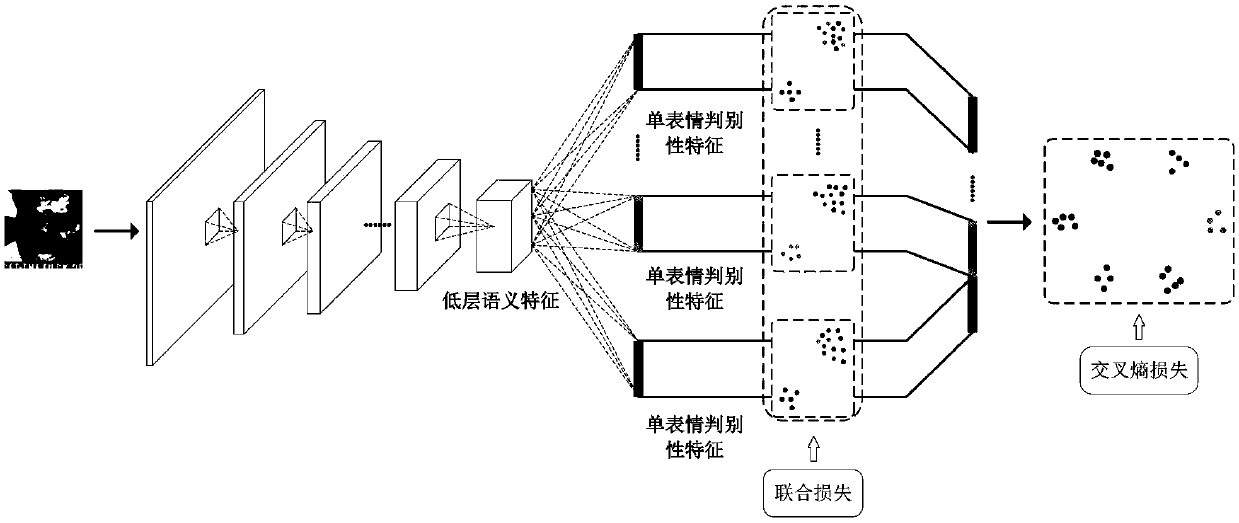

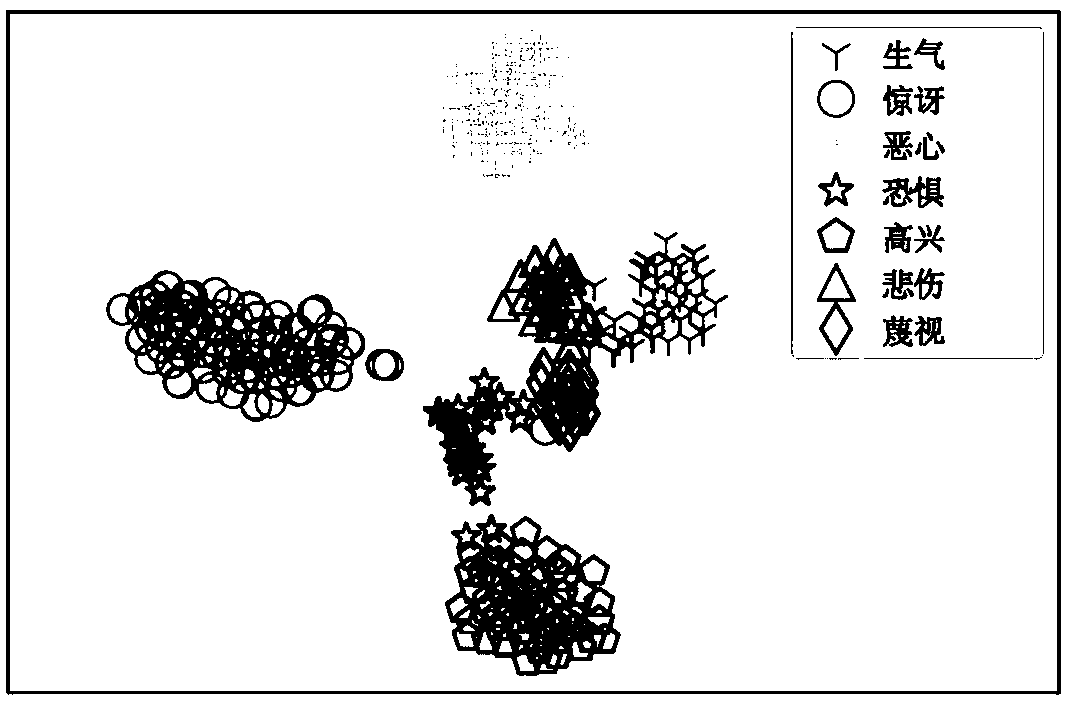

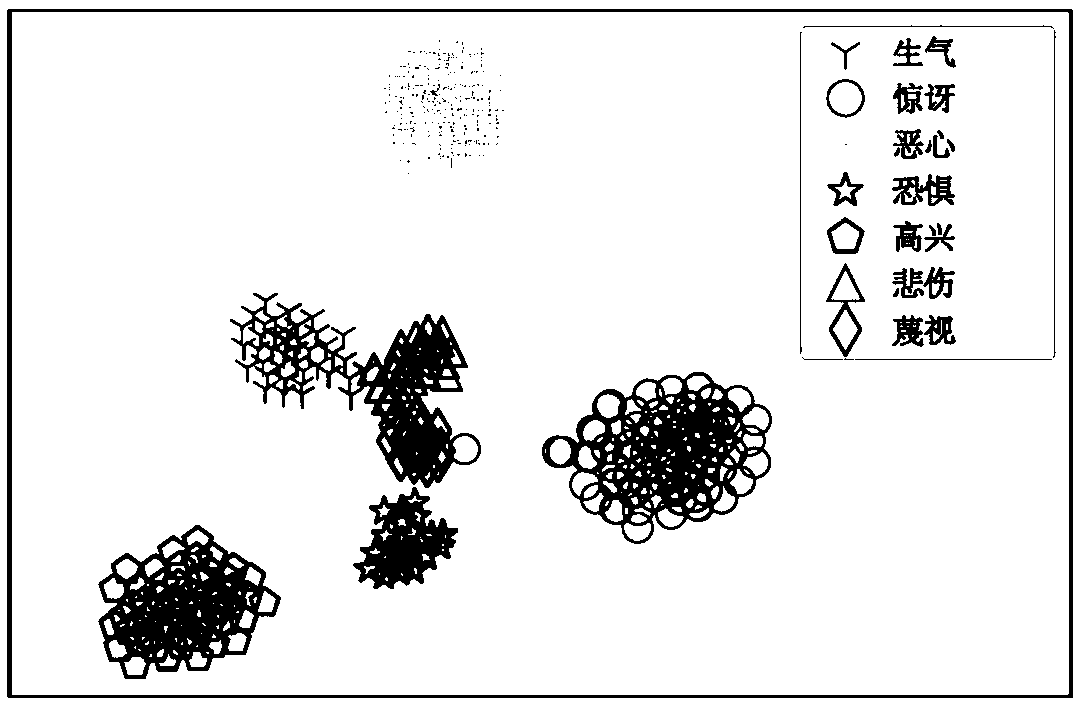

Facial expression identification method based on multi-task convolutional neural network

ActiveCN108764207AImprove the difference between classesImprove discrimination abilityCharacter and pattern recognitionNeural architecturesData setFeature extraction

The invention discloses a facial expression identification method based on multi-task convolutional neural network. The expression identification method comprises the following steps: firstly, designing a multi-task convolutional neural network structure, and sequentially extracting low-level semantic features shared by all expressions and a plurality of single-expression distinguishing characteristics in the network; then adopting multi-task learning and simultaneously learning learning tasks of the plurality of single-expression distinguishing characteristics and multi-expression identification tasks; monitoring the all tasks of the network by using combined loss, and balancing the loss of the network by using the two loss weights; finally, acquiring a final facial expression identification result from a maximum flexible classification layer arranged at the last of a model according to the trained network model. Characteristic extraction and expression classification are put in an end-to-end framework to be learned, the distinguishing characteristics are extracted from input images, and expression identification on the input images are reliably carried out. Experimental analysisshows that the algorithm is excellent in performance, complicated facial expressions can be effectively distinguished, and good identification performance on a plurality of published data sets can beachieved.

Owner:XIAMEN UNIV

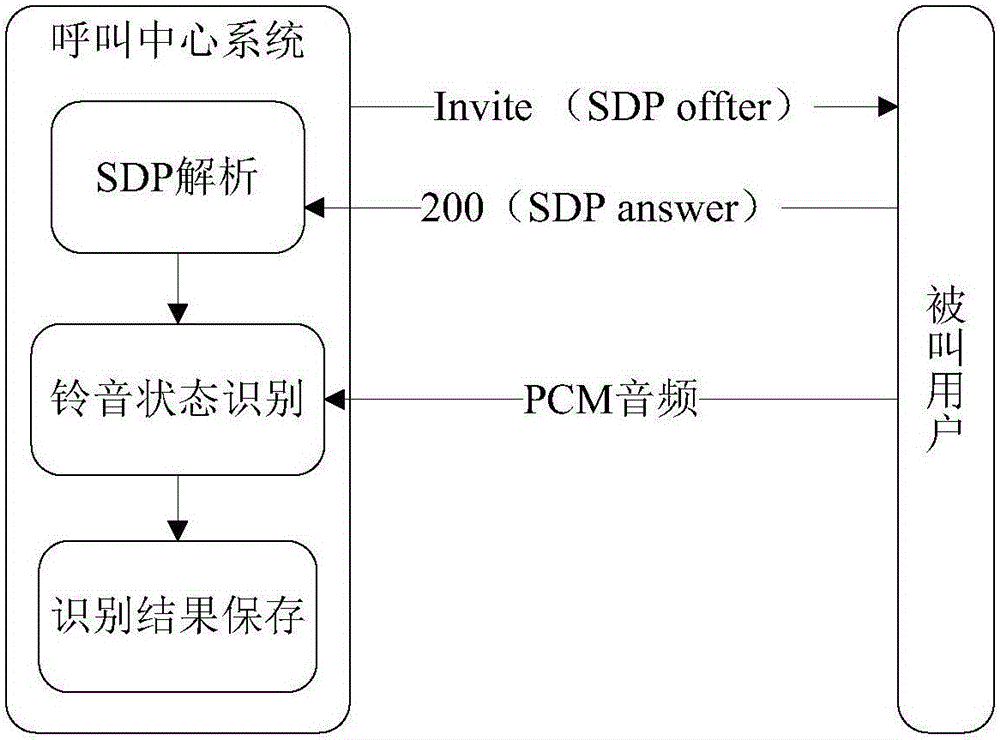

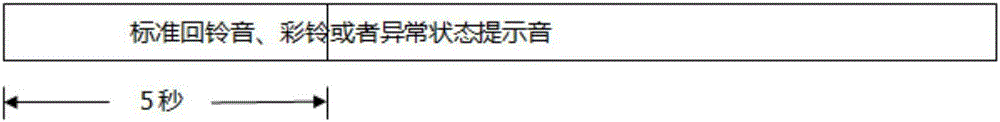

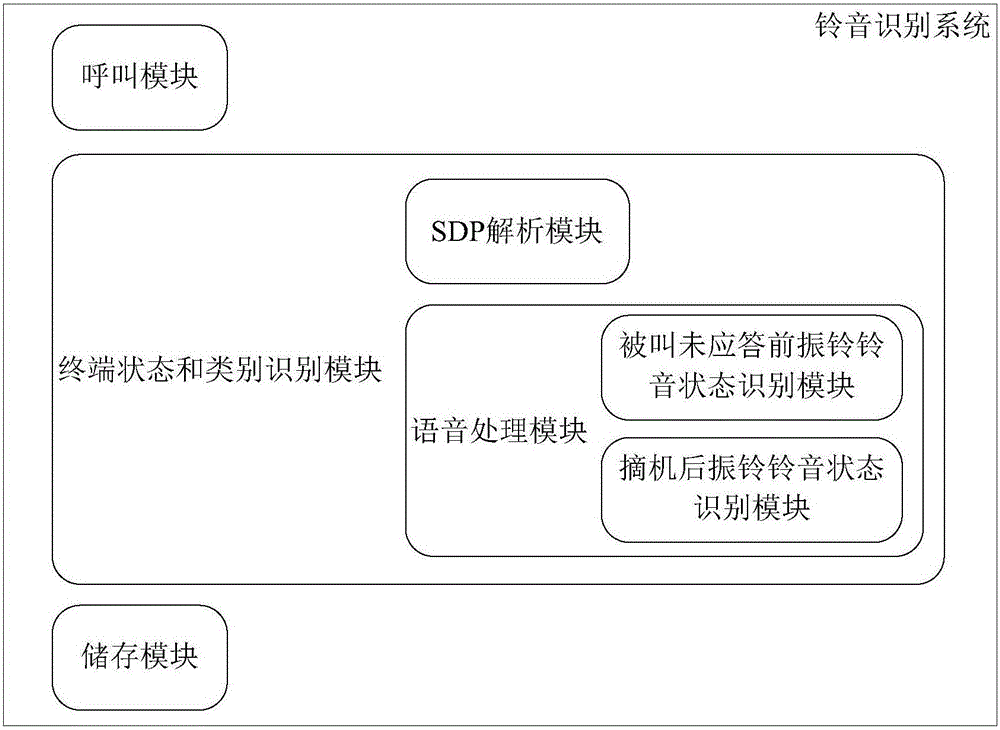

Ring tone recognition method and system for call center system

ActiveCN105979106ASmall amount of calculationImprove recognition efficiencySpecial service for subscribersManual exchangesFacsimileSpeech sound

The invention relates to a ring tone recognition method and system for a call center system. The method comprises the steps of firstly, calling the input telephone number; then, initiating a fax request to a called user, and preliminarily recognizing a fax machine via SDP (Session Description Protocol) analysis; recognizing a ring tone state, wherein based on an audio fragment within 5 seconds after calling, the ring state before the called user answers being standard ring-back tone or color ring tone is recognized; and after the called user hooks off, recognizing fax machine off-hook, automatic responder off-hook, natural person off-hook and get-through nobody answer based on voice analysis of a voice fragment after off-hook answer. By adopting the method, the number state and the terminal type can be accurately recognized, the calculation quantity of ring tone state recognition is reduced, the recognition result is quickly given, and the recognition efficiency is improved.

Owner:BEIJING RONGLIAN YITONG INFORMATION TECH CO LTD

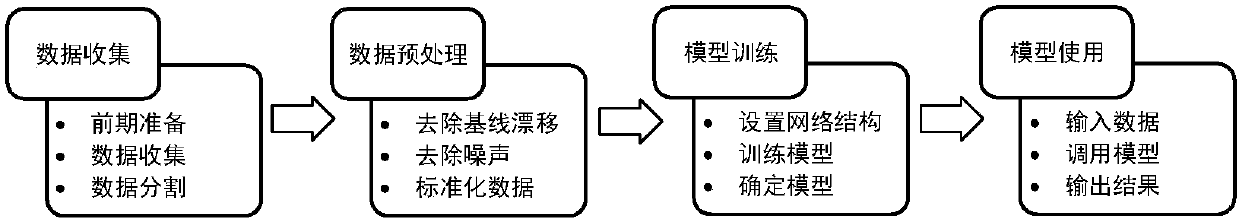

Electrocardio-signal emotion recognition method based on deep learning

InactiveCN107736894AImprove featuresHigh expressionCharacter and pattern recognitionSensorsEcg signalNoise removal

The invention relates to an electrocardio-signal emotion recognition method based on deep learning. The electrocardio-signal emotion recognition method comprises the following steps that under variousemotion picture inducing conditions, electrocardio data of a testee under different emotions is acquired through the chest or the wrist, the acquired electrocardio data is segmented into electrocardio signals fixed in length, and corresponding labels are made for the electrocardio data obtained under different emotions; the acquired electrocardio data is preprocessed, baseline drift removal basedon wavelet transformation is firstly performed, and noise removal is performed; data standardization is performed, normalization processing is conducted on noise-removed electrocardio data; a model training stage is performed.

Owner:TIANJIN UNIV

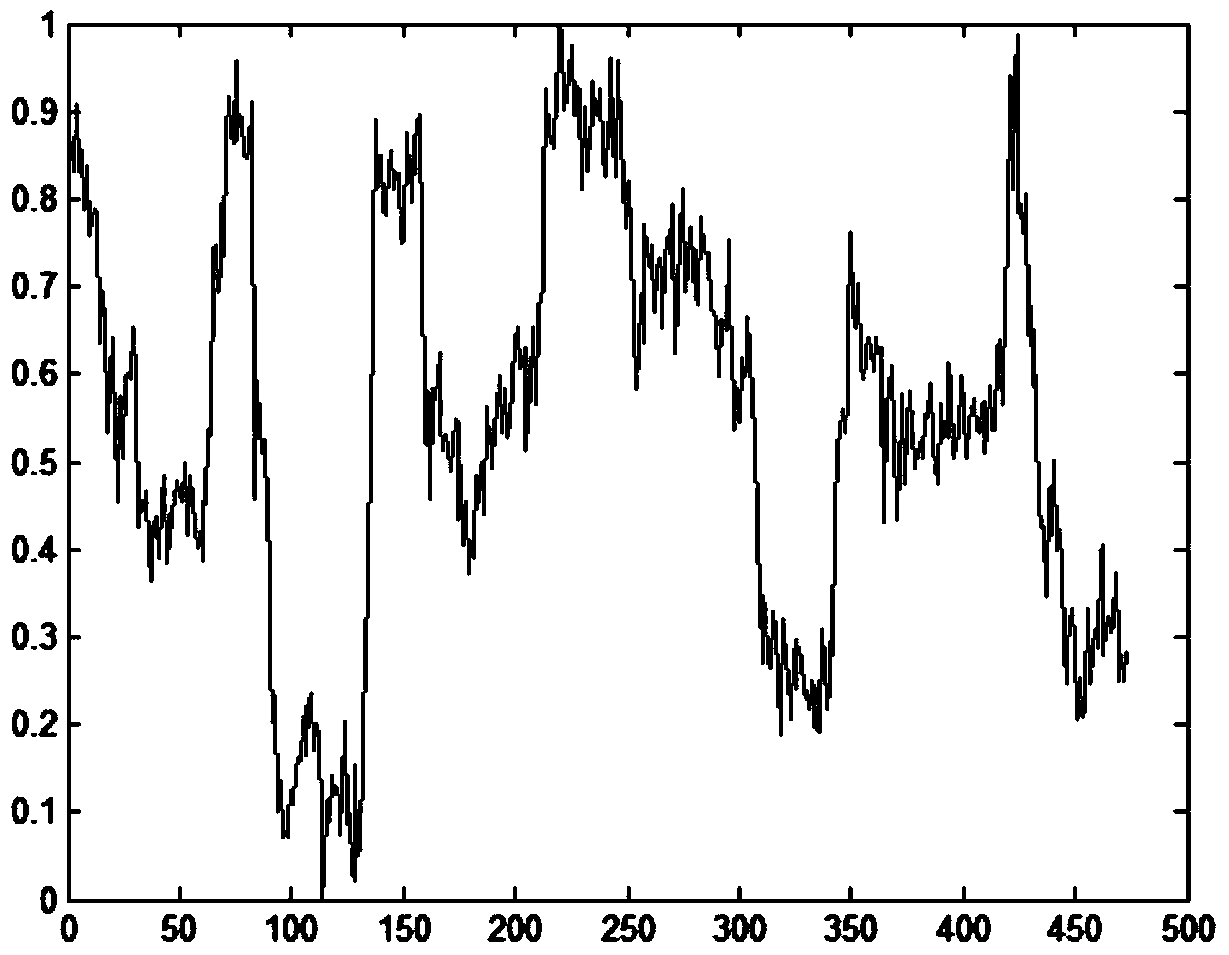

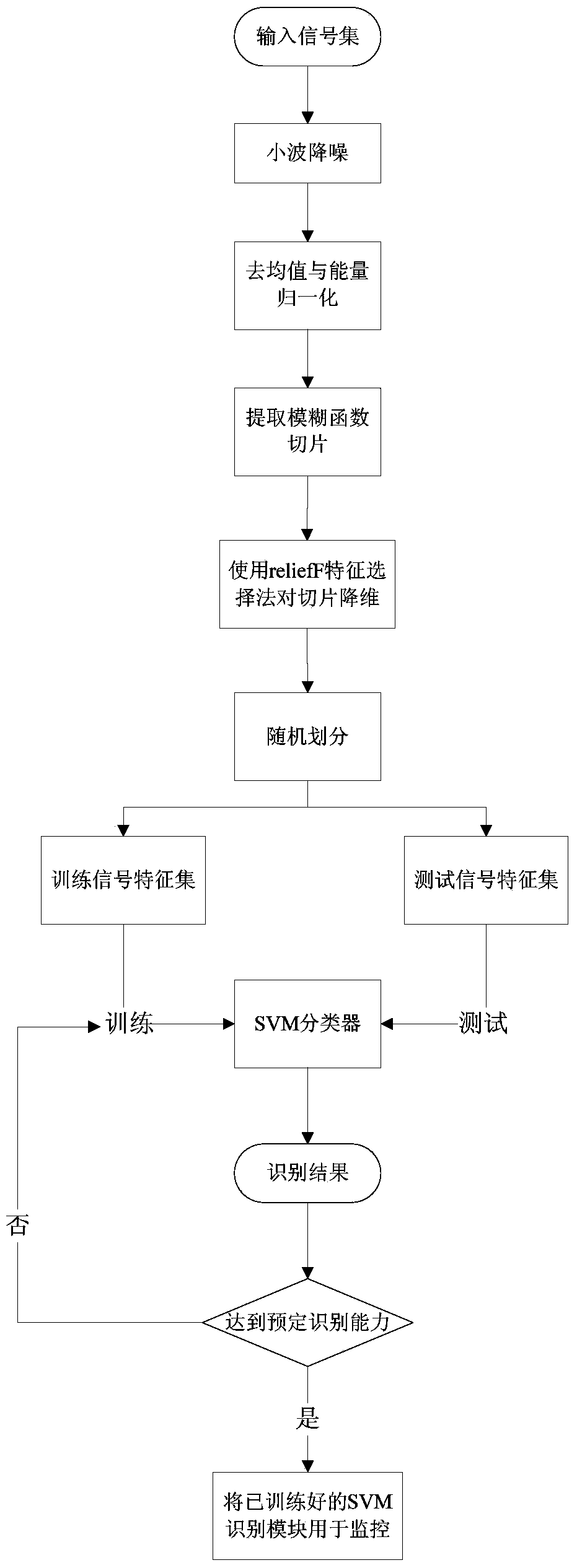

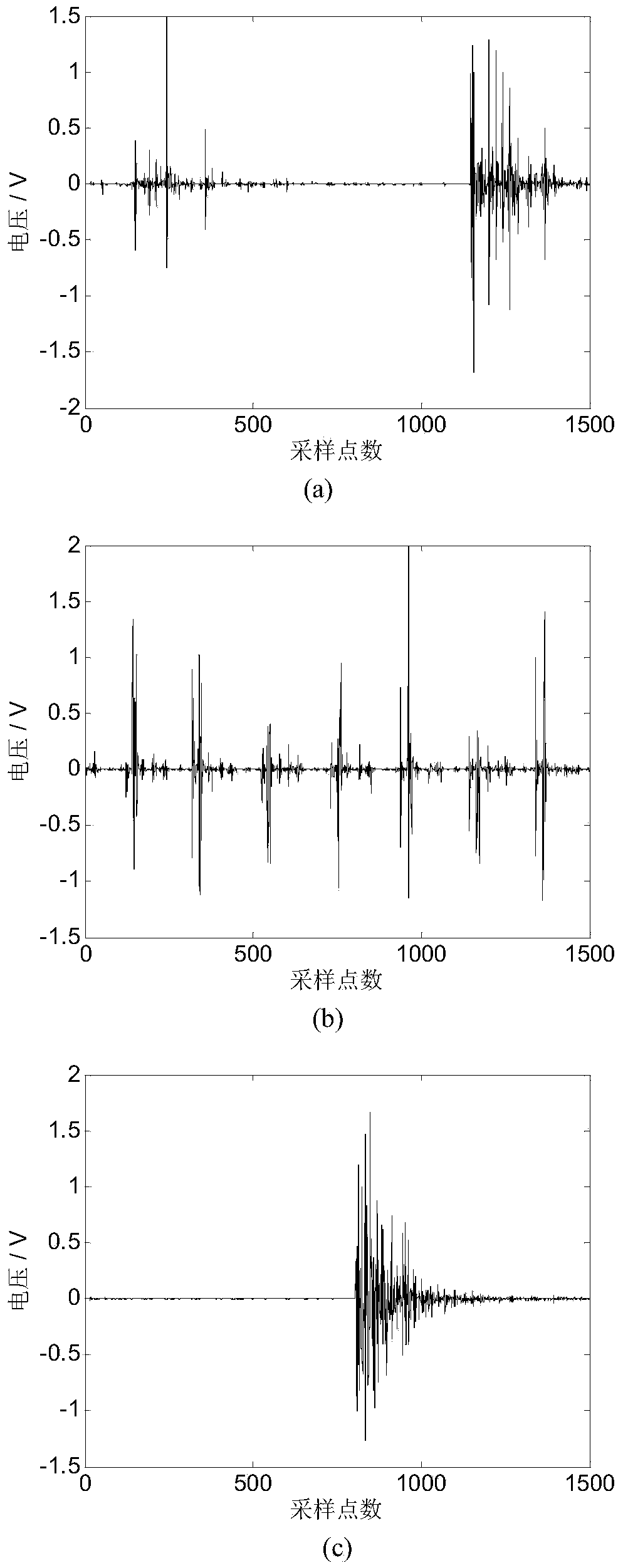

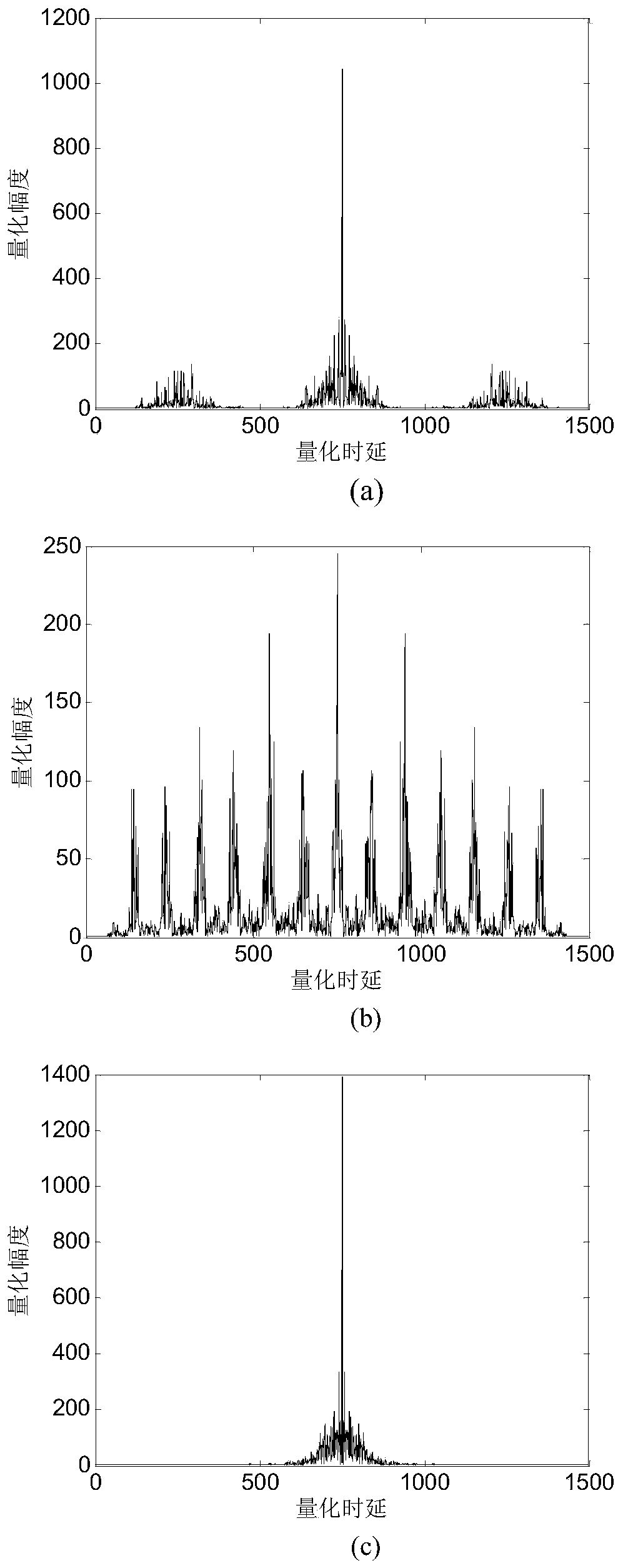

Fuzzy domain characteristics based optical fiber vibration signal identifying method

ActiveCN103968933ARobustOvercoming high dimensionalitySubsonic/sonic/ultrasonic wave measurementUsing wave/particle radiation meansIdentification rateSignal characteristic

Owner:西安雷谱汇智科技有限公司

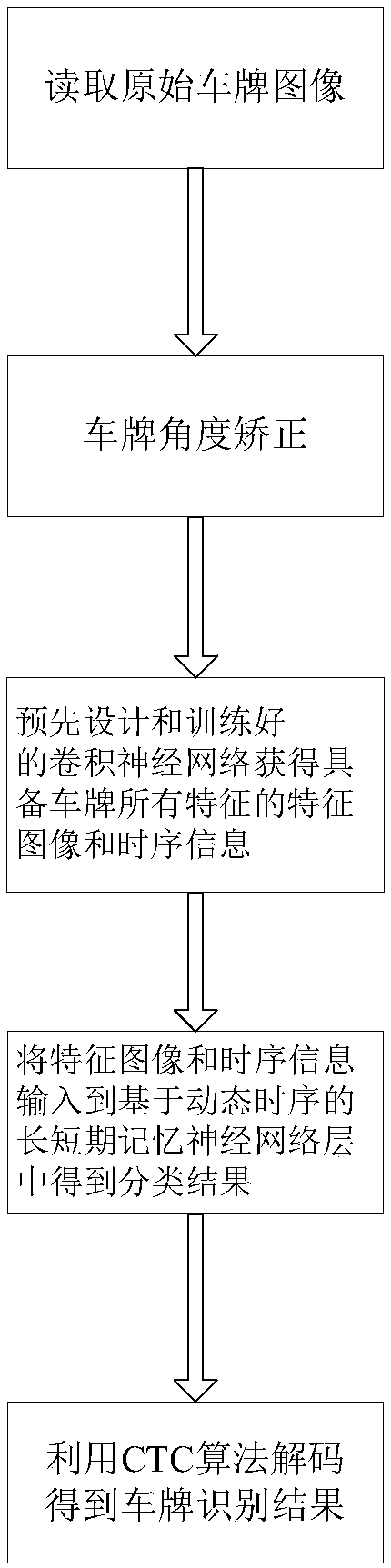

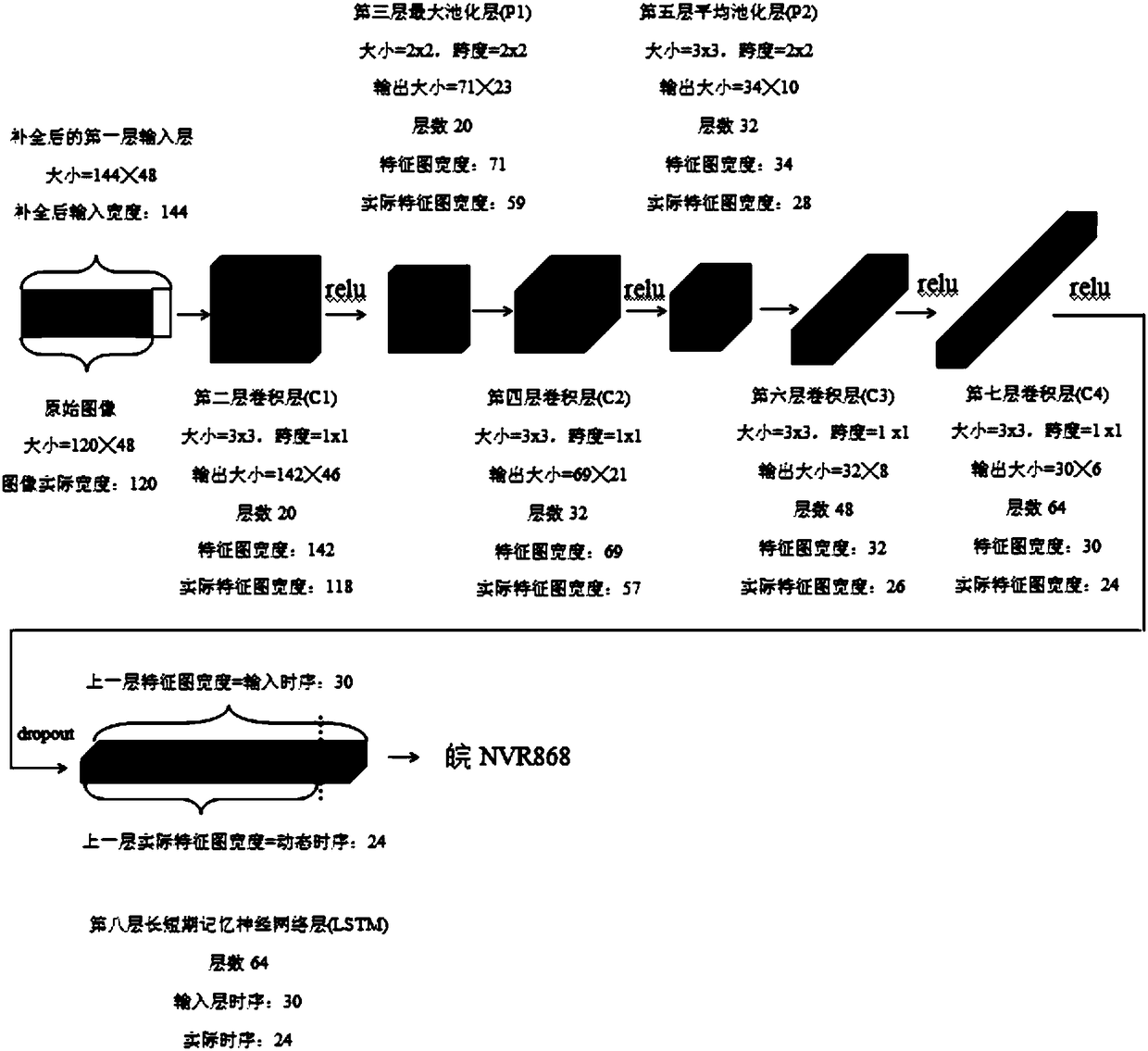

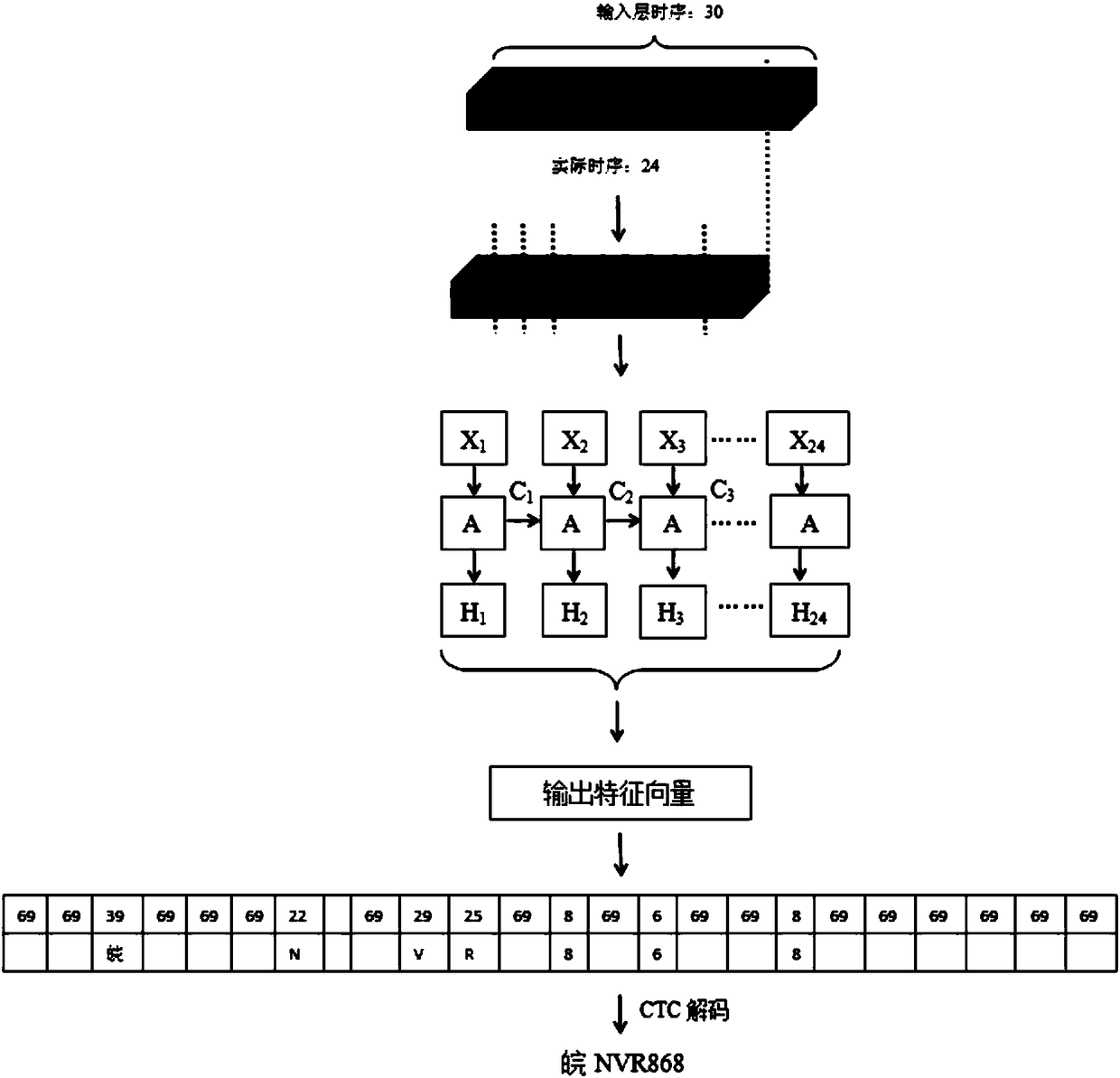

Dynamic time sequence convolutional neural network-based license plate recognition method

ActiveCN108388896AReduce training parametersSolve the problem of low accuracy rate and wrong recognition resultsCharacter and pattern recognitionNeural architecturesShort-term memoryLeak detection

The invention discloses a dynamic time sequence convolutional neural network-based license plate recognition method. The method comprises the following steps of: reading an original license plate image; carrying out license plate angle correction to obtain a to-be-recognized license plate image; inputting the to-be-recognized license plate image into a previously designed and trained convolutionalneural network so as to obtain a feature image and time sequence information, wherein the feature image comprises all the features of the license plate; and carrying out character recognition, inputting the feature image into a convolutional neural network of a long and short-term memory neural network layer on the basis of time sequence information of the last layer so as to obtain a classification result, and carrying out decoding by utilizing a CTC algorithm so as to obtain a final license plate character result. According to the method, vision modes are directly recognized from original images through using convolutional neural networks, self-learning and correction are carried out, the convolutional neural networks can be repeatedly used after being trained for one time, and the timeof single recognition is in a millisecond level, so that the method can be applied to the scenes needing to recognize license plates in real time. The dynamic time sequence-based long and short-termneural network layer is combined with CTC algorithm-based decoding, so that recognition error problems such as leak detection and repeated detection are effectively avoided, and the algorithm robustness is improved.

Owner:浙江芯劢微电子股份有限公司

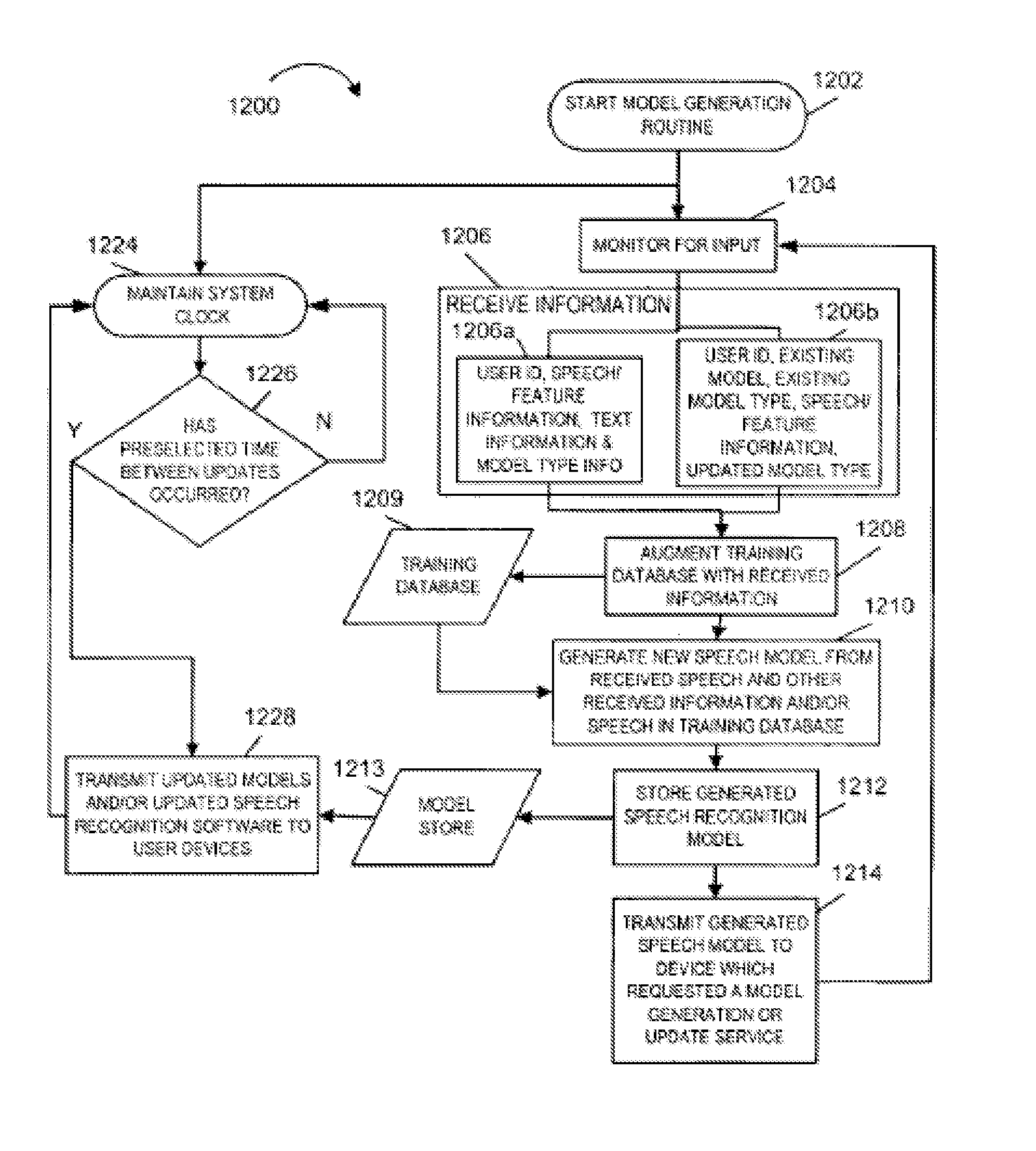

Methods and apparatus for performing speech recognition over a network and using speech recognition results

InactiveUS7302391B2Improve recognition resultsAutomatic call-answering/message-recording/conversation-recordingAutomatic exchangesThe InternetComputerized system

Techniques for generating, distributing, and using speech recognition models are described. A shared speech processing facility is used to support speech recognition for a wide variety of devices with limited capabilities including business computer systems, personal data assistants, etc., which are coupled to the speech processing facility via a communications channel, e.g., the Internet. Devices with audio capture capability record and transmit to the speech processing facility, via the Internet, digitized speech and receive speech processing services, e.g., speech recognition model generation and / or speech recognition services, in response. The Internet is used to return speech recognition models and / or information identifying recognized words or phrases. Thus, the speech processing facility can be used to provide speech recognition capabilities to devices without such capabilities and / or to augment a device's speech processing capability. Voice dialing, telephone control and / or other services are provided by the speech processing facility in response to speech recognition results.

Owner:GOOGLE LLC

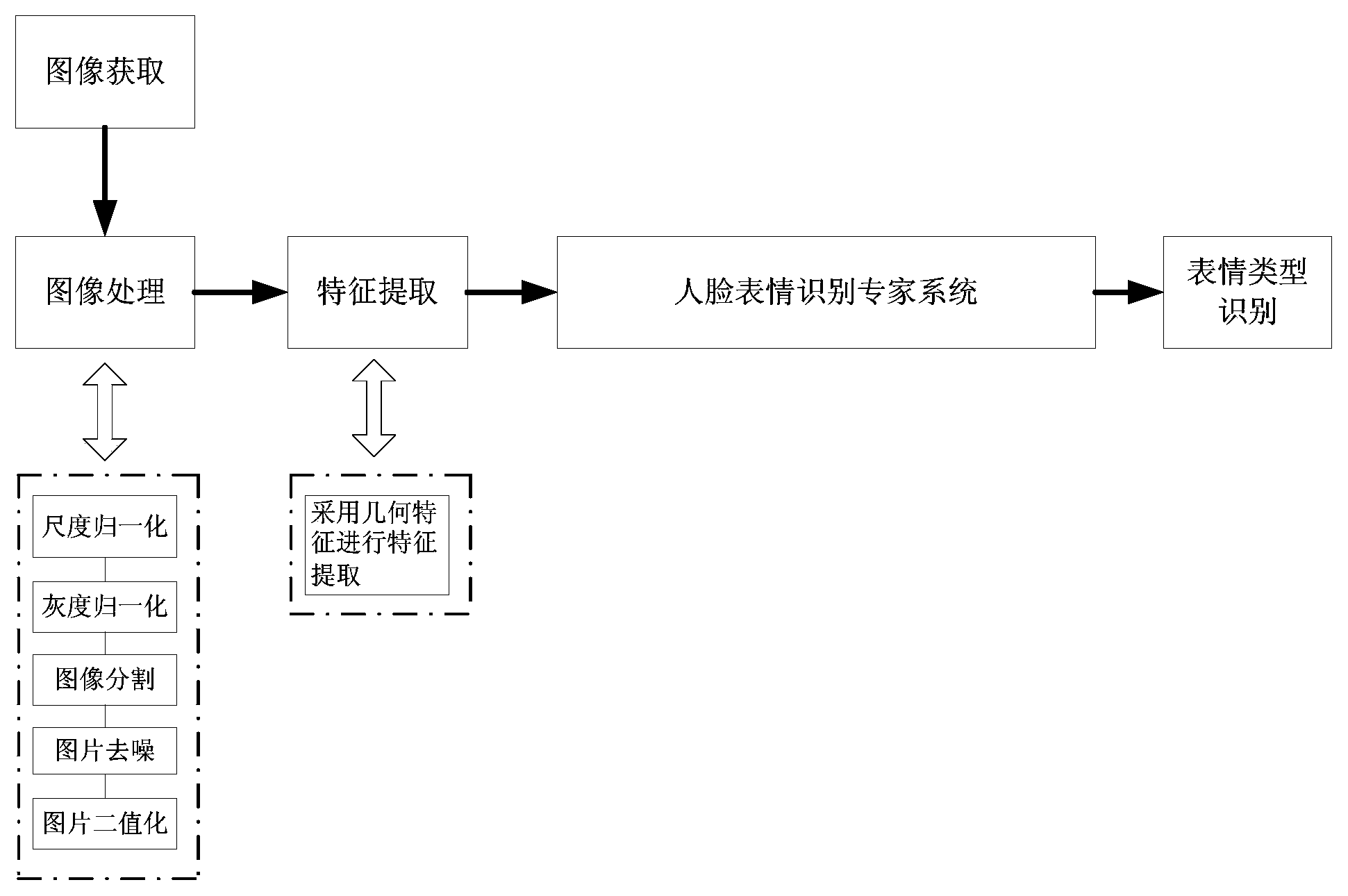

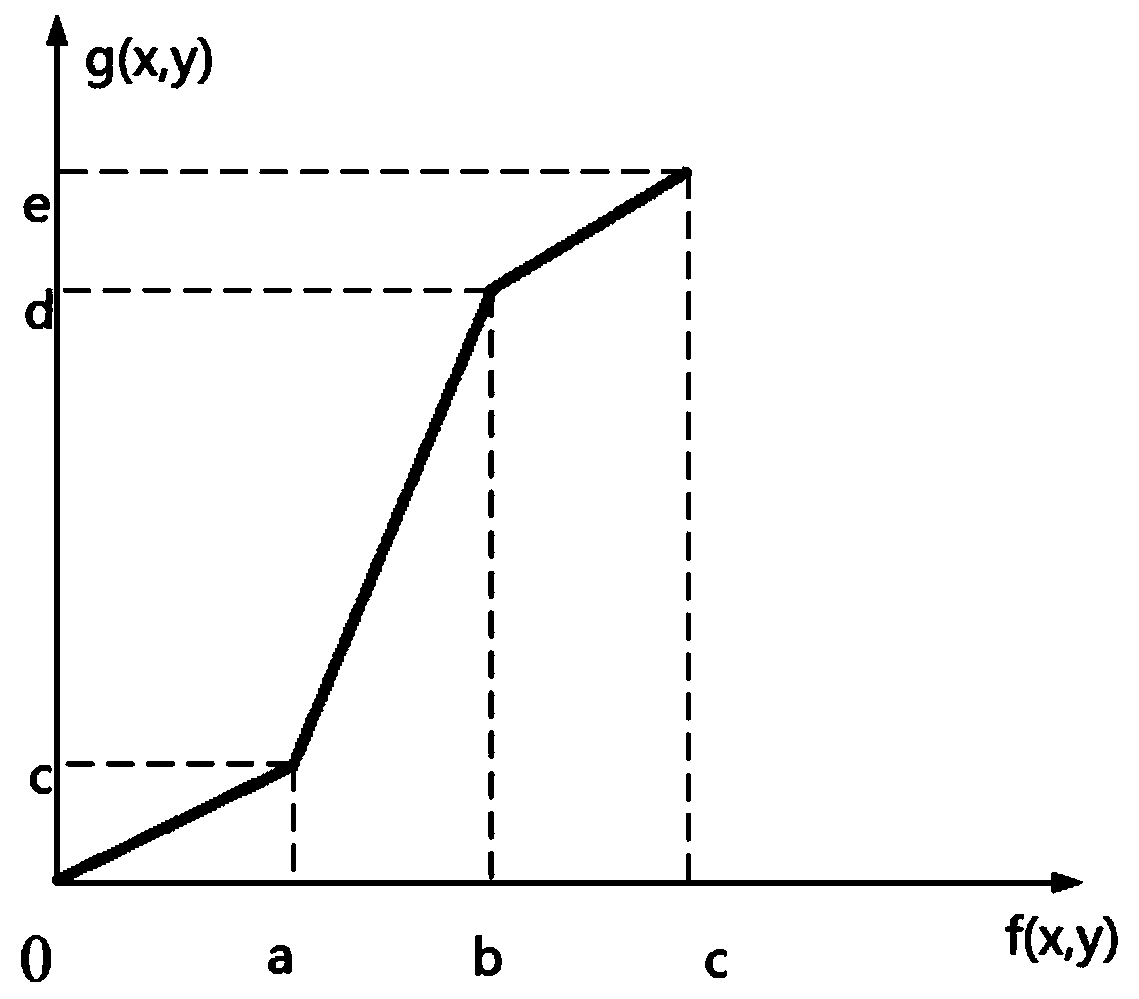

Facial expression image recognition method based on expert system

ActiveCN104077579AImprove recognition resultsEffective WaysCharacter and pattern recognitionExpression LibraryImaging processing

The invention relates to a facial expression image recognition method based on an expert system. According to the method, inference and recognition of the facial expression of a preprocessed image are carried out through the expert system established on the basis of an expression image processing method and the function of a traditional computer program. The method comprises the following steps: (1) capturing an image in a video, acquiring user information in the video, then carrying out identity verification through image processing and image characteristic extraction, acquiring characteristic parameters of the expression image of a user, determining a user expression library, and establishing the expert system for facial expression recognition; (2) carrying out imaging processing and image characteristic extraction on the image captured in the video, acquiring the characteristic parameters generated when the degree of the expression of the user is maximized, comparing the characteristic parameters with parameters, determined in the step (1), of image training samples in the user expression library, and finally outputting the statistical result of facial expression recognition through an inference engine of the expert system. Compared with the prior art, the method has the advantages of being high in recognition speed and the like.

Owner:SHANGHAI UNIV OF ENG SCI

Performing speech recognition over a network and using speech recognition results

Systems, methods and apparatus for generating, distributing, and using speech recognition models. A shared speech processing facility is used to support speech recognition for a wide variety of devices with limited capabilities including business computer systems, personal data assistants, etc., which are coupled to the speech processing facility via a communications channel, e.g., the Internet. Devices with audio capture capability record and transmit to the speech processing facility, via the Internet, digitized speech and receive speech processing services, e.g., speech recognition model generation and / or speech recognition services, in response. The Internet is used to return speech recognition models and / or information identifying recognized words or phrases. The speech processing facility can be used to provide speech recognition capabilities to devices without such capabilities and / or to augment a device's speech processing capability. Voice dialing, telephone control and / or other services are provided by the speech processing facility in response to speech recognition results.

Owner:GOOGLE LLC

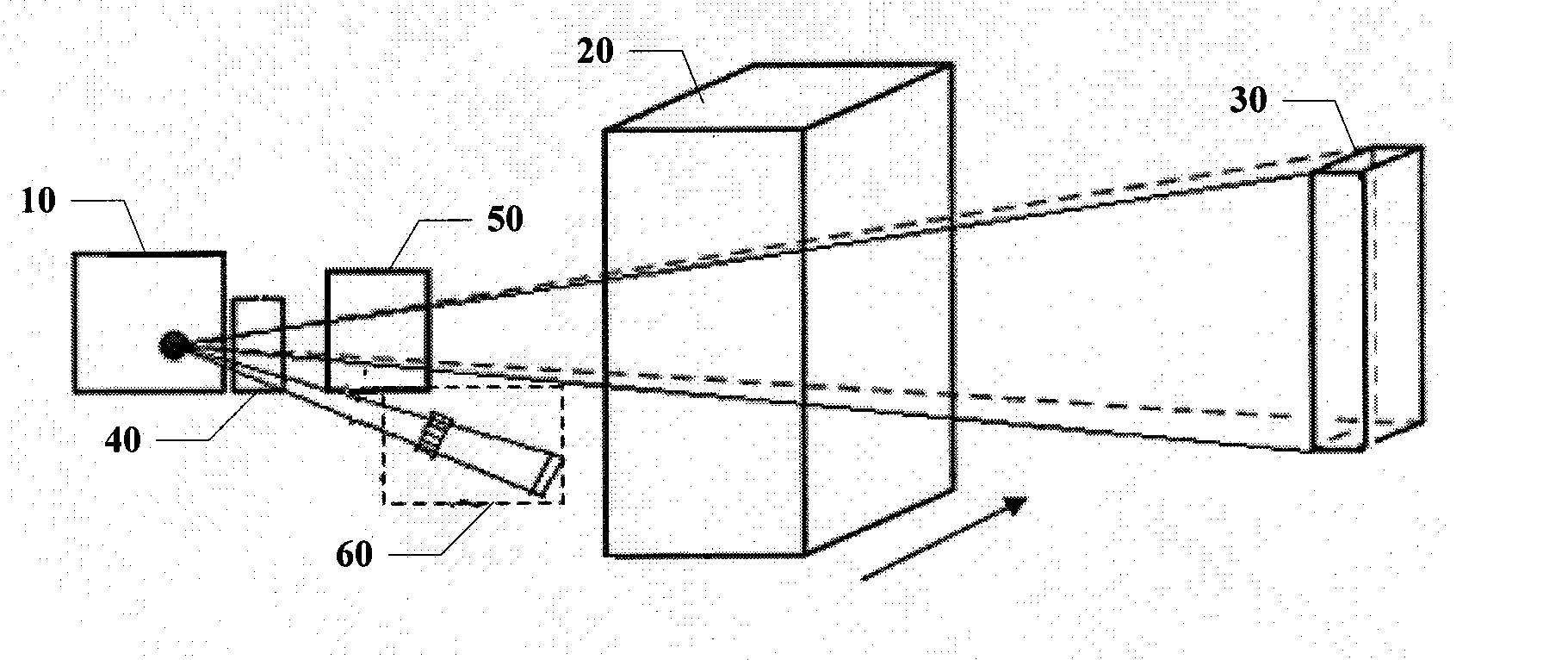

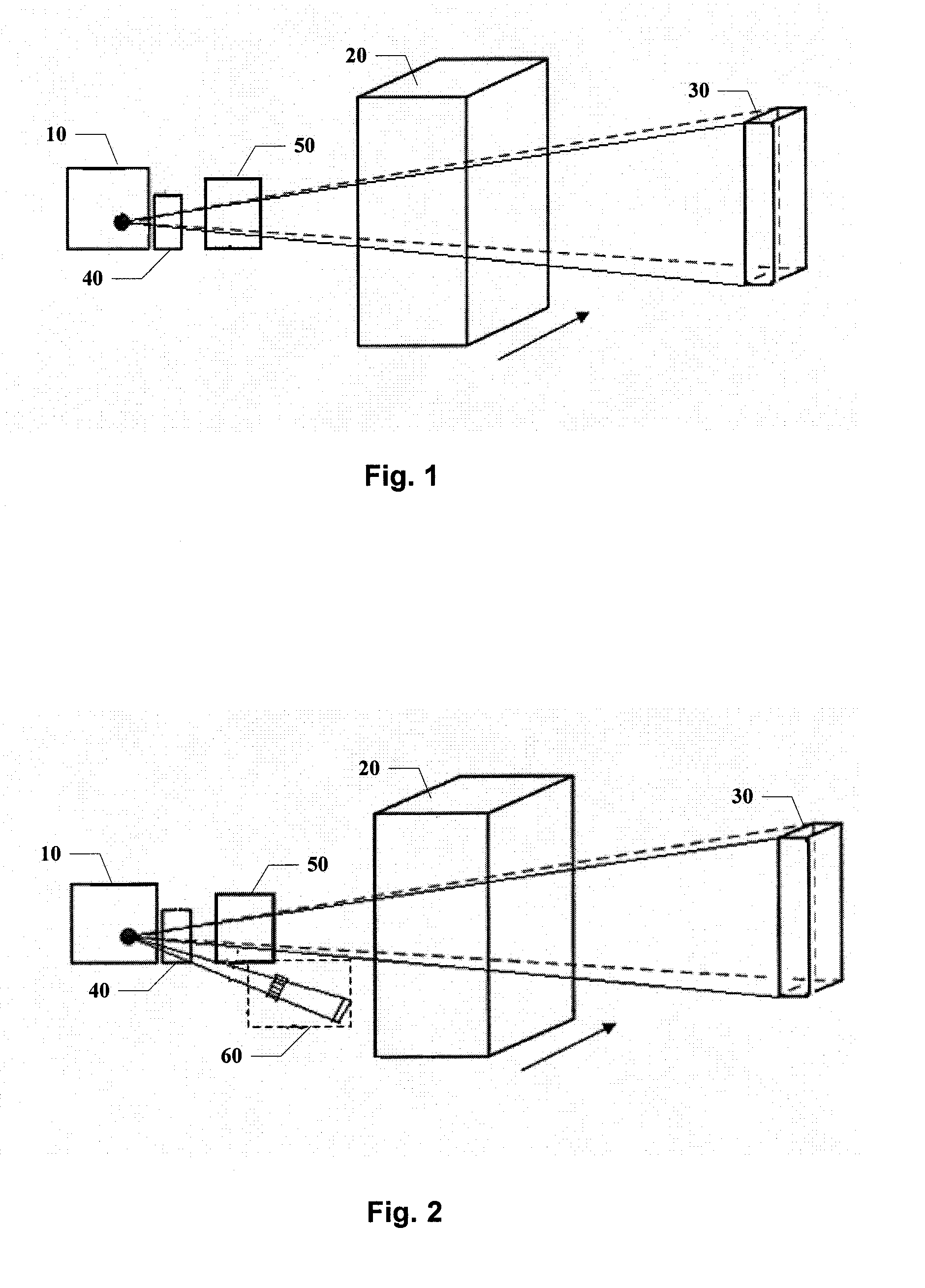

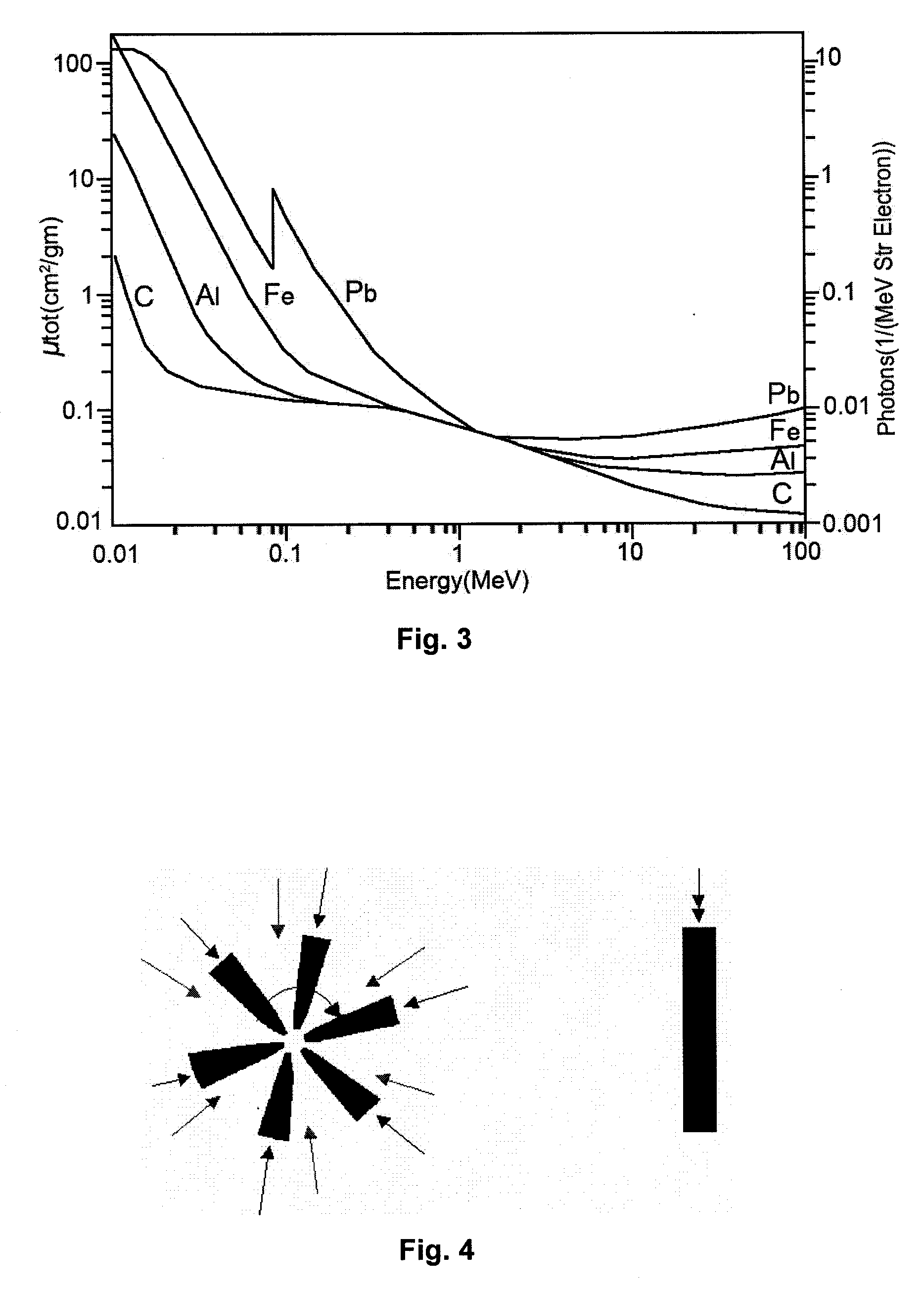

Device and method for real-time mark of substance identification system

ActiveUS20090323894A1Simplifying mark procedureImprove stabilityUsing wave/particle radiation meansX-ray apparatusTime markHigh energy

Disclosed are a method and a device for real-time mark for a high-energy X-ray dual-energy imaging container inspection system in the radiation imaging field. The method comprises the steps of emitting a first main beam of rays and a first auxiliary beam of rays having a first energy, and a second main beam of rays and a second auxiliary beam of rays having a second energy; causing the first and second main beams of rays transmitting through the article to be inspected; causing the first and second auxiliary beams of rays transmitting through at least one real-time mark material block; collecting values of the first and second main beams of rays that have transmitted through the article to be inspected as dual-energy data; collecting values of the first and second auxiliary beams of rays that have transmitted through the real-time mark material block as adjustment parameters; adjusting the set of classification parameters based on the adjustment parameters; and identifying the substance according to the dual-energy data based on adjusted classification parameters. The method according to the invention simplifies the mark procedure for a substance identification subsystem in a high-energy dual-energy system while improves the stability of the material differentiation result of the system.

Owner:TSINGHUA UNIV +1

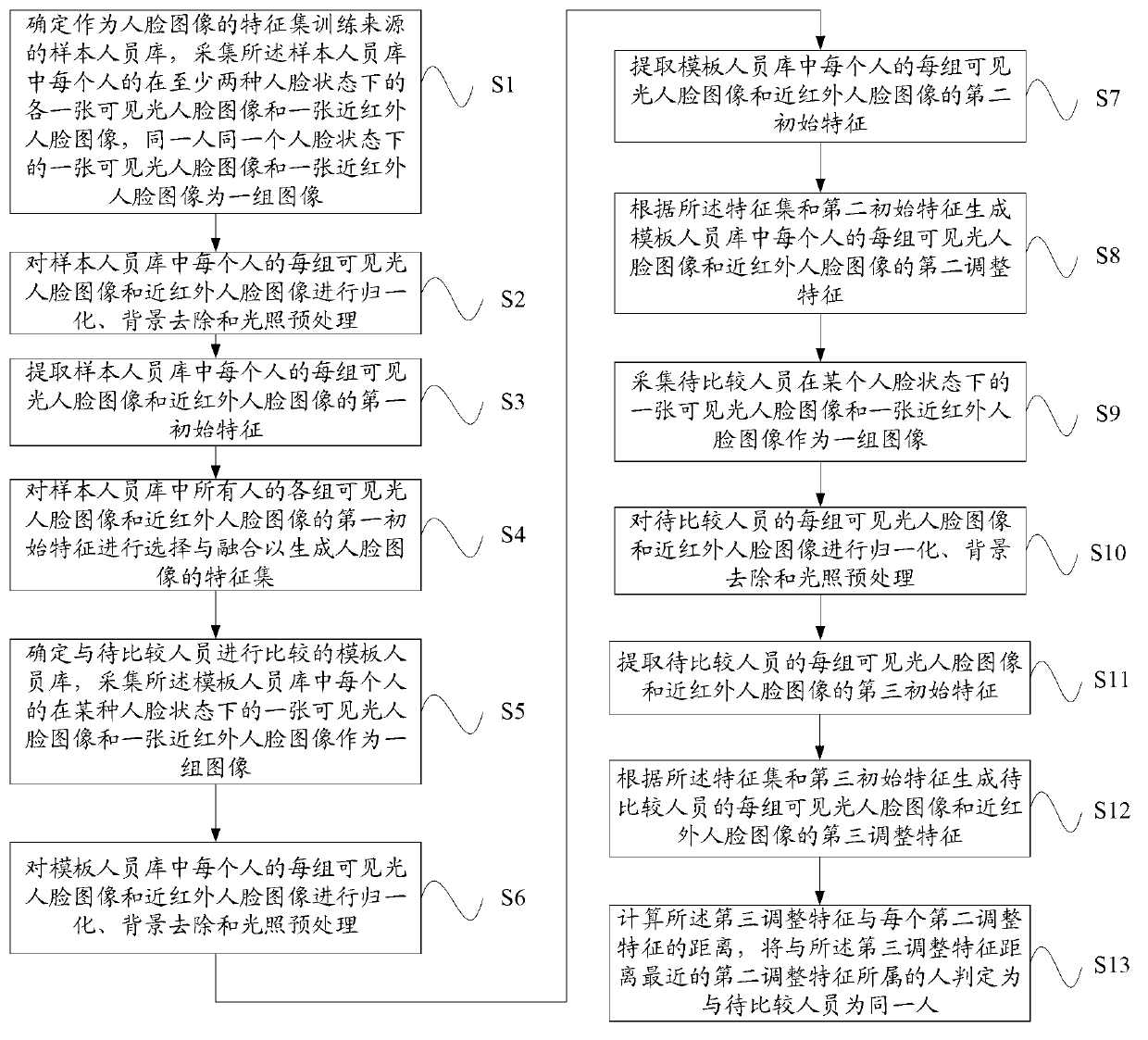

Face recognition method and system fusing visible light and near-infrared information

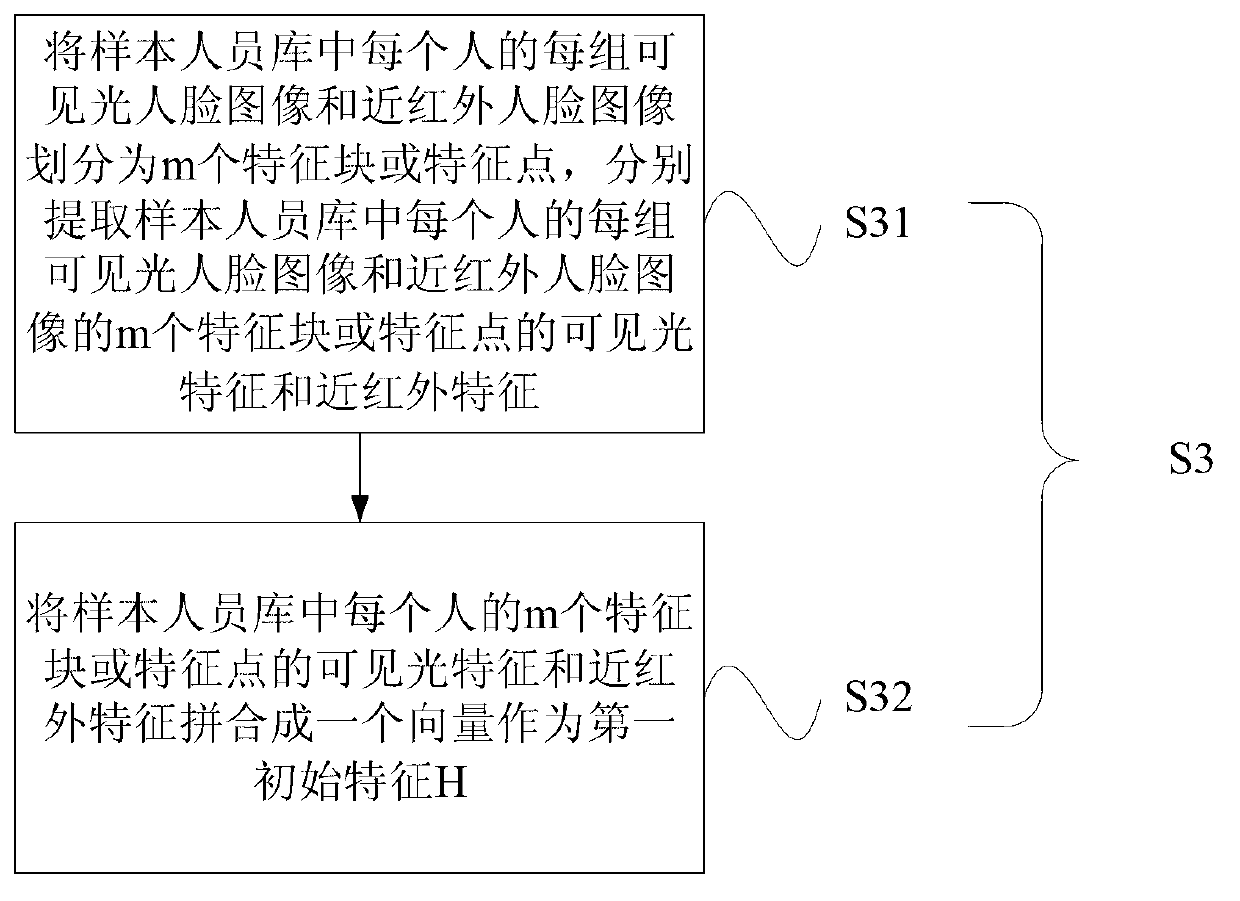

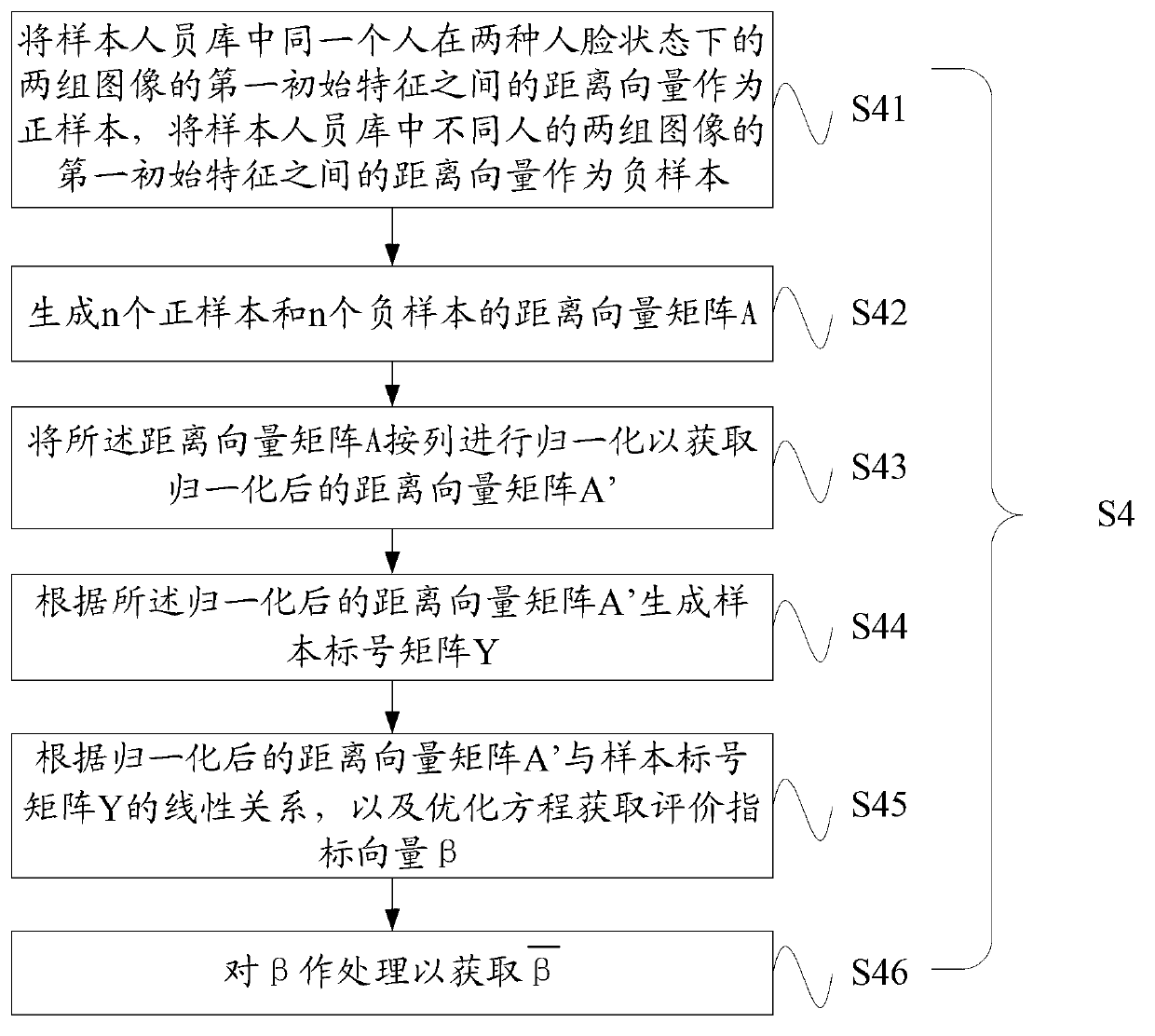

ActiveCN103136516AImprove robustnessImprove recognition resultsCharacter and pattern recognitionRecognition systemVisible spectrum

The invention provides a face recognition method and a face recognition system fusing visible light and near-infrared information. The face recognition method includes the following steps: extracting a first initial feature of each group of visible light face images of each person and a first initial feature of each group of near-infrared face images of each person in a sample personnel library, generating a feature set of the face images through selecting and fusing the first initial features, extracting a second initial feature of each group of visible light face images of each person and a second initial feature of each group of near-infrared face images of each person in a template personnel library, generating a second adjusting feature according to the feature set and the second initial features, extracting a third initial feature of each group of visible light face images and a third initial feature of each group of near-infrared face images of a person to be compared, generating a third adjusting feature according to the feature set and the third initial features, calculating distances between the third adjusting feature and each second adjusting feature, and judging a person with a second adjusting feature which is closest to the third adjusting feature to be the same person as the person to be compared. The face recognition method fusing the visible light and the near-infrared information can effectively improve the performance of face recognition.

Owner:SHANGHAI LINGZHI TECH CO LTD

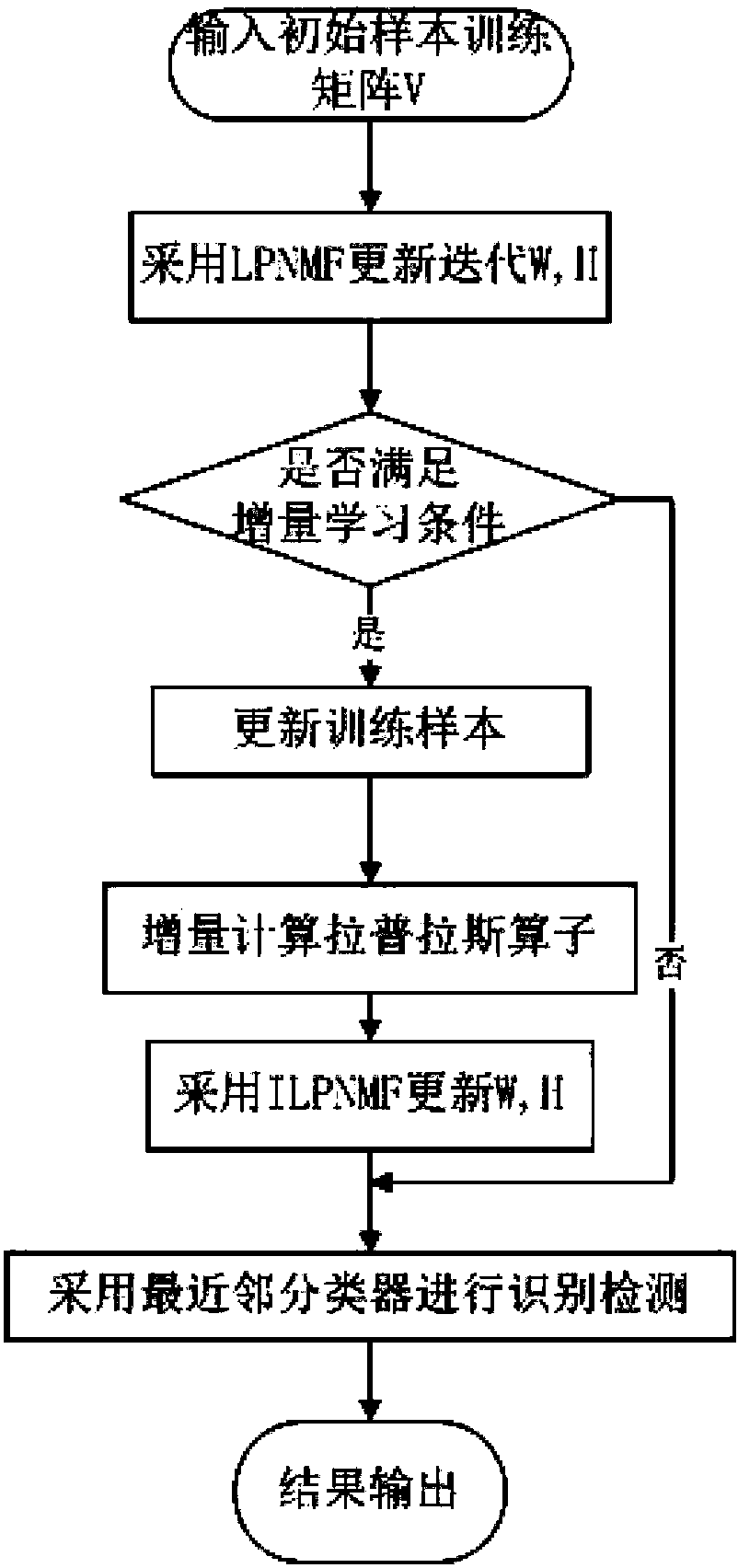

Incremental learning and face recognition method based on locality preserving nonnegative matrix factorization ( LPNMF)

ActiveCN103413117AMaintain local structureImprove recognition accuracyCharacter and pattern recognitionIncremental learningOnline learning

The invention discloses an incremental learning and face recognition method based on locality preserving nonnegative matrix factorization (LPNMF), and relates to the technical field of pattern recognition. The incremental learning and face recognition method is essentially a face recognition method based on on-line learning. The method comprises the step (a) of face image preprocessing, the step (b) of initial sample training, the step (c) of incremental learning and the step (d) of face recognition. The incremental learning and face recognition method can be applied to a linear face recognition system and a non-linear face recognition system, keeps local structures of original space of face images, greatly improves recognition rate, and can be actually applied in an on-line mode.

Owner:HANGZHOU HAILIANG INFORMATION TECH CO LTD

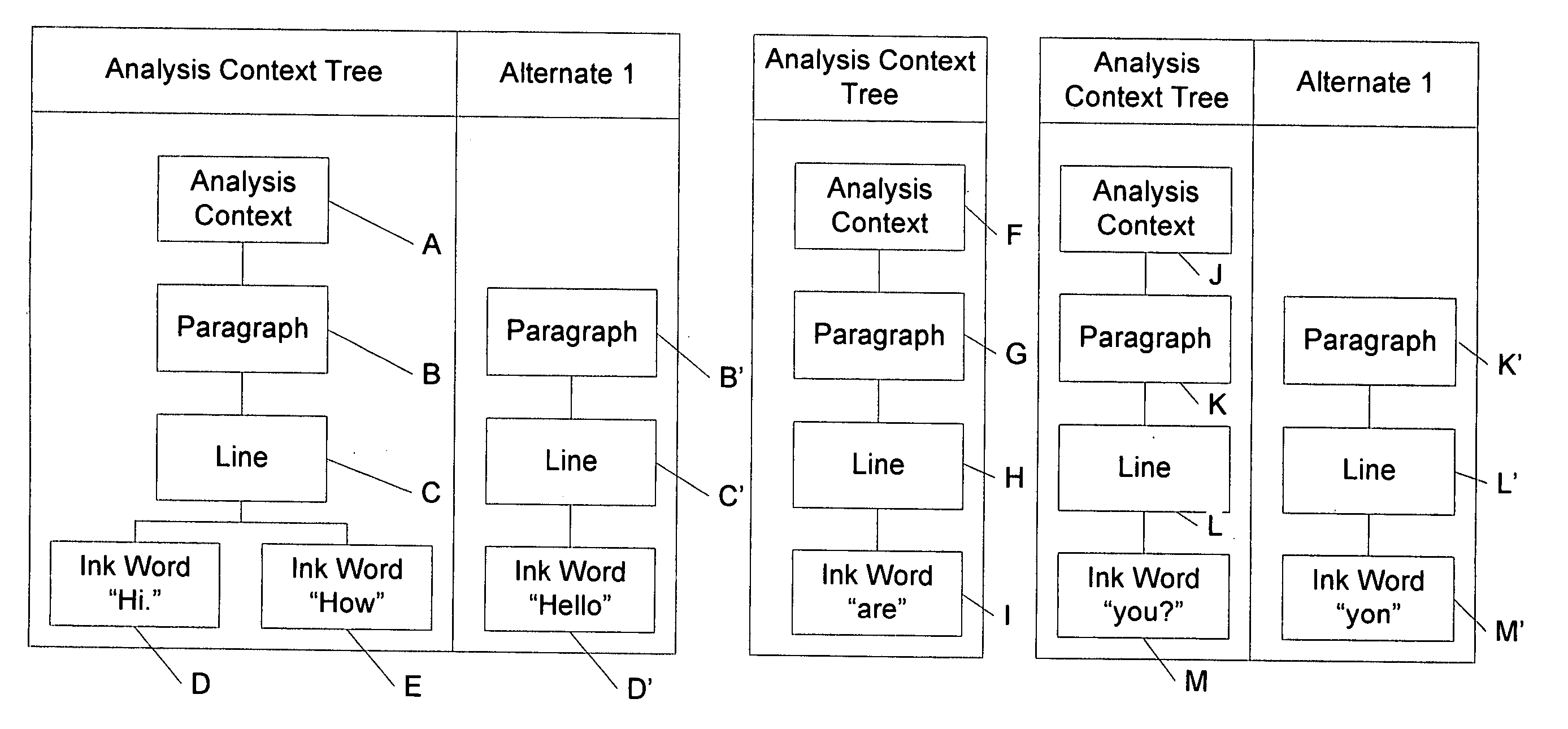

Analysis hints

A system and method for assisting with analysis and recognition of ink is described. Analysis hints may be associated with a field. The field may receive electronic ink. Based on the identity of the field and the analysis hint associated with it, at least one of analysis and recognition of ink may be assisted.

Owner:TELEFON AB LM ERICSSON (PUBL)

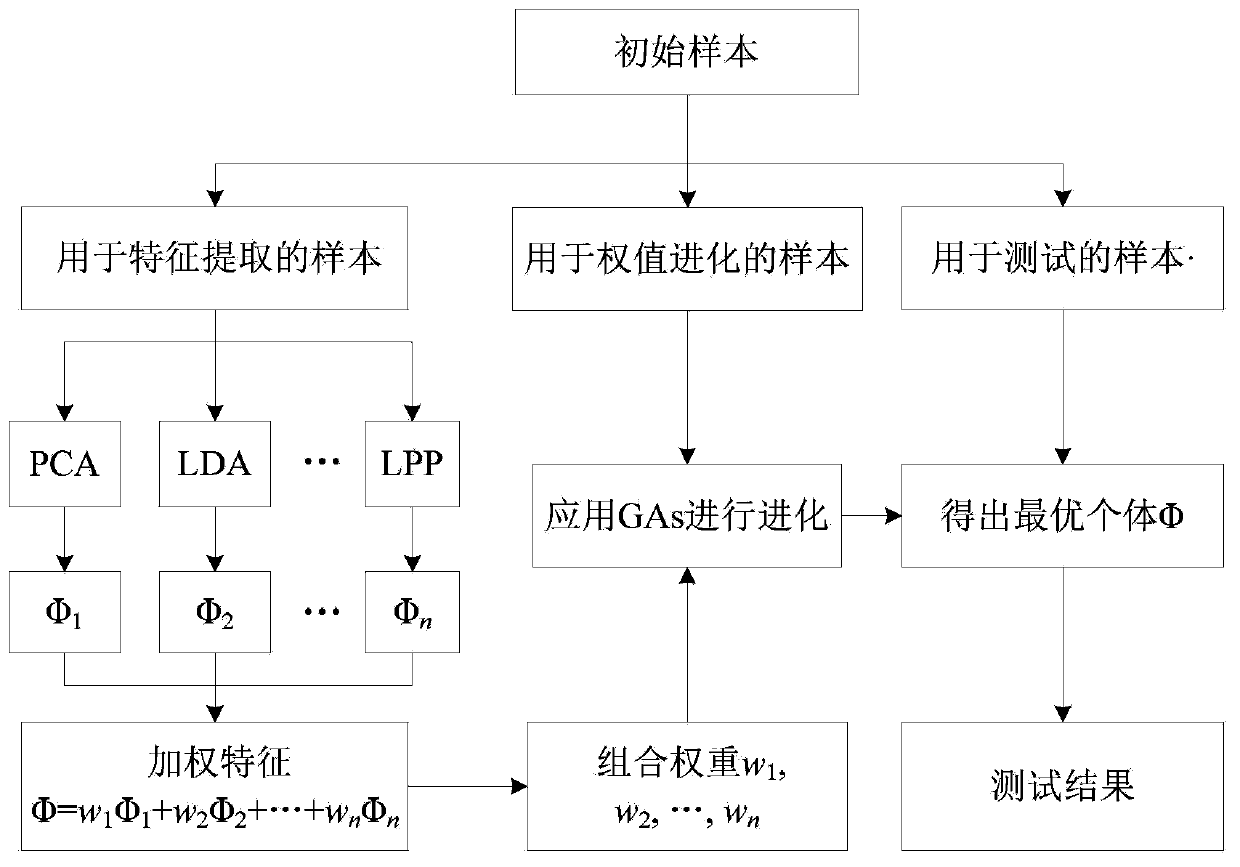

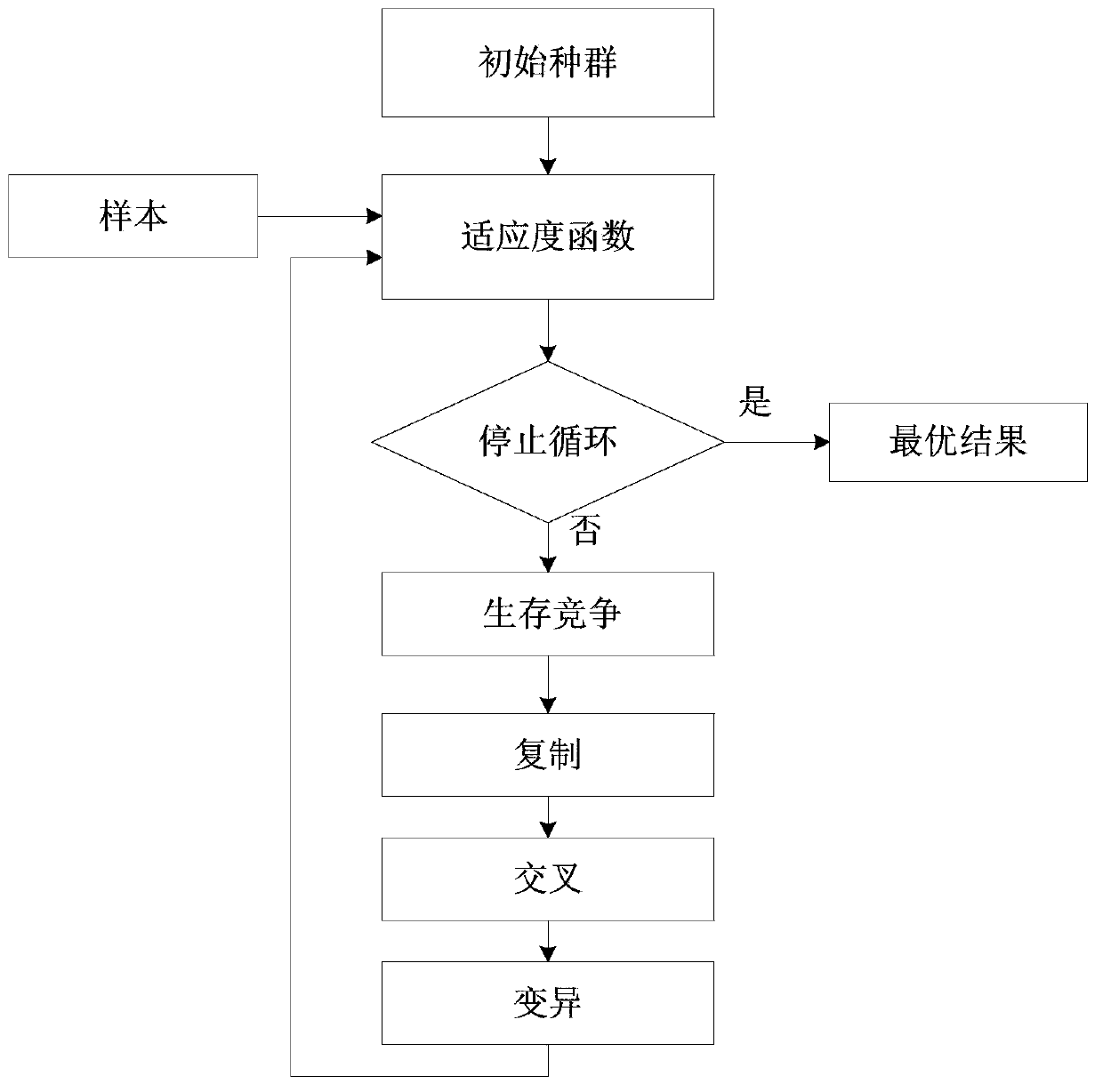

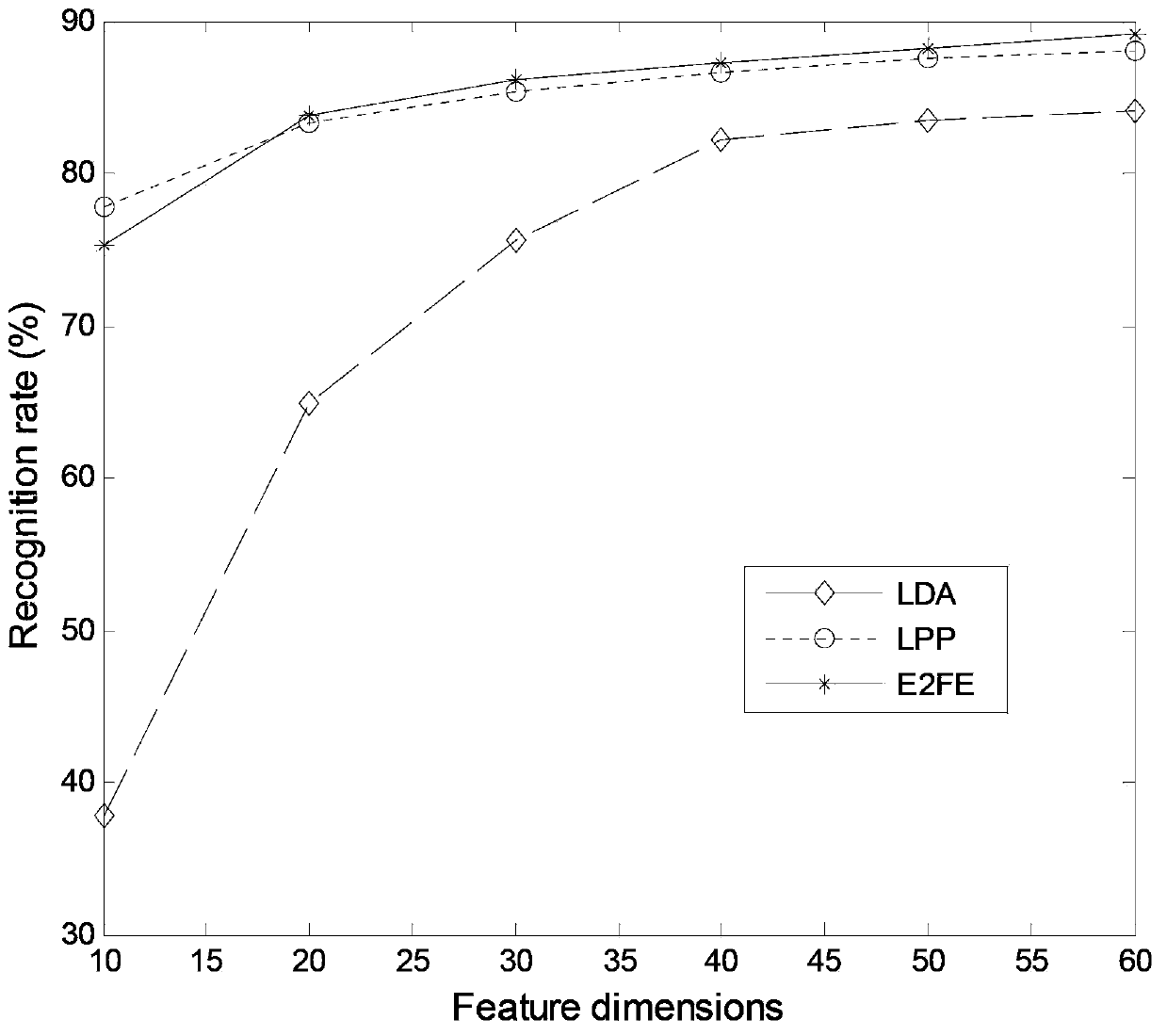

Face recognition method based on extraction of multiple evolution features

InactiveCN103390154AThe principle is simpleEasy to implementCharacter and pattern recognitionSpatial methodsFeature fusion

The invention discloses a face recognition method based on extraction of multiple evolution features. The method comprises the steps as follows: (1), classification of initial samples: the initial samples are divided into three parts, including training samples for feature extraction, training samples for weight evolution and test samples respectively; (2), feature extraction of the training samples: the training samples are subjected to feature extraction with a multiple seed space method, such as PCA (principal component analysis), LDA (linear discriminant analysis), LPP (locality preserving projection) or the like; and (3), multiple feature fusion evolution: features obtained with different feature extraction methods are fused according to a form that Phi is equal to the sum of Omega 1 Phi 1, Omega 2 Phi 2, ..., and Omega n Phi n, and the like, wherein Omega is a weight coefficient. An optimal weight coefficient is obtained with a genetic algorithm, so that fused features have better recognition effects than prior features. The face recognition method has the advantages that the principle is simple, the method is unique, the application is easy, and the like.

Owner:NAT UNIV OF DEFENSE TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com