Patents

Literature

2095 results about "Spacetime" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In physics, spacetime is any mathematical model that fuses the three dimensions of space and the one dimension of time into a single four-dimensional continuum. Spacetime diagrams can be used to visualize relativistic effects such as why different observers perceive where and when events occur differently.

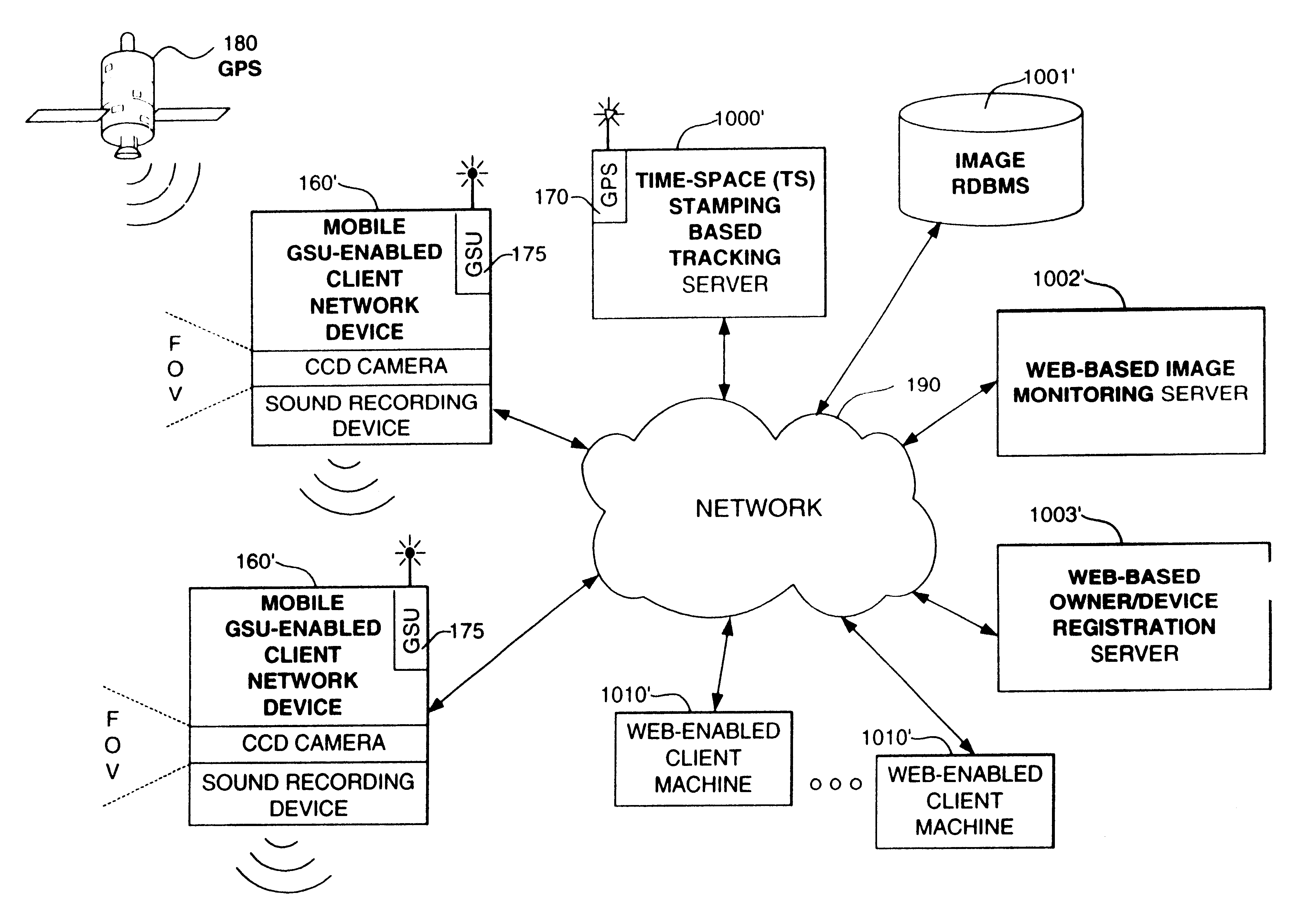

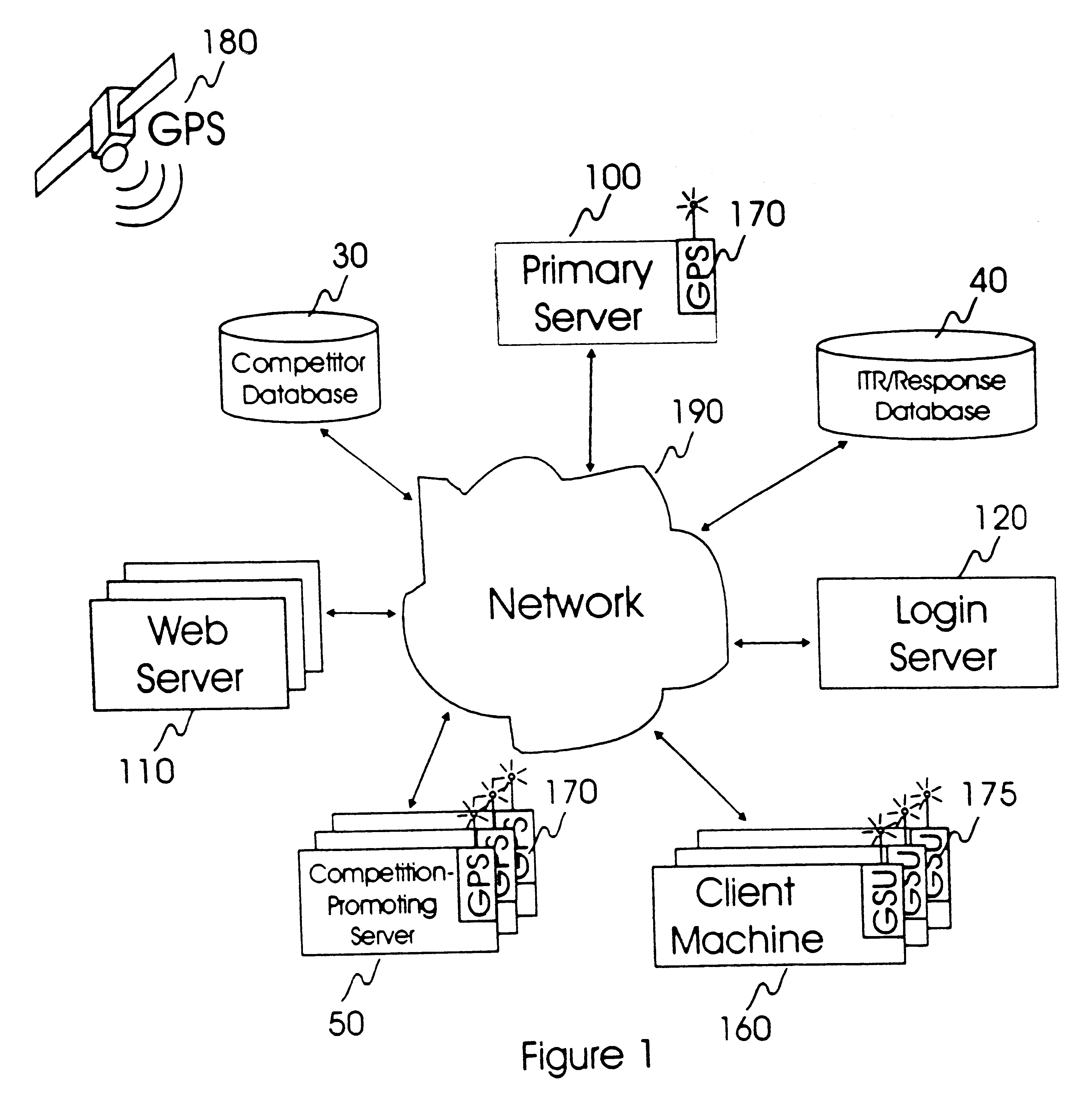

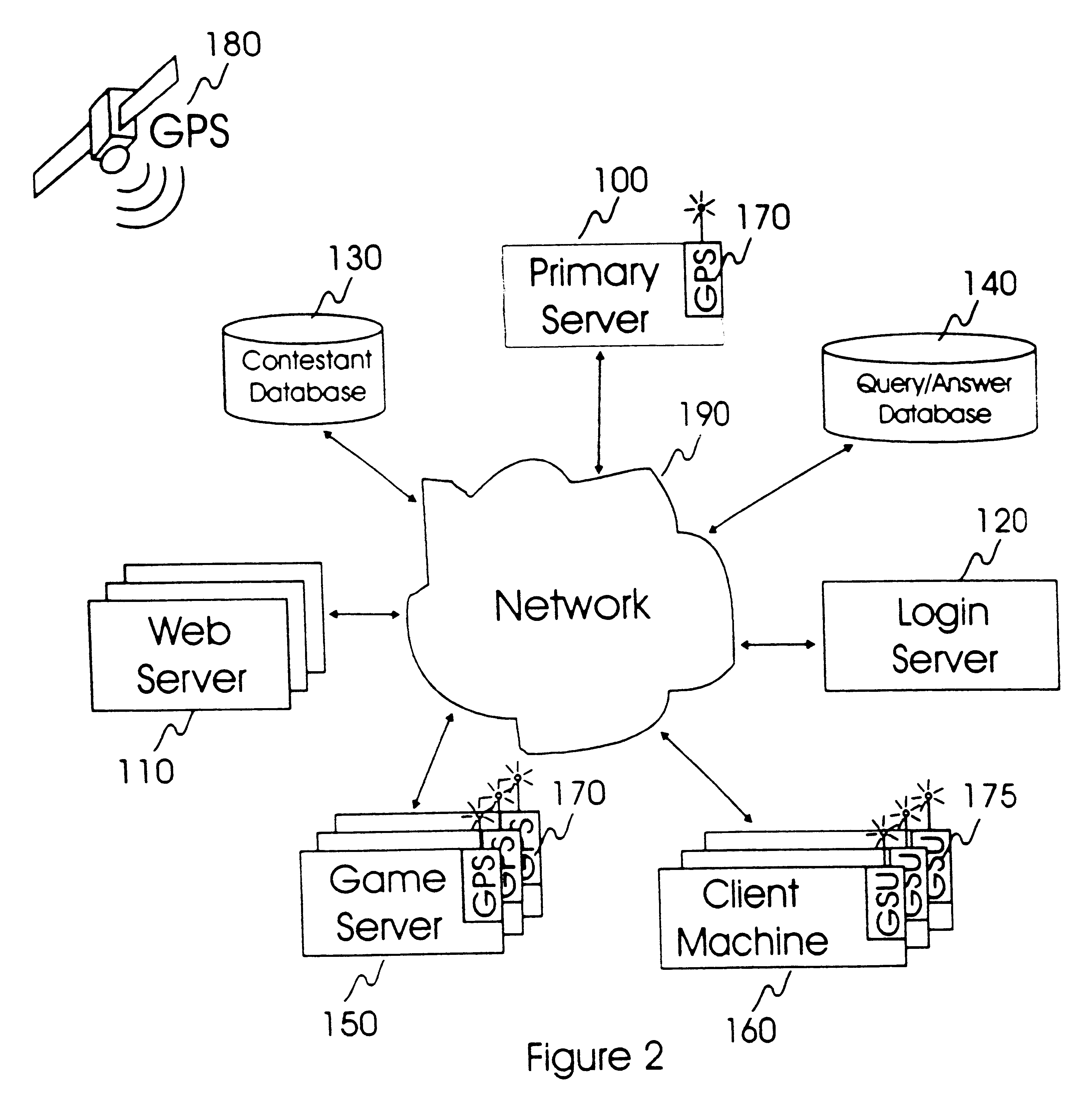

Internet-based method of and system for monitoring space-time coordinate information and biophysiological state information collected from an animate object along a course through the space-time continuum

InactiveUS6677858B1Avoiding shortcomingAvoiding shortcoming and drawbackInstruments for road network navigationInformation formatAnimationWireless data

An Internet-based method of and system for monitoring space-time coordinate information and biophysiological state information collected from an animate object moving along a course through the space-time continuum. The Internet-based system comprise a wireless GSU-enabled client network device affixed to the body of an animate object. The wireless device includes a global synchronization unit (GSU) for automatically generating time and space (TS) coordinate information corresponding to the time and space coordinate of the animate object with respect to a globally referenced coordinate system, as the animate object moves along a course through the space time continuum. The device also includes biophysiological state sensor affixed to the body of the animate object, for automatically sensing the biophysiological state of the animate object and generating biophysiological state information indicative of the sensed biophysiological state of the animate object along its course. The wireless device also includes a wireless date transmitter for transmitting the TS coordinate information and the biophysiological state information through free-space. A TS-stamping based tracking server receives the TS coordinate information and the biophysiological state information through in a wireless manner, and stores the same as the animate object moves along its course. An Internet information server serves Internet-based documents containing the collected TS coordinate and biophysiological state information. An Internet-enabled client system enables authorized persons to view the served Internet-based documents and monitor the collected TS coordinate and biophysiological state information, for various purposes.

Owner:REVEO

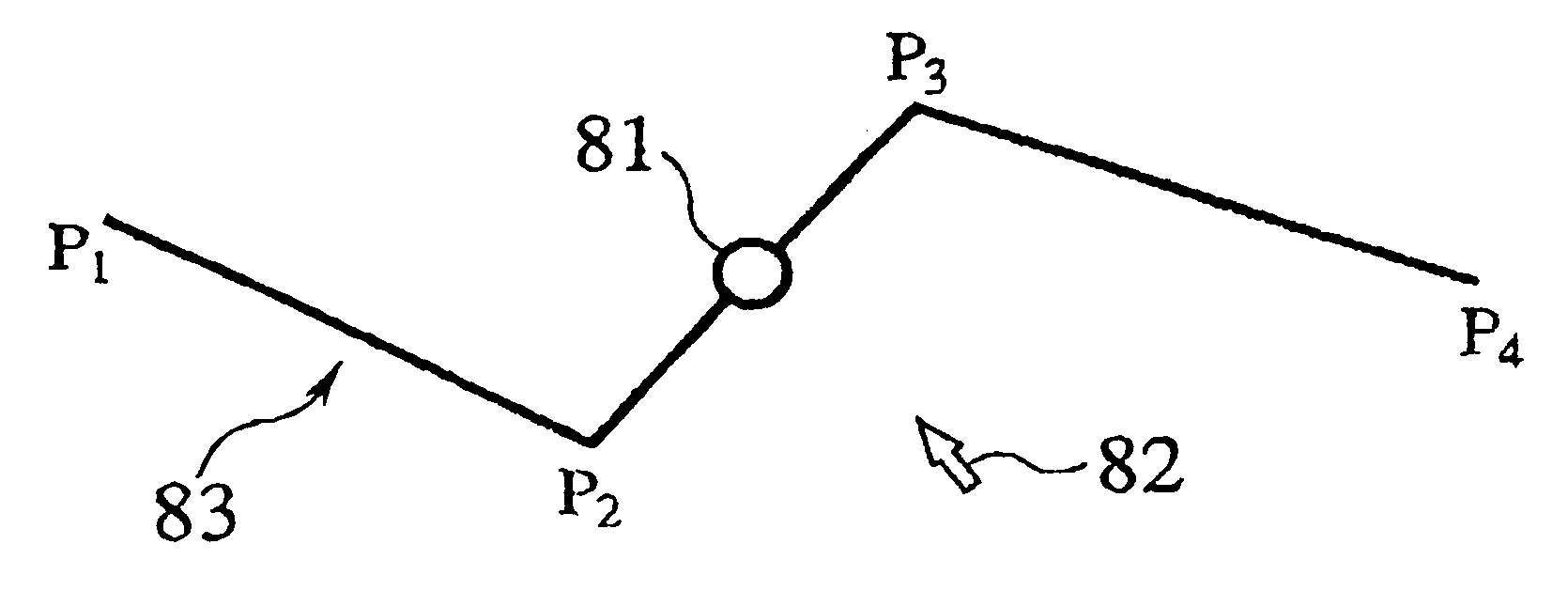

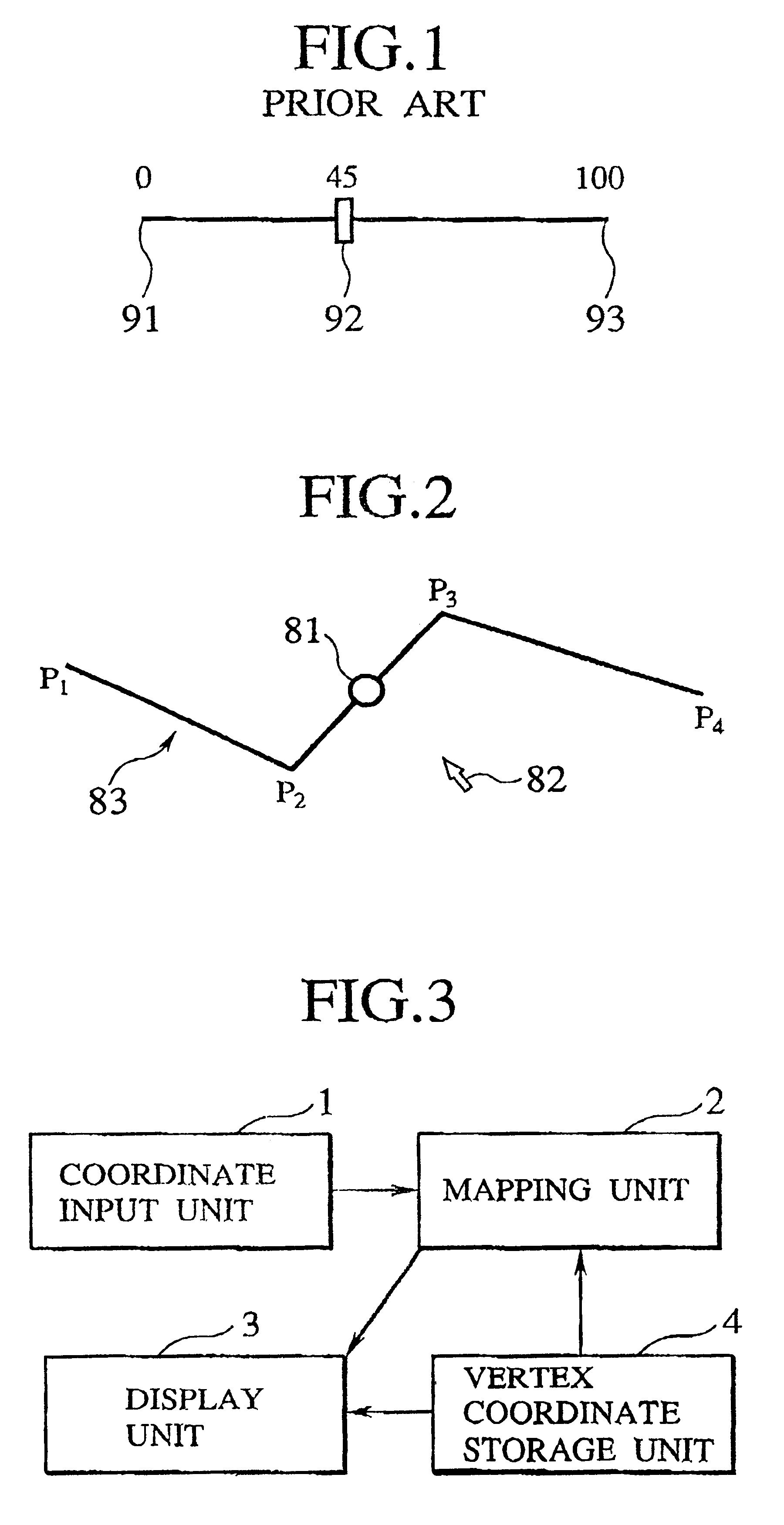

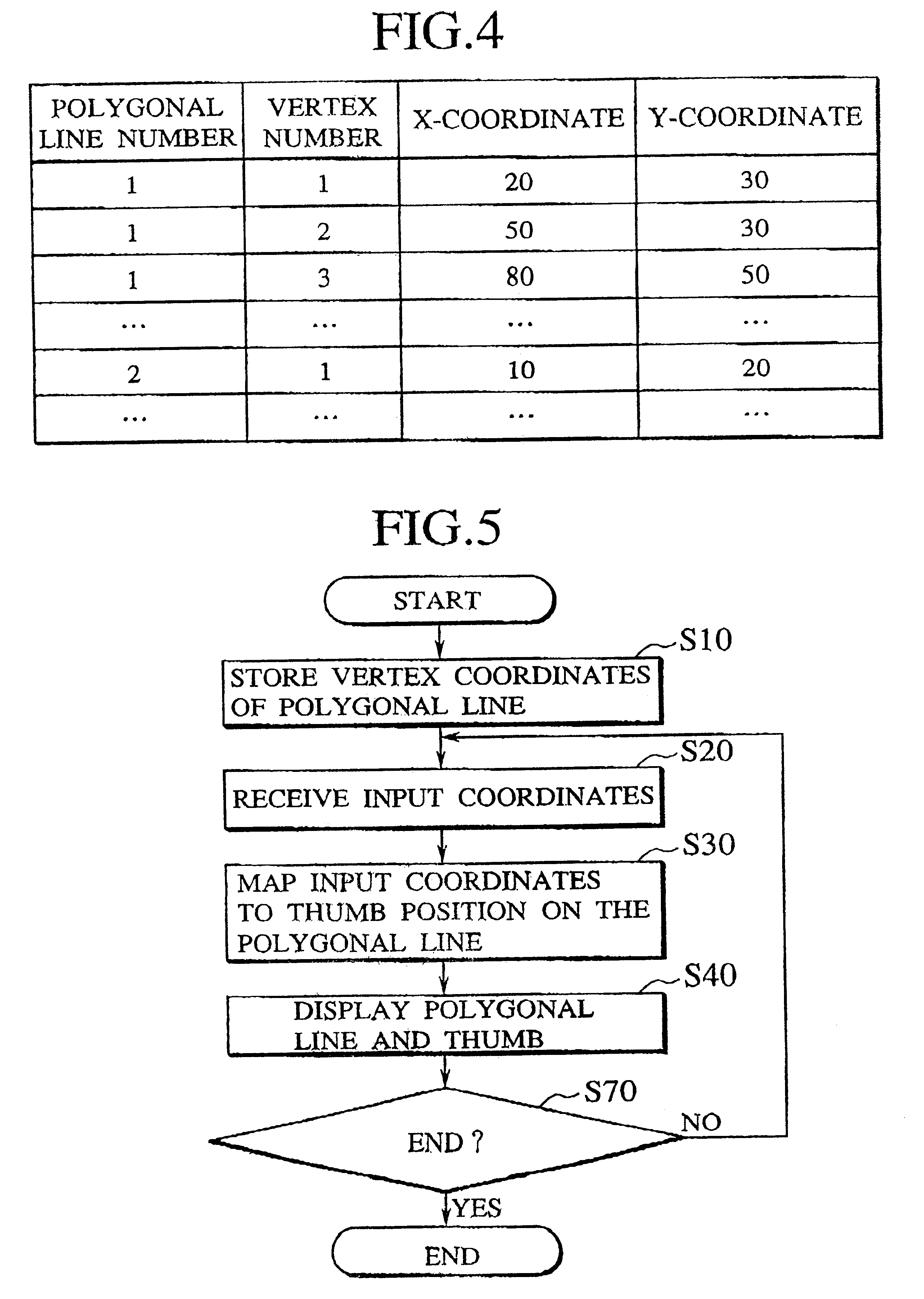

Scheme for graphical user interface using polygonal-shaped slider

InactiveUS6542171B1Easy to handleGymnastic exercisingCathode-ray tube indicatorsGraphicsPolygonal line

A scheme for graphical user interface using a polygonal-line-shaped slider that enables a user to intuitively manipulate-and playback spatio-temporal media data such as video data and animation data without deteriorating the temporal continuity of the data in interactive manipulation and playback of the spatio-temporal media data is disclosed. In this scheme, the slider is composed of a polygonal line composed of at least one segment and a coordinate indicator that is moved along the polygonal line. Data corresponding to coordinates specified by the coordinate indicator is entered. The apparatus according to present invention stores the coordinates of vertexes of the polygonal line, stores input coordinates, maps the input coordinates onto a point on the polygonal line, and positions the coordinate indicator at the point.

Owner:NIPPON TELEGRAPH & TELEPHONE CORP

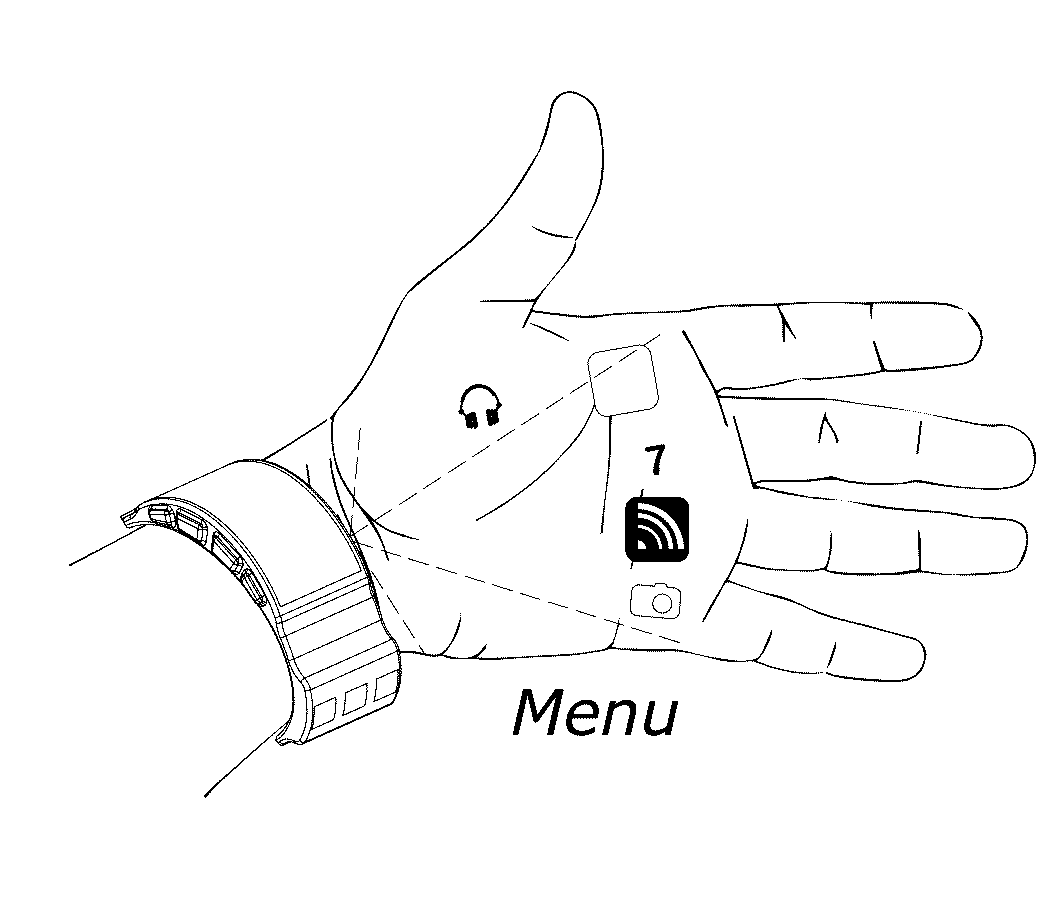

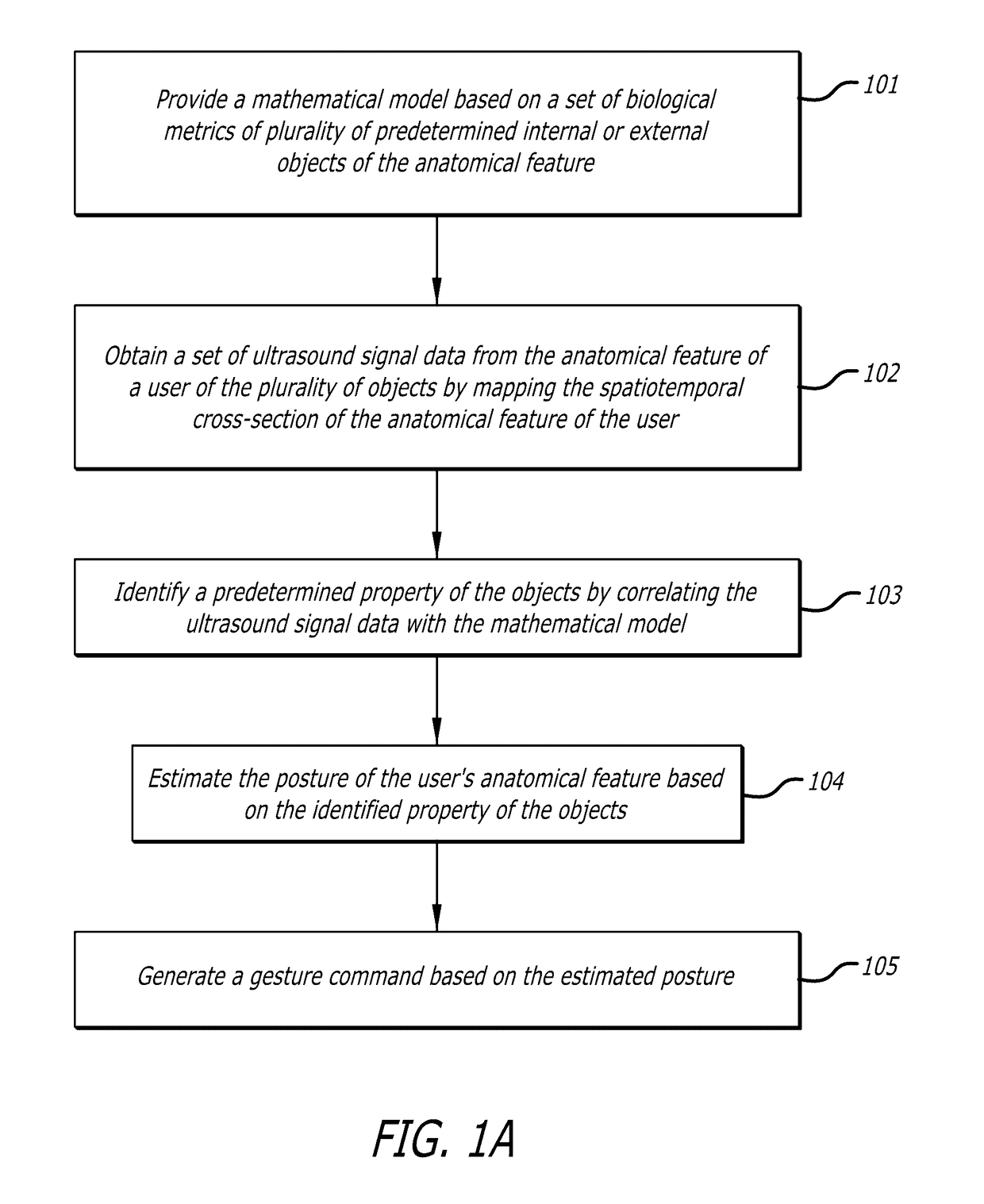

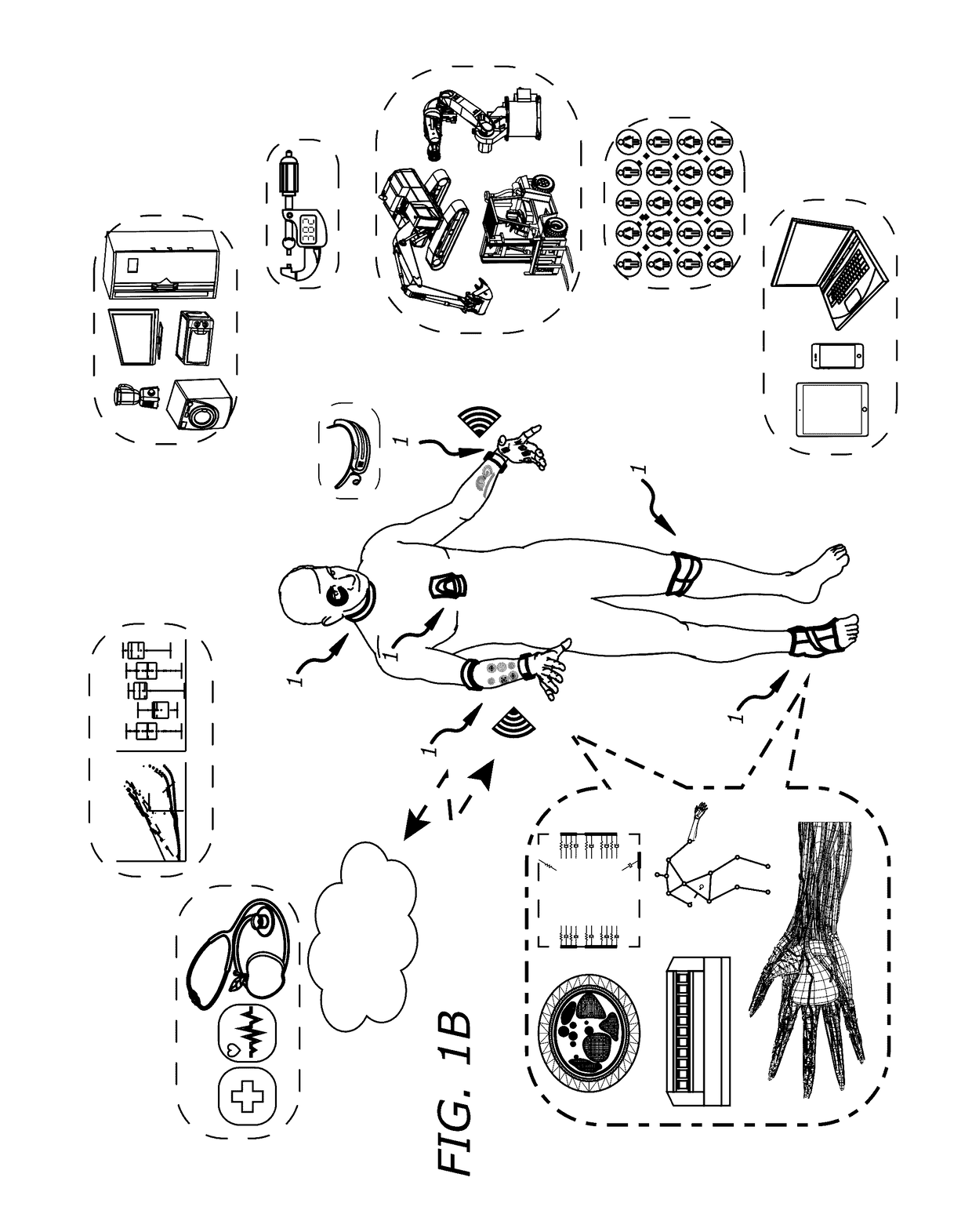

System and methods for on-body gestural interfaces and projection displays

ActiveUS20170123487A1Input/output for user-computer interactionDetails for portable computersSonificationTransceiver

A wearable system with a gestural interface for wearing on, for instance, the wrist of a user. The system comprises an ultrasonic transceiver array structure and may comprise a pico projector display element for displaying an image on a surface. User anatomical feature inputs are received in the form of ultrasound signals representative of a spatio-temporal cross-section of the wrist of the user by articulating wrist, finger and hand postures, which articulations are translated into gestures. Inputs from inertial and other sensors are used by the system as part of the anatomical feature posture identification method and device. Gestures are recognized using a mathematically-modeled, simulation-based set of biological metrics of tissue objects, which gestures are converted to executable computer instructions. Embodiments of the system disclosed herein may also be used to monitor biometric and health data over computer networks or using onboard systems.

Owner:OSTENDO TECH INC

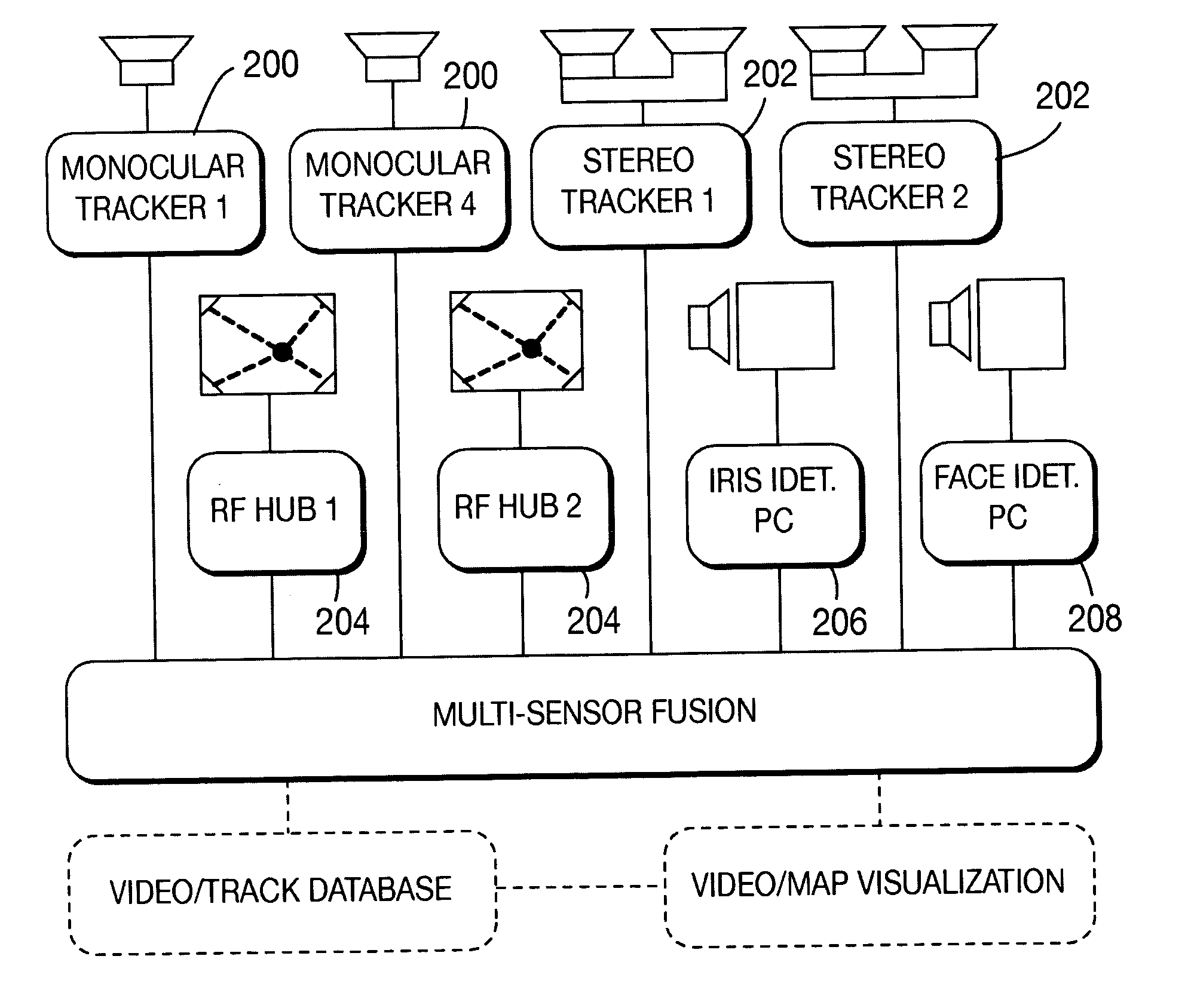

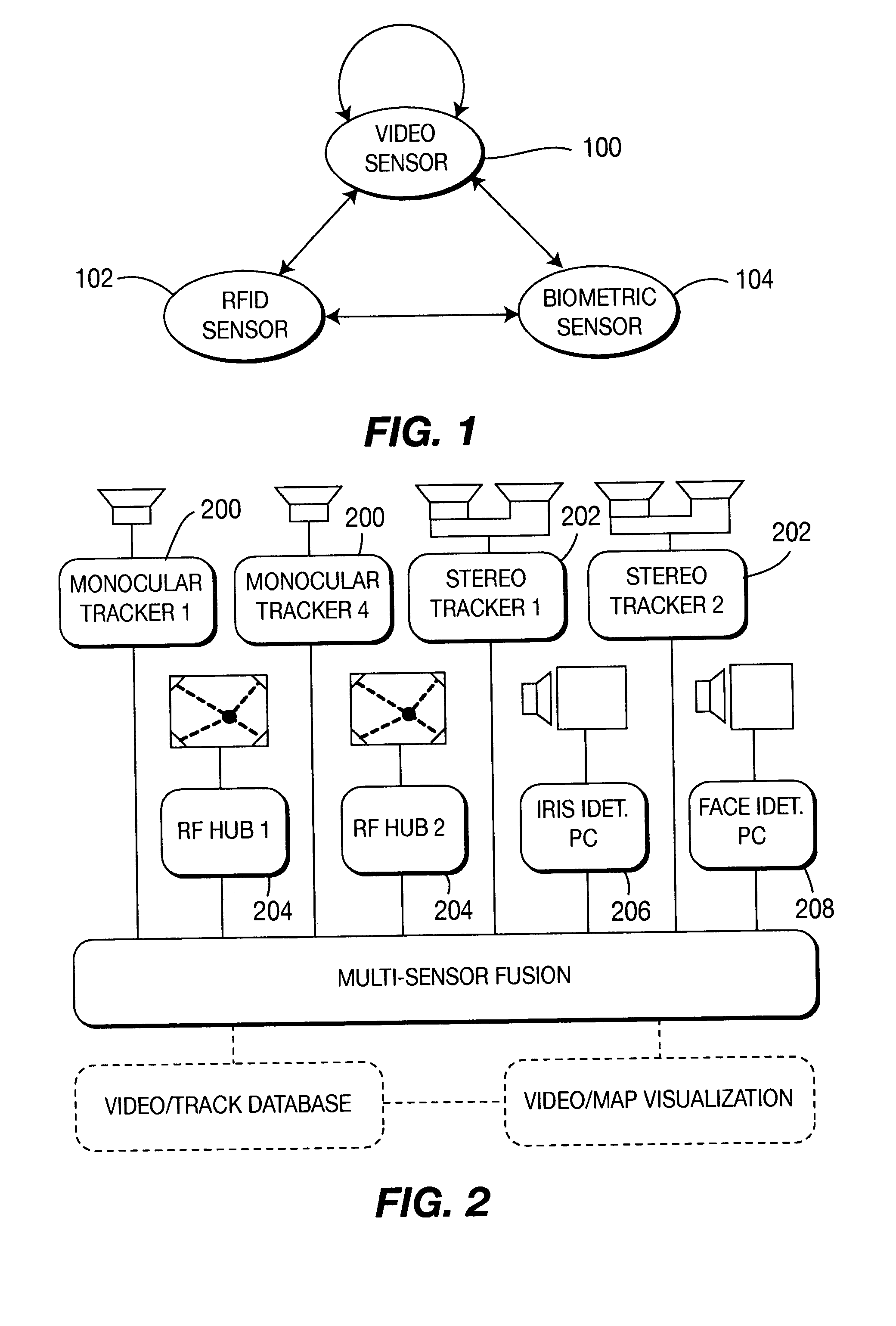

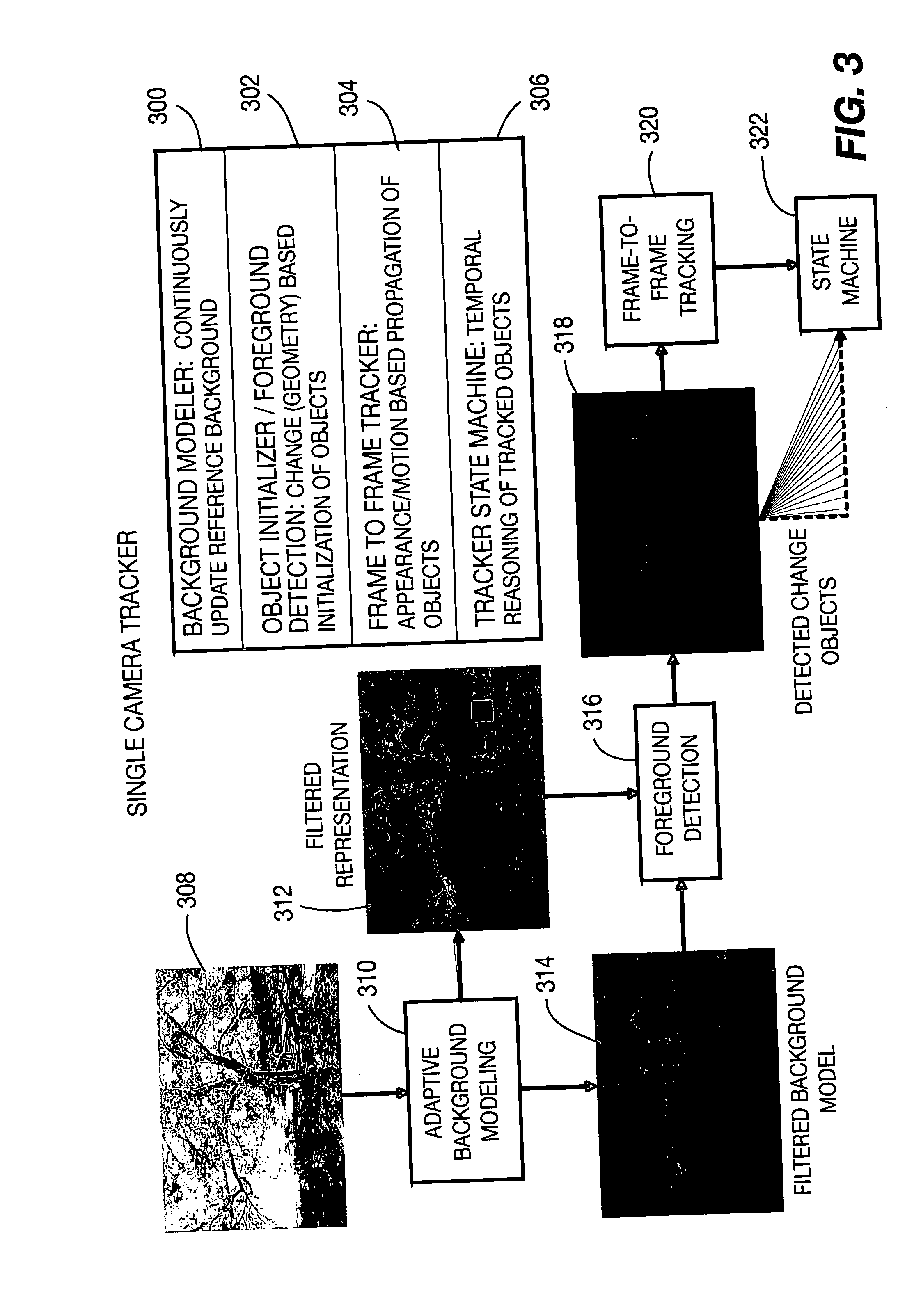

Method and apparatus for stereo, multi-camera tracking and RF and video track fusion

A unified approach, a fusion technique, a space-time constraint, a methodology, and system architecture are provided. The unified approach is to fuse the outputs of monocular and stereo video trackers, RFID and localization systems and biometric identification systems. The fusion technique is provided that is based on the transformation of the sensory information from heterogeneous sources into a common coordinate system with rigorous uncertainties analysis to account for various sensor noises and ambiguities. The space-time constraint is used to fuse different sensor using the location and velocity information. Advantages include the ability to continuously track multiple humans with their identities in a large area. The methodology is general so that other sensors can be incorporated into the system. The system architecture is provided for the underlying real-time processing of the sensors.

Owner:SRI INTERNATIONAL

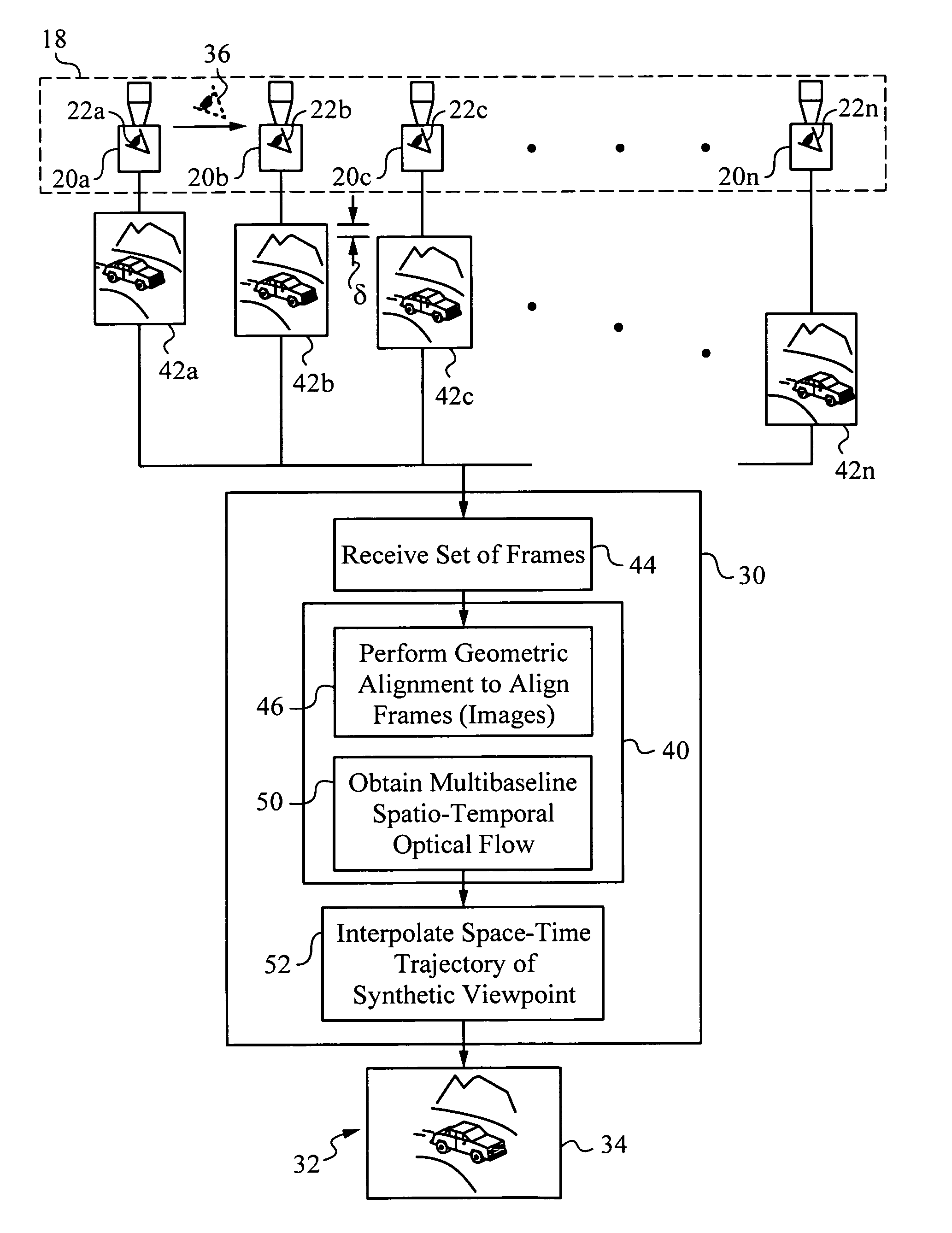

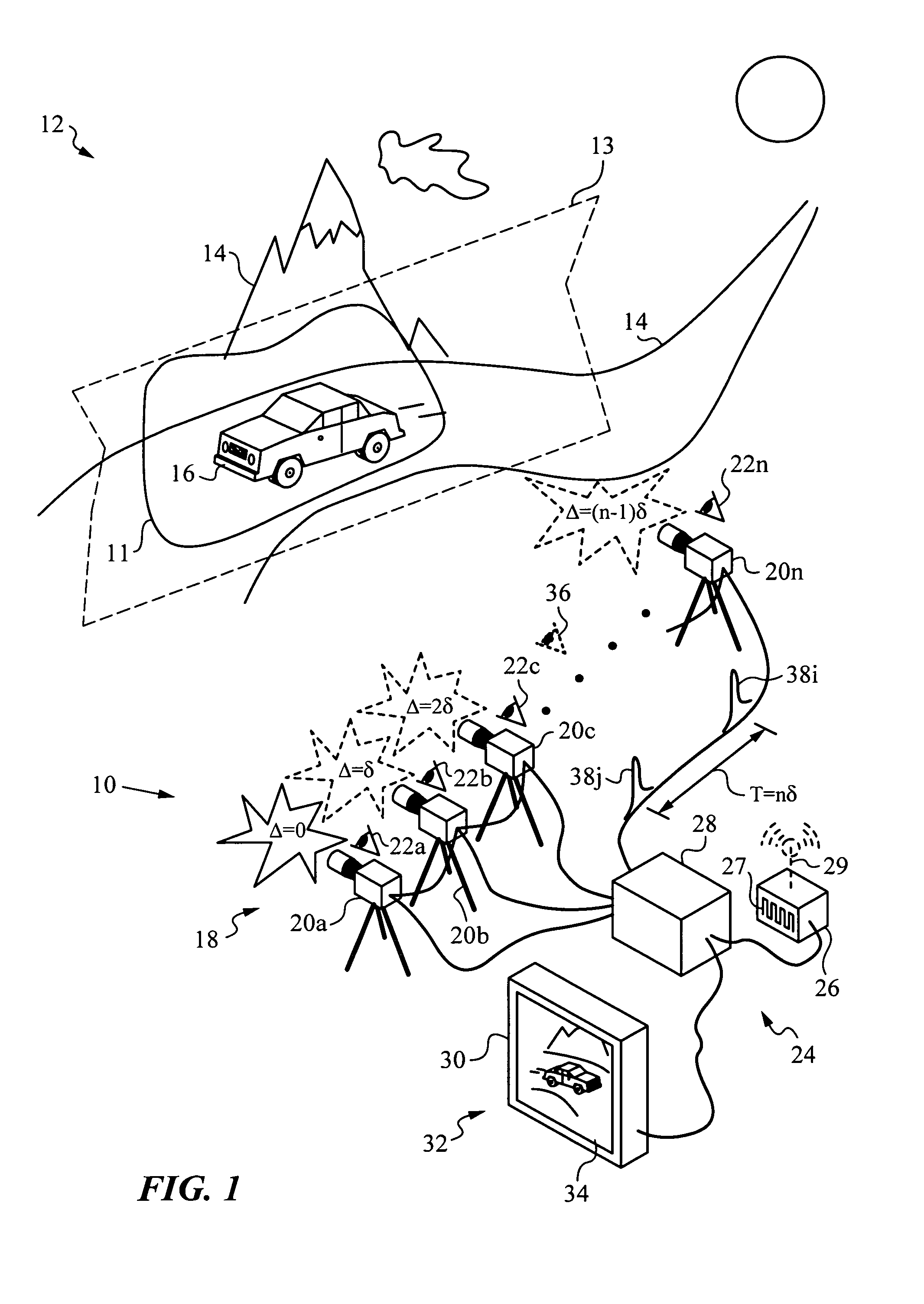

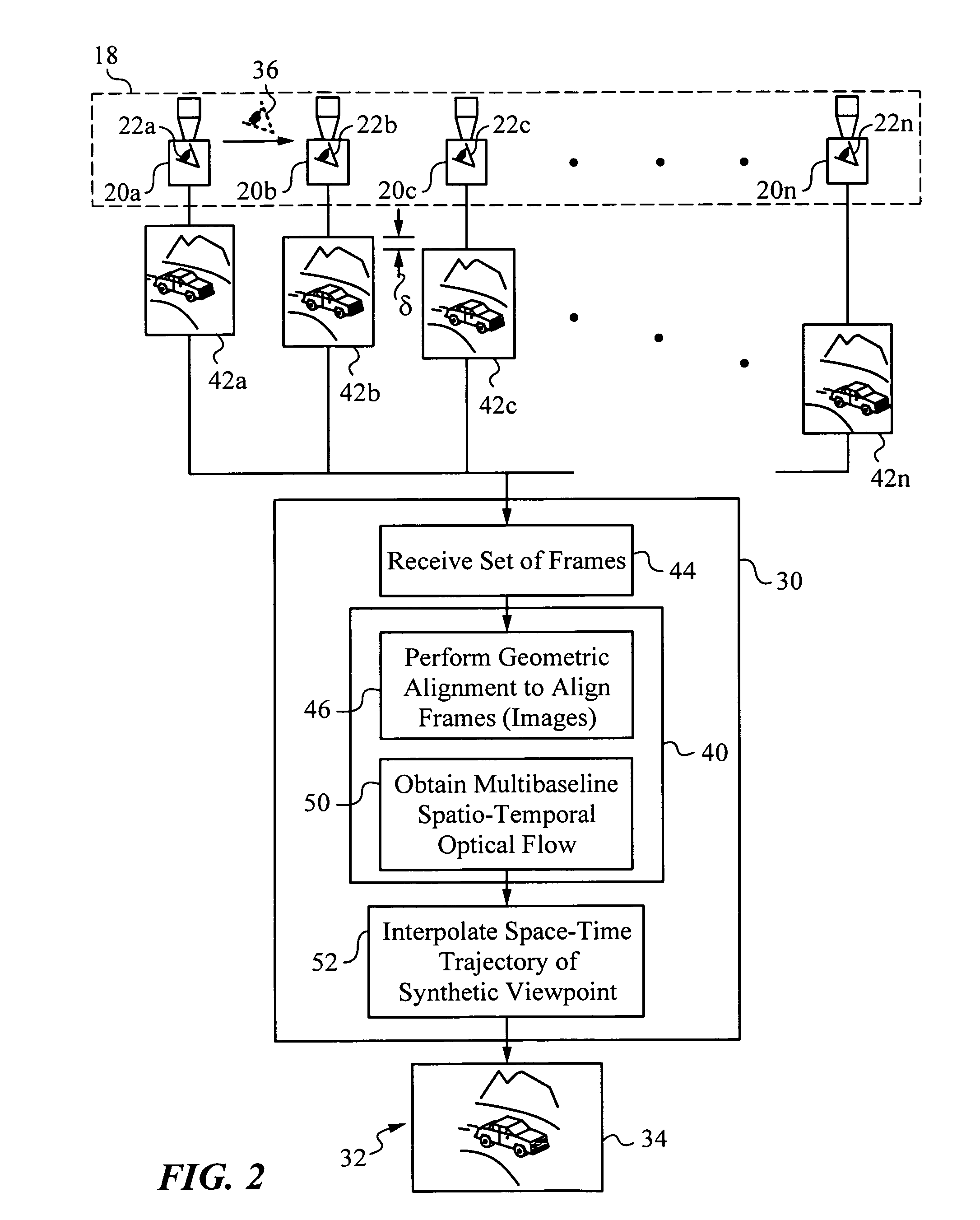

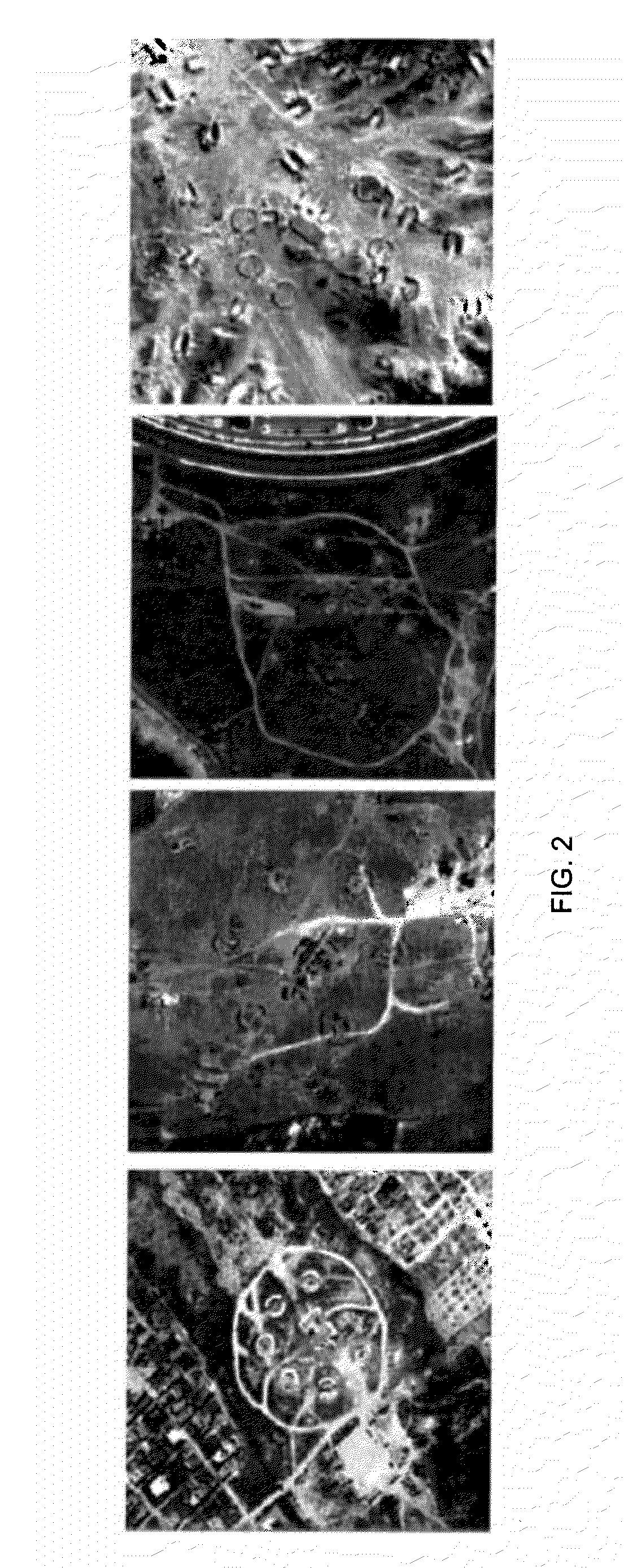

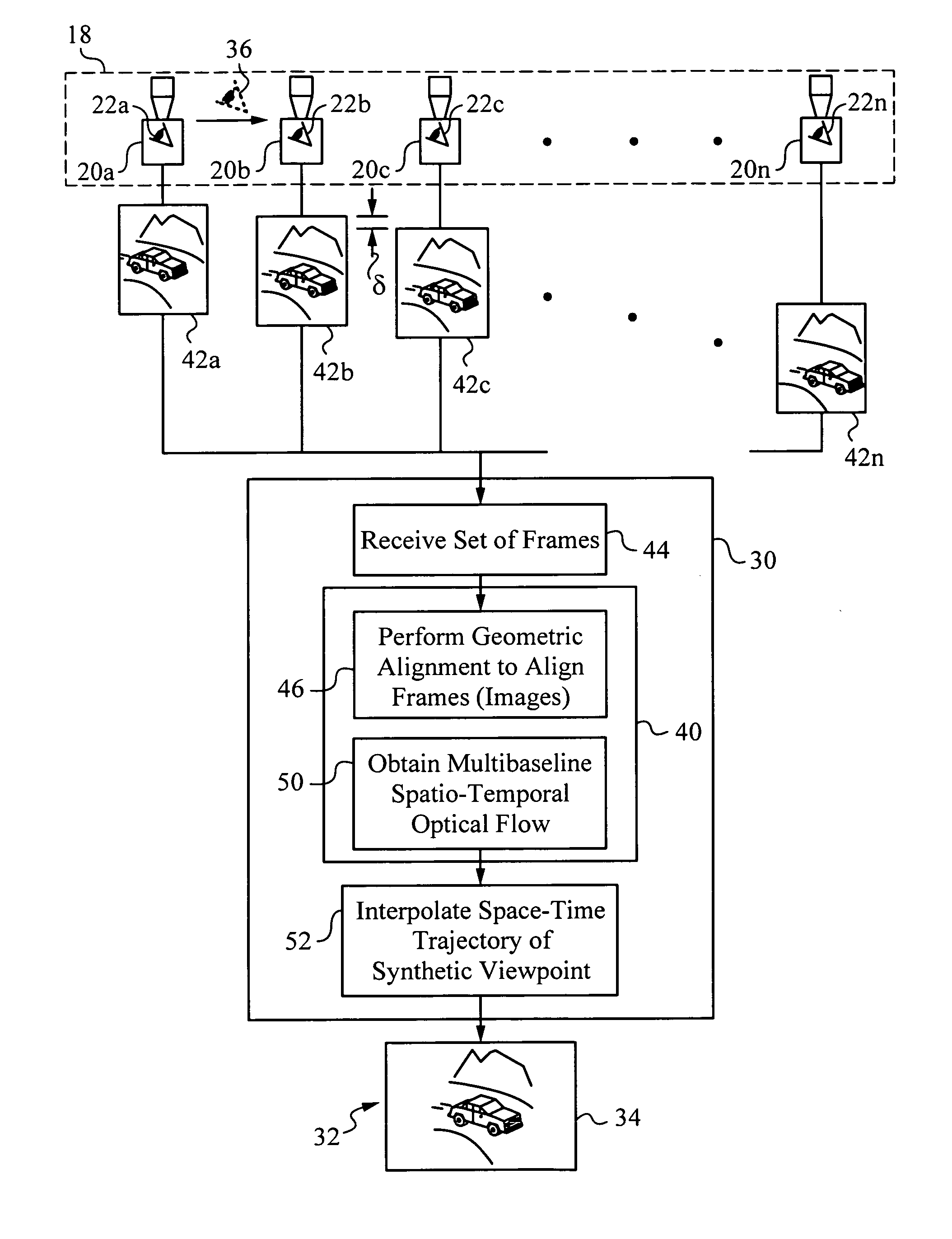

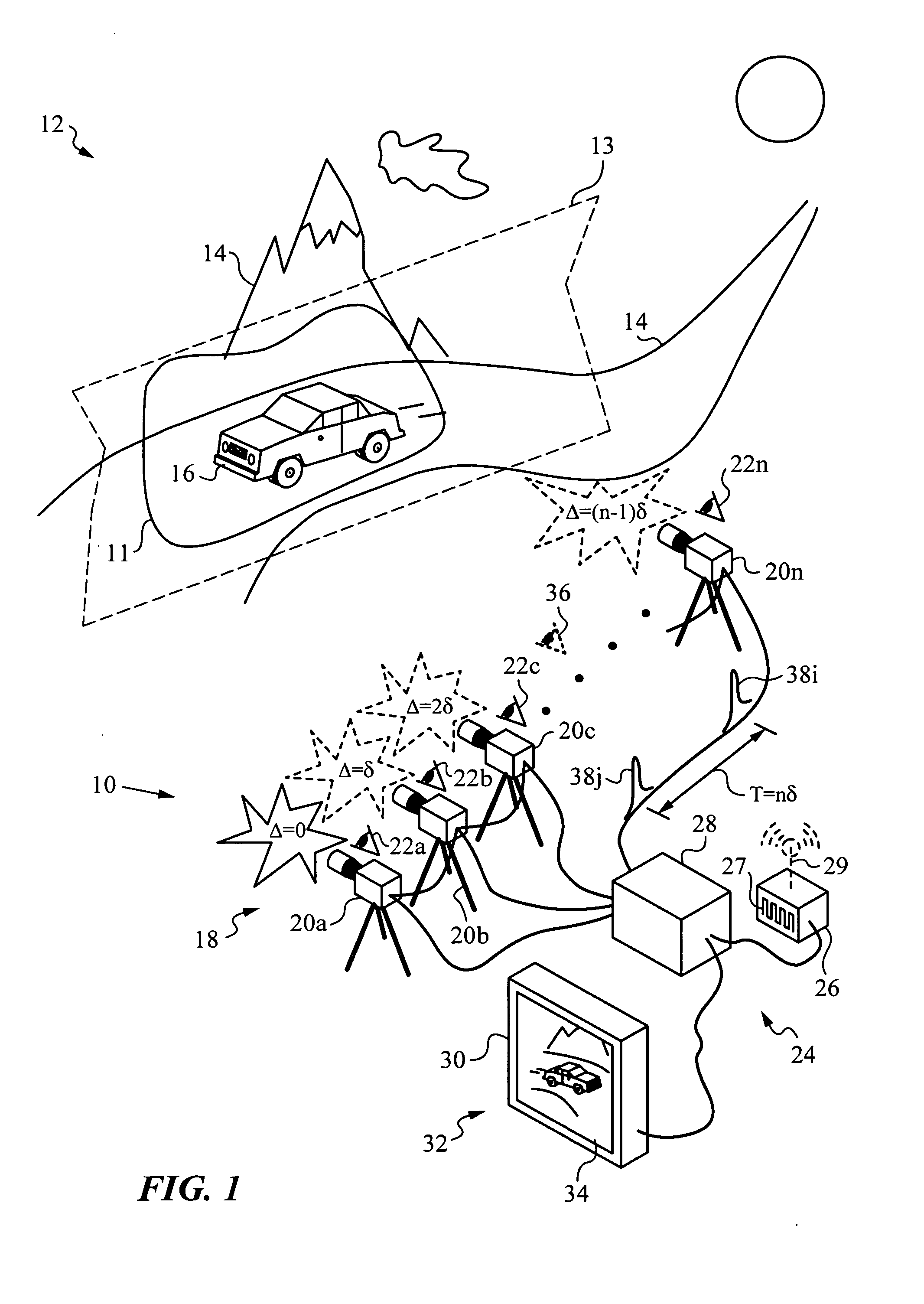

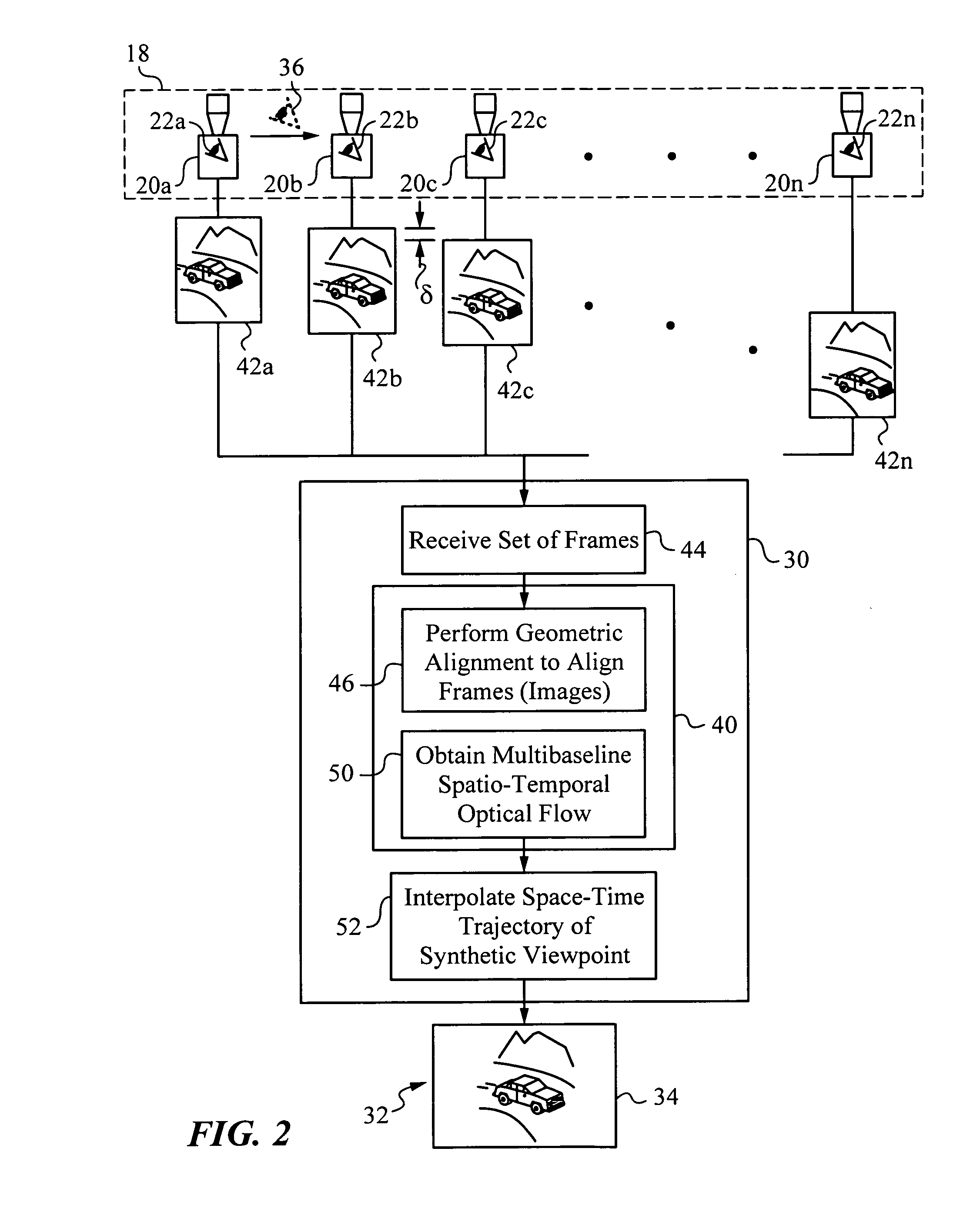

Apparatus and method for capturing a scene using staggered triggering of dense camera arrays

This invention relates to an apparatus and a method for video capture of a three-dimensional region of interest in a scene using an array of video cameras. The video cameras of the array are positioned for viewing the three-dimensional region of interest in the scene from their respective viewpoints. A triggering mechanism is provided for staggering the capture of a set of frames by the video cameras of the array. The apparatus has a processing unit for combining and operating on the set of frames captured by the array of cameras to generate a new visual output, such as high-speed video or spatio-temporal structure and motion models, that has a synthetic viewpoint of the three-dimensional region of interest. The processing involves spatio-temporal interpolation for determining the synthetic viewpoint space-time trajectory. In some embodiments, the apparatus computes a multibaseline spatio-temporal optical flow.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

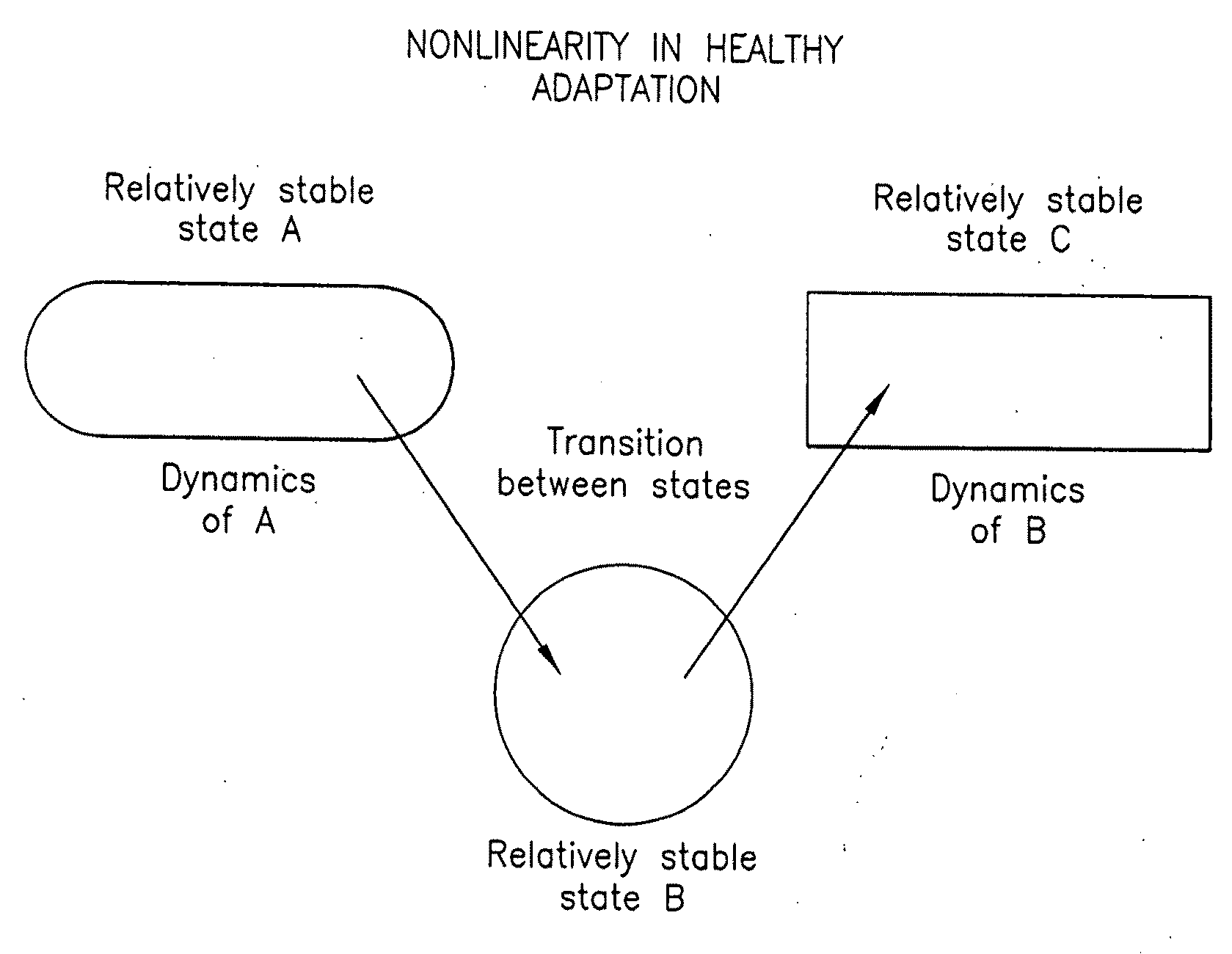

Method and Apparatus for Analysis of Psychiatric and Physical Conditions

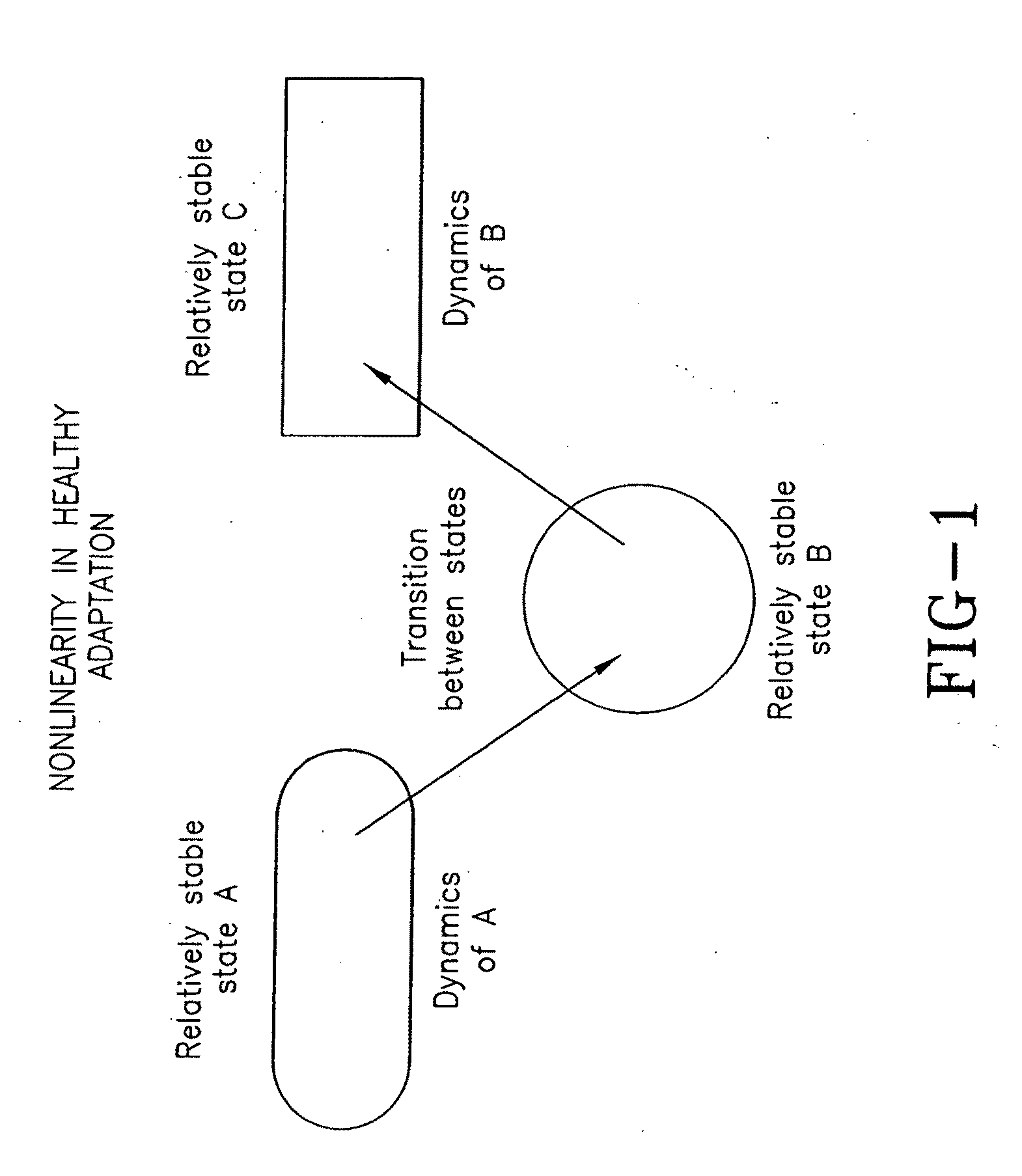

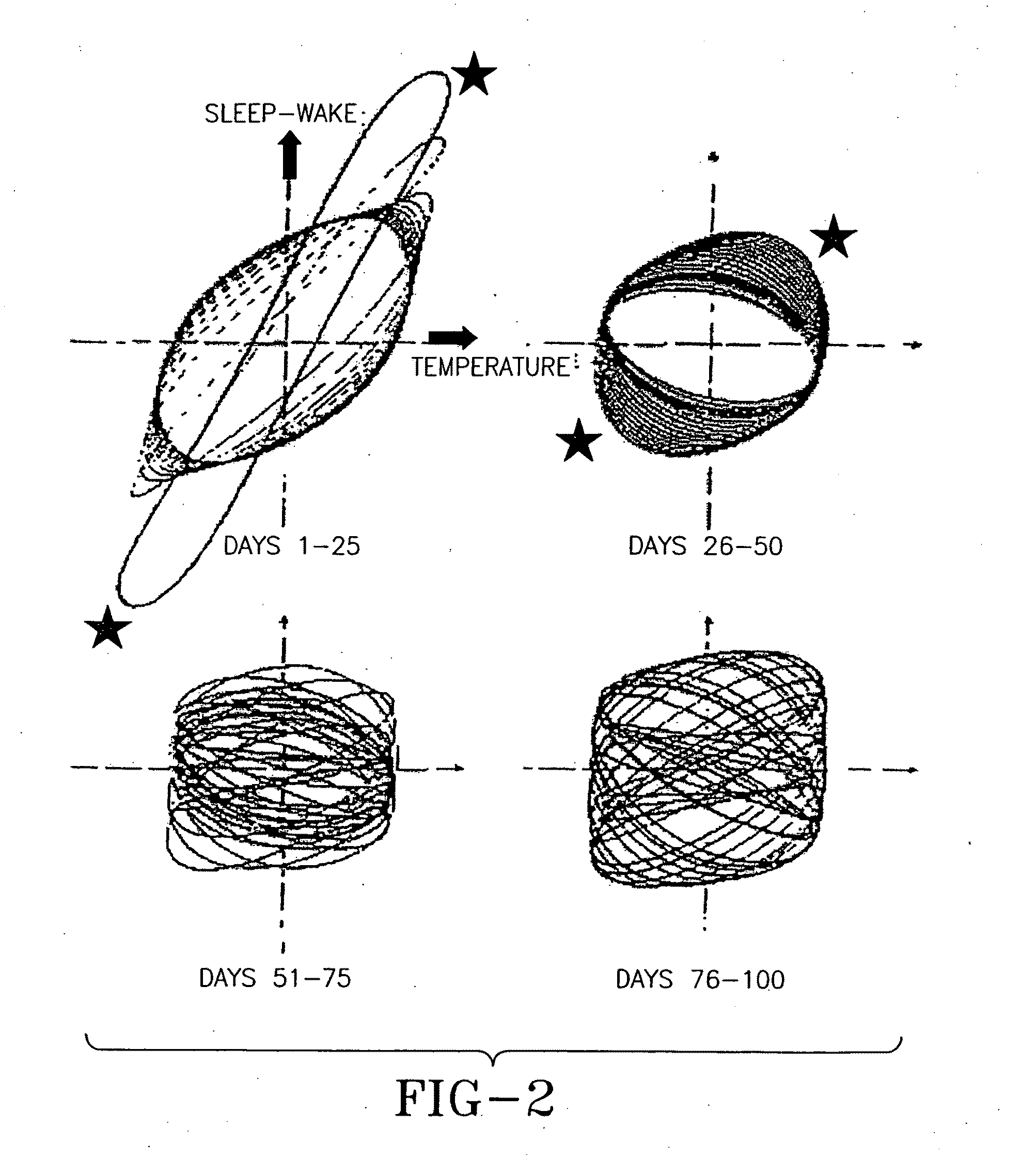

ActiveUS20090292180A1Determine psychiatricAccurate and effectiveComputer-assisted medical data acquisitionDiagnostic recording/measuringSpatial organizationEngineering

A method, apparatus and software for diagnosing the state or condition of a human, animal or other living thing, which always generates physiological modulating signals having temporal-spatial organization, the organization having dynamic patterns whose structure is fractal, involving the monitoring of at least one physiological modulating signal and obtaining a set of temporal-spatial values of each of said physiological modulating signals, and processing the respective temporal-spatial values using linear and nonlinear tools to determine the linear and nonlinear characteristics established for known criteria to determine the state or condition of the person, being or living things, and to use this data for diagnosis, tracking, and treatment and developmental issues.

Owner:MIROW SUSAN

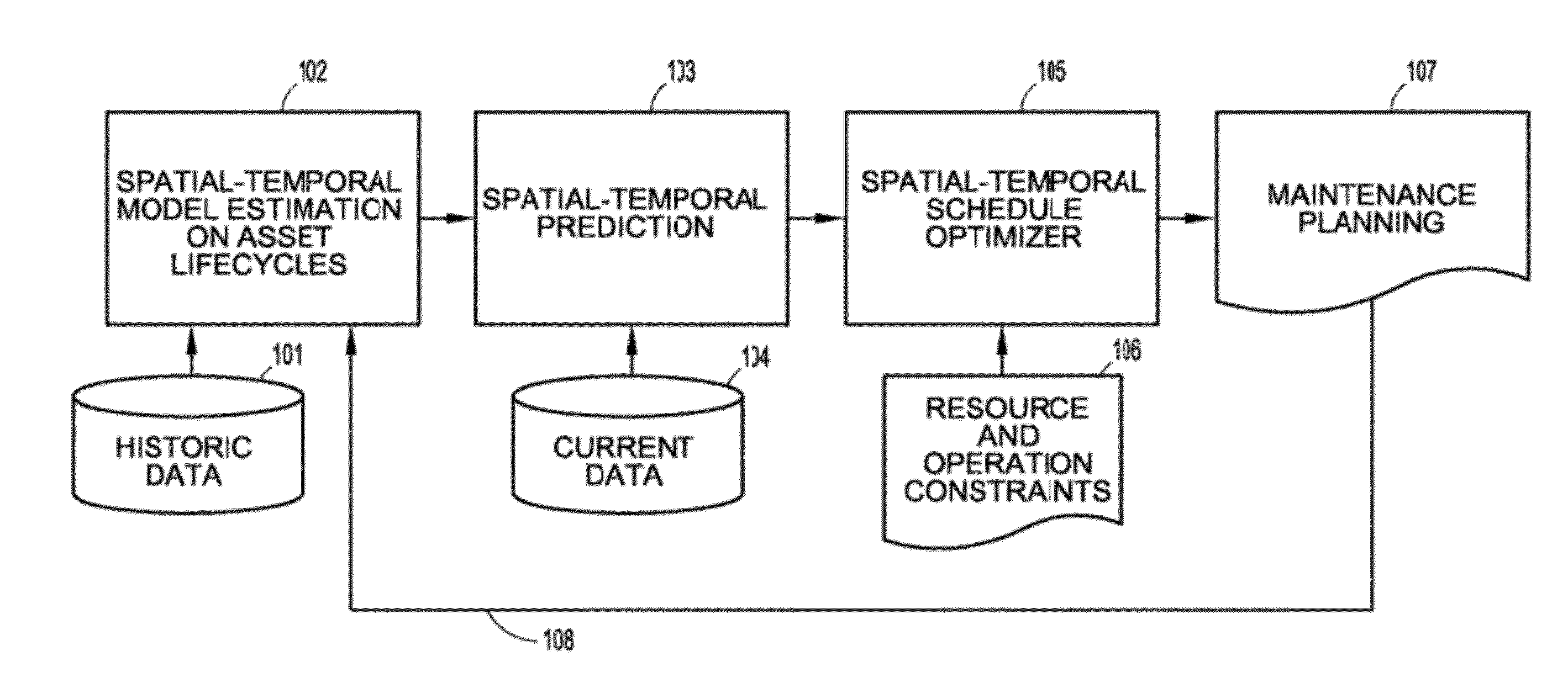

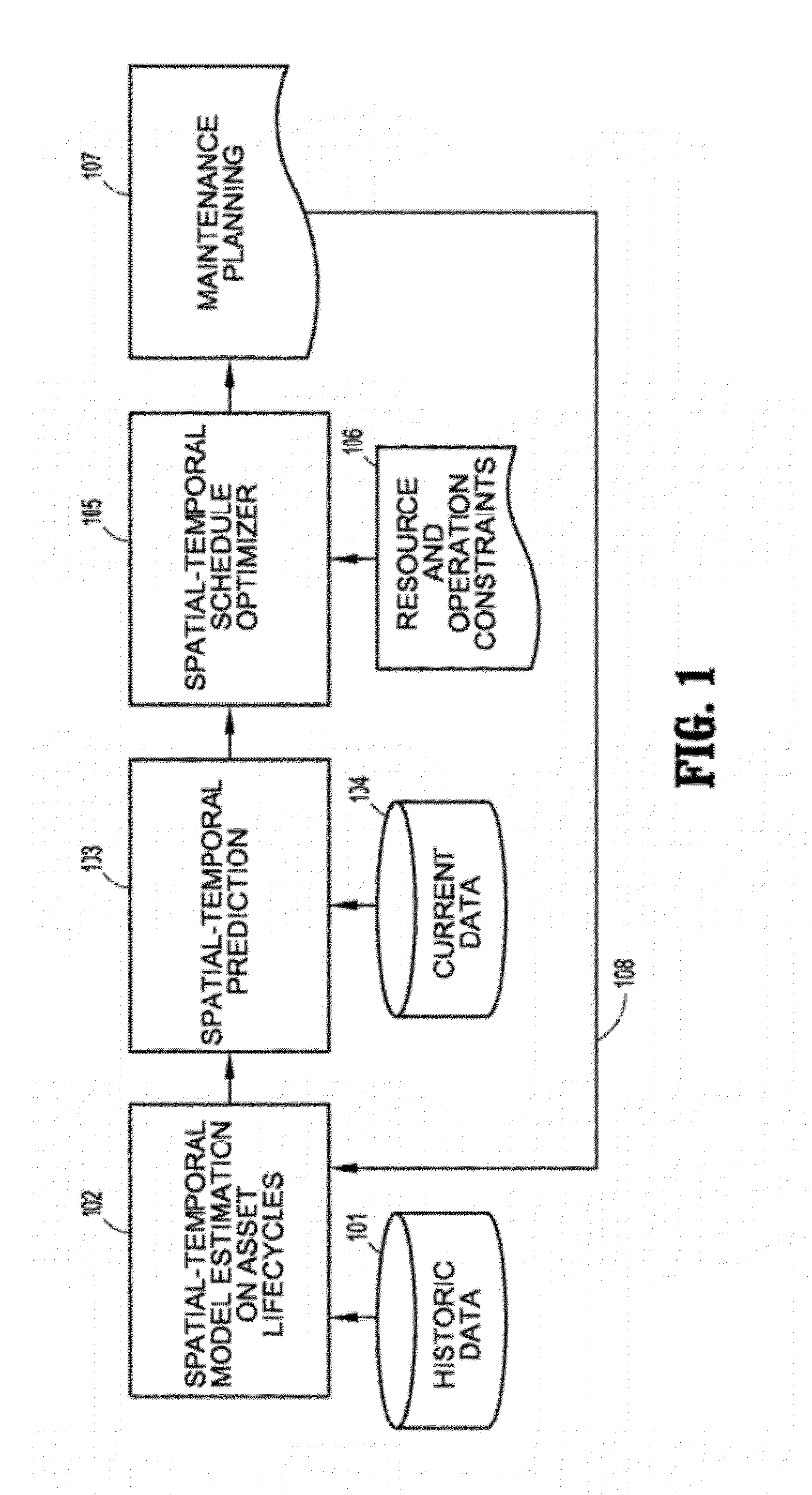

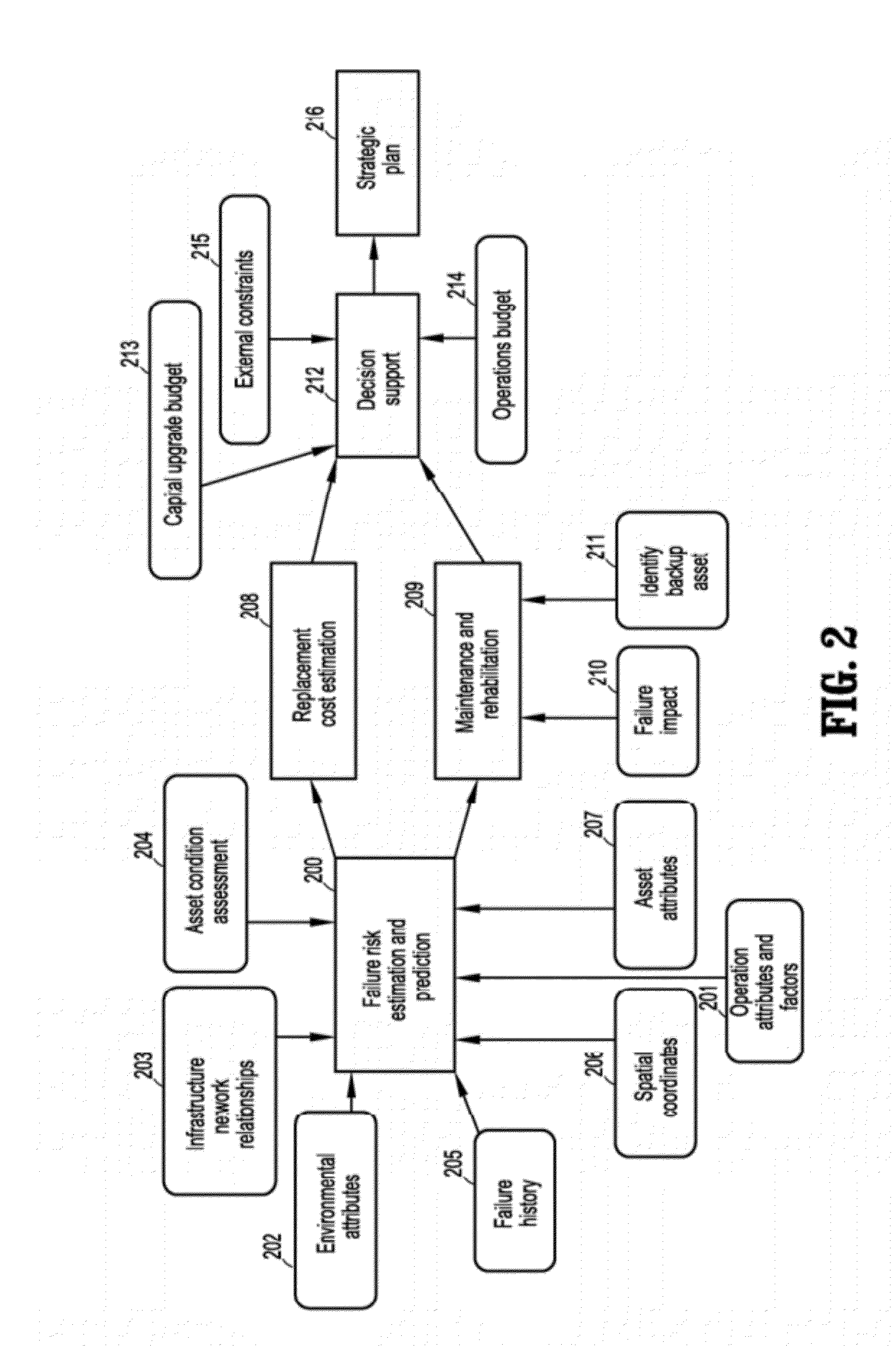

Spatial-Temporal Optimization of Physical Asset Maintenance

A method for determining a maintenance schedule of geographically dispersed physical assets includes receiving asset data including infrastructure relationships between the assets, modeling failure risk of the assets based on spatial, temporal and network relationships, and producing the maintenance schedule according to a combination of the risk model, asset data, maintenance, and external operation constraints. The maintenance schedule may be corrective and / or strategic.

Owner:IBM CORP

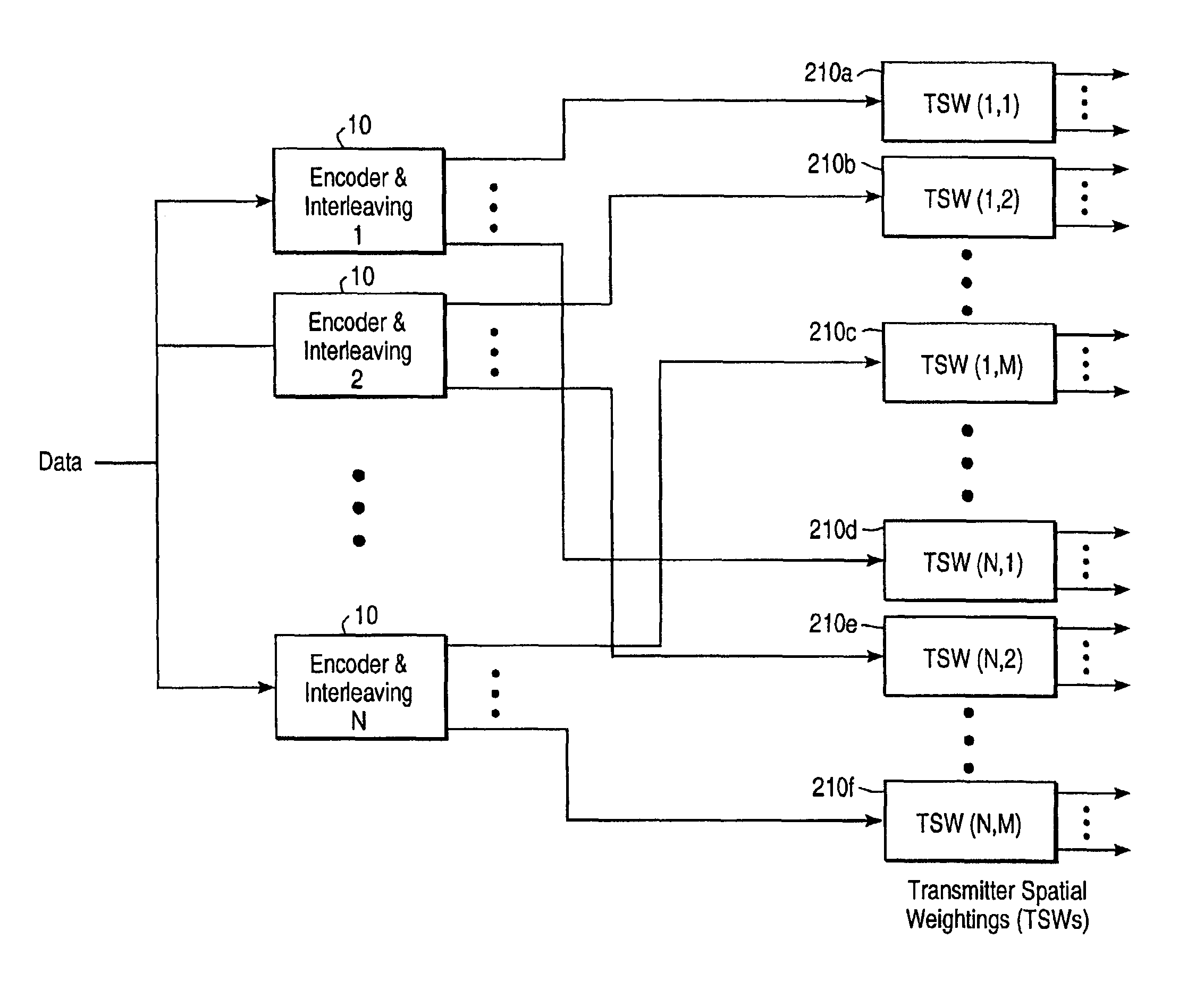

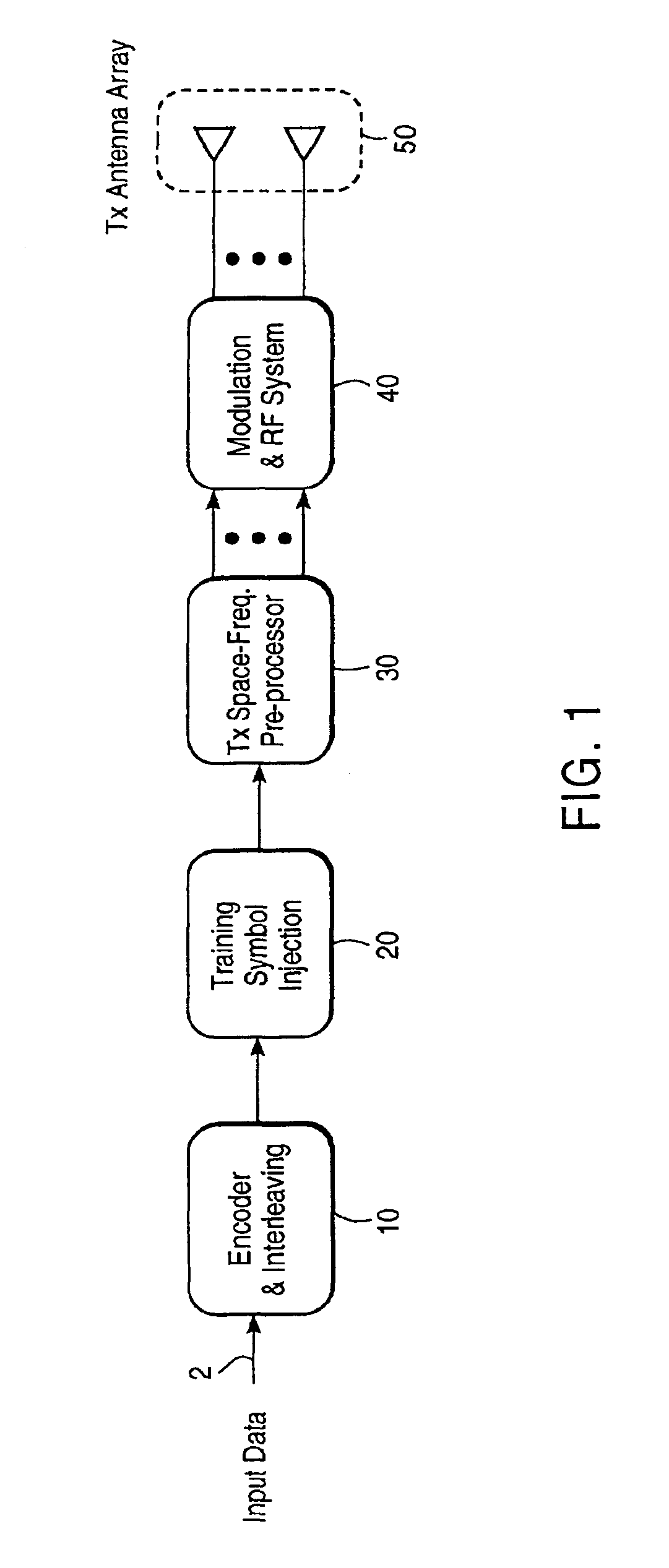

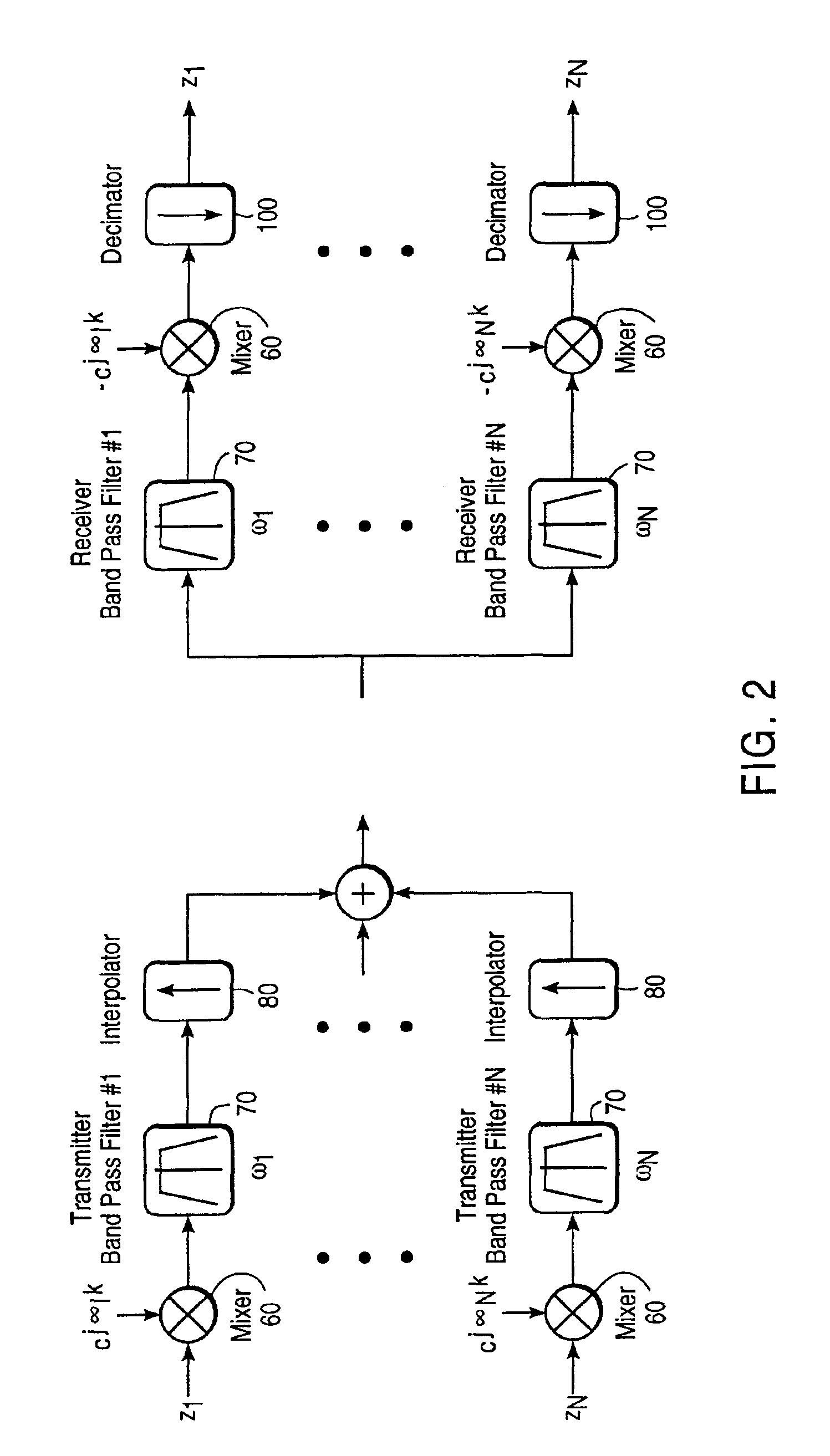

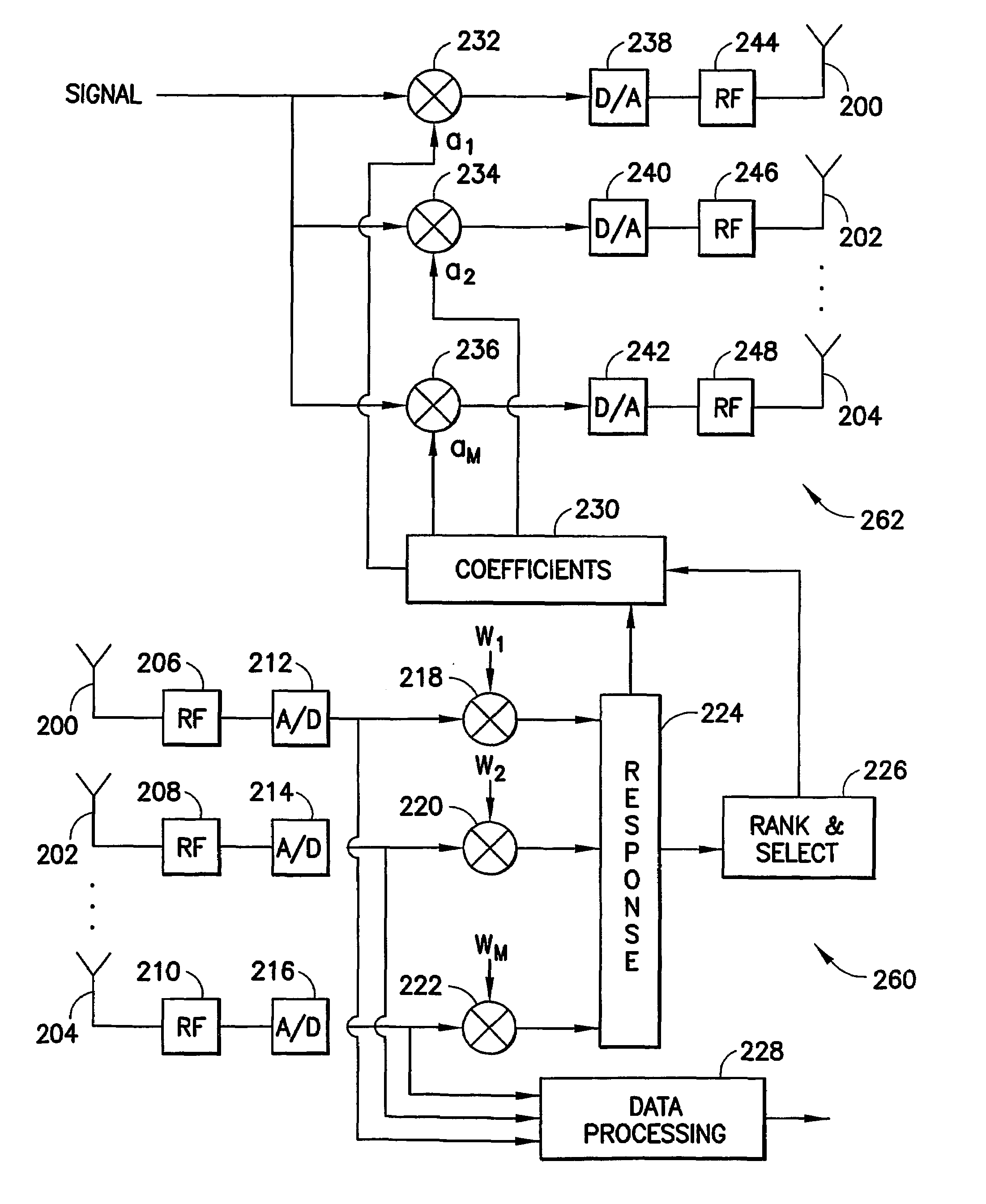

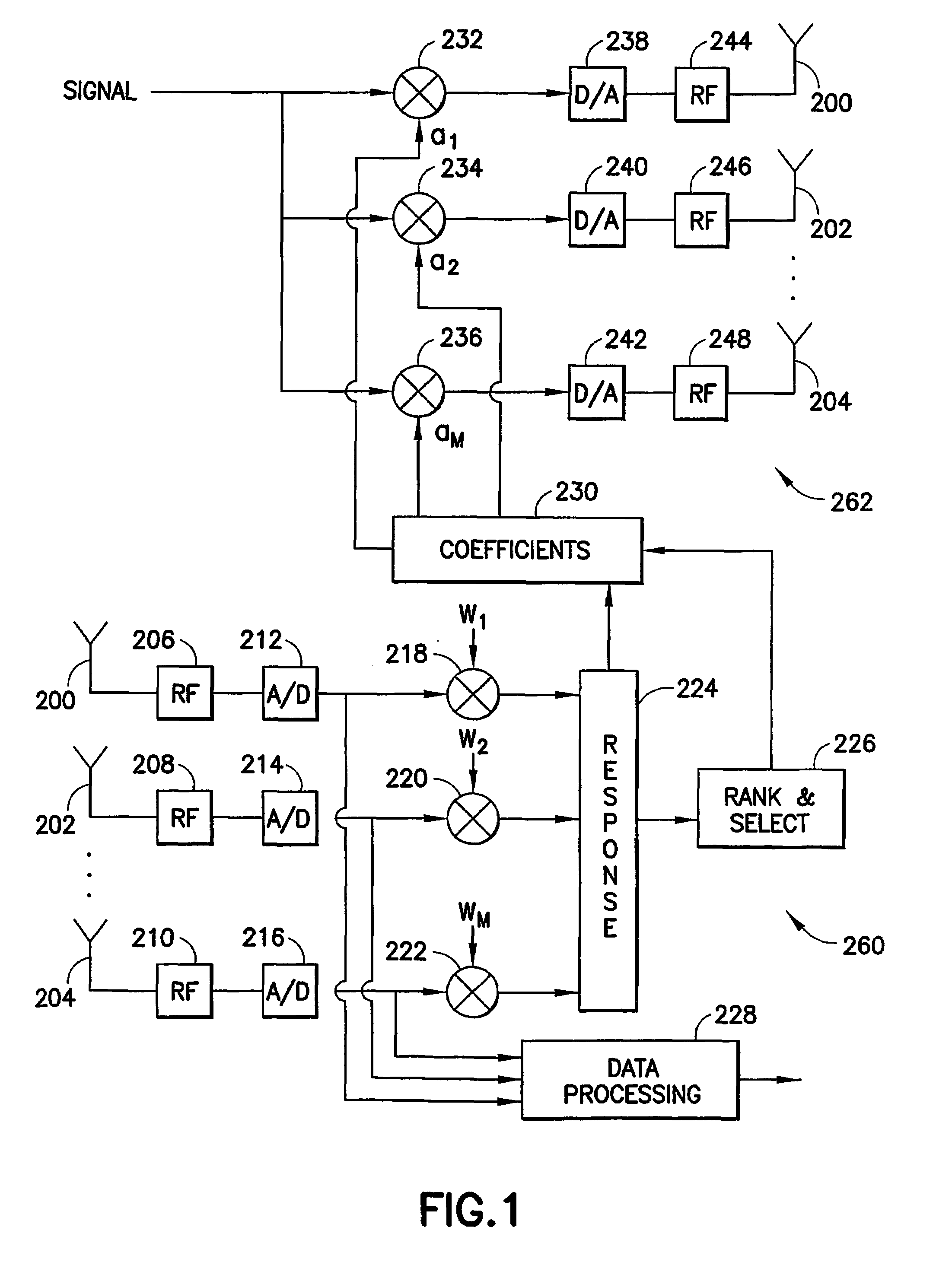

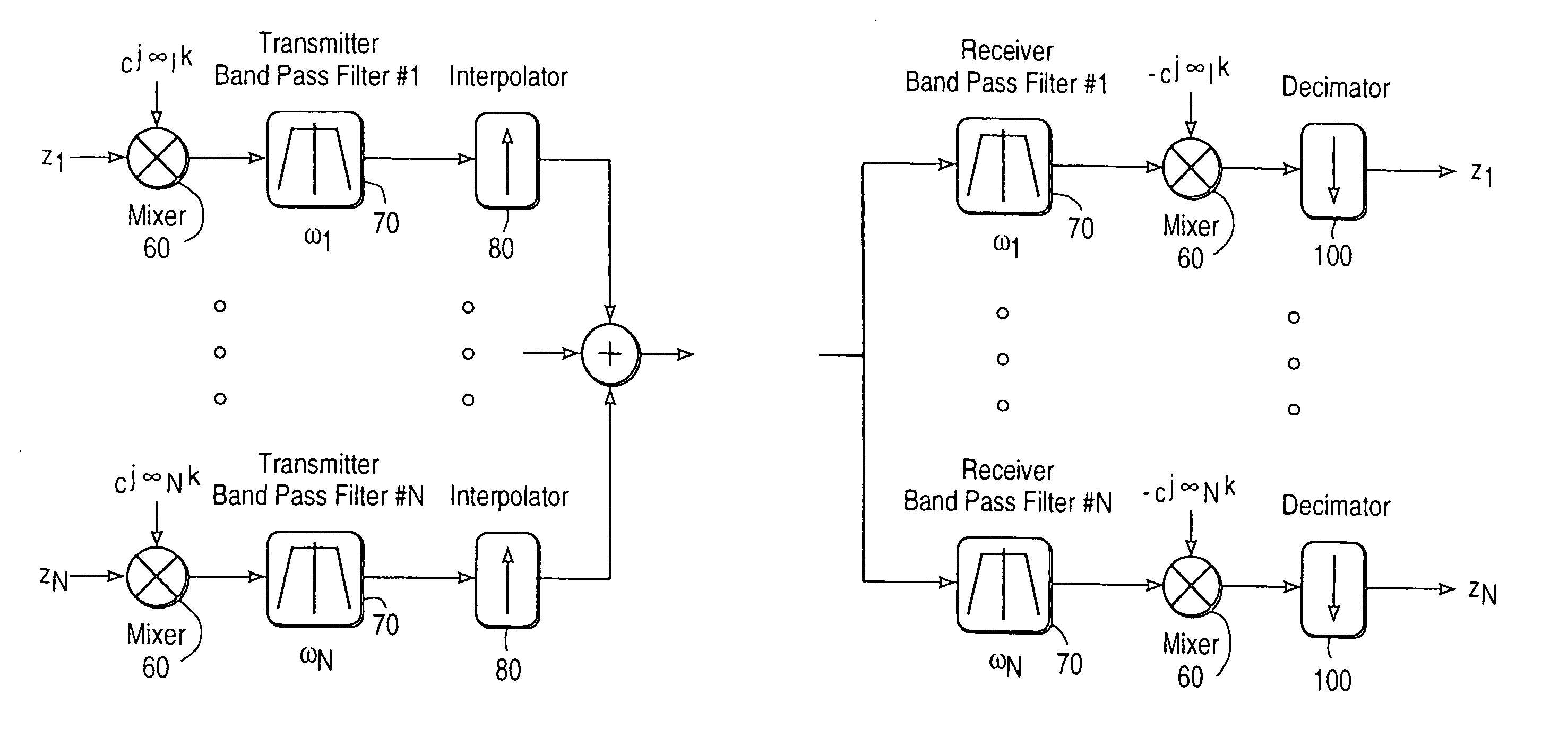

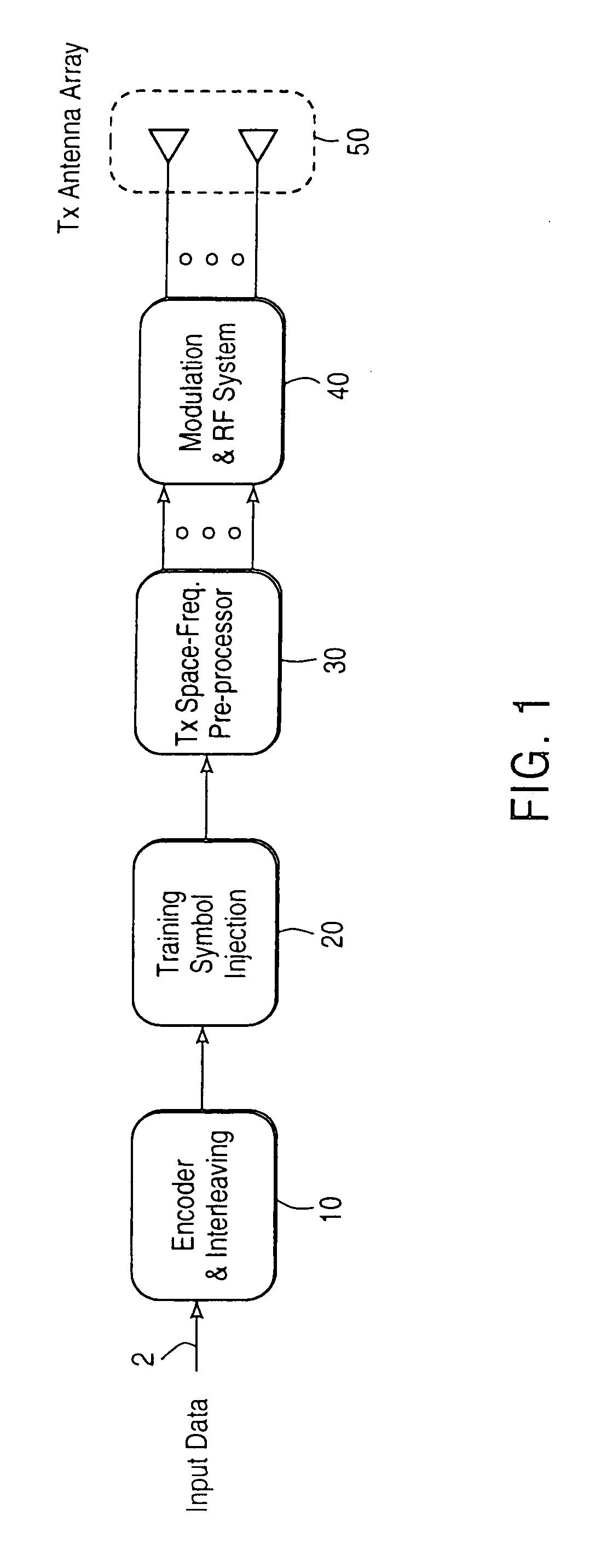

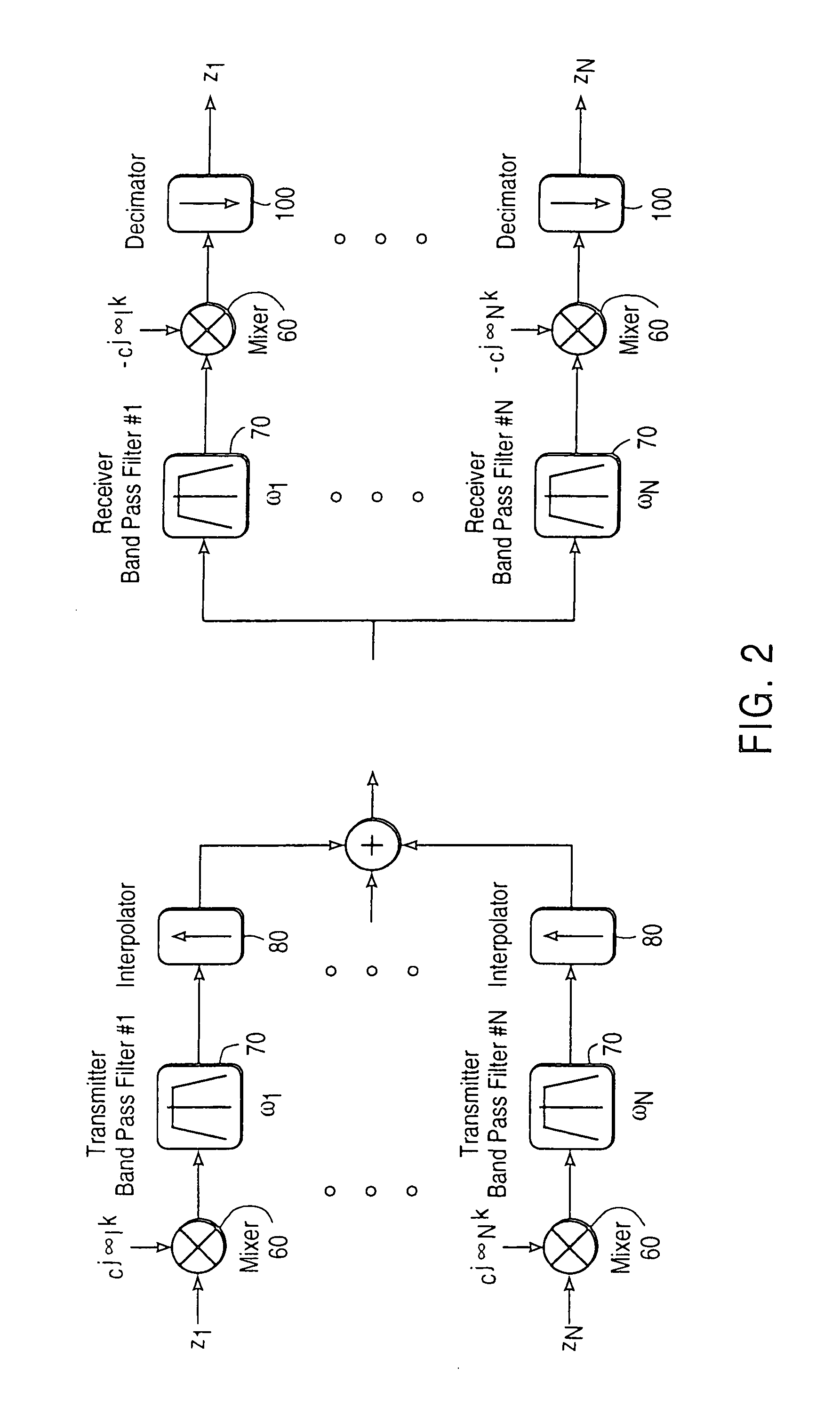

Spatio-temporal processing for communication

InactiveUS6888899B2Reduce complexityEfficiency benefitError prevention/detection by using return channelSpatial transmit diversityTransmitter antennaHandling system

A space-time signal processing system with advantageously reduced complexity. The system may take advantage of multiple transmitter antenna elements and / or multiple receiver antenna element or multiple polarizations of a single transmitter antenna element and / or single receiver antenna element. The system is not restricted to wireless contexts and may exploit any channel having multiple inputs or multiple outputs and certain other characteristics. Multi-path effects in a transmission medium cause a multiplicative increase in capacity.

Owner:CISCO TECH INC

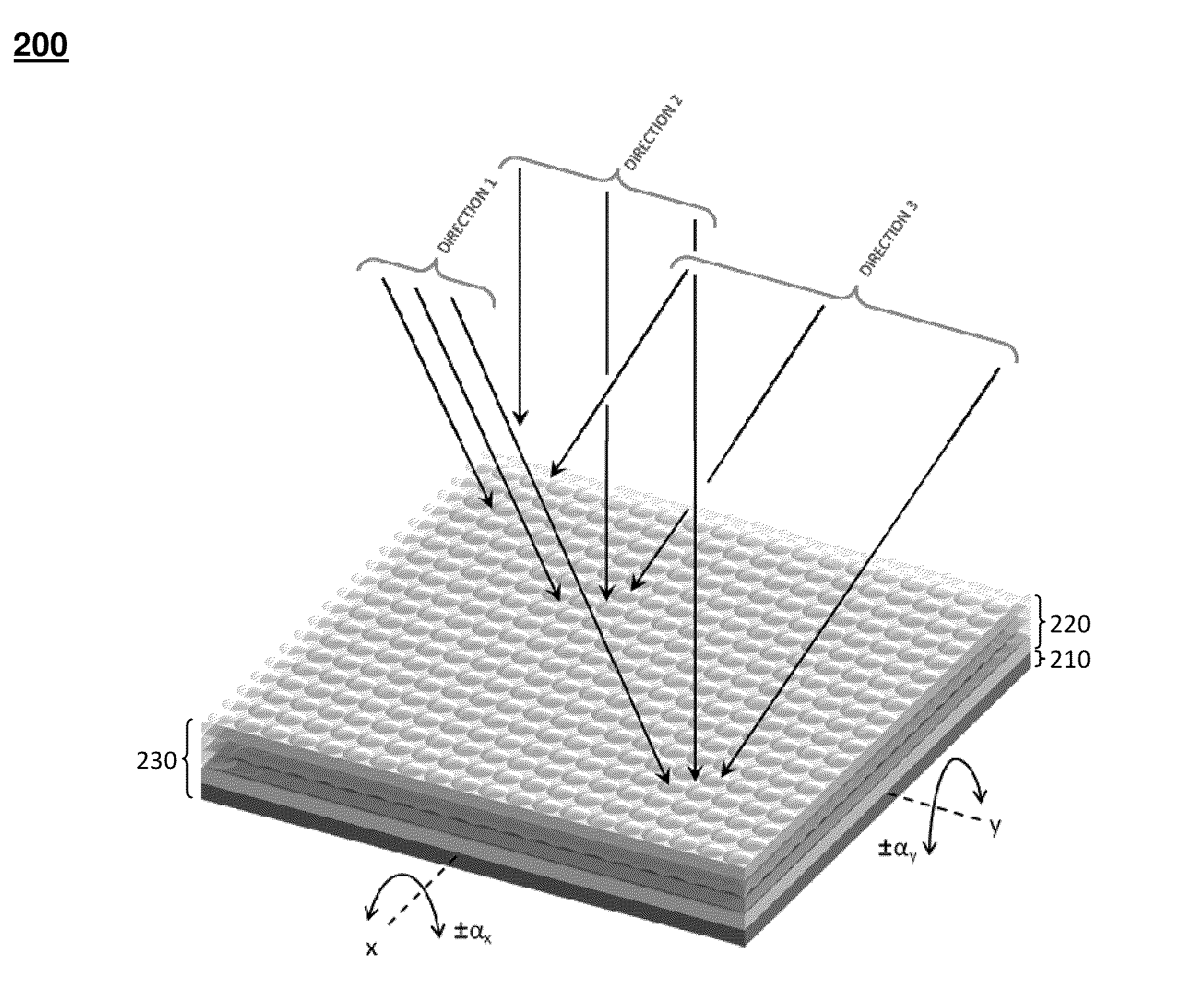

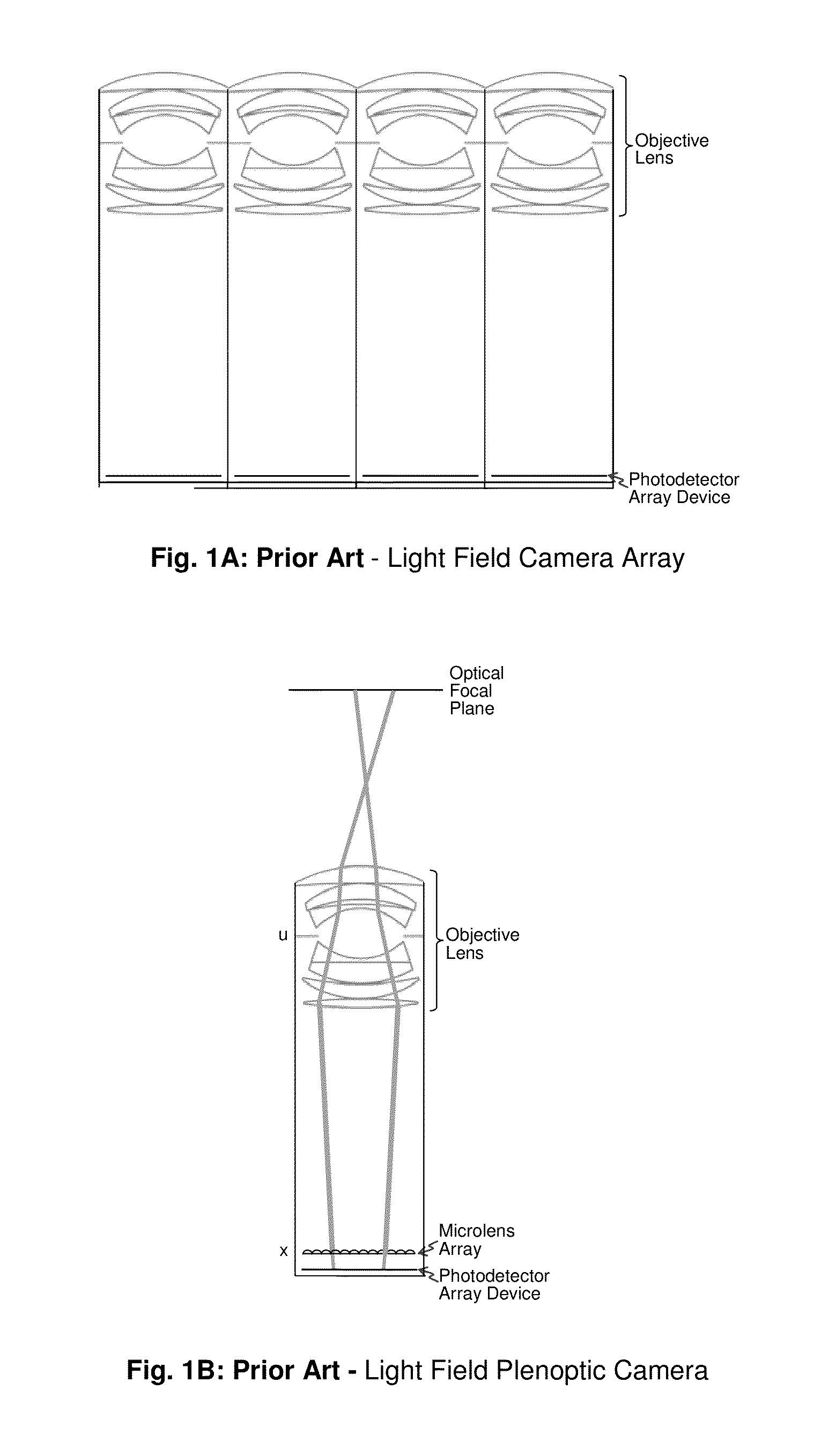

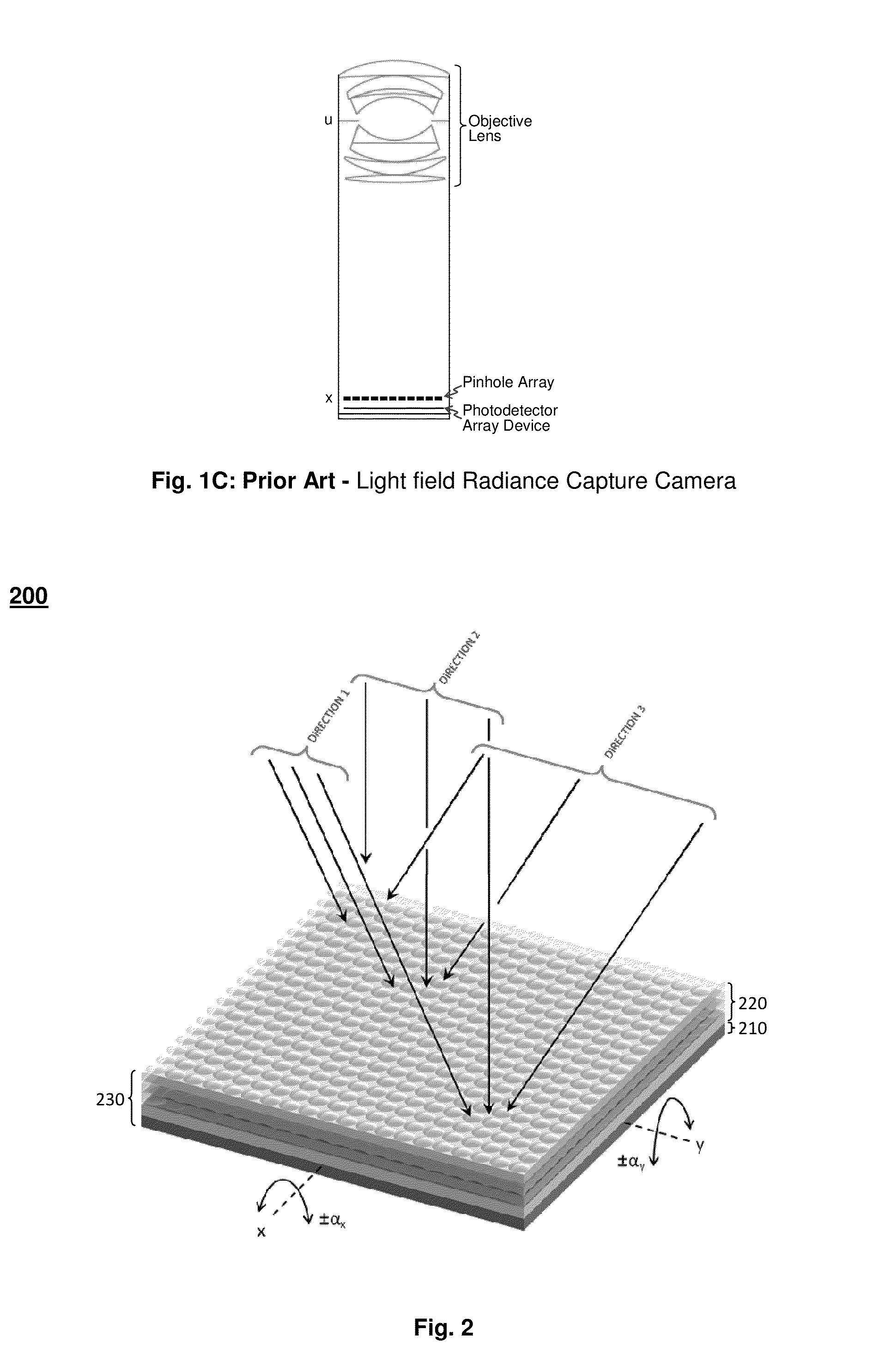

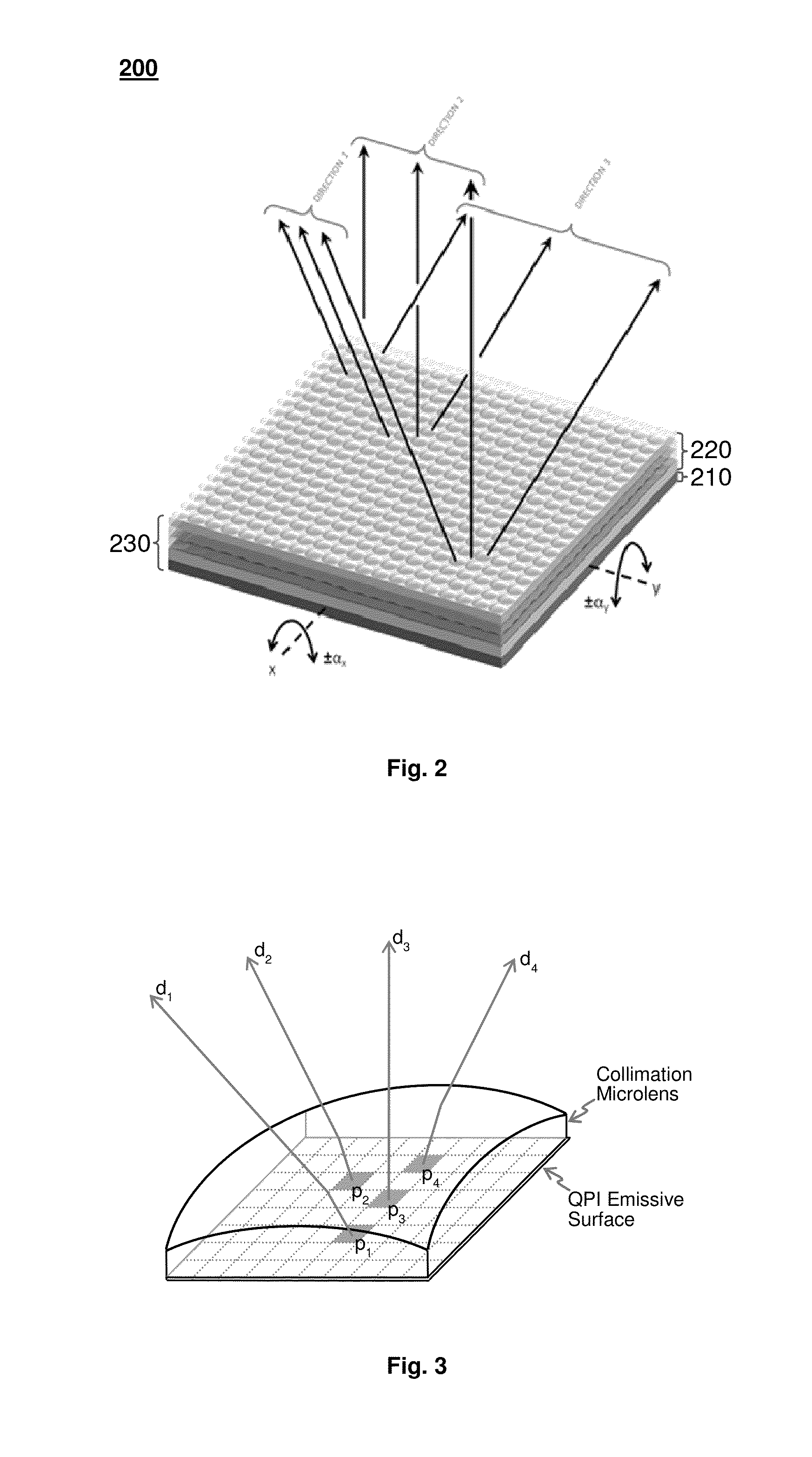

Spatio-Temporal Light Field Cameras

InactiveUS20130321581A1Convenient lightingTelevision system detailsImage enhancementMultiple perspectiveImage resolution

Spatio-temporal light field cameras that can be used to capture the light field within its spatio temporally extended angular extent. Such cameras can be used to record 3D images, 2D images that can be computationally focused, or wide angle panoramic 2D images with relatively high spatial and directional resolutions. The light field cameras can be also be used as 2D / 3D switchable cameras with extended angular extent. The spatio-temporal aspects of the novel light field cameras allow them to capture and digitally record the intensity and color from multiple directional views within a wide angle. The inherent volumetric compactness of the light field cameras make it possible to embed in small mobile devices to capture either 3D images or computationally focusable 2D images. The inherent versatility of these light field cameras makes them suitable for multiple perspective light field capture for 3D movies and video recording applications.

Owner:OSTENDO TECH INC

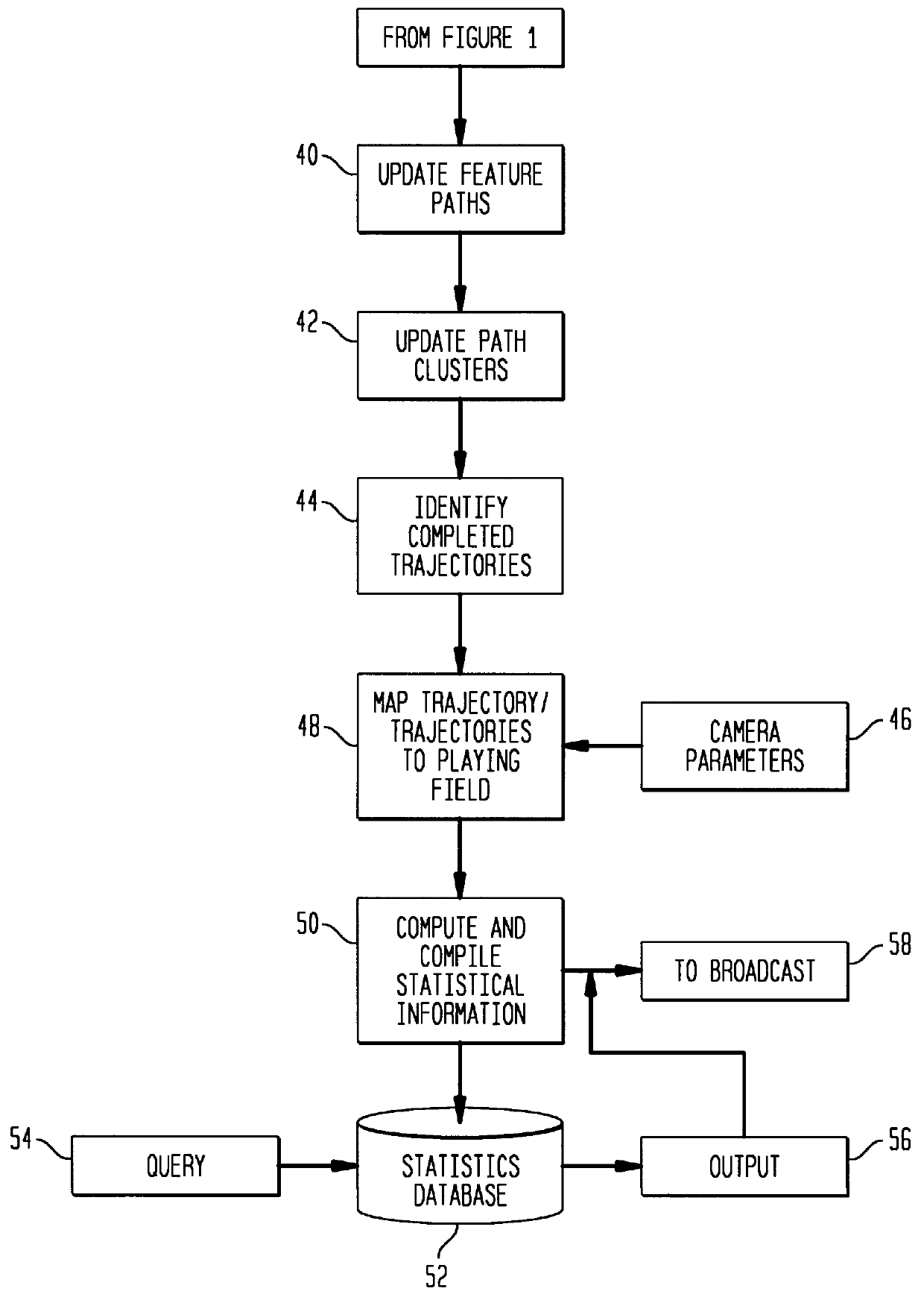

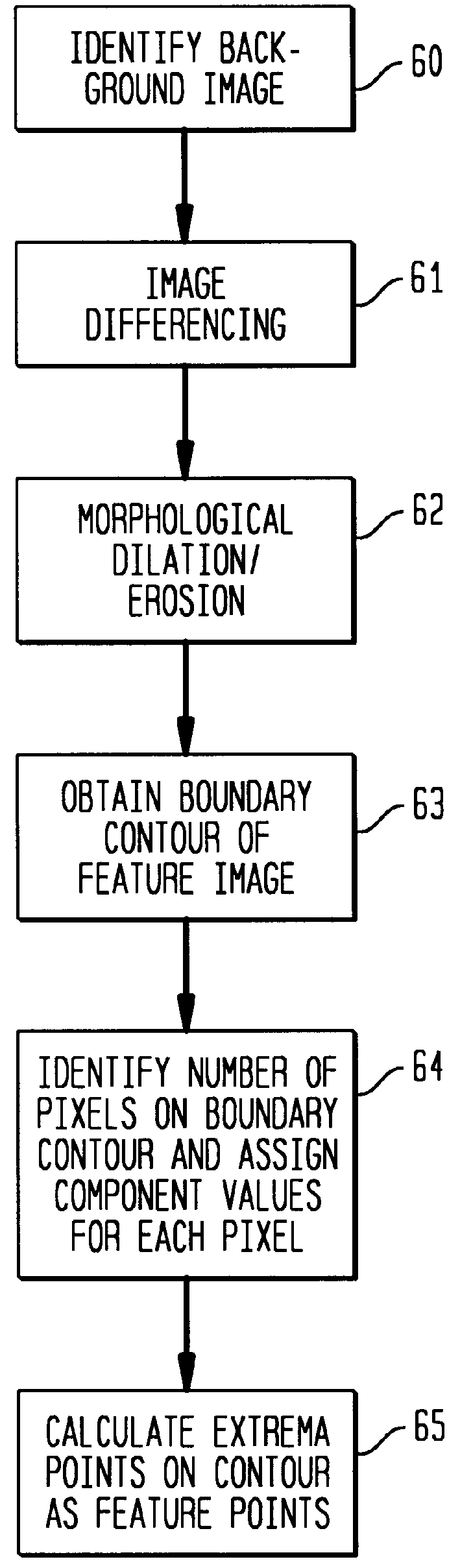

Method and apparatus for determination and visualization of player field coverage in a sporting event

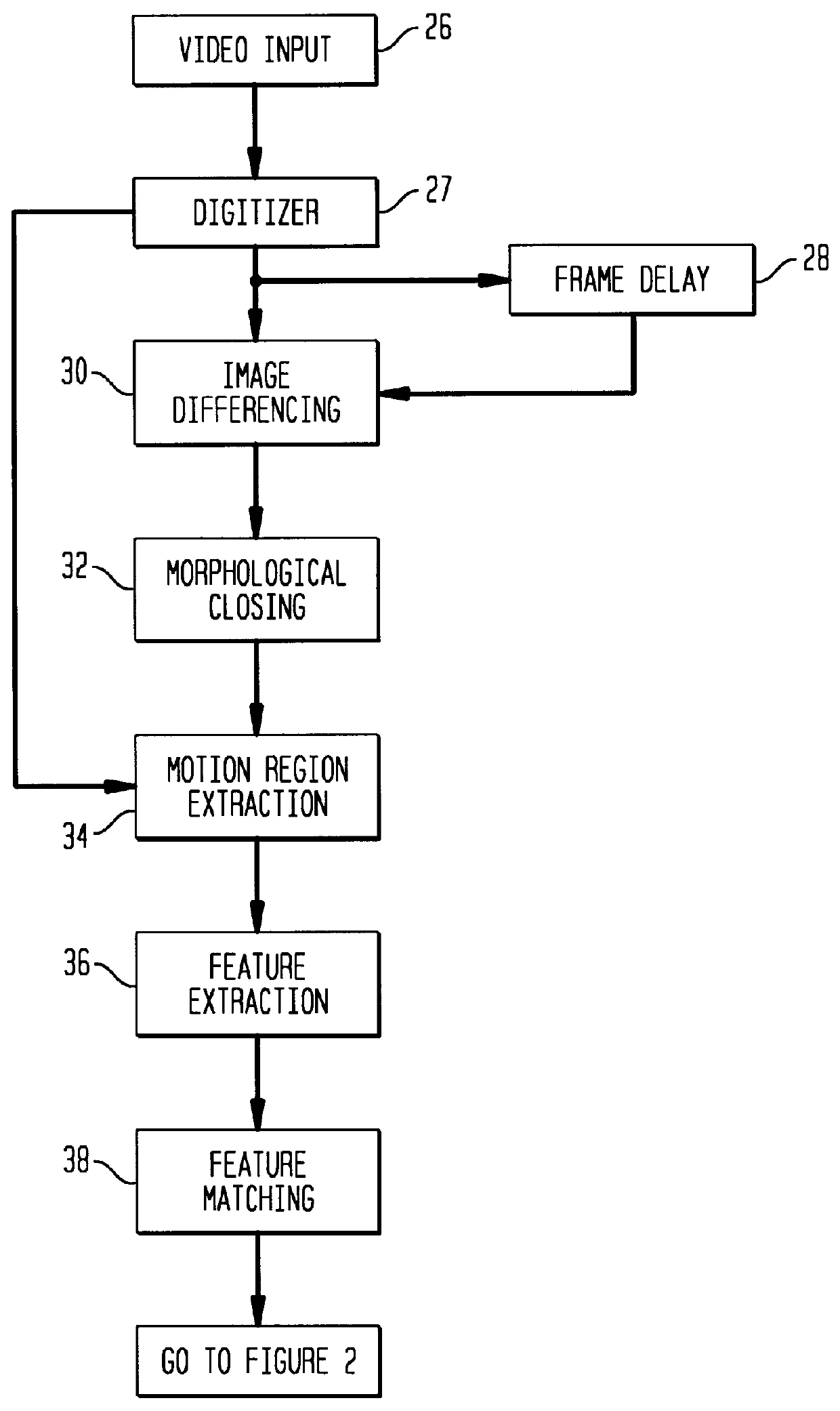

A method and apparatus for deriving an occupancy map reflecting an athlete's coverage of a playing area based on real time tracking of a sporting event. The method according to the present invention includes a step of obtaining a spatio-temporal trajectory corresponding to the motion of an athlete and based on real time tracking of the athlete. The trajectory is then mapped over the geometry of the playing area to determine a playing area occupancy map indicating the frequency with which the athlete occupies certain areas of the playing area, or the time spent by the athlete in certain areas of the playing area. The occupancy map is preferably color coded to indicate different levels of occupancy in different areas of the playing area, and the color coded map is then overlaid onto an image (such as a video image) of the playing area. The apparatus according to the present invention includes a device for obtaining the trajectory of an athlete, a computational device for obtaining the occupancy map based on the obtained trajectory and the geometry of the playing area, and devices for transforming the map the a camera view, generating a color (or other visually differentiable) coded version of the occupancy map, and overlaying the color coded map on a video image of the playing area. In particular, the spatio-temporal trajectory may be obtained by an operation on a video image of the sporting event, in which motion regions in the image are identified, and feature points on the regions are tracked as they move, thereby defining feature paths. The feature paths, in turn, are associated in clusters, which clusters generally correspond to the motion of some portion of the athlete (e.g., arms, legs, etc.). The collective plurality of clusters (i.e., the trajectory) corresponds with the motion of the athlete as a whole.

Owner:LUCENT TECH INC +1

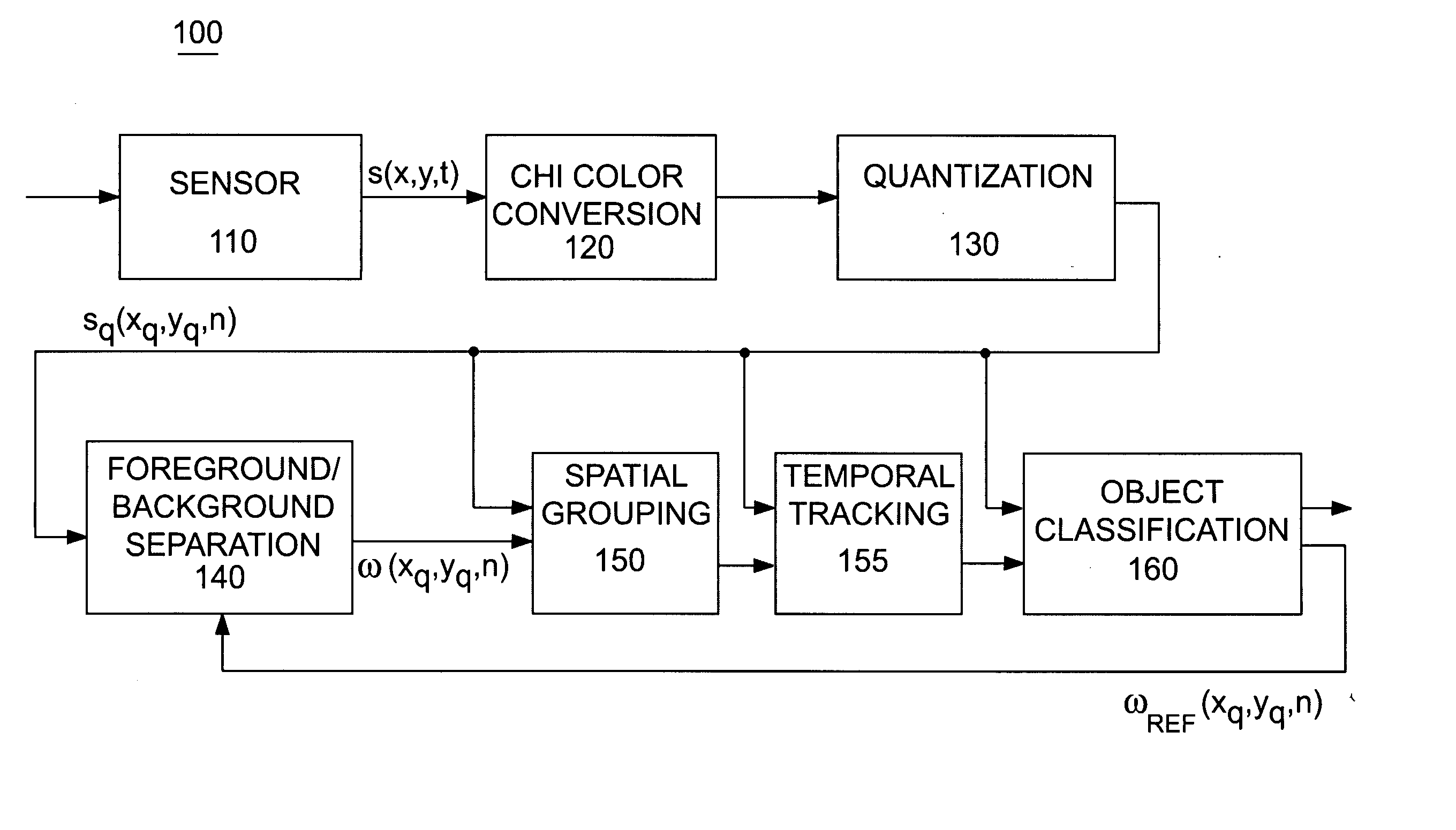

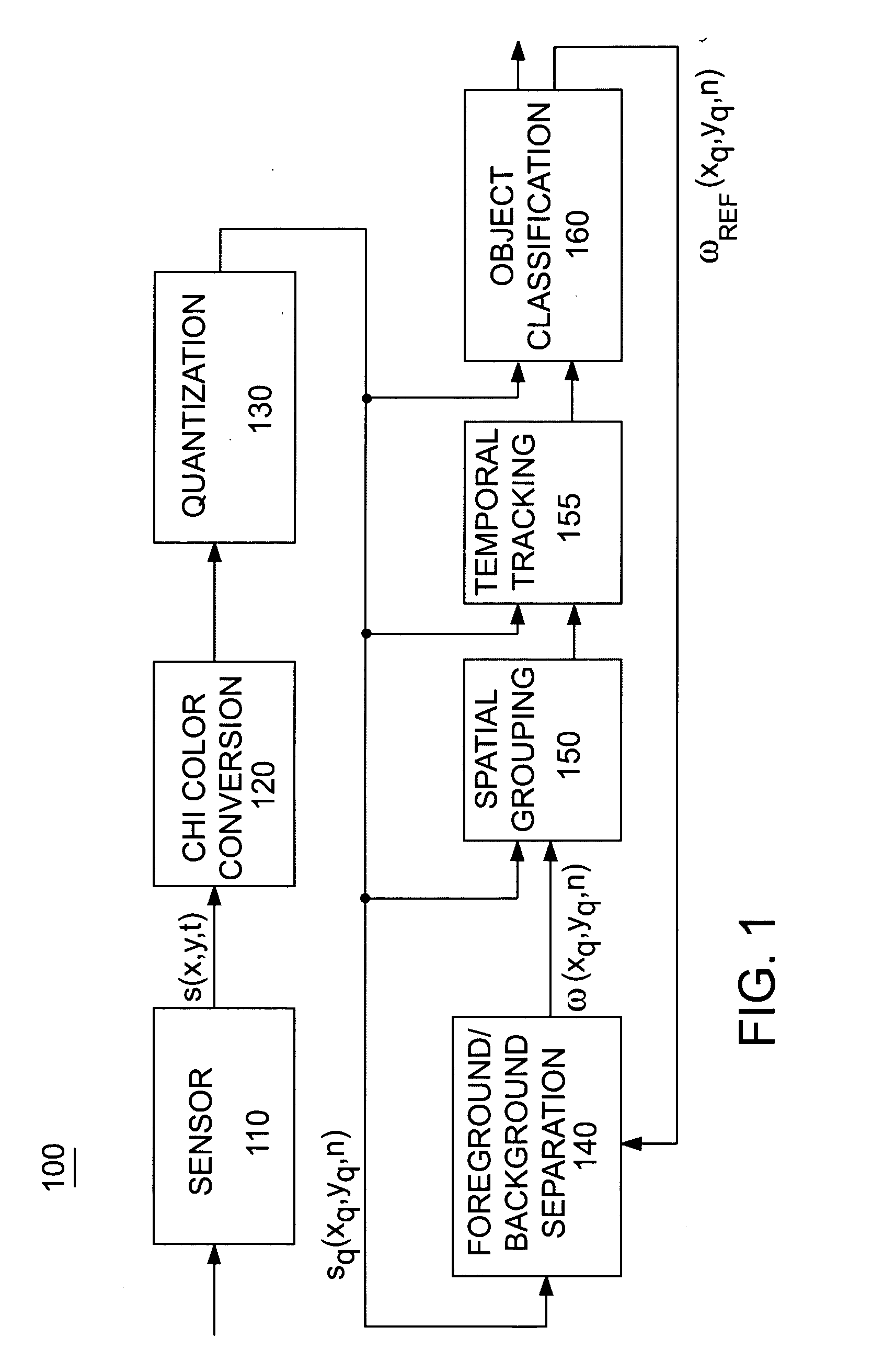

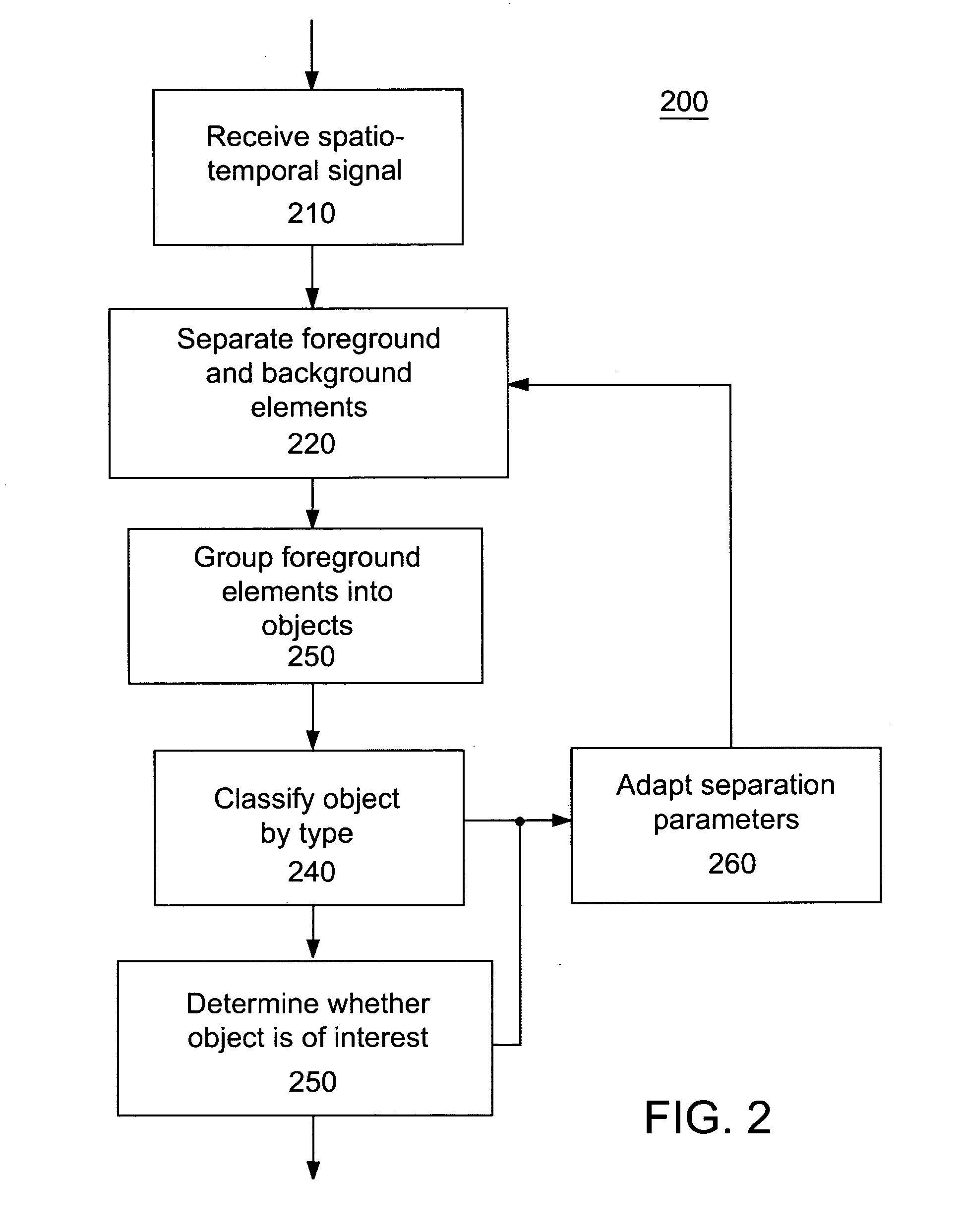

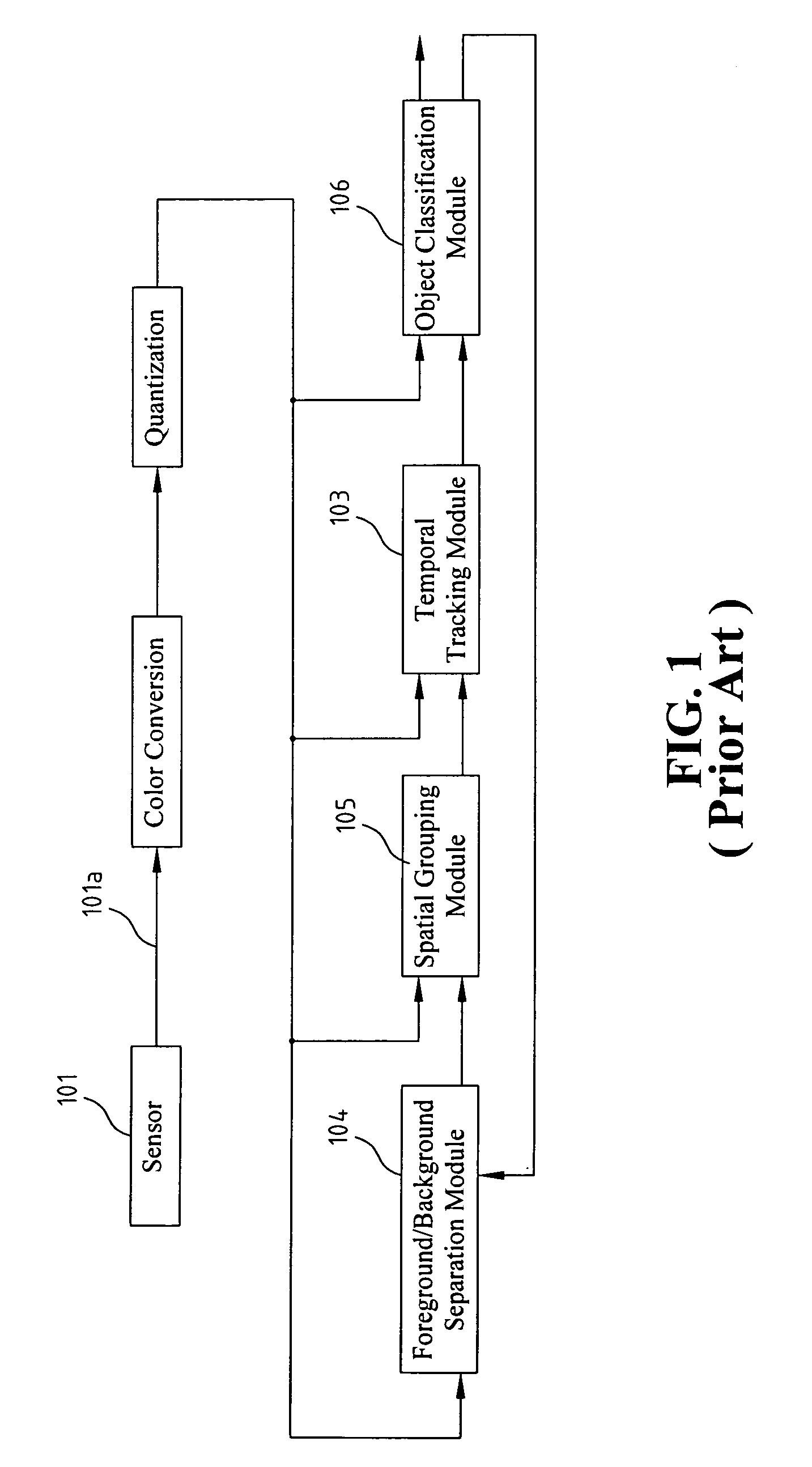

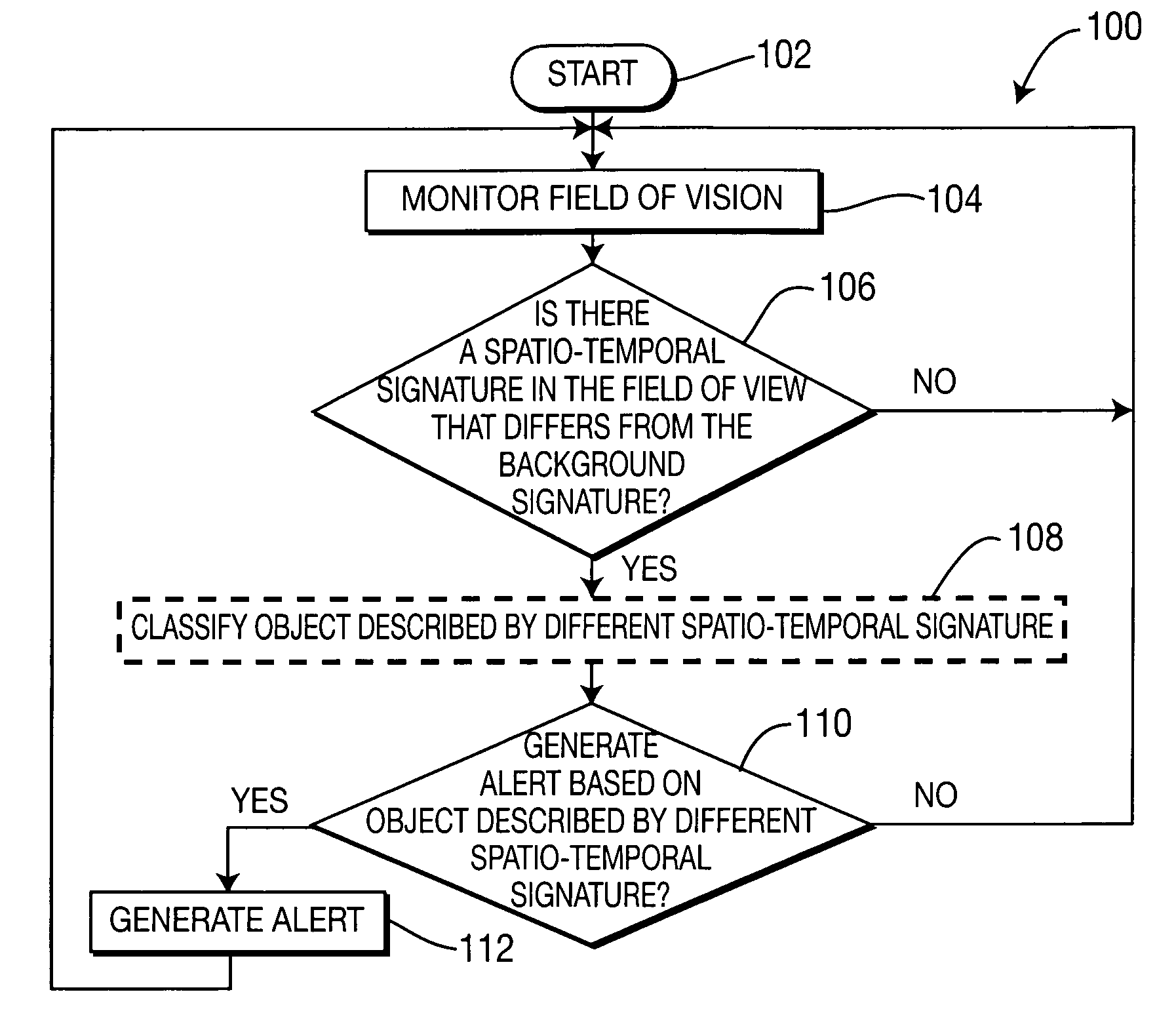

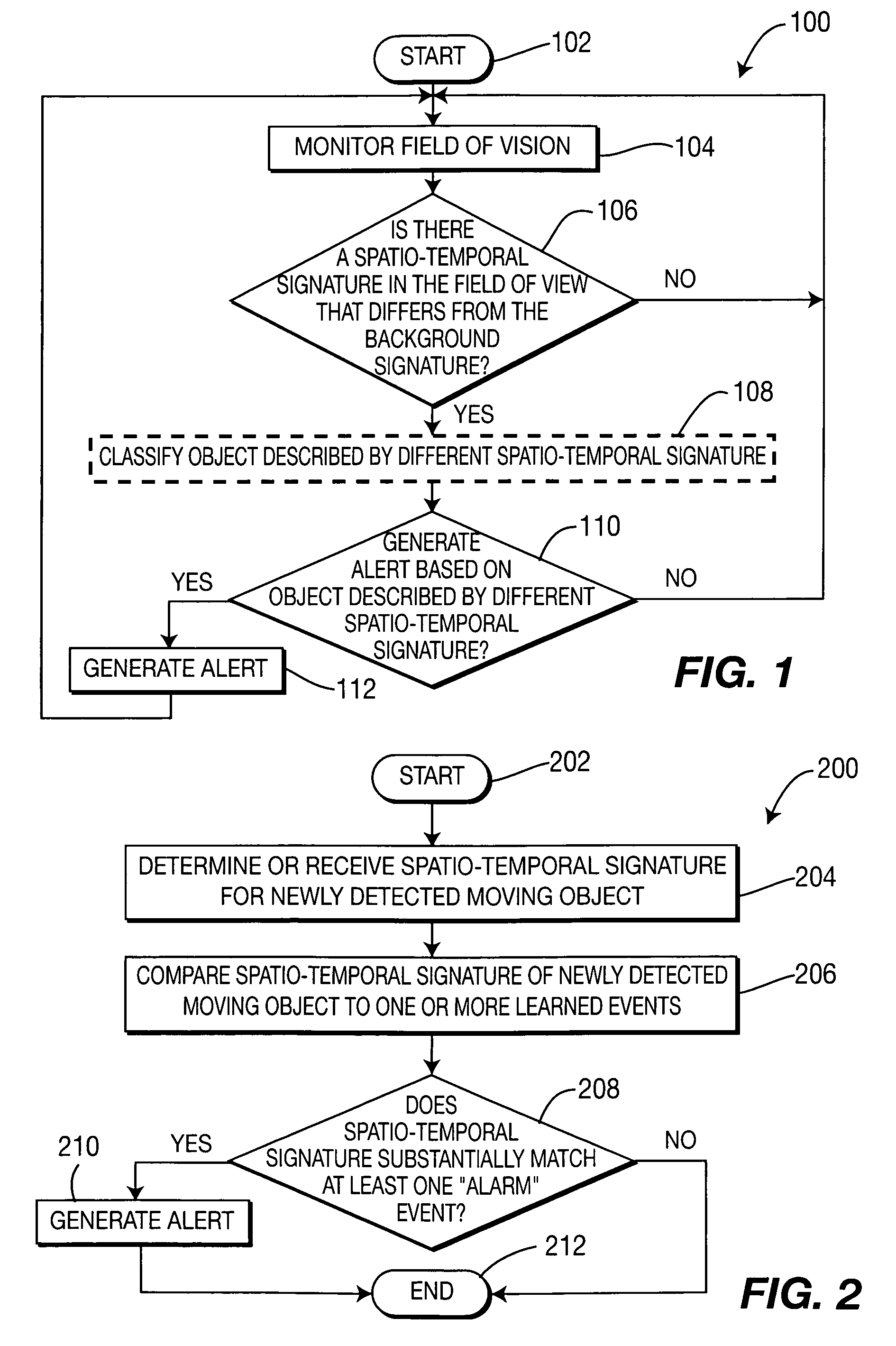

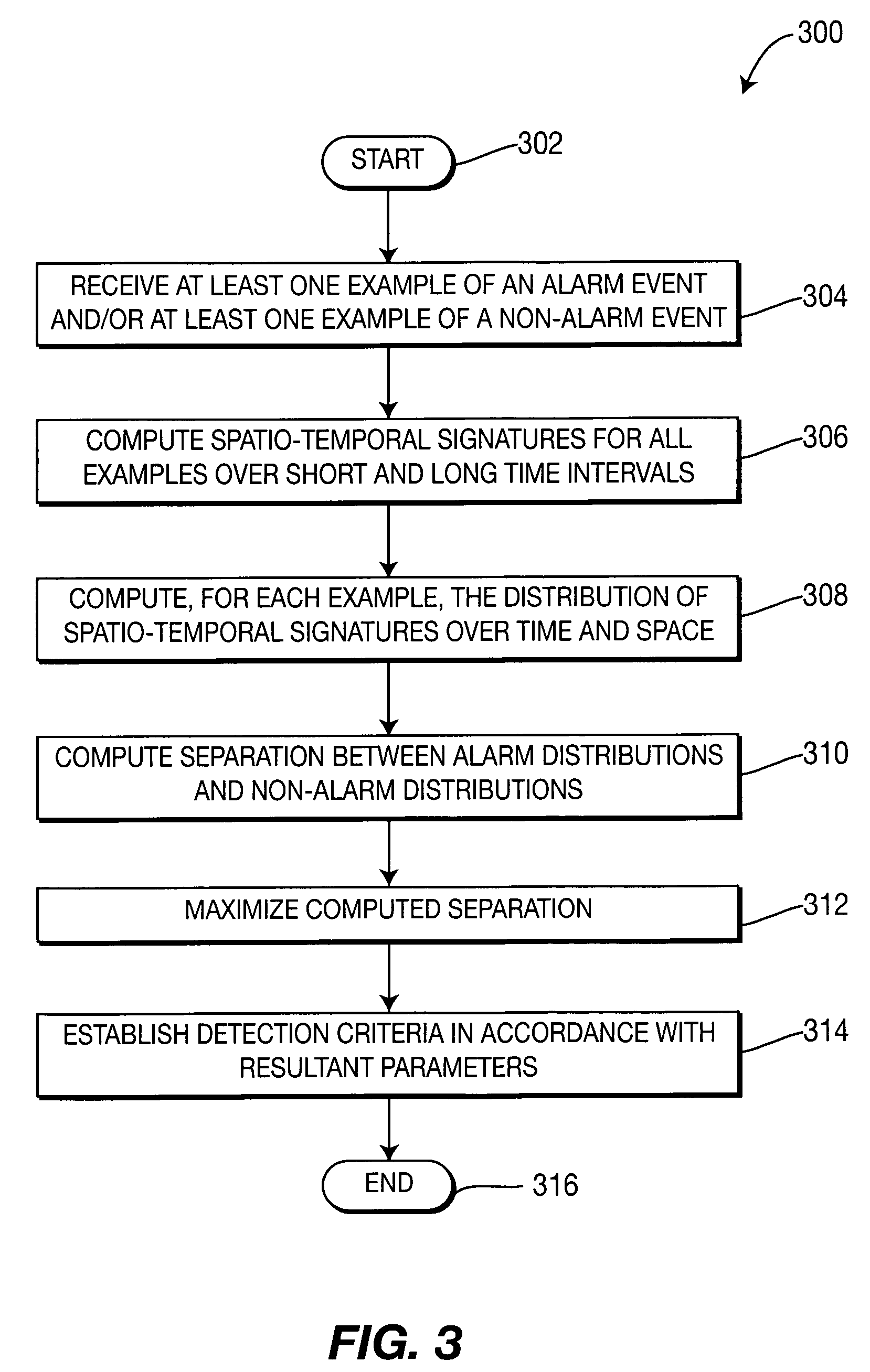

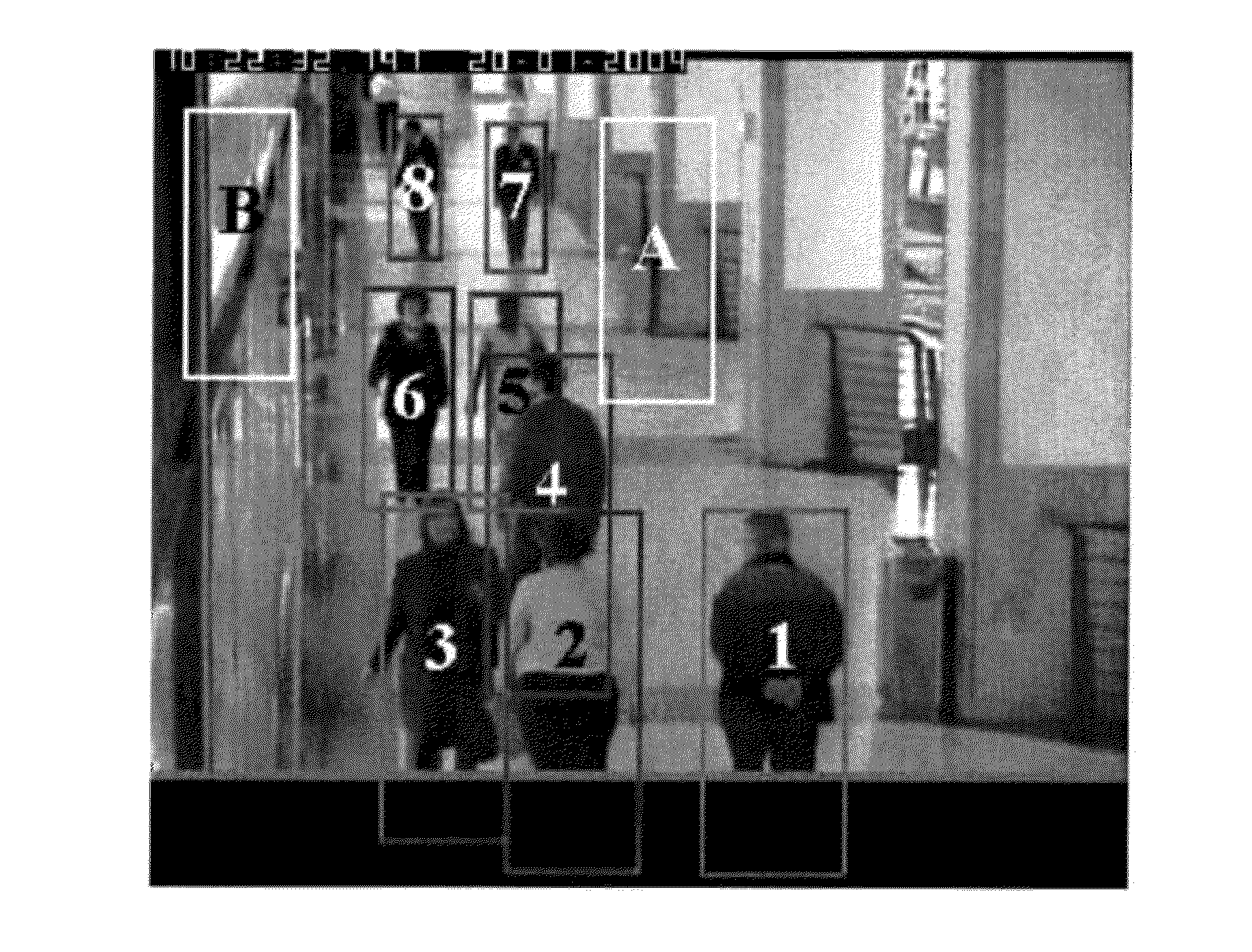

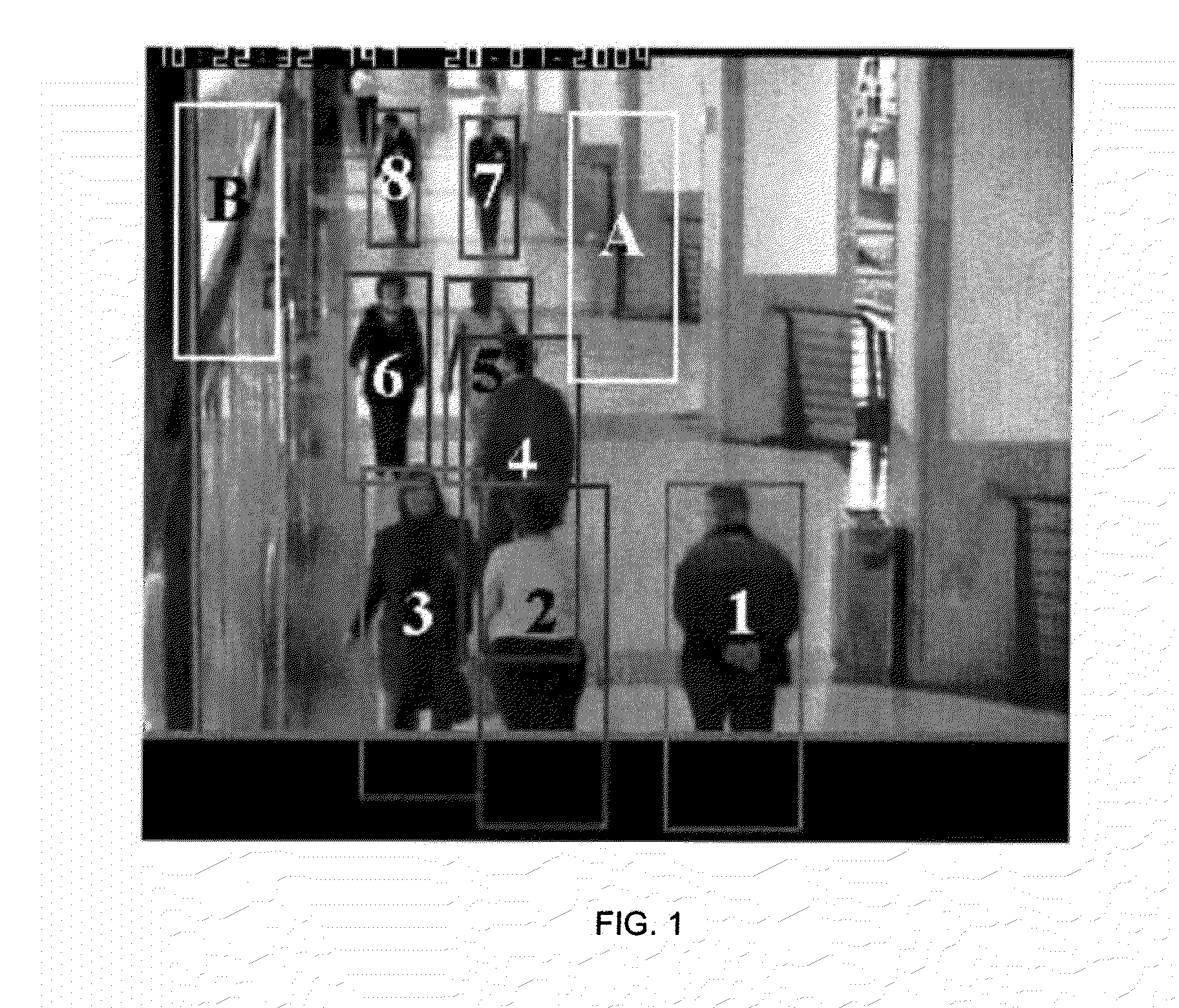

Methods and systems for detecting objects of interest in spatio-temporal signals

Methods and systems detect objects of interest in a spatio-temporal signal. According to one embodiment, a system processes a digital spatio-temporal input signal containing zero or more foreground objects of interest superimposed on a background. The system comprises a foreground / background separation module, a foreground object grouping module, an object classification module, and a feedback connection. The foreground / background separation module receives the spatio-temporal input signal as an input and, according to one or more adaptable parameters, produces as outputs foreground / background labels designating elements of the spatio-temporal input signal as either foreground or background. The foreground object grouping module is connected to the foreground / background separation module and identifies groups of selected foreground-labeled elements as foreground objects. The object classification module is connected to the foreground object grouping module and generates object-level information related to the foreground object. The object-level information adapts the one or more adaptable parameters of the foreground / background separation module, via the feedback connection.

Owner:MOTOROLA SOLUTIONS INC

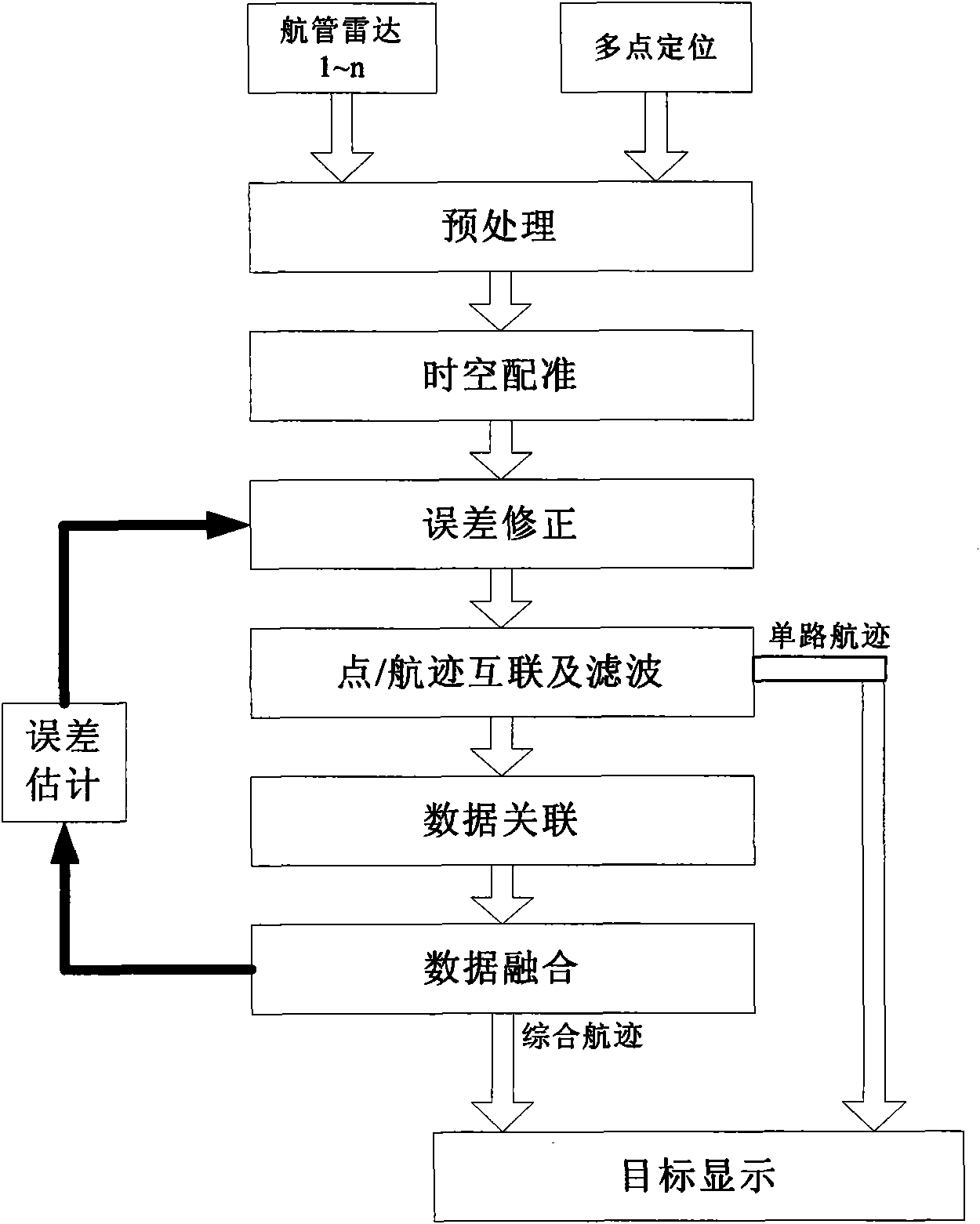

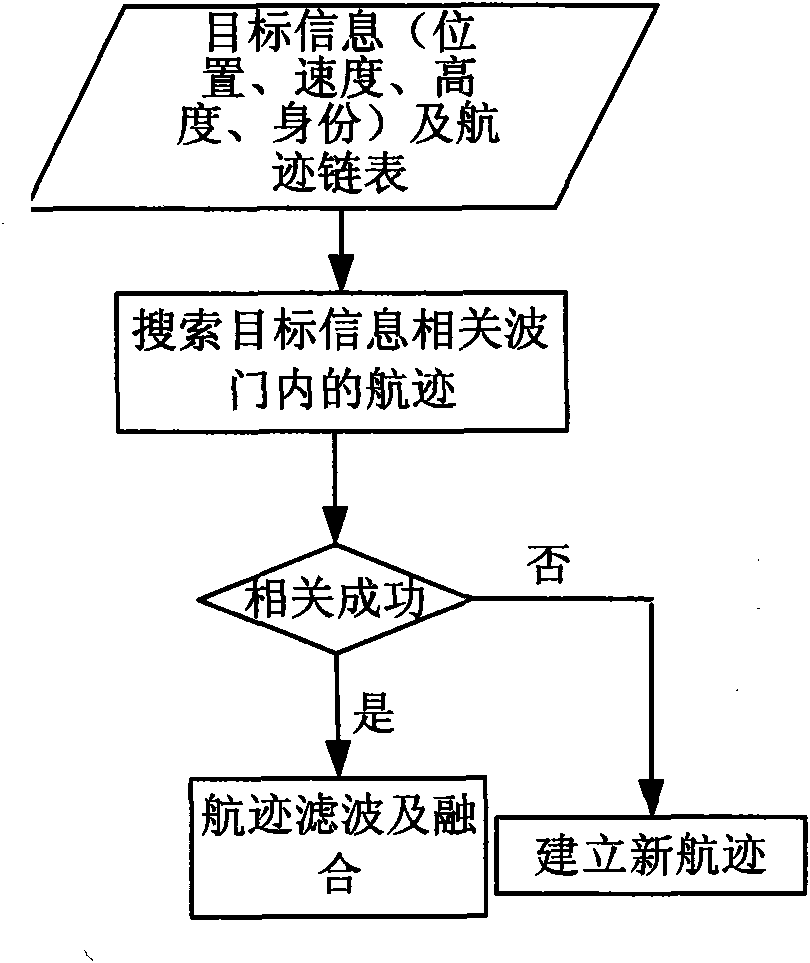

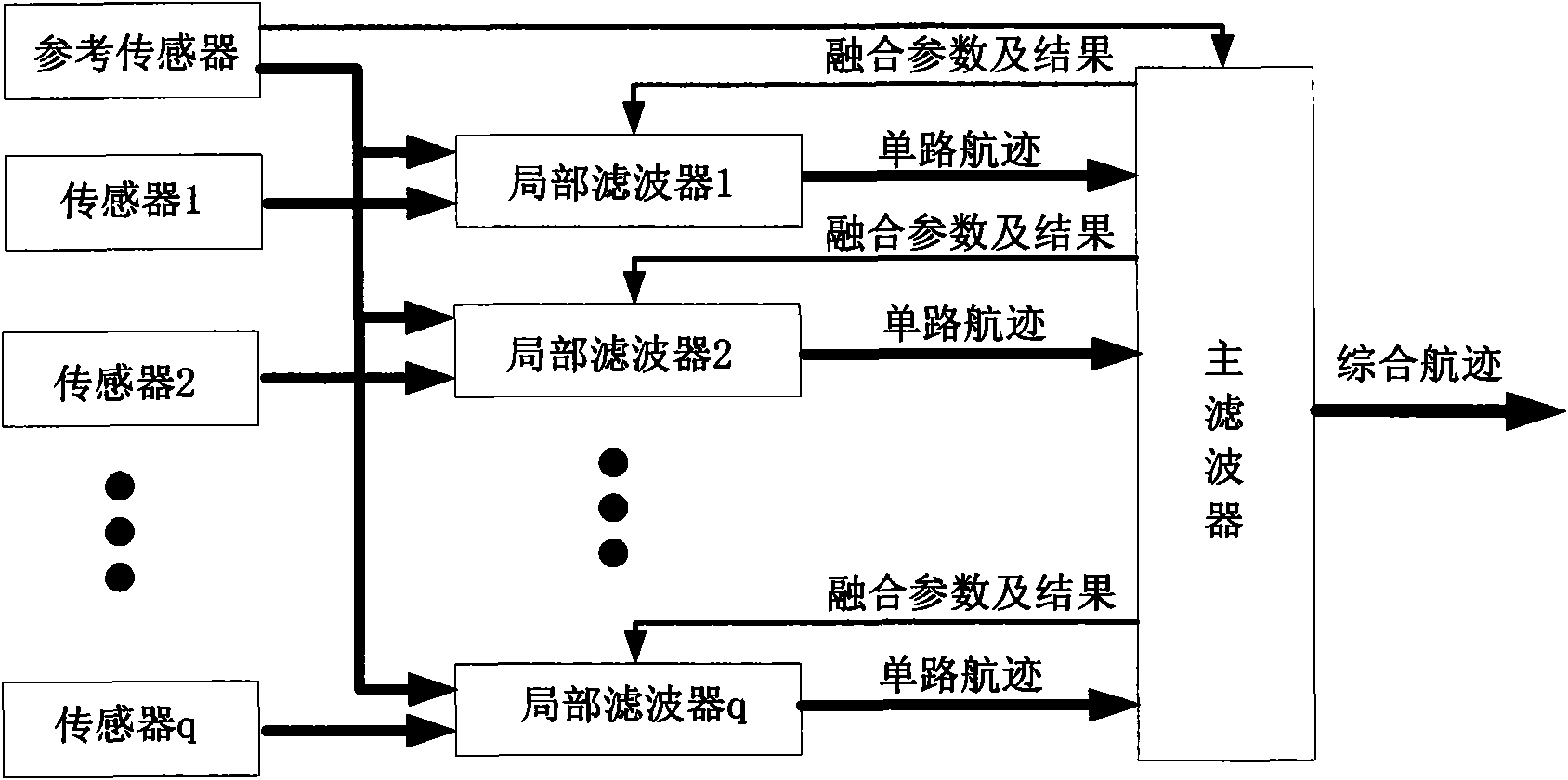

Federated Kalman filtering-based method for fusing multilateration data and radar data

InactiveCN101655561AReduce performanceCorrection errorRadio wave reradiation/reflectionRadarFiltration

The invention relates to a Federated Kalman filtering-based method for fusing multilateration data and radar data, comprising seven processes: pretreatment, time and space registration, error correction, single-sensor line tracking or airborne trace interconnection and filtration, data association, data fusion as well as error estimation. On the basis of multiclass monitoring information, the technical scheme of the invention establishes data association mapping relations among all classes of targets, constructs a process method fusing the multilateration system data and the radar data, realizes deep fusion of all classes of information and comprehensive utilization on effective information, ensures high updating rate of a multilateration system and the whole precision of system monitoringwhen period is less than or equal to 1sec, reduces impact of radar measuring error on system monitoring precision, and significantly improves the tracking precision of the system.

Owner:NANJING LES INFORMATION TECH

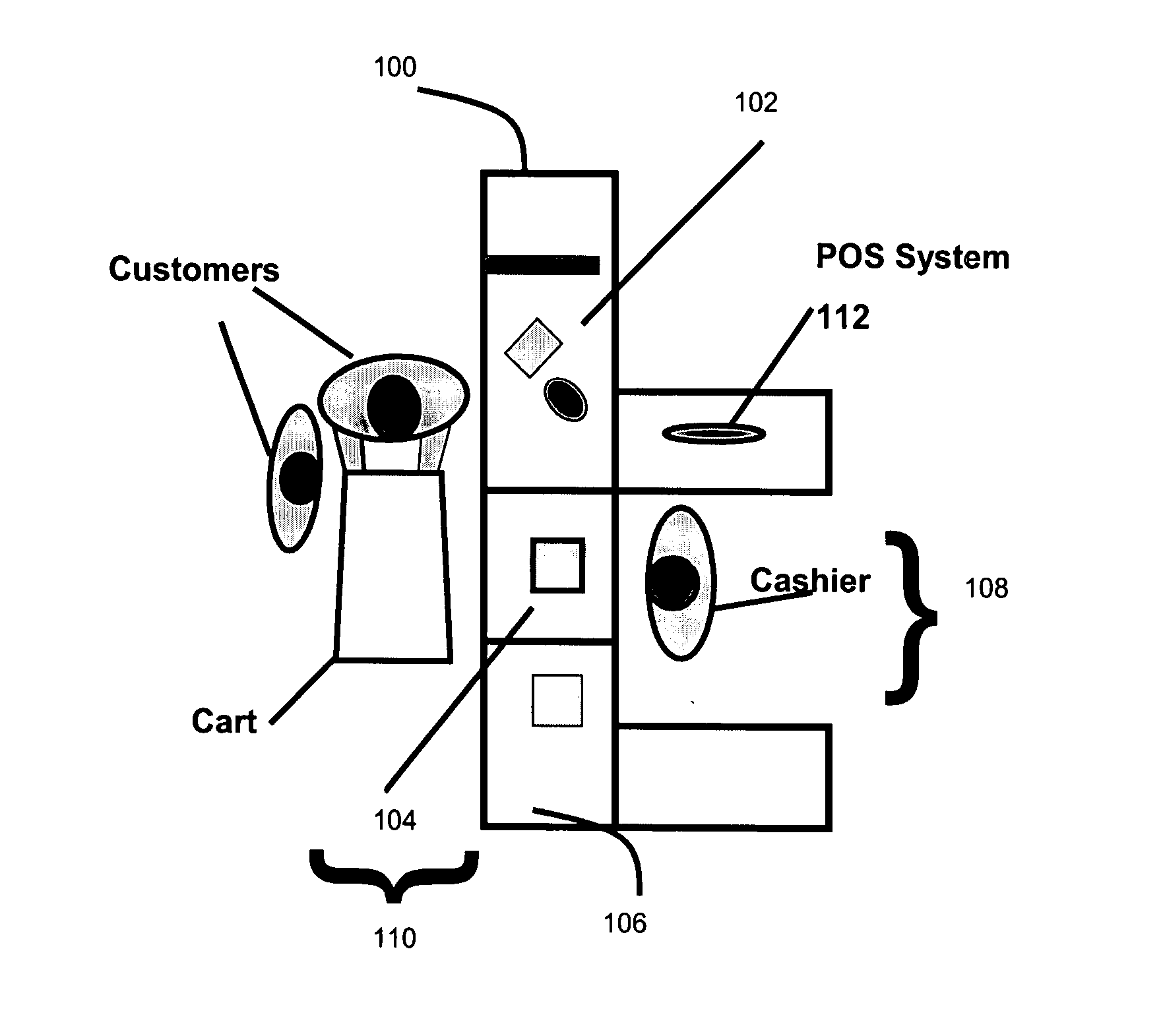

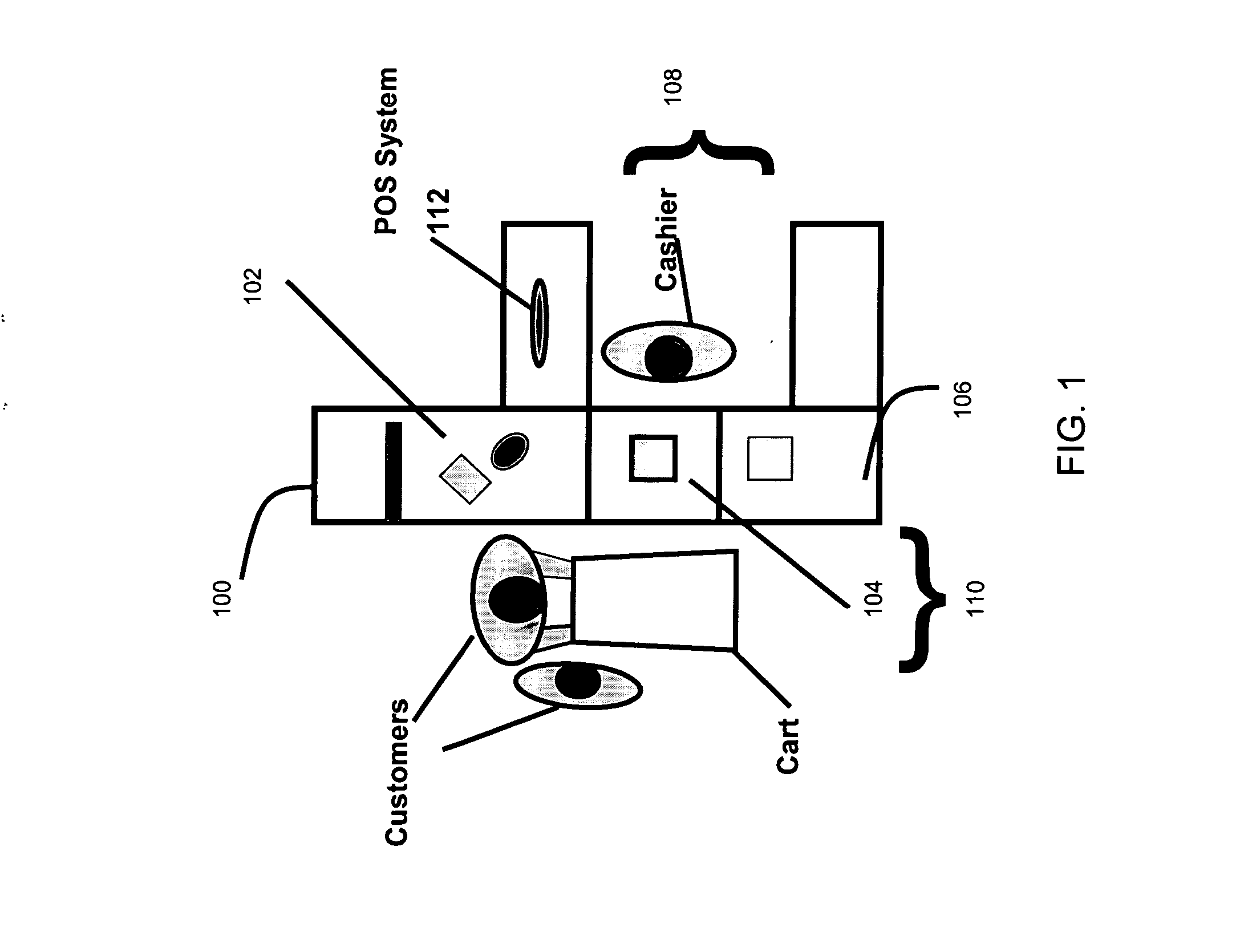

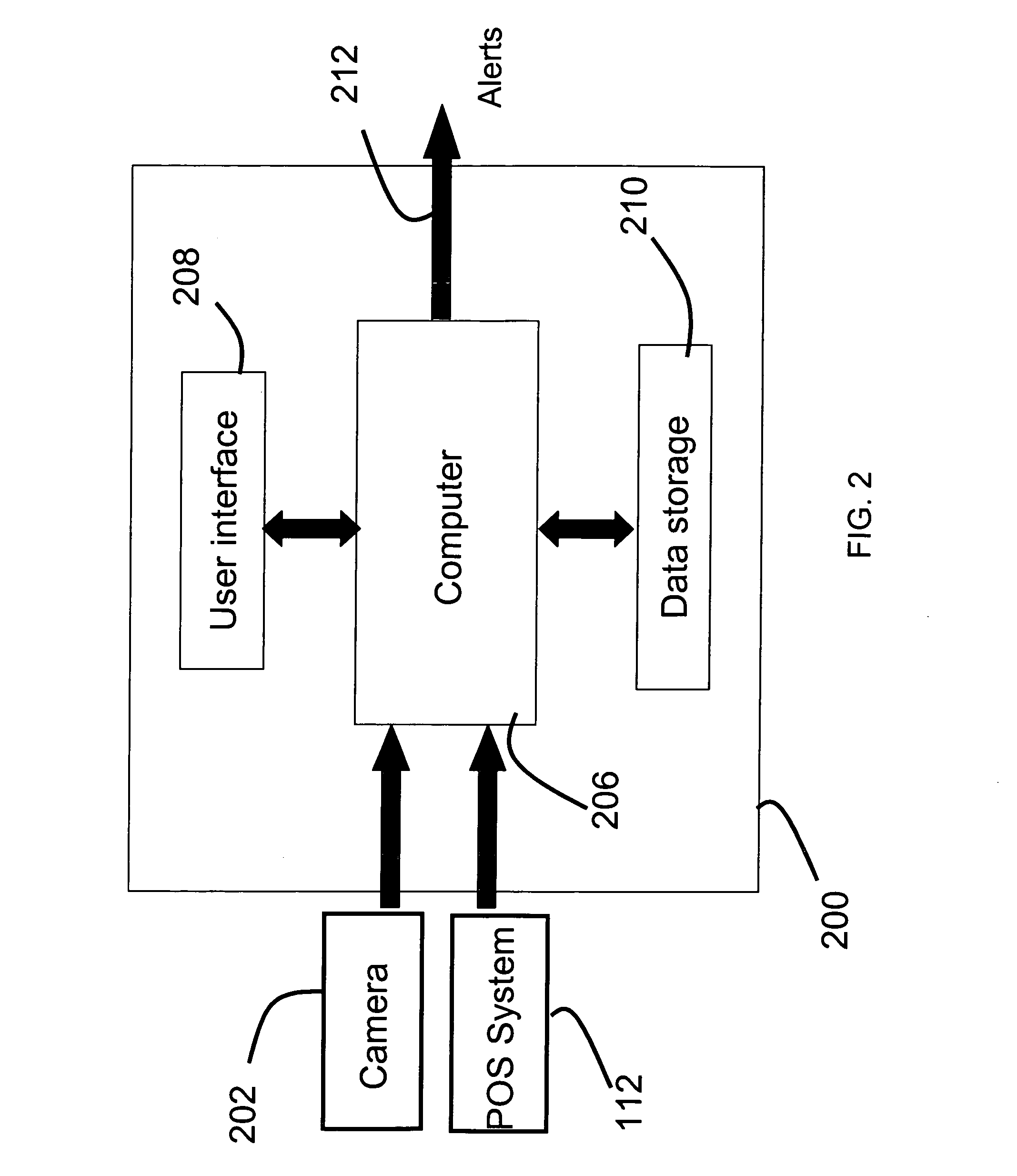

Video surveillance using spatial-temporal motion analysis

Detection of an omitted process in an event having of a sequence of processes includes: receiving video of an action area; receiving transaction data regarding a transaction occurring at the action area; detecting at least two different actor motion states in the video; detecting an event based on the motion states; and detecting the omitted process based on the detected event and the transaction data.

Owner:OBJECTVIDEO

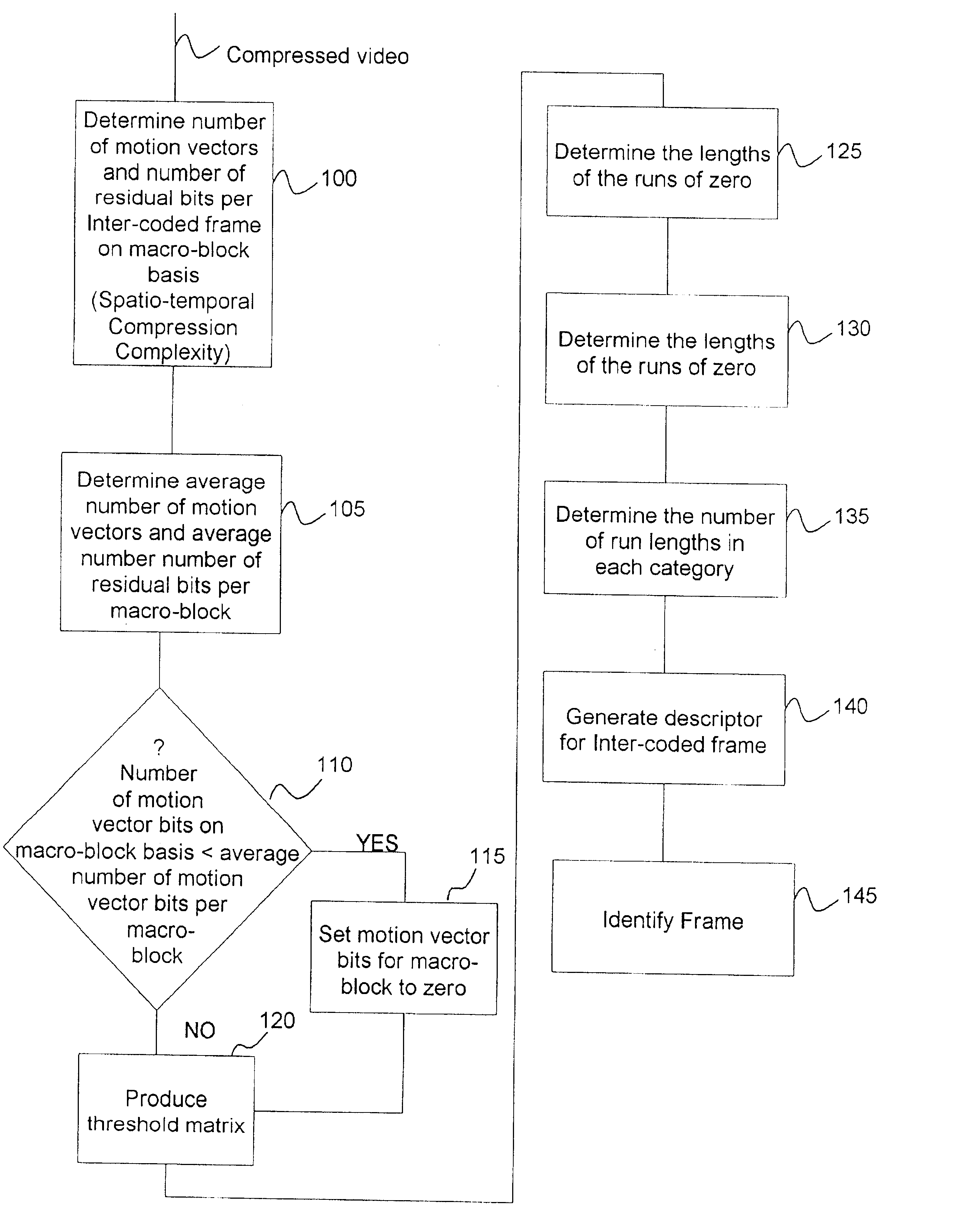

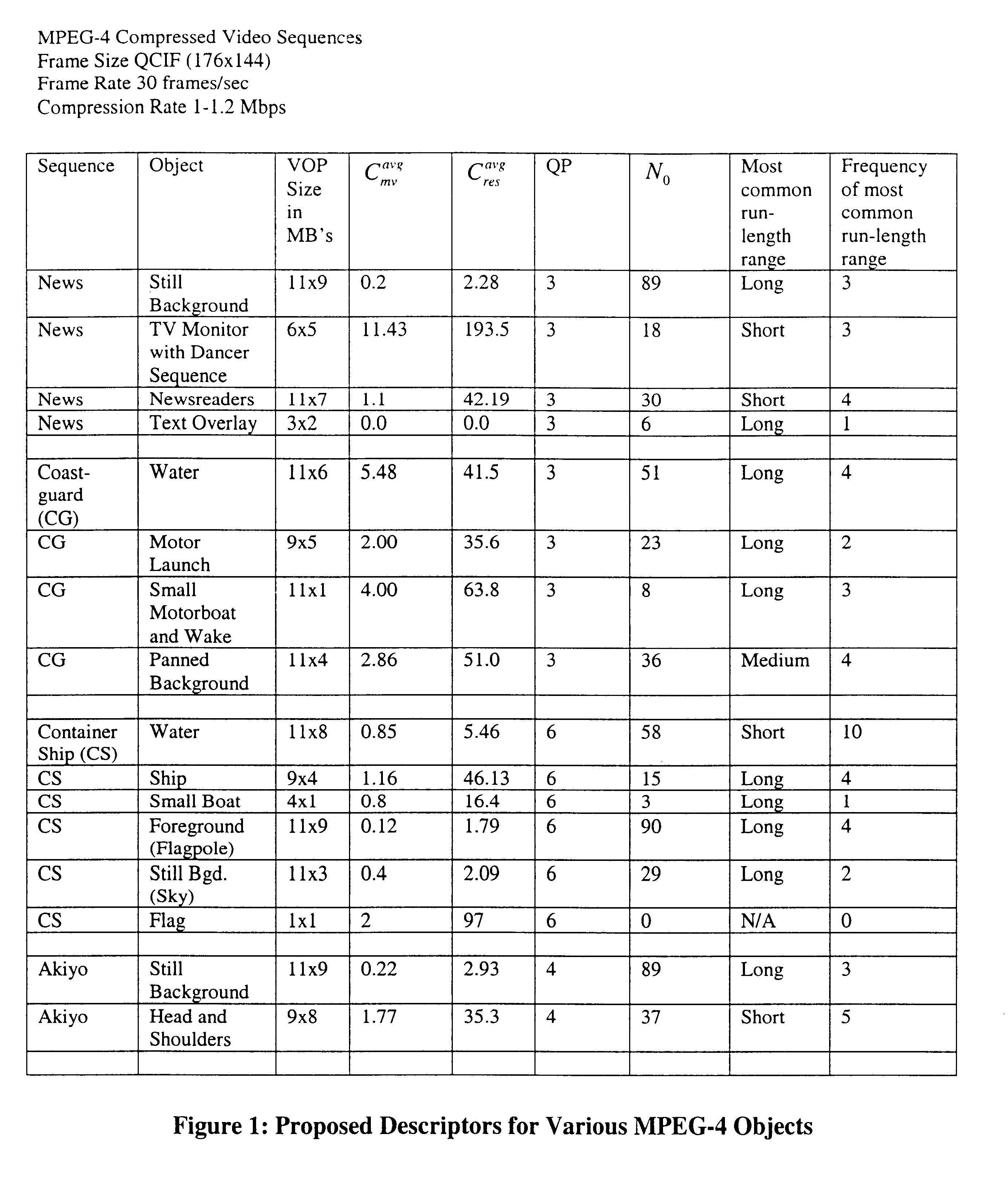

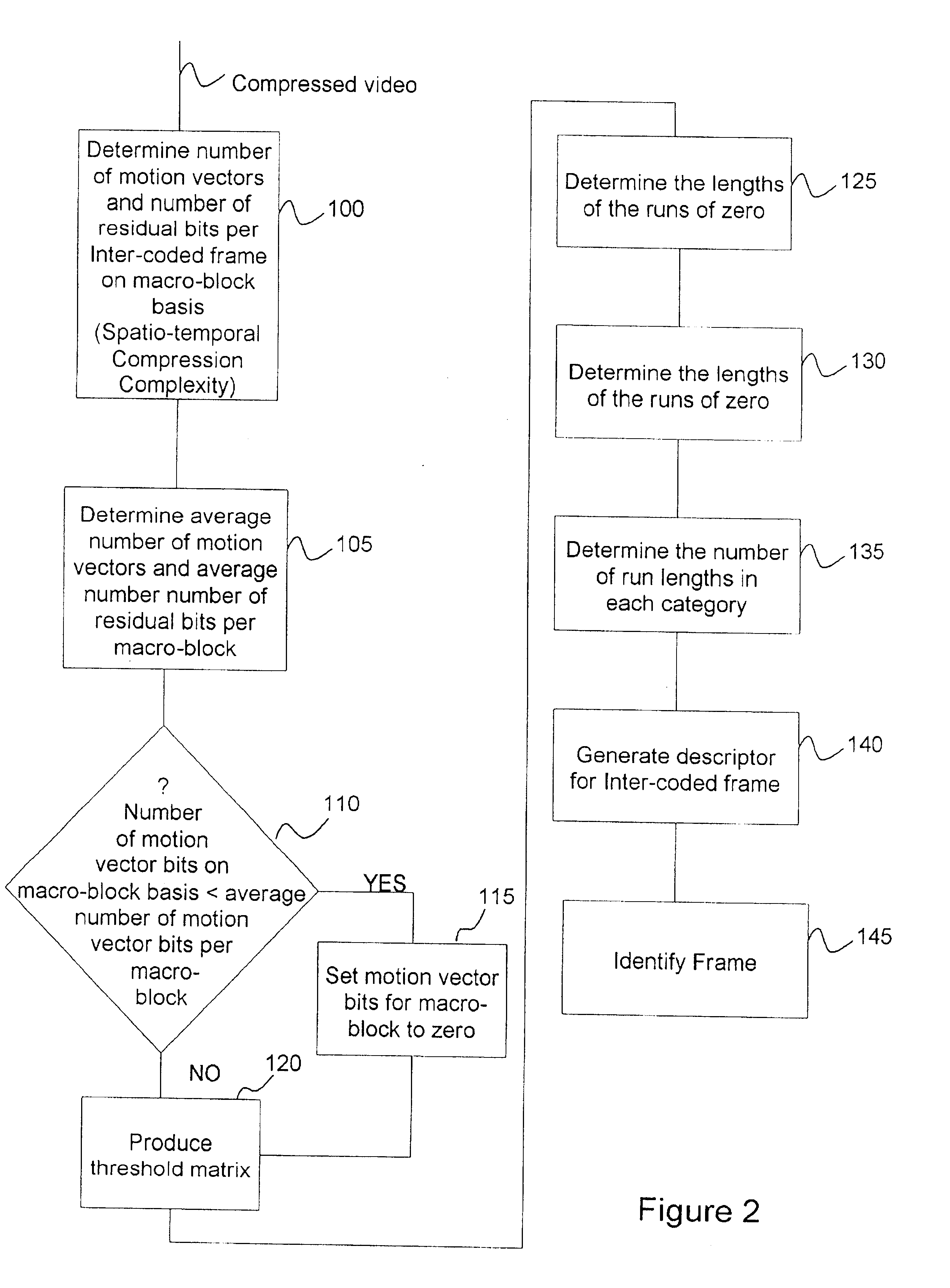

Methods of feature extraction of video sequences

InactiveUS6618507B1Digital data information retrievalCharacter and pattern recognitionFeature extractionVideo sequence

This invention relates to methods of feature extraction from MPEG-2 and MPEG-4 compressed video sequences. The spatio-temporal compression complexity of video sequences is evaluated for feature extraction by inspecting the compressed bitstream and the complexity is used as a descriptor of the spatio-temporal characteristics of the video sequence. The spatio-temporal compression complexity measure is used as a matching criterion and can also be used for absolute indexing. Feature extraction can be accomplished in conjunction with scene change detection techniques and the combination has reasonable accuracy and the advantage of high simplicity since it is based on entropy decoding of signals in compressed form and does not require computationally expensive inverse Discrete Cosine Transformation (DCT).

Owner:MICRO COMPACT CAR AG +1

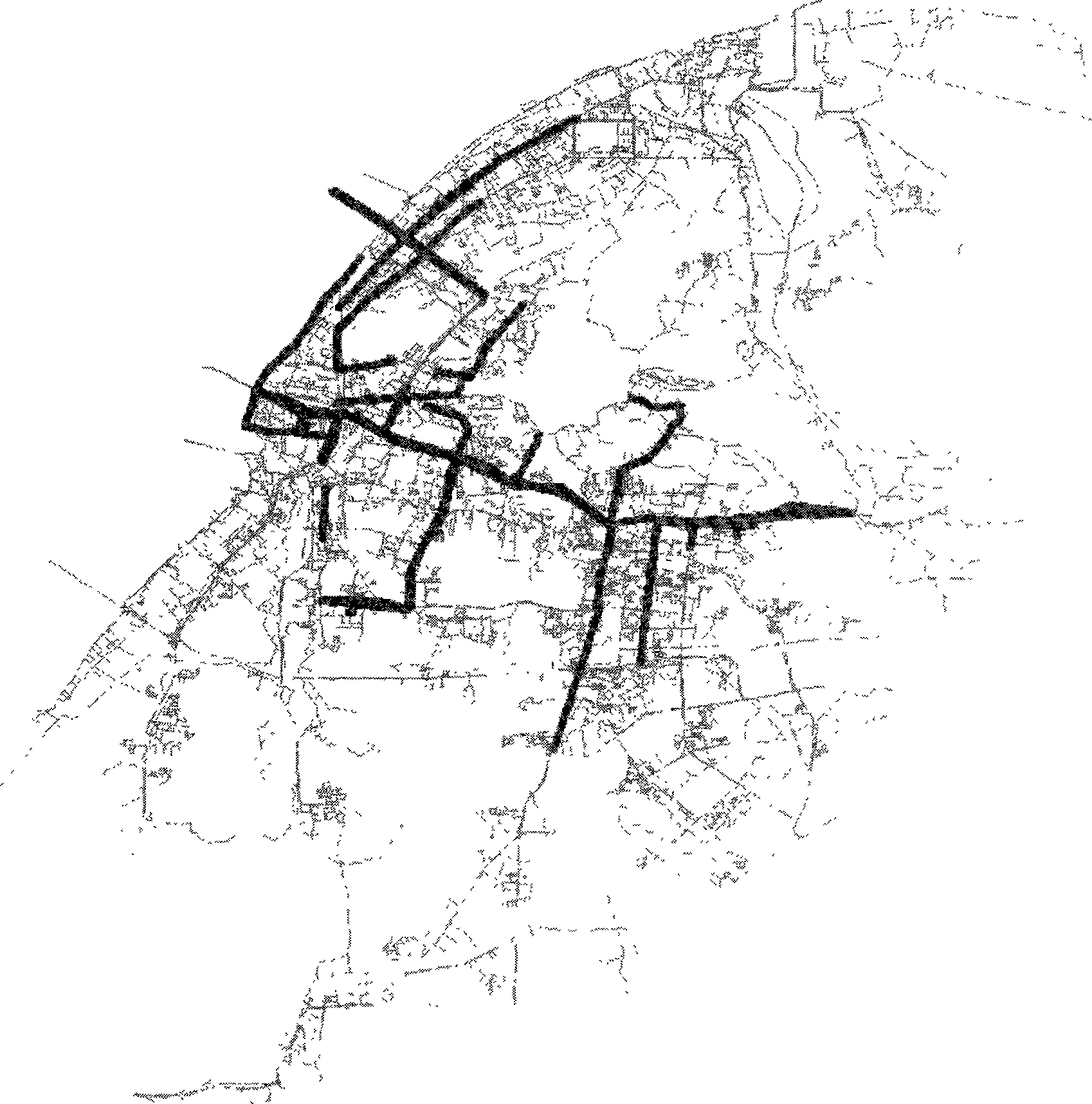

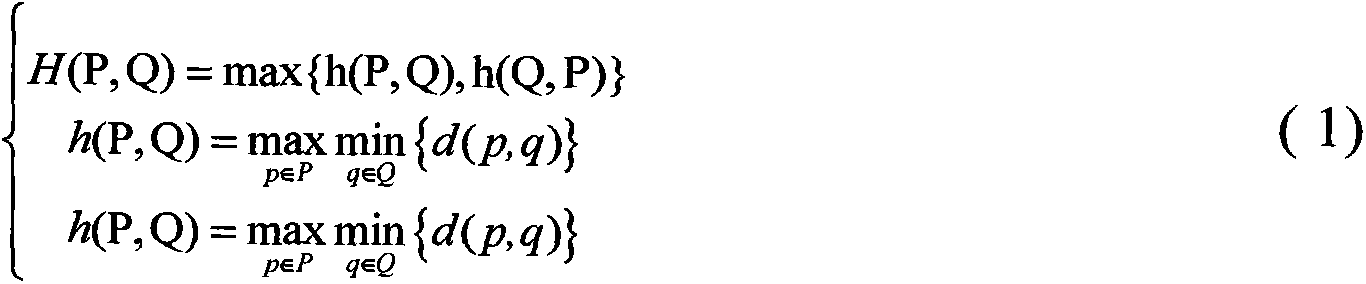

Clustering method based on mobile object spatiotemporal information trajectory subsections

InactiveCN103593430AImprove clustering effectStrong application valueRelational databasesSpecial data processing applicationsSpacetimeComputer science

The invention discloses a clustering method based on mobile object spatiotemporal information trajectory subsections. The clustering method based on mobile object spatiotemporal information trajectory subsections comprises the steps that the three attributes of time, speed and direction are introduced, and a similarity calculation formula of the time, speed and direction is provided for analyzing an internal structure and an external structure of a mobile object trajectory; firstly, according to the space density of the trajectory, the trajectory is divided into a plurality of trajectory subsections, then the similarities of the trajectory subsections are judged by calculating differences of the trajectory subjections on the space, time, speed and direction, finally, trajectory subsections in a non-significant cluster are deleted or are merged into adjacent significant clusters on the basis of a first cluster result, and therefore an overall moving rule is displayed on the clustering spatial form. According to the clustering method based on the mobile object spatiotemporal information trajectory subsections, the clustering result is improved, higher application value is provided, a space quadtree is adopted to conduct indexing on the trajectory subsections, clustering efficiency is greatly improved under the environment of a large-scale trajectory number set, and trajectories can be effectively clustered.

Owner:胡宝清

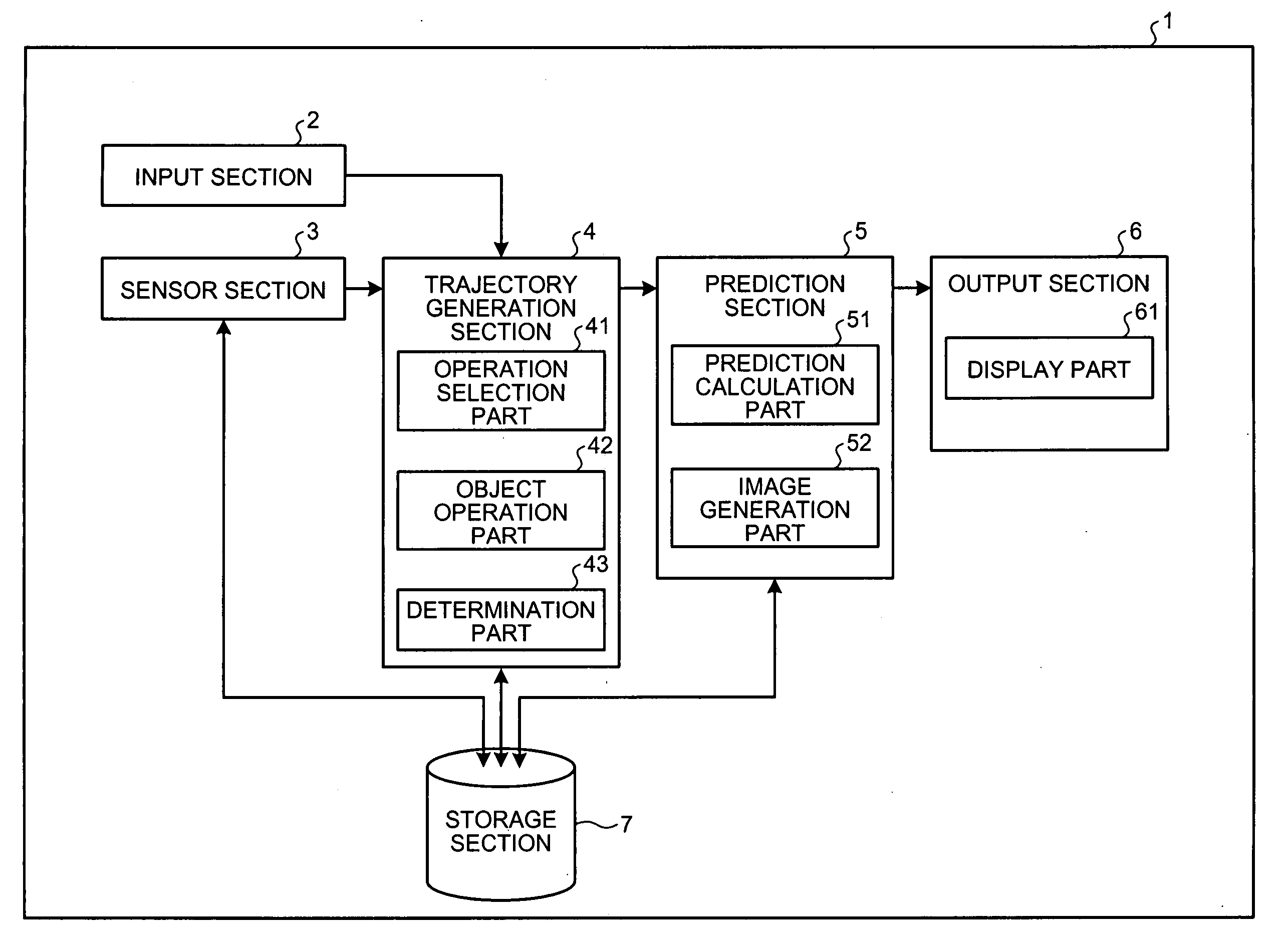

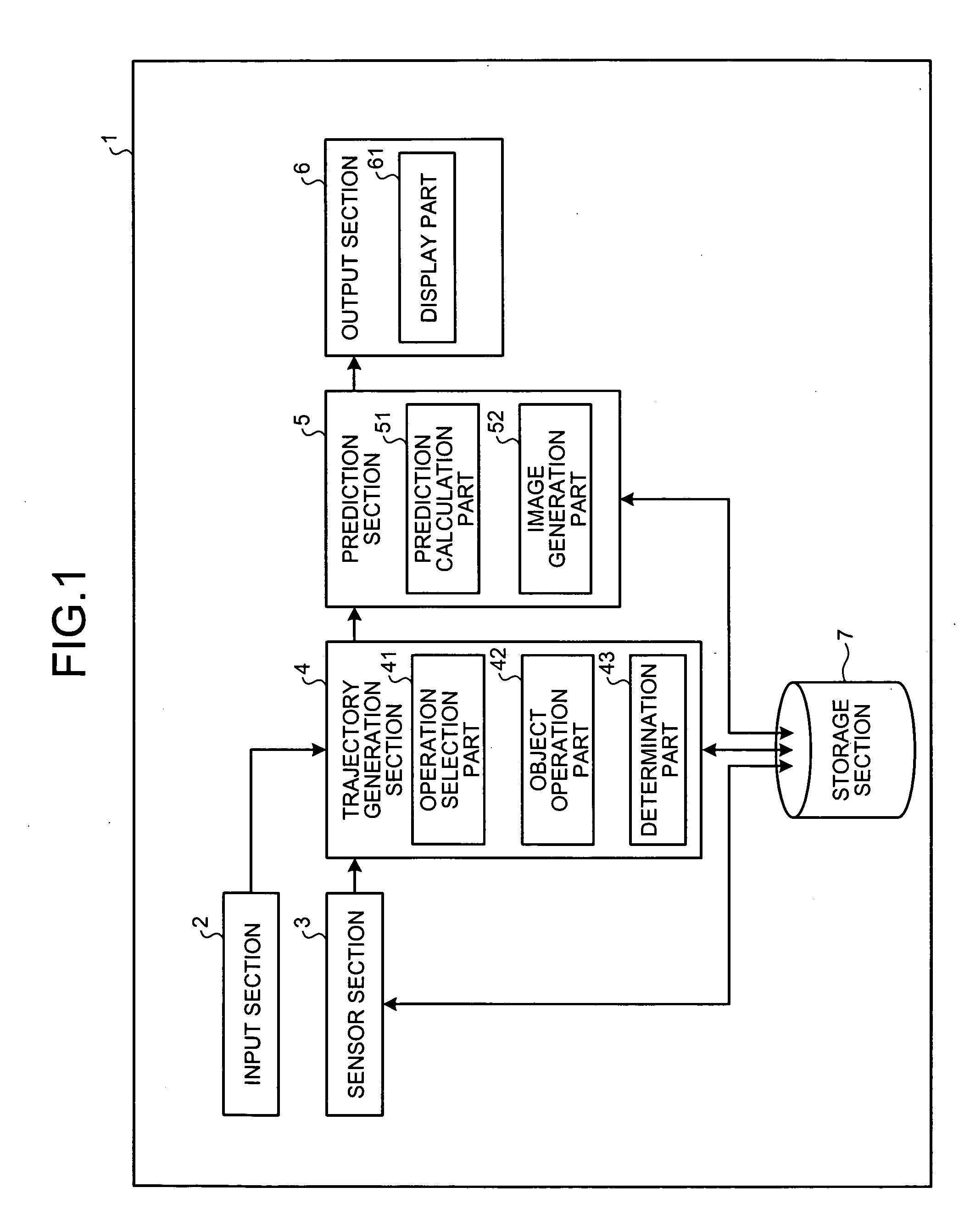

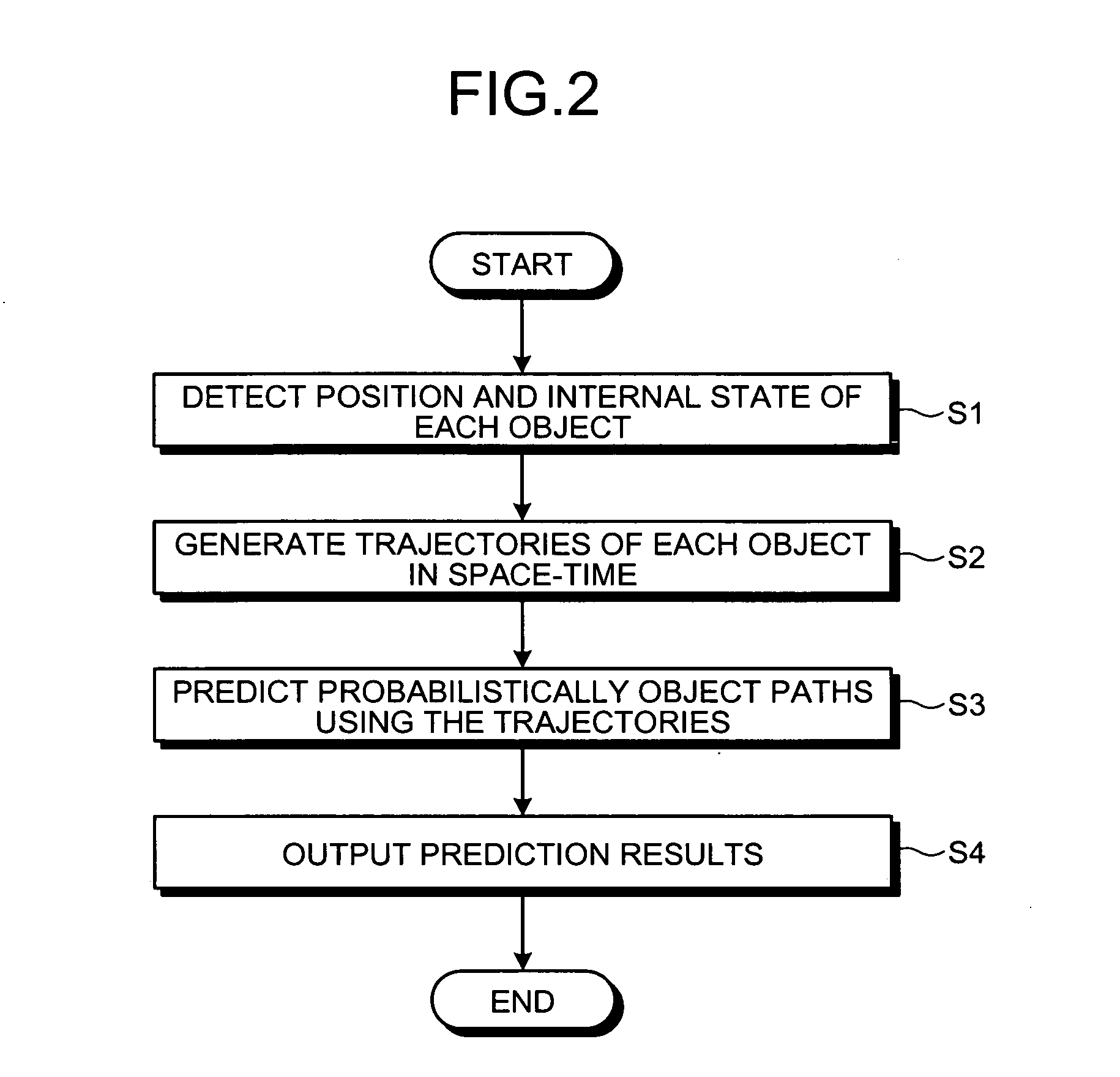

Object Path Prediction Method, Apparatus, and Program, and Automatic Operation System

ActiveUS20090024357A1Ensure safetySafety can be securedAnti-collision systemsDigital computer detailsObject basedOperational system

An object path prediction method, apparatus, and program and an automatic operation system that can secure safety even in situations that can actually occur are provided. For this purpose, a computer having a storage unit that stores the position of an object and the internal state including the speed of the object reads the position and internal state of the object from the storage unit, generates trajectories in a space-time consisting of time and space from changes of the positions that can be taken by the object with the passage of time based on the read position and internal state of the object, and predicts probabilistically paths of the object by using the generated trajectories.

Owner:TOYOTA JIDOSHA KK

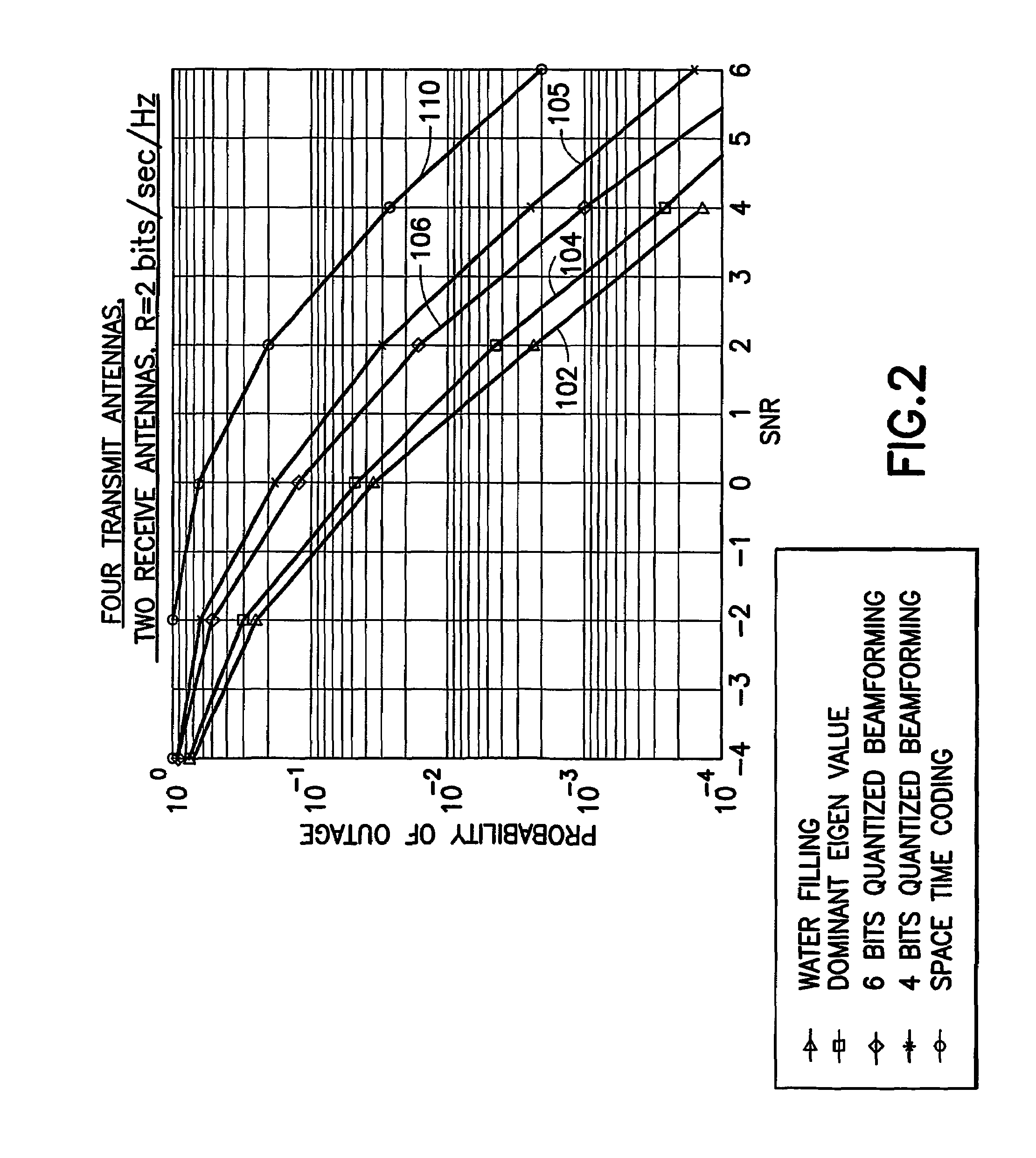

Low complexity beamformers for multiple transmit and receive antennas

InactiveUS7330701B2Power managementSpatial transmit diversityChannel state informationRound complexity

Beamforming systems having a few bits of channel state information fed back to the transmitter benefit from low complexity decoding structures and performances gains compared with systems that do not have channel state feedback. Both unit rank and higher rank systems are implemented. Substantial design effort may be avoided by following a method of using functions formulated for space-time systems with the change that the channel coherence time is equated to the number of transmit antennas and the number of antennas in the space-time formulation is fixed at one.

Owner:NOKIA CORP

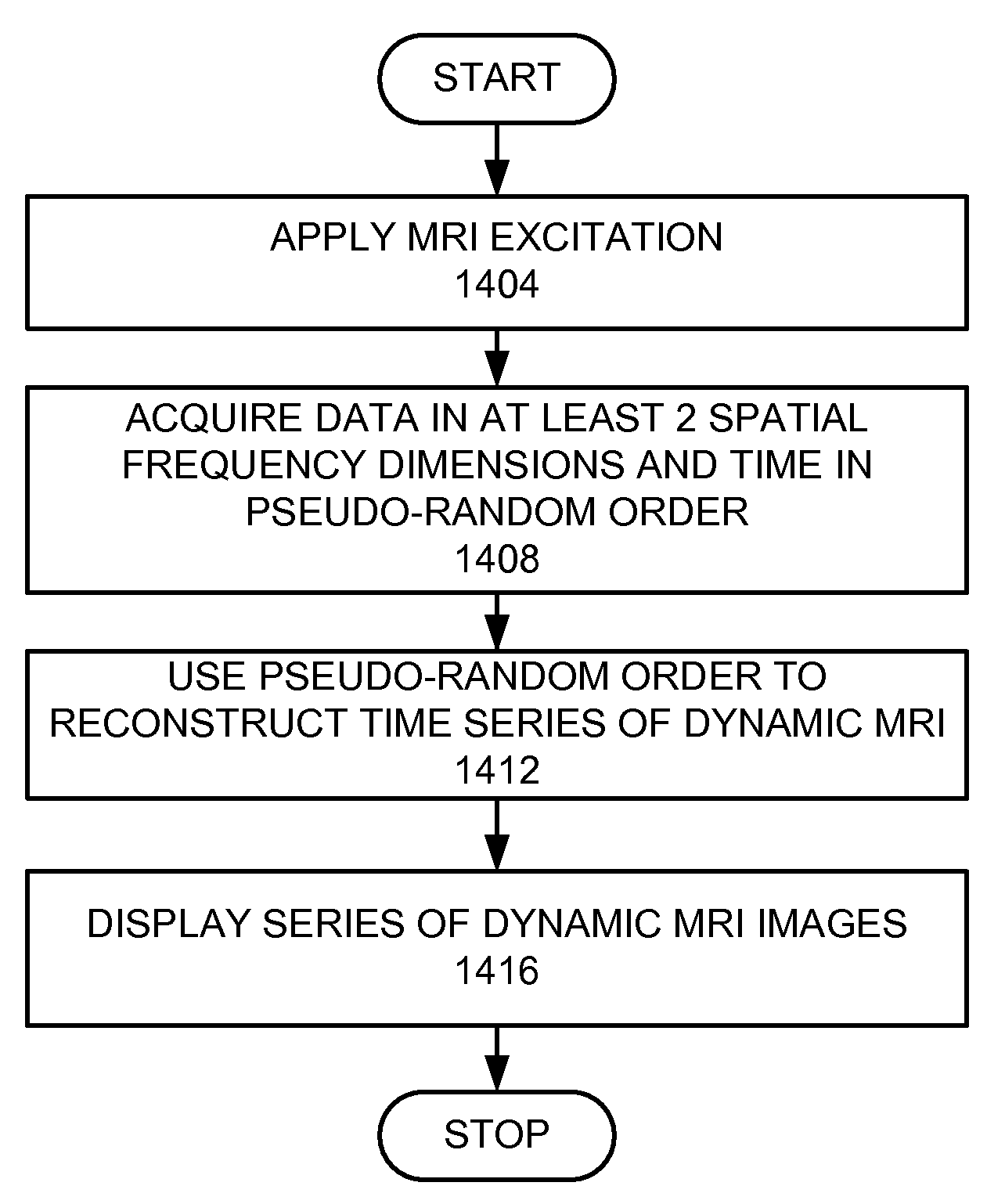

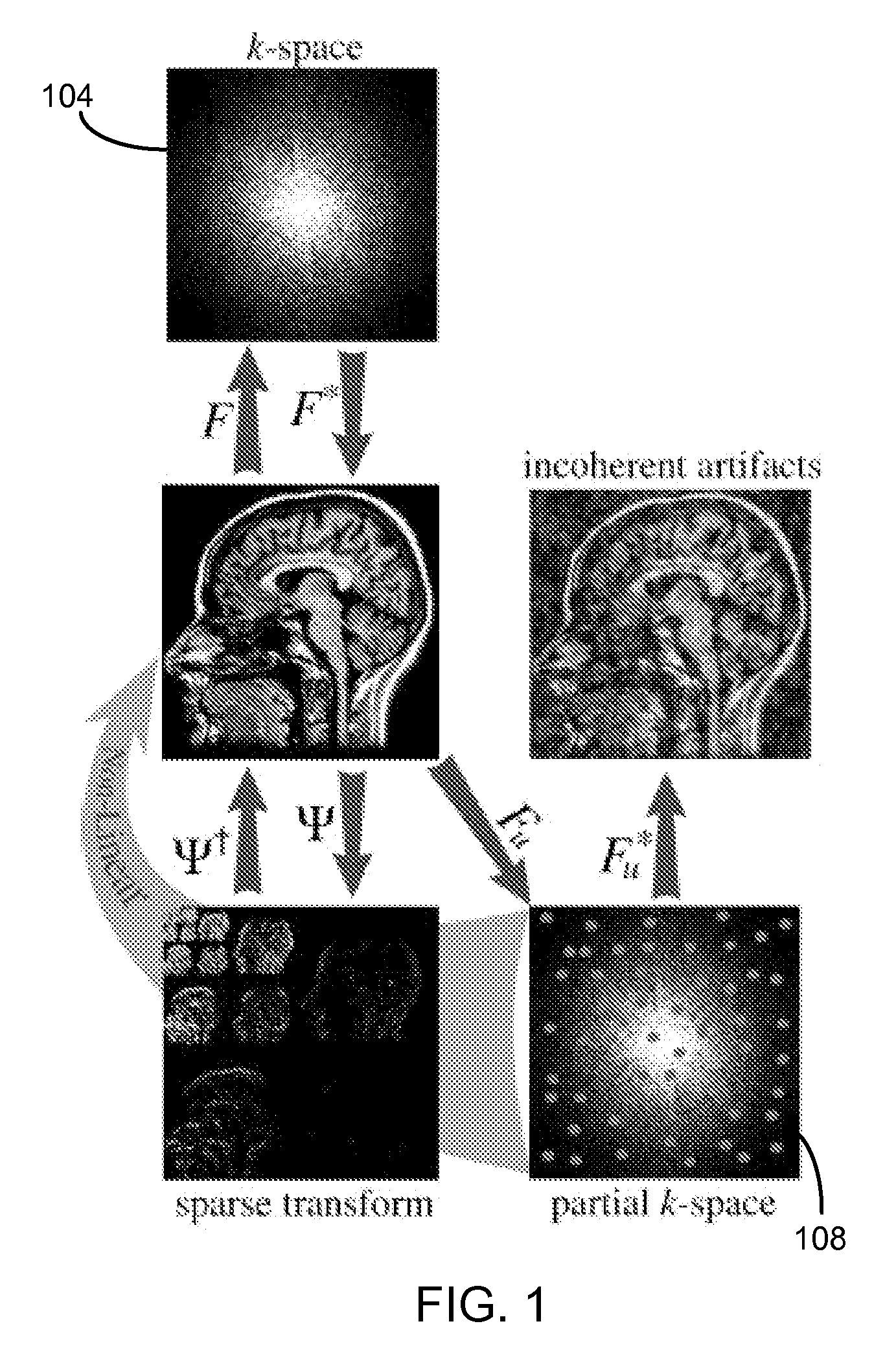

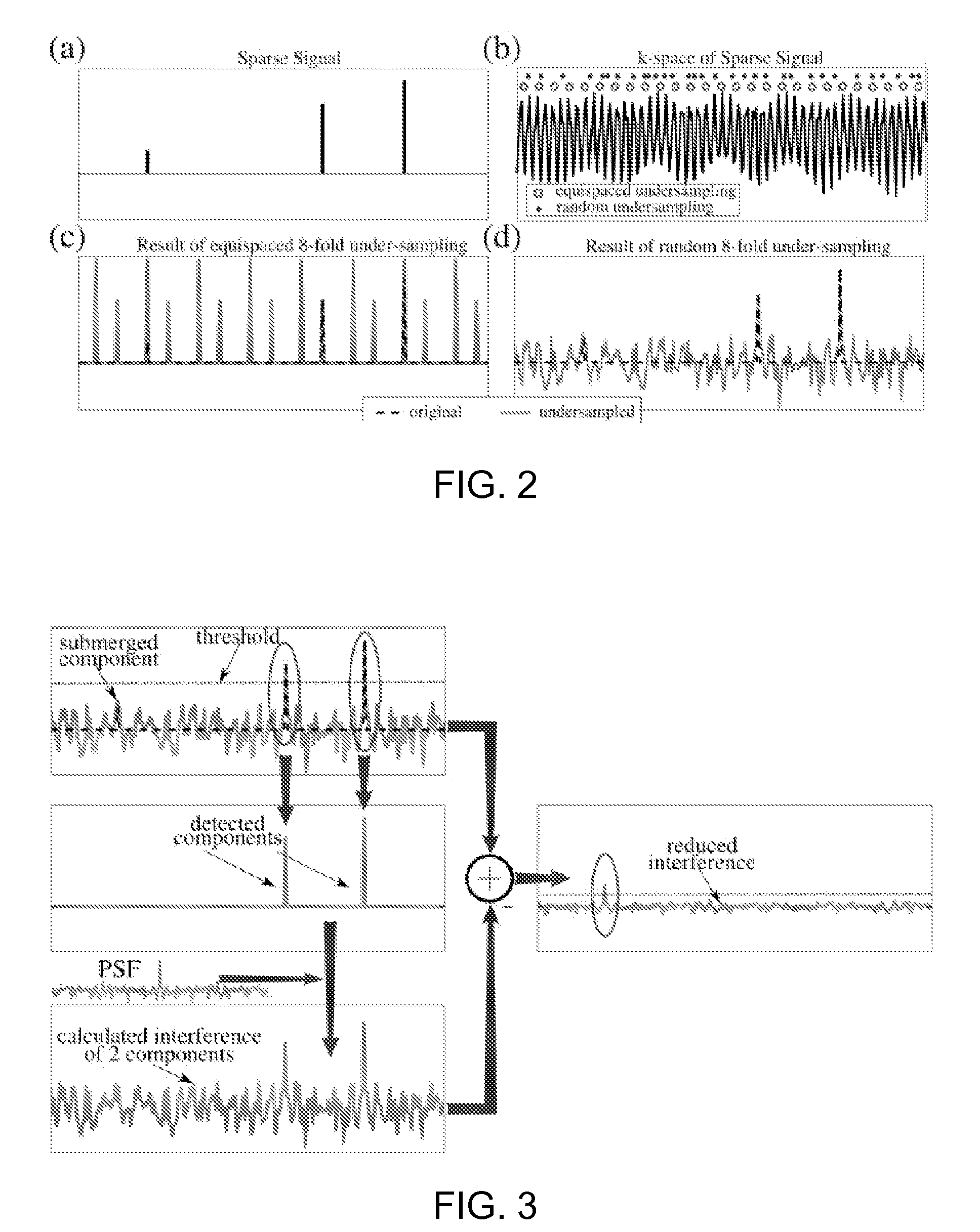

K-t sparse: high frame-rate dynamic magnetic resonance imaging exploiting spatio-temporal sparsity

ActiveUS20080197842A1Measurements using NMR imaging systemsElectric/magnetic detectionHigh frame rateResonance

A method of dynamic resonance imaging is provided. A magnetic resonance imaging excitation is applied. Data in 2 or 3 spatial frequency dimensions, and time is acquired, where an acquisition order in at least one spatial frequency dimension and the time dimension are in a pseudo-random order. The pseudo-random order and enforced sparsity constraints are used to reconstruct a time series of dynamic magnetic resonance images.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

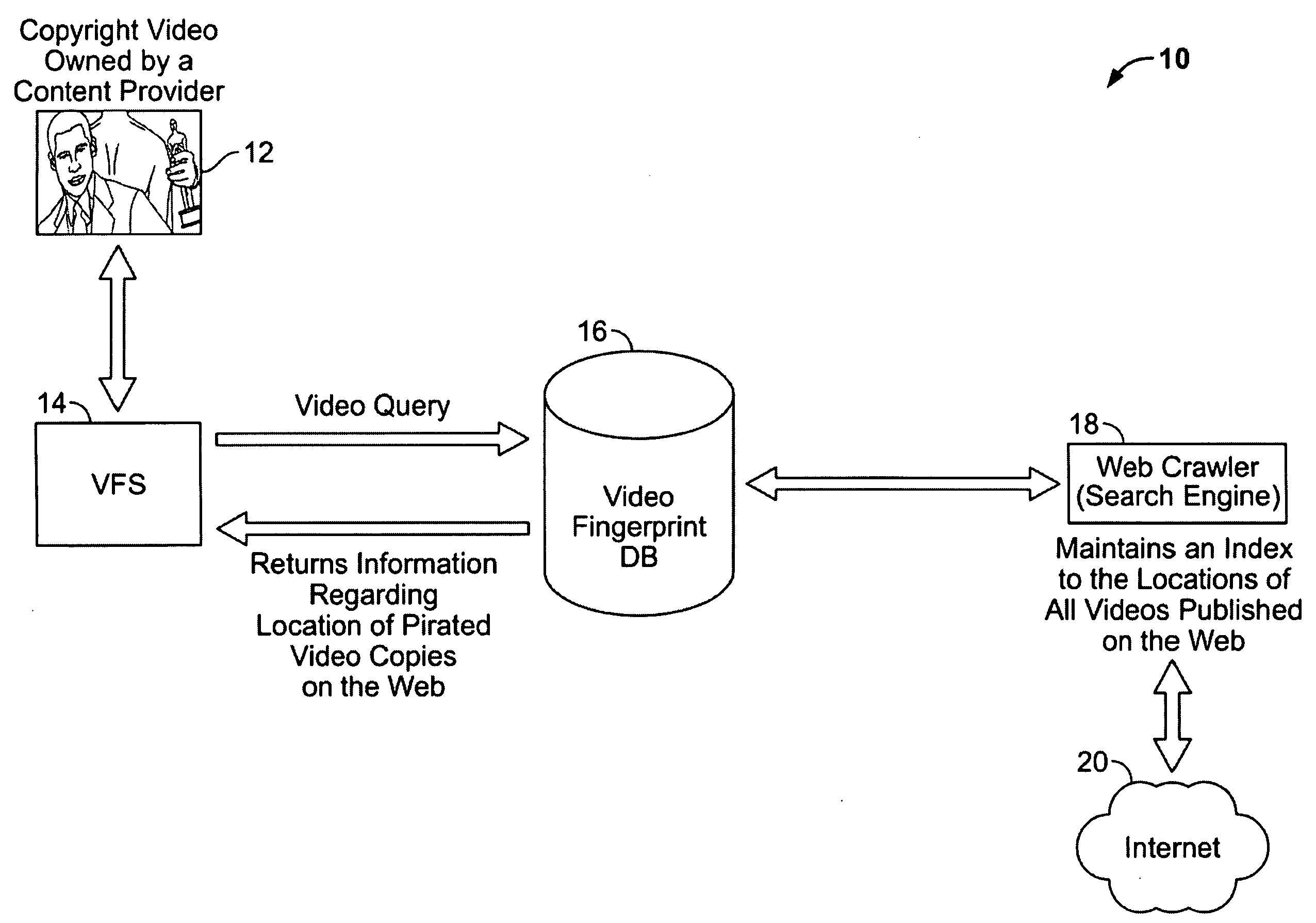

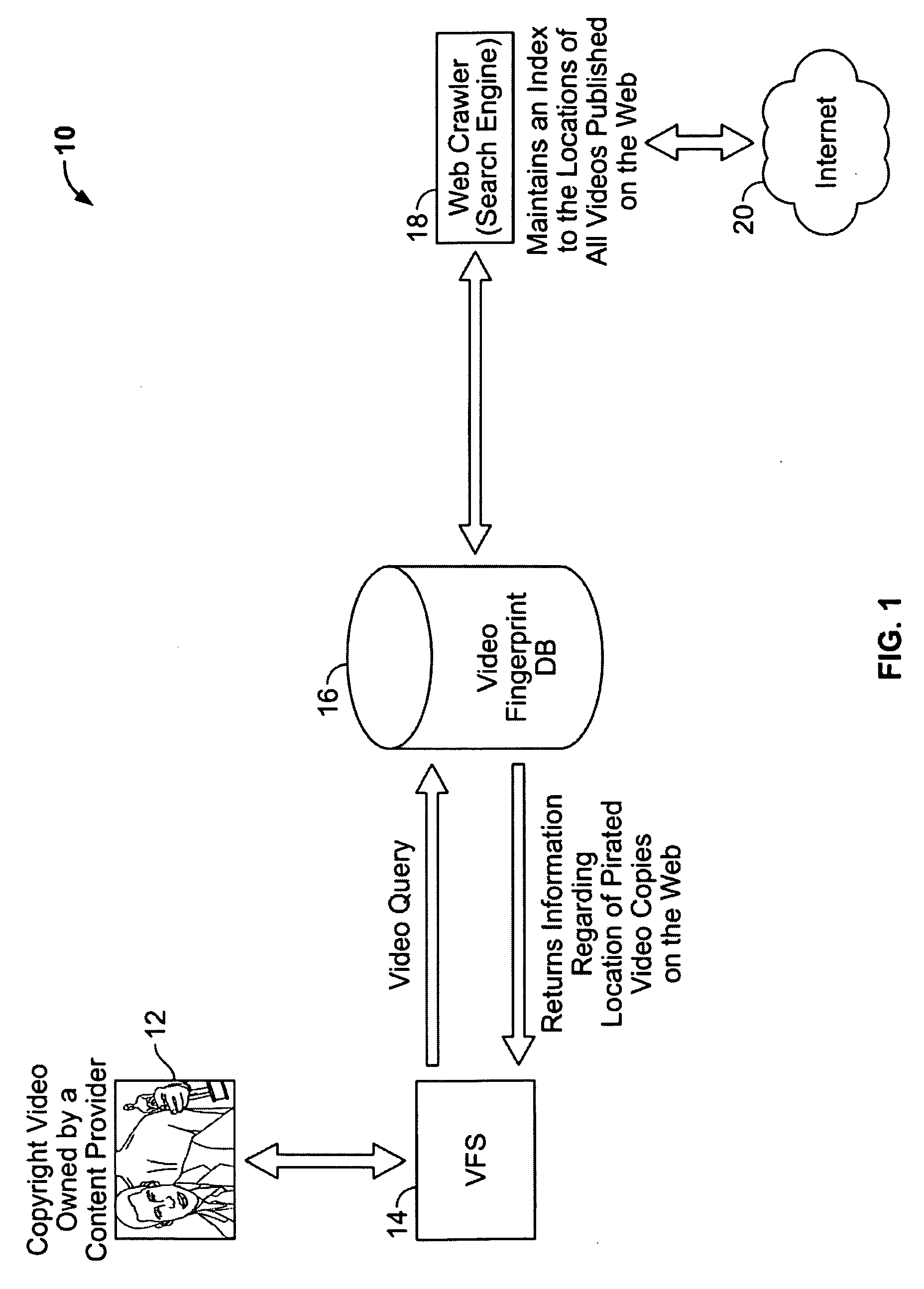

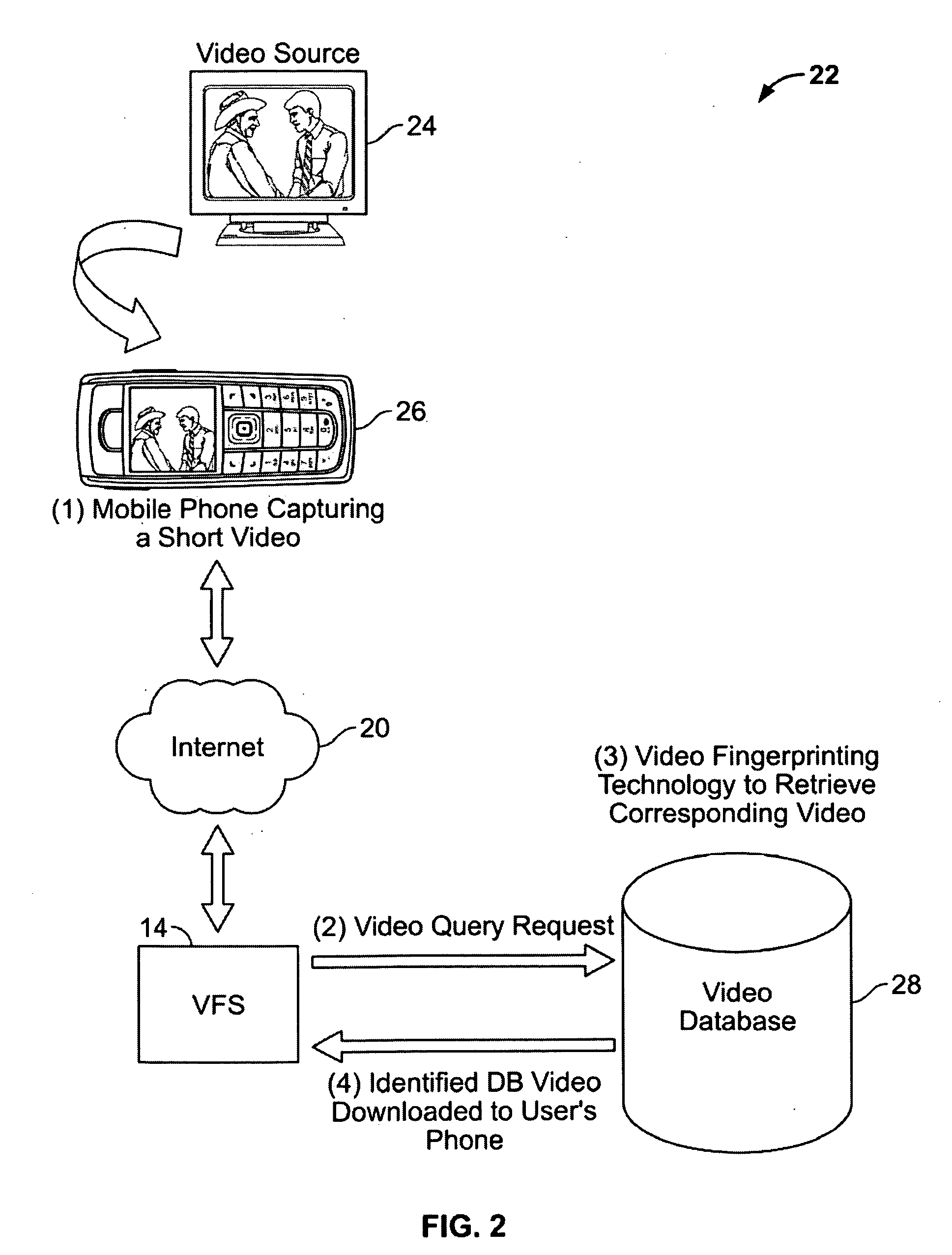

Content-based matching of videos using local spatio-temporal fingerprints

ActiveUS20100049711A1Poor discriminationImprove discriminationDigital data processing detailsPicture reproducers using cathode ray tubesData matchingPattern recognition

A computer implemented method computer implemented method for deriving a fingerprint from video data is disclosed, comprising the steps of receiving a plurality of frames from the video data; selecting at least one key frame from the plurality of frames, the at least one key frame being selected from two consecutive frames of the plurality of frames that exhibiting a maximal cumulative difference in at least one spatial feature of the two consecutive frames; detecting at least one 3D spatio-temporal feature within the at least one key frame; and encoding a spatio-temporal fingerprint based on mean luminance of the at least one 3D spatio-temporal feature. The least one spatial feature can be intensity. The at least one 3D spatio-temporal feature can be at least one Maximally Stable Volume (MSV). Also disclosed is a method for matching video data to a database containing a plurality of video fingerprints of the type described above, comprising the steps of calculating at least one fingerprint representing at least one query frame from the video data; indexing into the database using the at least one calculated fingerprint to find a set of candidate fingerprints; applying a score to each of the candidate fingerprints; selecting a subset of candidate fingerprints as proposed frames by rank ordering the candidate fingerprints; and attempting to match at least one fingerprint of at least one proposed frame based on a comparison of gradient-based descriptors associated with the at least one query frame and the at least one proposed frame.

Owner:SRI INTERNATIONAL

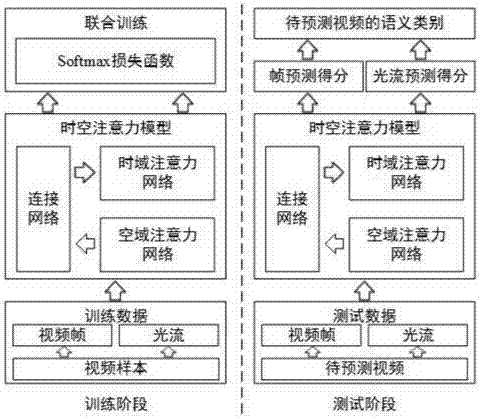

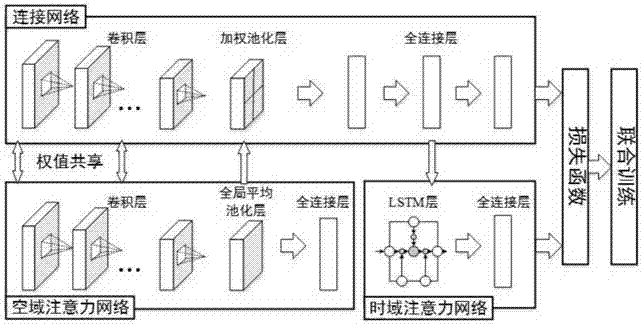

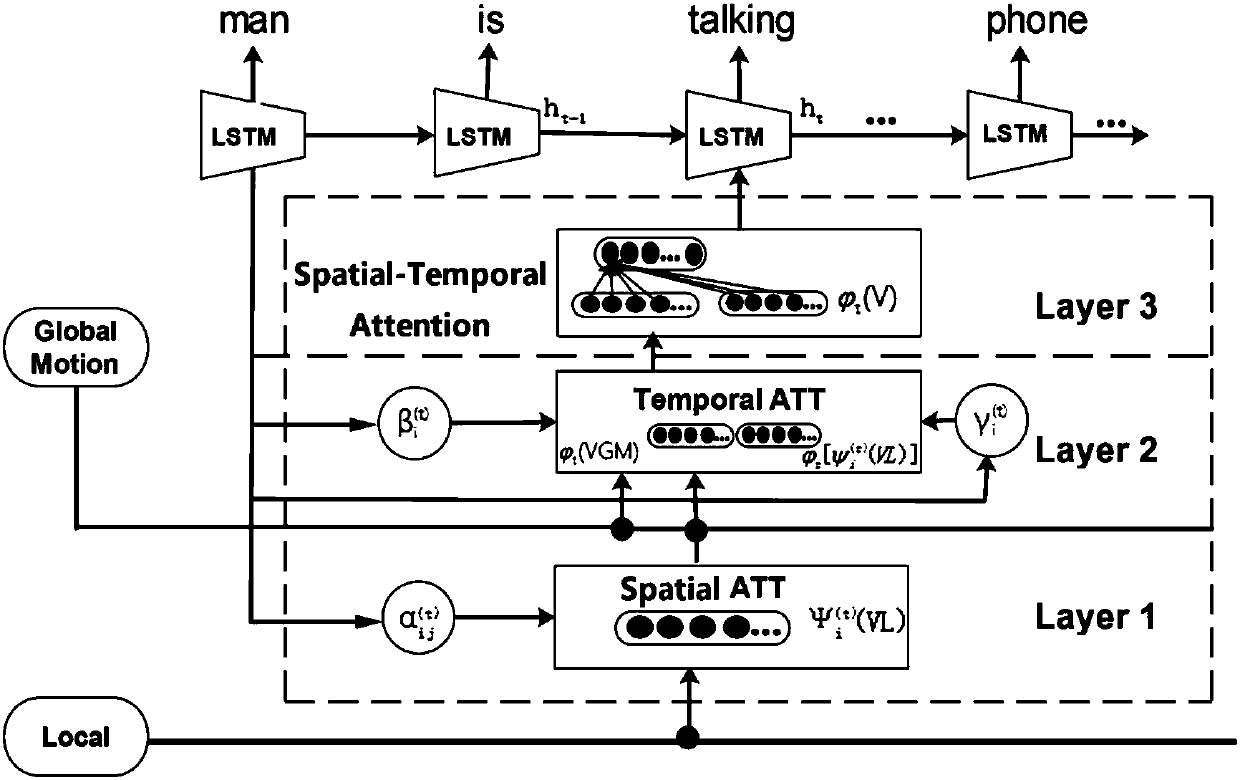

Space-time attention based video classification method

ActiveCN107330362AImprove classification performanceTime-domain saliency information is accurateCharacter and pattern recognitionAttention modelTime domain

The invention relates to a space-time attention based video classification method, which comprises the steps of extracting frames and optical flows for training video and video to be predicted, and stacking a plurality of optical flows into a multi-channel image; building a space-time attention model, wherein the space-time attention model comprises a space-domain attention network, a time-domain attention network and a connection network; training the three components of the space-time attention model in a joint manner so as to enable the effects of the space-domain attention and the time-domain attention to be simultaneously improved and obtain a space-time attention model capable of accurately modeling the space-domain saliency and the time-domain saliency and being applicable to video classification; extracting the space-domain saliency and the time-domain saliency for the frames and optical flows of the video to be predicted by using the space-time attention model obtained by learning, performing prediction, and integrating prediction scores of the frames and the optical flows to obtain a final semantic category of the video to be predicted. According to the space-time attention based video classification method, modeling can be performing on the space-domain attention and the time-domain attention simultaneously, and the cooperative performance can be sufficiently utilized through joint training, thereby learning more accurate space-domain saliency and time-domain saliency, and thus improving the accuracy of video classification.

Owner:PEKING UNIV

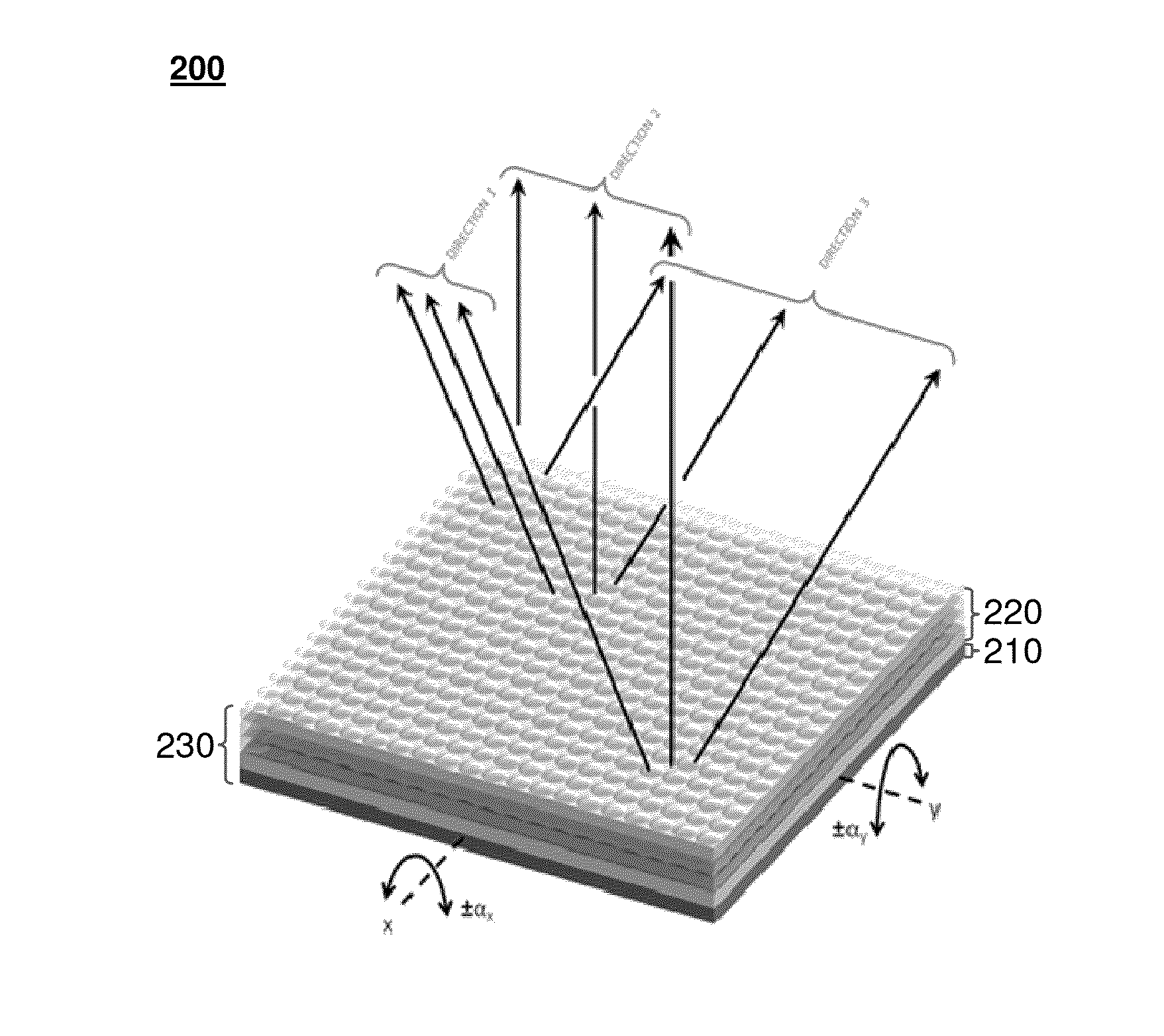

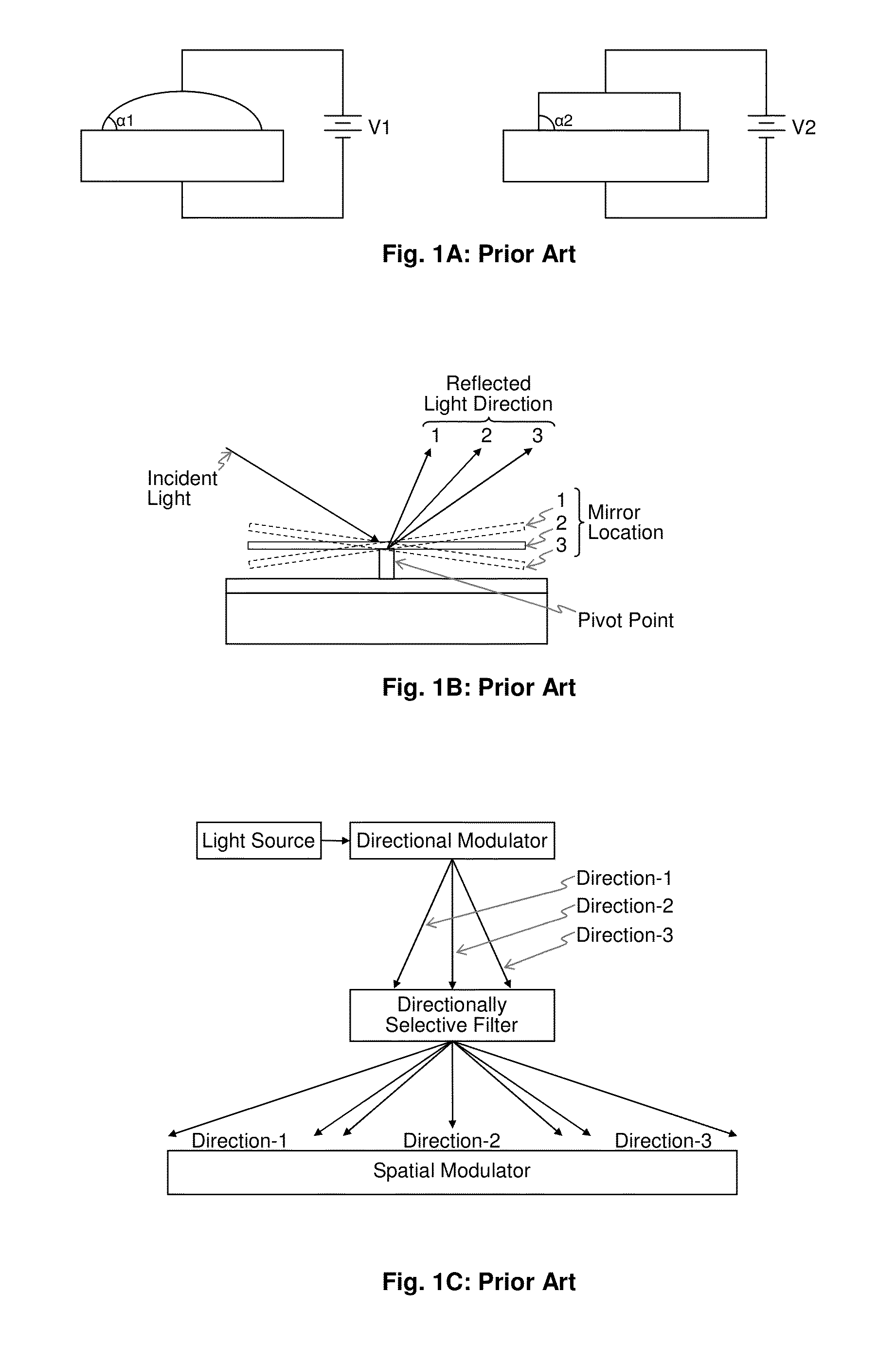

Spatio-Temporal Directional Light Modulator

A spatio-temporal directional light modulator is introduced. This directional light modulator can be used to create 3D displays, ultra-high resolution 2D displays or 2D / 3D switchable displays with extended viewing angle. The spatio-temporal aspects of this novel light modulator allow it to modulate the intensity, color and direction of the light it emits within an wide viewing angle. The inherently fast modulation and wide angular coverage capabilities of this directional light modulator increase the achievable viewing angle, and directional resolution making the 3D images created by the display be more realistic or alternatively the 2D images created by the display having ultra high resolution.

Owner:OSTENDO TECH INC

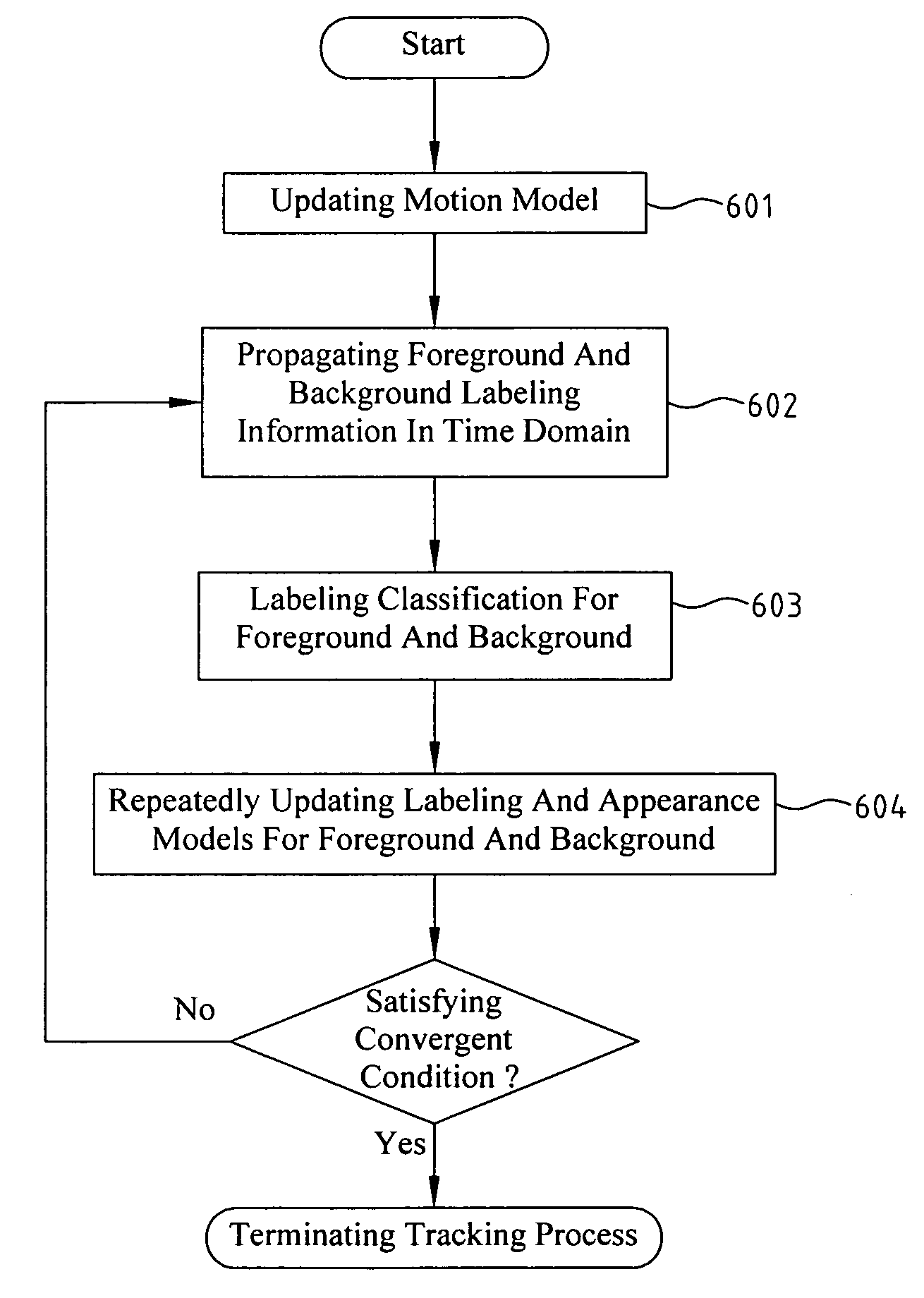

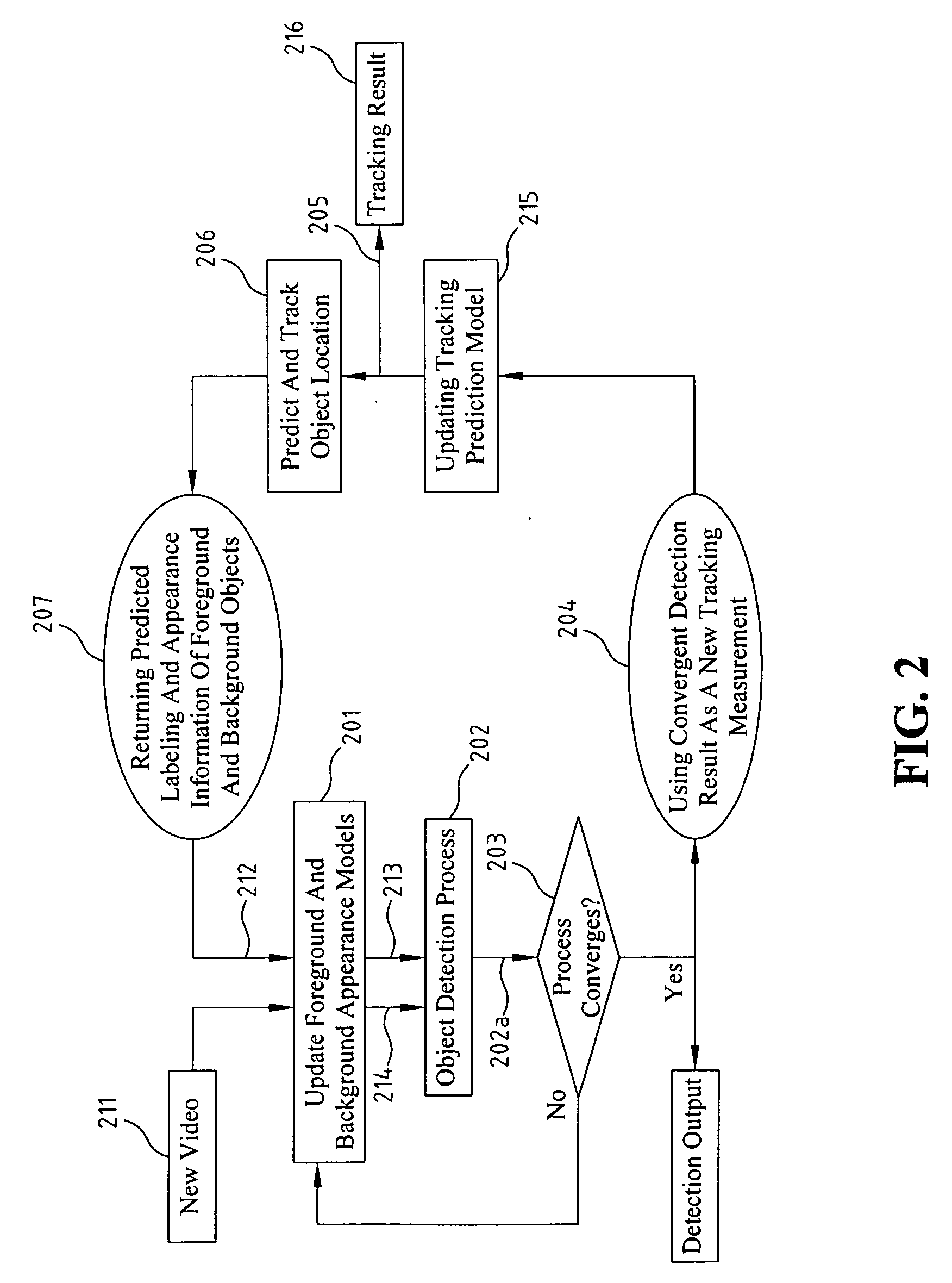

Method And System For Object Detection And Tracking

Disclosed is a method and system for object detection and tracking. Spatio-temporal information for a foreground / background appearance module is updated, based on a new input image and the accumulated previous appearance information and foreground / background information module labeling information over time. Object detection is performed according to the new input image and the updated spatio-temporal information and transmitted previous information over time, based on the labeling result generated by the object detection. The information for the foreground / background appearance module is repeatedly updated until a convergent condition is reached. The produced labeling result from objection detection is considered as a new tracking measurement for further updating on a tracking prediction module. A final tracking result may be obtained through the updated tracking prediction module, which is determined by the current tracking measurement and the previous observed tracking results. The tracking object location at the next time is predicted. The returned predicted appearance information for the foreground / background object is used as the input for updating the foreground and background appearance module. The returned labeling information is used as the information over time for the object detection.

Owner:IND TECH RES INST

Method and apparatus for improved video surveillance through classification of detected objects

A method and apparatus for video surveillance is disclosed. In one embodiment, a sequence of scene imagery representing a field of view is received. One or more moving objects are identified within the sequence of scene imagery and then classified in accordance with one or more extracted spatio-temporal features. This classification may then be applied to determine whether the moving object and / or its behavior fits one or more known events or behaviors that are causes for alarm.

Owner:SRI INTERNATIONAL

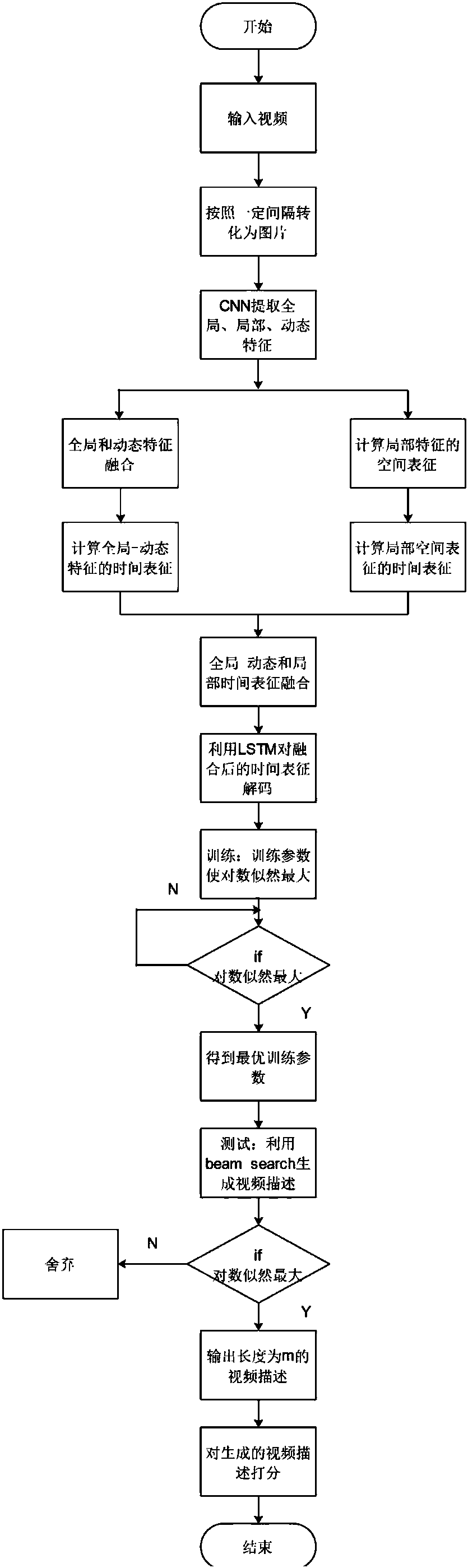

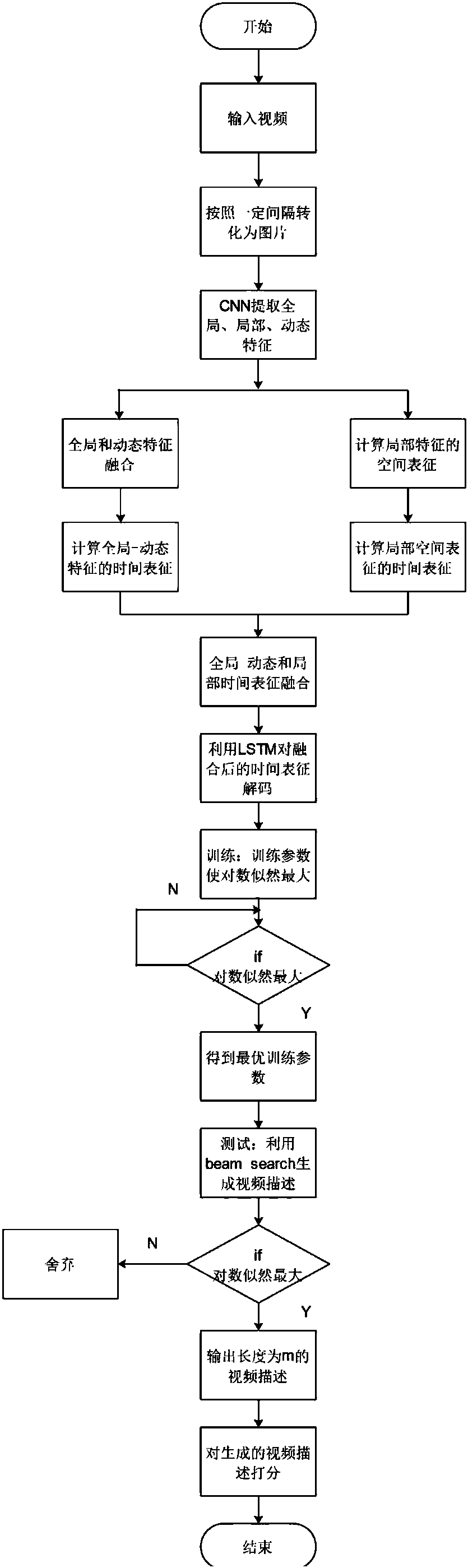

Video content description method by means of space and time attention models

ActiveCN107066973ACharacter and pattern recognitionNeural architecturesAttention modelTime structure

The invention discloses a video content description method by means of space and time attention models. The global time structure in the video is captured by means of the time attention model, the space structure on each frame of picture is captured by the space attention model, and the method aims to realize that the video description model masters the main event in the video and enhances the identification capability of the local information. The method includes preprocessing the video format; establishing the time and space attention models; and training and testing the video description model. By means of the time attention model, the main time structure in the video can be maintained, and by means of the space attention model, some key areas in each frame of picture are focused in each frame of picture, so that the generated video description can capture some key buy easy neglected detailed information while mastering the main event in the video content.

Owner:HANGZHOU DIANZI UNIV

Spatio-temporal processing for communication

InactiveUS20050157810A1Reduce complexityEfficiency benefitError prevention/detection by using return channelSpatial transmit diversityEngineeringHandling system

Owner:CISCO TECH INC

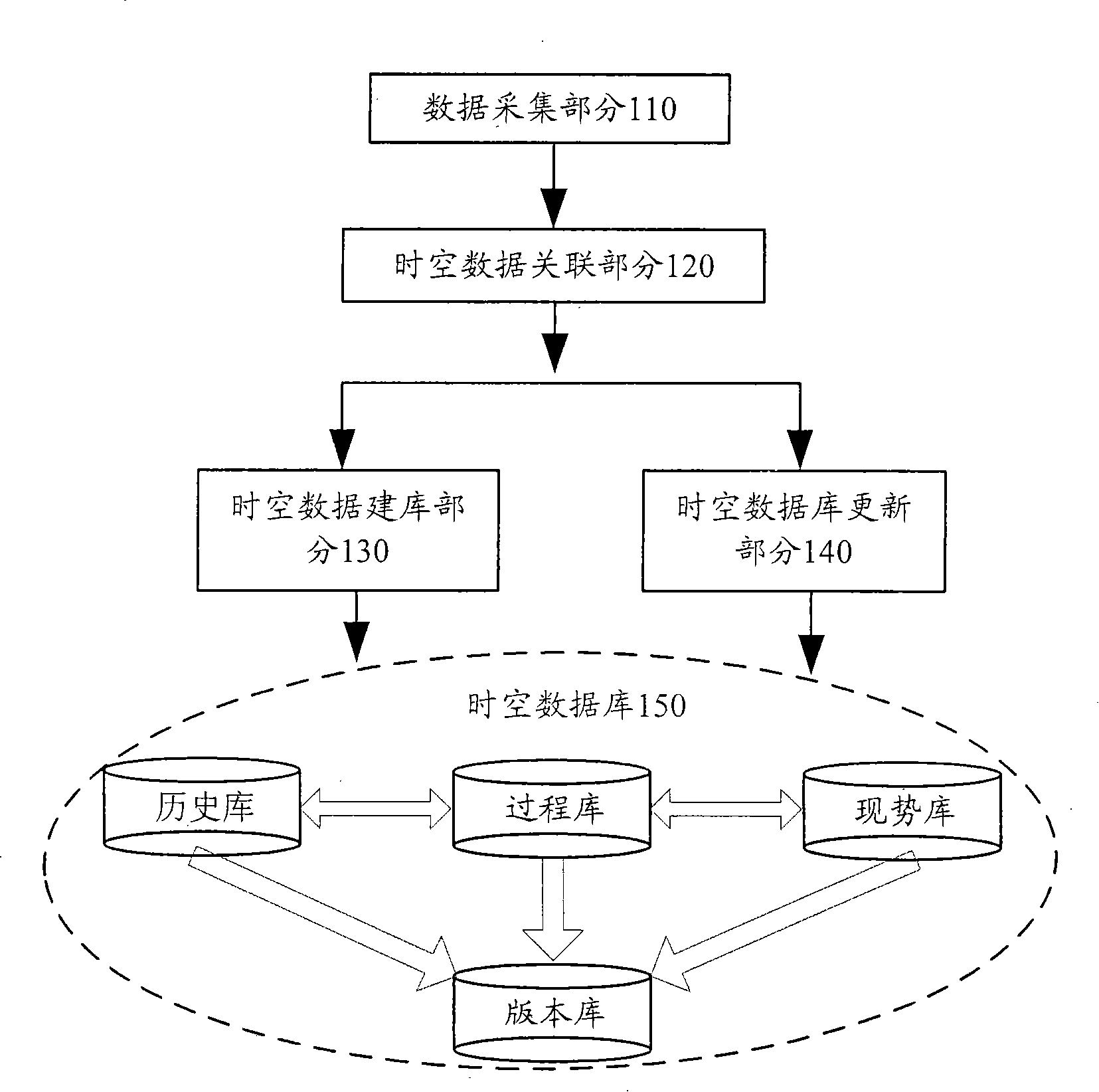

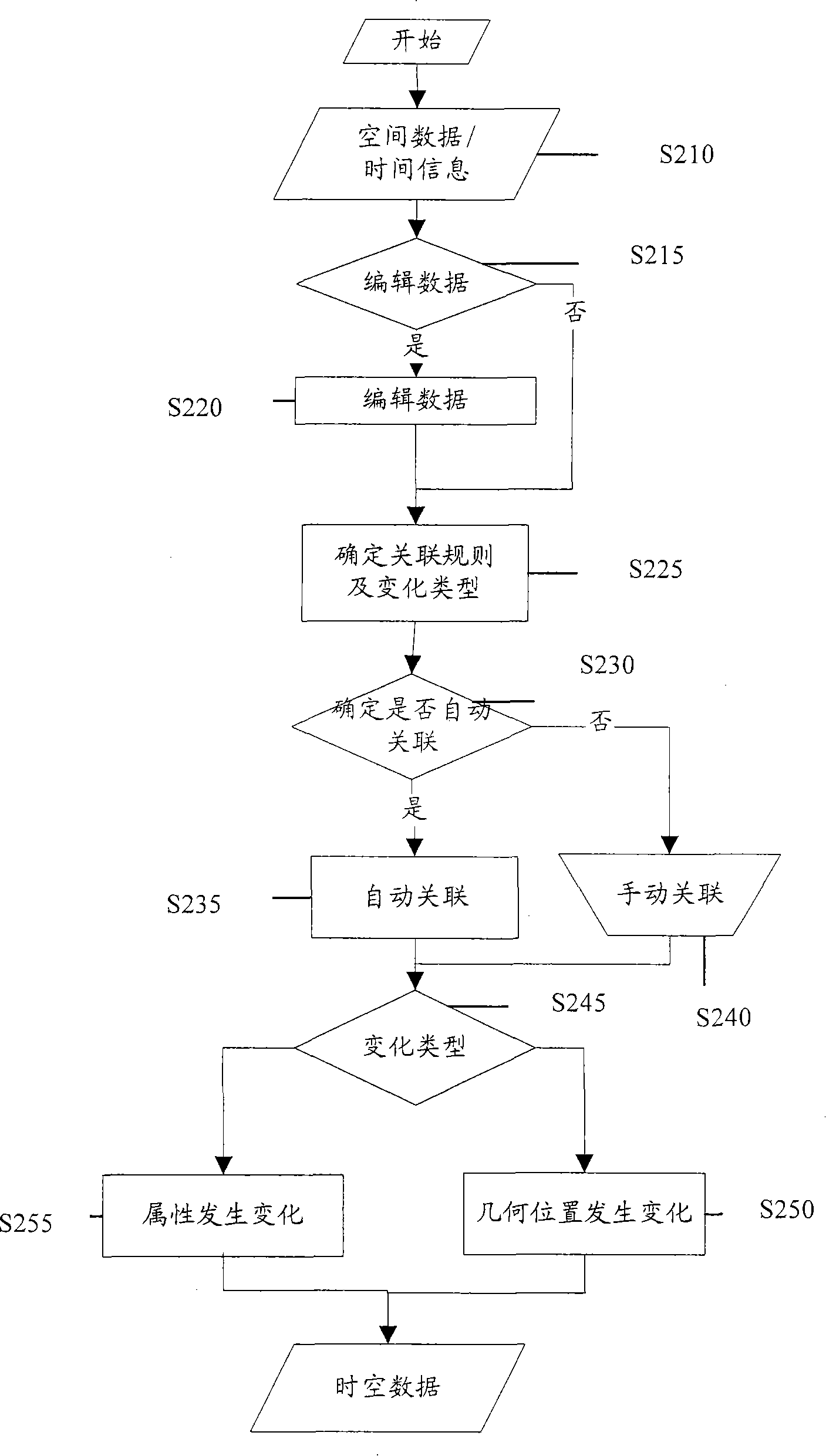

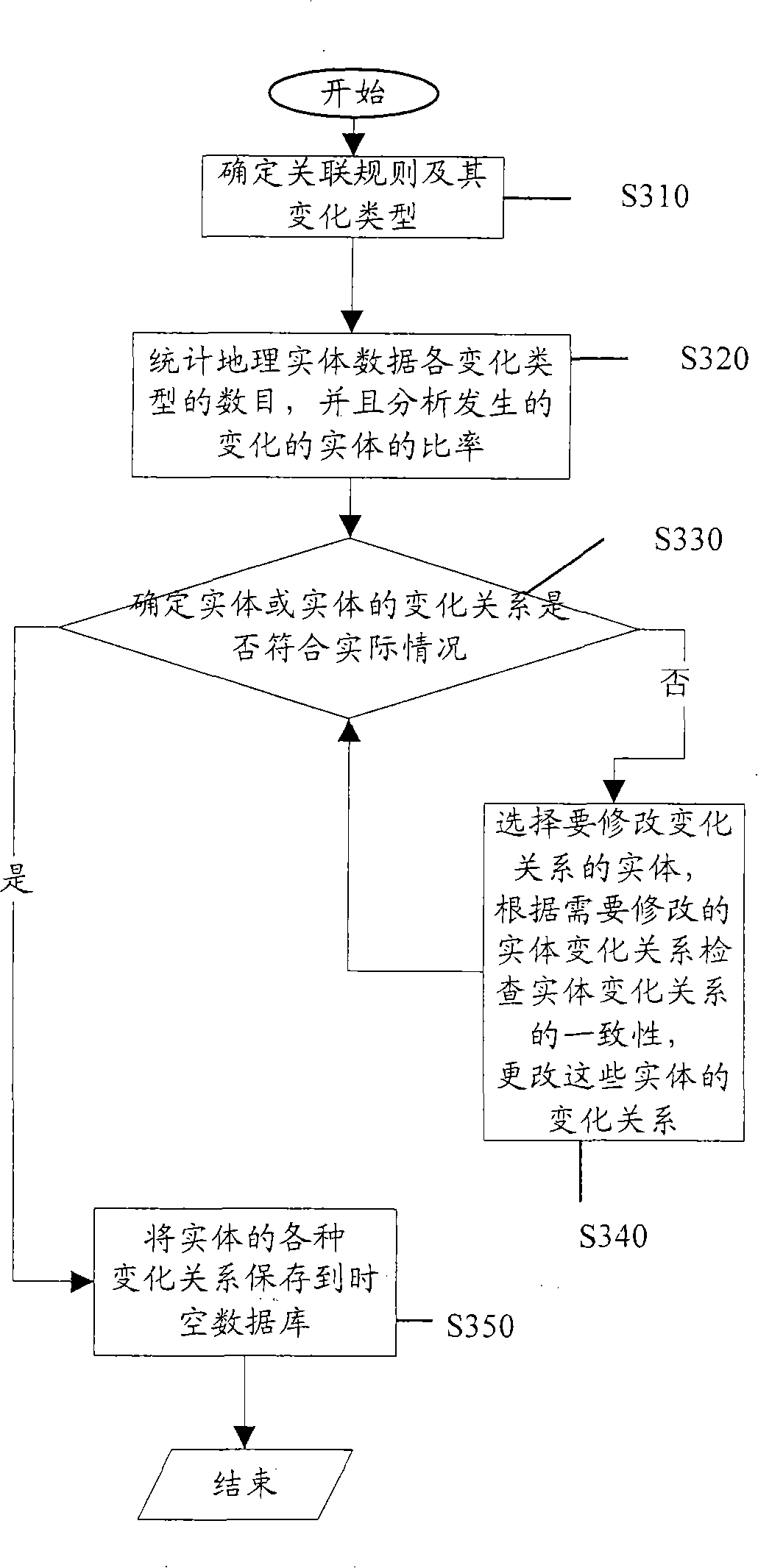

Space-time database administration method and system

InactiveCN101231642AReduce storageEfficient integrationSpecial data processing applicationsTemporal informationData acquisition

The invention discloses a spatiotemporal database management method, as well as a management system and a program product thereof. The spatiotemporal database management system comprises a data collection part, a spatiotemporal data association part and a spatiotemporal data management part, wherein, the data collection part is used to collect or receive the spatial information and the timing information about a geographical entity from external systems, and the produced and existed object timing information of the data are arranged; the spatiotemporal data association part is used to associate various data obtained from the data collection part with the object timing information, and store the association into a corresponding spatiotemporal data file, so as to express the evolution history of the geographical entity and predict the change of the geographical entity; the spatiotemporal data management part is used to respectively store the spatiotemporal data file produced by the spatiotemporal data association part according to the temporal information recorded by the timing information of the geographical entity and the evolution trend recorded by the spatial information of the geographical entity, so as to compose a spatiotemporal database.

Owner:CHINESE ACAD OF SURVEYING & MAPPING

Predicate Logic based Image Grammars for Complex Visual Pattern Recognition

InactiveUS20100278420A1Quick and efficient system setupImprove performanceCharacter and pattern recognitionChaos modelsNerve networkAlgorithm

First order predicate logics are provided, extended with a bilattice based uncertainty handling formalism, as a means of formally encoding pattern grmmars, to parse a set of image features, and detect the presence of different patterns of interest implemented on a processor. Information from different sources and uncertainties from detections, are integrated within the bilattice framework. Automated logical rule weight learning in the computer vision domain applies a rule weight optimization method which casts the instantiated inference tree as a knowledge-based neural network, to converge upon a set of rule weights that give optimal performance within the bilattice framework. Applications are in (a) detecting the presence of humans under partial occlusions and (b) detecting large complex man made structures in satellite imagery (c) detection of spatio-temporal human and vehicular activities in video and (c) parsing of Graphical User Interfaces.

Owner:SIEMENS CORP

Apparatus and method for capturing a scene using staggered triggering of dense camera arrays

ActiveUS20070030342A1Minimal computational loadEasy to sampleTelevision system detailsCharacter and pattern recognitionViewpointsOptical flow

This invention relates to an apparatus and a method for video capture of a three-dimensional region of interest in a scene using an array of video cameras. The video cameras of the array are positioned for viewing the three-dimensional region of interest in the scene from their respective viewpoints. A triggering mechanism is provided for staggering the capture of a set of frames by the video cameras of the array. The apparatus has a processing unit for combining and operating on the set of frames captured by the array of cameras to generate a new visual output, such as high-speed video or spatio-temporal structure and motion models, that has a synthetic viewpoint of the three-dimensional region of interest. The processing involves spatio-temporal interpolation for determining the synthetic viewpoint space-time trajectory. In some embodiments, the apparatus computes a multibaseline spatio-temporal optical flow.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

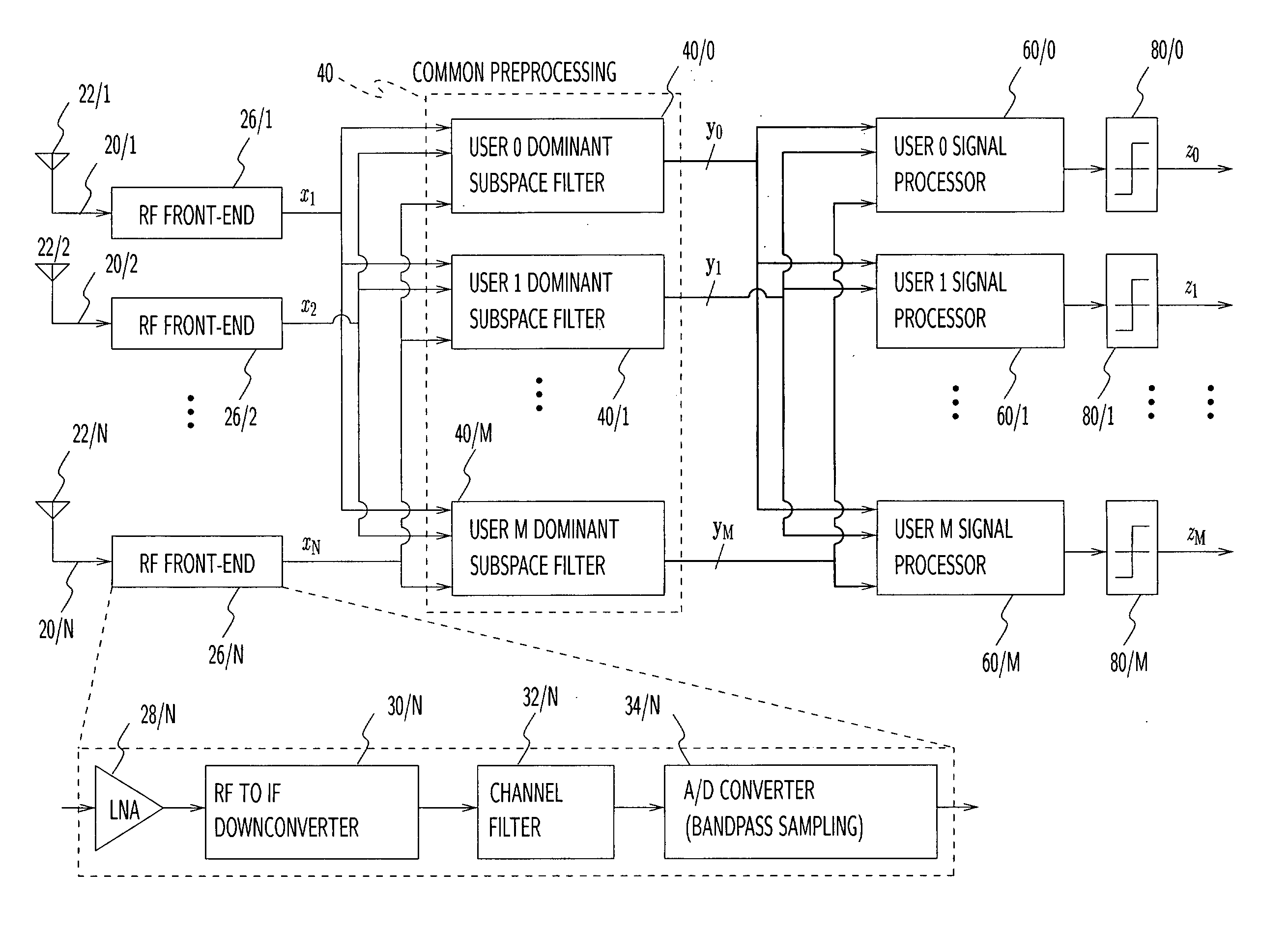

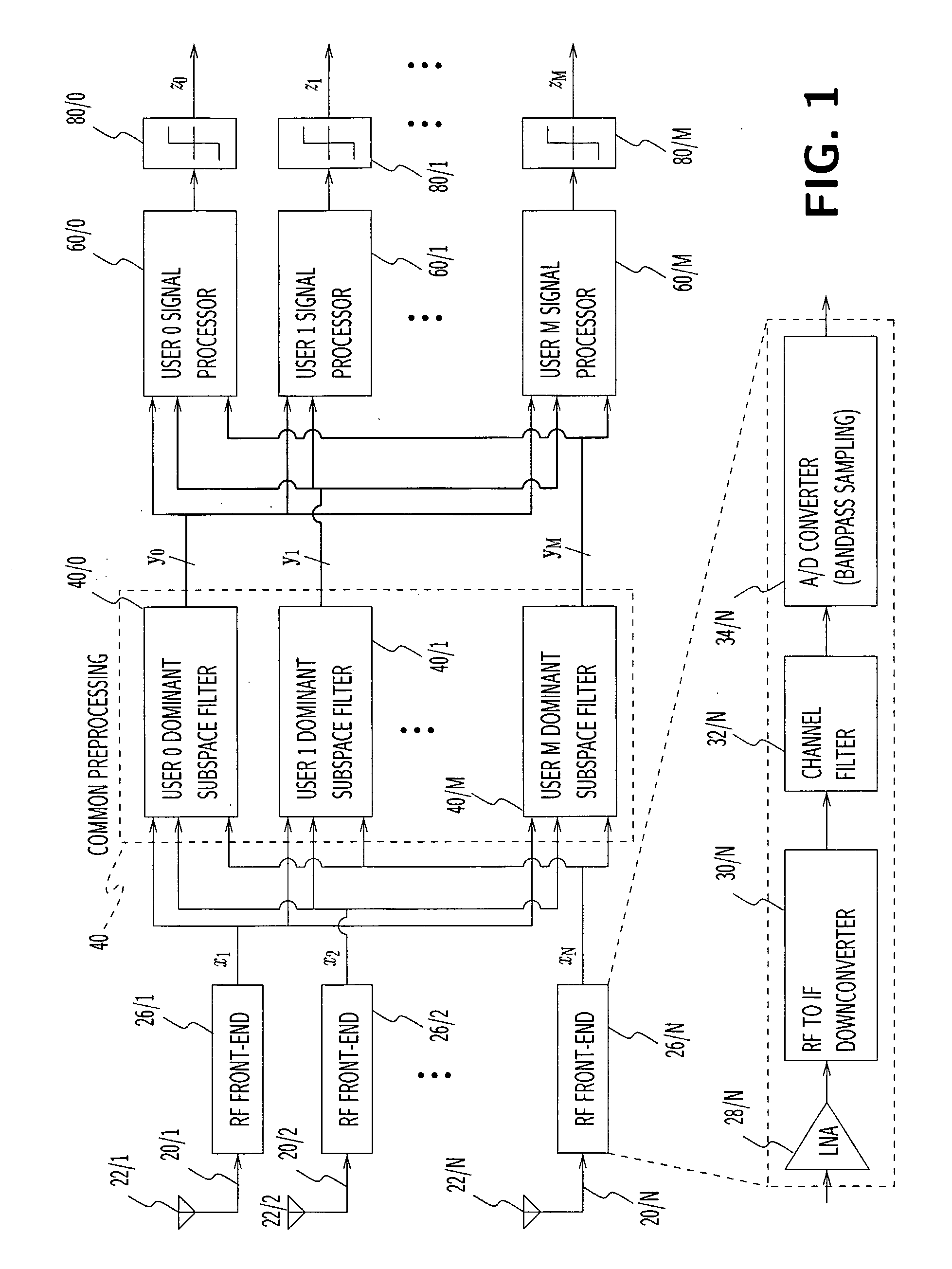

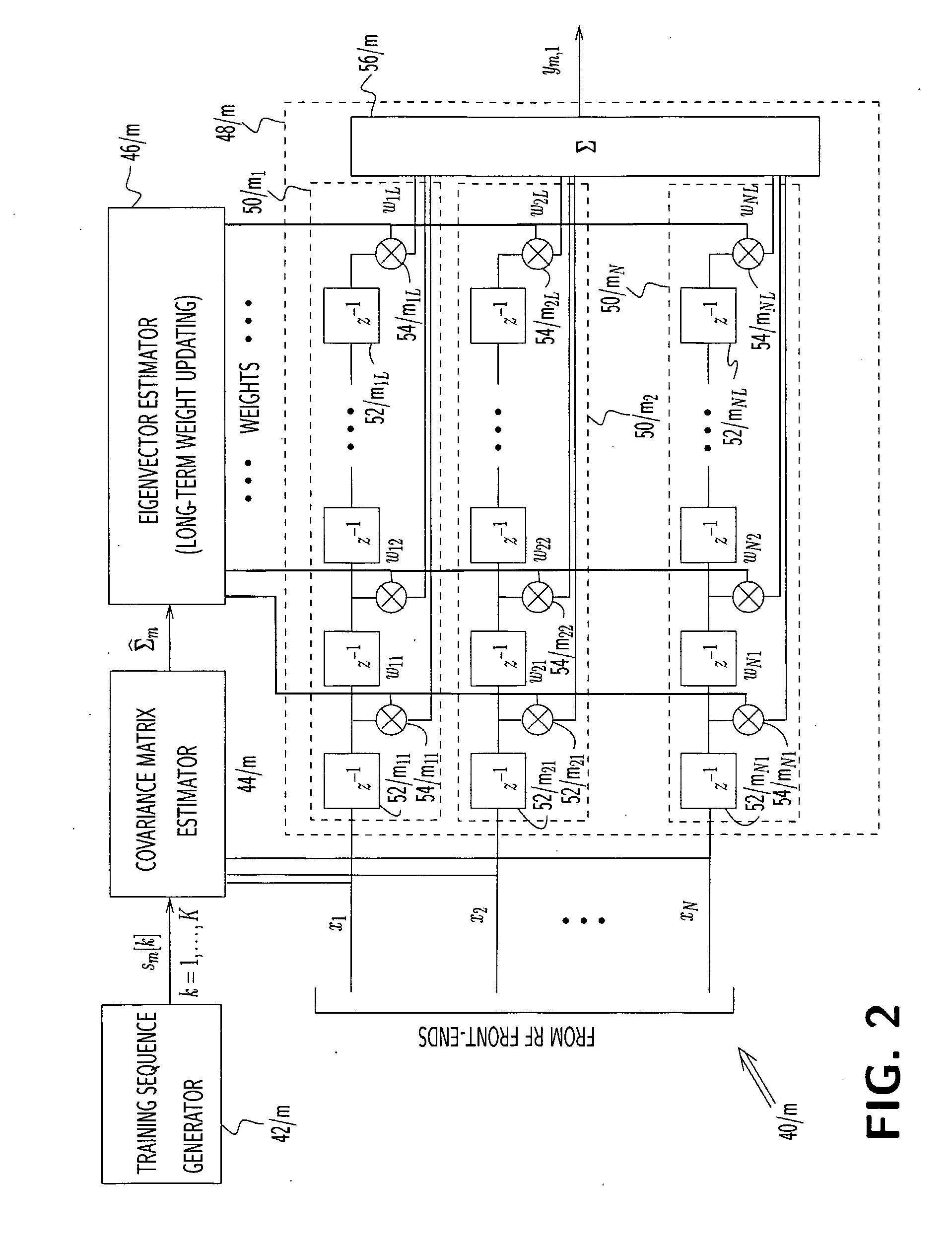

Multi-user adaptive array receiver and method

InactiveUS20070142089A1Reduce disadvantagesReduce complexitySpatial transmit diversityMultiplex communicationEngineeringSelf adaptive

An array receiver which reduces complexity and cost by exploiting multiuser information in signals received from a plurality of transmitting users preprocesses (40) samples of antenna signals ( x1, x2 . . . , xN) from the antenna elements (22 / 1, . . . , 22 / N) to form basis signals (yO . . . , yM) together having fewer space-time dimensions than the space-time dimensions of the combined antenna signals. The receiver processes and combines the basis signals to produce sets of estimated received signals (z0, . . . , zM), each for a corresponding one of the users. Each of the basis signals comprises a different combination of the antenna signals. The receiver combines the basis signals to provide a user-specific output signal, and periodically updates parameters of the filters (40 / 0, . . . , 40 / M) used for deriving each particular basis signal such that each user-specific output signal will exhibit a desired optimized concentration of energy of that user's received signal as received by the array antenna.

Owner:UNIV LAVAL

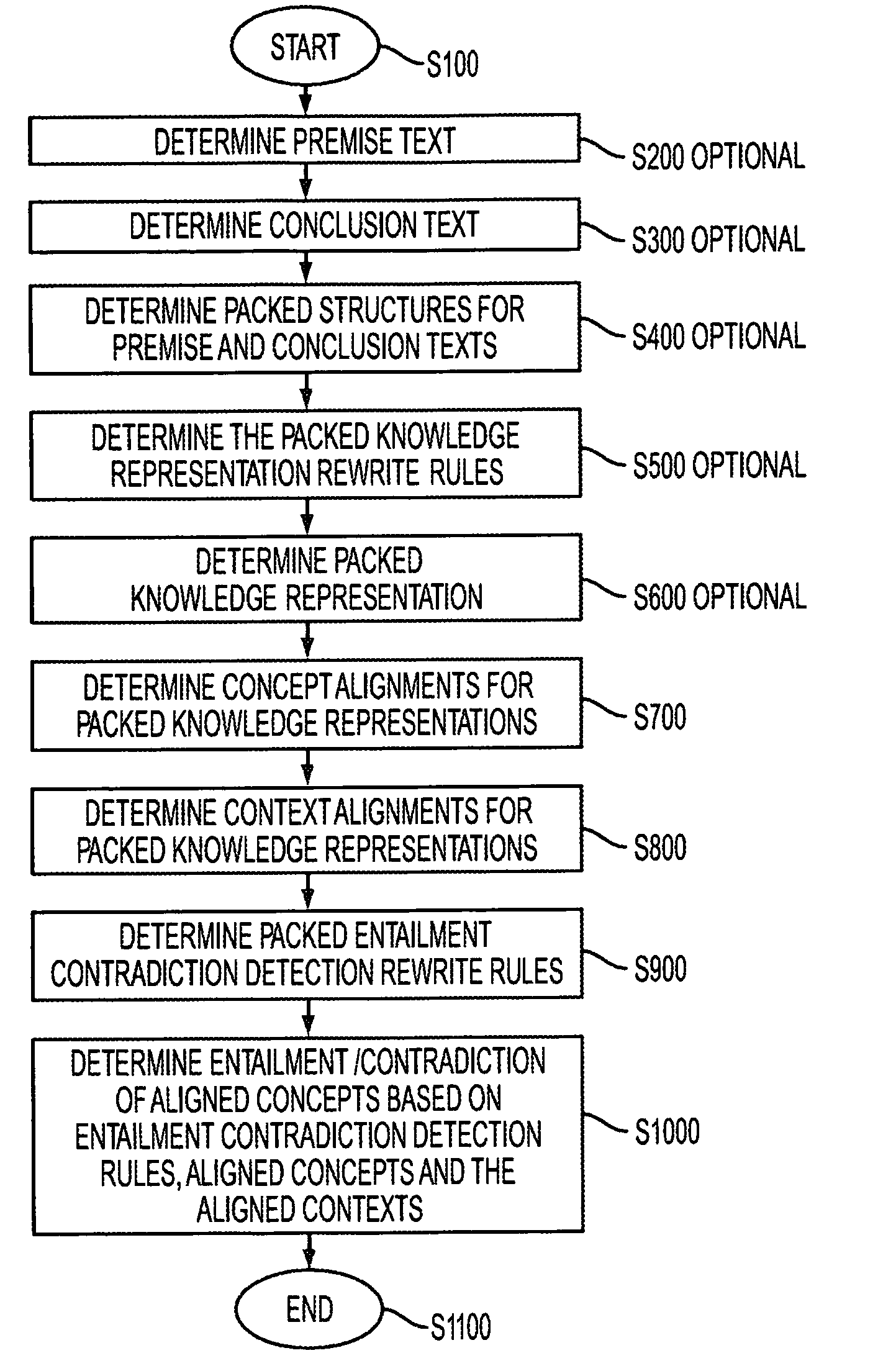

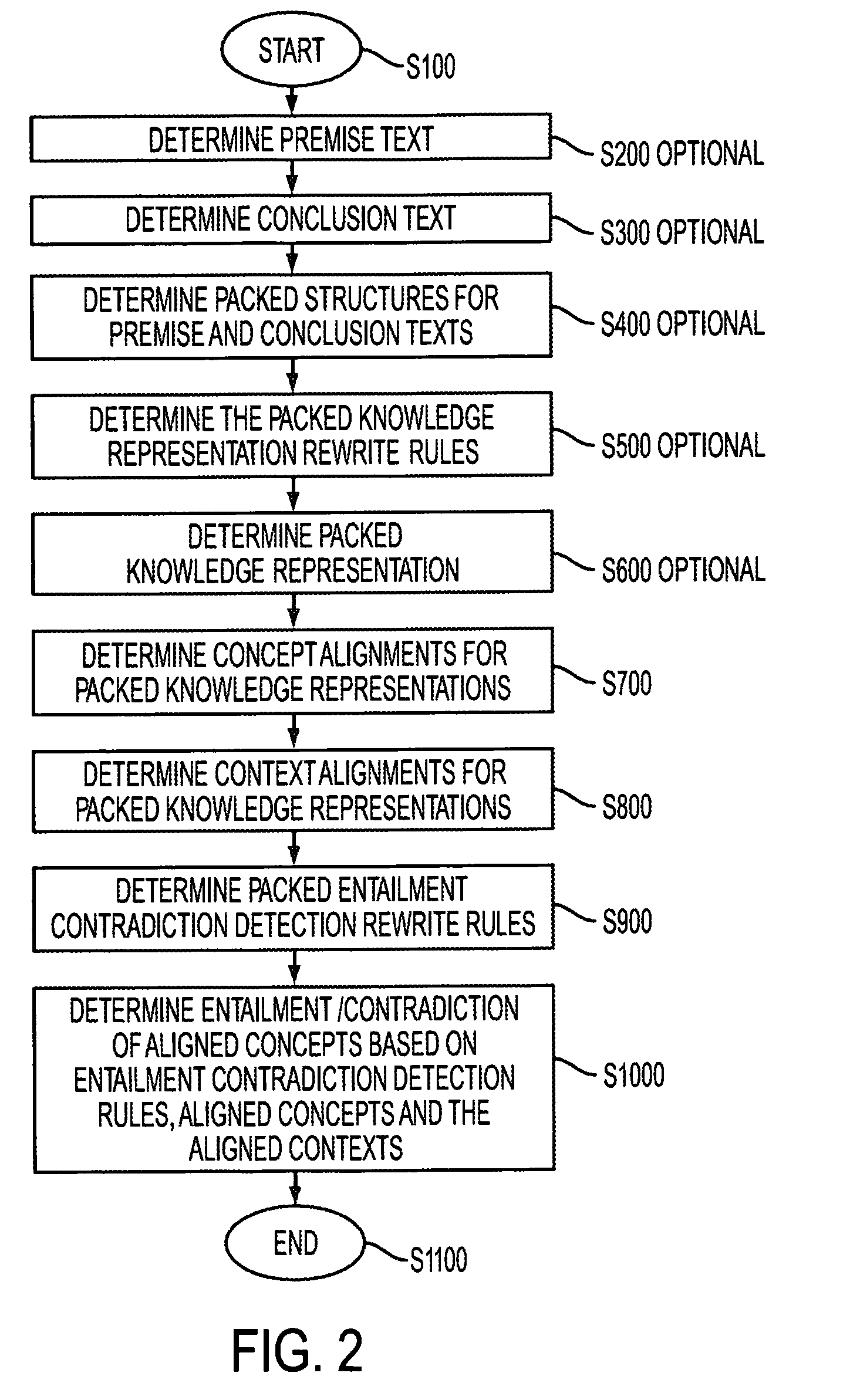

Systems and methods for detecting entailment and contradiction

InactiveUS7313515B2Knowledge representationSpecial data processing applicationsAlgorithmRewrite rule

Owner:PALO ALTO RES CENT INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com