Training method of human action recognition and recognition method

A technology of human action recognition and training method, applied in the field of video analysis, can solve the problems of no longer applicable, performance degradation, insufficient to capture all the characteristics of human action, etc., and achieve the effect of good recognition results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

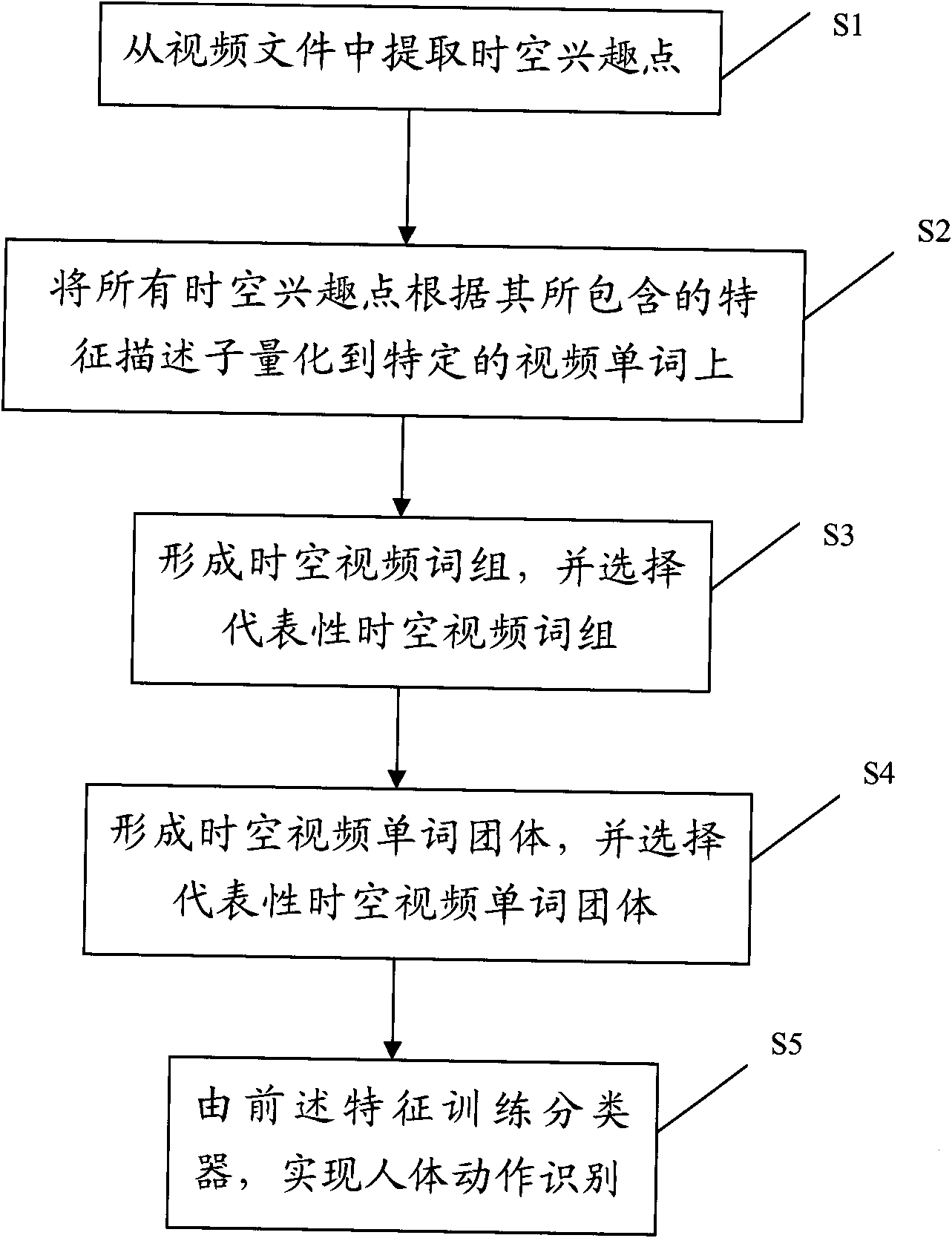

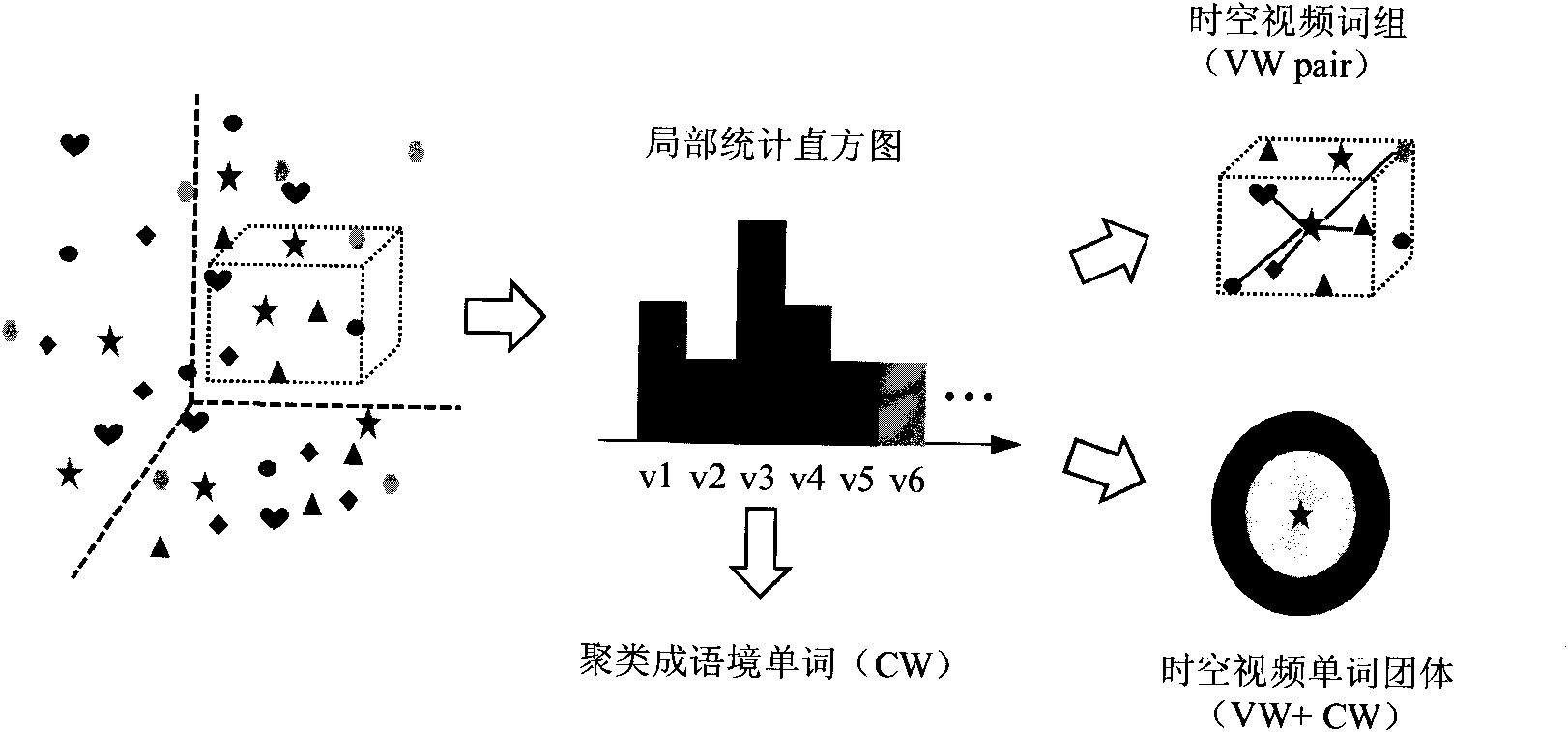

Method used

Image

Examples

Embodiment Construction

[0052] Before describing the present invention in detail, some related concepts in the present invention will be described in a unified manner.

[0053] Spatiotemporal Interest Points (STIPs): A given video sequence is processed by some spatiotemporal interest point detector algorithms (such as those proposed in the aforementioned references 2 and 4), within a certain threshold range, by non-maximum suppression (non -maximal suppression) processing, the local maxima of the response function are defined as spatiotemporal interest points. Spatio-temporal interest points show large changes in time and space dimensions, and are generally described by optical flow histograms or gradient histograms. Due to their locality, they have good rotation, translation and scaling invariance , but no description of the global motion.

[0054] Video words: In the set of spatio-temporal interest point descriptors extracted from all training videos, a subset is randomly selected and clustered us...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com