Patents

Literature

13986 results about "Training methods" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

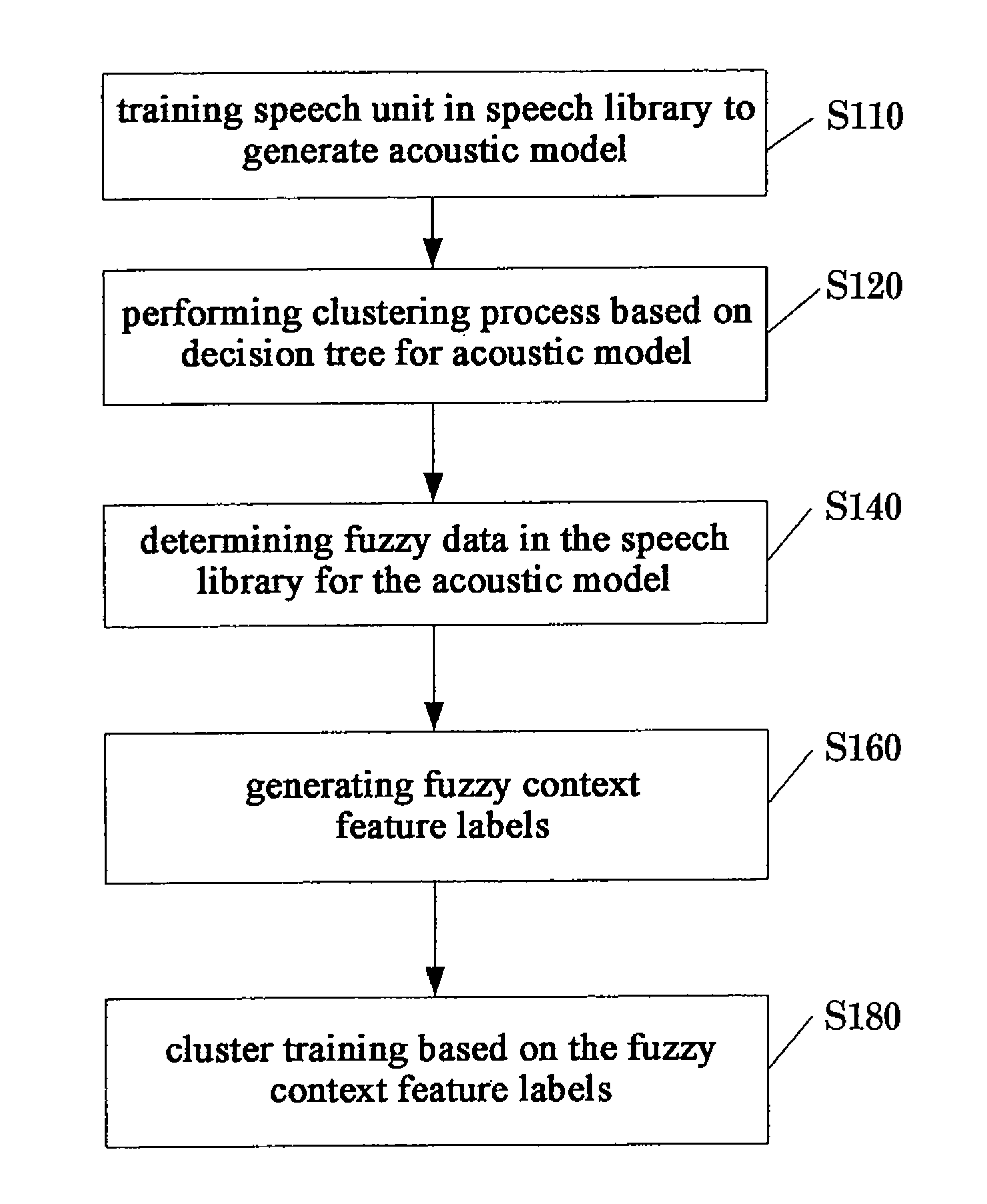

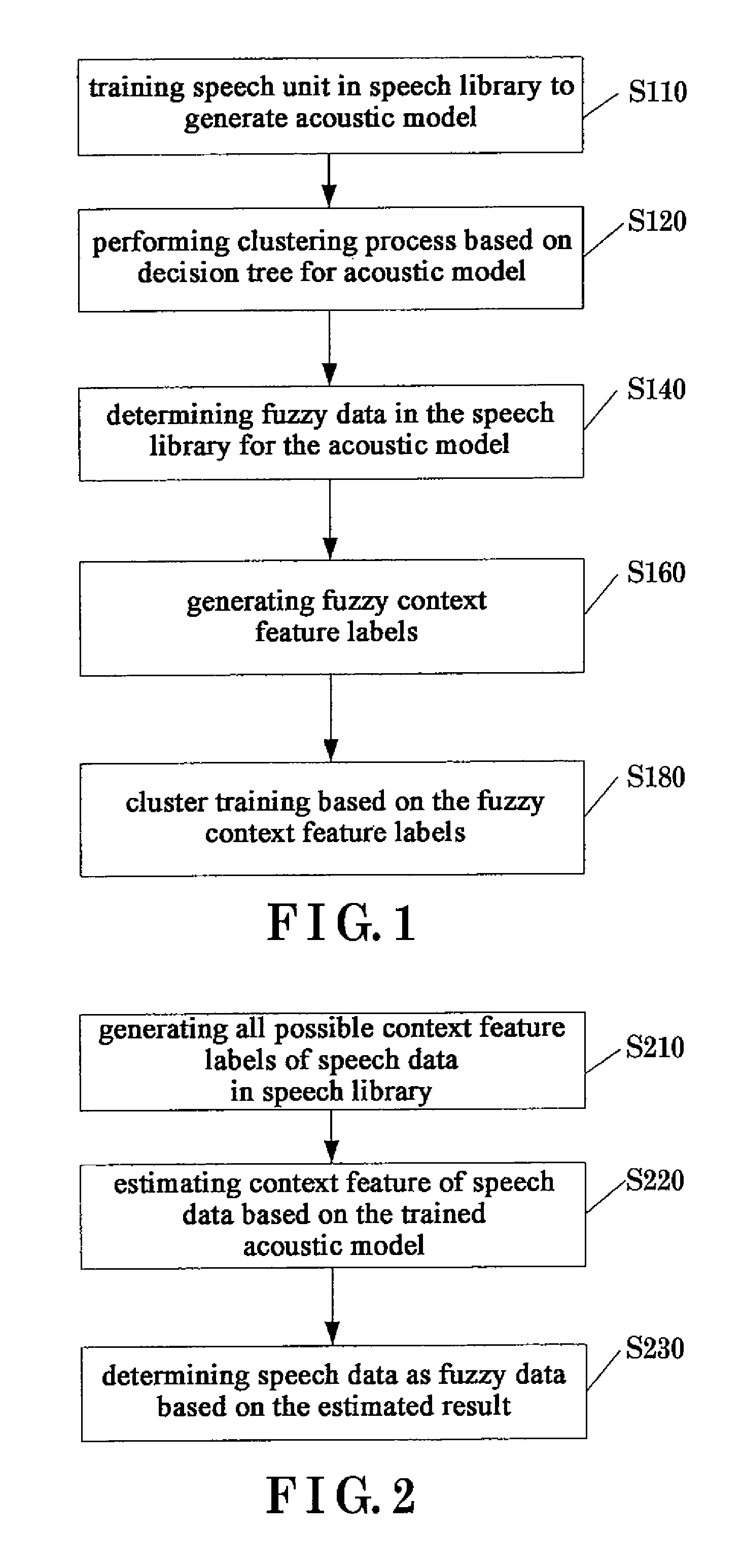

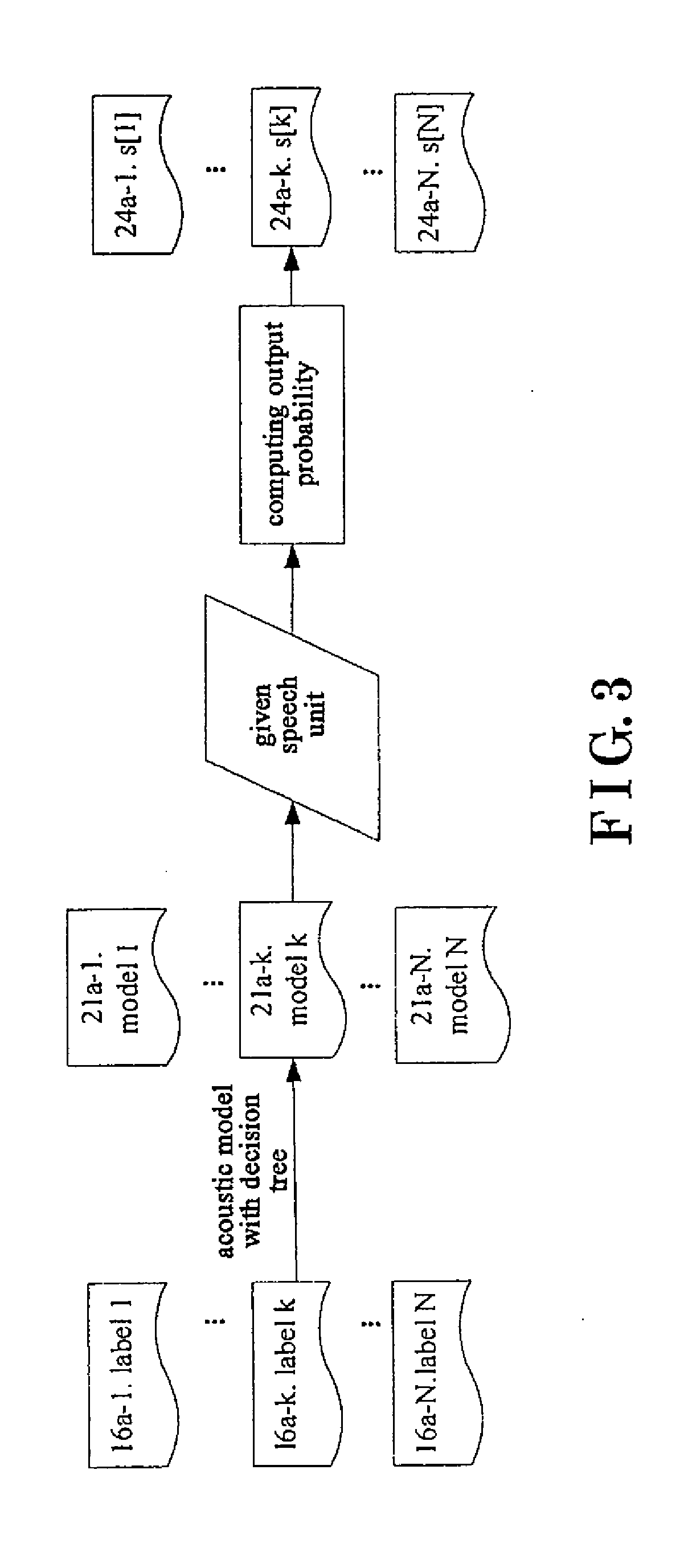

Method, apparatus for synthesizing speech and acoustic model training method for speech synthesis

According to one embodiment, a method, apparatus for synthesizing speech, and a method for training acoustic model used in speech synthesis is provided. The method for synthesizing speech may include determining data generated by text analysis as fuzzy heteronym data, performing fuzzy heteronym prediction on the fuzzy heteronym data to output a plurality of candidate pronunciations of the fuzzy heteronym data and probabilities thereof, generating fuzzy context feature labels based on the plurality of candidate pronunciations and probabilities thereof, determining model parameters for the fuzzy context feature labels based on acoustic model with fuzzy decision tree, generating speech parameters from the model parameters, and synthesizing the speech parameters via synthesizer as speech.

Owner:KK TOSHIBA

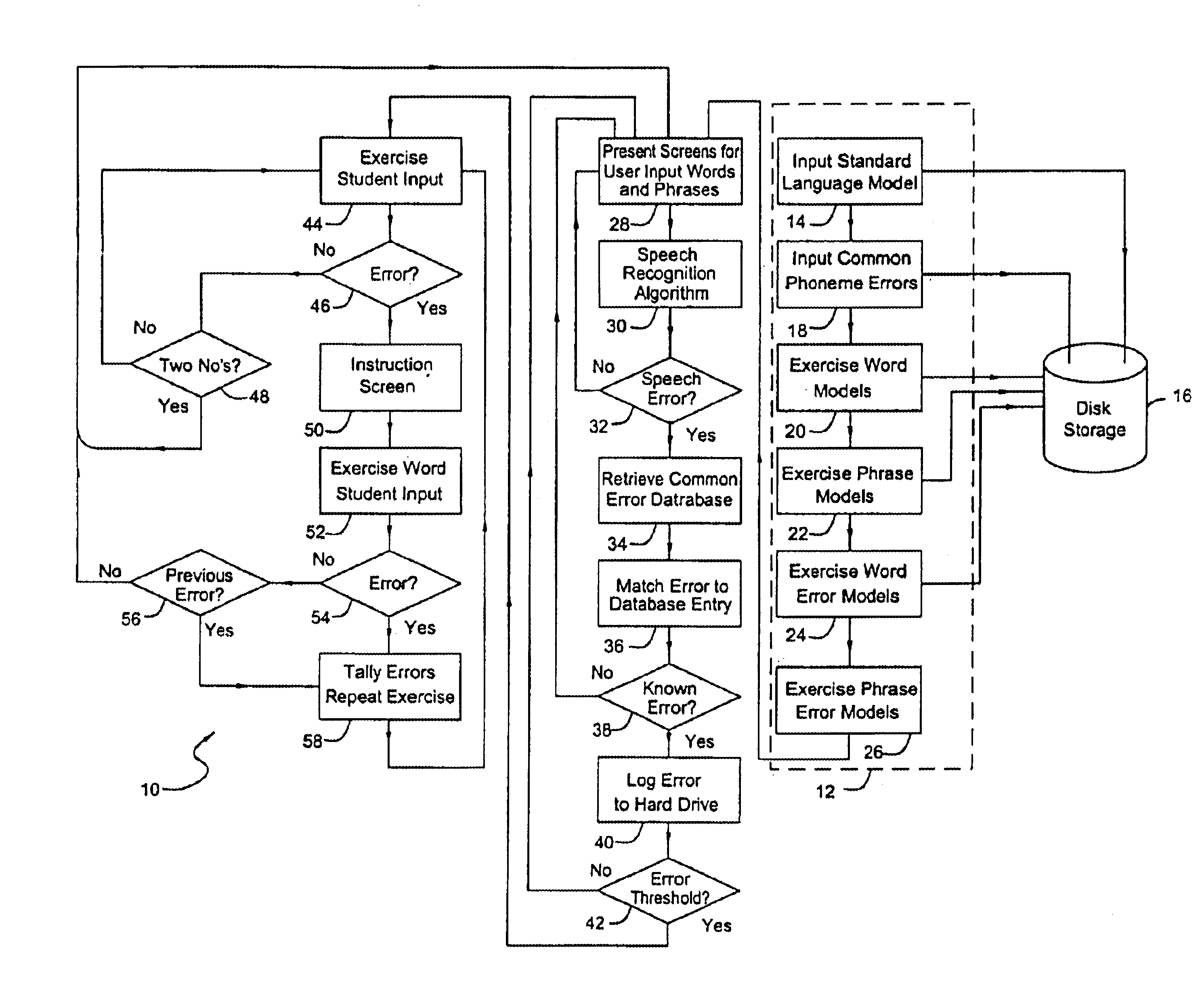

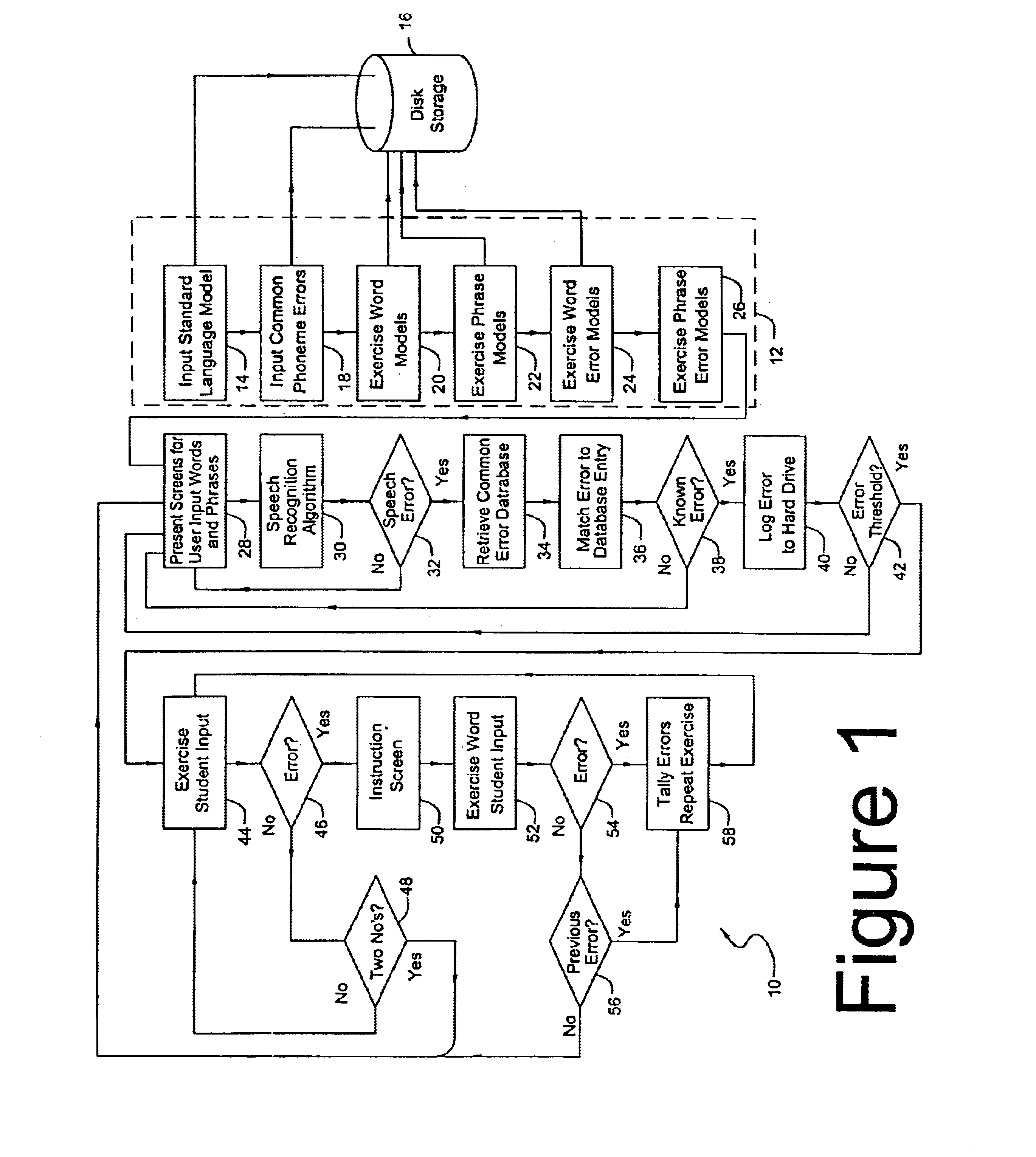

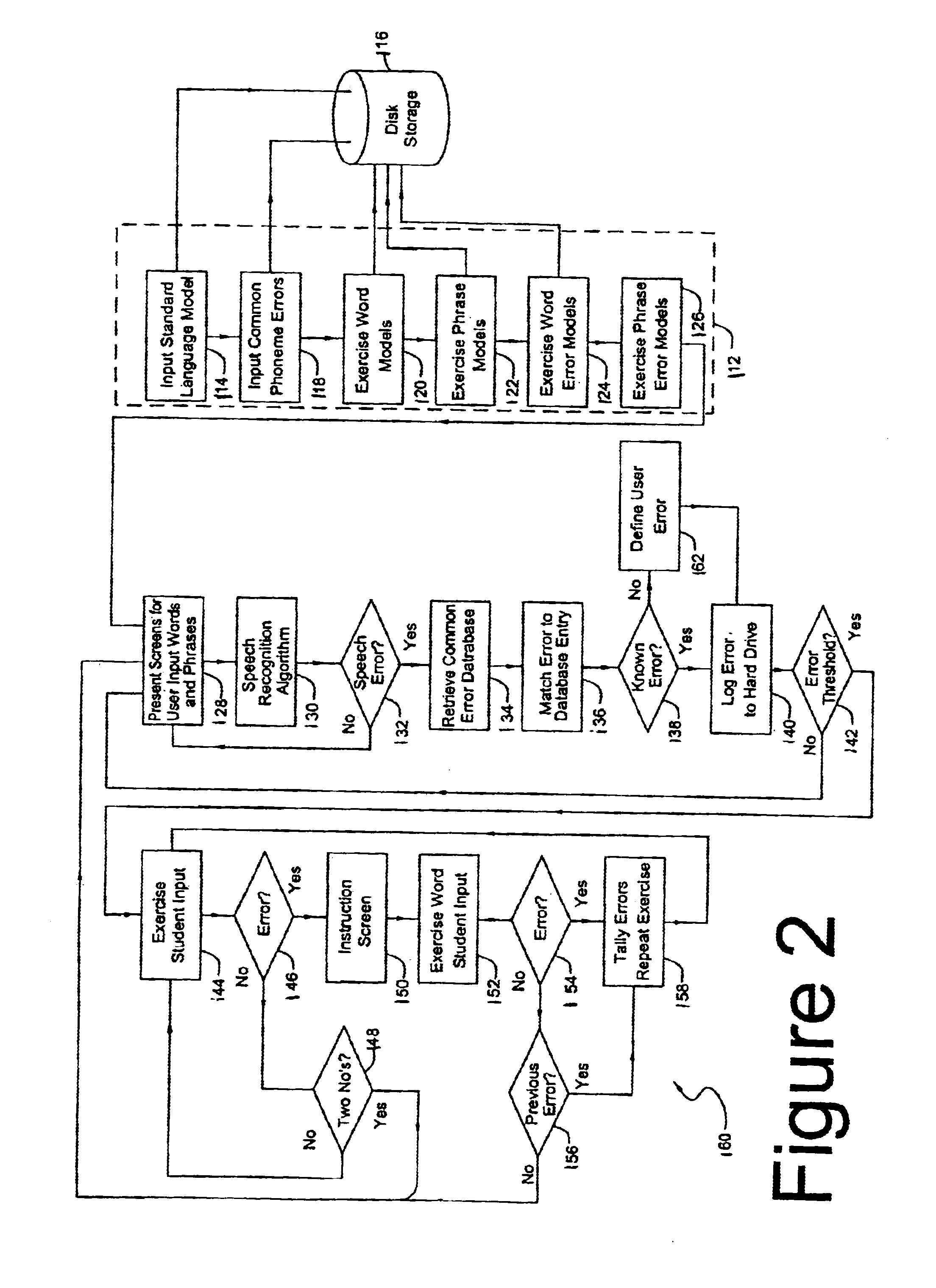

Speech training method with alternative proper pronunciation database

InactiveUS6963841B2Improving speech patternSpeech recognitionElectrical appliancesSpeech trainingUser input

In accordance with a present invention speech training system is disclosed. It uses a microphone to receive audible sounds input by a user into a first computing device having a program with a database consisting of (i) digital representations of known audible sounds and associated alphanumeric representations of the known audible sounds, and (ii) digital representations of known audible sounds corresponding to mispronunciations resulting from known classes of mispronounced words and phrases. The method is performed by receiving the audible sounds in the form of the electrical output of the microphone. A particular audible sound to be recognized is converted into a digital representation of the audible sound. The digital representation of the particular audible sound is then compared to the digital representations of the known audible sounds to determine which of those known audible sounds is most likely to be the particular audible sound being compared to the sounds in the database. In response to a determination of error corresponding to a known type or instance of mispronunciation, the system presents an interactive training program from the computer to the user to enable the user to correct such mispronunciation.

Owner:LESSAC TECH INC

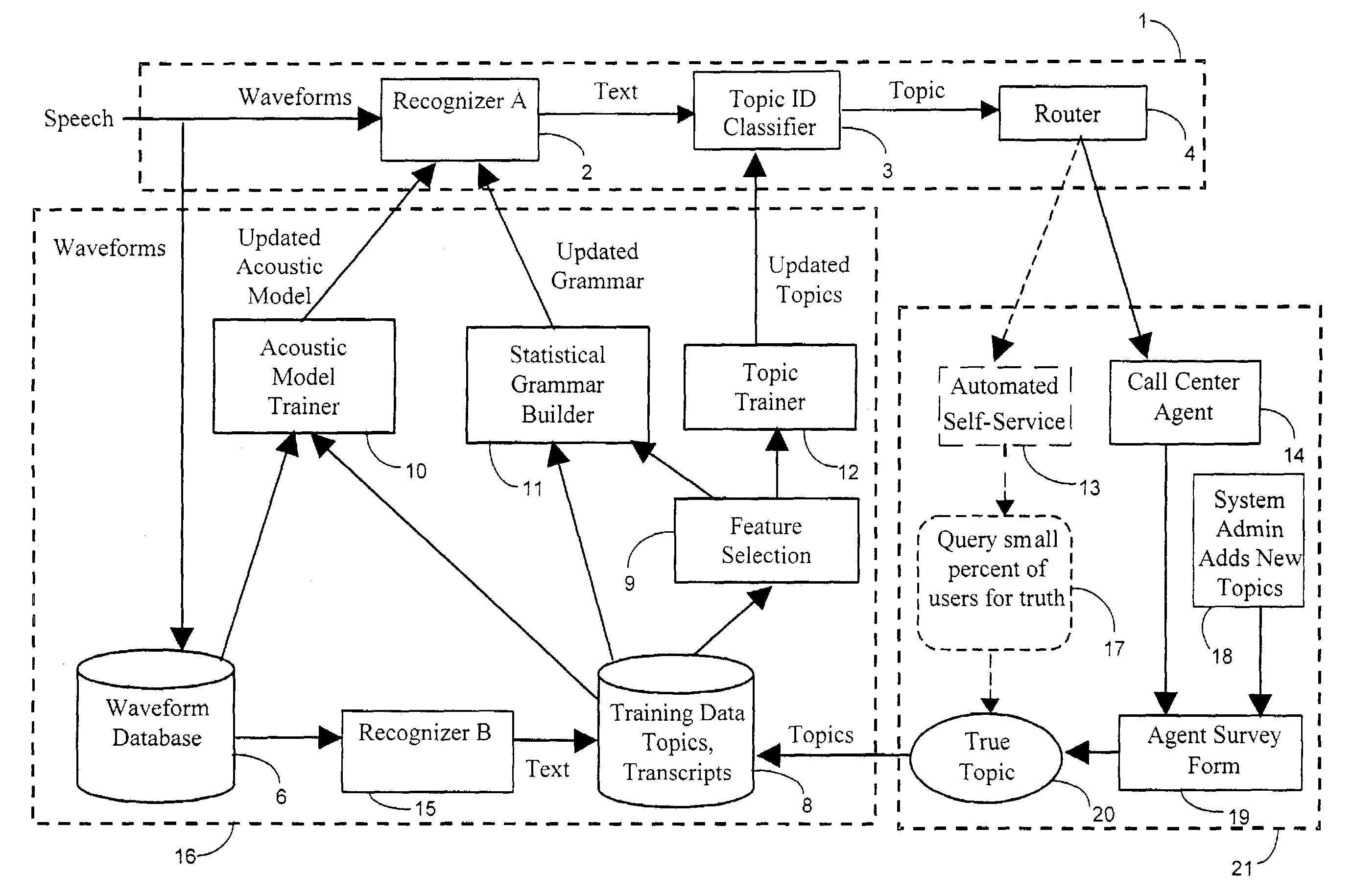

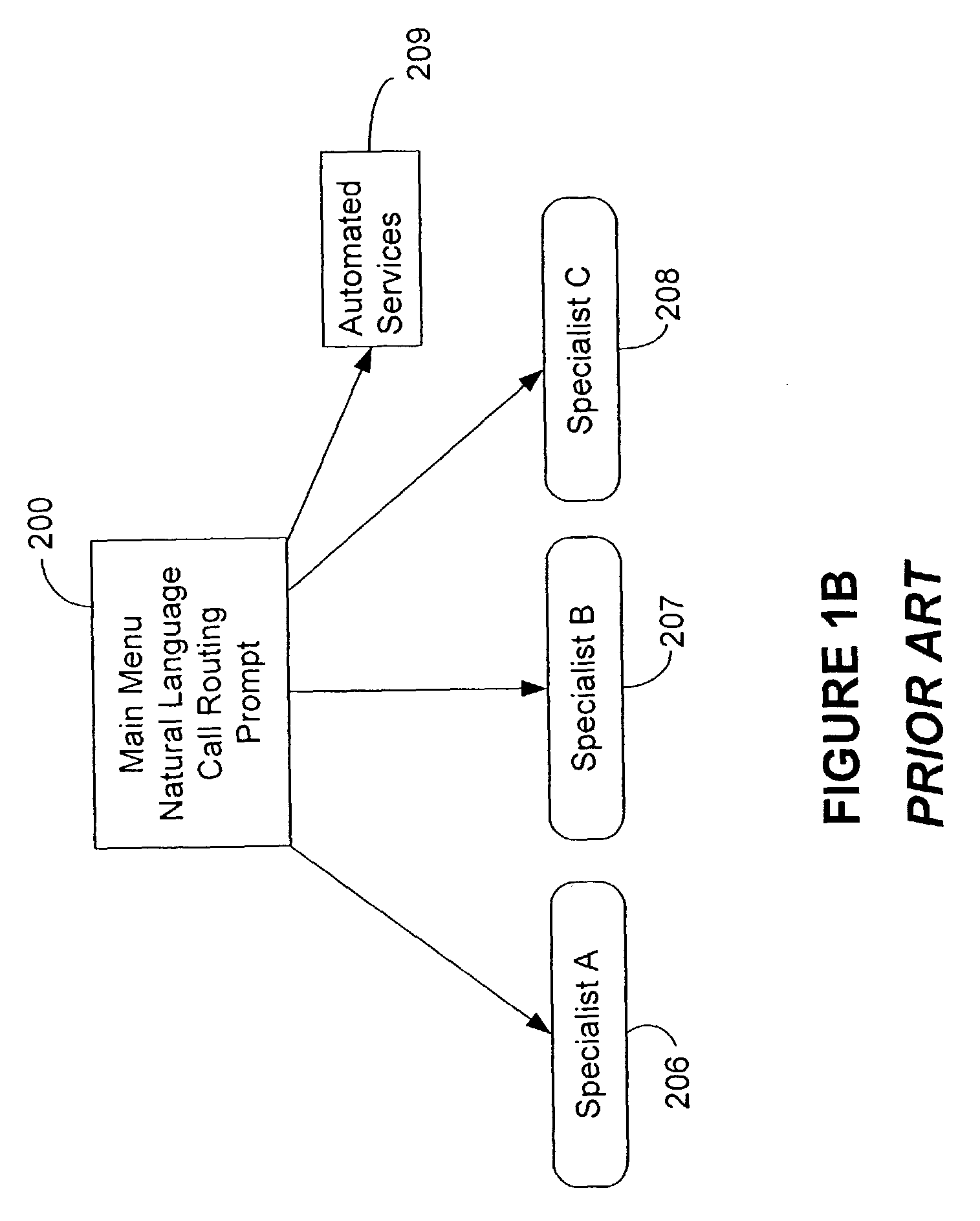

Unsupervised training in natural language call routing

InactiveUS7092888B1Tune performanceAutomatic call-answering/message-recording/conversation-recordingAutomatic exchangesUnique identifierSpeech identification

A method of training a natural language call routing system using an unsupervised trainer is provided. The unsupervised trainer is adapted to tune performance of the call routing system on the basis of feedback and new topic information. The method of training comprises: storing audio data from an incoming call as well as associated unique identifier information for the incoming call; applying a highly accurate speech recognizer to the audio data from the waveform database to produce a text transcription of the stored audio for the call; forwarding outputs of the second speech recognizer to a training database, the training database being adapted to store text transcripts from the second recognizer with respective unique call identifiers as well as topic data; for a call routed by the call router to an agent: entering a call topic determined by the agent into a form; and supplying the call topic information from the form to the training database together with the associated unique call identifier; and for a call routed to automated fulfillment: querying the caller regarding the true topic of the call; and adding this topic information, together with the associated unique call identifier, to the training database; and performing topic identification model training and statistical grammar model training on the basis of the topic information and transcription information stored in the training database.

Owner:RAYTHEON BBN TECH CORP +1

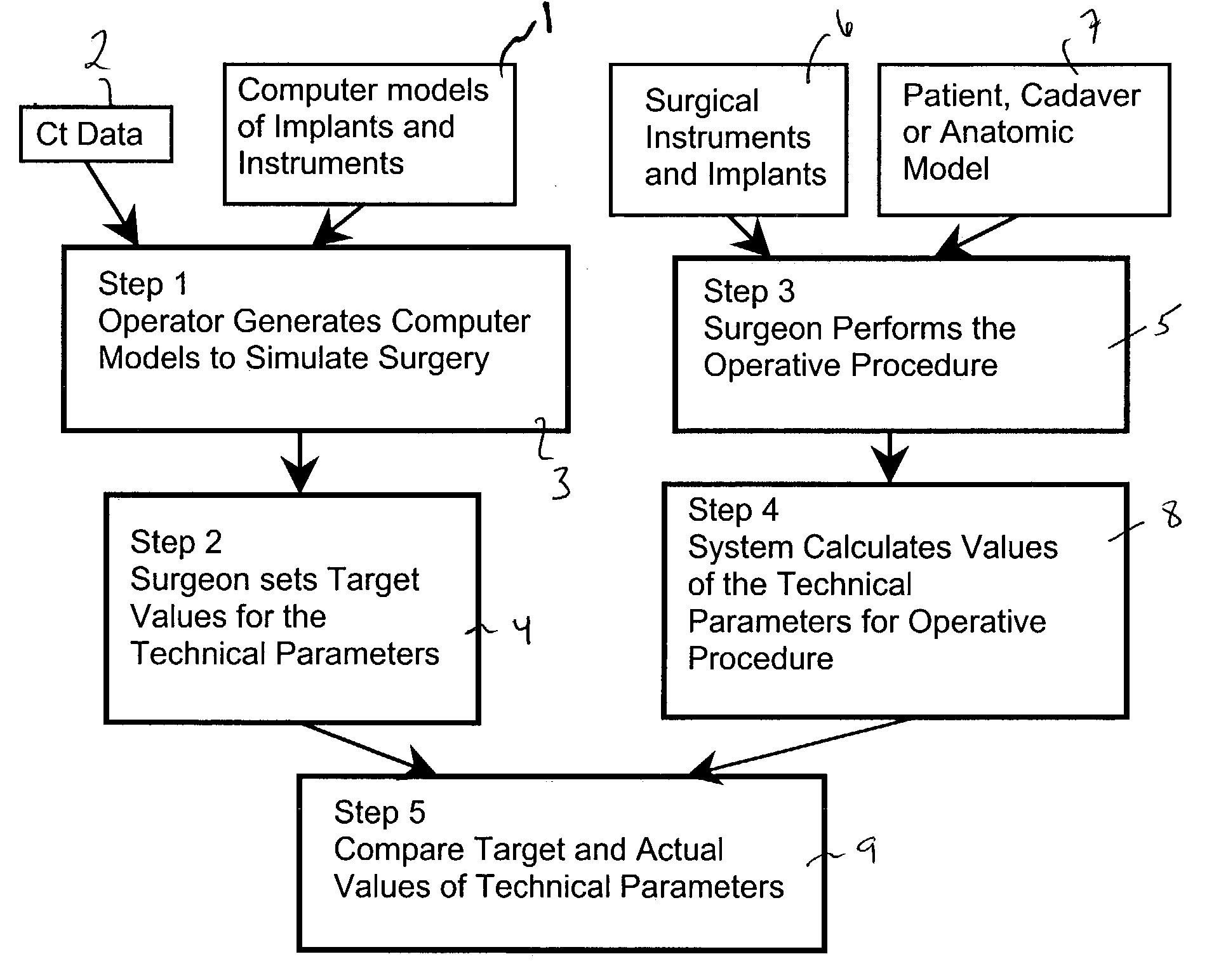

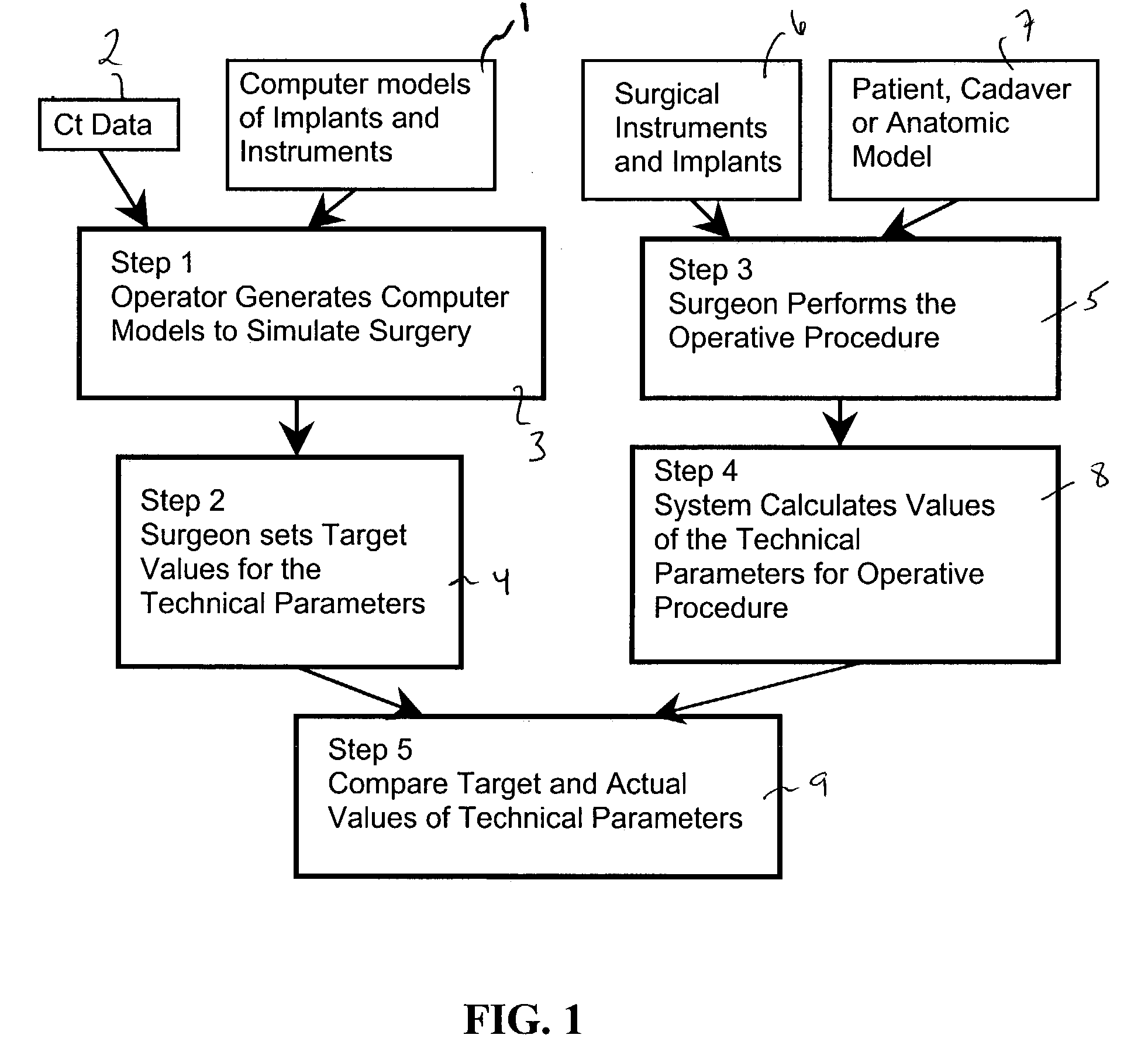

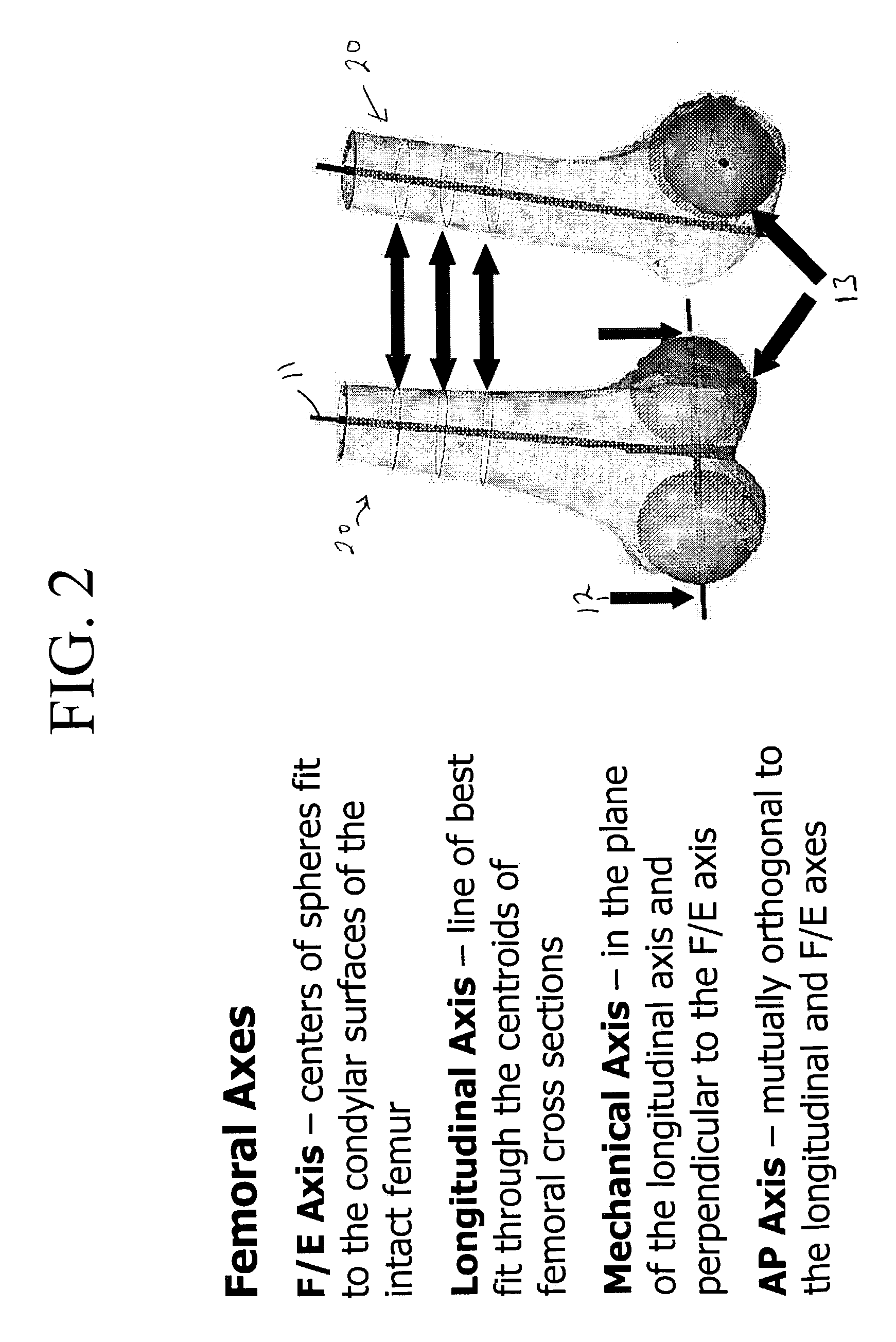

Computer-based training methods for surgical procedures

ActiveUS7427200B2Without expenseMaterial analysis using wave/particle radiationRadiation/particle handlingSystems analysisTechnical success

A method is disclosed for analyzing surgical techniques using a computer system for gathering and analyzing surgical data acquired during a surgical procedure on a body portion and comparing that data to pre-selected target values for the particular surgical procedure. The inventive method allows the surgeon, for example, to measure the technical success of a surgical procedure in terms of quantifiable geometric, spatial, kinematic or kinetic parameters. The method comprises calculation of these parameters from data collected during a surgical procedure and then comparing these results with values of the same parameters derived from target values defined by the surgeon, surgical convention, or computer simulation of the same procedure prior to the operation itself.

Owner:NOBLE PHILIP C +1

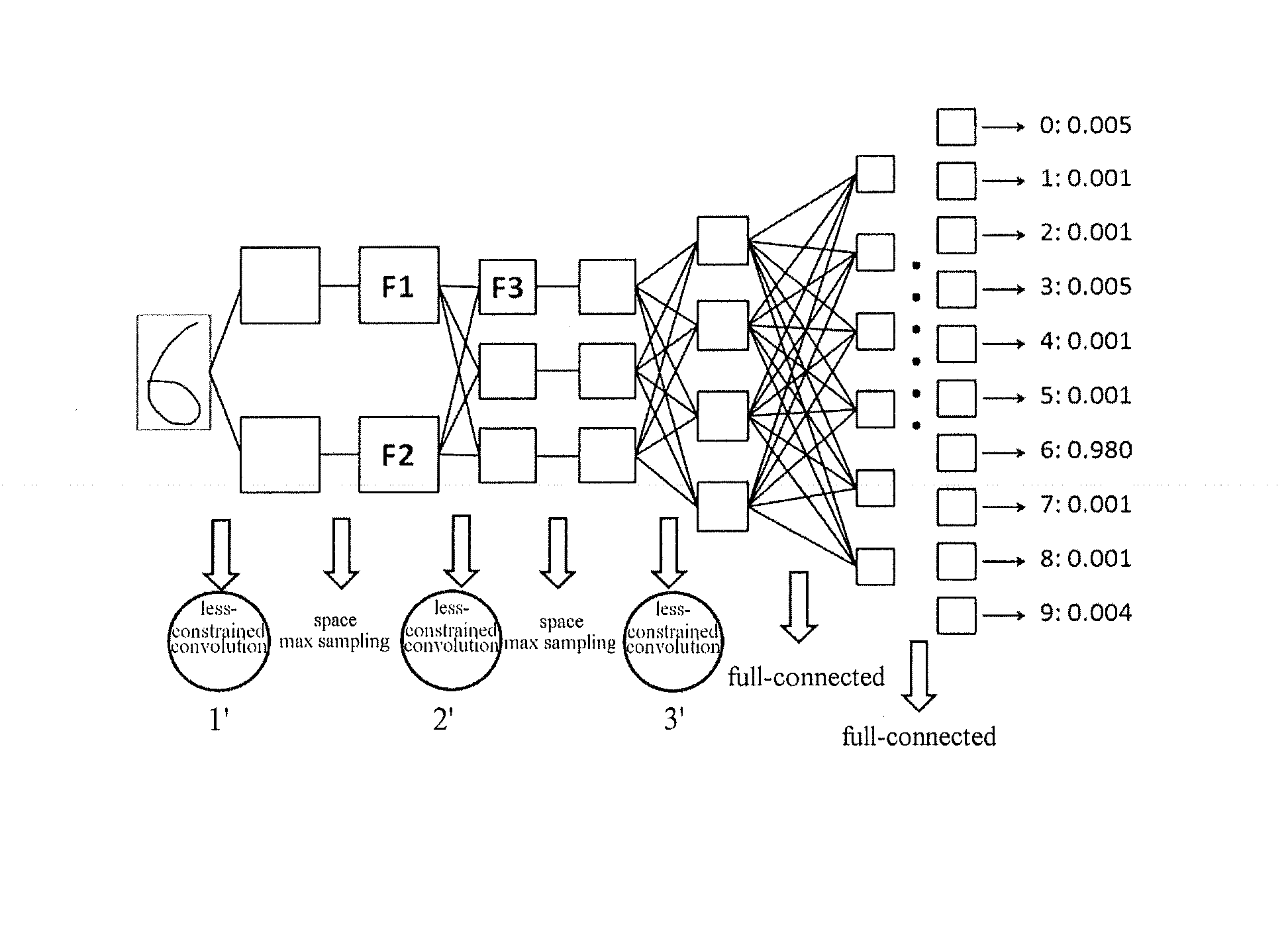

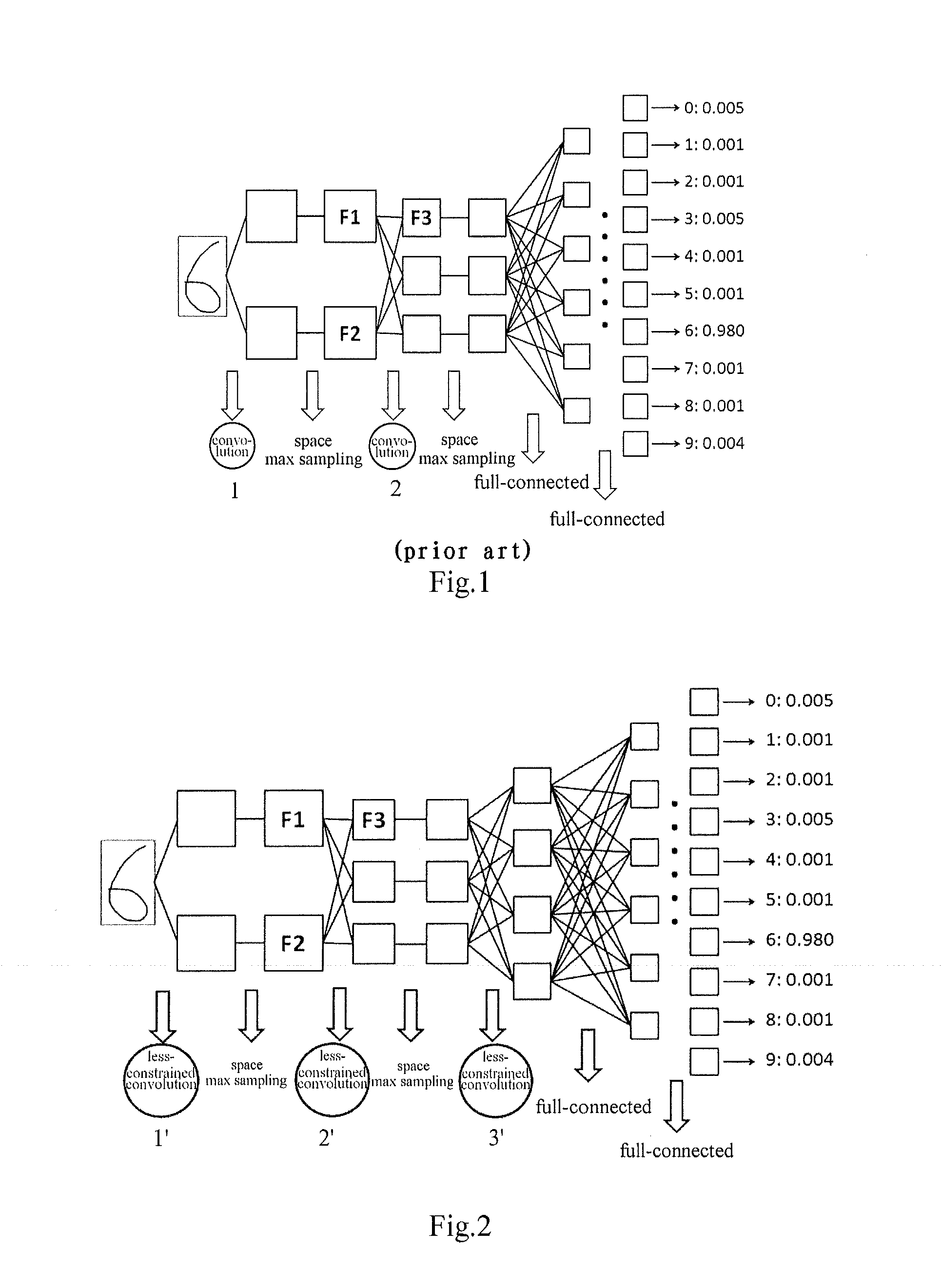

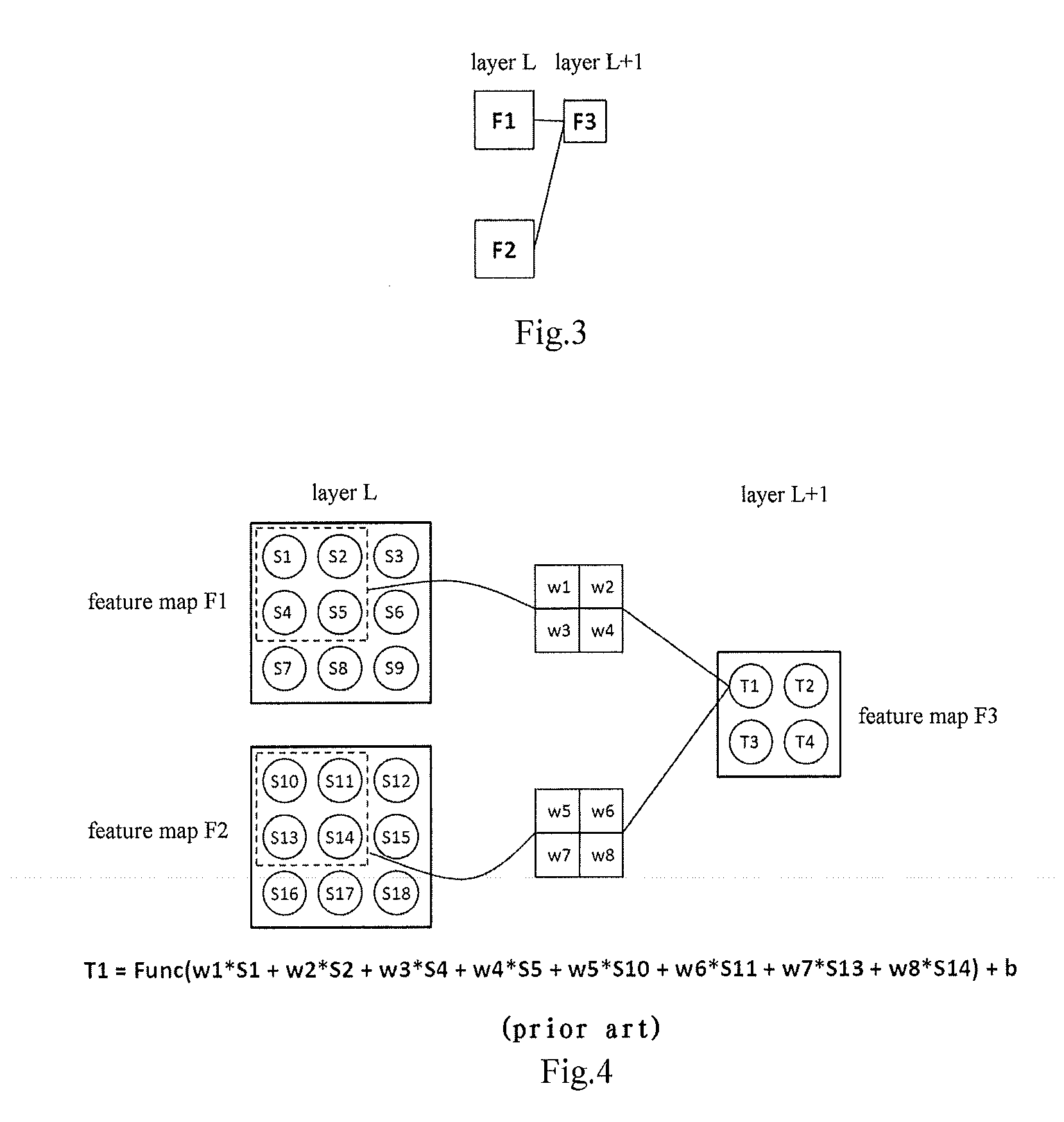

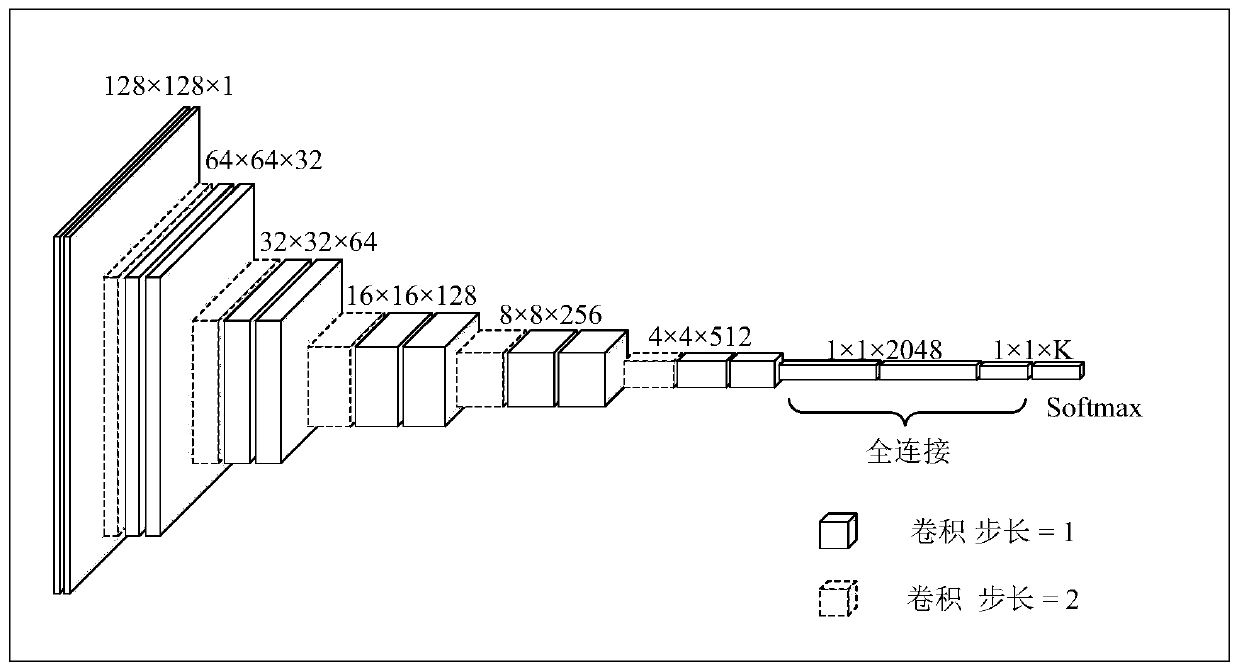

Convolutional-neural-network-based classifier and classifying method and training methods for the same

InactiveUS20150036920A1Character and pattern recognitionNeural learning methodsClassification methodsNeuron

The present invention relates to a convolutional-neural-network-based classifier, a classifying method by using a convolutional-neural-network-based classifier and a method for training the convolutional-neural-network-based classifier. The convolutional-neural-network-based classifier comprises: a plurality of feature map layers, at least one feature map in at least one of the plurality of feature map layers being divided into a plurality of regions; and a plurality of convolutional templates corresponding to the plurality of regions respectively, each of the convolutional templates being used for obtaining a response value of a neuron in the corresponding region.

Owner:FUJITSU LTD

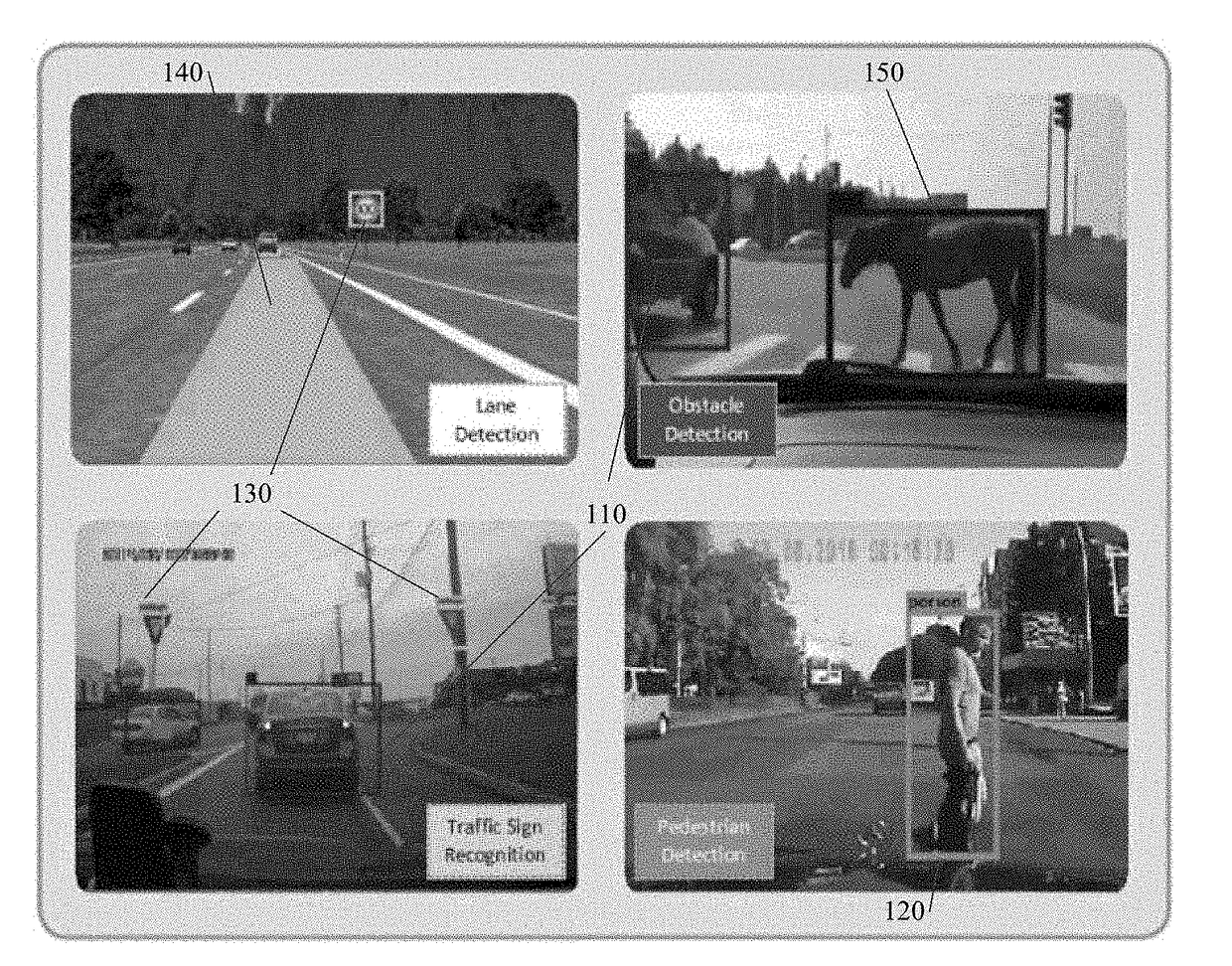

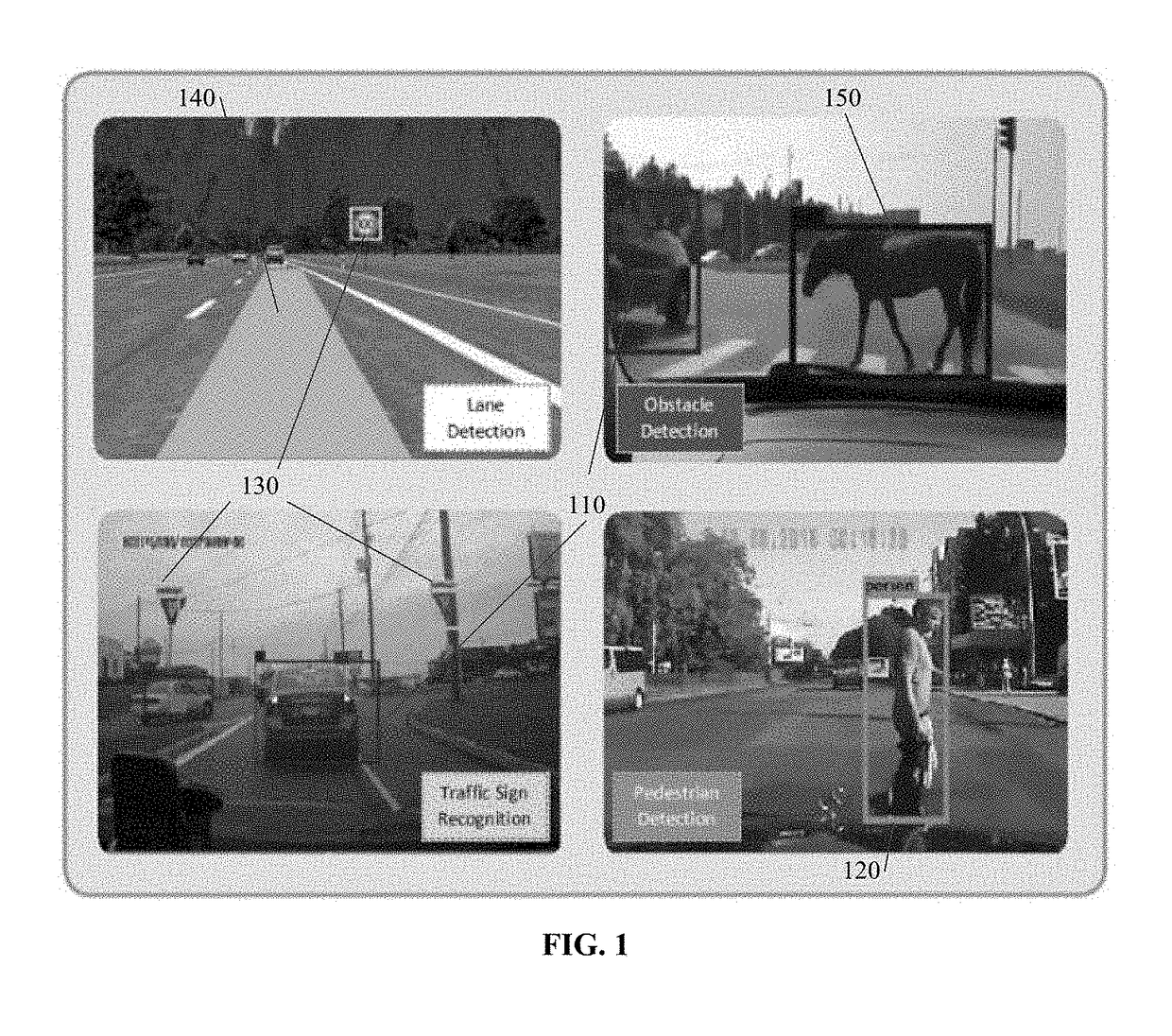

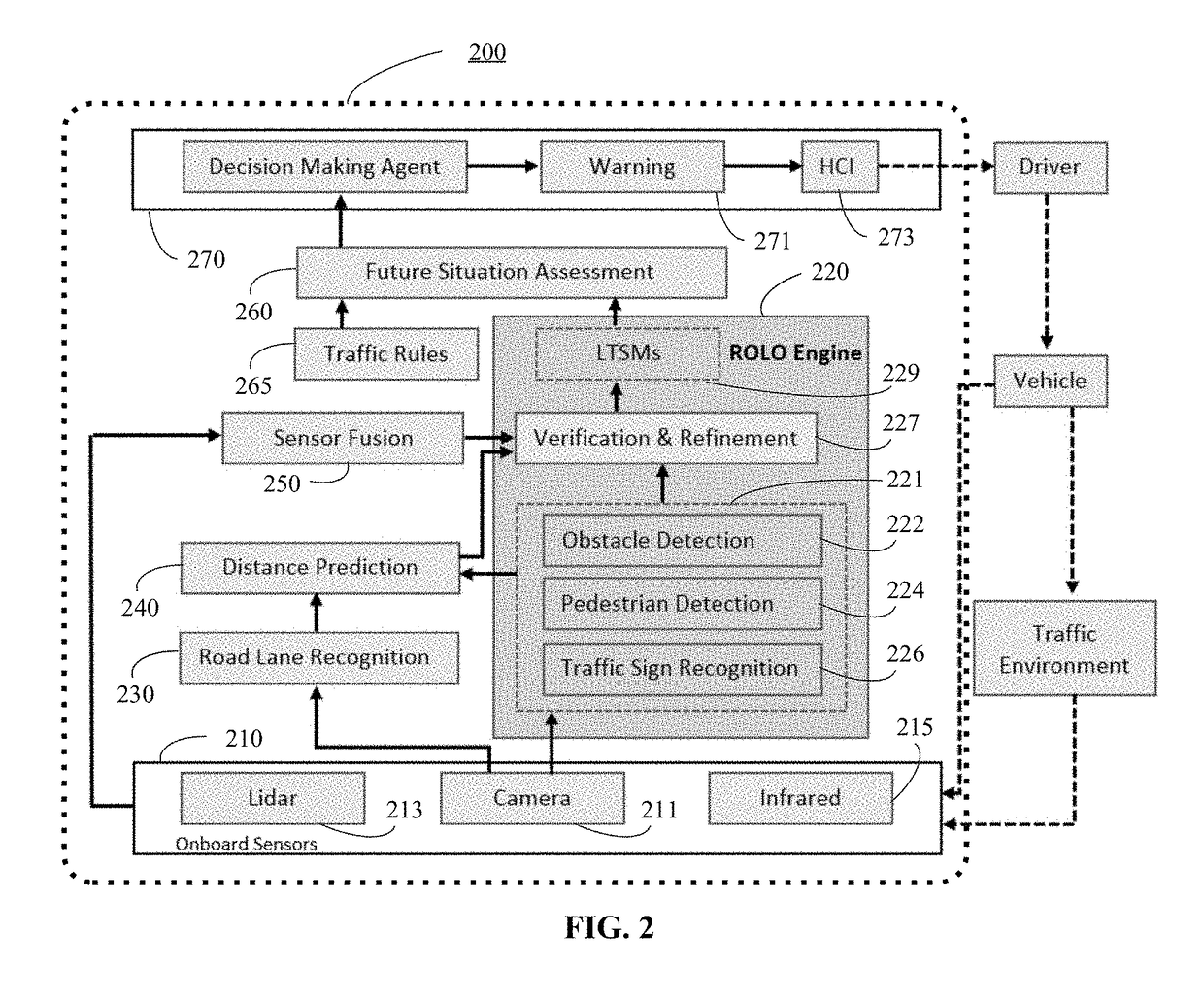

Method and system for vision-centric deep-learning-based road situation analysis

In accordance with various embodiments of the disclosed subject matter, a method and a system for vision-centric deep-learning-based road situation analysis are provided. The method can include: receiving real-time traffic environment visual input from a camera; determining, using a ROLO engine, at least one initial region of interest from the real-time traffic environment visual input by using a CNN training method; verifying the at least one initial region of interest to determine if a detected object in the at least one initial region of interest is a candidate object to be tracked; using LSTMs to track the detected object based on the real-time traffic environment visual input, and predicting a future status of the detected object by using the CNN training method; and determining if a warning signal is to be presented to a driver of a vehicle based on the predicted future status of the detected object.

Owner:TCL CORPORATION

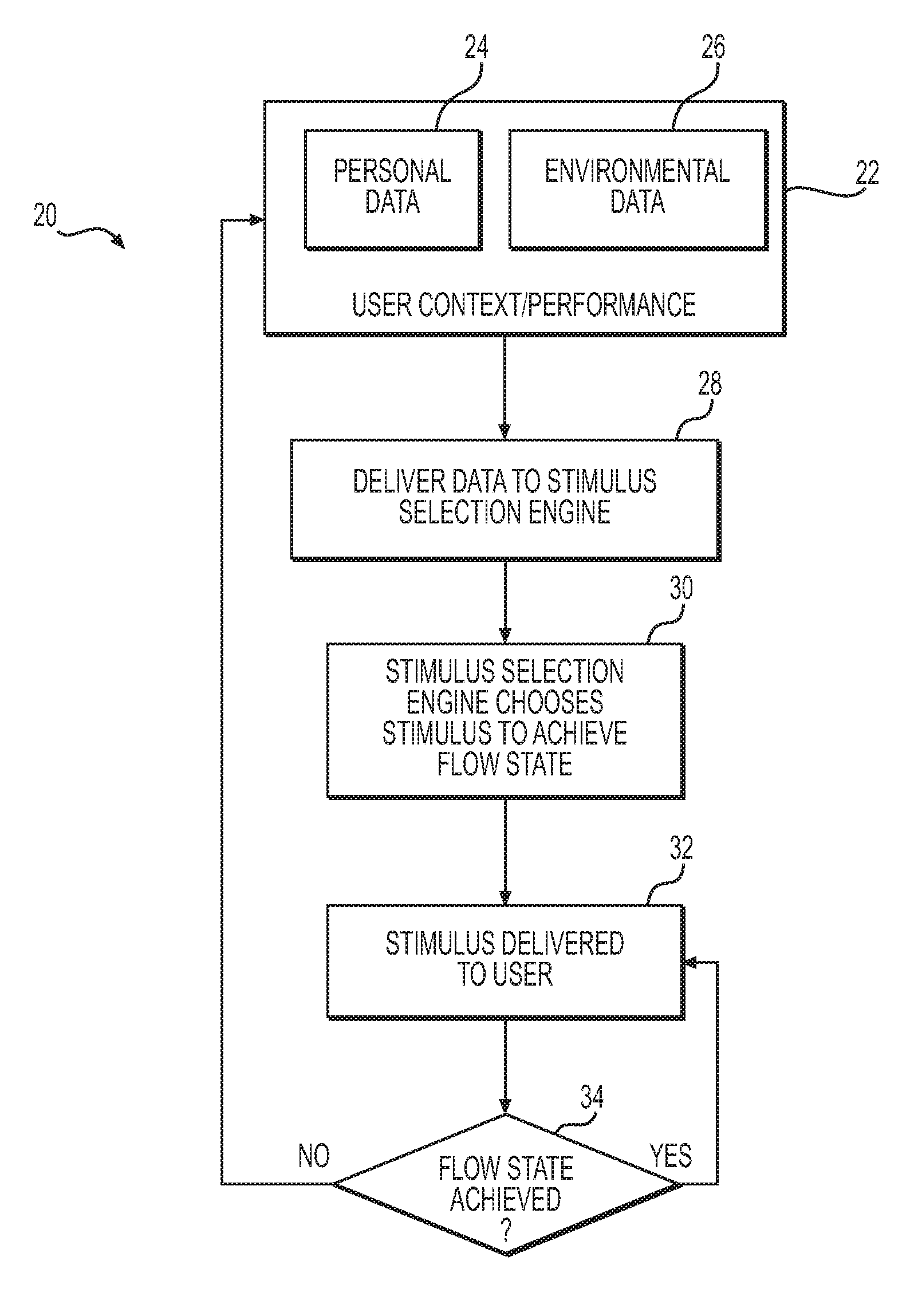

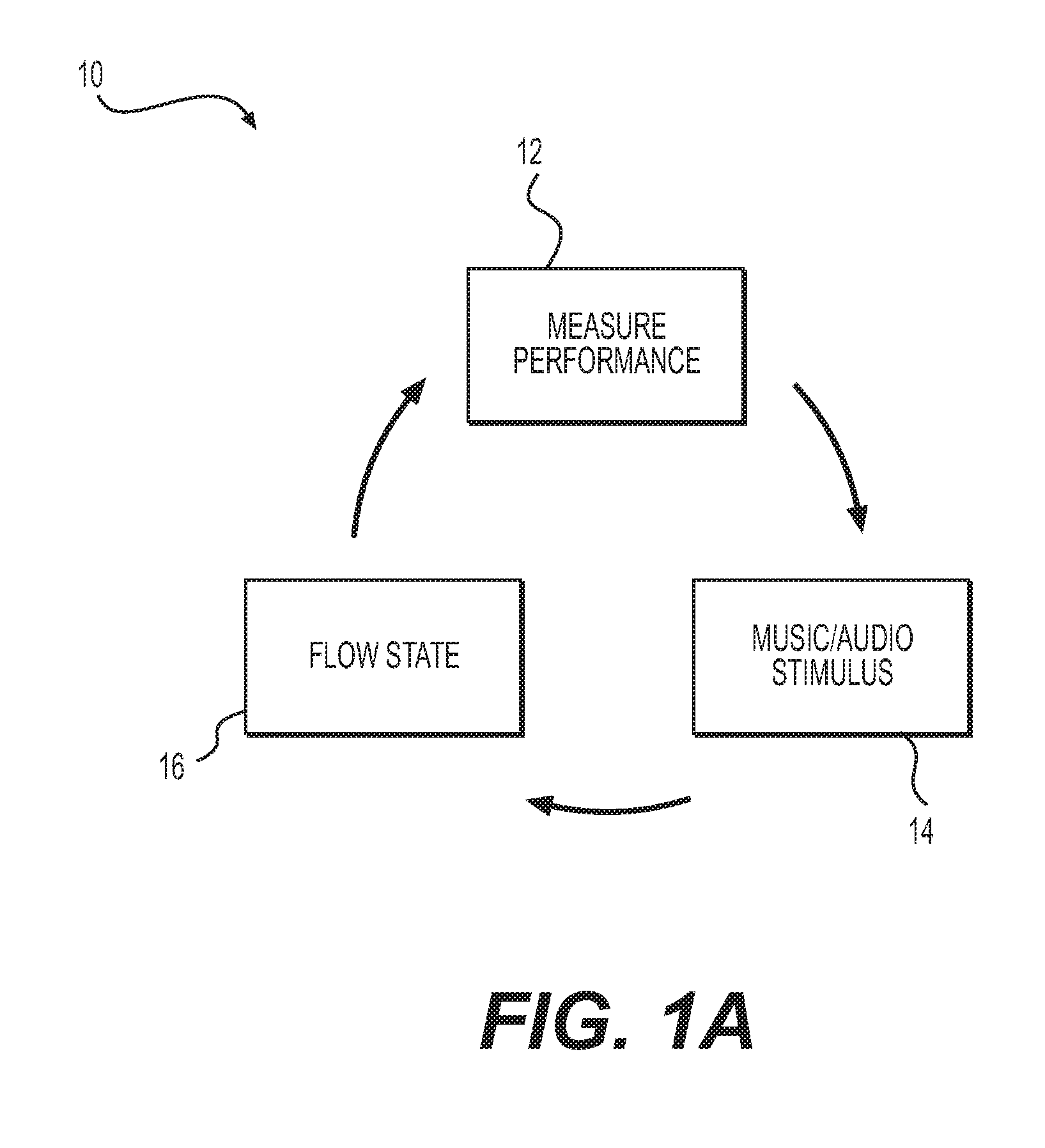

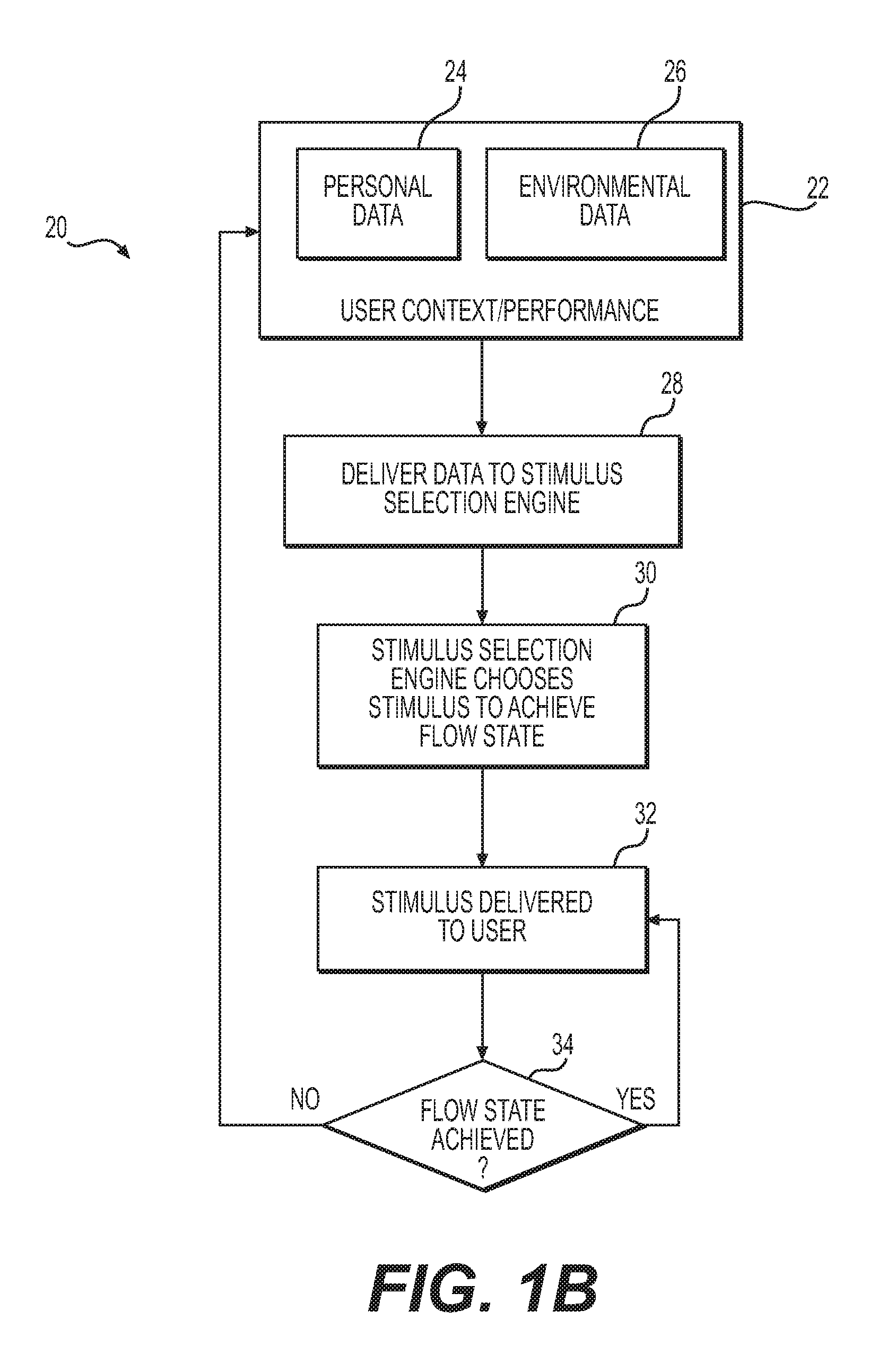

Human performance optimization and training methods and systems

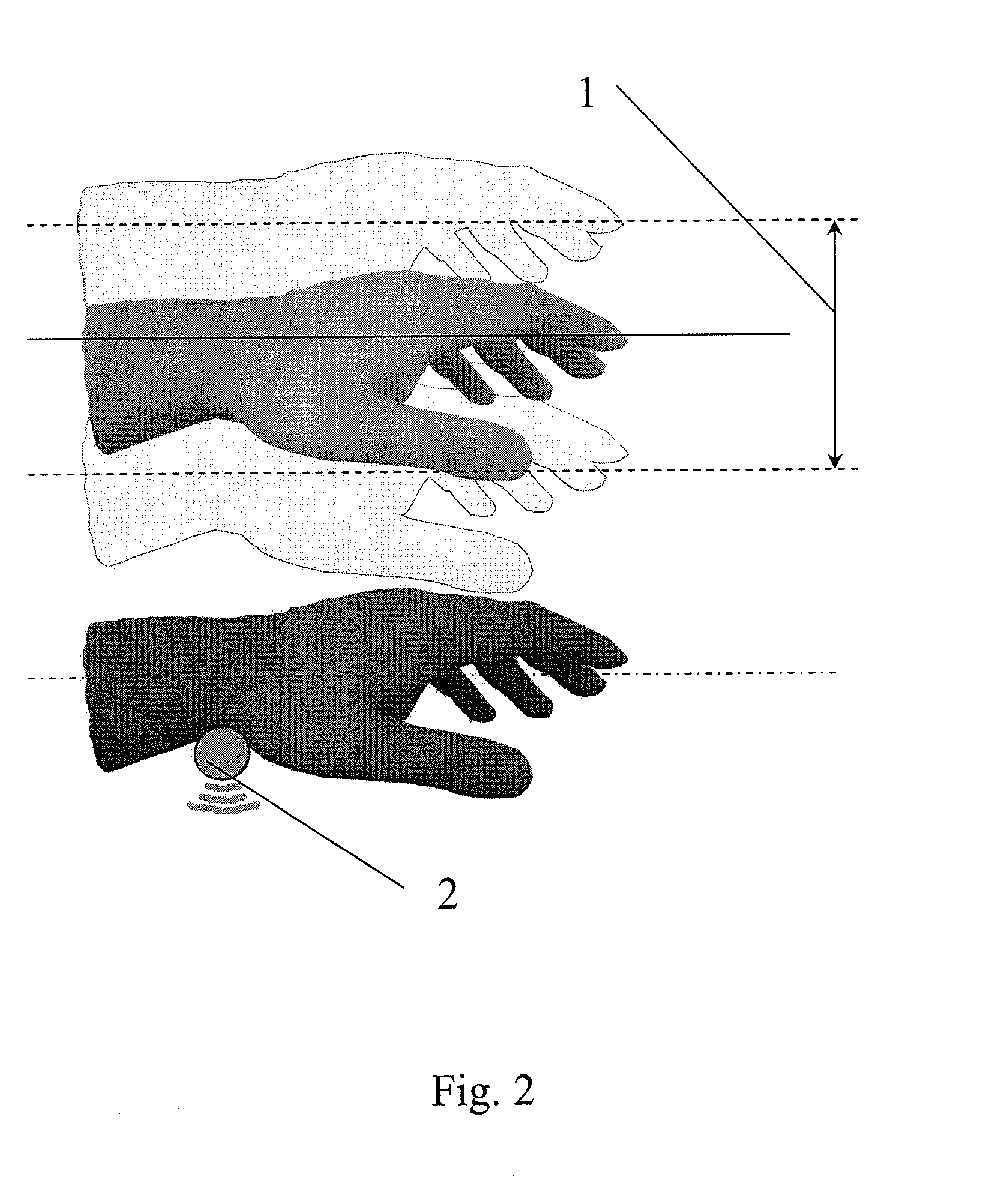

ActiveUS20160196758A1Improve performanceElectroencephalographyPhysical therapies and activitiesHorizonTouch Perception

Owner:SKULLCANDY

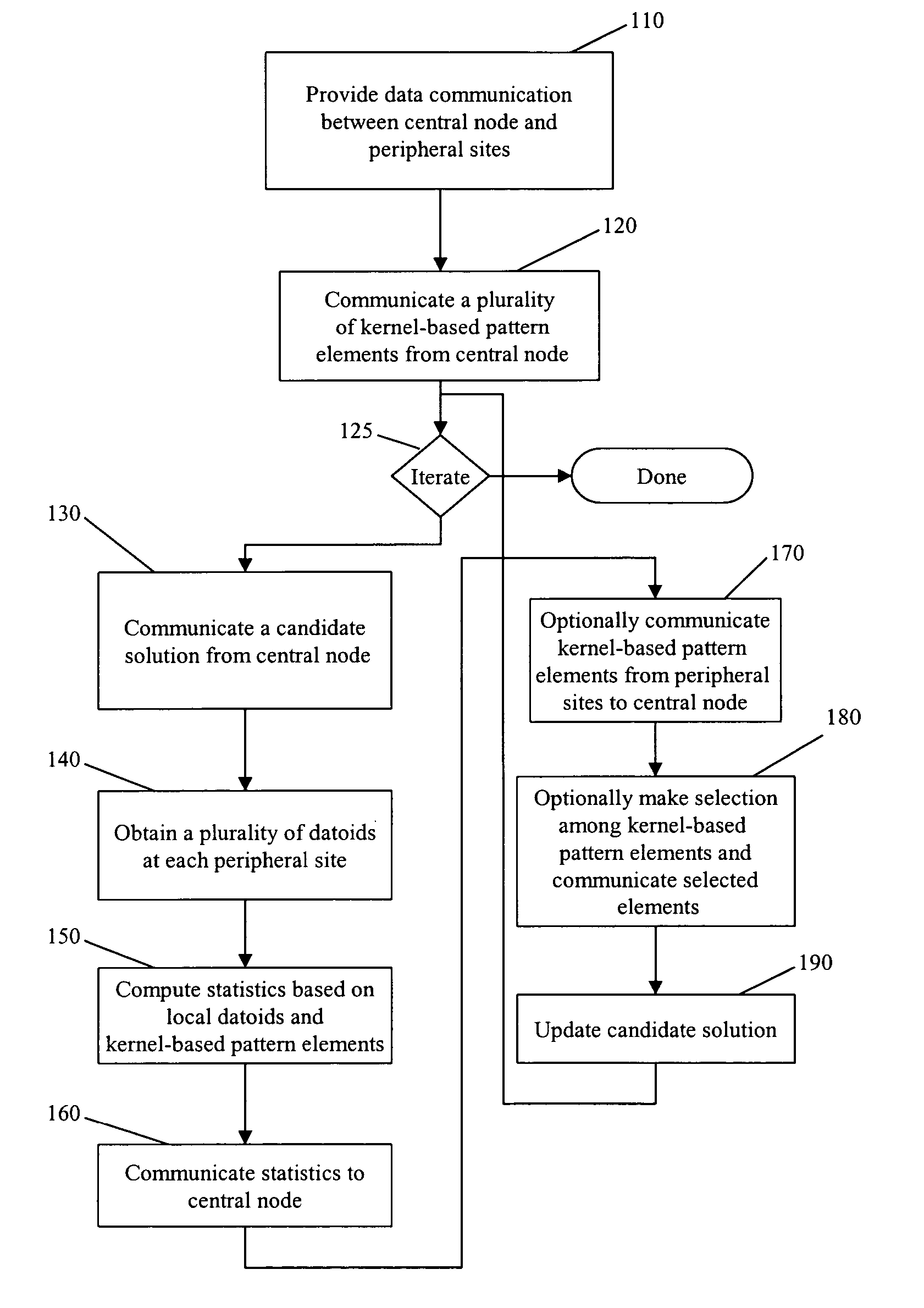

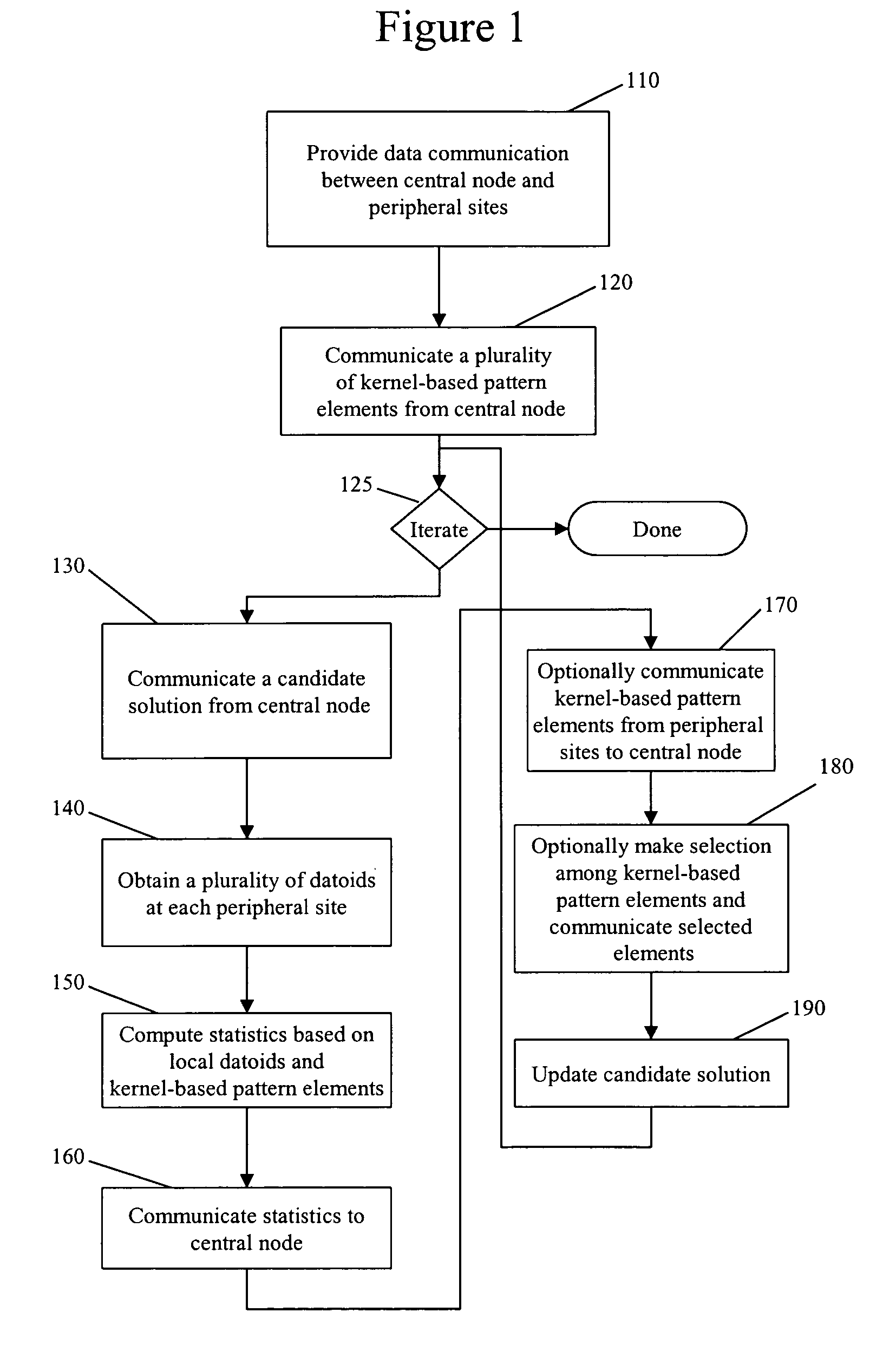

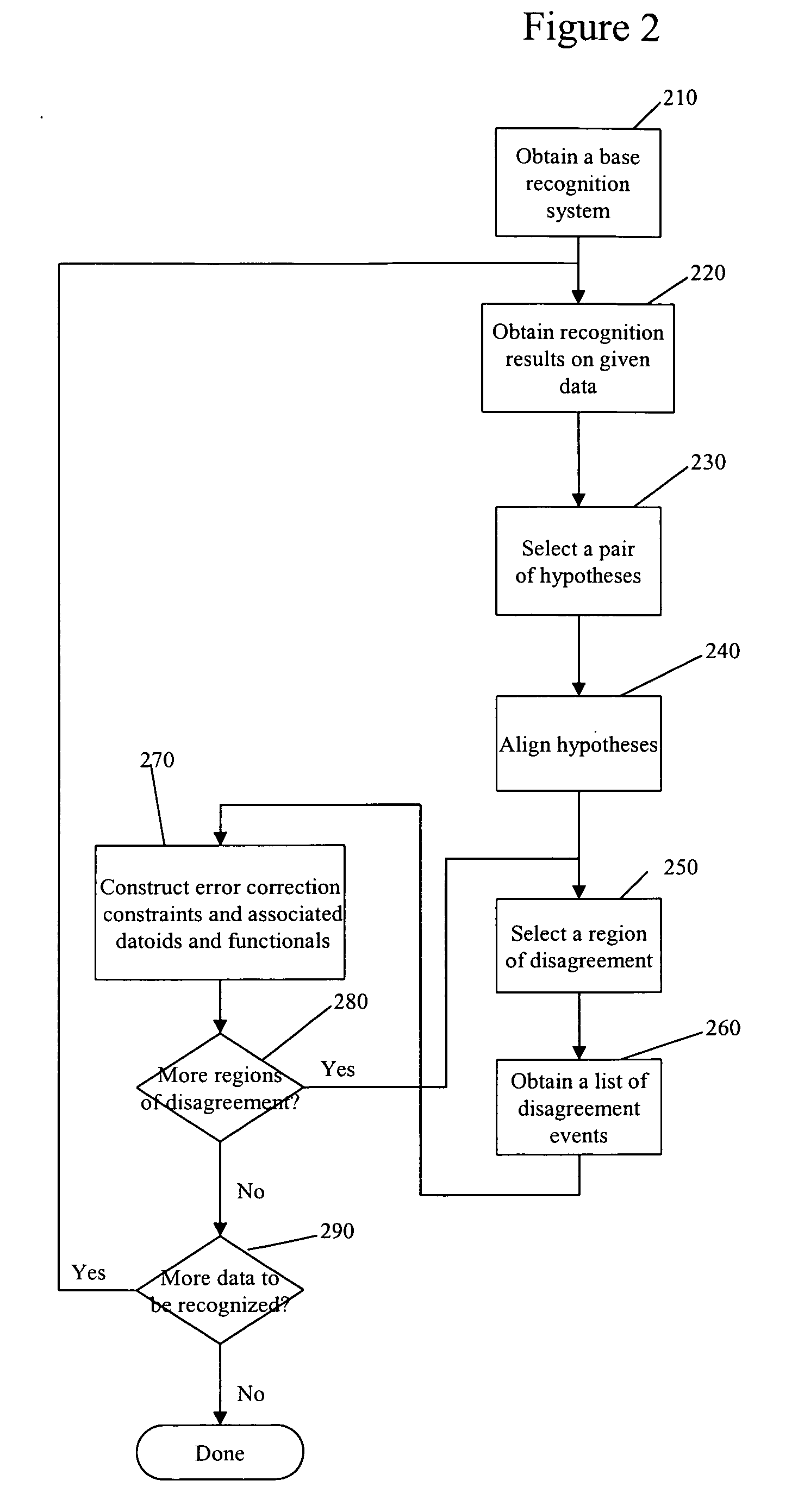

Distributed pattern recognition training method and system

ActiveUS20060015341A1Multiple digital computer combinationsSpeech recognitionPattern recognitionTraining methods

A distributed pattern recognition training method includes providing data communication between at least one central pattern analysis node and a plurality of peripheral data analysis sites. The method also includes communicating from the at least one central pattern analysis node to the plurality of peripheral data analysis a plurality of kernel-based pattern elements. The method further includes performing a plurality of iterations of pattern template training at each of the plurality of peripheral data analysis sites.

Owner:AURILAB

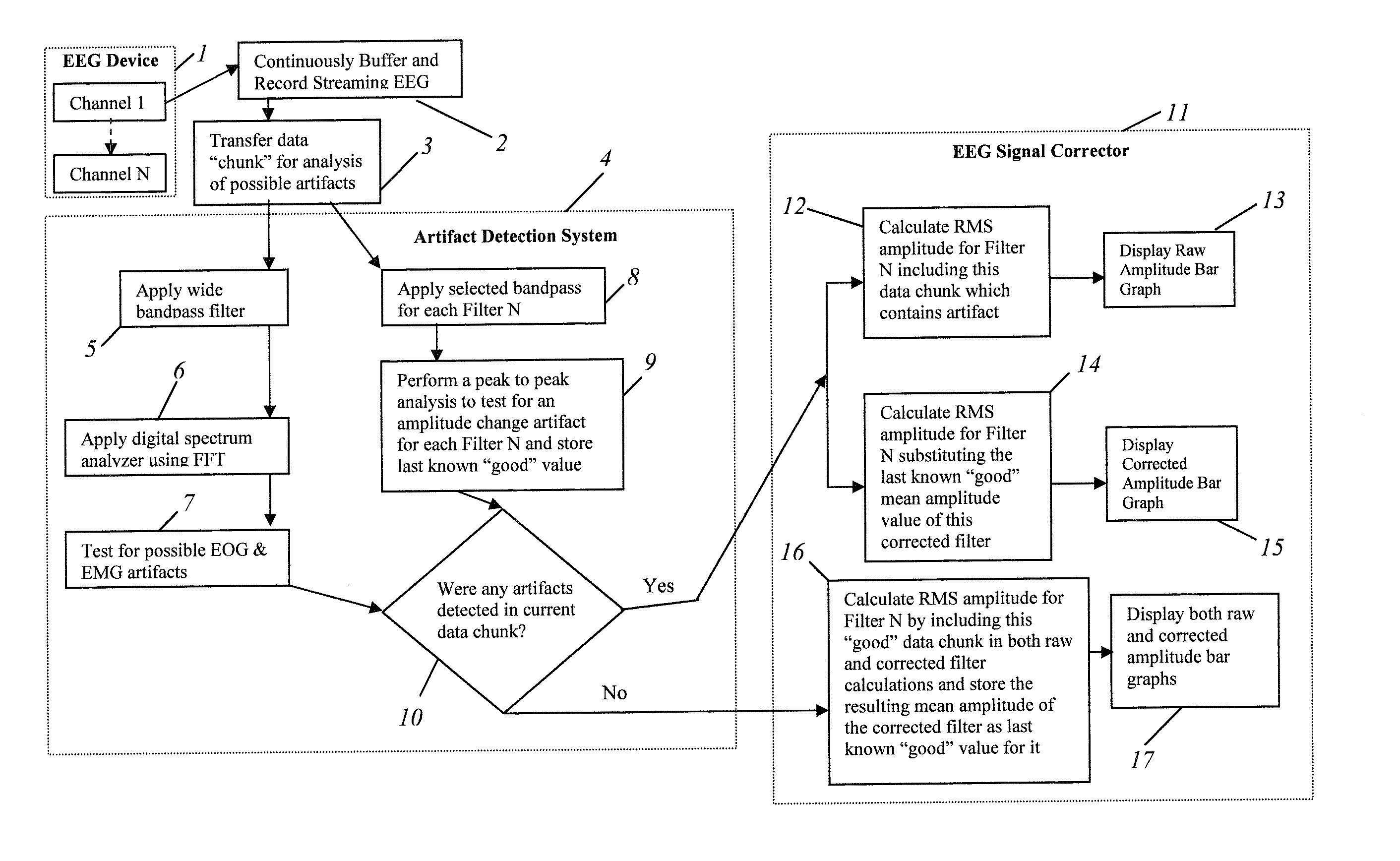

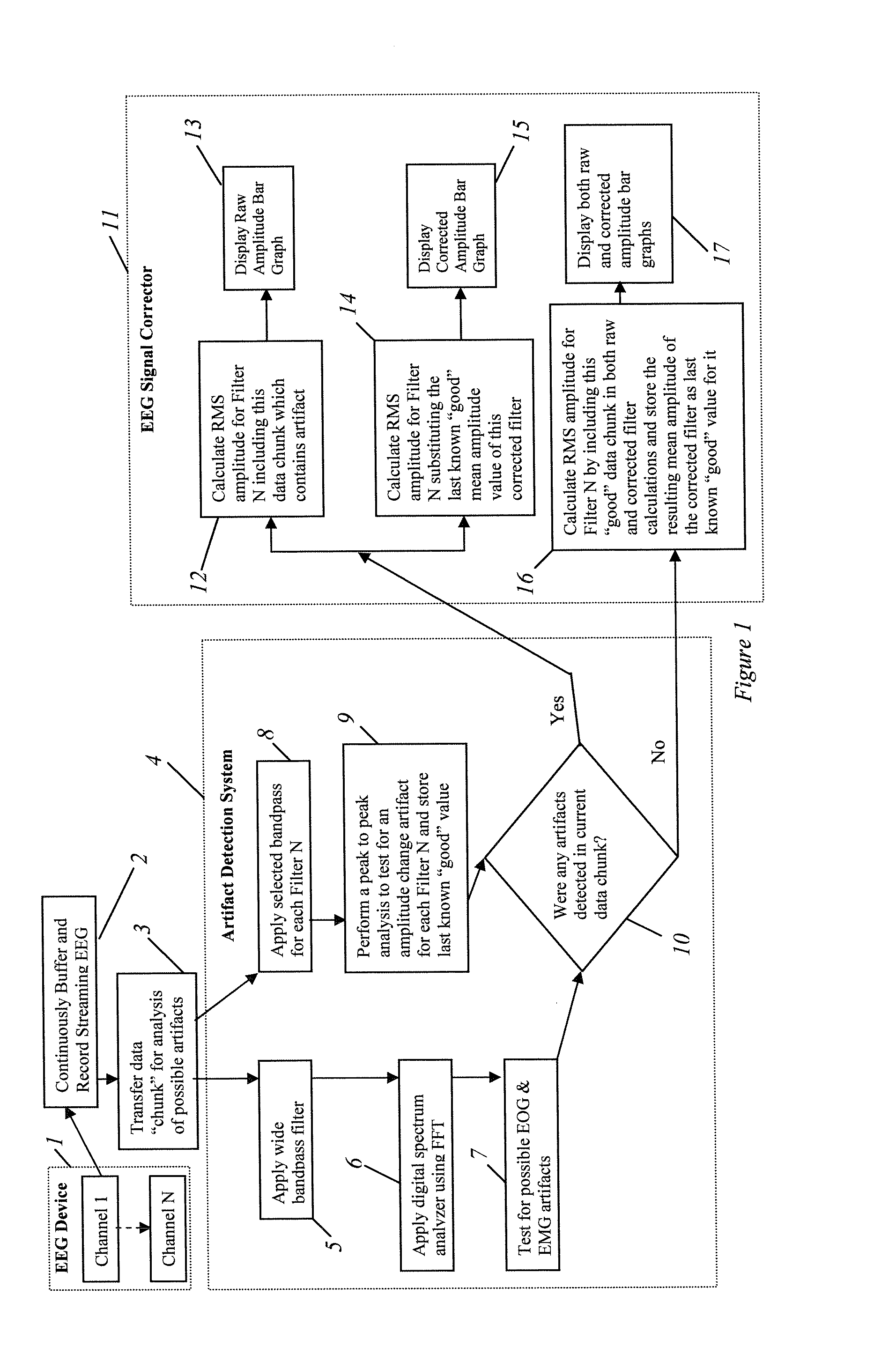

Artifact detection and correction system for electroencephalograph neurofeedback training methodology

InactiveUS20090062680A1Accurate feedbackEasy to processElectroencephalographyElectro-oculographyElectrode placementPattern recognition

The method for simultaneously and concurrently identifying and quantifying a wide variety of types of facial electromyographic (EMG) and eye movement electrooculargraphic (EOG) activity, which naturally contaminate electroencephalographic (EEG) waveforms in order to significantly improve the accuracy of the calculation in real-time of the amplitude and / or coherence of any brainwave activity for any chosen frequency bandwidth for any number of electrode placements. This multi-level, widely or universally applicable, pre-defined pattern recognition artifact detection and correction system provides a method for enhancing EEG biofeedback training by detecting and eliminating any brief, contaminated epoch of EEG activity from being included in the calculation and analysis of the EEG signal. The method and apparatus disclosed herein make it possible to provide without any interruption visual, auditory and / or tactile feedback of a “true” EEG signal that through operant conditioning learning principles enables individuals to more quickly and easily learn to control their brainwave activity using neurofeedback.

Owner:BRAIN TRAIN

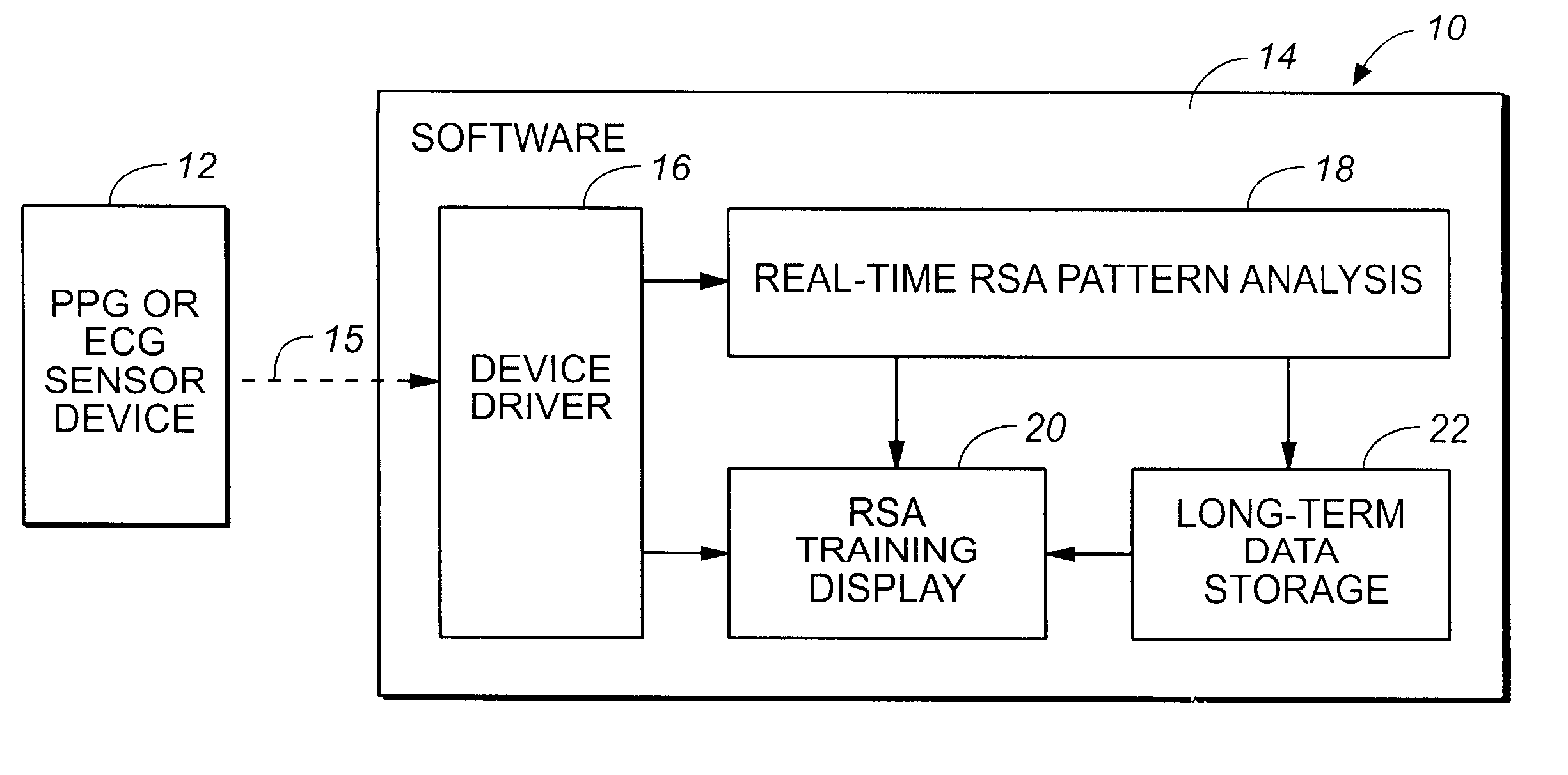

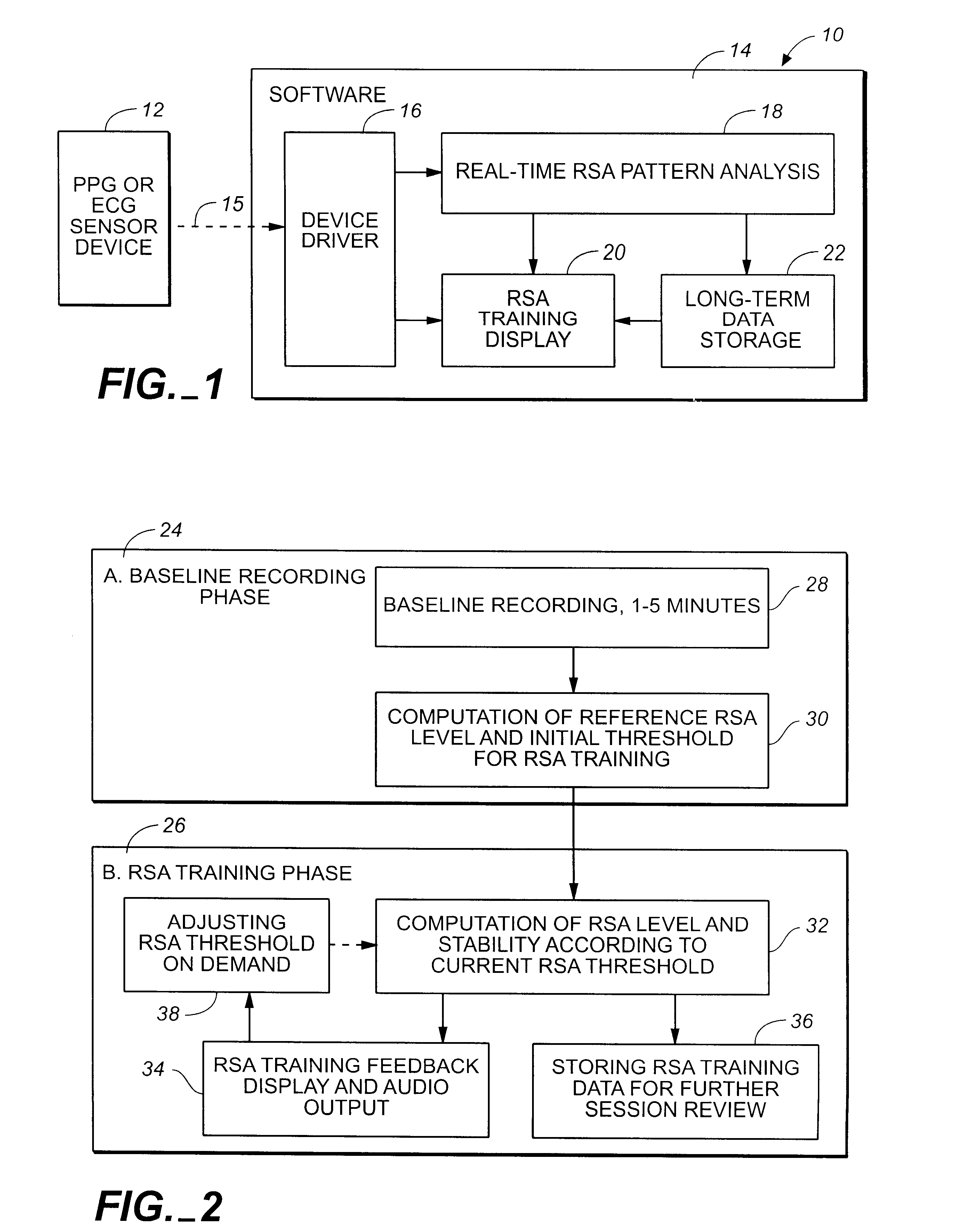

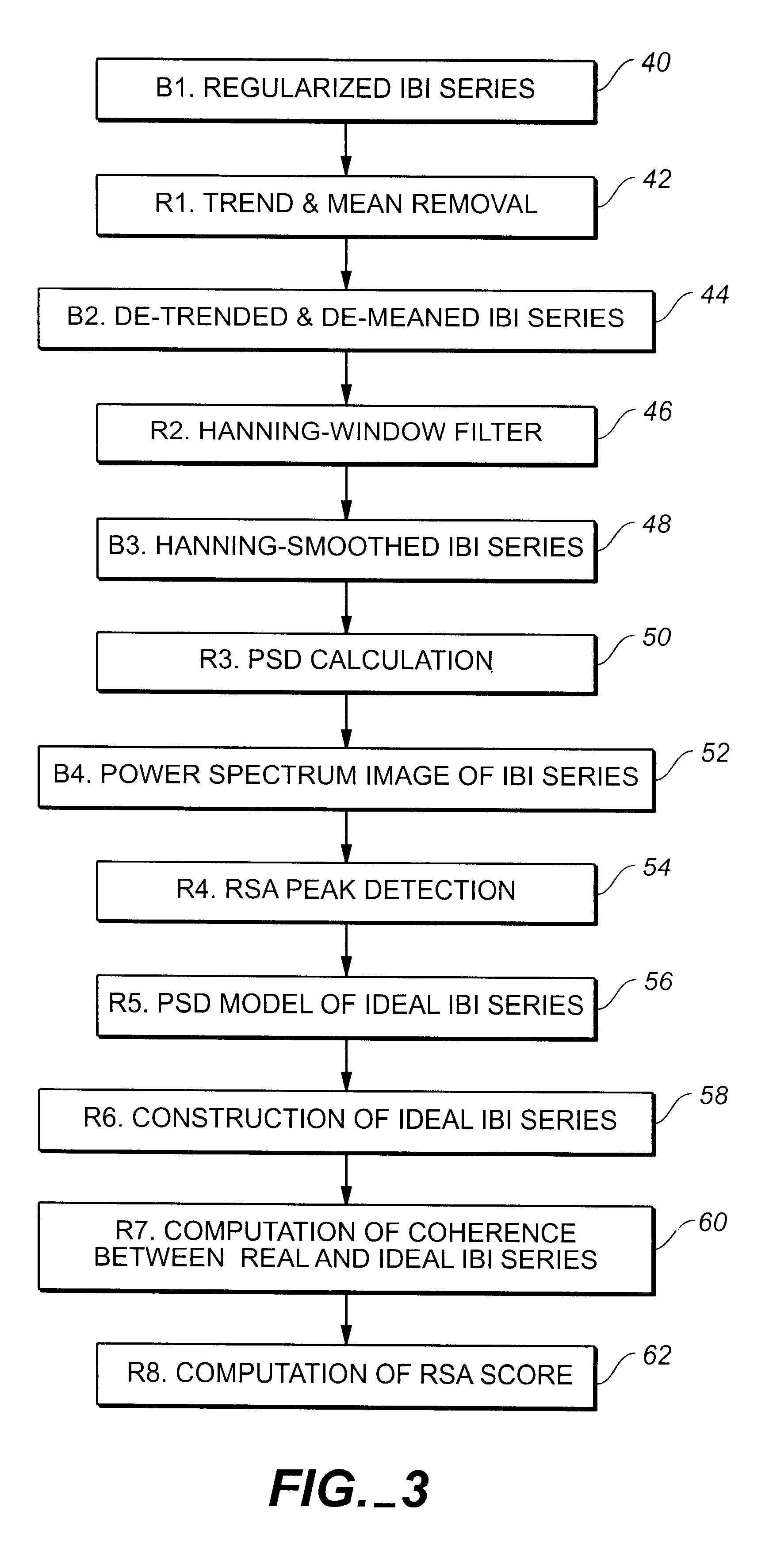

Respiratory sinus arrhythmia training system

InactiveUS6305943B1Cosmonautic condition simulationsRespiratory organ evaluationBiofeedback trainingGraphics

A biofeedback training method and apparatus to increase and reinforce the respiratory sinus arrhythmia as an outcome of a breath training technique, said apparatus comprising a physiological monitoring device that provides raw physiological data to a computer having a software program that receives and utilizes the heartbeat interbeat interval information supplied by the physiological sensors, calculates such intervals after every heartbeat, computes RSA parameters from the interbeat intervals, displays raw signal instantaneous heart rate values, calculates RSA parameters in the form of graphs, compares current values of the RSA training score with currently set threshold level to generate training feedback, provides continuous audiovisual feedback to the user to reward training attempts based on results of threshold comparisons, displays a breath pacer to induce an appropriate breathing pattern, recommended by selected breathing technique, saves interbeat intervals along with the RSA parameters into a built-in database for further review of training sessions, and permits the user to make several adjustments.

Owner:MEDDORNA

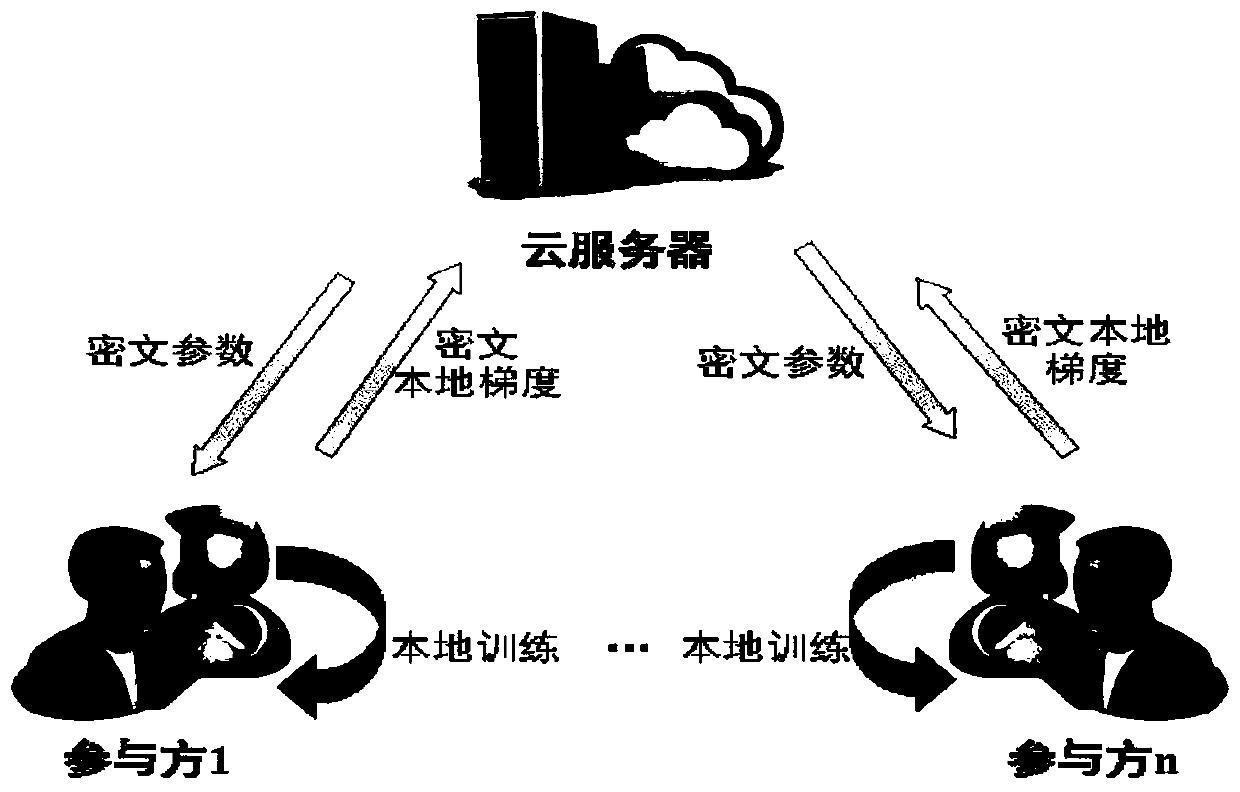

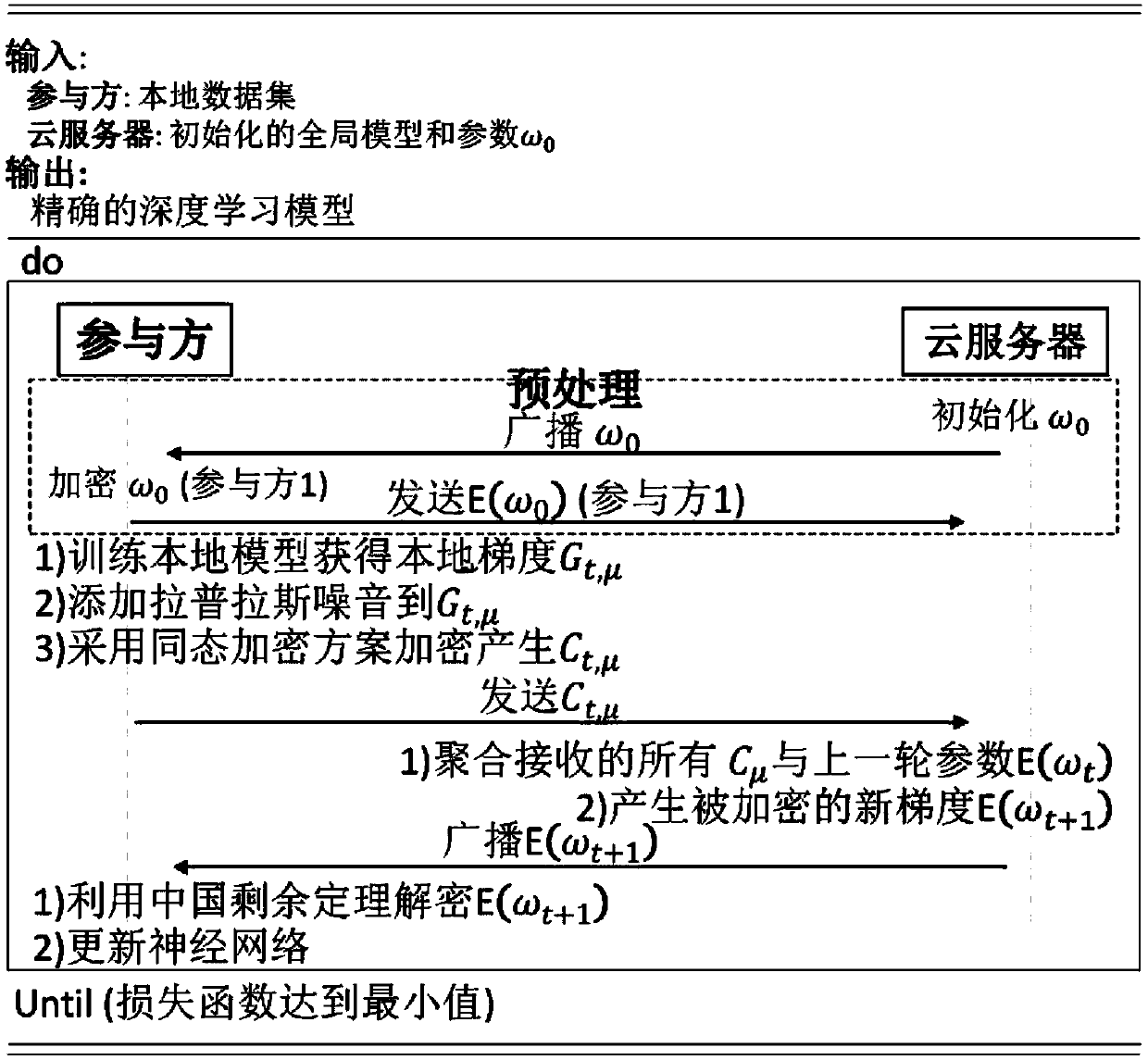

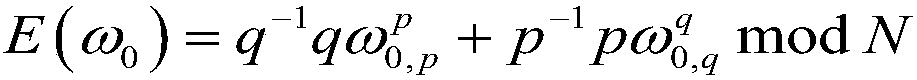

A combined deep learning training method based on a privacy protection technology

ActiveCN109684855AAvoid gettingSafe and efficient deep learning training methodDigital data protectionCommunication with homomorphic encryptionPattern recognitionData set

The invention belongs to the technical field of artificial intelligence, and relates to a combined deep learning training method based on a privacy protection technology. The efficient combined deep learning training method based on the privacy protection technology is achieved. In the invention, each participant first trains a local model on a private data set to obtain a local gradient, then performs Laplace noise disturbance on the local gradient, encrypts the local gradient and sends the encrypted local gradient to a cloud server; The cloud server performs aggregation operation on all thereceived local gradients and the ciphertext parameters of the last round, and broadcasts the generated ciphertext parameters; And finally, the participant decrypts the received ciphertext parameters and updates the local model so as to carry out subsequent training. According to the method, a homomorphic encryption scheme and a differential privacy technology are combined, a safe and efficient deep learning training method is provided, the accuracy of a training model is guaranteed, and meanwhile a server is prevented from inferring model parameters, training data privacy and internal attacksto obtain private information.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

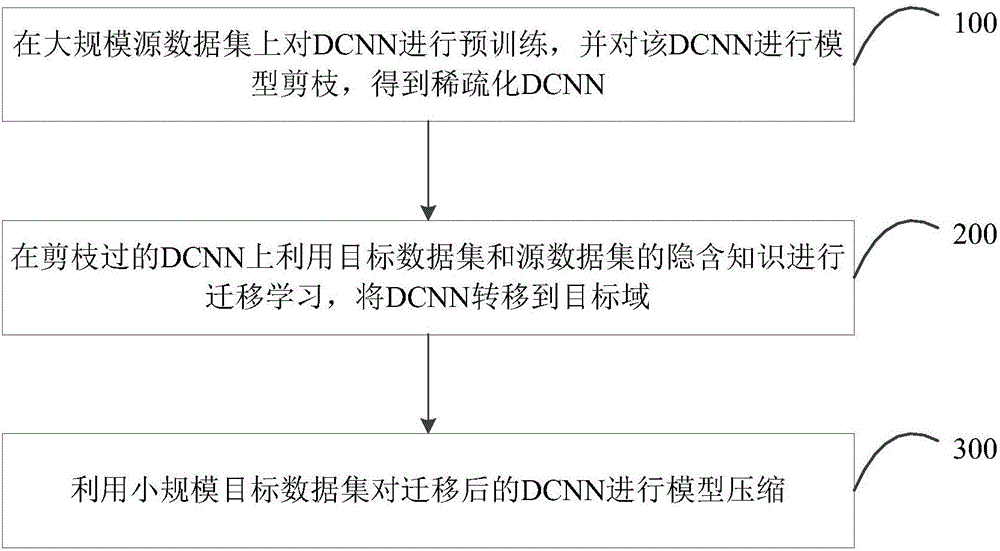

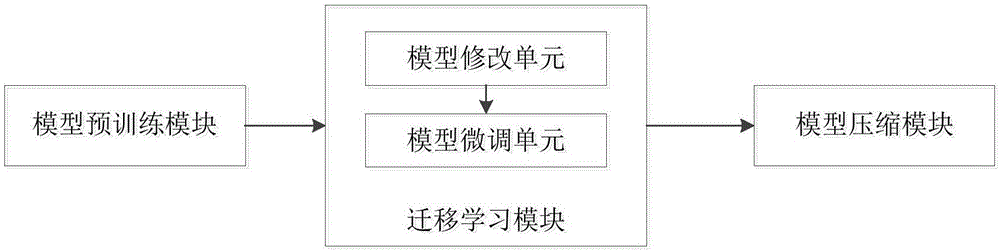

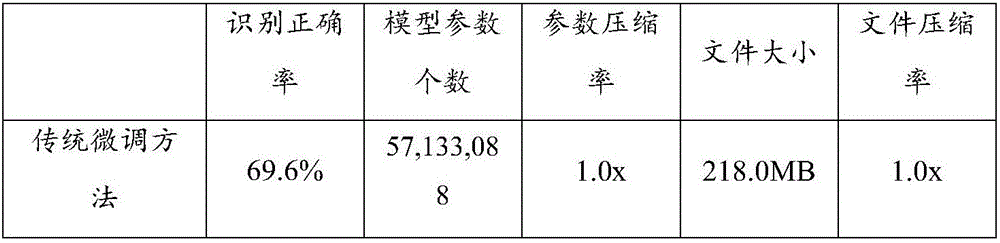

Deep convolution neural network training method and device

InactiveCN106355248AImprove predictive performanceImprove transfer learning capabilitiesPhysical realisationNeural learning methodsData setAlgorithm

The present invention relates to the field of deep learning techniques, in particular to a deep convolution neural network training method and a device. The deep convolution neural network training method and the device comprise the steps of a, pretraining the DCNN on a large scale data set, and pruning the DCNN; b, performing the migration learning on the pruned DCNN; c, performing the model compression and the pruning on the migrated DCNN with the small-scale target data set, In the process of migrating learning of large-scale source data set to small-scale target data set, the model compression and the pruning are performed on the DCNN by the migration learning method and the advantages of model compression technology, so as to improve the migration learning ability to reduce the risk of overfitting and the deployment difficulty on the small-scale target data set and improve the prediction ability of the model on the target data set.

Owner:SHENZHEN INST OF ADVANCED TECH

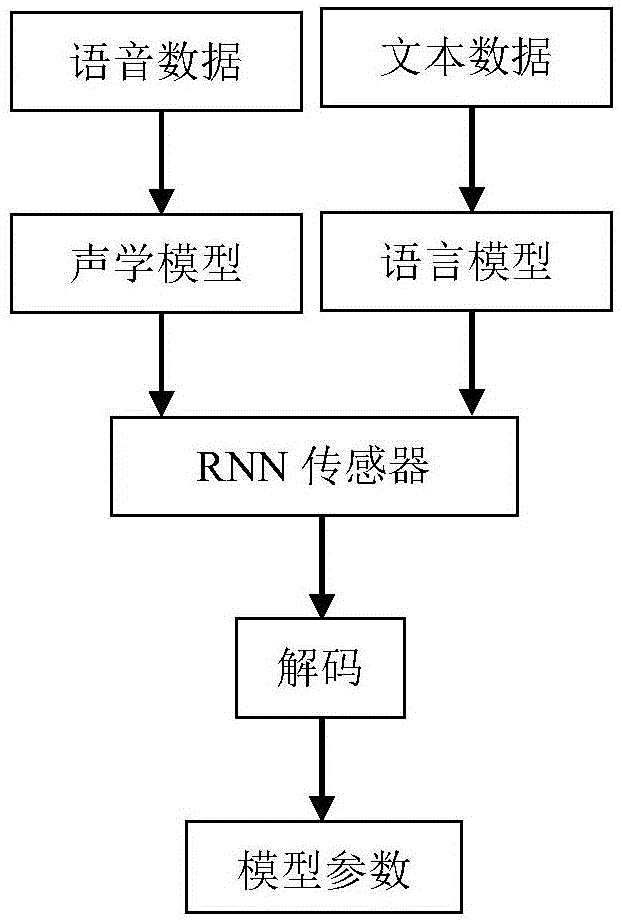

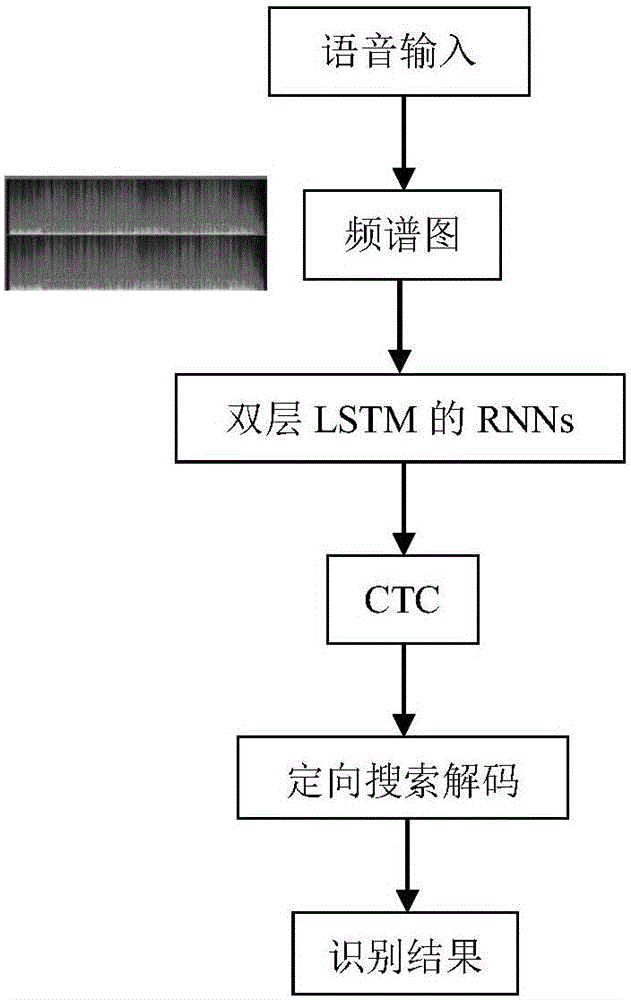

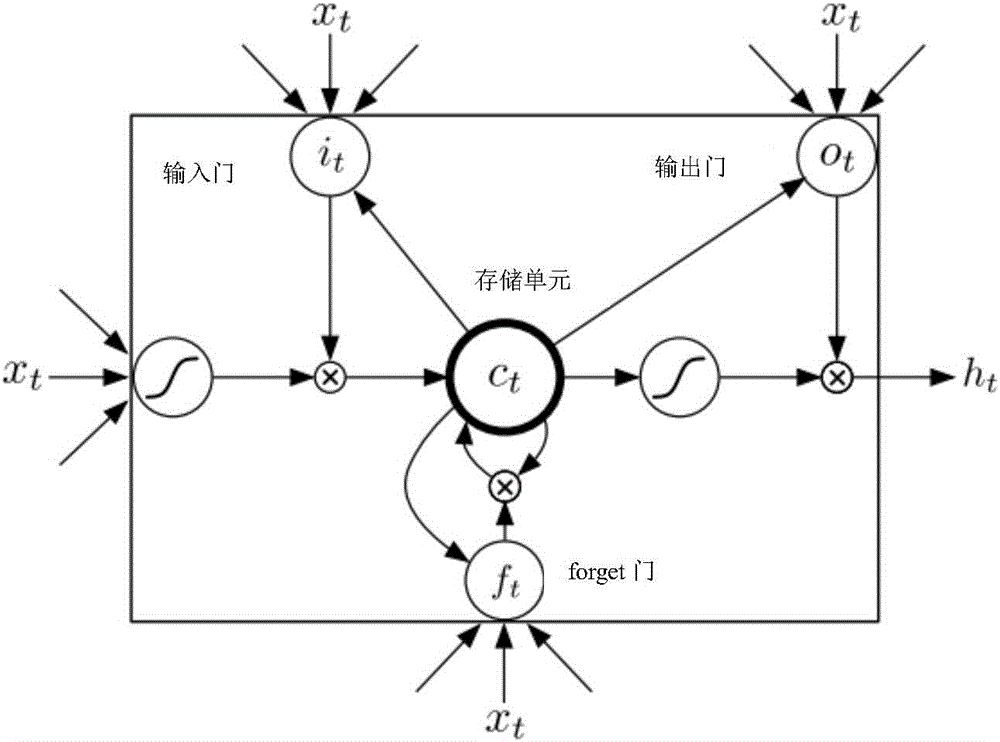

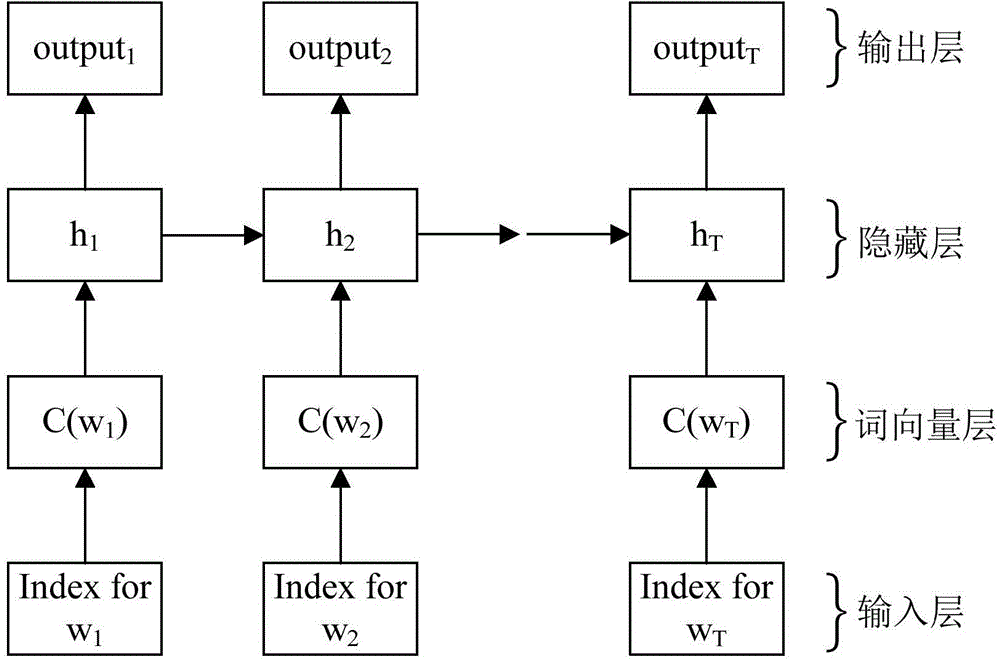

Voice identification method using long-short term memory model recurrent neural network

The invention discloses a voice identification method using a long-short term memory model recurrent neural network. The voice identification method comprises training and identification. The training process comprises steps of introducing voice data and text data to generate a commonly-trained acoustic and language mode, and using an RNN sensor to perform decoding to form a model parameter. The identification process comprises steps of converting voice input to a frequency spectrum graph through Fourier conversion, using the recursion neural network of the long-short term memory model to perform orientational searching decoding and finally generating an identification result. The voice identification method adopts the recursion neural network (RNNs) and adopts connection time classification (CTC) to train RNNs through an end-to-end training method. These LSTM units combining with the long-short term memory have good effects and combines with multi-level expression to prove effective in a deep network; only one neural network model (end-to-end model) exits from a voice characteristic (an input end) to a character string (an output end) and the neural network can be directly trained by a target function which is a some kind of a proxy of WER, which avoids to cost useless work to optimize an individual target function.

Owner:SHENZHEN WEITESHI TECH

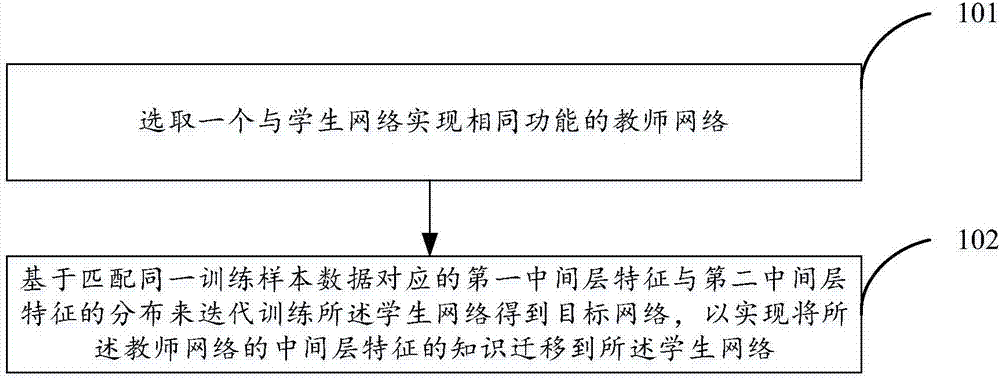

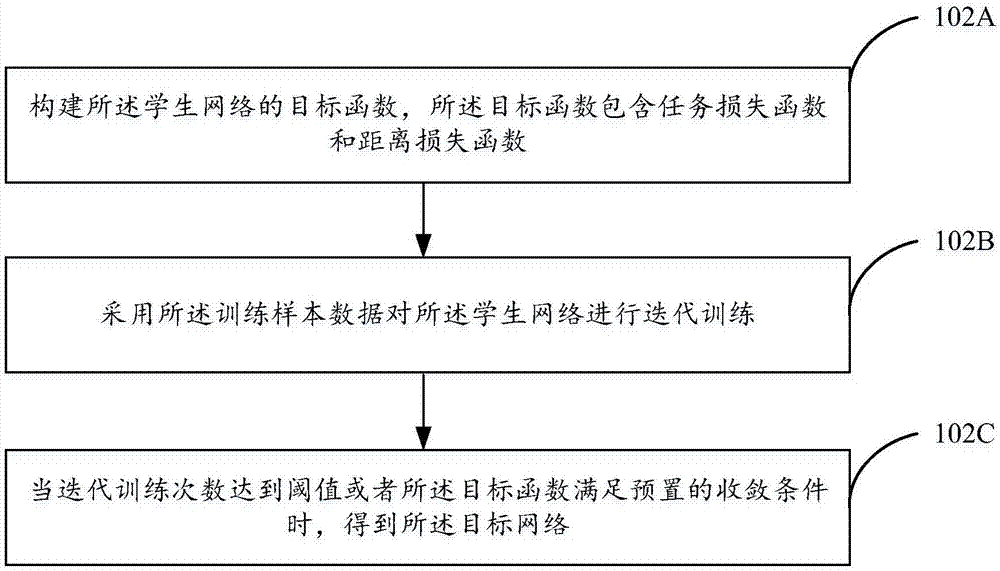

Neural network training method and device

ActiveCN107247989AImprove performanceImprove accuracyKernel methodsNeural architecturesApplicability domainLarge applications

The invention discloses a neural network training method and device so as to solve a technical problem that a student network trained by the existing knowledge transfer mode cannot give consideration to a large application range and accuracy. The neural network training method comprises the steps of selecting a teacher network which realizes the same functions as the student network; and iteratively training the student network based on distribution of first intermediate layer features and second intermediate layer features corresponding to matching with the same training sample data so as to acquire a target network, and thus transferring knowledge of intermediate layer features of the teacher network to the student network, wherein the first intermediate layer features are a feature map outputted from a first specific network layer of the teacher network after inputting the training sample into the teacher network, and the second intermediate layer features are a feature map outputted from a second specific network of the student network after inputting the training sample data into the student network. The neural network acquired by adopting the technical scheme disclosed by the invention is not only wide in application range but also excellent in performance.

Owner:BEIJING TUSEN ZHITU TECH CO LTD

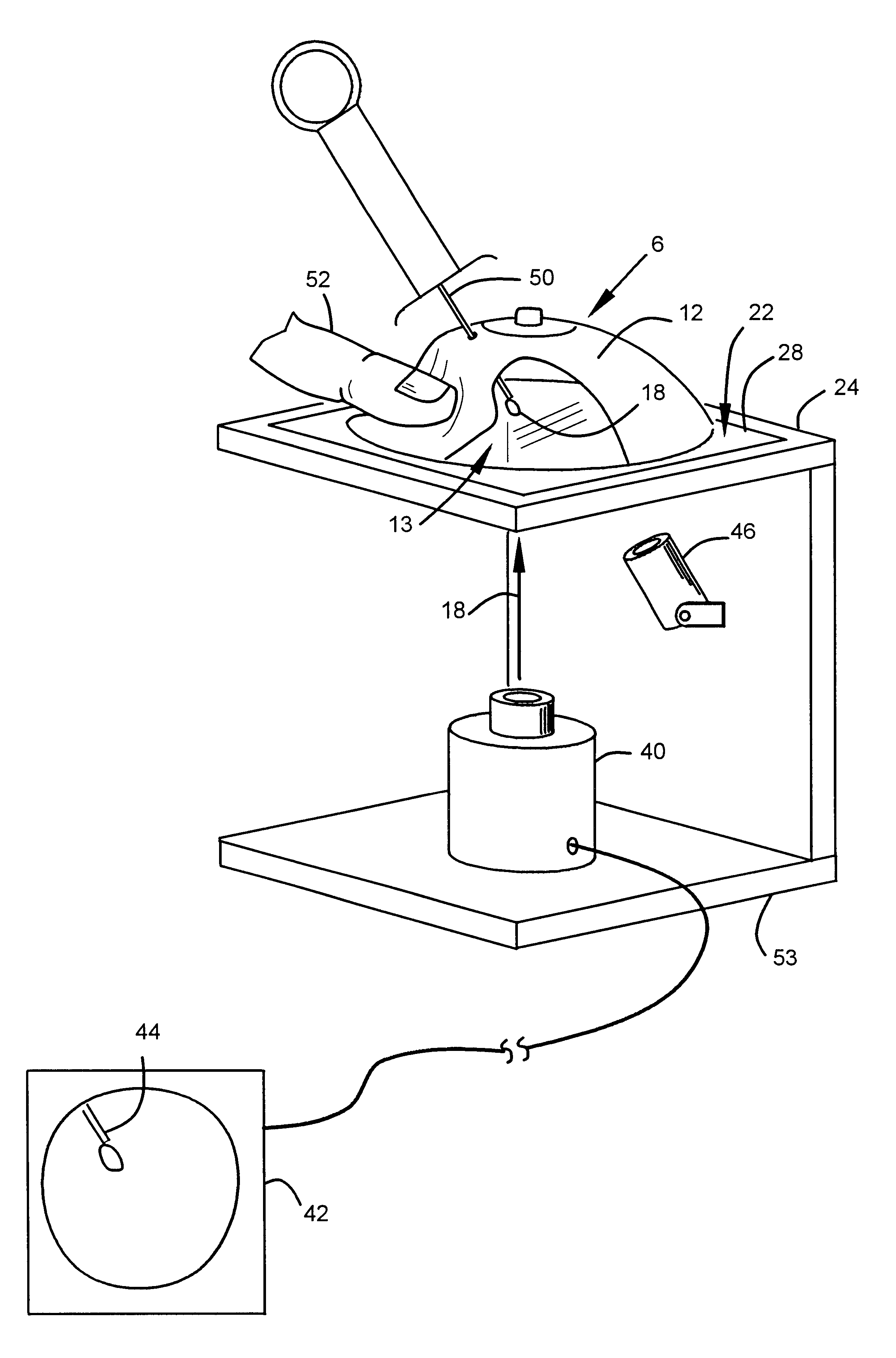

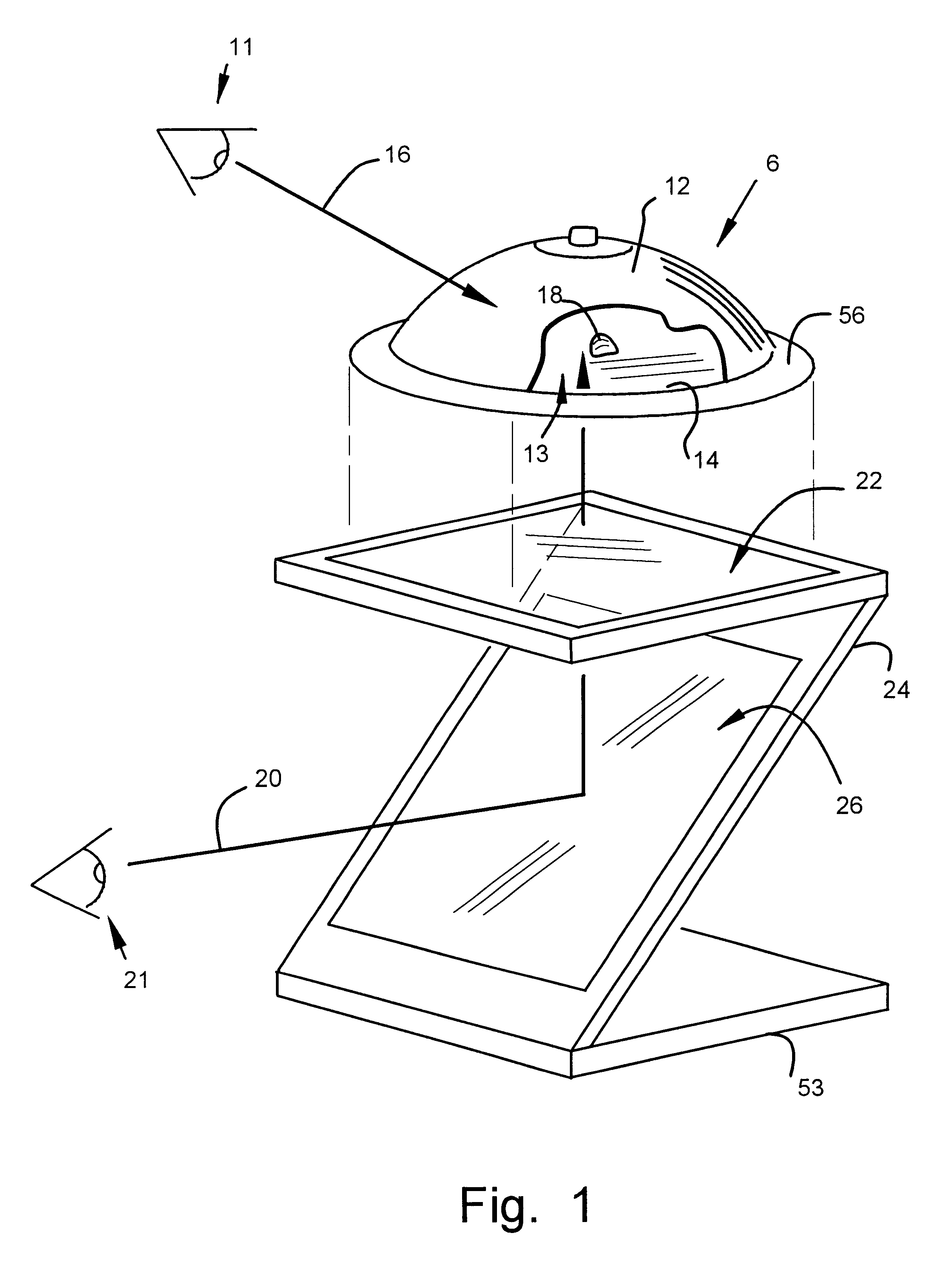

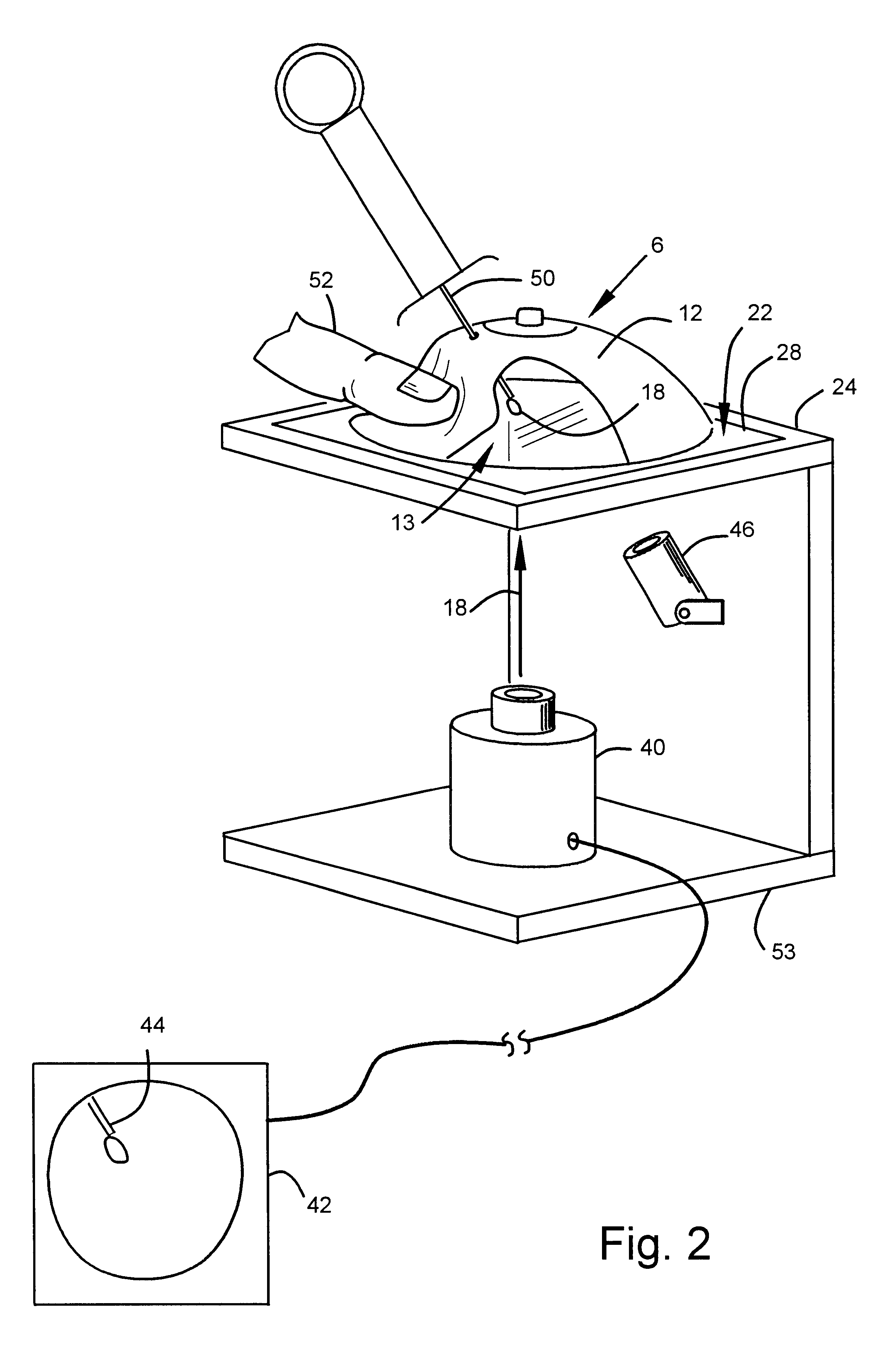

Training aids and methods for needle biopsy

InactiveUS6568941B1Accurate tactile sensationFacilitate tactile learningEducational modelsHuman bodyPalpation

A training aid for teaching needle biopsy of the human breast. The inventive methods use breast models having lifelike properties providing accurate tactile sensation during palpation of the breast that enable a trainee to learn to locate modeled internal lesions and similar tissues in the breast. These same properties allow learning of tactile sensations indicating relative position and motion of biopsy needles during biopsy needling procedures. To facilitate tactile learning, the breast model includes an opaque skin that blocks the trainee view of a breast cavity containing modeled lesions, ensuring that needling procedures are performed based solely on "feel". The present invention also includes alternative training methods using a second breast model that is sufficiently transparent to allow viewing of modeled lesions from any relative position. The present invention includes training systems incorporating breast models and viewing stands and methods of training using these aids.

Owner:GOLDSTEIN MARK K

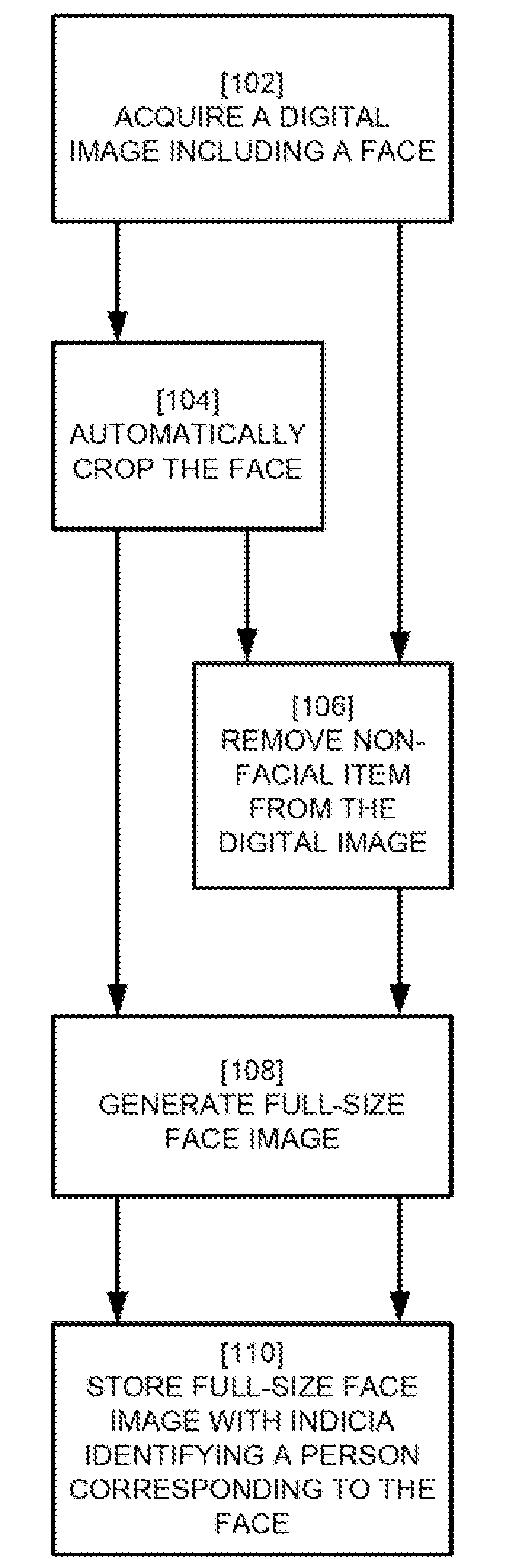

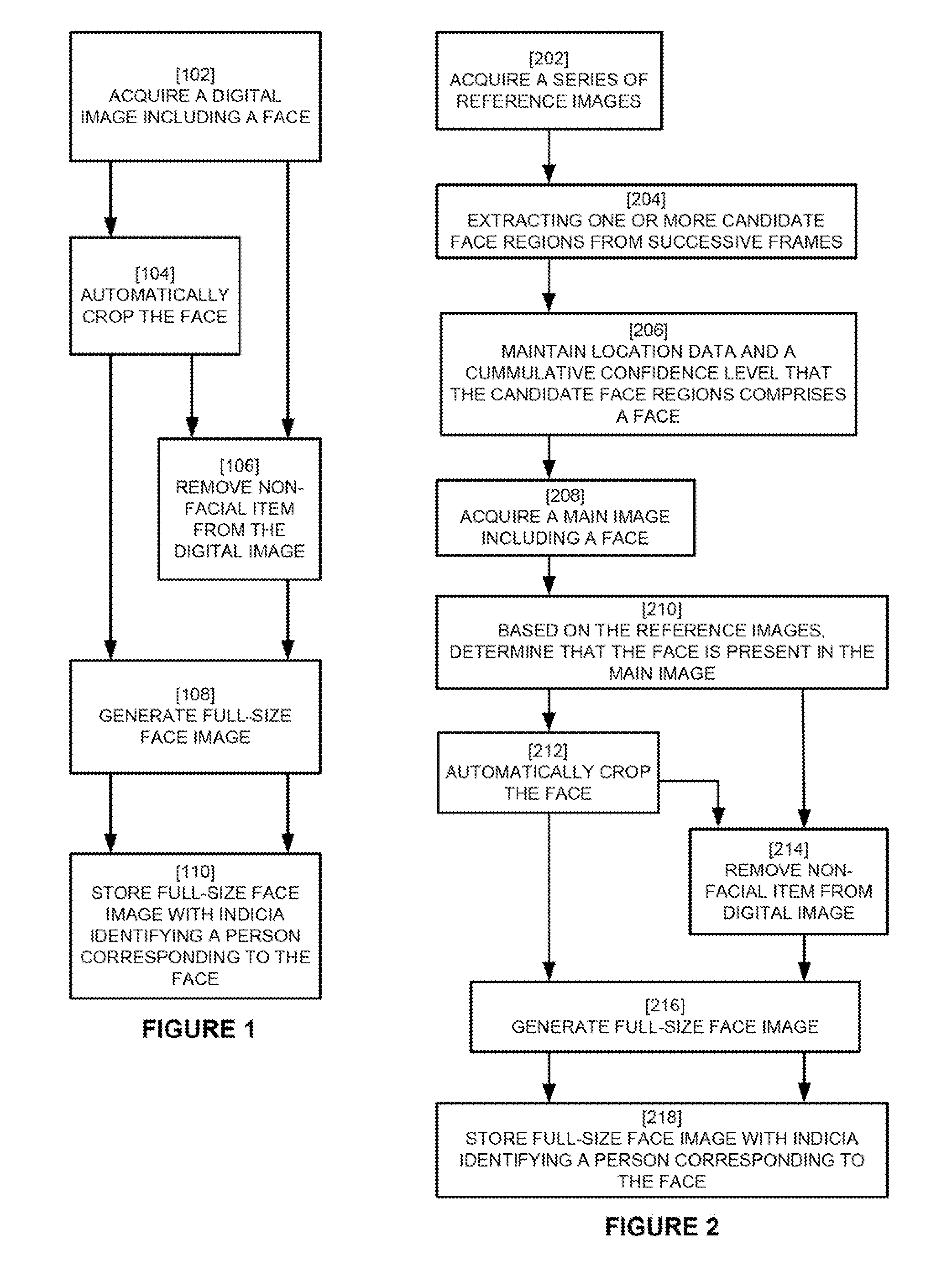

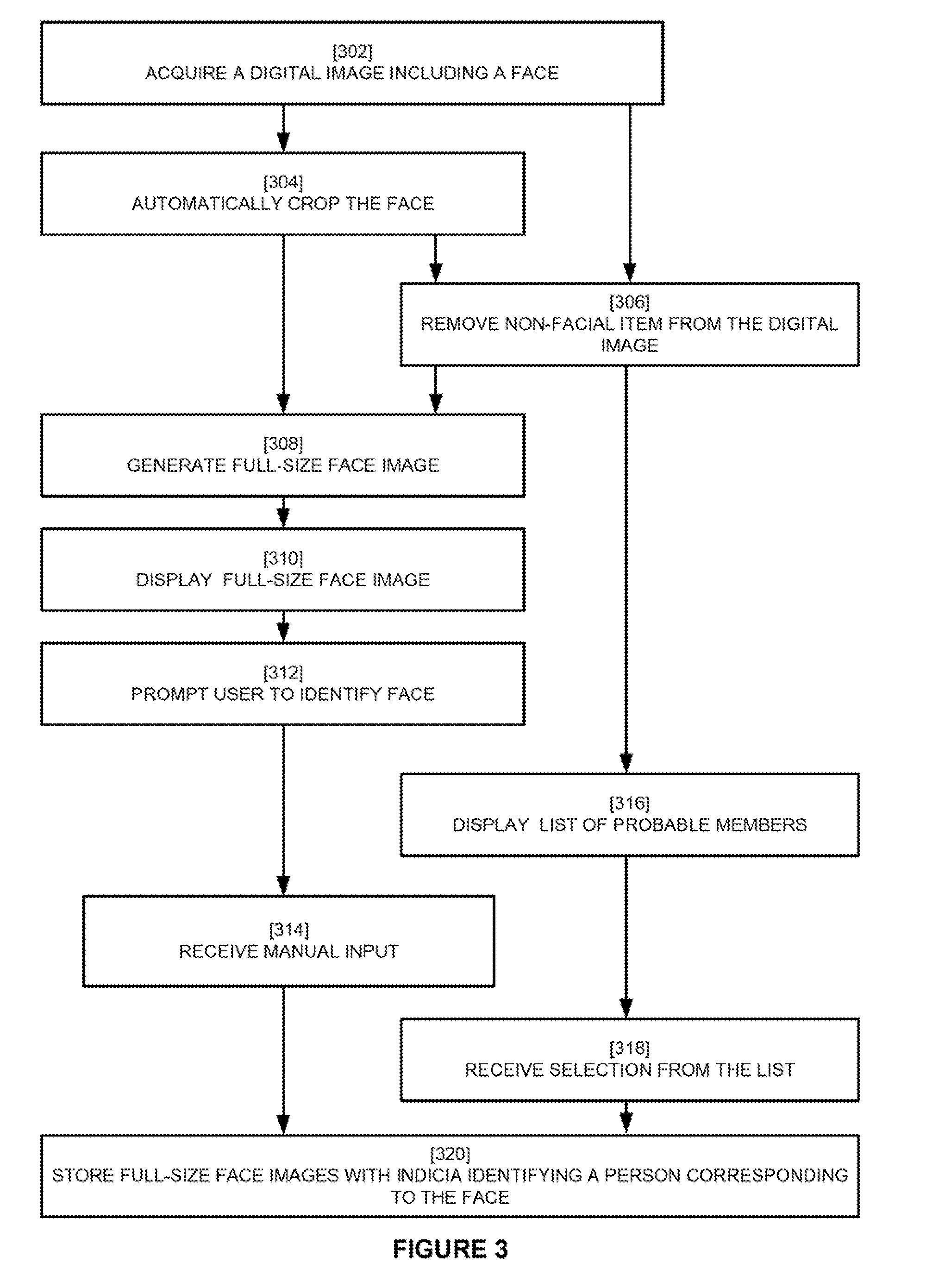

Face recognition training method and apparatus

ActiveUS20090238419A1Convenient for userTelevision system detailsCharacter and pattern recognitionPattern recognitionBiometric data

Owner:FOTONATION LTD

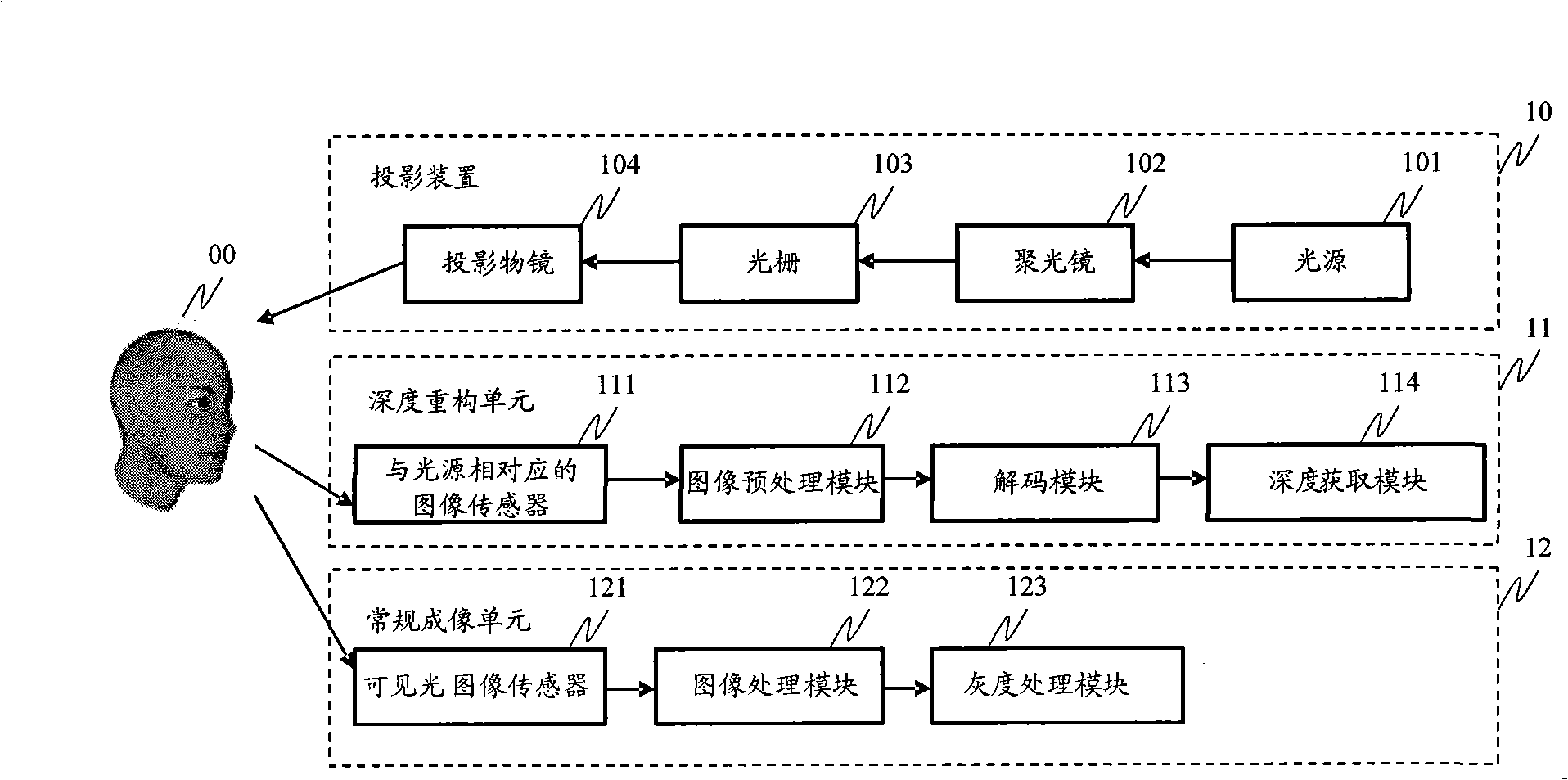

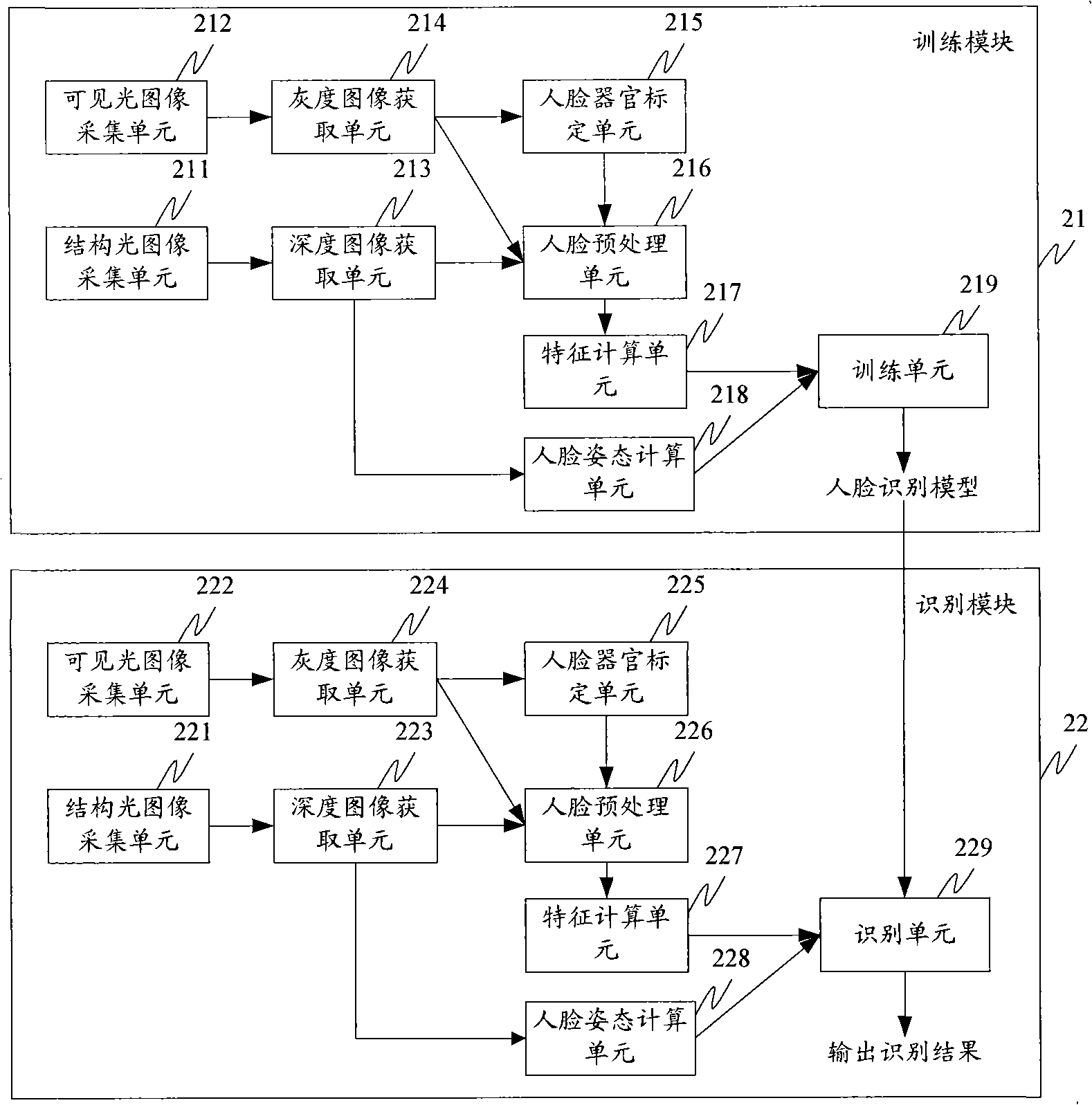

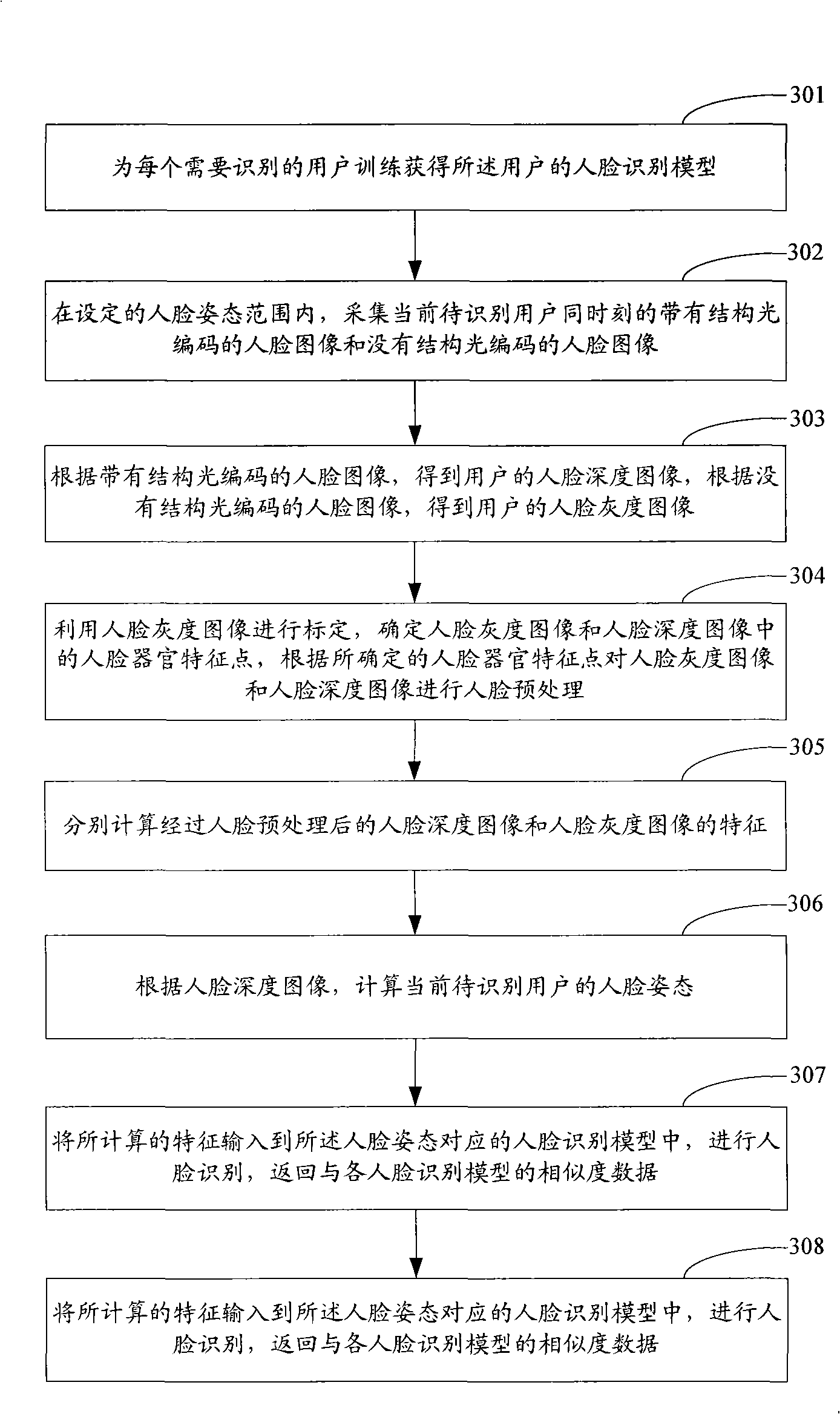

Human face recognition method and system, human face recognition model training method and system

ActiveCN101339607AStrong ability to resist light interferenceLow costCharacter and pattern recognitionPattern recognitionCalibration result

The invention discloses a face recognition method and system, in particular relates to a face recognition model training method and system. The face recognition method comprises the following steps: every user for recognition is trained and a face recognition model is obtained; within the set face posture range, the face image with structure light coding and the face image without structure light coding of the present user waiting for recognition are collected simultaneously; a face range image is obtained based on the face image with structure light coding, and a face gray image is obtained based on the face image without structure light coding; the face gray image is used for calibration, and face pre-treatment is conducted for the face gray image and the face range image based on the calibration results; the characteristics of pretreated face range image and face gray image are calculated respectively; the characteristics are input to the face recognition model to recognize faces and output face recognition results. The technical proposal provided by the invention can reduce the interference of light to face recognition and reduce costs simultaneously.

Owner:BEIJING VIMICRO ARTIFICIAL INTELLIGENCE CHIP TECH CO LTD

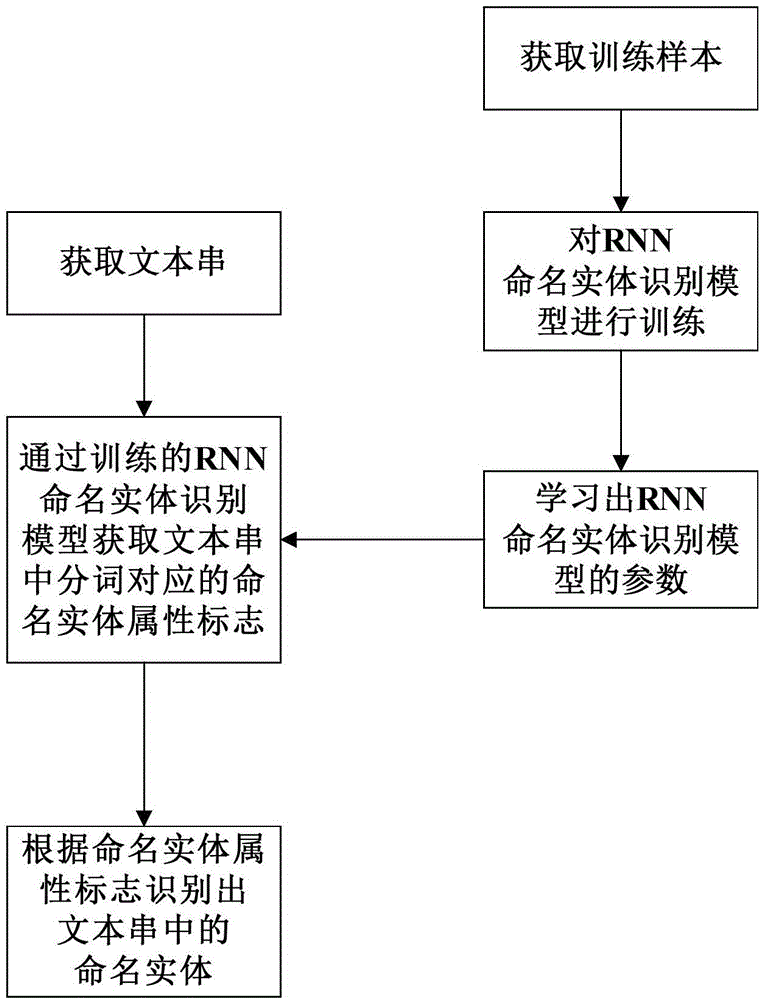

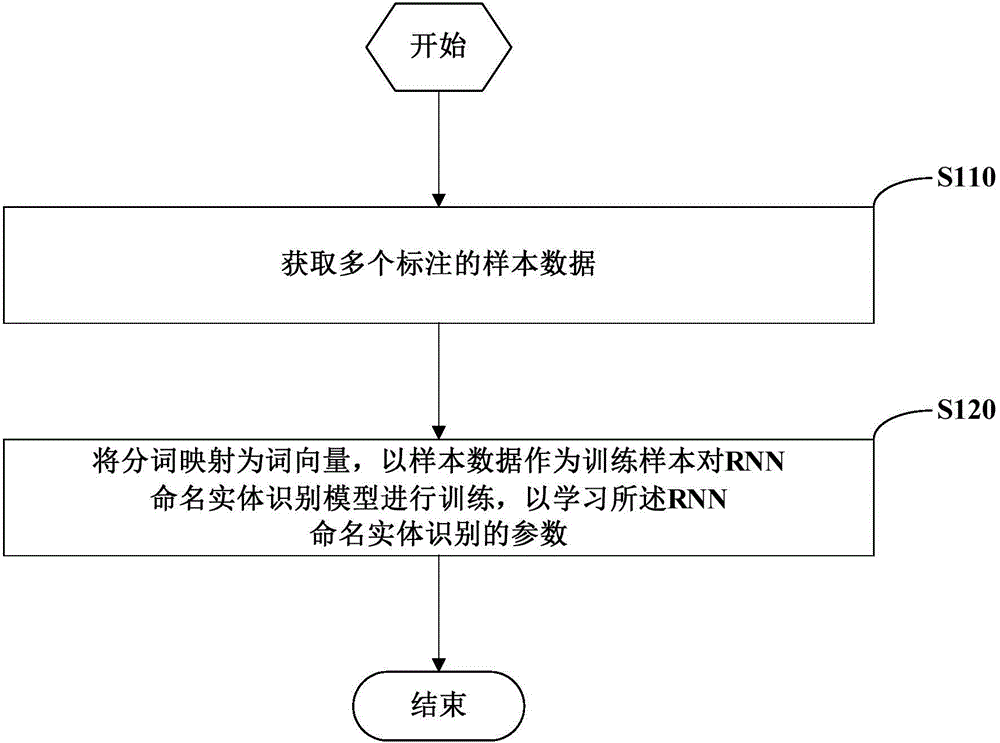

Named-entity recognition model training method and named-entity recognition method and device

InactiveCN104615589AImprove recognition accuracyImprove generalization abilitySpecial data processing applicationsNamed-entity recognitionLabeled data

An embodiment of the invention provides a named-entity recognition model training method and a named-entity recognition method and device. The method used for training a recurrent neutral network (RNN) named-entity recognition model includes: acquiring multiple labeled sample data, wherein each sample datum includes a text string and multiple term segment labeled data thereof, and each term segment labeled datum includes segmented terms separated from the text string and a named-entity attribute tag in the text string; mapping the segmented terms in the labeled sample data to be term vectors, taming the sample data as training samples, training the RNN named-entity recognition model, and learning parameters of the RNN named-entity recognition model. By the named-entity recognition model training method and the name-entity recognition method and device, the trained model has better generalization ability, the named entity in the natural language tests can be recognized rapidly, and recognition accuracy of the named entity is improved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

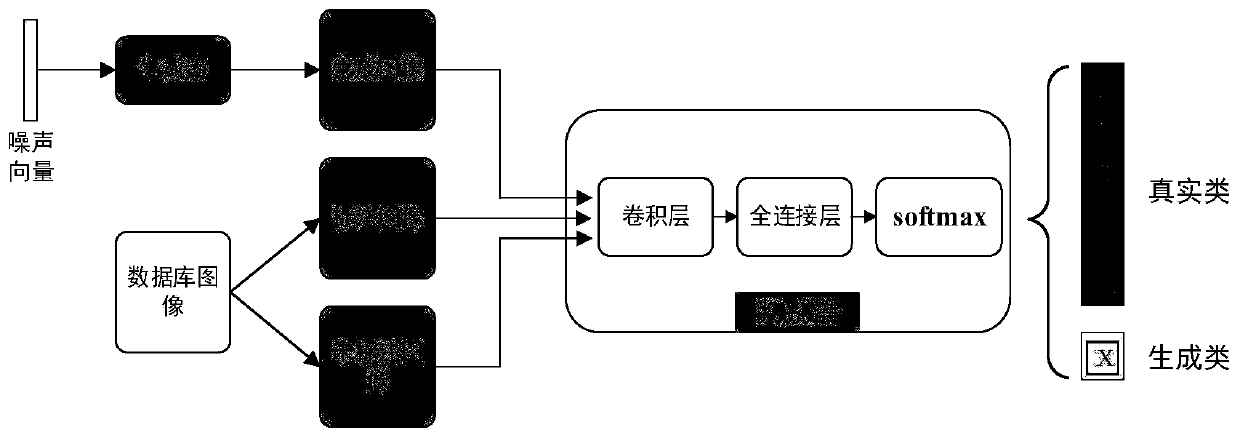

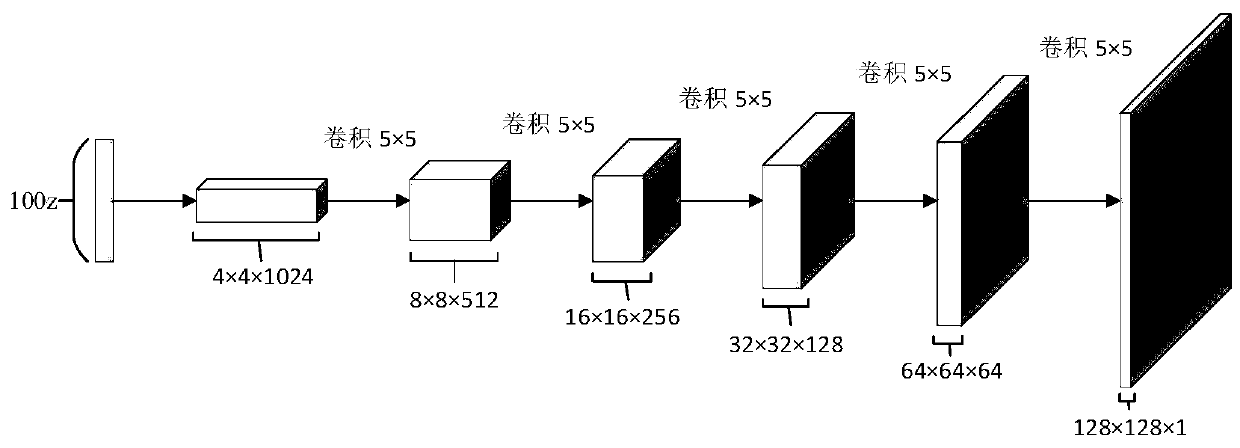

Semi-supervised X-ray image automatic labeling based on generative adversarial network

InactiveCN110110745ACharacter and pattern recognitionNeural architecturesX-rayGenerative adversarial network

The invention provides a semi-supervised X-ray automatic labeling method based on a generative adversarial network. A traditional training method is improved on the basis of an existing generative adversarial network method, and a semi-supervised training method combining supervised loss and unsupervised loss is used for carrying out image classification recognition based on a small number of labeled samples. The problem of data scarcity annotation of the X-ray image is studied. The method comprises: firstly, on the basis of a traditional unsupervised generative adversarial network, using a softmax for replacing a final output layer; expanding the X-ray image into a semi-supervised generative adversarial network, defining additional category label guide training for the generated sample, optimizing network parameters by adopting the semi-supervised training, and finally, automatically labeling the X-ray image by adopting a trained discriminant network. Compared with traditional supervised learning and other semi-supervised learning algorithms, the method has the advantage that in the aspect of medical X-ray image automatic labeling, the performance is improved.

Owner:SHANGHAI MARITIME UNIVERSITY

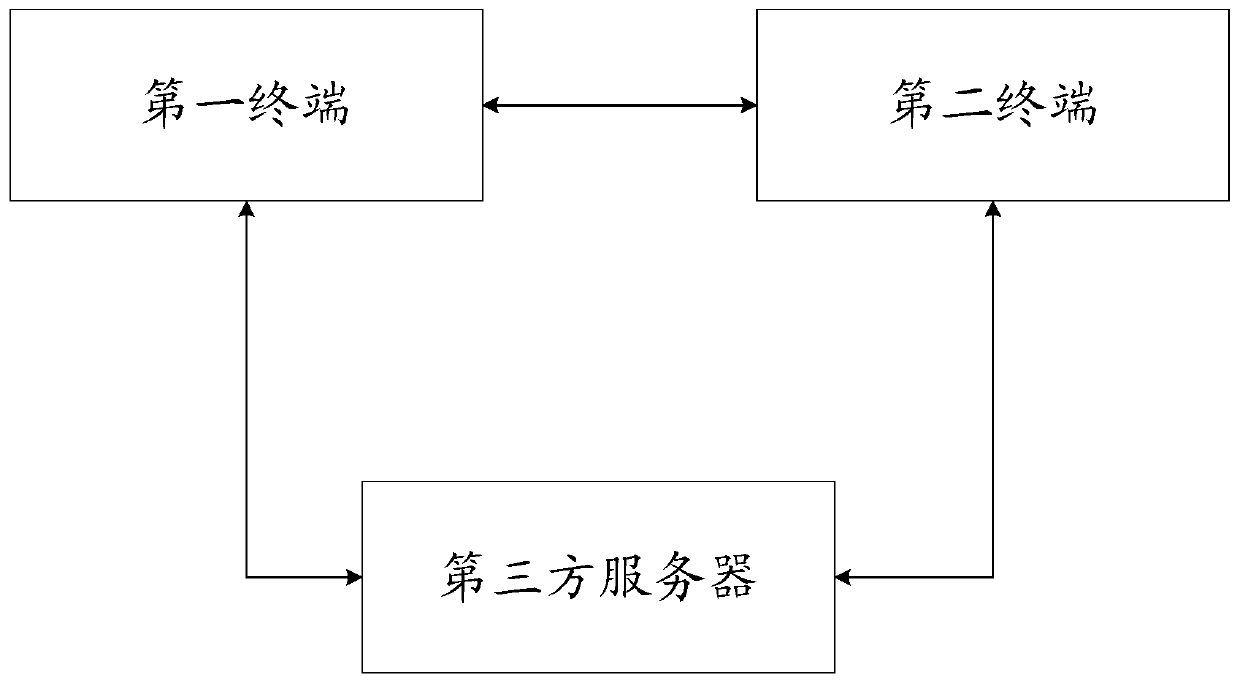

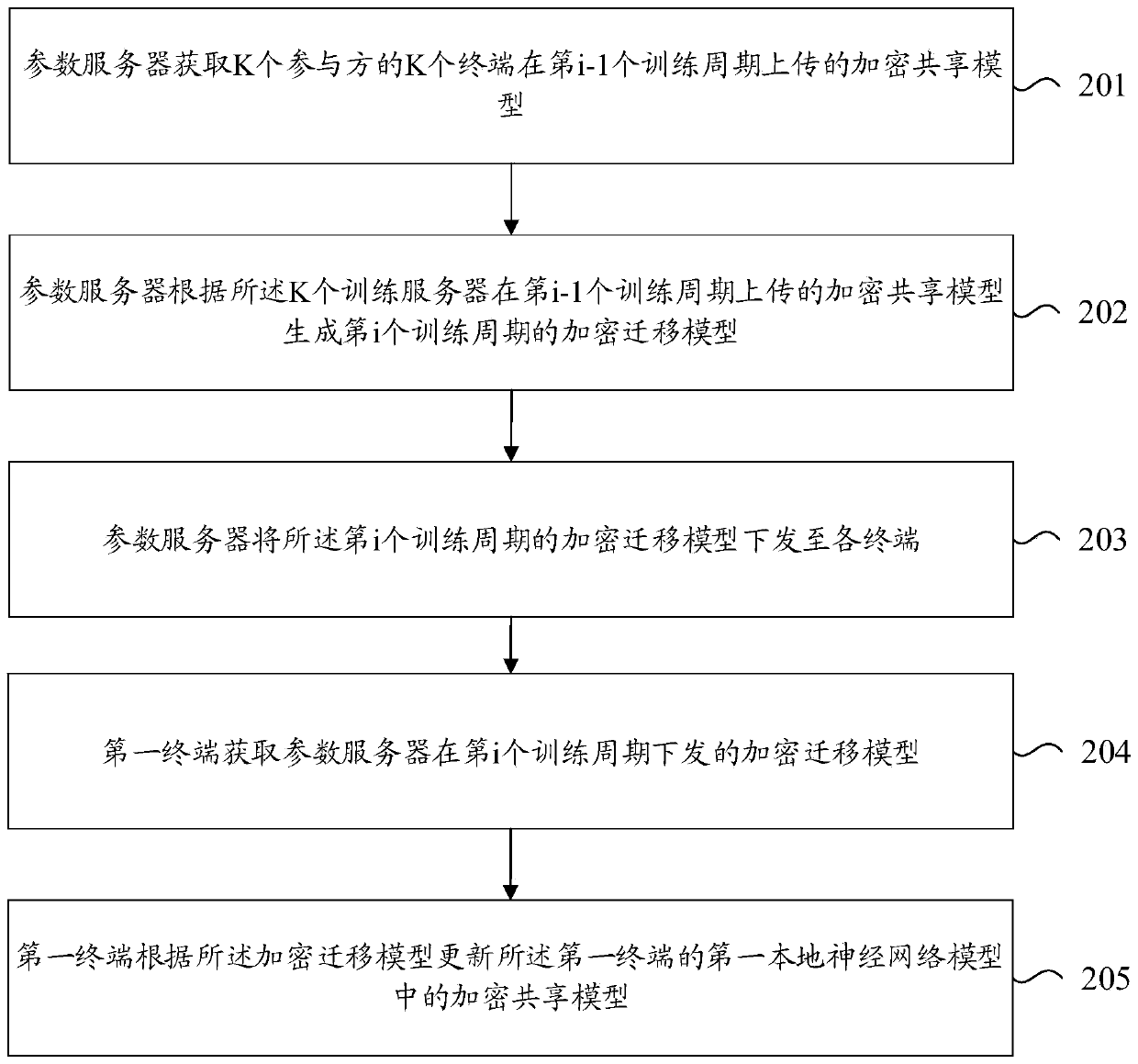

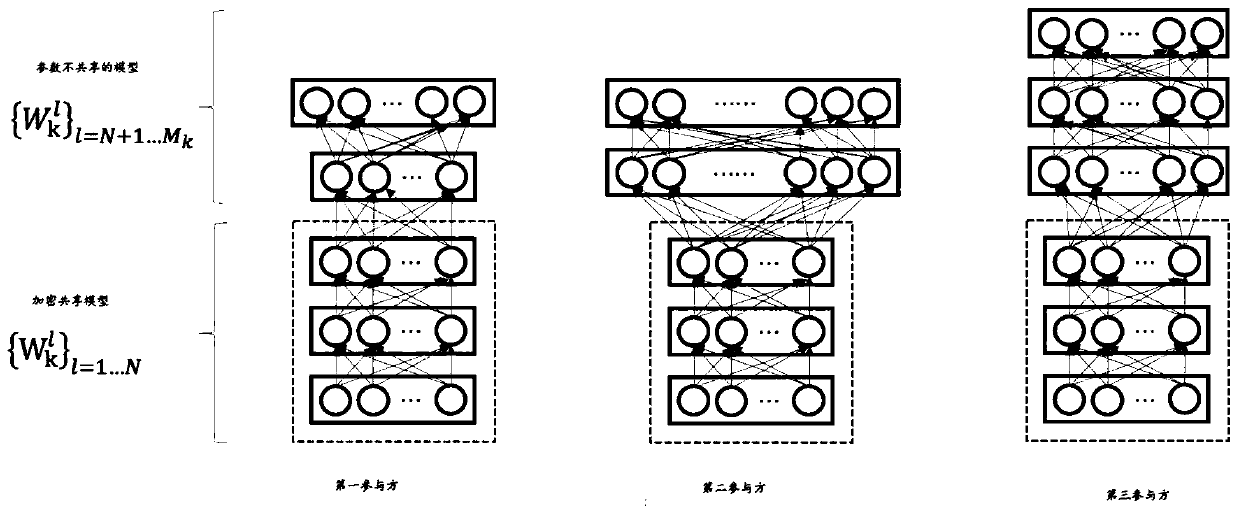

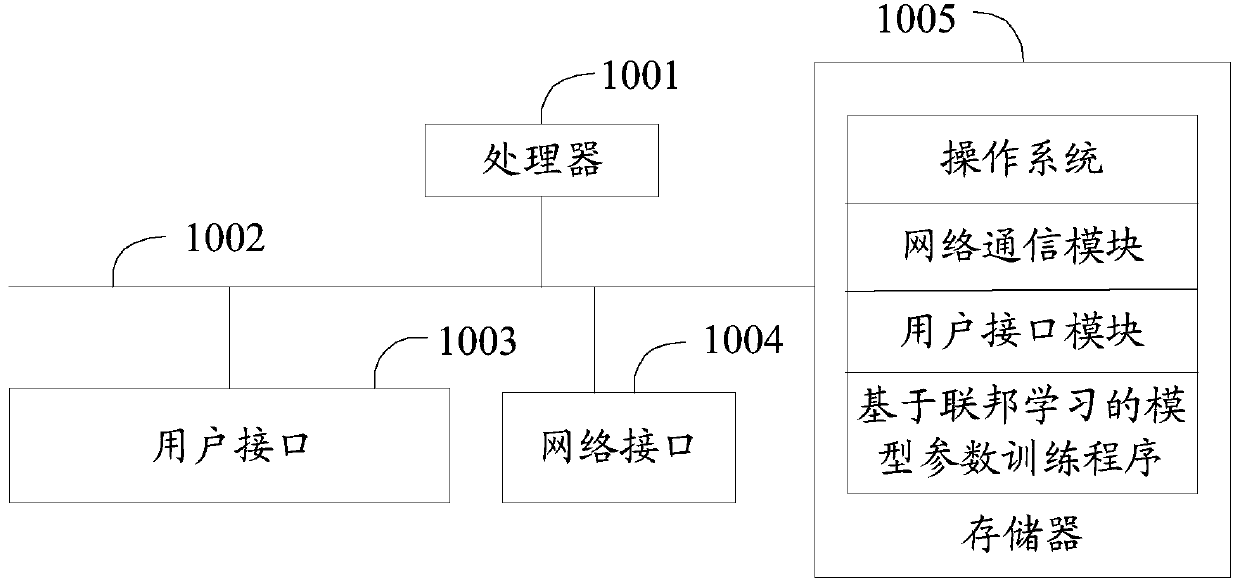

Training and prediction methods and devices of federal transfer learning model

ActiveCN110399742AImprove training efficiencyGuaranteed privacyKey distribution for secure communicationDigital data protectionTraining periodNetwork model

The invention relates to the technical field of artificial intelligence, in particular to training and prediction methods and devices of a federal transfer learning model. The training method comprises the following steps: a first terminal acquires an encrypted migration model issued by a parameter server in an ith training period, wherein the encrypted migration model is generated by the parameter server according to K encrypted sharing models uploaded by K terminals of K participants in an (i-1)th training period; and the first terminal updates an encryption sharing model in a first local neural network model of the first terminal according to the issued encryption migration model in the ith training period, and trains and updates the first local neural network model according to the first data. Therefore, in the federal transfer learning training process, the privacy of the data of each participant is ensured, and the training efficiency of the model is effectively improved, and theuniqueness of each terminal model is improved while the generalization ability of the model is ensured.

Owner:WEBANK (CHINA)

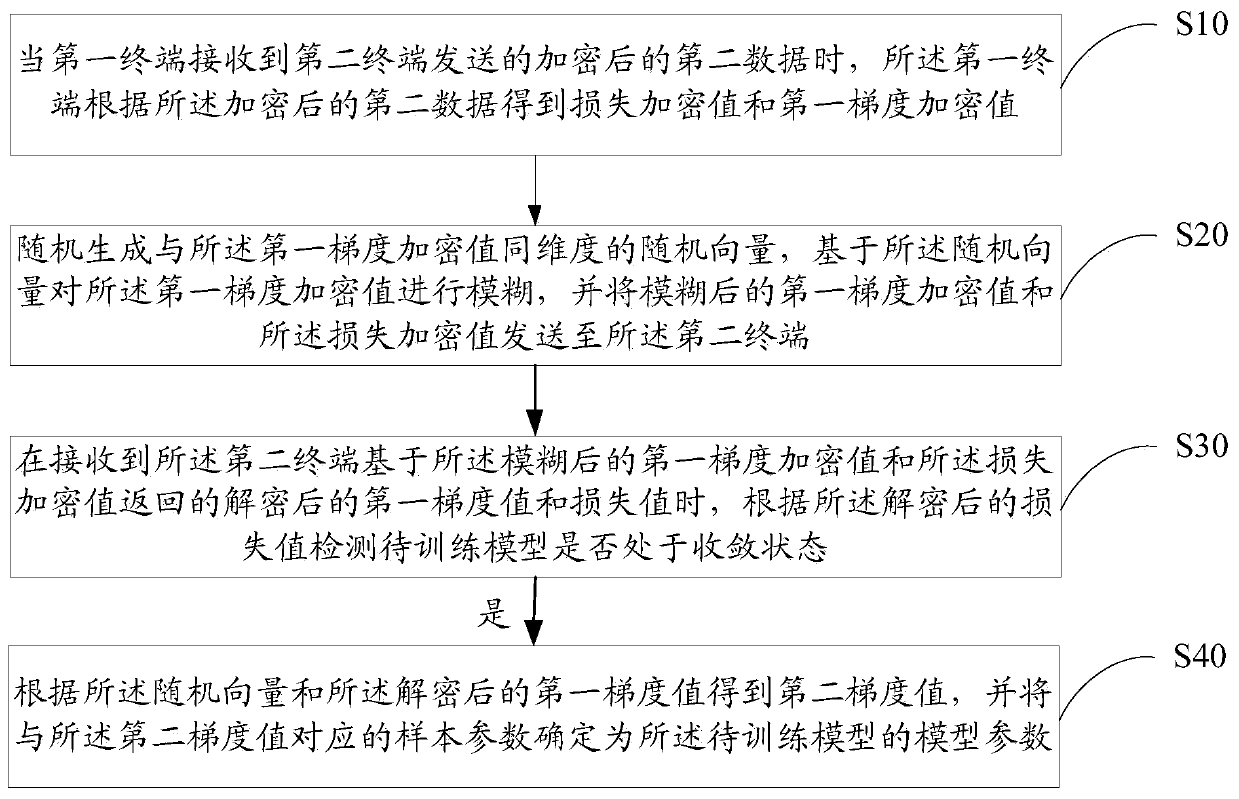

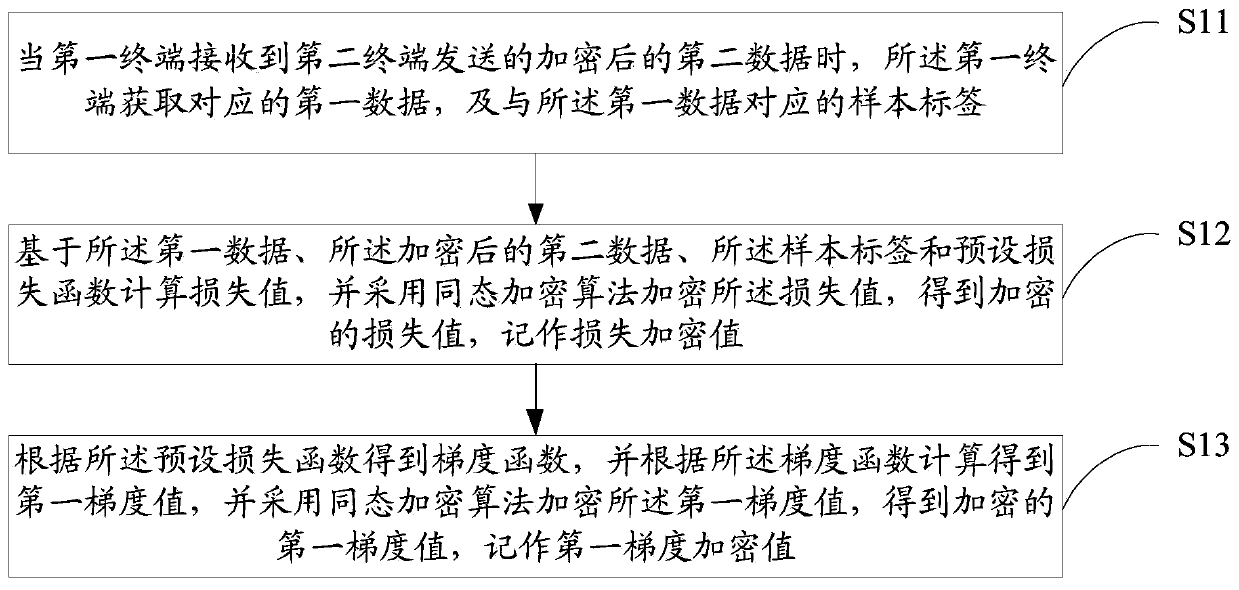

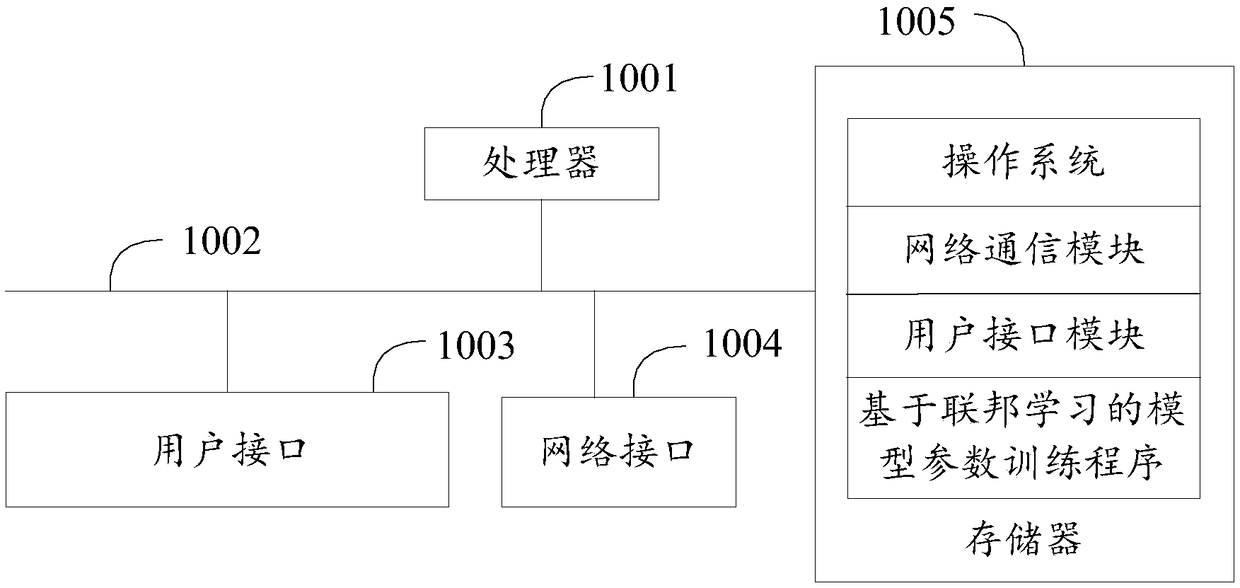

Model parameter training method and device based on federated learning, equipment and medium

PendingCN109886417ASolve application limitationsWon't leakPublic key for secure communicationDigital data protectionTrusted third partyModel parameters

The invention discloses a model parameter training method and device based on federal learning, equipment and a medium. The method comprises the following steps: when a first terminal receives encrypted second data sent by a second terminal, obtaining a corresponding loss encryption value and a first gradient encryption value; randomly generating a random vector with the same dimension as the first gradient encryption value, performing fuzzy on the first gradient encryption value based on the random vector, and sending the fuzzy first gradient encryption value and the loss encryption value toa second terminal; when the decrypted first gradient value and the loss value returned by the second terminal are received, detecting whether the model to be trained is in a convergence state or not according to the decrypted loss value; and if yes, obtaining a second gradient value according to the random vector and the decrypted first gradient value, and determining the sample parameter corresponding to the second gradient value as the model parameter. According to the method, model training can be carried out only by using data of two federated parties without a trusted third party, so thatapplication limitation is avoided.

Owner:WEBANK (CHINA)

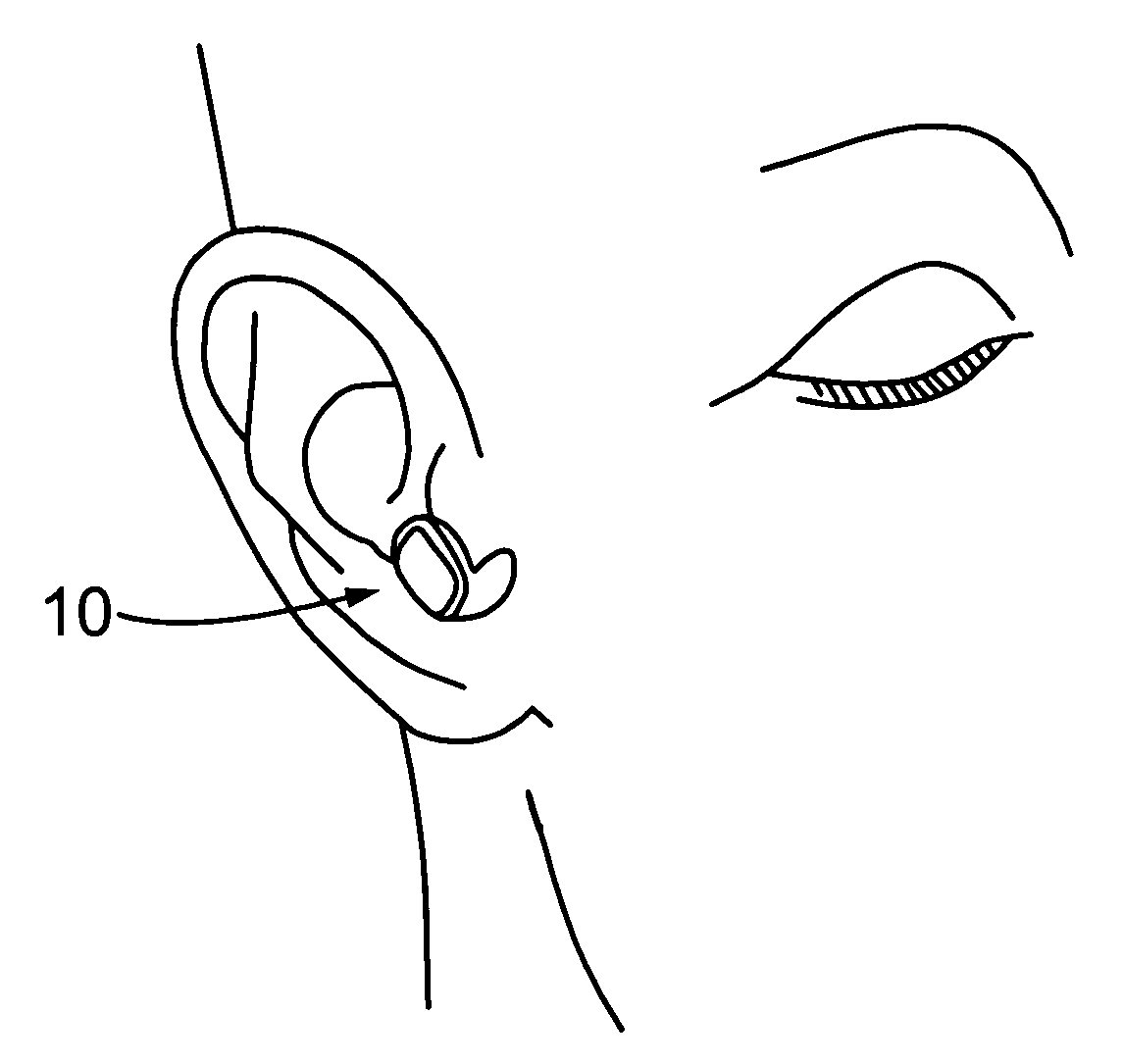

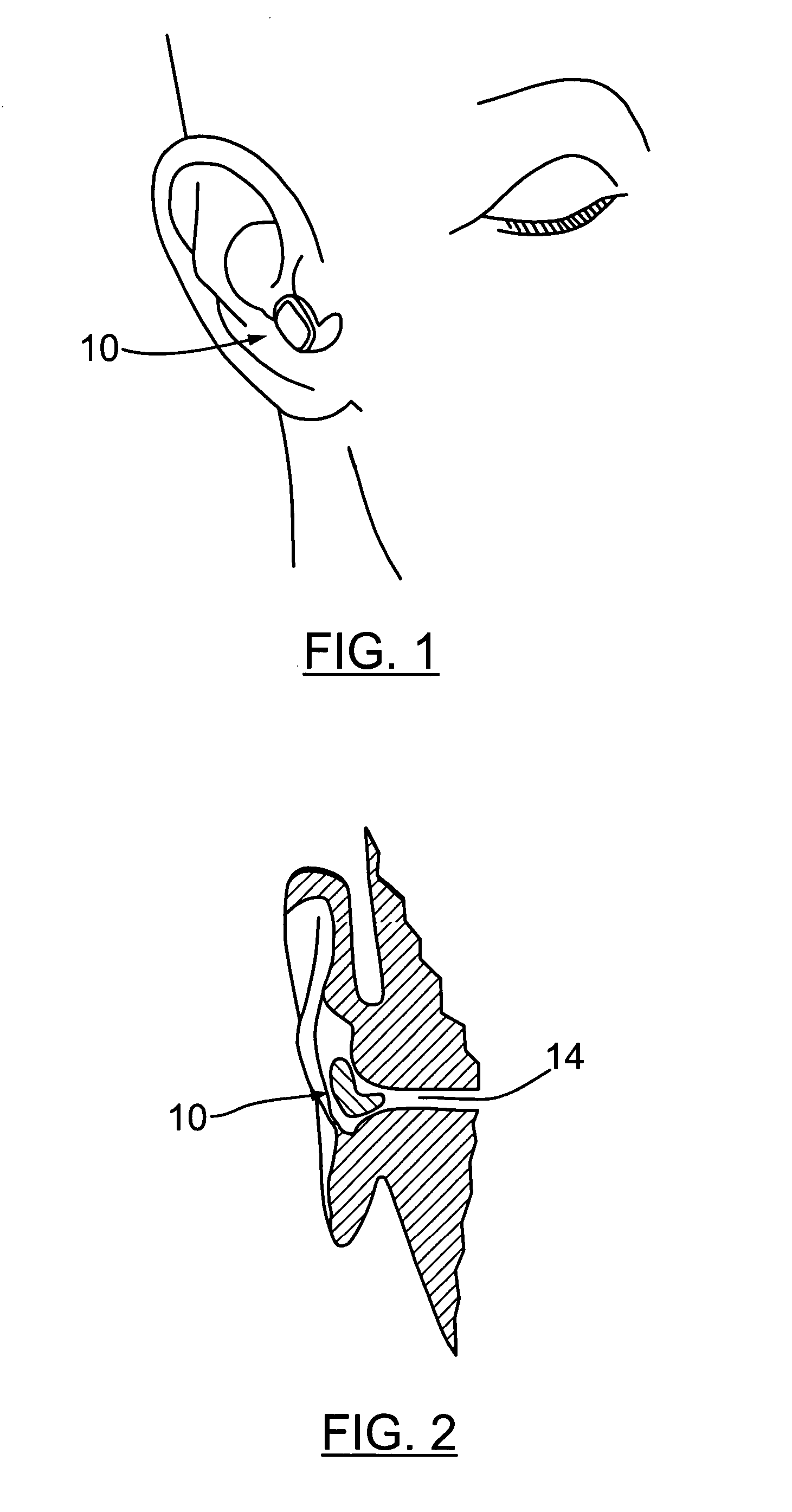

Training device and method to suppress sounds caused by sleep and breathing disorders

A training method and device to suppress sounds caused by sleep and breathing disorders, such as, snoring and sleep apnea. The system records the actual snoring of the subject, modifies it, by time delay and perhaps other sound modifying techniques, and broadcasts it to the subject by a speaker or earphone. This trains the person to attend to his / her own snoring, so that he / she might stop it by moving parts of his / her body or changing breathing patterns. The method and device may include the combination and / or integration of hearing aid functionality and means for detection of snoring sounds.

Owner:SARINGER RES

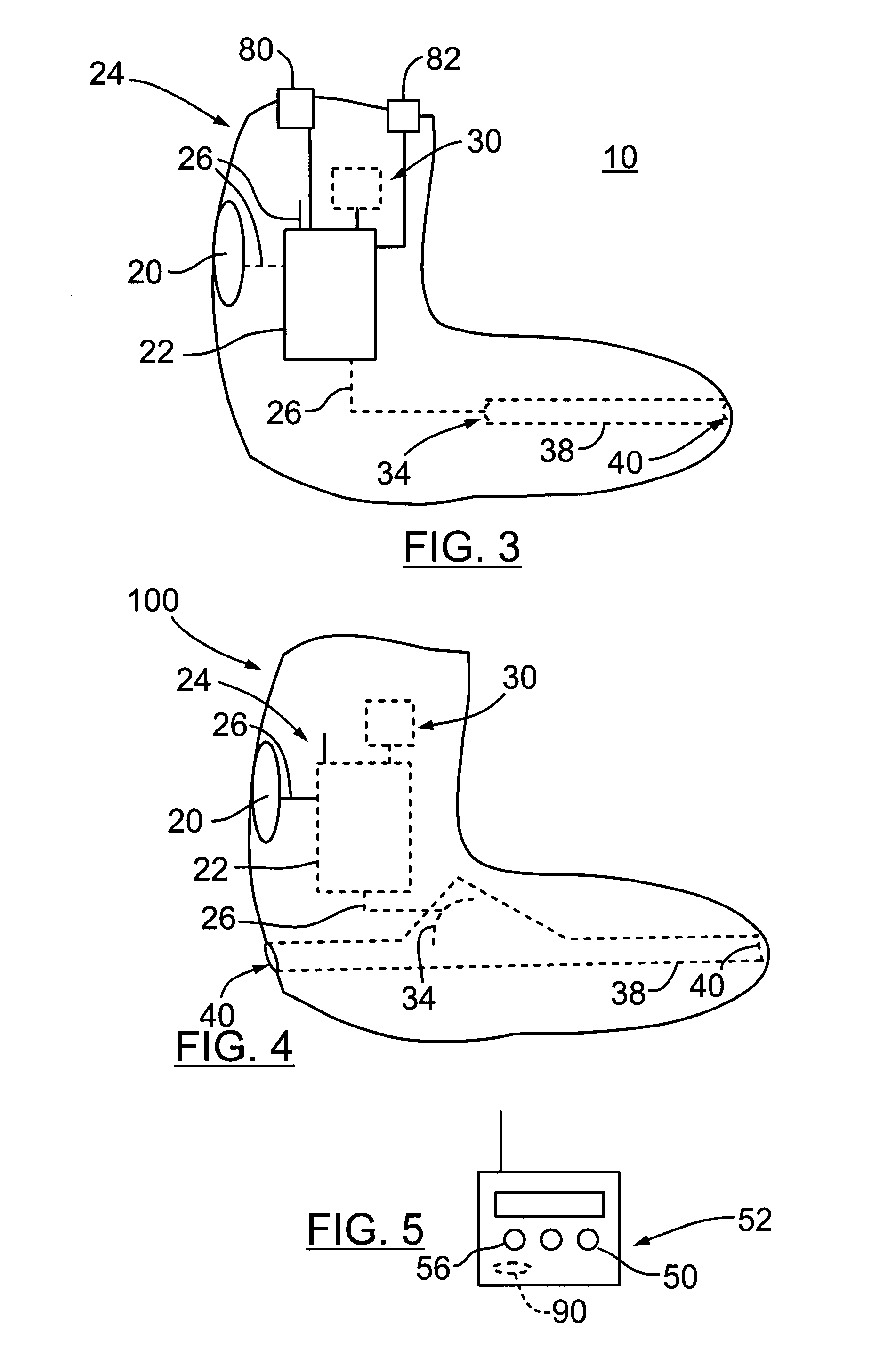

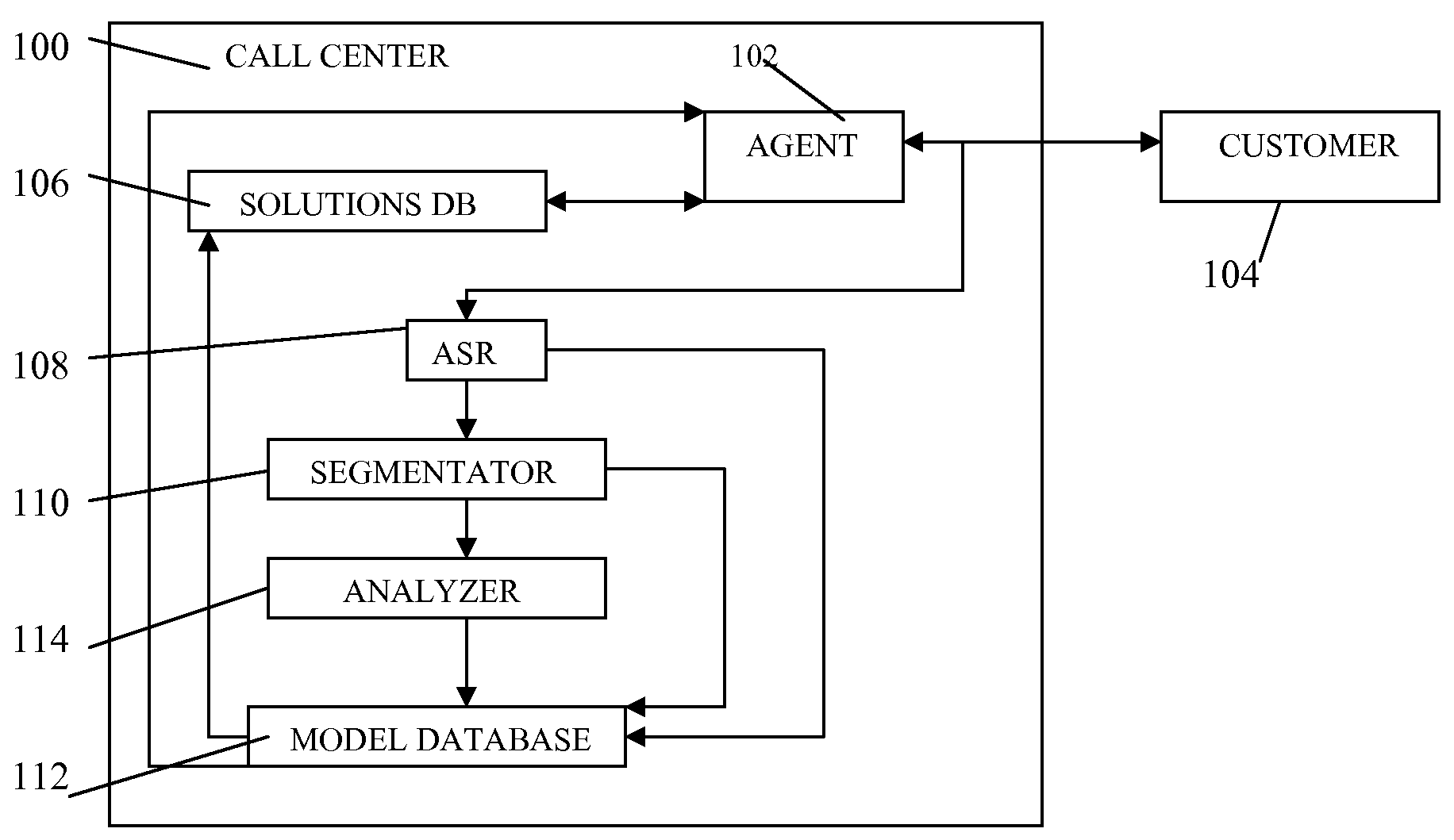

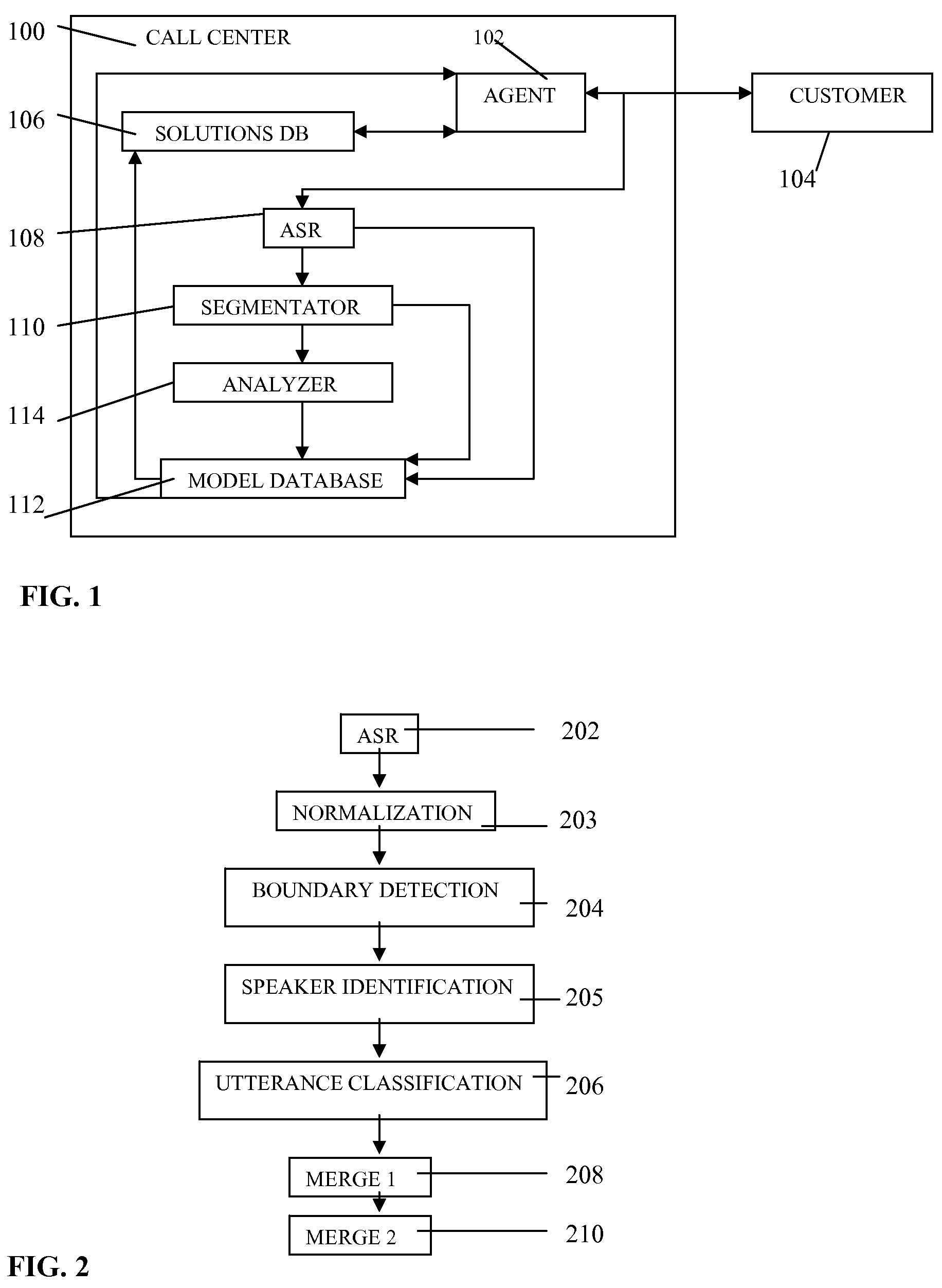

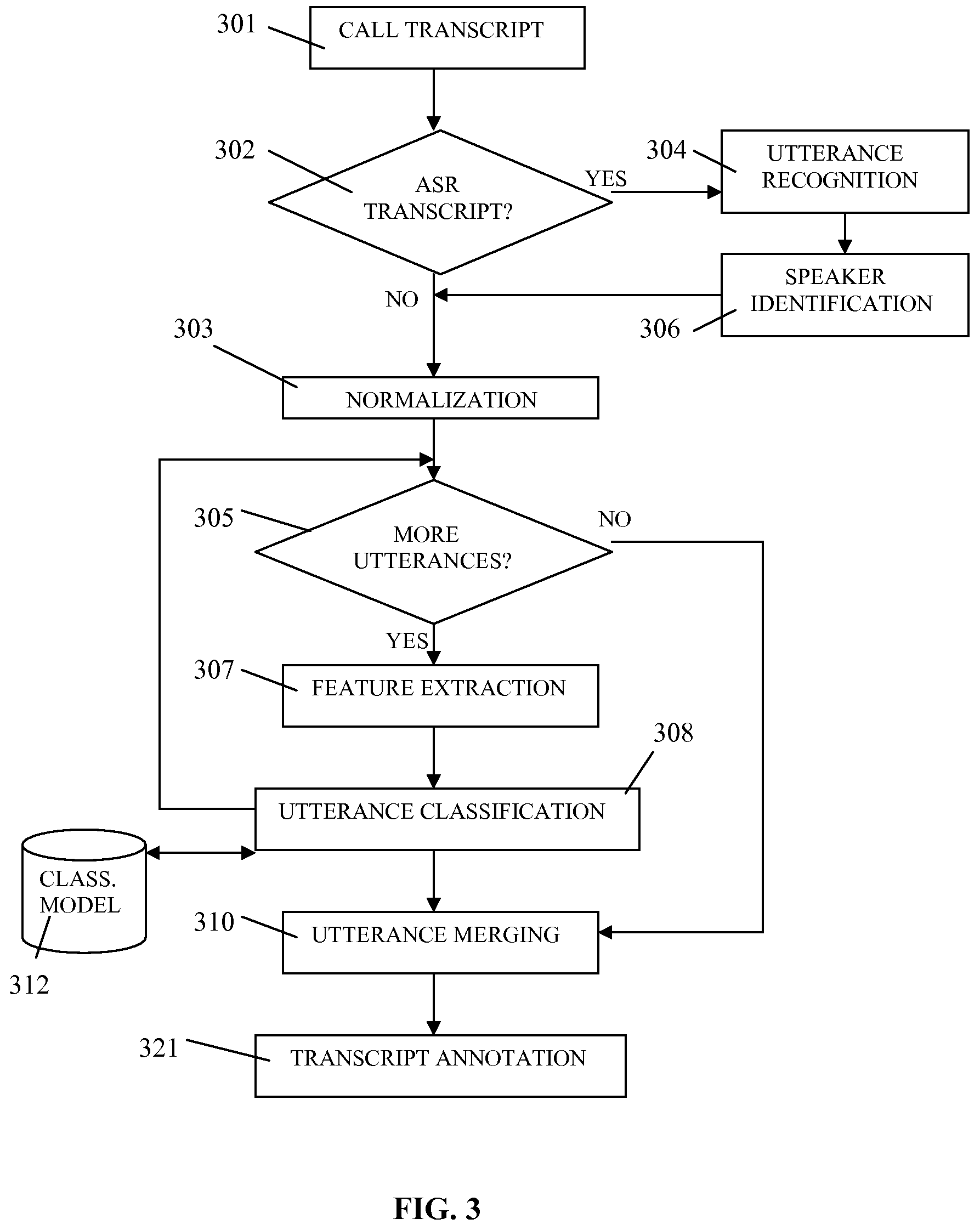

System and method for automatic call segmentation at call center

ActiveUS20100104086A1Special service for subscribersManual exchangesStatistical analysisComputer science

A system and method for automatic call segmentation including steps and means for automatically detecting boundaries between utterances in the call transcripts; automatically classifying utterances into target call sections; automatically partitioning the call transcript into call segments; and outputting a segmented call transcript. A training method and apparatus for training the system to perform automatic call segmentation includes steps and means for providing at least one training transcript with annotated call sections; normalizing the at least one training transcript; and performing statistical analysis on the at least one training transcript.

Owner:IBM CORP

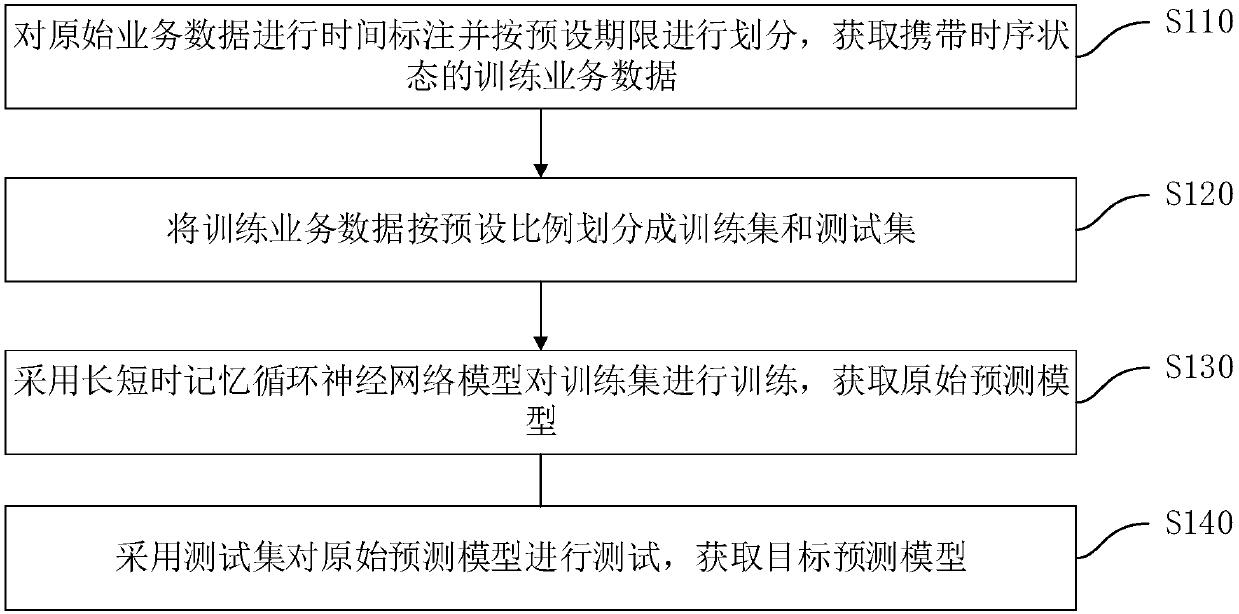

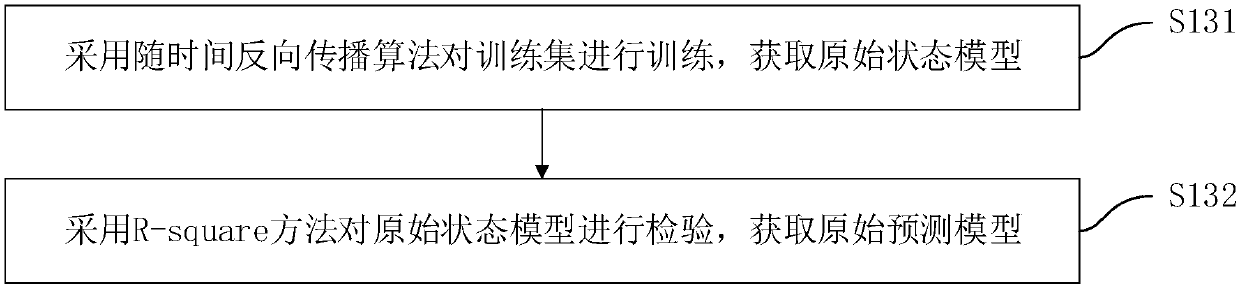

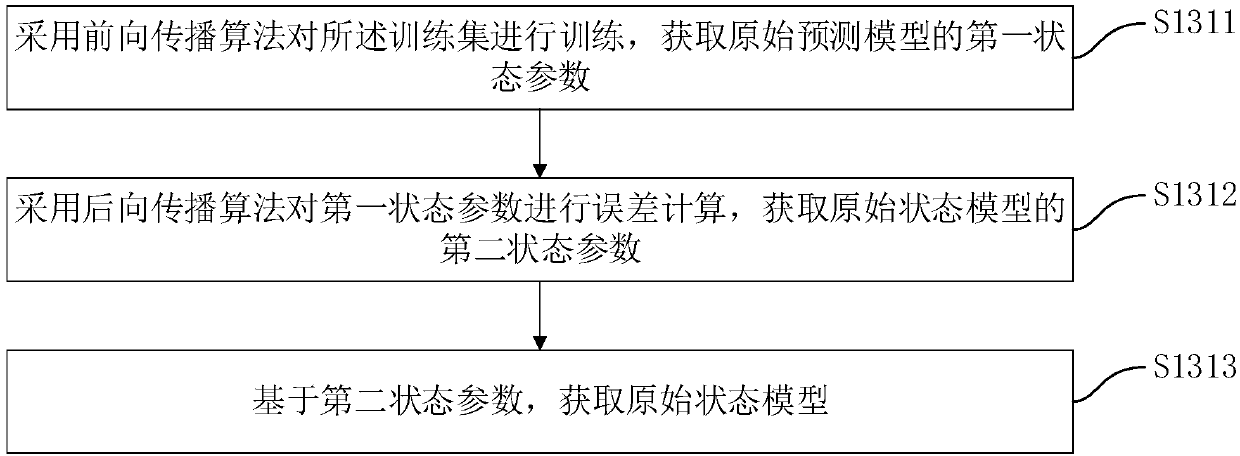

Prediction model training method, data monitoring method and device, equipment and medium

InactiveCN107730087AImprove accuracyImprove efficiencyFinanceCharacter and pattern recognitionNetwork modelTest set

The invention discloses a prediction model training method, a data monitoring method and device, equipment and a medium. The prediction model training method comprises the steps that time-stamping iscarried out on original service data, and the data are divided according to a preset deadline to acquire training service data carrying a time sequence state; the training service data are divided into a training set and a test set according to a preset ratio; a training set is used to train a long short term memory cycle neural network model to acquire an original prediction model; and the testset is used to test the original prediction model to acquire a target prediction model. The prediction model training method has the advantages of high time sequence and high accuracy in predicting.

Owner:PING AN TECH (SHENZHEN) CO LTD

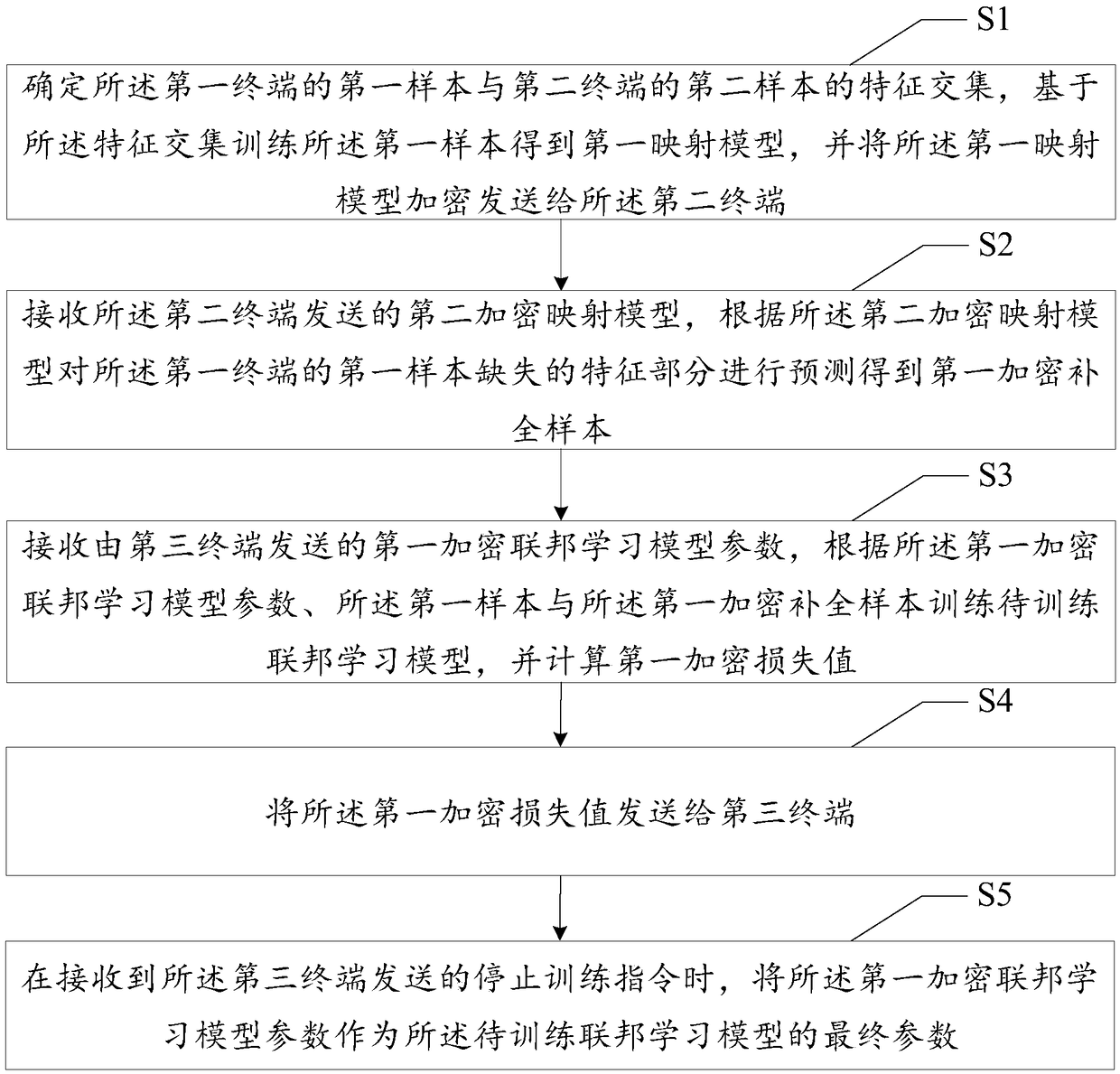

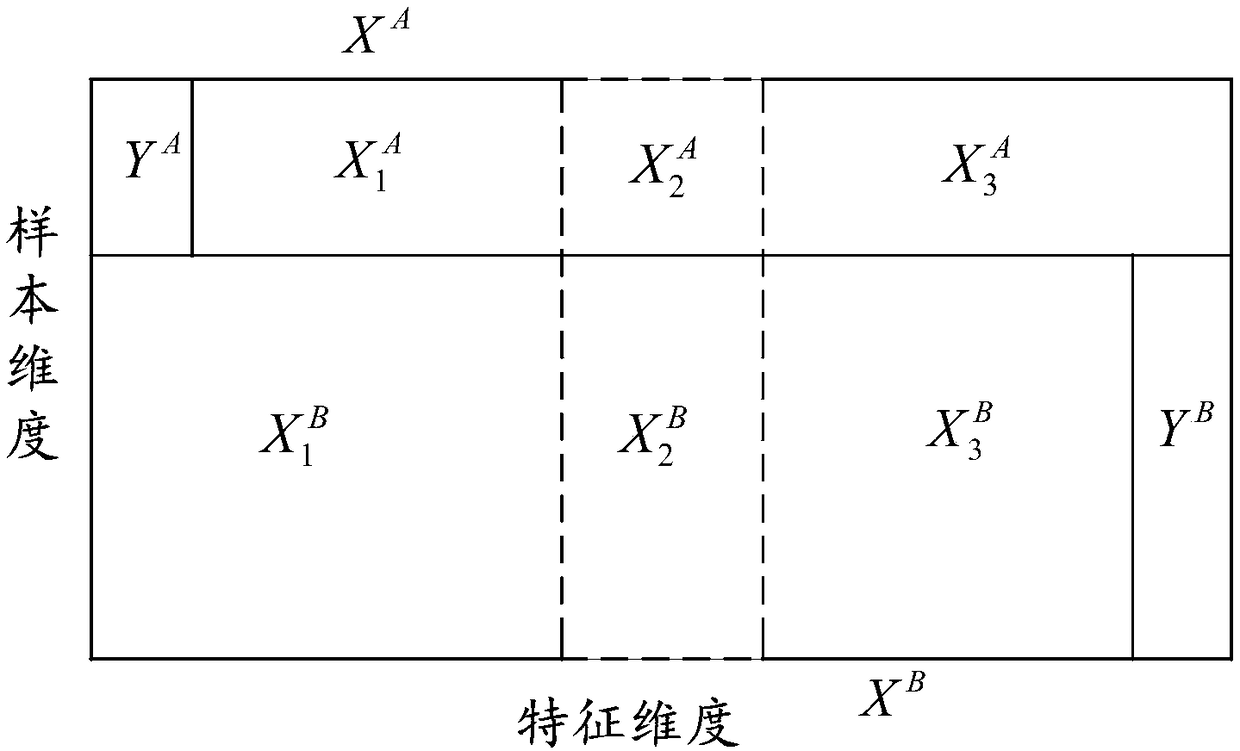

Model parameter training method, terminal, system and medium based on federated learning

ActiveCN109492420AImprove predictive abilityFeature Space ExpansionEnsemble learningCharacter and pattern recognitionCharacteristic spaceModel parameters

The invention discloses a model parameter training method based on federal learning, a terminal, a system and a medium, and the method comprises the steps: determining a feature intersection of a first sample of a first terminal and a second sample of a second terminal, training the first sample based on the feature intersection to obtain a first mapping model, and sending the first mapping modelto the second terminal; receiving a second encryption mapping model sent by a second terminal, and predicting the missing feature part of the first sample to obtain a first encryption completion sample; receiving a first encrypted federal learning model parameter sent by a third terminal, training a to-be-trained federal learning model according to the first encrypted federal learning model parameter, and calculating a first encryption loss value; sending the first encryption loss value to a third terminal; and when a training stopping instruction sent by the third terminal is received, takingthe first encrypted federal learning model parameter as a final parameter of the federal learning model to be trained. According to the invention, the characteristic space of two federated parties isexpanded by using transfer learning, and the prediction capability of the federated model is improved.

Owner:WEBANK (CHINA)

Training method and a device for carrying out said method

Owner:VASIN MAXIM ALEXEEVICH

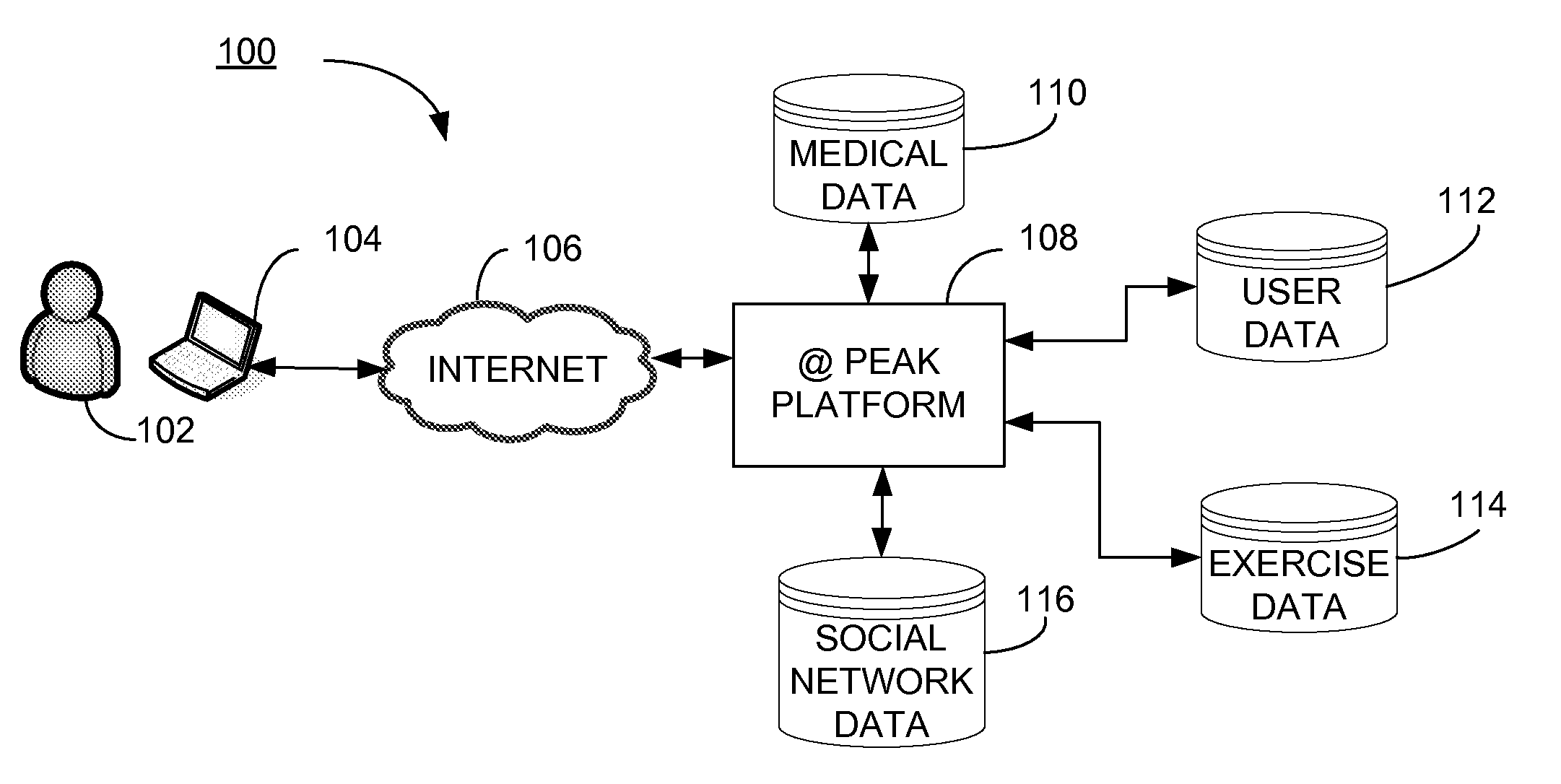

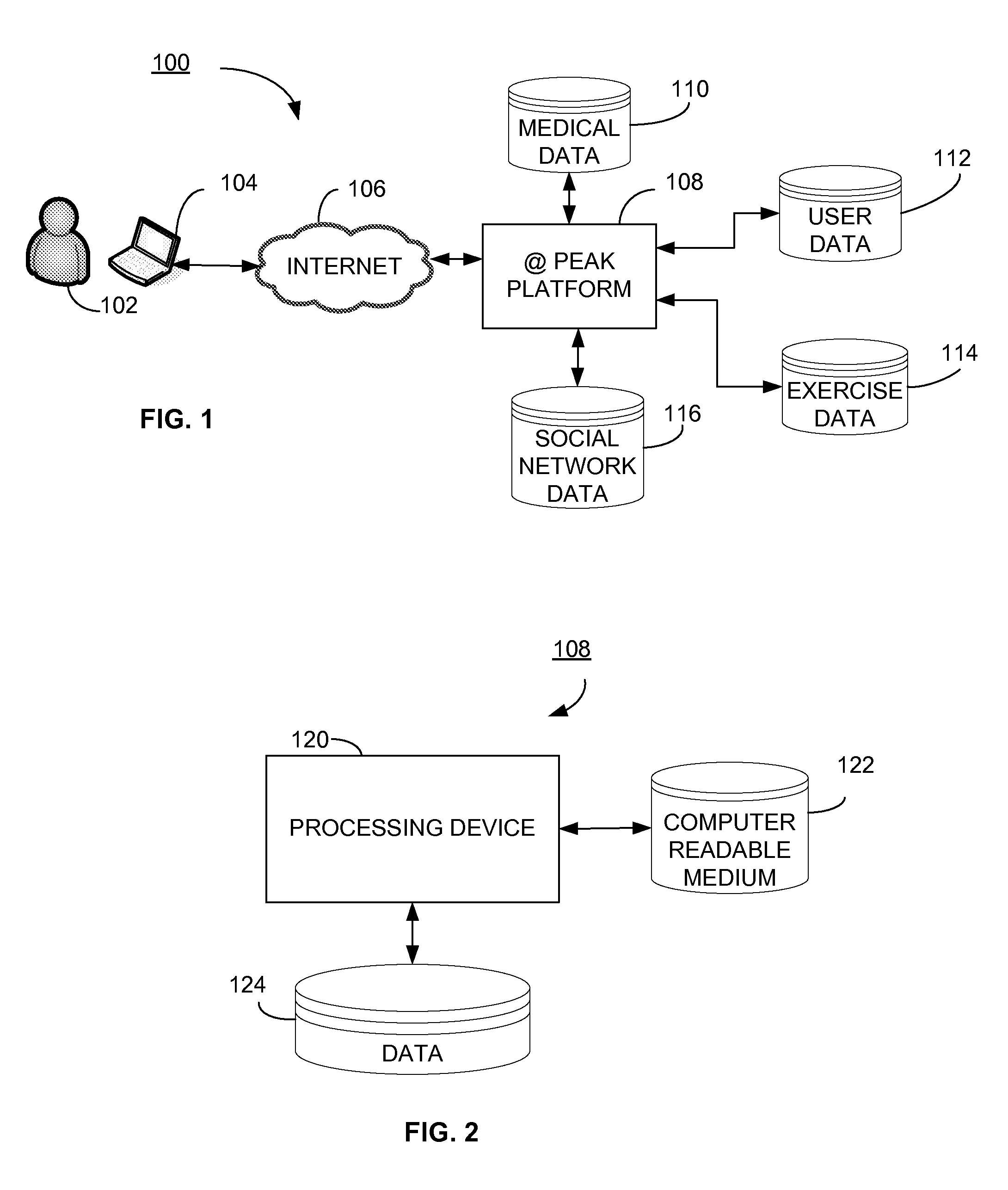

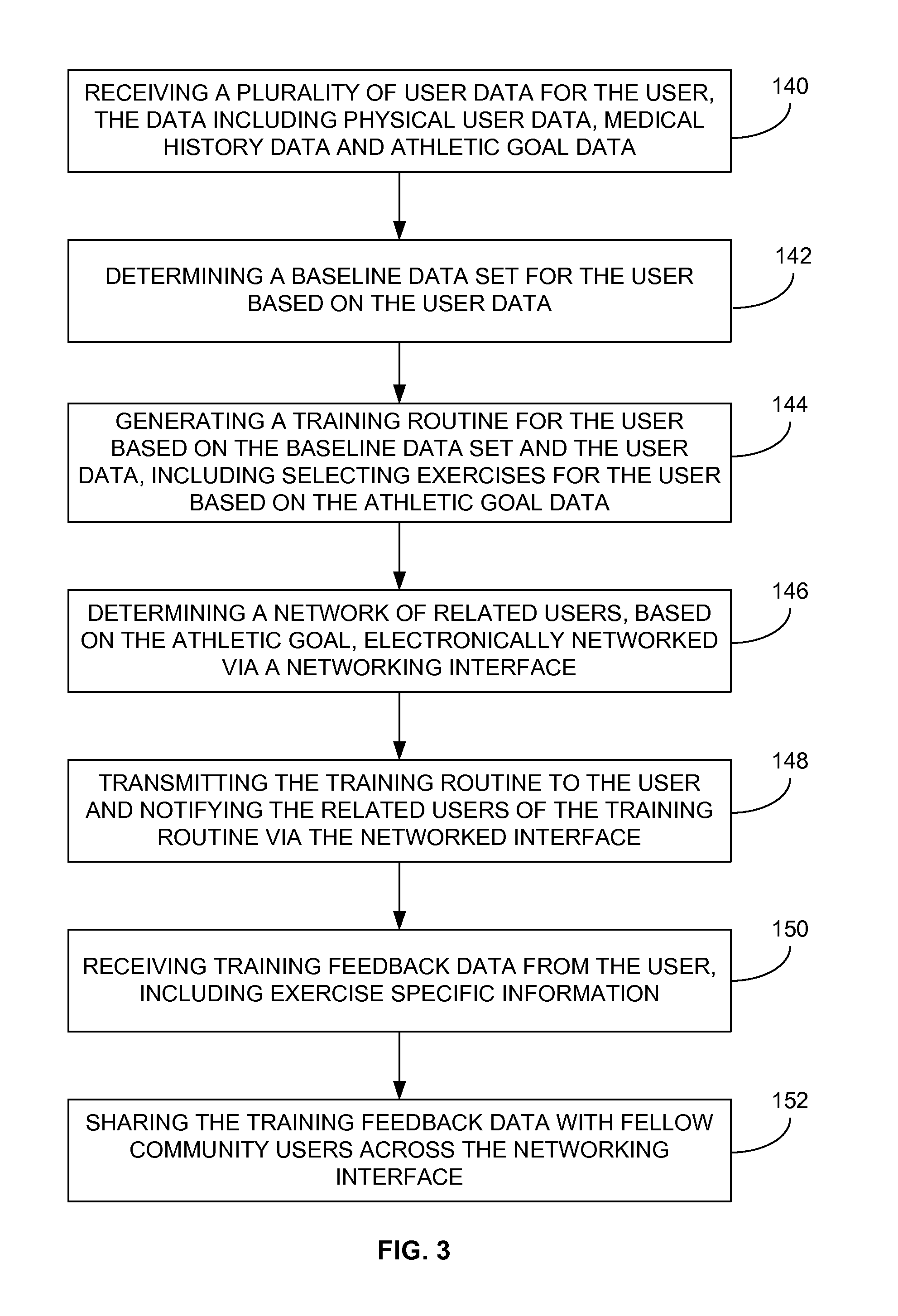

Interactive training method and system for developing peak user performance

InactiveUS20130138734A1Gymnastic exercisingMultiple digital computer combinationsBaseline dataData set

The present invention provides a computerized interactive training method and system for receiving a user data including physical user data, medical history data and athletic goal data for the user. The method and system further includes determining a baseline data set for the user and generating a training routine for the user based on the baseline data set and the user data, including selecting a plurality of exercises for the user directed towards the athletic goal data. The method and system further includes determining a network of related users electronically networked via a networking interface, transmitting the training routine to the user and notifying the related users of the training routine. The method and system provides for receiving training feedback data from the user, including exercise-specific information and sharing the training feedback data with fellow community users.

Owner:NEODEV SPORTS INC

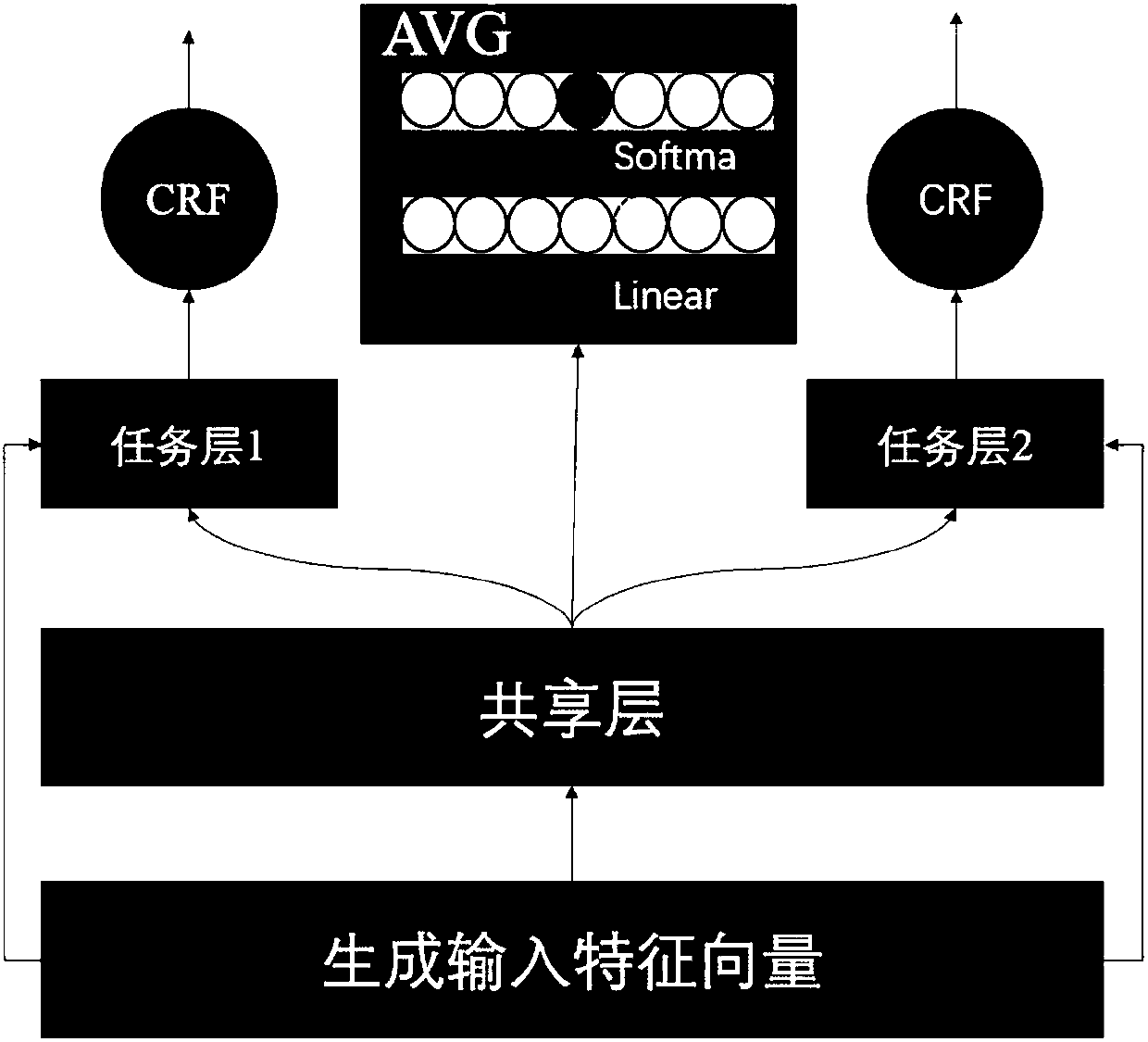

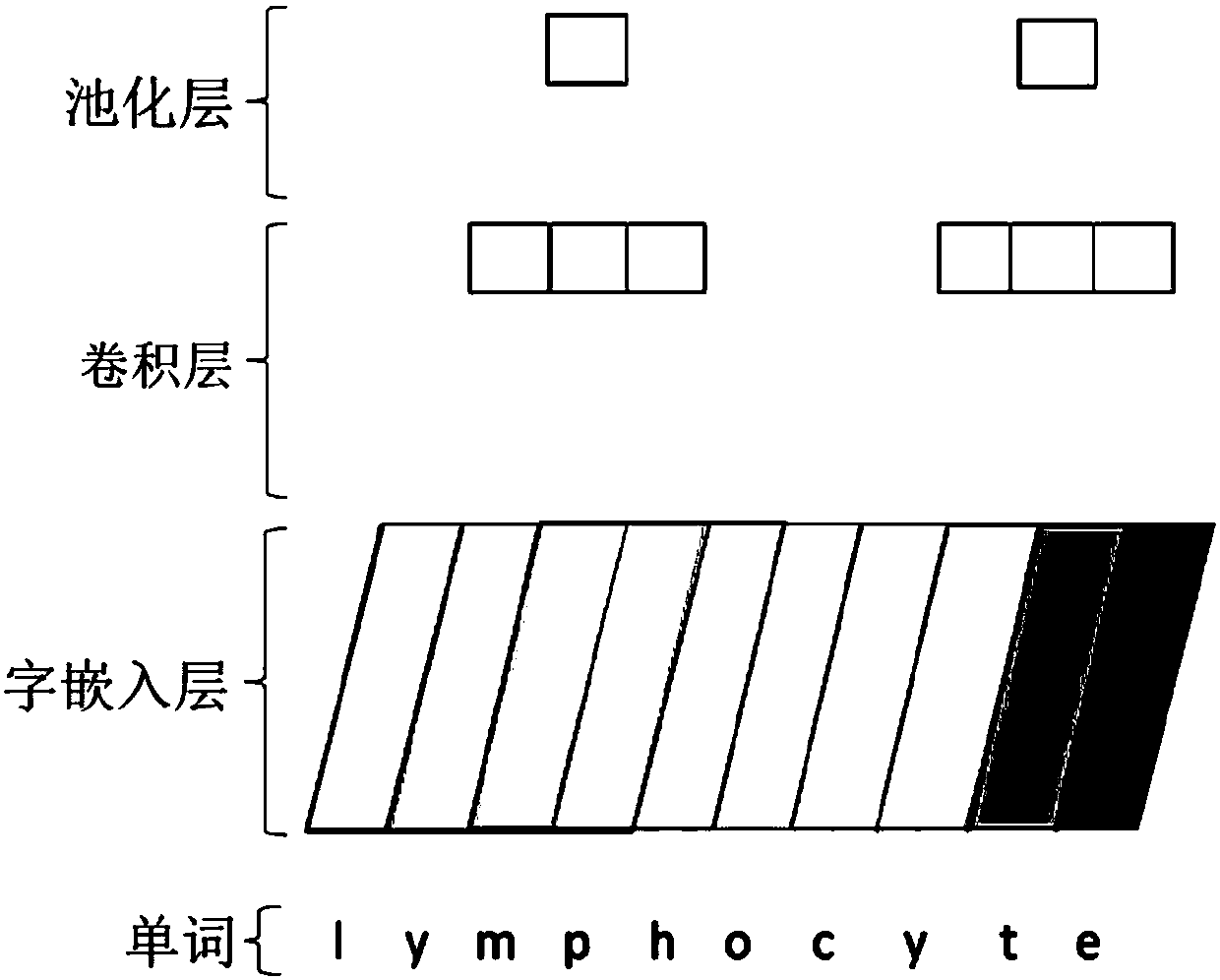

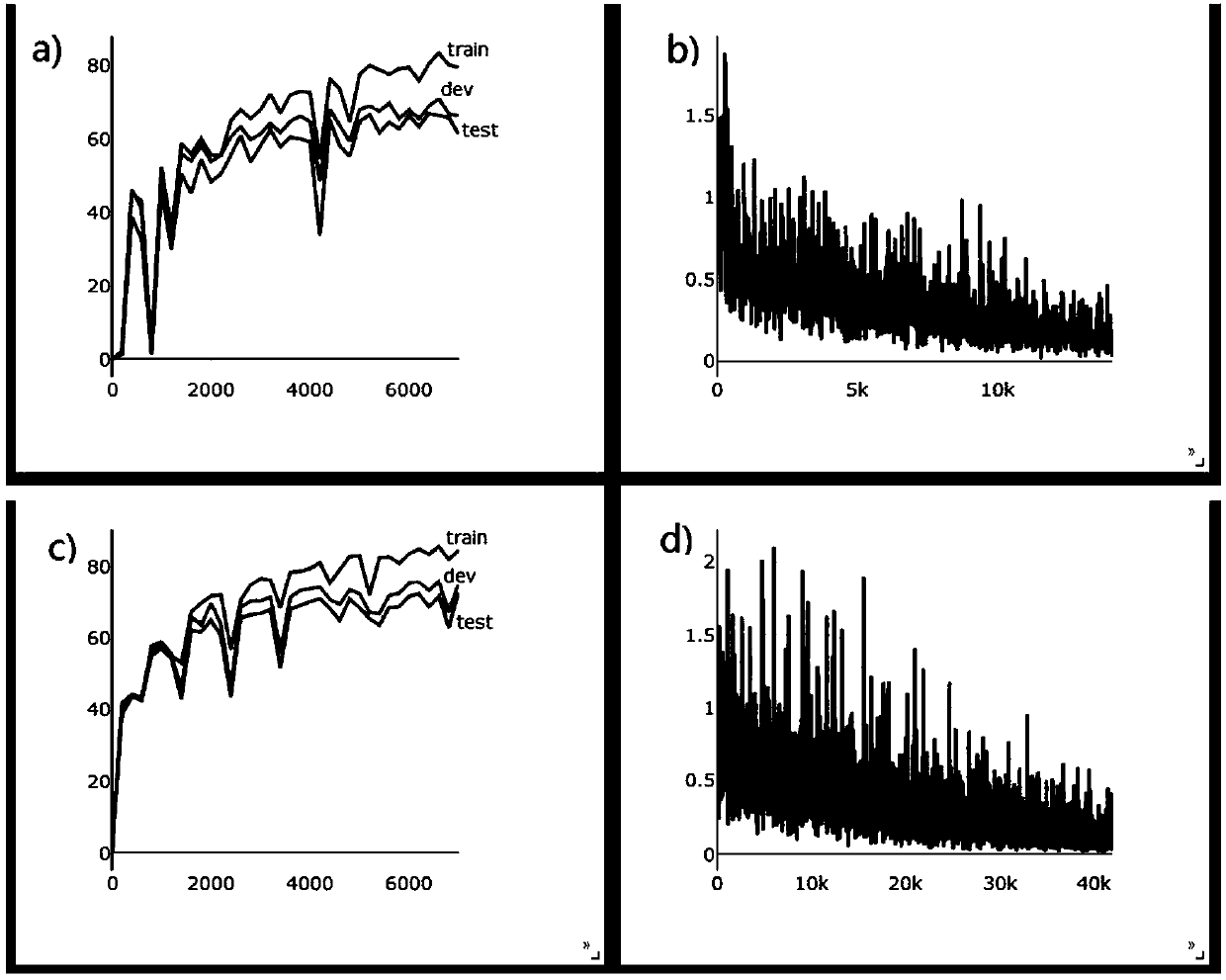

Multi-task named entity recognition and confrontation training method for medical field

InactiveCN108229582AEntity Recognition FacilitationImprove accuracyCharacter and pattern recognitionNeural architecturesConditional random fieldData set

The invention discloses a multi-task named entity recognition and confrontation training method for medical field. The method includes the following steps of (1) collecting and processing data sets, so that each row is composed of a word and a label; (2) using a convolutional neural network to encode the information at the word character level, obtaining character vectors, and then stitching withword vectors to form input feature vectors; (3) constructing a sharing layer, and using a bidirection long-short-term memory nerve network to conduct modeling on input feature vectors of each word ina sentence to learn the common features of each task; (4) constructing a task layer, and conducting model on the input feature vectors and the output information in (3) through a bidirection long-short-term network to learn private features of each task; (5) using conditional random fields to decode labels of the outputs of (3) and (4); (6) using the information of the sharing layer to train a confrontation network to reduce the private features mixed into the sharing layer. According to the method, multi-task learning is performed on the data sets of multiple disease domains, confrontation training is introduced to make the features of the sharing layer and task layer more independent, and the task of training multiple named entity recognition simultaneously in a specific domain is accomplished quickly and efficiently.

Owner:ZHEJIANG UNIV

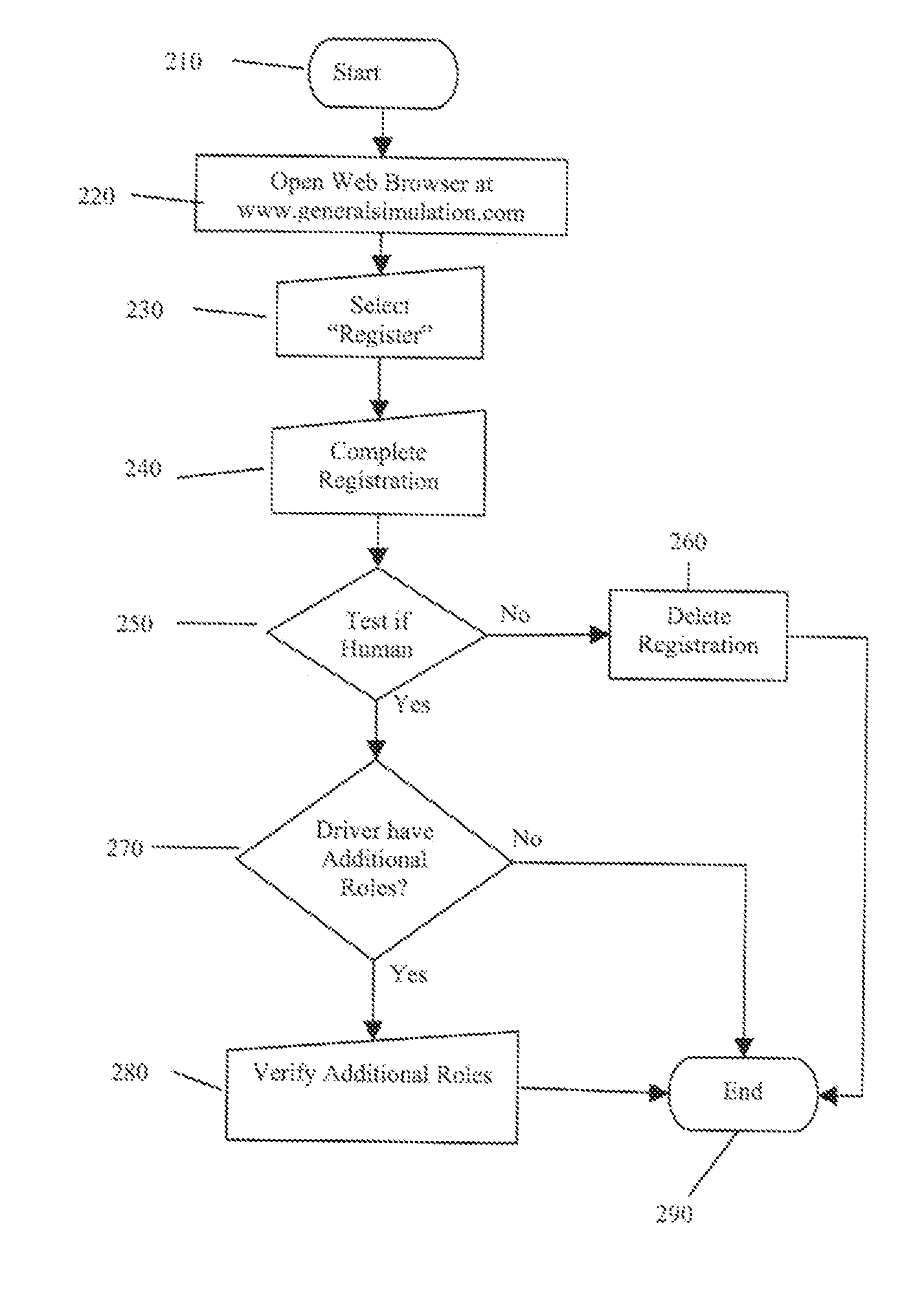

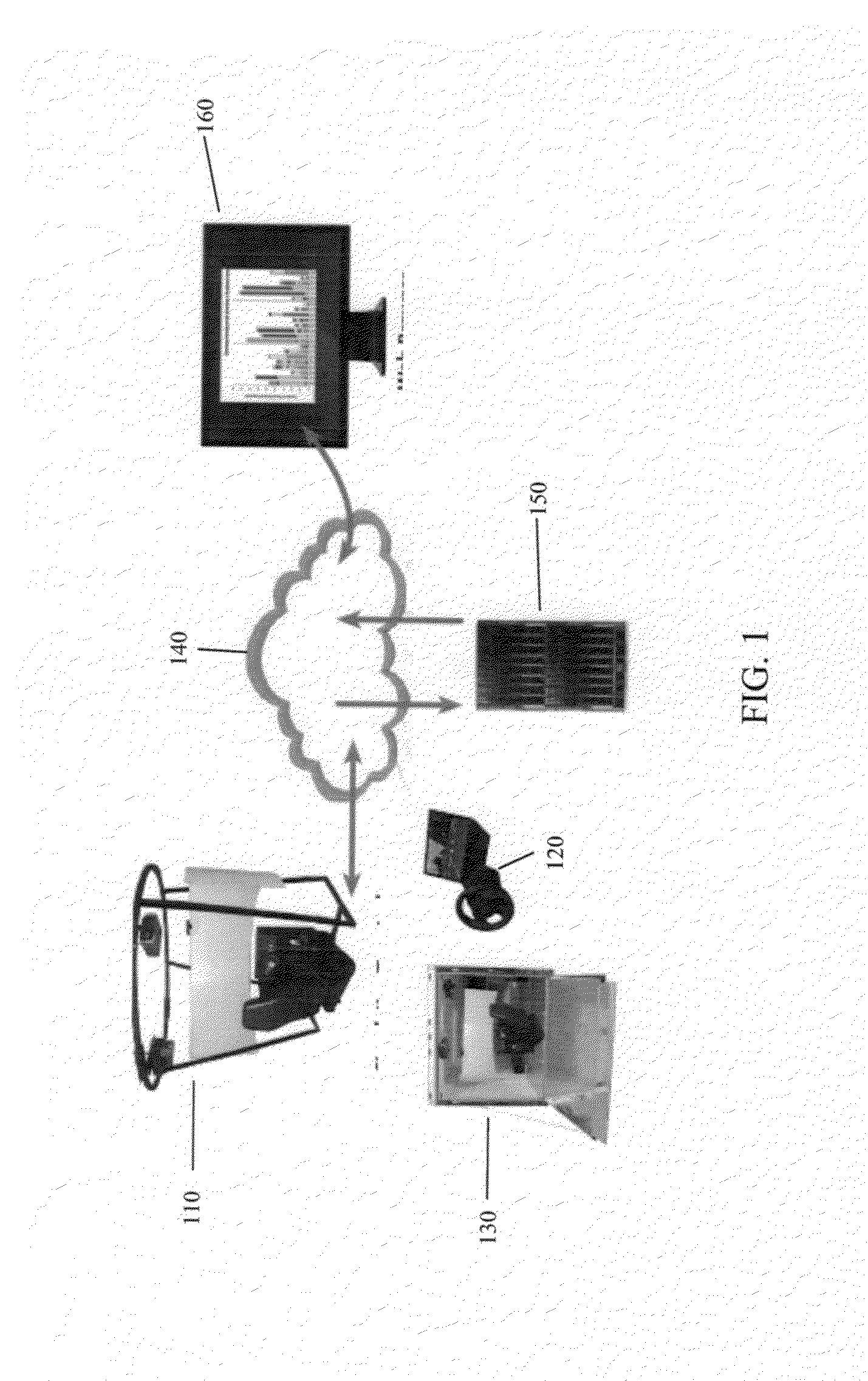

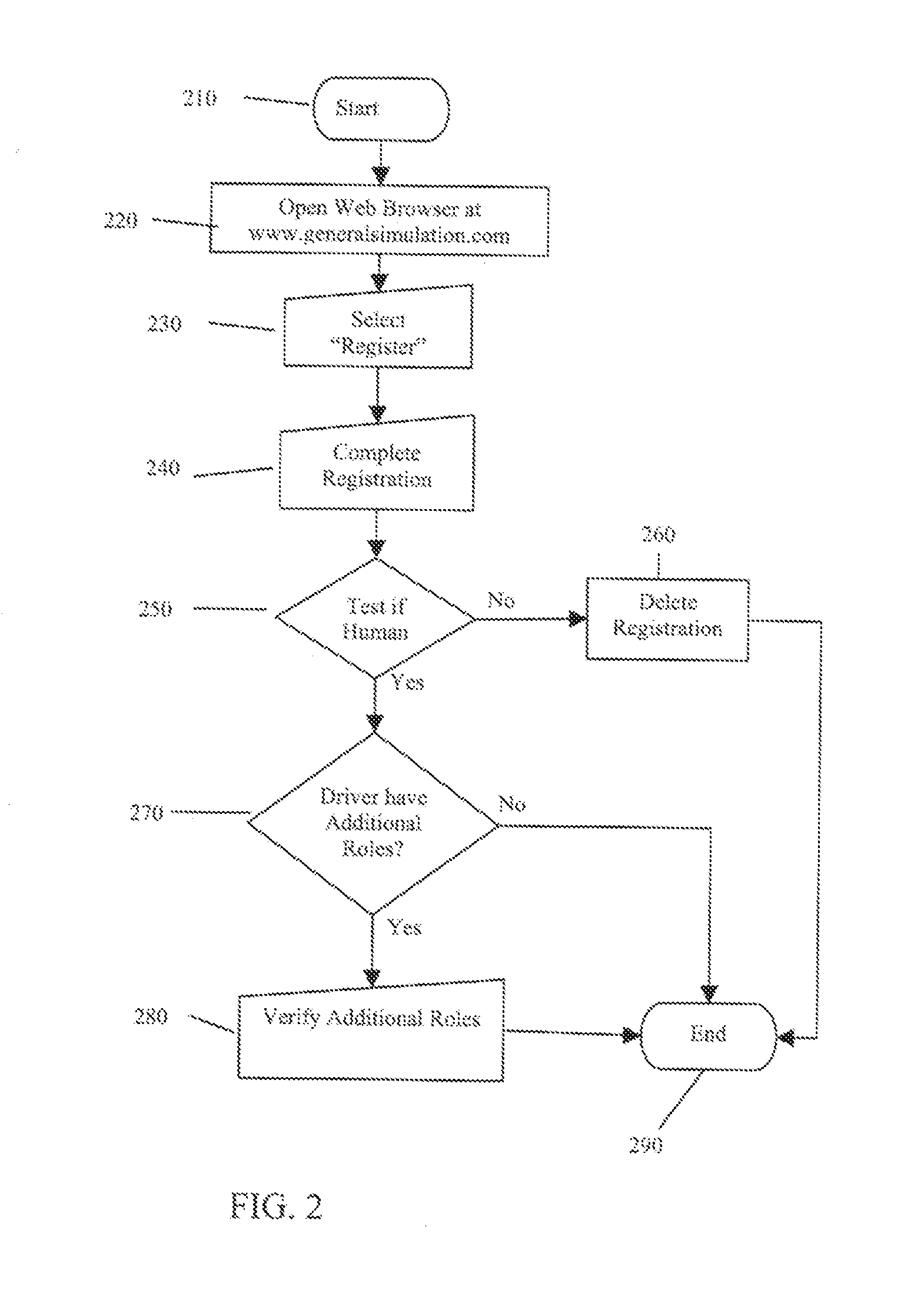

Driving assessment and training method and apparatus

InactiveUS20150104757A1Big amount of dataCosmonautic condition simulationsSimulatorsDriver/operatorSimulation

A system for driver assessment and training comprising a simulator which can be operated by a driver or pilot under test or in training, the simulator displaying scenarios the driver or pilot must drive through or fly through. The inputs of the driver or pilot in reaction to the displayed scenario are fed to a free body model which calculates the resulting movement of the simulated vehicle in the displayed world. Scoring can be by analysis of calculated Fonda curves comparing the driver performance to performances by one or more normative drivers plotted by standard deviation from norm on the vertical axis and sample point on the horizontal axis. Simulator sickness can be mitigated by calculation and display of a mitigation object which partially obscures the virtual scene being displayed. Signature curves give the driver or pilots' performance at a glance.

Owner:MBFARR

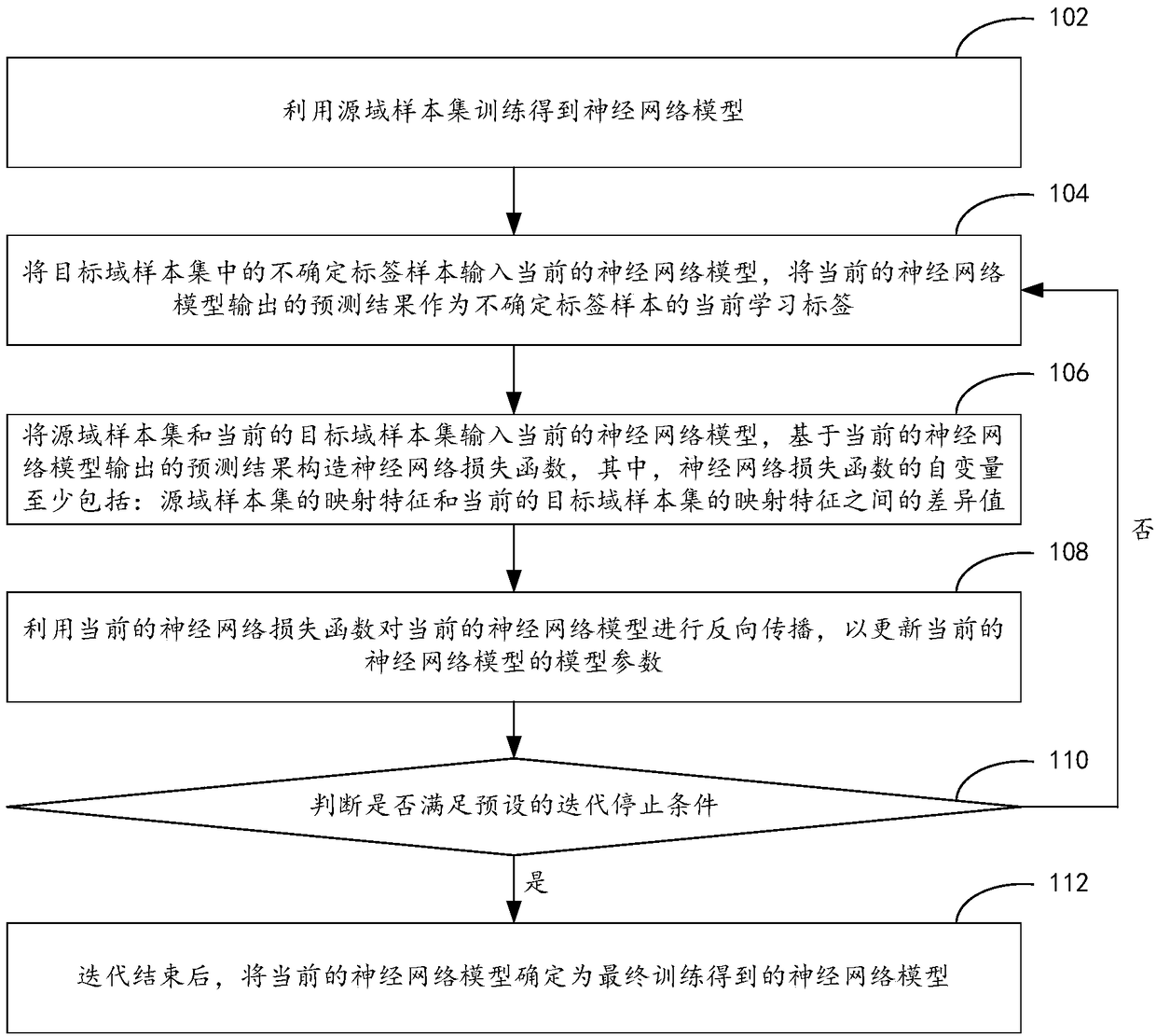

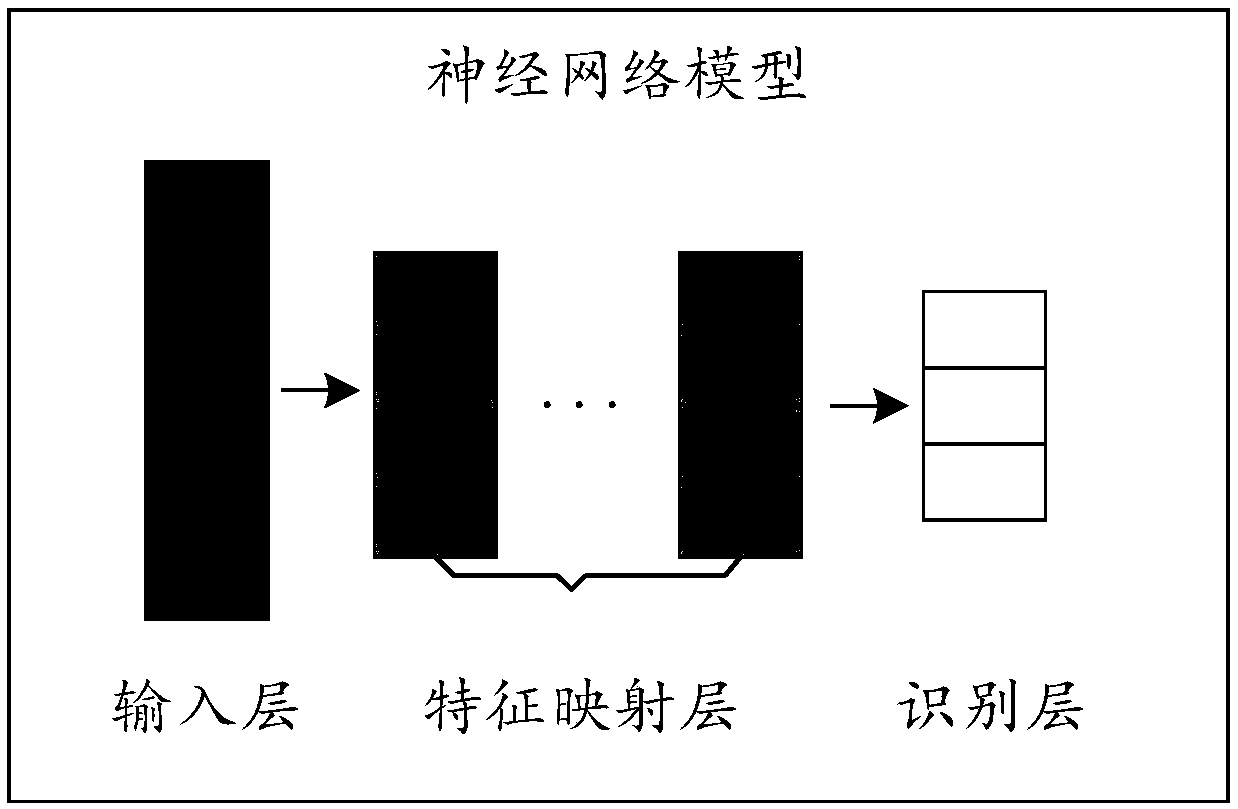

Neural network model training method and device, and computer device

InactiveCN108898218AExamples cannot be limitedImplement transfer learningNeural learning methodsNerve networkNetwork model

A neural network model training method and device, and a computer device are disclosed. The neural network model training method includes: performing training by using a source domain sample set to obtain a neural network model; performing iterative processing by using the following steps until an iterative stop condition is satisfied: inputting an uncertain label sample in a target domain sampleset into the current neural network model, and using the output prediction result as a current learning label of the uncertain label sample; inputting a source domain sample set and the current targetdomain sample set in to the current neural network model, constructing a neural network loss function on the basis of the output prediction result, wherein independent variables of the neural networkloss function include at least: a difference between a mapping feature of the source domain sample set and a mapping feature of the current target domain sample set; using the current neural networkloss function to perform back propagation so as to update model parameters of the current neural network model; and determining the current neural network model as the final trained neural network model after iteration is ended.

Owner:ALIBABA GRP HLDG LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com