Neural network training method and device

A neural network training and network technology, applied in the field of computer vision, can solve the problem that the student network cannot take into account the large application range and accuracy, and achieve the effects of wide application range, improved performance, and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

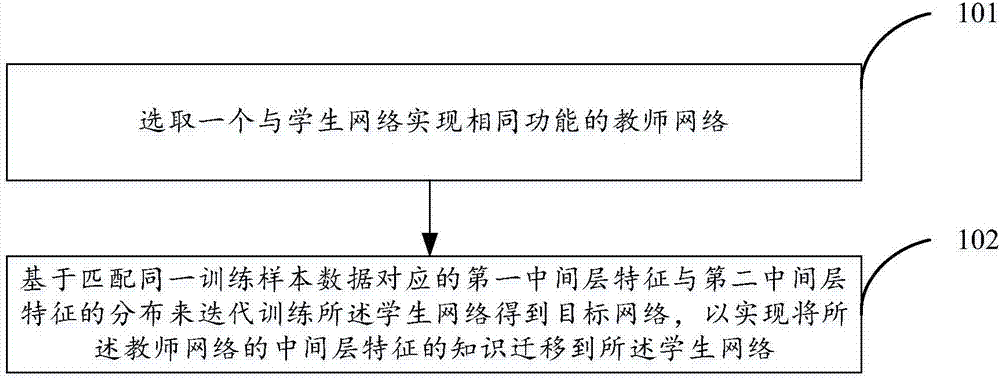

[0038] see figure 1 , which is a flowchart of a neural network training method in an embodiment of the present invention, the method comprising:

[0039] Step 101. Select a teacher network that realizes the same function as the student network.

[0040] Implemented functions such as image classification, target detection, image segmentation, etc. The teacher network has excellent performance and high accuracy, but compared with the student network, it has a complex structure, more parameter weights, and slower calculation speed. The student network has fast computing speed, average or poor performance, and simple network structure. A network that has the same function as the student network and has excellent performance can be selected as the teacher network from the set of preset neural network models.

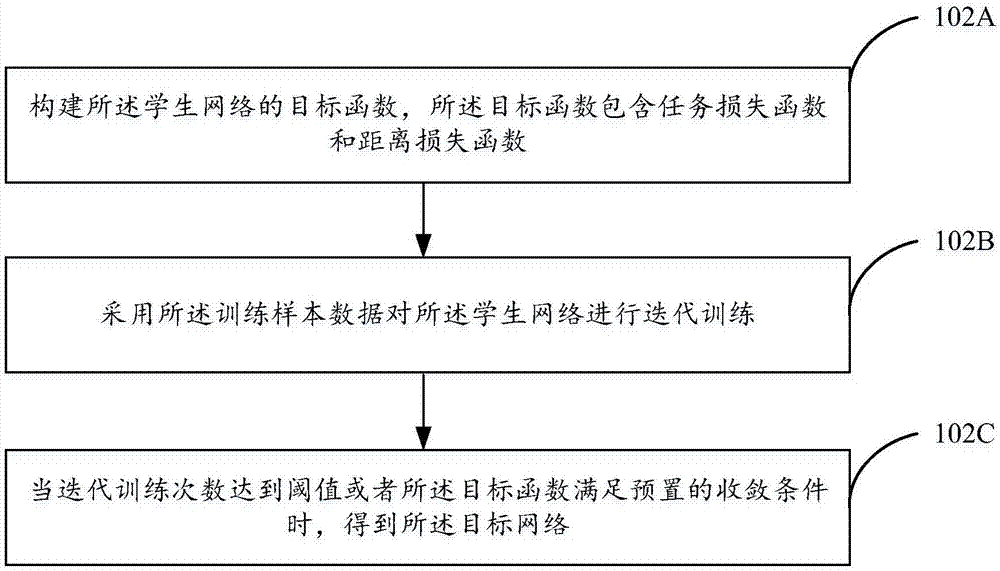

[0041] Step 102: Iteratively train the student network to obtain a target network based on matching the distribution of the first intermediate layer features and the second ...

specific example

[0066] Concrete example is as follows: k ( , ) is the linear kernel function shown in following formula (7); Also or k ( , ) is the polynomial kernel function shown in following formula (8); Also or k ( , ) is the Gaussian kernel function shown in the following formula (9).

[0067] k(x,y)=x T y Formula (7)

[0068] k(x,y)=(x T y+c) d Formula (8)

[0069]

Embodiment 2

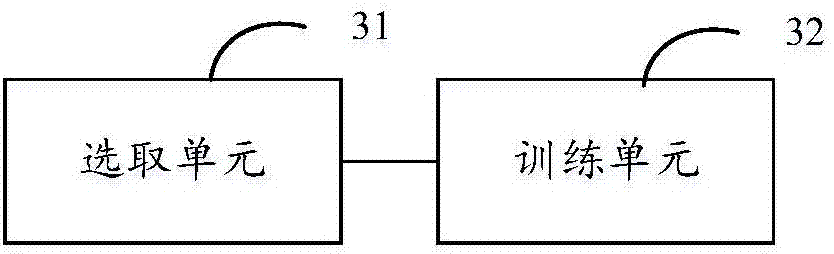

[0071] Based on the same concept of the neural network training method provided in the first embodiment, the second embodiment of the present invention also provides a neural network training device, the structure of which is as follows image 3 Described, comprise selection unit 31 and training unit 32, wherein:

[0072] The selecting unit 31 is configured to select a teacher network that realizes the same function as the student network.

[0073] Implemented functions such as image classification, target detection, image segmentation, etc. The teacher network has excellent performance and high accuracy, but compared with the student network, it has a complex structure, more parameter weights, and slower calculation speed. The student network has fast computing speed, average or poor performance, and simple network structure. A network that has the same function as the student network and has excellent performance can be selected as the teacher network from the set of prese...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com