Patents

Literature

1612results about "Communication with homomorphic encryption" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Fully homomorphic encryption method based on a bootstrappable encryption scheme, computer program and apparatus

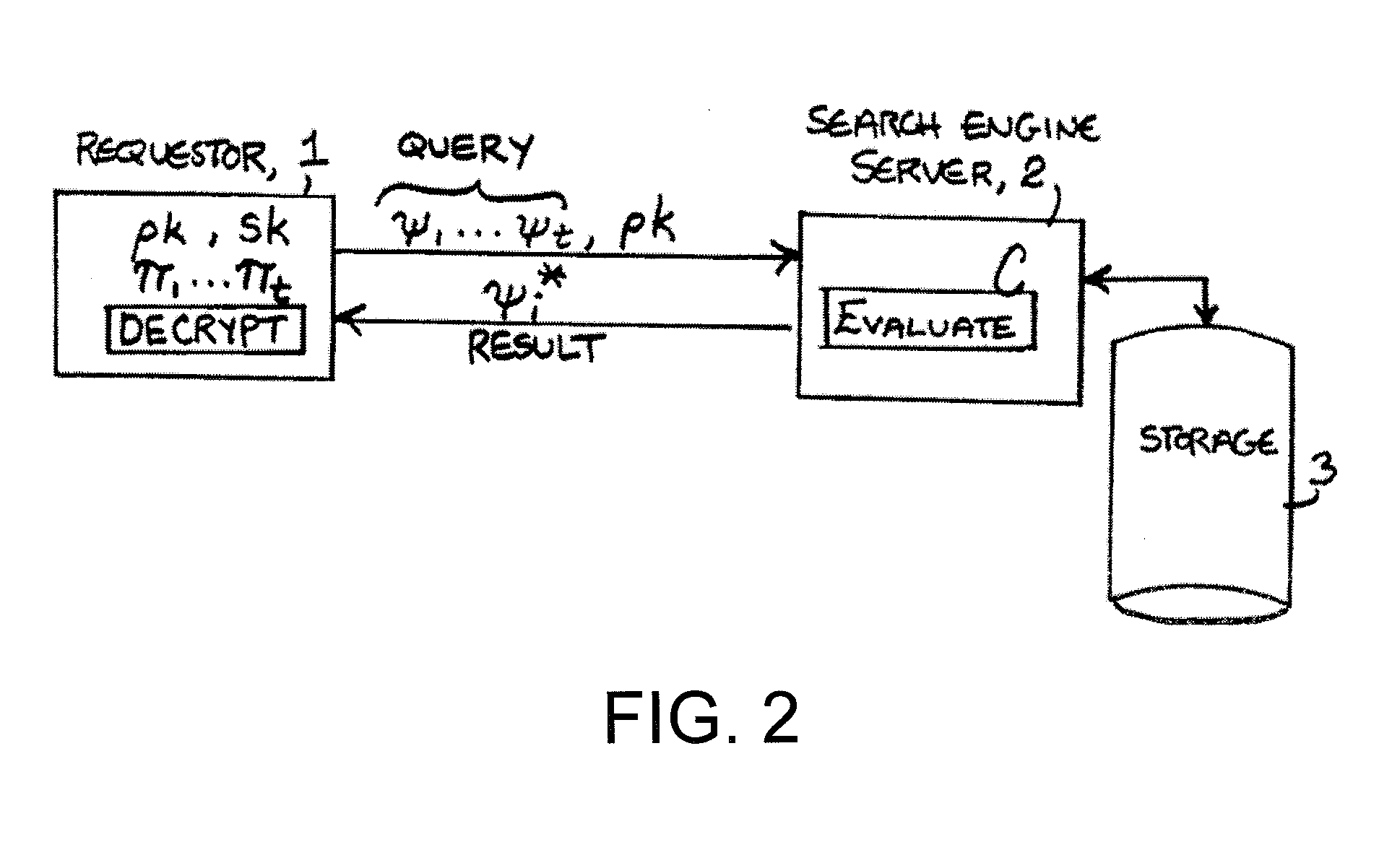

ActiveUS20110110525A1Key distribution for secure communicationMultiple keys/algorithms usageAssessment dataData application

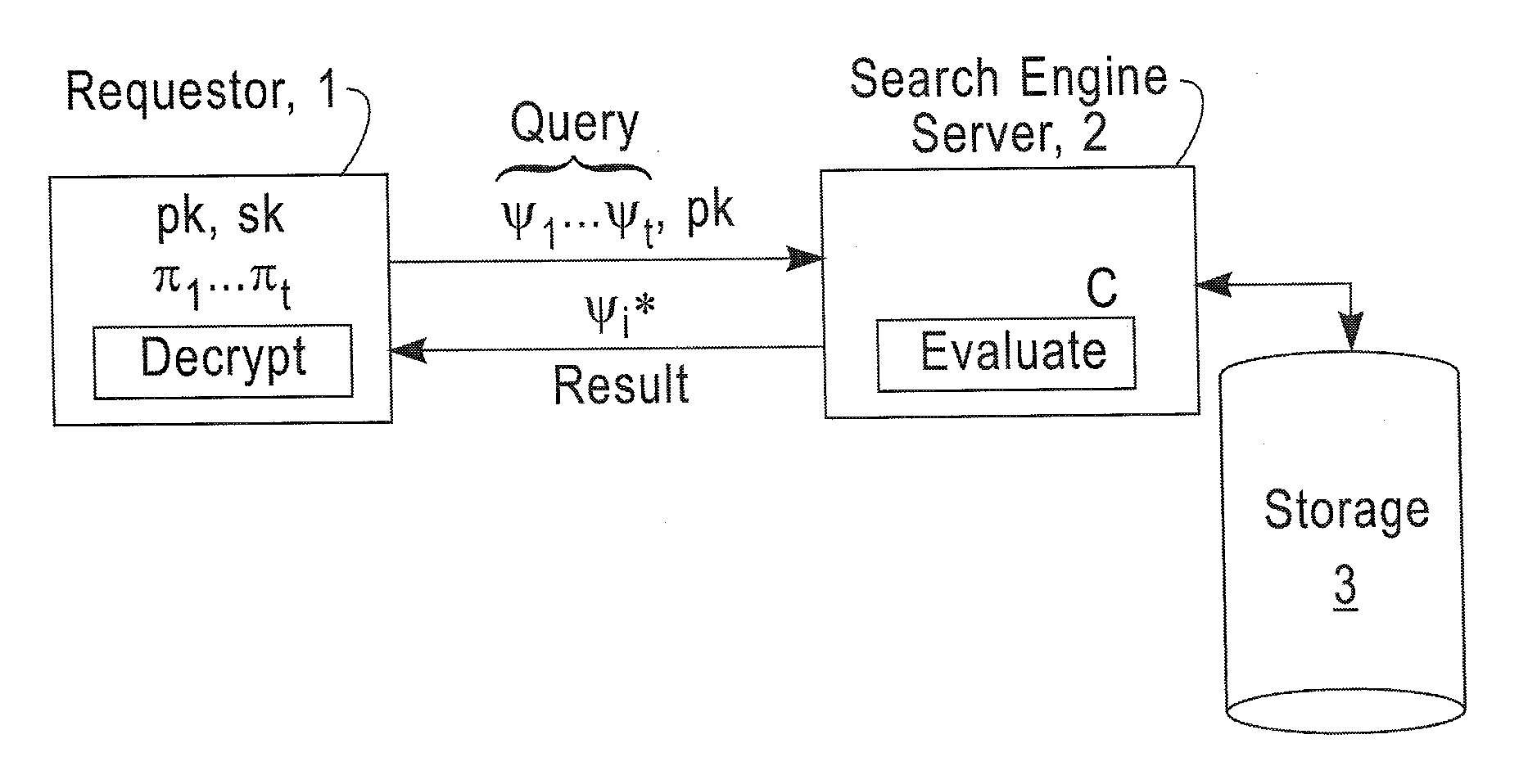

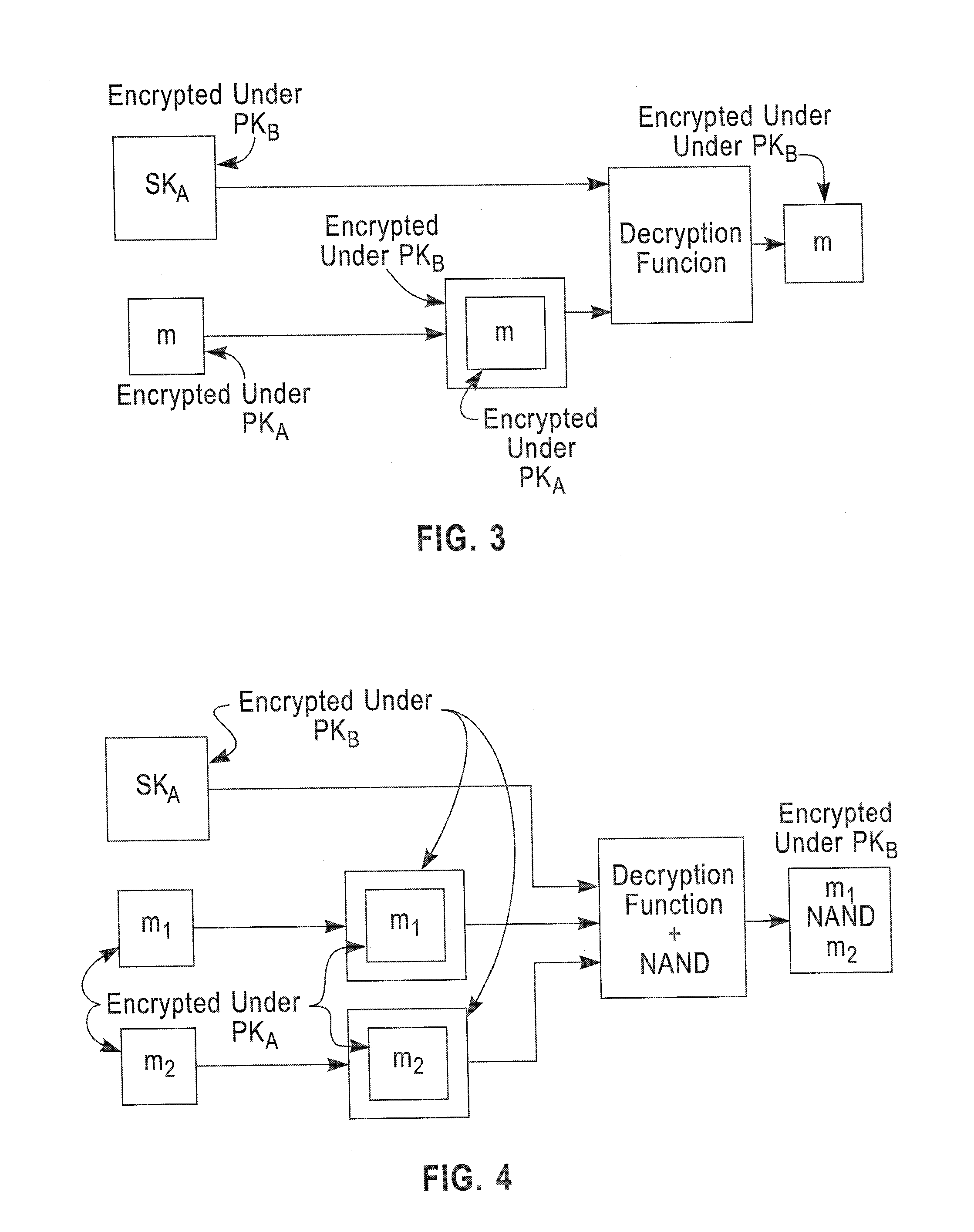

A method includes encrypting information in accordance with an encryption scheme that uses a public key; encrypting a plurality of instances of a secret key, each being encrypted using at least one additional instance of the public key; sending the encrypted information and the plurality of encrypted instances of the secret key to a destination; receiving an encrypted result from the destination; and decrypting the encrypted result. A further method includes receiving a plurality of encrypted secret keys and information descriptive of a function to be performed on data; converting the information to a circuit configured to perform the function on the data; and applying the data to inputs of the circuit and evaluating the data using, in turn, the plurality of encrypted secret keys.

Owner:IBM CORP

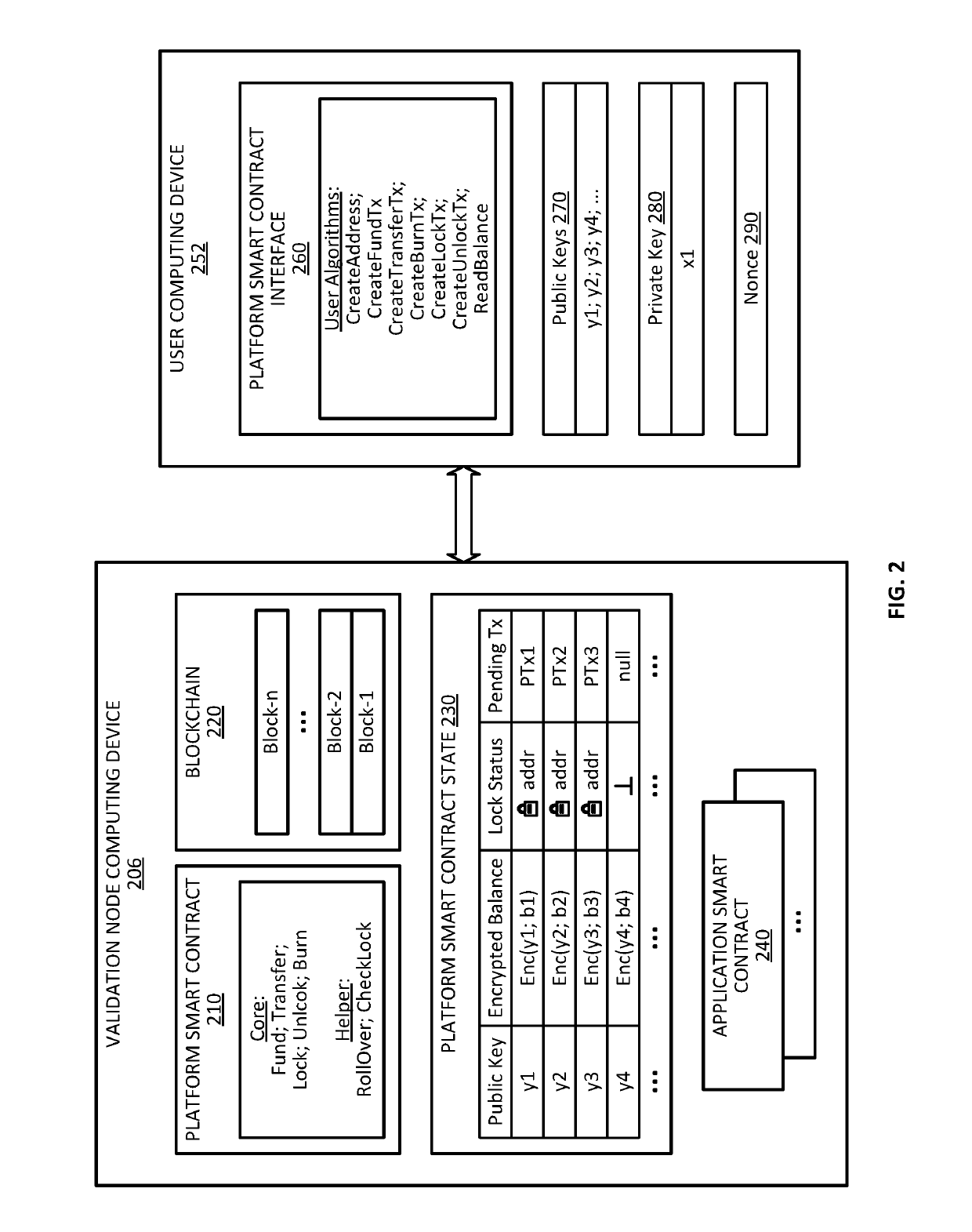

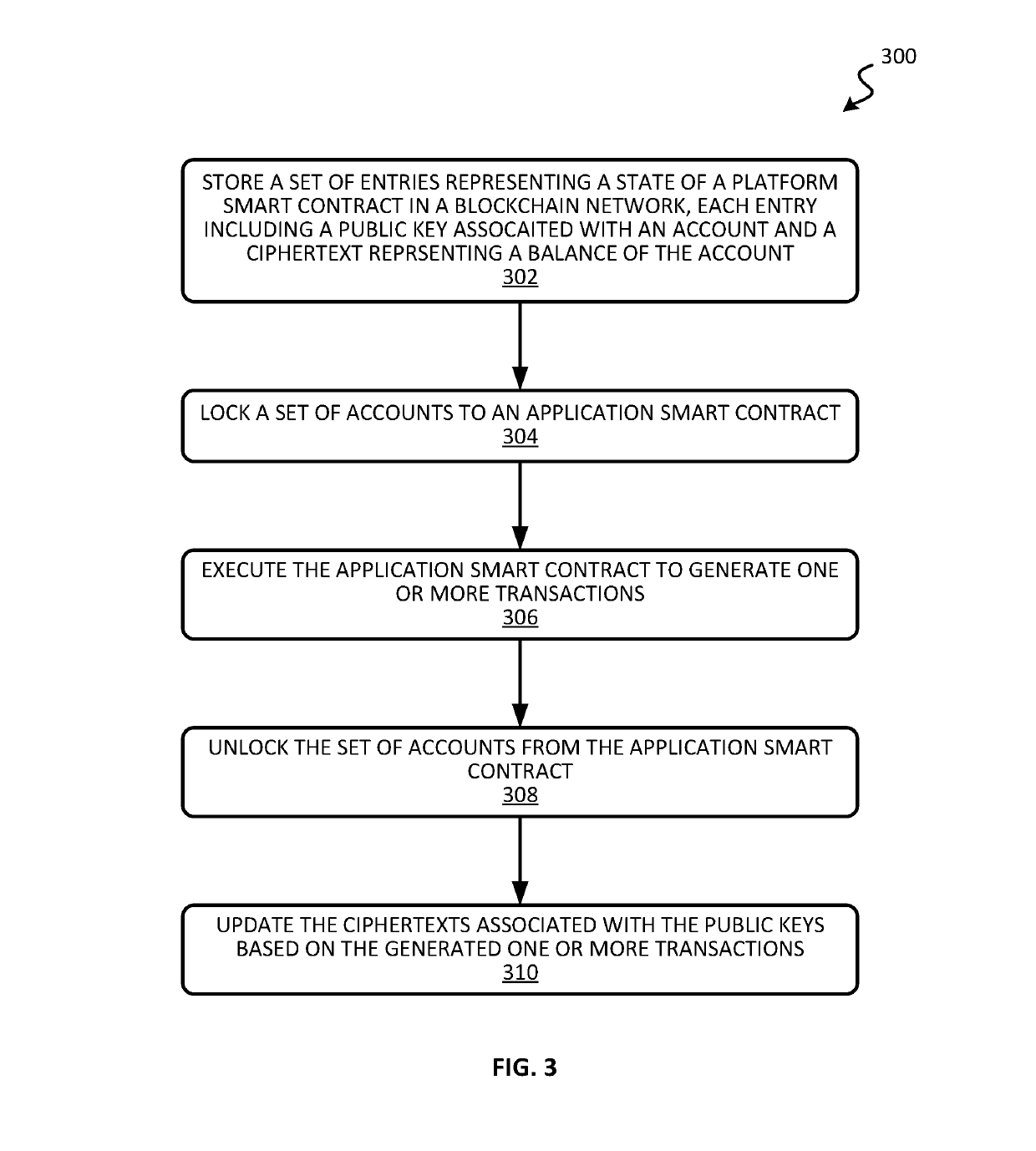

Blockchain system for confidential and anonymous smart contracts

ActiveUS20190164153A1Improve confidentialityEnhanced anonymityEncryption apparatus with shift registers/memoriesCryptography processingInternet privacyPrivacy preserving

Blockchain-based, smart contract platforms have great promise to remove trust and add transparency to distributed applications. However, this benefit often comes at the cost of greatly reduced privacy. Techniques for implementing a privacy-preserving smart contract is described. The system can keep accounts private while not losing functionality and with only a limited performance overhead. This is achieved by building a confidential and anonymous token on top of a cryptocurrency. Multiple complex applications can also be built using the smart contract system.

Owner:VISA INT SERVICE ASSOC +1

Bootstrappable homomorphic encryption method, computer program and apparatus

ActiveUS8515058B1Secret communicationCommunication with homomorphic encryptionComputer hardwareComputer program

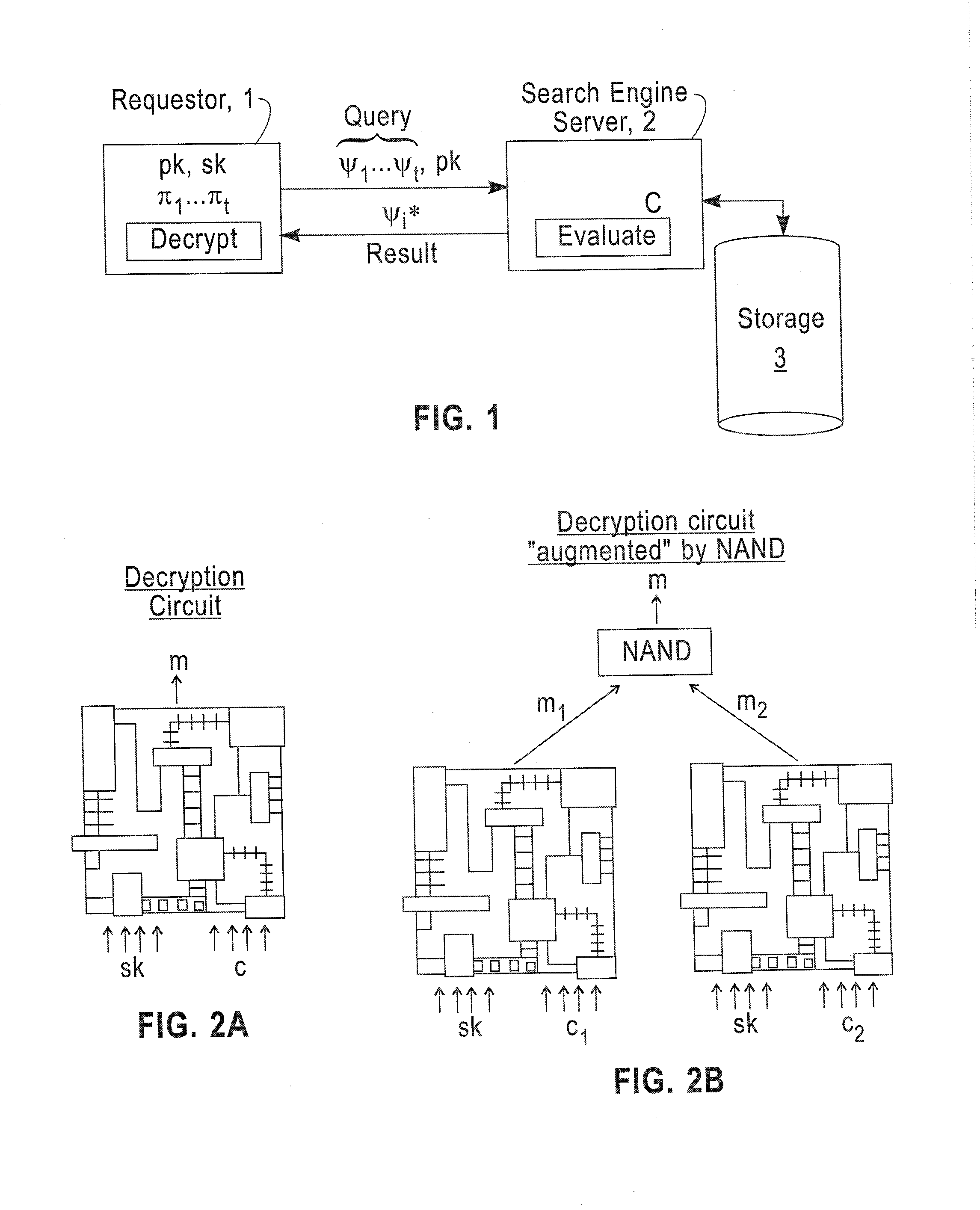

Embodiments of the present invention describe a fully homomorphic encryption scheme using a “bootstrapable” homomorphic encryption scheme that evaluate a function ƒ when ƒ is the encryption schemes own decryption function. Specifically, the fully homomorphic encryption scheme uses the “bootstrapable” homomorphic encryption scheme to determine the decryption function to decrypt data encrypted under the fully homomorphic encryption scheme.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

Fully Homomorphic Encryption

InactiveUS20130170640A1Reduce noise levelGrowth inhibitionPublic key for secure communicationSecret communicationRoundingNoise level

In one exemplary embodiment of the invention, a method and computer program include: receiving first and second ciphertexts having first and second data encrypted per an encryption scheme, the encryption scheme has public / secret keys and encryption, decryption, operation and refresh functions, the encryption function encrypts data, the decryption decrypts ciphertext, the operation receives ciphertexts and performs operation(s) on them, the refresh operates to prevent growth of the magnitude of noise for a ciphertext while reducing the modulus of the ciphertext without using the secret key, utilizing a modulus switching technique that involves transforming a first ciphertext c modulo q into a second ciphertext c′ modulo p while preserving correctness, the technique includes scaling by p / q and rounding, p<q; using the operation function(s), performing operation(s) on them to obtain a third ciphertext; and reducing a noise level of the third ciphertext using the refresh function.

Owner:IBM CORP

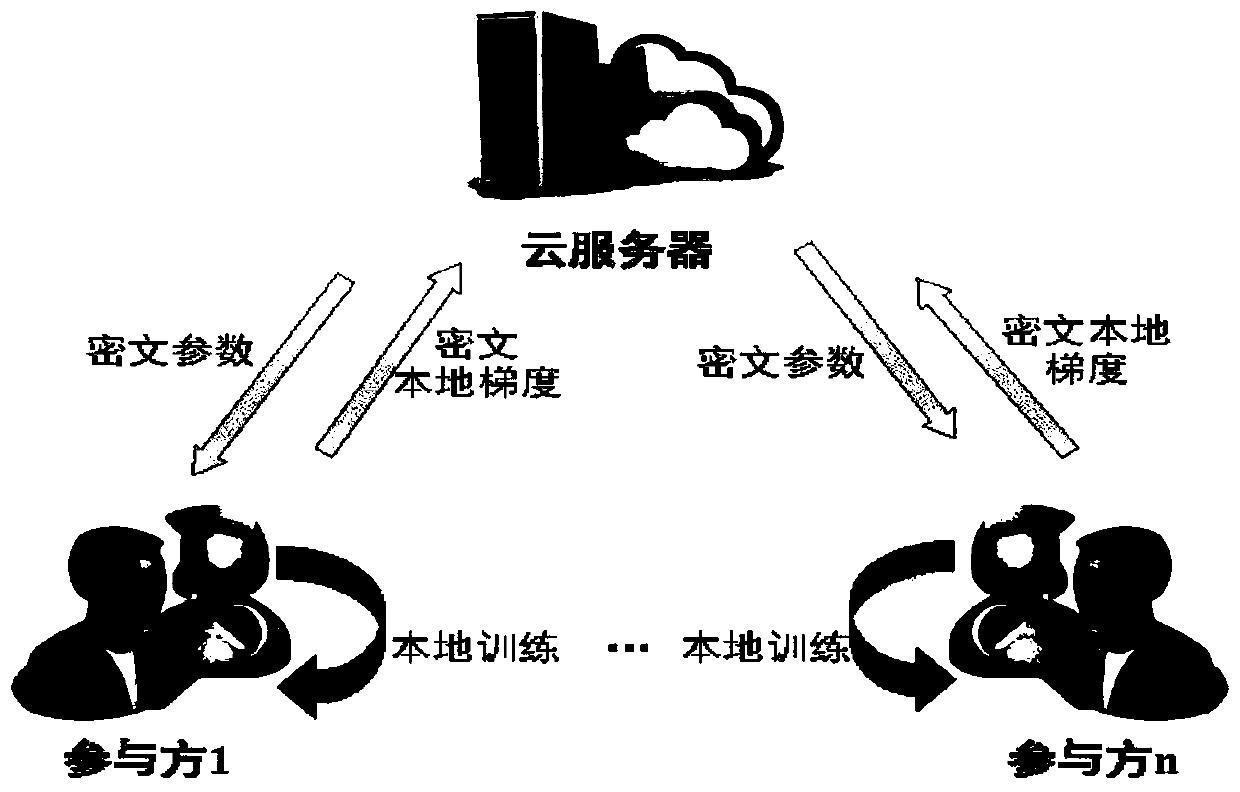

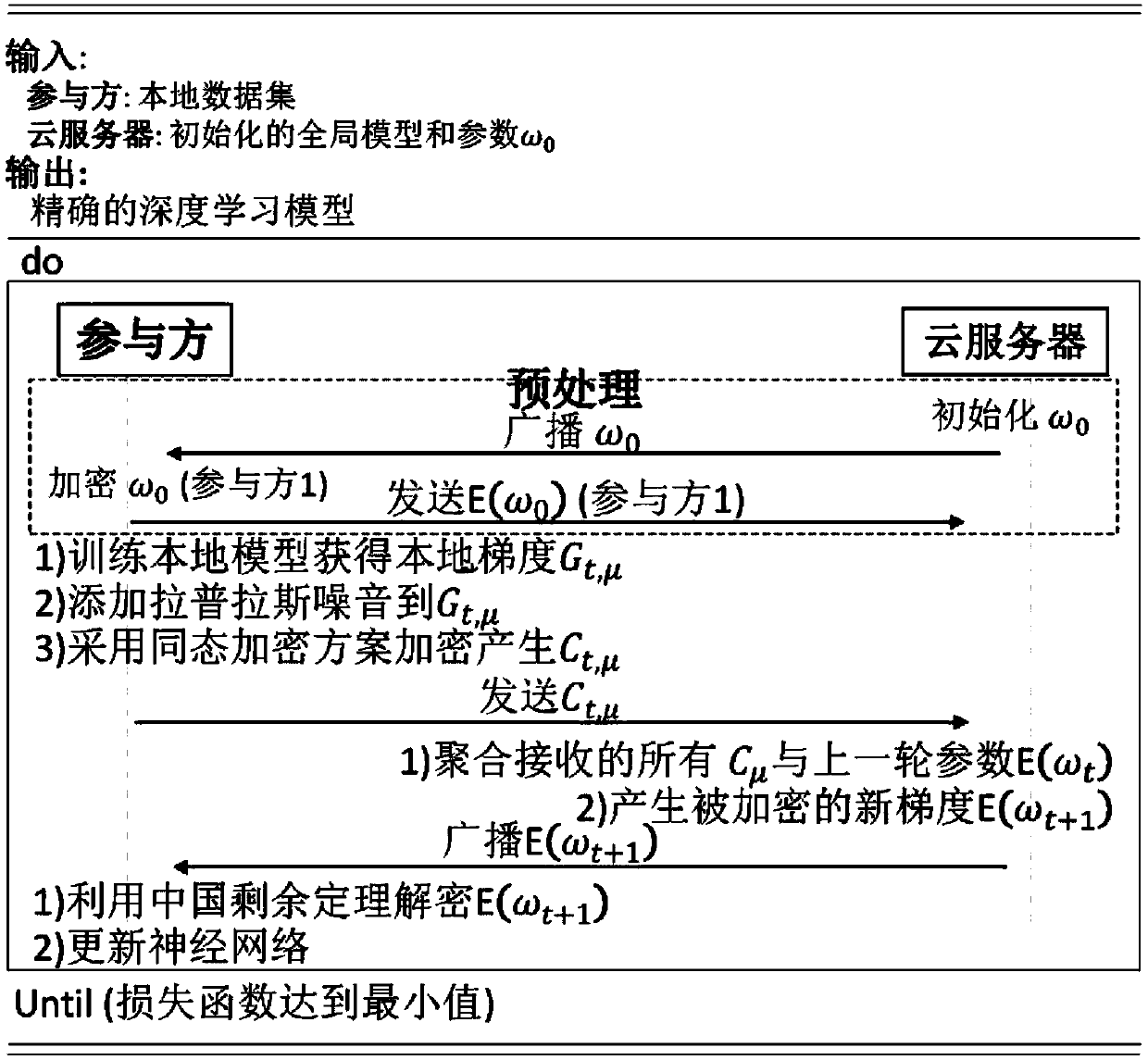

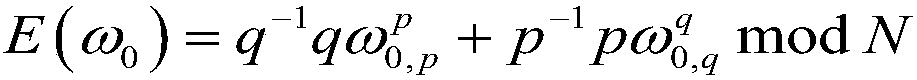

A combined deep learning training method based on a privacy protection technology

ActiveCN109684855AAvoid gettingSafe and efficient deep learning training methodDigital data protectionCommunication with homomorphic encryptionPattern recognitionData set

The invention belongs to the technical field of artificial intelligence, and relates to a combined deep learning training method based on a privacy protection technology. The efficient combined deep learning training method based on the privacy protection technology is achieved. In the invention, each participant first trains a local model on a private data set to obtain a local gradient, then performs Laplace noise disturbance on the local gradient, encrypts the local gradient and sends the encrypted local gradient to a cloud server; The cloud server performs aggregation operation on all thereceived local gradients and the ciphertext parameters of the last round, and broadcasts the generated ciphertext parameters; And finally, the participant decrypts the received ciphertext parameters and updates the local model so as to carry out subsequent training. According to the method, a homomorphic encryption scheme and a differential privacy technology are combined, a safe and efficient deep learning training method is provided, the accuracy of a training model is guaranteed, and meanwhile a server is prevented from inferring model parameters, training data privacy and internal attacksto obtain private information.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

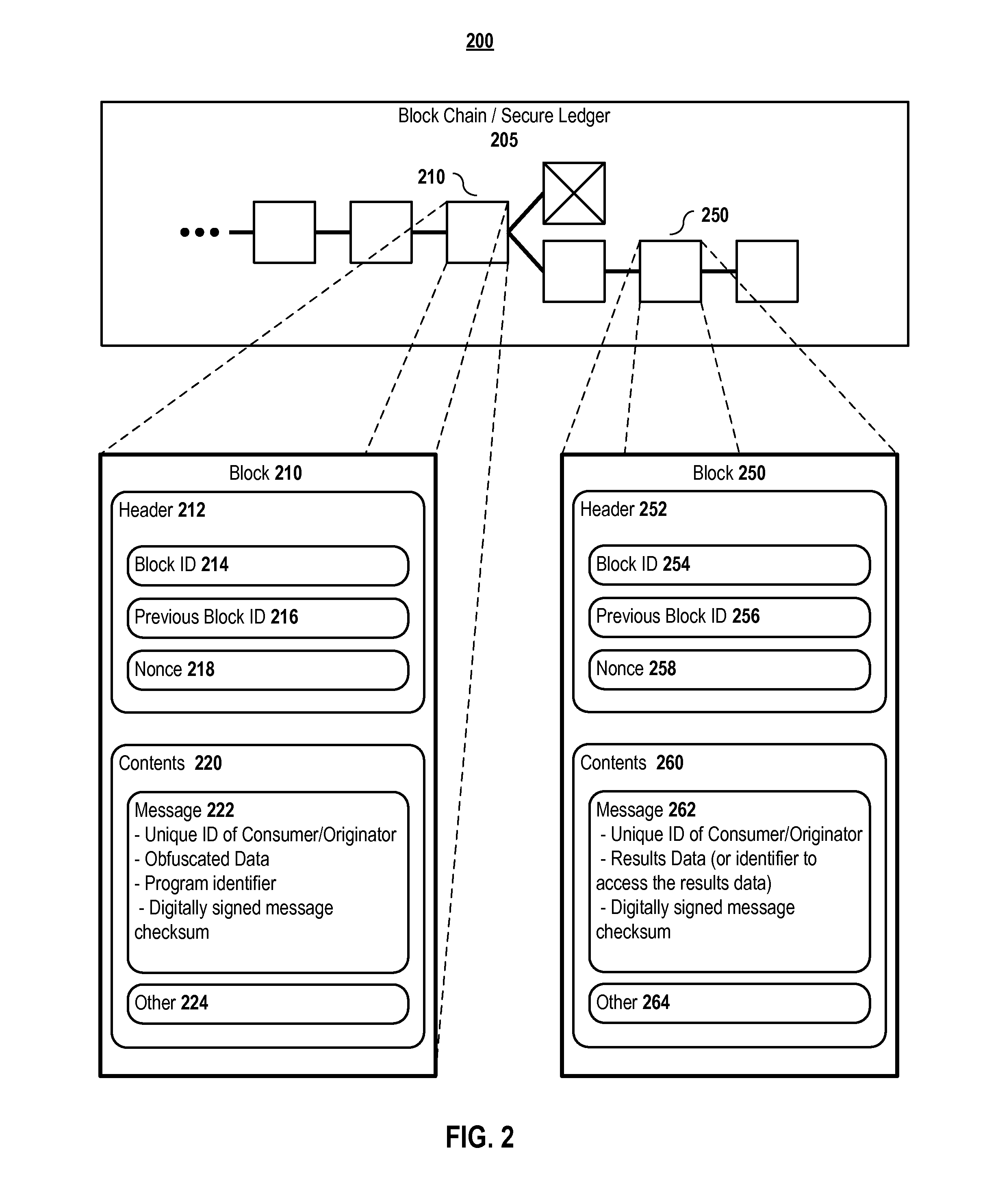

Methods and systems for obfuscating data and computations defined in a secure distributed transaction ledger

ActiveUS20160261404A1Digital data protectionCommunication with homomorphic encryptionConfidentialityDistributed transaction

Aspects of the present invention provide systems and methods that facilitate computations that are publically defined while assuring the confidentiality of the input data provided, the generated output data, or both using homomorphic encryption on the contents of the secure distributed transaction ledger. Full homomorphic encryption schemes protect data while still enabling programs to accept it as input. In embodiments, using a homomorphic encryption data input into a secure distributed transaction ledger allows a consumer to employ highly motivated entities with excess compute capability to perform calculations on the consumer's behalf while assuring data confidentiality, correctness, and integrity as it propagates through the network.

Owner:DELL PROD LP

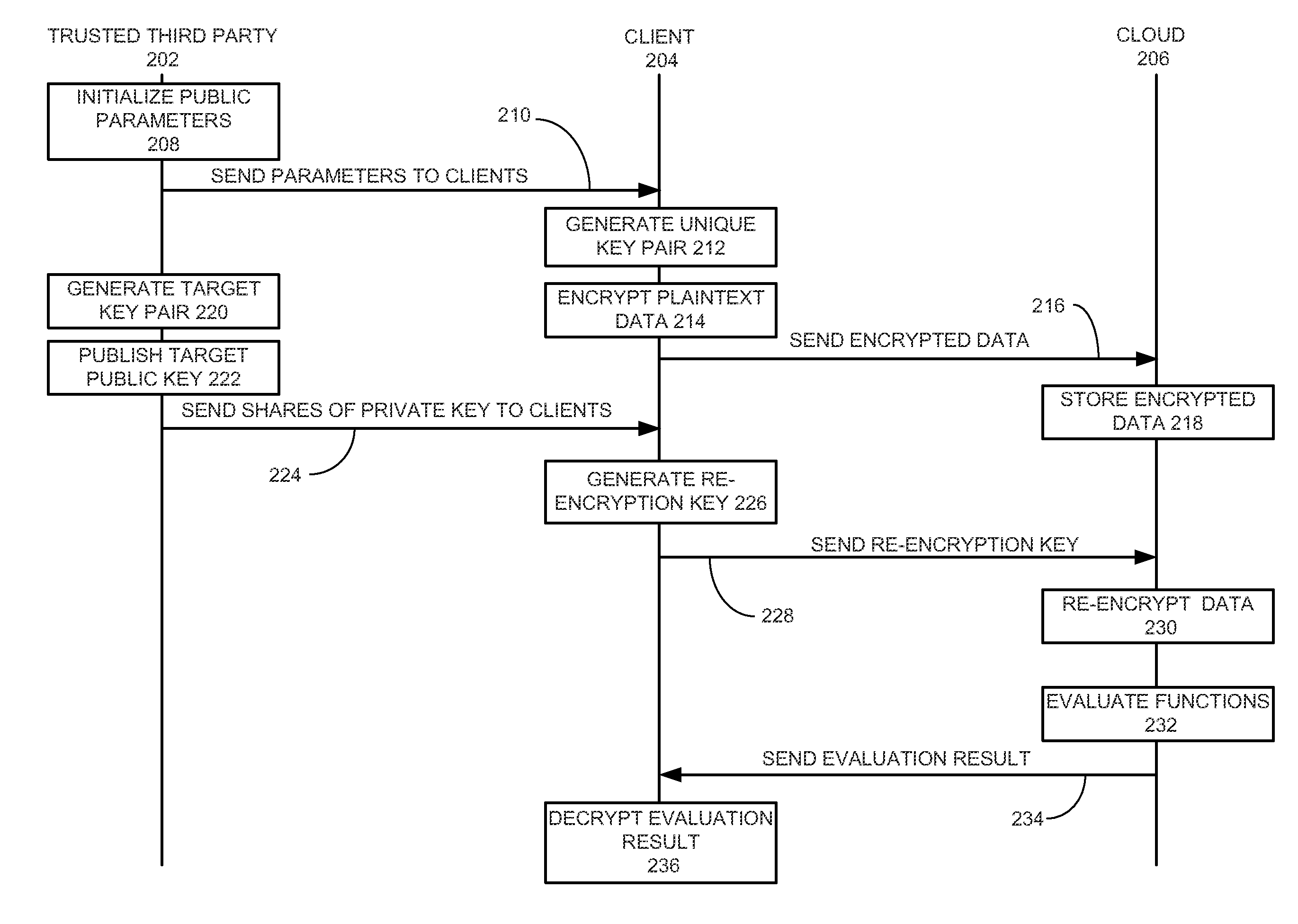

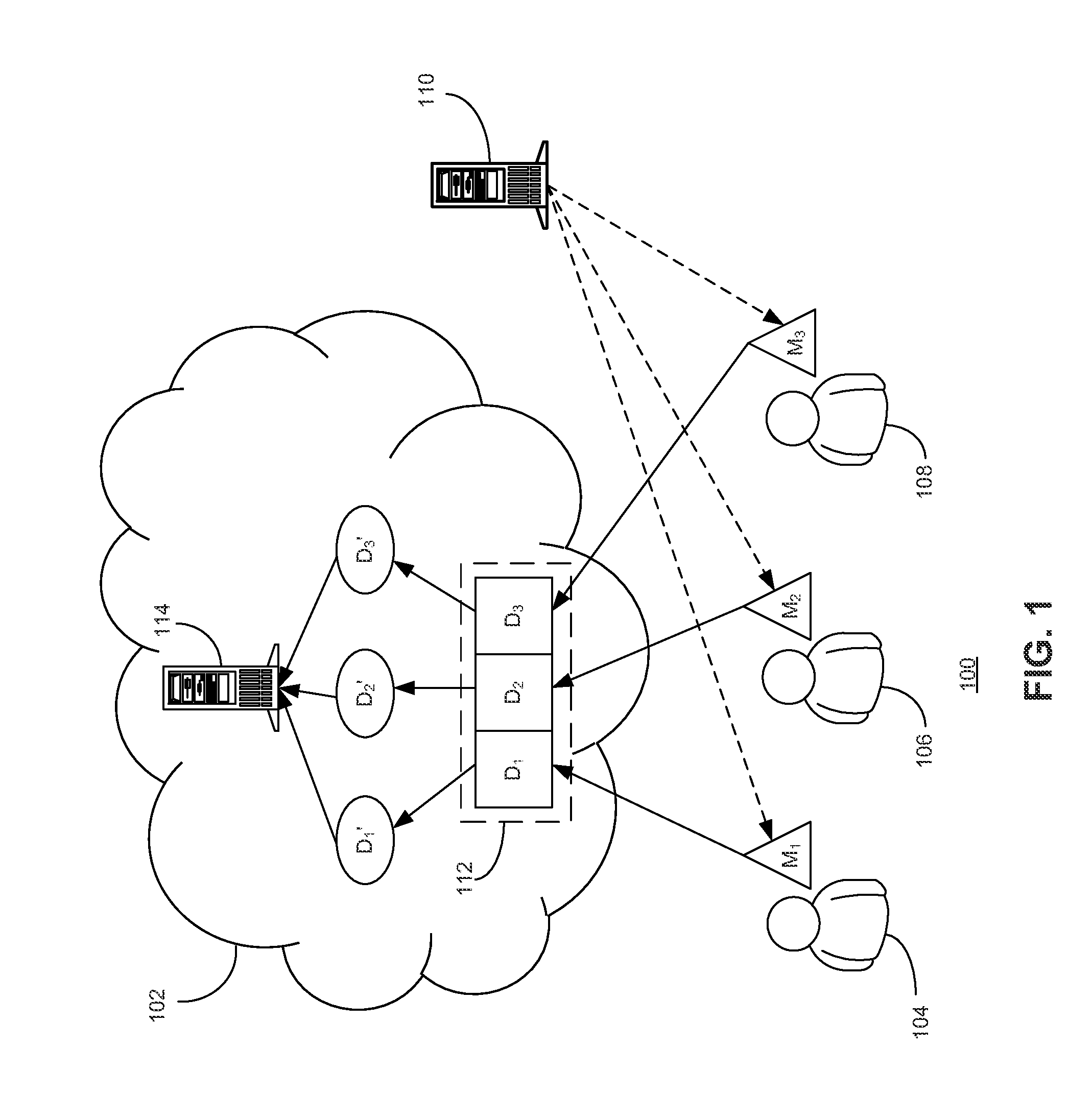

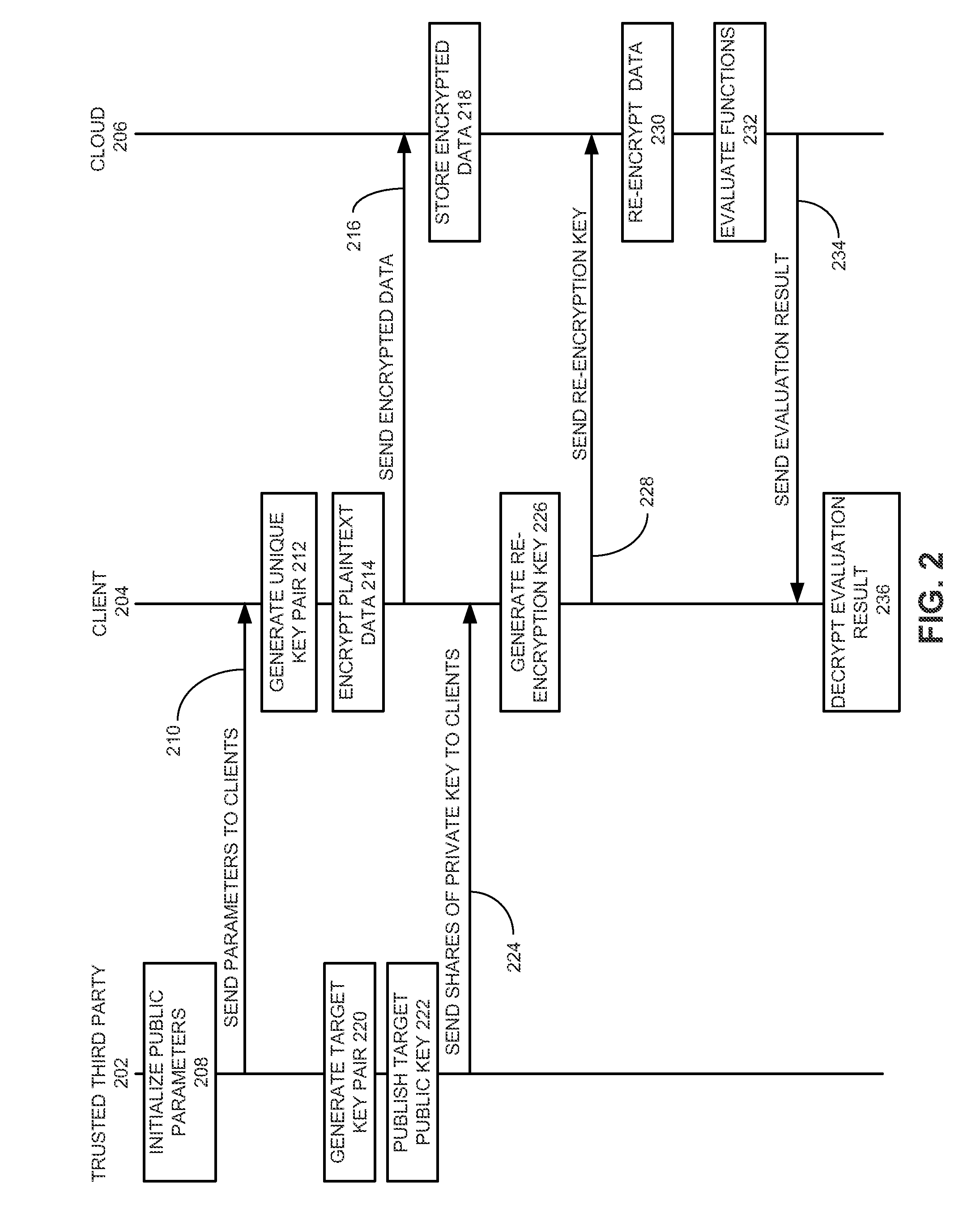

Method and system for secure multiparty cloud computation

One embodiment of the present invention provides a system for performing secure multiparty cloud computation. During operation, the system receives multiple encrypted datasets from multiple clients. An encrypted dataset associated with a client is encrypted from a corresponding plaintext dataset using a unique, client-specific encryption key. The system re-encrypts the multiple encrypted datasets to a target format, evaluates a function based on the re-encrypted multiple datasets to produce an evaluation outcome, and sends the evaluation outcome to the multiple clients, which are configured to cooperatively decrypt the evaluation outcome to obtain a plaintext evaluation outcome.

Owner:FUTUREWEI TECH INC

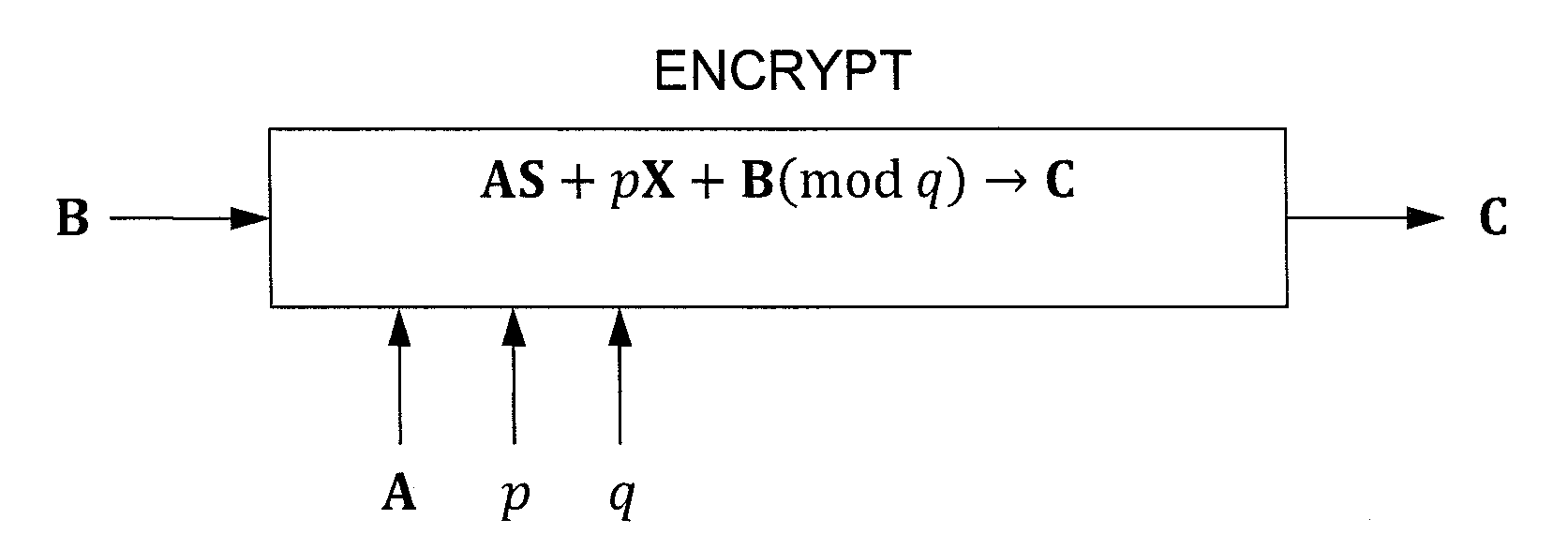

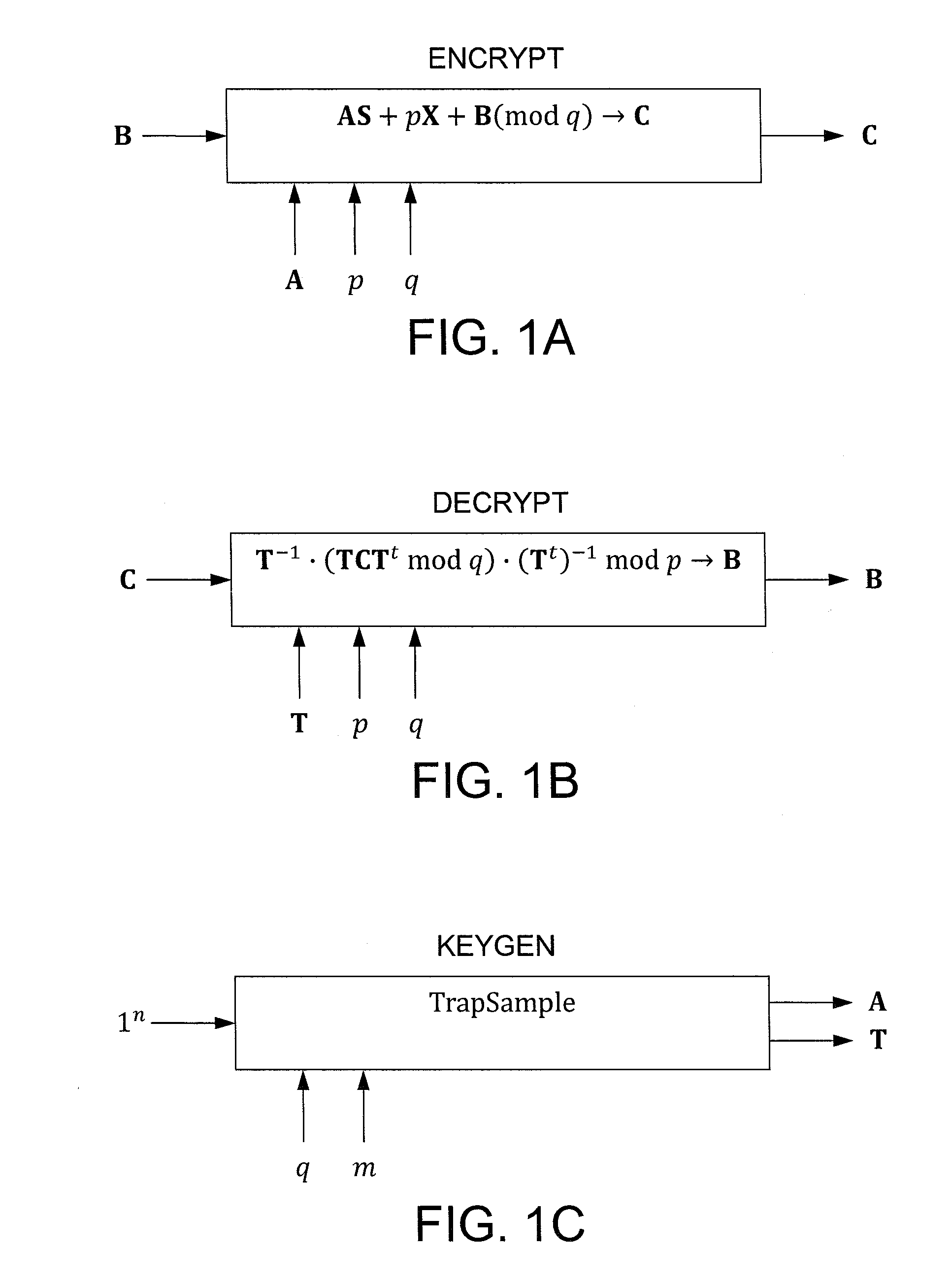

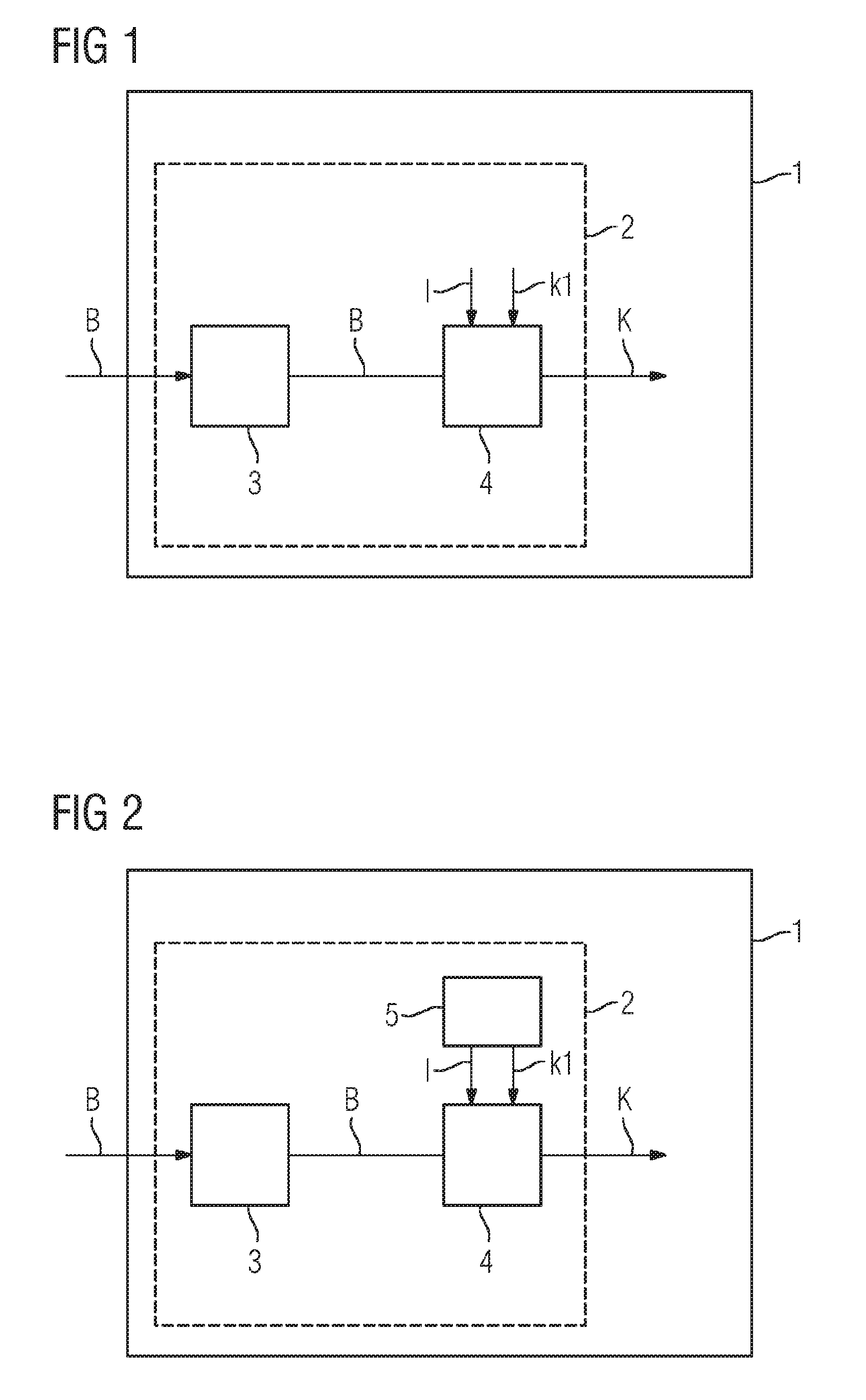

Efficient Homomorphic Encryption Scheme For Bilinear Forms

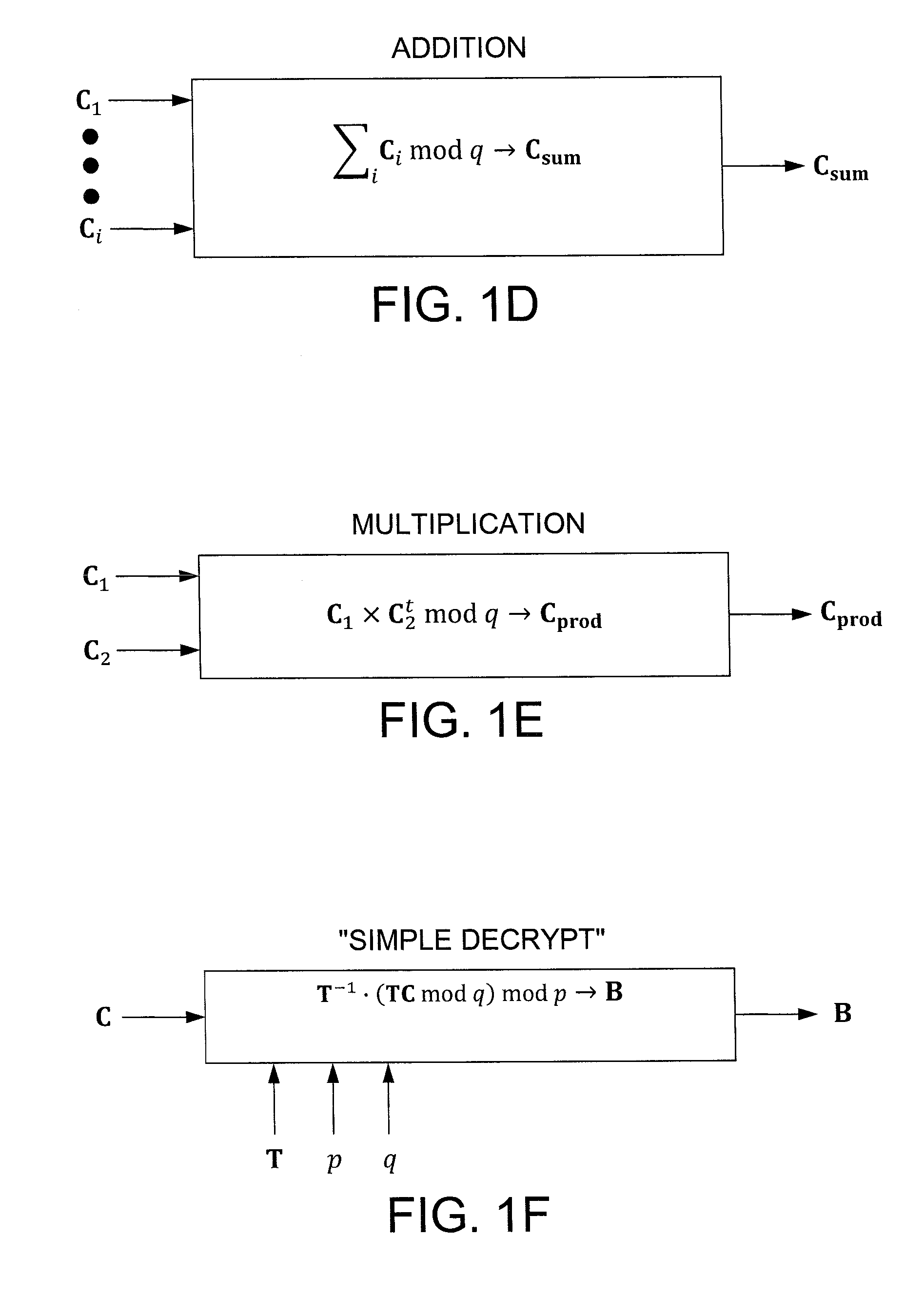

InactiveUS20110243320A1Public key for secure communicationSecret communicationComputer hardwareCiphertext

In one exemplary embodiment, a computer readable storage medium tangibly embodying a program of instructions executable by a machine for performing operations including: receiving information B to be encrypted as a ciphertext C in accordance with an encryption scheme having an encrypt function; and encrypting B in accordance with the encrypt function to obtain C, the scheme utilizes at least one public key A, where B, C, and A are matrices, the encrypt function receives as inputs A and B and outputs C as C→AS+pX+B (mod q), S is a random matrix, X is an error matrix, p is in integer, q is an odd prime number. In other exemplary embodiments, the encryption scheme includes a decrypt function that receives as inputs at least one private key T (a matrix) and C and outputs B as B=T−1·(TCTt mod q)·(Tt)−1 mod p.

Owner:IBM CORP

System and method for confidentiality-preserving rank-ordered search

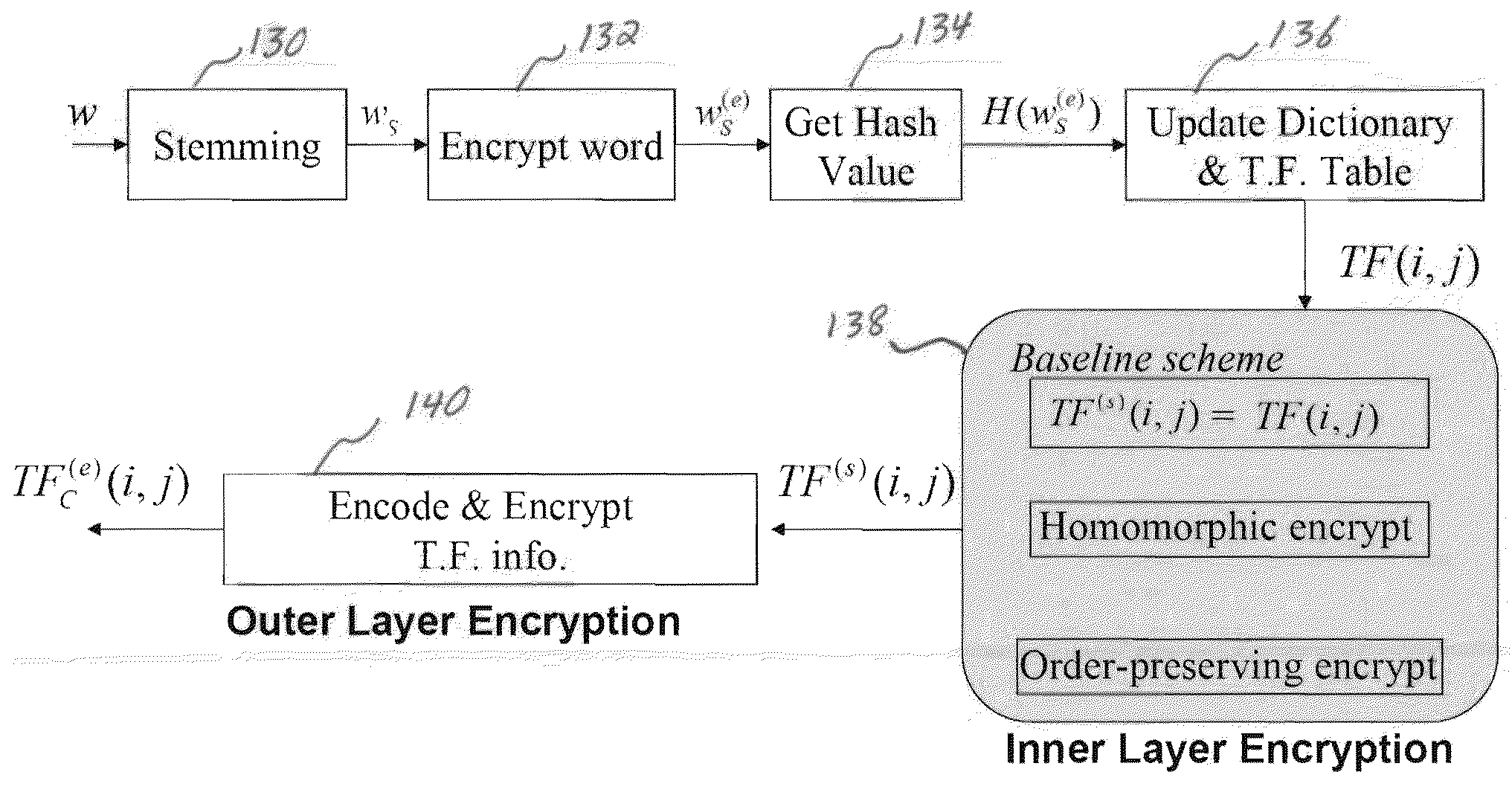

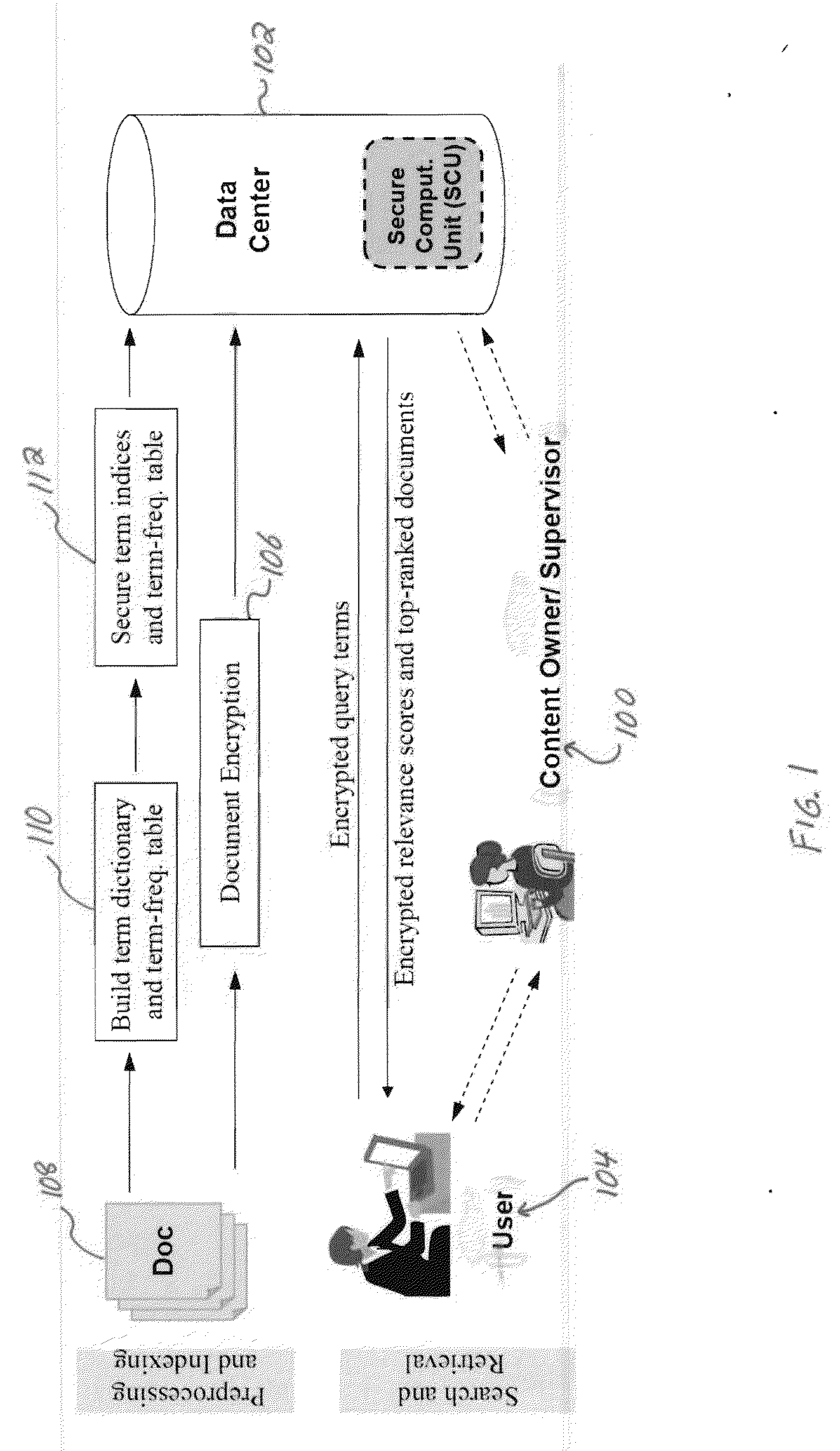

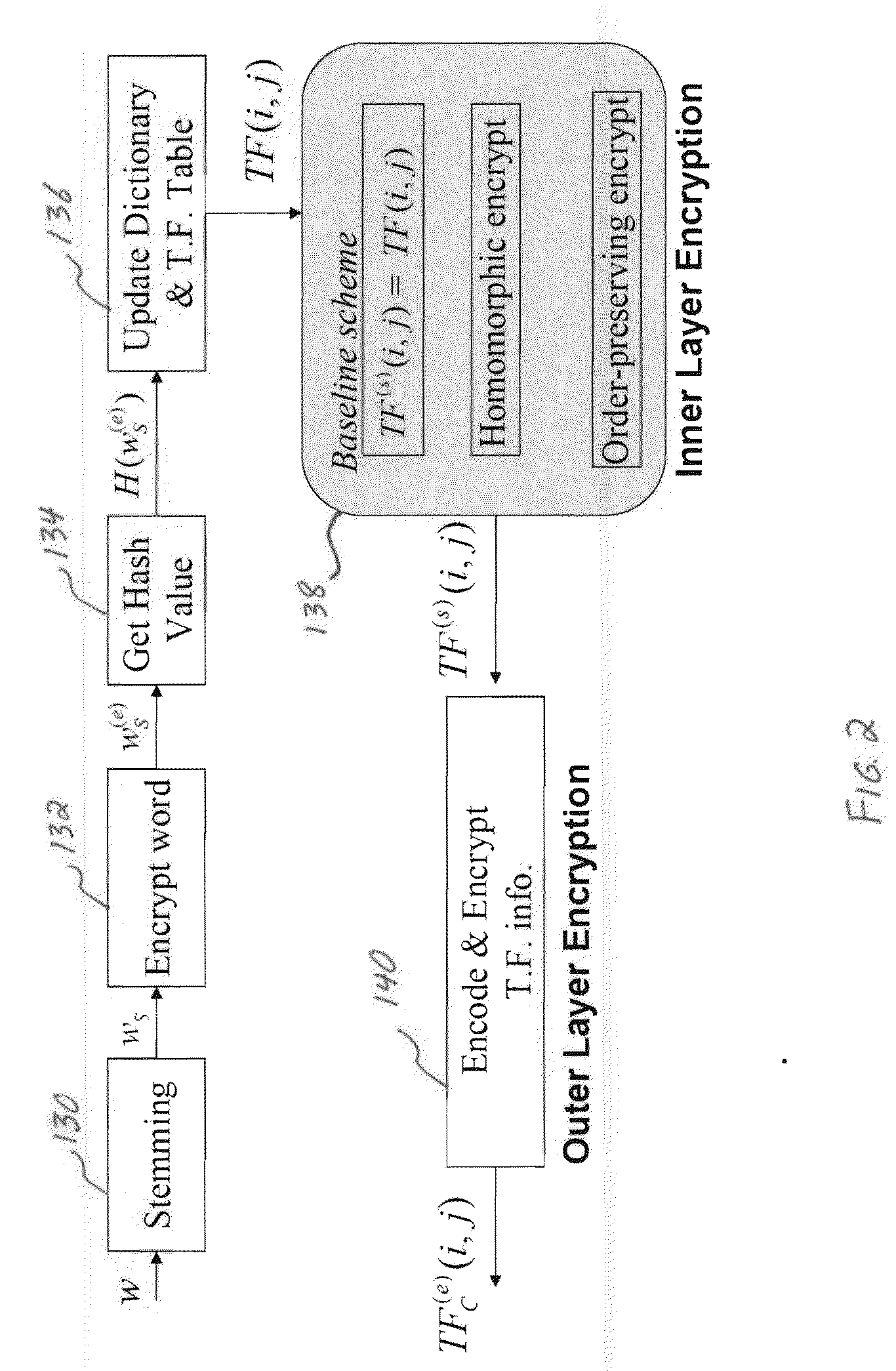

InactiveUS20100146299A1Avoid contactWeb data indexingDigital data processing detailsConfidentialityComputerized system

A confidentiality preserving system and method for performing a rank-ordered search and retrieval of contents of a data collection. The system includes at least one computer system including a search and retrieval algorithm using term frequency and / or similar features for rank-ordering selective contents of the data collection, and enabling secure retrieval of the selective contents based on the rank-order. The search and retrieval algorithm includes a baseline algorithm, a partially server oriented algorithm, and / or a fully server oriented algorithm. The partially and / or fully server oriented algorithms use homomorphic and / or order preserving encryption for enabling search capability from a user other than an owner of the contents of the data collection. The confidentiality preserving method includes using term frequency for rank-ordering selective contents of the data collection, and retrieving the selective contents based on the rank-order.

Owner:UNIV OF MARYLAND

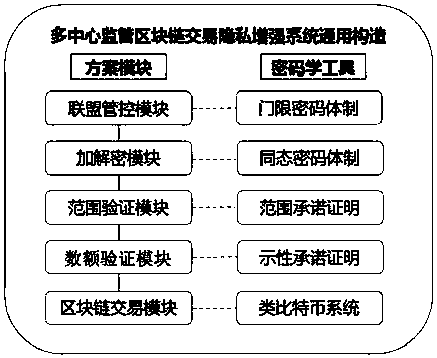

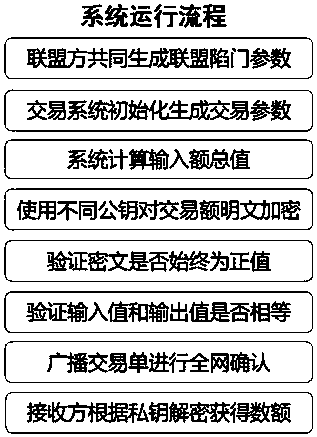

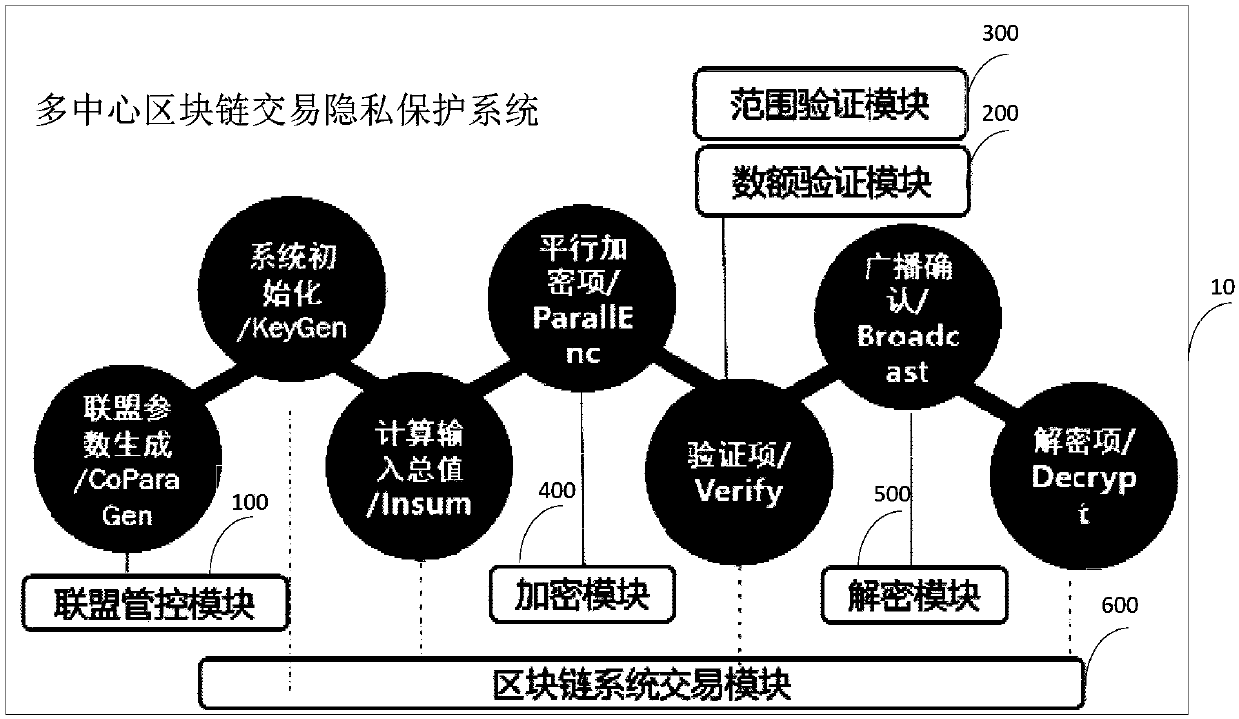

Multi-center block chain transaction privacy protection system and method

InactiveCN108021821ARealize privacy protectionEnhance the security of plaintext amountDigital data protectionCommunication with homomorphic encryptionDigital currencyCiphertext

The invention discloses a multi-center block chain transaction privacy protection system and method. The system comprises an alliance control module, an amount verification module, a range verification module, an encryption module, a decryption module and a block chain system transaction module, wherein the alliance control module is used for generating alliance parameters by multiple participants; the amount verification module is used for verifying that input and output of an encrypted ciphertext amount in a transaction are equal; the range verification module is used for verifying that theencrypted ciphertext amount in the transaction is in a specific interval and is constantly positive; the encryption module and the decryption module are used for carrying out homomorphic encryption and decryption on the amount in transmission and reception processes; and the block chain system transaction module is used for complete bitcoin-like digital currency transaction systems, and has a complete transaction process which comprises transmission, reception, broadcasting and block confirmation. The system is capable of enhancing general structures through block chain transaction privacies under a multi-center supervision mode, so as to realize privacy protection for trapdoor parameters under joint control of multiple parties and transaction metadata in transaction process, and effectively strengthen the safety of plaintext amounts in multi-center block chain system transaction process.

Owner:BEIHANG UNIV

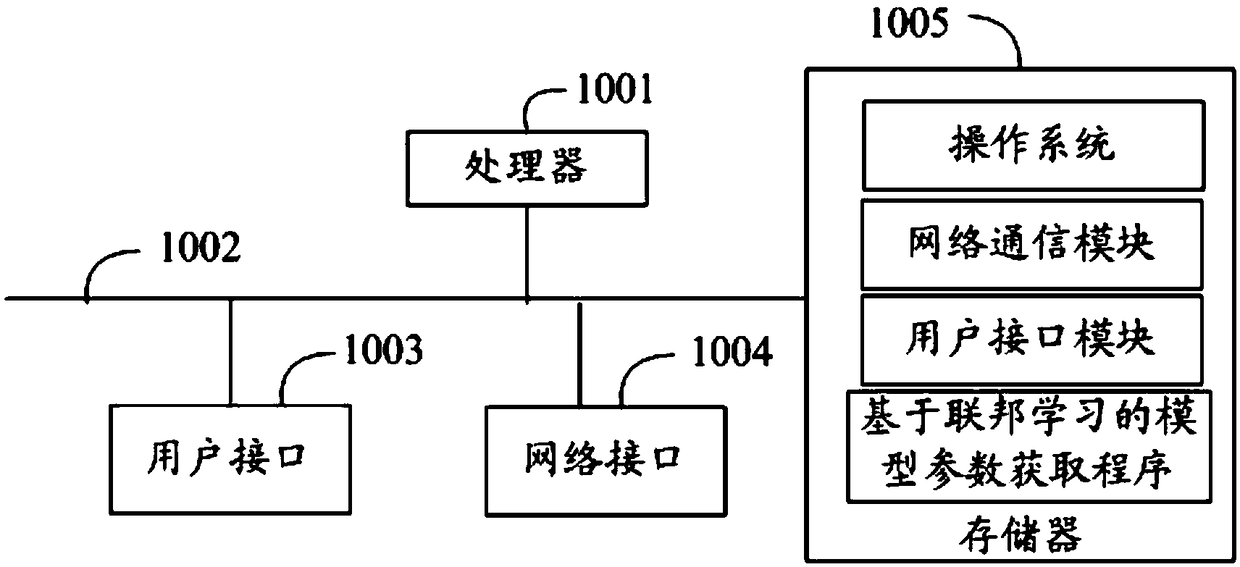

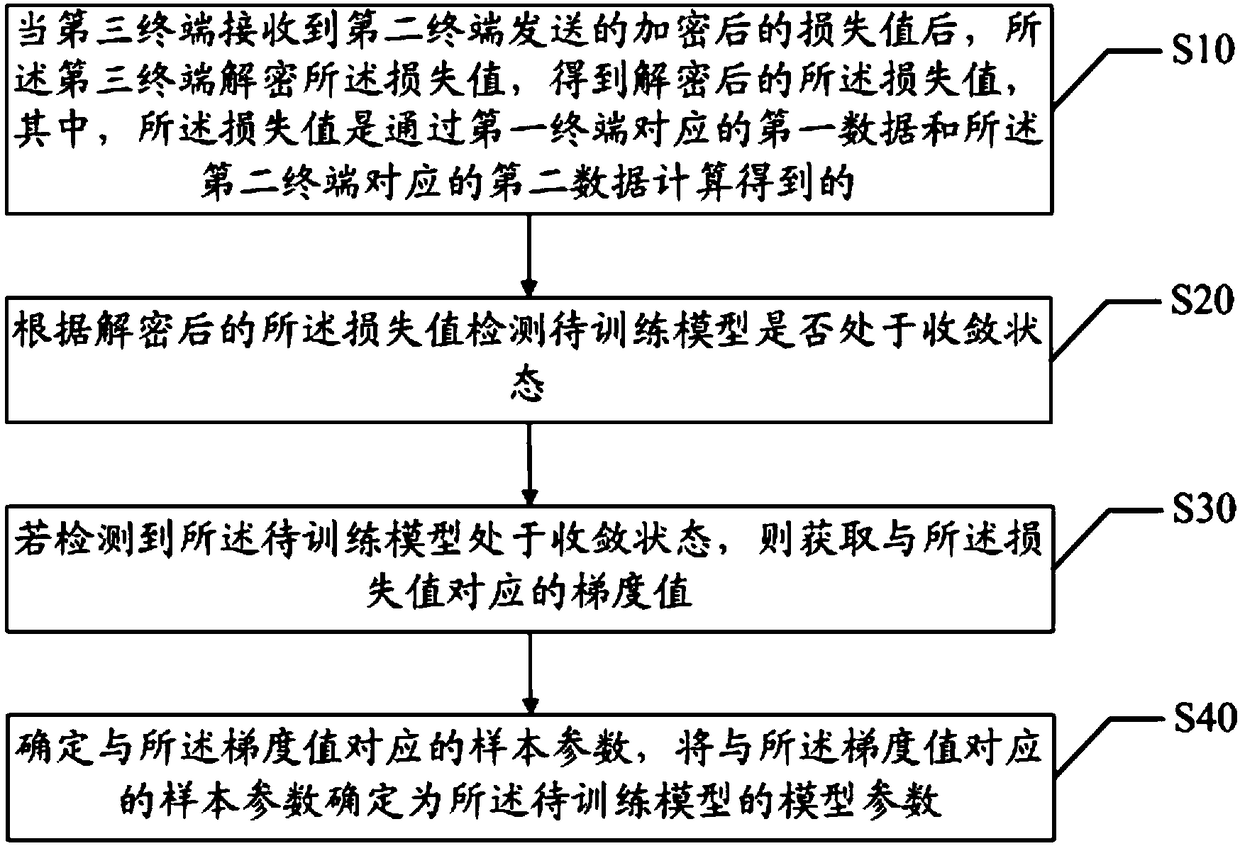

Model parameter obtaining method and system based on federation learning and readable storage medium

PendingCN109165515AImprove accuracyPublic key for secure communicationDigital data protectionAlgorithmModel parameters

The invention discloses a model parameter obtaining method and system based on federation learning, and a readable storage medium. The method comprises the following steps: when a third terminal receives an encrypted loss value sent by a second terminal, the third terminal decrypts the loss value to obtain the decrypted loss value; whether the model to be trained is in a convergent state accordingto the decrypted loss value is detected; if the model to be trained is detected to be in a convergent state, a gradient value corresponding to the loss value is obtained; the sample parameters corresponding to the gradient value are determined, and the sample parameters corresponding to the gradient value are determined as the model parameters of the model to be trained. The invention realizes that the loss value is calculated by combining the sample data of the first terminal and the second terminal, so as to help to learn and determine the model parameters in the model to be trained by combining the sample data of the first terminal and the second terminal, and improves the accuracy of the trained model.

Owner:WEBANK (CHINA)

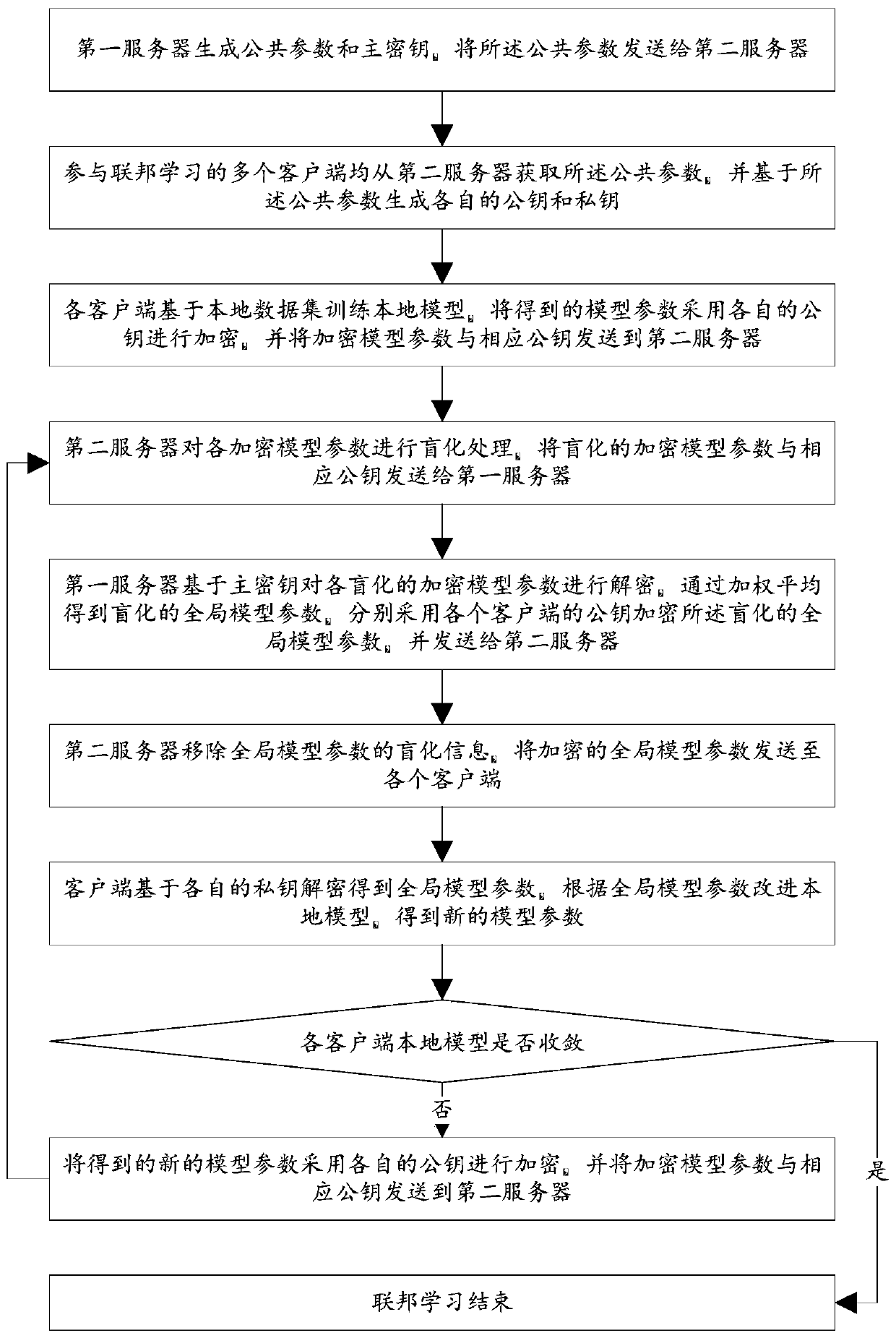

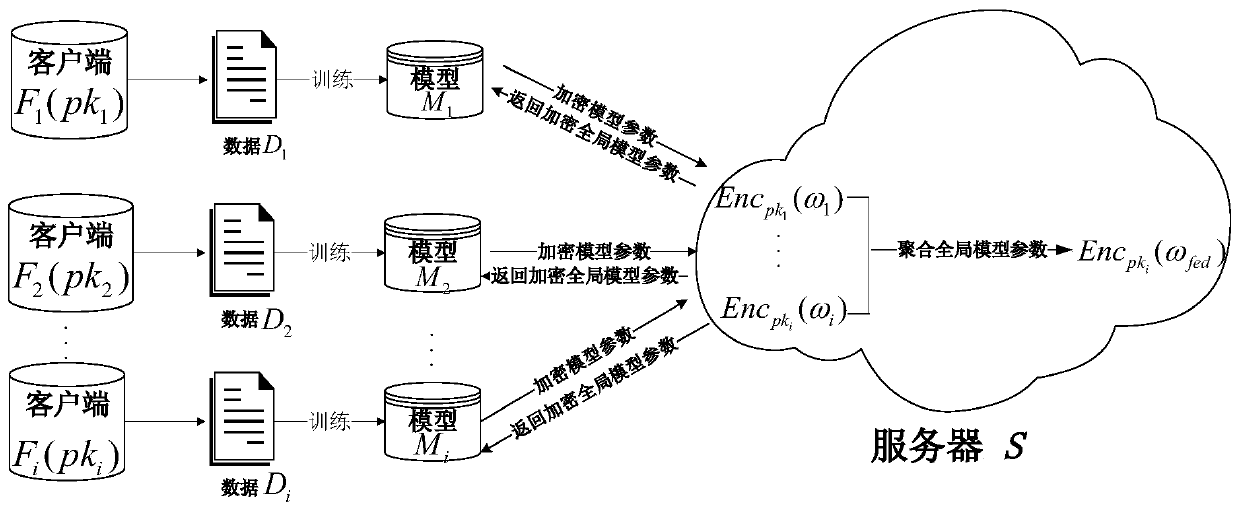

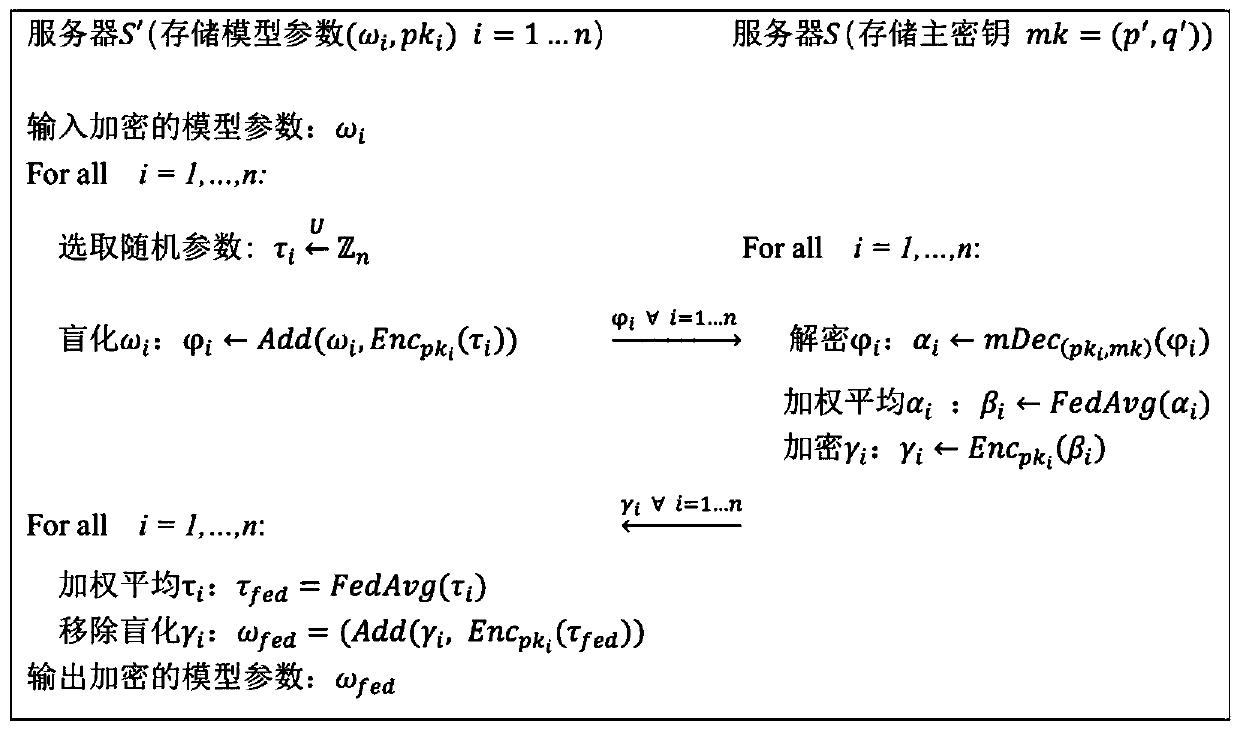

Federated learning training data privacy enhancement method and system

ActiveCN110572253AEnsure safetyEasy to joinCommunication with homomorphic encryptionModel parametersMaster key

The invention discloses a federated learning training data privacy enhancement method and system, and the method comprises the steps that a first server generates a public parameter and a main secretkey, and transmits the public parameter to a second server; a plurality of clients participating in federated learning generate respective public key and private key pairs based on the public parameters; the federated learning process is as follows: each client encrypts a model parameter obtained by local training by using a respective public key, and sends the encrypted model parameter and the corresponding public key to a first server through a second server; the first server carries out decryption based on the master key, obtains global model parameters through weighted average, carries outencryption by using a public key of each client, and sends the global model parameters to each client through the second server; and the clients carry out decrypting based on the respective private keys to obtain global model parameters, and the local models are improved, and the process is repeated until the local models of the clients converge. According to the method, a dual-server mode is combined with multi-key homomorphic encryption, so that the security of data and model parameters is ensured.

Owner:UNIV OF JINAN

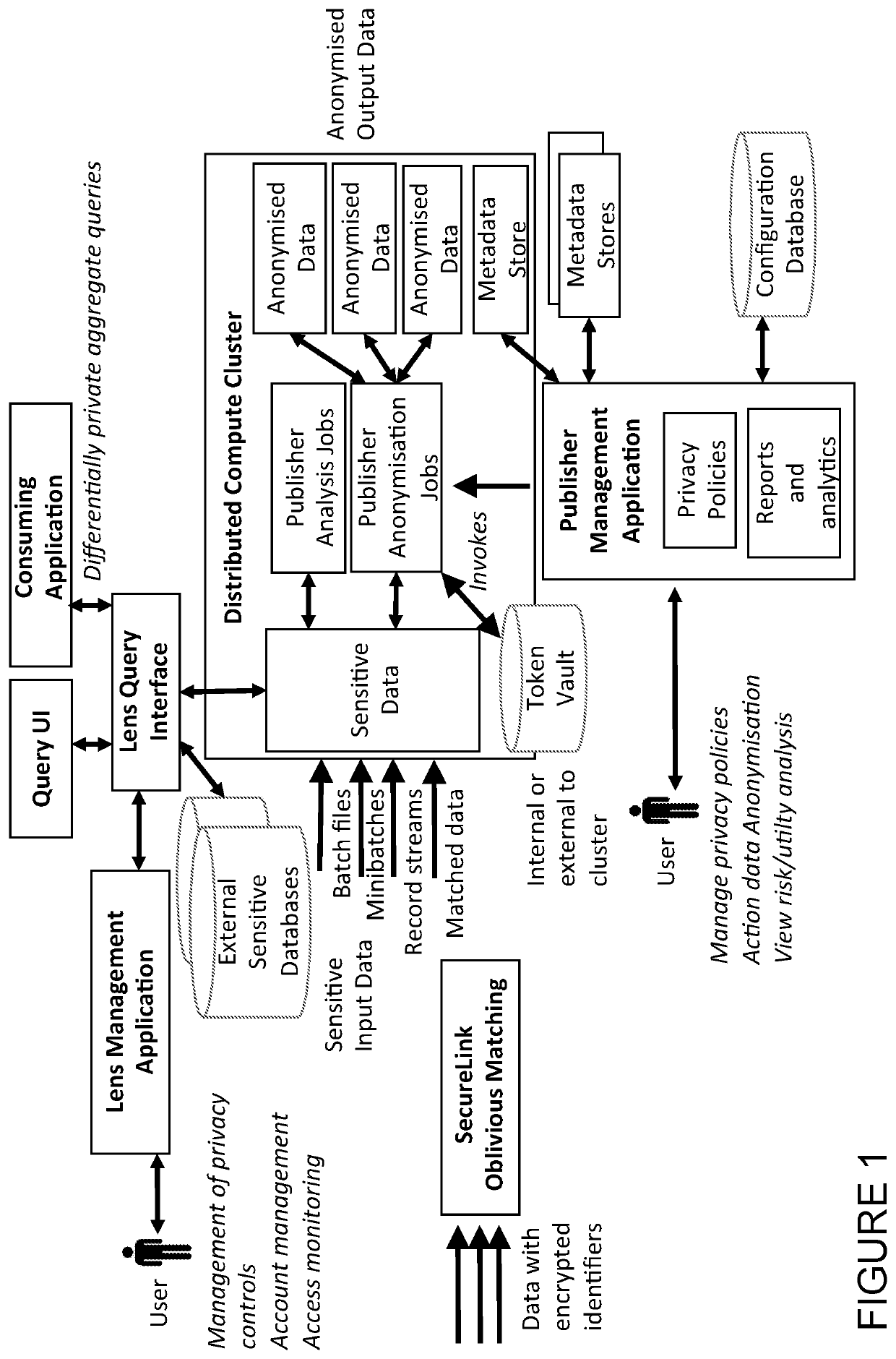

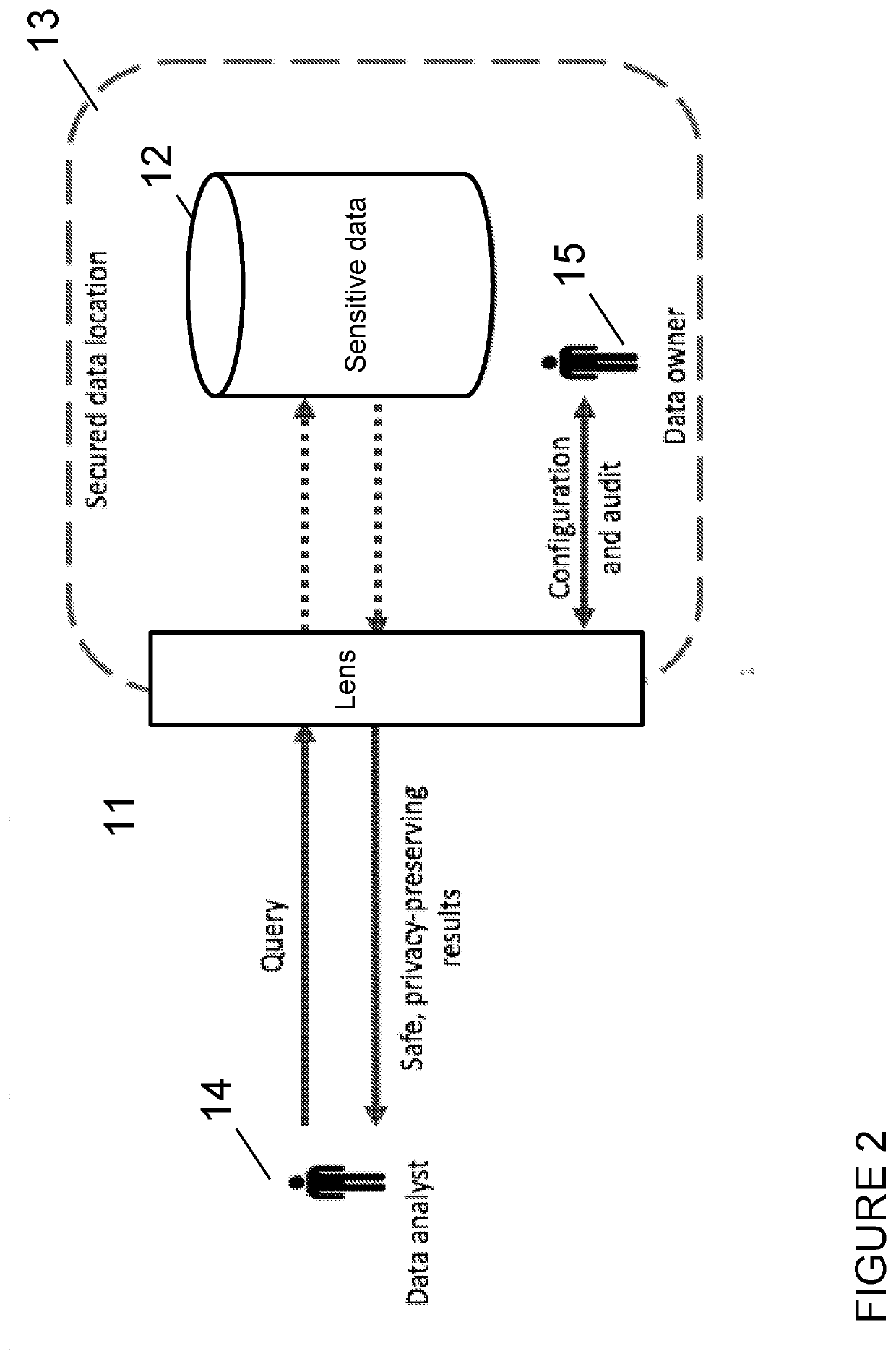

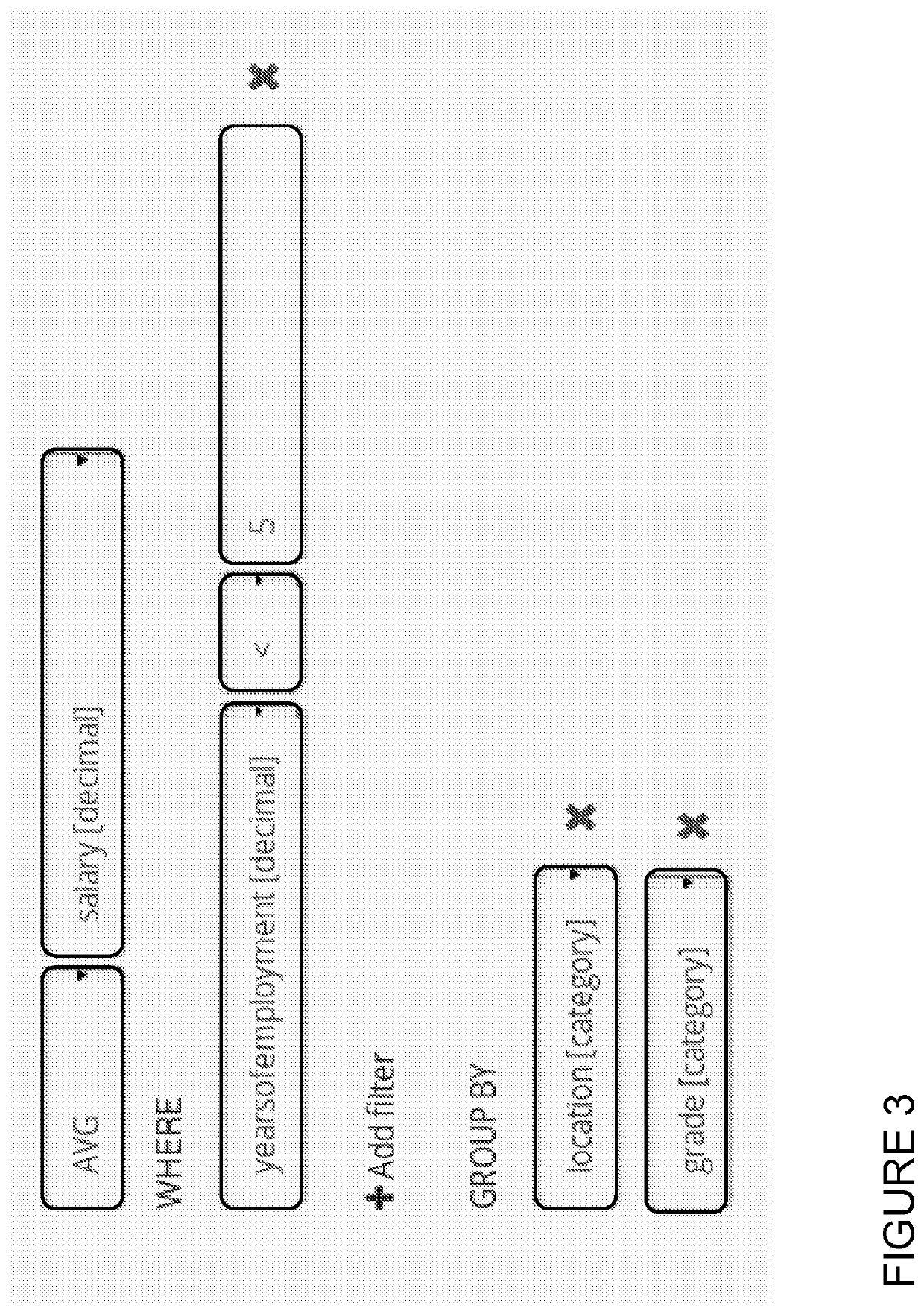

Computer-implemented privacy engineering system and method

ActiveUS20200327252A1Avoid problemsReduce prevent riskKey distribution for secure communicationUser identity/authority verificationData setEngineering

A system allows the identification and protection of sensitive data in a multiple ways, which can be combined for different workflows, data situations or use cases. The system scans datasets to identify sensitive data or identifying datasets, and to enable the anonymisation of sensitive or identifying datasets by processing that data to produce a safe copy. Furthermore, the system prevents access to a raw dataset. The system enables privacy preserving aggregate queries and computations. The system uses differentially private algorithms to reduce or prevent the risk of identification or disclosure of sensitive information. The system scales to big data and is implemented in a way that supports parallel execution on a distributed compute cluster.

Owner:PRIVITAR LTD

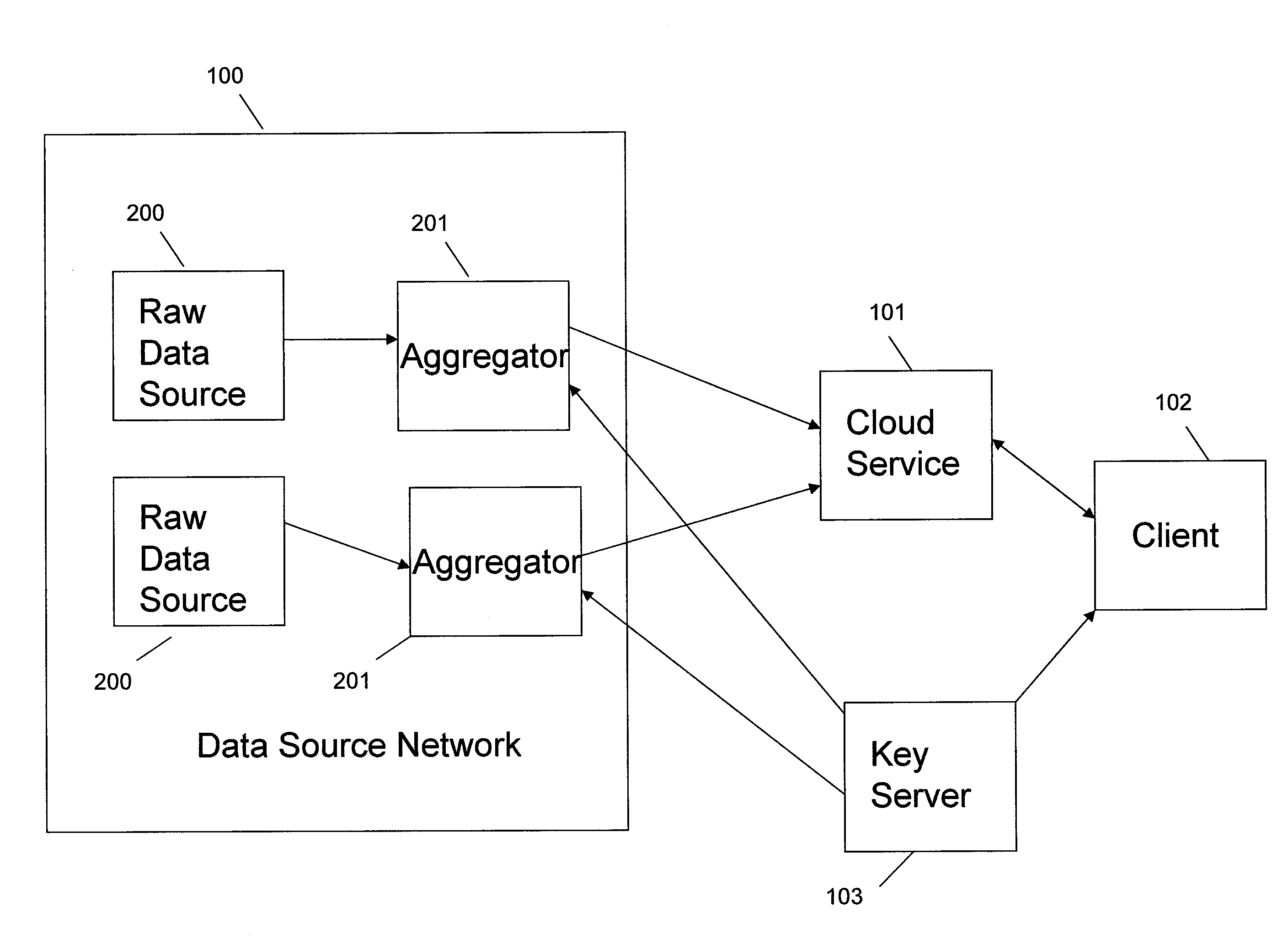

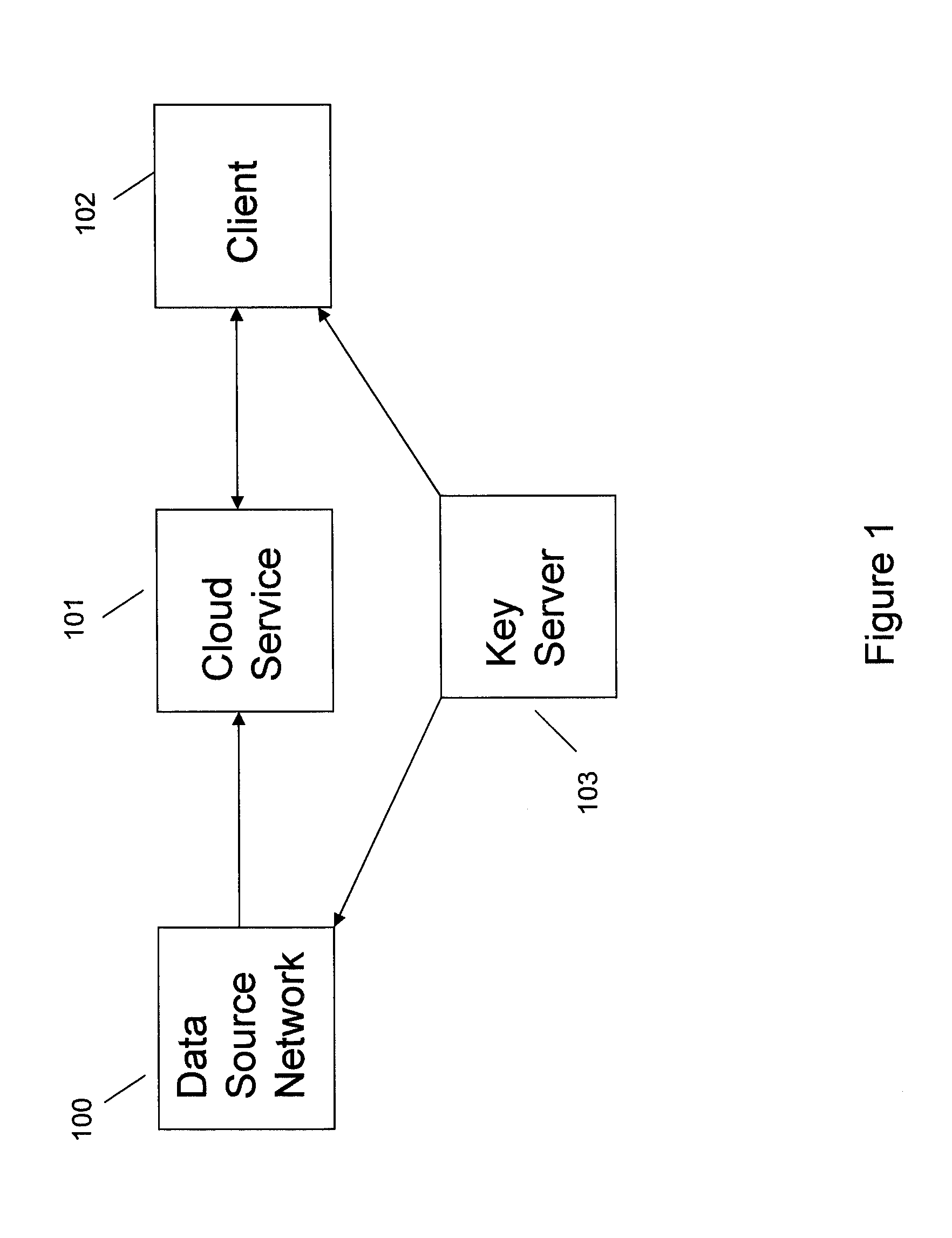

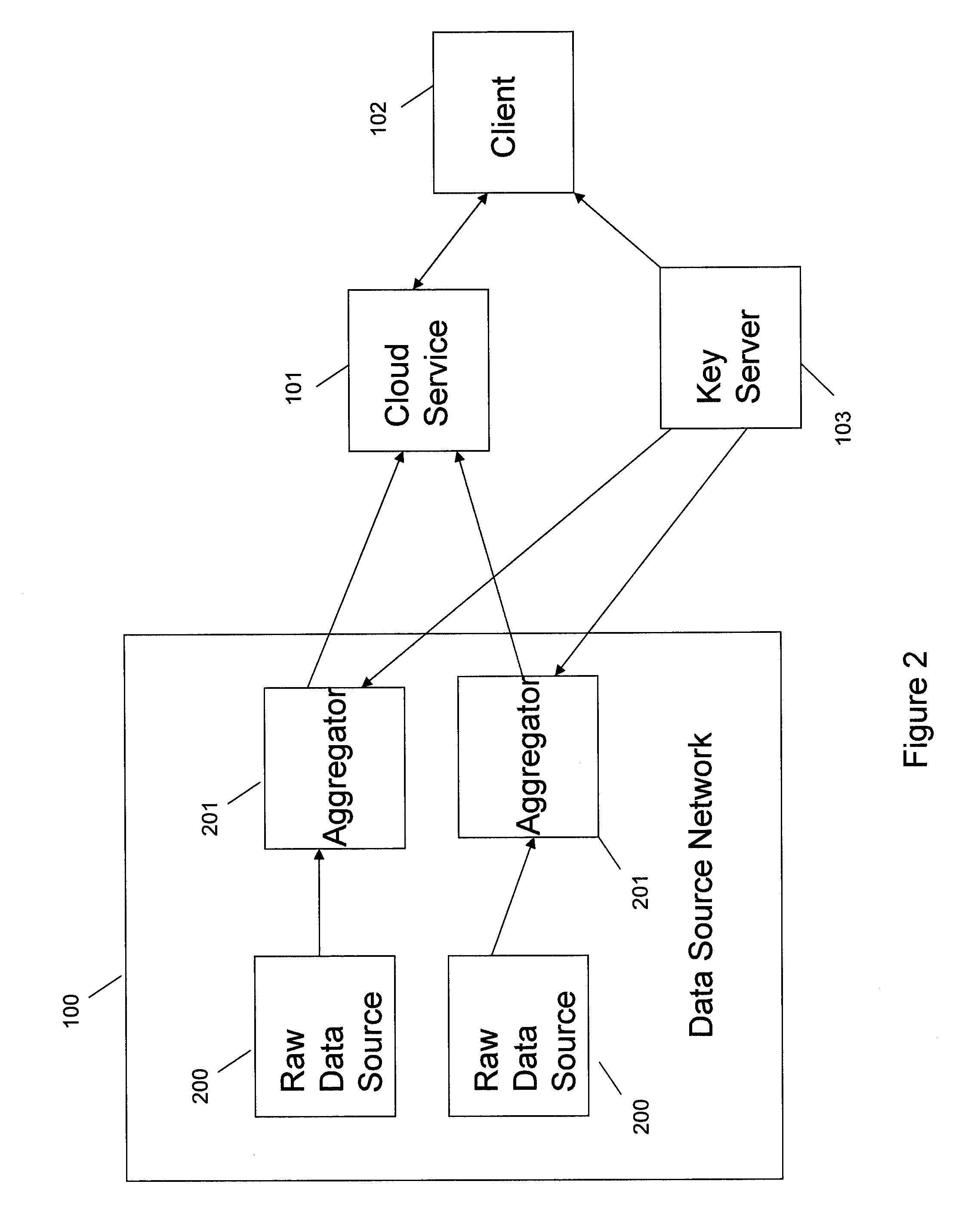

Systems and methods for communication, storage, retrieval, and computation of simple statistics and logical operations on encrypted data

InactiveUS20110264920A1Unauthorized memory use protectionHardware monitoringLogical queryData system

Systems and methods provide for a symmetric homomorphic encryption based protocol supporting communication, storage, retrieval, and computation on encrypted data stored off-site. The system may include a private, trusted network which uses aggregators to encrypt raw data that is sent to a third party for storage and processing, including computations that can be performed on the encrypted data. A client on a private or public network may request computations on the encrypted data, and the results may then be sent to the client for decryption or further computations. The third party aids in computation of statistical information and logical queries on the encrypted data, but is not able to decrypt the data on its own. The protocol provides a means for a third party to aid in computations on sensitive data without learning anything about those data values.

Owner:FUJIFILM BUSINESS INNOVATION CORP

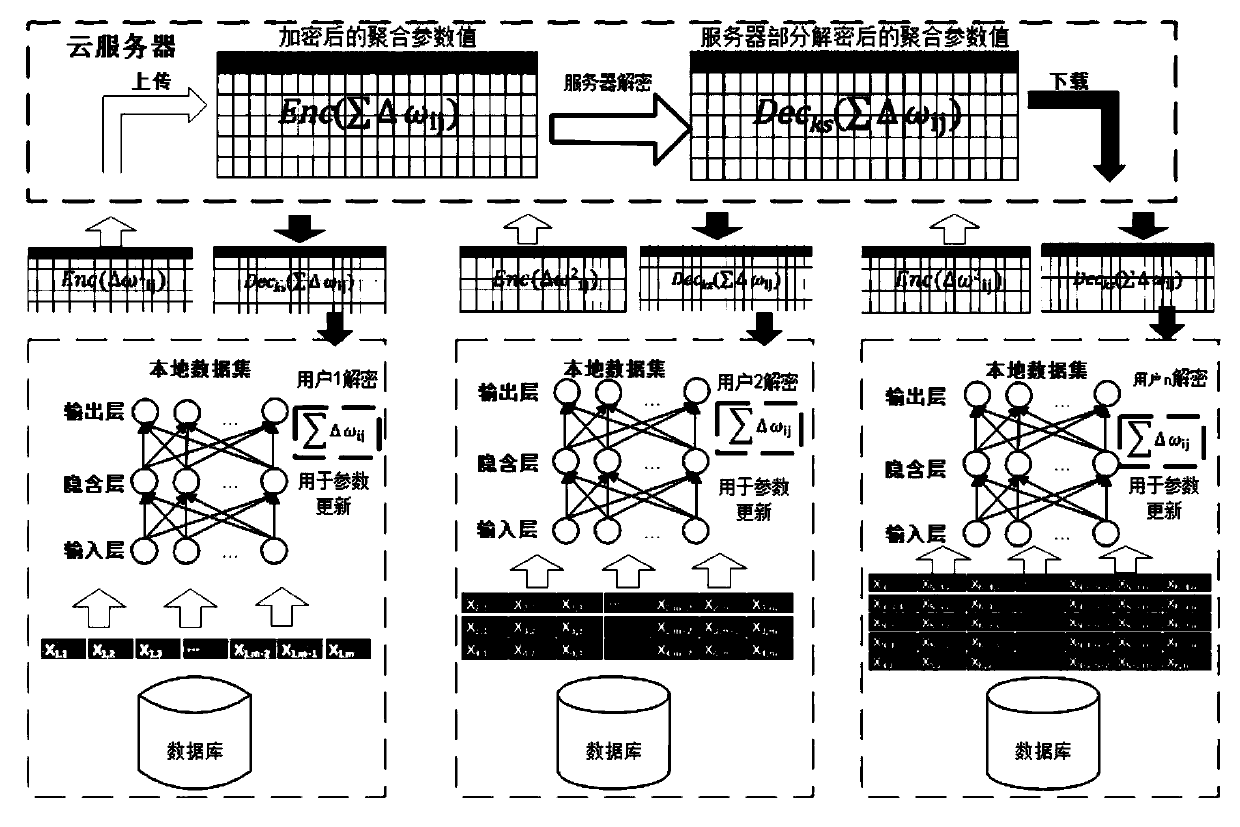

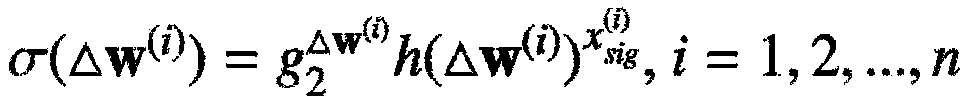

Privacy-protecting multi-party deep learning computation proxy method under cloud environment

InactiveCN108712260AImprove accuracyUser identity/authority verificationDigital data protectionData setCiphertext

The invention belongs to the technical field of cloud computing, and is to achieve data sharing under the premise of protecting privacy and deep learning application on the basis of data sharing. Thetechnical scheme adopted by the method is a privacy-protecting multi-party deep learning computation proxy method under a cloud environment. Each participant runs a deep learning algorithm based on arespective data set to compute a gradient parameter value, and uploads the gradient parameter that is encrypted by a multiplicative homomorphic ElGamal encryption scheme to a server; when uploading the gradient parameter to the cloud server, the participant simultaneously generates the signature of the parameter, and the signature meets polymerization, that is to say the cloud server can compute the gradient parameter and the signature; the cloud computing server computes the sum of gradient parameters of all users on a ciphertext, and returns the result back to the user, and the user acquiresthe final gradient parameter sum after decrypting, and verifies validity of the sum through checking whether the result and the polymerized signature are the effective message and signature. The method provided by the invention is mainly applied to cloud computing occasions.

Owner:QUFU NORMAL UNIV

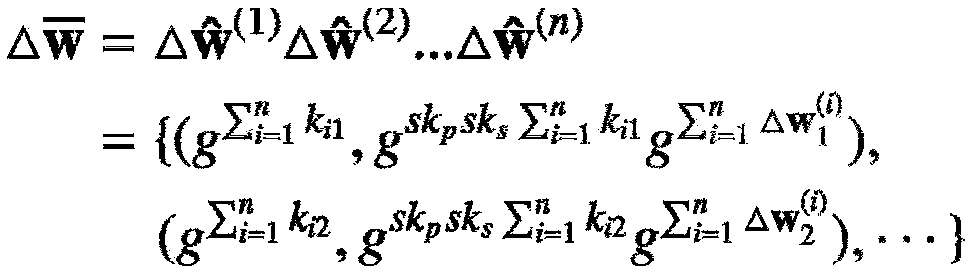

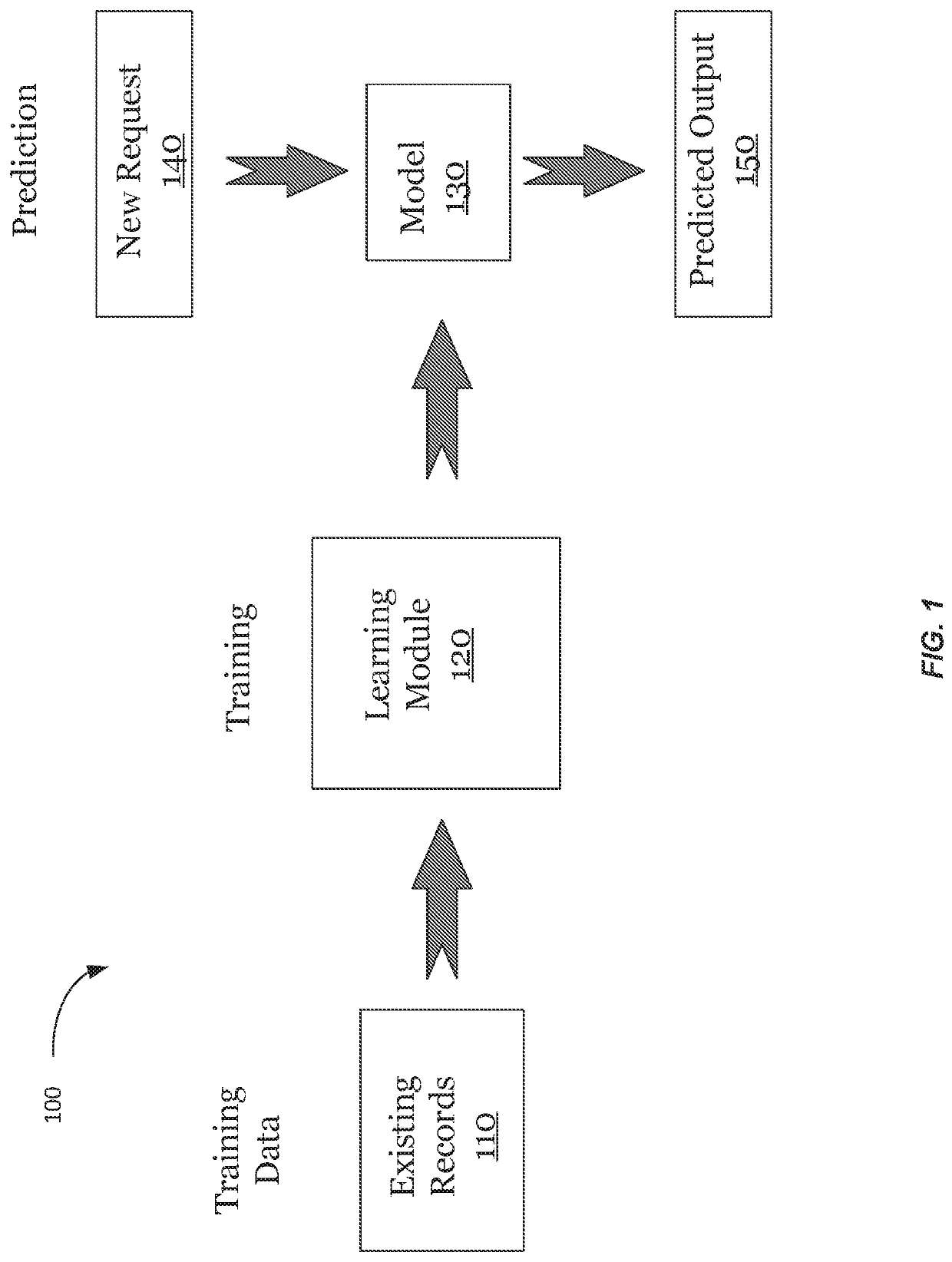

Privacy-preserving machine learning

ActiveUS20200242466A1Efficiently determinedLimited amount of memoryKey distribution for secure communicationDigital data protectionStochastic gradient descentAlgorithm

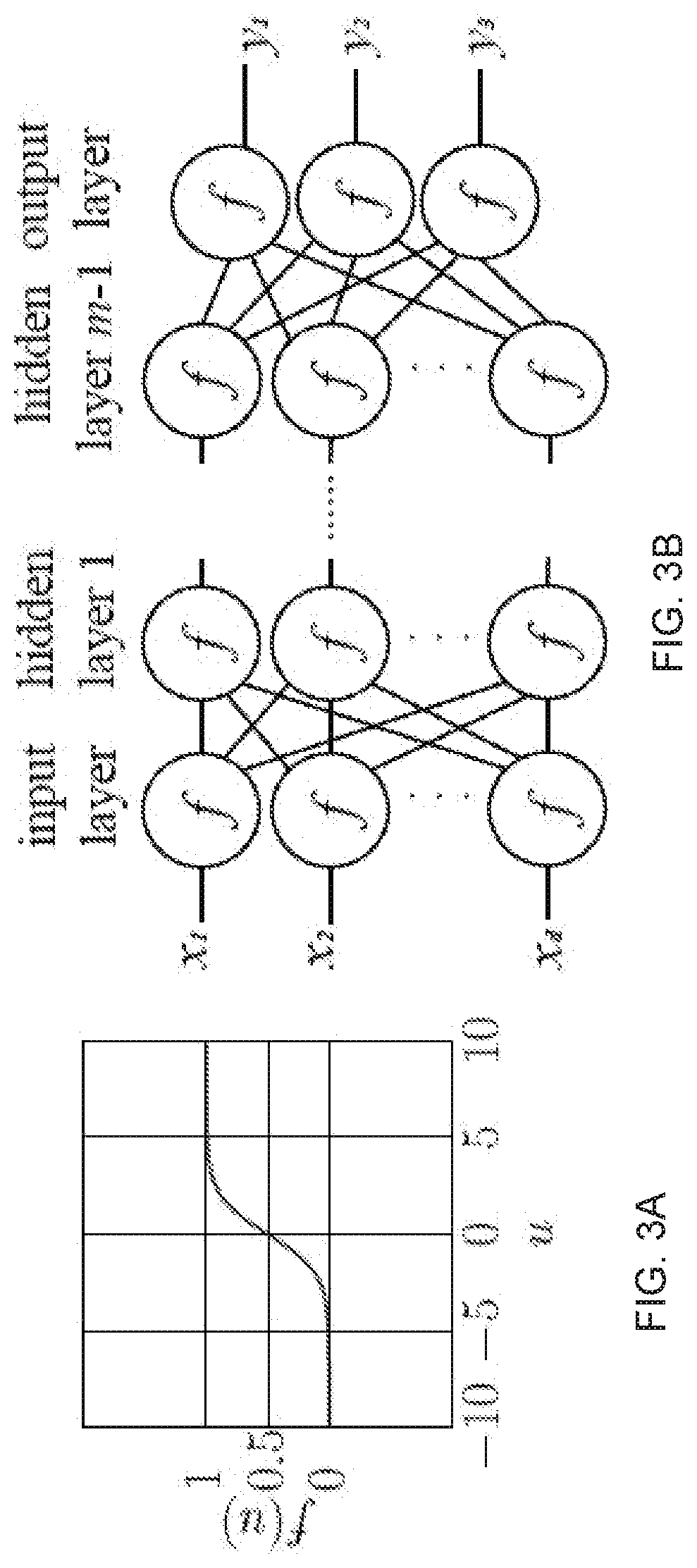

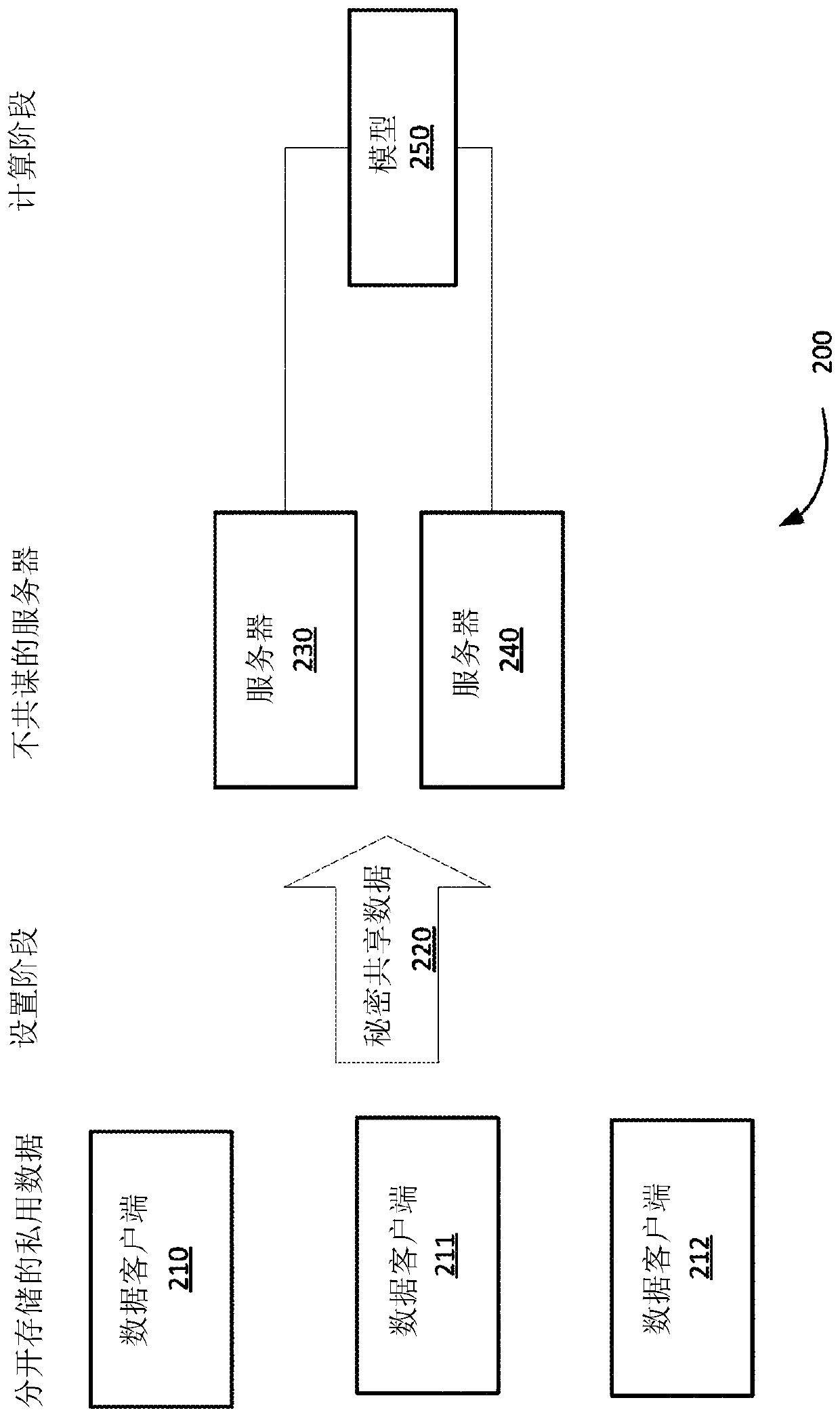

New and efficient protocols are provided for privacy-preserving machine learning training (e.g., for linear regression, logistic regression and neural network using the stochastic gradient descent method). A protocols can use the two-server model, where data owners distribute their private data among two non-colluding servers, which train various models on the joint data using secure two-party computation (2PC). New techniques support secure arithmetic operations on shared decimal numbers, and propose MPC-friendly alternatives to non-linear functions, such as sigmoid and softmax.

Owner:VISA INT SERVICE ASSOC

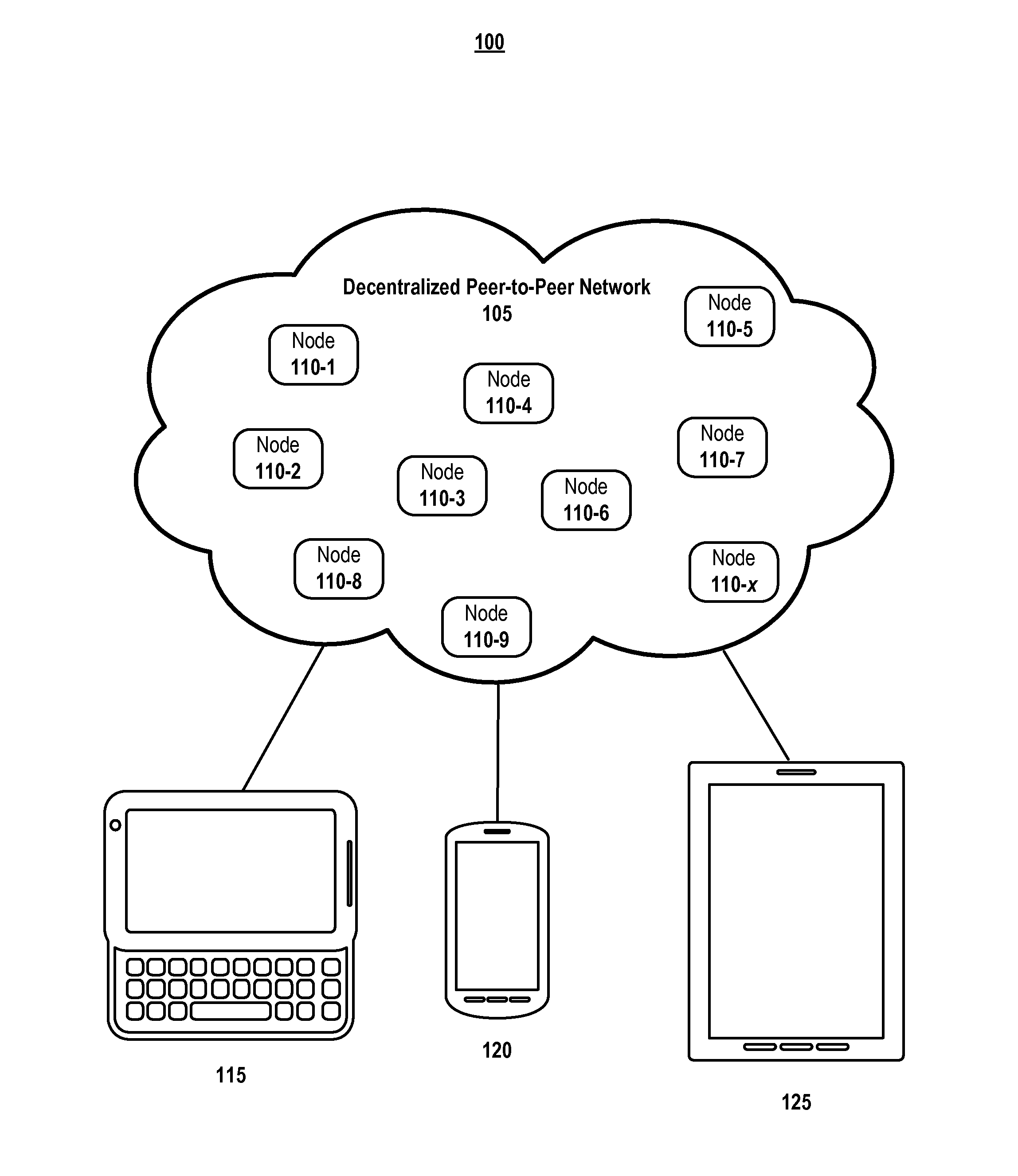

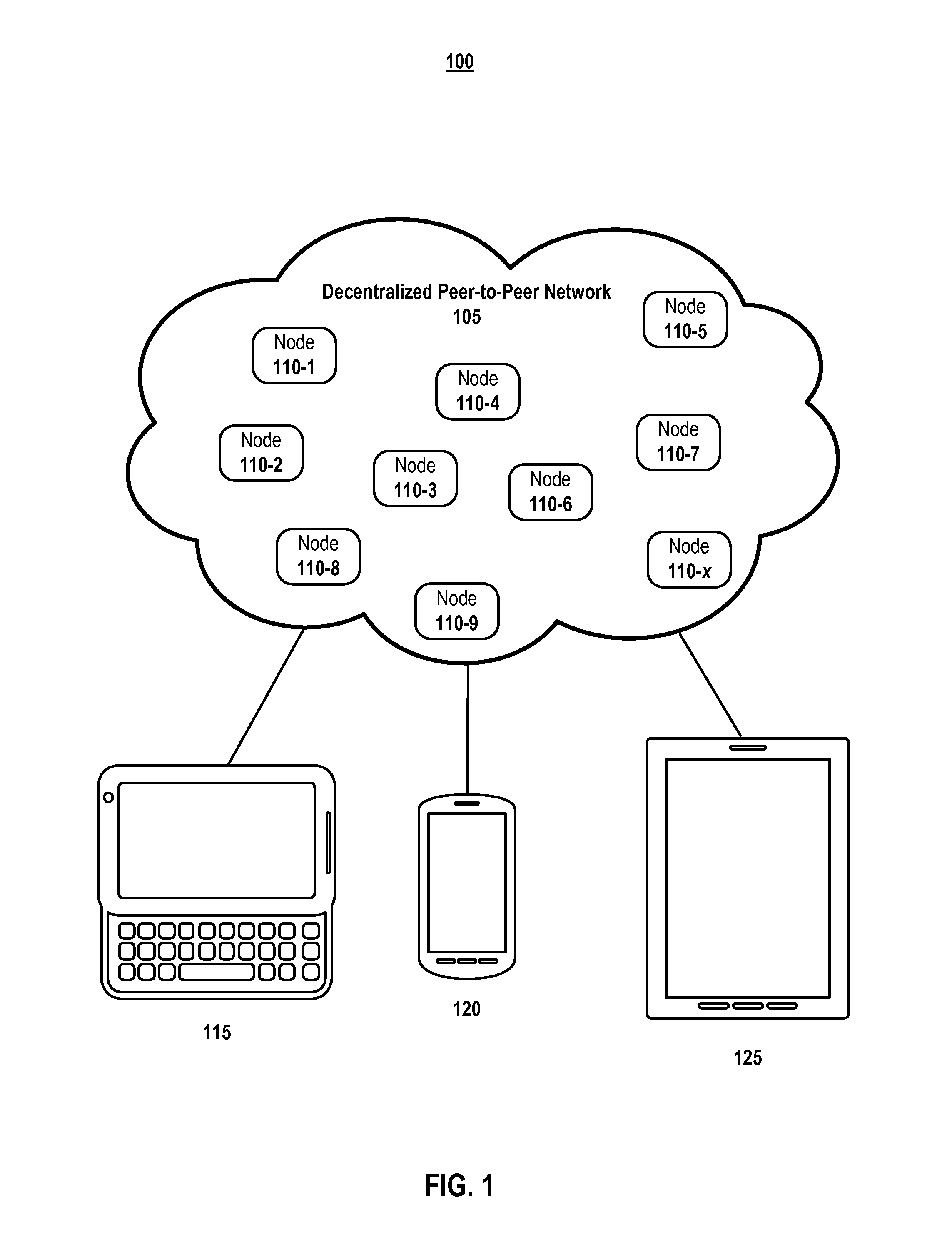

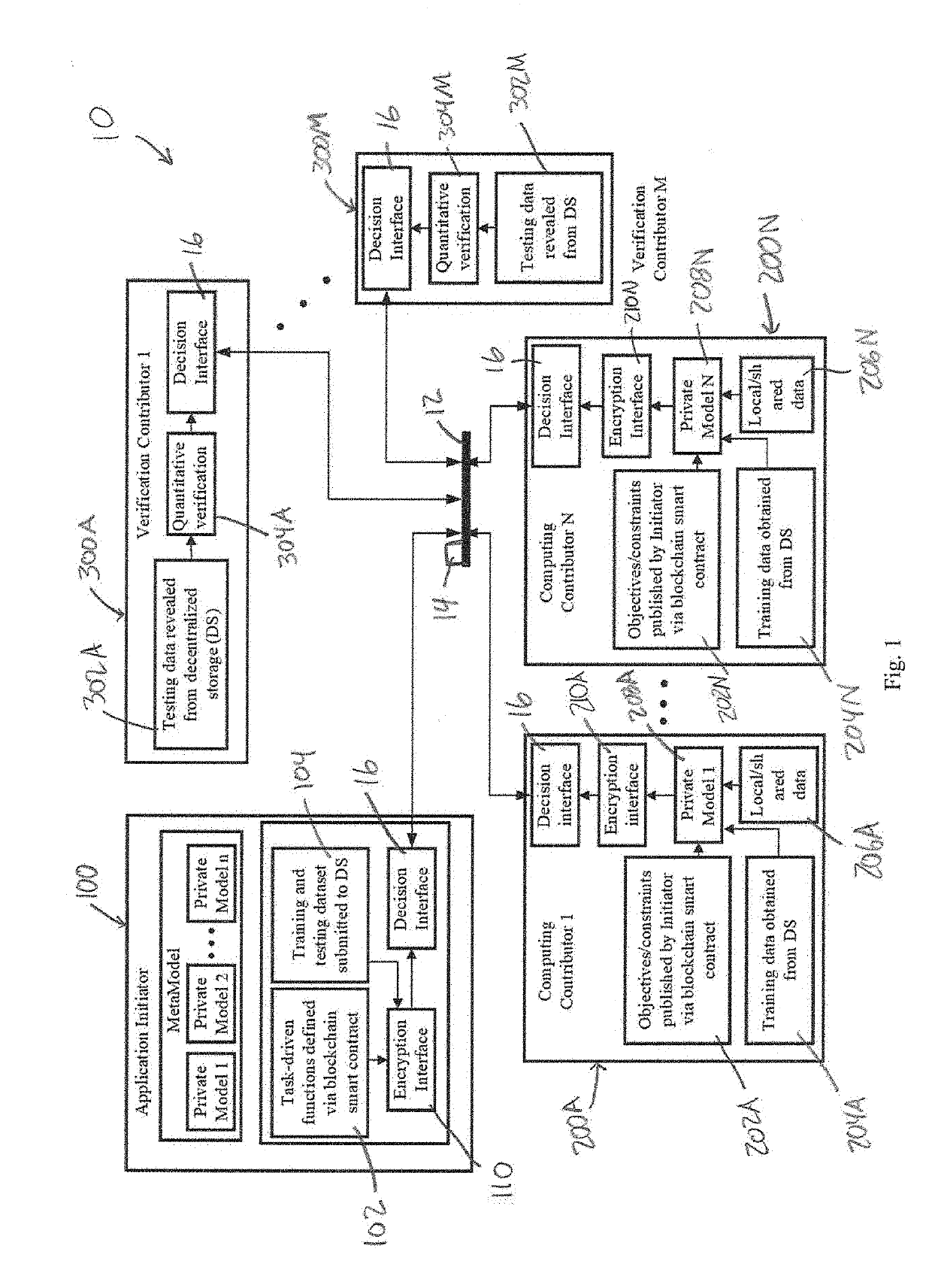

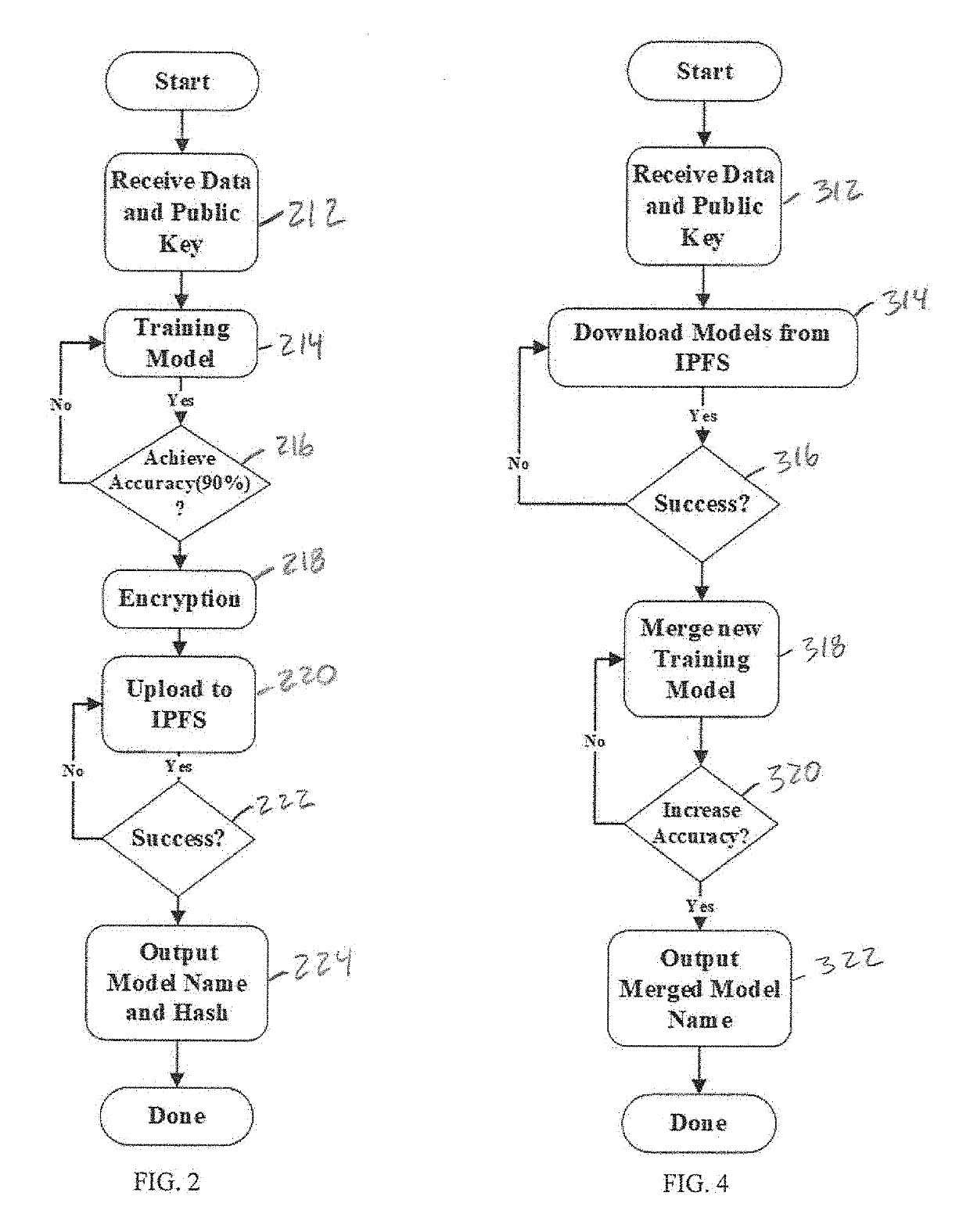

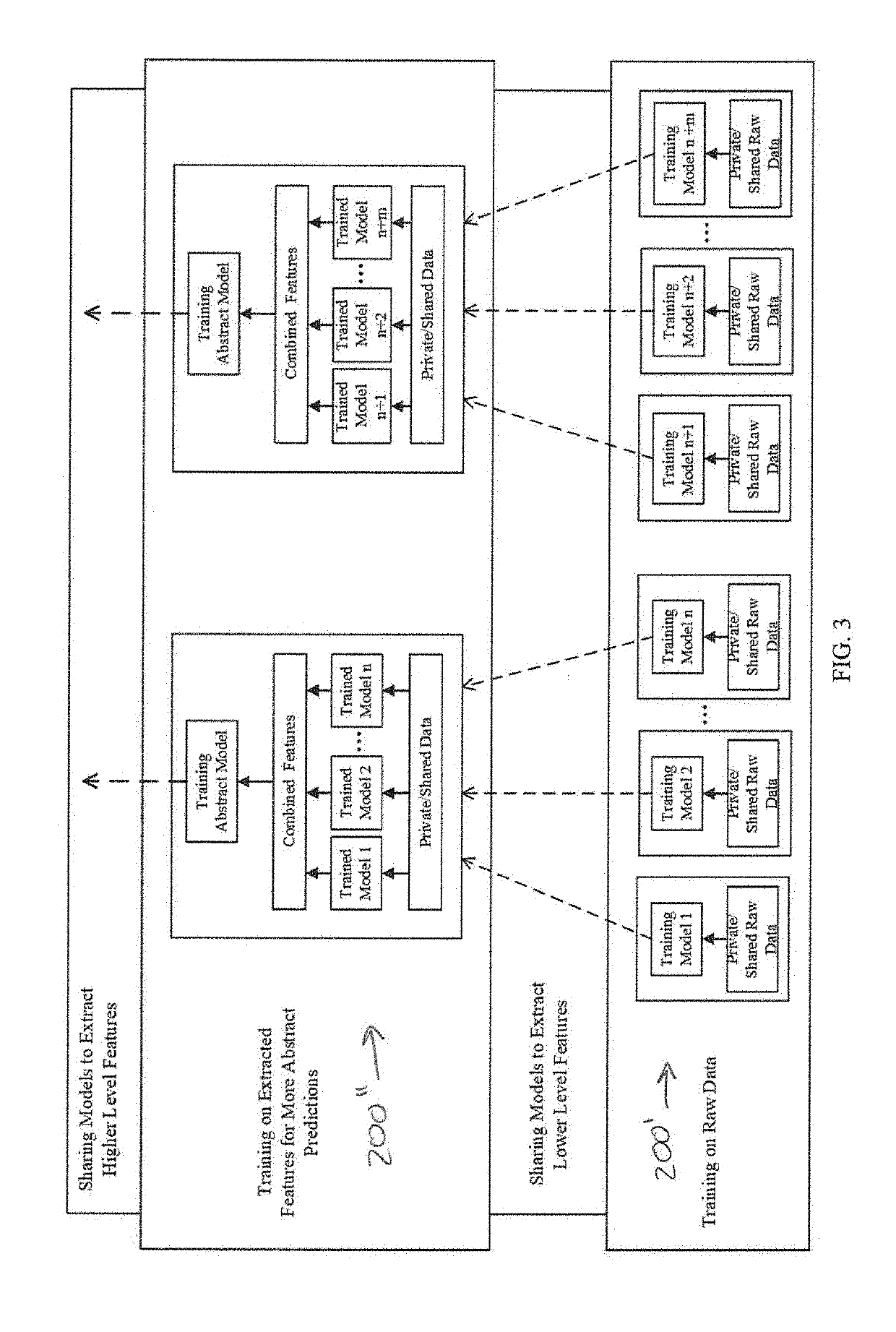

Blockchain-empowered crowdsourced computing system

ActiveUS20190334716A1User identity/authority verificationMachine learningInterface designPrivacy preserving

In various embodiments, the present invention is directed to a decentralized and secure method for developing machine learning models using homomorphic encryption and blockchain smart contracts technology to realize a secure, decentralized system and privacy-preserving computing system incentivizes the sharing of private data or at least the sharing of resultant machine learning models from the analysis of private data. In various embodiments, the method uses a homomorphic encryption (HE)-based encryption interface designed to ensure the security and the privacy-preservation of the shared learning models, while minimizing the computation overhead for performing calculation on the encrypted domain and, at the same time, ensuring the accuracy of the quantitative verifications obtained by the verification contributors in the cipherspace.

Owner:THE UNIVERSITY OF AKRON

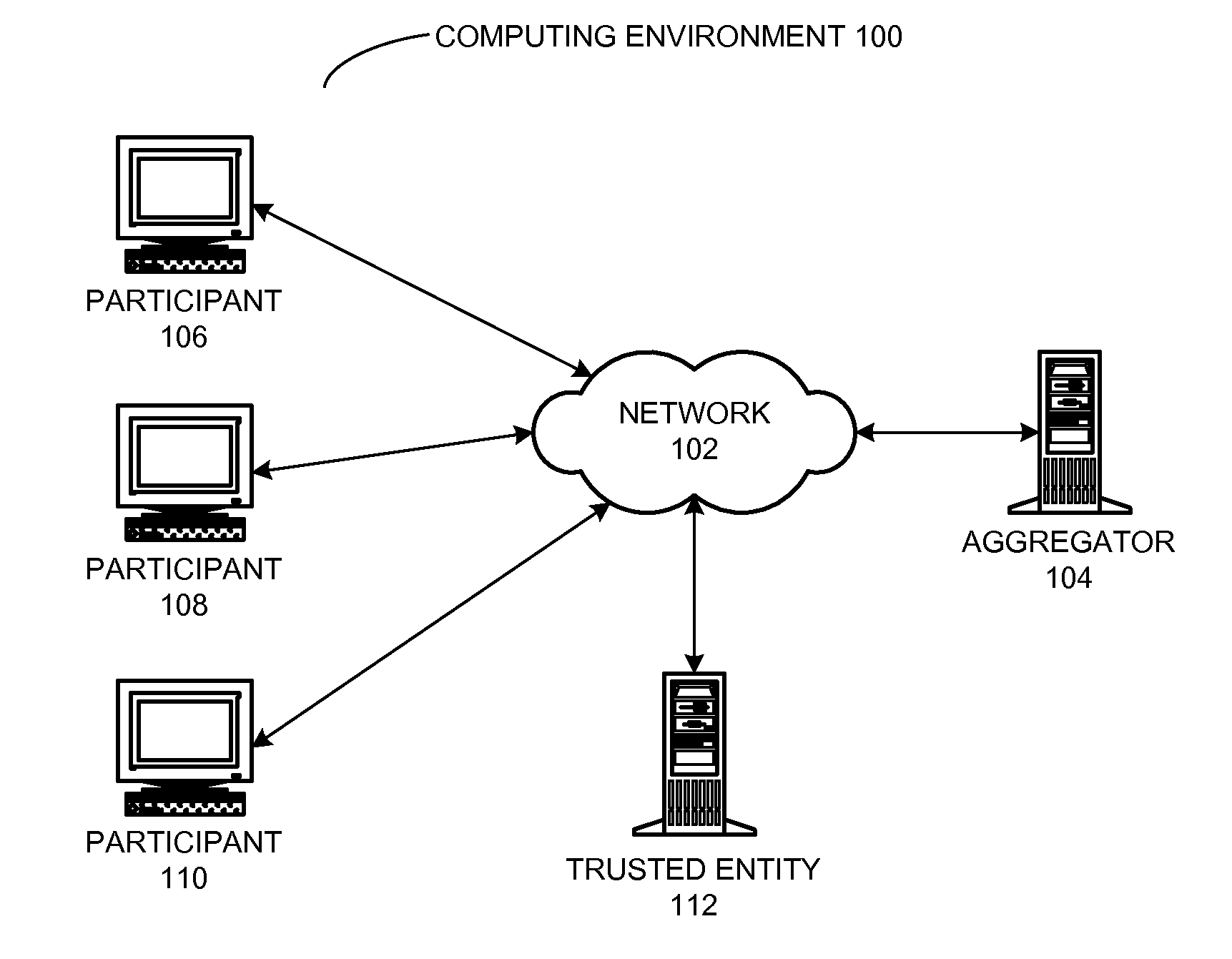

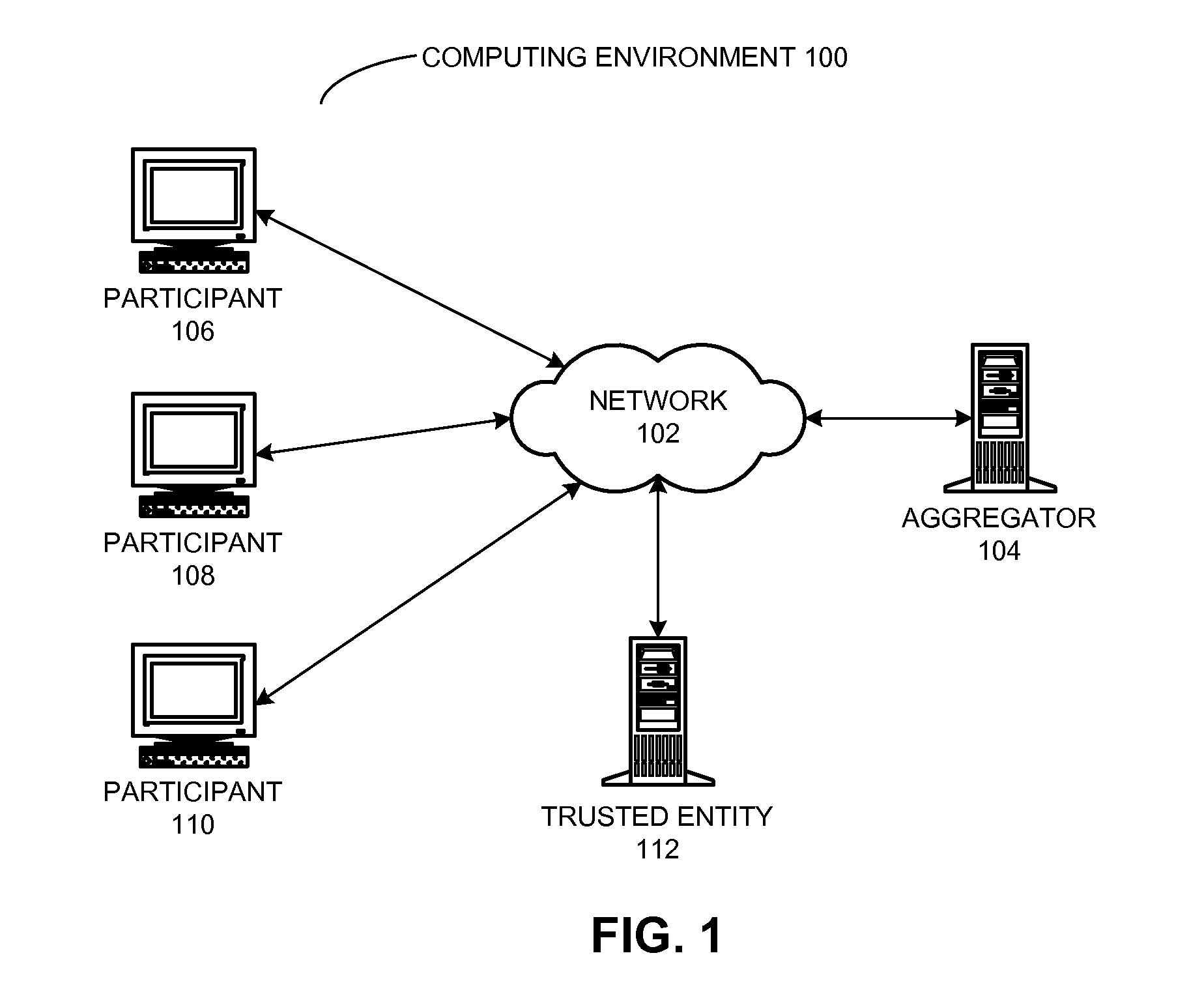

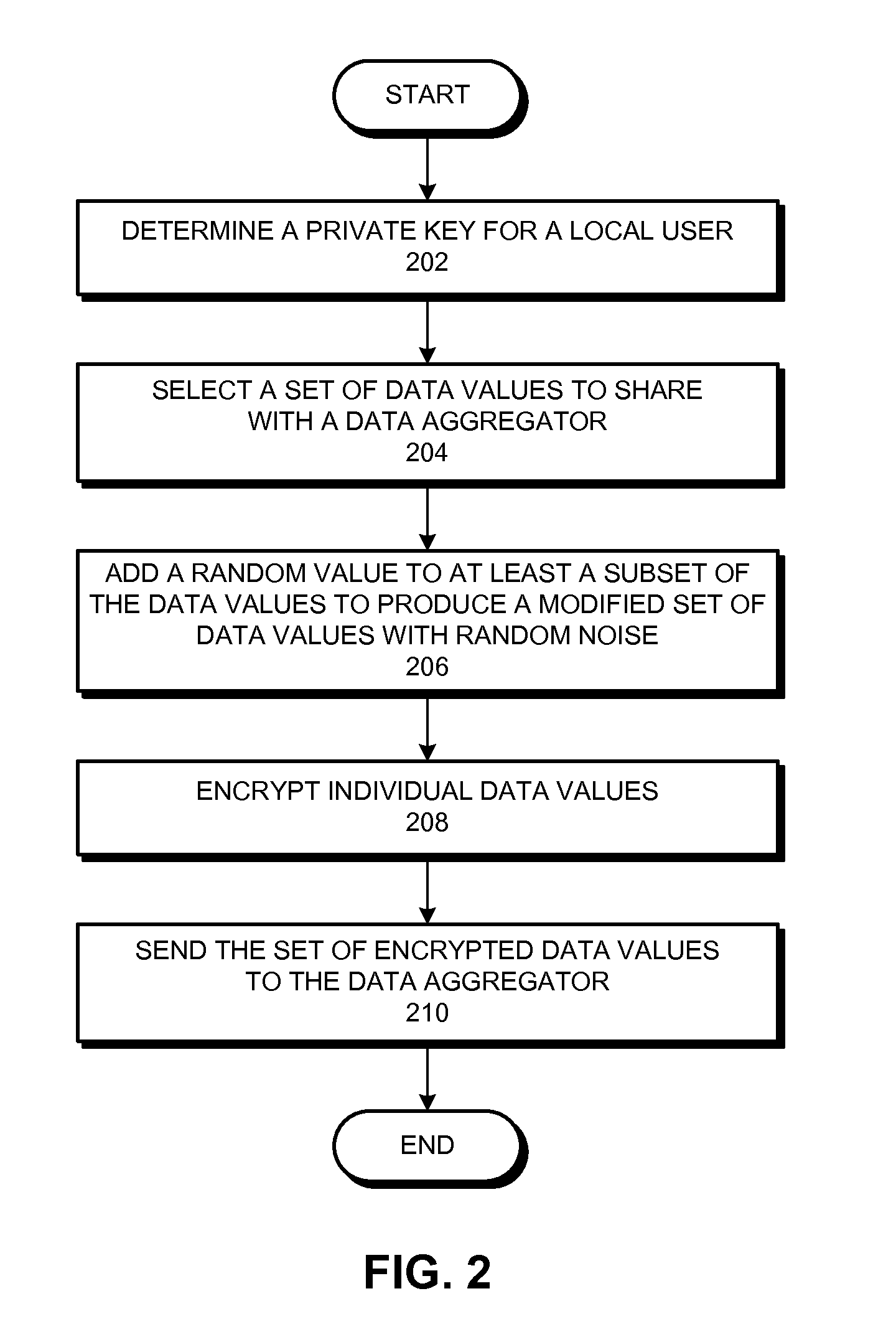

Privacy-preserving aggregation of time-series data

ActiveUS20120204026A1Key distribution for secure communicationUser identity/authority verificationData aggregatorPrivacy preserving

A private stream aggregation (PSA) system contributes a user's data to a data aggregator without compromising the user's privacy. The system can begin by determining a private key for a local user in a set of users, wherein the sum of the private keys associated with the set of users and the data aggregator is equal to zero. The system also selects a set of data values associated with the local user. Then, the system encrypts individual data values in the set based in part on the private key to produce a set of encrypted data values, thereby allowing the data aggregator to decrypt an aggregate value across the set of users without decrypting individual data values associated with the set of users, and without interacting with the set of users while decrypting the aggregate value. The system also sends the set of encrypted data values to the data aggregator.

Owner:XEROX CORP

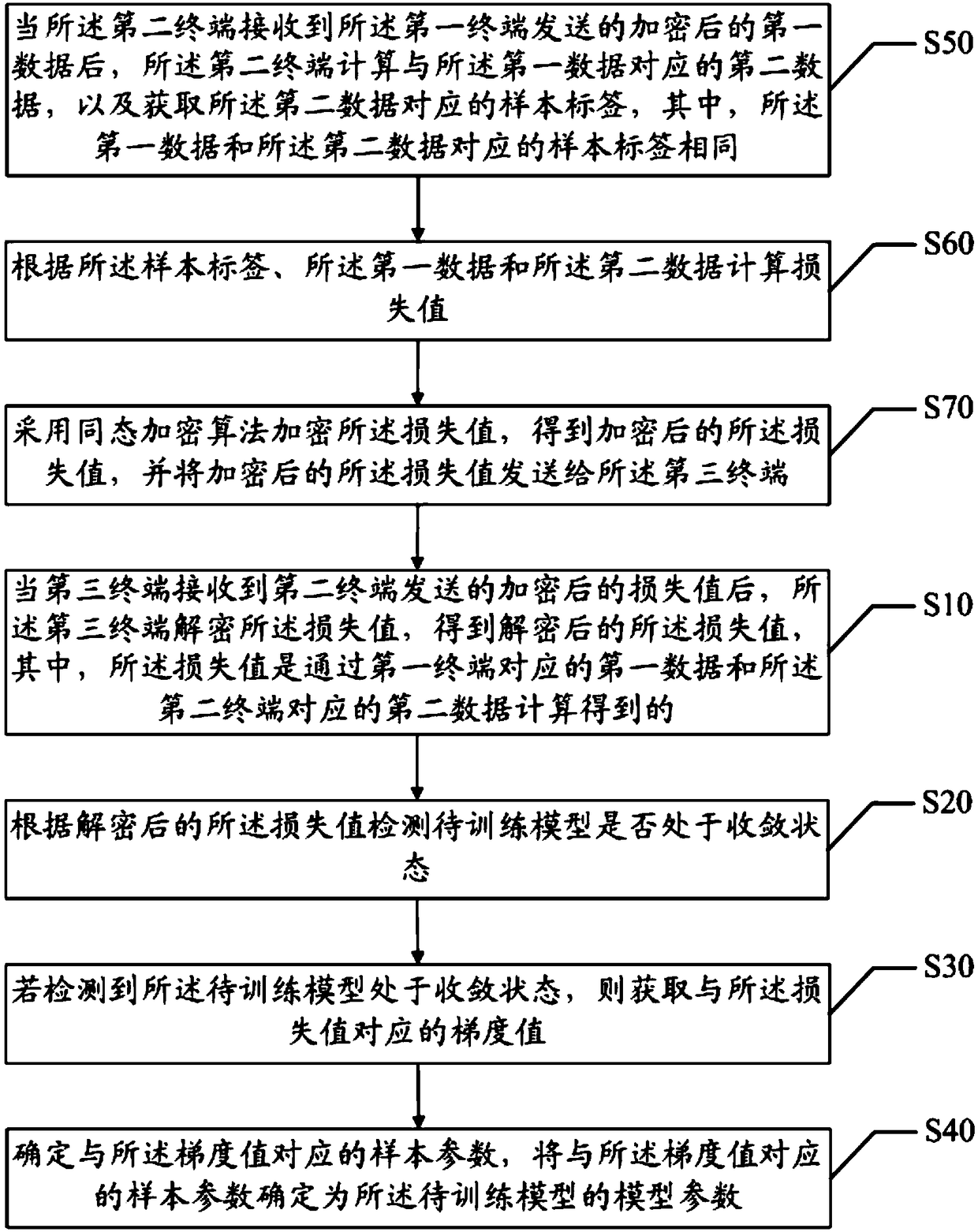

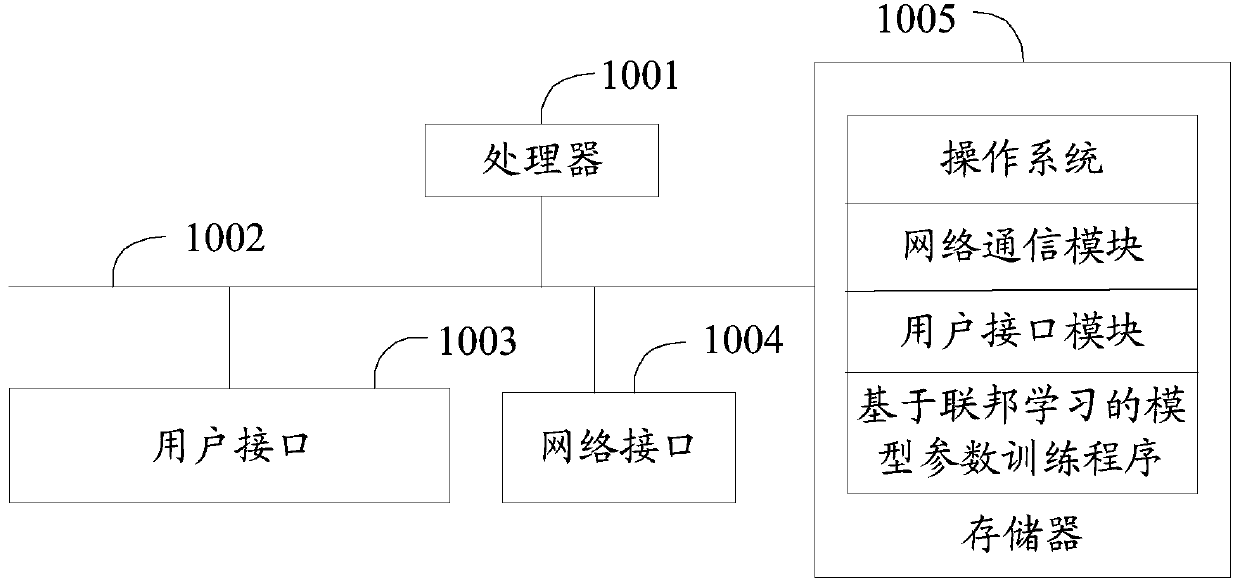

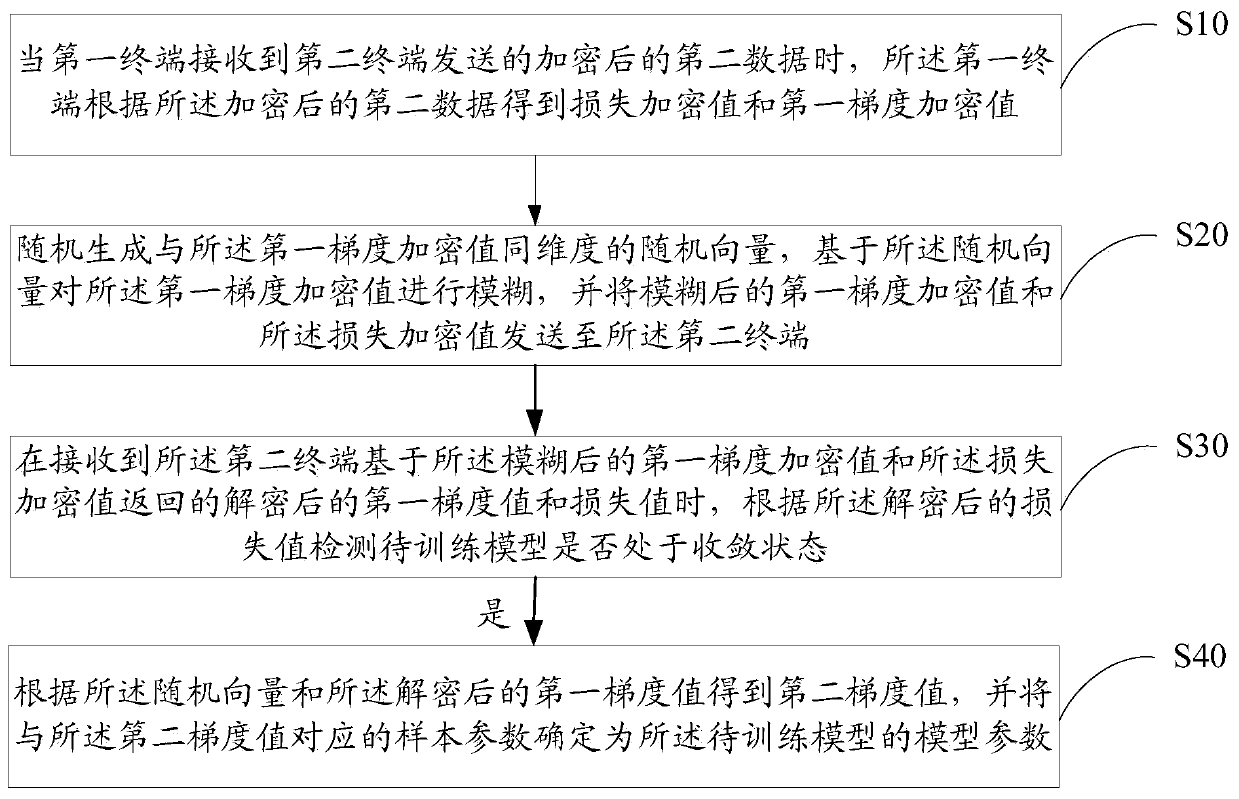

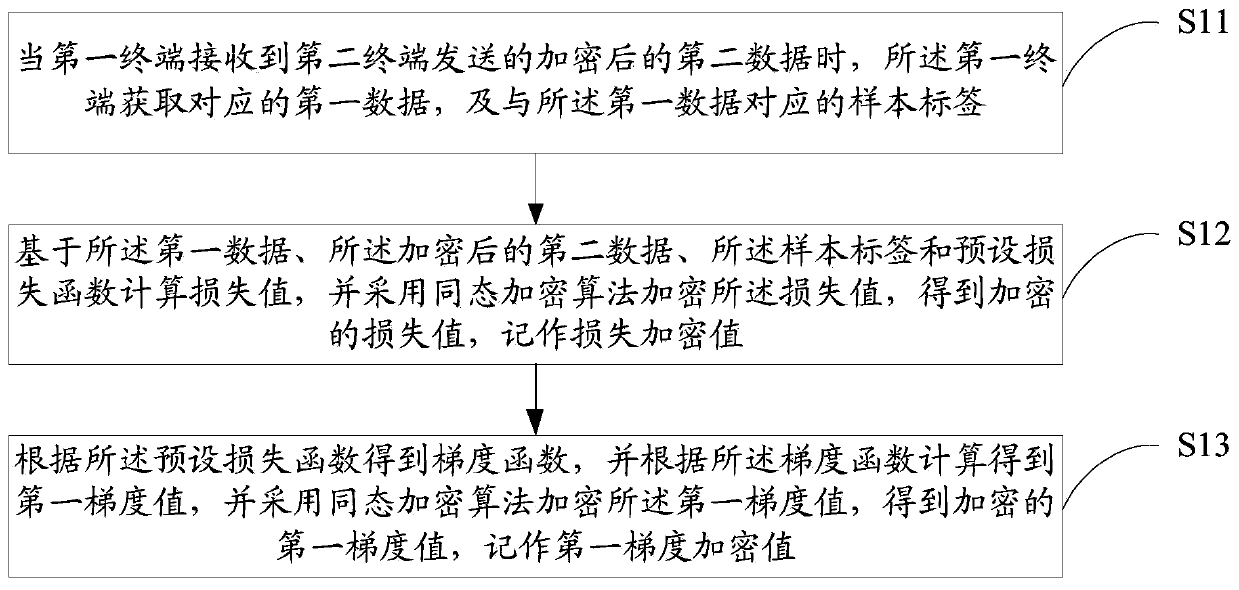

Model parameter training method and device based on federated learning, equipment and medium

PendingCN109886417ASolve application limitationsWon't leakPublic key for secure communicationDigital data protectionTrusted third partyModel parameters

The invention discloses a model parameter training method and device based on federal learning, equipment and a medium. The method comprises the following steps: when a first terminal receives encrypted second data sent by a second terminal, obtaining a corresponding loss encryption value and a first gradient encryption value; randomly generating a random vector with the same dimension as the first gradient encryption value, performing fuzzy on the first gradient encryption value based on the random vector, and sending the fuzzy first gradient encryption value and the loss encryption value toa second terminal; when the decrypted first gradient value and the loss value returned by the second terminal are received, detecting whether the model to be trained is in a convergence state or not according to the decrypted loss value; and if yes, obtaining a second gradient value according to the random vector and the decrypted first gradient value, and determining the sample parameter corresponding to the second gradient value as the model parameter. According to the method, model training can be carried out only by using data of two federated parties without a trusted third party, so thatapplication limitation is avoided.

Owner:WEBANK (CHINA)

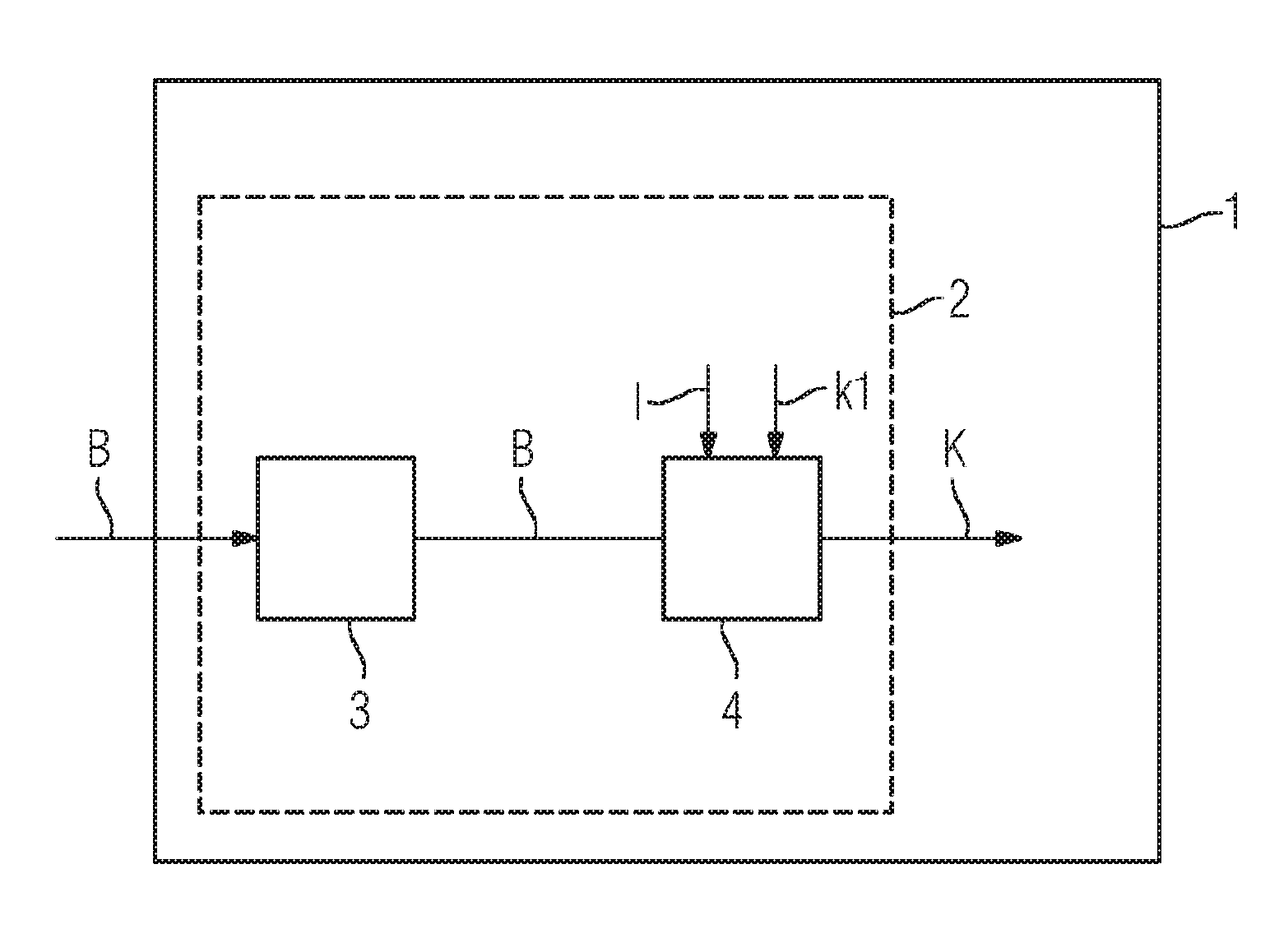

Decrypting data

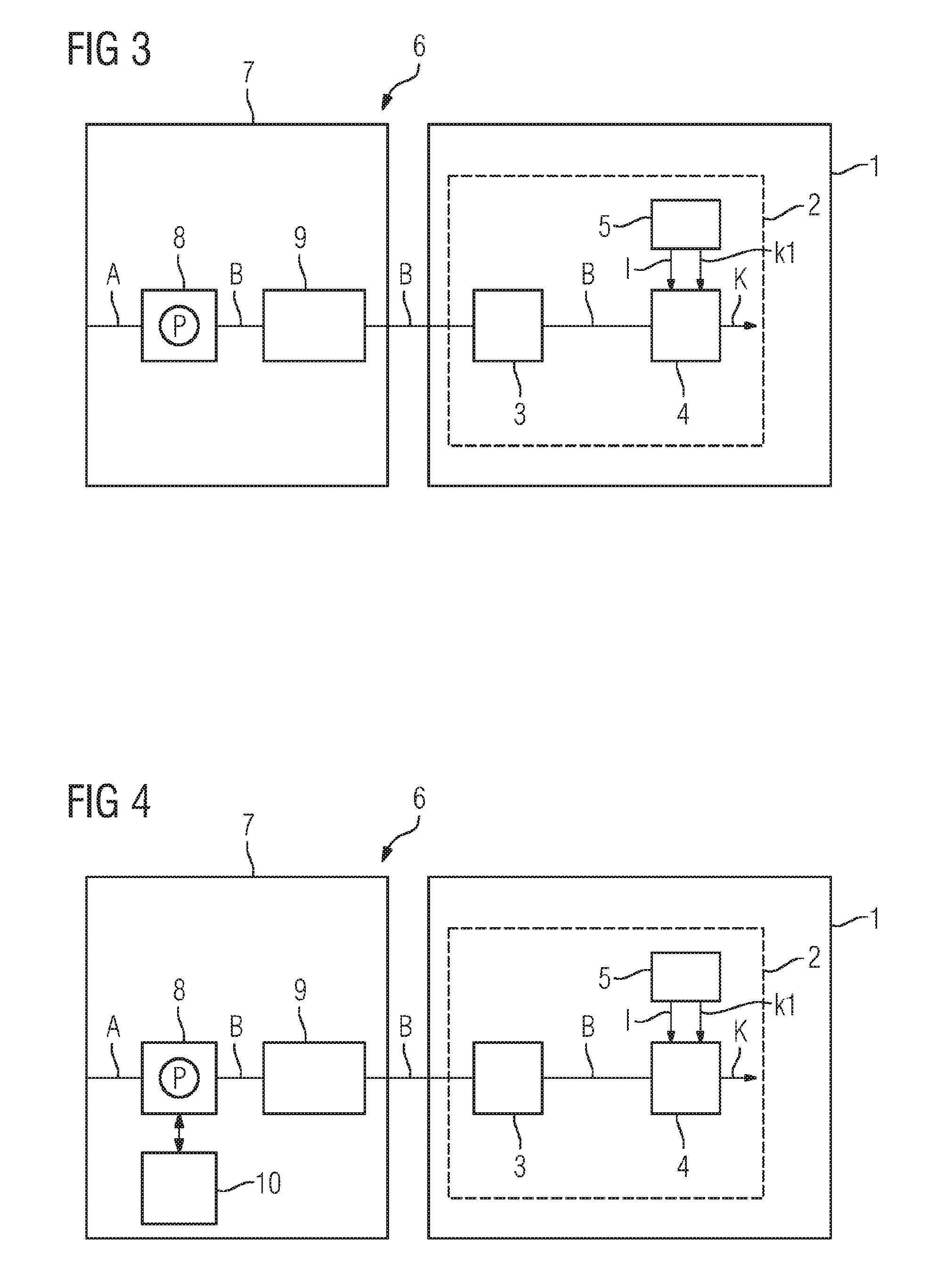

ActiveUS9584311B2Prevent tamperingGuaranteed normal executionSecret communicationCommunication with homomorphic encryptionHomomorphic encryptionComputer program

A device for decrypting data includes a number of devices secured by at least one security device. The secured devices include a receiver for receiving calculation data encrypted using a homomorphic encryption function and a decryptor for decrypting the encrypted calculation data by carrying out the inverse of the homomorphic encryption function on the encrypted calculation data using a private key assigned to the homomorphic encryption function. A method and a computer program product for decrypting data are also provided.

Owner:SIEMENS AG

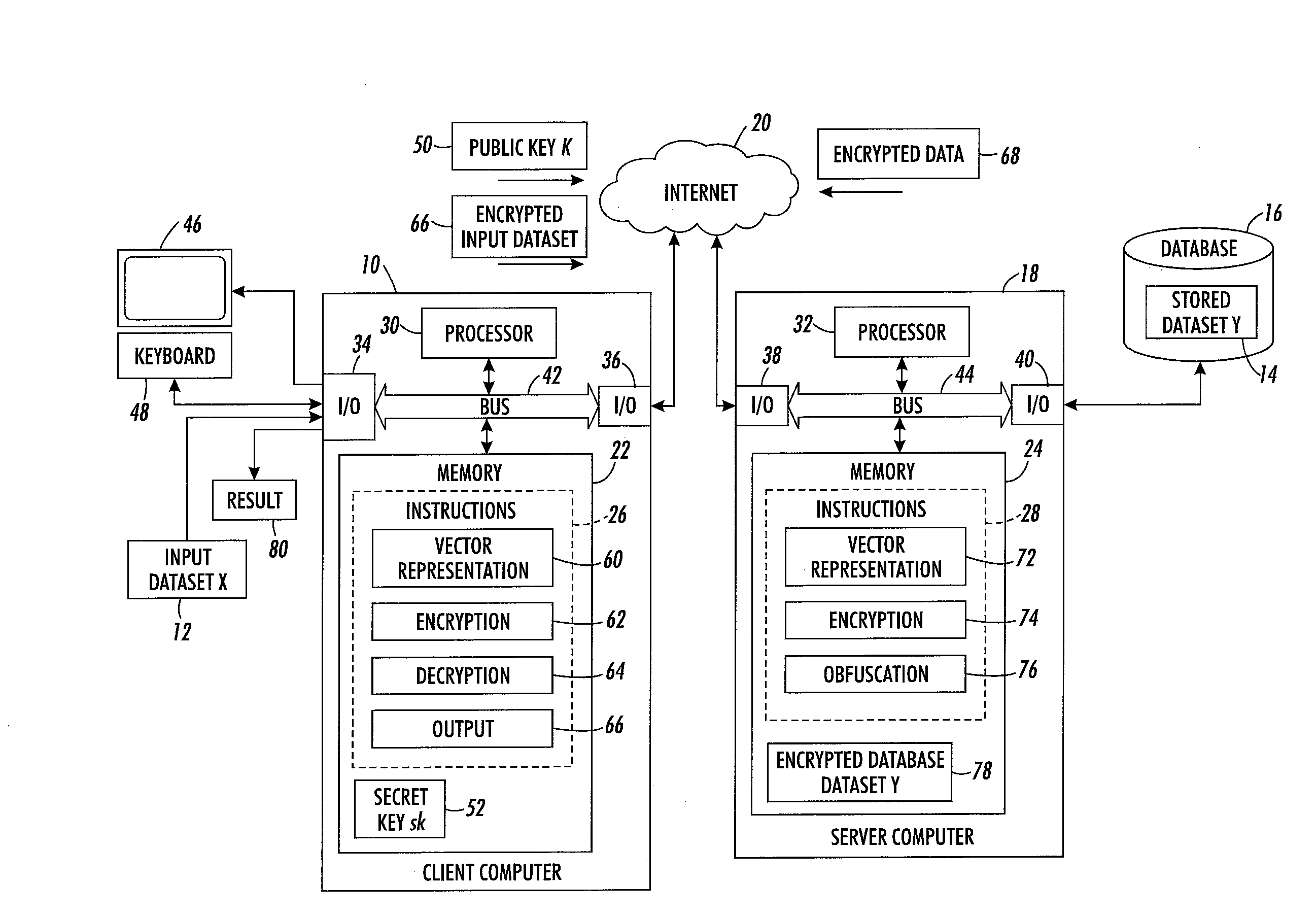

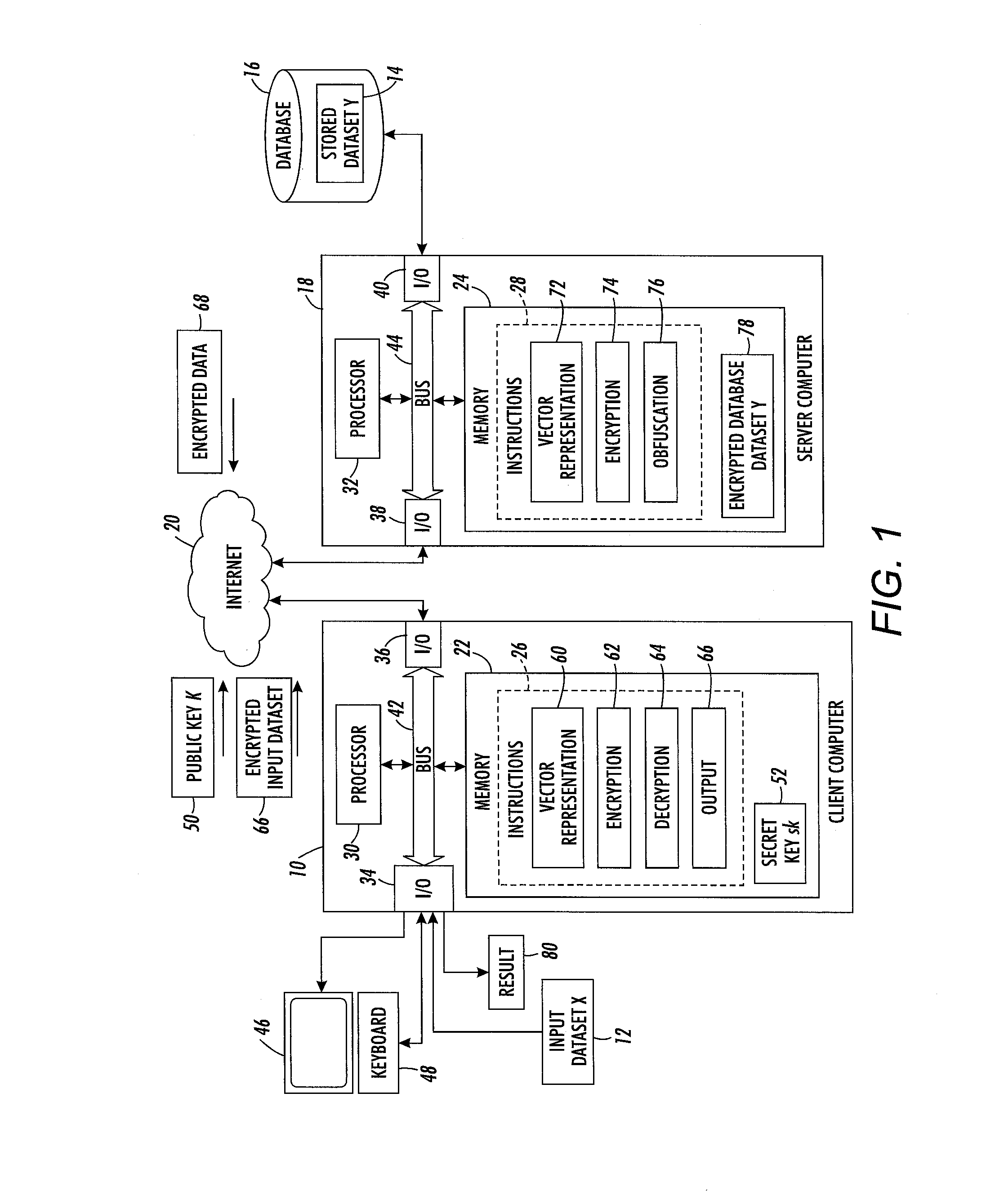

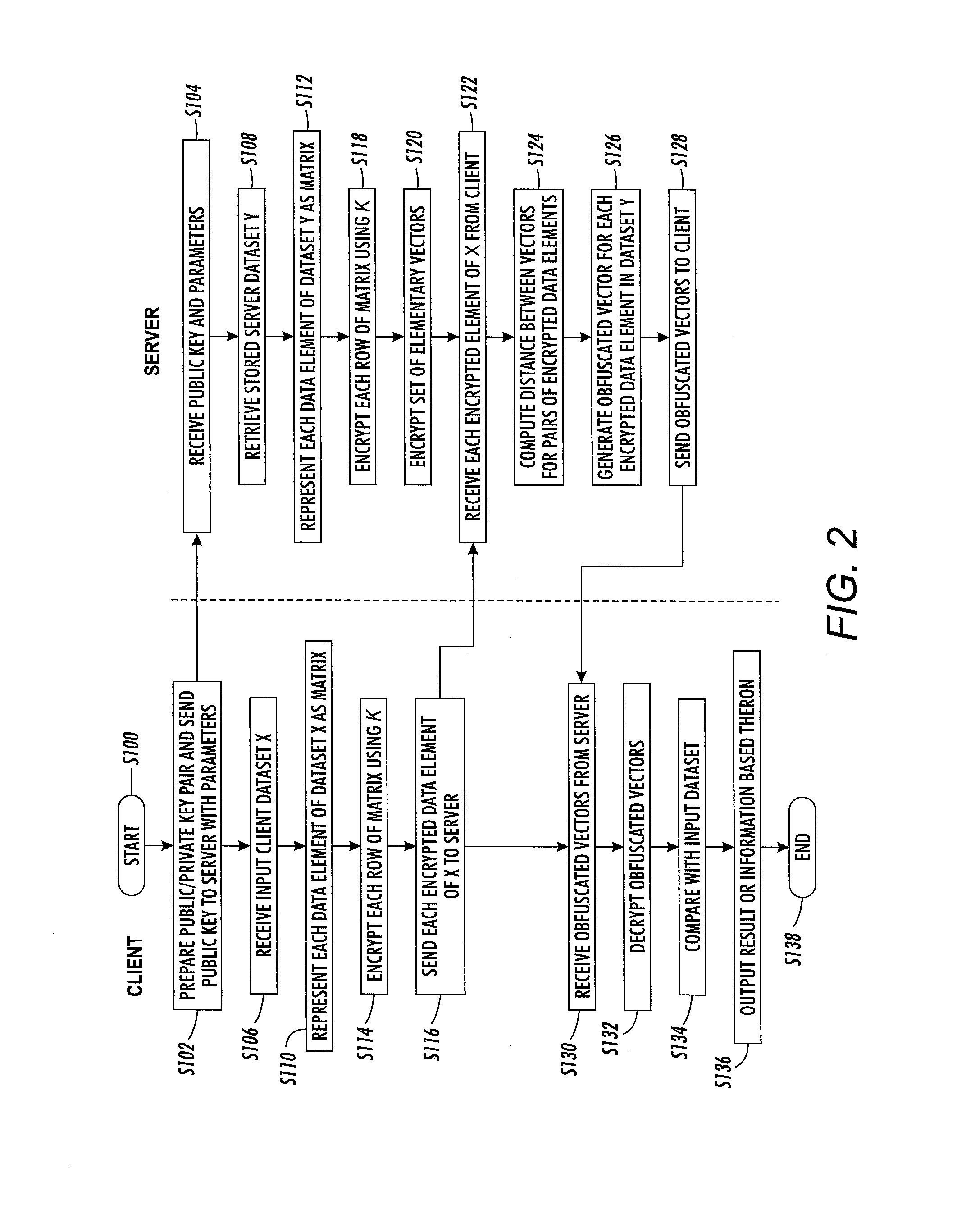

Compact fuzzy private matching using a fully-homomorphic encryption scheme

ActiveUS20160119119A1Public key for secure communicationComputer security arrangementsData matchingData element

A method for data matching includes providing two sets of encrypted data elements by converting data elements to respective sets of vectors and encrypting each vector with a public key of a homomorphic encryption scheme. Each data element includes a sequence of characters drawn from an alphabet. For pairs of encrypted data elements, a comparison measure is computed between the sets of encrypted vectors. An obfuscated vector is generated for each encrypted data element in the first set, which renders the first encrypted data element indecipherable when the comparison measure does not meet a threshold for at least one of the pairs of data encrypted elements comprising that encrypted data element. The obfuscated vectors can be decrypted with a private key, allowing data elements in the first set to be deciphered if the comparison measure meets the threshold for at least one of the data elements in the second set.

Owner:XEROX CORP

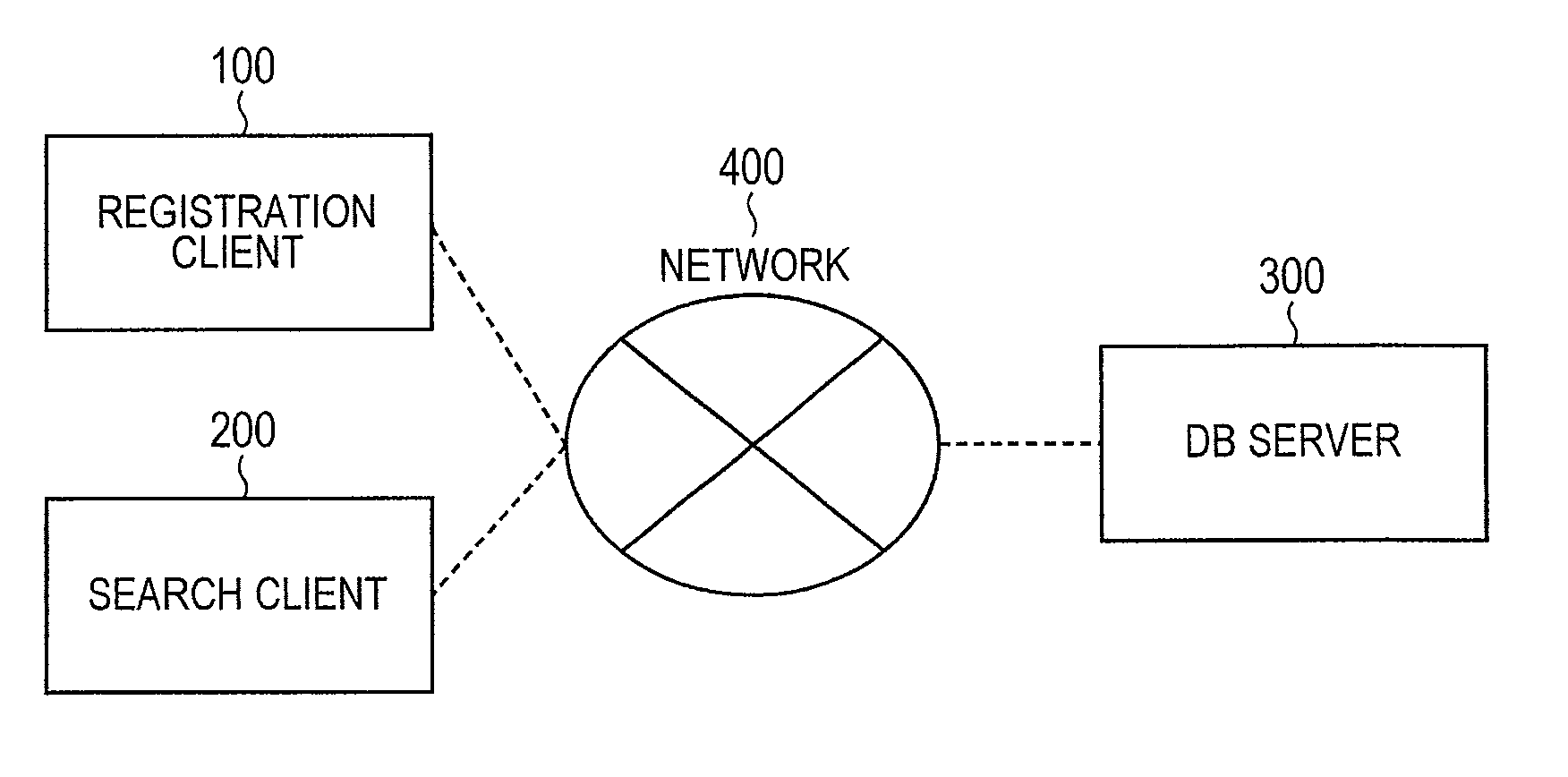

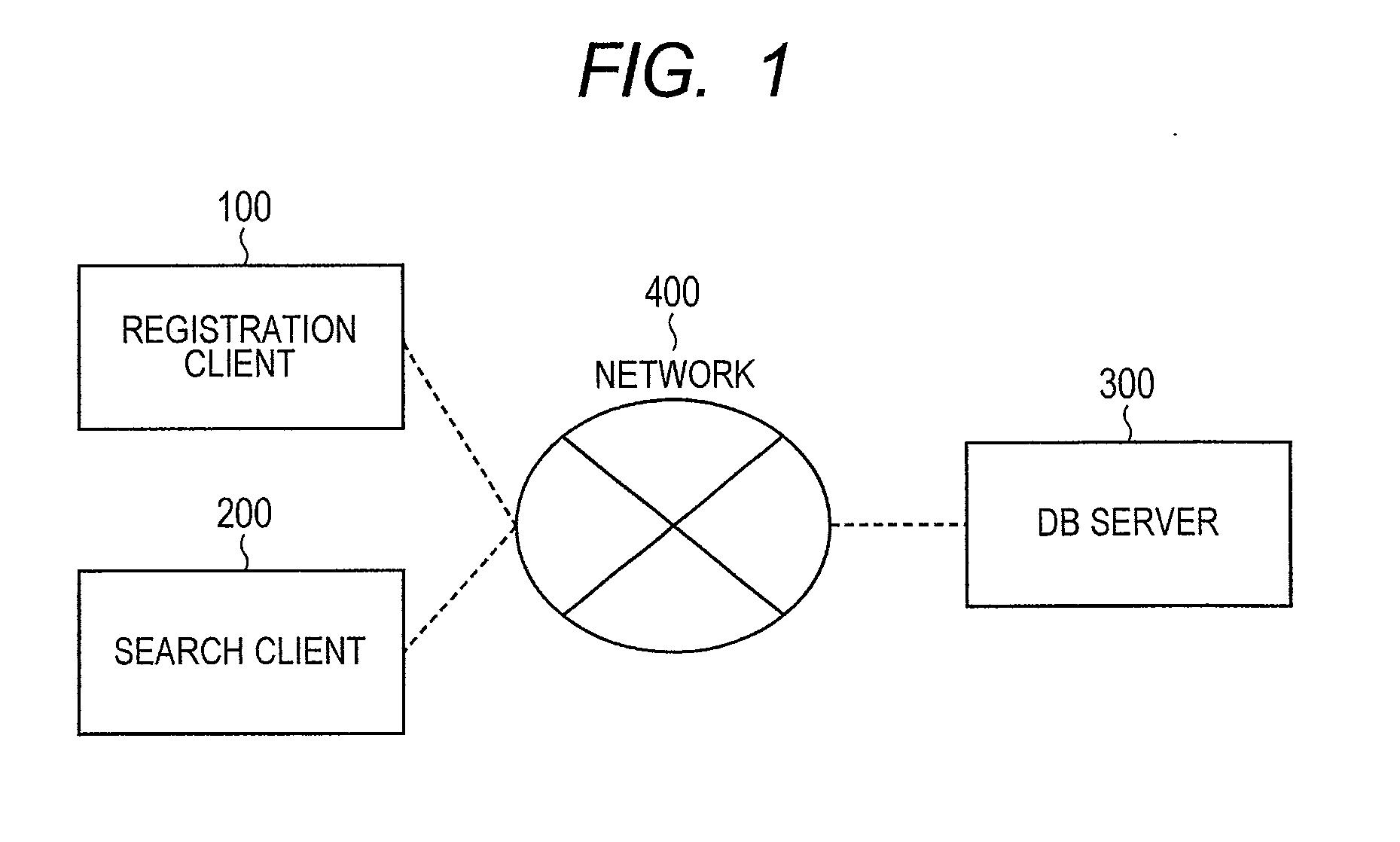

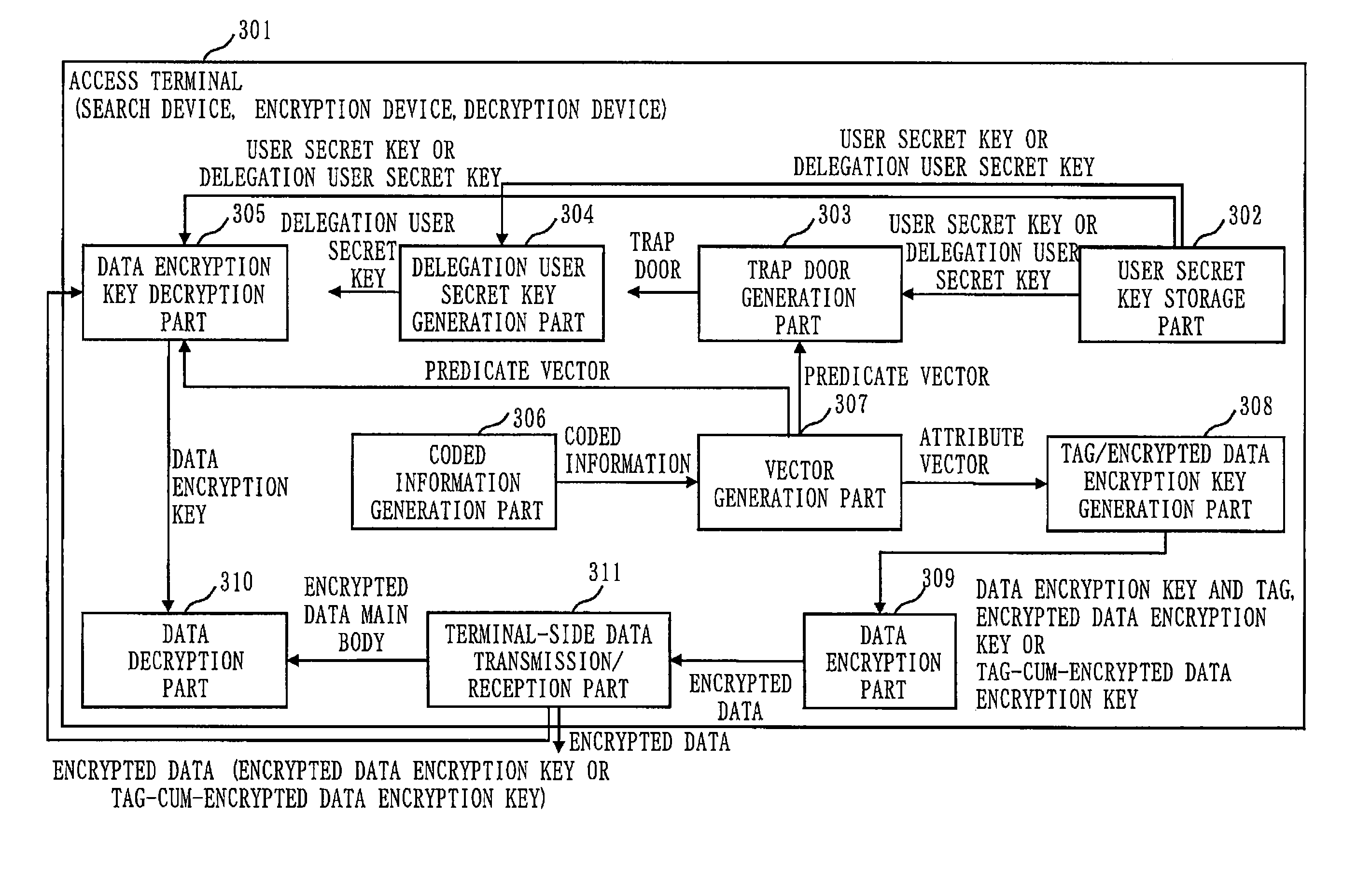

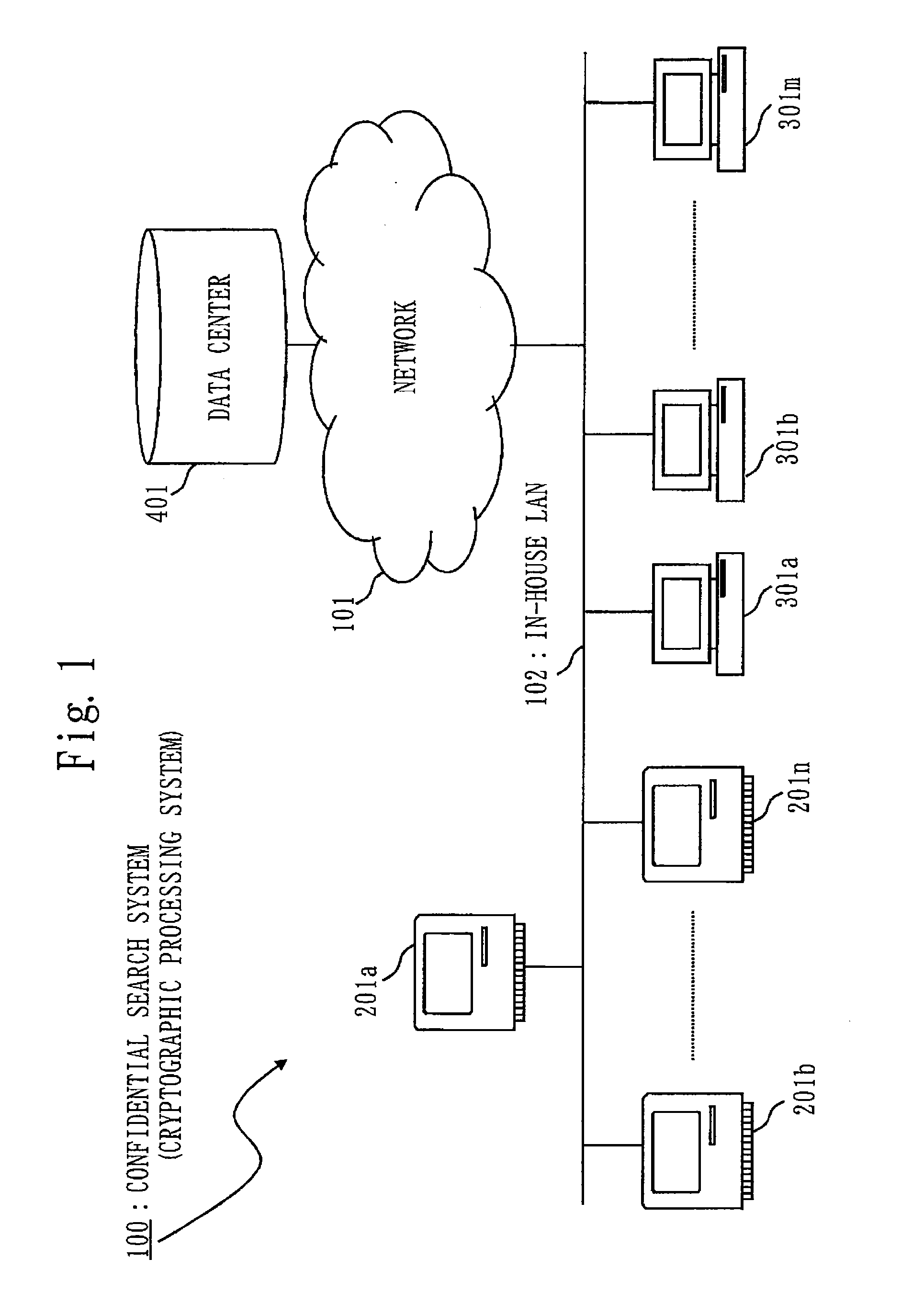

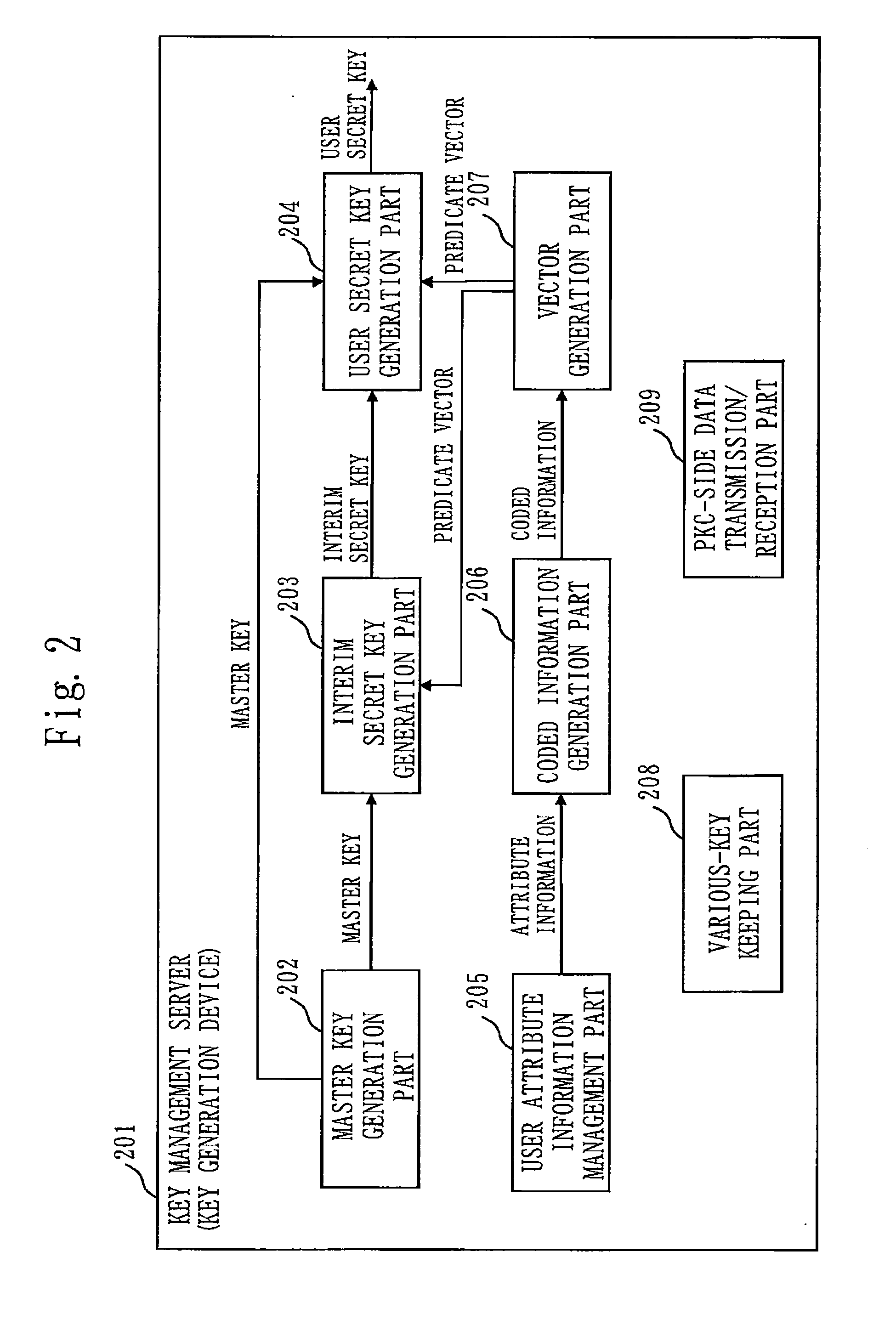

Searchable encryption processing system

ActiveUS20130262863A1Efficiently be decryptedImprove securityDigital data information retrievalDigital data protectionProbabilistic encryptionDatabase server

In the searchable encryption processing system, a data base server retaining data, a registration client which deposits the data into the data base server, and a search client which causes the data base server to search the data collaborate across a network, wherein the registration client, using a probabilistic encryption method which uses a mask using a homomorphic function and a hash value, deposits the encrypted data into the server, whereupon the search client, using probabilistic encryption which uses the mask which uses the homomorphic function for encryption of the search query, outputs the search query and non-corresponding data as search results without causing the data base server to unmask the mask and without allowing the frequency of occurrences of the data corresponding to the search to leak to the data base server.

Owner:HITACHI LTD

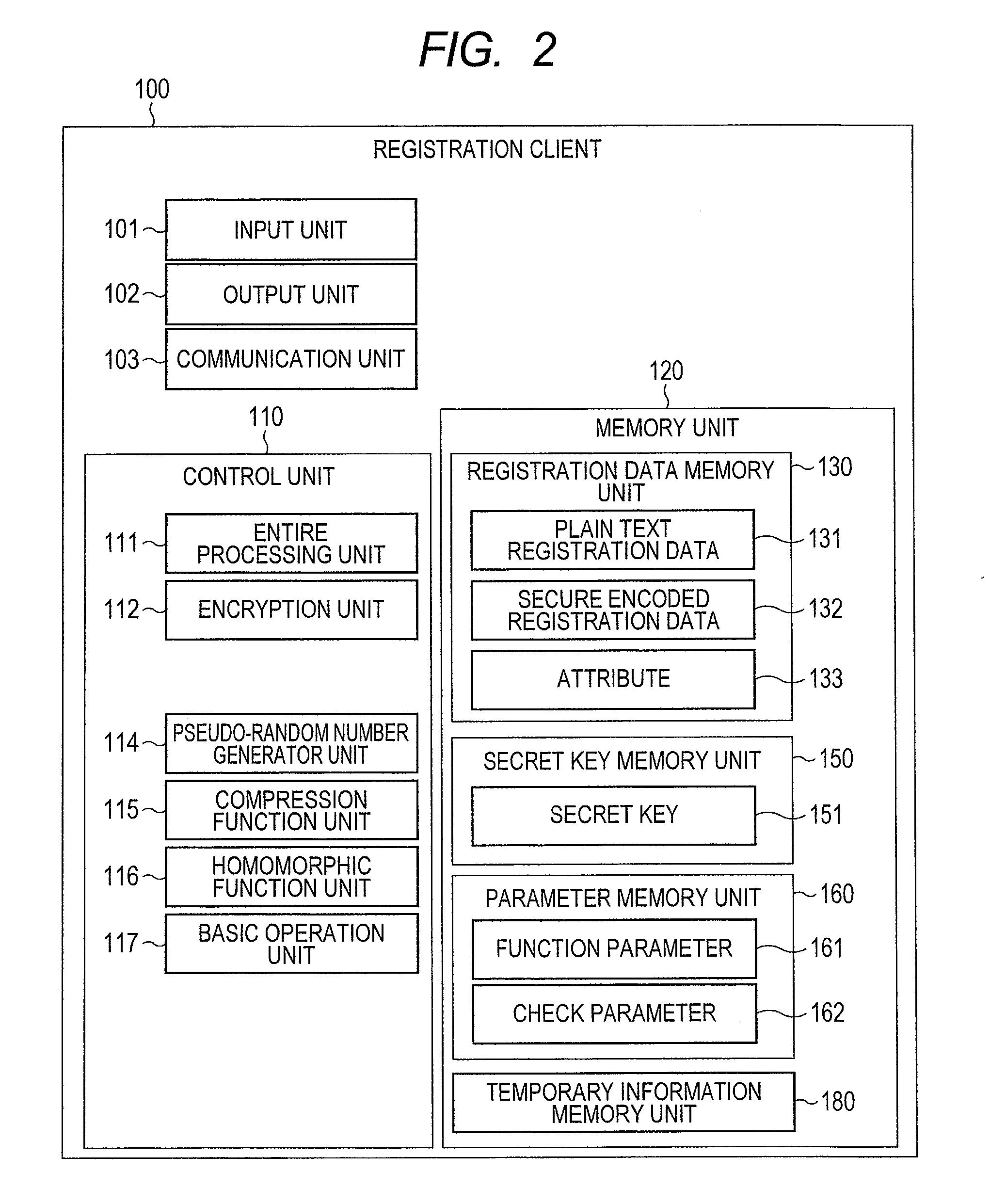

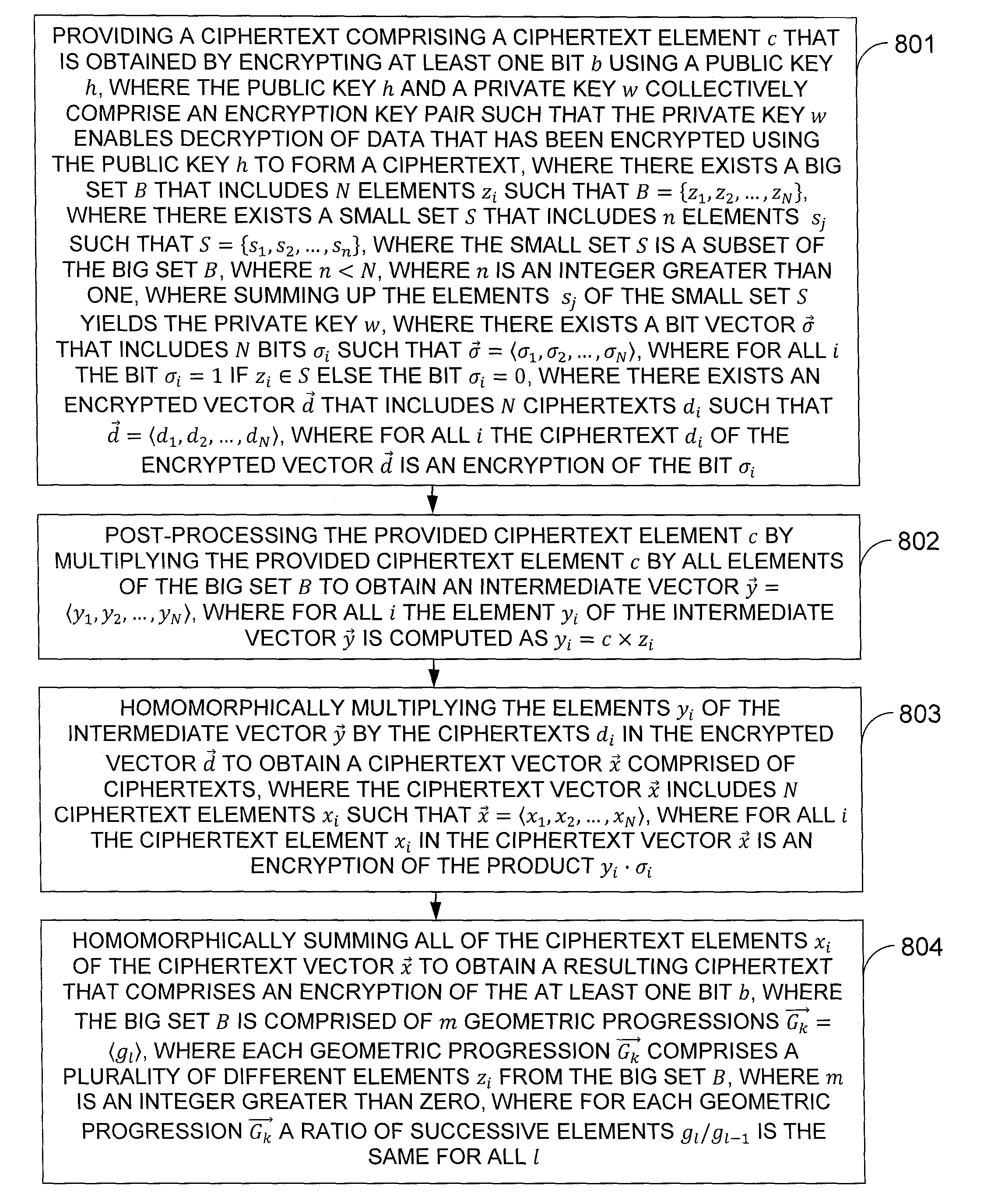

Efficient Implementation Of Fully Homomorphic Encryption

ActiveUS20120039473A1Public key for secure communicationUser identity/authority verificationCiphertextComputer science

In one exemplary embodiment of the invention, a method for homomorphic decryption, including: providing a ciphertext with element c, there exists a big set B having N elements zi so B={z1,z2, . . . , zN}, there exists a small set S having n elements sj so S={s1, s2, . . . , sn}, the small set is a subset of the big set, summing up the elements of the small set yields the private key, there exists a bit vector {right arrow over (σ)} having N bits σi so {right arrow over (σ)}=σ1, σ2, . . . , σN, σi=1 if zi ∈ S else σi=0, there exists an encrypted vector {right arrow over (d)} having N ciphertexts di so d=d1, d2, . . . , dN, di is an encryption of σi; post-processing c by multiplying it by all zi to obtain an intermediate vector {right arrow over (y)}=y1, y2, . . . , yN with yi computed yi=c×zi; homomorphically multiplying yi by di obtaining a ciphertext vector {right arrow over (x)} having N ciphertexts xi so z=x1, x2, . . . , xN, where xi is an encryption of the product yi·σi; and homomorphically summing all xi to obtain a resulting ciphertext that is an encryption of the at least one bit, where the big set is partitioned into n parts with each part having a plurality of different elements from the big set, where the elements of the small set are one element from each part.

Owner:IBM CORP

Method of authorizing an operation to be performed on a targeted computing device

ActiveUS20150074764A1Digital data processing detailsComputer security arrangementsAuthorization ModeAuthentication server

A method of authorizing an operation to be performed on a targeted computing device is provided. The method includes generating a request to perform an operation on the targeted computing device, signing the request with a private key of a first private, public key pair, transmitting the request to an authentication server, receiving an authorization response from the authentication server that includes the request and an authorization token, and transmitting the authorization response to the targeted computing device.

Owner:THE BOEING CO

Confidential search system and cryptographic processing system

ActiveUS20120297201A1Searchable data can be searchedSearch is limitedUnauthorized memory use protectionHardware monitoringInternet privacyPredicate encryption

A confidential search that can flexibly control searchable data depending on a role or authority of a user when the data is shared in a group. When the inner product of an attribute vector and a predicate vector is a predetermined value, the confidential search system conducts pairing computation of decrypted data generated based on the attribute vector and a decryption key generated based on the predicate vector, so as to realize confidential search by utilizing an inner-product predicate encryption process that can decrypt the encrypted data. In particular, the confidential search system enables flexible control of searchable data depending on the role or authority of the user, by devising a method of generating the attribute vector and the predicate vector.

Owner:MITSUBISHI ELECTRIC CORP

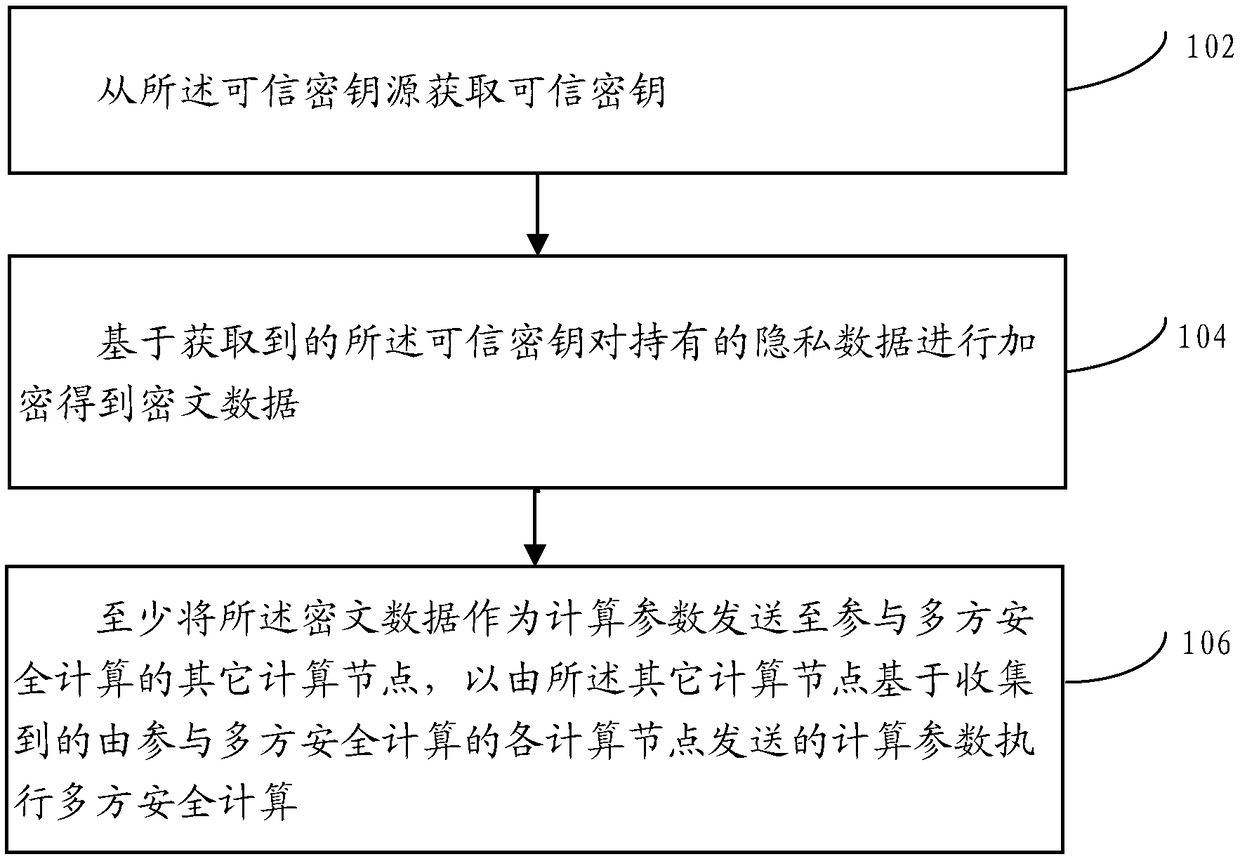

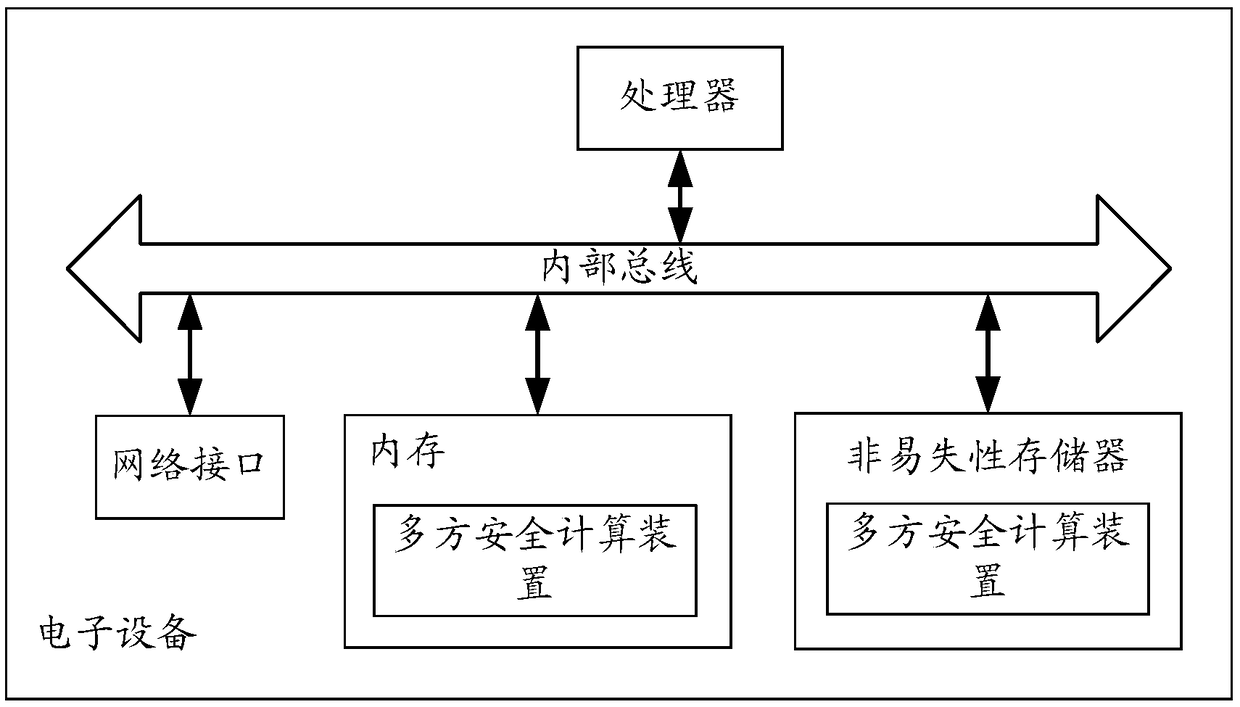

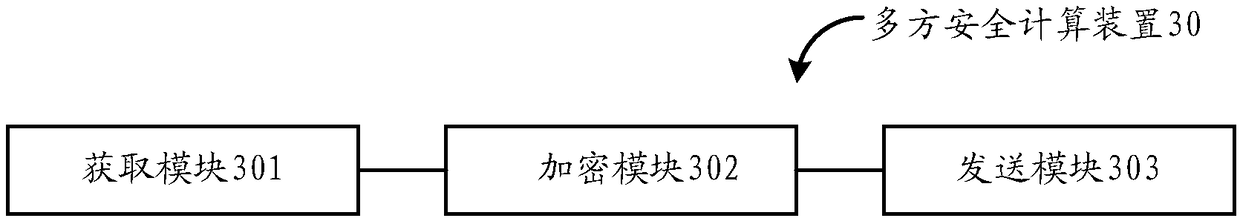

Multi-party safety calculation method and device, and electronic equipment

ActiveCN109241016AAvoid the Risk of Privacy LeakageKey distribution for secure communicationData stream serial/continuous modificationCiphertextCalculation methods

A multi-party secure computing method is applied to any computing node deployed in a distributed network. A plurality of computing nodes are deployed in the distributed network, and the plurality of computing nodes participate in the multi-party secure computing together based on the privacy data held by the plurality of computing nodes respectively; Wherein the computing node is corresponding toa trusted key source; The method includes obtaining a trusted key from the trusted key source; Encrypting the held privacy data based on the obtained trusted key to obtain ciphertext data; sending atleast the ciphertext data as computational parameters to other computational nodes participating in the multi-party security computation to perform the multi-party security computation by the other computational nodes based on the collected computational parameters sent by each computational node participating in the multi-party security computation.

Owner:ADVANCED NEW TECH CO LTD

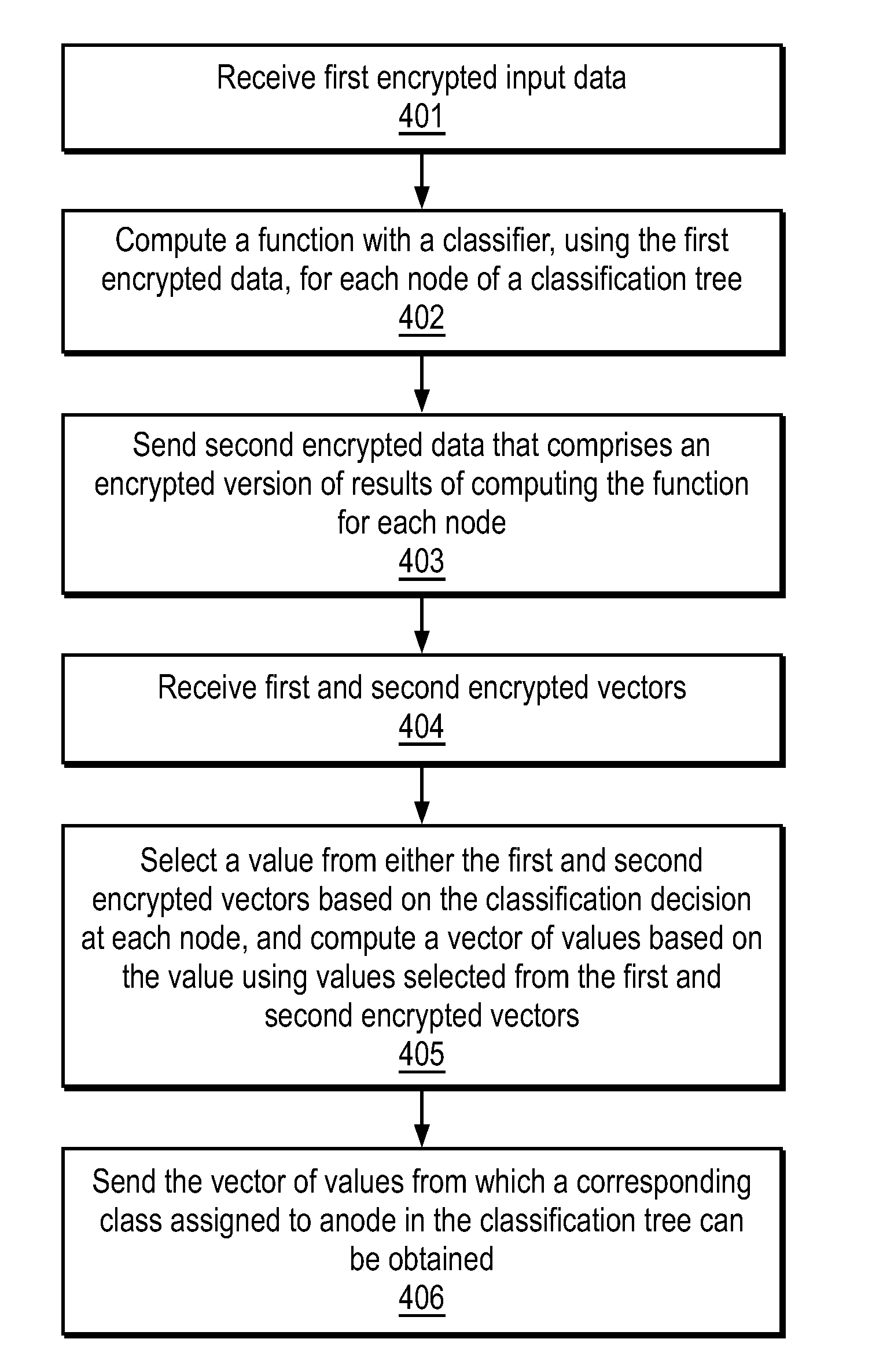

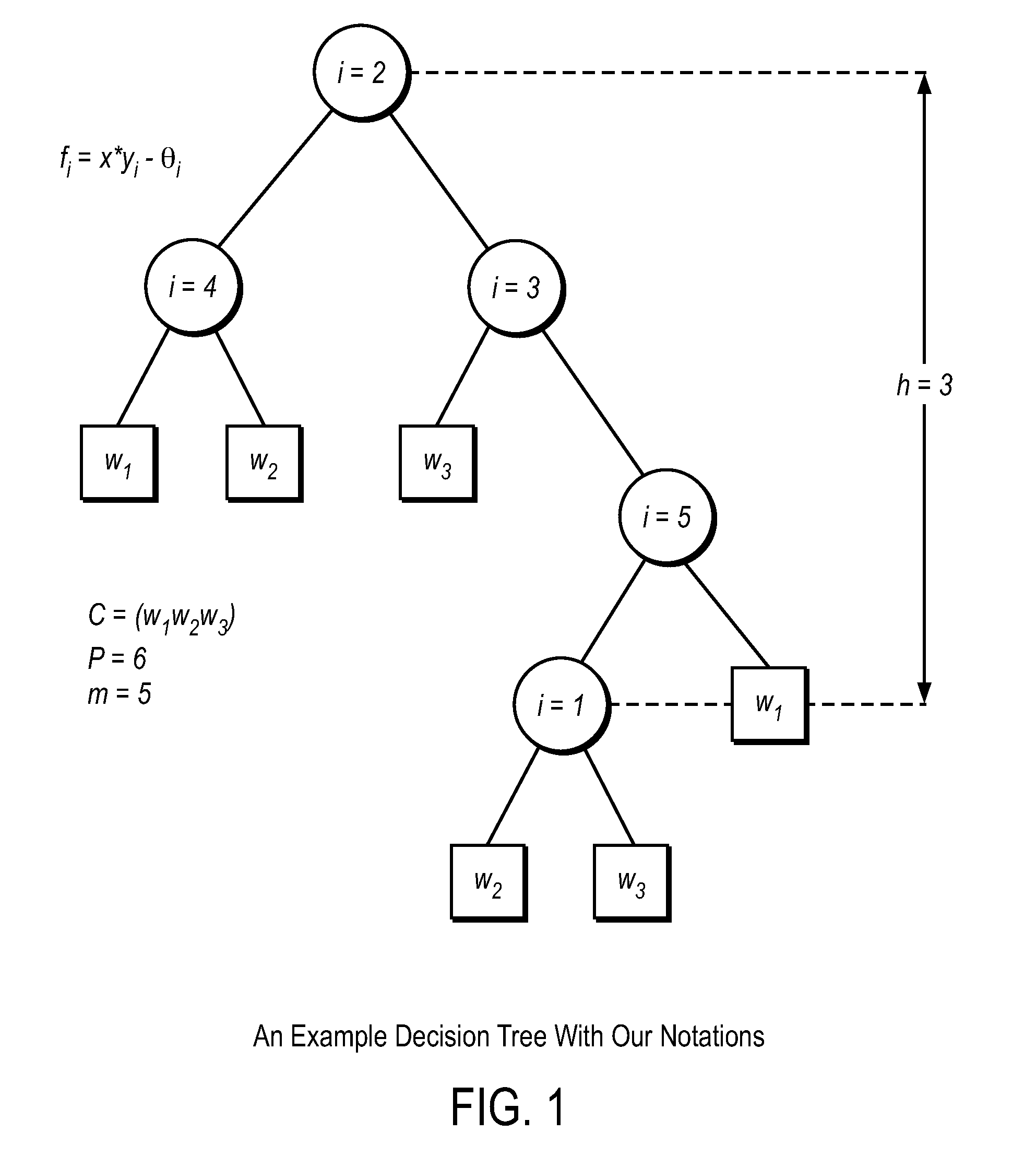

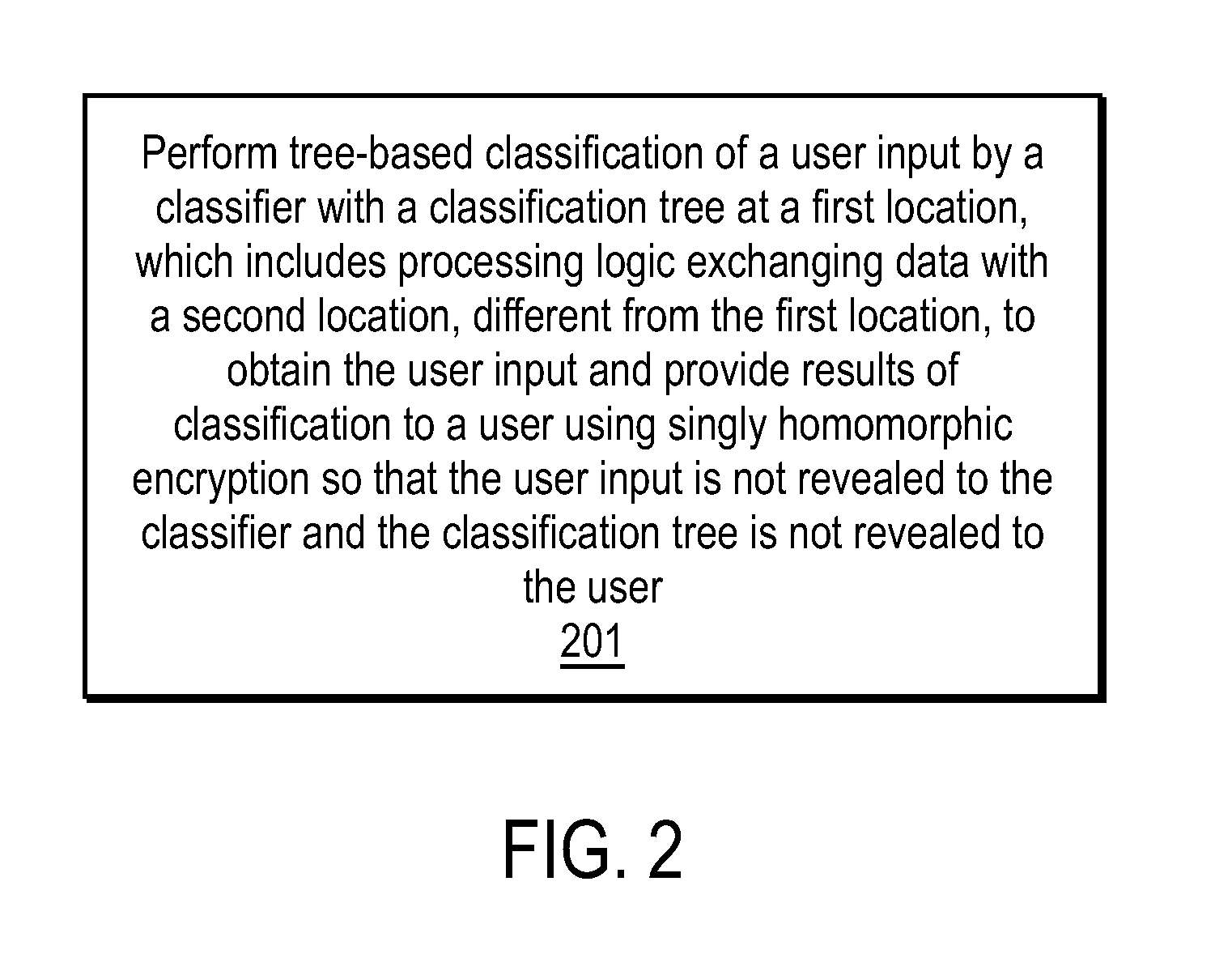

Efficient, remote, private tree-based classification using cryptographic techniques

InactiveUS20120201378A1Digital data protectionInternal/peripheral component protectionUser inputData mining

A method and apparatus are disclosed herein for classification. In one embodiment, the method comprises performing tree-based classification of a user input by a classifier with a classification tree at a first location, including exchanging data with a second location, different from the first location, to obtain the user input and provide results of classification to a user using singly homomorphic encryption so that the user input is not revealed to the classifier, the classification tree is not revealed to the user and the classifier's output is not revealed to the classifier.

Owner:RICOH KK

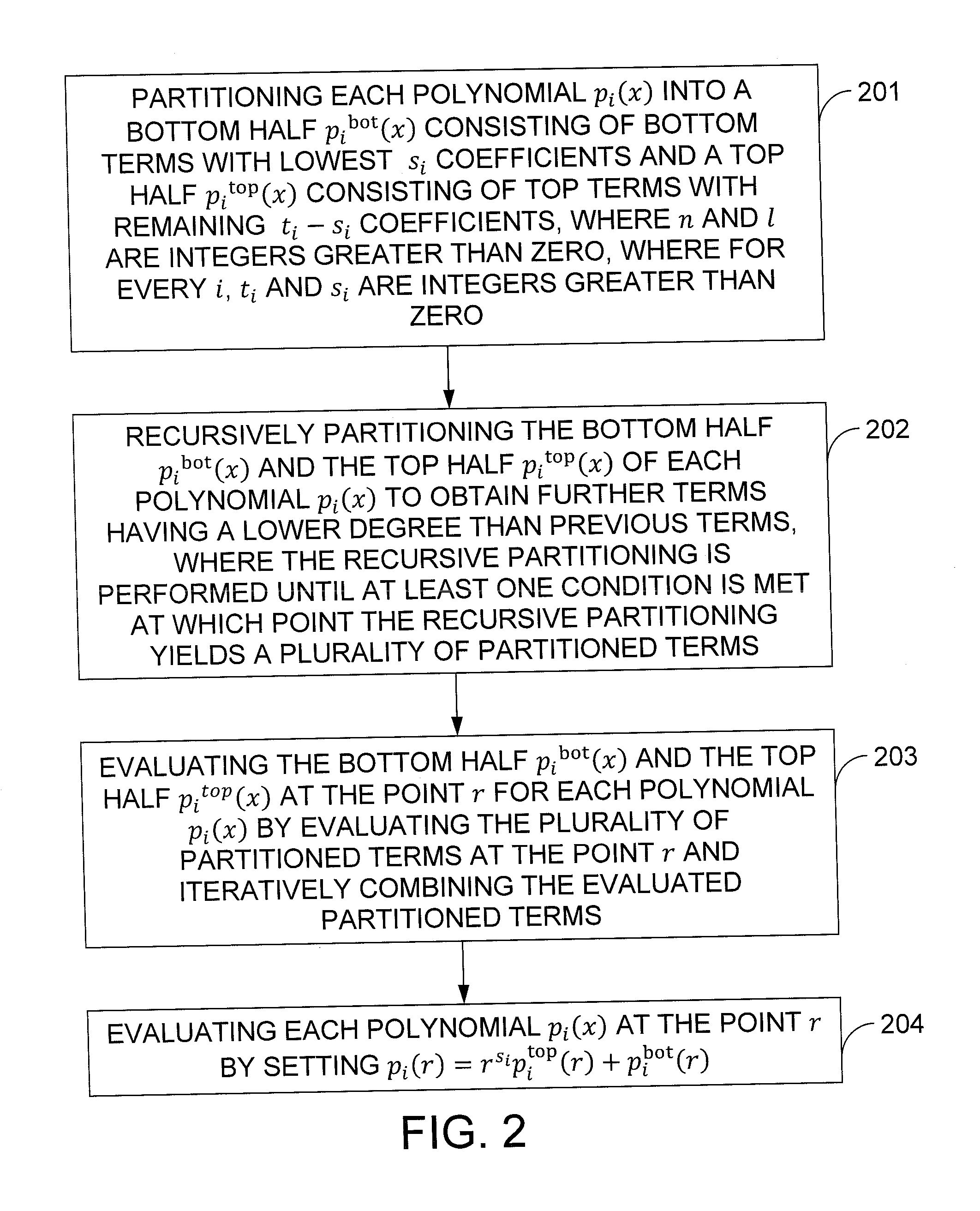

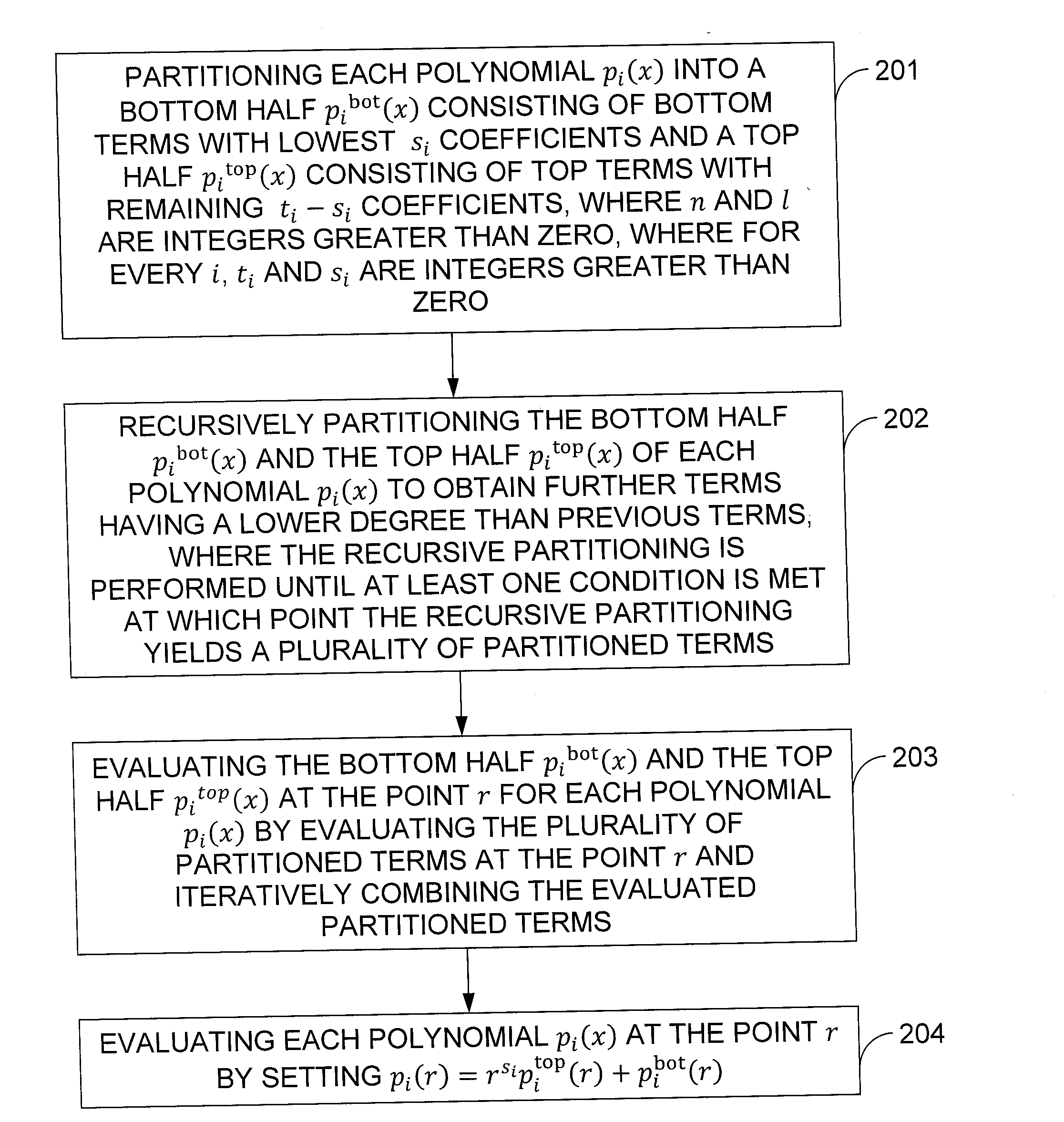

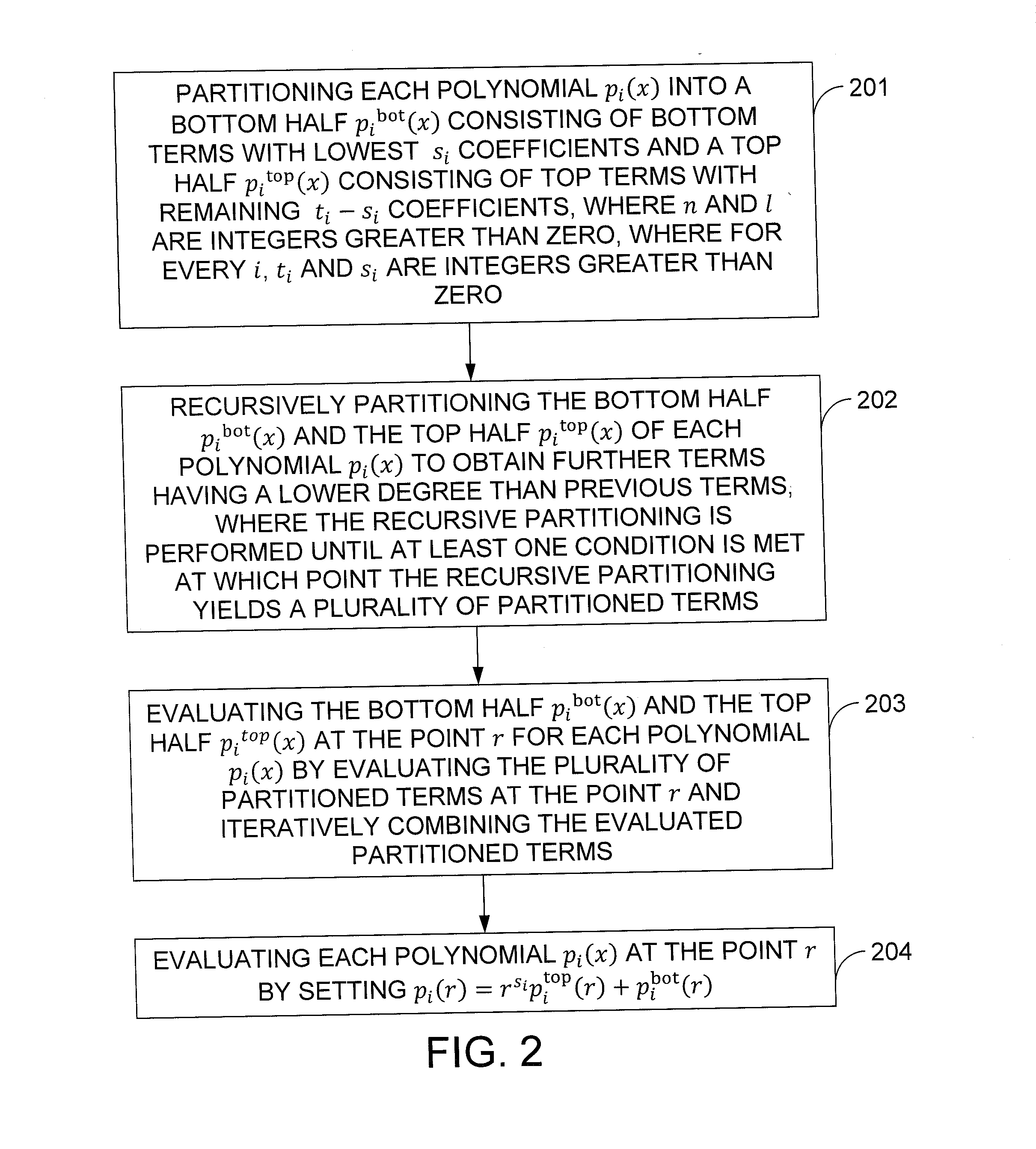

Fast Evaluation Of Many Polynomials With Small Coefficients On The Same Point

InactiveUS20120039463A1Degree of reductionSecret communicationCommunication with homomorphic encryptionComputational physicsRecursion

In one exemplary embodiment of the invention, a method for evaluating at point r one or more polynomials p1(x), . . . , pl(x) of maximum degree up to n−1, where the polynomial pi(x) has a degree of ti−1, the method including: partitioning each polynomial pi(x) into a bottom half pibot(x) with bottom terms of lowest si coefficients and a top half pitop(x) with top terms of remaining ti−si coefficients; recursively partitioning the bottom half pibot(x) and the top half pitop(x) of each polynomial pi(x) obtaining further terms having a lower degree than previous terms, performed until at least one condition is met yielding a plurality of partitioned terms; evaluating the bottom half pibot(x) and the top half pitop(x) at the point r for each polynomial pi(x) by evaluating the partitioned terms at the point r and iteratively combining the evaluated partitioned terms; and evaluating each polynomial pi(x) at the point r by setting pi(r)=rs<sub2>i< / sub2>pitop(r)+pibot(r).

Owner:IBM CORP

Secure distributed computing using containers

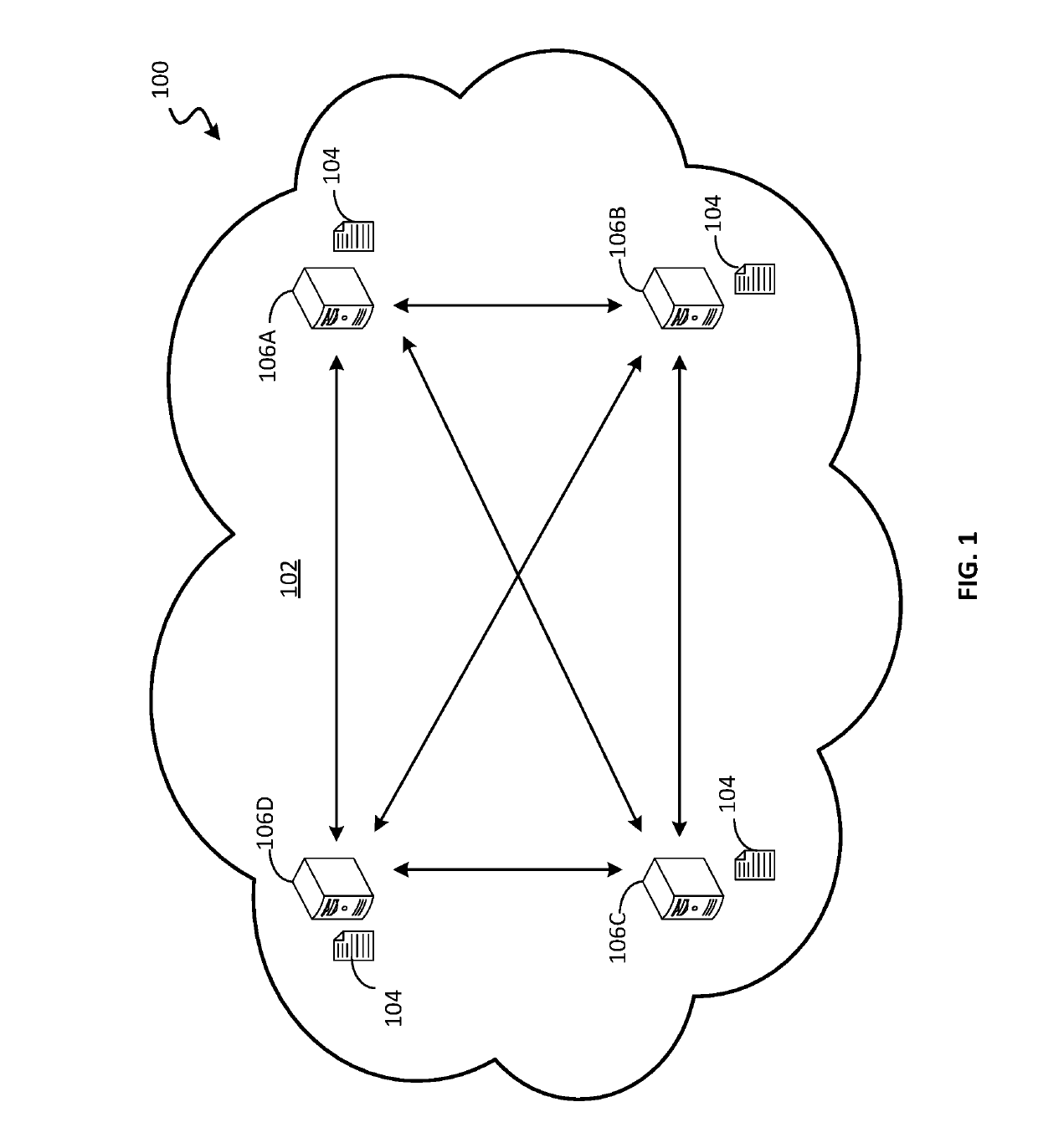

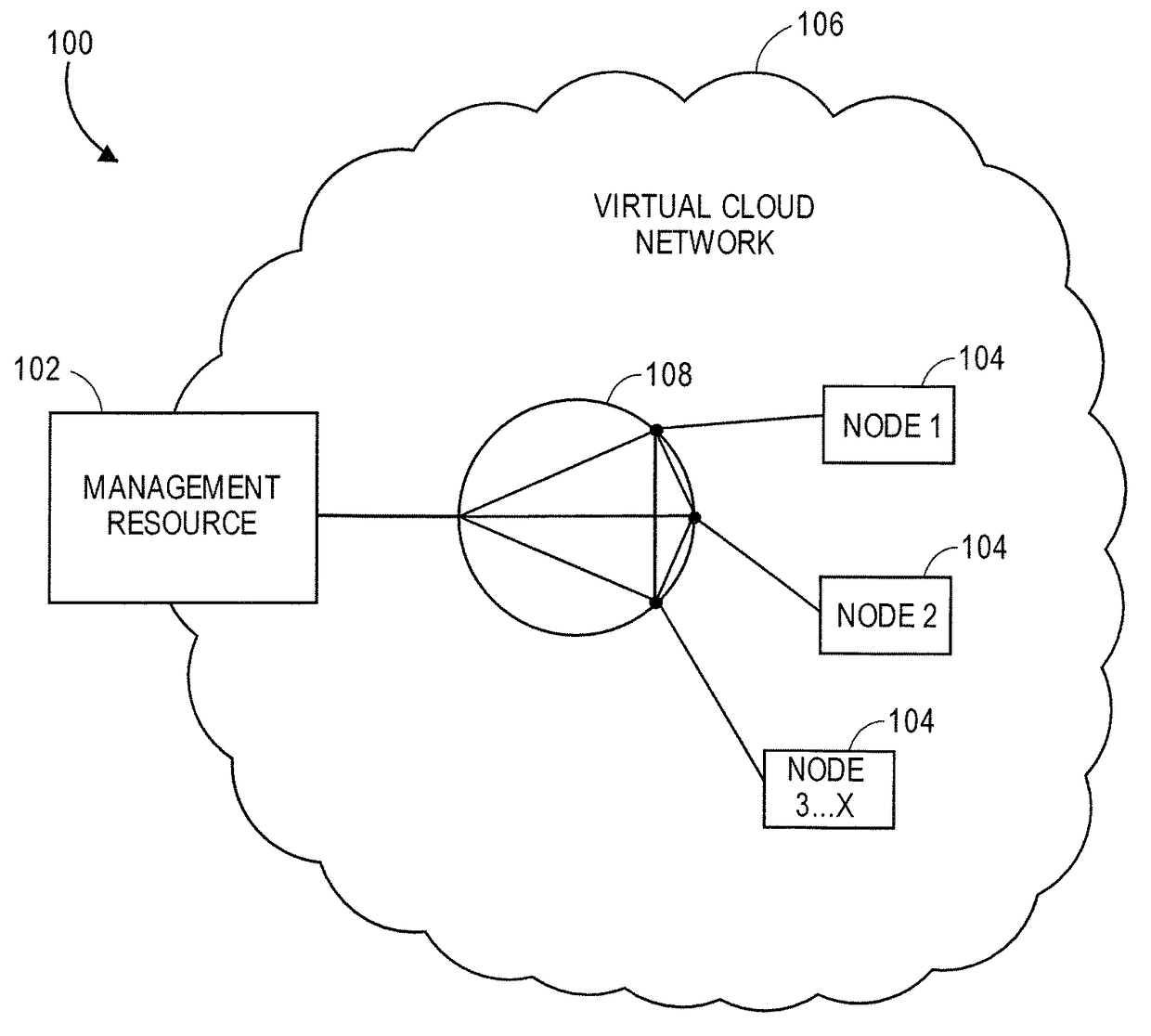

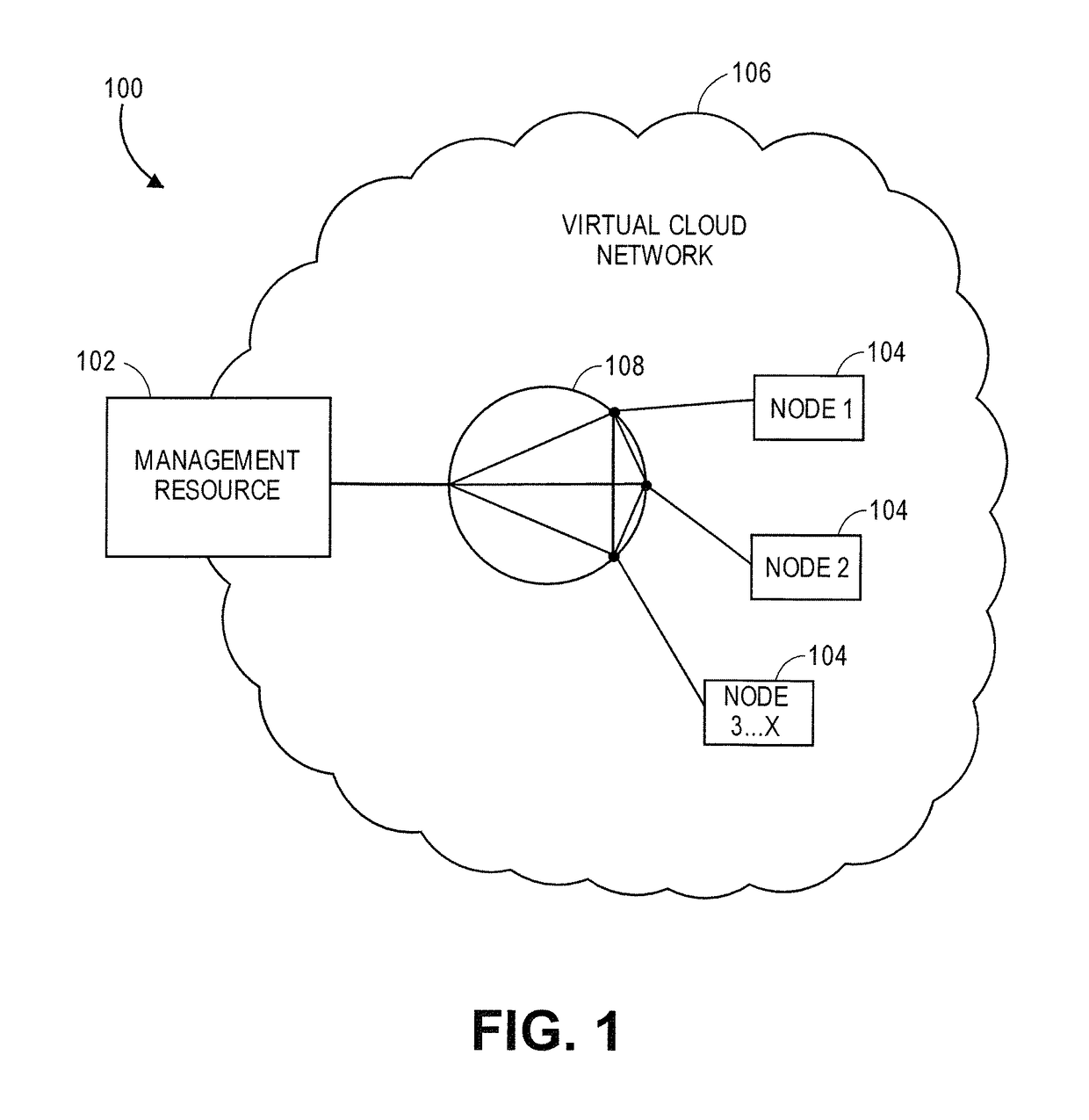

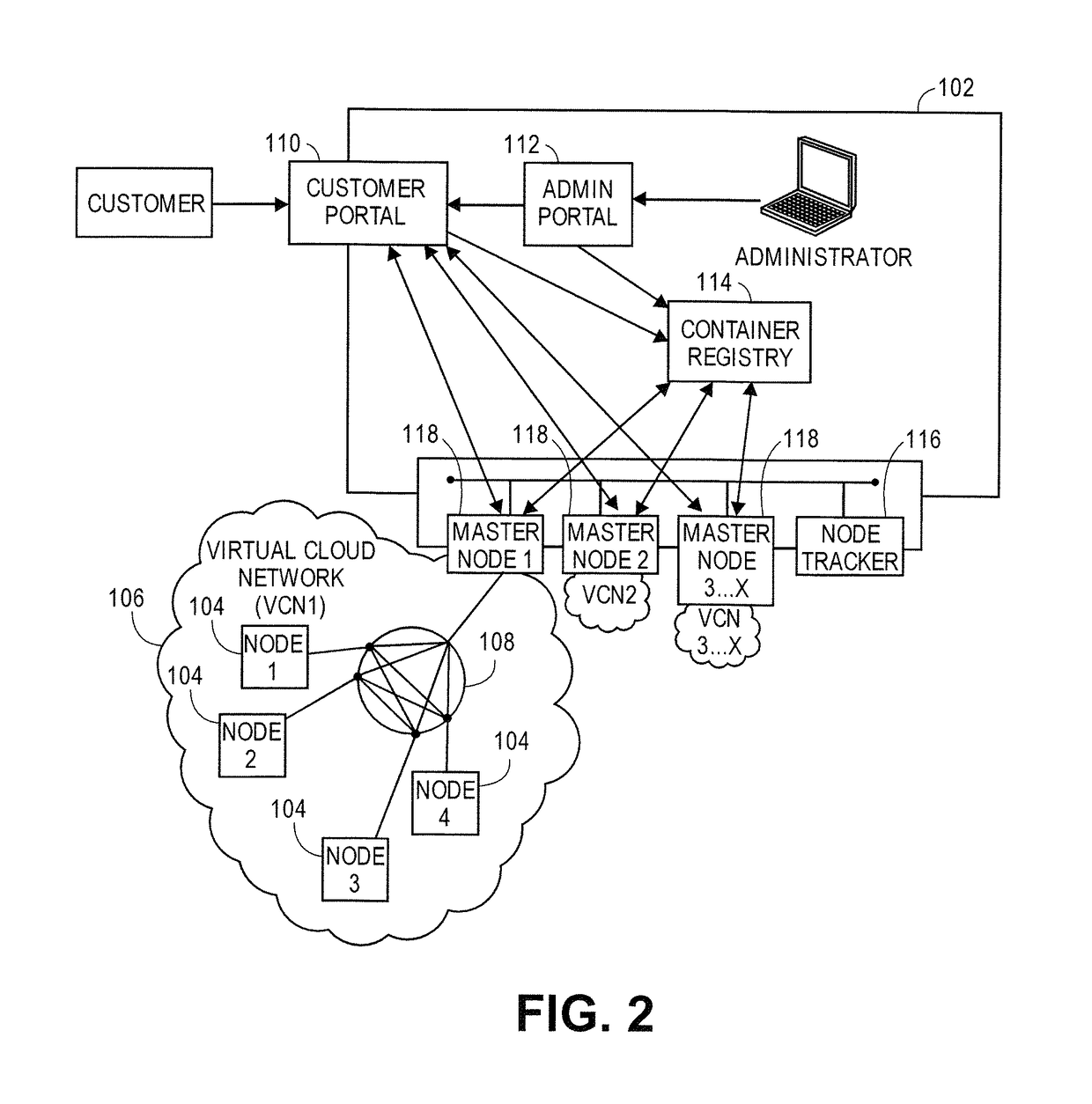

A system for performing distributed computing. The system comprises a plurality of compute node resources for performing computations for the distributed computing, a management resource for managing each of the compute node resources in the plurality, and a virtual cloud network. The management resource and the plurality of compute node resources are interconnected via the virtual cloud network.

Owner:ANDERSON NEIL

Privacy-preserving machine learning

InactiveCN110537191ADigital data protectionNeural architecturesStochastic gradient descentNerve network

Owner:VISA INT SERVICE ASSOC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com