Patents

Literature

1369 results about "Angular point" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

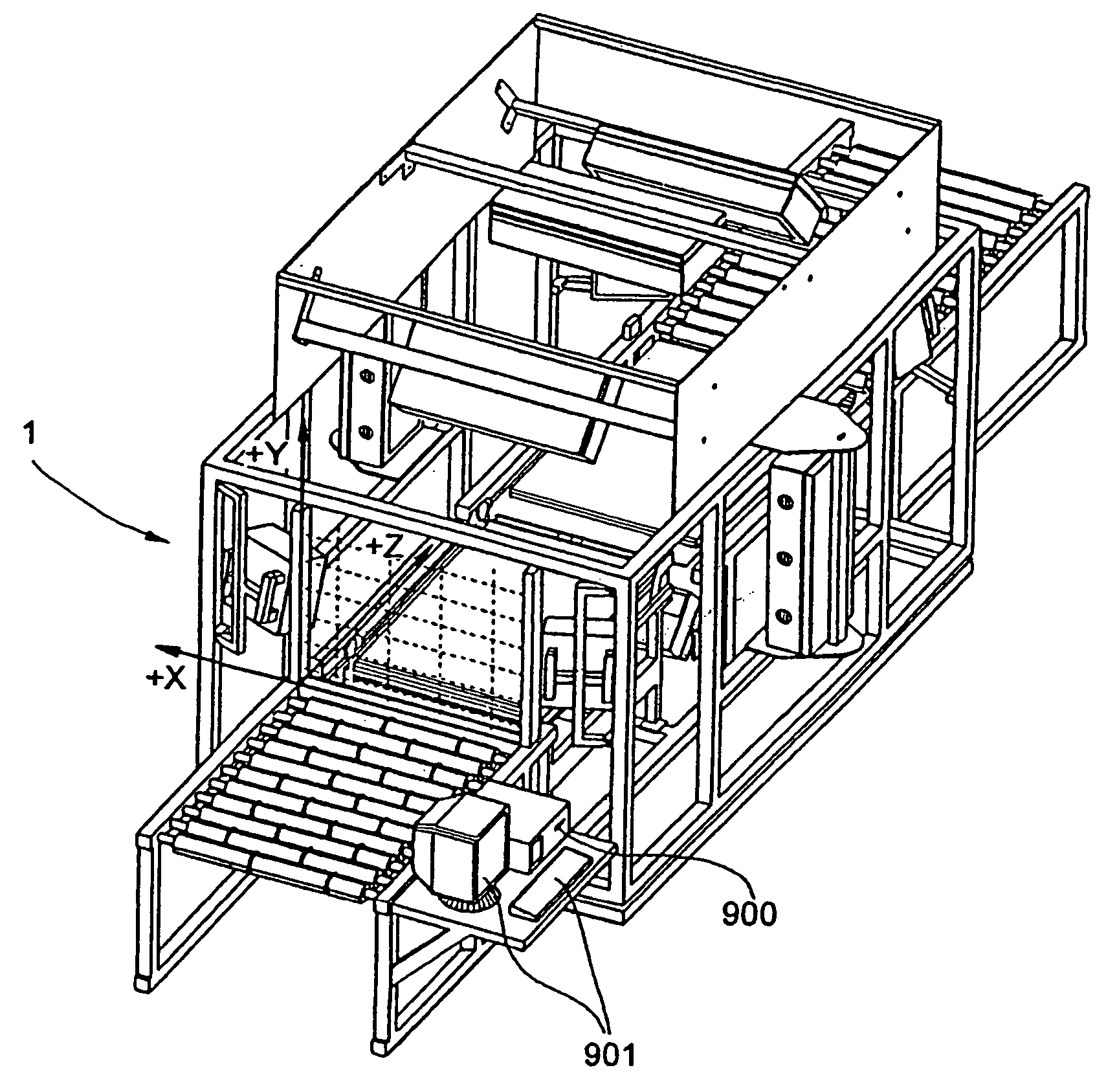

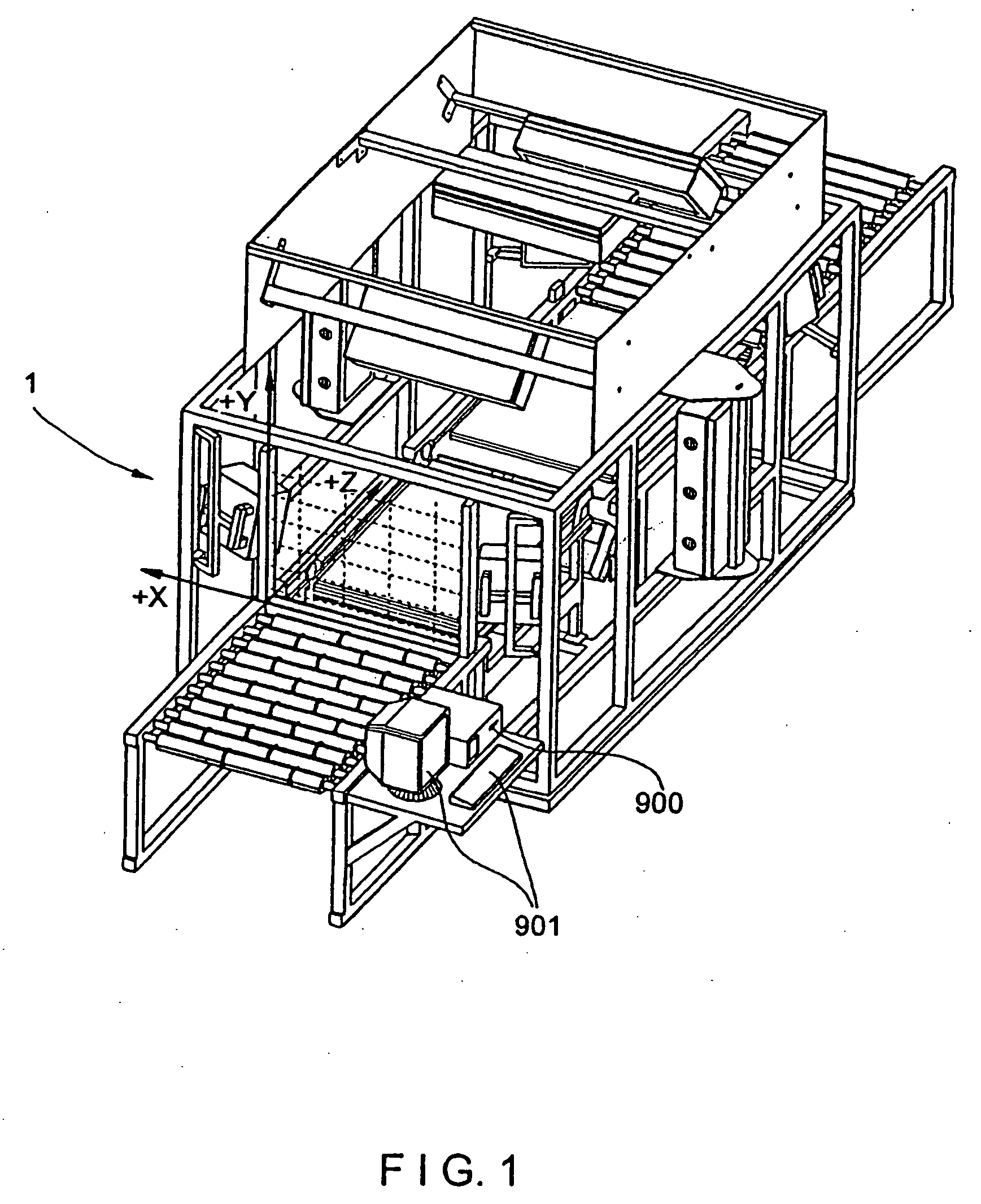

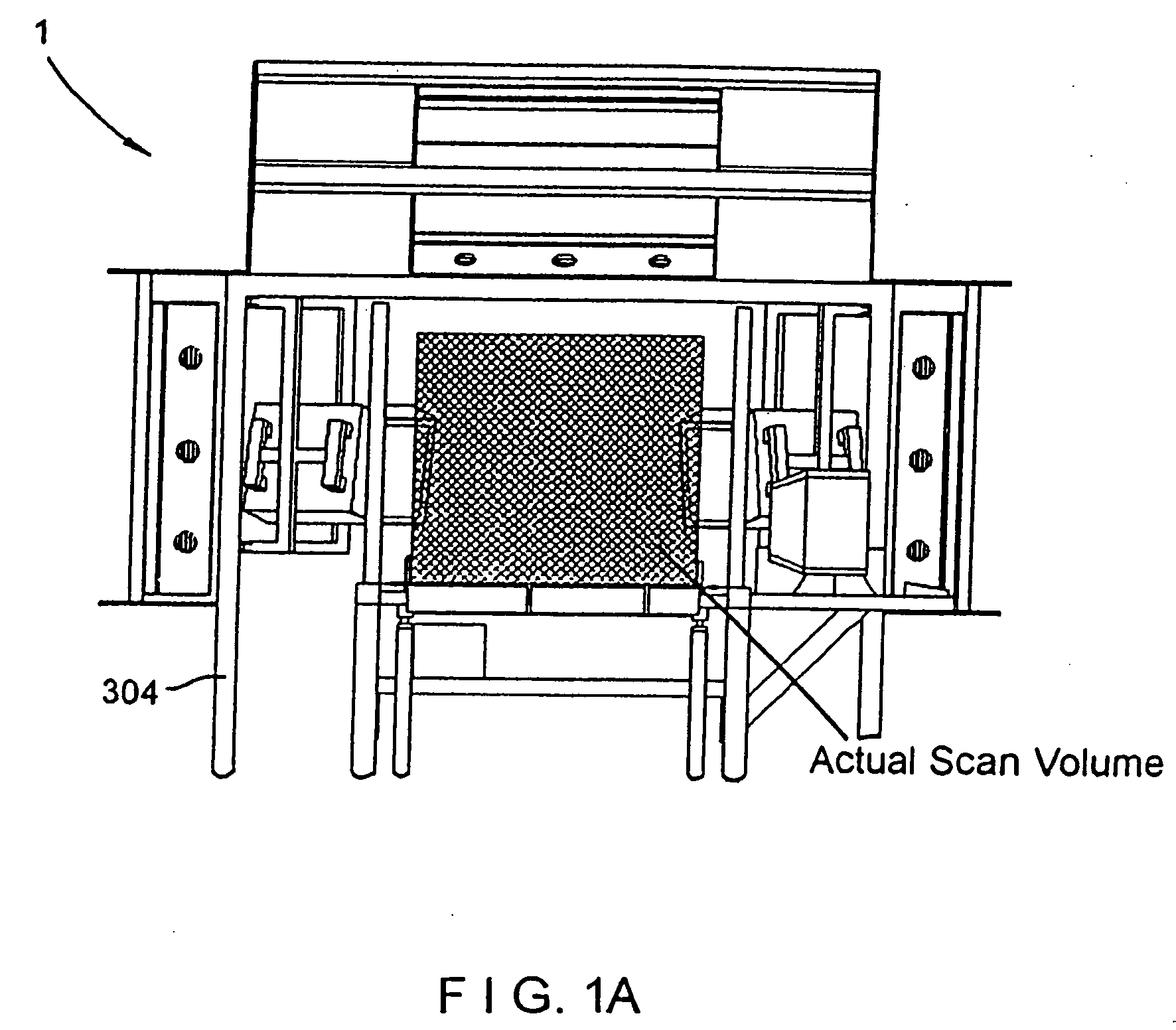

Automated method of and system for dimensioning objects over a conveyor belt structure by applying contouring tracing, vertice detection, corner point detection, and corner point reduction methods to two-dimensional range data maps of the space above the conveyor belt captured by an amplitude modulated laser scanning beam

A fully automated package identification and measuring system, in which an omni-directional holographic scanning tunnel is used to read bar codes on packages entering the tunnel, while a package dimensioning subsystem is used to capture information about the package prior to entry into the tunnel. Mathematical models are created on a real-time basis for the geometry of the package and the position of the laser scanning beam used to read the bar code symbol thereon. The mathematical models are analyzed to determine if collected and queued package identification data is spatially and / or temporally correlated with package measurement data using vector-based ray-tracing methods, homogeneous transformations, and object-oriented decision logic so as to enable simultaneous tracking of multiple packages being transported through the scanning tunnel.

Owner:METROLOGIC INSTR

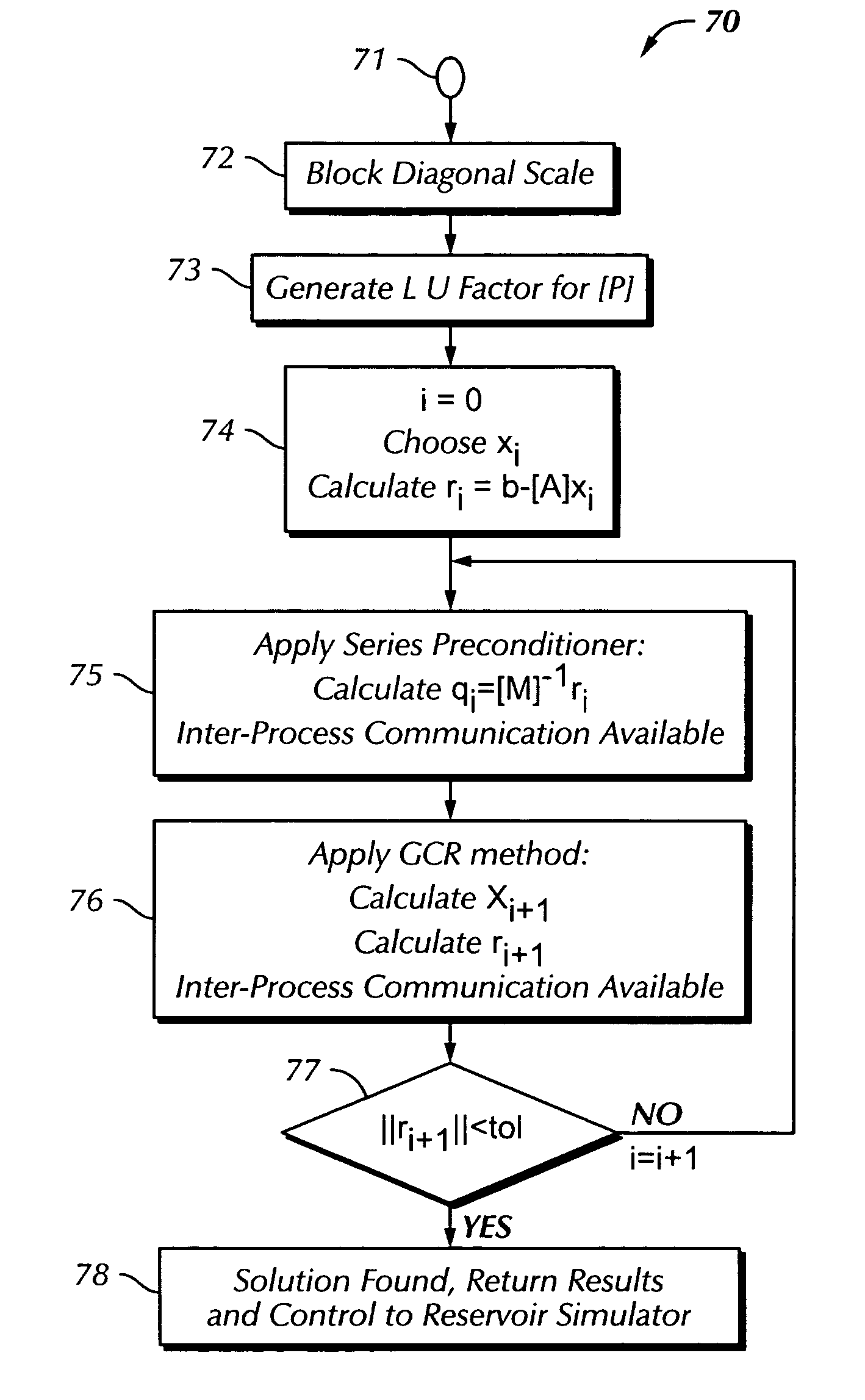

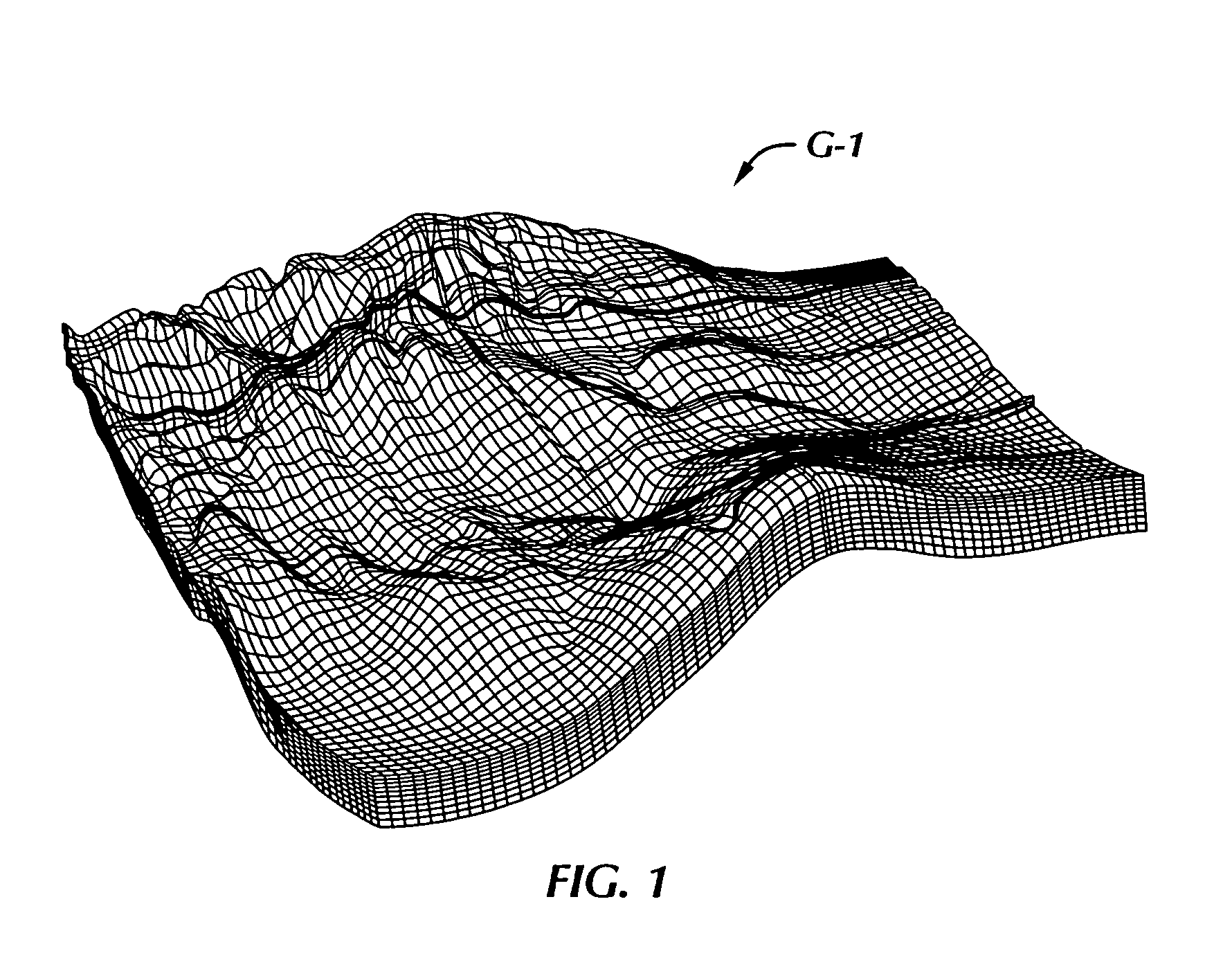

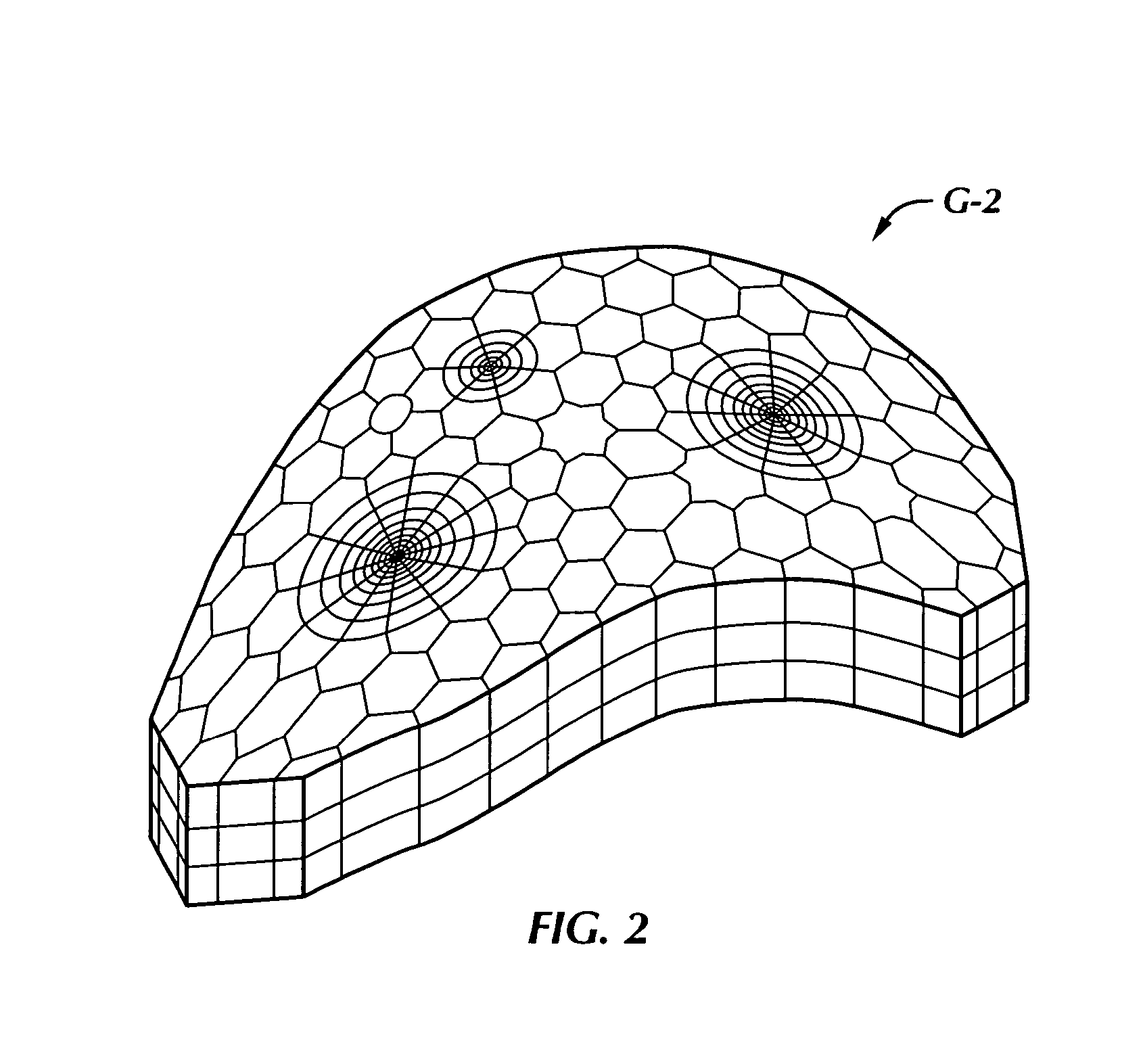

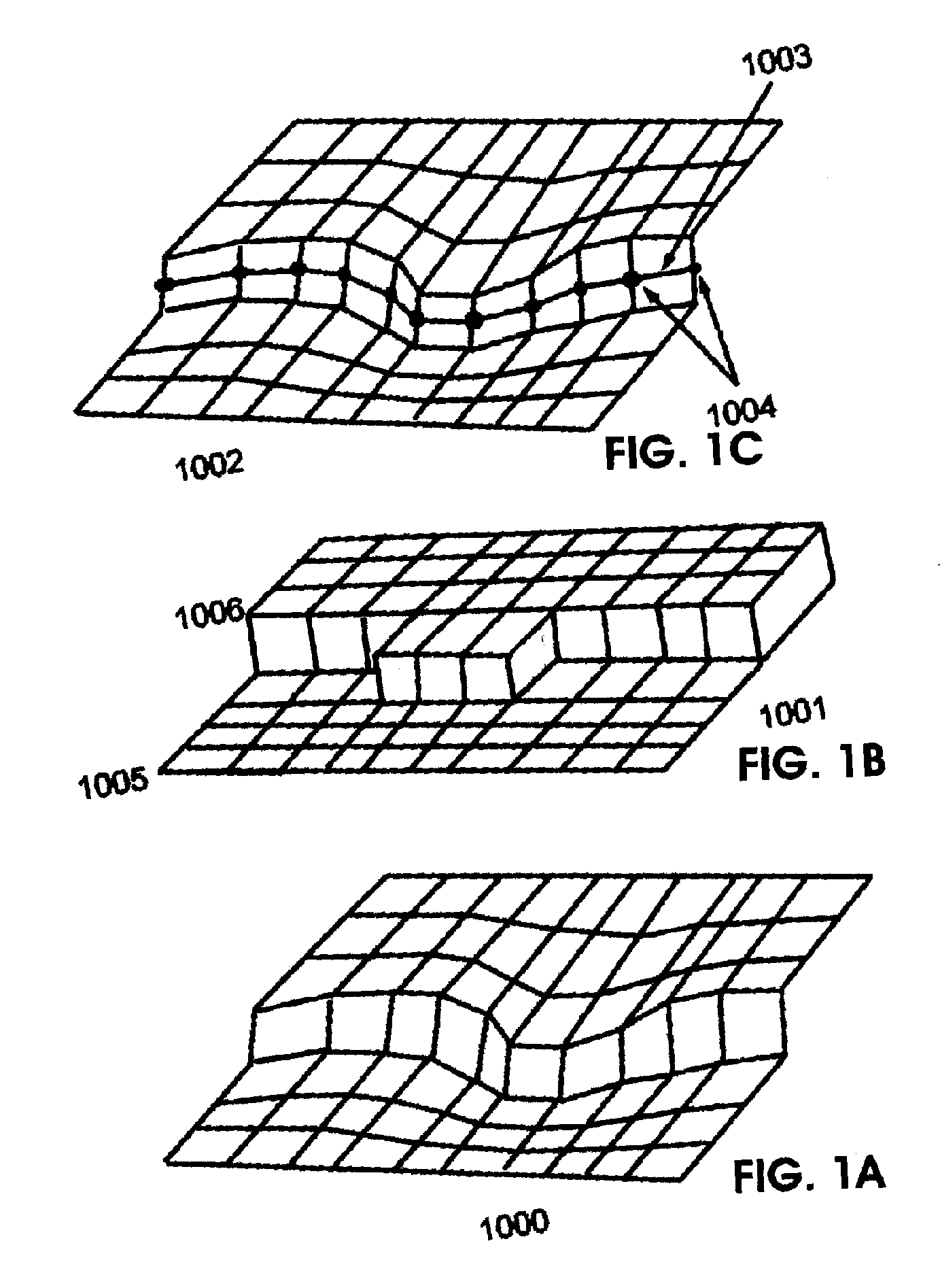

Solution method and apparatus for large-scale simulation of layered formations

ActiveUS20060235667A1Design optimisation/simulationSpecial data processing applicationsSupercomputerAngular point

A targeted heterogeneous medium in the form of an underground layered formation is gridded into a layered structured grid or a layered semi-unstructured grid. The structured grid can be of the irregular corner-point-geometry grid type or the simple Cartesian grid type. The semi-unstructured grid is areally unstructured, formed by arbitrarily connected control-volumes derived from the dual grid of a suitable triangulation; but the connectivity pattern does not change from layer to layer. Problems with determining fluid movement and other state changes in the formation are solved by exploiting the layered structure of the medium. The techniques are particularly suited for large-scale simulation by parallel processing on a supercomputer with multiple central processing units (CPU's).

Owner:SAUDI ARABIAN OIL CO

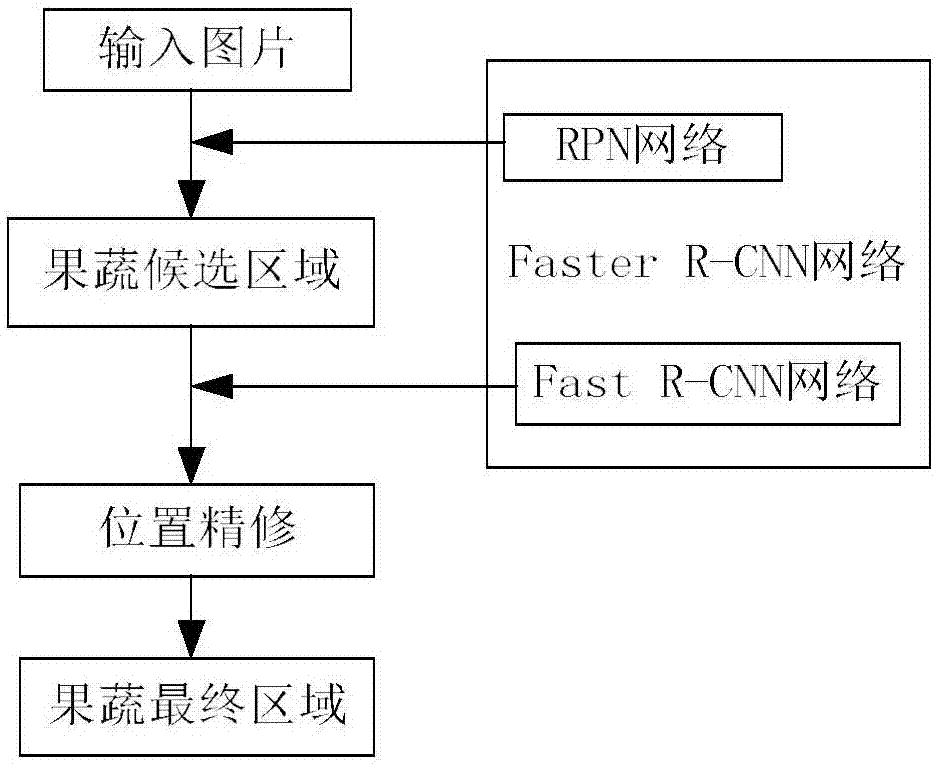

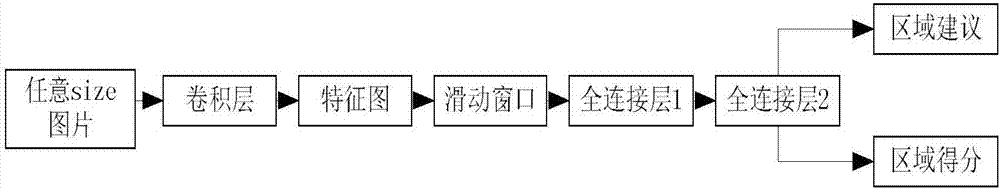

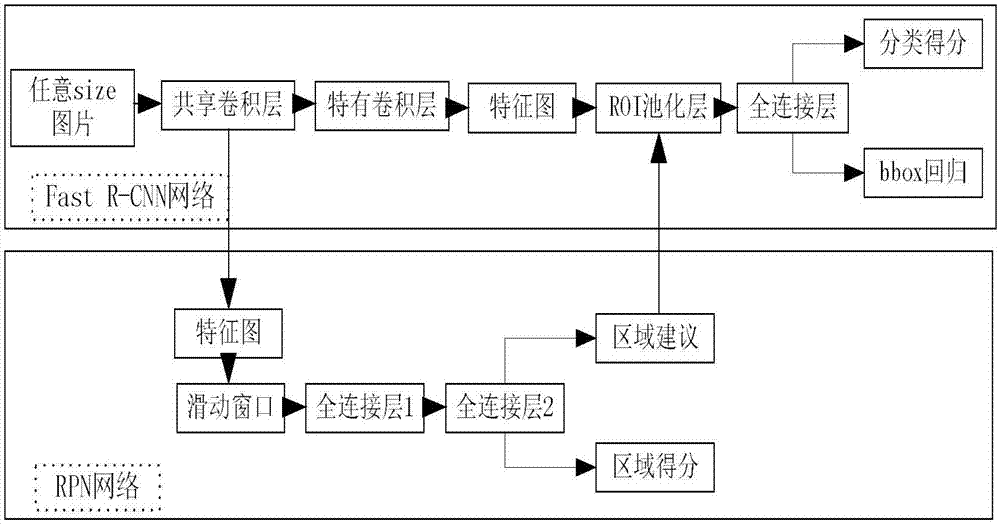

Fruit and vegetable detection method based on deep learning

InactiveCN107451602AImprove detection accuracyGuaranteed qualityCharacter and pattern recognitionAngular pointTest tests

The invention discloses a fruit and vegetable detection method based on deep learning. The method comprises the following steps that: S1: firstly, preprocessing data, and carrying out manual calibration on an original picture in advance to obtain a segmentation tag, wherein the calibration means the coordinates of the left upper angular point and the right lower angular point of a target frame in the original picture, and the tag is used for judging whether a target in each calibration frame is a fruit and vegetable and determining the category of the fruit and vegetable; S2: secondly, training the data, taking the original picture and the picture tag as a training set of a deep learning neural network, and combining with a RPN (Region Proposal Network) and a Fast R-CNN to train the data to obtain a final fruit and vegetable detection model; and S3: finally, testing test data, calling a final fruit and vegetable detection model and a test program, carrying out fruit and vegetable detection on a test picture, and analyzing a final fruit and vegetable detection model effect through the observation of a test result.

Owner:ZHEJIANG UNIV OF TECH

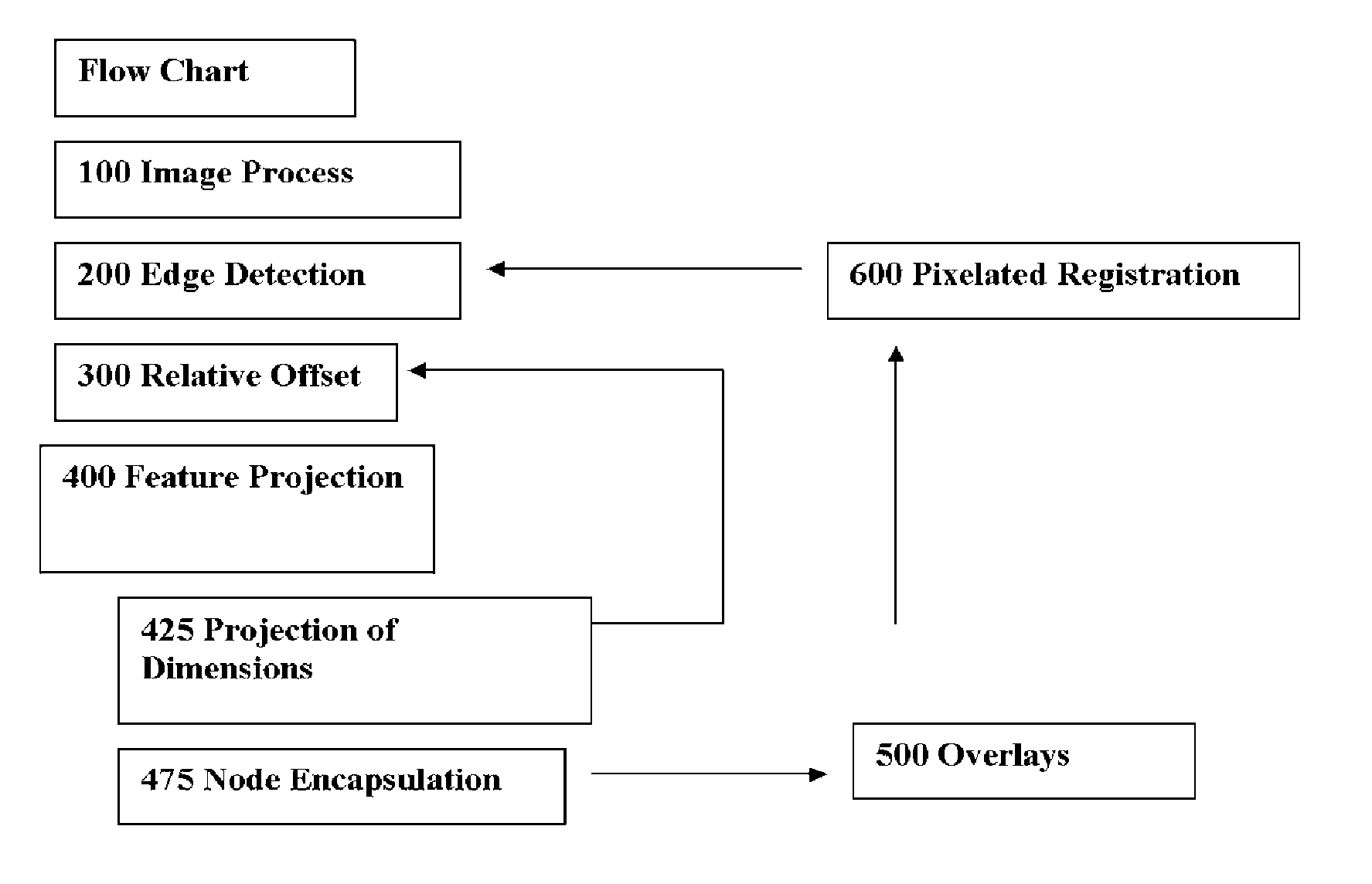

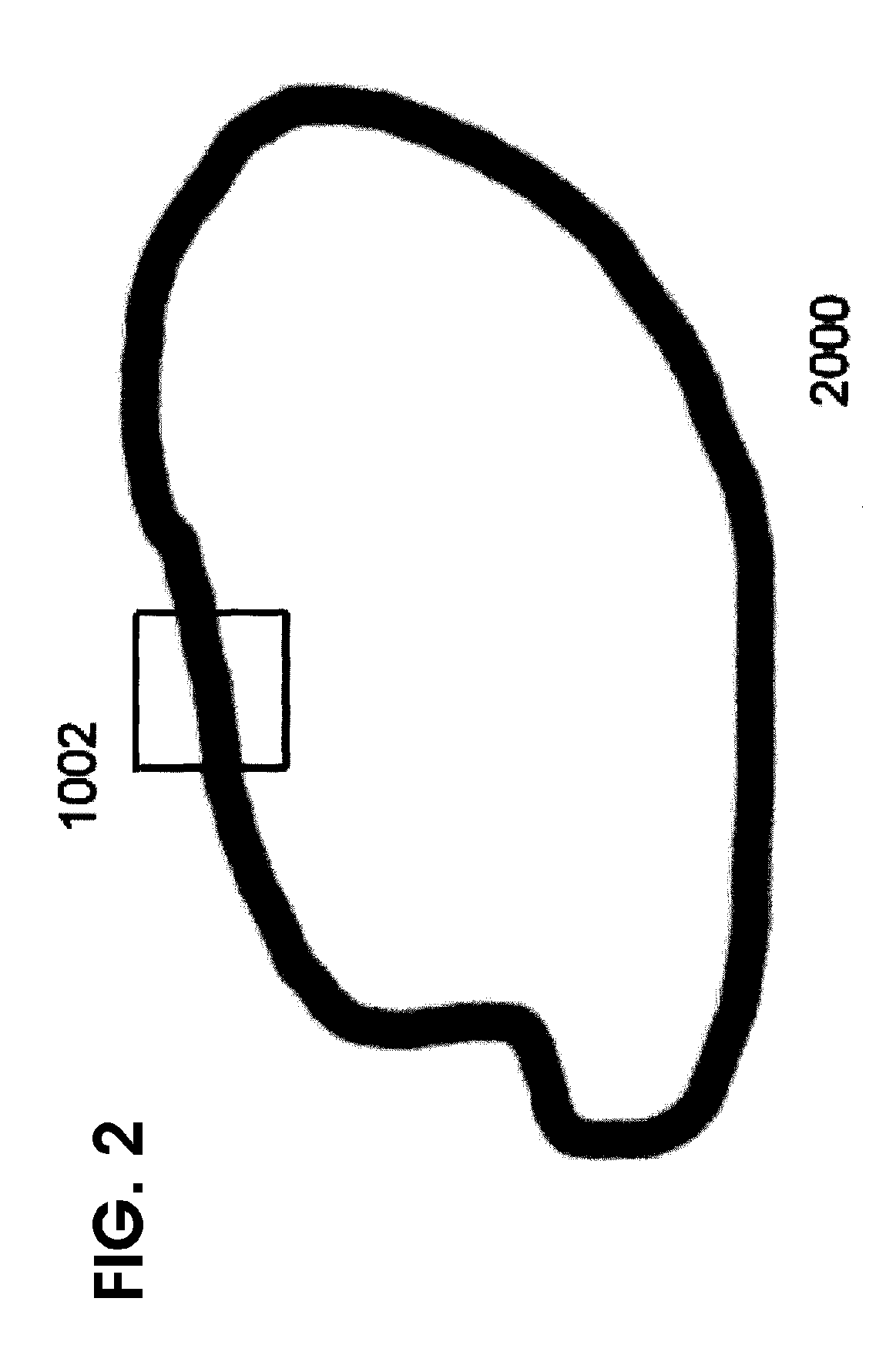

Method and apparatus for determining offsets of a part from a digital image

InactiveUS20060056732A1Improve good performanceHigh resolutionImage enhancementImage analysisGraphicsCrucial point

A method for image recognition of a material object that utilizes graphical modeling of the corner points of a vertex which includes projecting a point on a digital display to an inward depth, a one half pixel distance in the plane of the display, with a conic to a digital display, and a square block containing one half size child blocks that are scaled to depth, projecting the corner points of a vertex and replacing the bisecting points of edge features detected in a digital display scaled at an increasing rate of congruency to the dimensions of an object. The method may further include producing a digital image of the material object, providing a central processing unit, providing memory associated with a central processing unit; providing a display associated with a central processing unit; loading the digital image into the memory; defining the edges of features within the digital image; and a finding fight crucial points from registrations projected on to an edge feature display.

Owner:HOLMES DAVID

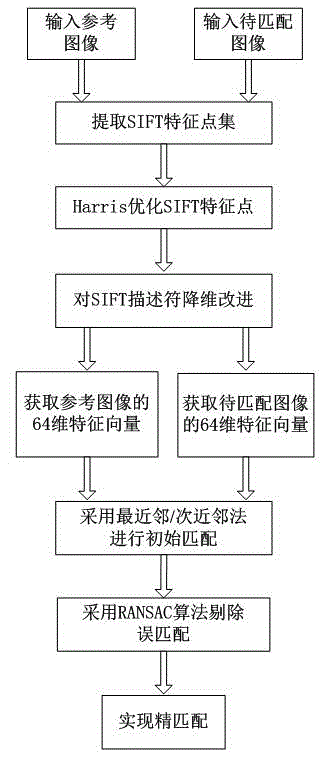

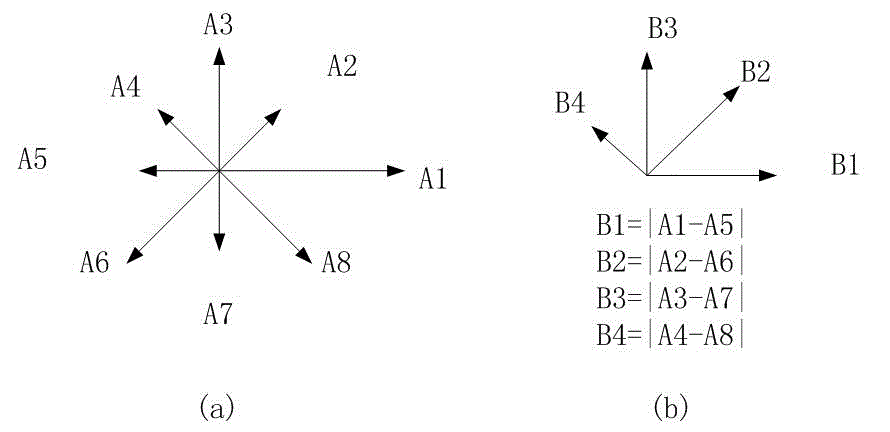

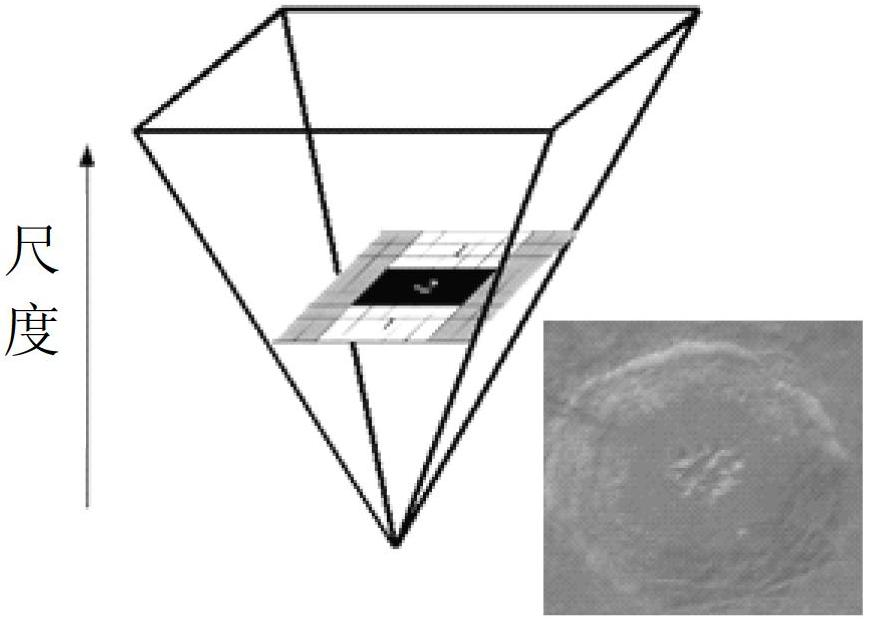

Efficient image matching method based on improved scale invariant feature transform (SIFT) algorithm

InactiveCN102722731AImprove real-time performanceReduce overheadCharacter and pattern recognitionFeature vectorScale-invariant feature transform

The invention discloses an efficient image matching method based on an improved scale invariant feature transform (SIFT) algorithm. The method comprises the following steps of: (1) extracting feature points of an input reference image and an image to be matched by using an SIFT operator; (2) by using a Harris operator, optimizing the feature points which are extracted by the SIFT operator, and screening representative angular points as final feature points; (3) performing dimensionality reduction on an SIFT feature descriptor, and acquiring 64-dimension feature vector descriptors of the reference image and the image to be matched; and (4) initially matching the reference image and the image to be matched by using a nearest neighbor / second choice neighbor (NN / SCN) algorithm, and eliminating error matching by using a random sample consensus (RANSAC) algorithm, so the images can be accurately matched. The method has the advantages that by selecting points which can well represent or reflect image characteristics for image matching, matching accuracy is ensured, and the real-time performance of SIFT matching is improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

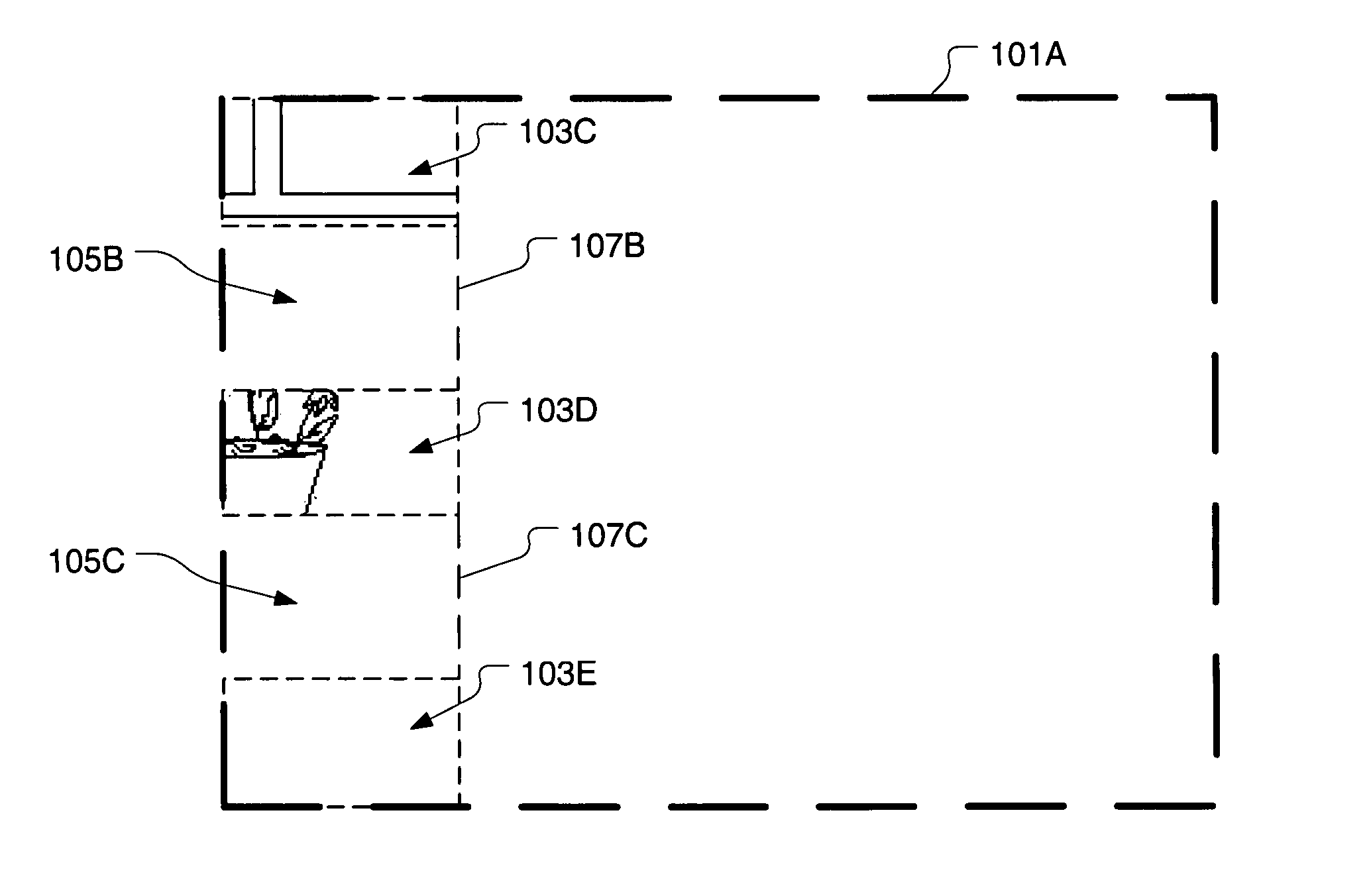

Method for digital image stitching and apparatus for performing the same

ActiveUS20060050152A1Television system detailsCharacter and pattern recognitionDigital imagingAngular point

A digital imaging device is operated to capture a first image in a digital format. At least two non-contiguous portions of the first image are rendered in a display of the digital imaging device. The at least two non-contiguous portions of the first image are used to align a live version of a second image in the display, wherein the second image is an extension of the first image. The second image is captured in a digital format. A corner matching algorithm is applied to determine an offset of the second image with respect to the first image, wherein the offset is required to achieve a substantially accurate alignment of the first and second images. Application of the corner matching algorithm is limited to an overlap region intervening between the at least two non-contiguous portions of the first image.

Owner:ADVANCED INTERCONNECT SYST LTD

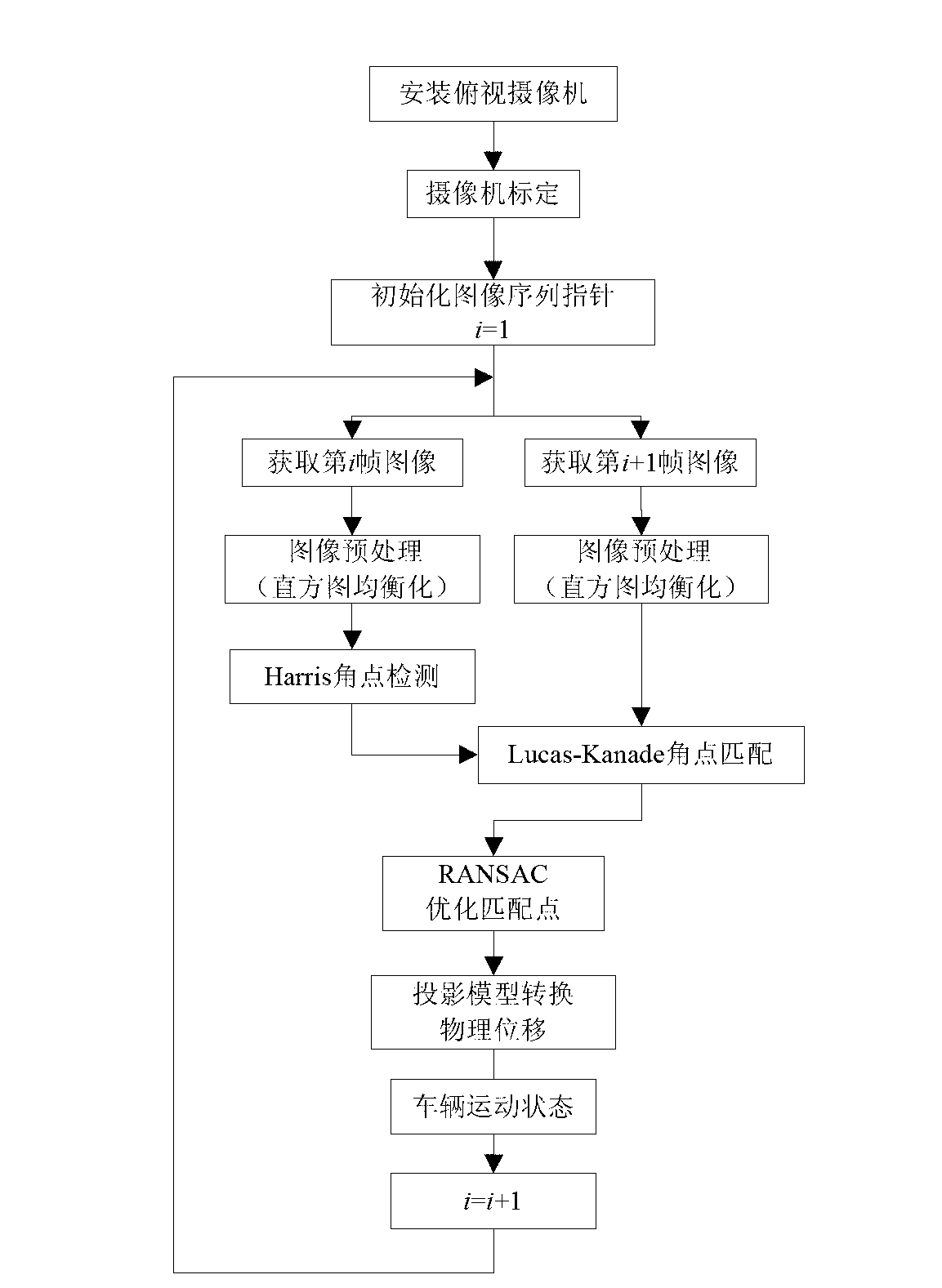

Light stream based vehicle motion state estimating method

InactiveCN102999759AHigh measurement accuracyImprove real-time performanceImage analysisCharacter and pattern recognitionRoad surfaceHistogram equalization

The invention discloses a light stream based vehicle motion state estimating method which is applicable to estimating motion of vehicles running of flat bituminous pavement at low speed in the road traffic environment. The light stream based vehicle motion state estimating method includes mounting a high-precision overlook monocular video camera at the center of a rear axle of a vehicle, and acquiring video camera parameters by means of calibration algorithm; preprocessing acquired image sequence by histogram equalization so as to highlight angular point characteristics of the bituminous pavement, and reducing adverse affection caused by pavement conditions and light variation; detecting the angular point characteristics of the pavement in real time by adopting efficient Harris angular point detection algorithm; performing angular point matching tracking of a front frame and a rear frame according to the Lucas-Kanade light stream algorithm, further optimizing matched angular points by RANSAC (random sample consensus) algorithm and acquiring more accurate light stream information; and finally, restructuring real-time motion parameters of the vehicle such as longitudinal velocity, transverse velocity and side slip angle under a vehicle carrier coordinate system, and accordingly, realizing high-precision vehicle ground motion state estimation.

Owner:SOUTHEAST UNIV

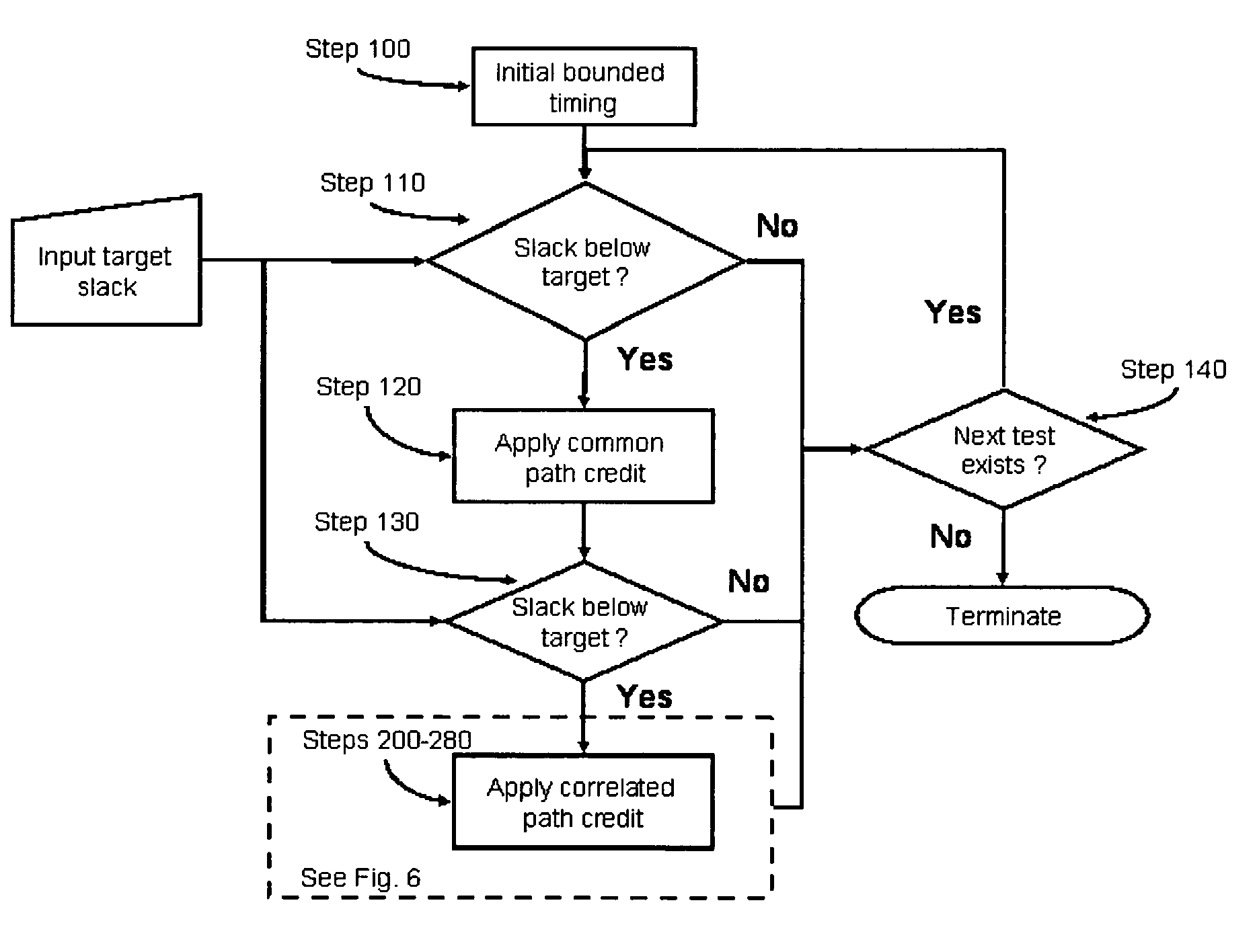

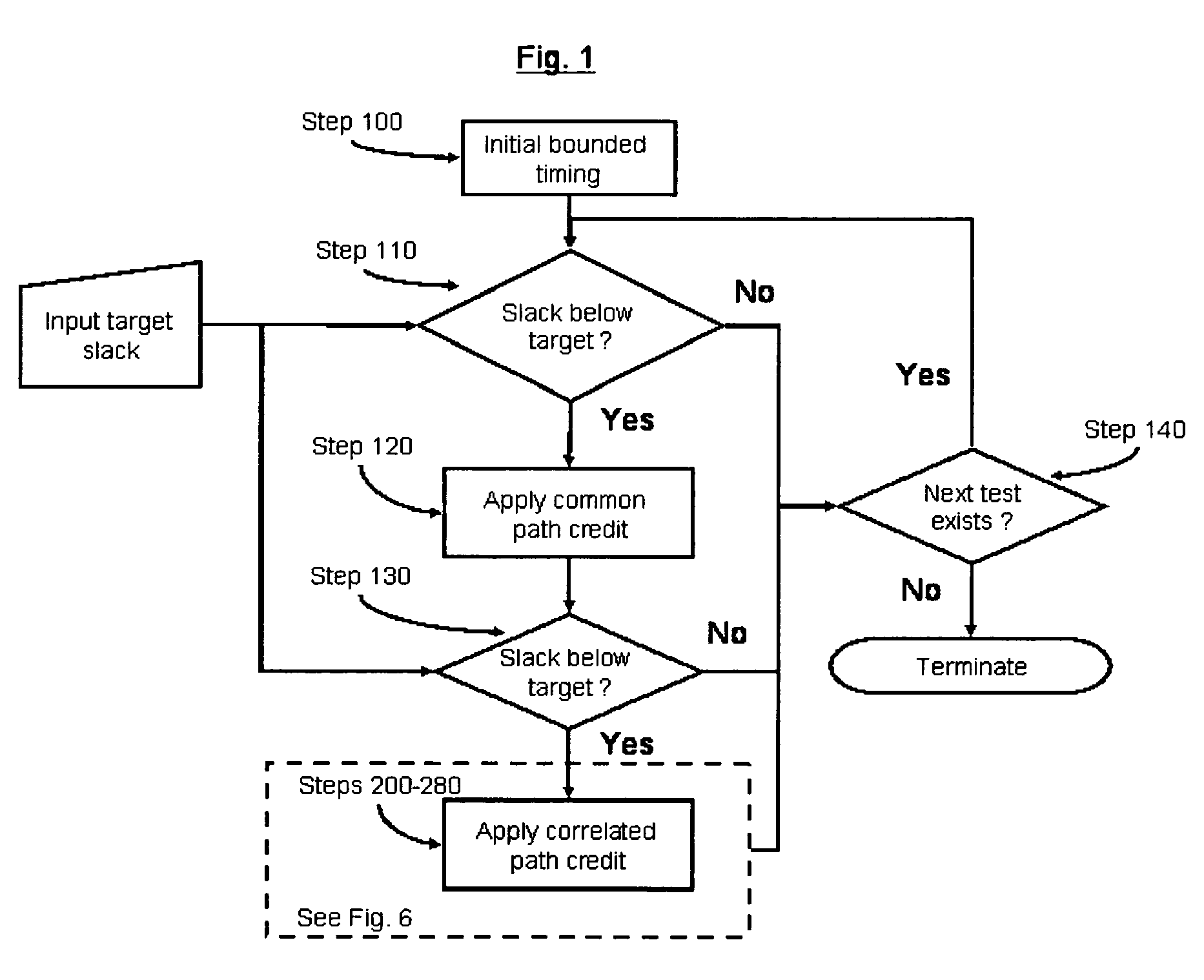

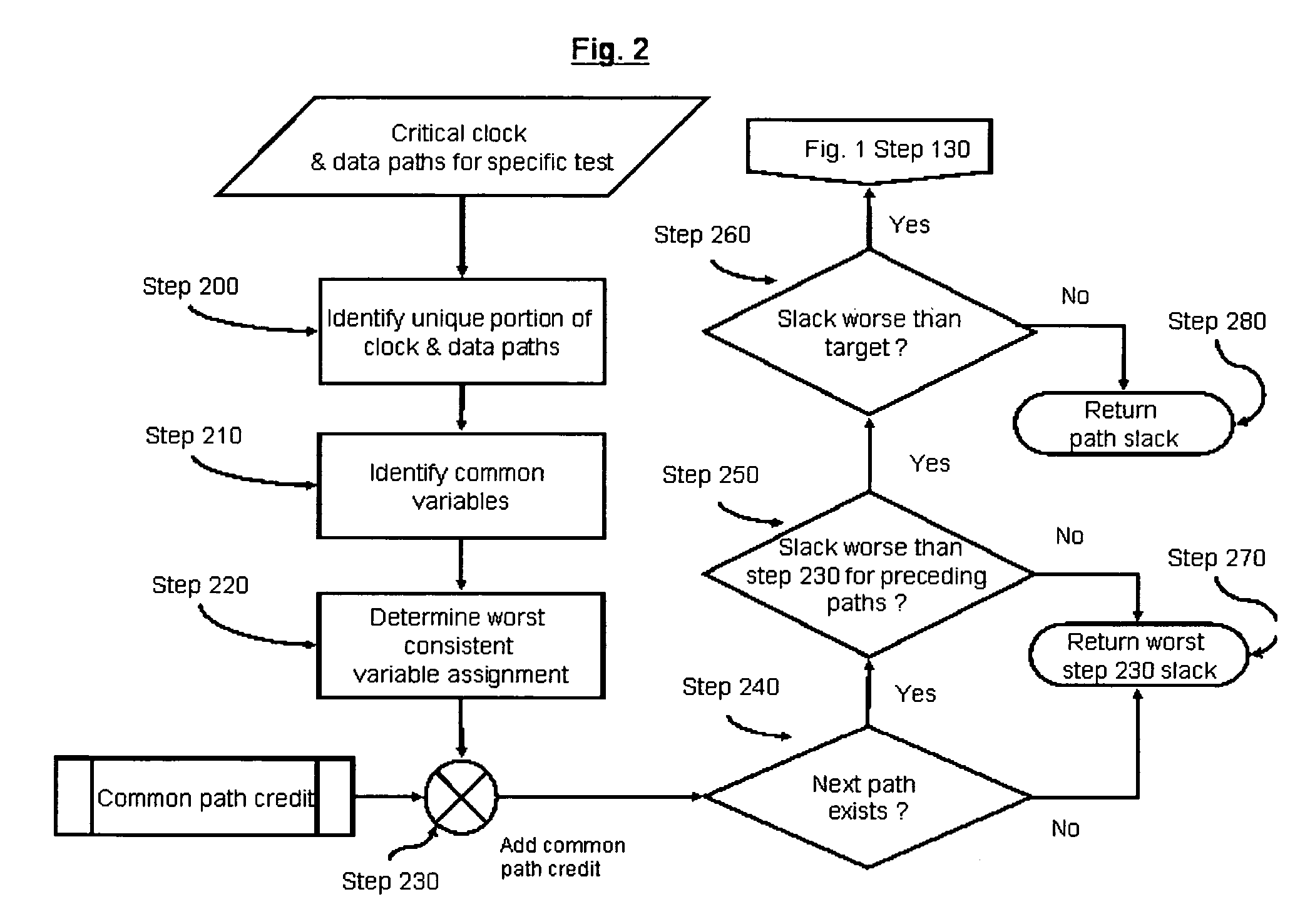

System and method for correlated process pessimism removal for static timing analysis

InactiveUS7117466B2Reduce pessimismComputer aided designSoftware simulation/interpretation/emulationStatic timing analysisAngular point

A method of removing pessimism in static timing analysis is described. Delays are expressed as a function of discrete parameter settings allowing for both local and global variation to be taken in to account. Based on a specified target slack, each failing timing test is examined to determine a consistent set of parameter settings which produces the worst possible slack. The analysis is performed on a path basis. By considering only parameters which are in common to a particular data / clock path-pair, the number of process combinations that need to be explored is reduced when compared to analyzing all combinations of the global parameter settings. Further, if parameters are separable and linear, worst-case variable assignments for a particular clock / data path pair can be computed in linear time by independently assigning each parameter value. In addition, if available, the incremental delay change with respect to each physically realizable process variable may be used to project the worst-case variable assignment on a per-path basis without the need for performing explicit corner enumeration.

Owner:GLOBALFOUNDRIES INC

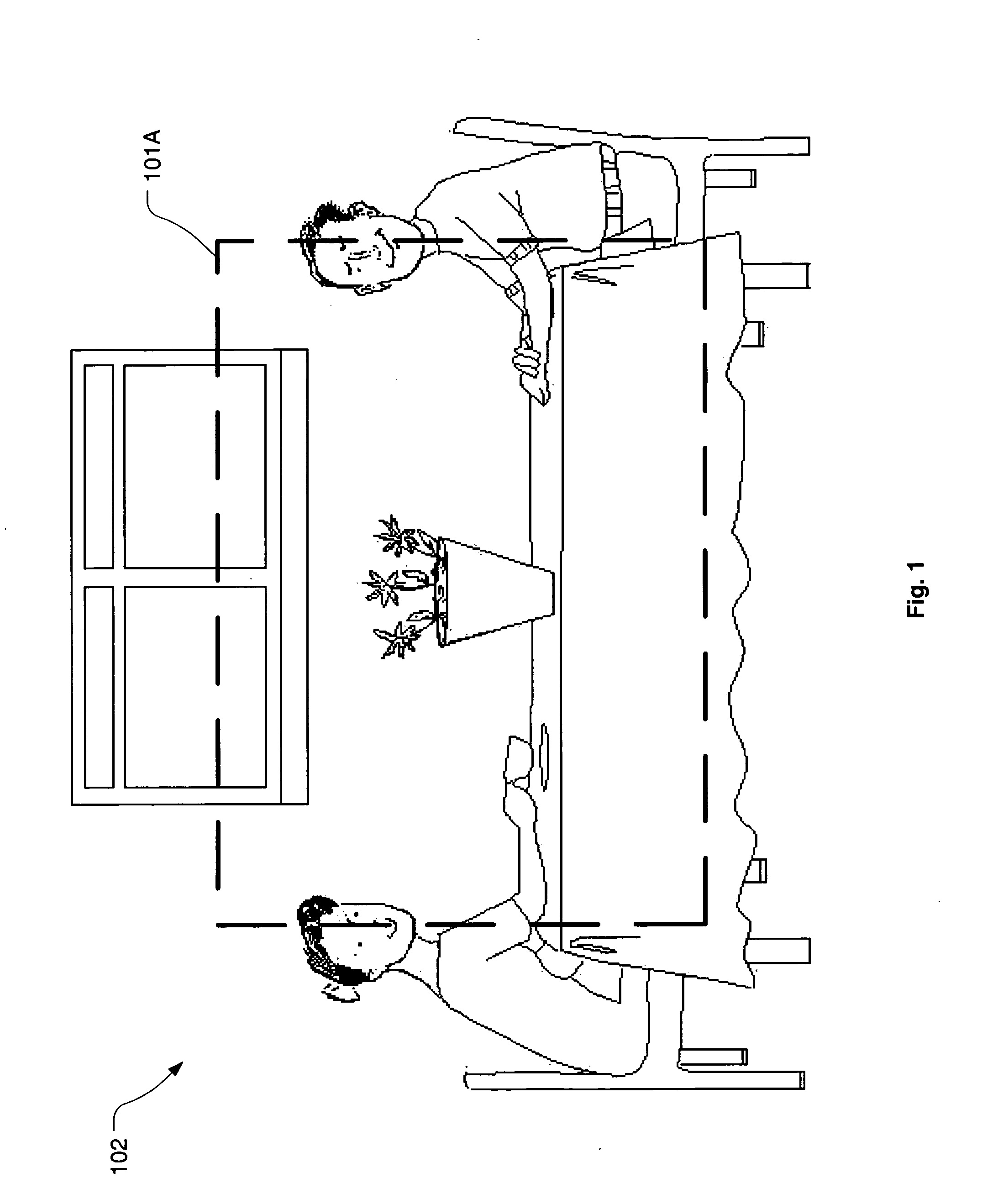

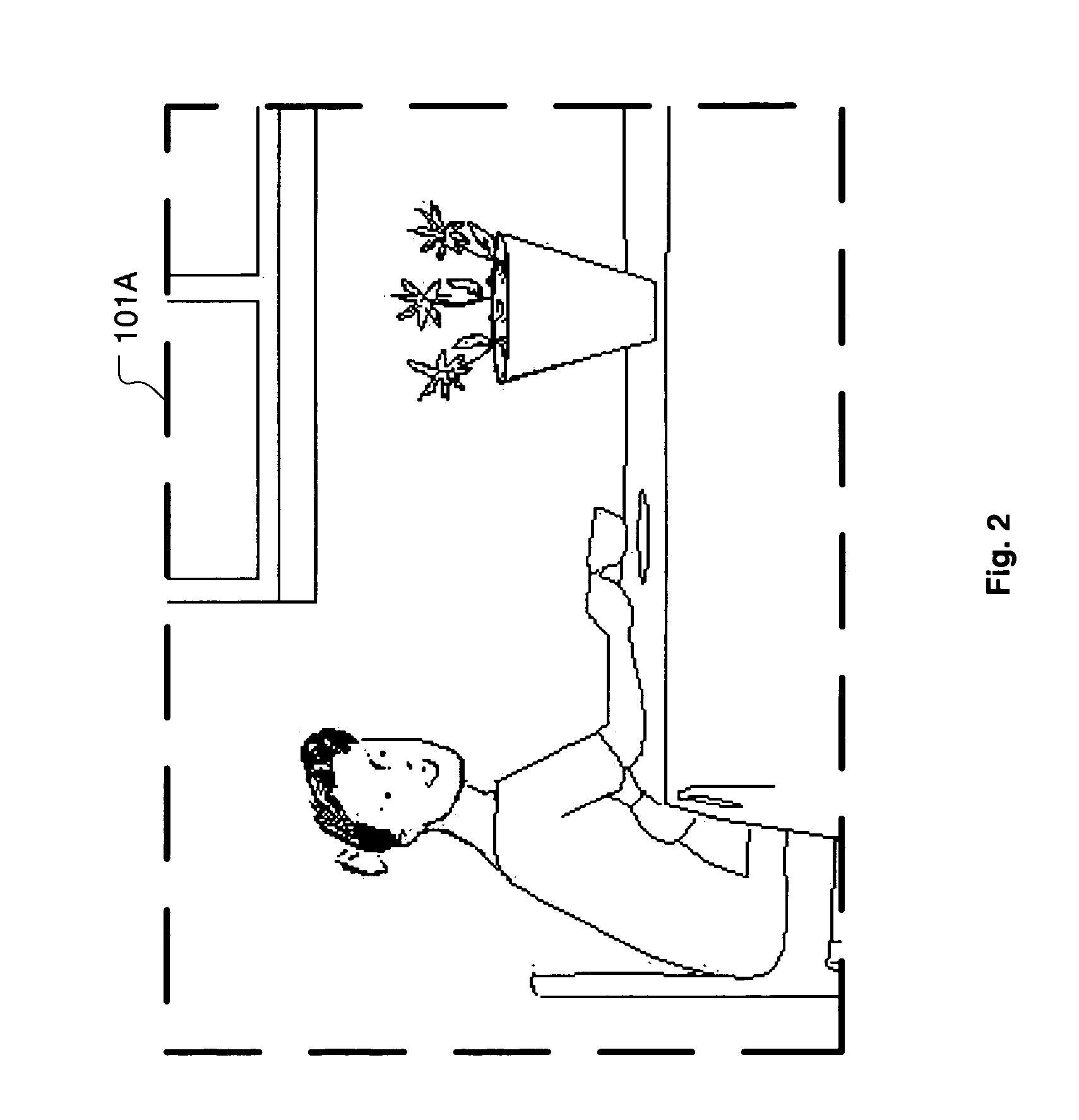

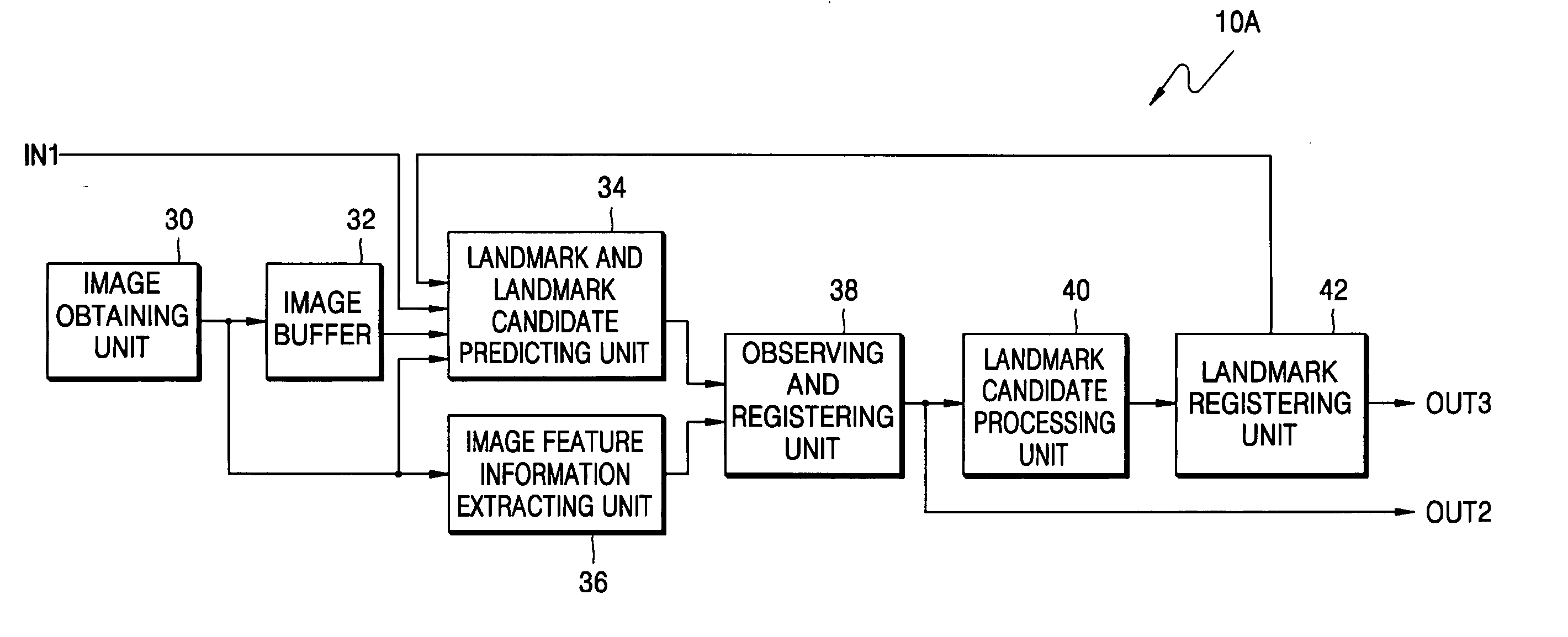

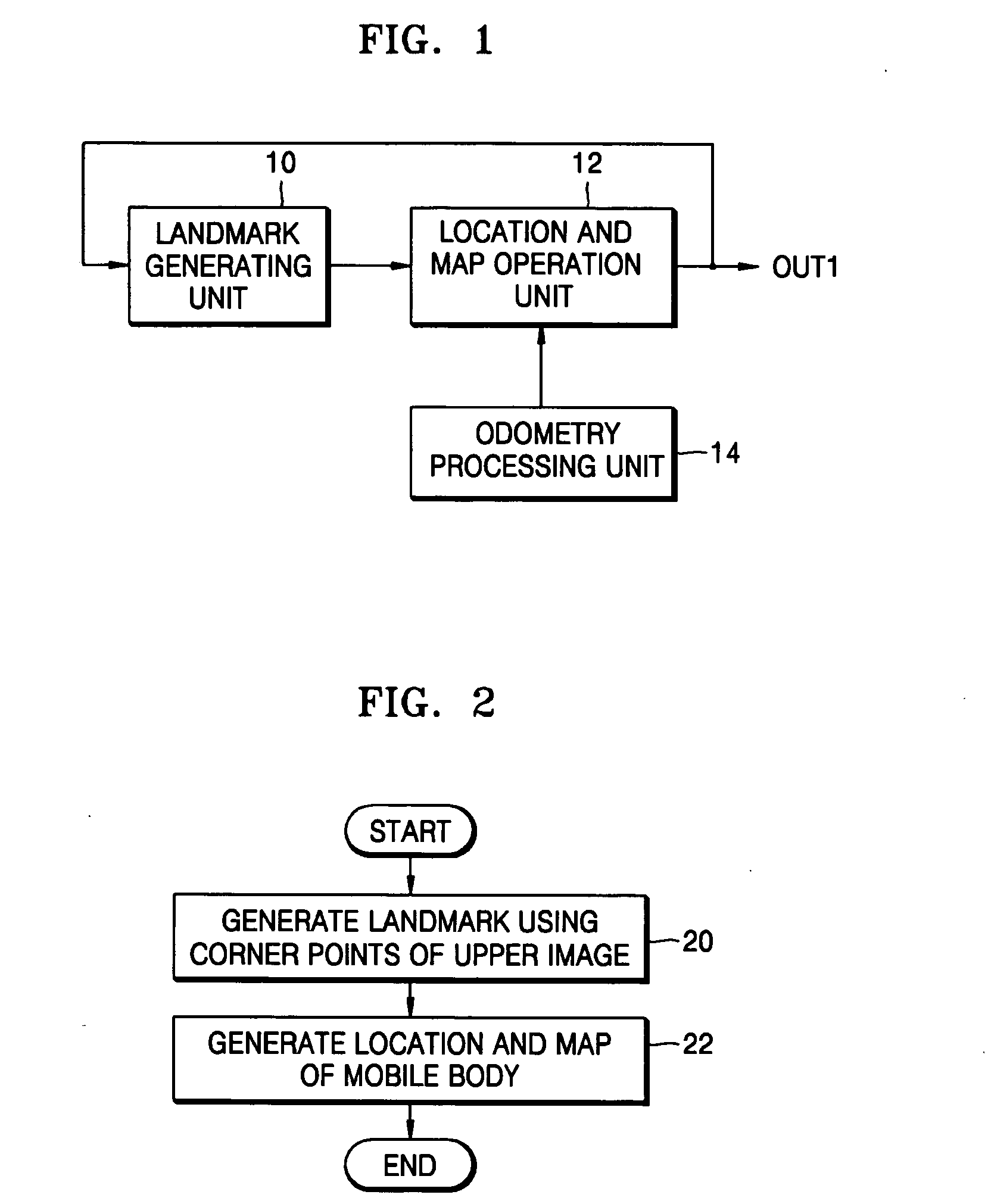

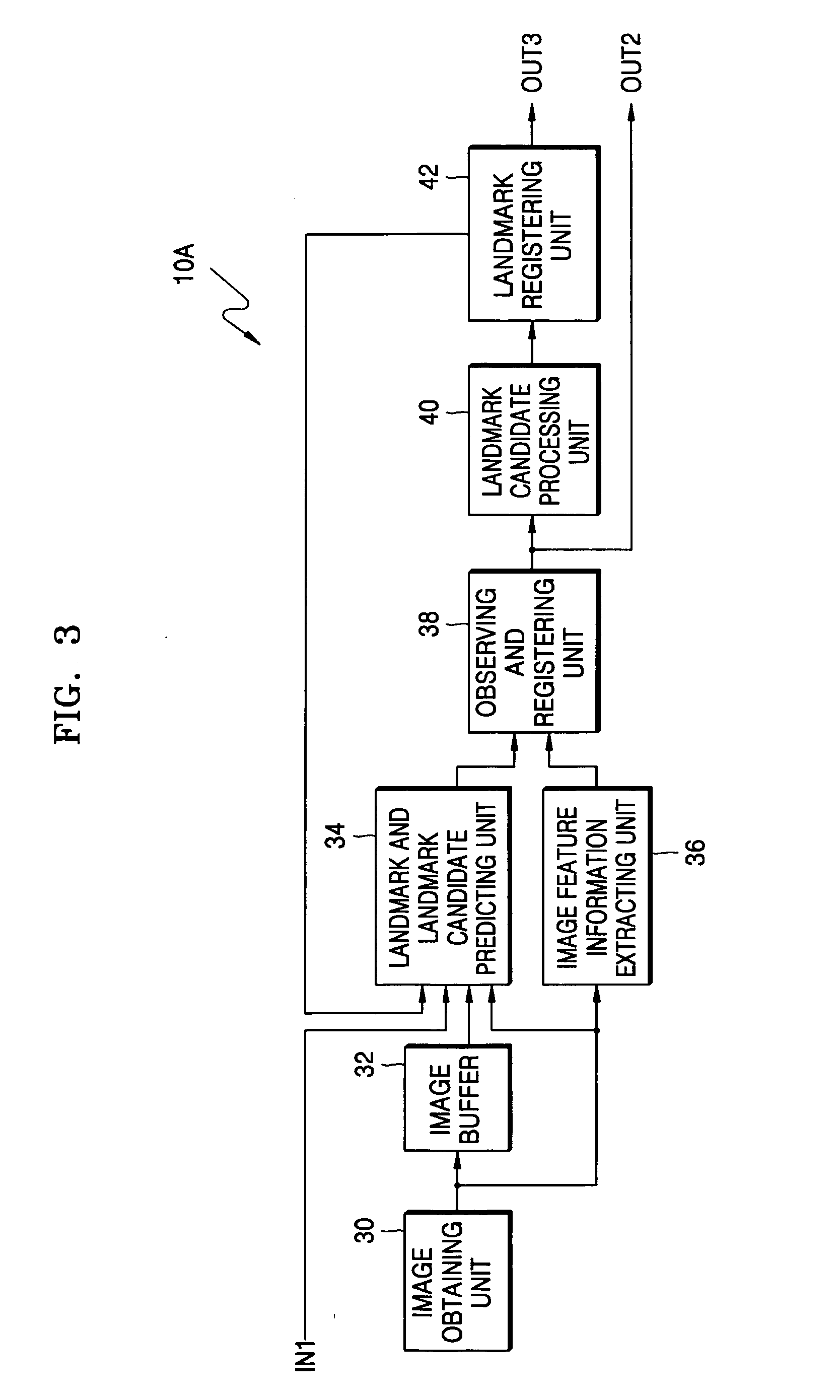

Apparatus and method for estimating location of mobile body and generating map of mobile body environment using upper image of mobile body environment, and computer readable recording medium storing computer program controlling the apparatus

InactiveUS20060165276A1Television system detailsCharacter and pattern recognitionAngular pointComputer science

An apparatus and method for estimating a location of a mobile body and generating a map of a mobile body environment using an upper image of the mobile body environment, and a computer readable recording medium storing a computer program for controlling the apparatus. The apparatus includes: a landmark generating unit observing corner points from the upper image obtained by photographing in an upper vertical direction in the mobile body environment and respectively generating landmarks from the observed corner points; and a location and map operation unit estimating the location of the mobile body and generating the map of the mobile body environment from the landmarks.

Owner:SAMSUNG ELECTRONICS CO LTD

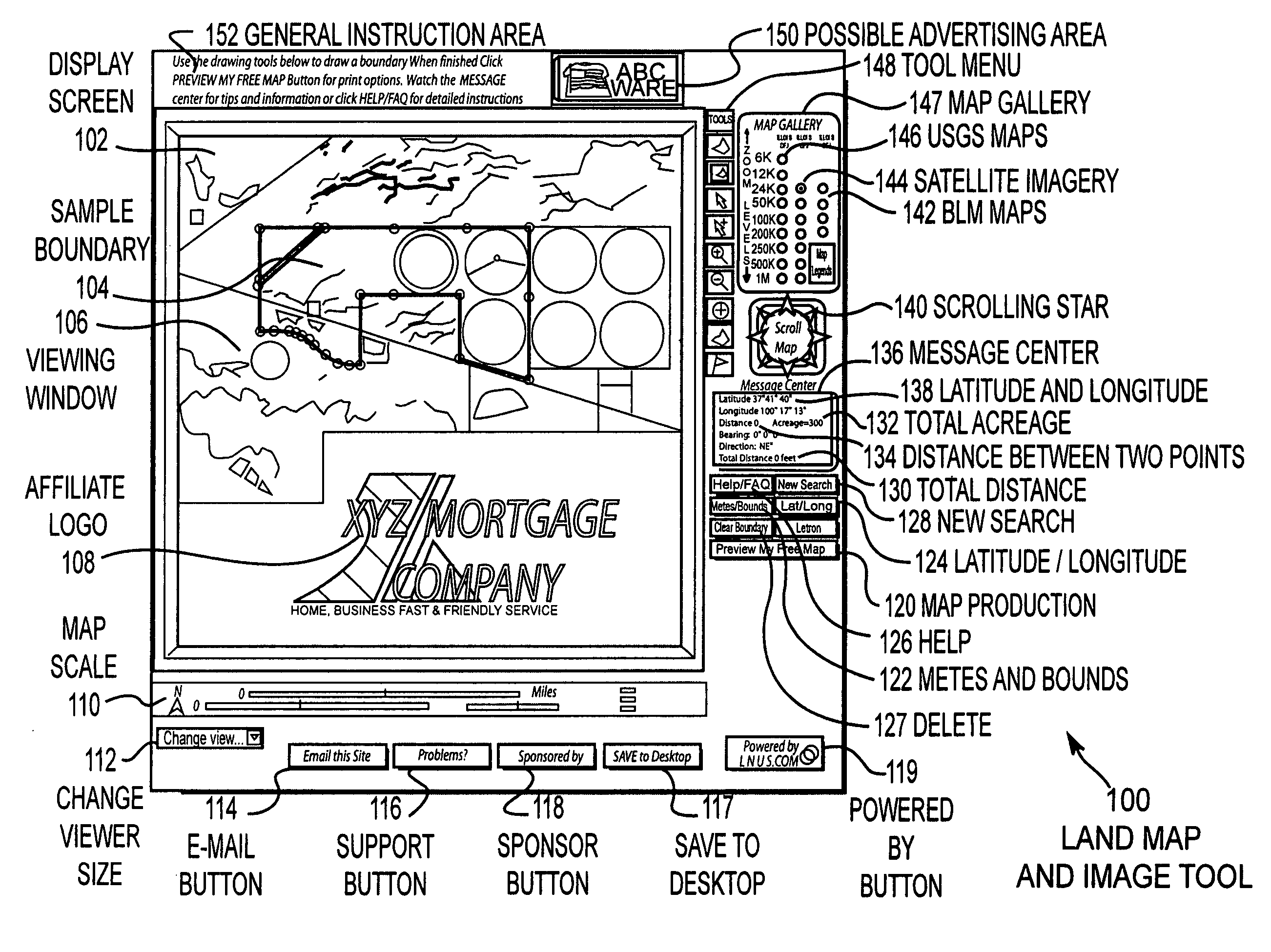

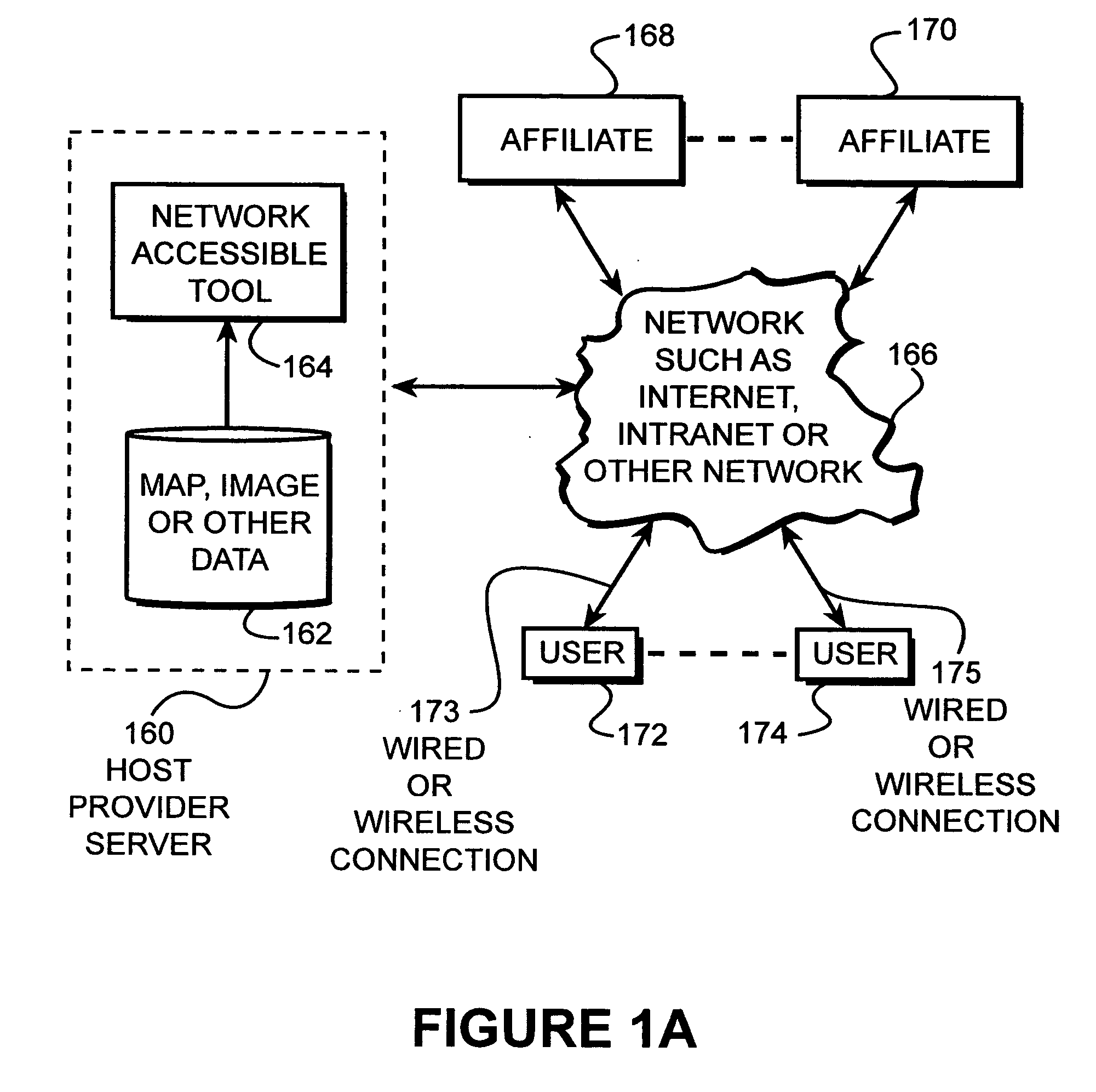

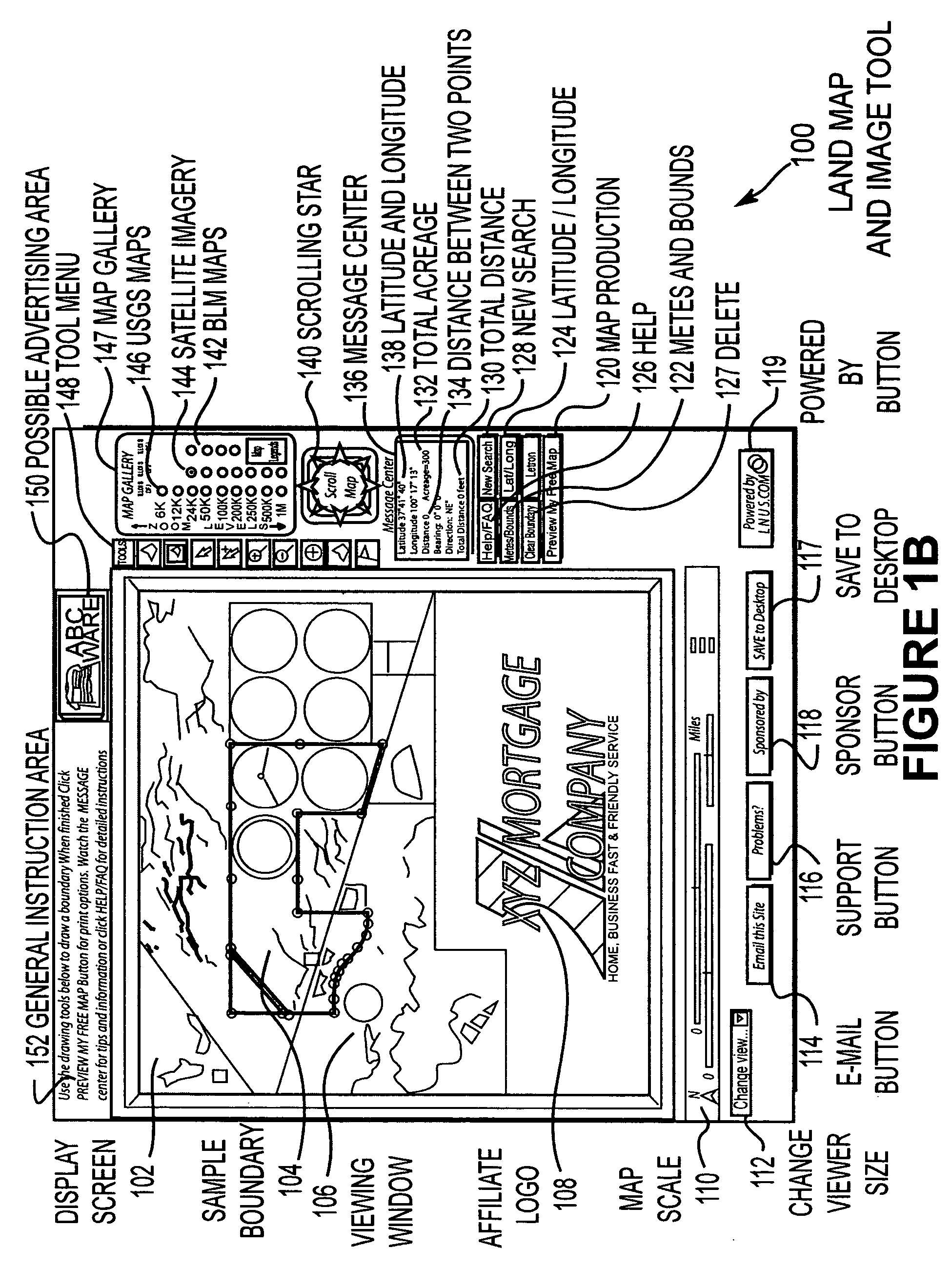

Land software tool

InactiveUS20060125828A1Accurate locationDrawing from basic elementsFinanceInternet usersAngular point

Disclosed is a network accessible tool that is capable of providing map and satellite image data, as well as other photographic image data to locate, identify, measure, view, and communicate information about land over the Internet-to-Internet users. The network accessible tool includes a location tool that allows the user to locate areas on a map using geographic names, township, range and section descriptions, county names, latitude and longitude coordinates or zip codes. Network accessible tool also includes a metes and bounds tool that draws boundaries on the map and image data in response to metes and bounds descriptions that have been entered by the Internet user. The network accessible tool also includes a lat / long drawing tool that draws boundaries on the map and image data based upon latitude and longitude coordinate pairs that have been entered by the Internet user. A cursor drawing tool allows the Internet user to draw and edit boundaries on the map and image data by simply clicking the cursor on the corner points of the boundary. An acreage calculation tool is also provided that calculates the acreage of an enclosed boundary. A distance measurement tool is also provided. The cursor information tool provides information relating to the name and creation date of the map and image data in accordance with the location of the cursor on the screen. The information can be communicated by printing, downloading, or e-mailing.

Owner:LANDNET CORP

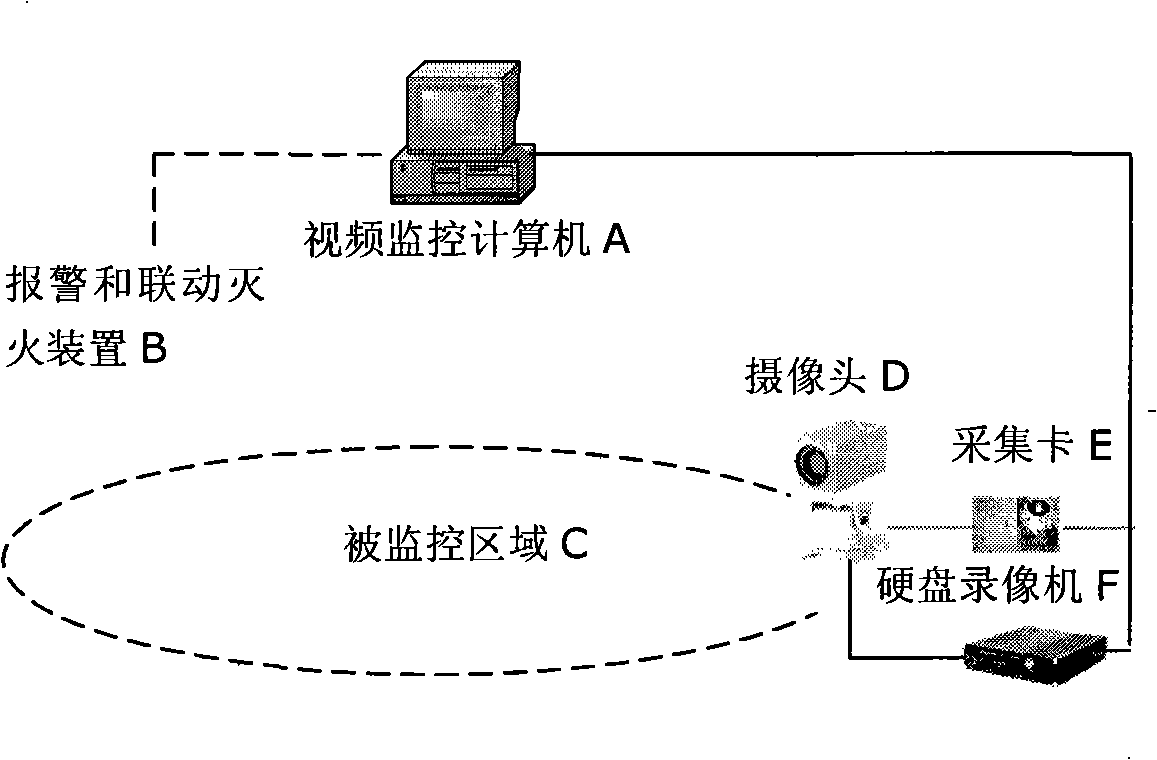

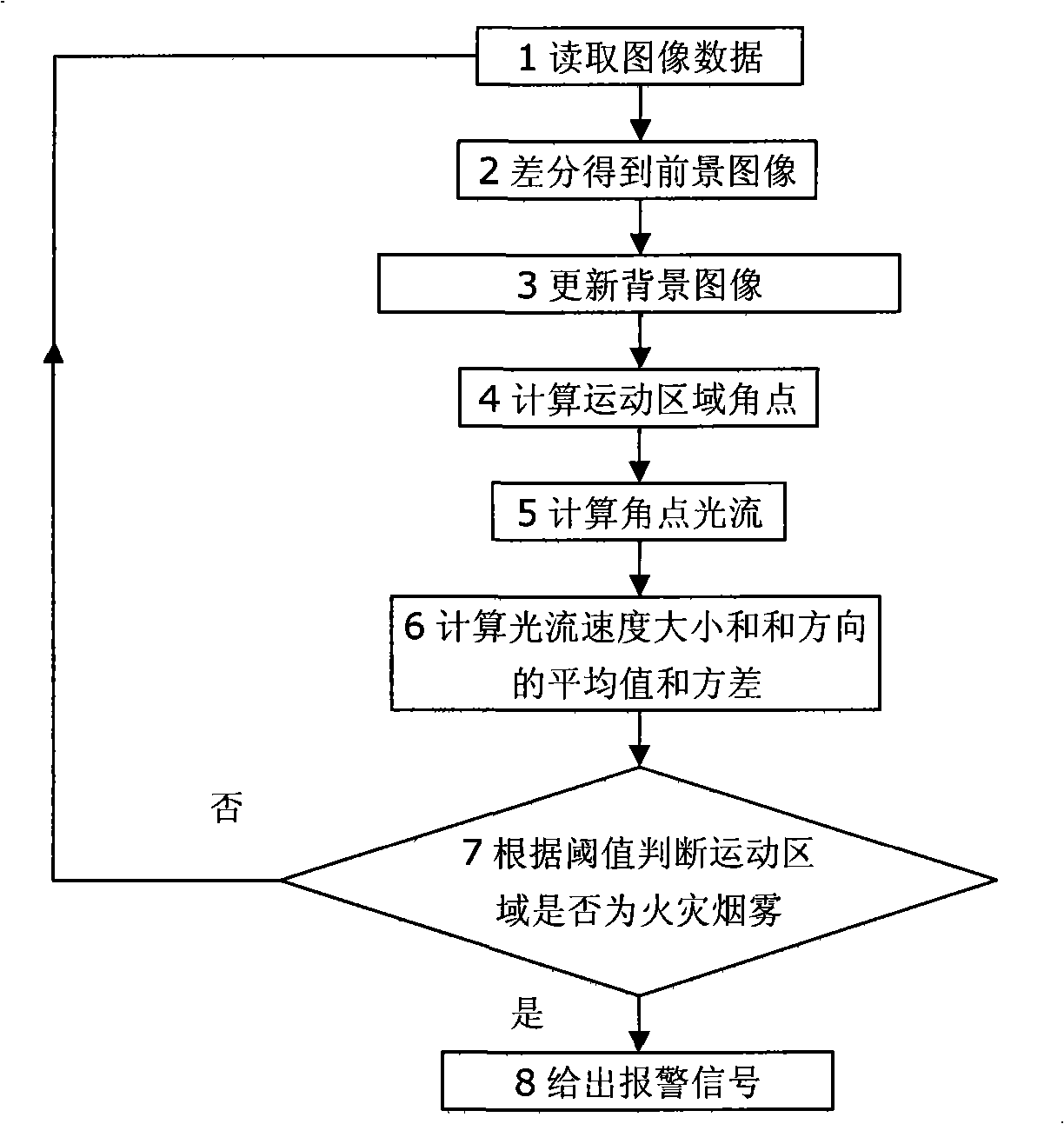

Video frequency fire hazard aerosol fog image recognition method based on light stream method

InactiveCN101339602AAccurate identificationAccurately reflectCharacter and pattern recognitionFire alarm smoke/gas actuationArray data structureSurveillance camera

The invention discloses a method for recognizing video fire smoke image based on optical flow, which is characterized in that: optical flow of corners and points in smoke motion regions is extracted by a computer based on the video image obtained by a surveillance camera; whether the smoke is fire smoke is judged based on the set threshold by calculating the average and variance of the array consisting all corner and point optical flow speed in the foreground image and the average and variance of the array consisting optical flow speed directions; if a fire smoke is identified, the computer sends orders which controls the alarm to send alarm signals and controls the interlocked fire extinguisher to start the fire extinguishing function quickly; if a fire smoke is not identified, the first step is returned to. The method provided by the invention can not only accurately reflect the motion characteristics of smoke, reducing light interference and impact of similar pixels on the results, but also reduce computation and improve practicality. The method can greatly reduce false alarm rate and quickly and accurately realize fire smoke detection function.

Owner:UNIV OF SCI & TECH OF CHINA

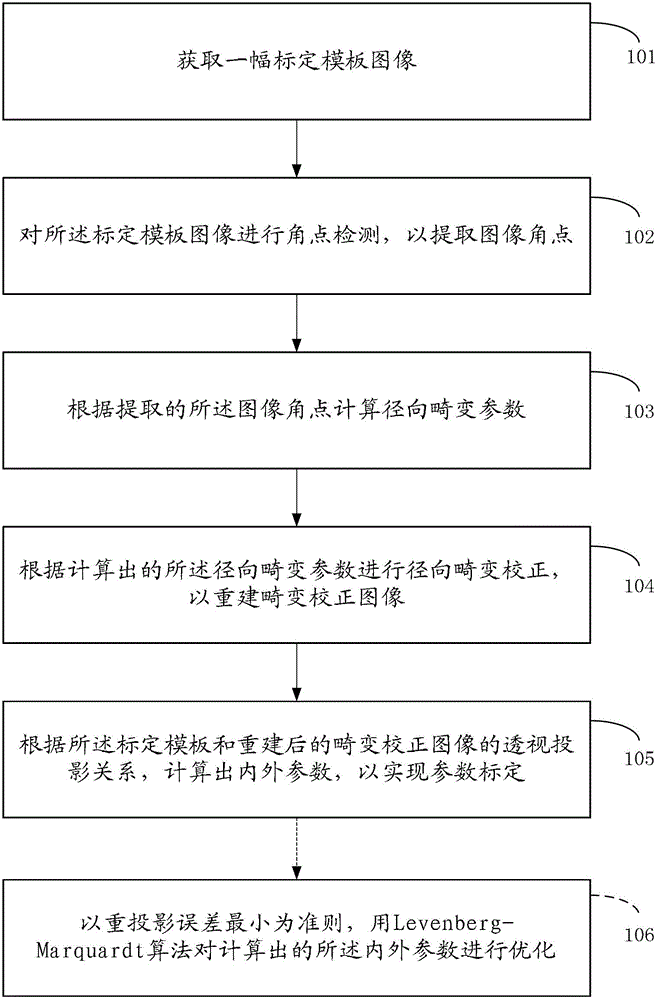

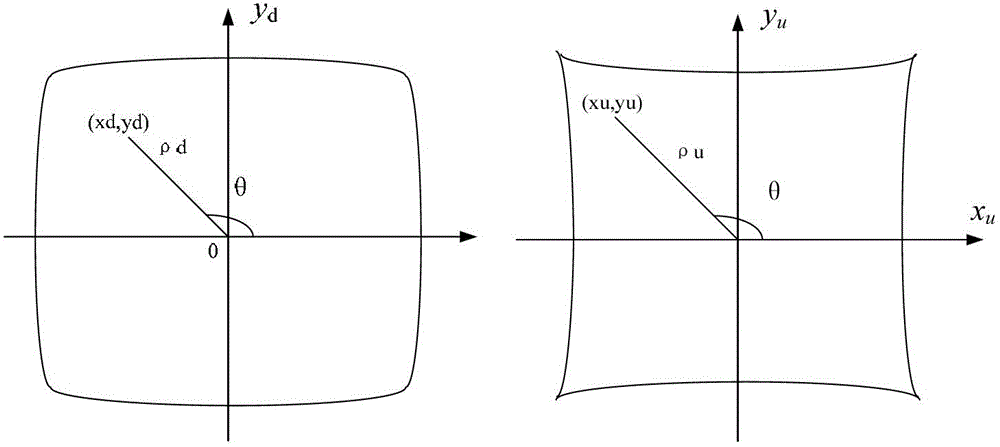

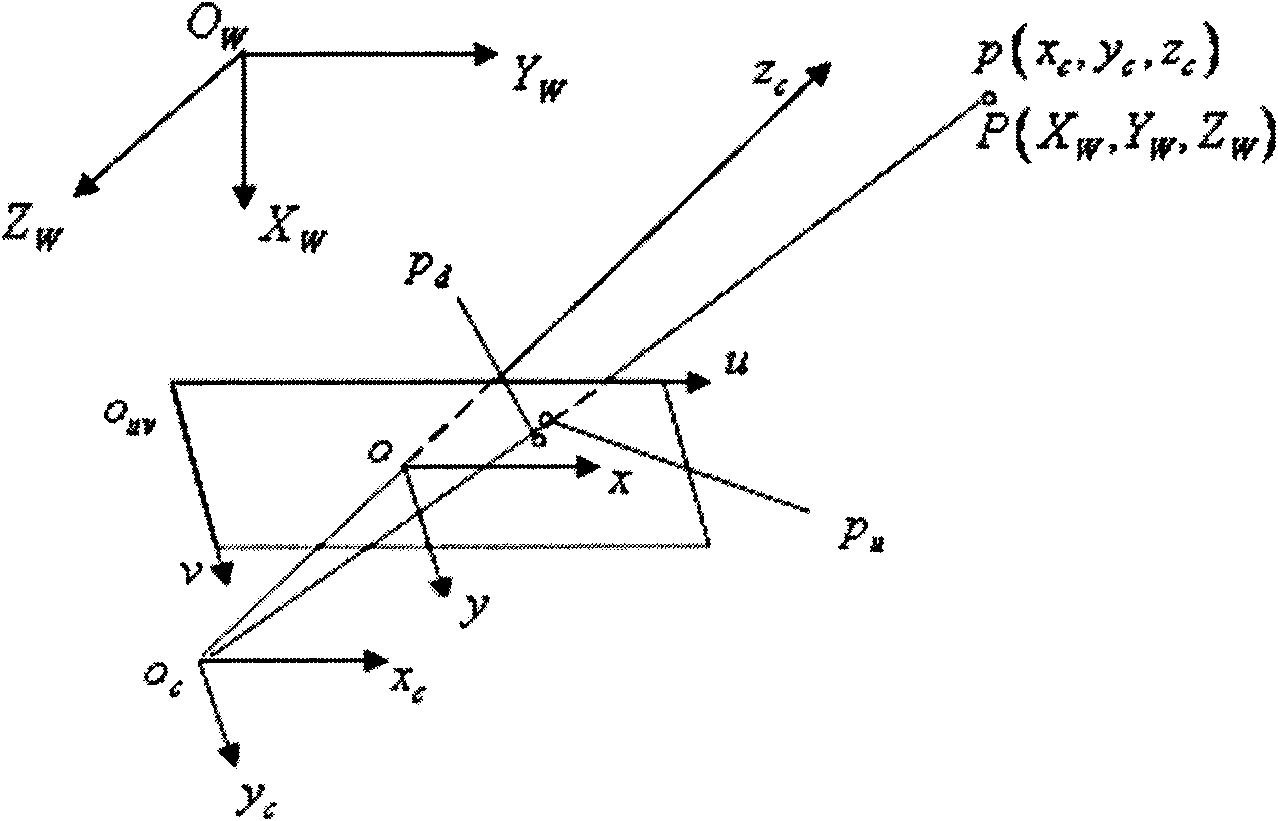

Parameter calibration method and device

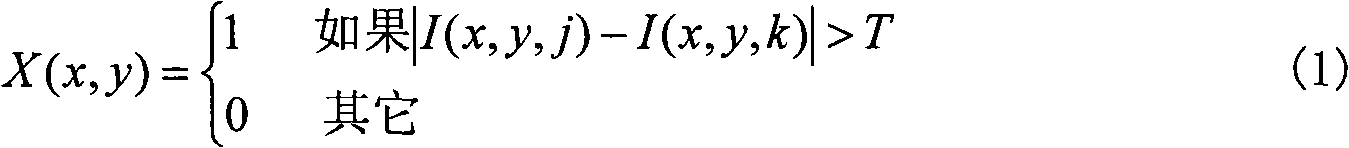

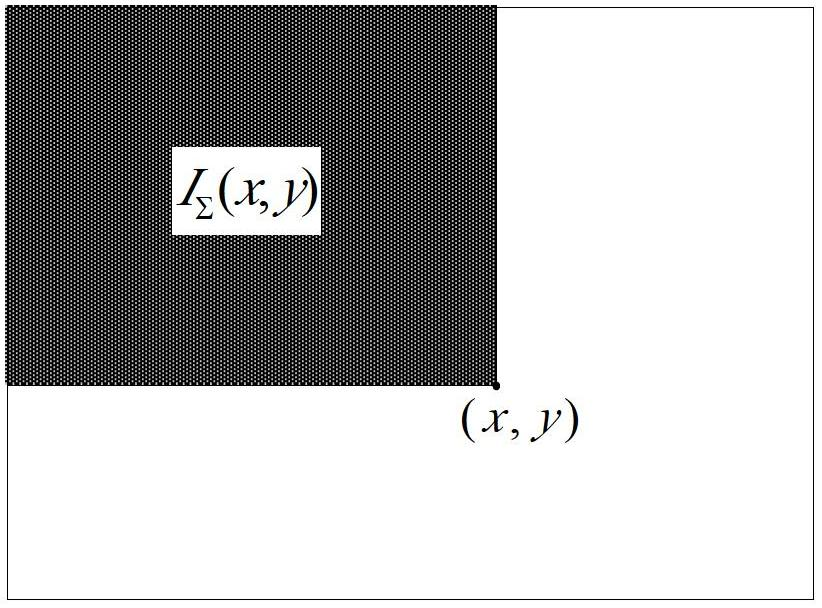

InactiveCN102750697AHigh precisionThe calibration result is accurateImage enhancementImage analysisAngular pointCorner detection

The invention discloses a parameter calibration method. The method comprises steps of acquiring a calibration template image which is obtained by photographing of a calibration template image; performing corner detection for the calibration template image so as to extract an image corner; calculating radial distortion parameters in accordance with the extracted image corner; performing radial distortion correction in accordance with the calculated radial distortion parameters so as to reconstruct a distortion correction image; and according to a perspective projection relation of the calibration template and the reconstructed distortion correction image, calculating internal parameters and external parameters so as to achieve parameter calibration, wherein internal parameters and external parameters comprise internal parameter matrixes, rotating vectors and translation vectors. The invention also provides a parameter calibration device. The method and the device can be applied to parameter calibration of imaging devices such as video cameras or cameras under the condition of high distortion, the operation is simple and the precision is high.

Owner:HUAWEI TECH CO LTD

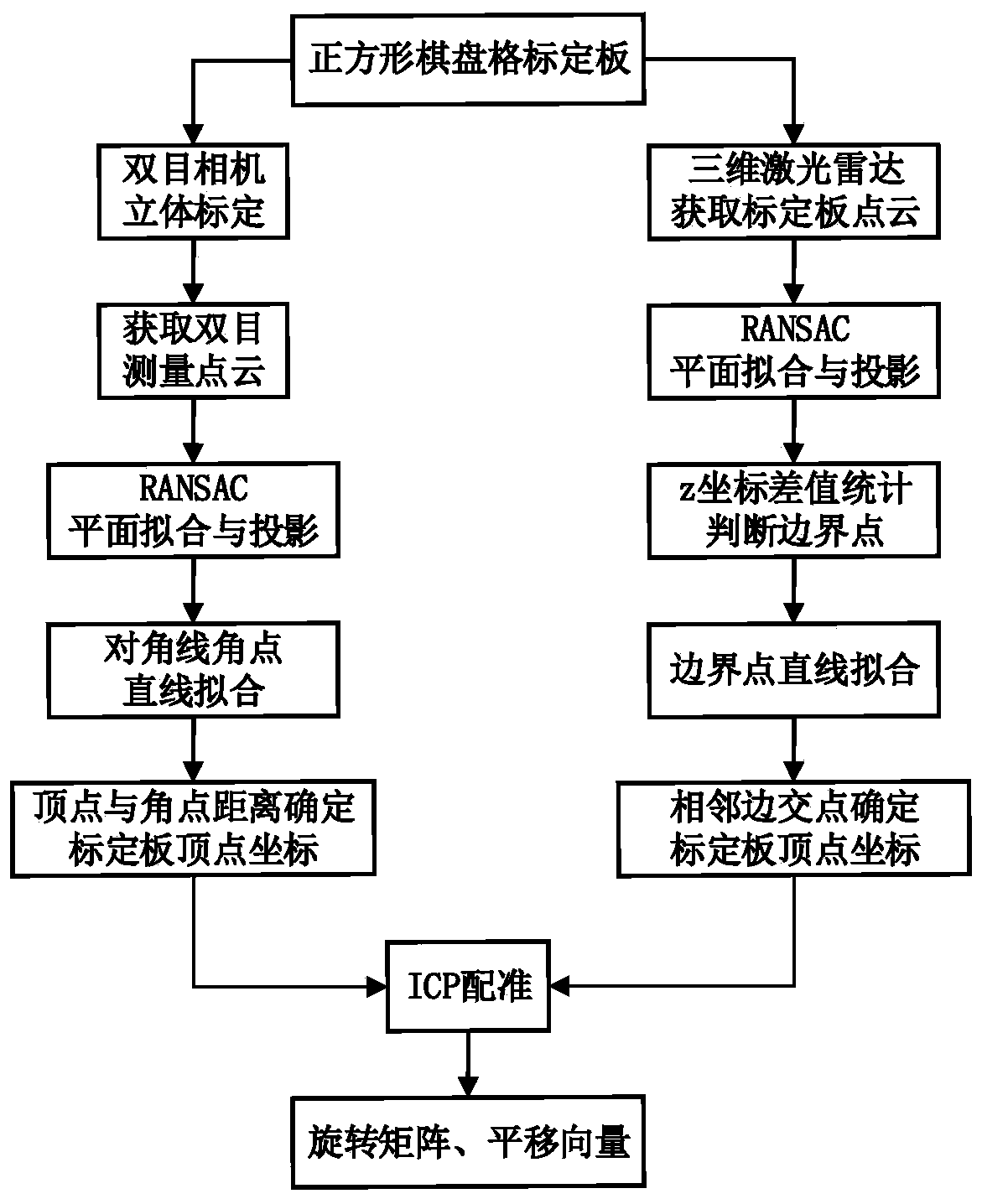

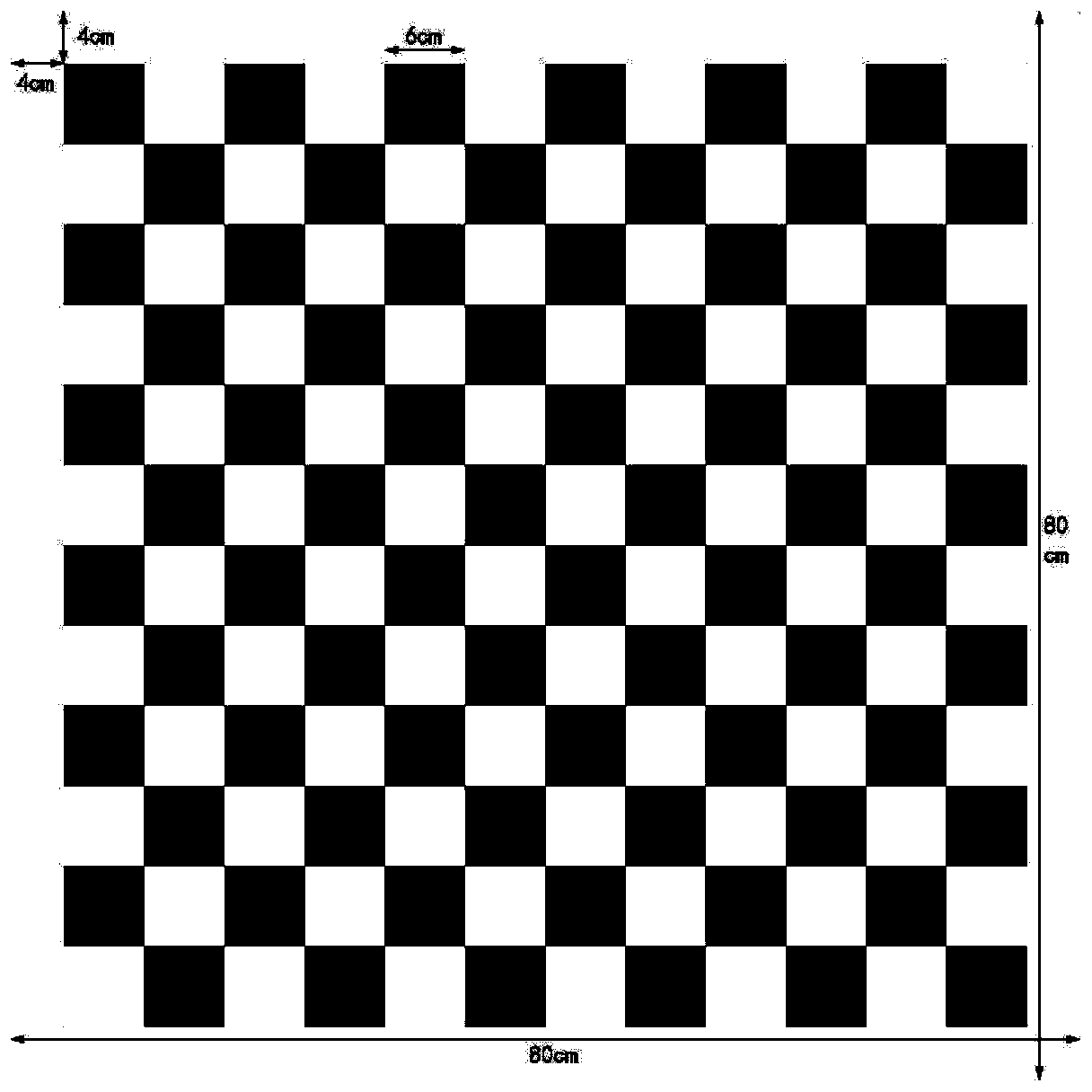

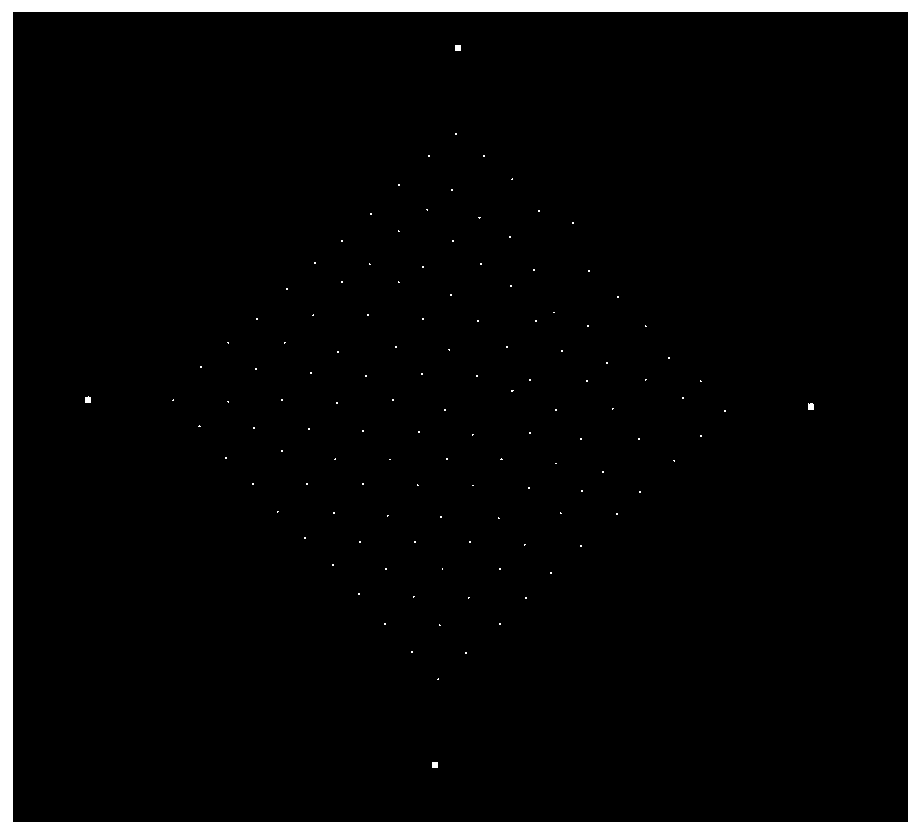

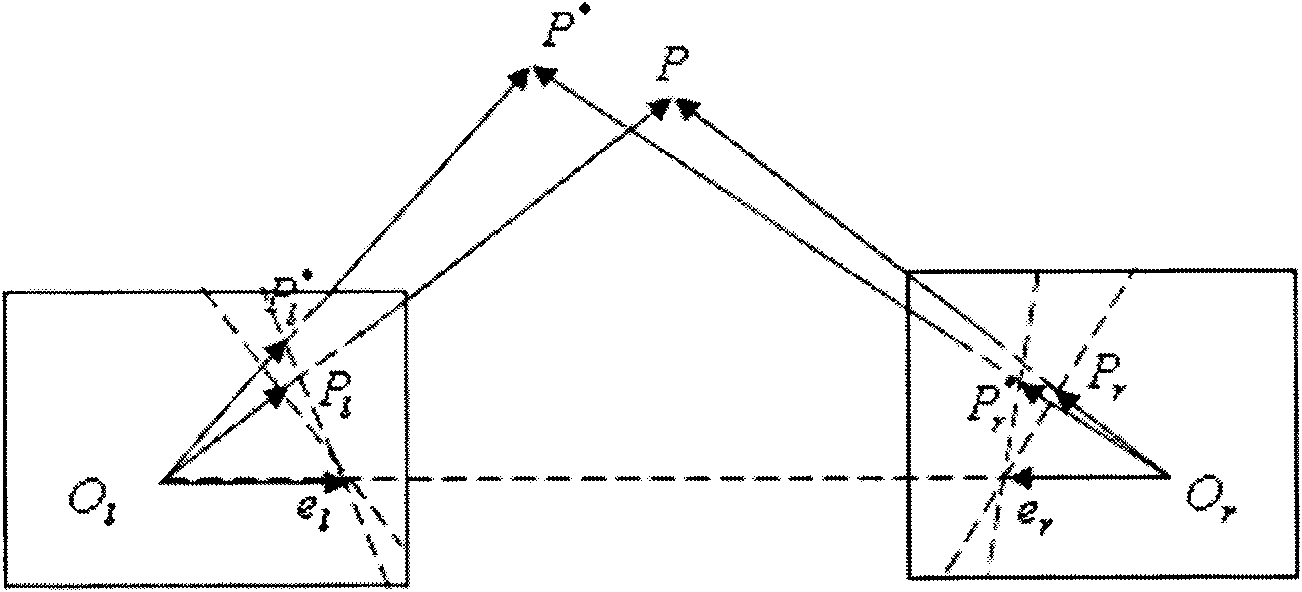

Fusion calibration method of three-dimensional laser radar and binocular visible light sensor

The invention discloses a fusion calibration method of a three-dimensional laser radar and a binocular visible light sensor. According to the invention, the laser radar and the binocular visible lightsensor are used to obtain the three-dimensional coordinates of the plane vertex of the square calibration plate, and then registration is carried out to obtain the conversion relation of the two coordinate systems. In the calibration process, an RANSAC algorithm is adopted to carry out plane fitting on the point cloud of the calibration plate, and the point cloud is projected to a fitting plane,so that the influence of measurement errors on vertex coordinate calculation is reduced. For the binocular camera, the vertex of the calibration plate is obtained by adopting an angular point diagonalfitting method; for the laser radar, a distance difference statistical method is adopted to judge boundary points of the point cloud on the calibration board. By utilizing the obtained vertex coordinates of the calibration plate, fusion calibration can be accurately carried out on the three-dimensional laser radar and the binocular visible light sensor, a rotation matrix and a translation vectorof coordinate systems of the three-dimensional laser radar and the binocular visible light sensor are obtained, and a foundation is laid for realizing data fusion of three-dimensional point cloud anda two-dimensional visible light image.

Owner:BEIHANG UNIV

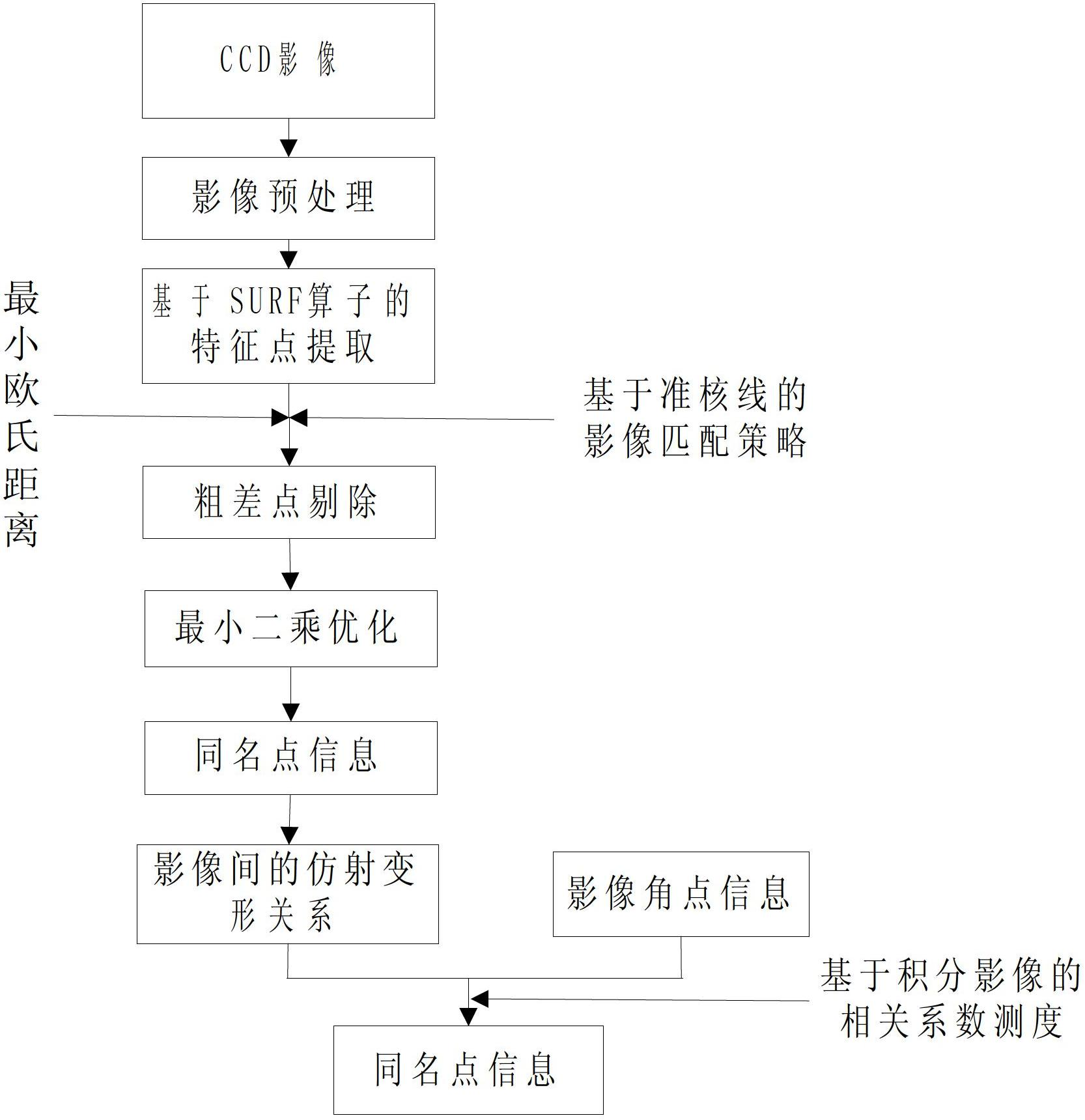

Image characteristic matching method

InactiveCN102693542AHigh precisionEvenly distributedImage analysisCorrelation coefficientSpace environment

The invention relates to an image characteristic matching method. The image characteristic matching method includes the steps of pre-processing an obtained CCD (charge coupled device) image; extracting characteristic points of the pre-processed CCD image by a SURF operator and conducting matching image by the quasi epipolar line limit condition and minimum Euclidean distance condition to obtain the identical point information; establishing affine deformation relation between the CCD images according to the obtained identical point information; extracting the characteristic points of a reference image by Harris corner extracting operator, projecting the characteristics points to a searching image by the affine transformation to obtain points to be matched; in neighbourhood around the points to be matched, counting the correlation coefficient between the characteristic points and the points in the neighbourhood and taking extreme points as the identical points; and using the comprehensive results of twice matching as the final identical point information. According to the method of the invention, can match surface images of deep-space stars obtained in a deep-space environment is utilized for imaging matching to obtain high-precision identical point information of CCD images, so that the characteristic matching is realized.

Owner:THE PLA INFORMATION ENG UNIV

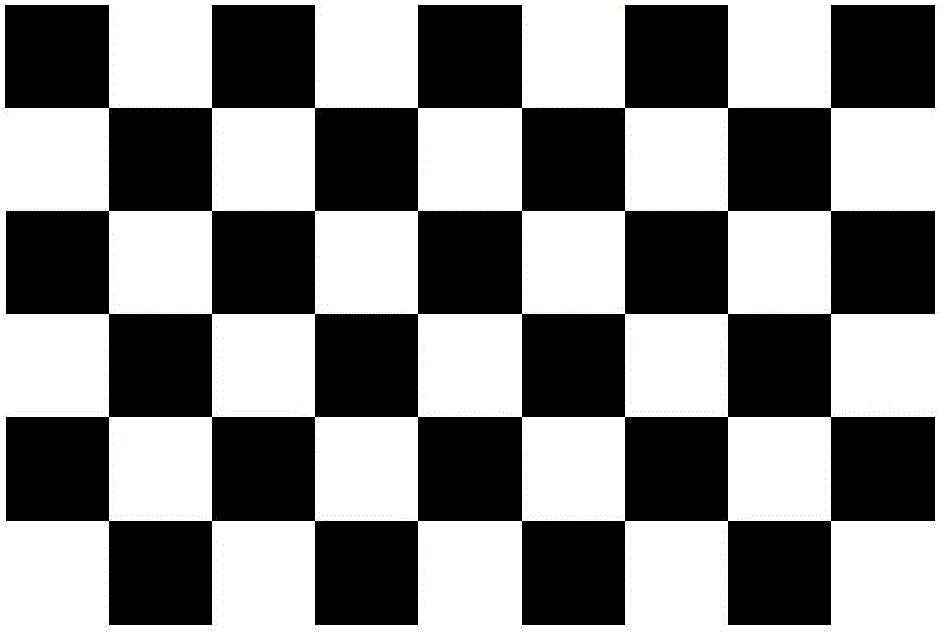

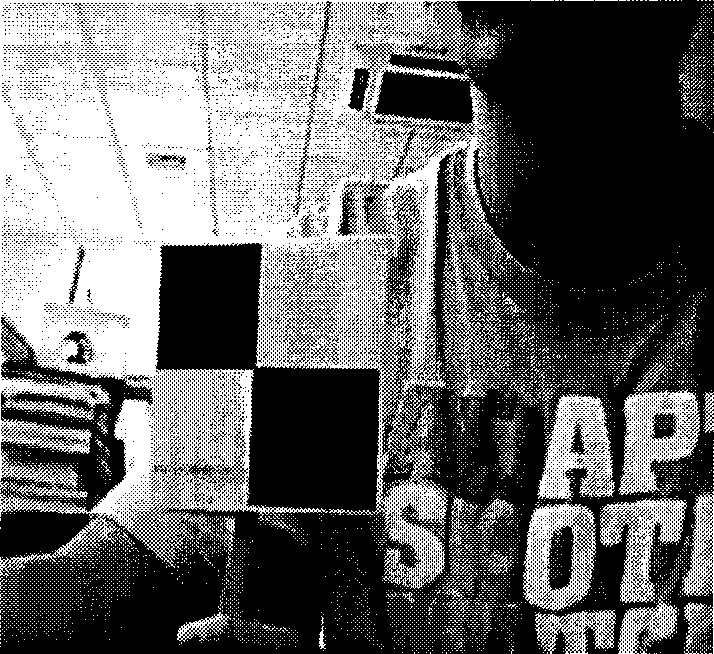

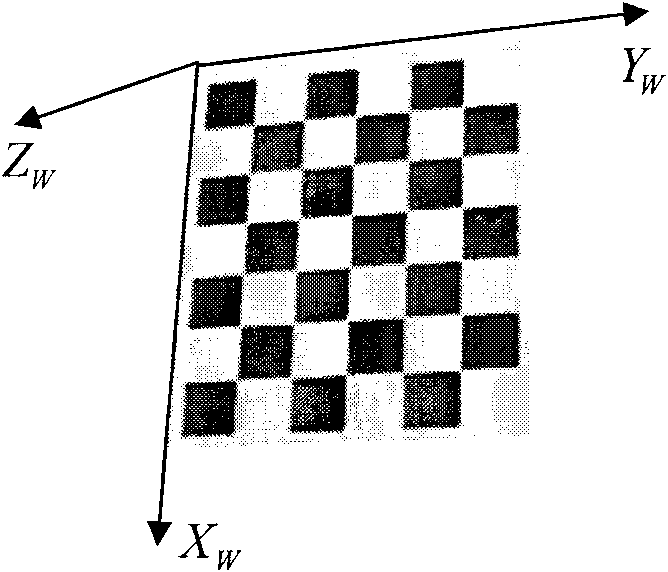

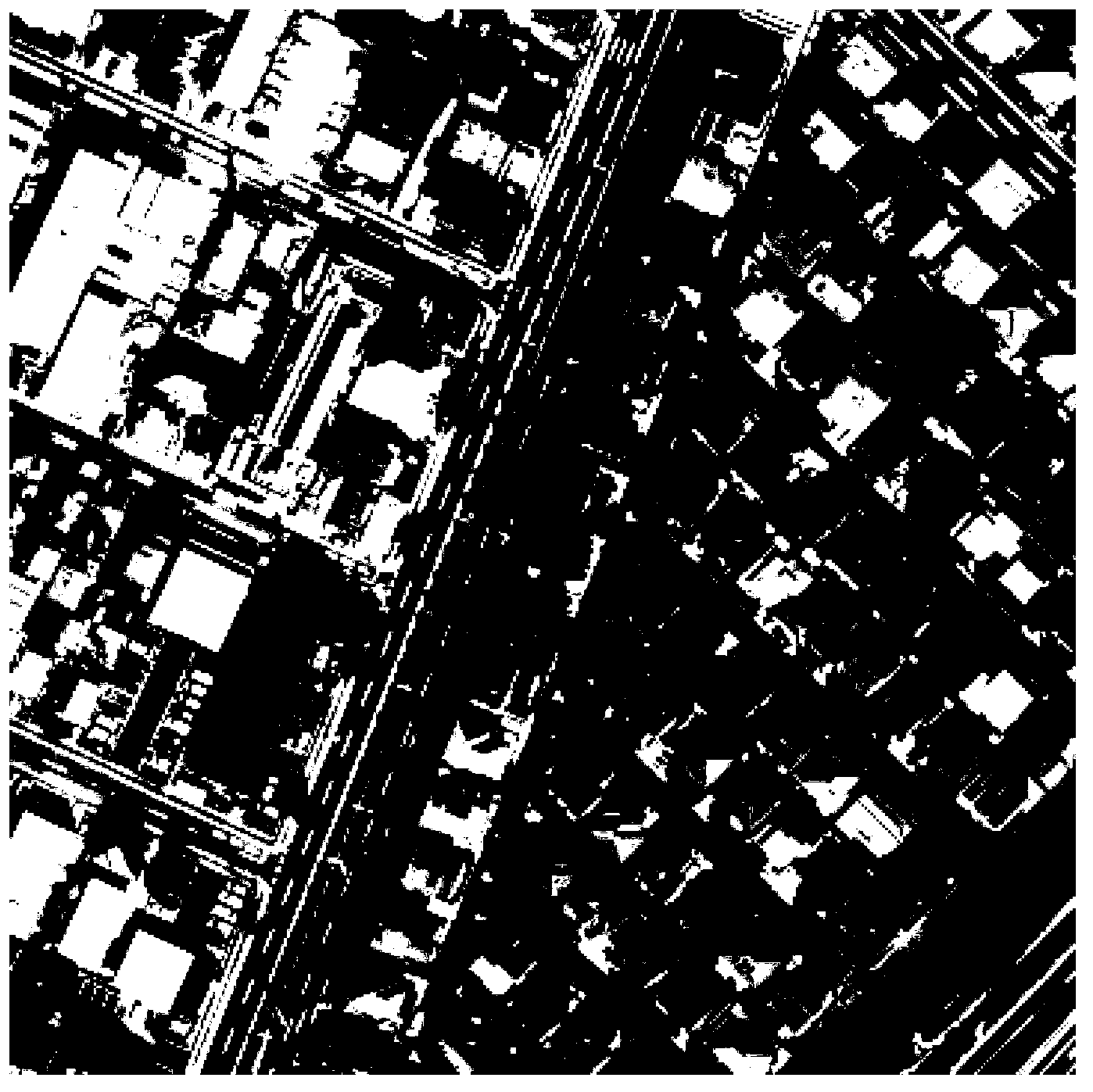

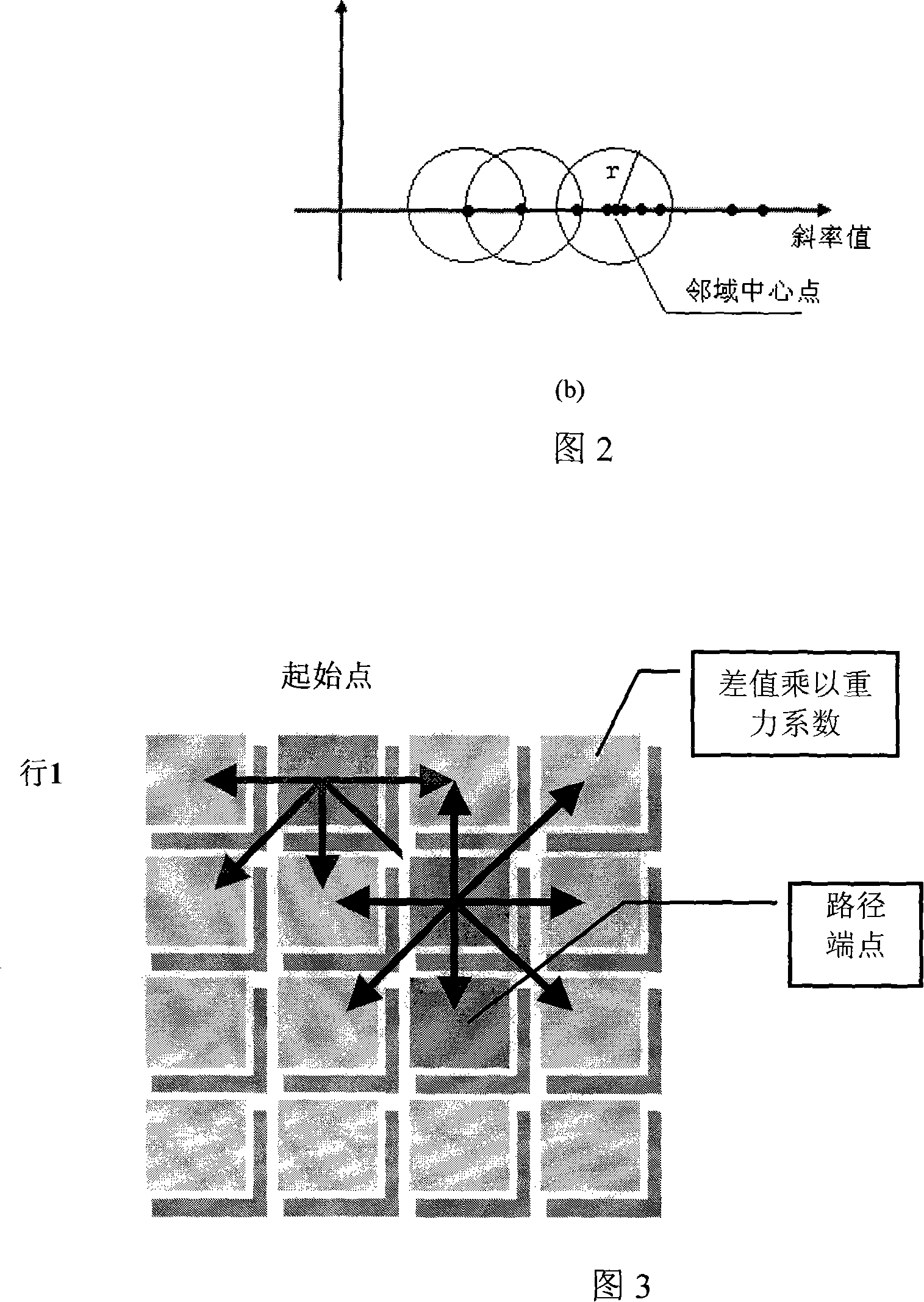

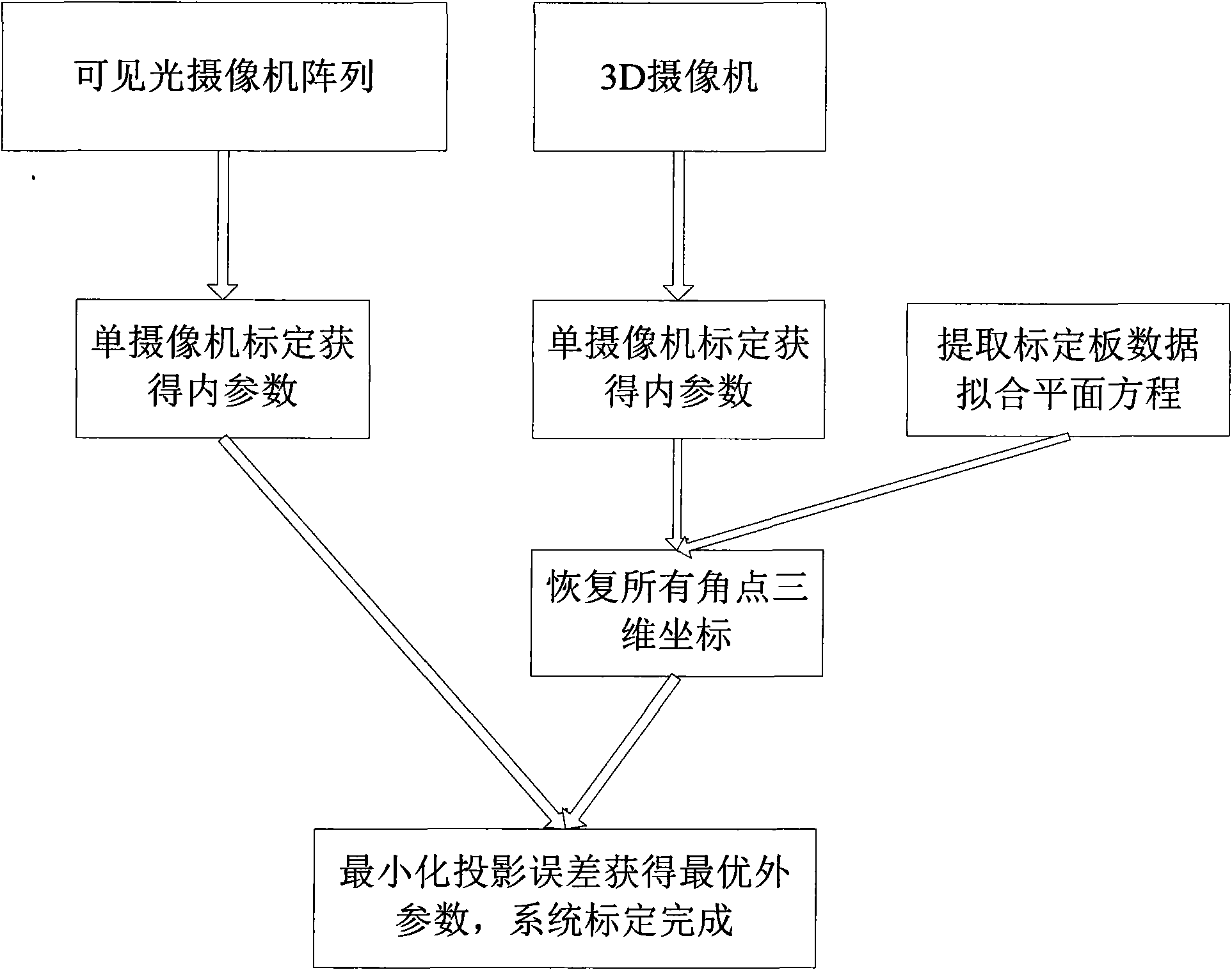

Checkerboard angle point detection process under complex background

InactiveCN101477687AReduce selectionSimple methodImage analysisCharacter and pattern recognitionInformation processingImaging processing

The invention relates to a method for detecting the angular points of checkerboards under a complicated background, and belongs to the technical field of computer information image processing. The method comprises the following steps: firstly, down-sampling an image, then up-sampling the image, dividing the image into three passages RGB at the same time, detecting the regions respectively, extracting the border of the image by adopting Canny operator, and then dividing the image by adopting an adaptive threshold; approximating the rectangles in the image by adopting the Douglas-Peucker method so as to detect the rectangular regions in the image; obtaining the peak of each rectangle after detecting the rectangles, and clustering angular points of the same type; and statistically averaging the angular points of the same type, of which the amount is maximum in a neighbor range to detect the obtained angular points. The invention provides a method for detecting angular points on the basis of rectangles. The method which detects specific angular points by utilizing the symmetry and rectangular characteristic of the image of a checkerboard has the advantages of good real-time property and reliability.

Owner:SHANGHAI JIAO TONG UNIV

Vehicle intelligent method for automatically identifying road pit or obstruction

InactiveCN101549683AEnsure driving safetyReduce incidenceAutomatic initiationsCharacter and pattern recognitionInformation processingCyclic process

A vehicle intelligent method for automatically identifying road speed-limit sign in the technical field of information processing includes the following steps: step one, two pinhole cameras are respectively installed at the edge of the inner side of the vehicle front far-sight lamp; step two, the two pinhole cameras collect road surface scene images in real time, and the collected images are respectively transported to a signal processor; step three, the images are processed by eliminating image distortion; step four, aiming at corner points that already found out, the mutual matching of corner points is automatically realized in left and right views under the instruction of epipolar constraint; step five, the three-dimensional coordinate figure of object point is ensured; step six, pit or obstruction is identified; step seven, the vehicle driving is controlled; step eight, a cyclic process is carried out by repeating step two to step seven. The invention can further improve automatization and intelligentialization level on the aspect of guaranteeing the safety of vehicle driving without vehicle damages or traffic accidents due to pit or obstruction appearing on the road.

Owner:SHANGHAI JIAO TONG UNIV

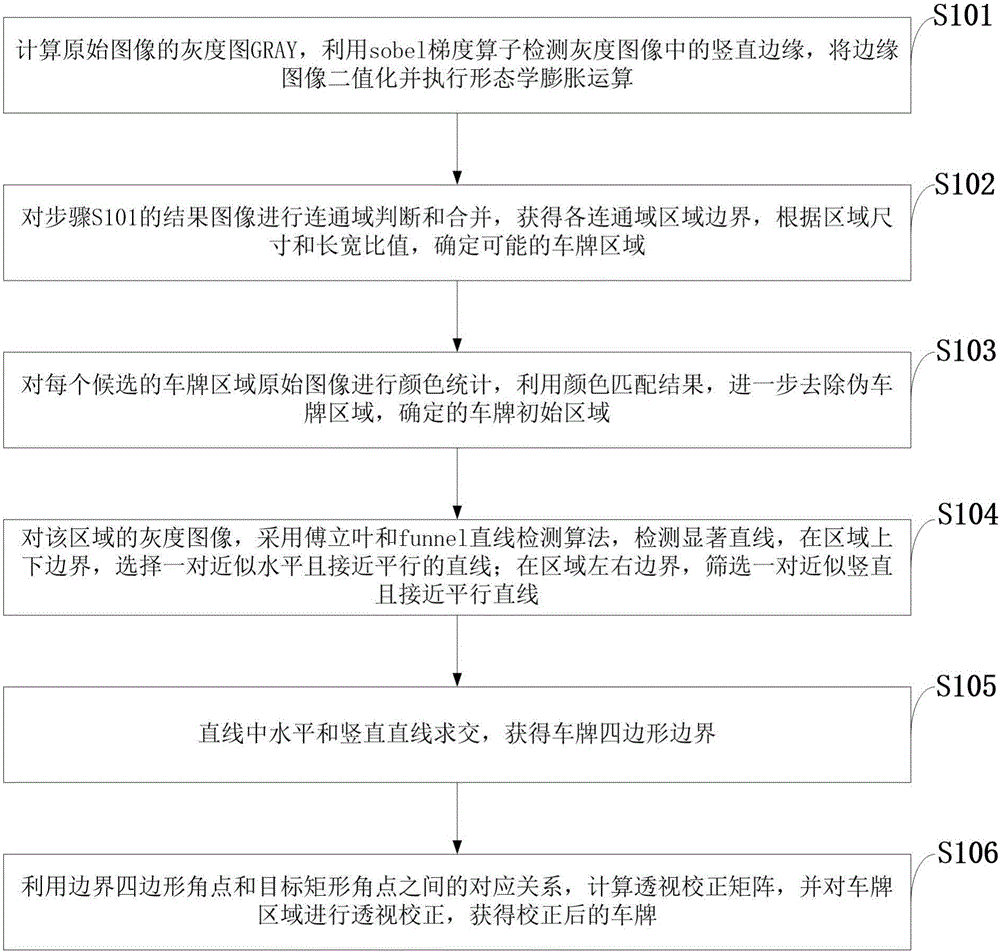

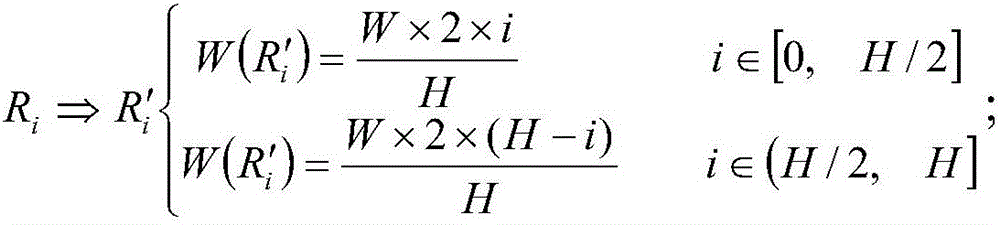

Method for automatic extraction of license plate position in vehicle monitoring image and perspective correction

InactiveCN106203433APerspective Correction Accurately ImplementedPrecise positioningCharacter and pattern recognitionIn vehicleAngular point

The invention discloses a method for the automatic extraction of the position of a license plate in a vehicle monitoring image and perspective correction, and the method comprises the steps: finding an approximate range of the license plate in the image through employing the edge and color information; carrying out the linear detection of the image; determining four boundary lines forming a perspective deformation license plate through employing the regional boundary information and combining with significant straight lines; solving four intersection points of the significant straight lines, and building mapping relation between the four intersection points with four corners of a target rectangle; carrying out the reverse calculation of a perspective correction matrix, and completing the perspective correction of a license plate. The method obtains the positions of four corners of a license plate with perspective distortion, builds the mapping relation between the four corners of the license plate with perspective distortion and the four corners of the target rectangle, calculates a perspective transformation matrix, and completes the perspective correction of the license plate. Compared with an affine transformation method based on horizontal and vertical rotation, the method truly describes the perspective transformation, and guarantees the higher license plate image correction precision. Compared with an affine transformation algorithm, the method can detect the perspective distortion information of the license plate, also can precisely locate the boundary corners of the license plate, calculates the perspective transformation matrix, and accurately achieves the perspective correction of the license plate.

Owner:XIDIAN UNIV

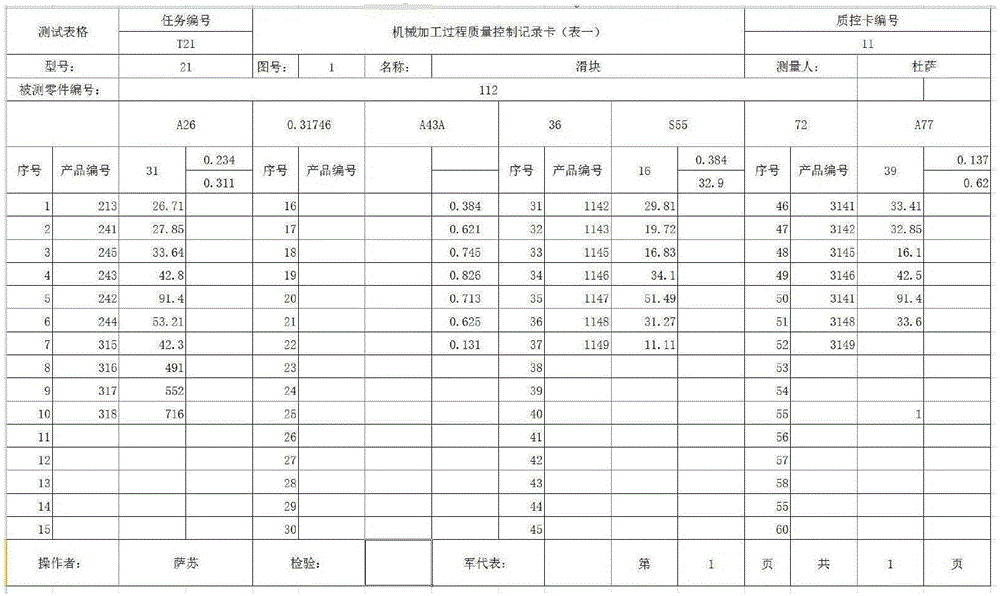

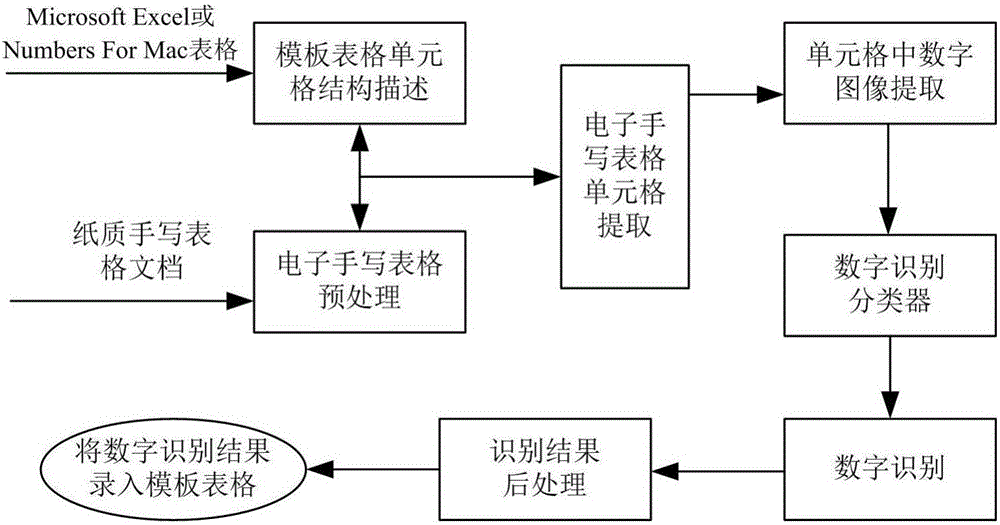

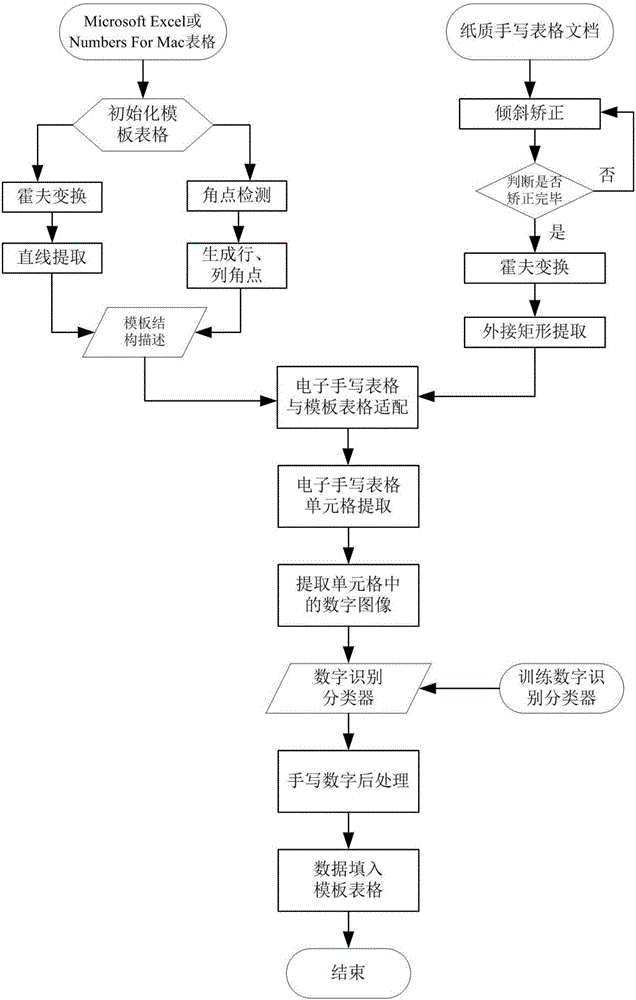

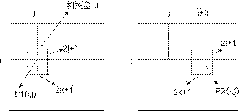

Complex table and method for identifying handwritten numbers in complex table

ActiveCN106407883AImprove recognition rateEasy to identifyCharacter and pattern recognitionData setAngular point

The invention discloses a complex table and a method for identifying handwritten numbers in the complex table. A complex template table is preprocessed by line detection and angular point detection to realize classified row and column ordering and obtain structural description of cells of the template table; after an electronic handwritten table is obtained, inclination of the electronic handwritten table is corrected, and the electronic handwritten table matches the template table to obtain cell position description thereof; each cell is processed by removing the edge and reserving characters in the cell as completely as possible; number images are extracted from the cells, and a trained classifier of a data set is used to identify the number images; and the handwritten characters are post-processed, and an identification result is filled into the template table. The method of the invention is easy to realize, and better in identification effect, and provides a good solution to automatic identification and table filling later.

Owner:BEIJING UNIV OF TECH +1

Rapid and self-adaptive generation algorithm of intermediate viewpoint based on left and right viewpoint images

InactiveCN101799939APromote generationReduce matching complexity3D modellingImaging processingViewpoints

The invention belongs to the image processing technique and particularly relates to a rapid and self-adaptive generation algorithm of intermediate viewpoint based on left and right viewpoint images. In order to accurately obtain the multi-viewpoint three-dimensional images of twin lens, the image at the real virtual viewpoint is obtained. The technical scheme comprises the following steps of: inputting left and right viewpoint images picked up by a camera to extract angular points of left and right images; extracting the angular point pair matched with the left and right views by using a method of normalization covariation; performing Delaunay triangulation on the left and right views according to the extracted accurate matching point by a point-to-point inserting method; acquiring an inverse transformation matrix to each triangle grid of the virtual viewpoint image; acquiring a point of each point in the triangle grid corresponding to the left and right viewpoint images, endowing the pixel value of the corresponding point to the point in the intermediate virtual viewpoint grid, and finally generating an image of the intermediate virtual viewpoint. The invention is mainly applied to image processing.

Owner:TIANJIN UNIV

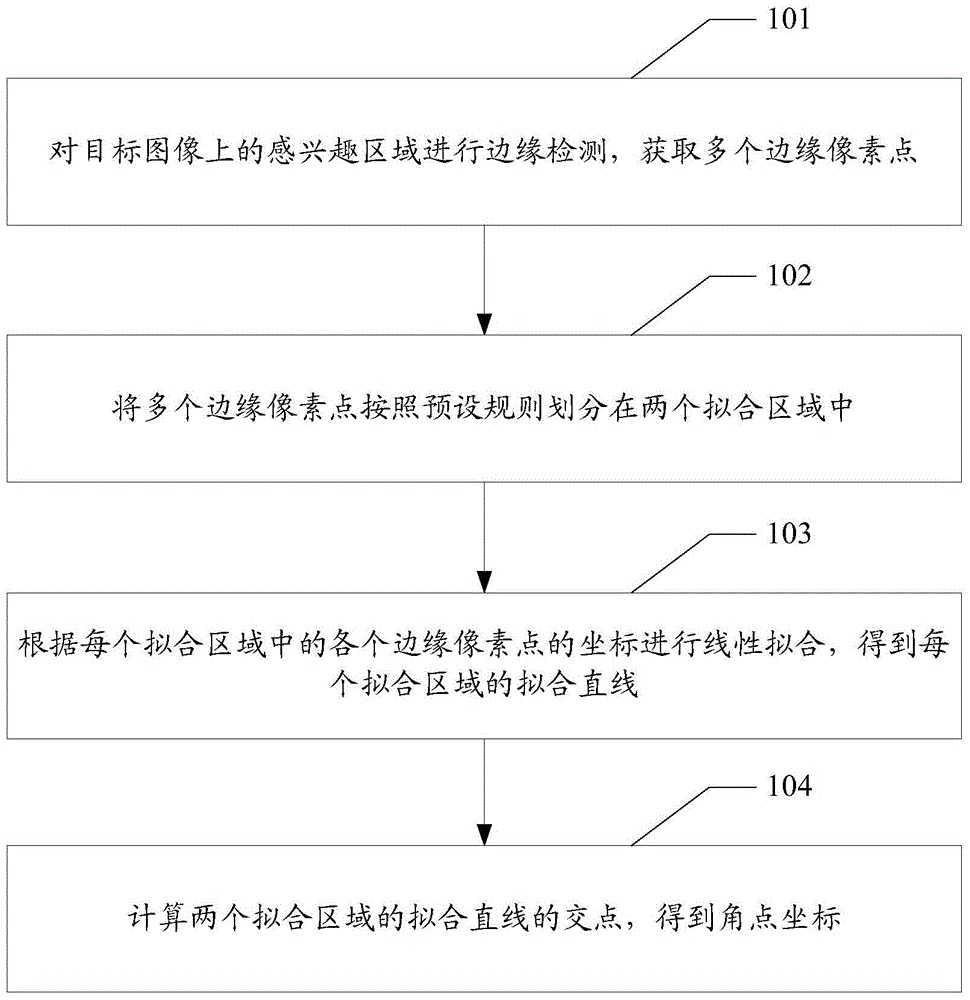

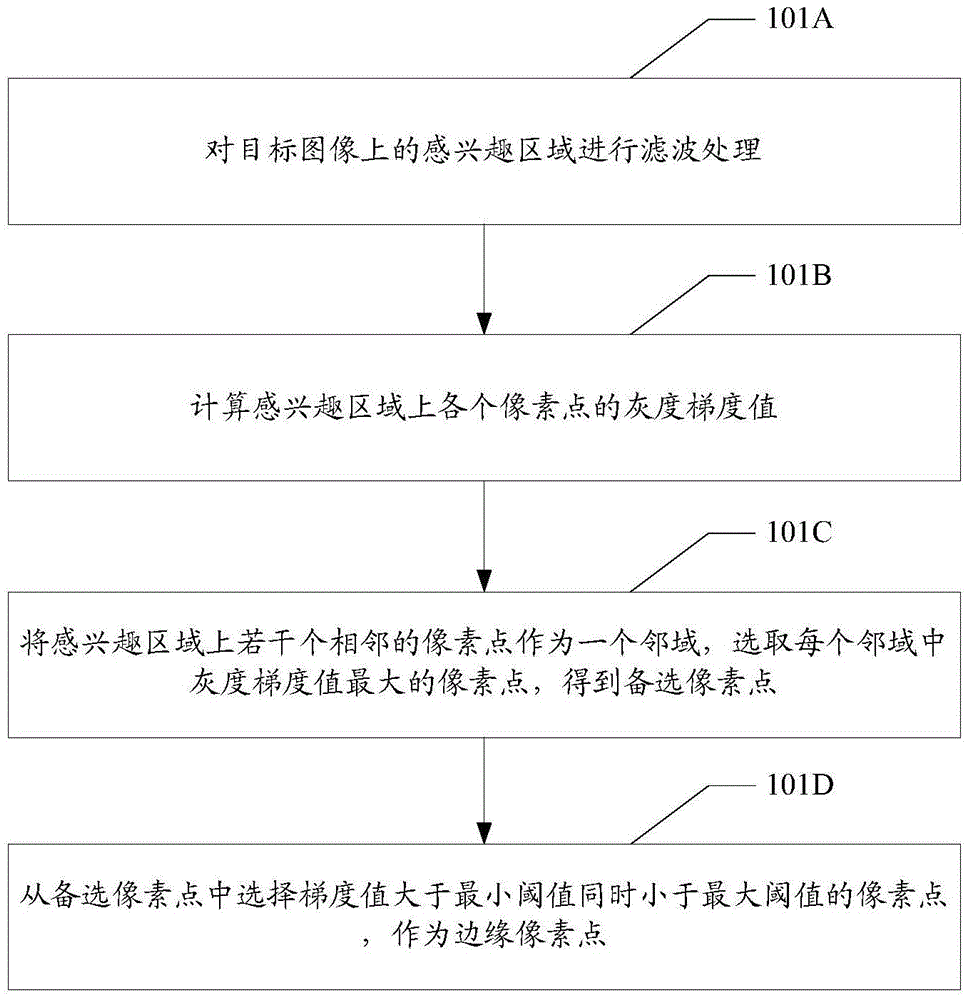

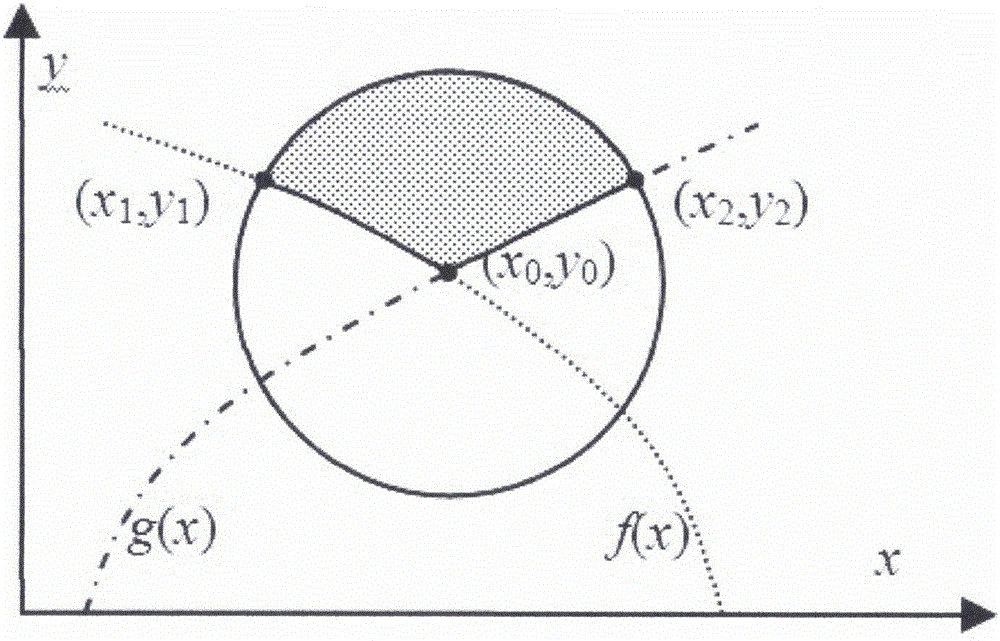

Angular point positioning method and apparatus

ActiveCN105069799APrecise positioningImprove accuracyImage enhancementImage analysisAngular pointIntersection of a polyhedron with a line

According to an angular point positioning method and apparatus which are provided by the present invention, firstly, an interest area on a target image is subjected to edge detection to acquire a plurality of edge pixel points, then the plurality of edge pixel points are partitioned into two fit areas according to preset rules, coordinates of each edge pixel point in each fit area is subjected to linear fitting to obtain two fitting straight lines and finally, angular point coordinates can be obtained by calculating a point of intersection of two fitting straight lines, and thus, in the image matching process, the required angular point position can be accurately positioned without manually presetting an offset and not only the manual operation is reduced, but also accuacy of angular point positioning and image matching is greatly promoted, so that system accuracy of a mechanism is promoted.

Owner:SHENZHEN HUAHAN WEIYE TECH

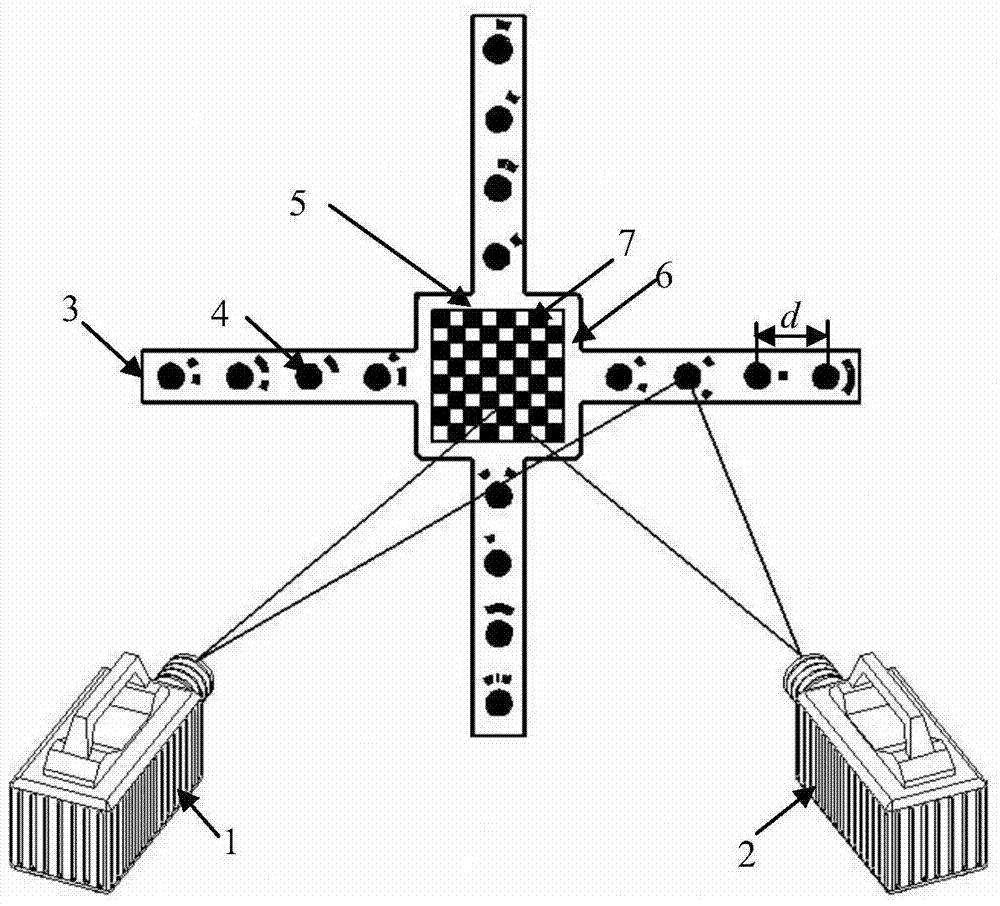

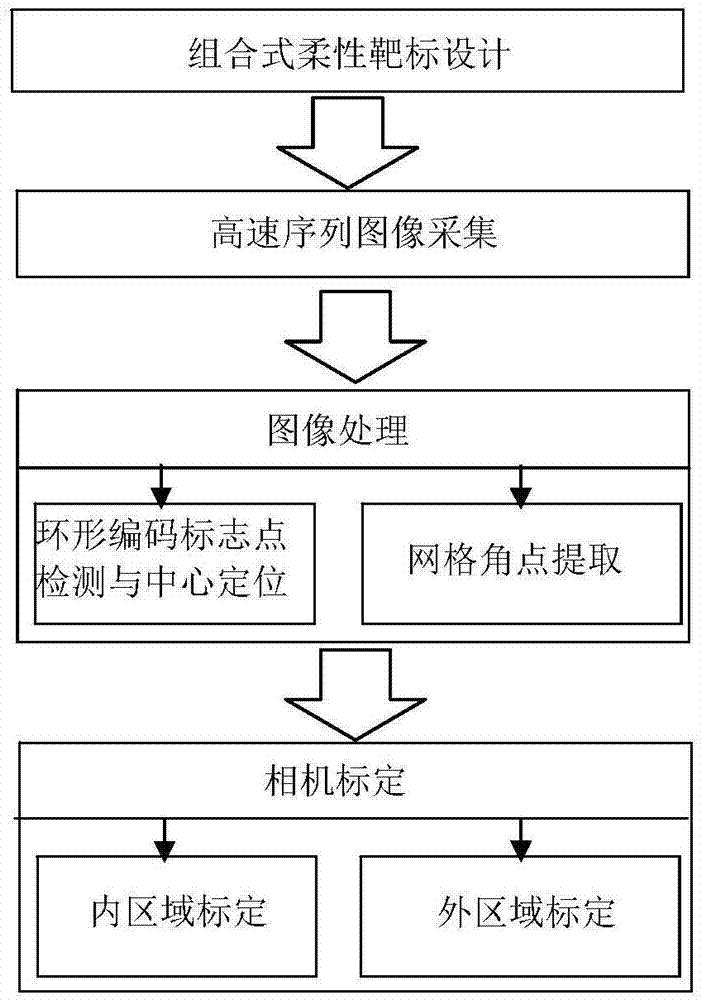

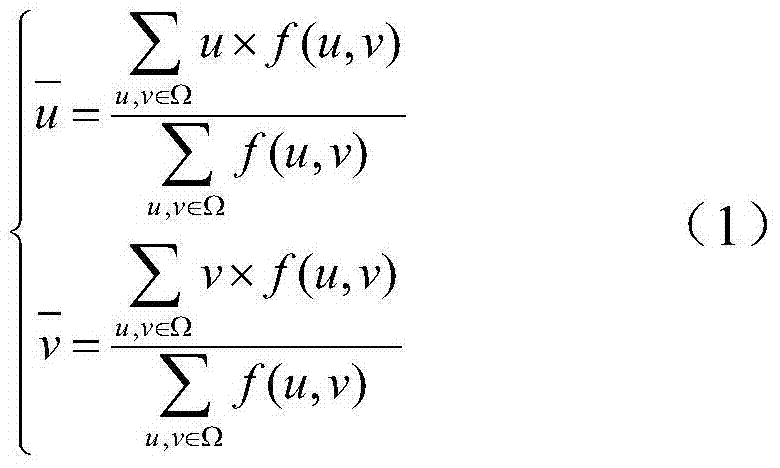

Flexible-target-based close-range large-field-of-view calibrate method of high-speed camera

The invention, which belongs to the computer vision field, provides a flexible-target-based close-range large-field-of-view calibrate method of a high-speed camera and relates to a close-range large-field-of-view binocular-visual-sense camera calibration method in a wind tunnel. According to the method, a flexible target is used for fill an overall calibration view field for calibration; the internal region of the target is formed by a planar chessboard mesh and distances between angular points of the chessboard mesh are known; the external region of the target is formed by cross target rods perpendicular to each other and a plurality of coding marking points with known distances are distributed on the target rods uniformly. During calibration, regional and constraint calibration is carried out on a high-speed camera by using different constraint information provided by different regions of the target. When the internal region of the target is calibrated, calibration is carried out by using a homography matrix; and the external region is calibrated by using distance constraints of the coding marking points. According to the invention, the cost is lowered and the operation portability is realized. During calibration, distortion of different regions is considered by using the regional and constraint camera calibration method, so that the calibration precision is improved.

Owner:DALIAN UNIV OF TECH

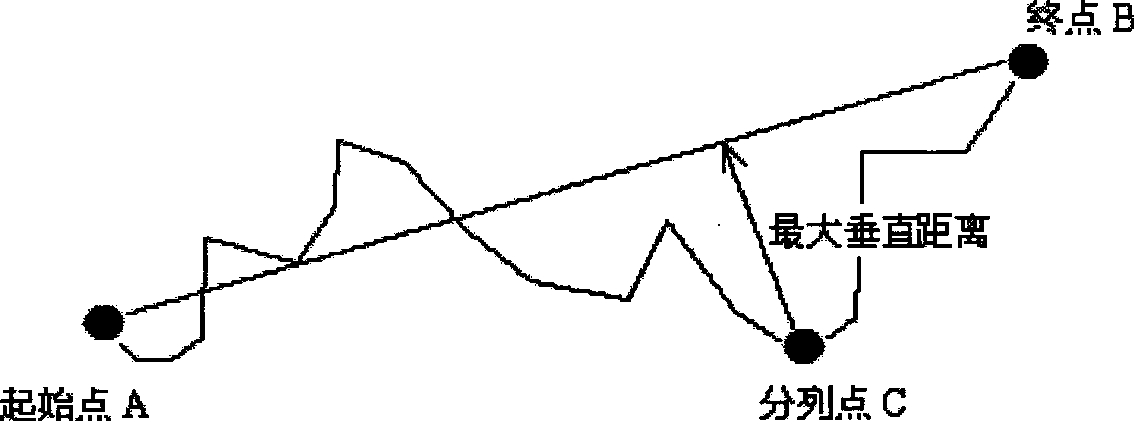

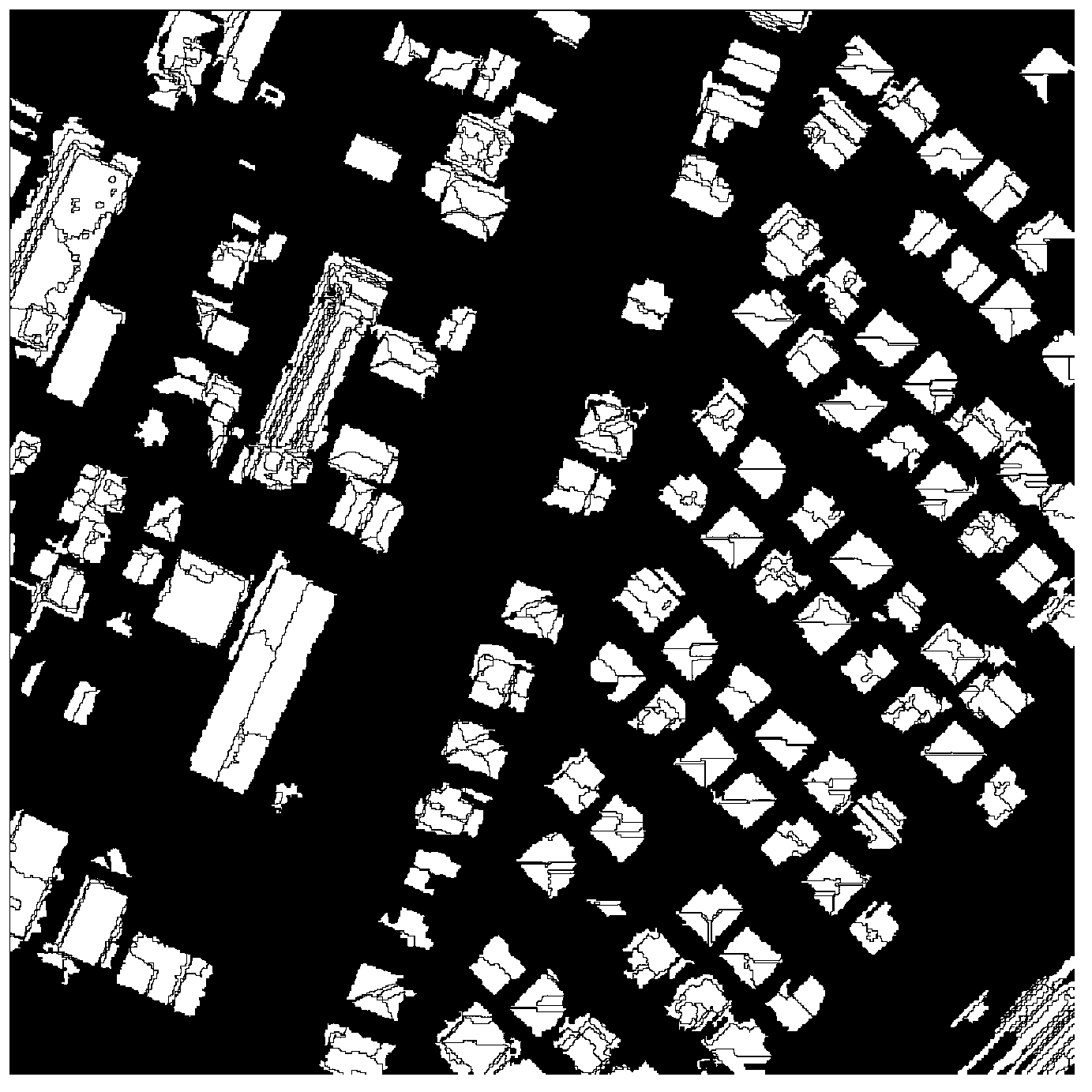

Method for reconstructing outer outline polygon of building based on multivariate data

InactiveCN102938066ASolve the problem of contour polygon reconstructionEffective positioningCharacter and pattern recognitionAngular pointIntersection of a polyhedron with a line

The invention discloses a method for reconstructing an outer outline polygon of a building based on multivariate data. The method comprises the following steps of: respectively dividing DSM (Design Standards Manual) data and image data so as to obtain a mask image of an interest region of the building and an image dividing object; combining the mask image with the image dividing object so as to obtain a complete building object; carrying out boundary tracing on the building object so as to obtain curves of the building; using points corresponding to local maximum curvature values of the curves as angular points; connecting the angular points in sequence so as to obtain the outline polygon of the building; dividing the building object into regions by using a hierarchical clustering method and calculating the main direction of the building; establishing a linear model of the polygon of the building and correcting and regularizing the linear model of the outline of the building with the combination of the main direction of the building and gradient information of the image data; calculating an intersection point of every two adjacent straight line sections by using the linear model of each line section of the polygon; and by taking the intersection points as the angular points, connecting the angular points in sequence so as to form the final polygon of the building. According to the method, the DSM data is organically combined with the image data, the data are complementary to each other in the whole process, so that the problem of reconstructing the polygon of the outline of the building is solved well, and the method has very strong robustness in the two-dimensional outline modeling aspect of the building.

Owner:NANJING UNIV

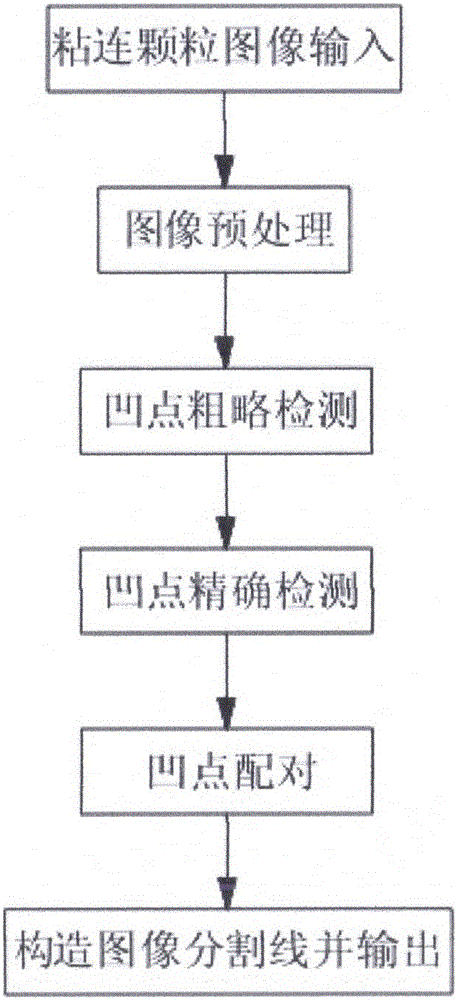

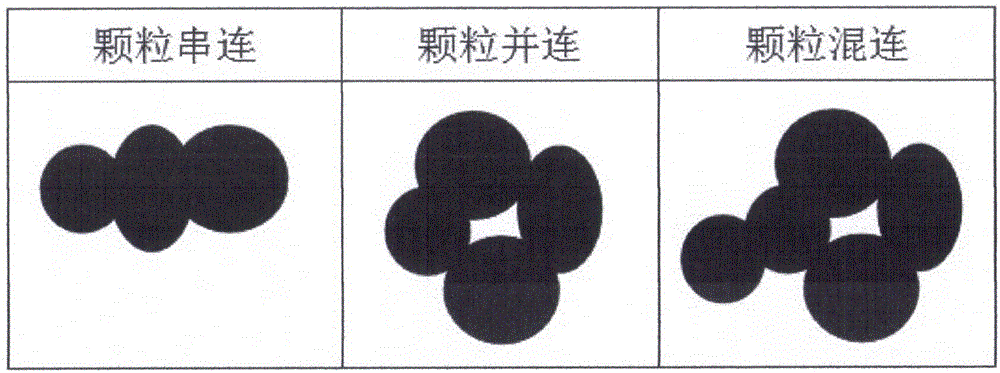

Circular masking-out area rate determination-based adhesive particle image concave point segmentation method

The present invention relates to a circular masking-out area rate determination-based adhesive particle image concave point segmentation method. The method comprises the following steps of 1) carrying out the image pre-processing to obtain a binary image of a particle image; 2) carrying out the concave point rough detection to obtain an angular point image of the particle image; 3) carrying out the concave point accurate detection, and utilizing an area rate method to obtain all concave points capable of being used for the particle segmentation in a regional contour; 4) carrying out the concave point pairing, wherein the selected concave point pairs are used as the segmentation points of an adhesive particle image; 5) constructing a segmentation line of the particles, obtaining the contour coordinates of individual particles, and combining the coordinates of two segmentation points to obtain the complete particle contour. According to the present invention, by combining an angular point detection method and a method based on concave point analysis, an operand problem brought by purely utilizing the concave point search based on area is avoided; by setting few parameters, a lot of sample training is not needed; at the same time, the segmentation paths can be replanned, thereby being able to adapt to the different shape and size change of the images.

Owner:WEIFANG UNIVERSITY

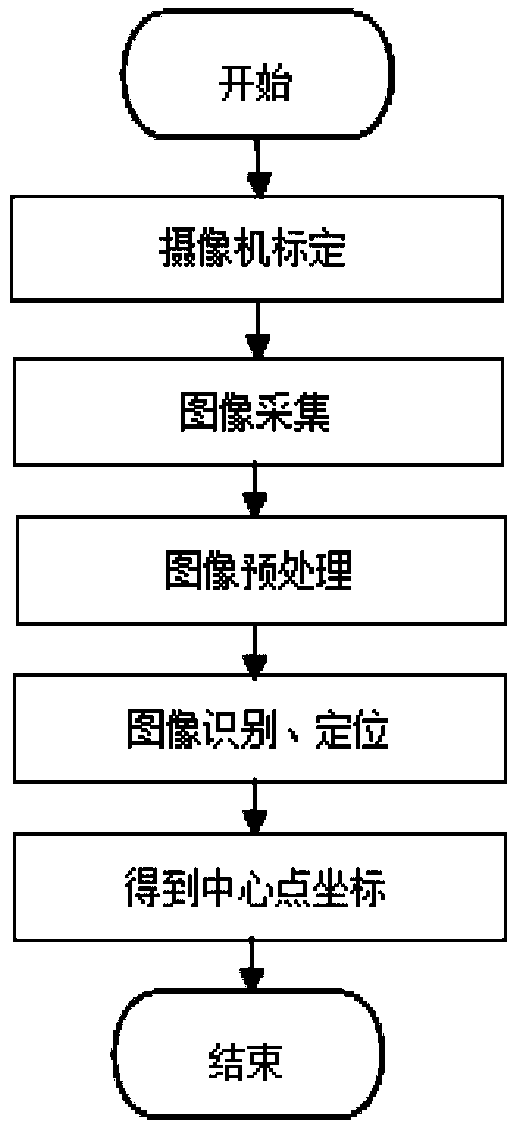

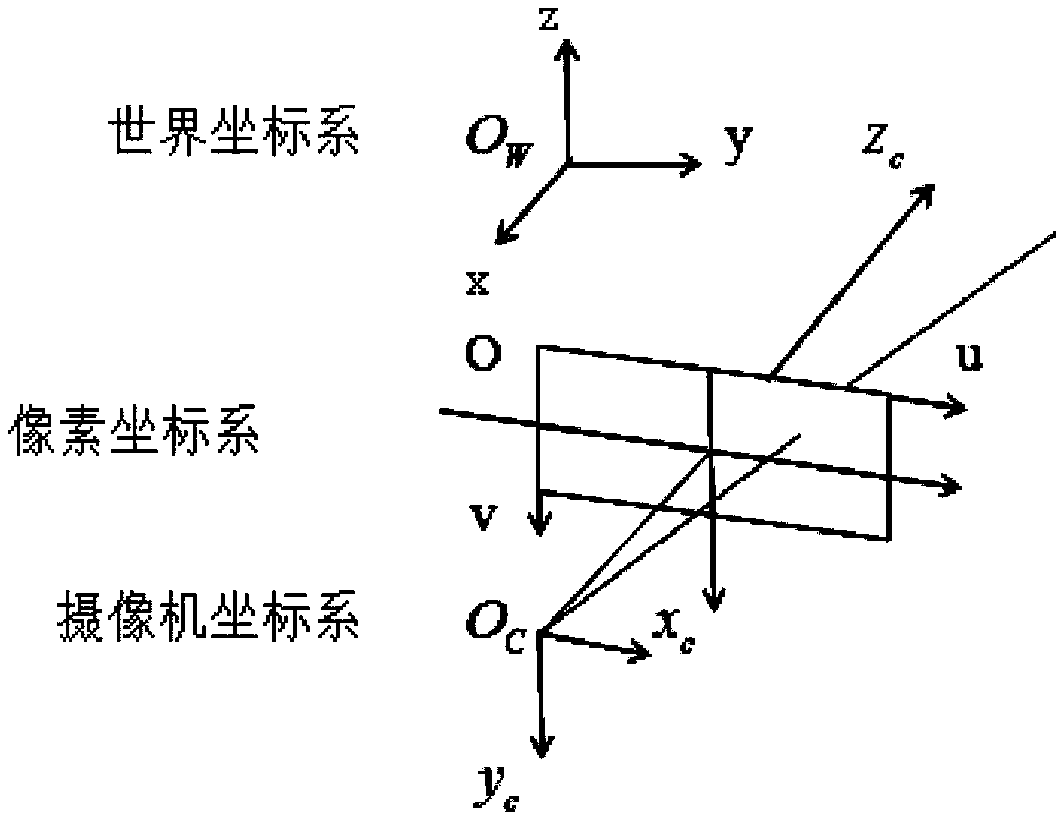

Computer vision based automatic labelling positioning method

InactiveCN109018591AHigh speedHigh precisionImage analysisLabelling machinesVision basedVisual perception

The invention relates to a computer vision based automatic labelling positioning method. The method comprises the following steps: acquiring an image of a labelling sample product through an image acquiring module; preprocessing the product image through an image recognizing module; determining an interesting area based on a position to be labelled; performing corner-point detection in the interesting area in order to recognize pixel coordinates of corner points of a label; finally and accurately positioning pixel coordinates of a center point of the label; calibrating a camera; transforming the pixel coordinates of the center point of a paper carton image into world coordinates through a data processing module in a computer processing controller based on the obtained camera parameters; returning the obtained spatial position of the center point of the label as known information to a manipulator; determining a moving route based on the input position information through the manipulator; and finding out a position point to be labelled so as to realize automatic labelling operation. The method can be continuously carried out for a long time at high speed, high accuracy and high resolution ratio; the recognizing rate is high; and the measurement result is accurate.

Owner:SHENYANG JIANZHU UNIVERSITY

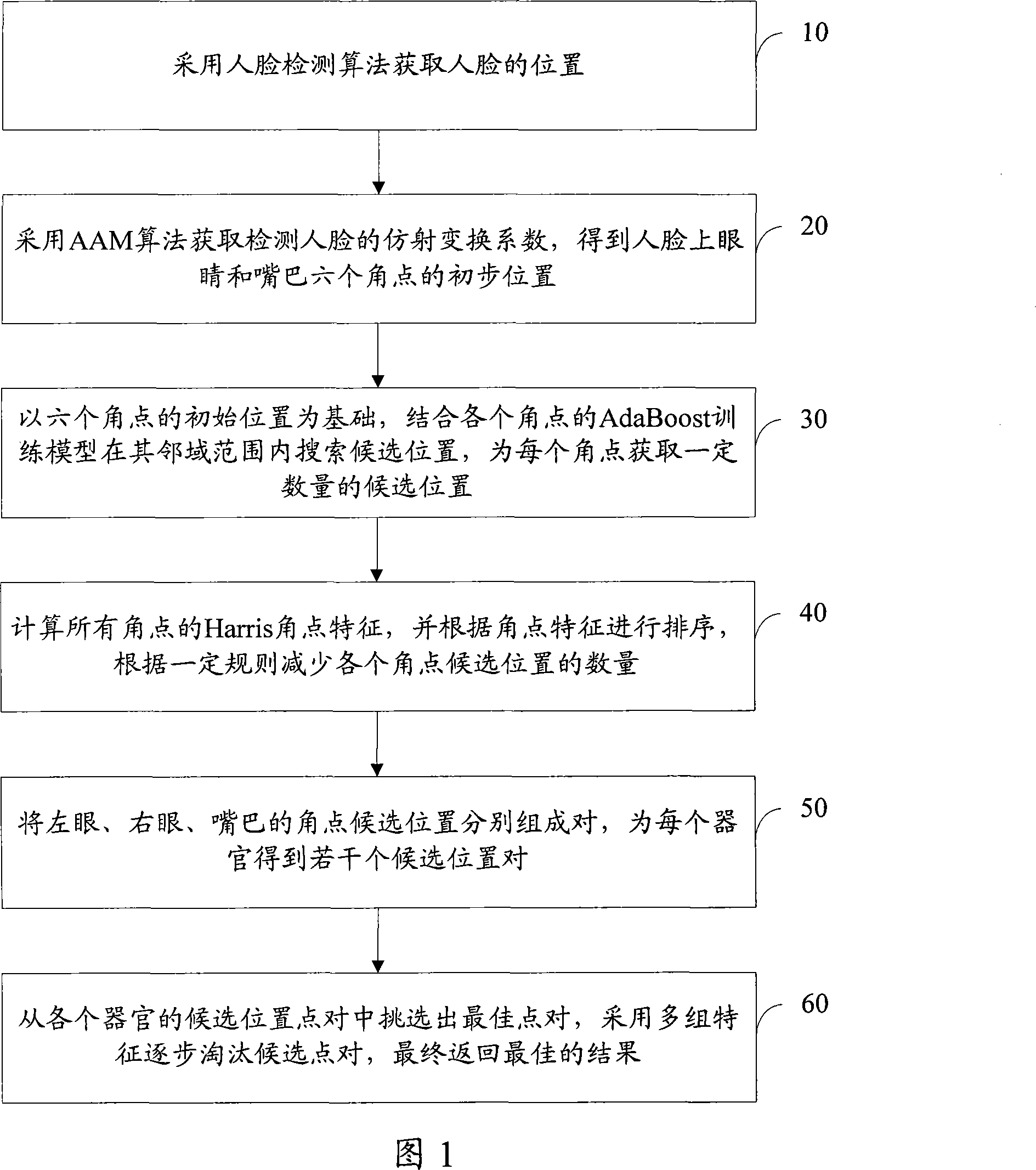

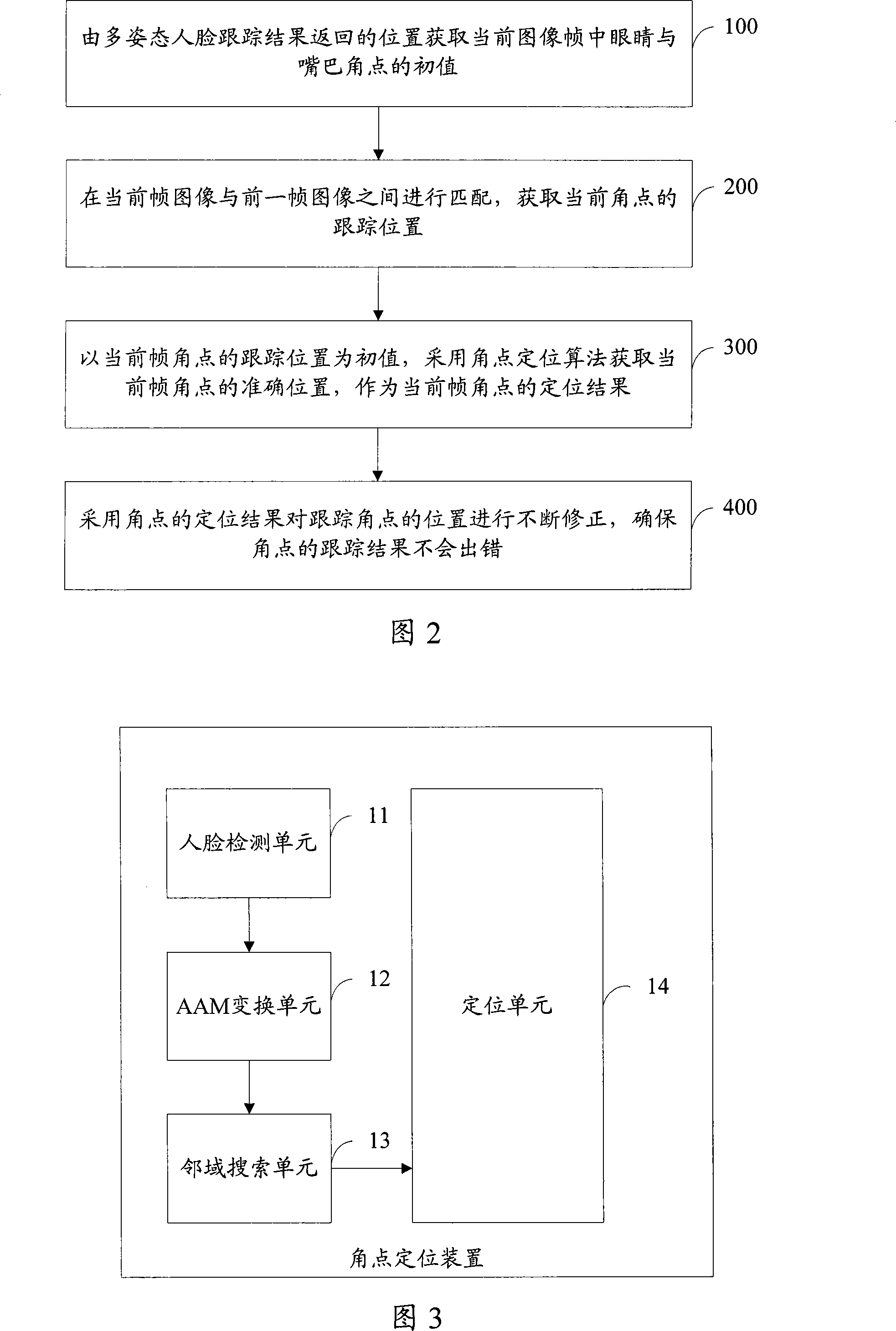

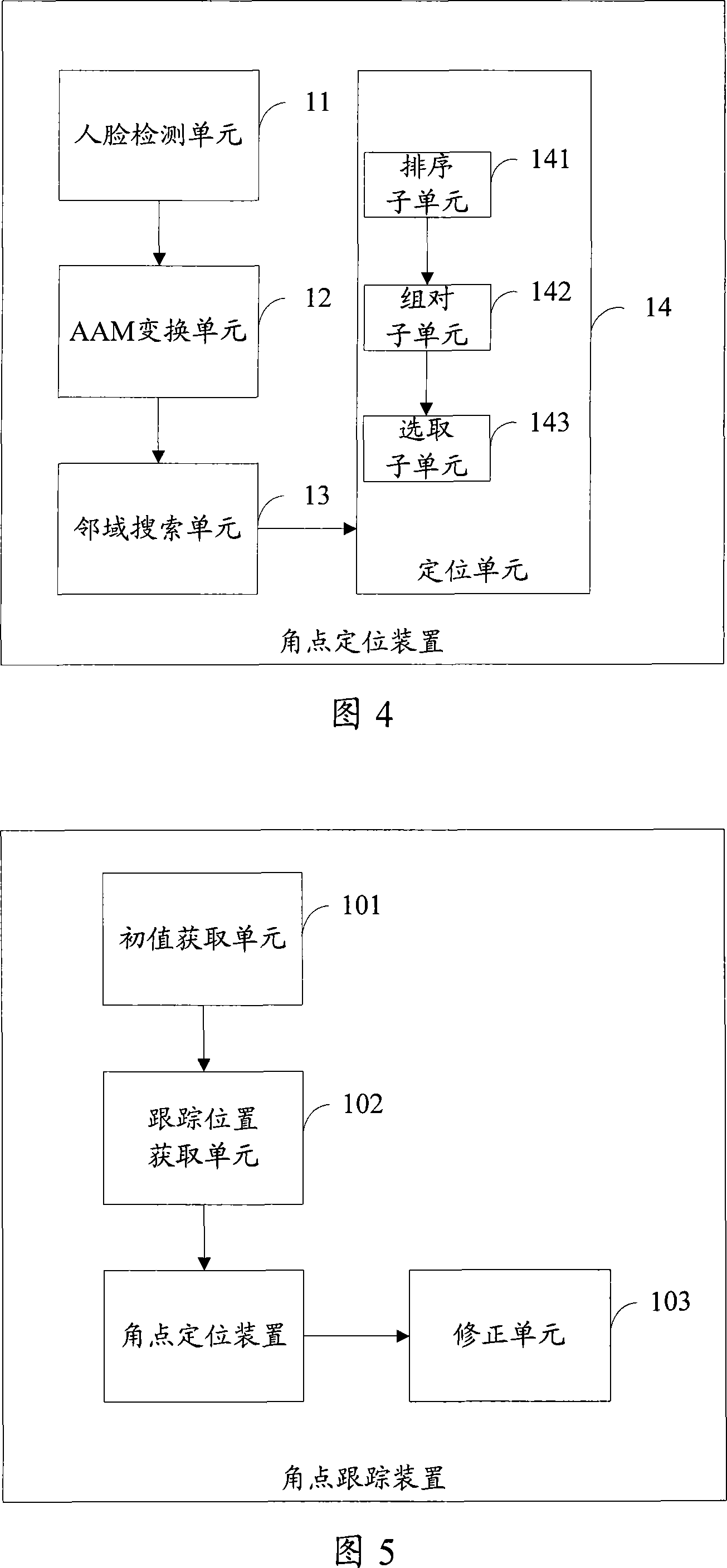

A method and device for positioning and tracking on corners of the eyes and mouths of human faces

InactiveCN101216882AImprove synthesis abilitySolve the problem of inaccurate corner positioningCharacter and pattern recognitionFace detectionAngular point

The invention discloses a method for positioning and tracking eye corners and mouth corners of a human face and a device thereof. In the invention, firstly a human face detection algorithm is adopted to obtain a position of the human face; an AAM algorithm is adopted to obtain an affined transformation coefficient for the detected human face and preliminary positions of six corner points of the eyes and the mouth on the human face; AdaBoost training models of all the corner points are combined to search the positions of candidate points in a neighborhood, so as to obtain a certain number of candidate points for each corner point; Harris corner point features of all the corner points are calculated, and the number of the candidate points of all the corner points are reduced according to certain rules; the candidate points of the corner points of the left eye, the right eye and the mouth are respectively combined into pairs; the point pairs are gradually eliminated by adopting a plurality of features, and finally an optimum result is returned. The proposal provided by the embodiment of the invention solves the problem of inaccurate positioning of the corner points of the eyes and the mouth of the human face in all kinds of gestures, and realizes the positioning of outer profiles of the eyes and the mouth of the human face, thereby providing a feasible scheme for driving human face two-dimensional and three-dimensional models.

Owner:VIMICRO CORP

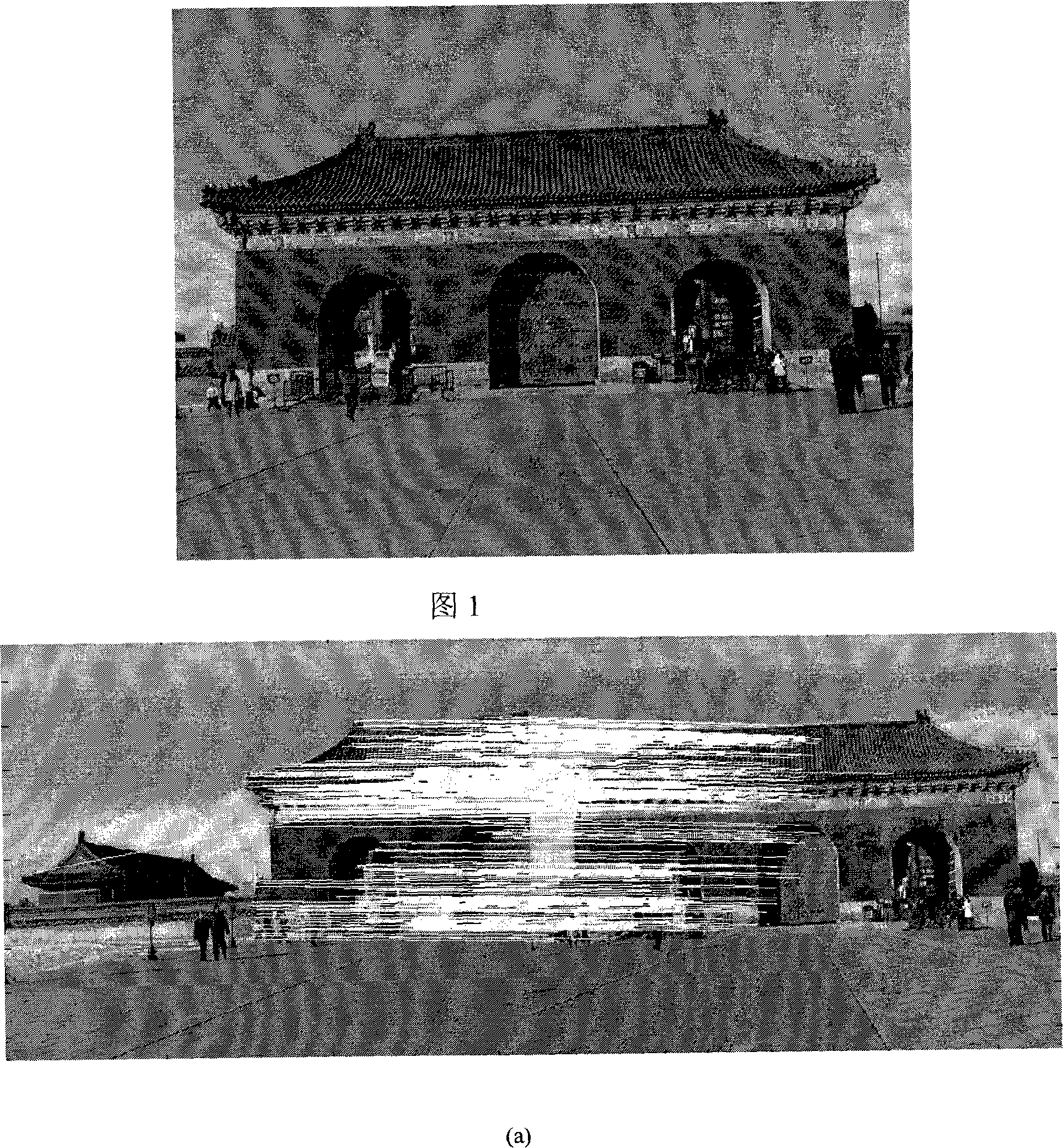

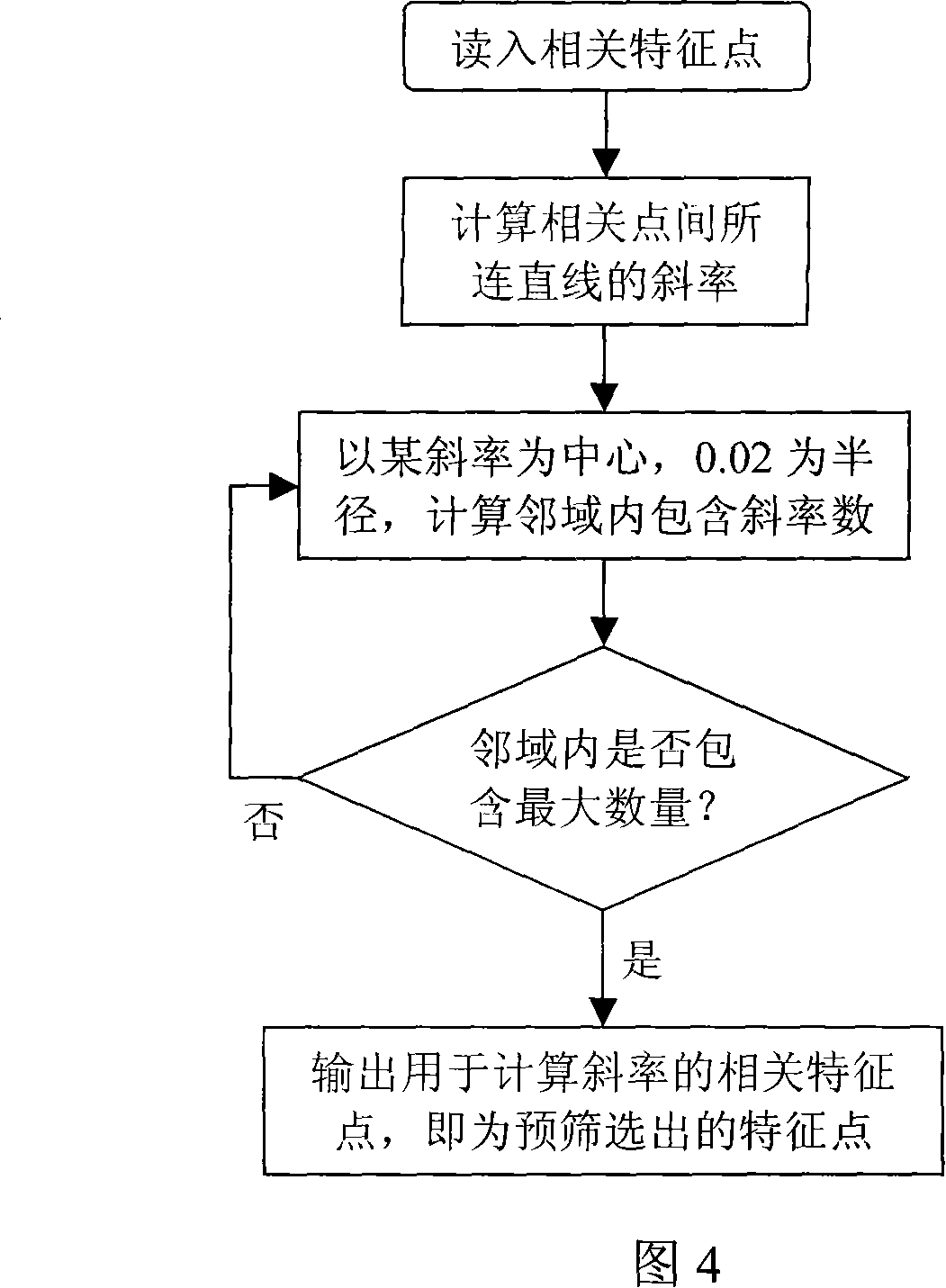

Large cultural heritage picture pattern split-joint method based on characteristic

InactiveCN101110122AImprove efficiencyFusion with natureImage enhancementImage mosaickingAngular point

A mosaicking method for large breadth cultural heritage image is provided, which relates to the technical field of image matching and image mosaicking. The invention comprises the following processing procedures: 1) aiming at the features of cultural heritage image, adopt an angle point detection method to extract the angle point of image, which can be taken as image feature for the matching of images; 2) calculate gradient of straight line connected between the matching points of nearby images, and; adopt a clustering method to pre-select the correlation points between images by utilizing the similarity of gradient; 3) adopt a best route method to generate a smaller mosaicking route with little pixel value, so as to evade the area with big pixel difference and remove the ghost image; 4) use a brightness component in HIS color space and blend the brightness via weighted function formula according to the mosaicking route. Compared with other mosaicking method, the method provided in the invention is able to greatly improve the speed for image mosaicking, eliminate the brightness difference of image, remove the ghost image in mosaicking and realize ideal mosaicking result.

Owner:BEIJING UNIV OF TECH

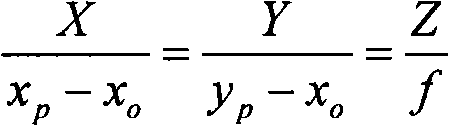

Camera array calibration algorithm based on gray level image and spatial depth data

The invention provides a camera array calibration algorithm capable of calibrating a 3D camera and obtaining relatively precise extrinsic parameters between the 3D camera and a visible light camera. The algorithm comprises the following steps: firstly, internally calibrating the visible light camera and the 3D camera to obtain intrinsic parameters of the cameras; secondly, adopting the intrinsic parameters of and a depth map provided by the 3D camera to restore the spatial locations of corner points of calibration boards, adopting minimum corner point projection error to obtain the optimum extrinsic parameters from the 3D camera to the required visible light camera, thereby completing the calibration of the whole camera array.

Owner:ZHEJIANG UNIV

Motion estimation method under violent illumination variation based on corner matching and optic flow method

InactiveCN101216941AOvercoming the disadvantages of failureOvercoming misjudgments of motion vectorsImage analysisCharacter and pattern recognitionVisual technologyAngular point

A motion estimation method based on the angle-point matching and the optical flow method under violent light change, relating to the computer vision technology field, comprises the following steps of: first, carrying out angle-point detection to a present frame; next, carrying out normalized angle-point matching of the present frame and a last frame rotation-invariantly; then, dividing the matched frame into blocks and calculating the affine transformation parameter in each block, carrying out block global motion vector estimating of the present frame according to the affine transformation parameter and performing motion compensation of the last frame using the vector estimation; again, carrying out block linear illumination compensation of the last frame after the motion compensation; finally, estimating the global motion vector of the next frame via the optical flow computation of the linear illumination compensated image and the present image frame. With overcoming the advantages of the invalidation of the optical flow method caused by bad conditions, violent light change and great rotation, etc, the invention obtains higher accuracy.

Owner:SHANGHAI JIAO TONG UNIV

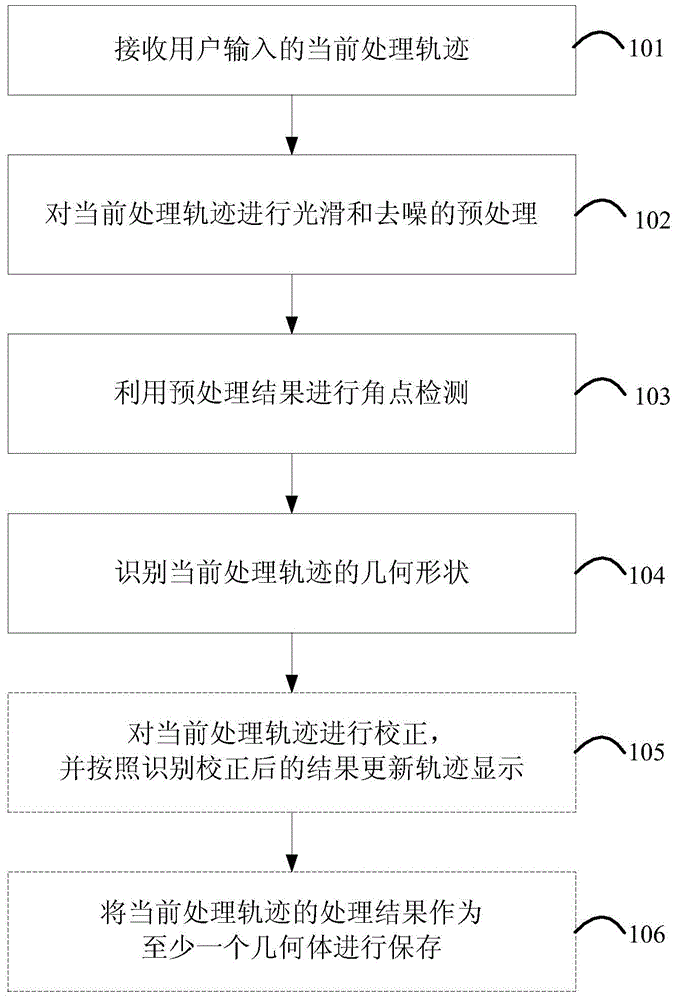

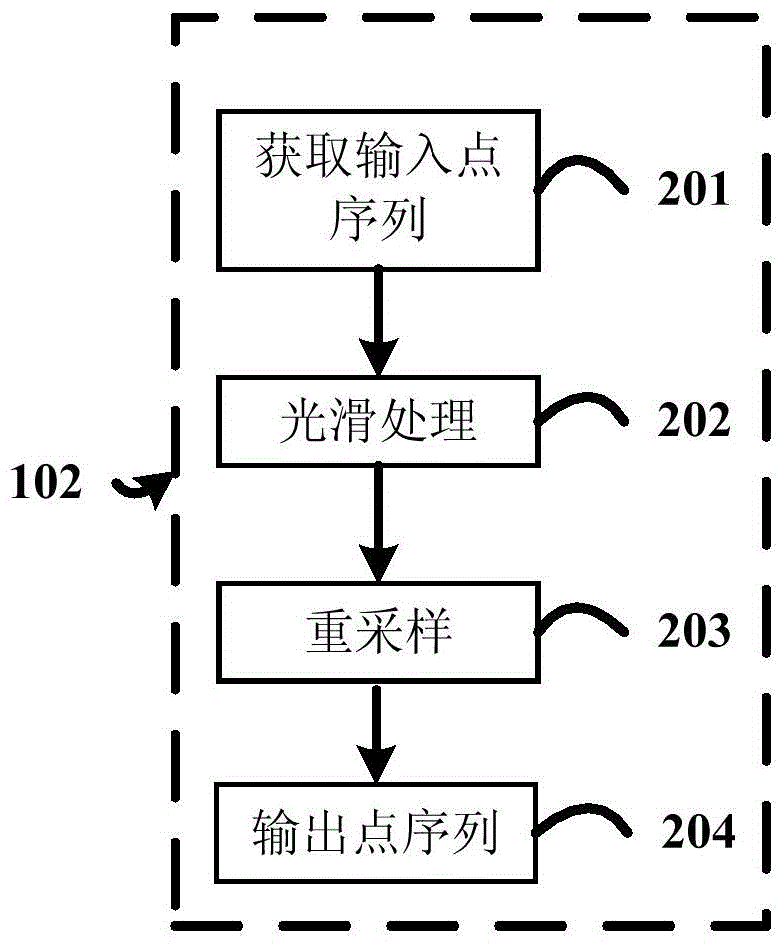

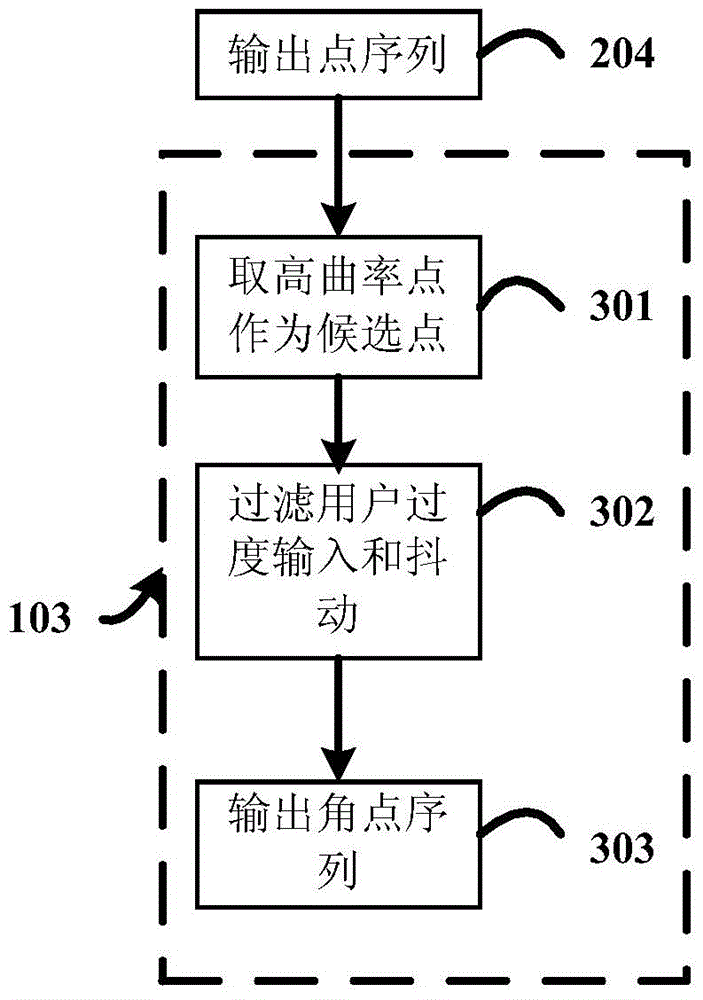

Method and device for identifying and editing freehand sketch

InactiveCN104424473AImplement editing operationsCharacter and pattern recognitionModifying/creating image using manual inputUser inputAngular point

The invention discloses a method for identifying a freehand sketch. The method comprises the steps of acquiring a current processing track inputted by a user, and carrying out preprocessing on the current processing track; detecting valid angular points according to a preprocessing result; segmenting the preprocessing result by utilizing the valid angular points, carrying out curve fitting on each section of track, and identifying a geometric shape of the current track according to all fitting results; storing the processing result of the current processing track as at least one geometry. The invention also discloses a method for editing the freehand sketch as well as a device for identifying and editing the freehand sketch.

Owner:BEIJING SAMSUNG TELECOM R&D CENT +1

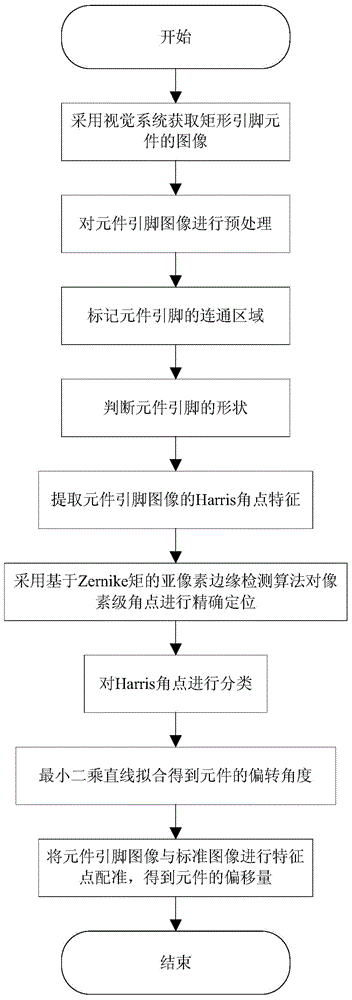

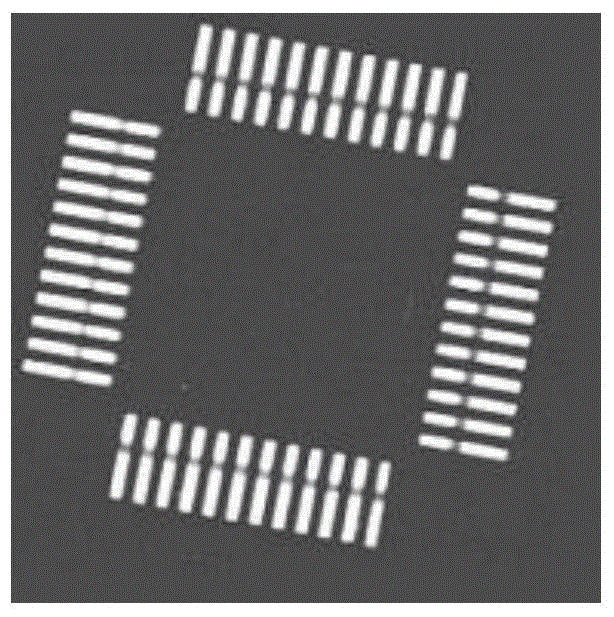

Detection method for rectangular pin component visual positioning

InactiveCN104359402AAccurate detectionHigh precisionUsing optical meansAngular pointVisual positioning

The invention provides a detection method for rectangular pin component visual positioning, and relates to the field of visual positioning and detection of rectangular pin components. The method aims to solve the problems that a traditional rectangular pin component detection method is low in positioning precision, pins break in images and deviation and deviation angles are detected separately. The method is characterized in that a measured component image is obtained by means of a chip mounter visual system, an image after binary pre-processing is obtained through threshold segmentation, a communication area of the component pins is marked, the shape of the component pins is judged, pixel-level Harris angular point coordinates of the pin image are extracted, sub-pixels of Harris angular points are solved and classified, the deviation angles of components are calculated, the pin image is matched with a standard image, and the deviation of the component is calculated. The method is mainly used for pin detection, deviation angle detection and deviation detection of the rectangular pin components.

Owner:NANJING UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com