Camera array calibration algorithm based on gray level image and spatial depth data

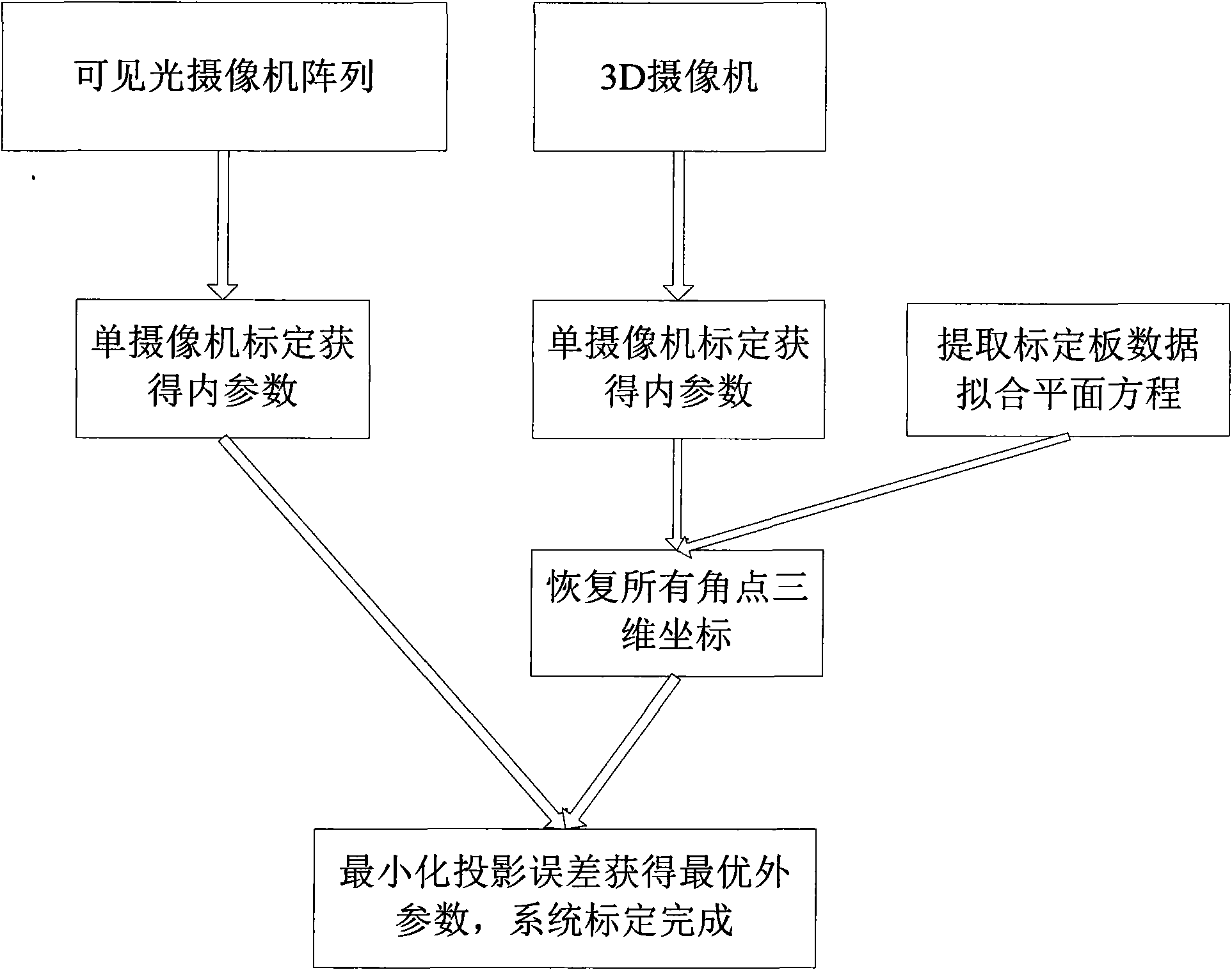

A technology of spatial depth and grayscale images, applied in the field of calibration algorithms including arrays of 3D cameras, can solve problems such as low resolution, large errors, and influence of calibration accuracy, and achieve high precision and simple effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0015] Below, the present invention will be further described in conjunction with the accompanying drawings and specific embodiments.

[0016] The visible light camera used in this embodiment uses a CCD sensor, which can provide a color image or a grayscale image with a resolution of 768×576. The 3D camera used is a Swiss Ranger 3000 (TOF camera), which can provide a resolution of 176× 144 depth and grayscale images.

[0017] When shooting the calibration plate image, in order to increase the robustness of corner detection and the stability of the image data, multiple frames of images can be taken continuously for the calibration plate at the same position, and then the average value is calculated as the actual value we use in calibration. image.

[0018] In the process of internal calibration of each camera, the selected tool is the calibration toolkit ToolBox_Calib provided by Matlab. The internal algorithm of the toolkit is based on the two-step calibration method of Zhang...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com