Patents

Literature

385results about How to "Improve the ability to distinguish" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Multi-mode-characteristic-fusion-based remote-sensing image classification method

ActiveCN105512661AImplement classificationImprove classification accuracyBiological neural network modelsCharacter and pattern recognitionClassification methods

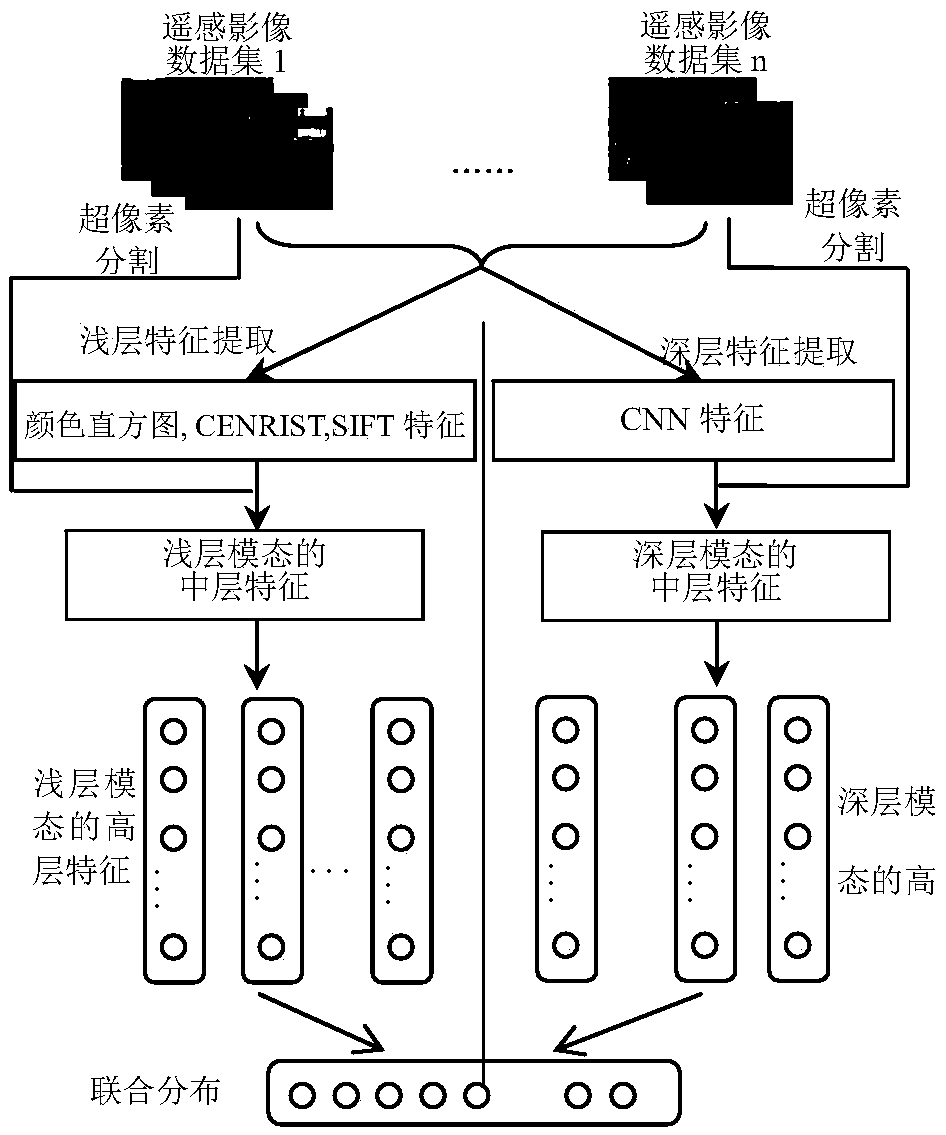

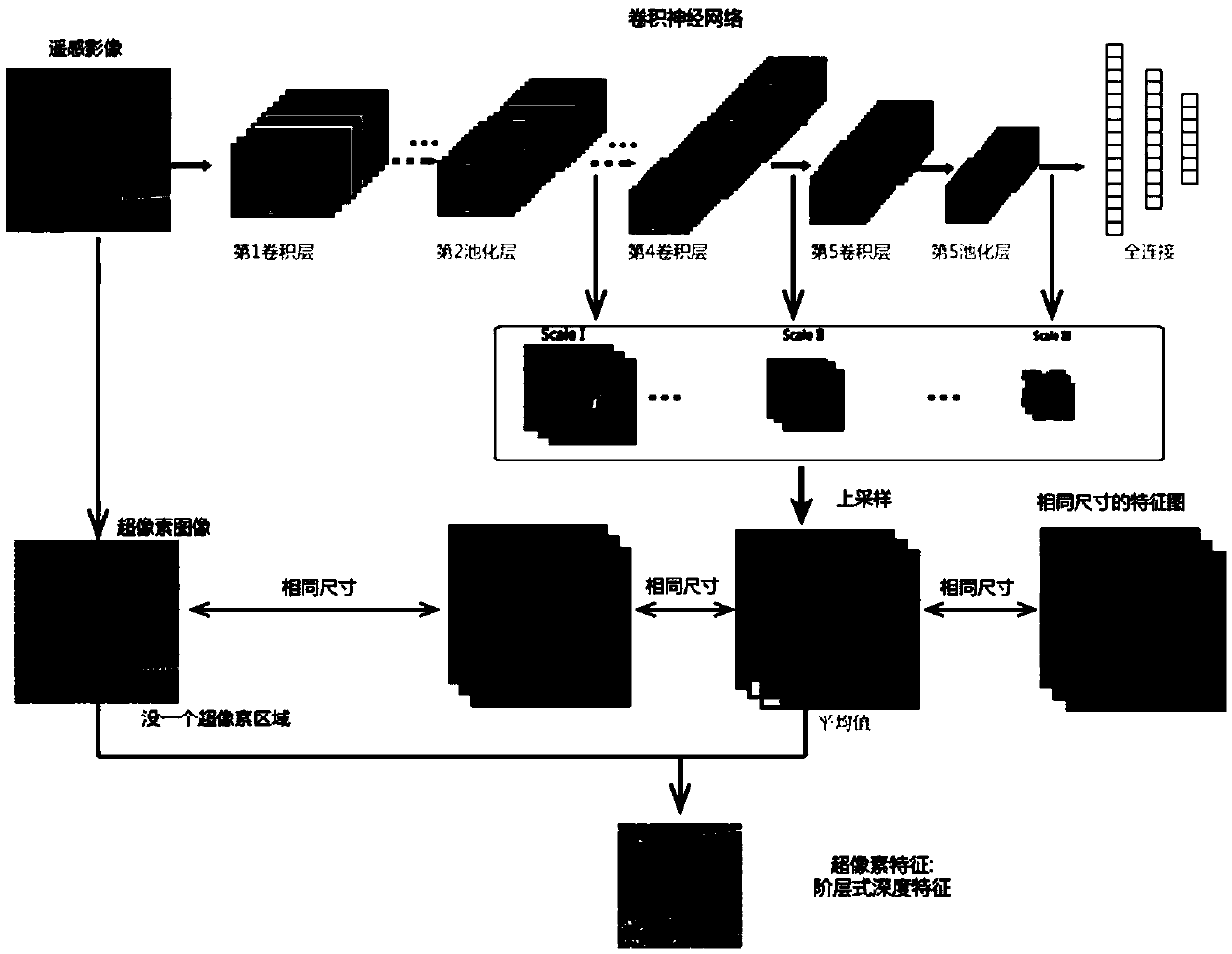

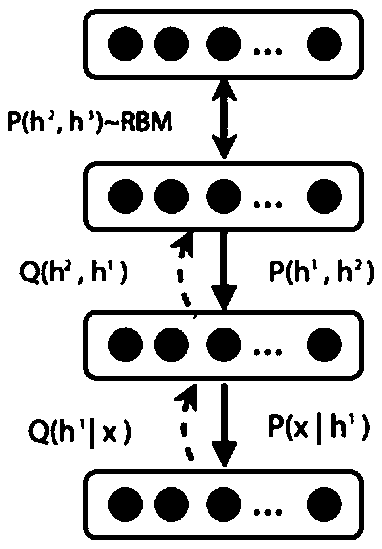

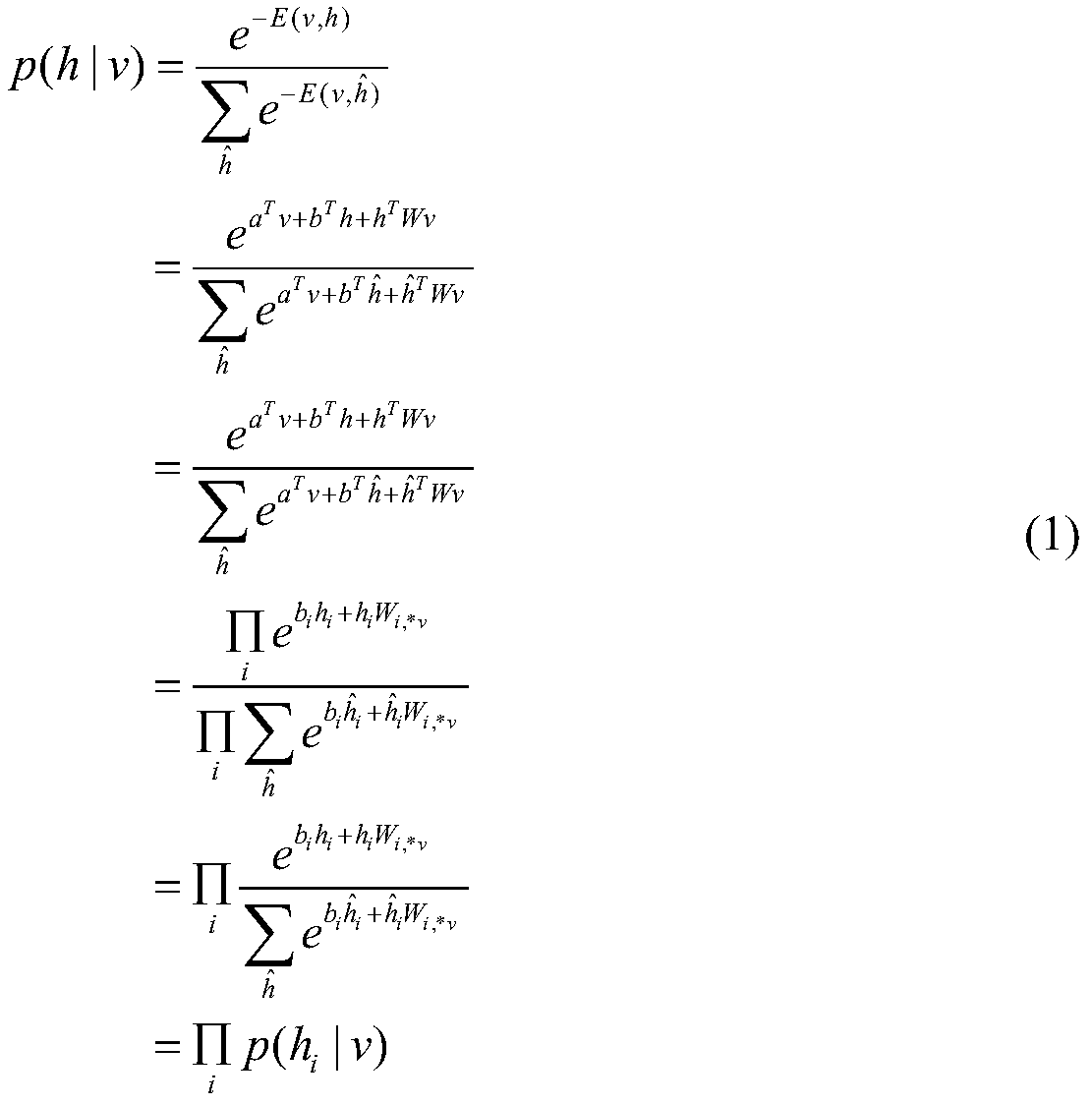

The invention, which belongs to the technical field of remote-sensing image classification, relates to a multi-mode-characteristic-fusion-based remote-sensing image classification method. Characteristics of at least two modes are extracted; the obtained characteristics of the modes are inputted into an RBM model to carry out fusion to obtain a combined expression of characteristics of the modes; and according to the combined expression, type estimation is carried out on each super-pixel area, thereby realizing remote-sensing image classification. According to the invention, the characteristics, including a superficial-layer mode characteristic and a deep-layer mode characteristic, of various modes are combined by the RBM model to obtain the corresponding combined expression, wherein the combined expression not only includes the layer expression of the remote-sensing image deep-layer mode characteristic but also includes external visible similarity of the superficial-layer mode characteristic. Therefore, the distinguishing capability is high and the classification precision of remote-sensing images is improved.

Owner:THE PLA INFORMATION ENG UNIV

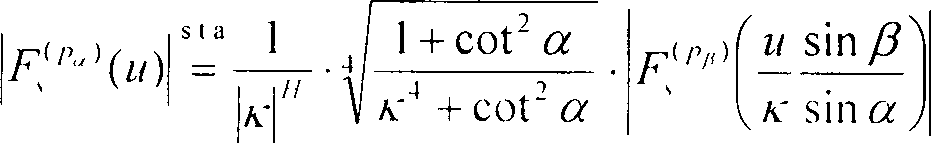

Multi-fractal detection method of targets in FRFT (Fractional Fourier Transformation) domain sea clutter

ActiveCN102967854AReduce the demand for signal-to-clutter ratio in target detectionThe need to reduce the signal-to-clutter ratioWave based measurement systemsTime domainTarget signal

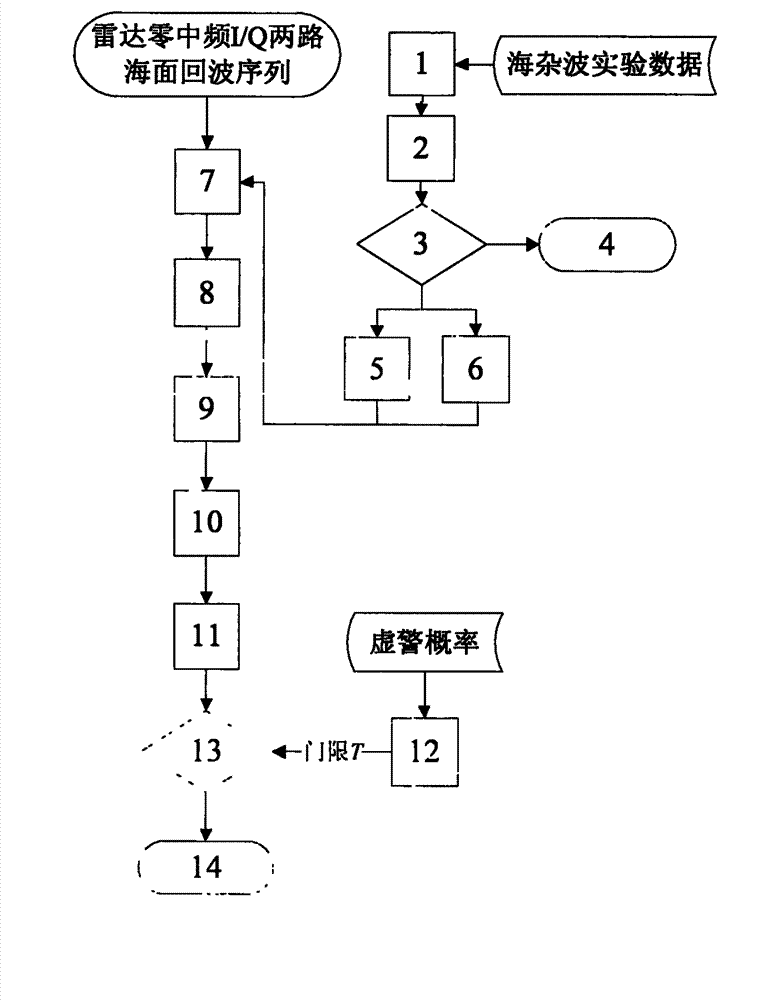

The invention discloses a multi-fractal detection method of targets in FRFT (Fractional Fourier Transformation) domain sea clutter and belongs to the radar signal processing field. According to the conventional multi-fractal detection methods of targets in sea clutter, echo sequences in the radar time domain are processed directly, and therefore detection performance of weak moving targets in strong sea clutter background is poor. The multi-fractal detection method of targets in FRFT domain sea clutter is characterized in that fractional Fourier transformation is organically combined with the multi-fractal processing method, and the generalized Hurst index number of the sea-clutter fractional Fourier transformation spectrum is extracted to form detection statistics by comprehensively utilizing the advantage that the fractional Fourier transformation is capable of effectively improving the signal to clutter ratio of the moving target on the sea surface and the feature that the multi-fractal characteristic is capable of breaking the tether of the signal to clutter ratio to a certain extent. The detection method comprehensively utilizes the advantages of phase-coherent accumulation and multi-fractal theory and has excellent separating capability on the weak moving targets in sea clutter; and simultaneously, the method is also suitable for tracking target signals in nonuniform fractal clutter and has popularization and application values.

Owner:NAVAL AERONAUTICAL & ASTRONAUTICAL UNIV PLA

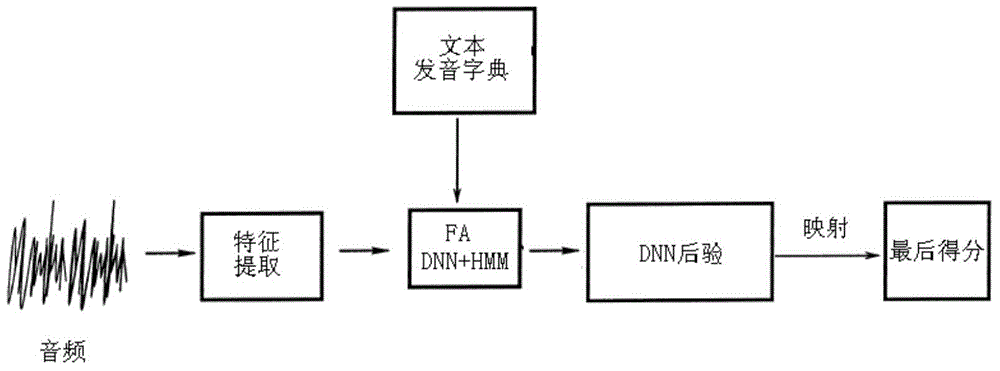

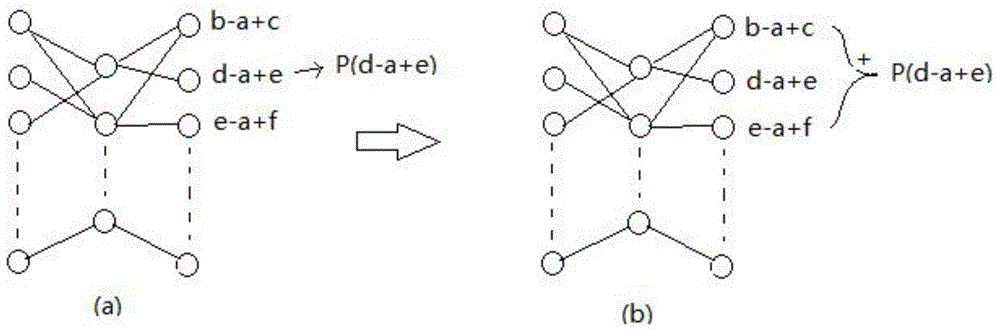

Spoken language pronunciation detecting and evaluating method based on deep neural network posterior probability algorithm

ActiveCN104575490AImprove the ability to distinguishReduce computational complexitySpeech recognitionFrame sizeDeep neural networks

The invention discloses a spoken language pronunciation detecting and evaluating method based on a deep neural network posterior probability algorithm. The method comprises the following steps: firstly, extracting voice to be an audio feature vector sequence by frames; secondly, inputting audio features into a model which is train in advance, a spoken language detecting and evaluating text and a corresponding word pronunciation dictionary, determining the time boundary of phoneme state, and ensuring that the model is a DNN plus HMM model; thirdly, extracting all frames within the time boundary after the time boundary is determined, averaging the frame sizes of voice frames, taking the average value as the posterior probability of the phoneme state, obtaining a word posterior score based on phoneme state posterior, and ensuring that the word posterior score is the average value of phoneme state posterior scores contained in the word posterior score.

Owner:SUZHOU CHIVOX INFORMATION TECH CO LTD

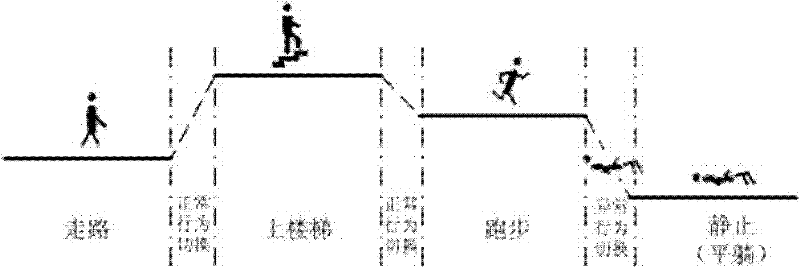

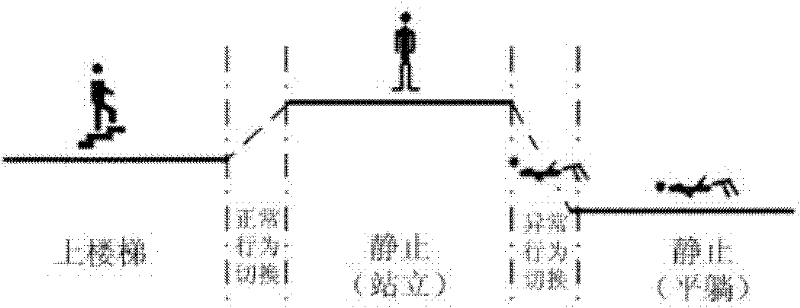

Method and device for detecting tumbling

ActiveCN102302370AReduce complexityEffective filteringDiagnostic recording/measuringSensorsFeature vectorCharacteristic space

The invention provides a method and device for detecting tumbling. The method provided by the invention comprises the steps of: firstly, collecting behavioral data of a user; secondly, identifying behavior of the user according to the behavioral data of the user; thirdly, segmenting out behavioral switching data from the collected data according to a behavioral identification result, and warping the behavioral switching data into equilong characteristic vectors; and fourthly, performing tumbling detection according to the warped characteristic vectors. According to the method and device provided by the invention, tumbling detection is performed on the basis of behavioral switching, a great amount of normal behavioral data can be filtered, the complexity of a feature space is reduced, the separating capacity of models is enhanced, and the model detection rate is improved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

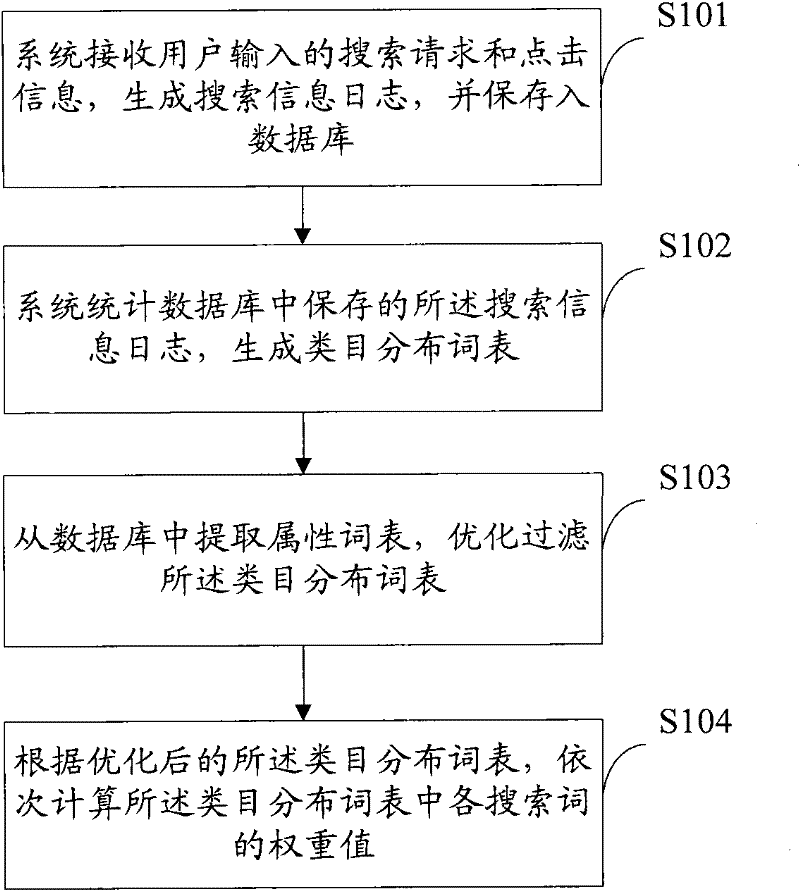

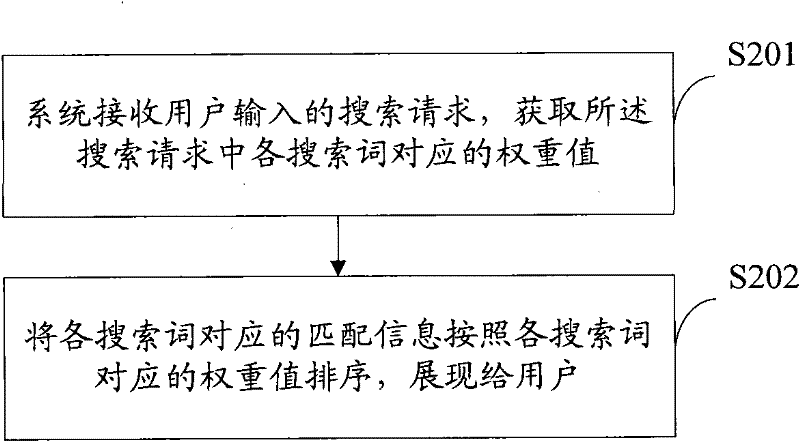

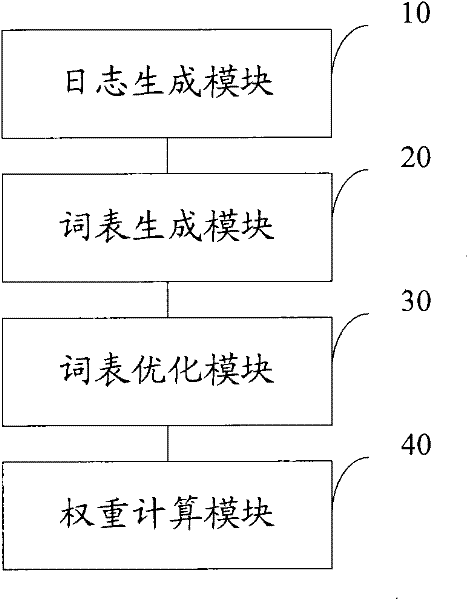

Method and device for determining search word weight value, method and device for generating search results

InactiveCN102289436AImprove versatilityLess importantDigital data information retrievalSpecial data processing applicationsSearch wordsStatistical database

Owner:ALIBABA GRP HLDG LTD

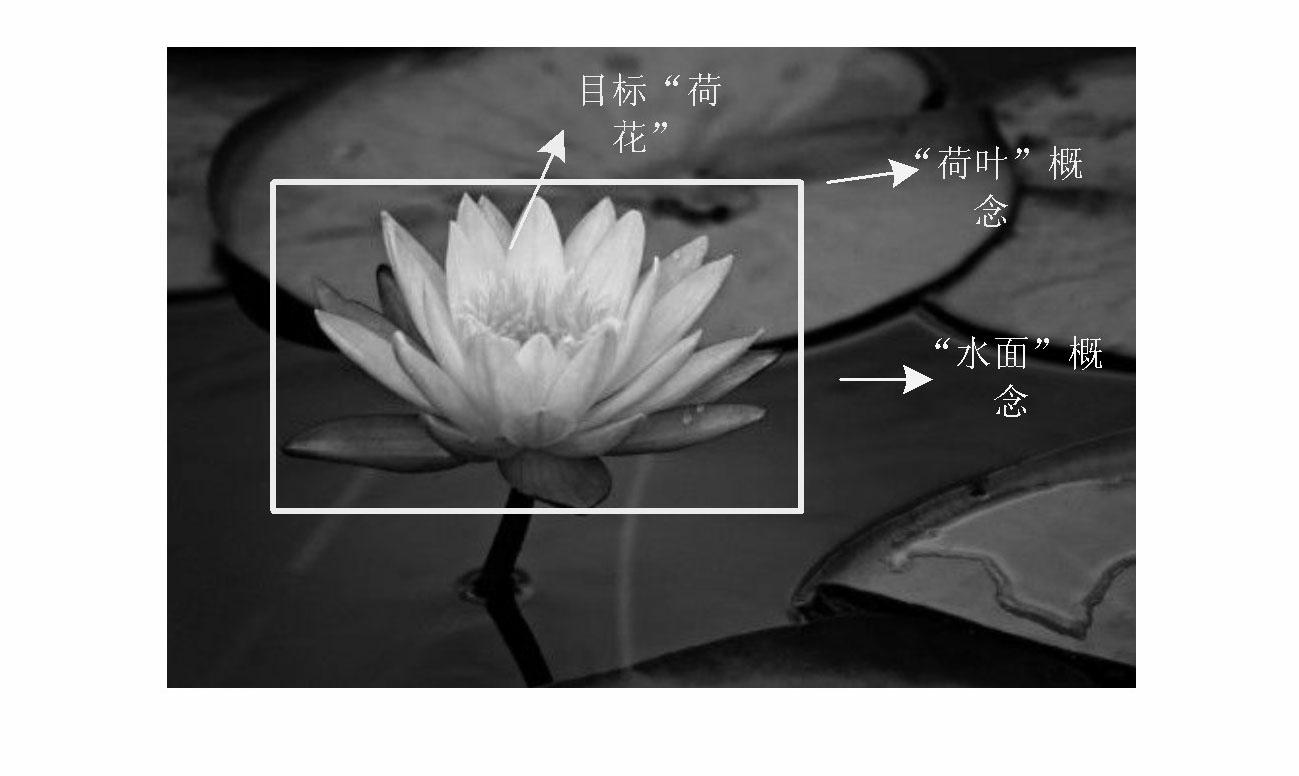

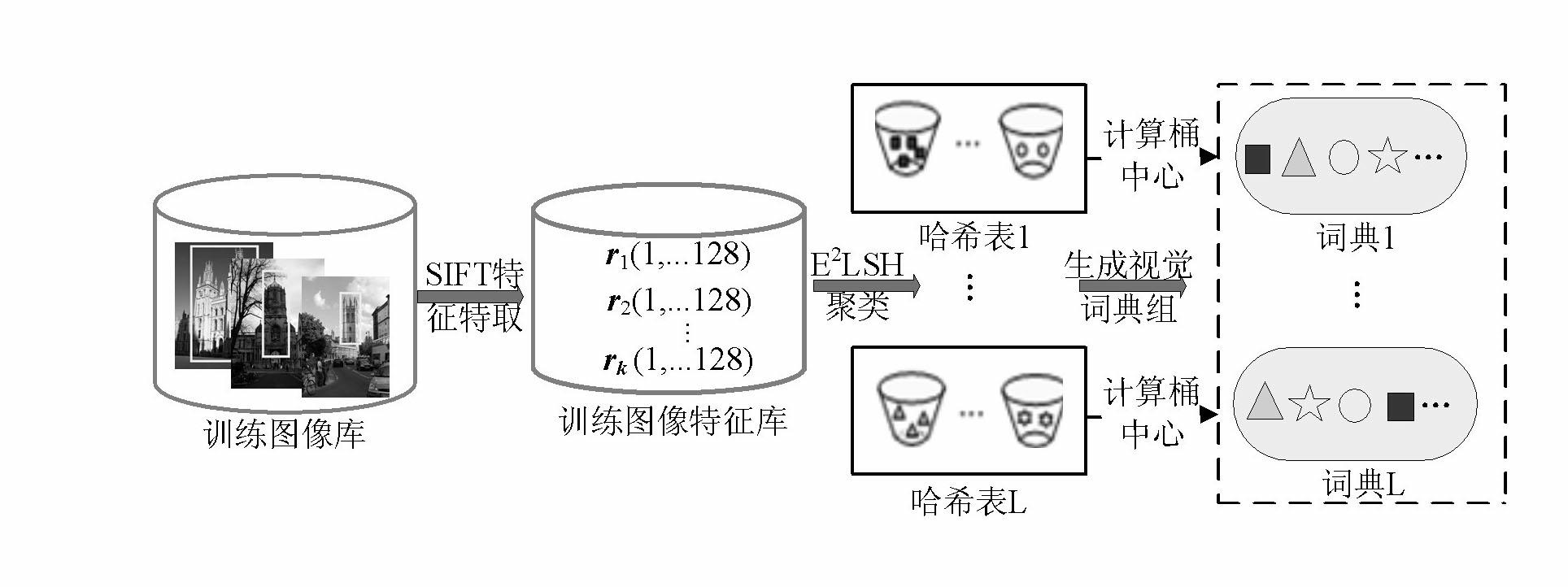

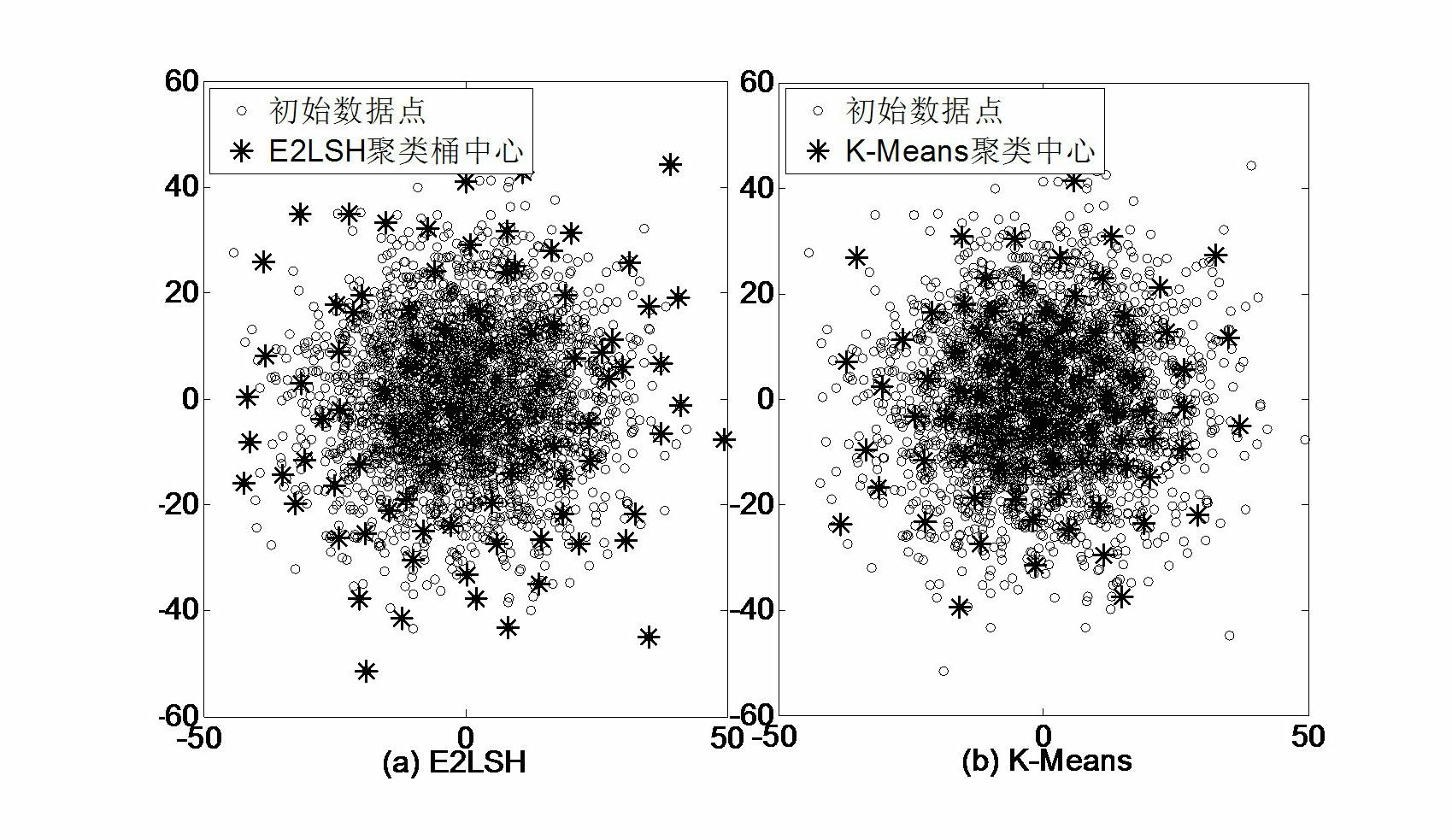

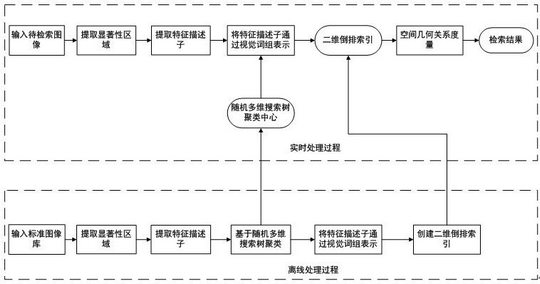

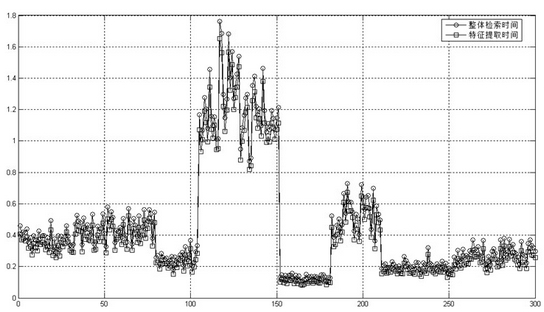

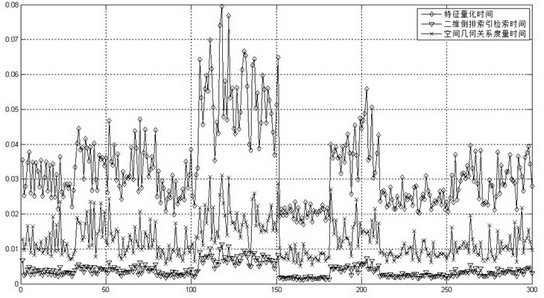

Target retrieval method based on group of randomized visual vocabularies and context semantic information

InactiveCN102693311AAddressing operational complexityReduce the semantic gapCharacter and pattern recognitionSpecial data processing applicationsImage databaseSimilarity measure

The invention relates to a target retrieval method based on a group of randomized visual vocabularies and context semantic information. The target retrieval method includes the following steps of clustering local features of a training image library by an exact Euclidean locality sensitive hash function to obtain a group of dynamically scalable randomized visual vocabularies; selecting an inquired image, bordering an target area with a rectangular frame, extracting SIFT (scale invariant feature transform) features of the inquired image and an image database, and subjecting the SIFT features to S<2>LSH (exact Euclidean locality sensitive hashing) mapping to realize the matching between feature points and the visual vocabularies; utilizing the inquired target area and definition of peripheral vision units to calculate a retrieval score of each visual vocabulary in the inquired image and construct an target model with target context semantic information on the basis of a linguistic model; storing a feature vector of the image library to be an index document, and measuring similarity of a linguistic model of the target and a linguistic model of any image in the image library by introducing a K-L divergence to the index document and obtaining a retrieval result.

Owner:THE PLA INFORMATION ENG UNIV

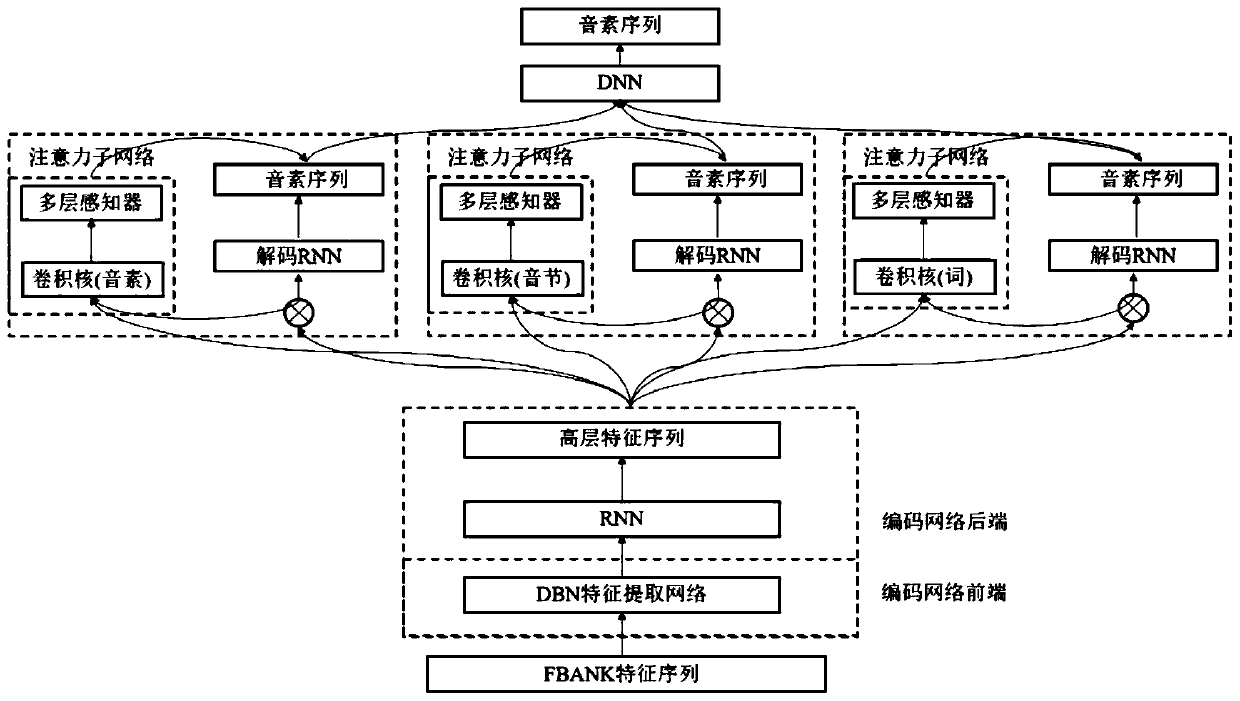

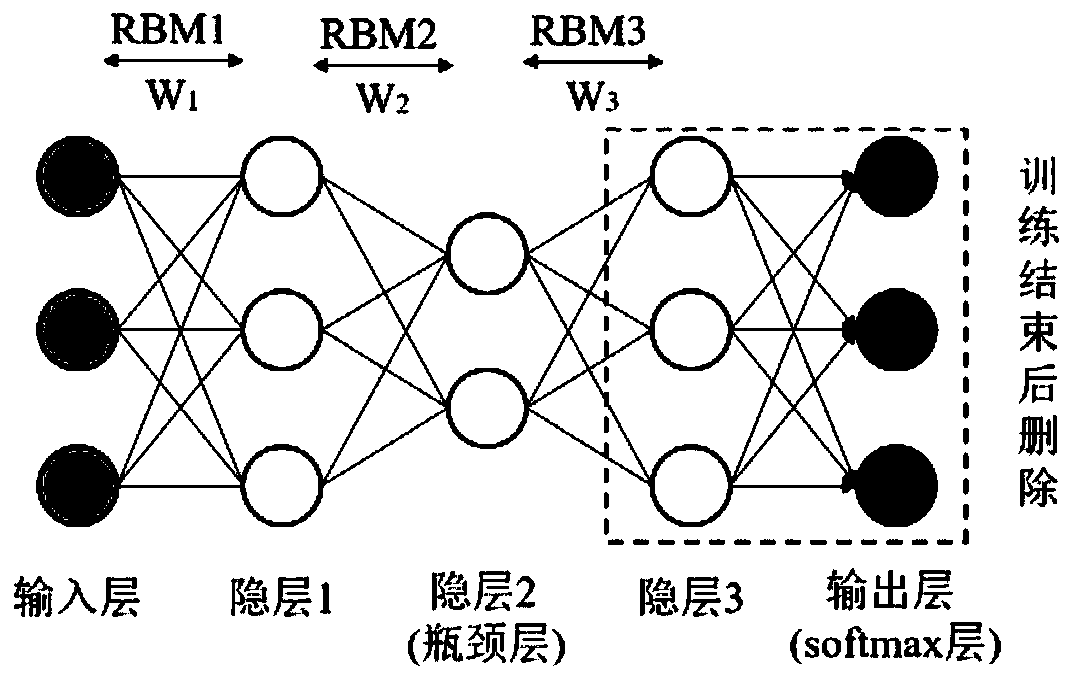

Speech recognition model establishing method based on bottleneck characteristics and multi-scale and multi-headed attention mechanism

The invention provides a speech recognition model establishing method based on bottleneck characteristics and a multi-scale and multi-headed attention mechanism, and belongs to the field of model establishing methods. A traditional attention model has the problems of poor recognition performance and simplex attention scale. According to the speech recognition model establishing method based on thebottleneck characteristics and the multi-scale and multi-headed attention mechanism, the bottleneck characteristics are extracted through a deep belief network to serve as a front end, the robustnessof a model can be improved, a multi-scale and multi-headed attention model constituted by convolution kernels of different scales is adopted as a rear end, model establishing is conducted on speech elements at the levels of phoneme, syllable, word and the like, and recurrent neural network hidden layer state sequences and output sequences are calculated one by one; and elements of the positions where the output sequences are located are calculated through decoding networks corresponding to attention networks of all heads, and finally all the output sequences are integrated into a new output sequence. The recognition effect of a speech recognition system can be improved.

Owner:HARBIN INST OF TECH

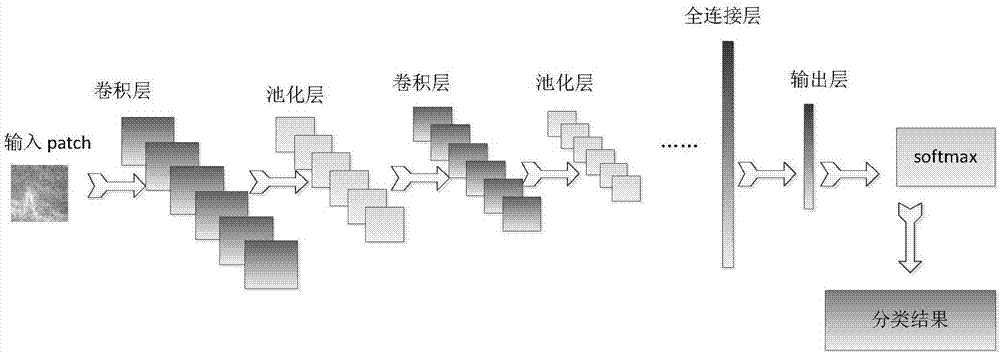

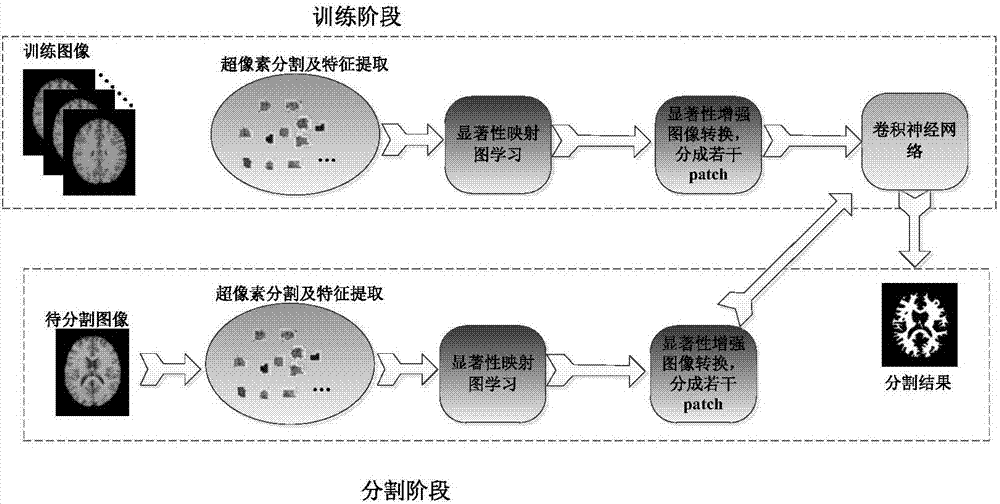

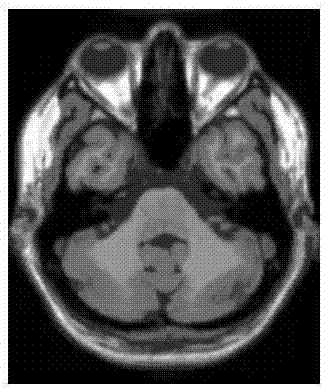

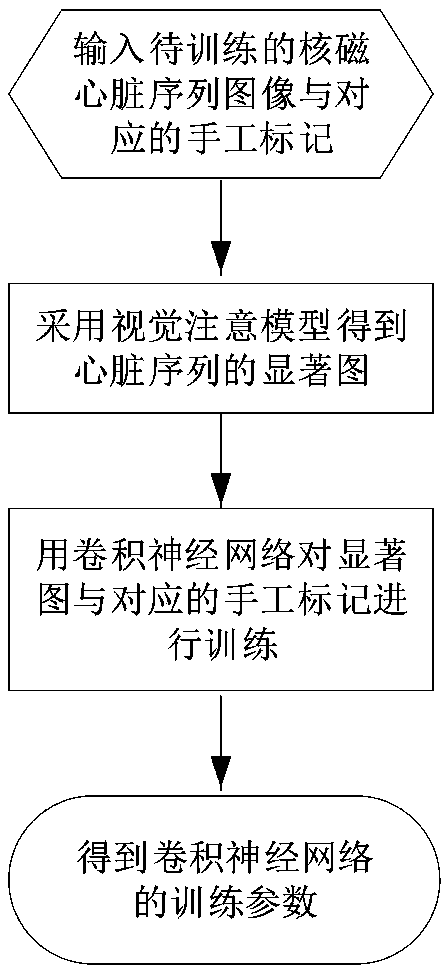

Brain image segmentation method and system based on saliency learning convolution nerve network

ActiveCN107506761AImprove classification performanceGood segmentation resultImage enhancementImage analysisNerve networkSaliency map

The invention discloses a brain image segmentation method and system based on a saliency learning convolution nerve network; the brain image segmentation method comprises the following steps: firstly proposing a saliency learning method to obtain a MR image saliency map; carrying out saliency enhanced transformation according to the saliency map, thus obtaining a saliency enhanced image; splitting the saliency enhanced image into a plurality of image blocks, and training a convolution nerve network so as to serve as the final segmentation model. The saliency learning model can form the saliency map, and said class information is obtained according to a target space position, and has no relation with image gray scale information; the saliency information can obviously enhance the target saliency, thus improving the target class and background class discrimination, and providing certain robustness for gray scale inhomogeneity. The convolution nerve network trained by the saliency enhanced images can be employed to learn the saliency enhanced image discrimination information, thus more effectively solving the gray scale inhomogeneity problems in the brain MR image.

Owner:SHANDONG UNIV

Image retrieval method based on visual phrases

InactiveCN102254015AReduce in quantityImprove the ability to distinguishSpecial data processing applicationsInformation processingVision based

Owner:SHANGHAI JIAO TONG UNIV

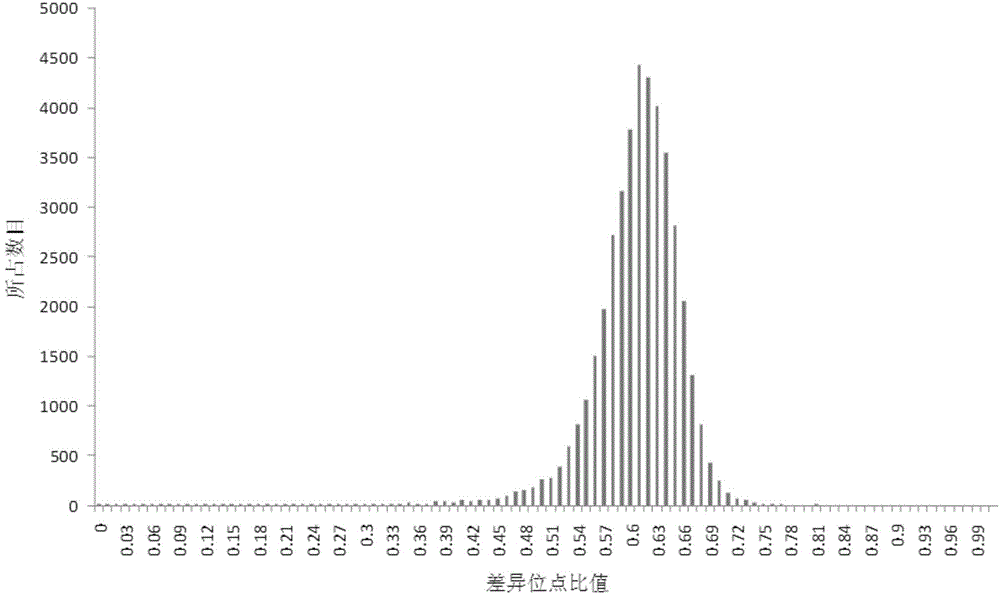

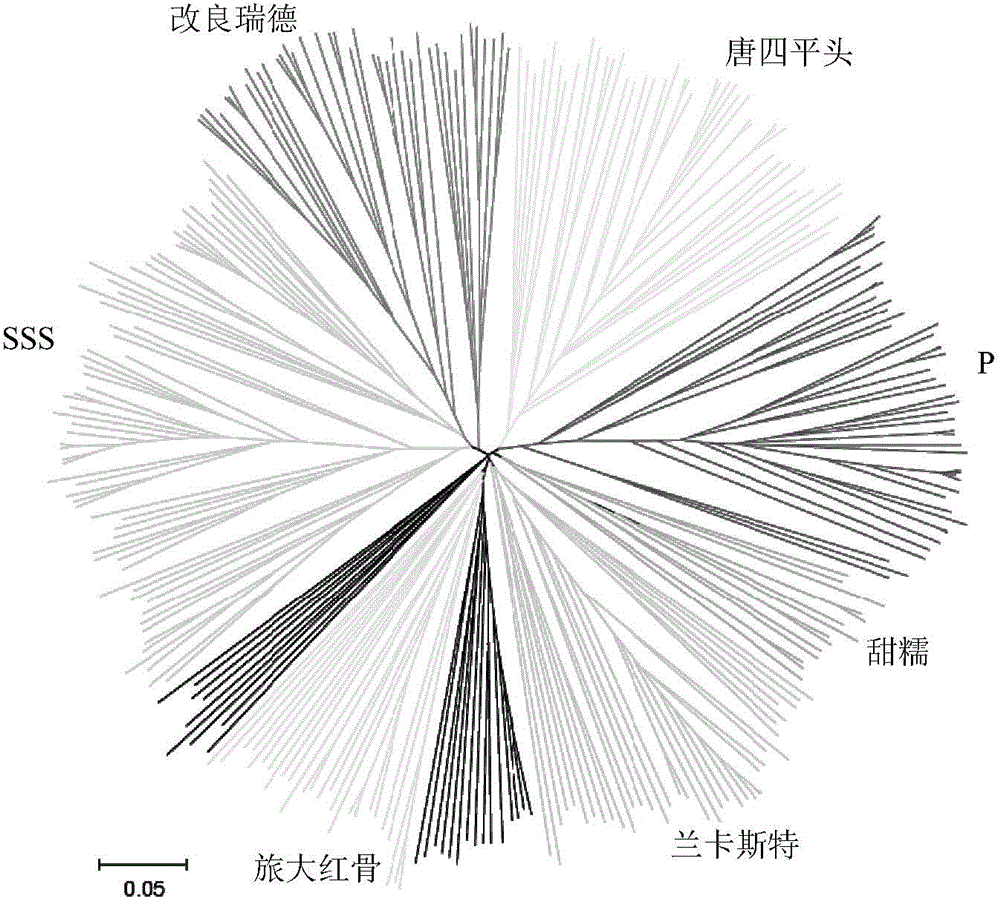

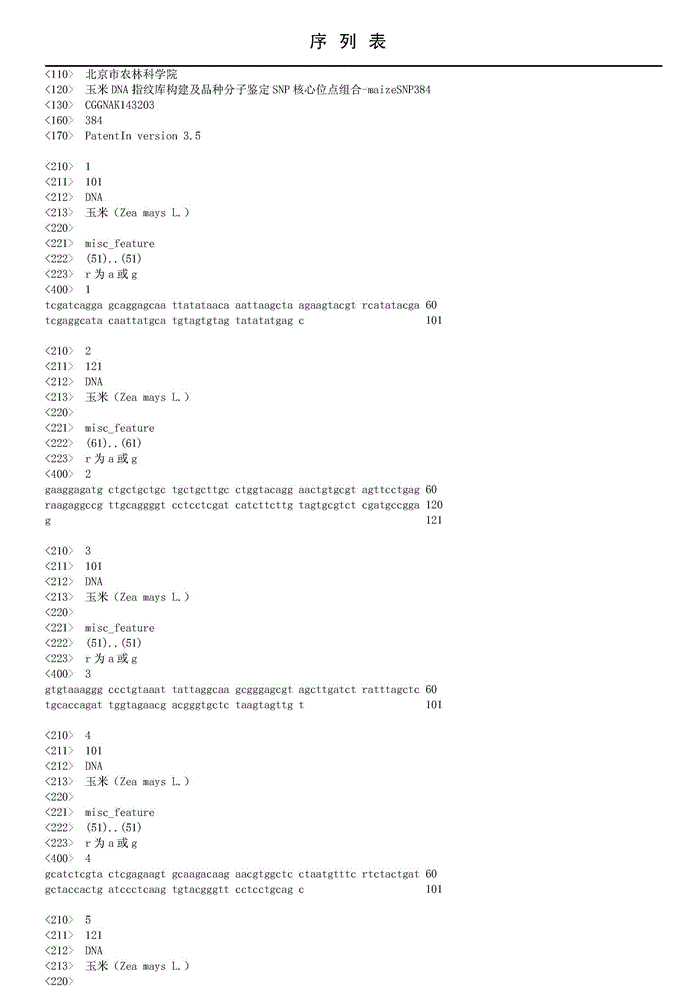

Core SNP sites combination maizeSNP384 for building of maize DNA fingerprint database and molecular identification of varieties

ActiveCN104532359AImprove stabilityGood repeatabilityNucleotide librariesMicrobiological testing/measurementMolecular identificationAgricultural science

The invention discloses a core SNP sites combination maizeSNP384 for building of a maize DNA fingerprint database and molecular identification of varieties, and an application of the core SNP sites combination. The invention provides applications of 384 SNP sites in any one of the following conditions: (1) building of the maize DNA fingerprint database; (2) detecting of the authenticity of maize varieties; (3) genetic analysis of corn germplasm resources; and (4) molecular breeding of maize, wherein the physical positions of the 384 SNP sites are determined by comparison on the basis of a whole genome sequence of the maize variety B73; the version number of the whole genome sequence of the maize variety B73 is B73 RefGen V1; and the 384 SNP sites are MG001-MG384. An experiment proves that the 384 SNP sites can be applied to building of the maize variety DNA fingerprint database, identification of the variety authenticity, dividing of germplasm resource groups, and other related researches.

Owner:BEIJING ACADEMY OF AGRICULTURE & FORESTRY SCIENCES

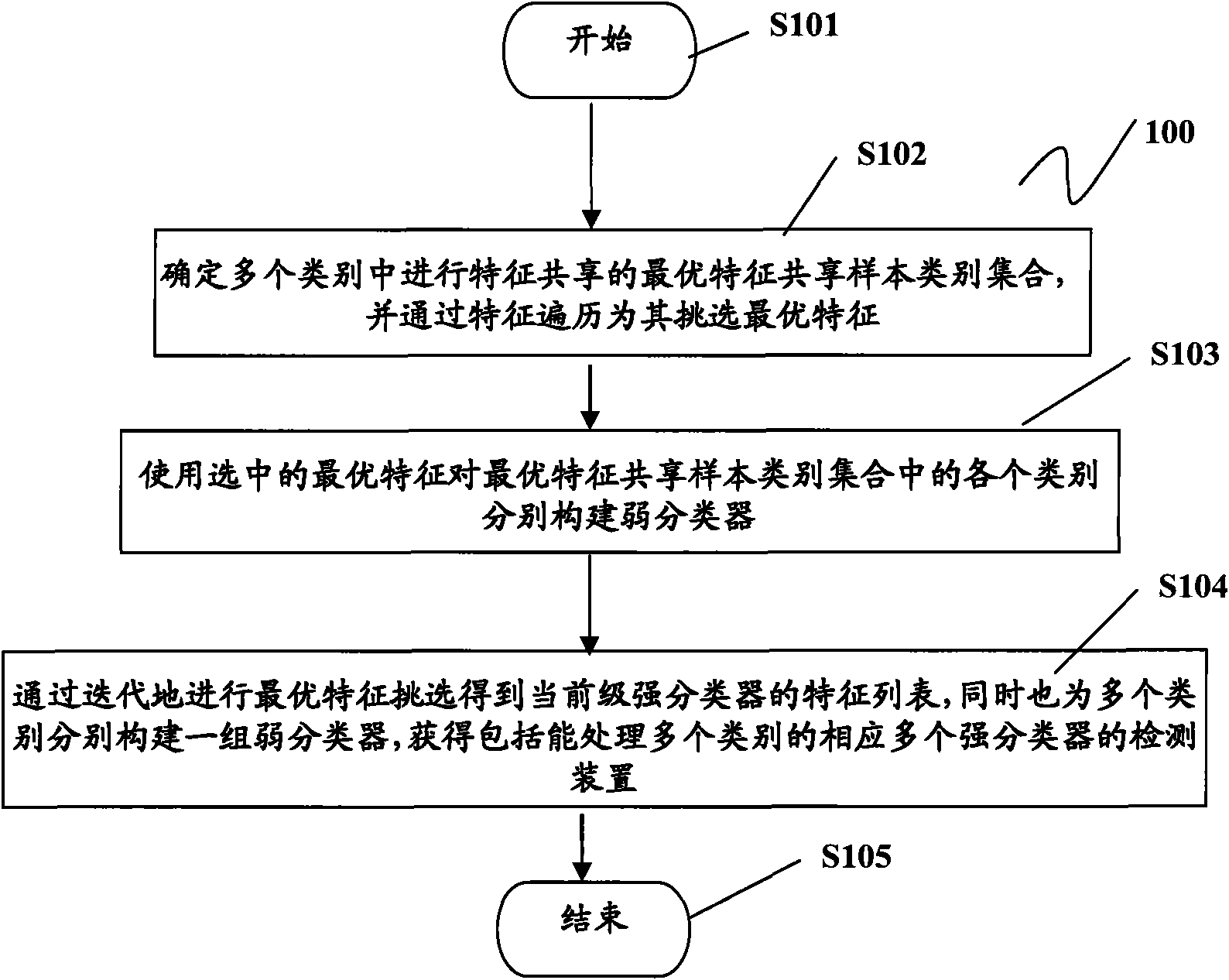

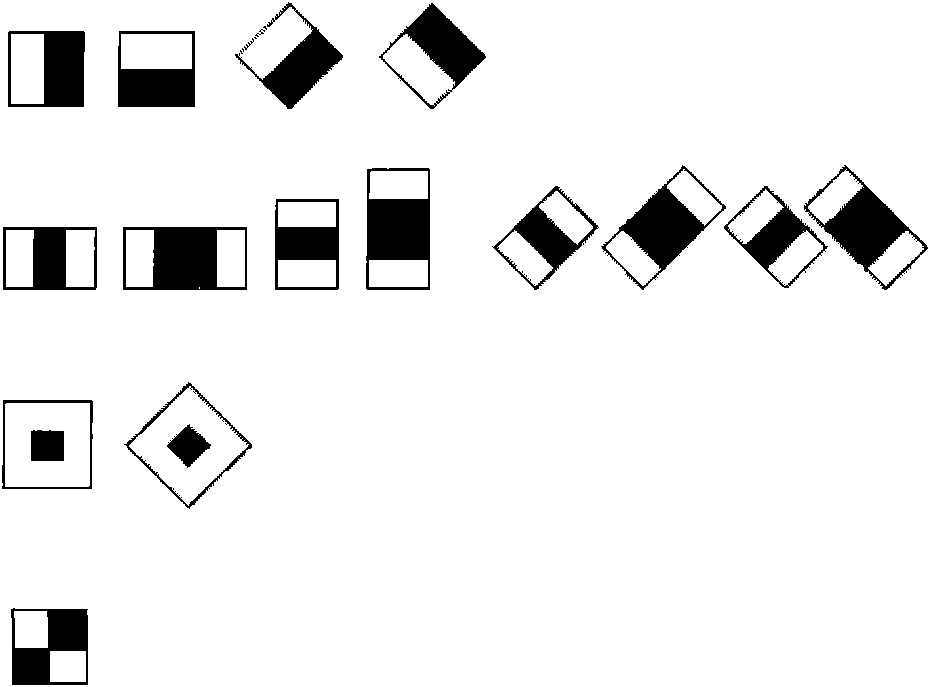

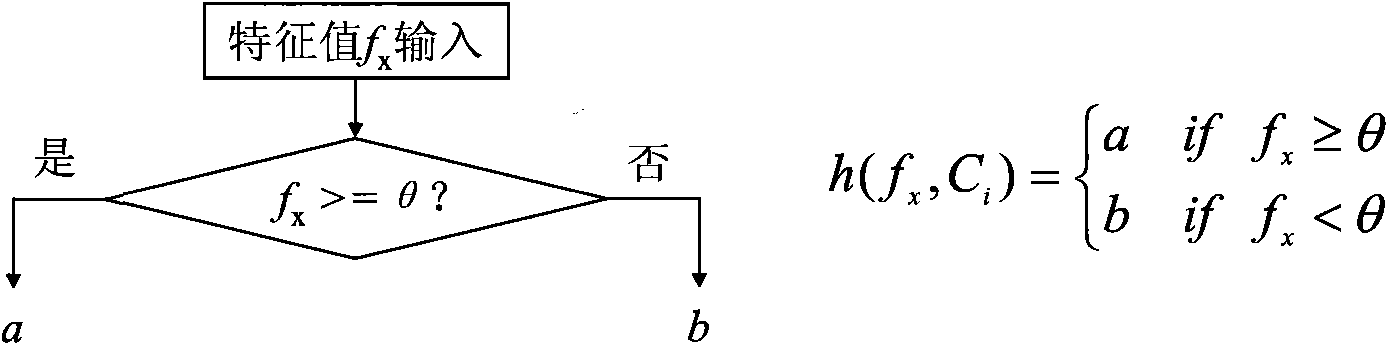

Detection device and method for multi-class targets

InactiveCN101853389AFast convergenceImprove the ability to distinguishCharacter and pattern recognitionMultiple categoryLinear classifier

The invention relates to detection device and method for multi-class targets. The detection device comprises an input unit, a joint classifier and a discrimination unit, wherein the input unit is configured to input data to be detected; and the joint classifier comprises multiple strong classifiers which can treat multiple classes of target data, wherein each strong classifier is formed by summarizing a group of weak classifiers, and each weak classifier carries out weak classifying on the data to be detected by using one feature; the discrimination unit is configured to discriminate which class of target data the data to be detected belongs to based on the classifying results of the multiple strong classifiers, the joint classifier contains a shared feature list, and each feature in the list is respectively shared by one or more weak classifiers belonging to different strong classifiers; and the weak classifiers which have the same feature but belong to different strong classifiers have mutually different parameter values.

Owner:SONY GRP CORP

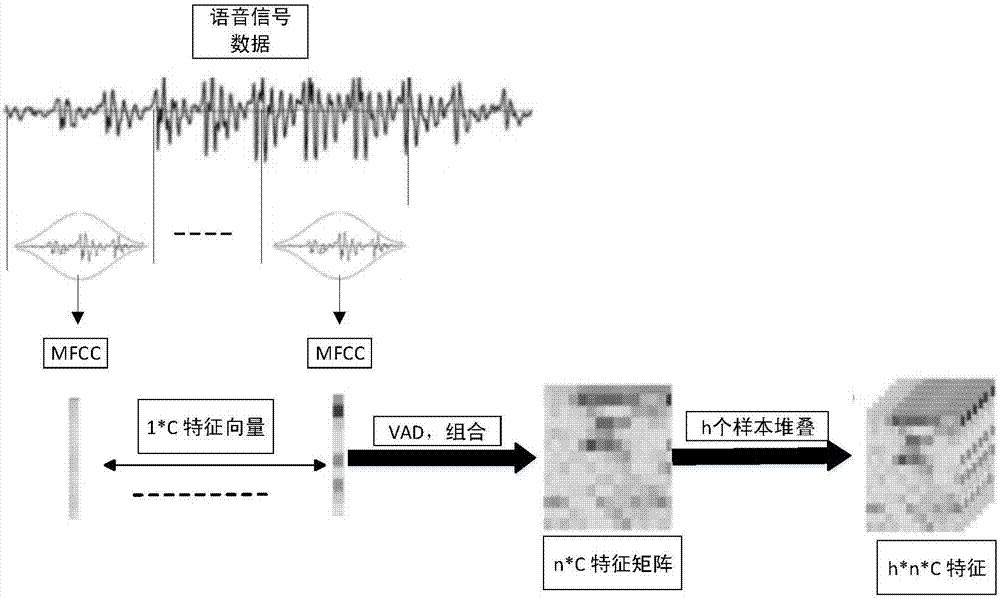

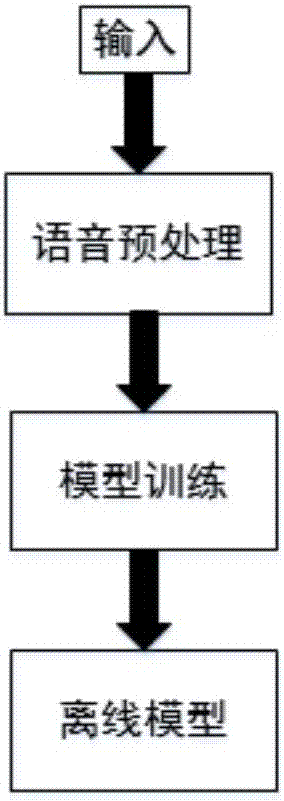

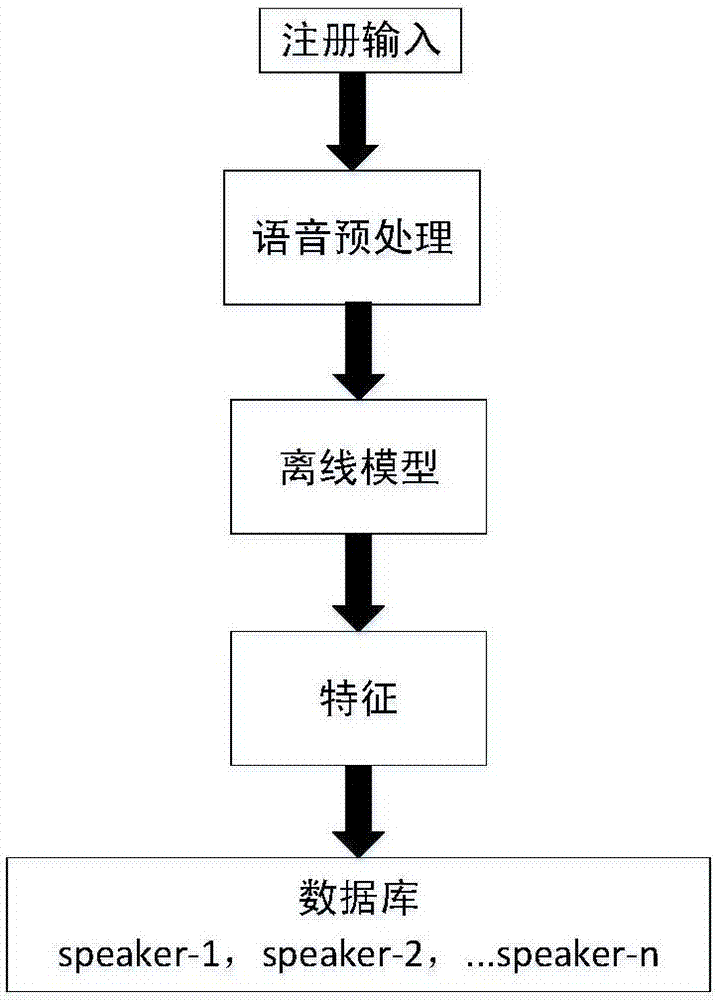

Speaker recognition method based on three-dimensional convolutional neural network text independence and system

ActiveCN107464568AImprove experienceImprove the ability to distinguishSpeech analysisSpeaker recognition systemAudio frequency

The invention discloses a speaker recognition system based on three-dimensional convolutional neural network text independence. The speaker recognition system comprises a module I, namely a voice acquisition module, a module II, namely a voice preprocessing module, a module III, namely a speaker recognition model training module, and a module IV, namely a speaker recognition module, wherein the voice acquisition module is used for acquiring voice data; the voice preprocessing module is used for extracting mel-frequency cepstrum coefficient characteristics of original voice data and used for ejecting non-voice data in the characteristics, and thus final training data are acquired; the speaker recognition model training module is sued for training off-line models recognized by a speaker; and the speaker recognition module is used for recognizing identity of a speaker in real time. The invention further discloses a speaker recognition method based on three-dimensional convolutional neural network text independence. By adopting the speaker recognition method and the speaker recognition system based on three-dimensional convolutional neural network text independence, the purpose that registration of a user is independent from a recognized text is achieved, and thus the user experience can be improved.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

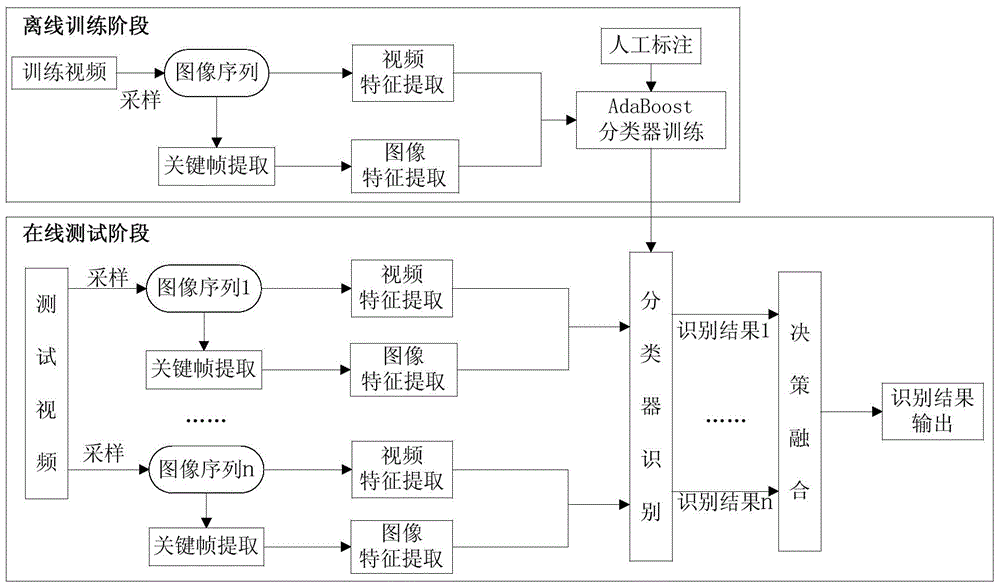

Video-based weather phenomenon recognition method

ActiveCN104463196AInformativeImprove the ability to distinguishCharacter and pattern recognitionPattern recognitionManual annotation

The invention discloses a video-based weather phenomenon recognition method. The method achieves classification recognition of common weather phenomena such as sun, cloud, rain, snow and fog. The method comprises the steps of training an off-line classifier, wherein an image sequence is sampled for a given training video; on the one hand, video characteristics of the image sequence are extracted; on the other hand, key frame images and image characteristics of the key frame images are extracted from the image sequence, the AdaBoost is adopted for conducting learning and training on the extracted video characteristics, the extracted image characteristics and manual annotations to obtain the classifier; recognizing the weather phenomena in an online mode, wherein a plurality of sets of image sequences are sampled for a testing video, video characteristics and image characteristics of each set of image sequences are extracted, the characteristics are sent into the classifier for classification to obtain a corresponding recognition result, then decision fusion is carried out in a voting mode, and the voting result is used as the weather phenomenon recognition result of the testing video.

Owner:PLA UNIV OF SCI & TECH

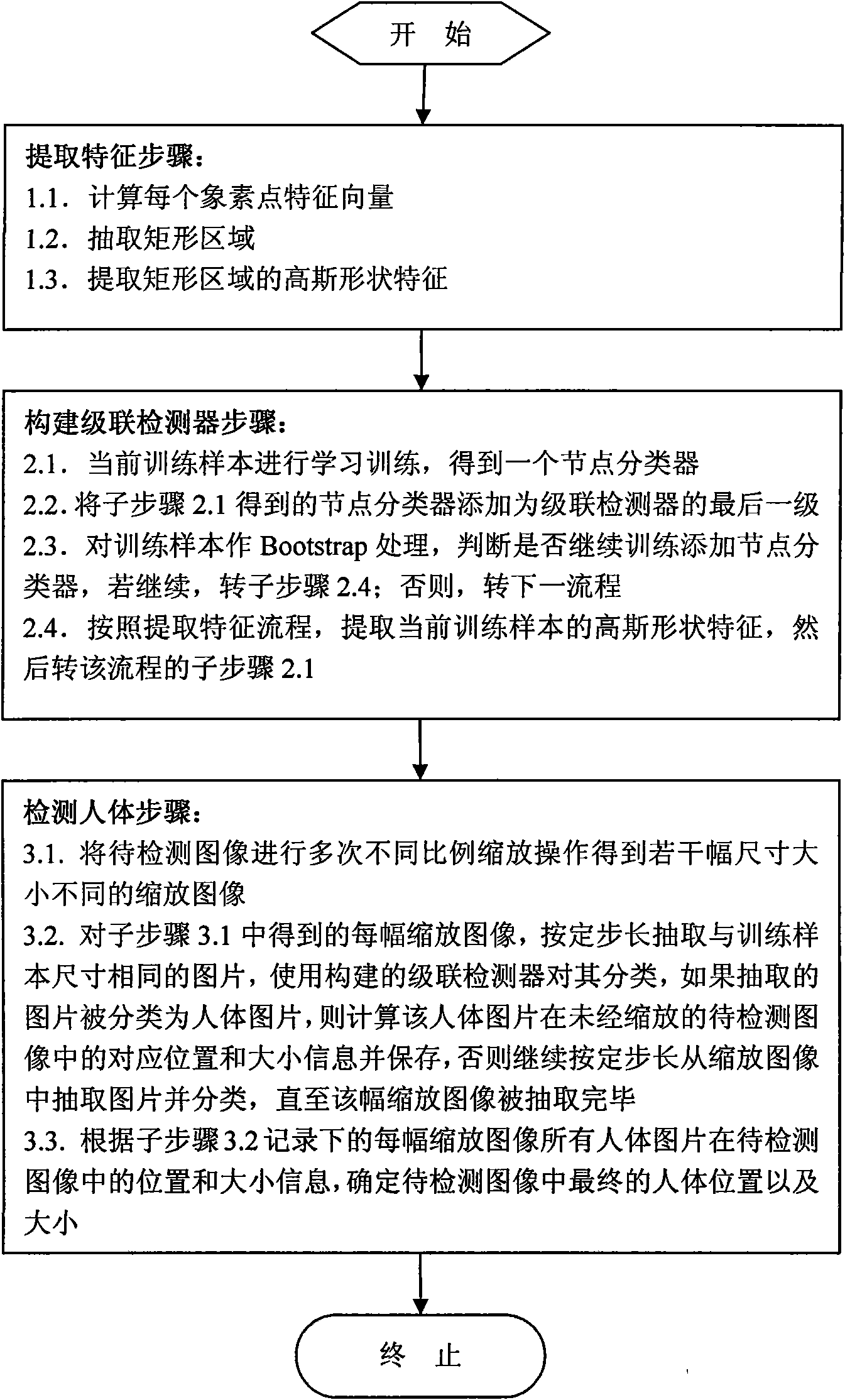

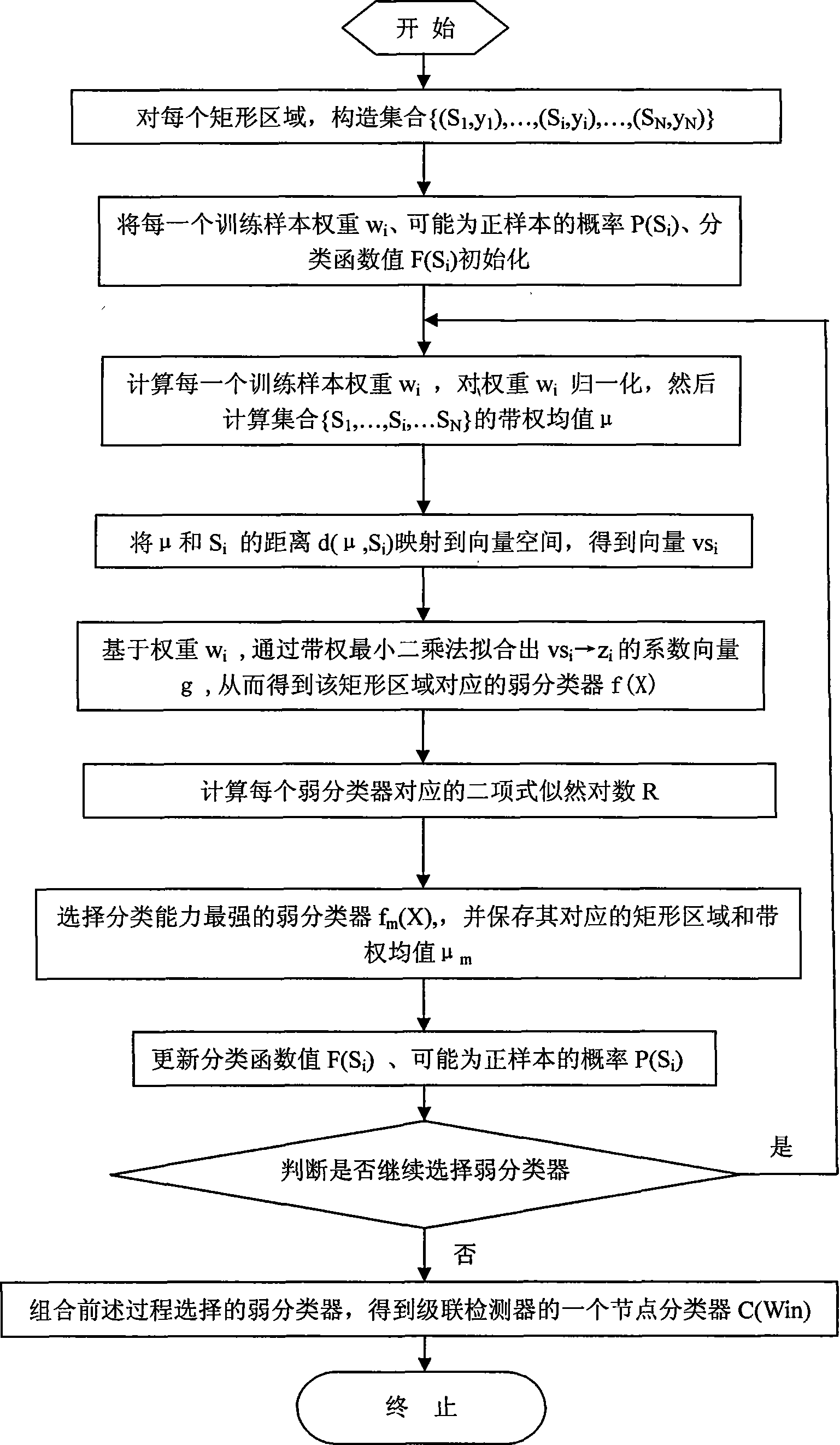

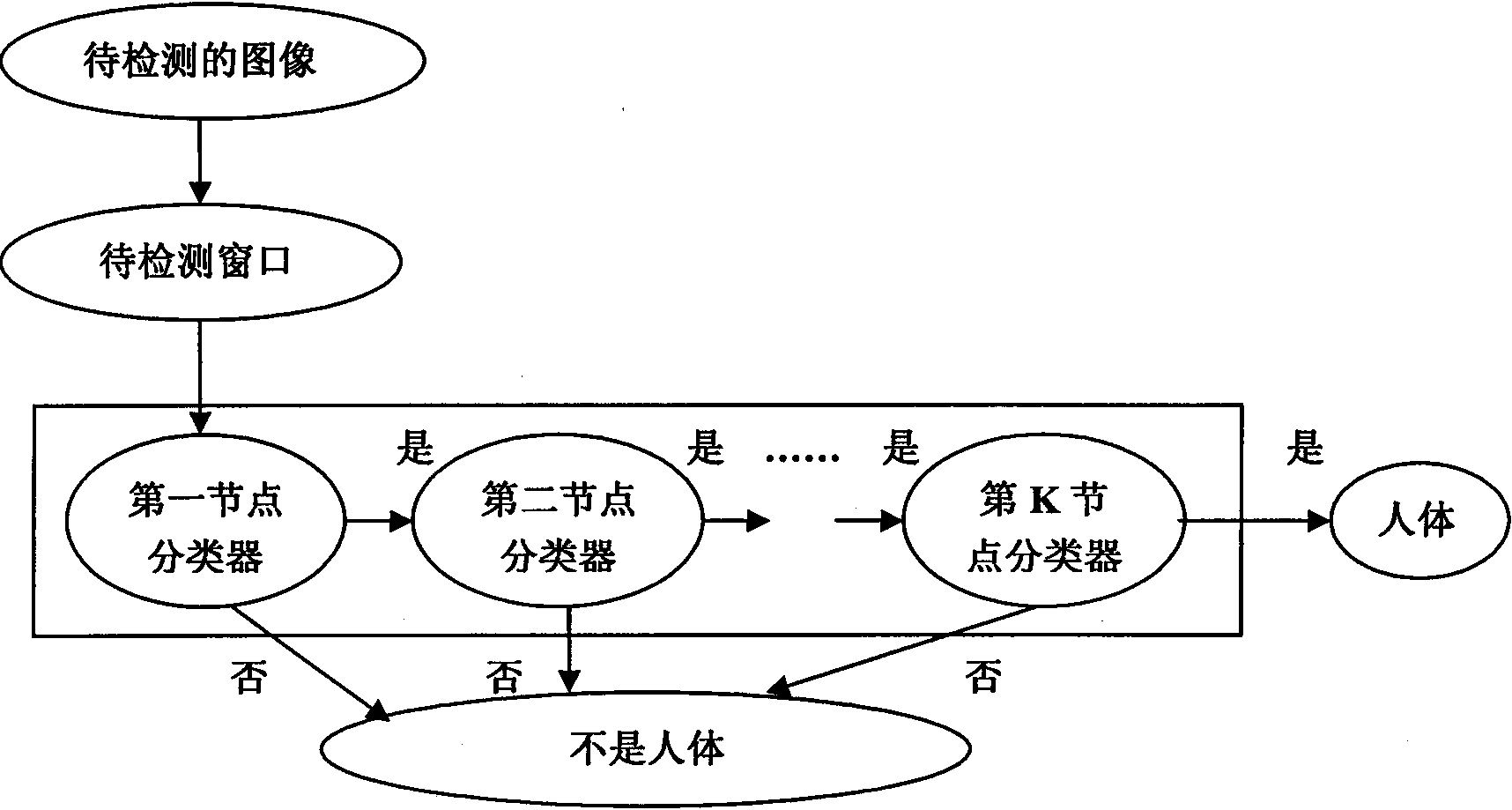

Human body detection method based on Gauss shape feature

InactiveCN101561867AImprove detection rateStrong robustnessCharacter and pattern recognitionHuman bodyFeature Dimension

The invention relates to a human body detection method based on Gauss shape feature, belongs to the field of computer vision and mode identification, and solves the problem that the detection rate and detection speed of the prior detection method are low. The method comprises: a first step of extracting feature, namely extracting the Gauss shape feature of each rectangular area of each training sample; a second step of constructing cascade detectors, namely studying the current training sample to construct the cascade detectors; and a third step of detecting human body, namely using the cascade detectors to scan and detect an image to be detected to determine the position and size of the human body in the image. The method has strong robust property for changes of illumination, background and the like by using the constructed Gauss shape feature, and has low feature dimension; the construction of the Gauss shape feature is added with a mean value of areas so as to enhance the capability of identifying the human body and the background; therefore, the constructed cascade detectors can greatly improve the detection rate of the human body, and can be applied to intelligent monitoring, assistant driving and human-computer interaction systems.

Owner:HUAZHONG UNIV OF SCI & TECH

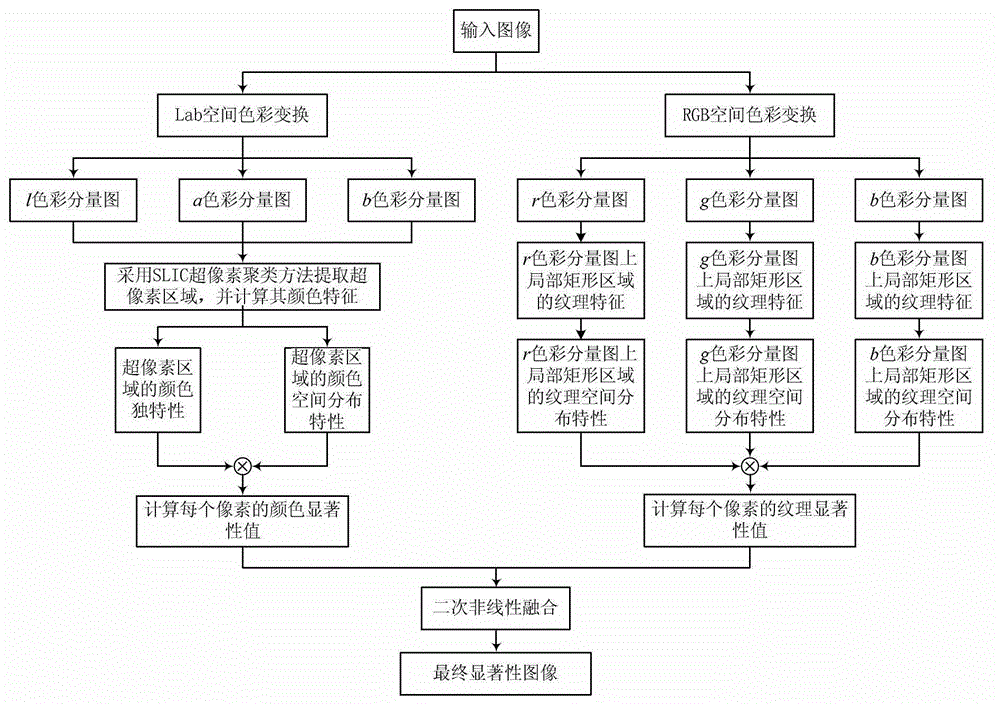

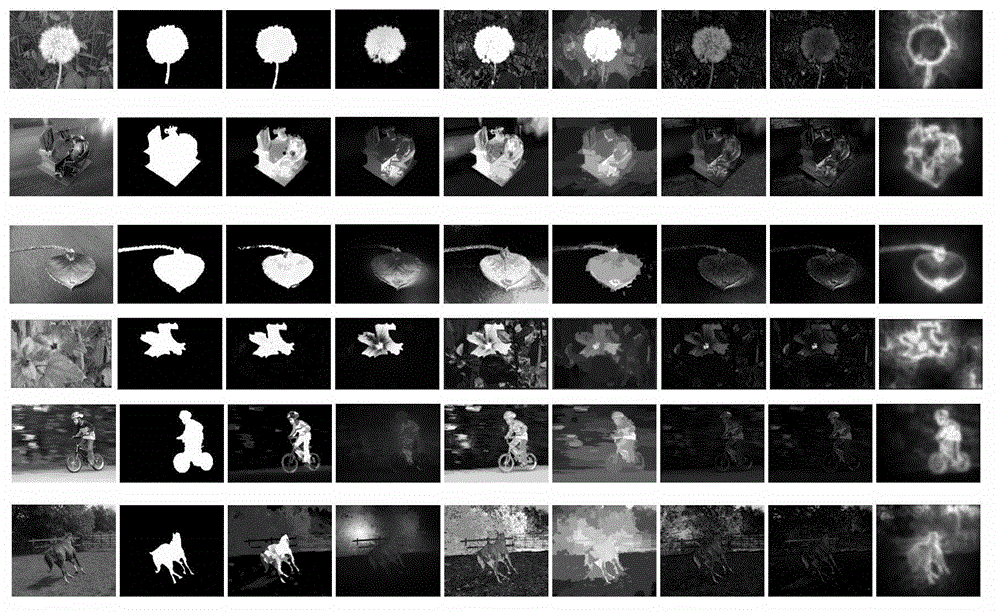

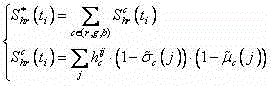

Visual saliency detection method with fusion of region color and HoG (histogram of oriented gradient) features

InactiveCN102867313AImprove the ability to distinguishImage analysisVisual saliencyComponent diagram

The invention relates to a visual saliency detection method with fusion of region color and HoG (histogram of oriented gradient) features. At present, the existing method is generally based on a pure calculation model of the region color feature and is insensitive to salient difference of texture. The method disclosed by the invention comprises the following steps of: firstly calculating a color saliency value of each pixel by analyzing color contrast and distribution feature of a superpixel region on a CIELAB (CIE 1976 L*, a*, b*) space color component diagram of an original image; then extracting an HoG-based local rectangular region texture feature on an RGB (red, green and blue) space color component diagram of the original image, and calculating a texture saliency value of each pixel by analyzing texture contrast and distribution feature of a local rectangular region; and finally fusing the color saliency value and the texture saliency value of each pixel into a final saliency value of the pixel by adopting a secondary non-linear fusion method. According to the method disclosed by the invention, a full-resolution saliency image which is in line with sense of sight of human eyes can be obtained, and the distinguishing capability against a saliency object is further stronger.

Owner:海宁鼎丞智能设备有限公司

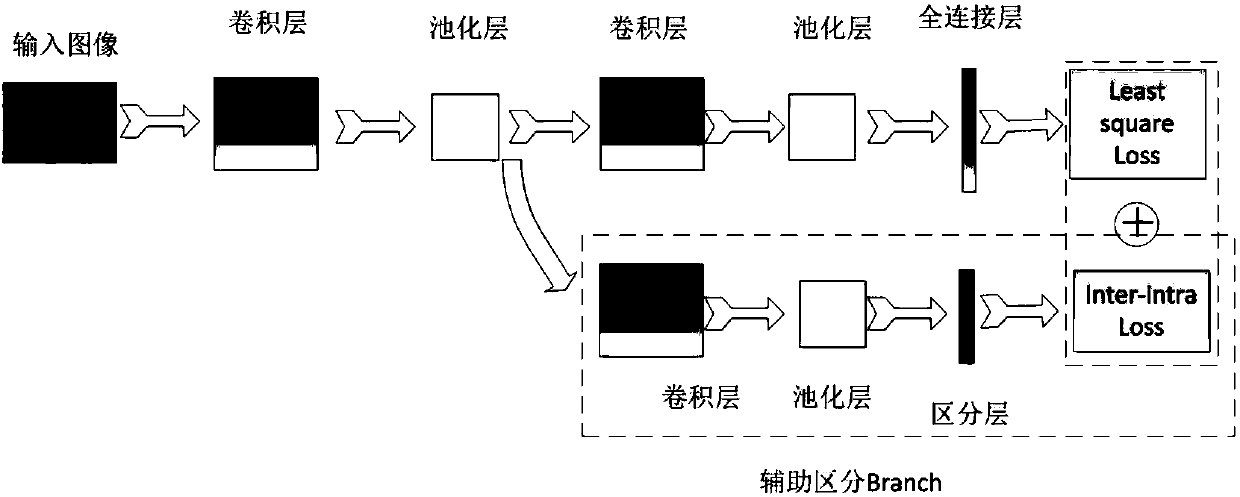

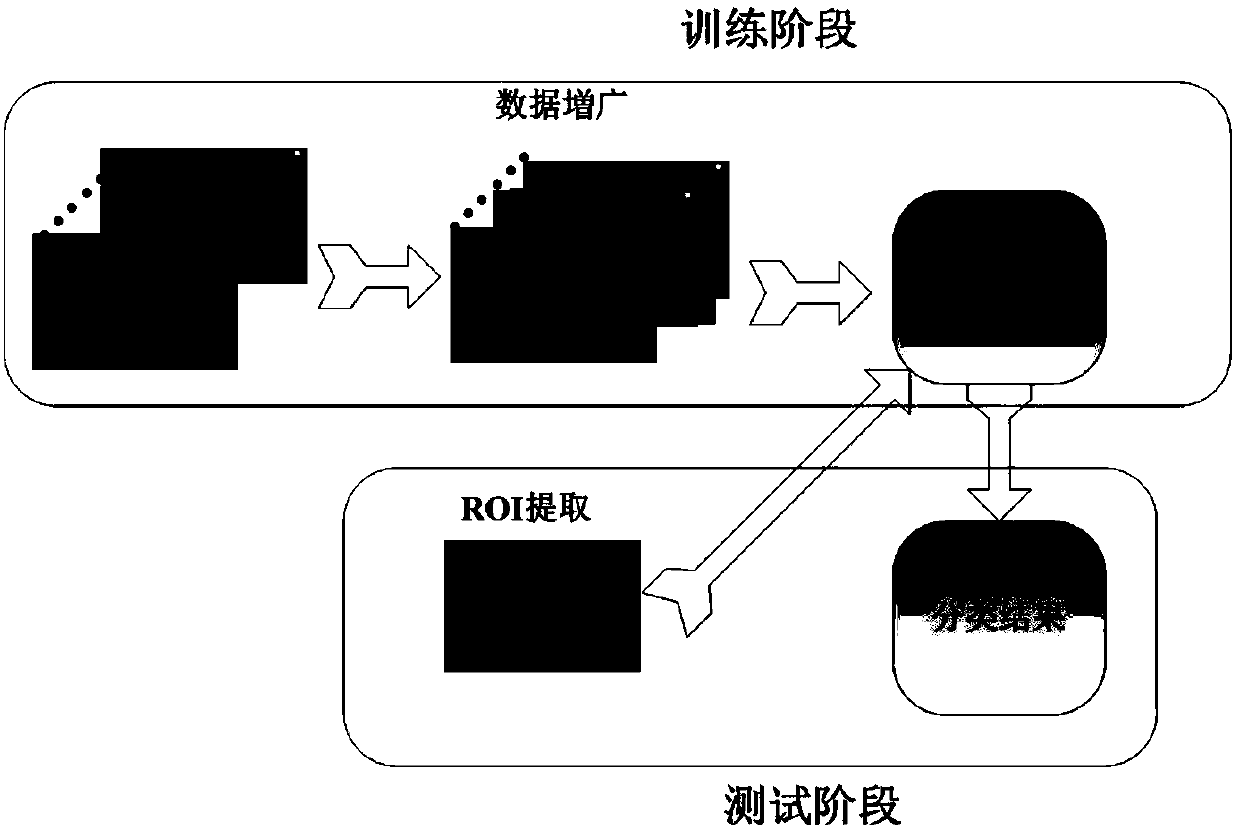

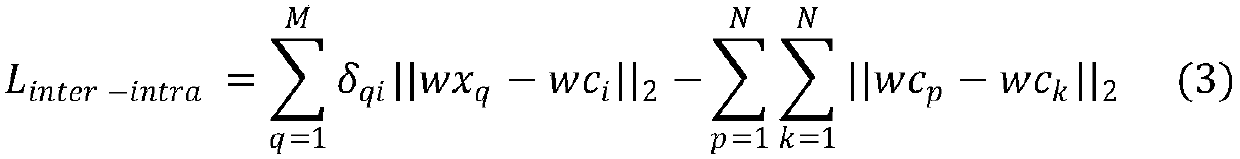

Breast tumor classification method based on differentiated convolutional neural network and breast tumor classification device based on differentiated convolutional neural network

ActiveCN107748900AAvoid artificially designed featuresImprove differentiationCharacter and pattern recognitionNeural architecturesTumour classificationRegion of interest

The invention discloses a breast tumor classification method based on a differentiated convolutional neural network and a breast tumor classification device based on a differentiated convolutional neural network. The method comprises the steps that the tumor in multiple ultrasonic images is segmented to acquire an area of interest, and data augmentation is performed so that a training set is obtained; a differentiated convolutional neural network model is constructed, and the model parameters of the differentiated convolutional neural network are calculated based on training images, wherein the structure of the differentiated convolutional neural network model is that differentiated auxiliary branches are additionally arranged on the basis of the convolutional neural network, a convolutional layer, a pooling layer and a full connection layer are accessed, and an Inter-intra Loss function is introduced for increasing the similarity between the same classes and the differentiation between different classes; a breast ultrasonic image to be classified is acquired, the ultrasonic image is segmented and the area of interest is acquired; and the area of interest is inputted to the differentiated convolutional neural network so as to obtain the classification result. According to the classification method, the tumor classification performance in the breast ultrasonic image can be effectively enhanced.

Owner:SHANDONG UNIV OF FINANCE & ECONOMICS

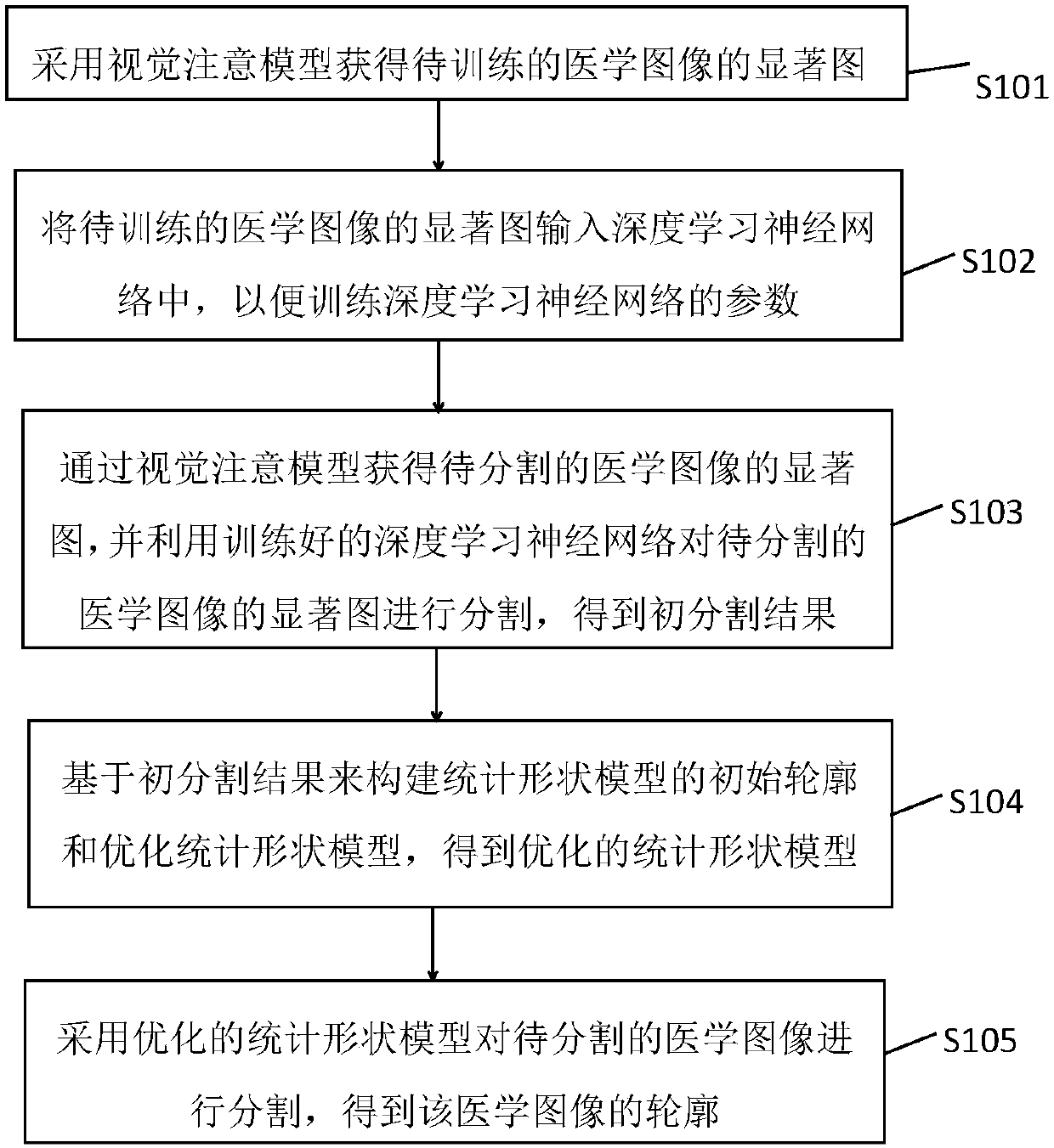

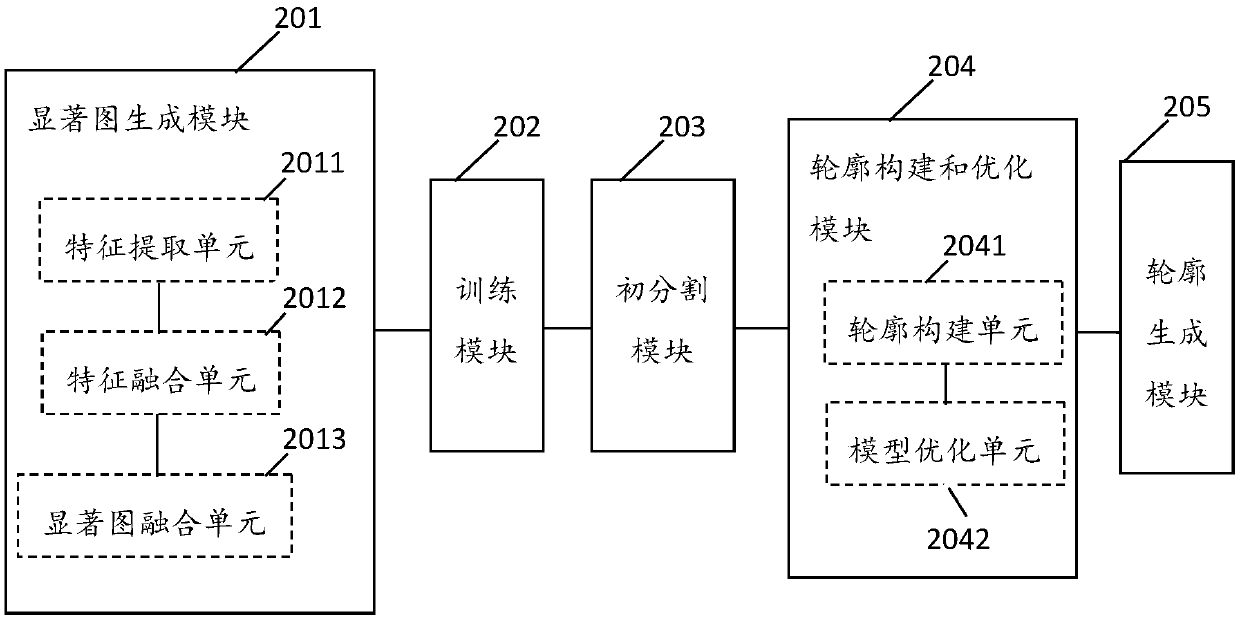

Method and system for medical image automatic segmentation, apparatus and storage medium

ActiveCN108898606AReduce information processingImprove classification performanceImage enhancementImage analysisVisual attentionAccurate segmentation

The present invention provides a method and a system for medical image automatic segmentation. The method for medical image automatic segmentation comprises: obtaining a salient image of a to-be-trained medical image by using a visual attention model, and using the salient image to train parameters of a deep learning neural network; obtaining a salient image of a to-be-segmented medical image by the visual attention model, and inputting the to-be-segmented medical image to the trained deep learning neural network for segmentation, to obtain an initial segmentation result; using the initial segmentation result to construct an initial contour of a statistical shape model and to optimize the statistical shape model, and using the optimized statistical shape model to segment the to-be-segmented medical image. The method combines the statistical shape model and the deep learning model, and calculated amount of matching operation in the statistical shape model is reduced by using the initialsegmentation result of the deep learning network, thereby realizing fast and accurate segmentation of the three-dimensional medical image by the statistical shape model.

Owner:SOUTH CENTRAL UNIVERSITY FOR NATIONALITIES

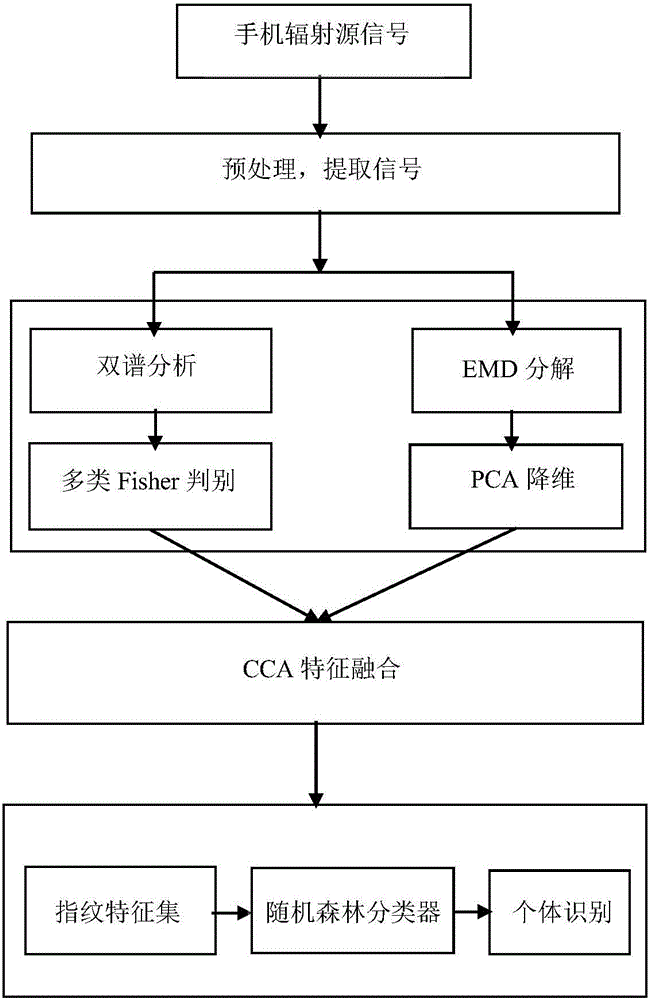

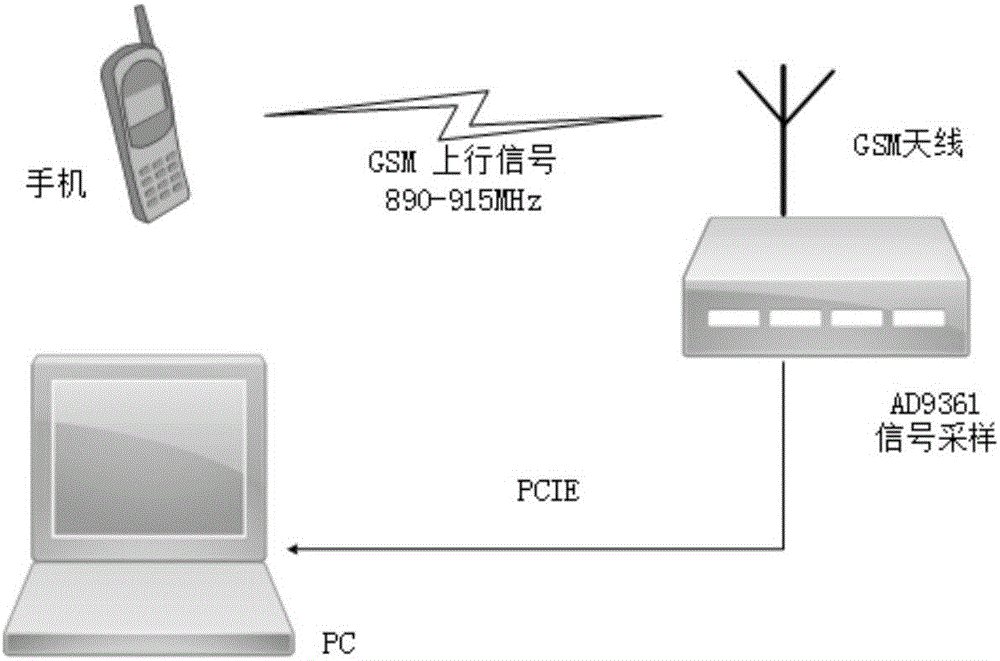

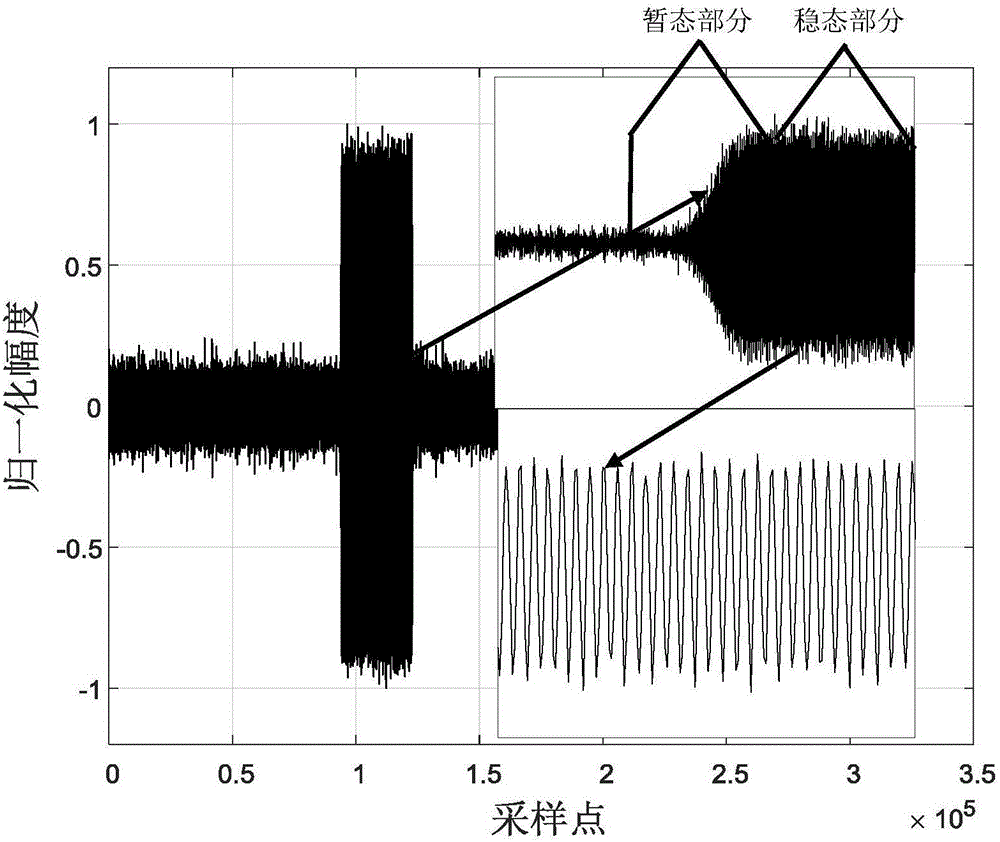

Bispectra and EMD fusion feature-based mobile phone individual identification method

ActiveCN106845339AImprove recognition rateImprove stabilityCharacter and pattern recognitionTransmissionFeature setDecomposition

The invention discloses a bispectra and EMD fusion feature-based mobile phone individual identification method. The method comprises the steps of performing bispectra calculation on all samples, and performing dimension reduction by utilizing PCA to obtain a feature set X; calculating empirical mode decomposition of each sample to obtain a power spectrum of a signal stray component, and performing Fisher judgment analysis to obtain a feature set Y; performing CCA feature fusion on the feature set X and the feature set Y to obtain a fused feature set Z; and performing horizontal segmentation on Z according to a ratio of m% to n% to obtain a training set ZTrain and a test set ZTest, training a random forest classifier by using the training set ZTrain, performing classification decision on the test set ZTest by utilizing the trained classifier, and finally outputting a mobile phone individual identification result.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

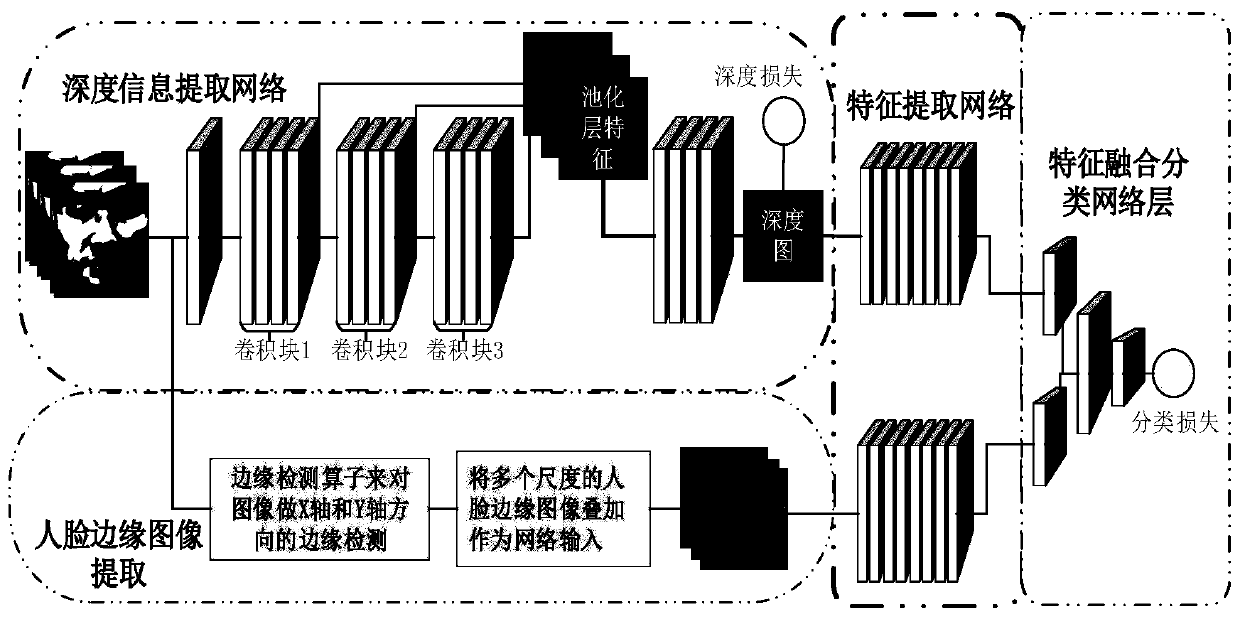

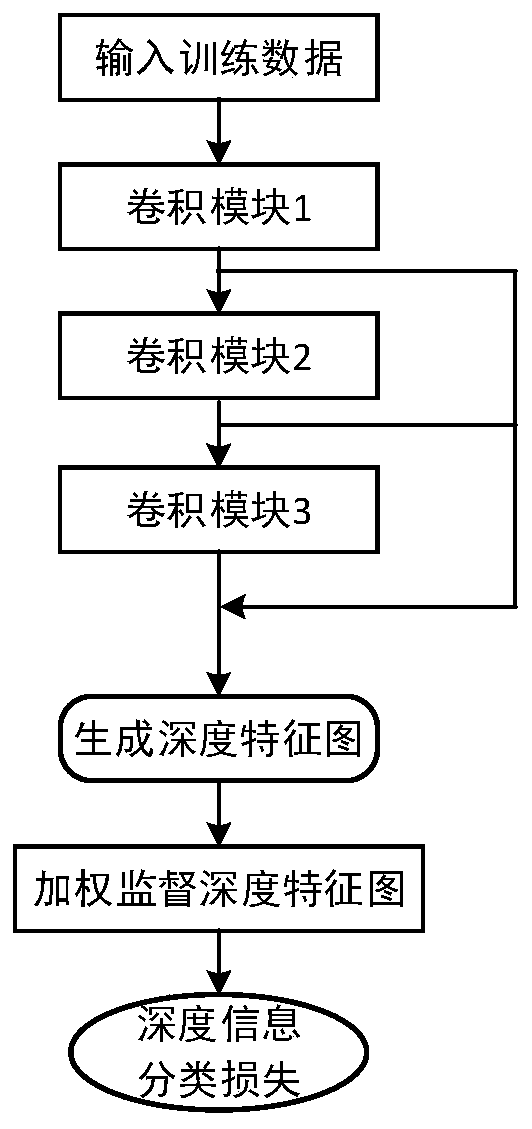

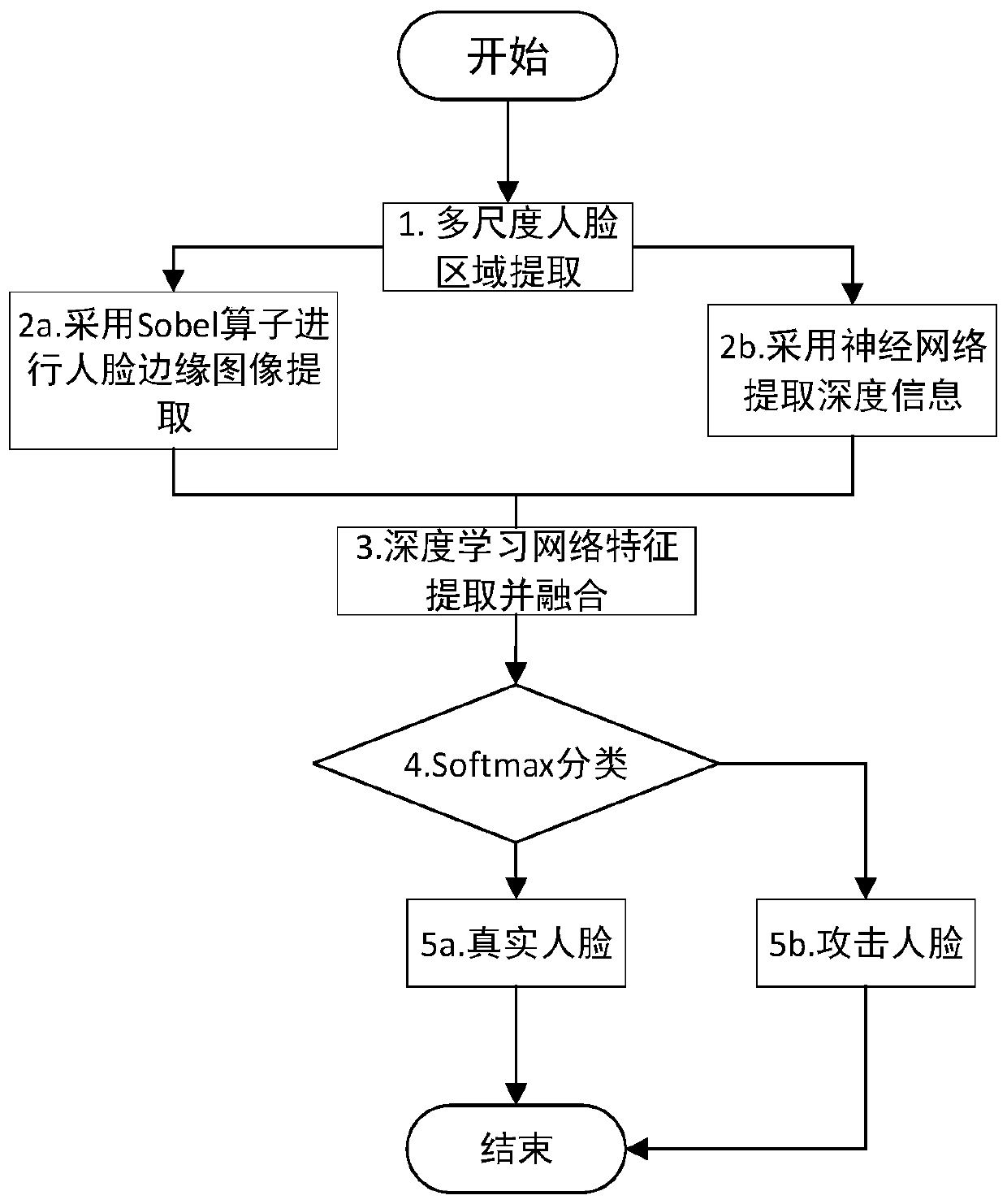

Face anti-counterfeiting method based on face depth information and edge image fusion

ActiveCN110348319AImprove interferenceImprove the ability to distinguishImage analysisCharacter and pattern recognitionPattern recognitionImaging quality

The invention provides a face anti-counterfeiting method based on face depth information and edge image fusion, and the method comprises the steps: respectively extracting the edge information and depth image information of a face image through a double-flow network, carrying out the fusion of two types of features, and then carrying out the learning and classification through a feature fusion classification network, wherein a Sobel operator is used for extracting edge information of a face image, a PRNe is used for acquiring three-dimensional structure information of a face of a preprocessedliving body object, and adopting a Z-Buffer algorithm for projection to obtain corresponding living body face depth label. Depth information extraction network branches in the double-flow network extract differentiated depth information of living and non-living faces, and a weighting matrix and an entropy loss supervision mode are adopted to enhance the depth discrimination between a face area anda background area. Compared with the prior art, the method is slightly influenced by factors such as image quality and illumination, the problem that the hardware depth information extraction cost ishigh is solved, the characteristics of background information are expanded, and learning of redundant noise is weakened.

Owner:WUHAN UNIV

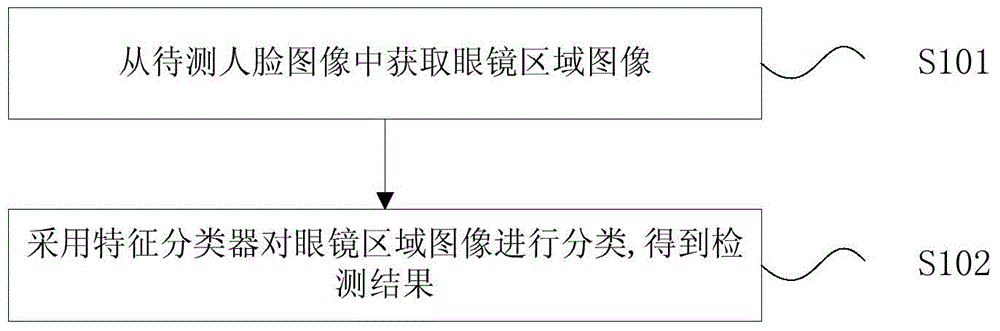

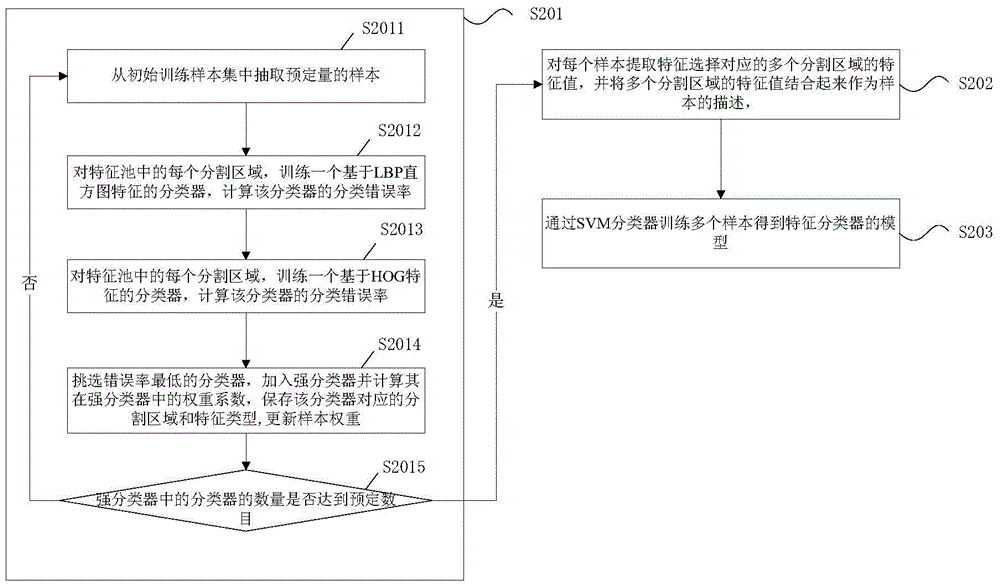

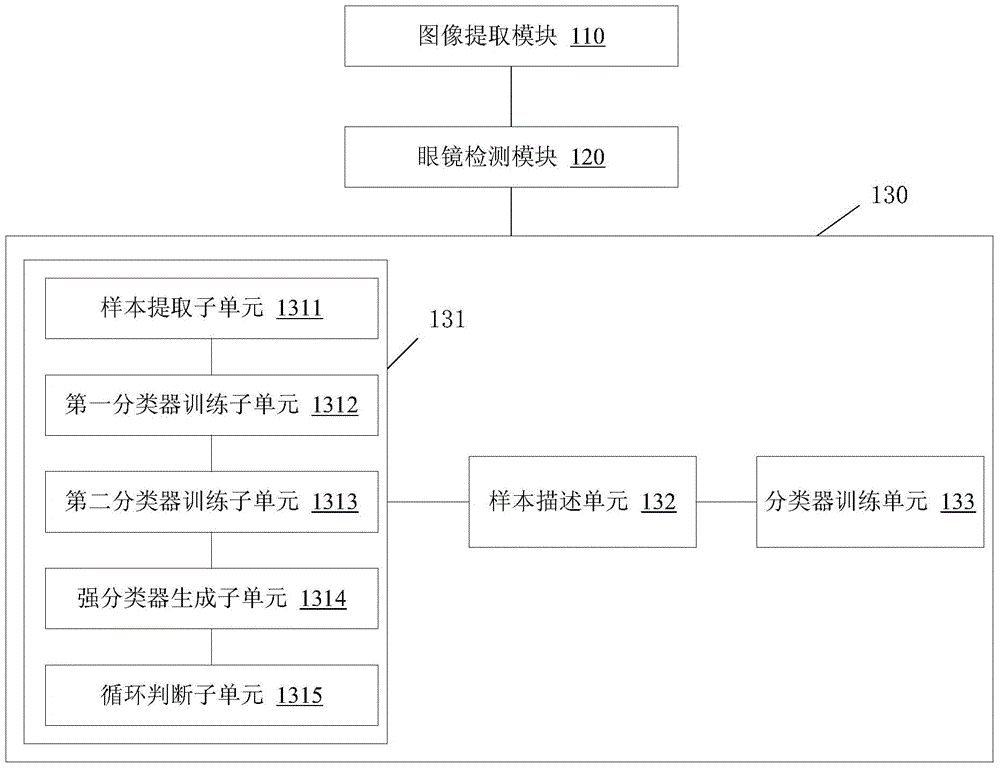

Glass detection method and system for face recognition

ActiveCN104463128AStrong distinguishing ability and stabilityReduce feature dimensionCharacter and pattern recognitionSupport vector machine svm classifierImaging Feature

The invention discloses a glass detection method and system for face recognition. The glass detection method comprises the steps that glass region images are obtained from a face image to be detected; the glass region images are classified through a feature classifier, and a detection result is obtained; the feature classifier is generated in the mode that a preset number of partition regions are selected from a feature pool and used as feature selections; feature values of multiple partition regions corresponding to the feature selection of each sample are extracted, and the feature values of the multiple partition regions are combined to be used as descriptions of the samples; the multiple samples are trained through a support vector machine SVM classifier, so that a model of the feature classifier is obtained. According to the glass detection method and system for face recognition, the stability of the feature descriptions and the distinguishing capacity of the adopted feature classifier are high; besides, compared with full-image feature extraction, the classification feature difficulty is greatly reduced, the complexity of classification operation is lowered, and the operation speed and the detection speed are both increased.

Owner:智慧眼科技股份有限公司

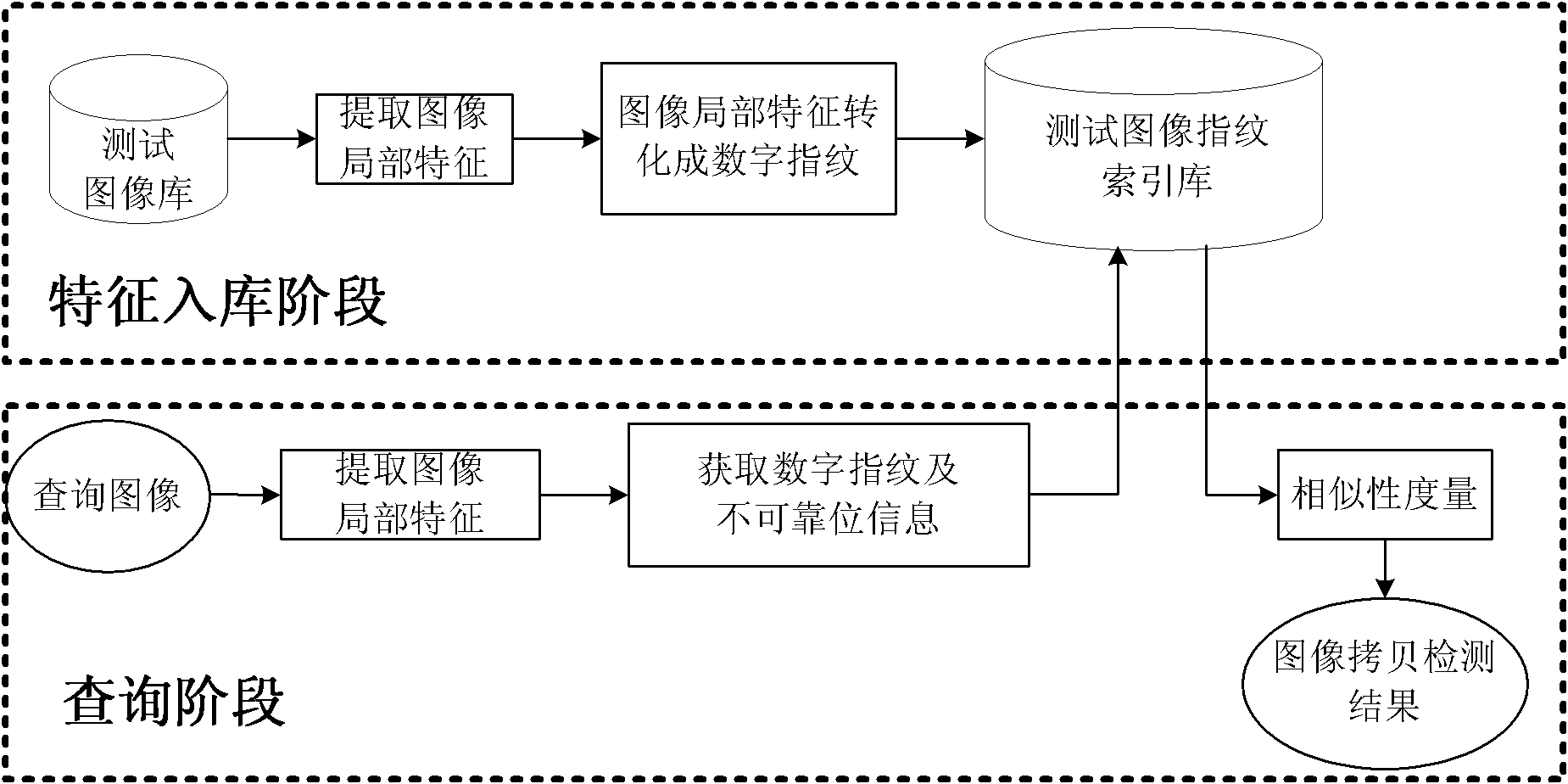

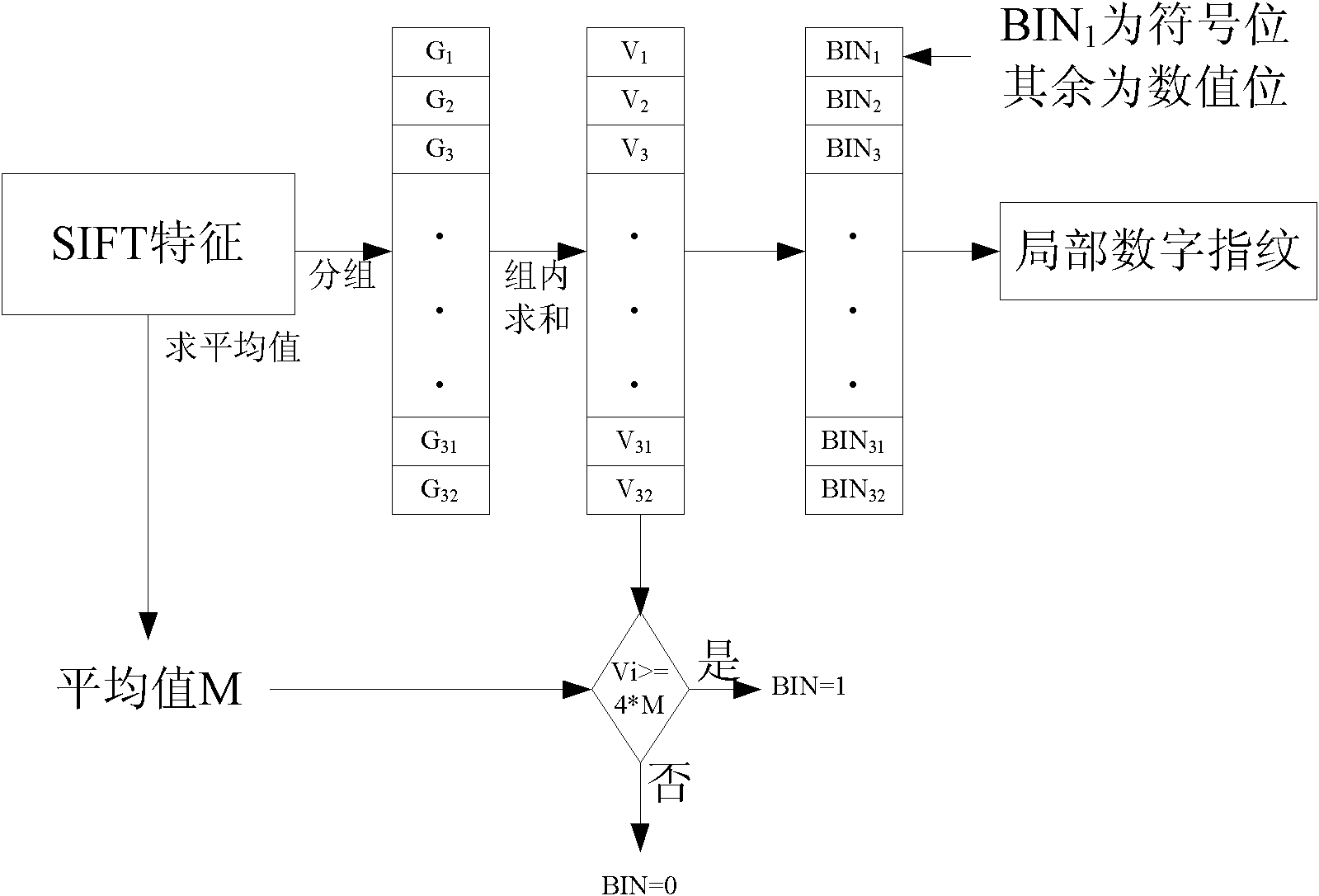

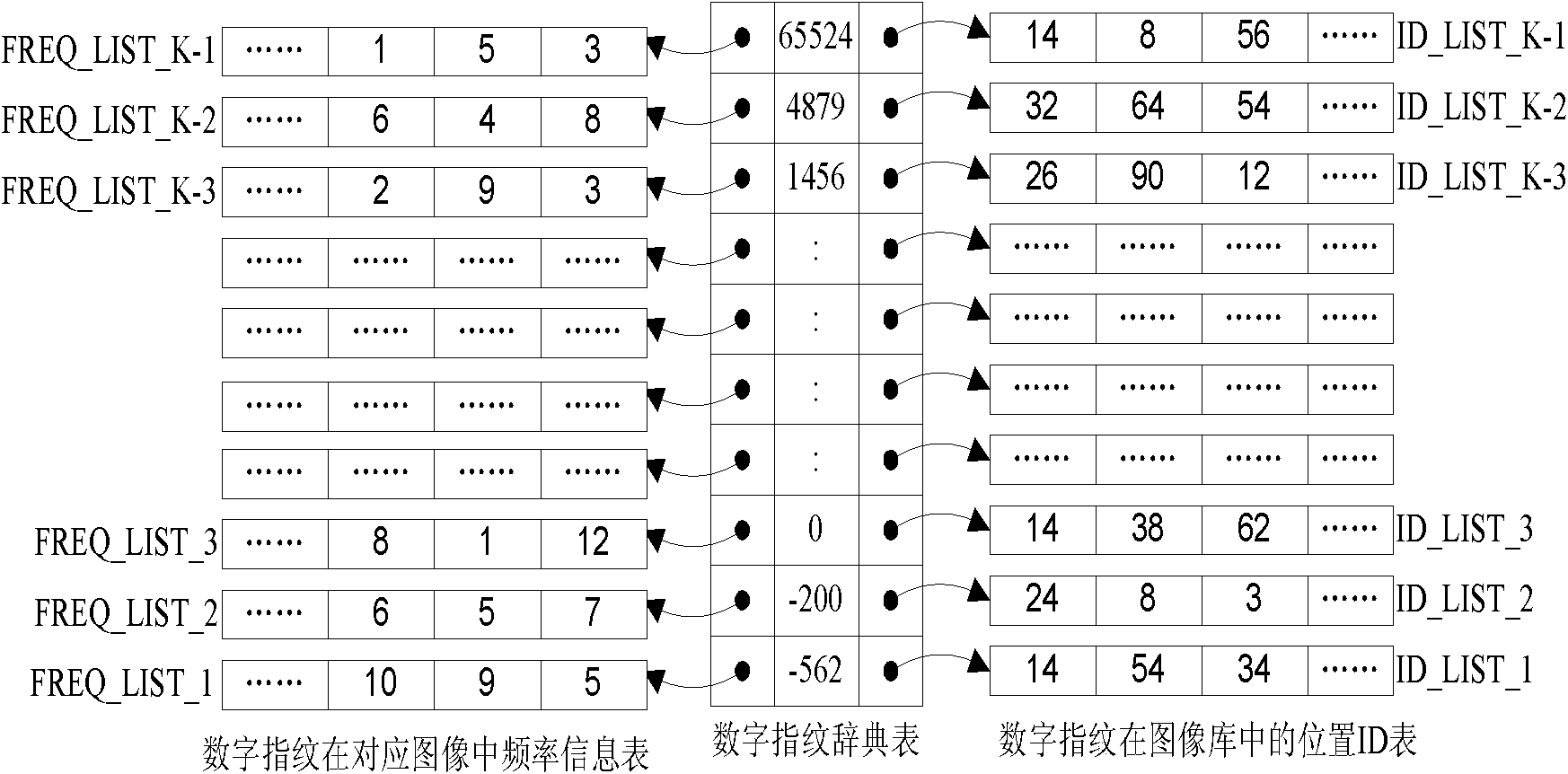

Image copying detection method based on local digital fingerprint

InactiveCN101853486AImprove robustnessStrong identification abilityImage data processing detailsSpecial data processing applicationsImage extractionFeature vector

The invention discloses an image copying detection method based on local digital fingerprint, which comprises the following steps that: local SIFI features are extracted from each image in a tested image library, local digital fingerprint conversion is carried out to the high-dimensional SIFT feature vector and the frequency of the fingerprint in each image is calculated so as to establish a digital fingerprint database; and when the image is inquired, the SIFT features are first extracted from the inquired image and then converted into the digital fingerprint and the information of unreliable positions during the conversion process, then inquiry is carried out in an inverted index structure in a tested fingerprint library by integrating the unreliable information so as to quickly obtain and inquire a tested image set associated with the local digital fingerprint of the inquired image, measure the similarity of the inquired image and the associated tested image and judge whether is image is copied. In the detection of the copying performance, the method has very good recall rate and precision; and in detecting the copying efficiency, the method can also detect the copying of the inquired image.

Owner:HUAZHONG UNIV OF SCI & TECH

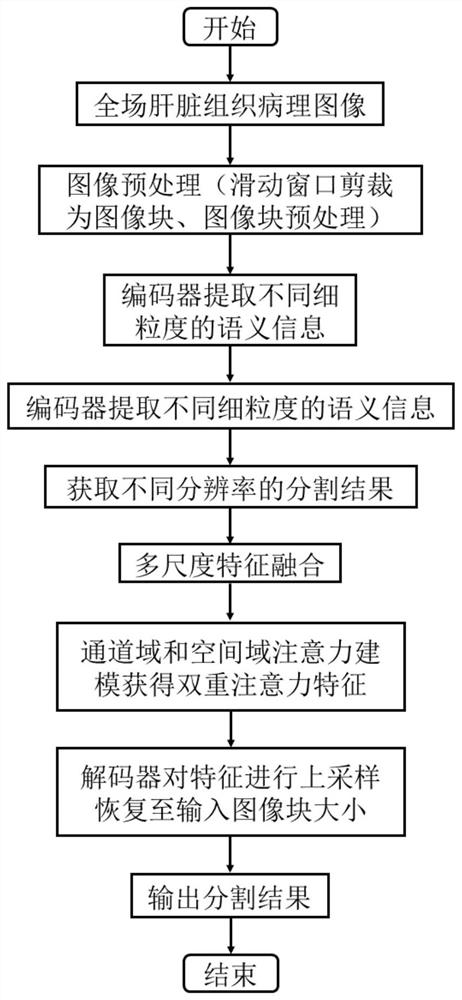

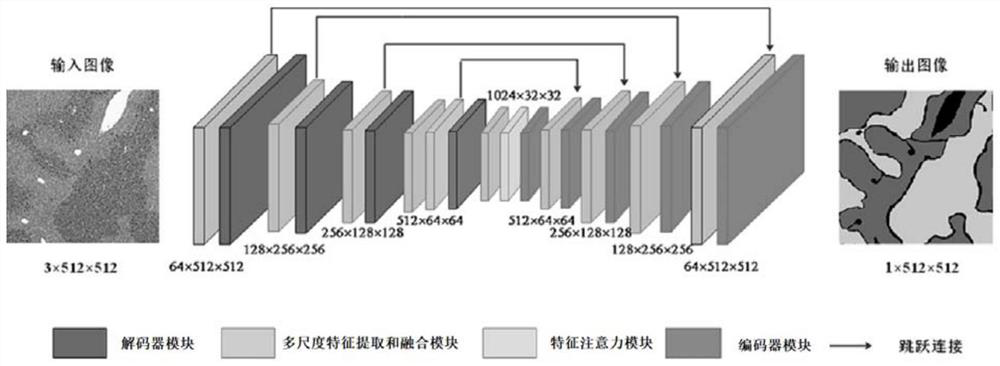

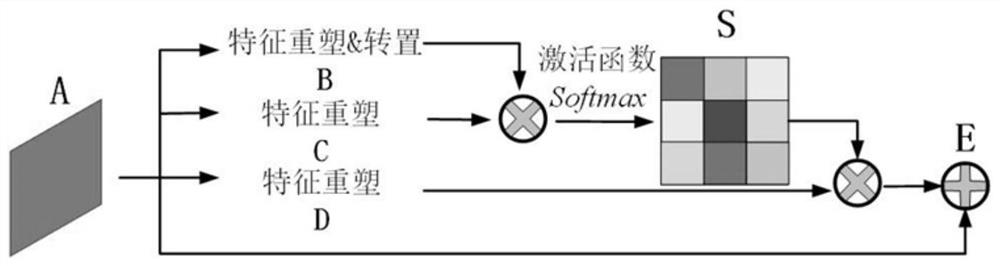

Liver pathological image segmentation model establishment and segmentation method based on attention mechanism

ActiveCN112017191ASolving the Difficult Problem of BoundariesMitigate the impact of learningImage enhancementImage analysisLiver tissueRadiology

The invention discloses a liver pathological image segmentation model establishment and segmentation method based on an attention mechanism. The method comprises the steps: firstly carrying out the cutting of a liver tissue pathological section image and a corresponding expert labeling mask image, and obtaining a section image block and a mask image block; then constructing a liver tissue pathological image segmentation network based on multi-scale features and an attention mechanism; and taking the slice image blocks and the mask image blocks as inputs of a segmentation network, taking the obtained segmentation probability graph as an output of the segmentation network, and training the obtained segmentation network to obtain a trained segmentation model. And inputting the liver pathological image to be processed into the segmentation model to obtain a segmentation result. According to the segmentation network, a feature attention mechanism is introduced, attention modeling is carriedout on the position and the channel dimension respectively, the distinguishing capacity of the model for a normal tissue area, an abnormal tissue area and a background is improved, and the influenceof many liver tissue pathological image cavities on model learning is relieved.

Owner:NORTHWEST UNIV(CN)

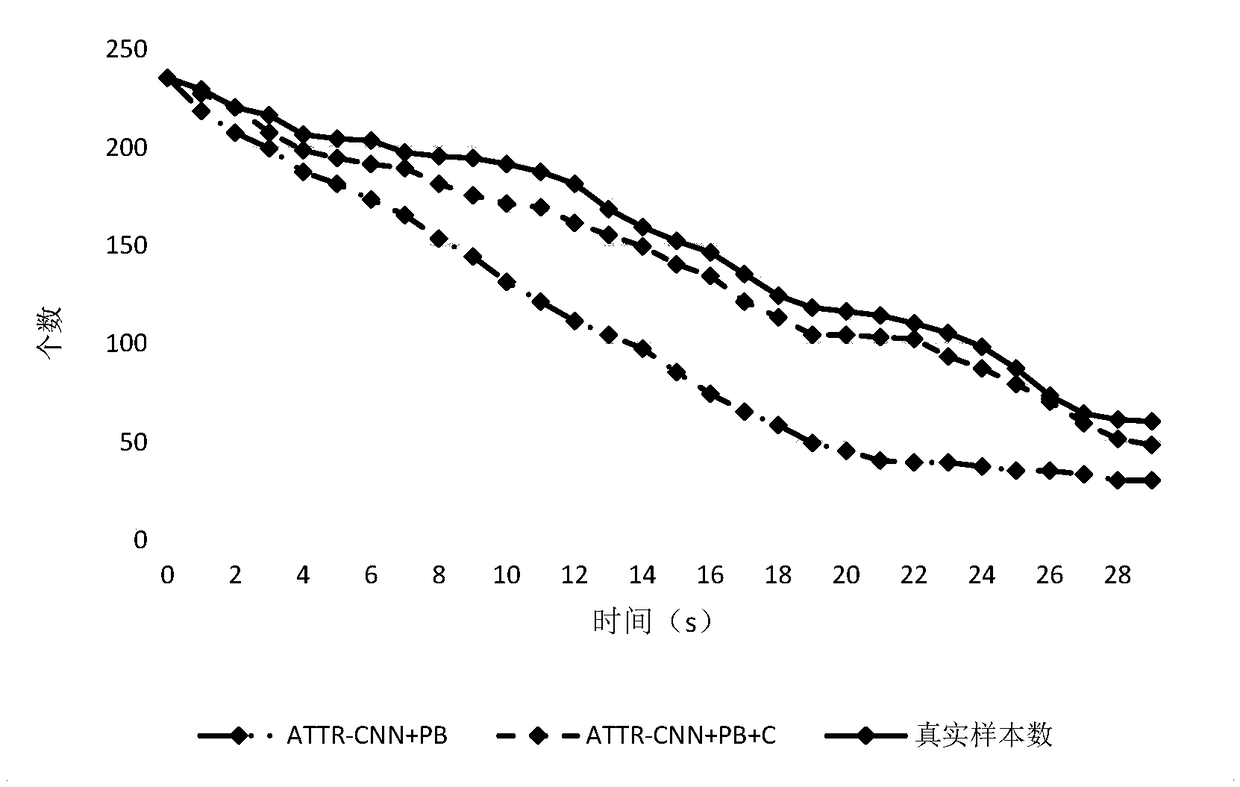

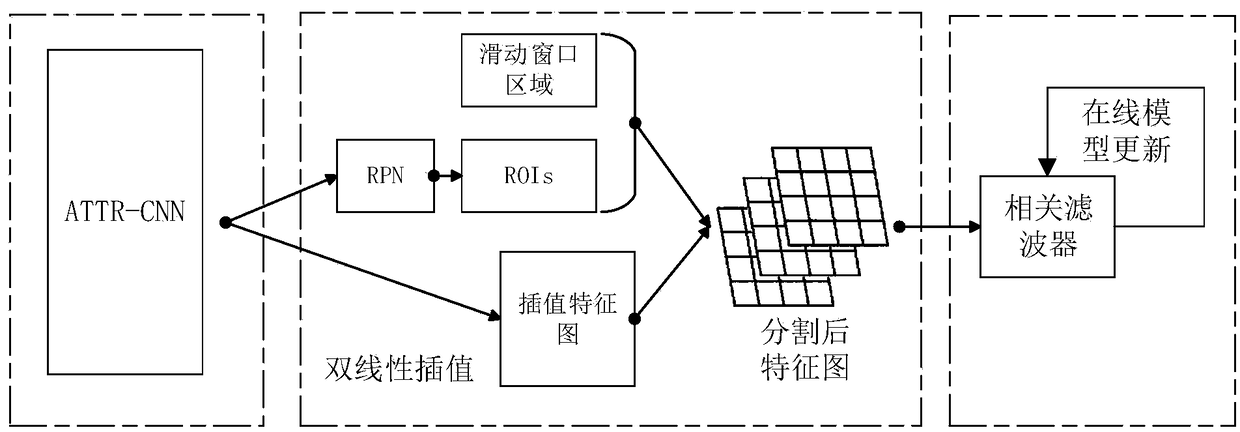

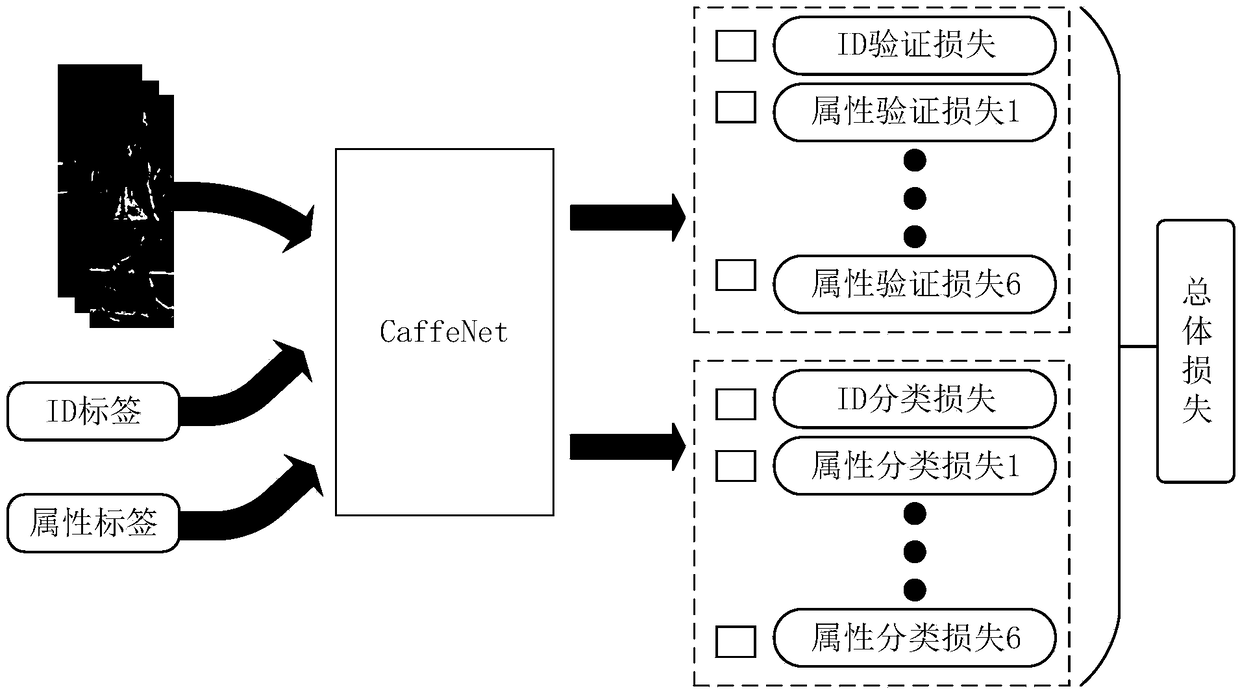

Pedestrian target tracking method based on depth learning

ActiveCN109146921AImprove accuracyAvoid introducingImage enhancementImage analysisCosine similarityFeature extraction

The invention discloses a pedestrian target tracking method based on depth learning, which combines depth learning and correlation filtering to track the target, and effectively improves the accuracyof tracking on the premise of ensuring real-time tracking. Aiming at the problem of large change of target posture in tracking process, the deep convolution feature based on pedestrian attribute is applied to tracking. Aiming at occlusion problem, cosine similarity method is used to judge occlusion in order to avoid the introduction of dirty data caused by occlusion. In order to improve the efficiency and solve the problem of using deep convolution features in correlation filters, a bilinear interpolation method is proposed to eliminate quantization errors and avoid repeated feature extraction, which greatly improves the efficiency. Aiming at the problem of high-speed motion of target, a preselection algorithm is proposed, which can not only search the global image, but also can be used asa strong negative sample to join the training, so as to improve the distinguishing ability of the correlation filter.

Owner:HUAZHONG UNIV OF SCI & TECH

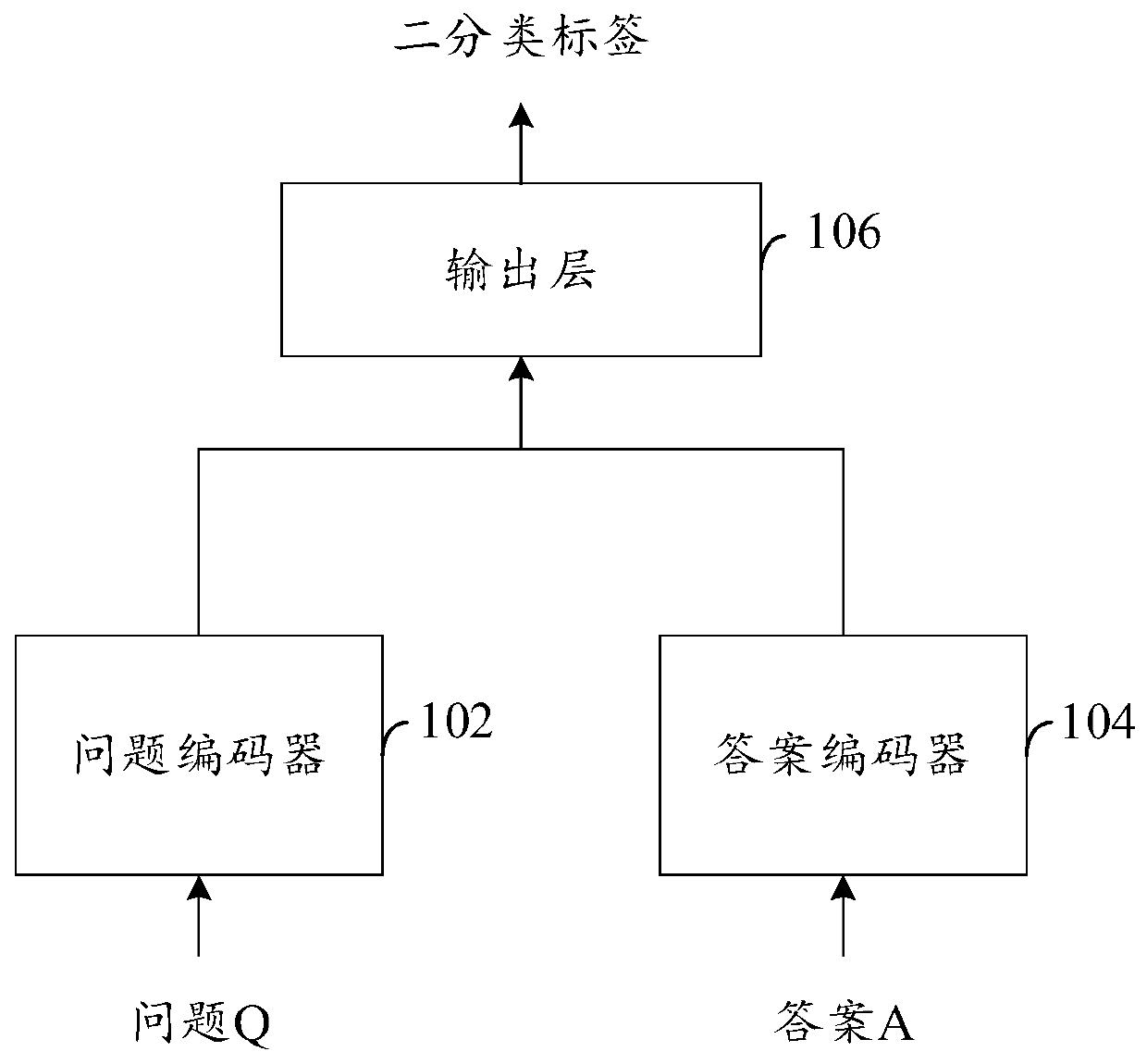

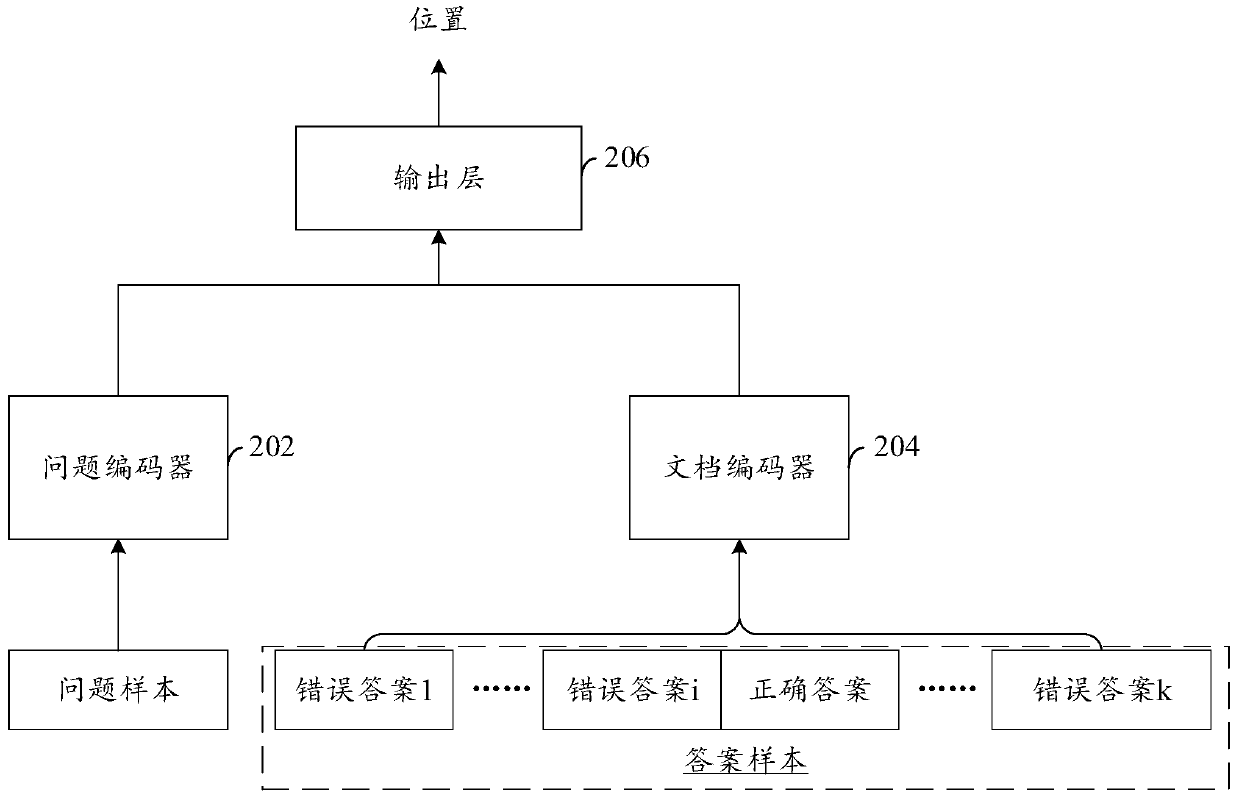

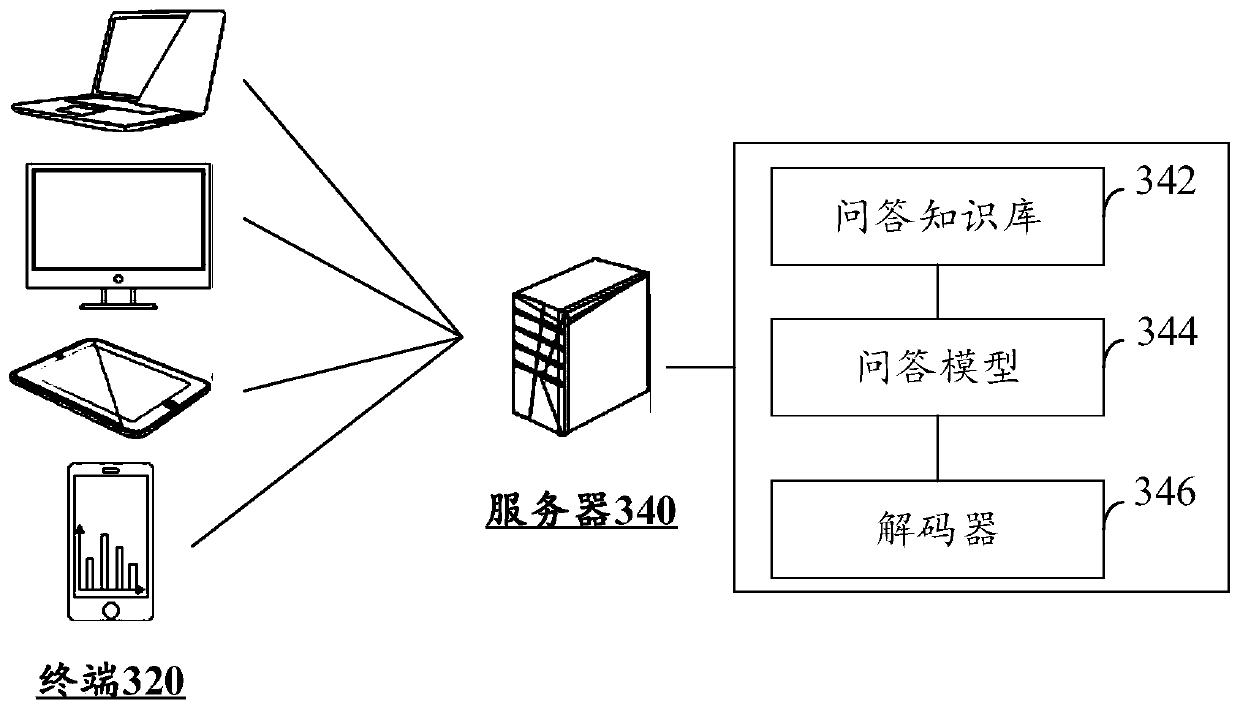

Question answering method based on machine learning and question and answer model training method and device

ActiveCN110516059ATraining position prediction abilityImprove robustnessDigital data information retrievalMachine learningModel parametersQuestions and answers

The invention discloses a question answering method based on machine learning and a question answering model training method and device, and relates to the field of artificial intelligence. The training method comprises the steps of acquiring training samples, each training sample comprising a question sample, an answer sample and a calibration position, and the answer samples being answer documents formed by splicing correct answer samples and wrong answer samples together; encoding the question sample and the answer sample through a question and answer model to obtain a vector sequence of the samples; predicting the position of the correct answer sample in the vector sequence of the sample through the question-answer model, and determining the loss between the position of the correct answer sample and the calibration position; and adjusting model parameters in the question and answer model according to the loss, and training the position prediction capability of the question and answer model for the correct answer sample. According to the method, the spliced answer samples are adopted to train the question and answer model, and the reading understanding ability of the question and answer model is trained, so that the question and answer model can accurately find a correct answer from multiple answers.

Owner:TENCENT TECH (SHENZHEN) CO LTD

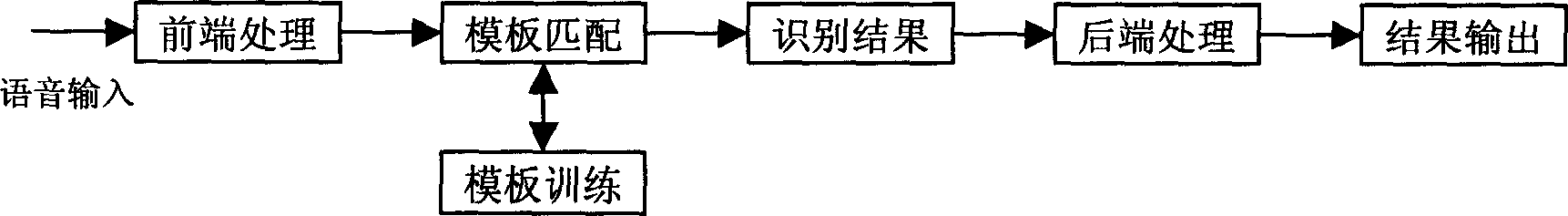

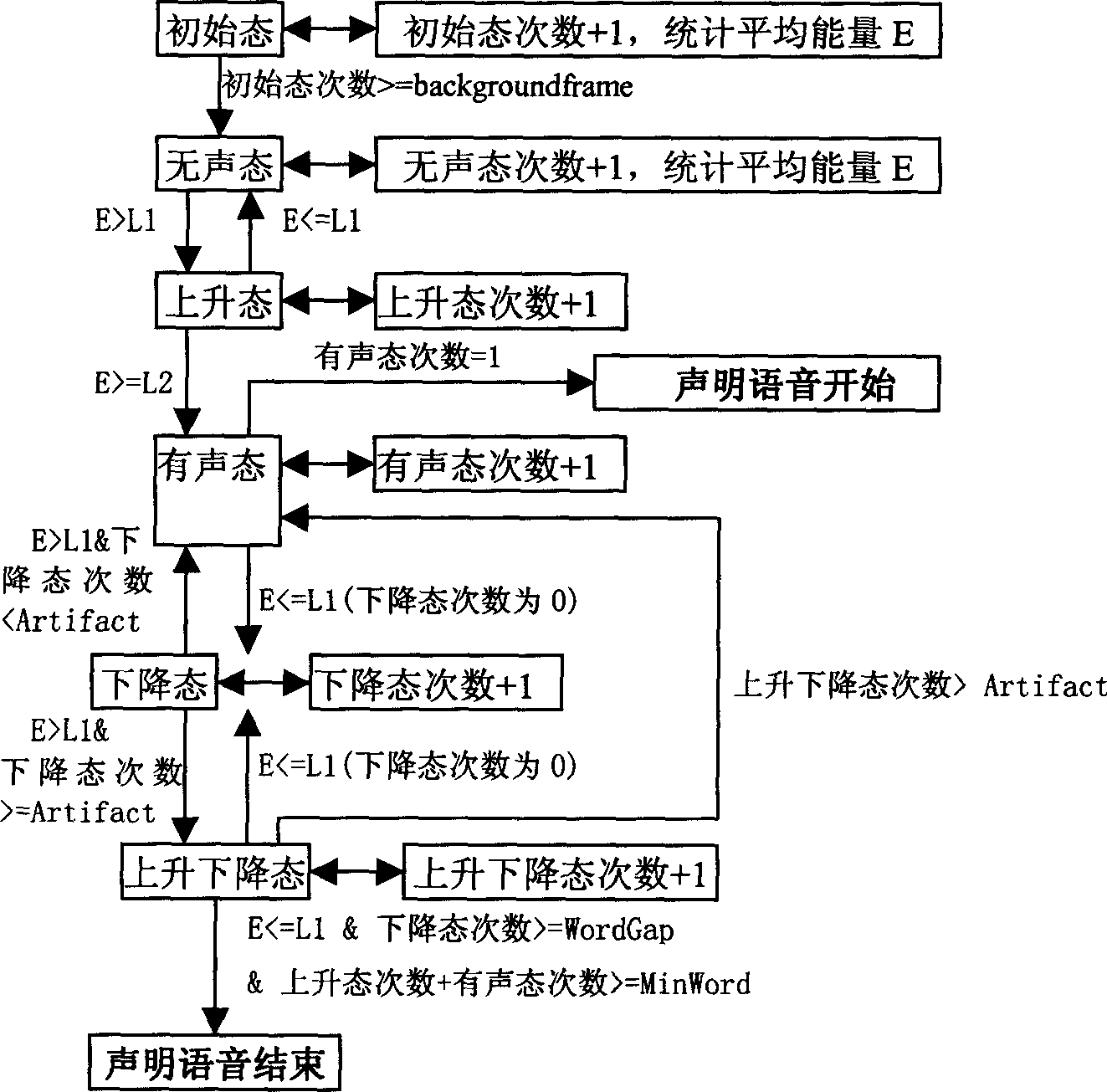

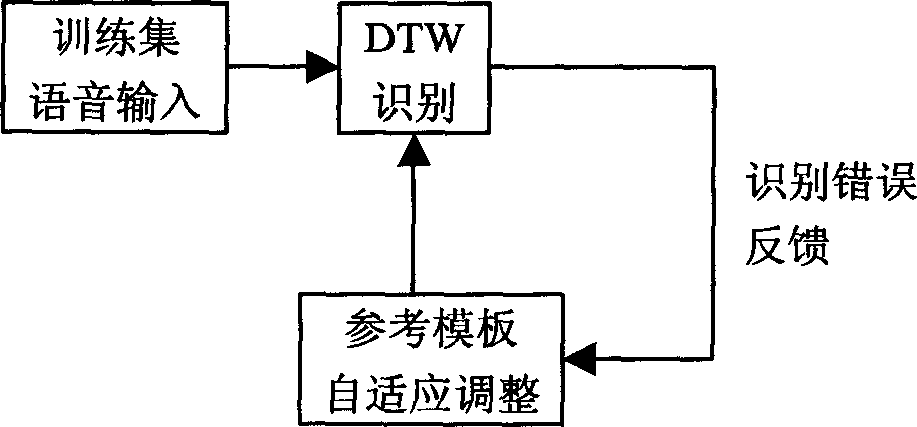

Automatic sound identifying treating method for embedded sound identifying system

InactiveCN1588535AStrong representativeImprove recognition rateSpeech recognitionSound input/outputIdentification rateSupport vector machine algorithm

The invention is a voice automatic-identification processing method of embedded voice identifying system, composed of a front-end processing part, a real-time identifying part, a back-end process part and a template training part, adopting self-adapting end-point detecting technique to draw voiced segments, adopting synchronous mode to identify input voice, applying vector-supporting algorithm to realize fast rejection of non-command voice, thus improving identification reliability and practicality, and adopting multistage vector quantization method to train the voice template and assorted with McE / GPD distinctive training to optimize the voice template so as to improve identifying property. The used acoustic model has a small memory space, thus effectively increasing the identification ratio of the system to above 95%, its algorithm load is small, its memory space is small and its identification rejection ratio is higher than 80%.

Owner:SHANGHAI JIAO TONG UNIV

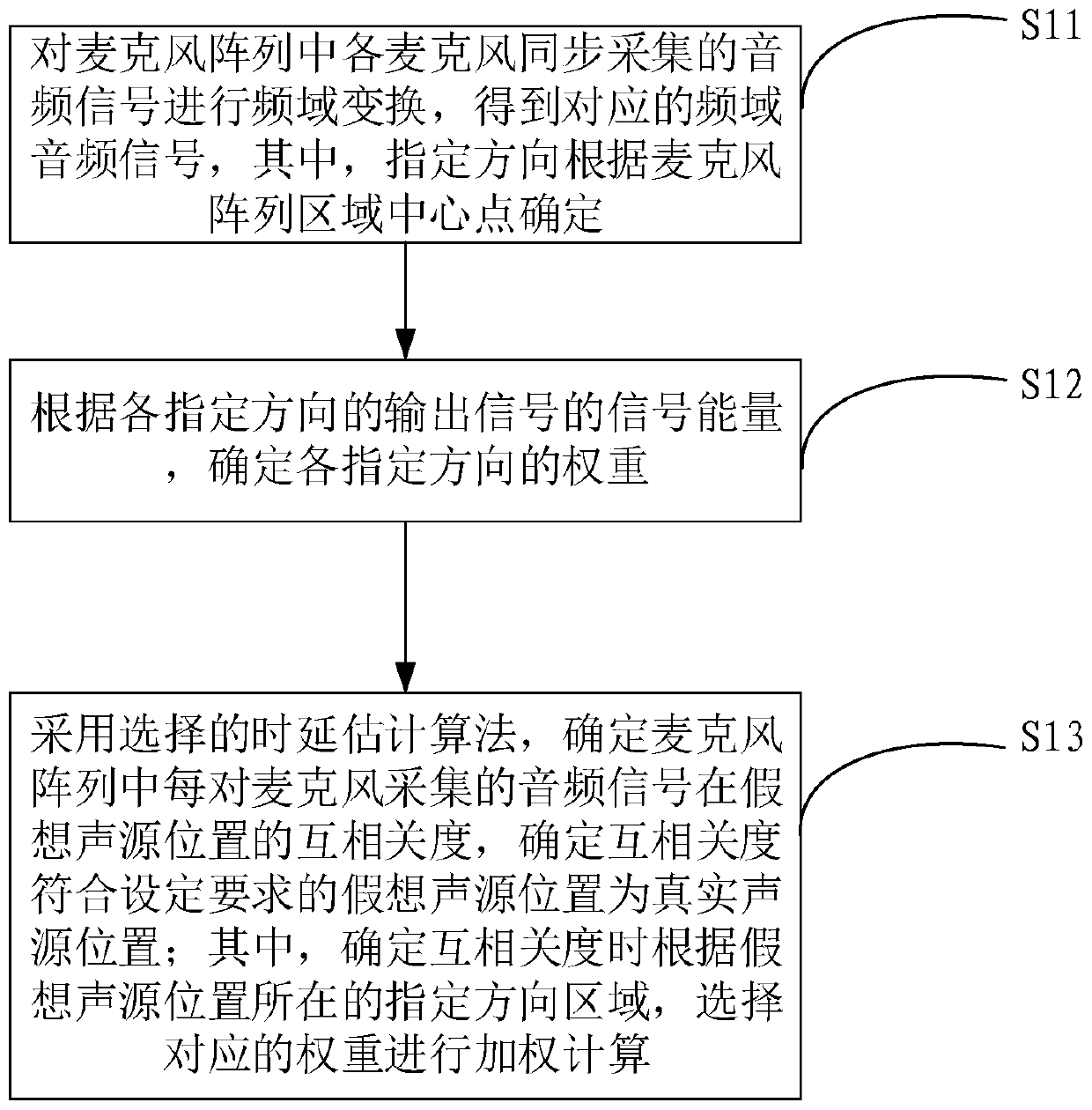

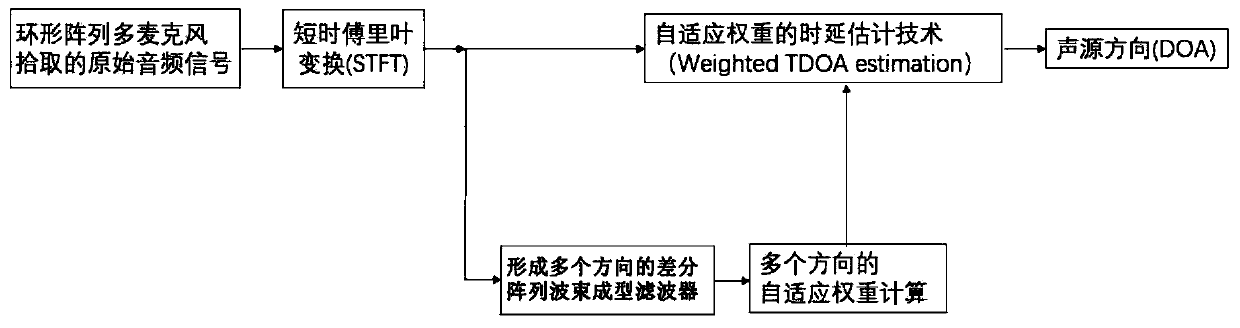

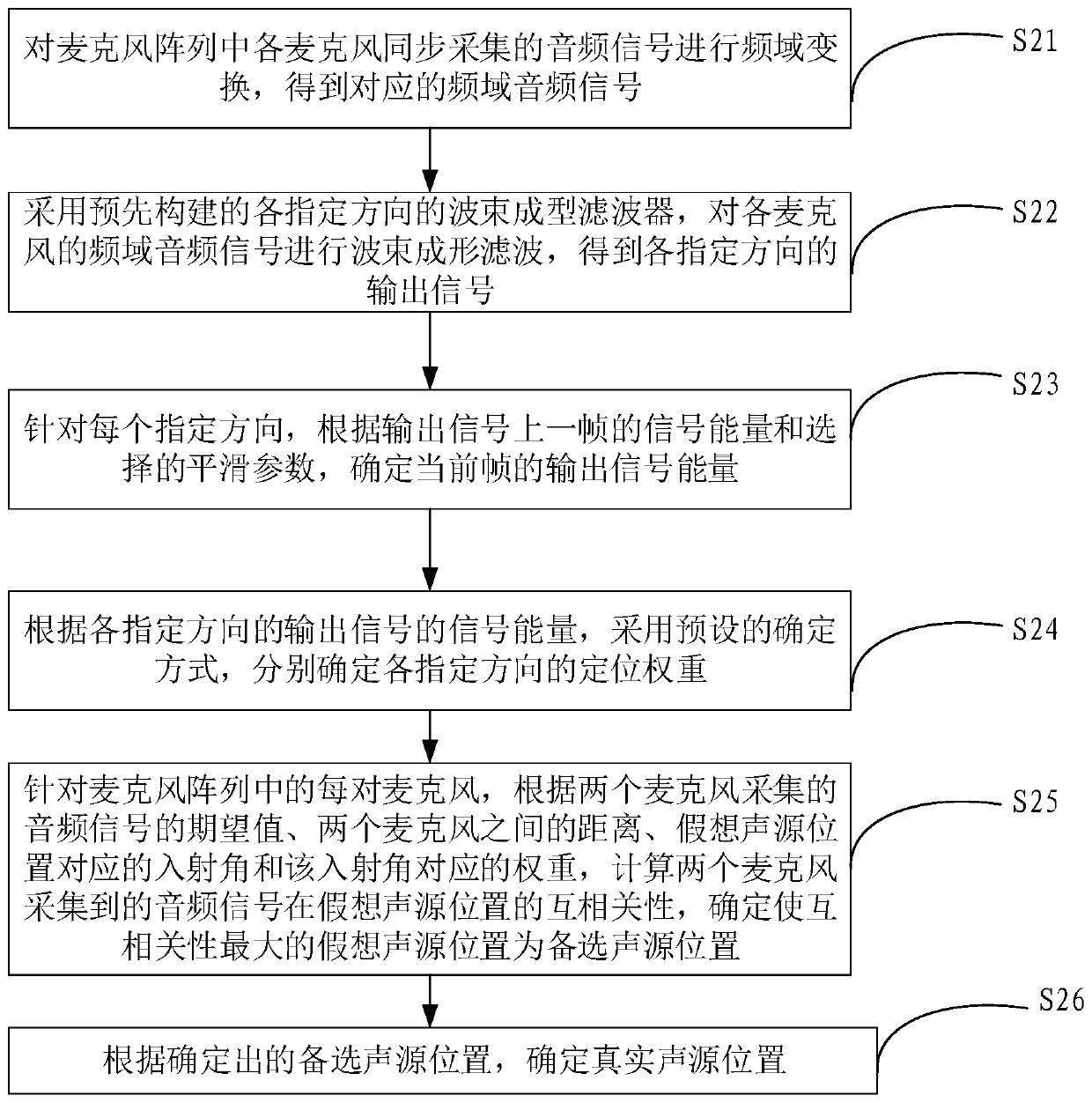

Sound source direction positioning method and device, voice equipment and voice system

PendingCN111025233AImprove accuracyImprove the ability to distinguishPosition fixationSound source locationSound sources

The invention discloses a sound source direction positioning method and device and equipment. The method comprises the steps of performing beam forming filtering in a specified direction on audio signals acquired by a microphone array to obtain an output signal in each specified direction; determining the weight of each specified direction according to the signal energy of the output signal of each specified direction; determining the cross-correlation degree of the audio signals collected by each pair of microphones in the microphone array at an imaginary sound source position by adopting a selected time delay estimation algorithm, and determining the imaginary sound source position of which the cross-correlation degree meets a set requirement as a real sound source position, wherein whenthe cross-correlation degree is determined, the corresponding weight is selected for weighted calculation according to the specified direction area where the imaginary sound source position is located. The robustness of sound source positioning is improved, the accuracy of sound source positioning is high, and the anti-interference capability is strong.

Owner:ALIBABA GRP HLDG LTD

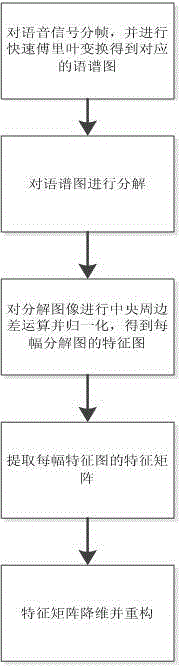

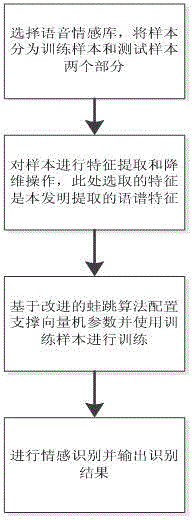

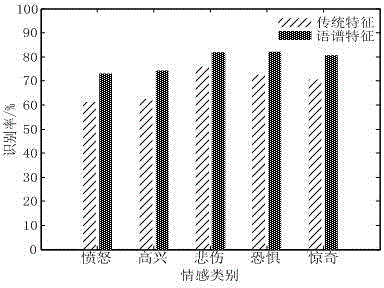

Speech spectrum characteristic extracting method facing speech emotion identification

InactiveCN104637497AEasy to digReduce varianceSpeech analysisFast Fourier transformFeature extraction

The invention discloses a speech spectrum characteristic extracting method facing speech emotion identification. The method comprises the first step of framing a speech signal and conducting the fast Fourier transformation to obtain a corresponding speech spectrum, the second step of resolving the speech spectrum, the third step of conducting the central peripheral subtract calculation on the resolved image and normalizing to obtain a characteristic image of each resolving image, the fourth step of extracting the characteristic matrix of each characteristic image and a fifth step of conducting dimensionality reduction on the characteristic matrix and reconstituting. The method comprehensively uses the image processing methods from the perspective of analyzing the speech spectrum characteristic, excavates the emotion identification characteristic from a creative perspective, uses a multiscale and multichannel filter to resolve the speech spectrum, conducts the processing in different characteristic fields, and combines the PCA analysis to better excavate beneficial information to speech emotion.

Owner:NANJING INST OF TECH

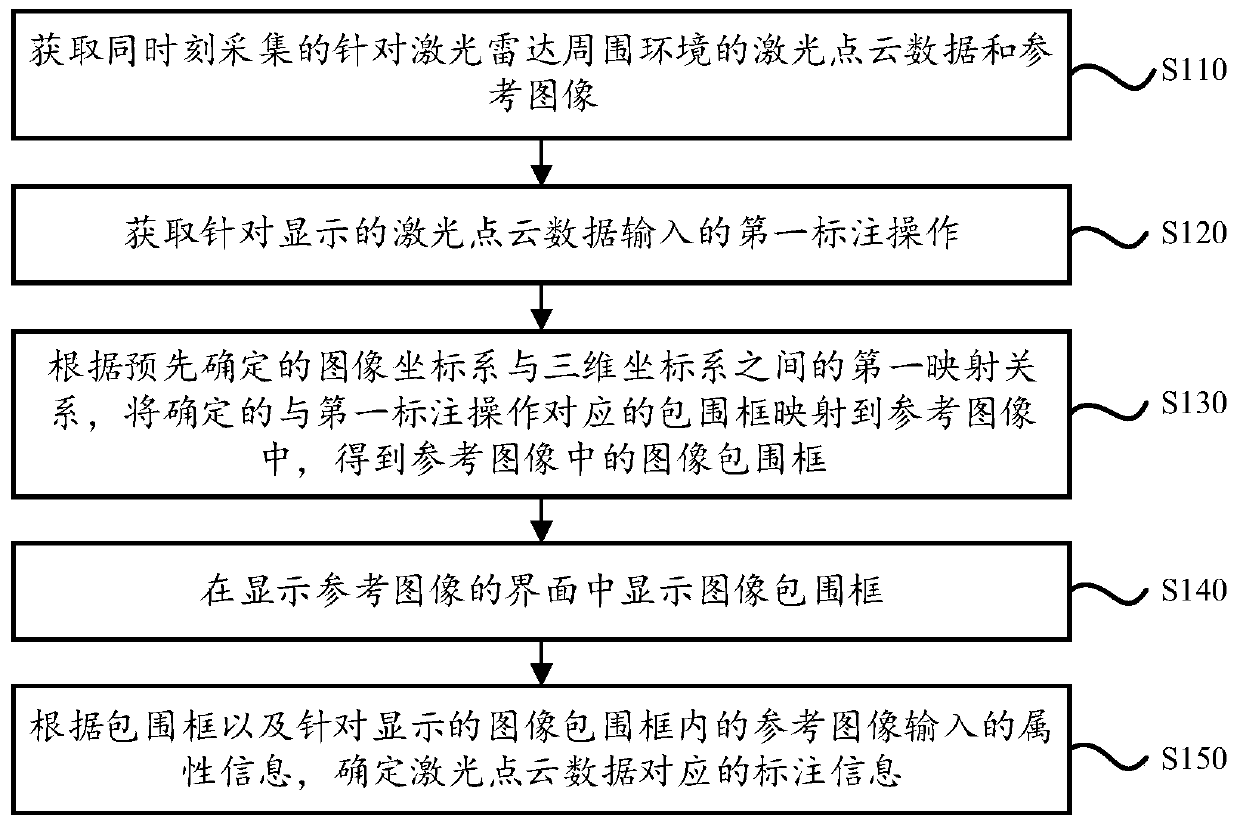

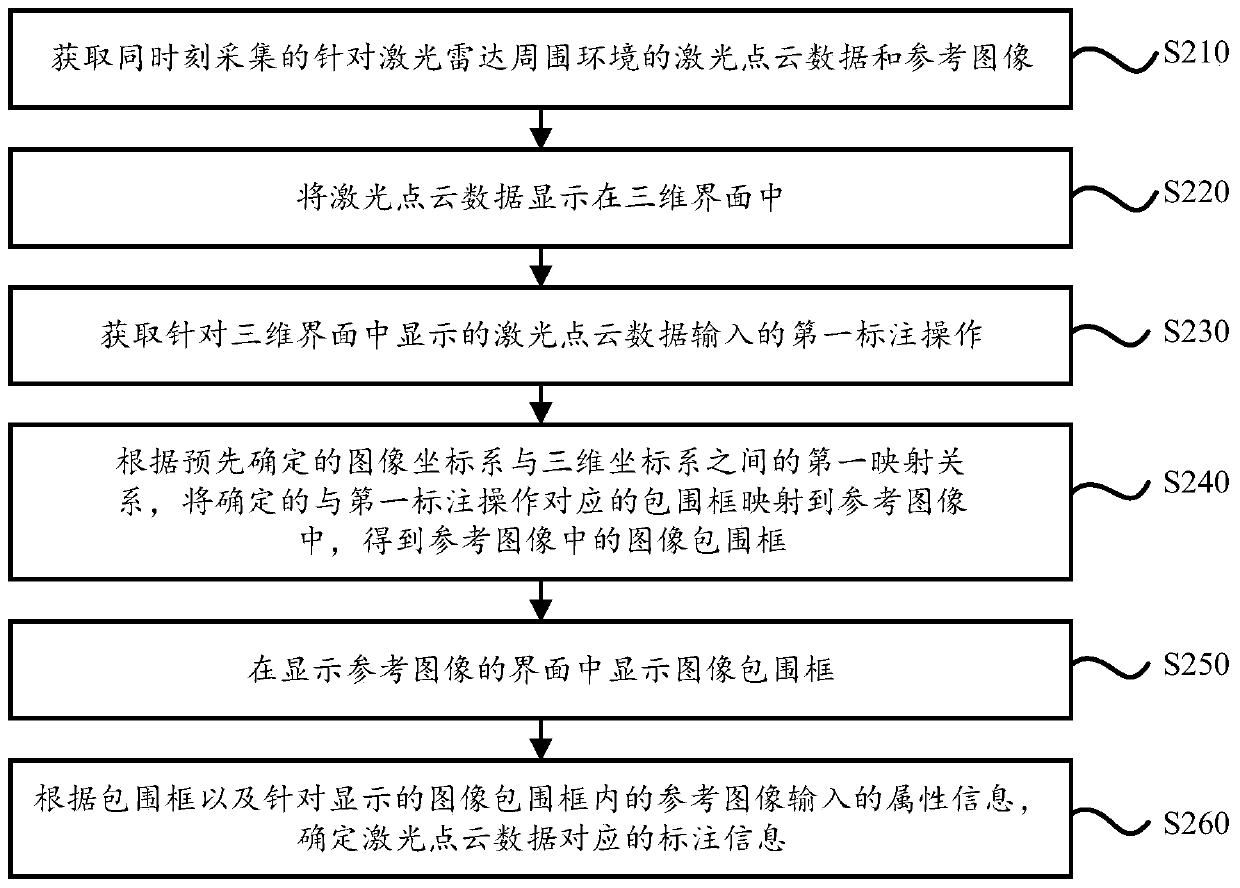

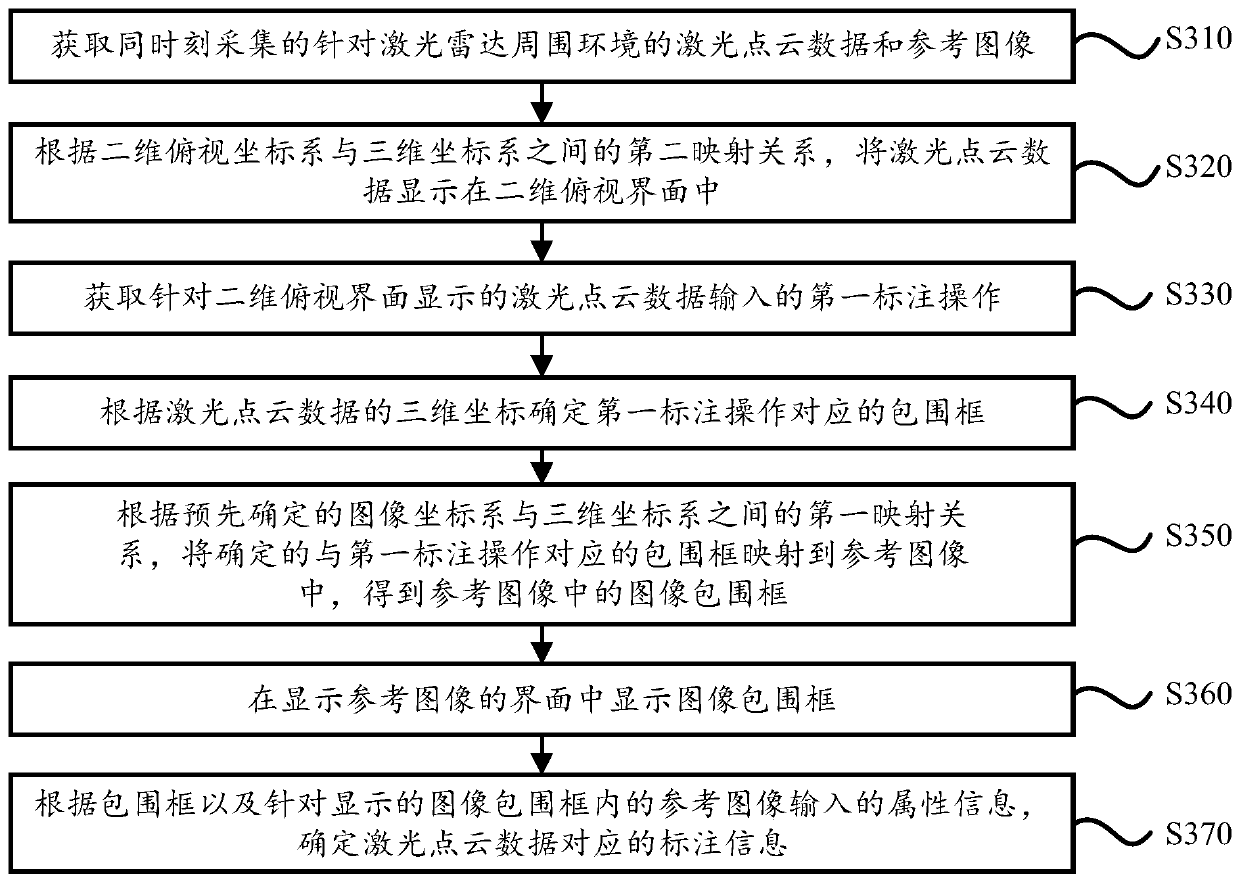

Laser point cloud data labeling method and device

The embodiment of the invention discloses a laser point cloud data labeling method and device. The method comprises the steps of obtaining laser point cloud data and a reference image which are collected at the same time and aim at the surrounding environment of the laser radar; obtaining a first labeling operation input for the displayed laser point cloud data; according to a predetermined firstmapping relation between an image coordinate system and a three-dimensional coordinate system, mapping the determined bounding box corresponding to the first labeling operation into the reference image to obtain an image bounding box in the reference image; displaying the image bounding box in an interface for displaying a reference image; and according to the bounding box and attribute information input by aiming at a reference image in the displayed image bounding box, determining marking information corresponding to the laser point cloud data. By applying the scheme provided by the embodiment of the invention, the accuracy of information labeling when the laser point cloud data is labeled can be improved.

Owner:MOMENTA SUZHOU TECH CO LTD

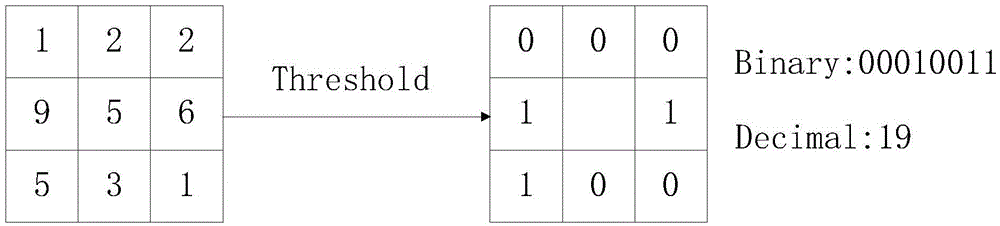

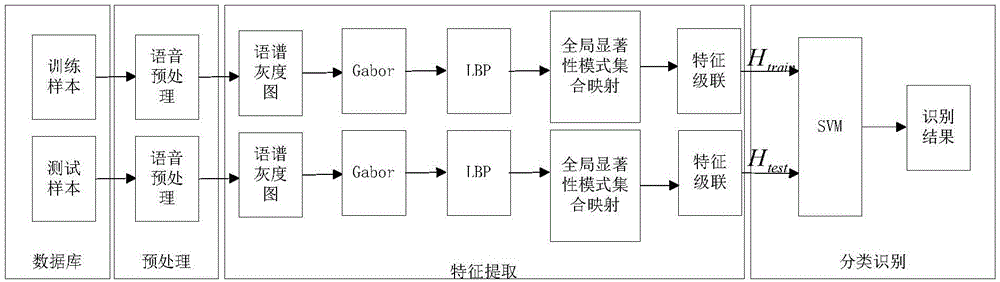

Self-learning spectrogram feature extraction method for speech emotion recognition

ActiveCN105047194AEffective classificationEasy to classifySpeech recognitionFeature extractionFeature selection

The invention discloses a self-learning spectrogram feature extraction method for speech emotion recognition. The method is characterized by, to begin with, carrying out preprocessing on speech, of which the emotion is known, in a standard corpus to obtain a quantitative spectrogram gray level image; then, calculating a Gabor spectrogram of the obtained spectrogram gray level image; carrying out training on an extracted LBP statistical histogram by utilizing a recognizable characteristic learning algorithm and constructing a global significance pattern set with different scales and different directions; and finally, carrying out feature selection on the LBP statistical histograms of the Gabor spectrograms under different scales and different directions of the speech by utilizing the global significance pattern set to obtain processed statistical histograms, and cascading the N statistical histograms to obtain speech emotion characteristics suitable for emotion classification. The emotion features can recognize different types of emotions, and recognition rate thereof is substantially superior to that of existing acoustic features.

Owner:SOUTHEAST UNIV

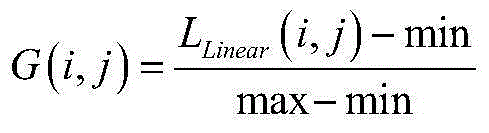

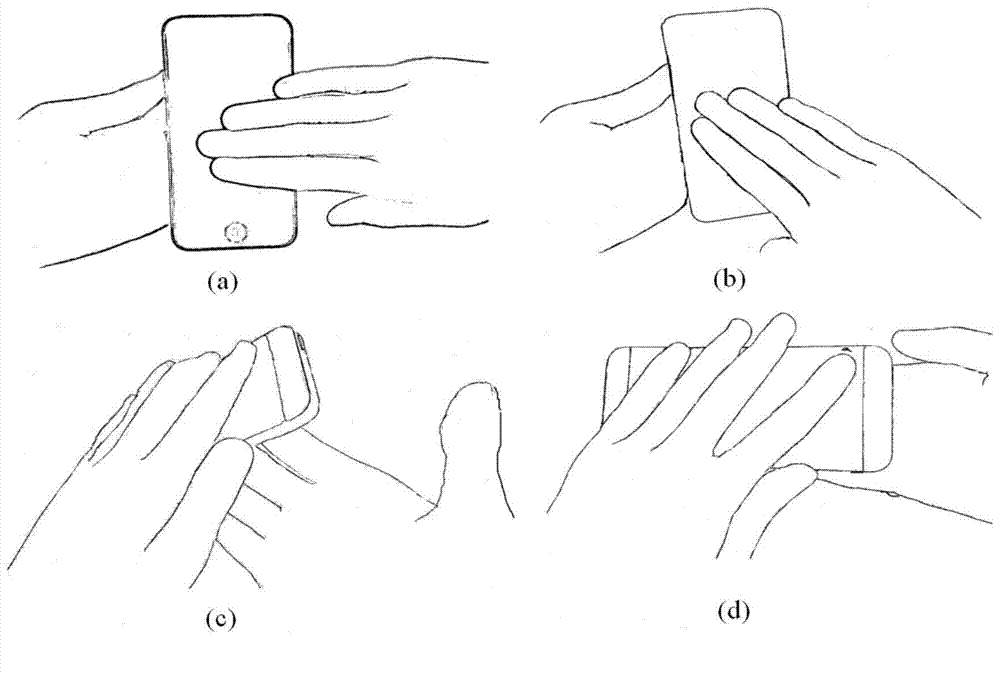

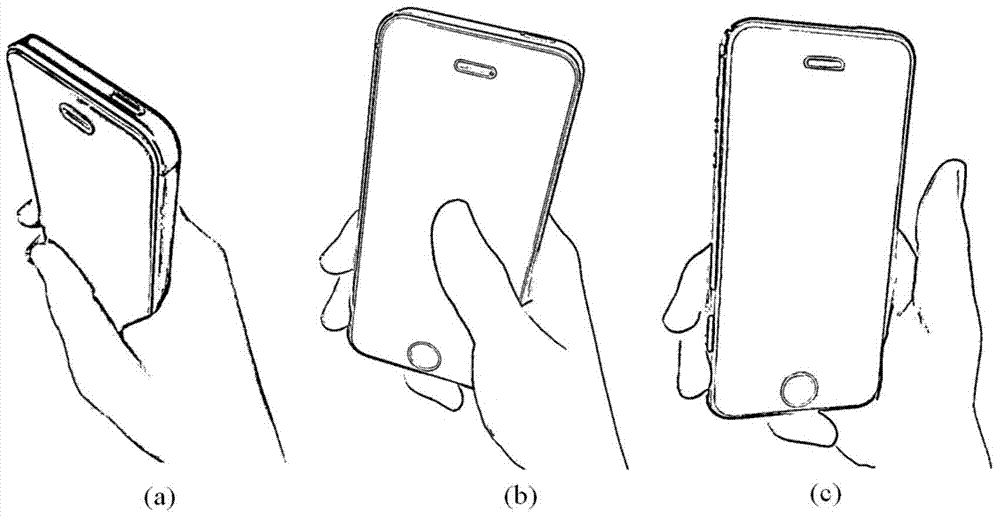

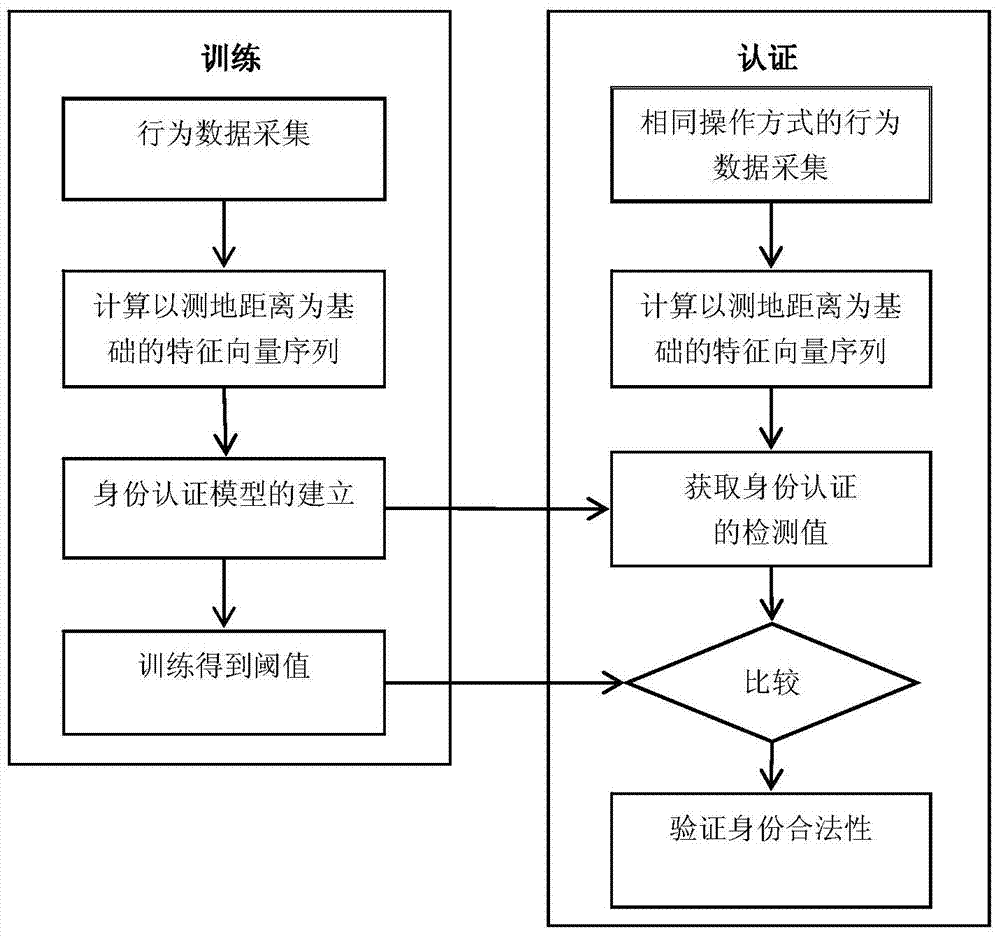

User identity authentication method for intelligent mobile terminal

ActiveCN104850773AImprove stabilityImprove the ability to distinguishDigital data authenticationFeature vectorActive movement

The invention discloses a user identity authentication method for an intelligent mobile terminal, by designing a special movement operation manner of the mobile terminal, active movement of the mobile terminal and screen touching operation are organically combined; by using various sensor behavior data and screen touching behavior data generated during authentication operation of a user, a characteristic vector sequence of a mapping relation between a finger screen touching track and a space pose position of the mobile terminal is established based on a screen touching geodesic distance of the user, so as to judge legality of user identity. The user identity authentication method has the advantages: the operation is simple, and no additional extra equipment need to be increased; behavior characteristics with space translation and rotation invariance are put forward, and influence of change of poses such as rotation and translation of the mobile terminal on an authentication result is effectively avoided.

Owner:XI AN JIAOTONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com