Patents

Literature

222 results about "Speech spectrum" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Speech spectrum - the average sound spectrum for the human voice. acoustic spectrum, sound spectrum - the distribution of energy as a function of frequency for a particular sound source.

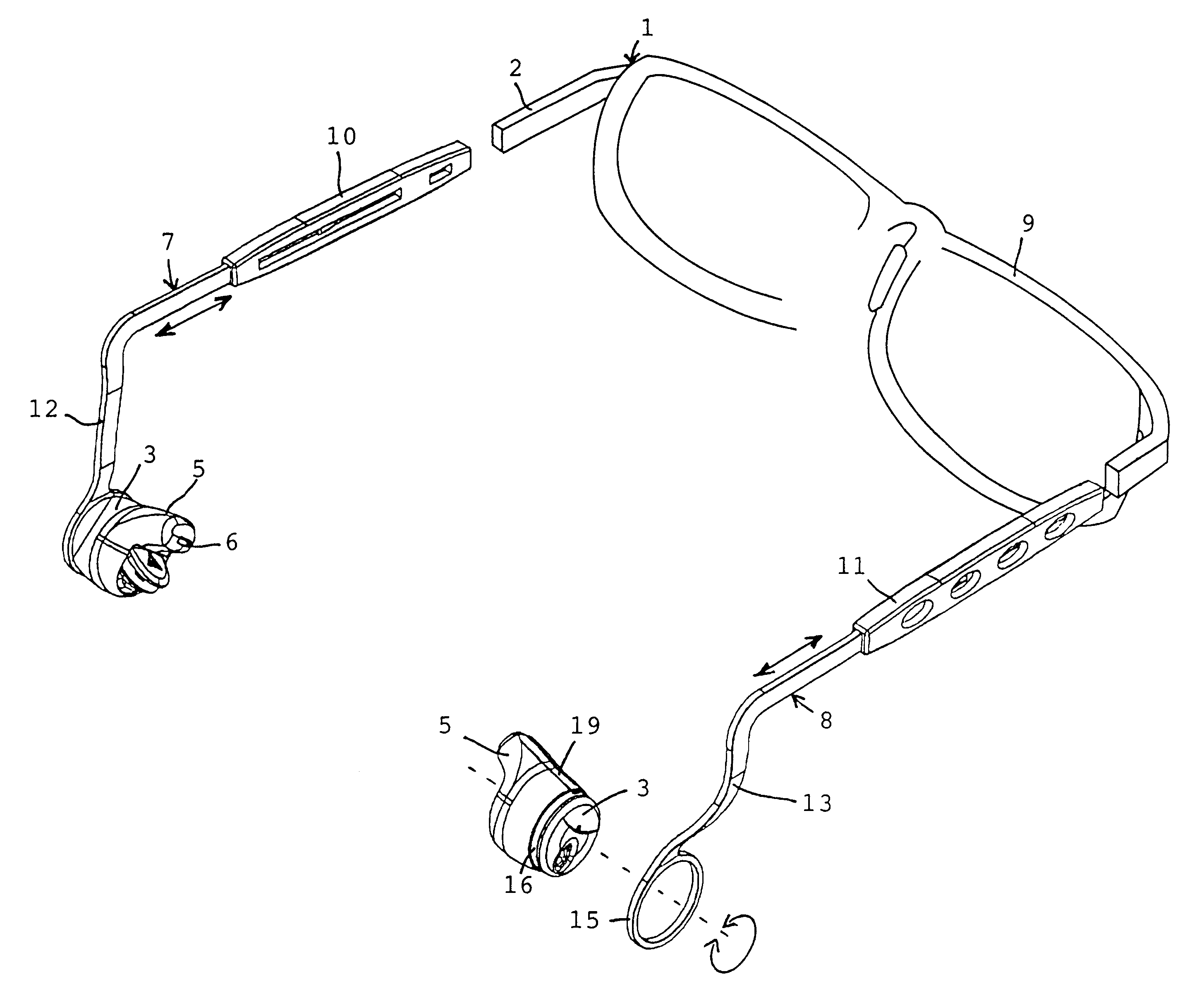

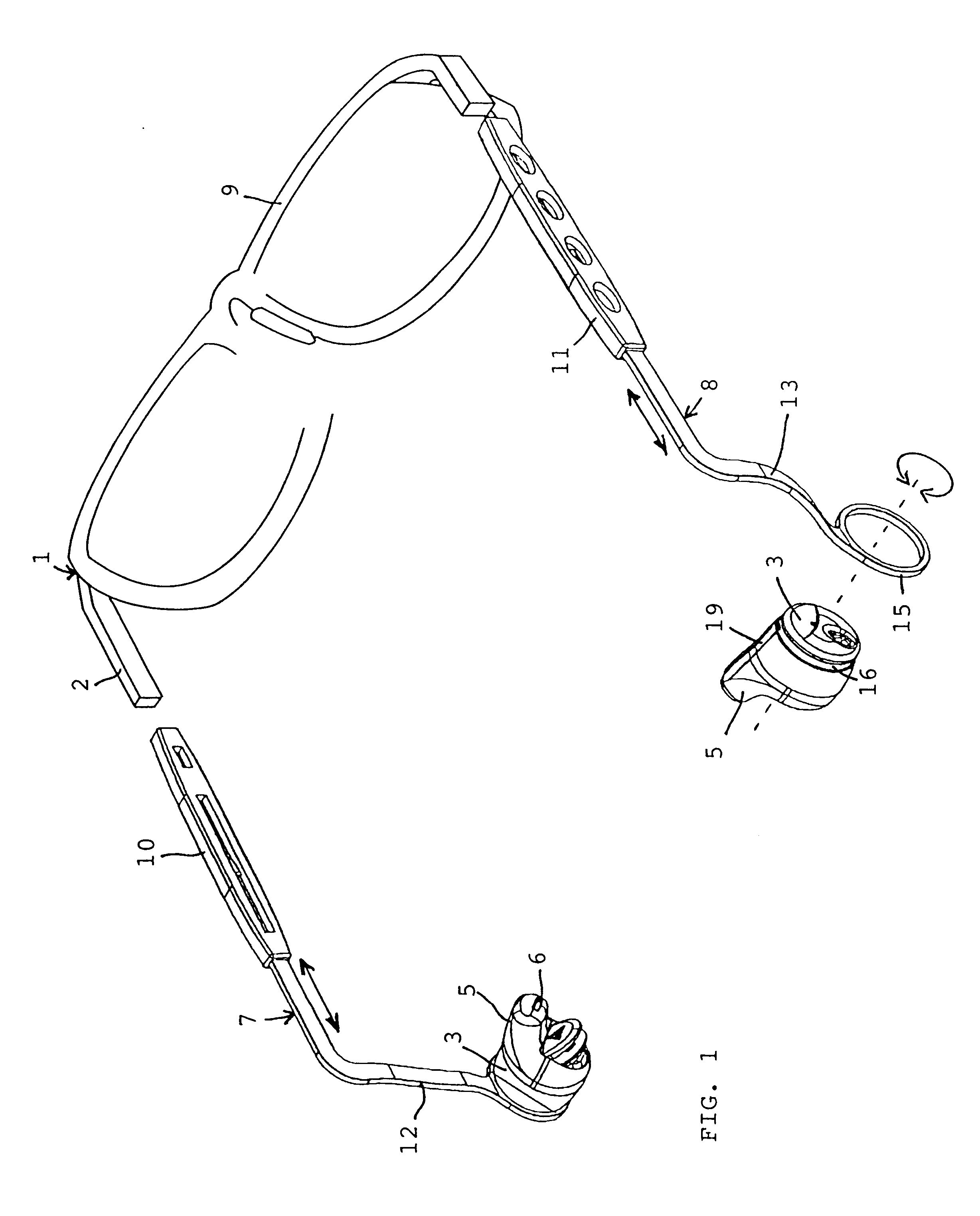

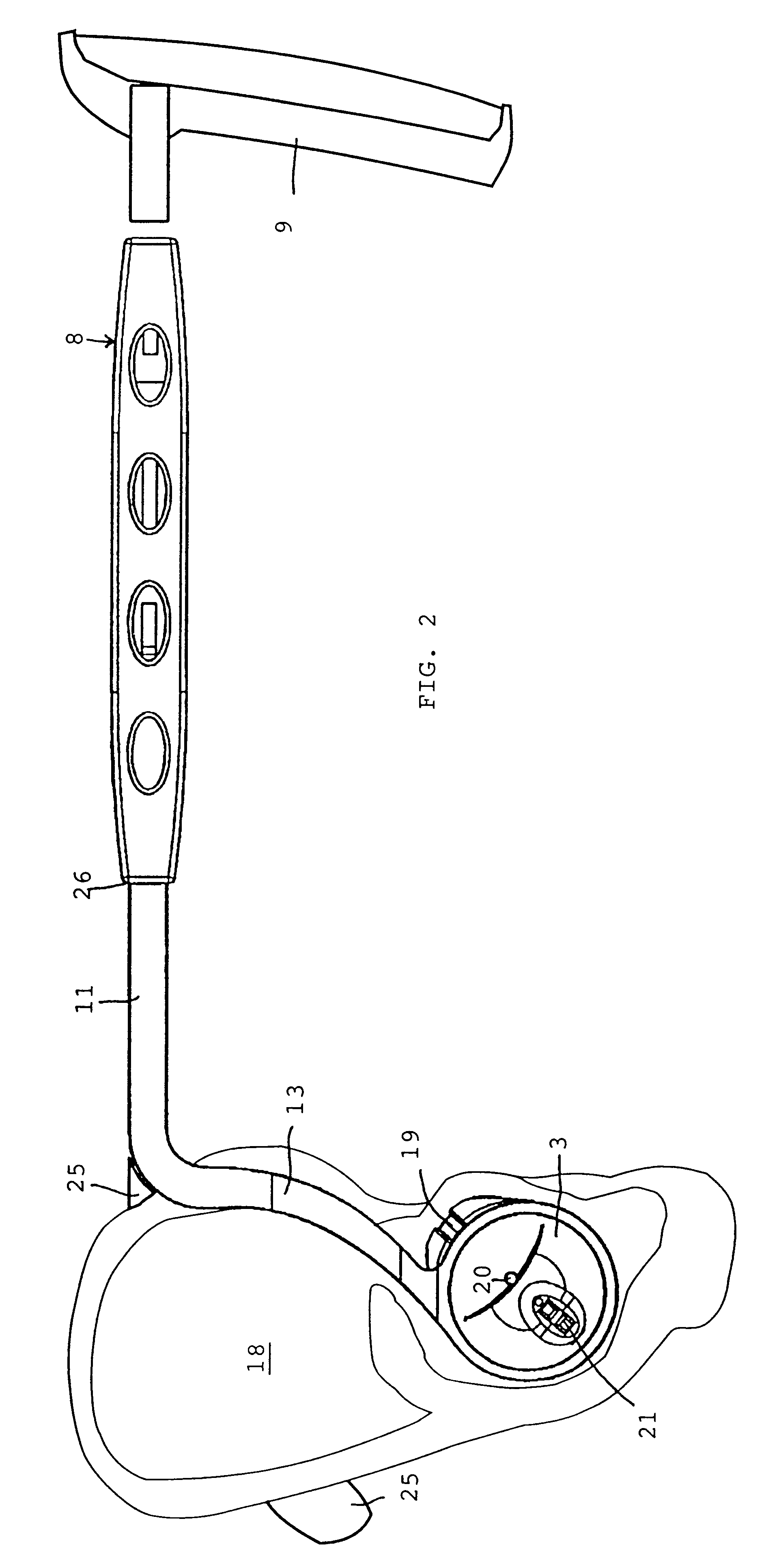

Hearing aid

The invention relates to a listening assistance device (1) comprising hearing modules (3) mounted on the ends of the arms of an eyeglass frame (2), said modules being designed to support the eyeglass frame on the ear of the user. The hearing modules also have a formed part (5) which extends into the auditive canal without closing the latter and which includes the sound outlet hole (6) of the module. The modules comprise a speech spectrum frequency response and linear dynamics in order to improve speech intelligibility. This makes it possible to provide a listening assistance device compensating for mild hearing loss that can be produced easily and economically, is more comfortable to use and is not regarded at first sight as a hearing aid by an observer.

Owner:KOCHLER ERIKA

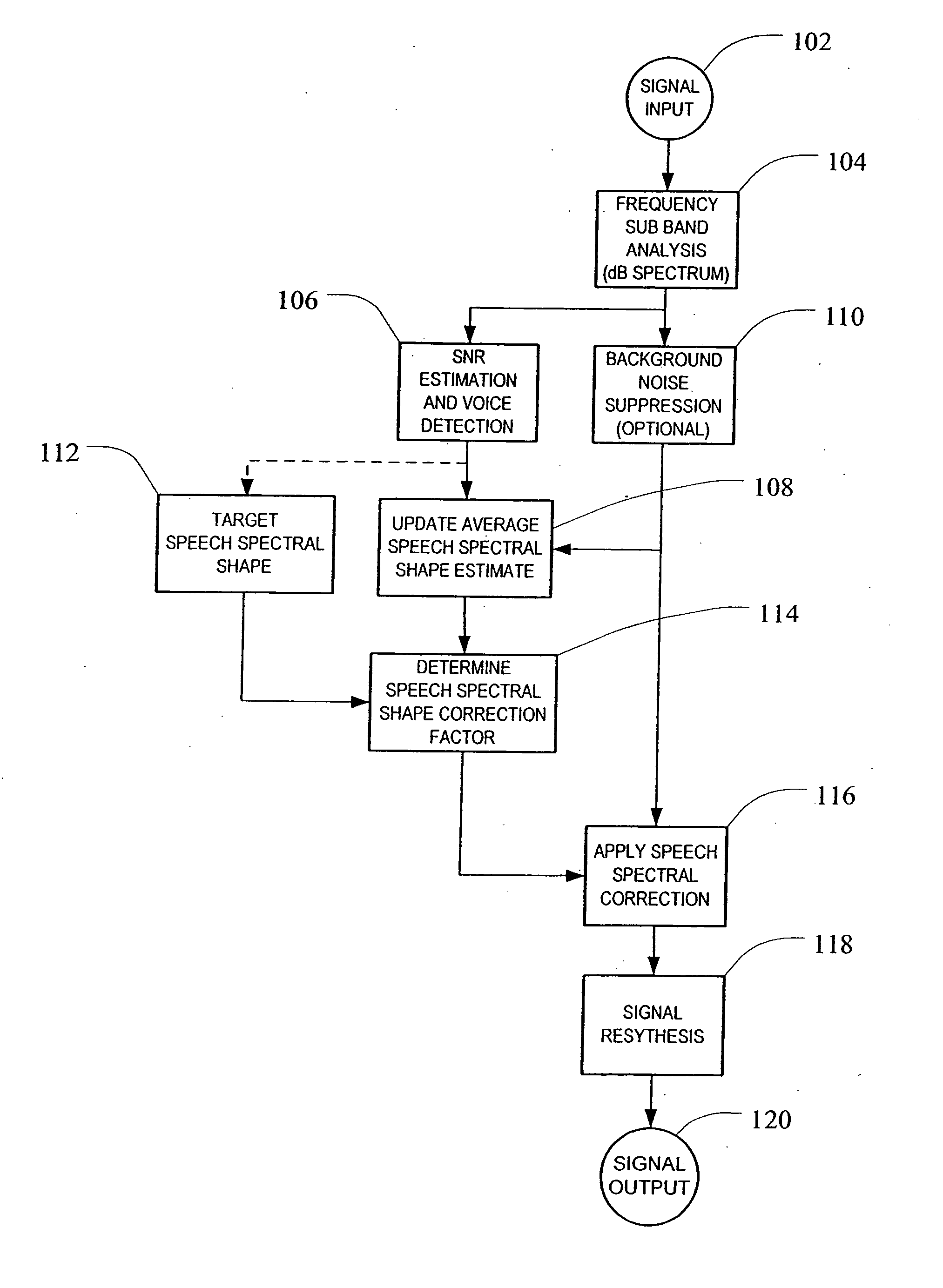

System and method for adaptive enhancement of speech signals

ActiveUS20060293882A1High frequency responseQuality improvementEar treatmentSpeech recognitionIir filteringFrequency spectrum

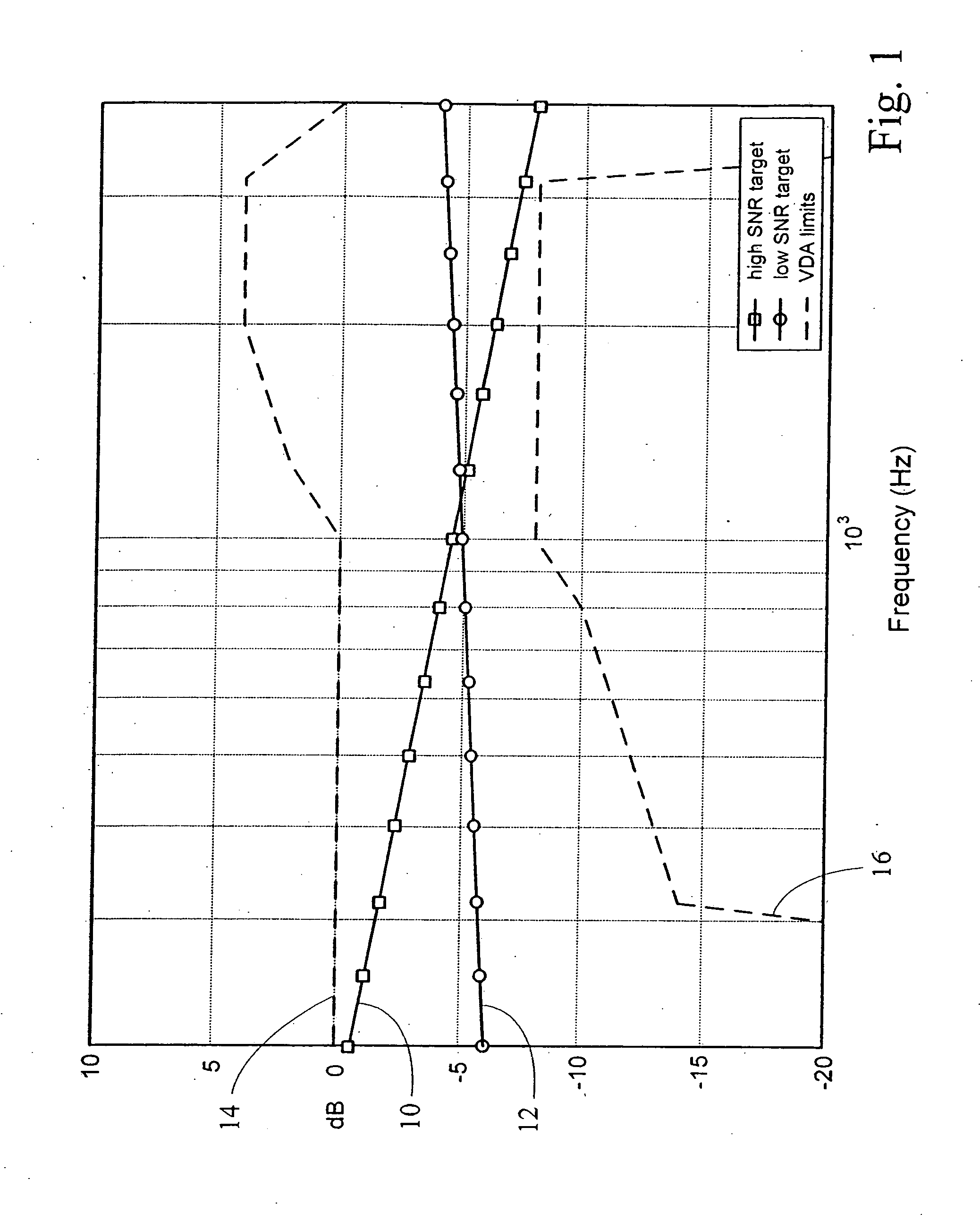

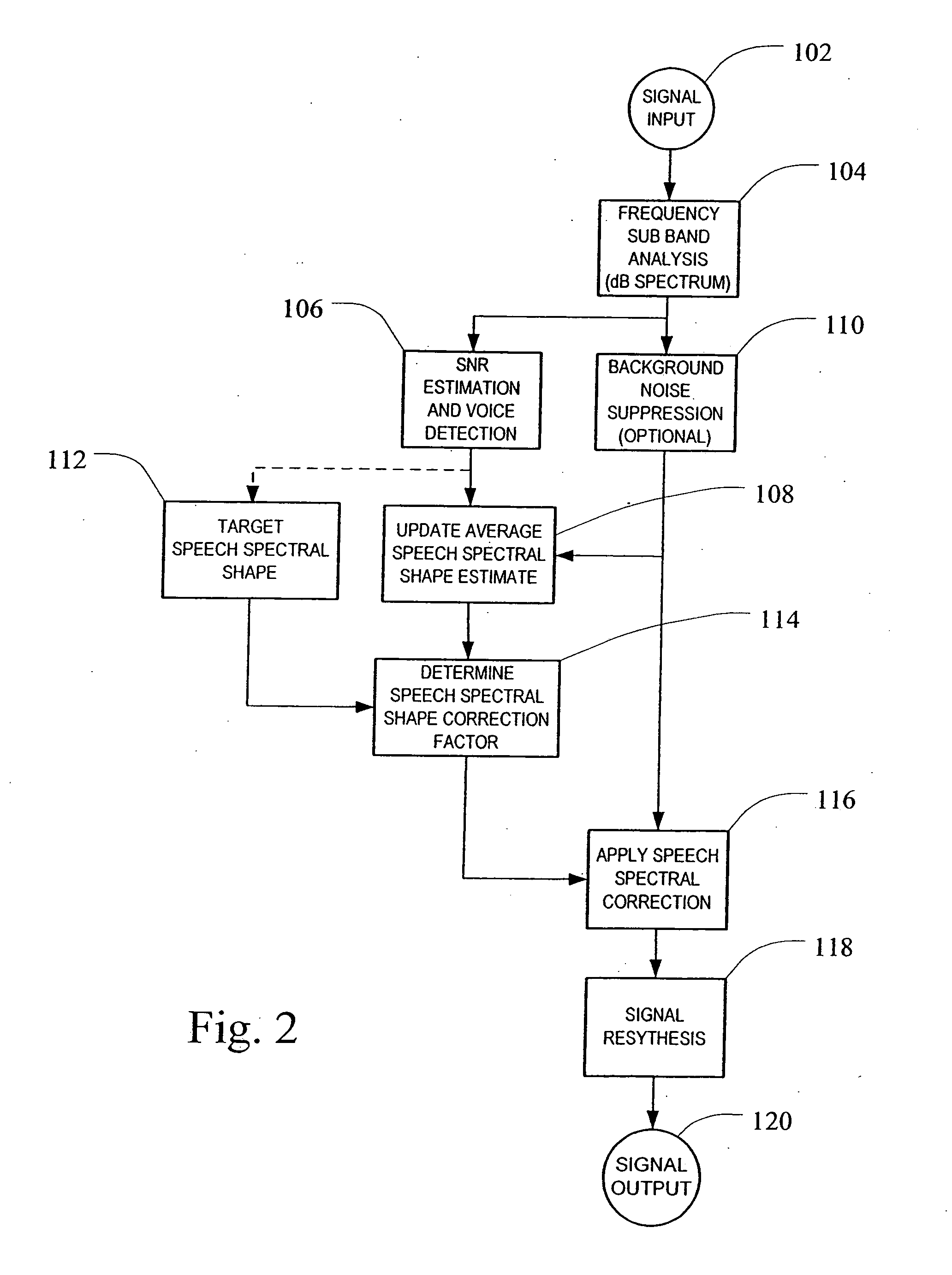

A method and system for enhancing the frequency response of speech signals are provided. An average speech spectral shape estimate is calculated over time based on the input speech signal. The average speech spectral shape estimate may be calculated in the frequency domain using a first order IIR filtering or “leaky integrators.” Thus, the average speech spectral shape estimate adapts over time to changes in the acoustic characteristics of the voice path or any changes in the electrical audio path that may affect the frequency response of the system. A spectral correction factor may be determined by comparing the average speech spectral shape estimate to a desired target spectral shape. The spectral correction factor may be added (in units of dB) to the spectrum of the input speech signal in order to enhance or adjust the spectrum of the input speech signal toward the desired spectral shape, and an enhanced speech signal re-synthesized from the corrected spectrum.

Owner:BLACKBERRY LTD

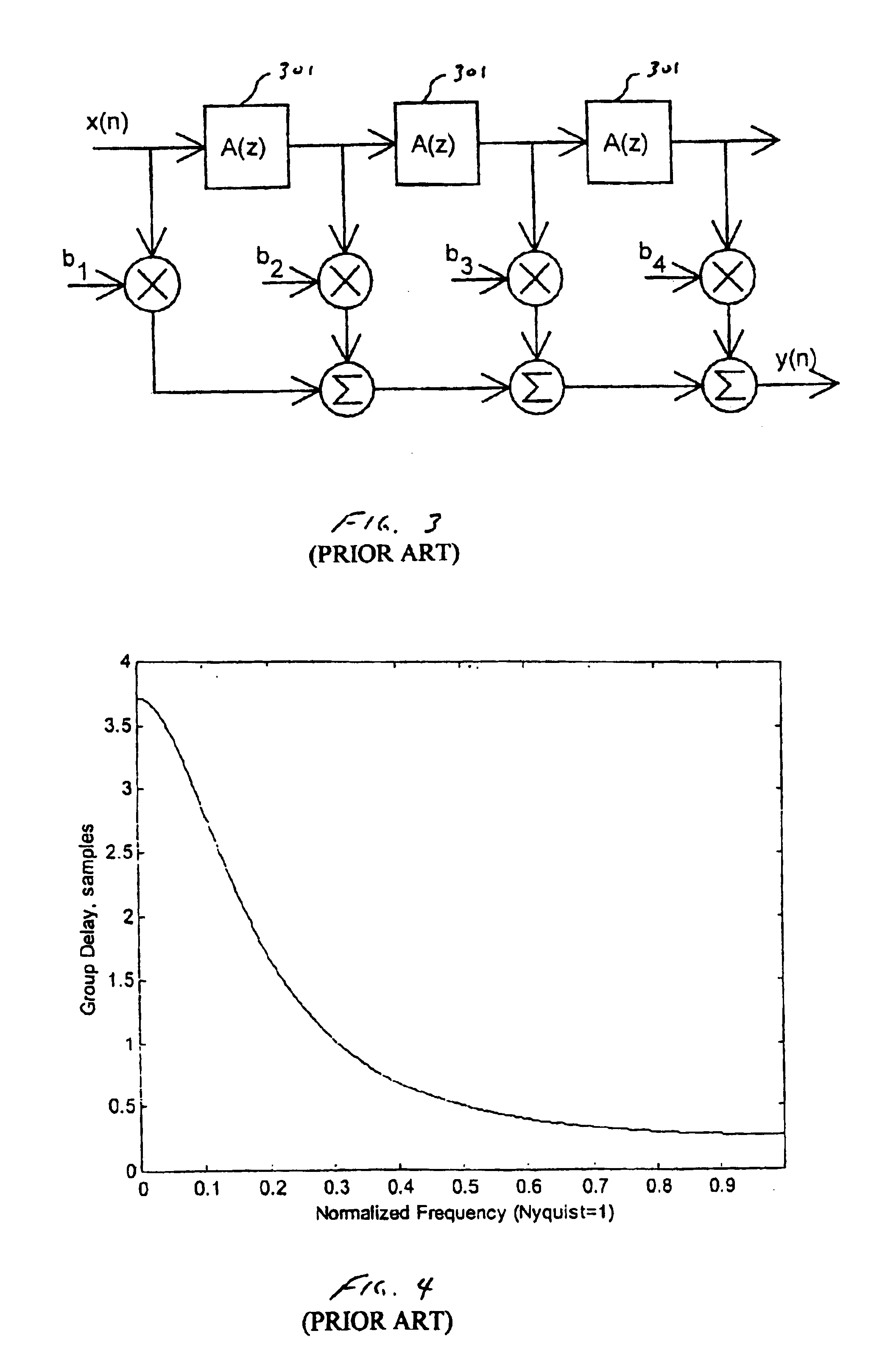

Spectral enhancement using digital frequency warping

InactiveUS6980665B2Improve speech intelligibilityImproving speech perceived speech qualityElectrophonic musical instrumentsHearing aids signal processingFrequency spectrumHandling system

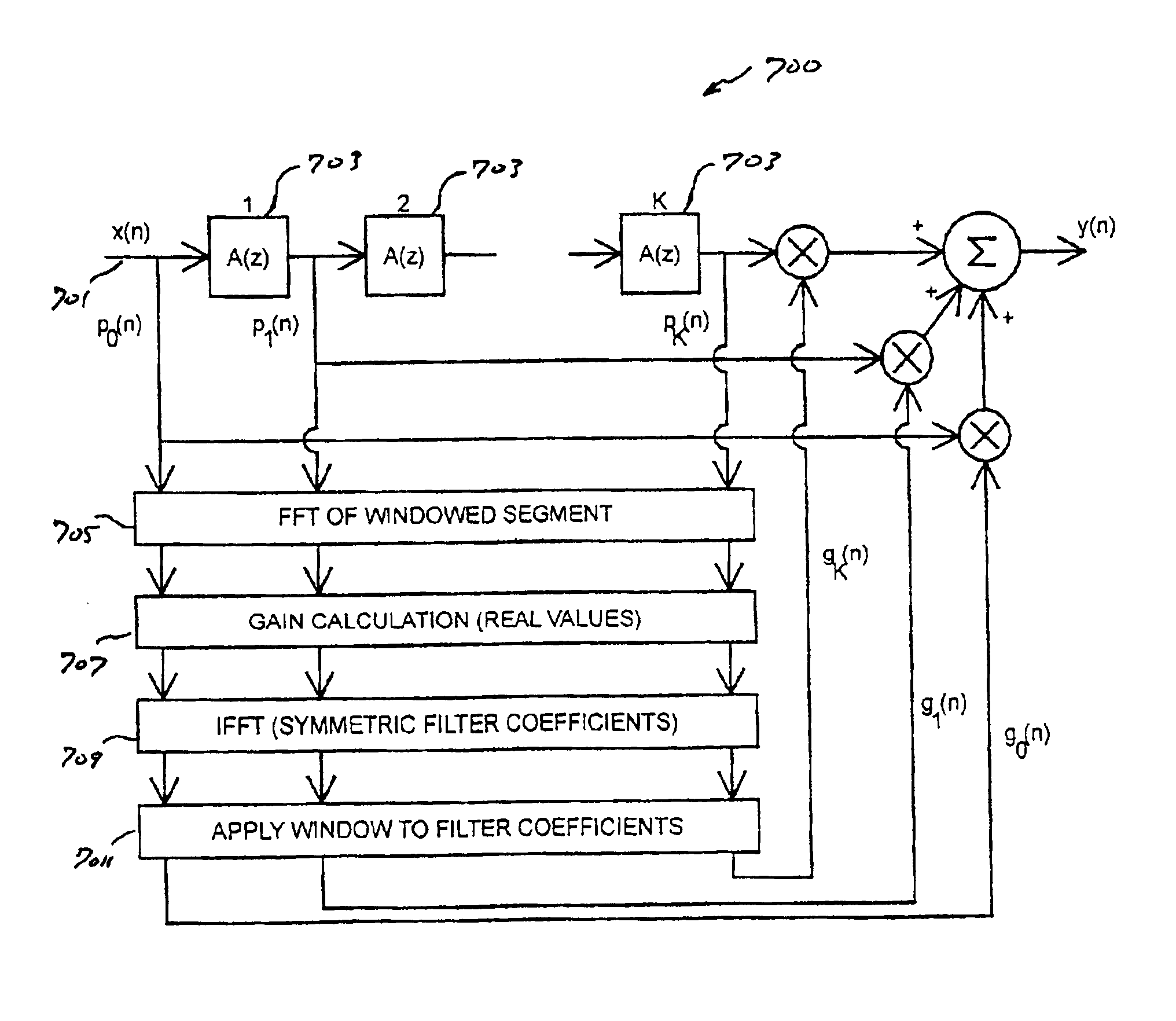

A frequency-warped processing system using either sample-by-sample or block processing is provided. Such a system can be used, for example, in a hearing aid to increase the dynamic-range contrast in the speech spectrum, thus improving ease of listening and possibly speech intelligibility. The processing system is comprised of a cascade of all-pass filters that provide the frequency warping. The power spectrum is computed from the warped sequence and then compression gains are computed from the warped power spectrum for the auditory analysis bands. Spectral enhancement gains are also computed in the warped sequence allowing a net compression-plus-enhancement gain function to be produced. The speech segment is convolved with the enhancement filter in the warped time-domain to give the processed output signal. Processing artifacts are reduced since the frequency-warped system has no temporal aliasing.

Owner:GN HEARING AS

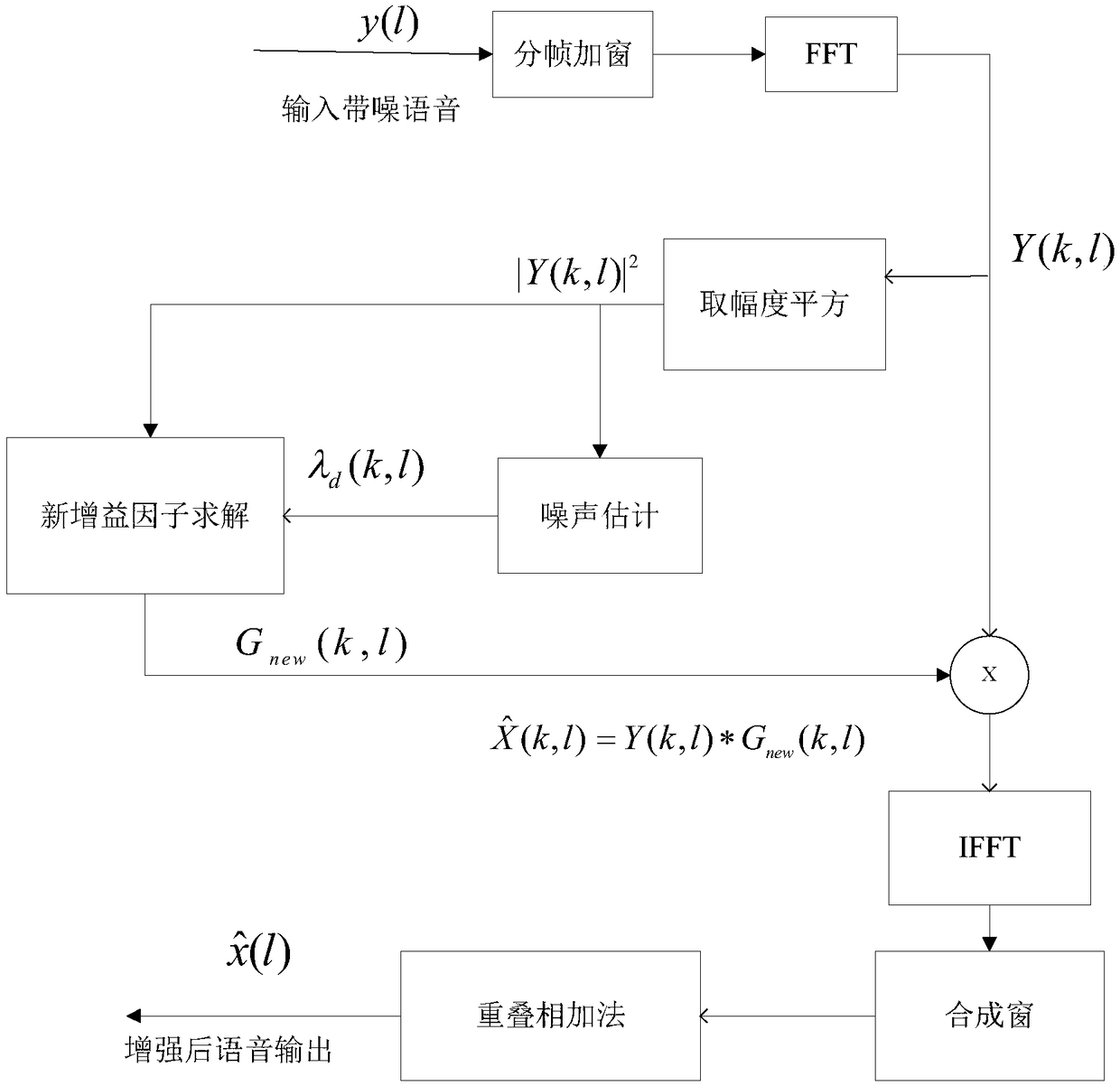

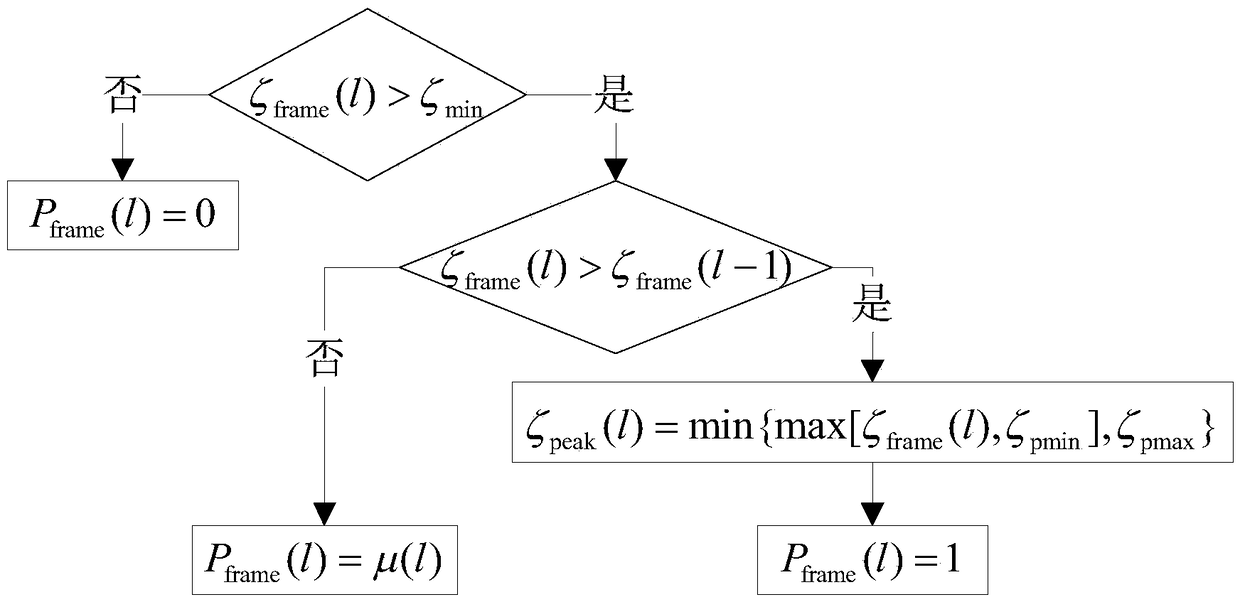

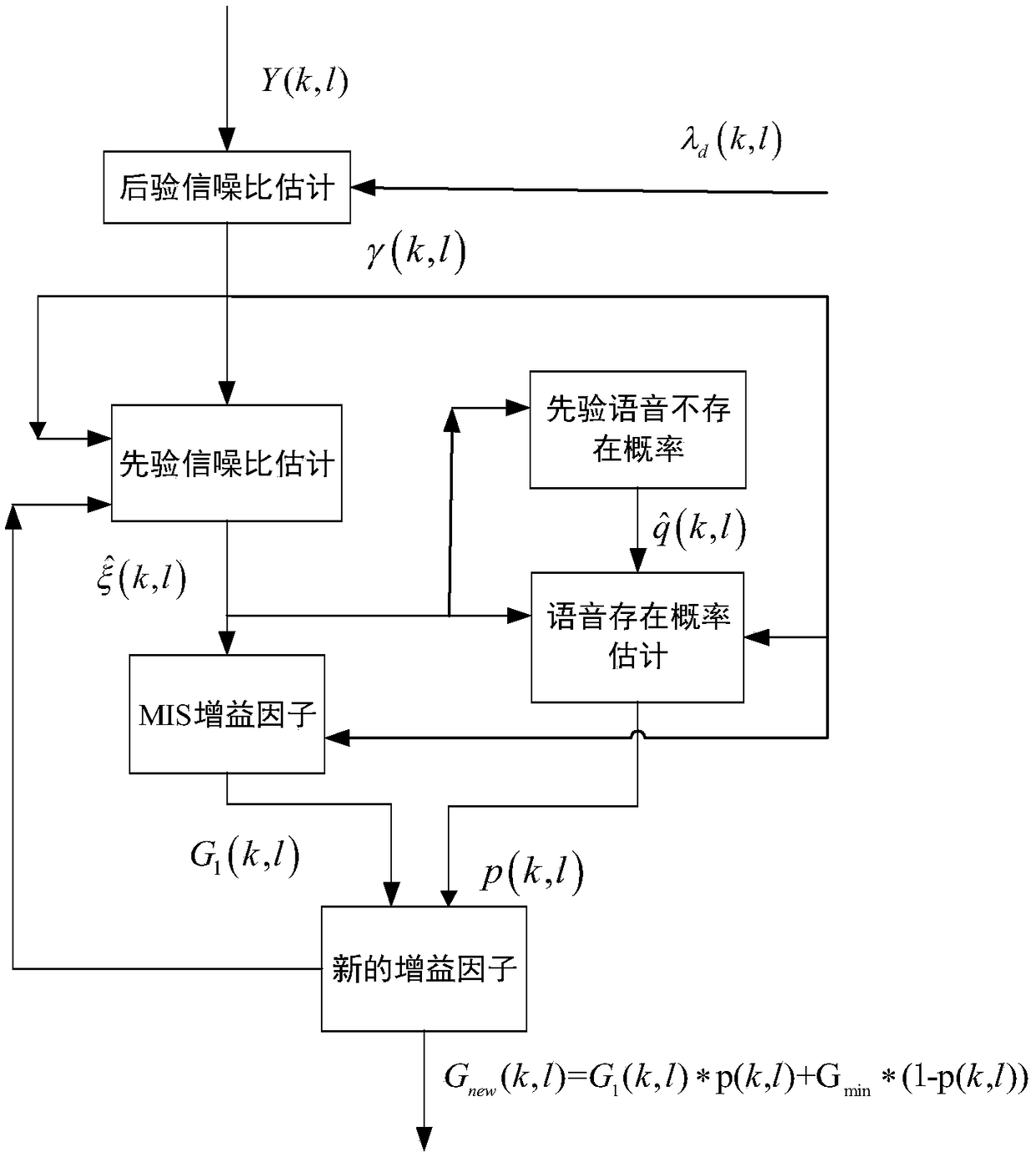

Speech enhancement method using voice existence probability

ActiveCN108831499AImprove signal-to-noise ratioReduce distortionSpeech analysisFrequency spectrumNoise power spectrum

The invention discloses a speech enhancement method using a speech existence probability, after the processing of the invention, the speech quality is higher, and the noise reduction amount is larger.The method is realized by the following technical scheme that: on the basis of a MIS measure speech enhancement method, the speech existence probability is used, an inputted noisy speech is sampled,framed and windowed, and then a noisy speech spectrum is obtained through fast Fourier transform (FFT); the noise estimation of the obtained speech spectrum is carried out, based on a non-stationary noise minimum search algorithm of statistical information, the smoothing between front and back frame noise estimation values is carried out by using inter-frame correlation, and a noise power spectrumis estimated; at the same time, speech prior signal-to-noise ratio estimated values obtained in several front and back frames are smoothened; then the speech existence probability and a MIS measure gain factor are combined, the obtained noisy speech spectrum is multiplied by a new gain factor, and a spectrum of the enhanced speech is obtained; and the inverse fast Fourier transform (IFFT) is performed to obtain an enhanced time domain speech signal.

Owner:10TH RES INST OF CETC

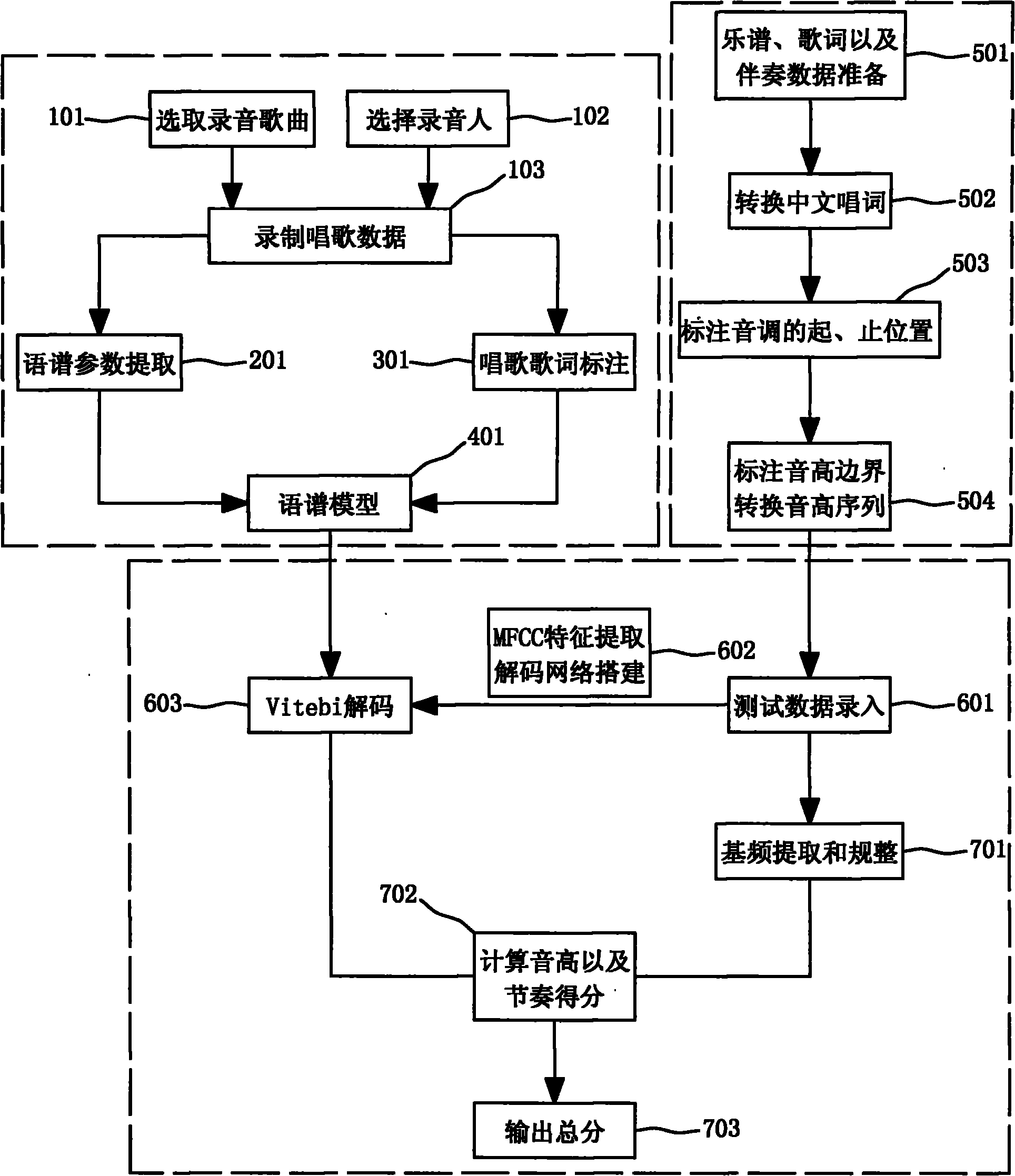

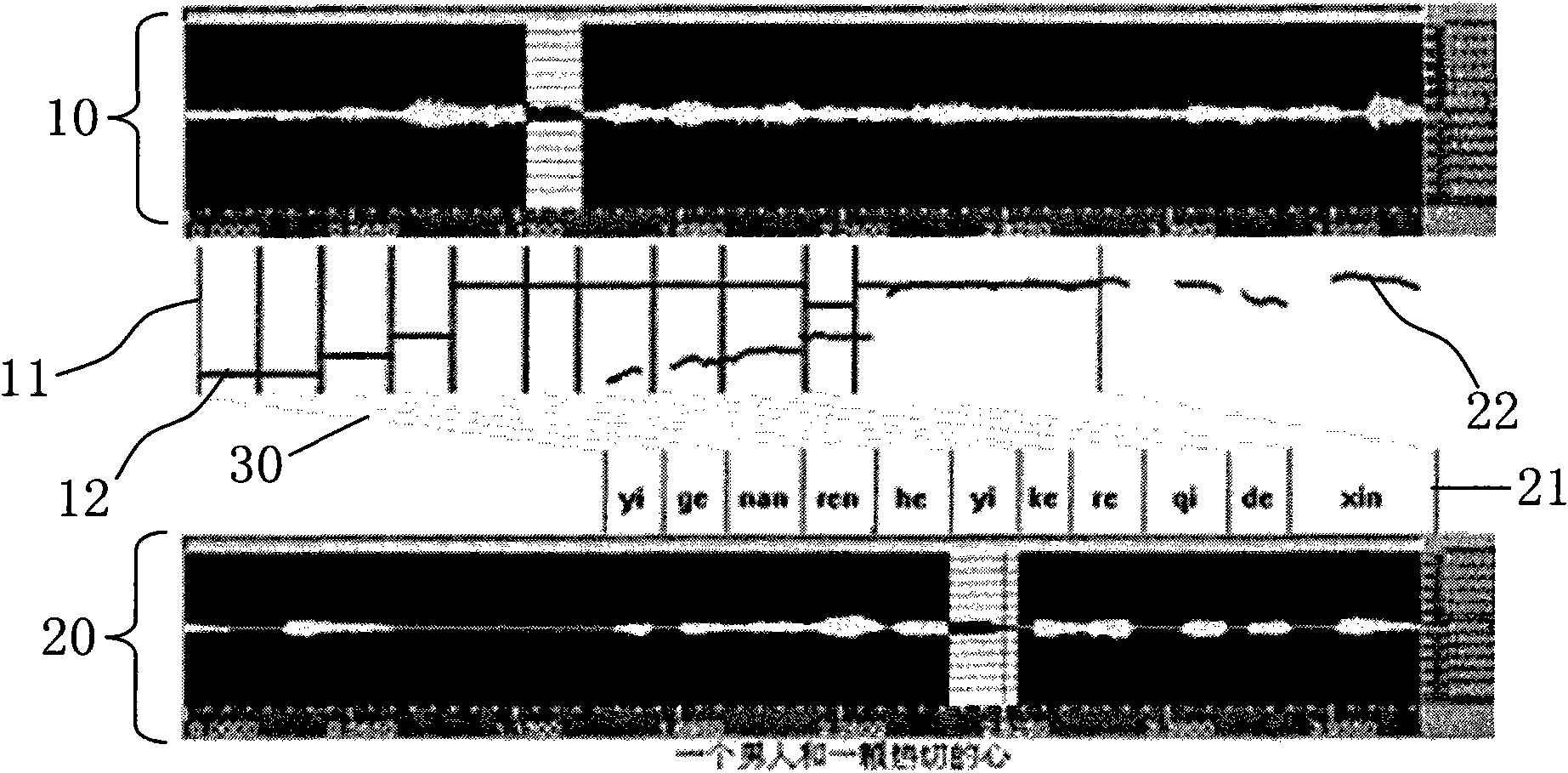

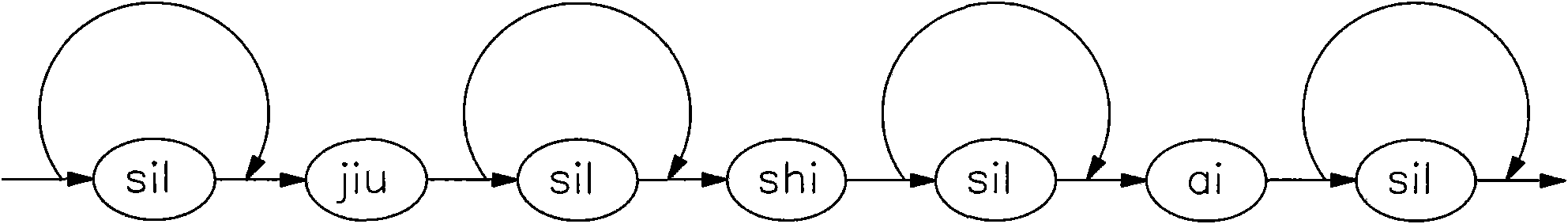

Speech spectrum segmentation based singing evaluating system

ActiveCN101894552AImprove evaluation performanceImprove accuracyElectrophonic musical instrumentsSpeech recognitionFundamental frequencyTime alignment

The invention relates to a speech spectrum segmentation based singing evaluating system. In the system, a speed spectrum model is trained, and a song resource library is produced; then, boundary of each lyric can be determined by using a speech spectrum model decoding mode according to singing data to be evaluated and the corresponding lyric content; and finally, scores of pitch and rhythm of the data to be evaluated can be calculated according to the acquired speech spectrum segmentation result, and a total score can be provided. The system trains the speech spectrum model which is completely matched with the style of the data to be evaluated by producing the overall singing database to accurately locate the position of each lyric in the data so as to determine the position of each tone, so that the accuracy of standard fundamental frequency and testing fundamental frequency on time alignment can be greatly improved, and the evaluating performance of the singing evaluating system can be finally improved.

Owner:IFLYTEK CO LTD

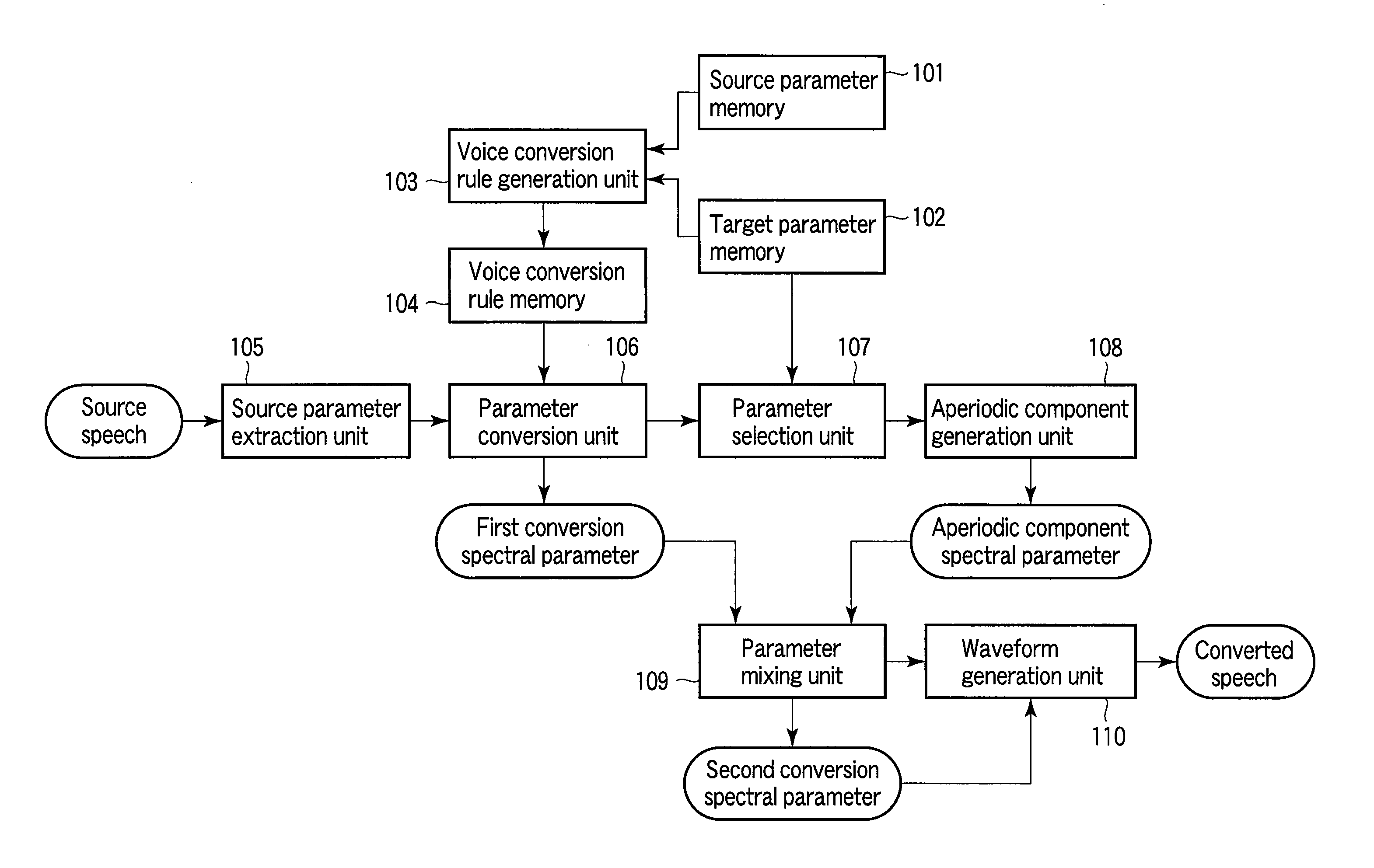

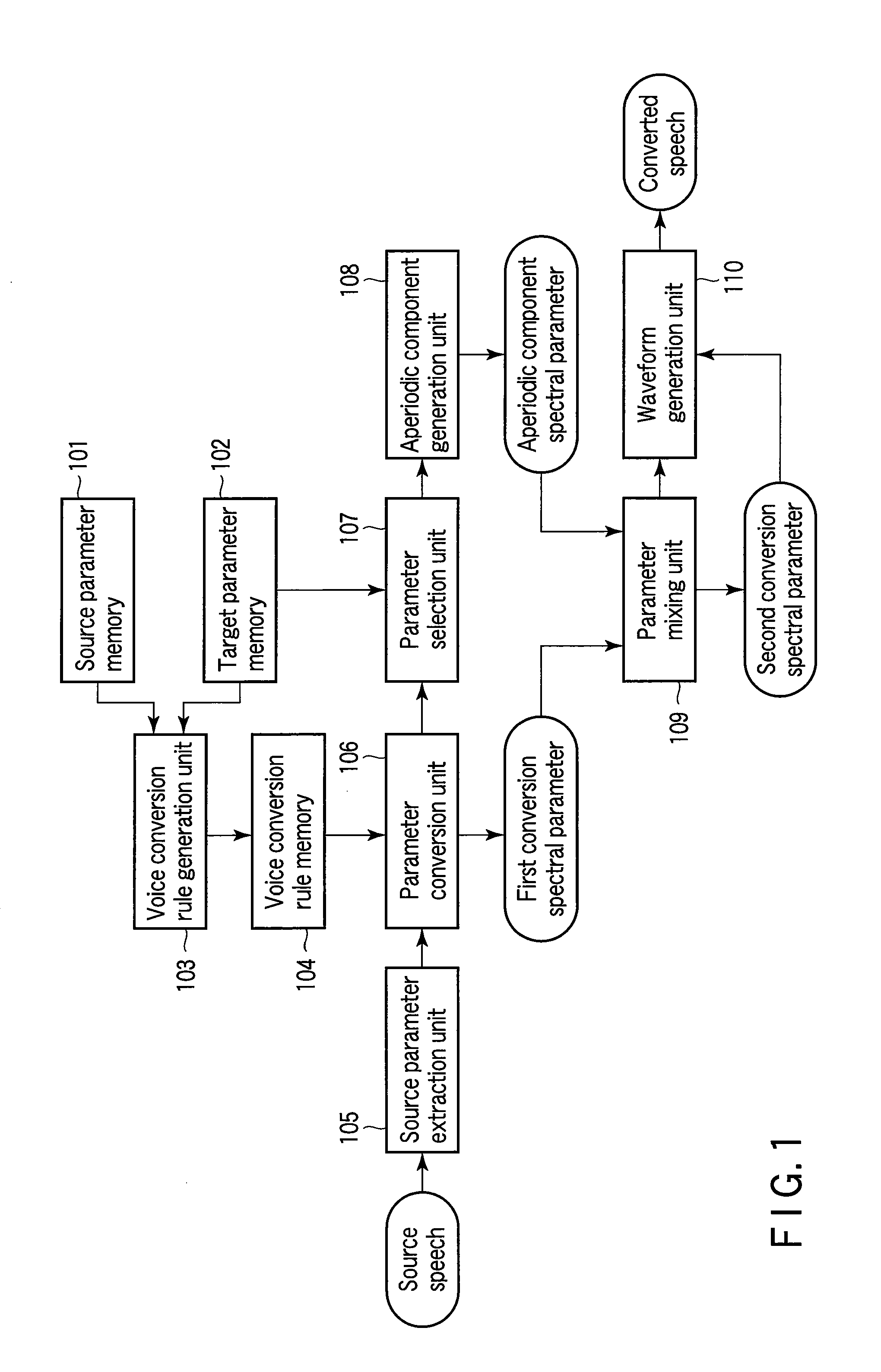

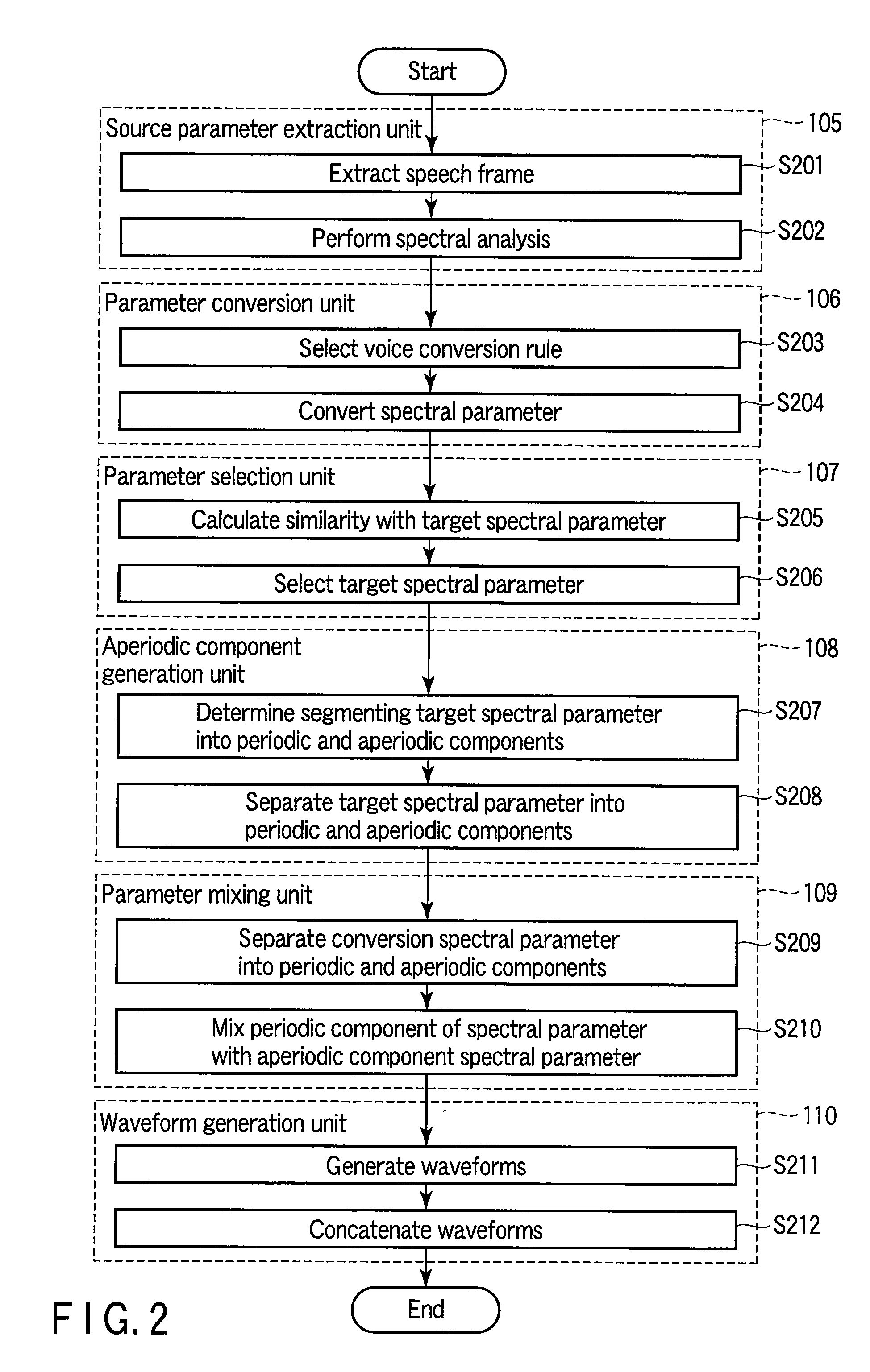

Voice conversion apparatus and method and speech synthesis apparatus and method

Owner:TOSHIBA DIGITAL SOLUTIONS CORP

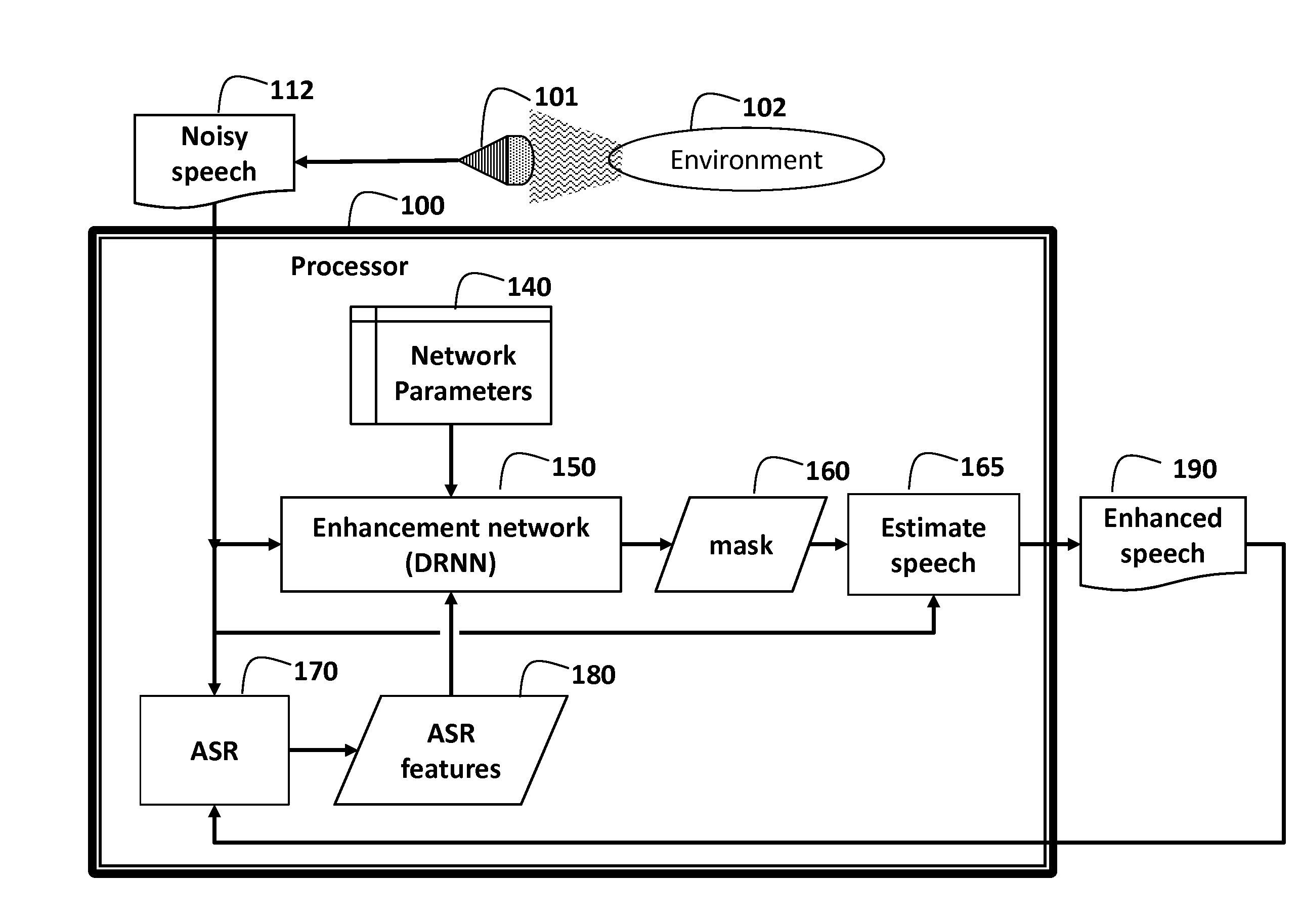

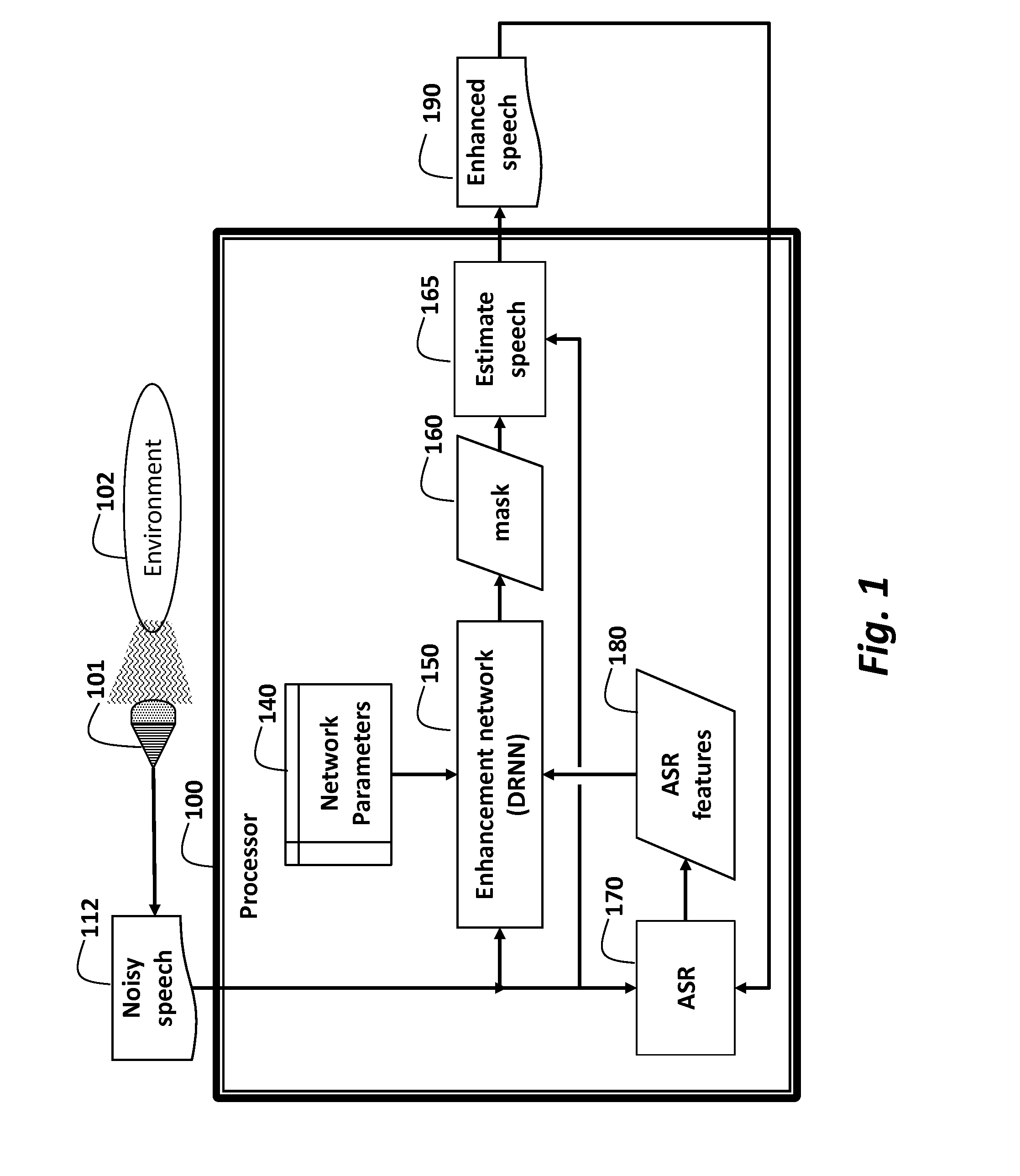

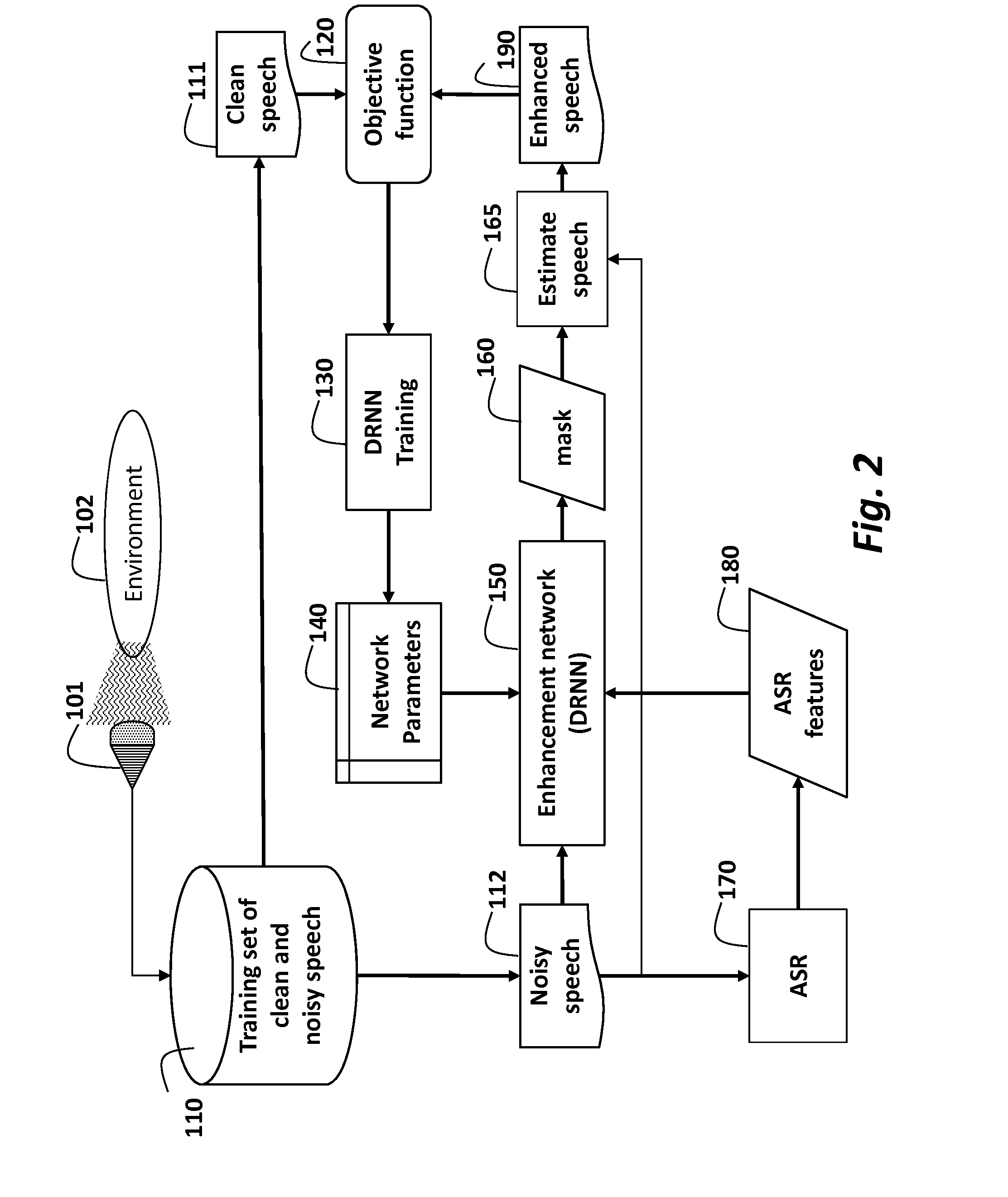

Method for Enhancing Noisy Speech using Features from an Automatic Speech Recognition System

InactiveUS20160111107A1Increase heightEasy to predictSpeech analysisFrequency spectrumAutomatic speech

A method transforms a noisy speech signal to an enhanced speech signal, by first acquiring the noisy speech signal from an environment. The noisy speech signal is processed by an automatic speech recognition system (ASR) to produce ASR features. The the ASR features and noisy speech spectral features are processed using an enhancement network having network parameters to produce a mask. Then, the mask is applied to the noisy speech signal to obtain the enhanced speech signal.

Owner:MITSUBISHI ELECTRIC RES LAB INC

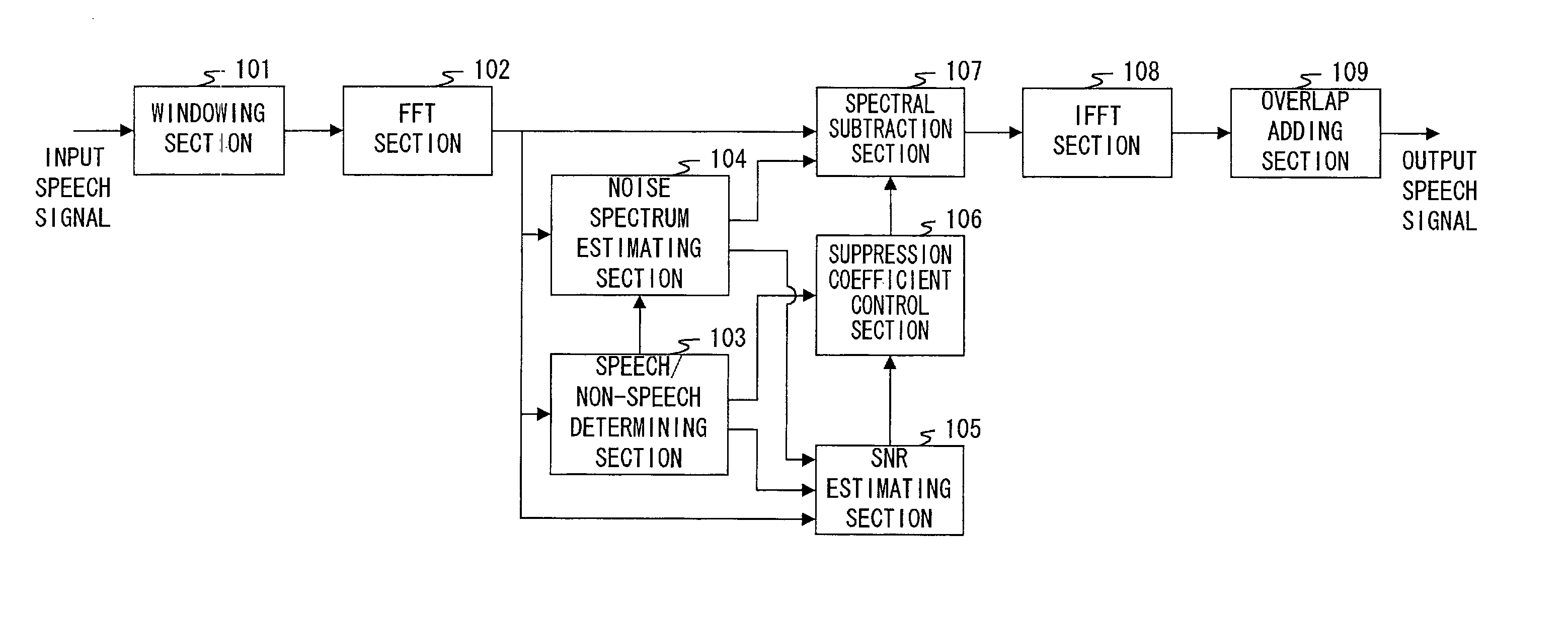

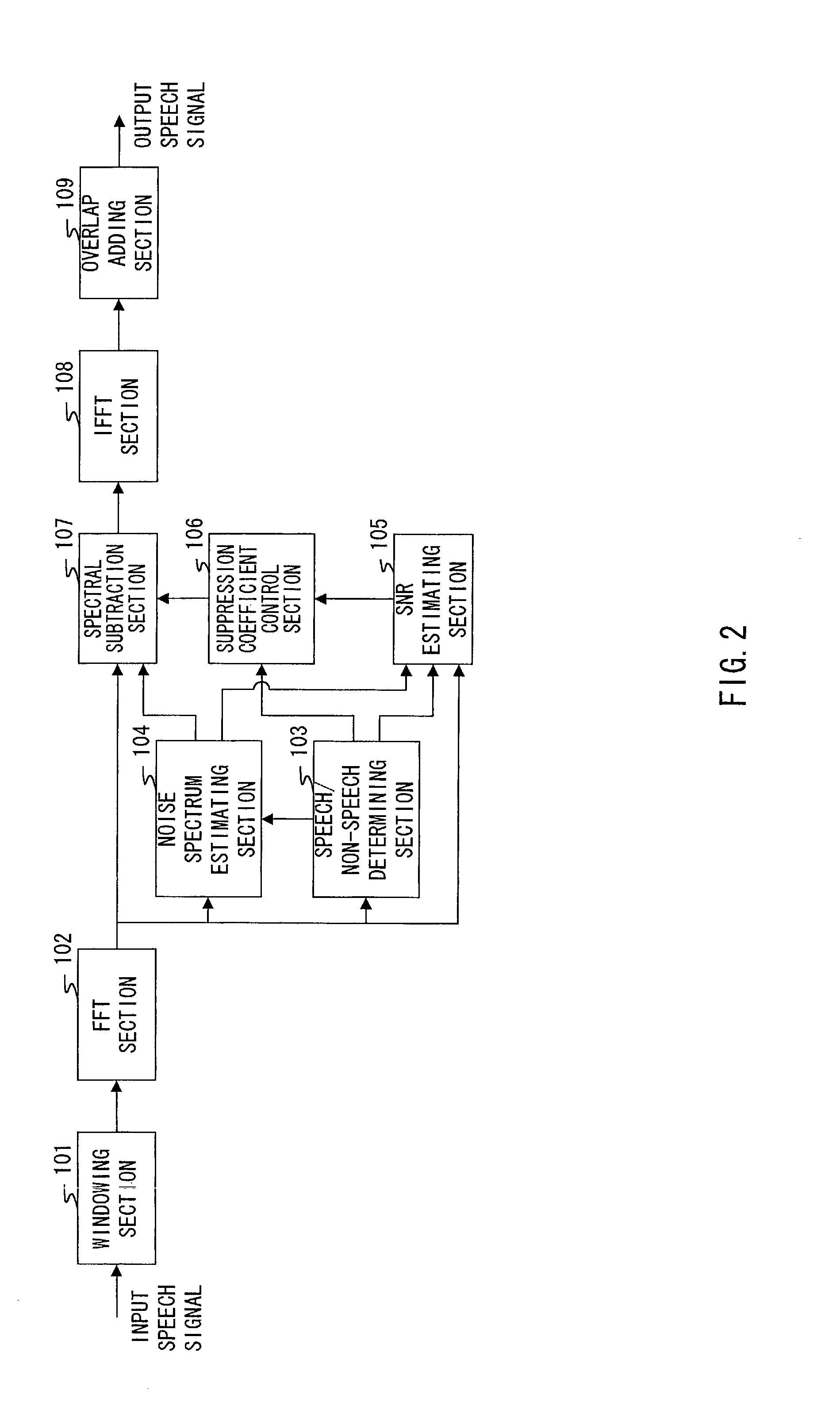

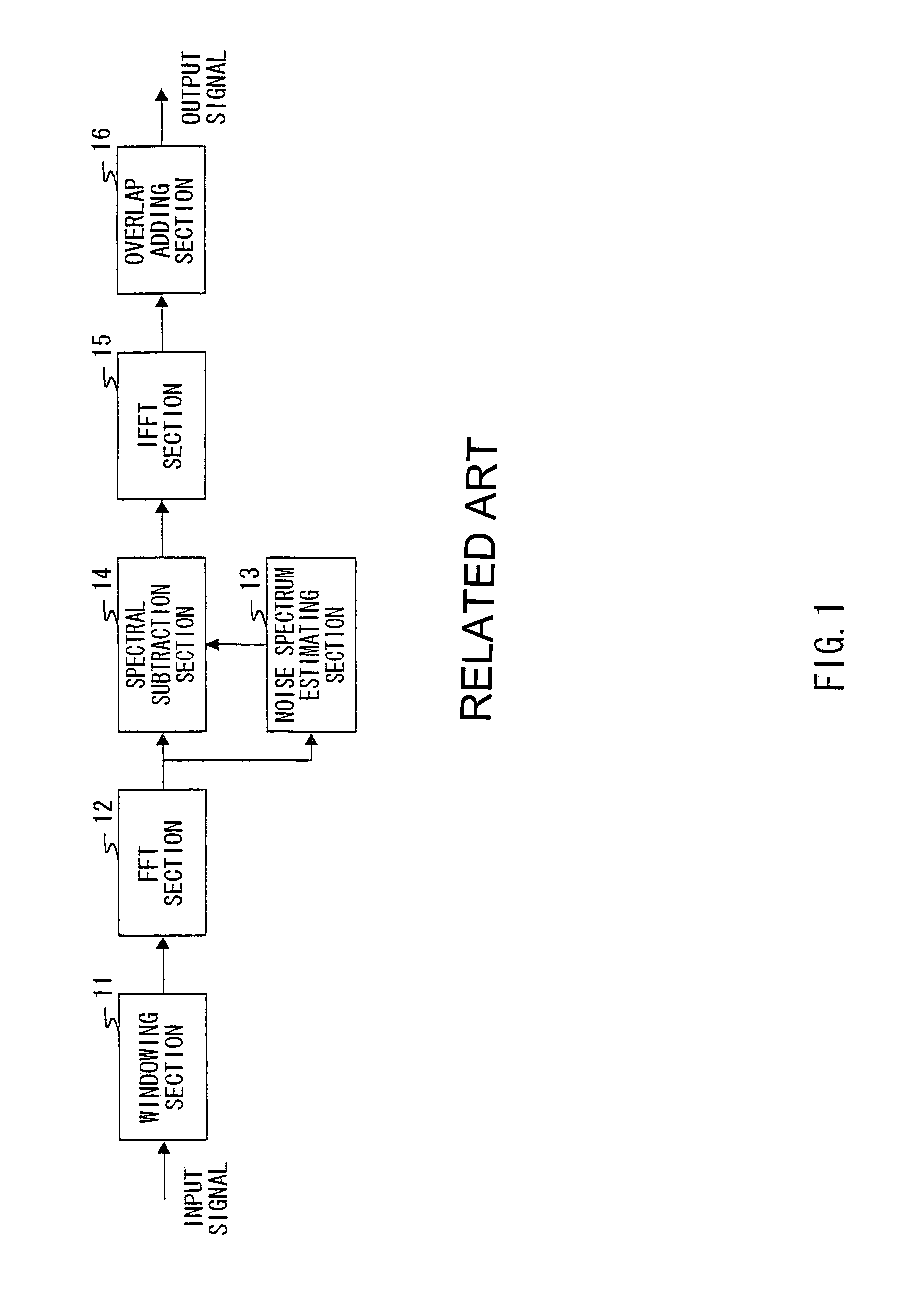

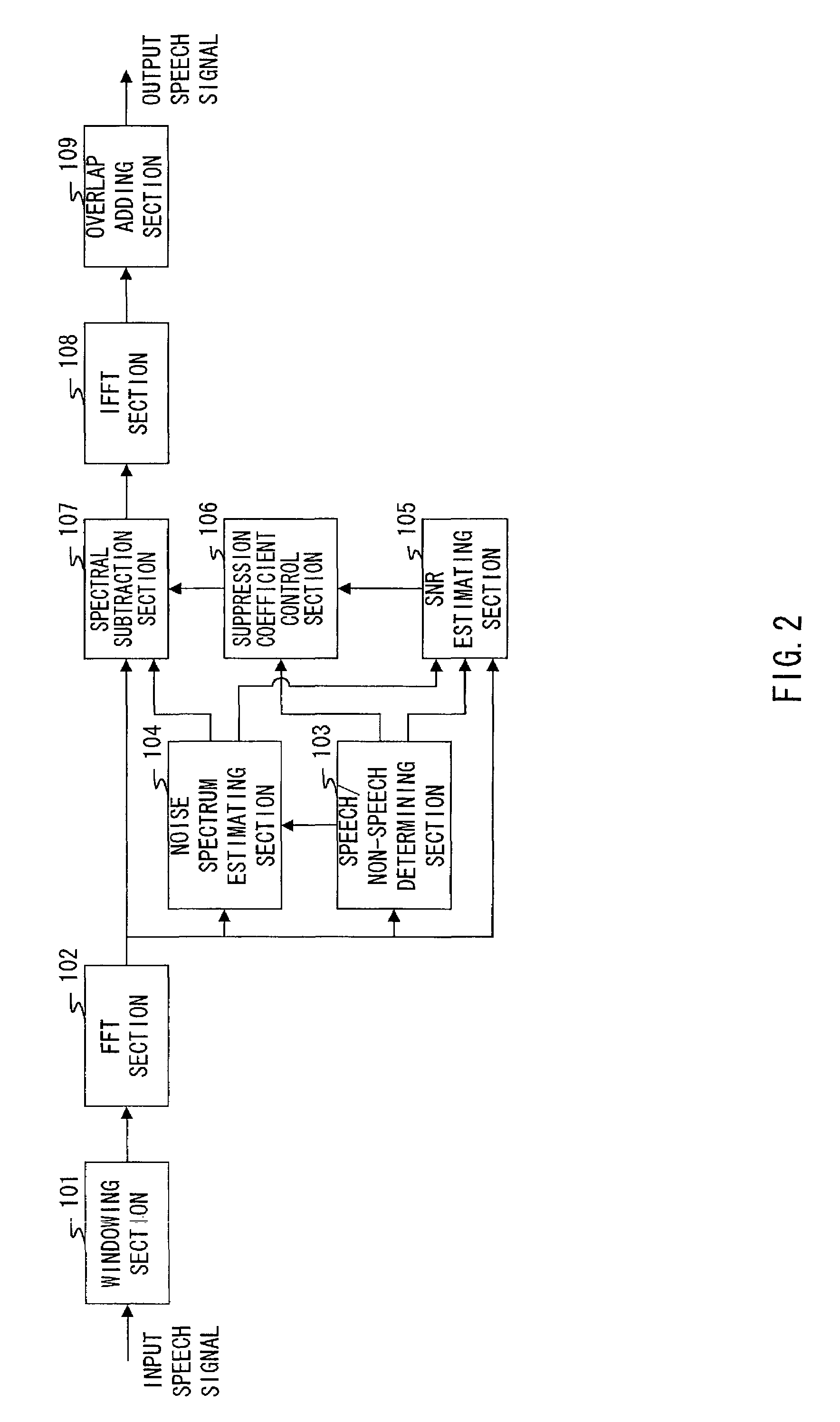

Noise suppressor and noise suppressing method

InactiveUS20020156623A1Improve efficiencyReduction of suppression distortionSubstation speech amplifiersCode conversionLower limitFrequency spectrum

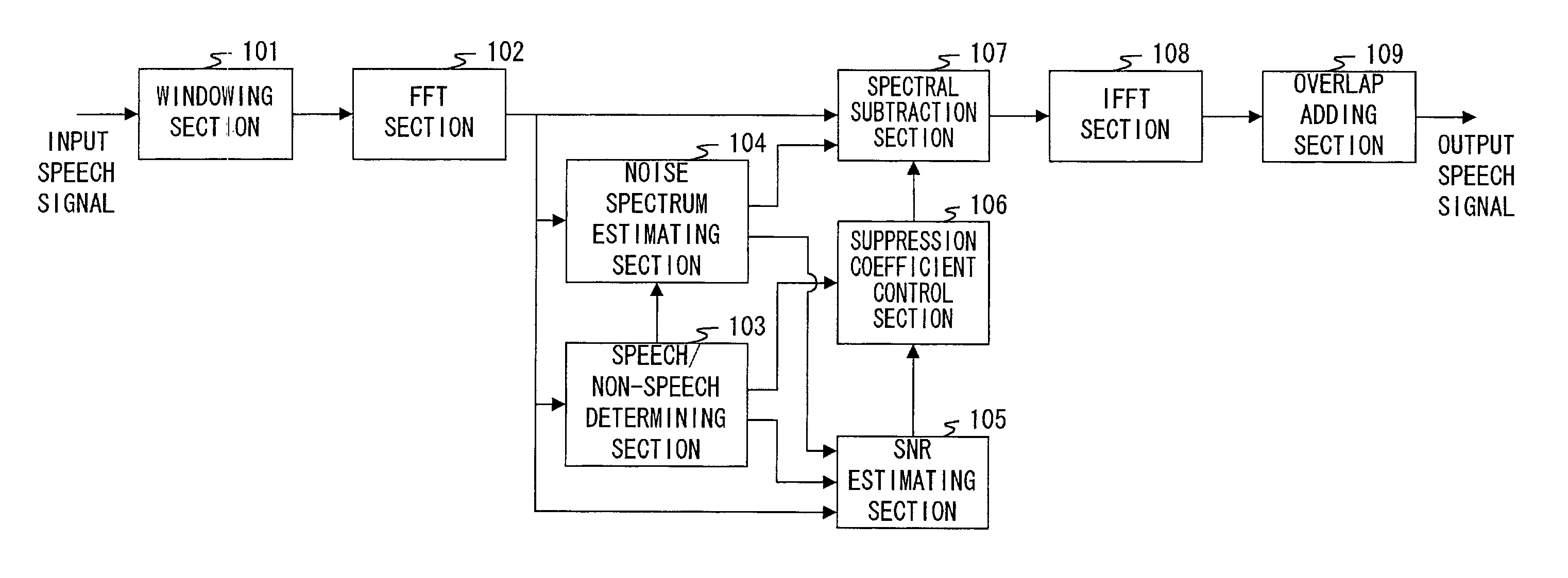

Speech / non-speech determining section 103 makes a speech / non-speech determination of whether a speech spectrum is of a speech interval with a speech included or of a non-speech interval with only a noise and no speech included. Noise spectrum estimating section 104 estimates a noise spectrum based on the speech spectrum determined as the non-speech interval. SNR estimating section 105 obtains speech signal power from the speech interval and noise signal power from the non-speech interval in the speech spectrum, and calculates SNR from a ratio of two values. Based on the speech / non-speech determination and a value of SNR, suppression coefficient control section 106 outputs a suppression lower limit coefficient to spectrum subtraction section 107. Spectral subtraction section 107 subtracts an estimated noise spectrum from the input speech spectrum, and outputs a speech spectrum with a noise suppressed.

Owner:PANASONIC CORP

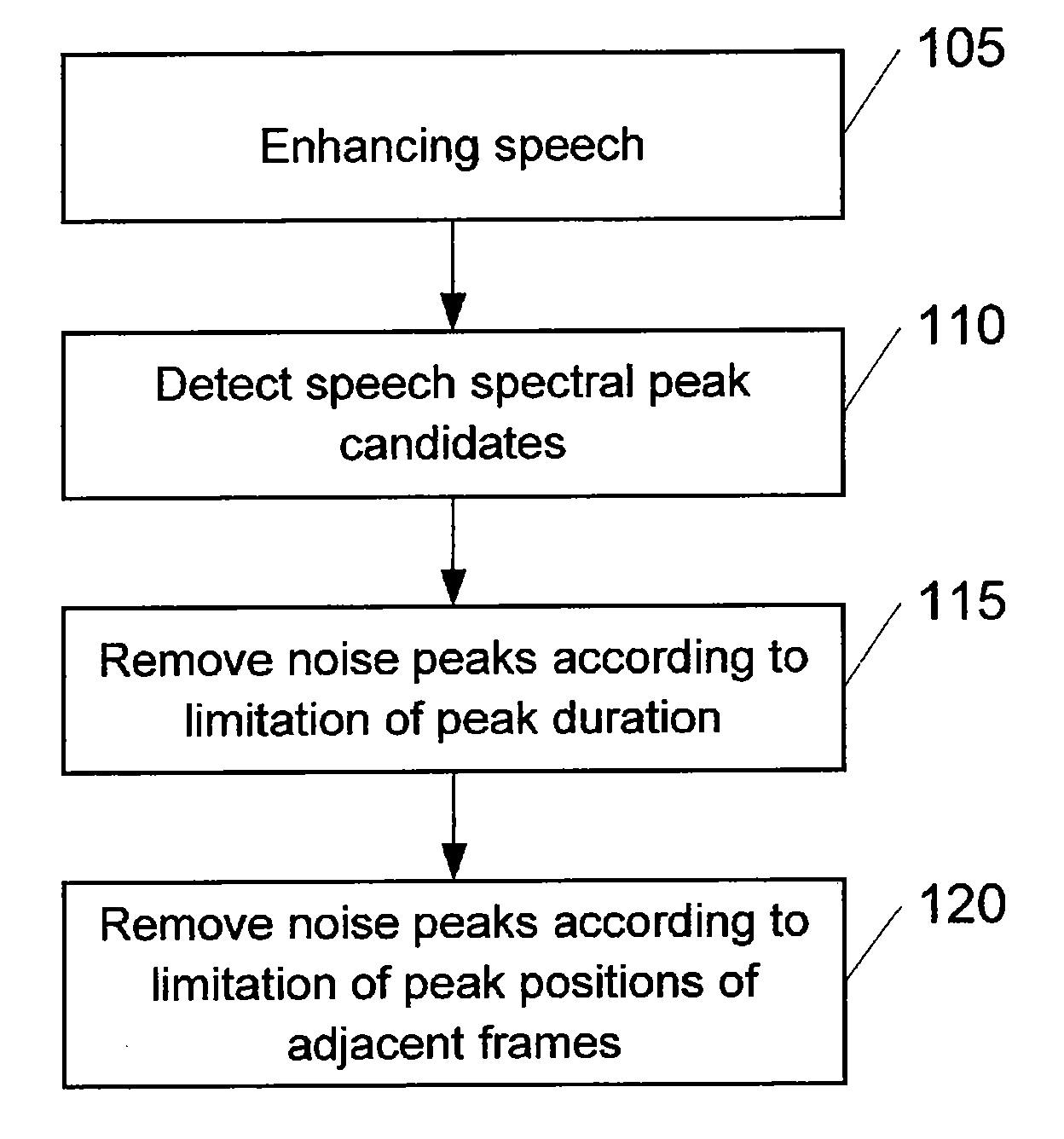

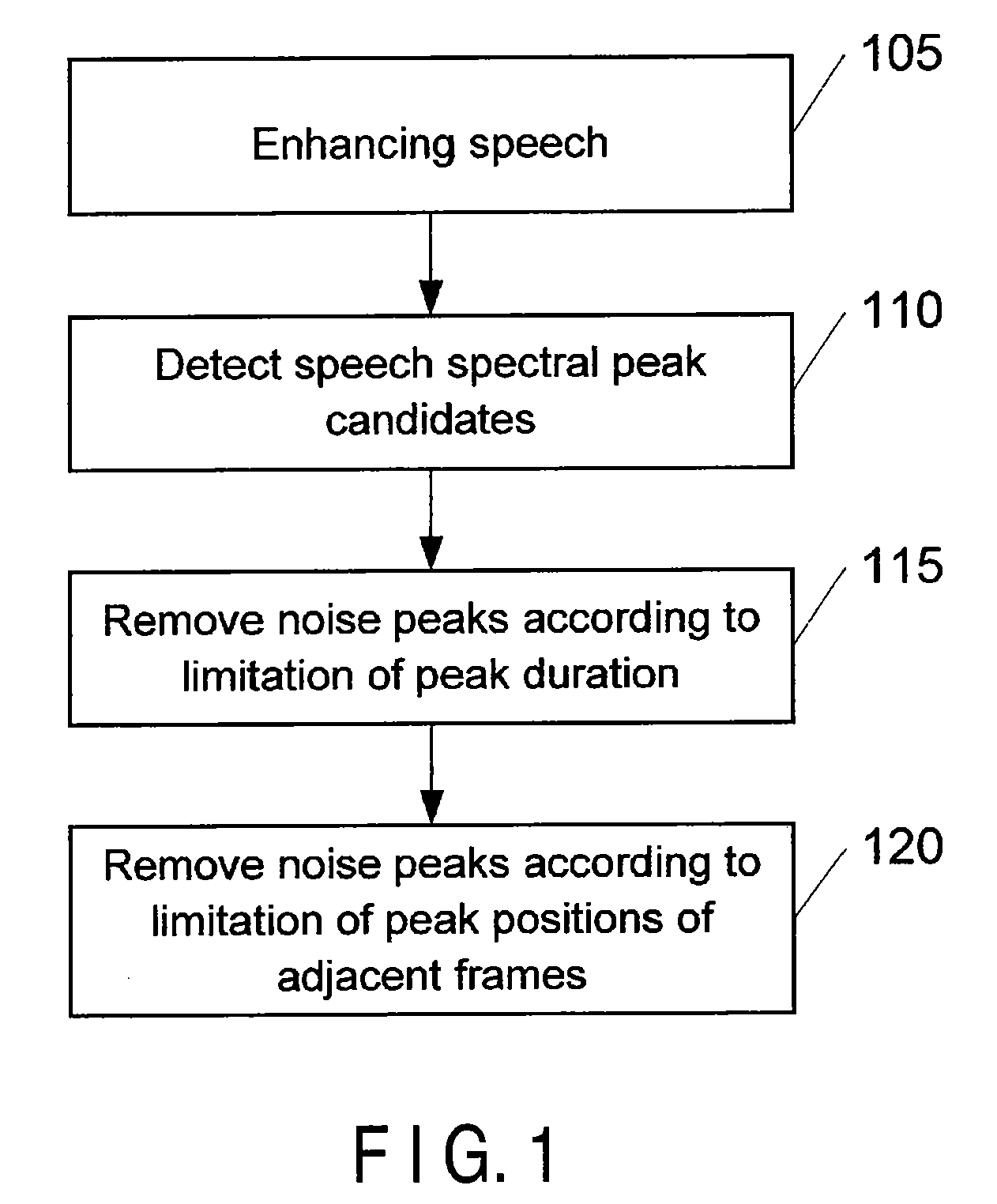

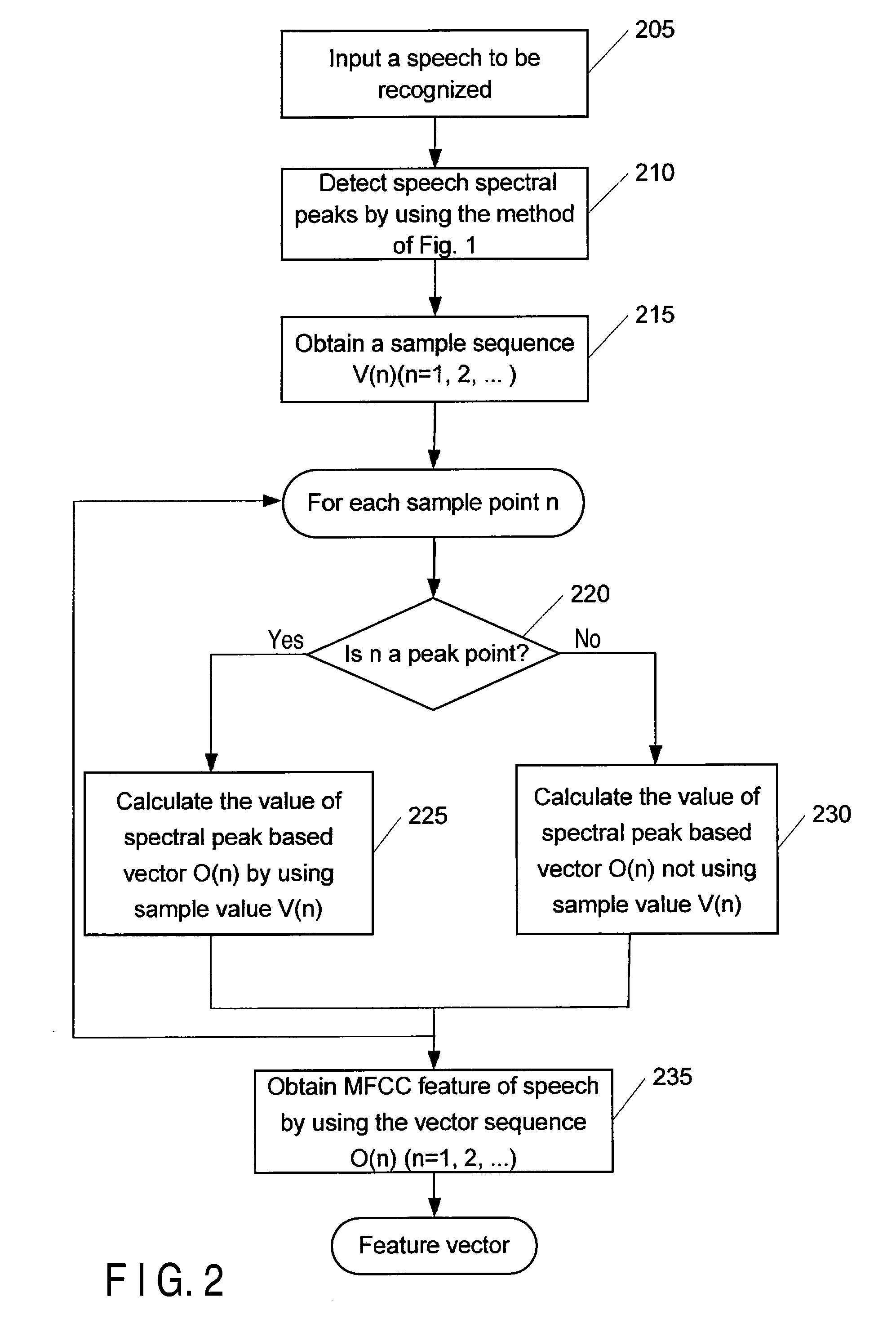

Detection of speech spectral peaks and speech recognition method and system

InactiveUS20090177466A1Improve Noise RobustnessRemove noise peaksSpeech analysisFrequency spectrumFeature Dimension

The present invention provides a method and apparatus for detecting speech spectral peaks and a speech recognition method and system. The method for detecting speech spectral peaks comprises detecting speech spectral peak candidates from power spectrum of the speech, and removing noise peaks from the speech spectral peak candidates according to peak duration and / or peak positions of adjacent frames, to detect speech spectral peaks. In the present invention, reliable speech spectral peaks can be obtained by removing noise peaks using the limitations of peak duration and adjacent frames in the detection of the speech spectral peaks. Further the energy values of the speech spectral peaks are used to extract the MFCC feature of speech instead of a sample sequence of the whole power spectrum in the conventional technique, the noise robustness of speech recognition can be enhanced while not increasing the speech feature dimensions.

Owner:KK TOSHIBA

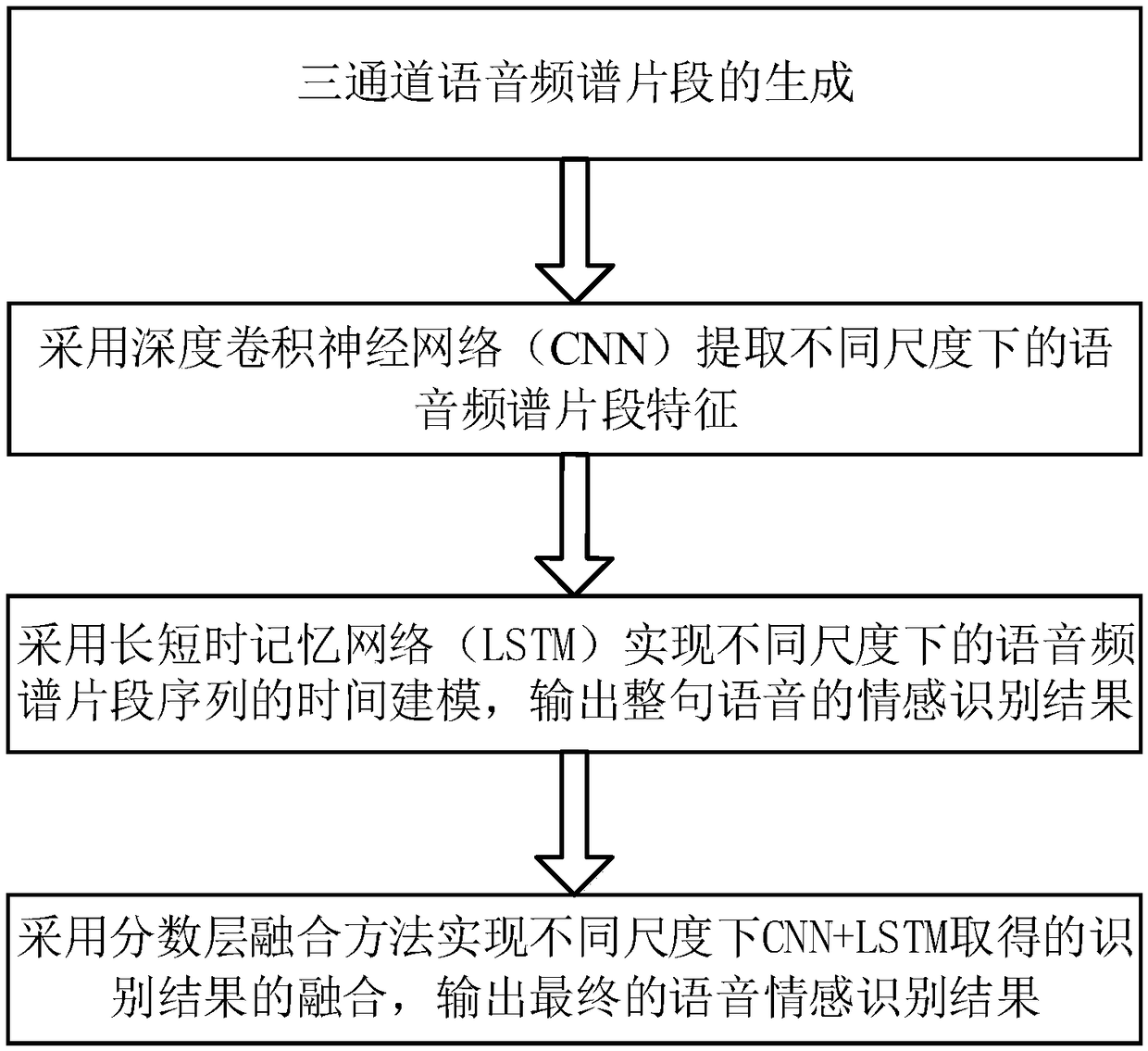

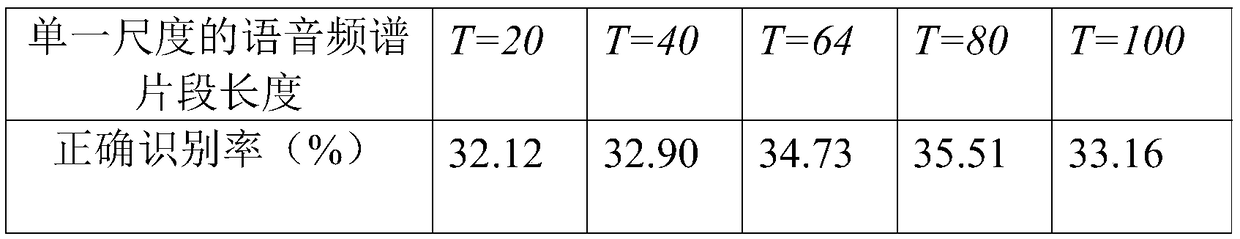

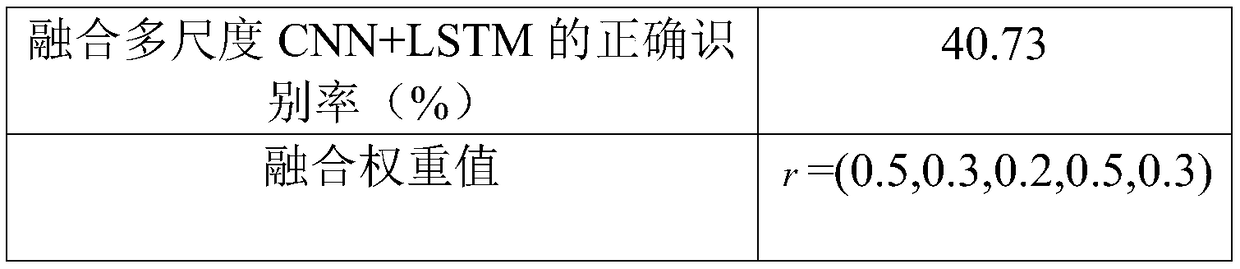

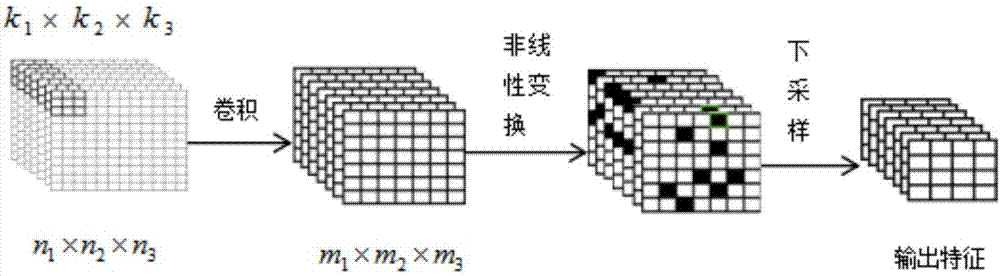

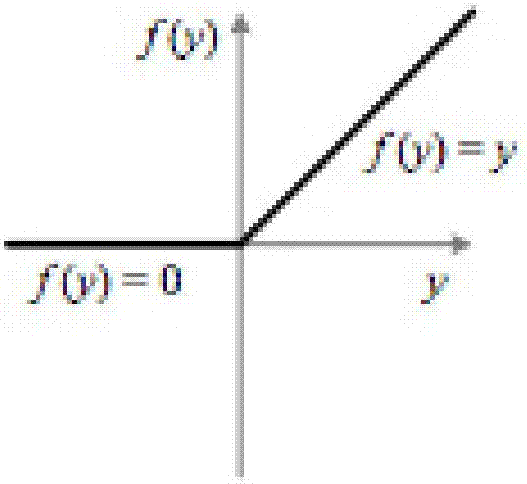

Speech emotion recognition method based on multi-scale deep convolution recurrent neural network

ActiveCN108717856AAlleviate the problem of insufficient samplesSpeech analysisPattern recognitionFrequency spectrum

The invention discloses a speech emotion recognition method based on a multi-scale deep convolution recurrent neural network. The method comprises the steps that (1), three-channel speech spectrum segments are generated; (2), speech spectrum segment features under different scales are extracted by adopting the convolution neural network (CNN); (3), time modeling of a speech spectrum segment sequence under different scales is achieved by adopting a long short-term memory (LSTM), and emotion recognition results of a whole sentence of speech is output; (4), fusions of recognition results obtainedby CNN+LSTM under different scales are achieved by adopting a score level fusion method, and the final speech emotion recognition result is output. By means of the method, natural speech emotion recognition performance under actual environments can be effectively improved, and the method can be applied to the fields of artificial intelligence, robot technologies, natural human-computer interaction technologies and the like.

Owner:TAIZHOU UNIV

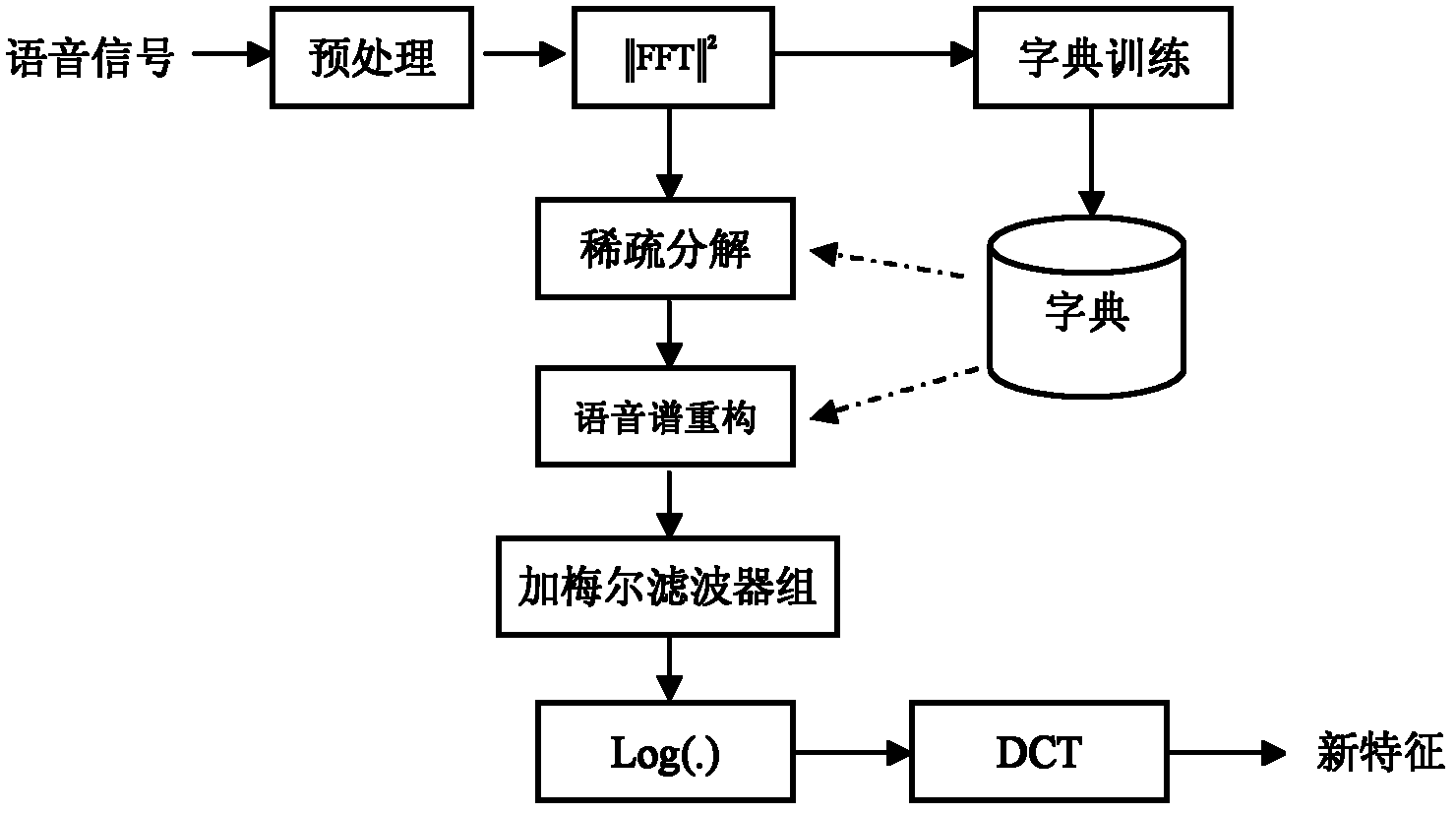

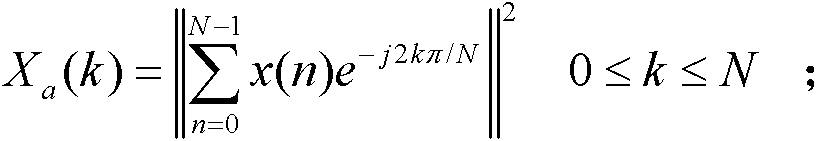

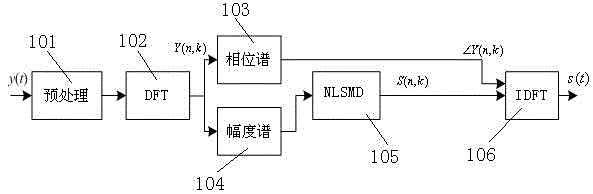

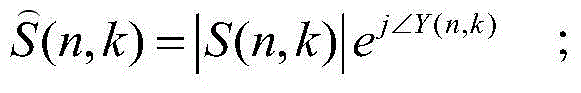

A Robust Speech Feature Extraction Method Based on Sparse Decomposition and Reconstruction

The invention discloses a robust speech characteristic extraction method based on sparse decomposition and reconfiguration, relating to a robust speech characteristic extraction method with sparse decomposition and reconfiguration. The robust speech characteristic extraction method solves the problems that 1, the selection of an atomic dictionary has higher the time complexity and is difficult tomeet the sparsity after signal projection; 2, the sparse decomposition of signals has less consideration for time relativity of speech signals and noise signals; and 3, the signal reconfiguration ignores the prior probability of atoms and mutual transformation of all the atoms. The robust speech characteristic extraction method comprises the following detailed steps of: step 1, preprocessing; step 2, conducting discrete Fourier transform and solving a power spectrum; step 3, training and storing the atom dictionary; step 4, conducting sparse decomposition; step 5, reconfiguring the speech spectrum; step 6, adding a Mel triangular filter and taking the logarithm; and step 7, obtaining sparse splicing of Mel cepstrum coefficients and a Mel cepstrum to form the robust characteristic. The robust speech characteristic extraction method is used for the fields of multimedia information processing.

Owner:哈尔滨工业大学高新技术开发总公司

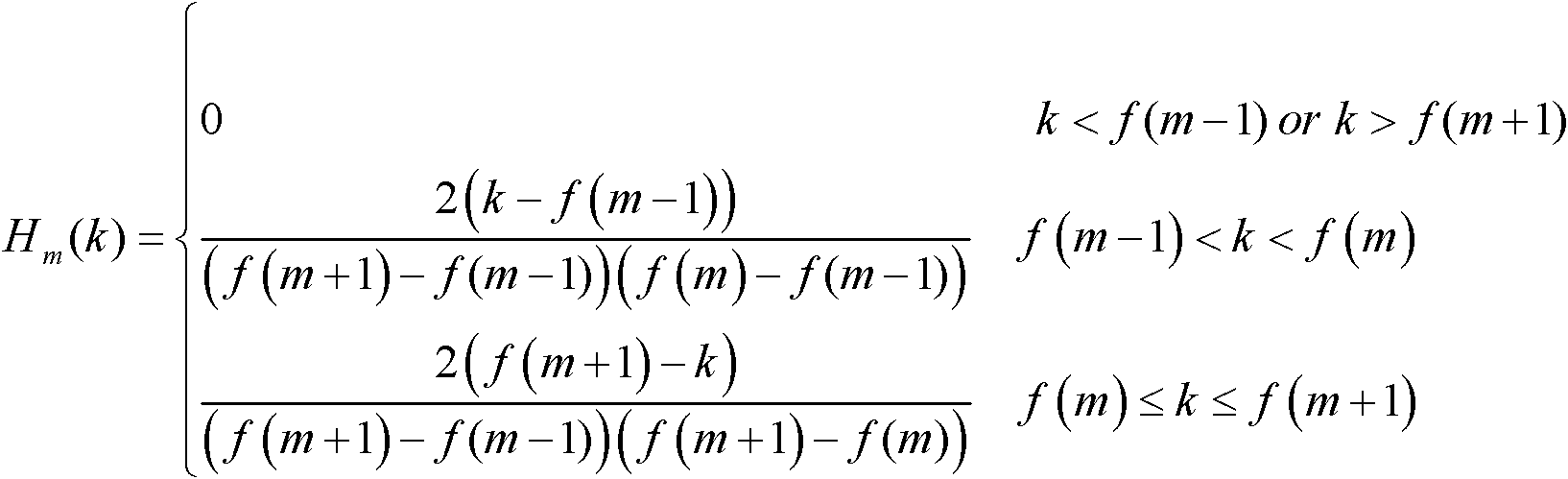

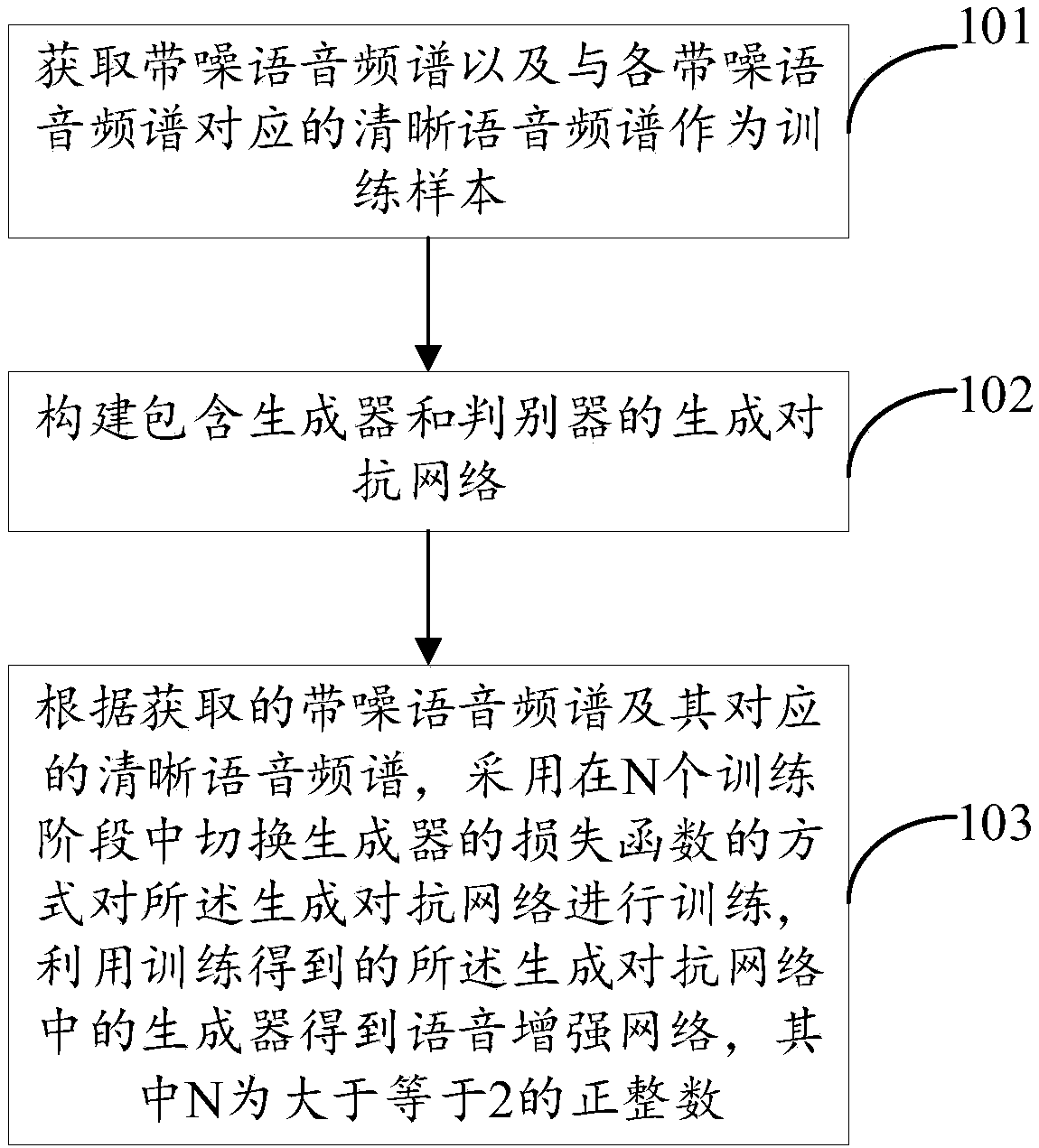

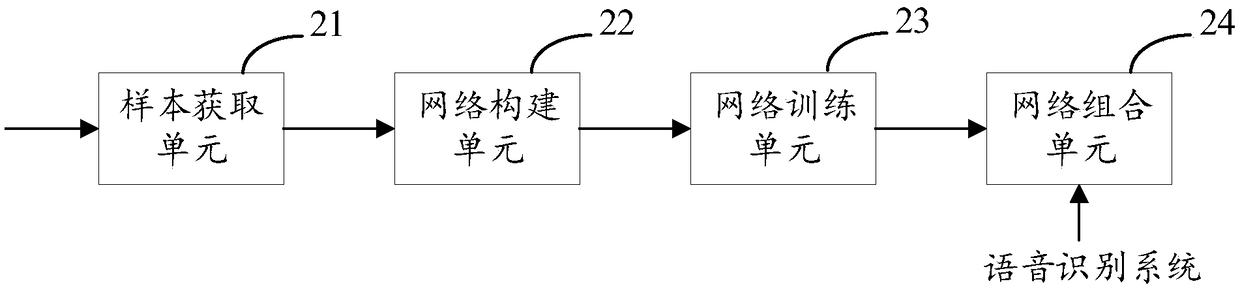

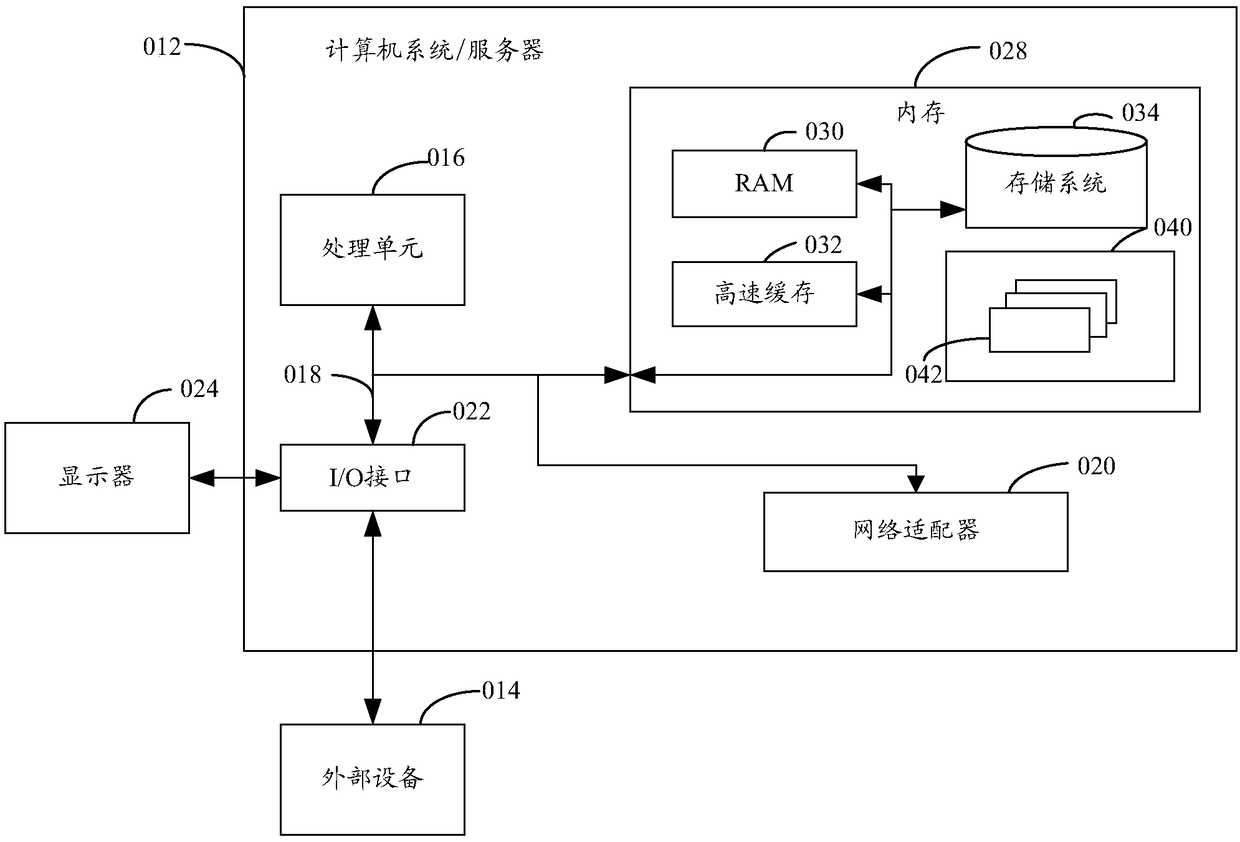

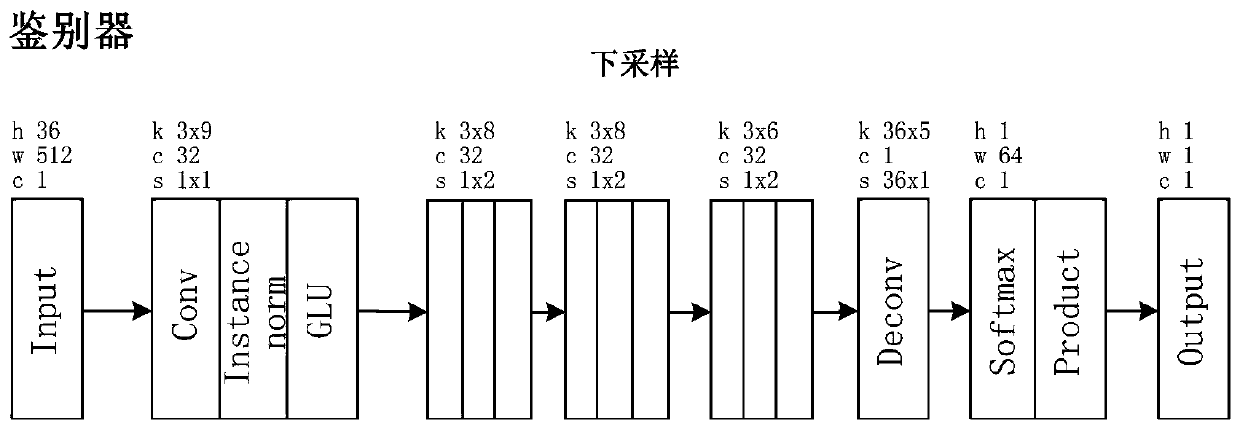

Method, apparatus and equipment for establishing voice enhancement network and computer storage medium

ActiveCN109147810AImprove performanceImprove stabilitySpeech analysisNeural architecturesDiscriminatorFrequency spectrum

The invention provides a method, an apparatus and equipment for establishing a voice enhancement network and a computer storage medium. The method comprises the following steps: obtaining a noisy speech spectrum and a clear speech spectrum corresponding to each noisy speech spectrum as training samples; obtaining a noisy speech spectrum corresponding to each noisy speech spectrum as training samples. constructing a generation antagonism network including a generator and a discriminator; according to the obtained noisy speech spectrum and the corresponding clear speech spectrum, training the generated antagonistic network by switching the loss function of the generator in N training stages, and obtaining the speech enhancement network by using the generator in the generated antagonistic network obtained by training, wherein N is a positive integer greater than or equal to 2. The invention can enhance the stability of the training convergence of the generated antagonistic network, thereby improving the performance of the speech enhancement network based on the generated antagonistic network, and further realizing the purpose of improving the accuracy of speech recognition.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

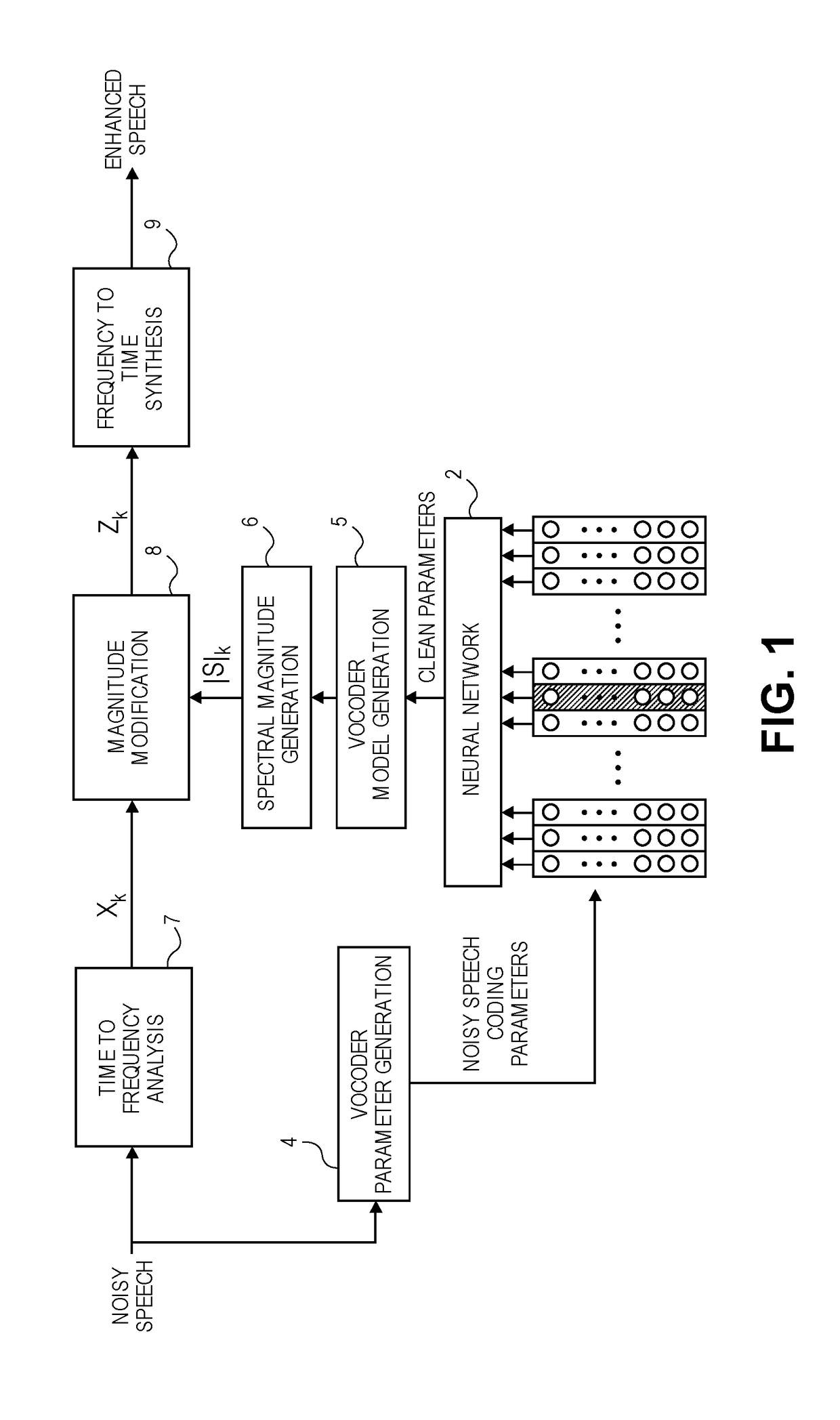

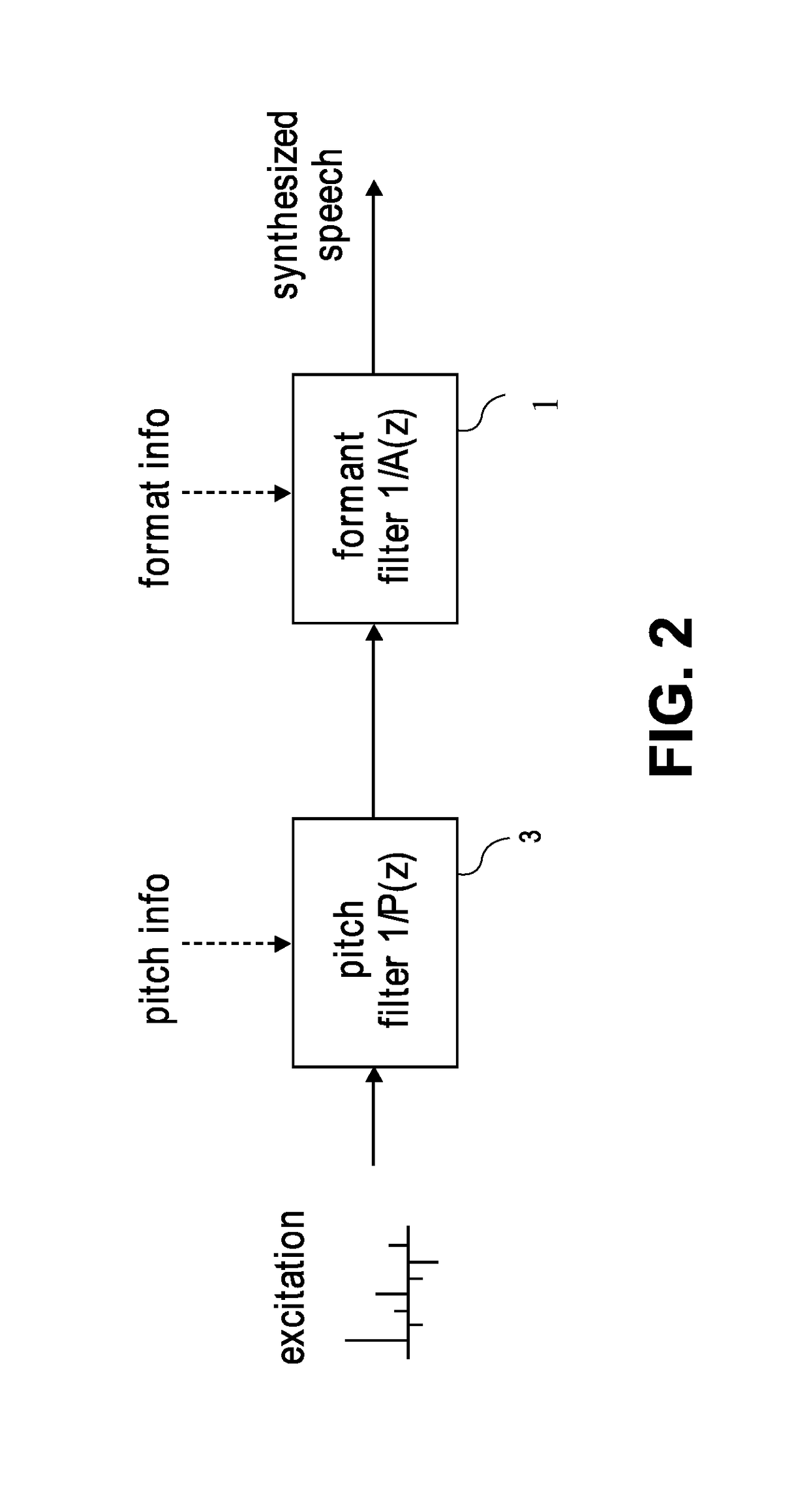

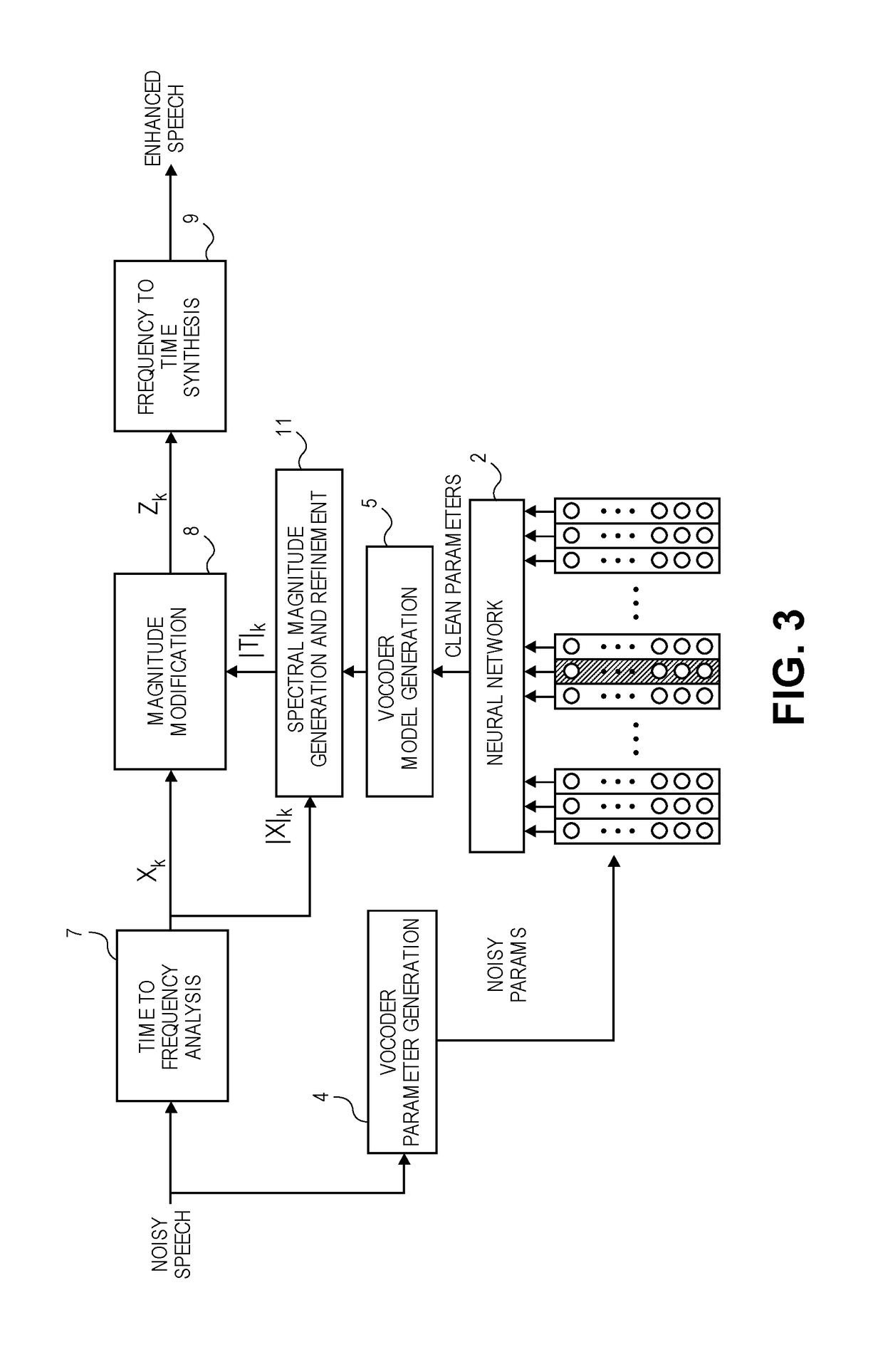

Speech Model-Based Neural Network-Assisted Signal Enhancement

InactiveUS20180366138A1Reduce the amount requiredReduce complexitySpeech recognitionTime domainFrequency spectrum

Several embodiments of a digital speech signal enhancer are described that use an artificial neural network that produces clean speech coding parameters based on noisy speech coding parameters as its input features. A vocoder parameter generator produces the noisy speech coding parameters from a noisy speech signal. A vocoder model generator processes the clean speech coding parameters into estimated clean speech spectral magnitudes. In one embodiment, a magnitude modifier modifies an original frequency spectrum of the noisy speech signal using the estimated clean speech spectral magnitudes, to produce an enhanced frequency spectrum, and a synthesis block converts the enhanced frequency spectrum into time domain, as an output speech sequence. Other embodiments are also described.

Owner:APPLE INC

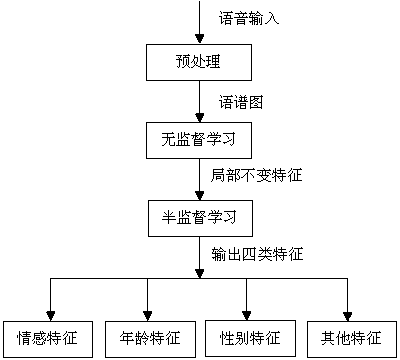

Semi-supervised speech feature variable factor decomposition method

ActiveCN104021373ADisadvantages of avoiding mutual interferenceDescribe wellCharacter and pattern recognitionSpeech recognitionFeature mappingSpeech spectrum

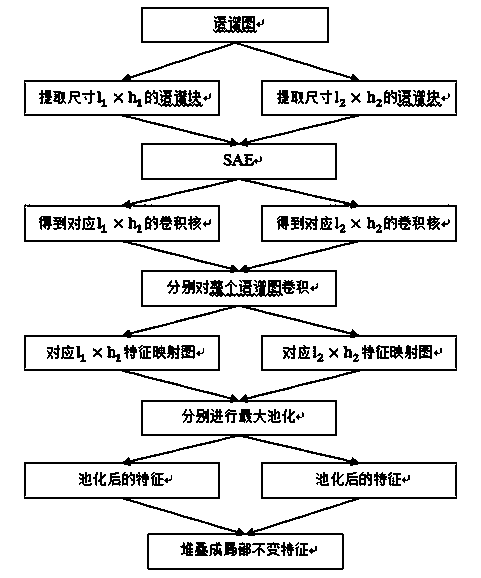

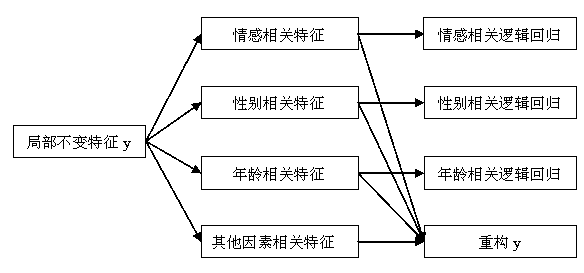

The invention discloses a semi-supervised speech feature variable factor decomposition method. Speech features are divided into four types: emotion-related features, gender-related features, age-related features and noise, language and other factor-related features. Firstly, a speech is pretreated to obtain a spectrogram, speech spectrum blocks of different sizes are inputted to an unsupervised feature learning network SAE, convolution kernels of different sizes are obtained through pre-training, convolution kernels of different sizes are then respectively used for carrying out convolution on the whole spectrogram, a plurality of feature mapping pictures are obtained, maximal pooling is then carried out on the feature mapping pictures, and the features are finally stacked together to form a local invariant feature y. Y serves as input of semi-supervised convolution neural network, y is decomposed into four types of features through minimizing four different loss function items. The problem that the recognition accuracy rate is not high as emotion, gender, age and speech features are mixed is solved, and the method can be used for different recognition demands based on speech signals and can also be used for decomposing more factors.

Owner:JIANGSU UNIV

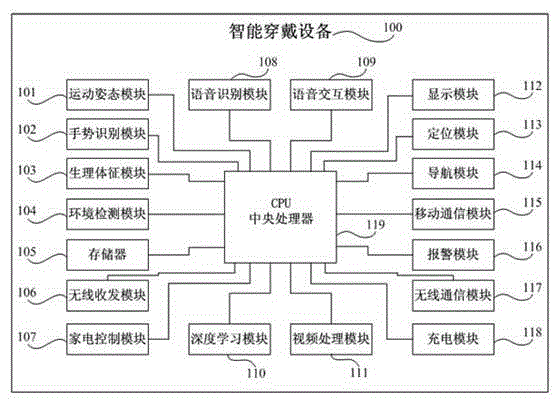

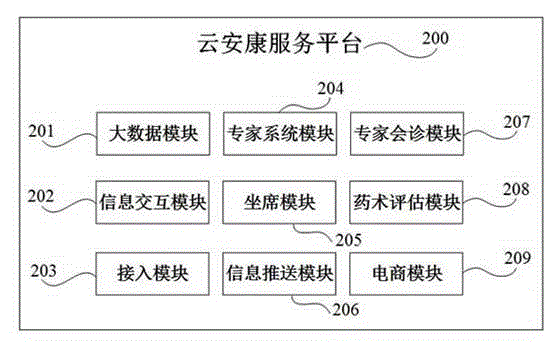

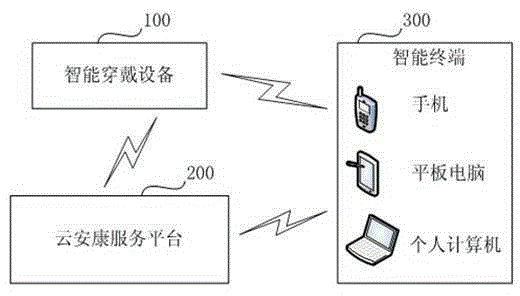

Intelligent wearing equipment for safety and health service for old people and voice recognition method

InactiveCN104952447AImprove speech recognition performanceCharacter and pattern recognitionSensorsFrequency spectrumOlder people

The invention discloses intelligent wearing equipment for safety and health service for old people and a voice recognition method. The intelligent wearing equipment comprises a moving posture module, a gesture recognition module, a physiological sign module, an environment detection module, a video processing module, a wireless communication module, a charging module, a central processing unit, a memorizer, a display module, a mobile communication module, an alarm module, a wireless transceiving module and a voice recognition module, wherein the voice recognition module is used for studying and storing physiological and abnormal sound of a wearer and frequently-used control and response voice to a standard voice frequency spectrum feature library, studying and storing frequently-used voice of the wearer to a wearer's voice frequency spectrum feature library and recognizing whether the voice of the wearer conforms to the standard voice frequency spectrum feature library or the wearer's voice frequency spectrum feature library. The intelligent wearing equipment is capable of recognizing the voice of the wearer effectively and combines gestures of the wearer or a cloud safety and health service platform for auxiliary recognition, so that voice recognition effects are further improved.

Owner:SHENZHEN GLOBAL LOCK SAFETY SYST ENG +1

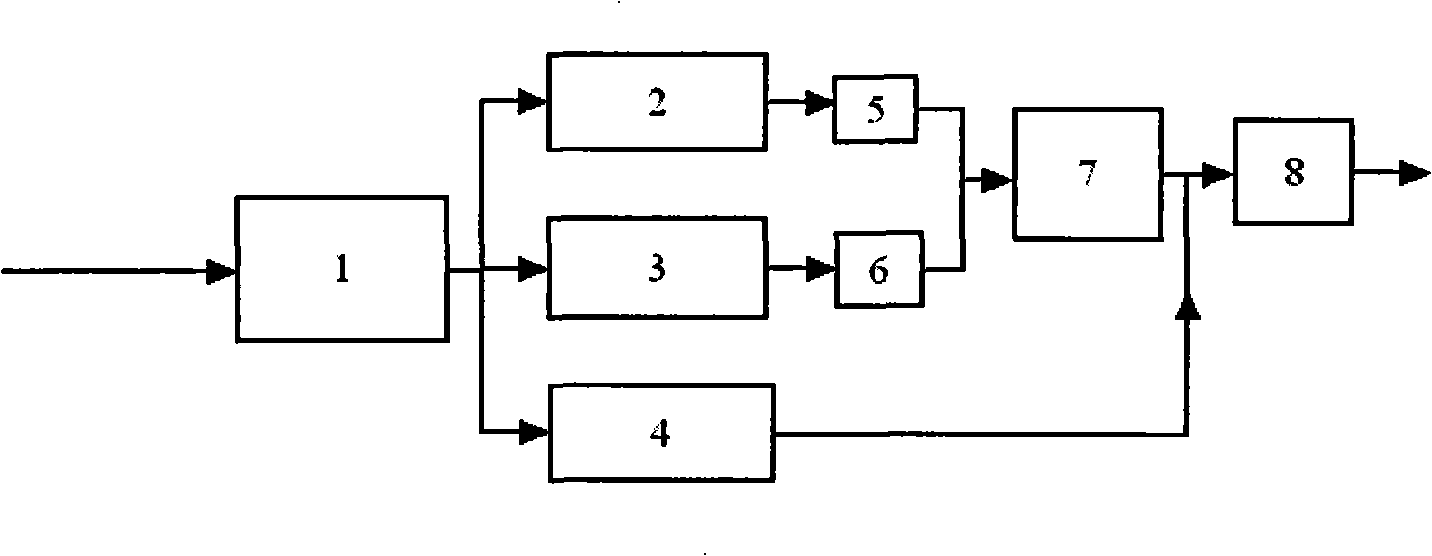

Non-air conduction speech reinforcement method based on multi-band spectrum subtraction

InactiveCN101320566ABroad application prospectsBroad marketing valueSpeech analysisMulti bandFrequency spectrum

The present invention discloses a non-air conduction speech enhancement method based on a multi-band spectral subtraction. Because of the noise in the radar-based non-air conduction speech is always colored and no uniform influence on the speech signals within the whole range of the frequency spectrum, the method divides the speech frequency spectrum in a targeted manner into five sections without overlapping; simultaneously, each section is provided with an individual spectral subtraction coefficient, so as to achieve the effectiveness and pertinence of the algorithm. The embodiment proves the non-air conduction speech enhancement method can effectively compensate the weakness of low pertinence in the traditional speech enhancement method; moreover, the method has the advantages of highly efficient implementation, simple algorithm and obvious effect. Therefore, the method has higher practical value and application prospects.

Owner:FOURTH MILITARY MEDICAL UNIVERSITY

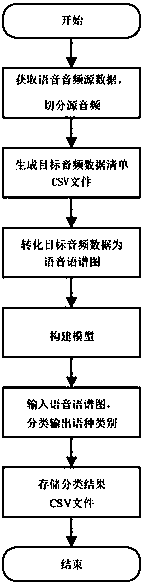

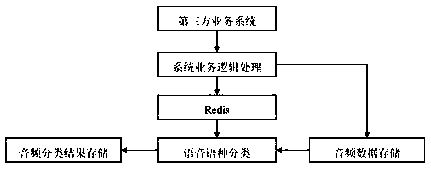

Speech language classifying method based on CNN and GRU fused deep neural network

ActiveCN109523993AImprove classification accuracyData augmentationSpeech recognitionFrequency spectrumTime–frequency analysis

The invention discloses a speech language classifying method based on a CNN and GRU fused deep neural network. The method comprises the following steps that S1, source audio data of a server is obtained, audio preprocessing is conducted, and the source audio data is cut; S2, audio data file information is read, and an audio data inventory CSV file is generated; S3, an audio data file is subjectedto short-time Fourier transformation, and two-dimensional speech spectrums associated with time and frequency domains of expansion of a series of frequency spectrum functions obtained after speech signal time domains are analyzed are obtained; S4, a model is built; S5, two-dimensional speech spectrum image data is input into the CNN and GRU fused speech language classifying deep neural network model, and language classification data is classified and output; S6, the language classification data and source audio data file information are stored. By means of the method, the problem about speechlanguage classification is solved, the method has the advantages of being automatic, high in identification rate, high in robustness, low in cost, high in portability and the like, and the business connection with a third-party system can be facilitated.

Owner:深圳市网联安瑞网络科技有限公司

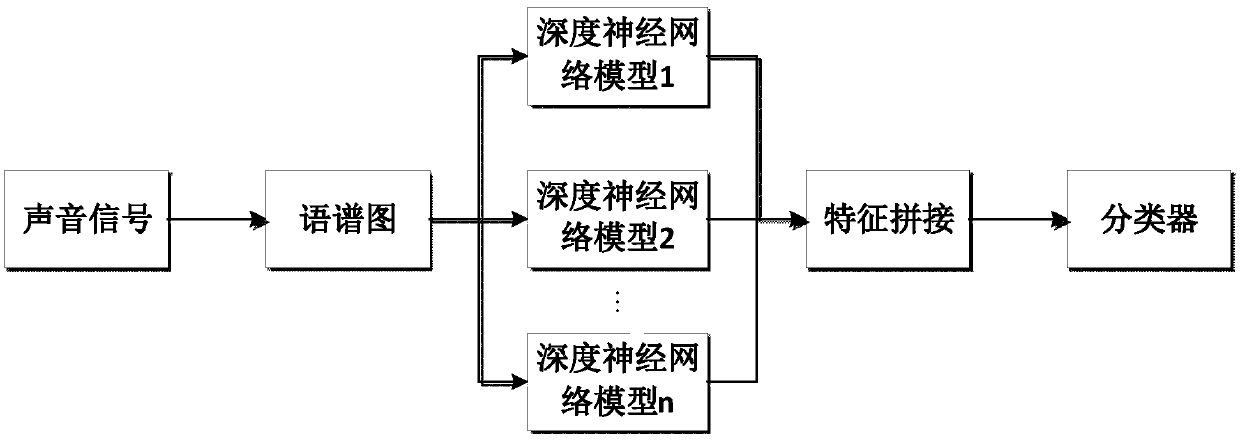

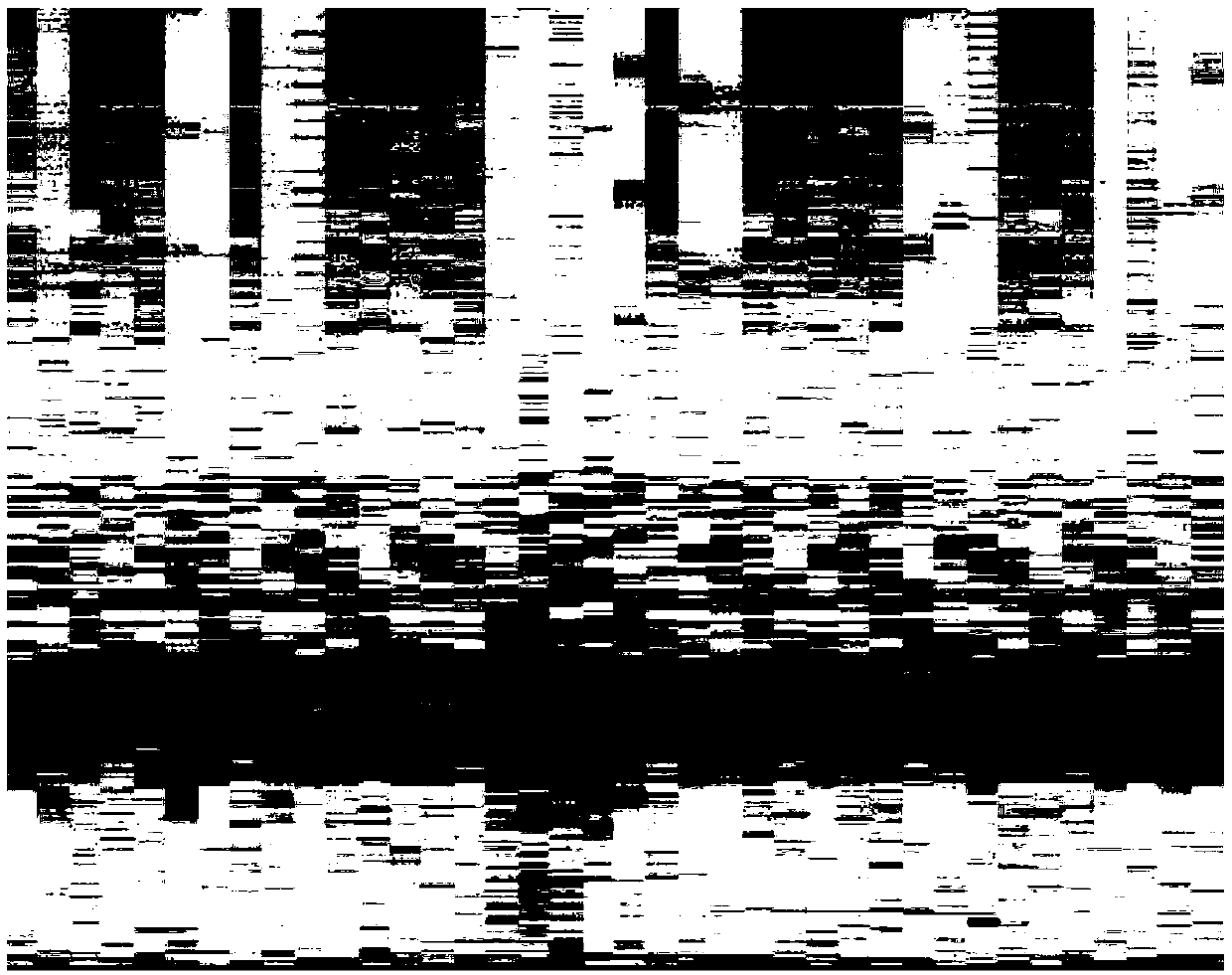

City noise identification method based on hybrid deep neural network models

The invention discloses a city noise identification method based on hybrid deep neural network models. The city noise identification method comprises the following steps that 1, city noise is collected, and a sound sample database is built; 2, sound signals in the sound sample database are converted into a speech spectrum; 3, the obtained speech spectrum is clipped, and then feature extracting isconducted by using the multiple pre-trained deep neural network models; 4, features extracted by the multiple models are spliced; 5, the spliced fusion feature serves as final input of a classifier, and a prediction model is trained; and 6, as for unknown sound, the sound is converted into the speech spectrum firstly, feature extracting is conducted by using the multiple pre-trained deep neural network models, the extracted features are spliced, then prediction is conducted by using the trained prediction model, and the final sound type is obtained. A large quantity of datasets are not needed,the operating rate is higher, and needed resources are fewer.

Owner:HANGZHOU DIANZI UNIV

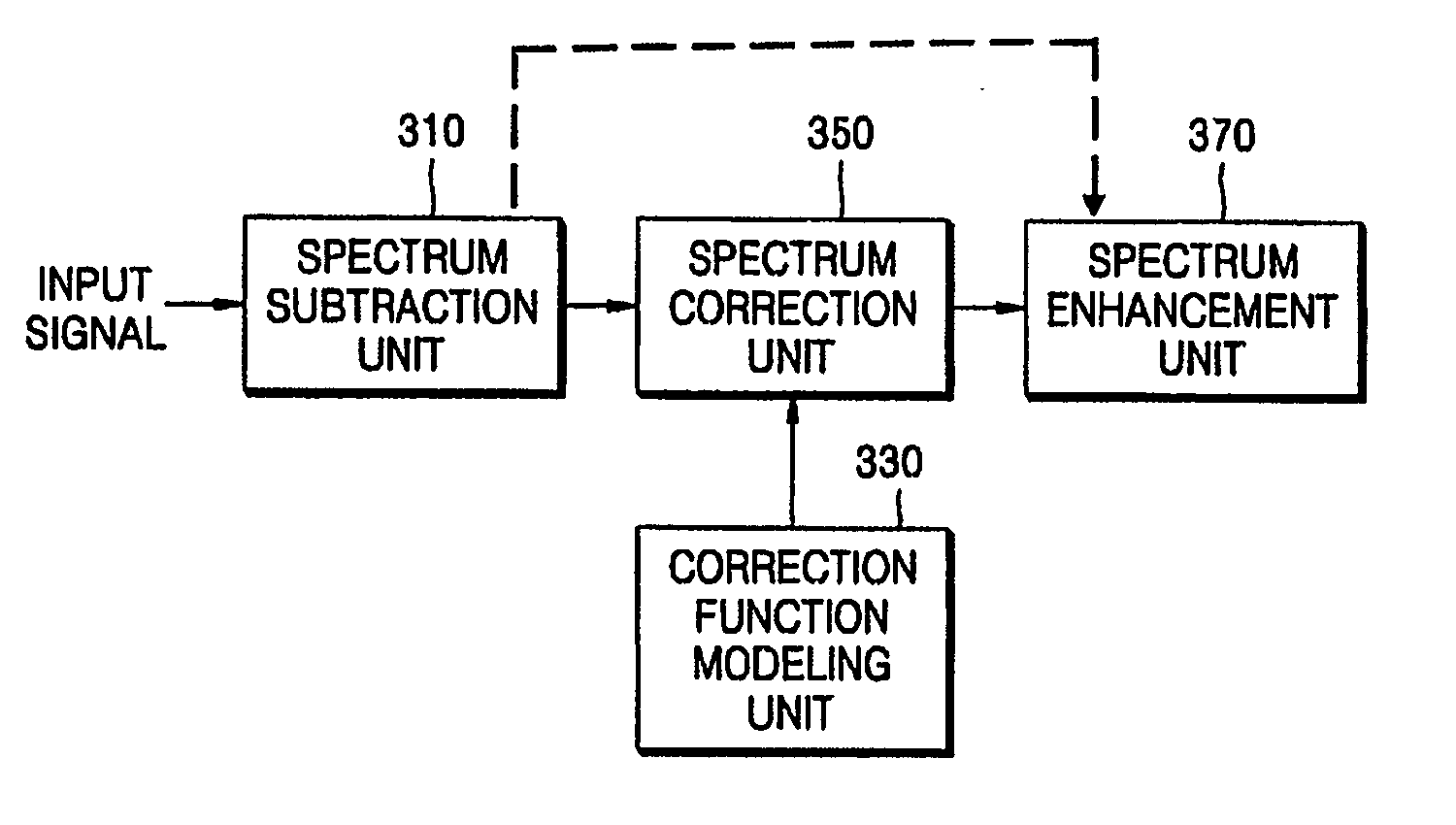

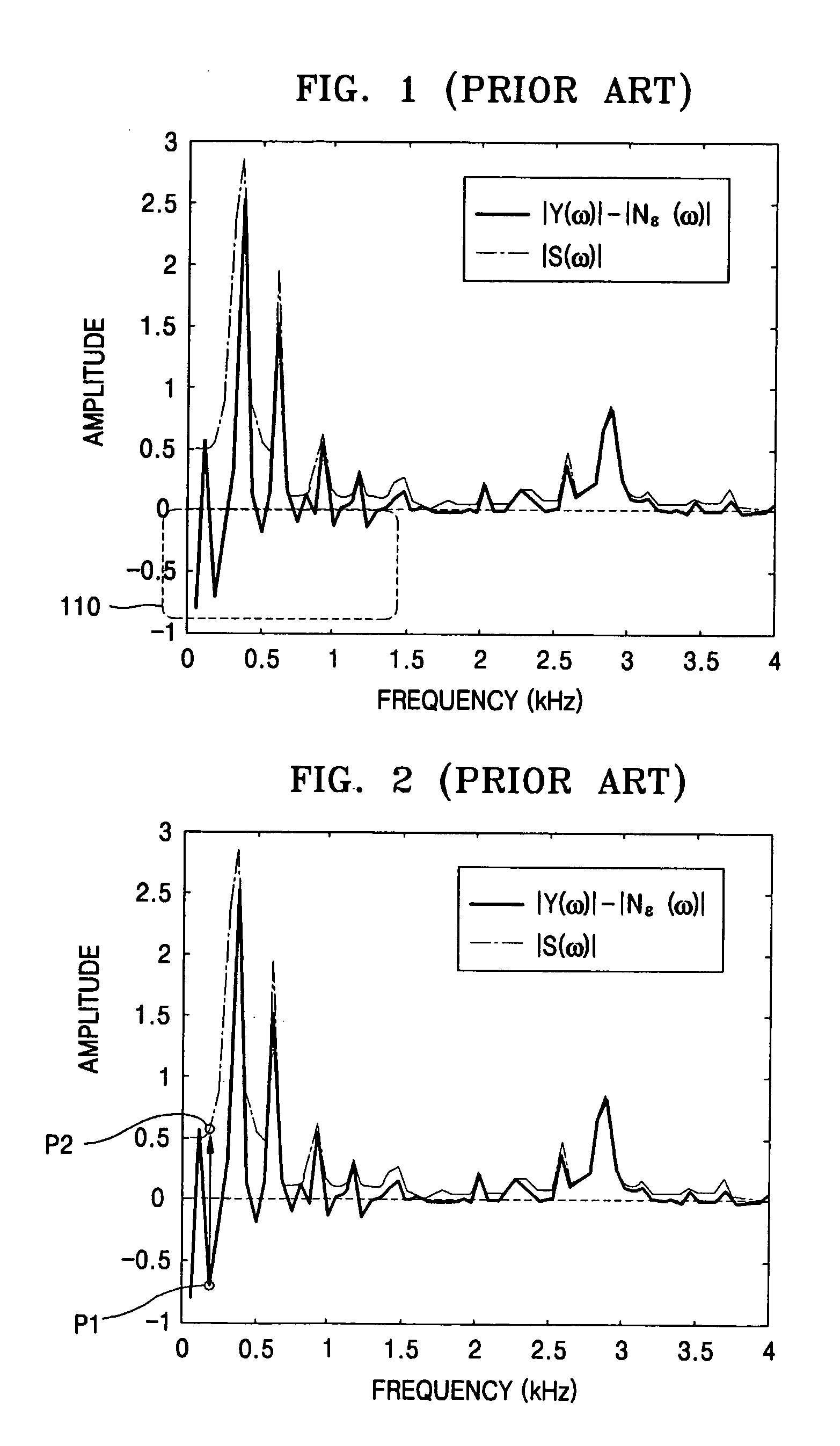

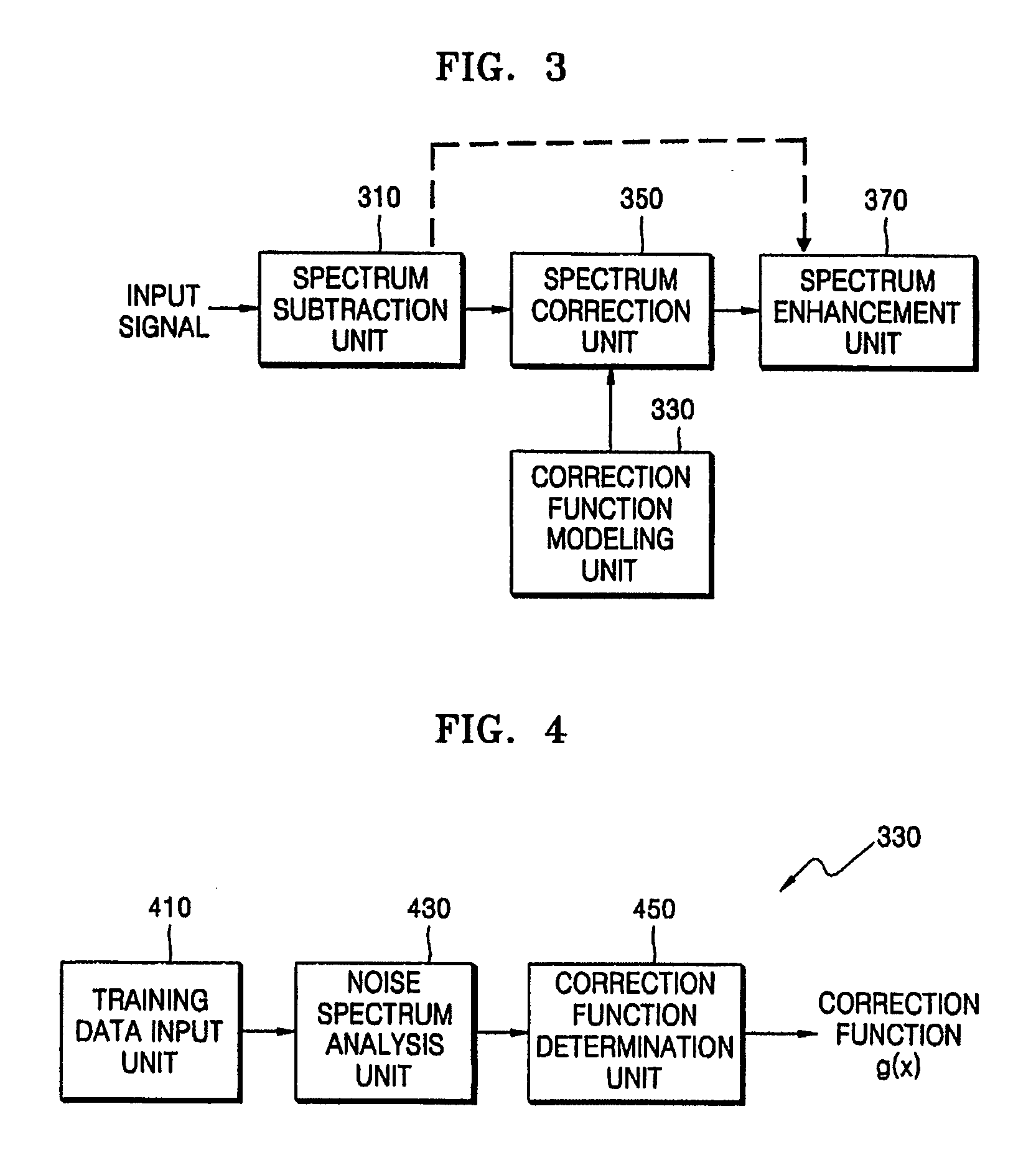

Speech enhancement apparatus and method

InactiveUS20070185711A1Quality improvementEnhancing natural characteristicHeater elementsSpeech recognitionFrequency spectrumNoise spectrum

Owner:SAMSUNG ELECTRONICS AMERICA +1

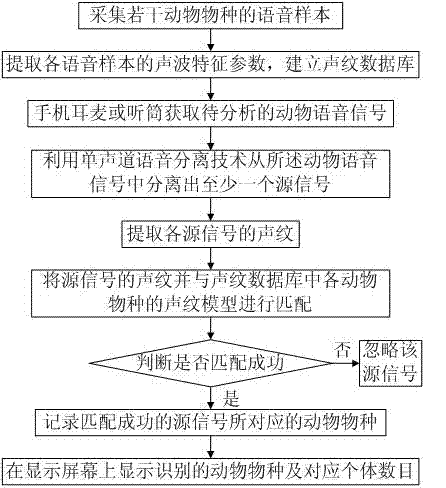

Method and device for identifying animals based on voice

ActiveCN103117061ATo achieve the purpose of seeking advantages and avoiding disadvantagesEntertainingSpeech analysisSpeech spectrumSpectral analysis

Disclosed are a method and a device for identifying animals based on voice. The method includes acquiring voice samples of a plurality of animal species, extracting sound wave feature parameters of each voice sample to build vocal print models so as to build a vocal print database; acquiring to-be-analyzed animal voice signals, and using single track voice separation to separate at least one source signal from the animal voice signals; extracting vocal print of each source signal, and matching the vocal print with vocal models of various animal spices in the vocal print database; and recording animal species corresponding to successfully matched source signals. By using mobile terminals such a mobile phone to monitor animal voices around a user and voice spectral analysis, sound wave feature parameters of animal voices are extracted to be matched with models in the database, and accordingly animal species and quantitative distribution of animals around can be identified, and outdoor danger can be avoided. In addition, the device is interesting and fun to operate.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

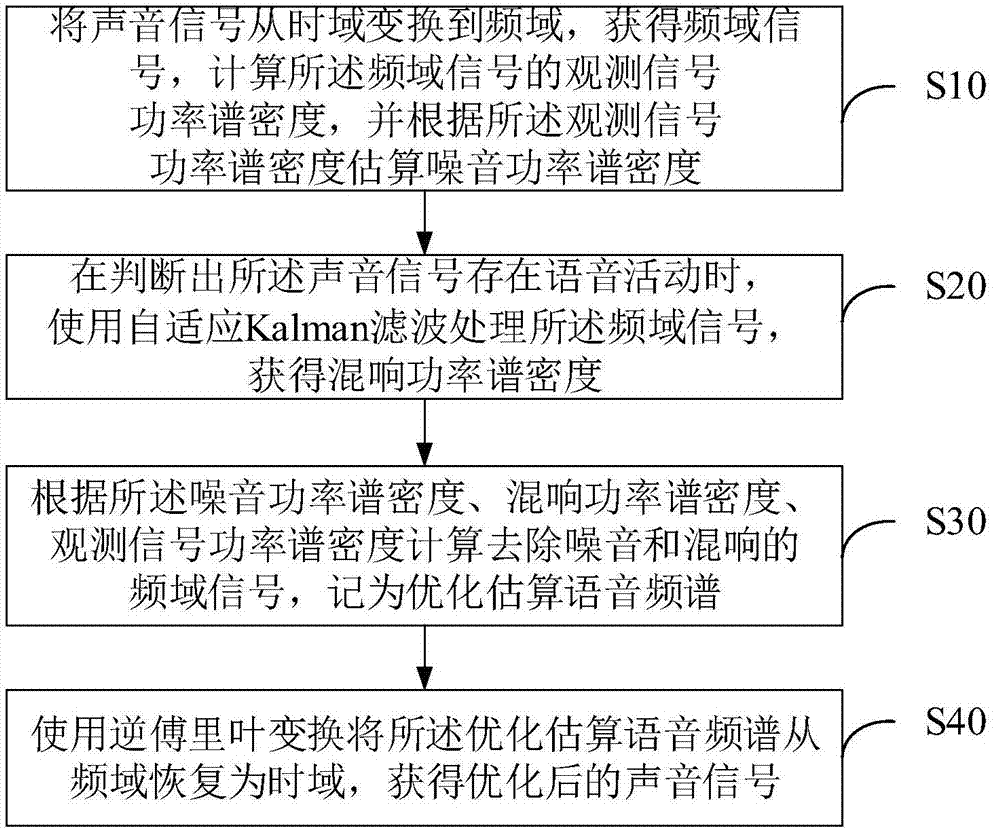

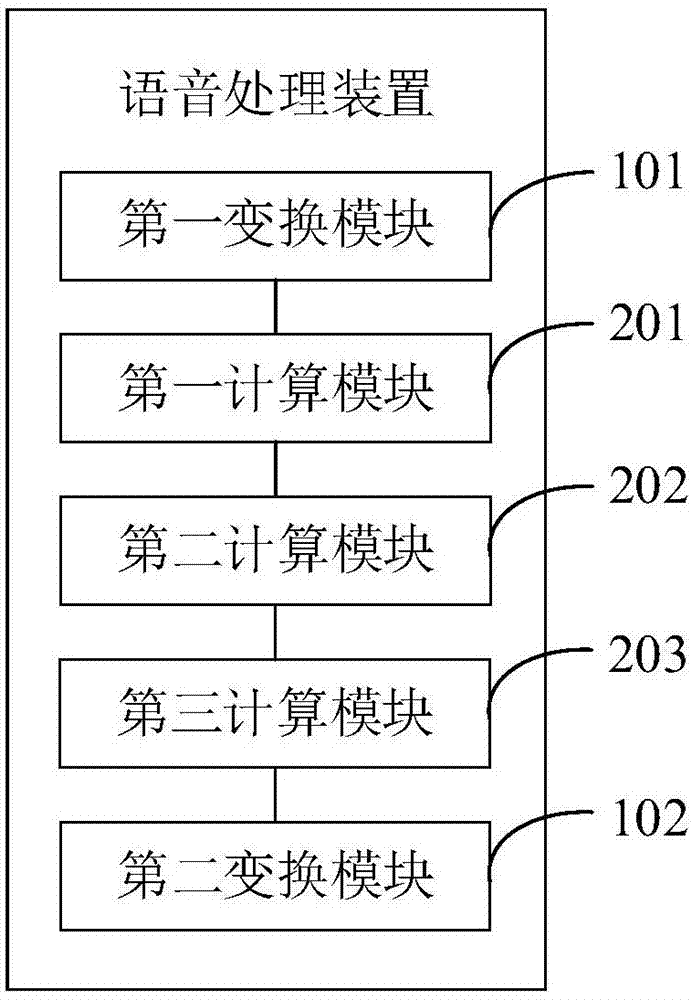

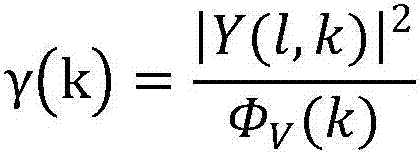

Voice processing method and apparatus thereof

The invention provides a voice processing method and an apparatus thereof. The method comprises the following steps of firstly, converting a sound signal into a frequency domain signal, through calculating a signal-to-noise ratio of the frequency domain signal, acquiring an adaptive updating step size of a noise power spectrum, and according to the step size, updating a noise power spectrum density; then, detecting whether a voice activity exists in the sound signal, and if the voice activity exists in the sound signal, using adaptive Kalman filtering to process the frequency domain signal and acquiring a reverberation power spectrum density; after the noise power spectrum density and the reverberation power spectrum density are determined, calculating an optimization and estimation voice spectrum; and finally, carrying out inverse Fourier transform on the optimization and estimation voice spectrum so as to restore an optimized sound signal. In the invention, collected sound signal quality under a remote speaking condition can be effectively optimized and a voice identification rate is increased.

Owner:深圳市雅今智慧科技有限公司

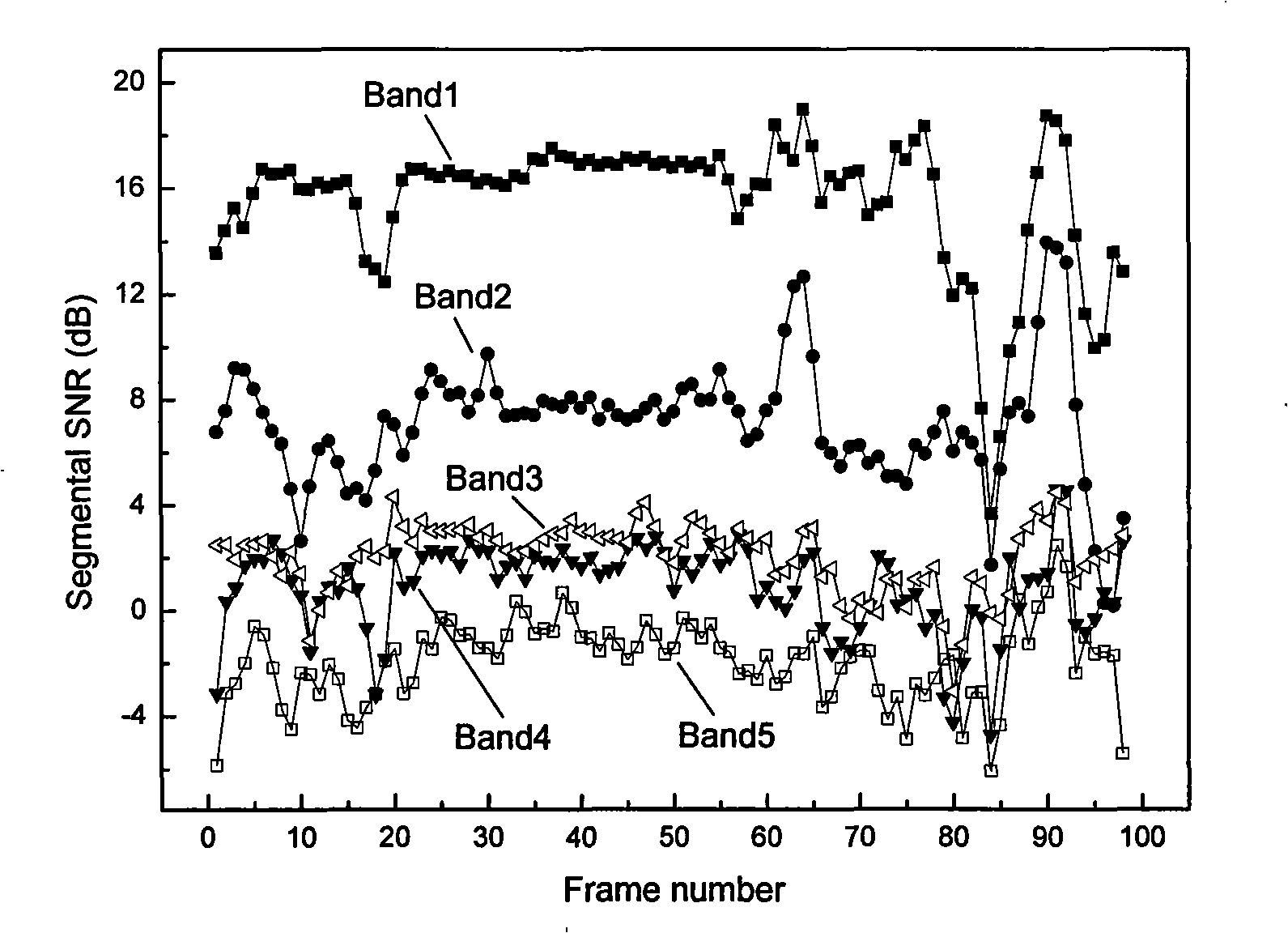

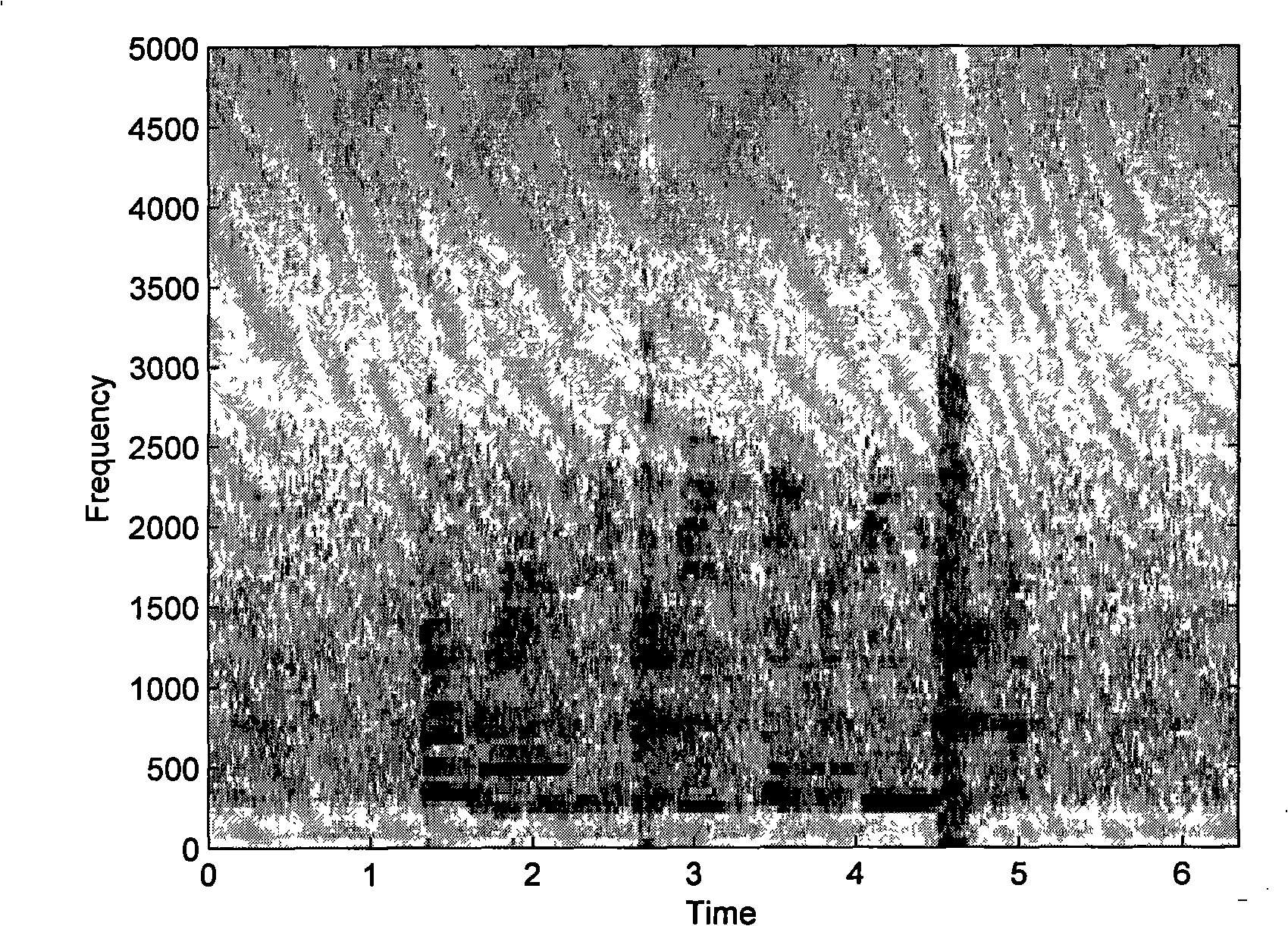

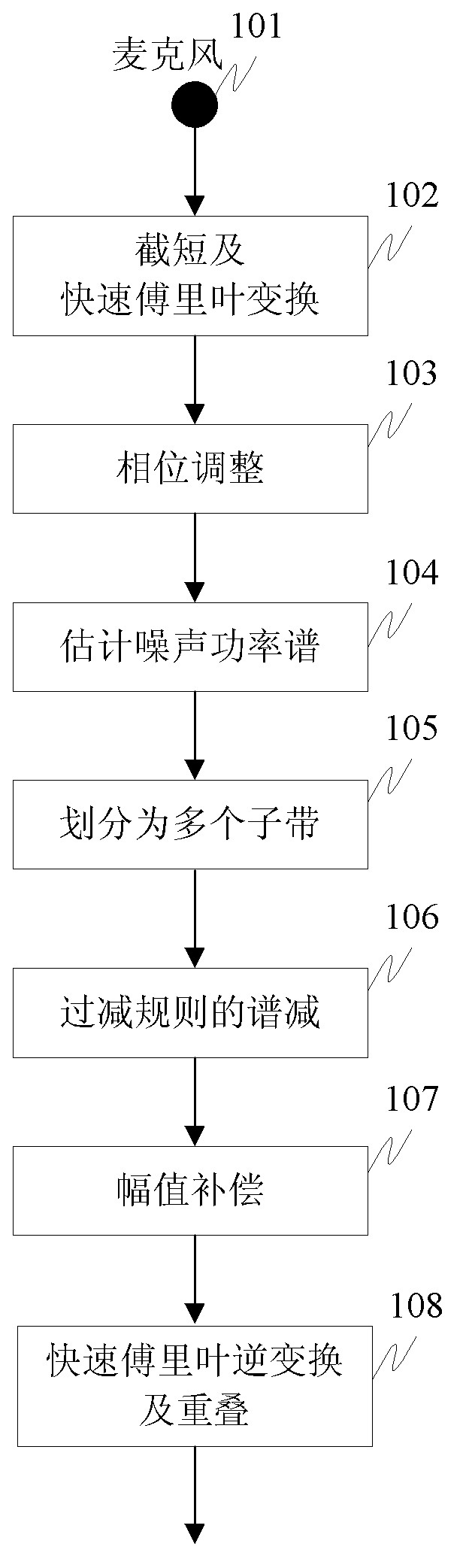

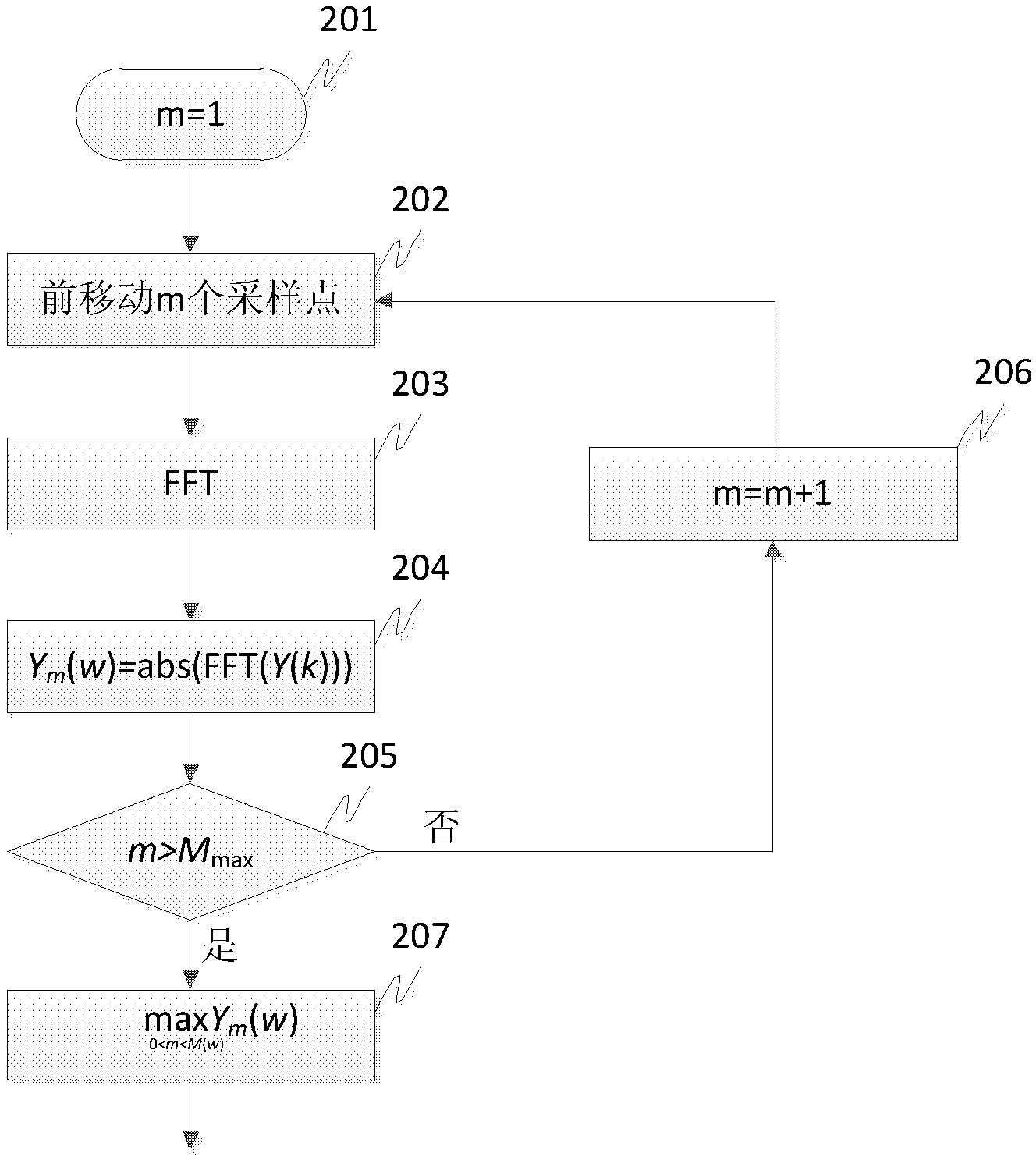

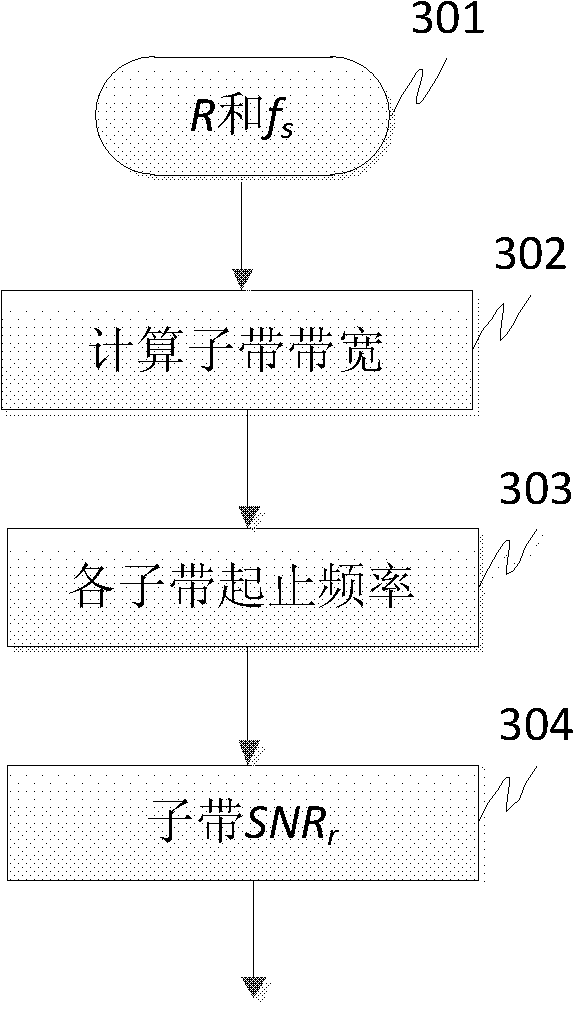

Speech enhancement method of multi-sub-band spectral subtraction based on phase adjustment and amplitude compensation

ActiveCN103021420AEliminate isolated peaksSuppress musical noiseSpeech analysisFast Fourier transformSignal-to-noise ratio (imaging)

The invention discloses a speech enhancement method of a multi-sub-band spectral subtraction based on phase adjustment and amplitude compensation. The method mainly includes truncating signals acquired by a microphone and performing fast Fourier transform (FFT); performing micro maximum search on an amplitude spectrum through a phase adjustment algorithm to obtain an adjusted amplitude spectrum of noisy speech; estimating the amplitude spectrum of noise; dividing a whole band into a plurality of sub-bands and calculating the signal to noise ratio of each sub-band; performing amplitude spectrum subtraction of an over-subtraction rule on each sub-band; performing amplitude compensation on speech spectrums after the spectrum subtraction; and obtaining time domain waveforms of the signals through fast Fourier inversion and signal overlapping.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

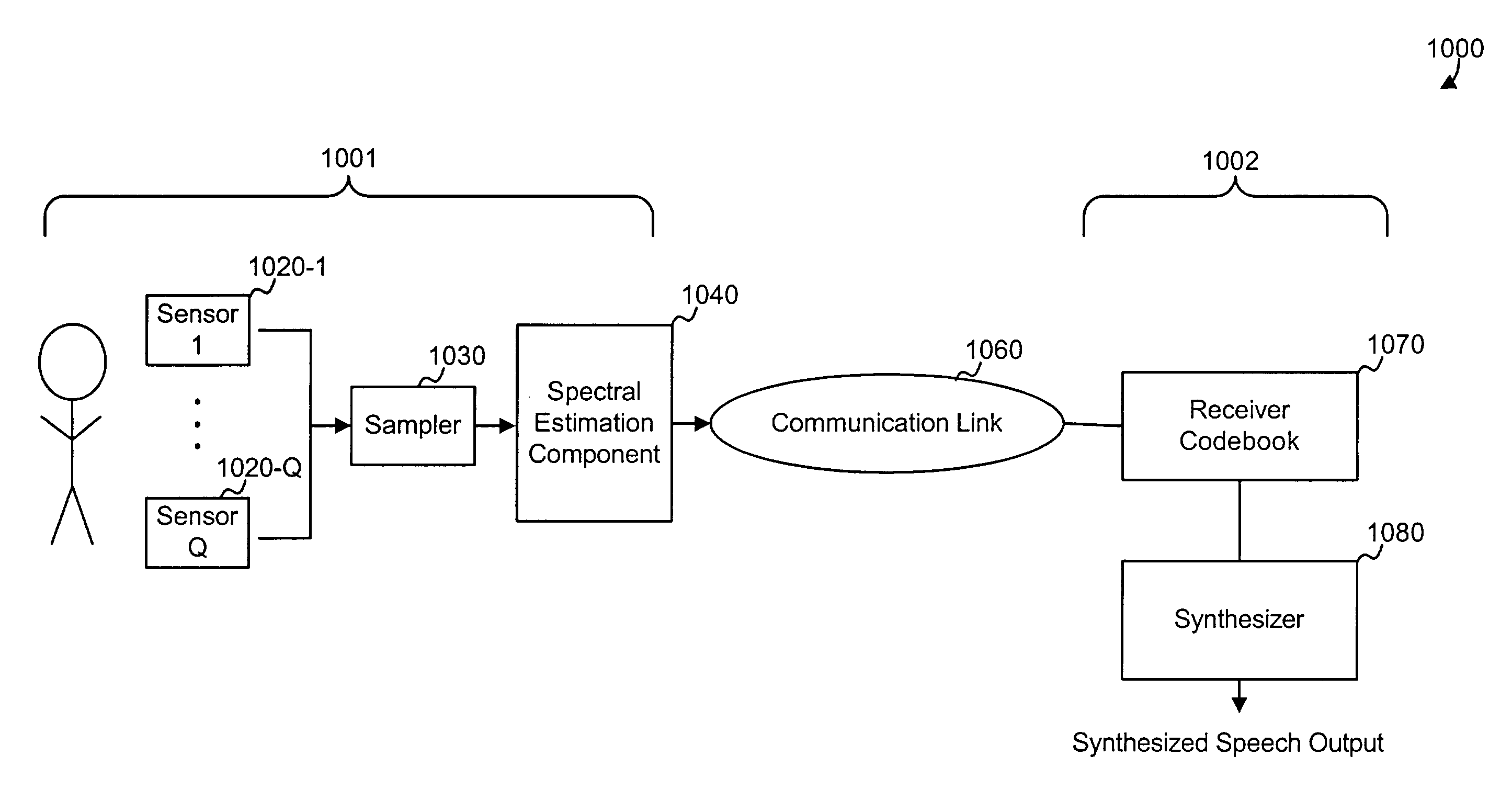

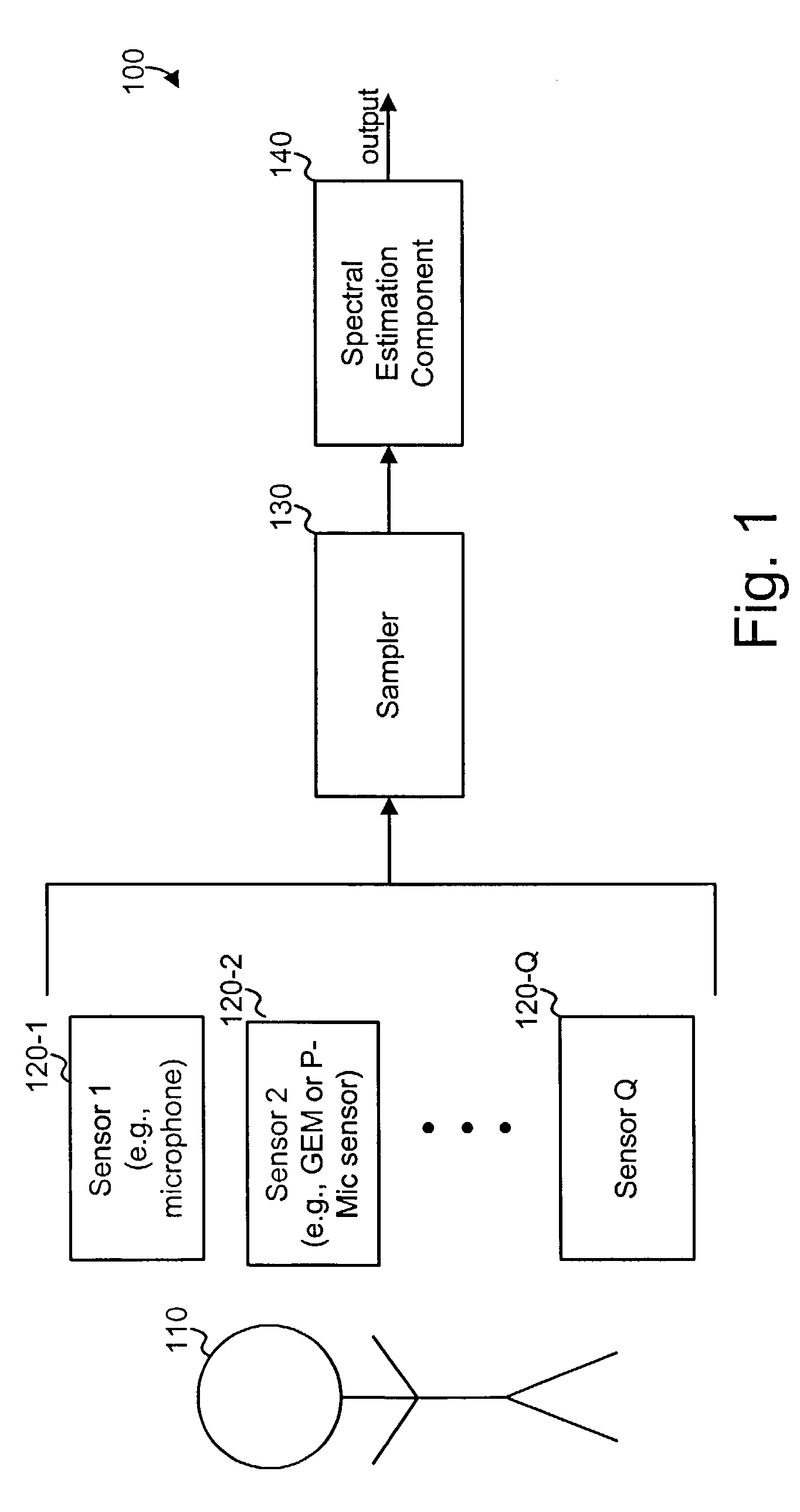

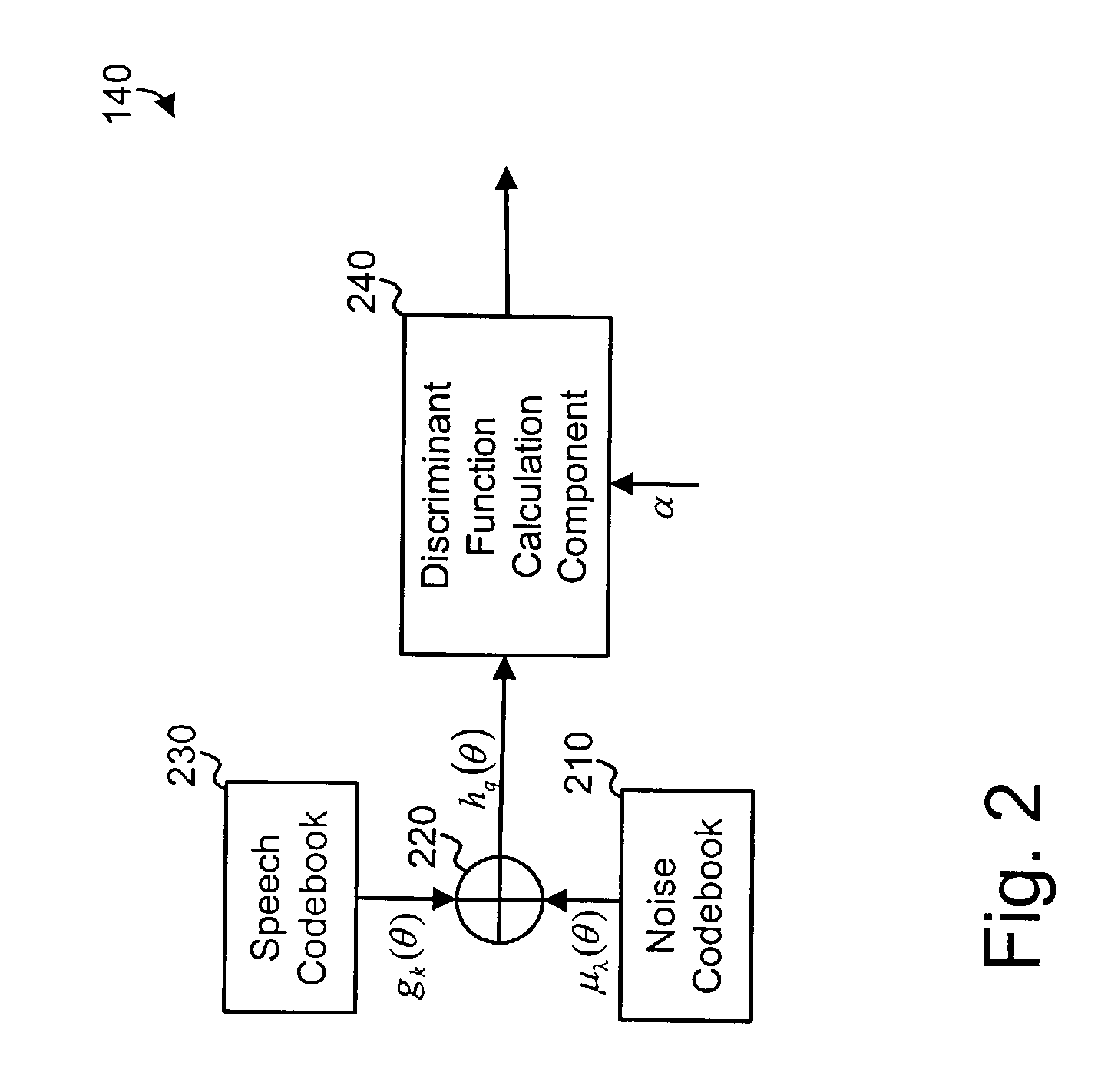

Estimation of speech spectral parameters in the presence of noise

A speech analysis system includes a codebook (230) that stores speech spectral parameters corresponding to a speech spectral hypothesis. The speech spectral hypothesis may be combined with a noise spectral hypothesis and the combined hypothesis compared to a sensed signal via a discriminant function (240). The discriminant function may be evaluated using the preconditioned conjugate gradient (PCG) process.

Owner:VERIZON PATENT & LICENSING INC +1

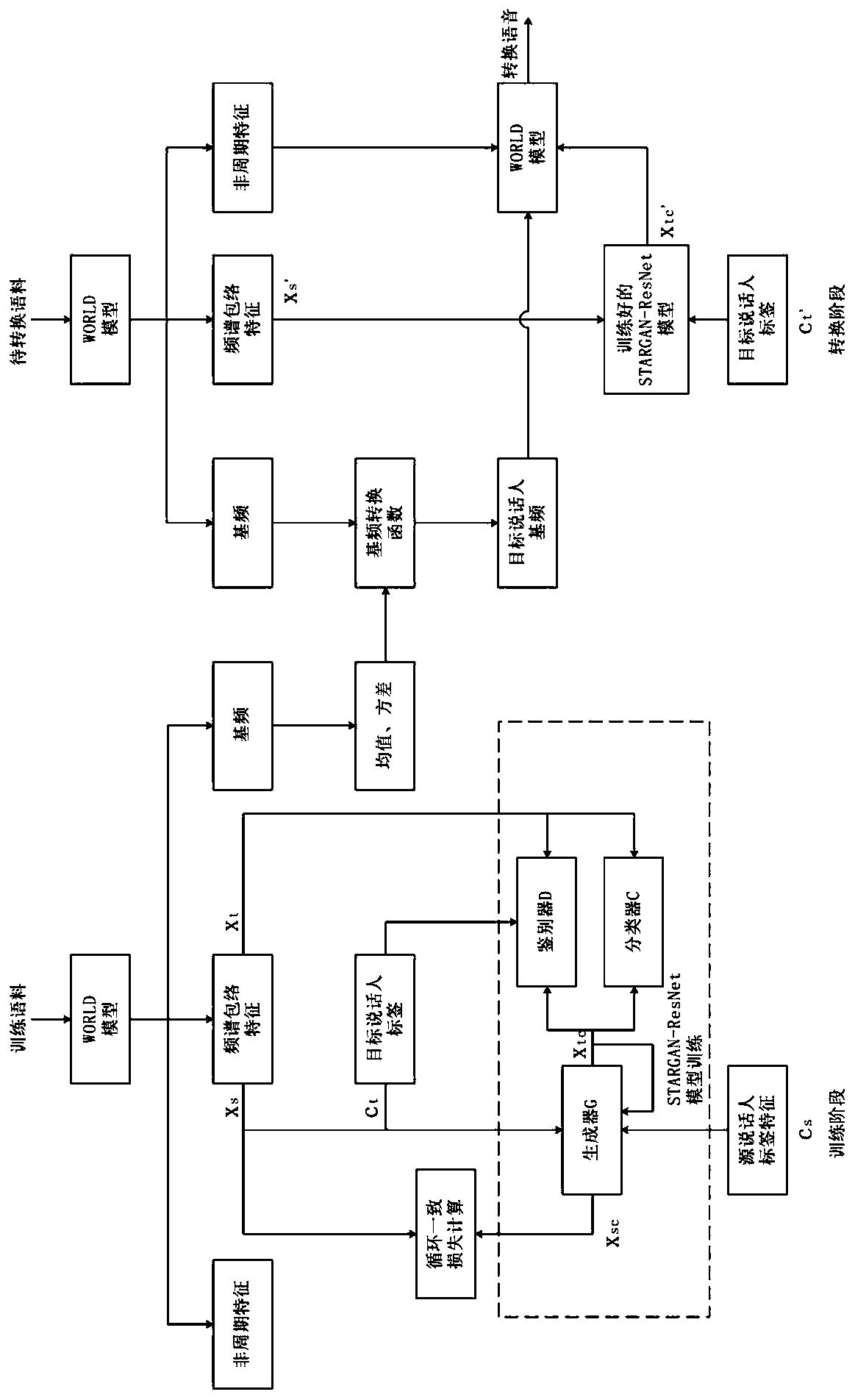

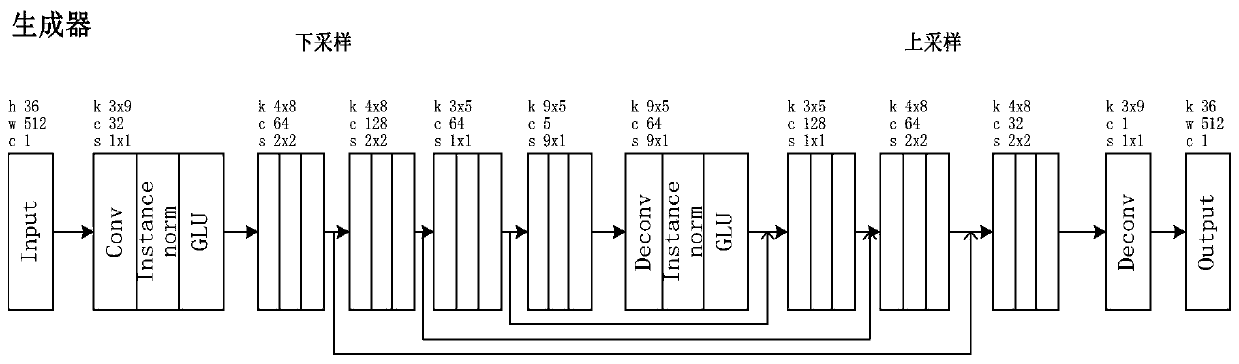

Multi-to-multi speaker conversion method based on STARGAN and ResNet

ActiveCN110060690AImprove voice qualityImprove the extraction effectSemantic analysisSpeech analysisFrequency spectrumSemantics

The invention discloses a multi-to-multi speaker conversion method based on STARGAN and ResNet, which comprises a training stage and a conversion stage, wherein the STARGAN and the ResNet are combinedto achieve a voice conversion system; a ResNet network is utilized to solve the problem of network degradation existing in the STARGAN; the learning capability of semantics and the synthesis capability of a voice spectrum of a model can be better improved; therefore, the individuality similarity and the voice quality of converted voice are better improved; meanwhile, the data is standardized by using the Instant norm; the noise generated in the voice conversion process can be well filtered; the problem of poor voice similarity and natural degree of the converted voice in the STARGAN is solved. A voice conversion method with high quality can be achieved. The method can achieve voice conversion under the condition of non-parallel text, and does not need any alignment process in the trainingprocess, a conversion system with multiple source-target speaker pairs is integrated in a conversion model, namely, the multi-to-multi speaker conversion is achieved.

Owner:NANJING UNIV OF POSTS & TELECOMM

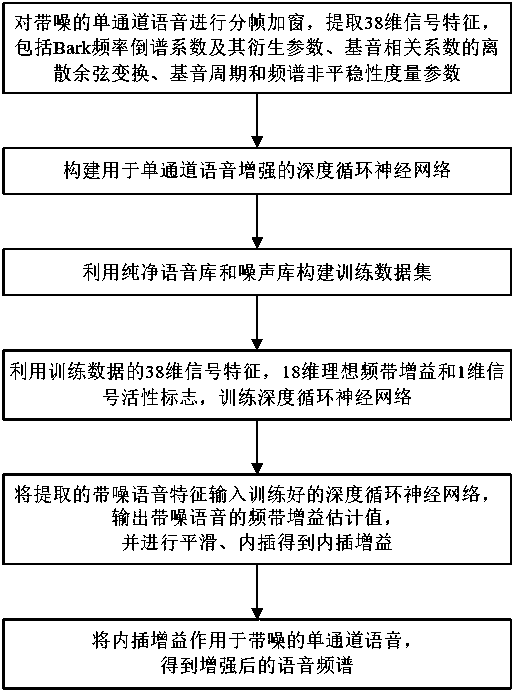

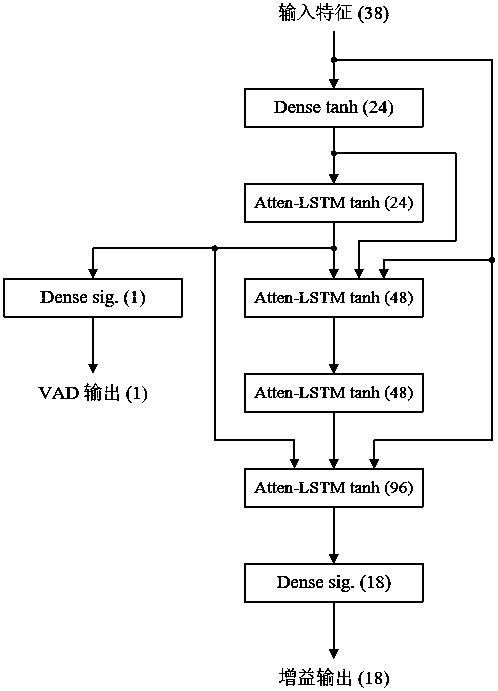

Single channel speech enhancement method based on attention-gated recurrent neural network

ActiveCN110085249AImprove learning effectImprove generalization abilitySpeech analysisFrequency spectrumComputation complexity

The invention discloses a single-channel speech enhancement method based on an attention-gated recurrent neural network. The method comprises the steps of framing and winding single-channel speech with noise, and extracting 38-dimensional signal features; constructing a deep recurrent neural network for single-channel speech enhancement; constructing a training data set by using a pure speech library and a noise library; training the constructed deep recurrent neural network; inputting the extracted speech features with noise into the trained deep recurrent neural network, outputting a frequency band gain estimation value of the speech with noise, smoothing and interpolating to obtain interpolation gain; enabling the interpolation gain to act on the single-channel speech with noise so as to obtain an enhanced speech spectrum. The single-channel speech enhancement method can effectively inhibit the noise including nonstationary noise, and maintains low enough computation complexity, thus being used for real-time single-channel speech enhancement; the method is ingenious and novel in conception, thus having a good application prospect.

Owner:NANJING INST OF TECH

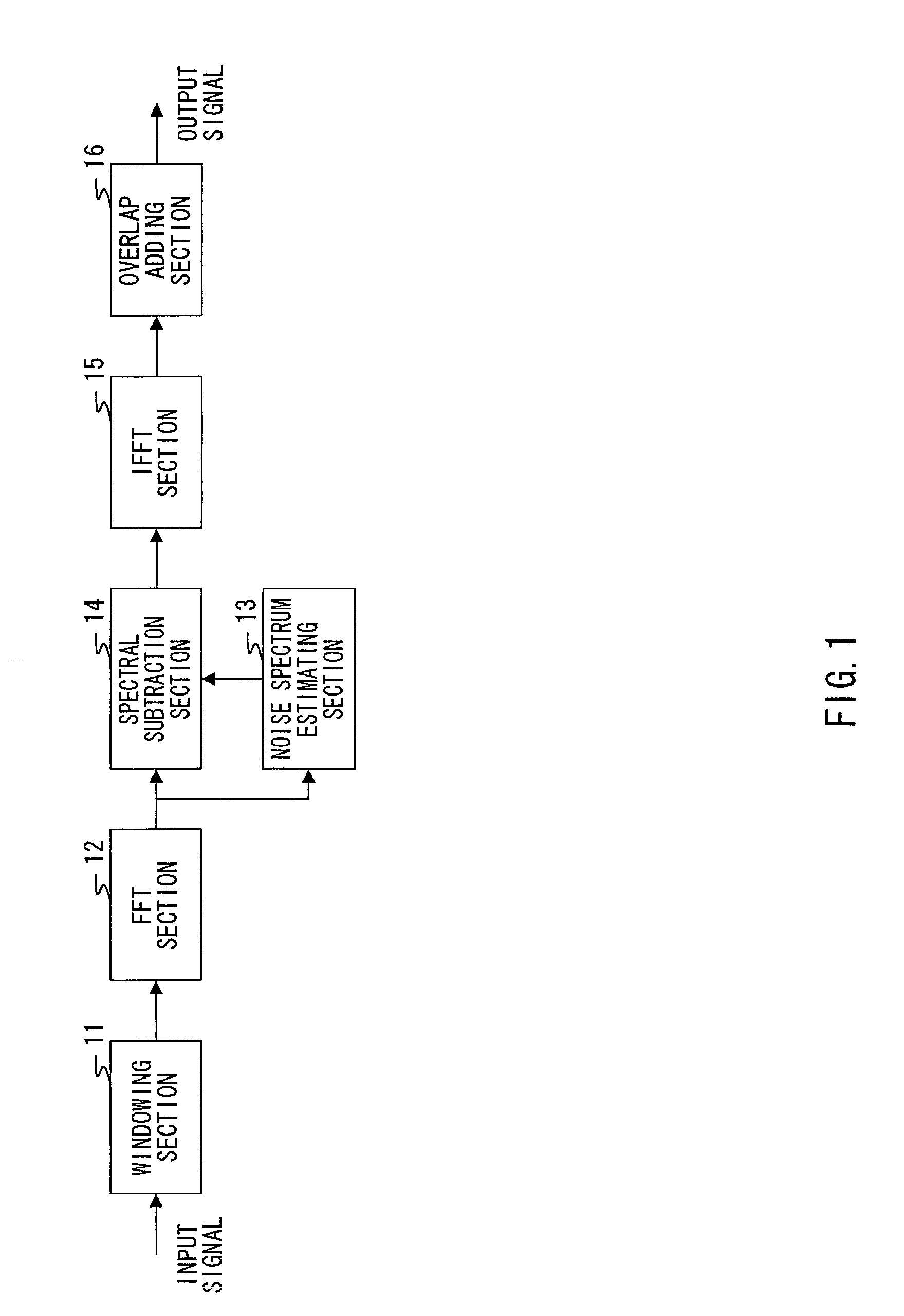

Method and apparatus for suppressing noise components contained in speech signal

InactiveUS20020128830A1Suppressing noise components contained in an input speech signal without impairing the spectrum of the speech signalSpeech recognitionTransmissionTime domainFrequency spectrum

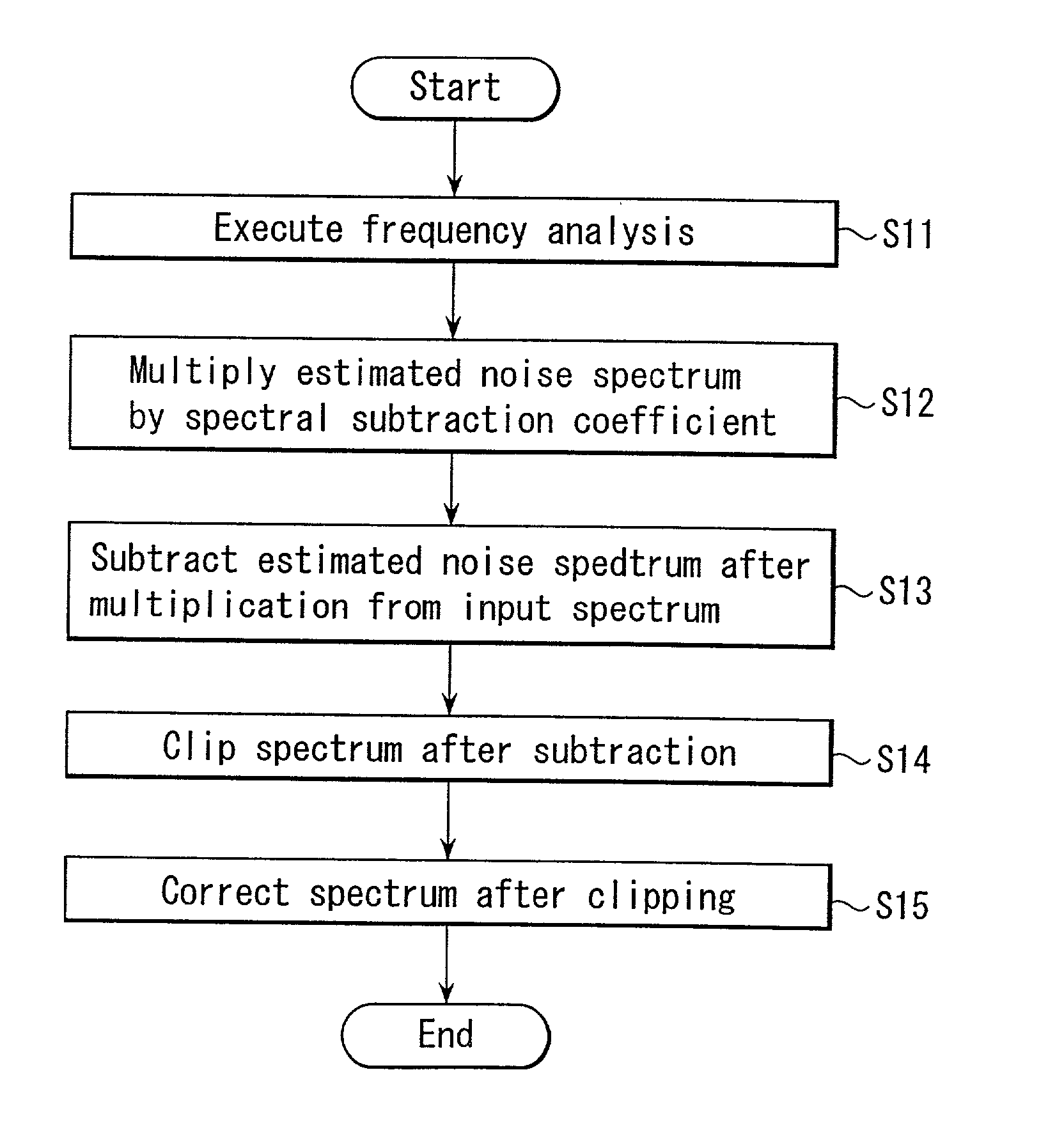

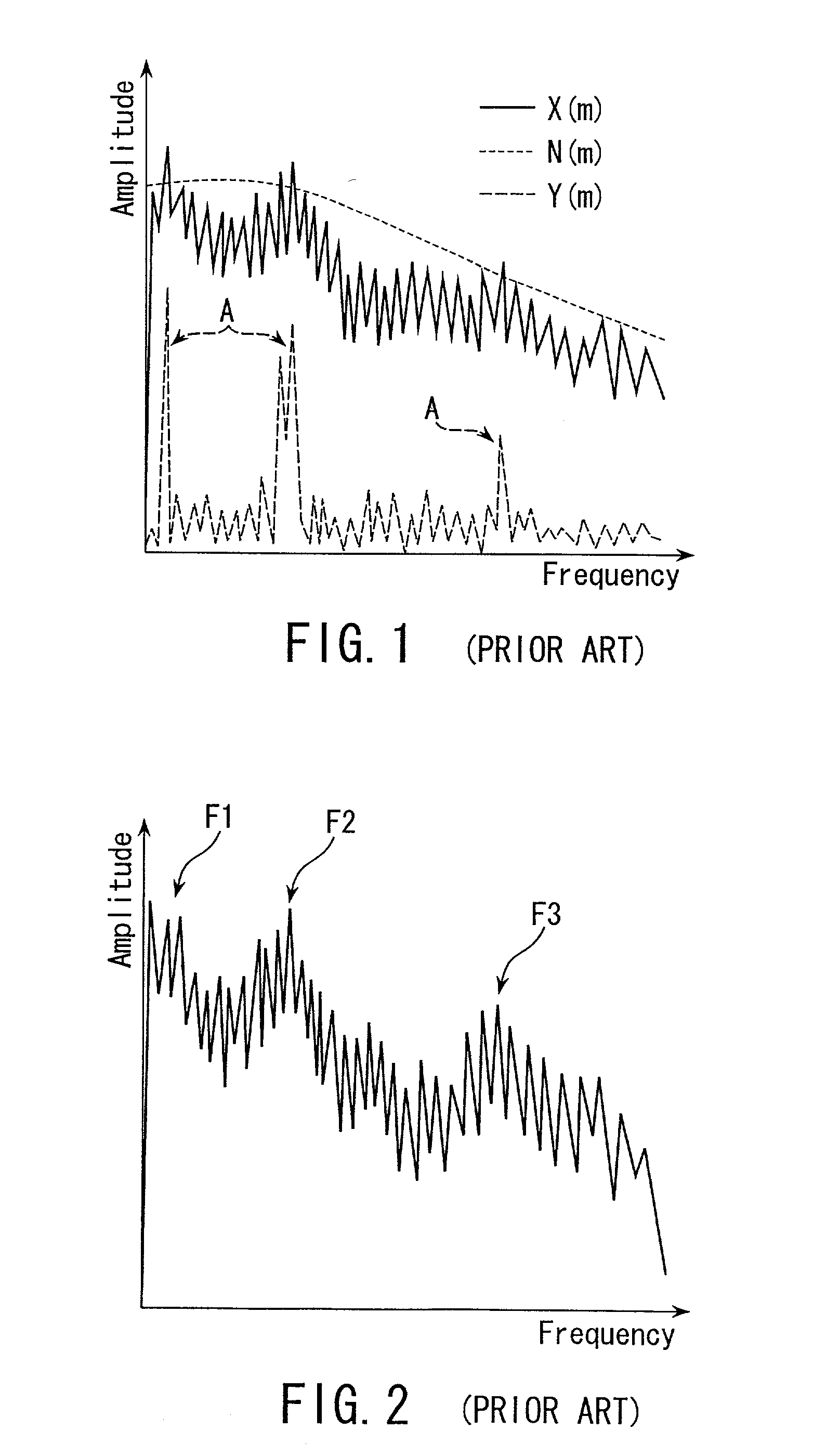

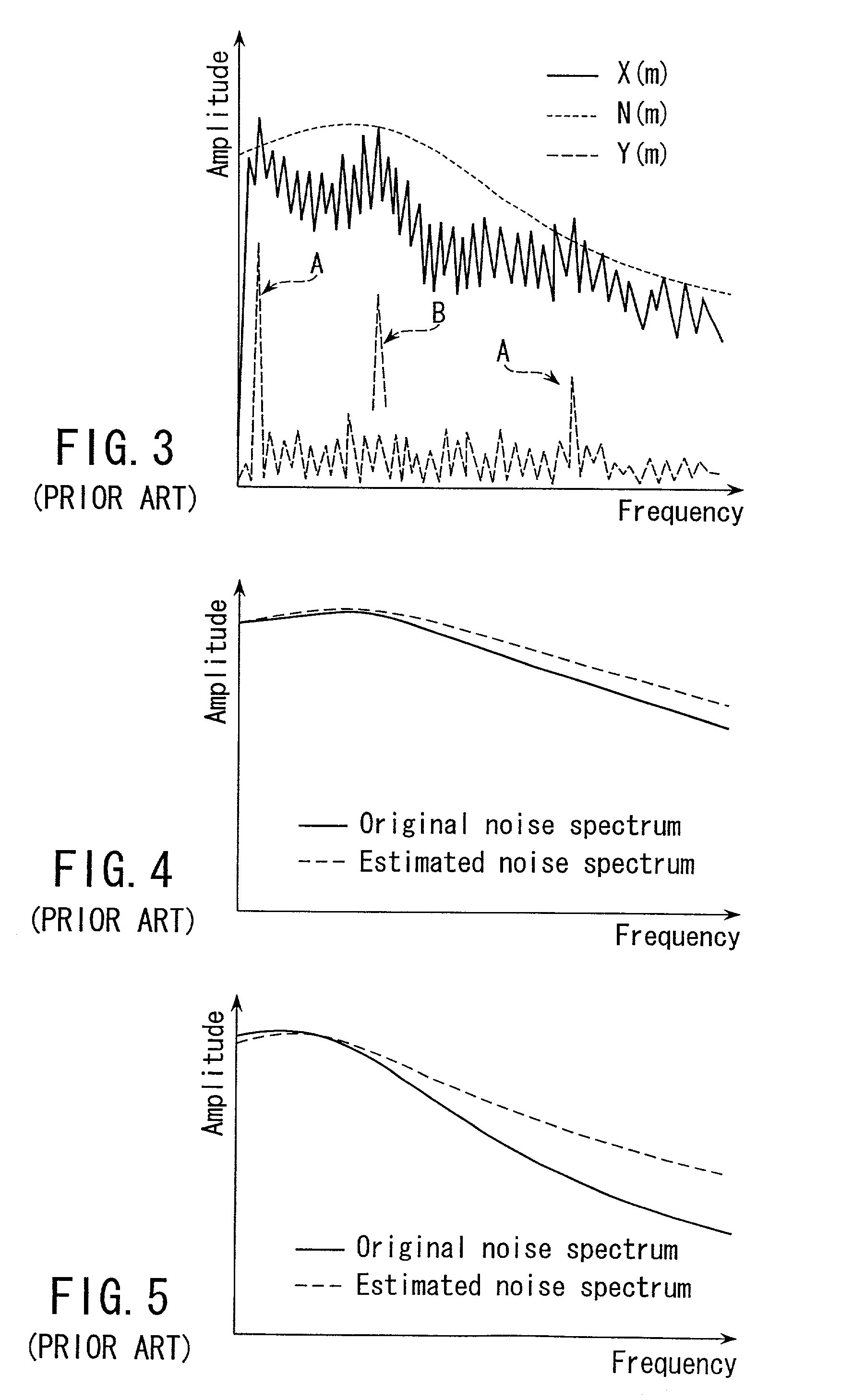

There is provided a method of suppressing noise components contained in an input speech signal. The method includes obtaining an input spectrum by executing frequency analysis of the input speech signal by a specific frame length, obtaining an estimated noise spectrum by estimating the spectrum of the noise components, obtaining the spectral slope of the estimated noise spectrum, multiplying the estimated noise spectrum by a spectral subtraction coefficient determined by the spectral slope, obtaining a subtraction spectrum by subtracting the estimated noise spectrum multiplied with the spectral subtraction coefficient from the input spectrum, and obtaining a speech spectrum by clipping the subtraction spectrum. The method may further include correcting the speech spectrum by smoothing in at least one of frequency and time domains. In this way, a speech spectrum in which noise components have been suppressed can be obtained.

Owner:KK TOSHIBA

Noise suppressing apparatus and noise suppressing method

InactiveUS7054808B2Improve efficiencyReduce inhibitionSubstation speech amplifiersCode conversionLower limitSpectral subtraction

Speech / non-speech determining section 103 makes a speech / non-speech determination of whether a speech spectrum is of a speech interval with a speech included or of a non-speech interval with only a noise and no speech included. Noise spectrum estimating section 104 estimates a noise spectrum based on the speech spectrum determined as the non-speech interval. SNR estimating section 105 obtains speech signal power from the speech interval and noise signal power from the non-speech interval in the speech spectrum, and calculates SNR from a ratio of two values. Based on the speech / non-speech determination and a value of SNR, suppression coefficient control section 106 outputs a suppression lower limit coefficient to spectrum subtraction section 107. Spectral subtraction section 107 subtracts an estimated noise spectrum from the input speech spectrum, and outputs a speech spectrum with a noise suppressed.

Owner:PANASONIC CORP

Speech enhancement method based on non-negative low-rank and sparse matrix decomposition principle

The invention discloses a speech enhancement method based on the non-negative low-rank and sparse matrix decomposition principle. The method includes the first step of firstly carrying out smoothing, framing and discrete Fourier transformation on noisy speech signals to obtain noisy speech frequency spectra, the second step of allowing the noisy speech magnitude spectra of frames to serve as column vectors which are arranged in chronological order to form a noisy speech time-frequency matrix and then carrying out non-negative low-rank and sparse matrix decomposition on the noisy speech time-frequency matrix to obtain a non-negative low-rank and sparse matrix, and the third step of utilizing the sparse matrix and reconstruction of noisy speech phase positions to enhance the speech spectra and finally obtaining the enhanced speech in a time domain form through inverse Fourier transformation. By the adoption of the method, noise adaptability is high, endpoint detection and model training are not needed, parameters are fewer and easy to regulate, strong noise environmental performance is good, and therefore the method has a good application prospect.

Owner:KEY LAB OF SCI & TECH ON AVIONICS INTEGRATION TECH

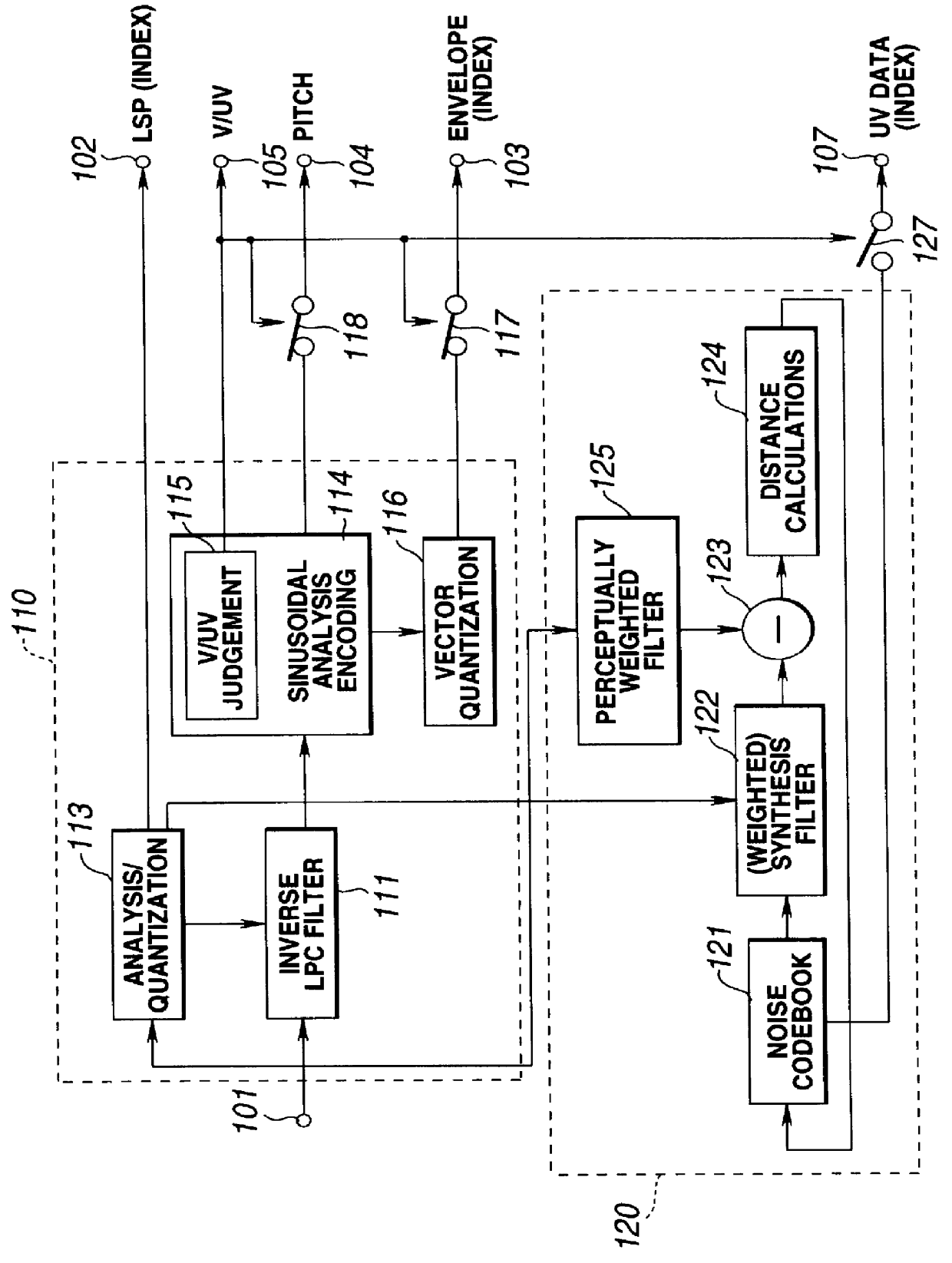

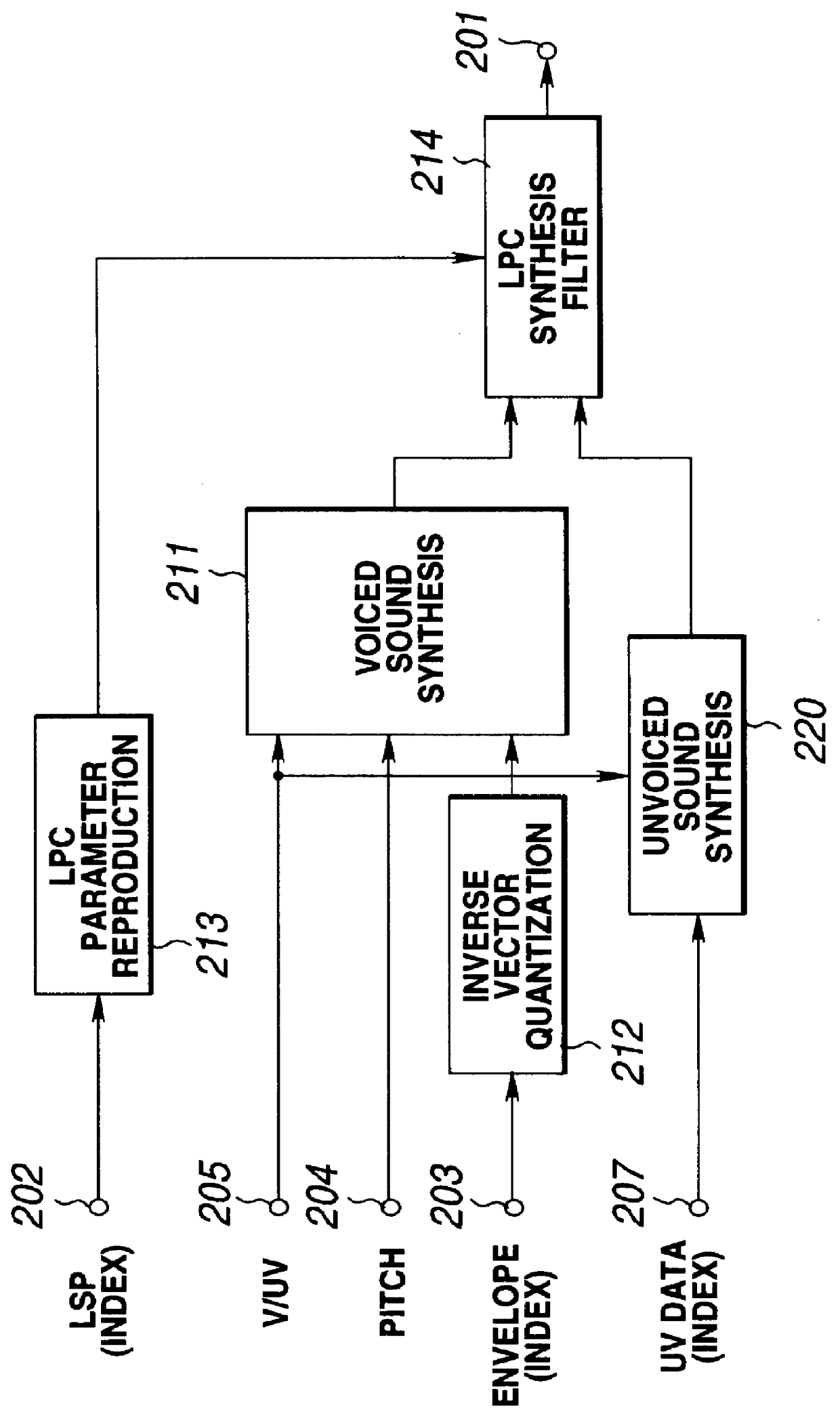

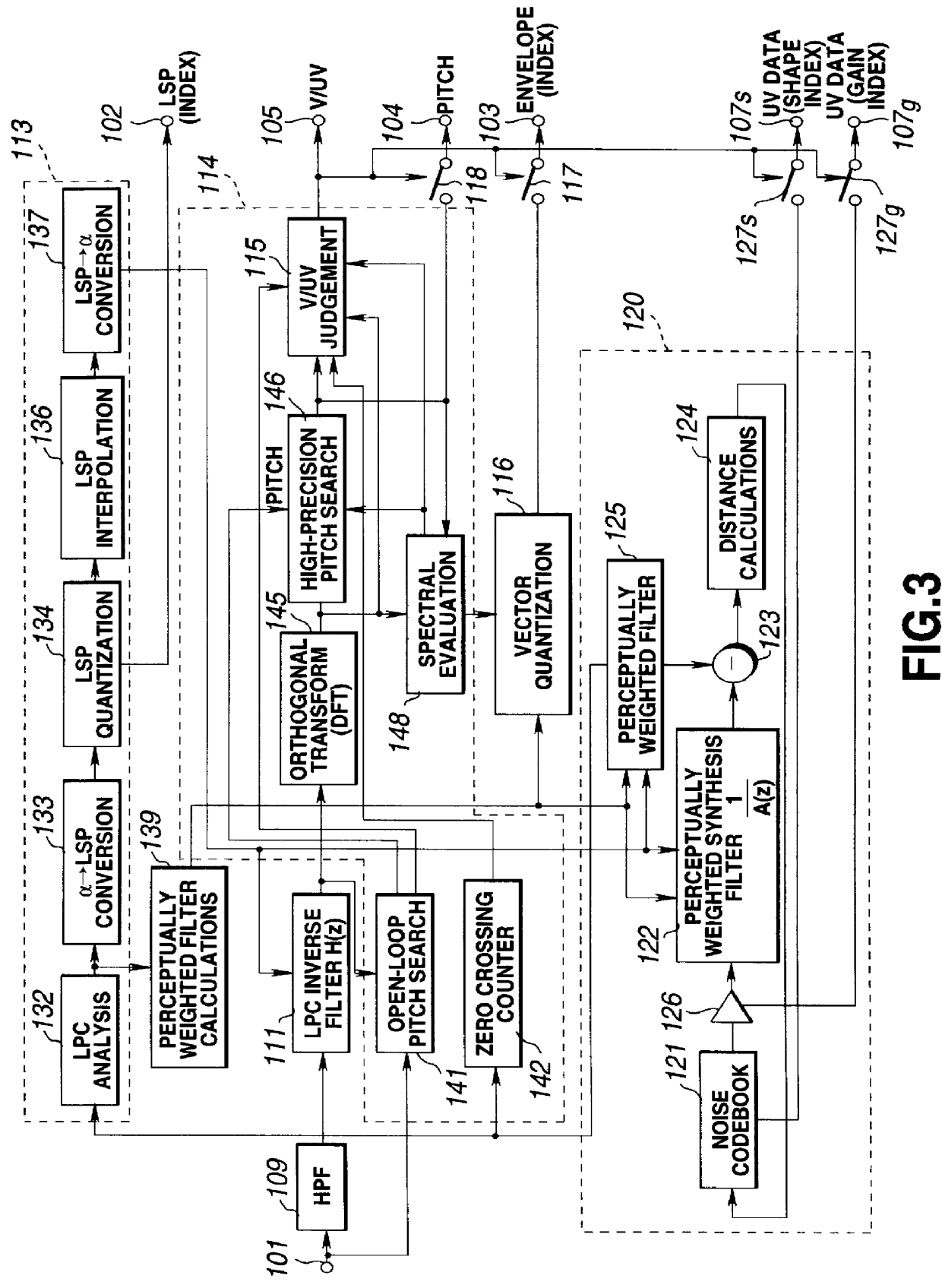

Speech analysis method and speech encoding method and apparatus

InactiveUS6108621AHigh clarity playback outputImprove accuracyTransmissionSpeech synthesisFrequency bandSpeech spectrum

A speech analysis method and a speech encoding method and apparatus in which, even if the harmonics of the speech spectrum are offset from integer multiples of the fundamental wave, the amplitudes of the harmonics can be evaluated correctly for producing a playback output of high clarity. To this end, the frequency spectrum of the input speech is split on the frequency axis into plural bands in each of which pitch search and evaluation of amplitudes of the harmonics are carried out simultaneously using an optimum pitch derived from the spectral shape. Using the structure of an harmonics as the spectral shape, and based on the rough pitch previously detected by an open-loop rough pitch search, a high-precision pitch search comprised of a first pitch search for the frequency spectrum in its entirety and a second pitch search of higher precision than the first pitch search is carried out. The second pitch search is performed independently for each of the high range side and the low range side of the frequency spectrum.

Owner:SONY CORP

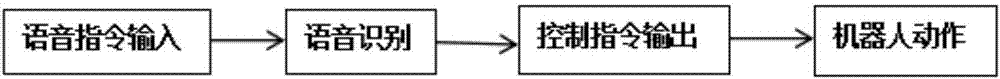

Deep learning-based intelligent industrial robot speech interaction and control method

InactiveCN106898350AChange the way of productionReduce labor intensitySpeech recognitionSpeech identificationSpeech sound

The invention discloses a deep learning-based intelligent industrial robot speech interaction and control method. The method comprises the following steps that: 1) speech is converted into a speech spectrum: original speech is converted into an image through FFT (Fast Fourier Transformation), wherein the image can be used as input; 2) modeling is performed on the whole speech sentence: the speech spectrum, adopted as input, is utilized to perform unsupervised training on a convolutional neural network; 3) the output sequence O of the convolutional neural network is compared with a tag T, and the convolutional neural network is adjusted in a supervised manner through the BP algorithm; and 4) specific text information is inputted into a robot as a control command. According to the deep learning-based intelligent industrial robot speech interaction and control method of the invention, the speech recognition technology and the industrial robot are combined together, and therefore, a traditional production mode is changed, the labor intensity of workers is decreased, labor productivity is enhanced, and the intelligentization development of industrial technologies can be promoted.

Owner:SOUTH CHINA UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com