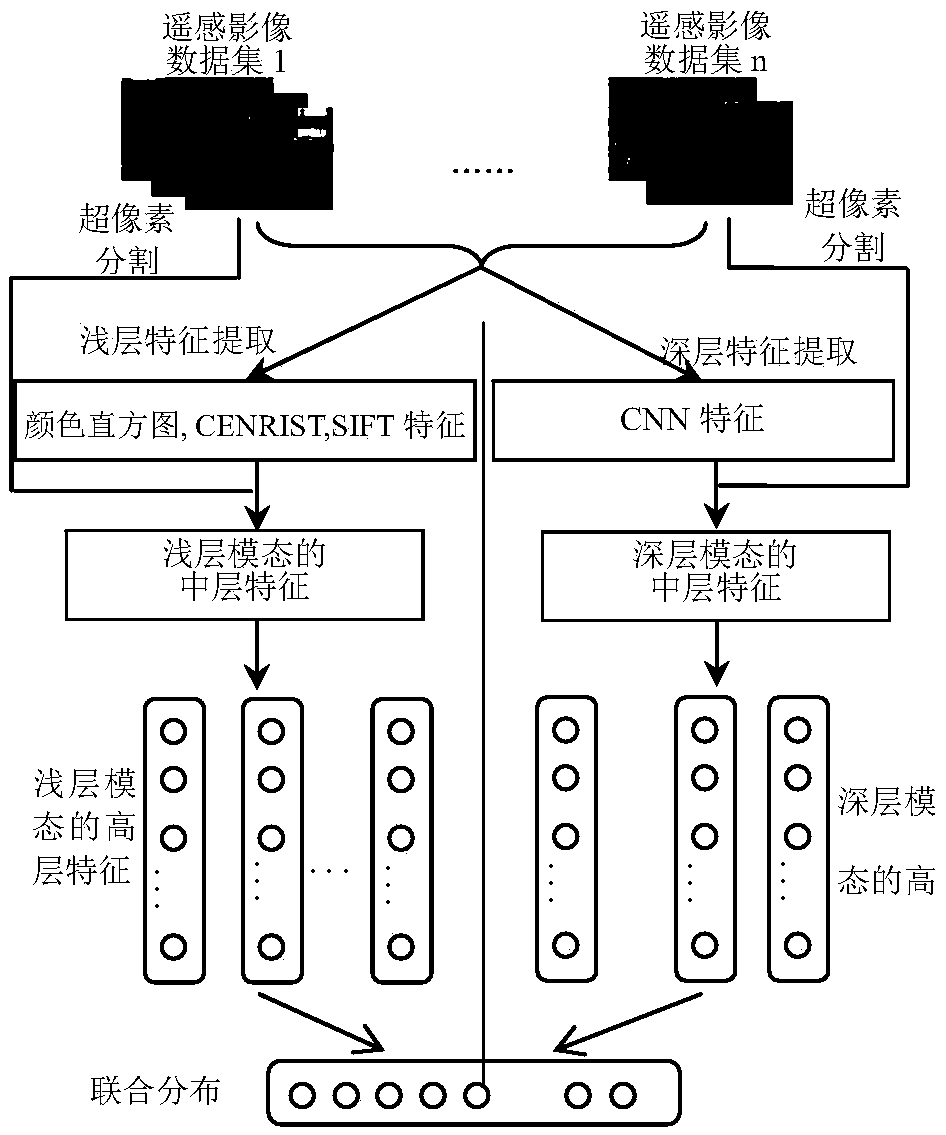

Multi-mode-characteristic-fusion-based remote-sensing image classification method

A remote sensing image and feature fusion technology, applied in character and pattern recognition, biological neural network models, instruments, etc., can solve the problems of lack of information in depth features, inability to fully express image information, etc. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

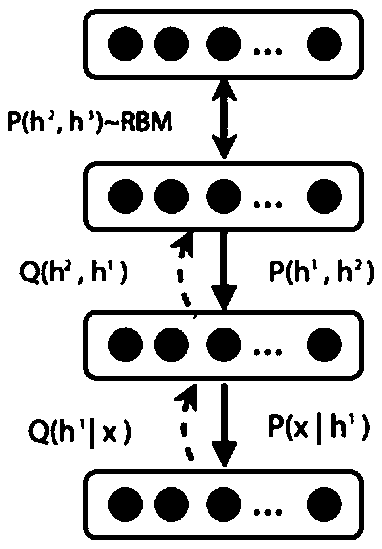

Method used

Image

Examples

experiment example

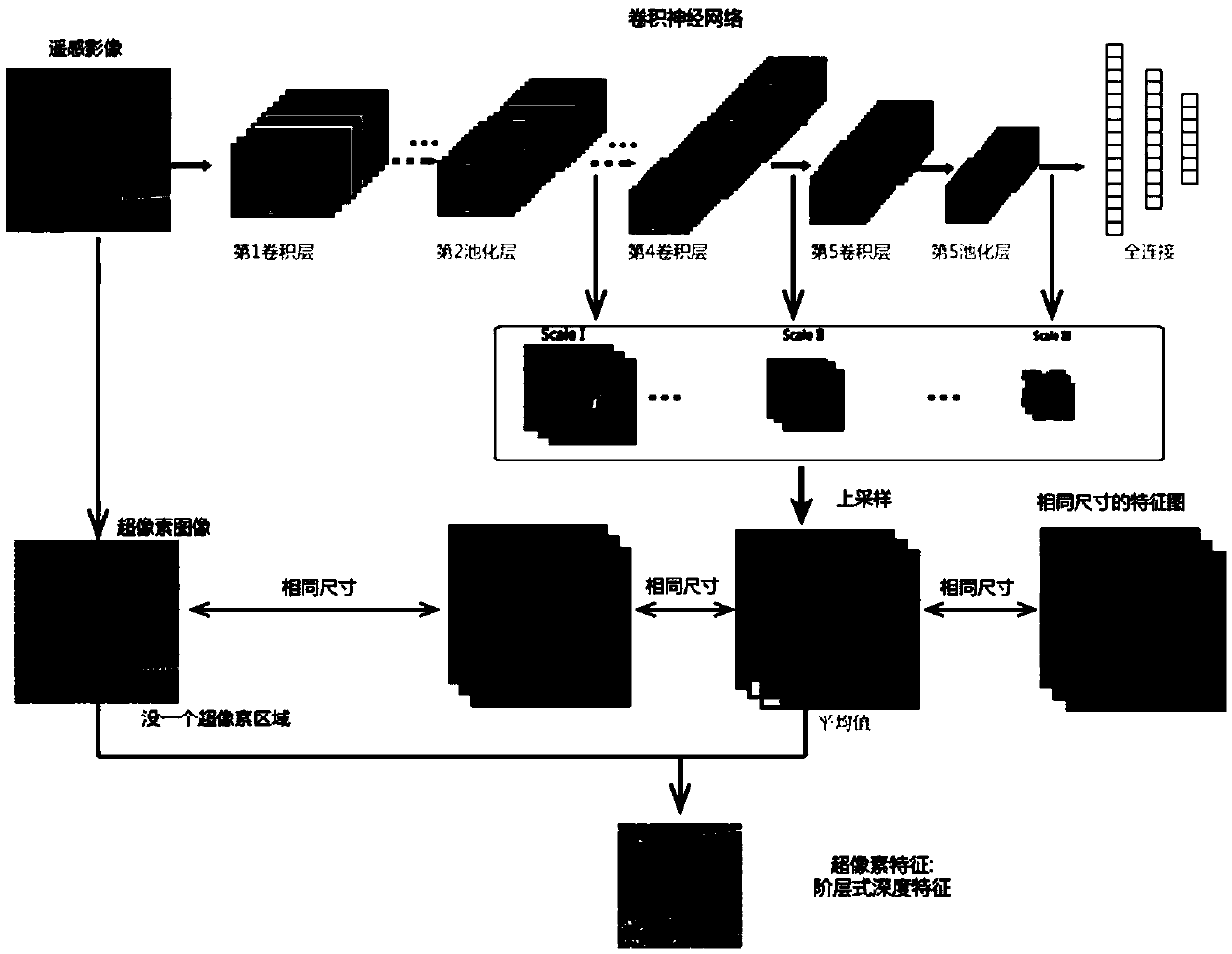

[0080] The following is an example of 300 high-resolution remote sensing images collected from Google Maps with a resolution of 60 cm, where the image size is 600×600 pixels. The selected images have a total of eight semantic classes: Urban Intensive Residential Area (UIR), Urban Sparse Residential Area (USR), Rural Residential Area (PR), River (RV), Farm Land (FL), Waste Land (WL) forest (FR) and mountain (MT), such as Figure 4 shown. In this experimental example, six types of classification tasks are used to evaluate the classification performance of the classification method of the present invention. The six categories of objects include buildings, roads, expendable land, farm land, forests, and rivers. When training the neural network model, 400 images are provided for each analog image set, which are images ranging in size from 80×80 to 200×200 pixels extracted from 300 satellites.

[0081] The architecture of the convolutional neural network used in this experiment e...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com