Robustness code summary generation method based on self-attention mechanism

A code summary and robust technology, applied in the intersection of software engineering and natural language processing technology, can solve the problems of less coding structure dependence, poor summary generation effect, etc., and achieve excellent evaluation results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

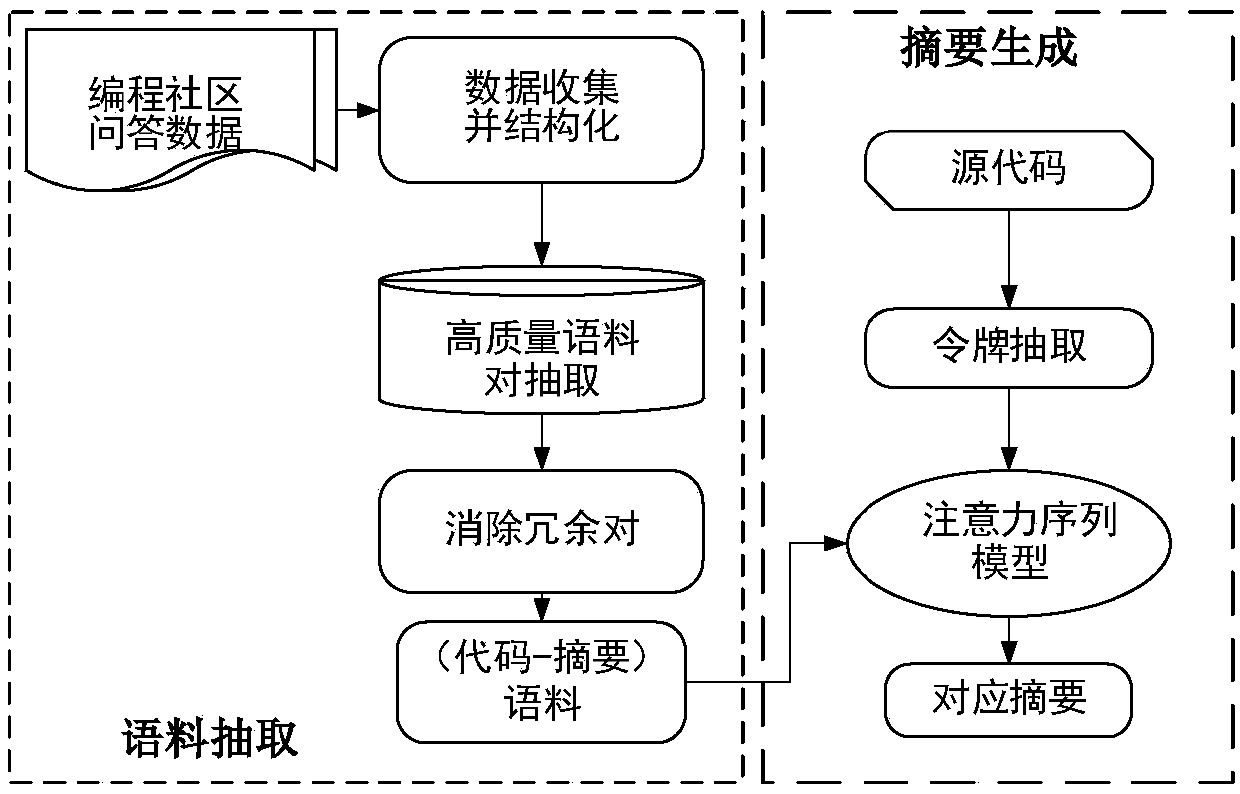

[0076] Step 1: Preprocess the initially collected noise corpus, remove some data that does not conform to the format, construct a feature matrix according to the feature value of the social attribute, perform the mean value completion operation of the default value, and remove the noise points whose frequency distribution is less than %1, All data were normalized.

[0077] Step 2: Use the DB-WTFF feature fusion framework to extract high-quality (PN, NL) corpus, in which the pyWavelets toolkit is used to implement wavelet transform, and Daubechies7 wavelet is used for 5-layer decomposition to extract high-quality corpus (C# 88000, SQL 46000).

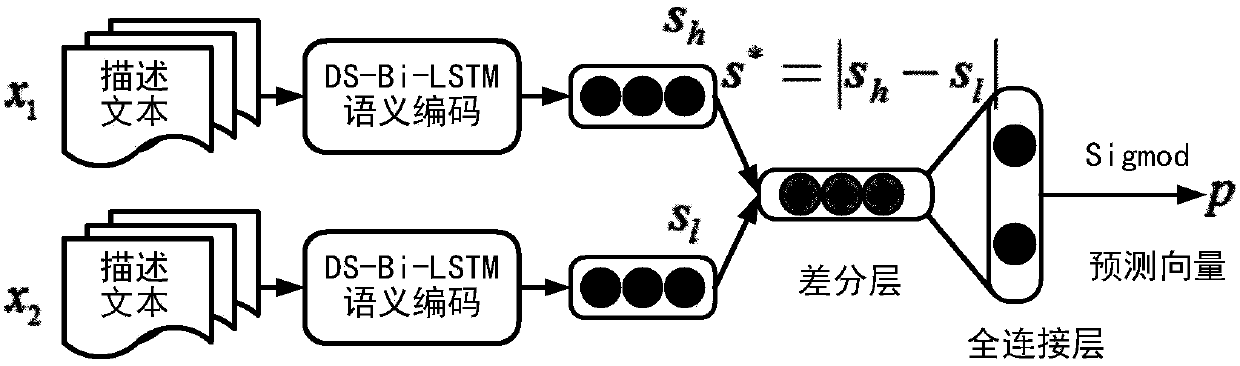

[0078] Step 3: Use the Tflearn framework to build a T-SNNC network, the text serialization length is unified to 33, and the output fully connected layer dimension is set to 32. In the DS-Bi-LSTM encoding module, the word embedding dimension is 128, and the number of bidirectional LSTM hidden layer units is 128. Split the manually label...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com